Effective fine-grain synchronization for automatically parallelized programs using optimist

- 格式:pdf

- 大小:594.35 KB

- 文档页数:32

Building Worthy Parallel Applications Using Networked Computers -- A TutorialFor Synergy V3.0Yuan Shishi@(215) 204-6437 (Voice)(215) 204-5082 (Fax)January 1995@1995 Temple UniversityTable of Contents1. Introduction________________________________________________41.1 Synergy Philosophy_______________________________________42. Synergy V3.0 Architecture_____________________________________54. Setup Synergy Runtime Environment_____________________________85. Parallel Application Development_______________________________9 5.1 Coarse Grain Parallelism___________________________________9 5.2 Adaptive Parallel Application Design (CTF)___________________10 5.3 POFP Program Development_______________________________115.3.1 Tuple Space Object Programming________________________135.3.2 Pipe Object Programming______________________________215.3.3 File Object Programming______________________________245.3.4 Compilation_________________________________________24 5.4 Parallel Program Configuration_____________________________245.4.1 Configuration in CSL_________________________________25 5.5 Parallel Execution________________________________________275.5.1 Parallel Debugging___________________________________285.5.2 Fault Tolerance______________________________________285.6 Graphic User Interface (License required)_____________________286. System Maintenance_________________________________________30 6.1 Utility Daemon Tests_____________________________________30 6.2 Object Daemon Tests_____________________________________30 6.3 Integrated Object Tests___________________________________30 6.4 Example Synergy Applications and Sub-directories_____________318. Concluding Remarks________________________________________329. Acknowledgments__________________________________________3310. References_______________________________________________35ABSTRACTSince the down falls of leading supercomputer giants the parallel processing field has been under a bearish atmosphere. We are forced to learn from our failures, not only about the high performance computing market place but also the fundamental problems in parallel processing. One of the problems is how can we make parallel processors effective for all applications?We propose a coarse grain parallel processing method called Passive Object-Flow Programming (POFP). POFP is a dataflow method. It advocates decoupling parallel programs from processors and functional programming from parallel processing, resource management and process management. This method is based on the observation that inter-processor communication requirements of multiple processors will out-pace any inter-networking designs.Decoupled parallel programs can be written in any language and processed using any combination of operating systems and communication protocols. Assuming the use of multiple high performance uni-processors and an automatic client/server software generation method, Synergy V3.0 offers automatic processor assignments, automatic load balancing and some fault tolerance benefits.Information disclosed in this documentation is part of an approved patent (U.S. Patent#5,381,534). Commercial use of the information and the system without written consent from the Technology Transfer Office of Temple University may constitute an infringement to the patent.1. IntroductionThe emergence of low cost, high performance uni-processors forces the enlargement of processing grains in all multi-processor systems. Consequently, individual parallel programs have increased in length and complexities. However, like reliability, parallel processing of any multiple communicating sequential programs is not really a functional requirement.Separating pure functional programming concerns from parallel processing and resource management concerns can greatly simplify the conventional “parallel programming” tasks. For example, the use of dataflow principles can facilitate automatic task scheduling. Smart tools can automate resource management. As long as the application dependent parallel structure is uncovered properly, we can even automatically assign processors to parallel programs in all cases.Synergy V3.0 is an implementation of above ideas. It supports parallel processing using multiple “Unix computers” mounted on multiple file systems (or clusters) using TCP/IP. It allows parallel processing of any application using mixed languages, including parallel programming languages. Synergy may be thought of as a successor to Linda1, PVM2 and Express®3.1.1 Synergy PhilosophyFacilitating the best use of computing and networking resources for each application is the key philosophy in Synergy. We advocate competitive resource sharing as opposed to “cycle stealing.” The tactic is to reduce processing time for each application. Multiple running applications would fully exploit system resources. The realization of the objectives, however, requires both quantitative analysis and highly efficient tools.It is inevitable that parallel programming and debugging will be more time consuming than single thread processing regardless how well the application programming interface (API) is designed. The illusive parallel processing results taught us that we must have quantitatively convincing reasons to processing an application in parallel before committing to the potential expenses (programming, debugging and future maintenance.)1 Linda is a tuple space parallel programming system lead by Dr. David Gelenter, Yale University. Its commercial version is distributed by the Scientific Computing Associates, New Heaven, NH. (See [2] for details)2 PVM is a message-passing parallel programming system by Oak Ridge National Laboratory, Unversity of Tennassee and Emory University. (See [4] for details)3 Express is a commercial message-passing parallel programming system by ParaSoft, CA. (See [4] for details)We use Timing Models to evaluate the potential speedups of a parallel program using different processors and networking devices [13]. Timing models capture the orders of timing costs for computing, communication, disk I/O and synchronization requirements. We can quantitatively examine an application’s speedup potential under various processor and networking assumptions. The analysis results delineate the limit of hopes. When applied to practice, timing models provide guidelines for processing grain selection and experiment design.Efficiency analysis showed that effective parallel processing should follow an incremental coarse-to-fine grain refinement method. Processors can be added only if there are unexplored parallelism, processors are available and the network is capable of carrying the anticipated load. Hard-wiring programs to processors will only be efficient for a few special applications with restricted input at the expense of programming difficulties. To improve performance, we took an application-oriented approach in the tool design. Unlike conventional compilers and operating systems projects, we build tools to customize a given processing environment for a given application. This customization defines a new infrastructure among the pertinent compilers, operating systems and the application for effective resource exploitation. Simultaneous execution of multiple parallel applications permits exploiting available resources for all users. This makes the networked processors a fairly real “virtual supercomputer.”An important advantage of the Synergy compiler-operating system-application infrastructure is the higher level portability over existing systems. It allows written parallel programs to adapt into any programming, processor and networking technologies without compromising performance.An important lesson we learned was that mixing parallel processing, resource management and functional programming tools in one language made tool automation and parallel programming unnecessarily difficult. This is especially true for parallel processors employing high performance uni-processors.2. Synergy V3.0 ArchitectureTechnically, the Synergy system is an automatic client/server software generation system that can form an effective parallel processor for each application using multiple distributed "Unix-computers." This parallel processor is specifically engineered to process programs inter-connected in an application dependent IPC (Inter-Program Communication/ Synchronization) graph using industry standard compilers, operating systems and communication protocols. This IPC graph exhibits application dependent coarse grain SIMD (Single Instruction Multiple Data), MIMD (Multiple Instruction Multiple Data) and pipeline parallelisms.Synergy V3.0 supports three passive data objects for program-to-program communication and synchronization:•Tuple space (a FIFO ordered tuple data manager)•Pipe (a generic location independent indirect message queue)•File (a location transparent sequential file)A passive object is any structured data repository permitting no object creation functions. All commonly known large data objects, such as databases, knowledge bases, hashed files, and ISAM files, can be passive objects provided the object creating operators are absent. Passive objects confine dynamic dataflows into a static IPC graph for any parallel application. This is the basis for automatic customization.POFP uses a simple open-manipulate-close sequence for each passive object. An one-dimensional Coarse-To-Fine (CTF) decomposition method (see Adaptable Parallel Application Development section for details) can produce designs of modular parallel programs using passive objects. A global view of the connected parallel programs reveals application dependent coarse grain SIMD, MIMD and pipeline potentials. Processing grain adjustments are done via the work distribution programs (usually called Masters). These adjustments can be made without changing codes. All parallel programs can be developed and compiled independently.Synergy V3.0 kernel includes the following elements:a) A language injection library (LIL)b)An application launching program (prun)c) An application monitor and control program (pcheck)d)*A parallel program configuration processor (CONF)e)*A distributed application controller (DAC)f)**Three utility daemons (pmd, fdd and cid)g)*Two object daemons (tsh and fsh)h) A Graphics User Interface (mp)* User transparent elements.** Pmd and fdd are user transparent. Cid is not user transparent.A parallel programmer must use the passive objects for communication and synchronization purposes. These operations are provided via the language injection library (LIL). LIL is linked to source programs at compilation time to generate hostless binaries that can run on any binary compatible platforms.After making the parallel binaries the interconnection of parallel programs (IPC graph) should be specified in CSL (Configuration Specification Language). Program “prun” starts a parallel application. Prun calls CONF to process the IPC graph and to complete the program/object-to-processor assignments automatically or as specified. It then activates DAC to start appropriate object daemons and remote processes (via remote cid’s). It preserves the process dependencies until all processes are terminated.Program “pcheck” functions analogously as the “ps” command in Unix. It monitors parallel applications and keeps track of parallel processes of each application. Pcheck also allows killing running processes or applications if necessary.To make remote processors listening to personal commands, there are two light weight utility daemons:•Command Interpreter Daemon (cid)•Port Mapper Daemon (pmd)Cid interprets a limited set of process control commands from the network for each user account. In other words, parallel users on the same processor need different cid’s. Pmd (the peer leader) provides a "yellow page" service for locating local cid’s. Pmd is automatically started by any cid and is transparent to all users.Fdd is a fault detection daemon. It is activated by an option in the prun command to detect worker process failures at runtime.Synergy V3.0 requires no root privileged processes. All parallel processes assume respective user security and resource restrictions defined at account creation. Parallel use of multiple computers imposes no additional security threat to the existing systems.Theoretically, there should be one object daemon for each supported object type. For the three supported types: tuple space, pipe and files, we saved the pipe daemon by implementing it directly in LIL. Thus, Synergy V3.0 has only two object daemons:•Tuple space handler (tsh)•File access handler (fah)The object daemons, when activated, talk to parallel programs via the LIL operators under the user defined identity (via CSL). They are potentially resource hungry. However they only "live" on the computers where they are needed and permitted.To summarize, building parallel applications using Synergy requires the following steps:a) Parallel program definitions. This requires, preferably, establishing timing models for agiven application. Timing model analysis provides decomposition guidelines. Parallel programs and passive objects are defined using these guidelines.b) Individual program composition using passive objects.c) Individual program compilation. This makes hostless binaries by compiling the sourceprograms with the Synergy object library (LIL). It may also include moving thebinaries to the $HOME/bin directory when appropriate.d) Application synthesis. This requires a specification of program-to-programcommunication and synchronization graph (in CSL). When needed, user preferred program-to-processor bindings are to be specified as well.e) Run (prun). At this time the program synthesis information is mapped on to a selectedprocessor pool. Dynamic IPC patterns are generated (by CONF) to guide thebehavior of remote processes (via DAC and remote cid’s). Object daemons are started and remote processes are activated (via DAC and remote cid’s).f) Monitor and control (pcheck).The Synergy Graphics User Interface (mp) integrates steps d-e-f into one package making the running host the console of a virtual parallel processor.4. Setup Synergy Runtime EnvironmentIn addition to installing Synergy V3.0 on each computer cluster, there are four requirements for each “parallel” account:a) An active SNG_PATH symbol definition pointing to the directory where SynergyV3.0 is installed. It is usually /usr/local/synergy.b) An active command search path ($SNG_PATH/bin) pointing to the directory holding the Synergy binaries.c) A local host file ($HOME/.sng_hosts). Note that this file is only necessary for a host to be used as an application submission console.d) An active personal command interpreter (cid) running in the background. Note that the destination of future parallel process’s graphic display should be defined before starting cid.Since the local host file is used each time an application is started, it needs to reflect a) all accessible processors; and b) selected hosts for the current application.The following commands are for creating and maintaining a list of accessible hosts:• %shosts [ default | ip_addr ]This command creates a list of host names either using the prefix of the currenthost IP address or a given IP address by searching the /etc/hosts file.• %addhost host_name [-f]This command adds a host entry in the local host file. The -f option forcesentry even if the host is not Synergy enabled.• %delhost host_name [-f]This command removes an entry from the local host file. The -f option forces the removal if the host is Synergy enabled.• %dhostsThis command allows entry removal in batches.The local host file has a simple format : <IP Addr, IP Name, Protocol, OS, login, Cluster>. A typical file has the following lines:129.32.32.102 tcp unix shi fsys1129.32.1.100 tcp unix shi fsys2155.247.182.16 tcp unix shi fsys3Manual modification to this file may be necessary if one uses different login names.The “chosts” command is for selecting and de-selecting a subset of processors from the above pool for running a parallel application.The “cds” command is for viewing processor availability for all selected hosts.5. Parallel Application Development5.1 Coarse Grain ParallelismAs single processor’s speed racing to the 100 MIPS range, exploiting parallelism at instruction level should be deemed obsolete. Execution time differences of assigning different processors to a non-computing intense program are diminishing for all practical purposes. The processing grain, however, is a function of the average uni-processor speed and the interconnection network speed [13]. For building scaleable parallel applications, a systematic coarse-to-fine application partitioning method is necessary.There are three program-to-program dependency topologies (Flynn’s taxonomy) that can facilitate simultaneous execution of programs : SIMD, MIMD and pipeline. Figure 1illustrates their spatial arrangements and respective speedup factors.D2D3SIMDMIMD Pipeline Speedup = 3Speedup = 3Speedup = (3 x 3)/(3 + 1 + 1) = 9/5Figure 1. Parallel Topologies and SpeedupsIn the pipeline case, the speedup approaches 3 (the number of pipe stages) when the number of input data items becomes large.An application will be effectively processed in parallel using multiple high performance uni-processors only if its potential coarse grain topologies are uncovered. Efficiency analysis showed that finer grain partitions necessarily cause larger inter-processor traffic volume leading to lower computing efficiency [13]. To build scaleable parallel applications, however, we need a coarse-to-fine grain development method.5.2 Adaptive Parallel Application Design (CTF)The basic assumption here is that the user has identified the computing intense program segments (or pseudo codes). Assuming high performance uni-processors, these program segments must be repetitive either iteratively or recursively.CTF has the following steps:a. Linear cut. This pass cuts a program into segments according to time consuming loops and recursive function calls. It produces segments of three types: Sequential (S), Loop (L) and Recursive (R).b. Inter-segment data dependency analysis. This pass analyzes the data dependencies between decomposed (S, L and R) segments. This results in an acyclic dataflow graph.c. L-segment analysis. The objective of this analysis is to identify one independent and computation intensive core amongst all nested loops. In addition to conventional loop dependency analysis, one may have to perform some program transformations to remove "resolvable dependencies." The depth of the selected loop is dependent on the number of parallel processors and interconnection network quality. A deeper independent loop should be selected if large number processors are connected via high speed networks.The selected loop will be cut into three-pieces: loop header (containing pre-processing statements and the parallel loop header), loop body (containing the computing intensive statements) and the loop tail (containing the post-processing statements). The loop master is formed using a loop header and a loop tail. The loop body is all that is needed for a loop worker.d. R-segment analysis. The objective is to identify if the recursive function is dependent on the previous activation of the same function. If no dependency is found, the result of the analysis is a two-piece partition of the R-segment: a master that recursively generates K*P independent partial states, where K is a constant and P is the number of pertinent SIMD processors, packages them to working tuples and waits for results; and a worker that fetches any working tuple, unwraps it, recursively investigates all potential paths and returns results appropriately.For applications using various branch-and-bound methods, there should be a tuple recording the partial optimal results. This tuple should be updated at most the same frequency as a constant time of the initial number of independent states (working tuples). The smaller the constant the more effective in preventing data bleeding. However, a larger constant would improve convergence speed (less redundant work done elsewhere).e. Merge. If pipelines are to be suppressed, this pass merges linearly dependent (or not parallelizable) segments to a single segment. This is to save communication costs between segments.f. Selecting IPC objects. We can use tuple space objects to implement parallelizable L and R segments (coarse grain SIMDs); pipes to connect all other segments. File objects are used in all file accesses.g. Coding (see Section 5.3).Note that the CTF method only partitions one dimension for nested loops. This simplifies program object interface designs making CTF a candidate for future automation. Computational experiments also suggested that multi-dimensional (domain) decomposition performs poorly in practice due to synchronization difficulties.Adhering to the CTF method one can produce a network of modular parallel programs exhibiting application dependent coarse grain SIMD, MIMD and pipeline potentials.5.3 POFP Program DevelopmentA Synergy program can be coded in any convenient programming language. To communicate and synchronize with other processes (or programs), however, the program must use the following passive object operations:a) General operations:obj_id = cnf_open(“name”, 0);This function creates a thread to the named object. For non-file objects, thesecond argument is not used. For file objects, the second argument can be“r”, “r+”, “w”, “w+” or “a.” This function returns an integer forsubsequent operations.cnf_close(obj_id);This function removes a thread to an object.var = cnf_getf();This function returns the non-negative value defined in “factor=“ clause inthe CSL specification.var = cnf_getP();This function returns the number of parallel SIMD processors at runtime.var = cnf_gett();This function returns the non-negative value defined in the “threshold=“clause as specified in the CSL file.cnf_term();This function removes all threads to all objects in the caller and exits thecaller.b) Tuple Space objects.length = cnf_tsread(obj_id,tpname_var,buffer,switch);It reads a tuple with a matching name as specified in "tpname_var" into"buffer". The length of the read tuple is returned to "length" and the actualtuple name is stored in "tpname_var". When "switch" = 0 it performsblocking read. A “-1” value assumes non-blocking read.length = cnf_tsget(obj_id, tpname_var,buffer,switch);It read a tuple with matching name as in "tpname_var" into "buffer" anddeletes the tuple from the object. The length of the read tuple is returned to"length" and the name of the read tuple is stored in "tpname_var". When"switch" = 0 it performs blocking get. A -1 switch value instructs non-blocking get.status =cnf_tsput(obj_id, "tuple_name",buffer,buffer_size);It inserts a tuple from "buffer" into "obj_id" of "buffer_size" bytes. Thetuple name is defined in "tuple_name". The execution status is returned in"status" (status < 0 indicates a failure and status = 0 indicates that anoverwrite has occurred).c) Pipe objects.status = cnf_read(obj_id, buffer, size);It reads "size" bytes of the content in the pipe into "buffer". Status < 0reports a failure. It blocks if pipe is empty.status = cnf_write(obj_id, buffer, size);It writes "size" bytes from "buffer" to "obj_id". Status < 0 is a failure.d) File objects:cnf_fgets(obj_id, buffer)cnf_fputs(obj_id, buffer)cnf_fgetc(obj_id, buffer)cnf_fputc(obj_id, buffer)cnf_fseek(obj_id, pos, offset)cnf_fflush(obj_id)cnf_fread(obj_id, buffer, size, nitems)cnf_fwrite(obj_id, buffer, size, nitems)These functions assume the same semantics as ordinary Unix file functionsprovided that the location and name of the file are transparent to the programmer.Note that these Synergy functions are effective only after at least one cnf_open call. This is because that the first cnf_open initializes the caller's IPC pattern and inherits any information specified at the application configuration. These information is used in all subsequent operations throughout the caller's lifetime.5.3.1 Tuple Space Object ProgrammingIn addition to the tuple binding method differences, the Synergy tuple space object differs from Linda's [2] (and Piranha's [3]) tuple space in the following:• Unique tuples names. A Synergy tuple space object holds only unique tuples.• FIFO ordering. Synergy tuples are stored and retrieved in an implicit First-In-First-Out order.• Typeless. Synergy tuples do not contain type information. The user programs are responsible for data packing and unpacking.Note that the cnf_tsread and cnf_tsget calls do not require a buffer length but a tuple name variable. The actual length of a retrieved tuple is returned after the call. The tuple name variable is for recording the name filter and is over-written by the retrieved tuple's name.A coarse grain SIMD normally consists of one master and one worker. The worker program will be running on many hosts (SI) communicating with two tuple space objects (MD). Figure 2 illustrates its typical configuration.Figure 2. Coarse Grain SIMD Using Tuple Space Objects Following is a sample tuple “get” program:============================================================tsd = cnf_open("TS1", 0); /* Open a tuple space object */ . . .strcpy(tpname,"*");/* Define a tuple name */length = cnf_tsget( tsd, tpname, buffer, 0 );/* Get any tuple from TS1. Block, if non-existent. Actual tuple namereturned in "tpname". Retrieved tuple size is recorded in "length".*//* Unpacking */ . . .============================================================A typical tuple “put” program is as follows.============================================================ double column[XRES];. . .main(){. . .tsd = cnf_open("TS1", 0);/* Packing */for (i=0; i<XRES; i++)column[i] = ...;/* Send to object */sprintf(tpname,"result%d",x);/* Making a unique tuple name */tplength = 8*XRES;if ( ( status = cnf_tsput( tsd, tpname, column, tplength ) ) < 0 ) {printf(" Cnf_tsput ERROR at: %s ", tpname );exit(2);}. . .}============================================================ SIMD Termination ControlIn an SIMD cluster, the master normally distributes the working tuples to one tuple space object (TS1) then collects results from another tuple space object (TS2, see Figure 2). The workers are replicated to many computers to speed up the processing. They receive "anonymous" working tuples from TS1 and return the uniquely named results to TS2.They must be informed of the end of working tuples to avoid endless wait. Using the FIFO property, we can simply place a sentinel to signal the end. Each worker returns the end tuple to the space object upon recognition of the sentinel. It then exits itself (see program frwrk in Examples section).SIMD Load BalancingGraceful termination of parallel programs does not guarantee a synchronized ending. An application is completed if and only if all its sub-components are completed. Thus, we must minimize the waiting by synchronizing the termination points of all components. This is the classic "load balancing problem." Large wait penalty is caused not only by the heterogeneous computing and communication components but also by the varying amount of work loads embedded in the distributed working tuples .In all our experiments, we found that SIMD synchronization penalty is more significant than commonly feared communication overhead. In an Ethernet (1 megabytes per second)environment, the communication costs per transaction is normally in seconds while the synchronization points can differ in much greater quantities.The "load balancing hypothesis" is that we can minimize the wait if we can somehow calculate and place the "right" amount work in each working tuple. The working tuple calculation for parallel computers and loop scheduling for vector processors are technically the same problem.Theoretically, ignoring the communication overhead, we can minimize the waiting time if we distribute the work in the smallest possible unit (say one loop per tuple). Assuming that the actual work load is uniformly distributed, "load balancing" should be achieved because the fast computers will automatically fetch more tuples than the slower ones.Considering the communication overhead, however, finding the optimal granularity becomes a non-linear optimization problem [10]. We looked for heuristics. Here we present the following algorithms:•Fixed chunking. Each working tuple contains the same number of data items.Analysis and experiments showed that the optimal performance, assuming uniform work distribution, can be achieved if the work size is N P ii P =∑1, where N is the totalnumber of data items to be computed, P i is the estimated processing powermeasured in relative index values[10] and P is the number of parallel workers.。

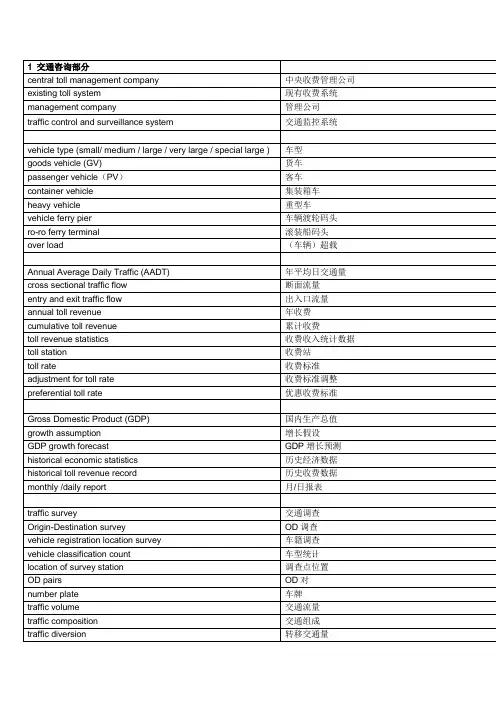

交通安全工程专业英语词汇1 交通规则traffic regulation2 路标guide post3 里程碑milestone4 停车标志mark car stop5 红绿灯traffic light6 自动红绿灯automatic traffic signal light7 红灯red light8 绿灯green light9 黄灯amber light10 交通岗traffic post11 岗亭police box12 交通警traffic police13 打手势pantomime14 单行线single line15 双白线double white lines16 双程线dual carriage-way17 斑马线zebra stripes18 划路线机traffic line marker19 交通干线artery traffic20 车行道carriage-way21 辅助车道lane auxiliary22 双车道two-way traffic23 自行车通行cyclists only24 单行道one way only25 窄路narrow road26 潮湿路滑slippery when wet27 陡坡steep hill28 不平整路rough road29 弯路curve road ; bend road30 连续弯路winding road31 之字路double bend road32 之字公路switch back road33 下坡危险dangerous down grade34 道路交叉点road junction35 十字路cross road36 左转turn left37 右转turn right38 靠左keep left39 靠右keep right40 慢驶slow41 速度speed42 超速excessive speed43 速度限制speed limit44 恢复速度resume speed45 禁止通行no through traffic46 此路不通blocked47 不准驶入no entry48 不准超越keep in line ; no overhead49 不准掉头no turns50 让车道passing bay51 回路loop52 安全岛safety island53 停车处parking place54 停私人车private car park55 只停公用车public car only56 不准停车restricted stop57 不准滞留restricted waiting58 临街停车parking on-street59 街外停车parking off-street60 街外卸车loading off-street61 当心行人caution pedestrian crossing62 当心牲畜caution animals63 前面狭桥narrow bridge ahead64 拱桥hump bridge65 火车栅level crossing66 修路road works67 医院hospital68 儿童children69 学校school70 寂静地带silent zone71 非寂静地带silent zone ends72 交通管理traffic control73 人山人海crowded conditions74 拥挤的人jam-packed with people75 交通拥挤traffic jam76 水泄不通overwhelm77 顺挤extrusion direct78 冲挤extrusion impact79 推挤shoved80 挨身轻推nudging81 让路give way82 粗心行人careless pedestrian83 犯交通罪committing traffic offences84 执照被记违章endorsed on driving license85 危险驾驶dangerous driving86 粗心驾车careless driving87 无教员而驾驶driving without an instructor88 无证驾驶driving without license89 未经车主同意without the owner's consent90 无第三方保险without third-party insurance91 未挂学字牌driving without a L plate92 安全第一safety first93 轻微碰撞slight impact94 迎面相撞head-on collision95 相撞collided96 连环撞 a chain collision97 撞车crash98 辗过run over99 肇事逃跑司机hit-run driver100 冲上人行道drive onto the pavemen智能交通专业英语词汇Glance value 瞥见距离之远近Glare 眩Glare control 眩光控制Glare recovery 眩光消除Glare recovery time 眩光消除时间Glare screen 防眩设施Glare shield 眩光遮蔽物Glare vision 眩光视觉Glass Funnel 玻璃漏斗Global Positioning System GPS 全球定位系统Global System for Mobile Communication GSM 泛欧式数字行动电话系统Goal 目标Goal Driven 目标驱动法Goods delivery problem 货物配送问题Gordon Ray雷伊Government-owned Public Parking Facility 公有公共停车场Gradation Curve 级配曲线Grade 坡度;纵向坡度Grade Crossings 平面交叉Grade I Road 一级路面Grade Line 坡度线Grade resistance 坡度阻力Grade Separation 立体交叉Grade separation bridge 立体交叉桥(结构物)Grade Separation Structure 立体叉结构Graduated Rate 累计费率Grain size analysis 粒径分析Grants 政府奖助Granular Materials 粒状材料Graphical analysis 图解分析法Gravel 砾石Gravel Equivalent Factor 卵石当景因素Gravel Road 砾石路Gravity Model 重力模式Gravity Yard 重力调车场Green Arrow 箭头绿灯Green/cycle ratio G/C 绿灯时间与号志周期之比值Green Extension 绿灯延长时间Green Flashing 闪光绿灯Green wave signal design 绿波式号志设计Greenhouse effect 温室效应Groove Joint 槽形接缝Gross National Product GNP 全国总生产毛额Gross Vehicle Weight GVW 总重Ground 地;地面Ground control point 地面控制点Ground improvement 地盘改良Ground treatment 地基处理Groundwater level 地下水位Group 群组Grouting 灌浆Growth Factor 成长因素Guardrail 护栏Guidance 导引Guidance information 导引信息Guide 导引Guide line 导引线Guide Sign 指示标志Gully control 蚀沟控制Gussasphalt 高温沥青胶浆Gutters 水沟HHalf-Integer Synchronization 半整数同步相位Hand in for Cancellation 缴销Hangar Marker 维护棚厂标记Hazard marker 危险标物Hazardous materials 危险物品Head (of water) 水头Head light 前灯;车前大灯Head On Collision 车头对撞Head Section 头区Head Sight Distance 车灯视矩Head Wall 端墙Header Board 横梁板Heading, Pilot tunnel 隧道导坑Headlight Sight Distance 车灯视距Head-on collision 对撞;正撞Headwater Depth 上水深Headway 时间车距;班距;行进间距(车距)Headway distribution 班距分布Headway Elasticity 班距弹性Headway, Period 班距Hearing 听力Heaving 隆起Heavy Commercial Vehicle Safety Management 重车安全管理Heavy Motorcycle 重型机踏车Heavy duty truck 重型卡车Heavy weight transportation management 大载重运输管理Heel of Switch 转辙轨跟端Heel Spread 跟端展距Height limit 高度限制Heuristic Approach 推理方法Hidden-Dip 躲坑High beam 远光灯High capacity buses 高容量巴士High Data Rate Digital Subscriber Line HDSL 高速数字用户回路High decked bus 高层巴士High oblique photography 平倾斜摄影High Occupancy Vehicle HOV 高乘载车辆High Occupancy Vehicle (HOV) Lane 高乘载车道High or direct gear 高速檔High Truss With Overhead Lateral Connections 高顶式High type 高级型High-mast Lighting 高杆多灯式照明设计High-Occupancy Vehicle Priority Control 高承载率车优先行驶控制High Speed Rail 高速铁路Highway 公路Highway (or street) lighting 公路(或街道)照明Highway Advisory Radio HAR 公路路况广播Highway aesthetics 公路美学Highway alignment design 公路线形设计Highway bridge 公路桥梁Highway Bus Carrier 公路汽车客运业Highway bus industry 公路客运业Highway Capacity Manual HCM 公路容量手册Highway Capacity 公路容量Highway construction 公路施工Highway Construction and Maintenance Cost 公路建设维护成本Highway database 公路数据库Highway Density 公路密度Highway embankment 公路路堤Highway engineering, Road engineering 公路工程Highway geometry, Highway routing 公路路线Highway Guardrail 公路护榈Highway information 公路资料Highway information system 公路信息系统Highway interchange area 交流道地区Highway Intersection 公路交叉Highway maintenance 公路维修与养护Highway passenger vehicle 公路客车Highway performance 公路绩效Highway Performance Monitoring System HPMS 公路绩效监测系统Highway planning 公路规划Highway ramp 公路匝道Highway Research Board (Renamed to beResearch Board) HRB 美国公路研究委员会Highway service standard 公路服务水准Highway Supervision and Administration Statistics 公路监理统计Highway surveillance 公路调查Highway Transit 公路大众运输系统Highway Transportation Statistics 公路运输统计Highway transportation, Road transportation 公路运输Highway Travel Time Survey 公路行驶时间调查Highway Type 公路之型式Highway, Road 公路Hiking trail design 健行步道设计Hill land, Hill slope, Hillslope, Slope, Slope land, Slopeland, Sloping land Hinterland 腹地Histogram 直方统计图Hit-and-run driving 肇事逃逸;闯祸逃逸Holding Line Marker 等候线标记Home interview 家庭访问Home-Based Trip 旅次Hook-Headed Spike 钩头道钉Hopper 进料斗Horizontal Alignment 平面线形Horizontal angle of the cone of vision 水平视锥角Horizontal Clearance 侧向净宽Horizontal control surveys 平面控制测量Horizontal Curve 平曲线Horizontal drain pipe 水平排水管Horizontal Marking 横向标线Host 主机Hot-Mix Asphalt Mixture HMA 热拌沥青混凝土Hourly variation 时变化图Household trip 旅次Human characteristics 人类特性Human factor 人为因素;人事行为因素Hydroplaning, Aquaplaning 水滑作用Hydrophilic 亲水性Hydrophobic 厌水性IIdeal Condition 理想状况Illegal parking 违规停车Illumination 照明;明亮度;照度Illumination, Lighting 照明Image 影像Image element, Picture element, Pixel 像素;像元;像点Image geometry 影像几何Impact 冲击Impact energy 撞(冲)击能量Impact resistance 冲击阻力Improvement plans 改善计画Improving Highway Traffic Order and Safety Projects 道路交通秩序与交通安全改进方案In-Band-Adjacent-Channel IBAC 带内邻频In-Band-On-Channel IBOC 带内同频In Kind, Match Fund 配合款Inbound 往内方向;进城;驶入Incident 事件Incident Management 事件管理Inclinometer 倾斜仪Incremental Delay 渐增延滞Incremental launching method 节块推进工法Indemnity of Damage 损害赔偿Intensity of Rainfall 雨量强度Index 指针Index system 索引系统Index system, Indicator system 指针系统Indigenous 原著民的;本土性Indirect observation 间接观测Individual Communication Subsystem 个别通讯次系统Individual difference 个人禀性的差异Individual spot 独立点Induced Coil 感应圈Inductive loop Detector 环路线圈侦测器Inductive Receiver 感应收讯器Inertia resistance 惯性阻力Information center 服务台Information Flow 信息流Information Level 信息层Information Management Services IMS 信息管理服务Information sign 指示标志;信息标志Information Sign/Guide Sign 指示标志Infrared Beacon 红外线信号柱Infrastructure 内部结构;基础建设Initial Cost 初置成本Initial Daily Traffic 初期每日交通量Injury 伤害Injury rate 受伤率Inlet 集水口Inlet Control 进口控制Inlet Time 流入时间Inner lane 中心车道In-situ 现地Inspection of Vehicle 汽车检验Integrated Ramp Control 整体匝道控制Integrated Service Digital Network ISDN 整体服务数字网络Integrated Services on Lease 整合租用Intellection or identification 运用智能;辨明Intelligent Transportation System ITS智能型运输系统Intelligent vehicle 智能车Intended Running Speed 期望行车速率Intensity 光度;光的强度Intensity and Duration of Rainfall 降雨时间与密度Intercepting Drain 截水管Interchange 交流道Interchangeability 相互交换性Intercity Bus 公路客运汽车Intercity bus industry 长途客运(业)Intercity highway passenger transportation 城际公路客运Interconnectivity 相互连结性Interference 干扰绿灯带Interlocking 连锁控制Interlocking Plant 连锁装置Intermodal 不同运具间的转换Internal Offset 内时差Internal resistance 内部阻力Internal survey 内区调查Internal-externaltrip 内区-外区间旅次International Driver License 国际驾照International Telecommunication Union ITU 国际电信联盟Internet 网际网络Internet Protocol IP 网际网络通讯协议Internet Service Provider ISP 网际网络服务业者Interoperability 操作时之相互连网性Interpolation Method 内插法Interrupted Delay 干扰延滞Interrupted flow 受有干扰车流;间断流通Intersection 交叉路口Intersection Angle 交角Intersection capacity 路口容量Intersection channelization 交叉路口的槽化Intersection characteristics 交叉路口特性Intersection design 交叉路口设计Intersection Point 切线交点Intersectional delay 交叉路口延滞Interstate 州际间的(一般指出入受限的公路)Interstate Commerce Commission ICC 州际商务委员会Interval 时段Interview technique 访问法;访谈法Intoxicated driving 酒后驾车Inventory 数据库JJitney 随停公车;简便公车Job Mix Formula 工地拌合公式Joint Opening 开口宽度;Joint Operation of Transport 联运Jointed Concrete Pavement JCP 接缝式混凝土铺面Jointed Reinforced Concrete Pavement JRCP 接缝式钢筋混凝土铺面Joule 焦耳(能量单位)Journal Resistance 轴颈阻力Jumbo 钻堡Junction 路口Junction box 汇流井KKalman filter algorithm 卡门滤波法Kalman filter, Kalman filtering 卡门滤波Keep right sign 靠右标志Key count station 关键调查站Kinematic Viscosity 动黏度Kink 纽结Kiss-and-Ride 停车转乘Kneading Action 辗挤作用Knot 节(等于每小时1.85公里)Knowledge Base 知识库Kurtosis 峰度LLag 间距Lag time 延迟时间Land Access 可及性Land Expropriation 土地征收Land Transportation 陆路运输Land use 土地使用;土地利用Landfill site 掩埋场Landscape design 景观设计Landslide/Slump 坍方Lane, traffic lane 车道Lane 巷道Lane allocation, Lane layout 车道配置Lane Balance 车道平衡Lane change 变换车道Lane control 车道管制Lane distribution 车道分布Lane Group 车道群Lane headway 车道行进间距Lane Line 车道线Lane Reduction Transition Line 路宽渐变线Lane residual width 巷道剩余宽度Lane Width 车道宽度Lane-direction control signal 车道行车方向管制号志Large Network Grouping 大型网络群组划分Large Passenger Vehicle 大客车Large-area Detector 大区域侦测器Latent travel demand 潜在旅次需求Lateral Acceleration 横向加速率Lateral clearance 侧向净距Lateral Collision Avoidance 侧向防撞Lateral Separation 左右隔离Lateral shift 侧向位移Laws of randomness 随机定理Lay-Down Vehicle Days 停驶延日车数Leading 绿灯早开Leading & lagging design 早开迟闭设计Leading Car 领导车Leading design 早开设计Learning Permit 学习驾照Lease 租赁Left turn 左转Left turn accel-decel & storage lane island 左转加减速-停储车道式分向岛Left turn acceleration lane island 左转加速车道式分向岛Left turn crossing 左转交叉穿越Left turn lane 左转车道Left turn maneuver 左转运行Left turn on red 在红灯时段内进行左转运行Left turn waiting zone 左转待转区Left turning vehicle 左转车辆Leg 路肢Legibility 公认性;易读性Length 长度Length of Economical Haul 经济运距Length of grade 坡长Length of lane change operation 变换车道作业的长度Length of Superelevation Runoff 超高渐变长度Length of time parked 停车时间的久暂Level 水准仪;横坑;水平面Level Crossing 平面交叉Level of illumination 明亮度水准Level of Service 服务水准Level or flat terrain 平原区Level surface 水准面Leveling Course 整平层License Plate 汔车号牌License Plate Method 牌照法License Suspension 吊扣驾照License Termination 吊销License Plate 汽车号牌License plate method 车辆牌照记录法;车辆牌照法License Plate Recognition 车牌辨识License renewal method 换照法Life cycle assessments LCA 生命周期评估Lift-On/Lift-Off 吊上吊下式Light Characteristics 灯质Light List 灯质表Light Motorcycle 轻型机踏车Light on method 亮灯法Light Phase 灯相Light Rail Rapid Transit LRRT 轻轨捷运Light Rail Transit LRT 轻轨运输Light Truck 小货车Lighting System 灯光系统Limited purpose (parking) survey 局部目的(停车)调查Line Capacity 路线容量Line marking 标线Linear Referencing System 线性参考系统Linear Shrinkage 线收缩Linear-Induction Motor LIM 线性感应马达Linear-Synchronous Motor LSM 线性同步马达Link arrival rate 路段流量到达率Link flow 路段流量Link performance function 道路绩效函数Linked or coordinated signal system 连锁号志系统Lip Curb 边石Liquidate 变成液体:偿还:破产Liquidated Damage 违约罚金Load Equivalent Factor LEF 荷重当量系数;载重当量因素Load Factor 负荷指数Load limit 载重限制Load Safety Factor 载重安全因素Loading 载重Loading & unloading 装卸Loading & unloading zone 上下旅客区段或装卸货物区段Loading Island 旅客上下车的车站岛Local Area Network LAN 局域网络Local Controller 路口控制器Local street 地区性街道Local traffic 地区性交通Local transmission network 区域传输网络Localizer 左右定位台Location file 地点档案Location of stop 站台设置位置Locked Joint 连锁接头Log Likelihood Function 对数概似函数Logical Architecture 逻辑架构Logit Model 罗吉特模式Logo 标记;商标Long Loop 长线圈Long tunnel 长隧道Long tunnel system 长隧道系统Long vehicle tunnel 车行长隧道Long-chord 长弦Longitude 经度Longitudinal Collision Avoidance 纵向防撞Longitudinal distribution of vehicle 车辆的纵向分布Longitudinal Drain 纵向排水Longitudinal Grade 纵坡度Longitudinal Joint 纵向接缝Longitudinal Separation 前后隔离Longitudinal Slope For Grade Line 纵断坡度Longitudinal ventilation 纵流式通风Longitudinal Warping 纵向扭曲Long-Range Planning 长程规划Long-term scour depth 长期冲刷深度Loop 环道(公路方面);回路(电路方面)Loop Detector 环路型侦测器Loop inductance 感应回路Los Angeles Abrasion Test 洛杉矶磨耗试验Lost Time 损失时间Louvers of Daylight Screening Structure 遮阳隔板Low beam 近灯Low Heat 低热Low or first gear 低速檔Low Pressure Sodium Lamp 低压钠气灯Low relative speed 低相对速率Low Truss 低架式Lumen 流明Luminance 辉度Luminaire 灯具Luminous Efficiency of a Source 光源效应Luminous flux 光流;光束Luminous Intensity 光度;光强度Lux 勒克斯MMacro or mass analysis 汇总分析;宏观分析Macroscopic 巨观Magnetic detector 电磁(磁性)侦测器Magnetic Levitation Maglev 磁浮运输系统Magnetic loop detector 磁圈侦测器Magnitude 规模Mainline 主线Maintenance Factor 维护系数Maintenance Work 养护工程Major flow 主要车流Major parking survey 主要停车调查Major Phase 主要时相Management Information Base MIB 网管信息库(管理讯息库)Management Information System MIS 管理信息系统Maneuverability 运行性Manhole 人孔;井Man-machine driving behavior 人机驾驶行为Man-machine interaction 人机互动Manual counts 人工调查法Map Matching Method 地图配对法Map scale 图比例尺Marginal vehicle 边际车辆Marker 标物;标记Market Package 产品组合Market Segment 市场区隔Marking 标线Marshaling Yard 货柜汇集场Marshall Test 马歇尔试验Mass Diagram 土积图Mass transportation 大众运输Match Fund 配合款Master 主路口Master controller 主要(总枢纽)控制器Master Node 主控制点Master Plan 主计画Material handling 物料搬运Maturity 成熟程度Max out 绿灯时间完全使用之现象Maximum allowable gradient 最大容许坡度Maximum Allowable Side Friction Factor 最大容许侧向摩擦系数Maximum Arterial Flow Method 最大干道流量法Maximum capacity 最大容量Maximum Density 最大密度Maximum flow rate 最大流率Maximum Grade 最大坡度Maximum individual delay 最大个别延滞Maximum Likelihood Function 最大概似法Maximum Load Section MLS 最大承载区间Maximum Peak Hour Volume 最尖峰小时交通量Maximum possible rate of flow 最大可能车流率Maximum queue 最大等待量(车队长度)Maximum Theoretical Specific Gravity 最大理论比重Mean Absolute Value of Error MAE 平均绝对误差Mean deviation 平均差Mean difference 均互差Mean Square Error MSE 平均平方误差Mean variance 离均差Mean velocity 平均速度Measure of Effectiveness MOE 绩效评估指针Mechanic License 技工执照Mechanical counter 机械式计数器Mechanical garage 机械式停车楼(间)Mechanical Kneading Compactor 揉搓夸压机Mechanical Load-Transfer Devices 机械传重设备Mechanical parking 机械停车Mechanical power 机械动力Median 中央分隔带;中央岛Median (50th percentile) speed 中位数速率;第50百分位数速率Median Bus Lane 设于道路中央之公车专用车道Median conflicts 中央冲突点Median Curing Cutback Asphalt MC 中凝油溶沥青Median island 中央岛Million Vehicles Kilometer MVK 百万车公里Median opening 中央分向岛缺(开)口Medium Distribution 中分布Memorandum of Understanding MOU 备忘录Mental factor 精神因素Mercury Vapor Lamp 水银蒸汽灯Merge 合并;并流;进口匝道;并入Merging area 并流区域Merging behavior 并入行为Merging conflicts 并流冲突点Merging maneuver 并流运行Merging of traffic 交通汇流Merging point 并流点Merging traffic 汇合交通Message Set 讯息集Metadata 诠释资料Metered Freeway Ramp 匝道仪控Metering rate 仪控率Metropolitan Planning Area 大都会规划区Micro or spot analysis 重点分析;微观分析Microwave Beacon 微波信号柱Mid-block (bus) stop 街廓中段公车站台Mid-block delay 街廓中段延滞Mid-Block Flow 中途转入流量Middle Ordinate 中距Mide-Block 街廓中央停靠方式Mile Per Gallon MPG 每加仑油量可跑的英哩数Milled Materials 刨除料Million Vehicles Kilometer MVK 百万车公里Mini car 迷你车Minibus 小型公车Minimum Curve Length 曲线之最短Minimum Design for Turning Roadway 转向道之最小设计Minimum Grade 最小纵断坡度Minimum Green Time 最短绿灯时间Minimum Phase Time 最短时相时间Minimum running time 最短行车时间Minimum separation 最小间距Minimum sight triangle 最小视界三角形Minimum speed limit 最低速率Minimum turning radius 最小转弯半径Minimum-speed curve 最低速率曲线Minor flow 次要车流Minor Phase 次要时相Minor street 次要道路Mix Design 配合设计Mixed fleet 混合车队Mixed fleet operation 混合车队营运Mixed flow, Mixed traffic, Mixed traffic flow 混合车流Mobile Communications 行动通讯Mobile Data 行动数据Mobile Data Network 行动数据网络Mobile Plant 移动式厂拌;活动式厂拌Mobile radio unit 车装式无线电话机Mobility 可行性Modal Split 运具分配Mode 运具Model traffic ordinance 模范交通条例Modem 通讯解调器Modular 模块化Modulus of Elasticity 弹性系数Modulus of Rupture 破裂模数Modulus of Subgrade Reaction 路基反应系数Moment of inertia 惯性力矩Monitoring 监测Monorail 单轨铁路Mortality 死亡数Motivation 动机Motor License 行车执照Motor transport service 汽车运输业Motor vehicle code 机动车辆规范Motorcycle lane 机车道Motorcycle user 机车使用者Motorcycle waiting zone 机车停等区Motorcyclist 机车驾驶人Motorcyclist Safety 机车骑士安全Mountable Curb or Rolled Curbs 可越式绿石Mountain road 山区道路Mountain terrain 山岭区Mounting height 装设高度Movement Distribution 流向分配Movement-Oriented 流动导向Moving belt 输送带Muck 碴Mud 泥浆Multi-Trip Ticket 多程票或回数票Multicommodity flow problem 多商品流量问题Multicommodity network flow problem 多重货物网络流动问题Multi-function Alarm Sign 多功能警示标志Multilane highway 多车道公路Multi-lane rural highway 多车道郊区公路Multilayer 多层Multileg Interchange 多路立体交叉Multileg Intersection 多路交叉Multimodal 多运具的Multimode mixed traffic 多车种车流Multi-parameter detector 多参数侦测器Multi-path effect 多路径效应Multipath Traffic Assignment Model 多重路线指派模式Multiperiod 多时段Multiple commodity network flow problem 多重商品网络流动问题Multiple cordon survey 多环周界调查Multiple network flow problem with side constraint 含额外限制多重网络流动问题Multiple turning lane 多线转向车道Multiple use area 多用途空间Multiple user classes 多种用路人Multiple-ride-ticket 回数票Multistories or multifloor garage 高楼停车间Multi-Tasking 多任务作业NNaphtha 石脑油National Cooperative Highway Research Program NCHRP 美国公路合作研究组织群National freeway 国道National Freeway Construction & Management Fund 国道公路建设管理基金National freeway network 国道路网National System Architecture 国家级架构National System of Interstate and Defense Highway 洲际国防公路Native Asphalt 天然沥育Natural (normal) distribution 自然(常态)分布Natural Disaster Traffic Management 自然灾害交通管理Natural moisture content 自然含水量Natural path 自然迹线Natural Rubber Latex 天然橡胶流质Natural Subgrade 天然路基Natural ventilation 自然通风Natural ventilation effect 自然通风效应Navigation 引导;导航Near-Side 路口近端Near-side bus stop 近端公车停靠站台Neon regulatory sign 霓虹式禁制标志Net Tractive Effort 净牵引力Net Weight 净重Network 网络New Jersey concrete barrier 纽泽西混凝土护栏Night visibility 夜间可见(视)性Night vision 夜间视力Nighttime driving 夜间驾驶Nitrogen Oxides NOX 氧化碳No left turn 不准左转;请勿左转No parking 禁止停车No parking on yellow line 禁止停车黄线No passing zone 不准超车区段No passing zone marking 不准超越地带标线No right turn 不准右转;请勿右转No standing on red line 禁止临时停车红线No turning 不准转向运行No U-turn 不准回转;请勿回转Noise 噪音Noise analysis 噪音分析Noise barrier, Sound insulating wall 隔音墙Noise control 噪音控制Noise induced annoyance 噪音干扰Noise intensity 噪音强度Noise Level 噪音水准Noise pollution 噪音污染Noise prevention 噪音防制Noise sensitive area 噪音敏感地区Nomograph 换算图Non-collision accident 非碰撞性肇事Non-Cutoff 无遮被型Non-Cutoff type NC/O 无遮蔽型Nonfatal Injury 非致命性的伤害Nonhomogeneous flow 不同流向的车流;非均质车流Non-Motorist 非机动车使用者(如行人)Non-Overlap 非重叠时相Nonpassing Sight Distance 不超车视距Non-Recurrent Congestion 非重现性交通壅塞Non-road user 非用路者Nonskid Surface Treatment 防滑处理Nonsynchronous controller 异步控制器No-Passing Zone Markings 禁止超车路段线Normal Crown NC 正常路拱Not approved 认定不应开发Novelty 新奇性Number of accident 肇事件数Number of conflict 冲突点数目Number of fatality 死亡人数Number of injury 受伤人数Number of Operating Vehicles 营业车辆数Number of parking spaces 停车车位数Number of Passengers 客运人数Number of Registered Vehicle 车辆登记数Numbers 要求之重复Numerical speed limit 数值速率限制Nurture room 育婴室OObject marking 障碍物标示(标线)Object or obstacle marking 路障标线Oblique crossing 斜向交叉穿越Obstruction approach marking 近障碍物标线Obstruction Light 障碍标示灯光Occupancy 车辆乘客数(包含驾驶)Occupancy study 乘客研究Occupant 车辆乘客(包含驾驶)Occupational Illness 职业病O-D Table 起讫旅次表Odometer 量距轮Off parking facilities, Off street parking garage 路外停车场Off Season 运输淡季Off street parking 路外停车Off-line 离线Off-ramp 出口匝道Off-set 号志时差Offset Fine-Turning Algorithm 时差微调法Off-set island end 岛端支距Offset transition 时差递移Off-set type intersection 错开式交叉路口Offset, Time lag 时差Off-street Bike Lane 道路外脚踏车车道Off-Street Parking Lot 路外停车场Off-tracking 轮迹内移Old vehicle elimination 车辆汰旧Omnibus (City Bus) 市区公共汽车On street parking, Roadside parking 路边停车On-Board Safety Monitoring 商用车辆车上安全监视On-Board Transit Survey 大众运输车上乘客调查On-Board Unit OBU 车上单元On-duty hour 勤务时间One lane 单车道One-side mounting 单侧配置法One-way arterial street 单向主要干道One-way Downgrade 单向下坡One-way Street 单行道One-way Ticket 单程票On-line 线上On-line Timing Plan Selection 动态查表Open bid 开标Open Geographic Information System 开放式地理信息系统Open GIS Services 开放式地理信息服务模式Open Graded Friction Course OGFC 开放级配摩擦层Open System Interconnection OSI 开放式系统互连架构Open traverse 开放导线Open-Graded Aggregate 开级配粒料Operating Cost 营运成本Operating speed 运行速率;营运速率Operating System OS 操作系统Operating Time 营运时间Operating Vehicle Days 行驶延日车数Operating-Mileage 营业里程Operation Bidding System 营运竞标制度Operation Right 路线经营权取得Operational delay 运行延滞Opposed crossing 反向交流;反向穿越Opposite mounting 双侧并列法Opposite car method 对向车法Optical Beacon 光学信号柱Optical Character Recognition OCR 车牌办识Optimal n-step toll 最佳n阶段收费方式Optimal path 最佳路径Optimal spacing 最适间距Optimum asphalt content 最佳沥青含量Optimum Moisture Content 最佳含水量Optimum signal timing 最佳号志时制Optional lane change 随意性变换车道Optional turning 随意转向Ordinal selection 序数选择法Ordinance 条例Ordinary Driver License 普通驾照Orientation system 导引系统Origin and destination study 起讫点研究Origin zone 起点区Outbound 往外方向;离开市区方向Outlet Control 出口控制Outlet Pipe 放流管Outlying area 外围(或偏远)地区Out-of-Face Replacement 整段抽换Over Haul 付价运矩Over high detector 超高侦测器Over turning 翻车;翻覆Overall parking program 整体停车计划Overall Standard Deviation 总标准偏差Overall Travel Speed 总旅行速率Overall travel time 全程行驶时间Overburden 超载;覆盖Overburden layer 覆土层Overflow Pavement 过水路面Overhaul Distance 付费运距Overhead (overhanging type) sign 悬挂式(横悬式;门架式)标志Overhead sign 架空标志Overlap 重叠Overlap degree 路线重叠指针Overlapping route 重复路线Overlaps 重耍Overlay 加铺路面层Overlay thickness design 加铺厚度设计Overloaded vehicle 超载车辆Overloading experiment 超载实验Overloading sediment 超载运移Overpass 天桥;高架道Overpass Bridge 跨越桥Overpass Highway 天桥公路Over-saturation Delay 过饱和延滞Overtaken 被超车Overtaken Vehicle 被超车辆Owl Rate 午夜费率Owner 业主Oxidation 氧化;硬化Ozone layer 臭氧层PPace 步调;速差间距Pacing violator 追赶违规者Packer 栓塞Packet-Switched Public Data Network PSPDN 分封交换公众数据网络Paging Communication 页码通讯Paging System 无线电叫人系统Paper Location 纸上定线Parabolic Curve 拋物线Parallax 视差Parameter 参数Parameter of Clothoid Curve 克罗梭曲参数Paratransit 副大众运输Parcel distribution industry 包裹配送业Park and ride system 停车转乘系统Park-and-Ride 停车转乘Parking 停车Parking accumulation 停车累积数Parking area 路边停车场Parking area planning 停车场规划Parking area, Parking lot 停车场Parking behavior 停车行为Parking capacity 停车容量Parking demand 停车需求Parking discount 停车折扣Parking duration 停车延时;停车持续时间Parking facility 停车设施Parking fee 停车费Parking garage 停车楼(间)Parking inventory 停车调查台帐或清单之建立Parking load 停车荷量Parking Lot 停车场Parking meter 停车收费表(器)Parking Peak Hour Factor 停车尖峰因素Parking prohibition 禁止路边停车Parking restriction 停车限制Parking Restrictions 禁止停车线Parking selecting behavior 停车选择行为Parking service index model 停车绩效指针模式Parking space 停车空间Parking Space Marking 停车场地标线Parking space requirement index 停车空间需求指针Parking space sharing 停车位共享Parking space, Parking stall 停车位Parking supply 停车供给Parking supply inventory 停车车位供应调查Parking volume 停车数(量)Parking-time limit 停车时间限制Parkway 林荫大道Partial Acceptance 部分验收Partial Clover Leaf 部分苜蓿叶Particulates 空气中微小颗粒Part-time restriction 局部时间设限Pass Ticket 通行票Pass-by Trip 顺道旅次Passed vehicle 被试验车超越的车辆Passenger 旅客;乘员Passenger car 小客车Passenger Car Equivalent PCE 小客车当量Passenger car ownership/usage 小客车持有与使用Passenger Car Unit PCU 小客车当量数Passenger Load Factor 客车乘载率Passenger Vehicles 小型车Passenger Volume 承载量Passenger-Cargo Dual-purpose 客货两用车Passenger-Kilometer 延人公里Passengers Per Bus-Kilometer 每车公里客运密度Passing 超车Passing Sight Distance 超车视距Passing time 通行时间;超车所需时间Passing Vehicle 超车车辆Passive Warning Device 被动警告设施Patching 修补Patrolling 巡逻Pattern of movement 运行移动的型态Paved Ditch 铺砌之水沟Paved road 铺面道路Pavement 铺面;路面Pavement markers 路面标记Pavement striping 路面标线Pavement aggregate 铺面骨材;铺面粒料Pavement aging 铺面老化Pavement Condition 铺面状况Pavement construction 铺面施工Pavement crack 铺面裂缝Pavement Crown 路拱Pavement damage 铺面破坏Pavement design 铺面设计Pavement distress 铺面损坏Pavement Drainage 路面排水Pavement engineering 铺面工程Pavement evaluation 铺面评审Pavement joint 铺面接缝Pavement Life 路面寿命Pavement maintenance 铺面维护Pavement maintenance management 铺面维护管理Pavement maintenance management system 铺面维护管理系统Pavement management and rehabilitation 铺面管理及修护Pavement marking 铺面标线Pavement material 铺面材料Pavement modulus 铺面模数Pavement performance 铺面绩效Pavement recycling 铺面再生Pavement rehabilitation 铺面翻修Pavement repair 铺面整修Pavement roughness 铺面糙度Pavement Serviceability 铺面服务性Pavement serviceability performance 铺面服务绩效Pavement strength 铺面强度Pavement structure 铺面结构Pavement structure evaluation 铺面结构评估Pavement thickness 铺面厚度Pavement, Road surface 路面Pavement-width transition marking 路宽渐变段标线Paver 铺筑机;铺装机Pay Enter 上车时付费Pay Leave 下车时付费Pay load 载重Peak Day (Hour) Volume 尖峰日(小时)流量Peak discharge 尖峰流量Peak hour 尖峰小时;尖峰时间。

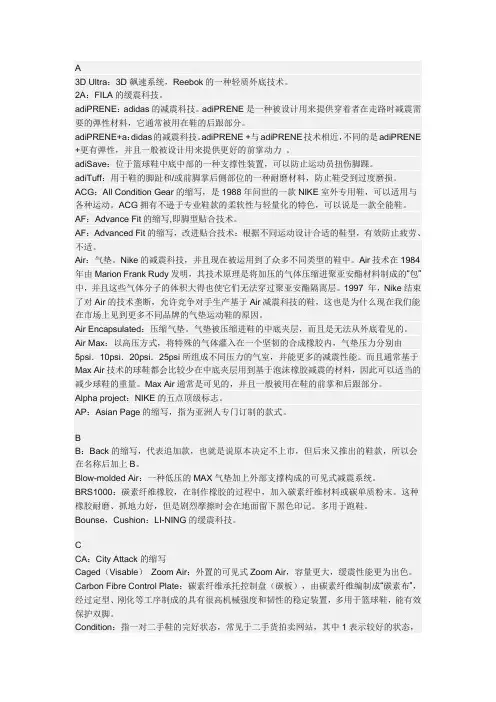

A3D Ultra:3D飙速系统,Reebok的一种轻质外底技术。

2A:FILA的缓震科技。

adiPRENE:adidas的减震科技。

adiPRENE是一种被设计用来提供穿着者在走路时减震需要的弹性材料,它通常被用在鞋的后跟部分。

adiPRENE+a:didas的减震科技。

adiPRENE +与adiPRENE技术相近,不同的是adiPRENE +更有弹性,并且一般被设计用来提供更好的前掌动力。

adiSave:位于篮球鞋中底中部的一种支撑性装置,可以防止运动员扭伤脚踝。

adiTuff:用于鞋的脚趾和/或前脚掌后侧部位的一种耐磨材料,防止鞋受到过度磨损。

ACG:All Condition Gear的缩写,是1988年问世的一款NIKE室外专用鞋,可以适用与各种运动。

ACG拥有不逊于专业鞋款的柔软性与轻量化的特色,可以说是一款全能鞋。

AF:Advance Fit的缩写,即脚型贴合技术。

AF:Advanced Fit的缩写,改进贴合技术:根据不同运动设计合适的鞋型,有效防止疲劳、不适。

Air:气垫。

Nike的减震科技,并且现在被运用到了众多不同类型的鞋中。

Air技术在1984年由Marion Frank Rudy发明,其技术原理是将加压的气体压缩进聚亚安酯材料制成的“包”中,并且这些气体分子的体积大得也使它们无法穿过聚亚安酯隔离层。

1997 年,Nike结束了对Air的技术垄断,允许竞争对手生产基于Air减震科技的鞋,这也是为什么现在我们能在市场上见到更多不同品牌的气垫运动鞋的原因。

Air Encapsulated:压缩气垫。

气垫被压缩进鞋的中底夹层,而且是无法从外底看见的。

Air Max:以高压方式,将特殊的气体灌入在一个坚韧的合成橡胶内,气垫压力分别由5psi.10psi.20psi.25psi所组成不同压力的气室,并能更多的减震性能。

而且通常基于Max Air技术的球鞋都会比较少在中底夹层用到基于泡沫橡胶减震的材料,因此可以适当的减少球鞋的重量。

有关农业的英语作文篇一:有关农业电子商务的特色创新与发展的探析中英文互译有关农业电子商务的特色创新与发展的探析中英文互译About agricultural the analysis of the characteristics of innovation and the development of e-commerce in Chinese and English translation国家一号文件《中共中央国务院关于加快发展现代农业进一步增强农村发展活力的若干意见》强调,要促进工业化、信息化、城镇化、农业现代化同步发展,着力强化现代农业基础支撑,深入推进社会主义新农村建设。

要大力培育现代流通方式和新型流通业态,发展农产品网上交易、连锁分销和农民网店。

继续实施“北粮南运”、“南菜北运”、“西果东送”、万村千乡市场工程、新农村现代流通网络工程,启动农产品现代流通综合示范区创建。

No. 1 country of the central committee of the CCP state council about accelerate the development of modern agriculture to further enhance the vitality of rural development certain opinions ", stressed that to promote industrialization, informatization, urbanization and agricultural modernization synchronization development, focus on strengthening modern agriculture as thefoundation, and thorough going efforts to promote socialist new rural construction. To foster modern ways of distribution and new circulation mode, the development of agricultural product trade on the net, chain store distribution and farmers. We will continue to implement the "north south yun", "south food north luck", "west GuoDong", new market project of thousands of villages and townships, rural modern circulation network engineering, start the creation of integrated demonstration area of modern circulation of agricultural products.一、当前农业电子商务发展中遇到的主要问题A, the main problems in current agricultural electronic commerce development近年来,电子商务产业发展迅猛,逐渐受到企业界和政府部门重视。

abaxial 【光】离中心光轴ABBE number 雅比数值,即相对色散倒数aberration change析光差变化〔因设计及应用光圈产生之光差变化〕aberrations 【光】析光差abrasion marks 〔底片〕花痕abrasive reducer 局部减薄剂absolute temperature 绝对温度absorption 吸收性能absorption curve 吸收曲线absorption filter = frequency filter 色谱滤片AC = alternating current 交流电AC coupler 交流电耦合器accelerator 促进剂accessories 配件accessory shoe 配件插座accumulator 储电器acetate base 醋酸片基acetate film 醋酸质胶片或菲林acetate filter 醋酸质滤光片acetic acid 【化】醋酸〔用于停影、定影、漂白及过调药〕,亦乙酸acetic acid, glacial 【化】冰醋酸〔即结晶如冰状的醋酸,用于急制及定影药〕acetone 【化】丙酮〔有机溶剂,配用于不溶于水的化学物〕achromat = achromatic lens 消色差镜头achromatic 【光】消色差的achromatic lens 消色差镜头acid 【化】酸acid fixer 酸性定影药acid rinse 酸漂acoustic 音响学,音响学的actinic 光化的,由光产生的化学变化action grip 快速手柄Action Photography 动态摄影acutance 明锐度,常指底片结像adapter 转接器adapter cable 转接导线adapter ring 转接环additive color printing method 加色法彩色放相技巧〔参阅附表〕additive synthesis 【光】原色混合〔原色包括红、绿、蓝色,三色相加产生白色,红绿产生黄色,红蓝产生洋红,绿蓝产生青靛色〕adhesive tape 胶纸advance lever 〔相机〕过片杆aerial camera 空中摄影机,或称遥感摄影机aerial film 空中摄影菲林,或称遥感摄影菲林aerial image 空间凝象〔指凝聚在焦点平面位置的影像〕aerial oxidation 氧化〔指与空气接触的氧化〕aerial perspective 透视感〔由气层产生远物模糊的透视现像〕Aerial Photography 空中摄影,或称遥感摄影aerial survey lens 空中测量镜头,应用于在空中测量地面,取景角度达120度,光圈多数固定于f5.6afocal lens 改焦镜头ageing 成熟过程1. 使感光物体成熟的过程2. 光学玻璃性能变为稳定所需的过程agitate 搅动agitation 搅动过程air brush 喷笔,执底或执相之用air lens 空气镜片〔指镜片与镜片之空间,其作用如镜片〕aircraft camera 航空摄影机album 相簿albumen 蛋白albumen pager 蛋白相纸,以蛋白作为乳化剂的相纸albumen print 蛋白相片,以蛋白相纸放成的作品albumin 蛋白质alcohol 酒精alcohol thermometer 酒精温度计alkali 【化】碱alkali earth 【化】碱土〔例如钡barium,钙calcium〕alkali metal【化】碱金属〔例如锂lithium,钠sodium〕Alpine Photography 山景摄影alternating current 交流电amateur 业余amateur photographer 业余摄影师amber 琥珀色Ambrotype 火棉胶正摄影法〔参阅附表〕American National Standard Institute 美国国家标准学会,ANSI 是感光度单位之一American Standards Association 1. 美国标准协会2. ASA 是感光度单位之一amidol 【化】二氨基酚,苯系化合物,俗称克美力,显影剂之一ammonium bichromate 【化】重铬酸铵,感光剂之一ammonium bifluoride 【化】氟化氢铵,用于使感光膜脱离玻璃片基ammonium carbonate 【化】碳酸铵,用于暖调显影药ammonium choloride 【化】氯化铵,用于漂白,过调药及感光剂ammonium persulphate 【化】过硫酸铵,显影剂之一ammonium sulphocyanate 【化】= ammonium thiocyanate 硫氰酸铵,用于过金〔色〕药ammonium thiocyanate 【化】= ammonium sulphocyanate 硫氰酸铵,用于过金〔色〕药Amphitype 正负双性相片amplifier 扩大器anamorphic process 变形拍摄方法anamorphotic lens 变形镜头,可将影像高度或阔度压缩或扩展anastigmat 消像散的anastigmat lens 消像散镜头angle coverage 〔镜头〕取景角度angle finder 量角器angle of gaze 凝视角〔人类视角通常是120 度,当集中注意力时约为五分之一,即25 度〕angle of incidence 【光】入射角angle of lens 镜头涵角angle of reflection 【光】反射角angle of refraction 【光】折射角angle of shooting 拍摄角度angle of view 观景角度Angstrom 〈埃〉长度单位=10-10 公尺anhydrous 无水的animation 动画Animation Photography 动画摄影animation stand 动画台annealing 【光】热炼〔制玻璃〕法〔这个方法是把玻璃在350至600度的电焗炉焗很长的时间,可减低制镜是时产生的扭曲〕ANSI 1. American National Standard Institute 〔美国国家标准学会〕2. 美国国家标准学会订出的感光度单位之一anti-fogging agent 防雾化剂anti-halation backing 防晕光底层anti-reflection coating 防反光膜anti-static wetting agent 消静电湿润剂anti-vignetting filter 消除黑角滤片aperture 光圈aperture display 光圈显示aperture needle 圈指针aperture ring 光圈环aperture scale 光圈刻度apochromatic 【光】复消色差Applied Photography 应用摄影arabic gum 阿拉伯树胶arc lamp 弧光灯Architectural Photography 建筑摄影area masking 局部加网area metering 区域测光artificial light 人造光源ASA 1. American Standards Association〔美国标准协会〕2. 感光度单位之一ASA setting device 感光度调校器asphalt 沥青aspherical lens 非球面镜头astigmatism 【光】像散,结像松散现像Astrophotography 天文摄影attachment 附加器audio 听觉性audio visual 视听auto = automatic 自动的简称automatic 自动化automatic loading 自动上片automatic bellows 自动近摄皮腔,自动回校光圈的近摄皮腔automatic camera 自动化相机automatic extension tube 自动延长管,自动回校光圈的延长管automatic flash 自动闪灯automatic focusing 自动对焦automatic rewinding 自动回卷automatic shooting range 自动拍摄范围automatic tray siphon 自动虹吸器,用于冲盆automatic winding 自动卷片auxiliary lens 附加镜头available light 现场光average gradient 平均倾斜率,平均梯度average metering 平均测光axial 【光】光轴back focal distance 【光】后焦距〔指镜头与菲林间的距离〕back projection 后方投影background 背景backlighting 背光bag bellow 袋型皮腔bar chart 棒形测试图bar static 线形静电纹〔因拉开过度卷紧菲林时产生的现象〕barn doors 遮光掩门barrel distortion 【光】桶形变形〔影像四边线条呈外弯线变形〕bas-relief 浮雕,黑房特技之一base 片基batch number 分批编号battery 电池battery charger 电池充电器battery pack 电池箱bayonet mount 刀环,镜头接环之一battery charger 电池充电器BCPS =beam candlepower second 光束烛光秒bead static珠形静电纹,亦称pearl static,在冲洗未完成前,用手拉擦过而产生的现象beam splitter 分光器bellows 皮腔bellows extension 皮腔延长度,多指近摄benzene 【化】苯benzotriazole【化】苯并三唑〔用于防雾化剂〕between-the-lens-shutter 镜间快门bi-convex 【光】双凸镜片bi-prism 双棱镜bi-prism focusing 双棱镜对焦bichromated albumen process 重铬酸盐蛋白蚀刻法〔参阅附录〕binocular vision 视觉三维效果birefringence =double refraction 双重折射,因镜片结构缺点产生重复折射现象bitumen 沥青bitumen grain process 沥青微粒蚀刻法〔参阅附录〕Black & White Photography 黑白摄影black filter 透紫外光滤片,只让紫外光透过的滤片black light 紫外光灯的俗称black opaqueopague 黑丹,修饰底片颜料bladed shutter 片闸式快门blank 【光】粗模,制镜过程中,经rough shaping 粗铸而成的镜片=dummy filter 空白滤光片,作为对焦等操作的预备,使应用滤镜拍摄时不会产生误差bleach 漂白药bleach-fix 漂定bleach-out process 漂移方法〔参阅附录〕bleaching 漂白bleeding 无边〔相片〕blimp 1. 闪烁2.保温隔音机套blocking 【光】粗磨,制造镜头过程之一,使blank 粗模〔镜片〕磨成Blocking out 遮挡blotch static雀斑形静电纹,亦称moisture static,因在湿度高的环境下回卷菲林而产生的现象。

Spring Semester, 2008PLL DESIGN AND CLOCK/FREQUENCY GENERATION (Lecture 12)Woogeun Rhee Institute of Microelectronics Tsinghua UniversityTerm Project• Design 645MHz fractional-N PLL circuit: - fref = 40MHz, fout = 645MHz with 50% duty cycle - Due date: June 24th40MHzfref(1) (2)645MHzPFD(3)CP(4)VCO(5)2(6)foutPLL BW: ~500kHz16/17 k-bit ACCUM2W. Rhee, Institute of Microelectronics, Tsinghua UniversityTerm Project (continued)• Do followings: 1. Design loop filter for ~500kHz PLL bandwidth. 2. Draw open-loop gain Bode plot based on LPF design from (a). 3. Plot node 3, 4 and check the lock time. 4. Plot node 1, 2 after PLL is fully settled. What is the amount of static phase offset? 5. Plot node 5, 6 for 10 VCO cycles (i.e. zoom-in plot). 6. Estimate the spur level based on VCO gain and waveform at node 3. 7. Verify (f) result by having FFT and measure spur level at VCO output in dBc. E1. Plot eye diagram of VCO output and measure jitter in time domain. (extra) E2. Run phase noise simulation of open-loop VCO and calculate closed-loop RJ. (extra) E3. Calculate closed-loop RJ by defining approximated noise bandwidth. (extra) E4. Tell whether DJ or RJ is dominant. (extra) • Note: Ideal blocks allowed for PFD & LPF design3W. Rhee, Institute of Microelectronics, Tsinghua UniversityV. Applications 3. Clock Multiplier Unit (CMU)4W. Rhee, Institute of Microelectronics, Tsinghua UniversityCMU Design Considerations• Similar to RF frequency synthesizer - Use of frequency divider and PFD/CP - Trade-off between noise and spur • Different from RF frequency synthesizer - Less stringent lock-in time - Ultimately interested in pk-pk jitter. - Noisier supply voltage - Noisier reference clock - Lower supply voltage - Mostly in digital CMOS process - Ring VCO and on-chip LPF preferred.5W. Rhee, Institute of Microelectronics, Tsinghua UniversityJitter• Absolute jitter (long-term jitter) - Phase error w.r.t. ideal referenceΔTabs ,rms = lim1 2 ΔT12 + ΔT22 + ⋅ ⋅ ⋅ + ΔTN N →∞ N• Cycle-to-cycle jitter (short-term jitter) - No need for referenceΔTcc ,rms ≈ lim1 (T2 − T1 )2 + (T3 − T2 )2 + ⋅ ⋅ ⋅ + (TN − TN −1 )2 N →∞ N• Period jitter - For PLL, period jitter = absolute jitter.ΔTp ,rms = lim1 (T − T1 )2 + (T − T2 )2 + ⋅ ⋅ ⋅ + (T − TN −1 )2 N →∞ N*Note:Periodic jitter (PJ) is often considered DJ by sinusoidal modulation, which is different from period jitter.W. Rhee, Institute of Microelectronics, Tsinghua University6Total Jitter (TJ)RJpk-pk (14*σ)• Total jitter (TJpp) - RJpp + DJpp = 14 x RJrms + DJpp • Random jitter (RJ) - Non-systematic jitter - Gaussian distribution • Deterministic jitter (DJ) - Systematic jitter - Coupling and ISI - Duty cycle distortionDJDJ dominant (modulation)RJ dominant (noise)Pspurfo7W. Rhee, Institute of Microelectronics, Tsinghua UniversityRandom Jitter (RJ)Bathtub Curve• For BER = 10-12, RJpp = 14 x RJrmsRef: “Jitter Fundamentals,” Wavecrest Company8W. Rhee, Institute of Microelectronics, Tsinghua UniversityRJ and Noise Integration BandwidthfoCDR tracking BWPDLPFCDRN• CDR tracking BW should be considered for TXPLL design. - SONET: 50kHz – 80MHz - Typically (Baud Rate) / 1667 – (Baud Rate) / 29W. Rhee, Institute of Microelectronics, Tsinghua UniversitySupply Noise EffectfoCDR tracking BWPDLPFCDRN10W. Rhee, Institute of Microelectronics, Tsinghua UniversityJSSC’96, von Kaenel et al. Supply Noise ConsiderationSupply w/o NoiseSupply w/t Noise(f 3dBLess power but needs more careful design for 50% duty cycle.Cascaded PLLsParallel PLLsISSCC’03, Wong et al.Cascaded PLLsV. Applications3. Clock Multiplier Unit (CMU)A. Uniform BW control for PCIe2B. ΔΣPLL for digital clock generationC. S-S clocking for EMI reductionInside PCCurrent ComingFB-DIMM•DDR2 DRAM + high-speed serial linkÆPoint-to-point serial link communication •Overcomes trade-off between speed and capacity.[Li, ITC’04], [PCI-SIG]s o 123H s H s e H s H s −Δτ=−⋅()[()()]()H 1(s)H 3(s)H 2(s)ΔτLC VCORing VCO[Noguchi, ISSCC’02][Herzel, JSSC’03][Moon, JSSC’04][Williams, CICC’04]Dual-Path VCOsTimeTimeVarious Coarse-Tuning Gains(with BW FINE = 1)Various Coarse-Tuning Bandwidths(with BW FINE = 1)(CppSim tool from M.I.T. used for simulation)PLL Behavioral Simulation(<80kHz)(10MHz)VDDINBINBIASOUT OUTBR1R2Narrowbanding (for coarse tuning)4th -pole of PLL (for fine tuning)Resistor noise contribution to PLLH(f)fF RCF BWLinear Amplifier and Noise ConsiderationPhase Noise PerformancesMeasured RJ VariationMeasured VCO Tuning CurvePSRR PerformanceV. Applications3. Clock Multiplier Unit (CMU)A. Uniform BW control for PCIe2B. ΔΣPLL for digital clock generationC. S-S clocking for EMI reductionFractional-N PLL for Wireline Applications?Flexible Frequency Planning with ΔΣPLL•Conventional PLL makes it difficult to accommodate various reference clock frequencies.Digital Clock Generation with <1ppm Resolution•PLL with ring VCO needs wide bandwidth to suppress VCO noise.ÆLow f ref /f bw ratio makes it difficult to implement ΔΣfractional-N PLL.ÆSuffer from cycle-to-cycle jitter problem due to quantization noise.Fractional-N PLL for Digital SystemL f ) d B c /H z )Basic ConceptsEquivalent Discrete-Time Model Frequency ResponseL ) B c H zBehavioral Simulation Results500MHz Output Spectrum(Fref= 14.318MHz, N=37.15603)VCO Control Voltage•FIR-embedded frequency divider reduces output cycle-to-cycle jitter.ISCAS’07, Chi et al.Measured Output SpectraV. Applications3. Clock Multiplier Unit (CMU)A. Uniform BW control for PCIe2B. ΔΣPLL for digital clock generationC. S-S clocking for EMI reductionElectromagnetic Interference (EMI)•Radiation emission is strictly regulated by FCC.•Can be reduced by shielded cables but expensive and bulky.ÆHow about modulating clock to reduce peak power?Modulation Profile Clock SpectrumCarrier(w/o modulation)Spread Spectrum ClockingJSSC’03, Chang et al.By Voltage ModulationBy Divider Modulation By ΔΣModulation ISSCC’99, Li et al.ISSCC’05, Lee et al.。