A general framework for types in graph rewriting

- 格式:pdf

- 大小:378.01 KB

- 文档页数:26

高二英语软件开发单选题50题1.The main language used in software development is _____.A.PythonB.JavaC.C++D.All of the above答案:D。

在软件开发中,Python、Java 和C++都是常用的编程语言,所以答案是以上皆是。

2.Which one is not a software development tool?A.Visual StudioB.IntelliJ IDEAC.PhotoshopD.Eclipse答案:C。

Photoshop 是图像编辑软件,不是软件开发工具。

Visual Studio、IntelliJ IDEA 和Eclipse 都是常用的软件开发集成环境。

3.The process of finding and fixing bugs in software is called _____.A.debuggingB.codingC.testingD.designing答案:A。

debugging 是调试的意思,即查找和修复软件中的错误。

coding 是编码,testing 是测试,designing 是设计。

4.A set of instructions that a computer follows is called a _____.A.programB.algorithmC.data structureD.variable答案:A。

program 是程序,即一组计算机遵循的指令。

algorithm 是算法,data structure 是数据结构,variable 是变量。

5.Which programming paradigm emphasizes on objects and classes?A.Procedural programmingB.Functional programmingC.Object-oriented programmingD.Logic programming答案:C。

Kalman Filtering,Factor Graphsand Electrical NetworksPascal O.Vontobel,Daniel Lippuner,and Hans-Andrea LoeligerISI-ITET,ETH Zurich,CH-8092Zurich,Switzerland.AbstractFactor graphs are graphical models with origins in coding theory.It is well known that Kalmanfiltering is an instance of the generic sum(mary)-product algorithm on thecorresponding factor graph.In this paper,a one-to-one correspondence between suchfactor graphs and a class of electrical networks is presented.The electrical network“computes”correct Bayesian estimates even for factor graphs with cycles. Extended SummaryBackgroundFactor graphs[1]are a unifying framework for a wide variety of system models in coding, signal processing,and artificial intelligence.Many important algorithms in thesefields are instances of the generic sum(mary)-product algorithm,which works by message passing in the factor graph.One such instance is Kalmanfiltering and smoothing;for the scalar case, this was shown in[1],the vector case was discussed in[2].In the present paper,as in[2],we will use Forney-style factor graphs(FFGs)[3],a variation of factor graphs(in[3]called“normal graphs”)with a number of attractive properties.Such a graph expresses the factorization of a function of several variables. E.g.,let X,Y,and Z be random variables that form a Markov chain.Then their joint probability density p XY Z(x,y,z)can be written asp XY Z(x,y,z)=p X(x)p Y|X(y|x)p Z|Y(z|y).(1)This factorization is expressed by the graph of Fig.1.The edges in the graph correspond to the variables in(1),the nodes in the graph correspond to the factors in(1),and an edge is connected to some node if and only if the variable corresponding to the edge is an argument in the factor corresponding to the node.One of the attractive properties of Forney-style factor graphs is that they subsume block diagrams as in Figures2and3.Thesefigures illustrate the classical linear state space modelX[k]=AX[k−1]+BU[k](2)Y[k]=CX[k]+W[k](3)where for k∈Z,U[k],W[k],X[k],and Y[k]are real vectors and where A,B,and C are matrices of appropriate dimensions.For the sake of exposition we will,in this paper,focus on the case where all these variables and matrices are scalars.The nodes in Figures 2and3are then defined to represent factors as shown in Table1(left column).In Kalman filtering,both U[.]and W[.]are usually assumed to be white Gaussian(“noise”)processes; the corresponding nodes in thesefigures are then of the type shown in the top row of Table1. The overall function(the factorization of)which is expressed by Figures2and3is the joint probability density of all involved variables.If the variables Y[k]are observed,say Y[k]=y k,the corresponding branches are“ter-minated”by nodes corresponding to factorsδ(y[k]−y k)(the dashed boxes in Fig.2);such factors may be viewed as degenerate cases of Gaussian distributions(top row of Table1) with variance zero.The graph then represents(the factorization of)the joint a posteriori density of all involved variables.As described in[1]and[2],Kalmanfiltering may be viewed as passing messages along the edges of these graphs;in general,the messages consist of mean vectors and covariance matrices(or inverses of covariance matrices)and are computed according to the rules of the generic sum-product algorithm.In its most narrow sense,Kalmanfiltering is only the forward sum-product recursion through the graph of Fig.2and yields the a posteriori probability distribution of the state X[k]given the observation sequence Y[.]up to time k.By computing also the backwards messages,the a posteriori probability of all quantities given the whole observation sequence Y[.]may be obtained.More generally,Kalmanfiltering amounts to the sum-product algorithm on any FFG(or part of an FFG)that consists of(the vector/matrix versions of)the linear building blocks listed in Table1(left column).As pointed out in[2],Kalmanfiltering in this sense is equivalent to a general least-squares algorithm.New ResultsInterestingly,the results of Kalmanfiltering(in its widest sense as defined above)can also be obtained by translating the state space model into an electrical network according to the rules of Table1.These networks consist of resistors,voltage sources,and transformers. (The latter are defined by the equations U2=aU1and I2=I1/a,where U i and I i are the voltage accross,and the current into,terminal pair i,i=1,2.)As shown in Table1,each edge/variable of the factor graph corresponds to a pair of nodes in the electrical network.Theorem.The voltages between such node pairs are the Bayesian estimates(i.e.,the means of the a posteriori marginal distributions)of the corresponding random variables.The equivalence of(traditional)Kalmanfiltering with an electrical network as in Table1 was recognized already by Carter[4].However,the above theorem holds also if the factor graph has cycles,which goes beyond[4](and appears to be related to the convergence results of[6]and[7]).In the electrical network,message passing according to the sum-product algorithm(and thus classical Kalmanfiltering)can be interpreted as follows.Each message represents aGaussian distribution as in the top row of Table1,and can thus be represented by a voltage source(corresponding to the mean)in series with a resistor(corresponding to the variance). As illustrated in Table2,the computation of messages out of the other node types in Table1 then amounts to connecting the incoming messages/circuits to the node circuit and reducing the resulting circuit to its Th´e venin equivalent circuit(i.e.,a voltage source in series with a resistor).We conclude with the following remarks:•The correspondence given in Table1can be extended to the vector case.•There is a dual version of the correspondence of Table1where the voltages and the currents are interchanged,as are series and parallel connections of subcircuits.•The representation of Kalmanfiltering(and smoothing)as an electrical network al-lows an easy transition from discrete-time Kalmanfiltering to continuous-time Kalman filtering.•A generalization beyond Kalmanfiltering/least squares was recently discovered by thefirst author[8]:if p(x,y,...)is any joint probability density function such that −log p(x,y,...)is convex,then a factor graph of p can be translated into an electrical network thatfinds the global maximum of p.The network consists of voltage sources (or current sources)and nonlinear resistors.Circuit simplifications similar to those in Table2correspond to the message passing rules of the max-product version(rather than the sum-product version)of generic sum(mary)-product algorithm.Moreover,if the voltages are solutions to the primal maximization problem,then the currents are the solutions to the dual problem.AcknowledgementThe authors thank S.Mitter for pointing out the book by Dennis[5],which inspired this work.p X Xp Y |X Y p Z |Y ZFigure 1:Forney-style factor graph (FFG)of a Markov chain....-X [k −1]?U [k ]?Z [k ]?Y [k ]-X [k ]?U [k +1]?Z [k +1]?Y [k +1]-X [k +1]...Figure 2:State space model.-X [k −1]A -?U [k ]B?+-=?C ?Z [k ]-X [k ]-W [k ]?Z [k ]+?Y [k ]Figure 3:Details of linear state space model.γe−(x −m )22σ2Xm ?m σ2?X 1δ(x −y )δ(x −z )=XZ Y r r?X ?Z -Y 2δ(x +y −z )+-X -Z 6Y?X ?Z -Y 3δ(y −ax )a-X -Y ¤¥¤¥¤¥§¦§¦§¦1:a ?X?Y Table 1:Correspondence between the nodes in the factor graph (left)and the components of the electrical network (right).All variables are scalars.1=X-Z -Y 6m Z =m X /σ2X +m Y /σ2Y 1/σ2X +1/σ2Y σ2Z =11/σ2X +1/σ2Y j σ2X m X j σ2Y m Y r r ⇒j σ2Z m Z2+-X --Z -6Y 6m Z =m X +m Y σ2Z =σ2X +σ2Y j σ2X m X j m Y σ2Y ⇒j σ2Z m Z3a -X --Y -m Y =am Xσ2Y =a 2σ2X j σ2X m X ¤¥¤¥¤¥§¦§¦§¦1:a⇒j σ2Y m YTable 2:Computation of messages (left and middle)with circuit interpretation (right).References[1] F.R.Kschischang,B.J.Frey,and H.-A.Loeliger,“Factor graphs and the sum-product algo-rithm,”IEEE rm.Theory,vol.IT–47,no.2,pp.498–519,2001.[2]H.-A.Loeliger,“Least squares and Kalmanfiltering on Forney graphs,”to appear in Codes,Graphs,and Systems,festschrift in honour of G.D.Forney,Kluwer,2001.[3]G.D.Forney,Jr.,“Codes on graphs:normal realizations,”IEEE rm.Theory,vol.IT-47,pp.520–548,Feb.2001.[4] D.W.Carter,“A circuit theory of the Kalmanfilter,”in Proc.32nd Conf.on Decision andControl(San Antonio,TX,USA),pp.1224–1226,Dec.1993.[5]J.B.Dennis,Mathematical Programming and Electrical Networks.MIT Press,Cambridge,Mass.,1959.[6]Y.Weiss and W.T.Freeman,“On the optimality of solutions of the max-product belief-propagation algorithm in arbitrary graphs,”IEEE rm.Theory,vol.47,pp.736–744, Feb.2001.[7]P.Rusmevichientong and B.Van Roy,“An analysis of belief propagation on the turbo decodinggraph with Gaussian densities,”IEEE rm.Theory,vol.47,pp.745–765,Feb.2001.[8]P.O.Vontobel,Kalman Filters,Factor Graphs,and Electrical Networks.Internal reportINT/200202,ISI-ITET,ETH Zurich,April2002.。

折线图英语作文Title: Exploring the Significance of Line Graphs。

Introduction:Line graphs are an indispensable tool in data representation, widely used across various fields such as economics, science, and social sciences. Their simplicity and effectiveness in illustrating trends and patterns make them a preferred choice for conveying information. In this essay, we delve into the importance and utility of line graphs.Importance of Line Graphs:Line graphs serve multiple purposes, primarily to visualize and analyze data trends over time or other continuous variables. They offer a clear depiction of how one variable changes in relation to another, aiding in the identification of patterns, correlations, and anomalies.1. Trend Analysis:One of the fundamental applications of line graphs is to analyze trends. By plotting data points over time, line graphs provide insights into the direction and magnitude of change in variables. For instance, in economic analysis, line graphs are used to track GDP growth, unemployment rates, or stock market performance over different periods.2. Comparisons:Line graphs facilitate easy comparison betweendifferent datasets. Multiple lines on the same graph enable viewers to contrast trends and observe any disparities or similarities. This is particularly useful in fields like marketing, where companies can compare sales figures of various products or services over time.3. Forecasting:Line graphs can also aid in forecasting future trendsbased on past data. By extrapolating the existing trend lines, analysts can make informed predictions about future outcomes. This forecasting capability is valuable in financial planning, resource allocation, and risk management.4. Identification of Patterns:Line graphs help in identifying underlying patterns within datasets. Whether it's seasonal fluctuations, cyclical trends, or long-term growth trajectories, line graphs provide a visual framework for pattern recognition. This assists researchers and decision-makers in understanding the underlying dynamics driving the data.5. Communication of Findings:In addition to their analytical functions, line graphs are powerful communication tools. They simplify complex data into visually appealing representations that are easy to comprehend. Whether presenting to stakeholders, policymakers, or the general public, line graphs facilitateeffective communication of findings and insights.Example Applications:To illustrate the versatility of line graphs, consider the following examples:1. Climate Change Analysis:Line graphs are commonly used to visualize temperature trends over decades. By plotting annual average temperatures, scientists can demonstrate the long-term impact of climate change, highlighting the steady rise in global temperatures.2. Stock Market Performance:Investors rely on line graphs to track the performance of stocks, indices, and commodities. A line graph depicting the movement of a stock price over time helps investors identify patterns, assess volatility, and make informed trading decisions.3. Disease Outbreak Monitoring:Health organizations utilize line graphs to monitor the spread of diseases such as COVID-19. By plotting daily or weekly case counts, epidemiologists can track the progression of outbreaks, assess the effectiveness of interventions, and forecast future infection rates.Conclusion:In conclusion, line graphs play a crucial role in data analysis, interpretation, and communication. Their ability to represent trends, compare datasets, forecast future outcomes, identify patterns, and communicate findings makes them an indispensable tool across various domains. As we continue to navigate an increasingly data-driven world, the importance of line graphs in understanding complex phenomena cannot be overstated.。

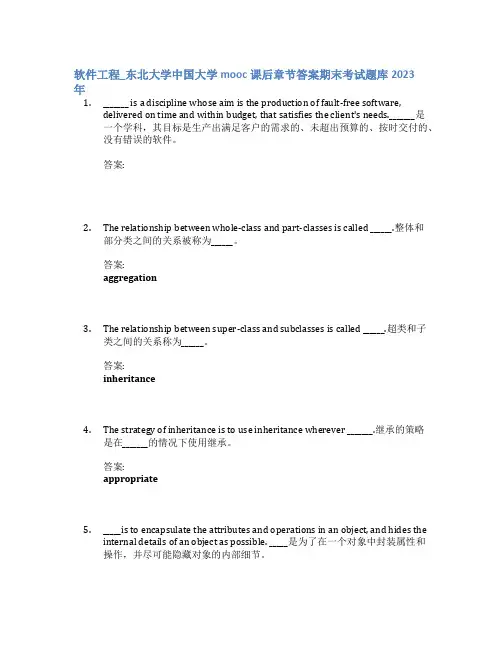

软件工程_东北大学中国大学mooc课后章节答案期末考试题库2023年1._______ is a discipline whose aim is the production of fault-free software,delivered on time and within budget, that satisfies the client's needs._______是一个学科,其目标是生产出满足客户的需求的、未超出预算的、按时交付的、没有错误的软件。

答案:2.The relationship between whole-class and part-classes is called ______.整体和部分类之间的关系被称为______。

答案:aggregation3.The relationship between super-class and subclasses is called ______.超类和子类之间的关系称为______。

答案:inheritance4.The strategy of inheritance is to use inheritance wherever _______.继承的策略是在_______的情况下使用继承。

答案:appropriate5._____is to encapsulate the attributes and operations in an object, and hides theinternal details of an object as possible. _____是为了在一个对象中封装属性和操作,并尽可能隐藏对象的内部细节。

Data encapsulation6.Two modules are ________ coupled if they have write access to global data.如果两个模块对全局数据具有写访问权限,则是________耦合。

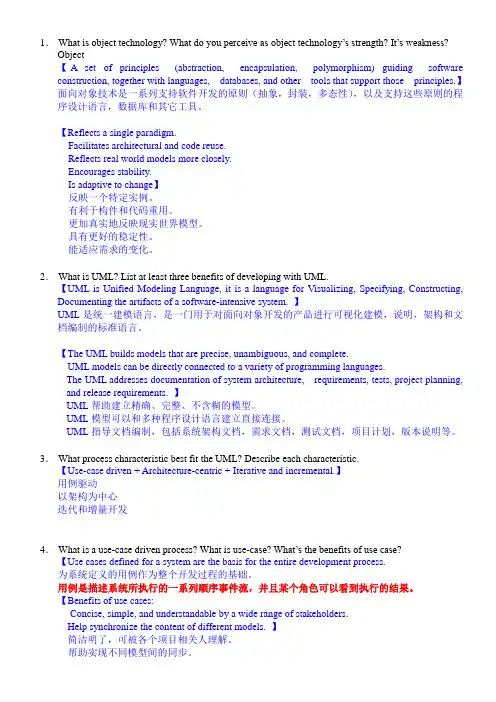

1.What is object technology? What do you perceive as object technology’s strength? It’s weakness?Object【A set of principles (abstraction, encapsulation, polymorphism) guiding software construction, together with languages, databases, and other tools that support those principles.】面向对象技术是一系列支持软件开发的原则(抽象,封装,多态性),以及支持这些原则的程序设计语言,数据库和其它工具。

【Reflects a single paradigm.Facilitates architectural and code reuse.Reflects real world models more closely.Encourages stability.Is adaptive to change】反映一个特定实例。

有利于构件和代码重用。

更加真实地反映现实世界模型。

具有更好的稳定性。

能适应需求的变化。

2.What is UML? List at least three benefits of developing with UML.【UML is Unified Modeling Language, it is a language for Visualizing, Specifying, Constructing, Documenting the artifacts of a software-intensive system. 】UML是统一建模语言,是一门用于对面向对象开发的产品进行可视化建模,说明,架构和文档编制的标准语言。

International Conference on Principles and Practice of Constraint Programming1995,pp.88–102 Asynchronous Weak-commitment Search for Solving Distributed Constraint SatisfactionProblemsMakoto YokooNTT Communication Science Laboratories2-2Hikaridai,Seika-cho,Soraku-gun,Kyoto619-02,Japane-mail:yokoo@cslab.kecl.ntt.jpAbstract.A distributed constraint satisfaction problem(DistributedCSP)is a CSP in which variables and constraints are distributed amongmultiple automated agents,and various application problems in Dis-tributed Artificial Intelligence can be formalized as Distributed CSPs.We develop a new algorithm for solving Distributed CSPs called asyn-chronous weak-commitment search,which is inspired by the weak-commit-ment search algorithm for solving CSPs.This algorithm can revise a baddecision without an exhaustive search by changing the priority orderof agents dynamically.Furthermore,agents can act asynchronously andconcurrently based on their local knowledge without any global control,while guaranteeing the completeness of the algorithm.The experimental results on various example problems show that thisalgorithm is by far more efficient than the asynchronous backtrackingalgorithm for solving Distributed CSPs,in which the priority order isstatic.The priority order represents a hierarchy of agent authority,i.e.,the priority of decision making.Therefore,these results imply that aflexible agent organization,in which the hierarchical order is changeddynamically,actually performs better than an organization in which thehierarchical order is static and rigid.1IntroductionDistributed Artificial Intelligence(DAI)is a subfield of AI that is concerned with the interaction,especially the coordination among artificial automated agents. Since distributed computing environments are spreading very rapidly due to the advances in hardware and networking technologies,there are pressing needs for DAI techniques,thus DAI is becoming a very vital area in AI.In[13],a distributed constraint satisfaction problem(Distributed CSP)is formalized as a CSP in which variables and constraints are distributed among multiple automated agents.It is well known that surprisingly a wide variety of AI problems can be formalized as CSPs.Similarly,various application problems in DAI which are concerned withfinding a consistent combination of agent ac-tions(e.g.,distributed resource allocation problems[3],distributed scheduling problems[9],and multi-agent truth maintenance tasks[5])can be formalized as Distributed CSPs.Therefore,we can consider a Distributed CSP as a generalframework for DAI,and distributed algorithms for solving Distributed CSPs as an important infrastructure in DAI.It must be noted that although algorithms for solving Distributed CSPs seem to be similar to parallel/distributed processing methods for solving CSPs[2,14], research motivations are fundamentally different.The primary concern in par-allel/distributed processing is the efficiency,and we can choose any type of par-allel/distributed computer architecture for solving the given problem efficiently. In contrast,in a Distributed CSP,there already exists a situation where knowl-edge about the problem(i.e.,variables and constraints)is distributed among automated agents.Therefore,the main research issue is how to reach a solution from this given situation.If all knowledge about the problem can be gathered into one agent,this agent can solve the problem alone using normal centralized constraint satisfaction algorithms.However,collecting all information about a problem requires certain communication costs,which could be prohibitively high. Furthermore,in some application problems,gathering all information to one agent is not desirable or impossible for security/privacy reasons.In such cases, multiple agents have to solve the problem without centralizing all information. The author has developed a basic algorithm for solving Distributed CSPs called asynchronous backtracking[13].In this algorithm,agents act asynchronously and concurrently based on their local knowledge without any global control.In this paper,we develop a new algorithm called asynchronous weak-commit-ment search,which is inspired by the weak-commitment search algorithm for solving CSPs[12].The main characteristic of this algorithm is as follows.–Agents can revise a bad decision without an exhaustive search by changing the priority order of agents dynamically.In the asynchronous backtracking algorithm,the priority order of agents is de-termined,and an agent tries tofind a value satisfying the constraints with the variables of higher priority agents.When an agent sets a variable value,the agent commits to the selected value strongly,i.e.,the selected value will not be changed unless an exhaustive search is performed by lower priority agents.There-fore,in large-scale problems,a single mistake of value selection becomes fatal since doing such an exhaustive search is virtually impossible.This drawback is common to all backtracking algorithms.On the other hand,in the asynchronous weak-commitment search,when an agent can notfind a value consistent with the higher priority agents,the priority order is changed so that the agent has the highest priority.As a result,when an agent makes a mistake in value se-lection,the priority of another agent becomes higher;thus the agent that made the mistake will not commit to the bad decision,and the selected value will be changed.We will show that the asynchronous weak-commitment search algorithm can solve problems such as the distributed1000-queens problem,the distributed graph-coloring problem,and the network resource allocation problem[8]that the asynchronous backtracking algorithm fails to solve within a reasonable amount of time.We can assume that the priority order represents a hierarchy of agentauthority,i.e.,the priority order of decision making.Therefore,these results im-ply that aflexible agent organization,in which the hierarchical order is changed dynamically,actually performs better than an organization in which the hierar-chical order is static and rigid.In the following,we briefly describe the definition of a Distributed CSP and the asynchronous backtracking algorithm(Section2).Then,we show the basic ideas and details of the asynchronous weak-commitment search algorithm(Sec-tion3),and empirical results which show the efficiency of the algorithm(Section 4).Finally,we examine the complexity of the algorithm(Section5).2Distributed Constraint Satisfaction Problem and Asynchronous Backtracking2.1FormalizationA CSP consists of n variables x1,x2,...,x n,whose values are taken fromfi-nite,discrete domains D1,D2,...,D n respectively,and a set of constraints on their values.A constraint is defined by a predicate.That is,the constraint p k(x k1,...,x kj)is a predicate which is defined on the Cartesian product D k1×...×D kj.This predicate is true iffthe value assignment of these variables sat-isfies this constraint.Solving a CSP is equivalent tofinding an assignment of values to all variables such that all constraints are satisfied.A Distributed CSP is a CSP in which variables and constraints are distributed among automated agents.We assume the following communication model.–Agents communicate by sending messages.An agent can send messages to other agents iffthe agent knows the addresses of the agents1.–The delay in delivering a message isfinite,though random.For the trans-mission between any pair of agents,messages are received in the order in which they were sent.Each agent has some variables and tries to determine their values.However, there exist inter-agent constraints,and the value assignment must satisfy these inter-agent constraints.Formally,there exist m agents1,2,...,m.Each variable x j belongs to one agent i(this relation is represented as belongs(x j,i)).Con-straints are also distributed among agents.The fact that an agent k knows a constraint predicate p l is represented as known(p l,k).We say that a Distributed CSP is solved iffthe following conditions are satisfied.1This model does not necessarily mean that the physical communication network must be fully connected(i.e.,a complete graph).Unlike most parallel/distributed algorithm studies,in which the topology of the physical communication network plays an important role,we assume the existence of a reliable underlying commu-nication structure among agents and do not care about the implementation of the physical communication network.This is because our primary concern is the coop-eration of intelligent agents,rather than solving CSPs by certain multi-processor architectures.–∀i,∀x j where belongs(x j,i),the value of x j is assigned to d j,and∀k,∀p l where known(p l,k),p l is true under the assignment x j=d j.2.2Asynchronous BacktrackingA basic algorithm for solving Distributed CSPs called asynchronous backtrack-ing is developed in[13].In this algorithm,agents act asynchronously and con-currently,in contrast to traditional sequential backtracking techniques,while guaranteeing the completeness of the algorithm.Without loss of generality,we make the following assumptions while describ-ing our algorithms for simplicity.Relaxing these assumptions to general cases is relatively straightforward2.–Each agent has exactly one variable.–All constraints are binary.–There exists a constraint between any pair of agents.–Each agent knows all constraint predicates relevant to its variable.In the following,we use the same identifier x i to represent an agent and its variable.We assume that each agent(and its variable)has a unique identifier.In the asynchronous backtracking algorithm,each agent concurrently assigns a value to its variable,and sends the value to other agents.After that,agents wait for and respond to incoming messages.There are two kinds of messages: ok?messages to communicate the current value,and nogood messages to com-municate information about constraint violations.The procedures executed at agent x i by receiving an ok?message and a nogood message are described in Fig.1.An overview of these procedures is given as follows.–After receiving an ok?message,an agent records the values of other agents in its agent view.The agent view represents the state of the world recognized by this agent(Fig.1(i)).–The priority order of variables/agents is determined by the alphabetical or-der of the identifiers,i.e.,preceding variables/agents in the alphabetical or-der have higher priority.If the current value satisfies the constraints with higher priority agents in the agent view,we say that the current value is consistent with the agent view3.If the current value is not consistent with the agent view,the agent selects a new value which is consistent with the agent view(Fig.1(ii)).2In[11],an algorithm in which each agent has multiple variables is described.If there exists no explicit constraint between two agents,there is a chance that an implicit constraint exists between them.In such a case,a new relation between these agents must be generated dynamically.The procedure for adding a new relation is described in[13].The idea of making originally implicit constraints explicit can be found in the consistency algorithms for CSPs.For example,the adaptive consistency procedure[4]adds links to a constraint network while transforming the constraint network toa backtrack-free constraint network.3More precisely,the agent must satisfy not only initially given constraint predicates, but also the new constraints communicated by nogood messages.–If the agent can notfind a value consistent with the agent view,the agent sends nogood messages to higher priority agents(Fig.1(iii)).A nogood mes-sage contains a set of variable values that can not be a part of anyfinal solution.By using this algorithm,if a solution exists,agents will reach a stable state where all constraints are satisfied.If there exists no solution,an empty nogood will be found and the algorithm will terminate4.when received(ok?,(x j,d j))do—(i)add(x j,d j)to agent view;check agent view;end do;when received(nogood,x j,nogood)doadd nogood to nogood list;check agent view;end do;procedure check agent viewwhen agent view and current value are not consistent do—(ii)if no value in D i is consistent with agent view then backtrack;—(iii)else select d∈D i where agent view and d are consistent;current value←d;send(ok?,(x i,d))to other agents;end if;end do;procedure backtracknogoods←{V|V=inconsistent subset of agent view};when an empty set is an element of nogoods dobroadcast to other agents that there is no solution,terminate this algorithm;end do;for each V∈nogoods do;select(x j,d j)where x j has the lowest priority in V;send(nogood,x i,V)to x j;end do;Fig.1.Procedures for receiving messages(asynchronous backtracking)4A set of variable values that is a superset of a nogood can not be afinal solution.If an empty set becomes a nogood,it means that there is no solution,since any set is a superset of an empty set.3Asynchronous Weak-commitment SearchIn this section,we briefly describe the weak-commitment search algorithm for solving CSPs[12],and describe how the asynchronous backtracking algorithm can be modified into the asynchronous weak-commitment search algorithm. 3.1Weak-commitment Search AlgorithmIn the weak-commitment search algorithm(Fig.2),all variables have tentative initial values.We can execute this algorithm by calling weak-commitment ({(x1,d1),(x2,d2),...,(x n,d n)},{}),where d i is the tentative initial value of x i.In this algorithm,a consistent partial solution is constructed for a subset ofvariables,and this partial solution is extended by adding variables one by one un-til a complete solution is found.When a variable is added to the partial solution, its tentative initial value is revised so that the new value satisfies all constraints between the partial solution,and satisfies as many constraints between variables that are not included in the partial solution as possible.This value ordering heuristic is called the min-conflict heuristic[6].The essential difference between this algorithm and the min-conflict backtracking[6]is the underlined part in Fig.2.When there exists no value for one variable that satisfies all constraints between the partial solution,this algorithm abandons the whole partial solution, and starts constructing a new partial solution from scratch,using the current value assignment as new tentative initial values.This algorithm records the abandoned partial solutions as new constraints, and avoids creating the same partial solution that has been created and aban-doned before.Therefore,the completeness of the algorithm(alwaysfinds a so-lution if one exists,and terminates if no solution exists)is guaranteed.The experimental results on various example problems in[12]show that this algo-rithm is3to10times more efficient than the min-conflict backtracking[6]or the breakout algorithm[7].3.2Basic IdeasThe main characteristics of the weak-commitment search algorithm are as fol-lows.1.The algorithm uses the min-conflict heuristic as a value ordering heuristic.2.It abandons the partial solution and restarts the search process if there existsno consistent value with the partial solution.Introducing thefirst characteristic into the asynchronous backtracking algo-rithm is relatively straightforward.When selecting a variable value,if there exist multiple values consistent with the agent view(those that satisfy all constraints with variables of higher priority agents),the agent prefers the value that mini-mizes the number of constraint violations with variables of lower priority agents.In contrast,introducing the second characteristic into the asynchronous back-tracking is not straightforward,since agents act concurrently and asynchronously,procedure weak-commitment(left,partial-solution)when all variables in left satisfy all constraints doterminate the algorithm,current value assignment is a solution;end do (x i,d)←a variable and value pair in left that does not satisfy some constraint;values←the list of x i’s values that are consistent with partial-solution;if values is an empty list;if partial-solution is an empty listthen terminate the algorithm since there exists no solution;else record partial-solution as a new constraint(nogood);remove each element of partial-solution and add to left;call weak-commitment(left,partial-solution);end if;else value←the value within values that minimizesthe number of constraint violations with left;remove(x i,d)from left;add(x i,value)to partial-solution;call weak-commitment(left,partial-solution);end if;Fig.2.Weak-commitment search algorithmand no agent has exact information about the partial solution.Furthermore, multiple agents may try to restart the search process simultaneously.In the following,we show that the agents can commit to their decisions weakly by changing the priority order dynamically.We define the way of establishing the priority order by introducing priority values,and change the priority values by the following rules.–For each variable/agent,a non-negative integer value representing the pri-ority order of the variable/agent is defined.We call this value the priority value.–The order is defined such that any variable/agent with a larger priority value has higher priority.–If the priority values of multiple agents are the same,the order is determined by the alphabetical order of the identifiers.–For each variable/agent,the initial priority value is0.–If there exists no consistent value for x l,the priority value of x l is changed to k+1,where k is the largest priority value of other agents.Furthermore,in the asynchronous backtracking algorithm,agents try to avoid situations previously found to be nogoods.However,due to the delay of mes-sages,an agent view of an agent can occasionally be a superset of a previously found nogood.In order to avoid reacting to unstable situations,and perform-ing unnecessary changes of priority values,each agent performs the following procedure.–Each agent records the nogoods that it has sent.When the agent view is a superset of a nogood that it has already sent,the agent will not change the priority value and waits for the next message.3.3Details of AlgorithmIn the asynchronous weak-commitment search,each agent concurrently assigns avalue to its variable,and sends the value to other agents.After that,agents wait for and respond to incoming messages5.In Fig.3,the procedures executed atagent x i by receiving an ok?message and a nogood message are described6.Thedifferences between these procedures and the procedures for the asynchronous backtracking algorithm are as follows.–The priority value,as well as the current value,is communicated throughthe ok?message(Fig.3(i)).–The priority order is determined by the communicated priority values.Ifthe current value is not consistent with the agent view,i.e.,some constraintwith variables of higher priority agents is not satisfied,the agent changes its value so that the value is consistent with the agent view,and also thevalue minimizes the number of constraint violations with variables of lower priority agents(Fig.3(ii)).–When x i can notfind a consistent value with its agent view,x i sends nogoodmessages to other agents,and increments its priority value.If x i has already sent an identical nogood,x i will not change the priority value and will waitfor the next message(Fig.3(iii)).3.4Example of Algorithm ExecutionWe illustrate the execution of the algorithm using a distributed version of the well-known n-queens problem(where n=4).There exist four agents,each ofwhich corresponds to a queen of each row.The goal of the agents is tofind positions on a4×4chess board so that they do not threaten one another.The initial values are shown in Fig.4(a).Agents communicate these valuesto one another.The values within parentheses represent the priority values.The initial priority values are0.Since the priority values are equal,the priority orderis determined by the alphabetical order of identifiers.Therefore,only the value ofx4is not consistent with its agent view,i.e.,only x4is violating constraints with higher priority agents.Since there is no consistent value,agent x4sends nogoodmessages and increments its priority value.In this case,the value minimizing the number of constraint violations is3,since it conflicts only with x3.Therefore, x4selects3and sends ok?messages to other agents(Fig.4(b)).Then,x3tries to change its value.Since there is no consistent value,agent x3sends nogood messages,and increments its priority value.In this case,the value that minimizes 5Although the following algorithm is described in a way that an agent reacts to messages sequentially,an agent can handle multiple messages concurrently,i.e.,the agentfirst revises agent view and nogood list according to the messages,and performs check agent view only once.6It must be mentioned that the way to determine that agents as a whole have reached a stable state is not contained in this algorithm.To detect the stable state,agents must use distributed termination detection algorithms such as[1].when received(ok?,(x j,d j,priority))do—(i)add(x j,d j,priority)to agent view;check agent view;end do;when received(nogood,x j,nogood)doadd nogood to nogood list;check agent view;end do;procedure check agent viewwhen agent view and current value are not consistent doif no value in D i is consistent with agent view then backtrack;else select d∈D i where agent view and d are consistentand d minimizes the number of constraint violations;—(ii)current value←d;send(ok?,(x i,d,current priority))to other agents;end if;end do; procedure backtrack—(iii)nogoods←{V|V=inconsistent subset of agent view};when an empty set is an element of nogoods dobroadcast to other agents that there is no solution,terminate this algorithm;end do;when no element of nogoods is included in nogood sent dofor each V∈nogoods do;add V to nogood sentfor each(x j,d j)in V do;send(nogood,x i,V)to x j;end do;end do;p max←max(x j,d j,p j)∈agent view(p j);current priority←1+p max;select d∈D i where d minimizes the number of constraint violations;current value←d;send(ok?,(x i,d,current priority))to other agents;end do;Fig.3.Procedures for receiving messages(asynchronous weak-commitment search)the number of constraint violations is1or2.In this example,x3selects1and sends ok?messages to other agents(Fig.4(c)).After that,x1changes its value to2,and a solution is obtained(Fig.4(d)).In the distributed4-queens problem,there exists no solution when x1’s value is1.We can see that a bad decision can be revised without an exhaustive search in the asynchronous weak-commitment search.x 1x 2x 3x 4(a)(b)(c)(d)(0)(0)(0)(1)(0)(0)(2)(1)(0)(0)(2)(1)Fig.4.Example of algorithm execution3.5Algorithm CompletenessThe priority values are changed if and only if a new nogood is found 7.Since the number of possible nogoods is finite,the priority values can not be changed infinitely.Therefore,after a certain time point,the priority values will be stable.Then,we show that the situations described below will not occur when the priority values are stable.(i)There exist agents that do not satisfy some constraints,and all agents are waiting for incoming messages.(ii)Messages are repeatedly sent/received,and the algorithm will not reach a stable state (infinite processing loop).If situation (i)occurs,there exist at least two agents that do not satisfy the constraint between them.Let us assume that the agent ranking k-th in the priority order does not satisfy the constraint between the agent ranking j-th (where j <k ),and all agents ranking higher than k-th satisfy all constraints within them.The only case that the k-th agent waits for incoming messages even though the agent does not satisfy the constraint between the j-th agent is that the k-th agent has sent nogood messages to higher priority agents.This fact contradicts the assumption that higher priority agents satisfy constraints within them.Therefore,situation (i)will not occur.Also,if the priority values are stable,the asynchronous weak-commitment search algorithm is basically identical to the asynchronous backtracking algo-rithm.Since the asynchronous backtracking is guaranteed not to fall into an infinite processing loop [13],situation (ii)will not occur.From the fact that situation (i)or (ii)will not occur,we can guarantee that the asynchronous weak-commitment search algorithm will always find a solution,or find the fact that there exists no solution.4EvaluationsIn this section,we evaluate the efficiency of algorithms by discrete event sim-ulation,where each agent maintains its own simulated clock.An agent’s time 7To be exact,different agents may find an identical nogood simultaneously.is incremented by one simulated time unit whenever it performs one cycle of computation.One cycle consists of reading all incoming messages,performing local computation,and sending messages.We assume that a message issued at time t is available to the recipient at time t+1.We analyze performance in terms of the amount of cycles required to solve the problem.Given this model, we compare the following three kinds of algorithms:(a)asynchronous backtrack-ing,in which a variable value is selected randomly from consistent values,and the priority order is determined by alphabetical order,(b)min-conflict only,in which the min-conflict heuristic is introduced into the asynchronous backtrack-ing,but the priority order is statically determined by alphabetical order,and(c) asynchronous weak-commitment search8.Wefirst applied these three algorithms to the distributed n-queens problem described in the previous section,varying n from10to1000.The results are summarized in Table1.For each n,we generated100problems,each of which had different randomly generated initial values,and averaged the results for these problems.For each problem,in order to conduct the experiments in a reasonable amount of time,we set the bound for the number of cycles to1000, and terminated the algorithm if this limit was exceeded;we counted the result as1000.The ratio of problems completed successfully to the total number of problems is also described in Table1.Table1.Required cycles for distributed n-queens problemasynchronous min-conflict only asynchronousbacktracking weak-commitmentn ratio cycles ratio cycles ratio cycles10100%105.4100%102.6100%41.55050%662.756%623.0100%59.110014%931.430%851.3100%50.810000%—16%891.8100%29.6 The second example problem is the distributed graph-coloring problem.The distributed graph-coloring problem is a graph-coloring problem,in which each node corresponds to an agent.The graph-coloring problem involves painting nodes in a graph by k different colors so that any two nodes connected by an arc do not have the same color.We randomly generate a problem with n nodes/agents and m arcs by the method described in[6],so that the graph is connected and the problem has a solution.We evaluate the problem n=60,90,8The amounts of local computation performed in each cycle for(b)and(c)are equiv-alent.The amounts of local computation for(a)can be smaller since it does not use the min-conflict heuristic,but for the lowest priority agent,the amounts of local computation of these algorithms are equivalent.。

About the T utorialNeo4j is one of the popular Graph Databases and Cypher Query Language (CQL). Neo4j is written in Java Language. This tutorial explains the basics of Neo4j, Java with Neo4j, and Spring DATA with Neo4j.The tutorial is divided into sections such as Neo4j Introduction, Neo4j CQL, Neo4j CQL Functions, Neo4j Admin, etc. Each of these sections contain related topics with simple and useful examples.AudienceThis tutorial has been prepared for beginners to help them understand the basic to advanced concepts of Neo4j. It will give you enough understanding on Neo4j from where you can take yourself to a higher level of expertise.PrerequisitesBefore proceeding with this tutorial, you should have basic knowledge of Database, Graph Theory, Java, and Spring Framework.Copyright & Disclaimer© Copyright 2018 by Tutorials Point (I) Pvt. Ltd.All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book is prohibited to reuse, retain, copy, distribute or republish any contents or a part of contents of this e-book in any manner without written consent of the publisher.We strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. If you discover any errors on our website or in this tutorial, please notify us at **************************T able of ContentsAbout the Tutorial (i)Audience (i)Prerequisites (i)Copyright & Disclaimer (i)Table of Contents (ii)1.Neo4j ─ Overview (1)What is a Graph Database? (1)Advantages of Neo4j (2)Features of Neo4j (2)2.Neo4j ─ Data Model (4)3.Neo4j ─ Environment Setup (6)Neo4j Database Server Setup with Windows exe File (6)Starting the Server (9)Working with Neo4j (11)4.Neo4j ─ Building Blocks (12)Node (12)Properties (12)Relationships (13)Labels (14)Neo4j Data Browser (14)NEO4J ─ CQL (17)5.Neo4j CQL ─ I ntroduction (18)Neo4j CQL Clauses (18)Neo4j CQL Functions (20)Neo4j CQL Data Types (21)CQL Operators (21)Boolean Operators in Neo4j CQL (22)Comparison Operators in Neo4j CQL (22)6.Neo4j CQL ─ Creating Nodes (24)Creating a Single node (24)Creating Multiple Nodes (27)Creating a Node with a Label (30)Creating a Node with Multiple Labels (33)Create Node with Properties (36)Returning the Created Node (39)7.Neo4j CQL ─ Creating a Relationship (42)Creating Relationships (42)Creating a Relationship Between the Existing Nodes (44)Creating a Relationship with Label and Properties (47)Creating a Complete Path (49)N EO4J CQL ─ WRITE CLA USES (51)8.Neo4j ─ Merge Command (52)Merging a Node with a Label (52)Merging a Node with Properties (56)OnCreate and OnMatch (58)Merge a Relationship (61)9.Neo4j ─ Set Clause (63)Setting a Property (63)Removing a Property (65)Setting Multiple Properties (67)Setting a Label on a Node (69)Setting Multiple Labels on a Node (71)10.Neo4j ─ Delete Clause (74)Deleting All Nodes and Relationships (74)Deleting a Particular Node (75)11.Neo4j ─ Remove Clause (77)Removing a Property (77)Removing a Label From a Node (79)Removing Multiple Labels (81)12.Neo4j ─ Foreach Clause (84)NEO4J CQL ─ READ CLA USES (87)13.Neo4j ─ Match Clause (88)Get All Nodes Using Match (88)Getting All Nodes Under a Specific Label (90)Match by Relationship (92)Delete All Nodes (94)14.Neo4j ─ Optional Match Clause (96)15.Neo4j ─ Where Clause (98)WHERE Clause with Multiple Conditions (101)Using Relationship with Where Clause (103)16.Neo4j ─ Count Function (106)Count (106)Group Count (108)N EO4J CQL ─ GENERAL C LAUSES (111)17.Neo4j ─ Return Clause (112)Returning Nodes (112)Returning Multiple Nodes (114)Returning Relationships (116)Returning Properties (118)Returning All Elements (120)Returning a Variable With a Column Alias (122)18.Neo4j ─ Order By Clause (124)Ordering Nodes by Multiple Properties (126)Ordering Nodes by Descending Order (128)19.Neo4j ─ Limit Clause (131)Limit with expression (133)20.Neo4j ─ Skip Clause (136)Skip Using Expression (138)21.Neo4j ─ With Clause (140)22.Neo4j ─ Unwind Clause (142)NE O4J CQL ─ FUNCTIONS (144)23.Neo4J CQL ─ String Functions (145)String Functions List (145)Upper (145)Lower (147)Substring (149)24.Neo4j ─ Aggreg ation Function (152)AGGREGATION Functions List (152)COUNT (152)MAX (155)MIN (157)AVG (159)SUM (161)N EO4J CQL ─ ADMIN (163)25.Neo4j ─ Backup & Restore (164)Neo4j Database Backup (164)Neo4j Database Restore (171)26.Neo4j ─ Index (174)Creating an Index (174)Deleting an Index (176)27.Neo4j ─ Create Unique Constraint (179)Create UNIQUE Constraint (179)28.Neo4j ─ Drop Unique (182)Neo4j5Neo4j is the world's leading open source Graph Database which is developed using Java technology. It is highly scalable and schema free (NoSQL).What is a Graph Database?A graph is a pictorial representation of a set of objects where some pairs of objects are connected by links . It is composed of two elements - nodes (vertices) and relationships (edges).Graph database is a database used to model the data in the form of graph. In here, the nodes of a graph depict the entities while the relationships depict the association of these nodes. Popular Graph DatabasesNeo4j is a popular Graph Database. Other Graph Databases are Oracle NoSQL Database, OrientDB, HypherGraphDB, GraphBase, InfiniteGraph, and AllegroGraph.Why Graph Databases?Nowadays, most of the data exists in the form of the relationship between different objects and more often, the relationship between the data is more valuable than the data itself.Relational databases store highly structured data which have several records storing the same type of data so they can be used to store structured data and, they do not store the relationships between the data.Unlike other databases, graph databases store relationships and connections as first-class entities.The data model for graph databases is simpler compared to other databases and, they can be used with OLTP systems. They provide features like transactional integrity and operational availability.RDBMS Vs Graph DatabaseFollowing is the table which compares Relational databases and Graph databases.1.Advantages of Neo4jFollowing are the advantages of Neo4j.∙Flexible data model: Neo4j provides a flexible simple and yet powerful data model, which can be easily changed according to the applications and industries.∙Real-time insights: Neo4j provides results based on real-time data.∙High availability: Neo4j is highly available for large enterprise real-time applications with transactional guarantees.∙Connected and semi structures data:Using Neo4j, you can easily represent connected and semi-structured data.∙Easy retrieval: Using Neo4j, you can not only represent but also easily retrieve (traverse/navigate) connected data faster when compared to other databases.∙Cypher query language: Neo4j provides a declarative query language to represent the graph visually, using an ascii-art syntax. The commands of this language are in human readable format and very easy to learn.∙No joins: Using Neo4j, it does NOT require complex joins to retrieve connected/related data as it is very easy to retrieve its adjacent node or relationship details without joins or indexes.Features of Neo4jFollowing are the notable features of Neo4j -∙Data model (flexible schema): Neo4j follows a data model named native property graph model. Here, the graph contains nodes (entities) and these nodes are connected with each other (depicted by relationships). Nodes and relationships store data in key-value pairs known as properties.In Neo4j, there is no need to follow a fixed schema. You can add or remove properties as per requirement. It also provides schema constraints.6∙ACID properties: Neo4j supports full ACID (Atomicity, Consistency, Isolation, and Durability) rules.∙Scalability and reliability: You can scale the database by increasing the number of reads/writes, and the volume without effecting the query processing speed and data integrity. Neo4j also provides support for replication for data safety and reliability.∙Cypher Query Language: Neo4j provides a powerful declarative query language known as Cypher. It uses ASCII-art for depicting graphs. Cypher is easy to learn and can be used to create and retrieve relations between data without using the complex queries like Joins.∙Built-in web application: Neo4j provides a built-in Neo4j Browser web application.Using this, you can create and query your graph data.∙Drivers: Neo4j can work with –o REST API to work with programming languages such as Java, Spring, Scala etc.o Java Script to work with UI MVC frameworks such as Node JS.o It supports two kinds of Java API: Cypher API and Native Java API to develop Java applications.In addition to these, you can also work with other databases such as MongoDB, Cassandra, etc.∙Indexing: Neo4j supports Indexes by using Apache Lucence.7Neo4j8Neo4j Property Graph Data ModelNeo4j Graph Database follows the Property Graph Model to store and manage its data. Following are the key features of Property Graph Model:∙The model represents data in Nodes, Relationships and Properties ∙Properties are key-value pairs ∙ Nodes are represented using circle and Relationships are represented using arrow keys ∙Relationships have directions: Unidirectional and Bidirectional ∙ Each Relationship contains "Start Node" or "From Node" and "To Node" or "End Node" ∙Both Nodes and Relationships contain properties ∙ Relationships connects nodesIn Property Graph Data Model, relationships should be directional. If we try to create relationships without direction, then it will throw an error message.In Neo4j too, relationships should be directional. If we try to create relationships without direction, then Neo4j will throw an error message saying that "Relationships should be directional".Neo4j Graph Database stores all of its data in Nodes and Relationships. We neither need any additional RRBMS Database nor any SQL database to store Neo4j database data. It stores its data in terms of Graphs in its native format.Neo4j uses Native GPE (Graph Processing Engine) to work with its Native graph storage format.The main building blocks of Graph DB Data Model are:∙Nodes ∙Relationships ∙Properties2.Neo4j Following is a simple example of a Property Graph.Here, we have represented Nodes using Circles. Relationships are represented using Arrows. Relationships are directional. We can represent Node's data in terms of Properties (key-value pairs). In this example, we have represented each Node's Id property within the Node's Circle.9Neo4j10In this chapter, we will discuss how to install Neo4j in your system using exe file.Neo4j Database Server Setup with Windows exe FileFollow the steps given below to download Neo4j into your system.Step 1: Visit the Neo4j official site using https:///. On clicking, this link will take you to the homepage of neo4j website.Step 2: As highlighted in the above screenshot, this page has a Download button on the top right hand side. Click it.Step 3: This will redirect you to the downloads page, where you can download the community edition and the enterprise edition of Neo4j. Download the community edition of the software by clicking the respective button.3.Step 4: This will take you to the page where you can download community version of Neo4j software compatible with different operating systems. Download the file respective to the desired operating system.11This will download a file named neo4j-community_windows-x64_3_1_1.exe to your system as shown in the following screenshot.12Step 5: Double-click the exe file to install Neo4j Server.Step 6: Accept the license agreement and proceed with the installation. After completion of the process, you can observe that Neo4j is installed in your system.Starting the ServerStep 1: Click the Windows startmenu and start the Neo4j server by clicking the start menu shortcut for Neo4j.13Step 2: On clicking the shortcut, you will get a window for Neo4j Community edition. By default, it selects c:\Users\[username]\Documents\Neo4j\default.graphdb. If you want, you can change your path to a different directory.14Step 3: Click the "Start" button to start the Neo4j server.Once the server starts, you can observe that the database directory is populated as shown in the following screenshot.15Working with Neo4jAs discussed in the previous chapters, neo4j provides an in-built browse application to work with Neo4j. You can access Neo4j using the URL http://localhost:7474/16Neo4j17Neo4j Graph Database has the following building blocks -∙ Nodes ∙ Properties ∙ Relationships ∙ Labels ∙Data BrowserNodeNode is a fundamental unit of a Graph. It contains properties with key-value pairs as shown in the following image.Here, Node Name = "Employee" and it contains a set of properties as key-value pairs.4.PropertiesProperty is a key-value pair to describe Graph Nodes and Relationships.Where Key is a String and Value may be represented using any Neo4j Data types.RelationshipsRelationships are another major building block of a Graph Database. It connects two nodes as depicted in the following figure.Here, Emp and Dept are two different nodes. "WORKS_FOR" is a relationship between Emp and Dept nodes.As it denotes, the arrow mark from Emp to Dept, this relationship describes -Each relationship contains one start node and one end node.Here, "Emp" is a start node, and "Dept" is an end node.As this relationship arrow mark represents a relationship from "Emp" node to "Dept" node, this relationship is known as an "Incoming Relationship" to "Dept" Node and "Outgoing Relationship" to "Emp" node.Like nodes, relationships also can contain properties as key-value pairs.18Here, "WORKS_FOR" relationship has one property as key-value pair.It represents an Id of this relationship.LabelsLabel associates a common name to a set of nodes or relationships. A node or relationship can contain one or more labels. We can create new labels to existing nodes or relationships. We can remove the existing labels from the existing nodes or relationships.From the previous diagram, we can observe that there are two nodes.Left side node has a Label: "Emp" and the right side node has a Label: "Dept". Relationship between those two nodes also has a Label: "WORKS_FOR".Note: Neo4j stores data in Properties of Nodes or Relationships.Neo4j Data BrowserOnce we install Neo4j, we can access Neo4j Data Browser using the following URL19Neo4j Data Browser is used to execute CQL commands and view the output.Here, we need to execute all CQL commands at dollar prompt: "$"Type commands after the dollar symbol and click the "Execute" button to run your commands. It interacts with Neo4j Database Server, retrieves and displays the results just below the dollar prompt.Use "VI View" button to view the results in diagrams format. The above diagram shows results in "UI View" format.Use "Grid View" button to view the results in Grid View. The following diagram shows the same results in "Grid View" format.20When we use "Grid View" to view our Query results, we can export them into a file in two different formats.21CSVClick the "Export CSV" button to export the results in csv file format.JSONClick the "Export JSON" button to export the results in JSON file format.22However, if we use "UI View" to see our Query results, we can export them into a file in only one format: JSON23Neo4j ─ CQL24Neo4j25CQL stands for Cypher Query Language. Like Oracle Database has query language SQL, Neo4j has CQL as query language.Neo4j CQL -∙ Is a query language for Neo4j Graph Database. ∙ Is a declarative pattern-matching language. ∙ Follows SQL like syntax.∙Syntax is very simple and in human readable format.Like Oracle SQL -∙ Neo4j CQL has commands to perform Database operations.∙Neo4j CQL supports many clauses such as WHERE, ORDER BY, etc., to write very complex queries in an easy manner.∙Neo4j CQL supports some functions such as String, Aggregation. In addition to them, it also supports some Relationship Functions.Neo4j CQL ClausesFollowing are the read clauses of Neo4j C ypher Q uery L anguage: 5.Following are the write clauses of Neo4j C ypher Q uery L anguage:Following are the general clauses of Neo4j C ypher Q uery L anguage:26Neo4j CQL FunctionsFollowing are the frequently used Neo4j CQL Functions:We will discuss all Neo4j CQL commands, clauses and functions syntax, usage and examples in-detail in the subsequent chapters.27End of ebook previewIf you liked what you saw…Buy it from our store @ https://28。

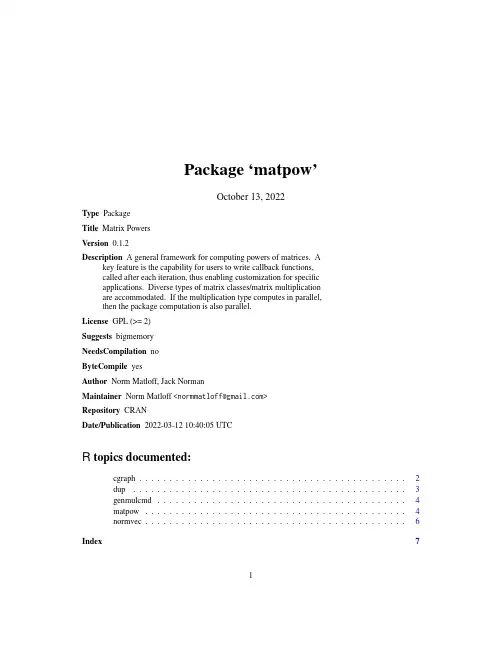

Package‘matpow’October13,2022Type PackageTitle Matrix PowersVersion0.1.2Description A general framework for computing powers of matrices.Akey feature is the capability for users to write callback functions,called after each iteration,thus enabling customization for specificapplications.Diverse types of matrix classes/matrix multiplicationare accommodated.If the multiplication type computes in parallel,then the package computation is also parallel.License GPL(>=2)Suggests bigmemoryNeedsCompilation noByteCompile yesAuthor Norm Matloff,Jack NormanMaintainer Norm Matloff<*********************>Repository CRANDate/Publication2022-03-1210:40:05UTCR topics documented:cgraph (2)dup (3)genmulcmd (4)matpow (4)normvec (6)Index712cgraph cgraph Callback ExamplesDescriptionCallback examples for matpow.Usagecgraph(ev,cbinit=FALSE,mindist=FALSE)eig(ev,cbinit=FALSE,x=NULL,eps=1e-08)mc(ev,cbinit=FALSE,eps=1e-08)mexp(ev,cbinit=FALSE,eps=1e-08)Argumentsev R environment as in the return value of matpow.cbinit matpow willfirst call the callback with cbinit set to TRUE before iterations begin,then to FALSE during iterations.mindist if TRUE,the matrix of minimum intervertex distances will be calculated.x initial guess for the principal eigenvector.eps convergence criterion.DetailsNote that these functions are not called directly.The user specifies the callback function(whether one of the examples here or one written by the user)in his/her call to matpow,which calls the callback after each iteration.•cgraph:Determines the connectivity of a graph,and optionally the minimum intervertex distance matrix.The matrix m in the call to matpow should be an adjacency matrix,1s and0s.•eig:Calculates the principal eigenvector of the input matrix.•mc:Calculates the long-run distribution vector for an aperiodic,discrete-time Markov chain;the input matrix is the transition matrix for the chain.•mexp:Calculates the exponential of the input matrix,as in e.g.expm of the Matrix package.In cgraph,it is recommended that squaring be set to TRUE in calling matpow,though this cannot be done if the mindist option is e of squaring is unconditionally recommended for eig and mc.Do not use squaring with mexp.Restrictions:These functions are currently set up only for ordinary R matrix multiplication or use with gputools.dup3ValueCallback functions don’t normally return values,but they usually do maintain data in the R envi-ronment ev that is eventually returned by matpow,including the following components as well as the application-independent ones:•cgraph:Graph connectedness is returned in a boolean component connected.If the mindist option had been chosen,the dists component will show the minimum intervertex distances.•eig:The x component will be the principal eigenvector.•mc:The pivec component will be the long-run distribution vector.•mexp:The esum component will be the matrix exponential.Examples##Not run:m<-rbind(c(1,0,0,1),c(1,0,1,1),c(0,1,0,0),c(0,0,1,1))ev<-matpow(m,callback=cgraph,mindist=T)ev$connected#prints TRUEev$dists#prints,e.g.that min dist from1to2is3m<-rbind(1:2,3:4)#allow for1000iterations maxev<-matpow(m,1000,callback=eig,squaring=TRUE)#how many iterations did we actually need?ev$i#only8ev$x#prints eigenvec;check by calling R s eigen()##End(Not run)dup Deep-CopyDescriptionFunctions to perform deep copies of matrices.Usagedup.vanilla(mat)dup.bigmemory(mat)Argumentsmat matrix to be copied.DetailsOne of the arugments to matpow is dup,a function to do deep copying of the type of matrix being used.The user may supply a custom one,or use either dup.vanilla or dup.bigmemory.ValueThe matrix copy.genmulcmd Generate Multiplication CommandDescriptionFunctions to form quoted multiplication commands.Usagegenmulcmd.vanilla(a,b,c)genmulcmd.bigmemory(a,b,c)Argumentsa a quoted string.b a quoted string.c a quoted string.DetailsOne of the arugments to matpow is genmulcmd,a function to generate a string containing the com-mand the multiply matrices.The string is fed into parse and eval for execution.The user may supply a custom function,or use either genmulcmd.vanilla or genmulcmd.bigmemory.ValueA quoted string for c=a*b for the given type of matrix/multiplication.matpow Matrix PowersDescriptionComputes matrix powers,with optional application-specific callbacks.Accommodates(external) parallel multiplication mechanisms.Usagematpow(m,k=NULL,squaring=FALSE,genmulcmd=NULL,dup=NULL,callback=NULL,...)Argumentsm input matrix.k desired power.If NULL,it is expected that the initialization portion of the user’s callback function will set k.squaring if TRUE,saves time byfirst squaring m,then squaring the result and so on,untila power is reached of k or more.genmulcmd function to generate multiplication commands,in quoted string form.For the ordinary R"matrix"class this is function(a,b,c)paste(c,"<-",a,"%*%",b),supplied as genmulcmd.vanilla with the package.dup function to make a deep copy of a matrix.callback application-specific callback function....application-specific argumentsDetailsMultiplication is iterated until the desired power k is reached,with these exceptions:(a)If squaring is TRUE,k may be exceeded.(b)The callback function can set stop in ev,halting iterations;this is useful,for instance,if some convergence criterion has been reached.One key advantage of using matpow rather than direct iteration is that parallel computation can be accommodated,by specifying genmulcmd.(The word"accommodated"here means the user must have available a mechanism for parallel computation;matpow itself contains no parallel code.) For instance,if one is using GPU with gputools,one sets genmulcmd to genmulcmd.gputools, which calls gpuMatMult()instead of the usual%*%.So,one can switch from serial to parallel by merely changing this one argument.If genmulcmd is not specified,the code attempts to sense the proper function by inspecting class(m),in the cases of classes"matrix"and"big.matrix".Of course,if the user’s R is configured to use a parallel BLAS,such as OpenBLAS,this is automat-ically handled via the ordinary R"matrix"class.Another important advantage of matpow is the ability to write a callback function,which en-ables muchflexibility.The callback,if present,is called by matpow after each iteration,allowing application-specific operations to be applied.For instance,cgraph determines the connectivity of a graph,by checking whether the current power has all of its entries nonzero.The call form is callbackname(ev,init,...)where ev is the R environment described above, and init must be set to TRUE on thefirst call,and FALSE afterward.Since some types of matrix multiplication do not allow the product to be in the same physical location as either multiplicand,a"red and black"approach is taken to the iteration process:Stor-age space for powers in ev alternatives between prod1and prod2,for odd-numbered and even-numbered iterations,respectively.ValueAn R environment ev,including the following components:prod1matrix,thefinal power.stop boolean value,indicating whether the iterations were stopped before thefinal power was to be computed.6normveci number of the last iteration performed.Application-specific data,maintained by the callback function,can be stored here as well.Examples##Not run:m<-rbind(1:2,3:4)ev<-matpow(m,16)ev$prod1#prints#[,1][,2]#[1,]1150074913511.67615e+11#[2,]2514225532353.66430e+11ev$i#prints15matpow(m,16,squaring=TRUE)$i#prints4,same prod1##End(Not run)#see further examples in the callbacksnormvec Vector NormDescriptionOrdinary L2vector norm.Usagenormvec(x)Argumentsx R vector.ValueVector norm.Indexcgraph,2,5dup,3eig(cgraph),2genmulcmd,4matpow,2–4,4mc(cgraph),2mexp(cgraph),2normvec,67。

英语二图表类作文写作框架Chart-based essays are a common type of assignment in English exams, requiring students to interpret data and present it in a coherent, well-structured essay. Mastering this type of essay involves not only understanding the data presented but also being able to articulate trends, comparisons, and insights clearly and effectively. This essay will provide a comprehensive framework for writing a top-notch chart-based essay in English.The first step in writing a chart-based essay is to thoroughly understand the chart provided. This involves identifying the type of chart (bar chart, pie chart, line graph, etc.), understanding what each axis represents, and noting any significant trends or data points. Pay attention to the following:Title and Labels: The title of the chart and the labels on the axes provide essential information about the data being presented.Units of Measurement: Ensure you understand the units of measurement used in the chart.Key Data Points: Identify any notable peaks, troughs, or trends in the data.The introduction of your essay should provide a brief overview of the chart and its significance. This section sets the stage for the rest of your essay and should include:Paraphrase the Title: Restate the title of the chart in your own words.Describe the Chart: Briefly describe what the chart shows, including the type of data and the time period covered.State the Purpose: Explain the purpose of your essay, which is to analyze and interpret the data presented in the chart.Example:The bar chart illustrates the average monthly temperatures in three different cities over a one-year period. This essay will analyze the temperature trends in these cities, compare the data, and draw relevant conclusions based on the information presented.The main body of your essay should be divided into several paragraphs, each focusing on a specific aspect of the chart. Here are some key points to cover:Begin by describing the overall trend shown in the chart. This could be an upward or downward trend, a cyclical pattern, or a steady state. Use specific data points to support your description.Example:Overall, the chart shows a clear seasonal variation in temperatures for all three cities. Temperatures are highest during the summer months (June to August) and lowest during the winter months (December to February).Next, compare and contrast the data for different categories or time periods. Highlight any significant differences or similarities.Example:In City A, the highest average temperature is recorded in July at 30°C, whereas City B reaches its peak temperature of 25°C in August. City C, on the other hand, experiences a more moderate peak temperature of 22°C in July. Despite these variations, all three cities show a similar overall pattern of higher temperatures in the summer and lower temperatures in the winter.Identify and discuss any anomalies or unusual data points in the chart. Offer possible explanations for these anomalies based on logical reasoning or external knowledge.Example:One notable anomaly is the unusually high temperature in City A during January, which deviates significantly from the expected winter trend. This could be attributed to a rare weather event or an error in data recording. Additionally, City C’s relatively s table temperature throughout the year suggests a more temperate climate compared to the other two cities.The conclusion should summarize the key findings from your analysis and reiterate the significance of the chart. It should also offer any final insights or implications based on the data.Example:In conclusion, the bar chart provides a clear depiction of the seasonal temperature variations in three different cities. While all cities experience higher temperatures in the summer and lower temperatures in the winter, City A shows the most extreme temperature fluctuations. City C, with its more consistent temperatures, highlights the differences in climate among the three cities. This analysis underscores the importance of understanding regional climate patterns for various practical applications, such as urban planning and climate adaptation strategies.To ensure your chart-based essay is of the highest quality, keep the following tips in mind:Be Clear and Concise: Avoid unnecessary jargon and ensure your writing is easy to understand.Use Data to Support Points: Always back up your statements with specific data from the chart.Stay Objective: Present the data objectively without inserting personal opinions or biases.Proofread: Check for any grammatical or typographical errors to ensure your essay is polished and professional.By following this framework, you can effectively interpret and present chart data in a clear, structured, and insightful essay. This skill is not only valuable for academic purposes but also for professional contexts where data interpretation is crucial.。