ABSTRACT Correlative Multi-Label Video Annotation

- 格式:pdf

- 大小:575.51 KB

- 文档页数:10

MULTI-SCALE STRUCTURAL SIMILARITY FOR IMAGE QUALITY ASSESSMENT Zhou Wang1,Eero P.Simoncelli1and Alan C.Bovik2(Invited Paper)1Center for Neural Sci.and Courant Inst.of Math.Sci.,New York Univ.,New York,NY10003 2Dept.of Electrical and Computer Engineering,Univ.of Texas at Austin,Austin,TX78712 Email:zhouwang@,eero.simoncelli@,bovik@ABSTRACTThe structural similarity image quality paradigm is based on the assumption that the human visual system is highly adapted for extracting structural information from the scene,and therefore a measure of structural similarity can provide a good approxima-tion to perceived image quality.This paper proposes a multi-scale structural similarity method,which supplies moreflexibility than previous single-scale methods in incorporating the variations of viewing conditions.We develop an image synthesis method to calibrate the parameters that define the relative importance of dif-ferent scales.Experimental comparisons demonstrate the effec-tiveness of the proposed method.1.INTRODUCTIONObjective image quality assessment research aims to design qual-ity measures that can automatically predict perceived image qual-ity.These quality measures play important roles in a broad range of applications such as image acquisition,compression,commu-nication,restoration,enhancement,analysis,display,printing and watermarking.The most widely used full-reference image quality and distortion assessment algorithms are peak signal-to-noise ra-tio(PSNR)and mean squared error(MSE),which do not correlate well with perceived quality(e.g.,[1]–[6]).Traditional perceptual image quality assessment methods are based on a bottom-up approach which attempts to simulate the functionality of the relevant early human visual system(HVS) components.These methods usually involve1)a preprocessing process that may include image alignment,point-wise nonlinear transform,low-passfiltering that simulates eye optics,and color space transformation,2)a channel decomposition process that trans-forms the image signals into different spatial frequency as well as orientation selective subbands,3)an error normalization process that weights the error signal in each subband by incorporating the variation of visual sensitivity in different subbands,and the vari-ation of visual error sensitivity caused by intra-or inter-channel neighboring transform coefficients,and4)an error pooling pro-cess that combines the error signals in different subbands into a single quality/distortion value.While these bottom-up approaches can conveniently make use of many known psychophysical fea-tures of the HVS,it is important to recognize their limitations.In particular,the HVS is a complex and highly non-linear system and the complexity of natural images is also very significant,but most models of early vision are based on linear or quasi-linear oper-ators that have been characterized using restricted and simplistic stimuli.Thus,these approaches must rely on a number of strong assumptions and generalizations[4],[5].Furthermore,as the num-ber of HVS features has increased,the resulting quality assessment systems have become too complicated to work with in real-world applications,especially for algorithm optimization purposes.Structural similarity provides an alternative and complemen-tary approach to the problem of image quality assessment[3]–[6].It is based on a top-down assumption that the HVS is highly adapted for extracting structural information from the scene,and therefore a measure of structural similarity should be a good ap-proximation of perceived image quality.It has been shown that a simple implementation of this methodology,namely the struc-tural similarity(SSIM)index[5],can outperform state-of-the-art perceptual image quality metrics.However,the SSIM index al-gorithm introduced in[5]is a single-scale approach.We consider this a drawback of the method because the right scale depends on viewing conditions(e.g.,display resolution and viewing distance). In this paper,we propose a multi-scale structural similarity method and introduce a novel image synthesis-based approach to calibrate the parameters that weight the relative importance between differ-ent scales.2.SINGLE-SCALE STRUCTURAL SIMILARITYLet x={x i|i=1,2,···,N}and y={y i|i=1,2,···,N}be two discrete non-negative signals that have been aligned with each other(e.g.,two image patches extracted from the same spatial lo-cation from two images being compared,respectively),and letµx,σ2x andσxy be the mean of x,the variance of x,and the covariance of x and y,respectively.Approximately,µx andσx can be viewed as estimates of the luminance and contrast of x,andσxy measures the the tendency of x and y to vary together,thus an indication of structural similarity.In[5],the luminance,contrast and structure comparison measures were given as follows:l(x,y)=2µxµy+C1µ2x+µ2y+C1,(1)c(x,y)=2σxσy+C2σ2x+σ2y+C2,(2)s(x,y)=σxy+C3σxσy+C3,(3) where C1,C2and C3are small constants given byC1=(K1L)2,C2=(K2L)2and C3=C2/2,(4)Fig.1.Multi-scale structural similarity measurement system.L:low-passfiltering;2↓:downsampling by2. respectively.L is the dynamic range of the pixel values(L=255for8bits/pixel gray scale images),and K1 1and K2 1aretwo scalar constants.The general form of the Structural SIMilarity(SSIM)index between signal x and y is defined as:SSIM(x,y)=[l(x,y)]α·[c(x,y)]β·[s(x,y)]γ,(5)whereα,βandγare parameters to define the relative importanceof the three components.Specifically,we setα=β=γ=1,andthe resulting SSIM index is given bySSIM(x,y)=(2µxµy+C1)(2σxy+C2)(µ2x+µ2y+C1)(σ2x+σ2y+C2),(6)which satisfies the following conditions:1.symmetry:SSIM(x,y)=SSIM(y,x);2.boundedness:SSIM(x,y)≤1;3.unique maximum:SSIM(x,y)=1if and only if x=y.The universal image quality index proposed in[3]corresponds to the case of C1=C2=0,therefore is a special case of(6).The drawback of such a parameter setting is that when the denominator of Eq.(6)is close to0,the resulting measurement becomes unsta-ble.This problem has been solved successfully in[5]by adding the two small constants C1and C2(calculated by setting K1=0.01 and K2=0.03,respectively,in Eq.(4)).We apply the SSIM indexing algorithm for image quality as-sessment using a sliding window approach.The window moves pixel-by-pixel across the whole image space.At each step,the SSIM index is calculated within the local window.If one of the image being compared is considered to have perfect quality,then the resulting SSIM index map can be viewed as the quality map of the other(distorted)image.Instead of using an8×8square window as in[3],a smooth windowing approach is used for local statistics to avoid“blocking artifacts”in the quality map[5].Fi-nally,a mean SSIM index of the quality map is used to evaluate the overall image quality.3.MULTI-SCALE STRUCTURAL SIMILARITY3.1.Multi-scale SSIM indexThe perceivability of image details depends the sampling density of the image signal,the distance from the image plane to the ob-server,and the perceptual capability of the observer’s visual sys-tem.In practice,the subjective evaluation of a given image varies when these factors vary.A single-scale method as described in the previous section may be appropriate only for specific settings.Multi-scale method is a convenient way to incorporate image de-tails at different resolutions.We propose a multi-scale SSIM method for image quality as-sessment whose system diagram is illustrated in Fig. 1.Taking the reference and distorted image signals as the input,the system iteratively applies a low-passfilter and downsamples thefiltered image by a factor of2.We index the original image as Scale1, and the highest scale as Scale M,which is obtained after M−1 iterations.At the j-th scale,the contrast comparison(2)and the structure comparison(3)are calculated and denoted as c j(x,y) and s j(x,y),respectively.The luminance comparison(1)is com-puted only at Scale M and is denoted as l M(x,y).The overall SSIM evaluation is obtained by combining the measurement at dif-ferent scales usingSSIM(x,y)=[l M(x,y)]αM·Mj=1[c j(x,y)]βj[s j(x,y)]γj.(7)Similar to(5),the exponentsαM,βj andγj are used to ad-just the relative importance of different components.This multi-scale SSIM index definition satisfies the three conditions given in the last section.It also includes the single-scale method as a spe-cial case.In particular,a single-scale implementation for Scale M applies the iterativefiltering and downsampling procedure up to Scale M and only the exponentsαM,βM andγM are given non-zero values.To simplify parameter selection,we letαj=βj=γj forall j’s.In addition,we normalize the cross-scale settings such thatMj=1γj=1.This makes different parameter settings(including all single-scale and multi-scale settings)comparable.The remain-ing job is to determine the relative values across different scales. Conceptually,this should be related to the contrast sensitivity func-tion(CSF)of the HVS[7],which states that the human visual sen-sitivity peaks at middle frequencies(around4cycles per degree of visual angle)and decreases along both high-and low-frequency directions.However,CSF cannot be directly used to derive the parameters in our system because it is typically measured at the visibility threshold level using simplified stimuli(sinusoids),but our purpose is to compare the quality of complex structured im-ages at visible distortion levels.3.2.Cross-scale calibrationWe use an image synthesis approach to calibrate the relative impor-tance of different scales.In previous work,the idea of synthesizing images for subjective testing has been employed by the“synthesis-by-analysis”methods of assessing statistical texture models,inwhich the model is used to generate a texture with statistics match-ing an original texture,and a human subject then judges the sim-ilarity of the two textures [8]–[11].A similar approach has also been qualitatively used in demonstrating quality metrics in [5],[12],though quantitative subjective tests were not conducted.These synthesis methods provide a powerful and efficient means of test-ing a model,and have the added benefit that the resulting images suggest improvements that might be made to the model[11].M )distortion level (MSE)12345Fig.2.Demonstration of image synthesis approach for cross-scale calibration.Images in the same row have the same MSE.Images in the same column have distortions only in one specific scale.Each subject was asked to select a set of images (one from each scale),having equal quality.As an example,one subject chose the marked images.For a given original 8bits/pixel gray scale test image,we syn-thesize a table of distorted images (as exemplified by Fig.2),where each entry in the table is an image that is associated witha specific distortion level (defined by MSE)and a specific scale.Each of the distorted image is created using an iterative procedure,where the initial image is generated by randomly adding white Gaussian noise to the original image and the iterative process em-ploys a constrained gradient descent algorithm to search for the worst images in terms of SSIM measure while constraining MSE to be fixed and restricting the distortions to occur only in the spec-ified scale.We use 5scales and 12distortion levels (range from 23to 214)in our experiment,resulting in a total of 60images,as demonstrated in Fig.2.Although the images at each row has the same MSE with respect to the original image,their visual quality is significantly different.Thus the distortions at different scales are of very different importance in terms of perceived image quality.We employ 10original 64×64images with different types of con-tent (human faces,natural scenes,plants,man-made objects,etc.)in our experiment to create 10sets of distorted images (a total of 600distorted images).We gathered data for 8subjects,including one of the authors.The other subjects have general knowledge of human vision but did not know the detailed purpose of the study.Each subject was shown the 10sets of test images,one set at a time.The viewing dis-tance was fixed to 32pixels per degree of visual angle.The subject was asked to compare the quality of the images across scales and detect one image from each of the five scales (shown as columns in Fig.2)that the subject believes having the same quality.For example,one subject chose the images marked in Fig.2to have equal quality.The positions of the selected images in each scale were recorded and averaged over all test images and all subjects.In general,the subjects agreed with each other on each image more than they agreed with themselves across different images.These test results were normalized (sum to one)and used to calculate the exponents in Eq.(7).The resulting parameters we obtained are β1=γ1=0.0448,β2=γ2=0.2856,β3=γ3=0.3001,β4=γ4=0.2363,and α5=β5=γ5=0.1333,respectively.4.TEST RESULTSWe test a number of image quality assessment algorithms using the LIVE database (available at [13]),which includes 344JPEG and JPEG2000compressed images (typically 768×512or similar size).The bit rate ranges from 0.028to 3.150bits/pixel,which allows the test images to cover a wide quality range,from in-distinguishable from the original image to highly distorted.The mean opinion score (MOS)of each image is obtained by averag-ing 13∼25subjective scores given by a group of human observers.Eight image quality assessment models are being compared,in-cluding PSNR,the Sarnoff model (JNDmetrix 8.0[14]),single-scale SSIM index with M equals 1to 5,and the proposed multi-scale SSIM index approach.The scatter plots of MOS versus model predictions are shown in Fig.3,where each point represents one test image,with its vertical and horizontal axes representing its MOS and the given objective quality score,respectively.To provide quantitative per-formance evaluation,we use the logistic function adopted in the video quality experts group (VQEG)Phase I FR-TV test [15]to provide a non-linear mapping between the objective and subjective scores.After the non-linear mapping,the linear correlation coef-ficient (CC),the mean absolute error (MAE),and the root mean squared error (RMS)between the subjective and objective scores are calculated as measures of prediction accuracy .The prediction consistency is quantified using the outlier ratio (OR),which is de-Table1.Performance comparison of image quality assessment models on LIVE JPEG/JPEG2000database[13].SS-SSIM: single-scale SSIM;MS-SSIM:multi-scale SSIM;CC:non-linear regression correlation coefficient;ROCC:Spearman rank-order correlation coefficient;MAE:mean absolute error;RMS:root mean squared error;OR:outlier ratio.Model CC ROCC MAE RMS OR(%)PSNR0.9050.901 6.538.4515.7Sarnoff0.9560.947 4.66 5.81 3.20 SS-SSIM(M=1)0.9490.945 4.96 6.25 6.98 SS-SSIM(M=2)0.9630.959 4.21 5.38 2.62 SS-SSIM(M=3)0.9580.956 4.53 5.67 2.91 SS-SSIM(M=4)0.9480.946 4.99 6.31 5.81 SS-SSIM(M=5)0.9380.936 5.55 6.887.85 MS-SSIM0.9690.966 3.86 4.91 1.16fined as the percentage of the number of predictions outside the range of±2times of the standard deviations.Finally,the predic-tion monotonicity is measured using the Spearman rank-order cor-relation coefficient(ROCC).Readers can refer to[15]for a more detailed descriptions of these measures.The evaluation results for all the models being compared are given in Table1.From both the scatter plots and the quantitative evaluation re-sults,we see that the performance of single-scale SSIM model varies with scales and the best performance is given by the case of M=2.It can also be observed that the single-scale model tends to supply higher scores with the increase of scales.This is not surprising because image coding techniques such as JPEG and JPEG2000usually compressfine-scale details to a much higher degree than coarse-scale structures,and thus the distorted image “looks”more similar to the original image if evaluated at larger scales.Finally,for every one of the objective evaluation criteria, multi-scale SSIM model outperforms all the other models,includ-ing the best single-scale SSIM model,suggesting a meaningful balance between scales.5.DISCUSSIONSWe propose a multi-scale structural similarity approach for image quality assessment,which provides moreflexibility than single-scale approach in incorporating the variations of image resolution and viewing conditions.Experiments show that with an appropri-ate parameter settings,the multi-scale method outperforms the best single-scale SSIM model as well as state-of-the-art image quality metrics.In the development of top-down image quality models(such as structural similarity based algorithms),one of the most challeng-ing problems is to calibrate the model parameters,which are rather “abstract”and cannot be directly derived from simple-stimulus subjective experiments as in the bottom-up models.In this pa-per,we used an image synthesis approach to calibrate the param-eters that define the relative importance between scales.The im-provement from single-scale to multi-scale methods observed in our tests suggests the usefulness of this novel approach.However, this approach is still rather crude.We are working on developing it into a more systematic approach that can potentially be employed in a much broader range of applications.6.REFERENCES[1] A.M.Eskicioglu and P.S.Fisher,“Image quality mea-sures and their performance,”IEEE munications, vol.43,pp.2959–2965,Dec.1995.[2]T.N.Pappas and R.J.Safranek,“Perceptual criteria for im-age quality evaluation,”in Handbook of Image and Video Proc.(A.Bovik,ed.),Academic Press,2000.[3]Z.Wang and A.C.Bovik,“A universal image quality in-dex,”IEEE Signal Processing Letters,vol.9,pp.81–84,Mar.2002.[4]Z.Wang,H.R.Sheikh,and A.C.Bovik,“Objective videoquality assessment,”in The Handbook of Video Databases: Design and Applications(B.Furht and O.Marques,eds.), pp.1041–1078,CRC Press,Sept.2003.[5]Z.Wang,A.C.Bovik,H.R.Sheikh,and E.P.Simon-celli,“Image quality assessment:From error measurement to structural similarity,”IEEE Trans.Image Processing,vol.13, Jan.2004.[6]Z.Wang,L.Lu,and A.C.Bovik,“Video quality assessmentbased on structural distortion measurement,”Signal Process-ing:Image Communication,special issue on objective video quality metrics,vol.19,Jan.2004.[7] B.A.Wandell,Foundations of Vision.Sinauer Associates,Inc.,1995.[8]O.D.Faugeras and W.K.Pratt,“Decorrelation methods oftexture feature extraction,”IEEE Pat.Anal.Mach.Intell., vol.2,no.4,pp.323–332,1980.[9] A.Gagalowicz,“A new method for texturefields synthesis:Some applications to the study of human vision,”IEEE Pat.Anal.Mach.Intell.,vol.3,no.5,pp.520–533,1981. [10] D.Heeger and J.Bergen,“Pyramid-based texture analy-sis/synthesis,”in Proc.ACM SIGGRAPH,pp.229–238,As-sociation for Computing Machinery,August1995.[11]J.Portilla and E.P.Simoncelli,“A parametric texture modelbased on joint statistics of complex wavelet coefficients,”Int’l J Computer Vision,vol.40,pp.49–71,Dec2000. [12]P.C.Teo and D.J.Heeger,“Perceptual image distortion,”inProc.SPIE,vol.2179,pp.127–141,1994.[13]H.R.Sheikh,Z.Wang, A. C.Bovik,and L.K.Cormack,“Image and video quality assessment re-search at LIVE,”/ research/quality/.[14]Sarnoff Corporation,“JNDmetrix Technology,”http:///products_services/video_vision/jndmetrix/.[15]VQEG,“Final report from the video quality experts groupon the validation of objective models of video quality assess-ment,”Mar.2000./.PSNRM O SSarnoffM O S(a)(b)Single−scale SSIM (M=1)M O SSingle−scale SSIM (M=2)M O S(c)(d)Single−scale SSIM (M=3)M O SSingle−scale SSIM (M=4)M O S(e)(f)Single−scale SSIM (M=5)M O SMulti−scale SSIMM O S(g)(h)Fig.3.Scatter plots of MOS versus model predictions.Each sample point represents one test image in the LIVE JPEG/JPEG2000image database [13].(a)PSNR;(b)Sarnoff model;(c)-(g)single-scale SSIM method for M =1,2,3,4and 5,respectively;(h)multi-scale SSIM method.。

硕士学位论文论 文 题 目 美国与中国视频广告中多模态隐喻的认知语言学研究—以啤酒广告为例 研究生姓名 陈彬彬指导教师姓名 苏晓军专业名称 外国语言学及应用语言学研究方向 认知语言学论文提交日期 2015年5月A Cognitive Linguistic Study of Multimodal Metaphors in Americanand Chinese Advertising: Based on Beer Video AdvertisingbyChen BinbinUnder the Supervision ofProfessor Su XiaojunSubmitted in Partial Fulfillment of the RequirementsFor the Degree of Master of ArtsSchool of Foreign LanguagesSoochow UniversityMay 2015ACKNOWLEDGEMENTSI would like to extend my wholehearted gratitude to all those who have helped me with my postgraduate studies.First of all, my sincere thanks go to my supervisor, Prof. Su Xiaojun, who has granted me great help by carefully scrutinizing every word and every figure of my thesis and offering me invaluable suggestions in conducting my research. Without his insightful comments and knowledgeable instructions I could not have completed the thesis in a satisfactory way. His commitment to academic research and rigorous attitude towards life have exerted great influence on me in terms of both study and life.I also feel deeply grateful to Prof. Zhu Xinfu, who supervised my undergraduate thesis and has long been concerned with my performance. Without his recommendation I could not have been so fortunate as to be guided by Prof. Su Xiaojun. It is his encouragement and inspiration that have driven me to seek for a higher level.My indebtedness goes to Prof. Wang Jun, Prof. Fang Hongmei, Prof. Wang Labao, Prof. Jiang Jin, Prof. Gao Yongchen and all the teaching staff in the School of Foreign Languages, Soochow University. Owing to their enlightening lectures and innovative ideas I have developed great interest in language.In addition, I want to express my heartfelt gratefulness to all the relatives, friends and classmates who have been supporting me not only in thesis writing but also in daily life. Without their solid backup the completion of my thesis would not have been possible.ABSTRACTThanks to globalization, different cultures have been on the way to integration, and the same is true for their advertising. However, the public often feel confused when distinguishing one piece of advertising from another. In response to this call for systemic studies on them scholars from many academies exert their efforts on analyzing metaphors in commercials, in terms of pictures and spoken languages. However, these attempts prove to be one tip of the iceberg as there are too many elements worth noticing in video advertising. It was until recently that public attention has been paid to the multimodal metaphors in commercials, but these scanty researches disperse in their subjects and have not included all the modalities, not to mention that a commercial is rich in carriers of conveying messages.This thesis attempts to make a comparative analysis of American and Chinese beer video advertising from the perspective of multimodal conceptual metaphor with the help of Multimodal Metaphor theory and some concepts borrowed from Conceptual Metaphor Theory. A corpus of 50 video advertising pieces of 10 beer brands, 5 from China and 5 from U.S.A. respectively, is established. By exploring into the mappings and entailments of these metaphors this thesis intends to find out the similarities and differences in using multimodalities, and then the universality and cross-cultural variations between these two countries, which might shed light on the understanding of beer advertising from America and China for potential consumers who want to spot the deep-rooted implications in advertising and for the businessmen who plan to pioneer in another market.Through thorough analyses this thesis concludes that similar conceptual metaphors, i.e. HAPPINESS IS DRINKING BEER and BEER IS A LIVING ORGANISM, exist in advertising from both countries which is rendered a result caused by universal embodiment and cultural congruence. Besides, visual modality remains to be the primary one in elaborating beer qualities. Nonetheless, theirpreference for modalities and the mappings and entailments underlying the conceptual metaphors differ between these two countries, which is under the impact of different ideological assumptions and cultural traits.Key Words: beer video advertising, conceptual metaphor, multimodal, universality, cross-cultural variation摘 要伴随着全球化的进程,不同文化之间相互借鉴和学习,其中之一就是广告的发展。

The ITU-T published J.144, a measurement of quality of service, for the transmission of television and other multimedia digital signals over cable networks. This defines the relationship between subjective assessment of video by a person and objective measurements taken from the network.The correlation between the two are defined by two methods:y Full Reference (Active) – A method applicable when the full reference video signal is available, and compared with the degraded signal as it passes through the network.y No Reference (Passive) – A method applicable when no reference video signal or informationis available.VIAVI believes that a combination of both Active and Passive measurements gives the correct blendof analysis with a good trade off of accuracy and computational power. T eraVM provides both voice and video quality assessment metrics, active and passive, based on ITU-T’s J.144, but are extended to support IP networks.For active assessment of VoIP and video, both the source and degraded signals are reconstituted from ingress and egress IP streams that are transmitted across the Network Under T est (NUT).The VoIP and video signals are aligned and each source and degraded frame is compared to rate the video quality.For passive measurements, only the degraded signal is considered, and with specified parameters about the source (CODEC, bit-rate) a metric is produced in real-time to rate the video quality.This combination of metrics gives the possibility of a ‘passive’ but lightweight Mean Opinion Score (MOS) per-subscriber for voice and video traffic, that is correlated with CPU-expensive but highly-accurate ‘active’ MOS scores.Both methods provide different degrees of measurement accuracy, expressed in terms of correlation with subjective assessment results. However, the trade off is the considerable computation resources required for active assessment of video - the algorithm must decode the IP stream and reconstitute the video sequence frame by frame, and compare the input and outputnframesto determine its score. The passive method is less accurate, but requires less computing resources. Active Video AnalysisThe active video assessment metric is called PEVQ– Perceptual Evaluation of Video Quality. PEVQ provides MOS estimates of the video quality degradation occurring through a network byBrochureVIAVITeraVMVoice, Video and MPEG Transport Stream Quality Metricsanalysing the degraded video signal output from the network. This approach is based on modelling the behaviour of the human visual tract and detecting abnormalities in the video signal quantified by a variety of KPIs. The MOS value reported, lies within a range from 1 (bad) to 5 (excellent) and is based on a multitude of perceptually motivated parameters.T o get readings from the network under test, the user runs a test with an video server (T eraVM or other) and an IGMP client, that joins the stream for a long period of time. The user selects the option to analysis the video quality, which takes a capture from both ingress and egress test ports.Next, the user launches the T eraVM Video Analysis Server, which fetches the video files from the server, filters the traffic on the desired video channel and converts them into standard video files. The PEVQ algorithm is run and is divided up into four separate blocks.The first block – pre-processing stage – is responsible for the spatial and temporal alignment of the reference and the impaired signal. This process makes sure, that only those frames are compared to each other that also correspond to each other.The second block calculates the perceptual difference of the aligned signals. Perceptual means that only those differences are taken into account which are actually perceived by a human viewer. Furthermore the activity of the motion in the reference signal provides another indicator representing the temporal information. This indicator is important as it takes into account that in frame series with low activity the perception of details is much higher than in frame series with quick motion. The third block in the figure classifies the previously calculated indicators and detects certain types of distortions.Finally, in the fourth block all the appropriate indicators according to the detected distortions are aggregated, forming the final result ‒ the mean opinion score (MOS). T eraVM evaluates the quality of CIF and QCIF video formats based on perceptual measurement, reliably, objectively and fast.In addition to MOS, the algorithm reports:y D istortion indicators: For a more detailed analysis the perceptual level of distortion in the luminance, chrominance and temporal domain are provided.y D elay: The delay of each frame of the test signal related to the reference signal.y Brightness: The brightness of the reference and degraded signal.y Contrast: The contrast of the distorted and the reference sequence.y P SNR: T o allow for a coarse analysis of the distortions in different domains the PSNR is provided for theY (luminance), Cb and Cr (chrominance) components separately.y Other KPIs: KPIs like Blockiness (S), Jerkiness, Blurriness (S), and frame rate the complete picture of the quality estimate.Passive MOS and MPEG StatisticsThe VQM passive algorithm is integrated into T eraVM, and when required produces a VQM, an estimation of the subjective quality of the video, every second. VQM MOS scores are available as an additional statistic in the T eraVM GUI and available in real time. In additionto VQM MOS scores, MPEG streams are analysed to determine the quality of each “Packet Elementary Stream” and exports key metrics such as Packets received and Packets Lost for each distinct Video stream within the MPEG Transport Stream. All major VoIP and Video CODECs are support, including MPEG 2/4 and the H.261/3/3+/4.2 TeraVM Voice, Video and MPEG Transport Stream Quality Metrics© 2020 VIAVI Solutions Inc.Product specifications and descriptions in this document are subject to change without notice.tvm-vv-mpeg-br-wir-nse-ae 30191143 900 0620Contact Us +1 844 GO VIAVI (+1 844 468 4284)To reach the VIAVI office nearest you, visit /contacts.VIAVI SolutionsVoice over IP call quality can be affected by packet loss, discards due to jitter, delay , echo and other problems. Some of these problems, notably packet loss and jitter, are time varying in nature as they are usually caused by congestion on the IP path. This can result in situations where call quality varies during the call - when viewed from the perspective of “average” impairments then the call may appear fine although it may have sounded severely impaired to the listener. T eraVM inspects every RTP packet header, estimating delay variation and emulating the behavior of a fixed or adaptive jitter buffer to determine which packets are lost or discarded. A 4- state Markov Model measures the distribution of the lost and discarded packets. Packet metrics obtained from the Jitter Buffer together with video codec information obtained from the packet stream to calculate a rich set of metrics, performance and diagnostic information. Video quality scores provide a guide to the quality of the video delivered to the user. T eraVM V3.1 produces call quality metrics, includinglistening and conversational quality scores, and detailed information on the severity and distribution of packet loss and discards (due to jitter). This metric is based on the well established ITU G.107 E Model, with extensions to support time varying network impairments.For passive VoIP analysis, T eraVM v3.1 emulates a VoIP Jitter Buffer Emulator and with a statistical Markov Model accepts RTP header information from the VoIP stream, detects lost packets and predicts which packets would be discarded ‒ feeding this information to the Markov Model and hence to the T eraVM analysis engine.PESQ SupportFinally , PESQ is available for the analysis of VoIP RTP Streams. The process to generate PESQ is an identical process to that of Video Quality Analysis.。

Linear Algebra and its Applications432(2010)2089–2099Contents lists available at ScienceDirect Linear Algebra and its Applications j o u r n a l h o m e p a g e:w w w.e l s e v i e r.c o m/l o c a t e/l aaIntegrating learning theories and application-based modules in teaching linear algebraୋWilliam Martin a,∗,Sergio Loch b,Laurel Cooley c,Scott Dexter d,Draga Vidakovic ea Department of Mathematics and School of Education,210F Family Life Center,NDSU Department#2625,P.O.Box6050,Fargo ND 58105-6050,United Statesb Department of Mathematics,Grand View University,1200Grandview Avenue,Des Moines,IA50316,United Statesc Department of Mathematics,CUNY Graduate Center and Brooklyn College,2900Bedford Avenue,Brooklyn,New York11210, United Statesd Department of Computer and Information Science,CUNY Brooklyn College,2900Bedford Avenue Brooklyn,NY11210,United Statese Department of Mathematics and Statistics,Georgia State University,University Plaza,Atlanta,GA30303,United StatesA R T I C L E I N F O AB S T R AC TArticle history:Received2October2008Accepted29August2009Available online30September2009 Submitted by L.Verde-StarAMS classification:Primary:97H60Secondary:97C30Keywords:Linear algebraLearning theoryCurriculumPedagogyConstructivist theoriesAPOS–Action–Process–Object–Schema Theoretical frameworkEncapsulated process The research team of The Linear Algebra Project developed and implemented a curriculum and a pedagogy for parallel courses in (a)linear algebra and(b)learning theory as applied to the study of mathematics with an emphasis on linear algebra.The purpose of the ongoing research,partially funded by the National Science Foundation,is to investigate how the parallel study of learning theories and advanced mathematics influences the development of thinking of individuals in both domains.The researchers found that the particular synergy afforded by the parallel study of math and learning theory promoted,in some students,a rich understanding of both domains and that had a mutually reinforcing effect.Furthermore,there is evidence that the deeper insights will contribute to more effective instruction by those who become high school math teachers and,consequently,better learning by their students.The courses developed were appropriate for mathematics majors,pre-service secondary mathematics teachers, and practicing mathematics teachers.The learning seminar focused most heavily on constructivist theories,although it also examinedThe work reported in this paper was partially supported by funding from the National Science Foundation(DUE CCLI 0442574).∗Corresponding author.Address:NDSU School of Education,NDSU Department of Mathematics,210F Family Life Center, NDSU Department#2625,P.O.Box6050,Fargo ND58105-6050,United States.Tel.:+17012317104;fax:+17012317416.E-mail addresses:william.martin@(W.Martin),sloch@(S.Loch),LCooley@ (L.Cooley),SDexter@(S.Dexter),dvidakovic@(D.Vidakovic).0024-3795/$-see front matter©2009Elsevier Inc.All rights reserved.doi:10.1016/a.2009.08.0302090W.Martin et al./Linear Algebra and its Applications432(2010)2089–2099Thematicized schema Triad–intraInterTransGenetic decomposition Vector additionMatrixMatrix multiplication Matrix representation BasisColumn spaceRow spaceNull space Eigenspace Transformation socio-cultural and historical perspectives.A particular theory, Action–Process–Object–Schema(APOS)[10],was emphasized and examined through the lens of studying linear algebra.APOS has been used in a variety of studies focusing on student understanding of undergraduate mathematics.The linear algebra courses include the standard set of undergraduate topics.This paper reports the re-sults of the learning theory seminar and its effects on students who were simultaneously enrolled in linear algebra and students who had previously completed linear algebra and outlines how prior research has influenced the future direction of the project.©2009Elsevier Inc.All rights reserved.1.Research rationaleThe research team of the Linear Algebra Project(LAP)developed and implemented a curriculum and a pedagogy for parallel courses in linear algebra and learning theory as applied to the study of math-ematics with an emphasis on linear algebra.The purpose of the research,which was partially funded by the National Science Foundation(DUE CCLI0442574),was to investigate how the parallel study of learning theories and advanced mathematics influences the development of thinking of high school mathematics teachers,in both domains.The researchers found that the particular synergy afforded by the parallel study of math and learning theory promoted,in some teachers,a richer understanding of both domains that had a mutually reinforcing effect and affected their thinking about their identities and practices as teachers.It has been observed that linear algebra courses often are viewed by students as a collection of definitions and procedures to be learned by rote.Scanning the table of contents of many commonly used undergraduate textbooks will provide a common list of terms such as listed here(based on linear algebra texts by Strang[1]and Lang[2]).Vector space Kernel GaussianIndependence Image TriangularLinear combination Inverse Gram–SchmidtSpan Transpose EigenvectorBasis Orthogonal Singular valueSubspace Operator DecompositionProjection Diagonalization LU formMatrix Normal form NormDimension Eignvalue ConditionLinear transformation Similarity IsomorphismRank Diagonalize DeterminantThis is not something unique to linear algebra–a similar situation holds for many undergraduate mathematics courses.Certainly the authors of undergraduate texts do not share this student view of mathematics.In fact,the variety ways in which different authors organize their texts reflects the individual ways in which they have conceptualized introductory linear algebra courses.The wide vari-ability that can be seen in a perusal of the many linear algebra texts that are used is a reflection the many ways that mathematicians think about linear algebra and their beliefs about how students can come to make sense of the content.Instruction in a course is based on considerations of content,pedagogy, resources(texts and other materials),and beliefs about teaching and learning of mathematics.The interplay of these ideas shaped our research project.We deliberately mention two authors with clearly differing perspectives on an undergraduate linear algebra course:Strang’s organization of the material takes an applied or application perspective,while Lang views the material from more of a“pure mathematics”perspective.A review of the wide variety of textbooks to classify and categorize the different views of the subject would reveal a broad variety of perspectives on the teaching of the subject.We have taken a view that seeks to go beyond the mathe-matical content to integrate current theoretical perspectives on the teaching and learning of undergrad-uate mathematics.Our project used integration of mathematical content,applications,and learningW.Martin et al./Linear Algebra and its Applications432(2010)2089–20992091 theories to provide enhanced learning experiences using rich content,student meta cognition,and their own experience and intuition.The project also used co-teaching and collaboration among faculty with expertise in a variety of areas including mathematics,computer science and mathematics education.If one moves beyond the organization of the content of textbooks wefind that at their heart they do cover a common core of the key ideas of linear algebra–all including fundamental concepts such as vector space and linear transformation.These observations lead to our key question“How is one to think about this task of organizing instruction to optimize learning?”In our work we focus on the conception of linear algebra that is developed by the student and its relationship with what we reveal about our own understanding of the subject.It seems that even in cases where researchers consciously study the teaching and learning of linear algebra(or other mathematics topics)the questions are“What does it mean to understand linear algebra?”and“How do I organize instruction so that students develop that conception as fully as possible?”In broadest terms, our work involves(a)simultaneous study of linear algebra and learning theories,(b)having students connect learning theories to their study of linear algebra,and(c)the use of parallel mathematics and education courses and integrated workshops.As students simultaneously study mathematics and learning theory related to the study of mathe-matics,we expect that reflection or meta cognition on their own learning will enable them to construct deeper and more meaningful understanding in both domains.We chose linear algebra for several reasons:It has not been the focus of as much instructional research as calculus,it involves abstraction and proof,and it is taken by many students in different programs for a variety of reasons.It seems to us to involve important mathematical content along with rich applications,with abstraction that builds on experience and intuition.In our pilot study we taught parallel courses:The regular upper division undergraduate linear algebra course and a seminar in learning theories in mathematics education.Early in the project we also organized an intensive three-day workshop for teachers and prospective teachers that included topics in linear algebra and examination of learning theory.In each case(two sets of parallel courses and the workshop)we had students reflect on their learning of linear algebra content and asked them to use their own learning experiences to reflect on the ideas about teaching and learning of mathematics.Students read articles–in the case of the workshop,this reading was in advance of the long weekend session–drawn from mathematics education sources including[3–10].APOS(Action,Process,Object,Schema)is a theoretical framework that has been used by many researchers who study the learning of undergraduate and graduate mathematics[10,11].We include a sketch of the structure of this framework and refer the reader to the literature for more detailed descriptions.More detailed and specific illustrations of its use are widely available[12].The APOS Theoretical Framework involves four levels of understanding that can be described for a wide variety of mathematical concepts such as function,vector space,linear transformation:Action,Process,Object (either an encapsulated process or a thematicized schema),Schema(Intra,inter,trans–triad stages of schema formation).Genetic decomposition is the analysis of a particular concept in which developing understanding is described as a dynamic process of mental constructions that continually develop, abstract,and enrich the structural organization of an individual’s knowledge.We believe that students’simultaneous study of linear algebra along with theoretical examination of teaching and learning–particularly on what it means to develop conceptual understanding in a domain –will promote learning and understanding in both domains.Fundamentally,this reflects our view that conceptual understanding in any domain involves rich mental connections that link important ideas or facts,increasing the individual’s ability to relate new situations and problems to that existing cognitive framework.This view of conceptual understanding of mathematics has been described by various prominent math education researchers such as Hiebert and Carpenter[6]and Hiebert and Lefevre[7].2.Action–Process–Object–Schema theory(APOS)APOS theory is a theoretical perspective of learning based on an interpretation of Piaget’s construc-tivism and poses descriptions of mental constructions that may occur in understanding a mathematical concept.These constructions are called Actions,Processes,Objects,and Schema.2092W.Martin et al./Linear Algebra and its Applications432(2010)2089–2099 An action is a transformation of a mathematical object according to an explicit algorithm seen as externally driven.It may be a manipulation of objects or acting upon a memorized fact.When one reflects upon an action,constructing an internal operation for a transformation,the action begins to be interiorized.A process is this internal transformation of an object.Each step may be described or reflected upon without actually performing it.Processes may be transformed through reversal or coordination with other processes.There are two ways in which an individual may construct an object.A person may reflect on actions applied to a particular process and become aware of the process as a totality.One realizes that transformations(whether actions or processes)can act on the process,and is able to actually construct such transformations.At this point,the individual has reconstructed a process as a cognitive object. In this case we say that the process has been encapsulated into an object.One may also construct a cognitive object by reflecting on a schema,becoming aware of it as a totality.Thus,he or she is able to perform actions on it and we say the individual has thematized the schema into an object.With an object conception one is able to de-encapsulate that object back into the process from which it came, or,in the case of a thematized schema,unpack it into its various components.Piaget and Garcia[13] indicate that thematization has occurred when there is a change from usage or implicit application to consequent use and conceptualization.A schema is a collection of actions,processes,objects,and other previously constructed schemata which are coordinated and synthesized to form mathematical structures utilized in problem situations. Objects may be transformed by higher-level actions,leading to new processes,objects,and schemata. Hence,reconstruction continues in evolving schemata.To illustrate different conceptions of the APOS theory,imagine the following’teaching’scenario.We give students multi-part activities in a technology supported environment.In particular,we assume students are using Maple in the computer lab.The multi-part activities,focusing on vectors and operations,in Maple begin with a given Maple code and drawing.In case of scalar multiplication of the vector,students are asked to substitute one parameter in the Maple code,execute the code and observe what has happened.They are asked to repeat this activity with a different value of the parameter.Then students are asked to predict what will happen in a more general case and to explain their reasoning.Similarly,students may explore addition and subtraction of vectors.In the next part of activity students might be asked to investigate about the commutative property of vector addition.Based on APOS theory,in thefirst part of the activity–in which students are asked to perform certain operation and make observations–our intention is to induce each student’s action conception of that concept.By asking students to imagine what will happen if they make a certain change–but do not physically perform that change–we are hoping to induce a somewhat higher level of students’thinking, the process level.In order to predict what will happen students would have to imagine performing the action based on the actions they performed before(reflective abstraction).Activities designed to explore on vector addition properties require students to encapsulate the process of addition of two vectors into an object on which some other action could be performed.For example,in order for a student to conclude that u+v=v+u,he/she must encapsulate a process of adding two vectors u+v into an object(resulting vector)which can further be compared[action]with another vector representing the addition of v+u.As with all theories of learning,APOS has a limitation that researchers may only observe externally what one produces and discusses.While schemata are viewed as dynamic,the task is to attempt to take a snap shot of understanding at a point in time using a genetic decomposition.A genetic decomposition is a description by the researchers of specific mental constructions one may make in understanding a mathematical concept.As with most theories(economics,physics)that have restrictions,it can still be very useful in describing what is observed.3.Initial researchIn our preliminary study we investigated three research questions:•Do participants make connections between linear algebra content and learning theories?•Do participants reflect upon their own learning in terms of studied learning theories?W.Martin et al./Linear Algebra and its Applications432(2010)2089–20992093•Do participants connect their study of linear algebra and learning theories to the mathematics content or pedagogy for their mathematics teaching?In addition to linear algebra course activities designed to engage students in explorations of concepts and discussions about learning theories and connections between the two domains,we had students construct concept maps and describe how they viewed the connections between the two subjects. We found that some participants saw significant connections and were able to apply APOS theory appropriately to their learning of linear algebra.For example,here is a sketch outline of how one participant described the elements of the APOS framework late in the semester.The student showed a reasonable understanding of the theoretical framework and then was able to provide an example from linear algebra to illustrate the model.The student’s description of the elements of APOS:Action:“Students’approach is to apply‘external’rules tofind solutions.The rules are said to be external because students do not have an internalized understanding of the concept or the procedure tofind a solution.”Process:“At the process level,students are able to solve problems using an internalized understand-ing of the algorithm.They do not need to write out an equation or draw a graph of a function,for example.They can look at a problem and understand what is going on and what the solution might look like.”Object level as performing actions on a process:“At the object level,students have an integrated understanding of the processes used to solve problems relating to a particular concept.They un-derstand how a process can be transformed by different actions.They understand how different processes,with regard to a particular mathematical concept,are related.If a problem does not conform to their particular action-level understanding,they can modify the procedures necessary tofind a solution.”Schema as a‘set’of knowledge that may be modified:“Schema–At the schema level,students possess a set of knowledge related to a particular concept.They are able to modify this set of knowledge as they gain more experience working with the concept and solving different kinds of problems.They see how the concept is related to other concepts and how processes within the concept relate to each other.”She used the ideas of determinant and basis to illustrate her understanding of the framework. (Another student also described how student recognition of the recursive relationship of computations of determinants of different orders corresponded to differing levels of understanding in the APOS framework.)Action conception of determinant:“A student at the action level can use an algorithm to calculate the determinant of a matrix.At this level(at least for me),the formula was complicated enough that I would always check that the determinant was correct byfinding the inverse and multiplying by the original matrix to check the solution.”Process conception of determinant:“The student knows different methods to use to calculate a determinant and can,in some cases,look at a matrix and determine its value without calculations.”Object conception:“At the object level,students see the determinant as a tool for understanding and describing matrices.They understand the implications of the value of the determinant of a matrix as a way to describe a matrix.They can use the determinant of a matrix(equal to or not equal to zero)to describe properties of the elements of a matrix.”Triad development of a schema(intra,inter,trans):“A singular concept–basis.There is a basis for a space.The student can describe a basis without calculation.The student canfind different types of bases(column space,row space,null space,eigenspace)and use these values to describe matrices.”The descriptions of components of APOS along with examples illustrate that this student was able to make valid connections between the theoretical framework and the content of linear algebra.While the2094W.Martin et al./Linear Algebra and its Applications432(2010)2089–2099descriptions may not match those that would be given by scholars using APOS as a research framework, the student does demonstrate a recognition of and ability to provide examples of how understanding of linear algebra can be organized conceptually as more that a collection of facts.As would be expected,not all participants showed gains in either domain.We viewed the results of this study as a proof of concept,since there were some participants who clearly gained from the experience.We also recognized that there were problems associated with the implementation of our plan.To summarize ourfindings in relation to the research questions:•Do participants make connections between linear algebra content and learning theories?Yes,to widely varying degrees and levels of sophistication.•Do participants reflect upon their own learning in terms of studied learning theories?Yes,to the extent possible from their conception of the learning theories and understanding of linear algebra.•Do participants connect their study of linear algebra and learning theories to the mathematics content or pedagogy for their mathematics teaching?Participants describe how their experiences will shape their own teaching,but we did not visit their classes.Of the11students at one site who took the parallel courses,we identified three in our case studies (a detailed report of that study is presently under review)who demonstrated a significant ability to connect learning theories with their own learning of linear algebra.At another site,three teachers pursuing math education graduate studies were able to varying degrees to make these connections –two demonstrated strong ability to relate content to APOS and described important ways that the experience had affected their own thoughts about teaching mathematics.Participants in the workshop produced richer concept maps of linear algebra topics by the end of the weekend.Still,there were participants who showed little ability to connect material from linear algebra and APOS.A common misunderstanding of the APOS framework was that increasing levels cor-responded to increasing difficulty or complexity.For example,a student might suggest that computing the determinant of a2×2matrix was at the action level,while computation of a determinant in the 4×4case was at the object level because of the increased complexity of the computations.(Contrast this with the previously mentioned student who observed that the object conception was necessary to recognize that higher dimension determinants are computed recursively from lower dimension determinants.)We faced more significant problems than the extent to which students developed an understanding of the ideas that were presented.We found it very difficult to get students–especially undergraduates –to agree to take an additional course while studying linear algebra.Most of the participants in our pilot projects were either mathematics teachers or prospective mathematics teachers.Other students simply do not have the time in their schedules to pursue an elective seminar not directly related to their own area of interest.This problem led us to a new project in which we plan to integrate the material on learning theory–perhaps implicitly for the students–in the linear algebra course.Our focus will be on working with faculty teaching the course to ensure that they understand the theory and are able to help ensure that course activities reflect these ideas about learning.4.Continuing researchOur current Linear Algebra in New Environments(LINE)project focuses on having faculty work collaboratively to develop a series of modules that use applications to help students develop conceptual understanding of key linear algebra concepts.The project has three organizing concepts:•Promote enhanced learning of linear algebra through integrated study of mathematical content, applications,and the learning process.•Increase faculty understanding and application of mathematical learning theories in teaching linear algebra.•Promote and support improved instruction through co-teaching and collaboration among faculty with expertise in a variety of areas,such as education and STEM disciplines.W.Martin et al./Linear Algebra and its Applications432(2010)2089–20992095 For example,computer and video graphics involve linear transformations.Students will complete a series of activities that use manipulation of graphical images to illustrate and help them move from action and process conceptions of linear transformations to object conceptions and the development of a linear transformation schema.Some of these ideas were inspired by material in Judith Cederberg’s geometry text[14]and some software developed by David Meel,both using matrix representations of geometric linear transformations.The modules will have these characteristics:•Embed learning theory in linear algebra course for both the instructor and the students.•Use applied modules to illustrate the organization of linear algebra concepts.•Applications draw on student intuitions to aid their mental constructions and organization of knowledge.•Consciously include meta-cognition in the course.To illustrate,we sketch the outline of a possible series of activities in a module on geometric linear transformations.The faculty team–including individuals with expertise in mathematics,education, and computer science–will develop a series of modules to engage students in activities that include reflection and meta cognition about their learning of linear algebra.(The Appendix contains a more detailed description of a module that includes these activities.)Task1:Use Photoshop or GIMP to manipulate images(rotate,scale,flip,shear tools).Describe and reflect on processes.This activity uses an ACTION conception of transformation.Task2:Devise rules to map one vector to another.Describe and reflect on process.This activity involves both ACTION and PROCESS conceptions.Task3:Use a matrix representation to map vectors.This requires both PROCESS and OBJECT conceptions.Task4:Compare transform of sum with sum of transforms for matrices in Task3as compared to other non-linear functions.This involves ACTION,PROCESS,and OBJECT conceptions.Task5:Compare pre-image and transformed image of rectangles in the plane–identify software tool that was used(from Task1)and how it might be represented in matrix form.This requires OBJECT and SCHEMA conceptions.Education,mathematics and computer science faculty participating in this project will work prior to the semester to gain familiarity with the APOS framework and to identify and sketch potential modules for the linear algebra course.During the semester,collaborative teams of faculty continue to develop and refine modules that reflect important concepts,interesting applications,and learning theory:Modules will present activities that help students develop important concepts rather than simply presenting important concepts for students to absorb.The researchers will study the impact of project activities on student learning:We expect that students will be able to describe their knowledge of linear algebra in a more conceptual(structured) way during and after the course.We also will study the impact of the project on faculty thinking about teaching and learning:As a result of this work,we expect that faculty will be able to describe both the important concepts of linear algebra and how those concepts are mentally developed and organized by students.Finally,we will study the impact on instructional practice:Participating faculty should continue to use instructional practices that focus both on important content and how students develop their understanding of that content.5.SummaryOur preliminary study demonstrated that prospective and practicing mathematics teachers were able to make connections between their concurrent study of linear algebra and of learning theories relating to mathematics education,specifically the APOS theoretical framework.In cases where the participants developed understanding in both domains,it was apparent that this connected learning strengthened understanding in both areas.Unfortunately,we were unable to encourage undergraduate students to consider studying both linear algebra and learning theory in separate,parallel courses. Consequently,we developed a new strategy that embeds the learning theory in the linear algebra。

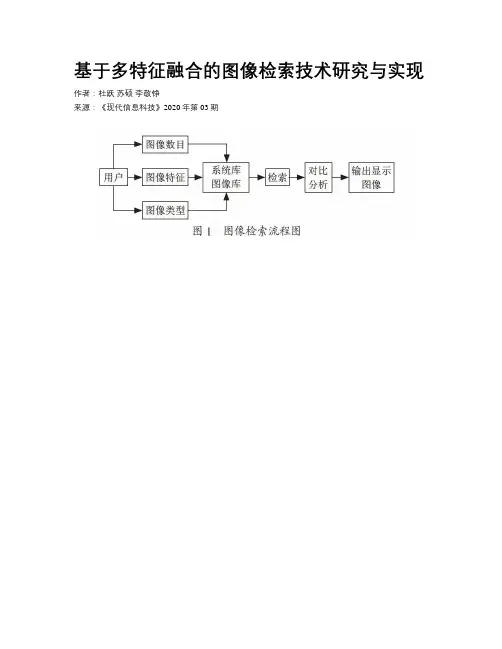

基于多特征融合的图像检索技术研究与实现作者:杜跃苏硕李敬铮来源:《现代信息科技》2020年第03期摘; 要:为了满足精准、智能、高效的图像检索方式,提出了一种基于多特征融合图像检索方法,多特征图像检索技术实现的图像检索使用了图像自身的多特征作为图像检索的依据,提高了图像搜索的精准性和高效性。

通过测试,实验结果证实了多特征融合图像检索技术利用了图像的颜色和纹理等作为检索的特征,多特征融合图像检索系统具有一定的学术意义和现实价值,能够大幅度提高图像检索的精确性,满足了用户的需求,具有良好的实用价值。

关键词:多特征融合;图像检索;纹理;图像特征中图分类号:TP391.41; ; ; ;文献标识码:A 文章编号:2096-4706(2020)03-0083-003Abstract:In order to meet the requirements of accurate,intelligent and efficient image retrieval,a method of image retrieval based on multi feature fusion is proposed. The image retrieval realized by multi feature image retrieval technology uses the multi feature of image itself as the basis of image retrieval,which improves the accuracy and efficiency of image retrieval. Through the test,the experimental results confirm that the multi feature fusion image retrieval technology uses the color and texture of the image as the retrieval features. The multi feature fusion image retrieval system has certain academic significance and practical value,can greatly improve the accuracy of image retrieval,meet the needs of users,and has good practical value.0; 引; 言当前,随着科学技术的发展,互联网技术不断发展,但是图像检索的效率仍然过于低下,互联网的高速发展给人们提供了大量的信息资源,但是不断增加的数据资源也给人们带来了一定的困难,即如何在海量数据资源中实现对信息的检索,提高用户的查询效率,因此目前急需一种更加精准、高效、智能的图像检索技术。