ON ALGORITHMS FOR ORDINARY LEAST SQUARES REGRESSION SPLINE FITTING A COMPARATIVE STUDY

- 格式:pdf

- 大小:459.26 KB

- 文档页数:17

算法导论课程作业答案Introduction to AlgorithmsMassachusetts Institute of Technology 6.046J/18.410J Singapore-MIT Alliance SMA5503 Professors Erik Demaine,Lee Wee Sun,and Charles E.Leiserson Handout10Diagnostic Test SolutionsProblem1Consider the following pseudocode:R OUTINE(n)1if n=12then return13else return n+R OUTINE(n?1)(a)Give a one-sentence description of what R OUTINE(n)does.(Remember,don’t guess.) Solution:The routine gives the sum from1to n.(b)Give a precondition for the routine to work correctly.Solution:The value n must be greater than0;otherwise,the routine loops forever.(c)Give a one-sentence description of a faster implementation of the same routine. Solution:Return the value n(n+1)/2.Problem2Give a short(1–2-sentence)description of each of the following data structures:(a)FIFO queueSolution:A dynamic set where the element removed is always the one that has been in the set for the longest time.(b)Priority queueSolution:A dynamic set where each element has anassociated priority value.The element removed is the element with the highest(or lowest)priority.(c)Hash tableSolution:A dynamic set where the location of an element is computed using a function of the ele ment’s key.Problem3UsingΘ-notation,describe the worst-case running time of the best algorithm that you know for each of the following:(a)Finding an element in a sorted array.Solution:Θ(log n)(b)Finding an element in a sorted linked-list.Solution:Θ(n)(c)Inserting an element in a sorted array,once the position is found.Solution:Θ(n)(d)Inserting an element in a sorted linked-list,once the position is found.Solution:Θ(1)Problem4Describe an algorithm that locates the?rst occurrence of the largest element in a?nite list of integers,where the integers are not necessarily distinct.What is the worst-case running time of your algorithm?Solution:Idea is as follows:go through list,keeping track of the largest element found so far and its index.Update whenever necessary.Running time isΘ(n).Problem5How does the height h of a balanced binary search tree relate to the number of nodes n in the tree? Solution:h=O(lg n) Problem 6Does an undirected graph with 5vertices,each of degree 3,exist?If so,draw such a graph.If not,explain why no such graph exists.Solution:No such graph exists by the Handshaking Lemma.Every edge adds 2to the sum of the degrees.Consequently,the sum of the degrees must be even.Problem 7It is known that if a solution to Problem A exists,then a solution to Problem B exists also.(a)Professor Goldbach has just produced a 1,000-page proof that Problem A is unsolvable.If his proof turns out to be valid,can we conclude that Problem B is also unsolvable?Answer yes or no (or don’t know).Solution:No(b)Professor Wiles has just produced a 10,000-page proof that Problem B is unsolvable.If the proof turns out to be valid,can we conclude that problem A is unsolvable as well?Answer yes or no (or don’t know).Solution:YesProblem 8Consider the following statement:If 5points are placed anywhere on or inside a unit square,then there must exist two that are no more than √2/2units apart.Here are two attempts to prove this statement.Proof (a):Place 4of the points on the vertices of the square;that way they are maximally sepa-rated from one another.The 5th point must then lie within √2/2units of one of the other points,since the furthest from the corners it can be is the center,which is exactly √2/2units fromeach of the four corners.Proof (b):Partition the square into 4squares,each with a side of 1/2unit.If any two points areon or inside one of these smaller squares,the distance between these two points will be at most √2/2units.Since there are 5points and only 4squares,at least two points must fall on or inside one of the smaller squares,giving a set of points that are no more than √2/2apart.Which of the proofs are correct:(a),(b),both,or neither (or don’t know)?Solution:(b)onlyProblem9Give an inductive proof of the following statement:For every natural number n>3,we have n!>2n.Solution:Base case:True for n=4.Inductive step:Assume n!>2n.Then,multiplying both sides by(n+1),we get(n+1)n!> (n+1)2n>2?2n=2n+1.Problem10We want to line up6out of10children.Which of the following expresses the number of possible line-ups?(Circle the right answer.)(a)10!/6!(b)10!/4!(c) 106(d) 104 ·6!(e)None of the above(f)Don’t knowSolution:(b),(d)are both correctProblem11A deck of52cards is shuf?ed thoroughly.What is the probability that the4aces are all next to each other?(Circle theright answer.)(a)4!49!/52!(b)1/52!(c)4!/52!(d)4!48!/52!(e)None of the above(f)Don’t knowSolution:(a)Problem12The weather forecaster says that the probability of rain on Saturday is25%and that the probability of rain on Sunday is25%.Consider the following statement:The probability of rain during the weekend is50%.Which of the following best describes the validity of this statement?(a)If the two events(rain on Sat/rain on Sun)are independent,then we can add up the twoprobabilities,and the statement is true.Without independence,we can’t tell.(b)True,whether the two events are independent or not.(c)If the events are independent,the statement is false,because the the probability of no rainduring the weekend is9/16.If they are not independent,we can’t tell.(d)False,no matter what.(e)None of the above.(f)Don’t know.Solution:(c)Problem13A player throws darts at a target.On each trial,independentlyof the other trials,he hits the bull’s-eye with probability1/4.How many times should he throw so that his probability is75%of hitting the bull’s-eye at least once?(a)3(b)4(c)5(d)75%can’t be achieved.(e)Don’t know.Solution:(c),assuming that we want the probability to be≥0.75,not necessarily exactly0.75.Problem14Let X be an indicator random variable.Which of the following statements are true?(Circle all that apply.)(a)Pr{X=0}=Pr{X=1}=1/2(b)Pr{X=1}=E[X](c)E[X]=E[X2](d)E[X]=(E[X])2Solution:(b)and(c)only。

Chapter 21. A dependent variable is also known as a(n) _____.a. explanatory variableb. control variablec. predictor variabled. response variableAnswer: dDifficulty: EasyBloom’s: KnowledgeA-Head: Definition of the Simple Regression ModelBUSPROG:Feedback: A dependent variable is known as a response variable.2. If a change in variable x causes a change in variable y, variable x is called the _____.a. dependent variableb. explained variablec. explanatory variabled. response variableAnswer: cDifficulty: EasyBloom’s: ComprehensionA-Head: Definition of the Simple Regression ModelBUSPROG:Feedback: If a change in variable x causes a change in variable y, variable x is called the independent variable or the explanatory variable.3. In the equation y = + x + u, is the _____.a. dependent variableb. independent variablec. slope parameterd. intercept parameterAnswer: dDifficulty: EasyBloom’s: KnowledgeA-Head: Definition of the Simple Regression ModelBUSPROG:Feedback: In the equation y = + x + u, is the intercept parameter.4. In the equation y = + x + u, what is the estimated value ofa.b.c.d.Answer: aDifficulty: EasyBloom’s: KnowledgeA-Head: Deriving the Ordinary Least Squares EstimatesBUSPROG:Feedback: The estimated value of is .5. In the equation c = + i + u, c denotes consumption and i denotes income. What is the residual for the 5th observation if =$500 and =$475a. $975b. $300c. $25d. $50Answer: cDifficulty: EasyBloom’s: KnowledgeA-Head: Deriving the Ordinary Least Squares EstimatesBUSPROG:Feedback: The formula for calculating the residual for the i th observation is . In this case, the residual is =$500 -$475= $25.6. What does the equation denote if the regression equation is y = β0+ β1x1 + ua. The explained sum of squaresb. The total sum of squaresc. The sample regression functiond. The population regression functionAnswer: cDifficulty: EasyBloom’s: KnowledgeA-Head: Deriving the Ordinary Least Squares EstimatesBUSPROG:Feedback: The equation denotes the sample regression function of the given regression model.7. Consider the following regression model: y = β0+ β1x1 + u. Which of the following is a property of Ordinary Least Square (OLS) estimates of this model and their associated statisticsa. The sum, and therefore the sample average of the OLS residuals, is positive.b. The sum of the OLS residuals is negative.c. The sample covariance between the regressors and the OLS residuals is positive.d. The point (, ) always lies on the OLS regression line.Answer: dDifficulty: EasyBloom’s: KnowledgeA-Head: Properties of OLS on Any Sample of DataBUSPROG:Feedback: An important property of the OLS estimates is that the point (, ) always lies on the OLS regression line. In other words, if , the predicted value of .8. The explained sum of squares for the regression function, , is defined as _____.a.b.c.d.Answer: bDifficulty: EasyBloom’s: KnowledgeA-Head: Properties of OLS on Any Sample of DataBUSPROG:Feedback: The explained sum of squares is defined as9. If the total sum of squares (SST) in a regression equation is 81, and the residual sum of squares (SSR) is 25, what is the explained sum of squares (SSE)a. 64b. 56c. 32d. 18Answer: bDifficulty: ModerateBloom’s: ApplicationA-Head: Properties of OLS on Any Sample of DataBUSPROG: AnalyticFeedback: Total sum of squares (SST) is given by the sum of explained sum of squares (SSE) and residual sum of squares (SSR). Therefore, in this case, SSE=81-25=56.10. If the residual sum of squares (SSR) in a regression analysis is 66 and the total sum of squares (SST) is equal to 90, what is the value of the coefficient of determinationa.b.c.d.Answer: cDifficulty: ModerateBloom’s: ApplicationA-Head: Properties of OLS on Any Sample of DataBUSPROG: AnalyticFeedback: The formula for calculating the coefficient of determination is . In this case,11. Which of the following is a nonlinear regression modela. y = β0 + β1x1/2 + ub. log y = β0 + β1log x +uc. y = 1 / (β0 + β1x) + ud. y = β0 + β1x + uAnswer: cDifficulty: ModerateBloom’s: ComprehensionA-Head: Properties of OLS on Any Sample of DataBUSPROG:Feedback: A regression model is nonlinear if the equation is nonlinear in the parameters. In this case,y=1 / (β0 + β1x) + u is nonlinear as it is nonlinear in its parameters.12. Which of the following is assumed for establishing the unbiasedness of Ordinary Least Square (OLS) estimatesa. The error term has an expected value of 1 given any value of the explanatory variable.b. The regression equation is linear in the explained and explanatory variables.c. The sample outcomes on the explanatory variable are all the same value.d. The error term has the same variance given any value of the explanatory variable.Answer: dDifficulty: EasyBloom’s: KnowledgeA-Head: Expected Values and Variances of the OLS EstimatorsBUSPROG:Feedback: The error u has the same variance given any value of the explanatory variable.13. The error term in a regression equation is said to exhibit homoskedasticty if _____.a. it has zero conditional meanb. it has the same variance for all values of the explanatory variable.c. it has the same value for all values of the explanatory variabled. if the error term has a value of one given any value of the explanatory variable.Answer: bDifficulty: EasyBloom’s: KnowledgeA-Head: Expected Values and Variances of the OLS EstimatorsBUSPROG:Feedback: The error term in a regression equation is said to exhibit homoskedasticty if it has the same variance for all values of the explanatory variable.14. In the regression of y on x, the error term exhibits heteroskedasticity if _____.a. it has a constant varianceb. Var(y|x) is a function of xc. x is a function of yd. y is a function of xAnswer: bDifficulty: EasyBloom’s: KnowledgeA-Head: Expected Values and Variances of the OLS EstimatorsBUSPROG:Feedback: Heteroskedasticity is present whenever Var(y|x) is a function of x because Var(u|x) = Var(y|x).15. What is the estimated value of the slope parameter when the regression equation, y = β0+ β1x1 +u passes through the origina.b.)c.d.Answer: cDifficulty: EasyBloom’s: KnowledgeA-Head: Regression through the Origin and Regression on a ConstantBUSPROG:Feedback: The estimated value of the slope parameter when the regression equation passes through the origin is .16. A natural measure of the association between two random variables is the correlation coefficient.Answer: TrueDifficulty: EasyBloom’s: KnowledgeA-Head: Definition of the Simple Regression ModelBUSPROG:Feedback: A natural measure of the association between two random variables is the correlation coefficient.17. The sample covariance between the regressors and the Ordinary Least Square (OLS) residuals is always positive.Answer: FalseDifficulty: EasyBloom’s: KnowledgeA-Head: Properties of OLS on Any Sample of DataBUSPROG:Feedback: The sample covariance between the regressors and the Ordinary Least Square (OLS) residuals is zero.18. is the ratio of the explained variation compared to the total variation.Answer: TrueDifficulty: EasyBloom’s: KnowledgeA-Head: Properties of OLS on Any Sample of DataBUSPROG:Feedback: The sample covariance between the regressors and the Ordinary Least Square (OLS) residuals is zero.19. There are n-1 degrees of freedom in Ordinary Least Square residuals.Answer: FalseDifficulty: EasyBloom’s: KnowledgeA-Head: Expected Values and Variances of the OLS EstimatorsBUSPROG:Feedback: There are n-2 degrees of freedom in Ordinary Least Square residuals.20. The variance of the slope estimator increases as the error variance decreases.Answer: FalseDifficulty: EasyBloom’s: KnowledgeA-Head: Expected Values and Variances of the OLS EstimatorsBUSPROG:Feedback: The variance of the slope estimator increases as the error variance increases.。

The Method of Least SquaresHervéAbdi11IntroductionThe least square methods(LSM)is probably the most popular tech-nique in statistics.This is due to several factors.First,most com-mon estimators can be casted within this framework.For exam-ple,the mean of a distribution is the value that minimizes the sum of squared deviations of the scores.Second,using squares makes LSM mathematically very tractable because the Pythagorean theo-rem indicates that,when the error is independent of an estimated quantity,one can add the squared error and the squared estimated quantity.Third,the mathematical tools and algorithms involved in LSM(derivatives,eigendecomposition,singular value decomposi-tion)have been well studied for a relatively long time.LSM is one of the oldest techniques of modern statistics,and even though ancestors of LSM can be traced up to Greek mathe-matics,thefirst modern precursor is probably Galileo(see Harper, 1974,for a history and pre-history of LSM).The modern approach wasfirst exposed in1805by the French mathematician Legendre in a now classic memoir,but this method is somewhat older be-cause it turned out that,after the publication of Legendre’s mem-oir,Gauss(the famous German mathematician)contested Legen-1In:Neil Salkind(Ed.)(2007).Encyclopedia of Measurement and Statistics. Thousand Oaks(CA):Sage.Address correspondence to:HervéAbdiProgram in Cognition and Neurosciences,MS:Gr.4.1,The University of Texas at Dallas,Richardson,TX75083–0688,USAE-mail:herve@ /∼hervedre’s priority.Gauss often did not published ideas when he though that they could be controversial or not yet ripe,but would mention his discoveries when others would publish them(the way he did, for example for the discovery of Non-Euclidean geometry).And in1809,Gauss published another memoir in which he mentioned that he had previously discovered LSM and used it as early as1795 in estimating the orbit of an asteroid.A somewhat bitter anterior-ity dispute followed(a bit reminiscent of the Leibniz-Newton con-troversy about the invention of Calculus),which,however,did not diminish the popularity of this technique.The use of LSM in a modern statistical framework can be traced to Galton(1886)who used it in his work on the heritability of size which laid down the foundations of correlation and(also gave the name to)regression analysis.The two antagonistic giants of statis-tics Pearson and Fisher,who did so much in the early develop-ment of statistics,used and developed it in different contexts(fac-tor analysis for Pearson and experimental design for Fisher).Nowadays,the least square method is widely used tofind or es-timate the numerical values of the parameters tofit a function to a set of data and to characterize the statistical properties of esti-mates.It exists with several variations:Its simpler version is called ordinary least squares(OLS),a more sophisticated version is called weighted least squares(WLS),which often performs better than OLS because it can modulate the importance of each observation in thefinal solution.Recent variations of the least square method are alternating least squares(ALS)and partial least squares(PLS). 2Functionalfit example:regressionThe oldest(and still the most frequent)use of OLS was linear re-gression,which corresponds to the problem offinding a line(or curve)that bestfits a set of data points.In the standard formu-lation,a set of N pairs of observations{Y i,X i}is used tofind a function relating the value of the dependent variable(Y)to the values of an independent variable(X).With one variable and alinear function,the prediction is given by the following equation:ˆY=a+bX.(1) This equation involves two free parameters which specify the in-tercept(a)and the slope(b)of the regression line.The least square method defines the estimate of these parameters as the values wh-ich minimize the sum of the squares(hence the name least squares) between the measurements and the model(i.e.,the predicted val-ues).This amounts to minimizing the expression:E= i(Y i−ˆY i)2= i[Y i−(a+bX i)]2(2) (where E stands for“error"which is the quantity to be minimized). The estimation of the parameters is obtained using basic results from calculus and,specifically,uses the property that a quadratic expression reaches its minimum value when its derivatives van-ish.Taking the derivative of E with respect to a and b and setting them to zero gives the following set of equations(called the normal equations):∂E ∂a =2Na+2bXi−2Yi=0(3)and∂E ∂b =2bX2i+2aXi−2Yi X i=0.(4)Solving the normal equations gives the following least square esti-mates of a and b as:a=M Y−bM X(5) (with M Y and M X denoting the means of X and Y)andb= (Yi−M Y)(X i−M X)(Xi−M X)2.(6)OLS can be extended to more than one independent variable(us-ing matrix algebra)and to non-linear functions.2.1The geometry of least squaresOLS can be interpreted in a geometrical framework as an orthog-onal projection of the data vector onto the space defined by the independent variable.The projection is orthogonal because the predicted values and the actual values are uncorrelated.This is il-lustrated in Figure1,which depicts the case of two independent variables(vectors x1and x2)and the data vector(y),and shows that the error vector(y−ˆy)is orthogonal to the least square(ˆy)es-timate which lies in the subspace defined by the two independent variables.yFigure1:The least square estimate of the data is the orthogonal projection of the data vector onto the independent variable sub-space.2.2Optimality of least square estimatesOLS estimates have some strong statistical properties.Specifically when(1)the data obtained constitute a random sample from a well-defined population,(2)the population model is linear,(3)the error has a zero expected value,(4)the independent variables are linearly independent,and(5)the error is normally distributed and uncorrelated with the independent variables(the so-called homo-scedasticity assumption);then the OLS estimate is the b est l inear u nbiased e stimate often denoted with the acronym“BLUE"(the5 conditions and the proof are called the Gauss-Markov conditions and theorem).In addition,when the Gauss-Markov conditions hold,OLS estimates are also maximum likelihood estimates.2.3Weighted least squaresThe optimality of OLS relies heavily on the homoscedasticity as-sumption.When the data come from different sub-populations for which an independent estimate of the error variance is avail-able,a better estimate than OLS can be obtained using weighted least squares(WLS),also called generalized least squares(GLS). The idea is to assign to each observation a weight that reflects the uncertainty of the measurement.In general,the weight w i,as-signed to the i th observation,will be a function of the variance ofthis observation,denotedσ2i .A straightforward weighting schemais to define w i=σ−1i(but other more sophisticated weighted sch-emes can also be proposed).For the linear regression example, WLS willfind the values of a and b minimizing:E w=i w i(Y i−ˆY i)2=iw i[Y i−(a+bX i)]2.(7)2.4Iterative methods:Gradient descentWhen estimating the parameters of a nonlinear function with OLS or WLS,the standard approach using derivatives is not always pos-sible.In this case,iterative methods are very often used.These methods search in a stepwise fashion for the best values of the es-timate.Often they proceed by using at each step a linear approx-imation of the function and refine this approximation by succes-sive corrections.The techniques involved are known as gradient descent and Gauss-Newton approximations.They correspond to nonlinear least squares approximation in numerical analysis and nonlinear regression in statistics.Neural networks constitutes a popular recent application of these techniques3Problems with least squares,and alternativesDespite its popularity and versatility,LSM has its problems.Prob-ably,the most important drawback of LSM is its high sensitivity to outliers(i.e.,extreme observations).This is a consequence of us-ing squares because squaring exaggerates the magnitude of differ-ences(e.g.,the difference between20and10is equal to10but the difference between202and102is equal to300)and therefore gives a much stronger importance to extreme observations.This prob-lem is addressed by using robust techniques which are less sensi-tive to the effect of outliers.Thisfield is currently under develop-ment and is likely to become more important in the next future. References[1]Abdi,H.,Valentin D.,Edelman,B.E.(1999)Neural networks.Thousand Oaks:Sage.[2]Bates,D.M.&Watts D.G.(1988).Nonlinear regression analysisand its applications.New York:Wiley[3]Greene,W.H.(2002).Econometric analysis.New York:PrenticeHall.[4]Harper H.L.(1974–1976).The method of least squares andsome alternatives.Part I,II,II,IV,V,VI.International Satis-tical Review,42,147–174;42,235–264;43,1–44;43,125–190;43,269–272;44,113–159;[5]Nocedal J.&Wright,S.(1999).Numerical optimization.NewYork:Springer.[6]Plackett,R.L.(1972).The discovery of the method of leastsquares.Biometrika,59,239–251.[7]Seal,H.L.(1967).The historical development of the Gauss lin-ear model.Biometrika,54,1–23.。

CHAPTER 13TEACHING NOTESWhile this chapter falls under “Advanced Topics,” most of this chapter requires no more sophistication than the previous chapters. (In fact, I would argue that, with the possible exception of Section 13.5, this material is easier than some of the time series chapters.)Pooling two or more independent cross sections is a straightforward extension of cross-sectional methods. Nothing new needs to be done in stating assumptions, except possibly mentioning that random sampling in each time period is sufficient. The practically important issue is allowing for different intercepts, and possibly different slopes, across time.The natural experiment material and extensions of the difference-in-differences estimator is widely applicable and, with the aid of the examples, easy to understand.Two years of panel data are often available, in which case differencing across time is a simple way of removing g unobserved heterogeneity. If you have covered Chapter 9, you might compare this with a regression in levels using the second year of data, but where a lagged dependent variable is included. (The second approach only requires collecting information on the dependent variable in a previous year.) These often give similar answers. Two years of panel data, collected before and after a policy change, can be very powerful for policy analysis. Having more than two periods of panel data causes slight complications in that the errors in the differenced equation may be serially correlated. (However, the traditional assumption that the errors in the original equation are serially uncorrelated is not always a good one. In other words, it is not always more appropriate to used fixed effects, as in Chapter 14, than first differencing.) With large N and relatively small T, a simple way to account for possible serial correlation after differencing is to compute standard errors that are robust to arbitrary serial correlation and heteroskedasticity. Econometrics packages that do cluster analysis (such as Stata) often allow this by specifying each cross-sectional unit as its own cluster.108SOLUTIONS TO PROBLEMS13.1 Without changes in the averages of any explanatory variables, the average fertility rate fellby .545 between 1972 and 1984; this is simply the coefficient on y84. To account for theincrease in average education levels, we obtain an additional effect: –.128(13.3 – 12.2) ≈–.141. So the drop in average fertility if the average education level increased by 1.1 is .545+ .141 = .686, or roughly two-thirds of a child per woman.13.2 The first equation omits the 1981 year dummy variable, y81, and so does not allow anyappreciation in nominal housing prices over the three year period in the absence of an incinerator. The interaction term in this case is simply picking up the fact that even homes that are near the incinerator site have appreciated in value over the three years. This equation suffers from omitted variable bias.The second equation omits the dummy variable for being near the incinerator site, nearinc,which means it does not allow for systematic differences in homes near and far from the sitebefore the site was built. If, as seems to be the case, the incinerator was located closer to lessvaluable homes, then omitting nearinc attributes lower housing prices too much to theincinerator effect. Again, we have an omitted variable problem. This is why equation (13.9) (or,even better, the equation that adds a full set of controls), is preferred.13.3 We do not have repeated observations on the same cross-sectional units in each time period,and so it makes no sense to look for pairs to difference. For example, in Example 13.1, it is veryunlikely that the same woman appears in more than one year, as new random samples areobtained in each year. In Example 13.3, some houses may appear in the sample for both 1978and 1981, but the overlap is usually too small to do a true panel data analysis.β, but only13.4 The sign of β1 does not affect the direction of bias in the OLS estimator of1whether we underestimate or overestimate the effect of interest. If we write ∆crmrte i = δ0 +β1∆unem i + ∆u i, where ∆u i and ∆unem i are negatively correlated, then there is a downward biasin the OLS estimator of β1. Because β1 > 0, we will tend to underestimate the effect of unemployment on crime.13.5 No, we cannot include age as an explanatory variable in the original model. Each person inthe panel data set is exactly two years older on January 31, 1992 than on January 31, 1990. This means that ∆age i = 2 for all i. But the equation we would estimate is of the form∆saving i = δ0 + β1∆age i +…,where δ0 is the coefficient the year dummy for 1992 in the original model. As we know, whenwe have an intercept in the model we cannot include an explanatory variable that is constant across i; this violates Assumption MLR.3. Intuitively, since age changes by the same amount for everyone, we cannot distinguish the effect of age from the aggregate time effect.10913.6 (i) Let FL be a binary variable equal to one if a person lives in Florida, and zero otherwise. Let y90 be a year dummy variable for 1990. Then, from equation (13.10), we have the linear probability modelarrest = β0 + δ0y90 + β1FL + δ1y90⋅FL + u.The effect of the law is measured by δ1, which is the change in the probability of drunk driving arrest due to the new law in Florida. Including y90 allows for aggregate trends in drunk driving arrests that would affect both states; including FL allows for systematic differences between Florida and Georgia in either drunk driving behavior or law enforcement.(ii) It could be that the populations of drivers in the two states change in different ways over time. For example, age, race, or gender distributions may have changed. The levels of education across the two states may have changed. As these factors might affect whether someone is arrested for drunk driving, it could be important to control for them. At a minimum, there is the possibility of obtaining a more precise estimator of δ1 by reducing the error variance. Essentially, any explanatory variable that affects arrest can be used for this purpose. (See Section 6.3 for discussion.)SOLUTIONS TO COMPUTER EXERCISES13.7 (i) The F statistic (with 4 and 1,111 df) is about 1.16 and p-value ≈ .328, which shows that the living environment variables are jointly insignificant.(ii) The F statistic (with 3 and 1,111 df) is about 3.01 and p-value ≈ .029, and so the region dummy variables are jointly significant at the 5% level.(iii) After obtaining the OLS residuals, ˆu, from estimating the model in Table 13.1, we run the regression 2ˆu on y74, y76, …, y84 using all 1,129 observations. The null hypothesis of homoskedasticity is H0: γ1 = 0, γ2= 0, … , γ6 = 0. So we just use the usual F statistic for joint significance of the year dummies. The R-squared is about .0153 and F ≈ 2.90; with 6 and 1,122 df, the p-value is about .0082. So there is evidence of heteroskedasticity that is a function of time at the 1% significance level. This suggests that, at a minimum, we should compute heteroskedasticity-robust standard errors, t statistics, and F statistics. We could also use weighted least squares (although the form of heteroskedasticity used here may not be sufficient; it does not depend on educ, age, and so on).(iv) Adding y74⋅educ, , y84⋅educ allows the relationship between fertility and education to be different in each year; remember, the coefficient on the interaction gets added to the coefficient on educ to get the slope for the appropriate year. When these interaction terms are added to the equation, R2≈ .137. The F statistic for joint significance (with 6 and 1,105 df) is about 1.48 with p-value ≈ .18. Thus, the interactions are not jointly significant at even the 10% level. This is a bit misleading, however. An abbreviated equation (which just shows the coefficients on the terms involving educ) is110111kids= -8.48 - .023 educ + - .056 y74⋅educ - .092 y76⋅educ(3.13) (.054) (.073) (.071) - .152 y78⋅educ - .098 y80⋅educ - .139 y82⋅educ - .176 y84⋅educ .(.075) (.070) (.068) (.070)Three of the interaction terms, y78⋅educ , y82⋅educ , and y84⋅educ are statistically significant at the 5% level against a two-sided alternative, with the p -value on the latter being about .012. The coefficients are large in magnitude as well. The coefficient on educ – which is for the base year, 1972 – is small and insignificant, suggesting little if any relationship between fertility andeducation in the early seventies. The estimates above are consistent with fertility becoming more linked to education as the years pass. The F statistic is insignificant because we are testing some insignificant coefficients along with some significant ones.13.8 (i) The coefficient on y85 is roughly the proportionate change in wage for a male (female = 0) with zero years of education (educ = 0). This is not especially useful since we are not interested in people with no education.(ii) What we want to estimate is θ0 = δ0 + 12δ1; this is the change in the intercept for a male with 12 years of education, where we also hold other factors fixed. If we write δ0 = θ0 - 12δ1, plug this into (13.1), and rearrange, we getlog(wage ) = β0 + θ0y85 + β1educ + δ1y85⋅(educ – 12) + β2exper + β3exper 2 + β4union + β5female + δ5y85⋅female + u .Therefore, we simply replace y85⋅educ with y85⋅(educ – 12), and then the coefficient andstandard error we want is on y85. These turn out to be 0ˆθ = .339 and se(0ˆθ) = .034. Roughly, the nominal increase in wage is 33.9%, and the 95% confidence interval is 33.9 ± 1.96(3.4), or about 27.2% to 40.6%. (Because the proportionate change is large, we could use equation (7.10), which implies the point estimate 40.4%; but obtaining the standard error of this estimate is harder.)(iii) Only the coefficient on y85 differs from equation (13.2). The new coefficient is about –.383 (se ≈ .124). This shows that real wages have fallen over the seven year period, although less so for the more educated. For example, the proportionate change for a male with 12 years of education is –.383 + .0185(12) = -.161, or a fall of about 16.1%. For a male with 20 years of education there has been almost no change [–.383 + .0185(20) = –.013].(iv) The R -squared when log(rwage ) is the dependent variable is .356, as compared with .426 when log(wage ) is the dependent variable. If the SSRs from the regressions are the same, but the R -squareds are not, then the total sum of squares must be different. This is the case, as the dependent variables in the two equations are different.(v) In 1978, about 30.6% of workers in the sample belonged to a union. In 1985, only about 18% belonged to a union. Therefore, over the seven-year period, there was a notable fall in union membership.(vi) When y85⋅union is added to the equation, its coefficient and standard error are about -.00040 (se ≈ .06104). This is practically very small and the t statistic is almost zero. There has been no change in the union wage premium over time.(vii) Parts (v) and (vi) are not at odds. They imply that while the economic return to union membership has not changed (assuming we think we have estimated a causal effect), the fraction of people reaping those benefits has fallen.13.9 (i) Other things equal, homes farther from the incinerator should be worth more, so δ1 > 0. If β1 > 0, then the incinerator was located farther away from more expensive homes.(ii) The estimated equation islog()price= 8.06 -.011 y81+ .317 log(dist) + .048 y81⋅log(dist)(0.51) (.805) (.052) (.082)n = 321, R2 = .396, 2R = .390.ˆδ = .048 is the expected sign, it is not statistically significant (t statistic ≈ .59).While1(iii) When we add the list of housing characteristics to the regression, the coefficient ony81⋅log(dist) becomes .062 (se = .050). So the estimated effect is larger – the elasticity of price with respect to dist is .062 after the incinerator site was chosen – but its t statistic is only 1.24. The p-value for the one-sided alternative H1: δ1 > 0 is about .108, which is close to being significant at the 10% level.13.10 (i) In addition to male and married, we add the variables head, neck, upextr, trunk, lowback, lowextr, and occdis for injury type, and manuf and construc for industry. The coefficient on afchnge⋅highearn becomes .231 (se ≈ .070), and so the estimated effect and t statistic are now larger than when we omitted the control variables. The estimate .231 implies a substantial response of durat to the change in the cap for high-earnings workers.(ii) The R-squared is about .041, which means we are explaining only a 4.1% of the variation in log(durat). This means that there are some very important factors that affect log(durat) that we are not controlling for. While this means that predicting log(durat) would be very difficultˆδ: it could still for a particular individual, it does not mean that there is anything biased about1be an unbiased estimator of the causal effect of changing the earnings cap for workers’ compensation.(iii) The estimated equation using the Michigan data is112durat= 1.413 + .097 afchnge+ .169 highearn+ .192 afchnge⋅highearn log()(0.057) (.085) (.106) (.154)n = 1,524, R2 = .012.The estimate of δ1, .192, is remarkably close to the estimate obtained for Kentucky (.191). However, the standard error for the Michigan estimate is much higher (.154 compared with .069). The estimate for Michigan is not statistically significant at even the 10% level against δ1 > 0. Even though we have over 1,500 observations, we cannot get a very precise estimate. (For Kentucky, we have over 5,600 observations.)13.11 (i) Using pooled OLS we obtainrent= -.569 + .262 d90+ .041 log(pop) + .571 log(avginc) + .0050 pctstu log()(.535) (.035) (.023) (.053) (.0010) n = 128, R2 = .861.The positive and very significant coefficient on d90 simply means that, other things in the equation fixed, nominal rents grew by over 26% over the 10 year period. The coefficient on pctstu means that a one percentage point increase in pctstu increases rent by half a percent (.5%). The t statistic of five shows that, at least based on the usual analysis, pctstu is very statistically significant.(ii) The standard errors from part (i) are not valid, unless we thing a i does not really appear in the equation. If a i is in the error term, the errors across the two time periods for each city are positively correlated, and this invalidates the usual OLS standard errors and t statistics.(iii) The equation estimated in differences islog()∆= .386 + .072 ∆log(pop) + .310 log(avginc) + .0112 ∆pctsturent(.037) (.088) (.066) (.0041)n = 64, R2 = .322.Interestingly, the effect of pctstu is over twice as large as we estimated in the pooled OLS equation. Now, a one percentage point increase in pctstu is estimated to increase rental rates by about 1.1%. Not surprisingly, we obtain a much less precise estimate when we difference (although the OLS standard errors from part (i) are likely to be much too small because of the positive serial correlation in the errors within each city). While we have differenced away a i, there may be other unobservables that change over time and are correlated with ∆pctstu.(iv) The heteroskedasticity-robust standard error on ∆pctstu is about .0028, which is actually much smaller than the usual OLS standard error. This only makes pctstu even more significant (robust t statistic ≈ 4). Note that serial correlation is no longer an issue because we have no time component in the first-differenced equation.11311413.12 (i) You may use an econometrics software package that directly tests restrictions such as H 0: β1 = β2 after estimating the unrestricted model in (13.22). But, as we have seen many times, we can simply rewrite the equation to test this using any regression software. Write the differenced equation as∆log(crime ) = δ0 + β1∆clrprc -1 + β2∆clrprc -2 + ∆u .Following the hint, we define θ1 = β1 - β2, and then write β1 = θ1 + β2. Plugging this into the differenced equation and rearranging gives∆log(crime ) = δ0 + θ1∆clrprc -1 + β2(∆clrprc -1 + ∆clrprc -2) + ∆u .Estimating this equation by OLS gives 1ˆθ= .0091, se(1ˆθ) = .0085. The t statistic for H 0: β1 = β2 is .0091/.0085 ≈ 1.07, which is not statistically significant.(ii) With β1 = β2 the equation becomes (without the i subscript)∆log(crime ) = δ0 + β1(∆clrprc -1 + ∆clrprc -2) + ∆u= δ0 + δ1[(∆clrprc -1 + ∆clrprc -2)/2] + ∆u ,where δ1 = 2β1. But (∆clrprc -1 + ∆clrprc -2)/2 = ∆avgclr .(iii) The estimated equation islog()crime ∆ = .099 - .0167 ∆avgclr(.063) (.0051)n = 53, R 2 = .175, 2R = .159.Since we did not reject the hypothesis in part (i), we would be justified in using the simplermodel with avgclr . Based on adjusted R -squared, we have a slightly worse fit with the restriction imposed. But this is a minor consideration. Ideally, we could get more data to determine whether the fairly different unconstrained estimates of β1 and β2 in equation (13.22) reveal true differences in β1 and β2.13.13 (i) Pooling across semesters and using OLS givestrmgpa = -1.75 -.058 spring+ .00170 sat- .0087 hsperc(0.35) (.048) (.00015) (.0010)+ .350 female- .254 black- .023 white- .035 frstsem(.052) (.123) (.117) (.076)- .00034 tothrs + 1.048 crsgpa- .027 season(.00073) (0.104) (.049)n = 732, R2 = .478, 2R = .470.The coefficient on season implies that, other things fixed, an athlete’s term GPA is about .027 points lower when his/her sport is in season. On a four point scale, this a modest effect (although it accumulates over four years of athletic eligibility). However, the estimate is not statistically significant (t statistic ≈-.55).(ii) The quick answer is that if omitted ability is correlated with season then, as we know form Chapters 3 and 5, OLS is biased and inconsistent. The fact that we are pooling across two semesters does not change that basic point.If we think harder, the direction of the bias is not clear, and this is where pooling across semesters plays a role. First, suppose we used only the fall term, when football is in season. Then the error term and season would be negatively correlated, which produces a downward bias in the OLS estimator of βseason. Because βseason is hypothesized to be negative, an OLS regression using only the fall data produces a downward biased estimator. [When just the fall data are used, ˆβ = -.116 (se = .084), which is in the direction of more bias.] However, if we use just the seasonspring semester, the bias is in the opposite direction because ability and season would be positive correlated (more academically able athletes are in season in the spring). In fact, using just theβ = .00089 (se = .06480), which is practically and statistically equal spring semester gives ˆseasonto zero. When we pool the two semesters we cannot, with a much more detailed analysis, determine which bias will dominate.(iii) The variables sat, hsperc, female, black, and white all drop out because they do not vary by semester. The intercept in the first-differenced equation is the intercept for the spring. We have∆= -.237 + .019 ∆frstsem+ .012 ∆tothrs+ 1.136 ∆crsgpa- .065 seasontrmgpa(.206) (.069) (.014) (0.119) (.043) n = 366, R2 = .208, 2R = .199.Interestingly, the in-season effect is larger now: term GPA is estimated to be about .065 points lower in a semester that the sport is in-season. The t statistic is about –1.51, which gives a one-sided p-value of about .065.115(iv) One possibility is a measure of course load. If some fraction of student-athletes take a lighter load during the season (for those sports that have a true season), then term GPAs may tend to be higher, other things equal. This would bias the results away from finding an effect of season on term GPA.13.14 (i) The estimated equation using differences is∆= -2.56 - 1.29 ∆log(inexp) - .599 ∆log(chexp) + .156 ∆incshrvote(0.63) (1.38) (.711) (.064)n = 157, R2 = .244, 2R = .229.Only ∆incshr is statistically significant at the 5% level (t statistic ≈ 2.44, p-value ≈ .016). The other two independent variables have t statistics less than one in absolute value.(ii) The F statistic (with 2 and 153 df) is about 1.51 with p-value ≈ .224. Therefore,∆log(inexp) and ∆log(chexp) are jointly insignificant at even the 20% level.(iii) The simple regression equation is∆= -2.68 + .218 ∆incshrvote(0.63) (.032)n = 157, R2 = .229, 2R = .224.This equation implies t hat a 10 percentage point increase in the incumbent’s share of total spending increases the percent of the incumbent’s vote by about 2.2 percentage points.(iv) Using the 33 elections with repeat challengers we obtain∆= -2.25 + .092 ∆incshrvote(1.00) (.085)n = 33, R2 = .037, 2R = .006.The estimated effect is notably smaller and, not surprisingly, the standard error is much larger than in part (iii). While the direction of the effect is the same, it is not statistically significant (p-value ≈ .14 against a one-sided alternative).13.15 (i) When we add the changes of the nine log wage variables to equation (13.33) we obtain116117 log()crmrte ∆ = .020 - .111 d83 - .037 d84 - .0006 d85 + .031 d86 + .039 d87(.021) (.027) (.025) (.0241) (.025) (.025)- .323 ∆log(prbarr ) - .240 ∆log(prbconv ) - .169 ∆log(prbpris )(.030) (.018) (.026)- .016 ∆log(avgsen ) + .398 ∆log(polpc ) - .044 ∆log(wcon )(.022) (.027) (.030)+ .025 ∆log(wtuc ) - .029 ∆log(wtrd ) + .0091 ∆log(wfir )(0.14) (.031) (.0212)+ .022 ∆log(wser ) - .140 ∆log(wmfg ) - .017 ∆log(wfed )(.014) (.102) (.172)- .052 ∆log(wsta ) - .031 ∆log(wloc ) (.096) (.102) n = 540, R 2 = .445, 2R = .424.The coefficients on the criminal justice variables change very modestly, and the statistical significance of each variable is also essentially unaffected.(ii) Since some signs are positive and others are negative, they cannot all really have the expected sign. For example, why is the coefficient on the wage for transportation, utilities, and communications (wtuc ) positive and marginally significant (t statistic ≈ 1.79)? Higher manufacturing wages lead to lower crime, as we might expect, but, while the estimated coefficient is by far the largest in magnitude, it is not statistically different from zero (tstatistic ≈ –1.37). The F test for joint significance of the wage variables, with 9 and 529 df , yields F ≈ 1.25 and p -value ≈ .26.13.16 (i) The estimated equation using the 1987 to 1988 and 1988 to 1989 changes, where we include a year dummy for 1989 in addition to an overall intercept, isˆhrsemp ∆ = –.740 + 5.42 d89 + 32.60 ∆grant + 2.00 ∆grant -1 + .744 ∆log(employ ) (1.942) (2.65) (2.97) (5.55) (4.868)n = 251, R 2 = .476, 2R = .467.There are 124 firms with both years of data and three firms with only one year of data used, for a total of 127 firms; 30 firms in the sample have missing information in both years and are not used at all. If we had information for all 157 firms, we would have 314 total observations in estimating the equation.(ii) The coefficient on grant – more precisely, on ∆grant in the differenced equation – means that if a firm received a grant for the current year, it trained each worker an average of 32.6 hoursmore than it would have otherwise. This is a practically large effect, and the t statistic is very large.(iii) Since a grant last year was used to pay for training last year, it is perhaps not surprising that the grant does not carry over into more training this year. It would if inertia played a role in training workers.(iv) The coefficient on the employees variable is very small: a 10% increase in employ increases hours per employee by only .074. [Recall:∆≈ (.744/100)(%∆employ).] Thishrsempis very small, and the t statistic is also rather small.13.17. (i) Take changes as usual, holding the other variables fixed: ∆math4it = β1∆log(rexpp it) = (β1/100)⋅[ 100⋅∆log(rexpp it)] ≈ (β1/100)⋅( %∆rexpp it). So, if %∆rexpp it = 10, then ∆math4it= (β1/100)⋅(10) = β1/10.(ii) The equation, estimated by pooled OLS in first differences (except for the year dummies), is4∆ = 5.95 + .52 y94 + 6.81 y95- 5.23 y96- 8.49 y97 + 8.97 y98math(.52) (.73) (.78) (.73) (.72) (.72)- 3.45 ∆log(rexpp) + .635 ∆log(enroll) + .025 ∆lunch(2.76) (1.029) (.055)n = 3,300, R2 = .208.Taken literally, the spending coefficient implies that a 10% increase in real spending per pupil decreases the math4 pass rate by about 3.45/10 ≈ .35 percentage points.(iii) When we add the lagged spending change, and drop another year, we get4∆ = 6.16 + 5.70 y95- 6.80 y96- 8.99 y97 +8.45 y98math(.55) (.77) (.79) (.74) (.74)- 1.41 ∆log(rexpp) + 11.04 ∆log(rexpp-1) + 2.14 ∆log(enroll)(3.04) (2.79) (1.18)+ .073 ∆lunch(.061)n = 2,750, R2 = .238.The contemporaneous spending variable, while still having a negative coefficient, is not at all statistically significant. The coefficient on the lagged spending variable is very statistically significant, and implies that a 10% increase in spending last year increases the math4 pass rate118119 by about 1.1 percentage points. Given the timing of the tests, a lagged effect is not surprising. In Michigan, the fourth grade math test is given in January, and so if preparation for the test begins a full year in advance, spending when the students are in third grade would at least partly matter.(iv) The heteroskedasticity-robust standard error for log() ˆrexpp β∆is about 4.28, which reducesthe significance of ∆log(rexpp ) even further. The heteroskedasticity-robust standard error of 1log() ˆrexpp β-∆is about 4.38, which substantially lowers the t statistic. Still, ∆log(rexpp -1) is statistically significant at just over the 1% significance level against a two-sided alternative.(v) The fully robust standard error for log() ˆrexpp β∆is about 4.94, which even further reducesthe t statistic for ∆log(rexpp ). The fully robust standard error for 1log() ˆrexpp β-∆is about 5.13,which gives ∆log(rexpp -1) a t statistic of about 2.15. The two-sided p -value is about .032.(vi) We can use four years of data for this test. Doing a pooled OLS regression of ,1垐 on it i t r r -,using years 1995, 1996, 1997, and 1998 gives ˆρ= -.423 (se = .019), which is strong negative serial correlation.(vii) Th e fully robust “F ” test for ∆log(enroll ) and ∆lunch , reported by Stata 7.0, is .93. With 2 and 549 df , this translates into p -value = .40. So we would be justified in dropping these variables, but they are not doing any harm.。

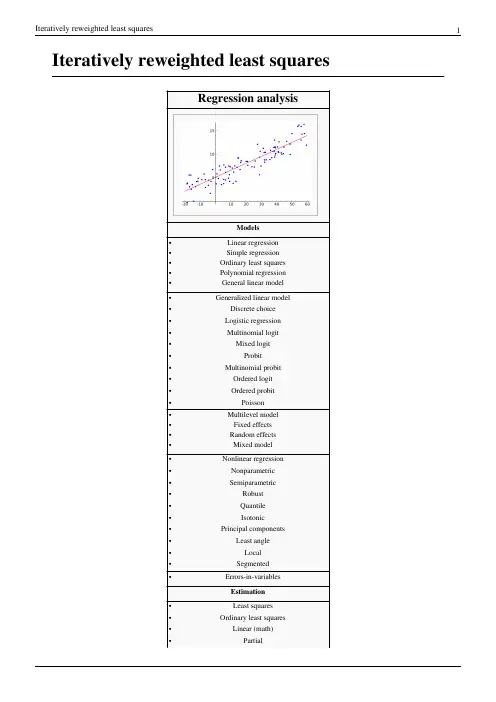

Iteratively reweighted least squaresModelsLinear regressionSimple regressionOrdinary least squaresPolynomial regressionGeneral linear modelGeneralized linear modelDiscrete choiceLogistic regressionMultinomial logitMixed logitProbitMultinomial probitOrdered logitOrdered probitPoissonMultilevel modelFixed effectsRandom effectsMixed modelNonlinear regressionNonparametricSemiparametricRobustQuantileIsotonicPrincipal componentsLeast angleLocalStatistics portalThe method of iteratively reweighted least squares (IRLS) is used to solve certain optimization problems. It solves objective functions of the form:by an iterative method in which each step involves solving a weighted least squares problem of the form:IRLS is used to find the maximum likelihood estimates of a generalized linear model, and in robust regression to find an M-estimator, as a way of mitigating the influence of outliers in an otherwise normally-distributed data set. For example, by minimizing the least absolute error rather than the least square error.Although not a linear regression problem, Weiszfeld's algorithm for approximating the geometric median can also be viewed as a special case of iteratively reweighted least squares, in which the objective function is the sum of distances of the estimator from the samples.One of the advantages of IRLS over linear and convex programming is that it can be used with Gauss–Newton and Levenberg–Marquardt numerical algorithms.ExamplesL 1 minimization for sparse recoveryIRLS can be used for1 minimization and smoothed p minimization, p < 1, in the compressed sensing problems.It has been proved that the algorithm has a linear rate of convergence for1 norm and superlinear fort with t < 1,under the restricted isometry property, which is generally a sufficient condition for sparse solutions.[1][2]L p norm linear regressionTo find the parameters β = (β1, …,βk )T which minimize the L p norm for the linear regression problem,the IRLS algorithm at step t +1 involves solving the weighted linear least squares[3] problem:[4]where W (t ) is the diagonal matrix of weights with elements:In the case p = 1, this corresponds to least absolute deviation regression (in this case, the problem would be better approached by use of linear programming methods).[citation needed ]Notes[3]/%7Edispenser/cgi-bin/dab_solver.py?page=Iteratively_reweighted_least_squares&editintro=Template:Disambiguation_needed/editintro&client=Template:DnReferences•University of Colorado Applied Regression lecture slides (/courses/7400/2010Spr/lecture23.pdf)•Stanford Lecture Notes on the IRLS algorithm by Antoine Guitton (/public/docs/sep103/antoine2/paper_html/index.html)•Numerical Methods for Least Squares Problems by Åke Björck (http://www.mai.liu.se/~akbjo/LSPbook.html) (Chapter 4: Generalized Least Squares Problems.)•Practical Least-Squares for Computer Graphics. SIGGRAPH Course 11 (/~jplewis/lscourse/SLIDES.pdf)Article Sources and Contributors4Article Sources and ContributorsIteratively reweighted least squares Source: /w/index.php?oldid=536800201 Contributors: 3mta3, BD2412, BenFrantzDale, Benwing, Colbert Sesanker, DavidEppstein, Giggy, Grumpfel, Kiefer.Wolfowitz, Lambiam, Lesswire, LutzL, Melcombe, Michael Hardy, Oleg Alexandrov, RainerBlome, Salix alba, Serg3d2, Stpasha, Wesleyyin, 13 anonymous editsImage Sources, Licenses and Contributorsfile:linear regression.svg Source: /w/index.php?title=File:Linear_regression.svg License: Public Domain Contributors: SewaquFile:Fisher iris versicolor sepalwidth.svg Source: /w/index.php?title=File:Fisher_iris_versicolor_sepalwidth.svg License: Creative Commons Attribution-Sharealike 3.0 Contributors: en:User:Qwfp (original); Pbroks13 (talk) (redraw)LicenseCreative Commons Attribution-Share Alike 3.0 Unported///licenses/by-sa/3.0/。

整体最小二乘法及其在测量数据处理中的应用丁克良[1],欧吉坤[2],陈义[2]1北京建筑工程学院 测绘与城市空间信息学院;2中国科学院测量与地球物理研究所3 同济大学 测量与国土信息工程系Ding Ke-liang,OU Ji-kun,Chen yi(1Beijing Institute of Civil Engineering And Architecture, School of Geomatics andurban information, 1000442 Institute of Geodesy and Geophysics, Chinese Academy ofSciences, Wuhan, 4300773. Department of Surveying and Geo-Informatics , Tongji University , Shanghai 200092 , China)摘要在参数求解中,针对参数估计模型的观测向量和系数矩阵都可能存在误差情况,20世纪80年代提出了整体最小二乘方法。

本文系统阐述了整体最小二乘基本原理、思想,基于奇异值分解原理详细阐述了整体最小二乘的解算方法。

在对普通最小二乘和整体最小二乘深入比较的基础上提出要注意整体最小二乘应用条件问题。

关键词:普通最小二乘,整体最小二乘,奇异值分解;测量数据处理Abstract: For parameter solution, the total least square (TLS) method was proposed in early 80’s of twenty century as to deal with some estimation models that both the observation and design matrix are subject to errors. The basic concept of TLS, principle of computational algorithms for TLS based on the singular value decomposition theory are described systematically in this paper. The applicability and limitation of TLS in surveying data process are proposed based on comparisons and analyses between the ordinary least squares and the total least squares.Key words: ordinary least squares; total least squares; singular decomposition; surveying data process1绪论最小二乘法经历了百余年的发展考验,已经成为许多领域数据处理广泛应用的方法。

T his appendix derives various results for ordinary least squares estimation of themultiple linear regression model using matrix notation and matrix algebra (see Appendix D for a summary). The material presented here is much more ad-vanced than that in the text.E.1THE MODEL AND ORDINARY LEAST SQUARES ESTIMATIONThroughout this appendix,we use the t subscript to index observations and an n to denote the sample size. It is useful to write the multiple linear regression model with k parameters as follows:y t ϭ1ϩ2x t 2ϩ3x t 3ϩ… ϩk x tk ϩu t ,t ϭ 1,2,…,n ,(E.1)where y t is the dependent variable for observation t ,and x tj ,j ϭ 2,3,…,k ,are the inde-pendent variables. Notice how our labeling convention here differs from the text:we call the intercept 1and let 2,…,k denote the slope parameters. This relabeling is not important,but it simplifies the matrix approach to multiple regression.For each t ,define a 1 ϫk vector,x t ϭ(1,x t 2,…,x tk ),and let ϭ(1,2,…,k )Јbe the k ϫ1 vector of all parameters. Then,we can write (E.1) asy t ϭx t ϩu t ,t ϭ 1,2,…,n .(E.2)[Some authors prefer to define x t as a column vector,in which case,x t is replaced with x t Јin (E.2). Mathematically,it makes more sense to define it as a row vector.] We can write (E.2) in full matrix notation by appropriately defining data vectors and matrices. Let y denote the n ϫ1 vector of observations on y :the t th element of y is y t .Let X be the n ϫk vector of observations on the explanatory variables. In other words,the t th row of X consists of the vector x t . Equivalently,the (t ,j )th element of X is simply x tj :755A p p e n d i x EThe Linear Regression Model inMatrix Formn X ϫ k ϵϭ .Finally,let u be the n ϫ 1 vector of unobservable disturbances. Then,we can write (E.2)for all n observations in matrix notation :y ϭX ϩu .(E.3)Remember,because X is n ϫ k and is k ϫ 1,X is n ϫ 1.Estimation of proceeds by minimizing the sum of squared residuals,as in Section3.2. Define the sum of squared residuals function for any possible k ϫ 1 parameter vec-tor b asSSR(b ) ϵ͚nt ϭ1(y t Ϫx t b )2.The k ϫ 1 vector of ordinary least squares estimates,ˆϭ(ˆ1,ˆ2,…,ˆk ),minimizes SSR(b ) over all possible k ϫ 1 vectors b . This is a problem in multivariable calculus.For ˆto minimize the sum of squared residuals,it must solve the first order conditionѨSSR(ˆ)/Ѩb ϵ0.(E.4)Using the fact that the derivative of (y t Ϫx t b )2with respect to b is the 1ϫ k vector Ϫ2(y t Ϫx t b )x t ,(E.4) is equivalent to͚nt ϭ1xt Ј(y t Ϫx t ˆ) ϵ0.(E.5)(We have divided by Ϫ2 and taken the transpose.) We can write this first order condi-tion as͚nt ϭ1(y t Ϫˆ1Ϫˆ2x t 2Ϫ… Ϫˆk x tk ) ϭ0͚nt ϭ1x t 2(y t Ϫˆ1Ϫˆ2x t 2Ϫ… Ϫˆk x tk ) ϭ0...͚nt ϭ1x tk (y t Ϫˆ1Ϫˆ2x t 2Ϫ… Ϫˆk x tk ) ϭ0,which,apart from the different labeling convention,is identical to the first order condi-tions in equation (3.13). We want to write these in matrix form to make them more use-ful. Using the formula for partitioned multiplication in Appendix D,we see that (E.5)is equivalent to΅1x 12x 13...x 1k1x 22x 23...x 2k...1x n 2x n 3...x nk ΄΅x 1x 2...x n ΄Appendix E The Linear Regression Model in Matrix Form756Appendix E The Linear Regression Model in Matrix FormXЈ(yϪXˆ) ϭ0(E.6) or(XЈX)ˆϭXЈy.(E.7)It can be shown that (E.7) always has at least one solution. Multiple solutions do not help us,as we are looking for a unique set of OLS estimates given our data set. Assuming that the kϫ k symmetric matrix XЈX is nonsingular,we can premultiply both sides of (E.7) by (XЈX)Ϫ1to solve for the OLS estimator ˆ:ˆϭ(XЈX)Ϫ1XЈy.(E.8)This is the critical formula for matrix analysis of the multiple linear regression model. The assumption that XЈX is invertible is equivalent to the assumption that rank(X) ϭk, which means that the columns of X must be linearly independent. This is the matrix ver-sion of MLR.4 in Chapter 3.Before we continue,(E.8) warrants a word of warning. It is tempting to simplify the formula for ˆas follows:ˆϭ(XЈX)Ϫ1XЈyϭXϪ1(XЈ)Ϫ1XЈyϭXϪ1y.The flaw in this reasoning is that X is usually not a square matrix,and so it cannot be inverted. In other words,we cannot write (XЈX)Ϫ1ϭXϪ1(XЈ)Ϫ1unless nϭk,a case that virtually never arises in practice.The nϫ 1 vectors of OLS fitted values and residuals are given byyˆϭXˆ,uˆϭyϪyˆϭyϪXˆ.From (E.6) and the definition of uˆ,we can see that the first order condition for ˆis the same asXЈuˆϭ0.(E.9) Because the first column of X consists entirely of ones,(E.9) implies that the OLS residuals always sum to zero when an intercept is included in the equation and that the sample covariance between each independent variable and the OLS residuals is zero. (We discussed both of these properties in Chapter 3.)The sum of squared residuals can be written asSSR ϭ͚n tϭ1uˆt2ϭuˆЈuˆϭ(yϪXˆ)Ј(yϪXˆ).(E.10)All of the algebraic properties from Chapter 3 can be derived using matrix algebra. For example,we can show that the total sum of squares is equal to the explained sum of squares plus the sum of squared residuals [see (3.27)]. The use of matrices does not pro-vide a simpler proof than summation notation,so we do not provide another derivation.757The matrix approach to multiple regression can be used as the basis for a geometri-cal interpretation of regression. This involves mathematical concepts that are even more advanced than those we covered in Appendix D. [See Goldberger (1991) or Greene (1997).]E.2FINITE SAMPLE PROPERTIES OF OLSDeriving the expected value and variance of the OLS estimator ˆis facilitated by matrix algebra,but we must show some care in stating the assumptions.A S S U M P T I O N E.1(L I N E A R I N P A R A M E T E R S)The model can be written as in (E.3), where y is an observed nϫ 1 vector, X is an nϫ k observed matrix, and u is an nϫ 1 vector of unobserved errors or disturbances.A S S U M P T I O N E.2(Z E R O C O N D I T I O N A L M E A N)Conditional on the entire matrix X, each error ut has zero mean: E(ut͉X) ϭ0, tϭ1,2,…,n.In vector form,E(u͉X) ϭ0.(E.11) This assumption is implied by MLR.3 under the random sampling assumption,MLR.2.In time series applications,Assumption E.2 imposes strict exogeneity on the explana-tory variables,something discussed at length in Chapter 10. This rules out explanatory variables whose future values are correlated with ut; in particular,it eliminates laggeddependent variables. Under Assumption E.2,we can condition on the xtjwhen we com-pute the expected value of ˆ.A S S U M P T I O N E.3(N O P E R F E C T C O L L I N E A R I T Y) The matrix X has rank k.This is a careful statement of the assumption that rules out linear dependencies among the explanatory variables. Under Assumption E.3,XЈX is nonsingular,and so ˆis unique and can be written as in (E.8).T H E O R E M E.1(U N B I A S E D N E S S O F O L S)Under Assumptions E.1, E.2, and E.3, the OLS estimator ˆis unbiased for .P R O O F:Use Assumptions E.1 and E.3 and simple algebra to writeˆϭ(XЈX)Ϫ1XЈyϭ(XЈX)Ϫ1XЈ(Xϩu)ϭ(XЈX)Ϫ1(XЈX)ϩ(XЈX)Ϫ1XЈuϭϩ(XЈX)Ϫ1XЈu,(E.12)where we use the fact that (XЈX)Ϫ1(XЈX) ϭIk . Taking the expectation conditional on X givesAppendix E The Linear Regression Model in Matrix Form 758E(ˆ͉X)ϭϩ(XЈX)Ϫ1XЈE(u͉X)ϭϩ(XЈX)Ϫ1XЈ0ϭ,because E(u͉X) ϭ0under Assumption E.2. This argument clearly does not depend on the value of , so we have shown that ˆis unbiased.To obtain the simplest form of the variance-covariance matrix of ˆ,we impose the assumptions of homoskedasticity and no serial correlation.A S S U M P T I O N E.4(H O M O S K E D A S T I C I T Y A N DN O S E R I A L C O R R E L A T I O N)(i) Var(ut͉X) ϭ2, t ϭ 1,2,…,n. (ii) Cov(u t,u s͉X) ϭ0, for all t s. In matrix form, we canwrite these two assumptions asVar(u͉X) ϭ2I n,(E.13)where Inis the nϫ n identity matrix.Part (i) of Assumption E.4 is the homoskedasticity assumption:the variance of utcan-not depend on any element of X,and the variance must be constant across observations, t. Part (ii) is the no serial correlation assumption:the errors cannot be correlated across observations. Under random sampling,and in any other cross-sectional sampling schemes with independent observations,part (ii) of Assumption E.4 automatically holds. For time series applications,part (ii) rules out correlation in the errors over time (both conditional on X and unconditionally).Because of (E.13),we often say that u has scalar variance-covariance matrix when Assumption E.4 holds. We can now derive the variance-covariance matrix of the OLS estimator.T H E O R E M E.2(V A R I A N C E-C O V A R I A N C EM A T R I X O F T H E O L S E S T I M A T O R)Under Assumptions E.1 through E.4,Var(ˆ͉X) ϭ2(XЈX)Ϫ1.(E.14)P R O O F:From the last formula in equation (E.12), we haveVar(ˆ͉X) ϭVar[(XЈX)Ϫ1XЈu͉X] ϭ(XЈX)Ϫ1XЈ[Var(u͉X)]X(XЈX)Ϫ1.Now, we use Assumption E.4 to getVar(ˆ͉X)ϭ(XЈX)Ϫ1XЈ(2I n)X(XЈX)Ϫ1ϭ2(XЈX)Ϫ1XЈX(XЈX)Ϫ1ϭ2(XЈX)Ϫ1.Appendix E The Linear Regression Model in Matrix Form759Formula (E.14) means that the variance of ˆj (conditional on X ) is obtained by multi-plying 2by the j th diagonal element of (X ЈX )Ϫ1. For the slope coefficients,we gave an interpretable formula in equation (3.51). Equation (E.14) also tells us how to obtain the covariance between any two OLS estimates:multiply 2by the appropriate off diago-nal element of (X ЈX )Ϫ1. In Chapter 4,we showed how to avoid explicitly finding covariances for obtaining confidence intervals and hypotheses tests by appropriately rewriting the model.The Gauss-Markov Theorem,in its full generality,can be proven.T H E O R E M E .3 (G A U S S -M A R K O V T H E O R E M )Under Assumptions E.1 through E.4, ˆis the best linear unbiased estimator.P R O O F :Any other linear estimator of can be written as˜ ϭA Јy ,(E.15)where A is an n ϫ k matrix. In order for ˜to be unbiased conditional on X , A can consist of nonrandom numbers and functions of X . (For example, A cannot be a function of y .) To see what further restrictions on A are needed, write˜ϭA Ј(X ϩu ) ϭ(A ЈX )ϩA Јu .(E.16)Then,E(˜͉X )ϭA ЈX ϩE(A Јu ͉X )ϭA ЈX ϩA ЈE(u ͉X ) since A is a function of XϭA ЈX since E(u ͉X ) ϭ0.For ˜to be an unbiased estimator of , it must be true that E(˜͉X ) ϭfor all k ϫ 1 vec-tors , that is,A ЈX ϭfor all k ϫ 1 vectors .(E.17)Because A ЈX is a k ϫ k matrix, (E.17) holds if and only if A ЈX ϭI k . Equations (E.15) and (E.17) characterize the class of linear, unbiased estimators for .Next, from (E.16), we haveVar(˜͉X ) ϭA Ј[Var(u ͉X )]A ϭ2A ЈA ,by Assumption E.4. Therefore,Var(˜͉X ) ϪVar(ˆ͉X )ϭ2[A ЈA Ϫ(X ЈX )Ϫ1]ϭ2[A ЈA ϪA ЈX (X ЈX )Ϫ1X ЈA ] because A ЈX ϭI kϭ2A Ј[I n ϪX (X ЈX )Ϫ1X Ј]Aϵ2A ЈMA ,where M ϵI n ϪX (X ЈX )Ϫ1X Ј. Because M is symmetric and idempotent, A ЈMA is positive semi-definite for any n ϫ k matrix A . This establishes that the OLS estimator ˆis BLUE. How Appendix E The Linear Regression Model in Matrix Form 760Appendix E The Linear Regression Model in Matrix Formis this significant? Let c be any kϫ 1 vector and consider the linear combination cЈϭc11ϩc22ϩ… ϩc kk, which is a scalar. The unbiased estimators of cЈare cЈˆand cЈ˜. ButVar(c˜͉X) ϪVar(cЈˆ͉X) ϭcЈ[Var(˜͉X) ϪVar(ˆ͉X)]cՆ0,because [Var(˜͉X) ϪVar(ˆ͉X)] is p.s.d. Therefore, when it is used for estimating any linear combination of , OLS yields the smallest variance. In particular, Var(ˆj͉X) ՅVar(˜j͉X) for any other linear, unbiased estimator of j.The unbiased estimator of the error variance 2can be written asˆ2ϭuˆЈuˆ/(n Ϫk),where we have labeled the explanatory variables so that there are k total parameters, including the intercept.T H E O R E M E.4(U N B I A S E D N E S S O Fˆ2)Under Assumptions E.1 through E.4, ˆ2is unbiased for 2: E(ˆ2͉X) ϭ2for all 2Ͼ0. P R O O F:Write uˆϭyϪXˆϭyϪX(XЈX)Ϫ1XЈyϭM yϭM u, where MϭI nϪX(XЈX)Ϫ1XЈ,and the last equality follows because MXϭ0. Because M is symmetric and idempotent,uˆЈuˆϭuЈMЈM uϭuЈM u.Because uЈM u is a scalar, it equals its trace. Therefore,ϭE(uЈM u͉X)ϭE[tr(uЈM u)͉X] ϭE[tr(M uuЈ)͉X]ϭtr[E(M uuЈ|X)] ϭtr[M E(uuЈ|X)]ϭtr(M2I n) ϭ2tr(M) ϭ2(nϪ k).The last equality follows from tr(M) ϭtr(I) Ϫtr[X(XЈX)Ϫ1XЈ] ϭnϪtr[(XЈX)Ϫ1XЈX] ϭnϪn) ϭnϪk. Therefore,tr(IkE(ˆ2͉X) ϭE(uЈM u͉X)/(nϪ k) ϭ2.E.3STATISTICAL INFERENCEWhen we add the final classical linear model assumption,ˆhas a multivariate normal distribution,which leads to the t and F distributions for the standard test statistics cov-ered in Chapter 4.A S S U M P T I O N E.5(N O R M A L I T Y O F E R R O R S)are independent and identically distributed as Normal(0,2). Conditional on X, the utEquivalently, u given X is distributed as multivariate normal with mean zero and variance-covariance matrix 2I n: u~ Normal(0,2I n).761Appendix E The Linear Regression Model in Matrix Form Under Assumption E.5,each uis independent of the explanatory variables for all t. Inta time series setting,this is essentially the strict exogeneity assumption.T H E O R E M E.5(N O R M A L I T Y O Fˆ)Under the classical linear model Assumptions E.1 through E.5, ˆconditional on X is dis-tributed as multivariate normal with mean and variance-covariance matrix 2(XЈX)Ϫ1.Theorem E.5 is the basis for statistical inference involving . In fact,along with the properties of the chi-square,t,and F distributions that we summarized in Appendix D, we can use Theorem E.5 to establish that t statistics have a t distribution under Assumptions E.1 through E.5 (under the null hypothesis) and likewise for F statistics. We illustrate with a proof for the t statistics.T H E O R E M E.6Under Assumptions E.1 through E.5,(ˆjϪj)/se(ˆj) ~ t nϪk,j ϭ 1,2,…,k.P R O O F:The proof requires several steps; the following statements are initially conditional on X. First, by Theorem E.5, (ˆjϪj)/sd(ˆ) ~ Normal(0,1), where sd(ˆj) ϭ͙ෆc jj, and c jj is the j th diagonal element of (XЈX)Ϫ1. Next, under Assumptions E.1 through E.5, conditional on X,(n Ϫ k)ˆ2/2~ 2nϪk.(E.18)This follows because (nϪk)ˆ2/2ϭ(u/)ЈM(u/), where M is the nϫn symmetric, idem-potent matrix defined in Theorem E.4. But u/~ Normal(0,I n) by Assumption E.5. It follows from Property 1 for the chi-square distribution in Appendix D that (u/)ЈM(u/) ~ 2nϪk (because M has rank nϪk).We also need to show that ˆand ˆ2are independent. But ˆϭϩ(XЈX)Ϫ1XЈu, and ˆ2ϭuЈM u/(nϪk). Now, [(XЈX)Ϫ1XЈ]Mϭ0because XЈMϭ0. It follows, from Property 5 of the multivariate normal distribution in Appendix D, that ˆand M u are independent. Since ˆ2is a function of M u, ˆand ˆ2are also independent.Finally, we can write(ˆjϪj)/se(ˆj) ϭ[(ˆjϪj)/sd(ˆj)]/(ˆ2/2)1/2,which is the ratio of a standard normal random variable and the square root of a 2nϪk/(nϪk) random variable. We just showed that these are independent, and so, by def-inition of a t random variable, (ˆjϪj)/se(ˆj) has the t nϪk distribution. Because this distri-bution does not depend on X, it is the unconditional distribution of (ˆjϪj)/se(ˆj) as well.From this theorem,we can plug in any hypothesized value for j and use the t statistic for testing hypotheses,as usual.Under Assumptions E.1 through E.5,we can compute what is known as the Cramer-Rao lower bound for the variance-covariance matrix of unbiased estimators of (again762conditional on X ) [see Greene (1997,Chapter 4)]. This can be shown to be 2(X ЈX )Ϫ1,which is exactly the variance-covariance matrix of the OLS estimator. This implies that ˆis the minimum variance unbiased estimator of (conditional on X ):Var(˜͉X ) ϪVar(ˆ͉X ) is positive semi-definite for any other unbiased estimator ˜; we no longer have to restrict our attention to estimators linear in y .It is easy to show that the OLS estimator is in fact the maximum likelihood estima-tor of under Assumption E.5. For each t ,the distribution of y t given X is Normal(x t ,2). Because the y t are independent conditional on X ,the likelihood func-tion for the sample is obtained from the product of the densities:͟nt ϭ1(22)Ϫ1/2exp[Ϫ(y t Ϫx t )2/(22)].Maximizing this function with respect to and 2is the same as maximizing its nat-ural logarithm:͚nt ϭ1[Ϫ(1/2)log(22) Ϫ(yt Ϫx t )2/(22)].For obtaining ˆ,this is the same as minimizing͚nt ϭ1(y t Ϫx t )2—the division by 22does not affect the optimization—which is just the problem that OLS solves. The esti-mator of 2that we have used,SSR/(n Ϫk ),turns out not to be the MLE of 2; the MLE is SSR/n ,which is a biased estimator. Because the unbiased estimator of 2results in t and F statistics with exact t and F distributions under the null,it is always used instead of the MLE.SUMMARYThis appendix has provided a brief discussion of the linear regression model using matrix notation. This material is included for more advanced classes that use matrix algebra,but it is not needed to read the text. In effect,this appendix proves some of the results that we either stated without proof,proved only in special cases,or proved through a more cumbersome method of proof. Other topics—such as asymptotic prop-erties,instrumental variables estimation,and panel data models—can be given concise treatments using matrices. Advanced texts in econometrics,including Davidson and MacKinnon (1993),Greene (1997),and Wooldridge (1999),can be consulted for details.KEY TERMSAppendix E The Linear Regression Model in Matrix Form 763First Order Condition Matrix Notation Minimum Variance Unbiased Scalar Variance-Covariance MatrixVariance-Covariance Matrix of the OLS EstimatorPROBLEMSE.1Let x t be the 1ϫ k vector of explanatory variables for observation t . Show that the OLS estimator ˆcan be written asˆϭΘ͚n tϭ1xt Јx t ΙϪ1Θ͚nt ϭ1xt Јy t Ι.Dividing each summation by n shows that ˆis a function of sample averages.E.2Let ˆbe the k ϫ 1 vector of OLS estimates.(i)Show that for any k ϫ 1 vector b ,we can write the sum of squaredresiduals asSSR(b ) ϭu ˆЈu ˆϩ(ˆϪb )ЈX ЈX (ˆϪb ).[Hint :Write (y Ϫ X b )Ј(y ϪX b ) ϭ[u ˆϩX (ˆϪb )]Ј[u ˆϩX (ˆϪb )]and use the fact that X Јu ˆϭ0.](ii)Explain how the expression for SSR(b ) in part (i) proves that ˆuniquely minimizes SSR(b ) over all possible values of b ,assuming Xhas rank k .E.3Let ˆbe the OLS estimate from the regression of y on X . Let A be a k ϫ k non-singular matrix and define z t ϵx t A ,t ϭ 1,…,n . Therefore,z t is 1ϫ k and is a non-singular linear combination of x t . Let Z be the n ϫ k matrix with rows z t . Let ˜denote the OLS estimate from a regression ofy on Z .(i)Show that ˜ϭA Ϫ1ˆ.(ii)Let y ˆt be the fitted values from the original regression and let y ˜t be thefitted values from regressing y on Z . Show that y ˜t ϭy ˆt ,for all t ϭ1,2,…,n . How do the residuals from the two regressions compare?(iii)Show that the estimated variance matrix for ˜is ˆ2A Ϫ1(X ЈX )Ϫ1A Ϫ1,where ˆ2is the usual variance estimate from regressing y on X .(iv)Let the ˆj be the OLS estimates from regressing y t on 1,x t 2,…,x tk ,andlet the ˜j be the OLS estimates from the regression of yt on 1,a 2x t 2,…,a k x tk ,where a j 0,j ϭ 2,…,k . Use the results from part (i)to find the relationship between the ˜j and the ˆj .(v)Assuming the setup of part (iv),use part (iii) to show that se(˜j ) ϭse(ˆj )/͉a j ͉.(vi)Assuming the setup of part (iv),show that the absolute values of the tstatistics for ˜j and ˆj are identical.Appendix E The Linear Regression Model in Matrix Form 764。