FUZZY INTERVAL NUMBER (FIN) TECHNIQUES FOR CROSS LANGUAGE INFORMATION RETRIEVAL

- 格式:pdf

- 大小:215.47 KB

- 文档页数:8

模糊数学综合评价法模糊综合评价法(fuzzy prehensive evaluation method)模糊数学综合评价法 1模糊综合评价法是一种基于模糊数学的综合评标方法。

该综合评价法根据模糊数学的隶属度理论把定性评价转化为定量评价,即用模糊数学对受到多种因素制约的事物或对象做出一个总体的评价。

它具有结果清晰,系统性强的特点,能较好地解决模糊的、难以量化的问题,适合各种非确定性问题的解决。

模糊数学综合评价法 2为了便于描述,依据模糊数学的基本概念,对模糊综合评价法中的有关术语定义如下:1.评价因素(F):系指对招标项目评议的具体内容(例如,价格、各种指标、参数、规范、性能、状况,等等)。

为便于权重分配和评议,可以按评价因素的属性将评价因素分成若干类(例如,商务、技术、价格、伴随服务,等),把每一类都视为单一评价因素,并称之为第一级评价因素(F1)。

第一级评价因素可以设置下属的第二级评价因素(例如,第一级评价因素“商务”可以有下属的第二级评价因素:交货期、付款条件和付款方式,等)。

第二级评价因素可以设置下属的第三级评价因素(F3)。

依此类推。

2.评价因素值(Fv):系指评价因素的具体值。

例如,某投标人的某技术参数为120,那么,该投标人的该评价因素值为120。

3.评价值(E):系指评价因素的优劣程度。

评价因素最优的评价值为1(采用百分制时为100分);欠优的评价因素,依据欠优的程度,其评价值大于或等于零、小于或等于1(采用百分制时为100分),即0≤E≤1(采用百分制时0≤E≤100)。

4.平均评价值(Ep):系指评标委员会成员对某评价因素评价的平均值。

平均评价值(Ep)=全体评标委员会成员的评价值之和÷评委数5.权重(W):系指评价因素的地位和重要程度。

一级评价因素的权重之和为1;每个评价因子的下一个评价因子的权重之和为1。

6.加权平均评价值(Epw):系指加权后的平均评价值。

加权平均评价值(Epw)=平均评价值(Ep)×权重(W)。

混合型区间线性规划的求解祝永武;李炜【摘要】仅含不等式约束的区间线性规划的求解问题已有较好的算法.对含有等式约束的区间线性规划求解问题,现有的算法效率都不能令人满意,并会出现辅助问题没有可行解的问题.该文讨论既含不等式约束又含等式约束这种混合型区间系数线性规划的求解问题.利用问题的几何结构,提出了一种新的辅助问题,有效地降低了计算复杂性.并给出了辅助问题不可行时的处理方案.【期刊名称】《杭州电子科技大学学报》【年(卷),期】2010(030)001【总页数】4页(P62-65)【关键词】线性规划;区间系数;标准型;最优值区间【作者】祝永武;李炜【作者单位】杭州电子科技大学理学院,浙江,杭州,310018;杭州电子科技大学理学院,浙江,杭州,310018【正文语种】中文【中图分类】O2210 引言传统的决策方法侧重于确定性的决策模型的建立,由于现实问题的复杂性及信息获取的不完整,数学模型中的系数并不能完全确定。

为了描述这种不确定性并在不确定环境下做出决策,引入了区间技术,即通过获取某不确定参数的变动范围来建立决策模型。

区间线性规划(Linear Programmingwith Interval Coefficients,LPIC)作为一种柔性线性规划,可以很好的解决不确定系统中的优化问题。

对于LPIC方面的研究,提出了不同的方案来确定区间不等式的关系[1-4],对目标函数、约束条件中含有区间数的线性规划问题进行了最优值区间讨论[5-7]。

前面的研究对于含有区间等式约束的最差最优值没有解决好,且没有讨论和解决最差最优值的模型不可行的问题,通过解决这两个问题从而进一步改进和完善混合型区间线性规划的最优值区间求解模型。

1 LPIC最优值区间的确定考虑到很多求解不确定问题的思路是把不确定问题转化为确定性的问题来进行求解,由于区间规划问题的参数是区间,因此所得的目标函数值也是一个区间,故考虑区间线性规划的最优值区间的求解。

收稿日期:2020-12-29基金项目:安徽省自然科学基金青年项目(1808085QF196);安徽省高等学校自然科学重点研究项目(KJ2020A0011);安徽财经大学教研重点项目(acjyzd201814)作者简介:殷仕淑(1973—),女,安徽蚌埠人,教授,硕士生导师,研究方向为管理决策优化。

犹豫模糊集决策理论与方法综述殷仕淑,信 芳(安徽财经大学管理科学与工程学院,安徽蚌埠233041)摘要:犹豫模糊集是在模糊信息的基础上通过采用多个隶属度来充分刻画原始信息的一种信息表达方式。

与模糊集相比它能够全面刻画专家给出的决策信息,与直觉模糊集相比它更加符合人在决策时的犹豫性。

对犹豫模糊集决策理论和方法进行综述,介绍犹豫模糊集的发展历程,分别回顾犹豫模糊集的信息融合理论、信息测度理论、偏好关系理论以及多属性决策理论,总结了犹豫模糊环境下决策理论与方法的未来研究方向。

关键词:多属性决策;犹豫模糊集;信息融合;信息测度;偏好关系中图分类号:C934 文献标识码:A 文章编号:2096-790X(2021)05-0026-09DOI:10.19576/j.issn.2096-790X.2021.05.006ReviewofHesitantFuzzySetDecisionTheoryandMethodYinShishu,XinFang(AnhuiUniversityofFinanceandEconomics,BengbuCity,AnhuiProvince233041)Abstract:Hesitantfuzzysetisakindofinformationexpressionbasedonfuzzyinformationbyusingmultiplemembershipdegreestofullydescribetheoriginalinformation.Comparedwithfuzzysets,itcandescribethedeci sioninformationgivenbyexpertscomprehensively.Comparedwithintuitionisticfuzzysets,itismoreconsistentwithpeople'shesitationindecision-making.Thispaperreviewsthetheoryandmethodofhesitantfuzzysetdeci sionmaking.Thispaperfirstintroducesthedevelopmentofhesitantfuzzysets,thenreviewstheinformationfusiontheory,informationmeasurementtheory,preferencerelationtheoryandmulti-attributedecision-makingtheoryofhesitantfuzzysets,andfinallyitsummarizesthefutureresearchdirectionofdecision-makingtheoryandmethodinhesitantfuzzyenvironment.Keywords:multiattributedecisionmaking;hesitantfuzzyset;informationfusion;informationmeasure;pref erencerelation0 引言在政治、经济、文化、军事等各个领域,决策的身影随处可见。

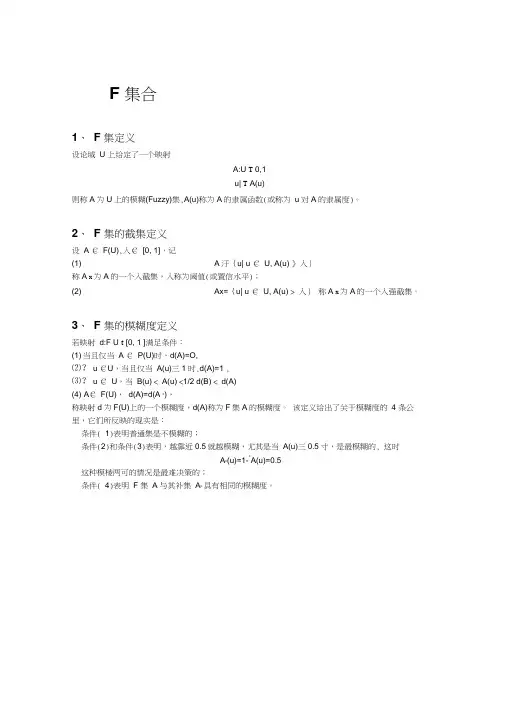

F 集合1、F 集定义设论域U 上给定了一个映射A:U T 0,1u| T A(u)则称A为U上的模糊(Fuzzy)集,A(u)称为A的隶属函数(或称为u对A的隶属度)。

2、F 集的截集定义设 A € F(U),入€ [0, 1],记(1) A汙{u| u € U, A(u) 》入}称A x为A的一个入截集,入称为阈值(或置信水平);(2) Ax={u| u € U, A(u) > 入}称A x为A的一个入强截集。

3、F 集的模糊度定义若映射d:F U t [0, 1 ]满足条件:(1) 当且仅当A € P(U)时,d(A)=O,⑵? u €U,当且仅当A(u)三1时,d(A)=1 ,⑶? u € U,当B(u) < A(u) <1/2 d(B) < d(A)(4) A€ F(U),d(A)=d(A c),称映射d为F(U)上的一个模糊度,d(A)称为F集A的模糊度。

该定义给出了关于模糊度的 4 条公里,它们所反映的现实是:条件( 1)表明普通集是不模糊的;条件(2)和条件(3)表明,越靠近0.5就越模糊,尤其是当A(u)三0.5寸,是最模糊的, 这时cA c(u)=1- A(u)=0.5这种模棱两可的情况是最难决策的;条件( 4)表明 F 集 A 与其补集A c具有相同的模糊度。

二、F模式识别1、典型模式识别系统1未知类别模式的分类I ___ _____ _____ _____ _____ _____ _____ -2、F集的贴近度定义设A, B, C € F(U),若映射N: F U X F U T [0, 1]满足条件:(1) N(A, B)=N(B, A),⑵ N(A, A)=1, N U,? =0,⑶若A B C,则N(A, C)乞N(A, B) N(B, C),则称N(A, B)为F集A与B的贴近度。

N称为F(U)上的贴近度函数。

贴近度是对两个F集接近程度的一种度量。

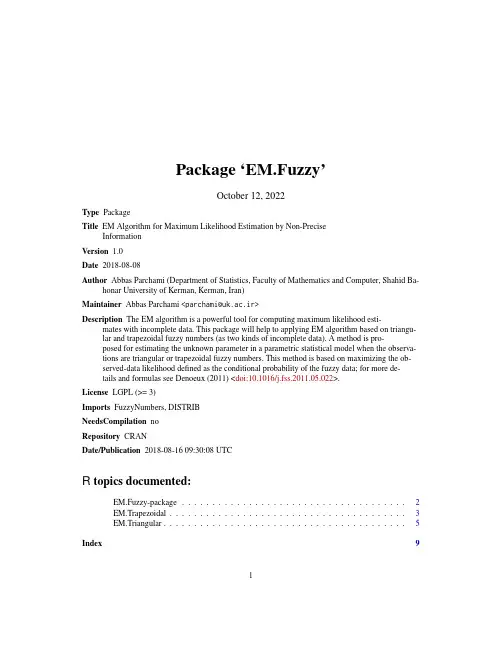

Package‘EM.Fuzzy’October12,2022Type PackageTitle EM Algorithm for Maximum Likelihood Estimation by Non-PreciseInformationVersion1.0Date2018-08-08Author Abbas Parchami(Department of Statistics,Faculty of Mathematics and Computer,Shahid Ba-honar University of Kerman,Kerman,Iran)Maintainer Abbas Parchami<**************.ir>Description The EM algorithm is a powerful tool for computing maximum likelihood esti-mates with incomplete data.This package will help to applying EM algorithm based on triangu-lar and trapezoidal fuzzy numbers(as two kinds of incomplete data).A method is pro-posed for estimating the unknown parameter in a parametric statistical model when the observa-tions are triangular or trapezoidal fuzzy numbers.This method is based on maximizing the ob-served-data likelihood defined as the conditional probability of the fuzzy data;for more de-tails and formulas see Denoeux(2011)<doi:10.1016/j.fss.2011.05.022>.License LGPL(>=3)Imports FuzzyNumbers,DISTRIBNeedsCompilation noRepository CRANDate/Publication2018-08-1609:30:08UTCR topics documented:EM.Fuzzy-package (2)EM.Trapezoidal (3)EM.Triangular (5)Index912EM.Fuzzy-package EM.Fuzzy-package EM Algorithm for Maximum Likelihood Estimation by Non-PreciseInformationDescriptionThe main goal of this package is easy estimation of the unknown parameter of a continues distribu-tion by EM algorithm where the observed data are fuzzy rather than crisp.This package contains two major functions:(1)the function EM.Triangular works by Triangular Fuzzy Numbers(TFNs), and(2)the function EM.Trapezoidal works by Trapezoidal Fuzzy Numbers(TrFNs).Author(s)Abbas ParchamiReferencesDenoeux,T.(2011)Maximum likelihood estimation from fuzzy data using the EM algorithm,Fuzzy Sets and Systems183,72-91.Gagolewski,M.,Caha,J.(2015)FuzzyNumbers Package:Tools to deal with fuzzy numbers in R.R package version0.4-1,https:///web/packages=FuzzyNumbersGagolewski,M.,Caha,J.(2015)A guide to the FuzzyNumbers package for R(FuzzyNumbers version0.4-1)Exampleslibrary(FuzzyNumbers)library(DISTRIB,warn.conflicts=FALSE)#Let us we are going to estimation the unknown mean of Normal population with known variance #(e.g,sd(X)=0.5)on the basis of11trapezoidal fuzzy numbers(which we simulate them in #bellow for simplification).n=11set.seed(1000)c1=rnorm(n,10,.5)c2=rnorm(n,10,.5)for(i in1:n){if(c1[i]>c2[i]){zarf<-c1[i];c1[i]<-c2[i];c2[i]<-zarf}}round(c1,3);round(c2,3)c1<=c2l=runif(n,0,1);round(l,3)u=runif(n,0,1);round(u,3)EM.Trapezoidal(T.dist="norm",T.dist.par=c(NA,0.5),par.space=c(-5,30),c1,c2,l,u, start=4,ebs=.0001,fig=2)EM.Trapezoidal MLE by EM algorithm based on Trapezoidal Fuzzy DataDescriptionThis function can easily obtain Maximum Likelihood Estimation(MLE)for the unknown one-dimensional parameter on the basis of Trapezoidal Fuzzy observation.UsageEM.Trapezoidal(T.dist,T.dist.par,par.space,c1,c2,l,u,start,ebs=0.001, fig=2)ArgumentsT.dist the distribution name of the random variable is determined by characteristic el-ement T.dist.The names of distributions is similar to stats package.T.dist.par a vector of distribution parameters with considered ordering in stats pack-age.If T.dist has only one parameter(which obviously is unknown)the usermust be considered T.dist.par=NA.Also,it may be T.dist has two parameterswhich one of them is unknown and another known.In such cases,the user mustbe considered T.dist.par=c(NA,known parameter where thefirst parame-ter is unknown,and T.dist.par=c(known parameter,NA where the secondparameter is unknown.See bellow examples.par.space an interval which is a subset/subinterval of the parameter space and it must be contain the true value of unknown parameter.c1a vector with length(c)=n from thefirst point of the core-values of TrFNs.c2a vector with length(c)=n from the last point of the core-values of TrFNs.Therefore,it is obvious that c1<=c2.l a vector with length(c)=n from the left spreads of TrFNs.u a vector with length(c)=n from the right spreads of TrFNs.start a real number from par.space which EM algorithm must be started/worked with this start point.ebs a real positive small number(e.g.,0.01,0.001or0.16)which determine the accuracy of EM algorithm in estimation of unknown parameter.fig a numeric argument which can tack only values0,1or2.If fig=0,the result of EM algorithm will not contains anyfigure.If fig=1,then the membership functions of TrFNs will be shown in afigurewith different colors.If fig=2,then the membership functions of TrFNs will be shown in afigurewith the curve of estimated probability density function(p.d.f.)on the basis ofmaximum likelihood estimation.ValueThe parameter computed/estimated in each iteration separately and also the computation of thefollowing values can be asked directly.MLE the value of maximum likelihood estimated for unknown parameter by EM al-gorithm based on TrFNs.parameter.vectora vector of the ML estimated parameter for unknown parameter in algorithmwhich itsfirst elements start and the last element is MLE.Iter.Num the number of EM algorithm iterations.NoteIn using this package it must be noted that:(1)The sample size of TrFNs must be less than16.This package is able to work with small samplesizes(n≤15)and can be extended by the user if needs.(2)Considering a suitable interval for par.space is very important to obtain a true result for EMalgorithm.It must be mentioned that this interval must be a sub-interval of the parameter spaceand the user must check the result of algorithm(MLE).It means that if the obtained MLE(byEM.Trapezoidal)overlay on the boundary of par.space,then the result is not acceptable and theEM algorithm must be repeated once again with a wider par.space.(3)This package is able to work for continuous distributions with one or two parameter which onlyone of them is unknown and the user wants to estimate it based on TrFNs.See AlsoDISTRIB FuzzyNumbersExampleslibrary(FuzzyNumbers)library(DISTRIB,warn.conflicts=FALSE)#Example1:Estimation the unknown mean of Normal population with known variance(e.g,#var=0.5^2)based of Trapezoidal FNs.n=2set.seed(1000)c1=rnorm(n,10,.5)c2=rnorm(n,10,.5)for(i in1:n){if(c1[i]>c2[i]){zarf<-c1[i];c1[i]<-c2[i];c2[i]<-zarf}}round(c1,3);round(c2,3)c1<=c2l=runif(n,0,1);round(l,3)u=runif(n,0,1);round(u,3)EM.Trapezoidal(T.dist="norm",T.dist.par=c(NA,0.5),par.space=c(-5,30),c1,c2,l,u,start=4, ebs=.1,fig=2)#Example2:n=4set.seed(10)c1=rexp(n,2)c2=rexp(n,2)for(i in1:n){if(c1[i]>c2[i]){zarf<-c1[i];c1[i]<-c2[i];c2[i]<-zarf}}round(c1,3);round(c2,3)c1<=c2l=runif(n,0,1);round(l,3)u=runif(n,0,2);round(u,3)EM.Trapezoidal(T.dist="exp",T.dist.par=NA,par.space=c(.1,20),c1,c2,l,u,start=7, ebs=.001)#Example3:Estimation the unknown standard deviation of Normal population with known #mean(e.g,mean=7)based of Trapezoidal FNs.n=10set.seed(123)c1=rnorm(n,4,1)c2=rnorm(n,4,1)for(i in1:n){if(c1[i]>c2[i]){zarf<-c1[i];c1[i]<-c2[i];c2[i]<-zarf}}round(c1,3);round(c2,3)c1<=c2l=runif(n,0,.5);round(l,3)u=runif(n,0,.75);round(u,3)EM.Trapezoidal(T.dist="norm",T.dist.par=c(4,NA),par.space=c(0,40),c1,c2,l,u,start=1, ebs=.0001,fig=2)#Example4:Estimation alpha parameter in Beta distribution.n=4set.seed(12)c1=rbeta(n,2,1)c2=rbeta(n,2,1)for(i in1:n){if(c1[i]>c2[i]){zarf<-c1[i];c1[i]<-c2[i];c2[i]<-zarf}}round(c1,3);round(c2,3)c1<=c2l=rbeta(n,1,1);round(l,3)u=rbeta(n,1,1);round(u,3)EM.Trapezoidal(T.dist="beta",T.dist.par=c(NA,1),par.space=c(0,10),c1,c2,l,u,start=1, ebs=.01,fig=2)EM.Triangular MLE by EM algorithm based on Triangular Fuzzy DataDescriptionThis function can easily obtain Maximum Likelihood Estimation(MLE)for the unknown one-dimensional parameter on the basis of Triangular Fuzzy observation.UsageEM.Triangular(T.dist,T.dist.par,par.space,c,l,u,start,ebs=0.001,fig=2)ArgumentsT.dist the distribution name of the random variable is determined by characteristic el-ement T.dist.The names of distributions is similar to stats package.T.dist.par a vector of distribution parameters with considered ordering in stats pack-age.If T.dist has only one parameter(which obviously is unknown)the usermust be considered T.dist.par=NA.Also,it may be T.dist has two parameterswhich one of them is unknown and another known.In such cases,the user mustbe considered T.dist.par=c(NA,known parameter where thefirst parame-ter is unknown,and T.dist.par=c(known parameter,NA where the secondparameter is unknown.See bellow examples.par.space an interval which is a subset/subinterval of the parameter space and it must be contain the true value of unknown parameter.c a vector with length(c)=n from the core-values of TFNs.l a vector with length(c)=n from the left spreads of TFNs.u a vector with length(c)=n from the right spreads of TFNs.start a real number from par.space which EM algorithm must be started/worked with this start point.ebs a real positive small number(e.g.,0.01,0.001or0.16)which determine the accuracy of EM algorithm in estimation of unknown parameter.fig a numeric argument which can tack only values0,1or2.If fig=0,the result of EM algorithm will not contains anyfigure.If fig=1,then the membership functions of TFNs will be shown in afigure.If fig=2,then the membership functions of TFNs will be shown in afigurewith the curve of estimated probability density function(p.d.f.)on the basis ofmaximum likelihood estimation.ValueThe parameter computed/estimated in each iteration separately and also the computation of the following values can be asked directly.MLE the value of maximum likelihood estimated for unknown parameter by EM al-gorithm based on TFNs.parameter.vectora vector of the ML estimated parameter for unknown parameter in algorithmwhich itsfirst elements start and the last element is MLE.Iter.Num the number of EM algorithm iterations.NoteIn using this package it must be noted that:(1)The sample size of TFNs must be less than16.This package is able to work with small samplesizes(n≤15)and can be extended by the user if needs.(2)Considering a suitable interval for par.space is very important to obtain a true result for EMalgorithm.It must be mentioned that this interval must be a sub-interval of the parameter space and the user must check the result of algorithm(MLE).It means that if the obtained MLE(by EM.Triangular)overlay on the boundary of par.space,then the result is not acceptable and the EM algorithm must be repeated once again with a wider par.space.(3)This package is able to work for continuous distributions with one or two parameter which onlyone of them is unknown and the user wants to estimate it based on TFNs.See AlsoDISTRIB FuzzyNumbersExampleslibrary(FuzzyNumbers)library(DISTRIB,warn.conflicts=FALSE)#Example1:n=2set.seed(131)c=rexp(n,2);round(c,3)l=runif(n,0,1);round(l,3)u=runif(n,0,2);round(u,3)EM.Triangular(T.dist="exp",T.dist.par=NA,par.space=c(0,30),c,l,u,start=5,ebs=.1, fig=0)EM.Triangular(T.dist="exp",T.dist.par=NA,par.space=c(0,30),c,l,u,start=50,ebs=.001, fig=1)#Fast ConvergenceEM.Triangular(T.dist="exp",T.dist.par=NA,par.space=c(0,30),c,l,u,start=50,ebs=.1^6, fig=2)#Example2:Computing the mean and the standard deviation of20EM estimations:n=15MLEs=c()for(j in100:120){print(j)set.seed(j)c=rexp(n,2)l=runif(n,0,1)u=runif(n,0,2)MLEs=c(MLEs,EM.Triangular(T.dist="exp",T.dist.par=NA,par.space=c(0,30),c,l,u,start=5,ebs=.01,fig=0)$MLE)}MLEs# 3.2837032.4755413.171026...mean(MLEs)#2.263996sd(MLEs)#0.4952257hist(MLEs)#Example3:Estimation the unknown mean of Normal population with known variance #(e.g,var=1)based of TFNs.n=5set.seed(100)c=rnorm(n,10,1);round(c,3)l=runif(n,0,1);round(l,3)u=runif(n,0,1);round(u,3)EM.Triangular(T.dist="norm",T.dist.par=c(NA,1),par.space=c(-10,30),c,l,u,start=20, ebs=.001,fig=2)#Example4:Estimation the unknown standard deviation of Normal population with known #mean(e.g,mean=7)based of TFNs.n=10set.seed(123)c=rnorm(n,7,2);round(c,3)l=runif(n,0,2.5);round(l,3)u=runif(n,0,1);round(u,3)EM.Triangular(T.dist="norm",T.dist.par=c(7,NA),par.space=c(0,10),c,l,u,start=5, ebs=.0001,fig=2)#Example5:Estimation the unknown parameter b where X~U(a=0,b).n=15set.seed(101)c=runif(n,0,5);round(c,3)l=runif(n,0,1);round(l,3)u=runif(n,0,1);round(u,3)b<-EM.Triangular(T.dist="unif",T.dist.par=c(0,NA),par.space=c(0,10),c,l,u,start=5,ebs=.001,fig=2)$MLEprint(b)Index∗EM algorithmEM.Trapezoidal,3EM.Triangular,5∗Fuzzy DataEM.Trapezoidal,3EM.Triangular,5∗Maximum Likelihood EstimationEM.Trapezoidal,3EM.Triangular,5∗TrapezoidalFuzzyNumberEM.Trapezoidal,3∗TriangularFuzzyNumberEM.Triangular,5EM.Fuzzy(EM.Fuzzy-package),2EM.Fuzzy-package,2EM.Trapezoidal,3EM.Triangular,59。

区间数线性规划的一种基于可信度的解法初探石艳;王关梅;周方明【摘要】对区间数线性规划的解法,从可信度的角度进行了一些初步的探讨,该方法能比较科学地确定参数的取值范围,也能得到较多的满意解,简化了以前算法的计算量,从而大大提高了解法的有效性.【期刊名称】《江西科学》【年(卷),期】2015(033)003【总页数】5页(P362-366)【关键词】区间数;可信度;线性规划;区间数线性规划【作者】石艳;王关梅;周方明【作者单位】贺州学院招生办公室,542800,广西,贺州;贺州学院应用技术学院,542800,广西,贺州;贺州市八步区铺门镇人民政府,542815,广西,贺州【正文语种】中文【中图分类】O221区间数线性规划(interval number linear programming,INLP)是指系数含有区间数的线性规划,具体地说,是指价格系数、技术系数和资源系数全部或部分为区间数的线性规划。

它是一种柔性的数学规划,可以较好地解决不确定系统中的一些优化问题。

目前,区间数线性规划是国内外研究的热点之一,很多学者都对此进行了研究,并且取得了一系列的研究成果。

文献[4]借助可能度的定义,将目标函数和约束条件转化为优化水平,利用极大极小算子求得可能弱有效解,并建立了一种求解多组可能弱有效解的模型。

文献[9]对不等式约束中含有区间数的不确定命题进行了研究,证明了现有的几种区间不等式评价系统体系的内在统一性,并提出了一种μ+的改进准则。

文献[10]提出一种可信度函数来描述2个区间数的关系,即μ-可信度集法。

文献[8]提出一种Tong法。

文献[11]提出一种Sengupta法,即A-可接受指标集法。

文献[9]证明了这3种方法的内在的统一性,其它2种方法都存在与Ishibuchi等价的叙述,并提出了一种μ+的改进准则。

文献[12]定义了区间数线性规划的标准型和区间数的序关系,通过求解标准型区间数线性规划的最好最优解和最差最优解来确定最优值区间,此外,对区间等式约束也做了讨论:将区间等式约束转化为确定型线性规划进行求解,即转化到区间数线性规划的一般形式上。

fuzzy方法(原创版3篇)目录(篇1)1.模糊方法的定义与特点2.模糊方法的应用领域3.模糊方法的优缺点4.模糊方法的发展前景正文(篇1)一、模糊方法的定义与特点模糊方法是一种基于模糊逻辑的数学方法,它以模糊集合为基础,运用模糊推理和模糊运算等手段来处理现实世界中存在的不确定性和模糊性问题。

模糊方法具有以下特点:1.处理不确定性和模糊性:模糊方法可以对具有不确定性和模糊性的问题进行有效的处理,弥补了传统数学方法在此方面的不足。

2.强调实际应用:模糊方法注重实际问题的解决,强调理论联系实际,将数学理论与实际问题相结合。

3.通用性强:模糊方法可以广泛应用于多个学科领域,如经济学、管理学、工程技术等。

二、模糊方法的应用领域模糊方法在多个领域具有广泛的应用,以下是一些典型的应用领域:1.控制工程:模糊控制是利用模糊逻辑对系统的不确定性和非线性进行建模和控制,以实现更优的控制效果。

2.决策分析:模糊评价、模糊综合评价等方法可以用于多因素、多目标的决策分析,提高决策的准确性和科学性。

3.模式识别:模糊模式识别方法可以用于处理现实世界中的不确定性和模糊性问题,提高识别的准确性。

4.人工智能:模糊方法在人工智能领域具有广泛的应用,如模糊推理、模糊神经网络等。

三、模糊方法的优缺点1.优点:(1)可以处理不确定性和模糊性问题,弥补了传统数学方法的不足;(2)强调实际应用,注重理论联系实际;(3)通用性强,可以应用于多个学科领域。

2.缺点:(1)理论体系尚未完善,需要进一步研究和发展;(2)计算复杂度较高,求解问题需要一定的计算资源。

四、模糊方法的发展前景随着科学技术的不断发展,模糊方法在各个领域的应用将更加广泛,其理论体系也将不断完善。

同时,随着计算机技术的发展,计算能力的提升将有助于降低模糊方法的计算复杂度,提高其应用效果。

目录(篇2)1.模糊方法的定义与特点2.模糊方法的应用领域3.模糊方法的优势与局限性正文(篇2)一、模糊方法的定义与特点模糊方法是一种基于模糊集合理论的数学方法,它以模糊集合为基础,运用模糊逻辑和模糊推理等工具,对问题进行定量或定性分析。

模糊数学第1节模糊聚类分析第2节模糊模式识别第3节模糊相似优先比方法第4节模糊综合评判第5节模糊关系方程求解在自然科学或社会科学研究中,存在着许多定义不很严格或者说具有模糊性的概念。

这里所谓的模糊性,主要是指客观事物的差异在中间过渡中的不分明性,如某一生态条件对某种害虫、某种作物的存活或适应性可以评价为“有利、比较有利、不那么有利、不利”;灾害性霜冻气候对农业产量的影响程度为“较重、严重、很严重”,等等。

这些通常是本来就属于模糊的概念,为处理分析这些“模糊”概念的数据,便产生了模糊集合论。

根据集合论的要求,一个对象对应于一个集合,要么属于,要么不属于,二者必居其一,且仅居其一。

这样的集合论本身并无法处理具体的模糊概念。

为处理这些模糊概念而进行的种种努力,催生了模糊数学。

模糊数学的理论基础是模糊集。

模糊集的理论是1965年美国自动控制专家查德(L. A. Zadeh)教授首先提出来的,近10多年来发展很快。

模糊集合论的提出虽然较晚,但目前在各个领域的应用十分广泛。

实践证明,模糊数学在农业中主要用于病虫测报、种植区划、品种选育等方面,在图像识别、天气预报、地质地震、交通运输、医疗诊断、信息控制、人工智能等诸多领域的应用也已初见成效。

从该学科的发展趋势来看,它具有极其强大的生命力和渗透力。

在侧重于应用的模糊数学分析中,经常应用到聚类分析、模式识别和综合评判等方法。

在DPS系统中,我们将模糊数学的分析方法与一般常规统计方法区别开来,列专章介绍其分析原理及系统设计的有关功能模块程序的操作要领,供用户参考和使用。

第1节模糊聚类分析1. 模糊集的概念对于一个普通的集合A,空间中任一元素x,要么x∈A,要么x∉A,二者必居其一。

这一特征可用一个函数表示为:A x x A x A()=∈∉⎧⎨⎩1A(x)即为集合A的特征函数。

将特征函数推广到模糊集,在普通集合中只取0、1两值推广到模糊集中为[0, 1]区间。

定义1 设X为全域,若A为X上取值[0, 1]的一个函数,则称A为模糊集。

模糊数学方法1965年美国加利福尼亚大学控制论专家扎德(Zadeh L .A .)教授在《Information and Control 》杂志上发表了一篇开创性论文“Fuzzy Sets ”,这标志着模糊数学的诞生。

模糊数学是研究和处理模糊性现象的数学方法。

众所周知,经典数学是以精确性为特征的。

然而,与精确性相悖的模糊性并不完全是消极的、没有价值的。

甚至可以这样说,有时模糊性比精确性还要好。

例如,要你某时到某地去迎接一个“大胡子高个子长头发戴宽边黑色眼镜的中年男人”。

尽管这里只提供了一个精确信息——男人,而其他信息——大胡子、高个子、长头发、宽边黑色眼镜、中年等都是模糊概念,但是你只要将这些模糊概念经过头脑的综合分析判断,就可以接到这个人。

模糊数学在实际中的应用几乎涉及到国民经济的各个领域及部门,农业、林业、气象、环境、地质勘探、医学、经济管理等方面都有模糊数学的广泛而又成功的应用。

§1 模糊集的基本概念要想掌握模糊数学方法,必须先了解模糊集的基本概念,特别是隶属函数的建立方法。

1.1 模糊子集与隶属函数定义1 设U 是论域,称映射():[0,1]A x U →确定了一个U 上的模糊子集A ,映射()A x 称为A 的隶属函数,它表示x 对A 的隶属程度。

使()0.5A x =的点称为A 的过渡点,此点最具模糊性。

当映射()A x 只取0或1时,模糊子集A 就是经典子集,而()A x 就是它的特征函数。

可见经典子集就是模糊子集的特殊情形。

例 1 设论域123456{(140),(150),(160),(170),(180),(190)}U x x x x x x =(单位:cm )表示人的身高,那么U 上的一个模糊集“高个子”(A )的隶属函数()A x 可定义为140()190140x A x -=-,也可用Zadeh 表示法:12345600.20.40.60.81A x x x x x x =+++++, 上式仅表示U 中各元素属于模糊集A 的隶属度,不是普通分式与求和运算。

一个FUZZY聚类分析的快速算法

张钟澍

【期刊名称】《成都信息工程学院学报》

【年(卷),期】1992(000)003

【摘要】无

【总页数】1页(P45)

【作者】张钟澍

【作者单位】无

【正文语种】中文

【相关文献】

1.Fuzzy聚类分析的集合算法 [J], 鲁百年;张德荣

2.大样本Fuzzy聚类分析的速算法及其在微机上的实现 [J], 李霞;张庆普

3.基于Fuzzy聚类分析中两种算法对经络穴位分类的比较研究 [J], 陈孝国;刘龙

4.Fuzzy聚类分析在评估环境影响方面的应用——评估环境质量指数的一个辅助方法 [J], 李宗秀

5.Fuzzy图的一个新定义和一个新算法 [J], 周生明

因版权原因,仅展示原文概要,查看原文内容请购买。

随机系统的Fuzzy系统逼近

李洪兴

【期刊名称】《四川师范大学学报:自然科学版》

【年(卷),期】2022(45)4

【摘要】揭示随机系统的Fuzzy系统逼近.首先,相对于不确定性系统,给出了随机系统的定义,它视为对一个不确定系统的逼近.然后指出,对于任意给定的一个随机系统,总能将它转化为一组Fuzzy推理规则,由此可构造一个Fuzzy系统,并且证明这样构造的Fuzzy系统能逼近给定的随机系统到指定的精度.随后,讨论Fuzzy系统与随机系统转换中的还原性.这里通过实例展现这种逼近的效果,还用例子说明某些特殊随机系统的Fuzzy系统逼近.最后简要地概述不确定性系统的统一性问题.

【总页数】25页(P427-451)

【作者】李洪兴

【作者单位】北京师范大学珠海校区应用数学学院;大连理工大学控制科学与工程学院

【正文语种】中文

【中图分类】O159

【相关文献】

1.随机连续动态系统的连续时间随机逼近辨识的数值实现

2.任意随机序列关于二重非齐次马氏链的随机和的一类随机选择系统的随机逼近定理

3.关于乘性噪声驱动

的随机动力系统的中心流形的逼近4.边缘线性化方法构造的Fuzzy系统及其逼近性能分析5.随机低秩逼近算法在推荐系统中的应用

因版权原因,仅展示原文概要,查看原文内容请购买。

区间二型模糊集 matlab

区间二型模糊集是指在模糊集理论中的一种特殊类型,它具有

两个模糊数作为其元素。

在Matlab中,我们可以使用模糊逻辑工具

箱(Fuzzy Logic Toolbox)来处理区间二型模糊集。

首先,我们需要定义区间二型模糊集的隶属函数。

隶属函数描

述了元素属于模糊集的程度。

在Matlab中,可以使用fuzzy集合函

数(fuzzy set functions)来定义和创建隶属函数,例如使用

trimf函数来创建三角隶属函数,gauss2mf函数创建二项式隶属函

数等。

接着,我们可以使用fuzzy集合对象(fuzzy set objects)来

表示区间二型模糊集。

可以使用addvar函数来添加输入/输出变量,使用addmf函数来添加隶属函数,以及使用addrule函数来添加模

糊规则。

在处理区间二型模糊集时,我们可以利用Matlab中的模糊推理(fuzzy inference)功能来进行模糊推理和模糊控制。

可以使用fuzzy系统对象(fuzzy system objects)来建立和评估模糊系统。

除了使用Matlab自带的模糊逻辑工具箱,还可以在Matlab中编写自定义的函数和算法来处理区间二型模糊集。

这包括定义隶属函数的数学表达式,实现模糊推理算法等。

总之,在Matlab中处理区间二型模糊集,可以通过使用模糊逻辑工具箱提供的函数和对象,或者编写自定义的算法来实现。

希望这些信息对你有所帮助。

fuzzy方法(实用版3篇)目录(篇1)1.引言2.介绍模糊方法的基本原理和特点3.模糊方法的应用领域和优势4.模糊方法的优缺点5.结论正文(篇1)一、引言随着科技的不断发展,人工智能技术已经成为当今社会发展的重要趋势。

而在人工智能领域中,模糊方法是一种非常重要的算法。

本文将介绍模糊方法的基本原理和特点,并探讨其在各个领域中的应用。

二、模糊方法的基本原理和特点模糊方法是一种基于模糊数学的理论和方法,其主要特点是能够处理模糊的、不确定的信息。

在模糊方法中,使用了一个称为“隶属函数”的数学工具,用来描述一个对象属于某个集合的程度。

隶属函数可以是线性的或非线性的,可以根据具体问题进行调整。

三、模糊方法的应用领域和优势1.工业制造:在工业制造中,模糊方法可以用来解决复杂的问题,如质量控制、生产调度等。

通过建立数学模型,模糊方法可以自动地优化生产流程,提高生产效率。

2.医疗诊断:在医疗诊断中,模糊方法可以用来处理大量的数据,如医学影像、病理分析等。

通过使用模糊方法,医生可以更加准确地诊断疾病,提高治疗效果。

3.自然语言处理:在自然语言处理中,模糊方法可以用来处理不确定的信息,如语义理解、情感分析等。

通过使用模糊方法,可以提高机器翻译的准确性和情感分析的准确性。

4.交通管理:在交通管理中,模糊方法可以用来预测交通流量、制定交通规划等。

通过使用模糊方法,可以更好地掌握交通状况,提高交通管理水平。

5.其他领域:除了以上领域,模糊方法还可以应用于许多其他领域,如金融、环境科学等。

通过使用模糊方法,可以更好地处理复杂的问题,提高决策的准确性。

四、模糊方法的优缺点1.优点:(1)能够处理模糊的、不确定的信息;(2)可以自动地优化问题;(3)可以提高机器翻译的准确性和情感分析的准确性;(4)可以更好地掌握交通状况,提高交通管理水平。

2.缺点:(1)需要大量的计算资源;(2)可能存在“维数灾”问题;(3)需要具备一定的数学基础;(4)可能存在“过拟合”问题。

关于区间数绝对值运算的几个结果覃小莉; KHALIL Ahmed; 张琛; 李生刚【期刊名称】《《纺织高校基础科学学报》》【年(卷),期】2019(032)003【总页数】9页(P298-306)【关键词】区间数; 绝对值; 伪度量; 度量; 四则运算【作者】覃小莉; KHALIL Ahmed; 张琛; 李生刚【作者单位】陕西师范大学数学与信息科学学院陕西西安 710062; 成都师范学院德阳高级中学四川德阳 618000; 陇东学院数学与统计学院甘肃庆阳 745000【正文语种】中文【中图分类】O1591 引言及预备知识区间数的理论和应用在建立区间值微分方程理论[1], 区间值最优化理论[2]以及模糊值函数的微分理论[3] 中有着重要地位, 所以清楚区间数的性质有很重要的意义。

一些学者对区间数做了不少的研究, 丰富了区间数的理论知识[4-6]。

区间数理论的基本思想是应用区间数变量代替点变量进行计算,所以在应用问题[4-10]中区间数经常被使用。

文献[11]研究了区间数绝对值的性质, 但是对于区间数的四则运算没有进行仔细的讨论。

文献[12-13]研究了区间数的特殊值-模糊值相关问题, 但是对于区间数的一般值没有研究。

本文借助数的四则运算和一元运算的一般概念, 讨论了区间数相关性质并定义一种新的区间数绝对值运算。

称实数集R的有界闭区间为区间数[14-16](记作a*或并且将区间数〈a,a〉和实数a视为同一个)。

区间数的全体构成的集合记为R。

区间数是实数的推广,在表示和处理不确定性问题中具有基本重要性[1-2,8,16-17]。

而∀k≥0,有和可见R与R 不同构。

在R上可以用如下方式定义四则运(1) 加法(2) 减法减法也可以定义为(3) 乘法乘法也可以定义为(4) 除法除法也可以定义为文献[11]定义了区间数的一种绝对值运算:该作者证明了这种绝对值运算具有许多良好性质。

本文将定义区间数的另一种绝对值运算:进一步研究了这些绝对值运算的性质。

fuzzy方法【实用版3篇】篇1 目录1.引言2.Fuzzy 方法的定义和原理3.Fuzzy 方法的应用领域4.Fuzzy 方法的优缺点5.结论篇1正文1.引言Fuzzy 方法是一种基于模糊逻辑的数学方法,由波兰数学家 Zadeh 在 1965 年首次提出。

该方法突破了传统数学中绝对精确的描述方式,引入了模糊性的概念,使得数学模型能够更好地描述现实世界中的不确定性和模糊性。

2.Fuzzy 方法的定义和原理Fuzzy 方法是一种基于模糊集合理论的方法,其核心思想是将现实世界中的事物分为模糊集合,通过对这些模糊集合进行运算和推理,从而得到相应的结论。

模糊集合是由隶属度(即元素属于集合的程度)在 0~1 之间的元素组成的集合,它具有不确定性和模糊性。

3.Fuzzy 方法的应用领域Fuzzy 方法自诞生以来,已经在多个领域取得了广泛的应用,如控制论、信息处理、人工智能、管理科学等。

以下是一些典型的应用领域:(1)控制论:Fuzzy 方法可以用于设计模糊控制器,以解决不确定系统的控制问题。

(2)信息处理:Fuzzy 方法可以用于模糊推理、模糊评价和模糊决策等任务。

(3)人工智能:Fuzzy 方法可以用于构建模糊神经网络、模糊专家系统和模糊知识表示等。

(4)管理科学:Fuzzy 方法可以用于进行模糊预测、模糊优化和模糊评价等。

4.Fuzzy 方法的优缺点Fuzzy 方法的优点主要表现在以下几个方面:(1)能够处理不确定性和模糊性:Fuzzy 方法能够较好地处现实世界中存在的不确定性和模糊性问题。

(2)实用性强:Fuzzy 方法已经在多个领域取得了实际应用,具有较强的实用性。

(3)易于理解和实现:Fuzzy 方法基于模糊集合理论,相对容易理解和实现。

然而,Fuzzy 方法也存在一些缺点,如:(1)理论体系不完善:Fuzzy 方法的理论体系相对不完善,尚需要进一步的研究和完善。

(2)结果的可解释性差:Fuzzy 方法得出的结论往往具有一定的模糊性,可解释性较差。

FUZZY INTERVAL NUMBER (FIN) TECHNIQUES FOR CROSS LANGUAGE INFORMATION RETRIEVAL……..…………….…………………..……….Keywords: Fuzzy Interval Number (FIN), Information Retrieval (IR), Cross Language Information Retrieval (CLIR). Abstract: A new method to handle problems of Information Retrieval (IR) and related applications is proposed. The method is based on Fuzzy Interval Numbers (FINs) introduced in fuzzy system applications. Definition,interpretation and a computation algorithm of FINs are presented. The frame of use FINs in IR is given. Anexperiment showing the anticipated importance of these techniques in Cross Language InformationRetrieval (CLIR) is presented..1 INTRODUCTIONOard (Oard, 1997) classifies free (full) text Cross Language Information Retrieval (CLIR) approaches to corpus-based and knowledge-based approaches. Knowledge-based approaches encompass dictionary-based and ontology (thesaurus)-based approaches while corpus-based approaches encompass parallel, comparable and monolingual corpora. Dictionary-based systems translate query terms one by one using all the possible senses of the term. The main drawbacks of this procedure are:a) the lack of fully updated Machine Readable Dictionaries (MRDs),b) the ambiguity of the translations of terms results in a 50% loss of precision (Davis, 1996).Since the machine translation of the query is less accurate than that of a full text, experiments have been conducted with collections having machine translations of all the collection texts to all languages of interest. Such systems are really multi-monolingual systems. Parallel and comparable corpora systems are different: the parallel (or comparable) corpora are used to “train” the system and after that no translations are used for retrieval. One such system, perhaps the most successful, is based on Latent Semantic Indexing (LSI) (Dumais, 1996), (Berry, 1995). The main problem with this approach is that it is not easy to find training parallel corpora related to any collection.Fuzzy (set) techniques were proposed for Information Retrieval (IR) applications many years ago (Radecki, 1979), (Kraft, 1993), mainly for modeling.Fuzzy Interval Numbers (FINs) were introduced by Kaburlasos (Kaburlazos, 2004), (Petridis, 2003) in fuzzy system applications.A FIN may be interpreted as a conventional fuzzy set; additional interpretations for a FIN are possible including a statistical interpretation.The special interest in these objects and associated techniques for IR stems from their anticipated capability to serve CLIR without the use of dictionaries and translations.The basic features of the method presented here are: 1) Documents are represented as FINs; a FIN resembles a probability distribution.2) The FIN representation of documents is based on the use of the collection term frequency as the term identifier.3) The use of FIN distance instead of a similarity measure.There are indications, part of which is presented in section 4 below, that a parallel corpora system can be build using FIN techniques.The structure of the remainder of this paper is as follows: In section 2 a brief introduction to FINs and other relevant concepts is given. In section 3 the“conceptual” transition from document vectors to document FINs is presented. Section 4 presents the special interest of FINs in handling CLIR problems. Conclusions and current work on the subject are presented in section 5.2 THEORETICAL BACKGROUNDA. Generalized IntervalsA generalized interval of height h (∈ (0,1]) is a mapping μ given by:If x 1 < x 2 (positive generalized interval) thenelseif x 1 > x 2 (negative generalized interval) thenelseif x 1 = x 2 (trivial generalized interval) thenIn this paper we use the more compact notation [x 1, x 2]h instead of the μ notation.The interpretation of a generalized interval depends on an application; for instance if a feature is present it could be indicated by a positive generalized interval.The set of all positive generalized intervals of height h is denoted by h+M , the set of all negative generalized intervals by h −M , the set of all trivial generalized intervals by h 0M and the set of all generalized intervals by M h = h−M ∪h0M ∪h+M .Two functions, that are going to be used in the sequel, are defined:Function support maps a generalized interval to the corresponding conventional interval; support([x 1, x 2]h ) = [x 1, x 2] for positive, support([x 1, x 2]h ) = [x 2, x 1] for negative and support([x 1, x 1]h ) = {x 1} for trivial generalized intervals.Function sign: M h → { –1, 0, +1 } maps a positive generalized interval to +1, a negative generalized interval to –1 and a trivial generalized interval to 0. Now, we try to define a metric distance and an inclusion measure function in the set (lattice) M h .A relation ≤ in a set S is called partial ordering relation if and only if it is: 1) x ≤ x (reflexive)2) x ≤ y and y ≤ x imply x= y (antisymmetric) 3) x ≤ y and y ≤ z imply x ≤ z (transitive)Therefore a partial order relation ≤ can be defined in the set M h , h ∈ (0,1]:1) [a, b]h ≤ [c, d]h ⇔ support([a, b]h ) ⊆ support ([c, d]h ), for [a, b]h , [c, d]h ∈ h+M 2) [a, b]h ≤ [c, d]h ⇔ support([c, d]h ) ⊆support([a, b]h ), for [a, b]h , [c, d]h ∈ h −M 3) [a, b]h ≤ [c, d]h ⇔ support([c, d]h ) ∩support([a, b]h ) ≠ 0, for [a, b]h ∈ h −M , [c, d]h ∈ h+MA partial ordering relation does not hold for all pairs of generalized interval.A lattice (L, ≤) is a partially ordered set and any two elements have a unique greatest lower bound or lattice meet (x ∧L y) and a unique least upper bound or lattice join (x ∨L y).A valuation v in a lattice L, defined as the area “under” a generalized interval, is a real function v:L → R which satisfiesv(x)+v(y)= v(x ∨L y) + v(x ∧L y), x,y ∈ L.A valuation is called monotone if and only if x ≤ y implies v(x) ≤ v(y) and positive if and only if x < y implies v(x) < v(y) for x,y ∈ L. A metric distance in a set S is a real function d:S ×S → R which satisfies:1) d(x,y) ≥ 0, x, y ∈ S2) d(x,y) = 0 ⇔ x = y, x ∈ S3) d(x,y) = d(y,x) , x, y ∈ S (symmetry) 4) d(x,y) ≤ d(x,z) + d(z,y), x, y, z ∈ S (triangle inequility)Therefore a metric distance d:L ×L → R can be defined in the lattice M h , h ∈ (0,1] given by d(x, y) = v(x ∨L y) - v(x ∧L y), x,y ∈ L.A lattice is called complete when each of its subsets has a least upper bound and a greatest lower bound. In a complete lattice the positive valuation function v can be used to define an inclusion measure function k: L ×L → [0, 1] given by v(u) k(x, u) =v(x ∨L u)The lattice M h is not complete.Therefore we must define in a different way an inclusion measure to quantify the degree of inclusion of a lattice element into another one.⎩⎨⎧≤≤=otherwise,0,)(21],[21x x x h x hx x μ⎩⎨⎧≥≥−=otherwise ,0,)(21],[21x x x h x hx x μ⎩⎨⎧=−=otherwise,0},,{)(1],[21x x h h x hx x μDefinitionAn inclusion measure σ in a non-complete lattice L is a map σ: L ×L → [0, 1] such that for u, w, x ∈ L: 1) σ(x, x) = 12) u < w ⇒ σ(w, u) < 13) u ≤ w ⇒ σ(x, u) ≤ σ(x, w) (consistency property) We have interchangeable used the notations σ(x, u), σ(x ≤ u) because both the notations indicate a degree of inclusion of x in u.Kaburlazos has proved the following proposition: Let the underlying positive valuation function f: R → R be a strictly increasing real function in R. Then the real function v: M h → R is given by v([a, b]h ) = sign ([a, b]h ) c(h) a ∫b [f(x) – f(a)] dx where v is a positive valuation function in M h ,c: (0,1] → R + is a positive real function for normalization. A metric distance in M h is given by: d h (x, y) = v(x ∨ y) – v(x ∧ y)As an example consider f(x)=x, c(h)=h. Then the distance is given by:d h ([a, b]h , [c, d]h )= h (|a-c|+|b-d|). As another example, for f(x)= x 3, h=1 and c(1)=0.5 we compute the distance (between the intervals [-1, 1]1, [2, 4]1) d h ([-1, 1]1 , [2, 4]1)= f(([-1, 1]1 ∨ [2, 4]1) - f(([-1, 1]1 ∧ [2, 4]1) = 32.5 + 3.5 = 36.The essential role of a positive valuation function v: L → R is known to be a mapping from a lattice L of semantics to the mathematical field R of real numbers for carrying out computations.B. Fuzzy Interval Numbers: Definition and InterpretationA positive Fuzzy Interval Number (FIN) is a continuous function F: (0,1] → h+M such that h 1 ≤ h 2 ⇒ support(F (h 1)) ⊇ support(F( h 2)), where 0 < h 1 ≤ h 2 <1.Figure 1 An 86 value FIN. In the support(F(0.25)) = [62,318] there are about 75% of the values.The set of all positive FINs is denoted by F +. Similarly, trivial and negative FINs are defined.Given a population (a vector) x = [x 1,x 2,…,x N ] of real numbers (measurements), sorted in ascending order, a FIN can be computed by applying the CALFIN algorithm given in the Appendix. In Fig.1 a FIN, calculated from a population of 86 values, is shown. Given a FIN, any “cut” at a given height h (∈ (0,1]) defines a generalized interval, denoted by F(h). In Fig.1, F(0.25) is the generalized interval [a,b]0.25 represented by acdb.A consequence of the CALFIN algorithm is the following: Let F(1) = {m 1}; approximately N/2 of the values of x are smaller than m 1 and N/2 are greater than m 1. Let F(0.5) = [p 1/2,q 1/2]0.5; approximately N/4 of the values of x lie in [p 1/2,m 1] and N/4 in [m 1,q 1/2]. In more general terms: for any h ∈ (0,1] approximately 100(1 - h)% of the N values of x are within support(F(h)).C. FIN MetricsLet m h : R → R + be a positive real function – a mass function – for h ∈ (0,1] (could be independent of h) and f h (x) =∫xh dt t m 0)(.Obviously, f h is strictly increasing. The real function v h : h+M → R , given by v h ([a,b]h ) = f h (b) – f h (a)is a positive valuation function in the set of positive generalized intervals of height h. d h ([a,b]h ,[c,d]h ) =[f h (a ∨c) – f h (a ∧c)] + [f h (b ∨d) – f h (b ∧d)],where a ∧c= min{a,c} and a ∨c= max{a,c}, is a metric distance between the two generalized intervals [a,b]h and [c,d]h . In Fig.2 an interpretation of a, b, c, d in the case of two FINs is shown.Figure 2: Two FINs F 1 and F 2 (representing two documents of the CACM test collection). The points a, b, c, d used to define d h (F 1(h),F 2(h)) = d h ([a,b]h ,[c,d]h ).0.250.50.751100200300400h0.250.50.751100200300400500ctf (mod)hGiven two positive FINs F 1 and F 2, d(F 1,F 2)= ∫121))(),((h d h F h F dchwhere c is an user-defined positive constant, is a metric distance (for a proof see (Kaburlazos, 2004))3 USING FINS TO REPRESENT DOCUMENTSIn the Vector Space Model (Salton, 1983) for Information Retrieval, a text document is represented by a vector in a space of many dimensions, one for each different term in the collection. In the simplest case, the components of each vector are the frequencies of the corresponding terms in the document:Doc k = ( f k1, f k2, … f kn )f kj stands for the frequency of occurrence of term t j in document Doc k . In Fig.3 one such vector is shown as a histogram.Figure 3 A document vector as a histogram. Each value on the term axis represents a term (stem), e.g. “51” stands for “industri” and “104” for “research”.Let ctf j be the total frequency of occurrence of term t j in the whole collection. Then ctf j is equal toThe collection term frequencies are going to be used as term identifiers. In order to ensure the uniqueness of the identifiers a multiple of a small ε is added tothe ctfs when needed. In Fig.4 the new form of the document vector histogram is shown.Figure 4 The document vector histogram in (modified) ctf abscissae. Each value on the ctf (mod) axis represents a term (stem), e.g. “1.24775” stands for “industri” and “2.09009” for “research”.The next step is to break the “high bars” to multiple pieces of height 1, placed side by side, separated by some ε′ < ε; this is shown in Fig.5. Now, the original histogram has been transformed to a “density graph”.Figure 5 The “density graph” representation of the document of Fig. 1.The abscissae vector is exactly the “numberpopulation” from which the document FIN (Fig.6) is computed from by the CALFIN algorithm described in the Appendix.0123450100150termtfctf (mod)tf01123456ctf (mod)∑kkjtfFigure 6The document FIN along with the median bars. The numbers of terms on the left and right sides of the bar with height 1 are equal.The FIN distance is used instead of the similarity measure between documents: the smaller the distance the more similar the documents. Thisa) means that for each query a FIN must becalculated andb) imposes a serious requirement on thequeries: they must have many terms. Ascan be seen in the CALFIN algorithm(appendix), at least two terms are needed tocalculate a FIN. Moreover, experienceshows that many term documents (queries)give “better” fins.4. FINS FOR CLIRA. The HypothesisConsider a document set {doc(l)1, doc(l)2, …, doc(l)n}, all in language l. Suppose that for each document doc(l)k there exist translations doc(j)k to the languages j = 1, …, m. So we have a multilingual document collection:{ 1≤ j ≤ m, 1≤ k ≤ n , doc(j)k }Assume that:1. The stopword lists of all languages aretranslations of each other (partially unrealistic). 2. All the translations are done “1 word to 1word”, i.e. we have no phrasal translations ofwords (highly unrealistic (Ballesteros, 1997)). 3. There is no different polysemy between any twolanguages (highly unrealistic (Ballesteros, 1998)).Under these assumptions: Consider a term in language l, t(l)j. Let tf(l)jk be the term frequency in doc(l)k and ctf(l)j the total frequency of the term in the collection. The following equalities hold: tf(1)jk = tf(2)jk = … = tf(m)jkctf(1)j = ctf(2)j = … = ctf(m)jIf docF(l)k is the FIN representing doc(l)k then the distances of the translated document FINs will be approximately 0:A1: d(docF(l1)k,docF(l2)k) ≈ 0The distances can be nullified exactly with the use ofa dictionary.Moreover, let qryF(l) be the FIN of a query submitted to a FIN-based IR System that manages the collection. Then:A2: d(qryF(l),docF(l)k ) ≈ d(qryF(l),docF(j)k),j ∈ 1..mThis means that Cross Language Information Retrieval is achievable without the use of dictionaries.B. The ExperimentThe experiment aims to test the aforementioned statements A1 and A2 in the “real world”.A small document collection comprising 3 short documents in english and their greek translations (the documents originate from EU databases) was used. The documents were slightly modified in orderto improve their compliance with the hypotheses of part A. The term content of the documents is shownin Table 1. Apparently it was not easy to avoid phrasal translations and terms with different polysemy.Table 1 The term content of the documentsTotal Number of TermsNumber of DistinctTermsenglish greek English greek doc1 69 69 67 58 doc2 83 83 50 51 doc3 79 86 65 71 Total Nt en =231 Nt gr =238 151 154The FINs of the documents were calculated takingall terms without exceptions. The FINs of the english documents are shown in Fig.7. The steep ascent of the left side of the curves is due to the inclusion of all the terms appearing only once in the collection.In Fig.8 the FINs of the greek and english versions of document 3 are shown. They are almost identical except for the right “tail” of the greek FIN. This is a result of different polysemy.ctf (mod) hFigure 7 The FINs of the english documents .Figure 8 The FINs of the english and greek versions of document 3.For the distance calculations a bell-shaped mass function is selected: m h (t) =222)max(⎟⎠⎞⎜⎝⎛−++ctf t A hβαThe positive real numbers A, α, β, are parameters;max(ctf) is the maximum value of all the collection term frequencies.That kind of mass function degrades the contribution of to the FIN distance of the terms with ctf = 1. The same degradation applies to the high ctf terms. So, the contribution to the distance of the “tail” to the right of the greek FIN in Fig.8 is degraded but the same applies in general to all high frequency terms even those that do not have high document frequency.The verification of A1 and A2 is translated as follows:A 1: d(docF(e)1,docF(g)1), d(docF(e)2,docF(g)2) and d(docF(e)3,docF(g)3) are significantly smaller that other distances between FINs.A2: Instead of query documents the FINs of the documents of the collection are used. So instead of d( qryF(l),docF(l)k ) ≈ d(qryF(l),docF(j)k ), j ∈ 1..m the following is examined:d(docF(l)m ,docF(l)n ) ≈ d(docF(l 1)m ,docF(l 2)n ), m, n = 1, 2, 3, m ≠ n, l, l 1, l 2 = e, gIn Table 2 the FIN distances of all pairs of documents in the collection are shown. As expected the smallest are the distances between the greek and english versions of the same documents.If d 1e1g = d( doc(e)1F, doc(g)1F ) = 0.03515 then d( doc(e)2F, doc(g)2F ) ≈ 1.2 d 1e1g and d( doc(e)2F, doc(g)2F ) ≈ 2.06 d 1e1gApart from these, the smallest distance is d( doc(e)1F, doc(e)3F ) = 0.27195 ≈ 7.7 d 1e1g . That is: the largest distance between two versions of the same document is about 3.8 times smaller than the smallest distance between versions of different documents. Moreover:d(docF(l 1)m ,docF(l 2)n ) ≤ 1.15 d(docF(l 3)m ,docF(l 4)n ), m, n = 1, 2, 3, m ≠ n, l 1, l 2, l 3, l 4 = e, gThat is: the distance between any two versions of the same document is at most 1.15 times larger than the distance of any two other versions of the same document.In conclusion:1) The distances of different versions (languages)of the same document are considerably smaller than others.2) The distances of two different documents arealmost the same irrespective of the language of the documents.In the second phase of the experiment one more english language document (doc(e)4) is inserted in the collection but not its greek version. The number of english terms is increased by ΔNt en = 58. Now the distances are changed:d(docF(l 1)m ,docF(l 2)n ) ≤ 1.32 d(docF(l 3)m ,docF(l 4)n ), m, n = 1, 2, 3, m ≠ n, l 1, l 2, l 3, l 4 = e, gTo rebalance the collection characteristics we renormalize the greek FIN absissae multiplying χj by:⎟⎟⎠⎞⎜⎜⎝⎛Δ+en en j r NT NT ctf x k )max(100.250.50.7511234567ctf (mod)h00.250.50.7511234567ctf (mod)hAfter that:d(docF(l1)m,docF(l2)n) ≤d(docF(l3)m,docF(l4)n), m, n = 1, 2, 3, m≠n, l1, l2, l3, l4 = e, g5. CONCLUSIONS AND FUTURE WORKFIN techniques seem promising for IR and related applications; the prospect of CLIR without dictionaries is very intriguing. Nevertheless there are quite enough topics to be considered carefully. These techniques, for monolingual and cross language IR, work with long documents and queries that can give “good” FINs. Unfortunately, this is not the case with the queries submitted to Internet search engines; these queries very often have just a couple of terms (Kobayashi, 2000). The FIN techniques can be more successful in problems of document classification where documents with many terms –and “better” FINs– must be handled.The quality of a FIN does not depend on number of terms only; it must be considered with the mass function for the distance calculation. In the previous paragraph a “soft” degradation of the contribution to the distance of terms with ctf = 1, has been attempted through the mass function. A better idea would be probably to ignore completely these terms in the FIN computation. In FIN construction, term document frequency (df) must be taken into account as well.The bell-shaped mass function seems to be a reasonable one but other ideas should be considered in conjunction with FIN computation.Last but not least the renormalization scheme: in any multilingual collection the numbers of terms in different languages are random and a solid and flexible re-balancing scheme is needed, which is not independent of the FIN construction method and the distance calculation (mass function).The optimal determination of the parameters A, α, β and k r is part of the system training process using parallel corpora.At present, experiments are been conducted along these lines mainly with two of the standard monolingual collections, namely CACM and WSJ. These collections are of interest because they have relatively longer queries.On the other hand, a trilingual – greek / english / french – test collection is been built for CLIR experimentation. The parallel corpora are created by translation by hand (although there is some mechanical help). Experiments are conducted and records of performance are kept during various stages of the parallel corpora creation. ACKNOWLEDGMENTSThis work was co-funded by 75% from the E.U. and 25% from the Greek Government under the framework of the Education and Initial Vocational Training Program – Archimedes.REFERENCESBallesteros, L. and W. B. Croft, “Phrasal Translation and Query Expansion Techniques for Cross-Language Information Retrieval” in the Proceedings of the 20th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR-97), pp. 84-91, 1997.Ballesteros, L. and W. B. Croft, “Resolving Ambiguity for Crosslanguage Retrieval” in the Proceedings of the 21st International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR-98), pp. 64-71, 1998.Berry, M. and P. Young, “Using latent semantic indexing for multi-language information retrieval” Computers and the Humanities, vol. 29, no 6, pp. 413-429, 1995. Davis, M., “New experiments in cross-language text retrieval at NMSU’s Computing Research Lab” in D.K. Harman, ed., The Fifth Text Retrieval Conference (TREC-5), NIST, 1996.Table 1 FIN distances of the documents of thecollectionDoc(e)1doc(e)2doc(e)3doc(g)1doc(g)2doc(g)3 doc(e)10.000000.340440.271950.035150.326580.29084 doc(e)20.340440.000000.605880.313140.072300.61259 doc(e)30.271950.605880.000000.299380.593630.04258 doc(g)10.035150.313140.299380.000000.300650.31147 doc(g)20.326580.072300.593630.300650.000000.60035 doc(g)30.290840.612590.042580.311470.600350.00000Dumais, S. T., T.K. Landauer, M.L. Littman, “Automatic cross-linguistic information retrieval using latent semantic indexing” in G. Grefenstette, ed., Working Notes of the Workshop on Cross-Linguistic Information Retrieval. ACM SIGIR.Kaburlasos, V.G., “Fuzzy Interval Numbers (FINs): Lattice Theoretic Tools for Improving Prediction of Sugar Production from Populations of Measurements,”IEEE Trans. on Man, Machine and Cybernetics – Part B, vol. 34, no 2, pp. 1017-1030, 2004.Kobayashi, M. and K. Takeda, “Information Retrieval on the Web,” ACM Computing Surveys, 32, 2 (2000), pp 144-173.Kraft, D.H. and D.A. Buell, “Fuzzy Set and Generalized Boolean Retrieval Systems” in Readings in Fuzzy Sets for Intelligent Systems, D. Dubius, H.Prade, R.R.Yager (eds) 1993.Oard, D.W., “Alternative Approaches for Cross-Language Text Retrieval” in Cross-Language Text and SpeechRetrieval, AAAI Technical Report SS-97-05.Available at/dlrg/filter/sss/papers/ Petridis, V. and V.G. Kaburlasos, “FINkNN: A Fuzzy Interval Number k-Near-est Neighbor Classifier for prediction of sugar production from populations of samples,” Journal of Machine Learning Research, vol.4 (Apr), pp. 17-37, 2003 (can be downloaded from).Radecki, T., “Fuzzy Set Theoretical Approach to Document Retrieval” in Information Processing and Management, v.15, Pergammon-Press 1979.Salton, G. and M.J. McGill, Introduction to Modern Information Retrieval, McGraw-Hill, 1983.A PPENDIX – FIN C OMPUTATIONConsider a vector of real numbers x = [x1,x2,…,x N] such that x1≤x2≤…≤x N. A FIN can be constructed according to the following algorithm CALFIN where dim(x) denotes the dimension of vector x, e.g. dim([2,-1])= 2, dim([-3,4,0,-1,7])= 5, etc.The median(x) of a vector x = [x1,x2,…,x N] is defined to be a number such that half of the N numbers x1,x2,…,x N are smaller than median(x) and the other half are larger than median(x); for instance, the median([x1,x2,x3]) with x1 < x2 < x3 equals x2, whereas the median([x1,x2,x3,x4]) with x1 < x2 < x3 < x4 was computed here as median([x1,x2,x3,x4])=(x2 + x3)/2. Algorithm CALFIN1. Let x be a vector of real numbers.2. Order incrementally the numbers in vectorx.3. Initially vector pts is empty.4. function calfin(x) {5. while (dim(x) ≠ 1)6. medi:=median(x)7. insert medi in vector pts8. x_left:=elements in vector x less-than number median(x)9. x_right:= elements in vector x larger-thannumber median(x)10. calfin(x_left)11. calfin(x_right)12. endwhile13. } //function calfin(x)14. Sort vector pts incrementally.15. Store in vector val, dim(pts)/2 numbersfrom 0 up to 1 in steps of 2/dim(pts)followed by another dim(pts)/2 numbersfrom 1 down to 0 in steps of 2/dim(pts).The above procedure is repeated recursively log2N times, until “half vectors” are computed including a single number; the latter numbers are, by definition, median numbers. The computed median values are stored (sorted) in vector pts whose entries constitute the abscissae of a positive FIN’s membership function; the corresponding ordinate values are computed in vector val. Note that algorithm CALFIN produces a positive FIN with a membership function μ(x) such that μ(x)=1 for exactly one number x.。