2 FIELD TEST OPERATIONS ARCHITECTURE

- 格式:pdf

- 大小:1.97 MB

- 文档页数:15

2024年高等教育自学考试自考《英语二》自测试题与参考答案一、阅读判断(共10分)第一题Read the following passage and then answer the questions below.The rise of e-learning has transformed the way people access education. With the advent of the internet, individuals now have the opportunity to learn at their own pace and from the comfort of their homes. One of the most popular forms of e-learning is the self-study examination for higher education, commonly known as the Self-study Examination for English Level Two (Self-study Examination for English Two). This examination is designed to test the English proficiency of students who wish to pursue further education or career opportunities.1、The self-study examination for higher education is gaining popularity due to its convenience and flexibility.2、The Self-study Examination for English Two is only available online.3、The examination is specifically for those who want to advance their education or career.4、The passage mentions that the self-study examination is only for English learning.5、The self-study examination is meant to be taken at a specific location.1、True2、False3、True4、False5、FalseSecond Question: Reading Comprehension and JudgmentPassage:In today’s rapidly evolving world, the role of technology in education cannot be overstated. Technological advancements have not only transformed how knowledge is imparted but also revolutionized the learning experience for students across the globe. The integration of digital tools into classrooms has enabled educators to personalize learning, catering to the diverse needs of individual students. Furthermore, online platforms and digital resources have made education more accessible, breaking down barriers of time and location. However, it is crucial to recognize that these benefits come with challenges such as ensuring equitable access to technology and maintaining the quality of educational content in a digital format. Despite these hurdles, the potential for technology to enhance teaching and learning is immense, making it an indispensable part of modern educational practices.Questions:1、The passage suggests that technology has changed the way education isdelivered.•Answer: True2、According to the text, personalized learning experiences are now possible due to the use of digital tools.•Answer: True3、The integration of technology in education has created insurmountable barriers for learners.•Answer: False4、Online platforms have made it difficult for students to access educational materials.•Answer: False5、The passage acknowledges both the advantages and challenges of incorporating technology into education.•Answer: True二、阅读理解(共10分)Title: Reading ComprehensionPassage:The global pandemic has highlighted the importance of digital literacy in today’s society. Many countries have implemented online learning platforms to ensure that education continues during times of crisis. One such platform, Edutalk, has gained popularity for its interactive and engaging approach to teaching. Edutalk offers a variety of courses, including a specialized courseon digital literacy. This course is designed to teach individuals the skills needed to navigate the digital world effectively and responsibly.The course covers several key topics, such as online communication, internet safety, and digital privacy. It also includes practical exercises that help students apply their knowledge in real-life situations. One of the most innovative features of the course is its virtual classroom, where students can interact with each other and with the instructor through live video calls and chat forums.Despite the benefits of online learning, there are challenges that come with it. One major challenge is the potential for isolation and reduced social interaction. However, Edutalk has addressed this by incorporating social features into its platform, such as discussion groups and peer support networks. These features help to foster a sense of community among the students.Questions:1.What is the primary focus of the specialized course offered by Edutalk?A) Online communicationB) Internet safetyC) Digital privacyD) All of the above2.Which of the following is NOT a key topic covered in the digital literacy course?A) Online communicationB) History of the internetC) Internet safetyD) Digital privacy3.What is one innovative feature of Edutalk’s digital literacy course?A) Traditional classroom settingB) Virtual classroom with live video callsC) Self-paced learning modulesD) Only text-based lessons4.What is a potential challenge associated with online learning, according to the passage?A) High cost of educationB) Reduced social interactionC) Limited access to resourcesD) Lack of hands-on experience5.How does Edutalk address the challenge of reduced social interaction in online learning?A) By offering only self-paced coursesB) By incorporating social features such as discussion groupsC) By encouraging students to attend physical classesD) By providing one-on-one tutoring sessionsAnswers:1.D) All of the above2.B) History of the internet3.B) Virtual classroom with live video calls4.B) Reduced social interaction5.B) By incorporating social features such as discussion groups三、概况段落大意和补全句子(共10分)第一题Reading Passage:In recent years, the importance of lifelong learning has been increasingly recognized in the field of education. Higher education self-study examinations, such as the National Self-Study Examination for English Level Two, have become a popular method for individuals to enhance their English proficiency independently. This essay discusses the benefits and challenges of self-study examinations in higher education.Questions:1、The passage mainly focuses on the topic of __________.A)The benefits of traditional classroom learningB)The challenges of self-study in higher educationC)The role of self-study examinations in enhancing English proficiencyD)The decline of traditional educational methods2、According to the passage, self-study examinations are particularly beneficial for__________.A)Students who prefer a more structured learning environmentB)Working professionals seeking to improve their language skillsC)Young learners who are eager to learn new subjectsD)Teachers who want to teach English more effectively3、The passage suggests that self-study examinations can be challenging due to__________.A)The lack of immediate feedback from teachersB)The difficulty of maintaining a consistent study scheduleC)The need for self-discipline and motivationD)The limited availability of study materials4、Which of the following is NOT mentioned as a challenge of self-study examinations?A)The potential for procrastinationB)The risk of not receiving a formal degreeC)The need for a strong support systemD)The difficulty of accessing advanced learning resources5、The author concludes the passage by suggesting that __________.A)Self-study examinations should be replaced with traditional classroom learningB)Self-study examinations can be effective when combined with online resourcesC)The challenges of self-study examinations outweigh their benefitsD)Self-study examinations are only suitable for individuals with exceptionalself-disciplineAnswers:1、C2、B3、C4、B5、B第二题阅读内容:In recent years, online learning has gained immense popularity due to its convenience and flexibility. This essay discusses the advantages and disadvantages of online learning, comparing it with traditional classroom teaching.概况段落大意和补全句子:1、The paragraph mainly focuses on the increasing popularity of online learning, its benefits, and its comparison with traditional classroom teaching.2、Online learning is popular because it offers convenience and flexibility.3、One of the advantages of online learning is its accessibility from anywhere.4、However, one disadvantage of online learning is the lack of face-to-face interaction.5、Compared to traditional classroom teaching, online learning provides more convenience but may lack some social aspects.四、填空补文(共10分)Four. Fill in the blanks with the appropriate options.Read the following passage:The ancient Egyptians had a profound influence on the world we live in today. Their achievements in mathematics, architecture, and religion are still celebrated. One of their greatest contributions was the development of the first known calendar. This calendar was based on the observation of the stars and the cycles of the Nile River. The Egyptians divided the year into three seasons: Inundation, Growth, and Harvest. Each season was further divided into four months, with each month consisting of 30 days. This system was used for agricultural planning and religious festivals.Choose the correct word to fill in the blanks from the options below:1.The Egyptians divided the year into three distinct __________.a) seasonsb) monthsc) daysd) nights2.The calendar they developed was based on __________.a) the moon’s phasesb) the Nile River’s flowc) the Gregorian calendard) the Chinese zodiac3.Each month in the Egyptian calendar had__________days.a) 28b) 30c) 31d) 294.The__________season was characterized by the flooding of the Nile.a) Growthb) Inundationc) Harvestd) Autumn5.The Egyptian calendar was important for __________.a) astronomical observationsb) religious ceremoniesc) trade and commerced) all of the aboveAnswer Key:1.a) seasons2.b) the Nile River’s flow3.b) 304.b) Inundation5.d) all of the above五、填词补文(共15分)第一题阅读以下短文,根据上下文填入合适的单词,每空一词。

|The 30 Years' Development of Landscape Architecture in Shenzhen Special Economic Zone |编者按:前海,这个过去略显沉寂的地方,在深圳特区成立30周年之际,随着国务院对“前海深港现代服务业合作区”发展规划的正式批复,一跃成为全国关注的热点。

它不仅被视为“特区中的特区”、大珠三角的“曼哈顿”,更被赋予了深圳二次创业重要引擎的历史使命。

面对如此关键地块的规划设计,如何回应可持续发展、宜居与活力、土地使用与地块划分、城市系统运作和开发各阶段的效率等问题,参与“前海地区概念规划国际咨询项目”的各家设计机构都给出了自己的答案。

如今,项目咨询活动已经落下帷幕。

本刊独家采访到第一名获得者美国Field Operations事务所的主创设计师詹姆斯・科纳先生,并组织刊发了前三名的精彩方案。

希望通过多维度的专题策划,能让读者更深入地了解这一重大城市事件。

Editor's Note: With the approval from the State ofCouncil of PRC for the Planning Strategy of Qianhai, Qianhai, a formerly neglected place in Shenzhen, has become the eye-catcher of China when Shenzhen embraced its30th anniversary celebration for the founding of Special Economic Zone. Qianhai is not only regarded as a Special Area of Shenzhen Special Economic Zone, the Manhattan of the Pearl-river Delta Region, but also is commissioned as an important engine for the development of Shenzhen in the future. In the International Consultancy of Conceptual Planning of Qianhai Region, designers from world-wide shared their ideas on how to plan and design this strategic site and how to respond to the sustainability issues, the creation of livable environment, the land use and site zoning, and the operation of urban mechanism. Landscape Architecture had an exclusive interview with Professor James CORNER, principal designer of Field Operations and presented the three winning proposals of this consultancy competition, which will help readers to learn more about this city event from different perspectives.《风景园林》:詹姆斯・科纳(James CORNER)教授,欢迎您来到中国!您的到来给予了中国同行极大的鼓舞。

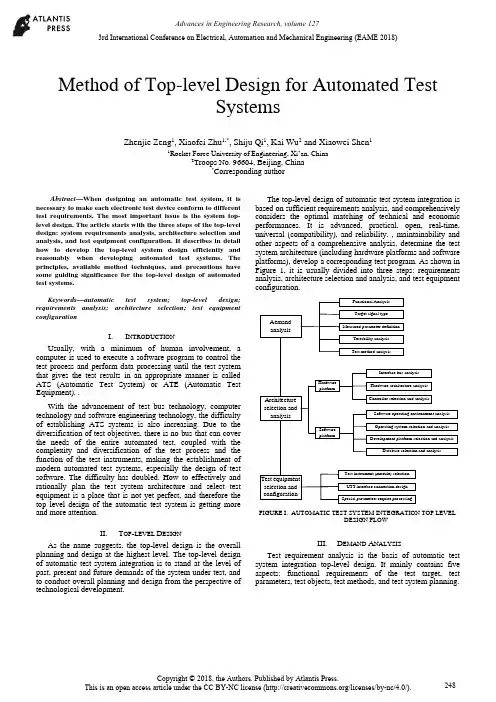

Method of Top-level Design for Automated TestSystemsZhenjie Zeng1, Xiaofei Zhu1,*, Shiju Qi1, Kai Wu2 and Xiaowei Shen11Rocket Force University of Engineering, Xi’an, China2Troops No. 96604, Beijing, China*Corresponding authorAbstract—When designing an automatic test system, it is necessary to make each electronic test device conform to different test requirements. The most important issue is the system top-level design. The article starts with the three steps of the top-level design: system requirements analysis, architecture selection and analysis, and test equipment configuration. It describes in detail how to develop the top-level system design efficiently and reasonably when developing automated test systems. The principles, available method techniques, and precautions have some guiding significance for the top-level design of automated test systems.Keywords—automatic test system; top-level design; requirements analysis; architecture selection; test equipment configurationI.I NTRODUCTIONUsually, with a minimum of human involvement, a computer is used to execute a software program to control the test process and perform data processing until the test system that gives the test results in an appropriate manner is called ATS (Automatic Test System) or ATE (Automatic Test Equipment). .With the advancement of test bus technology, computer technology and software engineering technology, the difficultyof establishing ATS systems is also increasing. Due to the diversification of test objectives, there is no bus that can cover the needs of the entire automated test, coupled with the complexity and diversification of the test process and the function of the test instruments, making the establishment of modern automated test systems, especially the design of test software. The difficulty has doubled. How to effectively and rationally plan the test system architecture and select test equipment is a place that is not yet perfect, and therefore the top level design of the automatic test system is getting more and more attention.II.T OP-LEVEL D ESIGNAs the name suggests, the top-level design is the overall planning and design at the highest level. The top-level design of automatic test system integration is to stand at the level of past, present and future demands of the system under test, and to conduct overall planning and design from the perspective of technological development.The top-level design of automatic test system integration is based on sufficient requirements analysis, and comprehensively considers the optimal matching of technical and economic performances. It is advanced, practical, open, real-time, universal (compatibility), and reliability. , maintainability and other aspects of a comprehensive analysis, determine the test system architecture (including hardware platforms and software platforms), develop a corresponding test program. As shown in Figure 1, it is usually divided into three steps: requirements analysis, architecture selection and analysis, and test equipment configuration.AemandanalysisArchitectureselection andanalysisTest equipmentselection andconfigurationFunctional AnalysisTarget signal typeMeasured parameter definitionTestability analysisTest method analysisInterface bus analysisHardware architecture analysisController selection and analysisHardwareplatformSoftware operating environment analysisOperating system selection and analysisDevelopment platform selection and analysisDatabase selection and analysisTest instrument (module) selectionUTT interface connection designSpecial parameters require processingSoftwareplatformFIGURE I. AUTOMATIC TEST SYSTEM INTEGRATION TOP LEVELDESIGN FLOWIII.D EMAND A NALYSISTest requirement analysis is the basis of automatic test system integration top-level design. It mainly contains five aspects: functional requirements of the test target, test parameters, test objects, test methods, and test system planning.3rd International Conference on Electrical, Automation and Mechanical Engineering (EAME 2018)A.Test Target Functional RequirementsThe different requirements of the test equipment working platform determine the test speed requirements, and also determine the different requirements of the online/offline test; the main control method and logic of the tested equipment determines the difference between the test procedures and methods; the input frequency of the tested equipment, Different parameters, such as amplitude and modulation method, determine the overall requirements for the operating frequency band, small signal level (minimum leakage), and waveform parameters of the automatic test system analog signal source; the output and content of the device under test determines the signal sampling of the automatic test system. The data acquisition method is different; the digital communication interface of the device under test determines that the digital communication interface that the automatic test system should have is different from the protocol; the testability interface of the device under test determines the final test capability and fault diagnosis ability of the automatic test system.B.Test ParametersThe test parameter analysis includes analysis: the form of the measured parameter (electrical or non-electrical, digital or analog, etc.), range and quantity; performance index (measurement accuracy and speed, etc.); the form and range of the excitation signal. In particular, when analyzing requirements for a top-level design of a general-purpose comprehensive automatic test system that is suitable for multiple systems, multiple protocols, and multiple equipment, comprehensive analysis is often required to integrate the test parameters.C.Test ObjectThe test objects vary widely. When analyzing the test objects, a comprehensive analysis must be performed in conjunction with the test system requirements of the test objects. In the face of a specific test object test system or subsystem, the description can use a variety of expressions to give different models of the test system at different levels of simplification, such as language descriptions, graphics, and mathematical formulas. As a simplified description of some test systems, their models merely express their basic characteristics, often ignoring irrelevant details in order to simplify their complexity. For a complex test object test system, a model is inevitably limited by some assumptions in its design and utility. These conditions often have some ambiguity and basically reflect an implicit conceptual idea. Therefore, when analyzing the requirements of a specific test object, it is usually necessary to establish a corresponding test system model.D.Test MethodsAccording to the functional requirements of the test target, a corresponding test method is formulated for the “face-to-face automatic test system” or “object-oriented automatic test system”.. E.Test System PlanningWhen developing an automated test system, it often takes a lot of time to complete the test-assisted tasks such as creating files and programming supporting test software. The test application software development platform can standardize all kinds of test processes and integrate an operating system that is suitable for various test and post-processing functions. It can help us to complete these test auxiliary work; therefore, we use this kind of test platform to conduct various tests. When testing, you can save a lot of time.IV.A RCHITECTURE S ELECTION AND A NALYSIS On the basis of sufficient requirements analysis, determining the architecture of the automated test system is the most critical step in the top-level design. That is how to determine the test plan from the perspective of the top-level design, and select the hardware platform and software platform architecture of the automatic test system, and the most important one is the selection of the test equipment digital communication interface bus.A.System Test Plan SelectionThe system test plan is the overall concept of product testing. It specifies the type of product testing, when (continuous or regular) testing, where (field or workshop, or which maintenance level), testing methods, and test methods used. The types of system test can be divided into: system-wide test and departmental system test, static test and dynamic test, online test and offline test, quantitative test and qualitative test, continuous test and periodic test, etc. The test level can be divided into three levels according to the location: production site, use site, and maintenance base. The test system (equipment) operating methods are generally:According to the use of the operation can be divided into three kinds of automatic, semi-automatic and artificial; according to the general degree of application can be divided into two kinds of special and general equipment; according to the association with the product can be divided into two kinds of BITE and external test equipment.Most of the test methods used in automated testing have so far been modeled on manual tests, from the measurement principles used, the testing techniques used, to the test procedures performed, except that computers were used instead of manual operations. As far as the characteristics and potential of automatic testing are concerned, fundamental reforms of the test plan are needed for future research.B.Selection of Test Equipment Digital CommunicationInterface Bus and ATS StructureThe development of automatic test systems has promoted the continuous emergence of various general-purpose test equipment interface buses and rapid technological advancement: from the early GPIB, CAMAC to the recent VXI, MXI, PCI, PCIe, PXI, PXIe, cPCI, MMS, IEEE1394 ( Firewire), USB, etc. Although technical characteristics are not the same, they are widely used.The structural elements of a modern automated test system are programmable test instruments, test controllers, interconnected standard digital interfaces, and software systems. At present, modern automatic testing has been widely used, and the test objects faced are large, complex, and diversified, making it impossible for an automatic test system based on any kind of bus technology to cover the needs of the entire test object.Multi-bus fusion automatic test system structure shown in Figure 2. It consists of test instruments, DUTs(design under test) and UUT(unit under test) interfaces, test controllers (computers), various general-purpose digital interface buses, and test software. The test controller is interconnected with the test instrument through the digital interface bus, and the device under test is connected to the input/output terminal of the test instrument through the UUT interface. The digital interface bus used may be GPIB, VXI, PXI, LXI, or even an internal computer bus (AT/EISA/PCI), or their convergence. Once the standard digital interface bus architecture used is determined, the automatic test system architecture is basically selected. In an automatic test system, regardless of the interface bus architecture, an external computer or built-in computer system can be selected as the test system controller. The choice of the test system controller should fully consider the optimal matching of technical and economic performance, and choose from real-time, practical, reliable, flexible and convenient.CAT test hostMaster control computerGPIB instrument PC card typeinstrumentVXIinstrumentPXIinstrumentUUT interfaceUUT……FIGURE II. MULTI-BUS FUSION AUTOMATIC TEST SYSTEMSTRUCTUREC.Test Software Platform Mode SelectionIn modern computer-based automated test systems, hardware is the foundation and software is the soul. Test software has increasingly become the main body of ATS, which determines the advanced nature, reliability, practicality, and real-time performance of the entire automated test system.The automatic test software platform mainly refers to the programming language and software support environment involved in the test application software design. It is an integrated software platform such as a computer operating system, a test programming language, a database software, and a program diagnosis software. The key element is Test programming language. Since the automatic test system was popularized and applied, there have been great developments in testing programming languages from low-level to high-level, to the current test application development environment.V.T EST E QUIPMENT C ONFIGURATION After the system structure of the test system is determined, the next task is to synthesize the test contents according to the requirements analysis, and to match the corresponding test equipment according to the test content requirements. There are three types of optional test equipment: general test equipment, special purpose equipment, and test interface adapter.A.Universal Test EquipmentThe universal test equipment includes a main box, a test controller, a main control interface, a zero slot controller, an instrument module, and a desktop instrument. The following factors should be considered when selecting the type of equipment: (1) The higher the degree of equipment automation, the shorter the time for detecting and isolating faults, and the less the manpower consumption, but the cost of test equipment will increase and more protection is needed. (2) Differences in capabilities between the two are to be considered when selecting a BIT (Built-in-Test) and an off-board automatic test equipment. (3) When the BIT is used in conjunction with the off-board automatic test, make full use of the BIT capability of each unit under test. (4) When selecting a dedicated or general-purpose device, it is necessary to consider that the special-purpose device is simple and convenient to use and has high efficiency, but the use range is narrow. (5) The main selection of instrument and equipment is based on the requirements of test parameters, characteristics of the signal to be measured, and range selection. When selecting the instrument module, pay attention to the size of the bus module, power, and number of slots.B.Special Purpose EquipmentWhen the test is not ready for selection, in addition to the above-mentioned common tests, when preparing for the following situations, it may be considered to develop or develop special purpose instrument (module) equipment. When the current product can not meet the test requirements, multiple instruments and equipments are required to complete the measurement together. However, the utilization rate of each instrument is very low or can be accomplished with one instrument. When the price is high and the utilization rate is low, the use of development or development is considered. Special purpose instrument.C.Test Interface Adapter DesignFor different test objects, the extraction and feeding of various test signals requires the design and manufacture of various test interfaces and special fixtures. In the automatic test system, especially the automatic test system assembly of complex electronic equipment, the requirements of the same type but different models and different test objects existuniversally, and often require the test system group to build a relatively universal automatic test platform. Through this platform, different test modules and test methods can be used to quickly and easily complete the automatic test system set-up (configuration) task for different test objects; however, the test interface and the dedicated test module cannot be matched and can only be tested according to the device under test. The test requires the development of a test interface adapter.VI.C ONCLUSIONThis article starts with the three steps of the top-level design: system requirements analysis, architecture selection and analysis, and test equipment configuration. It describes in detail how to perform top-level design efficiently and reasonably when developing automated test systems, and analyzes what the design must follow. Principles, methods, techniques, and precautions have certain guiding significance for the top-level design of automated test systems.R EFERENCES[1]LI Xing-shan, ZUO Yi, SUN Jie. Automatic Test System IntegrationTechnology[M]. Publishing House of Electronics Industry, 2004.[2]QIN Hong-lei, LU Hui et al. Automatic Test System. Beijing: HigherEducation Press, 2007[3]LIU Si-jiu, ZHANG Li-yong. Automatic Test System and VirtualInstrument. Beijing: Publishing House of Electronics Industry, 2009 [4]GU Zhi-yong, TENG Peng, HU Shi-guo, et al. Top-level design of ATSoverall plan for integrated helicopter display systems[J]. Electro-optics and Control, 2008, 15(11):59-62.[5]GU Ya-ping. Research on Top Design of VXI Bus TestingTechnology[J]. Electronic Testing, 1998(8):22-23.。

B l u e S c o p e B L6800BHandheld multi‐functional, multi‐technology test platform forEthernet, IP, SONET/SDH/PDH, OTN & Fibre ChannelKey BlueScope Benefits:∙Unmatchable market performance▪Test up to 32‐Mulistreams each with customizable traffic profiles.▪Powerful Packet Flooding. Flood nearly any field of a packet including MAC, VLAN, MPLS with Layer 3 & 4 payload options.▪Dual port Packet Capture and Analysis utilizing WireShark engine▪The most compact OTN tester on the market. Supports OTU‐1/2/1E/2E/1F/2F▪Unparalleled physical layer testing on all optical transport methods and line rates.∙Software license controlled features for upgrades or maintaining test standards as they are defined, then certified.▪Instant (DIY) remote/field upgradable; via a software only license key.▪Never lose a test capability/feature due to lost, forgotten, or damaged hardware modules.∙Linux Operating System▪Less susceptibility to viruses and malware. Known in the IT industry for being more stable than alternatives.Linux the OS choice for hand held testers and utilized in the Bluescope!BlueScope Highlights:∙Handheld test set hardware platform that supports both 1/10 GbE Ethernet, SONET/SDH/PDH, Fiber Channel, OTN, VOIP, IPTV, WLAN.∙Rapid Boot‐up∙Eight Hours battery stand time and approximate four hours of battery test time.∙Remote control through VNC and a CLI.∙Dual port operation enables performing two tests simultaneously∙Bluetooth support to easily offload test results or transfer test configurations.∙Customizable platform. Avoids technology obsolescence. Choose your ports, line rates and testing options you require now. Then invest in what you require. Bluescope is upgradeable for your future testing by you and can be fullyconfigured by you, whenever your test requirements, testing demands, financial position changes. Just add ports,features, and software options etc. in the future. Truly “pay as you grow” handheld test platform.Ethernet & IPBlueScope Ethernet Standard Features: ∙ Throughput Test (Single Stream)∙ Packet Filtering∙ IP Tools (Ping, DHCP, Trace Route)∙ In ‐Service Traffic Monitoring (Non ‐Intrusive Mode) ∙ MPLS (stacking up to 3 MPLSs) ∙ VLAN, Q ‐in ‐Q (up to 3 VLANs) ∙ Cable Diagnostics ∙ Remote Control via VNC ∙ Report Generation (PDF, CSV and TXT)BlueScope Ethernet SW Options:∙ (ET ‐1) Single 1GB port (ET ‐2) Dual 1GB port (ET ‐3) Single 10GB port(ET ‐4) Dual 1GB & Single 10GB port∙ (ET ‐5) Loopback Mode (L1‐L4, auto, filtered) ∙ (ET ‐6) Throughput multi ‐streams (4 streams)∙ (ET ‐7) RFC2544 (Network Equipment Benchmarking Test) ∙ (ET ‐8) Packet Capture and Decode via WireShark ∙ (ET ‐9) Throughput 32 multi ‐streams∙ (ET ‐10) Packet Flooding – Mac/VLAN/IP/User Defined Field ∙ (ET ‐11) L1/Unframed BERT (Cable BERT ‐RJ45, Unframed BERT ‐SFP Optic)∙ (ET ‐12) Network Discovery ∙ (ET ‐13) 100 FX/LX (Optic)BlueScope Ethernet SW Options:∙ (ET ‐14) Y.1564(EtherSAM)∙ (ET ‐15) PBB/PBB ‐TE(MAC ‐in ‐MAC) ∙ (ET ‐16) SyncE/1588 ∙ (ET ‐17) IPV6∙ (ET ‐18) EtherOAM Y.1731 (available early 2013) ∙ (ET ‐19) Asymmetric Testing (available early 2013)∙ (ET ‐20) One ‐Way Delay Measurement using 1588 or GPS (available early 2013)∙ (ET ‐21) Web Browser and HTTP Testing ∙ (ET ‐22) CLI Interface ∙ (WA ‐1) 10GbE WAN PHY∙ (WA ‐2) WLAN 802.11 a/b/g testing ∙ (PT ‐1) IPTV Quality Metrics∙ (PT ‐2) IPTV Decode (available early 2013) ∙ (VO ‐1) VoIP Quality Metrics∙ (VO ‐2) VoIP Signaling and Vocoding (available early 2013)Ethernet IP Applications∙Troubleshoots Ethernet/IP networks, captures and analyzes packets, and identifies network problems.∙Tests Carrier Ethernet transport to verify class of service (CoS), Triple‐Play Service, and Ethernet circuit transparency.∙Supports Packet Transport Network (PTN) testing with MPLS‐TP traffic generation and QoS analysis, along with simultaneous verification of OAM Label 13 or 14 operation.∙Confirms higher‐layer Ethernet data applications and services at 10Mbps to 1Gbps rates with Ipv4 and IPv6∙Tests Layer 1‐4 Ethernet/IP SLAs with RFC 2544 for up to 3 VLAN tags, Q‐in‐Q, and MPLS encapsulation.∙Verifies automatically SLA compliance according to Y.1564, including different traffic profiles per service, and KPI compliance for all committed services concurrently.Ethernet IP Testing LifecycleInstallation∙RFC2544, including frame delay variation, asymmetric rates, and concurrent results to reduce overall test time.∙Y.1564 EtherSAM automated SLA validation including bandwidth profiles and KPI compliance for quickly verifying multiple services.∙Carrier Ethernet testing with PBB, MPLS, MPLS‐TP, VLAN and Q‐in‐Q.Troubleshooting∙Line rate packet capture up to 10Gbps.∙Packet decodes with integrated WireSharkCarrier Ethernet Installation TestingFor years Ethernet/IP has been transported throughout carrier networks encapsulated in other data‐link layer technologies thatevolved into a carrier‐grade technology because of operations, administration, and maintenance (OAM) standards such as ITU‐Ty.1731, IEEE 802.1ag, and 802.3ah. Ethernet now possesses many of the characteristics that made SONET/SDH the transporttechnology of choice; end‐to‐end circuit transparency, redundancy, and full‐featured OAM for circuit‐based performancemanagement and alarming. The BlueScope delivers a much‐needed tool set for provisioning and troubleshooting Ethernet networksthat substantially improves installation and troubleshooting times, thereby granting error‐free operation and a significant reductionin operating expense.RFC2544 TestingThe BlueScope delivers all the Carrier Ethernet testing needed to qualify Ethernet‐based transport networks. RFC2544 is the defacto industry standard for Ethernet circuit installation. In addition to supporting Ethernet throughput or committed information rate (CIR), frame delay (FD) or latency, frame loss (FLR), and back‐to‐back burst testing as called out in the RFC, the BlueScope also tests forpacket jitter or frame delay variation (FDV) to ensure the circuit is ready to transport time‐sensitive services such as IPTV and VoIP.Using a pair of test sets and Asymmetric RFC testing, users can validate Ethernet Virtual Circuits (EVCs) with different upstream and downstream CIRs, or they can test sequentially in both directions to ensure that key performance indicators (KPIs) are met acrossany connection type.ITU‐T Y.1564 EtherSAM Service Activation TestingY.1564 EtherSAM allows for fast and easy verification of SLAs for differentiated services including validation of different bandwidthprofiles like committed information rate (CIR), extended information rate (EIR) and maximum bandwidth. Pass / Fail results for KPIs including CIR, frame delay (FD), frame delay variation (FDV or packet jitter) and frame loss rate (FLR) are provided independently forup to 16 services. Out of sequence frames and available seconds are reported per Y.1564.Verifying CoS with Multiple StreamsMulti‐stream testing generates several streams of traffic at the Ethernet, IP, and TCP/UDP layers (Layers 2‐4) to emulate various types of traffic with the appropriate CoS mappings so that users can assess the impact of traffic prioritization on the overall network architecture while confirming proper network element queuing, policing, and shaping. Up to 32 individually configured streams enable generation and analysis of per stream key parameters such as VLAN ID and priority, TOS/DSCP marking, packet size,source/destination IP and MAC address, and source/destination TCP/UDP ports. Users can configure constant or ramp traffic to simulate near real‐world traffic before actually delivering a service. This level of testing confirms the network design as well as drastically reduces post‐installation troubleshooting.BER and Latency TestingThe BlueScope supports optical Layer 1 (L1) BER testing for stress testing the underlying physical transport link. A standard 2^23 pattern is used to obtain key QoS measurements including bit error rates, pattern sync, latency, line coding, and signal/power levels.Ethernet OAM, VLAN, Q‐inQ, MPLS and PBB Tunneling TechnologiesEthernet tagging and encapsulation is commonly used to improve the scalability of Ethernet networks by isolating customer traffic and, in the case of provider backbone bridging (PBB), minimizing the number of MAC addresses that equipment must learn. Regardless of the encapsulation and tagging used, the BlueScope tests CoS to confirm KPIs such as CIR, FD, FDV, and FLR. Support for virtual local area network (VLAN) tags, Q‐in‐Q VLAN tags, PBB (also known as MAC‐in‐MAC) and multi‐protocol label switching (MPLS), the BlueScope allows testing at any part of the Metro network.Ethernet Timing Synchronization Verification using 1588v2 PTP and G.826x SyncECritical network timing and frequency synchronization testing enables service providers to analyze emerging 1588v2 PTP and Synchronous Ethernet (SyncE) protocols greatly reducing expenses for mobile backhaul and LTE by eliminating the need forTDM/GPS. Wireless backhaul providers can now verify whether Ethernet links can transfer PTP protocols by connecting to a PTP master and measuring critical packet parameters such as PDV with simultaneous network traffic loading. SyncE testing recovers the timing of an incoming Ethernet interface for the tester’s transmitter. Capturing and decoding the 1588v2 PTP and Ethernet Synchronization Messaging Channel (ESMC) messages allows operators to verify and troubleshoot proper configuration and operation of synchronization networks.Carrier Ethernet Fault IsolationIn the ever‐changing Ethernet and IP world providers must quickly, cost‐efficiently, and reliably troubleshoot problems at all layers of the stack. The BlueScope provides powerful line‐rate packet capture at Ethernet speeds up to 1GigE without dropping a single packet. When troubleshooting problems occur intermittently or inconsistently, it supports multiple traffic filters and triggers, including 16‐byte pattern identification, to isolate the exact problem and minimize the amount of information gathered.The BlueScope natively supports WireShark for on‐instrument packet decode. Additionally, users can save the captured traffic in a standard pcap file format and export it via USB or FTP through the management port for further analysis.SONET/SDH/PDHThe BlueScope 6800B performs BER testing on all line interfaces in end‐to‐end or loopback applications, inserts errors and alarms to verify NE conformance and connectivity, and measures BERs from DS1 (1.5M)/E1 (2.048M) rates to OC‐192/STM‐64.MappingsSONET/SDH mappings include all intermediate mappings down to VC‐4/VC‐3 in addition to BERT payload with multiple PRBS choices.SONET/SDH/PDH Overhead Byte Manipulation and AnalysisUsing the overhead byte manipulation and analysis capability, users can modify K1 and K2 bytes to test automatic protection switching (APS) to specify and identify user‐configurable path trace messages and payloads. The path overhead (POH) capture feature facilitates troubleshooting end‐to‐end problems. The Bluescope 6800B supports manual capture, capture on alarm, and capture based on user‐defined triggersPhysical Layer TestingPerform physical layer testing to verify dark fiber and line continuity across all optical transport methods and line rates. Support for unframed STM‐1/4/16/64, Fiber Channel 1x/2x/4x/10x, OTN OTU‐1/2/1E/2E/1F/2F, 1.250G(1GE), 10.313(10GE) and 3.1G (CPRI).Service Disruption measurementsThe Bluescope 6800B measures the protection switch times of SONET/SDH rings and their effects on tributaries. By measuring various error and alarm conditions on the tributaries, providers can verify that their transport network is providing adequate redundancy to guarantee SLAs.Multi‐Channel ViewDrill down to view the path hierarchy in its entirety on one screen with automatic detection of payload type (concatenated or non‐concatenated) for SONET ( 48x STS‐1 and 28x VT 2/1.5) and SDH (48x AU‐3 and 28x TU12/TU11).Line Through ModeConnecting the test unit in‐line provides not only monitoring capabilities but also the possibility of injecting errors. This provides for an effective tool in serice‐disruption testing.SDH/PDH Alarm/Error GenerationGenerate Alarms for:LOS, LOF, OOF,RS‐TIM, MS‐AIS, MS‐RDI, AU‐LOP, AU‐AIS, TU‐LOP, TU‐AIS, HP‐UNEQ, HP‐PLM, HP‐TIM, HP‐RDI,HP‐SRDI, HP‐CRDI, HP‐PRDI, HP‐TC‐UNEQ, HP‐TC‐LOMF, HP‐TC‐AIS, HP‐TC‐RDI, HP‐TC‐ODI, LP‐UNEQ, LP‐PLM, LP‐TIM, LP‐RFI, LP‐RDI, LP‐SRDI, LP‐CRDI, LP‐PRDI, LP‐TC‐UNEQ, LP‐TC‐LOMF, LP‐TC‐AIS, LP‐TC‐RDI, LP‐TC‐ODIGenerate Errors for:FAS, B1, B2,B3, MS‐REI, BIT,HP‐REI, HP‐TC‐IEC, HP‐TC‐REI, HP‐TC‐OEI, LP‐BIP, LP‐REI, LP‐TC‐IEC, LP‐TC‐REI, LP‐TC‐OEIBlueScope SONET/SDH Options:∙(SO‐1) OC‐3/12/48 (STM 1/4/16)(SO‐2) OC‐192 (STM‐64)(SO‐3) OC‐3/12/48/192 (STM 1/4/16/64)∙(SO‐4) Unframed Line Rate (Requires SO‐1,2 or 3)∙(SO‐5) Multi‐Channel View (Requires SO‐1, 2 or 3)∙(SO‐6) Signal Delay emulator (injection of signal delay in unframed line rates; Requires SO‐4)BlueScope PDH Options: ∙(PD‐1)E1/T1 (DS1)(PD‐2)E3/T3 (DS3)(PD‐3)E1/T1, E3/T3OTNOTN is the next generation network designed to combine and accelerate the benefits of SDH/SONET with the bandwidth expandability of DWDM (Dense wavelength division multiplexing).Test end‐to‐end connectivity by transmitting and receiving OTN signals with the ability to insert and analyze errors and alarms in network troubleshooting and equipment verification applications.TCM with Error/Alarm detectionVerify network element interoperability with the TCM bytes; Count, current rate and average rate for each error, SDT (Service disruption Time) measurements and RTD (Round Trip Delay) measurements. Verify OTN alarms and errors with injection capabilities such as loss of frame (LOF), alarm indication signal (AIS), and backward defect indication (BDI).FEC TestingTransmit and analyze correctable and uncorrectable FEC errors to verify a network element’s ability to correct conditions through the use of FEC enabled signals. Correctable and uncorrectable FEC error positions are accumulated and monitored through a graphical hierarchy window which allows users to easily recognize the position of the FEC error.Features programmable FEC error generation functions that allows the user to define a detailed position for correctable FEC errors and un‐correctable FEC errors.Line Through ModeConnecting the test unit in‐line provides not only monitoring capabilities but also the possibility of injecting errors. This provides for an effective tool in service‐disruption testing.BlueScope OTN Options:∙(OT‐1)OTU‐1 (2.66G/STM‐16)Requires SO‐1(OT‐2)OTU‐2 (10.7G/STM‐64)Requires SO‐2(OT‐3)OTU‐3 (2.66G & 10.7G / STM‐16 & STM‐64) Requires SO‐3(OT‐4)OTU‐4 (10G Ethernet over OTU‐2 [OUT‐1e/2e] available early 2013)Fibre ChannelThe BlueScope B6800B tests 1x, 2x, 4x and 10x Gbps Fibre Channel (FC). Users can manipulate various fields of the FC frames to emulate end customer traffic and perform BER measurements on L1 and L2 circuits. The BlueScope supports buffer crediting capability, which lets providers verify the effect of delay on the link throughput and test the ability of the link to obtain the optimum buffer credit values. The BlueScope also allows users to turn up storage area networks (SANs), producing reliable throughput, packet loss, RTD, and burstability results with consistent test methodology.BlueScope Fiber Channel Options:∙(FC‐1) Fiber Channel 1x/2x(FC‐2) Fiber Channel 4x(FC‐3)Fiber Channel 1x/2x/4x(FC‐4)Fiber Channel 10x (Available 2013)(FC‐5)Fiber Channel 1x/2x/4x/10x (10x Available 2013)Hardware Specifications:∙Ports:▪(2) SFPs (1000BASE‐SX/LX/ZX, 100‐FX/LX/SX)▪(2) 10/100/1000Base‐T (RJ45)▪(1) XFP▪(1) BNC connectors (Tx/Rx) 34‐45M▪(1) Bantam(Tx/Rx) : 1.5M ‐ 2M▪(1) SMA(Tx) : Tx Reference Clock Out▪(1) SMA: External clock input 1.544 ‐2.048 Mbps / 1.544m, 2.048 m, 10M / Any clock speed▪(1) GPS Signal input▪(1) HDMI output▪(1) 3.5mm headset audio jack and mic support.∙Port Configuration:▪BL6800‐01 (1Gb‐Single Port), BL6800‐02 (1Gb‐Dual Port), BL6800‐12 (10Gb‐Single Port, 1Gb‐Dual Port)▪BL6800‐01P (including PDH), BL6800‐02P (including PDH), BL6800‐12P (including PDH) ∙Dimensions:▪Size: 172.5 (W) x 227 (H) x 58.5 (D) mm▪Weight: 1.3 kg with Battery, Battery (0.3 kg)∙Operating Temp: 0Ԩ~40Ԩ∙Storage Temp: ‐20Ԩ~ +70Ԩ∙Display: 5.7 Color TFT‐LCD Touch Screen∙User Interface: Touch Screen & Keypad∙Humidity: 10% ~ 90%∙Power:▪AC adaptor: 100V~240 V(50Hz/60Hz)▪Removable/Rechargeable lithium ‐Ion Battery▪Battery life: 3 hours typical, 8 hour in standby mode▪Charging time: 1 ~ 2 hours∙Memory:▪16GByte internal Flash memory includedContact Information:Web: US & Canada:Support: ************************Tel: +1 408 841 9689Sales: **********************Fax: +1 408 841 9607。

软件测试部分中英文对照A Acceptance test ing:验收测试Acceptance Testing:可接受性测试Accessibi l i t y test:软体适用性测试actual outcome:实际结果Ad hoc test ing:随机测试Algorithm analysis:算法分析algori thm:算法Alpha test ing:α测试analysis:分析anomaly:异常applicat ion software:应用软件Applicat ion under test(AUT) :所测试的应用程序Architecture:构架Arti fact:工件AS Q:自动化软件质量(Automated Software Quality)Assertion checking :断言检查Associat ion:关联Audit:审计audit trai l:审计跟踪Automated Testing:自动化测试软件测试部分中英文对照BBackus-Naur Form:BNF范式baseline:基线Basic Block:基本块basis test set:基本测试集Behaviour:行为Bench test:基准测试benchmark:标杆/指标/基准Best practise:最佳实践Beta test ing:β测试Black Box Testing:黑盒测试Blocking bug :阻碍性错误Bottom-up test ing:自底向上测试boundary value coverage:边界值覆盖boundary value test ing:边界值测试Boundary values :边界值Boundry Value Analysis:边界值分析branch condit ion combination coverage:分支条件组合覆盖 branch condit ion combination test ing:分支条件组合测试 branch condit ion coverage:分支条件覆盖branch condit ion test ing:分支条件测试branch condit ion:分支条件Branch coverage :分支覆盖branch outcome:分支结果branch point:分支点branch test ing:分支测试branch:分支Breadth Testing:广度测试Brute force test ing:强力测试Buddy test:合伙测试Buffer:缓冲Bug:错误Bug bash :错误大扫除bug f ix:错误修正Bug report:错误报告Bug tracking system: 错误跟踪系统bug:缺陷Build:工作版本(内部小版本)Build Verficat ion tests(BVTs):版本验证测试Build-in:内置软件测试部分中英文对照CCapabil i ty Maturity Model (CM M): 能力成熟度模型Capabil i ty Maturity Model Integrat ion(CM MI):能力成熟度模型整合capture/playback tool:捕获/回放工具Capture/Replay Tool:捕获/回放工具CAS E:计算机辅助软件工程(computer aided software engineering)CAST:计算机辅助测试cause-effect graph:因果图cert i f i cat ion:证明change control:变更控制Change Manage ment :变更管理Change Request :变更请求Character Set :字符集Check In:检入Check Out :检出Closeout :收尾code audit:代码审计Code coverage :代码覆盖Code Inspection:代码检视Code page :代码页Code rule:编码规范Code sytle:编码风格Code W alkthrough:代码走读code-based test ing:基于代码的测试coding standards:编程规范Co m m o n sense :常识Co mpatibi l i t y Testing:兼容性测试complete path test ing:完全路径测试 completeness:完整性complexity:复杂性Co mponent test ing:组件测试Co mponent:组件computation data use:计算数据使用computer system security:计算机系统安全性 Concurrency user :并发用户Condit ion coverage :条件覆盖condit ion outcome:条件结果condit ion:条件configurat ion control:配置控制Configurat ion i tem :配置项configurat ion manage ment:配置管理Configurat ion test ing:配置测试conformance cri ter ion: 一致性标准Conformance Testing: 一致性测试consistency : 一致性consistency checker: 一致性检查器Control f low graph :控制流程图control f low graph:控制流图control f low:控制流conversion test ing:转换测试Core team:核心小组correct ive maintenance:故障检修correctness :正确性coverage :覆盖率coverage i tem:覆盖项crash:崩溃cri t i ca l i t y analysis:关键性分析cri t i ca l i t y:关键性CR M(change request manage ment):变更需求管理Customer-focused mindset :客户为中心的理念体系Cyclomatic complexity:圈复杂度软件测试部分中英文对照D data corruption:数据污染data defini t ion C-use pair:数据定义C-use使用对 data defini t ion P-use coverage:数据定义P-use覆盖 data defini t ion P-use pair:数据定义P-use使用对 data defini t ion:数据定义data defini t ion-use coverage:数据定义使用覆盖 data defini t ion-use pair:数据定义使用对 data defini t ion-use test ing:数据定义使用测试 data dict ionary:数据字典Data Flow Analysis:数据流分析data f low analysis:数据流分析data f low coverage:数据流覆盖data f low diagram:数据流图data f low test ing:数据流测试data integri ty:数据完整性data use:数据使用data validat ion:数据确认dead code:死代码Debug:调试Debugging:调试Decision condit ion:判定条件Decision coverage :判定覆盖decision coverage:判定覆盖decision outcome:判定结果decision table:判定表decision:判定Defect:缺陷defect density:缺陷密度Defect Tracking :缺陷跟踪Deployment :部署Depth Testing:深度测试design for sustainabi l i t y:可延续性的设计 design of experiments:实验设计design-based test ing:基于设计的测试Desk checking :桌前检查desk checking:桌面检查Determine Usage Model :确定应用模型Determine Potential Risks :确定潜在风险 diagnostic:诊断DIF(decimation in frequency) :按频率抽取dirty test ing:肮脏测试disaster recovery:灾难恢复DIT (decimation in t ime):按时间抽取 documentation test ing:文档测试 domain test ing:域测试domain:域DTP DETAIL TEST PLAN详细确认测试计划Dyna mic analysis:动态分析dynamic analysis:动态分析Dyna mic Testing:动态测试软件测试部分中英文对照E embedded software:嵌入式软件emulator:仿真End-to-End test ing:端到端测试Enhanced Request :增强请求enti ty relat ionship diagram:实体关系图 Encryption Source Code Base: 加密算法源代码库 Entry cri ter ia:准入条件entry point:入口点Envisioning Phase:构想阶段Equivalence class :等价类Equivalence Class:等价类equivalence part i t ion coverage:等价划分覆盖Equivalence part i t ion test ing:等价划分测试equivalence part i t ion test ing:参考等价划分测试equivalence part i t ion test ing:等价划分测试Equivalence Parti t ion ing:等价划分Error:错误Error guessing :错误猜测error seeding:错误播种/错误插值error:错误Event-driven :事件驱动Exception handlers:异常处理器exception:异常/例外executable statement:可执行语句Exhaustive Testing:穷尽测试exit point:出口点expected outcome:期望结果Exploratory test ing:探索性测试软件测试部分中英文对照FFailure:失效Fault:故障fault:故障feasible path:可达路径feature test ing:特性测试Field test ing:现场测试FM E A:失效模型效果分析(Failure Modes and Effects Analysis)FM E C A:失效模型效果关键性分析(Fai lure Modes and Effects Crit ical i t y Analysis) Framework :框架FTA:故障树分析(Fault Tree Analysis)functional decomposit ion:功能分解Functional Specif icat ion:功能规格说明书Functional test ing:功能测试Functional Testing:功能测试软件测试部分中英文对照GG11N(Globalizat ion):全球化Gap analysis:差距分析Garbage characters:乱码字符glass box test ing:玻璃盒测试Glass-box test ing:白箱测试或白盒测试Glossary :术语表G UI(Graphical User Interface):图形用户界面软件测试部分中英文对照H-MHard-coding :硬编码Hotfix:热补丁IGKL M NI18N(Internat ional izat ion):国际化Identi fy Exploratory Tests –识别探索性测试IEEE:美国电子与电器工程师学会(Inst i tu te of Electr ical and Electronic Engineers)Incident事故Incremental test ing:渐增测试incremental test ing:渐增测试infeasible path:不可达路径input domain:输入域Inspection :审查inspection:检视instal labi l i t y test ing:可安装性测试Instal l i ng test ing:安装测试instrumentation:插装instrumenter:插装器Integrat ion:集成Integrat ion test ing:集成测试interface :接口interface analysis:接口分析interface test ing:接口测试interface:接口inval id inputs:无效输入isolat ion test ing:孤立测试Issue :问题Iterat ion:迭代Iterat ive development:迭代开发job control language:工作控制语言Job:工作Key concepts :关键概念Key Process Area :关键过程区域Keyword driven test ing:关键字驱动测试Kick-off meeting :动会议L10N(Localizat ion):本地化Lag t ime :延迟时间LCSAJ:线性代码顺序和跳转(Linear Code Sequence And Jump)LCSAJ coverage:LCSAJ覆盖LCSAJ test ing:LCSAJ测试Lead t ime :前置时间Load test ing:负载测试Load Testing:负载测试Localizabi l i t y test ing:本地化能力测试Localizat ion test ing:本地化测试logic analysis:逻辑分析logic-coverage test ing:逻辑覆盖测试Maintainabi l i t y:可维护性maintainabi l i t y test ing:可维护性测试Maintenance :维护Master project schedule :总体项目方案Measurement :度量Me m ory leak :内存泄漏Migration test ing:迁移测试Milestone :里程碑Mock up :模型,原型modified condit ion/decision coverage:修改条件/判定覆盖 modified condit ion/decision test ing:修改条件/判定测试 modular decomposit ion:参考模块分解Module test ing:模块测试Monkey test ing:跳跃式测试Monkey Testing:跳跃式测试mouse over:鼠标在对象之上mouse leave:鼠标离开对象MTB F:平均失效间隔实际(mean t ime between fai lures)MTP M AIN TEST PLAN主确认计划MTTF:平均失效时间 (mean t ime to fai lure)MTT R:平均修复时间(mean t ime to repair)multip le condit ion coverage:多条件覆盖mutation analysis:变体分析软件测试部分中英文对照N-RN/A(Not applicable):不适用的Negative Testing :逆向测试,反向测试,负面测试negative test ing:参考负面测试Negative Testing:逆向测试/反向测试/负面测试non-functional requirements test ing:非功能需求测试nominal load:额定负载N-switch coverage:N切换覆盖N-switch test ing:N切换测试N-transit ions:N转换off by one:缓冲溢出错误Off-the-shelf software :套装软件operational test ing:可操作性测试output domain:输出域paper audit:书面审计Pair Program ming:成对编程part i t ion test ing:分类测试Path coverage :路径覆盖path coverage:路径覆盖path sensit iz ing:路径敏感性path test ing:路径测试path:路径Peer review :同行评审Performance :性能Performance indicator:性能(绩效)指标Performance test ing:性能测试Pilot:试验Pilot test ing:引导测试Portabi l i t y:可移植性portabi l i t y test ing:可移植性测试Posit ive test ing:正向测试Postcondit ion:后置条件Precondit ion:前提条件precondit ion:预置条件predicate data use:谓词数据使用predicate:谓词Prior i ty:优先权program instrumenter:程序插装progressive test ing:递进测试Prototype :原型Pseudo code :伪代码pseudo-local izat ion test ing:伪本地化测试pseudo-random:伪随机Q C:质量控制(quali ty control)Quality assurance(QA):质量保证Quality Control(QC) :质量控制Race Condit ion:竞争状态Rational Unified Process(以下简称RU P):瑞理统一工艺Recovery test ing:恢复测试recovery test ing:恢复性测试Refactoring:重构regression analysis and test ing:回归分析和测试 Regression test ing:回归测试Release :发布Release note :版本说明release:发布Reliabi l i t y:可靠性rel iabi l i t y assessment:可靠性评价rel iabi l i t y:可靠性Requirements manage ment tool:需求管理工具Requirements-based test ing:基于需求的测试Return of Investment(R OI):投资回报率review:评审Risk assessment :风险评估risk:风险Robustness :强健性Root Cause Analysis(RCA):根本原因分析软件测试部分中英文对照S-Z safety cri t i ca l:严格的安全性safety:(生命)安全性Sanity test ing:健全测试Sanity Testing:理智测试Sche ma Repository:模式库Screen shot :抓屏、截图SDP:软件开发计划(software development plan)Security test ing:安全性测试security test ing:安全性测试security.:(信息)安全性serviceabil i t y test ing:可服务性测试Severity:严重性Shipment :发布simple subpath:简单子路径Simulation:模拟Simulator:模拟器SLA(Service level agreement):服务级别协议SLA:服务级别协议(service level agreement)S moke test ing:冒烟测试Software development plan(SDP):软件开发计划Software development process:软件开发过程software development process:软件开发过程 software diversi ty:软件多样性software element:软件元素software engineering environment:软件工程环境software engineering:软件工程Software l i fe cycle :软件生命周期source code:源代码source statement:源语句Specif icat ion:规格说明书specif ied input:指定的输入spiral model :螺旋模型SQ A P SOFT W A R E QU ALITY ASS U R E N C E PLAN软件质量保证计划SQL:结构化查询语句(structured query language)Staged Delivery:分布交付方法state diagram:状态图state transit ion test ing:状态转换测试 state transit ion:状态转换state:状态Statement coverage :语句覆盖statement test ing:语句测试statement:语句Static Analysis:静态分析Static Analyzer:静态分析器Static Testing:静态测试stat is t i ca l test ing:统计测试Stepwise ref inement :逐步优化storage test ing:存储测试Stress Testing :压力测试structural coverage:结构化覆盖structural test case design:结构化测试用例设计structural test ing:结构化测试structured basis test ing:结构化的基础测试structured design:结构化设计structured program ming:结构化编程structured walkthrough:结构化走读stub:桩sub-area:子域Su m m ary: 总结SVV P SOFT W A R E Vevif icat ion&Validat ion PLAN: 软件验证和确认计划symbolic evaluation:符号评价symbolic execution:参考符号执行symbolic execution:符号执行symbolic trace:符号轨迹Synchronizat ion:同步Syntax test ing:语法分析system analysis:系统分析System design :系统设计system integrat ion:系统集成System Testing :系统测试TC TEST CAS E测试用例TCS TEST CAS E SPE CIFICATIO N测试用例规格说明TDS TEST DESIG N SPE CIFICATION测试设计规格说明书technical requirements test ing:技术需求测试Test :测试test automation:测试自动化Test case :测试用例test case design technique:测试用例设计技术test case suite:测试用例套test comparator:测试比较器test completion cri ter ion:测试完成标准test coverage:测试覆盖Test design :测试设计Test driver:测试驱动test environment:测试环境test execution technique:测试执行技术test execution:测试执行test generator:测试生成器test harness:测试用具Test infrastructure:测试基础建设test log:测试日志test measurement technique:测试度量技术Test Metrics:测试度量test procedure:测试规程test records:测试记录test report:测试报告Test scenario:测试场景Test Script:测试脚本Test Specif icat ion:测试规格Test strategy :测试策略test suite:测试套Test target:测试目标Test ware :测试工具Testabil i t y:可测试性testabi l i t y:可测试性Testing bed :测试平台Testing coverage :测试覆盖Testing environment :测试环境Testing i tem :测试项Testing plan :测试计划Testing procedure :测试过程Thread test ing:线程测试t ime sharing:时间共享t ime-boxed :固定时间TIR test incident report测试事故报告ToolTip:控件提示或说明top-down test ing:自顶向下测试TPS TEST PE O C E S S SPE CIFICATIO N测试步骤规格说明Traceabil i t y:可跟踪性traceabil i t y analysis:跟踪性分析 traceabil i t y matrix:跟踪矩阵Trade-off:平衡transaction:事务/处理transaction volume:交易量transform analysis:事务分析trojan horse:特洛伊木马truth table:真值表TST TEST SU M M A R Y REP O R T测试总结报告Tune System:调试系统T W TEST W A R E:测试件Unit Testing :单元测试Usabil i ty Testing:可用性测试Usage scenario:使用场景User acceptance Test :用户验收测试User database :用户数据库User interface(UI):用户界面User profi le:用户信息User scenario:用户场景V&V(Verif icat ion&Validat ion):验证&确认validat ion:确认veri f icat ion:验证version :版本Virtual user :虚拟用户volume test ing:容量测试VSS(visual source safe) :VTP Verif icat ion TEST PLAN验证测试计划VTR Verif icat ion TEST REP O R T验证测试报告W alkthrough :走读W aterfal l model :瀑布模型W eb test ing:网站测试W hite box test ing:白盒测试W ork breakdown structure(WBS):任务分解结构。

常用计算机英语词汇表(A-Z)3DNow!〔3D no waiting〕3DPA〔3D Positional Audio,3D定位音频〕3DS〔3D SubSystem,三维子系统〕ABS〔Auto Balance System,自动平衡系统〕AC〔Audio Codec,音频多媒体数字信号编解码器〕ACOPS: Automatic CPU OverHeat Prevention System〔CPU过热预防系统〕ACPI〔Advanced Configuration and Power Interface,先进设置和电源办理〕AE〔Atmospheric Effects,雾化效果〕AFR〔Alternate Frame Rendering,交替衬着技术〕AGAS〔Anti Glare Anti Static Coatings,防强光、防静电涂层〕AGP: Accelarated Graphic Port(加速图形端口),一种CPU与图形芯片的总线布局AGU〔Address Generation Units,地址产成单元〕AH: Authentication Header,鉴定文件头AHA〔Accelerated Hub Architecture,加速中心架构〕AL: Artificial Life〔人工生命〕ALU〔Arithmetic Logic Unit,算术逻辑单元〕AMR〔Audio/Modem Riser,音效/数据主机板附加直立插卡〕AMR〔Audio/Modem Riser;音效/调制解调器主机板附加直立插卡〕Anisotropic Filtering〔各向异性过滤〕API〔Application Programming Interfaces,应用程序接口〕APIC: Advanced Programmable Interrupt Controller(高级程序中断控制器)APM〔Advanced Power Management,高级能源办理〕APPE〔Advanced Packet Parsing Engine,增强形帧解析引擎〕ARP〔Address Resolution Protocol,地址解析协议〕ASC〔Anti Static Coatings,防静电涂层〕ASC〔Auto-Sizing and Centering,自动调效屏幕尺寸和中心位置〕ASCII〔American Standard Code for Information Interchange,美国国家尺度信息交换代码〕ASIC: Application Specific Integrated Circuit〔特殊应用积体电路〕ASK IR〔Amplitude Shift Keyed Infra-Red,长波形可移动输入红外线〕ASMO〔Advanced Storage Magneto-Optical,增强形光学存储器〕ASPI〔Advanced SCSI Program Interface,高级SCSI编程接口。

中国建筑集团二测题库Title: China State Construction Second Test BankSubject: Architecture and Engineering1.Q: What is the difference between traditional Chinese architecture and modern architecture?A: Traditional Chinese architecture is characterized by its use of wood, bricks, and tiles, as well as its unique roof structures such as the "dragon" and "tiger" ridges.In contrast, modern architecture often incorporates steel, concrete, and glass, and its design is more focused on functionality and aesthetics.1.问题:传统中国建筑和现代建筑有什么区别?答案:传统中国建筑以其木结构、砖瓦和独特的屋顶结构如“龙”和“虎”脊而著称。

相比之下,现代建筑通常采用钢材、混凝土和玻璃,其设计更注重功能和美观。

2.Q: What are the main materials used in the construction of traditional Chinese buildings?A: The main materials used in the construction of traditional Chinese buildings are wood, bricks, tiles, and stone.These materials were chosen for their availability, durability, and ability to withstand the harsh weather conditions in China.2.问题:传统中国建筑的主要材料是什么?答案:传统中国建筑的主要材料是木材、砖块、瓦片和石头。

oCONTENTSOTA Test ChallengesAccurate and Much Faster OTA Validation mmWave OTA Validation Test Hardware mmWave OTA Validation Test Software Solution ServicesOTA Test Challenges5G operation at mmWave frequencies relies on beamforming technology through antenna arrays with many elements. As the industry strives to reduce the size and cost of producing these 5G beamforming devices operating at mmWave, many of them lack conventional external RF connectors, becomingintegrated Antenna-in-Package (AiP) and Antenna-in-Module (AiM) devices. This industry shift presents a tough challenge for engineers in charge of characterization and validation of integrated beamforming designs, prompting them to look for accurate, over-the-air (OTA), radiated test solutions.Test Time Challenges and Measurement UncertaintyConfiguring and running detailed 3D spatial sweeps of 5G beamforming AiP devices within a carefully controlled RF environment in an anechoic chamber can be a very time-consuming and expensive task. A typical move → stop → measure, point-by-point, software-controlled test system with a positioner that can rotate in two independent axes (azimuth and elevation), produces only a handful of RFmeasurements per second. However, engineers need to measure and validate antenna performance by scanning hundreds or even thousands of points in space. A trade-off arises, in which the finer the 3D sampling grid (smaller distance between measurement points), the higher the test times, but the lower the measurement uncertainty. Conversely, a 3D grid that is too sparse can give faster results, but introduce quite a bit of measurement error. Figure 1 illustrates how employing a 3D scanning grid to measure a DUT’s power produces a 3D antenna pattern, but the points need to be close enough to minimize resolution errors.Figure 1 Selecting a denser 3D scanning grid reduces measurement uncertaintyFurthermore, getting data at multiple frequencies and powers, and configuring the DUT to steer the beam with various codebooks can greatly expand test times, as outlined in the table below:Table 1 OTA Test Times and Measurement Uncertainty for various grid densitiesNo. of Test PointsTXP EIRP Mean Error(dB)Typical Single ScanTest Time (s)Typical Test time 3 codes, 3 powers, 5frequencies6000 0.02 1000 12.5 Hours 800 0.2 133 1.6 Hours 2000.743325 MinutesAccurate and Much Faster OTA Validation To help engineers in charge of OTA characterization and validation test of beamforming devices reducetest times without compromising accuracy, National Instruments developed the mmWave OTA Validation Test reference architecture.The mmWave OTA Validation Test reference architecture takes a platform-level approach that integrates NI’s real-time motion control, data acquisition, and PXI triggering and synchronization to take fast, high-bandwidth RF measurements synchronized with the instantaneous (φ,θ) coordinates of the positioner’s motors. Unlike traditional OTA test solutions, NI’s approach moves the Device Under Test (DUT) in a smooth and continuous motion across the 3D space while the RF engine takes rapid measurements. This eliminates the time waste of moving discretely from point to point. As a result, engineers can perform 3D spatial sweeps with thousands of points that execute in a fraction of the time, all the while reducing measurement uncertainty and error.Figure 2 Continuous motion while triggering RF measurementsThe following table highlights the speed advantages of using a continuous-motion approach to OTA test over the more traditional start-stop-measure techniques, cutting test times by 5X or more.Table 2 Test time benchmark comparing discrete vs. continuous motion and measurementSoftware-based point-by-point measurements NI Continuous-motion measurements680 s (11 min) 84 smmWave OTA Validation Test HardwareAs shown in Figure 3 below, the mmWave OTA Validation Test reference architecture includes: • NI’s mmWave Vector Signal Transceiver (VST) for wideband RF signal generation andmeasurement• PXI instruments for repeatable, smooth, and precise motion control• Isolated RF chamber for Far-Field radiated testing of 5G mmWave AiP devices in a quiet environment• High-gain antennas, cables, adapters and other accessories• mmWave OTA Validation Test software for interactive use and automationFigure 3 Diagram of mmWave OTA Validation Test reference architecture components mmWave VST for IF-to-RF and RF-to-RF MeasurementsThe modular architecture of the NI mmWave VST enables it to scale with the variety and complexity of 5G mmWave devices. Using NI VSTs, engineers get fast, lab-grade, high-bandwidth IF and mmWave signal generation and analysis for OTA testing of 5G semiconductor devices.For RF-to-RF device testing, the mmWave OTA Validation Test reference architecture places the VST’s mmWave radio heads with high-power, bidirectional ports very close to the RF connectors on the outside of the anechoic chamber. Engineers can also take advantage of the VST’s IF ports to interface IF-to-RF DUTs.Figure 4 mmWave VST ArchitectureThis approach creates:•IF and mmWave signal generation and analysis capabilities for various DUT types• A future-proof, modular system that engineers can adapt without having to change any other part of the test solution as the 5G standard evolves to include higher frequencies • A way to move mmWave measurement ports closer to the DUT, minimizing signal losses and boosting Signal-to-Noise ratio• A complete test solution with wide data rates and signal processing at the speed of the latest multicore processorsConsider the following examples of how engineers can take advantage of the modularity of the mmWave VST to configure various 5G OTA test setups:IF-to-RF beamformer:Figure 5 IF-to-RF OTA test configuration using the mmWave VSTRF-to-RF beamformer:Figure 6 RF-to-RF OTA test configuration using the mmWave VSTIsolated RF Anechoic ChamberProper characterization of the beamforming performance of AiP devices requires the controlled and quiet RF environment of an anechoic chamber with high-quality RF absorbing material that keeps reflections to a minimum. Also, to ensure measurement repeatability, the motion system needs to enable fine angular resolution and moving to the exact point in space every time.NI’s mmWave OTA Validation Test reference architecture includes a carefully specified anechoic chamber with a 2-axis (azimuth and elevation) DUT positioner at the bottom and a fixed measurement antenna at the top. This chamber incorporates a National Instruments real-time motion controller that enables NI’s fast, continuous-motion OTA test approach.The distance between the positioner and the DUT allows for far-field testing of 5G mmWave AiP devices with an antenna aperture of 5 cm or less (following the 3GPP 38.310 Specification for Category 1 DUTs).Figure 7 High-isolation mmWave anechoic chambermmWave OTA Validation Test SoftwareThe mmWave OTA Validation Test reference architecture includes test software that helps engineers quickly configure extensive spatial sweeps to characterize their device’s antenna patterns, while they produce, visualize, store, or distribute detailed parametric results.Users can take advantage of the mmWave OTA Validation Test Software as a complete test framework for OTA validation tests. Alternatively, users can incorporate some of its components into their existing test framework, or they can use the separate components as stand-alone utilities.OTA test needs may vary greatly between different applications and DUT types. To help engineers adapt to different test situations, the mmWave OTA Validation Test Software offers a modular approach, extensible to various user needs, like customized DUT control, specific sweep configurations, signal routing, etc.Engineers working on both manual and automated validation tests of mmWave OTA devices, will greatly benefit from the following components:mmWave OTA Test Configuration UIThe mmWave OTA Validation Test Software provides an open-source LabVIEW graphical user interface (GUI) that helps users configure the test matrix to run, including measurement parameters, sweeping parameters, and connection settings.Figure 8 Front Panel of the Test Configuration UITestStand Template Startup SequencesThe mmWave OTA Validation Test Software installs template test sequences that engineers can use to run the configuration files they create with the mmWave OTA Configuration UI.Using these test sequences in TestStand, an industry-leading test framework software, engineers move quickly from manual configuration to complete automation of their test plans, controlling all aspects of test execution.With TestStand, users can modify and customize these open-source template sequences to suit their specific DUT needs or validation goals.mmWave OTA Test Positioner Soft Front PanelThe mmWave OTA Test Positioner SFP allows users to manipulate the positioner in an interactive manner. Users can complete the following tasks with the mmWave OTA Test Positioner SFP: •Move the positioner in azimuth or elevation independently•Configure a sweep in both azimuth and elevation•Configure the Absolute Zero location of the positioner for antenna alignmentFigure 9 mmWave OTA Test Positioner SFPmmWave OTA Test VisualizerThe mmWave OTA Test Visualizer completes offline configuration and analysis of OTA test data for antenna measurements. Engineers can use the mmWave OTA Test Visualizer to invoke different results visualizations and analyze antenna-specific measurements and patterns.The mmWave OTA Test Visualizer takes in measurement results as comma-separated values (CSV) files and displays the data on-screen. Users can select various data sources and types of plots, as illustrated below:Figure 10 3D Antenna Pattern for single and multiple beamsFigure 11 Antenna cut analysis, single beam and multiple beamsFigure 12 Polar plotFigure 13 Heat map plot for single and multiple beamsFigure 14 Best beam index for single and multiple beamsOTA Measurement InterfaceTo streamline the process of storing measurement values, importing and exporting measurement data, and interpreting measurement results using the automated sequences in TestStand, the mmWave OTA Test Software includes an OTA Measurement Interface (OTAMI). The OTAMI presents engineers with a measurement-oriented API that can get the following measurement results and visualizations:Furthermore, the OTAMI API gives users the ability to add measurements on-the-fly during sequence execution or to retrieve measurements from a CSV file. Once test execution finishes, engineers use the OTAMI API to export measurement data into a CSV file, simplifying the process of storing and retrieving data quickly.Antenna PluginEngineers that need to implement new DUT control for their devices have a simpler approach to automate OTA test. The mmWave OTA Validation Test software also supports the creation of custom antenna control modules. That is, by taking advantage of simple antenna control code modules, users can create custom DUT “plugins” that integrate readily into the test sequencer. That way, users can rapidly perform automated testing of various kinds of DUTs using the same test sequence template but invoking different DUT control plugins.©2019 National Instruments. All rights reserved. National Instruments, NI, , LabVIEW, and NI TestStand are trademarks of National Instruments. Other product and company names listed are trademarks or trade names of their respective companies. Information on this flyer may be updated or changed at any time, without notice.Page 11 | | mmWave OTA Validation Test Reference ArchitecturePerforming System CalibrationOne of the most important factors for getting reliable results with reduced measurement uncertainty is making sure that the test setup is properly calibrated.NI provides the RF System Calibration Assistant, a free software utility that controls the RF instruments, including an external RF power meter to perform a system calibration on all OTA hardware components and signal paths, considering both Horizontal and Vertical polarizations.Engineers can configure each path name, as well as the frequency and power of operation. The calibration utility then runs the calibration and creates a calibration file across frequency and power for every signal path.Figure 15 RF System Calibration utility to measure the losses through all signal paths Solution ServicesImplementing reliable mmWave OTA validation test setups can be a very complex task with several risk factors. Some of the more common ones include measurement uncertainty due to mechanical placement of the DUTs, in-chamber reflections, and system calibration.As a trusted advisor, NI complements its mmWave OTA Validation Test reference architecture with services from experts around the globe to help users achieve their OTA test goals. Whether the OTA challenges are simple or complex, you can maximize productivity and reduce costs with NI OTA test setup installation, training, technical support, consulting and integration, and hardware services.。