Linear phase transition in random linear constraint satisfaction problems, Probability Theo

- 格式:pdf

- 大小:349.02 KB

- 文档页数:26

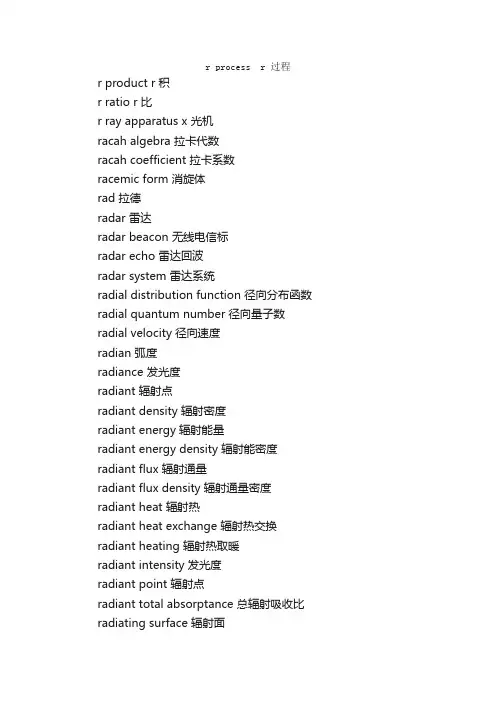

r process r 过程r product r 积r ratio r 比r ray apparatus x 光机racah algebra 拉卡代数racah coefficient 拉卡系数racemic form 消旋体rad 拉德radar 雷达radar beacon 无线电信标radar echo 雷达回波radar system 雷达系统radial distribution function 径向分布函数radial quantum number 径向量子数radial velocity 径向速度radian 弧度radiance 发光度radiant 辐射点radiant density 辐射密度radiant energy 辐射能量radiant energy density 辐射能密度radiant flux 辐射通量radiant flux density 辐射通量密度radiant heat 辐射热radiant heat exchange 辐射热交换radiant heating 辐射热取暖radiant intensity 发光度radiant point 辐射点radiant total absorptance 总辐射吸收比radiating surface 辐射面radiation 辐射radiation balance 辐射平衡radiation belt 范艾伦辐射带radiation camera 辐射照相机radiation chemical reaction 辐射化学反应radiation chemistry 辐射化学radiation control 辐射管理radiation cooling 辐射冷却radiation damage 辐射损伤radiation damping 辐射衰减radiation density 辐射密度radiation detector 辐射探测器radiation dominated universe 辐射为诸宙radiation dose 辐射剂量radiation energy 辐射能量radiation equilibrium 辐射平衡radiation field 辐射场radiation flux 辐射通量radiation formula 辐射公式radiation injury 辐射伤害radiation intensity 辐射强度radiation law 辐射定律radiation length 辐射长度radiation loss 辐射损失radiation measurement 辐射测量radiation monitor 辐射监测器radiation physics 射线物理学radiation pressure 辐射压radiation protection 辐射防护radiation pyrometer 辐射高温计radiation shield 辐射屏蔽层radiation shielding material 辐射屏蔽材料radiation streaming 辐射冲流radiation temperature 辐射温度radiation thermometer 辐射温度计radiation thickness gage 辐射测厚计radiationless transition 无辐射跃迁radiative capture 辐射俘获radiative heat exchange 辐射热交换radiative transfer 辐射转移radiator 辐射体radio 无线电radio beacon 无线电信标radio detecting and ranging 雷达radio direction finding 无线电定向radio emission 射电辐射radio frequency 射频radio frequency acceleration 高频加速radio frequency amplification 射频放大radio frequency amplifier 射频放大器radio frequency capture 射频俘获radio frequency confinement 射频约束radio frequency discharge 射频放电radio frequency heating 射频加热radio frequency probe 射频探针radio frequency source 射电源radio frequency spectroscopy 射频谱学radio galaxy 射电星系radio interferometer 射电干涉仪radio noise 无线电噪声radio observation 射电观测radio observatory 射电天文台radio pulsar 射电脉冲星radio quasar 射电类星体radio radiation 射电辐射radio receiver 收音机radio source 射电源radio spectroscopy 无线电频谱学radio star 无线电星radio telescope 射电望远镜radio wave holography 无线电波全息学radio wave propagation 无线电波传插radio wave spectrum 无线电波谱radio waves 无线电波radioactivation 放射化radioactivation analysis 放射化分析radioactive collector 放射式集电器radioactive decay 放射性衰变radioactive decay law 放射性衰变定律radioactive disintegration 放射性衰变radioactive displacement law 放射性位移定律radioactive element 放射性元素radioactive equilibrium 放射性平衡radioactive fall out 放射性沉降物radioactive family 放射族radioactive gaseous waste 放射性气态废物radioactive isotope 放射性同位素radioactive liquid waste 放射性液态废物radioactive material 放射性物质radioactive nuclide 放射性核素radioactive product 放射性产物radioactive rays 放射线radioactive standard 放射性标准radioactive tracer 放射性指示剂radioactive transformation 放射性转换radioactive unit 放射性单位radioactive waste 放射性废物radioactivity 放射性radioanalysis 放射性分析radioastrometry 射电天体测量学radioastronomy 射电天文学radioastronomy observatory 射电天文台radioastrophysics 射电天体物理学radioautography 放射自显影法radiocarbon 14 dating method 放射性碳14 测定年龄法radiochemistry 放射化学radiocontamination 放射性沾污radioelement 放射性元素radiogeophysics 放射地球物理学radiogram 无线电报radiography 射线照相法radioheliograph 射电日光仪radiointerferometer 射电干涉仪radioisotope 放射性同位素radioisotope assay 放射性同位素成分的定量分析radiolocation 无线电定位radiology 放射学radioluminescence 放射发光radiometer 辐射计radiometric force 辐射力radiomicrometer 微量辐射计radiomicrometry 微量辐射法radionuclide 放射性核素radiophotoluminescence 辐射光致发光radiophysics 无线电物理学radioresistance 抗辐射性radiosensitivity 放射敏感性radiosonde 无线电探空仪radiosonde observation 无线电探空仪观测radiospectrograph 无线电频谱仪radiospectrometer 射电光谱仪radiothorium 放射性钍radiotracer 放射性指示剂radium 镭radium standard 镭标准源radius 半径radius of gyration 回转半径radius vector 位置矢量radon 氡rail gun 轨炮rain 雨rain gauge 雨量器rainbow 虹rainbow hologram 虹全息图rainbow scattering 虹散射ram 随机存取存储器raman active 喇曼激活的raman effect 喇曼效应raman laser 喇曼激光器raman microprobe 喇曼微探针raman microscope 喇曼显微镜raman nath diffraction 喇曼纳什衍射raman scattering 喇曼散射raman shift 喇曼位移raman spectroscopy 喇曼光谱学raman spectrum 喇曼谱ramsauer effect 冉邵尔效应ramsauer townsend effect 冉邵尔汤森效应ramsden eyepiece 冉斯登目镜ramsey resonance 拉姆齐共振random coincidence 偶然符合random error 随机误差random function 随机函数random matrix 随机矩阵random numbers 随机数random phase approximation 随机相位近似random process 随机过程random variable 随机变数random vibration 随机振动random walk 无规行走randomness 随机性range 列range finder 测距仪range straggling 射程岐离rankine cycle 兰金循环rankine hugoniot relations 兰金雨贡纽关系rapidity 快度rare earth elements 稀土元素rare earth nuclei 稀土核rare earths 稀土族rare gas 稀有气体rare gas excimer laser 稀有气体激元激光器rarefied gas dynamics 稀薄气体动力学rate 比率rate meter 剂量率测量计rate of combustion 燃烧率rated output 额定输出ratio 比rationalized system of units 有理化单位制rationalized unit 核理单位ray 半直线ray of light 光线ray optics 光线光学ray representation 射线表示ray surface 光线速度面ray velocity 光线速度rayleigh criterion 瑞利判据rayleigh disc 瑞利圆板rayleigh distribution 瑞利分布rayleigh jeans' law of radiation 瑞利琼斯的辐射定律rayleigh number 瑞利数rayleigh refractometer 瑞利折射计rayleigh scattering 瑞利散射rayleigh taylor instability 瑞利泰勒不稳定性rayleigh wave 瑞利波rayleigh's radiation formula 瑞利辐射公式rbe dose rbe 剂量re emission 再发射reactance 电抗reaction 反应reaction cross section 反应截面reactive current 无功电流reactive power 无效功率reactivity 反应性reactivity coefficient 反应性系数reactivity control 反应性控制reactor 反应堆reactor components 反应堆组元reactor control 反应曝制reactor lifetime 反应堆寿命reactor materials 反应堆材料reactor period 反应堆周期reactor physics 反应堆物理学reactor poison 反应堆毒物reactor simulator 反应堆仿真器反应堆模拟器reactor time constant 反应堆寿命reactor vessel 反应堆容器real gas 真实气体real image 实象real time hologram 实时全息图realistic nuclear force 真实核力rearrangement collision 重排碰撞rearrangement scattering 重排散射receiver 接收机receiving antenna 接收天线reciprocal lattice 倒易点阵reciprocal lattice vector 倒易晶格矢量reciprocal linear dispersion 逆线性色散reciprocal shear ellipsoid 倒切变椭球reciprocal space 倒易空间reciprocal strain ellipsoid 倒应变椭球reciprocity 互易性reciprocity law 倒易律reciprocity theorem 倒易律recoil 反冲recoil atom 反冲原子recoil effect 反冲效应recoil electron 反冲电子recoil force 反冲力recoil momentum 反冲动量recoil nucleus 反冲核recoil particle 反冲粒子recoilless transition 无反冲跃迁recombinant dna technology 重组 dna 工艺recombination 复合recombination center 复合中心recombination coefficient 复合系数recorder 录音机;记录器记录仪recording anemometer 风速计recording device 记录装置recording instrument 记录器recording thermometer 温度自动记录器自记温度计recovery time 恢复时间recrystallization 再结晶rectangular prism 直角棱镜rectification 整流检波;精馏rectifier 整流rectifier tube 整淋rectilinear motion 直线运动recurrence formula 递推公式recurrence phenomenon 重复现象red dwarf 红矮星red giant star 红巨星red shift 红移red spot 红斑reduced equation 还原方程reduced equation of state 简化物态方程reduced eye 简约眼reduced mass 约化质量reduced pressure 换算压力reduced transition probability 约化跃迁几率reduced viscosity 约化粘滞度reduced wave number 约化波数reduced wave number zone 约化波数带reduced width 约化宽度reduction of area 断面收缩率reduction of cross sectional area 断面收缩率reduction to sea level 海平面归算redundancy 多余度reed relay 簧片继电器reentrant cavity 再入式谐振腔reference electrode 参考电极reference frame 参考系reference line 参考线reference star 定标星reference stimulus 参考剌激reference system 参考系reference temperature 参考温度reference thermoelectromotive force 参考温差电动势reference wave 参考波reflectance 反射率reflected light 反射光reflected ray 反射线reflected wave 反射波reflecting goniometer 反射测角计reflecting layer 反射层reflecting losses 反射损失reflecting material 反射物质reflecting medium 反射介质reflecting microscope 反射显微镜reflecting power 反射因数reflecting surface 反射表面reflecting telescope 反射望远镜reflection 反射reflection coefficient 反射系数reflection effect 反射效应reflection electron beam holography 反射电子束全息术reflection electron microscopy 反射式电子显微术reflection factor 反射因数reflection grating 反射光栅reflection nebula 反射星云reflection profile 反射曲线reflectivity 反射率reflectometer 反射计reflector 反射器反射镜reflex camera 反射摄影机refracting medium 折射介质refraction 折射refraction law 折射定律refraction method 折射法refractive error 折射误差refractive index 折射率refractivity 折射本领refractometer 折射计refractometry 量折射术refractor 折射望远镜refractoscope 折射检验仪refrigerant 致冷剂refrigerating effect 致冷效应refrigeration 致冷refrigeration cycle 致冷循环regelation 复冰regeneration 再生regeneration of energy 能量再生regeneration of k k 的再生regenerative detection 再生检波regenerative feedback 正反馈regenerator 回热器regge pole 雷其极点regge theory 雷其理论regge trajectory 雷其轨迹register 寄存器计数器regression analysis 回归分析regular multiplet 正常多重态regular reflection 单向反射regularization 正规化regulator 正规化函数regulator gene 第基因relative acceleration 相对加速度relative aperture 口径比relative atomic weight 相对原子量relative biological effectiveness 相对生物学效应relative biological effectiveness dose rbe 剂量relative error 相对误差relative humidity 相对湿度relative measurement 相对测量relative motion 相对运动relative permeability 相对磁导率relative refractive index 相对折射率relative stopping power 相对阻止本领relative velocity 相对速度relativistic astrophysics 相对论天体物理学relativistic cosmology 相对论宇宙学relativistic dynamics 相对论力学relativistic effect 相对论性效应relativistic electron beam 相对论性电子束relativistic field 相对论性场relativistic formula 相对论性公式relativistic invariance 相对论性不变性relativistic mass equation 相对论性质量方程relativistic mechanics 相对论力学relativistic quantum mechanics 相对论性量子力学relativistic quantum theory 相对论性量子论relativistic velocity 相对论性速度relativity 相对性relativity principle 相对性原理relativity theory 相对论relaxation 张弛relaxation effect 张弛效应relaxation function 弛豫函数relaxation modulus 张弛模量relaxation oscillation 张弛振荡relaxation spectrum 弛豫谱relaxation time 张弛时间relay 继电器reliability 可靠性reliability factor 可靠性因子reluctance 磁阻reluctivity 磁阻率rem 雷姆remanence 顽磁remanent field 剩余磁场remote batch processing 远程成批处理remote control 遥控remote measuring 遥测remote metering 遥测remote synchronization technology 遥测同步技术renormalization 重正化renormalization group 重正化群renormalization of mass and charge 质量与电荷重正化renormalization theory 重正化理论repeated stress 重复应力repetitive error 重复误差replica 复制replica grating 复制光栅replica method 复制方法representation 表现representation of group 群表示representation of the rotation group 转动群的表示reprocessing 后处理reprocessing of spent fuel 烧过的核燃料后处理reproduction factor 增殖比repulsion 推斥;斥力repulsive force 斥力repulsive potential 排斥势research laboratory 研究室research reactor 研究用堆residual charge 剩余电荷residual field 剩余磁场residual gas 残留气体residual gas analyser 剩余气体分析器residual heat 残留热residual image 余象residual induction 剩余感应residual intensity 剩余强度residual interaction 剩余相互酌residual magnetization 剩余磁化强度residual nucleus 剩余核residual rays 剩余射线residual resistance 剩余电阻residual stress 剩余应力residue class group 剩余群resilience 回弹能resistance 阻力resistance attenuator 电阻衰减器resistance box 电阻箱resistance minimum 电阻最小resistance thermometer 电阻温度计resistive attenuator 电阻衰减器resistive state 有阻力状态resistivity 电阻率resistor 电阻器电阻resolution 分解resolving power 分辨率resolving time 分辨时间resonanance curye 共振曲线resonance 共振resonance absorption 共振吸收resonance absorption cross section 共振吸收截面resonance accelerator 共振加速器resonance box 谐振箱resonance capture 共振俘获resonance circuit 共振电路resonance condition 共振条件resonance curve 共振曲线resonance energy 共振能resonance excitation 共振激发resonance fluorescence 共振荧光resonance formula 共振公式resonance frequency 共振频率resonance integral 共振积分resonance level 共振能级resonance line 共振线resonance method 共振法resonance neutron 共振中子resonance peak 共振峰resonance potential 共振电势resonance radiation 共振辐射resonance raman spectroscopy 共振喇曼光谱学resonance reaction 共振反应resonance region 共振区resonance scattering 共振散射resonance spectrum 共振光谱resonant accelerator 共振加速器resonant cavity 共振腔resonant interaction 共振相互酌resonant particle 共振粒子resonator 谐振器共振器resonoscope 共振示波器respect 关系response 响应response function 响应函数response time 响应时间rest energy 静能rest mass 静质量restitution coefficient 恢复系数restoring force 恢复力restricted problem of three bodies 限制三体问题restriction 限制restrictor 节流节力result 结果resultant 合成resultant acceleration 合加速度resultant force 合力retardation 减速;延迟retardation phenomenon 滞后现象retardation spectrum 滞后谱retarded green function 推迟格林函数retarded potential 推迟势retentivity 顽磁性reticular structure 网状结构reticule 分度线reticulum 网座reverberant chamber 混响室reverberation 混响reverberation time 混响时间reverse feedback 负反馈reverse osmosis 逆渗透reverse temperature 转换温度reverse x ray effect 逆 x 射线效应reversed field pinch 反向场箍缩reversibility 可逆性reversible cell 可逆电池reversible change 可逆变化reversible cycle 可逆循环reversible engine 可逆机reversible pendulum 可倒摆reversible permeability 可逆磁导率reversible process 可逆过程reversible reaction 可逆反应reversible system 可逆系统reversible transducer 可逆变换器reversing prism 道威棱镜revolution 旋转;公转revolution ellipsoid 回转椭面revolution paraboloid 回转抛物面revolving field type motor 旋转磁场型电动机reynolds' law of similitude 雷诺相似律reynolds' number 雷诺数rf ion source rf 离子源rgb system of color representation rgb 彩色显示系统rhenium 铼rheogoniometer 锥板龄测角器rheology 龄学rheometer 龄计rheopexy 笼性rheostat 变阻器rheostatatic brake 变阻破动器rho meson 介子rho type doublet 型二重态rhodium 铑rhodium iron resistance thermometer 铑铁电阻温度计rhombic antenna 菱形天线rhombic lattice 斜方晶格rhombic system 斜方系rhombohedral system 菱形系ribonucleic acid 核糖核酸ricci tensor 里奇张量riemann christoffel tensor 黎曼克里斯托菲张量riemann space 黎曼空间riemann tensor 黎曼张量rietveld method 里德伯尔德法righi leduc effect 里齐勒杜克效应right angled coordinates 直角坐标right ascension 赤经right hand rule 右手定则rigid body 刚体rigid dynamics 刚体动力学rigid plastic body 刚性塑料体rigidity 刚性ring counter 环形计数器ring current 环形电流ring cyclotron 环形回旋加速器ring discharge 环形放电ring laser 环形激光器ring laser gyroscope 环形激光陀螺仪ring nebula 环状星云ripple 波纹ripple current 波纹电流弱脉动电流ripple voltage 脉动电压rise time 上升时间risk 危险度ritz combination principle 里兹并合定则ritz formula 里兹公式rms error 均方根误差rna 核糖核酸roberts micromanometer 罗伯兹压力计robertson walker metric 罗伯森沃克度规robinson anemometer 罗宾逊风速计robot 机扑roche limit 洛希极限roche lobe 洛希瓣rochelle salt 罗谢耳盐rock crystal 水晶rocket 火箭rocking curve 摆动曲线rockwell hardness 洛氏硬度roentgen 伦琴roentgen apparatus x 光机roentgen radiation 伦琴辐射roentgen rays 伦琴射线roentgen tube x射线管roentgenmeter x射线计roentgenogramm x 射线照相roentgenography x 射线照相法roentgenoluminescence x 射线发光roentgenometer x 射线计roentgenoscope x 射线透视机roentgenoscopy x 射线透视法rogowski coil 罗果夫斯基线圈rolling 横摇rolling friction 滚动摩擦room temperature 室温root mean square error 均方根误差roots pump 罗茨泵rosenbluth formula 罗森布拉思公式rosenthal oscillation 罗森塔尔振动rosette motion 蔷薇花形运动rossby wave 罗斯贝波rotameter 转子量计rotary power 旋光本领rotary pump 回转泵rotary reflection 旋转反射rotary switch 转动式开关rotary vacuum pump 回转真空泵rotating anode x ray generator 转动阳极 x 射线发生器rotating crystal method 旋转晶体法rotating fluid 旋转铃rotating magnetic field 旋转磁场rotation 转动rotation group 转动群rotation invariance 转动不变性rotation vibration band 转动振动光谱带rotation vibration spectrum 转动振动光谱rotational absorption line 转动吸收线rotational band 转动谱带rotational brownian motion 转动布朗运动rotational constant 转动常数rotational coupling 转动耦合rotational energy 转动能rotational excitation 转动激发rotational excited level 转动激发能级rotational fine structure 转动精细结构rotational isomer 转动同质异构体rotational level 转动能级rotational line 转动谱线rotational partition function 转动配分函数rotational perturbation 转动微扰rotational quantum number 旋转量子数rotational relaxation 转动弛豫rotational spectrum 转动能谱rotational speed 转动速度rotational structure 转动结构rotational temperature 转动温度rotational term 转动项rotational transform angle 转动变换角rotational transition 转动跃迁rotational velocity 转动速度rotational viscosimeter 旋转粘度计rotator 旋转体rotatory dispersion 旋转色散rotatory polarization 转动偏振rotatory power 旋光本领rotatory reflection 旋转反射roton 旋子rotor 转子roughening transition 粗化跃迁roughness coefficient 粗糙系数roundoff error 舍入误差rouse model 劳斯模型routine 程序rowland circle 罗兰圆rowland ghost 罗兰鬼线rubber elasticity 橡胶弹性rubbery state 橡胶态rubidium magnetometer 铷磁强计rubidum 铷ruby laser 红宝石激光器rule 规则ruled diffraction grating 刻线式衍射光栅run of rays 光路rupture 断裂russell saunders coupling 罗素桑德斯耦合rutgers formula 拉特格斯公式ruthenium 钌rutherford 卢瑟福rutherford scattering 卢瑟福散射rutherford scattering spectroscopy 卢瑟福散射光谱学rutile structure 金红石结构rvb rvbrydberg constant 里德伯常数rydberg correction 里德伯校正rydberg potential 里德伯势rydberg series 里德伯系列rydberg series formula 里德伯公式rydberg state 里德伯状态。

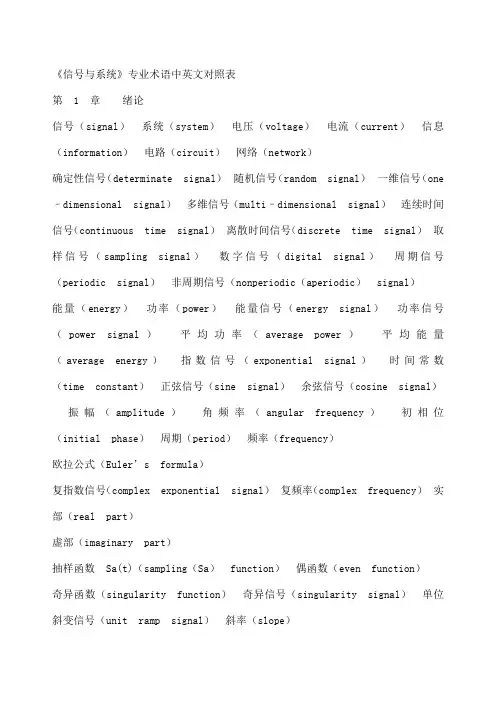

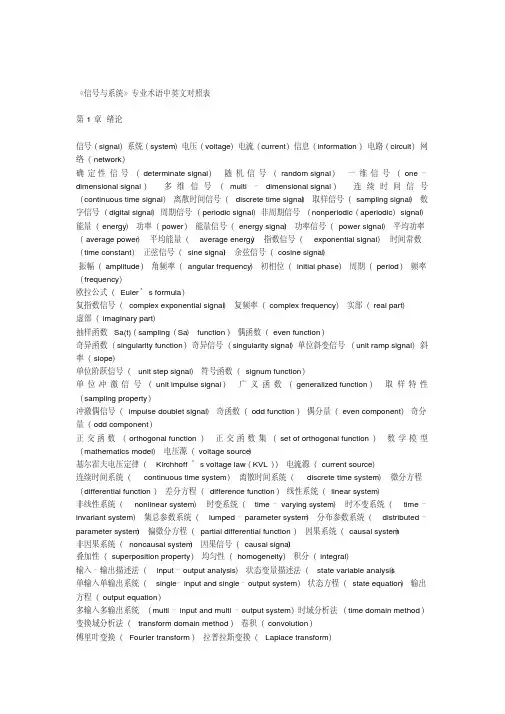

《信号与系统》专业术语中英文对照表第 1 章绪论信号(signal)系统(system)电压(voltage)电流(current)信息(information)电路(circuit)网络(network)确定性信号(determinate signal)随机信号(random signal)一维信号(one –dimensional signal)多维信号(multi–dimensional signal)连续时间信号(continuous time signal)离散时间信号(discrete time signal)取样信号(sampling signal)数字信号(digital signal)周期信号(periodic signal)非周期信号(nonperiodic(aperiodic)signal)能量(energy)功率(power)能量信号(energy signal)功率信号(power signal)平均功率(average power)平均能量(average energy)指数信号(exponential signal)时间常数(time constant)正弦信号(sine signal)余弦信号(cosine signal)振幅(amplitude)角频率(angular frequency)初相位(initial phase)周期(period)频率(frequency)欧拉公式(Euler’s formula)复指数信号(complex exponential signal)复频率(complex frequency)实部(real part)虚部(imaginary part)抽样函数Sa(t)(sampling(Sa)function)偶函数(even function)奇异函数(singularity function)奇异信号(singularity signal)单位斜变信号(unit ramp signal)斜率(slope)单位阶跃信号(unit step signal)符号函数(signum function)单位冲激信号(unit impulse signal)广义函数(generalized function)取样特性(sampling property)冲激偶信号(impulse doublet signal)奇函数(odd function)偶分量(even component)奇分量(odd component)正交函数(orthogonal function)正交函数集(set of orthogonal function)数学模型(mathematics model)电压源(voltage source)基尔霍夫电压定律(Kirchhoff’s voltage law(KVL))电流源(current source)连续时间系统(continuous time system)离散时间系统(discrete time system)微分方程(differential function)差分方程(difference function)线性系统(linear system)非线性系统(nonlinear system)时变系统(time–varying system)时不变系统(time–invariant system)集总参数系统(lumped–parameter system)分布参数系统(distributed–parameter system)偏微分方程(partial differential function)因果系统(causal system)非因果系统(noncausal system)因果信号(causal signal)叠加性(superposition property)均匀性(homogeneity)积分(integral)输入–输出描述法(input–output analysis)状态变量描述法(state variable analysis)单输入单输出系统(single–input and single–output system)状态方程(state equation)输出方程(output equation)多输入多输出系统(multi–input and multi–output system)时域分析法(time domain method)变换域分析法(transform domain method)卷积(convolution)傅里叶变换(Fourier transform)拉普拉斯变换(Laplace transform)第 2 章连续时间系统的时域分析齐次解(homogeneous solution)特解(particular solution)特征方程(characteristic function)特征根(characteristic root)固有(自由)解(natural solution)强迫解(forced solution)起始条件(original condition)初始条件(initial condition)自由响应(natural response)强迫响应(forced response)零输入响应(zero-input response)零状态响应(zero-state response)冲激响应(impulse response)阶跃响应(step response)卷积积分(convolution integral)交换律(exchange law)分配律(distribute law)结合律(combine law)第3 章傅里叶变换频谱(frequency spectrum)频域(frequency domain)三角形式的傅里叶级数(trigonomitric Fourier series)指数形式的傅里叶级数(exponential Fourier series)傅里叶系数(Fourier coefficient)直流分量(direct composition)基波分量(fundamental composition)n 次谐波分量(nth harmonic component)复振幅(complex amplitude)频谱图(spectrum plot(diagram))幅度谱(amplitude spectrum)相位谱(phase spectrum)包络(envelop)离散性(discrete property)谐波性(harmonic property)收敛性(convergence property)奇谐函数(odd harmonic function)吉伯斯现象(Gibbs phenomenon)周期矩形脉冲信号(periodic rectangular pulse signal)周期锯齿脉冲信号(periodic sawtooth pulse signal)周期三角脉冲信号(periodic triangular pulse signal)周期半波余弦信号(periodic half–cosine signal)周期全波余弦信号(periodic full–cosine signal)傅里叶逆变换(inverse Fourier transform)频谱密度函数(spectrum density function)单边指数信号(single–sided exponential signal)双边指数信号(two–sided exponential signal)对称矩形脉冲信号(symmetry rectangular pulse signal)线性(linearity)对称性(symmetry)对偶性(duality)位移特性(shifting)时移特性(time–shifting)频移特性(frequency–shifting)调制定理(modulation theorem)调制(modulation)解调(demodulation)变频(frequency conversion)尺度变换特性(scaling)微分与积分特性(differentiation and integration)时域微分特性(differentiation in the time domain)时域积分特性(integration in the time domain)频域微分特性(differentiation in the frequency domain)频域积分特性(integration in the frequency domain)卷积定理(convolution theorem)时域卷积定理(convolution theorem in the time domain)频域卷积定理(convolution theorem in the frequency domain)取样信号(sampling signal)矩形脉冲取样(rectangular pulse sampling)自然取样(nature sampling)冲激取样(impulse sampling)理想取样(ideal sampling)取样定理(sampling theorem)调制信号(modulation signal)载波信号(carrier signal)已调制信号(modulated signal)模拟调制(analog modulation)数字调制(digital modulation)连续波调制(continuous wave modulation)脉冲调制(pulse modulation)幅度调制(amplitude modulation)频率调制(frequency modulation)相位调制(phase modulation)角度调制(angle modulation)频分多路复用(frequency–division multiplex(FDM))时分多路复用(time –division multiplex(TDM))相干(同步)解调(synchronous detection)本地载波(local carrier)系统函数(system function)网络函数(network function)频响特性(frequency response)幅频特性(amplitude frequency response)相频特性(phase frequency response)无失真传输(distortionless transmission)理想低通滤波器(ideal low–pass filter)截止频率(cutoff frequency)正弦积分(sine integral)上升时间(rise time)窗函数(window function)理想带通滤波器(ideal band–pass filter)第 4 章拉普拉斯变换代数方程(algebraic equation)双边拉普拉斯变换(two-sided Laplace transform)双边拉普拉斯逆变换(inverse two-sided Laplace transform)单边拉普拉斯变换(single-sided Laplace transform)拉普拉斯逆变换(inverse Laplace transform)收敛域(region of convergence(ROC))延时特性(time delay)s 域平移特性(shifting in the s-domain)s 域微分特性(differentiation in the s-domain)s 域积分特性(integration in the s-domain)初值定理(initial-value theorem)终值定理(expiration-value)复频域卷积定理(convolution theorem in the complex frequency domain)部分分式展开法(partial fraction expansion)留数法(residue method)第 5 章策动点函数(driving function)转移函数(transfer function)极点(pole)零点(zero)零极点图(zero-pole plot)暂态响应(transient response)稳态响应(stable response)稳定系统(stable system)一阶系统(first order system)高通滤波网络(high-low filter)低通滤波网络(low-pass filter)二阶系统(second system)最小相移系统(minimum-phase system)维纳滤波器(Winner filter)卡尔曼滤波器(Kalman filter)低通(low-pass)高通(high-pass)带通(band-pass)带阻(band-stop)有源(active)无源(passive)模拟(analog)数字(digital)通带(pass-band)阻带(stop-band)佩利-维纳准则(Paley-Winner criterion)最佳逼近(optimum approximation)过渡带(transition-band)通带公差带(tolerance band)巴特沃兹滤波器(Butterworth filter)切比雪夫滤波器(Chebyshew filter)方框图(block diagram)信号流图(signal flow graph)节点(node)支路(branch)输入节点(source node)输出节点(sink node)混合节点(mix node)通路(path)开通路(open path)闭通路(close path)环路(loop)自环路(self-loop)环路增益(loop gain)不接触环路(disconnect loop)前向通路(forward path)前向通路增益(forward path gain)梅森公式(Mason formula)劳斯准则(Routh criterion)第 6 章数字系统(digital system)数字信号处理(digital signal processing)差分方程(difference equation)单位样值响应(unit sample response)卷积和(convolution sum)Z 变换(Z transform)序列(sequence)样值(sample)单位样值信号(unit sample signal)单位阶跃序列(unit step sequence)矩形序列(rectangular sequence)单边实指数序列(single sided real exponential sequence)单边正弦序列(single sided exponential sequence)斜边序列(ramp sequence)复指数序列(complex exponential sequence)线性时不变离散系统(linear time-invariant discrete-time system)常系数线性差分方程(linear constant-coefficient difference equation)后向差分方程(backward difference equation)前向差分方程(forward difference equation)海诺塔(Tower of Hanoi)菲波纳西(Fibonacci)冲激函数串(impulse train)第7 章数字滤波器(digital filter)单边Z 变换(single-sided Z transform)双边Z 变换(two-sided (bilateral) Z transform) 幂级数(power series)收敛(convergence)有界序列(limitary-amplitude sequence)正项级数(positive series)有限长序列(limitary-duration sequence)右边序列(right-sided sequence)左边序列(left-sided sequence)双边序列(two-sided sequence)Z 逆变换(inverse Z transform)围线积分法(contour integral method)幂级数展开法(power series expansion)z 域微分(differentiation in the z-domain)序列指数加权(multiplication by an exponential sequence)z 域卷积定理(z-domain convolution theorem)帕斯瓦尔定理(Parseval theorem)传输函数(transfer function)序列的傅里叶变换(discrete-time Fourier transform:DTFT)序列的傅里叶逆变换(inverse discrete-time Fourier transform:IDTFT)幅度响应(magnitude response)相位响应(phase response)量化(quantization)编码(coding)模数变换(A/D 变换:analog-to-digital conversion)数模变换(D/A 变换:digital-to- analog conversion)第8 章端口分析法(port analysis)状态变量(state variable)无记忆系统(memoryless system)有记忆系统(memory system)矢量矩阵(vector-matrix )常量矩阵(constant matrix )输入矢量(input vector)输出矢量(output vector)直接法(direct method)间接法(indirect method)状态转移矩阵(state transition matrix)系统函数矩阵(system function matrix)冲激响应矩阵(impulse response matrix)朱里准则(July criterion)。

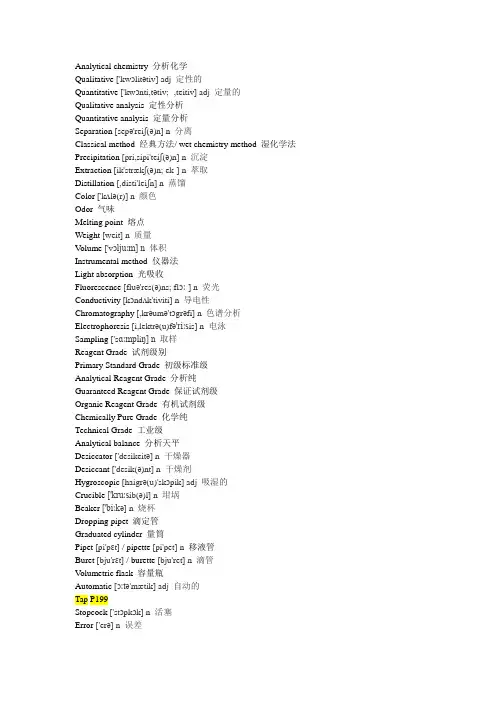

Analytical chemistry 分析化学Qualitative ['kwɔlitətiv] adj 定性的Quantitative ['kwɔnti,tətiv; -,teitiv] adj 定量的Qualitative analysis 定性分析Quantitative analysis 定量分析Separation [sepə'reiʃ(ə)n] n 分离Classical method 经典方法/ wet chemistry method 湿化学法Precipitation [pri,sipi'teiʃ(ə)n] n 沉淀Extraction [ik'strækʃ(ə)n; ek-] n 萃取Distillation [,disti'leiʃn] n 蒸馏Color ['kʌlə(r)] n 颜色Odor 气味Melting point 熔点Weight [weit] n 质量V olume ['vɔljuːm] n 体积Instrumental method 仪器法Light absorption 光吸收Fluorescence [fluə'res(ə)ns; flɔː-] n 荧光Conductivity [kɔndʌk'tiviti] n 导电性Chromatography [,krəumə'tɔgrəfi] n 色谱分析Electrophoresis [i,lektrə(u)fə'riːsis] n 电泳Sampling ['sɑːmpliŋ] n 取样Reagent Grade 试剂级别Primary Standard Grade 初级标准级Analytical Reagent Grade 分析纯Guaranteed Reagent Grade 保证试剂级Organic Reagent Grade 有机试剂级Chemically Pure Grade 化学纯Technical Grade 工业级Analytical balance 分析天平Desiccator ['desikeitə] n 干燥器Desiccant ['desik(ə)nt] n 干燥剂Hygroscopic [haigrə(u)'skɔpik] adj 吸湿的Crucible ['kruːsib(ə)l] n 坩埚Beaker ['biːkə] n 烧杯Dropping pipet 滴定管Graduated cylinder 量筒Pipet [pi'pɛt] / pipette [pi'pet] n 移液管Buret [bju'rɛt] / burette [bju'ret] n 滴管V olumetric flask 容量瓶Automatic [ɔːtə'mætik] adj 自动的Tap P199Stopcock ['stɔpkɔk] n 活塞Error ['erə] n 误差Uncertainty [ʌn'sɜːt(ə)nti; -tinti] n 不确定Mean [miːn] n 平均值Arithmetic mean 算数平均值Media ['miːdiə] n 中间数Accuracy ['ækjurəsi] n 精确度Precision [pri'siʒ(ə)n] n 准确度Absolute error 绝对误差Percent relative error 相对误差百分数Spread [spred] n 扩展度、分布Range [rein(d)ʒ] n值域、范围Standard deviation 标准偏差Variance ['veəriəns] n 方差Coefficient of variation 方差系数Deviation from the mean 与平均值间的差P201Deviation [diːvi'eiʃ(ə)n] n 偏差Absolute standard deviation 绝对标准偏差Relative standard deviation 相对标准偏差Percent relative standard deviation 相对标准偏差百分数Systematic [sistə'mætik] adj 系统的/ determinate [di'tɜːminət] adj 确定的Sampling error 取样误差Method error 方法误差Measurement error 操作误差Personal error 个人误差Random ['rændəm] adj 随机的/ indeterminate [,indi'tɜːminət] adj 不确定的True value 真实值Bia 偏差P201Sample ['sɑːmp(ə)l] n 样品Population [pɔpju'leiʃ(ə)n] n 总体Probability distribution 分布几率Gaussian ['ɡausiən] adj 高斯的Normal didtribution 正态分布Population’ s centralTrue mean valuePopulation standard deviation 总体标准偏差Confidence interval 置信区间Confidence level 置信水平Confidence limit 置信限度t-test t检验F-test F检验Q-test Q检验Detection limit 检出限Gravimetric analysis 质量分析Precipitation method 沉淀法V olatilization method 挥发法Titrimetric method 滴定法V olumetric analysis 体积分析Primary standard 初级标准Secondary standard 二级标准Standard solution 标准溶液Direct method 直接方法Standardization 标定Secondary standard solution 二级标准溶液Titration [tai'treiʃən] n 滴定Equivalence point 等当点,等效点,当量点Back titration 反滴定End point 终点Titration error 滴定偏差Indicator ['indikeitə] n 指示剂Titration curve 滴定曲线Litmus ['litməs] n 石蕊Phenolphthalein [,fiːnɔl'(f)θæliːn; -'(f)θe il-] n 酚酞Bromothymol blue 溴百里酚蓝Acid-base titration 酸碱滴定Complexometric titration 络合滴定Redox titration 氧化还原滴定Precipitation titration 沉淀滴定Potentiometric [pəu,tenʃiə'metrik] adj 电势测定的V oltammetry [vəul'tæmitri] n 伏安法Coulometry [ku'lɑmitri] n 库伦发Conductometry [kən'dʌktəmetri] n 电导测定法Dielectrometry 介电滴定Potentiometric method 电势测定方法Potentiometry 电势测定法Potentiometer 电位计Reference electrode 参考电极Indicator electrode 指示电极Junction potential 接界电势Coulometric method 库伦法Controlled-potential coulometry 控制电位电势法Potential coulometry 恒电位库伦法Controlled-current coulometry 控制电流库伦法Amperostatic coulometry 恒电流库伦法Electroanalysis [i,lektrəuə'næləsis] n 电分析Coulometric titration 库伦滴定Potentiostat [pəu'tenʃiəstæt] n 稳压器Coulometer [ku'lɑmitɚ] n 库伦计Galvanostat / amperostat 恒流器Coulomb ['kuːlɔm] n 库伦V oltammetry [vəul'tæmitri] n 伏安法V oltammogram 伏安图Working electrode 工作电极Reference electrode 参考电极Auxiliary electrode 辅助电极Saturated calomel electrode 饱和甘汞电极Linear-scan voltammetry 线性扫描伏安法Hydrodynamic voltammetry 流体动力学伏安法Polarography [,pəulə'rɔgrəfi] n 极谱法Amperometry 电流滴定法Stripping voltammetry 溶出伏安法Anodic [æn'ɑdik] adj 阳极的Cathodic [kə'θɔdik] adj 阴极的Adsorptive [æd'sɔrptiv] adj 吸附的Cyclic voltammetry 循环伏安法Alternating current 交替电流Chrono-conductometrySpectroscopy [spek'trɔskəpi] n 光谱学Emission [i'miʃ(ə)n] n 发射Scattering ['skætəriŋ] n 散射Spectrometer [spek'trɔmitə] n 分光仪Prism ['priz(ə)m] n 棱镜Diffraction grating 衍射光栅Monochromatic [mɔnə(u)krə'mætik] adj 单色的Half-mirrored 半透明反射的Sample beam 样品光束Reference 参比Electronic excitation 电子激发Transition [træn'ziʃ(ə)n; trɑːn-; -'siʃ-] n 跃迁Molar absorptivity / molar extinction coefficient 摩尔吸光/消光系数Hypsochromic / blue shift 蓝移Bathochromic / red shift 红移Beer-Lam-bert law朗伯比耳定律Absorbance [əb'zɔːb(ə)ns; -'sɔːb(ə)ns] n 吸光度Transmittance [trænz'mit(ə)ns; trɑːnz-; -ns-] n 透射比Infrared spectrophotometer 红外分光光度计Far-infrared 远红外Rotational 振动的Mid-infrared 中红外Rotational-vibrational 旋转振动的Overtone / harmonic vibration 谐振Resonant frequency 共振频率Vibrational mode 振动模式Vibrational degree of freedom 振动自由度Stretching ['stretʃiŋ] n伸缩、拉伸、伸长Bending ['bendiŋ] n 弯曲Scissoring ['sizəriŋ] n剪刀式摆动、剪,剪切Rocking ['rɔkiŋ] n左右摇摆,摇摆, 摇动Wagging [wæg] n上下摇摆,推移,摇摆Twisting ['twistiŋ] n扭摆,扭曲Symmetric [si'metrik] adj 对称的Antisymmetric stretching 反对称伸缩Fourier transform 傅立叶转换Functional group region 官能团区Fingerprint region 指纹区Fourier transform infrared (FTIR)spectroscopy 傅立叶转换红外光谱Inter ferometer 干涉仪Inter ferogram 干涉图Atomic absorption spectroscopy (AAS) 原子吸收光谱Atomization [,ætomi'zeʃən] n 原子化Flame atomization 火焰原子化Furnace ( electrothermal) atomization 炉子原子化Atomic emission 原子发射Inductively coupled plasma (ICP) 感应耦合等离子体Emission line 发射线Flame photometry火焰光谱Atomic fluorescence 原子荧光Nuclear magnetic resonance (NMR) 核磁共振Nuclear magnetic resonance spectroscope 核磁共振光谱Intrinsic magnetic moment固有磁矩Angular moment角动量Nonzero spin 非零自旋P219Resonant frequency 共振频率Nuclear shielding 核屏蔽Chemical shift 化学位移Tetramethylsilane 四甲基硅J-coupling J耦合Scalar coupling标量耦合Spin-spin coupling 自旋耦合Splitting ['splitiŋ] n 分裂Pascal’ s triangle帕斯卡三角Doublet ['dʌblit] n 双重峰Triplet ['triplit] n 三重峰Quartet [kwɔː'tet] n 四重峰One-dimensional technique 一维技术Two-dimensional technique 二维技术Time domain NMR spectroscopic technique 时域核磁共振光谱技术Solid state NMR spectroscopy 固态核磁共振光谱法Mass spectrometry (MS) 质谱学Mass-to-charge ratio 质荷比Molecular ion 分子离子Fragment ion 碎片离子Mass spectrum 质谱Base peak 基峰Mass spectrometer 质谱仪Ion source 离子源Mass analyzer 质量分析器Ion detector 离子检测器Electron impact ( EI ) ionization 电子轰击离子化Chemical ionization 化学电离Electrospray ionization ( ESI) 电喷射离子化Matrix-assisted laser desorption / ionization ( MALDI) 基质辅助激光解吸/电离Inductively coupled plasma (ICP)Sector field mass analyzer扇形磁场质谱分析仪Time-of-flight (TOF) analyzer 飞行时间分析仪Quadrupole mass analyzer 四级杆质量分析器Tandem mass spectrometry 串联质谱法Chromatography [,krəumə'tɔgrəfi] n 色谱分析法Mobile phase 流动相Differential partitioning 差动分隔Stationary phase 稳定相Partition coefficient 分配系数Retention [ri'tenʃ(ə)n] n 保留Elution [i'lju:ʃən] n 洗出液Elute [i'l(j)uːt] v 洗提Eluent['ɛljuənt] n 洗脱液Eluotropic series洗脱序(洗脱液洗脱能力大小的次序)Chromatogram 色谱图Distribution constant 分配常数Retention time 保留时间Retention volume 保留体积Dead time 死时间V oid time 空隙时间V oid volume 空隙体积Baseline width 基线宽度Band broadening 谱带增宽Resolution 分辨率Capacity factor 容量因数,容量因子Selectivity factor选择系数,选择因子Theoretical plate 理论塔板Peak capacity 最高容量Column chromatography 柱层析(法), 柱色谱(法)Planar chromatography平面色谱法Paper chromatography 纸色谱法Thin layer chromatography 薄层色谱Retention factor 保留因子Gas chromatography 气相色谱gas-liquid chromatography 气液色谱Capillary column 毛细管柱Packed column 填料柱,填充柱,填料塔Liquid chromatography (LC) 液相色谱法High performance liquid chromatography (HPLC) 高效液体色谱Normal phase 正相Reverse phase 反相Affinity chromatography (AC) 亲合色谱法Supercritical fluid chromatography 超临界流体色谱法Ion exchange chromatography 离子交换色谱法Ion exchange resin 离子交换树脂Fast protein liquid chromatography (FPLC) 快速蛋白质液相层析Size-exclusion chromatography (SEC) 体积排除色谱法Gel permeation chromatography (GPC) 凝胶渗透色谱法Hydrodynamic diameter 流体力学半径Hydrodynamic volume 流体力学体积Electrophoresis [i,lektrə(u)fə'riːsis] n 电泳Capillary zone electrophoresis 毛细管电泳Electroosmotic flow 电渗透流Micellar electrokinetic capillary chromatography 胶束电动毛细管色谱p226 Capillary gel electrophoresis 毛细管凝胶电泳Capillary electrochromatography 毛细管电色谱Optical microscopy 光学显微镜Electron microscopy 电子显微镜Scanning electron microscopy (SEM) 扫描电子显微术Transmission electron microscopy (TEM) 透射电子显微镜法Scanning probe microscopy (SPM) 扫描探针显微术Atomic force microscopy (AFM) 原子力显微镜Thermogravimetry (TG) 热重分析法Differential thermal analysis (DTA) 热分析Differential scanning calorimetry (DSC) 差热扫描量热计Dynamic mechanical analysis (DMA) 动态力学分析Raman spectroscopy (RS) 拉曼光谱Auger electron spectroscopy (AES) 俄歇电子能谱学Photoelectron spectroscopy (PES) 光电子能谱Electron spectroscopy for chemical analysis (ESCA) 化学分析用电子能谱学X-ray photoelectron spectroscopy (XPS) X射线光电子能谱学X-ray fluorescence (XRF) X射线荧光X-ray powder diffractometry (XRD) x射线粉末衍射Electrochemiluminescence (ECL) 电致化学发光。

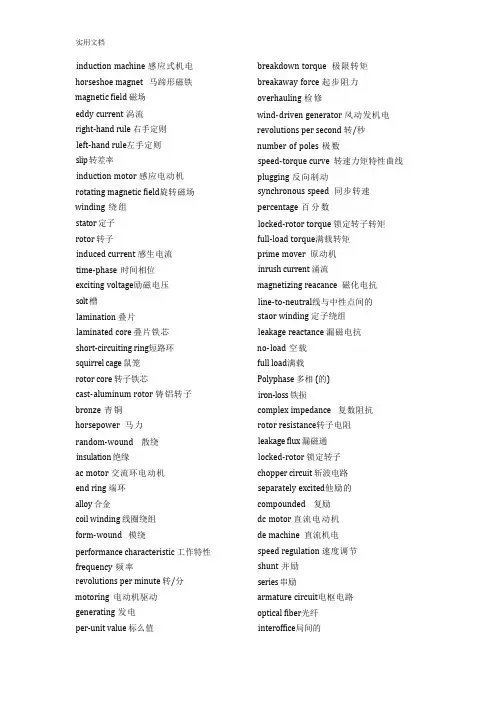

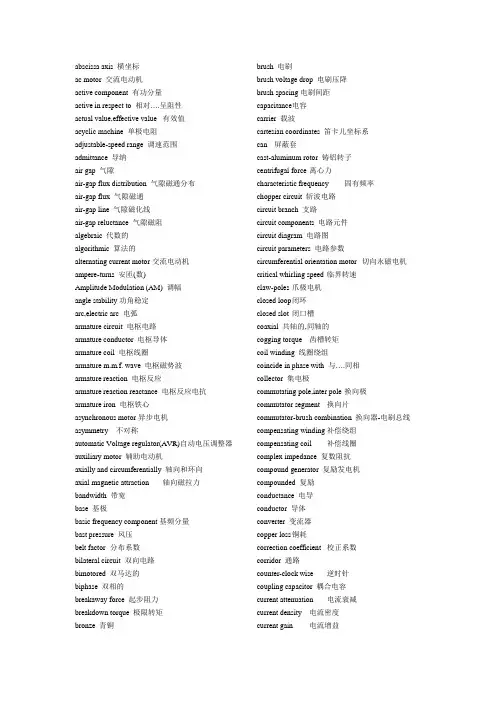

induction machine 感应式机电horseshoe magnet 马蹄形磁铁magnetic field 磁场eddy current 涡流right-hand rule 右手定则left-hand rule左手定则slip 转差率induction motor 感应电动机rotating magnetic field旋转磁场winding 绕组stator 定子rotor 转子induced current 感生电流time-phase 时间相位exciting voltage励磁电压solt 槽lamination 叠片laminated core 叠片铁芯short-circuiting ring短路环squirrel cage 鼠笼rotor core 转子铁芯cast-aluminum rotor 铸铝转子bronze 青铜horsepower 马力random-wound 散绕insulation 绝缘ac motor 交流环电动机end ring 端环alloy 合金coil winding 线圈绕组form-wound 模绕performance characteristic 工作特性frequency 频率revolutions per minute 转/分motoring 电动机驱动generating 发电per-unit value 标么值breakdown torque 极限转矩breakaway force 起步阻力overhauling 检修wind-driven generator 风动发机电revolutions per second 转/秒number of poles 极数speed-torque curve 转速力矩特性曲线plugging 反向制动synchronous speed 同步转速percentage 百分数locked-rotor torque 锁定转子转矩full-load torque满载转矩prime mover 原动机inrush current 涌流magnetizing reacance 磁化电抗line-to-neutral线与中性点间的staor winding 定子绕组leakage reactance 漏磁电抗no-load 空载full load满载Polyphase 多相 (的)iron-loss 铁损complex impedance 复数阻抗rotor resistance转子电阻leakage flux 漏磁通locked-rotor 锁定转子chopper circuit 斩波电路separately excited他励的compounded 复励dc motor 直流电动机de machine 直流机电speed regulation 速度调节shunt 并励series 串励armature circuit电枢电路optical fiber光纤interoffice局间的waveguide 波导波导管bandwidth 带宽light emitting diode发光二极管silica硅石二氧化硅regeneration 再生, 后反馈放大coaxial 共轴的,同轴的high-performance 高性能的carrier载波mature 成熟的Single Side Band(SSB) 单边带coupling capacitor 结合电容propagate 传导传播modulator 调制器demodulator 解调器line trap限波器shunt 分路器Amplitude Modulation(AM) 调幅Frequency Shift Keying(FSK)移频键控tuner 调谐器attenuate 衰减incident 入射的two-way configuration 二线制generator voltage 发电机电压dc generator 直流发机电polyphase rectifier多相整流器boost 增压time constant 时间常数forward transfer function正向传递函数error signal误差信号regulator 调节器stabilizing transformer稳定变压器time delay 延时direct axis transient time constan直t轴瞬变时间常数transient response 瞬态响应solid state固体buck 补偿operational calculus算符演算gain 增益pole 极点feedback signal 反馈信号dynamic response 动态响应voltage control system 电压控制系统mismatch 失配error detector 误差检测器excitation system 励磁系统field current励磁电流transistor晶体管high-gain 高增益boost-buck 升压去磁feedback system 反馈系统reactive power 无功功率feedback loop 反馈回路automatic Voltage regulator(AVR) 自动电压调整器reference Voltage 基准电压magnetic amplifier 磁放大器amplidyne 微场扩流发机电self-exciting自励的limiter限幅器manual control 手动控制block diagram 方框图linear zone 线性区potential transformer 电压互感器stabilization network稳定网络stabilizer稳定器air-gap flux气隙磁通saturation effect饱和效应saturation curve 饱和曲线flux linkage磁链per unit value标么值shunt field并励磁场magnetic circuit磁路load-saturation curve 负载饱和曲线air-gap line气隙磁化线polyphase rectifier多相整流器circuit components 电路元件circuit parameters 电路参数electrical device电气设备electric energy电能primary cell原生电池energy converter 电能转换器conductor 导体heating appliance 电热器direct-current直流time invariant时不变的self-inductor 自感mutual-inductor 互感the dielectric电介质storage battery 蓄电池e.m.f = electromotive fore电动势unidirectional current单方向性电流circuit diagram 电路图load characteristic负载特性terminal voltage 端电压external characteristi外c特性conductance 电导volt-ampere characteristics伏安特性carbon-filament lamp 碳丝灯泡 ideal source 理想电源internal resistance内阻active (passive) circuit element有s (无) 源电路元件leakage current 漏电流circuit branch支路P.D. = potential drop电压降potential distribution电位分布r.m.s values = root mean square values 均方根值effective values有效值steady direct current恒稳直流电sinusoidal time function正弦时间函数complex number 复数Cartesian coordinates 笛卡儿坐标系modulus 模real part实部imaginary part 虚部displacement current 位移电流trigonometric transformations 瞬时值epoch angle 初相角phase displacement 相位差signal amplifier小信号放大器mid-frequency band 中频带bipolar junction transistor (B双JT极)性晶体管field effect transistor ( E )应管electrode 电极电焊条polarity极性gain 增益isolation隔离分离绝缘隔振emitter 发射管放射器发射极collector集电极base 基极self-bias resisto 偏置电阻triangular symbol 三角符号phase reversal 反相infinite voltage gai 穷大电压增益feedback component 反馈元件differentiation微分integration 积分下限impedance 阻抗fidelity保真度summing circuit 总和路线反馈系统中的比较环节Oscillation振荡inverse 倒数admittance 导纳transformer 变压器turns ratio变比匝比ampere-turns 安匝 (数)mutual flux 交互(主)磁通vector equation 向 (相)量方程power frequency 工频capacitance effect 电容效应induction machine 感应机电shunt excited 并励series excited串励separately excited他励self excited自励field winding 磁场绕组励磁绕组 speed-torque characteristic速度转矩特性dynamic-state operation 动态运行salient poles凸极excited by 励磁field coils励磁线圈air-gap flux distributio隙磁通分布direct axis直轴armature coil 电枢线圈rotating commutator 旋转(整流子)换向器commutator-brush combination 换向器-电刷总线mechanical rectifier机械式整流器armature m.m.f. wave 电枢磁势波Geometrical position 几何位置magnetic torque 电磁转矩spatial waveform 空间波形sinusoidal density wave 正弦磁密度external armature circuit电枢外电路instantaneous electric power 瞬时电功率instantaneous mechanical power 瞬时机械功率effects of saturation饱和效应reluctance 磁阻power amplifier 功率放大器compound generator 复励发机电rheostat 变阻器self excitation process 自励过程commutation condition 换向状况cumulatively compounded motor 积复励电动机operating condition 运行状态equivalent T circuit 等值电路 rotor (stator) winding转子(定子绕组) winding loss 绕组(铜)损耗prime motor 原动机active component 有功分量reactive component 无功分量electromagnetic torque 电磁转矩retarding torque 制动转矩inductive component 感性(无功)分量abscissa axis横坐标induction generator 感应发机电synchronous generator 同步发机电automatic station 无人值守电站hydropower station 水电站process of self excitation 自励过程auxiliary motor 辅助电动机 technical specifications技术条件 voltage across the terminals端电压steady state condition 瞬态暂态reactive in respect t相o对….性 active in respect t 对….呈阻性 synchronous condenser 同步进相(调相)机coincide in phase with 与….同相synchronous reactance 同步电抗algebraic 代数的algorithmic 算法的1--master element主要元件,是指控制开关等元件。

《信号与系统》专业术语中英文对照表第 1 章绪论信号(signal)系统(system)电压(voltage)电流(current)信息(information)电路(circuit)网络(network)确定性信号(determinate signal)随机信号(random signal)一维信号(one–dimensional signal)多维信号(multi–dimensional signal)连续时间信号(continuous time signal)离散时间信号(discrete time signal)取样信号(sampling signal)数字信号(digital signal)周期信号(periodic signal)非周期信号(nonperiodic(aperiodic) signal)能量(energy)功率(power)能量信号(energy signal)功率信号(power signal)平均功率(average power)平均能量(average energy)指数信号(exponential signal)时间常数(time constant)正弦信号(sine signal)余弦信号(cosine signal)振幅(amplitude)角频率(angular frequency)初相位(initial phase)周期(period)频率(frequency)欧拉公式(Euler’s formula)复指数信号(complex exponential signal)复频率(complex frequency)实部(real part)虚部(imaginary part)抽样函数 Sa(t)(sampling(Sa) function)偶函数(even function)奇异函数(singularity function)奇异信号(singularity signal)单位斜变信号(unit ramp signal)斜率(slope)单位阶跃信号(unit step signal)符号函数(signum function)单位冲激信号(unit impulse signal)广义函数(generalized function)取样特性(sampling property)冲激偶信号(impulse doublet signal)奇函数(odd function)偶分量(even component)奇分量(odd component)正交函数(orthogonal function)正交函数集(set of orthogonal function)数学模型(mathematics model)电压源(voltage source)基尔霍夫电压定律(Kirchhoff’s voltage law(KVL))电流源(current source)连续时间系统(continuous time system)离散时间系统(discrete time system)微分方程(differential function)差分方程(difference function)线性系统(linear system)非线性系统(nonlinear system)时变系统(time–varying system)时不变系统(time–invariant system)集总参数系统(lumped–parameter system)分布参数系统(distributed–parameter system)偏微分方程(partial differential function)因果系统(causal system)非因果系统(noncausal system)因果信号(causal signal)叠加性(superposition property)均匀性(homogeneity)积分(integral)输入–输出描述法(input–output analysis)状态变量描述法(state variable analysis)单输入单输出系统(single–input and single–output system)状态方程(state equation)输出方程(output equation)多输入多输出系统(multi–input and multi–output system)时域分析法(time domain method)变换域分析法(transform domain method)卷积(convolution)傅里叶变换(Fourier transform)拉普拉斯变换(Laplace transform)第 2 章连续时间系统的时域分析齐次解(homogeneous solution)特解(particular solution)特征方程(characteristic function)特征根(characteristic root)固有(自由)解(natural solution)强迫解(forced solution)起始条件(original condition)初始条件(initial condition)自由响应(natural response)强迫响应(forced response)零输入响应(zero-input response)零状态响应(zero-state response)冲激响应(impulse response)阶跃响应(step response)卷积积分(convolution integral)交换律(exchange law)分配律(distribute law)结合律(combine law)第3 章傅里叶变换频谱(frequency spectrum)频域(frequency domain)三角形式的傅里叶级数(trigonomitric Fourier series)指数形式的傅里叶级数(exponential Fourier series)傅里叶系数(Fourier coefficient)直流分量(direct composition)基波分量(fundamental composition) n 次谐波分量(nth harmonic component)复振幅(complex amplitude)频谱图(spectrum plot(diagram))幅度谱(amplitude spectrum)相位谱(phase spectrum)包络(envelop)离散性(discrete property)谐波性(harmonic property)收敛性(convergence property)奇谐函数(odd harmonic function)吉伯斯现象(Gibbs phenomenon)周期矩形脉冲信号(periodic rectangular pulse signal)周期锯齿脉冲信号(periodic sawtooth pulse signal)周期三角脉冲信号(periodic triangular pulse signal)周期半波余弦信号(periodic half–cosine signal)周期全波余弦信号(periodic full–cosine signal)傅里叶逆变换(inverse Fourier transform)频谱密度函数(spectrum density function)单边指数信号(single–sided exponential signal)双边指数信号(two–sided exponential signal)对称矩形脉冲信号(symmetry rectangular pulse signal)线性(linearity)对称性(symmetry)对偶性(duality)位移特性(shifting)时移特性(time–shifting)频移特性(frequency–shifting)调制定理(modulation theorem)调制(modulation)解调(demodulation)变频(frequency conversion)尺度变换特性(scaling)微分与积分特性(differentiation and integration)时域微分特性(differentiation in the time domain)时域积分特性(integration in the time domain)频域微分特性(differentiation in the frequency domain)频域积分特性(integration in the frequency domain)卷积定理(convolution theorem)时域卷积定理(convolution theorem in the time domain)频域卷积定理(convolution theorem in the frequency domain)取样信号(sampling signal)矩形脉冲取样(rectangular pulse sampling)自然取样(nature sampling)冲激取样(impulse sampling)理想取样(ideal sampling)取样定理(sampling theorem)调制信号(modulation signal)载波信号(carrier signal)已调制信号(modulated signal)模拟调制(analog modulation)数字调制(digital modulation)连续波调制(continuous wave modulation)脉冲调制(pulse modulation)幅度调制(amplitude modulation)频率调制(frequency modulation)相位调制(phase modulation)角度调制(angle modulation)频分多路复用(frequency–division multiplex(FDM))时分多路复用(time–division multiplex (TDM))相干(同步)解调(synchronous detection)本地载波(local carrier)系统函数(system function)网络函数(network function)频响特性(frequency response)幅频特性(amplitude frequency response)相频特性(phase frequency response)无失真传输(distortionless transmission)理想低通滤波器(ideal low–pass filter)截止频率(cutoff frequency)正弦积分(sine integral)上升时间(rise time)窗函数(window function)理想带通滤波器(ideal band–pass filter)第 4 章拉普拉斯变换代数方程(algebraic equation)双边拉普拉斯变换(two-sided Laplace transform)双边拉普拉斯逆变换(inverse two-sided Laplace transform)单边拉普拉斯变换(single-sided Laplace transform)拉普拉斯逆变换(inverse Laplace transform)收敛域(region of convergence(ROC))延时特性(time delay)s 域平移特性(shifting in the s-domain)s 域微分特性(differentiation in the s-domain) s 域积分特性(integration in the s-domain)初值定理(initial-value theorem)终值定理(expiration-value)复频域卷积定理(convolution theorem in the complex frequency domain)部分分式展开法(partial fraction expansion)留数法(residue method)第 5 章策动点函数(driving function)转移函数(transfer function)极点(pole)零点(zero)零极点图(zero-pole plot)暂态响应(transient response)稳态响应(stable response)稳定系统(stable system)一阶系统(first order system)高通滤波网络(high-low filter)低通滤波网络(low-pass filter)二阶系统(second system)最小相移系统(minimum-phase system)维纳滤波器(Winner filter)卡尔曼滤波器(Kalman filter)低通(low-pass)高通(high-pass)带通(band-pass)带阻(band-stop)有源(active)无源(passive)模拟(analog)数字(digital)通带(pass-band)阻带(stop-band)佩利-维纳准则(Paley-Winner criterion)最佳逼近(optimum approximation)过渡带(transition-band)通带公差带(tolerance band)巴特沃兹滤波器(Butterworth filter)切比雪夫滤波器(Chebyshew filter)方框图(block diagram)信号流图(signal flow graph)节点(node)支路(branch)输入节点(source node)输出节点(sink node)混合节点(mix node)通路(path)开通路(open path)闭通路(close path)环路(loop)自环路(self-loop)环路增益(loop gain)不接触环路(disconnect loop)前向通路(forward path)前向通路增益(forward path gain)梅森公式(Mason formula)劳斯准则(Routh criterion)第 6 章数字系统(digital system)数字信号处理(digital signal processing)差分方程(difference equation)单位样值响应(unit sample response)卷积和(convolution sum)Z 变换(Z transform)序列(sequence)样值(sample)单位样值信号(unit sample signal)单位阶跃序列(unit step sequence)矩形序列 (rectangular sequence)单边实指数序列(single sided real exponential sequence)单边正弦序列(single sided exponential sequence)斜边序列(ramp sequence)复指数序列(complex exponential sequence)线性时不变离散系统(linear time-invariant discrete-time system)常系数线性差分方程(linear constant-coefficient difference equation)后向差分方程(backward difference equation)前向差分方程(forward difference equation)海诺塔(Tower of Hanoi)菲波纳西(Fibonacci)冲激函数串(impulse train)第 7 章数字滤波器(digital filter)单边 Z 变换(single-sided Z transform)双边 Z 变换(two-sided (bilateral) Z transform) 幂级数(power series)收敛(convergence)有界序列(limitary-amplitude sequence)正项级数(positive series)有限长序列(limitary-duration sequence)右边序列(right-sided sequence)左边序列(left-sided sequence)双边序列(two-sided sequence) Z 逆变换(inverse Z transform)围线积分法(contour integral method)幂级数展开法(power series expansion) z 域微分(differentiation in the z-domain)序列指数加权(multiplication by an exponential sequence) z 域卷积定理(z-domain convolution theorem)帕斯瓦尔定理(Parseval theorem)传输函数(transfer function)序列的傅里叶变换(discrete-time Fourier transform:DTFT)序列的傅里叶逆变换(inverse discrete-time Fourier transform:IDTFT)幅度响应(magnitude response)相位响应(phase response)量化(quantization)编码(coding)模数变换(A/D 变换:analog-to-digital conversion)数模变换(D/A 变换:digital-to- analog conversion)第 8 章端口分析法(port analysis)状态变量(state variable)无记忆系统(memoryless system)有记忆系统(memory system)矢量矩阵(vector-matrix )常量矩阵(constant matrix )输入矢量(input vector)输出矢量(output vector)直接法(direct method)间接法(indirect method)状态转移矩阵(state transition matrix)系统函数矩阵(system function matrix)冲激响应矩阵(impulse response matrix)朱里准则(July criterion)。

A phase transition for the diameter of the configuration modelRemco van der Hofstad∗Gerard Hooghiemstra†and Dmitri Znamenski‡August31,2007AbstractIn this paper,we study the configuration model(CM)with i.i.d.degrees.We establisha phase transition for the diameter when the power-law exponentτof the degrees satisfiesτ∈(2,3).Indeed,we show that forτ>2and when vertices with degree2are present withpositive probability,the diameter of the random graph is,with high probability,bounded frombelow by a constant times the logarithm of the size of the graph.On the other hand,assumingthat all degrees are at least3or more,we show that,forτ∈(2,3),the diameter of the graphis,with high probability,bounded from above by a constant times the log log of the size of thegraph.1IntroductionRandom graph models for complex networks have received a tremendous amount of attention in the past decade.See[1,22,26]for reviews on complex networks and[2]for a more expository account.Measurements have shown that many real networks share two fundamental properties. Thefirst is the fact that typical distances between vertices are small,which is called the‘small world’phenomenon(see[27]).For example,in the Internet,IP-packets cannot use more than a threshold of physical links,and if the distances in terms of the physical links would be large,e-mail service would simply break down.Thus,the graph of the Internet has evolved in such a way that typical distances are relatively small,even though the Internet is rather large.The second and maybe more surprising property of many networks is that the number of vertices with degree k falls offas an inverse power of k.This is called a‘power law degree sequence’,and resulting graphs often go under the name‘scale-free graphs’(see[15]for a discussion where power laws occur in the Internet).The observation that many real networks have the above two properties has incited a burst of activity in network modelling using random graphs.These models can,roughly speaking,be divided into two distinct classes of models:‘static’models and’dynamic’models.In static models, we model with a graph of a given size a snap-shot of a real network.A typical example of this kind of model is the configuration model(CM)which we describe below.A related static model, which can be seen as an inhomogeneous version of the Erd˝o s-R´e nyi random graph,is treated in great generality in[4].Typical examples of the‘dynamical’models,are the so-called preferential attachment models(PAM’s),where added vertices and edges are more likely to be attached to vertices that already have large degrees.PAM’s often focus on the growth of the network as a way to explain the power law degree sequences.∗Department of Mathematics and Computer Science,Eindhoven University of Technology,P.O.Box513,5600 MB Eindhoven,The Netherlands.E-mail:rhofstad@win.tue.nl†Delft University of Technology,Electrical Engineering,Mathematics and Computer Science,P.O.Box5031,2600 GA Delft,The Netherlands.E-mail:G.Hooghiemstra@ewi.tudelft.nl‡EURANDOM,P.O.Box513,5600MB Eindhoven,The Netherlands.E-mail:znamenski@eurandom.nlPhysicists have predicted that distances in PAM’s behave similarly to distances in the CM with similar degrees.Distances in the CM have attracted considerable attention(see e.g.,[14,16,17,18]), but distances in PAM’s far less(see[5,19]),which makes it hard to verify this prediction.Together with[19],the current paper takes afirst step towards a rigorous verification of this conjecture.At the end of this introduction we will return to this observation,but let usfirst introduce the CM and present our diameter results.1.1The configuration modelThe CM is defined as follows.Fix an integer N.Consider an i.i.d.sequence of random variables D1,D2,...,DN.We will construct an undirected graph with N vertices where vertex j has degreeD j.We will assume that LN =Nj=1D j is even.If LNis odd,then we will increase DNby1.Thissingle change will make hardly any difference in what follows,and we will ignore this effect.We will later specify the distribution of D1.To construct the graph,we have N separate vertices and incident to vertex j,we have D j stubs or half-edges.The stubs need to be paired to construct the graph.We number the stubs in a givenorder from1to LN .We start by pairing at random thefirst stub with one of the LN−1remainingstubs.Once paired,two stubs form a single edge of the graph.Hence,a stub can be seen as the left-or the right-half of an edge.We continue the procedure of randomly choosing and pairing the stubs until all stubs are connected.Unfortunately,vertices having self-loops,as well as multiple edges between vertices,may occur,so that the CM is a multigraph.However,self-loops are scarce when N→∞,as shown e.g.in[7].The above model is a variant of the configuration model[3],which,given a degree sequence,is the random graph with that given degree sequence.The degree sequence of a graph is the vector of which the k th coordinate equals the fraction of vertices with degree k.In our model,by the law of large numbers,the degree sequence is close to the distribution of the nodal degree D of which D1,...,DNare i.i.d.copies.The probability mass function and the distribution function of the nodal degree law are denoted byP(D=k)=f k,k=1,2,...,and F(x)= xk=1f k,(1.1)where x is the largest integer smaller than or equal to x.We pay special attention to distributions of the form1−F(x)=x1−τL(x),(1.2) whereτ>2and L is slowly varying at infinity.This means that the random variables D j obey a power law,and the factor L is meant to generalize the model.We denote the expectation of D byµ,i.e.,µ=∞k=1kf k.(1.3)1.2The diameter in the configuration modelIn this section we present the results on the bounds on the diameter.We use the abbreviation whp for a statement that occurs with probability tending to1if the number of vertices of the graph N tends to∞.Theorem1.1(Lower bound on diameter)Forτ>2,assuming that f1+f2>0and f1<1, there exists a positive constantαsuch that whp the diameter of the configuration model is bounded below byαlog N.A more precise result on the diameter in the CM is presented in[16],where it is proved that under rather general assumptions on the degree sequence of the CM,the diameter of the CM divided by log N converges to a constant.This result is also valid for related models,such as the Erd˝o s-R´e nyi random graph,but the proof is quite difficult.While Theorem1.1is substantially weaker,the fact that a positive constant times log N appears is most interesting,as we will discuss now in more detail.Indeed,the result in Theorem1.1is most interesting in the case whenτ∈(2,3).By[18, Theorem1.2],the typical distance forτ∈(2,3)is proportional to log log N,whereas we show here that the diameter is bounded below by a positive constant times log N when f1+f2>0and f1<1. Therefore,we see that the average distance and the diameter are of a different order of magnitude. The pairs of vertices where the distance is of the order log N are thus scarce.The proof of Theorem 1.1reveals that these pairs are along long lines of vertices with degree2that are connected to each other.Also in the proof of[16],one of the main difficulties is the identification of the precise length of these long thin lines.Our second main result states that whenτ∈(2,3),the above assumption that f1+f2>0is necessary and sufficient for log N lower bounds on the diameter.In Theorem1.2below,we assume that there exists aτ∈(2,3)such that,for some c>0and all x≥1,1−F(x)≥cx1−τ,(1.4) which is slightly weaker than the assumption in(1.2).We further define for integer m≥2and a real numberσ>1,CF =CF(σ,m)=2|log(τ−2)|+2σlog m.(1.5)Then our main upper bound on the diameter when(1.4)holds is as follows:Theorem1.2(A log log upper bound on the diameter)Fix m≥2,and assume that P(D≥m+1)=1,and that(1.4)holds.Then,for everyσ>(3−τ)−1,the diameter of the configuration model is,whp,bounded above by CFlog log N.1.3Discussion and related workTheorem1.2has a counterpart for preferential attachment models(PAM)proved in[19].In these PAM’s,at each integer time t,a new vertex with m≥1edges attached to it,is added to the graph. The new edges added at time t are then preferentially connected to older edges,i.e.,conditionally on the graph at time t−1,which is denoted by G(t−1),the probability that a given edge is connected to vertex i is proportional to d i(t−1)+δ,whereδ>−m is afixed parameter and d i(t−1)is the degree of vertex i at time t−1.A substantial literature exists,see e.g.[10],proving that the degree sequence of PAM’s in rather great generality satisfy a power law(see e.g.the references in [11]).In the above setting of linear preferential attachment,the exponentτis equal to[21,11]τ=3+δm.(1.6)A log log t upper bound on the diameter holds for PAM’s with m≥2and−m<δ<0,which, by(1.6),corresponds toτ∈(2,3)[19]:Theorem1.3(A log log upper bound on the diameter of the PAM)Fix m≥2andδ∈(−m,0).Then,for everyσ>13−τ,and withCG (σ)=4|log(τ−2)|+4σlog mthe diameter of the preferential attachment model is,with high probability,bounded above by CGlog log t,as t→∞.Observe that the condition m≥2in the PAM corresponds to the condition P(D≥m+1)=1 in the CM,where one half-edge is used to attach the vertex,while in PAM’s,vertices along a pathhave degree at least three when m≥2.Also note from the definition of CG and CFthat distancesin PAM’s tend to be twice as big compared to distances in the CM.This is related to the structure of the graphs.Indeed,in both graphs,vertices of high degree play a crucial role in shortest paths. In the CM vertices of high degree are often directly connected to each other,while in the PAM, they tend to be connected through a later vertex which links to both vertices of high degree.Unfortunately,there is no log t lower bound in the PAM forδ>0and m≥2,or equivalently τ>3.However,[19]does contain a(1−ε)log t/log log t lower bound for the diameter when m≥1 andδ≥0.When m=1,results exists on log t asymptotics of the diameter,see e.g.[6,24].The results in Theorems1.1–1.3are consistent with the non-rigorous physics predictions that distances in the PAM and in the CM,for similar degree sequences,behave similarly.It is an interesting problem,for both the CM and PAM,to determine the exact constant C≥0such that the diameter of the graph of N vertices divided by log N converges in probability to C.For the CM,the results in[16]imply that C>0,for the PAM,this is not known.We now turn to related work.Many distance results for the CM are known.Forτ∈(1,2) distances are bounded[14],forτ∈(2,3),they behave as log log N[25,18,9],whereas forτ>3 the correct scaling is log N[17].Observe that these results induce lower bounds for the diameter of the CM,since the diameter is the supremum of the distance,where the supremum is taken over all pairs of vertices.Similar results for models with conditionally independent edges exist,see e.g. [4,8,13,23].Thus,for these classes of models,distances are quite well understood.The authors in [16]prove that the diameter of a sparse random graph,with specified degree sequence,has,whp, diameter equal to c log N(1+o(1)),for some constant c.Note that our Theorems1.1–1.2imply that c>0when f1+f2>0,while c=0when f1+f2=0and(1.4)holds for someτ∈(2,3).There are few results on distances or diameter in PAM’s.In[5],it was proved that in the PAMand forδ=0,for whichτ=3,the diameter of the resulting graph is equal to log tlog log t (1+o(1)).Unfortunately,the matching result for the CM has not been proved,so that this does not allow us to verify whether the models have similar distances.This paper is organized as follows.In Section2,we prove the lower bound on the diameter formulated in Theorem1.1and in Section3we prove the upper bound in Theorem1.2.2A lower bound on the diameter:Proof of Theorem1.1We start by proving the claim when f2>0.The idea behind the proof is simple.Under the conditions of the theorem,one can,whp,find a pathΓ(N)in the random graph such that this path consists exclusively of vertices with degree2and has length at least2αlog N.This implies that the diameter is at leastαlog N,since the above path could be a cycle.Below we define a procedure which proves the existence of such a path.Consider the process of pairing stubs in the graph.We are free to choose the order in which we pair the free stubs,since this order is irrelevant for the distribution of the random graph.Hence,we are allowed to start with pairing the stubs of the vertices of degree2.Let N(2)be the number of vertices of degree2and SN(2)=(i1,...,i N(2))∈N N(2)the collection of these vertices.We will pair the stubs and at the same time define a permutationΠ(N)=(i∗1, (i)N(2))of SN(2),and a characteristicχ(N)=(χ1,...,χN(2))onΠ(N),whereχj is either0or1.Π(N)andχ(N)will be defined inductively in such a way that for any vertex i∗k ∈Π(N),χk=1,if and only if vertex i∗k is connected to vertex i∗k+1.Hence,χ(N)contains a substring ofat least2αlog N ones precisely when the random graph contains a pathΓ(N)of length at least 2αlog N.We initialize our inductive definition by i∗1=i1.The vertex i∗1has two stubs,we consider the second one and pair it to an arbitrary free stub.If this free stub belongs to another vertex j=i∗1 in SN(2)then we choose i∗2=j andχ1=1,otherwise we choose i∗2=i2,andχ1=0.Suppose forsome1<k≤N(2),the sequences(i∗1, (i)k )and(χ1,...,χk−1)are defined.Ifχk−1=1,then onestub of i∗k is paired to a stub of i∗k−1,and another stub of i∗kis free,else,ifχk−1=0,vertex i∗khastwo free stubs.Thus,for every k≥1,the vertex i∗k has at least one free stub.We pair this stubto an arbitrary remaining free stub.If this second stub belongs to vertex j∈SN (2)\{i∗1, (i)k},then we choose i∗k+1=j andχk=1,else we choose i∗k+1as thefirst stub in SN(2)\{i∗1, (i)k},andχk=0.Hence,we have defined thatχk=1precisely when vertex i∗k is connected to vertexi∗k+1.We show that whp there exists a substring of ones of length at least2αlog N in thefirsthalf ofχN ,i.e.,inχ12(N)=(χi∗1,...,χi∗N(2)/2).For this purpose,we couple the sequenceχ12(N)with a sequence B12(N)={ξk},whereξk are i.i.d.Bernoulli random variables taking value1withprobability f2/(4µ),and such that,whp,χi∗k ≥ξk for all k∈{1,..., N(2)/2 }.We write PNforthe law of the CM conditionally on the degrees D1,...,DN.Then,for any1≤k≤ N(2)/2 ,thePN-probability thatχk=1is at least2N(2)−CN(k)LN −CN(k),(2.1)where,as before,N(2)is the total number of vertices with degree2,and CN(k)is one plus thetotal number of paired stubs after k−1pairings.By definition of CN(k),for any k≤N(2)/2,we haveCN(k)=2(k−1)+1≤N(2).(2.2) Due to the law of large numbers we also have that whpN(2)≥f2N/2,LN≤2µN.(2.3) Substitution of(2.2)and(2.3)into(2.1)then yields that the right side of(2.1)is at leastN(2) LN ≥f24µ.Thus,whp,we can stochastically dominate all coordinates of the random sequenceχ12(N)with ani.i.d.Bernoulli sequence B12(N)of Nf2/2independent trials with success probability f2/(4µ)>0.It is well known(see e.g.[12])that the probability of existence of a run of2αlog N ones convergesto one whenever2αlog N≤log(Nf2/2) |log(f2/(4µ))|,for some0< <1.We conclude that whp the sequence B1(N)contains a substring of2αlog N ones.Since whpχN ≥B12(N),where the ordering is componentwise,whp the sequenceχNalso contains the samesubstring of2αlog N ones,and hence there exists a required path consisting of at least2αlog N vertices with degree2.Thus,whp the diameter is at leastαlog N,and we have proved Theorem 1.1in the case that f2>0.We now complete the proof of Theorem1.1when f2=0by adapting the above argument. When f2=0,and since f1+f2>0,we must have that f1>0.Let k∗>2be the smallest integer such that f k∗>0.This k∗must exist,since f1<1.Denote by N∗(2)the total number of vertices of degree k∗of which itsfirst k∗−2stubs are connected to a vertex with degree1.Thus,effectively, after thefirst k∗−2stubs have been connected to vertices with degree1,we are left with a structure which has2free stubs.These vertices will replace the N(2)vertices used in the above proof.It is not hard to see that whp N∗(2)≥f∗2N/2for some f∗2>0.Then,the argument for f2>0can be repeated,replacing N(2)by N∗(2)and f2by f∗2.In more detail,for any1≤k≤ N∗(2)/(2k∗) , the PN-probability thatχk=1is at least2N∗(2)−C∗N(k)LN −CN(k),(2.4)where C∗N(k)is the total number of paired stubs after k−1pairings of the free stubs incident to the N∗(2)vertices.By definition of C∗N(k),for any k≤N∗(2)/(2k∗),we haveCN(k)=2k∗(k−1)+1≤N∗(2).(2.5)Substitution of(2.5),N∗(2)≥f∗2N/2and the bound on LNin(2.3)into(2.4)gives us that the right side of(2.4)is at leastN∗(2) LN ≥f∗24µ.Now the proof of Theorem1.1in the case where f2=0and f1∈(0,1)can be completed as above.We omit further details. 3A log log upper bound on the diameter forτ∈(2,3)In this section,we investigate the diameter of the CM when P(D≥m+1)=1,for some integer m≥2.We assume(1.4)for someτ∈(2,3).We will show that under these assumptions CFlog log N isan upper bound on the diameter of the CM,where CFis defined in(1.5).The proof is divided into two key steps.In thefirst,in Proposition3.1,we give a bound on the diameter of the core of the CM consisting of all vertices with degree at least a certain power of log N.This argument is very close in spirit to the one in[25],the only difference being that we have simplified the argument slightly.After this,in Proposition3.4,we derive a bound on the distance between vertices with small degree and the core.We note that Proposition3.1only relies on the assumption in(1.4),while Proposition3.4only relies on the fact that P(D≥m+1)=1,for some m≥2.The proof of Proposition3.1can easily be adapted to a setting where the degrees arefixed, by formulating the appropriate assumptions on the number of vertices with degree at least x for a sufficient range of x.This assumption would replace(1.5).Proposition3.4can easily be adapted to a setting where there are no vertices of degree smaller than or equal to m.This assumption would replace the assumption P(D≥m+1)=1,for some m≥2.We refrain from stating these extensions of our results,and start by investigating the core of the CM.We takeσ>13−τand define the core CoreNof the CM to beCoreN={i:D i≥(log N)σ},(3.1)i.e.,the set of vertices with degree at least(log N)σ.Also,for a subset A⊆{1,...,N},we define the diameter of A to be equal to the maximal shortest path distance between any pair of vertices of A.Note,in particular,that if there are pairs of vertices in A that are not connected,then the diameter of A is infinite.Then,the diameter of the core is bounded in the following proposition:Proposition3.1(Diameter of the core)For everyσ>13−τ,the diameter of CoreNis,whp,bounded above by2log log N|log(τ−2)|(1+o(1)).(3.2)Proof.We note that(1.4)implies that whp the largest degree D(N)=max1≤i≤N D i satisfiesD(N)≥u1,where u1=N1τ−1(log N)−1,(3.3) because,when N→∞,P(D(N)>u1)=1−P(D(N)≤u1)=1−(F(u1))N≥1−(1−cu1−τ1)N=1−1−c(log N)τ−1NN∼1−exp(−c(log N)τ−1)→1.(3.4)DefineN (1)={i :D i ≥u 1},(3.5)so that,whp ,N (1)=∅.For some constant C >0,which will be specified later,and k ≥2we define recursively u k =C log N u k −1 τ−2,and N (k )={i :D i ≥u k }.(3.6)We start by identifying u k :Lemma 3.2(Identification of u k )For each k ∈N ,u k =C a k (log N )b k N c k ,(3.7)with c k =(τ−2)k −1τ−1,b k =13−τ−4−τ3−τ(τ−2)k −1,a k =1−(τ−2)k −13−τ.(3.8)Proof .We will identify a k ,b k and c k recursively.We note that,by (3.3),c 1=1τ−1,b 1=−1,a 1=0.By (3.6),we can,for k ≥2,relate a k ,b k ,c k to a k −1,b k −1,c k −1as follows:c k =(τ−2)c k −1,b k =1+(τ−2)b k −1,a k =1+(τ−2)a k −1.(3.9)As a result,we obtain c k =(τ−2)k −1c 1=(τ−2)k −1τ−1,(3.10)b k =b 1(τ−2)k −1+k −2 i =0(τ−2)i =1−(τ−2)k −13−τ−(τ−2)k −1,(3.11)a k =1−(τ−2)k −13−τ.(3.12)The key step in the proof of Proposition 3.1is the following lemma:Lemma 3.3(Connectivity between N (k −1)and N (k ))Fix k ≥2,and C >4µ/c (see (1.3),and (1.4)respectively).Then,the probability that there exists an i ∈N (k )that is not directly connected to N (k −1)is o (N −γ),for some γ>0independent of k .Proof .We note that,by definition,i ∈N (k −1)D i ≥u k −1|N (k −1)|.(3.13)Also,|N (k −1)|∼Bin N,1−F (u k −1) ,(3.14)and we have that,by (1.4),N [1−F (u k −1)]≥cN (u k −1)1−τ,(3.15)which,by Lemma 3.2,grows as a positive power of N ,since c k ≤c 2=τ−2τ−1<1τ−1.We use a concentration of probability resultP (|X −E [X ]|>t )≤2e −t 22(E [X ]+t/3),(3.16)which holds for binomial random variables[20],and gives that that the probability that|N(k−1)|is bounded below by N[1−F(u k−1)]/2is exponentially small in N.As a result,we obtain that for every k,and whpi∈N(k)D i≥c2N(u k)2−τ.(3.17)We note(see e.g.,[18,(4.34)]that for any two sets of vertices A,B,we have thatPN (A not directly connected to B)≤e−D A D BL N,(3.18)where,for any A⊆{1,...,N},we writeD A=i∈AD i.(3.19)On the event where|N(k−1)|≥N[1−F(u k−1)]/2and where LN≤2µN,we then obtain by(3.18),and Boole’s inequality that the PN-probability that there exists an i∈N(k)such that i is not directly connected to N(k−1)is bounded byNe−u k Nu k−1[1−F(u k−1)]2L N≤Ne−cu k(u k−1)2−τ4µ=N1−cC4µ,(3.20)where we have used(3.6).Taking C>4µ/c proves the claim. We now complete the proof of Proposition3.1.Fixk∗= 2log log N|log(τ−2)|.(3.21)As a result of Lemma3.3,we have whp that the diameter of N(k∗)is at most2k∗,because thedistance between any vertex in N(k∗)and the vertex with degree D(N)is at most k∗.Therefore,weare done when we can show thatCoreN⊆N(k∗).(3.22)For this,we note thatN(k∗)={i:D i≥u k∗},(3.23)so that it suffices to prove that u k∗≥(log N)σ,for anyσ>13−τ.According to Lemma3.2,u k∗=C a k∗(log N)b k∗N c k∗.(3.24) Because for x→∞,and2<τ<3,x(τ−2)2log x|log(τ−2)|=x·x−2=o(log x),(3.25) wefind with x=log N thatlog N·(τ−2)2log log N|log(τ−2)|=o(log log N),(3.26) implying that N c k∗=(log N)o(1),(log N)b k∗=(log N)13−τ+o(1),and C a k∗=(log N)o(1).Thus,u k∗=(log N)13−τ+o(1),(3.27)so that,by picking N sufficiently large,we can make13−τ+o(1)≤σ.This completes the proof ofProposition3.1. For an integer m≥2,we defineC(m)=σ/log m.(3.28)Proposition 3.4(Maximal distance between periphery and core)Assume that P (D ≥m +1)=1,for some m ≥2.Then,for every σ>(3−τ)−1the maximal distance between any vertex and the core is,whp ,bounded from above by C (m )log log N .Proof .We start from a vertex i and will show that the probability that the distance between i and Core N is at least C (m )log log N is o (N −1).This proves the claim.For this,we explore the neighborhood of i as follows.From i ,we connect the first m +1stubs (ignoring the other ones).Then,successively,we connect the first m stubs from the closest vertex to i that we have connected to and have not yet been explored.We call the arising process when we have explored up to distance k from the initial vertex i the k -exploration tree .When we never connect two stubs between vertices we have connected to,then the number of vertices we can reach in k steps is precisely equal to (m +1)m k −1.We call an event where a stub on the k -exploration tree connects to a stub incident to a vertex in the k -exploration tree a collision .The number of collisions in the k -exploration tree is the number of cycles or self-loops in it.When k increases,the probability of a collision increases.However,for k of order log log N ,the probability that more than two collisions occur in the k -exploration tree is small,as we will prove now:Lemma 3.5(Not more than one collision)Take k = C (m )log log N .Then,the P N -probab-ility that there exists a vertex of which the k -exploration tree has at least two collisions,before hittingthe core Core N ,is bounded by (log N )d L −2N ,for d =4C (m )log (m +1)+2σ.Proof .For any stub in the k -exploration tree,the probability that it will create a collision beforehitting the core is bounded above by (m +1)m k −1(log N )σL −1N .The probability that two stubs will both create a collision is,by similar arguments,bounded above by (m +1)m k −1(log N )σL −1N2.The total number of possible pairs of stubs in the k -exploration tree is bounded by[(m +1)(1+m +...+m k −1)]2≤[(m +1)m k ]2,so that,by Boole’s inequality,the probability that the k -exploration tree has at least two collisions is bounded by (m +1)m k 4(log N )2σL −2N .(3.29)When k = C (m )log log N ,we have that (m +1)m k 4(log N )2σ≤(log N )d ,where d is defined inthe statement of the lemma. Finally,we show that,for k = C (m )log log N ,the k -exploration tree will,whp connect to the Core N :Lemma 3.6(Connecting exploration tree to core)Take k = C (m )log log N .Then,the probability that there exists an i such that the distance of i to the core is at least k is o (N −1).Proof .Since µ<∞we have that L N /N ∼µ.Then,by Lemma 3.5,the probability that there exists a vertex for which the k -exploration tree has at least 2collisions before hitting the core is o (N −1).When the k -exploration tree from a vertex i does not have two collisions,then there are at least (m −1)m k −1stubs in the k th layer that have not yet been connected.When k = C (m )log log N this number is at least equal to (log N )C (m )log m +o (1).Furthermore,the expected number of stubs incident to the Core N is at least N (log N )σP (D 1≥(log N )σ)so that whp the number of stubs incident to Core N is at least (compare (1.4))12N (log N )σP (D 1≥(log N )σ)≥c 2N (log N )2−τ3−τ.(3.30)By(3.18),the probability that we connect none of the stubs in the k th layer of the k-exploration tree to one of the stubs incident to CoreNis bounded byexp−cN(log N)2−τ3−τ+C(m)log m2LN≤exp−c4µ(log N)2−τ3−τ+σ=o(N−1),(3.31)because whp LN /N≤2µ,and since2−τ3−τ+σ>1.Propositions3.1and3.4prove that whp the diameter of the configuration model is boundedabove by CF log log N,with CFdefined in(1.5).This completes the proof of Theorem1.2.Acknowledgements.The work of RvdH and DZ was supported in part by Netherlands Organ-isation for Scientific Research(NWO).References[1]R.Albert and A.-L.Barab´a si.Statistical mechanics of complex networks.Rev.Mod.Phys.74,47-97,(2002).[2]A.-L.Barab´a si.Linked,The New Science of Networks.Perseus Publishing,Cambridge,Mas-sachusetts,(2002).[3]B.Bollob´a s.Random Graphs,2nd edition,Academic Press,(2001).[4]B.Bollob´a s,S.Janson,and O.Riordan.The phase transition in inhomogeneous randomgraphs.Random Structures and Algorithms31,3-122,(2007).[5]B.Bollob´a s and O.Riordan.The diameter of a scale-free random binatorica,24(1):5–34,(2004).[6]B.Bollob´a s and O.Riordan.Shortest paths and load scaling in scale-free trees.Phys.Rev.E.,69:036114,(2004).[7]T.Britton,M.Deijfen,and A.Martin-L¨o f.Generating simple random graphs with prescribeddegree distribution.J.Stat.Phys.,124(6):1377–1397,(2006).[8]F.Chung and L.Lu.The average distances in random graphs with given expected degrees.A,99(25):15879–15882(electronic),(2002).[9]R.Cohen and S.Havlin.Scale free networks are ultrasmall,Physical Review Letters90,058701,(2003).[10]C.Cooper and A.Frieze.A general model of web graphs.Random Structures Algorithms,22(3):311–335,(2003).[11]M.Deijfen,H.van den Esker,R.van der Hofstad and G.Hooghiemstra.A preferential attach-ment model with random initial degrees.Preprint(2007).To appear in Arkiv f¨o r Matematik.[12]P.Erd¨o s and A.R´e nyi.On a new law of large numbers,J.Analyse Math.23,103–111,(1970).[13]H.van den Esker,R.van der Hofstad and G.Hooghiemstra.Universality forthe distance infinite variance random graphs.Preprint(2006).Available from http://ssor.twi.tudelft.nl/∼gerardh/[14]H.van den Esker,R.van der Hofstad,G.Hooghiemstra and D.Znamenski.Distances inrandom graphs with infinite mean degrees,Extremes8,111-141,2006.。