Online large-margin training of dependency parsers

- 格式:pdf

- 大小:86.31 KB

- 文档页数:8

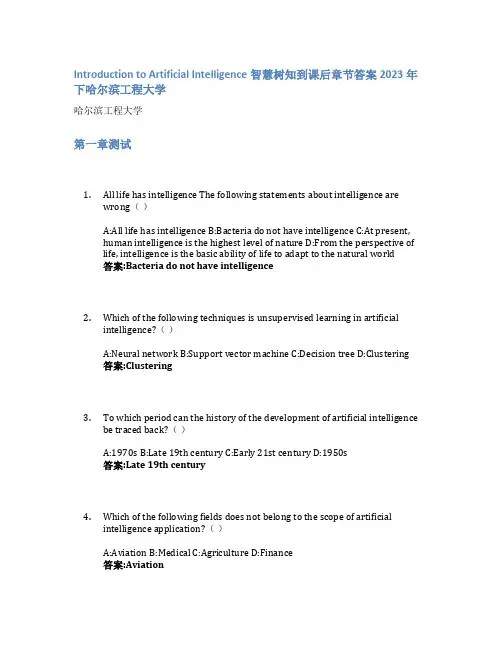

Introduction to Artificial Intelligence智慧树知到课后章节答案2023年下哈尔滨工程大学哈尔滨工程大学第一章测试1.All life has intelligence The following statements about intelligence arewrong()A:All life has intelligence B:Bacteria do not have intelligence C:At present,human intelligence is the highest level of nature D:From the perspective of life, intelligence is the basic ability of life to adapt to the natural world答案:Bacteria do not have intelligence2.Which of the following techniques is unsupervised learning in artificialintelligence?()A:Neural network B:Support vector machine C:Decision tree D:Clustering答案:Clustering3.To which period can the history of the development of artificial intelligencebe traced back?()A:1970s B:Late 19th century C:Early 21st century D:1950s答案:Late 19th century4.Which of the following fields does not belong to the scope of artificialintelligence application?()A:Aviation B:Medical C:Agriculture D:Finance答案:Aviation5.The first artificial neuron model in human history was the MP model,proposed by Hebb.()A:对 B:错答案:错6.Big data will bring considerable value in government public services, medicalservices, retail, manufacturing, and personal location services. ()A:错 B:对答案:对第二章测试1.Which of the following options is not human reason:()A:Value rationality B:Intellectual rationality C:Methodological rationalityD:Cognitive rationality答案:Intellectual rationality2.When did life begin? ()A:Between 10 billion and 4.5 billion years B:Between 13.8 billion years and10 billion years C:Between 4.5 billion and 3.5 billion years D:Before 13.8billion years答案:Between 4.5 billion and 3.5 billion years3.Which of the following statements is true regarding the philosophicalthinking about artificial intelligence?()A:Philosophical thinking has hindered the progress of artificial intelligence.B:Philosophical thinking has contributed to the development of artificialintelligence. C:Philosophical thinking is only concerned with the ethicalimplications of artificial intelligence. D:Philosophical thinking has no impact on the development of artificial intelligence.答案:Philosophical thinking has contributed to the development ofartificial intelligence.4.What is the rational nature of artificial intelligence?()A:The ability to communicate effectively with humans. B:The ability to feel emotions and express creativity. C:The ability to reason and make logicaldeductions. D:The ability to learn from experience and adapt to newsituations.答案:The ability to reason and make logical deductions.5.Which of the following statements is true regarding the rational nature ofartificial intelligence?()A:The rational nature of artificial intelligence includes emotional intelligence.B:The rational nature of artificial intelligence is limited to logical reasoning.C:The rational nature of artificial intelligence is not important for itsdevelopment. D:The rational nature of artificial intelligence is only concerned with mathematical calculations.答案:The rational nature of artificial intelligence is limited to logicalreasoning.6.Connectionism believes that the basic element of human thinking is symbol,not neuron; Human's cognitive process is a self-organization process ofsymbol operation rather than weight. ()A:对 B:错答案:错第三章测试1.The brain of all organisms can be divided into three primitive parts:forebrain, midbrain and hindbrain. Specifically, the human brain is composed of brainstem, cerebellum and brain (forebrain). ()A:错 B:对答案:对2.The neural connections in the brain are chaotic. ()A:对 B:错答案:错3.The following statement about the left and right half of the brain and itsfunction is wrong ().A:When dictating questions, the left brain is responsible for logical thinking,and the right brain is responsible for language description. B:The left brain is like a scientist, good at abstract thinking and complex calculation, but lacking rich emotion. C:The right brain is like an artist, creative in music, art andother artistic activities, and rich in emotion D:The left and right hemispheres of the brain have the same shape, but their functions are quite different. They are generally called the left brain and the right brain respectively.答案:When dictating questions, the left brain is responsible for logicalthinking, and the right brain is responsible for language description.4.What is the basic unit of the nervous system?()A:Neuron B:Gene C:Atom D:Molecule答案:Neuron5.What is the role of the prefrontal cortex in cognitive functions?()A:It is responsible for sensory processing. B:It is involved in emotionalprocessing. C:It is responsible for higher-level cognitive functions. D:It isinvolved in motor control.答案:It is responsible for higher-level cognitive functions.6.What is the definition of intelligence?()A:The ability to communicate effectively. B:The ability to perform physicaltasks. C:The ability to acquire and apply knowledge and skills. D:The abilityto regulate emotions.答案:The ability to acquire and apply knowledge and skills.第四章测试1.The forward propagation neural network is based on the mathematicalmodel of neurons and is composed of neurons connected together by specific connection methods. Different artificial neural networks generally havedifferent structures, but the basis is still the mathematical model of neurons.()A:对 B:错答案:对2.In the perceptron, the weights are adjusted by learning so that the networkcan get the desired output for any input. ()A:对 B:错答案:对3.Convolution neural network is a feedforward neural network, which hasmany advantages and has excellent performance for large image processing.Among the following options, the advantage of convolution neural network is().A:Implicit learning avoids explicit feature extraction B:Weight sharingC:Translation invariance D:Strong robustness答案:Implicit learning avoids explicit feature extraction;Weightsharing;Strong robustness4.In a feedforward neural network, information travels in which direction?()A:Forward B:Both A and B C:None of the above D:Backward答案:Forward5.What is the main feature of a convolutional neural network?()A:They are used for speech recognition. B:They are used for natural languageprocessing. C:They are used for reinforcement learning. D:They are used forimage recognition.答案:They are used for image recognition.6.Which of the following is a characteristic of deep neural networks?()A:They require less training data than shallow neural networks. B:They havefewer hidden layers than shallow neural networks. C:They have loweraccuracy than shallow neural networks. D:They are more computationallyexpensive than shallow neural networks.答案:They are more computationally expensive than shallow neuralnetworks.第五章测试1.Machine learning refers to how the computer simulates or realizes humanlearning behavior to obtain new knowledge or skills, and reorganizes the existing knowledge structure to continuously improve its own performance.()A:对 B:错答案:对2.The best decision sequence of Markov decision process is solved by Bellmanequation, and the value of each state is determined not only by the current state but also by the later state.()A:对 B:错答案:对3.Alex Net's contributions to this work include: ().A:Use GPUNVIDIAGTX580 to reduce the training time B:Use the modified linear unit (Re LU) as the nonlinear activation function C:Cover the larger pool to avoid the average effect of average pool D:Use the Dropouttechnology to selectively ignore the single neuron during training to avoid over-fitting the model答案:Use GPUNVIDIAGTX580 to reduce the training time;Use themodified linear unit (Re LU) as the nonlinear activation function;Cover the larger pool to avoid the average effect of average pool;Use theDropout technology to selectively ignore the single neuron duringtraining to avoid over-fitting the model4.In supervised learning, what is the role of the labeled data?()A:To evaluate the model B:To train the model C:None of the above D:To test the model答案:To train the model5.In reinforcement learning, what is the goal of the agent?()A:To identify patterns in input data B:To minimize the error between thepredicted and actual output C:To maximize the reward obtained from theenvironment D:To classify input data into different categories答案:To maximize the reward obtained from the environment6.Which of the following is a characteristic of transfer learning?()A:It can only be used for supervised learning tasks B:It requires a largeamount of labeled data C:It involves transferring knowledge from onedomain to another D:It is only applicable to small-scale problems答案:It involves transferring knowledge from one domain to another第六章测试1.Image segmentation is the technology and process of dividing an image intoseveral specific regions with unique properties and proposing objects ofinterest. In the following statement about image segmentation algorithm, the error is ().A:Region growth method is to complete the segmentation by calculating the mean vector of the offset. B:Watershed algorithm, MeanShift segmentation,region growth and Ostu threshold segmentation can complete imagesegmentation. C:Watershed algorithm is often used to segment the objectsconnected in the image. D:Otsu threshold segmentation, also known as themaximum between-class difference method, realizes the automatic selection of global threshold T by counting the histogram characteristics of the entire image答案:Region growth method is to complete the segmentation bycalculating the mean vector of the offset.2.Camera calibration is a key step when using machine vision to measureobjects. Its calibration accuracy will directly affect the measurementaccuracy. Among them, camera calibration generally involves the mutualconversion of object point coordinates in several coordinate systems. So,what coordinate systems do you mean by "several coordinate systems" here?()A:Image coordinate system B:Image plane coordinate system C:Cameracoordinate system D:World coordinate system答案:Image coordinate system;Image plane coordinate system;Camera coordinate system;World coordinate systemmonly used digital image filtering methods:().A:bilateral filtering B:median filter C:mean filtering D:Gaussian filter答案:bilateral filtering;median filter;mean filtering;Gaussian filter4.Application areas of digital image processing include:()A:Industrial inspection B:Biomedical Science C:Scenario simulation D:remote sensing答案:Industrial inspection;Biomedical Science5.Image segmentation is the technology and process of dividing an image intoseveral specific regions with unique properties and proposing objects ofinterest. In the following statement about image segmentation algorithm, the error is ( ).A:Otsu threshold segmentation, also known as the maximum between-class difference method, realizes the automatic selection of global threshold T by counting the histogram characteristics of the entire imageB: Watershed algorithm is often used to segment the objects connected in the image. C:Region growth method is to complete the segmentation bycalculating the mean vector of the offset. D:Watershed algorithm, MeanShift segmentation, region growth and Ostu threshold segmentation can complete image segmentation.答案:Region growth method is to complete the segmentation bycalculating the mean vector of the offset.第七章测试1.Blind search can be applied to many different search problems, but it has notbeen widely used due to its low efficiency.()A:错 B:对答案:对2.Which of the following search methods uses a FIFO queue ().A:width-first search B:random search C:depth-first search D:generation-test method答案:width-first search3.What causes the complexity of the semantic network ().A:There is no recognized formal representation system B:The quantifiernetwork is inadequate C:The means of knowledge representation are diverse D:The relationship between nodes can be linear, nonlinear, or even recursive 答案:The means of knowledge representation are diverse;Therelationship between nodes can be linear, nonlinear, or even recursive4.In the knowledge graph taking Leonardo da Vinci as an example, the entity ofthe character represents a node, and the relationship between the artist and the character represents an edge. Search is the process of finding the actionsequence of an intelligent system.()A:对 B:错答案:对5.Which of the following statements about common methods of path search iswrong()A:When using the artificial potential field method, when there are someobstacles in any distance around the target point, it is easy to cause the path to be unreachable B:The A* algorithm occupies too much memory during the search, the search efficiency is reduced, and the optimal result cannot beguaranteed C:The artificial potential field method can quickly search for acollision-free path with strong flexibility D:A* algorithm can solve theshortest path of state space search答案:When using the artificial potential field method, when there aresome obstacles in any distance around the target point, it is easy tocause the path to be unreachable第八章测试1.The language, spoken language, written language, sign language and Pythonlanguage of human communication are all natural languages.()A:对 B:错答案:错2.The following statement about machine translation is wrong ().A:The analysis stage of machine translation is mainly lexical analysis andpragmatic analysis B:The essence of machine translation is the discovery and application of bilingual translation laws. C:The four stages of machinetranslation are retrieval, analysis, conversion and generation. D:At present,natural language machine translation generally takes sentences as thetranslation unit.答案:The analysis stage of machine translation is mainly lexical analysis and pragmatic analysis3.Which of the following fields does machine translation belong to? ()A:Expert system B:Machine learning C:Human sensory simulation D:Natural language system答案:Natural language system4.The following statements about language are wrong: ()。

营运能力分析外文中英文翻译营运能力分析外文中英文翻译外文翻译原文 Operation ability analysis Material Source: China's securities nets 05/17/20XX Author:Techever Operation ability fully utilize existing resources to create social wealth ability, can be used to evaluate the enterprise to its own resources utilization and operating activities ability. Its essence is to as few as possible resources occupation short turnover time, produce as many products, create as many sales revenue, and to achieve this goal, we must improve enterprise's operation ability level. Operation ability is the assets of the enterprise turnaround to measure the efficiency of the utilization of assets enterprises. The index reflects assets turnover rate have inventory turnover, liquid assets turnover rate, total asset turnover. The faster turnaround speed, it shows that the enterprise of assets into business links, forming the faster the cycle of revenue and profit more short, business efficiency is higher. Operation ability refers to the enterprise asset turnover operation ability, usually can use total asset turnover, fixed asset turnover, flow asset turnover, inventory turnover and accounts receivable turnover these five financial ratios to enterprises'operating capacity for layered analysis. Operation ability analysis can help investors understand enterprise business conditions and operating management level. With our su ning electric equipment (BBS) (market, for example, 0020XX) to introduce how to enterprise's investors operating capability analysis. Total asset turnover is to show enterprise sales income and total assets of the ratio of average balance. Suning 20XX sales revenue for 91.1 billion yuan, average total assets of 14 million yuan; 20XX sales income increased to 160.4 billionyuan, the average total assets is increased by 2.3 times, reached 31.9 million yuan. Due to the growing rate of total assets than the sales income increase, total asset turnover down to 5 by lead. The rate of decline in 20XX with suning opened the new mass are directly related. In order to com plete the “national cloth nets“ thestrategic pattern, suning in 20XX at 65, a new store new landed 20 cities, and the original logistics, service system of radiation radius is limited, so su ning to makes lots of management platform, in order to support the construction of the urban construction in the same after other stores of logistics and management. This makes su ning expansion strategy initial cost of relatively high. Current assets turnover is enterprise's sales income and liquidity ratio of average balance. Through this ratio analysis, we can further understanding of enterprise in the short term operation ability changes. From statements that su ning 20XX sales income nearly 1.6 billion yuan, growth rate, while the average flow rate reached more than doubled assets. The liquidity didn't bring the same margin large increase of sales income growth, so current assets turnover in 20XX 7.36 dropped by the 20XX 5.61, explain the efficiency in the use of su ning liquid assets declined. Fixed asset turnover is mainly used for analysis of fixed assets such as factory buildings, equipment, the ratio of the utilization efficiency of the higher and higher, explain utilization, management level, the better. If the fixed asset turnover compared with industry average low, then explaining enterprise of fixed assets utilization is low, might affect the enterprise profitability. It reflects enterprise asset utilization degree. Fixed asset turnover ratio = sales revenue/average net value of fixed assets The average net value of fixed assets = (initial equity + final equity) voting 2 Enterprise inside certain period advocate business wu income with average net current assets ratio of total assetutilization, is appraise enterprise another important indexes. It reflects the enterprise liquid assets turnover rate from enterprise all assets, liquidity of the strongest in current assets Angle of enterprise assets utilization efficiency, in order to further analyze the quality of enterprise assets reveals acoustics major factor. Current assets turnover means certain period for a year) (usually the main business income and total migrant assets ratio of the average balance.therefore, can through to inventory turnover and accounts receivable further analysis of flow asset turnover ratio changes. Suning in stock sales primarily, therefore, accounts receivable accounted for only the liquidity, and inventory 50% 4.75%. Inventory turnover refers to enterprises and inventory cost of sales average balance ratio. For real estate industry inventory turnover is a very key indicators, real estate industry is very special. Usually, inventory turnover is the sooner the better, and real estate industry inventory quantity bigger, the slower the turnover that the strength of the company is the more abundant. Other industry's inventory turnover for six or seven times a year of general level, in contrast, in the real estate industry a year about a second, if in six or seven times a year inventory turnover for real estate industry as the company is tiny companies, with a powerful real estate stocks, inventory turnover are very low, because must keep a lot of land reserves, land reserve is his inventory, the houses built yet form sales belong to assets range, depend on these achieve sales.Inventory turnover condition can also be expressed with inventory, namely said days once inventory turnover the time required that the shorter days, the faster inventory turnover. Suning 15.05 inventory turnover in 20XX for 20XX, this ratio dropped to 10.33. Accordingly, inventory turnover days from 24 days extended to 35 days. Inventory liquidation speed decreasedobviously, explain suning sales ability may exist problems down or inventory excess. Accounts receivable turnover refers to the enterprise certain period income and accounts receivable credit average balance ratio. It reflects the company obtained the account receivable from the right to withdraw money, can be converted into cash needed the length of time. Accounts receivable turnover can be used to estimate the accounts receivable converted speed and management efficiency. Recovery quickly can save money, also shows that enterprise credit situation is good, not easy loss of bad happened. Generally believe that the higher the turnover of the good.This index measure enterprise accounts receivable into cash speed. Because credit sales income can't easily get, in practice used more sales income is calculated alternative credit income. Suning customers is mainly individual consumer to both clear of money and goods, trading on the basis of the account receivable credit income proportion is very small, so the sales income data obtained by receivable turnover is very high. In general, the higher the ratio of enterprises that enterprise collection receivable and the faster, can reduce the loss of bad, and liquidity strong, enterprise's short-term solvency will also strengthen, in some extent could compensate for the current ratio low adverse impact. If the enterprise receivables turnover is too low, then explaining enterprise collection receivable inefficient or credit policy very loose, affect the enterprise use of the capital and capital normal turnover. On real estate enterprise operation ability of financial analysis framework can mainly from three aspects: building management ability index, accounts receivable turnover and working capital turnover rate. In these three respects based on real estate enterprise combining the characteristics, the selection of the appropriate financial index on real estate enterprises'operating capabilityevaluation. This paper puts forward the analysis framework of general applicability, for real estate enterprises and other enterprises in the operation of the managers do provide quantitative basis for decision-making and analysis methods. Through the case analysis can be found that, because the influence of assets turnover rate, total assets yield level but not necessarily advocate business wu income consistent with gross margin. And commercial real estate and industrial real estate, residential real estate than sex where profit margins, so vanke's sales income margin increased year by year, but despite highest when still about 41%, but the lujiazui, and the land is provided income can be as high as 80% gross margin, cofco property of materials processing income also can achieve 75% gross margin. From the trend, the incomes of the three companies are in growth state gross margin. But because the operating cash flow is low, the efficiency high profit margins of the lujiazui and cofco real estate but show low on total assets. Three real estate enterprise operations in there is a common problem, namely the working capital turnover rate is too slow. Operation ability of the enterprise of the scale of operations and different difference were real estate enterprise can cause inventory turnover rate and working capital turnover rate is different. Residential property turnover rate sex than commercial real estate and industrial real estate, so vanke faster the inventory turnover faster than lujiazui, cofco property because small in scale, the turnover rate close to YuWanKe. But in recent years due to land prices continue to rise, real estate enterprises have been through a lot of store, extend the project development period and so on the way to getting the higher profit margin. Thus the current real estate enterprises in our country there are a large amount of inventory turnover, slow ills. 译文营运能力的分析资料来源:中国证券网 05/17/20XX 作者:Techever 营运能力是充分利用现有资源创造社会财富的能力,可以用来评价企业对其拥有资源的利用程度和营运活动能力。

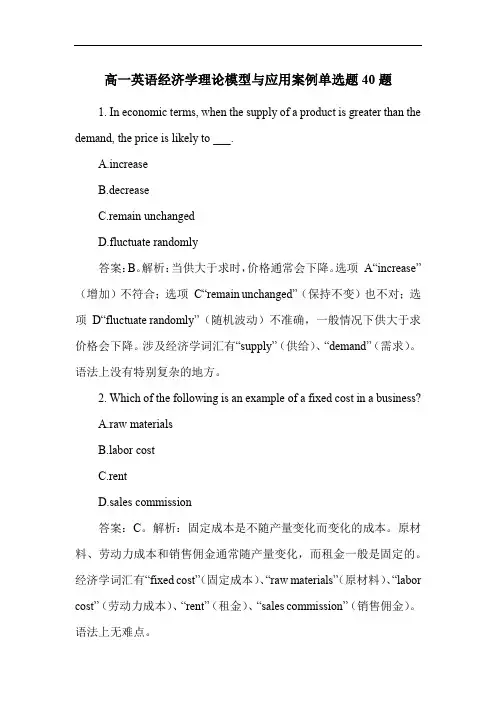

高一英语经济学理论模型与应用案例单选题40题1. In economic terms, when the supply of a product is greater than the demand, the price is likely to ___.A.increaseB.decreaseC.remain unchangedD.fluctuate randomly答案:B。

解析:当供大于求时,价格通常会下降。

选项A“increase”(增加)不符合;选项C“remain unchanged”((保持不变)也不对;选项D“fluctuate randomly”(随机波动)不准确,一般情况下供大于求价格会下降。

涉及经济学词汇有“supply”((供给)、“demand”((需求)。

语法上没有特别复杂的地方。

2. Which of the following is an example of a fixed cost in a business?A.raw materialsbor costC.rentD.sales commission答案:C。

解析:固定成本是不随产量变化而变化的成本。

原材料、劳动力成本和销售佣金通常随产量变化,而租金一般是固定的。

经济学词汇有“fixed cost”((固定成本)、“raw materials”((原材料)、“labor cost”((劳动力成本)、“rent”((租金)、“sales commission”((销售佣金)。

语法上无难点。

3. If a country's GDP is growing rapidly, it usually indicates ___.A.high unemploymentB.economic recessionC.economic prosperityD.deflation答案:C。

解析:一个国家的GDP 快速增长通常表明经济繁荣。

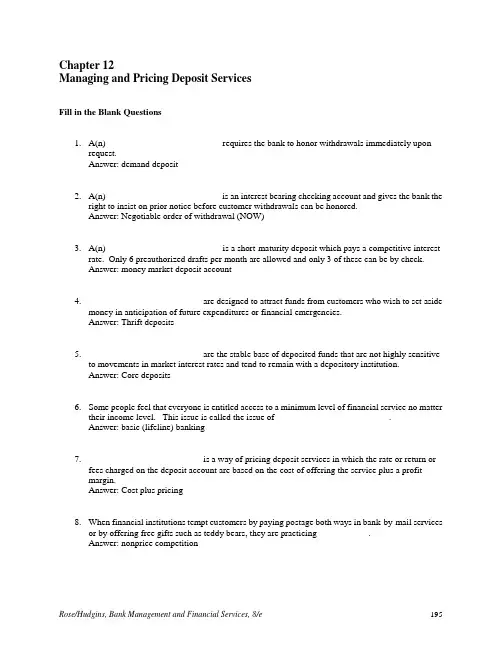

Chapter 12Managing and Pricing Deposit ServicesFill in the Blank Questions1. A(n) _________________________ requires the bank to honor withdrawals immediately uponrequest.Answer: demand deposit2. A(n) _________________________ is an interest bearing checking account and gives the bank theright to insist on prior notice before customer withdrawals can be honored.Answer: Negotiable order of withdrawal (NOW)3. A(n) _________________________ is a short-maturity deposit which pays a competitive interestrate. Only 6 preauthorized drafts per month are allowed and only 3 of these can be by check.Answer: money market deposit account4. _________________________ are designed to attract funds from customers who wish to set asidemoney in anticipation of future expenditures or financial emergencies.Answer: Thrift deposits5. _________________________ are the stable base of deposited funds that are not highly sensitiveto movements in market interest rates and tend to remain with a depository institution.Answer: Core deposits6. Some people feel that everyone is entitled access to a minimum level of financial service no mattertheir income level. This issue is called the issue of _________________________.Answer: basic (lifeline) banking7. _________________________ is a way of pricing deposit services in which the rate or return orfees charged on the deposit account are based on the cost of offering the service plus a profitmargin.Answer: Cost plus pricing8. When financial institutions tempt customers by paying postage both ways in bank-by-mail servicesor by offering free gifts such as teddy bears, they are practicing ___________.Answer: nonprice competition9. The _________________________is the added cost of bringing in new funds.Answer: marginal cost10. _________________________ pricing is where the financial institution sets up a schedule of feesin which the customer pays a low or no fee if the deposit balance stays above some minimum level and pays a higher fee if the balance declines below that minimum level.Answer: Conditional11. When a customer is charged a fixed charge per check this is called __________________ pricing.Answer: flat rate12. When a customer is charged based on the number and kinds of services used, with the customersthat use a number of services being charged less or having some fees waived, this is called__________________ pricing.Answer: relationship13. _________________________ is part of the new technology for processing checks where the banktakes a picture of the back and the front of the original check and which can now be processed as if they were the original.Answer: Check imaging14. A(n) _________________________ is a thrift account which carries a fixed maturity date andgenerally carries a fixed interest rate for that time period.Answer: time deposit15. A(n) _________________________ is a conditional method of pricing deposit services in whichthe fees paid by the customer depend mainly on the account balance and volume of activity.Answer: deposit fee schedule16. The _________________________ was passed in 1991 and specifies the information thatinstitutions must disclose to their customers about deposit accounts.Answer: Truth in Savings Act17. The _________________________ must be disclosed to customers based on the formula of oneplus the interest earned divided by the average account balance adjusted for an annual 365 day year.It is the interest rate the customer has actually earned on the account.Answer: annual percentage yield (APY)18. A(n) _________________________ is a retirement plan that institutions can sell which is designedfor self-employed individuals.Answer: Keogh plan19. Deposit institution location is most important to ______-income consumers.Answer: low20. _____-income consumers appear to be more influenced by the size of the financial institution.Answer: high21.For decades depository institutions offered one type of savings plan. could be opened withas little as $5 and withdrawal privileges were unlimited.Answer: Passbook savings deposits22.CD’s allow depositors to switch to a higher interest rate if market ratesrise.Answer: Bump-up23.CD’s permit periodic adjustm ents in promised interest rates.Answer: Step-up24.CD’s allow the depositor t o withdraw some of his or her funds without awithdrawal penalty.Answer: Liquid25.A(n) , which was authorized by Congress in 1997, allows individuals to makenon-tax-deductible contributions to a retirement fund that can grow tax free and also pay no taxes on their investment earnings when withdrawn.Answer: Roth IRA26.Due to the fact that they may be perceived as more risky, banks generally offer higherdeposit rates than traditional banks.Answer: virtual27. are accounts in domestic banking institutions where the U.S.Treasury keeps most of their operating funds.Answer: Treasury Tax and Loan Accounts (TT&L accounts)28. is a process where merchants and utility companies take theinformation from a check an individual has just written and electronically debits the individual’s account instead of sending the check through the regular check clearing process.Answer: electronic check conversion29.On October 28, 2004, became the law, permitting depository institutions toelectronically transfer check images instead of the checks themselves.Answer: Check 2130.The to the cost plus pricing derives the weighted average cost of all fundsraised and is based on the assumption that it is not the cost of each type of deposit that matters but rather the weighted average cost of all funds that matters.Answer: pooled-funds cost approachTrue/False QuestionsT F 31. The volume of core deposits at U.S. banks has been growing in recent years relative to other categories of deposits.Answer: FalseT F 32. The U.S. Treasury keeps most of its operating funds in TT&L deposits, according to the textbook.Answer: TrueT F 33. Deposits owned by commercial banks and held with other banks are called correspondent deposits.Answer: TrueT F 34. The implicit interest rate on checkable deposits equals the difference between the cost of supplying deposit services to a customer and the amount of the service charge actuallyassessed that customer.Answer: TrueT F 35. Legally imposed interest-rate ceilings on deposits were first set in place in the United States after passage of the Bank Holding Company Act.Answer: FalseT F 36. Gradual phase-out of legal interest-rate ceilings on deposits offered by U.S. banks was first authorized by the Glass-Steagall Act.Answer: FalseT F 37. The contention that there are certain banking services (such as small loans or savings and checking accounts) that every citizen should have access to is usually called socializedbanking.Answer: FalseT F 38. Domestic deposits generate legal reserves.Answer: TrueT F 39. Excess legal reserves are the source out of which new bank loans are created.Answer: TrueT F 40. Demand deposits are among the most volatile and least predictable of a bank's sources of funds with the shortest potential maturity.Answer: TrueT F 41. IRA and Keogh deposits have great appeal for bankers principally because they can be sold bearing relatively low (often below-market) interest rates.Answer: FalseT F 42. In general, the longer the maturity of a deposit, the lower the yield a financial institution must offer to its depositors because of the greater interest-rate risk the bank faces withlonger-term deposits.Answer: FalseT F 43. The availability of a large block of core deposits decreases the duration of a bank's liabilities.Answer: FalseT F 44. Interest-bearing checking accounts, on average, tend to generate lower net returns than regular (noninterest-bearing) checking accounts.Answer: FalseT F 45. Personal checking accounts tend to be more profitable than commercial checking accounts.Answer: FalseT F 46. NOW accouts can be held by businesses and individuals and are interest bearing checking accounts.Answer: FalseT F 47. A MMDA is a short term deposit where the bank can offer a competitive interest rate and which allows up to 6 preauthorized drafts per month.Answer: TrueT F 48. A Roth IRA allows an individual to accumulate investment earnings tax free and also pay no tax on their investment earnings when withdrawn provided the taxpayer follows therules on this new account.Answer: TrueT F 49. Competition tends to raise deposit interest costs.Answer: TrueT F 50. Competition lowers the expected return to a bank from putting its deposits to work.Answer: TrueT F 51. A bank has full control of its deposit prices in the long run.Answer: FalseT F 52. Nonprice competition for deposits has tended to distort the allocation of scarce resources in the banking sector.Answer: TrueT F 53. Deposits are usually priced separately from loans and other bank services.Answer: TrueT F 54. According to recent Federal Reserve data no-fee savings accounts are on the decline.Answer: TrueT F 55. According to recent survey information provided by the staff of the Federal Reserve Board the average level of fees on most types of checking and NOW accounts appear to haverisen.Answer: TrueT F 56. The Truth in Savings Act requires a bank to disclose to its deposit customer the frequency with which interest is compounded on all interest-bearing accounts.Answer: TrueT F 57. Under the Truth in Savings Act customers must be informed of the impact of any early deposit withdrawals on the annual percentage yield they expect to receive from aninterest-bearing deposit.Answer: TrueT F 58. The number one factor households consider in selecting a bank to hold their checkingaccount is, according to recent studies cited in this chapter, low fees and low minimumbalance.Answer: FalseT F 59. The number one factor households consider in choosing a bank to hold their savings deposits, according to recent studies cited in this chapter, is location.Answer: FalseT F 60. Conditionally free deposits for customers mean that as long as the customers do not go above a certain level of deposits there are no monthly fees or per transaction charges.Answer: FalseT F 61. When a bank temporarily offers higher than average interest rates or lower than average customer fees in order to attract new business they are practicing conditional pricing.Answer: FalseT F 62. Web-centered banks with little or no physical facilities are known as ________ banks Answer: TrueT F 63. The total dollar value of checks paid in the United States has grown modestly in recent years.Answer: FalseT F 64. There are still a number of existing problems with online bill-paying services which has limited the growth.Answer: TrueT F 65. The depository institutions which tend to have the highest deposit yields are credit unions.Answer: FalseT F 66. Urban markets are more responsive to deposit interest rates and fees than rural markets.Answer: FalseT F 67. Research indicates that at least half of all households and small businesses hold their primary checking account at a depository institution situated within 3 miles of theirlocation.Answer: TrueMultiple Choice Questions68. Deposit accounts whose principal function is to make payments for purchases of goods andservices are called:A) DraftsB) Second-party payments accountsC) Thrift depositsD) Transaction accountsE) None of the aboveAnswer: D69. Interest payments on regular checking accounts were prohibited in the United States under terms ofthe:A) Glass-Steagall ActB) McFadden-Pepper ActC) National Bank ActD) Garn-St. Germain Depository Institutions ActE) None of the aboveAnswer: A70. Money-market deposit accounts (MMDAs), offering flexible interest rates, accessible forpayments purposes, and designed to compete with share accounts offered by money market mutual funds, were authorized by the:A) Glass-Steagall ActB) Depository Institutions Deregulation and Monetary Control Act (DIDMCA)C) Bank Holding Company ActD) Garn-St.Germain Depository Institutions ActE) None of the aboveAnswer: D71. The stable and predictable base of deposited funds that are not highly sensitive to movements inmarket interest rates but tend to remain with the bank are called:A) Time depositsB) Core depositsC) Consumer CDsD) Nontransaction depositsE) None of the aboveAnswer: B72. Noegotiable Orders of Withdrawal (NOW) accounts, interest-bearing savings accounts that can beused essentially the same as checking accounts, were authorized by:A) Glass-Steagall ActB) Depository Institutions Deregulation and Monetary Control Act (DIDMCA)C) Bank Holding Company ActD) Garn-St. Germain Depository Institutions ActE) None of the aboveAnswer: B74. A deposit which offers flexible money market interest rates but is accessible for spending bywriting a limited number of checks or executing preauthorized drafts is known as a:A) Demand depositB) NOW accountC) MMDAsD) Time depositE) None of the aboveAnswer: C75. The types of deposits that will be created by the banking system depend predominantly upon:A) The level of interest ratesB) The state of the economyC) The monetary policies of the central bankD) Public preferenceE) None of the above.Answer: D76. The most profitable deposit for a bank is a:A) Time depositB) Commercial checking accountC) Personal checking accountD) Passbook savings depositE) Special checking accountAnswer: B77. Some people feel that individuals are entitled to some minimum level of financial services nomatter what their income level. This issue is often called:A) Lifeline bankingB) Preference bankingC) Nondiscriminatory bankingD) Lifeboat bankingE) None of the aboveAnswer: A78. The formula Operating Expense per unit of deposit service + Estimated overhead expense +Planned profit from each deposit service unit sold reflects what deposit pricing method listedbelow?A) Marginal cost pricingB) Cost plus pricingC) Conditional pricingD) Upscale target pricingE) None of the above.Answer: B79. Using deposit fee schedules that vary deposit prices according to the number of transactions, theaverage balance in the deposit account, and the maturity of the deposit represents what deposit pricing method listed below?A) Marginal cost pricingB) Cost plus pricingC) Conditional pricingD) Upscale target pricingE) None of the above.Answer: C80. The deposit pricing method that favors large-denomination deposits because services are free if thedeposit account balance stays above some minimum figure is called:A) Free pricingB) Conditionally free pricingC) Flat-rate pricingD) Upscale target pricingE) Marginal cost pricingAnswer: B81. The federal law that requires U.S. depository institutions to make greater disclosure of the fees,interest rates, and other terms attached to the deposits they sell to the public is called the:A) Consumer Credit Protection ActB) Fair Pricing ActC) Consumer Full Disclosure ActD) Truth in Savings ActE) None of the above.Answer: D82. Depository institutions selling deposits to the public in the United States must quote the rate ofreturn pledged to the owner of the deposit which reflects the customer's average daily balance kept in the deposit. This quoted rate of return is known as the:A) Annual percentage rate (APR)B) Annual percentage yield (APY)C) Daily deposit yield (DDY)D) Daily average return (DAR)E) None of the above.Answer: B83. According to recent studies cited in this book, in selecting a bank to hold their checking accountshousehold customers rank first which of the following factors?A) SafetyB) High deposit interest ratesC) Convenient locationD) Availability of other servicesE) Low fees and low minimum balance.Answer: C84. According to recent studies cited in this chapter, in choosing a bank to hold their savings depositshousehold customers rank first which of the following factors?A) FamiliarityB) Interest rate paidC) Transactional convenienceD) LocationE) Fees charged.Answer: A85. According to recent studies cited in this chapter, in choosing a bank to supply their deposits andother services business firms rank first which of the following factors?A) Quality of financial advice givenB) Financial health of lending institutionC) Whether loans are competitively pricedD) Whether cash management and operations services are provided.E) Quality of bank officers.Answer: B86. A financial institution that charges customers based on the number of services they use and giveslower deposit fees or waives some fees for a customer that purchases two or more services ispracticing:A) Marginal cost pricingB) Conditional pricingC) Relationship pricingD) Upscale target pricingE) None of the aboveAnswer: C87. A bank determines from an analysis on its deposits that account processing and other operatingexpenses cost the bank $3.95 per month. It has also determined that its non operating expenses on its deposits are $1.35 per month. The bank wants to have a profit margin which is 10 percent of monthly costs. What monthly fee should this bank charge on its deposit accounts?A) $5.30 per monthB) $3.95 per monthC) $5.83 per monthD) $5.70 per monthE) None of the aboveAnswer: C88. A bank determines from an analysis on its deposits that account processing and other operatingexpenses cost the bank $4.45 per month. The bank has also determined that nonoperating expenses on deposits are $1.15 per month. It has also decided that it wants a profit of $.45 on its deposits.What monthly fee should this bank charge on its deposit accounts?A) $6.05B) $5.60C) $5.15D) $4.45E) None of the aboveAnswer: A89. A customer has a savings deposit for 45 days. During that time they earn $5 in interest and have anaverage daily balance of $1000. What is the annual percentage yield on this savings account?A) 0.5%B) 4.13%C) 4.07%D) 4.5%E) None of the aboveAnswer: B90. A customer has a savings account for one year. During that year they earn $65.50 in interest. For180 days they have $2000 in the account for the other 180 days they have $1000 in the account.What is the annual percentage yield on this savings account.A) 6.55%B) 3.28%C) 4.37%D) 8.73%E) None of the aboveAnswer: C91.If you deposit $1,000 into a certificate of deposit that quotes you a 5.5% APY, how much will youhave at the end of 1 year?A)$1,050.00B)$1,055.00C)$1,550.00D)$1,005.50E)None of the above.Answer: B92. A bank quotes an APY of 8%. A small business that has an account with this bank had $2,500 intheir account for half the year and $5,000 in their account for the other half of the year. How much in total interest earnings did this bank make during the year?A) $300B) $200C) $400D) $150E) None of the aboveAnswer: A93. Conditional deposit pricing may involve all of the following factors except:A) The level of interest ratesB) The number of transactions passing through the accountC) The average balance in the accountD) The maturity of the accountE) All of the above are usedAnswer: A94.Customers who wish to set aside money in anticipation of future expenditures or financialemergencies put their money inA) DraftsB) Second-party payment accountsC) Thrift DepositsD) Transaction accountsE) None of the aboveAnswer: C95. A savings account evidenced only by computer entry for which the customer gets a monthlyprintout is called:A) Passbook savings accountB) Statement savings planC) Negotiable order of withdrawalD) Money market mutual fundE) None of the aboveAnswer: B96. A traditional savings account where evidenced by the entries recorded in a booklet kept by thecustomer is called:A) Passbook savings accountB) Statement savings planC) Negotiable order of withdrawalD) Money market mutual fundE) None of the aboveAnswer: A97.An account at a bank that carries a fixed maturity date with a fixed interest rate and which oftencarries a penalty for early withdrawal of money is called:A) Demand depositB) Transaction depositC) Time depositD) Money market mutual depositE) None of the aboveAnswer: C98. A time deposit that has a denominations greater than $100,000 and are generally for wealthyindividuals and corporations is known as a:A) Negotiable CDB) Bump-up CDC) Step-up CDD) Liquid CDE) None of the aboveAnswer: A99. A time deposit that is non-negotiable but where the promised interest rate can rise with marketinterest rates is called a:A) Negotiable CDB) Bump-up CDC) Step-up CDD) Liquid CDE) None of the aboveAnswer: B100.A time deposit that allows for a periodic upward adjustment to the promised rate is called a:A) Negotiable CDB) Bump-up CDC) Step-up CDD) Liquid CDE) None of the aboveAnswer: C101.A time deposit that allows the depositor to withdraw some of his or her funds without a withdrawalpenalty is called a:A) Negotiable CDB) Bump-up CDC) Step-up CDD) Liquid CDE) None of the aboveAnswer: D102.What has made IRA and Keogh accounts more attractive to depositors recently?A) Allowing the bank to have FDIC insurance on these accountsB) Allowing the fund to grow tax free over the life of the fundC) Allowing the depositor to pay no taxes on investment earnings when withdrawnD) Requiring banks to pay at least 6% on these accounts to depositorsE) Increasing FDIC insurance coverage to $250,000 on these accountsAnswer: E103.The dominant holder of bank deposits in the U.S. is:A) The private sectorB) State and local governmentsC) Foreign governmentsD) Deposits of other banksE) None of the aboveAnswer: A104.The deposit pricing method absent of any monthly account maintenance fee or per-transaction fee is called:A) Free pricingB) Conditionally free pricingC) Flat-rate pricingD) Marginal cost pricingE) Nonprice competitionAnswer: A105.The deposit pricing method that charges a fixed charge per check or per period or both is called:A) Free pricingB) Conditionally free pricingC) Flat-rate pricingD) Marginal cost pricingE) Nonprice competitionAnswer: C106.The deposit pricing method that focuses on the added cost of bringing in new funds is called:A) Free pricingB) Conditionally free pricingC) Flat-rate pricingD) Marginal cost pricingE) Nonprice competitionAnswer: D107.Prior to Depository Institution Deregulation and Control Act (DIDMCA), banks used . This tended to distort the allocation of scarce resources.A) Free pricingB) Conditionally free pricingC) Flat-rate pricingD) Marginal cost pricingE) Nonprice competitionAnswer: E108.A customer has a savings deposit for 60 days. During that time they earn $11 and have an average daily balance of $1500. What is the annual percentage yield on this savings account?A) .73%B) 4.3%C) 4.5%D) 4.7%E) None of the aboveAnswer: C109.A customer has a savings deposit for 15 days. During that time they earn $15 and have an average daily balance of $2200. What is the annual percentage yield on this savings account?A) .68%B) 16.36%C) 16.59%D) 17.98%E) None of the aboveAnswer: D110.A bank determines from an analysis on its deposits that account processing and other operating expenses cost the bank $4.15 per month. It has also determined that its none operating expenses on its deposits are $1.65 per month. The bank wants to have a profit margin which is 10 percent of monthly costs. What monthly fee should this bank charge on its deposit accounts?A) $6.38 per monthB) $5.80 per monthC) $4.57 per monthD) $4.15 per monthE) None of the aboveAnswer: A111.A bank has $200 in checking deposits. Interest and noninterest costs on these accounts are 4%.This bank has $400 in savings and time deposits with interest and noninterest costs of 8%. This bank has $200 in equity capital with a cost of 24%. This bank as estimated that reserverequirements, deposit insurance fees and uncollected balances reduce the amount of moneyavailable on checking deposits by 10% and on savings and time deposits by 5%. What is thisbank’s before-tax cost of funds?A) 11.00%B) 11.32%C) 11.50%D) 12.00%E) None of the aboveAnswer: B112.A bank has $100 in checking deposits. Interest and noninterest costs on these accounts are 8%.This bank has $600 in savings and time deposits with interest and noninterest costs of 12%. This bank has $100 in equity capital with a cost of 26%. This bank has estimated that reserverequirements, deposit insurance fees and uncollected balances reduce the amount of moneyavailable on checking deposits by 20% and on savings and time deposits by 5%. What is the bank’s before-tax cost of funds?A) 13.05%B) 13.25%C) 15.33%D) 19.17%E) None of the aboveAnswer: A113.A bank has $500 in checking deposits. Interest and noninterest costs on these accounts are 6%.This bank has $250 in savings and time deposits with interest and noninterest costs of 14%. This bank has $250 in equity capital with a cost of 25%. This bank has estimated that reserverequirements, deposit insurance fees and uncollected balances reduce the amount of moneyavailable on checking deposits by 15% and on savings and time deposits by 4%. What is the bank’s before-tax cost of funds?A) 15.00%B) 12.75%C) 13.42%D) 15.74%E) None of the aboveAnswer: C114.A bank expects to raise $30 million in new money if it pays a deposit rate of 7%. It can raise $60 million in new money if it pays a deposit rate of 7.5%. It can raise $80 million in new money if it pays a deposit rate of 8% and it can raise $100 million in new money if it pays a deposit rate of 8.5%.This bank expects to earn 9% on all money that it receives in new deposits. What deposit rate should the bank offer on its deposits, if they use the marginal cost method of determining deposit rates?A) 7%B) 7.5%C) 8%D) 8.5%E) None of the aboveAnswer: B115.A bank expects to raise $30 million in new money if it pays a deposit rate of 7%. It can raise $60 million in new money if it pays a deposit rate of 7.5%. It can raise $80 million in new money if it pays a deposit rate of 8% and it can raise $100 million in new money if it pays a deposit rate of 8.5%.This bank expects to earn 9% on all money that it receives in new deposits. What is the marginal cost of deposits if the bank raises their deposit rate from 7 to 7.5%?A) .5%B) 7.5%C) 8.0%D) 9.5%E) 10.5%Answer: C116.Under the Truth in Savings Act, a bank must inform its customers of the terms being quoted on their deposits. Which of the following is not one of the terms listed?A) Loan rate informationB) Balance computation methodC) Early withdrawal penaltyD) Transaction limitationsE) Minimum balance requirementsAnswer: A117.Which of these Acts is attempting to address the low savings rate of workers in the U.S. by including an automatic enrollment (“default option”) in employees’ retirement accounts?A)The Economic Recovery Tax Act of 1981B)The Tax Reform Act of 1986C)The Tax Relief Act of 1997D)The Pension Protection Act of 2006E)None of the aboveAnswer: D118.Business (commercial) transaction accounts are generally more profitable than personal checking accounts, according to the textbook. Which of the following explain the reasons for this statement:A)The average size of the business transaction is smaller than the personal transactionB)Lower interest expenses are associated with commercial deposit transactionC)The bank receives more investable funds in the commercial deposits transactionD) A and BE) B and CAnswer: E。

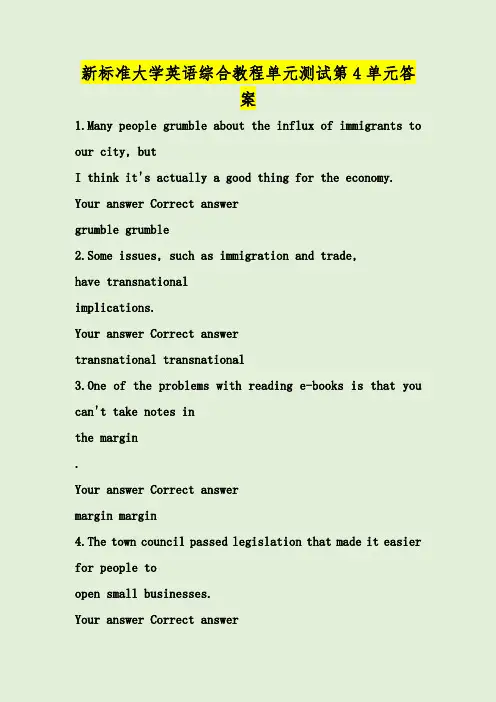

新标准大学英语综合教程单元测试第4单元答案1.Many people grumble about the influx of immigrants to our city,butI think it's actually a good thing for the economy. Your answer Correct answergrumble grumble2.Some issues,such as immigration and trade,have transnationalimplications.Your answer Correct answertransnational transnational3.One of the problems with reading e-books is that you can't take notes inthe margin.Your answer Correct answermargin margin4.The town council passed legislation that made it easier for people toopen small businesses.Your answer Correct answerlegislation legislation5.There are some mysteries that have baffled scientists for centuries.Your answer Correct answerbaffled baffled6.I'm in shock—have you seen the staggering stock market numbers thismorningYour answer Correct answerstaggering staggering7.We are all living in an increasingly multicultural society as more andmore people move to different countries.Your answer Correct answermulticultural multicultural8.The computer's influence on our daily lives is immeasurable;it has had themost massiveeffect on how humans live.Your answer Correct answermassive massive9.There will always be people who disapprove of newtechnology simplybecause it is"different."Your answer Correct answerdisapprove disapprove10.After several years of travel,Jake moved to London to find a job,a house,and the stabilityhe needed in his life.Your answer Correct answerstability stabilitySection B:Choose the best way to complete the sentences.11.Some people have an unhealthy_____with technology and need to have every new gadgetavailable.A.sessionB.obsessionC.recessionD.cessation12.After much_____,Heather was finally able to convince her boss to award her employees witha holiday bonus.A.desistenceB.consistenceC.insistenceD.resistance13.It's hard to_____what the future might look like,but science fiction writers have beendoing it for over a hundred years.A.visualizeB.conferC.mechanizeD.ascertain14.I know almost everyone loves her new book,but I'm not even_____interested in reading it.A.massivelyB.supposedlyC.entirelyD.remotely15.Even though Mr Lewis didn't say it out loud,the_____ in his speech was that his staff didn'twork hard enough.A.personificationB.implicationC.justificationD.explanation16.Many people complain that the cost of education is prohibitively expensive,but I think thecost of_____is much higher.A.ignoreB.ignorantC.ignoranceD.ignoring17.After he lost the competition,Lawrence felt completely and utterly_____.A.dispiritedB.inquisitiveC.observantD.unchanged18.We need to come up with a_____explanation for why we missed class yesterday.A.plausibleB.sentientC.staggeringD.corporate19.Paul has become very_____and conceited since he first tasted fame.A.arroganceB.elegantC.eleganceD.arrogant20.Dinosaurs have been_____for approximately65million years!A.succinctB.extinctC.instinctD.blinkedSection C:Complete each sentence with a suitable word.21.I'm as ignorant of your country's laws as you are of mine.Your answer Correct answeras as22.It's a pretty safe bet that almost all college graduates are moretechnically savvy than their parents.Your answer Correct answerthat that23.What do you make of the generation of MP3players Your answer Correct answerof of24.The New York team qualified for the championship round by a narrowmargin.Your answer Correct answerby by25.Forall the excitement surrounding the new computer program, not much is different.Your answer Correct answerFor For26.My mother still hasn't come to grips withthe convenience of e-mail.Your answer Correct answerwith with27.How can we ask George to help in such a way that he will think it'shis ideaYour answer Correct answerin in28.When you think about it,it is not surprising that mostpeople now owna cell phone.Your answer Correct answerthat that29.I think it's time to buy new pants when the knees begin to wear out.Your answer Correct answerout out30.In the wake of the earthquake,many people displayed an incredibleamount of generosity.Your answer Correct answerof ofPart II:Banked ClozeQuestions31to40are based on the following passage. When m y grandfather thinks about how the world is changing, he inevitably tells the storyof Dr Reynolds.Dr Reynolds was a(n)(31)eminentdoctor from the big city.Itseemed as if he was in the newspaper every day.My grandfather lived in asmall(32)suburboutside the city.This was at a time when s uch neighbourhoodswere still relatively new.People just couldn't understand why anyone would want to live sofar from the city centre.However,the neighbourhood my grandfather lived in was growing every day.Many people couldn't afford to live in the city,and others were tired of the crowds.Cost and congestioncomprised a real(33)curseof the cities,a problem that has only gottenworse with rge sections of the urban(34)workforce were moving out ofthe city to the smaller towns.Along with these people and their families,companies also started to relocate outside major cities.A great example of this was Dr Reynolds. It is impossible to overstate the(35)magnitudeof the significance thatDr Reynolds'move had on my grandfather's town.Because he was so famous,there was obviouslya considerable amount of(36)hypeassociated with his arrival.However,hequickly showed that he had the skills to back up his reputation.Dr Reynolds was not only abig,(37)brawnyman who played football in his youth,but he also graduated at the top of his class in medical school.I'd be willing to(38)betthat this was one of the most exciting things happened in my grandfather's town.It must have been since he told the story so many times!Dr Reynolds'arrival(39)fundamentallychanged the dynamics of the town.It was nolonger"far away"from the city;it suddenly became"just outside"the city limits.Today,the world is changing much faster than can be measured by the arrival of a big-city doctor in asmall town.Who k nows,maybe(40)somedayI'll have my own story to tell my owngrandchildren!Your answer Correct answer(31)eminent eminent(32)suburb suburb(33)curse curse(34)workforce workforce(35)magnitude magnitude(36)hype hype(37)brawny brawny(38)bet bet(39)fundamentally fundamentally(40)someday somedayPart III:Reading ComprehensionQuestions41to45are based on the following passage. Sometimes it seems we hear about the wonders of modern technology on a daily basis.I will admit that it's hard to argue with the puters,automobiles,the Internet,and increasingly smaller handheld devices certainly do make our lives more convenient.Everything, it seems,is gettingfaster,lighter,cheaper,and smaller.Pretty soon, computers will be the size of iPods and iPodswill be the size of fingernails.Technology is making our lives more convenient,to be sure. But is it making our lives betterHow d ependent on these conveniences should we become How d ependent have we already become Instant communication,video telephones,robots,thousands of songs in the palm of your hand—all of these things are now available at your local shopping centre.Indeed,what was once considered sciencefiction now seems downright plausible.I can realistically envision a world where literallyeverything we do depends on a computer or a machine and, frankly,it scares me.Consider the following scenario.Michael begins his day by waking up to an electric alarm clock.He gets dressed and pours himself a cup of coffee from a machine that is set to brew automaticallyevery morning.He walks to the corner and boards a subway train for his commute to work.The trainis controlled by a computer that knows how fast to go and when to slow down and stop.Michael entershis office building through doors that"see"he is coming and open for him.At work,Michael sitsin front of a computer all day to do his job.He writes e-mails,updates a Website,attends a videoconference online,and makes telephone calls.At night, he relaxes in front of the television(which has recordedall of his favourite shows),or he reads an e-book on his smart phone.How much ofMichael's day is not dependent on computers or machines How much of this scenario is unbelievable The answer:none of it.It is a daily reality for many people.Look around you.Do you use a computer to send e-mails, write reports,do homework,or searchthe Internet What happens when that computer gets a virus or breaks down Most people just sit there,baffled.They simply don't know what to do because they don't know how the computer works.We d epend on computers to make our lives easier,and we depend on computer technicians to keep our computersoperational.The same can be said for nearly any technology. That's why engineers,computerprofessionals,and technology consultants make such remarkable salaries.The more we rely on technology,the more we rely on specialists.The more we rely on specialists,the less responsible we feel to actually know how to do something.This is a slippery slope thatwill eventually lead most people to complete ignorance ofhow things work.It is ironic,then,that the very technologies that are currently touted as the greatest advancements in human knowledge could ultimately have the exact opposite effect.41.The writer of this passage would probably agree with which of the following statementsA.Technology is beginning to control our lives.puters play a marginal role in our society.C.We rely on computer specialists because they are convenient.D.Technology is slowly becoming more expensive.42.The writer's scenario with Michael is presented in order to_____.A.describe an innovationB.substantiate his argumentC.illustrate an example from a bygone time.D.brag about personal accomplishments43.Which paragraph draws a personal analogy to the readerA.Paragraph2.B.Paragraph3.C.Paragraph4.D.Paragraph5.44.Which of the following is the best one-sentence summary of this passageA.Technology makes our lives more convenient.B.Science fiction is becoming a daily reality.C.Everybody uses computers and other technologies.D.The more we rely on technology,the more helpless we become.45.Which of the following events does not support the writer's main ideaA.Marcus plays the latest video game after school.B.Lisa reads a book before going to bed each night.C.Carol uses a GPS system to find her way in a new city.D.Tony blogs and sends e-mails from his mobile phone.。

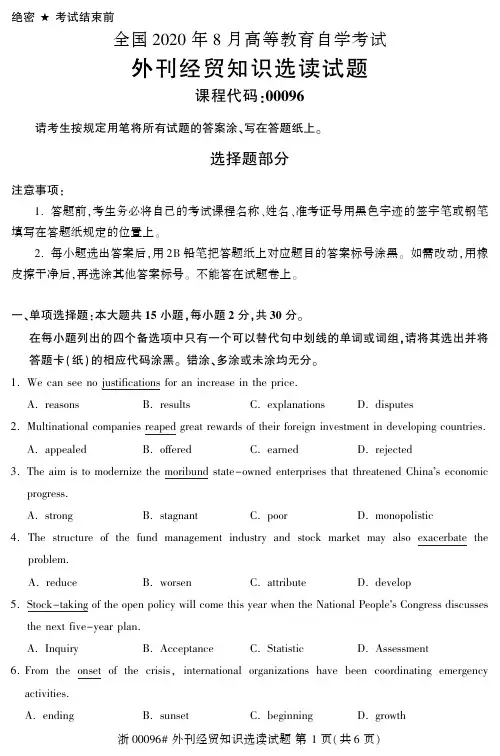

绝密★考试结束前全国2020年8月高等教育自学考试外刊经贸知识选读试题课程代码:00096 请考生按规定用笔将所有试题的答案涂㊁写在答题纸上㊂选择题部分注意事项: 1.答题前,考生务必将自己的考试课程名称㊁姓名㊁准考证号用黑色字迹的签字笔或钢笔填写在答题纸规定的位置上㊂ 2.每小题选出答案后,用2B铅笔把答题纸上对应题目的答案标号涂黑㊂如需改动,用橡皮擦干净后,再选涂其他答案标号㊂不能答在试题卷上㊂一㊁单项选择题:本大题共15小题,每小题2分,共30分㊂在每小题列出的四个备选项中只有一个可以替代句中划线的单词或词组,请将其选出并将答题卡(纸)的相应代码涂黑㊂错涂㊁多涂或未涂均无分㊂1.We can see no justifications for an increase in the price.A.reasonsB.resultsC.explanationsD.disputes2.Multinational companies reaped great rewards of their foreign investment in developing countries.A.appealedB.offeredC.earnedD.rejected3.The aim is to modernize the moribund state-owned enterprises that threatened China’s economic progress.A.strongB.stagnantC.poorD.monopolistic4.The structure of the fund management industry and stock market may also exacerbate the problem.A.reduceB.worsenC.attributeD.develop5.Stock-taking of the open policy will come this year when the National People’s Congress discusses the next five-year plan.A.InquiryB.AcceptanceC.StatisticD.Assessment6.From the onset of the crisis,international organizations have been coordinating emergency activities.A.endingB.sunsetC.beginningD.growth7.The government plans to curtail economic growth to avoid speculative bubbles.A.enlargeB.continueC.assistD.restrict8.The America’s formidable capital and technological resources would be utilized to meet its objectives.A.powerfulB.availableC.vastD.different9.The law banned discrimination against people with physical disabilities in the workplace.A.equalityB.fairnessC.differentiationD.protection10.Investment funds have moved out of commodities and into liquid assets.A.water resourcesB.current assetsC.real estateD.fixed assets11.With unemployment rate at9.7%,the president is pressing the Congress to approve financial incentives for small businesses to add workers.A.controlB.capitalC.toolsD.stimulation12.9%of listed firms are technically insolvent and have stopped paying their debts.A.privateB.publicC.bankruptD.unsuccessful13.A substantial proportion of loans from the World Bank is still to be disbursed.A.borrowedB.paidC.declinedD.recovered14.This marks the first time in history that these companies will be subject to federal supervision.A.be free fromB.be exchanged byC.be occupied inD.be subordinated to15.Online education is a fledgling sector where no perfect model has been created.A.inexperiencedB.matureC.unfinishedD.uncertain二㊁判断题:本大题共10小题,每小题2分,共20分㊂判断下列各题,在答题卡(纸)相应位置正确的涂 A”,错误的涂 B”㊂Passage1Force of the Multinationals Direct investment by multinational companies is becoming a hugely important force in the world economy.In essence,a combination of factors,such as the development of global communications and a change in the political climate towards multinationals,is bringing in an era of true global manufacturing.A company such as Siemens may now start making a product in Germany then ship itto Malaysia for the labor⁃intensive final stages of manufacture.The strategy by Japanese companies of locating production in cheaper Far Eastern countries such as Thailand has done much to integrate the economies of the companies were setting up production in Mexico,for similar reasons,before negotiations on the NAFTA had even started.There is an important distinction to be made between the kind of integration based on trade,which is relatively simple,and the far more complex links involved in global manufacturing.The report says that as integration moves from shallow trade-based linkages to deep international production-based linkage under the common governance,the traditional division between integration at the corporate and country levels begins to break down.” Foreign direct investment tends to transfer assets from the developed world to the developing world.But the pattern is not entirely simple.Big shifts have occurred in the composition of foreign direct investment by sector.Increasing investment is going into services and high⁃tech manufacture, rather than basic manufacture and natural resources.As might be expected,foreign direct investment in the developed world is mostly in the former category,whereas in the developing world the emphasis is on the latter.It seems countries have to reach a basic level of sophistication before they can get in the act.Simple cash incentives to set up production in a country have little effect, other than on margin.In addition,the increasing sophistication of global production means that cheap labor is often not a deciding factor either.16.International production⁃based integration is better than trade⁃based integration.bor⁃intensive manufacture is the production mainly depend on the use of a large number of labor. companies set up production in Mexico for its cheaper labor and cost.19.Cash incentive is a deciding factor in global production.20.Foreign direct investment in the developed countries is mostly in services and high⁃tech sector.Passage2Asia,You Cost Too Much The Asian economic miracle can be best summed up as the biggest price undercut in history. Asia grew because it was the cheapest source for the low⁃tech consumer goods that the West craves. Hong Kong and Korea didn’t invent new or more efficient manufacturing techniques,they simply bought market share with low wages.But now Asia is beginning to cost too much.If you still thinkAsia is cheap or even a bargain,compare office rents in Shanghai with those in Chicago and Paris. Or try to hold a qualified manager in China against the almost weekly job offers he receives due to the shortage of Chinese professionals.No wonder companies are voting with their feet in response to Asia’s rising cost.Germany’s Siemens is dumping Singapore in favor of lower cost locations in the region.The way things are going,Siemens may have to move again before too long. The competition facing Asia is not going to let up,either Local council representatives from Britain are running all over the world advertising tax cuts,giving away state land and slashing bureaucracy in an effort to attract industry.Technological innovations and cost reduction in communications and transport mean that location isn’t as important as it once was.Only Singapore seems to understand that keeping up isn’t good enough and that being competitive means forging ahead.The Lion City made a concerted effort to open market,cut government regulations and create transparency.But most Asian government just don’t seem to understand the relationship between high costs and low competitiveness.Otherwise why would tariffs on agricultural imports be crippling the Korean and Japanese food processing industries?The oligarchical nature of trucking in Malaysia guarantees that high transport costs will drive business away.n economic growth was primarily based on cheap exports rather than high technology.22.Siemens is satisfied with Singapore’s low cost and will stay there all the time.23.High rents and shortage of professionals became China’s disadvantage in Business.24.The importance of location is weakened by technology and communication innovations.25.Most Asian government learned from Singapore to open markets and cut tariffs.非选择题部分注意事项: 用黑色字迹的签字笔或钢笔将答案写在答题纸上,不能答在试题卷上㊂三㊁将下列中文词组译成英文:本大题共10小题,每小题1分,共10分㊂26.经济特区27.出口配额制28.消费品29.批发商30.贸易差额31.服务部门32.垄断33.最惠国待遇34.硬通货35.经常项目四㊁将下列英语单词或词组译成中文:本大题共10小题,每小题1分,共10分㊂36.market forces37.countertrademercial hub39.intellectual property right40.brain trust41.austerity programmes42.the General Agreement on Tariffs and Trade43.discount rate44.countervailing duty45.dumping五㊁简答题:本大题共6小题,每小题3分,共18分㊂Passage1 Once,when Japan faced pressure from abroad,it would either give in reluctantly or keep quiet and hope that the fuss would die down.No longer it seems.America wants Japan to meet import targets for some American goods,but far from capitulating to the thrust of American trade policy, Japan is taking a stand that could lead to a trans-Pacific confrontation.The annual white paper on trade development published by the Ministry of International Trade and Industry will reject the argument that Japan needs special trade sanctions.It is Japan’s persistent surplus,more than anything,that has provoked anger in Washington.This year the surplus has been growing fast.With the economy still barely growing,despite two fiscal packages in the past nine months,Japan’s critics say that the country is once more exporting its way out of recession.46.Which word can give in”be replaced by?47.Explain the fuss would die down”.48.Paraphrase exporting its way out of recession”.Passage2 The price of tin on the European spot market rose to4,400pounds per ton,reflecting widespread production cuts in the world tin industry,at a two-day meeting in Indonesia,theassociation of tin-producing countries,whose members represent70percent of world tin output, decided to strengthen their co-operation in a bid to stabilize tin prices and to call on the United States to limit sales of tin from its strategic stockpile.Platinum progressed at the outset on concern about strike action in South African mines but quickly fell victim to profit-taking as work resume.49.What is strategic stockpile”?50.What does progress”mean in the context?51.What is profit-taking in business?六㊁翻译题:本大题12分㊂52. Commerce among nations entered a modern era;the constrained trading between imperial powers and their colonies began to break down.World markets opened to all countries,and multilateral trade flourished.Generally,a country could sell its goods in the best market it could find and buy what is needed from the least expensive supplier.Moreover,since currencies were convertible,most transactions could be completed with cash.Barter was as antiquated as the horse⁃soldier.During the past few years,however,the international monetary system has begun to strain under a variety of economic changes.One important cause is the enormous burden of debt carried by Third World countries.A professor at Harvard University says, The plain fact is that many countries are broken.”绝密★启用前2020年8月高等教育自学考试全国统一命题考试外刊经贸知识选读试题答案及评分参考(课程代码 00096)一㊁单项选择题:本大题共15小题,每小题2分,共30分㊂1.A2.C3.B4.B5.D6.C7.D8.A9.C10.B 11.D12.C13.B14.D15.C二㊁正误判断题:本大题共10小题,每小题2分,共20分㊂16.B17.A18.A19.B20.A 21.A22.B23.A24.A25.B三㊁将下列中文词组译成英文:本大题共10小题,每小题1分,共10分㊂26.The Special Economic Zone27.export quota system28.consumer goods29.wholesaler30.trade balance31.the service sector32.monopolize33.most⁃favored nation treatment34.hard currency35.current account四㊁将下列英文单词或词组译成中文:本大题共10小题,每小题1分,共10分㊂36.市场力量37.对等贸易(反向贸易)38.商业活动中心39.知识产权40.智囊团41.紧缩计划42.关贸总协定43.贴现率44.反补贴税45.倾销五㊁简答题:本大题共6小题,每小题3分,共18分㊂Passage146.surrender or yield47.nervous activities become weaker48.get through the recession by exportingPassage249.Strategic stockpile is the stock for future use when faced with extremely difficult situations.50.Price goes up.51.to profit in a price fluctuation on an exchange by selling what one has bought at a lower pricewhen the price goes up.六㊁翻译题:本大题12分㊂52.国家间商贸进入了新的纪元;帝国主义列强及其殖民地间的强制性贸易开始崩溃㊂(2分)世界市场向所有国家开放,多边贸易开始繁荣㊂(2分)一般来说,一个国家可以在其能找到的最有利的市场上销售其货物,也能从最廉价的供应商那里购得它所相应的货物㊂(2分)而且,因为货币可以兑换,大部分交易可以用现金支付,易货贸易就像骑兵一样时过境迁了㊂(2分)然而,在过去的几年里,国家货币体系在种种经济变化影响下,开始承受着巨大的压力㊂(2分)一个重要的原因是第三世界国家的巨大债务负担㊂哈佛大学一位教授说: 显而易见,许多国家都已经破产了㊂”(2分)。

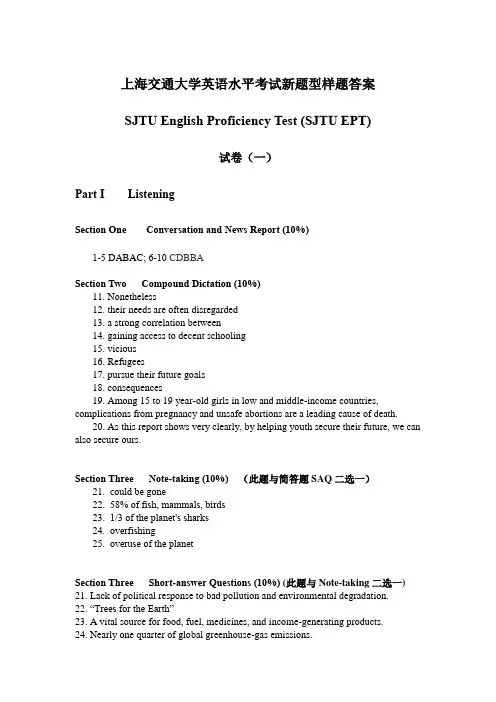

上海交通大学英语水平考试新题型样题答案SJTU English Proficiency Test (SJTU EPT)试卷(一)Part I ListeningSection One Conversation and News Report (10%)1-5 DABAC; 6-10 CDBBASection Two Compound Dictation (10%)11. Nonetheless12. their needs are often disregarded13. a strong correlation between14. gaining access to decent schooling15. vicious16. Refugees17. pursue their future goals18. consequences19. Among 15 to 19 year-old girls in low and middle-income countries, complications from pregnancy and unsafe abortions are a leading cause of death.20. As this report shows very clearly, by helping youth secure their future, we can also secure ours.Section Three Note-taking (10%) (此题与简答题SAQ二选一)21.could be gone22.58% of fish, mammals, birds23.1/3 of the planet's sharks24.overfishing25.overuse of the planetSection Three Short-answer Questions (10%) (此题与Note-taking二选一)ck of political response to bad pollution and environmental degradation.22.“Trees for the Earth”23.A vital source for food, fuel, medicines, and income-generating products.24.Nearly one quarter of global greenhouse-gas emissions.25.Reductions in deforestation and mass planting of new trees.Section Four Listening and translating (5%)26. 不会有人排队时加塞,或购买时超过50美元(汽油)的限制。

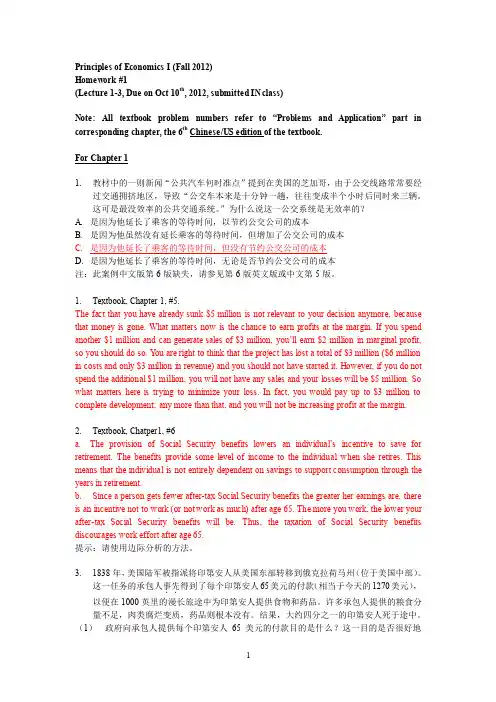

Principles of Economics I (Fall 2012)Homework #1(Lecture 1-3, Due on Oct 10th, 2012, submitted IN class)Note: All textbook problem numbers refer to “Problems and Application”part in corresponding chapter, the 6th Chinese/US edition of the textbook.For Chapter 11.教材中的一则新闻“公共汽车何时准点”提到在美国的芝加哥,由于公交线路常常要经过交通拥挤地区,导致“公交车本来是十分钟一趟,往往变成半个小时后同时来三辆。

这可是最没效率的公共交通系统。

”为什么说这一公交系统是无效率的?A.是因为他延长了乘客的等待时间,以节约公交公司的成本B.是因为他虽然没有延长乘客的等待时间,但增加了公交公司的成本C.是因为他延长了乘客的等待时间,但没有节约公交公司的成本D.是因为他延长了乘客的等待时间,无论是否节约公交公司的成本注:此案例中文版第6版缺失,请参见第6版英文版或中文第5版。

1.Textbook, Chapter 1, #5.The fact that you have already sunk $5 million is not relevant to your decision anymore, because that money is gone. What matters now is the chance to earn profits at the margin. If you spend another $1 million and can generate sales of $3 million, you’ll earn $2 million in marginal profit, so you should do so. Y ou are right to think that the project has lost a total of $3 million ($6 million in costs and only $3 million in revenue) and you should not have started it. However, if you do not spend the additional $1 million, you will not have any sales and your losses will be $5 million. So what matters here is trying to minimize your loss. In fact, you would pay up to $3 million to complete development; any more than that, and you will not be increasing profit at the margin.2.Textbook, Chatper1, #6a. The provision of Social Security benefits lowers an individual’s incentive to save for retirement. The benefits provide some level of income to the individual when she retires. This means that the individual is not entirely dependent on savings to support consumption through the years in retirement.b. Since a person gets fewer after-tax Social Security benefits the greater her earnings are, there is an incentive not to work (or not work as much) after age 65. The more you work, the lower your after-tax Social Security benefits will be. Thus, the taxation of Social Security benefits discourages work effort after age 65.提示:请使用边际分析的方法。