ABSTRACT Remote Control for Videoconferencing

- 格式:pdf

- 大小:93.73 KB

- 文档页数:11

浅谈创新教育中的教师素质时代的日新月异,科技的迅猛发展,呼唤着创造力。

没有创造力的人将无法成为今后世界的主人,没有创造力的民族将无法屹立于世界民族之林。

学校是培养人才的主战场,教师是培养人才的工作者。

当创新教育以锐不可当之势向我们走来时,我们该做些什么?我们该怎样去做?一、更新教育观念,调整知识结构,不断完善自我教师是教育活动的组织者,对学生的成长发展起着不可低估的作用。

未来社会对教育的要求,归根到底是对教师的要求。

要培养出能适应21世纪经济和社会发展需要的人才,教师首先必须使自身更能适应新世纪的需要,营造全新的知识结构,因为它是人们开阔视野、解放思想、更新观念的基础。

(1)对本学科、本专业知识的重新系统地学习,尤其是对于本专业中最先进科学知识的消化与吸收,不断充实自己的头脑,形成工作需要的专业知识,再将所学到得知识和理论溶入今后的教学中,使先进的科学技术知识成为激发学生学习兴趣,激励学生奋发学习创新进取的动力源泉。

(2)努力学习现代教育教学理论,通晓现代教学论的一般规律,了解教学实质,从认识论、方法论的高度科学地安排设计课堂教学。

下功夫学习和研究新时期学生心理,把教学建立在学生丰富的精神生活和非智力活动的背景上。

(3)注意语、数、理等相关学科及现代化电教手段的学习和掌握。

现代科学发展有两个突出特点,即高度分化和高度综合,一方面分类越来越细,另一方面各学科间的广泛渗透,产生了许多综合性的边缘学科。

社会发展需要教师具有“广、博、精、深”的知识,也需要教师运用现代教学媒介开阔学生视野,丰富学生的思维。

(4)“实验是化学的生命”。

作为化学教师必须具备过硬的实验技能。

要有较强的动手能力,能自己改进和设计适合于教学的实验方案,想方设法完成教材中的每个实验,能够运用可靠的化学实验事实得出规律和结论。

要有良好的实验作风和实验习惯并引导学生以科学的态度去观察、记录实验现象,处理实验结果。

二、结合学科特点,改革课堂教学,培养创新思维为适应创新教育的要求,抓住课堂这一素质教育的主阵地,教师应在以下几个方面有所转变:1.营造和谐氛围,发挥激励作用,引导学生发表意见,多显示思维活动的过程,从而提高学生的创造力在学生的学习过程中,思维效率由低到高的顺序是:看、听、写、说。

轮机工程常用英语缩写语博客【篇一:轮机工程常用英语缩写语博客】轮机工程常用英语缩写语安(培)ampere(a) 加速度acceleration(a) 面积area 绝对温度absolute temperature 近似绝对温标approximate absolute temperature(aa) 自动振幅控制automatic amplitube control(aac) 美国工程师协会american association engineer(aae) 美国电气工程师协会american association electricalengineer(aaee) 空气乙炔焊air-acetylene welding(aaw) 鼓风airblast(ab) 空气断路器air-breaker(ab) 美国船舶局american bureau shipping(ab) 空气吹灰断路器air-blast circuitbreaker(ab) 自动反偏器automatic back bisa(aab) 缩写abbreviation(abbr) 自动偏压补偿automatic bisa compensation(abc) 自动偏压控制automatic bisa control(abc) 鼓风冷却的aie blast cooled(abc)锅炉自动控制automatic boiler control(abc) 在下死点后after bottom dead center(abdc) 后轴承aft bearing(a.brg) 耐磨的abrasive resistant(abs) 美国标准局american bureau standards(abs) 绝对地absolue(abs) 绝对误差absolue error(abs.e) 摘要,文摘abstract(abs) 绝对粘度absolueviscosity(abs.visv) 空冷器air cooler(ac) 蓄电池,蓄能器,蓄压器,蓄热器accumulator(ac) 交流电alternative current(ac) 模拟计算机analog computer(ac) 自动控制automatic control(ac) 轴流离心式axial centrifugal(a 空气压缩机airopressor(a/c) 空气调节air conditioning(acb) 燃烧自动控制automatic combustion control(acc) 音响报警acoustic alarm(acc alarm) ( 经美国船舶局)核准的automatic control system certified for(accu) 无人机舱自动控制系统unattended engine room 空气调节设备air conditioning(aci) 自动控制器automatic controller(acr) 自动电流调节器automatic current regulator(acr) 交流继电器alternating current relay(ac rel) 检修孔access opening(acs_o) 自动控制阀automatic control valve(acv) 反时针方向anti-clockwise(acw) 平均偏差average deviation(ad) 公元annodomini(a.d.) 自动数据测取装置automatic data acquisition(ada) 交流直流转换器a/dconverter(ada.conv) 详细报警数据additional alarm information(add.alarm info) 可调速度adjustable(adj.sp) 交流直流基准电a/dreference(a/d ref) 提前的,超前的advanced(adv) 轮机助理,助理工程师assistant engineer(ae) 辅助发动机auxiliary engine(ae) 防污染的anti-fouling(af) 防污油漆anti-fouling paint(af) 轴向流动axial flow(af) 减磨轴承antifriction bearing(afb) 自动断油automatic fuel cutoff(afco) 减摩金属antifriction metal(afm) 自动频率调谐器automatic frequency tuner(aft) 自动微调automatic fine tuning(aft) 后面的,后部的af terward(aftw) 自动给水控制automatic feed watercontol(afwc) 自动增益控制automatic gain control(agc) 机组,成套设备aggregate(agg) 安培,小时ampere-hour(ah.a.h) 安培小时计ampere-hour meter(ahm,ahm) 自动高压控制automatic high-voltage control(ahvc) 全铁的all iro(ai) 进气阀air inlet valve(aiv) 合金接头alloy junction(aj) 成套配合件adaptation kit(ak) 铸铝青铜aluminium bronze casting(albc) 铝铸件aluminium casting(alc) 碱性的alkali(ne)(alk) 镍合金钢alloyed nickel steel(aini) 镍钴合金钢alloyed nickel cobalt steel(ainico) 硅铝合金aluminium silicon(alsi) 调幅,幅度调制amplitude modulation(am) 上午ante meridiem(am) 算术平均值arithmetic mean(a.m.) 美国商船学会american merchant marine institute(a.m.m.i) 自动-手动auto-manual(a/m) 兵器铝青铜arms bronze(amb) 放大器输入端amplifier input(amp.in) 放大器输出端amplifier output(amp.out) 总数,总量amount(amt.) 自动延时补偿器automatic time element compenstator(amtec) 自然空气冷却air natural cooled(an) 角铁angle bar(ang) 年度报告annual report(ann rep) 实际净重actural net weight(an wt) 空气油冷却器air oil cooler(aoc) 航空滑油aviation oil(a-oil) 自动操作进口阀automatic operated inlet valve(aoiv) 自动操作仪表板automatic operations panel(aop) 辅助油泵auxiliary oil pump(aop) 自动操纵阀automatically operated valve(aov) 观测台,观察板access panel(ap) 自动舵autopilot(ap) 原子动力atomic power(ap) 自动程序设计automatic programming(ap) 自动程控制automatic program control(apc) 石油比重分级标准degree americanpetroleum institute(api) 附录appendix(app) 近似的approximate(appr) 辅助电源accessory power supply(aps) 自动移相器automatic phase shifter(aps) 自动相位同步automatic phase synchronization(a.p.s) 辅助电源设备,辅助动力装置auxiliary power nit(apu) 装配和修理assembly repair(a-r) 自动遥控,远距离自动控制automatic remote control(arc) 电弧焊arc weld(arc/w) 布置arangement(arrang) 自动跟踪装置automatic range tracing unit(artu) 项目条款article(art) 年度检验annual survey(as) 自动同步器automatic synchroonizer(as) 自动灵敏度控制automatic sensibility control(asc) 故障传感控制装置abort sensing control unit(ascu) 自动开关板automatic switchingpanel(asp) 合金钢保护电镀alloy steel protective plating(aspp) 验收总结报告acceptance summaryreport(asr) 协会,社,公司association(ass) 助理assistant(asst) 美国标准螺纹american standard thread(ast) 自动蒸汽温度控制automatic steam-tempeature control(astc) 快速启闭阀acceleration- switching valve(asv) 角阀angle stop valve(asv) 自动滑阀automatic shuttle valve(asv) 验收试验acceptant test(at) 安(培)匝(数)ampere-turns(at) 大气压atmosphere(at) 绝对大气压atmospheric pressure,absolute(ata) 上死点后after top dead center(atdc) 表压atmospheric pressure gauge(atg) 自藕变压器auto-transformer(a-tr) 附件,附属装置attachment(att) 自动起动失灵automatic startfailure(au sta) 自动停车automatic stop(au sto) 自动停止失灵automatic stop failure(au stof) ( 法国船级社) 机舱自动化automation(aut) 自动优先automation priority(auto.p) 自动截止止回阀automatic stop checkvalve(auto s&cv) 空气出口air vent(av) 酸值acid value(a.v.) 平均值average(avg) 万用表avometer(avo) 辅助绕组auxiliary winding(aw) 电焊机arc weiding machine(awm) 维修用备件awaiting parts(awp) 实际作压力actual working pressure(awp) 轴线axis(ax) 宽度breadth(b) 呼吸装置breathing apparatus(ba) 桥式放大器bridging amplifier(ba) 蓄电池battery(ba) 缓冲放大器buffer amplifier(ba) 锅炉和机械设备检验完毕boiler machineriessurveyed(b&ms) 套筒接头bell spigot(b&s) 压载ballast(ball) 桶barrel(bar) 在下死点前before bottom center(bbc) 轴承青铜bearing bronze(bbz) 中心间距between centers(bc) 公元前before christ(b.c) 控制板board control(b.c.) 基园直径base circle diameter(bcd) 保险丝back-up fuse(bckup fuse) 配电盘switch board(bd) 击穿电压breakdownvoltage(bdv) 反馈back-feed(bf) 锅炉给水boiler feed(bf) 布式硬度brinell hardness(bh) 传感头bulk head(b/head) 制动马力brake horse power(bhp) 制动马力/小时brake-horse-power per hour(bhph) 黄铜套brass jacket(bj) 齿隙back lash(b/lash) 蓝图blueprint(bp) 沸点boiling point(bp) 反压力,背压back pressure(bp) 不合格,废品below proof(bp) 锅炉装置boiler plant(bp) 锅炉汽压boilerpressure(bp) 参考书,手册book reference(b.r.) 总登记吨brutton register tonne(brt) 锅炉检验完毕boiler surveyed(bs) 按钮开关button switch(bs) 船上用品免税单bill store(b/s) 黄铜锻件brassforging(bsf) 制动热效率brake thermal efficiency(bthe) 污水井bilge well(bw) 回水阀back-watervalve(bwv) 青铜bronze(bz) 电容capacity(c) 摄氏温度celsius scale(c) 百分度的centigrade(c) 合同签订日期contract award date(cad) 大卡,千卡kilocalorie(call) 卡calorie(cal) 热值,发热量calorific value(cal val) 计算机辅助测量系统computer assisted measurement system(cams) 产品目录catalogue(cat) 线路断路器circuit breaker(cb) 接触断路器contact breaker(cb) 闭合回路closedcircuit(cc) 燃烧室combustion chamber(cc) 连续冷却continuous cooling(cc) 中央控制台central control console(ccc) 冷铸生铁chilled cast iron(ccit) 立方厘米cubic centimeter(ccm) 全套设备协调试验complex coordination test(cct) 故障类别classification(cd) 阻力系数coefficient drag(cd) 交货证明书certificate delivery(c/d) 机舱集中控制centralized engine room control(cerc) 换算系数conversionfactor(cf) 重心centre gravity(cg) 表面硬化case hardening(ch) 通道channel(chann) 校验和读出check read(chre) 气缸盖温度cylinder head temperature(cht) 合同项目contract item(ci) 控制项目controlled item(ci) 接通cut-in(ci) 铸铁管cast-iron pipe(cip) 断路器circuit breaker(cir bkr) 循环circulating(circ) 中国工业标准chinese industrial standard(cis) 铜套copper jacket(cj) 中心线center line(c 冷却退火cooldown(cldwn) 限流电阻current-limiting reslstor(clr) 控制电机control motor(cm) 制造厂证明书certificate manufacture(c/m) 持续最大功率continuous maximum rating(cmr) 原油crude oil(c.o.) 系数coefficient(coef) 股份有限公司company limited(co ltd) 集油柜collecting(coltk) 计算机故障computer fail(comp fail) 冷凝液condensate(cond) 合闸故障connnection failure(conf) 常数constant(const) 恒速constant speed(const-sp) 合同,契约contract(cotr) 污油沉淀柜contaminated oil settling tank(cost) 恒压constant pressure(cp) 发动机完全停止,供电完全停止complete power failure(cpf) 压缩比compression ratio(cr) 抗腐浊剂corrosion resistant(cre) 不锈钢corrosion resistant steel 横截面cross- section(crs) 控制信号control signal(cs) 电流强度current strength(cs) 持续使用功率continuousserving rating(c.s.r.) 恒温constant teperature(ct) 电路,线路。

AVRCP

AVRCP(Audio/Video Remote Control Profile),也就是音频/视频远程控制配置文件。

AVRCP 设计用于提供控制TV、Hi-Fi设备等的标准接口。

此配置文件用于许可单个远程控制设备(或其它设备)控制所有用户可以接入的A/V设备。

它可以与A2DP 或VDP 配合使用。

AVRCP 定义了如何控制流媒体的特征。

包括暂停、停止、启动重放、音量控制及其它类型的远程控制操作。

AVRCP 定义了两个角色,即控制器和目标设备。

控制器通常为远程控制设备,而目标设备为特征可以更改的设备。

在AVRCP 中,控制器将检测到的用户操作翻译为A/V 控制信号,然后再将其传输至远程Bluetooth 设备。

对于“随身听”类型的媒体播放器,控制设备可以是允许跳过音轨的耳机,而目标设备则是实际的播放器。

常规红外遥控器的可用功能可以在此协议中实现。

AVRCP 协议规定了AV/C 数字接口命令集(AV/C 命令集,由1394 行业协会定义)的应用范围,实现了简化实施和易操作性。

此协议为控制消息采用了AV/C 设备模式和命令格式,这些消息可以通过音频/视频控制传输协议(AVCTP) 传输。

扩展阅读:

1

/Bluetooth/Learn/Works/Profiles_Overview.htm

开放分类:

手机,通信,蓝牙。

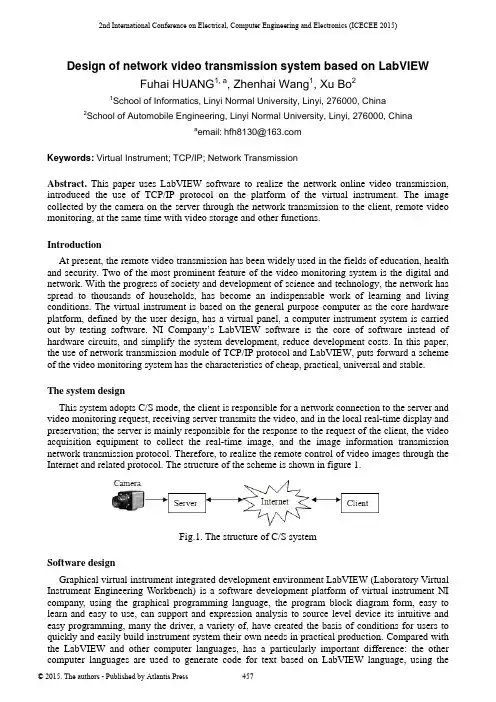

2nd International Conference on Electrical, Computer Engineering and Electronics (ICECEE 2015)Design of network video transmission system based on LabVIEWFuhai HUANG1, a, Zhenhai Wang1, Xu Bo21School of Informatics, Linyi Normal University, Linyi, 276000, China 2School of Automobile Engineering, Linyi Normal University, Linyi, 276000, Chinaa email:***************Keywords: Virtual Instrument; TCP/IP; Network TransmissionAbstract.This paper uses LabVIEW software to realize the network online video transmission, introduced the use of TCP/IP protocol on the platform of the virtual instrument. The image collected by the camera on the server through the network transmission to the client, remote video monitoring, at the same time with video storage and other functions.IntroductionAt present, the remote video transmission has been widely used in the fields of education, health and security. Two of the most prominent feature of the video monitoring system is the digital and network. With the progress of society and development of science and technology, the network has spread to thousands of households, has become an indispensable work of learning and living conditions. The virtual instrument is based on the general purpose computer as the core hardware platform, defined by the user design, has a virtual panel, a computer instrument system is carried out by testing software. NI Company’s LabVIEW software is the core of software instead of hardware circuits, and simplify the system development, reduce development costs. In this paper, the use of network transmission module of TCP/IP protocol and LabVIEW, puts forward a scheme of the video monitoring system has the characteristics of cheap, practical, universal and stable.The system designThis system adopts C/S mode, the client is responsible for a network connection to the server and video monitoring request, receiving server transmits the video, and in the local real-time display and preservation; the server is mainly responsible for the response to the request of the client, the video acquisition equipment to collect the real-time image, and the image information transmission network transmission protocol. Therefore, to realize the remote control of video images through the Internet and related protocol. The structure of the scheme is shown in figure 1.Fig.1. The structure of C/S systemSoftware designGraphical virtual instrument integrated development environment LabVIEW (Laboratory Virtual Instrument Engineering Workbench) is a software development platform of virtual instrument NI company, using the graphical programming language, the program block diagram form, easy to learn and easy to use, can support and expression analysis to source level device its intuitive and easy programming, many the driver, a variety of, have created the basis of conditions for users to quickly and easily build instrument system their own needs in practical production. Compared with the LabVIEW and other computer languages, has a particularly important difference: the other computer languages are used to generate code for text based on LabVIEW language, using thegraphical programming language.LabVIEW software provides a visual and motor module, this module can initialize camera and video image capture, as shown in figure 2(a).In addition the TCP protocol controls provide the data communication module, protocol, can be very convenient to establish a network connection and data transmission, as shown in figure 2(b).(a) NI-IMAQ module (b) TCP moduleFig.2. LabVIEW moduleThe system initialization of the camera on the server side, and connected through the TCP protocol to establish with the client, the client to receive and display the video image of the program flow diagram as shown in figure 3.Fig.3. System flow diagramServer designServer side call camera image acquisition. LabVIEW image acquisition is mainly composed of two kinds of methods, one is the use of industrial camera driver, such as LabVIEW driver, DLL dynamic link library; another is the use of NI IMAQ and IMAQdx driver for image acquisition. This design choice is driven by the use of NI for image acquisition IMAQdx. Video image shouldbe format conversion before transmission effectively. This design uses the TCP/IP protocol for the video transmission. TCP is a connection based protocol, the transmission must be created before the data transmission. To create a TCP connection, need to specify the address and port of the address. Different ports address different services on the site. Through the "TCP VI Listener" create a listener and wait for a specified port has been received in the TCP connection. If the connection is successful, VI will return a connection handle, connecting the remote TCP address and port of the client. With two 'TCP Write' VI to send data, the first node to transmit data length, the second nodes transmit video data. Finally, the end connected with the TCP Close Connection 'VI'. The server program block diagram shown in figure 4.Fig.4. The server program block diagramThe design of the clientThe client is mainly to complete the network connection, read the video data and save functions. 'TCP Open Connection' with VI open TCP, and then use the two 'TCP Read' VI to read the data, the first to receive video data as input length of second nodes, second nodes receive video data, finally, 'TCP Close Connection' by VI end connection. Block diagram as shown in figure 5.Fig.5. The client programTest resultFirst select a port before the program runs, usually more than 1000, because a lot of less than 1000 of the port is other procedures have been occupied port. Set the server and the client port must be consistent, in order to ensure the normal listening. When the client sends a request to the server in response to the request, the connection, the connection is established, the server will send data package, finally, the client receives the data, and the data unpack restore. The result of running the program is shown in figure 6.Fig.6. The result of running the programConclusionThis design adopts C/S mode, using LabVIEW platform provides a powerful network functions and network conditions, and combined with the TCP/IP protocol, the effective realization of video network transmission and preservation, by running results show the good performance. References[1]LabVIEW User Manual: USA: National Instruments Corporation (1998).[2]Changqing Cai, WeiJun Zhang: The design on the multi-temperature testing system based on the Laview, International Seminar on Future Biomedical Information Engineering (FBIE) (2008).[3]Using External Code in LabVIEW, National Instruments Corporation (2003).[4]Wagner. C, Armenta. S, Lendl. B: Talanta 80(3)(2010).[5]F. Correa Alegria, E. Martinho, F. Almeida: Measurement 42(7) (2009).。

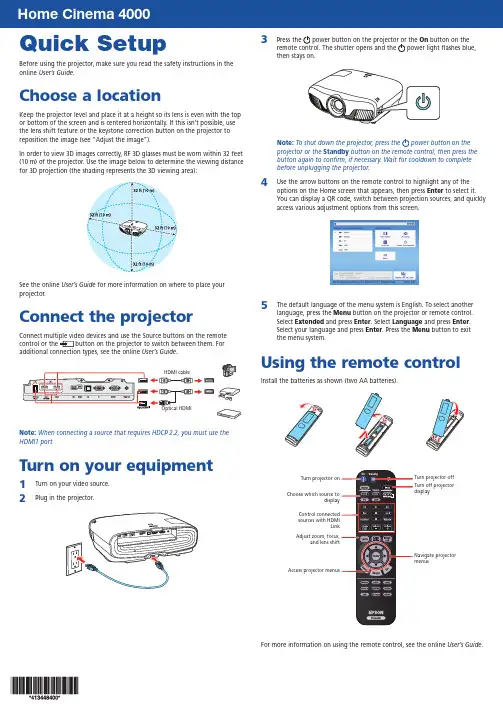

Quick SetupBefore using the projector, make sure you read the safety instructions in the online User’s Guide .Choose a locationKeep the projector level and place it at a height so its lens is even with the top or bottom of the screen and is centered horizontally. If this isn’t possible, use the lens shift feature or the keystone correction button on the projector to reposition the image (see “Adjust the image”).In order to view 3D images correctly, RF 3D glasses must be worn within 32 feet (10 m) of the projector. Use the image below to determine the viewing distancefor 3D projection (the shading represents the 3D viewing area):See the online User’s Guide for more information on where to place your projector.Connect the projectorConnect multiple video devices and use the Source buttons on the remote control or the button on the projector to switch between them. For additional connection types, see the online User’s Guide .Note: When connecting a source that requires HDCP 2.2, you must use the HDMI1 port.Turn on your equipment1 Turn on your video source.2Plug in the projector.3Press the power button on the projector or the On button on the remote control. The shutter opens and the power light flashes blue, then stays on.Note: To shut down the projector, press the power button on the projector or the Standby button on the remote control, then press the button again to confirm, if necessary. Wait for cooldown to complete before unplugging the projector.4Use the arrow buttons on the remote control to highlight any of the options on the Home screen that appears, then press Enter to select it. You can display a QR code, switch between projection sources, and quickly access various adjustment options from this screen.5The default language of the menu system is English. To select another language, press the Menu button on the projector or remote control. Select Extended and press Enter . Select Language and press Enter . Select your language and press Enter . Press the Menu button to exit the menu system.Using the remote controlInstall the batteries as shown (two AA batteries).Turn off projector displayNavigate projector menusTurn projector off For more information on using the remote control, see the online User’s Guide .Adjust the image1 P ress the Patternbutton on the remote control to display a test pattern.2 T o raise the image or correct a tilted image, adjust the front feet asshown below.3 P ress the4 P ress the56 I f your image looks like or , you can use the buttons on theprojector to correct this.Note: Using the keystone adjustment can affect the size and effectiveresolution of your image. If possible, change the position of yourprojector to eliminate the keystone effect and use the lens shift optionto position the image as necessary.7 Press Esc to exit.Viewing 3D imagesTo view 3D content, you must first connect a 3D-compatible video device toone of the HDMI ports on your projector. You also need a pair of Epson® (partnumber V12H548006) or Epson-compatible RF 3D active shutter glasses.1 Turn on and begin playback on the 3D-compatible video device.Note: Make sure you set the video device to play content in 3D mode.2 Slide the power switch on your 3D glasses to the On position.Note: If the glasses don’t automatically display 3D content, you may needto pair them with the projector. Move the glasses within 10 feet (3 m) of theprojector, then press and hold the Pairing button on the 3D glasses for atpress EnterMediumTroubleshootingIf you see a blank screen or the No signal message after turning on your video device or computer, check the following:•Make sure the power light on the projector is blue and not flashing.•P ress the button on the projector or one of the Source buttons on the remote control to switch to the correct image source, if necessary.•O n some Windows® laptops, you may need to hold down the Fn key and press F7 or the function key that lets you display on an external monitor.It may be labeled CRT/LCD or have an icon such as.•O n Windows 7 or later, hold down the Windows key and press P at the same time, then click Duplicate.•I f you’re using a Mac laptop, open System Preferences and select Displays. Click the Arrangement tab and select the Mirror Displayscheckbox.If 3D images aren’t displaying properly, check the following:•P ress the Menu button, then select Signal > 3D Setup > 3D Display and make sure that the 3D option is selected.•M ake sure that you are within the 3D viewing range. See “Choose a location” on the front of this sheet or the online User’s Guide for more information.•C heck that your 3D glasses have not entered standby mode. Slide the power switch on the 3D glasses to the Off position, then back to the On position.•C heck that your video device and media are both 3D-compatible. Refer to the documentation that came with your video device for moreinformation.Where to get helpManualsFor more information about using the projector, click the icons on your desktop to access the online manuals (requires an Internet connection). If you don’t see icon links to the manuals, you can install them from the projector CD or go to the Epson website, as described below.Telephone support servicesTo use the Epson® PrivateLine® Support service, call (800) 637-7661. This service is available for the duration of your warranty period. You may also speak with a support specialist by calling (562) 276-4394 (US) or (905) 709-3839 (Canada). Support hours are 6 am to 8 pm, Pacific Time, Monday through Friday, and7 am to 4 pm, Pacific Time, Saturday. Days and hours of support are subjectto change without notice. Toll or long distance charges may apply. Internet supportVisit /support (U.S.) or www.epson.ca/support (Canada) for solutions to common problems. You can download utilities and documentation, get FAQs and troubleshooting advice, or e-mail Epson. RegistrationRegister today to get product updates and exclusive offers. You can use the CD included with your projector or register online at /webreg.Optional accessoriesFor a list of optional accessories, see the online User’s Guide.You can purchase RF 3D glasses (part number V12H548006) or other accessories from an Epson authorized reseller. To find the nearest reseller, call 800-GO-EPSON (800-463-7766). Or you can purchase online at (U.S. sales) or www.epson.ca (Canadian sales).NoticesBluetooth Safety and SpecificationsContains Bluetooth module model: DBUB-E207This document provides safety instructions and describes the specifications. Read this document carefully before use to ensure your safety and product performance.U.S.Contains FCC ID: BKMAE-E207This device complies with Part 15 of the FCC Rules. Operation is subject to the following two conditions: (1) This device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation.This equipment has been tested and found to comply with the limits for a Class B digital device, pursuant to Part 15 of the FCC Rules. These limits are designed to provide reasonable protection against harmful interference in a residential installation. This equipment generates, uses and can radiate radio frequency energy and, if not installed and used in accordance with the instructions, may cause harmful interference to radio communications. However, there is no guarantee that interference will not occur ina particular installation. If this equipment does cause harmful interference to radioor television reception, which can be determined by turning the equipment off and on, the user is encouraged to try to correct the interference by one of the following measures:•Reorient or relocate the receiving antenna.•Increase the separation between the equipment and receiver.•C onnect the equipment into an outlet on a circuit different from that to which the receiver is connected.•Consult the dealer or an experienced radio/TV technician for help.This transmitter must not be co-located or operating in conjunction with any other antenna or transmitter.Radiation Exposure Statement:This equipment complies with FCC radiation exposure limits set forth for an uncontrolled environment. This equipment should be installed and operated with minimum distance 7.9 inches (20 cm) between the radiator and your body.CanadaContains IC: 1052D-E207This Class B digital apparatus complies with RSS-102 of the IC radio frequency (RF) Exposure rules.This device complies with Industry Canada license-exempt RSS standards. Operationis subject to the following two conditions: (1) This device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation.This transmitter must not be co-located or operating in conjunction with any other antenna or transmitter.CAN ICES-3(B) / NMB-3(B)Radiation Exposure Statement:This equipment complies with IC RSS-102 radiation exposure limits set forth for an uncontrolled environment. This equipment should be installed and operated with minimum distance 20 cm (7.9 inches) between the radiator and your body. Declaration of ConformityAccording to 47CFR, Part 2 and 15, Class B Personal Computers and Peripherals; and/or CPU Boards and Power Supplies used with Class B Personal Computers.We: Epson America, Inc.Located at: 3840 Kilroy Airport WayMS: 3-13Long Beach, CA 90806Telephone: (562) 981-3840Declare under sole responsibility that the product identified herein, complies with47CFR Part 2 and 15 of the FCC rules as a Class B digital device. Each product marketed, is identical to the representative unit tested and found to be compliant with the standards. Records maintained continue to reflect the equipment being producedcan be expected to be within the variation accepted, due to quantity production and testing on a statistical basis as required by 47CFR 2.909. Operation is subject to the following two conditions: (1) this device may not cause harmful interference, and (2) this device must accept any interference received, including interference that may cause undesired operation.Trade Name: EpsonType of Product: LCD ProjectorModel: H715AMarketing Name: Home Cinema 4000Epson America, Inc. Limited WarrantyTwo-Year Projector Limited Warranty and 90-Day Lamp Limited WarrantyWhat Is Covered: Epson America, Inc. (“Epson”) warrants to the original retail purchaser of the Epson projector product enclosed with this limited warranty statement that the product, if purchased new and operated in the United States, Canada, or Puerto Rico will be free from defects in workmanship and materials for a period of two years from the date of original purchase. This limited warranty applies only to the projector and not to the projector lamp, which carries a limited warranty period of ninety days from the date of original purchase. For warranty service, you may be required to provide proof of the date of original purchase.What Epson Will Do To Correct Problems: If your product requires service during thelimited warranty period, please call Epson at the number on the bottom of this statement and be prepared to provide the model, serial number, and, if required, date of original purchase. If Epson confirms that warranty service is required, Epson will, at its option, repair or replace the defective unit, without charge for parts or labor. If Epson authorizes an exchange for the defective unit, Epson will ship a replacement product to you, freight prepaid, so long as you use an address in the United States, Canada, or Puerto Rico. You are responsible for securely packaging the defective unit and returning it to Epson within five working days of receipt of the replacement. Epson requires a debit or a credit card number to secure the cost of the replacement product in the event that you fail to return the defective one. If Epson authorizes repair instead of exchange, Epson will direct you to send your product to Epson or its authorized service center, where the product will be repaired and sent back to you. You are responsible for packing the product and for all postage or shipping costs to and from the Epson authorized service center. When warranty service involves the exchange of the product or of a part, the item replaced becomes Epson property. The exchanged product or part may be new or refurbished to the Epson standard of quality. If service cannot be provided on the product for any reason and Epson no longer sells the same model, Epson will replace your product with a model of equal or superior value. Replacement products or parts assume the remaining warranty period of the original product. If Epson replaces the lamp as part of thewarranty service, the replacement lamp carries the limited 90-day warranty stated above.What This Warranty Does Not Cover: This warranty covers only normal use in the United States, Canada, or Puerto Rico.This warranty does not cover the following:•Excessive continual use •Consumables such as filters •Installation or removal•Cosmetic damage caused by handling or normal wear and tear during usage• D amage caused by failure to properly maintain the projector (see your online User’sGuide for details) • D amage caused by interaction with non-Epson products, such as add-in cards or cables• A ny problem resulting from misuse, abuse, improper installation, neglect, impropershipping, disasters such as fire, flood, and lightning, improper electrical current, software problems, exposure to chemical smoke, or excessive humidity • A ny problem resulting from service by other than Epson or an Epson AuthorizedServicerEpson is not responsible for warranty service should the Epson label or logo or the rating label or serial number be removed. This warranty is not transferrable. Epson is not responsible for your data or applications, which cannot be restored and should be backed up by you. Postage, insurance, or shipping costs incurred in presenting your Epson product for carry-in warranty service are your responsibility. If a claimed defect cannot be identified or reproduced in service, you will be held responsible for costs incurred.DISCLAIMER OF OTHER WARRANTIES: THE WARRANTY AND REMEDY PROVIDEDABOVE ARE EXCLUSIVE AND IN LIEU OF ALL OTHER EXPRESS OR IMPLIED WARRANTIES INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY , NONINFRINGEMENT OR FITNESS FOR A PARTICULAR PURPOSE. SOME LAWS DO NOT ALLOW THE EXCLUSION OF IMPLIED WARRANTIES. IF THESE LAWS APPLY , THEN ALL EXPRESS AND IMPLIED WARRANTIES ARE LIMITED TO THE WARRANTY PERIOD IDENTIFIED ABOVE. UNLESS STATED HEREIN, ANY STATEMENTS OR REPRESENTATIONS MADE BY ANY OTHER PERSON OR FIRM ARE VOID.EXCLUSION OF DAMAGES; EPSON’S MAXIMUM LIABILITY: IN NO EVENT SHALL EPSON OR ITS AFFILIATES BE LIABLE FOR ANY SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES OR ANY LOST PROFITS RESULTING FROM THE USE OR INABILITY TO USE THE EPSON PRODUCT, WHETHER RESULTING FROM BREACH OF WARRANTY OR ANY OTHER LEGAL THEORY . IN NO EVENT SHALL EPSON OR ITS AFFILIATES BE LIABLE FOR DAMAGES OF ANY KIND IN EXCESS OF THE ORIGINAL RETAIL PURCHASE PRICE OF THE PRODUCT.Arbitration, Governing Laws: Any dispute, claim or controversy arising out of or relating to this warranty shall be determined by arbitration in Los Angeles County, California before a single arbitrator. The arbitration shall be administered by JAMS pursuant to its Comprehensive Arbitration Rules and Procedures. Judgment on the award may beentered in any court having jurisdiction. Any action must be brought within three months of the expiration of the warranty. This clause shall not preclude parties from seeking provisional remedies in aid of arbitration from a court of appropriate jurisdiction. This warranty shall be construed in accordance with the laws of the State of California, except this arbitration clause which shall be construed in accordance with the Federal Arbitration Act.Other Rights You May Have: This warranty gives you specific legal rights, and you may also have other rights which vary from jurisdiction to jurisdiction. Some jurisdictions do not allow limitations on how long an implied warranty lasts, or allow the exclusion or limitation of incidental or consequential damages, so the above limitations or exclusions may not apply to you.In Canada, warranties include both warranties and conditions.EPSON is a registered trademark, and EPSON Exceed Your Vision is a registered logomark of Seiko Epson Corpo-ration. Epson Connection is a service mark and PrivateLine is a registered trademark of Epson America, Inc.Mac is a trademark of Apple Inc., registered in the US and other countries.General Notice: Other product names used herein are for identification purposes only and may be trademarks of their respective owners. Epson disclaims any and all rights in those marks.This information is subject to change without notice.© 2017 Epson America, Inc., 3/17Printed in XXXXXXCPD-53682To find the Epson Authorized Reseller nearest you, please visit: in the U.S. or www.epson.ca in Canada.To find the Epson Customer Care Center nearest you, please visit/support in the U.S. or www.epson.ca/support in Canada.To contact the Epson Connection SM , please call (800) 637-7661 or (562) 276-4394 in the U.S. and (905) 709-3839 in Canada or write to Epson America, Inc., P .O. Box 93012, Long Beach, CA 90809-3012.。

International Conference on Advances in Materials, Machinery, Electrical Engineering (AMMEE 2017)Transmission of UAV videos based On HEVCZhiyuan Li, Chao Wang, Xiaoduo ZhangZhengzhou Electromechanical Engineering Research Institute, China****************Keywords:UAV, transmission of videos, region-of-interest, HEVC.Abstract. To meet the requirement of high-quality transmission of videos captured by unmannedaerial vehicles (UAV) with low bandwidth, a novel rate control (RC) scheme based on region-of-interest (ROI) is proposed. First, the ROI information is sent to the encoder with the latest highefficient video coding (HEVC) standard to generate an ROI map. Then, by using the ROI map, bitallocation methods are developed at frame level and large coding unit (LCU) level, to avoid inaccurate bit allocation produced by camera movement. At last, by using a better robustness R-λ model, the quantization parameter (QP) for each LCU is calculated. The experimental results show that theproposed RC method can get a lower bit rate error and a higher quality for reconstructed video bychoosing appropriate pixel weight on the HEVC platform1.IntroductionUnmanned aerial vehicles (UAVs) equipped with surveillance systems have been widely used inmilitary missions and civilian domains[1,2]. The huge data has posed big challenges for the videocompression systems on the UAVs. Rate control (RC) plays a major role in video coding. By usingRC, better video quality can be achieved without violating the constraints imposed by the availablechannel bandwidth or memory requirement. Many valuable results about RC algorithms based onregion-of-interest (ROI) have been published[3-6]. The methods in Refs.[3]—[6] are developed fordifferent preceding compression standards, which are not suitable for the latest high efficiency videocoding (HEVC) standard with the flexible picture partition and some other techniques[7-9]. Moreover,they are designed for low- resolution videos,which are not suitable for high-resolution and high-frame-rate video services, such as UAV videos.Although some RC methods[10,11] are proposed for HEVC at high resolutions, these methods computethe quantization parameter (QP) with a quadratic model, which may degrade the video quality due tothe inaccurate bit allocation, and has been replaced by the R-λ model with better robustness for HEVC[12,13]. ROI based RC approaches with R-λ model[14,15] have already been applied in the video conferencing application. However, objects’ diversity anduncertainty of an aerial video in various missions make ROI detection more difficult than the singletype ROI in video conferencing. Furthermore, the ratio of bit allocation between every picture in onegroup of picture (GOP) is inflexible and predetermined in the previous schemes, which cannot meetthe videos captured by high speed UAVs.2.The Framework of The ROIIn this paper, we design a novel RC scheme which is appropriate for the high-resolution and high-frame-rate UAV video coding in HEVC. It uses ROI map for bit allocation at frame level and largecoding unit (LCU) level. The scheme allocates more bits to the frames with a larger ROI at framelevel. In this way, the larger ROI can get enough coding bits to improve the quality of ROI. At LCUlevel, different numbers of bits are dynamically allocated to LCUs with the ROI map. It can avoid inaccurate bit allocation from local object motions or global camera motions. Then the QP for each LCU is calculated with the R-λmodel. Experimental results show that in HEVC, the proposed ROI based RC scheme can significantly improve the performance compared with state-of-art methods.Fig.1 presents the overview of the proposed scheme. Firstly, the scheme generates an ROI map by using the objects detected in UAV videos. There are two classes of methods can be utilized to realize object detection. One is automatic detection based on computer vision, and the other is manual detection based on interactive information. After that, the RC module can make use of the generated ROI map to perform two efficient bit allocation methods at frame level and LCU level. Then R- model is applied to calculate the QP.Fig. 1 The framework of the ROI based rate controlThe previous ROI based RC schemes are designed for the conversational applications, in which ROI detections are mostly implemented for face detection or motion detection. The object detection method for specific application limits the applied scope and makes the detected ROI fairly inaccurate for non-conversational scenarios. Different from the single type object in conversation, many regions may be the ROI (such as pedestrian, road and vehicles) in an aerial video. The diversity and uncertainty of objects in various missions has posed big challenges to the ROI detection in the UAV videos.Fortunately, many valuable results about salient objects detection methods have been published.A comprehensive survey of salient object detection can be found in Ref. [16]. Cheng et al [17] designed a good generic objectness measure method with high object detection rate. Moreover, the development of the researches on target detection for UAV in different applications [18] brings convenience to multi-objective extraction for UAV videos. On the other hand, the interaction and the real-time performance are the two key characteristics for the UAV system. Targets can be detected with simple interaction with users or other equipment’s in the UAV system. The encoder obtains ROI with high quality by the manual detection to avoid a large amount of calculation and inaccuracy. After detecting ROI in one frame, pixels in ROI are assigned with weight value k, and pixels in non-ROI are assigned with a weight value 1, which can be expressed as(1)where RM is the ROI map at pixel level having the same size with the original frame, and (w, h) is the location of the pixel in the frame. Then, the ROI map is sent to HEVC encoder to guide an ROI based RC with an effective bit allocation method.Since a human observer is the end-user of UAV video, enhancing the quality of ROI is an important task to improve the user satisfaction. As the overall coding and transmission resources are limited, allocating more bits on ROI while decreasing the quality of non-ROI is reasonable. Thus, the necessary bit stream can be reduced by allocating fewer bits for the less important parts. We introduce the proposed ROI based RC scheme with HEVC encoder for UAV videos. Bit allocation methods are applied in this scheme. At frame level bit allocation,We allocate bits to each frame in the GOP according to the frame weight measured by the ROI map at frame level. Similarly, RC scheme allocates bits to each LCU in the frame in accordance with their importance measured by the ROI map at LCU level.In the traditional R-λ method [12], the ratio of bit allocation between each picture in one GOP is determined by bit per pixel (BPP). Obviously, BPP value ignores the characteristics of video content, since it only depends on the bandwidth, frame rate and resolution. Hence, the BPP value used as a weight performs poorly at frame level bit allocation. Then, the problem of frame level bit allocation can be solved by the ratio between the Varangian multiplier (λ) values which are derived from R-λmethod [13].However, the ratio of λ within each GOP is fixed. It cannot adapt to the changeful aerial video well, especially when the UAV flies rapidly or works in zoom state. When working in short-focus mode for the target positioning, the ROI size is smaller. We can reduce the allocation bits to improve the compression ratio. On the contrary, when working in long-focus mode to identify and observe target details, the ROI size is larger. We should increase the allocation bits to improve the satisfaction of observer. Therefore, in order to meet the requirement of the UAV application, the frame level bit allocation problem can be solved by the ratio between the ROI maps at frame level in a GOP. The ROI map at frame level Fω[i] can be expressed as(2)where Fω[i] represents the bit allocation weight of the ith frame in the current GOP, H and W are the height and width of one frame, and N ROI[i] is the number of pixels in the ROI of the current frame. Then RC scheme allocates bits adaptively for each frame in the GOP according to their importance measured by Fω[i]. More bits are allocated to more important frames to improve the quality of video. Fewer bits are allocated to less important to improve the compression ratio. Then, the target bits for the ith frame in the current GOP can be achieved by(3)where T f [i] is the target bits to be allocated for the ith frame in the current GOP, T GOP,rem is the remaining bits used to encode the rest of frames in the current GOP, and Fωrem is the sum of weight for the rest frames in the current GOP.Mean absolute difference (MAD) of the LCU at the same position in the previously decoded frame is used as a weight at LCU level bit allocation in the typical RC algorithm [12]. However, MAD of the previous LCU may be quite different from the MAD of the current one with the high speed movement of UAV. Thus the MAD performs poorly at LCU level bit allocation. Gradient of the current LCU was used to allocate the bit at LCU level in Ref. [19], as it can be obtained directly from the current LCU. Instead of the gradient weight, we use ROI information of the current LCU to allocate bits at LCU level. The quality of ROI can be controlled flexibly by adjusting the pixel weight in the ROIs. The ROI map at LCU level Lω[i, j] can be expressed as(4)where Lω[i, j] represents the bit allocation weight of the jth LCU in the ith frame, H[j] and W[j] are the height and width of the jth LCU, r j and c j are row and column position of the top left corner of the jth LCU in ith frame, and N ROI[i, j] is the number of pixels in the ROI of the jth LCU. The LCU with larger ROI means that it is more important and it needs more protections during video transmission.Once the target bits for the ith frame are allocated, the target bits for LCU denoted by T LCU[i, j] can be achieved with the ROI map at LCU level as(5)where Lωrem is the weight of the rest LCU in the current frame, and T f,rem is the remaining bits used to encode the rest of LCUs in the current frame. Clearly, the quality of ROI can be emphasized, since more target bits are allocated through Eq.(5) with high values of Lω[i, j] in ROI. We can flexibly control the quality of ROI by adjusting the value of k in Eq.(1). In this way, more bits are allocated to ROI for ensuring better perceived quality. λ can be derived from T LCU[i, j] by R-λmodel as(6)Where αand βare initialized as 3.2003 and −1.367, respectively, and then updated by least square regression once a coded picture is available. Fromλ, QP is finally obtained by(7)3.Experimental ResultsFig. 2 shows the RD curves of HM16.0 and our algorithm in ROI, non-ROI and whole regions. It is observed that our approach outperforms HM 16.0 at various bitrates in terms of average Y-PSNR of the ROI. As for the cost, the RD performance of non-ROI may be degraded. However, thanks to the human vision system (HVS), people pay more attention to ROI, and the reduction of visual quality outside ROI is almost unnoticeable. Consequently, the degraded RD performance of non-ROI has few negative effects on the perceived visual quality of the entire frame.Fig. 2 RD performance comparison between the HM16.0 and our algorithm in ROI, non-ROI andwhole regionsFig. 3 demonstrates the reconstructed frame of the UAV video sequence compressed at 1 000 kbit/s obtained by HM16.0 and our algorithm. As expected, our approach can yield more favorable visual quality in the whole frame, since the visual quality in the ROI is improved and that in non-ROI is reduced almost unnoticeably, which can be seen in the amplified block in Fig.3.Fig. 3 Perceived visual quality comparison of encodedframe and amplified blocks between (a) HM16.0 and (b)our algorithmAt last, we compare the results of our scheme with different k and the gradient based R- (GRL) method[19] in the aspect of PSNR increase (ΔPSNR) on HM16.0 in ROI. As shown in Fig.4, the larger k leads to the bigger increase of PSNR in ROI. This means that our scheme can flexibly control the quality of ROI by adjusting the k value.Fig. 4 ΔPSNR in ROI of our algorithm with different k= 9 and 5 and GRL method4.ConclusionIn this paper, we put forward an ROI based RC scheme in HEVC standard. The scheme is developed for UAV applications. A more efficient and accurate ROI information can be obtained for real-time encoder through the salient object detection method and the interaction of UAV. More bits are assigned to the frame which contains larger ROI to adapt with the fast changing scene as the UAV flies at high speed. Bits are adaptively allocated with the ROI map at LCU level in order to improve the quality of the ROI. The quality of ROI can be flexibly controlled by using appropriate pixel weight in the ROI, and the R- model with a better robustness is used to get a proper QP. The experimental results demonstrate that the reconstructed video quality is improved, and the bitrate error is reduced. Hence, the proposed RC scheme has potential value to be applied in UAV applications.References[1].R. Schneider man, IEEE Signal Processing Magazine 29, 8 (2012).[2].M. Bhaskaranand and J. D. Gibson, IEEE Journal of Selected Topics in Signal Processing 9, 139(2015).[3].B.-R. Joan, S.-S. Joan and A.-L. Francesc, IEEE Signal Processing Letters 16, 45 (2009).[4].J. Y. Kim, C. H. Yi and T. Y. Kim, IEEE Transactions on Consumer Electronics 56, 951 (2010).[5].Y. Liu, Z. Li and Y. Soh, IEEE Transactions on Circuits and Systems for Video Technology 18,134 (2008).[6].K. Sun and D. Wu, Journal of Visual Communication & Image Representation 30, 234 (2015).[7].Xu Sheng-yang, Yu Mei, Jiang Gang-yi, Fang Shu-qing and Shao Feng, Journal ofOptoelectronics·Laser 26, 2381 (2015).[8].Xu Jian, Wang Rang-ding, Huang Mei-ling, Li Qian and Xu Da-wen, Journal ofOptoelectronics·Laser 26, 1753 (2015). (in Chinese)[9].G. J. Sullivan, J.-R. Ohm, W.-J. Han and T. Wiegand, IEEE Transactions on Circuits and Systemsfor Video Technology 22, 1649 (2012).[10].M. Xv, X. Deng, S. Li and Z. Wang, IEEE Journal of Selected Topics in Signal Processing 8,475 (2014).[11]. M. Simone, B. Riccardo and R. Roberto, A Saliency-Based Rate Control for People Detectionin Video,[12].IEEE International Conference on Acoustics, Speech and Signal Processing, 2016 (2013).[13]. B. Li, H. Li, L. Li and J. Zhang, Rate Control by R-lambda Model for HEVC, ITU-T SG16Contribution, JCT-VC K0103, Shanghai, (2012).[14].X. Wang and M. Karczewicz, Intra Frame Rate Control Based on SATD, ITU-T SG16Contribution, JCT-VC M0257, Incheon, (2013).[15].S. Li, M. Xu, X. Deng and Z. Wang, Signal Processing-Image Communication 38, 127 (2015).[16].M. Marwa, C. Marco and P.-P Beatrice, Region-of-Interest Based Rate Control Scheme forHigh Efficiency Video Coding, IEEE International Conference on Acoustics, Speech and Signal Processing, 7338 (2014).。

VideoRemote Control User Guide2Remote Control LayoutSTB POWER Turn set top box device on or off Playback Controls Control playback ofDVR or Live TVRECBegin DVR recordingINFODisplay the InfobarOKEnter a choice youhave madeEXITExit to Main ScreenGUIDEDisplay TV GuideCH +/-Change the channelUp/Down Arrows Page Up and PageDown ControlP<PReturn to previouschannel TITLESActivate SubtitlesWillow Remote ControlThis is not a universal remote control that can be used to program or control the menu or tool functions of your television set. We suggest the use of t he remote control that came with your TV set for this type of program functionality or to use a universal remote that works with Amino set top boxes. For the complete Amino remote control user guide visit our website at: https://www.directlink.coop/docs/AminoRemoteControlUserGuidev4.pdfTV PowerTurn TV on or off TV AV Source Change TV Input StopStop playback of DVR content InfoDisplay the Infobar LIST MENUDisplays DVR Guide MENUDisplays Menubar ARROWSNavigation Arrows Not Active For future use VOL + / -Adjust volumeMUTEToggle TV audio on or offNumber Pad Enter a channel number or PIN Remote Setup3OperationWhen the remote control is first operated all buttons transmit commands for the set-top box. Point the remote control towards the front of the set-top box when pressing a remote control button to ensure correct operation. Do not cover the IR window at the front of the product.When a button on the remote is pressed either the set-top box standby button will flash (or TV standby button if a TV brand has been entered) depending on which product is being controlled by the remote control.If a button is pressed for more than 30 seconds the remote control will stop transmitting commands in order to preserve battery life.TV control setup: Brand SearchThe remote control can also be programmed to operate certain functions of your TV set. To program this functionality then perform the following instructions in sequence. In case of a mistake, the process can be exited at any point by pressing and holding the Text/ SHIFT button and then at the same time pressing the STOP button. The remote control will revert to normal operation and no TV brand code will be stored.1 Determine the manufacturer of your TV set2 Look up the 4-digit manufacturer code supplied in thismanual and make a note of this code3 Make sure your TV set is turned on. (The set-top box does notneed to be turned on to perform this programing feature)4 Press and hold both the 1 and the 3 buttons at the sametime on the remote control for approximately 3 secondsuntil the TV standby button remains lit, then release bothbuttons45 Now enter the 4 digit manufacturer code for your TV set. Oneach digit entry the TV standby button will flash. On entry ofthe 4th digit the TV standby button will flash then remain lit6 Point the remote control at the TV set and press and holddown the TV Standby Button or the Mute button on theremote control7 When the TV either turns off or brings up the MUTE symbolon screen, then release that button. This may take a fewminutes to happen8 Press and hold the Text/SHIFT button and then at thesame time press the STOP button to finish setting up the TVcontrol mode.The TV standby button will go out. The TV control function is now programmed into the remote control.The following buttons should now operate the TV set: TV Standby, AV source select, Volume Up, Volume Down, MuteIf not all the above mentioned buttons operate the TV set then a KEY FIX operation can be performed. (See page 6).Should the TV model be changed and the remote control requires re-programming then repeat the above setup procedure with the new TV.Should the TV brand be not successfully found by the remote control then the TV standby button will flash rapidly and the remote control will revert to normal operation. No TV brand code will be stored.5 TV control setup: Auto SearchShould the TV brand not be successfully found by the previous Brand Search method then Auto Search can be used.Caution: this process may take a long time to find your TV code (in cases up to 5 minutes)Make sure your TV set is turned on. (The set-top box does not need to be turned on to perform this programing feature)1 Press and hold both the 1 and the 3 buttons at the same timeon the remote control for approximately 3 seconds until the TVstandby button remains lit, then release both buttons2 Point the remote control at the TV set and press and hold downthe TV Standby Button or the Mute button on the remotecontrol3 When the TV either turns off or brings up the MUTE symbol onscreen, then release that button. This may take up to 5 minutesto happen4 Press and hold the Text/SHIFT button and then at the same timepress the STOP button to finish setting up the TV control mode.5 The TV standby button will go out. The TV control function is nowprogrammed into the remote control.Should the TV brand be not successfully found by the remote control then the TV standby button will flash rapidly and the remote control will revert to normal operation. No TV brand code will be stored.If even Auto Search fails to successfully setup operation of the TV set, then the remote is unable to control that particular TV set.6TV Shift functionalityWhen a TV brand has successfully been programmed into the remote control with the above steps, the following additional buttons may also control the TV set. These can be accessed by pressing and holding the TEXT/SHIFT button 20 on the remote control and at the same time pressing:Digit buttons 0..9, Left, Right, Up, Down, OK, Channel Up, Channel Down, the 4 colour keysOperation of these buttons is not guaranteed to control the normally expected functionality of the TV set.Key FixIf not all the previously mentioned buttons operate the TV set then a KEY FIX operation can be performed as follows.1 Press and hold the 1and 9buttons simultaneously forapproximately 3 seconds until the TV standby button is lit2 Try all the above mentioned TV buttons3 If the TV does not respond to a button, then hold that button downpermanently. The remote control will now step through alternativesettings. When the TV responds correctly then release that button4 Repeat that procedure for other buttons if necessary5 Once finished, press TEXT/SHIFT and STOP at the same time.Removing TV functionalityTo reset the remote control and erase the TV functionality1 Press and hold the 1and 6buttons simultaneously forapproximately 3 seconds until the TV standby button is lit2 Enter the following key sequence in the correct order 9 9 63 The TV settings will be deleted from the remote control.7A.R. Systems0012 Abex0014 ABS0016 Accent0019 Acer0028 Acme3521 Acoustic Solutions0032 Action0033 Acura0036 ADA0038 ADC0040 Addison0043 Admiral0046 Advent0054 Adventura0055 Adyson0058 AEG0059 Aftron3470 Agashi0063 Agazi3522 AGB0064 Aiko0069 Aim0070 Aiwa0072 Akai0074 Akashi0075 Akiba0078 Akira0079 Akito0082 Akura0083 Alaron0085 Alba0086 Albatron0087 Alcyon0093Alienware0099Alkos3523Allorgan0105Allstar0108AmericaAction0123Amoi0132Amplivision0138Ampro0139Amstrad0140Anam0146AnamNational0147Andersson0148Anglo0151Anitech0154Ansonic0156AOC0165Aomni3555ApexDigital0170Apollo0171Apple0172Arc En Ciel0178Arcam0179ArcamDelta3524Ardem0184Arena3375Aristona0192ART0199ArthurMartin0200ASA0202Asberg0205Astar0218Astra0221Asuka0227ATD0229Atlantic0233Atori0237Auchan0240Audiosonic0264Audioton0266Audiovox0268Audioworld0269Ausind0276Autovox0280Aventura0287Awa0296Axxon0303Ayomi3525Baird0311Bang &Olufsen0314Barco0319BARON3959Basic Line0325Bastide0327Baur0331Baysonic0333Bazin0335Beaumark0340Beijing0345Beko0346Belcor0348Bell &Howell0350Belson0355Belstar0357BenQ0359Beon0361Berthen0363Best0364Bestar0368Bestar-Daewoo0369BGH3507Binatone0378BlackDiamond0384Black Star0386Blacktron3526Blackway0388Blaupunkt0390Blue Sky0395Blue Star0396Boca0399Bondstec0403Boots0405Bork3615BPL0413Bradford0414Brandt0416Brillian0422Brinkmann0423Brionvega0424Britannia0425Brockwood0430Broksonic0432Bruns0435BskyB0436BSR0437BTC0439Bush0445Caihong0462Caishi0465Cameron4032Brand Code Brand Code Brand Code Brand Code8Camper3911 Candle0480 Capsonic0486 Carad0488 Carena0489 Carnivale0491 Carrefour0492 Carver0494 Cascade0496 Casio0499 Catha4094 Cathay0501 CCE0504 Celebrity0509 Celera0510 Celestial0511 Centrex0516 Centrum0519 Centurion0520 Century0521 CGE0523 Changcheng0526 Changfei0527 Changfeng0528 Changhai0529 Changhong0530 Chimei3563 Chuangjia4096 Chun Yun0545 Chunfeng0546 Chung Hsin0547 Chunsun0549 Cimline0552 Cinex0563 Citizen0567 City0569Clarion0575Clarivox0576Clatronic0581Clayton0582CMS0590CMSHightec0591Coby0597Commer-cial Solu-tions0615Concerto0625Concorde0626Condor0627Conia0628Conrowa0634Contec0635ContinentalEdison0637Cosmel0647Craig0650Crosley0655Crown0658CS Elec-tronics0663CTC0664C-Tech0449CTX0665Curtis0666CurtisMathes0667CXC0670Cyber-Power0674Cybertron0675Cytron0680Daewoo0692Dainichi0694Dansai0699Dantax0702Datron4201Datsura0703Dawa0707Daytron0710De Graaf0716DEC0717Decca0718Deitron0722Dell0725Denon0731Denver0733Desmet0738Diamant0746Diamond0747DiamondVision0748Dick SmithElectronics0750Digatron0751Digihome0758Digiline0759DigiMax3808Digital Life0772DigitalLifestyles3567Digitex0780Digitor0781DirecTV0794Dixi0807Domeos0817Dongda0820Donghai0821Drean0832DSE0833DTS0837Dual0838Dual-Tec3528Dumont0840Duongjie4101Durabrand0842Dux0843D-Vision0684DVX0847Dwin0848DX An-tenna0849Dynatron0855Dynex3476Easy Living0860Ecco0864ECE0865Elbe0880Elbe-Sharp3529Elcit0883Electa3530ELECTROTECH3531Electro-band0888Electro-graph0889Electro-home0890Elektra0896Element3477Elfunk0899ELG0900Elin0902Elite0903Elman0907Elta0910Emerson0917Brand Code Brand Code Brand Code Brand Code9Emperor0921 Emprex0922 Envision0933 Enzer0934 Epson0937 Erres0942 ESA0943 ESC0945 Etron0949 Eurofeel0954 EuroLine0955 Euroman0956 Europa0957 Europhon0959 Evesham0972 Evolution0973 Excello0975 Expert0976 Exquisit0978 Fagor Life4102 Feilang0990 Feilu0991 Feiyue0993 Fenmenti4103 Fenner0994 Ferguson0996 Fidelity0998 Filsai1000 Finlandia1003 Finlux1004 Firstline1008 Fisher1009 Flint1014 FNR1016 Formenti1023Formenti-Phoenix1024Fortress1027Fraba1030Friac1040Frontech1042Fujitsu1052FujitsuGeneral1053FujitsuSiemens1054Funai1056Furichi1058Futronic1061Futuretech1064Gaba4059Galaxi1068Gateway1076GBC1078GE1081GeantCasino1082GEC1083Geloso1087GeneralTechnic1095Genesis1096Genexxa1097GFM3820G-Hanz3397Giant1113Gibralter1114Go Video1126Goldfunk1135GoldHand1136Goldline1138GoldStar1140Goodmans1142Gorenje1144GP1147GPM1149GPX1150Gradiente1151Graetz1152Granada1154Grandin1156Great Wall4105Gronic1160Grundig1162Grunkel1164Grunpy1165GVA3510Haaz1172Haier1175Haihong1176Halifax1179Hallmark1180Hampton1183Hankook1188Hanns.G3478Hannspree1189Hanseatic1190Hantarex1192Hantor1193HarleyDavidson1197Harman/Kardon1198Harvard1202Harwa1203Harwood1204Hauppauge1206Havermy1208HCM1210Helios1219Hello Kitty1221Hema1222Hemmer-mann1223HewlettPackard1229Hifivox1233Higashi1234Highline1236Hikona1237HiLine3533Hinari1243Hisawa1247Hisense1249Hitachi1251HitachiFujian1252Hitec1253Hitsu1257Hoeher1262Hongmei1272Hornyphon1277Hoshai1278HowardComputers1281HP1283Huafa1287Huanghai-mei1288Huanghe1289Huanglong1290Huangshan1291Huanyu1292Huari1294Huijiaban4108Brand Code Brand Code Brand Code Brand Code10Humax1298 Hush1304 Hygashi1308 Hyper1309 Hypson1312 Hyundai1315 Iberia1320 iBUYPOW-ER1322 ICE1324 ICeS1325 iDEAL3641 I-Inc3462 iLo1341 Imperial1346 Indesit1349 Indiana1350 Infinity1352 InFocus1353 Ingelen1354 Ingersol1355 Initial1356 Inno Hit1358 Innova1359 Innovation1360 Inotech1364 Insignia1368 Inspira4296 Inteq1373 Interactive3534 Interbuy1376 Interfunk1377 Internal1379 Interna-tional1380 Intervision1386IR4110Irradio1396IRT1397Isukai1402ITC1404ITS1405ITT1406ITT Nokia1407ITV1408Janeil1414JBL1420JCB1421Jean1424JEC1426Jensen1429Jiahua1435Jinfeng1438Jinhai1439Jinxing1442JMB1445JNC1446Jocel1448JTV3645Jubilee1460JVC1464Kaisui1471Kamosonic3535Kamp1475Kangchong1476Kanghua1477Kangwei4115Kapsch1483Karcher1484Kathrein1486Kawa1487Kawasho1489KDS1494KEC1496Ken Brown1499Kendo1500Kennedy1504Kennex1505Kenstar3756Kenwood1507Khind1511KIC1512Kingsley1520Kiota1522Kioto1523Kiton1525KLH1529KLL1531Kloss1532Kneissel1535Kolin1541Kolster1543Konig1547Konka1548Korpel1552Korting1554Kosmos1557Koyoda1561KTV1572Kunlun1578Kuro1579Kyoshu1583Kyoto1585L&SElectronic1588LaSAT1597Lavis1602Layco4117Lecson1606Lenco1615Lenoir1617Lesa1622Lexsor1626Leyco1627LG1628LG/Gold-star3536Liesenk3537Liesenk &Tter1630Liesenkot-ter1631Life3538Lifetec1633Linksys1640Lloyd’s1648Local IndiaTV1653Local Ma-laysia TV1656Lodos1659Loewe1660LoeweOpta3540Logik1661Logix1663Luma1674Lumatron1676Lux May1680Luxman1682Luxor1683LXI1686MElectronic1688Madison1698MAG1701Brand Code Brand Code Brand Code Brand Code11Magna-dyne1702 Magnafon1703 Magna-sonic1704 Magnavox1706 Magnin1708 Magnum1709 Majestic1713 Mandor1717 Manesth1718 Manhattan1719 Marantz1724 Marelli1729 Mark1731 Mascom1738 Mastro1743 Masuda1744 Matsui1750 Matsushita1751 Maxdorf1756 Maxent1757 Maxim1759 McMichael1768 Meck1775 MediaCenter PC1777 Mediator1784 Medion1787 Medison1788 Mega-power1791 Megatron1795 MEI1796 Melectronic3654 Melvox1799 Memorex1800Memphis1802Mercury1804Mermaid1806Metronic1809Metz1810MGA1811Micromaxx1822Microsoft1826Microstar1827MicroTEK1829Midland1831Mikomi1833Minato1835Mind1837Minerva1838Ministry OfSound4247Minoka1840Mintek1845Minutz1847Mitsai3851Mitsubishi1855Mivar1857Monivision1872Moree4565Morgan’s1875Morgans3970Motion1877Motorola1878MTC1889MTEC1890MTlogic1892Mudan1896Multistan-dard1904Multisys-tem4121Multitec1906Multitech1907Murphy1911Musikland1915Myryad1922NAD1926Naiko1930Nakimura1933Naonis1936NAT1941National1942NEC1950Necker-mann1951NEI1952Nesco1960Netsat1966NetTV1967Network1968Neufunk1970New Tech1979New World1980Newave1981Nexus Elec-tronics3948Nicamagic1994Nikai4566Nikkai1998Nikkei1999Nikko2000Nimbro4065Nintaus2006NiveusMedia2012Noblex2013Nobliko2014Nogamatic2016Nokia2017Norcent2020Nordic2021Nord-mende2022Nordvision3543Normerel2024Nortek3862Northgate2027NorwoodMicro2029Novatronic2035Novex4567NTC2045Nu-Tec2048NuVision2053O.K.Line4301Oceanic2061Okana4122Okano2065Olevia2067Omni2074ONCEAS3544Onei4302Onida2081Onimax2082Onn3663Onwa2087Opera2090Oppo2092Optimus2095Optoma2097Optonica2099Orbit2103Orion2111Orline2113Ormond2114Brand Code Brand Code Brand Code Brand Code12Orsowe2116 Osaki2118 Osio2121 Oso2122 Osume2123 Otic2125 Otto Versand2126Oulin4123 Pacific2135 PackardBell2138 Pael2140 Palladium2145 Palsonic2147 Panama2149 Panasonic2153 Panavision2154 Panda2155 PARK3951 Pathe Cinema2168Pathe Marconi2169 Pausa2171 PengSheng2181 Penney2182 Pensonic3778 Perdio2185 Perfekt2186 Petters2189 Philco2192 Philhar-monic2194 Philips2195 Phocus2198 Phoenix2199Phonola2201Pho-notrend2202Pilot2207Pioneer2212Pionier2213Plantron2219Playsonic2224Polaroid2230Polyvision4304Poppy2236Portland2238Powerpoint2241PrandoniPrince4125Precision2244Premier2248President2250Prima2253Princess3514Princeton2258Prinston2259Prinz2260Prism2261Profekt4126Profex2269Profilo2272Profitronic2273Proline2274Promax2275Proscan2279Prosco2280Prosonic2282Protech2284Proton2288Protron2289Proview2290ProVision2291Pulsar2296Pye2302Pymi2304Qingdao2308Quadro4071QuandraVision3546Quasar2320Quelle2322Questa2324Radialva2329Radio4128RadioShack3547Radiola2330Ra-diomarelli2331Radionette2332RadioShack2333Radiotone2334Rank2345Rank Arena2346RBM2350RCA2351Realistic2354Recco2358Recor2359Rectiligne2362Rediffusion2364Redstar2366Reflex2368Relisys2374Remotec2377Reoc2379Revolu-tionHD3503Revox2383Rex2385RFT2386Rhapsody2387Ricavision2388Rinex2392R-Line2327Roadstar2398Robotron2401Rolson2410Rowa2416Royal Lux2420RTF3548Runco2423Saba2429Sagem2434Saisho2437Saivod2439Salora2443Sambers2445Sampo2446Samsung2448Samsux2449Sandra2454Sansui2458Sanyo2462Sanyuan2464SBR2492Sceptre2498SchaubLorenz2500Schneider2501Scimitsu2505Scotch2506Scott2508Sears2514Seaway2515Brand Code Brand Code Brand Code Brand Code13Seelver2520 SEG2522 SEI2524 Sei-Sinudyne2525 Seleco2528 Semivox2529 Semp2530 Sencora2531 Sentra2534 SerieDorada2538 Serino2539 Shanghai2547 Shanshui4133 Shaofeng2549 Sharp2550 Shencai2553 Sheng Chia2554 ShengCai4217 Shenyang2555 Sherwood2557 Shinelco4074 Shintoshi2564 Shivaki2567 Shogun2568 Shorai2569 Siam2572 Siarem2573 Siemens2574 Siera2576 Siesta2577 Signature2582 Silva2591 Silva Schneider2592 Silver2594SilverCrest2595Simpson2598Singer2599Sinotec2600Sinudyne2601Skantic2605SKY2610Skysonic2627Skyworth2631SLX2638Sogera2649Solavox2654Sole2655Sonawa2663Songdian4136Soniko2669Sonitron2671Sonneclair2673Sonoko2675Sonolor2676Sontec2677Sony2679Sound &Vision2680Soundesign2684Soundwave2689Sova2690Sowa2691Soyea2692Soyo2693Spectroniq2701Squareview2703Srypton4138SSS2708Stack 92710Standard2713Starlite2728Stenway2739Stern2741Strato2745Strong2748Studio Ex-perience2750Stylandia2752SunBrite2759Sunkai2762Sunny2764Sunstar2768Sunwood2772Superla2782Superscan2786Supersonic2787SuperTech2789Supervision2791Supra2792Supre-Macy2794Supreme2795Susumu2797Sutron2798SVA2800Svasa2801Swisstec2806Sydney2808Sylvania2809Symphonic2810Synco2811Syntax2814Sysline2815Systemax2817Sytong2820Tacico2823Tactus2825TagarSystems2831Taishan2835Talent2838Tandberg2842Tandy2843Tashiko2850Tatung2852TCL2856TCM2857Teac2860Teachima-gen4140Tec2861Tech Line2863Techica2865Technema2866Technica2868Technics2869Technika2870TechniSat2873Technisson2874TechnolAce2875Techno-sonic2878Techview2883Techwood2884Tecnima-gen2885Teco2886Tedelex2889Tek2892Teknika2895Teleavia2901Telecor2910Telefunken2914Telefusion2915Brand Code Brand Code Brand Code Brand Code14Telegazi2917 Telemeister2924 Telesonic2930 Telestar2931 Teletech2934 Teleton2935 Televideon2938 Teleview2939 Televiso2941 Temco2946 Tennessee2952 Tensai2954 Tenson2955 Tesla3680 Tesmet3550 Tevion2962 Texet2963 Thomas2971 Thomson2972 Thorn2974 Tiankeban4142 TMK2994 TML2995 TNCi2996 Tobo2999 Tokai3001 Tokaido3002 Tokyo3004 Tomashi3006 Topline3016 Toshiba3021 Totevision3024 Touch3025 Towada3028 Toyoda3030Trakton3036TransContinens3037TRANS-continents3039Transfec4143Transonic3041Transtec3042Triad3049Trident3054Tristar3057Triumph3058TruTech3467TVS3081TVTEXT 953082Uher3089Ultra3093Ultravox3095Unic Line3100Union3781United3106Universal3113Universum3115Univox3116US Logic3124VectorResearch3137Venturer3143VEOS3144Vestel3148Vexa3149Vibrant3154Victor3155Videocon3163Videologic3165Vid-eologique3166Videosat3170VideoSys-tem3172Videotech-nic3173Videoton3174Vidikron3178Vidtech3179Viewsonic3186Viking3188Viore3192Visiola3197Vision3198Vistar3207Vistron3773Vizio3211Voodoo3215Vortec3217Voxson3220VU4078Vue3225Walker4311Waltham3230Wards3231Watson3233Watt Radio3234Waycon3237Wega3238Wegavox3239Welltech3244Weltblick3245Weltstar3247Westing-house3249Weston3251Wharfedale3255WhiteWesting-house3258Wilson3260Windsor3265Windstar3266Windy Sam3267Wintel3271Wyse3288Xenius3297Xiahua3299Xiangyu3302Xingfu3305Xinghai3306Xinrisong3308XLogic3310Xoro3315Xrypton3317Xuelian3320Yamaha3326Yamishi3328Yokan3335Yoko3336Yorx3340Yuhang3345Zanussi3349Zenith3356ZhuHai3364Ziggo4007Zonda3369ZT Group3370Brand Code Brand Code Brand Code Brand Code15COPYRIGHT NOTICE© Amino Communications Ltd. All rights reserved. Printed in China. Amino and AmiNET are trademarks of Amino Communications Ltd. All other product or brand names as they appear are trademarks or registered trademarks of their respective holders. This document contains proprietary information of Amino Communications Ltd (“Amino”)No part of this document may be copied, reproduced, transmitted, distributed, transcribed, stored in a retrieval system, modified or translated into any other language or computer language, or sublicensed in any form or by any means - electronic, mechanical, magnetic, optical, chemical, manual or otherwise - without the prior written consent of Amino.Product Disposal Instructions for Residential UsersPlease remove any batteries and dispose of them and the product as per your local authority’s recycling processes. For further information please contact your local authority or the retailer where the product was purchased.Product Disposal Instructions for Business UsersBusiness Users should contact their supplier and check the terms and conditions of the purchase contract and ensure that the product is not mixed with other commercial waste for disposal.DisclaimerAmino shall not be liable for any errors contained herein nor for any damages arising out of or related to this document or the information contained within it, even if the Company has been advised of the possibility of such damages. This document is intended for informational and instructional purposes only. The Company reserves the right to make changes to the specifications and other information contained in this document without prior notification.503.266.8111190 SE 2nd Avenue Canby, Oregon 97013Contact UsWe appreciate your business and are proudto serve the Canby area with the very best inentertainment.www.directlink.coop06.18。