Association Rules and Predictive Models for e-Banking Services

- 格式:pdf

- 大小:496.12 KB

- 文档页数:14

Class 5: ANOVA (Analysis of Variance) and F-testsI. What is ANOVAWhat is ANOVA? ANOVA is the short name for the Analysis of Variance. The essenceof ANOVA is to decompose the total variance of the dependent variable into two additive components, one for the structural part, and the other for the stochastic part, of a regression. Today we are going to examine the easiest case.II. ANOVA: An IntroductionLet the model beεβ+= X y .Assuming x i is a column vector (of length p) of independent variable values for the i th'observation,i i i εβ+='x y .Then b 'x i is the predicted value.sum of squares total:[]∑-=2Y y SST i []∑-+-=2'x b 'x y Y b i i i[][][][]∑∑∑-+-+-=Y -b 'x b 'x y 2Y b 'x b 'x y 22i i i i i i[][]∑∑-+=22Y b 'x e i ibecause [][][]∑∑=-=--0Y b 'x e Y b 'x b 'x y ii i i i .This is always true by OLS. = SSE + SSRImportant: the total variance of the dependent variable is decomposed into two additive parts: SSE, which is due to errors, and SSR, which is due to regression. Geometric interpretation: [blackboard ]Decomposition of VarianceIf we treat X as a random variable, we can decompose total variance to the between-group portion and the within-group portion in any population:()()()i i i x y εβV 'V V +=Prove:()()i i i x y εβ+='V V()()()i i i i x x εβεβ,'Cov 2V 'V ++=()()iix εβV 'V +=(by the assumption that ()0 ,'Cov =εβk x , for all possible k.)The ANOVA table is to estimate the three quantities of equation (1) from the sample.As the sample size gets larger and larger, the ANOVA table will approach the equation closer and closer.In a sample, decomposition of estimated variance is not strictly true. We thus need toseparately decompose sums of squares and degrees of freedom. Is ANOVA a misnomer?III. ANOVA in MatrixI will try to give a simplied representation of ANOVA as follows:[]∑-=2Y y SST i ()∑-+=i i y Y 2Y y 22∑∑∑-+=i i y Y 2Y y 22∑-+=222Y n 2Y n y i (because ∑=Y n y i )∑-=22Y n y i2Y n y 'y -=y J 'y n /1y 'y -= (in your textbook, monster look)SSE = e'e[]∑-=2Y b 'x SSR i()()[]∑-+=Y b 'x 2Y b 'x 22i i()[]()∑∑-+=b 'x Y 2Y n b 'x 22i i()[]()∑∑--+=i i i e y Y 2Y n b 'x 22()[]∑-+=222Y n 2Y n b 'x i(because ∑∑==0e ,Y n y i i , as always)()[]∑-=22Yn b 'x i2Y n Xb X'b'-=y J 'y n /1y X'b'-= (in your textbook, monster look)IV. ANOVA TableLet us use a real example. Assume that we have a regression estimated to be y = - 1.70 + 0.840 xANOVA TableSOURCE SS DF MS F with Regression 6.44 1 6.44 6.44/0.19=33.89 1, 18Error 3.40 18 0.19 Total 9.8419We know∑=100xi, ∑=50y i , 12.509x 2=∑i , 84.134y 2=∑i , ∑=66.257y x i i . If weknow that DF for SST=19, what is n?n= 205.220/50Y ==84.95.25.22084.134Y n y SST 22=⨯⨯-=-=∑i()[]∑-+=0.1250.84x 1.7-SSR 2i[]∑-⨯⨯⨯-⨯+⨯=0.125x 84.07.12x 84.084.07.17.12i i= 20⨯1.7⨯1.7+0.84⨯0.84⨯509.12-2⨯1.7⨯0.84⨯100- 125.0= 6.44SSE = SST-SSR=9.84-6.44=3.40DF (Degrees of freedom): demonstration. Note: discounting the intercept when calculating SST. MS = SS/DFp = 0.000 [ask students]. What does the p-value say?V. F-TestsF-tests are more general than t-tests, t-tests can be seen as a special case of F-tests.If you have difficulty with F-tests, please ask your GSIs to review F-tests in the lab. F-tests takes the form of a fraction of two MS's.MSR/MSE F , df2df1An F statistic has two degrees of freedom associated with it: the degree of freedom inthe numerator, and the degree of freedom in the denominator.An F statistic is usually larger than 1. The interpretation of an F statistics is thatwhether the explained variance by the alternative hypothesis is due to chance. In other words, the null hypothesis is that the explained variance is due to chance, or all the coefficients are zero.The larger an F-statistic, the more likely that the null hypothesis is not true. There is atable in the back of your book from which you can find exact probability values.In our example, the F is 34, which is highly significant.VI. R 2R 2 = SSR / SSTThe proportion of variance explained by the model. In our example, R-sq = 65.4%VII. What happens if we increase more independent variables.1. SST stays the same.2. SSR always increases.3. SSE always decreases.4. R 2 always increases.5. MSR usually increases.6. MSE usually decreases.7. F-test usually increases.Exceptions to 5 and 7: irrelevant variables may not explain the variance but take up degrees of freedom. We really need to look at the results.VIII. Important: General Ways of Hypothesis Testing with F-Statistics.All tests in linear regression can be performed with F-test statistics. The trick is to run"nested models."Two models are nested if the independent variables in one model are a subset or linearcombinations of a subset (子集)of the independent variables in the other model.That is to say. If model A has independent variables (1, 1x , 2x ), and model B hasindependent variables (1, 1x , 2x ,3x ), A and B are nested. A is called the restricted model; B is called less restricted or unrestricted model. We call A restricted because A implies that0=3β. This is a restriction.Another example: C has independent variable (1, 1x , 2x +3x ), D has (1, 2x +3x ). C and A are not nested.C and B are nested. One restriction in C: 32ββ=.C andD are nested. One restriction in D: 0=1β. D and A are not nested.D and B are nested: two restriction in D: 32ββ=; 0=1β.We can always test hypotheses implied in the restricted models. Steps: run tworegression for each hypothesis, one for the restricted model and one for the unrestrictedmodel. The SST should be the same across the two models. What is different is SSE and SSR. That is, what is different is R 2. Let()()df df SSE ,df df SSE u u r r ==;df df ()()0u r u r r u n p n p p p -=---=-<Use the following formulas:()()()()(),SSE SSE /df SSE df SSE F SSE /df r u r u dfr dfu dfu u u---=or()()()()(),SSR SSR /df SSR df SSR F SSE /df u r u r dfr dfu dfu u u---=(proof: use SST = SSE+SSR)Note, df(SSE r )-df(SSE u ) = df(SSR u )-df(SSR r ) =df ∆,is the number of constraints (not number of parameters) implied by the restricted modelor()()()22,2R R /df F 1R /dfur dfr dfu dfuuu--∆=- Note thatdf 1df ,2F t =That is, for 1df tests, you can either do an F-test or a t-test. They yield the same result. Another way to look at it is that the t-test is a special case of the F test, with the numerator DF being 1.IX. Assumptions of F-testsWhat assumptions do we need to make an ANOVA table work?Not much an assumption. All we need is the assumption that (X'X) is not singular, so that the least square estimate b exists.The assumption of ε'X =0 is needed if you want the ANOVA table to be an unbiased estimate of the true ANOVA (equation 1) in the population. Reason: we want b to be an unbiased estimator of β, and the covariance between b and εto disappear.For reasons I discussed earlier, the assumptions of homoscedasticity and non-serial correlation are necessary for the estimation of ()i V ε.The normality assumption that εi is distributed in a normal distribution is needed for small samples.X. The Concept of IncrementEvery time you put one more independent variable into your model, you get an increase in 2R . We sometime called the increase "incremental 2R ." What is means is that more variance is explained, or SSR is increased, SSE is reduced. What you should understand is that the incremental 2R attributed to a variable is always smaller than the 2R when other variables are absent.XI. Consequences of Omitting Relevant Independent VariablesSay the true model is the following:0112233i i i i i y x x x ββββε=++++.But for some reason we only collect or consider data on 21,,x and x y . Therefore, we omit3x in the regression. That is, we omit in 3x our model. We briefly discussed this problembefore. The short story is that we are likely to have a bias due to the omission of a relevant variable in the model. This is so even though our primary interest is to estimate the effect of1x or 2x on y.Why? We will have a formal presentation of this problem.XII. Measures of Goodness-of-FitThere are different ways to assess the goodness-of-fit of a model. A. R 2R 2 is a heuristic measure for the overall goodness-of-fit. It does not have an associated test statistic.R 2 measures the proportion of the variance in the dependent variable that is “explained” by the model: R 2 =SSESSR SSRSST SSR +=B. Model F-testThe model F-test tests the joint hypotheses that all the model coefficients except for the constant term are zero.Degrees of freedoms associated with the model F-test: Numerator: p-1 Denominator: n-p.C. t-tests for individual parametersA t-test for an individual parameter tests the hypothesis that a particular coefficient is equal to a particular number (commonly zero).t k = (b k - βk0)/SE k , where SE k is the (k, k) element of MSE(X’X)-1, with degree of freedom=n-p. D. Incremental R 2Relative to a restricted model, the gain in R 2 for the unrestricted model: ∆R 2= R u 2- R r 2E. F-tests for Nested ModelIt is the most general form of F-tests and t-tests.()()()()(),SSE SSE /df SSE df SSE F SSE /df r u r dfu dfr u dfu u u---=It is equal to a t-test if the unrestricted and restricted models differ only by one single parameter.It is equal to the model F-test if we set the restricted model to the constant-only model.[Ask students] What are SST, SSE, and SSR, and their associated degrees of freedom, for the constant-only model?Numerical ExampleA sociological study is interested in understanding the social determinants of mathematicalachievement among high school students. You are now asked to answer a series of questions. The data are real but have been tailored for educational purposes. The total number of observations is 400. The variables are defined as: y: math scorex1: father's education x2: mother's educationx3: family's socioeconomic status x4: number of siblings x5: class rankx6: parents' total education (note: x6 = x1 + x2) For the following regression models, we know: Table 1 SST SSR SSE DF R 2 (1) y on (1 x1 x2 x3 x4) 34863 4201 (2) y on (1 x6 x3 x4) 34863 396 .1065 (3) y on (1 x6 x3 x4 x5) 34863 10426 24437 395 .2991 (4) x5 on (1 x6 x3 x4) 269753 396 .02101. Please fill the missing cells in Table 1.2. Test the hypothesis that the effects of father's education (x1) and mother's education (x2) on math score are the same after controlling for x3 and x4.3. Test the hypothesis that x6, x3 and x4 in Model (2) all have a zero effect on y.4. Can we add x6 to Model (1)? Briefly explain your answer.5. Test the hypothesis that the effect of class rank (x5) on math score is zero after controlling for x6, x3, and x4.Answer: 1. SST SSR SSE DF R 2 (1) y on (1 x1 x2 x3 x4) 34863 4201 30662 395 .1205 (2) y on (1 x6 x3 x4) 34863 3713 31150 396 .1065 (3) y on (1 x6 x3 x4 x5) 34863 10426 24437 395 .2991 (4) x5 on (1 x6 x3 x4) 275539 5786 269753 396 .0210Note that the SST for Model (4) is different from those for Models (1) through (3). 2.Restricted model is 01123344()y b b x x b x b x e =+++++Unrestricted model is ''''''011223344y b b x b x b x b x e =+++++(31150 - 30662)/1F 1,395 = -------------------- = 488/77.63 = 6.29 30662 / 395 3.3713 / 3F 3,396 = --------------- = 1237.67 / 78.66 = 15.73 31150 / 3964. No. x6 is a linear combination of x1 and x2. X'X is singular.5.(31150 - 24437)/1F 1,395 = -------------------- = 6713 / 61.87 = 108.50 24437/395t = 10.42t ===。

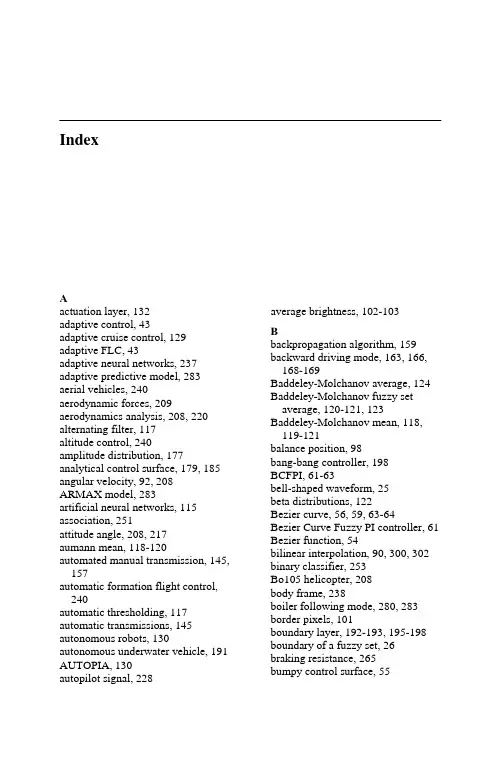

IndexAactuation layer, 132average brightness,102-103adaptive control, 43Badaptive cruise control, 129backpropagation algorithm, 159adaptive FLC, 43backward driving mode,163,166,168-169adaptive neural networks,237adaptive predictive model, 283Baddeley-Molchanov average, 124aerial vehicles, 240 Baddeley-Molchanov fuzzy set average, 120-121, 123aerodynamic forces,209aerodynamics analysis, 208, 220Baddeley-Molchanov mean,118,119-121alternating filter, 117altitude control, 240balance position, 98amplitude distribution, 177bang-bang controller,198analytical control surface, 179, 185BCFPI, 61-63angular velocity, 92,208bell-shaped waveform,25ARMAX model, 283beta distributions,122artificial neural networks,115Bezier curve, 56, 59, 63-64association, 251Bezier Curve Fuzzy PI controller,61attitude angle,208, 217Bezier function, 54aumann mean,118-120bilinear interpolation, 90, 300,302automated manual transmission,145,157binary classifier,253Bo105 helicopter, 208automatic formation flight control,240body frame,238boiler following mode,280,283automatic thresholding,117border pixels, 101automatic transmissions,145boundary layer, 192-193,195-198autonomous robots,130boundary of a fuzzy set,26autonomous underwater vehicle, 191braking resistance, 265AUTOPIA, 130bumpy control surface, 55autopilot signal, 228Index 326CCAE package software, 315, 318 calibration accuracy, 83, 299-300, 309, 310, 312CARIMA models, 290case-based reasoning, 253center of gravity method, 29-30, 32-33centroid defuzzification, 7 centroid defuzzification, 56 centroid Method, 106 characteristic polygon, 57 characterization, 43, 251, 293 chattering, 6, 84, 191-192, 195, 196, 198chromosomes, 59circuit breaker, 270classical control, 1classical set, 19-23, 25-26, 36, 254 classification, 106, 108, 111, 179, 185, 251-253classification model, 253close formation flight, 237close path tracking, 223-224 clustering, 104, 106, 108, 251-253, 255, 289clustering algorithm, 252 clustering function, 104clutch stroke, 147coarse fuzzy logic controller, 94 collective pitch angle, 209 collision avoidance, 166, 168 collision avoidance system, 160, 167, 169-170, 172collision avoidance system, 168 complement, 20, 23, 45 compressor contamination, 289 conditional independence graph, 259 confidence thresholds, 251 confidence-rated rules, 251coning angle, 210constant gain, 207constant pressure mode, 280 contrast intensification, 104 contrast intensificator operator, 104 control derivatives, 211control gain, 35, 72, 93, 96, 244 control gain factor, 93control gains, 53, 226control rules, 18, 27, 28, 35, 53, 64, 65, 90-91, 93, 207, 228, 230, 262, 302, 304-305, 315, 317control surfaces, 53-55, 64, 69, 73, 77, 193controller actuator faulty, 289 control-weighting matrix, 207 convex sets, 119-120Coordinate Measurement Machine, 301coordinate measuring machine, 96 core of a fuzzy set, 26corner cube retroreflector, 85 correlation-minimum, 243-244cost function, 74-75, 213, 282-283, 287coverage function, 118crisp input, 18, 51, 182crisp output, 7, 34, 41-42, 51, 184, 300, 305-306crisp sets, 19, 21, 23crisp variable, 18-19, 29critical clearing time, 270 crossover, 59crossover probability, 59-60cruise control, 129-130,132-135, 137-139cubic cell, 299, 301-302, 309cubic spline, 48cubic spline interpolation, 300 current time gap, 136custom membership function, 294 customer behav or, 249iDdamping factor, 211data cleaning, 250data integration, 250data mining, 249, 250, 251-255, 259-260data selection, 250data transformation, 250d-dimensional Euclidean space, 117, 124decision logic, 321 decomposition, 173, 259Index327defuzzification function, 102, 105, 107-108, 111 defuzzifications, 17-18, 29, 34 defuzzifier, 181, 242density function, 122 dependency analysis, 258 dependency structure, 259 dependent loop level, 279depth control, 202-203depth controller, 202detection point, 169deviation, 79, 85, 185-188, 224, 251, 253, 262, 265, 268, 276, 288 dilation, 117discriminated rules, 251 discrimination, 251, 252distance function, 119-121 distance sensor, 167, 171 distribution function, 259domain knowledge, 254-255 domain-specific attributes, 251 Doppler frequency shift, 87 downhill simplex algorithm, 77, 79 downwash, 209drag reduction, 244driver’s intention estimator, 148 dutch roll, 212dynamic braking, 261-262 dynamic fuzzy system, 286, 304 dynamic tracking trajectory, 98Eedge composition, 108edge detection, 108 eigenvalues, 6-7, 212electrical coupling effect, 85, 88 electrical coupling effects, 87 equilibrium point, 207, 216 equivalent control, 194erosion, 117error rates, 96estimation, 34, 53, 119, 251, 283, 295, 302Euler angles, 208evaluation function, 258 evolution, 45, 133, 208, 251 execution layer, 262-266, 277 expert knowledge, 160, 191, 262 expert segmentation, 121-122 extended sup-star composition, 182 Ffault accommodation, 284fault clearing states, 271, 274fault detection, 288-289, 295fault diagnosis, 284fault durations, 271, 274fault isolation, 284, 288fault point, 270-271, 273-274fault tolerant control, 288fault trajectories, 271feature extraction, 256fiber glass hull, 193fin forces, 210final segmentation, 117final threshold, 116fine fuzzy controller, 90finer lookup table, 34finite element method, 318finite impulse responses, 288firing weights, 229fitness function, 59-60, 257flap angles, 209flight aerodynamic model, 247 flight envelope, 207, 214, 217flight path angle, 210flight trajectory, 208, 223footprint of uncertainty, 176, 179 formation geometry, 238, 247 formation trajectory, 246forward driving mode, 163, 167, 169 forward flight control, 217 forward flight speed, 217forward neural network, 288 forward velocity, 208, 214, 217, 219-220forward velocity tracking, 208 fossil power plants, 284-285, 296 four-dimensional synoptic data, 191 four-generator test system, 269 Fourier filter, 133four-quadrant detector, 79, 87, 92, 96foveal avascular zone, 123fundus images, 115, 121, 124 fuselage, 208-210Index 328fuselage axes, 208-209fuselage incidence, 210fuzz-C, 45fuzzifications, 18, 25fuzzifier, 181-182fuzzy ACC controller, 138fuzzy aggregation operator, 293 fuzzy ASICs, 37-38, 50fuzzy binarization algorithm, 110 fuzzy CC controller, 138fuzzy clustering algorithm, 106, 108 fuzzy constraints, 286, 291-292 fuzzy control surface, 54fuzzy damage-mitigating control, 284fuzzy decomposition, 108fuzzy domain, 102, 106fuzzy edge detection, 111fuzzy error interpolation, 300, 302, 305-306, 309, 313fuzzy filter, 104fuzzy gain scheduler, 217-218 fuzzy gain-scheduler, 207-208, 220 fuzzy geometry, 110-111fuzzy I controller, 76fuzzy image processing, 102, 106, 111, 124fuzzy implication rules, 27-28 fuzzy inference system, 17, 25, 27, 35-36, 207-208, 302, 304-306 fuzzy interpolation, 300, 302, 305- 307, 309, 313fuzzy interpolation method, 309 fuzzy interpolation technique, 300, 309, 313fuzzy interval control, 177fuzzy mapping rules, 27fuzzy model following control system, 84fuzzy modeling methods, 255 fuzzy navigation algorithm, 244 fuzzy operators, 104-105, 111 fuzzy P controller, 71, 73fuzzy PD controller, 69fuzzy perimeter, 110-111fuzzy PI controllers, 61fuzzy PID controllers, 53, 64-65, 80 fuzzy production rules, 315fuzzy reference governor, 285 Fuzzy Robust Controller, 7fuzzy set averages, 116, 124-125 fuzzy sets, 7, 19, 22, 24, 27, 36, 45, 115, 120-121, 124-125, 151, 176-182, 184-188, 192, 228, 262, 265-266fuzzy sliding mode controller, 192, 196-197fuzzy sliding surface, 192fuzzy subsets, 152, 200fuzzy variable boundary layer, 192 fuzzyTECH, 45Ggain margins, 207gain scheduling, 193, 207, 208, 211, 217, 220gas turbines, 279Gaussian membership function, 7 Gaussian waveform, 25 Gaussian-Bell waveforms, 304 gear position decision, 145, 147 gear-operating lever, 147general window function, 105 general-purpose microprocessors, 37-38, 44genetic algorithm, 54, 59, 192, 208, 257-258genetic operators, 59-60genetic-inclined search, 257 geometric modeling, 56gimbal motor, 90, 96global gain-scheduling, 220global linear ARX model, 284 global navigation satellite systems, 141global position system, 224goal seeking behaviour, 186-187 governor valves80, 2HHamiltonian function, 261, 277 hard constraints, 283, 293 heading angle, 226, 228, 230, 239, 240-244, 246heading angle control, 240Index329heading controller, 194, 201-202 heading error rate, 194, 201 heading speed, 226heading velocity control, 240 heat recovery steam generator, 279 hedges, 103-104height method, 29helicopter, 207-212, 214, 217, 220 helicopter control matrix, 211 helicopter flight control, 207 Heneghan method, 116-117, 121-124heuristic search, 258 hierarchical approaches, 261 hierarchical architecture, 185 hierarchical fuzzy processors, 261 high dimensional systems, 191 high stepping rates, 84hit-miss topology, 119home position, 96horizontal tail plane, 209 horizontal tracker, 90hostile, 223human domain experts, 255 human visual system, 101hybrid system framework, 295 hyperbolic tangent function, 195 hyperplane, 192-193, 196 hysteresis thres olding, 116-117hIIF-THEN rule, 27-28image binarization, 106image complexity, 104image fuzzification function, 111 image segmentation, 124image-expert, 122-123indicator function, 121inert, 223inertia frame, 238inference decision methods, 317 inferential conclusion, 317 inferential decision, 317 injection molding process, 315 inner loop controller, 87integral time absolute error, 54 inter-class similarity, 252 internal dependencies, 169 interpolation property, 203 interpolative nature, 262 intersection, 20, 23-24, 31, 180 interval sets, 178interval type-2 FLC, 181interval type-2 fuzzy sets, 177, 180-181, 184inter-vehicle gap, 135intra-class similarity, 252inverse dynamics control, 228, 230 inverse dynamics method, 227 inverse kinema c, 299tiJ - Kjoin, 180Kalman gain, 213kinematic model, 299kinematic modeling, 299-300 knowledge based gear position decision, 148, 153knowledge reasoning layer, 132 knowledge representation, 250 knowledge-bas d GPD model, 146eLlabyrinths, 169laser interferometer transducer, 83 laser tracker, 301laser tracking system, 53, 63, 65, 75, 78-79, 83-85, 87, 98, 301lateral control, 131, 138lateral cyclic pitch angle, 209 lateral flapping angle, 210 leader, 238-239linear control surface, 55linear fuzzy PI, 61linear hover model, 213linear interpolation, 300-301, 306-307, 309, 313linear interpolation method, 309 linear optimal controller, 207, 217 linear P controller, 73linear state feedback controller, 7 linear structures, 117linear switching line, 198linear time-series models, 283 linguistic variables, 18, 25, 27, 90, 102, 175, 208, 258Index 330load shedding, 261load-following capabilities, 288, 297 loading dock, 159-161, 170, 172 longitudinal control, 130-132 longitudinal cyclic pitch angle, 209 longitudinal flapping angle, 210 lookup table, 18, 31-35, 40, 44, 46, 47-48, 51, 65, 70, 74, 93, 300, 302, 304-305lower membership functions, 179-180LQ feedback gains, 208LQ linear controller, 208LQ optimal controller, 208LQ regulator, 208L-R fuzzy numbers, 121 Luenburger observer, 6Lyapunov func on, 5, 192, 284tiMMamdani model, 40, 46 Mamdani’s method, 242 Mamdani-type controller, 208 maneuverability, 164, 207, 209, 288 manual transmissions, 145 mapping function, 102, 104 marginal distribution functions, 259 market-basket analysis, 251-252 massive databases, 249matched filtering, 115 mathematical morphology, 117, 127 mating pool, 59-60max member principle, 106max-dot method, 40-41, 46mean distance function, 119mean max membership, 106mean of maximum method, 29 mean set, 118-121measuring beam, 86mechanical coupling effects, 87 mechanical layer, 132median filter, 105meet, 7, 50, 139, 180, 183, 302 membership degree, 39, 257 membership functions, 18, 25, 81 membership mapping processes, 56 miniature acrobatic helicopter, 208 minor steady state errors, 217 mixed-fuzzy controller, 92mobile robot control, 130, 175, 181 mobile robots, 171, 175-176, 183, 187-189model predictive control, 280, 287 model-based control, 224 modeless compensation, 300 modeless robot calibration, 299-301, 312-313modern combined-cycle power plant, 279modular structure, 172mold-design optimization, 323 mold-design process, 323molded part, 318-321, 323 morphological methods, 115motor angular acceleration, 3 motor plant, 3motor speed control, 2moving average filter, 105 multilayer fuzzy logic control, 276 multimachine power system, 262 multivariable control, 280 multivariable fuzzy PID control, 285 multivariable self-tuning controller, 283, 295mutation, 59mutation probability, 59-60mutual interference, 88Nnavigation control, 160neural fuzzy control, 19, 36neural networks, 173, 237, 255, 280, 284, 323neuro-fuzzy control, 237nominal plant, 2-4nonlinear adaptive control, 237non-linear control, 2, 159 nonlinear mapping, 55nonlinear switching curve, 198-199 nonlinear switching function, 200 nonvolatile memory, 44 normalized universe, 266Oobjective function, 59, 74-75, 77, 107, 281-282, 284, 287, 289-291,Index331295obstacle avoidance, 166, 169, 187-188, 223-225, 227, 231 obstacle avoidance behaviour, 187-188obstacle sensor, 224, 228off-line defuzzification, 34off-line fuzzy inference system, 302, 304off-line fuzzy technology, 300off-line lookup tables, 302 offsprings, 59-60on-line dynamic fuzzy inference system, 302online tuning, 203open water trial, 202operating point, 210optical platform, 92optimal control table, 300optimal feedback gain, 208, 215-216 optimal gains, 207original domain, 102outer loop controller, 85, 87outlier analysis, 251, 253output control gains, 92 overshoot, 3-4, 6-7, 60-61, 75-76, 94, 96, 193, 229, 266Ppath tracking, 223, 232-234 pattern evaluation, 250pattern vector, 150-151PD controller, 4, 54-55, 68-69, 71, 74, 76-77, 79, 134, 163, 165, 202 perception domain, 102 performance index, 60, 207 perturbed plants, 3, 7phase margins, 207phase-plan mapping fuzzy control, 19photovoltaic power systems, 261 phugoid mode, 212PID, 1-4, 8, 13, 19, 53, 61, 64-65, 74, 80, 84-85, 87-90, 92-98, 192 PID-fuzzy control, 19piecewise nonlinear surface, 193 pitch angle, 202, 209, 217pitch controller, 193, 201-202 pitch error, 193, 201pitch error rate, 193, 201pitch subsidence, 212planetary gearbox, 145point-in-time transaction, 252 polarizing beam-splitter, 86 poles, 4, 94, 96position sensor detectors, 84 positive definite matrix, 213post fault, 268, 270post-fault trajectory, 273pre-defined membership functions, 302prediction, 251, 258, 281-283, 287, 290predictive control, 280, 282-287, 290-291, 293-297predictive supervisory controller, 284preview distance control, 129 principal regulation level, 279 probabilistic reasoning approach, 259probability space, 118Problem understanding phases, 254 production rules, 316pursuer car, 136, 138-140 pursuer vehicle, 136, 138, 140Qquadrant detector, 79, 92 quadrant photo detector, 85 quadratic optimal technology, 208 quadrilateral ob tacle, 231sRradial basis function, 284 random closed set, 118random compact set, 118-120 rapid environment assessment, 191 reference beam, 86relative frame, 240relay control, 195release distance, 169residual forces, 217retinal vessel detection, 115, 117 RGB band, 115Riccati equation, 207, 213-214Index 332rise time, 3, 54, 60-61, 75-76road-environment estimator, 148 robot kinematics, 299robot workspace, 299-302, 309 robust control, 2, 84, 280robust controller, 2, 8, 90robust fuzzy controller, 2, 7 robustness property, 5, 203roll subsidence, 212rotor blade flap angle, 209rotor blades, 210rudder, 193, 201rule base size, 191, 199-200rule output function, 191, 193, 198-199, 203Runge-Kutta m thod, 61eSsampling period, 96saturation function, 195, 199 saturation functions, 162scaling factor, 54, 72-73scaling gains, 67, 69S-curve waveform, 25secondary membership function, 178 secondary memberships, 179, 181 selection, 59self-learning neural network, 159 self-organizing fuzzy control, 261 self-tuning adaptive control, 280 self-tuning control, 191semi-positive definite matrix, 213 sensitivity indices, 177sequence-based analysis, 251-252 sequential quadratic programming, 283, 292sets type-reduction, 184setting time, 54, 60-61settling time, 75-76, 94, 96SGA, 59shift points, 152shift schedule algorithms, 148shift schedules, 152, 156shifting control, 145, 147shifting schedules, 146, 152shift-schedule tables, 152sideslip angle, 210sigmoidal waveform, 25 sign function, 195, 199simplex optimal algorithm, 80 single gimbal system, 96single point mass obstacle, 223 singleton fuzzification, 181-182 sinusoidal waveform, 94, 300, 309 sliding function, 192sliding mode control, 1-2, 4, 8, 191, 193, 195-196, 203sliding mode fuzzy controller, 193, 198-200sliding mode fuzzy heading controller, 201sliding pressure control, 280 sliding region, 192, 201sliding surface, 5-6, 192-193, 195-198, 200sliding-mode fuzzy control, 19 soft constraints, 281, 287space-gap, 135special-purpose processors, 48 spectral mapping theorem, 216 speed adaptation, 138speed control, 2, 84, 130-131, 133, 160spiral subsidence, 212sporadic alternations, 257state feedback controller, 213 state transition, 167-169state transition matrix, 216state-weighting matrix, 207static fuzzy logic controller, 43 static MIMO system, 243steady state error, 4, 54, 79, 90, 94, 96, 98, 192steam turbine, 279steam valving, 261step response, 4, 7, 53, 76, 91, 193, 219stern plane, 193, 201sup operation, 183supervisory control, 191, 280, 289 supervisory layer, 262-264, 277 support function, 118support of a fuzzy set, 26sup-star composition, 182-183 surviving solutions, 257Index333swing curves, 271, 274-275 switching band, 198switching curve, 198, 200 switching function, 191, 194, 196-198, 200switching variable, 228system trajector192, 195y,Ttail plane, 210tail rotor, 209-210tail rotor derivation, 210Takagi-Sugeno fuzzy methodology, 287target displacement, 87target time gap, 136t-conorm maximum, 132 thermocouple sensor fault, 289 thickness variable, 319-320three-beam laser tracker, 85three-gimbal system, 96throttle pressure, 134throttle-opening degree, 149 thyristor control, 261time delay, 63, 75, 91, 93-94, 281 time optimal robust control, 203 time-gap, 135-137, 139-140time-gap derivative, 136time-gap error, 136time-invariant fuzzy system, 215t-norm minimum, 132torque converter, 145tracking error, 79, 84-85, 92, 244 tracking gimbals, 87tracking mirror, 85, 87tracking performance, 84-85, 88, 90, 192tracking speed, 75, 79, 83-84, 88, 90, 92, 97, 287trajectory mapping unit, 161, 172 transfer function, 2-5, 61-63 transient response, 92, 193 transient stability, 261, 268, 270, 275-276transient stability control, 268 trapezoidal waveform, 25 triangular fuzzy set, 319triangular waveform, 25 trim, 208, 210-211, 213, 217, 220, 237trimmed points, 210TS fuzzy gain scheduler, 217TS fuzzy model, 207, 290TS fuzzy system, 208, 215, 217, 220 TS gain scheduler, 217TS model, 207, 287TSK model, 40-41, 46TS-type controller, 208tuning function, 70, 72turbine following mode, 280, 283 turn rate, 210turning rate regulation, 208, 214, 217two-DOF mirror gimbals, 87two-layered FLC, 231two-level hierarchy controllers, 275-276two-module fuzzy logic control, 238 type-0 systems, 192type-1 FLC, 176-177, 181-182, 185- 188type-1 fuzzy sets, 177-179, 181, 185, 187type-1 membership functions, 176, 179, 183type-2 FLC, 176-177, 180-183, 185-189type-2 fuzzy set, 176-180type-2 interval consequent sets, 184 type-2 membership function, 176-178type-reduced set, 181, 183-185type-reduction,83-1841UUH-1H helicopter, 208uncertain poles, 94, 96uncertain system, 93-94, 96 uncertain zeros, 94, 96underlying domain, 259union, 20, 23-24, 30, 177, 180unit control level, 279universe of discourse, 19-24, 42, 57, 151, 153, 305unmanned aerial vehicles, 223 unmanned helicopter, 208Index 334unstructured dynamic environments, 177unstructured environments, 175-177, 179, 185, 187, 189upper membership function, 179Vvalve outlet pressure, 280vapor pressure, 280variable structure controller, 194, 204velocity feedback, 87vertical fin, 209vertical tracker, 90vertical tracking gimbal, 91vessel detection, 115, 121-122, 124-125vessel networks, 117vessel segmentation, 115, 120 vessel tracking algorithms, 115 vision-driven robotics, 87Vorob’ev fuzzy set average, 121-123 Vorob'ev mean, 118-120vortex, 237 WWang and Mendel’s algorithm, 257 WARP, 49weak link, 270, 273weighing factor, 305weighting coefficients, 75 weighting function, 213weld line, 315, 318-323western states coordinating council, 269Westinghouse turbine-generator, 283 wind–diesel power systems, 261 Wingman, 237-240, 246wingman aircraft, 238-239 wingman veloc y, 239itY-ZYager operator, 292Zana-Klein membership function, 124Zana-Klein method, 116-117, 121, 123-124zeros, 94, 96µ-law function, 54µ-law tuning method, 54。

Data Min Knowl DiscDOI10.1007/s10618-014-0372-zLeveraging the power of local spatial autocorrelationin geophysical interpolative clusteringAnnalisa Appice·Donato MalerbaReceived:16December2012/Accepted:22June2014©The Author(s)2014Abstract Nowadays ubiquitous sensor stations are deployed worldwide,in order to measure several geophysical variables(e.g.temperature,humidity,light)for a grow-ing number of ecological and industrial processes.Although these variables are,in general,measured over large zones and long(potentially unbounded)periods of time, stations cannot cover any space location.On the other hand,due to their huge vol-ume,data produced cannot be entirely recorded for future analysis.In this scenario, summarization,i.e.the computation of aggregates of data,can be used to reduce the amount of produced data stored on the disk,while interpolation,i.e.the estimation of unknown data in each location of interest,can be used to supplement station records. We illustrate a novel data mining solution,named interpolative clustering,that has the merit of addressing both these tasks in time-evolving,multivariate geophysical appli-cations.It yields a time-evolving clustering model,in order to summarize geophysical data and computes a weighted linear combination of cluster prototypes,in order to predict data.Clustering is done by accounting for the local presence of the spatial autocorrelation property in the geophysical data.Weights of the linear combination are defined,in order to reflect the inverse distance of the unseen data to each clus-ter geometry.The cluster geometry is represented through shape-dependent sampling of geographic coordinates of clustered stations.Experiments performed with several data collections investigate the trade-off between the summarization capability and predictive accuracy of the presented interpolative clustering algorithm.Responsible editors:Hendrik Blockeel,Kristian Kersting,Siegfried Nijssen and FilipŽelezný.A.Appice(B)·D.MalerbaDipartimento di Informatica,Universitàdegli Studi di Bari“Aldo Moro”,Via Orabona4,70125Bari,Italye-mail:annalisa.appice@uniba.itD.Malerbae-mail:donato.malerba@uniba.itA.Appice,D.Malerba Keywords Spatial autocorrelation·Clustering·Inverse distance weighting·Geophysical data stream1IntroductionThe widespread use of sensor networks has paved the way for the explosive living ubiquity of geophysical data streams(i.e.streams of data that are measured repeatedly over a set of locations).Procedurally,remote sensors are installed worldwide.They gather information along a number of variables over large zones and long(potentially unbounded)periods of time.In this scenario,spatial distribution of data sources,as well as temporal distribution of measures pose new challenges in the collection and query of data.Much scientific and industrial interest has recently been focused on the deployment of data management systems that gather continuous multivariate data from several data sources,recognize and possibly adapt a behavioral model,deal with queries that concern present and past data,as well as seen and unseen data.This poses specific issues that include storing entirely(unbounded)data on disks withfinite memory(Chiky and Hébrail2008),as well as looking for predictions(estimations) where no measured data are available(Li and Heap2008).Summarization is one solution for addressing storage limits,while interpolation is one solution for supplementing unseen data.So far,both these tasks,namely summa-rization and interpolation,have been extensively investigated in the literature.How-ever,most of the studies consider only one task at a time.Several summarization algo-rithms,e.g.Rodrigues et al.(2008),Chen et al.(2010),Appice et al.(2013a),have been defined in data mining,in order to compute fast,compact summaries of geophysical data as they arrive.Data storage systems store computed summaries,while discarding actual data.Various interpolation algorithms,e.g.Shepard(1968b),Krige(1951),have been defined in geostatistics,in order to predict unseen measures of a geophysical vari-able.They use predictive inferences based upon actual measures sampled at specific locations of space.In this paper,we investigate a holistic approach that links predictive inferences to data summaries.Therefore,we introduce a summarization pattern of geo-physical data,which can be computed to save memory space and make predictive infer-ences easier.We use predictive inferences that exploit knowledge in data summaries, in order to yield accurate predictions covering any(seen and unseen)space location.We begin by observing that the common factor of several summarization and inter-polation algorithms is that they accommodate the spatial autocorrelation analysis in the learned model.Spatial autocorrelation is the cross-correlation of values of a variable strictly due to their relatively close locations on a two-dimensional surface.Spatial autocorrelation exists when there is systematic spatial variation in the values of a given property.This variation can exist in two forms,called positive and negative spatial autocorrelation(Legendre1993).In the positive case,the value of a variable at a given location tends to be similar to the values of that variable in nearby locations. This means that if the value of some variable is low at a given location,the presence of spatial autocorrelation indicates that nearby values are also low.Conversely,neg-ative spatial autocorrelation is characterized by dissimilar values at nearby locations. Goodchild(1986)remarks that positive autocorrelation is seen much more frequentlyLocal spatial autocorrelation and interpolative clusteringin practice than negative autocorrelation in geophysical variables.This is justified by Tobler’sfirst law of geography,according to which“everything is related to everything else,but near things are more related than distant things”(Tobler1979).As observed by LeSage and Pace(2001),the analysis of spatial autocorrelation is crucial and can be fundamental for building a reliable spatial component into any (statistical)model for geophysical data.With the same viewpoint,we propose to:(i) model the property of spatial autocorrelation when collecting the data records of a number of geophysical variables,(ii)use this model to compute compact summaries of actual data that are discarded and(iii)inject computed summaries into predictive inferences,in order to yield accurate estimations of geophysical data at any space location.The paper is organized as follows.The next section clarifies the motivation and the actual contribution of this paper.In Sect.3,related works regarding spatial autocorre-lation,spatial interpolators and clustering are reported.In Sect.4,we report the basics of the presented algorithm,while in Sect.5,we describe the proposed algorithm.An experimental study with several data collections is presented in Sect.6and conclusions are drawn.2Motivation and contributionsThe analysis of the property of spatial autocorrelation in geophysical data poses spe-cific issues.One issue is that most of the models that represent and learn data with spatial autocorrelation are based on the assumption of spatial stationarity.Thus,they assume a constant mean and a constant variance(no outlier)across space(Stojanova et al. 2012).This means that possible significant variabilities in autocorrelation dependen-cies throughout the space are overlooked.The variability could be caused by a different underlying latent structure of the space,which varies among its portions in terms of scale of values or density of measures.As pointed out by Angin and Neville(2008), when autocorrelation varies significantly throughout space,it may be more accurate to model the dependencies locally rather than globally.Another issue is that the spatial autocorrelation analysis is frequently decoupled from the multivariate analysis.In this case,the learning process accounts for the spa-tial autocorrelation of univariate data,while dealing with distinct variables separately (Appice et al.2013b).Bailey and Krzanowski(2012)observe that ignoring complex interactions among multiple variables may overlook interesting insights into the cor-relation of potentially related variables at any site.Based upon this idea,Dray and Jombart(2011)formulate a multivariate definition of the concept of spatial autocorre-lation,which centers on the extent to which values for a number of variables observed at a given location show a systematic(more than likely under spatial randomness), homogeneous association with values observed at the“neighboring”locations.In this paper,we develop an approach to modeling non stationary spatial auto-correlation of multivariate geophysical data by using interpolative clustering.As in clustering,clusters of records that are similar to each other at nearby locations are identified,but a cluster description and a predictive model is associated to each clus-A.Appice,D.Malerba ter.Data records are aggregated through clusters based on the cluster descriptions. The associated predictive models,that provide predictions for the variables,are stored as summaries of the clustered data.On any future demand,predictive models queried to databases are processed according to the requests,in order to yield accurate esti-mates for the variables.Interpolative clustering is a form of conceptual clustering (Michalski and Stepp1983)since,besides the clusters themselves,it also provides symbolic descriptions(in the form of conjunctions of conditions)of the constructed clusters.Thus,we can also plan to consider this description,in order to obtain clusters in different contexts of the same domain.However,in contrast to conceptual clus-tering,interpolative clustering is a form of supervised learning.On the other hand, interpolative clustering is similar to predictive clustering(Blockeel et al.1998),since it is a form of supervised learning.However,unlike predictive clustering,where the predictive space(target variables)is typically distinguished from the descriptive one (explanatory variables),1variables of interpolative clustering play,in principle,both target and explanatory roles.Interpolative clustering trees(ICTs)are a class of tree structured models where a split node is associated with a cluster and a leaf node with a single predictive model for the target variables of interest.The top node of the ICT contains the entire sample of training records.This cluster is recursively partitioned along the target variables into smaller sub-clusters.A predictive model(the mean)is computed for each target variable and then associated with each leaf.All the variables are predicted indepen-dently.In the context of this paper,an ICT is built by integrating the consideration of a local indicator of spatial autocorrelation,in order to account for significant vari-abilities of autocorrelation dependencies in training data.Spatial autocorrelation is coupled with a multivariate analysis by accounting for the spatial dependence of data and their multivariate variance,simultaneously(Dray and Jombart2011).This is done by maximizing the variance reduction of local indicators of spatial autocorrelation computed for multivariate data when evaluating the candidates for adding a new node to the tree.This solution has the merit of improving both the summarization,as well as the predictive performance of the computed models.Memory space is saved by storing a single summary for data of multiple variables.Predictive accuracy is increased by exploiting the autocorrelation of data clustered in space.From the summarization perspective,an ICT is used to summarize geophysical data according to a hierarchical view of the spatial autocorrelation.We can browse gen-erated clusters at different levels of the hierarchy.Predictive models on leaf clusters model spatial autocorrelation dependencies as stationary over the local geometry of the clusters.From the interpolation perspective,an ICT is used to compute knowledge1The predictive clustering framework is originally defined in Blockeel et al.(1998),in order to com-bine clustering problems and classification/regression problems.The predictive inference is performed by distinguishing between target variables and explanatory variables.Target variables are considered when evaluating similarity between training data such that training examples with similar target values are grouped in the same cluster,while training examples with dissimilar target values are grouped in separate clusters. Explanatory variables are used to generate a symbolic description of the clusters.Although the algorithm presented in Blockeel et al.(1998)can be,in principle,run by considering the same set of variables for both explanatory and target roles,this case is not investigated in the original study.Local spatial autocorrelation and interpolative clusteringto make accurate predictive inferences easier.We can use Inverse Distance Weighting2 (Shepard1968b)to predict variables at a specific location by a weighted linear com-bination of the predictive models on the leaf clusters.Weights are inverse functions of the distance of the query point from the clusters.Finally,we can observe that an ICT provides a static model of a geophysical phe-nomenon.Nevertheless,inferences based on static models of spatial autocorrelation require temporal stationarity of statistical properties of variables.In the geophysical context,data are frequently subject to the temporal variation of such properties.This requires dynamic models that can be updated continuously as new fresh data arrive (Gama2010).In this paper,we propose an incremental algorithm for the construction of a time-adaptive ICT.When a new sample of records is acquired through stations of a sensor network,a past ICT is modified,in order to model new data of the process, which may change their properties over time.In theory,a distinct tree can be learned for each time point and several trees can be subsequently combined using some gen-eral framework(e.g.Spiliopoulou et al.2006)for tracking cluster evolution over time. However,this solution is prohibitively time-consuming when data arrive at a high rate. By taking into account that(1)geophysical variables are often slowly time-varying, and(2)a change of the properties of the data distribution of variables is often restricted to a delimited group of stations,more efficient learning algorithms can be derived.In this paper,we propose an algorithm that retains past clusters as long as they discrimi-nate between surfaces of spatial autocorrelated data,while it mines novel clusters only if the latest become inaccurate.In this way,we can save computation time and track the evolution of the cluster model by detecting changes in the data properties at no additional cost.The specific contributions in this paper are:(1)the investigation of the property of spatial autocorrelation in interpolative clustering;(2)the development of an approach that uses a local indicator of the spatial autocorrelation property,in order to build an ICT by taking into account non-stationarity in autocorrelation and multivariate analysis;(3)the development of an incremental algorithm to yield a time-evolving ICT that accounts for the fact that the statistical properties of the geophysical data may change over time;(4)an extensive evaluation of the effectiveness of the proposed (incremental)approach on several real geophysical data.3Related worksThis work has been motivated by the research literature for the property of spatial autocorrelation and its influence on the interpolation theory and(predictive)clustering. In the following subsections,we report related works from these research lines.2Inverse distance weighting is a common interpolation algorithm.It has several advantages that endorse its widespread use in geostatistics(Li and Revesz2002;Karydas et al.2009;Li et al.2011):simplicity of implementation;lack of tunable parameters;ability to interpolate scattered data and work on any grid without suffering from multicollinearity.A.Appice,D.Malerba3.1Spatial autocorrelationSpatial autocorrelation analysis quantifies the degree of spatial clustering (positive autocorrelation)or dispersion (negative autocorrelation)in the values of a variable measured over a set of point locations.In the traditional approach to spatial autocorre-lation,the “overall pattern”of spatial dependence in data is summarized into a single indicator,such as the familiar Global Moran’s I,Global Geary’s C or Gamma indi-cators of spatial associations (see Legendre 1993,for details).These indicators allow us to establish whether nearby locations tend to have similar (i.e.spatial clustering),random or different values (i.e.spatial dispersion)of a variable on the entire sample.They are referred to as global indicators of spatial autocorrelation,in contrast to the local indicators that we will consider in this paper.Procedurally,global indicators (see Getis 2008)are computed,in order to indicate the degree of regional clustering over the entire distribution of a geophysical variable.Stojanova et al.(2013),as well as Appice et al.(2012)have recently investigated the addition of these indicators to predictive inferences.They show how global measures of spatial autocorrelation can be computed at multiple levels of a hierarchical clustering,in order to improve predictions that are consistently clustered in space.Despite the useful results of these studies,a limitation of global indicators of spatial autocorrelation is that they assume a spatial stationarity across space (Angin and Neville 2008).While this may be useful when spatial associations are studied for the small areas associated to the bottom levels of a hierarchical model,it is not very meaningful or may even be highly misleading in the analysis of spatial associations for large areas associated to the top levels of a hierarchical model.Local indicators offer an alternative to global modeling by looking for “local pat-terns”of spatial dependence within the study region (see Boots 2002for a survey).Unlike global indicators,which return one value for the entire sample of data,local indicators return one value for each sampled location of a variable;this value expresses the degree to which that location is part of a cluster.Widely speaking,a local indi-cator of spatial autocorrelation allows us to discover deviations from global patterns of spatial association,as well as hot spots like local clusters or local outliers.Several local indicators of spatial autocorrelation are formulated in the literature.Anselin’s local Moran’s I (Anselin 1995)is a local indicator of spatial autocorre-lation,that has gained wide acceptance in the literature.It is formulated as follows:I (i )= (z (i )−z )m 2 n j =1,j =i(λ(i j )(z (j )−z )),(1)where z (i )is the value of a variable Z measured at the location i ,z = n i =1z (i )n is themean of the data measured for Z ,λ(i j )is a spatial (Gaussian or bi-square)weight between the locations i and j over a neighborhood structure of the training data and m 2=1n j (z (j )−z )2is the second moment.Anselin’s local Moran’s I is related to the global Moran I as the average of I (i )is equal to the global I,up to a factor of proportionality.A positive value for I (i )indicates that z (i )is surrounded by similar values.Therefore,this value is part of a cluster.A negative value for I (i )indicates that z (i )is surrounded by dissimilar values.Thus,this value is an outlier.Local spatial autocorrelation and interpolative clusteringThe standardized Getis and Ord local GI∗(Getis and Ord1992)is a local indicator of spatial autocorrelation that is formulated as follows:G I∗(i)=1S2n−1nnj=1,j=iλ(i j)2−Λ(i)2⎛⎝nj=1,j=iλi j z(j)−zΛ(i)⎞⎠,(2)whereΛ(i)= nj=1,j=iλ(i j)and S2=nj=1(z(j)−z)2n.A positive value for G I∗(i)indicates clusters of high values around i,while a negative value for G I∗(i)indicates clusters of low values around i.The interpretation of GI∗is different from that of I:the former distinguishes clusters of high and low values,but does not capture the presence of negative spatial auto correlation(dispersion);the latter is able to detect both positive and negative spatial autocorrelations,but does not distinguish clusters of high or low values.Getis and Ord (1992)recommend computing GI∗to look for spatial clusters and I to detect spatial outliers.By following this advice,Holden and Evans(2010)apply fuzzy C-means to GI∗values,in order to cluster satellite-inferred burn severity classes.Scrucca(2005) and Appice et al.(2013c)use k-means to cluster GI∗values,to compute spatially aware clusters of the data measured for a geophysical variable.Measures of both global and local spatial autocorrelation are principally defined for univariate data.However,the integration of multivariate and autocorrelation informa-tion has recently been advocated by Dray and Jombart(2011).The simplest approach considers a two-step procedure,where data arefirst summarized with a multivariate analysis such as PCA.In a second step,any univariate(either global or local)spatial measure can be applied to PCA scores for each axis separately.The other approach finds coefficients to obtain a linear combination of variables,which maximizes the product between the variance and the global Moran measure of the scores.Alterna-tively,Stojanova et al.(2013)propose computing the mean of global measures(Moran I and global Getis C),computed for distinct variables of a vector,as a global indicator of spatial autocorrelation of the vector by blurring cross-correlations between separate variables.Dray et al.(2006)explore the theory of the principal coordinates of neighbor matrices and develop the framework of Morans eigenvector maps.They demonstrate that their framework can be linked to spatial autocorrelation structure functions also in multivariate domains.Blanchet et al.(2008)expand this framework by taking into account asymmetric directional spatial processes.3.2Interpolation theoryStudies on spatial interpolation were initially encouraged by the analysis of ore mining, water extraction or pumping and rock inspection(Cressie1993).In thesefields,inter-polation algorithms are required as the main resource to recover unknown information and account for problems like missing data,energy saving,sensor default,as well as to support data summarization and investigation of spatial correlation between observed data(Lam1983).The interpolation algorithms estimate a geophysical quantity in any geographic location where the variable measure is not available.The interpolated valueA.Appice,D.Malerba is derived by making use of the knowledge of the nearby observed data and,some-times,of some hypotheses or supplementary information on the data variable.The rationale behind this spatially aware estimate of a variable is the property of positive spatial autocorrelation.Any spatial interpolator accounts for this property,including within its formulation the consideration of a stronger correlation between data which are closer than for those that are farther apart.Regression(Burrough and McDonnell1998),Inverse Distance Weighting(IDW) (Shepard1968a),Radial Basis Functions(RBF)(Lin and Chen2004)and Kriging (Krige1951)are the most common interpolation algorithms.These algorithms are studied to deal with the irregular sampling of the investigated area(Isaaks and Srivas-tava1989;Stein1999)or with the difficulty of describing the area by the local atlas of larger and irregular manifolds.Regression algorithms,that are statistical interpolators, determine a functional relationship between the variable to be predicted and the geo-graphic coordinates of points where the variable is measured.IDW and RBF,which are both deterministic interpolators,use mathematical functions to calculate an unknown variable value in a geographic location,based either on the degree of similarity(IDW) or the degree of smoothing(RBF)in relation to neighboring data points.Both algo-rithms share with Kriging the idea that the collection of variable observations can be considered as a production of correlated spatial random data with specific statistical properties.In Kriging,specifically,this correlation is used to derive a second-order model of the variable(the variogram).The variogram represents an approximate mea-sure of the spatial dissimilarity of the observed data.The IDW interpolation is based on a linear combination of nearby observations with weights proportional to a power of the distances.It is a heuristic but efficient approach justified by the typical power-law of the spatial correlation.In this sense,IDW accomplishes the same strategy adopted by the more rigorous formulation of Kriging(Li and Revesz2002;Karydas et al.2009; Li et al.2011).Several studies have arisen from these base interpolation algorithms.Ohashi and Torgo(2012)investigate a mapping of the spatial interpolation problem into a multiple regression task.They define a series of spatial indicators to better describe the spatial dynamics of the variable of interest.Umer et al.(2010)propose to recover missing data of a dense network by a Kriging interpolator.By considering that the compu-tational complexity of a variogram is cubic in the size of the observed data(Cressie 1993),the variogram calculus,in this study,is sped-up by processing only the areas with information holes,rather than the global data.Goovaerts(1997)extends Krig-ing,in order to predict multiple variables(cokriging)measured at the same location. Cokriging uses direct and cross covariance functions that are computed in the sample of the observed data.Teegavarapu et al.(2012)use IDW and1-Nearest Neighbor,in order to interpolate a grid of rainfall data and re-sample data at multiple resolutions. Lu and Wong(2008)formulate IDW in an adaptive way,by accounting for the varying distance-decay relationship in the area under examination.The weighting parameters are varied according to the spatial pattern of the sampled points in the neighborhood. The algorithm proves more efficient than ordinary IDW and,in several cases,also better than Kriging.Recently,Appice et al.(2013b)have started to link IDW to the spatio-temporal knowledge enclosed in specific summarization patterns,called trend clusters.Nevertheless,this investigation is restricted to univariate data.Local spatial autocorrelation and interpolative clusteringThere are several applications(e.g.Li and Revesz2002;Karydas et al.2009;Li et al.2011)where IDW is used.This contributes to highlighting IDW as a determinis-tic,quick and simple interpolation algorithm that yields accurate predictions.On the other hand,Kriging is based on the statistical properties of a variable and,hence,it is expected to be more accurate regarding the general characteristics of the recorded data and the efficacy of the model.In any case,the accuracy of Kriging depends on a reliable estimation of the variogram(Isaaks and Srivastava1989;¸Sen and¸Salhn 2001)and the variogram computation cost is proportional to the cube of the number of observed data(Cressie1990).This cost can be prohibitive in time-evolving applica-tions,where the statistical properties of a variable may change over time.In the Data Mining framework,the change of the underlying properties over time is usually called concept drift(Gama2010).It is noteworthy that the concept drift,expected in dynamic data,can be a serious complication for Kriging.It may impose the repetition of costly computation of the variogram each time the statistical properties of the variable change significantly.These considerations motivate the broad use of an interpolator like IDW that is accurate enough and whose learning phase can be run on-line when data are collected in a stream.3.3Cluster analysisCluster analysis is frequently used in geophysical data interpretation,in order to obtain meaningful and useful results(Song et al.2010).In addition,it can be used as a sum-marization paradigm for data streams,since it underlines the advantage of discovering summaries(clusters)that adjust well to the evolution of data.The seminal work is that of Aggarwal et al.(2007),where a k-means algorithm is tailored to discover micro-clusters from multidimensional transactions that arrive in a stream.Micro-clusters are adjusted each time a transaction arrives,in order to preserve the temporal locality of data along a time horizon.Another clustering algorithm to summarize data streams is presented in Nassar and Sander(2007).The main characteristic of this algorithm is that it allows us to summarize multi-source data streams.The multi-source stream is com-posed of sets of numeric values that are transmitted by a variable number of sources at consecutive time points.Timestamped values are modeled as2D(time-domain)points of a Euclidean space.Hence,the source location is neither represented as a dimension of analysis nor processed as information-bearing.The stream is broken into windows. Dense regions of2D points are detected in these windows and represented by means of cluster feature vectors.Although a spatial clustering algorithm is employed,the spatial arrangement of data sources is still neglected.Appice et al.(2013a)describe a clustering algorithm that accounts for the property of spatial autocorrelation when computing clusters that are compact and accurate summaries of univariate geophysical data streams.In all these studies clustering is addressed as an unsupervised task.Predictive clustering is a supervised extension of cluster analysis,which combines elements of prediction and clustering.The task,originally formulated in Blockeel et al.(1998),assumes:(1)a descriptive space of explanatory variables,(2)a predictive space of target variables and(3)a set of training records defined on both descriptive and predictive space.Training records that are similar to each other are clustered and a predictive model is associated to each cluster.This allows us to predict the unknown。

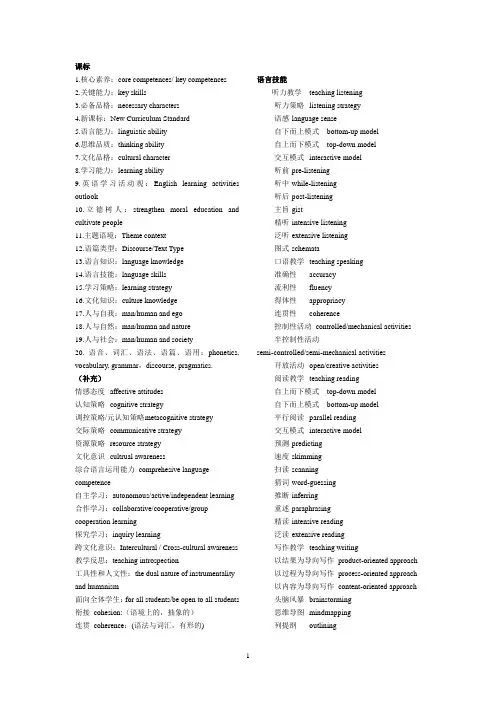

课标1.核心素养:core competences/ key competences2.关键能力:key skills3.必备品格:necessary characters4.新课标:New Curriculum Standard5.语言能力:linguistic ability6.思维品质:thinking ability7.文化品格:cultural character8.学习能力:learning ability9.英语学习活动观:English learning activities outlook10.立德树人:strengthen moral education and cultivate people11.主题语境:Theme context12.语篇类型:Discourse/Text Type13.语言知识:language knowledge14.语言技能:language skills15.学习策略:learning strategy16.文化知识:culture knowledge17.人与自我:man/human and ego18.人与自然:man/human and nature19.人与社会:man/human and society20. 语音、词汇、语法、语篇、语用:phonetics, vocabulary, grammar,discourse, pragmatics.(补充)情感态度affective attitudes认知策略cognitive strategy调控策略/元认知策略m etacognitive strategy交际策略communicative strategy资源策略resource strategy文化意识cultrual awareness综合语言运用能力comprehesive language competence自主学习:autonomous/active/independent learning 合作学习:collaborative/cooperative/group cooperation learning探究学习:inquiry learning跨文化意识:Intercultural / Cross-cultural awareness 教学反思:teaching introspection工具性和人文性:the dual nature of instrumentality and humanism面向全体学生:for all students/be open to all students 衔接cohesion:(语境上的,抽象的)连贯coherence:(语法与词汇,有形的) 语言技能听力教学teaching listening听力策略listening strategy语感 language sense自下而上模式bottom-up model自上而下模式top-down model交互模式interactive model听前 pre-listening听中 while-listening听后 post-listening主旨 gist精听 intensive listening泛听 extensive listening图式 schemata口语教学teaching speaking准确性accuracy流利性fluency得体性appropriacy连贯性coherence控制性活动controlled/mechanical activities半控制性活动semi-controlled/semi-mechanical activities开放活动open/creative activities阅读教学teaching reading自上而下模式top-down model自下而上模式bottom-up model平行阅读parallel reading交互模式interactive model预测 predicting速度 skimming扫读 scanning猜词 word-guessing推断 inferring重述 paraphrasing精读 intensive reading泛读 extensive reading写作教学teaching writing以结果为导向写作product-oriented approach以过程为导向写作process-oriented approach以内容为导向写作content-oriented approach头脑风暴brainstorming思维导图mindmapping列提纲outlining打草稿drafting修改 editing校对 proofreading控制型写作controlled writing指导性写作guided writing表达性写作expressive writing教学实施程序性提问procedral questions展示性问题display questions参阅性问题referential questions评估性问题evaluative questions开放式问题open questions封闭式问题closed questions直接纠错explicit correction间接纠错implicit correction重复法repetition重述法recast强调暗示法pinpointing指导者instructor促进者facilitator控制者controller组织者organizer参与者participant提示者prompter评估者assessor资源提供者resource-provider研究者researcher形成性评价formative assessment终结性评价summative assessment诊断性评价diagnostic assessment成绩测试achievement test水平测试proficiency test潜能测试aptitude test诊断测试diagnostic test结业测试exit test主观性测试subjective test客观性测试objective test直接测试direct test间接测试indirect test常模参照性测试Norm-referenced Test标准参照性测试Criterion-referenced Test 个体参照性测试Individual-referenced Test 信度 Reliability效度 Validity表面效度Face validity结构效度Construct validity内容效度Content validity共时效度Concurrent validity预示效度Predictive validity区分度Discrimination难度 Difficulty教学法语法翻译法grammar-translation method直接法direct method听说法audio-lingual method视听法audio-visual method交际法communicative approach全身反应法total physical response(TPR)任务型教学法task-based teaching approach语言学中英对照导论规定性与描写性Prescriptive[prɪˈskrɪptɪv] and descriptive [dɪˈskrɪptɪv]共时性与历时性Synchronic[sɪŋˈkrɒnɪk] and diachronic [ˌdaɪəˈkrɒnɪk]语言和言语Langue 美[lɑːŋɡ] and parole[pəˈrəʊl]语言能力与语言运用 competence and performance 任意性Arbitrariness[ˈɑːbɪtrərinəs]能产性Productivity[ˌprɒdʌkˈtɪvəti]双重性Duality[djuːˈæləti]移位性Displacement[dɪsˈpleɪsmənt]文化传承性Cultural transmission [trænzˈmɪʃn]语音学(phonetics[fəˈnetɪks])发音语音学articulatory [ɑːˈtɪkjuleɪtəri] phonetics听觉语音学auditory[ˈɔːdətri] phonetics声学语音学acoustic [əˈkuːstɪk]phonetics元音vowel [ˈvaʊəl]辅音consonant [ˈkɒnsənənt]发音方式Manner of Articulation闭塞音stops摩擦音fricative [ˈfrɪkətɪv]塞擦音affricate[ˈæfrɪkət]鼻音nasal [ˈneɪzl]边音lateral [ˈlætərəl]流音liquids[ˈlɪkwɪdz]滑音 glides[ɡlaɪdz]发音部位place of articulation双唇音bilabial[ˌbaɪˈleɪbiəl]唇齿音labiodental[ˌleɪbiəʊˈdentl]齿音 dental齿龈音alveolar[ælˈviːələ]硬颚音palatal [ˈpælətl]软腭音velar [ˈviːlə(r)]喉音glottal[ˈɡlɒtl]声带振动vocal [ˈvəʊkl]cord [kɔːd] vibration 清辅音voiceless浊辅音voiced [vɔɪst]音位学(phonology[fəˈnɒlədʒi])音素 phone音位phoneme [ˈfəʊniːm]音位变体allophone[ˈæləfəʊn]音位对立phonemic[fəʊˈniːmɪk]contrast互补分布complementary[ˌkɒmplɪˈmentri] distribution最小对立体minimal pair序列规则sequential[sɪˈkwenʃl] rule同化规则assimilation [əˌsɪməˈleɪʃn]rules省略规则deletion[dɪˈliːʃən] rule重音 stress语调intonation[ˌɪntəˈneɪʃn]升调 rising tone降调 falling tone[təʊn]形态学(morphology[mɔːˈfɒlədʒi])词素morphemes[ˈmɔːfiːmz]同质异形体allomorphs[ˈæləmɔːf]自由词素free morphemes粘着词素bound morphemes屈折词素inflectional[ɪnˈflɛkʃən(ə)l]/ grammatical morphemes派生词素derivational[ˌdɛrɪˈveɪʃən(ə)l]/ lexical morphemes词缀 affix[əˈfɪks , ˈæfɪks]前缀 prefix后缀suffix[ˈsʌfɪks] 语义学(semantics [sɪˈmæntɪks])词汇意义lexical meaning意义 sense概念意conceptual[kənˈseptʃuəl] meaning本意denotative[ˌdiː.nəʊˈteɪtiv] meaning字面意literal meaning内涵意connotative 美[ˈkɑː.nə.teɪ.t̬ɪv] meaning指称reference[ˈrefrəns]同义现象synonymy [sɪˈnɒnɪmi]方言同义词dialectal[ˌdaɪ.əˈlek.təl]synonyms[ˈsɪnənɪmz]文体同义词stylistic [staɪˈlɪstɪk]synonyms搭配同义词collocational[ˌkɒləʊˈkeɪʃən(ə)l] synonyms语义不同的同义词semantically different synonyms多义现象polysemy [pəˈlɪsɪmi]同音异义homonymy同音异义词homophones[ˈhɒməʊfəʊnz]同形异义词homographs完全同音异义词completehomonyms[ˈhɒm.ə.nɪm]下义关系hyponymy美[haɪ'pɒnɪmɪ]上义词superordinate下义词hyponyms['haɪpəʊnɪm]反义词antonymy[æn‘tɒnɪmɪ]等义反义词gradable [ˈɡreɪdəbl] antonyms [ˈæntəʊnɪmz]互补反义词complementary antonyms关系反义词relational opposites蕴含 entailment[ɪnˈteɪl.mənt]预设presuppose[ˌpriːsəˈpəʊz]语义反常semantically anomalous[əˈnɒmələs]语用学(pragmatics[præɡˈmætɪks])言语行为理论speech act theory言内行为locutionary[ləˈkjuː.ʃən.ər.i]言外行为illocutionary[ˌɪl.əˈkjuː.ʃən.ər.i]阐述类representatives[ˌrɛprɪˈzɛntətɪvz]指令类directives [dɪˈrɛktɪvz]承诺类commissives表达类expressives[ɪkˈspresɪvz]宣告类declarations [ˌdɛkləˈreɪʃənz]言后行为perlocutionary直接言语行为direct speech act间接言语行为inderect speech act会话原则principle of conversation合作原则cooperative principle数量准则the maxim [ˈmæksɪmof quantity质量准则the maxim of quality关系准则the maxim of relation方式准则the maxim of manner语言与社会地域方言regional dialect社会方言sociolect [ˈsəʊsiəʊlekt]语域register[ˈredʒɪstə(r)]语场 field of discourse语旨tenor [ˈtenə(r)]of discourse语式 mode of discourse第二语言习得语言习得机制LAD(language acquisition Device 对比分析contrastive[kənˈtrɑː.stɪv] analysis错误分析error analysis语际错误interlingual[ˌɪntəˈlɪŋɡwəl] errors语内错误intralingual [ˌɪntrəˈlɪŋɡwəl] errors过度概括overgeneralization互相联想cross-association中介语interlanguage石化fossilization [ˌfɒs.ɪ.laɪˈzeɪ.ʃən]。

2024年高二英语学科全球合作研究的合作机制构建分析单选题30题1.International cooperation is crucial for addressing global challenges. The ______ of different countries is essential.A.effortsanizationsC.cooperationsD.initiatives答案:B。

“国际合作对于应对全球挑战至关重要。

不同国家的组织是必不可少的。

”A 选项“efforts”努力;C 选项“cooperations”合作,此处与前文重复;D 选项“initiatives”倡议。

根据语境,这里强调不同国家的组织,所以选B。

2.Global cooperation requires strong ______ among nations.A.associationsB.partnershipsC.connectionsD.relationships答案:B。

“全球合作需要国家之间强大的伙伴关系。

”A 选项“associations”协会;C 选项“connections”联系;D 选项“relationships”关系,而伙伴关系更能体现全球合作的需求,所以选B。

3.The success of global cooperation depends on effective ______.A.coordinationsB.arrangementsanizationsD.plans答案:C。

“全球合作的成功取决于有效的组织。

”A 选项“coordinations”协调;B 选项“arrangements”安排;D 选项“plans”计划。

这里强调组织的重要性,所以选C。

4.In global cooperation, ______ play an important role in promoting common development.A.institutionspaniesC.factoriesD.schools答案:A。