Abstract Managing Wire Delay in Large Chip-Multiprocessor Caches

- 格式:pdf

- 大小:352.26 KB

- 文档页数:12

SAFER SMARTER FASTERMTP6000 SERIES ACCESSORIESSAFETY FOCUSED. EXCEPTIONAL USABILITY.Chasing a suspect down a busy street or managing a3-alarm fire, you depend on communications that performin demanding environments. That’s why it’s critical tochoose the only accessories designed, tested and certifiedto work optimally with Motorola TETRA radios. Ourcomprehensive portfolio of MTP6000 accessories deliverson that promise – new enhancements for exceptionalaudio, integrated Bluetooth® and secure connections.STAY CONNECTED,STAY SAFE IN THEMOST DEMANDINGSITUATIONSREMOTE SPEAKER MICROPHONESSURVEILLANCEEARPIECES& HEADSETSPAGE 3BATTERIES,CHARGERS,VEHICULARACCESSORIES &ANTENNASPAGES 12-15CARRYACCESSORIES& CARRY CASESPAGES 9-11MTP6000 SERIES ACCESSORIESMOTOROLA’S NEW TETRA RADIO ACCESSORY CATALOGUEPAGE 4IMPRES™ACCESSORIES ARE AUTOMATICPlug in our IMPRES accessories – such as the remotespeaker microphone (RSM) – and your radio identifies it and automatically loads the correct profile to optimise audio performance. What’s more, our IMPRES large-speaker RSMs match the high-powered speaker on your radio for even better communications in high-noise environments – essential when on noisy, hectic incident scenes.PMMN4082IMPRES large submersible windportingremote speaker microphone,high/low volume toggle switch,emergency button, and programmablebutton IP57.MDRLN4941Receive-only earpiece with translucenttube and rubber eartip.PMMN4081IMPRES large noise-cancelling remotespeaker microphone with 3.5mm audiojack and emergency button, IP54.WADN4190Receive-only flexible earpiece.SURVEILLANCE ACCESSORY REPLACEMENT KITS FOR 2- AND 3-WIRE KITS PMLN6123, PMLN6124, PMLN6129, PMLN6130, and NNTN8459PAGE 7Temple transducers rest on your temple AND convert audio into sound vibration and transmit it to your inner ear. If you’re using hearing protection or directing heavy traffic, you can hear the audio from your radio and the surrounding environment. Since the transducer sits in front of your ears, you can also wear earplugs and still hear the radio.PMLN6634Lightweight Headset, single muff with adjustable headband, boom microphone and in-line push-to-talk switch.PMLN6124IMPRES 3-wire surveillance kit with translucent tube and one programmable button, beige.PMLN5102Ultra lightweight headset with boom microphone and in-line push-to-talk.PMLN5653Bone Conduction Ear Microphone with in-line large push-to-talk with protective ring. Speak softly and be heard. Suitable also in high noise environment when ear muffs are worn over the ear microphones.PMLN5096D-Style earset with boom microphone and in-line push-to-talk.PMLN6406Heavy duty headset with noise-canceling boom microphone and push-to-talk on earcup, noise reduction=24dB.PMLN6624IMPRES Temple Transducer, bone conduction headset with boom microphone and in-line push-to-talk (behind-the-head headband).BONE CONDUCTION ACCESSORIESNTN2572Operations critical wireless earpiece with12” cable, requires NNTN8191 OperationsINTEGRATED BLUETOOTH®Bluetooth audio is embedded in theradio enabling connectivity with avariety of wireless accessories. Ourexclusive Operations Critical Wirelessaccessories handle loud environments,while an optional wireless push-to-talk(PTT) seamlessly links to any accessory.Just put the PTT in a pocket or on alapel and connect instantly.PAGE 9PMLN5004Shoulder wearing device with Peter Jones (ILG) Klick Fast stud.Compatible with all Peter Jones (ILG) Klick Fast docks and belt loops.PMLN6252Soft leather case with 2.5 inch swivelbelt loop.PMLN6249Hard leather case with 3-inch swivel belt loop.PMLN6250Soft leather case with 3 inch swivel belt loop.PMLN56162 inch short belt clip.HLN97142.5 inch large belt clip.PMLN6251Soft leather pouch for use with shoulder wearing device (PMLN5004).PAGE 10PAGE 12PERSONAL CHARGERSConveniently and quickly charge your radio.NNTN8038Personal Charger with US plug (110-240V).NNTN8133Personal Charger with Euro plug (110-240V).NNTN8134Personal Charger with UK plug (110-240V).the leading brands. If your radio gets banged on a ride, rattled by heavy equipment or shocked by static electricity, you can depend on our batteries to stay true and stand tough.MTP6000 SERIES ACCESSORIES MOTOROLA’S NEW TETRA RADIO ACCESSORY CATALOGUEMOTOROLA, MOTO, MOTOROLA SOLUTIONS and the Stylized M Logo are trademarks or registered trademarks of Motorola Trademark Holdings, LLC and are used under license. All other trademarks are the property of their respective owners. © 2012 Motorola Solutions, Inc. All rights reserved.MTP3000SERIES_ACCESSORIES_CATALOGUE_UK_(023/12)YOUR RADIO YOUR PARTNERMOTOROLA, MOTO, MOTOROLA SOLUTIONS and the Stylized M Logo are trademarks or registered trademarks of Motorola Trademark Holdings, LLC and are used under license. All other trademarks are the property of their respective owners. © 2014 Motorola Solutions, Inc. All rights reserved. MTP6000_ACCESSORIES_CATALOGUE_UK_(03/14)For more information on the MTP6000 Series radio accessories, please visit us on the web at: /MTP6000。

Module 12 Personality12.1 Contemporary approaches to personality“Personality” – a characteristics pattern of thinking, feeling, and behaving that is unique to each individual, and remains relatively consistent over time an situationsPersonality measurements1.“idiographic approach” –focusing on create detailed descriptions of a specific person’s uniquepersonality characteristics-Figure myself out-It is helpful for understanding your social worlds, and can be applied to the full range of human experience2.“nomothetic approach” – examine personality in large group of people, with the aim of makinggeneralization of about personality structure-To understand the factors that predict certain behaviours across people in general-The KEY is to identify the important personality traits that are related to whatever it is that you are interested in understandingThe trait perspective∙“Personality trait” –describes a person’s habitual patterns of thinking, feeling, and behaving -How that person is most of the time)-The first systematic attempt to identify all possible traits was made in the 1930s by Allport ∙“the Barnum effect” – people are easy to be convinced that a personality profile describe them well, while the profile is generally false and not describe them at all-This is the reason why personality tests of questionable validity are so widely believed, as well as horoscopes, astrologers, and psychics-实验: 每个人都拿到一模一样的描述,而他们都觉得说的就是他们自己∙“factor analysis” – is used to group items that people respond to similarity-比如friendly, warm, and kind can be group togetherThe Five Factor Model (Big Five)It is a trait-based theory of personality based on the finding that personality can be described using five major dimension1.“Openness” 开放型a.高分表现:Dreamers, creative, open to new things, new ways of seeing things, open to new experience,curious, considered unconventional, think more abstractly, more sensitive to emotionsb.低分表现:Defenders, conventional, avoid unknown and find security from the known, prefer tangible,being practical, straightforward, dislike complexity, resistant to change2.“Conscientiousness” 责任型a.高分表现:Organizers, efficient, self-disciplined, dependable, comfortable with schedules and lists,great employees and students, live longer due to positive health behavioursb.低分表现:Easy-going ones, fun to hang out with, poor collaborators, disorganized, careless with details, difficult to meet deadline, be-in-the-moment, not that stress about details3.“Extraversion” 外向型a.高分表现:Socializers, sensation seekers, love stimulating environments, love company, outgoing andenergetic, assertive, talkative, enthusiastic, prefer high level of stimulation and excitement, take things too far, have higher risk of dangerousb.低分表现:Quiet, solitary, recharging their batteries, overwhelmed by high levels of stimulation, morecaution and reserved, someone who can really to talk to4.“Agreeableness” 服从型a.高分表现:Warm and friendly, easy to be friend with, compassionate, empathetic, helpful and altruistic, willing to put their on interest aside in order to please others or avoid conflicts, hard tochoose, great team members, poor leadership skillsb.低分表现:Uncooperative, unkind, out themselves first, being authentic more than pandering to other people’s needs, engage in conflict if necessary, skeptical of other’s motives, less trusting ofhuman nature, self-interested5.“Neuroticism” 神经质型a.高分表现:Neurotics, difficult to deal with, emotional volatility, have negative emotions, sensitive,strong reaction to stressful situations, magnify small frustration into major problems, andpersist, most vulnerable to anxiety and depressive disordersb.低分表现:Mentally healthy, secure and confident, highly resilient to stress, excellent at managingemotions, regard as stablePersonality of evil“Authoritarian personality” –独裁/权利主义-Theorized to be rigid and dogmatic in their thinking, separate social world into US and THEM, believe strongly in the superiority in US and the inferiority in THEM-Likely to endorse prejudice and violence towards THEM-于二战后提出There are 3 lines of research that discover personality beyond the big five1.“HEXACO model of personality” – a six factor theory, 在big five基础上新增加了一个additional factor, 就是“honesty-humility” 诚信/人性a.高分表现:Sincere, honest, faithful, modest, perform altruistic and prosocial behavioursb.低分表现:Deceitful, greedy, pompous, selfish, antisocial, violent tendency, materialistic, manipulateothers, break the rules, unfaithful to relationship, strong sense of self-importance2.“The Dark Triad” 黑暗面–包含三个traitsI.“Machiavellianism” 权术主义(To use people and to be manipulative and deceitful, lack respect, focus predominantlyin their self-interest)Become aggressive when it serves their goalsII.“Psychopathy” 精神病(Having shallow emotional response, feel little empathy, harming others with littleremorse for their actions)Become aggressive when they feel physically threatenedIII.“Narcissism” 自恋(Egotistical preoccupation with self-image and excessive focus on self-importance, likefull of himself)Become aggressive when they feel self-esteem is threatened3.“Right-wing Authoritarianism” 右翼独裁主义-As a highly problematic set of personality characteristics that involve three key tendenciesa.Obeying orders and deferring to the established authorities in a society绝对服从已建立政府的法规b.Supporting aggression against those who dissent or differ from the established social order支持对于不同于建立的法规的思想或行动的暴力制裁c.Believing strongly in maintaining the existing social order 强烈地维持已建立的一切任何规定-CENTER: thinking in dogmatic terms 非黑即白,没有过度地带-Thinking with complete certainty-Process info in highly biased ways, ignoring or rejecting evidence that contradict their views -Unquestioning acceptance of authority figures-Helping to maintain repressive dictatorships in Hitler’s time-“Global change game实验” 一群RWA高的人一下子就ruin the worldPersonality traits over the lifespan∙Our personality start even before we are born-Infant possess different temperament right from birth, suggesting that the seeds of our personalities are present right from birth-Infant temperament predict the adult personality of neuroticism, extraversion, and conscientiousness三岁看到老实验: longitudinal study测试三岁小孩的不同脾性是否能预测长大的性格∙Neurons that fire together wire together-Personality conditions how you feel, perceive, interpret, and behave, this set I motion processes that feed back to reinforce the original personality trait, like a positive feedbacksystem∙Young adults tend to experience fewer negative emotions than do adolescence, reflecting decrease in neuroticism.Also conscientiousness, agreeableness, and extraversion all increase in early adulthood ∙There is considerable stability in our personality-Over time, our environments changes as well as the roles we play in those environments -Although individuals may change over time, their personal characteristics relative to other people remain remarkably stablePersonality traits and statePeople’s behaviour is also determined by situational factors and context∙“State” – is a temporary physical or psychological engagement that influence behaviours ∙四种情况1.Location2.Associations 独自一人或者与别人在一起3.Activities4.Subjective states 生气,高兴,或者生病Behaviourist perspective∙There is no existence of internalized traits-The relationship between specific environmental stimuli and the observed pattern of behaviour is important, behaviour is based on past experiences-Personality is a description of the response tendencies that occur in different situations ∙The dimension of “extraversion” is unnecessary-Emphasize the importance of stimulus-response associations that are learned through exposure to specific situations, rather than emphasizing internalized, relatively stablepersonality traits∙The AGREEMENT between behaviourist and other approaches is the emphasis on how learning contributes to personalitySocial-Cognitive theory perspective(By Albert Bandura)It emphasize the role of beliefs and the reciprocal relationships between people and their environment∙Environmental stimuli do not automatically trigger specific behaviour, instead inform individual’s belief about the world, in particular, their belief s about that consequences are likely to follow from certain behaviours∙CENTRAL IDEA: “reciprocal determinism”-Behaviour, internal factors, external factors interact to determine one another, and that our personalities are based on interactions among these three aspects∙So personality is not something inside the person, but rather exist between the person and the environment∙People’s personalities and their environment are interdependent in many different ways, linked together in feedback loops that connect their perceptions, cognitions, emotions, behaviours-the ways they structure their environment, the ways that their environments structure them12.2 Cultural and biological approaches to personalityUniversals and differences across cultures∙Despite the differences that may exist between cultures, the people in those cultures share the same basic personality structures∙Although individual personalities differ enormously, the basic machinery of the human personality system is universal∙在中国的研究: researchers found 4 traits:-Dependability可靠型, social potency社会效能型, individualism个人主义型, interpersonal relatedness人际关系型∙At this point, most psychologists would agree the five factor model captures important and perhaps universal dimensions of personality-But also might miss important cultural-specific qualities than can only be understood by analyzing personality from that culture’s perspectiveComparing between nations∙Many of the findings in these large-scale cross-cultural studies defy cultural stereotypes∙It is difficult to understand if these differences are real because:nguage translation challenge2.“Response styles” – characteristic ways of responding questions(Ensure that people use the same kind of reasoning process when answering the questionsbecause response styles can be strongly influenced by cultural norms)比如发现日本人分数都特别低,不是因为真的人不好,而是文化背景让他们习惯谦虚∙The problem of essentializing cultural differences-It is hard to attribute that difference to something fundamental to the cultures, some sort of basic differences between the essence of each culture∙Individualism东亚国家 vs. Collectivism西方国家-Individualism注重自己, collectivism注重于外界的联系-To individualists, the individualistic task was processed by the brains as most self-relevant, the parietal lobe is firing.To collectivists, it was the collectivistic task most self-relevantGenetic influence on personalityGenetic factors contribute substantially to personality∙Twin studies同卵和异卵双胞胎的实验证明基因确实一定程度上决定我们的性格∙In terms of basic personality characteristics, your genes are more important than you home -Most of the time, the influence of parenting on personality is overshadowed by the contribution made by our genes∙Researchers had discovered genes that code for specific brain chemicals than are related to personalityRole of evolution in personality∙Animals have personalities-对于chimpanzee的研究表明big five同样存在∙The big five traits are selected for being adaptive in past evolutionary epochs, helping to promote survival and reproduction-每个性格特征的高分和低分都有自己的长处,所以并不是神经质型高分的就一定不好,低分的就一定好∙Being either high or low in each big five trait could be desirable, depending on the situations The brain and personality∙“Humourism” – explained both physical illness and disorders of personality as resulting from imbalance in key fluids in the body –the 4 “humours”- 4 humours: blood, phlegm, black bile, yellow bile∙“Phrenology” – (by Franz Gall) the theory that personality characteristics could be assessed by carefully measuring the outer skull-The specific dimensions of the skull indicated the size of the brain area inside, which in turn corresponded to specific personality-根据人的头骨把大脑分成几个区域,每个区域负责不同的性格特征Extraversion and arousal∙“Arousal theory of extraversion” –arguing that extraversion is determined by people’s threshold of arousal-Extraverts have higher threshold for arousal than introverts, so extraverts generally seek greater amount of stimulation∙Module of brain-personality relationship models:1.“Ascending reticular activating system (ARAS)” – plays a central role in controlling thisarousal response-It is the reactivity that differentiate the extraverts and the introverts2.“Approach/ inhibition model of motivation” – describes two major brain systems forprocessing rewards and punishmentsA.“Behavioural activation system (BAS)” – a GO systemArousing the person to respond to action in the pursuit of desired goalsResponsive to rewards and unresponsive to negative consequencesRelated to extraversionB.“Behavioural inhibition system (BIS)” – a DANGER systemMotivating the person to action in order to avoid punishment or other negative outcomesAssociated with greater negative emotional responses and avoidance motivationRelated to neuroticismImage of personality in the brain∙Extraversionrger medial orbitofrontal lobe – for processing reward2.Less activation in the amygdala – for processing novelty, danger, and fear∙Conscientiousnessrger middle frontal gyrus in the left prefrontal cortex – involve in working memory andcarrying our planned actions∙Neuroticism1.Smaller dorsomedial prefrontal cortex – controlling emotions2.Smaller hippocampus – controlling obsessive negative thinkingrger mid-cingulate gyrus – detecting errors and perceiving pain∙Agreeableness1.Smaller volume of superior temporal sulcus – interpreting other’s intentionsrger posterior cingulate – involve in empathy and perspective-taking∙Openness1.Greater activation in dorsolateral prefrontal cortex – involve creativity and intelligence∙These does not mean these difference cause the personality difference, it suggest these brain regions are involved in serving neurological functions that related to personality process ∙There is no specific brain area that involved uniquely in a single personality trait12.3 Psychodynamic and humanistic approach to personalityPsychodynamic perspective∙“Psychodynamic theory” – by Freud 其实根本就不是一个theory-The universal assumption is that personality and behaviour are shaped by powerful focus in consciousness-We have very little control over ourselves, and remarkably little insight into the reason for our own behaviours-Our mind is a black-box∙“conscious” 意识-Current awareness, containing everything that you are aware right now ∙“unconscious” 下意识-Most vast and powerful but inaccessible part of your consciousness, operating without you conscious endorsement or will to influence and guide your behaviour-Contain full lifetime of memories and experiences, include those no longer bring into conscious awareness-It is the primary driver of behavioursStructure of personality∙“Id” 本我-Collection of basic biological needs, animal desires 本能欲望-Fueled by an energy called “libido”-Operate according the “pleasure principle” – motivating people to seek out experiences that bring pleasure, with little regard for the appropriateness or consequences -The predominant force controlling our actions in the earliest stage of our life-Tell us what our body want to do∙“Superego” 超我-Comprised our values and moral standards 价值与道德标准-Tell us what we ought to do-Forms over time as we become socialized and educated, learned through being praised or punished-Represent a process of internalization∙“Ego” 自我-Between id and superego 调解本我和超我的矛盾-The decision maker and frequently under tension-Operate according the “reality principle” – plugged into reality∙The tension between 3 systems give rise to personality in two ways1.Different people will have deep personality differences because of the strength of their id,ego, and superego2.Generates much of our personality is how we react to “anxiety” – a experiential result of thetension between id, ego, and superegoDefence mechanismThe unconscious strategies the ego use to reduce or avoid anxiety 防御机制- The undesired tendencies are not confronted and problems are not dealt with 没有面对不堪,问题也没有解决Psychosexual stages 小孩发展∙ At each stage, the libido manifest in specific are of body, depends on what areas are mostimportant for providing pleasure∙ “Fixation ” 固恋现象 – becoming preoccupied with obtaining the pleasure associated with aparticular stage and then fixed at that stage 在任一阶段中不能正常往下发展,日后行为模式就会固定在此阶段,有不好的影响1. Oral stage 表现为lack of self-confidence, 容易产生依赖和攻击性2. Anal stage 表现为 通过 “toilet training ”,如果父母教得不好的话就会:a. “anal retentive ” 滞留型,过于在乎清洁,控制欲强,因为父母施加压力过大b. “anal expulsive ” 排出型,邋遢懒散,因为父母教导不够3. Phallic stage 表现为 男女不同:a. 男生 “Oedipus complex 恋母情结” and “castration anxiety 阉割恐惧”b. 女生 “Electra complex 恋父情结” and “penis envy”4. Latency stage 不会发生fixation 因为男女感情疏远,集体活动呈男女分离的趋势5. Genital stage 不会发生fixationExploring the unconscious∙Freud refined dream analysis and free association to reveal unconscious material∙“Projective tests” 投射测验– personality tests in which ambiguous images are presented to an individual to elicit responses that reflect unconscious desire or conflicts1.“Rorschach inkblot test” 罗夏墨迹测验– in which people are asked to describe what theysee 看墨迹2.“Thematic Apperception test (TAT)” 主题理解测验– ask respondent to tell stories aboutambiguous pictures involving various interpersonal situation 看图说故事∙但这些投射测验遭到反对,原因是:1.Low reliability 可靠性低–同一个人在不同时间重新做测试,结果都不一样2.Low validity 有效性低–没有测试到要测试的东西-比如“figure drawing test” –测试的其实是artistic ability and intelligenceWorking the Scientific Literacy ModelPerceiving others as a projective test∙Projection本来是在过去Freud’s psychodynamic theory里面,由于方法争议大,近代现代更倾向于用“trait approach” 来应用于投射测验-we make guesses as to what other people are like by using our own self-concepts as a guide -They found several correlations showing that the way people view themselves is, in fact, related to how they view others, in other words, how people perceive others appears to bea projection of how they perceive themselves-但是不足的是: it cannot make precise predictions, only general statements; and cannot know whether it is because of the rater is either optimistic or pessimisticAlternative approaches都反对Freud, 认为归属感,成就感,完整感对性格发展才是重要因素∙Carl Jung –“Analytical psychology” – focus on the role of unconscious archetypes in personality development-认为有两种unconscious1.“personal unconscious” –same as Freud’s2.“collective unconscious”集体无意识– non-personal realm of the unconscious thatholds the collective memories and mythologies of humankind, stretching deep into ourancestral past人类世世代代在无意识中积累的知识经验,不受地域文化差别所限-CENTRAL ROLE: “archetype”原型– image and symbols that reflects common truths held across cultures, which represent major narrative patterns in the unconscious ∙Alfred Adler –“inferiority complex” 自卑情结– the struggle that many people have with feeling inferiority-反对Freud’s pleasur e principle-Stem from experience of helplessness and powerless during children由于童年的无助和无力感产生的∙Karen Horney –“womb envy” 反对恋母情结-She focus on the social and cultural factors-Highlighted the importance of interpersonal conflict between children and their parents Humanistic approach∙Against the pessimism and disempowerment inherent in Freudian approaches, and to explore the potential for humans to become truly free and deeply fulfilled 反对Freud的性本恶理论,提倡积极正面的motivation and humanity∙代表人物荣格Carl Rogers –“Person-centered perspective”-States that people are basically good, and given the right environment their personality will develop fully and normally-Believe that people possess inner drive toward “self-actualization自我实现”, the more self-actualized a person becomes, the more his inherently good nature will dominate hispersonality∙Abraham Maslow马斯洛∙Martin Seligman-Launched the “positive psychology” movement。

运营维护技术大数据分析在变电站通信网络故障诊断中的应用陈兴东,白云海(国网河北省电力有限公司超高压分公司,河北文章主要探讨大数据分析技术在变电站通信网络故障诊断中的应用。

详细分析电力通信网络的主要特性与面临的挑战。

基于特征工程、机器学习等大数据分析技术提出一套完备的故障诊断流程。

通过对特定算法的分析,初步验证了大数据分析方法在故障诊断问题中的有效性。

其中,对于通信网络业务流量和链路状态故障诊断的准确/s。

结果表明,大数据分析技术能显著提高故障检测的准确性,缩短诊断时间,大数据分析;通信网络;故障诊断Application of Big Data Analysis in Fault Diagnosis of Substation Communication NetworkCHEN Xingdong, BAI Yunhai(State Grid Hebei Electric Power Co., Ltd., UHV Branch, ShijiazhuangAbstract: This paper mainly discusses the application of big data analysis technology in substation communication network fault diagnosis. The main characteristics and challenges of power communication network are analyzed in detail.大数据分析技术可以高效管理和分析来自电力通信网络的海量数据,实现对网络故障的快速准确判所示,主要包括数据采集、特征工程、模型训练以及模型部署等步骤,进模型部署容器化技术流处理平台报警和通知机制在网络设备和业务系统中部署数据采集软件程序,负责搜集网络中的流数据和日志数据。

此外,利的模型参数,提高模型的性能和泛化能力。

长期完整性的分层容错论文翻译长期完整性的分层容错秉健春,佩特罗斯曼尼阿蒂斯英特尔研究大学伯克利分校斯科特 Shenker,约翰 Kubiatowicz 美国加州大学伯克利分校摘要通常,容错服务有关的假设类型和故障,他们可以容忍的最大数量这种故障时,同时提供其正确性的保证;阈值是侵犯性,正确性丢失。

我们重新审视的概念在长期归档存储方面的故障阈值。

我们注意到,故障阈值不可避免地长期违反服务,使得传统的容错不适用长期的。

在这项工作中,我们进行的再分配容错预算的一项长期的服务。

我们分裂投入服务件的服务,每一个都可以容忍不同没有失败的故障数(并不会造成整个服务失败):每件可在一个关键值得信赖的过错层,它必须永远不会失败,或不可信的故障层,它可以大量经常失败,或其他故障层次之间。

通过仔细工程的一项长期的服务分裂成片,必须服从不同的故障阈值,我们可以延长其必然灭亡。

我们证明了这一点做法 Bonafide,一个长期的 key - value 存储,与所有类似的文献中提出的系统,在保持完整性面对拜占庭故障,无需自我认证的数据。

我们描述了分层容错的概念,设计,实施, Bonafide 和实验1/ 3评价,并主张我们的做法是一个实际仍未显著改善在长期服务的艺术状态。

一,引言当前容错复制服务设计往往不适合长期的应用,如档案,数字文物,这是越来越重要的存储 [42],为企业的监管[5, 6],文化[36]原因。

从典型的故障假设这不合适结果这类系统的正确性空调。

例如,在典型的拜占庭容错(BFT)系统[13],它是假定复制故障副本的数目总是比一些不太固定阈值,如副本人口的 1 / 3。

在典型的短期的应用,这样一个 uniformthreshold 基于故障假设是合理的,可以实现的。

例如,可以说在一个 wellmaintained 人口多元化,高保证副本服务器,由当时总人口的三分之一被打破生长进入或刚刚出现故障,故障副本的运营商可以修复它们。

JAVA外文文献毕业设计下面是一个 Java 外文文献毕业设计的例子,题目为 "Design and Implementation of a Java-based Inventory Management System"。

Abstract:This paper presents the design and implementation of a Java-based inventory management system. The system aims to provide efficient and effective inventory management for businesses by automating various processes such as tracking inventory levels, managing orders, and generating reports. The system is developed using Java programming language with the help of popular frameworks and libraries such as Spring Boot and Hibernate. The design follows the principles of object-oriented programming and utilizes various design patterns to ensure maintainability, extensibility, and reusability. In addition, the system incorporates security measures such as authentication and authorization to protect sensitive data. The implementation of the system demonstrates the capabilities of Java in developing robust, scalable, and user-friendly applications for inventory management.1. Introduction2. System ArchitectureThe system architecture consists of multiple layers including the presentation layer, business logic layer, and dataaccess layer. The presentation layer is responsible forproviding a user interface for users to interact with the system. The business logic layer handles the processing and manipulation of data, while the data access layer is responsible forinteracting with the database.3. Object-Oriented DesignThe design of the system follows the principles of object-oriented programming. The system is divided into several modules, each representing a specific functionality or process. The modules are designed to be loosely coupled and highly cohesive, making it easier to maintain and extend the system in the future. In addition, various design patterns such as the Factory pattern and the Singleton pattern are used to improve code reuse and maintainability.4. Security MeasuresTo protect sensitive data such as customer information and inventory data, the system incorporates security measures suchas authentication and authorization. Users are required to authenticate themselves using their credentials before being granted access to the system. Different roles and permissionsare assigned to users to ensure that they can only perform authorized actions.5. ImplementationThe system is implemented using Java programming language with the help of popular frameworks and libraries such as Spring Boot and Hibernate. Spring Boot is used to simplify the configuration and deployment of the system, while Hibernate is used for object-relational mapping and database interaction. The implementation demonstrates the capabilities of Java in developing robust, scalable, and user-friendly applications for inventory management.6. ConclusionIn conclusion, this paper presents the design and implementation of a Java-based inventory management system. The system provides efficient and effective inventory management for businesses by automating various processes such as tracking inventory levels, managing orders, and generating reports. The system incorporates security measures to protect sensitive data and follows the principles of object-oriented programming for maintainability and extensibility. The implementation demonstrates the capabilities of Java in developing robust, scalable, and user-friendly applications for inventory management.Keywords: Java, inventory management system, object-oriented design, security measures, implementation.。

2024届山西省省际名校联考三模英语试题一、阅读理解Instructors can use examinations to evaluate student performance in a class. Midterm examination notices and final examination dates and times are published before registering begins.Midterm Examination GuidelinesMidterm examinations are usually held during regularly scheduled class meeting times. However, large classes with multiple sections requiring a common midterm examination can hold examinations outside regular class meeting times with the following restrictions:●Notice of out-of-class midterm examination dates and times must be published in the Schedule of Classes and on MyUCLA before registration begins.●Instructors must include out-of-class midterm examination dates in the course outline. Final Examination GuidelinesFinal examinations are generally held in the same rooms as class meetings; however, by prior arrangement with the Registrar’s Office, common final examinations can be scheduled, and additional room locations may be booked. Final examination locations are displayed on Monday of the ninth week of the term in the Schedule of Classes and in MyUCLA.It is the student’s responsibility to make sure the final examination times do not conflict by checking the Schedule of Classes.The instructor’’s methods of evaluation must be announced at the beginning of the course. Final written examinations may not surpass three hours’ length and are given only at the times and places established and published by the department chair and the registrar.Instructors must submit grades no later than 10 days after the last day of finals for fall, winter, and spring terms, and no later than 10 days after the last day of any given summer session. 1.What is the purpose of publishing exam notices before registering begins?A.To announce changes about exam policies.B.To ensure students are well-prepared for exams.C.To emphasize the importance of midterm exams.D.To allow students to plan their schedules in advance.2.When can out-of-class midterm examination dates and times be announced?A.After registration begins.B.Before registration begins.C.Anytime during the semester.D.Only on the first day of the class,3.What is the responsibility of students regarding the final examination times?A.To determine the locations of final examinations.B.To make sure the final examination times do not conflict.C.To schedule additional room locations for final exams.D.To submit grades within 10 days after the last day of finals.Frederick Phiri, known as the junk-art king of Zambia, set out on a remarkable journey at the age of 22 when he began earning an international reputation for being able to make complex and elegant sculptures from deserted metal found in his community.Phiri’s path to artistic recognition was filled with challenges. His childhood was marked by the loss of his father and his mother leaving him behind, making him under the care of his grandfather. While his grandfather provided for his basic education, Phiri faced financial struggles when he entered secondary school, forcing him to take on various jobs to fund his studies. Yet, despite these obstacles, his passion for art remained growing, and he dedicated his free time to drawing and crafting in the classroom.Upon completing his education, Phiri sought to support himself by creating wire animal sculptures (雕塑品), which he sold to tourists. It was during this period that his exceptional talent caught the attention of Karen Beattie, the director of Project Luangwa, a nonprofit organization committed to education and economic development in central Africa.Teaming up with local welder (焊接工) Moses Mbewe in 2017, Phiri contributed to the creation of a complex set of doors for Project Luangwa during the rainy season. Impressed by his work, Beattie presented Phiri with a challenge: to create art from abandoned waste metal. Undiscouraged, Phiri embraced the opportunity, transforming forgotten keys, broken bike chains, and old metal bottles into attracting abstract animal sculptures, including elephants, cranes, giraffes, and monkeys.Today, Phiri’s artistic pursuits continue to flourish as he transforms deserted junk into striking sculptures showcased at the Project Luangwa headquarters. His talent has earnedrecognition and admiration from the community, fueling his dreams of pursuing formal art education at the Evelyn Hone College in Lusaka and creating even more magnificent sculptures in the future. Through creativity and determination, Phiri has turned adversity (逆境) into artistic success, leaving a lasting impact on Zambia’s art scene.4.What is Phiri distinguished for?A.Serving his community.B.Collecting works of art.C.Being the king of Zambia.D.Turning trash into treasure.5.What aspect of Phiri’s childhood shaped his early life?A.His struggle to pay for primary schooling by himself.B.The loss of his father and abandonment by his mother.C.His dedication to part-time jobs while attending school.D.His responsibility to support his grandfather financially.6.What challenge did Karen Beattie present to Phiri?A.To create sculptures from wire.B.To sell his sculptures internationally.C.To create art from deserted waste metal.D.To design a complex set of doors for Project Luangwa.7.What attitude does Phiri exhibit towards his future?A.Uncertain.B.Confident.C.Depressed.D.Confused.Humans spend approximately one-third of their lives sleeping, which means that by the time you reach 15 years old, you will have slept for about 5 years! However, the question remains: why do we do it? Sleep has long been a puzzle for people, leading scientists to explore its mysteries. They have found that during sleep, our brains and bodies do not simply shut down; instead, complex processes unfold.What causes sleepiness? It’s caused by our biological clock releasing a special chemical signal at the same time daily, signaling it’’s time to wind down. The feeling of heaviness comes from the buildup of old nerve messenger chemicals. When the message gets too much, the brain senses that it’s time to sleep. During sleep, the body breaks down and clears away these old messengers, preparing for a fresh start.As you slip into sleep, your heart and breathing slow down, and your brain ignores the most sounds and surroundings around you. Despite appearances, your brain remains active during sleep, performing essential tasks. Throughout the night, you cycle through light, deep, and REM (rapid eye movement) sleep stages every 90 minutes. Early on, slow-wave sleep is the primary stage, aiding in brain cleaning, while towards morning,REM sleep increases, leading to vivid dreams. During sleep, your body repairs and grows, creating new skin, muscle, and other tissues with released chemicals. It’s also a time for hormone (荷尔蒙) production, aiding growth, and boosting the immune (免疫) system to fight infections.Scientists still have many questions about sleep, such as why some people need more sleep than others and how certain animals can function with less sleep. They aim to find ways to improve sleep quality. However, one thing is clear: getting enough sleep is vital for health and intelligence. Think of sleep as a free magic potion that strengthens you, aids growth, boosts immunity, and enhances intelligence, happiness, and creativity. Simply lie down and rest to enjoy these benefits.8.What inference can be drawn from scientists’ findings about sleep?A.Sleep serves no purpose.B.Sleep has fundamental processes.C.Sleep involves complex processes.D.Sleep has minimal impact on brain function. 9.What is the primary function of the body’s “biological clock“?A.Determining the best time for sleep.B.Controlling chemicals within the body.C.Managing eating and digestion patterns.D.Regulating daytime and nighttime activities.10.What is the main focus of the third paragraph?A.Describing the different stages and functions of sleep.B.Discussing the benefits of daytime activities for overall health.C.Exploring the effects of inadequate sleep on the body and mind.D.Analyzing the impact of stress on the immune system during sleep.11.Which of the following best describes the structure of the passage?A.Narrative of personal sleep experiences.B.Comparison of human and animal sleep patterns.C.Imaginary stories illustrating the advantages of sleep.D.Explanation of sleep importance, with body processes described.In a groundbreaking achievement, a rhino has successfully undergone embryo (胚胎) transfer, marking the first successful use of a method that holds promise for saving the nearly extinct northern white rhino subspecies.The experiment, conducted with the less endangered southern white rhino subspecies, involved creating an embryo in a lab using eggs and sperm (精子) collected from other rhinos. This embryo was then transferred into a southern white rhino alternative mother in Kenya. Despite the unfortunate death of the alternative mother due to an infection in November 2023, researchers praised the successful embryo transfer and pregnancy (怀孕) as a proof of concept. They are now ready to proceed to the next stage of the project: transferring northern white rhinoembryos.Professor Thomas Hildebrandt expressed optimism about the findings, highlighting the significance of the successful embryo transfer in demonstrating that frozen and defrosted embryos produced in a lab can survive. This development offers hope for the revival of the northern white rhino population.However, challenges facing rhino conservation remain significant. While the southern white rhino subspecies and the black rhino species have shown signs of recovery from population declines due to illegal hunting for their horns (牛角), the northern white rhino subspecies is on the edge of extinction. With only two known members left in the world, Najin and her daughter Fatu, both unable to reproduce naturally, and the recent death of the last male white rhino, Sudan, in 2018, urgent action is needed to prevent the extinction of this subspecies. Dr. Jo Shaw, CEO of Save the Rhino International, emphasized the importance of addressing the primary threats facing rhinos worldwide: illegal hunting for their horns and habitat loss due to development. She stressed the need to provide rhinos with the space and security they need to succeed in their natural environment.While the successful embryo transfer representsa significant advancement in rhino conservation efforts, organized action is required to address the main challenges facing rhino populations worldwide.12.What is the purpose of the experiment mentioned in the text?A.To evaluate the efficiency of a new rhino birth program.B.To observe the behavior of rhinos in a controlled environment.C.To assess the effects of climate change on the southem rhino habitats.D.To develop a way of rescuing the endangered northern white rhinos subspecies. 13.Which word can replace the underlined word “revival” in paragraph 3?A.Rebirth.B.Decline.C.Stability.D.Decrease. 14.What is the current condition of the northern white rhino subspecies?A.Facing extinction.B.Showing signs of recovery.C.Developing in their natural habitat.D.Recovering from population declines 15.What might be the best title of this text?A.Dr. Jo Shaw’s Call to Action: Addressing Threats to Rhino SurvivalB.Challenges Facing Rhino Conservation Efforts: Urgent Action NeededC.The Successful Embryo Transfer: A Breakthrough in Rhino ConservationD.Professor Thomas Hildebrandt’s Optimism: Hope for Rhino Population RevivalBeing more organized can change your life for the better and can even result-in you becoming more successful. After all, when you have things in order, you will be likely to get more done and achieve more of your goals. 16 . If you aim to enhance your organizational skills, consider using the following strategies.●Create a To-Do ListKeeping a to-do list is crucial for staying organized and ensuring productivity throughout your day. You can choose traditional pen and paper methods or use phone apps and computer software programs to track tasks and receive reminders for deadlines. 17●Keep a ScheduleIt’s essential to maintain a schedule by recording important dates for tasks such as bill payments and project submissions. Additionally, keeping track of meetings and setting alerts beforehand ensures adequate preparation and timely attendance. 18●Get Things Done Right AwayProcrastination (拖延症) is only going to cause you problems. 19 . Get things done as soon as you can, rather than putting them off until later on. Not only will this help you bemore organized, but it will also reduce the amount of time you waste during the day.●Set Priorities20 . This means knowing what you want and not being sorry for taking time out for it. Everyone has priorities and they are all different, so it’s important to be true to yourself and what you want. You have to make a list, and make sure that you work your way down. Put the most important thing at the top, most of the things we keep putting off we usually push to the bottom of the list.A.This can prevent forgetfulnessB.So this is a trait you definitely need to changeC.You have to take note of what your priorities areD.This is an absolute must for those who are diligentE.Actually highly successful people share the trait of being organizedF.Make sure you can easily access the things that you use most oftenG.This approach helps in staying organized and managing time effectively二、完形填空Alia found herself disappointed in the drawing studio after missing out on picking her classes due to illness. She had hoped for Biology, but it was 21 by the time she got to choose. She loved everything about science, from the way it used facts to how it revealed the basic nature of things. But 22 was so... un-scientific.The first class project was self-portraits (肖像). Some students were drawing self-portraits using 23 . Others were working from photographs. Alia 24 the incomplete outlines, feeling like a cat in a dog show.The teacher 25 her. “Every portrait begins with a circle,” he said. “Then you create a series of lines.” To 26 , he drew a group of small, quick portraits. He began each one with a 27 , some straight lines, and a triangle (三角形) to determine 28 the eyes, nose, and chin (下巴) should go.29 , Alia began her own self-portrait. She 30 the basic form of a head, the way she had been shown. From there, she used lines to plot the 31 of her face.The process took patience and 32 . She had to take note of each detail. One wrong measurement could throw off the whole 33 .Alia was surprised by the structure and discipline involved in drawing a portrait. Measuring, studying details and 34 the basic nature of something 35 her of what she loved about science.21.A.full B.changeable C.busy D.tough 22.A.biology B.art C.physics D.medicine 23.A.artbooks B.glass C.brushes D.mirrors 24.A.cared for B.searched for C.glanced at D.get rid of 25.A.punished B.approached C.blamed D.requested 26.A.introduce B.demonstrate C.complete D.promote 27.A.letter B.square C.circle D.dot28.A.why B.when C.how D.where 29.A.Surprisingly B.Excitedly C.Hesitantly D.Disappointedly 30.A.drew B.wrote C.printed D.typed 31.A.features B.sizes C.colors D.lengths 32.A.accuracy B.love C.courage D.humor 33.A.design B.paper C.portrait D.book 34.A.changing B.revealing C.distinguishing D.understanding 35.A.warned B.informed C.suspect D.reminded三、语法填空阅读下面短文,在空白处填入1个适当的单词或括号内单词的正确形式。

Telecom Power Technology通信网络技术有线传输技术在通信工程中的应用及发展方向探讨陈仁权(中邮通建设咨询有限公司,江苏南京主要探讨有线传输技术在通信工程中的应用及发展方向。

从电话通信、广播电视、互联网接入、局域网个方面分析了有线传输技术在通信工程中的应用,从高速传输、网络安全等层面探讨了有线传输有线传输技术;通信工程;电话通信;互联网Discussion on the Application and Development Direction of Wired TransmissionTechnology in Communication EngineeringCHEN Renquan(China Post Construction Consulting Co., Ltd., NanjingAbstract: The article mainly discusses the application and development direction of wire transmission technologyin communication engineering. It analyzes the application of wired transmission technology in communication engineering from five aspects: telephone communication, broadcasting and television, Internet access, local area network and sensor 2023年11月10日第40卷第21期159 Telecom Power TechnologyNov. 10, 2023, Vol.40 No.21陈仁权:有线传输技术在通信工程中的应用及发展方向探讨作用,人们可通过互联网工具接入网络,发送邮件、上传下载文件。

OM-CP-VOLT101A DC Voltage Data LoggerXxxxx Xxxxxx XxxxxxxxProduct OverviewThe OM-CP-VOLT101A data loggers are versatile data logging devices with many uses and applications. Connect negative and positive wire leads directly to the terminal port on the OM-CP-VOLT101A to monitor and measure voltage levels. The OM-CP-VOLT101A is commonly used to assess battery efficiencies or photovoltaic studies to identify how much energy is being created from solar cells.The OM-CP-VOLT101A features a removable terminal block to allow for simple retrieval of the data logger for downloading while leaving the leads connected. With a ten year battery life and the ability to store up to one million time and date stamped readings, this device is ideal for long term deployment and voltage studies. Four models of the OM-CP-VOLT101A are available. The 2.5V is capable of measuring -3V to 3 V, the 15 V capable of measuring -8V to 24V, and the 30V which can measure from -8V to 32V. For lower voltage applications that require a higher resolution, Omega also offers the OM-CP-VOLT101A-160MV model, which can measure voltage between -160 and 160mV.Wiring the Data LoggerOM-CP-VOLT101A, 2.5V, 15V and 30V models Single Ended Wiring OM-CP-VOLT101A-160MVDC Differential WiringTwo-position removable screw terminal connections; accepts 2-wire configurations.Three position removable screw terminal connections, accepts 3 wire configurations.Warning: Note the polarity instructions. Do not attach wires to the wrong terminals.LEDs- Green LED blinks: 10 seconds to indicate logging and 15 seconds to indicate delay start mode- Red LED blinks: 10 seconds to indicate low battery and/or memory and 1 second to indicate an alarm conditionEngineering UnitsNative measurement units can be scaled to display measurement units of another type. This is useful when monitoring voltage outputs from different types of sensors such as temperature, CO2, flow rate and more.Password ProtectionAn optional password may be programmed into the device to restrict access to configuration options. Data may be read out without the password.Multiple Start/Stop Mode Activation- To start device: Press and hold the pushbutton for 5 seconds, the green LED will flash during this time. The device has started logging.- To stop the device: Press and hold the pushbutton for 5 seconds, the red LED will flash during this time. The device has stopped logging.AlarmTroubleshooting TipsWhy is the wireless data logger not appearing in the software?If the OM-CP-VOLT101A or OM-CP-VOLT101A-160MV doesn’t appear in the Connected Devices panel, or an error message is received while using the OM-CP-VOLT101A or OM-CP-VOLT101A-160MV, try the following:• Check that the OM-CP-IFC200 is properly connected. For more information, see Troubleshooting Interface Cable problems (below).• Ensure that the battery is not discharged. For best voltage accuracy, use a voltage meter connected to the battery of the device. If possible, try switching the battery with a new OM-CP-BAT105.• Ensure that no other Omega software is running in the background.• Ensure that Omega Software is being used.• Ensure that the Connected Devices panel is large enough to display devices. This can be verified by positioning the cursor on the edge of the Connected Devices panel until the resize cursor appears, then dragging the edge of the panel to resize it. The screen layout may also be reset in the options menu by selecting File, Options, and scrolling to the bottom.Troubleshooting Interface Cable problemsCheck that the software properly recognizes the connected OM-CP-IFC200.If the data logger is not appearing in the Connected Devices list, it may be that the OM-CP-IFC200 is not properly connected.1. In the software, click the File button, then click Options.2. In the Options window, click Communications.3. The Detected Interfaces box will list all of the available communication interfaces. If the OM-CP-IFC200is listed here, then the software has correctly recognized and is ready to use it.Check that Windows recognizes the connected OM-CP-IFC200.If the software does not recognize the OM-CP-IFC200, there may be a problem with Windows or the USB drivers.1. In Windows, click Start, right-click Computer and choose Properties or press Windows+Break as a key-board shortcut.2. Click Device Manager in the left hand column.3. Double click Universal Serial Bus Controllers.4. Look for an entry for Data logger Interface.5. If the entry is present, and there are no warning messages or icons, then windows has correctlyrecognized the connected OM-CP-IFC200.6. If the entry is not present, or has an exclamation point icon next to it, the USB drivers may need to beinstalled. These are available on the software flash drive included with the OM-CP-IFC200.Ensure that the USB end of the OM-CP-IFC200 is securely connected to the computer.1. Locate the USB-A plug of the OM-CP-IFC200.2. If the interface cable is connected to the PC, unplug it. Wait ten seconds.3. Reconnect the cable to the PC.4. Check to make sure that the red LED is lit, indicating a successful connection.Installation GuideInstalling the Interface cable- OM-CP-IFC200Refer to the “Quick Start Guide” included in the package.Installing the softwareInsert the Omega Software Flash Drive in an open USB port. If the autorun does not appear, locate the drive on the computer and double click on Autorun.exe. Follow the instructions provided in the Installation Wizard. Device OperationConnecting and Starting the data logger1. Once the software is installed and running, plug the interface cable into the docking station.2. Connect the USB end of the interface cable into an open USB port on the computer. Place the data loggerinto the docking station.3. The data logger will automatically appear under Connected Devices within the software.4. For most applications, select “Custom Start” from the menu bar and choose the desired start method,reading rate and other parameters appropriate for the data logging application and click “Start”. (“Quick Start” applies the most recent custom start options, “Batch Start” is used for managing multiple loggers at once, “Real Time Start” stores the dataset as it records while connected to the logger.)5. The status of the device will change to “Running”, “Waiting to Start” or “Waiting to Manual Start”,depending upon your start method.6. Disconnect the data logger from the docking station and place it in the environment to measure.Note: The device will stop recording data when the end of memory is reached or the device is stopped. At this point the device cannot be restarted until it has been re-armed by the computer.Downloading data from a data logger1. Connect the logger to the docking station.2. Highlight the data logger in the Connected Devices list. Click “Stop” on the menu bar.3. Once the data logger is stopped, with the logger highlighted, click “Download”. You will be prompted toname your report.4. Downloading will offload and save all the recorded data to the PC.Product MaintenanceBattery ReplacementMaterials: Small Phillips Head Screwdriver & Replacement Battery (OM-CP-BAT105)1. Puncture the center of the back label with the screw driver and unscrew the enclosure.2. Remove the battery by pulling it perpendicular to the circuit board.3. Insert the new battery into the terminals and verify it is secure.4. Screw the enclosure back together securely.Note: Be sure not to over tighten the screws or strip the threads. RecalibrationThe OM-CP-VOLT101A and OM-CP-VOLT101A-160MV standard calibration depends on the range.Voltage160mV 2.5V15V30VRange0V and 90-160mV0V and 2.25-2.5V0V and 13.5-15V0V and 27-30V Recalibration is recommended annually for any Omega data logger; a reminder is automatically displayed in the software when the device is due.OM-CP-VOLT101A General SpecificationsBattery WarningWARNING: FIRE, EXPLOSION, AND SEVERE BURN HAZARD. DO NOT SHORT CIRCUIT, CHARGE, FORCE OVER DISCHARGE, DISASSEMBLE, CRUSH,PENETRATE OR INCINERATE. BATTERY MAY LEAK OR EXPLODE IF HEATED ABOVE 80°C (176°F).OM-CP-VOLT101A-160MV General SpecificationsBattery WarningWARNING: FIRE, EXPLOSION, AND SEVERE BURN HAZARD. DO NOT SHORT CIRCUIT, CHARGE, FORCE OVER DISCHARGE, DISASSEMBLE, CRUSH,PENETRATE OR INCINERATE. BATTERY MAY LEAK OR EXPLODE IF HEATED ABOVE 80°C (176°F).WARRANTY/DISCLAIMEROMEGA ENGINEERING, INC. warrants this unit to be free of defects in materials and workmanship for a period of 13 months from date of purchase. OMEGA’s WARRANTY adds an additional one (1) month grace period to the normal one (1) year product warranty to cover handling and shipping time. This ensures that OMEGA’s customers receive maximum coverage on each product.If the unit malfunctions, it must be returned to the factory for evaluation. OMEGA’s Customer Service Department will issue an Authorized Return (AR) number immediately upon phone or written request. Upon examination by OMEGA, if the unit is found to be defective, it will be repaired or replaced at no charge. OMEGA’s WARRANT Y does not apply to defects resulting from any action of the purchaser, including but not limited to mishandling, improper interfacing, operation outside of design limits, improper repair, or unauthorized modification. This WARRANTY is VOID if the unit shows evidence of having been tampered with or shows evidence of having been damaged as a result of excessive corrosion; or current, heat, moisture or vibration; improper specification; misapplication; misuse or other operating conditions outside of OMEGA’s control. Components in which wear is not warranted, include but are not limited to contact points, fuses, and triacs.OMEGA is pleased to offer suggestions on the use of its various products. However, OMEGA neither assumes responsibility for any omissions or errors nor assumes liability for any damages that result from the use of its products in accordance with information provided by OMEGA, either verbal or written. OMEGA warrants only that the parts manufactured by the company will be as specified and free of defects. OMEGA MAKES NO OTHER W ARRANTIES OR REPRESENTATIONS OF ANY KIND W HATSOEVER, EXPRESSED OR IMPLIED, EXCEPT THAT OF TITLE, AND ALL IMPLIED W ARRANTIES INCLUDING ANY W ARRANTY OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE HEREBY DISCLAIMED. LIMITATION OF LIABILITY: The remedies of purchaser set forth herein are exclusive, and the total liability of OMEGA with respect to this order, whether based on contract, warranty, negligence, indemnification, strict liability or otherwise, shall not exceed the purchase price of the component upon which liability is based. In no event shall OMEGA be liable for consequential, incidental or special damages.CONDITIONS: Equipment sold by OMEGA is not intended to be used, nor shall it be used: (1) as a “Basic Component” under 10 CFR 21 (NRC), used in or with any nuclear installation or activity; or (2) in medical applications or used on humans. Should any Product(s) be used in or with any nuclear installation or activity, medical application, used on humans, or misused in any way, OMEGA assumes no responsibility as set forth in our basic WARRANTY / DISCLAIMER language, and, additionally, purchaser will indemnify OMEGA and hold OMEGA harmless from any liability or damage whatsoever arising out of the use of the Product(s) insuch a manner.FOR WARRANTY RETURNS, please have the following information available BEFORE contacting OMEGA:1. P urchase Order number under which the product was PURCHASED,2. M odel and serial number of the product under warranty, and3. R epair instructions and/or specific problems relative to the product.FOR NON-WARRANTY REPAIRS, consult OMEGA for current repair charges. Have the following information available BEFORE contacting OMEGA:1. Purchase Order number to cover the COST of the repair,2. Model and serial number of the product, and 3. R epair instructions and/or specific problems relative to the RETURN REQUESTS / INQUIRIESDirect all warranty and repair requests/inquiries to the OMEGA Customer Service Department. BEFORE RET URNING ANY PRODUCT (S) T O OMEGA, PURCHASER MUST OBT AIN AN AUT HORIZED RET URN (AR) NUMBER FROM OMEGA’S CUST OMER SERVICE DEPARTMENT (IN ORDER TO AVOID PROCESSING DELAYS). The assigned AR number should then be marked on the outside of the return package and on any correspondence.The purchaser is responsible for shipping charges, freight, insurance and proper packaging to prevent breakage in transit.The information contained in this document is believed to be correct, but OMEGA accepts no liability for any errors it contains, and reserves the right to alter specifications without notice.Servicing North America:U.S.A. Omega Engineering, Inc. Headquarters: Toll-Free: 1-800-826-6342 (USA & Canada only) Customer Service: 1-800-622-2378 (USA & Canada only) Engineering Service: 1-800-872-9436 (USA & Canada only) Tel: (203) 359-1660 Fax: (203) 359-7700 e-mail:**************For Other Locations Visit /worldwide ***********************。

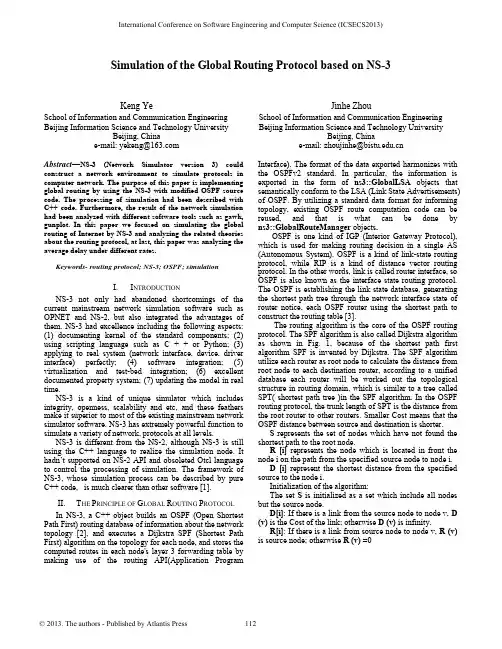

Simulation of the Global Routing Protocol based on NS-3Keng YeSchool of Information and Communication Engineering Beijing Information Science and Technology UniversityBeijing, Chinae-mail:**************Jinhe ZhouSchool of Information and Communication Engineering Beijing Information Science and Technology UniversityBeijing, Chinae-mail:*******************.cnAbstract—NS-3 (Network Simulator version 3) could construct a network environment to simulate protocols in computer network. The purpose of this paper is implementing global routing by using the NS-3 with modified OSPF source code. The processing of simulation had been described with C++ code. Furthermore, the result of the network simulation had been analyzed with different software tools such as gawk, gunplot. In this paper we focused on simulating the global routing of Internet by NS-3 and analyzing the related theories about the routing protocol, at last, this paper was analyzing the average delay under different rates.Keywords-routing protocol; NS-3; OSPF; simulationI.I NTRODUCTIONNS-3 not only had abandoned shortcomings of the current mainstream network simulation software such as OPNET and NS-2, but also integrated the advantages of them. NS-3 had excellence including the following aspects: (1) documenting kernel of the standard components; (2) using scripting language such as C + + or Python; (3) applying to real system (network interface, device, driver interface) perfectly; (4) software integration; (5) virtualization and test-bed integration; (6) excellent documented property system; (7) updating the model in real time.NS-3 is a kind of unique simulator which includes integrity, openness, scalability and etc, and these feathers make it superior to most of the existing mainstream network simulator software. NS-3 has extremely powerful function to simulate a variety of network, protocols at all levels.NS-3 is different from the NS-2, although NS-3 is still using the C++ language to realize the simulation node. It hadn’t supported on NS-2 API and obsoleted Otcl language to control the processing of simulation. The framework of NS-3, whose simulation process can be described by pure C++ code, is much clearer than other software [1].II.T HE P RINCIPLE OF G LOBAL R OUTING P ROTOCOLIn NS-3, a C++ object builds an OSPF (Open Shortest Path First) routing database of information about the network topology [2], and executes a Dijkstra SPF (Shortest Path First) algorithm on the topology for each node, and stores the computed routes in each node's layer 3 forwarding table by making use of the routing API(Application Program Interface). The format of the data exported harmonizes with the OSPFv2 standard. In particular, the information is exported in the form of ns3::GlobalLSA objects that semantically conform to the LSA (Link State Advertisements) of OSPF. By utilizing a standard data format for informing topology, existing OSPF route computation code can be reused, and that is what can be done by ns3::GlobalRouteManager objects.OSPF is one kind of IGP (Interior Gateway Protocol), which is used for making routing decision in a single AS (Autonomous System). OSPF is a kind of link-state routing protocol, while RIP is a kind of distance vector routing protocol. In the other words, link is called router interface, so OSPF is also known as the interface state routing protocol. The OSPF is establishing the link state database, generating the shortest path tree through the network interface state of router notice, each OSPF router using the shortest path to construct the routing table [3].The routing algorithm is the core of the OSPF routing protocol. The SPF algorithm is also called Dijkstra algorithm as shown in Fig. 1, because of the shortest path first algorithm SPF is invented by Dijkstra. The SPF algorithm utilize each router as root node to calculate the distance from root node to each destination router, according to a unified database each router will be worked out the topological structure in routing domain, which is similar to a tree called SPT( shortest path tree )in the SPF algorithm. In the OSPF routing protocol, the trunk length of SPT is the distance from the root router to other routers. Smaller Cost means that the OSPF distance between source and destination is shorter.S represents the set of nodes which have not found the shortest path to the root node.R [i]represents the node which is located in front the node i on the path from the specified source node to node i.D [i]represent the shortest distance from the specified source to the node i.Initialization of the algorithm:The set S is initialized as a set which include all nodes but the source node.D[i]: If there is a link from the source node to node v, D (v) is the Cost of the link; otherwise D (v) is infinity.R[i]: If there is a link from source node to node v, R (v) is source node; otherwise R (v) =0International Conference on Software Engineering and Computer Science (ICSECS2013)Figure 1. Pseudo code for Dijstra algorthmWhen the router is initializing or the network structure is changing (for example, increasing or decreasing in the router, link state changing), the router will generate LSA, which contains all of the routers connected to the link, and calculate the shortest path.All routers exchange data of link state with each other through a Flooding, which is that the routers sent LSA to all adjacent OSPF routers. Basing on which the routers received, they have been updating their database and forwarding link state information to the adjacent routers until a stable process When the network is re-stabilized, the convergences of OSPF routing protocol have been completed. All routers will be calculated by the respective link state database of information to generate routing table, which contains the router to another reachable one and the destination to the next router.III.T HE SIMULATION PROCESS OF GLOBAL ROUTINGIn this section, we’ll introduce some important terms that are realizing specific function in ns-3.A.NodeIn NS-3 the basic abstraction of network device is called the Node. The class Node provides methods for managing the functions of network devices in simulation. You should consider a Node as a computer or a router to which you will add functionality by yourself [1]. One adds things like topology, channel, protocol stacks and peripheral devices with their associated drivers to activate the Node to do practical function.Class NodeContainer provides a very convenient way to create, manage, and access nodes, set as follows,NodeContainer c;c.Create (7); B.TopologyClass NodeContainer can connect any two nodes, set asfollows,NodeContainer n0n4 = NodeContainer(c.Get(0), c.Get(4));C.ChannelThe class Channel provides methods for constructingcommunication subnetwork objects and connecting nodes toeach other. Channels may also be specialized by us in theobject- oriented programming method. The specializedChannel can simulate things as complicated as a wire, alarge Ethernet switch, or three-dimensional space full ofobstructions in the case of wireless networks [1].We will utilize specialized versions of the Channelcalled CsmaChannel, PointToPointChannel and WifiChannel in NS-3. This paper choosesPointToPointHelper to simulate, set as follows,PointToPointHelper p2p;DeviceIn ns-3 the abstraction of network device covers both thesoftware driver and the simulated hardware. A network device is “installed” in a Node so as to activate the Node tocommunicate with other Nodes in the simulation viaChannels. Just as in a real computer, a Node may beconnected to more than one Channel via multipleNetDevices. The abstraction of network device isrepresented in C++ by the class NetDevice. The classNetDevice provides methods for managing connections toNode and Channel objects; and may be specialized by us inthe object-oriented programming. We will utilize the severalspecialized versions of the NetDevice calledCsmaNetDevice, PointToPointNetDevice, andWifiNetDevice in NS-3[1]. This paper choosesPointToPointNetDevice to simulate, set as follows, p2p.SetDeviceAttribute ("DataRate", StringValue ("5Mbps"));NetDeviceContainer d0d4 = p2p.Install (n0n4);E.Protocol StackUntil now, nodes, devices and channels are created, wewill use class InternetStack to add stack, set as follows, InternetStackHelper internet;internet.Install (c);F.Assignning Ipv4 AddressThis paper chooses Class Ipv4AddressHelper assign IPaddresses to the device interfaces.NS_LOG_INFO ("Assign IP Addresses.");Ipv4AddressHelper ipv4;ipv4.SetBase ("10.1.1.0", "255.255.255.0");Ipv4InterfaceContainer i0i4=ipv4.Assign (d0d4); G.Setting OSPF Cost Metrici0i4.SetMetric (0, sampleMetric04);i0i4.SetMetric (1, sampleMetric04);H.Generating Routing TableNS_LOG_INFO is recording information on the progress of the program. This paper chooses global routing protocol which is introduced in section 2.Ipv4GlobalRoutingHelper::PopulateRoutingTables ();I.Sending Traffic And Setting Data Rate , The Size OfPacketNS_LOG_INFO ("Create Applications.");uint16_t port = 80; // Discard port (RFC 863)OnOffHelper onoff ("ns3::TcpSocketFactory",InetSocketAddress (i5i6.GetAddress (1), port));onoff.SetAttribute("OnTime", RandomVariableValue (ConstantVariable (1)));onoff.SetAttribute("OffTime", RandomVariableValue (ConstantVariable (0)));onoff.SetAttribute("DataRate",StringValue("5kbps") );onoff.SetAttribute ("PacketSize", UintegerValue (50)); J.Generating And Stopping TrafficApplicationContainer apps = onoff.Install (c.Get (0));apps.Start (Seconds (1.0));apps.Stop (Seconds (100.0));K.Class Packetsink Receive The Traffic OfPacketSinkHelper sink ("ns3::TcpSocketFactory",Address (InetSocketAddress (Ipv4Address::GetAny (), port)));apps = sink.Install (c.Get (6));apps.Start (Seconds (1.0));apps.Stop (Seconds (100.0));NS_LOG_INFO ("Configure Tracing.");L.Generating Trace File To Record SimulationInformationAsciiTraceHelper ascii;Ptr<OutputStreamWrapper> stream = ascii.CreateFileStream ("ospf.tr");p2p.EnableAsciiAll (stream);internet.EnableAsciiIpv4All (stream);M.Generating Routing File To Record Route Information Ipv4GlobalRoutingHelper g;Ptr<OutputStreamWrapper>routingStream=Create<OutputStreamWrapper>("ospf.routes",std::ios::out);g.PrintRoutingTableAllAt(Seconds(12), routingStream);N.Executing SimulatorNS_LOG_INFO ("Run Simulation.");Simulator::Run ();Simulator::Destroy ();NS_LOG_INFO ("Done.");IV.S IMULATION R ESULTSimulation results verify that the shortest path tree conforms to Dijkstra algorithm in OSPF protocol and count package delay to check network performance.A.Generating Shortest PathAccording to the topology in Fig. 2, in the 1st second executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, as shown in Fig. 3 the shortest path is node 0->node 4-> node 1-> node 2-> node 5-> node 6-> node 3, rather than node 0->node 4-> node 1-> node 2-> node 3 which include the least node.In 2nd second, disconnecting the link node 1 and node 2, node 0 executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, as shown in Fig.4 at the moment, the shortest path is node 0->node 4-> node 1-> node 5-> node 6 -> node 3.In 4th second, reconnecting the link node 1 and node 2, node 0 executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, the shortest path is node 0->node 4-> node 1-> node 2-> node 5-> node 6-> node 3.In 6th second, disconnecting the link node 5 and node 6,node 0 executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, at the moment, the shortest path is node 0->node 4-> node 1-> node 2-> node 3.In 8th second, disconnecting the link node 2 and node 3,node 0 executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, at the moment, the shortest path is node 0->node 4-> node 1-> node 5-> node 2 -> node 3.In 12th second, reconnecting the link node 1 and node 2, node 0 executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, at the moment, the shortest path is node 0->node 4-> node 1-> node 5-> node 6 -> node 3.In 14th second, reconnecting the link node 1 and node 2, node 0 executes a Dijkstra SPF algorithm on the topology for each node, finds the shortest path between node 0 and node 3, at the moment, the shortest path is node 0->node 4-> node 1-> node 2-> node 5-> node 6-> node 3.Figure 2. topology with cost in simulationFigure 3. shortest path in 1stsecondFigure 4. shortest path in 2ndsecondB. DelayIn Fig. 5 are shown the simulation results of delay for the communication between node 0 and node 3. Under different shortest path, delay is diverse. The network delay is very close to the case when the packets take the same path. The path of least delay is not necessarily the shortest path.The average delay is the time of all data packets arrived at destination node from the source node. The characterization means the current status of the network. As shown in Fig. 6,V.C ONCLUSIONSummary, this paper describes an effective simulation method to realize global routing protocols, evaluate network performance, and carry out a detailed analysis of delay and shortest path tree. The conclusion has certain reference value.A CKNOWLEDGMENTThis work was supported by National Natural Science Foundation of China (61271198) and Beijing Natural Science Foundation (4131003).Figure 5.the packet delayFigure 6. the average delay under different rateR EFERENCES[1] NS-3 Tutorial. /, 12 Dev 2012[2] NS-3 project NS-3 Doxygen. /,12 Dev 2012 [3] Andrew S. Tanenbaum. Computer Network Fourth Edition. Beijing:Tsinghua University Press.pp.350-366,454-459(2008) [4] WANG Jianqiang,LI Shiwei,ZENG Junwei,DOUYingying.(2011)Simulation Research of VANETs Routing Protocols Performance Based on NS -3Microcomputer Applications Vol. 32 No. 11.[5] NS-3 project NS-3 Reference Manual. /,12Dev 2012[6] Timo Bingmann.( 2009). Accuracy Enhancements of the 802.11Model and EDCA QoS Extensions in ns-3. Diploma Thesis at the Institute of Telematics[7] Learmonth, G. Holliday, J. (2011) NS3 simulation and analysis ofMCCA: Multihop Clear Channel Assessment in 802.11 DCF .Consumer Communications and Networking Conference (CCNC). IEEE[8] Henderson T R,Floyd S,Riley G F(2006) NS-3 Project Goals.Proceedings of the Workshop of Network Simulation. Pisa, Italy.[9] Vincent.S,Montavont.J(2008).Implementation of an IPv6 Stack forNS-3. Proceedings of the Workshop of Network Simulation.AthensGreece:[s. n.].。