Fast Adaptation for Robust Speech Recognition in Reverberant Environments

- 格式:pdf

- 大小:65.07 KB

- 文档页数:4

《基于深度学习的多通道语音增强方法研究》篇一一、引言随着人工智能技术的飞速发展,语音信号处理在众多领域中扮演着越来越重要的角色。

然而,由于环境噪声、信道失真、干扰声源等因素的影响,实际环境中获得的语音信号往往存在严重的质量问题。

为了改善这一情况,提高语音识别的准确性和可懂度,多通道语音增强技术应运而生。

本文将重点研究基于深度学习的多通道语音增强方法,旨在通过深度学习技术提高语音信号的信噪比和清晰度。

二、多通道语音增强技术概述多通道语音增强技术通过在空间域和时间域上利用多个传感器,以收集到来自不同方向的语音信号信息。

利用这一技术,可以有效地抑制噪声和干扰声源,从而提高语音信号的信噪比和清晰度。

传统的多通道语音增强方法主要依赖于信号处理技术,如滤波器、波束形成等。

然而,这些方法往往难以处理复杂的噪声环境和动态变化的声源。

三、深度学习在多通道语音增强中的应用深度学习技术为多通道语音增强提供了新的解决方案。

通过构建深度神经网络模型,可以自动学习和提取语音信号中的有效特征,从而实现对噪声和干扰声源的更有效抑制。

此外,深度学习还可以在多通道语音信号的融合和降噪过程中,对时间域和空间域的信息进行联合处理,进一步提高语音增强的效果。

四、基于深度学习的多通道语音增强方法研究本文提出了一种基于深度学习的多通道语音增强方法。

该方法首先通过多个传感器收集来自不同方向的语音信号信息,然后利用深度神经网络模型对收集到的信息进行特征提取和降噪处理。

具体而言,我们采用了卷积神经网络(CNN)和循环神经网络(RNN)的组合模型,以实现时间和空间域上的联合处理。

在训练过程中,我们使用了大量的实际录音数据和模拟噪声数据,以使模型能够更好地适应各种噪声环境和动态变化的声源。

五、实验与结果分析为了验证本文提出的多通道语音增强方法的性能,我们进行了大量的实验。

实验结果表明,该方法在各种噪声环境下均能显著提高语音信号的信噪比和清晰度。

与传统的多通道语音增强方法相比,基于深度学习的多通道语音增强方法具有更高的准确性和鲁棒性。

一种基于深度学习的英语语音识别技术一、引言随着科技的发展和人们对语音技术的需求不断增加,语音识别技术逐渐进入人们的视野。

语音识别技术有着广泛的应用场景,如智能音箱、车载语音识别、语音助手等等。

在这些应用场景中,英语是一种被广泛使用的语言,因此本文将介绍一种基于深度学习的英语语音识别技术。

二、英语语音识别技术的概述英语语音识别技术是指通过计算机对英语语音进行分析,从而将其转化为相应的文本。

该技术的实现主要分为三个过程:特征提取、模型训练和识别。

(一)特征提取语音信号是一种包含时间变化的信号,需要将其转化为计算机可以处理的数字信号并提取有效的语音特征。

其中比较常用的基于短时傅里叶变换的梅尔频率倒谱系数(MFCC)特征提取方法。

(二)模型训练模型训练是指根据已知的数据集,让计算机学习到一种映射关系,从而可以对新的语音信号进行识别。

在英语语音识别领域,深度学习模型得到越来越广泛的应用。

当模型训练完成后,我们可以使用其对新的语音信号进行识别。

该过程基本分为两步:语音信号的预处理和模型的推理。

在预处理中,我们需要对传入的语音信号进行MFCC特征提取,并且进行归一化;在推理中,我们需要使用训练好的模型,对预处理后的特征进行推理,从而得到相应的文本输出。

三、基于深度学习的英语语音识别技术在深度学习领域,常用的模型有循环神经网络(RNN)、卷积神经网络(CNN)和深度神经网络(DNN)等。

在英语语音识别领域,这些模型均有着广泛的应用。

(一)RNNRNN是一种具有记忆功能的神经网络,它的前向传播过程包括当前输入和前一时刻的输出。

在语音信号处理中,RNN可以根据前一时刻的输出,对当前输入进行预测。

尤其是长短时记忆网络(LSTM)和门控循环单元(GRU)等变种模型,都能够有效地解决语音识别过程中的记忆问题。

(二)CNNCNN是一种卷积神经网络,它主要用于图像处理领域。

但是,在语音信号处理中,CNN也被广泛应用。

通过卷积核的处理,CNN可以提取语音信号的局部特征,从而提高识别的准确性。

英文语音识别技术在自然语言处理中的应用前景展望The Prospects of English Speech Recognition Technology in Natural Language ProcessingIntroductionIn recent years, the field of natural language processing (NLP) has witnessed significant advancements, thanks to the rapid development of English speech recognition technology. As a subfield of artificial intelligence, NLP focuses on enabling computers to understand, interpret, and generate human language. English speech recognition technology plays a crucial role in enhancing NLP applications by converting spoken English into written text. This article aims to explore the potential applications and future prospects of English speech recognition technology in NLP.Improved Voice Assistants and ChatbotsVoice assistants, like Siri and Alexa, have become ubiquitous in our daily lives, performing various tasks such as setting reminders, answering questions, and providing recommendations. English speech recognition technology can enhance the accuracy and efficiency of these voice assistants by offering robust speech-to-text conversion. Users can communicate with their devices more naturally, enabling voice assistants to understand and respond accurately. Additionally, chatbots, which rely on NLP algorithms to engage in natural language conversations, can significantly benefit from improved speech recognition technology. The ability to understand spoken language opens up a whole new level of interactivity, making chatbots more user-friendly and effective.Transcription and Voice SearchOne of the primary applications of English speech recognition technology is in transcription services. Transcribing interviews, meetings, or lectures can be a time-consuming task, but with the help of speech recognition, the process can be automated. English speech recognition technology accurately converts spoken language into text, saving valuable time and resources for professionals and researchers alike. Furthermore, voice search functionality is a growing trend in internet searches. Enhanced speech recognition technology ensures more accurate and relevant search results, providing users with faster access to information. This technology can revolutionize the way we navigate the internet and retrieve information.Language Learning and AccessibilityEnglish speech recognition technology has immense potential in language learning and accessibility. Language learners can utilize real-time speech recognition to improve their pronunciation and enhance their communication skills. By providing instant feedback on errors, learners can correct their mistakes and progress more effectively. Additionally, individuals with disabilities that affect their ability to type or write can benefit from speech recognition technology. People with motor disabilities or conditions like dyslexia can express themselves more easily using spoken language, which is then converted into written text for communication purposes.Enhanced Sentiment Analysis and Voice AnalyticsSentiment analysis, or opinion mining, is a valuable tool used to determine the sentiment expressed in text. English speech recognition technology can dramatically improve sentiment analysis by incorporating the tone and intonation of spoken language, adding a new dimension to the analysis. Voice analytics, which measures patterns and characteristics in speech, can also benefit from advanced speech recognition. It enables businesses to gather meaningful insights from customer calls and interactions, helping improve customer service and product development.Virtual Assistants in HealthcareThe healthcare industry stands to benefit significantly from the integration of English speech recognition technology into virtual assistants or chatbot applications. Patients can engage with virtual assistants to schedule appointments, access healthcare information,and even receive personalized medical advice. Healthcare professionals can dictate patient notes and medical records, allowing for more efficient documentation and reducing administrative burden. This technology opens up new possibilities for telemedicine and remote patient monitoring, where accurate speech recognition plays a vital role in transforming spoken medical instructions into written text.Challenges and Future DevelopmentsWhile English speech recognition technology has shown remarkable progress, challenges still exist. Accents, dialects, and background noise can pose difficulties for accurate recognition. Ongoing research aims to address these challenges, developing algorithms that can adapt to various speaking styles and environmental conditions. Moreover, advancements in machine learning and deep neural networks hold promise for further improving speech recognition accuracy and efficiency.In conclusion, English speech recognition technology holds immense potential in enhancing NLP applications. From improving voice assistants and chatbots to aiding transcription services, language learning, sentiment analysis, and healthcare, the prospects are vast. As researchers continue to advance the technology and overcome existing challenges, we can expect even more innovative applications and improved user experiences in the future. English speech recognition is set to revolutionize how we interact with technology and harness the power of spoken language.。

专利名称:Noise-robust speech processing发明人:Neti, Chalapathy V.申请号:EP96308906.5申请日:19961209公开号:EP0781833A2公开日:19970702专利内容由知识产权出版社提供专利附图:摘要:A method for noise-robust speech processing with cochlea filters within a computer system is disclosed. This invention provides a method for producing feature vectors from a segment of speech, that is more robust to variations in the environment due to additive noise. A first output is produced by convolving (50) a speech signal input with spatially dependent impulse responses that resemble cochlea filters. The temporal transient and the spatial transient of the first output is then enhanced by taking a time derivative (52) and a spatial derivative (54), respectively, of the first output to produce a second output. Next, all the negative values of the second output are replaced (56) with zeros. A feature vector is then obtained (58) from each frame of the second output by a multiple resolution extraction. The parameters for the cochlea filters are finally optimized by minimizing the difference between a feature vector generated from a relatively noise-free speech signal input and a feature vector generated from a noisyspeech signal input.申请人:International Business Machines Corporation 地址:Old Orchard Road Armonk, N.Y. 10504 US国籍:US代理机构:Ling, Christopher John更多信息请下载全文后查看。

专利名称:Fast speech recognition method for mandarin words发明人:Chung-Mou Pengwu申请号:US08/685733申请日:19960724公开号:US05764851A公开日:19980609专利内容由知识产权出版社提供摘要:A method for fast speech recognition of Mandarin words is accomplished by obtaining a first database which is a vocabulary of N Mandarin phrases. The vocabulary is described by an acoustic model which is formed by concatenating together word models. Each of the so concatenated word models is a concatenation of an initial model and a final model, wherein the initial model may be a null element, and both the initial and final model are represented by a probability model. A second database which contains initial models is determined. A preliminary logarithmic probability is subsequently calculated. A sub- set of vocabulary comprising acoustic models having the highest probability of occupance are established using the preliminary logarithmic probabilities. This facilitates recognizing phrases of Mandarin phrases which are then outputted to a user.申请人:INDUSTRIAL TECHNOLOGY RESEARCH INSTITUTE代理人:W. Wayne Liauh更多信息请下载全文后查看。

DUTCH HLT RESOURCES: FROM BLARK TO PRIORITY LISTSH. Strik1, W. Daelemans2, D. Binnenpoorte3, J. Sturm1, F. De Vriend1, C. Cucchiarini1,41 Department of Language and Speech, University of Nijmegen, The Netherlands{Strik, D.Binnenpoorte, Janienke.Sturm, F.deVriend, C.Cucchiarini}@let.kun.nl2 Department of CNTS Language Technology, University of Antwerp, BelgiumWalter.Daelemans@uia.ua.ac.be3 Speech Processing Expertise Centre (SPEX), Nijmegen, The Netherlands4 Nederlandse Taalunie, The Hague, The NetherlandsABSTRACTn this paper we report on a project about Dutch Human Language Technologies (HLT) resources. I n this project we first defined a so-called BLARK (Basic LAnguage Resources Kit). Subsequently, a survey was carried out to make an inventory and evaluation of existing Dutch HLT resources. Based on the information collected in the survey, a priority list was drawn up of materials that need to be developed to complete the Dutch BLARK. Although the current project only concerns the Dutch language, the method employed and some of the results are also relevant for other languages.1. INTRODUCTIONWith information and communication technology becoming more and more important, the need for HLT also increases. HLT enable people to use natural language in their communication with computers, and for many reasons it is desirable that this natural language be the user’s mother tongue. In order for people to use their native language in these applications, a set of basic provisions (such as tools, corpora, and lexicons) is required. However, since the costs of developing HLT resources are high, it is important that all parties involved, both in industry and academia, co-operate so as to maximise the outcome of efforts in the field of HLT. This particularly applies to languages that are commercially less interesting than English, such as Dutch.For this reason, the Dutch Language Union (Nederlandse Taalunie – abbreviated NTU), which is a Dutch/Flemish intergovernmental organisation responsible for strengthening the position of the Dutch language (for further details on the NTU, see [1]), launched an initiative, the Dutch HLT Platform. This platform aims at stimulating co-operation between industry and scientific institutes and at providing an infrastructure that will make it possible to develop, maintain and distribute HLT resources for Dutch.The work to be carried out in this project was organised along four action lines, which are described in more detail in [2]. I n the present paper, action lines B and C are further outlined. Action line A is about constructing a ‘broking and linking’ function, and the goal of action line D is to define a blueprint for management, maintenance and distribution.The aims of action line B are to define a set of basic HLT resources for Dutch that should be available for both academia and industry, the so-called BLARK (Basic LAnguage Resources Kit), and to carry out a survey to determine what is needed to complete this BLARK and what costs are associated with the development of the materials needed. These efforts should result in a priority list with cost estimates, which can serve as a policy guideline. Action line C is aimed at drawing up a set of standards and criteria for the evaluation of the basic materials contained in the BLARK and for the assessment of project results. Obviously, the work done in action lines B and C is closely related, for determining whether materials are available cannot be done without a quality evaluation. For this reason, action lines B and C have been carried out in an integrated way.The work in action lines B and C was carried out in three stages, which are described in more detail below:1. defining the BLARK,2. carrying out a field survey to make an inventory andevaluation of existing HLT resources, and3. defining the priority list.The project was co-ordinated by a steering committee consisting of Dutch and Flemish HLT experts.2. DEFINING THE BLARKThe first step towards defining the BLARK was to reach consensus on the components and the instruments to be distinguished in the survey. A distinction was made between applications, modules, and data (see Table 1). 'Applications' refers to classes of applications that make use of HLT. 'Modules' are the basic software components that are essential for developing HLT applications, while 'data' refers to data sets and electronic descriptions that are used to build, improve, or evaluate modules.In order to guarantee that the survey is complete, unbiased and uniform, a matrix was drawn up by the steering committee describing (1) which modules are required for which applications, (2) which data are required for which modules, and (3) what the relative importance is of the modules and data. This matrix (subdivided in language and speech technology) is depicted in Table 1, where “+” means important and “++” means very important.This matrix serves as the basis for defining the BLARK. Table 1 shows for instance that monolingual lexicons and annotated corpora are required for the development of a wide range of modules; these should therefore be included in the BLARK. Furthermore, semantic analysis, syntactic analysis, and text pre-processing (for language technology) and speechrecognition, speech synthesis, and prosody prediction (for speech technology) serve a large number of applications and should therefore be part of the BLARK, as well.Based on the data in the matrix and the additional prerequisite that the technology with which to construct the modules be available, a BLARK is proposed consisting of the following components:For language technology:Modules:• Robust modular text pre-processing (tokenisation and named entity recognition)• Morphological analysis and morpho-syntacticdisambiguation• Syntactic analysis• Semantic analysisData:• Mono-lingual lexicon• Annotated corpus of text (a treebank with syntactic, morphological, and semantic structures)• Benchmarks for evaluationFor speech technology:Modules:• Automatic speech recognition (including tools for robust speech recognition, recognition of non-natives, adaptation, and prosody recognition) • Speech synthesis (including tools for unit selection)• Tools for calculating confidence measures• Tools for identification (speaker identification as well as language and dialect identification)• Tools for (semi-) automatic annotation of speech corporaData:• Speech corpora for specific applications, such as Computer Assisted Language Learning (CALL),directory assistance, etc.• Multi-modal speech corpora• Multi-media speech corpora• Multi-lingual speech corpora• Benchmarks for evaluation3. SURVEY: INVENTORY & EVALUATION In the second stage, a survey was carried out to establish which of the components that make up the BLARK are already available; i.e. which modules and data can be bought or are freely obtainable for example through open source. Besides being available, the components should also be (re-)usable. Note that only language specific modules and data were considered in this survey.Obviously, components can only be considered usable if they are of sufficient quality. Therefore, a formal evaluation of the quality of all modules and data is indispensable. Evaluation of the components can be carried out on two levels: a descriptive level and a content level. Evaluation on a content level would comprise validation of data and performance validation of modules, whereas evaluation on a descriptive level would mean checking the modules and data against a list of evaluation criteria. Since there was only a limited amount of time, it was decided that only the checklist approach would be feasible. A checklist was drawn up consisting of the following items:• Availability:• public domain, freeware, shareware, etc.• legal aspects, IPR• Programming code:• language: Fortran, Pascal, C, C++, etc.• makefile• stand-alone or part of a larger module?• Platform: Unix, Linux, Windows 95/98/NT, etc.• Documentation• Compatibility with standards: (S)API, SABLE• Compatibility with standard packages: MATLAB, Praat, etc.• Reusability / adaptability / extendibility:• to other tasks and applications• to other platforms• StandardsAs a first step in the inventory, the experts in the steering committee made an overview of the availability of components. Then the steering committee appointed four field researchers to carry out the survey. The field researchers then extended and completed this overview on the basis of information found on the internet and in the literature, and personal communication with experts.4. PRIORIT LISTSThe survey of Dutch and Flemish HLT resources resulted in an extensive overview of the present state of HLT for the Dutch language. We then combined the BLARK with the inventory of components that were available and of sufficient quality, and drew up priority lists of the components that need to be developed to complete the BLARK. The prioritisation proposed was based on the following requirements:• the components should be relevant (either directly or indirectly) for a large number of applications,• the components should currently be either unavailable, inaccessible, or have insufficient quality, and• developing the components should be feasible in the short term.At this point, we incorporated all information gathered in a report containing the BLARK, the availability figures together with a detailed inventory of available HLT resources for Dutch, priority lists of components that need to be developed, and a number of recommendations [3]. This report was given a provisional status, as feedback on this version from a lot of actors in the field was considered desirable, since reaching consensus on the analysis and recommendations for the Dutch and Flemish HLT field is one of the main objectives.Therefore, we consulted the whole HLT field. Using the address list compiled in Action Line A of the Platform, a first version of the priority lists, the recommendations, and a link to a pre-final version of the inventory [3] were sent to all known actors in the Dutch HLT field: a total of about 2000 researchers, commercial developers and users of commercial systems. We asked all actors to comment on the report, the priority lists, and the recommendations. Relevant comments were incorporated in the report.Simultaneously, the same group of people was invited to a workshop that was organised to discuss the BLARK, the priority list and the recommendations. Some of the actors that had sent their comments were asked to give a presentation to make their ideas publicly known. The presentations served as an onset for a concluding discussion between the audience and a panel consisting of five experts (all members of the steering committee). A number of conclusions that could be drawn from the workshop are:• Cooperation between universities, research institutes and companies should be stimulated.• It should be clear for all components in the BLARK how they can be integrated with off-the-shelf software packages. Furthermore, documentation and information about performance should be readily available.• Control and maintenance of all modules and data sets in the BLARK should be guaranteed.• Feedback of users on the components (regarding quality and usefulness of the components) should be processed ina structured way.• The question as to what open source / license policy should be used needs some further discussion.On the basis of the feedback received from the Dutch HLT field, some adjustments were made to the first version of the report. The final priority lists are as follows:For language technology:1. Annotated corpus written Dutch: a treebank with syntacticand morphological structures2. Syntactic analysis: robust recognition of sentence structurein texts3. Robust text pre-processing: tokenisation and named entityrecognition4. Semantic annotations for the treebank mentioned above5. Translation equivalents6. Benchmarks for evaluationFor speech technology:1. Automatic speech recognition (including modules for non-native speech recognition, robust speech recognition,adaptation, and prosody recognition)2. Speech corpora for specific applications (e.g. directoryassistance, CALL)3. Multi-media speech corpora (speech corpora that alsocontain information from other media, i.e. speech togetherwith text, html, figures, movies, etc.).4. Tools for (semi-) automatic transcription of speech data5. Speech synthesis (including tools for unit selection)6. Benchmarks for evaluationFrom the inventory and the reactions from the field, it can be concluded that the current HLT infrastructure is scattered, incomplete, and not sufficiently accessible. Often the available modules and applications are poorly documented. Moreover, there is a great need for objective and methodologically sound comparisons and benchmarking of the materials. The components that constitute the BLARK should be available at low cost or for free.To overcome the problems in the development of HLT resources for Dutch the following can be recommended:• existing parts of the BLARK should be collected, documented and maintained by some sort of HLT agency, • the BLARK should be completed by encouraging funding bodies to finance the development of the prioritisedresources,• the BLARK should be available to academia and the HLT industry under the conditions of some sort of open source/ open license development,• benchmarks, test corpora, and a methodology for objective comparison, evaluation, and validation of parts of theBLARK should be developed.Furthermore, it can be concluded that there is a need for well-trained HLT researchers, as this was one of the issues discussedat the workshop. Finally, enough funding should be assigned to fundamental research.The results of the survey will be disseminated to the HLT field. The priority lists and the recommendations will be made available to funding bodies and policy institutions by the NTU.A summary of the report, containing the priority lists, the recommendations, and the BLARK will be translated into English to reach a broader public. More information can be found at [4, 5, 6].5. ACKNOWLEDGEMENTThe following people participated in the steering committee (at various stages of the project): J. Beeken, G. Bouma, C. Cucchiarini, E. D’Halleweyn, W. Daelemans, E. Dewallef, A. Dirksen, A. Dijkstra, D. Heijlen, F. de Jong, J.P. Martens, A. Nijholt, H. Strik, L. Teunissen, D. van Compernolle, F. van Eynde, and R. Veldhuis. The four field researchers were: D. Binnenpoorte, J. Sturm, F. de Vriend, and M. Kempen. We would like to thank all of them, and all others who contributedto the work presented in this paper. Furthermore, we would liketo thank an anonymous reviewer for constructive remarks on a previous version of this paper.6. REFERENCES[1] Beeken, J., Dewallef, E., D'Halleweyn, E. (2000), APlatform for Dutch in Human Language Technologies.Proceedings of LREC2000, Athens, Greece.[2] Cucchiarini, C., D'Halleweyn, E. and Teunissen, L. (2002),A Human Language Technologies Platform for the Dutchlanguage: awareness, management, maintenance anddistribution. Proceedings LREC2002, CanaryIslands, Spain.[3] Daelemans, W., Strik, H. (Eds.) (2001) Het Nederlands inde taal- en spraaktechnologie: prioriteiten voorbasisvoorzieningen (versie 1), 27 sept. 2001. See/tst/actieplan/batavo-v1.pdfor http://lands.let.kun.nl/TSpublic/strik/publications/a82-batavo-v1.pdf[4] /tst/[5] http://www.ntu.nl/_/werkt/technologie.html[6] http://lands.let.kun.nl/TSpublic/strik/taalunie/Table 1. Overview of the importance of data for modules, and modules for applications.Data ApplicationsModulesm o n o l i n g l e xm u l t i l i n l e xt h e s a u r ia n n o c o r pu n a n n o c o r ps p e e c h c o r pm u l t i l i n g c o r pm u l t i m o d c o r pm u l t i m e d i a c o rC A L La c c e s s c o n t r o ls p e e c h i n p u ts p e e c h o u t p u td i a l o g s y s te m sd o c p r o di n f o a c c e s st r a n s l a -t i o nLanguage TechnologyGrapheme-phoneme conv.++ ++ + ++ ++ + + Token detection ++ + ++ + + + + + + Sent boundary detection + ++ ++ + ++ ++ + ++ ++ ++ Name recognition + + + ++ ++ ++ + ++ ++ + ++ ++ ++ Spelling correction + Lemmatising ++ ++ + + + + + + + + Morphological analysis ++ ++ + + + ++ + ++ ++ ++ Morphological synthesis ++ ++ + + ++ + ++ ++ Word sort disambig. ++ ++ + + ++ + ++ ++ ++ ++ Parsers and grammars ++ ++ + ++ ++ ++ ++ ++ ++ Shallow parsing ++ ++ ++ + ++ ++ ++ ++ ++ ++ Constituent recognition ++ ++ + + ++ ++ ++ ++ ++ ++ Semantic analysis ++ ++ ++ ++ ++ + ++ ++ ++ ++ ++ Referent resolution + ++ ++ + + ++ ++ ++ ++ ++ Word meaning disambig. + ++ ++ + + ++ + + + ++ ++ Pragmatic analysis + + ++ ++ ++ + ++ ++ ++ + ++ Text generation ++ ++ ++ ++ ++ + ++ ++ ++ ++ Lang. dep. translation ++ ++ ++ ++ + ++ ++ Speech TechnologyComplete speech recog. ++ + ++ + ++ + ++ ++ ++ ++ ++ ++ ++ ++ ++ Acoustic models ++ + ++ + ++ + + + ++ + ++ ++ + + + Language models + ++ + + + + + ++ + ++ ++ ++ ++ ++ Pronunciation lexicon ++ + + ++ + + + ++ + ++ + ++ + ++ ++ Robust speech recognition + + + + + + ++ + + ++ ++ + + + Non-native speech recog. + ++ + ++ ++ + + ++ + + + + + Speaker adaptation + + + ++ + + ++ + + ++ + + ++ + Lexicon adaptation ++ + + ++ + + + ++ + ++ + ++ + ++ ++ Prosody recognition + + ++ + ++ + + + ++ + ++ ++ ++ ++ ++ Complete speech synth. ++ + + + + + ++ ++ + + ++ Allophone synthesis + + + + + + + + + + Di-phone synthesis ++ + + + + + ++ ++ + + + Unit selection ++ + + + + + ++ ++ + + + Prosody prediction for Text-to-Speech ++ + + + + + ++ ++ ++ + ++ Aut. phon. transcription ++ ++ + + ++ + + + ++ + + + + + + + Aut. phon. segmentation ++ ++ + + ++ + + + ++ + + + + + + + Phoneme alignment + + + ++ + + + ++ + + + + Distance calc. phonemes + + + ++ + + + ++ + + + + Speaker identification + ++ ++ ++ + ++ + + ++ + + + + Speaker verification + ++ ++ ++ + ++ + ++ + + + + Speaker tracking + ++ ++ ++ + ++ + + + + + Language identification + ++ + + ++ ++ + + + + + + + + Dialect identification + ++ + + ++ ++ + + + + + + + + Confidence measures + + + ++ + ++ + ++ ++ ++ ++ + + + Utterance verification + + + ++ + + + + + ++ ++ + + +。

收稿日期:2020年10月12日,修回日期:2020年11月22日基金项目:山西工程技术学院科研课题(编号:2020004)资助。

作者简介:刘鹏,男,硕士,讲师,工程师,研究方向:模式识别与机器学习。

∗1引言传统的语音增强算法(如子空间法、谱减法和维纳滤波法),作为一种非监督方法大都基于语音和噪声信号复杂的统计特性来实现降噪,但在降噪过程中不可避免地会产生“音乐噪音”,导致语音失真[1]。

考虑到噪声对清晰语音产生影响的复杂过程,在带噪语音与清晰语音信号间基于神经网络建立非线性映射模型来实现语音增强已成为当前研究的一个热点。

Xugang Lu ,Yu Tsao 等学者采用逐层预训练(layer-wise pre-training )堆叠自编码器(Stacked AutoEncoder )后微调(fine tuning )整个学习网络的方法,建立了深度降噪自编码器(Deep Denoising AutoEncoder ,DDAE ),完成了带噪语音的降噪,并验证了增加降噪自编码器的深度,有助于提高语音增强的效果[2]。

但是,由于深度降噪自编码器是对训练集中带噪语音与清晰语音对的一种统计平均,在缺乏足够样本量的数据集上进行训练,极易产生神经元的联合适应性(co-adaptations ),进而导致过拟合。

为此,文献[3]提出了DDAE 的集成模型(Ensemble Model ),将训练数据聚类后分别训练多个DDAE 模型,然后在训练数据集上通过回归拟合来建立多个DDAE 的组合函数。

但是,集成模型需要训练和评估多个DDAE 模型,这将花费大量的运行时间和内存空间。

研究表明,集成模型通常只能一种深度降噪自编码器的语音增强算法∗刘鹏(山西工程技术学院信息工程与大数据科学系阳泉045000)摘要依据带噪语音中不同类型语音分段(segment )对语音整体的可懂度影响不同,提出了一种基于语音分段来分类训练深度降噪自编码器(DDAE )的语音增强算法。

Iterative and Sequential Kalman Filter-Based Speech Enhancement AlgorithmsSharon Gannot,Student Member,IEEE,David Burshtein,Member,IEEE,and Ehud Weinstein,Fellow,IEEEAbstract—Speech quality and intelligibility might significantly deteriorate in the presence of background noise,especially when the speech signal is subject to subsequent processing.In par-ticular,speech coders and automatic speech recognition(ASR) systems that were designed or trained to act on clean speech signals might be rendered useless in the presence of background noise.Speech enhancement algorithms have therefore attracted a great deal of interest in the past two decades.In this paper, we present a class of Kalmanfilter-based algorithms with some extensions,modifications,and improvements of previous work. Thefirst algorithm employs the estimate-maximize(EM)method to iteratively estimate the spectral parameters of the speech and noise parameters.The enhanced speech signal is obtained as a byproduct of the parameter estimation algorithm.The sec-ond algorithm is a sequential,computationally efficient,gradient descent algorithm.We discuss various topics concerning the prac-tical implementation of these algorithms.Extensive experimental study using real speech and noise signals is provided to compare these algorithms with alternative speech enhancement algorithms, and to compare the performance of the iterative and sequential algorithms.I.I NTRODUCTIONS PEECH quality and intelligibility might significantly de-teriorate in the presence of background noise,especially when the speech signal is subject to subsequent processing. In particular,speech coders and automatic speech recognition (ASR)systems that were designed or trained to act on clean speech signals might be rendered useless in the presence of background noise.Speech enhancement algorithms have therefore attracted a great deal of interest in the past two decades[2],[5]–[8],[11],[13],[15]–[18],[20],[21],[23], [25],[26],[29],[30].Lim and Oppenheim[20]have suggested modeling the speech signal as a stochastic autoregressive(AR)model em-bedded in additive white Gaussian noise,and use this model for speech enhancement.The proposed algorithm is iterative in nature.It consists of estimating the speech AR parameters by solving the Yule–Walker equations using the current estimate of the speech signal,and then apply the(noncausal)Wiener filter to the observed signal to obtain a hopefully improved estimate of the desired speech signal.It can be shown that the version of the algorithm that uses the covariance of the speech signal estimate,given at the output of the Wienerfilter, is in fact the estimate-maximize(EM)algorithm(up to a scale Manuscript received June22,1996;revised August27,1997.The associate editors coordinating the review of this manuscript and approving it for publication was Dr.Jean-Claude Junqua.The authors are with the Department of Electrical Engineering–Systems, Tel-Aviv University,Tel-Aviv69978,Israel(e-mail:burstyn@eng.tau.ac.il). Publisher Item Identifier S1063-6676(98)04218-7.factor)for the problem at hand.As such,it is guaranteed to converge to the maximum likelihood(ML)estimate of the AR parameters,or at least to a local maximum of the likelihood function,and to yield the best linearfiltered estimate of the speech signal,computed at the ML parameter estimate.Hansen and Clements[15]proposed to incorporate auditory domain constrains in order to improve the convergence behavior of the Lim and Oppenheim algorithm,and Masgrau et al.[21]proposed to incorporate third-order cumulants in the Yule–Walker equations in order to improve the immunity of the AR parameter estimate to additive Gaussian noise. Weinstein et al.[29]presented a time-domain formulation to the problem at hand.Their approach consists of representing the signal model using linear dynamic state equation,and applying the EM method.The resulting algorithm is similar in structure to the Lim and Oppenheim[20]algorithm,only that the noncausal Wienerfilter is replaced by the Kalman smoothing equations.In addition to that,sequential speech enhancement algorithms are presented in[29].These sequen-tial algorithms are characterized by a forward Kalmanfilter whose parameters are continuously updated.In[30],similar methods were proposed for the related problem of multisensor signal enhancement.Lee et al.[17]extended the sequential single sensor algorithm of Weinstein et al.by replacing the white Gaussian excitation of the speech signal with a mixed Gaussian term that may account for the presence of an impulse train in the excitation sequence of voiced speech.Lee et al. examined the signal-to-noise ratio(SNR)improvement of the algorithm when applied to synthetic speech input.They also provide limited results on real speech signals.The use of Kalmanfiltering was previously proposed by Paliwal and Basu[23]for speech enhancement,where experi-mental results reveal its distinct advantage over the Wienerfil-ter,for the case where the estimated speech parameters are ob-tained from the clean speech signal(before being corrupted by the noise).Gibson et al.[13]proposed to extend the use of the Kalmanfilter by incorporating a colored noise model in order to improve the enhancement performances for certain class of noise sources.A disadvantage of the above mentioned Kalman filtering algorithms is that they do not address the model pa-rameters estimation problem.Koo and Gibson[16]suggested an algorithm that iterates between Kalmanfiltering of the given corrupted speech measurements,and estimation of the speech parameters given the enhanced speech waveform.The resulting algorithm is,in fact,an approximated EM algorithm. In this paper,we present iterative-batch and sequential algo-rithms with some extensions,modifications,and improvements1063–6676/98$10.00©1998IEEEof previous work[29],and discuss various topics concerning the practical implementation of these algorithms.This dis-cussion is supported by extensive experimental study using recorded speech signals and actual noise sources.The out-comes consist of the assessment of sound spectrograms,sub-jective distortion measures such as total output SNR,segmen-tal SNR,and Itakura–Saito,informal subjective tests,speech intelligibility tests,and ASR experiments.The iterative-batch algorithm is compared to various methods,including spectral subtraction[2],the short-time spectral amplitude(STSA)esti-mator[5],the log spectral amplitude estimator(LSAE)[6],the hidden Markov model(HMM)basedfiltering algorithms[7], [8],and the Wienerfilter approach of[20].These algorithms may be distinguished by the amount of a priori knowledge that is assumed on the statistics of the clean speech signal.For example,the algorithms in[7]and[8]require a training stage in which the clean speech parameters are estimated,prior to the application of the enhancement algorithm,while the other approaches do not require such training stage.A distinct advantage of the proposed algorithm compared to alternative algorithms is that it enhances the quality and SNR of the speech,while preserving its intelligibility and natural sound.The sequential algorithm is generally inferior to the iterative-batch algorithm.However,at low SNR’s the degradation is usually insignificant.The organization of the paper is as follows.In Section II, we present the signal model.In Section III,we present the iterative-batch algorithm.In Section IV,we show how higher-order statistics might be incorporated in order to improve the performance of the iterative-batch algorithm.The sequential algorithm is presented in Section V.Experimental results are provided in Section VI.In Sections VII and VIII,we discuss and summarize our results.II.T HE S IGNAL M ODELLet the signal measured by the microphone be givenby(1)where represents the sampled speech signal,andrepresents additive background noise.We shall assume the standard LPC modeling for the speechsignal over the analysis frame,inwhich is modeled as astochastic AR process,i.e.,(2)where theexcitation is a normalized(zero mean unitvariance)whitenoise,are the AR coefficients.We may incorporate themore detailed voiced speech model suggested in[3]in whichthe excitation process is composed of a weighted linear com-bination of an impulse train and a white noise sequence torepresent voiced and unvoiced speech,respectively.However,as indicated in[11],this approach did not yield any significantperformance improvements over the standard LPC modeling.The additivenoise is also assumed to be a realizationfrom a zero-mean,possibly nonwhite stochastic ARprocess:(3)wherewhere the statevector is definedby:is givenby:wherewhereandandwhere are thefollowing dimensionalvectors:GANNOT et al.:SPEECH ENHANCEMENT ALGORITHMS375 Assuming that all the signal and noise parameters areknown,which impliesthatand(4)andlet be the estimateofafter(5)be the vector of observed data in the current analysis frame.We will use thenotationPropagationEquations•Backward(smoothing)recursion:For(12)whereare thematrices(13)(15)sub-matrixof,andmay similarly be extractedfrom376IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING,VOL.6,NO.4,JULY 1998ing (delayed Kalman filter estimate)[23]or even just byfiltering.That is,instead ofusing th entryof (fixed lag smoothing)or(filtering),that are the first andtheis assumed to be Gaussian,thenhigher-order statistics (HOS)may be incorporated in order to improve the initial estimate of the speech parameters.In that case,the quality of the enhanced speech signal is significantly improved compared to the standard initialization method that was indicated above.It can be shown,by invoking the basic cumulant properties in [22,Sect.II-B3],and recalling (2),thatdenotes the jointcumulant of the bracketed variables.We notethatis Gaussian,it can beshown,by invoking the same basic cumulant properties in [22]and recalling (1)and the statistical independenceofand directly from theobservedsignal,one typically obtains a better and more robustinitial estimate of the speech parameters as compared with the conventional LPC approach based on second-order statistics.The use of third-ordercumulantsGANNOT et al.:SPEECH ENHANCEMENT ALGORITHMS377 of the enhanced speechsignal,are definedbyisamatrix,matrix,and is a scalar value.Similarly,isamatrix,matrix,andis a scalarvalue.is the CDF of a Gaussian random variable,whosemean and variance are the empirical mean and variance ofthe given signal segment.Hence,a Gaussian random variablecorresponds to a straight line(presented by a dashed linein thefigure).As can be seen,the noise segment shown(which is typical of that noise signal)is very close to anideal Gaussian curve.On the other hand,the voiced segmentshown(unvoiced speech segments possess similar Gaussiancurves)deviates significantly from the Gaussian curve.Moreprecisely,for the noise segment shown,the deviation fromthe Gaussian curve is significant only for data samples withcumulative probability value higher than99%or lower than3%.On the other hand,for the speech segment shown,thedeviation from the Gaussian curve is already significant for378IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING,VOL.6,NO.4,JULY1998Fig.1.Gaussian curves for typical speech and computer fan noise segments.data samples whose cumulative probability is higher than80% or lower than20%.Our informal speech quality test involved ten listeners.Each considered40Hebrew and English sentences.Some of the English sentences were taken from the TIMIT data base[12]. The rest were recorded in a silent environment.The speech signal was corrupted by several noise signals at various SNR’s. Each listener had to characterize the quality of the enhanced speech and to compare it with the quality of the corrupted one. Each listener examined each enhanced speech signal,without knowing which signal corresponds to which algorithm.All listeners indicated that the quality of the speech processed by the KEMI algorithm is superior to the quality of the corrupted speech at the entire SNR range examined(between 15dB).They also indicated a significant reduction in the noise level without any severe distortion of the speech signal.At SNR values below5dB,the speech quality of the KGDS algorithm is only slightly inferior to that of the KEMI.Above 5dB the KGDS algorithm was sometimes unstable,with a time varying signal level.We attribute this phenomenon to the fact that at high SNR’s the estimated noise model parameters might be very inaccurate(since the noise is masked by the signal).A possible solution is to replace the sequential update equation of the noise parameters by an estimator that,based on a voice activity detector,considers only signal segments where speech activity is not detected.A comparison between thefiltered output and thefixed-lag smoothed output showed a slight advantage to the former (although thefixed-lag smoothed output was sometimes char-acterized as being slightly muffled).The spectral subtraction algorithm significantly reduced the noise level,but generated an annoying“musical noise”effect,i.e.,the enhanced speech contained tones with fast shifting frequencies.The algorithm collapses at SNR values below10dB,the intelligibility of the speech,processed by the HMM-MMSE algorithm,is severely damaged.All these observations were valid both for a synthetic white Gaussian noise and for a recorded fan noise signal. The WEM algorithm was designed under the assumption of white Gaussian noise,hence was not tested for colored-noise environments.Our listening tests indicate some advantage to the KEMI algorithm over the WEM algorithm.Fig.2shows the spectrograms of some clean speech seg-ment(upper left),and the corresponding noisy segment(upper right),enhanced KEMI(fixed lag smoothing version)signal (lower left),and enhanced LSAE signal(lower right).As can be seen,the LSAE algorithm shows better noise reduction,at the expense of larger distortion of the speech signal,which is expressed by formant widening.Intelligibility tests were also conducted for the KEMI al-gorithm.The clean speech database was the high quality connected digits recorded at TI,(TIDIGITS)[19]by225 adult female and male ing the TIDIGITS data base,we created two new databases,each consisting of200 utterances of isolated digits.Thefirst database consisted of digit utterances that were corrupted by additive computer fan noise,at SNR level ofGANNOT et al.:SPEECH ENHANCEMENT ALGORITHMS379Fig.2.Clean,noisy and enhanced(KEMI and LSAE)sound spectrograms.algorithm.Twelve listeners participated in the experiment.Therecognition rate of each listener was evaluated by considering20noisy utterances and20enhanced utterances.All40ut-terances were randomly selected,and were presented to thelistener at a random order.The overall word error rate wasslightly decreased from31%(74wrong decisions)withoutspeech enhancement,to26%(63wrong decisions)whenpreprocessing the speech signal,using the KEMI algorithm.Our objective set of experiments consisted of total outputSNR measurements,segmental SNR and Itakura–Saito(IS)distortion measurements.These distortion measures are knownto be correlated with the subjective perception of speechquality[14].The IS distortion measure and the segmental SNRpossess the highest correlation,with a small advantage to theformer.Let and denote the clean and enhanced speechsignals,respectively.The total output SNR is defined by380IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING,VOL.6,NO.4,JULY1998Fig.3Total SNR level averaged over the four sentences(fan noise).Fig.4.Total SNR level averaged over the four sentences(white noise).0dB),SNR improvementfigures might be misleading(e.g.,an algorithm that produces a constant zero signal,improvesSNR).In fact,below0dB,the distortion of the HMM-MMSEalgorithm is very significant.The distortion of the LSAEalgorithm in that region is less severe,but the enhanced signalsounds unnatural.On the other hand,the KEMI algorithmimproves SNR over the full range of input SNR values,withoutaffecting the intelligibility and natural sound of the speech.The IS distance measure results in Fig.5show an advantageto the KEMI algorithm over the full range of input SNR’s.The IS values of the HMM algorithm were much worse(i.e.,higher)compared to the other algorithms,and are therefore notpresented.At SNR’s belowGANNOT et al.:SPEECH ENHANCEMENT ALGORITHMS381Fig.5.Median Itakura–Saito distortion measure (the same legend of Fig.3,except forHMM).Fig.6.Single digits recognition rate with preprocessing (KEMI,HMM,and LSAE)and without.system,when tested on the clean isolated digits sentences,was 99.1%.The single digits recognition rate of the system when subject to speech signals contaminated by computer fan noise at various SNR’s (The SNR was measured in the frequency region of interest,between 200and 3200Hz)is summarized in Fig.6.We also show the corresponding recognition rate,when the noisy speech is preprocessed by the KEMI algorithm (fixed-lag smoothing version;the filtered version was slightly inferior),by the LSAE algorithm,and by the HMM-MMSE algorithm.The main purpose of this comparison is to compare the performance of the enhancement algorithms using an additional task.Alternative noise adaptation algorithms [10],[28]that adapt the parameters of the recognizer,instead ofpreprocessing the input speech,cannot produce an enhanced speech signal.Hence,the performance of these algorithms was not evaluated.As can be seen,the KEMI algorithm improves the performance by about 6dB (i.e.,when speech enhancement is not employed,the SNR needs to be increased by 6dB in order to obtain the same recognition rate).The KEMI algorithm shows superior performance compared to the HMM-MMSE algorithm,and is comparable to the LSAE at input SNR’s higher than 6dB.Below 6dB input SNR,the LSAE is superior to the KEMI algorithm.VII.D ISCUSSIONThe proposed Kalman filter-based enhancement algorithms use parallel AR models to describe the dynamics of the speech382IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING,VOL.6,NO.4,JULY 1998and noise signals.The combined model is used to decompose the noisy signal into speech and noise components.HMM-based enhancement algorithms also use parallel models,one set for each of the components into which the signal is to be decomposed (although usually the noise model degenerates to a single state HMM).The main difference between the two approaches is that the HMM-based methods constrain the estimated speech (and noise)parameters to some codebook of possible spectra that is obtained from some clean speech data base.This codebook is in fact a detailed model to the speech signal.The success of the HMM-based methods,depends on the accuracy of this model.A mismatch between the data base used to construct the speech codebook and the actual speech signal that needs to be enhanced might deteriorate the quality of the enhanced signal.For example,speaker-dependent applications might be more successful than speaker-independent applications when using the HMM-based methods.The LSAE algorithm shows improved performance compared to HMM-MMSE,especially at the low SNR range.We attribute this phenomenon to the fact that LSAE (also STSA)tends to attenuate the noisy speech signal less than Wiener filtering does,especially at the lower SNR range (in addition to that,recall that HMM-MMSE is a weighted combination of Wiener filters).HMM-based signal decomposition has also been used to enhance the robustness of HMM-based speech recognition systems [10],[28].In this case,parallel HMM model com-bination is used to transform the probability distribution of the clean speech signal into the probability distribution of the noisy signal.The Kalman filter-based algorithms employ the MMSEcriterion,although other criteria such asminimaxare also possible in the enhancement stage of the algorithm.In fact,in [26],a Kalman filter based on the minimax criterion is shown to be superior over a standard (MMSE)Kalman filter.It should be noted,however,that the implementation of the minimax criterion for the parameter estimation stage of the algorithm seems to be much more complicated.The proposed algorithms use Kalman filtering instead of the Wiener filter approach of Lim and Oppenheim [20].The advantage of Kalman filtering compared to Wiener filtering is attributed to the fact that the Kalman filter approach enables accurate modeling of the nonstationary transitions at frame boundaries.The advantage of the Kalman filter compared to the Wiener filter was previously noted in [23],for the case where the estimated speech parameters are obtained from the clean speech signal (e.g.,4.5dB improvement in segmental SNR for speech contaminated by white noise at 0dB input SNR,and 5.5dB improvement when using fixed lag Kalman smoothing).In [18],the HMM-based enhancement in [7]and [8]that employs parallel Wiener filters was modified by replacing Wiener filtering by Kalman filtering.An important feature of the proposed algorithm is that,unlike the alternative algorithms that were examined,a voice activity detector (V AD)is not required.In fact,the implemen-tation of these alternative algorithms in Section VI,assumed an ideal V AD that produces the true noise spectrum.In thatsense,the comparison was biased in favor of these com-peting algorithms.This advantage of the proposed algorithm is especially significant for noise sources with fast changing spectrum,since a V AD is less useful in that case.It is also significant for the lower SNR region,where it is more difficult to construct reliable V AD’s.VIII.S UMMARYWe presented iterative-batch and sequential speech en-hancement algorithms in the presence of colored background noise,and compared the performance of these algorithms with alternative speech enhancement algorithms.The iterative-batch algorithm employs the EM method to estimate the spectral parameters of the speech signal and noise process.Each iteration of the algorithm is composed of an estimation (E)step and a maximization (M)step.The E-step is imple-mented by using the Kalman filtering equations.The M-step is implemented by using a nonstandard YW equation set,in which correlations are replaced by their a posteriori values,that are calculated by using the Kalman filtering equations.The enhanced speech is obtained as a byproduct of the E-step.The performance of this algorithm was compared to that of alternative speech enhancement algorithms.A distinct advantage of the proposed algorithm compared to alternative algorithms is that it enhances the quality and SNR of the speech,while preserving its intelligibility and natural sound.Another advantage of the algorithm is that a V AD is not required.Our development assumes a colored,rather than white,Gaussian noise model.The incremental computational price that is paid for this extension is moderate.However,the realizable improvement in the enhancement performance may be quite significant,as indicated in [11]and [13].Fixed-lag Kalman smoothing was superior to Kalman fil-tering in terms of the objective distance measures (total and segmental SNR and Itakura–Saito distance)and in terms of the ASR performance.However,our informal speech quality tests suggest the opposite conclusion (i.e.,that filtering is slightly superior to fixed-lag smoothing).Fourth-order cumulant based equations were shown to pro-vide a reliable initialization to the EM algorithm.Alternative initialization methods that we tried,such as third-order statis-tics based equations,were not as effective.In order to reduce the computational load and to eliminate the delay of the iterative-batch algorithm,the sequential al-gorithm may be used.Although in general,the performance of the iterative-batch algorithm is superior,at low SNR’s the differences in performance are small.A PPENDIX AWe provide a derivation of the EM algorithm presented in Section III.LetGANNOT et al.:SPEECH ENHANCEMENT ALGORITHMS383 The ML estimateof is givenbyfrom the observeddatathat isrelated to the observed data vector(“incomplete data”)througha(generally noninvertible)transformation,th iteration of the EM algorithm consists of thefollowing estimation(E)step and maximization(M)step.E-Step:is the estimateofafterThe corresponding vectors of speech and noise samplesareis the framelength.are the speech and noiseAR orders).The“complete data”vector,where is the vector of unknown parameters defined in(4).Under the assumption that both the speech innovationsequence,areGaussian(hence,and are also assumed Gaussian),and recalling(2)and(3),oneobtains(26)whereUnder the assumptionthatandatyieldsis the estimateof iteration cycles.Theconstants(“complete data”)be some vector,that is related to the384IEEE TRANSACTIONS ON SPEECH AND AUDIO PROCESSING,VOL.6,NO.4,JULY1998 measurements vectorshould be chosen such that the differenti-ation ofand invoking(28),(29)yieldsTo obtain our sequential algorithm,the iteration index isreplaced by the time index.We also incorporate a forgettingfactor for calculating the covariance terms.For convenience,we defineis a matrix,matrix,and is a scalar value.[see(18)]isGANNOT et al.:SPEECH ENHANCEMENT ALGORITHMS385 ment strategies with application to hearing aid design,”in Proc.Int.Conf.Acoustics,Speech,Signal Processing,vol.1,pp.13–16,1994.[26]X.Shen,L.Deng,and A.Yasmin,“H-infinityfiltering for speechenhancement,”in Proc.Int.Conf.Spoken Language Processing,1996,pp.873–876.[27]R.H.Shumway and D.S.Stoffer,“An approach to time seriessmoothing and forecasting using the EM algorithm,”J.Time SeriesAnal.,vol.3,pp.253–264,1982.[28] A.P.Varga and R.K.Moore,“Hidden Markov model decompositionof speech and noise,”in Proc.Int.Conf.Acoustics,Speech,SignalProcessing,1990,pp.845–848.[29] E.Weinstein,A.V.Oppenheim,and M.Feder,“Signal enhancementusing single and multi-sensor measurements,”RLE Tech.Rep.560,Mass.Inst.Technol.,Cambridge,MA,1990.[30] E.Weinstein,A.V.Oppenheim,M.Feder,and J.R.Buck,“Iterativeand sequential algorithms for multisensor signal enhancement,”IEEETrans.Signal Processing,vol.42,pp.846–859,1994.Sharon Gannot(S’95)received the B.Sc.degree (summa cum laude)from the Technion—Israel In-stitute of Technology,Haifa,in1986,and the M.Sc. degree(cum laude)from Tel-Aviv University,Israel, in1995,both in electrical engineering.Currently,he is a Ph.D.student in the Department of Electrical Engineering-Systems,Tel-Aviv University.From1986to1993,he was head of research and development section in the Israeli Defense Forces. His research interests include parameter estimation, statistical signal processing,and speech processing using either single or multimicrophonearrays.David Burshtein(M’92)received the B.Sc.(summacum laude)and Ph.D.degrees in electrical engineer-ing from Tel-Aviv University,Israel,in1982and1987,respectively.From1982to1987,he was a Senior ResearchEngineer in the Research Laboratories,Israel Min-istry of Defense,and was involved in research anddevelopment of digital signal processing systems.During1988and1989,he was a Research StaffMember in the Speech Recognition Group,IBMT.J.Watson Research Center.In October1989,he joined the Department of Electrical Engineering-Systems,Tel-Aviv University, where he is currently a faculty member.In the past six years,he has also been acting as a consultant to the Israeli Ministry of Defense and to DSPC-Israel. His research interests include parameter estimation,speech processing,speech recognition,statistical pattern recognition,and neuralnetworks.Ehud Weinstein(M’82–SM’86–F’94)was born inTel-Aviv,Israel,on May9,1950.He received theB.Sc.degree from the Technion—Israel Instituteof Technology,Haifa,and the Ph.D.degree fromYale University,New Haven,CT,both in electricalengineering,in1975and1978,respectively.In1980,he joined the Department of ElectricalEngineering-Systems,Faculty of Engineering,Tel-Aviv University,Israel,where he is currently aProfessor.Since1978,he has been affiliated withthe Woods Hole Oceanographic Institute.He is also a Research Affiliate in the Research Laboratory of Electronics at the Massachusetts Institute of Technology,Cambridge,since1990.His research interests are in the general areas of estimation theory,statistical signal processing,array processing,and digital communications.Dr.Weinstein is a co-recipient of the1983Senior Award of the IEEE Acoustics,Speech,and Signal Processing Society.。

语音降噪方法的比较研究姚建琴;王海玲;刘海生【摘要】例举五种语音降噪方法:经验模态分解法、自相关频域降噪法,基于gabor 变换的时频域降噪法,以及经验模态分解——自相关联合降噪方法、gabor变换——自相关联合降噪法,并通过信噪比、互相关系数和主观评价等方法比较了五种语音降噪方法的特点和效果.结果表明:同单一方法相比,采用联合降噪方法可结合不同方法的优点,取得更好的降噪效果.【期刊名称】《电声技术》【年(卷),期】2017(041)006【总页数】5页(P92-96)【关键词】语音降噪;自相关;EMD;Gabor变换;信噪比;语谱图【作者】姚建琴;王海玲;刘海生【作者单位】同济大学物理科学与工程学院声学研究所,200092,上海;同济大学物理科学与工程学院声学研究所,200092,上海;同济大学物理科学与工程学院声学研究所,200092,上海【正文语种】中文【中图分类】TN931.42语音传输过程中环境噪声对语音信号的干扰是不可避免的,语音降噪的主要目的是从带噪语音中提取出尽可能纯净的原始语音,提高识别率。

本文通过在消声室录制的语音信号内加入白噪声信号,合成信噪比-20 dB含噪语音信号,然后使用五种降噪方法对其进行降噪。

这些降噪方法分别是:经验模态分解法(Empirical Mode Decomposition,简称EMD)、自相关-频域降噪法,基于gabor变换的时频域降噪法,以及经验模态分解——自相关联合降噪方法、gabor变换——自相关联合降噪方法。

文中利用信噪比、互相关系数和主观评价对五种语音降噪方法的特点和效果进行了比较。

含噪语音信号是由在消声室录制的纯净语音干信号加白噪声而合成的。

纯净语音干信号录制的内容为汉语拼音清音和浊音字母。

录制使用labview音频软件,采样率为44 100 Hz。

将录制的信号转换为wav格式后,利用MATLAB程序将生成的白噪声作为加性噪声与单字母无空白段语音音频信号相加,得到含噪信号。

《基于深度学习的多通道语音增强方法研究》篇一一、引言随着人工智能技术的飞速发展,语音信号处理在众多领域如语音识别、语音合成以及语音通信等方面均具有广泛应用。

然而,在复杂的声学环境中,原始的语音信号常常会受到各种噪声的干扰,这严重影响了语音信号的质量和可识别性。

因此,语音增强的研究显得尤为重要。

近年来,基于深度学习的多通道语音增强方法因其在处理复杂声学环境中的优势而备受关注。

本文将深入探讨基于深度学习的多通道语音增强方法的研究。

二、多通道语音增强的背景与重要性多通道语音增强技术是指利用多个麦克风或传感器接收到的信号,通过一定的算法处理,达到提高语音信号质量的目的。

这种方法可以有效地抑制噪声干扰,提高语音的可识别性。

传统的多通道语音增强方法主要依赖于信号处理技术,如滤波、波束形成等。

然而,这些方法在处理复杂声学环境时效果有限。

近年来,深度学习技术的崛起为多通道语音增强提供了新的思路和方法。

三、基于深度学习的多通道语音增强方法深度学习技术在多通道语音增强中发挥了重要作用,主要包括基于深度神经网络的噪声抑制和基于深度学习的波束形成算法等。

其中,基于深度神经网络的噪声抑制算法可以通过学习大量的训练数据,建立从带噪语音到纯净语音的映射关系,从而达到噪声抑制的目的。

而基于深度学习的波束形成算法则可以通过训练深度神经网络来优化波束形成的权重,提高信号的信噪比。

四、深度学习模型的设计与实现在多通道语音增强的深度学习模型中,通常采用卷积神经网络(CNN)或循环神经网络(RNN)等结构。

这些网络可以通过学习输入信号的时空特征,提取出有用的信息来抑制噪声。

此外,还可以采用端到端的训练方式,直接从带噪的输入信号得到增强的输出信号。

在模型训练过程中,通常使用大量的带噪和纯净的语音数据作为训练集,通过优化损失函数来提高模型的性能。

五、实验与分析本部分将通过实验验证基于深度学习的多通道语音增强方法的有效性。

首先,我们采用了公开的语料库作为训练和测试的数据集。

基于自适应滤波的语音增强算法

葛良;陶智

【期刊名称】《苏州大学学报(工科版)》

【年(卷),期】2002(022)004

【摘要】介绍了自适应滤波进行语音增强的一种方法.对带噪语音进行自适应滤波处理,滤波后的语音信噪比和听觉质量得到了很大的改善.

【总页数】3页(P23-25)

【作者】葛良;陶智

【作者单位】苏州大学信息技术学院,江苏,苏州,215006;苏州大学理学院,江苏,苏州,215006

【正文语种】中文

【中图分类】TN912

【相关文献】

1.一种基于自适应滤波的语音增强算法的DSP实现

2.基于DFRCT滤波器组自适应语音增强算法

3.基于自适应滤波的一种语音增强算法研究

4.基于自适应滤波的一种语音增强算法研究

5.基于小波包与自适应维纳滤波的语音增强算法

因版权原因,仅展示原文概要,查看原文内容请购买。

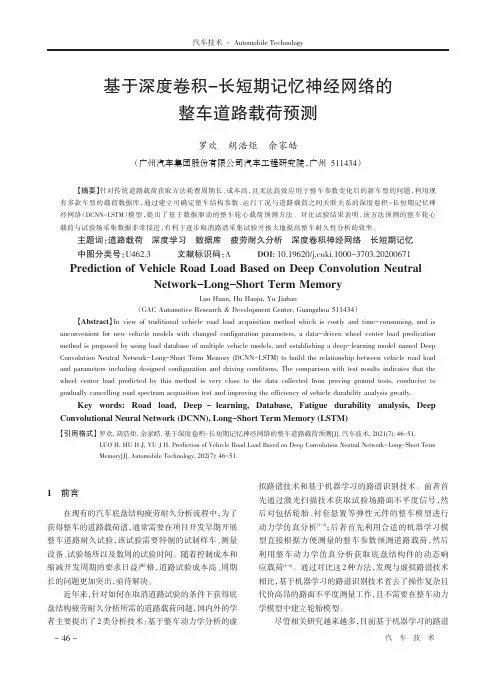

Fast Adaptation for Robust Speech Recognition in Reverberant Environments L.Couvreur,S.Dupont,C.Ris,J.-M.Boite and C.Couvreur Facult´e Polytechnique de Mons,Belgium Lernout&Hauspie Speech Products,Belgium lcouv,dupont,ris,boite@tcts.fpms.ac.be christophe.couvreur@lhs.beAbstractWe present a fast method,i.e.requiring little data,for adapting a hybrid Hidden Markov Model/Multi Layer Perceptron speech recognizer to reverberant environments.Adaptation is per-formed by a linear transformation of the acoustic feature space.A dimensionality reduction technique similar to the eigenvoice approach is also investigated.A pool of adaptation transfor-mations are estimated a priori for various reverberant environ-ments.Then,the principal directions of the pool are extracted, the so-called eigenrooms.The adaptation transformation for ev-ery new reverberant environment is constrained to lay on the subspace spanned by the most significant eigenrooms.Conse-quently,the adaptation procedure involves estimating only the projection coefficients on the selected eigenrooms,which re-quires less data than direct estimation of the adaptation trans-formation.Supervised adaptation experiments for recognition of connected digit sequences(A URORA database)in reverberant environments are carried out.Standard adaptation demonstrates improvements in word error rate higher than30%for typical re-verberation levels.The eigenroom-based adaptation technique implemented so far allows at most50%reduction of adaptation data for the same improvement.1.IntroductionIn many real applications,automatic speech recognition(ASR) systems have to deal with noise and room reverberation.Since these systems,or more exactly their acoustic models,are com-monly trained on clean speech material,i.e.noise-free and echo-free speech,they perform poorly during operation because of the mismatch between the training conditions and the oper-ating conditions.In this work,we are primarily concerned by the mismatch due to room reverberation.Two approaches come out naturally for reducing this mismatch.One can suggest to train the acoustic models on reverberated speech.Such training material can be obtained by convolving clean speech with room impulse responses which are either measured in reverberant en-closures[1]or artificially generated[2].Alternatively,one can suggest to recover(partially)echo-free acoustic features and keep on using the acoustic models trained on echo-free speech.We propose here adaptation methods in the framework of connectionist speech recognition[3]to compensate for room reverberation by linear transformation of the acoustic features. The standard adaptation procedure consists in estimating the coefficients of the linear mapping from data recorded in the target operating reverberant environment.Recently,the eigen-voice concept has been introduced[4,5]for reducing the dimen-sionality problem inherent to such adaptation procedures.The eigenvoice method increases the reliability and the efficiency of the adaptation procedure by limiting the amount of parame-ters that must be estimated.The method was originally devel-oped for fast speaker adaptation of recognizers based on Hid-den Markov Models/Gaussian Mixture Models(HMM/GMM) [6,7].In[8],the method was extended to hybrid Hidden Markov Models/Multi Layer Perceptron(HMM/MLP)recog-nizers.We generalize here the latter approach to room reverber-ation adaptation by introducing the eigenroom concept.In the next section,wefirst review the standard technique for adaptation of a HMM/MLP recognizer by linear transforma-tion of the acoustic features.We then describe how the eigen-voice concept can be generalized for adaptation to room rever-beration,and we propose a fast version of the standard adap-tation technique.In section3,results for recognition of con-nected digit sequences are reported.Conclusions are drawn in section4.2.Adaptation ProcedureIn this work,we use a Multi Layer Perceptron(MLP)as acous-tic model for speech recognition[3].Actually,a single hidden layer MLP is used.The MLP inputs are acoustic feature vectors computed for successive frames of speech along the utterance to be recognized.The MLP outputs are estimates of a posteri-ori phone probabilities.The resulting lattice of probabilities is then searched for the most likely word sequence given a lexi-con of word phonetic transcriptions.Such an acoustic model is commonly trained on a large database consisting of a sequence of acoustic feature vectors and the corresponding sequence of phone labels.The training procedure aims at minimizing the square error between the actual outputs and the expected ones (1for the output of the desired phone and0otherwise).This supervised training of the MLP coefficients can be efficiently implemented via a gradient descent procedure using the popu-lar back-propagation(BP)algorithm[9].2.1.Standard AdaptationUnfortunately,the performance of a MLP-based speech recog-nizer degrades severely when the training acoustic conditions differ from the operating acoustic conditions[2].In order to recover satisfying performance,the acoustic model has to be adapted.A usual technique for adapting a MLP consists in transforming the input acoustic feature vectors linearly[10]:(1) where,,and denote the current acoustic feature vec-tor possibly augmented with left and right context acoustic fea-ture vectors,its compensated version,and the adaptation pa-rameters,respectively.The transformed feature vector serves then as input to the unchanged existing MLP.Hence,the adap-tation procedure consists in estimating the adaptation parame-ters.The linear transformation can be seen asFigure1:Adaptation scheme for hands-free speech recognition in reverberant environments. an extra linear input layer appended to the existing MLP.Ini-tializing this layer with the identity matrix()and zerobiases(),it can be estimated by resuming the supervisedtraining of the augmented MLP on the available adaptation data,keeping all the other layers frozen[10].In this work,we ap-ply this procedure for adapting an existing speaker-independentMLP trained on echo-free speech to a reverberant environment(seefigure1).2.2.Fast AdaptationAs shown in section3,a significant amount of data is necessaryto adapt efficiently the echo-free MLP,and to obtain a room-dependent but still speaker-independent acoustic model.Wepropose to apply a method similar to the eigenvoice approach[5]in order to reduce the amount of adaptation data.Let de-fine as the-dimensional adaptation vector gathering theadaptation parameters,(2)Assume that such a vector may be computed for reverber-ant environments with different reverberation levels.That is,a set of adaptation vectors are com-puted a priori.Next,a Principal Component Analysis(PCA)[11]is performed on the set.The principal directionsare extracted by eigendecomposition of the covariance matrix(3) withandand the th element of(7) where denotes the learning rate coefficient.The gradient termFigure2:Eigenroom-based adaptation:the adaptation transfor-mation is assumed to be modeled by only3parameters(=3) and constrained to lay on thefirst eigenroom(=1).Table1:Word error rate(WER)as the sum of substitution errorrate(SUB),deletion error rate(DEL)and insertion error rate(INS)for the baseline speaker-independent echo-free MLP.WER[%]0.70.50.5Test set=2004006008001000WER=8.220.433.446.748.5 25k4.97.412.020.022.175k3.4 6.29.717.118.5125k3.4 5.78.815.817.1175k3.9 5.48.515.116.1(8) where thefirst term is obtained by the BP algorithm and thesecond term is easily derived from equation(6),i.e.equal to theoutput of the th eigenroom transformation.3.Experimental ResultsThe speech material used in this work comes from the cleanpart of the AURORA database[12]and consists of English con-nected digit sequences.The corpus is divided into a training setof8840utterances and a test set of1001utterances,pronouncedby110speakers and104other speakers,respectively.First,we train a MLP with a600-node hidden layer onthe echo-free training set.The resulting model is assumed tobe speaker-independent.For every speech frame,it estimatesthe a posteriori probabilities for a33-phoneme set given theacoustic vectors of the current frame augmented with7-frame left-context and7-frame right-context acoustic vectors.Eachacoustic vector is composed of12Mel-warped frequency cep-stral coefficients(MFCC)and the energy.The performance of the resulting MLP for recognition of the echo-free test set isgiven in table1.Speech decoding is done by Viterbi search,with neither pruning nor grammar constraints.Then,we try to adapt the echo-free MLP to various rever-berant environments.Following the notation of section2,the compensated vector is obtained by linear transformation(see equation(1))of the-dimensional vector formed by the cur-rent acoustic vector with its context,i.e..As described in section2.2,no reverberated data arecollected in the reverberant environment to which we want toadapt.Only the reverberation time has to be known.Given,one can generate adaptation material by convolving the echo-free training set with artificial room impulse responses matching[2].Once the echo-free MLP has been adapted, it is used to recognize a reverberated test set.In this work,the Table3:Word error rate(WER[%])for various reverberant environments([ms])and for various amounts of adaptation data in the case of a block diagonal adaptation matrix.Adapt.data[frame]No6.112.922.034.637.050k5.511.318.128.632.5100k5.510.416.627.130.2150k5.210.216.126.728.8200k=.Figure3:Block diagonal adaptation matrix. reverberated test sets are obtained by acoustic room simulation (Image method[13])which allows us to specify any room con-figuration and control the reverberation time.Table2shows the WER for various as a function of the number of adapta-tion frames.Thefirst line corresponds to the performance of the echo-free system,i.e.with no adaptation.As expected,adapt-ing the acoustic model significantly improves the performance of the speech recognizer.Though the standard adaptation technique provides high WER improvements,especially with large amounts of adap-tation data,the number of parameters defining the adaptation transformation is too large() for using it in a fast adaptation framework.Indeed,the compu-tation of the eigenrooms would be highly memory demanding, computationally prohibitive and prone to round-off error.First, we observe that the biases do not help adapting.Besides,we observe that high value coefficients of the adaptation matrix are mostly located along the main diagonal.Hence,we decide to use a block diagonal adaptation matrix instead of a full matrix. That is,the elements which are off the main block diagonal are forced to zero(seefigure3).The number of adaptation parame-ters is reduced drastically().For the sake of comparison,table3reports the WER for variousas a function of the number of adaptation frames.As expected, the adaptation procedure with a block diagonal matrix provides less WER improvement than with a full matrix.Next,we test the eigenroom-based adaptation technique. As afirst step,block diagonal adaptation matrices are generated for varying from100ms to1200ms.For each,200000 frames of adaptation data are obtained by using artificially gen-erated room impulse responses(see section2.2).Then,theeigenvalue rank kN o r m a l i z e d e i g e n v a l u e λkFigure 4:Scree plot for PCA applied to block diagonal matrices for adaptation to room reverberation (limited to first 20eigen-values).adaptation vectors are formed and the principal directions of the resulting vector set are extracted.Figure 4gives the scree plot of the resulting eigenvalues.It clearly shows that the first few principal directions ()account for most of the vari-ability within the adaptation vector set.Finally,we apply the eigenroom-based adaptation approach with various number of eigenrooms.Figure 5compares WER improvements relativelyto the performance of the unadapted MLP:(a)forms with the number of adaptation frames varying from 25000to200000,and (b)for 50000adaptation frames withvary-ing from 200ms to 1000ms.The eigenroom-based adaptation procedure performs significantly better than the standard adap-tation procedure,especially for low amounts of adaptation data.4.Conclusion and Future WorkWe have shown that HMM/MLP recognizers can be efficientlyadapted to room reverberation by linear transformation of the input acoustic vectors.Besides,the eigenroom concept has been proposed.Similarly to the eigenvoice approach for fast speaker adaptation,the eigenroom-based approach requires less data for room adaptation than the standard approach.Unfortunately,the adaptation matrix has to be limited to a block diagonal matrix for computational reasons.Future work will focused on relax-ing this constrain.For example,a structured full matrix like a FIR matrix might be used.Furthermore,the promising results obtained for supervised adaptation have to be confirmed in un-supervised adaptation mode.5.References[1] D.Giuliani,M.Matassoni,M.Omologo and P.Svaizer,“Training of HMM with Filtered Speech Material for Hands-free Recognition”,Proc.ICASSP’99,vol.1,pp.449–452,Phoenix,USA,Mar.1999.[2]L.Couvreur,C.Couvreur and C.Ris,“A Corpus-BasedApproach for Robust ASR in Reverberant Environments”,Proc.ICSLP’2000,vol.1,pp.397–400,Beiging,China,Oct.2000.[3]H.Bourlard and N.Morgan,“Connectionist Speech Recog-nition –A Hybrid Approach”,Kluwer Academic Publish-ers,1994.[4]P.Nguyen,C.Wellekens and J.-C.Junqua,“MaximumLikelihood Eigenspace and MLLR for Speech Recognitionfor eigenroom-based adapted MLP’s with various numbers of eigenrooms (a)as a function of the number of adaptation frames and (b)as a function of the reverberation time .in Noisy Environments”,Proc.EUROSPEECH’99,vol.6,pp.2519–2522,Budapest,Hungary,Sep.1999.[5]R.Kuhn,J.-C.Junqua,P.Nguyen and N.Niedzielski,“Rapid Speaker Adaptation in Eigenvoice Space”,IEEE Trans.on Speech and Audio Processing ,vol.8,no.6,pp.695–707,Nov.2000.[6]R.Kuhn,P.Nguyen,J.-C.Junqua,L.Goldwasser,N.Niedzielski,S.Fincke,K.Field and M.Contolini,“Eigenvoices for Speaker Adaptation”,Proc.ICSLP’98,vol.5,pp.1771–1774,Sydney,Australia,Dec.1998.[7]P.Nguyen,“Fast Speaker Adaptation”,Technical Report ,Eur´e com Institute,Jun.1998.[8]S.Dupont and L.Cheboub,“Fast Speaker Adaptation of Artificial Neural Networks for Automatic Speech Recogni-tion”,Proc.ICASSP’2000,vol.3,pp.1795–1798,Istanbul,Turkey,Jun.2000.[9]S.Haykin,“Neural Networks:A Comprehensive Founda-tion”,McMillan,1994.[10]o,C.Martins and L.Almeida,“Speaker-Adaptationin a Hybrid HMM-MLP Recognizer”,Proc.ICASSP’96,vol.6,pp.3383–3386,Atlanta,USA,May 1996.[11]K.Fukunaga,“Introduction to Statistical Pattern Recog-nition”,Academic Press,1990.[12]AURORA database -http://www.elda.fr/aurora2.html.[13]J.B.Allen and D.A.Berkley,“Image Method for Effi-ciently Simulating Small-Room Acoustics”,J.Acoust.Soc.Am.,vol.65,no.4,pp.943–950,Apr.1979.。