Appearance models for occlusion handling

Andrew Senior *,Arun Hampapur,Ying-Li Tian,Lisa Brown,

Sharath Pankanti,Ruud Bolle

IBM T.J.Watson Research Center,P.O.Box 704,Yorktown Heights,NY 10598,USA Received 7April 2003;received in revised form 6June 2005;accepted 7June 2005

Abstract

Objects in the world exhibit complex interactions.When captured in a video sequence,some interactions manifest themselves as occlusions.A visual tracking system must be able to track objects,which are partially or even fully occluded.In this paper we present a method of tracking objects through occlusions

using appearance models.These models are used to localize objects during partial occlusions,detect complete occlusions and resolve depth ordering of objects during occlusions.This paper presents a tracking system which successfully deals with complex real world interactions,as demonstrated on the PETS 2001dataset.q 2005Elsevier B.V.All rights reserved.

Keywords:Probabilistic color appearance models;Visual vehicle and people tracking;Occlusion resolution;Moving object segmentation;Surveillance

1.Introduction

Real world video sequences capture the complex interactions between objects (people,vehicles,building,trees,etc.).In video sequences,these interactions result in several challenges to the tracking algorithm:distinct objects cross paths and cause occlusions;a number of objects may exhibit similar motion,causing dif?culties in segmentation;new objects may emerge from existing objects (a person getting out of a car)or existing objects may disappear (a person entering a car or exiting the scene).Maintaining appearance models of objects over time is necessary for a visual tracking system to be able to model and understand such complex interactions.

In this paper,we present a tracking system which uses appearance models to successfully track objects through complex real world interactions.Section 2presents a short review of related research.Section 3presents the overall architecture of the system,and its components:background

subtraction,high-level tracking and appearance models,are discussed in Sections 4–6,respectively.We have developed an interactive tool for generating ground truth using partial tracking results,which is discussed in Section 9.Section 10discusses our method for comparing automatic tracking results to the ground truth.Section 11presents results on the PETS test sequences.We summarize our paper and present future directions in Section 12.

2.Related work

Visually tracking multiple objects involves estimating 2D or 3D trajectories and maintaining identity through occlusion.One solution to the occlusion problem is to avoid it altogether by placing cameras overhead,looking down on the plane of motion of the objects [1–3]or to use the fusion of multiple cameras to determine depth [4,5].

In the case investigated here—of static monocular cameras viewing moving vehicles and people from a side view—occlusion is a signi?cant problem.It involves correctly segmenting visually merged objects,i.e.during occlusion,and specifying depth layering.An useful categorization of the work in this ?eld is based on the complexity of the model used to track objects over time.As model complexity increases,stronger assumptions about the object are invoked.In general,as the complexity of the models increases,more constraints are imposed,better

Image and Vision Computing 24(2006)1233–1243

https://www.doczj.com/doc/702220944.html,/locate/imavis

0262-8856/$-see front matter q 2005Elsevier B.V.All rights reserved.doi:10.1016/j.imavis.2005.06.007

*Corresponding author.

E-mail addresses:aws@https://www.doczj.com/doc/702220944.html, (A.Senior),arunh@https://www.doczj.com/doc/702220944.html, (A.Hampapur),yltian@https://www.doczj.com/doc/702220944.html, (Y.-L.Tian),lisabr@https://www.doczj.com/doc/702220944.html, (L.Brown),sharat@https://www.doczj.com/doc/702220944.html, (S.Pankanti),bolle@https://www.doczj.com/doc/702220944.html, (R.Bolle).

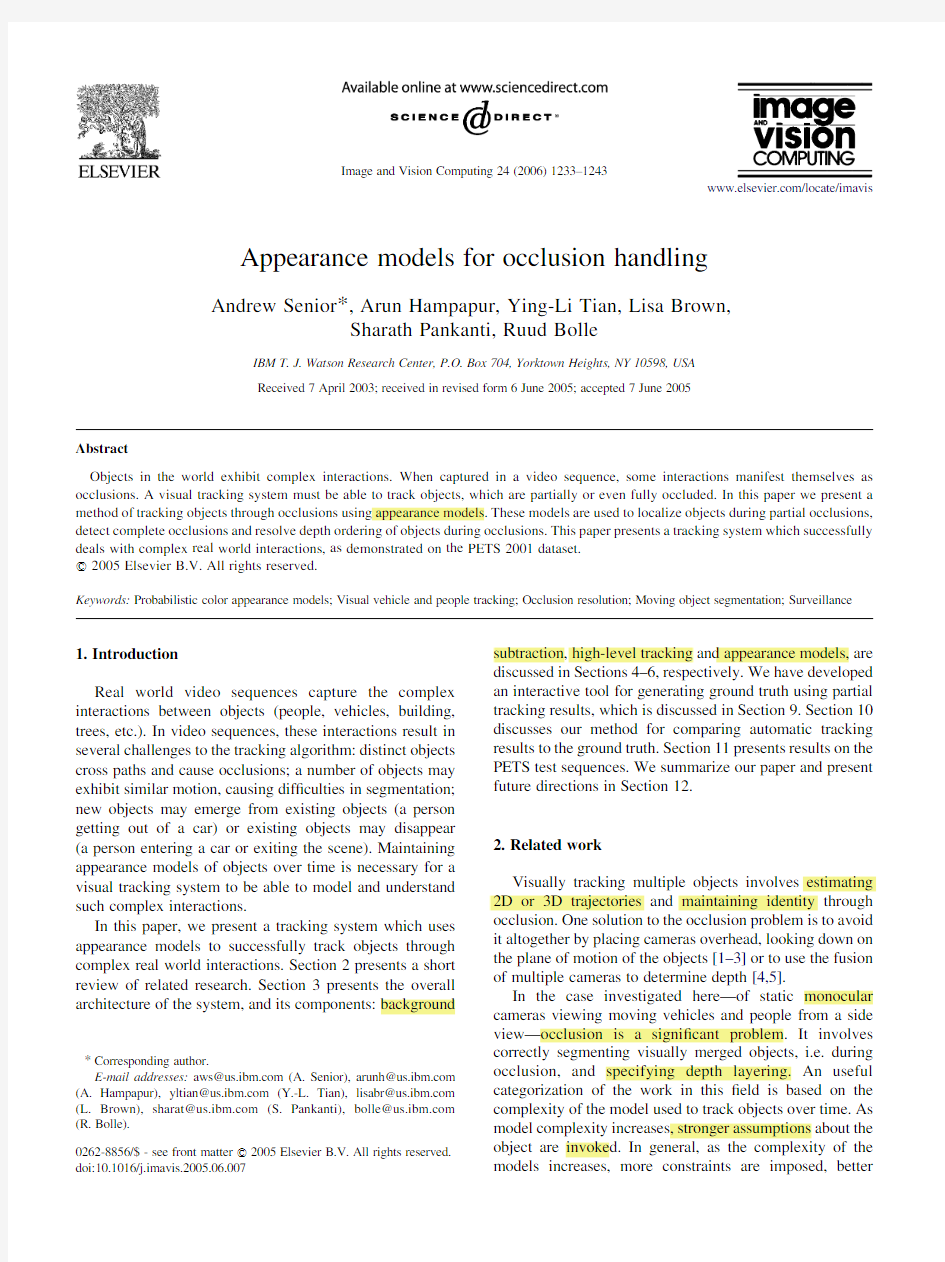

performance is achieved—when the appropriate conditions are met—but general robustness,applicability and real-time speed are more dif?cult to attain.Table1gives examples of multi-person tracking methods for progressively more complex tracking models.Several prototype projects are listed for each class of method.The right-hand column describes the constraints and limitations for each class.A few of the projects are listed in more than one category because they integrate model information from more than one class.In order to achieve the generality of simple methods and the accuracy of re?ned models,requires spanning a range of model complexity.In almost all of these approaches,background subtraction is the?rst step,and plays an important role including adapting to scene changes and removing shadows and re?ections.

At the top of Table1are the simplest methods that rely on basic cues such as color histograms and recent temporal history of each object.These systems have achieved a high degree of robustness and real-time performance but are limited in the accuracy with which they can perform trajectory estimation and identity maintenance through occlusion.Each object is represented by a set of features. In[Roh00]each object/person is represented by two or three colors temporally weighted depending on the size, duration and frequency of its appearance.In[6,7]each object/person is represented by a temporally updated color histogram.In[6],the histogram intersection method is used to determine the identity of an object and the histograms are used directly to compute the posterior probability of a pixel belonging to each object during an occlusion.In[7],people are tracked using the similarity of their color histograms from frame to frame using a mean shift iteration approach. To segment individuals from each other,temporal infor-mation is used.

Appearance-based systems have improved the perform-ance of tracking and identity maintenance through occlusion but are still limited to simple interactions and scene conditions.Appearance-based systems,by our de?nition, maintain information about each pixel in an evolving image-based model for each moving object/person.In the simplest case,this is just an image template.For example in [8],people and vehicles are classi?ed using shape characteristics then tracked by template matching combined with temporal consistency information such as recent proximity and similar classi?cation.Their method can deal with partial occlusion but does not take into account the variable appearance of objects due to lighting changes,self-occlusions,and other complex3-dimensional projection effects.The work most closely related to this paper is that of [9].Their system combines gray-scale texture appearance and shape information of a person together in a2D dynamic template,but does not use appearance information in analyzing multi-people groups.In our system,we use a color appearance model(thereby incorporating texture, shape,temporal and occlusion history)and are able to successfully perform identity maintenance in a wide range of circumstances but our system is still vulnerable to misclassi?cation for similarly colored/textured objects particularly for complex non-rigid3D objects when they interact,such as people,since their appearance depends on viewpoint and is often complicated by shadows and scene geometry.

As the complexity of tracking models,the methods become progressively more sophisticated at performing identity maintenance but at the expense of greater constraints.For example,if it is possible to calibrate the camera and manually input speci?c information regarding the scene,then systems can be designed which take advantage of knowledge of where people will enter/exit the scene,where the ground plane is,where occluding objects occur,and the typical height in the image a person will occupy.This process is referred to as‘closed-world tracking’in[10]and was applied to tracking children in an interactive narrative play space.The contextual information is exploited to adaptively select and weight image features used for correspondence.In[11]a scene model is created, spatially specifying short term occluding objects such as a street sign in the foreground,long term occluding objects such as a building,and bordering occlusion due to the limits of the camera?eld of view.In[12]static foreground occluding objects are actively inferred while the system is running without prior scene information.In[13]not only is

Table1

Performance measures for Dataset1,Camera1

Multi-person tracking models Constraints and limitations

Simple cues:color histogram,temporal consistency,shape features[6–8]Cannot perform segmentation during occlusion,limited identity

maintenance,good computational speed performance

Appearance based:evolving image information[9,10,20]Better identity maintenance but not robust to3D object viewpoint changes,

similar textured,colored objects;simplistic occlusion rules

3D scene model and2D human properties[11,12,14]Requires camera calibration and input of scene information;improves

reliability of constraints when correct but makes assumptions about

normative human height stance,and behavior

2D human appearance model:active shape model,2D body con?gurations, 2D clothing blobs[15–17]Requires prior training,sometimes requires learning individual appearances,assumes people wearing clothing of different colors with uniform colors/textures

2d human temporal model:motion templates of people walking,higher order dynamics[14,18,19]Assumes people are actively walking,moving in a periodic fashion or assumes second order dynamics

A.Senior et al./Image and Vision Computing24(2006)1233–1243 1234

the expected height of a person used based on the ground plane location,but also the time of day is utilized to calculate the angle of the sun and estimate the size and location of potential shadows.

Several systems make assumptions regarding the composition of a typical person in a scene.For example,it is frequently assumed that each person is composed of a small number of similarly colored/textured blobs,such as their shirt and pants.One of the earliest works to exploit this type of assumption was[14].In their work,a single person is tracked in real-time.The human is modeled as a connected set of blobs.Each blob has a spatial and color distribution and a support map indicating which pixels are members of the blob.Body parts(heads and hands)are tracked through occlusions using simple Newtonian dynamics and maxi-mum likelihood estimations.Several researchers have extended this work to tracking multiple people during occlusion.In[15]each person is segmented into classes of similar color using the Expectation Maximization algor-ithm.Then a maximum a posteriori probability approach is used to track these classes from frame to frame.In[16]the appearance of each person is modeled as a combination of regions;each region has a color distribution represented non-parametrically.Each region also has a vertical height distribution and a horizontal location distribution.Assum-ing the probability of each color is independent of location for each blob(i.e.each region can have multiple colors but their relative positions are independent)and the vertical location of the blob is independent of the horizontal position,the system computes the product of the prob-abilities to estimate the best arrangement of blobs(i.e., maximum likelihood estimation)and therefore the relative location of the people in the scene.These assumptions can be powerful constraints that reduce the search space for multi-person tracking but inevitably they also reduce the range of applicability.Although these constraints improve performance,there are always natural exceptions and environmental conditions which will cause these systems to fail.Examples include peculiar postures and transactions involving objects.

The performance of tracking can also be improved by the use of temporal or motion constraints.All systems assume proximity,most methods employ simplistic?rst order dynamics,but a more recent generation of systems is assuming higher order dynamics and speci?c periodicities which can be attributed to people.[17]presents an approach to detect and predict occlusion by using temporal analysis and trajectory prediction.In temporal analysis,a map of the previous segmented and processed frame is used as a possible approximation of the current connected elements. In trajectory prediction,an extended Kalman?lter provides an estimate of each object’s position and velocity.[18]have built a system for tracking people walking by each other in a corridor.Each foreground object is statistically modeled using a generalized cylinder object model and a mixture of Gaussians model based on intensity.A simple particle?lter (often called condensation)is used to perform joint inference on both the number of objects present and their con?gurations.Assumptions about motion include model-ing translational dynamics as damped constant velocity plus Gaussian noise and a turn parameter that encodes how much the person(represented by a generalized cylinder)has turned away from the camera.[13]track multiple humans using a Kalman?lter with explicit handling of occlusion. Proper segmentation is veri?ed by‘walking recognition’. This is implemented via motion templates(based on prior data of walking phases)and temporal integration.The basis for walking recognition relies on the observation that the functional walking gaits of different people do not exhibit signi?cant dynamical time warping,i.e.the phases should correspond linearly to the phases of the average walker. However,in practice,people start and stop,are not always walking,and it is dif?cult to?nd phase correspondence if the viewed in the same direction as the walking motion.

All of the systems mentioned here are prototypes.Each project usually evaluates its own performance based on a small number of video sequences.In most cases,these sequences are produced by the investigators and typical results are primarily a small number of visual examples. Hence it is very dif?cult to compare the capabilities of these methods or quantify their accuracy or robustness.However, in general these methods are brittle and will fail if their large number of stated and unstated assumptions is not met.The IEEE Workshop on Performance Evaluation of Tracking and Surveillance is an attempt to address some of these issues by making a public dataset widely available and inviting researchers to compare the performance of their algorithms.However,there is still a need to provide ground truth evaluation of prede?ned performance criteria.

3.Tracking system architecture

In this paper,we describe a new visual tracking system designed to track independently moving objects using the output of a conventional video camera.Fig.1shows the a schematic of the principal components of the tracking system.

The input video sequence is used to estimate a background model,which is then used to perform back-ground subtraction,as described in Section4.The resulting foreground regions form the raw material of a two-tiered tracking system.

The?rst tracking process associates foreground regions in consecutive frames to construct hypothesized tracks.The second tier of tracking uses appearance models to resolve ambiguities in these tracks that occur due to object interactions and result in tracks corresponding to indepen-dently moving objects.

A?nal operation?lters the tracks to remove tracks which are invalid artefacts of the track construction process,

A.Senior et al./Image and Vision Computing24(2006)1233–12431235

and saves the track information (the centroids of the objects at each time frame)in the PETS XML ?le format.

In this paper,we describe results using the PETS 2001evaluation dataset 1,camera 1.For reasons of speed and storage economy,we have chosen to process the video at half resolution.The sytem operates on AVI video ?les (Cinepak compressed)generated from the distributed JPEG images.Naturally,higher accuracies and reliability are to be expected from processing the video at full size and without compression artefacts.

4.Background estimation and subtraction

The background subtraction approach presented here is based on that taken by Horprasert et al.[19]and is an attempt to make the background subtraction robust to illumination changes.The background is modeled statisti-cally at each pixel.The estimation process computes the brightness distortion and color distortion in RGB color space.Each pixel i is modeled by a 4-tuple (E i ,s i ,a i ,b i ),where E i is a vector with the means of the pixel’s red,green,and blue components computed over N background frames;s i is a vector with the standard deviations of the color values;a i is the variation of the brightness distortion;and b i is the variation of the chromaticity distortion.

By comparing the difference between the background image and the current image,a given pixel is classi?ed into one of four categories:original background,shaded

background or shadow,highlighted background,and fore-ground objects.The pixels in the foreground objects,are passed to the next stage (Section 5,and the remaining categories are grouped together as background pixels.The categorization thresholds are calculated automatically;details can be found in the original paper [19].Finally,isolated pixels are removed and then a morphological closing operator is applied to join nearby foreground pixels.We have also developed an active background estimation method that can deal with objects moving in the training images (except the ?rst).The ?rst frame is stored as a prototype background image,and differenced with sub-sequent training frames—areas of signi?cant difference being the moving objects.When the statistical background model is constructed,these moving object regions are excluded from the calculations.To handle variations in illumination that have not been seen in the training set,we have also added a further two modi?cations to the background subtraction algorithm that operate when running on test sequences.The ?rst is an overall gain control that applies a global scaling factor to the pixel intensities before comparing them to the stored means.The scale factor is calculated on the non-foreground regions of the previous image,under the assumption that lighting changes between adjacent frames are small.Further,background adaptation is employed by blending in the pixel values of current non-foreground regions,thus slowly learning local changes in appearance not attributable to moving objects.These processes reduce the sensitivity of the algorithm to the lighting changes seen at the end of sequence 1,and throughout sequence 2.

5.High-level tracking

The foreground regions of each frame are grouped into connected components.A size ?lter is used to remove small components.Each foreground component is described by a bounding box and an image mask,which indicates those pixels in the bounding box and an image mask,which indicates those pixels in the bounding box that belong to the foreground.The set of foreground pixels is called F .For each successive frame,the correspondence process attempts to associate each foreground region with one of the existing tracks.This is achieved by constructing a distance matrix showing the distance between each of the foreground regions and all the currently active tracks.We use a bounding box distance measure ,as shown in Fig.2.The distance between bounding boxes A and B (Fig.2,left)is the lower of the distance from the centroid,C a ,of A to the closest point on B or from the centroid,C b ,of B to the closest point on A .If either centroid lies within the other bounding box (Fig.2,right),the distance is zero.The motivation for using the bounding box distance as opposed to Euclidean distance between the centroids is the large jump in the Euclidean distance when two bounding boxes

Fig.1.Block diagram of the tracking system.

A.Senior et al./Image and Vision Computing 24(2006)1233–1243

1236

(objects)merge or split.A time distance between the observations is also added in to penalize tracks for which no evidence has been seen for some time.

The distance matrix is then binarized,by thresholding,resulting in a correspondence matrix associating tracks with foreground regions.The analysis of the correspondence matrix produces four possible results as shown in Fig.1:existing object,new object,merge detected and split detected.

For well-separated moving objects,the correspondence matrix (rows correspond to existing tracks and columns to foreground regions in the current segmentation)will have at most one non-zero element in each row or column-associating each track with one foreground region and each foreground region with one track,respectively.Columns with all zero elements represent new objects in the scene,which are not associated with any track,and result in the creation of a new track.Rows with all zero elements represent tracks that are no longer visible (because they left the scene,or were generated because of artefacts of the background subtraction).

In the case of merging objects,two or more tracks will correspond to one foreground region,i.e.a column in the correspondence matrix will have more than one non-zero entry.When objects split,for example when people in a group walk away from each other,a single track will correspond to multiple foreground regions,resulting in more than one non-zero element in a row of the correspondence matrix.When a single track corresponds to more than one bounding box,all those bounding boxes are merged together,and processing proceeds.If two objects hitherto tracked as one should separate,the parts continue to be tracked as one until they separate suf?ciently that both bounding boxes do not correspond to the track,and a new track is created.

Once a track is created,an appearance model of the object is initialized.This appearance model is adapted every time the same object is tracked into the next frame.On the detection of object merges,the appearance model is used to resolve the ambiguity.A detailed discussion of the appearance model and its application to occlusion handling is presented in the Section 6.

Because of failures in the background subtraction,particularly in the presence of lighting variation,some spurious foreground regions are generated,which result in tracks.However,most of these are ?ltered out with rules detecting their short life or the fact that the appearance model created in one frame fails to explain the ‘foreground’

pixels in subsequent frames.An additional rule is used to prune out tracks,which do not move.These are considered to be static objects whose appearance varies,such as moving trees and re?ections of sky.

6.Appearance-based tracking

To resolve more complex structures in the track lattice produced by the bounding box tracking,we use appearance-based modeling.Here,for each track we build an appearance model,showing how the object appears in the image.The appearance model is an RGB color model with an associated probability mask.The color model,M RGB (x ),shows the appearance of each pixel of an object,and the probability mask,P c (x ),records the likelihood of the object being observed at that pixel.For simplicity of notation,the coordinates x are assumed to be in image coordinates,but in practice the appearance models model local regions of the image only,normalized to the current centroid,which translate with respect to the image coordinates.However,at any time an alignment is known,allowing us to calculate P c and M RGB for any point x in the image,P c (x )being zero outside the modeled region.

When a new track is created,a rectangular appearance model is created with the same size as the bounding box of the foreground region.The model is initialized by copying the pixels of the track’s foreground component into the color model.The corresponding probabilities are initialized to 0.4,and pixels which did not correspond to this track are given zero initial probability.

On subsequent frames,the appearance model is updated by blending in the current foreground region.The color model is updated by blending the current image pixel with the color model for all foreground pixels,and all the probability mask values are also updated with the following formulae (a Z l Z 0.95):

M RGB ex ;t TZ M RGB ex ;t K 1Ta C e1K a TI ex Tif x 2F (1)P c ex ;t TZ P c ex ;t K 1Tl if x ;F (2)P c ex ;t TZ P c ex ;t K 1Tl C e1K l Tif x 2F

(3)

In this way,we maintain a continuously updated model of the appearance of the pixels in a foreground region,together with their observation probabilities.The latter can be thresholded and treated as a mask to ?nd the boundary of the object,but also gives information about non-rigid variations in the object,for instance retaining observation information about the whole region swept out by a pedestrian’s legs.

Fig.3shows the appearance model for a van from the PETS data at several different frames.The appearance models are used to solve a number of problems,including improved localization during tracking,track correspon-dence and occlusion

resolution.

Fig.2.Bounding box distance measure.

A.Senior et al./Image and Vision Computing 24(2006)1233–12431237

Given a one-to-one track-to-foreground-region corre-spondence,we use the appearance model to provide improved localization of the tracked object.The back-ground subtraction is unavoidably noisy,and the additional layers of morphology increase the noise in the localization of the objects,by adding some background pixels to a foreground region,and removing extremities.The appear-ance model,however,has an accumulation of information about the appearance of the pixels of an object and can be correlated with the image to give a more accurate estimate of the centroid of the object.The accumulated Euclidean RGB distance,p (I ,x ,M ),is minimized over a small search region and the point with the lower distance taken as the object’s location.The process could be carried out to

sub-pixel accuracy,but the pixel level is suf?cient for our tracking.

p eI ;x ;M TZ

Y

y

p RGB ex C y TP c ex C y T(4)p RGB ex TZ e2p s 2T

K e3=2T

e

K jj I ex T?M ex Tjj 2

2s 2

(5)

When two tracks merge into a single foreground region,we use the appearance models for the tracks to estimate the separate objects’locations and their depth ordering.

This is done by the following operations,illustrated in Figs.4–6:

(1)Using a ?rst-order model,the centroid locations of the

objects i are predicted.

(2)For a new merge,with no estimate of the depth-ordering,each object is correlated with the image in the predicted position,to ?nd the location of best-?t.

(3)Given this best-?t location,the ‘disputed’pixels-those

which have non-zero observation probabilities in more than one of the appearance model probability masks-are classi?ed using a maximum likelihood classi?er with a simple spherical Gaussian RGB model,determining which model was most likely to have produced them.

p i ex TZ p RGB i ex TP c i ex T

(6)

Figs.4c and 5c show the results of such classi?cations.(4)Objects are ordered so that those which are assigned fewer

disputed pixels are given greater depth.Those with few visible pixels are marked as occluded.

(5)All disputed pixels are reclassi?ed,with disputed

pixels being assigned to the foremost object which overlapped

them.

Fig.3.The evolution of an appearance model.In each ?gure,the upper image shows the appearance for pixels where observation probability is greater than 0.5.The lower shows the probability mask as grey levels,with white being 1.The frame numbers at which these images represent the models are given,showing the progressive accommodation of the model to slow changes in scale and

orientation.

Fig.4.An occlusion resolution (Frame 921of dataset 1,camera 1).(a)Shows three appearance models for tracks converging in a single region.(b)Shows the pixels of a single foreground region,classi?ed independently as to which of the models they belong to.(d–f)show the pixels ?nally allocated to each track,and (c)shows the regions overlaid on the original frame,with the original foreground region bounding box (thick box),the new bounding boxes (thin boxes)and the tracks of the object centroids.

A.Senior et al./Image and Vision Computing 24(2006)1233–1243

1238

On subsequent frames,the localization step is carried out in depth order,with the foremost objects being ?tted ?rst,and pixels which match their appearance model being ignored in the localization of ‘deeper’objects,as they are considered occluded.After the localization and occlusion resolution,the appearance model for each track is updated using only those pixels assigned to that track.

If a tracked object separates into two bounding boxes,then a new track is created for each part,with the appearance models being initialized from the corresponding areas of the previous appearance model.

7.Multi-object segmentation

The appearance models can also be used to split complex objects.While the background subtractions yields complex,noisy foreground regions,the blending process of the model update allows ?ner structure in objects to be observed.The principal way in which this structure is used in the current system is to look for objects,which are actually groups of people.These can be detected in the representation if the people are walking suf?ciently far apart that background pixels are visible between them.These are evidenced in the probability mask,and can be detected by observing the vertical projection of the probability mask.We look for minima in this projection,which are suf?ciently low and divide suf?ciently high maxima.When such a minimum is detected,the track can be divided into the two component objects,though here we choose to track the multi-person object and ?ag its identity.

8.Object classi?cation

For the understanding of video it is important to label the objects in the scene.For the limited variety of objects in the test data processed here,we have written a simple rules-based classi?er.Objects are initially classi?ed by size and shape.We classify objects as:Single Person,Multiple People,Vehicle,and Other.For each object we ?nd the area,the length of the contour,and the length and orientation of the principal axes.We compute the ‘dispersedness’,which is the ratio of the perimeter squared to the area.Dispersedness has been shown to be a useful cue to distinguish 2D image objects of one or more people from those of individual vehicles [8].For each 2D image object,we also determine which principal axis is most nearly vertical and compute the ratio of the more-nearly horizontal axis length to the more-nearly vertical axis length.This ratio,r ,is used to distinguish a foreground region of a single person from one representing multiple people since a single person’s image is typically signi?cantly taller than it is wide while a multi-person blob grows in width with the number of visible people.From these principles,we have designed the ad-hoc,rule-based classi?cation shown in Fig.7–10.In addition,we use temporal consistency to improve robustness so a cleanly tracked object,which is occasionally misclassi?ed,can use its classi?cation history to improve the results.

9.Ground truth generation

The tracking results were evaluated by comparing them with ground truth.This section overviews the ground truth generation process.A semi-automatic interactive tool was developed to aid the user in generating ground truth.The ground truth marking (GTM)tool has the following four major components:(i)iterative frame

acquisition

Fig.5.An occlusion resolution (Frame 825of dataset 1,camera 1).(a)appearance models.(b)Independently classi?ed foreground region pixels as to which of the models they belong to.(d,e)the pixels allocated to each track after enforcing the depth-ordering,and (c)the regions overlaid on the original

frame.

Fig.6.The appearance model for a group of people.

A.Senior et al./Image and Vision Computing 24(2006)1233–12431239

and advancement mechanism;(ii)automatic object detection;(iii)automatic object tracking;(iv)visualization;(v)re?nement.After each frame of video is acquired,the object detection component automatically determines the foreground objects.The foreground objects detected in frame n are related to those in frame n K 1by the object tracking component.At any frame n ,all the existing tracks up to frame n and the bounding boxes detected in frame n are displayed by the visualization component.The editing component allows the user to either (a)accept the results of the object detection/tracking components,(b)modify (insert/delete/update)the detected components,(c)partially/totally modify (create,associate,and dis-sociate)track relationships among the objects detected in frame n K 1and those in frame n .Once the user is satis?ed with the object detection/tracking results at frame n ,she can proceed to the next frame.

Generating object position and track ground truth for video sequences is a very labour intensive process.In order to alleviate the tedium of the ground truth determination,GTM allows for sparse ground truth marking mode.In this mode,the user need not mark all the frames of the video but only a subset thereof.The intermediate object detection and tracking results are interpolated for the skipped frames using linear interpolation.The rate,t ,of frame subsampling is user-adaptable and can be changed dynamically from frame to frame.

The basic premise in visual determination of the ground truth is that the humans are perfect vision machines.Although we refer to the visually determined object position and tracks as ‘the ground truth’,it should be emphasized that there is a signi?cant subjective component of human judgment involved in the process.The objects to be tracked in many instances were very small (e.g.few pixels)and

Fig.

7.The classi?cation rules for a foreground region.r is the horizontal-to-vertical principal axis length ratio.

Fig.8.A comparison of estimated tracks

(black)with ground truth positions (white),for two tracks superimposed on a mid-sequence frame showing the two objects.

Fig.9.An image showing all the tracks detected by the system for dataset 1,camera 1,overlaid on a background image.

20406080

100050010001500200025003000

T i m e (m s )

Frame

Total Background

Fig.10.Per frame time requirements for the tracking and background subtraction.The lower line shows the time required for the background subtraction,and the upper line shows the total time required to process each frame.Times vary from about 10ms when there are no objects to be tracked to a peak of about 130ms when there are several overlapping objects being tracked.

A.Senior et al./Image and Vision Computing 24(2006)1233–1243

1240

exhibited poor contrast against the surrounding background. When several objects came very close to each other, determination of the exact boundary of each object was not easy.Further,since the judgments about of the object location were based on visual observation of a single (current)frame,the motion information(which is a signi?cant clue for determining the object boundary)was not available for marking the ground truth information. Finally,limited human ability to exert sustained attention to mark minute details frame after frame tends to introduce errors in the ground truth data.Because of the monotonous nature of the ground truth determination,there may be an inclination to acceptance of the ground truth proposed by the(automatic)component of the GTM interface.Conse-quently,the resultant ground truth results may be biased towards the algorithms used in the automatic component of the GTM recipe.Perhaps,some of the subjectiveness of the ground truth data can be assessed by juxtaposing indepen-dently visually marked tracks obtained from different individuals and from different GTM interfaces.For the purpose of this study,we assume that the visually marked ground truth data is error-free.

10.Performance metrics

Given a ground truth labelling of a sequence,this section presents the method used for comparison of the ground truth with tracking results to evaluate the performance.The approach presented here is similar to the approach presented by Pingali and Segen[20].Given two sets of tracks,a correspondence between the two sets needs to be established before the individual tracks can be compared to each other. Let N g be the number of tracks in the ground truth and N r be the number of tracks in the results.Correspondence is established by minimizing the distance between individual tracks.The following distance measure is used,evaluated for frames when both tracks exist:

D TeT1;T2TZ

1

N12

X

i:d T1et iT&d T2et iT

?????????????????????????

d2xeiTC d2veiT

q

(7)

d xeiTZ j x1eiTK x2eiTj(8) d veiTZ j v1eiTK v2eiTj(9) wher

e N12is the number o

f points in both tracks T1and T2, x k(i)is the centroid and v k(i)is the velocity of object k at time t i.Thus the distance between two tracks increases with the distance between the centroids and the difference in velocities.The distance is inversely proportional to the length for which both tracks exist-so tracks,which have many frames in common will have low distances.An N g! N r distance matrix is constructed usin

g the track distance measure D T.Track correspondence is established by thresholding this matrix.Eac

h track in the ground truth can be assigned one or more tracks from the results.This accommodates fragmented tracks.Once the correspondence between the ground truth and the result tracks are established,the following error measures are computed between the corresponding tracks.

?Object centroid position error:Objects in the ground truth are represented as bounding boxes.The object centroid position error is approximated by the distance between the centroids of the bounding boxes of ground truth and the results.This error measure is useful in determining how close the automatic tracking is to the actual position of the object.

?Object area error:Here again,the object area is approximated by the area of the bounding box.The bounding box area will be very different from the actual object area.However,given the impracticality of manually identifying the boundary of the object in thousands of frames,the bounding box area error gives some idea of the quality of the segmentation.

?Object detection lag:This is the difference in time between when a new object appears in the ground truth and when the tracking algorithm detects it.

?Track incompleteness factor:This measures how well the automatic track covers the ground truth:

Trackincompleteness Z

F nf C F pf

T i

(10)

where,F nf is the false negative frame count,i.e.the number of frames that are missing from the result track.

F pf is the false positive frame count,i.e.the number of

frames that are reported in the result which are not present in the ground truth and T i is the number frames present in both the results and the ground truth.

?Track error rates:These include the false positive rate f p and the false negative rate f n as ratios of numbers of tracks:

f p Z

Results without corresponding ground truth

Total number of ground truth tracks

(11)

f n Z

Ground truth without corresponding result

Total number of ground truth tracks

(12)

?Object type error:This counts the number of tracks for which our classi?cation(person/car)was incorrect.

11.Experimental results

The goal of our effort was to develop a tracking system for handling occlusion.Given this focus,we report results only on PETS test dataset1,camera1.The current version of our system does not support continuous background estimation and hence we do not report results on the remaining sequences,which have signi?cant lighting variations.Given the labour intensive nature of the ground

A.Senior et al./Image and Vision Computing24(2006)1233–12431241

truth generation,we have only generated ground truth up to frame 841.Table 2shows the various performance metrics for these frames.

Of the seven correct tracks,four are correctly detected,and the remaining three (three people walking together)are merged into a single track,though we do detect that it is several people.This accounts for the majority of the position error,since this result track is compared to each of the three ground truth tracks.No incorrect tracks are detected,though in the complete sequence,?ve spurious tracks are generated by failures in the background subtraction in the ?nal frames,which are accumulated into tracks.The bounding box area measure is as yet largely meaningless since the bounding boxes in the results are only crude approximations of the object bounding boxes,subject to the vagaries of back-ground subtraction and morphology.The detection lag is small,showing that the system detects objects nearly as quickly as the human ground truther.

12.Summary and conclusions

We have written a computer system capable of tracking moving objects in video,suitable for understanding moderately complex interactions of people and vehicles,as seen in the PETS 2001data sets.We believe that for the sequence on which we have concentrated our efforts,the tracks produced are accurate.The two tier approach proposed in the paper successfully tracks through all the occlusions in the dataset.The high level bounding box association is suf?cient to handle isolated object tracking.At object interactions,the appearance model is very effective in segmenting and localizing the individual objects and successfully handles the interactions.

To evaluate the system,we have designed and built a ground truthing tool and carried out preliminary evaluation of our results in comparison to the ground truth.The attempt to ground truth the data and use it for performance evaluation lead to the following insights.The most important aspect of the ground truth is at object interactions.Thus ground truth can be generated at varying resolutions through a sequence,coarse resolutions for isolated object paths and high resolution at object interactions.The tool we designed allows for this variation.

13.Future work

The implementation of the appearance models holds much scope for future investigation.A more complex model,for instance storing color covariances or even multi-modal distributions for each pixel would allow more robust modeling,but the models as described seem to be adequate for the current task.The background subtraction algorithm is currently not adaptive,and so begins to fail for long sequences with varying lighting conditions.Continuous updating of background regions will improve its robustness to such situations.The system must also operate in real-time to be applicable to real-world tracking problems.Currently the background subtraction works at about 9fps and the subsequent processing takes a similar amount of time.Without further optimization,the system should run on live data by dropping frames,but we have not tested the system in this mode.Acknowledgements

We would like to thank Ismail Haritaog

?lu of IBM Almaden Research for providing the background estimation and subtraction code.References

[1]A.F.Bobick,et al.,The KidsRoom:a perceptually-based interactive

and immersive story environment,Teleoperators and Virtual Environment 8(1999)367–391.

[2]W.Grimson,C.Stauffer,R.Romano,L.Lee,Using adaptive tracking

to classify and monitor activities in a site,Conference on Computer Vision and Pattern Recognition (1998)22–29.

[3]H.Tao,H.Sawhney,R.Kumar,Dynamic layer representation with

applications to tracking,Proceedings of International Conference on Pattern Recognition (2000).

[4]T.-H.Chang,S.Gong,E.-J.Ong,Tracking multiple people under

occlusion using multiple cameras,Proceedings of 11th British Machine Vision Conference (2000).

[5]S.Dockstader,A.Tekalp,Multiple camera fusion for multi-object

tracking,Proceedings of IEEE Workshop on Multi-Object Tracking (2001)95–102.

[6]S.McKenna,J.Jabri,Z.Duran,H.Wechsler,Tracking interacting

people,International Workshop on Face and Gesture Recognition (2000)348–353.

[7]I.Haritaog

?lu,M.Flickner,Detection and tracking of shopping groups in stores,CVPR (2001).

[8]A.Lipton,H.Fuyiyoshi,R.Patil,Moving target classi?cation and

tracking from real-time video,Proceedings of Fourth IEEE Workshop on Applications of Computer Vision (1998).

[9]I.Haritaog

?lu,D.Harwood,L.S.Davis,W 4:Real-time surveillance of people and their activities,IEEE Transaction on Pattern Analysis and Machine Intelligence 22(8)(2000)809–830.

[10]S.Intille,J.Davis, A.Bobick,Real-time closed-world tracking,

Conference on Computer Vision and Pattern Recognition (1997)697–703.

[11]T.Ellis,M.Xu,Object detection and tracking in an open and dynamic

world,International Workshop on Performance Evaluation of Tracking and Surveillance (2001).

[12]A.Senior,Tracking with probabilistic appearance models,Third

International workshop on Performance Evaluation of Tracking and Surveillance Systems (2002).

Table 2

Performance measures for Dataset 1,Camera 1

Dataset 1,Camera 1

Track error f p 5/7Track error f n

2/7

Average position error 5.51pixels Average area error K 346pixels Average detection lag

1.71frames Average track incompleteness 0.12Object type error

0tracks

A.Senior et al./Image and Vision Computing 24(2006)1233–1243

1242

[13]T.Zhao,R.Nevatia,F.Lv,Segmentation and tracking of multiple

humans in complex situations,Conference on Computer Vision and Pattern(2001).

[14]C.R.Wren,A.Azarbayejani,T.Darrell,A.P.Pentland,P?nder:real-

time tracking of the human body,IEEE Trans Pattern Analysis and Machine Intelligence19(7)(1997)780–785.

[15]S.Khan,M.Shah,Tracking people in presence of occlusion,Asian

Conference on Computer(2000).

[16]A.Elgammal,L.Davis,Probabilistic framework for segmenting

people under occlusion,Eighth International Conference on Computer Vision,II,IEEE(2001)145–152.[17]R.Rosales,S.Sclaroff,Improved tracking of multiple humans with

trajectory prediction and occlusion modelling,IEEE CVPR Workshop on the Interpretation of Visual Motion(1998).

[18]M.Isard,J.MacCormick,BraMBLe:a Bayesian multiple-blob tracker,

International Conference on Computer Vision2(2001)34–41. [19]T.Horprasert,D.Harwood,L.S.Davis,A statistical approach for real-

time robust background subtraction and shadow detection,ICCV’99 Frame-Rate Workshop(1999).

[20]G.Pingali,J.Segen,Performance evaluation of people tracking

systems,Proceedings of IEEE Workshop on Applications of Computer Vision(1996)33–38.

A.Senior et al./Image and Vision Computing24(2006)1233–12431243

外表重要吗Is Appearance Important When I was very small, I was always educated by my parents and teachers that appearance was not important, and we shouldn’t deny a person by his face, only the beautiful soul could make a person shinning. I kept this words in my heart, but as I grow up, I start to question about the importance of appearance. It occupies some places in my heart. People will make judgement of another person in first sight. The one who looks tidy and beautiful will be surely attract the public’s attention and becomes the spotlight. On the contrary, if the person looks terrible, he will miss many chances to make friends, because he will be ignored by the first sight. It is true that an attractive people is confident and has the charm, and everybody will appreciate his character. So before you go out of the house, make sure you look good. 在我很小的时候,我的父母和老师总是教育我外表是不重要的,我们不能通过外表而去否认一个人,因为只有美丽的心灵才能使人发光。我心里一直把这些话牢记于心,但是当我长大后,我开始怀疑关于外貌的重要性,因为外貌在我心里占据了一定分量。人们会根据第一印象来判断一个人。那些看起来整洁,漂亮的人肯定会吸引更多公众的

水平对置、后置后驱、低重心、前双横臂后多连杆、全铝合金车架、5门5座,你以为笔者说的是保时捷新车型吗?那笔者再补充多几个关键词好了,后置的水平对置双电刷电动机、0油耗、藏在地板下的笔记本电池组,同时拥有这些标签的,便是Tesla第二款车型Model S。Model S是五门五座纯电动豪华轿车,布局设计及车身体积与保时捷Panamera相当,并且是目前电动车续航里程的纪录保持者(480公里)。虽然现在纯电动在我国远未至于普及,但是在香港地区却是已经有Tesla的展厅,在该展厅内更是摆放了一台没有车身和内饰,只有整个底盘部分的Model S供人直观了解Model S的技术核心。 图:Tesla Model S。

图:拆除车壳之后,Model S的骨架一目了然。

图:这套是Model S的个性化定制系统,可以让买家选择自己喜爱的车身颜色、内饰配色和轮圈款式,然后预览一下效果。可以看到Model S共分为普通版、Sign at ure版和Performance版,后面两个型号标配的是中间的21寸轮圈,而普通版则是两边的19寸款式。Signature版是限量型号,在美国已全部售罄,香港也只有少量配额。 图:笔者也尝试一下拼出自己心目中的Model S,碳纤维饰条当然是最爱啦。

图:参观了一下工作车间,不少Roadster在等着检查保养呢,据代理介绍,不同于传统的汽车,电动车的保养项目要少很多,至少不用更换机油和火花塞嘛,换言之电动车的维护成本要比燃油汽车要低。 Tesla于2010年5月进军香港市场,并于翌年2011年9月成立服务中心。由于香港政府对新能源车的高度支持,香港的电动车市场发展比起大陆地区要好得多。例如Tesla的第一款车型Roadster(详见《无声的革命者——Tesla Roadster Sport 》),在香港获得豁免资格,让车主可以节省将近100万港元的税款。在这样的优惠政策之下,Tesla Roadster尽管净车价达100万港元,但50台的配额已经基本售罄。而Model S目前在香港已经开始接受报名预定,确定车型颜色和配置之后约两个月左右可以交车。

英语情景口语:personal appearance相貌 3 personal appearance Beginner A: That girl looks very attractive, doesn’t she? B: do you think so? I don’t like girls who look like that. I like girls who aren’t too slim. If you like her, go and talk to her. A: I’d like to, but there’s her boyfriend. He’s very broad-shouldered. B: he’s huge! He must go to the gym to have a well-built body like that. A: do you prefer tall girls or short ones? B: I don’t mind, but I like girls with long hair. A: we have different tastes. I like girls with short hair. I like tall girls- probably because I’m so tall myself. B: have you ever dated a girl taller than you? A: no, never. I don’t think I’ve ever met a girl taller than me! Have you gained weight recently? B: yes, I have. Perhaps I should go to the gym, like that girl’s boyfriend. A: I ‘m getting a bit plump myself. Perhaps I’ll go with you. Intemp3ediate A: let’s play a little game. I’ll describe someone and you try and guess who it is. B: ok. I’m really bored at the moment. A: ok. This man is tall and slim. He’s got blue eyes and curly brown hair. B: does he have a moustache or a beard? A: good question. Yes, he has a moustache, but no beard. B: sounds like mike, is it? A: yes, it is. You describe someone we both know. B: right. She’s not very tall and she’s quite plump. She’s got blonder hair, but I don’t know what color her eyes are. A: is she attractive? I don’t think I know anyone like that. B: I like slim girls, so I doubt I would find a plump girl attractive. You’ll have to give me some more infomp3ation. A: she’s got tiny feet and wears really unfashionable shoes. In fact, she wears unfashionable clothes too. B: this doesn’t sound like anyone I know. I give up. Teel me who she is. A; she’s your mother! B: how embarrassing! I don’t even recognize a description of my own mother! How important do you think appearance is ? A: I think that unfortunately it’s more important than a person’s character. Advertising and stuff tells us that we have to be attractive. I think it’s wrong, but that’s the way the world is now. A: I’m afraid you’re right. I chose my girlfriend because she has a wonderful personality. B: well, you certainly didn’t choose her because of her looks! Hey, I was joking! Don’t hit me!

文化资源的概念与分 类

第二讲文化资源的概念与分类 提要: 1. 文化资源的概念 2. 文化资源的分类 3. 文化资源的特点与开发类型 1 文化资源的概念 文化资源是一个涵盖很广泛的概念,有人说,除了自然资源,都是文化资源。这个说法有一定的道理,因为它的前提——文化,正如爱德华·泰勒所说,本身就是一个“复合的整体”,“它包括知识、信仰、艺术、道德、法律、习俗和个人作为社会成员所必需的其他能力及习惯”。当然,文化资源并不能完全等同于文化,加了“资源”一词就意味着它已经拥有过去时态(时间性)、可资利用(效用性)等含义。因此,我们可以认为:文化资源可用于指称人类文化中能够传承下来,可资利用的那部分内容和形式。 因此,文化资源是人类劳动创造的物质成果及其转化的一部分。在本课程中,我们围绕所关注的文化经济一体化的生产经营活动来理解各类文化资源。文化产业的中心任务是将有限的文化资源转化为有用的文化产品,包括文化实物产品、文化服务产品及其衍生形态。 1.1 什么是资源? 一般来说,资源是指不直接用于生活消费的生产性资产。资源常常讲利用和开发,与生产的关系十分紧密。我们认为与文化生产或者与文化产业相关的“素材”才是文化资源的核心指向,而这些素材也来自于人类的活动与创造。 人类早期的资源以自然资源和生活资源为主,如土地、森林、猎物等。随着生产的发展和社会生活的复杂化,人类自身创造的资源越来越重要,其的构成也越来越复杂、多样。符号化知识、经验型技能、创新型能力、通讯手段、社会组织系统等等,都成为了生产的要素即生产的资源。不仅

有经济生产方面的资源,而且有社会生活方面的资源,如政治资源、文化资源等。因此,人类积累的一切创造发明成果,都成了人类政治、经济、文化、科技活动进一步发展的资源。 总之,资源是指“资财的来源”,即包括自然资源如土地、森林、矿藏、水域等,也包括各类社会资源。其中,文化就是一种社会资源,它也同时体现为有形的物质载体和无形的精神资产。 1.2 资源科学的学科体系: 资源科学——综合资源学,区域资源学,部门资源学 综合资源学——资源地理学,资源生态学,资源经济学,资源评价学,资源工程与工艺学 ,资源管理学,资源法学 ↓ 区域资源学 ↑ 部门资源学——气候资源学,生物资源学,水资源学,土地资源学,矿产资源学,海洋资源学,能源学,旅游资源学。 1.3 我国的文化资源 中国有五千年的文明历史,文化积累十分丰富。从形而上的角度看,有哲学思想和文学艺术两大源流,每条源流的支脉又蕴藏着丰富的精神内涵,至今影响我们的思想、价值观念、审美意识。 从形而下的角度看,几千年来我们的衣食住行发展出复杂多样的方式,其间凸现的生活艺术融进了中国人高雅的情趣和独具匠心的巧思。此外,中国地域辽阔,民风民俗、民间文化异彩纷呈。剪纸、年画、皮影、木偶、砖雕、器具、面具、玩具、民居、刺绣、服饰、饮食、建筑、园林、风筝、印染等民间文化堪称世界一流。这也是我们的又一大财富。 2 文化资源的分类

特斯拉电动汽车动力电池管理系统解析 1. Tesla目前推出了两款电动汽车,Roadster和Model S,目前我收集到的Roadster 的资料较多,因此本回答重点分析的是Roadster的电池管理系统。 2. 电池管理系统(Battery Management System, BMS)的主要任务是保证电池组工作在安全区间内,提供车辆控制所需的必需信息,在出现异常时及时响应处理,并根据环境温度、电池状态及车辆需求等决定电池的充放电功率等。BMS的主要功能有电池参数监测、电池状态估计、在线故障诊断、充电控制、自动均衡、热管理等。我的主要研究方向是电池的热管理系统,因此本回答分析的是电池热管理系统 (Battery Thermal Management System, BTMS). 1. 热管理系统的重要性 电池的热相关问题是决定其使用性能、安全性、寿命及使用成本的关键因素。首先,锂离子电池的温度水平直接影响其使用中的能量与功率性能。温度较低时,电池的可用容量将迅速发生衰减,在过低温度下(如低于0°C)对电池进行充电,则可能引发瞬间的电压过充现象,造成内部析锂并进而引发短路。其次,锂离子电池的热相关问题直接影响电池的安全性。生产制造环节的缺陷或使用过程中的不当操作等可能造成电池局部过热,并进而引起连锁放热反应,最终造成冒烟、起火甚至爆炸等严重的热失控事件,威胁到车辆驾乘人员的生命安全。另外,锂离子电池的工作或存放温度影响其使用寿命。电池的适宜温度约在10~30°C之间,过高或过低的温度都将引起电池寿命的较快衰减。动力电池的大型化使得其表面积与体积之比相对减小,电池内部热量不易散出,更可能出现内部温度不均、局部温升过高等问题,从而进一步加速电池衰减,缩短电池寿命,增加用户的总拥有成本。 电池热管理系统是应对电池的热相关问题,保证动力电池使用性能、安全性和寿命的关键技术之一。热管理系统的主要功能包括:1)在电池温度较高时进行有效散热,防止产生热失控事故;2)在电池温度较低时进行预热,提升电池温度,确保低温下的充电、放电性能和安全性;3)减小电池组内的温度差异,抑制局部热区的形成,防止高温位置处电池过快衰减,降低电池组整体寿命。 2. Tesla Roadster的电池热管理系统 Tesla Motors公司的Roadster纯电动汽车采用了液冷式电池热管理系统。车载电池组由6831节18650型锂离子电池组成,其中每69节并联为一组(brick),再将9组串联为一层(sheet),最后串联堆叠11层构成。电池热管理系统的冷却液为50%水与50%乙二醇混合物。

如何挖掘旅游资源的文化内涵 摘要:随着我国的国民经济不断发展,人们的生活水平不断提高,大家都开始注重享受型消费,旅游便是其中最典型的一项,旅游不只是一种社会经济现象,更是一种文化现象。旅游总是离不开文化,与文化息息相关,旅游的发展需要借助文化的推动。发掘旅游景点的文化内涵就相当于在寻找旅游景点的灵魂。中华文化博大精深,旅游景点只要有了传统文化作为依托,这汪泉水才能永不干涸。本文的目的是为了探究如何从博大精深的中华文化中挖掘出旅游景点的文化内涵,从而推动当地旅游的科学发展和可持续发展。 关键词:挖掘;旅游资源;文化内涵 近年来,随着经济的不断发展,人们的出游量也在不断增大。但是对于一个景点来说,想要得到长久的可持续发展。他就一定要依靠时代所赋予自己的文化内涵。没有文化内涵的旅游景点,就像是一个没有灵魂的人,景色再美,也经不住时间的推敲和人们的考验。旅游资源不只需要依赖于一定的自然条件,更需要依赖经济文化条件。所以,研究如何挖掘旅游资源的文化内涵是推动当地旅游发展的重要载体。笔者通过考察走访,从联系历史背景与历史人物,结合当地特产特色,不断进行丰富沉淀,保护文化内涵不消灭四个方面对于如何挖掘旅游资源的文化内涵给出了一些建议。 一、联系历史背景与历史人物 旅游业想要生存和发展,就必须要依靠旅游资源,拥有足够丰富的旅游资源才能够吸引游客的目光。旅游资源往往分为自然旅游资源和人文旅游资源。人们在选择旅游目的地和旅游产品时,越来越开始更加重视他们的文化内涵。那些自然旅游资源和人文旅游资源兼备的旅游景点自然便更能受到人们的青睐。所以我们在进行资源开发和对旅游城市进行规划时应该更加注重它的文化内涵。文化是历史的积淀,时间的产物,所以在发掘旅游资源的文化内涵时必然离不开对历史的挖掘。我国五千年的悠久历史对于旅游资源的文化内涵的挖掘是非常有帮助的。历史的年代感会使人感到纯厚的文化积淀。有故事,有历史的旅游资源必然得到较好的发展。 在挖掘旅游资源的文化内涵的同时,一定要深入了解当地的历史,开发旅游资源的历史背景,借助相应的历史典故和有名的历史人物。例如,风波亭,秦桧跪姿石像等风景点都是借助大将军岳飞这个历史人物,和他精忠报国的历史典故作为这一旅游资源的文化内涵的。当人们站在风波亭中,想起岳飞的这首满江红:怒发冲冠,凭栏处,潇潇雨歇,抬望眼,仰天长啸,怀壮激烈。三十功名尘与土,八千里路云和月。莫等闲白了少年头空悲切。锦康耻,犹未雪。臣子恨,何时灭。!加长车踏破,贺兰山缺,壮志饥餐胡虏肉,笑谈渴饮匈奴血。待从头,收拾旧山河,朝天阙。便能犹如感受到了当时的场景和氛围一般,这也是开发旅游资源的文化内涵的价值所在。 除了利用真实的历史人物和历史事件之外,我们当然也可以利用中国古代传说和一些神话故事。神话和传说以其独有的美好性往往能够使人记忆深刻,并且充满向往,以此作为旅游例如,山东蓬莱借助八仙过海的故事作为文化内涵。山东省济源县燕崖乡的织女洞和牛郎庙,牛郎织女相约的鹊桥和由此延伸出来的情人谷。 二、结合开发当地特产特色 文化具有差异性,它是各民族和地区进行生活实践的产物。不同地区,不同民族的传统文化和文化氛围各不相同。旅游这项迁移类娱乐方式便是基于这一点而产生的。人们从一个地方短暂性的迁移到另一个地方,往往是为了去体验这个地方的文化氛围和风土人情,感受他们之间的差异和不同。文化并没有优劣之分,旅游文化也并不只是旅游文化的简单相加。旅游渐渐成为一种文化现象。文化虽然是历史,但它并不只是历史。

Tesla Model S 特斯拉Model S是一款纯电动车型,外观造型方面,该车定位一款四门Coupe车型,动感的车身线条使人过目不忘。此外在前脸造型方面,该车也采用了自己的设计语言。另值得一提的是,特斯拉Model S的镀铬门把手在触摸之后可以自动弹出,充满科技感的设计从拉开车门时便开始体现。该车在2011年年中正式进入量产阶段,预计在2012年年内将有5000台量产车投放市场。 目录 1概述 2售价 3内饰 4动力 5车型 6技术规格 7性能表现 8荣誉 9对比测试 10车型参数 1概述

Tesla Model S是一款由Tesla汽车公司制造的全尺寸高性能电动轿车,预计于2012年年中投入销售,而它的竞争对手则直指宝马5系。该款车的设计者Franz von Holzhausen,曾在马自达北美分公司担任设计师。在Tesla汽车公司中,Model S拥有独一无二的底盘、车身、发动机以及能量储备系统。Model S的第一次亮相是在2009年四月的一期《大卫深夜秀》节目中 4 Tesla Model S 。 2售价 Model S的电池规格分为三种,分别可以驱动车辆行驶260公里、370公里和480公里。而配备这三种电池的Model S的售价则分别为57400美元、67400美元和77400美元。下线的首批1000辆签名款车型将配有可以行驶480公里的蓄电池。尽管官方尚未公布该签名款车型的具体售价,但据推测,价格将会保持在50000美元左右。 Tesla汽车公司称其将会对市场出租可以提供480公里行驶距离的电池。而从Model S中取得的收益将为第三代汽车的发展提供资金保障。 3内饰

基于4P-4C-4R理论的特斯拉电动汽车品牌营销策略探究 兰州大学管理学院:鲍瑞雪指导老师:苏云【摘要】:特斯拉汽车作为新兴的电动汽车品牌,其发展速度是十分惊人的,尤其是自2014年进入中国市场后更是在中国新能源汽车业引起巨大的轰动,其成功模式值得深思,其中特斯拉汽车公司的营销策略,不仅根据目标市场进行分析,还结合了自身的实际情况,通过对多种营销策略的综合利用,最终实现了成功,促进了企业的持续发展。本文基于营销学4P-4C-4R理论对特斯拉汽车公司的营销策略进行深入分析,研究表明特斯拉从顾客角度出发,采取4P、4C、4R相结合的营销策略。4P是战术,4C是战略,应用4C、4R来思考, 4P角度来行动。 【关键词】: 4P 4C 4R 品牌营销策略特斯拉电动汽车 一、研究背景 当前,美国电动汽车企业特斯拉公司已经成为世界电动汽车界的一匹黑马。特斯拉公司成立于2003年,不同于其它新能源汽车企业,它主要从事电动跑车等高端电动汽车的设计、制造和销售。成立仅仅10年,特斯拉就已经开始在新能源汽车企业和高端汽车企业中崭露头角。作为新兴电动汽车品牌,特斯拉汽车的成功不是偶然的,这与其注重品牌营销策略密不可分。特斯拉汽车公司不仅对目标市场进行具体细分,结合自身实际进行有特色的精准的定位,还充分利用名人效应,提升品牌形象,并借助已有成功模式,寻求找到符合自身发展的途径,出一条饱含自己特色的品牌营销之路。 二、理论依据及文献综述 (一)理论依据 本文以经典的营销学理论4P-4C-4R理论为理论依据,着力探究4P-4C-4R 理论与特斯拉电动汽车营销策略之间的内在联系,分析特斯拉电动汽车品牌营销策略的经典之处。 1、4P营销理论 4P营销理论被归结为四个基本策略的组合,即产品(Product)、价格(Price)、渠道(Place)、促销(Promotion)。该理论注重产品开发的功能,要求产品有独特的卖点,把产品的功能诉求放在第一位。在价格策略方面,企业应当根据不

如何挖掘旅游资源的文 化内涵 公司内部编号:(GOOD-TMMT-MMUT-UUPTY-UUYY-DTTI-

如何挖掘旅游资源的文化内涵 摘要:随着我国的国民经济不断发展,人们的生活水平不断提高,大家都开始注重享受型消费,旅游便是其中最典型的一项,旅游不只是一种社会经济现象,更是一种文化现象。旅游总是离不开文化,与文化息息相关,旅游的发展需要借助文化的推动。发掘旅游景点的文化内涵就相当于在寻找旅游景点的灵魂。中华文化博大精深,旅游景点只要有了传统文化作为依托,这汪泉水才能永不干涸。本文的目的是为了探究如何从博大精深的中华文化中挖掘出旅游景点的文化内涵,从而推动当地旅游的科学发展和可持续发展。 关键词:挖掘;旅游资源;文化内涵 近年来,随着经济的不断发展,人们的出游量也在不断增大。但是对于一个景点来说,想要得到长久的可持续发展。他就一定要依靠时代所赋予自己的文化内涵。没有文化内涵的旅游景点,就像是一个没有灵魂的人,景色再美,也经不住时间的推敲和人们的考验。旅游资源不只需要依赖于一定的自然条件,更需要依赖经济文化条件。所以,研究如何挖掘旅游资源的文化内涵是推动当地旅游发展的重要载体。笔者通过考察走访,从联系历史背景与历史人物,结合当地特产特色,不断进行丰富沉淀,保护文化内涵不消灭四个方面对于如何挖掘旅游资源的文化内涵给出了一些建议。 一、联系历史背景与历史人物 旅游业想要生存和发展,就必须要依靠旅游资源,拥有足够丰富的旅游资源才能够吸引游客的目光。旅游资源往往分为自然旅游资源和人文旅游资源。人们在选择旅游目的地和旅游产品时,越来越开始更加重视他们的文化内涵。那些自

详解特斯拉Model S 1、Model S的核心技术是什么? 核心技术是软件,主要包括电池管理软件,电机以及车载设备的电控技术。最重要的是电池控制技术。 Model S的加速性能,续航里程、操控性能的基础都是电池控制技术,没有电池控制技术,一切都就没有了。 2、Model S的电池控制技术有什么特色? 顶配的Model S使用了接近7000块松下NCR 18650 3100mah电池,对电池两次分组,做串并联。设置传感器,感知每块电池的工作状态和温度情况,由电池控制系统进行控制。防止出现过热短路温度差异等危险情况。 在日常使用中,保证电池在大电流冲放电下的安全性。 其他厂商都采用大电池,最多只有几百块,也没有精确到每块电池的控制系统。 3、为什么要搞这么复杂的电池控制系统? 为了能够使用高性能的18650钴锂电池。高性能电池带来高性能车。因为18650钴锂电池的高危性,没有一套靠谱的系统,安全性就不能保证。这也是大多数厂商无论电力车,插电车,混合动力车都不太敢用钴锂电池,特别是大容量钴锂电池的原因。 松下NCR 18650 3100mah,除了测试一致性最好,充放电次数多,安全性相对较好以外,最重要的是能量大,重量轻,价格也不高。 由于能量大,重量轻,在轿车2吨以内的车重限制下,可以塞进去更多的电池,从而保证更长的续航里程。因为电池输出电流有限制,电池越多,输出电流越大,功率越大,可以使用的电机功率也就越大。电机功率越大,相当于发动机功率大,车就有更快的加速性能,而且可以保持较长的一段时间。 4、作为一辆车,Model S有哪些优点?这些优点是电动车带来的吗? 作为一辆车,Model S主要具有以下几个优点 (1)起步加速快,顶配版本0-100公里加速4秒多,能战宝马M5

Don’t Judge a Person by His Appearance With the development of the society and the improvement of living standard of the people, more and more advanced theories emerge. However, the conventional view of judging the person by the appearance still exists among the public. This phenomenon arouses substantial discussion and heated debates. Judging a person by his appearance will lead to a number of problems. To start with, the appearance of one person is not equal to his ability, which is the key to success. The most handsome boy may have lowest quality. Additionally,if you treat a person only because of his pleasant appearance, you may be cheated since a dangerous man frequently disguise himself with charming smile and gentle behaviors. Eventually, when you seek for a husband or a wife only by one’s good looks, but don’t care about whether you have

TESLA 硅谷工程师、资深车迷、创业家马丁·艾伯哈德(Martin Eberhard)在寻找创业项目时发现,美国很多停放丰田混合动力汽车普锐斯的私家车道上经常还会出现些超级跑车的身影。他认为,这些人不是为了省油才买普锐斯,普锐斯只是这群人表达对环境问题的方式。于是,他有了将跑车和新能源结合的想法,而客户群就是这群有环保意识的高收入人士和社会名流。 2003年7月1日,马丁·艾伯哈德与长期商业伙伴马克·塔彭宁(Marc Tarpenning)合伙成立特斯拉(TESLA)汽车公司,并将总部设在美国加州的硅谷地区。成立后,特斯拉开始寻找高效电动跑车所需投资和材料。

由于马丁·艾伯哈德毫无这方面的制造经验,最终找到AC Propulsion公司。当时,对AC Propulsion公司电动汽车技术产生兴趣的还有艾龙·穆思科(Elon Musk)。在AC Propulsion公司CEO汤姆·盖奇(Tom Gage)的引见下,穆思科认识了艾伯哈德的团队。2004年2月会面之后,穆思科向TESLA投资630万美元,但条件是出任公司董事长、拥有所有事务的最终决定权,而艾伯哈德作为创始人任TESLA的CEO。 在有了技术方案、启动资金后,TESLA开始开发高端电动汽车,他们选择英国莲花汽车的Elise作为开发的基础。没有别的原因,只是因为莲花是唯一一家把TESLA放在眼里的跑车生产商。

艾伯哈德和穆思科的共同点是对技术的热情。但是,作为投资人,穆思科拥有绝对的话语权,随着项目的不断推进,TESLA开始尝到“重技术研发轻生产规划、重性能提升轻成本控制”的苦果。2007年6月,离预定投产日期8月27日仅剩下两个月时,TESLA还没有向零部件供应商提供Roadster的技术规格,核心的部件变速箱更是没能研制出来。另一方面,TESLA在两个月前的融资中向投资人宣称制造Roadster的成本为6.5万美元,而此时成本分析报告明确指出Roadster最初50辆的平均成本将超过10万美元。 生意就是生意,尤其硅谷这样的世界级IT产业中心,每天都在发生一些令人意想不到的事情。投资人穆思科以公司创始人艾伯哈德产品开发进度拖延、成本超支为由撤销其

Ladies and Gentlemen: It’s really my great honor to be here and give you a speech. Today my topic is Judge by Appearance. Now I am standing here, dear friends, what is your impression of me? A girl with small eyes? She is not my style! So just now, you judged me by appearance. The most interesting thing is everyone judges others by their appearance, but Judge by appearance has negative meanings. Actually, in my view, it’s inevitable and necessary to Judge by appearance. Imagine you are now meeting a stranger, there is no doubt that outward appearance is the first thing and the only thing you see and know about the person. So Judge by Appearance is a kind of human nature. In many novels, hero and heroine tends to twist together because of judging each other by appearance, though it may lead to misunderstanding, nobody can avoid it. Apart from facial appearance, outward appearance also exist in personal attire as well as behavior,I don’t care weather your clothes are in fashion or not. But if your hair is all funky with a disgusting smell, I just want to step away. What’s more, if somebody appear smiley and behave in good manners, we will have a great first impression of them as this is the type of person we want to be around. However if someone looks grumpy we will avoid this type of person. So actually, we judge one‘s appearance to determine if the other person is a threat or trustworthy and decide how to get along with them or possible reject them. However, it doesn’t means that appearance can show everything. You know what? At the time when I have butch haircu t in primary school, everyone thought I was a tomboy. But they don’t understand me at all. Only god knows I am a typical sentimental girl! It’s obviously that appearances are deceiving sometimes and we should always make an effort to investigate further and get to know the real person. Appearance doesn’t stay, what stays is our heart and our soul, and that is the real thing that we should judge people upon. Remember this and you may make a sound judgment in the future.

第二讲文化资源的概念与分类 提要: 1. 文化资源的概念 2. 文化资源的分类 3. 文化资源的特点与开发类型 1 文化资源的概念 文化资源是一个涵盖很广泛的概念,有人说,除了自然资源,都是文化资源。这个说法有一定的道理,因为它的前提——文化,正如爱德华·泰勒所说,本身就是一个“复合的整体”,“它包括知识、信仰、艺术、道德、法律、习俗和个人作为社会成员所必需的其他能力及习惯”。当然,文化资源并不能完全等同于文化,加了“资源”一词就意味着它已经拥有过去时态(时间性)、可资利用(效用性)等含义。因此,我们可以认为:文化资源可用于指称人类文化中能够传承下来,可资利用的那部分内容和形式。 因此,文化资源是人类劳动创造的物质成果及其转化的一部分。在本课程中,我们围绕所关注的文化经济一体化的生产经营活动来理解各类文化资源。文化产业的中心任务是将有限的文化资源转化为有用的文化产品,包括文化实物产品、文化服务产品及其衍生形态。 1.1 什么是资源? 一般来说,资源是指不直接用于生活消费的生产性资产。资源常常讲利用和开发,与生产的关系十分紧密。我们认为与文化生产或者与文化产业相关的“素材”才是文化资源的核心指向,而这些素材也来自于人类的活动与创造。 人类早期的资源以自然资源和生活资源为主,如土地、森林、猎物等。随着生产的发展和社会生活的复杂化,人类自身创造的资源越来越重要,其的构成也越来越复杂、多样。符号化知识、经验型技能、创新型能力、通讯手段、社会组织系统等等,都成为了生产的要素即生产的资源。不仅有经济生产方面的资源,而且有社会生活方面的资源,如政治资源、文化资源等。因此,人类积累的一切创造发明成果,都成了人类政治、经济、文化、科技活动进一步发展的资源。 总之,资源是指“资财的来源”,即包括自然资源如土地、森林、矿藏、水域等,也包括各类社会资源。其中,文化就是一种社会资源,它也同时体现为有形的物质载体和无形的精神资产。 1.2 资源科学的学科体系: 资源科学——综合资源学,区域资源学,部门资源学 综合资源学——资源地理学,资源生态学,资源经济学,资源评价学,资源工程与工艺学 ,资源管理学,资源法学 ↓ 区域资源学 ↑ 部门资源学——气候资源学,生物资源学,水资源学,土地资源学,矿产资源学,海洋资源学,能源学,旅游资源学。 1.3 我国的文化资源

facial features she has a thin face an oval face a round face clean-shaven a bloated face a cherubic face a chubby face chubby-cheeked a chubby/podgy face he had a weather-beaten face face she has freckles spots/pimples blackheads moles warts wrinkles rosy cheeks a birthmark a double chin

hollow cheeks a dimple smooth-cheeked/smooth-faced a deadpan face a doleful face a sad face a serious face a smiling face a happy face smooth-cheeked/smooth-faced to go red in the face (with anger/heat) to go red/to blush (with embarassment) he looks worried frightened surprised a smile a smirk a frown nose a bulbous nose a hooked nose

a big nose a turned-up/snu b nose a pointed nose a flat nose/a pug nose a lopsided nose a hooter/conk (colloquial Br. Eng.) a schnozzle (colloquial Am. Eng.) to flare your nostrils/to snort eyes she has brown eyes he has beady eyes a black eye red eyes bloodshot eyes to wink to blink she is cross-eyed a squint she's blind he's blind in one eye to go blind

1.名词:资源文化资源 (1)资源,是指一定的社会历史条件下存在着,能够为人类开发利用,在社会经济活动中经由人类劳动而创造出财富或资产的各种要素。 (2)文化资源就是人们从事文化生产和文化活动所利用或可资利用的各种资源。 2.简要谈谈文化资源与自然资源的区别。 (1)自然资源,一般是指能够进入人类劳动生产过程并被加工成生产资料和生活资料的那部分自然要素,如土地、矿藏、水、大气、森林、草地、野生生物等。 (2)文化资源是资源的一种形式,相对于其他资源来说,它是一种特殊的资源,它指的是具有文化属性的各种资料,包括物质资料和精神资料等。文化资源蕴藏于深厚的历史文化传统之中,与自然资源不同的是,文化资源并不是固定不变的,而是具有变异性的特点,它会随着社会历史的变化而出现变化。文化资源是以文化形态表现出来的,这是它不同于自然资源的突出特征。文化资源既可以一种可感的物质化和符号化形式存在,又可以一种思想化、观念化和经验化的方式表现出来。 3.如何认识文化资源对当代社会的重要意义? (1)文化资源是人类思想资料的重要来源(2)文化资源是人类价值观念形成的依据(3)文化资源可以转化为文化资本,成为影响社会发展的重要因素(4)文化资源是人类文化遗产的重要来源(5)文化资源是文化生产力的重要基础 4.文化资源有哪些主要特点?(1)意识性文化资源是人类创造的一种资源,而非天然形成的,所以,无论是物质文化资源还是非物质文化资源,都与人类意识有关,是人类有意识、有目的地创造出来的,这是与自然资源不同的地方.(2)再生性与不可再生性(3)社会性文化资源是人类所创造的一种社会化的资源,它是为满足社会需要而创造的,因此,文化资源带有鲜明的社会性特点。(4)传承性是指文化的延续和继承,它是一种文化传递活动,它使得文化具有了稳定性和连续性。(5)教化性它具有十分突出的社会功能和作用,因为文化体现出的是人类的某种意志和价值观,它是按照人类社会的愿望和要求创造和形成的,具有明显的社会意识形态内容。(6)共享性(7)动态性世界上任何一种文化均承载着一定的内容,而此内容既有其相对稳定的特征,也有其发展变化的一面。由此决定了文化资源也是一个不断变化的动态过程。 5.文化资源的传承方式主要有哪些?主要有物质的方式、精神的方式和行为的方式。(1)物质的方式是通过物质的有形载体来传承文化的,例如,历史上保存下来的各种不同文化风格和样式的建筑、工艺、雕刻、器物、服饰、饮食等。这些物质载体不仅成为民族文化的一种象征而具有深厚的历史价值,而且在现代社会中还因为表达了某种特定的文化观念而具有很高的经济价值。(2)精神的传承方式属于非物质的,它是通过精神的无形方式来传承文化的,例如,风俗习惯、口传文化(神话、传说、歌谣、民间故事等)、宗教信仰、语言艺术、道德观念、思想意识等。(3)行为方式的传承主要表现在人类的生产方式和生活方式等层面,事实上它们与人类的物质文化和精神文化都有密切关系。 6.结合现实简要谈谈知识资源对当代社会发展的影响 知识经济的兴起,就包含着极为深刻的当代文化内涵,它不仅决定着知识在当代社会的发展趋势,同时也关系到知识对当代社会所产生的影响作用。知识这一精神文化资源在当代已经成为一种重要的新型资源,知识与经济的相互渗透、相互结合、相互影响,是促使现代社会发生深刻变革的重要原因。知识经济是通过知识创新与知识成果的转化而形成的一种新型经济活动。 知识经济是相对于传统的农业经济、工业经济和商业经济的一种新的经济形态。知识经济把知识看作是内在的生产力要素,通过知识形态的转化与生成,把知识资源转化为生产资源,以获取经济效益。