Integral Invariants for Robust Geometry_期刊版

- 格式:pdf

- 大小:1.28 MB

- 文档页数:15

V、X、Z:Value of function :函数值Variable :变数Vector :向量Velocity :速度Vertical asymptote :垂直渐近线Volume :体积X-axis :x轴x-coordinate :x坐标x-intercept :x截距Zero vector :函数的零点Zeros of a polynomial :多项式的零点T:Tangent function :正切函数Tangent line :切线Tangent plane :切平面Tangent vector :切向量Total differential :全微分Trigonometric function :三角函数Trigonometric integrals :三角积分Trigonometric substitutions :三角代换法Tripe integrals :三重积分S:Saddle point :鞍点Scalar :纯量Secant line :割线Second derivative :二阶导数Second Derivative Test :二阶导数试验法Second partial derivative :二阶偏导数Sector :扇形Sequence :数列Series :级数Set :集合Shell method :剥壳法Sine function :正弦函数Singularity :奇点Slant asymptote :斜渐近线Slope :斜率Slope-intercept equation of a line :直线的斜截式Smooth curve :平滑曲线Smooth surface :平滑曲面Solid of revolution :旋转体Space :空间Speed :速率Spherical coordinates :球面坐标Squeeze Theorem :夹挤定理Step function :阶梯函数Strictly decreasing :严格递减Strictly increasing :严格递增Sum :和Surface :曲面Surface integral :面积分Surface of revolution :旋转曲面Symmetry :对称R:Radius of convergence :收敛半径Range of a function :函数的值域Rate of change :变化率Rational function :有理函数Rationalizing substitution :有理代换法Rational number :有理数Real number :实数Rectangular coordinates :直角坐标Rectangular coordinate system :直角坐标系Relative maximum and minimum :相对极大值与极小值Revenue function :收入函数Revolution , solid of :旋转体Revolution , surface of :旋转曲面Riemann Sum :黎曼和Riemannian geometry :黎曼几何Right-hand derivative :右导数Right-hand limit :右极限Root :根P、Q:Parabola :拋物线Parabolic cylinder :抛物柱面Paraboloid :抛物面Parallelepiped :平行六面体Parallel lines :并行线Parameter :参数Partial derivative :偏导数Partial differential equation :偏微分方程Partial fractions :部分分式Partial integration :部分积分Partition :分割Period :周期Periodic function :周期函数Perpendicular lines :垂直线Piecewise defined function :分段定义函数Plane :平面Point of inflection :反曲点Polar axis :极轴Polar coordinate :极坐标Polar equation :极方程式Pole :极点Polynomial :多项式Positive angle :正角Point-slope form :点斜式Power function :幂函数Product :积Quadrant :象限Quotient Law of limit :极限的商定律Quotient Rule :商定律M、N、O:Maximum and minimum values :极大与极小值Mean Value Theorem :均值定理Multiple integrals :重积分Multiplier :乘子Natural exponential function :自然指数函数Natural logarithm function :自然对数函数Normal line :法线Normal vector :法向量Octant :卦限Odd function :奇函数One-sided limit :单边极限Open interval :开区间Optimization problems :最佳化问题Order :阶Ordinary differential equation :常微分方程Origin :原点Orthogonal :正交的L:Laplace transform :Laplace 变换Law of Cosines :余弦定理Least upper bound :最小上界Left-hand derivative :左导数Left-hand limit :左极限Lemniscate :双钮线Level curve :等高线L'Hospital's rule :洛必达法则Limacon :蚶线Linear approximation:线性近似Linear equation :线性方程式Linear function :线性函数Linearity :线性Linearization :线性化Line in the plane :平面上之直线Line in space :空间之直线Lobachevski geometry :罗巴切夫斯基几何Local extremum :局部极值Local maximum and minimum :局部极大值与极小值Logarithm :对数Logarithmic function :对数函数I:Implicit differentiation :隐求导法Implicit function :隐函数Improper integral :瑕积分Increasing/Decreasing Test :递增或递减试验法Increment :增量Increasing Function :增函数Indefinite integral :不定积分Independent variable :自变数Indeterminate from :不定型Inequality :不等式Infinite point :无穷极限Infinite series :无穷级数Inflection point :反曲点Instantaneous velocity :瞬时速度Integer :整数Integral :积分Integrand :被积分式Integration :积分Integration by part :分部积分法Intercepts :截距Intermediate value of Theorem :中间值定理Interval :区间Inverse function :反函数Inverse trigonometric function :反三角函数Iterated integral :逐次积分H:Higher mathematics 高等数学/高数E、F、G、H:Ellipse :椭圆Ellipsoid :椭圆体Epicycloid :外摆线Equation :方程式Even function :偶函数Expected Valued :期望值Exponential Function :指数函数Exponents , laws of :指数率Extreme value :极值Extreme Value Theorem :极值定理Factorial :阶乘First Derivative Test :一阶导数试验法First octant :第一卦限Focus :焦点Fractions :分式Function :函数Fundamental Theorem of Calculus :微积分基本定理Geometric series :几何级数Gradient :梯度Graph :图形Green Formula :格林公式Half-angle formulas :半角公式Harmonic series :调和级数Helix :螺旋线Higher Derivative :高阶导数Horizontal asymptote :水平渐近线Horizontal line :水平线Hyperbola :双曲线Hyperboloid :双曲面D:Decreasing function :递减函数Decreasing sequence :递减数列Definite integral :定积分Degree of a polynomial :多项式之次数Density :密度Derivative :导数of a composite function :复合函数之导数of a constant function :常数函数之导数directional :方向导数domain of :导数之定义域of exponential function :指数函数之导数higher :高阶导数partial :偏导数of a power function :幂函数之导数of a power series :羃级数之导数of a product :积之导数of a quotient :商之导数as a rate of change :导数当作变率right-hand :右导数second :二阶导数as the slope of a tangent :导数看成切线之斜率Determinant :行列式Differentiable function :可导函数Differential :微分Differential equation :微分方程partial :偏微分方程Differentiation :求导法implicit :隐求导法partial :偏微分法term by term :逐项求导法Directional derivatives :方向导数Discontinuity :不连续性Disk method :圆盘法Distance :距离Divergence :发散Domain :定义域Dot product :点积Double integral :二重积分change of variable in :二重积分之变数变换in polar coordinates :极坐标二重积分Calculus :微积分differential :微分学integral :积分学Cartesian coordinates :笛卡儿坐标一般指直角坐标Cartesian coordinates system :笛卡儿坐标系Cauchy’s Mean Value Theorem :柯西均值定理Chain Rule :连锁律Change of variables :变数变换Circle :圆Circular cylinder :圆柱Closed interval :封闭区间Coefficient :系数Composition of function :函数之合成Compound interest :复利Concavity :凹性Conchoid :蚌线Cone :圆锥Constant function :常数函数Constant of integration :积分常数Continuity :连续性at a point :在一点处之连续性of a function :函数之连续性on an interval :在区间之连续性from the left :左连续from the right :右连续Continuous function :连续函数Convergence :收敛interval of :收敛区间radius of :收敛半径Convergent sequence :收敛数列series :收敛级数Coordinate:s:坐标Cartesian :笛卡儿坐标cylindrical :柱面坐标polar :极坐标rectangular :直角坐标spherical :球面坐标Coordinate axes :坐标轴Coordinate planes :坐标平面Cosine function :余弦函数Critical point :临界点Cubic function :三次函数Curve :曲线Cylinder:圆柱Cylindrical Coordinates :圆柱坐标A、B:Absolute convergence :绝对收敛Absolute extreme values :绝对极值Absolute maximum and minimum :绝对极大与极小Absolute value :绝对值Absolute value function :绝对值函数Acceleration :加速度Antiderivative :反导数Approximate integration :近似积分Approximation :逼近法by differentials :用微分逼近linear :线性逼近法by Simpson’s Rule :Simpson法则逼近法by the Trapezoidal Rule :梯形法则逼近法Arbitrary constant :任意常数Arc length :弧长Area :面积under a curve :曲线下方之面积between curves :曲线间之面积in polar coordinates :极坐标表示之面积of a sector of a circle :扇形之面积of a surface of a revolution :旋转曲面之面积Asymptote :渐近线horizontal :水平渐近线slant :斜渐近线vertical :垂直渐近线Average speed :平均速率Average velocity :平均速度Axes, coordinate :坐标轴Axes of ellipse :椭圆之轴Binomial series :二项级数。

哈密尔顿系统的辛几何算法哈密尔顿系统是一类具有特殊的物理意义的动力系统,其在物理学、力学、动力学和计算力学等领域有着广泛应用。

哈密尔顿系统通常具有一组关于位置和动量的相变量,其演化满足哈密尔顿方程。

由于哈密尔顿系统具有良好的保持量和结构稳定性,因此在数值模拟中的算法设计尤其重要。

辛几何算法是一类特殊的数值演化方法,其以保持哈密尔顿系统相变量守恒和辛结构稳定性为目标,常常用于哈密尔顿系统的数值积分。

辛几何算法最早由李约瑟于 1988 年提出,其不仅能够在数值计算中保持相变量的守恒,还能够在哈密尔顿系统的长期演化中保持辛结构稳定性。

辛几何算法主要由两个部分组成,即辛映射和辛算子。

辛映射指的是从一个相变量向下一个相变量的映射,它通常满足“保相量”和辛结构不变性的特点。

保相量指的是相变量在变化过程中的守恒,而辛结构不变性则指的是哈密尔顿系统在演化过程中的辛不变性。

而辛算子则是这个辛映射的数值逼近,常常采用辛波发方法、显式和隐式辛算法等方法。

在演化哈密尔顿系统时,辛几何算法通常采用显式辛算法进行数值模拟。

显式辛算法的主要思路是采用辛映射和辛算子的组合,来实现对哈密尔顿系统的数值模拟。

在模拟过程中,辛几何算法需要保证每一步的演化都是辛的,这样系统才能保持哈密尔顿量以及其他相变量不变。

因此,辛几何算法在数值模拟中的应用非常广泛。

然而,辛几何算法的实现却比较困难。

在数值模拟时,辛几何算法需要考虑一系列问题,如相变量的数值守恒、哈密尔顿量的捕获和重构、快速演化、长时间演化、难以计算的高维效应等等。

这些问题都需要采用一些特殊的技巧和策略来解决。

总的来说,辛几何算法是一种特殊的数值演化方法,其以保持哈密尔顿系统相变量守恒和辛结构稳定性为目标,在计算力学、物理学、动力学等领域有着广泛应用。

不等式机器证明的降维算法与通用程序

杨路

【期刊名称】《高技术通讯》

【年(卷),期】1998(8)7

【摘要】提出了降维算法,它能有效地处理带参数的根式,将维数控制在最小限度。

据此编成的通用程序已在PC机上验证了400多个具有相当难度的代数和几何的不等式,对Botema的《几何不等式》一书中120个基本不等式的验证仅用时20几秒。

【总页数】6页(P20-25)

【关键词】降维算法;结式;临界曲面;判别曲面;机器证明

【作者】杨路

【作者单位】中国科学院成都计算机应用研究所

【正文语种】中文

【中图分类】TP301.6;TP391.75

【相关文献】

1.一类根式不等式的有理化算法与机器证明 [J], 徐嘉;姚勇

2.运用统计流形降维的通用型隐写分析算法 [J], 戴良斌;全笑梅

3.基于PCA降维结合机器学习算法的人机交互手势识别研究 [J], 钟健; 何韦颖; 谭汉松

4.基于局域降维Dijkstra算法的校园送餐机器人多目标路径规划 [J], 宫金良;牛作

硕;张彦斐

5.具有线性不等式约束非线性规划问题的降维算法 [J], 杨懿;张守贵

因版权原因,仅展示原文概要,查看原文内容请购买。

一种非单调自适应新锥模型信赖域算法近年来,统计推断和模式识别领域的研究人员发展了一些有效的算法来提取有用信息,这些算法大部分都建立在锥模型中。

在锥模型中,对对象进行抽样,并采用不同的模型表示在不同的空间中。

通过计算锥模型中的约束,可以获得两个或更多的对象的信息。

然而,由于子空间有可能交叉,锥模型在提取信息时存在两个主要问题:第一,多维空间中的子空间可能会发生重叠,这会影响模型的有效性;第二,子空间边界变化可能会导致模型精度降低。

因此,开发一种非单调自适应新锥模型信赖域算法(NDA-NCRM)是很有必要的。

NDA-NCRM是一种半抽象的算法,它可以通过在子空间中构造拉格朗日乘数,从而使模型的边界具有可变性,并使子空间不会发生重叠现象。

NDA-NCRM的另一个优点是,它可以支持任意数量的对象,这对锥模型的计算量有很大的影响。

NDA-NCRM算法的主要原理是,在子空间中构造一系列连续的拉格朗日乘数,以使模型的边界具有可变性,从而避免子空间发生重叠的情况,并且算法可以支持任意数量的对象。

NDA-NCRM的基本思想是,在锥模型中建立一个简单的有效的拉格朗日乘数,然后通过迭代对拉格朗日乘数进行修改,从而得到更好的目标函数值的结果。

NDA-NCRM的迭代过程中,需要计算原始锥模型中的约束,从而获取不同子空间中的信息。

在每一步迭代中,都要重新估计拉格朗日乘数,以确保所有变量可以有效地调节子空间的边界,从而保证模型的有效性。

此外,对NDA-NCRM算法进行改进,可以更好地提高模型的精度。

这种改进可以通过引入权重来实现,从而使调整参数更加精确。

此外,可以将NDA-NCRM算法和其他半抽象算法结合起来,比如聚类或分类等,从而更有效地提取有用信息。

总之,NDA-NCRM算法是一种有效的、非单调的锥模型信赖域算法,它可以提高模型的精度,同时可以有效地支持任意数量的对象。

此外,NDA-NCRM算法还可以实现通过调整参数更加精确地提取有用信息,并可以与其他半抽象算法结合起来,从而更有效地提取有用信息。

电磁理论中的Hodge星算子

饶明忠;黄键

【期刊名称】《电工技术学报》

【年(卷),期】1995(000)004

【摘要】在三维空间中给Hodge星算子下了定义,导出了0 ̄3-形式基的星运算公式,指出了欧几里得空间1-形式电磁量间的外积构成一个李代数,并导出了微分流形中的格林定理。

【总页数】4页(P56-59)

【作者】饶明忠;黄键

【作者单位】不详;不详

【正文语种】中文

【中图分类】TM15

【相关文献】

1.复Finsler流形上的Hodge-Laplace算子 [J], 钟春平;钟同德

中(p,q)型微分形式关于Hodge *算子、( )算子及其伴随形式( )的积分表示[J], 钟春平

3.Finsler流形上的Hodge-Laplace算子 [J], 崔宁伟;王佳

4.霍奇星算子,余微分及拉—贝算子与电二阶电磁场方程 [J], 陈强顺;王建成

5.紧复流形上的星算子定义及伴随算子 [J], 黄晴;杨秋花;卢卫君

因版权原因,仅展示原文概要,查看原文内容请购买。

改进的无奇异局部边界积分方程方法

付东杰;陈海波;张培强

【期刊名称】《应用数学和力学》

【年(卷),期】2007(28)8

【摘要】在局部边界积分方程方法中,当源节点位于分析域的整体边界上时,局部边界积分将出现奇异积分问题,这些奇异积分需要做特别的处理.为此,提出了对域内节点采用局部积分方程,而对边界节点直接采用移动最小二乘近似函数引入边界条件来解决奇异积分问题,这同时也解决了对积分边界进行插值引入近似误差的问题.作为应用和数值实验,对Laplace方程和Helmholtz方程问题进行了分析,取得了很好的数值结果.进而,在Helmholtz方程求解中,采用了含波解信息的修正基函数来代替单项式基函数进行近似.数值结果显示,这样处理是简单高效的,在高波数声传播问题的求解中非常具有前景.

【总页数】7页(P976-982)

【关键词】无网格方法;移动最小二乘近似;局部边界积分方程方法;奇异积分

【作者】付东杰;陈海波;张培强

【作者单位】中国科学技术大学力学和机械工程系;中科院材料力学行为和设计重点实验室

【正文语种】中文

【中图分类】O302

【相关文献】

空间中无界域的光滑边界上的奇异积分和奇异积分方程 [J], 钟同德

2.一种求解边界积分方程中三阶奇异体积分的数值方法 [J], 邹广德;王志超

3.Helmholtz边界积分方程中奇异积分间接求解方法 [J], 周琪; 陈永强

4.改进的无网格局部边界积分方程方法 [J], 戴保东;程玉民

5.关于薄板的无网格局部边界积分方程方法中的友解 [J], 龙述尧;熊渊博

因版权原因,仅展示原文概要,查看原文内容请购买。

基于压缩感知与小波域奇异值分解的图像认证

高志荣;吕进

【期刊名称】《中南民族大学学报(自然科学版)》

【年(卷),期】2010(029)004

【摘要】提出了一种基于压缩感知与小波域奇异值分解的图像哈希认证新方法.该方法首先通过小波变换得到图像的低频子带,然后对低频子带进行基于块的奇异值分解,最后对产生的最大奇异值数组进行基于压缩感知的随机投影,产生哈希认证信息,并偕同原图像传送到接收端.图像接收端通过比较哈希信息完成图像认证,并通过利用传递的哈希信息重建原始图像的奇异值,进一步映射奇异值差值到图像像素域,从而实现对图像篡改的识别与定位.大量实验结果证明了该方法的有效性.

【总页数】5页(P89-93)

【作者】高志荣;吕进

【作者单位】中南民族大学,计算机科学学院,武汉,430074;中南民族大学,计算机科学学院,武汉,430074

【正文语种】中文

【中图分类】TP309.2

【相关文献】

1.基于小波域奇异值分解的振动信号压缩算法 [J], 王怀光;张培林;陈林;陈彦龙

2.基于奇异值分解的自嵌入图像认证水印算法 [J], 胡玉平

3.一种基于压缩感知的无损图像认证算法 [J], 艾鸽;伍家松;段宇平;舒华忠

4.基于压缩感知的数字图像认证算法研究 [J], 邓桂兵;周爽;李珊珊;蒋天发

5.基于奇异值分解的小波域数字水印方法 [J], 曾晴;马苗;孙莉;周涛

因版权原因,仅展示原文概要,查看原文内容请购买。

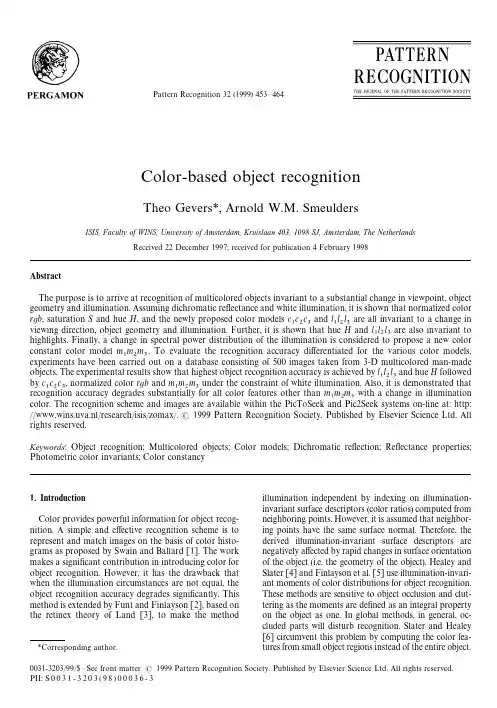

Speeded-Up Robust Features (SURF)Herbert Bay a ,Andreas Essa,*,Tinne Tuytelaars b ,Luc Van Goola,ba ETH Zurich,BIWI,Sternwartstrasse 7,CH-8092Zurich,Switzerland bK.U.Leuven,ESAT-PSI,Kasteelpark Arenberg 10,B-3001Leuven,BelgiumReceived 31October 2006;accepted 5September 2007Available online 15December 2007AbstractThis article presents a novel scale-and rotation-invariant detector and descriptor,coined SURF (Speeded-Up Robust Features).SURF approximates or even outperforms previously proposed schemes with respect to repeatability,distinctiveness,and robustness,yet can be computed and compared much faster.This is achieved by relying on integral images for image convolutions;by building on the strengths of the leading existing detectors and descriptors (specifically,using a Hessian matrix-based measure for the detector,and a distribution-based descriptor);and by sim-plifying these methods to the essential.This leads to a combination of novel detection,description,and matching steps.The paper encompasses a detailed description of the detector and descriptor and then explores the effects of the most important param-eters.We conclude the article with SURF’s application to two challenging,yet converse goals:camera calibration as a special case of image registration,and object recognition.Our experiments underline SURF’s usefulness in a broad range of topics in computer vision.Ó2007Elsevier Inc.All rights reserved.Keywords:Interest points;Local features;Feature description;Camera calibration;Object recognition1.IntroductionThe task of finding point correspondences between two images of the same scene or object is part of many com-puter vision applications.Image registration,camera cali-bration,object recognition,and image retrieval are just a few.The search for discrete image point correspondences can be divided into three main steps.First,‘interest points’are selected at distinctive locations in the image,such as cor-ners,blobs,and T-junctions.The most valuable property of an interest point detector is its repeatability.The repeat-ability expresses the reliability of a detector for finding the same physical interest points under different viewing condi-tions.Next,the neighbourhood of every interest point is represented by a feature vector.This descriptor has to be distinctive and at the same time robust to noise,detectiondisplacements and geometric and photometric deforma-tions.Finally,the descriptor vectors are matched between different images.The matching is based on a distance between the vectors,e.g.the Mahalanobis or Euclidean dis-tance.The dimension of the descriptor has a direct impact on the time this takes,and less dimensions are desirable for fast interest point matching.However,lower dimensional feature vectors are in general less distinctive than their high-dimensional counterparts.It has been our goal to develop both a detector and descriptor that,in comparison to the state-of-the-art,are fast to compute while not sacrificing performance.In order to succeed,one has to strike a balance between the above requirements like simplifying the detection scheme while keeping it accurate,and reducing the descriptor’s size while keeping it sufficiently distinctive.A wide variety of detectors and descriptors have already been proposed in the literature (e.g.[21,24,27,39,25]).Also,detailed comparisons and evaluations on benchmarking datasets have been performed [28,30,31].Our fast detector and descriptor,called SURF (Speeded-Up Robust1077-3142/$-see front matter Ó2007Elsevier Inc.All rights reserved.doi:10.1016/j.cviu.2007.09.014*Corresponding author.E-mail address:aess@vision.ee.ethz.ch (A.Ess)./locate/cviuAvailable online at Computer Vision and Image Understanding 110(2008)346–359Features),was introduced in[4].It is built on the insights gained from this previous work.In our experiments on these benchmarking datasets,SURF’s detector and descriptor are not only faster,but the former is also more repeatable and the latter more distinctive.We focus on scale and in-plane rotation-invariant detec-tors and descriptors.These seem to offer a good compromise between feature complexity and robustness to commonly occurring photometric deformations.Skew,anisotropic scaling,and perspective effects are assumed to be second order effects,that are covered to some degree by the overall robustness of the descriptor.Note that the descriptor can be extended towards affine-invariant regions using affine normalisation of the ellipse(cf.[31]),although this will have an impact on the computation time.Extending the detector, on the other hand,is less straightforward.Concerning the photometric deformations,we assume a simple linear model with a bias(offset)and contrast change(scale factor).Nei-ther detector nor descriptor use colour information.The article is structured as follows.In Section2,we give a review over previous work in interest point detection and description.In Section3,we describe the strategy applied for fast and robust interest point detection.The input image is analysed at different scales in order to guarantee invariance to scale changes.The detected interest points are provided with a rotation and scale-invariant descriptor in Section4.Furthermore,a simple and efficientfirst-line indexing technique,based on the contrast of the interest point with its surrounding,is proposed.In Section5,some of the available parameters and their effects are discussed,including the benefits of an upright version(not invariant to image rotation).We also investi-gate SURF’s performance in two important application scenarios.First,we consider a special case of image regis-tration,namely the problem of camera calibration for3D reconstruction.Second,we will explore SURF’s applica-tion to an object recognition experiment.Both applications highlight SURF’s benefits in terms of speed and robustness as opposed to other strategies.The article is concluded in Section6.2.Related work2.1.Interest point detectionThe most widely used detector is probably the Harris corner detector[15],proposed back in1988.It is based on the eigenvalues of the second moment matrix.However, Harris corners are not scale invariant.Lindeberg[21]intro-duced the concept of automatic scale selection.This allows to detect interest points in an image,each with their own characteristic scale.He experimented with both the deter-minant of the Hessian matrix as well as the Laplacian (which corresponds to the trace of the Hessian matrix)to detect blob-like structures.Mikolajczyk and Schmid[26] refined this method,creating robust and scale-invariant feature detectors with high repeatability,which they coined Harris-Laplace and Hessian-Laplace.They used a(scale-adapted)Harris measure or the determinant of the Hessian matrix to select the location,and the Laplacian to select the scale.Focusing on speed,Lowe[23]proposed to approxi-mate the Laplacian of Gaussians(LoG)by a Difference of Gaussians(DoG)filter.Several other scale-invariant interest point detectors have been proposed.Examples are the salient region detec-tor,proposed by Kadir and Brady[17],which maximises the entropy within the region,and the edge-based region detector proposed by Jurie and Schmid[16].They seem less amenable to acceleration though.Also several affine-invari-ant feature detectors have been proposed that can cope with wider viewpoint changes.However,these fall outside the scope of this article.From studying the existing detectors and from published comparisons[29,30],we can conclude that Hessian-based detectors are more stable and repeatable than their Harris-based counterparts.Moreover,using the determinant of the Hessian matrix rather than its trace(the Laplacian) seems advantageous,as itfires less on elongated,ill-localised structures.We also observed that approximations like the DoG can bring speed at a low cost in terms of lost accuracy.2.2.Interest point descriptionAn even larger variety of feature descriptors has been proposed,like Gaussian derivatives[11],moment invari-ants[32],complex features[1],steerablefilters[12], phase-based local features[6],and descriptors representing the distribution of smaller-scale features within the interest point neighbourhood.The latter,introduced by Lowe[24], have been shown to outperform the others[28].This can be explained by the fact that they capture a substantial amount of information about the spatial intensity patterns, while at the same time being robust to small deformations or localisation errors.The descriptor in[24],called SIFT for short,computes a histogram of local oriented gradients around the interest point and stores the bins in a128D vec-tor(8orientation bins for each of4Â4location bins).Various refinements on this basic scheme have been pro-posed.Ke and Sukthankar[18]applied PCA on the gradi-ent image around the detected interest point.This PCA-SIFT yields a36D descriptor which is fast for matching, but proved to be less distinctive than SIFT in a second comparative study by Mikolajczyk and Schmid[30];and applying PCA slows down feature computation.In the same paper[30],the authors proposed a variant of SIFT, called GLOH,which proved to be even more distinctive with the same number of dimensions.However,GLOH is computationally more expensive as it uses again PCA for data compression.The SIFT descriptor still seems the most appealing descriptor for practical uses,and hence also the most widely used nowadays.It is distinctive and relatively fast, which is crucial for on-line applications.Recently,Se et al.[37]implemented SIFT on a Field ProgrammableH.Bay et al./Computer Vision and Image Understanding110(2008)346–359347Gate Array(FPGA)and improved its speed by an order of magnitude.Meanwhile,Grabner et al.[14]also used inte-gral images to approximate SIFT.Their detection step is based on difference-of-mean(without interpolation),their description step on integral histograms.They achieve about the same speed as we do(though the description step is constant in speed),but at the cost of reduced quality compared to SIFT.Generally,the high dimensionality of the descriptor is a drawback of SIFT at the matching step. For on-line applications relying only on a regular PC,each one of the three steps(detection,description,matching)has to be fast.An entire body of work is available on speeding up the matching step.All of them come at the expense of getting an approximative matching.Methods include the best-bin-first proposed by Lowe[24],balltrees[35],vocabulary trees[34],locality sensitive hashing[9],or redundant bit vectors[13].Complementary to this,we suggest the use of the Hessian matrix’s trace to significantly increase the matching speed.Together with the descriptor’s low dimen-sionality,any matching algorithm is bound to perform faster.3.Interest point detectionOur approach for interest point detection uses a very basic Hessian matrix approximation.This lends itself to the use of integral images as made popular by Viola and Jones[41],which reduces the computation time drastically. Integral imagesfit in the more general framework of box-lets,as proposed by Simard et al.[38].3.1.Integral imagesIn order to make the article more self-contained,we briefly discuss the concept of integral images.They allow for fast computation of box type convolutionfilters.The entry of an integral image I RðxÞat a location x¼ðx;yÞT represents the sum of all pixels in the input image I within a rectangular region formed by the origin and x.I RðxÞ¼X i6xi¼0X j6yj¼0Iði;jÞð1ÞOnce the integral image has been computed,it takes three additions to calculate the sum of the intensities over any upright,rectangular area(see Fig.1).Hence,the calcu-lation time is independent of its size.This is important in our approach,as we use bigfilter sizes.3.2.Hessian matrix-based interest pointsWe base our detector on the Hessian matrix because of its good performance in accuracy.More precisely,we detect blob-like structures at locations where the determi-nant is maximum.In contrast to the Hessian-Laplace detector by Mikolajczyk and Schmid[26],we rely on the determinant of the Hessian also for the scale selection,as done by Lindeberg[21].Given a point x¼ðx;yÞin an image I,the Hessian matrix Hðx;rÞin x at scale r is defined as followsHðx;rÞ¼L xxðx;rÞL xyðx;rÞL xyðx;rÞL yyðx;rÞ;ð2Þwhere L xxðx;rÞis the convolution of the Gaussian secondorder derivative o22gðrÞwith the image I in point x,and similarly for L xyðx;rÞand L yyðx;rÞ.Gaussians are optimal for scale-space analysis[19,20], but in practice they have to be discretised and cropped (Fig.2,left half).This leads to a loss in repeatability under image rotations around odd multiples of p.This weakness holds for Hessian-based detectors in general. Fig.3shows the repeatability rate of two detectors based on the Hessian matrix for pure image rotation. The repeatability attains a maximum around multiples of p2.This is due to the square shape of thefilter.Nev-ertheless,the detectors still perform well,and the slight decrease in performance does not outweigh the advan-tage of fast convolutions brought by the discretisation and cropping.As realfilters are non-ideal in any case, and given Lowe’s success with his LoG approximations, we push the approximation for the Hessian matrix even further with boxfilters(in the right half of Fig.2). These approximate second order Gaussian derivatives and can be evaluated at a very low computationalcost ing integral images,it takes only three additions and four memory accesses to calculate the sum of intensities inside a rectangular region of anysize.Fig.2.Left to right:The(discretised and cropped)Gaussian second order partial derivative in y-(L yy)and xy-direction(L xy),respectively;our approximation for the second order Gaussian partial derivative in y-(D yy) and xy-direction(D xy).The grey regions are equal to zero.348H.Bay et al./Computer Vision and Image Understanding110(2008)346–359using integral images.The calculation time therefore is independent of thefilter size.As shown in Section5 and Fig.3,the performance is comparable or better than with the discretised and cropped Gaussians.The9Â9boxfilters in Fig.2are approximations of a Gaussian with r¼1:2and represent the lowest scale(i.e. highest spatial resolution)for computing the blob response maps.We will denote them by D xx,D yy,and D xy.The weights applied to the rectangular regions are kept simple for computational efficiency.This yieldsdetðH approxÞ¼D xx D yyÀðwD xyÞ2:ð3ÞThe relative weight w of thefilter responses is used to bal-ance the expression for the Hessian’s determinant.This is needed for the energy conservation between the Gaussian kernels and the approximated Gaussian kernels,w¼j L xyð1:2ÞjFj D yyð9ÞjFj L yyð1:2ÞjFj D xyð9ÞjF¼0:912:::’0:9;ð4Þwhere j x jF is the Frobenius norm.Notice that for theoret-ical correctness,the weighting changes depending on the scale.In practice,we keep this factor constant,as this did not have a significant impact on the results in our experiments.Furthermore,thefilter responses are normalised with respect to their size.This guarantees a constant Frobenius norm for anyfilter size,an important aspect for the scale space analysis as discussed in the next section.The approximated determinant of the Hessian repre-sents the blob response in the image at location x.These responses are stored in a blob response map over different scales,and local maxima are detected as explained in Sec-tion3.4.3.3.Scale space representationInterest points need to be found at different scales,not least because the search of correspondences often requires their comparison in images where they are seen at different scales.Scale spaces are usually implemented as an image pyramid.The images are repeatedly smoothed with a Gaussian and then sub-sampled in order to achieve a higher level of the pyramid.Lowe[24]subtracts these pyr-amid layers in order to get the DoG(Difference of Gaussi-ans)images where edges and blobs can be found.Due to the use of boxfilters and integral images,we do not have to iteratively apply the samefilter to the output of a previouslyfiltered layer,but instead can apply boxfilters of any size at exactly the same speed directly on the original image and even in parallel(although the latter is not exploited here).Therefore,the scale space is analysed by up-scaling thefilter size rather than iteratively reducing the image size,Fig.4.The output of the9Â9filter,intro-duced in previous section,is considered as the initial scale layer,to which we will refer as scale s¼1:2(approximating Gaussian derivatives with r¼1:2).The following layers are obtained byfiltering the image with gradually bigger masks,taking into account the discrete nature of integral images and the specific structure of ourfilters.Note that our main motivation for this type of sampling is its computational efficiency.Furthermore,as we do not have to downsample the image,there is no aliasing.On the downside,boxfilters preserve high-frequency compo-nents that can get lost in zoomed-out variants of the same scene,which can limit scale-invariance.This was however not noticeable in our experiments.The scale space is divided into octaves.An octave repre-sents a series offilter response maps obtained by convolv-ing the same input image with afilter of increasing size.In total,an octave encompasses a scaling factor of2(which implies that one needs to more than double thefilter size, see below).Each octave is subdivided into a constant num-ber of scale levels.Due to the discrete nature of integral images,the minimum scale difference between two subse-quent scales depends on the length l0of the positive or neg-ative lobes of the partial second order derivative in the direction of derivation(x or y),which is set to a third of thefilter size length.For the9Â9filter,this length l0is 3.For two successive levels,we must increase this size byFig.3.Top:Repeatability score for image rotation of up to180°.Hessian-based detectors have in general a lower repeatability score for anglesFig.4.Instead of iteratively reducing the image size(left),the use ofintegral images allows the up-scaling of thefilter at constant cost(right).H.Bay et al./Computer Vision and Image Understanding110(2008)346–359349a minimum of 2pixels (1pixel on every side)in order to keep the size uneven and thus ensure the presence of the central pixel.This results in a total increase of the mask size by 6pixels (see Fig.5).Note that for dimensions different from l 0(e.g.the width of the central band for the vertical filter in Fig.5),rescaling the mask introduces rounding-offerrors.However,since these errors are typically much smaller than l 0,this is an acceptable approximation.The construction of the scale space starts with the 9Â9filter,which calculates the blob response of the image for the smallest scale.Then,filters with sizes 15Â15,21Â21,and 27Â27are applied,by which even more than a scale change of two has been achieved.But this is needed,as a 3D non-maximum suppression is applied both spa-tially and over the neighbouring scales.Hence,the first and last Hessian response maps in the stack cannot contain such maxima themselves,as they are used for reasons of comparison only.Therefore,after interpolation,see Sec-tion 3.4,the smallest possible scale is r ¼1:6¼1:2129corre-sponding to a filter size of 12Â12,and the highest to r ¼3:2¼1:224.For more details,we refer to [2].Similar considerations hold for the other octaves.For each new octave,the filter size increase is doubled (going from 6–12to 24–48).At the same time,the sampling inter-vals for the extraction of the interest points can be doubled as well for every new octave.This reduces the computation time and the loss in accuracy is comparable to the image sub-sampling of the traditional approaches.The filter sizes for the second octave are 15,27,39,51.A third octave is com-puted with the filter sizes 27,51,75,99and,if the original image size is still larger than the corresponding filter sizes,the scale space analysis is performed for a fourth octave,using the filter sizes 51,99,147,and 195.Fig.6gives an over-view of the filter sizes for the first three octaves.Further octaves can be computed in a similar way.In typical scale-space analysis however,the number of detected interest points per octave decays very quickly,cf.Fig.7.The large scale changes,especially between the first fil-ters within these octaves (from 9to 15is a change of 1.7),renders the sampling of scales quite crude.Therefore,we have also implemented a scale space with a finer sam-pling of the scales.This computes the integral image on the image up-scaled by a factor of 2,and then starts the first octave by filtering with a filter of size 15.Additional filter sizes are 21,27,33,and 39.Then a second octave starts,again using filters which now increase their sizes by 12pixels,after which a third and fourth octave follow.Now the scale change between the first two filters is only 1.4(21/15).The lowest scale for the accurate version that can be detected through quadratic interpolation is s ¼ð1:2189Þ=2¼1:2.As the Frobenius norm remains constant for our filters at any size,they are already scale normalised,and no fur-ther weighting of the filter response is required,for more information on that topic,see [22].3.4.Interest point localisationIn order to localise interest points in the image and over scales,a non-maximum suppression in a 3Â3Â3neigh-bourhood is applied.Specifically,we use a fast variant introduced by Neubeck and Van Gool [33].The maxima of the determinant of the Hessian matrix are then interpo-lated in scale and image space with the method proposed by Brown and Lowe [5].Scale space interpolation is especially important in our case,as the difference in scale between the first layers of every octave is relatively large.Fig.8shows an example of the detected interest points using our ‘Fast-Hessian’detector.4.Interest point description and matchingOur descriptor describes the distribution of the intensity content within the interest point neighbourhood,similartoFig.5.Filters D yy (top)and D xy (bottom)for two successive scale levels (9Â9and 15Â15).The length of the dark lobe can only be increased by an even number of pixels in order to guarantee the presence of a central pixel(top).Fig.6.Graphical representation of the filter side lengths for three different octaves.The logarithmic horizontal axis represents the scales.Note that the octaves are overlapping in order to cover all possible scales seamlessly.350H.Bay et al./Computer Vision and Image Understanding 110(2008)346–359the gradient information extracted by SIFT [24]and its variants.We build on the distribution of first order Haar wavelet responses in x and y direction rather than the gra-dient,exploit integral images for speed,and use only 64D.This reduces the time for feature computation and match-ing,and has proven to simultaneously increase the robust-ness.Furthermore,we present a new indexing step based on the sign of the Laplacian,which increases not only the robustness of the descriptor,but also the matching speed (by a factor of 2in the best case).We refer to our detec-tor-descriptor scheme as SURF—Speeded-Up Robust Features.The first step consists of fixing a reproducible orienta-tion based on information from a circular region around the interest point.Then,we construct a square region aligned to the selected orientation and extract the SURF descriptor from it.Finally,features are matched between two images.These three steps are explained in the following.4.1.Orientation assignmentIn order to be invariant to image rotation,we identify a reproducible orientation for the interest points.For that purpose,we first calculate the Haar wavelet responses in x and y direction within a circular neighbourhood of radius 6s around the interest point,with s the scale at which the interest point was detected.The sampling step is scale dependent and chosen to be s .In keeping with the rest,also the size of the wavelets are scale dependent and set to a side length of 4s .Therefore,we can again use integral images for fast filtering.The used filters are shown in Fig.9.Only six operations are needed to compute the response in x or y direction at any scale.Once the wavelet responses are calculated and weighted with a Gaussian (r ¼2s )centred at the interest point,the responses are represented as points in a space with the hor-izontal response strength along the abscissa and the vertical response strength along the ordinate.The dominant orien-tation is estimated by calculating the sum of all responses within a sliding orientation window of size p ,see Fig.10.The horizontal and vertical responses within the window are summed.The two summed responses then yield a local orientation vector.The longest such vector over all win-dows defines the orientation of the interest point.The size of the sliding window is a parameter which had to be cho-sen carefully.Small sizes fire on single dominating gradi-ents,large sizes tend to yield maxima in vector length that are not outspoken.Both result in a misorientation of the interest point.Note that for many applications,rotation invariance is not necessary.Experiments of using the upright version of SURF (U-SURF,for short)for object detection can be found in [3,4].U-SURF is faster to compute and can increase distinctivity,while maintaining a robustness to rotation of about ±15°.4.2.Descriptor based on sum of Haar wavelet responses For the extraction of the descriptor,the first step con-sists of constructing a square region centred around the interest point and oriented along the orientation selected in previous section.The size of this window is 20s .Exam-ples of such square regions are illustrated in Fig.11.The region is split up regularly into smaller 4Â4square sub-regions.This preserves important spatial information.For each sub-region,we compute Haar waveletresponsesFig.8.Detected interest points for a Sunflower field.This kind of scenes shows the nature of the features obtained using Hessian-baseddetectors.Fig.9.Haar wavelet filters to compute the responses in x (left)and y direction (right).The dark parts have the weight À1and the light parts þ1.H.Bay et al./Computer Vision and Image Understanding 110(2008)346–359351at 5Â5regularly spaced sample points.For reasons of simplicity,we call d x the Haar wavelet response in horizon-tal direction and d y the Haar wavelet response in vertical direction (filter size 2s ),see Fig.9again.‘‘Horizontal’’and ‘‘vertical’’here is defined in relation to the selected interest point orientation (see Fig.12).1To increase the robustness towards geometric deformations and localisa-tion errors,the responses d x and d y are first weighted with a Gaussian (r ¼3:3s )centred at the interest point.Then,the wavelet responses d x and d y are summed up over each sub-region and form a first set of entries in thefeature vector.In order to bring in information about the polarity of the intensity changes,we also extract the sum of the absolute values of the responses,j d x j and j d y j .Hence,each sub-region has a 4D descriptor vector v for its underlying intensity structure v ¼ðP d x ;P d y ;Pj d x j ;P j d y jÞ.Concatenating this for all 4Â4sub-regions,this results in a descriptor vector of length 64.The wavelet responses are invariant to a bias in illumina-tion (offset).Invariance to contrast (a scale factor)is achieved by turning the descriptor into a unit vector.Fig.13shows the properties of the descriptor for three distinctively different image-intensity patterns within a sub-region.One can imagine combinations of such local intensity patterns,resulting in a distinctive descriptor.SURF is,up to some point,similar in concept as SIFT,in that they both focus on the spatial distribution of gradi-ent information.Nevertheless,SURF outperforms SIFT in practically all cases,as shown in Section 5.We believe this is due to the fact that SURF integrates the gradient infor-mation within a subpatch,whereas SIFT depends on the orientations of the individual gradients.This makesSURFFig.10.Orientation assignment:a sliding orientation window of size p3detects the dominant orientation of the Gaussian weighted Haar wavelet responses at every sample pointwithin a circular neighbourhood around the interest point.Fig.11.Detail of the Graffiti scene showing thesize of the oriented descriptor window at different scales.Fig.12.To build the descriptor,an oriented quadratic grid with 4Â4square sub-regions is laid over the interest point (left).For each square,the wavelet responses are computed from 5Â5samples (for illustrative purposes,we show only 2Â2sub-divisions here).For each field,we collect the sums d x ,j d x j ;d y ,and j d y j ,computed relatively to the orientation of the grid (right).1For efficiency reasons,the Haar wavelets are calculated in the unrotated image and the responses arethen interpolated,instead of actually rotating the image.Fig.13.The descriptor entries of a sub-region represent the nature of the underlying intensity pattern.Left:In case of a homogeneous region,all values are relatively low.Middle:In presence of frequencies in x direction,the value of P j d x j is high,but all others remain low.Ifthe intensity is gradually increasing in x direction,both values P d x andP j d x j are high.352H.Bay et al./Computer Vision and Image Understanding 110(2008)346–359。

关于复合泊松分布的一个注记(英文)

张博;祁永成

【期刊名称】《北京大学学报:自然科学版》

【年(卷),期】2007(43)3

【摘要】复合泊松分布的无穷组合与分拆的性质将被讨论,所得结果改进了Gerber H.U.的经典结果。

【总页数】3页(P299-301)

【关键词】复合泊松分布;随机和;组合;分拆;分层保险

【作者】张博;祁永成

【作者单位】北京大学经济学院环境资源与发展经济学系;Department of Mathematics and Statistics,University of Minnesota Duluth,Duluth,MN 55812,USA

【正文语种】中文

【中图分类】O211

【相关文献】

1.关于构造多元高斯过程协相关函数的一个注记(英文) [J], 牟唯嫣;熊世峰;

2.Thue-Morse线性子序列的一个注记(英文) [J], 牛敏;张婷;殷弘

3.一个关于Cantor展式中收缩靶问题的注记(英文) [J], 曹春云

4.关于一些无三角形的平面图选择数的一个注记(英文) [J], 朱晓颖;段滋明

5.区间上Zygmund类最大值的一个注记(英文) [J], 张思汇

因版权原因,仅展示原文概要,查看原文内容请购买。

泊松表面重建摘要:我们展示了对有向点集的表面重建可以转化为一个空间泊松问题。

这种泊松公式化表达的方法同时考虑了所有的点,而不借助于启发式的空间分割或合并,于是对数据的噪声有很大的抵抗性。

不像径向基函数的方案,我们的泊松方法允许对局部基函数划分层次结构,从而使问题的解缩减为一个良态的稀疏线性系统。

我们描述了一个空间自适应的多尺度算法,其时间和空间复杂度正比于重建模型的大小。

使用公共提供的扫描数据进行实验,在重建的表面,我们的方法比先前的方法显示出更详细的细节。

1、 引言:由点样本重建三维表面在计算机图形学中是一个热门研究问题。

它允许对扫描数据的拟合,对表面空洞的填充,和对现有模型的重新构网。

我们提出了一种重要的方法,把表面重建问题表示为泊松方程的解。

跟许多先前的工作一样(参见第2部分),我们使用隐式函数框架来处理表面重建问题。

特别地,像[K a z 05]我们计算了一个三维指示函数 (在模型内部的点定义为1,外部的点定义为0),然后可以通过提取合适的等值面获得重建的表面。

我们的核心观点是从模型表面采样的有向点集和模型的指示函数之间有一个内在关系。

特别地,指示函数的梯度是一个几乎在任何地方都是零的向量场(由于指示函数在几乎任何地方都是恒定不变的),除了模型表面附近的点,在这些地方指示函数的梯度等于模型表面的内法线。

这样,有向点样本可视为模型的指示函数梯度的样本(如图1)。

图1 二维泊松重建的直观图例计算指示函数的问题因此简化为梯度算子的反算,即找到标量函数~χ,使其梯度最佳逼近样本定义的向量场V u r ,即,,如果我们使用散度算子,那么这个变化的问题转化为标准的泊松问题:计算标量函数~χ,它的拉普拉斯算子(梯度的散度)等于向量场V u r的散度,在第3、4部分我们将会对上式作精确的定义。

把表面重建问题表达成泊松问题拥有许多优点。

很多对隐式表面进行拟合的方法把数据分割到不同区域以进行局部拟合,然后使用合成函数进一步合并这些局部拟合结果。

复杂零假设的边界P值及其应用

史宁中

【期刊名称】《应用概率统计》

【年(卷),期】1992(008)004

【摘要】本文研究零假设为复杂情况时的P值问题。

对于一维正态均值的单边检验H_0:θ≤θ_0,P值是指拒绝域在参数θ=θ_0时的概率。

根据这一想法,本文定义了一般情况下的边界P值。

当零假设是一个多面体凸锥时,本文给出了一个计算边界P值的方法,并利用这个方法解决了一个实际问题。

【总页数】9页(P411-419)

【作者】史宁中

【作者单位】无

【正文语种】中文

【中图分类】O212.1

【相关文献】

1.具有零边界值的Orlicz-Sobolev空间 [J], 刘春芳;付永强

2.内燃机复杂零件结构分析的实用边界元方法 [J], 胡圣荣;罗锡文;陈国华

3.一种复杂二值图像边界跟踪与提取算法 [J], 唐世伟;冯永强;陈静

4.QTL作图中零假设检验统计量分布特征及LOD临界值估计方法 [J], 孙子淇;李慧慧;张鲁燕;王建康

5.双CPU并行算法求解Poisson方程零边界值问题试验 [J], 陈赣中;王宗皓

因版权原因,仅展示原文概要,查看原文内容请购买。

求解带二次约束的非凸二次规划的一种分支定界算法(英文)杨永健;高岳林

【期刊名称】《应用数学》

【年(卷),期】2006(19)1

【摘要】本文提出了一种求解带二次约束和线性约束的二次规划的分支定界算法.在算法中,我们运用Lipschitz条件来确定目标函数和约束函数的在每个n矩形上的上下界,对于n矩形的分割,我们采用选择n矩形最长边的二分法,同时我们采用了一些矩形删除技术,在不大幅增加计算量的前提下,起到了加速算法收敛的效果.从理论上我们证明了算法的收敛性,同时数值实验表明该算法是有效的.

【总页数】5页(P25-29)

【关键词】二次规划;二次约束;分支定界;最优化

【作者】杨永健;高岳林

【作者单位】上海大学数学系

【正文语种】中文

【中图分类】O212.2

【相关文献】

1.一种新的求解带有非凸二次约束的非凸二次规划问题的加速全局优化方法 [J], 吴慧卓;段东东;张可村

2.线性约束非凸二次规划的有限分支定界算法 [J], 田朝薇;宋海洲

3.基于D.C.分解的一类箱型约束的非凸二次规划的新型分支定界算法 [J], 付文龙;

杜廷松;翟军臣

4.求解单二次约束非凸二次规划问题的全局最优DC算法 [J], 王建国;郑芳英;胡觉亮

5.一种新的二次约束二次规划问题的分支定界算法 [J], 黄小利;高岳林;谢金宵;谷剑峰

因版权原因,仅展示原文概要,查看原文内容请购买。

Integral Invariants for Robust Geometry ProcessingHelmut PottmannTU WienJohannes WallnerTU GrazQi-Xing Huang Stanford UniversityYong-Liang Yang Tsinghua UniversityAbstractDifferential invariants of curves and surfaces such as curvatures and their derivatives play a central role in Geometry Processing.They are,however,sensitive to noise and minor perturbations and do not exhibit the desired multi-scale behaviour.Recently,the relation-ships between differential invariants and certain integrals over small neighborhoods have been used to define efficiently computable in-tegral invariants which have both a geometric meaning and useful stability properties.This paper considers integral invariants defined via distance functions,and the stability analysis of integral invari-ants in general.Such invariants proved useful for many tasks where the computation of shape characteristics is important.A prominent and recent example is the automatic reassembling of broken objects based on correspondences between fracture surfaces.Keywords:geometry processing,curvature,integral invariant,sta-bility,3D shape understanding1IntroductionLocal shape analysis of curves and surfaces usually employs con-cepts of elementary differential geometry like curvatures (see e.g.[do Carmo 1976;Porteous 2001]).Likewise,global shape under-standing benefits from differential geometry concepts like principal curvature lines or crest lines (see for instance [Alliez et al.2003;Hildebrandt et al.2005;Kim and Kim 2005;Ohtake et al.2004;Yokoya and Levine 1989]).However,the actual computation of curvatures for real data,given as triangle meshes or voxel grids,is a nontrivial task,because numerical differentiation is sensitive to noise.A standard method to deal with rough data is denoising and smoothing prior to numeric computation.These techniques come in two categories:Global methods which employ appropriate geo-metric flows (cf.[Bajaj and Xu 2003;Clarenz et al.2004b;Osher and Fedkiw 2002])and local ones,which use approximation by smooth surfaces (cf.[Taubin 1995;Cazals and Pouget 2003;Gold-feather and Interrante 2004;Ohtake et al.2004;Razdan and Bae 2005]).We especially want to mention the method of tensor voting (cf.[Tong and Tang 2005]).Semi-differential invariants in the sense of [Van Gool et al.1992]are a way of avoiding higher derivatives by combining reference points with first order derivatives.An alternative approach to differential geometry foremploy an exact theory of discrete analogues of ties,instead of numerically approximating the area of research,which could be called discrete etry in a narrower sense,has been investigated for a many results have been achieved –see e.g.work by and Zalgaller 1967],[Cheeger et al.1984],[Pinkall 1993],[Bobenko and Pinkall 1996],[Polthier 2002],2002],[Cohen-Steiner and Morvan 2003],[Bobenko 2005].It is possible to deal with noisy data using theories,as shown by [Hildebrandt and Polthier Klette 2006],and [Rusinkiewicz 2004].However,data appears to be neither the main strength nor the of an entirely discrete theory.Typically a discrete theory of curvatures associates them to vertices or edges or faces in a mesh,and such a discrete curvature often to appear in Computer Aided Geometric Designhas an interpretation,e.g.as ‘curvature concentrated in a vertex’,or ‘integral of curvature over a face’.This measure-theoretic in-terpretation of curvatures should not be confused with the integral invariants of the present paper.Our approach to the numerical problems inherent in the computa-tion of higher order differential invariants of noisy geometry is the following:For given 3D data,we integrate various functions over suitable small kernel domains like balls and spheres,which yields integral invariants associated with each kernel location.These in-variants turn out to have a geometric meaning and can be used as curvature estimators.This method has been initiated by [Manay et al.2004]and [Connolly 1986]and is also the topic of [Yang et al.2006;Pottmann et al.2007].The following properties of curvature estimators are important:•Robustness with respect to noise,including discretization ar-tifacts;•Multi-scale behaviour,i.e.,adaptability to the choice of reso-lution.Integration,which is an essential ingredient in the definition of in-tegral invariants,has a smoothing effect and achieves stability and robustness without the need for preprocessing.The present paper studies robustness aspects of integral invariants,as well as integral invariants related to distance functions.Thus we establish the theoretical explanation of robustness properties en-countered in numerical experiments and geometry processing algo-rithms.For the volume descriptor and similar invariants the rela-tion to shape (i.e.,curvatures)have already been established;we here present this analysis for geometry descriptors based on dis-tance functions.1A Prior WorkThe first to introduce integral invariants were [Manay et al.2004].One example is the area invariant suitable for estimating the curva-ture of a curve c at a point p ,where c is assumed to be the boundary of a planar domain D (see Fig.1,left):Consider the circular disk B r (p )of radius r and center p ,and compute the area A r (p )of the centered in a point p .The area invariant (left)is the surface area of the intersection of the disk p +rB with the domain D ,whereas the Connolly function (right)is the perimeter of the arc (p +rS )∩D divided by r .ture yields a way to estimate curvature at scale r,because features smaller than r hardly influence the result of computation.Manay et al.show the superior performance of this and other integral in-variants on noisy data,especially for the reliable retrieval of shapes from geometric databases.A similar invariant appears in earlier work(see Fig.1,right):The angle of the circular arc∂B r(p)∩D has been used by[Connolly 1986]for molecular shape analysis.If we multiply this so-called Connolly function by the kernel radius r,we obtain the length of the circular arc,which happens to be the derivative of the area invariant with respect to the kernel radius.The extensions of area invariant and Connolly function to surfaces in three-space are straightforward.One arrives at the volume de-scriptor,whose relation to mean curvature is derived by[Hulin and Troyanov2003],and which is used by[Gelfand et al.2005]for global surface matching.Its derivative with respect to r is the sur-face area of the spherical patch∂B r(p)∩D(see[Connolly1986]). The precise relation between these integral invariants on the one hand,and the curvature of planar curves and the mean curvature on surfaces on the other hand,has been derived by[Cazals et al.2003]. Principal component analysis of the domain B r(p)∩D is done by integrating coordinate functions and their products over that do-main.Therefore also principal moments of inertia and principal directions of B r(p)∩D are integral invariants.A discussion of their relation to principal curvatures and of computational issues is given by[Pottmann et al.2007],while[Yang et al.2006]shows applications and compares this method with other ways of estimat-ing principal curvatures.We also point to[Clarenz et al.2004b; Clarenz et al.2004a],who use principal component analysis of sur-face patches for feature detection.Results of a similarflavour,without the emphasis on computability and robustness,are the formulae of J.Bertrand and V.A.Puiseux (1848)which relate Gaussian curvature to perimeter and area of geodesic disks(cf.p.127of[Strubecker1969]).1B Organization and main contributions of the present paperOur paper is organized as follows:After introducing notation and some basic facts in sections2and3,we briefly discuss integral in-variants for planar curves in Section4.Those are mainly the area invariant and its derivatives with respect to kernel radius and kernel center,respectively.The analogous but slightly more involved dis-cussion of the3D counterparts,namely the volume descriptor and its derivatives,is performed in Section5.Section6studies invari-ants for curves in surfaces and shows how to obtain the geodesic curvature by integration.Invariants based on distance functions are the topic of Section7–both for surfaces and for space curves. Section8analyzes the stability of some important integral invari-ants in the presence of noise or surface perturbations.Section9 is concerned with efficient computation and implementation issues. Applications are briefly surveyed in Section10.We conclude our paper with Section11,which contains pointers to future research. The main contributions of the present paper are(i)new facts about asymptotics expansion of integral invariants and their relations to curvature,notably those computed from distance functions(ii)a thorough theoretical stability and robustness analysis of integral in-variants,with a focus on the volume descriptor;(iii)methods for computing integral invariants,especially the octree-based approach of Section9B.2Basics2A Notation and definition of integral invariantsIn this paper we assume that a curve in R2or a surface in R3is theboundary∂D of a domain D in R n,with n=2,3,respectively(locally,this is always the case,so this is no actual restriction).Wewrite1D for the indicator function of that domain:1D(x)=1ifthe point x is contained in D,and1D(x)=0otherwise.B denotes the unit ball,and p+rB denotes the ball with radius r and center p.In R2,such a ball is actually a disk,but we use thesame notation regardless of dimension.Further,the unit circle ofR2and the unit sphere of R3are denoted by S.S is the boundary of B.The symbol p+rS denotes the sphere or circle with centerp and radius r.In many cases,integral invariants,evaluated at the boundary pointp of a domain D,have the form of one of the two following convo-lution integrals:I r(p)=Zp+rBg(x)w(p−x)d x,I r(p)=Zp+rSg(x)w(p−x)d x,(1)where g is a function associated with the domain(e.g.,its indica-tor function or the distance from the boundary),and w is a weight function(e.g.,a constant).The symbol d x has various meanings, depending on the domain of integration.E.g.in dimension n=3, when integrating over the domain p+rB,it means a volume inte-gral.In dimension n=2,when integrating over p+rS,it means an arc length integral.In the remaining cases(n=3,integral over a sphere,and n=2,integral over a disk)d x means an area integral. As an example,both the area invariant and the Connolly function (cf.Fig.1)have the general form of Equation(1):we let g(x)= 1D(x)and w(x)=1.2B Integral invariants as functions of the kernel radiusFor geometry processing applications,the multi-scale behaviour of an integral invariant defined by(1)is important,which means the change of its value,if the kernel radius r varies.This leads us to consider integral invariants as univariate functions of the kernel ra-dius.A useful piece of information on these functions is the general relationddrI r(p)=I r(p),(2) which follows immediately from the product formula for integrals: I r(p)=R rI ρ(p)dρ(see[Pottmann et al.2007]).Another im-portant topic is the asymptotic behaviour when the kernel radius r tends to zero.[Pottmann et al.2007]give a general recipe for computing thefirst few terms in the Taylor expansion of I r(p).In-variants which are of this type are the area and volume functionals (see below),geometry descriptors based on the distance function (introduced in the present paper),and quantities used in principal component analysis(cf.[Yang et al.2006;Pottmann et al.2007]).2C Integral invariants as functions of the kernel centerWhen the radius used in the definition of an integral invariant I r(p) or I r(p)is kept constant,then this invariant is a function of the point ually we are interested in the values of the invariant when the point p is situated on the surface under investigation.The relations between integral invariants and geometric characteristics of the surface(mostly curvatures),which one is interested in,are no longer valid if p is not contained in the surface.Nevertheless we are interested in the behaviour of the invariant when p leaves the surface.The main reason for this is that in ac-tual computations we are often concerned with imprecise or quan-tized data,and the point for which integral invariants are eval-uated may actually lie at some distance from the hypothetical smooth surface under consideration.In order to estimate the ef-fect of these perturbations,we compute the gradient vector ∇I r =(∂∂p 1,∂∂p 2,∂∂p 3)·R p +rB g (x )d x ,and the same for I r (p ).The magnitude of perturbation is then measured by I r (p +∆p )=I r (p )+ ∆p ,∇I r (p ) +O (2),where O (2)denotes second or-der terms and , is the scalar product of vectors.Consequently,we can give a simple estimate for the change ∆I r in the integral invariant in terms of the change in the point p :Approximately, ∆I r ∆p · ∇I r .3Facts about curves and surfacesThis material is found e.g.in the monographs by [do Carmo 1976]or [Spivak 1975].References for the facts on distance functions quoted below are [Ambrosio and Mantegazza 1998]and [Pottmann and Hofer 2003].3A Planar curvesFor every point p of a sufficiently smooth curve c we can choose a Cartesian coordinate system with p as origin,such that the x 1axis is tangent to the curve (the Frenet frame).With respect to such coordinates,the curve may be written as the graph of a functionx 2=f (x 1),with f (x 1)=αx 21+βx 31+γx 41+O (x 51).With the well known formula κ=f (1+(f )2)−3/2for the curva-ture we can relate the coefficients in the Taylor expansion with the derivatives of the curvatures;the result isx 2=κ2x 21+κ 6x 31+κ −3κ324x 41+O (x 51).(3)Here the derivatives κ and κ of the curvature are with respectto x 1.For x 1=0this is also the derivative with respect to arc length.If the curve is of lesser smoothness,this Taylor expansion terminates not with O (x 51),but earlier.Because we need it later,we record in this place the coordinate of the intersection points c +r andc −r of this curve with the circle x 21+x 22=r 2(see Fig.2):c +r =(r,κ2r 2)+(−κ28,κ 6)r 3+(−κκ 12,κ 24)r 4+O (r 5).The coordinates of c −r are found by substituting −r for r in the previous formula.3B SurfacesNext,we discuss coordinate systems for surfaces.For every point p of a sufficiently smooth surface Φthere is a coordinate system with p as origin,such that the surface can be written as the graph of a functionx 3=1(κ1x 21+κ2x 22)+R (x 1,x 2),(4)where κ1,κ2are the principal curvatures of the surface in the point p ,and the remainder term R (x 1,x 2)is bounded by |R (x 1,x 2)|≤C ·(p x 21+x 22)3,i.e.,it is of third order.Mean curvature H andGaussian curvature K are defined by H =κ1+κ2,K =κ1κ2.If the surface is the boundary of the domain D ,we assume that the positive x 3axis points to the inside of D ,so that a convex domain gets nonnegative curvatures.The distance of a point x ∈R 3from the surface can be given a sign,depending on whether x is inside D or outside:dist(x ,Φ)>0⇐⇒x ∈D.Recall that the distance,signed or not,fulfills the eikonal equation ∇dist(x ,Φ) =1.ATaylor approximation of the signed distance function is given by dist(x ,Φ)=1(κ1x 21+κ2x 22)−x 3+O (3),(5)where O (3)means a third order remainder term (we use f (x )=O (k )as an abbreviation of f (x )=O ( x k )as x →0).Note that this Taylor expansion does not contain any quadratic terms which involve x 3(cf.[Ambrosio and Mantegazza 1998;Pottmann and Hofer 2003]).3C Space curvesA space curve c (u )has several orthonormal frames associated with it.For the purposes of this paragraph,we assume that u is an arc length parameter,and a dot indicates differentiation with respect tou .Then the Frenet frame {t ,h ,b }is defined by t =˙c,˙t =κh ,and b =t ×h .Here κis the curvature.Further,˙b=−τh ,where τis the torsion of the curve.By rotation of the Frenet frame about the unit tangent vector t we get the class of frames {t ,e 1,e 2}with e 1=cos φh +sin φb and e 2=−sin φh +cos φb .If wechoose the function φsuch that ˙φ=−τ,then both ˙e 1and ˙e 2are proportional to t ,and {t ,e 1,e 2}is called a rotation-minimizing frame.Now consider the ruled surface Ψ1parametrized by g 1(u,v )=c (u )+v e 1(u ).By differentiation we see that the partial deriva-tives g 1,u ,g 1,v and therefore the tangent plane of Ψ1in the point g 1(u,v )is spanned by t (u )and e 1(u ).As there is no dependence on v ,the surface is developable.An analogous result is true for the surface Ψ2which is defined via e 2.It follows that the planes orthogonal to c are orthogonal to both Ψ1and Ψ2,and thereforedist(x ,c )2=dist(x ,Ψ1)2+dist(x ,Ψ2)2.(6)As the surface Ψ1is developable,its principal frame in the pointc (u )=g 1(u,0)is {t ,e 1,e 2},with e 2as normal vector.By Meusnier’s theorem,the principal curvatures have the values κ1=κcos (h ,e 2)=κcos(φ+π/2)and κ2=0.For the surface Ψ2,the principal frame is {−t ,e 2,e 1},and the principal curva-tures have the values κ1=κcos (h ,e 1)=κcos φand κ2=0.This information will be useful when computing distance functions.3D Curves in surfacesA curve c contained in a surface Φhas an associated Darboux frame {t ,e 2,n },where t is the unit tangent vector of c ,n is the sur-face normal vector,and e 2=n ×t .If the curve is traversedwith unit speed,then ˙t=κg e 2+κn n ,˙e 2=−κg t +τg n ,and ˙n=−κn t −τg e 2.The coefficient functions κg ,κn ,τg which occur here are the geodesic curvature,normal curvature,and geodesic torsion of the curve c w.r.t.Φ,respectively.In this place we would like to mention the famous Gauss-Bonnet formula:For a closed curve c with interior D ⊂Φ,we have the identity H c κg =2π−R D K (x )d x ,with K as the Gaussian curvature of the surface Φ.4Simple invariants for planar curves4AArea invariant and Connolly functionGiven a planar curve c which occurs as the boundary of the planar domain D ,and a point p ∈c ,[Manay et al.2004]define the area invariant as the invariant I r according to Equation (1)with g =1D and w (x )=1=const .,i.e.,A r (p ):=Zp +rB1D (x )d x .(7)pp c(a)(b) p(c)(d)Figure2:Deriving the gradient of the area invariant.(a)The point p is moved towards p+d.(b)This is equivalent to moving the curvec in the opposite direction.(c)The area difference is highlighted.(d)The apparent length L d of the chord c+r−c−r when viewed in direction d contributes to the area difference.A r is the area of the intersection D∩B r(p)of the curve’s interior and the kernel disk B r(p).The perimeter of the circular arc D∩(p+rS)defines the invariantCA r(p):=Zp+rS 1D(x)d x=ddrA r(p),(8)(see Fig.1).The differential relation follows from(2).The name “Connolly function”is given to CA r(p)/r.It has been shown by [Cazals et al.2003]that there is the Taylor expansionCA r=πr−κr2+O(r3).(9) By integrating(9),we getA r=π2r2−κ3r3+O(r4).(10)This is a more precise estimate than A r≈r3arccos(rκ/2),which was given by[Manay et al.2004],in the sense that these two ex-pressions have different Taylor expansions as r→0.It is worth noting how the behaviour of both the area invariant and the Connolly function changes if the curve under consideration is not smooth.Of course,formulas(9)and(10)are useless.Sup-pose that the curve c is still smooth,but consists of two curvature continuous pieces joined together at the point p.If left and right limit curvatures have valuesκ−andκ+,then Equation(9)is obvi-ously still valid,with the arithmetic mean of the left and right hand curvature instead ofκ.If the curve is not even smooth,but piece-wise smooth with an opening angleαdifferent from180degrees, then the perimeter of the circular arc which lies inside the curve is shortened or lengthened accordingly,and we arrive atCA r=αr−κ−+κ+2r2+O(r3),(11)A r=αr2−κ−+κ+r3+O(r4),(12)where the second equation is found by integrating thefirst one.The caseα=πandκ−=κ+=κyields the smooth case.4B The gradient of the area functionalFollowing the general discussion of Section2C,we are interested in the change of the area invariant A r(p),if the point p varies.We pick a direction d and consider A r(p+d).Figures2.(a)–(d)show the change in area inflicted by the change in p:We haveA r(p+d)−A r(p)=L d· d +O( d 2),(13) where L d is the apparent length of the curve segment c∩(p+rB) when viewed from direction d(see Fig.2).Denote the two points of intersection of the circle p+rS with the curve c by c−r,c+r,and consider the chord vectorc r(p)=c+r−c−r.(14) With the symbol c⊥for a rotation about90degrees,the apparent length Ld of the curve segment visible in Fig.2is now expressed as L d= c⊥r,dd.This leads to the following theorem:Theorem1The gradient of the area invariant A r(p)with respect to p is obtained by rotating the chord vector about90degrees.The gradient and its norm are expressed by∇p A r=c⊥r=(c+r−c−r)⊥,(15)∇A r(p) =2r−κ24r3+O(r4).(16)Proof.The discussion preceding the theorem implies that the change in area is∆A r(p)= c⊥r,d +O(2),so the area gradient equals c⊥r.For the computation of ∇A r = c+r−c−r we use the Frenet frame associated with the point p and the coordinates of c+r and c−r given by Section3A:We getc r=(2r−κ2r3/4+O(r4),O(r3))T(17) and compute the norm of the gradient: c⊥r = c =[(2r−κ2r3/4+O(r4))2+O(r6)]1/2=2r[1−κ2r2/4+O(r4)]1/2= 2r(1−κ2r2/8+O(r4)),by the binomial series. The proof of Theorem1shows a phenomenon which occurs often when computing with Taylor expansions:The x1coordinate of the vector c r is known up to third order,whereas the x2coordinate has only the general term O(r3)there.It would appear that we cannot compute the norm up to third order at all.Nevertheless,the computation shows that the third order term in the x2coordinate is irrelevant.5Simple invariants for surfaces5A The volume and surface area descriptors3D counterparts of the invariants A r(p),CA r(p)are the volume descriptor V r(p)and the surface area descriptor SA r(p)of a point p on the boundary surface of a domain D:V r(p)=Zp+rB1D(x)d x,(18) SA r(p)=Zp+rS1D(x)d x=dV r(p).(19)The relation of these quantities to mean curvature is discussed in [Hulin and Troyanov2003]and[Pottmann et al.2007]:V r=2π3r3−πH4r4+O(r5),SA r=2πr2−πHr3+O(r4).(20)A r,dd(p+rS)∩Φreference planeFigure3:The apparent area A r,d enclosed by a space curve(p+ rS)∩Φwhen seen in direction of the vector d is found as area enclosed by the planar curve which arises when projecting the given space curve onto a reference plane orthogonal to d.The area is endowed with a sign,depending on the orientation of the boundary curve.With the area vector a r,we have A r,d= a r,d/ d . The normalized sphere area descriptor SA r/r2has been introduced by Connolly[Connolly1986]for molecular shape analysis.For an application in the samefield it has been studied by[Cazals et al. 2003].Equation(20)can be used to estimate the mean curvature.We de-fine the mean curvature estimators e H r(p)and H e r(p)at scale r by deleting higher order terms in the Taylor expansions in(20):e H r(p)=8−4V r(p),H e r(p)=2−SA r(p).(21)In the limit r→0,both e H r(p)and H e r(p)tend to the actual mean curvature H(p).Like in the curve case,we would like to study the behaviour of the volume descriptor for surfaces which are only piecewise smooth and piecewise curvature-continuous.Consider a point p at a sharp edge,where two smooth surface patches meet.The open-ing angle of that edge shall beα.In the smooth case(no edge),α=π.Clearly,the Taylor series of the volume descriptor starts with2αr3/3,but the higher order terms are not so obvious.A more detailed analysis shows that the curvature of the edge relative to the adjoining surfaces is irrelevant for the r4term,and that the volumedescriptor has the form V r=2α3r3−π(H−+H+)8r4+O(r5).HereH+and H−are the mean curvatures to either side of the edge.The case of a sharp corner is more complicated.5B The gradient of the volume functionalWe are now interested in the gradient∇V r(p)of the volume de-scriptor with respect to p.We consider the surfaceΦwhich is the boundary of the domain D.As in Section4B,we choose a direc-tion d and investigate the difference V r(p+d)−V r(p).The2D counterpart of this analysis is illustrated by Fig.2:the difference of volumes is given byV r(p+d)−V r(p)=A r,d d +O( d 2),(22) where A r,d is the apparent oriented area of the surface patchΦ∩B r(p)when viewed from the direction d.This area is the same as the oriented area enclosed by the projection of the closed curve c r=Φ∩∂B r(p)onto any plane which is orthogonal to d.Let c r(u)be a parameterization of this curve c r on the sphere S2r(p). We consider its area vectora r(p)=12Ic rx×d x=12ZIc r(u)×˙c r(u)du.(23)It is well known that the apparent area A r,d can be expressed as A r,d= a r(p),dd,which leads to∆V r(p)= a r(p),∆p + O(2).Theorem2The gradient of the volume descriptor V r(p)with re-spect to the point p is the area vector a r(p),whose definition in terms of the intersection curveΦ∩(p+rS)is given by Equation (23):∇V r(p)=a r(p).(24) If n(p)is a unit normal vector of the surfaceΦin the point p,which points towards the inside of the domain D,then the area vector has the following Taylor expansion:a r(p)=πhr2−3H2−K8r4in(p)+O(r5).(25)Proof.Equation(24)follows directly from the discussion preced-ing the theorem.In order to investigate the behavior of a r for r→0,we express the intersection curve c r in the principal frame associated with the point p,and we employ cylinder coordinates (ρ,φ,x3),such that x1=ρcosφ,x2=ρsinφ.The point of the curve c r which lies in the planeφ=const according to (4)is found by intersecting the curve x3=12(κ1(ρcosφ)2+κ2(ρsinφ)2)+O(ρ3)with the circleρ2+x23=r.From the coordinates of c+(r)in Section3A we read off that this point in cylinder coordinates is given byρ(φ)=r−18κ2n(φ)r3+O(r4),x3(φ)=12κn(φ)r2+O(r3),whereκn(φ)=κ1cos2φ+κ2sin2φ.When we return to Cartesian coordinates,we get a point c r(φ)of the intersection curve,and the area vector is computedby integration:a r(p)=122πRφ=0[ρ(φ)cosφ,ρ(φ)sinφ,x3(φ)]T×ddφ[ρ(φ)cosφ,ρ(φ)sinφ,x3(φ)]T.Carrying out this integration yields Equation(25).6Invariants for curves in surfacesSection4A dealt with the area invariant for a planar curve c,which arises as the boundary of a domain D.The area invariant A r(p) means that part of D whose distance from p does not exceed r. The same question can be asked if both the domain D and its boundary curve c are contained in a smooth surfaceΦ.We defineA r(p,Φ):=Area((p+rB)∩Φ).(26) It is interesting that the Taylor expansion of this area invariant given by the following theorem does not feature surface curvatures in its first two terms.Theorem3The area invariant of a curve c contained in a smooth surfaceΦhas the Taylor expansionA r(p,Φ)=πr2−κgr3+O(r4),(27) whereκg is the signed geodesic curvature of the curve c(i.e.,the curvature of the projection of c onto the tangent plane in the point p).Proof.We use a coordinate frame with p as origin and x 3axis or-thogonal to Φ.Without loss of generality Φhas the parametriza-tion x 3=g (x 1,x 2).We use the notation g ,i =∂g∂x i and ρ=px 21+x 22.As both the x 1and x 2axes are tangent to Φ,g ,1=O (ρ),g ,2=O (ρ).The surface area differential equals d x =q 1+g 2,1+g 2,2dx 1dx 2=(1+O (ρ2))dx 1dx 2.A domain D in the surface has a corresponding domain e Din the x 1,x 2plane,which arises as projection of D .If the diameter of Dis of magnitude O (ρ2),then the area of D ,which is computed as R e Dd x ,obviously equals Re D dx 1dx 2+O (ρ4).It follows that we can compute the area invariant A r (p ,Φ)to an ac-curacy of O (ρ4),if we project the curve c under consideration into the x 1,x 2plane.The result now follows directly from Equation (10). We notice that the last paragraph of the preceding proof shows that the area of the surface patch Φ∩(p +rB )equalsA r (p )=r 2π+O (r 4),(28)(which is e.g.Equ.(21)of [Pottmann et al.2007]).Remark 1The area invariant for curves in surfaces employs those points of the surface whose distance from the point p ,measured in Euclidean space,does not exceed r .We could also ask for the points whose distance,measured inside the surface Φ,does not exceed r .As it turns out,Theorem 3remains unchanged.The reason for this is that the area of a geodesic disk (i.e.,the points at distance ≤r from p )differs from the area of a Euclidean disk only by a term of magnitude O (r 4):According to the formulae of J.Bertrand and V .A.Puiseux (see p.127of [Strubecker 1969]),that area has theexpansion GA r =πr 2−π12Kr 4+O (r 5).Here K is the Gaussian curvature of the surface.We should note that the cost of computing geodesic disks usually is too high to merit their use if all we want to compute is curvatures.7Invariants from distance functionsIn applications where we have access to a distance function,itmakes sense to employ this function or functions derived from it for the definition of integral invariants.We discuss several ways to do this.7A Invariants composed from the signed distanceLet dist(x ,Φ)be the signed distance function associated with a sur-face Φ:dist(x ,Φ)is positive if x lies outside the domain bounded by Φ,and negative if x lies inside.The absolute value of the signed distance equals the distance from Φin the ordinary sense.We employ the general method of Equation (1)to define the signed distance integral D r .Letting g (x )=dist(x ,Φ)and w (x )=1leads toD r (p )=Zp +rBdist(x ,Φ)d x .(29)The relation of these descriptors to shape characteristics is de-scribed as follows:Theorem 4The signed distance integral D r (p )defined by Equa-tion (29)has the Taylor expansion D r =4πH 15r 5+O (r 6).Here H is the mean curvature of the surface Φin the point p .Proof.We consider the principal coordinate frame associated with the point p and the quadratic Taylor polynomial of the signed dis-tance function given by Equation (5).Obviously,D r =R rB `−x 3+1(κ1x 21+κ2x 22)+O (ρ3)´d x ,with ρ=(x 21+x 22+x 23)1/2.The volume integral of O (ρ3)over a volume of size O (r 3)is boundedby O (r 6).Computation of the integrals of the functions x 3,x 21,x 22finally yields the expression for D r . Note that the descriptor D r is related to mean curvature,just as the volume descriptor,which moreover is more stable (see the discus-sion below).Figure 4illustrates the similarity between these two descriptors.The sphere integral SD r corresponding to D r by dif-ferentiation has the Taylor expansion 4πH 3r 4+O (r 5).D 0.8D 0.4D noise 0.4D noise0.8V 0.8V 0.4Figure 4:Comparison of the descriptor D r derived from the signed(un-squared)distance the volume descriptor V r .Both are related to mean curvature.From top left:D r for two different kernel radii;D r for two different kernel radii with artificial noise added;V r for two different kernel radii.It is clearly visible that all descriptors try to capture the same geometric property (in this case,mean curva-ture),and that the effect of adding noise is almost eliminated when choosing a bigger neighbourhood for computation.For the kernel ball size compared to the bunny,see Fig.5.7B Invariants composed from the squared distanceWe now use the square of the signed distance function for the defi-nition of integral invariants.We study the squared distance integralD 2,r (p )=Zp +rBdist(x ,Φ)2d x ,(30)and the corresponding squared sphere distance integral SD 2,r (p )defined by integration over p +rS .The following theorem de-scribes their relation to the curvatures of the surface Φ.。