Introduction to Statistical Shape and Appearance Models for Segmentation

- 格式:pdf

- 大小:2.24 MB

- 文档页数:37

A GENERAL EXCEL SOLUTION FOR LTPD TYPESAMPLING PLANSDavid C. Trindade, Sun Microsystems, and David J. Meade, AMDDavid C. Trindade, Sun Microsystems, 901 San Antonio Road, MS UCUP03-706, Palo Alto, CA 94303KEY WORDS: Acceptance sampling, sample size, EXCEL add-in, risk probabilityAbstractIn this paper we discuss a fairly common problem in lot acceptance sampling. Suppose a company qualification or lot acceptance plan calls for a large sample size n with a non-zero acceptance number c. For example, a plan may call for accepting a lot on three or less failures out of 300 sample units. Because the sample units are costly, the manufacturer wants to reduce the acceptance number and consequently the sample size while holding the rejectable quality percent defective p value constant at a specified consumer's risk (e.g., 10% probability of acceptance). Thus, an LTPD sampling plan is desired. However, typical tables available in the literature cover only a limited number of p values for a few risk probabilities. For the general situation of any desired p value at any probability of acceptance, what are the sample sizes n corresponding to various c values? We describe methods for obtaining solutions via EXCEL and present a simple add-in for the calculations. IntroductionThere are several ways to categorize a single-sampling lot acceptance plan for fraction non-conforming product. See discussion in Western Electric handbook on acceptance sampling [1]. In particular, plans that have a low probability of acceptance (say, 10%) for a specified quality level are called LTPD or Lot Tolerance Percent Defective plans. This quality level is considered the highest percent defective (that is, the poorest quality) that can be tolerated in a small percentage of the product. The Dodge-Romig [2] Sampling Inspection Tables define LTPD as “an allowable percent defective which may be considered as the borderline of distinction between a satisfactory lot and an unsatisfactory one.” An alternate name for the LTPD is the rejectable quality level or RQL.For our purposes, we will consider a random sample of a lot from a process or from a large lot for which the sample is less than 10% of the lot size (Type B sampling). In this manner, we can apply the binomial distribution exactly instead of the more complicated hypergeometric distribution (Type A). For a discussion of the differences between Type A and B sampling see Duncan [3] or Montgomery [4].The probability of acceptance of the poorest quality LTPD that can be tolerated in an individual lot is often referred to as the “consumer’s risk.” A value of 10% for the consumer’s risk is commonly referenced in LTPD plans. Many product qualification plans are based on LTPD considerations in order to assure consumer protection against individual lots of poor quality. However, a common problem is the need to adjust the sample lot size and corresponding acceptance number based on time, money, or resource considerations while holding the consumer’s risk constant at a specified quality level. Because of this objective, many tables are available (see [2], [5] or [6]) to assist in the proper selection of sample sizes and acceptance numbers. A difficulty with utilizing these tables is the restriction to only the values specified in the tables. Other authors have provided graphical solutions to handle more general requirements. See Tobias and Trindade [7]. Further details on LTPD schemes can be found in Schilling [8]. We present below a general EXCEL solution that handles any specified LTPD at any probability of acceptance.The following example is based on the problem (solved graphically) in Tobias and Trindade [7]Example 1: Consider the case where a given sampling plan calls for a lot to be accepted on three or less failures out of 300 sampled units. Because of the cost associated with each individual unit, we need to construct a sampling plan that will allow the lot to be rejected on just one failure. One important requirement of the new plan is that the LTPD and the probability of acceptance be equivalent to that of the original plan. In this example, we will set the probability of acceptance equal to 10%.Step 1: Begin by creating in a spreadsheet a table like the one shown in Figure 1, specifying the sample size n, the acceptance number c, and the probability of acceptance (10% for LTPD plans). We are interested in determining the percent defective at the specified probability of acceptance, that is, the LTPD.Step 2: The binomial cumulative distribution function gives the probability of realizing up to c rejects in a sample of size n . To determine the percent defective value that makes the binomial CDF equal to the probability of acceptance value, we use an identity between the binomial CDF F (x ) and the Beta CDF G (p ) for integer valued shape parameters. (See Bury [9], page 346.) The relationship is:F (x ; p, n ) = 1 – G(p ; x + 1, n – x ).We use the EXCEL BETAINV function to calculate the LTPD for the original plan, as illustrated in cell B10. The LTPD calculated in this example is 0.02213, as shown in Figure 2. The general format of the BETAINV isBETAINV(1-prob of acceptance, c + 1, n – c).Figure 1: Illustration of how the Excel worksheet should be set up prior to solving for a solution to the original samplingplan.where c=3, n=300, and prob of acceptance = 0.1.Step 3: Create a second table with two columns. The first column should contain a list of possible samplesizes in descending order if a lower acceptance number is desired or in ascending order for a higher c .set up prior to solving for the alternative sample size.The second column should contain the EXCEL BINOMDIST function (binomial CDF) for each sample size, LTPD, acceptance number combination, as shown in Figure 3 (column F). Our goal is to find the smallest value of n that yields a BINOMDIST function value less than or equal to the probability of acceptance, 10%. From Figure 4 we can easily see that the appropriate sample size is 175. This is the smallest possible sample size for which the BINOMDIST function is less than or equal to 0.10. The format of the BINOMDIST function isBINOMDIST(acceptance number, sample size, LTPD, 1)The final “1” instructs Excel to calculate the cumulative distribution function.Figure 4: Illustration of solving for the correct value of n.The new sampling plan calls for rejection of any lot where more than 1 unit fails out of a sample of 175 units. The probability of accepting any given lot at an incoming percent defective value equal to the LTPD is 10%.Automated Analysis ToolsThe process of finding an alternative LTPD sampling plan can be made simple through the use of visual basic macros. The example below introduces a user-friendly Excel macro that performs LTPD sampling plan calculations. We will use the data from the previous example where c= 3, n= 300, and probability of acceptance = 10%.Step 1: Execute t he macro and select “Find alternative sampling plan.” See Figure 5.Step 2: Enter the information for the original sampling plan. Then, enter a new acceptance number for the alternative sampling plan. Finally, enter the worksheet cell address where output table should be placed. Figure 6 illustrates typical input.box.Step 3:Click the “OK” button. The final analysis table is show in Figure 7. The routine also provides the approximate AQL to accept 95% of the lots.The program also provides the capability for: 1. solving for the LTPD for a given sampling plan; 2. solving for the sample size for a specified LTPD and acceptance number. The execution of these routines are self-evident from the dialog boxes. output table. The sample size for the alternative sampling plan is 175 (reference cell C10).Program AvailabilityThe EXCEL LTPD add-in, written in Visual Basic by David Meade, is available for free downloading from the website /LTPD.html. References[1] Western Electric Company (1956), Statistical Quality Control Handbook, Delmar Printing Company, Charlotte, NC[2] Dodge, H.F., and H.G. Romig (1959) Sampling Inspection Tables, Single and Double Sampling, 2nd ed., John Wiley and Sons, New York[3] Duncan, A.J (1986) Quality Control and Industrial Statistics, 5th ed., Irwin, Homewood, IL[4] Montgomery, D.C. (1991) Introduction to Statistical Quality Control, 2nd ed,, John Wiley and Sons, New York[5] MIL-STD-105D (1963) Sampling Procedures and Tables for Inspection by Attributes, U.S. Government Printing Office[6] MIL-S-19500G (1963) General Specification for Semiconductor Devices, U.S. Government Printing Office[7] Tobias, P.A. and D.C. Trindade (1995) Applied Reliability, 2nd ed., Kluwer Academic Publishers, Boston, MA[8] Schilling, E.G. (1982) Acceptance Sampling in Quality Control, Marcel Dekker, New York[9] Bury, K.V. (1975) Statistical Models in Applied Science, John Wiley and Sons, New York。

1Introduction and OverviewJeroen C.J.M.van den Bergh and Marco A.Janssen1.1IntroductionThe up-and-comingfield known as‘‘industrial ecology’’is currently dominated by descriptive and design studies of physical processes and technical solutions that leave out relevant economic conditions and mechanisms.The main motivation for this book is that such an approach is insu‰cient to provide policymakers and business managers with eco-nomically feasible,cost-e¤ective,and socially supported instruments and solutions.The approach presented here therefore aims to integrate the natural science and technological dimensions of industrial ecology with an economic angle.Various authors in thefield of industrial ecology have strongly recommended such a synthesis(e.g.,Koenig and Cantlon2000; Fischho¤and Small2000;Brunner2002).The main value of adding eco-nomics to industrial ecology can best be summarized as increasing policy bining economics with industrial ecology will entail three elements that are largely lacking from the current literature on industrial ecology:1.Adding an economic context to industrial ecology,in the form of costs,benefits,economic e‰ciency considerations,(re)allocations,invest-ments,market processes and distortions,economic growth,multisectoral interactions,international trade,and so forth.2.Showing the usefulness of a range of concepts,theories,and methods for the integration of economics and industrial ecology in empirical applications.Examples are structural decomposition analysis,(general) equilibrium analysis,complex systems modeling,econometric-statistical analysis,dynamic input-output modeling,urban and regional economics, theories of thefirm,and institutional and evolutionary economics.3.Deriving general policy lessons based on the integration of economic and industrial ecology considerations at a theoretical level,as well as on4Introduction and Overview the insights of empirical case studies.In essence,this means combining lessons about physical,technical,and economic opportunities as well as about their limitations.To motivate the idea that industrial ecology lacks a coherent and thorough treatment of relevant economic dimensions,let us consider au-thoritative surveys of and outlets for insights and research in thefield of industrial ecology.Socolow et al.(1994),Graedel and Allenby(2003), and Ayres and Ayres(2002)are very representative of the insights that thefield has delivered so far,and the Journal of Industrial Ecology allows us to trace recent research themes and trends.Socolow et al.(thirty-six chapters in530pages)is so broad that it really holds the middle ground between a text on industrial ecology and one on environmental science, covering issues from thefirm to the global level.It contains two chapters that deal with economics,one analyzing principal-agent problems at the level offirms,and another studying raw materials extraction and trade. Although useful,these chapters can certainly not be regarded as any-thing close to a complete treatment of economic dimensions as previously outlined.Graedel and Allenby(twenty-six chapters in363pages)more clearly steps away from traditional environmental science and is strong onfirm-level and manufacturing issues but lacks any treatment of eco-nomic considerations,be it at thefirm or the economy-wide level.Ayres and Ayres(forty-six chapters in680pages)includes an entire section on the theme‘‘Economics and Industrial Ecology,’’which consists of seven very short chapters.But it turns out that several of these chapters do not really address economic issues at all,but instead focus on physical indi-cators(Total Material Requirement,or TMR),exergy,transmaterializa-tion,and technology policy.The chapters that do deal with economic issues survey the inclusion of materialflows in economic models,the em-pirical relationship between dematerialization and economic growth,and the literature on optimal resource extraction.Again,this is useful but does not represent a su‰ciently broad coverage of economic issues.In e¤ect,from reading both these books and the Journal of Industrial Ecology,one can easily obtain the impression that industrial ecology completely lacks economic considerations and instead is mainly about technological planning and design.At the aggregate level,this is perhaps most clearly reflected in the well-known Factor X debate(X¼10in the case of Factor Ten Club1994,and X¼4in the case of von Weizsa¨cker et al.1997).A scan of all the issues of the Journal of Industrial Ecology (twenty-three in seven volumes)delivered a disappointingly small numberJeroen C.J.M.van den Bergh and Marco A.Janssen5 of articles that address(and then often only tangentially)economic rea-soning or methods(these articles are mentioned in the literature survey presented in chapter2).The planning-and-design perspective strongly contrasts with the economics perspective,which emphasizesfirm behavior (strategies,routines,and input-mix decisions that a¤ect material use), market processes(liberalization and decentralization),and economy-wide feedback involving prices,incomes,foreign trade,change in sector struc-ture and intermediate deliveries,shifts in consumer expenditures,and market-based policy instruments.One can conclude that,with regard to the three elements listed earlier,(1)and(2)have received very little to no attention in the literature on industrial ecology.Instead,all method-related developments and empirical work have focused on noneconomic methods like material and substanceflow analysis,life cycle analysis,and process and product design.Given the neglect of thesefirst two elements, element(3)has evidently not been accomplished.Economists have long been interested in the impact that economies have on natural resources and the environment and the negative feedback this may provide to welfare and economic growth(van den Bergh1999). This interest has,inter alia,resulted in studies that examine the rela-tionship between physicalflows through the economy,on the one hand, and market functioning,economic growth,international trade,and en-vironmental regulation,on the other.Early work that combined eco-nomics and materialflow analysis started with the methodological studies by Ayres and Kneese(1969),Kneese,Ayres,and D’Arge(1970),and Georgescu-Roegen(1971).Much of the subsequent work focused on input-output and other types of economic models,with extensions to ac-count for polluting residuals(James1985).Boulding(1966)and Daly (1968)can be seen as conceptual predecessors of this line of thought.In the context of his‘‘steady state,’’Daly(1977)proposes the idea of reduc-ing or minimizing the physical resource‘‘throughput’’that runs through the economy,which closely meets the approach of industrial ecology. Daly and Townsend(1993)presents a collection of reprinted classic articles on the boundary of philosophy,economics,and environmental science,many of which are imbued with the spirit of industrial ecology. The emergence of thefield of industrial ecology since the1990s has stimulated a great deal of methodologically well-founded research that is aimed at measuring,describing,predicting,redirecting,and reducing physicalflows in economies.The current book intends to o¤er a balanced combination of environmental-physical,technological,and economic con-siderations.This is believed to provide the best basis for identifying6Introduction and Overview opportunities to reduce pressures on the environment that are linked to materialflows,as well as to design public policies to foster such oppor-tunities.A brief overview of the relevant literature on the interface between economics and industrial ecology is provided in chapter2.In addition,the book presents new and original studies that try to accom-plish a close link between economics,in particular environmental and re-source economics,and industrial ecology.These have not yet influenced the dominant themes in industrial ecology—witness the critical evalua-tion from an economic perspective of the representative books and the field journal earlier in the chapter.Research on industrial metabolism has emphasized the description of materialflows in economic systems(Daniels and Moore2002and Daniels 2002o¤er a good overview of all the methods and their applications). Studies along these lines provide interesting and useful information about the size of materialflows and the identification of stocks in which certain undesirable materials accumulate.This leads to concepts like‘‘chemi-cal time bombs’’(e.g.,the accumulation of chemicals in river mud)and ‘‘waste mining’’(i.e.,beyond a certain point,material waste that has accumulated in certain locations can be turned into a resource suitable for profitable mining)(e.g.,Bartone1990;Allen and Behmanesh1994). Nevertheless,several relevant issues cannot be addressed properly with a descriptive industrial ecology approach.One reason is that the‘‘metabo-lism’’of economic systems changes over time,which cannot be under-stood simply by the measurement of materialflows.An understanding of the relationship between economic activities and materialflows can help to unravel the socioeconomic causes of both the physicalflows and the changes therein.An analytical approach to this problem requires that at-tention be given to,inter alia,the behavior of economic agents like pro-ducers and consumers,interactions among stakeholders in production chains,the spectrum of technological choices,and the role of trade and interregional issues.At a more abstract level,such an approach involves substitution and allocation mechanisms at the level of production tech-nologies,firms,sectors,and the composition of demand.In other words, besides a description of materialflows,thefield of industrial ecology requires a comprehension of the processes behind the materialflows.A deeper understanding of the processes leading to changes in material flows can also provide insight into how to develop both e¤ective and e‰-cient policies that lead to a reduction in harmful materialflows.In addi-tion,the notion of rebound e¤ects can be seriously examined.This notion comprises the whole range of indirect technological and economic con-Jeroen C.J.M.van den Bergh and Marco A.Janssen7 sequences of certain technological scenarios.Such indirect e¤ects can be induced by,or run through,alterations in prices,changes in market de-mand and supply,substitution in production or consumption,growth in incomes,and changes in consumer expenditures.A better grip on the sign and magnitude of rebound e¤ects is needed to counter simplistic technology-based arguments in favor of certain policy or management strategies and to temper naı¨ve expectations about what can be achieved over certain periods of time with technology.This is relevant,for exam-ple,when estimating the impacts of rapid changes in information and communication technology on materialflows.This book provides a unique overview of di¤erent economic ap-proaches to address problems associated with the use of materials in economic systems at di¤erent levels.Some of these approaches have eco-nomics at the core,whereas others add economic aspects to technologi-cal or natural-science-dominated approaches or applications.The major part of the book o¤ers a collection of new studies that cover a wide range of approaches and methods for integrating physics and technologi-cal analysis and knowledge with economics.The scale varies from indus-trial parks in Denmark and the Netherlands to the international trade of waste.The variety of approaches can be explained by the fact that the diversity and complexity of topics on the boundary of economics and industrial ecology—indicated by the dimensions:materials,technology, physics,economics,and varying(spatial and aggregation)scales—cannot be completely covered with a single approach.As a result,the book reflects a pluralistic approach.The contributions have been organized in four themes(parts II to V). Thefirst theme(chapters3and4)is concerned with the historical analysis of structural change.The second theme(chapters5to8)covers a range of models that try to predict future structural change under di¤erent policy scenarios.The third theme(chapters9and10)addresses two models that can be used to examine waste management and recycling opportunities. Finally,the fourth theme(chapters11and12)adopts a local-scale per-spective by focusing on the dynamics of eco-industrial parks.Chapter13 closes the book with a summary and synthesis of policy implications.1.2Historical Analysis of Structural ChangeChapters3and4o¤er di¤erent(statistical and decomposition)ap-proaches to the historical analysis of the impact of structural change on materialflows through the economy.In chapter3,Ayres,Ayres,and8Introduction and Overview Warr challenge the widespread idea of dematerialization by presenting data on the major commodityflows in the U.S.economy since1900,in both mass and exergy terms.Based on these data,the U.S.economy turns out not to be‘‘dematerializing,’’to any degree that has environmental significance.Since1900it has exhibited a slow and modest long-term in-crease in materials consumption per capita,except during the Depression and World War II.The trends with regard to resource productivity(gross domestic product[GDP]per unit of materials consumption)are moder-ately increasing overall.Ayres et al.argue that policy should focus not on reducing the total mass of materials consumed,but on reducing the need for consumables,especially intermediates.In chapter4,Hoekstra and van den Bergh present a quantitative his-torical analysis based on the method of input-output(I/O)structural de-composition analysis(SDA).They open with an overview of the literature on SDA.This method uses historical I/O data to identify the relative im-portance of a wide range of drivers.SDA has been applied in studies of energy use and energy-related emissions,but only once(in unpublished work)of materialflows.The authors carry out a decomposition of the use of iron and steel and plastics products in the Netherlands for the period1990–1997.This is based on a new data set,unique in the world, which incorporates hybrid-unit input-output tables.The data were con-structed especially for the present purpose in cooperation with the na-tional statistical o‰ce of the Netherlands,through a construction process that is briefly discussed.Finally,an illustration is given of how the results of SDA can serve as an input to a forecasting scenario analysis.1.3Projective Analysis of Structural ChangeChapters5to8present case studies on regional and national scales that employ a range of modeling approaches.In chapter5,Ruth, Davidsdottir,and Amato describe a model that combines engineering and econometric techniques for the analysis of the dynamics of large industrial systems.A transparent dynamic computer modeling approach is chosen to integrate information from these analyses in ways that foster the participation of stakeholders from industry and government agencies in all stages of the modeling process—from problem definition and de-termination of system boundaries to the generation of scenarios and the interpretation of results.Three case studies of industrial energy use in the United States are presented:one each for the iron and steel,pulp and paper,and ethylene industries.Jeroen C.J.M.van den Bergh and Marco A.Janssen9 In chapter6,Foran and Poldy describe two analytical frameworks that have been applied to Australia:namely,the Australian Stocks and Flows Framework(ASFF)and the OzEcco embodied energyflows model.The first(ASFF)is a set of thirty-two linked calculators that follow,and ac-count for,the important physical transactions that underpin our everyday life.The second(OzEcco)is based on the concept of embodied energy, the chain of energyflows from oil wells and coal mines that eventually are included or embodied in every good and service in both the domestic and export components of our economy.Both analytical frameworks are based on systems theory and implemented in a dynamic approach.In chapter7,Mannaerts presents STREAM,a partial equilibrium model for materialflows in Europe,with emphasis on the Netherlands. The model provides a consistent framework for material use scenarios and related environmental policy analysis of dematerialization,recycling, input substitution,market and cost prices,and international allocation of production capacity.The chapter reports the e¤ects of various envi-ronmental policy instruments for Western Europe and the Netherlands, including imposed energy taxation,taxation of primary materials,per-formance standards for energy and emissions,and deposit money for scrap.Chapter7shows that no absolute decline in material use can be found in member countries of the Organization for Economic Coopera-tion and Development(OECD),but that a relative decoupling of material use and GDP can be observed.In chapter8,Idenburg and Wilting present the DIMITRI model,a mesoeconomic model that operates at the level of production sectors, focusing on production and related environmental pressure in the Neth-erlands.The use of a multiregional input-output structure enables an analysis of changes among sectors and among regions resulting from technological changes.Because of the dynamic nature of the model,it allows analysis not only of the consequences of changes in direct or op-erational inputs,but also of shifts from operational inputs toward cap-ital inputs and vice versa.Di¤erent analyses with the model are presented to show the benefits of a dynamic input-output framework for policy analysis.1.4Waste Management and RecyclingChapters9and10address modeling of opportunities for waste manage-ment and recycling.In chapter9,Bartelings,Dellink,and van Ierland present a general equilibrium model of the waste market to study market10Introduction and Overview distortions and specificallyflat-fee pricing.A numerical example is used to demonstrate the e¤ects offlat-fee pricing on the generation of waste. The results show that introducing a unit-based price will stimulate both the prevention and recycling of waste and can improve welfare,even if implementation and enforcement costs are taken into account.Intro-ducing an upstream tax can provide incentives for the prevention of waste but will not automatically stimulate recycling.A unit-based pricing scheme is therefore a more desirable policy option.In chapter10,van Beukering provides an overview of the internaliza-tion of wasteflows.He focuses attention on the international trade of recyclable materials between developed countries and developing coun-tries.Empirical facts indicate a high rate of growth of such trade.More-over,a particular trade pattern has emerged,characterized by waste materials being recovered in developing countries and then exported to other developing countries,where they are recycled.Chapter10discusses the economic and environmental significance of the simultaneous increase in international trade and the recycling of materials.1.5Dynamics of Eco-Industrial ParksChapters11and12deal with industrial ecology dynamics at the lowest scale and consider the self-organization and stimulation of eco-industrial parks.A classic example in industrial ecology is the industrial symbio-sis reported in Kalundborg.Both chapter11and chapter12use the Kalundborg case study as their starting point.The eco-industrial park in Kalundborg is one of the most internationally well-known examples of a local network for exchanging waste products among industrial producers. In chapter11,Jacobsen and Anderberg o¤er an analysis of the evolution of the‘‘Kalundborg symbiosis.’’This case has been studied from di¤erent viewpoints.For comparison and contrast,the authors discuss ongoing e¤orts to develop an eco-industrial park in Avedøre Holme,an indus-trial district around the major power plant in the Copenhagen area.The chapter closes by addressing the limitations of the Kalundborg symbiosis. In similar vein,in chapter12,Boons and Janssen use the Kalundborg example to analyze why there have been so many e¤orts to re-create Kalundborg in other locations.The main problem of creating an eco-industrial park is to overcome a collective action problem,which is not without costs.But such costs are generally neglected,since most studies look only at benefits from technical bottom-up and top-down designs.As research on collective action problems shows,top-downJeroen C.J.M.van den Bergh and Marco A.Janssen11 arrangements are often not e¤ective in creating sustained cooperative arrangements.Furthermore,bureaucrats and designers may not have the required knowledge to see entrepreneurial opportunities for reducing wasteflows.Boons and Janssen argue that more may be expected from incentives for self-organized interactions that stimulate repeated inter-actions and reduce investment costs,or from providing subsidies for investments in interfirm linkages.1.6Policy ImplicationsFinally,in chapter13,van den Bergh,Verbruggen,and Janssen discuss the policy implications of the research reported here.In particular,the chapter considers the question of whether a combined policy specifi-cally focused on materials and waste is needed.It concludes that a gen-eral dematerialization policy is meaningful for a number of theoretical and practical reasons.Dematerialization and waste policy support one another in the long run,even if,in the short run,they are often conflict-ing.The aim of a combined policy is to facilitate technological innovation and transitions toward a material-poor economy.Policy can attempt to stimulate the incorporation of certain physical requirements into produc-tion processes and products,even when from an economic point of view these requirements are second best.ReferencesAllen,D.T.,and N.Behmanesh.(1994).Wastes as raw materials.In B.R.Allenby and D.J. Richards(eds.),The Greening of Industrial Ecosystems,69–89.Washington,DC:Na-tional Academy Press.Ayres,R.U.,and L.W.Ayres(eds.).(2002).A Handbook of Industrial Ecology.Chelten-ham,England:Edward Elgar.Ayres,R.U.,and A.V.Kneese.(1969).Production,consumption and externalities.Ameri-can Economic Review59:282–297.Bartone,C.(1990).Economic and policy issues in resource recovery from municipal wastes. Resources Conservation and Recycling4:7–23.Boulding,K.E.(1966).The economics of the coming spaceship Earth.In H.Jarrett(ed.), Environmental Quality in a Growing Economy,3–14.Baltimore:Johns Hopkins University Press for Resources for the Future.Brunner,P.H.(2002).Beyond materialsflow analysis.Journal of Industrial Ecology6(1): 8–10.Daly,H.E.(1968).On economics as a life science.Journal of Political Economy76(3): 392–406.Daly,H.E.(1977).Steady-State Economics.San Francisco:Freeman.12Introduction and Overview Daly,H.E.,and K.N.Townsend(eds.).(1993).Valuing the Earth:Economic,Ecology andEthics.Cambridge,MA:MIT Press.Daniels,P.L.(2002).Approaches for quantifying the metabolism of physical economies:A comparative survey.Part II:Review of individual approaches.Journal of Industrial Ecology 6(1):65–88.Daniels,P.L.,and S.Moore.(2002).Approaches for quantifying the metabolism of physical economies:A comparative survey.Part I:Methodological overview.Journal of Industrial Ecology5(4):69–93.Factor Ten Club.(1994).Carnoules Declaration.Wuppertal,Germany:Wuppertal Institute. Fischho¤,B.,and M.J.Small.(2000).Human behavior in industrial ecology modeling. Journal of Industrial Ecology3(2–3):4–7.Georgescu-Roegen,N.(1971).The Entropy Law and the Economic Process.Cambridge, MA:Harvard University Press.Graedel,T.E.,and B.R.Allenby.(2003).Industrial Ecology.2nd edition(1st ed.,1995). Upper Saddle River,NJ:Pearson Education,Prentice Hall.James, D.(1985).Environmental economics,industrial process models,and regional-residuals management models.In A.V.Kneese and J.L.Sweeney(eds.),Handbook of Nat-ural Resource and Energy Economics,vol.1,271–324.Amsterdam:North-Holland. Kneese,A.V.,R.U.Ayres,and R.C.D’Arge.(1970).Economics and the Environment:A Materials Balance Approach.Baltimore:Johns Hopkins University Press.Koenig,H.E.,and J.E.Cantlon.(2000).Quantitative industrial ecology and ecological economics.Journal of Industrial Ecology3(2–3):63–83.Socolow,R.,C.Andrews,F.Berkhout,and V.Thomas(eds.).(1994).Industrial Ecology and Global Change.Cambridge:Cambridge University Press.van den Bergh,J.C.J.M.(ed.).(1999).Handbook of Environmental and Resource Econom-ics.Cheltenham,England:Edward Elgar.von Weizsa¨cker, E., A. B.Lovins,and L.H.Lovins.(1997).Factor Four:Doubling Wealth—Halving Resource Use:A Report to the Club of Rome.London:Earthscan.。

ga分布的密度函数-回复Title: Understanding the Probability Density Function of the gamma distributionIntroduction:Probability density functions (PDFs) play a critical role in describing the likelihood of different values occurring in a given continuous random variable. One such PDF is the gamma distribution, which has various applications in fields such as healthcare, physics, and economics. In this article, we will explore the gamma distribution and its properties, step by step, providing a comprehensive understanding of this important probability distribution.1. Definition and Properties:The gamma distribution is a continuous probability distribution that is widely used to model positive-valued random variables. It is characterized by two parameters: shape parameter (α) and rate parameter (β). The gamma distribution is denoted as Gamma(α, β).2. Probability Density Function (PDF) of the Gamma Distribution: The probability density function (PDF) of the gamma distribution is given as:f(x) = (β^α* x^(α-1) * e^(-βx)) / Γ(α)Where:f(x) - Probability density function of the gamma distributionx - Random variableα- Shape parameter (α> 0)β- Rate parameter (β> 0)e - Euler's number (~2.71828)Γ(α) - Gamma function, which is the generalization of the factorial function for positive real numbers3. Shape Parameter (α):The shape parameter, denoted as α, defines the shape of the gamma distribution. It determines the skewness and kurtosis of the distribution. Higher values of αresult in distributions with higher peaks and less variability. When αis less than 1, the gamma distribution becomes a skewed distribution.4. Rate Parameter (β):The rate parameter, denoted as β, controls the rate at which the gamma distribution decays. Higher values of βlead to faster decayand narrower distributions. Conversely, lower values of βresult in slower decay and wider distributions.5. Relationship with Other Distributions:The gamma distribution is related to several other distributions, making it a versatile tool in statistical modeling. For example, when αis an integer, the gamma distribution is equivalent to the Erlang distribution. Furthermore, the exponential distribution is a special case of the gamma distribution when α= 1.6. Moments of the Gamma Distribution:Moments are descriptive statistics that provide insights into the shape and characteristics of a distribution. The moments of a gamma distribution can be calculated using the shape and rate parameters. The mean (μ) of the gamma distribution is given by μ= α/ β, and the variance (σ^2) is given by σ^2 = α/ β^2.7. Applications of the Gamma Distribution:The gamma distribution finds widespread use across various fields. In healthcare, it can model waiting times and durations, such as the time until a patient is discharged from a hospital or the lifespan of individuals. In physics, the gamma distribution can describe thedecay rates of radioactive substances. In economics, it can be used to model income distribution and stock market returns.8. Estimation and Fitting:Given a set of observed data, it is often necessary to estimate the parameters of the gamma distribution that best fit the data. Maximum likelihood estimation (MLE) is commonly used for this purpose. Additionally, various software packages and programming languages provide functions to fit gamma distributions to data.Conclusion:In conclusion, the gamma distribution is a powerful tool in statistical modeling due to its flexibility and wide-ranging applications. Its probability density function, characterized by the shape and rate parameters, provides insights into the shape and characteristics of random variables. Understanding the properties of the gamma distribution allows researchers to accurately model and analyze real-world phenomena, making it a valuable technique in various fields.。

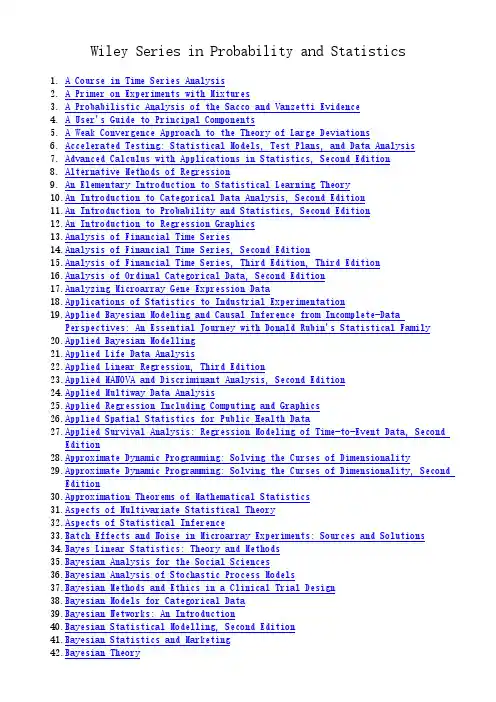

Wiley Series in Probability and Statistics1. A Course in Time Series Analysis2. A Primer on Experiments with Mixtures3. A Probabilistic Analysis of the Sacco and Vanzetti Evidence4. A User's Guide to Principal Components5. A Weak Convergence Approach to the Theory of Large Deviations6.Accelerated Testing: Statistical Models, Test Plans, and Data Analysis7.Advanced Calculus with Applications in Statistics, Second Edition8.Alternative Methods of Regression9.An Elementary Introduction to Statistical Learning Theory10.An Introduction to Categorical Data Analysis, Second Edition11.An Introduction to Probability and Statistics, Second Edition12.An Introduction to Regression Graphics13.Analysis of Financial Time Series14.Analysis of Financial Time Series, Second Edition15.Analysis of Financial Time Series, Third Edition, Third Edition16.Analysis of Ordinal Categorical Data, Second Edition17.Analyzing Microarray Gene Expression Data18.Applications of Statistics to Industrial Experimentation19.Applied Bayesian Modeling and Causal Inference from Incomplete-DataPerspectives: An Essential Journey with Donald Rubin's Statistical Family20.Applied Bayesian Modelling21.Applied Life Data Analysis22.Applied Linear Regression, Third Edition23.Applied MANOVA and Discriminant Analysis, Second Edition24.Applied Multiway Data Analysis25.Applied Regression Including Computing and Graphics26.Applied Spatial Statistics for Public Health Data27.Applied Survival Analysis: Regression Modeling of Time-to-Event Data, SecondEdition28.Approximate Dynamic Programming: Solving the Curses of Dimensionality29.Approximate Dynamic Programming: Solving the Curses of Dimensionality, SecondEdition30.Approximation Theorems of Mathematical Statistics31.Aspects of Multivariate Statistical Theory32.Aspects of Statistical Inference33.Batch Effects and Noise in Microarray Experiments: Sources and Solutions34.Bayes Linear Statistics: Theory and Methods35.Bayesian Analysis for the Social Sciences36.Bayesian Analysis of Stochastic Process Models37.Bayesian Methods and Ethics in a Clinical Trial Design38.Bayesian Models for Categorical Data39.Bayesian Networks: An Introduction40.Bayesian Statistical Modelling, Second Edition41.Bayesian Statistics and Marketing42.Bayesian Theory43.Bias and Causation44.Biostatistical Methods in Epidemiology45.Biostatistical Methods: The Assessment of Relative Risks46.Biostatistical Methods: The Assessment of Relative Risks, Second Edition47.Biostatistics: A Methodology for the Health Sciences, Second Edition48.Bootstrap Methods: A Guide for Practitioners and Researchers, Second Edition49.Business Survey Methods50.Case Studies in Reliability and Maintenance51.Categorical Data Analysis, Second Edition52.Causality: Statistical Perspectives and Applications53.Clinical Trial Design: Bayesian and Frequentist Adaptive Methods54.Clinical Trials: A Methodologic Perspective, Second Edition55.Cluster Analysis, 5th Editionbinatorial Methods in Discrete Distributionsparative Statistical Inference, Third Edition58.Constrained Statistical Inference: Inequality, Order, and Shape Restrictions59.Contemporary Bayesian Econometrics and Statistics60.Continuous Multivariate Distributions: Models and Applications, Volume 1,Second Edition61.Convergence of Probability Measures, Second Edition62.Counting Processes and Survival Analysis63.Decision Theory64.Design and Analysis of Clinical Trials: Concepts and Methodologies, SecondEdition65.Design and Analysis of Experiments: Advanced Experimental Design, Volume 266.Design and Analysis of Experiments: Introduction to Experimental Design, Volume1, Second Edition67.Design and Analysis of Experiments: Special Designs and Applications, Volume 368.Directional Statistics69.Dirichlet and Related Distributions: Theory, Methods and Applications70.Discrete Distributions: Applications in the Health Sciences71.Discriminant Analysis and Statistical Pattern Recognition72.Empirical Model Building73.Empirical Model Building: Data, Models, and Reality, Second Edition74.Environmental Statistics: Methods and Applications75.Exploration and Analysis of DNA Microarray and Protein Array Data76.Exploratory Data Mining and Data Cleaning77.Exploring Data Tables, Trends, and Shapes78.Financial Derivatives in Theory and Practice79.Finding Groups in Data: An Introduction to Cluster Analysis80.Finite Mixture Models81.Flowgraph Models for Multistate Time-to-Event Data82.Forecasting with Dynamic Regression Models83.Forecasting with Univariate Box-Jenkins Models: Concepts and Cases84.Fourier Analysis of Time Series: An Introduction, Second Edition85.Fractal-Based Point Processes86.Fractional Factorial Plans87.Fundamentals of Exploratory Analysis of Variance88.Generalized Least Squares89.Generalized Linear Models: With Applications in Engineering and the Sciences,Second Edition90.Generalized, Linear, and Mixed Models91.Geometrical Foundations of Asymptotic Inference92.Geostatistics: Modeling Spatial Uncertainty93.Geostatistics: Modeling Spatial Uncertainty, Second Edition94.High-Dimensional Covariance Estimation95.Hilbert Space Methods in Probability and Statistical Inference96.History of Probability and Statistics and Their Applications before 175097.Image Processing and Jump Regression Analysis98.Inference and Prediction in Large Dimensions99.Introduction to Nonparametric Regression100.Introduction to Statistical Time Series, Second Edition101.Introductory Stochastic Analysis for Finance and Insurancetent Class and Latent Transition Analysis: With Applications in the Social, Behavioral, and Health Sciencestent Curve Models: A Structural Equation Perspectivetent Variable Models and Factor Analysis: A Unified Approach, 3rd Edition105.Leading Personalities in Statistical Sciences: From the Seventeenth Century to the Present106.Lévy Processes in Finance: Pricing Financial Derivatives107.Linear Models: The Theory and Application of Analysis of Variance108.Linear Statistical Inference and its Applications: Second Editon109.Linear Statistical Models110.LISP-STAT: An Object-Oriented Environment for Statistical Computing and Dynamic Graphics111.Longitudinal Data Analysis112.Long-Memory Time Series: Theory and Methods113.Loss Distributions114.Loss Models: From Data to Decisions, Third Edition115.Management of Data in Clinical Trials, Second Edition116.Markov Decision Processes: Discrete Stochastic Dynamic Programming117.Markov Processes and Applications118.Markov Processes: Characterization and Convergence119.Mathematics of Chance120.Measurement Error Models121.Measurement Errors in Surveys122.Meta Analysis: A Guide to Calibrating and Combining Statistical Evidence 123.Methods and Applications of Linear Models: Regression and the Analysis of Variance124.Methods for Statistical Data Analysis of Multivariate Observations, Second Edition125.Methods of Multivariate Analysis, Second Edition126.Methods of Multivariate Analysis, Third Edition127.Mixed Models: Theory and Applications128.Mixtures: Estimation and Applications129.Modelling Under Risk and Uncertainty: An Introduction to Statistical, Phenomenological and Computational Methods130.Models for Investors in Real World Markets131.Models for Probability and Statistical Inference: Theory and Applications 132.Modern Applied U-Statistics133.Modern Experimental Design134.Modes of Parametric Statistical Inference135.Multilevel Statistical Models, 4th Edition136.Multiple Comparison Procedures137.Multiple Imputation for Nonresponse in Surveys138.Multiple Time Series139.Multistate Systems Reliability Theory with Applications140.Multivariable Model-Building: A pragmatic approach to regression analysis based on fractional polynomials for modelling continuous variables141.Multivariate Density Estimation: Theory, Practice, and Visualization142.Multivariate Observations143.Multivariate Statistics: High-Dimensional and Large-Sample Approximations 144.Nonlinear Regression145.Nonlinear Regression Analysis and Its Applications146.Nonlinear Statistical Models147.Nonparametric Analysis of Univariate Heavy-Tailed Data: Research and Practice148.Nonparametric Regression Methods for Longitudinal Data Analysis: Mixed-Effects Modeling Approaches149.Nonparametric Statistics with Applications to Science and Engineering 150.Numerical Issues in Statistical Computing for the Social Scientist151.Operational Risk: Modeling Analytics152.Optimal Learning153.Order Statistics, Third Edition154.Periodically Correlated Random Sequences: Spectral Theory and Practice 155.Permutation Tests for Complex Data: Theory, Applications and Software 156.Planning and Analysis of Observational Studies157.Planning, Construction, and Statistical Analysis of Comparative Experiments158.Precedence-Type Tests and Applications159.Preparing for the Worst: Incorporating Downside Risk in Stock Market Investments160.Probability and Finance: It's Only a Game!161.Probability: A Survey of the Mathematical Theory, Second Edition162.Quantitative Methods in Population Health: Extensions of Ordinary Regression163.Random Data: Analysis and Measurement Procedures, Fourth Edition164.Random Graphs for Statistical Pattern Recognition165.Randomization in Clinical Trials: Theory and Practice166.Recent Advances in Quantitative Methods in Cancer and Human Health Risk Assessment167.Records168.Regression Analysis by Example, Fourth Edition169.Regression Diagnostics: Identifying Influential Data and Sources of Collinearity170.Regression Graphics: Ideas for Studying Regressions Through Graphics171.Regression Models for Time Series Analysis172.Regression with Social Data: Modeling Continuous and Limited Response Variables173.Reliability and Risk: A Bayesian Perspective174.Reliability: Modeling, Prediction, and Optimization175.Response Surfaces, Mixtures, and Ridge Analyses, Second Edition176.Robust Estimation & Testing177.Robust Methods in Biostatistics178.Robust Regression and Outlier Detection179.Robust Statistics180.Robust Statistics, Second Edition181.Robust Statistics: Theory and Methods182.Runs and Scans with Applications183.Sampling, Third Edition184.Sensitivity Analysis in Linear Regression185.Sequential Estimation186.Shape & Shape Theory187.Simulation and the Monte Carlo Method188.Simulation and the Monte Carlo Method, Second Edition189.Simulation and the Monte Carlo Method: Solutions Manual to Accompany, Second Edition190.Simulation: A Modeler's Approach191.Smoothing and Regression: Approaches, Computation, and Application192.Smoothing of Multivariate Data: Density Estimation and Visualization193.Spatial Statistics194.Spatial Statistics and Spatio-Temporal Data: Covariance Functions and Directional Properties195.Spatial Tessellations: Concepts and Applications of Voronoi Diagrams, Second Edition196.Stage-Wise Adaptive Designs197.Statistical Advances in the Biomedical Sciences: Clinical Trials, Epidemiology, Survival Analysis, and Bioinformatics198.Statistical Analysis of Profile Monitoring199.Statistical Design and Analysis of Experiments: With Applications to Engineering and Science, Second Edition200.Statistical Factor Analysis and Related Methods: Theory and Applications 201.Statistical Inference for Fractional Diffusion Processes202.Statistical Meta-Analysis with Applications203.Statistical Methods for Comparative Studies: Techniques for Bias Reduction 204.Statistical Methods for Forecasting205.Statistical Methods for Quality Improvement, Third Edition206.Statistical Methods for Rates and Proportions, Third Edition207.Statistical Methods for Survival Data Analysis, Third Edition208.Statistical Methods for the Analysis of Biomedical Data, Second Edition 209.Statistical Methods in Diagnostic Medicine210.Statistical Methods in Diagnostic Medicine, Second Edition211.Statistical Methods in Engineering and Quality Assurance212.Statistical Methods in Spatial Epidemiology, Second Edition213.Statistical Modeling by Wavelets214.Statistical Models and Methods for Lifetime Data, Second Edition215.Statistical Rules of Thumb, Second Edition216.Statistical Size Distributions in Economics and Actuarial Sciences217.Statistical Texts for Mixed Linear Models218.Statistical Tolerance Regions: Theory, Applications, and Computation219.Statistics for Imaging, Optics, and Photonics220.Statistics for Research, Third Edition221.Statistics of Extremes: Theory and Applications222.Statistics: A Biomedical Introduction223.Stochastic Dynamic Programming and the Control of Queueing Systems224.Stochastic Processes for Insurance & Finance225.Stochastic Simulation226.Structural Equation Modeling: A Bayesian Approach227.Subjective and Objective Bayesian Statistics: Principles, Models, and Applications, Second Edition228.Survey Errors and Survey Costs229.System Reliability Theory: Models, Statistical Methods, and Applications, Second Edition230.The Analysis of Covariance and Alternatives: Statistical Methods for Experiments, Quasi-Experiments, and Single-Case Studies, Second Edition231.The Construction of Optimal Stated Choice Experiments: Theory and Methods 232.The EM Algorithm and Extensions, Second Edition233.The Statistical Analysis of Failure Time Data, Second Edition234.The Subjectivity of Scientists and the Bayesian Approach235.The Theory of Measures and Integration236.The Theory of Response-Adaptive Randomization in Clinical Trials237.Theory of Preliminary Test and Stein-Type Estimation With Applications 238.Time Series Analysis and Forecasting by Example239.Time Series: Applications to Finance with R and S-Plus, Second Edition 240.Uncertainty Analysis with High Dimensional Dependence Modelling241.Univariate Discrete Distributions, Third Editioning the Weibull Distribution: Reliability, Modeling, and Inference243.Variance Components244.Variations on Split Plot and Split Block Experiment Designs245.Visual Statistics: Seeing Data with Dynamic Interactive Graphics246.Weibull Models。

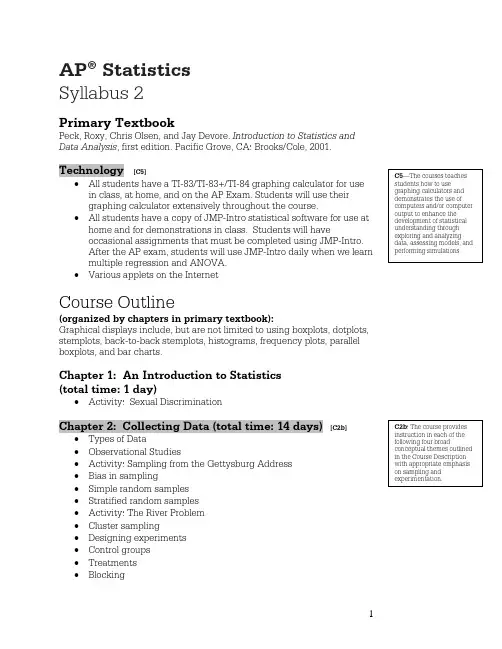

AP® StatisticsSyllabus 2Primary TextbookPeck, Roxy, Chris Olsen, and Jay Devore. Introduction to Statistics and Data Analysis, first edition. Pacific Grove, CA: Brooks/Cole, 2001. Technology [C5]•All students have a TI-83/TI-83+/TI-84 graphing calculator for use in class, at home, and on the AP Exam. Students will use theirgraphing calculator extensively throughout the course.•All students have a copy of JMP-Intro statistical software for use at home and for demonstrations in class. Students will haveoccasional assignments that must be completed using JMP-Intro.After the AP exam, students will use JMP-Intro daily when we learn multiple regression and ANOVA.•Various applets on the InternetCourse Outline(organized by chapters in primary textbook):Graphical displays include, but are not limited to using boxplots, dotplots, stemplots, back-to-back stemplots, histograms, frequency plots, parallel boxplots, and bar charts.Chapter 1: An Introduction to Statistics(total time: 1 day)•Activity: Sexual DiscriminationChapter 2: Collecting Data (total time: 14 days)[C2b]•Types of Data•Observational Studies•Activity: Sampling from the Gettysburg Address•Bias in sampling•Simple random samples•Stratified random samples•Activity: The River Problem•Cluster sampling•Designing experiments•Control groups•Treatments•Blocking• Random assignment • Replication• Activity: The Caffeine Experiment • The scope of inferenceChapter 3: Displaying Univariate Data (total time: 4 days) [C2a]•Displaying categorical data: pie and bar charts • Dotplots • Stemplots • Histograms• Describing the shape of a distribution • Cumulative frequency graphsChapter 4: Describing Univariate Data (total time: 8 days) [C2a] [C5]• Describing center: mean and median• Describing spread: range, interquartile range, and standard deviation • Boxplots • Outliers• Using the TI-83 • The empirical rule • Standardized scores • Percentiles and quartiles • Transforming data • Using JMP-Intro• Activity: Matching DistributionsChapter 5: Describing Bivariate Data (total time: 16 days) [C2a] [C5]• Introduction to bivariate data• Making and describing scatterplots • The correlation coefficient• Properties of the correlation coefficient • Least squares regression line • Using the TI-83• Regression to the mean • Residual plots• Standard deviation• Coefficient of determination •Unusual and influential points• Activity: Matching Scatterplots and Correlations • Applets: Demonstrating the effects of outliers • Using JMP-Intro• Modeling nonlinear data: exponential and power transformationsMidterm: Chapters 1–5 (total time: 3 days)• Review Chapters 1–5 using previous AP questions • Introduce first semester projectChapter 6: Probability (total time: 13 days) [C2c] [C5]• Definition of probability, outcomes, and events • Law of large numbers • Properties of probabilities • Conditional probability •Independence • Addition rule• Multiplication rule• Estimating probabilities using simulation • Using the TI-83 for simulations • Activity: Cereal Boxes • Activity: ESP Testing • Activity: Senior parkingChapter 7: Random Variables (total time: 18 days) [C2c] [C5]• Properties of discrete random variables • Properties of continuous random variables• Expected value (mean) of a discrete random variable • Standard deviation of a discrete random variable• Linear functions and linear combinations of random variables • The binomial distribution • The geometric distribution • The normal distribution • Using the normal table• Using the TI-83 distribution menu • Combining normal random variables • Normal approximation to the binomialFirst Semester Final Exam (total time: 4 days)• Review using previous AP questionsChapter 8: Sampling Distributions (total time: 9 days)[C2b]• Sampling distributions• Activity: How many textbooks?• Activity: Cents and the central limit theorem• Sampling distribution of the sample mean (including distribution of a difference between two independent sample means) • Sampling distribution of the sample proportion (including distribution of a difference between two independent sample proportions)Chapter 9: Confidence Intervals (total time: 10 days) [C2d]•Properties of point estimates: bias and variability • Confidence interval for a population proportion • Confidence interval for a population mean • Logic of confidence intervals • Meaning of confidence level• Activity: What does it mean to be 95% confident? •Finding sample size• Finite population correction factor • +4 confidence interval for a proportion • Confidence interval for a population mean • The t-distribution • Checking conditionsChapter 10: Hypothesis Tests (total time: 11 days) [C2d] [C5]• Forming hypotheses• Logic of hypothesis testing • Type I and Type II errors• Hypothesis test for a population proportion • Test statistics and p-values • Activity: Kissing the right way • Two-sided tests• Hypothesis test for a population mean • Checking conditions • Power• Using JMP-IntroChapter 11: Two Sample Procedures (total time: 11 days) [C2d] [C5]• Activity: Fish Oil• Hypothesis test for the difference of two means (unpaired) • Two-sided tests• Checking conditions• Confidence interval for the difference of two means (unpaired) • Matched pairs hypothesis test• Matched pairs confidence interval• Hypothesis test for the difference of two proportions • Confidence interval for the difference of two proportions • Using the TI-83 test menu• Choosing the correct test: It’s all about the designMidterm (chapters 8-11) (total time: 3 days)• Review using previous AP questionsChapter 12: Chi-square Tests (total time: 8 days) [C2d] [C5]• Activity: M&M’s• The chi-square distribution • Goodness of Fit test • Checking conditions •Assessing normality•Homogeneity of Proportions test (including large sample test for a proportion) • Using the TI-83• Test of independence• Choosing the correct test: It’s all about the design.Chapter 13: Inference for Slope (total time: 5 days)[C2d] [C5]• Activity: Dairy and Mucus• Hypothesis test for the slope of a least squares regression line • Confidence interval for the slope of a least squares regression line • Using the TI-83 • Using JMP-Intro• Understanding computer outputReview for AP Exam and Final Exam (total time: 7 days)• 2002 complete AP exam• Remaining previous AP questions • Final exam • AP examPost AP Exam (total time: 25 days)• Second semester project (see below) • Chapter 14: Multiple regression • Using JMP-Intro• Chapter 15: ANOVA • Using JMP-Intro•Guest speakers: careers in statisticsAP Statistics Example Project[[C2a, b, c, d]] [[C3]] [[C4]] [[C5]]The Project: Students will design and conduct an experiment toinvestigate the effects of response bias in surveys. They may choose the topic for their surveys, but they must design their experiment so that it can answer at least one of the following questions:•Can the wording of a question create response bias?• Do the characteristics of the interviewer create response bias? • Does anonymity change the responses to sensitive questions? • Does manipulating the answer choices change the response?The project will be done in pairs. Students will turn in one project per pair. A written report must be typed (single-spaced, 12-point font) and included graphs should be done on the computer using JMP-Intro or Excel.Proposal : The proposal should• Describe the topic and state which type of bias is being investigated.• Describe how to obtain subjects (minimum sample size is 50). • Describe what questions will be and how they will be asked, including how to incorporate direct control, blocking, and randomization.Written Report: The written report should include a title in the form of a question and the following sections (clearly labeled):• Introduction: What form of response bias was investigated? Whywas the topic chosen for the survey?• Methodology: Describe how the experiment was conducted and justify why the design was effective. Note: This section should be very similar to the proposal.• Results: Present the data in both tables and graphs in such a way that conclusions can be easily made. Make sure to label thegraphs/tables clearly and consistently.• Conclusions: What conclusions can be drawn from theexperiment? Be specific. Were any problems encountered during the project? What could be done different if the experiment were tobe repeated? What was learned from this project? • The original proposal.Poster: The poster should completely summarize the project, yet be simple enough to be understood by any reader. Students should include some pictures of the data collection in progress.Oral Presentation: Both members will participate equally. Theposter should be used as a visual aid. Students should be prepared for questions.。

FDA《⾏业指南:注射产品可见颗粒的检查》,中英⽂对照版来了!⾏业指南:注射剂可见异物的检查》草案,该⽂件已完成翻译,现12⽉14⽇,FDA发布了《⾏业指南:注射剂可见异物的检查分享给⼤家,如下:Inspection of Injectable Productsfor Visible Particulates注射产品中可见颗粒的检查Guidance for Industry⾏业指南TABLE OFCONTENTS⽬录I. INTRODUCTION介绍II. STATUTORY AND REGULATORY FRAMEWORK法律法规框架III. CLINICAL RISK OF VISIBLE PARTICULATES可见颗粒物的临床风险IV. QUALITY RISK ASSESSMENT质量风险评估V. VISUAL INSPECTION PROGRAM CONSIDERATIONS⽬视检查的程序考虑A. 100% Inspection100%检查1. Components and Container Closure Systems部件和容器密封系统2. Facility and Equipment设施和设备3. Process⼯艺4. Special Injectable Product Considerations特殊注射产品的考虑B. Statistical Sampling统计学抽样C. Training and Qualification培训和确认D. Quality Assurance Through a Life Cycle Approach通过⽣命周期⽅法实现质量保证E. Actions To Address Nonconformance解决不符合问题的措施VI. REFERENCES参考⽂献I. INTRODUCTION介绍Visibleparticulates in injectable products can jeopardize patient safety. Thisguidanceaddresses the development and implementation of a holistic, risk-basedapproach to visibleparticulate control that incorporates product development,manufacturing controls, visualinspection techniques, particulate identification,investigation, and corrective actions designedto assess, correct, and preventthe risk of visible particulate contamination.2The guidance alsoclarifies that meeting an applicable United StatesPharmacopeia (USP)3compendialstandardalone is not generally sufficient for meeting the current goodmanufacturing practice (CGMP)requirements for the manufacture of injectableproducts. The guidance does not coversubvisible particulates4 or physical defects that products are typicallyinspected for along withinspection for visible particulates (e.g., containerintegrity flaws, fill volume, appearance of lyophilized cake/suspensionsolids).注射产品中的可见颗粒物会危及患者安全。

应用统计复试专业英语Title: The Essentials of Applied Statistics for Postgraduate Studies.Introduction.Applied statistics, a field that intersects with various disciplines, plays a pivotal role in extracting meaningful insights from data. In the realm of postgraduate studies, proficiency in applied statistics is crucial for effective research, analysis, and decision-making. This article delves into the key areas of applied statistics relevant to postgraduate students, highlighting their importance and applications in various fields.1. Basic Concepts and Terminology.Familiarity with the fundamental terminology and concepts of statistics is the foundation of applied statistics. Terms such as mean, median, mode, variance,standard deviation, probability, and distribution are essential for understanding data characteristics and patterns. Additionally, concepts like hypothesis testing and confidence intervals are vital for making inferences about populations based on sample data.2. Descriptive Statistics.Descriptive statistics involves summarizing and presenting data in a meaningful way. It encompasses measures of central tendency (e.g., mean, median, mode), dispersion (e.g., variance, standard deviation), and shape (e.g., skewness, kurtosis). Postgraduate students need to master these descriptive techniques to effectively summarize and communicate their data findings.3. Inferential Statistics.Inferential statistics allows researchers to draw conclusions about a population based on sample data. It involves hypothesis testing, estimation of population parameters, and confidence intervals. Postgraduate studentsneed to understand the principles of inferential statistics to make informed decisions and test their research hypotheses.4. Regression Analysis.Regression analysis is a powerful tool for exploring the relationship between two or more variables. It helps researchers understand how changes in one variable affect another variable. Postgraduate students often use regression analysis in their research to identify predictors, assess the strength of relationships, and predict future outcomes.5. Experimental Design.Experimental design is crucial for ensuring thevalidity and reliability of research findings. It involves planning the experiment, selecting appropriate samples, controlling variables, and analyzing the collected data. Postgraduate students need to be familiar with different types of experimental designs (e.g., randomized controlledtrials, factorial experiments) to design and conduct effective research studies.6. Data Visualization.Data visualization is an essential skill for communicating complex data effectively. It involves creating graphs, charts, and other visual aids to represent data and highlight patterns and trends. Postgraduate students should have a strong grasp of data visualization techniques to present their research findings clearly and engage their audience.7. Applications in Diverse Fields.Applied statistics finds applications across various fields, including social sciences, business, medicine, engineering, and more. Postgraduate students need to understand how applied statistics is used in their respective fields to solve practical problems and make informed decisions. For example, in business, applied statistics is used for market research, sales forecasting,and quality control. In medicine, it is employed for statistical analysis of clinical trials and epidemiological studies.Conclusion.In summary, applied statistics is a crucial skill for postgraduate students across various disciplines. Itinvolves mastering basic concepts and terminology, descriptive and inferential statistics, regression analysis, experimental design, data visualization, and applicationsin diverse fields. By developing proficiency in these areas, postgraduate students can effectively analyze data, make informed decisions, and contribute to the advancement of knowledge in their respective fields.。