Cointegration and Forward and Spot Exchange Rate Regressions

- 格式:pdf

- 大小:240.72 KB

- 文档页数:36

外文题目:Can macroeconomic variables explain long term stock market movements?A comparison of the US and Japan出处:School of Economics and Finance, University of St Andrews, St Andrews, UK,作者:Andreas Humpe Peter MacmillanCan macroeconomic variables explain long term stock marketmovements?A comparison of the US and JapanBy Andreas Humpe Peter Macmillan原文:ABSTRACTWithin the framework of a standard discounted value model we examine whether a number of macroeconomic variables influence stock prices in the US and Japan. A cointegration analysis is applied in order to model the long term relationship between industrial production, the consumer price index, money supply, long term interest rates and stock prices in the US and Japan. For the US we find the data are consistent with a single cointegrating vector, where stock prices are positively related to industrial production and negatively related to both the consumer price index and a long term interest rate. We also find an insignificant (although positive) relationship between US stock prices and the money supply. However, for the Japanese data we find two cointegrating vectors. We find for one vector that stock prices are influenced positively by industrial production and negatively by the money supply. For the second cointegrating vector we find industrial production to be negatively influenced by the consumer price index and a long term interest rate. These contrasting results may be due to the slump in the Japanese economy during the 1990s and consequent liquidity trap.Keywords: Stock Market Indices, Cointegration, Interest Rates.I. Introduction.A significant literature now exists which investigates the relationship between stock market returns and a range of macroeconomic and financial variables, across a number of different stock markets and over a range of different time horizons. Existing financial economic theory provides a number of models that provide a framework for the study of this relationship.One way of linking macroeconomic variables and stock market returns is through arbitrage pricing theory (APT) (Ross, 1976), where multiple risk factors can explain asset returns. While early empirical papers on APT focussed on individual security returns, it may also be used in an aggregate stock market framework, where a change in a given macroeconomic variable could be seen as reflecting a change in an underlying systematic risk factor influencing future returns. Most of the empirical studies based on APT theory, linking the state of the macro economy to stock market returns, are characterised by modelling a short run relationship betweenmacroeconomic variables and the stock price in terms of first differences, assuming trend stationarity. For a selection of relevant studies see inter alia Fama (1981, 1990), Fama and French (1989), Schwert (1990), Ferson and Harvey (1991) and Black, Fraser and MacDonald (1997). In general, these papers found a significant relationship between stock market returns and changes in macroeconomic variables, such as industrial production, inflation, interest rates, the yield curve and a risk premium.An alternative, but not inconsistent, approach is the discounted cash flow or present value model (PVM)1. This model relates the stock price to future expected cash flows and the future discount rate of these cash flows. Again, all macroeconomic factors that influence future expected cash flows or the discount rate by which these cash flows are discounted should have an influence on the stock price. The advantage of the PVM model is that it can be used to focus on the long run relationship between the stock market and macroeconomic variables. Campbell and Shiller (1988) estimate the relationship between stock prices, earnings and expected dividends. They find that a long term moving average of earnings predicts dividends and the ratio of this earnings variable to current stock price is powerful in predicting stock returns over several years. They conclude that these facts make stock prices and returns much too volatile to accord with a simple present value model. Engle and Granger (1987) and Granger (1986) suggest that the validity of long term equilibria between variables can be examined using cointegration techniques. These have been applied to the long runrelationship between stock prices and macroeconomic variables in a number of studies, see inter alia Mukherjee and Naka (1995), Cheung and Ng (1998), Nasseh and Strauss (2000), McMillan (2001) and Chaudhuri and Smiles (2004). Nasseh and Strauss (2000), for example, find a significant long-run relationship between stock prices and domestic and international economic activity in France, Germany, Italy, Netherlands, Switzerland and the U.K. In particular they find large positive coefficients for industrial production and the consumer price index, and smaller but nevertheless positive coefficients on short term interest rates and business surveys of manufacturing. The only negative coefficients are found on long term interest rates. Additionally, they find that European stock markets are highly integrated with that of Germany and also find industrial production, stock prices and short term rates in Germany positively influence returns on other European stock markets (namely France, Italy, Netherlands, Switzerland and the UK).In this paper, we will draw upon theory and existing empirical work as a motivation to select a number of macroeconomic variables that we might expect to be strongly related to the real stock price. We then make use of these variables, in a cointegration model, to compare and contrast the stock markets in the US and Japan. In contrast to most other studies we explicitly use an extended sample size of most of the last half century, which covers the most severe stock market booms in US and Japan. While Japans’ h ay days have been in the late 1980s, the US stock market boom occurred during the 1990s and ended in 2000. Japans’ stock market has not yet fully recovered from a significant decline during the 1990s, and at the time of writing, trades at around a quarter of the value it saw at its peak in 1989.2The aim of this paper is to see whether the same model can explain the US and Japanese stock market while yielding consistent factor loadings. This might be highly relevant, for example, to private investors, pension funds and governments, as many long term investors base their investment in equities on the assumption that corporate cash flows should grow in line with the economy, given either a constant or slowly moving discount rate. Thus, the expected return on equities may be linked to future economic performance. A further concern might be the impact of the Japanese deflation on real equity returns. In this paper, we make use of the cointegration methodology, to investigate whether the Japanese stock market has broadly followed the same equity model that has been found to hold in the US.In the following section, we briefly outline the simple present value model of stock price formation and make use of it in order to motivate our discussion of the macroeconomic variables we include in our empirical analysis. In the third section we briefly outline the cointegration methodology, in the fourth section we discuss our results and in the fifth section we offer a summary and some tentative conclusions based on our results.As suggested by Chen, Roll and Ross (1986), the selection of relevant macroeconomic variables requires judgement and we draw upon both on existing theory and existing empirical evidence. Theory suggests, and many authors find, that corporate cash flows are related to a measure of aggregate output such as GDP or industrial production4. We follow, Chen, Roll and Ross (1986), Maysami and Koh (1998) and Mukherjee and Naka (1995) and make use of industrial production in this regard.Unanticipated inflation may directly influence real stock prices (negatively) through unexpected changes in the price level. Inflation uncertainty may also affect the discount rate thus reducing the present value of future corporate cash flows. DeFina (1991) has also argued that rising inflation initially has a negative effect on corporate income due to immediate rising costs and slowly adjusting output prices, reducing profits and therefore the share price. Contrary to the experience of the US, Japan suffered periods of deflation during the late 1990s and early part of the 21st century, and this may have had some impact on the relationship between inflation and share prices. The money supply, for example M1, is also likely to influence share prices through at least three mechanisms: First, changes in the money supply may be related to unanticipated increases in inflation and future inflation uncertainty and hence negatively related to the share price; Second, changes in the money supply may positively influence the share price through its impact on economic activity; Finally, portfolio theory suggests a positive relationship, since it relates an increase in the money supply to a portfolio shift from non-interest bearing money to financial assets,including equities. For a further discussion on the role of the money supply see Urich and Wachtel (1981), Rogalski and Vinso (1977) and Chaudhuri and Smiles (2004). The interest rate directly changes the discount rate in the valuation model and thus influences current and future values of corporate cash flows. Frequently, authors have included both a long term interest rate (e.g. a 10 year bond yield) and a short term interest rate (e.g. a 3 month T-bill rate)5. We do not use a short term rate as our aimhere is to find a long term relationship between the stock market and interest rate variables. Changes in the short rate are mainly driven by the business cycle and monetary policy. In contrast, the long term interest rate should indicate the longer term view of the economy concerning the discount rate.For our long term rate using the US data we have chosen a 10 year bond yield, however for Japan we instead use the official discount rate. This is due largely to data availability constraints and that the market for 10 year bonds (and similar maturities) in Japan has not been liquid for most of the time period covered. We should note that the discount rate is an official lending rate for banks in Japan, which implies it is generally lower than a market rate. Unlike many studies; see inter alia Mukherjee and Naka (1995) and Maysami and Koh (2000); we do not include the exchange rate as an explanatory variable. We reason the domestic economy should adjust to currency developments and thus reflect the impact of fo reign income due to firms’ exports measured in domestic currency over the medium run. Additionally, in the white paper on the Japanese Economy in 1993, published by the Japanese Economic Planning Agency, it has been pointed out that the boom in the economy during the late 1980s has been driven by domestic demand rather than exports (Government of Japan, 1993).In contrast to our study, many researchers have based their analysis on business cycle variables or stock market valuation measures such as the term spread or 7default spread for the former category or dividend yield or earnings yield for the latter. Examples of those papers include Black, Fraser and MacDonald (1997); Campbell and Hamao (1992); Chen, Roll and Ross (1986); Cochran, DeFina and Mills (1993); Fama (1990); Fama and French (1989); Harvey, Solnik and Zhou (2002) and Schwert (1990). These variables are usually found to be stationary and as we plan to model long term equilibrium using non stationary variables we do not included them in our model.As McAdam (2003) has confirmed, the US economy has been characterized by more frequent but less significant downturns relative to those suffered by the Japanese economy. This might be explained by a higher capital and export orientated Japanese economy relative to the US. We might therefore expect higher relative volatility in corporate cash flows and hence also in Japanese share prices. A priori, therefore, share prices in Japan may be more sensitive to changes in industrial production, although the greater relative volatility may also influence the estimated coefficient standard errors in any regression equation. However, previous research (seeBinswanger, 2000) has found that although both stock markets move positively with economic output, that the coefficient for output on equity returns tends to be larger for the US data relative the Japanese data. Campbell and Hamao (1992) also find smaller positive coefficients for the dividend price ratio and the long-short interest rate spread on stock market returns in Japan relative to the US in a sample covering monthly data from 1971 to 1990. Thus the intuitive expectation of higher coefficients in Japan due to higher capital and export exposure has not been confirmed empirically in existing research. Japan’s banking crisis and subsequent asset deflation during the 1990s could have changed significantly the influence of a number of variables, particularly the interest rate and money supply.To our knowledge, there has not been any empirical study of the present value model in Japan after the early 1990s and thus the severe downturn with low economic growth and deflation. Existing studies of the Japanese stock market before 1990 include Brown and Otsuki (1990), Elton and Gruber (1988), and Hamao (1988), although these papers mainly consider stationary business cycle variables and risk factors and therefore cannot give an indication of the empirical relationships we might expect to find in our model.In this paper we compare the US and Japan over the period January 1965 until June 2005. The use of monthly data gives the opportunity to analyse a very rich data set, to our knowledge earlier papers have only analysed shorter periods or have made use of a lower data frequency. This allows us to include the impact of the historically high volatility of both stock markets. The US stock market showed very high returns between 1993 and 1999, while from 2000 until 2003 returns have been very large and negative. In the Japanese stock market during the period from 1980 through to 1990, returns have in the main been large and positive while they tend to have been large negative for most of years between 1990 and 2003. The impact of recent problems to the Japanese banking sector is also captured in our data (see Government of Japan, 1993). Most existing research has been applied to US data and very little is known about the differences between US and Japanese stock market valuation. This paper investigates the differences and common patterns in both countries in order to verify whether the same variables that explain aggregate stock market movement in the US can also be used to do so in Japan.ConclusionIn order to achieve a deeper understanding of long term stock market movements, a comparison of the US and Japanese stock market, using monthly data over the last 40years has been conducted. Using US data we found evidence of a single cointegration vector between stock prices, industrial production, inflation and the long interest rate. The coefficients from the cointegrating vector, normalised on the stock price, suggested US stock prices were influenced, as expected, positively by industrial production and negatively by inflation and the long interest rate. However, we found the money supply had an insignificant influence over the stock price. In Japan, we found two cointegrating vectors. One normalised on the stock price provided evidence that stock prices are positively related to industrial production but negatively related to the money supply. We also found that for our second vector, normalised on industrial production, that industrial production was negatively related to the interest rate and the rate of inflation. An explanation of the difference in behaviour between the two stock markets may lie in Japan’s slump after 1990 an d its consequent liquidity trap of the late 1990s and early 21st century.外文题目:Can macroeconomic variables explain long term stock market movements?A comparison of the US and Japan出处:School of Economics and Finance, University of St Andrews, St Andrews, UK,作者:Andreas Humpe Peter Macmillan*译文:宏观经济变量可以解释长期的股市走势?一个美国和日本比较研究在一个标准的贴现值模型的框架内,我们考察宏观经济变量是否影响美国和日本的股票价格。

河北省邯郸市武安市武安市第一中学2024-2025学年高二上学期10月期中英语试题一、听力选择题1.What does the woman hurry to do?A.Go to the classroom.B.Catch the bus.C.Go up one floor.2.What does the woman think of the medicine?A.It doesn’t work.B.It makes her tired.C.It makes her have no appetite. 3.How many cookies were left?A.Three.B.Four.C.Ten.4.What may the speakers do next?A.Have a conference.B.Exchange seats.C.Take the fight.5.Where might the speakers be?A.In a library.B.At the man’s home.C.In a supermarket.听下面一段较长对话,回答以下小题。

6.What does the woman like about the old design?A.The red walls.B.The piano.C.The floor.7.What will the speakers do next?A.Play the piano.B.Have something to eat.C.Enjoy the live music.听下面一段较长对话,回答以下小题。

8.What are the speakers doing?A.Having a party B.Travelling to France.C.Watching a movie. 9.Who is the woman speaking to?A.Her brother.B.Her friend.C.Her father.听下面一段较长对话,回答以下小题。

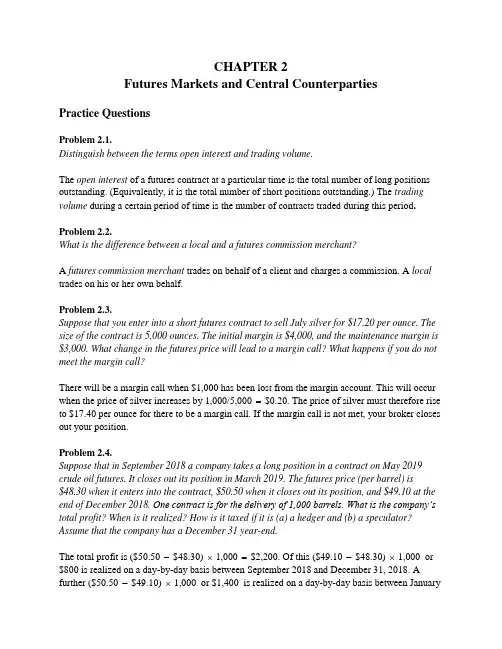

CHAPTER 2Futures Markets and Central CounterpartiesPractice QuestionsProblem 2.1.Distinguish between the terms open interest and trading volume.The open interest of a futures contract at a particular time is the total number of long positions outstanding. (Equivalently, it is the total number of short positions outstanding.) The trading volume during a certain period of time is the number of contracts traded during this period. Problem 2.2.What is the difference between a local and a futures commission merchant?A futures commission merchant trades on behalf of a client and charges a commission. A local trades on his or her own behalf.Problem 2.3.Suppose that you enter into a short futures contract to sell July silver for $17.20 per ounce. The size of the contract is 5,000 ounces. The initial margin is $4,000, and the maintenance margin is $3,000. What change in the futures price will lead to a margin call? What happens if you do not meet the margin call?There will be a margin call when $1,000 has been lost from the margin account. This will occur when the price of silver increases by 1,000/5,000 = $0.20. The price of silver must therefore rise to $17.40 per ounce for there to be a margin call. If the margin call is not met, your broker closes out your position.Problem 2.4.Suppose that in September 2018 a company takes a long position in a contract on May 2019 crude oil futures. It closes out its position in March 2019. The futures price (per barrel) is $48.30 when it enters into the contract, $50.50 when it closes out its position, and $49.10 at the end of December 2018. One contract is for the delivery of 1,000 barrels. What is the company’s total profit? When is it realized? How is it taxed if it is (a) a hedger and (b) a speculator? Assume that the company has a December 31 year-end.The total profit is ($50.50 - $48.30) ⨯ 1,000 = $2,200. Of this ($49.10 - $48.30) ⨯ 1,000 or $800 is realized on a day-by-day basis between September 2018 and December 31, 2018. A further ($50.50 - $49.10) ⨯ 1,000 or $1,400 is realized on a day-by-day basis between January1, 2019, and March 2019. A hedger would be taxed on the whole profit of $2,200 in 2019. A speculator would be taxed on $800 in 2018 and $1,400 in 2019.Problem 2.5.What does a stop order to sell at $2 mean? When might it be used? What does a limit order to sell at $2 mean? When might it be used?A stop order to sell at $2 is an order to sell at the best available price once a price of $2 or less is reached. It could be used to limit the losses from an existing long position. A limit order to sell at $2 is an order to sell at a price of $2 or more. It could be used to instruct a broker that a short position should be taken, providing it can be done at a price at least as favorable as $2. Problem 2.6.What is the difference between the operation of the margin accounts administered by a clearing house and those administered by a broker?The margin account administered by the clearing house is marked to market daily, and the clearing house member is required to bring the account back up to the prescribed level daily. The margin account administered by the broker is also marked to market daily. However, the account does not have to be brought up to the initial margin level on a daily basis. It has to be brought up to the initial margin level when the balance in the account falls below the maintenance margin level. The maintenance margin is usually about 75% of the initial margin.Problem 2.7.What differences exist in the way prices are quoted in the foreign exchange futures market, the foreign exchange spot market, and the foreign exchange forward market?In futures markets, prices are quoted as the number of U.S. dollars per unit of foreign currency. Spot and forward rates are quoted in this way for the British pound, euro, Australian dollar, and New Zealand dollar. For other major currencies, spot and forward rates are quoted as the number of units of foreign currency per U.S. dollar.Problem 2.8.The party with a short position in a futures contract sometimes has options as to the precise asset that will be delivered, where delivery will take place, when delivery will take place, and so on. Do these options increase or decrease the futures price? Explain your reasoning.These options make the contract less attractive to the party with the long position and more attractive to the party with the short position. They therefore tend to reduce the futures price.Problem 2.9.What are the most important aspects of the design of a new futures contract?The most important aspects of the design of a new futures contract are the specification of the underlying asset, the size of the contract, the delivery arrangements, and the delivery months. Problem 2.10.Explain how margin accounts protect futures traders against the possibility of default.Margin is money deposited by a trader with his or her broker. It acts as a guarantee that the trader can cover any losses on the futures contract. The balance in the margin account is adjusted daily to reflect gains and losses on the futures contract. If losses lead to the balance in the margin account falling below a certain level, the trader is required to deposit a further margin. This system makes it unlikely that the trader will default. A similar system of margin accounts makes it unlikely that the trader’s broker will default on the contract it has with the clearing house member and unlikely that the clearing house member will default with the clearing house. Problem 2.11.A trader buys two July futures contracts on frozen orange juice concentrate. Each contract is for the delivery of 15,000 pounds. The current futures price is 160 cents per pound, the initial margin is $6,000 per contract, and the maintenance margin is $4,500 per contract. What price change would lead to a margin call? Under what circumstances could $2,000 be withdrawn from the margin account?There is a margin call if more than $1,500 is lost on one contract. This happens if the futures price of frozen orange juice falls by more than 10 cents to below 150 cents per pound. $2,000 can be withdrawn from the margin account if there is a gain on one contract of $1,000. This will happen if the futures price rises by 6.67 cents to 166.67 cents per pound.Problem 2.12.Show that, if the futures price of a commodity is greater than the spot price during the delivery period, then there is an arbitrage opportunity. Does an arbitrage opportunity exist if the futures price is less than the spot price? Explain your answer.If the futures price is greater than the spot price during the delivery period, an arbitrageur buys the asset, shorts a futures contract, and makes delivery for an immediate profit. If the futures price is less than the spot price during the delivery period, there is no similar perfect arbitrage strategy. An arbitrageur can take a long futures position but cannot force immediate delivery of the asset. The decision on when delivery will be made is made by the party with the short position. Nevertheless companies interested in acquiring the asset may find it attractive to enterinto a long futures contract and wait for delivery to be made.Problem 2.13.Explain the difference between a market-if-touched order and a stop order.A market-if-touched order is executed at the best available price after a trade occurs at a specified price or at a price more favorable than the specified price. A stop order is executed at the best available price after there is a bid or offer at the specified price or at a price less favorable than the specified price.Problem 2.14.Explain what a stop-limit order to sell at 20.30 with a limit of 20.10 means.A stop-limit order to sell at 20.30 with a limit of 20.10 means that as soon as there is a bid at20.30 the contract should be sold providing this can be done at 20.10 or a higher price. Problem 2.15.At the end of one day a clearing house member is long 100 contracts, and the settlement price is $50,000 per contract. The original margin is $2,000 per contract. On the following day the member becomes responsible for clearing an additional 20 long contracts, entered into at a price of $51,000 per contract. The settlement price at the end of this day is $50,200. How much does the member have to add to its margin account with the exchange clearing house?The clearing house member is required to provide 20$2000$40000⨯,=, as initial margin for the new contracts. There is a gain of (50,200 - 50,000)⨯ 100 = $20,000 on the existing contracts. There is also a loss of (5100050200)20$16000,-,⨯=, on the new contracts. The member must therefore add,-,+,=,400002000016000$36000to the margin account.Problem 2.16.Explain why collateral requirements will increase in the OTC market as a result of new regulations introduced since the 2008 credit crisis.Regulations require most standard OTC transactions entered into between financial institutions to be cleared by CCPs. These have initial and variation margin requirements similar to exchanges. There is also a requirement that initial and variation margin be provided for most bilaterally cleared OTC transactions between financial institutions.As more transactions go through CCPs there will more netting. (Transactions with counterparty A can be netted against transactions with counterparty B providing both are cleared through the same CCP.) This will tend to offset the increase in collateral somewhat. However, it is expected that on balance there will be collateral increases.Problem 2.17.The forward price on the Swiss franc for delivery in 45 days is quoted as 1.1000. The futures price for a contract that will be delivered in 45 days is 0.9000. Explain these two quotes. Which is more favorable for a trader wanting to sell Swiss francs?The 1.1000 forward quote is the number of Swiss francs per dollar. The 0.9000 futures quote is the number of dollars per Swiss franc. When quoted in the same way as the futures price the /.=.. The Swiss franc is therefore more valuable in the forward forward price is11100009091market than in the futures market. The forward market is therefore more attractive for a trader wanting to sell Swiss francs.Problem 2.18.Suppose you call your broker and issue instructions to sell one July hogs contract. Describe what happens.Live hog futures are traded by the CME Group. The broker will request some initial margin. The order will be relayed by telephone to your broker’s trading desk on the floor of the exchange (or to the trading desk of another broker). It will then be sent by messenger to a commission broker who will execute the trade according to your instructions. Confirmation of the trade eventually reaches you. If there are adverse movements in the futures price your broker may contact you to request additional margin.Problem 2.19.“Speculation in futures markets is pure gambling. It is not in the public interest to allow speculators to trade on a futures exchange.” Discuss this viewpoint.Speculators are important market participants because they add liquidity to the market. However, regulators generally only approve contracts when they are likely to be of interest to hedgers as well as speculators.Problem 2.20.Explain the difference between bilateral and central clearing for OTC derivatives.In bilateral clearing the two sides enter into a master agreement covering all outstanding transactions. The agreement defines the circumstances under which transactions can be closedout by one side, how transactions will be valued if there is a close out, how the collateral required by each side is calculated, and so on. In central clearing a CCP stands between the two sides in the same way that an exchange clearing house stands between two sides for transactions entered into on an exchange. The CCP requires initial and variation margin.Problem 2.21.What do you think would happen if an exchange started trading a contract in which the qualityof the underlying asset was incompletely specified?The contract would not be a success. Parties with short positions would hold their contracts until delivery and then deliver the cheapest form of the asset. This might well be viewed by the party with the long position as garbage! Once news of the quality problem became widely known no one would be prepared to buy the contract. This shows that futures contracts are feasible only when there are rigorous standards within an industry for defining the quality of the asset. Many futures contracts have in practice failed because of the problem of defining quality.Problem 2.22.“When a futures contract is traded on the floor of the exchange, it may be the case that the open interest increases by one, stays the same, or decreases by one.” Explain this statement.If both sides of the transaction are entering into a new contract, the open interest increases by one. If both sides of the transaction are closing out existing positions, the open interest decreases by one. If one party is entering into a new contract while the other party is closing out an existing position, the open interest stays the same.Problem 2.23.Suppose that on October 24, 2018, a company sells one April 2019 live-cattle futures contracts. It closes out its position on January 21, 2019. The futures price (per pound) is 121.20 cents when it enters into the contract, 118.30 cents when it closes out its position, and 118.80 cents at the end of December 2018. One contract is for the delivery of 40,000 pounds of cattle. What is the total profit? How is it taxed if the company is (a) a hedger and (b) a speculator?Assume that the company has a December 31 year end.The total profit is40,000×(1.2120−1.1830) = $1,160If the company is a hedger this is all taxed in 2019. If it is a speculator40,000×(1.2120−1.1880) = $960is taxed in 2018 and40,000×(1.1880−1.1830) = $200is taxed in 2019.Problem 2.24.A cattle farmer expects to have 120,000 pounds of live cattle to sell in three months. The live-cattle futures contract traded by the CME Group is for the delivery of 40,000 pounds of cattle. How can the farmer use the contract for hedging? From the farmer’s viewpoint, what are the pros and cons of hedging?The farmer can short 3 contracts that have 3 months to maturity. If the price of cattle falls, the gain on the futures contract will offset the loss on the sale of the cattle. If the price of cattle rises, the gain on the sale of the cattle will be offset by the loss on the futures contract. Using futures contracts to hedge has the advantage that the farmer can greatly reduce the uncertainty about the price that will be received. Its disadvantage is that the farmer no longer gains from favorable movements in cattle prices.Problem 2.25.It is July 2017. A mining company has just discovered a small deposit of gold. It will take six months to construct the mine. The gold will then be extracted on a more or less continuous basis for one year. Futures contracts on gold are available with delivery months every two months from August 2017 to December 2018. Each contract is for the delivery of 100 ounces. Discuss how the mining company might use futures markets for hedging.The mining company can estimate its production on a month by month basis. It can then short futures contracts to lock in the price received for the gold. For example, if a total of 3,000 ounces are expected to be produced in September 2018 and October 2018, the price received for this production can be hedged by shorting 30 October 2018 contracts.Problem 2.26.Explain how CCPs work. What are the advantages to the financial system of requiring CCPs to be used for all standard derivatives transactions between financial institutions.?A CCP stands between the two parties in an OTC derivative transaction in much the same way that a clearing house does for exchange-traded contracts. It absorbs the credit risk but requires initial and variation margin from each side. In addition, CCP members are required to contribute to a default fund. The advantage to the financial system is that there is a lot more collateral (i.e., margin) available and it is therefore much less likely that a default by one major participant in the derivatives market will lead to losses by other market participants. The disadvantage is that CCPs are replacing banks as the too-big-to-fail entities in the financial system. There clearly needs to be careful oversight of the management of CCPs.Further QuestionsProblem 2.27.Trader A enters into futures contracts to buy 1 million euros for 1.1 million dollars in three months. Trader B enters in a forward contract to do the same thing. The exchange rate (dollars per euro) declines sharply during the first two months and then increases for the third month to close at 1.1300. Ignoring daily settlement, what is the total profit of each trader? When the impact of daily settlement is taken into account, which trader does better?The total profit of each trader in d ollars is 0.03×1,000,000 = 30,000. Trader B’s profit is realized at the end of the three months. Trader A’s profit is realized day-by-day during the three months. Substantial losses are made during the first two months and profits are made during the final month. It is likely that Trader B has done better because Trader A had to finance its losses during the first two months.Problem 2.28.Explain what is meant by open interest. Why does the open interest usually decline during the month preceding the delivery month? On a particular day, there were 2,000 trades in a particular futures contract. This means that there were 2000 buyers (going long) and 2000 sellers (going short). Of the 2,000 buyers, 1,400 were closing out positions and 600 were entering into new positions. Of the 2,000 sellers, 1,200 were closing out positions and 800 were entering into new positions. What is the impact of the day's trading on open interest?Open interest is the number of contract outstanding. Many traders close out their positions just before the delivery month is reached. This is why the open interest declines during the month preceding the delivery month. The open interest went down by 600. We can see this in two ways. First, 1,400 shorts closed out and there were 800 new shorts. Second, 1,200 longs closed out and there were 600 new longs.Problem 2.29.One orange juice future contract is on 15,000 pounds of frozen concentrate. Suppose that in September 2017 a company sells a March 2019 orange juice futures contract for 120 cents per pound. At the end of December 2017 the futures price is 140 cents; at the end of December 2018 the futures price is 110 cents; and in February 2019 it is closed out at 125 cents. The company has a December 31 year end. What is the company's profit or loss on the contract? How is it realized? What is the accounting and tax treatment of the transaction if the company is classified as a) a hedger and b) a speculator?The price goes up during the time the company holds the contract from 120 to 125 cents per pound. Overall the company therefore takes a loss of 15,000×0.05 = $750. If the company is classified as a hedger this loss is realized in 2019, If it is classified as a speculator it realizes aloss of 15,000×0.20 = $3000 in 2017, a gain of 15,000×0.30 = $4,500 in 2018, and a loss of 15,000×0.15 = $2,250 in 2019.Problem 2.30.A company enters into a short futures contract to sell 5,000 bushels of wheat for 750 cents per bushel. The initial margin is $3,000 and the maintenance margin is $2,000. What price change would lead to a margin call? Under what circumstances could $1,500 be withdrawn from the margin account?There is a margin call if $1000 is lost on the contract. This will happen if the price of wheat futures rises by 20 cents from 750 cents to 770 cents per bushel. $1500 can be withdrawn if the futures price falls by 30 cents to 720 cents per bushel.Problem 2.31.Suppose that there are no storage costs for crude oil and the interest rate for borrowing or lending is 4% per annum. How could you make money if the June and December futures contracts for a particular year trade at $50 and $56?You could go long one June oil contract and short one December contract. In June you take delivery of the oil borrowing $50 per barrel at 4% to meet cash outflows. The interest accumulated in six months is about 50×0.04×1/2 or $1 per barrel. In December the oil is sold for $56 per barrel which is more than the $51 that has to be repaid on the loan. The strategy therefore leads to a profit. Note that this profit is independent of the actual price of oil in June and December. It will be slightly affected by the daily settlement procedures.Problem 2.32.What position is equivalent to a long forward contract to buy an asset at K on a certain date and a put option to sell it for K on that date?The long forward contract provides a payoff of S T−K where S T is the asset price on the date and K is the delivery price. The put option provides a payoff of max (K−S T, 0). If S T> K the sum of the two payoffs is S T–K. If S T< K the sum of the two payoffs is 0. The combined payoff is therefore max (S T–K, 0). This is the payoff from a call option. The equivalent position is therefore a call option.Problem 2.33.A company has derivatives transactions with Banks A, B, and C which are worth +$20 million, −$15 million, and −$25 million, respectively to t he company. How much margin or collateral does the company have to provide in each of the following two situations?a) The transactions are cleared bilaterally and are subject to one-way collateral agreements where the company posts variation margin, but no initial margin. The banks do not have to post collateral.b) The transactions are cleared centrally through the same CCP and the CCP requires a total initial margin of $10 million.If the transactions are cleared bilaterally, the company has to provide collateral to Banks A, B, and C of (in millions of dollars) 0, 15, and 25, respectively. The total collateral required is $40 million. If the transactions are cleared centrally they are netted against each other and the company’s total variation margin (i n millions of dollars) is –20 + 15 + 25 or $20 million in total. The total margin required (including the initial margin) is therefore $30 million.Problem 2.34.A bank’s derivatives transactions with a counterparty are worth +$10 million to the bankand are cleared bilaterally. The counterparty has posted $10 million of cash collateral.What credit exposure does the bank have?The counterparty may stop posting collateral and some time will then elapse before the bank is able to close out the transaction s. During that time the transactions may move in the bank’s favor, increasing its exposure. Note that the bank is likely to have hedged the transactions and will incur a loss on the hedge if the transactions move in the bank’s favor. For example, if the transactions change in value from $10 to $13 million after the counterparty stops posting collateral, the bank loses $3 million on the hedge and will not necessarily realize an offsetting gain on the transactions.Problem 2.35. (Excel file)The author’s We b page (www-2.rotman.utoronto.ca/~hull/data) contains daily closing prices for crude oil futures contract and gold futures contract. You are required to download the data for crude oil and answer the following:(a) Assuming that daily price changes are normally distributed with zero mean, estimate the standard deviation of daily price changes. Calculate the standard deviation of two-day changes from the standard deviation of one-day changes assuming that changes are independent.(b) Suppose that an exchange wants to set the margin requirement for a member with a long position in one contract so that it is 99% certain that the margin will not be wiped out by a two-day price move. (It chooses two days because it considers that it can take two days to close out a defaulting member.) How high does the margin have to be when the normal distribution assumption is made? Each contract is on 1,000 barrels of oil.(c) Use the data to determine how often the margin of the member would actually be wiped out by a two-day price move. What do your results suggest about the appropriateness of the normal distribution assumption?(d) Suppose that for retail clients the maintenance margin is equal to the amount calculated in (b) and is 75% of the initial margin. How frequently would the balance in the account of a client with a long position be negative immediately before a margin payment is due (so that the client has an incentive to default)? Assume that balances in excess of the initial margin are withdrawn by the client.(a)contract. The standard deviation of two-day price changes is $1577.7contract.(b)Margin for member =$2,231.2×2.326 = $5,190.6(c)Worksheet shows that the margin would be wiped out 24 times or on 2.31% of the days.This suggests that price changes have heavier tails than the normal distribution.(d)Worksheet shows that there would be 157 margin calls and the client has an incentive todefault 9 times.。

Forward exchange rateNot to be confused with forward rate or forward price. The forward exchange rate(also referred to as for-ward rate or forward price)is the exchange rate at which a bank agrees to exchange one currency for an-other at a future date when it enters into a forward contract with an investor.[1][2][3]Multinational corpora-tions,banks,and otherfinancial institutions enter into forward contracts to take advantage of the forward rate for hedging purposes.[1]The forward exchange rate is determined by a parity relationship among the spot ex-change rate and differences in interest rates between two countries,which reflects an economic equilibrium in the foreign exchange market under which arbitrage opportu-nities are eliminated.When in equilibrium,and when interest rates vary across two countries,the parity con-dition implies that the forward rate includes a premium or discount reflecting the interest rate differential.For-ward exchange rates have important theoretical implica-tions for forecasting future spot exchange rates.Financial economists have put forth a hypothesis that the forward rate accurately predicts the future spot rate,for which empirical evidence is mixed.1IntroductionThe forward exchange rate is the rate at which a com-mercial bank is willing to commit to exchange one cur-rency for another at some specified future date.[1]The forward exchange rate is a type of forward price.It is the exchange rate negotiated today between a bank and a client upon entering into a forward contract agreeing to buy or sell some amount of foreign currency in the future.[2][3]Multinational corporations andfinancial in-stitutions often use the forward market to hedge future payables or receivables denominated in a foreign cur-rency against foreign exchange risk by using a forward contract to lock in a forward exchange rate.Hedging with forward contracts is typically used for larger transac-tions,while futures contracts are used for smaller transac-tions.This is due to the customization afforded to banks by forward contracts traded over-the-counter,versus the standardization of futures contracts which are traded on an exchange.[1]Banks typically quote forward rates for major currencies in maturities of one,three,six,nine, or twelve months,however in some cases quotations for greater maturities are available up tofive or ten years.[2]2Relation to covered interest rate parityCovered interest rate parity is a no-arbitrage condition in foreign exchange markets which depends on the availabil-ity of the forward market.It can be rearranged to give the forward exchange rate as a function of the other variables. The forward exchange rate depends on three known vari-ables:the spot exchange rate,the domestic interest rate, and the foreign interest rate.This effectively means that the forward rate is the price of a forward contract,which derives its value from the pricing of spot contracts and the addition of information on available interest rates.[4] The following equation represents covered interest rate parity,a condition under which investors eliminate expo-sure to foreign exchange risk(unanticipated changes in exchange rates)with the use of a forward contract–the exchange rate risk is effectively covered.Under this con-dition,a domestic investor would earn equal returns from investing in domestic assets or converting currency at the spot exchange rate,investing in foreign currency assets in a country with a different interest rate,and exchanging the foreign currency for domestic currency at the nego-tiated forward exchange rate.Investors will be indiffer-ent to the interest rates on deposits in these countries due to the equilibrium resulting from the forward exchange rate.The condition allows for no arbitrage opportunities because the return on domestic deposits,1+id,is equal to the return on foreign deposits,[S/F](1+if).If these two returns weren't equalized by the use of a forward con-tract,there would be a potential arbitrage opportunity in which,for example,an investor could borrow currency in the country with the lower interest rate,convert to the foreign currency at today’s spot exchange rate,and invest in the foreign country with the higher interest rate.[4] (1+i d)=SF(1+i f)whereF is the forward exchange rateS is the current spot exchange rateid is the interest rate in domestic currency(basecurrency)if is the interest rate in foreign currency(quoted currency)124FORECASTING FUTURE SPOT EXCHANGE RATES This equation can be arranged such that it solves for theforward rate:F=S (1+i f) (1+i d)3Forward premium or discount The equilibrium that results from the relationship be-tween forward and spot exchange rates within the con-text of covered interest rate parity is responsible for elim-inating or correcting for market inefficiencies that would create potential for arbitrage profits.As such,arbitrage opportunities arefleeting.In order for this equilibrium to hold under differences in interest rates between two countries,the forward exchange rate must generally dif-fer from the spot exchange rate,such that a no-arbitrage condition is sustained.Therefore,the forward rate is said to contain a premium or discount,reflecting the interest rate differential between two countries.The following equations demonstrate how the forward premium or dis-count is calculated.[1][2]The forward exchange rate differs by a premium or dis-count of the spot exchange rate:F=S(1+P)whereP is the premium(if positive)or discount(ifnegative)The equation can be rearranged as follows to solve for the forward premium/discount:P=FS−1In practice,forward premiums and discounts are quoted as annualized percentage deviations from the spot ex-change rate,in which case it is necessary to account for the number of days to delivery as in the following example.[2]P N=(FS−1)360dwhereN represents the maturity of a given forward exchange rate quoted represents the number of days to delivery For example,to calculate the6-month forward premium or discount for the euro versus the dollar deliverable in30 days,given a spot rate quote of1.2238$/€and a6-month forward rate quote of1.2260$/€:P6=(1.22601.2238−1)36030=0.021572=2.16%The resulting0.021572is positive,so one would say that the euro is trading at a0.021572or2.16%premium against the dollar for delivery in30days.Conversely,if one were to work this example in euro terms rather than dollar terms,the perspective would be reversed and one would say that the dollar is trading at a discount against the Euro.4Forecasting future spot exchange rates4.1Unbiasedness hypothesisThe unbiasedness hypothesis states that given conditions of rational expectations and risk neutrality,the forward exchange rate is an unbiased predictor of the future spot exchange rate.Without introducing a foreign exchange risk premium(due to the assumption of risk neutral-ity),the following equation illustrates the unbiasedness hypothesis.[3][5][6][7]F t=E t(S t+k)whereF t is the forward exchange rate at time tE t(S t+k)is the expected future spot exchangerate at time t+kk is the number of periods into the future fromtime tThe empirical rejection of the unbiasedness hypothesis is a well-recognized puzzle amongfinance researchers. Empirical evidence for cointegration between the forward rate and the future spot rate is mixed.[5][8][9]Researchers have published papers demonstrating empirical failure of the hypothesis by conducting regression analyses of the realized changes in spot exchange rates on forward premi-ums andfinding negative slope coefficients.[10]These re-searchers offer numerous rationales for such failure.One rationale centers around the relaxation of risk neutrality, while still assuming rational expectations,such that a for-eign exchange risk premium may exist that can account for differences between the forward rate and the future spot rate.[11]3The following equation represents the forward rate as be-ing equal to a future spot rate and a risk premium(not tobe confused with a forward premium):[12]F t=E t(S t+1)+P tThe current spot rate can be introduced so that the equa-tion solves for the forward-spot differential(the differ-ence between the forward rate and the current spot rate): F t−S t=E t(S t+1−S t)+P tEugene Fama concluded that large positive correlationsof the difference between the forward exchange rate andthe current spot exchange rate signal variations over timein the premium component of the forward-spot differen-tial F t−S t or in the forecast of the expected change in the spot exchange rate.Fama suggested that slope coef-ficients in the regressions of the difference between theforward rate and the future spot rate F t−S t+1,and the expected change in the spot rate E t(S t+1−S t),on the forward-spot differential F t−S t which are different from zero imply variations over time in both components of the forward-spot differential:the premium and the expected change in the spot rate.[12]Fama’sfindings were sought to be empirically validated by a significant body of re-search,ultimatelyfinding that large variance in expected changes in the spot rate could only be accounted for by risk aversion coefficients that were deemed“unaccept-ably high.”[7][11]Other researchers have found that the unbiasedness hypothesis has been rejected in both cases where there is evidence of risk premia varying over time and cases where risk premia are constant.[13]Other rationales for the failure of the forward rate unbi-asedness hypothesis include considering the conditionalbias to be an exogenous variable explained by a pol-icy aimed at smoothing interest rates and stabilizing ex-change rates,or considering that an economy allowing fordiscrete changes could facilitate excess returns in the for-ward market.Some researchers have contested empiri-cal failures of the hypothesis and have sought to explainconflicting evidence as resulting from contaminated dataand even inappropriate selections of the time length offorward contracts.[11]Economists demonstrated that theforward rate could serve as a useful proxy for future spotexchange rates between currencies with liquidity premiathat average out to zero during the onset offloating ex-change rate regimes in the1970s.[14]Research examiningthe introduction of endogenous breaks to test the struc-tural stability of cointegrated spot and forward exchangerate time series have found some evidence to support for-ward rate unbiasedness in both the short and long term.[9] 5See also•Foreign exchange derivative 6References[1]Madura,Jeff(2007).International Financial Manage-ment:Abridged8th Edition.Mason,OH:Thomson South-Western.ISBN0-324-36563-2.[2]Eun,Cheol S.;Resnick,Bruce G.(2011).InternationalFinancial Management,6th Edition.New York,NY: McGraw-Hill/Irwin.ISBN978-0-07-803465-7.[3]Levi,Maurice D.(2005).International Finance,4th Edi-tion.New York,NY:Routledge.ISBN978-0-415-30900-4.[4]Feenstra,Robert C.;Taylor,Alan M.(2008).Interna-tional Macroeconomics.New York,NY:Worth Publish-ers.ISBN978-1-4292-0691-4.[5]Delcoure,Natalya;Barkoulas,John;Baum,ChristopherF.;Chakraborty,Atreya(2003).“The Forward RateUnbiasedness Hypothesis Reexamined:Evidence froma New Test”.Global Finance Journal14(1):83–93.doi:10.1016/S1044-0283(03)00006-1.Retrieved2011-06-21.[6]Ho,Tsung-Wu(2003).“A re-examination of the un-biasedness forward rate hypothesis using dynamic SUR model”.The Quarterly Review of Economics and Finance 43(3):542–559.doi:10.1016/S1062-9769(02)00171-0.Retrieved2011-06-23.[7]Sosvilla-Rivero,Simón;Park,Young B.(1992).“Furthertests on the forward exchange rate unbiasedness hy-pothesis”.Economics Letters40(3):325–331.doi:10.1016/0165-1765(92)90013-O.Retrieved2011-06-27.[8]Moffett,Michael H.;Stonehill,Arthur I.;Eiteman,DavidK.(2009).Fundamentals of Multinational Finance,3rd Edition.Boston,MA:Addison-Wesley.ISBN978-0-321-54164-2.[9]Villanueva,O.Miguel(2007).“Spot-forward cointegra-tion,structural breaks and FX market unbiasedness”.In-ternational Financial Markets,Institutions&Money17(1):58–78.doi:10.1016/j.intfin.2005.08.007.Retrieved2011-06-22.[10]Zivot,Eric(2000).“Cointegration and forward andspot exchange rate regressions”.Journal of In-ternational Money and Finance19(6):785–812.doi:10.1016/S0261-5606(00)00031-0.Retrieved2011-06-22.[11]Diamandis,Panayiotis F.;Georgoutsos,Dimitris A.;Kouretas,Georgios P.(2008).“Testing the forward rate unbiasedness hypothesis during the1920s”.International Financial Markets,Institutions&Money18(4):358–373.doi:10.1016/j.intfin.2007.04.003.Retrieved2011-06-23.[12]Fama,Eugene F.(1984).“Forward and spot exchangerates”.Journal of Monetary Economics14(3):319–338.doi:10.1016/0304-3932(84)90046-1.Retrieved2011-06-20.[13]Chatterjee,Devalina(2010).Three essays in forward rateunbiasedness hypothesis(Thesis).Utah State University.pp.1–102.Retrieved2012-06-21.46REFERENCES [14]Cornell,Bradford(1977).“Spot rates,forward rates andexchange market efficiency”.Journal of Financial Eco-nomics5(1):55–65.doi:10.1016/0304-405X(77)90029-0.Retrieved2011-06-28.5 7Text and image sources,contributors,and licenses7.1Text•Forward exchange rate Source:https:///wiki/Forward_exchange_rate?oldid=725209361Contributors:Edward,Low-ellian,Fintor,Rjwilmsi,Salix alba,BiH,Cydebot,TonyTheTiger,Magioladitis,Addbot,RjwilmsiBot,John Shandy`,ZéroBot,Helpful Pixie Bot,Phelmoxes,Gcodrut and Anonymous:137.2Images7.3Content license•Creative Commons Attribution-Share Alike3.0。