Human Pose Estimation From Silhouettes A Consistent Approach Using Distance

- 格式:pdf

- 大小:222.66 KB

- 文档页数:9

(原)⼈体姿态识别alphapose转载请注明出处:论⽂RMPE: Regional Multi-Person Pose Estimation官⽅代码:官⽅pytorch代码:1. 简介该论⽂指出,定位和识别中不可避免的会出现错误,这些错误会引起单⼈姿态估计(single-person pose estimator,SPPE)的错误,特别是完全依赖⼈体检测的姿态估计算法。

因⽽该论⽂提出了区域姿态估计(Regional Multi-Person Pose Estimation,RMPE)框架。

主要包括symmetric spatial transformer network (SSTN)、Parametric Pose Non-Maximum-Suppression (NMS), 和Pose-Guided Proposals Generator (PGPG)。

并且使⽤symmetric spatial transformer network (SSTN)、deep proposals generator (DPG) 、parametric pose nonmaximum suppression (p-NMS) 三个技术来解决野外场景下多⼈姿态估计问题。

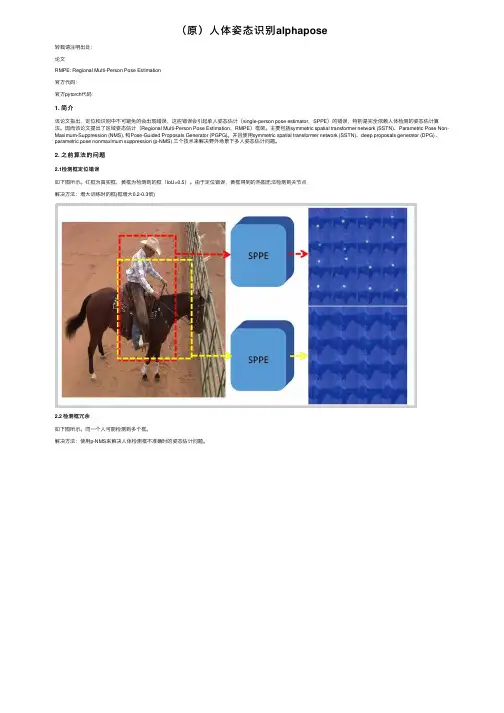

2. 之前算法的问题2.1检测框定位错误如下图所⽰。

红框为真实框,黄框为检测到的框(IoU>0.5)。

由于定位错误,黄框得到的热图⽆法检测到关节点解决⽅法:增⼤训练时的框(框增⼤0.2-0.3倍)2.2 检测框冗余如下图所⽰。

同⼀个⼈可能检测到多个框。

解决⽅法:使⽤p-NMS来解决⼈体检测框不准确时的姿态估计问题。

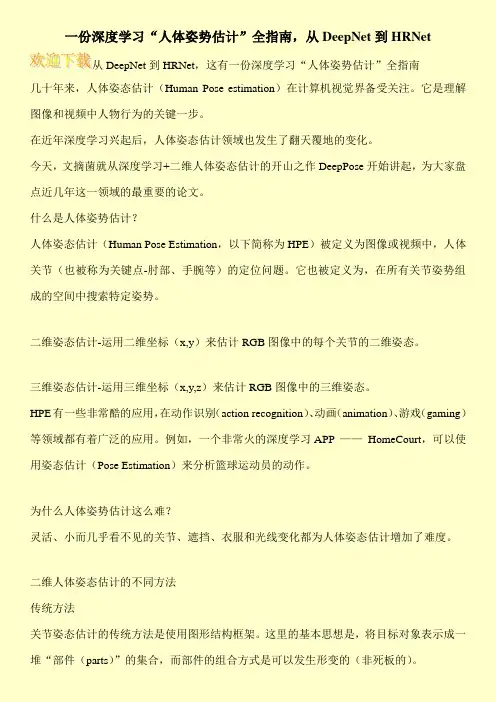

3. ⽹络结构3.1 总体结构总体⽹络结构如下图:Symmetric STN=STN+SPPE+SDTNSTN:空间变换⽹络,对于不准确的输⼊,得到准确的⼈的框。

输⼊候选区域,⽤于获取⾼质量的候选区域。

SPPE:得到估计的姿态。

SDTN:空间逆变换⽹络,将估计的姿态映射回原始的图像坐标。

一份深度学习“人体姿势估计”全指南,从DeepNet到HRNet 从DeepNet到HRNet,这有一份深度学习“人体姿势估计”全指南

几十年来,人体姿态估计(Human Pose estimation)在计算机视觉界备受关注。

它是理解图像和视频中人物行为的关键一步。

在近年深度学习兴起后,人体姿态估计领域也发生了翻天覆地的变化。

今天,文摘菌就从深度学习+二维人体姿态估计的开山之作DeepPose开始讲起,为大家盘点近几年这一领域的最重要的论文。

什么是人体姿势估计?

人体姿态估计(Human Pose Estimation,以下简称为HPE)被定义为图像或视频中,人体关节(也被称为关键点-肘部、手腕等)的定位问题。

它也被定义为,在所有关节姿势组成的空间中搜索特定姿势。

二维姿态估计-运用二维坐标(x,y)来估计RGB图像中的每个关节的二维姿态。

三维姿态估计-运用三维坐标(x,y,z)来估计RGB图像中的三维姿态。

HPE有一些非常酷的应用,在动作识别(action recognition)、动画(animation)、游戏(gaming)等领域都有着广泛的应用。

例如,一个非常火的深度学习APP ——HomeCourt,可以使用姿态估计(Pose Estimation)来分析篮球运动员的动作。

为什么人体姿势估计这么难?

灵活、小而几乎看不见的关节、遮挡、衣服和光线变化都为人体姿态估计增加了难度。

二维人体姿态估计的不同方法

传统方法

关节姿态估计的传统方法是使用图形结构框架。

这里的基本思想是,将目标对象表示成一堆“部件(parts)”的集合,而部件的组合方式是可以发生形变的(非死板的)。

(2条消息)2020CVPR人体姿态估计论文盘点Hey,今天总结盘点一下2020CVPR论文中涉及到人体姿态估计的论文。

人体姿态估计分为2D(6篇)和3D(11篇)两大类。

2D 人体姿态估计[1].UniPose: Unified Human Pose Estimation in Single Images and Videos作者 | Bruno Artacho, Andreas Savakis单位 | 罗切斯特理工学院摘要:我们提出了一个统一的人体姿态估计框架UniPose,它基于我们的“瀑布式”萎缩空间池架构,在多个姿态估计指标上取得了state-of-art结果。

单姿态合并率上下文分割和联合定位在一个阶段内估计人体姿态,精度高,不依赖统计后处理方法UniPose中的瀑布模块利用了级联结构中渐进式过滤的效率,绘制可与空间金字塔结构相媲美的多尺度视野。

此外,我们的方法扩展到单姿态LSTM进行多帧处理,并获得了视频中时间姿态估计的最新结果。

我们在多个数据集上的结果表明,具有ResNet主干网和瀑布模型的UniPose是一个健壮而有效的姿势估计体系结构,可获得单人姿势检测的state-of-the-art.一种不需要后处理的单人姿态估计方法,可扩展到视频[2].The Devil Is in the Details: Delving Into Unbiased Data Processing for Human Pose Estimation作者 | Junjie Huang, Zheng Zhu, Feng Guo, Guan Huang单位 | XForwardAI Technology Co.,Ltd;清华GitHub:https:///HuangJunJie2017/UDP-Pose摘要:近年来,自顶向下的姿态估计方法在人体姿态估计中占据主导地位。

据我们所知,数据处理作为训练和推理中的一个有趣的基本组成部分,并没有在姿态估计领域中得到系统的考虑。

人体姿态模型英文文献Unfortunately, I don't have access to the specific article database that you're referencing. However, I can provide you with a general outline and structure for an English literature review on human pose estimation models, which you can use as a starting point for your research. Please note that this is a general template, and you will need to conduct your own research and analysis to fill in the specific details and references.Title: A Review of Human Pose Estimation Models.Abstract: This article presents a comprehensive review of human pose estimation models, focusing on the recent advancements and challenges in this field. It discusses various techniques, including deep learning-based methods, traditional computer vision approaches, and their applications in real-world scenarios. The article also highlights the importance of pose estimation in areas such as human-computer interaction, sports analysis, andhealthcare.Introduction: Human pose estimation is a crucial task in computer vision that aims to detect and localize key body joints of a person in an image or video. It has applications in various domains, including action recognition, sports analysis, virtual reality, and healthcare. In recent years, significant progress has been made in this field, especially with the advent of deep learning techniques. This article aims to provide a comprehensive review of human pose estimation models, focusing on their principles, recent advancements, and potential challenges.Section 1: Principles of Human Pose Estimation.This section introduces the fundamental concepts and principles of human pose estimation. It explains the importance of keypoint detection and the challenges involved in accurately estimating pose in different scenarios.Section 2: Traditional Computer Vision Approaches.This section reviews traditional computer vision techniques used for human pose estimation. It discusses methods such as feature extraction, shape models, and optimization algorithms. It also highlights the limitations of these approaches and their inability to handle complex poses and backgrounds.Section 3: Deep Learning-Based Methods.This section presents a detailed overview of deep learning-based human pose estimation models. It covers convolutional neural networks (CNNs), recurrent neural networks (RNNs), and transformer-based architectures. It also discusses the advantages of these methods, such as their ability to learn complex representations and handle diverse poses and backgrounds.Section 4: Applications of Human Pose Estimation.This section explores the various applications ofhuman pose estimation in real-world scenarios. It covers areas such as human-computer interaction, sports analysis, virtual reality, and healthcare. It also discusses the potential impact of pose estimation in these domains and the challenges associated with their implementation.Section 5: Challenges and Future Directions.This section highlights the current challenges and future directions in human pose estimation research. It identifies areas such as robustness to occlusions, pose estimation in crowded scenes, and real-time performance as key areas for further exploration. It also discusses potential advancements in deep learning techniques and the integration of pose estimation with other computer vision tasks.Conclusion: Human pose estimation has emerged as a crucial task in computer vision, with significant progress made in recent years. Deep learning-based methods have demonstrated remarkable performance in estimating poses in diverse scenarios. However, there are still challenges tobe addressed, such as robustness to occlusions and real-time performance. Future research in this field is expected to bring further advancements in pose estimation techniques and their applications in various domains.This outline provides a general structure for a literature review on human pose estimation models. You can expand each section by adding more details, discussing specific models, techniques, and applications. Remember to include relevant references and citations to support your arguments and analysis.。

第38卷第3期__________________________计算机仿真_____________________________2021年3月文章编号:1006 -9348(2021 )03 -0292 -06基于S M P L模型的人体姿态估计李健,杨镖镖,张皓若(陕西科技大学电子信息与人工智能学院,陕西西安710021)摘要:针对目前人体形变模型中姿态估计算法容易出现误差、信息缺失等问题,提出一种利用深度相机获取的人体三维信息 来优化模型的方法。

通过深度相机I C i n e c t获取的三维骨架信息,与S M P L模型进行配准,修正原始的模型姿态,得到一个接近人体真实姿态的模型。

实验结果表明,融合人体三维信息后,模型的准确性得到一定程度上的提高。

关键词:形变模型;姿态估计;深度相机;三维骨架中图分类号:TP391 文献标识码:BH u m a n Pose Estimation Based on S M P L ModelLI Jian, YANG Biao - biao,ZHANG Hao - ruo(College of Electronic Information and Artificial Intelligence,Shaanxi University of Science and Technology,Xi'an Shanxi710021, China)A B S T R A C T:Aiming at the current pose estimation algorithms in human body deformation models that are prone t oerrors and lack of information,a method for optimizing the model i s proposed,using the three- dimensional information of the human body obtained by the depth camera.The three- dimensional skeleton information obtained by the depth camera Kinect was registered with the S M P L model,and the original model pose was corrected t o obtain a model close to the real pose of the human body.The experimental results show that the accuracy of the model i s improved to a certain extent after fusing the three- dimensional information of the human body.K E Y W O R D S:MoqDnable model;Pose estimation;Depthi引言三维人体建模一直以来都是三维重建研究领域一个比 较富有挑战性且比较重要的部分,其本质在于将现实世界中 的人体以三维数字化的方式在计算机中存储并表示。

以依赖联合解释变量估计身体姿势在这项工作中,我们根据静态图像估计2 d人类身体姿势。

近期非常成功的在解决这一任务的方法是依靠识别将可变形的部分集合于树模型。

在这样一个图形结构框架内,我们为获得好的模板,通过新的、非线性联合解释变量。

特别是,我们使用两层随机森林作为解释变量。

第一层作为独立的身体部位分类器。

第二层将第一个分布估计纳入考虑,从而将由相互依存的和同现的各部分建模。

这形成一个姿势估计框架,需要身体部位之间的依赖关系联合定位考虑,因而能够绕过树结构的不确定性,如腿和手臂。

在实验中,我们证明我们的身体部位联合解释变量比基于树的最先进的方法实现更高的依赖联合定位精度。

背景由于其应用的相关领域广,从静态图像估计一个人的姿势是很活跃的研究领域。

在这个领域最受欢迎的一个方法是图像结构框架,模型模拟使用一个树模型各个部分的空间关系。

图示结构在姿势模拟上改进了许多方面,如或模拟身体部位的模型。

在目标检测中,表现最好的方法之一是依赖于所谓的可变形模型,一部分使用混合物的恒星模型模板的部分。

最近有研究显示,混合物的模板也可以有效地一部分用于树模型,形成非常强大的姿势估计模型。

特别是,相反的建模转换一个部位模板的经典图形结构模型, 由不同的可变形模板编码身体的部分转换四肢。

虽然这种方法优于经典图片结构模型对人类姿势估计,它说明,使用模板,视窗扫描模板与线性向量机模板训练HOG的特性对噪声非常敏感,限制性能。

在这项研究中,我们因此在一个更好的图形结构背景下解决获取的问题。

同样地,我们没有明确模拟肢体的转换。

但是使用不明确的肢体姿势变化学习模板的处理。

相反,我们不使用噪音敏感、视窗扫描模板,而是提出非线性解释变量联合位置作为解释变量。

我们依靠随机森林, 从深度数据联合位置显示快速、健壮,和准确的预测或身体部分。

虽然以前的工作独立对所有身体部位模板并使用图形结构框架、模型空间和方向关系部分模板。

我们提出一个更有识别力的模板表示需要共生和关系在某种程度上考虑到其他地区,如图1。

2021年第40卷第2期传感器与微系统(Transducer and Microsystem Technologies)23DOI : 10.13873/J. 1000-9787(2021)02-0023-03基于深度学习的3D 时空特征融合步态识别赵黎明,张荣,张超越(宁波大学信息科学与工程学院,浙江宁波315211)摘 要:现有基于轮廓图的步态识别方法易受服装等外部条件干扰,而基于3D 模型的识别方法虽然一 定程度上抵抗了外部干扰,但对摄像设备有额外的要求,且模型计算复杂。

针对上述问题,利用3D 姿态 估讣技术,建立了行人运动的"轻"模型,利用神经网络框架,提取行人3D 空间运动的时空信息,并且与伦 廓图的信息相融合,进一步丰富了步态特征。

在CASIA-B 的数据集上的实验结果表明:融合了 3D 时空运 动信息增强了步态特征的鲁棒性,进一步提升了识别率。

关键词:深度学习;步态识别;3D 姿态;吋空特征融合中图分类号:TP391 文献标识码:A 文章编号:1000-9787(2021)02-0023-03Fusion of 3D spatiotemporal features for gait recognitionbased on deep learning **收稿日期:2020-11-20*基金项目:浙江省公益性技术研究项目(LGF18F020007,LGF21 F020008);宁波市自然科学基金资助项目(2018A610057,2018A610163)ZHAO Liming, ZHANG Rung, ZHANG Chaoyue(College of Information Science and Engineering ,Ningbo University ,Ningbo 315211,China)Abstract : The existing gait recognition methods based on silhouettes are easy to be interfered by clothing and other external conditions ・ Although the 3D model-based recognition method resists external interference to a certain extent , it has additional requirements of camera equipment and complicated model calculations. In order to solve the above problems," light" model for pedestrian motion is established by using 3D pose estimation technology ・ The spatiotemporal information of pedestrian 3D spatial motion is extracted by using neural network framework ,and the information is fused with the information of skeleton map to further enrich the gait features. The results on CASIA-B dataset show that the fusion of 3D spatiotemporal motion information enhances the robustness of gait features and further improves recog n ition rate.Keywords : deep learning ; gait recognition ; 3D pose ; spatiotemporal feature fusion 0引言步态识别是利用步态信息对人的身份进行识别⑴。

人体姿态捕捉方法综述人体姿态捕捉(Human Pose Estimation)是指从图像或视频中提取人体姿态的过程。

它在许多应用领域中起着重要的作用,如人机交互、多媒体检索、人体动作分析等。

随着计算机视觉和深度学习的发展,人体姿态捕捉方法不断演进和改进。

本文将对人体姿态捕捉方法进行综述,系统地介绍几种主要方法。

传统的人体姿态捕捉方法主要分为基于模型的方法和基于特征的方法。

基于模型的方法试图通过建立人体姿态模型来解决捕捉问题,并通过优化算法来拟合模型与输入图像之间的对应关系。

基于特征的方法则试图直接从输入图像中提取特征,并通过分类或回归算法来估计人体姿态。

基于模型的方法主要包括预定义模型和灵活模型。

预定义模型是指事先定义好的人体姿态模型,如人体关节模型、骨骼模型等。

这些模型一般是基于人体解剖学知识构建的,并通过优化算法来拟合模型与图像之间的对应关系。

灵活模型则是指根据输入图像自动学习的模型,如图像表示模型、概率图模型等。

这些模型能够根据输入图像的不同自适应调整,提高姿态估计的准确性和鲁棒性。

基于特征的方法主要包括手工设计特征和深度学习特征。

手工设计特征是指通过对输入图像进行特征提取和降维,将复杂的姿态估计问题简化为特征分类或回归问题。

常用的手工设计特征包括HOG(Histogram of Oriented Gradient)、SIFT(Scale-Invariant Feature Transform)等。

深度学习特征则是指通过深度神经网络自动学习图像特征,并通过分类或回归算法来估计人体姿态。

深度学习特征在人体姿态捕捉问题中取得了显著的成果,如卷积神经网络(CNN)、循环神经网络(RNN)等。

除了基于模型和特征的方法,还有一些将两者结合起来的方法,如混合方法和端到端方法。

混合方法将传统的基于模型和特征的方法进行融合,通过建立模型和提取特征相结合来解决姿态捕捉问题。

端到端方法则是指直接从原始图像输入开始,通过一个深度神经网络来学习图像特征和姿态估计模型,实现一体化的姿态捕捉流程。

People Recognition and Pose Estimation in Image SequencesChikahito NakajimaCentral Research Institute of Electric Power Industry,2-11-1, Iwado Kita,Komae,Tokyo Japan. nakajima@criepi.denken.or.jpMassimiliano Pontil and Tomaso Poggio Center for Biological and Computational Learning,MIT45Carleton Steet,Cambridge,MA,02142USA.{pontil,tp}@AbstractThis paper presents a system which learns from examples to automatically recognize people andestimate their poses in image sequences with the potential application to daily surveillance in indoorenvironments.The person in the image is represented by a set of features based on color andshape information.Recognition is carried out through a hierarchy of biclass SVM classifiers thatare separately trained to recognize people and estimate their poses.The system shows a very highaccuracy in people recognition and about85%level of performance in pose estimation,outperformingin both cases k-Nearest Neighbors classifiers.The system works in real time.1IntroductionThis paper presents a system which learns from examples to automatically recognize people and to estimate their poses in image sequences.The problem of people recognition presents a number of difficulties due to the similarity of people images and the high variability of conditions under which such images are recorded.As afirst attempt to attack the problem,we have made two assumptions about the context in which people are observed:(a) each person wears the same clothes throughout the day,and(b)images are taken from afixed camera with a static background.The second assumption is introduced for practical reasons(images are taken in a small indoor environment)and facilitates the detection of a person in the image.Thefirst assumption simplifies the recognition task as it allows us to extract features based on color information.In the paper, we discuss both features based on color and pixel histograms and local shape information[5].People recognition and pose estimation is formulated as a multiclass classification problem.In this work,classification is based on a hierarchy of biclass Support Vector Machine(SVM)classifiers[1,11]. SVM is a technique for learning from examples motivated in the framework of statistical learning theory [11].This technique has received a great deal of attention in the last few years due to its successful application to different problems(see[3]and references therein).We have used two types of hierarchies of biclass SVM classifiers:a bottom-up decision tree[7]and a top-down decision graph[6].We have performed a preliminary set of experiments,where the system is trained to recognize four different people and estimate as many possible poses(front,back,left and right side).The system has been trained with640examples,40for each person at a given pose.The system works in real time and shows very high performance in people recognition and about85%level of performance in pose estimation,outperforming in both cases k-Nearest Neighbors classifiers.The experimental results also indicate a potential application of the system to daily surveillance in indoor environments.The paper is organized as follows.Section2presents a description of the system outline.Section3 describes the experimental results.Section4summarizes our work and presents our future research.2System OutlineThe system consists of three modules:Image I/O,Pre-Processing and Recognition.Figure1shows an outline of the system.Each image from a camera is distributed to the Pre-Processing module throughFigure1:Outline of the system.a. b.Figure2:An example of moving person detection.the Image I/O.The results of the Pre-Processing and the Recognition modules are shown on a display. Each module works independently on three separated computers connected through a network.2.1Pre-ProcessingThe Pre-Processing module consists of two parts:moving person detection and feature extraction.2.1.1Moving Person DetectionThe system uses twofilters to detect a moving person in an image sequence.Thefirstfilter computes the difference between a given image and an average image.The average image is calculated over the k previous images in the sequence.In our experiments we choose k=3.Generally,the result of thisfilter has a lot of noise.To reduce the noise,we use anotherfilter which extracts moving edges from the image sequence andfills the interior part with the original pixel values.Figure2-a shows an image from the sequence and Figure2-b is the combined result of the twofilters.2.1.2Feature ExtractionOnce the person has been detected in the image,a set of features is extracted.Typically color or shape features are used for surveillance systems[4].In this paper,we used features based on color and pixel histograms and local shapefilters.1.Color Histogram and Pixel HistogramThese features consist of two sections.Thefirst section is the RGB color histograms.The system computes a1-D color histogram of32bins per color channel of an image.The second section is the pixel histograms along the horizontal and vertical lines in the image.To compute the pixel histogramsfirst a50×120window is centered at the detected person and then the horizontal and vertical(HV)histograms are calculated inside the window.We have chosen a resolution of10bins for the vertical histogram and30bins for the horizontal histogram.The total number of extracted features is136,32×3for the RGB histograms and10+30for the HV pixel histograms.Figure3:Shape Patterns.a.A top−down decision graph.b.A bottom−up decision tree.Figure4:Hierarchy of SVMs.2.Local Shape FeaturesA second set of features is obtained by convolving the local shape patterns shown in Figure3witha given image.These patterns have been introduced in[5]for position invariant person detection.Let M i,i=1,...,25,be the pattern in Figure3and V k the3×3patch at pixel k in an image.We consider two different types of convolution operations.Thefirst is the linear convolution given by k M i·V k,where the sum is on the image pixels.The second is a non-linear convolution given by F i= k C(k,i),whereC(k,i)= V k·M i:if V k·M i=max j(V k·M j)0:otherwise.The system uses the simple convolution from the pattern1to5and the non-linear convolution from the pattern6to25.The non-linear convolution works mainly on edge areas in the image and has been inspired by recent work in[8].This paper uses a simple combination model,such as”R+G-B”,”R-G”and”R+G”,suggested by physiological study[10].The system extracts75 (25×3)features from the three types of the above RGB combinations.2.2RecognitionWe have used two similar types of multiclass classifiers based on the combination of biclass SVM:the top-down decision graph recently proposed in[6]and the bottom-up decision tree described in[7].These methods are illustrated in Figure4-a and Figure4-b in the case four classes.They both require the computation of all the possible biclass SVM classifiers,each trained on a pair of classes(i.e.two different people or two different poses).Each class is represented by a set of input vectors,each vector consisting of the features extracted above.Briefly,a linear SVM[11,7]finds the hyperplane w·x+b which best separates two classes. The w is the weight vector,the x is the vector of features,and b is a constant.This hyperplane is the one which maximizes the distance or margin between the two classes.The margin,equal to2 w −1,is an important geometrical quantity because it provides an estimate of the similarity of the two classesa.Frontb.Backc.Leftd.RightFigure5:Examples of the four poses for one person.a.Cpapab.Chikac.Hiroed.BerndFigure6:Examples of the four people in the frontal pose.and can play a very important role in designing the multiclass classifier.This idea can be extended to non-linear SVM;see[11]for more information.Each node in the decision graph in Figure4-a represents a biclass SVM and has two children(except the bottom nodes).Classification of an input vector starts from the root node of the graph and follow the decision path along the graph.For example,looking at Figure4-a,if the root note classifies the input in class A(resp.D),the node is exited via the left(resp.right)edge and so on.Notice that the classification result depends on the initial position of each class in the graph as each node can be associated with different class pairs.A possible heuristic to achieve high classification performance consists in selecting the SVMs with the largest margin in the top nodes of the graph.In the bottom-up decision tree of Figure4-b,there are2nodes in the bottom layer and one node in the second layer,each representing a biclass SVM.To classify an input,first the biclass SVM classifiers in the bottom nodes are evaluated and,depending on their result,a last biclass SVM is evaluated in the top node.For example,if A and D win at the bottom node classes,the A/D biclass SVM is evaluated as thefinal classification at the top node.This method can easily be extended to any number of classes[7].Both the bottom-up decision tree and the decision graph requires the evaluation of n−1SVMs(n being the number of classes)and are very fast if compared to other classification strategy like in[9,2]. 3ExperimentsWe have performed a preliminary set of experiments where the system is trained to recognize within four different people,and estimate as many poses(front,back,left and right side).These poses are shown in Figure5for one person.The frontal image of the four people are shown in Figure6.The system has been trained with640examples,40for each person at a given pose.First,we have trained a multiclass classifier to recognize people.In this case,each class contained40images of one person,10per each different pose.For each person another classifier was trained to classify the pose.In this case,each class contained40images of the same person at approximately the same pose.To summarize,five multiclass classifiers have been trained,one for people recognition and four for pose estimation.The system usesfirst the multiclass classifier to recognize the person in the image.Once the person has been recognized,the pose multiclass classifier relative to that person is used to classifya.Cpapab.Chikac.Hiroed.BerndFigure7:Examples of people recognition results.a.Frontb.Backc.Leftd.RightFigure8:Examples of pose estimation results.Table1:People recognition and pose estimation rates from the test set.SV M(T op−down)k=1,2k=4RGB+HV91.694.494.1LocalShape99.585.084.8 P ose68.067.866.8 Estimation84.582.082.71In case of a tie,the system chooses the class whose nearest neighbors have minimum average distance from the input.4ConclusionsWe have presented a system which is able to recognize people and estimate their poses in an image sequence.The core of the system is a multiclass classification problem which we have approached using two types of hierarchical SVM classifiers.The experimental results indicate the effectiveness of the system to solve the task and a better per-formance of the two hierarchical SVM classifiers with respect to k−Nearest Neighbors.This system is part of the surveillance system currently under development.In the future research we plan to combine this system and a face recognition system to develop a robust surveillance system. References[1]C.Cortes and V.Vapnik.Support vector networks.Machine Learning,20:273-297,1995.[2]J.Friedman.Another approach to pilychotomous classification.Stanford University,Dept.of Statis-tics,Technical Report,1996.[3]I.Guyon.SVM Application List./isabelle/Projects/SVM/applist.html.[4]I.Haritaoglu,D.Harwood and L.Davis.Hydra:multiple people detection and tracking usingsilhouettes.Proc.of International Workshop on Visual Surveillance,6-13,1999.[5]T.Kurita,K.Hotta,and T.Mishima.Scale and rotation invariant recognition method using higher-order local autocorrelation features of log-polar image.Proc.of ACCV,1998.[6]J.C.Platt,N.Cristianini,and rge margin dags for multiclass classification.Advances in Neural Information Processing Systems(to appear).[7]M.Pontil and A.Verri.Support vector machines for3-d object recognition.IEEE Trans.PAMI,637–646,1998.[8]M.Riesenhuber and T.Poggio.Hierarchical models of object recognition in cortex.Nature Neuro-science,2(11):1019–1037,1999.[9]B.Scholkpof and C.Burges and V.Vapnik.Extracting support data for a given task.Proc.of thefirst Int.Conf.on Knowledge Discovery and Data Mining,AAAI Press,1995.[10]K.Uchikawa.Mechanism of color perception.Asakura syoten,(Japanese),1998.[11]V.Vapnik.Statistical learning theory.John wiley&sons,inc.,1998.。

⼈体⾏为识别数据集ActionThis dataset consists of a set of actions collected from various sports which are typically featured on broadcast television channels such as the BBC and ESPN. The video sequences were obtained from a wide range of stock footage websites including BBC Motion gallery, and GettyImages.This dataset features video sequences that were obtained using a R/C-controlled blimp equipped with an HD camera mounted on a gimbal.The collection represents a diverse pool of actions featured at different heights and aerial viewpoints. Multiple instances of each action were recorded at different flying altitudes which ranged from 400-450 feet and were performed by different actors.It contains 11 action categories collected from YouTube.Walk, Run, Jump, Gallop sideways, Bend, One-hand wave, Two-hands wave, Jump in place, Jumping Jack, Skip.UCF50 is an action recognition dataset with 50 action categories, consisting of realistic videos taken from YouTube.The Action Similarity Labeling (ASLAN) Challenge.The dataset was captured by a Kinect device. There are 12 dynamic American Sign Language (ASL) gestures, and 10 people. Each person performs each gesture 2-3 times.Contains six types of human actions (walking, jogging, running, boxing, hand waving and hand clapping) performed several times by25 subjects in four different scenarios: outdoors, outdoors with scale variation, outdoors with different clothes and indoors.Hollywood-2 datset contains 12 classes of human actions and 10 classes of scenes distributed over 3669 video clips andapproximately 20.1 hours of video in total.This dataset contains 5 different collective activities : crossing, walking, waiting, talking, and queueing and 44 short video sequences some of which were recorded by consumer hand-held digital camera with varying view point.The Olympic Sports Dataset contains YouTube videos of athletes practicing different sports.Surveillance-type videosThe dataset is designed to be realistic, natural and challenging for video surveillance domains in terms of its resolution, background clutter, diversity in scenes, and human activity/event categories than existing action recognition datasets.Collected from various sources, mostly from movies, and a small proportion from public databases, YouTube and Google videos. The dataset contains 6849 clips divided into 51 action categories, each containing a minimum of 101 clips.Dataset of 9,532 images of humans performing 40 different actions, annotated with bounding-boxes.Fully annotated dataset of RGB-D video data and data from accelerometers attached to kitchen objects capturing 25 people preparing two mixed salads each (4.5h of annotated data). Annotated activities correspond to steps in the recipe and include phase (pre-/ core-/ post) and the ingredient acted upon.Human pose/ExpressionDynamic temporal facial expressions data corpus consisting of close to real world environment extracted from movies.Action Databases1. - fully annotated 4.5 hour dataset of RGB-D video + accelerometer data, capturing 25 people preparing two mixed salads each(Dundee University, Sebastian Stein)2. database (Orit Kliper-Gross)3. (Ferda Ofli)4. (Scott Blunsden, Bob Fisher, Aroosha Laghaee)5. (Janez Pers)6. - synchronised video, depth and skeleton data for 20 gaming actions captured with Microsoft Kinect (Victoria Bloom)7. - 650 3D action recognition in the wild videos, 14 action classes (Simon Hadfield)8. (Marcin Marszalek, Ivan Laptev, Cordelia Schmid)9. : Synchronized Video and Motion Capture Dataset for Evaluation of Articulated Human Motion (Brown University)10. (Hansung Kim)11. (Paul Hosner)12. (INRIA)13. - 7 types of human activity videos taken from a first-person viewpoint (Michael S. Ryoo, JPL)14. (KTH CVAP lab)15. - 2 cameras, annotated, depth images (Christian Wolf, et al)16. - Multicamera Human Action Video Data (Hossein Ragheb)17. (Oxford Visual Geometry Group)18. (Ross Messing)19. (Michael S. Ryoo, J. K. Aggarwal, Amit K. Roy-Chowdhury)20. (Michael S. Ryoo, J. K. Aggarwal, Amit K. Roy-Chowdhury)21. (Moritz Tenorth, Jan Bandouch)22. (Alonso Patron-Perez)23. (Univ of Central Florida)24. (Univ of Central Florida)25. (Kishore Reddy)26. 101 action classes, over 13k clips and 27 hours of video data (Univ of Central Florida)27. (Univ of Central Florida)28. Aerial camera, Rooftop camera and Ground camera (UCF Computer Vision Lab)29. (Amit K. Roy-Chowdhury)30. (Marco Cristani)31. (B. Bhanu, G. Denina, C. Ding, A. Ivers, A. Kamal, C. Ravishankar, A. Roy-Chowdhury, B. Varda)32. (userID: VIHASI password: virtual$virtual) (Hossein Ragheb, Kingston University)33. Kinect dataset for exercise actions (Ceyhun Akgul)34. - 88 open-source YouTube cooking videos with annotations (Jason Corso)35. (Univ. of West Virginia)。

Shape-From-Silhouette of Articulated Objects and its Use for Human Body Kinematics Estimation and Motion Capture German K.M.Cheung Simon Baker Takeo KanadeRobotics Institute,Carnegie Mellon University,Pittsburgh PA15213german+@ simonb+@ tk+@AbstractShape-From-Silhouette(SFS),also known as Visual Hull (VH)construction,is a popular3D reconstruction method which estimates the shape of an object from multiple silhou-ette images.The original SFS formulation assumes that all of the silhouette images are captured either at the same time or while the object is static.This assumption is violated when the object moves or changes shape.Hence the use of SFS with moving objects has been restricted to treating each time instant sequentially and independently.Recently we have successfully extended the traditional SFS formu-lation to refine the shape of a rigidly moving object over time.Here we further extend SFS to apply to dynamic ar-ticulated objects.Given silhouettes of a moving articulated object,the process of recovering the shape and motion re-quires two steps:(1)correctly segmenting(points on the boundary of)the silhouettes to each articulated part of the object,(2)estimating the motion of each individual part us-ing the segmented silhouette.In this paper,we propose an iterative algorithm to solve this simultaneous assignment and alignment problem.Once we have estimated the shape and motion of each part of the object,the articulation points between each pair of rigid parts are obtained by solving a simple motion constraint between the connected parts.To validate our algorithm,wefirst apply it to segment the dif-ferent body parts and estimate the joint positions of a per-son.The acquired kinematic(shape and joint)information is then used to track the motion of the person in new video sequences.1.IntroductionTraditional Shape-From-Silhouette(SFS)assumes either that all of the silhouette images are captured at the same time or that the object is static[15,18,14].Although sys-tems have been proposed to apply SFS to video[5,2],these systems apply SFS to each frame sequentially and inde-pendently.Recently there has been some work on using SFS on rigidly moving objects to recover shape and motion [21,22],or to refine the shape over time[4].These meth-ods involve the estimation of the6DOF rigid motion of the object between successive frames.In[22]the motion is as-sumed to be circular.Frontier points are extracted from the silhouette boundary and used to estimate the axis of rota-tion.In[21],Ponce et al.define a local parabolic structure on the surface of a smooth curved object and use epipolar geometry to locate corresponding frontier points on three silhouette images.The motion between the images is then estimated by a two-step minimization.In[4]the6DOF motion is estimated by combining both the silhouette and the color information.At each time in-stant,3D line segments called Bounding Edges are con-structed from rays through the camera centers and points on the silhouette ing the fact that each Bounding Edge touches the object at at least one point,a multi-view stereo algorithm is proposed to extract the colors and posi-tions of these touching points(subsequently referred to as Colored Surface Points).The motion between consecutive frames is then computed by minimizing the errors of pro-jecting the Colored Surface Points into the images.Once the6DOF rigid motion is recovered and compensated for, all the silhouette images are treated as taken at the same time and traditional SFS is applied to get a refined shape of the object.In this paper we extend[4]to handle articulated objects. An articulated object consists of a set of rigidly moving parts which are connected to each other at certain articu-lation points.A good example of an articulated object is the human body(if we approximate the body parts as rigid). Here we propose an algorithm to automatically recover the joint positions,and the shape and motion of each part of an articulated object.We begin with silhouette images,al-though color information is used to break the alignment am-biguity as in[4].Given silhouettes of a moving articulated object,recov-ering the shape and motion requires two inter-related steps: (1)correctly segment(points on the boundary of)the silhou-ettes to each part of the object and(2)estimate the shape and motion of the individual parts.We propose an itera-tive algorithm to solve this simultaneous assignment and 6DOF motion estimation problem.Once the motions of the rigid parts are known,their articulation points are es-timated by imposing motion constraints between adjoining parts.To test our algorithm,we apply it to acquire the shape and joint locations of articulated human models.Once this kinematic information of the person has been acquired,we show how the6DOF motion estimation algorithm can be used to track the articulated motion of that person in new video sequences.Results on both synthetic and real data are presented to show the validity of our algorithms.Bounding EdgeCamera center 3Object Oi r 1E 1 i& I13Figure1.The Bounding Edge is obtained byfirst pro-jecting the ray onto,,and then re-projecting thesegments overlapping the silhouettes back into3D space.is the intersection of the reprojected segments.The point where the object touches is located by searchingalong for the point with the minimum projected colorvariance.Note that the image from camera4is not usedbecause it is occluded.See[4]for details.2.BackgroundIn[3]and[4]we extended the traditional SFS formu-lation to rigidly moving bining the silhouette and color images,wefirst extract3D points on the surface of the object at each time instant.These surface points are then used to estimate the6DOF motion between succes-sive frames.Once the rigid motion across time is known, all of the silhouette images are treated as being captured at the same time and SFS is performed to estimate the shape of the object.Below we give a brief review of this temporal SFS algorithm.2.1.Visual Hulls and Their Bounding EdgesThe term Visual Hull(VH)wasfirst coined by Lauren-tini in[13]to denote the3D shape obtained by intersecting the visual cones formed by the silhouette images and the camera centers[13,14,2].One useful property of a VH is that it provides an upper bound on the shape of the ob-ject.In[4],we introduced a new representation of a VH called the Bounding Edge representation.Assume there are color-balanced and calibrated cameras positioned around a Lambertian object.Letbe the set of color and corresponding silhouette images of the object obtained from the cameras at time.Let be a point on the boundary of the silhouette image. Through the center of camera,defines a ray in3D space.A Bounding Edge is defined as the portion of such that the projection of on the image planes of all the other cameras lies completely inside the silhouettes.An example is shown in Figure1.can be constructed by successively projecting the ray onto each silhouette im-age,and retaining the portion whose projection overlaps all the silhouettes.2.2.Colored Surface Points(CSP)The most important property of a Bounding Edge is the Second Fundamental Property of Visual Hulls(2nd FPVH) which states that each Bounding Edge touches the object (which forms the silhouette images)at at least one point [4].Using this property,we are able to locate points on the surface of the object using a multi-stereo color match-ing approach.Consider a Bounding Edge.Since we assume the object is Lambertian and the cameras are color balanced,there exists at least one point on(the point where it touches the object)such that the projected colors of this point in all the visible color images are the same. In other words,this point has zero projected color variance among the visible color images.In practice,due to noise and inaccuracies in color balancing,instead of searching for the point that has zero projected color variance,we assign the touching point on to be the point with the minimum color variance,as shown in Figure1.We refer to this point as a Colored Surface Point(CSP)of the object and repre-sent its position and color(which is obtained by averaging its projected color across all visible cameras)by and respectively.By sampling the boundaries of all the sil-houette images,a set of Colored Surface Points can be constructed.Note that there is no point-to-point correspon-dence relationship between two different sets of CSPs ob-tained at different time instant.The only property common to the CSPs is that they all lie on the surface of the object.2.3.SFS Across Time for Rigid ObjectsWe now describe our algorithm for recovering the6DOF motion of a rigid object using the CSPs.Without loss of generality,we assume that the orientation and position of the object at time is0¯and that at time it is.The rigid object alignment prob-lem is then equivalent to recovering.Consider the motion between and as an example and assume we have two sets of dataobtained at and respectively. Tofind,we align the CSPs with the2D silhouette and color images.The idea is very similar to that in[19]for 2D image alignment.Suppose we have an estimate of.For a CSP (with color)at time,its3D position at time would be.Consider two different cases of the projection of into the camera:1.The projection lies inside the silhouette.In thiscase,we use the color difference as an error measure:(1)where is the projected color of a3D pointinto the color image.Here we assume this color error is zero if the projection of lies outside.(b)(a)(d)(c)Figure 2.Results of our temporal SFS algorithm [4]applied to synthetic data:(a)one of the input images,(b)unaligned CSPs,(c)aligned CSPs,(d)refined visual hull.2.The projection lies outside.In this case,we use the distance of the projection from ,represented byas an error measure.The distance iszero if the projection lies inside .Summing over all cameras in which is visible,the errormeasure of with respect tois given by(2)where is a weighing constant.Similarly,the error measure of a CSP at time is written as(3)Now the problem of estimating the motion is posedas minimizing the total error(4)which can be solved using a gradient descent or Iterative Levenberg-Marquardt algorithm [20].Hereafter we refer to this motion estimation process as either “temporal SFS”or the visual hull alignment algorithm.To show the validity of our visual hull alignment algo-rithm,we apply it to both synthetic and real sequences of a rigidly moving person.In the synthetic sequence,a com-puter graphics model of a person is made to rotate about the z-axis.Twenty five sets of color and silhouette images of the model from eight virtual cameras are rendered us-ing OpenGL.One example of the rendered color images is shown in Figure 2(a).CSPs are then extracted and aligned.Figures 2(b)and (c)illustrate respectively the unaligned and aligned CSPs for all 25frames.Figure 2(d)shows the vi-sual hull constructed by applying SFS to all the silhouette images (compensating for the alignment).A real sequence of a person standing on a turntable (with unknown speed and rotation axis)was also captured by eight cameraswith(b)(a)(d)(c)Figure 3.Results of our temporal SFS algorithm [4]ap-plied to estimate the shape of a real human body (a)one of the input images,(b)unaligned CSPs,(c)aligned CSPs,(d)refined visual hull displayed from several different view points.thirty frames per camera.The person was asked to remainstill throughout the capture process to satisfy the rigidity as-sumption.The results are presented in Figure 3.It can be seen that excellent shape estimates (the visual hulls shown in Figure 2(d)and Figure 3(d))of the human bodies can be obtained using our temporal SFS algorithm [4].Although the 3D shape of a person can be obtained in detail using the VH alignment algorithm described above,the acquired shape does not contain any kinematic infor-mation.Kinematic information is essential for applications such as motion tracking,capture,recognition and render-ing.We now show how this information can be obtained automatically and accurately using temporal SFS algorithm for articulated objects.3.SFS for Articulated ObjectsTo extend the temporal SFS algorithm to articulated ob-jects we employ an idea similar to that used for multiple layered motion estimation in [16].The rigid parts of the articulated object are first modeled as separate and indepen-dent of each other.With this assumption,we iteratively (1)assign the extracted CSPs to different parts of the object and (2)apply the temporal SFS algorithm to align each part across time.Once the motions of all the parts are recovered,an articulation constraint is applied to estimate the joint po-sitions.Note that this iterative approach can be categorized as belonging to the Expectation Maximization framework [7].The whole algorithm is explained below in detail using a two-part,one-joint articulated object.3.1.Segmentation/Alignment AlgorithmConsider an one-joint objectwhich consists of two rigid partsand as shown in Figure 4at two dif-ferent time instants and .Assume CSPs of the ob-ject are extracted from the color and silhouette images of calibrated and color-balanced cameras,denoted by.Furthermore,treatingPart APart B time t 2Part APart BY 2B(R 2, t 2)AAMotion of part BW 11(R 2, BR 2+ t 2BBW 11R 2+ t 2AAW 12W 21Figure 4.A two-part articulated object at two different time instants and .and as two independently moving rigid objects allows us to represent the relative motion of between and as and that of as .Now consider the following two complementary cases.3.1.1.Alignment with known segmentationSuppose we have segmented the CSPs at into groupsbelonging to partand part ,represented by and respectively for both .By applying the temporal SFS algorithm described in Section 2(Eq.(4))to and separately,estimates of the relative motionsare obtained.3.1.2.Segmentation with known alignment Assumewearegiven the relative motion of and from to .For a CSP at time ,consider the following two errormeasures(5)(6)Here is the error of with respect to thecolor/silhouette images at if it belongs to part (thusfollowing the motion model ()).Similarly is the error if lies on the surface of .In these expres-sions the summations are over all visible cameras .By comparing these two errors,a simple strategy to classify the point is devised as follows:if ifotherwise(7)where is a thresholding constant and contains all the CSPs which are classified as neither belonging to part nor part .Similarly,the CSPs at time can be classified using the errors and .In practice,the above decision rule does not work very well because of image/silhouette noise and camera calibra-tion errors.Here we suggest using spatial coherency and temporal consistency to improve the segmentation.To use spatial coherency,the notion of a spatial neighborhood has to be defined.Since it is difficult to define a spatial neigh-borhood for the scattered CSPs in 3D space (see for example Figure 3(b)),an alternate way is used.Recall that each CSPlies on a Bounding Edge which in turn corresponds to a boundary point of the silhouette image .We define two CSPs and as “neighbors”if their correspond-ing 2D boundary pointsand are neighboring pixels (in 8-connectivity sense)in the same silhouette image.This neighborhood definition allows us to easily apply spatial co-herency to the CSPs.From Figure 5(a)it can be seen that different parts of an articulated object usually project onto the silhouette image as continuous outlines.Inspired by this property,the following spatial coherency rule (SCR)is pro-posed:Spatial Coherency Rule (SCR):Ifis classified as belonging to part by Eq.(7),it stays as belonging to part if all of its left and right immediate “neighbors”are also classified as belonging to part by Eq.(7),otherwise it is reclassified as belonging to .The same procedure applies to part .Figure 5(a)shows how the spatial coherency rule can be used to remove spurious partition error.The second con-straint we utilize to improve the segmentation results is tem-poral consistency as illustrated in Figure 5(b).Consider three successive frames captured at ,and .For a CSP ,it has two classifications due to motion from to and motion from to .Since either belongs to part or ,the temporal consistency rule (TCR)simply requires that the two classifications have to agree with each other:Temporal Consistency Rule (TCR):If has the same classification by SCR from to and from to ,the classification is maintained,other-wise,it is reclassified as belonging to .Note that SCR and TCR not only remove wrongly seg-mented points,but they also remove some of the correctlyclassified CSPs.Overall though they are effective because few but more accurate data is always preferred over abun-dant but less accurate data,especially in our case where the segmentation has a great effect on motion estimation.3.1.3.Iterative algorithmSummarizing the above discussion,we propose an iterative segmentation/alignment process to estimate the shape and motion of parts and over frames as follows :1.Given segmentationsof CSPs,recover therelative motions of andover all frames using the temporal SFSalgorithm.classifiedObjectclassifiedremovedCoherencyCSPs(a)Figure5.(a)Spatial coherency removes spurious segmenta-tion errors,(b)Temporal consistency ensures segmentationagrees between successive frames.2.Repartition the CSPs according to the estimated mo-tions by applying Eq.(7),followed by the intra-frame SCR and inter-frame TCR.3.Repeat Steps1and2until the segmentation/alignmentconverges or for afixed maximum number of times.Although for the sake of explanation we have described this algorithm for an articulated object with two rigid parts, it can easily be generalized to apply to objects with parts.3.2.InitializationAs common to all iterative EM algorithms,initialization is always a problem[16].Here we suggest two different ap-proaches to start our algorithm.Both approaches are com-monly used in the layer estimation literature[16].Thefirst approach uses the fact that the6DOF motion of each part of the articulated object represents a single point in a six di-mensional space.In other words,if we have a large set of estimated motions of all the parts of the object,we can ap-ply clustering algorithms on these estimates in the6D space to separate the motion of each individual part.To get a set of estimated motions for all the parts,the following method is used.The CSPs at each time instant arefirst divided into subgroups by cutting the corresponding silhouette bound-aries into arbitrary segments.These subgroups of CSPs are then used to generate the motion estimates using the VH alignment algorithm,each time with a randomly cho-sen subgroup from each time instant.Since this approach requires the clustering of points in a6D space,it performs best when the motions between different parts of the artic-ulated object are relatively large so that the motion clusters are distinct from each other.The second approach is applicable in situations where one part of the object is much larger than the other.As-sume,say,part is the dominant part.Since this assump-tion means that most of the CSPs of the object belong to, the dominant motion of can be approximated using all the CSPs.Once an approximation of is available,the CSPs are sorted in terms of their errors with respect to this dominant motion.An initial segmentation is then obtained by thresholding the sorted CSPs errors.3.3.Articulation Point EstimationAfter recovering the motions of parts and sepa-rately,the point of articulation between them is estimated. Suppose we represent the joint position at time as. Since lies on both and,it must satisfy the motion equation from to as follows(8) Putting together similar equations for over frames, we get......(9)The least squares solution of Eq.(9)can be computed using Singular Value Decomposition.3.4.Human Body Kinematics AcquisitionHere we apply our SFS algorithm for articulated objects to segment the body parts and to estimate the joint positions of a person.Instead of estimating all the joints at the same time,we take a sequential approach and model the joints one by one.Tofind the position of,say the left shoulder joint,the person is asked to move his whole left arm around the shoulder while keeping the rest of the body still.This makes the human body a one-articulation point object.Since the size of the whole body is much larger than a single body part,the dominant motion initialization method is used.Figure6(a)shows some of the input images and the results for the right elbow and the right hip joints of the computer graphics model used in the synthetic sequence(Figure2)at the end of Section2. Figure6(b)presents some of the input images and the results for the left shoulder and the left knee joints of the person in Figure3.The input images,CSPs and results for the left hip/knee joints of the synthetic data set can be seen in the movie syn-kinematics-leftleg.mpg and those for the right shoulder/elbow and right hip/knee joints of the real person in the movie real-kinematics-rightarm.mpg and real-kinematics-rightleg.mpg respectively.All the movie sequences mentioned in this paper can be found at /˜german/research/CVPR2003/HumanMT.To create a complete articulated human model(after each body part is segmented and its joint position is located using our SFS algorithm for articulated objects)the various body parts are aligned to the whole body voxel model acquired at the end of Section2(Figure2(d)for the synthetic data and Figure3(d)for the real person).The alignment is done between the3D CSPs of the body part and the reference im-age of the sequences that are used to obtain the whole body voxel model.Figure7(a)displays the complete articulated model of the synthetic data set with the joint locations andSegmented Segmented (a). Results of right elbow and right hip joints for the synthetic data setestimated joint positionUnaligned CSPsAligned and CSPsestimated joint positionUnaligned CSPsAligned and CSPsThree of the input images from camera 6Three of the input images from camera 1(b). Results left shoulder and left knee joints for the real humanSegmented Segmented estimated joint positionUnaligned CSPsAligned and CSPsestimated joint positionUnaligned CSPsAligned and CSPsThree of the input images from camera 7Left ShoulderJointThree of the input images from camera 1Left Knee JointFigure 6.(a).Estimated right elbow and right hip joints of a synthetic data set.(b).Estimated left shoulder and left knee joints of a real data set.For each joint,the unaligned CSPs from different frames are drawn with different colors.The aligned and segmented CSPs are shown with two different colors to show the segmentation.Theestimated articulation point (joint location)is indicated by the black sphere.segmented body parts (shown in terms of the 3D points de-rived from the voxel centers of the model).We have also added a skeleton by joining the joint locations together.The articulated model of the real person is shown in Figure 7(b).The work most similar to our vision-based human body kinematic information acquisition is by Kakadiaris et al.in [12].They first use deformable templates to segment 2D body parts in a silhouette sequence.The segmented 2D shapes from three orthogonal view-points are then com-bined into a 3D shape by SFS.Here we address the acqui-sition of motion,shape and articulation information at the same time,while [12]focuses mainly on shape estimation.4.Application:Motion CaptureDue to increased applications in entertainment,secu-rity/surveillance and human-computer interaction,the prob-lem of vision-based motion capture has gained much at-tention in recent years.Several researchers have pro-posed systems to track body parts from video sequences [10,1,11,6,5,17,8,9].In most of these systems,generic shapes (e.g.rectangles/ellipses in 2D,cylinders/ellipsoids in 3D)are used to model the body parts of the person.Al-though generic models/shapes are simple to use and can be generalized to different persons easily,they suffer from two disadvantages.Firstly they only coarsely approximate the actual body shape of the person.Secondly generic shapes/models also lack accurate joint information of theRight Left Right Right Shoulder Left Shoulder Right Right Left Elbow Left Hip Knee Lower Arm (LLA) Upper (LUA)Upper Leg Left Lower Upper Arm (RUA)Right Lower Arm (RLA) Right Upper Leg Right Lower Leg (RLL)(TSO)(a)Figure plete articulated human model of (a)the syn-thetic data set (different body parts shown with different colors),(b)the real person.These are the models used for motion tracking in the experimental results in Section 4.3.person.In vision-based motion capture systems,precise kinematic information (shape and joint)is essential to ob-tain accurate motion data.Here we show how to use the ac-quired human kinematic model of the person in the previous section to perform motion capture from color and silhouette image sequences.As compared to other systems which use either only color images [1,17]or only silhouette images [6,5],our algorithm combines both silhouette and color in-formation to fit the articulated model.4.1.Human Body ModelThe articulated human model used in our tracking algo-rithm is the same as those depicted in Figure 7.It consists of nine body parts (torso,right/left lower/upper arms,right/left lower/upper legs)connected by eight joints (right/left shoul-der/elbow joints,right/left hip/knee joints).Each body part is assumed to be rigid with the torso being the base.The shoulder and hip joints have 3DOF each while there is 1DOF for each of the elbow and knee joints.Including trans-lation and rotation of the torso base,there are a total of 22DOF in the model.4.2.Tracking with An Articulated ModelAssume we have estimated the kinematic information of all nine body parts of the person at a reference time with color and silhouette images .Represent the shape of body part in terms of a set of CSPs as,its joint as and call this the model dataset.Now suppose we are given the run-time data set at ,which consists of color/silhouette images and the corre-sponding CSPs of the person.Let be the rotation matrix of at its joint and be the trans-lation of the torso base at .Without loss of generality,assume is zero and is the identity matrix for all body parts at .The motion capture problem can be posed as estimating and for all the body parts from the color and the silhouette images .The most straightforward way to solve the above motion capture problem is to align all the body parts (with a total of 22DOF)of the human model directly to the silhouetteand color images all at once.Although this all-at-onceproach can be done by generalizing the temporal SFS rithm to perform a non-linear optimization over all22in practice it is prone to the problem of falling into minima because of the high dimensionality.To avoid local minimum problem,we instead use a two-step chical approach:firstfit the torso base and then each independently.This approach makes use of the fact that motion of the body is largely independent of the motion the limbs which are,under most of the cases,largely pendent of each other.Thefirst step of our hierarchical approach volves recovering the global translation andof the torso base.This can be done by ing the6DOF temporal SFS algorithm described in tion2.Once the global motion of the body isthe four joint positions:left/right shoulders andhips are calculated.The four limbs of the body are then aligned separately around thesefixed joint positions in the second step.For each limb,the two joint rotations(shoul-der and elbow for arms,hip and knee for legs)are estimated simultaneously.We briefly explain the second step below using the left arm and time as an example.Here only the errors of projecting the model CSPs onto the run-time color/silhouette images are considered.This can be ex-tended to include the projection errors of the run-time CSPs by segmenting them to individual part of the body.Assume we have recovered the torso translation and ori-entation,then the joint location and the transformed position of a model CSPon the left upper arm(LUA)at time are expressed asUsing these and Eq.(2),we can express the sum of pro-jected color/silhouette error of across visi-ble cameras at as a function of the unknown.Sim-ilarly,the error for each CSP on the Left Lower Arm (LLA)can be written as function of and.By optimizing the combined errors of the whole left arm as(10)the joint rotation matrices are estimated.This simultaneous estimation approach,as compared to estimating the joint ro-tations(e.g.first shoulder and then elbow)of the limb indi-vidually and sequentially,is better because both joint con-straints are incorporated implicitly into the equations at the same time.4.3.Experimental Results4.3.1.Synthetic sequencesTwo synthetic motion video sequences:KICK(60frames) and PUNCH(72frames)were generated using the syn-joint angles of the left arm and right leg of the syntheticsequence KICK.The estimated joint angles closely followthe ground-truth values throughout the whole sequence.thetic human model in Figure2(a).A total of eight cam-eras are used.The complete articulated model shown in Figure7(a)is used to track the motion in these sequences. Figure8compares the ground-truth and estimated joint an-gles of the left arm and right leg of the body in the KICK sequence.It can be seen that our tracking algorithm per-forms very well.The moviefile syn-track.mpg illustrates the tracking results on both sequences.In the movie,the upper left corner shows one of the input camera sequences, the upper right corner shows the tracked body parts and joint skeleton(rendered color)overlaid on one of the input images(which are converted from color to gray-scale for clarity).The lower left corner depicts the ground-truth mo-tion rendered through an avatar and the lower right corner represents the tracked motions rendered through the same avatar.The avatar renderings show that the ground-truth and tracked motions are almost indistinguishable from each order.4.3.2.Real sequencesThree video sequences:STILLMARCH(158frames), AEROBICS(110frames)and KUNGFU(200frames)of the real person in Figure3(a)were captured to test the track-ing algorithm.Eight cameras are used in each sequence and the articulated model in Figure7(b)acquired in Section3.4 is used.Figures9(a)(b)show the tracking results on the AEROBICS and KUNGFU sequences respectively.Each figure shows four selected frames of the sequence with the (color)tracked body parts and the joint skeleton overlaid on one of the eight camera(turned gray-scale)input images. The movie real-track.mpg contains results on all three se-quences.In the movie,the upper left corner represents one of the input camera images and the upper right corner il-lustrates the tracked body parts with joint skeleton overlaid on a gray-scale version of the input images.The lower left corner illustrates the results of applying the estimated joint angles to a3D articulated visual hull(voxel)model(ob-tained by combining the results in Figure3(d)and the kine-。