太极拳虚拟练习体统

- 格式:pdf

- 大小:2.09 MB

- 文档页数:8

利用虚拟现实技术进行体育训练的技巧虚拟现实(Virtual Reality,简称VR)技术是近年来技术领域的一大创新。

随着VR技术的不断发展和普及,它也开始在体育训练领域发挥越来越大的作用。

利用虚拟现实技术进行体育训练,不仅可以提高训练效果,还可以打破传统训练的限制,给运动员们带来更丰富、更真实的体验。

接下来,我们将介绍一些利用虚拟现实技术进行体育训练的技巧,帮助你更好地掌握这一先进的训练方式。

首先,利用虚拟现实技术进行体育训练最大的优势之一是模拟真实场景。

通过虚拟现实技术,我们可以在虚拟环境中再现各种真实比赛场景,无论是足球场、篮球场还是网球场等,都可以通过VR技术进行模拟。

这样一来,运动员们可以在虚拟环境中进行实战演练,提高比赛适应能力和应对战术的能力。

例如,足球运动员可以在虚拟环境中看到对方球员的位置、动作变化,从而更好地找到突破对方防守的办法。

篮球运动员可以在虚拟环境中练习各种技巧,比如投篮、传球等,提高比赛的技术水平。

其次,利用虚拟现实技术进行体育训练还可以针对特定运动项目进行专业化训练。

不同的运动项目有着不同的技巧要求和训练方法,而虚拟现实技术可以根据不同项目的特点进行定制化训练。

通过专业的VR训练系统,运动员们可以进行针对性强的技术训练,例如足球运动员可以进行射门、盘带等训练,篮球运动员可以进行自由扔篮球、接球等训练。

这种定制化的训练可以帮助运动员们更好地掌握运动项目的技巧要领,提高竞技水平。

第三,虚拟现实技术还可以提供全方位的训练反馈。

传统的体育训练往往需要借助教练的指导和运动员的自感觉来判断动作是否准确,而这种方式存在一定的主观性和不准确性。

而利用虚拟现实技术进行体育训练,可以在训练过程中提供实时的动作反馈。

运动员们可以通过VR设备观察自己的动作,如姿势是否正确、动作是否流畅,从而及时调整并改进训练方式。

这种实时反馈能够帮助运动员们更好地提高自身的技术水平,并有效纠正不良动作习惯。

如何练习太极拳的虚和实太极拳是当下比较好的运动方式之一,不管是老人、小孩都喜欢练习太极拳。

对于太极拳虚和实的练习你了解多少呢?我们该如何练好太极拳呢?下面就和店铺一起来看看吧!如何练习太极拳的虚和实太极拳拳谱说,虚实宜分清楚,一处有一处虚实,处处总有此一虚一实。

可以知道,太极拳的所有动作都有虚实,虚实转换,上下相随。

从初学时的动作虚实明显的大虚大实,逐步发展到较难察觉的小虚小实,乃至内有虚实而不见有虚实的境界。

总之,虚实在太极拳中是必不可少的。

通常,把太极拳的虚实分为脚的虚实、手的虚实、手与足的虚实。

对于双脚的虚实要求,练习中始终有一虚一实的转换。

如云手动作,左脚虚时,向左横步。

左脚实时,右脚抬起向左并步;就如弓步的定势动作也有前1/3和后2/3的虚实之分。

手的方法较多,虚实转换更为复杂。

如揽雀尾动作,棚、将、挤、按为一体,虚实的变幻十分讲究;如动作“如封似闭”也称做“六封四闭”,就是按虚实的四六比例变化而得名。

手与足的上下虚实更是有讲究,除了在动作上有要求,而且在技击的动作上也是必需的。

如右手下持为实,其右脚则为虚;待左手下抨为实时,其左脚则为虚;待右手上棚虚时,则右脚需为实。

太极拳练习时,虚实的掌握准确与否较为关键。

身体的重心一移动,虚实变化随之而来。

虚实的练可以首先从双脚开始,因为较容易掌握;其次从双手人门;最后才‘是最主要的上下相随的手足虚实变化和配合。

以腰为轴的上下相随虚实久习久练,逐步会成为一种习惯,从而自然有了行云流水的感觉了。

至于在太极拳中的心意与动作和技击的虚虚实实,以及虚实与阴阳的理论关系亦很复杂,这要从太极拳的起源结合五行八卦等传统理论来讨论,以上主要谈及的是太极拳技术与套路表演中的虚实变化。

如何练好太极拳首先,要练好太极拳必须明白太极拳是怎样一种拳术?它具有什么样的内涵和性质?概括为一句话:太极拳是一种性命双修的内功拳术。

性命双求也可称为心肾双求,心主性,肾主命;心象火,肾象水。

基于虚拟现实技术的体育运动训练系统设计随着科技的不断进步,虚拟现实技术(Virtual Reality,VR)已经逐渐应用到各个领域,包括体育运动训练。

虚拟现实技术的引入为体育训练带来了全新的可能性,可以提供更加真实、沉浸式的训练环境,使运动员能够在虚拟场景中进行技巧的练习和运动能力的提升。

一、虚拟现实技术在体育运动训练中的优势1. 提供更真实的训练环境:虚拟现实技术可以模拟各种训练场景,包括比赛场地、山脉、森林等,使得运动员能够在虚拟环境中进行真实训练。

这种逼真度的训练环境可以帮助运动员更好地适应实际比赛的情况,并提高他们的反应能力和应对能力。

2. 提供即时的数据反馈:虚拟现实技术可以通过传感器来实时监测运动员的动作,并提供即时的数据反馈。

通过这些数据,运动员可以了解他们的表现如何,并针对性地进行改进。

这种实时反馈可以帮助运动员更好地理解和掌握技巧,提高运动的准确性和稳定性。

3. 安全性高:在一些体育项目中,训练过程中存在一定的危险性,比如高空跳伞、攀岩等。

利用虚拟现实技术,运动员可以在安全的环境下进行这些高风险项目的训练,降低了事故和伤害的风险。

二、基于虚拟现实技术的体育运动训练系统设计1. 虚拟训练场景的建立:首先,需要建立逼真、真实的虚拟训练场景,包括比赛场地的模拟、天气条件的模拟等。

这可以通过三维建模技术来实现,利用计算机图形技术将真实场景还原到虚拟环境中。

2. 运动动作传感器的设计:为了能够准确感知运动员的动作,需要设计运动动作传感器。

这些传感器可以通过肩部、腰部等位置的附着装置来感知运动员的身体动作,并将数据传输到计算机系统进行分析。

3. 实时数据反馈与分析:在训练过程中,需要通过虚拟现实技术提供即时的数据反馈和分析。

运动员可以通过佩戴虚拟现实头盔来观看虚拟训练场景,并通过头盔上的显示屏或声音设备获取数据反馈。

这些数据可以包括运动员的动作准确度、力度、速度等,运动员可以根据反馈来调整动作,提高技巧和训练效果。

利用拓展现实技术进行虚拟运动训练的教程拓展现实技术(Augmented Reality,AR)是一种利用计算机生成的虚拟信息与真实世界实时叠加的技术。

利用拓展现实技术进行虚拟运动训练,已经成为迅速发展的领域,为运动员和健身爱好者提供了全新的训练方式。

本文将介绍如何利用拓展现实技术进行虚拟运动训练的教程。

首先,为了进行虚拟运动训练,我们需要一套拓展现实设备,如头戴式显示器(Head-Mounted Display,HMD)、智能手机、平板电脑等,以及相应的拓展现实应用程序。

这些设备通常配备有摄像头、加速计和陀螺仪等传感器,能够追踪用户的头部、身体动作和位置,为用户呈现出虚拟世界与现实世界的结合。

接下来,选择适合的虚拟运动训练应用程序。

市场上已经有许多针对不同运动项目的拓展现实应用程序可供选择。

根据用户的偏好和训练目标,选择适合自己的应用程序进行训练。

在进行虚拟运动训练之前,首先需要进行设备的设置和校准。

校准过程可以保证设备能够正确追踪用户的动作和位置,并提供更加准确的虚拟环境呈现。

校准通常包括校准头部追踪、调整设备位置和角度等步骤,根据不同的设备和应用程序,具体操作可能会有所不同。

完成设备校准后,我们可以开始虚拟运动训练了。

根据选择的应用程序,用户可以进行各种类型的虚拟运动训练,如健身训练、瑜伽、舞蹈等。

在虚拟环境中,用户可以看到虚拟的教练或引导视频,并根据提示进行相应的动作。

设备通过传感器实时追踪用户的动作,并将其反馈到虚拟环境中,让用户可以看到自己的动作与虚拟引导的对比,从而纠正姿势和动作。

虚拟运动训练不仅可以提供个性化的训练方案,还能够提供即时的反馈和指导。

在训练过程中,设备会根据用户的表现给出相应的评价和建议,帮助用户优化训练效果。

通过与虚拟教练的互动,用户可以更好地掌握正确的动作技巧和运动要领。

虚拟运动训练还具有趣味性和互动性,可以增加用户的参与度和动力。

一些应用程序提供了竞技和社交功能,用户可以与其他训练者进行比赛、交流和分享成果,增强训练的乐趣和挑战感。

基于虚拟现实技术的武术训练动作模拟系统设计虚拟现实(VR)技术是一种以电脑生成的模拟环境来模拟多种感官体验的技术。

它已经被广泛应用于各个领域,如游戏、教育和医疗等。

本文将介绍一个基于虚拟现实技术的武术训练动作模拟系统的设计。

系统需要一个虚拟现实头盔,用于提供沉浸式的虚拟现实体验。

头盔上有一个高分辨率的显示屏,用于显示模拟的武术训练场景。

头盔还配备有传感器,可以跟踪头部的运动,以实现用户对场景的自由观察。

系统需要一个控制器,用于模拟武术动作。

这个控制器可以是一个手柄,用于模拟剑、棍等器械的挥舞动作。

控制器上也有传感器,可以跟踪用户的手部运动,并将其转化为虚拟场景中的动作。

在系统的设计中,我们需要一个强大的虚拟现实引擎,用于生成和渲染虚拟场景。

引擎应该能够实现真实的物理模拟,包括重力、碰撞等效果。

引擎还应该能够实时更新虚拟场景,根据用户的动作和输入实时调整场景中的物体和角色。

为了使用户能够更好地感受武术训练,系统可以设计一些特殊的辅助功能。

系统可以在虚拟场景中显示用户的运动轨迹,让用户能够更清楚地了解自己的训练效果。

系统还可以提供实时反馈,根据用户的动作来评估其技术的准确性和流畅性。

系统还应该提供一个交互界面,方便用户选择不同的武术训练场景和动作。

界面可以采用图形化的设计,让用户可以通过点击或手势来选择和切换不同的选项。

基于虚拟现实技术的武术训练动作模拟系统设计需要结合虚拟现实头盔、控制器、虚拟现实引擎和交互界面等多个组件。

通过这个系统,用户可以在虚拟场景中进行武术训练,获得身临其境的感觉,并得到实时的动作反馈和训练效果评估。

这种系统可以提高武术训练的效果和乐趣,同时也带来了更多的创新和可能性。

使用虚拟现实技术进行体育训练的技巧与方法虚拟现实(VR)技术近年来迅速发展,逐渐在各个领域中得到广泛应用,包括体育训练领域。

通过虚拟现实技术,运动员可以在模拟的环境中进行训练,从而改善技术和技能,提高竞争力。

本文将探讨使用虚拟现实技术进行体育训练的技巧和方法。

首先,为了成功运用虚拟现实技术进行体育训练,我们需要了解该技术的基本原理和工作方式。

虚拟现实技术通过头戴式显示器和传感器等设备创建虚拟现实环境,运动员可以通过佩戴这些设备来体验不同的场景和环境。

虚拟现实技术能够提供身临其境的感觉,使运动员感觉自己置身于真实的比赛场景中。

在使用虚拟现实技术进行体育训练时,下面是一些关键的技巧和方法:1. 设定明确的目标:在开始虚拟现实训练之前,运动员应该设定明确的目标。

这些目标可以是技术上的,例如提高准确性、速度或力量。

同时,目标也可以是战略上的,例如提高比赛中的决策能力。

通过设定目标,运动员可以更好地衡量训练的进展,以及虚拟现实技术提供的帮助。

2. 选择适当的虚拟现实场景:虚拟现实技术可以模拟各种场景,包括比赛场地、训练场地和特定运动的环境。

运动员应选择与其实际训练需求相符的虚拟场景。

例如,篮球运动员可以选择在虚拟的比赛场地中进行投篮训练,而足球运动员可以在虚拟的足球场地中进行射门训练。

选择适当的场景可以使运动员更好地适应真实的训练环境。

3. 结合实际训练:虚拟现实技术可以用作实际训练的补充,而不是替代。

将虚拟现实训练与实际训练相结合,可以更好地提高技术和技能。

例如,在进行高尔夫训练时,运动员可以使用虚拟现实技术来模拟各种球场情况,然后在实际的球场上进行实际击球练习。

4. 利用反馈机制:虚拟现实技术可以提供实时反馈,帮助运动员纠正动作和改进技术。

这一功能可以大大提高训练的效果。

运动员可以通过观察自己的动作和技巧在虚拟现实环境中的表现,以及虚拟教练的指导,来纠正问题并改进技术。

5. 记录和评估进展:在使用虚拟现实技术进行体育训练过程中,记住记录自己的进展并进行评估至关重要。

使用增强现实技术进行虚拟运动训练的步骤与技巧随着科技的不断发展,增强现实技术(AR)正逐渐应用于各个领域,并在体育训练中占据重要地位。

使用增强现实技术进行虚拟运动训练可以为运动员和教练员提供全新的训练方式和体验。

本文将介绍使用增强现实技术进行虚拟运动训练的步骤与技巧。

第一步,准备设备和软件进行虚拟运动训练需要一些特定的设备和软件。

首先,您需要一台适合运行增强现实应用程序的计算机或智能手机。

然后,您需要购买或下载相应的增强现实软件,这些软件通常可以在应用商店或官方网站上找到。

一些虚拟运动训练软件还需要特定的硬件设备,如AR眼镜或传感器。

确保您的设备和软件都是最新版本,并按照说明进行安装和设置。

第二步,选择适合的虚拟运动训练方案根据您的训练需求和运动类型,选择适合的虚拟运动训练方案。

增强现实技术可以应用于各种体育项目,包括足球、篮球、游泳、高尔夫等。

您可以选择模拟比赛、习题和技巧训练等不同类型的训练方案。

确保根据您的水平和目标选择合适的训练方案。

第三步,设置运动场景和环境使用增强现实技术进行虚拟运动训练需要设置适当的运动场景和环境。

根据您的训练需求,您可以选择不同的场景,如足球场、沙滩、游泳池等。

确保清空并确保您的训练区域安全,没有任何障碍物可能导致意外。

如果您使用AR眼镜,确保您的训练区域没有明显的光线反射或光线干扰,以获得更好的视觉效果。

第四步,跟随指导进行训练启动您选择的虚拟运动训练软件,并按照指导进行训练。

增强现实技术将在您的周围创建虚拟对象,通过视觉和声音指导您进行训练。

跟随指导进行训练,注意动作的准确性和节奏。

训练软件将提供实时反馈和评估,帮助您改进技术和提高表现。

第五步,分析和评估训练成果虚拟运动训练软件通常提供分析和评估功能,以帮助您分析和评估训练成果。

通过分析您的技术和表现,您可以发现潜在的问题和改进点。

许多训练软件还提供数据记录和比较功能,您可以跟踪您的进展和与其他运动员进行比较。

太极拳的全面系统训练(一)罗红元-基宏太极拳传承人、国际A级裁判、武术七段太极拳的全面、系统训练的目的是:不断提高练习者的竞技能力和水平。

训练的内容包括:“自制功夫”和“制人功夫”两大部分。

“自制功夫”的练习着重于增进身体健康水平和身体形态的改善,发展运动素质,使身体进入一种良好的预应状态——“太极状态”。

“制人功夫”的练习刚注重技击技术和战术水平的提高,使之达到纯熟、运用自如的程度。

传统的太极拳训练体系总结和积累了十分丰富和有效的练习方法和手段,首先我们要将这些训练方法进行整理、总结和系统化,使每一练习方法都有明确的目的和定位,同时我们要大胆引进现代体育项目的先进训练方法和理论,提高太极拳训练的科学水平。

由于不同的练习者有其不同的练习目的,有的只想做一般水平的提高者,可着重于自制功夫和“太极状态”的练习,也可能加太极拳套路比赛和一些规则限制较多、对抗程度相对较低的太极对手对抗交流式比赛,训练的程度以适中为度。

而以提高竞竞赛能力为目的的练习者,应着重全面的训练和提高训练强度,积极参与开放和对抗程度强的推手比赛和散手比赛,以此作为提高训练水平的动力和检验竞技水平的标准。

一、太极拳的自制功夫与训练太极拳自制功夫的练习,是围绕着“太极状态”的形成、维持和提高而进行的。

练习时要求由外及内,然后由内及外,内外合一。

太极拳的自制功夫包括了以意导体、以体导气和以气导体三个主要练习内容和层次。

“太极状态”是产生太极拳专项素质和技巧的硬件,“太极状态”的质量与特点,直接影响着专项素质和运用技巧的质量与特点。

在周身一家、内外高度协调的基础上,时时处处保持弹性和压力不丢是太极拳的基本状态和特点。

像一个充满气的轮胎富于弹性与压力,这就是所谓的“太极状态”。

“太极状态”的形成从以意导体开始,在意识的支配下,通过特定的身形调整,如五把弓调节和对拉拔长等,使身体调节成一理想的模式,为下一步内气的聚合和运行创造条件,这一过程就像轮胎的制造过程;以体导气,就是通过正确的身法调节,引导内气有序地聚合和分布,如“气沉丹田”、“气遍身躯”和“气势饱满”等,这一过程就像为做好成型的轮胎注入气体而产生压力;以气导体,就是在以上两个环节的调节基础上,以意导气,一旦丹田为原动中心产生内气的激荡,从而带动身体和“气势中心”的运动而做功。

基于虚拟现实技术的武术训练动作模拟系统设计一、系统需求分析在虚拟现实环境的实现上,系统需要具备高清晰度的视觉效果、真实的3D空间感和交互性。

在动作模拟和反馈功能方面,系统应该能够准确地捕捉训练者的动作并进行实时分析,给出针对性的指导与反馈。

在个性化训练方案方面,系统需要根据训练者的身体状况、训练目标和训练习惯进行个性化配置,并提供相应的训练计划和记录功能。

二、系统设计方案基于以上需求分析,设计一个基于虚拟现实技术的武术训练动作模拟系统是可行的。

系统的设计方案主要包括硬件设备、软件系统和用户界面。

1.硬件设备在硬件设备方面,系统需要使用高性能的VR设备,如头戴式显示器、体感捕捉器等。

头戴式显示器可以提供高清晰度的视觉效果和真实的3D空间感,而体感捕捉器可以准确地捕捉训练者的动作。

系统还需要配备专业的运动传感器,用于监测训练者的身体姿势和动作,并提供实时反馈。

2.软件系统3.用户界面用户界面是训练者与系统交互的重要部分,它需要直观、友好并且易于操作。

用户界面应该包括虚拟现实环境的导航和操作、动作模拟与反馈的显示和操作、个性化训练方案的配置和管理等功能。

用户界面还需要提供实时的训练数据和统计信息,以帮助训练者监控训练效果和调整训练计划。

三、系统实现的可行性分析基于虚拟现实技术的武术训练动作模拟系统的实现是可行的,因为现有的VR设备和运动传感器已经能够满足系统的硬件需求,而动作捕捉和分析技术也已经非常成熟。

软件系统方面也可以借鉴目前已有的VR体育训练软件和个性化健身软件的设计理念和技术手段。

在实现过程中,可以采用Unity3D、Unreal Engine等成熟的虚拟现实开发平台,借助其强大的建模、渲染和物理引擎功能,快速构建高质量的虚拟现实模拟环境和动作模拟系统。

可以利用深度学习和机器学习技术,训练系统的动作识别和反馈算法,提高系统的准确性和智能化程度。

四、系统未来的发展方向基于虚拟现实技术的武术训练动作模拟系统具有广阔的发展前景。

使用虚拟现实进行体育运动训练的步骤虚拟现实(Virtual Reality,简称VR)技术的发展为体育运动训练带来了全新的可能性。

通过虚拟现实技术,运动员可以在虚拟环境中进行身体训练和技能提升,提高运动表现和竞技水平。

下面将介绍使用虚拟现实进行体育运动训练的步骤。

第一步:设定训练目标在使用虚拟现实进行体育运动训练之前,运动员需要明确自己的训练目标。

无论是提高力量、增加速度还是改善协调性,设定明确的目标对于制定训练计划至关重要。

通过设定目标,运动员可以更好地利用虚拟现实技术进行有针对性的训练。

第二步:选择适合的虚拟现实设备虚拟现实设备的选择对于体育运动训练至关重要。

目前市面上有各种各样的虚拟现实设备,如头戴式显示器、手柄控制器等。

根据不同的训练需求,选择适合的设备可以提供更好的训练体验和效果。

第三步:选择合适的虚拟现实训练程序虚拟现实训练程序是指在虚拟环境中进行的体育运动训练。

运动员可以选择各种不同的训练程序,如篮球、足球、高尔夫等。

通过选择合适的训练程序,运动员可以模拟真实比赛场景,提高技术水平和应对能力。

第四步:进行虚拟现实训练一旦选择了合适的虚拟现实训练程序,运动员可以开始进行虚拟现实训练。

通过戴上头戴式显示器和手柄控制器,运动员可以进入虚拟环境,并进行各种训练动作。

虚拟现实技术可以实时追踪运动员的动作,并反馈给他们,帮助他们纠正动作错误和提高技术水平。

第五步:分析训练数据虚拟现实技术可以生成大量的训练数据,如运动员的动作轨迹、速度、力量等。

通过分析这些数据,运动员和教练可以了解训练效果,并进行针对性的调整和改进。

这些数据还可以用于比较不同训练阶段的差异,帮助运动员制定更有效的训练计划。

第六步:定期评估训练效果使用虚拟现实进行体育运动训练需要定期评估训练效果。

运动员和教练可以通过比较训练前后的数据和表现来评估训练的效果。

如果发现训练效果不理想,可以根据评估结果进行调整和改进,以达到更好的训练效果。

基于虚拟现实技术的武术训练动作模拟系统设计

虚拟现实技术的快速发展为各个领域带来了许多创新和变革。

利用虚拟现实技术来模拟武术训练动作是一种创新的方法,可以提供更加安全、交互性强的训练环境,有效提高学习者的技能和体验。

本文将设计一种基于虚拟现实技术的武术训练动作模拟系统。

系统需要具备一个可视化的虚拟现实环境。

这可以通过虚拟现实头盔、手柄等设备来实现。

学习者可以通过头盔观看一个虚拟的训练场景,并通过手柄来进行交互。

虚拟训练场景应具备良好的真实感,包括场景的细节、光影效果等。

系统需要模拟不同的武术动作,并提供实时的反馈。

系统可以通过人体姿势识别技术来捕捉学习者的动作,并与设定的标准动作进行比对。

如果学习者的动作符合标准,系统应给予正面的反馈,例如鼓励音效、成功提示等;如果学习者的动作不符合标准,系统应给予指导性的反馈,例如错误提示、示范动作等。

这可以帮助学习者即时纠正错误,提高训练效果。

系统还可以提供不同级别的训练模式和挑战模式。

初学者可以选择较为简单的训练模式,通过模拟动作来学习基本的武术技巧;熟练者可以选择挑战模式,挑战更高难度的动作和训练任务,提高自己的技术水平。

系统应根据学习者的水平和进步情况,动态调整难度,确保训练的有效性。

系统还可以提供其他辅助功能,例如学习者的数据记录和分析。

系统可以记录学习者的训练时间、速度、准确度等指标,并生成数据报告,帮助学习者了解自己的训练情况和进步情况。

系统还可以提供音乐、视频等个性化设置,增加学习的乐趣和吸引力。

专利名称:简化24式太极拳综合训练系统专利类型:发明专利

发明人:范继军,朱伟,贺超

申请号:CN201610637515.1

申请日:20160802

公开号:CN106166376A

公开日:

20161130

专利内容由知识产权出版社提供

摘要:本发明公开了一种简化24式太极拳综合训练系统,包括人机操作模块,视频采集模块,体感控制模块,数据处理模块,动作标准评估模块,动作指导建议输出模块,人工专家指导模块,中央处理器,节奏契合度评估模块,空气投影训练教学模块。

本发明实现了太极拳动作的自动判定评估和指导,且在可以在训练学习的过程中的采用三维投放的形式进行教学视频或指导视频的播放,并将理论知识穿插在其中,大大方便了训练者对于各个动作的反复观摩和理解,提高了训练效率,使得动作更加规范,同时也减轻了教师的工作量;还可以营造各种训练的背景,也可以建立一个虚拟的三维模型进行陪练,提高了动作训练过程中的趣味性。

申请人:河南牧业经济学院

地址:450046 河南省郑州市郑东新区龙子湖北路6号

国籍:CN

代理机构:西安铭泽知识产权代理事务所(普通合伙)

代理人:俞晓明

更多信息请下载全文后查看。

太极阴虚拟定位使用教程太极阴虚位是太极拳中重要的动作之一,也是许多拳友们追求的终极目标。

它既是太极拳的核心要义,也是修炼者内功的体现。

下面是太极阴虚位的使用教程,希望能对您有所帮助。

一、阴虚位的定义太极阴虚位,即将身体的重量向下沉,使脚掌贴地,腿部和腰部形成一个统一、稳定的支撑体系。

这种站立姿势能够锻炼人体的肌肉、韧带和关节,提高身体的平衡能力和稳定性,使身体的力量更加集中、充实。

二、阴虚位的步骤1.始终站直在练习太极阴虚位时,身体要保持笔直的姿势。

双腿稍微分开与肩同宽,两脚平行放置,小腿轻微内八字。

膝盖微微弯曲,臀部、背部、头颈都要呈直线状,同时保持三怀(怀胸、怀气和怀中)。

2.提起气势通过吸气来提起气势,感受全身肌肉的张力。

同时,要将重心沉下,感觉身体下沉、稳定。

这样可以增加身体的稳定性和灵活性,使拳术的力量更加集中、充实。

3.注意呼吸呼吸时要做到自然、平稳,不要急促或用力。

吸气时感受气息进入丹田,吐气时慢慢将气息排出,呼吸要与身体的动作相配合。

通过合理的呼吸,可以调节身体的能量,增加内力的积聚。

在进行太极阴虚位练习时,要保持身体的平衡。

可以通过调整脚和腿的姿势,使整个身体呈现一个稳定的三角形支撑架。

双脚贴地,重心均匀分布在两腿之间,保持身体的稳定性。

5.注意力的集中太极阴虚位需要集中注意力,感受身体的变化和内力的流动。

要时刻保持意识的清醒,将注意力集中于身体的各个部分,感受内力在身体中的流动和产生的变化。

三、使用阴虚位的好处1.强化肌肉和韧带通过练习太极阴虚位可以增强身体的肌肉力量和韧带的弹性。

长时间的站立会使腿部肌肉得到锻炼,增强腿部的力量和平衡能力。

同时,太极阴虚位还可以加强腰部、臀部和腹部的肌肉,提高身体的稳定性和控制力。

2.提高身体的平衡能力太极阴虚位是锻炼身体平衡能力的重要方法之一、通过保持身体的平衡,可以使身体的重心均匀分布,提高身体的稳定性和控制力。

在进行太极拳的动作时,身体能够更加灵活自如地移动,使力量更集中、准确。

基于虚拟现实技术的武术训练动作模拟系统设计

随着科技的不断发展,虚拟现实技术的应用越来越广泛,已经在许多领域得到应用。

虚拟现实技术也逐渐开始在武术训练领域中应用。

本文基于虚拟现实技术,设计了一款武

术训练动作模拟系统。

该系统可以为武术爱好者提供更加直观、互动、个性化的武术训练

体验,提高武术训练的效率和趣味性。

首先,该系统需要进行武术动作的录制和建模。

我们可以通过摄像机等设备记录武术

动作的实际演练情况,然后使用刚体运动学等技术将其转化为计算机可识别的3D模型。

由于武术动作具有很高的复杂性和多变性,因此需要对不同的动作类型分别进行建模,并

且根据用户的不同需求进行个性化的定制。

同时,我们还需要设计真实的场景、情境和对手,以增强用户的身临其境感。

其次,该系统需要借助虚拟现实技术呈现训练场景,使用户可以身临其境地进行武术

训练。

用户可以通过虚拟现实头盔和手柄等设备进行交互,与虚拟对手进行实时的互动。

这样用户就可以更加真实地体验到武术训练中的情境和感觉,并且可以在虚拟的环境中不

断重复实践和修正自己的动作,并且可以根据视觉和听觉反馈来纠正自己的错误。

最后,该系统还需要配合一些智能算法,来对用户的训练进行监控和分析,并且向用

户提供相应的反馈和建议。

例如,该系统可以记录用户的训练时间、次数、速度等基础数据,并且通过神经网络等算法来对用户的动作进行评估,判断是否达到规范、标准的要求,并且向用户提供相应的建议和改进方案。

同时,该系统还可以收集用户的数据,并且在后

期进行数据分析和统计,对武术训练的效果和成果进行评估。

基于虚拟现实技术的武术训练动作模拟系统设计摘要:本文基于虚拟现实技术的武术训练动作模拟系统设计,旨在研究如何将虚拟现实技术应用于武术训练中,提升武术训练的实用性和教学效果。

本文首先介绍了武术训练中存在的问题,然后分析了虚拟现实技术的优势和特点,提出了基于虚拟现实技术的武术训练动作模拟系统的设计思路和实现方法,最后简单说明了系统的应用价值和前景。

引言:一、武术训练中存在的问题在传统的武术训练中,教学多依靠师父传授、传帮带的方式,通常需要在指定的时间和地点进行实地教学。

这种教学模式存在教学内容单一、教学难度大、时间和地点限制等问题。

因此,许多武术爱好者和学生常常发现武术训练难以持续,难以保持兴趣和热情,且无法得到及时有效的指导和培训。

同时,武术训练中的技术难度也有所增加,特别是针对初学者,他们需要大量的练习来完善他们的技能。

二、虚拟现实技术的优势和特点虚拟现实技术为武术训练带来了新的思路和技术手段。

虚拟现实技术可以使用计算机技术模拟现实场景和环境,用户可以使用非常逼真的虚拟动作进行互动,不仅可以提高训练的效果,而且可以使学生随时随地进行训练,反复练习,大大缩短了培训时间。

另外,虚拟现实技术可以给学生更多的自主选择权,让他们可以根据自己的需求进行选择和学习。

(1)系统要素:在设计系统运作过程中,应该考虑到虚拟世界、交互、音频、视频等要素。

其中,虚拟世界是基于虚拟现实技术实现的,交互包括语音和手势控制,音视频可以防止工艺显示紫色与培训材料中的内容视频。

(2)基础动作库的建立:为了使系统更加准确、完善,需要建立武术动作库。

首先应该对每个武术动作进行详细记录和视频拍摄,制作动作图表,并计算各个关节角度及移动轨迹。

然后需要通过模拟器进行动作重现,使得系统可以更真实地模拟武术动作。

(3)口令和语音识别技术:可以更好的控制系统,使虚拟人物完成动作训练动作。

同时,通过语音识别技术可以使系统更加便捷,学生可以直接通过口令让虚拟人物进行训练动作。

虚拟现实在体育训练中的应用虚拟现实(Virtual Reality,简称VR)技术是一种能够创造出仿真的三维环境,并将用户通过视觉、听觉、触觉等感官进行身临其境体验的技术手段。

随着科技的不断进步,虚拟现实技术在各个领域得到了广泛的应用,其中体育训练领域也不例外。

本文将探讨虚拟现实技术在体育训练中的应用。

一、虚拟现实在体育训练中的优势虚拟现实技术在体育训练中的应用具有以下几个优势。

1. 提供真实的仿真环境虚拟现实技术可以创造出逼真的三维环境,使训练者感觉自己置身于实际比赛场景中。

通过这种方式,训练者可以更好地模拟比赛情境,提高对于现实比赛的适应能力。

2. 提供个性化的定制训练虚拟现实技术可以根据个人需求进行定制化的训练。

无论是初学者还是职业选手,都可以通过调节虚拟场景的难度和训练模式,来实现个性化的训练效果。

3. 提供实时的数据反馈虚拟现实技术可以通过传感器等设备实时获取训练者的动作、姿态等信息,并对其进行分析和反馈。

这种实时的数据反馈可以帮助训练者及时纠正错误动作,优化训练效果。

二、虚拟现实在不同项目的体育训练中的应用虚拟现实技术在不同体育项目的训练中都有广泛的应用。

以下将以足球、篮球和跑步三个项目为例,介绍虚拟现实技术在体育训练中的具体应用。

1. 足球训练虚拟现实技术可以创造出真实的足球场景,让训练者在虚拟环境中进行技术训练和比赛模拟。

训练者可以通过虚拟现实设备和感应器,感受到真实的球场环境和对手的防守压力。

此外,虚拟现实还可以辅助训练者改善传球、射门、头球等特定技术动作,提高训练效果。

2. 篮球训练虚拟现实技术可以创造出室内篮球场景,让训练者进行个人技术和战术训练。

训练者可以在虚拟现实的环境中感受到真实的比赛节奏和防守压力,通过对抗训练和投篮练习等模拟训练,提高球员的技术水平和应对能力。

3. 跑步训练虚拟现实技术可以创造出不同场景的跑步环境,如城市街道、郊外风景等,让跑步者在家中就能享受到各种跑步路线的体验。

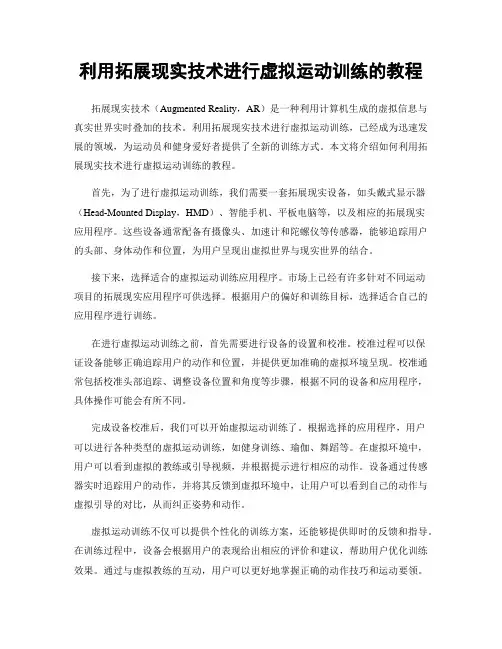

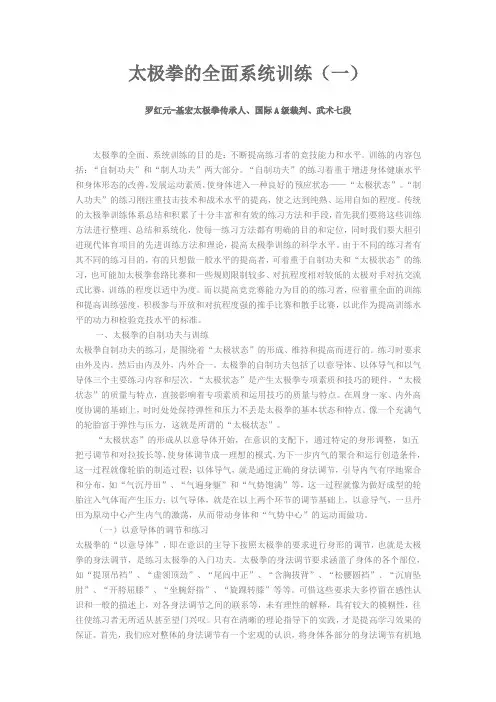

Training for Physical Tasks in Virtual Environments:Tai Chi Philo Tan Chua Rebecca Crivella Bo Daly Ning Hu Russ Schaaf David Ventura Todd Camill Jessica Hodgins Randy PauschEntertainment Technology CenterCarnegie Mellon UniversityAbstractWe present a wireless virtual reality system and a pro-totype full body Tai Chi training application.Our primary contribution is the creation of a virtual reality system that tracks the full body in a working volume of4meters by5 meters by2.3meters high to produce an animated repre-sentation of the user with42degrees of freedom.This–combined with a lightweight(<3pounds)belt-worn video receiver and head-mounted display–provides a wide area, untethered virtual environment that allows exploration of new application areas.Our secondary contribution is our attempt to show that user interface techniques made possi-ble by such a system can improve training for a full body motor task.We tested several immersive techniques,such as providing multiple copies of a teacher’s body positioned around the student and allowing the student to superimpose his body directly over the virtual teacher.None of these techniques proved significantly better than mimicking tra-ditional Tai Chi instruction,where we provided one virtual teacher directly in front of the student.We consider the im-plications of thesefindings for future motion training tasks. 1IntroductionWith recent advancements in wireless technology and motion tracking,virtual environments have the potential to deliver on some of their early promises,creating effective training environments and compelling entertainment expe-riences.In this paper,we describe and evaluate a system for training in a physical task–Tai Chi–and show the ap-plication of training techniques not possible with real world training.The capture and display system is wireless,freeing the student from the encumbrance of trailing wires.We place a Tai Chi student in a3D rendered environment with a vir-tual instructor,and they learn Tai Chi by mimicking expert motions,similar to real-world Tai Chi instruction.Our hy-pothesis is that virtual environments allow us to takeadvan-Figure1.Left:A student in the Tai Chi trainer.Right:The virtual world.Student is in light,teacher in dark clothestage of how physical activities such as Tai Chi are currently learned while visually enhancing the learning environment in ways not possible in the real world.The student’s move-ment is recorded with an optical motion capture system that allows real-time capture of the whole body.Captured mo-tion is used to animate representations of the student and teacher and displayed via a wireless head-mounted display (HMD),allowing students to have a natural representation of their body in the virtual environment and easily acquire feedback on their movement.We view this system as afirst step toward more effective and general systems for the training of physical tasks.Phys-ical training is an economically important application with a significant impact on both health and safety.VR has great potential for enhancing physical training,with the ability to record a motion once and then replay and practice the motion unlimited times,enabling self-guided training and evaluation.Tai Chi is itself important because there are demonstrated positive effects in preventing falls in the el-derly and other health improvements[22].We chose Tai Chi because the slow nature of the move-ments and the range of motionfit the properties of our VR system well,additionally we can offer evaluation and feed-back to the student during training.Tai Chi is a challenging training application because the sequence of movements, the form,is complicated and the standards for performance are exacting.Novices struggle to memorize the sequence of movements and more experienced students spend20years or more refining their form.Because emphasis is on bal-ance and shape of the body during slow movements,stu-dents are able to adjust their motion based on feedback from the teacher during the sequence.Figure1shows a user wearing the wireless virtual reality equipment(a belt and an HMD)and the virtual environment with the real-time data applied to a student model.In the virtual environment,the student is rendered with a white shirt and the master wears red.Real-time,full body capture of the student’s motion allows us to experiment with different forms of visual feedback.We implemented several user interaction tech-niques,such as providing multiple simultaneous copies of the teacher surrounding the student,and allowing the stu-dent to superimpose his body directly inside the teacher’s body.While the former could be done via projection or CA VE-like systems,the latter technique is not readily im-plemented without the use of an HMD.We compared var-ious interfaces objectively by computing the error in limb position between student and teacher,and subjectively via a questionnaire of our subjects.Surprisingly,both the ob-jective measures and subjective questionnaires showed that none of the virtual environment enabling techniques per-formed better than merely mimicking a real world situation, with one teacher in front of the student.2BackgroundWireless virtual environments with full body capture have become possible only in the past few years.To our knowledge,ours is thefirst wireless full body virtual en-vironment system usable for a broad variety of applica-tions.The only other wireless system of which we are aware was a highly specialized dismounted soldier training system that required the participant to wear25pounds of equip-ment[11],severely limiting its applicability.That system was constructed for combat training and used a General Re-ality CyberEye HMD and Premier Wireless for the trans-mittal of a bi-ocular NTSC video image.The motion of the soldier and rifle were captured with a Datacube optical system.The technology for wireless whole body capture in real-time has been available for a number of years,first with magnetic sensors and more recently with commercial op-tical motion capture systems.Researchers have explored ways to make magnetic systems less burdensome for the user by using fewer sensors and supplementing the data with inverse kinematics for the limbs where motion is not directly measured[2,15,10].The HiBall wireless,wide-area system created by Welch et al.provides very good data but requires the student to wear relatively encumber-ing trackers[21].Technologies for capturing some of the motion of the user via video have also been developed[23].With the system described in this paper,our goal has been specifically to explore the use of virtual environ-ments in training applications.A number of virtual en-vironments have been developed in which the goal is to teach the student decision-making strategies for such situ-ations as hostage negotiations,earthquakes,parachute de-scent andfirefighting[13,17,6].Fewer virtual environ-ments have been built that attempt to train the user for phys-ical tasks.Emering et al.created a system for action recog-nition that was applied to a martial arts scenario[1].Davis and Blumberg built a virtual aerobics trainer in which the user’s actions were captured and recognized as he or she was instructed to“get moving”or given feedback such as “good job!”[4].The user’s silhouette was captured via in-frared light and matched to a set of pre-recorded templates. However,the effectiveness of the system as a trainer was not assessed.Becker and Pentland built a Tai Chi trainer[3]in a modified version of the Alive[8]system that provided bet-ter depth information.Their system used a hidden Markov Model to interpret the user’s gestures and achieved a greater than90%recognition rate on a small set of18gestures. When gestures were recognized but did not receive a high score,the system provided feedback by playing back the segment of the motion in which the student’s motion dif-fered most from that of the teacher.Yang performed some preliminary user studies with his virtual environment called“Just Follow Me”[24]using a Ghost metaphor to show the motion of a teacher to a subject. This form of visual feedback is similar to one of our layouts although the rendering styles differed.Subjects executed slow and fast hand motions in one of two test conditions, either while seeing the teacher’s motion displayed in afirst person view or while viewing the teacher’s motion on a third person video display.Although Yang did not quantitatively assess the results,qualitative assessment indicated that the virtual environment produced more accurate trajectories. 3Training EnvironmentOur training environment uses the Vicon real-time op-tical motion capture system from Oxford Metrics.The recorded motion isfiltered to reduce noise and recorded for offline analysis.The students wear a wireless HMD through which they see a rendered virtual environment containing animated representations of both the student and the teacher.Figure2.The virtual environment for Tai ChiX10TransmitterRecieverFigure3.Data pipeline and system diagramThe head-mounted display is extremely light,which is im-portant for a task involving body posture.3.1Virtual WorldThe virtual environment places the student in a pavil-ion on an island surrounded by a calm lake(Figure2).On the horizon the sun is setting behind the mountains.The environment is designed to be quiet and peaceful as those are the circumstances in which Tai Chi should be practiced. The pavilion also indicates to the student where the motion capture area is.In the motion capture laboratory,floor mats are used to demarcate the capture region for the optical motion capture system.To provide further continuity between the real and virtual worlds,the virtual world has a Yin Yang symbol on thefloor in the same place that the symbol appears in the real world painted on afloor mat.These physical and visual cues allow the student to easily position him or herself in the appropriate part of the laboratory and virtual environment. This positioning is important because the complete Tai Chi form uses the entire available motion capture area.The student wears a Spandex unitard with reflective markers applied to the clothing and skin.The student also wears a fabric hip belt that holds the batteries and the re-ceiver for the HMD.These weigh3pounds in total.We use a marker set with4114mm markers,an adaptation of a standard biomechanical marker set.Markers are placed at most joints and along every major bone,and additional markers provide redundant information for critical body segments(head,hands,and feet).When subjects begin the experiment,they are asked to perform a calibration motion in which they move each joint through its full range of mo-tion.The system uses this information to compute a17bone skeleton with bones sized to the correct length for that stu-dent.The setup process–from having the student put on the unitard through calibration–takes approximately30min-utes.3.2Real-time Optical Motion CaptureTwelve cameras were placed around the laboratory to provide good coverage of a4m×5m capture region2.3m high.The lens of each camera is surrounded by infrared emitters that reflect IR light off the retroreflective tape on the markers.The cameras capture1024×1024bitmap im-ages at60fps.These locations on the image plane of each camera are converted to a set of3D points by the Oxford Metrics Vicon512Datastation hardware.The locations of the markers are sent to the Oxford Met-rics Tarsus real-time server whichfits the student’s skeleton to the three-dimensional marker locations.Thefitting pro-cess runs at between30-60fps on a1GHz Pentium III.The variability in frame rate appears to be caused by occasional spurious marker locations produced by the image processor and large changes in the data.3.3FilteringAfter the skeleton has beenfit to the capture data,the position and orientation of each limb isfiltered by the real-time server to reduce noise.Because thefitting process oc-curs in real time and must be done very quickly,two types of error are apparent in the output.Thefirst is a gross error due to marker mislabeling which causes bones to“flip”out of position.The other error is a general jittering of the skeleton that results from inaccuracy of thefitting process and marker position reconstruction.Flipping errors,which usually occur during high accelerations,are rare in our sys-tem since Tai Chi motions tend to be slow.Jittering,how-ever,is noticeable regardless of the speed of the student’s motion and needs to be corrected throughfiltering.The mean residual error of markers in thefitting process is between3.9mm and7.2mm for our students(as reported by the Viconfitting software),enough to cause distracting movement in the virtual environment.In particular whenviewing the environment infirst-person,where the virtual camera isfixed to the head’s position and orientation,those small errors become large changes in the view.We chose a Discrete Kalmanfilter to reduce the noise in our data because it had been successfully applied in systems and applications similar to ours[20].Sul and his colleagues also used a Kalmanfilter to post-process motion capture data to smooth the motion and maintain the kinematic con-straints of a hierarchical model[16].We,however,chose to ignore the bone length constraints during thefiltering pro-cess.This gives acceptable visual results and avoids any extra lag due to the processing time needed to satisfy those constraints.The Discrete Kalmanfilter requires the input signal to be in a linear space.This constraint is not a problem for bone position vectors(which are vectors in R3),but it is a problem for bone orientations(quaternions representing points in S3).In order to apply thisfilter to the orientation data wefirst linearize the orientation space using a local linearization technique[7].Because the virtual camera isfixed to the position and orientation of the student’s head,jitter that may have been unnoticeable on other parts of the body is magnified and re-sults in an unacceptably shakyfirst-person view.To correct this,we apply a more aggressive set offilter parameters to the head position and orientation than to the rest of the body. These parameters make thefirst-person point of view stable without sacrificing agility in the rest of the body.3.4RenderingWe chose the Lithtech3.1game engine for our render-ing because it was a fast,robust,and proven engine that supported rendering features critical to our Tai Chi envi-ronment and future environments built with our system: smooth skinned models,custom render styles,and efficient networking.Our rendering application is structured with a client/server model.The server acts as an intermediary, broadcasting commands to control the layout of students and teachers in the environment and the playback position and speed.The server also records data for both the virtual teacher and student for offline analysis.The client takes the data from thefiltering application,applies the position and orientation data to an avatar skeleton,and renders the virtual world with the teacher and student models. The client,server,and thefiltering process all reside on a 733MHz Pentium II with a GeForce3graphics accelerator. The rendering speed averages17fps,and is always at least 14fps.After the scene is rendered,a scan converter changes the signal from VGA to NTSC.The signal is then sent to an off-the-shelf X10wireless video transmitter that broadcasts the video from a location3m above the student’s head in the center of the capture area.This signal is received by an X10video receiver on the student’s belt,and then sent to the Olympus Eye-Trek glasses we use for our HMD.The ren-dering is bi-ocular,with the same image sent to each eye via separate LCD screens.Because a lightweight HMD is re-quired for this application,we had to compromise on spatial resolution of the display itself.The Eye-Trek had a37.5◦horizontal and21.7◦verticalfield of view,each screen was 640×360pixels.The weight of the apparatus was3.4oz. Therefore,our decision to degrade VGA to NTSC in order to broadcast it implied little or no further loss of resolution. An important detail in rendering the image for the student is to eliminate other light sources in the laboratory so that the virtual environment is the all the subject sees.We accom-plish this by turning off the lights in the laboratory rather than via a cowling.3.5LatencyThe latency of the motion capture and rendering process was170ms.To measure our end-to-end system latency, we put markers on a rotating disc revolving at60RPM and then added representations of those markers to our existing Tai Chi environment.We then projected the virtual disc image onto the physical disc as it rotated and took pho-tographs to measure the angle between the projected and real markers.The angle difference∆θbetween the markers is proportional to latency such that latency=k∆θwhere k=60s/(60RPM∗2π)=1/2π≈0.159.Our measured∆θwas1.1radians,giving us an end-to-end latency of170ms.A latency of170ms is high for an interactive virtual en-vironment,but the effect on the student’s performance is somewhat mitigated by the slow nature of movements in Tai Chi.In addition,the students tend to lag behind the teacher by an average of610ms as described in section4.2.4AssessmentIn order to evaluate the effectiveness of our system for learning Tai Chi,we tested40volunteers to analyze how well they learned the motions of a previously recorded teacher,given different visual user interfaces involving the teacher and student in the virtual space.There are a large number of design choices for represent-ing the student and teacher in a full body motion training task.We made the constraining assumptions that the stu-dent would always see afirst-person view,that the teacher and student would be represented in a fairly realistic way (both would remain humanoid,be of the correct propor-tions,etc.),and that the feedback that the students received would be entirely visual and based on body positions alone. We alsofixed the orientation of the teacher and student so(a)One onOne(b)FourTeachers(c)Side bySide(d)Superimposition1(e)Superimposition2Figure4.Thefive conditionsthat they faced that same direction.This decision was basedboth on early pilot studies and the fact that traditional TaiChi classes are taught this way.After a number of pilotexperiments,we identifiedfive representative points in thedesign space(Figure4).•One on One:One teacher with one student•Four Teachers:Four teachers surrounding one student•Side By Side:Four teachers standing next to four stu-dents with one student in the center.•Superimposition1:Five normally rendered studentswith a red wireframe teacher superimposed on them.•Superimposition2:Five wireframe and transparentgreen students with a red stickfigure teacher superim-posed inside them.4.1Experiment DesignThe majority of our40volunteers were college studentswith no previous formal motion training.Each subject ex-perienced four randomized(layout,motion)pairs where thelayout was one of thefive in Figure4and the motion wasone of the four described below.There were originally onlyfour layouts;thefifth,Superimposition2,was added latein the experiment.Based on early subject feedback regard-ing the rendering of Superimposition1,we chose to test analternate rendering method with the same general layout.No subject repeated a layout or a motion.The order inwhich the students saw the layouts and motions was ran-domized to minimize any learning effects between motions.For each(layout,motion)pair,subjects were asked to matchthe teacher as they performed twelve repetitions of the samemotion after watching the motion from any viewpoint thesubject chose.Subjects were told when six repetitions re-mained and when four repetitions remained.All four motion animations were segments of the sameTai Chi form performed by a Tai Chi teacher and captured inan offline motion capture session.Each motion was approx-imately twenty seconds long and had distinguishing charac-teristics.Motion1featured simple hand movements and a90degree turn to the right;motion2had little hand mo-tion,a180degree turn of the feet,and some turning ofthe upper body;motion3featured slow hand movements,large movement of the feet,and some turning of the tho-rax;and motion4featured swift hand movement and turn-ing of the thorax and hips but little movement of the feet.Based on participants’error measurements,we can say thatall motions were of approximately equal difficulty exceptfor motion1which was significantly easier(Figure5(b)).4.2ResultsIn order to measure how closely the student mimickedthe teacher’s motion we used12bones for comparison:theupper and lower arms,hands,upper and lower legs,andfeet.Each bone was defined using the skeleton’s hierarchywith a parent end and a child end.For example the upperarm had a parent end corresponding to the shoulder and achild end corresponding to the elbow.We measured theerror for each bone as the difference between teacher andstudent child end positions,once the parent end positionsand bone lengths had been normalized.Given student boneE r r o r(a)Learning EffectsE r r o r (b)MotionDifficulty E r r o r(c)Layout ComparisonFigure 5.Error analysis results.Error bars show 95%confidence interval.end positions S p and S c and teacher bone end positions T p and T c the error E for that bone was:E limb = S c −S p S c −S p −T c −T p T c −T pWe then average the errors over all limbs to get thetotal error for one frame of data:E f rame =∑12i =1E limb (i )/12.To obtain the total error for one repetition of the motion,we summed over the n frames in that repetition:E rep =∑n j =1E f rame (j )/n .Finally,though there are 12repetitions for each motion,we considered only the last four that the students performed.Before the 9th trial,subjects were reminded that there were four repetitions remaining and encouraged to do their best on the remaining trials.Averaging over these four gives a reasonable indication of how well the student learned the form.So the total error,for one student,given one layout and one motion,is E trial =∑12k =9E rep (k )/44.2.1Data Analysis DetailsThe error measurement for full motions was slightly more complicated.There were two major sources of error which were common for all students who participated in the ex-periment:time lag and yaw offset.If the student was not oriented in precisely the same way as the teacher,the error in yaw would add an additional error to all bones in the student’s body.Even if the student performed the form per-fectly,if there was a few degree offset in the orientation the student would have a large error.To minimize this,we considered the student’s starting pose and found the yaw offset that minimized this static pose error.We found thatE r r o r (a)Yaw Shift (degrees)E r r o r(b)Time Shift (s)Figure 6.Change in error with yaw and time shiftingwhen considering all possible offset angles,from −30◦to 30◦there was one clear point where error was minimized (Figure 6(a)shows a typical case).In addition to the yaw shift,as the students performed the motion they all tended to lag behind the master.Be-cause they were still doing the motion correctly,just not at the same time as the master,we searched for a time shift that would minimize this error.For each repetition of the motion,we considered all possible time shifts from 0to 120frames (0to 2seconds).For each value,we compared the shifted student data with the original teacher data to get the error value.Again we found that there was one clear shift value which minimized the error (Figure 6(b)shows a typi-cal case).The average time shift was approximately 610ms.We also needed to make sure there was no learning or fatigue effect.Figure 5(a)shows that when we averaged all conditions performed first,second,third,and fourth across all subjects,the order of performance had no effect on er-ror.Before we could average results from different mo-tions we needed to compensate for different difficulties in the motions.Figure 5(b)shows that for the average across all subjects,motion 1was significantly easier than the other motions.For our final analysis,we normalized the error values based on the mean difficulty of each motion.Having dealt with these issues,we can now compare all of the layouts with each other.Figure 5(c)shows the average error across all subjects for each layout.The N varies for each of the layouts because Superimposition 2was added later in the experiment and some subjects were unable to do all four (layout,motion)tests due to lack of time,discomfort,and other reasons.The only statistically significant conclusion we can draw based on the 95%con-fidence intervals for the average errors is that Superimpo-sition 1is worse than the others by a small amount.One on One,which most closely mimics a traditional teaching environment,is as good as the other techniques.4.2.2Questionnaire ResultsAfter each experiment ended,we gave a questionnaire to the subjects.We asked them to rank the four layouts they expe-One On One (n =30)(n =23)(n =26)(n =27)Super.2(n =6)%S t u d e n t sEasiest Hardest Figure 7.Questionnaire Results:Layout Dif-ficultyrienced,from easiest to hardest.As Figure 7shows,the two Superimposition trials were considered to be significantly harder than the other trials.In fact,all of the subjects who tried Superimposition 2thought it was the most difficult.In-terestingly,although subjects considered Superimposition 2very difficult compared to the other layouts,average error on that layout was not significantly greater than the other non-superimposed layouts.5ConclusionsOur primary contribution is the engineering of a wireless virtual reality system that tracks 41points on the human body in a working volume of 4meters by 5meters by 2.3meters high.Our system has a positional accuracy of roughly 5mm,a latency of 170ms,and maintains a ren-dering frame rate of 17fps using a commodity ing a light sunglasses-style HMD and a 3pound waist pack,ours is the first wireless virtual environment system useful for a large variety of applications.To our surprise,none of the layouts of students and teachers had a substantial effect on learning a motor con-trol task involving full body motion.This result contradicts the initial intuition of virtual reality researchers with which we have discussed this project.They generally assumed su-perimposition would make the task much easier.If other studies come to similar conclusions for other motor con-trol tasks,the virtual environment research community may need to more carefully examine which tasks are best suited for immersive training,as full body motion motor tasks are often cited as example applications.We have several theories about why the VR-only tech-niques fared no better than our simulation of real-world instruction (the One on One layout).In our experiments,students were expected to perform motor movements while simultaneously watching the movements of the teacher’s avatar.Other studies of simpler movements have indicated that this simultaneous activity may interfere with learn-ing [12,14].Pomplun and Mataric compared the per-formance of subjects making arm movements when the subjects were instructed to rehearse while watching a video presentation of the motion and when they were instructed to just watch the video before performing the task [12].The subjects who did not rehearse performed substantially better.Ellis et al.showed that system latency is highly corre-lated with performance in a virtual reality tracking task [5]and Ware and Balakrishnan show that mean time for static target acquisition is directly proportional to latency [19].Although our latency is admittedly large,we chose a slow task so that latency effects would be small.We also mea-sured our errors with a technique that should account for the students’latencies in responding to the teacher.It would have benefited our experiment to understand how our la-tency –and by extension other factors such as field of view and frame rate –affected performance,and what amount of latency is acceptable for a motion training task such as ours.With the exception of the superimposition layouts,our rendering styles were chosen to represent the student and teacher in as realistic a fashion as possible given the limited polygon count possible in a virtual environment.However,experimental evidence indicates that subjects tend to track the hands when watching or imitating a teacher performing arm movements [9].If these results generalize to Tai Chi,a rendering style that emphasized the position and orientation of the hands might be more effective.We evaluated the motion of the students during trials 9-12in the virtual environment.A better evaluation technique would have been to remove the visual stimulus of the images of the teacher and student and assess the stu-dent’s unaided performance [14].In addition to assessing retention in the absence of feedback,this assessment tech-nique would have allowed us to compare the effectiveness of more standard visual presentations such as a video of a teacher projected on the wall of the laboratory and perhaps understand whether the small field of view and low resolu-tion of the HMD were affecting the student’s ability to learn in the environment.We can not conclusively rule out that our virtual envi-ronment was at fault;one can always worry that if only our fidelity had been better,the latency had been lower,or we had just been clever enough to try another rendering style or user interface,the results would be different.However,our system is substantially better in both tracking area and spatial resolution than most full body tracking systems,and。