Introduction to Descriptive Linguistics Artificial Languages A Study of Formal Grammars

- 格式:pdf

- 大小:139.53 KB

- 文档页数:26

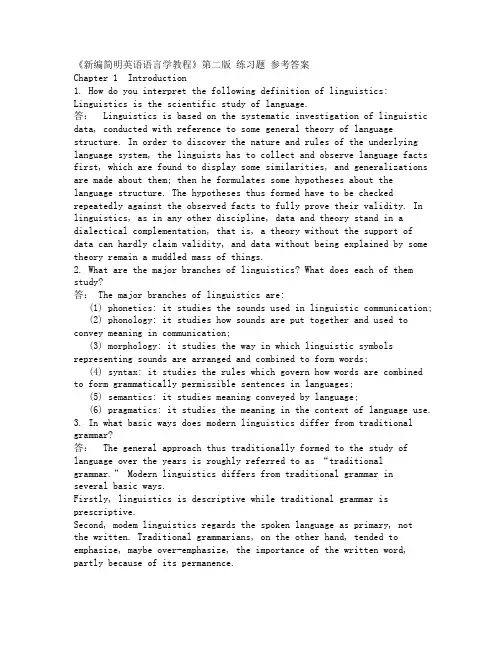

《新编简明英语语言学教程》第二版练习题参考答案Chapter 1 Introduction1. How do you interpret the following definition of linguistics: Linguistics is the scientific study of language.答: Linguistics is based on the systematic investigation of linguistic data, conducted with reference to some general theory of language structure. In order to discover the nature and rules of the underlying language system, the linguists has to collect and observe language facts first, which are found to display some similarities, and generalizations are made about them; then he formulates some hypotheses about the language structure. The hypotheses thus formed have to be checked repeatedly against the observed facts to fully prove their validity. In linguistics, as in any other discipline, data and theory stand in a dialectical complementation, that is, a theory without the support of data can hardly claim validity, and data without being explained by some theory remain a muddled mass of things.2. What are the major branches of linguistics? What does each of them study?答: The major branches of linguistics are:(1) phonetics: it studies the sounds used in linguistic communication;(2) phonology: it studies how sounds are put together and used to convey meaning in communication;(3) morphology: it studies the way in which linguistic symbols representing sounds are arranged and combined to form words;(4) syntax: it studies the rules which govern how words are combined to form grammatically permissible sentences in languages;(5) semantics: it studies meaning conveyed by language;(6) pragmatics: it studies the meaning in the context of language use.3. In what basic ways does modern linguistics differ from traditional grammar?答: The general approach thus traditionally formed to the study of language over the years is roughly referred to as “traditional grammar.” Modern linguistics differs from traditional grammar inseveral basic ways.Firstly, linguistics is descriptive while traditional grammar is prescriptive.Second, modem linguistics regards the spoken language as primary, notthe written. Traditional grammarians, on the other hand, tended to emphasize, maybe over-emphasize, the importance of the written word, partly because of its permanence.Then, modem linguistics differs from traditional grammar also in that it does not force languages into a Latin-based framework.4. Is modern linguistics mainly synchronic or diachronic? Why?答: In modem linguistics, a synchronic approach seems to enjoypriority over a diachronic one. Because people believed that unless the various states of a language in different historical periods are successfully studied, it would be difficult to describe the changes that have taken place in its historical development.5. For what reasons does modern linguistics give priority to speechrather than to writing?答: Speech and writing are the two major media of linguistic communication. Modem linguistics regards the spoken language as the natural or the primary medium of human language for some obvious reasons. From the point of view of linguistic evolution, speech is prior to writing. The writing system of any language is always “invented” byits users to record speech when the need arises. Even in today's world there are still many languages that can only be spoken but not written. Then in everyday communication, speech plays a greater role than writing in terms of the amount of information conveyed. And also, speech is always the way in which every native speaker acquires his mother tongue, and writing is learned and taught later when he goes to school. For modern linguists, spoken language reveals many true features of human speech while written language is only the “revised” record of speech. Thus their data for investigation and analysis are mostly drawn from everyday speech, which they regard as authentic.6. How is Saussure's distinction between langue and parole similar to Chomsky's distinction between competence and performance?答: Saussure's distinction and Chomsky's are very similar, they differ at least in that Saussure took a sociological view of language and his notion of langue is a matter of social conventions, and Chomsky looks at language from a psychological point of view and to him competence is a property of the mind of each individual.7. What characteristics of language do you think should be included in a good, comprehensive definition of language?答: First of all, language is a system, i.e., elements of language are combined according to rules.Second, language is arbitrary in the sense that there is no intrinsic connection between a linguistic symbol and what the symbol stands for.Third, language is vocal because the primary medium for all languages is sound.Fourth, language is human-specific, i. e., it is very different from the communication systems other forms of life possess.8. What are the main features of human language that have been specified by C. Hockett to show that it is essentially different from animal communication system?答:The main features of human language are termed design features. They include:1) ArbitrarinessLanguage is arbitrary. This means that there is no logical connection between meanings and sounds. A good example is the fact that different sounds are used to refer to the same object in different languages.2) ProductivityLanguage is productive or creative in that it makes possible the construction and interpretation of new signals by its users. This is why they can produce and understand an infinitely large number of sentences, including sentences they have never heard before.3) DualityLanguage consists of two sets of structures, or two levels. At the lower or the basic level there is a structure of sounds, which are meaningless by themselves. But the sounds of language can be grouped and regrouped into a large number of units of meaning, which are found at the higher level of the system.4) DisplacementLanguage can be used to refer to things which are present or not present, real or imagined matters in the past, present, or future, or in far-away places. In other words, language can be used to refer to contexts removed from the immediate situations of the speaker. This is what “displacement” means.5) Cultural transmissionWhile human capacity for language has a genetic basis, i.e., we were all born with the ability to acquire language, the details of any language system are not genetically transmitted, but instead have to be taught and learned.9. What are the major functions of language? Think of your own examples for illustration.答: Three main functions are often recognized of language: the descriptive function, the expressive function, and the social function. The descriptive function is the function to convey factual information, which can be asserted or denied, and in some cases even verified. Fore xample: “China is a large country with a long history.”The expressive function supplies information about the user’s feelings, preferences, prejudices, and values. For example: “I will never go window-shopping with her.”The social function serves to establish and maintain social relations between people. . For example: “We are your firm supporters.”Chapter 2 Speech Sounds1. What are the two major media of linguistic communication? Of the two, which one is primary and why?答: Speech and writing are the two major media of linguistic communication.Of the two media of language, speech is more primary than writing, for reasons, please refer to the answer to the fifth problem in the last chapter.2. What is voicing and how is it caused?答: Voicing is a quality of speech sounds and a feature of all vowels and some consonants in English. It is caused by the vibration of the vocal cords.3. Explain with examples how broad transcription and narrowtranscription differ?答: The transcription with letter-symbols only is called broad transcription. This is the transcription normally used in dictionaries and teaching textbooks for general purposes. The latter, i.e. the transcription with letter-symbols together with the diacritics is called narrow transcription. This is the transcription needed and used by the phoneticians in their study of speech sounds. With the help of the diacritics they can faithfully represent as much of the fine details as it is necessary for their purpose.In broad transcription, the symbol [l] is used for the sounds [l] in the four words leaf [li:f], feel [fi:l], build [bild], and health [helθ]. As a matter of fact, the sound [l] in all these four sound combinations differs slightly. The [l] in [li:f], occurring before a vowel, is called a dear [l], and no diacritic is needed to indicate it; the [1] in [fi:l] and [bild], occurring at the end of a word or before another consonant, is pronounced differently from the clear [1] as in “leaf”. It is called dark [?] and in narrow transc ription thediacritic [?] is used to indicate it. Then in the sound combination [helθ], the sound [l] is followed by the English dental sound [θ], its pronunciation is somewhat affected by the dental sound that follows it. It is thus called a dental [l], and in narrow transcription thediacritic [、] is used to indicate it. It is transcribed as [helθ].Another example is the consonant [p]. We all know that [p] is pronounced differently in the two words pit and spit. In the word pit, the sound [p] is pronounced with a strong puff of air, but in spit the puff of air is withheld to some extent. In the case of pit, the [p] sound is said to be aspirated and in the case of spit, the [p] sound is unaspirated. This difference is not shown in broad transcription, but in narrow transcription, a small raised “h” is used to show aspiration, thus pit is transcribed as [ph?t] and spit is transcribed as [sp?t].4. How are the English consonants classified?答: English consonants can be classified in two ways: one is in terms of manner of articulation and the other is in terms of place of articulation. In terms of manner of articulation the English consonants can be classified into the following types: stops, fricatives, affricates, liquids, nasals and glides. In terms of place of articulation, it can be classified into following types: bilabial, labiodental, dental, alveolar, palatal, velar and glottal.5. What criteria are used to classify the English vowels?答: Vowels may be distinguished as front, central, and back according to which part of the tongue is held highest. To further distinguish members of each group, we need to apply another criterion, i.e. the openness of the mouth. Accordingly, we classify the vowels into four groups: close vowels, semi-close vowels, semi-open vowels, and open vowels. A third criterion that is often used in the classification of vowels is the shape of the lips. In English, all the front vowels and the central vowels are unfounded vowels, i. e., without rounding the lips, and all the back vowels, with the exception of [a:], are rounded. It should be noted that some front vowels can be pronounced with rounded lips.6. A. Give the phonetic symbol for each of the following sound descriptions:1) voiced palatal affricate2) voiceless labiodental fricative3) voiced alveolar stop4) front, close, short5) back, semi-open, long6) voiceless bilabial stopB. Give the phonetic features of each of the following sounds:1) [ t ] 2) [ l ] 3) [?] 4) [w] 5) [?] 6) [?]答:A. (1) [?] (2) [ f ] (3) [d ] (4) [ ? ] (5) [ ?:] (6) [p]B. (1) voiceless alveolar stop (2) voiced alveolar liquid(3) voiceless palatal affricate (4) voiced bilabial glide(5) back, close, short (6) front, open7. How do phonetics and phonology differ in their focus of study? Who do you think will be more interested in the difference between, say, [l] and [?], [ph] and [p], a phonetician or a phonologist? Why?答: (1) Both phonology and phonetics are concerned with the same aspect of language –– the speech sounds. But while both are related to the study of sounds,, they differ in their approach and focus. Phonetics is of a general nature; it is interested in all the speech sounds used in all human languages: how they are produced, how they differ from each other, what phonetic features they possess, how they can be classified, etc. Phonology, on the other hand, aims to discover how speech sounds in a language form patterns and how these sounds are used to convey meaning in linguistic communication.(2) A phonologist will be more interested in it. Because one of the tasks of the phonologists is to find out rule that governs the distribution of [l] and [?], [ph] and [p].8. What is a phone? How is it different from a phoneme? How are allophones related to a phoneme?答: A phone is a phonetic unit or segment. The speech sounds we hear and produce during linguistic communication are all phones. A phoneme is not any particular sound, but rather it is represented or realized by a certain phone in a certain phonetic context. The different phones which can represent a phoneme in different phonetic environments are called the allophones of that phoneme. For example, the phoneme /l/ in English can be realized as dark [?], clear [l], etc. which are allophones of the phoneme /l/.9. Explain with examples the sequential rule, the assimilation rule, and the deletion rule.答: Rules that govern the combination of sounds in a particular language are called sequential rules.There are many such sequential rules in English. For example, if a word begins with a [l] or a [r], then the next sound must be a vowel. That is why [lbik] [lkbi] are impossible combinations in English. They have violated the restrictions on the sequencing of phonemes.The assimilation rule assimilates one sound to another by “copying” a feature of a sequential phoneme, thus making the two phones similar. Assimilation of neighbouring sounds is, for the most part, caused by articulatory or physiological processes. When we speak, we tend to increase the ease of articulation. This “sloppy” tendency may become regularized as rules of language.We all know that nasalization is not a phonological feature in English, i.e., it does not distinguish meaning. But this does not mean that vowels in English are never nasalized in actual pronunciation; in fact they are nasalized in certain phonetic contexts. For example, the [i:] sound is nasalized in words like bean, green, team, and scream. This is because in all these sound combinations the [i:] sound is followed by a nasal [n] or [m].The assimilation rule also accounts for the varying pronunciation of the alveolar nasal [n] in some sound combinations. The rule is that within a word, the nasal [n] assumes the same place of articulation as the consonant that follows it. We know that in English the prefix in- can be added to ma adjective to make the meaning of the word negative, e.g. discreet – indiscreet, correct – incorrect. But the [n] sound in the prefix in- is not always pronounced as an alveolar nasal. It is soin the word indiscreet because the consonant that follows it, i.e. [d], is an alveolar stop, but the [n] sound in the word incorrect is actually pronounced as a velar nasal, i.e. [?]; this is because the consonantthat follows it is [k], which is a velar stop. So we can see that while pronouncing the sound [n], we are “copying” a feature of the consonant that follows it.Deletion rule tells us when a sound is to be deleted although it is orthographically represented. We have noticed that in the pronunciation of such words as sign, design, and paradigm, there is no [g] sound although it is represented in spelling by the letter g. But in their corresponding forms signature, designation, and paradigmatic, the [g] represented by the letter g is pronounced. The rule can be stated as: Delete a [g] when it occurs before a final nasal consonant. Given the rule, the phonemic representation of the stems in sign – signature, resign – resignation, phlegm – phlegmatic, paradigm – paradigmatic will include the phoneme /g/, which will be deleted according to the regular rule if no suffix is added.10. What are suprasegmental features? How do the major suprasegmental features of English function in conveying meaning?答: The phonemic features that occur above the level of the segments are called suprasegmental features. The main suprasegmental features include stress, intonation, and tone. The location of stress in English distinguishes meaning. There are two kinds of stress: word stress and sentence stress. For example, a shift of stress may change the part of speech of a word from a noun, to a verb although its spelling remains unchanged. Tones are pitch variations which can distinguish meaning just like phonemes.Intonation plays an important role in the conveyance of meaning inalmost every language, especially in a language like English. Whenspoken in different tones, the same sequence of words may have differentmeanings.Chapter 3 Morphology1. Divide the following words into their separate morphemes by placing a“ ” between each morpheme and the next:a. microfile e. telecommunicationb. bedraggled f. forefatherc. announcement g. psychophysicsd. predigestion h. mechanist答:a. micro file b. be draggle edc. announce mentd. pre digest ione. tele communicate ionf. fore fatherg. psycho physics h. mechan ist2. Think of three morpheme suffixes, give their meaning, and specify thetypes of stem they may be suffixed to. Give at least two examples ofeach.Model: -orsuffix: -ormeaning: the person or thing performing the actionstem type: added to verbsexamples: actor, “one who acts in stage plays, motionpictures, etc.” translator, “one who translates”答:(1) suffix: -ablemeaning: something can be done or is possiblestem type: added to verbsexamples: acceptable, “can be accepted”respectable, “can be respected”(2) suffix: -lymeaning: functionalstem type: added to adjectivesexamples: freely. “adverbial form of ‘free’ ”qu ickly, “adverbial form of 'quick' ”.(3) suffix: -eemeaning: the person receiving the actionstem type: added to verbsexamples: employee, “one who works in a company”interviewee, “one who is interviewed”3. Think of three morpheme prefixes, give their meaning, and specify the types of stem they may be prefixed to. Give at least two examples of each.Model: a-prefix: a-meaning: “without; not”stem type: added to adjectivesexamples: asymmetric, “lacking symmetry” asexual, “without sex or sex organs”答:(1) prefix: dis-meaning: showing an oppositestem type: added to verbs or nounsexamples : disapprove, “do not approve”dishonesty, “lack of honesty”.(2) prefix: anti-meaning: against, opposed tostem type: added to nouns or adjectivesexamples : antinuclear, “opposing the use of atomic weapons and power”antisocial, “opposed or harmful to the laws and customs of an organized community. ”(3) prefix: counter-meaning: the opposite ofstem type: added to nouns or adjectives.examples: counterproductive, “prod ucing results opposite to those intended”counteract, “act against and reduce the force oreffect of (sth.) ”4. The italicized part in each of the following sentences is an inflectional morpheme. Study each inflectional morpheme carefully and point out its grammatical meaning.Sue moves in high-society circles in London.A traffic warden asked John to move his car.The club has moved to Friday, February 22nd.The branches of the trees are moving back and forth.答:(1) the third person singular(2) the past tense(3) the present perfect(4) the present progressive5. Determine whether the words in each of the following groups are related to one another by processes of inflection or derivation.a) go, goes, going, goneb) discover, discovery, discoverer, discoverable, discoverabilityc) inventor, inventor’s, inventors, inventors’d) democracy, democrat, democratic, democratize答:(略)6. The following sentences contain both derivational and inflectional affixes. Underline all of the derivational affixes and circle the inflectional affixes.a) The farmer’s cows escaped.b) It was raining.c) Those socks are inexpensive.d) Jim needs the newer copy.e) The strongest rower continued.f) She quickly closed the book.g) The alphabetization went well.答:(略)Chapter 4 Syntax1. What is syntax?Syntax is a branch of linguistics that studies how words are combined to form sentences and the rules that govern the formation of sentences.2. What is phrase structure rule?The grammatical mechanism that regulates the arrangement of elements(i.e. specifiers, heads, and complements) that make up a phrase iscalled a phrase structure rule.The phrase structural rule for NP, VP, AP, and PP can be written as follows:NP → (Det) N (PP) ...VP → (Qual) V (NP) ...AP → (Deg) A (PP) ...PP → (Deg) P (NP) ...The general phrasal structural rule ( X stands for the head N, V, A or P):The XP rule: XP → (specifier) X (complement)3. What is category? How to determine a word's category?Category refers to a group of linguistic items which fulfill the same or similar functions in a particular language such as a sentence, a noun phrase or a verb.To determine a word's category, three criteria are usually employed, namely meaning, inflection and distribution. A word's distributionalfacts together with information about its meaning and inflectional capabilities help identify its syntactic category.4. What is coordinate structure and what properties does it have?The structure formed by joining two or more elements of the same type with the help of a conjunction is called coordinate structures. Conjunction exhibits four important properties:1) There is no limit on the number of coordinated categories that can appear prior to the conjunction.2) A category at any level (a head or an entire XP) can be coordinated.3) Coordinated categories must be of the same type.4) The category type of the coordinate phrase is identical to the category type of the elements being conjoined.5. What elements does a phrase contain and what role does each element play?A phrase usually contains the following elements: head, specifier and complement. Sometimes it also contains another kind of element termed modifier.The role of each elementHead:Head is the word around which a phrase is formed.Specifier:Specifier has both special semantic and syntactic roles. Semantically,it helps to make more precise the meaning of the head. Syntactically, it typically marks a phrase boundary.Complement:Complements are themselves phrases and provide information aboutentities and locations whose existence is implied by the meaning of the head.Modifier:Modifiers specify optionally expressible properties of the heads.6. What is deep structure and what is surface structure?There are two levels of syntactic structure. The first, formed by the XP rule in accordance with the head's subcategorization properties, is called deep structure (or D-structure). The second, corresponding to the final syntactic form of the sentence which results from appropriate transformations, is called surface structure (or S-structure).7. Indicate the category of each word in the following sentences. a) The old lady got off the bus carefully.Det A N V P Det N Advb) The car suddenly crashed onto the river bank.Det N Adv V P Det Nc) The blinding snowstorm might delay the opening of the schools.Det A N Aux V Det N P Det Nd) This cloth feels quite soft.Det N V Deg A(以下8-12题只作初步的的成分划分,未画树形图, 仅供参考)8. The following phrases include a head, a complement, and a specifier. Draw the appropriate tree structure for each.a) rich in mineralsXP(AP) → head (rich) A complement (in minerals) PPb) often read detective storiesXP(VP) → specifier (often) Qual head (read) V complement (detective stories) NPc) the argument against the proposalsXP(NP) → specifier (the) Det head (argument) N complement (against the proposals) PPd) already above the windowXP(VP) → specifier (already) Deg head (above) P complement (the window) NP9. The following sentences contain modifiers of various types. For each sentence, first identify the modifier(s), then draw the tree structures.(划底线的为动词的修饰语,斜体的为名词的修饰语)a) A crippled passenger landed the airplane with extreme caution.b) A huge moon hung in the black sky.c) The man examined his car carefully yesterday.d) A wooden hut near the lake collapsed in the storm.10. The following sentences all contain conjoined categories. Draw a tree structure for each of the sentences. (划底线的为并列的范畴)a) Jim has washed the dirty shirts and pants.b) Helen put on her clothes and went out.c) Mary is fond of literature but tired of statistics.11. The following sentences all contain embedded clauses that function as complements of a verb, an adjective, a preposition or a noun. Draw a tree structure for each sentence. (划底线的为补语从句)a) You know that I hate war.b) Gerry believes the fact that Anna flunked the English exam.c) Chris was happy that his father bought him a Rolls-Royce.d) The children argued over whether bats had wings.12. Each of the following sentences contains a relative clause. Draw the deep structure and the surface structure trees for each of these sentences. (划底线的为关系从句)a) The essay that he wrote was excellent.b) Herbert bought a house that she lovedc) The girl whom he adores majors in linguistics.13. The derivations of the following sentences involve the inversion transformation. Give the deep structure and the surface structure of each of these sentences.a) Would you come tomorrow? (surface structure)you would come tomorrow (deep structure)b) What did Helen bring to the party? (surface structure)Helen brought what to the party (deep structure)c) Who broke the window? (surface structure)who broke the window (deep structure)Chapter 5 Semantics1. What are the major views concerning the study of meaning?答:(1) The naming theory proposed by the ancient Greek scholar Plato. According to this theory, the linguistic forms or symbols, in other words, the words used in a language are simply labels of the objects they stand for. So words are just names or labels for things.(2) The conceptualist view has been held by some philosophers and linguists from ancient times. This view holds that there is no direct link between a linguistic form and what it refers to (i. e., between language and the real world); rather, in the interpretation of meaning they are linked through the mediation of concepts in the mind.(3) The contextualist view held that meaning should be studied in terms of situation, use, context –– elements closely linked with language behaviour. The representative of this approach was J.R. Firth, famous British linguist.(4) Behaviorists attempted to define the meaning of a language form as the “situation in which the speaker utters it and the response it calls forth in the hearer.” This theory, somewhat close to contextualism, is linked with psychological interest.2. What are the major types of synonyms in English?答: The major types of synonyms are dialectal synonyms, stylistic synonyms, emotive or evaluative synonyms, collocational synonyms, and semantically different synonyms.Examples(略)3. Explain with examples “homonymy”, “polysemy”, and “hyponymy”.答:(1) Homonymy refers to the phenomenon that words having different meanings have the same form, i.e., different words are identical in sound or spelling, or in both.。

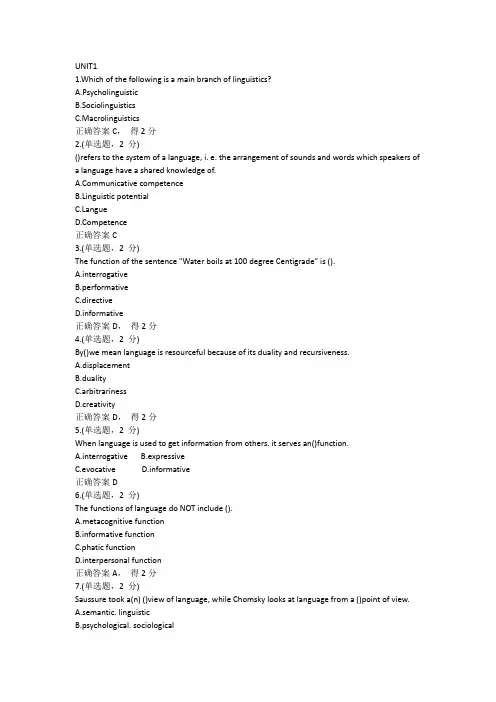

UNIT11.Which of the following is a main branch of linguistics?A.PsycholinguisticB.SociolinguisticsC.Macrolinguistics正确答案C,得2分2.(单选题,2 分)()refers to the system of a language, i. e. the arrangement of sounds and words which speakers of a language have a shared knowledge of.municative competenceB.Linguistic potentialnguepetence正确答案C3.(单选题,2 分)The function of the sentence "Water boils at 100 degree Centigrade” is ().A.interrogativeB.performativeC.directivermative正确答案D,得2分4.(单选题,2 分)By()we mean language is resourceful because of its duality and recursiveness.A.displacementB.dualityC.arbitrarinessD.creativity正确答案D,得2分5.(单选题,2 分)When language is used to get information from others. it serves an()function.A.interrogativeB.expressiveC.evocativermative正确答案D6.(单选题,2 分)The functions of language do NOT include ().A.metacognitive functionrmative functionC.phatic functionD.interpersonal function正确答案A,得2分7.(单选题,2 分)Saussure took a(n) ()view of language, while Chomsky looks at language from a ()point of view.A.semantic. linguisticB.psychological. sociologicalC.sociological.. psychologicalD.applied. pragmatic正确答案C,得2分8.(单选题,2 分)The actual production and comprehension of the speech by speakers of a language is called().A.performancenguage devicepetenceD.grammar rules正确答案A,9.(单选题,2 分)Saussure is closely connected with().nguepetenceC.paroleD.performance正确答案C,得2分10.(单选题,2 分)"A refer to Confucius even though he was dead 2, 000 years ago. " This shows that language has the design feature of().A.creativityB.arbitrarinessC.displacementD.duality正确答案C,得2分11.(单选题,2 分)According to F. de Saussure()refers to the abstract linguistic system shared by all the members of a speech community.nguageB.performancengueD.parole正确答案C,得2分12.(单选题,2 分)The study of physical properties of the sounds produced in speech is closely connected with().A.auditory phoneticsB.articulatory phoneticsC.acoustic phonetics教师批阅正确答案C,得2分13.(单选题,2 分)Which of the following is NOT a frequently discussed design feature?A.ArbitrarinessB.ConventionC.DualityD.Culture transmission正确答案B,我的答案:D得0分14.(单选题,2 分)Which of the following words is entirely arbitrary?A.bangB.crashC.treeD.typewriter正确答案C,我的答案:A得0分15.(单选题,2 分)The study of language at one point in time is a()study.A.descriptiveB.diachronicC.synchronicD.historical正确答案C,得2分16.(单选题,2 分)Which of the following statements is true of Jacobson 's framework of language functions?A.The emotive function is to convey message and information.B.The conative function is to clear up intentions, words and meaning.C.The referential function is to indulge in language for its own sake.D.The phatic function is to establish communion with others.正确答案D,我的答案:C得0分17.(填空题,2 分)____grammars attempt to tell what is in the language, while____grammars tell people what should be in the language. Most contemporary linguists believe that whatever occurs naturally in the language should be described.正确答案:(1) Descriptive(2) prescriptive18.(填空题,2 分)The features that define our human languages can be called____features.正确答案:(1) design19.(填空题,2 分)The link between a linguistic sign and its meaning is a matter of____ ____relation.正确答案:(1) convention20.(填空题,2 分)____ can be defined as the study of language in use. Sociolinguistics, on the other hand, attempts to show the relationship between language and society.正确答案:(1) Pragmatics21.(填空题,2 分)Saussure distinguished the linguistic competence of the speaker and the actual phenomena or data of linguistics (utterances) as____ and.The former refers to the abstract linguistic system shared by all the members of a speech community, and the latter is the concrete manifestation of language either through speech or through writing.正确答案:(1) langue, parole22.(填空题,2 分)Arbitrariness of language makes it potentially creative, and ____ of language makes learning a language laborious. For learners of a foreign language, it is this feature of language that is more with noticing than its arbitrariness.正确答案:(1) conventionality23.(填空题,2 分)Chomsky initiated the distinction between ____and performance.正确答案:(1) competence24.(填空题,2 分)Syntagmatic relation in fact is a____ relation.正确答案:(1) positional25.(填空题,2 分)Linguistics is usually defined as the____ study of language.正确答案:(1) scientific26.(填空题,2 分)Our language can be used to talk about itself. This is the____ function of language.正确答案:(1) metalingual27.(填空题,2 分)By____ is meant the property of having two levels of structures, such that units of the primary level are composed of elements of the secondary level and each of the two levels has its own principles of organization.正确答案:(1) duality28.(填空题,2 分)Theory that primitive man made involuntary vocal noises while performing heavy work has been called the ____ theory.正确答案:(1) yo-he-ho29.(填空题,2 分)Human language is arbitrary. This refers to the fact that there is no logical or intrinsic connection between a particular sound and the ____ it is associated with.正确答案:(1) meaning30.(填空题,2 分)Semantics and ____ investigate different aspects of linguistic meaning.正确答案:(1) pragmatics31.(填空题,2 分)The relation between them is____.正确答案:(1) arbitrary32.(填空题,2 分)By____, we mean language is resourceful because of its duality and its recursiveness.正确答案:(1) creativity33.(填空题,2 分)In linguistics, ____ refers to the study of the rules governing the way words are combined to form sentences in a language, or simply, the study of the formation as sentence.正确答案:(1) syntax34.(填空题,2 分)Modern linguistic is ____ in the sense that the linguist tries to discover what language is rather than lay down some rules for people to observe.正确答案:(1) descriptive35.(填空题,2 分)____ mainly studies the characteristics of speech sounds and provides methods for their description, classification and transcription.正确答案:(1) phonetics36.(填空题,2 分)One of the important distinctions in linguistics is ____ and performance.正确答案:(1) competence37.(填空题,2 分)The most important function of language is ____function.正确答案:(1) informative38.(填空题,2 分)____ refers to the role language plays in communication(e. g. to express ideas, attitudes) or in particular social situations(e. g. Religious, legal).正确答案:(1) Function39.(填空题,2 分)When language is used for establishing an atmosphere or maintaining social contact rather thanexchanging information or ideas, its function is ____function.正确答案:(1) phatic40.(填空题,2 分)The abstract linguistic system shared by all the members of a speech community is____.正确答案:(1) langue41.(判断题,2 分)It is conclusive that Chinese is regarded as the primeval language.正确答案错,得2分42.(判断题,2 分)Historical linguistics equals to the study of synchronic study正确答案错,得2分43.(判断题,2 分)Onomatopoeic words can show the arbitrary nature of language.正确答案错,得2分44.(判断题,2 分)Wherever humans exist,language exists.正确答案错,得2分45.(判断题,2 分)The bow- wow theory is a theory on the origin of language.正确答案对,得2分46.(判断题,2 分)According to Saussure, the relation between the signified and the signifier is arbitrary.正确答案对,得2分47.(判断题,2 分)When language is used to get information from other,it serves an informative function.正确答案错,得2分48.(判断题,2 分)Prescriptive linguistics is more popular than descriptive linguistics, because it can tell us how to speak correct language.正确答案错,得2分49.(判断题,2 分)The features that define our human languages can be called DESIGN FEATURES.正确答案对,得2分50.(判断题,2 分)Duality is one of the characteristics of human language. It refers to the fact that language has two levels of structures: the system of sounds and the system of meaning.对UNIT21.(单选题,1 分)The vowel()is a low back vowel.A./i:/B./e/C./u/D./a:/教师批阅正确答案D,得1 分2.(单选题,1 分)Which one is different from the others according to manners of articulation?A.[w]B.[f]C.[z]D.[v]教师批阅正确答案A,得1 分3.(单选题,1 分)Which of the following is true of an allophone?A.An allophone changes the meaning of the word.B.There is no possibility of an allophone becoming a phoneme.C.A phone can be the allophone of all English vowel phonemes.D.There are no restrictions on the distribution of an allophone.教师批阅正确答案B,得1 分4.(单选题,1 分)Which of the following CANNOT be considered as minimal par?A./s / /T/B./ai/ /Oi/C./s/ /z/D./p/ /b/教师批阅正确答案A,我的答案:C 得0 分5.(单选题,1 分)Which of the following is the correct description of [v]?A.voiced labiodental fricativeB.voiced labiodental stopC.voiceless labiodental fricativeD.voiceless labiodental stop教师批阅正确答案A,得1 分6.(单选题,1 分)The consonant /s / in the word “smile” can be described as:().A.Voiceless oral alveolar fricativeB.voiced oral bilabial fricativeC.voiceless nasal bilabial liquidD.voiced oral alveolar plosive教师批阅正确答案A,得1 分7.(单选题,1 分)Point out which item does not fall under the same category as the rest, and explain the reason in ONE sentence.A.residentB.restartC.resolutionD.resignation教师批阅正确答案B,得1 分8.(单选题,1 分)()is the smallest meaningful unit of language.A.PhoneB.PhonemeC.MorphemeD.Syllable教师批阅正确答案B,得1 分9.(单选题,1 分)An aspirated P and an unaspirated p are()of the p phoneme.A.analoguesB.allophonesC.tagmemesD.morphemes教师批阅正确答案B,得1 分10.(单选题,1 分)()refers to the degree of force used in producing a syllable.A.RhymeB.StressC.ToneD.Coda教师批阅正确答案B,得1 分11.(单选题,1 分)Which branch of phonetics concerns the production of speech sounds?A.Acoustic phoneticsB.articulatory phoneticsC.None of themD.auditory phonetics教师批阅正确答案B,得1 分12.(单选题,1 分)A sound which is capable of distinguishing one word or one shape of word from another in a givenlanguage is a().A.phonemeB.allophoneC.phoneD.word教师批阅正确答案A,得1 分13.(单选题,1 分)()is one of the suprasegmental features.A.stopB.toneC.voicingD.deletion教师批阅正确答案B,得1 分14.(单选题,1 分)Which one is different from others according to places of articulatory?A.[p]B.[m]C.[b]D.[n]教师批阅正确答案D,得1 分15.(单选题,1 分)Classification of English speech sounds in terms of manner of articulation involves the following EXCEPT(). DA.affricatesB.bilabialC. lateralD.fricative教师批阅正确答案B,得1 分16.(单选题,1 分)Of the consonants/p/ /t/ /k/ /f/ /m/ /z/and /g/, which has the features of voiceless and velar?A./p/B./t/C./g/D./k/教师批阅正确答案D,得1 分17.(单选题,1 分)Of the three cavities, ()is the most variable and active in amplifying and modifying speech sounds.A.none of themB.oral cavityC. pharynx cavityD.nasal cavity教师批阅正确答案B,得1 分18.(单选题,1 分)What kind of sounds can we make when the vocal cords are vibrating?A.Glottal stopB.VoicedC.ConsonantD.Voiceless教师批阅正确答案B,得1 分19.(单选题,1 分)The most recognizable differences between American English and British English are in()and vocabulary.A.grammarB.structureC.pronunciationage教师批阅正确答案C,得1 分20.(单选题,1 分)Which of the allowing is not a minimal pair?A./keit/ /feit/B./sai / sei/C./li:f/ /fi:l/D./sip/ /zip/教师批阅正确答案C,得1 分21.(填空题,2.5 分)Consonant articulations are relatively easy to feel. And as a result are most conveniently described in terms of____and manner of articulation.正确答案:(1) place22.(填空题,2.5 分)The different members of a phoneme, sounds which are phonetically different but do not make one word different from another in meaning, are ____allophones教师批阅得2.5 分正确答案:(1) allophones23.(填空题,2.5 分)____are produced by constricting or obstructing the vocal tract at some place to divert, impede,or completely shut off the flow of air in the oral cavity.正确答案:(1) Consonants24.(填空题,2.5 分)The sound /k/ can be described with "voiceless,____,stop”.教师批阅得2.5 分正确答案:(1) velar25.(填空题,2.5 分)According to ____, when there is choice as to where to place consonant put into the onset rather than the coda.(1) the Maximal Onset Principle26.(填空题,2.5 分)The sound /b/can be described with" ____, bilabial,stop”.正确答案:(1) voiced27.(填空题,2.5 分)____transcription should transcribe all the possible speech sounds, including the minute shades. Narrow28.(填空题,2.5 分)Most speech sounds are made by movements of the tongue and the lips, and these movements are called ____, as compared to those made by hands. These movements of the tongue and lips are made____ _so that they can be heard and recognized.正确答案:(1) gestures(2) audibles29.(填空题,2.5 分)Stress refers to the degree of ____used in producing syllable.force教师批阅得2.5 分正确答案:(1) force30.(填空题,2.5 分)In phonological analysis the words fail -veil are distinguishable simply because of the two phonemes/f/-/v/. This is an example for illustrating____minimal pairs教师批阅得0 分正确答案:(1) minimal pair31.(填空题,2.5 分)The syllable structure in Chinese is ____or____or ____正确答案:(1) CVC(2) CV(3) V32.(填空题,2.5 分)Voicing refers to the ____of the vocal folds.(1) vibration33.(填空题,2.5 分)____refers to the change of a sound as a result of the influence of an adjacent sound.正确答案:(1) Assimilation34.(填空题,2.5 分)In English, the two words cut and gut differ only in their initial sounds and the two sounds are two different ____and the two words are a____pair.正确答案:(1) phonemes(2) minimal35.(填空题,2.5 分)In ____assimilation, a following sound is influencing a preceding sound.正确答案:(1) regressive36.(填空题,2.5 分)The sound /p/can be described with____, bilabial,stop”.正确答案:(1) voiceless37.(填空题,2.5 分)In English, consonant clusters in onset and coda positions disallow many consonant combinations, which is explained by the work of____.正确答案:(1) sonority scale38.(填空题,2.5 分)Phonetic similarity means that the____of phoneme must bear some phonetic resemblance.正确答案:(1) allophones39.(填空题,2.5 分)In English there are a number of ____which are produced by moving from one vowel position to another through intervening positions.正确答案:(1) diphthongs40.(填空题,2.5 分)The present system of the____derives mainly from one developed in the 1920s by the British phonetician, Daniel Jones (1881-1967) and his colleagues at University of London.正确答案:(1) cardinal vowels41.(判断题,1 分)There are two nasal consonants in English.正确答案错,得1 分42.(判断题,1 分)In English, we can have the syllable structure of CCCVCCCC.正确答案对,得1 分43.(判断题,1 分)In the sound writing system, the reference of the grapheme is the phoneme.正确答案对,得0 分44.(判断题,1 分)Phonology studies speech sounds, including the production of speech, that is, how speech sounds are actually made, transmitted and received.正确答案错,得1 分45.(判断题,1 分)The “Minimal Pair” test that can be used to find out which sound substitutions cause differences in meaning do not work well for all languages.正确答案对,得1 分46.(判断题,1 分)The airstream provided by the lungs has to undergo a number of modifications to acquire the quality of a speech sound.正确答案对,得1 分47.(判断题,1 分)Two sounds are in free variation when they occur in the same environment and do not contrast, namely, the substitution of one for the other does not produce a different word, but merely a different pronunciation.正确答案对,得0 分48.(判断题,1 分)Sound [p] in the word"spit "is an unaspirated stop.正确答案对,得1 分49.(判断题,1 分)Speech sounds are those sounds made by human beings that have become units in the language system. We can analyze speech sounds from various perspectives.正确答案对,得1 分50.(判断题,1 分)Tones in tone language are not always fixed. For example, tones in Chinese never change.正确答案错,得1 分51.(判断题,1 分)The International Phonetic Alphabet uses narrow transcription.正确答案对,得0 分52.(判断题,1 分)All syllables must have a nucleus but not all syllables contain an onset and a coda.正确答案对,得1 分The speech sounds we hear and produce during linguistic communications are all phonemes.正确答案错,得1 分54.(判断题,1 分)Broad Transcription is intended to symbolize all the possible speech sounds, including the minute shades.正确答案错,得1 分55.(判断题,1 分)It is sounds by which we make communicative meaning.正确答案错,得0 分56.(判断题,1 分)All the suffixes may change the position of the stress.正确答案错,得1 分57.(判断题,1 分)The assimilation rule assimilates one sound to another by copying a feature of a sequential phoneme, thus making the two phones similar.正确答案错,得0 分58.(判断题,1 分)The speech sounds which are in complementary distribution are definitely allophones of the same phoneme.正确答案错,得1 分59.(判断题,1 分)Phonetic similarity means that the allophones of a phoneme must bear some morphological resemblance.正确答案错,得1 分60.(判断题,1 分)A syllable can be divided into two parts, the NUCLEUS and the CODA.正确答案错,得1 分61.(判断题,1 分)The last sound of "top can be articulated as an unreleased or released plosive. These different realizations of the same phoneme are not in complementary distribution.正确答案对,得1 分62.(判断题,1 分)All syllables contain three parts: onset, nucleus and coda.正确答案错,得1 分63.(判断题,1 分)Larynx is what we sometimes call “Adam’s apple”.正确答案错,得1 分64.(判断题,1 分)Chinese is a tone language.正确答案对,得1 分65.(判断题,1 分)A phoneme in one language or one dialect may be an allophone in another language or dialect.正确答案对,得1 分When preceding /p/, the negative prefix “in-” always changes to “im-” .正确答案对,得1 分67.(判断题,1 分)The last sound of "sit"can be articulated as an unreleased or released plosive. These different realizations of the same phoneme are not in complementary distribution.正确答案对,得0 分68.(判断题,1 分)The initial sound of"peak "is aspirated while the second sound of"speak"is unaspirated. They are in free variation.正确答案错,得1 分69.(判断题,1 分)[p] is voiced bilabial stop.正确答案错,得1 分70.(判断题,1 分)Pure vowels are a set of vowel qualities arbitrarily defined, fixed and unchanging, intended to provide a frame of reference for the description of the actual vowels of existing languages.正确答案错,得0 分UNIT31.(单选题,1 分)“-s” in the word “books” is ().A.a stemB.an inflectional affixC.a derivational affixD.a root教师批阅正确答案B,得1 分2.(单选题,1 分)other than compounds may be divided into roots and affixes.A.Poly-morphemic wordsB.Free morphemesC.Bound morphemes教师批阅正确答案A,得1 分3.(单选题,1 分)Of the following sound combinations, only() is permissible according to the sequential rules in English.A.ilmbB.miblC.ilbmD.bmil教师批阅正确答案B,我的答案:C 得0 分4.(单选题,1 分)Which two terms can best describe the following pairs of words: table-- tables, day+ break-- daybreak?A.inflection and derivationpound and derivationC.derivation and inflectionD.inflection and compound教师批阅正确答案D,得1 分5.(单选题,1 分)()is a branch of grammar which studies the internal structure of words and the rules by which words are formed.A.morphologyB.morphemeC.grammarD.syntax教师批阅正确答案A,得1 分6.(单选题,1 分)Which of the following words are formed by blending?A.televisionB.bunchC.girlfriendD.smog教师批阅正确答案D,得1 分7.(单选题,1 分)The word UN is formed in the way of().A.acronymB.clippingC.InitialismD.blending教师批阅正确答案C,我的答案:A 得0 分8.(单选题,1 分)Language has been changing, but such changes are not so obvious at all linguistic aspects except that of().A.phonologyB.lexiconC.semanticsD.syntax教师批阅正确答案B,得1 分9.(单选题,1 分)There are different types of affixes or morphemes. The affix “ed” in the word “learned” is knownas a(n) ().A.derivational affixesB.free formC.free morphemeD.inflectional affixes教师批阅正确答案D,得1 分10.(单选题,1 分)Which of the following is not a boundary to morpheme? ()A.-putB.-mitC.-tainD.-ceive教师批阅正确答案A,得1 分11.(单选题,1 分)()modify the meaning of the stem, but usually do not change the part of speech of the original word.A.AffixesB.PrefixesC.SuffixesD.Roots教师批阅正确答案B,得1 分12.(单选题,1 分)The words that contain only one morpheme are called().A.free morphemeB.affixesC.bound momsD.roots教师批阅正确答案A,我的答案:D 得0 分13.(单选题,1 分)Wife", which used to refer to any woman, stands for a married woman" in modem English. This phenomenon is known as().A.semantic narrowingB.semantic broadeningC.semantic shiftD.semantic elevation教师批阅正确答案A,得1 分14.(单选题,1 分)() are added to an existing form to create a word, which is a very common way in English.A.derivational affixesB.inflectional affixesC.stemsD.free morpheme教师批阅正确答案A,得1 分15.(单选题,1 分)Which of the following is under the category of “open class”? ()A.ConjunctionsB.NounsC.PreparationD.determinants教师批阅正确答案B,得1 分16.(单选题,1 分)Nouns, verbs and adjectives can be classified as().A.lexical wordsB.invariable wordsC.grammatical wordsD.function words教师批阅正确答案A,我的答案:C 得0 分17.(单选题,1 分)The word “selfish” contains two().A.morphsB.phonemesC.allomorphsD.morphemes教师批阅正确答案D,得1 分18.(单选题,1 分)Which of the following ways of word-formation does not change the grammatical class of the stems?()A.coinageB.inflectionpoundD.derivation教师批阅正确答案B,得1 分19.(单选题,1 分)Which of the following is an inflectional suffix?()A.-aryB.-ifyC.-istD.-ing正确答案D,得1 分20.(单选题,1 分)() is a branch of grammar which studies the internal structure of words and the rules by which words are formed.A.MorphemeB.SyntaxC.MorphologyD.Grammar教师批阅正确答案C,得1 分21.(单选题,1 分)()is the smallest unit of language in regard to the relationship between sounding and meaning, a unit that cannot be divided into further smaller units without destroying or drastically altering the meaning.A.MorphemeB.RootC.WordD.Allomorph教师批阅正确答案A,得1 分22.(单选题,1 分)The number of morphemes in the word “girls” is().A.fourB.twoC.oneD.three教师批阅正确答案B,得1 分23.(单选题,1 分)() at the end of stems can modify the meaning of the original word and in many cases change its part of speech.A.prefixesB.suffixesC.free morphemesD.roots教师批阅正确答案B,得1 分24.(单选题,1 分)Compound words consist of()morphemes.A.freeB.either bound or freeC.boundD.both bound and free正确答案A,得1 分25.(单选题,1 分)Derivational morpheme contrasts sharply with inflectional morpheme in that the former changes the() while the latter does not.A.speech soundB.formC.MeaningD.word class教师批阅正确答案D,得1 分26.(单选题,1 分)A prefix is an affix which appears().A.in the middle of the stemB.below the stemC.before the stemD.after the stem教师批阅正确答案C,得1 分27.(单选题,1 分)() is the collective term for the type of morpheme that can be used only when added to another morpheme.A.AffixB.SuffixC.StemD.Prefix教师批阅正确答案A,得1 分28.(单选题,1 分)The words that contain only one morpheme are called().A.bound morphemeB.free morphemeC.rootsD.Affixes教师批阅正确答案B,我的答案:C 得0 分29.(单选题,1 分)The meaning carried by the inflectional morpheme is().A.morphemicB.prefixesC.semanticD.grammatical教师批阅正确答案D,得1 分30.(单选题,1 分)()refers to the way in which a particular verb changes for tense, person, or number.A.DerivationB.InflectionC.affixationD.Conjunction教师批阅正确答案B,得1 分31.(单选题,1 分)Those that affect the syntactic category and the meaning of the root as well are ().A.prefixesB.suffixesC.stemsD.affixes教师批阅正确答案B,我的答案:D 得0 分32.(单选题,1 分)() is the smallest meaningful unit of language.A.PhonemeB.WordC.AllomorphD.Morpheme教师批阅正确答案D,得1 分33.(单选题,1 分)The number of the closed-class words is() and no new members are regularly added.A.fixedrgeC.smallD.limitless教师批阅正确答案A,得1 分34.(单选题,1 分)Words like pronouns, prepositions, conjunctions, articles are()items.A.open-classB.variable wordsC.closed-classD.lexical words教师批阅正确答案C,得1 分35.(单选题,1 分)Bound morphemes do not include().A.wordsB.rootsD.suffixes教师批阅正确答案A,得1 分36.(单选题,1 分)Inflectional morphemes manifest the following meaning EXCEPT().A.caseB.numberC.toneD.tense教师批阅正确答案C,我的答案:D 得0 分37.(单选题,1 分)It is true that words may shift in meaning, i.e. semantic change. The semantic change of the word tail belongs to().A.widening of meaningB.meaning shiftC.narrowing of meaningD.loss of meaning教师批阅正确答案A,得1 分38.(单选题,1 分)The word “hospitalize” is an example of() in terms of word formation.poundB.inflectionC.clippingD.derivation教师批阅正确答案D,得1 分39.(单选题,1 分)The morpheme “vision” in the word “television” is a /an().A.inflectional morphemeB.bound formC.free morphemeD.bound morphine教师批阅正确答案C,得1 分40.(单选题,1 分)The compound word "bookstore"is the place where books are sold. This indicates that the meaning of a compound().A.is the sum total of the meaning of its componentsB.can always be worked out by looking at the meanings of morphemesC.is the same as the meaning of a free phraseD.None of the above正确答案D,得1 分41.(单选题,1 分)The morpheme “ vision” in the common word “television” is a(n)().A.bound formB.bound morphemeC.free morphemeD.inflectional morpheme教师批阅正确答案C,得1 分42.(填空题,1 分)____is a unit of expression that has universal intuitive recognition by native speakers, whether it is expressed in spoken or written form. It is the minimum free form.正确答案:(1) Word43.(填空题,1 分)Words can be classified into variable words and invariable words. As for variable words, they may have ____changes. That is, the same word my have different grammatical forms but part of the word remainsrelatively constant.inflective教师批阅得1 分正确答案:(1) inflective44.(填空题,1 分)Bound morphemes are classified into two types: ____and ____ root.正确答案:(1) affix(2) bound45.(填空题,1 分)A word formed by derivation is called a____and a word formed by compounding is called a____.正确答案:(1) derivative(2) compound46.(填空题,1 分)According to Leonard Bloomfield, word should be treated as the minimum ____.morpheme教师批阅得0 分正确答案:(1) conjunction47.(填空题,1 分)Back-formation refers to an abnormal type of word-formation where a shorter word is derived by。

I.要点语言和语言学语言:语言的定义及定义的理解。

/ 语言的甄别性特征/语言的功能/语言的起源。

语言学:语言学的定义及定义的理解。

/普通语言学及其研究范围。

/常见的重要区别性概念。

1.什么是语言?如何理解语言的定义语言的定义似乎很简单,其实是一个另人头疼的问题。

很多人认为语言不过是一种交际工具而已。

语言确实是一种交际工具,但是有的时候,假如你触景生情即兴吟诵一首诗,没有交际对象,很明显不能算交际,但是通过语言来完成的。

另外除了语言,还有很多交际工具,例如,旗语,花卉语。

Language is a means of verbal communication.Language is a system of arbitrary vocal symbols used for human communication.语言是人跟人互通信息,用发音器官发出来的,成系统的行为方式。

(赵元任)“语言是存在之居所”(海得格尔)“语言是人类最后的家园”(钱冠连)目前国内采用比较多的就是下面的这个定义Language is a system of arbitrary vocal symbols used for human communication. (语言是用于人类交际的,任意的,有声的符号系统)。

System:语言首先是个系统。

所谓系统就是指语言要素按照一定的语言规则组合而成,可以体现在词,短语和句子等不同层面。

Arbitrary:语言的任意性说明了语言是社会约定俗成的产物。

体现在层面上。

V ocal:指语音相对于拼写系统而言是所有人类语言的第一重要媒介。

口语一定是出现在书面语之前。

Symbols:指语言的词是通过约定俗成与外界的物体、行为、思想相联系。

任意性和符号性是联系在一起的。

Human:指语言是人类独有的。

2.语言的区别性特征指那些把人类语言和其他动物交际系统区别开来的本质性特征。

1)Arbitrariness:指语言的语音和意义之间没有逻辑联系。

Chapter 1Invitations to Linguistics1.1 Why Study Language?1.Some myths about language2.Some fundamental views about language3.Some concrete demonstrations to show Linguistics’importance1.2 What is Language?1. Language “is not to be confused with human speech, of which it is only a definite part, though certainly an essential one. It is both a social product of the faculty of speech and a collection of necessary conventions that have been adopted by a social body to permit individuals to exercise that faculty”.--Ferdinand de Saussure (1857-1913): Course in General Linguistics (1916) 2. “Language is a purely human and non-instinctive method of communicating ideas, emotions and desires by means of voluntarily produced symbols.”--Edward Sapir (1884-1939): Language: An Introduction to the Study of Speech (1921)3. “A language is a system of arbitrary vocal symbols by means of which a social group co-operates.”--Bernard Bloch (1907-1965) & George Trager (1906-1992): Outline of Linguistic Analysis (1942)4. “A language is a system of arbitrary vocal symbols by means of which the members of a society interact in terms of their total culture.”--George Trager: The Field of Linguistics (1949)5. “From now on I will consider language to be a set (finite or infinite) of sentences, each finite in length and constructed out of a finite set of elements.”--Noam Chomsky (1928- ): Syntactic Structures (1957)6. Language is “the institution whereby humans communicate and interact with each other by means of habitually used oral-auditory arbitrary symbols.”--Robert A. Hall (1911-1997): Introductory Linguistics (1964)7.“Language is a system of arbitrary vocal symbols used for human communication.”--Ronald Wardhaugh: Introduction to Linguistics (1977)8. “Language is a means of verbal communication.”—It is instrumental in that communicating by speaking or writing is a purposeful act.—It is social and conventional in that language is a social semiotic and communication can only take place effectively if all the users share a broad understanding of human interaction including such associated factors as nonverbal cues, motivation, and socio-cultural roles. -- Our textbook (2006)9. Language is a system of arbitrary vocal symbols used for human communication.1.3 Design Features of LanguageLanguage distinguishes human beings from animals in that it is far more sophisticated than any animal communication system.Human language is ‘unique’1. Arbitrariness①Definition②Different levels of arbitrarinessa.Arbitrary relationship between the sound of a morpheme and its meaningb.Arbitrariness at the syntactic levelc.Arbitrariness and convention2. Duality①Definition②Two levels of structures in languageThe secondary level (sounds, meaningless)The primary level (words, meaningful)③Hierarchy of language3. Creativity①Language is resourceful because of its duality and its recursiveness. We can useit to create new meanings.②The recursive nature of language provides a potential to create an infinitenumber of sentences. For instance:---He bought a book which was written by a teacher who taught in a school which was known for its graduates who ..4. Displacement①Definition②Two examples③The advantage of displacement1.4 Origin of language1.The “bow-wow”theory2.The “pooh-pooh”theory3.The “yo-he-ho”theory1.5 Functions of language1.Jakobson’s classificationContextREFERENTIALAddresser EMOTIVE(e.g. intonation showing anger)MessagePOETIC(e.g. poetry)AddresseeCONATIVE(e.g. imperatives andvocatives)ContactPHATIC(e.g. Good morning!)CodeMETALINGUAL(e.g. Hello, do you hear me?)2.Halliday’s classification①Three metafunctions of language②Seven categories of language functions by observing child languagedevelopment3.The author’s introduction①Informative②Interpersonal Function③Performative④Emotive Function⑤Phatic Communion⑥Recreational Function⑦Metalingual Function1.6 What is Linguistics?Linguistics is usually defined as the science of language or, alternatively, as the scientific study of language.1.7 Main branches of Linguistics1.Phonetics2.Phonology3.Morphology4.Syntax5.Semantics6.Pragmatics1.8 Macrolinguistics1.Psycholinguistics2.Sociolinguistics3.Anthropological Linguisticsputational Linguistics1.9 Important distinctions in Linguistics1.Descriptive vs. Prescriptive2.Synchronic vs. Diachronicngue & Parolepetence and Performance。

I. Explain the following terms: (15 points, 3 points each)1、Ideational function:Ideational function is the content function of language and allows us to conceptualize(概念化)the world for our own benefit and that of others.2、interpersonal functionInterpersonal function is the participatory function of language. It’s to establish, maintain and signal relationships between people.3、textual function,This function is using language to bring texts into being. It is to create written and spoken texts.4、prescriptive and descriptive,Language is de, not pre.Prescriptive prescribes rules of what is correct.Descriptivism claims that linguistics’ first task is to describe the way people actually speak and write their language, not to prescribe how they ought to speak and write5、competence and performanceCompetence is the knowledge that native speakers have of their language as system of abstract formal relations.Performance is what we do when we speak of listen, that is , the infinite varied individual acts of verbal behavior with their irregularities, inconsistencies, and errors. (COMPETENCE: Enabling a speaker to produce and understand an indefinite number of sentences and to recognize grammatical mistakes and ambiguities and stable. (Performance: Influenced by psychological and social factors, such as pressure, distress, anxiety, or embarrassment, etc.)6、functionalism and formalismp347、phoneticsScientific study of speech and is concerned with defining and classifying speech sounds.(Deals with the production, perception, and physical properties of speech sounds.)8,transcription of soundsThe process of representing oral text in a writtenformat.9.phonologyPhonology is not specifically concerned with the physical properties of the speech production system.(Phoneticians are concerned with how sounds differ in the way they are pronounced while phonologists are interested in the patterning of such sounds and the rules that underlie such variations.)10.phonemesThe smallest units of sound that can change the meaning of a word.11.allophoneA phonetic variant of a phoneme in a particular language – it is one of several similar speech sounds belonging to a phonemeplementary distributionWhen two or more sounds never occur in the same environment, they are said to be in complementary distribution.13.suprasegmental featuresSuprasegmental features are those aspects of speech that involve more than single sound segments.14.morphologyThe study of the internal structure of words, and the rules by which words are formed.15.morphemeMorphemes are the smallest meaningful units in the grammatical system of a language.16.inflectionInflection refers to the process of adding an affix to a word or changing it in some other way according to the rules of the grammar of a language.17.word formationNew words may be added to the vocabulary or lexicon of a language by compounding, conversion, derivation and a number of other processes.18.lexiconLexicon is synonymous with vocabulary, which refers to all the words and phrases used in a language or that a particular person knows.19.lexemeLexeme is a more abstract and more technical term referring to the smaller unit of the meaning system of a language that can be distinguished from another smaller unite.20.syntaxSyntax is a study of sentences.A traditional term for the study of the rules governing the way in which words are combined to form sentences in a language(The branch of linguistics that studies how the words of a language can be combined to make larger units, such as phrases, clauses, and sentences)21.immediate constituentsIt’s of the same form class as the whole construction, and it’s the subordinating type.22.semanticsTraditionally defined as the study of meaning.23.referential/representational theoryReferential theory is a linguistic sign derives its meaning from that which refers to something in reality.Representational theory: It holds that language in general and words in particular are only an icon for an actual thing being symbolized.This suggests that there is one kind of “natural” resemblance or relationship between words and the things represented by them.24.semantic fieldSemantic field refers to the organization of related lexemes into a system, which shows their relationship to one another.25.illocutinary actReferring to the making of a statement, offer, promise, etc. in uttering a sentence, by virtue of the conventional force associated with it(or with its explicit performative paraphrase).26.design featuresThe defining properties of human language that distinguish it from any animal system of communication. 27.speech act theoryOne of the basic theories of pragmatics.All linguistic activities are related to speech acts. Therefore, to speak a language is to perform a set of speech acts, such as statement, command, inquiry and commitment.28.politeness principle and its maximsaccording to this theory, everybody has face wants.i.e.the expectation concerning their public self-image. In order to maintain harmonious interpersonal relationships and ensure successful social interaction, we should be aware of the two aspects of another person’s face, i.e the positive face and the negative face.Politeness principle is based on the assumption that conversational implicatures arising from the flouting of the maxims of the cooperative principle are.(1) Tact maxim(2) Generosity maxim(3) Approbation maxim(4) Modesty maxim(5) Agreement maxim(6) Sympathy maxim29.cooperative principle and its maximsTacit agreement exists between the speaker and the hearer in all linguistic communicative activities.They follow a set of principles in order to achieve particular communicative goals.maximally efficient, rational and cooperativesincerely, relevantly and clearlywhile providing sufficient information⑴ The maxim of quality⑵ The maxim of quantity⑶ The maxim of relevance⑷ The maxim of manner30.indirect speech actIndirect speech act refers to an indirect relationship between the propositional content and illocutionary force of an utterance.31. Lingua francaLingua franca is the general term for a language that serves as a means of communication between different groups of speakers.32.Sapir-Whorf HypothesisThe Sapir-Whorf Hypothesis as we know today can be broken down into two basic principles: linguistic determinism and linguistic relativityLinguistic determinism is Language determines the way people perceive the world.Linguistic relativity: Language influences the way people perceive the world.33.Critical Period Hypothesis,This hypothesis states that there is only a small window of time for a first language to be natively acquired.34.validity and reliabilityII. Answer the following questions briefly: (25 points, 5 points each)1.What are the functions of human language?Ideational: (also descriptive function)to organize a speaker‟s or writer‟s experience of the world and to convey information which can be stated ordenied and in some cases tested. It can be divided into experiential function and logical functionInterpersonal: (also social function)to establish, maintain and signal relationships between peopleTextual:to create written and spoken texts2.What are the sub-branches of linguistics within thelanguage system?Phonetics 语音学Phonology 音系/位学Morphology 形态学Syntax 句法学Semantics 语义学Discourse Analysis 语篇分析Pragmatics 语用学3.What are the characteristics of English speech sounds?Common pattern:c onsonant-vowel-consonant: fit dig net sitconsonant clusters: stream glimpse task Consonants:voiced&voicelessVowelscharacteristic length: lip—lap—leapEnglish vowels & consonantsVoewls: 7 short vowels, 5 long vowels, 8 diphthongs, 5tripthongs.4.What are morphological rules? Give at least four rules with examples.Morphological rules is the rules, which determine how morphemes are combined into new words.5.What is endocentric construction/exocentricconstruction? Explain with examples.Endocentric construction is one whse distribution is functionally equivalent to that of one or more of itsconstituents. A word or a group of words act as a definable center or headExocentric construction refers to a group of syntactically related words where none of the words is functionally equivalent to the group as a whole.E.g.6. Illustrate the differences between PS rules and T-rules.7. What are the major concerns of pragmatics?8. Explain the difference between inflectional and derivational affixes in terms of both function and position.9. Talk briefly about syllabus design.III. Make comments on the following topics. (40 points, 20 points each)(answersare open)该部分的答题思路是,先将理论要点陈述出来,再进行自己的评论和论述。

Chapter 1: Introduction1.Linguistics:语言学It is generally defined as the scientific study of language.( Linguistics studies not any particular language ,but it studies language in general)2。

General linguistics:普通语言学The study of language as a whole is called general linguistics。

(language is a complicated entity with multiple layers and facets )nguage:Language is a system of arbitrary vocal symbols used for human communication。

4.descriptive (描述性):A linguistic study aims to describe and analyze the language people actually use。

5。

prescriptive(规定性): It aims to lay down rules for “correct and standard” behaviors。

i.e。

what they should say and what they should not to say.6。

synchronic(共时语言学):the description of language at some point of time in hiatory7。

diachronic (历时语言学):the description of language as it changes through time 3)speech(口语)Writing(书面语)These the two media of communication。

Chapter One Introduction to LinguisticsI. Mark the choice that best completes the statement.1.All languages’ have three major components: a sound system ,a system of___and a system of semantics.A. morphologyB. lexicogrammarC. syntaxD. meaning2.Which of the following words is entirely arbitrary?A.treeB.typewriterC.bowwowD.bang3.The function of the sentence Water boils at 100 degrees Centigrade is ___.A.interpersonalB.emotivermativeD.performative4.In Chinese when someone breaks a bowl or a plate the host or the people present are likely to say 碎碎(岁岁)平安as a means of controlling the forces which they believe might affect their lives. Which function does it perform?A.interpersonalB.emotivermativeD.performative5.Which of the following property of language enables language users to overcome the barriers caused by time and place of speaking (due to this feature of language, speakers of a language are free to talk about anything in any situation)?A. TransferabilityB. DualityC. DisplacementD. Arbitrariness6. What language function does the following conversation play?(The two chatters just met and were starting their conversation by the following dialogue.)A:A nice day, isn’t it?B : Right! I really enjoy the sunlight.A.EmotiveB. PhaticC. PerformativeD. Interpersonal7.------- refers to the actual realization of the ideal language user’s knowledge of the rules of his language in utterances.A.PerformativepetenceC. LangueD. Parole8.When a dog is barking, you assume it is barking for something or at someone that exists here and now. It couldn’t be sorrowful for some lost love or lost bone.This indicates that dog’s language does not have the feature of --------- .A. ReferenceB. ProductivityC. DisplacementD.Duality9.--------- answers such questions as we as infants acquire our first language.A. PsycholinguisticsB. Anthropological linguisticsC. SociolinguisticsD. Applied linguistics10.-------- deals with the study of dialects in different social classes in a particular region.A. Linguistic theoryB. Practical linguisticsC. SociolinguisticsD. Comparative linguisticsII. M ark the following statements with “T” if they are true or “F” if they are false.(10%)1. The widely accepted meaning of arbitrariness was discussed by Chomsky first.2. For learners of a foreign language, it is arbitrariness that is more worth noticing than its conventionality.3. Displacement benefits human beings by giving them the power to handle generalizations and abstractions.4. For Jakobson and the Prague school structuralists, the purpose of communication is to refer.5. Interpersonal function is also called ideational function in the framework of functional grammar.6. Emotive function is also discussed under the term expressive function.7. The relationship between competence and performance in Chomsky’s theory is that between a language community and an individual language user.8.A study of the features of the English used in Shakespeare’s time is an example of the diachronic study of language.9.Articulatory phonetics investigates the properties of the sound waves.10.The nature of linguistics as a science determines its preoccupation with prescription instead of description.III.Fill in each of the following blanks with an appropriate word. The first letter of the wordis already given(10%)1.Nowadays, two kinds of research methods co-exist in linguistic studies, namely, qualitativeand q__________ research approaches.2.In any language words can be used in new ways to mean new things and can be combined intoinnumerable sentences based on limited rules. This feature is usually termed as p__________.nguage has many functions. We can use language to talk about language. This function is m__________function.4.The claim that language originated by primitive man involuntary making vocal noises while performing heavy work has been called the y_theory.5.P________ is often said to be concerned with the organization of speech within specific language, or with the systems and patterns of sounds that occur in particular language.6.Modern linguistics is d_in the sense that linguist tires to discover what language is rather than lay down some rules for people to observe.7.One general principle of linguistics analysis is the primacy of s___________over writing.8.The description of a language as it changes through time is a d___________ linguistic study.9.Saussure put forward the concept l__________ to refer to the abstract linguistic system shared by all members of a speech community.10.Linguistic potentia l is similar to Saussure’ s langue and Chomsky’ s c__________.IV. Explain the following concepts or theories.1.Design features2.Displacementpetence4.Synchronic linguisticsV. Answer the following question briefly.(10%)1.Why do people take duality as one of the important design features of human languages?Can you tell us what language would be like if it had no such design features?2.How can we use language to do things? Please give two examples to show this point.II. Fill in each of the following blanks with (an) appropriate word(s).1. Language is ____________in that communicating by speaking or writing is a purposeful act.2. Language is_____________ and__________ in that language is a social semiotic and communication can only take place effectively if all the users share a broad understanding of human interaction.3.The features that define our human languages can be called_____________, which include____________, _____________, ______________, _____________.4.________is the opposite side of arbitrariness.5.The fact that in the system of spoken language, we have the primary units as words and secondary units as sound shows that language has the property of___________.nguage is resourceful because of its_____________ and its___________, which contributes to the_____________ of language.7._______benefits human beings by giving them the power to handle generalization and abstractions.8.In Jakobson’s version,there are six functions of language, namely, ____________, _____________, _______________, ________________, ________________and metalingual function.9.When people use language to express attitudes, feelings and emotions, people are using the _____________ function of language in Jakobson’s version.10.In functional grammar, language has three metafunctions, namely, _____________,____________________,__________________.11.Among Halliday’s three metafunctions ______________creates relevance to context.12.The________________function of language is primary to change the social status of persons.13.Please name five main branch of linguistics:___________________________,___________________,__________________,_____________________and ____________________.14.In________________phonetics,we study the speech sounds produced by articulatory organs by identifying and classifying the individual sounds.15.In________________phonetics,we focus on the way in which the listener analyzes or processes a sound wave16.________________is the minimal unit of meaning.17.The study of sounds used in linguistic communication is called_______________.18.The study of how sounds are put together and used to convey meaning in communication is called_________________.19.The study of the way in which symbols represent sounds in linguist communicate are arranged to form words has constituted the branch of study called_____________.20.The study of rules which governs the combinations of words to form permissible sentences constitutes a major branch of linguistic studies that is_________________.21.The fact that we have alliteration in poems is probably because of the__________________ function of language.III. Mark the choice that best completes the statement.1.The description of a language at some print in time is a_______________ study.A.descriptiveB. prescriptiveC. synchronicD. diachronic2. According to Chomsky, a speaker can produce and understand an infinitely large number of sentence because_______A. he has come across all of them in his lifeB. he has internalized a set of rules about his languageC. he has acquired the ability through the act of communicating with others language3.Saussure’s distinction between langue and parole is very similar to Chomsky’s distinction between competence and performance, but Saussure takes a ____________view of language and Chomsky looks at language from a__________ point of viewA. sociological, psychologicalB. psychological, sociologicalC. biological, psychologicalD. psychological, biological4.The fact that there is no intrinsic connection between the word pen and the thing we write with indicates language is______A. arbitraryB. rule-governedC. appliedD. illogical5.We can understand and produce an infinitely large number of sentence including sentences we never heard before, because language is______A.creativeB. arbitraryC. limitlessD. resourceful6.______means language can be used to refer to contexts removed from the immediate situation of the speakerA.DualityB. DisplacementC. productivityD. Arbitrariness7.______examines how meaning is encoded in a languageA.PhoneticsB. syntaxC. SemanticD. Pragmatics8.______is concerned with the internal organization of words.A.MorphologyB. syntaxC. SemanticD. phonology9.______refers to the fact that the forms of linguistic signs bear no natural relationship to their meaningA.DualityB. Arbitrariness C .Replacement D. Creativity10.______of language makes it potentially creative, and______ of language makes learning a language laboriousA. Conventionality, arbitrarinessB. Arbitrariness, replacementC. Arbitrariness, conventionalityD. Conventionality, arbitrariness11.When people use language to indulge in itself for its own sake, people are using the______ function of languageA.poeticB. creativeC. phaticD. metalingual12.____proposes a theory of metafunctions of language.A.ChomskyB.SaussureC.JacobsonD. Halliday13.____function constructs a model of experience and constructs logical relations.A.InterpersonalB. TextualC. LogicalD. Ideational14.Interpersonal function enacts_________ relationship.A.socialB. experientialC. textualD. personal15.By_____________ function people establish and maintain their status in society.A.e xperientialB. referentialC. metalingualD. Interpersonal16.The study of the description and classification of speech sounds, words and connected speech belongs to the study of_____.A.phonologyB. phoneticsC. morphologyD. syntax17.In__________ phonetics, we investigate the properties of the sound waves.A.articulatoryB. acousticC. auditoryD. sound18.French distinguishes between nouns like GARE(station)which is feminine and nouns like TRAIN which is masculine. This shows that French is a language which____.A.is illogicalB. has grammatical genderC.has biological genderD. has two casespetence, in the linguistic sense of the word, is______.A.pragmatic skillB. intuitive knowledge of languageC.perfect knowledge of language skillD. communicative ability20.French has Tu (means: you) aimer a (means: will love) Jean and English has You will love Jean. This shows us that____.A. both languages are alike in expressing future timeB.Both languages have a future tense but English requires more wordsC.English is loose while French is compactD.French forms its future tense by adding a special suffix21.Knowing how to say something appropriate in a given situation and with exactly the effect you intend is a question of the_____A.lexisB. syntaxC. semanticsD. pragmatics22.A(n)_____is a speaker/listener who is a member of homogeneous speech community, who knows language perfectly and is not affected by memory limitations or distractions.A. perfect language userB. ideal language userC. proficient userD. native language userIV. Analyze the following with your linguistic knowledge.e the following two examples to support the idea that language is not all arbitrary.a.They married and had a baby.b.They had a baby and married.2.Examine the way the following words are separated. Comment on the way of separation in rel ation to Bloomfield’s idea that word is minimal unit of meaning.a.typical,success.ful.ly,organiz.action,hard.ly,wind.y,word3.What is the difference between the following two statements in terms of attitude to grammar? What kind of linguistic concepts do they represent?a.Never put an a before an uncountable noun.b.People usually do not put an a before an uncountable noun.4.How do you understand the sentence Music is a universal language?5.What are the two interpretations of the sentence They are hunting dogs? What is the linguistic knowledge that enables you to distinguish the meanings of this sentence?V. Match each term in Column A with one relevant item in Column B.。