基于集成学习的遥感文本图像分类(IJIGSP-V8-N12-3)

- 格式:pdf

- 大小:620.24 KB

- 文档页数:9

基于深度学习的遥感图像分类1. 引言随着遥感技术的发展,遥感图像的分类问题已经成为了遥感数据处理的重要问题。

对于大规模的遥感图像数据,传统的分类方法会面临很大的挑战,而基于深度学习的分类方法能够很好地处理这些数据,同时具有很强的泛化能力和鲁棒性。

本文将介绍基于深度学习的遥感图像分类方法,主要包括卷积神经网络(Convolutional Neural Network,CNN)介绍,遥感图像数据处理方法和实验结果分析等内容。

2. 卷积神经网络概述卷积神经网络是一种深度学习算法,主要用于图像分类、目标检测和语音识别等领域。

它的主要特点是在网络中加入了卷积层和池化层,能够有效地提取图像的特征。

卷积层通过卷积核(filter)对输入的图像进行卷积操作,得到图像的特征。

池化层则对卷积层的输出进行降采样操作,进一步减少特征提取的计算量和复杂度。

卷积神经网络的结构一般包括输入层、卷积层、池化层和全连接层等。

其中,输入层用于接收输入数据,卷积层负责提取输入数据的特征,池化层对特征进行降采样,全连接层对特征进行分类。

在深度学习中,卷积神经网络往往需要进行多层的迭代学习,以提高分类的准确率。

3. 遥感图像处理在遥感图像分类中,数据的处理和选择对分类结果有重要影响。

一般来说,遥感图像数据具有很高的空间分辨率和光谱分辨率,需要对数据进行预处理以降低数据维度,并提取有用的特征。

首先,对于多光谱遥感图像,需要进行波段选择和波段组合,选择代表地物类别的波段进行组合。

同时,为了减少计算量,进行图像降维处理,可以使用主成分分析法(PCA)或独立成分分析法(ICA)等方法。

其次,对于高光谱遥感图像,需要进行光谱特征提取,在具体实现中可以使用多种方法,如线性判别分析(LDA)、局部保持投影(LPP)和稀疏编码等方法。

最后,遥感图像常常存在噪声和遮挡等问题,因此需要进行图像增强和去噪处理,以提高分类准确率。

4. 实验结果分析本文以美国明尼苏达州One Metro Area数据集作为实验数据,使用深度学习方法对数据进行分类。

遥感图像分类方法与准确性评价指标遥感图像分类是利用遥感数据进行地物分类的过程,其目的是将遥感图像中的不同地物进行识别与分类。

在遥感图像分类中,有效的分类方法和准确性评价指标对于获得准确的分类结果至关重要。

一、常用的遥感图像分类方法1. 监督分类方法监督分类方法是指在进行分类之前,通过在选定的地物样本中确定其类别,并利用这些样本进行分类算法的训练。

常用的监督分类方法包括最大似然分类、支持向量机、决策树等。

最大似然分类是一种基于统计理论的方法,其基本假设是不同类别地物的像元值符合某种概率分布。

支持向量机是一种基于几何学原理的分类方法,其核心思想是将不同类别地物的像元用超平面分割成两个部分,以实现分类。

决策树是一种基于判定树的分类方法,通过根据不同属性进行逐级判定,最终将地物分类。

2. 无监督分类方法无监督分类方法是指在进行分类之前不需要先进行样本标签的确定,而是根据图像中像元之间的相似性和差异性进行聚类。

常用的无监督分类方法包括K-means 聚类、高斯混合模型等。

K-means聚类是一种基于距离度量的分类方法,其核心思想是将图像中的像元根据相似性进行分组,形成不同的类,实现地物分类。

高斯混合模型是一种基于概率统计的分类方法,通过假设图像像元符合多个高斯分布的线性组合,确定不同类别地物的概率分布。

二、遥感图像分类准确性评价指标1. 精度(Accuracy)精度是指分类结果中被正确分类的像元数占总像元数的比例。

精度越高,表示分类结果越准确。

在实际应用中,精度常常使用整体精度(Overall Accuracy)和Kappa系数进行评价。

整体精度是指分类正确的像元数占总像元数的比例,其范围为0到1之间,1表示分类完全正确。

Kappa系数是基于整体精度的一种校正指标,它考虑了分类结果与随机分类之间的差异性,范围也在0到1之间,1表示没有误分类。

2. 生产者精度(Producer's Accuracy)生产者精度是指在分类结果中,某一类地物被正确分类的像元数占该类地物实际像元数的比例。

遥感图像分类方法及应用示例遥感技术是通过卫星、飞机等远距离传感器获取地表信息的一种技术手段。

遥感图像分类是遥感技术中的一项重要任务,它可以将遥感图像中的像素按照其特征进行分类,并生成分类结果。

本文将介绍遥感图像分类的方法,并给出一些应用示例。

一、遥感图像分类方法1. 基于像元的分类方法基于像元的分类方法是将遥感图像中的每个像素点看作一个样本进行分类,通过像素点的光谱特征来确定其所属类别。

常见的方法有最大似然法、支持向量机等。

最大似然法是一种基于统计学原理的分类方法,它通过求解样本的概率密度函数来确定像素点的类别。

支持向量机是一种基于样本间距离的分类方法,它通过构建超平面将不同类别的样本分开。

2. 基于对象的分类方法基于对象的分类方法是将遥感图像中的像素组成的对象进行分类,通过对象的形状、纹理等特征来确定其所属类别。

常见的方法有基于区域的分割和基于对象的分类。

基于区域的分割将遥感图像中的像素按照相似性进行分组,形成具有相同特征的区域。

基于对象的分类是在分割得到的区域基础上,通过提取区域的特征来确定其所属类别。

3. 基于深度学习的分类方法随着深度学习技术的发展,基于深度学习的分类方法在遥感图像分类中得到了广泛应用。

深度学习通过构建深层神经网络模型,可以自动学习遥感图像中的特征表示。

常见的方法有卷积神经网络(CNN)、循环神经网络(RNN)等。

卷积神经网络可以有效地提取图像的空间特征,循环神经网络可以捕捉图像序列的时序特征。

二、遥感图像分类的应用示例1. 农作物类型分类农作物类型分类是农业生产中的重要任务,可以帮助农民了解农田的分布情况和种植结构,指导农作物管理和精细化农业。

通过遥感图像分类方法,可以将农田遥感图像中的不同农作物进行分类,比如小麦、玉米、水稻等。

这样可以帮助农民进行农作物识别和农田监测,提高农业效益。

2. 土地利用分类土地利用分类是城市规划和土地资源管理中的重要任务,可以帮助决策者了解土地利用的分布情况和变化趋势,指导城市规划和土地资源开发。

![一种基于深度集成学习的高分辨率遥感图像分类方法[发明专利]](https://uimg.taocdn.com/c8f486e516fc700aba68fcb3.webp)

专利名称:一种基于深度集成学习的高分辨率遥感图像分类方法

专利类型:发明专利

发明人:席江波,聂聪冲,孙悦鸣,姜万冬

申请号:CN202010173481.1

申请日:20200313

公开号:CN111368776A

公开日:

20200703

专利内容由知识产权出版社提供

摘要:本发明公开了一种基于深度集成学习的高分辨率遥感图像分类方法,其思路为:首先使用像元亮度值作为分类特征进行全连接网络分类实验;其次使用面向对象分割,再以重心为中心提取卷积块进行卷积神经网络分类;再将原始图像全部裁剪为图像块,使用U‑Net完全卷积网络进行one vs all多元分类;最后,在前三个深度网络基分类器的分类结果上训练一个全连接网络进行概率组合,实现了较好的分类性能。

本发明通过使用深度集成学习方法,能够有效结合光谱和空间等信息改善分类准确率,且综合基分类器的优势,在高分辨率遥感图像分类的单个类别和总体分类精度上都能得到比单一分类器更好的分类精度。

申请人:长安大学

地址:710064 陕西省西安市南二环中段33号

国籍:CN

代理机构:西安睿通知识产权代理事务所(特殊普通合伙)

代理人:惠文轩

更多信息请下载全文后查看。

遥感图像分类方法综述

张裕;杨海涛;袁春慧

【期刊名称】《四川兵工学报》

【年(卷),期】2018(039)008

【摘要】将常见的遥感图像分类方法分为基于人工特征描述的分类方法、基于机器学习的分类方法和基于深度学习的分类方法三类.介绍了各类方法的主要算法,总结和评述了各算法的优缺点,最后展望了遥感图像分类方法研究发展趋势.

【总页数】5页(P108-112)

【作者】张裕;杨海涛;袁春慧

【作者单位】航天工程大学研究生管理大队,北京101416;航天工程大学航天遥感室,北京101416;航天工程大学研究生管理大队,北京101416

【正文语种】中文

【中图分类】TP751

【相关文献】

1.遥感图像分类方法综述 [J], 马莉

2.高光谱遥感图像分类方法综述 [J], 张蓓

3.稀疏表示遥感图像分类方法综述 [J], 何苗;王保云;盛伟;孔艳

4.遥感图像分类方法综述 [J], 张裕; 杨海涛; 袁春慧

5.稀疏表示遥感图像分类方法综述 [J], 何苗;王保云;盛伟;孔艳

因版权原因,仅展示原文概要,查看原文内容请购买。

![一种基于集成学习算法的遥感图像分类方法[发明专利]](https://uimg.taocdn.com/f90a587e51e79b8969022661.webp)

专利名称:一种基于集成学习算法的遥感图像分类方法专利类型:发明专利

发明人:潘浩,蔡晓斌,周年荣,马仪,文刚,黄然,赵加能

申请号:CN202010465983.1

申请日:20200528

公开号:CN111612086A

公开日:

20200901

专利内容由知识产权出版社提供

摘要:本申请提供一种基于集成学习算法的遥感图像分类方法,根据遥感图像的原始数据集D,确定支持向量机、随机森林和BP神经网络分类算法模型的最终精度,进而确定主分类算法模型与辅助算法模型;获取遥感图像的预测数据集Dt并导入算法模型,得到主分类算法结果集S和辅助分类算法结果集S1和S2;比较主分类算法结果集S中的遥感图像分类类型Wi与辅助分类算法结果集S1和S2中对应的遥感图像分类类型V1i和V2i,判断对应的遥感图像Dti的分类结果;本申请采用交叉验证进行分组提高样本代表程度;利用多种算法模型,在每次循环时利用精确度确定主分类算法和辅助分类算法,对预测结果进行多重计算、多对比,减少误判和错判情况的概率,提高了预测精度。

申请人:云南电网有限责任公司电力科学研究院

地址:650217 云南省昆明市经济技术开发区云大西路105号

国籍:CN

代理机构:北京弘权知识产权代理事务所(普通合伙)

更多信息请下载全文后查看。

基于深度学习的遥感图像分类技术研究概述遥感图像分类是利用遥感传感器获取的图像信息对不同地物进行识别和分类的过程。

传统的遥感图像分类方法通常依赖于手工设计特征和分类器,这些方法在处理大规模遥感数据时面临着挑战。

近年来,深度学习技术的快速发展为遥感图像分类带来了新的可能性。

深度学习通过对大规模数据的自动学习,能够从数据中自动提取出最具代表性的特征,提高了遥感图像分类的准确性和效率。

基于深度学习的遥感图像分类方法1. 卷积神经网络(CNN)卷积神经网络是一种用于图像识别的深度学习模型。

通过通过卷积层、池化层和全连接层等不同组成部分,CNN可以从原始图像中逐步提取特征,并进行分类。

在遥感图像分类中,CNN已经取得了很大的成功。

2. 迁移学习遥感数据通常具有特别的特征,在没有大规模标注数据的情况下,很难直接利用深度学习方法进行训练。

迁移学习是一种通过利用预训练模型的方法,将已经在大规模数据上训练好的深度学习模型应用于遥感图像分类中。

迁移学习能够将已有的知识迁移到新的任务中,提高遥感图像分类的准确性。

3. 多尺度特征融合遥感图像通常具有不同的分辨率和信息密度。

通过融合多尺度的特征,可以提取更加全面和丰富的信息,从而提高遥感图像分类的准确性。

基于深度学习的方法可以通过多尺度卷积、多尺度注意力机制等技术实现多尺度特征融合。

4. 数据增强在遥感图像分类任务中,不同目标物体的遥感图像样本通常数量不平衡。

通过数据增强技术,可以通过对已有的样本进行旋转、平移、缩放等变换操作,生成新的合成样本,从而扩大训练数据的规模,改善模型的泛化能力。

5. 目标检测与定位遥感图像分类不仅需要识别图像中的目标物体,还需要准确地定位目标的位置。

基于深度学习的目标检测算法,如基于区域的卷积神经网络(R-CNN)、快速区域卷积神经网络(Fast R-CNN)和区域卷积神经网络(Faster R-CNN)等,可以实现遥感图像中目标的准确检测和定位。

I.J. Engineering and Manufacturing, 2018, 4, 13-20Published Online July 2018 in MECS ()DOI: 10.5815/ijem.2018.04.02Available online at /ijemRemote Sensing Image Scene ClassificationMd. Arafat Hussain, Emon Kumar Dey*Institute of Infromation Technology, University of Dhaka, Dhaka-1000, BangladeshReceived: 11 March 2018; Accepted: 06 June 2018; Published: 08 July 2018AbstractRemote sensing image scene classification has gained remarkable attention because of its versatile use in different applications like geospatial object detection, natural hazards detection, geographic image retrieval, environment monitoring and etc. We have used the strength of convolutional neural network in scene image classification and proposed a new CNN to classify the images. Pre-trained VGG16 and ResNet50 are used to reduce overfitting and the training time in this paper. We have experimented on a recently proposed NWPU-RESISC45 dataset which is the largest dataset of remote sensing scene images. This paper found a significant improvement of accuracy by applying the proposed CNN and also the approaches have applied.Index Terms: Convolutional Neural Network, Remote Sensing Image, Scene Classification, CNN.© 2018 Published by MECS Publisher. Selection and/or peer review under responsibility of the Research Association of Modern Education and Computer Science.1.IntroductionImage or picture is a visual representation anything. Human can see a complex picture, understand it and can interpret properly without thinking twice but it is a very difficult task for machine. A machine can learn from the provided labelled data. Due to machines learning capability, image recognition and classification has become an active research area.Image classification and recognition is important for various applications a like facial image classification, medical image classification, scene image classification, human pose classification, gender classification etc. Recently scene image classification has found remarkable attention for its growing importance. Researchers are trying to efficiently classify different types of scene images like natural scene, remote sensing scene, indoor scene etc.Satellite and airborne observing the earth and taking a huge amount of remote sensing scene images. With the development of modern satellite and airborne, high spatial resolution (HSR) remote sensing images can * Corresponding author.E-mail address: emonkd@iit.du.ac.bdprovide detailed information. Remote sensing image classification has great importance in many applications such as natural vegetation mapping, urban planning, geospatial object detection, hazards detection, geographic image retrieval and environment monitoring [1]. The effectiveness of these applications depends on the classification accuracy.The goal of the classification is to categorize a scene image in appropriate classes. In the past years, some datasets have proposed on remote sensing image. These datasets are very small and already found high accuracy using feature extraction based hand crafted methods. The benefits of deep learning approach cannot be found with these small datasets because of various reasons. Recently, few large datasets have been published and some models have already been applied also. But there is a huge scope to improve the performance of this dataset. For example, a recently published NWPU-RESISC45 dataset [2] with 311,000 images and 45 scene classes achieved 90.36% accuracy using fine tuning VGGNet-16 [3] which is the current state of the art solution.On the other hand, current state-of-the-art hand crafted feature extraction methods showing poor performance on large dataset for scene classification. For example, the NWPU-RESISC45 achieved only 24.84% accuracy using the colored histogram features of this dataset. So the major objective of this research is how we can improve the performances remote sensing dataset using hand engineered feature extraction method as well as deep learning architecture.Many experiments happened on focusing remote sensing scene image using different datasets. Local descriptors like local binary pattern, local ternary pattern, completed local binary pattern or histograms of oriented gradients have proven their worth in different scene classification. Hand engineered based feature extraction method was very popular for scene detection before the deep learning based approach was proposed. Still it is very attractive for its simplicities. Different researches have proposed different ways to solve this problem. Xiao et al. proposed the SUN for large scale scene detection in [4]. The dataset contains 899 categories of 130519 images. Which helped the scene detection research group of the world a lot. They also applied different feature extraction method to find the accuracy of the dataset and found a satisfactory state of the art result.Lienou et. al. [5] conducted experiment on satellite images using sematic concept. They applied latent dirichle allocation model is used to training for each class. The model basically obtains their feature by extracting image words. They obtained 96.5% accuracy which is comparatively support vector machine which gained 85% accuracy on QuickBird image dataset.Vatsavai et. al. [6] proposed an unsupervised way based on the Latent Dirichlet Allocation (LDA) method which is a semantic labelling framework. They collected 130 images of refineries, international nuclear plants, airports and coal power plants. Though there were reasonable overlapping between two classes they found 73% training accuracy and 67% testing accuracy.Deep learning has come with a revolutionary change in the field of machine learning. Accuracy of different datasets jumped after applying deep learning approach [21-23]. The typical Convolutional Neural Networks (ConvNet) including Alexnet [7], VGGNET [8], GoogleNet [9] has been applied for scene classification purpose. As, in most of the existing scene dataset number of images are small, the activations of different layers of ConvNet or their fusion considered as the visual representation sent to the classification layer of ConVNet. For example, in [10-12] the authors used deep learning model for scene classification based on 7 layer AlexNet or CaffeNet [13]. Castelluccio, Marco [14] achieved state of the art accuracy on UC Merced dataset and the Brazilian coffee scene dataset. They applied CaffeNet and GoogLeNet with various learning procedures on these datasets. They used these two network from scratch and also used fine-tuning on their experimental dataset and they used the procedure to reduce overfitting. They found 97.10% accuracy using fine-tuned GoogleNet on UC Merced dataset and 91.83% accuracy using GoogleNet on Brazilian coffee scene dataset.VGG architecture consisting 16 layers have also been applied in [15-16]. Wang, Limin, et al. used [17] VGG networks to find state of the art accuracy on Place205, MIT67 and SUN397. They used Caffe toolbox to design ConvNets. They designed three types of VGG nets named VGG11, VGG13, VGG16. Trained the VGG nets onPlace205 dataset. To reduce computation cost they loaded weight to VGG13 from VGG11 and from VGG13 to VGG16.Many deep learning based models have proposed to classify images of different dataset. But there is no specific model or approach which is best suited for all dataset. A deep knowledge in this field and many year research experience can give an appropriate path to design a good model for a dataset. In this paper we have focused on the improvement of the performances of large-scale image dataset. We have experimented on NWPU-RESISC45 using our proposed architecture of deep learning model. We have found a reasonable accuracy.2.MethodologyIn this paper we have applied two types of approaches to evaluate the dataset. We applied both transfer learning approach and training from the scratch. Transfer learning fine tunes the existing deep convolutional neural network models. We considered VGG16 and ResNet50 for the transfer learning. In 2.3 we described our proposed from the scratch. Following sub-sections describe the methodologies.2.1.Transfer Learning using Pre-trained VGG16 ModelWe fine-tuned the VGG16 model which was trained on ImageNet dataset [7]. As this dataset covers a huge variety of images, a model that is trained on this dataset with a good accuracy rate has a huge knowledge indeed. We just want to fit this knowledge for our dataset.We consider two factors, one is the size of the dataset another is the similarity with the ImageNet dataset. Our dataset is comparatively small and partially similar with ImageNet dataset. In this situation, partially training the VGG16 model will be best. We kept the convolutional part same as before but changed the fully connected layers. We replaced the first fully-connected layer with 256 neurons, second fully-connected layer with 256 neurons and third with 45 neurons. We replaced 4096 by 256 to reduce overfitting by reducing the parameters.2.2.Transfer Learning using Pre-trained ResNet50 ModelWe applied fine-tuning on pre-trained model not only changing the output layer and training the classifier but also training some layers near the last layer. Here last layer is replaced with six layers. After the last average pooling we added a global average pooling layer to reduce dimensionality of input. It also works an alternative of flatten layer by producing one dimensional vector from 3 dimension. Then a fully-connected layer is added with 256 neurons. After that a dropout layer is added and another fully-connected layer of 256 neurons follows the dropout layer. Another dropout layer follows the second fully connected layer. The last layer of the model is a fully-connected layer with 45 neurons with a softmax classifier.2.3.Proposed CNN model from ScratchThe model is a VGGNet based seven-layer convolutional neural network. First four layers are convolutional layer and other three are fully connected layers. There are four max-pooling layer followed by each convolutional layer. Two dropout layers are added which follows first two convolutional layers. Last fully-connected layer is basically classification layer which classify images into different classes. Figure 1 shows the basic architecture of our proposed model. Table 1 shows every layers, their output and parameters. Convolutional layers (marked in blue) and fully-connected layers (marked in yellow) are the skeleton of our model.Fig.1. Proposed ModelTable 1. Layers, Output Shape and Parameters of Proposed3.Experimental ResultsIn this paper we have used NWPU-RESISC45 dataset. We applied some hand engineered learning procedure and deep learning procedure in this experiment. In deep learning procedure we used two well-known deep learning model VGG16 and ResNet50. We, trained them from scratch as well as applied transfer learning for the dataset. We used Theano, Tensorflow as machine learning library, Keras as deep learning API. The experiment was implemented on Windows 10 operating system.3.1.DatasetNWPU-RESISC45 dataset is used in this experiment. It is one of the largest remote sensing image scene dataset. The dataset contains 31,500 images in total with 45 scene classes and each class contain 700 images. The dataset is developed from the inspiration of applying many data driven algorithms like deep learning. Scarcity of data severely limits the learning capability of deep learning models which was the main motivation behind this dataset. So, the dataset is produced to face the problem by combining a number of datasets. Firstly we have used twelve scene classes for our experiment due to our resource constraints. We split the dataset into two parts where 80% data is used for training and another 20% for validation. Images were randomly chosen for training and validation. Figure 2 shows some sample images from NWPU-RESISC45 dataset.Fig.2. Sample Images from NWPU-RESISC45 Dataset3.2.ResultsWe have applied the proposed models and compared the result with existing methods. Table 2 shows the experimental results.Table 2 shows that the handheld feature extraction method NABP shows 71.76% accuracy, again 72.28% accuracy have been found using CENTRIST and 73.80% using tCENTRIST. All of these three methods was performed using 5 fold cross validation with SVM classifier.Table 2. Experimental Results of Different Methods.In the next phase we applied VGG11, VGG16 and our proposed 7 layer CNN from the scratch. VGG11 and VGG16 shows comparatively less accuracy than our proposed CNN trained from scratch. VGG16 and VGG11 shows 70.52% and 76.9% of accuracy respectively and our proposed 7 layers CNN shows 87.57%. The result improved more than 10% than VGG11. Results of initial two models produce less accuracy. The main reason is the models are large enough and huge number of parameters but we could not feed enough data to these models due to resource constraints. So, the models over fits the data and yields a poor accuracy rate. We have reduced a number of parameters to reduce overfitting in our model.We found our best results using transfer learning. 91.9% accuracy is found when we used VGG16 as fixed feature extractor and trained only the softmax classifier, 90.48% accuracy found by fine-tuning the last three layers of VGG16.Fig.3. Experimental Results Comparison for Different Methods on NWPU-RESISC45 DatasetLastly we applied ResNet50 to classify the remote sensing image dataset and found best results. Usingoriginal ResNet50 we found 95.9% and after applying some fine tuning described previously we got 96.91% accuracy for our dataset. Figure 3 shows a comparison of results based on different findings using different procedures.4.ConclusionThe goal of this experiment was to increase accuracy of remote sensing scene images. We have chosen NWPU-RESISC45 dataset. The main dataset contains 31,500 images with 45 classes. Different machine learning procedures are applied on this dataset including deep learning. We applied various types of procedures on the dataset. We proposed a new model which yields the best result (87.57%) for the models learned from scratch. Best result has been found using transfer learning (fine-tuned ResNet50 96.91%). ResNet50 is a large model compared to the proposed 7 layer CNN model. It takes long time to find output from input using ResNet. Proposed CNN takes less than half time compared to ResNet50 to take decision. But, as accuracy is our main concern, transfer learning is the best suited for the dataset we have used. Recently a new large dataset on scene image has been published named by PatternNet [20]. It is a high scaled remote sensing image dataset containing 38 classes. In future we will try to do our research on a better environment on these largest datasets. These will help us to accurately classify remote sensing scene images.References[1]Guo, Zhenhua, Lei Zhang, and David Zhang. "A completed modeling of local binary pattern operatorfor texture classification." IEEE Transactions on Image Processing 19.6 (2010): 1657-1663.[2]Cheng, Gong, Junwei Han, and Xiaoqiang Lu. "Remote sensing image scene classification: benchmarkand state of the art." Proceedings of the IEEE 105.10 (2017): 1865-1883.[3]Simonyan, Karen, and Andrew Zisserman. "Very deep convolutional networks for large-scale imagerecognition." arXiv preprint arXiv:1409.1556 (2014).[4]Xiao, J., Hays, J., Ehinger, K. A., Oliva, A., & Torralba, A. (2010, June). Sun database: Large-scalescene recognition from abbey to zoo. In Computer vision and pattern recognition (CVPR), 2010 IEEE conference on (pp. 3485-3492). IEEE.[5]Lienou, Marie, Henri Maitre, and Mihai Datcu. "Semantic annotation of satellite images using latentDirichlet allocation." IEEE Geoscience and Remote Sensing Letters 7.1 (2010): 28-32.[6]Vatsavai, Ranga Raju, Anil Cheriyadat, and Shaun Gleason. "Unsupervised semantic labelingframework for identification of complex facilities in high-resolution remote sensing images." Data Mining Workshops (ICDMW), 2010 IEEE International Conference on. IEEE, 2010.[7] A. Krizhevsky, I. Sutskever, G. E. Hinton, Imagenet classification with deep convolutional neuralnetworks, in: Advances in Neural Information Processing Systems (NIPS), 2012, pp. 1097-1105.[8]K. He, X. Zhang, S. Ren, J. Sun, Spatial pyramid pooling in deep convolutional networks for visualrecognition, IEEE Transactions on Pattern Analysis and Machine Intelligence 37 (9) (2015) 1904-1916.[9] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, A.Rabinovich, Going deeper with convolutions, in: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015.[10] F. P. S. Luus, B. P. Salmon, F. van den Bergh, B. T. J. Maharaj, Multiview deep learning for land-useclassification, IEEE Geoscience and Remote Sensing Letters 12 (12) (2015) 2448-2452.[11] B. Zhao, B. Huang, Y. Zhong, Transfer learning with fully pretrained deep convolution networks forland-use classification, IEEE Geoscience and Remote Sensing Letters 14 (9) (2017) 1436-1440.[12] F. Hu, G.-S. Xia, J. Hu, L. Zhang, Transferring deep convolutional neural networks for the sceneclassification of high-resolution remote sensing imagery, Remote Sensing 7 (11) (2015) 14680-14707. [13]Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, T. Darrell, Ca_e:Convolutional architecture for fast feature embedding, in: ACM International Conference on Multimedia, 2014, pp. 675-678.[14]Castelluccio, Marco, et al. "Land use classification in remote sensing images by convolutional neuralnetworks." arXiv preprint arXiv:1508.00092 (2015)[15]K. Nogueira, O. A. Penatti, J. A. dos Santos, Towards better exploiting convolutional neural networksfor remote sensing scene classi_cation, Pattern Recognition 61 (2017) 539 -556.[16]G. Cheng, J. Han, X. Lu, Remote sensing image scene classi_cation: Benchmark and state of the art,Proceedings of the IEEE 105 (10) (2017) 1865-1883.[17]Wang, Limin, et al. "Places205-vggnet models for scene recognition." arXiv preprintarXiv:1508.01667 (2015).[18]Rahman, Md Mostafijur, et al. "Noise adaptive binary pattern for face image analysis." Computer andInformation Technology (ICCIT), 2015 18th International Conference On. IEEE, 2015.[19]Wu, J.; Rehg, J.M. CENTRIST: A visual descriptor for scene categorization. IEEE Trans. Pattern Anal.Mach. Intell. 2011, 33, 1489–1501[20]Zhou, W., Newsam, S., Li, C., & Shao, Z. (2017). Patternnet: a benchmark dataset for performanceevaluation of remote sensing image retrieval. arXiv preprint arXiv:1706.03424.[21]Dey, E. K., Tawhid, M. N. A., & Shoyaib, M. (2015). An automated system for garment texture designclass identification. Computers, 4(3), 265-282.[22]Islam, S. S., Rahman, S., Rahman, M. M., Dey, E. K., & Shoyaib, M. (2016, May). Application of deeplearning to computer vision: A comprehensive study. In Informatics, Electronics and Vision (ICIEV), 2016 5th International Conference on (pp. 592-597). IEEE.[23]Islam, S. S., Dey, E. K., Tawhid, M. N. A., & Hossain, B. M. (2017). A CNN Based Approach forGarments Texture Design Classification. Advances in Technology Innovation, 2(4), 119-125.[24]Rahman, M. M., Rahman, S., Dey, E. K., & Shoyaib, M. (2015). A gender recognition approach with anembedded preprocessing. International Journal of Information Technology and Computer Science (IJITCS), 7(7), 19.Authors’ ProfilesMd. Arafat Hussain Completed Bachelor of Science in Software Engineering from Institute ofInformation Technology (IIT), University of Dhaka, Bangladesh in 2017. His area of interest inresearch is machine learning, image processing and pattern recognition.Emon Kumar Dey is currently an assistant professor in Institute of Information Technology(IIT), University of Dhaka. He received his M.S degree from the Department of ComputerScience and Engineering, University of Dhaka, Bangladesh in 2011. His research area includepattern recognition, machine learning, image processing, LiDAR data processing, 3D buildingmodelling etc.How to cite this paper: Md. Arafat Hussain, Emon Kumar Dey,"Remote Sensing Image Scene Classification", International Journal of Engineering and Manufacturing(IJEM), Vol.8, No.4, pp.13-20, 2018.DOI: 10.5815/ijem.2018.04.02。

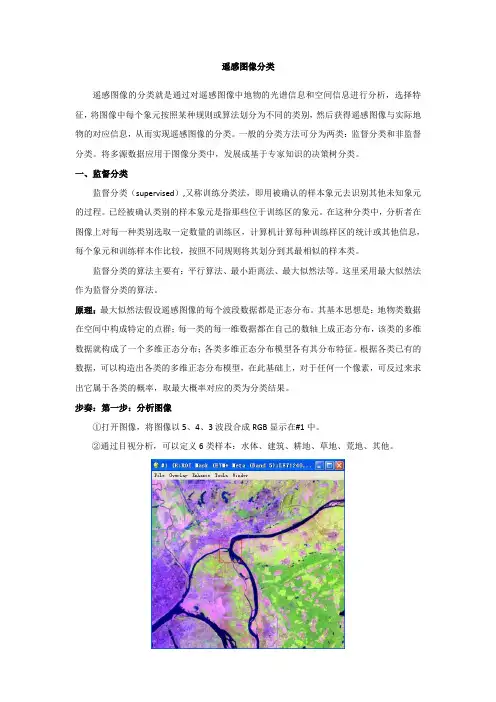

遥感图像分类遥感图像的分类就是通过对遥感图像中地物的光谱信息和空间信息进行分析,选择特征,将图像中每个象元按照某种规则或算法划分为不同的类别,然后获得遥感图像与实际地物的对应信息,从而实现遥感图像的分类。

一般的分类方法可分为两类:监督分类和非监督分类。

将多源数据应用于图像分类中,发展成基于专家知识的决策树分类。

一、监督分类监督分类(supervised),又称训练分类法,即用被确认的样本象元去识别其他未知象元的过程。

已经被确认类别的样本象元是指那些位于训练区的象元。

在这种分类中,分析者在图像上对每一种类别选取一定数量的训练区,计算机计算每种训练样区的统计或其他信息,每个象元和训练样本作比较,按照不同规则将其划分到其最相似的样本类。

监督分类的算法主要有:平行算法、最小距离法、最大似然法等。

这里采用最大似然法作为监督分类的算法。

原理:最大似然法假设遥感图像的每个波段数据都是正态分布。

其基本思想是:地物类数据在空间中构成特定的点群;每一类的每一维数据都在自己的数轴上成正态分布,该类的多维数据就构成了一个多维正态分布;各类多维正态分布模型各有其分布特征。

根据各类已有的数据,可以构造出各类的多维正态分布模型,在此基础上,对于任何一个像素,可反过来求出它属于各类的概率,取最大概率对应的类为分类结果。

步奏:第一步:分析图像①打开图像,将图像以5、4、3波段合成RGB显示在#1中。

②通过目视分析,可以定义6类样本:水体、建筑、耕地、草地、荒地、其他。

第二步:选择训练样本①在主图像窗口选择Overlay-----Region of Interest,打开ROI Tool对话框。

②在ROI Tool对话框中设置相关样本的名称、颜色等。

③选择ROI_Type—Polygon,在window中选择image,在图像上绘制训练区。

④重复②、③步奏,最终完成以下结果:第三步:评价训练样本①在ROI Tool对话框中,选择Options——Compute ROI Separability,打开目标图像。

![基于分层集成学习的高光谱遥感图像分类方法[发明专利]](https://uimg.taocdn.com/1f48d7f4a76e58fafbb0039f.webp)

专利名称:基于分层集成学习的高光谱遥感图像分类方法专利类型:发明专利

发明人:陈雨时,赵兴,刘柏森

申请号:CN201410541909.8

申请日:20141014

公开号:CN104268579A

公开日:

20150107

专利内容由知识产权出版社提供

摘要:基于分层集成学习的高光谱遥感图像分类方法,属于高光谱遥感图像分类技术领域。

本发明是为了解决高光谱遥感图像数据的分类精度低的问题。

它主要是使用两层的集成结构对高光谱图像进行分类,分别是内层结构和外层结构;内层结构是通过随机波段选择构成存在差异的光谱集合;之后以光谱集合为单位,分别使用Adaboost的集成方法来训练,再对测试样本进行分类;外层结构是将内层集成中各个光谱集合的分类结果进行整合,采用权重投票的方法确定样本的最终类别;最后是将整幅图像作为测试样本,实现全图分类从而得到分类主题图。

本发明用于对高光谱遥感图像分类。

申请人:哈尔滨工业大学

地址:150001 黑龙江省哈尔滨市南岗区西大直街92号

国籍:CN

代理机构:哈尔滨市松花江专利商标事务所

代理人:张利明

更多信息请下载全文后查看。

遥感图像分类方法及其在测绘中的应用遥感图像是通过遥感技术获取的地球表面的图像信息。

遥感图像具有广泛的应用领域,其中之一就是测绘领域。

遥感图像分类方法是将遥感图像中的像素点按照一定的规则进行分类和标记,以获取图像中不同区域的信息,从而实现对地物和地貌的解译和分析。

本文将介绍常用的遥感图像分类方法,并探讨其在测绘中的应用。

一、遥感图像分类方法1. 基于像元的分类方法基于像元的分类方法是将遥感图像中的每个像素点单独进行分类。

常用的方法包括最大似然分类、支持向量机等。

最大似然分类是根据每个像素点的灰度值和概率分布进行分类,将每个像素点归入具有最大可能性的类别。

支持向量机是一种基于统计学习理论的分类方法,通过构建一个划分超平面将不同类别的像素点分开。

2. 基于对象的分类方法基于对象的分类方法将相邻像素点组成的图像区域作为一个整体进行分类。

常用的方法包括分割-分类法、基于决策树的分类方法等。

分割-分类法首先将遥感图像进行分割,得到不同的图像区域,然后对每个图像区域进行分类。

基于决策树的分类方法通过构建一棵决策树,根据遥感图像特征进行划分,逐层分类。

3. 基于深度学习的分类方法基于深度学习的分类方法是近年来新兴的分类方法,它利用神经网络模型对遥感图像进行分类。

常用的方法包括卷积神经网络(CNN)、循环神经网络(RNN)等。

卷积神经网络通过多层卷积和池化操作提取图像的特征,然后通过全连接层进行分类。

循环神经网络则适用于序列数据的分类,可以处理带有时序关系的遥感图像数据。

二、遥感图像分类在测绘中的应用1. 地物提取与更新遥感图像分类可以用于地物提取与更新。

通过对遥感图像进行分类,可以有效地将地物区分出来,如建筑物、道路、水体等。

同时,可以利用多期遥感图像进行地物的更新,观察地物的变化情况,为城市规划、土地利用等提供依据。

2. 地貌分析与监测遥感图像分类也可以应用于地貌分析与监测。

通过对地表的分类,可以得到不同区域的地貌类型,如山地、平原、河流等。

基于集成学习的遥感图像分类技术研究基于集成学习的遥感图像分类技术研究摘要:随着遥感技术的发展和应用领域的扩展,遥感图像分类在环境监测、城市规划、资源调查等领域中具有重要的地位。

然而,由于遥感图像的复杂性和大规模的数据量,传统的图像分类算法在处理遥感图像时存在着一些问题。

为了解决这些问题,近年来基于集成学习的遥感图像分类技术逐渐受到了研究者的关注。

本文将详细介绍基于集成学习的遥感图像分类技术的研究现状和发展趋势。

一、引言遥感图像分类是将遥感图像中的像素分为不同的类别,以获取地物信息的过程。

目前,常用的遥感图像分类方法包括传统的最邻近算法、支持向量机、决策树等。

然而,这些传统方法在处理遥感图像时存在着一些局限性,如分类精度不高、对噪声敏感等。

因此,需要寻找一种更加有效和鲁棒的遥感图像分类方法。

二、集成学习的基本原理集成学习是一种通过结合多个分类器的预测结果来改善分类准确率的技术。

常用的集成学习方法包括投票法、堆叠法和权重法等。

通过集成学习方法,可以将多个基分类器的决策进行整合,提高分类的准确性和稳定性。

三、基于集成学习的遥感图像分类技术(一)基于投票的集成学习方法基于投票的集成学习方法是常见的集成学习方法之一。

该方法通过对基分类器进行投票,根据投票结果确定最终的分类结果。

对于遥感图像分类来说,可以使用基于投票的集成学习方法来提高分类准确性。

例如,将多个决策树分类器进行投票,根据投票结果来确定地物的分类结果。

(二)基于堆叠的集成学习方法基于堆叠的集成学习方法是一种将多个基分类器的预测结果作为输入,经过另一个分类器进行整合的方法。

该方法通过将多个基分类器的预测结果输入到一个最终的分类器中,从而提高分类的准确性。

对于遥感图像分类来说,可以使用基于堆叠的集成学习方法将多个基分类器的预测结果进行整合,得到更准确的分类结果。

(三)基于权重的集成学习方法基于权重的集成学习方法是一种根据基分类器的性能对其进行加权的方法。

基于松弛因子改进FastICA算法的遥感图像分类方法

王小敏;曾生根;夏德深

【期刊名称】《计算机研究与发展》

【年(卷),期】2006(43)4

【摘要】多波段遥感图像反映了不同地物的光谱特征,其分类是遥感应用的基础.独立分量分析算法利用信号的高阶统计信息,去除了遥感图像各个波段之间的相关性,获得的波段图像是相互独立的.然而独立分量分析算法计算量太大,影响了其在多波段遥感图像分类上的应用.M-FastICA算法可以改善FastICA算法的性能,减少计算量,但是同FastICA算法一样,其收敛依赖于初始权值的选择.在M-FastICA算法中引入松弛因子,使算法可以实现大范围的收敛.应用BP神经网络对独立分量分析算法预处理后的图像进行自动分类,其分类精度比原始遥感图像的精度高,并且3种独立分量分析算法的最终分类性能相当.

【总页数】8页(P708-715)

【作者】王小敏;曾生根;夏德深

【作者单位】南京理工大学计算机科学系,南京,210094;南京理工大学计算机科学系,南京,210094;南京理工大学计算机科学系,南京,210094

【正文语种】中文

【中图分类】TP391.41

【相关文献】

1.基于松弛因子的快速独立分量分析算法的遥感图像分类技术 [J], 王小敏;曾生根;夏德深

2.引入松弛因子的高阶收敛FastICA算法 [J], 季策;王艳茹;沙明博;杨正义

3.基于改进的AlexNet网络模型的遥感图像分类方法研究 [J], 周天顺;党鹏飞;谢辉

4.基于松弛改进FastICA算法的星基ADS-B信号分离 [J], 刘慧; 倪育德; 刘鹏

5.基于改进GoogLeNet的遥感图像分类方法 [J], 韩要昌; 王洁; 史通; 蔡启航因版权原因,仅展示原文概要,查看原文内容请购买。