Sigmetrics15

- 格式:pdf

- 大小:306.43 KB

- 文档页数:14

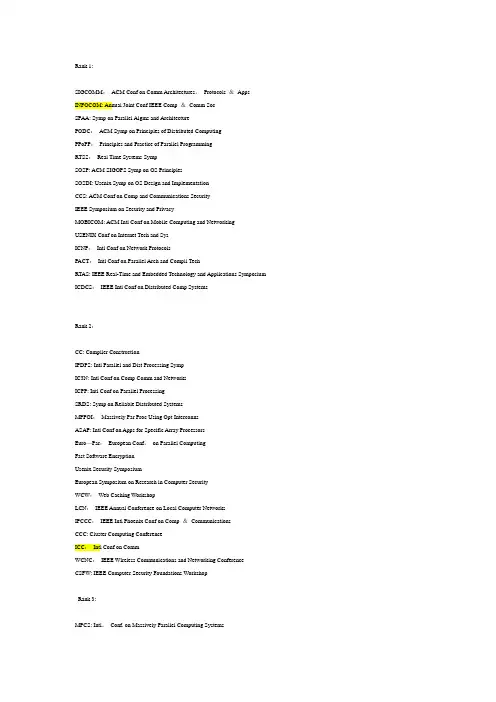

Rank 1:SIGCOMM:ACM Conf on Comm Architectures,Protocols &Apps INFOCOM: Annual Joint Conf IEEE Comp &Comm SocSPAA: Symp on Parallel Algms and ArchitecturePODC:ACM Symp on Principles of Distributed ComputingPPoPP:Principles and Practice of Parallel ProgrammingRTSS:Real Time Systems SympSOSP: ACM SIGOPS Symp on OS PrinciplesSOSDI: Usenix Symp on OS Design and ImplementationCCS: ACM Conf on Comp and Communications SecurityIEEE Symposium on Security and PrivacyMOBICOM: ACM Intl Conf on Mobile Computing and NetworkingUSENIX Conf on Internet Tech and SysICNP:Intl Conf on Network ProtocolsPACT:Intl Conf on Parallel Arch and Compil TechRTAS: IEEE Real-Time and Embedded Technology and Applications Symposium ICDCS:IEEE Intl Conf on Distributed Comp SystemsRank 2:CC: Compiler ConstructionIPDPS: Intl Parallel and Dist Processing SympIC3N: Intl Conf on Comp Comm and NetworksICPP: Intl Conf on Parallel ProcessingSRDS: Symp on Reliable Distributed SystemsMPPOI:Massively Par Proc Using Opt InterconnsASAP: Intl Conf on Apps for Specific Array ProcessorsEuro—Par:European Conf。

Manuals+— User Manuals Simplified.Mytrix GMS-15 RGB Gaming Mousepad Large Cherry Pink User GuideHome » mytrix » Mytrix GMS-15 RGB Gaming Mousepad Large Cherry Pink User GuideContents1 Mytrix GMS-15 RGB Gaming Mousepad Large CherryPink2 INTERFACE INSTRUCTIONS3 RGB SETTINGS4 TECHNICAL SPECIFICATION5 Documents / Resources6 Related PostsMytrix GMS-15 RGB Gaming Mousepad Large Cherry PinkThank you for buying Mytrix RGB mouse pad GMS-15. It supports a plug-andplay USB interface conveniently and there are a total of 14 light modes to meet your preference.INTERFACE INSTRUCTIONS1. RGB Light Mode Selecting Button2. Breathable Waterproof Micro-Texture Cloth Surface3. Non-slip Rubber Base4. RGB Light EdgeCONNECTIONPlug the detachable Type-C to USB-A cable into the mouse pad and turn on the SWITCH button.RGB SETTINGSFunctions:Select the Light Mode:1. RGB Light Mode Selecting Button2. Breathable Waterproof Micro-Texture Cloth Surface3. Non-slip Rubber Base4. RGB Light EdgePlug the detachable Type-C to USB-A cable into the mouse pad and turn on the SWITCH button. Press the SWITCH button to switch from 14 light modes in sequenceAdjust the Brightness:Double press the fingerprint icon to switch between the high and low brightness of the current light modeACCESSORY LISTThe product comes with a 1-year limited warranty. Our goal is to achieve total customer satisfaction, we will do our best to understand our customer’s requirements and always meet the requirements. If you have any questions, please do not hesitate to contact us via *****************. We will be more than happy to assist you.Company: Mytrix Technology LLCCustomer Service: +1-978-496-8821Email: *****************Web: Address: 13 Garabedian Dr. Unit C,Salem NH 03079Documents / ResourcesMytrix GMS-15 RGB Gaming Mousepad Large Cherry Pink [pdf] User GuideGMS-15 RGB Gaming Mousepad Large Cherry Pink, GMS-15, RGB Gaming Mousepad LargeCherry Pink, Gaming Mousepad Large Cherry Pink, Mousepad Large Cherry Pink, Large Cherry Pink, Cherry Pink, PinkManuals+,。

A Large-Scale Study of Flash Memory Failures in the FieldJustin Meza Carnegie Mellon University meza@Qiang WuFacebook,Inc.qwu@Sanjeev KumarFacebook,Inc.skumar@Onur MutluCarnegie Mellon Universityonur@ABSTRACTServers useflash memory based solid state drives(SSDs)as a high-performance alternative to hard disk drives to store per-sistent data.Unfortunately,recent increases inflash density have also brought about decreases in chip-level reliability.In a data center environment,flash-based SSD failures can lead to downtime and,in the worst case,data loss.As a result, it is important to understandflash memory reliability char-acteristics overflash lifetime in a realistic production data center environment running modern applications and system software.This paper presents thefirst large-scale study offlash-based SSD reliability in thefield.We analyze data collected across a majority offlash-based solid state drives at Facebook data centers over nearly four years and many millions of operational hours in order to understand failure properties and trends of flash-based SSDs.Our study considers a variety of SSD char-acteristics,including:the amount of data written to and read fromflash chips;how data is mapped within the SSD address space;the amount of data copied,erased,and discarded by the flash controller;andflash board temperature and bus power. Based on ourfield analysis of howflash memory errors man-ifest when running modern workloads on modern SSDs,this paper is thefirst to make several major observations:(1) SSD failure rates do not increase monotonically withflash chip wear;instead they go through several distinct periods corresponding to how failures emerge and are subsequently detected,(2)the effects of read disturbance errors are not prevalent in thefield,(3)sparse logical data layout across an SSD’s physical address space(e.g.,non-contiguous data),as measured by the amount of metadata required to track logical address translations stored in an SSD-internal DRAM buffer, can greatly affect SSD failure rate,(4)higher temperatures lead to higher failure rates,but techniques that throttle SSD operation appear to greatly reduce the negative reliability im-pact of higher temperatures,and(5)data written by the op-erating system toflash-based SSDs does not always accurately indicate the amount of wear induced onflash cells due to op-timizations in the SSD controller and buffering employed in the system software.We hope that thefindings of thisfirst large-scaleflash memory reliability study can inspire others to develop other publicly-available analyses and novelflash reliability solutions.Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage,and that copies bear this notice and the full ci-tation on thefirst page.Copyrights for third-party components of this work must be honored.For all other uses,contact the owner/author(s).Copyright is held by the author/owner(s).SIGMETRICS’15,June15–19,2015,Portland,OR,USA.ACM978-1-4503-3486-0/15/06./10.1145/2745844.2745848.Categories and Subject DescriptorsB.3.4.[Hardware]:Memory Structures—Reliability,Test-ing,and Fault-ToleranceKeywordsflash memory;reliability;warehouse-scale data centers1.INTRODUCTIONServers useflash memory for persistent storage due to the low access latency offlash chips compared to hard disk drives. Historically,flash capacity has lagged behind hard disk drive capacity,limiting the use offlash memory.In the past decade, however,advances in NANDflash memory technology have increasedflash capacity by more than1000×.This rapid in-crease inflash capacity has brought both an increase inflash memory use and a decrease inflash memory reliability.For example,the number of times that a cell can be reliably pro-grammed and erased before wearing out and failing dropped from10,000times for50nm cells to only2,000times for20nm cells[28].This trend is expected to continue for newer gen-erations offlash memory.Therefore,if we want to improve the operational lifetime and reliability offlash memory-based devices,we mustfirst fully understand their failure character-istics.In the past,a large body of prior work examined the failure characteristics offlash cells in controlled environments using small numbers e.g.,tens)of rawflash chips(e.g.,[36,23,21, 27,22,25,16,33,14,5,18,4,24,40,41,26,31,30,37,6,11, 10,7,13,9,8,12,20]).This work quantified a variety offlash cell failure modes and formed the basis of the community’s un-derstanding offlash cell reliability.Yet prior work was limited in its analysis because these studies:(1)were conducted on small numbers of rawflash chips accessed in adversarial man-ners over short amounts of time,(2)did not examine failures when using real applications running on modern servers and instead used synthetic access patterns,and(3)did not account for the storage software stack that real applications need to go through to accessflash memories.Such conditions assumed in these prior studies are substantially different from those expe-rienced byflash-based SSDs in large-scale installations in the field.In such large-scale systems:(1)real applications access flash-based SSDs in different ways over a time span of years, (2)applications access SSDs via the storage software stack, which employs various amounts of buffering and hence affects the access pattern seen by theflash chips,(3)flash-based SSDs employ aggressive techniques to reduce device wear and to cor-rect errors,(4)factors in platform design,including how many SSDs are present in a node,can affect the access patterns to SSDs,(5)there can be significant variation in reliability due to the existence of a very large number of SSDs andflash chips.All of these real-world conditions present in large-scalePlatform SSDs PCIePer SSDCapacity Age(years)Data written Data read UBERA1v1,×4720GB 2.4±1.027.2TB23.8TB5.2×10−10B248.5TB45.1TB2.6×10−9C1v2,×41.2TB 1.6±0.937.8TB43.4TB1.5×10−10D218.9TB30.6TB5.7×10−11E13.2TB0.5±0.523.9TB51.1TB5.1×10−11F214.8TB18.2TB1.8×10−10Table1:The platforms examined in our study.PCIe technology is denoted by vX,×Y where X=version and Y=number of lanes.Data was collected over the entire age of the SSDs.Data written and data read are to/from the physical storage over an SSD’s lifetime.UBER=uncorrectable bit error rate(Section3.2).rection and forwards the result to the host’s OS.Errors that are not correctable by the driver result in data loss.1Our SSDs allow us to collect information only on large er-rors that are uncorrectable by the SSD but correctable by the host.For this reason,our results are in terms of such SSD-uncorrectable but host-correctable errors,and when we refer to uncorrectable errors we mean these type of errors.We refer to the occurrence of such uncorrectable errors in an SSD as an SSD failure.2.3The SystemsWe examined the majority offlash-based SSDs in Face-book’s serverfleet,which have operational lifetimes extend-ing over nearly four years and comprising many millions of SSD-days of usage.Data was collected over the lifetime of the SSDs.We found it useful to separate the SSDs based on the type of platform an SSD was used in.We define a platform as a combination of the device capacity used in the system,the PCIe technology used,and the number of SSDs in the system. Table1characterizes the platforms we examine in our study. We examined a range of high-capacity multi-level cell(MLC)flash-based SSDs with capacities of720GB,1.2TB,and3.2TB. The technologies we examined spanned two generations of PCIe,versions1and2.Some of the systems in thefleet em-ployed one SSD while others employed two.Platforms A and B contain SSDs with around two or more years of operation and represent16.6%of the SSDs examined;Platforms C and D contain SSDs with around one to two years of operation and represent50.6%of the SSDs examined;and Platforms E and F contain SSDs with around half a year of operation and represent around22.8%of the SSDs examined.2.4Measurement MethodologyTheflash devices in ourfleet contain registers that keep track of statistics related to SSD operation(e.g.,number of bytes read,number of bytes written,number of uncorrectable errors).These registers are similar to,but distinct from,the standardized SMART data stored by some SSDs to monitor their reliability characteristics[3].The values in these regis-ters can be queried by the host machine.We use a collector script to retrieve the raw values from the SSD and parse them into a form that can be curated in a Hive[38]table.This process is done in real time every hour.The scale of the systems we analyzed and the amount of data being collected posed challenges for analysis.To process the many millions of SSD-days of information,we used a cluster of machines to perform a parallelized aggregation of the data stored in Hive using MapReduce jobs in order to get a set of 1We do not examine the rate of data loss in this work.lifetime statistics for each of the SSDs we analyzed.We then processed this summary data in R[2]to collect our results.2.5Analytical MethodologyOur infrastructure allows us to examine a snapshot of SSD data at a point in time(i.e.,our infrastructure does not store timeseries information for the many SSDs in thefleet).This limits our analysis to the behavior of thefleet of SSDs at a point in time(for our study,we focus on a snapshot of SSD data taken during November2014).Fortunately,the number of SSDs we examine and the diversity of their characteristics allow us to examine how reliability trends change with vari-ous characteristics.When we analyze an SSD characteristic (e.g.,the amount of data written to an SSD),we group SSDs into buckets based on their value for that characteristic in the snapshot and plot the failure rate for SSDs in each bucket. For example,if an SSD s is placed in a bucket for N TB of data written,it is not also placed in the bucket for(N−k)TB of data written(even though at some point in its life it did have only(N−k)TB of data written).When performing bucketing,we round the value of an SSD’s characteristic to the nearest bucket and we eliminate buckets that contain less than0.1%of the SSDs analyzed to have a statistically sig-nificant sample of SSDs for our measurements.In order to express the confidence of our observations across buckets that contain different numbers of servers,we show the95th per-centile confidence interval for all of our data(using a binomial distribution when considering failure rates).We measure SSD failure rate for each bucket in terms of the fraction of SSDs that have had an uncorrectable error compared to the total number of SSDs in that bucket.3.BASELINE STATISTICSWe willfirst focus on the overall error rate and error distri-bution among the SSDs in the platforms we analyze and then examine correlations between different failure events.3.1Bit Error RateThe bit error rate(BER)of an SSD is the rate at which er-rors occur relative to the amount of information that is trans-mitted from/to the SSD.BER can be used to gauge the relia-bility of data transmission across a medium.We measure the uncorrectable bit error rate(UBER)from the SSD as:UBER=Uncorrectable errorsBits accessedForflash-based SSDs,UBER is an important reliability met-ric that is related to the SSD lifetime.SSDs with high UBERs are expected to have more failed cells and encounter more(se-vere)errors that may potentially go undetected and corruptdata than SSDs with low UBERs.Recent work by Grupp et al.examined the BER of raw MLCflash chips(without per-forming error correction in theflash controller)in a controlled environment[20].They found the raw BER to vary from 1×10−1for the least reliableflash chips down to1×10−8 for the most reliable,with most chips having a BER in the 1×10−6to1×10−8range.Their study did not analyze the effects of the use of chips in SSDs under real workloads andsystem software.Table1shows the UBER of the platforms that we examine. Wefind that forflash-based SSDs used in servers,the UBER ranges from2.6×10−9to5.1×10−11.While we expect that the UBER of the SSDs that we measure(which correct small errors,perform wear leveling,and are not at the end of their rated life but still being used in a production environment) should be less than the raw chip BER in Grupp et al.’s study (which did not correct any errors in theflash controller,exer-cisedflash chips until the end of their rated life,and accessed flash chips in an adversarial manner),wefind that in some cases the BERs were within around one order of magnitude of each other.For example,the UBER of Platform B in our study,2.6×10−9,comes close to the lower end of the raw chip BER range reported in prior work,1×10−8.Thus,we observe that inflash-based SSDs that employ error correction for small errors and wear leveling,the UBER ranges from around1/10to1/1000×the raw BER of similarflash chips examined in prior work[20].This is likely due to the fact that ourflash-based SSDs correct small errors,perform wear leveling,and are not at the end of their rated life.As a result,the error rate we see is smaller than the error rate the previous study observed.As shown by the SSD UBER,the effects of uncorrectable errors are noticeable across the SSDs that we examine.We next turn to understanding how errors are distributed amonga population of SSDs and how failures occur within SSDs.3.2SSD Failure Rate and Error CountFigure2(top)shows the SSD incidence failure rate within each platform–the fraction of SSDs in each platform that have reported at least one uncorrectable error.Wefind that SSD failures are relatively common events with between4.2% and34.1%of the SSDs in the platforms we examine reporting uncorrectable errors.Interestingly,the failure rate is much lower for Platforms C and E despite their comparable amounts of data written and read(cf.Table1).This suggests that there are differences in the failure process among platforms.We analyze which factors play a role in determining the occurrence of uncorrectable errors in Sections4and5.Figure2(bottom)shows the average yearly uncorrectable error rate among SSDs within the different platforms–the sum of errors that occurred on all servers within a platform over12months ending in November2014divided by the num-ber of servers in the platform.The yearly rates of uncor-rectable errors on the SSDs we examined range from15,128 for Platform D to978,806for Platform B.The older Platforms A and B have a higher error rate than the younger Platforms C through F,suggesting that the incidence of uncorrectable errors increases as SSDs are utilized more.We will examine this relationship further in Section4.Platform B has a much higher average yearly rate of un-correctable errors(978,806)compared to the other platforms (the second highest amount,for Platform A,is106,678).We find that this is due to a small number of SSDs having amuchA B C D E FSSDfailurerate..2.4.6.81.A B C D E FYearlyuncorrectableerrorsperSSDe+4e+58e+5Figure2:The failure rate(top)and average yearly uncorrectable errors per SSD(bottom),among SSDs within each platform.higher number of errors in that platform:Figure3quantifies the distribution of errors among SSDs in each platform.The x axis is the normalized SSD number within the platform,with SSDs sorted based on the number of errors they have had over their lifetime.The y axis plots the number of errors for a given SSD in log scale.For every platform,we observe that the top 10%of SSDs with the most errors have over80%of all un-correctable errors seen for that platform.For Platforms B,C, E,and F,the distribution is even more skewed,with10%of SSDs with errors making up over95%of all observed uncor-rectable errors.We alsofind that the distribution of number of errors among SSDs in a platform is similar to that of a Weibull distribution with a shape parameter of0.3and scale parameter of5×103.The solid black line on Figure3plots this distribution.An explanation for the relatively large differences in errors per machine could be that error events are correlated.Exam-ining the data shows that this is indeed the case:during a recent two weeks,99.8%of the SSDs that had an error during thefirst week also had an error during the second week.We therefore conclude that an SSD that has had an error in the past is highly likely to continue to have errors in the future. With this in mind,we next turn to understanding the correla-tion between errors occurring on both devices in the two-SSD systems we examine.3.3Correlations Between Different SSDsGiven that some of the platforms we examine have twoflash-based SSDs,we are interested in understanding if the likeli-directly to flash cells),cells are read,programmed,and erased,and unreliable cells are identified by the SSD controller,re-sulting in an initially high failure rate among devices.Figure 4pictorially illustrates the lifecycle failure pattern we observe,which is quantified by Figure 5.Figure 5plots how the failure rate of SSDs varies with theamount of data written to the flash cells.We have groupedthe platforms based on the individual capacity of their SSDs.Notice that across most platforms,the failure rate is low whenlittle data is written to flash cells.It then increases at first(corresponding to the early detection period,region 1in thefigures),and decreases next (corresponding to the early failureperiod,region 2in the figures).Finally,the error rate gener-ally increases for the remainder of the SSD’s lifetime (corre-sponding to the useful life and wearout periods,region 3inthe figures).An obvious outlier for this trend is Platform C –in Section 5,we observe that some external characteristics ofthis platform lead to its atypical lifecycle failure pattern.Note that different platforms are in different stages in theirlifecycle depending on the amount of data written to flashcells.For example,SSDs in Platforms D and F,which havethe least amount of data written to flash cells on average,aremainly in the early detection or early failure periods.On theother hand,SSDs in older Platforms A and B,which havemore data written to flash cells,span all stages of the lifecyclefailure pattern (depicted in Figure 4).For SSDs in PlatformA,we observe up to an 81.7%difference between the failurerates of SSDs in the early detection period and SSDs in thewearout period of the lifecycle.As explained before and as depicted in Figure 4,the lifecy-cle failure rates we observe with the amount of data writtento flash cells does not follow the conventional bathtub curve.In particular,the new early detection period we observe acrossthe large number of devices leads us to investigate why this“early detection period”behavior exists.Recall that the earlydetection period refers to failure rate increasing early in life-time (i.e.,when a small amount of data is written to the SSD).After the early detection period,failure rate starts decreasing.We hypothesize that this non-monotonic error rate behav-ior during and after the early detection period can be ac-counted for by a two-pool model of flash blocks:one poolof blocks,called the weaker pool ,consists of cells whose errorrate increases much faster than the other pool of blocks,called the stronger pool .The weaker pool quickly generates uncor-rectable errors (leading to increasing failure rates observed in the early detection period as these blocks keep failing).The cells comprising this pool ultimately fail and are taken out of use early by the SSD controller.As the blocks in the weaker pool are exhausted,the overall error rate starts decreasing (as we observe after the end of what we call the early detection period )and it continues to decrease until the more durable blocks in the stronger pool start to wear out due to typical use.We notice a general increase in the duration of the lifecycle periods (in terms of data written)for SSDs with larger capac-ities.For example,while the early detection period ends after around 3TB of data written for 720GB and 1.2TB SSDs (in Platforms A,B,C,and D),it ends after around 10TB of data written for 3.2TB SSDs (in Platforms E and F).Similarly,the early failure period ends after around 15TB of data written for 720GB SSDs (in Platforms A and B),28TB for 1.2TB SSDs (in Platforms C and D),and 75TB for 3.2TB SSDs (in Platforms E and F).This is likely due to the more flexibility a higher-capacity SSD has in reducing wear across a larger number of flash cells.4.2Data Read from Flash Cells Similar to data written ,our framework allows us to measure the amount of data directly read from flash cells over each SSD’s lifetime.Prior works in controlled environments have shown that errors can be induced due to read-based access patterns [5,32,6,8].We are interested in understanding how prevalent this effect is across the SSDs we examine.Figure 6plots how failure rate of SSDs varies with the amount of data read from flash cells.For most platforms (i.e.,A,B,C,and F),the failure rate trends we see in Figure 6are similar to those we observed in Figure 5.We find this similar-ity when the average amount of data written to flash cells by SSDs in a platform is more than the average amount of data read from the flash cells.Platforms A,B,C,and F show this behavior.22Though Platform B has a set of SSDs with a large amount of data read,the average amount of data read across all SSDs in this platform is less than the average amount of data written.0e+004e+138e+13Data written (B)0.000.501.00S S D f a i l u r e r a t e ●Platform B0.0e+00 1.0e+14Data written (B)0.000.501.00S S D f a i l u r e r a te ●Platform D0.0e+00 1.5e+14 3.0e+14Data written (B)0.000.501.00S S D f a i l u r e r a t e ●Platform E Platform F Figure 5:SSD failure rate vs.the amount of data written to flash cells.SSDs go through several distinct phases throughout their life:increasing failure rates during early detection of less reliable cells (1),decreasing failure rates during early cell failure and subsequent removal (2),and eventually increasing error rates during cell wearout (3).0.0e+00 1.0e+14 2.0e+14Data read (B)0.000.501.00S S D f a i l u r e r a t e ●Platform B0.0e+00 1.0e+142.0e+14Data read (B)0.000.501.00S S D f a i l u r e r a te ●Platform C Platform D 0.0e+00 1.5e+14Data read (B)0.000.501.00S S D f a i l u r e r a t e ●Platform E Platform F Figure 6:SSD failure rate vs.the amount of data read from flash cells.SSDs in Platform E,where over twice as much data is read from flash cells as written to flash cells,do not show failures dependent on the amount of data read from flash cells.In Platform D,where more data is read from flash cells thanwritten to flash cells (30.6TB versus 18.9TB,on average),wenotice error trends associated with the early detection andearly failure periods.Unfortunately,even though devices inPlatform D may be prime candidates for observing the occur-rence of read disturbance errors (because more data is readfrom flash cells than written to flash cells),the effects of earlydetection and early failure appear to dominate the types oferrors observed on this platform.In Platform E,however,over twice as much data is readfrom flash cells as written to flash cells (51.1TB versus 23.9TBon average).In addition,unlike Platform D,devices in Plat-form E display a diverse amount of utilization.Under theseconditions,we do not observe a statistically significant differ-ence in the failure rate between SSDs that have read the mostamount of data versus those that have read the least amountof data.This suggests that the effect of read-induced errors inthe SSDs we examine is not predominant compared to othereffects such as write-induced errors.This corroborates priorflash cell analysis that showed that most errors occur due toretention effects and not read disturbance [32,11,12].4.3Block ErasesBefore new data can be written to a page in flash mem-ory,the entire block needs to be first erased.3Each erasewears out the block as shown in previous works [32,11,12].Our infrastructure tracks the average number of blocks erasedwhen an SSD controller performs garbage collection .Garbagecollection occurs in the background and erases blocks to en-sure that there is enough available space to write new data.The basic steps involved in garbage collection are:(1)select-ing blocks to erase (e.g.,blocks with many unused pages),(2)copying pages from the selected block to a free area,and (3)erasing the selected block [29].While we do not have statisticson how frequently garbage collection is performed,we exam-ine the number of erases per garbage collection period metricas an indicator of the average amount of erasing that occurswithin each SSD.We examined the relationship between the number of erasesperformed versus the failure rate (not plotted).Across the3Flash pages (around 8KB in size)compose each flash block.A flash block is around 128×8KB flash pages.SSDs we examine,we find that the failure trends in terms of erases are correlated with the trends in terms of data written (shown in Figure 5).This behavior reflects the typical opera-tion of garbage collection in SSD controllers:As more data is written,wear must be leveled across the flash cells within an SSD,requiring more blocks to be erased and more pages to be copied to different locations (an effect we analyze next).4.4Page Copies As data is written to flash-based SSDs,pages are copied to free areas during garbage collection in order to free up blocks with unused data to be erased and to more evenly level wear across the flash chips.Our infrastructure measures the num-ber of pages copied across an SSD’s lifetime.We examined the relationship between the number of pages copied versus SSD failure rate (not plotted).Since the page copying process is used to free up space to write data and also to balance the wear due to writes,its operation is largely dic-tated by the amount of data written to an SSD.Accordingly,we observe similar SSD failure rate trends with the number ofcopied pages (not plotted)as we observed with the amount ofdata written to flash cells (shown in Figure 5).4.5Discarded Blocks Blocks are discarded by the SSD controller when they are deemed unreliable for further use.Discarding blocks affects the usable lifetime of a flash-based SSD by reducing the amount of over-provisioned capacity of the SSD.At the same time,dis-carding blocks has the potential to reduce the amount of errors generated by an SSD,by preventing unreliable cells from being accessed.Understanding the reliability effects of discarding blocks is important,as this process is the main defense that SSD controllers have against failed cells and is also a major impediment for SSD lifetime.Figure 7plots the SSD failure rate versus number of dis-carded blocks over the SSD’s lifetime.For SSDs in most plat-forms,we observe an initially decreasing trend in SSD failure rate with respect to the number of blocks discarded followed by an increasing and then finally decreasing failure rate trend.We attribute the initially high SSD failure rate when few blocks have been discarded to the two-pool model of flash cell failure discussed in Section 4.1.In this case,the weaker pool of flash blocks (corresponding to weak blocks that fail。

最新无线网络领域顶级国际期刊、会议档次划分和介绍无线网络算是计算机(CS)和电子工程(EE)的一个交叉学科。

相关的会议和期刊通常没有物理生物等领域那些量化的影响因子排名。

但是有一点是公认的:会议影响力远大于期刊。

而会议之间也没有任何量化比较,只有人们默认的一些不成文的档次划分。

我读硕士和博士期间,组里对于会议和期刊档次的划分大致如下(其中加入了一些个人分析和评价)。

第一档:SIGCOMM, MobiComSIGCOMM是ACM老牌会议,其首席地位无可置疑。

SIGCOMM论文录用率在10%左右,收录的论文偏重系统和协议设计,极少有理论论文。

另外,每年收录的二三十篇论文中仅有1/5左右是无线网络,但这些论文的平均质量和影响力都很高。

MobiCom 专注于无线网络,每年收录30篇左右,录用率在10%~15%。

在2005年以前,MobiCom论文以理论加仿真占主导,所以一人一电脑就可搞定。

但之后随着WiFi硬件接口的公开和软件无线电平台的星期,做实验变得越来越容易,所以对于实验验证的要求越来越高,收录的论文也越来越偏系统设计测量之类。

2010年MobiCom的一位组委会成员感叹:MobiCom is becoming highly imbalanced. The systems guys clearly dominate the entire conference. Most of them don’t have the patience to read theory papers… If you don’t have any running code, it’s very hard to get in.第二档:MobiHoc, MobiSys, SIGMETRICSMobiHoc, MobiSys两个会议可以说是MobiCom下比肩而立的两兄弟。

MobiHoc偏理论,MobiSys偏系统和应用(特别是手机等移动计算系统)。

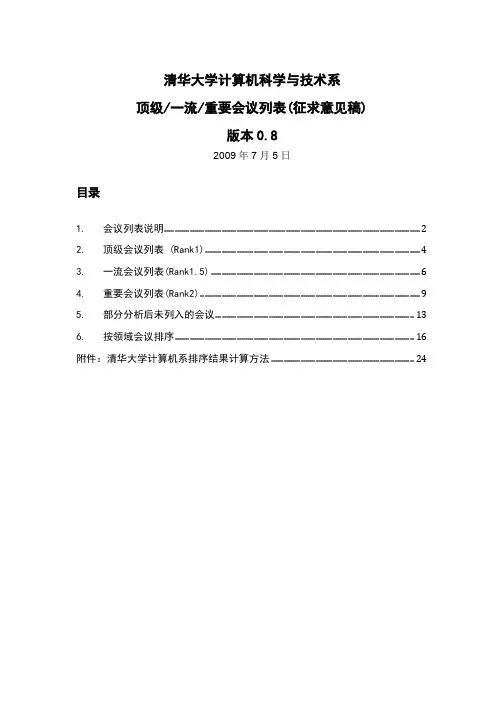

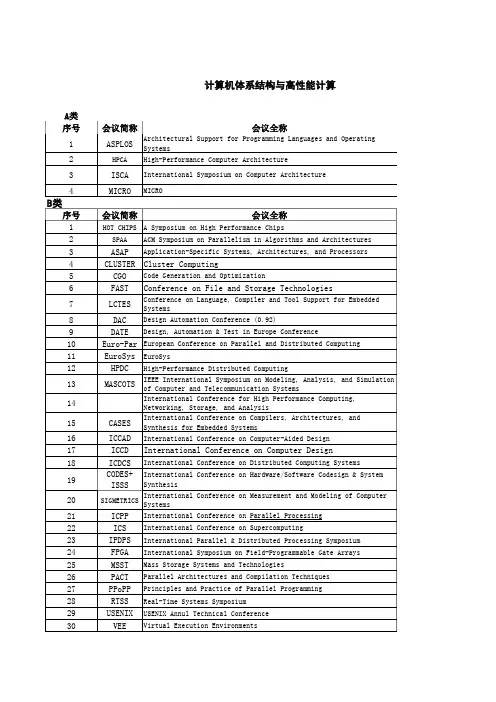

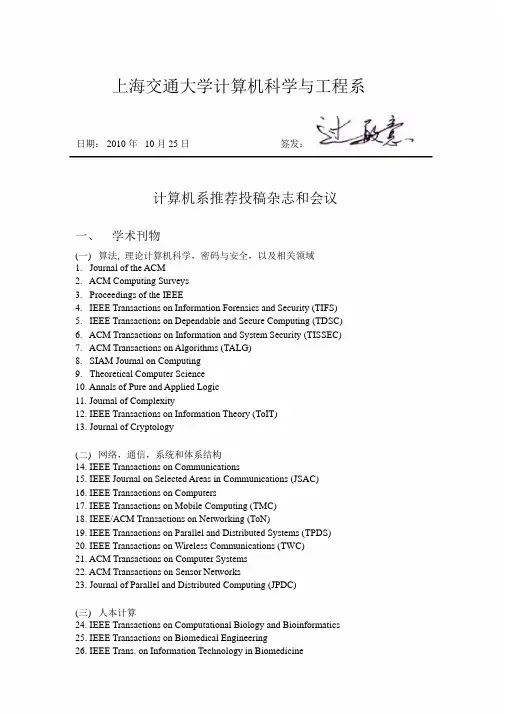

中国计算机学会推荐国际学术会议和期刊目录(2015年)中国计算机学会中国计算机学会推荐国际学术期刊(计算机体系结构/并行与分布计算/存储系统)一、A类二、B类三、C类中国计算机学会推荐国际学术会议计算机体系结构/并行与分布计算/存储系统一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(计算机网络)一、A类二、B类三、C类中国计算机学会推荐国际学术会议(计算机网络)一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(网络与信息安全)一、A类二、B类三、C类中国计算机学会推荐国际学术会议(网络与信息安全)一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(软件工程/系统软件/程序设计语言)一、A类二、B类三、C类中国计算机学会推荐国际学术会议(软件工程/系统软件/程序设计语言)一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(数据库/数据挖掘/内容检索)一、A类二、B类三、C类中国计算机学会推荐国际学术会议(数据库/数据挖掘/内容检索)一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(计算机科学理论)一、A类二、B类三、C类中国计算机学会推荐国际学术会议(计算机科学理论)一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(计算机图形学与多媒体)一、A类二、B类三、C类中国计算机学会推荐国际学术会议(计算机图形学与多媒体)一、A类二、B类三、C类中国计算机学会推荐国际学术期刊(人工智能)一、A类。

ACM的具体介绍ACM(Association for Computing Machinery)国际计算机协会ACM 是一个国际科学教育计算机组织,它致力于发展在高级艺术、最新科学、工程技术和应用领域中的信息技术。

它强调在专业领域或在社会感兴趣的领域中培养、发展开放式的信息交换,推动高级的专业技术和通用标准的发展。

1947年,即世界第一台电子数字计算机(ENIAC)问世的第二年,ACM即成为第一个,也一直是世界上最大的科学教育计算机组织。

它的创立者和成员都是数学家和电子工程师,其中之一是约翰.迈克利(John.Mauchly),他是ENIAC的发明家之一。

他们成立这个组织的初衷是为了计算机领域和新兴工业的科学家和技术人员能有一个共同交换信息、经验知识和创新思想的场合。

几十年的发展,ACM的成员们为今天我们所称之为“信息时代”作出了贡献。

他们所取得的成就大部分出版在ACM印刷刊物上并获得了ACM颁发的在各种领域中的杰出贡献奖。

例如:A.M.Turing奖和GranceMurr—ay Hopper奖。

ACM组织成员今天已达到九万人之多,他们大部分是专业人员、发明家、研究员、教育家、工程师和管理人员;三分之二以上的ACM成员,又是属于一个或多个SIGs(Special Interest Group)专业组织成员。

他们都对创造和应用信息技术有着极大的兴趣。

有些最大的最领先的计算机企业和信息工业也都是ACM 的成员。

ACM就像一个伞状的组织,为其所有的成员提供信息,包括最新的尖端科学的发展,从理论思想到应用的转换,提供交换信息的机会。

正象ACM建立时的初衷,它仍一直保持着它的发展“信息技术”的目标,ACM成为一个永久的更新最新信息领域的源泉。

编辑本段竞赛规则1比赛试题由6-10道试题组成,题目由英文或中文描述(中文题一半以上)。

2采用Windows环境,可使用的编程语言与编程工具为C/C++(VC++6.0)和pascal语言。

CCF推荐国际刊物会议列表2015中国计算机学会(CCF)推荐国际刊物会议列表20151.免费下载CSDN附件:2.存储在七⽜,不需CSDN:计算机体系结构/并⾏与分布计算/存储系统中国计算机学会推荐国际学术刊物 (计算机体系结构/并⾏与分布计算/存储系统)⼀、A类序号刊物简称刊物全称出版社⽹址1TOCS ACM Transactions on ComputerSystemsACM2TOC IEEE Transactions on Computers IEEE3TPDS IEEE Transactions on Parallel andDistributed SystemsIEEE4TCAD IEEE Transactions On Computer-AidedDesign Of Integrated Circuits AndSystemIEEE5TOS ACM Transactions on Storage ACM⼆、B类序号刊物简称刊物全称出版社⽹址1TACO ACM Transactions on Architecture andCode OptimizationACM2TAAS ACM Transactions on Autonomous and Adaptive SystemsACM3TODAES ACM Transactions on DesignAutomation of Electronic SystemsACM4TECS ACM Transactions on EmbeddedComputing SystemsACM5TRETS ACM Transactions on Reconfigurable Technology and SystemsACM6TVLSI IEEE Transactions on VLSI Systems IEEE7JPDC Journal of Parallel and DistributedComputingElsevier8PARCO Parallel Computing Elsevier9Performance Evaluation: An InternationalJournalElsevier10JSA Journal of Systems Architecture Elsevier三、C类序号刊物简称刊物全称出版社⽹址1Concurrency and Computation: Practiceand ExperienceWiley2DC Distributed Computing Springer 3FGCS Future Generation Computer Systems Elsevier 4Integration Integration, the VLSI Journal Elsevier5Microprocessors and Microsystems:Embedded Hardware DesignElsevier6JGC The Journal of Grid computing Springer 7TJSC The Journal of Supercomputing Springer8JETC The ACM Journal on EmergingTechnologies in Computing SystemsACM9JET Journal of Electronic Testing-Theory and ApplicationsSpringer10RTS Real-Time Systems Springer中国计算机学会推荐国际学术会议中国计算机学会推荐国际学术会议 (计算机体系结构/并⾏与分布计算/存储系统)⼀、A类序号会议简称会议全称出版社⽹址1ASPLOS Architectural Support for ProgrammingLanguages and Operating SystemsACM2FAST Conference on File and StorageTechnologiesUSENIX3HPCA High-Performance Computer Architecture IEEE4ISCA International Symposium on ComputerArchitectureACM /IEEE5MICRO MICRO IEEE/ACM6SC International Conference for HighPerformance Computing, Networking,Storage, and AnalysisIEEE7USENIXATCUSENIX Annul Technical Conference USENIX8PPoPP Principles and Practice of ParallelProgrammingACM⼆、B类序号会议简称会议全称出版社⽹址1HOT CHIPS ACM Symposium on HighPerformance ChipsIEEE2SPAA ACM Symposium on Parallelism in Algorithms and ArchitecturesACM3PODC ACM Symposium on Principles ofDistributed ComputingACM4CGO Code Generation and Optimization IEEE/ACM 5DAC Design Automation Conference ACM6DATE Design, Automation & Test in Europe ConferenceIEEE/ACM7EuroSys EuroSys ACM8HPDC High-Performance DistributedComputingIEEE9ICCD International Conference on ComputerDesignIEEE10ICCAD International Conference on Computer-Aided DesignIEEE/ACM11ICDCS International Conference on Distributed Computing SystemsIEEE12HiPEAC International Conference on High Performance and EmbeddedArchitectures and CompilersACM13SIGMETRICS International Conference onMeasurement and Modeling ofComputer SystemsACM14ICPP International Conference on Parallel ProcessingIEEE15ICS International Conference on SupercomputingACM16IPDPS International Parallel & Distributed Processing SymposiumIEEE17FPGA ACM/SIGDA International Symposiumon Field-Programmable Gate ArraysACM18 Performance International Symposium on Computer Performance, Modeling, Measurementsand EvaluationACM19LISA Large Installation systemAdministration ConferenceUSENIX20MSST Mass Storage Systems andTechnologiesIEEE21PACT Parallel Architectures and Compilation TechniquesIEEE/ACM23RTAS Real-Time and Embedded Technologyand Applications SymposiumIEEE24VEE Virtual Execution Environments ACM25CODES+ISSS ACM/IEEE Conf. Hardware/SoftwareCo-design and System SynthesisACM/ IEEE26ITC International Test Conference IEEE26ITC International Test Conference IEEE27SOCC ACM Symposium on Cloud Computing ACM三、C类序号会议简称会议全称出版社⽹址1CF ACM International Conference onComputing FrontiersACM2NOCS ACM/IEEE International Symposium onNetworks-on-ChipACM/IEEE3ASP-DAC Asia and South Pacific DesignAutomation ConferenceACM/IEEE4ASAP Application-Specific Systems, Architectures, and ProcessorsIEEE5CLUSTER Cluster Computing IEEE 6CCGRID Cluster Computing and the Grid IEEE7Euro-Par European Conference on Parallel and Distributed ComputingSpringer8ETS European Test Symposium IEEE9FPL Field Programmable Logic andApplicationsIEEE10FCCM Field-Programmable Custom Computing MachinesIEEE11GLSVLSI Great Lakes Symposium on VLSISystemsACM/IEEE12HPCC IEEE International Conference on High Performance Computing and CommunicationsIEEE13MASCOTS IEEE International Symposium onModeling, Analysis, and Simulation of Computer and TelecommunicationSystemsIEEE14NPC IFIP International Conference onNetwork and Parallel ComputingSpringer15ICA3PP International Conference on Algorithmsand Architectures for ParallelProcessingIEEE16CASES International Conference on Compilers, Architectures, and Synthesis forEmbedded SystemsACM17FPT International Conference on Field-Programmable TechnologyIEEE18HPC International Conference on HighPerformance ComputingIEEE/ ACM19ICPADS International Conference on Parallel and Distributed SystemsIEEE20ISCAS International Symposium on Circuits and SystemsIEEE21ISLPED International Symposium on Low Power Electronics and DesignACM/IEEE22ISPD International Symposium on PhysicalDesignACM23HotInterconnects Symposium on High-Performance InterconnectsIEEE24VTS VLSI Test Symposium IEEE25ISPA Sym. on Parallel and Distributed Processing with Applications26SYSTOR ACM SIGOPS International Systemsand Storage ConferenceACM27ATS IEEE Asian Test Symposium IEEE中国计算机学会推荐国际学术刊物 (计算机⽹络)⼀、A类序号刊物简称刊物全称出版社⽹址1TON IEEE/ACM Transactions on Networking IEEE, ACM2JSAC IEEE Journal of Selected Areas in CommunicationsIEEE3TMC IEEE Transactions on Mobile Computing IEEE⼆、B类序号刊物简称刊物全称出版社⽹址序号刊物简称刊物全称出版社⽹址1TOIT ACM Transactions on InternetTechnologyACM2TOMCCAP ACM Transactions on MultimediaComputing, Communications andApplicationsACM3TOSN ACM Transactions on Sensor Networks ACM 4CN Computer Networks Elsevier 5TOC IEEE Transactions on Communications IEEE6TWC IEEE Transactions on Wireless CommunicationsIEEE三、C类序号刊物简称刊物全称出版社⽹址1Ad hoc Networks Elsevier2CC Computer Communications Elsevier3TNSM IEEE Transactions on Network andService ManagementIEEE4IET Communications IET5JNCA Journal of Network and ComputerApplicationsElsevier6MONET Mobile Networks & Applications Springer 7Networks Wiley8PPNA Peer-to-Peer Networking andApplicationsSpringer9WCMC Wireless Communications & MobileComputingWiley.10Wireless Networks Springer中国计算机学会推荐国际学术会议 (计算机⽹络)⼀、A类序号会议简称会议全称出版社⽹址1MOBICOM ACM International Conference on Mobile Computing and NetworkingACM2SIGCOMM ACM International Conference on the applications, technologies, architectures,and protocols for computercommunicationACM3INFOCOM IEEE International Conference onComputer CommunicationsIEEE⼆、B类序号会议简称会议全称出版社⽹址1SenSys ACM Conference on EmbeddedNetworked Sensor SystemsACM2CoNEXT ACM International Conference onemerging Networking EXperiments and TechnologiesACM3SECON IEEE Communications SocietyConference on Sensor and Ad Hoc Communications and NetworksIEEE4IPSN International Conference on Information Processing in Sensor NetworksIEEE/ACM5ICNP International Conference on NetworkProtocolsIEEE6MobiHoc International Symposium on Mobile AdHoc Networking and ComputingACM/IEEE7MobiSys International Conference on MobileSystems, Applications, and ServicesACM8IWQoS International Workshop on Quality ofServiceIEEE9IMC Internet Measurement Conference ACM/USENIX10NOSSDAV Network and Operating System Supportfor Digital Audio and VideoACM11NSDI Symposium on Network System Designand ImplementationUSENIX三、C类序号会议简称会议全称出版社⽹址1ANCS Architectures for Networking and Communications SystemsACM/IEEE Formal Techniques for Networked and2FORTE Formal Techniques for Networked and Distributed SystemsSpringer3LCN IEEE Conference on Local ComputerNetworksIEEE4Globecom IEEE Global CommunicationsConference, incorporating the GlobalInternet SymposiumIEEE5ICC IEEE International Conference on CommunicationsIEEE6ICCCN IEEE International Conference onComputer Communications andNetworksIEEE7MASS IEEE International Conference on MobileAd hoc and Sensor SystemsIEEE8P2P IEEE International Conference on P2P ComputingIEEE9IPCCC IEEE International PerformanceComputing and CommunicationsConferenceIEEE10WoWMoM IEEE International Symposium on aWorld of Wireless Mobile and Multimedia NetworksIEEE11ISCC IEEE Symposium on Computers and CommunicationsIEEE12WCNC IEEE Wireless Communications &Networking ConferenceIEEE13Networking IFIP International Conferences onNetworkingIFIP14IM IFIP/IEEE International Symposium onIntegrated Network ManagementIFIP/IEEE15MSWiM International Conference on Modeling,Analysis and Simulation of Wireless andMobile SystemsACM16NOMS Asia-Pacific Network Operations and Management SymposiumIFIP/IEEE17HotNets The Workshop on Hot Topics inNetworksACM18WASA International Conference on Wireless Algorithms, Systems, and ApplicationsSpringer⽹络与信息安全中国计算机学会推荐国际学术刊物 (⽹络与信息安全)⼀、A类序号刊物简称刊物全称出版社⽹址1TDSC IEEE Transactions on Dependable andSecure ComputingIEEE2TIFS IEEE Transactions on InformationForensics and SecurityIEEE3Journal of Cryptology Springer⼆、B类序号刊物简称刊物全称出版社⽹址1TISSEC ACM Transactions on Information andSystem SecurityACM2Computers & Security Elsevier3Designs, Codes and Cryptography Springer4JCS Journal of Computer Security IOS Press三、C类序号刊物简称刊物全称出版社⽹址1CLSR Computer Law and Security Reports Elsevier2EURASIP Journal on InformationSecuritySpringer3IET Information Security IET4IMCS Information Management & ComputerSecurityEmerald4IMCSSecurityEmerald 5ISTR Information Security Technical Report Elsevier6IJISP International Journal of InformationSecurity and PrivacyIdea Group Inc7IJICS International Journal of Information andComputer SecurityInderscience8SCN Security and Communication Networks Wiley中国计算机学会推荐国际学术会议 (⽹络与信息安全)⼀、A类序号会议简称会议全称出版社⽹址1CCS ACM Conference on Computer and Communications SecurityACM2CRYPTO International Cryptology Conference Springer 3EUROCRYPTEuropean Cryptology Conference Springer4S&P IEEE Symposium on Security andPrivacyIEEE5USENIXSecurityUsenix Security SymposiumUSENIXAssociation⼆、B类序号会议简称会议全称出版社⽹址1ACSAC Annual Computer Security Applications ConferenceIEEE2ASIACRYPT Annual International Conference on theTheory and Application of Cryptologyand Information SecuritySpringer3ESORICS European Symposium on Research inComputer SecuritySpringer4FSE Fast Software Encryption Springer5NDSS ISOC Network and Distributed SystemSecurity SymposiumISOC6CSFW IEEE Computer Security Foundations WorkshopIEEE7RAID International Symposium on RecentAdvances in Intrusion DetectionSpringer8PKC International Workshop on Practice andTheory in Public Key CryptographySpringer9DSN The International Conference onDependable Systems and NetworksIEEE/IFIP10TCC Theory of Cryptography Conference Springer11SRDS IEEE International Symposium onReliableDistributed SystemsIEEE12CHES Workshop on Cryptographic HardwareandEmbedded SystemsSpringer三、C类序号会议简称会议全称出版社⽹址1WiSec ACM Conference on Security andPrivacy inWireless and Mobile NetworksACM2ACMMM&SECACM Multimedia and SecurityWorkshopACM3SACMAT ACM Symposium on Access ControlModels and TechnologiesACM4ASIACCS ACM Symposium on Information,Computer andCommunications SecurityACM5DRM ACM Workshop on Digital Rights ManagementACM6ACNS Applied Cryptography and NetworkSecuritySpringer7ACISP Australasia Conference on InformationSecurity and PrivacySpringer8DFRWS Digital Forensic Research Workshop Elsevier9FC Financial Cryptography and DataSecuritySpringer10DIMVA Detection of Intrusions and Malware & Vulnerability AssessmentSIDAR、GI、SpringerVulnerability Assessment Springer 11SECIFIP International InformationSecurity ConferenceSpringer 12IFIP WG 11.9IFIP WG 11.9 InternationalConferenceon Digital ForensicsSpringer 13ISC Information Security ConferenceSpringer 14ICICS International Conference onInformationand Communications SecuritySpringer15SecureComm International Conference on SecurityandPrivacy in Communication NetworksACM 16NSPW New Security Paradigms WorkshopACM 17CT-RSARSA Conference, Cryptographers'TrackSpringer 18SOUPSSymposium On Usable Privacy andSecurityACM 19HotSecUSENIX Workshop on Hot Topics inSecurityUSENIX 20SAC Selected Areas in CryptographySpringer 21TrustComIEEE International Conference on Trust,Security and Privacy in Computing and CommunicationsIEEE22PAMPassive and Active MeasurementConferenceSpringer 23PETSPrivacy Enhancing TechnologiesSymposiumSpringer 24International Conference on DigitalForensics & Cyber CrimeSpringer软件⼯程/系统软件/程序设计语⾔中国计算机学会推荐国际学术期刊 (软件⼯程/系统软件/程序设计语⾔)⼀、A 类序号刊物简称刊物全称出版社⽹址1TOPLASACM Transactions on ProgrammingLanguages & SystemsACM 2TOSEM ACM Transactions on Software Engineering Methodology ACM3TSE IEEE Transactions on Software EngineeringIEEE⼆、B 类序号刊物简称刊物全称出版社⽹址1ASE Automated Software Engineering Springer 2ESE Empirical Software Engineering Springer 3TSC IEEE Transactions on Service Computing IEEE 4IETS IET Software IET 5IST Information and Software Technology Elsevier6JFP Journal of Functional Programming Cambridge University Press7 Journal of Software: Evolution and Process Wiley 8JSS Journal of Systems and Software Elsevier 9RE Requirements Engineering Springer 10SCP Science of Computer Programming Elsevier 11SoSyM Software and System Modeling Springer 12SPE Software: Practice and Experience Wiley 13STVR Software Testing, Verification and ReliabilityWiley三、C 类序号刊物简称刊物全称出版社⽹址1CL Computer Languages, Systems and StructuresElsevier 2IJSEKE International Journal on Software Engineering and Knowledge Engineering World Scientific 3STTT International Journal on Software Tools for Technology TransferSpringer3STTT International Journal on Software Tools for Technology Transfer Springer 4JLAP Journal of Logic and Algebraic Programming Elsevier 5JWE Journal of Web Engineering Rinton Press 6SOCA Service Oriented Computing and Applications Springer 7SQJ Software Quality Journal Springer8TPLP Theory and Practice of Logic Programming Cambridge University Press中国计算机学会推荐国际学术会议(软件⼯程/系统软件/程序设计语⾔)⼀、A类序号会议简称会议全称出版社⽹址1FSE/ESEC ACM SIGSOFT Symposium on the Foundationof Software Engineering/ European SoftwareEngineering ConferenceACM2OOPSLA Conference on Object-OrientedProgramming Systems, Languages,and ApplicationsACM3ICSE International Conference onSoftware EngineeringACM/IEEE4OSDI USENIX Symposium onOperating Systems Designand ImplementationsUSENIX5PLDI ACM SIGPLAN Symposium on ProgrammingLanguage Design & ImplementationACM6POPL ACM SIGPLAN-SIGACTSymposium on Principlesof Programming LanguagesACM7SOSP ACM Symposium onOperating Systems PrinciplesACM8ASE International Conference onAutomated Software EngineeringIEEE/ACM⼆、B类序号会议简称会议全称出版社⽹址1ECOOP European Conference on Object-Oriented Programming2ETAPS European Joint Conferences on Theoryand Practice of SoftwareSpringer3FM World Congress on Formal Methods FME4ICPC IEEE International Conference onProgram ComprehensionIEEE5RE IEEE International RequirementEngineering ConferenceIEEE6CAiSE International Conference on AdvancedInformation Systems EngineeringSpringer7ICFP International Conference onFunction ProgrammingACM8LCTES International Conference on Languages,Compilers, Tools and Theory forEmbedded SystemsACM9MoDELS International Conference on Model DrivenEngineering Languages and SystemsACM, IEEE10CP International Conference on Principlesand Practice of Constraint ProgrammingSpringer11ICSOC International Conference on ServiceOriented ComputingSpringer12ICSME International Conference on SoftwareMaintenance and EvolutionIEEE13VMCAI International Conference on Verification,Model Checking, and Abstract InterpretationSpringer14ICWS International Conference on Web Services(Research Track)IEEE15SAS International Static Analysis Symposium Springer16ISSRE International Symposium on SoftwareReliability EngineeringIEEE17ISSTA International Symposium onSoftware Testing and AnalysisACM18Middleware Conference on middlewareACM/IFIP/18Middleware Conference on middleware ACM/IFIP/ USENIX19SANER International Conference on SoftwareAnalysis, Evolution, and ReengineeringIEEE20HotOS USENIX Workshop on Hot Topics inOperating SystemsUSENIX21ESEM International Symposium on EmpiricalSoftware Engineering and MeasurementACM/IEEE三、C类序号会议简称会议全称出版社⽹址1PASTE ACMSIGPLAN-SIGSOFT Workshopon Program Analysis for SoftwareTools and EngineeringACM2APLAS Asian Symposium on ProgrammingLanguages and SystemsSpringer3APSEC Asia-Pacific Software Engineering ConferenceIEEE4COMPSAC International Computer Software andApplications ConferenceIEEE5ICECCS IEEE International Conference onEngineering of Complex Computer SystemsIEEE6SCAM IEEE International Working Conferenceon Source Code Analysis and ManipulationIEEE7ICFEM International Conference on FormalEngineering MethodsSpringer8TOOLS International Conference on Objects,Models, Components, PatternsSpringer9PEPM ACM SIGPLAN Symposium on PartialEvaluation and Semantics BasedProgramming ManipulationACM10QRS The IEEE International Conference on Software Quality, Reliability and Security IEEE11SEKE International Conference on SoftwareEngineering and Knowledge EngineeringKSI12ICSR International Conference on Software Reuse Springer 13ICWE International Conference on Web Engineering Springer14SPIN International SPIN Workshop on ModelChecking of SoftwareSpringer15LOPSTR International Symposium on Logic-based Program Synthesis and Transformation Springer16TASE International Symposium on TheoreticalAspects of Software EngineeringIEEE17ICST The IEEE International Conference onSoftware Testing, Verification and ValidationIEEE18ATVA International Symposium on Automated Technology for Verification and Analysis Springer19ISPASS IEEE International Symposium onPerformance Analysis of Systems andSoftwareIEEE20SCC International Conference on ServiceComputingIEEE21ICSSP International Conference on Softwareand System ProcessISPA22MSR Working Conference on Mining Software Repositories IEEE/ACM 23REFSQ Requirements Engineering: Foundation for Software Quality Springer24WICSA Working IEEE/IFIP Conference onSoftware ArchitectureIEEE25EASE Evaluation and Assessment in SoftwareEngineeringACM数据库/数据挖掘/内容检索中国计算机学会推荐国际学术期刊(数据库/数据挖掘/内容检索)⼀、A类序号刊物简称刊物全称出版社⽹址1TODS ACM Transactions on Database Systems ACM2TOIS ACM Transactions on InformationSystemsACM2TOISSystemsACM3TKDE IEEE Transactions on Knowledge andData EngineeringIEEE4VLDBJ VLDB Journal Springer⼆、B类序号刊物简称刊物全称出版社⽹址1TKDD ACM Transactions onKnowledge Discovery from DataACM2AEI Advanced Engineering Informatics Elsevier 3DKE Data and Knowledge Engineering Elsevier4DMKD Data Mining andKnowledge DiscoverySpringer5EJIS European Journal of Information Systems6GeoInformatica Springer 7IPM Information Processing and Management Elsevier 8Information Sciences Elsevier 9IS Information Systems Elsevier10JASIST Journal of the American Society forInformation Science and TechnologyAmericanSociety forInformationScience andTechnology11JWS Journal of Web Semantics Elsevier12KIS Knowledge and Information Systems Springer13TWEB ACM Transactions on the Web ACM三、C类序号刊物简称刊物全称出版社⽹址1DPD Distributed and Parallel Databases Springer2I&M Information and Management Elsevier3IPL Information Processing Letters Elsevier4IR Information Retrieval Springer5IJCIS International Journal of CooperativeInformation SystemsWorldScientific6IJGIS International Journal of Geographical Information Science7IJIS International Journal of Intelligent Systems Wiley8IJKM International Journal of KnowledgeManagementIGI9IJSWIS International Journal on Semantic Web and Information SystemsIGI10JCIS Journal of Computer Information Systems IACIS 11JDM Journal of Database Management IGI-Global12JGITM Journal of Global Information Technology Management13JIIS Journal of Intelligent Information Systems Springer14JSIS Journal of Strategic Information Systems Elsevier中国计算机学会推荐国际学术会议(数据库/数据挖掘/内容检索)⼀、A类序号会议简称会议全称出版社⽹址1SIGMOD ACM Conference on Management ofDataACM2SIGKDD ACM Knowledge Discovery and DataMiningACM3SIGIR International Conference on ResearchonDevelopment in Information RetrievalACM4VLDB International Conference onVery Large Data BasesMorganKaufmann/ACM5ICDE IEEE International Conference onData EngineeringIEEE⼆、B类序号会议简称会议全称出版社⽹址1CIKM ACM International Conferenceon Information and KnowledgeManagementACM2PODS ACM SIGMOD Conference onPrinciples ACM2PODS Principlesof DB SystemsACM3DASFAA Database Systems for AdvancedApplicationsSpringer4ECML-PKDDEuropean Conference on MachineLearning and Principles andPractice of Knowledge Discoveryin DatabasesSpringer5ISWC IEEE International Semantic WebConferenceIEEE6ICDM International Conference on Data Mining IEEE7ICDT International Conference on DatabaseTheorySpringer8EDBT International Conference onExtending DB TechnologySpringer9CIDR International Conference onInnovation Database ResearchOnlineProceeding10SDM SIAM International Conference on DataMiningSIAM11WSDM ACM International Conference onWeb Search and Data MiningACM三、C类序号会议简称会议全称出版社⽹址1DEXA Database and Expert System Applications Springer2ECIR European Conference on IR Research Springer3WebDB International ACM Workshop onWeb and DatabasesACM4ER International Conference on ConceptualModelingSpringer5MDM International Conference on Mobile Data ManagementIEEE6SSDBM International Conference on Scientificand Statistical DB ManagementIEEE7WAIM International Conference on Web AgeInformation ManagementSpringer8SSTD International Symposium on Spatialand Temporal DatabasesSpringer9PAKDD Pacific-Asia Conference on KnowledgeDiscovery and Data MiningSpringer10APWeb The Asia Pacific Web Conference Springer11WISE Web Information Systems Engineering Springer12ESWC Extended Semantic Web Conference Elsevier计算机科学理论中国计算机学会推荐国际学术期刊(计算机科学理论)⼀、A类序号刊物简称刊物全称出版社⽹址1IANDC Information and Computation Elsevier2SICOMP SIAM Journal on Computing SIAM3TIT IEEE Transactions on Information Theory IEEE⼆、B类序号刊物简称刊物全称出版社⽹址1TALG ACM Transactions on Algorithms ACM2TOCL ACM Transactions on ComputationalLogicACM3TOMS ACM Transactions on MathematicalSoftwareACM4AlgorithmicaAlgorithmica Springer 5CC Computational complexity Springer 6FAC Formal Aspects of Computing Springer 7FMSD Formal Methods in System Design Springer 8INFORMS INFORMS Journal on Computing INFORMS9JCSS Journal of Computer and SystemElsevier9JCSS Journal of Computer and SystemSciencesElsevier10JGO Journal of Global Optimization Springer 11JSC Journal of Symbolic Computation Elsevier12MSCS Mathematical Structures in ComputerScienceCambridgeUniversityPress13TCS Theoretical Computer Science Elsevier三、C类序号刊物简称刊物全称出版社⽹址1APAL Annals of Pure and Applied Logic Elsevier2ACTA Acta Informatica Springer3DAM Discrete Applied Mathematics Elsevier4FUIN Fundamenta Informaticae IOS Press5LISP Higher-Order and SymbolicComputationSpringer6IPL Information Processing Letters Elsevier 7JCOMPLEXITYJournal of Complexity Elsevier8LOGCOM Journal of Logic and ComputationOxford University Press9Journal of Symbolic Logic Association for SymbolicLogic10LMCS Logical Methods in Computer Science LMCS11SIDMA SIAM Journal on Discrete Mathematics SIAM12Theory of Computing Systems Springer中国计算机学会推荐国际学术会议(计算机科学理论)⼀、A类序号会议简称会议全称出版社⽹址1STOC ACM Symposium on Theory ofComputingACM2FOCS IEEE Symposium on Foundations ofComputer ScienceIEEE3LICS IEEE Symposium on Logic inComputer ScienceIEEE4CAV Computer Aided Verification Springer⼆、B类序号会议简称会议全称出版社⽹址1SoCG ACM Symposium on ComputationalGeometryACM2SODA ACM-SIAM Symposium on DiscreteAlgorithmsSIAM3CADE/IJCAR Conference on AutomatedDeduction/The International JointConference on Automated ReasoningSpringer4CCC IEEE Conference on Computational ComplexityIEEE5ICALP International Colloquium onAutomata, Languages andProgrammingSpringer6CONCUR International Conference onConcurrency TheorySpringer7HSCC International Conference onHybrid Systems: Computation andControlSpringerand ACM8ESA European Symposium on Algorithms Springer三、C类序号会议简称会议全称出版社⽹址1CSL Computer Science Logic Springer2FSTTCS Foundations of Software Technologyand Theoretical ComputerScienceIndian AssociationforResearch inComputingScience3IPCO International Conference onInteger Programming andCombinatorial OptimizationSpringerCombinatorial Optimization4RTA International Conference onRewriting Techniques andApplicationsSpringer5ISAAC International Symposium onAlgorithmsand ComputationSpringer6MFCS Mathematical Foundations ofComputer ScienceSpringer7STACS Symposium on Theoretical Aspectsof Computer ScienceSpringer8FMCAD Formal Method in Computer-AidedDesignACM9SAT Theory and Applications ofSatisfiability TestingSpringer10ICTAC International Colloquium onTheoreticalAspects of ComputingSpringer计算机图形学与多媒体中国计算机学会推荐国际学术刊物(计算机图形学与多媒体)⼀、A类序号刊物简称刊物全称出版社⽹址1TOG ACM Transactions on Graphics ACM2TIP IEEE Transactions on Image Processing IEEE3TVCG IEEE Transactions on Visualization and Computer GraphicsIEEE⼆、B类序号刊物简称刊物全称出版社⽹址1TOMCCAP ACM Transactions on MultimediaComputing,Communications andApplicationACM2CAD Computer-Aided Design Elsevier 3CAGD Computer Aided Geometric Design Elsevier 4CGF Computer Graphics Forum Wiley 5GM Graphical Models Elsevier6TCSVT IEEE Transactions on Circuits andSystems forVideo TechnologyIEEE7TMM IEEE Transactions on Multimedia IEEE8JASA Journal of The Acoustical Society ofAmericaAIP9SIIMS SIAM Journal on Imaging Sciences SIAM10SpeechComSpeech Communication Elsevier三、C类序号刊物简称刊物全称出版社⽹址1CAVW Computer Animation and Virtual Worlds Wiley2C&G Computers & Graphics-UK Elsevier3CGTA Computational Geometry: Theory and ApplicationsElsevier4DCG Discrete & Computational Geometry Springer 5IET-IPR IET Image Processing IET 6IEEE Signal Processing Letter IEEE7JVCIR Journal of Visual Communication andImage RepresentationElsevier8MS Multimedia Systems Springer 9MTA Multimedia Tools and Applications Springer10SignalProcessingElsevier11SPIC Signal processing : imagecommunicationElsevier12TVC The Visual Computer Springer中国计算机学会推荐国际学术会议(计算机图形学与多媒体)。

学术会议排名一、A类序号会议名称论文集的出版社1. Special Interest Group on Data Communication(SIGCOMM) ACM录取率:9~13%2. Special Interest Group on Mobility of Sys-tems, Users, Data and Computing (MOBICOM) ACM录取率:~10%3. International Symposium on Mobile Ad Hoc Networking and Computing (MOBIHOC) ACM/IEEE录取率:<13%4. Special Interest Group on Measurement and Evaluation (SIGMETRICS) ACM录取率:<13%二、B类序号会议名称论文集的出版社1. International Conference on Network Protocols (ICNP) IEEE录取率:14~18%2. Conference on Computer Communications (INFOCOM) IEEE录取率:18~20%3. International World Wide Web (WWW)录取率:~15%4. International Conference on Embedded Networked SensorSystems (SenSys) ACM录取率:~15%5. USENIX Annual Technical Conference USENIX '09 January 9, 2009 full papers: <14 pages, short papers: <6 pages 录取率:12~16%6. Network and Distributed System Security Symposium (NDSS ) USENIX录取率:12~20%7. International Conference on Distributed Computing Systems (ICDCS) IEEE录取率:13~17%8. International Conference on Pervasive Computing and Communications (PerCom) IEEE '10 Paper submission: September 28, 2009录取率:9~17%三、C类序号会议名称论文集的出版社1. International Workshop on Quality of Service (IWQoS) IEEE录取率:~20%2. IEEE/ACM International Workshop on Grid Computing(Grid) IEEE/ACM录取率:~20%3. Symposium on Network System Design and Implementation (NDSI) USENIX录取率:~22%4. International Conferences on Networking (Networking) IFIP录取率:19~24%5. Conference on High Performance Computing, Networking and Storage (Supercomputing) IEEE/ACM录取率:22~29%6. International Conference on Information Processing in Sensor Networks (IPSN) ACM/IEEE录取率:15~18%四、其他会议序号会议名称论文集的出版社1. IFIP/IEEE International Symposium on Integrated Network Management(IM) IFIP/IEEE录取率:23~50%2. International Parallel & Distributed Processing Symposium (IPDPS) IEEE录取率:23~38%3. International Symposium on Modeling, Analysis and Simulation of Wireless and Mobile Systems (MSWiM) ACM 录取率:23~38%4. IEEE Communications Society Conference on Sensor and Ad Hoc Communications and Networks(SECON) IEEE 录取率:18~27%5. Network Operations and Management Symposium(MONS) IFIP/IEEE录取率:~26%6. Conference on Local Computer Networks (LCN) IEEE录取率:26~40%7. Formal Techniques for Networked and Distributed Systems FORTE录取率:30~70%8. IEEE Global Communications Conference, incorporating the Global Internet Symposium (Globecom) IEEE录取率:~35%9. International Conference on Communications (ICC) IEEE录取率:~35%10. SYMPOSIUM ON COMPUTERS AND COMMUNICATIONS (ISCC) IEEE录取率:~40%11. IEEE Semiannual Vehicular Technology Conference (VTC) IEEE。

计算机学科国际会议分级说明:本列表合并了UCLA、NUS、NTU、CCF、清华大学计算机系、上海交大计算机系认可的国际会议,分级时采用了“就高”的原则。