Online object tracking A benchmark

- 格式:pdf

- 大小:5.70 MB

- 文档页数:8

2017目标跟踪算法综述2017目标跟踪算法综述时间:2017年7月31日。

本文所提的跟踪主要指的是单目标跟踪,多目标跟踪暂时不作为考虑范围。

本文主要从常用的评价标准,如EAO,EFO,fps等,分析2016-2017年最新出的目标跟踪文章在应用层面的优缺点。

算法原理不作为本文重点,旨在对比不同方法的“效果和时间性能”,方便大家根据不同的业务常见选择不同的方法。

本文按照以下几类进行展开,并不局限于传统方法或者深度学习。

TCNN C-COT ECO (根据名次递增)CFNet DCFNet SANet DRLT (端到端CNN RNN)CA-CF BACF (利用背景信息,框架性通用改进)ACET Deep-LK (优于C-COT or MDNet, 且CPU实时)LMCF Vision-based (速度提升,但性能提升不明显CPU实时)2017目标跟踪算法综述目标跟踪VOT2016 BenchMark评价标准介绍推荐网站TCNN C-COT ECO 根据名次递增TCNN17_arXiv CVPR_TCNN_Modeling and Propagating CNNs in a Tree Structure for Visual TrackingC-COT16_ECCV_CCOT_Beyond Correlation Filters - Learning ContinuousCFNet DCFNet SANet DRLT 端到端CNN RNNDCFNet17_arXiv prePrint_DCFNet_ Discriminant Correlation Filters Network for Visual TrackingSANet17_CVPR_SANet Structure-Aware Network for Visual Tracking DRLT17_arXiv prePrint_DRLT_Deep Reinforcement Learning for Visual Object Tracking in VideosCA-CF BACF 利用背景信息框架性通用改进CA-CF17_CVPR_CA-CFContext-Aware Correlation Filter Tracking BACF17_CVPR_BACF_Learning Background-Aware Correlation Filters for Visual TrackingACET Deep-LK 优于C-COT or MDNet 且CPU实时ACET17_arXiv prePrint_ACET_Active Collaborative Ensemble TrackingDeep-LK17_arXiv prePrint_Deep-LK_ for Efficient Adaptive Object TrackingLMCF Vision-based 速度提升但性能提升不明显CPU实时LMCF17_CVPR_LMCF_Large Margin Object Tracking with Circulant Feature MapsVision-based17_arXiv prePrint_NULL_Vision-based Real-Time Aerial Object Localization and Tracking for UAV Sensing System目标跟踪VOT2016 BenchMark评价标准介绍见目标跟踪VOT2016 BenchMark评价标准介绍推荐网站OTB Results: 这是foolwood总结的目标跟踪发展主线图:这是foolwood总结的这是浙江大学Mengmeng Wang同学在极视角公开课上展示的一个总结: foolwood这个人在github上最新的一些跟踪方法常见数据集上的结果比较。

benchmark方法-回复什么是benchmark方法?Benchmark方法是一种常用的研究方法,用于比较和评估不同实体、系统或策略之间的性能差异。

它是一种定量研究方法,通过设定标准或基准来衡量被测试对象的表现。

Benchmark方法广泛应用于各个领域,包括金融、科技、医疗等,用于评估各种产品、系统或策略的效果和可行性。

Benchmark方法的重要性和意义是什么?Benchmark方法的目的是提供一个客观的标准来评估不同实体、系统或策略的性能差异。

通过使用统一的基准,我们可以更好地识别出行业或领域内的佼佼者,并找到并改进低效或不理想的方案。

Benchmark方法为决策者提供了有力的工具和数据,帮助他们做出明智的决策和策略选择。

Benchmark方法的步骤是什么?1. 确定研究目的和对象:首先,我们需要明确研究的目的是什么,以及要测试或比较的实体、系统或策略是什么。

例如,我们可能想要比较两种不同的投资组合的回报率,或者评估两个电子产品的性能差异。

2. 设定基准或标准:接下来,我们需要确定一个标准或基准来比较被测试对象的性能。

这个基准应该是一个可衡量的、普遍接受的标准,以便于与其他研究结果进行比较和评估。

3. 收集数据和测量指标:在进行比较之前,我们需要收集和记录需要比较的数据。

这些数据应该是客观的、可衡量的,并与所设定的基准相对应。

比如,在比较两种投资组合的回报率时,我们需要收集和记录每个投资组合的历史数据。

4. 进行比较和分析:一旦数据收集完毕,我们可以开始进行比较和分析,将被测试对象的性能与所设定的基准进行对比。

这可以通过统计方法、图表或其他分析工具来完成。

比如,我们可以计算投资组合的年化收益率,并将其与市场指数进行比较。

5. 做出决策和改进:通过比较和分析的结果,我们可以得出关于被测试对象的性能和差异的结论。

根据这些结论,我们可以做出相应的决策和改进。

例如,在投资领域,如果一个投资组合的回报率明显低于基准,我们可能会考虑调整投资策略或选择其他的投资组合。

java英⽂参考⽂献java英⽂参考⽂献汇编 导语:Java是⼀门⾯向对象编程语⾔,不仅吸收了C++语⾔的各种优点,还摒弃了C++⾥难以理解的多继承、指针等概念,因此Java语⾔具有功能强⼤和简单易⽤两个特征。

下⾯⼩编为⼤家带来java英⽂参考⽂献,供各位阅读和参考。

java英⽂参考⽂献⼀: [1]Irene Córdoba-Sánchez,Juan de Lara. Ann: A domain-specific language for the effective design and validation of Java annotations[J]. Computer Languages, Systems & Structures,2016,:. [2]Marcelo M. Eler,Andre T. Endo,Vinicius H.S. Durelli. An Empirical Study to Quantify the Characteristics of Java Programs that May Influence Symbolic Execution from a Unit Testing Perspective[J]. The Journal of Systems & Software,2016,:. [3]Kebo Zhang,Hailing Xiong. A new version of code Java for 3D simulation of the CCA model[J]. Computer Physics Communications,2016,:. [4]S. Vidal,A. Bergel,J.A. Díaz-Pace,C. Marcos. Over-exposed classes in Java: An empirical study[J]. Computer Languages, Systems & Structures,2016,:. [5]Zeinab Iranmanesh,Mehran S. Fallah. Specification and Static Enforcement of Scheduler-Independent Noninterference in a Middleweight Java[J]. Computer Languages, Systems & Structures,2016,:. [6]George Gabriel Mendes Dourado,Paulo S Lopes De Souza,Rafael R. Prado,Raphael Negrisoli Batista,Simone R.S. Souza,Julio C. Estrella,Sarita M. Bruschi,Joao Lourenco. A Suite of Java Message-Passing Benchmarks to Support the Validation of Testing Models, Criteria and Tools[J]. Procedia Computer Science,2016,80:. [7]Kebo Zhang,Junsen Zuo,Yifeng Dou,Chao Li,Hailing Xiong. Version 3.0 of code Java for 3D simulation of the CCA model[J]. Computer Physics Communications,2016,:. [8]Simone Hanazumi,Ana C.~V. de Melo. A Formal Approach to Implement Java Exceptions in Cooperative Systems[J]. The Journal of Systems & Software,2016,:. [9]Lorenzo Bettini,Ferruccio Damiani. Xtraitj : Traits for the Java Platform[J]. The Journal of Systems & Software,2016,:. [10]Oscar Vega-Gisbert,Jose E. Roman,Jeffrey M. Squyres. Design and implementation of Java bindings in OpenMPI[J]. Parallel Computing,2016,:. [11]Stefan Bosse. Structural Monitoring with Distributed-Regional and Event-based NN-Decision Tree Learning Using Mobile Multi-Agent Systems and Common Java Script Platforms[J]. Procedia Technology,2016,26:. [12]Pablo Piedrahita-Quintero,Carlos Trujillo,Jorge Garcia-Sucerquia. JDiffraction : A GPGPU-accelerated JAVA library for numerical propagation of scalar wave fields[J]. Computer Physics Communications,2016,:. [13]Abdelhak Mesbah,Jean-Louis Lanet,Mohamed Mezghiche. Reverse engineering a Java Card memory management algorithm[J]. Computers & Security,2017,66:. [14]G. Bacci,M. Bazzicalupo,A. Benedetti,A. Mengoni. StreamingTrim 1.0: a Java software for dynamic trimming of 16S rRNA sequence data from metagenetic studies[J]. Mol Ecol Resour,2014,14(2):. [15]Qing‐Wei Xu,Johannes Griss,Rui Wang,Andrew R. Jones,Henning Hermjakob,Juan Antonio Vizcaíno. jmzTab: A Java interface to the mzTab data standard[J]. Proteomics,2014,14(11):. [16]Rody W. J. Kersten,Bernard E. Gastel,Olha Shkaravska,Manuel Montenegro,Marko C. J. D. Eekelen. ResAna: a resource analysis toolset for (real‐time) JAVA[J]. Concurrency Computat.: Pract. Exper.,2014,26(14):. [17]Stephan E. Korsholm,Hans S?ndergaard,Anders P. Ravn. A real‐time Java tool chain for resource constrained platforms[J]. Concurrency Computat.: Pract. Exper.,2014,26(14):. [18]M. Teresa Higuera‐Toledano,Andy Wellings. Introduction to the Special Issue on Java Technologies for Real‐Time and Embedded Systems: JTRES 2012[J]. Concurrency Computat.: Pract. Exper.,2014,26(14):. [19]Mostafa Mohammadpourfard,Mohammad Ali Doostari,Mohammad Bagher Ghaznavi Ghoushchi,Nafiseh Shakiba. Anew secure Internet voting protocol using Java Card 3 technology and Java information flow concept[J]. Security Comm. Networks,2015,8(2):. [20]Cédric Teyton,Jean‐Rémy Falleri,Marc Palyart,Xavier Blanc. A study of library migrations in Java[J]. J. Softw. Evol. and Proc.,2014,26(11):. [21]Sabela Ramos,Guillermo L. Taboada,Roberto R. Expósito,Juan Touri?o. Nonblocking collectives for scalable Java communications[J]. Concurrency Computat.: Pract. Exper.,2015,27(5):. [22]Dusan Jovanovic,Slobodan Jovanovic. An adaptive e‐learning system for Java programming course, based on Dokeos LE[J]. Comput Appl Eng Educ,2015,23(3):. [23]Yu Lin,Danny Dig. A study and toolkit of CHECK‐THEN‐ACT idioms of Java concurrent collections[J]. Softw. Test. Verif. Reliab.,2015,25(4):. [24]Jonathan Passerat?Palmbach,Claude Mazel,David R. C. Hill. TaskLocalRandom: a statistically sound substitute to pseudorandom number generation in parallel java tasks frameworks[J]. Concurrency Computat.: Pract.Exper.,2015,27(13):. [25]Da Qi,Huaizhong Zhang,Jun Fan,Simon Perkins,Addolorata Pisconti,Deborah M. Simpson,Conrad Bessant,Simon Hubbard,Andrew R. Jones. The mzqLibrary – An open source Java library supporting the HUPO‐PSI quantitative proteomics standard[J]. Proteomics,2015,15(18):. [26]Xiaoyan Zhu,E. James Whitehead,Caitlin Sadowski,Qinbao Song. An analysis of programming language statement frequency in C, C++, and Java source code[J]. Softw. Pract. Exper.,2015,45(11):. [27]Roberto R. Expósito,Guillermo L. Taboada,Sabela Ramos,Juan Touri?o,Ramón Doallo. Low‐latency Java communication devices on RDMA‐enabled networks[J]. Concurrency Computat.: Pract. Exper.,2015,27(17):. [28]V. Serbanescu,K. Azadbakht,F. Boer,C. Nagarajagowda,B. Nobakht. A design pattern for optimizations in data intensive applications using ABS and JAVA 8[J]. Concurrency Computat.: Pract. Exper.,2016,28(2):. [29]E. Tsakalos,J. Christodoulakis,L. Charalambous. The Dose Rate Calculator (DRc) for Luminescence and ESR Dating-a Java Application for Dose Rate and Age Determination[J]. Archaeometry,2016,58(2):. [30]Ronald A. Olsson,Todd Williamson. RJ: a Java package providing JR‐like concurrent programming[J]. Softw. Pract. Exper.,2016,46(5):. java英⽂参考⽂献⼆: [31]Seong‐Won Lee,Soo‐Mook Moon,Seong‐Moo Kim. Flow‐sensitive runtime estimation: an enhanced hot spot detection heuristics for embedded Java just‐in‐time compilers [J]. Softw. Pract. Exper.,2016,46(6):. [32]Davy Landman,Alexander Serebrenik,Eric Bouwers,Jurgen J. Vinju. Empirical analysis of the relationship between CC and SLOC in a large corpus of Java methods and C functions[J]. J. Softw. Evol. and Proc.,2016,28(7):. [33]Renaud Pawlak,Martin Monperrus,Nicolas Petitprez,Carlos Noguera,Lionel Seinturier. SPOON : A library for implementing analyses and transformations of Java source code[J]. Softw. Pract. Exper.,2016,46(9):. [34]Musa Ata?. Open Cezeri Library: A novel java based matrix and computer vision framework[J]. Comput Appl Eng Educ,2016,24(5):. [35]A. Omar Portillo‐Dominguez,Philip Perry,Damien Magoni,Miao Wang,John Murphy. TRINI: an adaptive load balancing strategy based on garbage collection for clustered Java systems[J]. Softw. Pract. Exper.,2016,46(12):. [36]Kim T. Briggs,Baoguo Zhou,Gerhard W. Dueck. Cold object identification in the Java virtual machine[J]. Softw. Pract. Exper.,2017,47(1):. [37]S. Jayaraman,B. Jayaraman,D. Lessa. Compact visualization of Java program execution[J]. Softw. Pract. Exper.,2017,47(2):. [38]Geoffrey Fox. Java Technologies for Real‐Time and Embedded Systems (JTRES2013)[J]. Concurrency Computat.: Pract. Exper.,2017,29(6):. [39]Tórur Biskopst? Str?m,Wolfgang Puffitsch,Martin Schoeberl. Hardware locks for a real‐time Java chip multiprocessor[J]. Concurrency Computat.: Pract. Exper.,2017,29(6):. [40]Serdar Yegulalp. JetBrains' Kotlin JVM language appeals to the Java faithful[J]. ,2016,:. [41]Ortin, Francisco,Conde, Patricia,Fernandez-Lanvin, Daniel,Izquierdo, Raul. The Runtime Performance of invokedynamic: An Evaluation with a Java Library[J]. IEEE Software,2014,31(4):. [42]Johnson, Richard A. JAVA DATABASE CONNECTIVITY USING SQLITE: A TUTORIAL[J]. Allied Academies International Conference. Academy of Information and Management Sciences. Proceedings,2014,18(1):. [43]Trent, Rod. SQL Server Gets PHP Support, Java Support on the Way[J]. SQL Server Pro,2014,:. [44]Foket, C,De Sutter, B,De Bosschere, K. Pushing Java Type Obfuscation to the Limit[J]. IEEE Transactions on Dependable and Secure Computing,2014,11(6):. [45]Parshall, Jon. Rising Sun, Falling Skies: The Disastrous Java Sea Campaign of World War II[J]. United States Naval Institute. Proceedings,2015,141(1):. [46]Brunner, Grant. Java now pollutes your Mac with adware - here's how to uninstall it[J]. ,2015,:. [47]Bell, Jonathan,Melski, Eric,Dattatreya, Mohan,Kaiser, Gail E. Vroom: Faster Build Processes for Java[J]. IEEE Software,2015,32(2):. [48]Chaikalis, T,Chatzigeorgiou, A. Forecasting Java Software Evolution Trends Employing Network Models[J]. IEEE Transactions on Software Engineering,2015,41(6):. [49]Lu, Quan,Liu, Gao,Chen, Jing. Integrating PDF interface into Java application[J]. Library Hi Tech,2014,32(3):. [50]Rashid, Fahmida Y. Oracle fixes critical flaws in Database Server, MySQL, Java[J]. ,2015,:. [51]Rashid, Fahmida Y. Library misuse exposes leading Java platforms to attack[J]. ,2015,:. [52]Rashid, Fahmida Y. Serious bug in widely used Java app library patched[J]. ,2015,:. [53]Odeghero, P,Liu, C,McBurney, PW,McMillan, C. An Eye-Tracking Study of Java Programmers and Application to Source Code Summarization[J]. IEEE Transactions on Software Engineering,2015,41(11):. [54]Greene, Tim. Oracle settles FTC dispute over Java updates[J]. Network World (Online) [55]Rashid, Fahmida Y. FTC ruling against Oracle shows why it's time to dump Java[J]. ,2015,:. [56]Whitwam, Ryan. Google plans to remove Oracle's Java APIs from Android N[J]. ,2015,:. [57]Saher Manaseer,Warif Manasir,Mohammad Alshraideh,Nabil Abu Hashish,Omar Adwan. Automatic Test Data Generation for Java Card Applications Using Genetic Algorithm[J]. Journal of Software Engineering andApplications,2015,8(12):. [58]Paul Venezia. Prepare now for the death of Flash and Java plug-ins[J]. ,2016,:. [59]PW McBurney,C McMillan. Automatic Source Code Summarization of Context for Java Methods[J]. IEEE Transactions on Software Engineering,2016,42(2):. java英⽂参考⽂献三: [61]Serdar Yegulalp,Serdar Yegulalp. Sputnik automates code review for Java projects on GitHub[J].,2016,:. [62]Fahmida Y Rashid,Fahmida Y Rashid. Oracle security includes Java, MySQL, Oracle Database fixes[J]. ,2016,:. [63]H M Chavez,W Shen,R B France,B A Mechling. An Approach to Checking Consistency between UML Class Model and Its Java Implementation[J]. IEEE Transactions on Software Engineering,2016,42(4):. [64]Serdar Yegulalp,Serdar Yegulalp. Unikernel power comes to Java, Node.js, Go, and Python apps[J]. ,2016,:. [65]Yudi Zheng,Stephen Kell,Lubomír Bulej,Haiyang Sun. Comprehensive Multiplatform Dynamic Program Analysis for Java and Android[J]. IEEE Software,2016,33(4):. [66]Fahmida Y Rashid,Fahmida Y Rashid. Oracle's monster security fixes Java, database bugs[J]. ,2016,:. [67]Damian Wolf,Damian Wolf. The top 5 Java 8 features for developers[J]. ,2016,:. [68]Jifeng Xuan,Matias Martinez,Favio DeMarco,Maxime Clément,Sebastian Lamelas Marcote,Thomas Durieux,Daniel LeBerre. Nopol: Automatic Repair of Conditional Statement Bugs in Java Programs[J]. IEEE Transactions on Software Engineering,2017,43(1):. [69]Loo Kang Wee,Hwee Tiang Ning. Vernier caliper and micrometer computer models using Easy Java Simulation and its pedagogical design features-ideas for augmenting learning with real instruments[J]. Physics Education,2014,49(5):. [70]Loo Kang Wee,Tat Leong Lee,Charles Chew,Darren Wong,Samuel Tan. Understanding resonance graphs using Easy Java Simulations (EJS) and why we use EJS[J]. Physics Education,2015,50(2):.【java英⽂参考⽂献汇编】相关⽂章:1.2.3.4.5.6.7.8.。

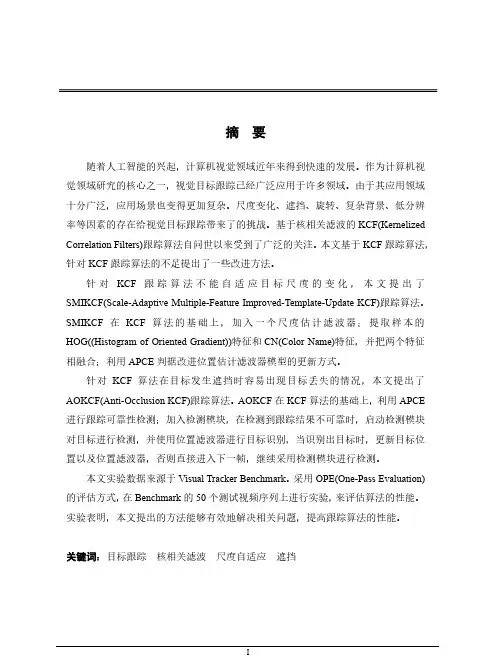

华中科技大学硕士学位论文摘要随着人工智能的兴起,计算机视觉领域近年来得到快速的发展。

作为计算机视觉领域研究的核心之一,视觉目标跟踪已经广泛应用于许多领域。

由于其应用领域十分广泛,应用场景也变得更加复杂。

尺度变化、遮挡、旋转、复杂背景、低分辨率等因素的存在给视觉目标跟踪带来了的挑战。

基于核相关滤波的KCF(Kernelized Correlation Filters)跟踪算法自问世以来受到了广泛的关注。

本文基于KCF跟踪算法,针对KCF跟踪算法的不足提出了一些改进方法。

针对KCF跟踪算法不能自适应目标尺度的变化,本文提出了SMIKCF(Scale-Adaptive Multiple-Feature Improved-Template-Update KCF)跟踪算法。

SMIKCF在KCF算法的基础上,加入一个尺度估计滤波器;提取样本的HOG((Histogram of Oriented Gradient))特征和CN(Color Name)特征,并把两个特征相融合;利用APCE判据改进位置估计滤波器模型的更新方式。

针对KCF算法在目标发生遮挡时容易出现目标丢失的情况,本文提出了AOKCF(Anti-Occlusion KCF)跟踪算法。

AOKCF在KCF算法的基础上,利用APCE 进行跟踪可靠性检测;加入检测模块,在检测到跟踪结果不可靠时,启动检测模块对目标进行检测,并使用位置滤波器进行目标识别,当识别出目标时,更新目标位置以及位置滤波器,否则直接进入下一帧,继续采用检测模块进行检测。

本文实验数据来源于Visual Tracker Benchmark。

采用OPE(One-Pass Evaluation)的评估方式,在Benchmark的50个测试视频序列上进行实验,来评估算法的性能。

实验表明,本文提出的方法能够有效地解决相关问题,提高跟踪算法的性能。

关键词:目标跟踪核相关滤波尺度自适应遮挡华中科技大学硕士学位论文ABSTRACTWith growing of artificial intelligence, computer vision has been rapid development in recent years. Visual target tracking is one of the most important part of computer vision research, with widely used in many fields. The application situation is becoming more and more complicated due to the wide application fields. The existence of scale changes, occlusion, rotation, complex background, low resolution and other factors has brought higher challenges to visual target tracking. KCF (Kernelized Correlation Filters) tracking algorithm has been widely concerned since it was proposed. Based on the KCF tracking algorithm, this paper proposed improved methods for the shortcoming of KCF tracking algorithm.Firstly, the SMIKCF(Scale-Adaptive Multiple-Feature Improved-Template-Update KCF) tracking algorithm is proposed in this paper to solve the problem that the KCF tracking algorithm can not adapt to the target scale. On the basis of KCF algorithm, SMIKCF adds a scale estimation filter, and combines HOG characteristics and CN characteristics, using the APCE criterion to improve the updating method of the position estimation filter model.Secondly, the AOKCF(Anti-Occlusion KCF) tracking algorithm is proposed to solve the problem of occlusion. AOKCF tracking algorithm is based on KCF tracking algorithm. APCE criterion is used to check the reliability of tracking results. When the tracking result is unreliable, add detection module to detect the target, and then use the position filter to the target recognition. If the target is recognized, then update the target position and the position filter module. Otherwise, go directly to the next frame.The experimental datas are from Visual Tracker Benchmark. Experiments were performed on Benchmark's 50 test video sequences and use the OPE (One-Pass Evaluation) evaluation method to evaluate the performance of the algorithm.华中科技大学硕士学位论文Experimental results show that the proposed method can effectively solve the related problems and improve the performance of the tracking algorithm.Keywords: Object tracking Kernelized correlation filters Scale adaptive Occlusion华中科技大学硕士学位论文目录摘要 (I)Abstract (II)目录 (IV)1 绪论 (1)1.1研究背景与意义 (1)1.2国内外研究概况 (1)1.3论文的主要研究内容 (4)1.4主要组织结构 (4)2 KCF跟踪算法介绍 (6)2.1引言 (6)2.2相关滤波器 (6)2.3核相关滤波器 (9)2.4本章小结 (17)3 改进的尺度自适应KCF跟踪算法研究 (18)3.1引言 (18)3.2尺度估计 (19)3.3多特征融合 (22)3.4模型更新策略改进 (22)3.5算法整体框架 (24)华中科技大学硕士学位论文3.6实验结果与分析 (25)3.7本章小结 (40)4 抗遮挡KCF跟踪算法研究 (41)4.1引言 (41)4.2跟踪可靠性检测 (43)4.3重检测算法研究 (43)4.4算法整体框架 (47)4.5实验结果与分析 (48)4.6本章小结 (60)5 总结与展望 (61)5.1全文总结 (61)5.2课题展望 (62)致谢 (63)参考文献 (64)附录1 攻读学位期间发表论文与参与课题 (70)华中科技大学硕士学位论文1 绪论1.1 研究背景与意义人工智能的飞速发展使得计算机视觉备受关注,作为计算机视觉领域研究的核心之一,视觉目标跟踪已经广泛应用于许多领域[1]。

CVPR 2013 录用论文(目标跟踪部分)/gxf1027/article/details/8650878完整录用论文官方链接:/cvpr13/program.php过段时间CvPaper上面应该会有正文链接今年有关RGB-D摄像机应用和研究的论文渐多起来了。

当然,自己还是比较关心Tracking方面的Papers。

从作者来看,一作大部分为华人,而且有不少在Tracking这个圈子里相当有名的牛,比如Ming-Hsuan Yang,Robert Collins等(中科院到阿大的Xi Li也是非常活跃,从他的论文可以看出深厚的数学功底,另外Chunhua Shen老师团队非常高产)。

此外,从录用论文题目初步判断,Sparse coding (representation)的热度在减退,所以Haibin Ling老师并没有这方面的文章录用,且纯粹的tracking-by-detection几乎不见踪影了。

以下是摘录的tracking方面的录用论文:Oral部分:Structure Preserving Object Tracking. Lu Zhang, Laurens van der MaatenTracking Sports Players with Context-Conditioned Motion Models. Jingchen Liu, Peter Carr, Robert Collins, Yanxi Liu Post部分:Online Object Tracking: A Benchmark. Yi Wu, Jongwoo Lim,Ming-Hsuan YangLearning Compact Binary Codes for Visual Tracking. Xi Li, Chunhua Shen, Anthony Dick, Anton van den HengelPart-based Visual Tracking with Online Latent Structural Learning. Rui Yao, Qinfeng Shi,Chunhua Shen, Yanning Zhang, Anton van den HengelSelf-paced learning for long-term tracking.James Supancic III, Deva Ramanan(long-term的噱头还是很吸引人的,和当年TLD一样,看看是否是工程的思想多一些)Visual Tracking via Locality Sensitive Histograms.Shengfeng He, Qingxiong Yang, Rynson Lau, Jiang Wang,Ming-Hsuan Yang (CityU of HK,使用直方图作为表观在当前研究背景下真是反其道而行之啊)Minimum Uncertainty Gap for Robust Visual Tracking. Junseok Kwon, Kyoung Mu Lee (VTD作者)Least Soft-thresold Squares Tracking. Dong Wang, Huchuan Lu, Ming-Hsuan YangTracking People and Their Objects. Tobias Baumgartner, Dennis Mitzel, Bastian Leibe(这个应该也有应用的背景和前景)(以上不全包括多目标跟踪方面的论文)其它关注的论文:Alternating Decision Forests. Samuel Schulter, Paul Wohlhart,Christian Leistner, Amir Saffari,Peter M. Roth,Horst Bischof(Forest也是近些年的热点之一。

视觉跟踪以连续视频帧为对象,提取目标特征后,根据视频帧序列的上下文信息,利用特征构建目标外观模型,判断候选样本是否为跟踪目标。

跟踪算法按照特征提取方式的不同,通常分为基于传统模型和基于深度学习模型,传统模型通常利用灰度特征、颜色特征、梯度特征以及纹理特征等[1-4]人工特征,单个特征表达单一,跟踪精度不高,融合多个特征之后虽然能够一定程度上提高算法的跟踪精度,但算法对复杂环境下目标出镜头、严重遮挡等情况跟踪效果不佳。

深度学习模型得到的卷积特征具有高层特征更抽象、底层特征更细粒的特点,对目标特征的表达更为丰富[5-6]。

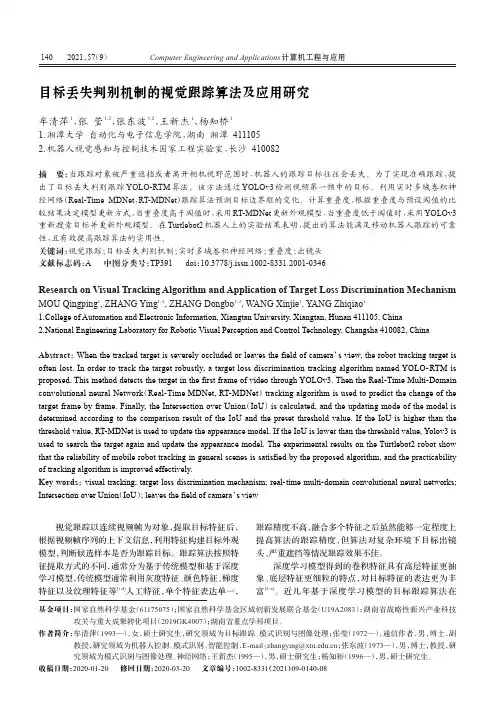

近几年基于深度学习模型的目标跟踪算法在目标丢失判别机制的视觉跟踪算法及应用研究牟清萍1,张莹1,2,张东波1,2,王新杰1,杨知桥11.湘潭大学自动化与电子信息学院,湖南湘潭4111052.机器人视觉感知与控制技术国家工程实验室,长沙410082摘要:当跟踪对象被严重遮挡或者离开相机视野范围时,机器人的跟踪目标往往会丢失。

为了实现准确跟踪,提出了目标丢失判别跟踪YOLO-RTM算法。

该方法通过YOLOv3检测视频第一帧中的目标。

利用实时多域卷积神经网络(Real-Time MDNet,RT-MDNet)跟踪算法预测目标边界框的变化。

计算重叠度,根据重叠度与预设阈值的比较结果决定模型更新方式,当重叠度高于阈值时,采用RT-MDNet更新外观模型,当重叠度低于阈值时,采用YOLOv3重新搜索目标并更新外观模型。

在Turtlebot2机器人上的实验结果表明,提出的算法能满足移动机器人跟踪的可靠性,且有效提高跟踪算法的实用性。

关键词:视觉跟踪;目标丢失判别机制;实时多域卷积神经网络;重叠度;出镜头文献标志码:A中图分类号:TP391doi:10.3778/j.issn.1002-8331.2001-0346Research on Visual Tracking Algorithm and Application of Target Loss Discrimination Mechanism MOU Qingping1,ZHANG Ying1,2,ZHANG Dongbo1,2,WANG Xinjie1,YANG Zhiqiao11.College of Automation and Electronic Information,Xiangtan University,Xiangtan,Hunan411105,China2.National Engineering Laboratory for Robotic Visual Perception and Control Technology,Changsha410082,ChinaAbstract:When the tracked target is severely occluded or leaves the field of camera’s view,the robot tracking target is often lost.In order to track the target robustly,a target loss discrimination tracking algorithm named YOLO-RTM is proposed.This method detects the target in the first frame of video through YOLOv3.Then the Real-Time Multi-Domain convolutional neural Network(Real-Time MDNet,RT-MDNet)tracking algorithm is used to predict the change of the target frame by frame.Finally,the Intersection over Union(IoU)is calculated,and the updating mode of the model is determined according to the comparison result of the IoU and the preset threshold value.If the IoU is higher than the threshold value,RT-MDNet is used to update the appearance model.If the IoU is lower than the threshold value,Yolov3is used to search the target again and update the appearance model.The experimental results on the Turtlebot2robot show that the reliability of mobile robot tracking in general scenes is satisfied by the proposed algorithm,and the practicability of tracking algorithm is improved effectively.Key words:visual tracking;target loss discrimination mechanism;real-time multi-domain convolutional neural networks; Intersection over Union(IoU);leaves the field of camera’s view基金项目:国家自然科学基金(61175075);国家自然科学基金区域创新发展联合基金(U19A2083);湖南省战略性新兴产业科技攻关与重大成果转化项目(2019GK4007);湖南省重点学科项目。

KCF:High-SpeedTrackingwithKernelizedCorrelati。

High-Speed Tracking with Kernelized Correlation Filters 的翻译与分析基于核相关滤波器的⾼速⽬标跟踪⽅法,简称KCF 写在前⾯,之所以对这篇⽂章进⾏精细的阅读,是因为这篇⽂章极其重要,在⽬标跟踪领域⽯破天惊的⼀篇论⽂,后来在此论⽂基础上⼜相继出现了很多基于KCF的⽂章,因此⽂章好⽐作⼤厦的基⽯,深度学习,长短记忆等框架⽹络也可以在KCF上进⾏增添模块,并能够达到较好的效果,因此我将深⼊学习这篇⽂章,并在此与⼤家分享,由于学识有限,难免有些谬误,还请斧正。

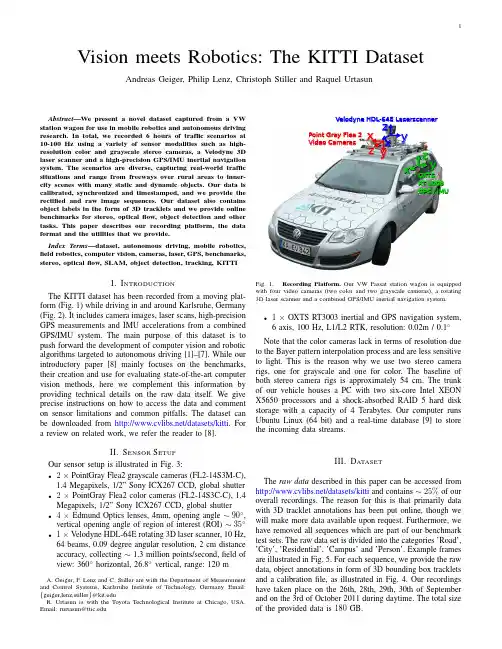

High-Speed Tracking with Kernelized Correlation Filters:Abstract---The core component of most modern trackers is discriminative classifier, tasked with distinguishing between the target and the surrounding environment. To cope with natural image changes, this classifier is typically trained with translated and scaled sample patches. Such sets of samples are riddled with redundancies --any overlapping pixels are constrained to be the same. Based on this simple observation, we can diagonalize it with the Discrete Fourier Transform, reducing both storage and computation by several orders of magnitude. Interestingly, for linear regression our formulation is equivalent to a correlation Filter (KCF), that unlike other kernel algorithms has the exact same complexity as its linear counterpart. Building on it ,we also propose a fast multi-channel extension of linear correlation filters ,via a linear kernel ,which we call Dual Correlation Filter(DCF).Both KCF and DCF outperform top-ranking trackers such as Struck of TLD on a 50videos benchmark, despite running at hundreds of frames-per -second , and being implemented in a few lines ofcode(Algorithm1). To encourage further developments, our tracking framework was made open-source.Index Terms——Visual tracking, circulant matrices, discrete Fourier transform, kernel methods, ridge regression, correlation filters.摘要:当前最前沿的跟踪器的核⼼组成是⼀个具有分辨能⼒的分类器,负责将⽬标和其背景环境区分开来。

第55卷第1期2021年1月Vol.55No.1Jan72021西安交通大学学报JOURNAL OF XI'AN JIAOTONG UNIVERSITY目标跟踪中基于深度可分离卷积的剪枝方法毛远宏,贺占庄,刘露露(西安微电子技术研究所,710065,西安%摘要:为了减少跟踪网络中存在的参数量和计算量大的问题,提出了基于深度可分离卷积的剪枝方法%深度可分离卷积将跟踪网络中的传统卷积层分解为逐点卷积和逐层卷积两部分%在逐点卷积中,通过逐点卷积层中权重的大小来评估输入特征图通道在线性组合中的重要程度,将较小的权重及其关联的特征通道裁减掉。

在逐层卷积中,通过K-L散度来衡量逐层卷积中滤波器的相似性,将相似的滤波器裁剪掉,减少冗余。

通过上述方法进行多轮迭代剪枝,从而减少跟踪网络的参数量和计算量%在VOT数据集上的实验结果表明,在精度没有下降的前提下,剪枝后网络的参数量下降了22.54%,计算量下降了17.8%%在NVIDIA TX2设备上的实验结果表明,剪枝后网络的跟踪速度在CPU上提升了14.95%,在GPU上提升了13.07%%关键词:目标跟踪;卷积神经网络;深度可分离卷积;网络剪枝中图分类号:TP391文献标志码:ADOI:10.7652/xjtuxb202101007文章编号:0253-987X(2021)01-0052-08OSID码Pruning Based on Separable Convolutions for Object TrackingMAO Yuanhong,HE Zhanzhuang,LIU Lulu(Xi'an Microelectronics Technology Institute,Xi'an710065,China)Abstract:A pruning method based on separable convolution is proposed to reduce parameters and calculationamountinthetracking network.Separableconvolution decomposesthetraditional convolutional layer into two parts,i.e.pointwise convolution and depthwise convolution in the trackingnetwork.Inpointwiseconvolution,theimportanceoftheinputfeature mapchannelin thelinearcombinationisevaluatedbytheweightsinpointwiseconvolutions,sothatthesma l er weightsandtheirassociatedfeaturechanneloughttobepruned.Indepthwiseconvolutions,the K-Ldivergenceisadoptedto measurethesimilarityoffilters,andthesimilarfiltersarethen prunedtoreduceredundancy.Withtheimprovementsabove,iterativepruningisperformed.The parameters in the tracker are reduced by22.54%,and the computation is reduced by17.8%.On the NVIDIA TX2,the tracking rate increases by14.95%for CPU and13.07%for GPU without significantdegradationoftrackingperformanceinVOTbenchmark.Keywords:object tracking;convolutional neural networks;separable convolution;filter pruning目前,基于深度学习的方法在目标跟踪领域得度学习通常存在着参数过多和计算量过大的问题。

基于Camshift 方法的视觉目标跟踪技术综述作者:伍祥张晓荣潘涛朱文武来源:《电脑知识与技术》2024年第17期摘要:视觉目标跟踪技术是机器视觉、模式识别等相关领域中重要的研究内容之一。

受限于场景的复杂度、目标速度、目标的遮挡程度等状况,其相关研究具有一定的难度和挑战性,而均值漂移及其相关算法是解决该类问题的重要途径。

首先介绍视觉目标跟踪的研究方法和原理,然后介绍Camshift方法的前身Meanshift方法的原理和算法过程,并做出相应的分析和阐述。

再介绍针对Camshift算法的相关研究和改进方法,最后总结Camshift方法的应用情况以及后续可能的研究方向。

关键词:机器视觉;模式识别;目标跟踪;Meanshift;Camshift中图分类号:TP391 文献标识码:A文章编号:1009-3044(2024)17-0011-04 开放科学(资源服务)标识码(OSID):0 引言实现视觉目标跟踪可能的前置步骤包括目标分类、物体检测以及图像分割。

目标分类是指根据目标在图像中呈现的特征,将不同类别的目标加以区分的图像处理方法。

物体检测是指在某张图像中,检测出目标出现的位置、尺度以及其對应的类别。

相较于目标分类,物体检测不仅需要指出其类别,还需返回该目标在图像中的物理坐标信息,其量化度得到了增强。

而图像分割在物体检测的基础上提出了更加精细的要求,即不仅需要指出物体在图像中的坐标位置信息,还要标注出目标在图像中的精准轮廓,其难度和复杂度进一步提升。

上述技术在图像处理过程中,存在一些共性化的技术难点,比如尺度变化、部分遮挡等[1-2]。

针对物体尺度变化,一种常规的方式是采用尺度不变特征变换(SIFT)技术[3],通过尺度空间极值计算、关键点定位、方向和幅值计算以及关键点描述等步骤完成目标在尺度变化时的特征匹配。

针对目标部分区域被遮挡的问题,Wang B等[4]提出了一种通过网络流优化的轨迹片段(tracklet)关联中的在线目标特定度量来忽略目标被遮挡的时段,从而连续化目标运动轨迹;Fir⁃ouznia Marjan[5]提出了一种改进的粒子滤波方法,通过状态空间重构,在有遮挡的情况下提升目标跟踪的精度。

CVPR2020papers # CVPR2020-Code**【推荐阅读】****【CVPR 2020 论⽂开源⽬录】**- [CNN](#CNN)- [图像分类](#Image-Classification)- [视频分类](#Video-Classification)- [⽬标检测](#Object-Detection)- [3D⽬标检测](#3D-Object-Detection)- [视频⽬标检测](#Video-Object-Detection)- [⽬标跟踪](#Object-Tracking)- [语义分割](#Semantic-Segmentation)- [实例分割](#Instance-Segmentation)- [全景分割](#Panoptic-Segmentation)- [视频⽬标分割](#VOS)- [超像素分割](#Superpixel)- [交互式图像分割](#IIS)- [NAS](#NAS)- [GAN](#GAN)- [Re-ID](#Re-ID)- [3D点云(分类/分割/配准/跟踪等)](#3D-PointCloud)- [⼈脸(识别/检测/重建等)](#Face)- [⼈体姿态估计(2D/3D)](#Human-Pose-Estimation)- [⼈体解析](#Human-Parsing)- [场景⽂本检测](#Scene-Text-Detection)- [场景⽂本识别](#Scene-Text-Recognition)- [特征(点)检测和描述](#Feature)- [超分辨率](#Super-Resolution)- [模型压缩/剪枝](#Model-Compression)- [视频理解/⾏为识别](#Action-Recognition)- [⼈群计数](#Crowd-Counting)- [深度估计](#Depth-Estimation)- [6D⽬标姿态估计](#6DOF)- [⼿势估计](#Hand-Pose)- [显著性检测](#Saliency)- [去噪](#Denoising)- [去⾬](#Deraining)- [去模糊](#Deblurring)- [去雾](#Dehazing)- [特征点检测与描述](#Feature)- [视觉问答(VQA)](#VQA)- [视频问答(VideoQA)](#VideoQA)- [视觉语⾔导航](#VLN)- [视频压缩](#Video-Compression)- [视频插帧](#Video-Frame-Interpolation)- [风格迁移](#Style-Transfer)- [车道线检测](#Lane-Detection)- ["⼈-物"交互(HOI)检测](#HOI)- [轨迹预测](#TP)- [运动预测](#Motion-Predication)- [光流估计](#OF)- [图像检索](#IR)- [虚拟试⾐](#Virtual-Try-On)- [HDR](#HDR)- [对抗样本](#AE)- [三维重建](#3D-Reconstructing)- [深度补全](#DC)- [语义场景补全](#SSC)- [图像/视频描述](#Captioning)- [线框解析](#WP)- [数据集](#Datasets)- [其他](#Others)- [不确定中没中](#Not-Sure)<a name="CNN"></a># CNN**Exploring Self-attention for Image Recognition****Improving Convolutional Networks with Self-Calibrated Convolutions****Rethinking Depthwise Separable Convolutions: How Intra-Kernel Correlations Lead to Improved MobileNets**<a name="Image-Classification"></a># 图像分类**Interpretable and Accurate Fine-grained Recognition via Region Grouping****Compositional Convolutional Neural Networks: A Deep Architecture with Innate Robustness to Partial Occlusion** **Spatially Attentive Output Layer for Image Classification**<a name="Video-Classification"></a># 视频分类**SmallBigNet: Integrating Core and Contextual Views for Video Classification**<a name="Object-Detection"></a># ⽬标检测**Overcoming Classifier Imbalance for Long-tail Object Detection with Balanced Group Softmax****AugFPN: Improving Multi-scale Feature Learning for Object Detection****Noise-Aware Fully Webly Supervised Object Detection****Learning a Unified Sample Weighting Network for Object Detection****D2Det: Towards High Quality Object Detection and Instance Segmentation****Dynamic Refinement Network for Oriented and Densely Packed Object Detection****Scale-Equalizing Pyramid Convolution for Object Detection****Revisiting the Sibling Head in Object Detector****Scale-equalizing Pyramid Convolution for Object Detection****Detection in Crowded Scenes: One Proposal, Multiple Predictions****Instance-aware, Context-focused, and Memory-efficient Weakly Supervised Object Detection****Bridging the Gap Between Anchor-based and Anchor-free Detection via Adaptive Training Sample Selection****BiDet: An Efficient Binarized Object Detector****Harmonizing Transferability and Discriminability for Adapting Object Detectors****CentripetalNet: Pursuing High-quality Keypoint Pairs for Object Detection****Hit-Detector: Hierarchical Trinity Architecture Search for Object Detection****EfficientDet: Scalable and Efficient Object Detection**<a name="3D-Object-Detection"></a># 3D⽬标检测**SESS: Self-Ensembling Semi-Supervised 3D Object Detection****Associate-3Ddet: Perceptual-to-Conceptual Association for 3D Point Cloud Object Detection****What You See is What You Get: Exploiting Visibility for 3D Object Detection****Learning Depth-Guided Convolutions for Monocular 3D Object Detection****Structure Aware Single-stage 3D Object Detection from Point Cloud****IDA-3D: Instance-Depth-Aware 3D Object Detection from Stereo Vision for Autonomous Driving****Train in Germany, Test in The USA: Making 3D Object Detectors Generalize****MLCVNet: Multi-Level Context VoteNet for 3D Object Detection****3DSSD: Point-based 3D Single Stage Object Detector**- CVPR 2020 Oral**Disp R-CNN: Stereo 3D Object Detection via Shape Prior Guided Instance Disparity Estimation****End-to-End Pseudo-LiDAR for Image-Based 3D Object Detection****DSGN: Deep Stereo Geometry Network for 3D Object Detection****LiDAR-based Online 3D Video Object Detection with Graph-based Message Passing and Spatiotemporal Transformer Attention** **PV-RCNN: Point-Voxel Feature Set Abstraction for 3D Object Detection****Point-GNN: Graph Neural Network for 3D Object Detection in a Point Cloud**<a name="Video-Object-Detection"></a># 视频⽬标检测**Memory Enhanced Global-Local Aggregation for Video Object Detection**<a name="Object-Tracking"></a># ⽬标跟踪**SiamCAR: Siamese Fully Convolutional Classification and Regression for Visual Tracking****D3S -- A Discriminative Single Shot Segmentation Tracker****ROAM: Recurrently Optimizing Tracking Model****Siam R-CNN: Visual Tracking by Re-Detection****Cooling-Shrinking Attack: Blinding the Tracker with Imperceptible Noises****High-Performance Long-Term Tracking with Meta-Updater****AutoTrack: Towards High-Performance Visual Tracking for UAV with Automatic Spatio-Temporal Regularization****Probabilistic Regression for Visual Tracking****MAST: A Memory-Augmented Self-supervised Tracker****Siamese Box Adaptive Network for Visual Tracking**## 多⽬标跟踪**3D-ZeF: A 3D Zebrafish Tracking Benchmark Dataset**<a name="Semantic-Segmentation"></a># 语义分割**FDA: Fourier Domain Adaptation for Semantic Segmentation****Super-BPD: Super Boundary-to-Pixel Direction for Fast Image Segmentation**- 论⽂:暂⽆**Single-Stage Semantic Segmentation from Image Labels****Learning Texture Invariant Representation for Domain Adaptation of Semantic Segmentation****MSeg: A Composite Dataset for Multi-domain Semantic Segmentation****CascadePSP: Toward Class-Agnostic and Very High-Resolution Segmentation via Global and Local Refinement****Unsupervised Intra-domain Adaptation for Semantic Segmentation through Self-Supervision****Self-supervised Equivariant Attention Mechanism for Weakly Supervised Semantic Segmentation****Temporally Distributed Networks for Fast Video Segmentation****Context Prior for Scene Segmentation****Strip Pooling: Rethinking Spatial Pooling for Scene Parsing****Cars Can't Fly up in the Sky: Improving Urban-Scene Segmentation via Height-driven Attention Networks** **Learning Dynamic Routing for Semantic Segmentation**<a name="Instance-Segmentation"></a># 实例分割**D2Det: Towards High Quality Object Detection and Instance Segmentation****PolarMask: Single Shot Instance Segmentation with Polar Representation****CenterMask : Real-Time Anchor-Free Instance Segmentation****BlendMask: Top-Down Meets Bottom-Up for Instance Segmentation****Deep Snake for Real-Time Instance Segmentation****Mask Encoding for Single Shot Instance Segmentation**<a name="Panoptic-Segmentation"></a># 全景分割**Video Panoptic Segmentation****Pixel Consensus Voting for Panoptic Segmentation****BANet: Bidirectional Aggregation Network with Occlusion Handling for Panoptic Segmentation**<a name="VOS"></a># 视频⽬标分割**A Transductive Approach for Video Object Segmentation****State-Aware Tracker for Real-Time Video Object Segmentation****Learning Fast and Robust Target Models for Video Object Segmentation****Learning Video Object Segmentation from Unlabeled Videos**<a name="Superpixel"></a># 超像素分割**Superpixel Segmentation with Fully Convolutional Networks**<a name="IIS"></a># 交互式图像分割**Interactive Object Segmentation with Inside-Outside Guidance**<a name="NAS"></a># NAS**AOWS: Adaptive and optimal network width search with latency constraints****Densely Connected Search Space for More Flexible Neural Architecture Search****MTL-NAS: Task-Agnostic Neural Architecture Search towards General-Purpose Multi-Task Learning****FBNetV2: Differentiable Neural Architecture Search for Spatial and Channel Dimensions****Neural Architecture Search for Lightweight Non-Local Networks****Rethinking Performance Estimation in Neural Architecture Search****CARS: Continuous Evolution for Efficient Neural Architecture Search**<a name="GAN"></a># GAN**SEAN: Image Synthesis with Semantic Region-Adaptive Normalization****Reusing Discriminators for Encoding: Towards Unsupervised Image-to-Image Translation****Distribution-induced Bidirectional Generative Adversarial Network for Graph Representation Learning****PSGAN: Pose and Expression Robust Spatial-Aware GAN for Customizable Makeup Transfer****Semantically Mutil-modal Image Synthesis****Unpaired Portrait Drawing Generation via Asymmetric Cycle Mapping****Learning to Cartoonize Using White-box Cartoon Representations****GAN Compression: Efficient Architectures for Interactive Conditional GANs****Watch your Up-Convolution: CNN Based Generative Deep Neural Networks are Failing to Reproduce Spectral Distributions** <a name="Re-ID"></a># Re-ID**High-Order Information Matters: Learning Relation and Topology for Occluded Person Re-Identification****COCAS: A Large-Scale Clothes Changing Person Dataset for Re-identification**- 数据集:暂⽆**Transferable, Controllable, and Inconspicuous Adversarial Attacks on Person Re-identification With Deep Mis-Ranking****Pose-guided Visible Part Matching for Occluded Person ReID****Weakly supervised discriminative feature learning with state information for person identification**<a name="3D-PointCloud"></a># 3D点云(分类/分割/配准等)## 3D点云卷积**PointASNL: Robust Point Clouds Processing using Nonlocal Neural Networks with Adaptive Sampling****Global-Local Bidirectional Reasoning for Unsupervised Representation Learning of 3D Point Clouds****Grid-GCN for Fast and Scalable Point Cloud Learning****FPConv: Learning Local Flattening for Point Convolution**## 3D点云分类**PointAugment: an Auto-Augmentation Framework for Point Cloud Classification**## 3D点云语义分割**RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds****Weakly Supervised Semantic Point Cloud Segmentation:Towards 10X Fewer Labels****PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation****Learning to Segment 3D Point Clouds in 2D Image Space**## 3D点云实例分割PointGroup: Dual-Set Point Grouping for 3D Instance Segmentation## 3D点云配准**Feature-metric Registration: A Fast Semi-supervised Approach for Robust Point Cloud Registration without Correspondences** **D3Feat: Joint Learning of Dense Detection and Description of 3D Local Features****RPM-Net: Robust Point Matching using Learned Features**## 3D点云补全**Cascaded Refinement Network for Point Cloud Completion**## 3D点云⽬标跟踪**P2B: Point-to-Box Network for 3D Object Tracking in Point Clouds**## 其他**An Efficient PointLSTM for Point Clouds Based Gesture Recognition**<a name="Face"></a># ⼈脸## ⼈脸识别**CurricularFace: Adaptive Curriculum Learning Loss for Deep Face Recognition****Learning Meta Face Recognition in Unseen Domains**## ⼈脸检测## ⼈脸活体检测**Searching Central Difference Convolutional Networks for Face Anti-Spoofing**## ⼈脸表情识别**Suppressing Uncertainties for Large-Scale Facial Expression Recognition**## ⼈脸转正**Rotate-and-Render: Unsupervised Photorealistic Face Rotation from Single-View Images**## ⼈脸3D重建**AvatarMe: Realistically Renderable 3D Facial Reconstruction "in-the-wild"****FaceScape: a Large-scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction** <a name="Human-Pose-Estimation"></a># ⼈体姿态估计(2D/3D)## 2D⼈体姿态估计**TransMoMo: Invariance-Driven Unsupervised Video Motion Retargeting****HigherHRNet: Scale-Aware Representation Learning for Bottom-Up Human Pose Estimation****The Devil is in the Details: Delving into Unbiased Data Processing for Human Pose Estimation****Distribution-Aware Coordinate Representation for Human Pose Estimation**## 3D⼈体姿态估计**Cascaded Deep Monocular 3D Human Pose Estimation With Evolutionary Training Data****Fusing Wearable IMUs with Multi-View Images for Human Pose Estimation: A Geometric Approach** **Bodies at Rest: 3D Human Pose and Shape Estimation from a Pressure Image using Synthetic Data** **Self-Supervised 3D Human Pose Estimation via Part Guided Novel Image Synthesis****Compressed Volumetric Heatmaps for Multi-Person 3D Pose Estimation****VIBE: Video Inference for Human Body Pose and Shape Estimation****Back to the Future: Joint Aware Temporal Deep Learning 3D Human Pose Estimation****Cross-View Tracking for Multi-Human 3D Pose Estimation at over 100 FPS**<a name="Human-Parsing"></a># ⼈体解析**Correlating Edge, Pose with Parsing**<a name="Scene-Text-Detection"></a># 场景⽂本检测**STEFANN: Scene Text Editor using Font Adaptive Neural Network****ContourNet: Taking a Further Step Toward Accurate Arbitrary-Shaped Scene Text Detection****UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World****ABCNet: Real-time Scene Text Spotting with Adaptive Bezier-Curve Network****Deep Relational Reasoning Graph Network for Arbitrary Shape Text Detection**<a name="Scene-Text-Recognition"></a># 场景⽂本识别**SEED: Semantics Enhanced Encoder-Decoder Framework for Scene Text Recognition****UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World****ABCNet: Real-time Scene Text Spotting with Adaptive Bezier-Curve Network****Learn to Augment: Joint Data Augmentation and Network Optimization for Text Recognition**<a name="Feature"></a># 特征(点)检测和描述**SuperGlue: Learning Feature Matching with Graph Neural Networks**<a name="Super-Resolution"></a># 超分辨率## 图像超分辨率**Closed-Loop Matters: Dual Regression Networks for Single Image Super-Resolution****Learning Texture Transformer Network for Image Super-Resolution****Image Super-Resolution with Cross-Scale Non-Local Attention and Exhaustive Self-Exemplars Mining****Structure-Preserving Super Resolution with Gradient Guidance****Rethinking Data Augmentation for Image Super-resolution: A Comprehensive Analysis and a New Strategy** ## 视频超分辨率**TDAN: Temporally-Deformable Alignment Network for Video Super-Resolution****Space-Time-Aware Multi-Resolution Video Enhancement****Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution**<a name="Model-Compression"></a># 模型压缩/剪枝**DMCP: Differentiable Markov Channel Pruning for Neural Networks****Forward and Backward Information Retention for Accurate Binary Neural Networks****Towards Efficient Model Compression via Learned Global Ranking****HRank: Filter Pruning using High-Rank Feature Map****GAN Compression: Efficient Architectures for Interactive Conditional GANs****Group Sparsity: The Hinge Between Filter Pruning and Decomposition for Network Compression**<a name="Action-Recognition"></a># 视频理解/⾏为识别**Oops! Predicting Unintentional Action in Video****PREDICT & CLUSTER: Unsupervised Skeleton Based Action Recognition****Intra- and Inter-Action Understanding via Temporal Action Parsing****3DV: 3D Dynamic Voxel for Action Recognition in Depth Video****FineGym: A Hierarchical Video Dataset for Fine-grained Action Understanding****TEA: Temporal Excitation and Aggregation for Action Recognition****X3D: Expanding Architectures for Efficient Video Recognition****Temporal Pyramid Network for Action Recognition**## 基于⾻架的动作识别**Disentangling and Unifying Graph Convolutions for Skeleton-Based Action Recognition**<a name="Crowd-Counting"></a># ⼈群计数<a name="Depth-Estimation"></a># 深度估计**BiFuse: Monocular 360◦ Depth Estimation via Bi-Projection Fusion****Focus on defocus: bridging the synthetic to real domain gap for depth estimation****Bi3D: Stereo Depth Estimation via Binary Classifications****AANet: Adaptive Aggregation Network for Efficient Stereo Matching****Towards Better Generalization: Joint Depth-Pose Learning without PoseNet**## 单⽬深度估计**On the uncertainty of self-supervised monocular depth estimation****3D Packing for Self-Supervised Monocular Depth Estimation****Domain Decluttering: Simplifying Images to Mitigate Synthetic-Real Domain Shift and Improve Depth Estimation** <a name="6DOF"></a># 6D⽬标姿态估计**PVN3D: A Deep Point-wise 3D Keypoints Voting Network for 6DoF Pose Estimation****MoreFusion: Multi-object Reasoning for 6D Pose Estimation from Volumetric Fusion****EPOS: Estimating 6D Pose of Objects with Symmetries****G2L-Net: Global to Local Network for Real-time 6D Pose Estimation with Embedding Vector Features**<a name="Hand-Pose"></a># ⼿势估计**HOPE-Net: A Graph-based Model for Hand-Object Pose Estimation****Monocular Real-time Hand Shape and Motion Capture using Multi-modal Data**<a name="Saliency"></a># 显著性检测**JL-DCF: Joint Learning and Densely-Cooperative Fusion Framework for RGB-D Salient Object Detection****UC-Net: Uncertainty Inspired RGB-D Saliency Detection via Conditional Variational Autoencoders**<a name="Denoising"></a># 去噪**A Physics-based Noise Formation Model for Extreme Low-light Raw Denoising****CycleISP: Real Image Restoration via Improved Data Synthesis**<a name="Deraining"></a># 去⾬**Multi-Scale Progressive Fusion Network for Single Image Deraining****Detail-recovery Image Deraining via Context Aggregation Networks**<a name="Deblurring"></a># 去模糊## 视频去模糊**Cascaded Deep Video Deblurring Using Temporal Sharpness Prior**<a name="Dehazing"></a># 去雾**Domain Adaptation for Image Dehazing****Multi-Scale Boosted Dehazing Network with Dense Feature Fusion**<a name="Feature"></a># 特征点检测与描述**ASLFeat: Learning Local Features of Accurate Shape and Localization**<a name="VQA"></a># 视觉问答(VQA)**VC R-CNN:Visual Commonsense R-CNN**<a name="VideoQA"></a># 视频问答(VideoQA)**Hierarchical Conditional Relation Networks for Video Question Answering**<a name="VLN"></a># 视觉语⾔导航**Towards Learning a Generic Agent for Vision-and-Language Navigation via Pre-training** <a name="Video-Compression"></a># 视频压缩**Learning for Video Compression with Hierarchical Quality and Recurrent Enhancement** <a name="Video-Frame-Interpolation"></a># 视频插帧**AdaCoF: Adaptive Collaboration of Flows for Video Frame Interpolation****FeatureFlow: Robust Video Interpolation via Structure-to-Texture Generation****Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution** **Space-Time-Aware Multi-Resolution Video Enhancement****Scene-Adaptive Video Frame Interpolation via Meta-Learning****Softmax Splatting for Video Frame Interpolation**<a name="Style-Transfer"></a># 风格迁移**Diversified Arbitrary Style Transfer via Deep Feature Perturbation****Collaborative Distillation for Ultra-Resolution Universal Style Transfer**<a name="Lane-Detection"></a># 车道线检测**Inter-Region Affinity Distillation for Road Marking Segmentation**<a name="HOI"></a># "⼈-物"交互(HOT)检测**PPDM: Parallel Point Detection and Matching for Real-time Human-Object Interaction Detection****Detailed 2D-3D Joint Representation for Human-Object Interaction****Cascaded Human-Object Interaction Recognition****VSGNet: Spatial Attention Network for Detecting Human Object Interactions Using Graph Convolutions**<a name="TP"></a># 轨迹预测**The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction****Social-STGCNN: A Social Spatio-Temporal Graph Convolutional Neural Network for Human Trajectory Prediction** <a name="Motion-Predication"></a># 运动预测**Collaborative Motion Prediction via Neural Motion Message Passing****MotionNet: Joint Perception and Motion Prediction for Autonomous Driving Based on Bird's Eye View Maps**<a name="OF"></a># 光流估计**Learning by Analogy: Reliable Supervision from Transformations for Unsupervised Optical Flow Estimation**<a name="IR"></a># 图像检索**Evade Deep Image Retrieval by Stashing Private Images in the Hash Space**<a name="Virtual-Try-On"></a># 虚拟试⾐**Towards Photo-Realistic Virtual Try-On by Adaptively Generating↔Preserving Image Content**<a name="HDR"></a># HDR**Single-Image HDR Reconstruction by Learning to Reverse the Camera Pipeline**<a name="AE"></a># 对抗样本**Enhancing Cross-Task Black-Box Transferability of Adversarial Examples With Dispersion Reduction****Towards Large yet Imperceptible Adversarial Image Perturbations with Perceptual Color Distance**<a name="3D-Reconstructing"></a># 三维重建**Unsupervised Learning of Probably Symmetric Deformable 3D Objects from Images in the Wild****Multi-Level Pixel-Aligned Implicit Function for High-Resolution 3D Human Digitization****Implicit Functions in Feature Space for 3D Shape Reconstruction and Completion**<a name="DC"></a># 深度补全**Uncertainty-Aware CNNs for Depth Completion: Uncertainty from Beginning to End**<a name="SSC"></a># 语义场景补全**3D Sketch-aware Semantic Scene Completion via Semi-supervised Structure Prior**<a name="Captioning"></a># 图像/视频描述**Syntax-Aware Action Targeting for Video Captioning**<a name="WP"></a># 线框解析**Holistically-Attracted Wireframe Parser**<a name="Datasets"></a># 数据集**OASIS: A Large-Scale Dataset for Single Image 3D in the Wild****STEFANN: Scene Text Editor using Font Adaptive Neural Network****Interactive Object Segmentation with Inside-Outside Guidance****Video Panoptic Segmentation****FSS-1000: A 1000-Class Dataset for Few-Shot Segmentation****3D-ZeF: A 3D Zebrafish Tracking Benchmark Dataset****TailorNet: Predicting Clothing in 3D as a Function of Human Pose, Shape and Garment Style****Oops! Predicting Unintentional Action in Video****The Garden of Forking Paths: Towards Multi-Future Trajectory Prediction****Open Compound Domain Adaptation****Intra- and Inter-Action Understanding via Temporal Action Parsing****Dynamic Refinement Network for Oriented and Densely Packed Object Detection****COCAS: A Large-Scale Clothes Changing Person Dataset for Re-identification**- 数据集:暂⽆**KeypointNet: A Large-scale 3D Keypoint Dataset Aggregated from Numerous Human Annotations** **MSeg: A Composite Dataset for Multi-domain Semantic Segmentation****AvatarMe: Realistically Renderable 3D Facial Reconstruction "in-the-wild"****Learning to Autofocus****FaceScape: a Large-scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction** **Bodies at Rest: 3D Human Pose and Shape Estimation from a Pressure Image using Synthetic Data** **FineGym: A Hierarchical Video Dataset for Fine-grained Action Understanding****A Local-to-Global Approach to Multi-modal Movie Scene Segmentation****Deep Homography Estimation for Dynamic Scenes****Assessing Image Quality Issues for Real-World Problems****UnrealText: Synthesizing Realistic Scene Text Images from the Unreal World****PANDA: A Gigapixel-level Human-centric Video Dataset****IntrA: 3D Intracranial Aneurysm Dataset for Deep Learning****Cross-View Tracking for Multi-Human 3D Pose Estimation at over 100 FPS**<a name="Others"></a># 其他**CONSAC: Robust Multi-Model Fitting by Conditional Sample Consensus****Learning to Learn Single Domain Generalization****Open Compound Domain Adaptation****Differentiable Volumetric Rendering: Learning Implicit 3D Representations without 3D Supervision****QEBA: Query-Efficient Boundary-Based Blackbox Attack****Equalization Loss for Long-Tailed Object Recognition****Instance-aware Image Colorization****Contextual Residual Aggregation for Ultra High-Resolution Image Inpainting****Where am I looking at? Joint Location and Orientation Estimation by Cross-View Matching****Epipolar Transformers****Bringing Old Photos Back to Life****MaskFlownet: Asymmetric Feature Matching with Learnable Occlusion Mask****Self-Supervised Viewpoint Learning from Image Collections****Towards Discriminability and Diversity: Batch Nuclear-norm Maximization under Label Insufficient Situations** - Oral**Towards Learning Structure via Consensus for Face Segmentation and Parsing****Plug-and-Play Algorithms for Large-scale Snapshot Compressive Imaging****Lightweight Photometric Stereo for Facial Details Recovery****Footprints and Free Space from a Single Color Image****Self-Supervised Monocular Scene Flow Estimation****Quasi-Newton Solver for Robust Non-Rigid Registration****A Local-to-Global Approach to Multi-modal Movie Scene Segmentation****DeepFLASH: An Efficient Network for Learning-based Medical Image Registration****Self-Supervised Scene De-occlusion****Polarized Reflection Removal with Perfect Alignment in the Wild****Background Matting: The World is Your Green Screen****What Deep CNNs Benefit from Global Covariance Pooling: An Optimization Perspective****Look-into-Object: Self-supervised Structure Modeling for Object Recognition****Video Object Grounding using Semantic Roles in Language Description****Dynamic Hierarchical Mimicking Towards Consistent Optimization Objectives****SDFDiff: Differentiable Rendering of Signed Distance Fields for 3D Shape Optimization****On Translation Invariance in CNNs: Convolutional Layers can Exploit Absolute Spatial Location****GhostNet: More Features from Cheap Operations****AdderNet: Do We Really Need Multiplications in Deep Learning?****Deep Image Harmonization via Domain Verification****Blurry Video Frame Interpolation****Extremely Dense Point Correspondences using a Learned Feature Descriptor****Filter Grafting for Deep Neural Networks****Action Segmentation with Joint Self-Supervised Temporal Domain Adaptation****Detecting Attended Visual Targets in Video****Deep Image Spatial Transformation for Person Image Generation****Rethinking Zero-shot Video Classification: End-to-end Training for Realistic Applications** <a name="Not-Sure"></a># 不确定中没中**FADNet: A Fast and Accurate Network for Disparity Estimation**。

基于视频图像的运动人体目标跟踪检测系统研究与设计的开题报告一、选题背景及意义现代智能视频监控系统已经在安防领域得到了广泛的应用,而基于视频图像的运动人体目标跟踪检测技术是其中关键的一环。

传统的人体目标跟踪算法主要基于像素级的物体分割与轮廓描述,这种方法存在一些问题,例如对快速运动的物体跟踪效果较差,对目标旋转、遮挡等情况处理能力较弱。

因此,近年来研究人员开始尝试基于深度学习等方法改进人体目标跟踪技术,取得了显著的成果。

本论文旨在研究设计一种基于视频图像的运动人体目标跟踪检测系统,实现对运动目标的精确跟踪与检测,提高视频监控系统的安防性能,具有重要意义。

二、研究内容及方法本文将研究以下内容:1. 基于深度学习技术的人体目标检测算法研究,包括Faster RCNN、YOLO 等目标检测算法的原理、优缺点等。

2. 基于视觉目标跟踪算法研究,包括粒子滤波、卡尔曼滤波、Meanshift 等视觉目标跟踪算法的原理、优缺点等。

3. 综合运用深度学习技术和视觉目标跟踪技术,设计一种基于视频图像的运动人体目标跟踪检测系统。

研究方法包括文献调研、数据采集、算法实现与比较。

三、预期成果及创新点预期成果包括:1. 设计一种基于视频图像的运动人体目标跟踪检测系统,并进行有效性验证。

2. 分析比较不同算法在目标跟踪与检测表现上的优缺点。

3. 探索深度学习技术与视觉跟踪技术的结合方式,提高系统运行效率与准确度。

创新点包括:1. 设计一种基于视频图像的运动人体目标跟踪检测系统,与传统目标跟踪算法相比,具有更好的跟踪效果和适应性。

2. 综合运用深度学习技术和视觉跟踪技术,能够有效地解决目标快速运动和旋转、遮挡等问题。

3. 对目标跟踪与检测算法做出深入的分析和比较,为后续相关研究提供参考。

四、论文进度安排第一阶段(2021年4月— 2021年6月):文献调研与数据采集第二阶段(2021年7月— 2021年9月):基于深度学习技术的人体目标检测算法研究第三阶段(2021年10月— 2022年1月):基于视觉目标跟踪算法研究第四阶段(2022年2月— 2022年5月):综合运用深度学习技术和视觉目标跟踪技术,设计一种基于视频图像的运动人体目标跟踪检测系统。

⽬标跟踪算法综述第⼀部分:⽬标跟踪速览先跟⼏个SOTA的tracker混个脸熟,⼤概了解⼀下⽬标跟踪这个⽅向都有些什么。

⼀切要从2013年的那个数据库说起。

如果你问别⼈近⼏年有什么⽐较niubility的跟踪算法,⼤部分⼈都会扔给你吴毅⽼师的论⽂,OTB50和OTB100(OTB50这⾥指OTB-2013,OTB100这⾥指OTB-2015,50和100分别代表视频数量,⽅便记忆):Wu Y, Lim J, Yang M H. Online object tracking: A benchmark [C]// CVPR, 2013.Wu Y, Lim J, Yang M H. Object tracking benchmark [J]. TPAMI, 2015.顶会转顶刊的顶级待遇,在加上引⽤量1480+320多,影响⼒不⾔⽽喻,已经是做tracking必须跑的数据库了,测试代码和序列都可以下载:,OTB50包括50个序列,都经过⼈⼯标注:两篇论⽂在数据库上对⽐了包括2012年及之前的29个顶尖的tracker,有⼤家⽐较熟悉的OAB, IVT, MIL, CT, TLD, Struck等,⼤都是顶会转顶刊的神作,由于之前没有⽐较公认的数据库,论⽂都是⾃卖⾃夸,⼤家也不知道到底哪个好⽤,所以这个database的意义⾮常重⼤,直接促进了跟踪算法的发展,后来⼜扩展为OTB100发到TPAMI,有100个序列,难度更⼤更加权威,我们这⾥参考OTB100的结果,⾸先是29个tracker的速度和发表时间(标出了⼀些性能速度都⽐较好的算法):接下来再看结果(更加详细的情况建议您去看论⽂⽐较清晰):直接上结论:平均来看Struck, SCM, ASLA的性能⽐较⾼,排在前三不多提,着重强调CSK,第⼀次向世⼈展⽰了相关滤波的潜⼒,排第四还362FPS简直逆天了。

速度排第⼆的是经典算法CT(64fps)(与SCM, ASLA等都是那个年代最热的稀疏表⽰)。

kitti注册要100字Submit Your ResultsYou will see your results on a private' page first. We will not make your results public without your consent.Note:For each benchmark, you can only submit once per hour and three times per month last 30 days). n case you cantsubmit to a benchmark,the time of the next possible submission is shown in red. lf you want to adjust the parameters of your method,please do this by using the provided training data with your choice oftraining/validation split(leave-one-out, 5-fold cross-validation). if you have a good reason to submitresults while being blocked (e.g. when evaluating on different benchmarks sinultaneously or your submission went wrong), write an email to me.lmportant: Do not create a second KITTI account! We actively detect fraud.Submission instructions: For the object detection and orientationestimation as well as 3D object detection and bird's eye view benchmark,OStereo 2012all results must be provided in the root directory of azip file using theformat described in the readme.txt (one line per detection) and the fileOptical Flow 2012name convention: 000000.txt,...,007517.txt.To avoid the 3D detectionCStereo / Flow / Scene Flow 2015benchmark, either set the 3D bounding boxdimensions(height, width,OVisual Odometrylength) to zero or set the 3D translation to (-1000,-1000,-1000). To avoidObject Detection / Orientation / 3D Object Detection / Bird'sthe 3D detection benchmark and evaluate only 2D detection and birdsEyeeye view detection, set the 3D bounding box height to 0. For the 3DOObject Trackingobject detection and the bird's eye view benchmark 2D bounding boxesRoadare always required.ODepth Completion (coming soon)OSingle Image Depth Prediction (coming soon) Submit [.zip, contents <= 500 MB]Your SubmissionsOBJECT 11 Dec. 2017 06:49OBJECT 22 NoV. 2017 03:15。