The Case for Exploiting Packet Loss Locality in Multicast Loss Recovery

- 格式:pdf

- 大小:250.44 KB

- 文档页数:13

0 IntroductionMobile terminal in Ad hoc networks has a portable, light and facility qualities while the limited energy depends on batteries under the urgent and hazardous circumstance, which will have an impact on connectivity of the whole topological network and reduce the quality of communication when the nodes run out of energy due to failing to charge up and then stops. Currently, there are some various effective works about minimum energy in Ad hoc networks. There are Medium Access Control (MAC) layer mechanism, 2.5 layer mechanism and network energy-saving mechanism, respectively, as classified according to network hierarchy. PAMAS[1] protocol and 802.11PSM [2] protocol are used in MAC layer; minimum energy protocol in 2.5 layer includes Span [3] protocol for connection of backbone set and on-demand protocol deciding states of the nodes by network traffic as well as minimum transmission power routing (MTPR), minimum battery consumption routing (MBCR), and minimum-maximum battery consumption routing are all minimum energy protocol in the network layer.By exploiting multi-path in Ad hoc network, energy can be minimized, frequent routing updating can be reduced, data transmission rate can be improved and wireless network bandwidth can be increased. Source Transmit Power Selection Algorithm referred to in reference [7] is, currently, an efficient, multi-path and energy-minimized routing algorithm with disjoint nodes. However, due to properties of multicast in wireless transmission, if there are multi path transmitting data simultaneously, it will give rise to great collision problems, such as, high packets loss rate, bad transmitting performance of data. Hence, if we employ multi-path, we should exploit related strategy to lower the collision and choose minimum possibility of collision to conduct communication.In this paper, we proposed Energy Collision-Constrained Algorithm, (ECCA) and correlation factor was introduced to weigh the collision probability between node-disjoint multi-path when transmitting data simultaneously, then a upper limit for correlation factor was calculated according to service requirements, finally a minimum energy node-disjoint multi-path routing was found to satisfy the limit.1 the theory of energy in Mobile Ad hoc network1.1 energy model in Ad hoc networkIn nature, there are difference between wireless transmission and wire transmission: a link exists between two nodes in a traditional wire network, which refers to they can directly communicate through the physical medium while, in Ad hoc wireless network, referring to sending nodes try to send signals in high enough power to make Signal-to-Noise in the received nodes greater than threshold value which can ensure bit error rate in transmission lower than some value to ensure right transmission.Assume that the nodes in the network exploit omnidirectional aerial as well as regulate power dynamically, besides, every node has a maximum transmission power . This paper is based on wireless transmitting energy model in reference [7,8], inwhich signal power sent by sending nodes is decreased by ,where d is distancebetween source and destination ,generally, m is 2 or 4. If the node i want tocommunicate with the node j, the minimum sending power of the node i is :To simplify the calculation and simulation afterwards as well as without loss of generality, we assume k=1, therefore, the equation 1 can be changed to:In mobile Ad hoc network, if the node i can communicate with the node j, then the sending power of the node I must meet:The channel in wireless Ad hoc network is unidirectional due to the influence of emission frequency and geographical position. For example, the required power is lower when transmitted from uphill mountains to downhill, vice versa. Observed in unidirectional channel as follow:1.2 topological graph for energyWith the development off Global Positioning System (GPS), it’s become probability to achieve low-cost GPS in Ad hoc nodes, and the nodes may know where its physical position is as follow:1)firstly, locate the position of every node in the network and assign a related IDto every node to make all nodes form a node set by exploiting GPS2)as for random nodes , if , then there exists a link from i to j.to carry out energy management, we must assign a weight and the set “V” formed by all linksFigure1 topological graph for energy1.3 source transmission power selection (STPS)Assume that Q is the sub-graph which is formed by the energy topological graph with only M disjoint nodes. However, as for moving nodes frequently, the power of every node can be considered as the biggest weight in all borders. And the required power E(Q) equals the total power of all nodes. As shown in the following equations:Where . This is much difference compared to the traditional networks. For Ad hoc network, its energy is based on nodes, and equals the total power of all nodes, while it’s based on borders and equals the total weight of all borders in a traditional network. The equation 6 is for a traditional network:Figure 2 source transmission power selectionIn figure 2, it’s an sub-graph G’ of energy topological graph G with only M disjoint nodes. By observing, we find there is only border in the middle of nodes except the source node and destination node, so the nodes in the middle are constants while the power of sources have M kinds of choices. The total power E(Q) of sub-graph Q can be changed as follow:Where transmission power of source node is p(s) .2node-disjoint multi-path collision problemsthe properties of WMA (Wireless Multicast Advantage) refers to when one node is sending message, all nodes can received messages in its bound of power. In Figure 3, and are the distance from the node i to j, respectively. If the node jwants to communicate with the node k, in traditional networks, the power of thenode i equals , while in Ad hoc networks, the power of the node Iequals p(i)= according to the properties of WMA, apparently, it’s minimum energy. Rather , it’s this properties that results in collision problems.Assume , when the node i wants to send data packets to the node j, and the power of the node i is still ,and for this reason, the collision will happen.Figure 3.WMA propertiesTo reduce the great collision problems caused by using multi-path, we employed the disjoint path in the traditional networks. It includes node-disjoint and link-disjoint path. The former is better than the latter according to the collision problems. However, there still exist great collision problem between multi-path , even if the nodes are disjoint. Figure 4, two node-joint path, S-b-c-D and S-d-e-D, when b sends data packets to c, d can also receive the packets due to the weight in on the border (b, c) is greater up to 5 than that of on border (b, d). hence, when from b to c, the collision will happen. Similarly, when c sends packets to D, the node d and e can neither send packets or receive packets.Therefore, there still exist collision problems while transmitting data simultaneously, even though the nodes are disjoint. To solve this problem, a correlation factor was introduced to weigh the probability of collision in a group of node-joint.Definition 1 the correlation factor 入of two node-disjoint path: assume the correlation factor 入is the number of the borders of two node-disjoint in G’, andG’ is the sub-graph of the energy topological graph in two node-disjoint path. Figure.4 is illustrated by the two node-disjoint path, S-b-c-D and S-d-e-D.There are three such links in G’(b, d), (c, d), (c, e). the only common property is :one node belongs to S-b-c-D and another belongs to S-d-e-D. therefore, the correlation factor 入in figure 2 is 3.Figure 4. node-disjoint paths (入=3)Definition 2the correlation factor in k piece of nodes 入: a sub-graph G’ of energy topological graph G, and the k is the number of the two random borders belonging to k pieces of node-disjoint in G’.3Based on ECCA algorithmThis paper proposed two steps of ECCA:1)according to the loss packet rate required by the specific application andnetwork simulation experiments, we calculated a upper limit for collision, namely when the correlation factor in used node-disjointpaths, this will meet the requirement that specific application to losspackets rate.2)On the condition of the appropriate correlation factor less than , try tofind the most energy-saving group of node-disjoint paths with k pieces ofborders.To avoid overusing some node and make its energy run out quickly, bytrials, we define a threshold value , when choosing nodes, try not to usethose which the remains of energy is less than unless not using thisnode to find a group node-disjoint paths required.3.1 ECCA AlgorithmAssume that the source node S has M close nodes and the requiredpower of these close nodes are, respectively, and the relatedborders to these power are respectively.Input: networks energy topological graph GOutput: correlation factor , most energy-saving node-disjointpaths with k borders.Initialize: set m=k, the power of source node.Step1 modify energy topological graph G, delete the power of sourcenode, unable to cover to the borders ,attain the relatedpower of source nodes.Step2 for n=1 to k{operate algorithm on modified the topological graph , only usethose without marks which the remaining energy is greater than nodeand find a shortest path and mark the middle nodes in this path.}If node-disjoint path can’t be found ,then directly turn to step4; if it’s found,then determine the power of all nodes, attain the sub-graph of , marked .Step3relative to the sub-graph,calculate the transmission power of thesource node, and find the correlation factorcalculate the total transmission power by theequationstep4 set m=m+1, repeatedly run step1 to step4 until m>M.step5choose the smallest group of gathering energy, the total consumed power for the minimum energy which meets is :(8)3.2 the analysis of algorithm complexityAssume n as the number of node in the networks, so the complexity ofDijstra algorithm. The number of close nodes of the source node is at most the n-1, therefore, the algorithm complexity of the whole algorithm is , namely.3.3 the analysis of performanceaccording to the loss packet rate required by the specific application and network simulation experiments, we calculated a upper limit forcollision , namely when the correlation factor in usednode-disjoint paths, this will meet the requirement that specific application to loss packets rate.4.simulation analysissimulate a network which contains 80 hosts distribute in the grid of 50mX50m, the bound of MTU of every node is 100m,and set a=2. so the maximum power of every node is 10000. 40 CBRs will be produced by the upper traffic at random, the average lasting time of every connection corresponds to the time of 200 packets transmitted. The length of every packet is 512 bytes, the rate is 4 packets/s. data link exploits IEEE802.11 protocol, and the bandwidth is 2 Mbps. The initialized energy of every node is set to 20J, variance ranges from 0 to 2 by Gauss. The simulation time is 1200s. k=2 in ECCA. The simulation is produced as the number of the nodes are 20,30,40,50,60,70,80. the random chosen source and destination nodes in the simulation is the average value by 10 times of experimentations.4.2 the result of simulationFigure5. the comparison of loss packets rateThe first simulation compared the loss packets rate of two algorithm as shown in figure5. the benefit of ECCA compared with STPS is not finding the minimum energy but choosing the energy-saving path which can meet the condition of limit collision by correlation factor.The second one compared the energy consumption of two algorithm as shown infigure6. by choosing different the value of , we can compare the totaltransmission power , due to the path attained by ECCA. These two algorithm is much similar, and that shows the more strict the limit for collision, the more energy will be consumed. In general, compared with STPS, the increased volume of the total transmission power is very small.Figure5 comparison of power consumptionFigure7. comparison of seek timeWe compared the seek time of ECCA and STPS by simulation as shown in figure7. some calculations are added due to correlation factor in algorithm, so it took much time to seek the time. However, when the seek time respectively is 2,4,6 in ECCA, compared with the minimum energy algorithm STPS, it increases 0.02s,0.06s,0.07s respectively. Therefore, the seek time of ECCA is better. Reference:。

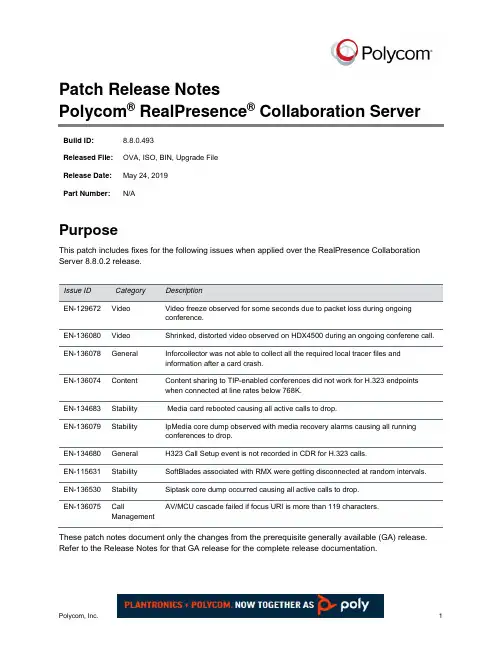

Patch Release NotesPolycom® RealPresence® Collaboration Server Build ID: 8.8.0.493Released File: OVA, ISO, BIN, Upgrade FileRelease Date: May 24, 2019Part Number: N/APurposeThis patch includes fixes for the following issues when applied over the RealPresence Collaboration Server 8.8.0.2 release.Issue ID Category DescriptionEN-129672 Video Video freeze observed for some seconds due to packet loss during ongoingconference.EN-136080 Video Shrinked, distorted video observed on HDX4500 during an ongoing conferene call. EN-136078 General Inforcollector was not able to collect all the required local tracer files andinformation after a card crash.EN-136074 Content Content sharing to TIP-enabled conferences did not work for H.323 endpointswhen connected at line rates below 768K.EN-134683 Stability Media card rebooted causing all active calls to drop.EN-136079 Stability IpMedia core dump observed with media recovery alarms causing all runningconferences to drop.EN-134680 General H323 Call Setup event is not recorded in CDR for H.323 calls.EN-115631 Stability SoftBlades associated with RMX were getting disconnected at random intervals. EN-136530 Stability Siptask core dump occurred causing all active calls to drop.AV/MCU cascade failed if focus URI is more than 119 characters.EN-136075 CallManagementThese patch notes document only the changes from the prerequisite generally available (GA) release. Refer to the Release Notes for that GA release for the complete release documentation.Patch Release Notes – 8.8.0.3 Polycom, Inc.Prerequisites and Configuration ConsiderationsFor information on prerequisites and configuration, please refer to the Polycom RealPresence Collaboration Server v8.8.0 Release Notes and Polycom RealPresence Collaboration Server v8.8 Administrator Guide.Installation and Upgrade NotesFor information on installation, please refer to the Polycom RealPresence Collaboration Server v8.8.0 Release Notes.。

科技论文题目The Application of LCD系别尚德光伏学院专业微电子技术班级1001学生姓名于路学号100100193指导教师谢丽君2013年4 月On the deployment of VolP in Ethernet networks:methodology and case studyKhaled Salah, Department of Information and Computer Science, King Fahd University of Petroleum and Minerals, P.O. Box 5066, Dhahran 31261, Saudi ArabiaReceived 25 May 2004; revised 3 June 2005; accepted 3 June 2005. Available online 1 July 2005.AbstractDeploying IP telephony or voice over IP (V olP) is a major and challenging task for data network researchers and designers. This paper outlines guidelines and a step-by-step methodology on how V olP can be deployed successfully. The methodology can be used to assess the support and readiness of an existing network. Prior to the purchase and deployment of V olP equipment, the methodology predicts the number of V olP calls that can be sustained by an existing network while satisfying QoS requirements of all network services and leaving adequate capacity for future growth. As a case study, we apply the methodology steps on a typical network of a small enterprise. We utilize both analysis and simulation to investigate throughput and delay bounds. Our analysis is based on queuing theory, and OPNET is used for simulation. Results obtained from analysis and simulation are in line and give a close match. In addition, the paper discusses many design and engineering issues. These issues include characteristics of V olP traffic and QoS requirements, V olP flow and call distribution, defining future growth capacity, and measurement and impact of background traffic.Keywords: Network Design,Network Management,V olP,Performance Evaluation,Analysis,Simulation,OPNET1 IntroductionThese days a massive deployment of V olP is taking place over data networks. Most ofthese networks are Ethernet based and running IP protocol. Many network managers are finding it very attractive and cost effective to merge and unify voice and data networks into one. It is easier to run, manage, and maintain. However, one has to keep in mind that IP networks are best-effort networks that were designed for non-real time applications. On the other hand, V olP requires timely packet delivery with low latency, jitter, packet loss, and sufficient bandwidth. To achieve this goal, an efficient deployment of V olP must ensure these real-time traffic requirements can be guaranteed over new or existing IP networks. When deploying a new network service such as V olP over existing network, many network architects, managers, planners, designers, and engineers are faced with common strategic, and sometimes challenging, questions. What are the QoS requirements for V olP? How will the new V olP load impact the QoS for currently running network services and applications? Will my existing network support V olP and satisfy the standardized QoS requirements? If so, how many V olP calls can the network support before upgrading prematurely any part of the existing network hardware? These challenging questions have led to the development of some commercial tools for testing the performance of multimedia applications in data networks. A list of the available commercial tools that support V olP is listed in [1,2]. For the most part, these tools use two common approaches in assessing the deployment of V olP into the existing network. One approach is based on first performing network measurements and then predicting the network readiness for supporting V olP. The prediction of the network readiness is based on assessing the health of network elements. The second approach is based on injecting real V olP traffic into existing network and measuring the resulting delay, jitter, and loss. Other than the cost associated with the commercial tools, none of the commercial tools offer a comprehensive approach for successful V olP deployment. I n particular, none gives any prediction for the total number of calls that can be supported by the network taking into account important design and engineering factors. These factors include V olP flow and call distribution, future growth capacity, performance thresholds, impact of V olP on existing network services and applications, and impact background traffic on V olP. This paper attempts to address those important factors and layout a comprehensive methodology for a successful deployment of any multimedia application such as V olP and video conferencing. However, thepaper focuses on V olP as the new service of interest to be deployed. The paper also contains many useful engineering and design guidelines, and discusses many practical issues pertaining to the deployment of V olP. These issues include characteristics of V olP traffic and QoS requirements, V olP flow and call distribution, defining future growth capacity, and measurement and impact of background traffic. As a case study, we illustrate how our approach and guidelines can be applied to a typical network of a small enterprise. The rest of the paper is organized as follows. Section 2 presents a typical network topology of a small enterprise to be used as a case study for deploying V olP. Section 3 outlines practical eight-step methodology to deploy successfully V olP in data networks. Each step is described in considerable detail. Section 4 describes important design and engineering decisions to be made based on the analytic and simulation studies. Section 5 concludes the study and identifies future work.Moreover, industrial automation communication protocols have not reached the same level of standardization as office communication networks, which further justifies the predominance of proprietary architectures.However, the success of the Internet and of the Web has started impacting the industrial V olP world too. Industrial users are starting to familiarize with Web interfaces, graphical quality, multimedia content, and features such as mobility, adaptivity, and personalization of the applications.At the same time,TCP-IP based communication protocols and embedded operating systems have started to spread in the industrial automation field [6][10], thus reducing the need of proprietary architectures making enterprisewide integration more appealing.In this scenario, it is easy to foresee a slow but inexorable convergence of the industrial V olP solutions towards standard architectures, standard communication protocols, and advanced interactive functions.Our work focuses on the design of a new distributed software architecture for VOLP systems able to provide features and services such as personalization, adaptivity, distribution, mobility, multi-channel notification, integration with office networks and software packages, although preserving the robustness, reliability, performance and cost-effectiveness oftraditional V olP solutions.The project, called ESAMyV olP is a research activity carried out in collaboration between Politecnico di Milano and ESA Elettronica S.p.A., an Italian company operating in the V olP market.2 Existing networkFig. 1 illustrates a typical network topology for a small enterprise residing in a high-rise building. The network shown is realistic and used as a case study only; however, our work presented in this paper can be adopted easily for larger and general networks by following the same principles, guidelines, and concepts laid out in this paper. The network is Ethernet-based and has two Layer-2 Ethernet switches connected by a router. The router is Cisco 2621, and the switches are 3Com Superstack 3300. Switch 1 connects Floors 1 and 2 and two servers; while Switch 2 connects Floor 3 and four servers. Each floor LAN is basically a shared Ethernet connecting employee PCs with workgroup and printer servers. The network makes use of VLANs in order to isolate broadcast and multicast traffic. A total of five LANs exist. All VLANs are port based. Switch 1 is configured such that it has three VLANs. VLAN1 includes the database and file servers. VLAN2 includes Floor 1. VLAN3 includes Floor2. On the other hand, Switch 2 is configured to have two VLANs. VLAN4 includes the servers for E-mail, HTTP, Web and cache proxy, and firewall. VLAN5 includes Floor 3. All the links are switched Ethernet 100 Mbps full duplex except for the links for Floors 1–3 which are shared Ethernet 100 Mbps half duplex.Industrial V olP products rarely implement innovative services, such as remote access to the plant control, messaging and remote notification.Indeed, V olP companies seem to privilege exclusively performance and good access to industrial communication standards, even if these factors could be incompatible with the adoption of innovative solution based on modern and solid Web architectures.Even the V olP players that seem to offer the most innovative contents (and claim their products are Web-enabled) still leverage on legacy architectures, typically exploiting monolithic Applications.On the contrary, recent studies [6] show how users are increasingly looking towards a new range of products with advanced features, superior graphical capabilities and improved usability that could grant:• remote and possibly, distributed control of an industrial plant;• remote notification solutions even when the user is not in front of the terminal;• personalization and automatic adaptation of the GUI;• integration with existing enterprise processes, systems and equipments;• openness to new standard and best practises in the field, by offering low cost modularity and extensibility.SCADA (Supervisory Control And Data Acquisition) systems recently introduced some interesting innovations but, as the acronym suggests, their target is focused on products that implement a wide range of high-level functionalities and that can be deployed in a large set of contexts.They are typically deployed on high-profile devices (PCs and powerful embedded systems) and represent a niche in the V olP market.In the other market’s sectors, innovation has been led by main vendors (e.g., Siemens), who have been working for the past few years in raising the level of the features provided by traditional V olP applications.Sm@rtAccess [15], for example, is a technology developed that allows distributing the control of an industrial plant over a maximum of three stations.Its functioning, though, is based on simply broadcasting the displayed interface of the apparatus that is directly connected with the plant to the others clients.The bandwidth requirements of this approach exceed the capability of a typical Internet connection.Progea [16] proposes a more innovative solution by offering remotization features and a Web-based architecture.Running the Progea server application on a Windows XP based PC, it is possible to remotely control a plant from an internet connected standard Web browser that has the support of a JVM (Java Virtual Machine).Even if powerful, this approach lacks in offering a portable solution since differentimplementations have been provided for different platforms.3 Step-by-step methodologyFig. 2 shows a flowchart of a methodology of eight steps for a successful V olP deployment. The first four steps are independent and can be performed in parallel. Before embarking on the analysis and simulation study, in Steps 6 and 7, Step 5 must be carried out which requires any early and necessary redimensioning or modifications to the existing network. As shown, both Steps 6 and 7 can be done in parallel. The final step is pilot deployment.3.1. V olP traffic characteristics, requirements, and assumptionsFor introducing a new network service such as V olP, one has to characterize first the nature of its traffic, QoS requirements, and any additional components or devices. For simplicity, we assume a point-to-point conversation for all V olP calls with no call conferencing. For deploying V olP, a gatekeeper or Call Manager node has to be added to the network [3,4,5]. The gatekeeper node handles signaling for establishing, terminating, and authorizing connections of all V olP calls. Also a V olP gateway is required to handle external calls. A V olP gateway is responsible for converting V olP calls to/from the Public Switched Telephone Network (PSTN). As an engineering and design issue, the placement of these nodes in the network becomes crucial. We will tackle this issue in design step 5. Other hardware requirements include a V olP client terminal, which can be a separate V olP device, i.e. IP phones, or a typical PC or workstation that is V olP-enabled. A V olP-enabled workstation runs V olP software such as IP Soft Phones .Fig. 3 identifies the end-to-end V olP components from sender to receiver [9]. The first component is the encoder which periodically samples the original voice signal and assigns a fixed number of bits to each sample, creating a constant bit rate stream. The traditionalsample-based encoder G.711 uses Pulse Code Modulation (PCM) to generate 8-bit samples every 0.125 ms, leading to a data rate of 64 kbps . The packetizer follows the encoder and encapsulates a certain number of speech samples into packets and adds the RTP, UDP, IP, and Ethernet headers. The voice packets travel through the data network. An important component at the receiving end, is the playback buffer whose purpose is to absorb variations or jitter in delay and provide a smooth playout. Then packets are delivered to the depacketizer and eventually to the decoder which reconstructs the original voice signal. We will follow the widely adopted recommendations of H.323, G.711, and G.714 standards for V olP QoS requirements.Table 1 compares some commonly used ITU-T standard codecs and the amount of one-way delay that they impose. To account for upper limits and to meet desirable quality requirement according to ITU recommendation P.800, we will adopt G.711u codec standards for the required delay and bandwidth. G.711u yields around 4.4 MOS rating. MOS, Mean Opinion Score, is a commonly used V olP performance metric given in a scale of 1–5, with 5 is the best. However, with little compromise to quality, it is possible to implement different ITU-T codecs that yield much less required bandwidth per call and relatively a bit higher, but acceptable, end-to-end delay. This can be accomplished by applying compression, silence suppression, packet loss concealment, queue management techniques, and encapsulating more than one voice packet into a single Ethernet frame.3.1.1. End-to-end delay for a single voice packetFig. 3 illustrates the sources of delay for a typical voice packet. The end-to-end delay is sometimes referred to by M2E or Mouth-to-Ear delay. G.714 imposes a maximum total one-way packet delay of 150 ms end-to-end for V olP applications . In [22], a delay of up to 200 ms was considered to be acceptable. We can break this delay down into at least three different contributing components, which are as follows (i) encoding, compression, and packetization delay at the sender (ii) propagation, transmission and queuing delay in the network and (iii) buffering, decompression, depacketization, decoding, and playback delay atthe receiver.3.1.2. Bandwidth for a single callThe required bandwidth for a single call, one direction, is 64 kbps. G.711 codec samples 20 ms of voice per packet. Therefore, 50 such packets need to be transmitted per second. Each packet contains 160 voice samples in order to give 8000 samples per second. Each packet is sent in one Ethernet frame. With every packet of size 160 bytes, headers of additional protocol layers are added. These headers include RTP+UDP+IP+Ethernet with preamble of sizes 12+8+20+26, respectively. Therefore, a total of 226 bytes, or 1808 bits, needs to be transmitted 50 times per second, or 90.4 kbps, in one direction. For both directions, the required bandwidth for a single call is 100 pps or 180.8 kbps assuming a symmetric flow.3.1.3. Other assumptionsThroughout our analysis and work, we assume voice calls are symmetric and no voice conferencing is implemented. We also ignore the signaling traffic generated by the gatekeeper. We base our analysis and design on the worst-case scenario for V olP call traffic. The signaling traffic involving the gatekeeper is mostly generated prior to the establishment of the voice call and when the call is finished. This traffic is relatively small compared to the actual voice call traffic. In general, the gatekeeper generates no or very limited signaling traffic throughout the duration of the V olP call for an already established on-going call. In this paper, we will implement no QoS mechanisms that can enhance the quality of packet delivery in IP networks.A myriad of QoS standards are available and can be enabled for network elements. QoS standards may include IEEE 802.1p/Q, the IETF’s RSVP, and DiffServ.Analysis of implementation cost, complexity, management, and benefit must be weighed carefully before adopting such QoS standards. These standards can be recommended when the cost for upgrading some network elements is high and the network resources are scarce and heavily loaded.3.2. V olP traffic flow and call distributionKnowing the current telephone call usage or volume of the enterprise is an importantstep for a successful V olP deployment. Before embarking on further analysis or planning phases for a V olP deployment, collecting statistics about of the present call volume and profiles is essential. Sources of such information are organization’s PBX, telephone records and bills. Key characteristics of existing calls can include the number of calls, number of concurrent calls, time, duration, etc. It is important to determine the locations of the call endpoints, i.e. the sources and destinations, as well as their corresponding path or flow. This will aid in identifying the call distribution and the calls made internally or externally. Call distribution must include percentage of calls within and outside of a floor, building, department, or organization. As a good capacity planning measure, it is recommended to base the V olP call distribution on the busy hour traffic of phone calls for the busiest day of a week or a month. This will ensure support of the calls at all times with high QoS for all V olP calls. When such current statistics are combined with the projected extra calls, we can predict the worst-case V olP traffic load to be introduced to the existing network.Fig. 4 describes the call distribution for the enterprise under study based on the worst busy hour and the projected future growth of V olP calls. In the figure, the call distribution is described as a probability tree. It is also possible to describe it as a probability matrix. Some important observations can be made about the voice traffic flow for inter-floor and external calls. For all these type of calls, the voice traffic has to be always routed through the router. This is so because Switchs 1 and 2 are layer 2 switches with VLANs configuration. One can observe that the traffic flow for inter-floor calls between Floors 1 and 2 imposes twice the load on Switch 1, as the traffic has to pass through the switch to the router and back to the switch again. Similarly, Switch 2 experiences twice the load for external calls from/to Floor 3.3.3. Define performance thresholds and growth capacityIn this step, we define the network performance thresholds or operational points for a number of important key network elements. These thresholds are to be considered when deploying the new service. The benefit is twofold. First, the requirements of the new service to be deployed are satisfied. Second, adding the new service leaves the network healthy andsusceptible to future growth. Two important performance criteria are to be taken into account. First is the maximum tolerable end-to-end delay; and second is the utilization bounds or thresholds of network resources. The maximum tolerable end-to-end delay is determined by the most sensitive application to run on the network. In our case, it is 150 ms end-to-end for V olP. It is imperative to note that if the network has certain delay sensitive applications, the delay for these applications should be monitored, when introducing V olP traffic, such that they do not exceed their required maximum values. As for the utilization bounds for network resources, such bounds or thresholds are determined by factors such as current utilization, future plans, and foreseen growth of the network. Proper resource and capacity planning is crucial. Savvy network engineers must deploy new services with scalability in mind, and ascertain that the network will yield acceptable performance under heavy and peak loads, with no packet loss. V olP requires almost no packet loss. In literature, 0.1–5% packet loss was generally asserted. However, in [24] the required V olP packet loss was conservatively suggested to be less than 105 . A more practical packet loss, based on experimentation, of below 1% was required in [22]. Hence, it is extremely important not to utilize fully the network resources. As rule-of-thumb guideline for switched fast full-duplex Ethernet, the average utilization limit of links should be 190%, and for switched shared fast Ethernet, the average limit of links should be 85% [25]. The projected growth in users, network services, business, etc. must be all taken into consideration to extrapolate the required growth capacity or the future growth factor. In our study, we will ascertain that 25% of the available network capacity is reserved for future growth and expansion. For simplicity, we will apply this evenly to all network resources of the router, switches, and switched-Ethernet links. However, keep in mind this percentage in practice can be variable for each network resource and may depend on the current utilization and the required growth capacity. In our methodology, the reservation of this utilization of network resources is done upfront, before deploying the new service, and only the left-over capacity is used for investigating the network support of the new service to be deployed.3.4. Perform network measurementsIn order to characterize the existing network traffic load, utilization, and flow, network measurements have to be performed. This is a crucial step as it can potentially affect results to be used in analytical study and simulation. There are a number of tools available commercially and noncommercially to perform network measurements. Popular open-source measurement tools include MRTG, STG, SNMPUtil, and GetIF [26]. A few examples of popular commercially measurement tools include HP OpenView, Cisco Netflow, Lucent VitalSuite, Patrol DashBoard, Omegon NetAlly, Avaya ExamiNet, NetIQ Vivinet Assessor, etc. Network measurements must be performed for network elements such as routers, switches, and links. Numerous types of measurements and statistics can be obtained using measurement tools. As a minimum, traffic rates in bits per second (bps) and packets per second (pps) must be measured for links directly connected to routers and switches. To get adequate assessment, network measurements have to be taken over a long period of time, at least 24-h period. Sometimes it is desirable to take measurements over several days or a week. One has to consider the worst-case scenario for network load or utilization in order to ensure good QoS at all times including peak hours. The peak hour is different from one network to another and it depends totally on the nature of business and the services provided by the network.The market of industrial V olP is seeing a slow but steady evolution towards the integration of industrial automation terminals with software and hardware architectures typical of office and Web-based applications, to achieve greater usability and flexibility of the interface and easier interoperability between industrial automation solutions and enterprise information systems.This goal requires unbundling the functions and modules of a traditional V olP solution, deploying them over a modular and distributed system, which exploits the open standards of the Internet and the architectural patterns of multi-tier Web Applications. The MyV olP project aims at designing, implementing and evaluating a distributed V olP platform which can be seamlessly accessed both locally and remotely and can be easily integrated in the enterprise ICT infrastructure. The major functional and non-functional requirements at the base of the MyV olP design are summarized in Table 1 and Table 2, respectively.Table 2 shows a summary of peak-hour utilization for traffic of links in both directions connected to the router and the two switches of the network topology of Fig. 1. These measured results will be used in our analysis and simulation study.4 The VolP architectureIn this section we overview the main characteristics of the design of the V olP framework. The overall architecture of the V olP platform is illustrated in Figure 1: the V olP functionality, usually embedded within the terminal attached to the controlled system, becomes partitioned into a client-server architecture, implemented on top of a hybrid communication network, comprising an Ethernet backbone that connects the V olP devices and a set of field bus protocols for connecting to the controlled plant.4.1. General design choicesThe design of the system had to address several issues, according to the requirements. In this section we give a summary of the adopted solutions.4.1.1. Distribution model of presentation andbusiness logic.The architecture design has been addressed by applying the state of the art solutions for granting separation of concerns and modular implementation. We adopted a Rich web interface paradigm consisting of extending the client side of traditional Web architectures, thus moving some of the computation from the server to the client.The business layer is still located at server side and contains the control policies, while the presentation layer is implemented at the client-side. It is responsible of building the interface for the human supervisor and of managing the user interaction.4.1.2. Personalization solutions.One of the most challenging requirements advocated for strong personalization capabilities of the platform. We use personalization based on groups that assumes users can be classified in roles, factoring out most of the personalization rules at the group level. The remaining detailed personalization rules can be applied on the single user, but we may assumethat their number and complexity are limited. This solution implements a good trade-off between the needs of fine-grained personalization and of reducing the complexity of the computation.4.1.3. Connectivity.The communication between client and server was implemented by means of HTTP with (emulated) callback through the HTTP request-response cycle. The client submits requests upon user interaction and upon timeouts generated by its internal clock. Requests submitted to the server are left pending until an actual update on the status of the controlled system happens. In this case, the server sends a response to the client, thus simulating an event-based message exchange.4.1.4. Personalization enactment.Some specific decisions must be taken about how and where to apply the personalization and adaptation rules on the Interfaces.Personalization and adaptivity rules could be stored and managed with two approaches:1.Rules encoded as XML files: personalization rules are generated by the offline configuration tool in XML format. Such rules are parsed and interpreted at runtime by a general purpose code, that generates the expected interface. A variant of the approach could devise several specific components for parsing the personalization and adaptivity rules addressing different issues (e.g., user interface, alarm configuration, and so on);2.Rules embedded in the code: this solution consists in generating and compiling a source code at configuration time that include specific personalization and adaptation rules targeted to the developed project. The result is a binary code that embeds all the rules and can be executed extremely fast, because no access to files or rule repositories is needed; We adopt both client and server side rule calculations: a hybrid approach in which part of the rules are applied at server side and the remaining ones at client side. We apply at client side the rules that affects the user interface and, in general, the client-side issues. For this part, the rules have been stored as binary code in the client application, for performance reasons. Vice versa, we adopted server-side application of the rules concerning the server configuration. In this case, the rules have been encoded as XML files and parsed by the server components.。

PF_RING User GuideLinux High Speed Packet CaptureVersion 1.1January 2008© 2004-08 1. IntroductionPF_RING is a high speed packet capture library that turns a commodity PC into an efficient and cheap network measurement box suitable for both packet and active traffic analysis and manipulation. Moreover, PF_RING opens totally new markets as it enables the creation of efficient application such as traffic balancers or packet filters in a matter of lines of codes.This manual is divided in two parts:•PF_RING installation and configuration.•PF_RING SDK.1.1 What’s New with PF_RING?•Release 1.0 (January 2008)•Initial PF_RING users guide.•Release 1.1 (January 2008)•Described PF_RING plugins architecture.2. PF_RING InstallationPF_RING’s architecture is depicted in the figure below.PF_RING User-Space LibraryPF_RING MonitoringApplication Monitoring Application Monitoring Application Ethernet Device DriverPF_RINGLegacyU s e r l a n d K e r n e l PF_RINGThe main building blocks are:•The accelerated kernel driver that provides low-level packet copying into the kernel PF_RINGs.•The user space PF_RING SDK that provides transparent PF_RING-support to user-space applications.When you download PF_RING you fetch the following components:•An automatic patch mechanism allows you to automatically patch a vanilla kernel with PF_RING.•The PF_RING user-space SDK.•An enhanced version of the libpcap library that transparently takes advantage of PF_RING if installed, or fallback to the standard behavior if not installed.PF_RING is downloaded by means of SVN as explained in /PF_RING.html2.1 Linux Kernel InstallationThe PF_RING source code layout is the following:8 README 0 kernel/ 32 mkpatch.sh 0 userland/The Linux kernel patch is performed automatically by mkpatch.sh tool. This tool downloads from the Internet the linux kernel source and patches it. The patched kernel will be placed on a new directory named workspace that will sit at the same level of the other PF_RING files.Users can decide what Linux kernel version to download by modifying the following mkpatch.sh variables:VERSION=${VERSION:-2}PATCHLEVEL=${PATCHLEVEL:-6}SUBLEVEL=${SUBLEVEL:-18.4}In the above configuration the kernel 2.6.18.4 will be downloaded.After the kernel has been downloaded and patched, users need to compile and install the kernel as usual. Once the kernel is installed you need to modify your boot loader (usually lilo or grub) in order to let your system access the new kernel. Done this, you need to reboot the box and make sure you select the kernel you just installed as default kernel.Note that:•the kernel installation requires super user (root) capabilities.•For some Linux distributions a kernel installation/compilation package is provided.2.2 PF_RING Device ConfigurationWhen PF_RING is activated, a new entry /proc/net/pf_ring is created.nbox-factory:/home/deri# ls /proc/net/pf_ring/info plugins_infonbox-factory:/home/deri# cd /proc/net/pf_ring/nbox-factory:/proc/net/pf_ring# cat infoVersion : 3.7.5Bucket length : 2000 bytesRing slots : 4096Slot version : 9Capture TX : Yes [RX+TX]IP Defragment : NoTransparent mode : YesTotal rings : 0Total plugins : 2nbox-factory:/proc/net/pf_ring# cat plugins_infoID Plugin2 sip [SIP protocol analyzer]12 rtp [RTP protocol analyzer]PF_RING allows users to install plugins for handling custom traffic. Those plugins are also registered in the pf_ring /proc tree and can be listed by typing the plugins_info file.2.3 Libpfring and Libpcap InstallationBoth libpfring and libpcap are distributed in source format. They can be compiled as follows:•cd userland/libpfring•make•sudo make install•cd ../libpcap-0.9.7-ring/•./configure•makeNote that the libpfring is reentrant hence it’s necessary to link you PF_RING-enabled applications also against the -lpthread library.IMPORTANTLegacy pcap-based applications need to be recompiled against the new libpcap andlinked with a PF_RING enabled libpcap.a in order to take advantage of PF_RING. Donot expect to use PF_RING without recompiling your existing application.3. PF_RING for Application DevelopersConceptually PF_RING is a simple yet powerful technology that enables developers to create high-speed traffic monitor and manipulation applications in a small amount of time. This is because PF_RING shields the developer from inner kernel details that are handled by a library and kernel driver. This way developers can dramatically save development time focusing on they application they are developing without paying attention to the way packets are sent and received.This chapter covers:•The PF_RING API.•Extensions to the libpcap library for supporting legacy applications.•How to patch the Linux kernel for enabling PF_RING3.1 The PF_RING APIThe PF_RING internal data structures should be hidden to the user who can manipulate packets and devices only by means of the available API defined in the include file pfring.h that comes with PF_RING.3.1.1 Return CodesBy convention, the library returns negative values for errors and exceptions. Non-negative codes indicate success.3.1.2 PF_RING: Device Initializationpfring* pfring_open(char *device_name, u_int8_t promisc, u_int8_t reentrant);This call is used to initialize an PF_RING device hence obtain a handle of type struct pfring that can be used in subsequent calls. Note that:• You can use both physical (e.g. eth0) and virtual (e.g. tap devices)• You need super-user capabilities in order to open a device.Input parameters:device_nameSymbolic name of the PF_RING-aware device we’re attempting to open (e.g. eth0).promiscIf set to a value different than zero, the device is open in promiscuous mode.reentrantIf set to a value different than zero, the device is open in reentrant mode. This isimplemented by means of semaphores and it results is slightly worse performance.Use reentrant mode only for multithreaded applications.Return value:On success a handle is returned, NULL otherwise.3.1.3 PF_RING: Device Terminationvoid pfring_close(pfring *ring);This call is used to terminate an PF_RING device previously open. Note that you must always close a device before leaving an application. If unsure, you can close a device from a signal handler.Input parameters:ringThe PF_RING handle that we are attempting to close.3.1.4 PF_RING: Read an Incoming Packetint pfring_recv(pfring *ring, char* buffer, u_int buffer_len, struct pfring_pkthdr *hdr,u_char wait_for_incoming_packet);This call returns an incoming packet when available.Input parameters:ringThe PF_RING handle where we perform the check.bufferA memory area allocated by the caller where the incoming packet will be stored.buffer_lenThe length of the memory area above. Note that the incoming packet is cut if the incoming packet is too long for the allocated area.hdrA memory area where the packet header will be copied.wait_for_incoming_packetIf 0 we simply check the packet availability, otherwise the call is blocked until a packet isavailable.Return value:The actual size of the incoming packet, from ethernet onwards.3.1.5 PF_RING: Ring Clustersint pfring_set_cluster(pfring *ring, u_int clusterId);This call allows a ring to be added to a cluster that can spawn across address spaces. On a nuthsell when two or more sockets are clustered they share incoming packets that are balanced on a per-flow manner. This technique is useful for exploiting multicore systems of for sharing packets in the same address space across multiple threads.Input parameters:ringThe PF_RING handle to be cluster.clusterIdA numeric identifier of the cluster to which the ring will be bound.Return value:Zero if success, a negative value otherwise.int pfring_remove_from_cluster(pfring *ring);This call allows a ring to be removed from a previous joined cluster.Input parameters:ringThe PF_RING handle to be cluster.clusterIdA numeric identifier of the cluster to which the ring will be bound.Return value:Zero if success, a negative value otherwise.3.1.6 PF_RING: Packet Reflectionint pfring_set_reflector(pfring *ring, char *reflectorDevice);This call allows packets received from a ring not to be forwarded to user-space (as usual) but to be sent unmodified on a reflector device. This technique allows users to implement simple applications that set one or more filters and forward all packets matching the filter. All this is done in kernel space for maximum speed: the application just needs to instrument the ring without the need to fetch-and-forward packets.Input parameters:ringThe PF_RING handle to be used as reflector.reflectorDeviceThe reflector device (e.g. eth0). Note that it’s not possible to use the same device for bothreceiving and forwarding packet.Return value:Zero if success, a negative value otherwise.int pfring_set_sampling_rate(pfring *ring, u_int32_t rate /* 1 = no sampling */);Implement packet sampling directly into the kernel. Note that this solution is much more efficient than implementing it in user-space. Sampled packets are only those that pass all filters (if any)Input parameters:ringThe PF_RING handle on which sampling is applied.rateThe sampling rate. Rate of X means that 1 packet out of X is forwarded. This means that asampling rate of 1 disables samplingReturn value:Zero if success, a negative value otherwise.PF_RING allows to filter packets in two ways: precise (a.k.a. hash filtering) or wildcard filtering. Precise filtering is used when it is necessary to track a precise 6-tuple connection <vlan Id, protocol, source IP, source port, destination IP, destination port>. Wildcard filtering is used instead whenever a filter can have wildcards on some of its fields (e.g. match all UDP packets regardless of their destination).3.1.8.1 PF_RING: Wildcard Filteringint pfring_add_filtering_rule(pfring *ring, filtering_rule* rule_to_add);Add a filtering rule to an existing ring. Each rule will have a unique rule Id across the ring (i.e. two rings can have rules with the same id).Input parameters:ringThe PF_RING handle on which the rule will be added.rule_to_addThe rule to add.Return value:Zero if success, a negative value otherwise.int pfring_remove_filtering_rule(pfring *ring, u_int16_t rule_id);Remove a previously added filtering rule.Input parameters:ringThe PF_RING handle on which the rule will be added.rule_idThe id of a previously added rule that will be removed.Return value:Zero if success, a negative value otherwise (e.g. the rule does not exist).int pfring_get_filtering_rule_stats(pfring *ring, u_int16_t rule_id, char* stats, u_int *stats_len);Read statistics of a hash filtering rule.Input parameters:ringThe PF_RING handle from which stats will be read.rule_idThe rule id that identifies the rule for which stats are read.statsA buffer allocated by the user that will contain the rule statistics. Please make sure that thebuffer is large enough to contain the statistics.stats_lenThe size (in bytes) of the stats buffer.Return value:Zero if success, a negative value otherwise (e.g. the rule does not exist).int pfring_handle_hash_filtering_rule(pfring *ring,hash_filtering_rule* rule_to_add,u_char add_rule);Add or remove a hash filtering rule.Input parameters:ringThe PF_RING handle from which stats will be read.rule_to_addThe rule that will be added/removed.add_ruleIf set to a positive value the rule is added, if zero the rule is removedReturn value:Zero if success, a negative value otherwise (e.g. the rule to be removed does not exist).int pfring_get_hash_filtering_rule_stats(pfring *ring,hash_filtering_rule* rule,char* stats, u_int *stats_len);Read statistics of a hash filtering rule.Input parameters:ringThe PF_RING handle on which the rule will be added/removed.ruleThe rule for which stats are read. This needs to be the same rule that has been previously added.statsA buffer allocated by the user that will contain the rule statistics. Please make sure that thebuffer is large enough to contain the statistics.stats_lenThe size (in bytes) of the stats buffer.Return value:Zero if success, a negative value otherwise (e.g. the rule to be removed does not exist).int pfring_toggle_filtering_policy(pfring *ring, u_int8_t rules_default_accept_policy);Set the default filtering policy. This means that if no rule is matching the incoming packet the default policy will decide if the packet is forwarded to user space of dropped. Note that filtering rules are limited to a ring, so each ring can have a different set of rules and default policy.Input parameters:ringThe PF_RING handle on which the rule will be added/removed.rules_default_accept_policyIf set to a positive value the default policy is accept (i.e. forward packets to user space), dropotherwiseReturn value:Zero if success, a negative value otherwise.3.1.9 PF_RING: Miscellaneous Functionsint pfring_enable_ring(pfring *ring);A ring is not enabled (i.e. incoming packets are dropped) until the user space application calls pfring_recv() or the above function. This function should usually not be called unless the user space application sets drop-filters and periodically reads statistics from the ring.Input parameters:ringThe PF_RING handle to enable.Return value:Zero if success, a negative value otherwise.int pfring_stats(pfring *ring, pfring_stat *stats);Read ring statistics (packets received and dropped).Input parameters:ringThe PF_RING handle to enable.statsA user-allocated buffer on which stats will be stored.Return value:Zero if success, a negative value otherwise.int pfring_version(pfring *ring, u_int32_t *version);Read the ring version. Note that is the ring version is 3.7 the retuned ring version is 0x030700.Input parameters:ringThe PF_RING handle to enable.versionA user-allocated buffer on which ring version will be copied.Return value:Zero if success, a negative value otherwise.3.2 The C++ PF_RING interfaceThe C++ interface (see. PF_RING/userland/libpfring/c++/) is equivalent to the C interface. No major changes have been made and all the methods have the same name as C. For instance:• C: int pfring_stats(pfring *ring, pfring_stat *stats);• C++: inline int get_stats(pfring_stat *stats);4. Writing PF_RING PluginsSince version 3.7, developers can write plugins in order to delegate to PF_RING activities like:• Packet payload parsing• Packet content filtering• In-kernel traffic statistics computation.In order to clarify the concept, imagine that you need to develop an application for VoIP traffic monitoring. In this case it’s necessary to:•parse signaling packets (e.g. SIP or IAX) so that those that only packets belonging to interesting peers are forwarded.•compute voice statistics into PF_RING and report to user space only the statistics, not the packets.In this case a developer can code two plugins so that PF_RING can be used as an advanced traffic filter and a way to speed-up packet processing by avoiding packets to cross the kernel boundaries when not needed.The rest of the chapter explains how to implement a plugin and how to call it from user space.4.1 Implementing a PF_RING PluginInside the directory kernel/net/ring/plugins/ there is a simple plugin called dummy_plugin that shows how to implement a simple plugin. Let’s explore the code.Each plugin is implemented as a Linux kernel module. Each module must have two entry points, module_init and module_exit, that are called when the module is insert and removed. The module_init function, in the dummy_plugin example it’s implement by the function dummy_plugin_init(), is responsible for registering the plugin by calling the do_register_pfring_plugin() function. The parameter passed to the registration function is a data structure of type ‘struct pfring_plugin_registration’ that contains:•a unique integer pluginId.•pfring_plugin_handle_skb: a pointer to a function called whenever an incoming packet is received.•pfring_plugin_filter_skb: a pointer to a function called whenever a packet needs to be filtered. This function is called after pfring_plugin_handle_skb().•pfring_plugin_get_stats: a pointer to a function called whenever a user wants to read statistics from a filtering rule that has set this plugin as action.A developer can choose not to implement all the above functions, but in this case the plugin will be limited in functionality (e.g. if pfring_plugin_filter_skb is set to NULL filtering is not supported).static int plugin_handle_skb(filtering_rule_element *rule,filtering_hash_bucket *hash_rule,struct pcap_pkthdr *hdr,struct sk_buff *skb,u_int16_t filter_plugin_id,struct parse_buffer *filter_rule_memory_storage);This function is called whenever an incoming packet (RX or TX) is received. This function typically updates rule statistics. Note that if the developer has set this plugin as filter plugin, then the packet has:•already been parsed•passed a rule payload filter (if set).Input parameters:ruleA pointer to a wildcard rule (if this plugin has been set on a wildcard rule) or NULL (if this pluginhas been set to a hash rule).hash_ruleA pointer to a hash rule (if this plugin has been set on a hash rule) or NULL (if this plugin hasbeen set to a wildcard rule). Note if rule is NULL, hash_rule is not, and vice-versa.hdrA pointer to a pcap packet header for the received packet. Please note that:•the packet is already parsed•the header is an extended pcap header containing parsed packet header metadata.skbA sk_buff datastructure used in Linux to carry packets inside the kernel.filter_plugin_idThe id of the plugin that has parsed packet payload (not header that is already stored into hdr).if the filter_plugin_id is the same as the id of the dummy_plugin then this packet has already been parsed by this plugin and the parameter filter_rule_memory_storage points to the payload parsed memory.filter_rule_memory_storagePointer to a data structure containing parsed packet payload information that has been parsed by the plugin identified by the parameter filter_plugin_id. Note that:•only one plugin can parse a packet.•the parsed memory is allocated dynamically (i.e. via kmalloc) by plugin_filter_skb and freedby the PF_RING core.Return value:Zero if success, a negative value otherwise.int plugin_filter_skb(filtering_rule_element *rule,struct pcap_pkthdr *hdr,struct sk_buff *skb,struct parse_buffer **parse_memory)This function is called whenever a previously parsed packet (via plugin_handle_skb) incoming packet (RX or TX) needs to be filtered. In this case the packet is parsed, parsed information is returned and the return value indicates whether the packet has passed the filter.Input parameters:ruleA pointer to a wildcard rule that contains a payload filter to apply to the packet.hdrA pointer to a pcap packet header for the received packet. Please note that:•the packet is already parsed•the header is an extended pcap header containing parsed packet header metadata.skbA sk_buff data structure used in Linux to carry packets inside the kernel.Output parameters:parse_memoryA pointer to a memory area allocated by the function, that will contain information about theparsed packet payload.Return value:Zero if the packet has not matched the rule filter, a positive value otherwise.4.1.3 PF_RING Plugin: Read Packet Statisticsint plugin_plugin_get_stats(filtering_rule_element *rule,filtering_hash_bucket *hash_bucket,u_char* stats_buffer,u_int stats_buffer_len)This function is called whenever a user space application wants to read statics about a filtering rule.Input parameters:ruleA pointer to a wildcard rule (if this plugin has been set on a wildcard rule) or NULL (if this pluginhas been set to a hash rule).hash_ruleA pointer to a hash rule (if this plugin has been set on a hash rule) or NULL (if this plugin hasbeen set to a wildcard rule). Note if rule is NULL, hash_rule is not, and vice-versa.stats_bufferA pointer to a buffer where statistics will be copied..stats_buffer_lenLength in bytes of the stats_buffer.Return value:The length of the rule stats, or zero in case of error.4.2 Using a PF_RING PluginA PF_RING based application, can take advantage of plugins when filtering rules are set. The filtering_rule data structure is used to both set a rule and specify a plugin associated to it.filtering_rule rule;rule.rule_id = X;....rule.plugin_action.plugin_id = MY_PLUGIN_ID;When the plugin_action.plugin_id is set, whenever a packet matches the header portion of the rule, then the MY_PLUGIN_ID plugin (if registered) is called and the plugin_filter_skb () and plugin_handle_skb() are called.If the developer is willing to filter a packet before plugin_handle_skb() is called, then extra filtering_rule fields need to be set. For instance suppose to implement a SIP filter plugin and to instrument it so that only the packets with INVITE are returned. The following lines of code show how to do this.struct sip_filter *filter = (struct sip_filter*)rule.extended_fields.filter_plugin_data;rule.extended_fields.filter_plugin_id = SIP_PLUGIN_ID;filter->method = method_invite;filter->caller[0] = '\0'; /* Any caller */filter->called[0] = '\0'; /* Any called */filter->call_id[0] = '\0'; /* Any call-id */As explained before, the pfring_add_filtering_rule() function is used to register filtering rules.。

七、任现职后主要专业技术工作业绩登记任现职期间教学方面主要工作业绩:任现职期间平均每年指导本科毕业论文3篇以上,其中论文《基于S7-200系列海水养殖自动控制系统》获得2010年校级优秀学士论文;任现职期间科研方面的主要工作业绩:(1)主持纵向课题累计经费1230万元,其中省部级课题2项,厅局级课题1项;(2)主持横向课题累计经费122.7万元;(3)参与横向课题累计经费39.75万元;(4)作为第一发明人获授权实用新型专利8项:ZL200820026210.8、ZL201020657692.4、ZL201020657697.7、ZL201621064576.5、ZL201620834804.6、ZL201620834816.9、ZL201621064540.7、ZL201120234588.9;(5)作为第一发明人获发明专利受理2项:CN106230858A、CN104729739A;(6)作为第一发明人获软件著作权登记1项:2016SR338514;(7)主持烟台市科技成果鉴定1项:烟科成鉴字[2013]第18号;(8)发表EI收录论文3篇,国内核心期刊论文1篇:图像的多尺度稀疏分解及其在遥感图像融合上的应用,烟台大学学报(自然科学与工程版)第30卷第一期;八、各部门意见学校教务部门审核意见:负责人(签章):(公章)年月日学校科研部门审核意见:负责人(签章):(公章)年月日学校人事部门审核意见:负责人(签章):(公章)年月日评价委员会意见:(公章)主任(签章):年月日学校意见:(公章)年月日单位(章):现职称:实验师申报职称:高级实验师方式:正常推荐单位意见申报人承诺是否人事代理人员本单位已审核申报人基本条件和材料,符合《烟台大学职称评审条件》规定的申报条件,经评议,其推荐位次为2/2 。

负责人签名:本人所提供的个人信息和证明材料真实准确,对因提供有关信息、证件不实或违反有关规定造成的后果负责。