A Machine Learning Approach to Automatic Production of Compiler Heuristics

- 格式:pdf

- 大小:374.20 KB

- 文档页数:10

高三英语人工智能单选题40题1. Artificial intelligence is a branch of computer science that aims to create intelligent _____.A.machinesB.devicesC.equipmentsD.instruments答案解析:A。

“machines”通常指机器,在人工智能领域常指具有一定智能的机器;“devices”一般指设备、装置;“equipments”是“equipment”的复数形式,指装备、器材;“instruments”指仪器、工具。

2. In the field of artificial intelligence, algorithms are used to train _____.A.modelsB.patternsC.shapesD.forms答案解析:A。

“models”在人工智能中常指模型,可以通过算法进行训练;“patterns”指模式、图案;“shapes”指形状;“forms”指形式。

3. Deep learning is a powerful technique in artificial intelligence that uses neural _____.worksB.systemsC.structuresanizations答案解析:A。

“networks”指网络,在深度学习中常指神经网络;“systems”指系统;“structures”指结构;“organizations”指组织。

4. Artificial intelligence can process large amounts of data to make accurate _____.A.predictionsB.forecastsC.projectionsD.expectations答案解析:A。

人工智能生态相关的专业名词解释下载温馨提示:该文档是我店铺精心编制而成,希望大家下载以后,能够帮助大家解决实际的问题。

文档下载后可定制随意修改,请根据实际需要进行相应的调整和使用,谢谢!并且,本店铺为大家提供各种各样类型的实用资料,如教育随笔、日记赏析、句子摘抄、古诗大全、经典美文、话题作文、工作总结、词语解析、文案摘录、其他资料等等,如想了解不同资料格式和写法,敬请关注!Download tips: This document is carefully compiled by the editor. I hope that after you download them, they can help yousolve practical problems. The document can be customized and modified after downloading, please adjust and use it according to actual needs, thank you!In addition, our shop provides you with various types of practical materials, such as educational essays, diary appreciation, sentence excerpts, ancient poems, classic articles, topic composition, work summary, word parsing, copy excerpts,other materials and so on, want to know different data formats and writing methods, please pay attention!人工智能技术的快速发展,推动着人工智能生态系统的形成和完善。

中科院自动化所的中英文新闻语料库中科院自动化所(Institute of Automation, Chinese Academy of Sciences)是中国科学院下属的一家研究机构,致力于开展自动化科学及其应用的研究。

该所的研究涵盖了从理论基础到技术创新的广泛领域,包括人工智能、机器人技术、自动控制、模式识别等。

下面将分别从中文和英文角度介绍该所的相关新闻语料。

[中文新闻语料]1. 中国科学院自动化所在人脸识别领域取得重大突破中国科学院自动化所的研究团队在人脸识别技术方面取得了重大突破。

通过深度学习算法和大规模数据集的训练,该研究团队成功地提高了人脸识别的准确性和稳定性,使其在安防、金融等领域得到广泛应用。

2. 中科院自动化所发布最新研究成果:基于机器学习的智能交通系统中科院自动化所发布了一项基于机器学习的智能交通系统研究成果。

通过对交通数据的收集和分析,研究团队开发了智能交通控制算法,能够优化交通流量,减少交通拥堵和时间浪费,提高交通效率。

3. 中国科学院自动化所举办国际学术研讨会中国科学院自动化所举办了一场国际学术研讨会,邀请了来自不同国家的自动化领域专家参加。

研讨会涵盖了人工智能、机器人技术、自动化控制等多个研究方向,旨在促进国际间的学术交流和合作。

4. 中科院自动化所签署合作协议,推动机器人技术的产业化发展中科院自动化所与一家著名机器人企业签署了合作协议,共同推动机器人技术的产业化发展。

合作内容包括技术研发、人才培养、市场推广等方面,旨在加强学界与工业界的合作,加速机器人技术的应用和推广。

5. 中国科学院自动化所获得国家科技进步一等奖中国科学院自动化所凭借在人工智能领域的重要研究成果荣获国家科技进步一等奖。

该研究成果在自动驾驶、物联网等领域具有重要应用价值,并对相关行业的创新和发展起到了积极推动作用。

[英文新闻语料]1. Institute of Automation, Chinese Academy of Sciences achievesa major breakthrough in face recognitionThe research team at the Institute of Automation, Chinese Academy of Sciences has made a major breakthrough in face recognition technology. Through training with deep learning algorithms and large-scale datasets, the research team has successfully improved the accuracy and stability of face recognition, which has been widely applied in areas such as security and finance.2. Institute of Automation, Chinese Academy of Sciences releases latest research on machine learning-based intelligent transportationsystemThe Institute of Automation, Chinese Academy of Sciences has released a research paper on a machine learning-based intelligent transportation system. By collecting and analyzing traffic data, the research team has developed intelligent traffic control algorithms that optimize traffic flow, reduce congestion, and minimize time wastage, thereby enhancing overall traffic efficiency.3. Institute of Automation, Chinese Academy of Sciences hosts international academic symposiumThe Institute of Automation, Chinese Academy of Sciences recently held an international academic symposium, inviting automation experts from different countries to participate. The symposium covered various research areas, including artificial intelligence, robotics, and automatic control, aiming to facilitate academic exchanges and collaborations on an international level.4. Institute of Automation, Chinese Academy of Sciences signs cooperation agreement to promote the industrialization of robotics technologyThe Institute of Automation, Chinese Academy of Sciences has signed a cooperation agreement with a renowned robotics company to jointly promote the industrialization of robotics technology. The cooperation includes areas such as technology research and development, talent cultivation, and market promotion, aiming to strengthen the collaboration between academia and industry and accelerate the application and popularization of robotics technology.5. Institute of Automation, Chinese Academy of Sciences receivesNational Science and Technology Progress Award (First Class) The Institute of Automation, Chinese Academy of Sciences has been awarded the National Science and Technology Progress Award (First Class) for its important research achievements in the field of artificial intelligence. The research outcomes have significant application value in areas such as autonomous driving and the Internet of Things, playing a proactive role in promoting innovation and development in related industries.。

中考英语生物工程的前沿技术单选题40题1. Gene editing technology in medicine can ____ many incurable diseases.A. treatB. preventC. causeD. ignore答案解析:A。

本题考查动词的词义辨析。

选项A“treat”有治疗的意思,基因编辑技术在医学上可以用来治疗很多不治之症,符合语境。

选项B“prevent”是预防,基因编辑技术主要是对已有的疾病进行处理而不是预防,所以该选项错误。

选项C“cause”是导致,与基因编辑技术在医疗中的积极作用相悖,错误。

选项D“ignore”是忽视,不符合基因编辑技术在医疗中的功能,这里主要考查词汇的理解。

2. In agricultural gene editing, which of the following is a possible benefit?A. Reducing the yieldB. Increasing the resistance to pestsC. Making plants more sensitive to diseasesD. Decreasing the nutritional value答案解析:B。

本题考查农业方面基因编辑的好处。

选项B“增加对害虫的抵抗力”是基因编辑在农业方面可能带来的好处。

选项A“减少产量”不是好处,不符合题意。

选项C“使植物对疾病更敏感”是负面的,不是好处。

选项D“降低营养价值”也是负面的,不符合基因编辑在农业中的积极意义,这里主要考查词汇和对基因编辑在农业应用的理解。

3. The gene - editing tool CRISPR is known for its ____.A. complexityB. inaccuracyC. high costD. simplicity and efficiency答案解析:D。

热点话题一:人工智能与科技标题:The Impact of Artificial Intelligence on the Job Market内容概要:随着人工智能的不断发展,许多工作领域正在经历巨大的变革。

自动化技术和机器学习的应用对就业市场产生了深远的影响。

一些工作可能会被自动化取代,但同时也会出现新的就业机会,需要人们具备更高级的技能,如创意思维、解决复杂问题的能力和人际沟通技巧。

这需要教育体系和培训机构相应地调整,以培养适应未来工作环境的人才。

标题:Ethical Considerations in Artificial Intelligence Development内容概要:人工智能的快速发展引发了一系列伦理问题。

例如,自主驾驶汽车在道路上的行为决策,医疗诊断的准确性,以及个人隐私在数据分析中的使用等。

这些问题需要严肃思考和监管,以确保人工智能的应用不会损害人类的安全和权益。

同时,也需要制定一系列的法规和规范,来指导人工智能技术的开发和使用。

标题:The Role of Artificial Intelligence in Healthcare内容概要:人工智能在医疗领域的应用日益增多。

从辅助医生进行更精准的诊断,到药物研发和基因编辑,人工智能技术正在改变医疗行业的面貌。

这些技术可以加速疾病诊断和治疗过程,提高医疗效率。

然而,同时也需要注意数据安全和患者隐私的保护,以及确保医疗决策仍然具有人类医生的判断和道德。

标题:Artificial Intelligence in Education: Personalized Learning内容概要:人工智能在教育领域的应用也具有巨大潜力。

个性化学习平台利用人工智能分析学生的学习习惯和表现,为每个学生定制教学内容和进度。

这可以提高学生的学习效果,激发学习兴趣。

然而,这也带来了一些挑战,如如何平衡人工智能辅助和教师指导,以及如何确保学生在个性化学习中获得全面的知识和技能。

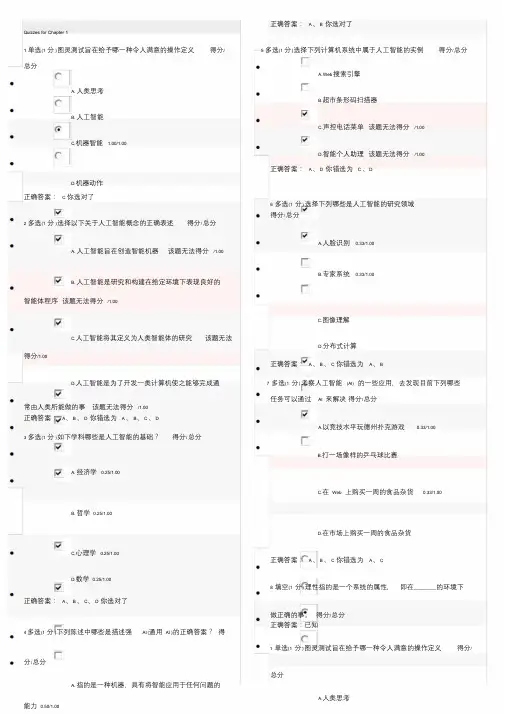

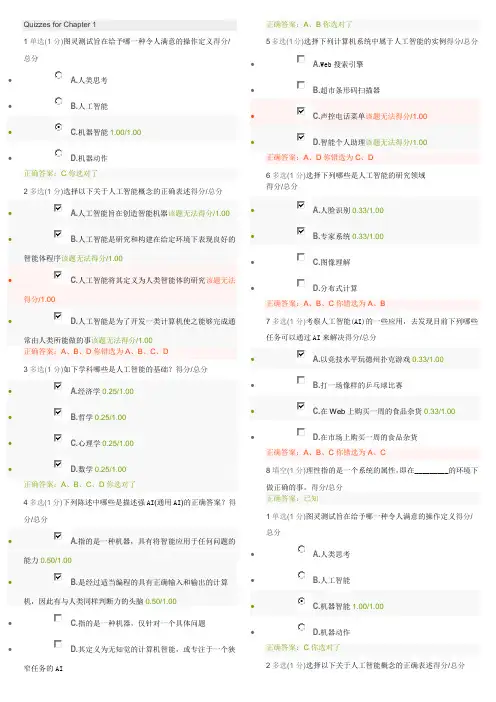

正确答案:A、B 你选对了Quizzes for Chapter 11 单选(1 分)图灵测试旨在给予哪一种令人满意的操作定义得分/ 5 多选(1 分)选择下列计算机系统中属于人工智能的实例得分/总分总分A. W eb搜索引擎A. 人类思考B.超市条形码扫描器B. 人工智能C.声控电话菜单该题无法得分/1.00C.机器智能 1.00/1.00D.智能个人助理该题无法得分/1.00正确答案:A、D 你错选为C、DD.机器动作正确答案: C 你选对了6 多选(1 分)选择下列哪些是人工智能的研究领域得分/总分2 多选(1 分)选择以下关于人工智能概念的正确表述得分/总分A.人脸识别0.33/1.00A. 人工智能旨在创造智能机器该题无法得分/1.00B.专家系统0.33/1.00B. 人工智能是研究和构建在给定环境下表现良好的智能体程序该题无法得分/1.00C.图像理解C.人工智能将其定义为人类智能体的研究该题无法D.分布式计算得分/1.00正确答案:A、B、C 你错选为A、BD.人工智能是为了开发一类计算机使之能够完成通7 多选(1 分)考察人工智能(AI) 的一些应用,去发现目前下列哪些任务可以通过AI 来解决得分/总分常由人类所能做的事该题无法得分/1.00正确答案:A、B、D 你错选为A、B、C、DA.以竞技水平玩德州扑克游戏0.33/1.003 多选(1 分)如下学科哪些是人工智能的基础?得分/总分B.打一场像样的乒乓球比赛A. 经济学0.25/1.00C.在Web 上购买一周的食品杂货0.33/1.00B. 哲学0.25/1.00D.在市场上购买一周的食品杂货C.心理学0.25/1.00正确答案:A、B、C 你错选为A、CD.数学0.25/1.008 填空(1 分)理性指的是一个系统的属性,即在_________的环境下正确答案:A、B、C、D 你选对了做正确的事。

得分/总分正确答案:已知4 多选(1 分)下列陈述中哪些是描述强AI (通用AI )的正确答案?得1 单选(1 分)图灵测试旨在给予哪一种令人满意的操作定义得分/ 分/总分总分A. 指的是一种机器,具有将智能应用于任何问题的A.人类思考能力0.50/1.00B.人工智能B. 是经过适当编程的具有正确输入和输出的计算机,因此有与人类同样判断力的头脑0.50/1.00C.机器智能 1.00/1.00C.指的是一种机器,仅针对一个具体问题D.机器动作正确答案: C 你选对了D.其定义为无知觉的计算机智能,或专注于一个狭2 多选(1 分)选择以下关于人工智能概念的正确表述得分/总分窄任务的AIA. 人工智能旨在创造智能机器该题无法得分/1.00B.专家系统0.33/1.00B. 人工智能是研究和构建在给定环境下表现良好的C.图像理解智能体程序该题无法得分/1.00D.分布式计算C.人工智能将其定义为人类智能体的研究该题无法正确答案:A、B、C 你错选为A、B得分/1.00 7 多选(1 分)考察人工智能(AI) 的一些应用,去发现目前下列哪些任务可以通过AI 来解决得分/总分D.人工智能是为了开发一类计算机使之能够完成通A.以竞技水平玩德州扑克游戏0.33/1.00常由人类所能做的事该题无法得分/1.00正确答案:A、B、D 你错选为A、B、C、DB.打一场像样的乒乓球比赛3 多选(1 分)如下学科哪些是人工智能的基础?得分/总分C.在Web 上购买一周的食品杂货0.33/1.00A. 经济学0.25/1.00D.在市场上购买一周的食品杂货B. 哲学0.25/1.00正确答案:A、B、C 你错选为A、CC.心理学0.25/1.008 填空(1 分)理性指的是一个系统的属性,即在_________的环境下D.数学0.25/1.00 做正确的事。

高一人工智能英语阅读理解25题1<背景文章>Artificial intelligence (AI) has become one of the most talked - about topics in recent years. AI can be defined as the simulation of human intelligence processes by machines, especially computer systems. These processes include learning, reasoning, problem - solving, perception, and language understanding.The development of AI has a long history. It started in the 1950s when the concept was first introduced. In the early days, AI research focused on simple tasks like playing games and solving basic mathematical problems. However, with the development of computer technology and the increase in data availability, AI has made great strides.AI has found applications in various fields. In the medical field, AI can assist doctors in diagnosing diseases. For example, it can analyze medical images such as X - rays and MRIs to detect early signs of diseases that might be missed by human eyes. In education, AI - powered tutoring systems can provide personalized learning experiences for students. They can adapt to the individual learning pace and style of each student, helping them to better understand difficult concepts. In the transportation industry, self - driving cars, which are a significant application of AI, are expectedto revolutionize the way we travel. They can potentially reduce traffic accidents caused by human error and improve traffic efficiency.However, AI also brings some potential negative impacts. One concern is the impact on employment. As AI systems can perform many tasks that were previously done by humans, there is a fear that many jobs will be lost. For example, jobs in manufacturing, customer service, and some administrative tasks may be at risk. Another issue is the ethical considerations. For instance, how should AI make decisions in life - or - death situations? And there are also concerns about data privacy as AI systems rely on large amounts of data.1. <问题1>What is the main idea of this passage?A. To introduce the development of computer technology.B. To discuss the applications and impacts of artificial intelligence.C. To explain how to solve problems in different fields.D. To show the importance of data in AI systems.答案:B。

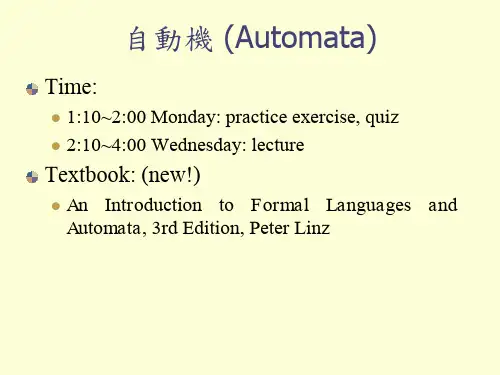

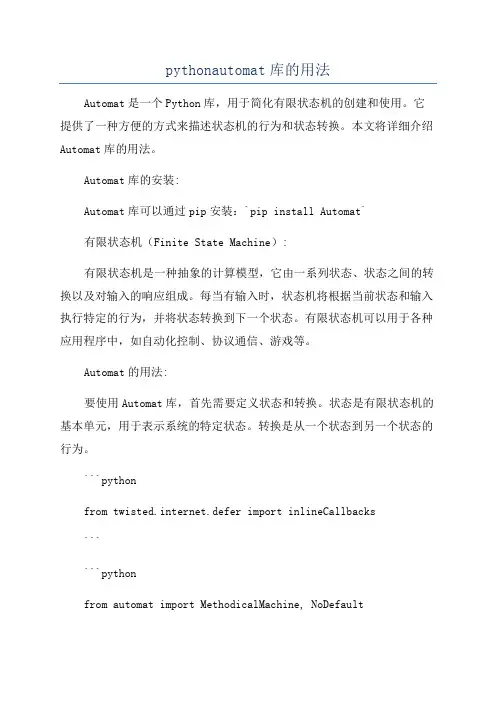

pythonautomat库的用法Automat是一个Python库,用于简化有限状态机的创建和使用。

它提供了一种方便的方式来描述状态机的行为和状态转换。

本文将详细介绍Automat库的用法。

Automat库的安装:Automat库可以通过pip安装:`pip install Automat`有限状态机(Finite State Machine):有限状态机是一种抽象的计算模型,它由一系列状态、状态之间的转换以及对输入的响应组成。

每当有输入时,状态机将根据当前状态和输入执行特定的行为,并将状态转换到下一个状态。

有限状态机可以用于各种应用程序中,如自动化控制、协议通信、游戏等。

Automat的用法:要使用Automat库,首先需要定义状态和转换。

状态是有限状态机的基本单元,用于表示系统的特定状态。

转换是从一个状态到另一个状态的行为。

```pythonfrom twisted.internet.defer import inlineCallbacks``````pythonfrom automat import MethodicalMachine, NoDefaultclass MyStateMachine:pass```定义行为:```pythonfrom automat import MethodicalMachine, NoDefault class MyStateMachine:def action1(self, arg1):print("Action 1 executed with argument:", arg1) def action2(self):print("Action 2 executed")```定义转换:```pythonfrom automat import MethodicalMachine, NoDefault class MyStateMachine:def action1(self, arg1):print("Action 1 executed with argument:", arg1) def action2(self):print("Action 2 executed")def event1(self, arg):passdef event2(self, arg):pass```在状态之间定义转换:要定义状态之间的转换,我们可以使用`MY_STATE.to(MY_STATE2)`语法。

Quizzes for Chapter 11单选(1分)图灵测试旨在给予哪一种令人满意的操作定义得分/总分• A.人类思考•B.人工智能•C.机器智能1.00/1.00•D.机器动作正确答案:C 你选对了2多选(1分)选择以下关于人工智能概念的正确表述得分/总分• A.人工智能旨在创造智能机器该题无法得分/1.00 •B.人工智能是研究和构建在给定环境下表现良好的智能体程序该题无法得分/1.00•C.人工智能将其定义为人类智能体的研究该题无法得分/1.00• D.人工智能是为了开发一类计算机使之能够完成通常由人类所能做的事该题无法得分/1.00 正确答案:A 、B 、D 你错选为A 、B 、C 、D3多选(1分)如下学科哪些是人工智能的基础?得分/总分• A.经济学0.25/1.00 • B.哲学0.25/1.00 •C.心理学0.25/1.00 •D.数学0.25/1.00正确答案:A 、B 、C、D 你选对了4多选(1分)下列陈述中哪些是描述强AI (通用AI )的正确答案?得分/总分• A.指的是一种机器,具有将智能应用于任何问题的能力0.50/1.00• B.是经过适当编程的具有正确输入和输出的计算机,因此有与人类同样判断力的头脑0.50/1.00•C.指的是一种机器,仅针对一个具体问题正确答案:A 、B 你选对了5多选(1分)选择下列计算机系统中属于人工智能的实例得分/总分•A.Web 搜索引擎 •B.超市条形码扫描器•C.声控电话菜单该题无法得分/1.00 •D.智能个人助理该题无法得分/1.00正确答案:A 、D 你错选为C 、D6多选(1分)选择下列哪些是人工智能的研究领域 得分/总分• A.人脸识别0.33/1.00• B.专家系统0.33/1.00 •C.图像理解•D.分布式计算正确答案:A、B 、C 你错选为A 、B7多选(1分)考察人工智能(AI)的一些应用,去发现目前下列哪些任务可以通过AI 来解决得分/总分• A.以竞技水平玩德州扑克游戏0.33/1.00• B.打一场像样的乒乓球比赛•C.在Web 上购买一周的食品杂货0.33/1.00•D.在市场上购买一周的食品杂货正确答案:A、B 、C 你错选为A 、C8填空(1分)理性指的是一个系统的属性,即在_________的环境下做正确的事。

自动驾驶的英文作文Title: The Future of Transportation: Autonomous Driving。

In recent years, the rapid development of technologyhas brought forth a myriad of innovations, one of the most significant being autonomous driving. This groundbreaking advancement holds the potential to revolutionize the way we commute, travel, and transport goods. In this essay, wewill explore the implications, benefits, challenges, and future prospects of autonomous driving.Autonomous driving, also known as self-driving or driverless technology, refers to vehicles capable of navigating and operating without human input. This technology relies on a combination of sensors, cameras, radar, lidar, GPS, and advanced algorithms to perceive the environment, make decisions, and control the vehicle. The ultimate goal is to create a safer, more efficient, and convenient transportation system.One of the primary advantages of autonomous driving is its potential to enhance road safety. Human error is a leading cause of traffic accidents, accounting for a significant portion of fatalities and injuries worldwide. By eliminating the human factor, autonomous vehicles have the potential to significantly reduce the number of accidents, saving lives and preventing injuries. These vehicles can react faster, avoid collisions, and adhere strictly to traffic laws, making roads safer for everyone.Furthermore, autonomous driving has the potential to improve traffic flow and reduce congestion. With interconnected vehicles communicating in real-time, they can coordinate movements more efficiently, optimizing routes and minimizing delays. This efficiency not only saves time for commuters but also reduces fuel consumption and greenhouse gas emissions, contributing to a more sustainable transportation system.Another significant benefit of autonomous driving is its potential to enhance accessibility and mobility for individuals with disabilities or limited mobility. Self-driving vehicles can provide newfound independence and freedom for those who may have difficulty driving or accessing traditional transportation options. Additionally, autonomous ride-sharing services could offer affordable and convenient transportation solutions for underserved communities, bridging the gap between urban and rural areas.Despite these promising benefits, autonomous drivingalso presents several challenges and concerns that must be addressed. One of the most pressing issues is the ethical and legal implications surrounding liability and responsibility in the event of accidents or malfunctions. Determining accountability in such scenarios raises complex legal and moral questions that require carefulconsideration and regulation.Moreover, there are concerns regarding cybersecurityand the potential for malicious actors to hack into autonomous vehicles, compromising their safety and security. As these vehicles rely on interconnected systems and data sharing, robust cybersecurity measures are essential to safeguard against cyber threats and ensure the integrity ofautonomous driving technology.Additionally, the widespread adoption of autonomous vehicles raises questions about job displacement and the impact on various industries, such as transportation, logistics, and automotive manufacturing. While autonomous driving has the potential to create new job opportunities in areas such as software development, maintenance, and support services, it may also lead to the displacement of traditional roles, requiring proactive measures to mitigate potential economic disruptions.Looking ahead, the future of autonomous driving holds immense promise and potential. As technology continues to advance and regulatory frameworks evolve, we can expect to see increasingly sophisticated autonomous vehicles on our roads. However, realizing the full benefits of autonomous driving will require collaboration among industry stakeholders, policymakers, regulators, and society as a whole to address challenges, ensure safety, and maximize the positive impact on our transportation systems and communities.In conclusion, autonomous driving represents a transformative innovation with the power to revolutionize transportation as we know it. By enhancing safety, efficiency, accessibility, and sustainability, autonomous vehicles have the potential to reshape our cities, improve quality of life, and create a more connected and inclusive society. As we navigate the road ahead, it is essential to approach the development and deployment of autonomous driving technology with careful consideration, foresight, and a commitment to realizing its full potential for the benefit of all.。

北京理工大学2023年博士英语考试真题全文共3篇示例,供读者参考篇1Beijing Institute of TechnologyDoctoral English Exam 2023Instructions:- Total time: 3 hours- Total marks: 100- Answer all questionsSection A: Reading Comprehension (40 marks)Read the following passage and answer the questions that follow.The future of artificial intelligence (AI) is a topic that continues to generate both excitement and fear. While the possibilities of AI are endless, there are also concerns about its potential impact on the job market and society as a whole. One key question is whether AI will create more jobs than it eliminates.1. What is the main topic of the passage?2. What are the possibilities of AI mentioned in the passage?3. What concerns are raised about the impact of AI?Section B: Writing (30 marks)In about 300-350 words, write an essay on the following topic:"The role of technology in education"Section C: Grammar and Vocabulary (30 marks)1. Choose the correct form of the verb to complete the sentence:"The team _____ won the championship last year."a) haveb) hasc) isd) are2. Which of the following sentences is correct in terms of punctuation?a) She likes to read, write and paint.b) She likes to read write, and paint.c) She likes to read write and paint.d) She likes to read, write, and paint.3. Fill in the blank with the correct preposition:"The book is _____ the table."a) inb) onc) underd) next toSection D: Speaking (30 marks)Prepare a 5-minute presentation on the topic "The impact of climate change on the environment" and be prepared to answer questions from the examiners.Good luck!篇2Beijing Institute of TechnologyPhD English Exam 2023Part I: Reading Comprehension (40 points)Section ARead the following passage and answer the questions below.Nanotechnology is a promising field that has the potential to revolutionize various industries. By manipulating materials at the nanoscale, scientists can design new materials with unique properties that can be used in electronics, medicine, and environmental remediation.1. What is nanotechnology?2. What are some potential applications of nanotechnology?3. How can nanotechnology revolutionize industries?Section BRead the following passage and answer the questions below.Artificial intelligence (AI) is rapidly transforming industries and society as a whole. AI technologies, such as machine learning and natural language processing, are being used in a wide range of applications, from autonomous vehicles to personalized medicine.1. What is artificial intelligence?2. Name two AI technologies mentioned in the passage.3. Provide two examples of how AI is being used in different industries.Part II: Writing (60 points)In 800-1000 words, discuss a recent technological advancement that has had a significant impact on society. Consider the implications of this advancement on various aspects of life, such as education, healthcare, and the economy. Provide examples to support your argument and discuss the potential challenges and opportunities associated with the adoption of this technology.---This document provides a brief overview of the Beijing Institute of Technology's 2023 PhD English exam. The exam consists of two parts: Reading Comprehension and Writing. In the Reading Comprehension section, students are required to read passages on various topics and answer questions based on their understanding of the text. The Writing section requires students to write an essay on a given topic, demonstrating their ability to analyze and articulate complex ideas in English.Overall, the exam aims to assess students' proficiency in English and their ability to engage with academic content in the language. Students are expected to demonstrate critical thinking skills, analytical abilities, and effective communication in both sections of the exam. Good luck to all the test-takers!篇32023 Beijing Institute of Technology Ph.D. English Exam QuestionsSection A: Reading Comprehension (40 points)Directions: Read the following passage and answer the questions below."The Impact of Climate Change on Global Food Security"Climate change is a pressing issue that has far-reaching consequences, particularly on global food security. Rising temperatures, changing precipitation patterns, and extreme weather events are posing significant challenges to agriculture and food production worldwide. According to the United Nations, climate change could lead to a decline in crop yields by up to 30% by 2050, exacerbating food shortages and increasing food prices.One of the key impacts of climate change on food security is the shift in growing seasons. As temperatures rise, traditional planting and harvesting times may no longer be suitable, leading to reduced crop yields and disruptions in food supply chains. Extreme weather events, such as droughts, floods, and hurricanes, can also destroy crops and infrastructure, further destabilizing food production.In addition to affecting crop production, climate change also has implications for food safety and nutrition. Rising temperatures can create favorable conditions for the spread of foodborne pathogens and pests, increasing the risk of food contamination and outbreaks of foodborne illnesses. Moreover, changes in precipitation patterns can affect water availability for irrigation and drinking, leading to water scarcity and food insecurity in many regions.To address the challenges posed by climate change on global food security, policymakers, researchers, and stakeholders must work together to develop sustainable agriculture practices, promote resilience in food systems, and reduce greenhouse gas emissions. Investments in climate-smart agriculture, water management, and crop diversification are essential to ensuring astable and resilient food supply in the face of changing climatic conditions.Question 1: What are some of the key impacts of climate change on global food security?Question 2: How can rising temperatures affect food safety and nutrition?Question 3: What are some solutions to address the challenges posed by climate change on food security?Section B: Writing (60 points)Directions: Write an essay in response to the following prompt.Prompt: "Discuss the role of education in promoting sustainable agriculture and food security in the context of climate change."In your essay, you should:1. Define the concept of sustainable agriculture and explain its importance in addressing global food security.2. Discuss the ways in which education can help raise awareness about climate change and its impact on food production.3. Provide examples of educational initiatives or programs that have successfully promoted sustainable agriculture practices and resilience in food systems.4. Offer recommendations for how educational institutions, governments, and communities can collaborate to build a more sustainable and food-secure future.Section C: Speaking (40 points)Directions: In this section, you will be asked to respond to a series of questions related to the theme of climate change and food security. You will have two minutes to prepare your response before presenting it to the examiners.Question 1: "How can individuals contribute to promoting sustainable agriculture and food security in their communities?"Question 2: "What role can governments play in supporting smallholder farmers and enhancing their resilience to climate change impacts?"Good luck with your exam preparation!。

Journal of Machine Learning Research17(2016)1-5Submitted5/16;Revised11/16;Published11/16 Auto-WEKA2.0:Automatic model selectionand hyperparameter optimization in WEKALars Kotthoff*************.ca Chris Thornton***************.ca Holger H.Hoos***********.ca Frank Hutter******************.de Kevin Leyton-Brown**************.ca Department of Computer ScienceUniversity of British Columbia2366Main Mall,Vancouver,B.C.V6T1Z4CanadaEditor:GeoffHolmesAbstractWEKA is a widely used,open-source machine learning platform.Due to its intuitive in-terface,it is particularly popular with novice users.However,such users oftenfind it hard to identify the best approach for their particular dataset among the many available.We describe the new version of Auto-WEKA,a system designed to help such users by automati-cally searching through the joint space of WEKA’s learning algorithms and their respective hyperparameter settings to maximize performance,using a state-of-the-art Bayesian opti-mization method.Our new package is tightly integrated with WEKA,making it just as accessible to end users as any other learning algorithm.Keywords:Hyperparameter Optimization,Model Selection,Feature Selection1.The Principles Behind Auto-WEKAThe WEKA machine learning software(Hall et al.,2009)puts state-of-the-art machine learning techniques at the disposal of even novice users.However,such users do not typically know how to choose among the dozens of machine learning procedures implemented in WEKA and each procedure’s hyperparameter settings to achieve good performance.Auto-WEKA1addresses this problem by treating all of WEKA as a single,highly para-metric machine learning framework,and using Bayesian optimization tofind a strong instan-tiation for a given dataset.Specifically,it considers the combined space of WEKA’s learning algorithms A={A(1),...,A(k)}and their associated hyperparameter spacesΛ(1),...,Λ(k) and aims to identify the combination of algorithm A(j)∈A and hyperparametersλ∈Λ(j)that minimizes cross-validation loss,A∗λ∗∈argminA(j)∈A,λ∈Λ(j)1kk∑i=1L(A(j)λ,D(i)train,D(i)test),1.Thornton et al.(2013)first introduced Auto-WEKA and empirically demonstrated state-of-the-art per-formance.Here we describe an improved and more broadly accessible implementation of Auto-WEKA, focussing on usability and software design.Kotthoff,Thornton,Hutter,Hoos,Leyton-Brownwhere L (A λ,D (i )train ,D (i )test )denotes the loss achieved by algorithm A with hyperparameters λwhen trained on D (i )train and evaluated on D (i )test .We call this the combined algorithm selectionand hyperparameter optimization (CASH)problem.CASH can be seen as a blackbox func-tion optimization problem:determining argmin θ∈Θf (θ),where each configuration θ∈Θcomprises the choice of algorithm A (j )∈A and its hyperparameter settings λ∈Λ(j ).In this formulation,the hyperparameters of algorithm A (j )are conditional on A (j )being selected.For a given θrepresenting algorithm A (j )∈A and hyperparameter settings λ∈Λ(j ),f (θ)is then defined as the cross-validation loss 1k ∑k i =1L (A (j )λ,D (i )train ,D (i )test ).2Bayesian optimization (see,e.g.,Brochu et al.,2010),also known as sequential model-based optimization,is an iterative method for solving such blackbox optimization problems.In its n -th iteration,it fits a probabilistic model based on the first n −1function evaluations ⟨θi ,f (θi )⟩n −1i =1,uses this model to select the next θn to evaluate (trading offexploration of new parts of the space vs exploitation of regions known to be good)and evaluates f (θn ).While Bayesian optimization based on Gaussian process models is known to perform well for low-dimensional problems with numerical hyperparameters (see,e.g.,Snoek et al.,2012),tree-based models have been shown to be more effective for high-dimensional,structured,and partly discrete problems (Eggensperger et al.,2013),such as the highly conditional space of WEKA’s learning algorithms and their corresponding hyperparameters we face here.3Thornton et al.(2013)showed that tree-based Bayesian optimization methods yielded the best performance in Auto-WEKA,with the random-forest-based SMAC (Hutter et al.,2011)performing better than the tree-structured Parzen estimator,TPE (Bergstra et al.,2011).Auto-WEKA uses SMAC to determine the classifier with the best performance on the given data.2.Auto-WEKA 2.0Since the initial release of a usable research prototype in 2013,we have made substantial improvements to the Auto-WEKA package described by Thornton et al.(2013).At a prosaic level,we have fixed bugs,improved tests and documentation,and updated the software to work with the latest versions of WEKA and Java.We have also added four major features.First,we now support regression algorithms,expanding Auto-WEKA beyond its pre-vious focus on classification (starred entries in Fig.1).Second,we now support the op-timization of all performance metrics WEKA supports.Third,we now natively support parallel runs (on a single machine)to find good configurations faster and save the N best configurations of each run instead of just the single best.Fourth,Auto-WEKA 2.0is now fully integrated with WEKA.This is important,because the crux of Auto-WEKA lies in its simplicity:providing a push-button interface that requires no knowledge about the avail-able learning algorithms or their hyperparameters,asking the user to provide,in addition to the dataset to be processed,only a memory bound (1GB by default)and the overall time2.In fact,on top of machine learning algorithms and their respective hyperparameters,we also include attribute selection methods and their respective hyperparameters in the configurations θ,thereby jointly optimizing over their choice and the choice of algorithms.3.Conditional dependencies can also be accommodated in the Gaussian process framework (Hutter and Osborne,2013;Swersky et al.,2013),but currently,tree-based methods achieve better performance.Auto-WEKA2.0:Automatic model and hyperparameter selection in WEKA LearnersBayesNet2 DecisionStump*0 DecisionTable*4 GaussianProcesses*10 IBk*5 J489 JRip4 KStar*3 LinearRegression*3 LMT9Logistic1M5P4M5Rules4MultilayerPerceptron*8NaiveBayes2NaiveBayesMultinomial0OneR1PART4RandomForest7RandomTree*11REPTree*6SGD*5SimpleLinearRegression*0SimpleLogistic5SMO11SMOreg*13VotedPerceptron3ZeroR*0Ensemble MethodsStacking2Vote2 Meta-MethodsLWL5 AdaBoostM16 AdditiveRegression4AttributeSelectedClassifier2Bagging4RandomCommittee2RandomSubSpace3Attribute Selection MethodsBestFirst2GreedyStepwise4Figure1:Learners and methods supported by Auto-WEKA2.0,along with number of hyperparameters|Λ|.Every learner supports classification;starred learners also support regression.budget available for the entire learning process.4The overall budget is set to15minutes by default to accommodate impatient users;longer runs allow the Bayesian optimizer to search the space more thoroughly;we recommend at least several hours for production runs.The usability of the earlier research prototype was hampered by the fact that users had to download Auto-WEKA manually and run it separately from WEKA.In contrast, Auto-WEKA2.0is now available through WEKA’s package ers do not need to install software separately;everything is included in the package and installed automatically upon request.After installation,Auto-WEKA2.0can be used in two different ways:1.As a meta-classifier:Auto-WEKA can be run like any other machine learning algo-rithm in WEKA:via the GUI,the command-line interface,or the public API.Figure2 shows how to run it from the command line.2.Through the Auto-WEKA tab:This provides a customized interface that hides someof the complexity.Figure3shows the output of an example run.Source code for Auto-WEKA is hosted on GitHub(https:///automl/autoweka) and is available under the GPL license(version3).Releases are published to the WEKA package repository and available both through the WEKA package manager and from the Auto-WEKA project website(/autoweka).A manual describes how to use the WEKA package and gives a high-level overview for developers;we also provide lower-level Javadoc documentation.An issue tracker on GitHub,JUnit tests and the con-tinuous integration system Travis facilitate bug tracking and correctness of the code.Since its release on March1,2016,Auto-WEKA2.0has been downloaded more than15000times, with an average of about400downloads per week.4.Internally,to avoid using all its budget for executing a single slow learner,Auto-WEKA limits individualruns of any learner to1/12of the overall budget;it further limits feature search to1/60of the budget.Kotthoff,Thornton,Hutter,Hoos,Leyton-Brownjava-cp autoweka.jar weka.classifiers.meta.AutoWEKAClassifier -timeLimit5-t iris.arff-no-cvFigure2:Command-line call for running Auto-WEKA with a time limit of5minutes on training dataset iris.arff.Auto-WEKA performs cross-validation internally,so we disable WEKA’s cross-validation(-no-cv).Running with-h lists the available options.Figure3:Example Auto-WEKA run on the iris dataset.The resulting best classifier along with its parameter settings is printedfirst,followed by its performance.While Auto-WEKA runs,it logs to the status bar how many configurations it has evaluated so far.3.Related ImplementationsAuto-WEKA was thefirst method to use Bayesian optimization to automatically instantiate a highly parametric machine learning framework at the push of a button.This automated machine learning(AutoML)approach has recently also been applied to Python and scikit-learn(Pedregosa et al.,2011)in Auto-WEKA’s sister package,Auto-sklearn(Feurer et al., 2015).Auto-sklearn uses the same Bayesian optimizer as Auto-WEKA,but comprises a smaller space of models and hyperparameters,since scikit-learn does not implement as many different machine learning techniques as WEKA;however,Auto-sklearn includes additional meta-learning techniques.It is also possible to optimize hyperparameters using WEKA’s own grid search and MultiSearch packages.However,these packages only permit tuning one learner and one filtering method at a time.Grid search handles only one hyperparameter.Furthermore, hyperparameter names and possible values have to be specified by the user.Auto-WEKA2.0:Automatic model and hyperparameter selection in WEKAReferencesJ.Bergstra,R.Bardenet,Y.Bengio,and B.K´e gl.Algorithms for hyper-parameter opti-mization.In Advances in Neural Information Processing Systems24(NIPS’11),pages 2546–2554,2011.E.Brochu,V.Cora,and N.de Freitas.A tutorial on Bayesian optimization of expensive cost functions,with application to active user modeling and hierarchical reinforcement puting Research Repository(arXiv),abs/1012.2599,2010.K.Eggensperger,M.Feurer,F.Hutter,J.Bergstra,J.Snoek,H.Hoos,and K.Leyton-Brown.Towards an empirical foundation for assessing Bayesian optimization of hyper-parameters.In NIPS Workshop on Bayesian Optimization(BayesOpt’13),2013.M.Feurer,A.Klein,K.Eggensperger,J.Springenberg,M.Blum,and F.Hutter.Efficient and Robust Automated Machine Learning.In Advances in Neural Information Processing Systems28(NIPS’15),pages2944–2952,2015.M.Hall,E.Frank,G.Holmes,B.Pfahringer,P.Reutemann,and I.H.Witten.The WEKA Data Mining Software:An Update.SIGKDD Explor.Newsl.,11(1):10–18,Nov.2009. ISSN1931-0145.F.Hutter and M.Osborne.A Kernel for Hierarchical Parameter puting Re-search Repository(arXiv),abs/1310.5738,Oct.2013.F.Hutter,H.H.Hoos,and K.Leyton-Brown.Sequential Model-Based Optimization for General Algorithm Configuration.In Learning and Intelligent OptimizatioN Conference (LION5),pages507–523,2011.F.Pedregosa,G.Varoquaux,A.Gramfort,V.Michel,B.Thirion,O.Grisel,M.Blon-del,P.Prettenhofer,R.Weiss,V.Dubourg,J.Vanderplas,A.Passos,D.Cournapeau, M.Brucher,M.Perrot,and E.Duchesnay.Scikit-learn:Machine learning in Python. Journal of Machine Learning Research,12:2825–2830,2011.J.Snoek,rochelle,and R.P.Adams.Practical Bayesian optimization of machine learn-ing algorithms.In Advances in Neural Information Processing Systems25(NIPS’12), pages2951–2959,2012.K.Swersky, D.Duvenaud,J.Snoek, F.Hutter,and M.Osborne.Raiders of the lost architecture:Kernels for Bayesian optimization in conditional parameter spaces.In NIPS Workshop on Bayesian Optimization(BayesOpt’13),2013.C.Thornton, F.Hutter,H.H.Hoos,and K.Leyton-Brown.Auto-WEKA:Combined selection and hyperparameter optimization of classification algorithms.In19th ACM SIGKDD Conference on Knowledge Discovery and Data Mining(KDD’13),2013.。

AutonomousLearning(五篇范例)第一篇:Autonomous LearningAutonomous LearningAutonomous learning,or learner autonomy,I think it's a very important capability,which is insufficient for Chinese pared with other countries' students,Chinese students are accustomed to accept the standard answers without questions,but we all know,a little learning is a dangerous thing.So I approve of autonomous learning in Chinese education.According to the definition of autonomous learning,which is a school of education which sees learners as individuals who can and should be autonomous,in another word,be responsible for their own learning climate.We can see,individuality,I mean the learners are the main part in the autonomous learning,not teachers,not parents.Autonomous education helps students develop their self-consciousness, vision, practicality and freedom of discussion.These attributes serve to aid the student in his or her independent learning.we live in a learning society, That's far more from enough for us to learning knowledge only from the books and teachers.When we were kids,we had been told must be respect teachers and could not do anything bad.We should honor the teacher and respect his teaching.We should be diligent at our lessons.And we should on questions.The teachers flog learning into us,and we have to accept eventually.Burying ourselves in books,that trains many excellent students,who is good at math,who is good at literature,who is good at geography,but what stops us to forward to the Nobel Prize, to independent innovation? Because most of us students,lost the basic capability of autonomouslearning.People don't learn anything today,I think it's a great shame the way educational standards are declining cation is about something much more important.It's about teaching people how to live, how to get on with one another, how to form relationships.It's about understanding things, not just knowing them.Yes,of course,seven sevens are forty-nine.But what does that mean? Our teachers never told us, It's not just a formula, maybe teachers would say so.but we need to know, and we need to understand.Autonomous learning is very popular with those who home educate their children.The child usually gets to decide what projects they wish to tackle or what interests to pursue.In home education this can be instead of or in addition to regular subjects like doing math or English.But some teachers and parents still approve of school education.So autonomous learning is a good choice or not,that depends on your ideas.But in one respect at least, the definition of autonomous learning is uncontroversial: it is the exercise of the capacity to think for oneself.Just as there is little contention over the minimal definition of what autonomous learning is, there is little dispute over how it is recognised.It is generally accepted that the capacity for autonomous learning is recognised by its expression in a number of different forms, such as the ability to understand an argument and set it in context;to search for, read, and understand relevant primary and secondary material;to explain and articulate an issue in oral and written form to others;and to demonstrate an awareness of the consequences of what has been learned.So I think developing responsible and autonomous learners is the key to motivating students for real wise teachers.Maybe you don't understand the academic definition of autonomous learning,neitherdo I.However, the minimal definition of autonomous learning can support two different views about the issue.One view is that autonomous learning simply and solely constitutes learning that students do for themselves.For those that hold such a view, an autonomous learner is someone who, given minimal information, would, for example, go away to the library, find sources for themselves and work by themselves.In the discipline of philosophy such work would amount to the student sitting down with a text and trying to come to an understanding of it on their own.Another view, however, and one that we believe significantly contradicts the first, has it that autonomous learning involves showing the student how to do something in such a way that they are then capable of undertaking a comparable activity by themselves.From this perspective, autonomous learning becomes the habitual exercise of skills, developed and perfected through continuous practice, which come to be second nature.In Chinese education,most teachers are frustrated by their unmotivated students.What they may not know is how important the connection is between student motivation and self-determination.Research has shown that motivation is related to whether or not students have opportunities to be autonomous and to make important academic choices.Having choices allows children to feel that they have control or ownership over their own learning.This, in turn, helps them develop a sense of responsibility and self-motivation.When students feel a sense of ownership, they want to engage in academic tasks and persist in learning.That's the first step for students to learn autonomously.Passion of the study makes students feel happy and energetic.There is no doubt that autonomous learners,who make many teachers fear that giving students more choice willlead to their losing control over classroom management.Research tells us that in fact the opposite happens.When students understand their role as agent over their feeling, thinking, and learning behaviors, they are more likely to take responsibility for their learning.T o be autonomous learners, however, students need to have some choice and control.And teachers need to learn how to help students develop the ability to make appropriate choices and take control over their own learning.So teachers need not to worry about the uncontrollableness of the students.Autonomous learning is a lean approach to learning.At least, autonomous learning can help us toimprove the independent creative ability,we need to know thing for a fact and know the ways and wherefores of it.Maybe we have many immature ideas.So what? Just learn it,Just do yourself.Before the coming of knowledge explosion,many experts in education field put forward that the mainly mission is not the knowledge any more, but the study of the study method.So we need to understand,not rote learning.The motivation for learning is our thirst for knowledge,our passions.learning can take place only when there is motivation.That's autonomous learning!。

深度强化学习在自动驾驶中的应用研究(英文中文双语版优质文档)Application Research of Deep Reinforcement Learning in Autonomous DrivingWith the continuous development and progress of artificial intelligence technology, autonomous driving technology has become one of the research hotspots in the field of intelligent transportation. In the research of autonomous driving technology, deep reinforcement learning, as an emerging artificial intelligence technology, is increasingly widely used in the field of autonomous driving. This paper will explore the application research of deep reinforcement learning in autonomous driving.1. Introduction to Deep Reinforcement LearningDeep reinforcement learning is a machine learning method based on reinforcement learning, which enables machines to intelligently acquire knowledge and experience from the external environment, so that they can better complete tasks. The basic framework of deep reinforcement learning is to use the deep learning network to learn the mapping of state and action. Through continuous interaction with the environment, the machine can learn the optimal strategy, thereby realizing the automation of tasks.The application of deep reinforcement learning in the field of automatic driving is to realize the automation of driving decisions through machine learning, so as to realize intelligent driving.2. Application of Deep Reinforcement Learning in Autonomous Driving1. State recognition in autonomous drivingIn autonomous driving, state recognition is a very critical step, which mainly obtains the state information of the environment through sensors and converts it into data that the computer can understand. Traditional state recognition methods are mainly based on rules and feature engineering, but this method not only requires human participation, but also has low accuracy for complex environmental state recognition. Therefore, the state recognition method based on deep learning has gradually become the mainstream method in automatic driving.The deep learning network can perform feature extraction and classification recognition on images and videos collected by sensors through methods such as convolutional neural networks, thereby realizing state recognition for complex environments.2. Decision making in autonomous drivingDecision making in autonomous driving refers to the process of formulating an optimal driving strategy based on the state information acquired by sensors, as well as the goals and constraints of the driving task. In deep reinforcement learning, machines can learn optimal strategies by interacting with the environment, enabling decision making in autonomous driving.The decision-making process of deep reinforcement learning mainly includes two aspects: one is the learning of the state-value function, which is used to evaluate the value of the current state; the other is the learning of the policy function, which is used to select the optimal action. In deep reinforcement learning, the machine can learn the state-value function and policy function through the interaction with the environment, so as to realize the automation of driving decision-making.3. Behavior Planning in Autonomous DrivingBehavior planning in autonomous driving refers to selecting an optimal behavior from all possible behaviors based on the current state information and the goal of the driving task. In deep reinforcement learning, machines can learn optimal strategies for behavior planning in autonomous driving.4. Path Planning in Autonomous DrivingPath planning in autonomous driving refers to selecting the optimal driving path according to the goals and constraints of the driving task. In deep reinforcement learning, machines can learn optimal strategies for path planning in autonomous driving.3. Advantages and challenges of deep reinforcement learning in autonomous driving1. AdvantagesDeep reinforcement learning has the following advantages in autonomous driving:(1) It can automatically complete tasks such as driving decision-making, behavior planning, and path planning, reducing manual participation and improving driving efficiency and safety.(2) The deep learning network can perform feature extraction and classification recognition on the images and videos collected by the sensor, so as to realize the state recognition of complex environments.(3) Deep reinforcement learning can learn the optimal strategy through the interaction with the environment, so as to realize the tasks of decision making, behavior planning and path planning in automatic driving.2. ChallengeDeep reinforcement learning also presents some challenges in autonomous driving:(1) Insufficient data: Deep reinforcement learning requires a large amount of data for training, but in the field of autonomous driving, it is very difficult to obtain large-scale driving data.(2) Safety: The safety of autonomous driving technology is an important issue, because once an accident occurs, its consequences will be unpredictable. Therefore, the use of deep reinforcement learning in autonomous driving requires very strict safety safeguards.(3) Interpretation performance: Deep reinforcement learning requires a lot of computing resources and time for training and optimization. Therefore, in practical applications, the problems of computing performance and time cost need to be considered.(4) Interpretability: Deep reinforcement learning models are usually black-box models, and their decision-making process is difficult to understand and explain, which will have a negative impact on the reliability and safety of autonomous driving systems. Therefore, how to improve the interpretability of deep reinforcement learning models is an important research direction.(5) Generalization ability: In the field of autonomous driving, vehicles are faced with various environments and situations. Therefore, the deep reinforcement learning model needs to have a strong generalization ability in order to be able to accurately and Safe decision-making and planning.In summary, deep reinforcement learning has great application potential in autonomous driving, but challenges such as data scarcity, safety, interpretability, computational performance, and generalization capabilities need to be addressed. Future research should address these issues and promote the development and application of deep reinforcement learning in the field of autonomous driving.深度强化学习在自动驾驶中的应用研究随着人工智能技术的不断发展和进步,自动驾驶技术已经成为了当前智能交通领域中的研究热点之一。

The future of technology is a fascinating topic that sparks the imagination and fuels debates about the potential advancements and their impact on society.Heres a detailed exploration of what the future might hold in the realm of technology,written in a style that is both informative and engaging.The Dawn of a New Era:A Glimpse into the Future of TechnologyAs we stand on the precipice of the future,it is impossible not to be captivated by the rapid pace of technological innovation.The fusion of science,creativity,and human ingenuity is set to redefine the way we live,work,and interact with the world around us. This essay delves into the potential advancements in various sectors and the profound implications they may have for humanity.Artificial Intelligence and Machine LearningThe rise of artificial intelligence AI and machine learning is perhaps one of the most transformative forces in the tech landscape.As these systems become more sophisticated, they are expected to take on roles traditionally reserved for humans,from complex decisionmaking processes to creative endeavors.The integration of AI into everyday life could lead to a more efficient and personalized experience,with smart homes, autonomous vehicles,and personalized healthcare becoming the norm.Quantum ComputingQuantum computing represents a leap forward in computational power.By harnessing the principles of quantum mechanics,these machines have the potential to solve problems that are currently beyond the reach of classical computers.This could revolutionize fields such as cryptography,drug discovery,and complex system simulations,offering solutions to some of the worlds most pressing challenges.Biotechnology and Genetic EngineeringThe ability to manipulate genetic material is opening doors to a future where diseases can be eradicated,and human potential can be maximized.Advances in biotechnology and genetic engineering could lead to personalized medicine,where treatments are tailored to an individuals genetic makeup.Moreover,the ethical implications of such power cannot be overstated,as they raise questions about the nature of human identity and the potential for genetic discrimination.Renewable Energy and Environmental TechnologyAs the world grapples with the effects of climate change,the push for sustainable energy sources is more critical than ever.Solar,wind,and tidal power are set to become the mainstays of our energy mix,with advancements in energy storage and distribution systems ensuring a reliable supply.Additionally,technologies that can clean up pollution and restore ecosystems will become increasingly important in our quest for a greener planet.Virtual and Augmented RealityThe immersive worlds of virtual reality VR and augmented reality AR are poised to revolutionize entertainment,education,and even remote work.VR can transport users to entirely new environments,while AR overlays digital information onto the physical world,enhancing our perception and interaction with our surroundings.The potential for these technologies to transform industries and everyday life is immense.Space Exploration and ColonizationThe final frontier is no longer just a concept but a tangible goal for the future.With companies and governments investing in space exploration,we may soon witness the establishment of lunar bases and manned missions to Mars.The technological advancements required for such endeavors will have spinoff benefits for Earthbased industries,from materials science to robotics.Cybersecurity and PrivacyAs our reliance on digital systems grows,so too does the importance of cybersecurity. Protecting personal data and ensuring the integrity of digital transactions will be paramount.The development of quantum cryptography and other advanced security measures will be essential in safeguarding our digital world against the everevolving threat landscape.The Ethical and Societal ImplicationsWith great power comes great responsibility.The future of technology is not without its challenges.The ethical considerations of AI,the potential for job displacement due to automation,and the digital divide are just a few of the issues that society will need to address.Balancing the benefits of technological progress with the need for social equityand environmental sustainability will be a key task for policymakers and technologists alike.In conclusion,the future of technology is a canvas of endless possibilities,filled with both promise and peril.As we step into this brave new world,it is crucial that we approach these advancements with a sense of responsibility,ensuring that they serve to enhance the human experience and contribute to a better,more equitable future for all.。

A Machine Learning Approach to AutomaticProduction of Compiler HeuristicsAntoine Monsifrot,Fran¸c ois Bodin,and Ren´e QuiniouIRISA-University of Rennes France{amonsifr,bodin,quiniou}@irisa.frAbstract.Achieving high performance on modern processors heavilyrelies on the compiler optimizations to exploit the microprocessor archi-tecture.The efficiency of optimization directly depends on the compilerheuristics.These heuristics must be target-specific and each new proces-sor generation requires heuristics reengineering.In this paper,we address the automatic generation of optimizationheuristics for a target processor by machine learning.We evaluate thepotential of this method on an always legal and simple transformation:loop unrolling.Though simple to implement,this transformation mayhave strong effects on program execution(good or bad).However decid-ing to perform the transformation or not is difficult since many inter-acting parameters must be taken into account.So we propose a machinelearning approach.We try to answer the following questions:is it possible to devise alearning process that captures the relevant parameters involved in loopunrolling performance?Does the Machine Learning Based Heuristicsachieve better performance than existing ones?Keywords:decision tree,boosting,compiler heuristics,loop unrolling.1IntroductionAchieving high performance on modern processors heavily relies on the abil-ity of the compiler to exploit the underlying architecture.Numerous program transformations have been implemented in order to produce efficient programs that exploit the potential of the processor architecture.These transformations interact in a complex way.As a consequence,an optimizing compiler relies on internal heuristics to choose an optimization and whether or not to apply it.De-signing these heuristics is generally difficult.The heuristics must be specific to each implementation of the instruction set architecture.They are also dependent on changes made to the compiler.In this paper,we address the problem of automatically generating such heuristics by a machine learning approach.To our knowledge this is the first study of machine learning to build these heuristics.The usual approach consists in running a set of benchmarks to setup heuristics parameters.Very fewD.Scott(Ed.):AIMSA2002,LNAI2443,pp.41–50,2002.c Springer-Verlag Berlin Heidelberg200242 A.Monsifrot,F.Bodin,and R.Quinioupapers have specifically addressed the issue of building such heuristics.Never-theless approximate heuristics have been proposed[8,11]for unroll and jam a transformation that is like unrolling(our example)but that behaves differently.Our study aims to simplify compiler construction while better exploiting optimizations.To evaluate the potential of this approach we have chosen a simple transformation:loop unrolling[6].Loop unrolling is always legal and is easy to implement,but because it has many side effects,it is difficult to devise a decision rule that will be correct in most situations.In this novel study we try to answer the following questions:is it possible to learn a decision rule that selects the parameters involved in loop unrolling effi-ciency?Does the Machine Learning Based Heuristics(denoted MLBH)achieve better performance than existing ones?Does the learning process really take into account the target architecture?To answer thefirst question we build on previous studies[9]that defined an abstract representation of loops in order to capture the parameters influencing performance.To answer the second question we compare the performance of our Machine Learning Based Heuristics and the GNU Fortran compiler[3]on a set of applications.To answer the last question we have used two target machines, an UltraSPARC machine[12]and an IA-64machine[7],and used on each the MLBH computed on the other.The paper is organized as follows.Section2gives an overview of the loop unrolling transformation.Section3shows how machine learning techniques can be used to automatically build loop unrolling heuristics.Section4illustrates an implementation of the technique based on the OC1decision tree software[10].2Loop Unrolling as a CaseThe performance of superscalar processors relies on a very high frequency1and on the parallel execution of multiple instructions(this is also called Instruction Level Parallelism–ILP).To achieve this,the internal architecture of superscalar microprocessors is based on the following features:Memory hierarchy:the main memory access time is typically hundreds of times greater than the CPU cycle time.To limit the slowdown due to memory accesses,a set of intermediate levels are added between the CPU unit and the main memory;the level the closest to the CPU is the fastest,but also the small-est.The data or instructions are loaded by blocks(sets of contiguous bytes in memory)from one memory level of the hierarchy to the next level to exploit the following fact:when a program accesses some memory element,the next contiguous one is usually also accessed in the very near future.In a classical configuration there are2levels,L1and L2of cache memories as shown on the figure1.The penalty to load data from main memory tends to be equivalent to executing1000processor cycles.If the data is already in L2,it is one order of magnitude less.If the data is already in L1,the access can be done in only a few CPU cycles.1typically2gigahertz corresponding to a processor cycle time of0.5nanosecondA Machine Learning Approach43MemoryMain L2CacheCacheL1CPUx1000x100x10x1Fig.1.Memory hierarchyMultiple Pipelined Functional Units:the processor has multiple functional units that can run in parallel to execute several instructions per cycle (typically an integer operation can be executed in parallel with a memory access and a floating point computation).Furthermore these functional units are pipelined.This divides the operation in a sequence of steps that can be performed in parallel.Scheduling instructions in the functional units is performed in an out-of-order or in-order mode.Contrary to the in-order ,in the out-of-order mode instructions are not always executed in the order specified by the program.When a processor runs at maximum speed,each pipelined functional unit executes one instruction per cycle.This requires that all operands and branch addresses are available at the beginning of the cycle.Otherwise,functional units must wait during some delays.The processor performance depends on these waiting delays.The efficiency of the memory hierarchy and ILP are directly related to the structure and behavior of the code.Many program transformations reduce the number of waiting delays in program execution.Loop unrolling [6]is a simple program transformation where the loop body is replicated many times.It may be applied at source code level to benefit from all compiler optimizations.It improves the exploitation of ILP:increasing the size of the body augments the number of instructions eligible to out-of-order scheduling.Loop unrolling also reduces loop management overhead but it has also some beneficial side effects from later compiler steps such as common sub-expression elimination.However it also has many negative side effects that can cancel the benefits of the transformation:–the instruction cache behavior may be degraded (if the loop body becomes too big to fit in the cache),–the register allocation phase may generate spill code (additional load and store instructions),–it may prevent other optimization techniques.As a consequence,it is difficult to fully exploit loop pilers are usually very conservative.Their heuristics are generally based on the loop body size:under a specific threshold,if there is no control flow statement,the loop is unrolled.This traditional approach under-exploits loop unrolling [5]and must be adapted when changes are made to the compiler or to the target architecture.The usual approach to build loop unrolling heuristics for a given target com-puter consists in running a set of benchmarks to setup the heuristics parameters.This approach is intrinsically limited because in most optimizations such as loop unrolling,too many parameters are involved.Microarchitecture characteristics44 A.Monsifrot,F.Bodin,and R.Quiniou(for instance the size of instruction cache,...)as well as the other optimizations (for instance instruction scheduling,...)that follow loop unrolling during the compilation process should be considered in the decision procedure.The main parameters,but not all(for instance number instruction cache misses),which influence loop unrolling efficiency directly depend on the loop body statements. This is because loop unrolling mainly impacts code generation and instruction scheduling.As a consequence,it is realistic to base the unrolling decision on the properties of the loop code while ignoring its execution context.3Machine Learning for Building HeuristicsMachine learning techniques offer an automatic,flexible and adaptive framework for dealing with the many parameters involved in deciding the effectiveness of program optimizations.Classically a decision rule is learnt from feature vectors describing positive and negative applications of the transformation.However, it is possible to use this framework only if the parameters can be abstracted statically from the loop code and if their number remains limited.Reducing the number of parameters involved in the process is important as the performance of machine learning techniques is poor when the number of dimensions of the learning space is high[10].Furthermore learning from complex spaces requires more data.To summarize the approach,the steps involved in using a machine learning technique for building heuristics for program transformation are:1.finding a loop abstraction that captures the“performance”features involvedin an optimization,in order to build the learning set,2.choosing an automatic learning process to compute a rule in order to decidewhether loop unrolling should be applied,3.setting up the result of the learning process as heuristics for the compiler. In the remainder of this section,we present the representation used for abstract-ing loop properties.The next section shows how to sort the loop into winning and loosing classes according to unrolling.Finally,the learning process based on learning decision trees is overviewed.3.1Loop AbstractionThe loop abstraction must capture the main loop characteristics that influence the execution efficiency on a modern processor.They are represented by integer features which are relevant static loop properties according to unrolling.We have selected5classes of integer features:Memory access:number of memory accesses,number of array element reuses from one iteration to another.Arithmetic operations count:number of additions,multiplications or divi-sions excepting those in array index computations.Size of the loop body:number of statements in the loop.A Machine Learning Approach45 Control statements in the loop:number of if statements,goto,etc.in the loop body.Number of iterations:if it can be computed at compile time.In order to reduce the learning complexity,only a subset of these features are used for a given compiler and target machine.The chosen subset was determined experimentally by cross validation(see Section4).The quality of the predictions achieved by an artificial neural network based on20indices was equivalent to the predictive quality of the6chosen features.Figure2gives the features that were selected and an example of loop ab-straction.do i=2,100a(i)=a(i)+a(i-1)*a(i+1) enddo Number of statements1 Number of arithmetic operations2 Minimum number of iterations99 Number of array accesses4 Number of array element reuses3 Number of if statements0Fig.2.Example of features for a loop.3.2Unrolling Beneficial LoopsA learning example refers to a loop in a particular context(represented by the loop features).To determine if unrolling is beneficial,each loop is executed twice.Then,the execution times of the original loop and of the unrolled loop are compared.Four cases can be considered:not significant:the loop execution time is too small and therefore the timing is not significant.The loop is discarded from the learning set.equal:the execution times of the original and of the unrolled loop are close.A threshold is used to take into account the timer inaccuracy.Thus,the loop performance is considered as invariant by unrolling if the benefit is less than 10%.improved:the speedup is above10%.The loop is considered as being benefi-cial by unrolling.degraded:there is a speed-down.The loop is considered as a degraded loop by unrolling.The loop set is then partitioned into equivalence classes(denoted loop classes in the remainder).Two loops are in the same loop class if their respective ab-stractions are equal.The next step is to decide if a loop class is to be considered as a positive or a negative example.Note that there can be beneficial and degraded loops in the same class as non exhaustive descriptions are used to represent the loops.This is a natural situation as the loop execution or compilation context may greatly influence its execution time,for instance due to instruction cache memory effects. The following criterion has been used to decide whether a class will represent a positive or a negative example:46 A.Monsifrot,F.Bodin,and R.Quiniou1.In a particular class,a loop whose performance degrades by less than 5%is counted once,a loop that degrades performance by 10%is counted twice.A loop that degrades performance more than 20%is counted three times.2.if the number of unrolling beneficial loops is greater than the number of degraded loops (using the weights above),then the class represents a positive example,else the class represents a negative example.3.3A Learning Method Based on Decision Trees and BoostingWe have chosen to represent unrolling decision rules as decision trees.Decision trees can be learnt efficiently from feature based vectors.Each node of the de-cision tree represents a test checking the value(s)of one (or several)feature(s)which are easy to read by an expert.This is not the case for statistical meth-ods like Nearest Neighbor or Artificial Neural Network for instance,which have comparable or slightly better performance.We used the OC1[10]software.OC1is a classification tool that induces oblique decision trees.Oblique decision trees produce polygonal partitionings of the feature space.OC1recursively splits a set of objects in a hyperspace by finding optimal hyperplanes until every object in a subspace belongs to the same class.nnyyxynBA 6x+y > 60 ?3x −2y > 6 ?B −x+2y > 8 ?A Fig.3.The left side of the figure shows an oblique decision tree that uses two attributes.The right side shows the partitioning that this tree creates in the attribute space.A decision tree example is shown in Figure 3,together with its 2-D related space.Each node of the tree tests a linear combination of some indices (equiva-lent to an hyperplane)and each leaf of the tree corresponds to a class.The main advantages of OC1is that it finds smaller decision trees than classical tree learn-ing methods.The major drawback is that they are less readable than classical ones.The classification of a new loop is equivalent to finding a leaf loop class.Once induced,a decision tree can be used as a classification process.An object represented by its feature vector is classified by following the branches of the tree indicated by node tests until a leaf is reached.To improve the accuracy obtained with OC1we have used boosting [13].Boosting is a general method for improving the accuracy of any given algorithm.A Machine Learning Approach 47Boosting consists in learning a set of classifiers for more and more difficult prob-lems:the weights of examples that are misclassified by the classifier learnt at step n are augmented (by a factor proportional to the global error)and at step n +1a new classifier is learnt on this weighted examples.Finally,the global classification is obtained by a weighted vote of the individual classifier according to their proper accuracy.In our case 9trees were computed.4ExperimentsThe learning set used in the experiments is Number of loops 1036Discarded loops 177Unrolling beneficial loops 233Unrolling invariant loops 505Unrolling degraded loops 121Loop classes 572Positive examples 139Negative examples 433Table 1.IA-64learning set.made of loops extracted from programs inFortran 77.Most of them were chosen inavailable benchmarks [4,1].We have studiedtwo types of programs:real applications (themajority comes from SPEC [4])and compu-tational kernels.Table 1presents some char-acteristics of a loop set (cf section 3.2).The accuracy of the learning method wasassessed by a 10-fold cross-validation.We have experiment with pruning.We have ob-tained smaller trees but the resulting quality was degraded.The results without pruning are presented in Table 2.Two factors can explain the fact that the overall accuracy cannot be better than 85%:1.since unrolling beneficial and degraded loops can appear in the same class (cf section 3.2)a significant proportion of examples may be noisy,2.the classification of positive examples is far worse than the classification of negative ones.Maybe the learning set does not contain enough beneficial loops.To go beyond cross validation another set of experiments has been performed on two target machines,an UltraSPARC and an IA-64.They aim at showing the technique does catch the most significant loops of the programs.The g77[3]compiler was used.With this compiler,loop unrolling can be globally turned on and off.To assess our method we have implemented loop unrolling at the sourceTable 2.Cross validation accuracyUltraSPARC IA-64normal boosting normal boosting Accuracy of overallexample classification 79.4%85.2%82.6%85.2%Accuracy of positive example classification 62.4%61.7%73.9%69.6%Accuracy of negative example classification85.1%92.0%86.3%92.3%48 A.Monsifrot,F.Bodin,and R.Quinioucode level using TSF[2].This is not the most efficient scheme because in some cases this inhibits some of the compiler optimizations(contrary to unrolling performed by the compiler itself).We have performed experiments to check whether the MLB heuristics are at least as good as compiler heuristics and whether the specificities of a target architecture can be taken into account.A set of benchmark programs were selected in the learning set and for each one we have:1.run the code compiled by g77with-O3option,2.run the code compiled with-O3-funroll options:the compiler uses its ownunrolling strategy,3.unroll the loops according to the result of the MLB heuristics and run thecompiled code with-O3option.The heuristics was learned for the target machine from learning set where the test program was removed.4.unroll the loops according to the result of the MLB heuristics learnt for theother target machine and run the compiled code with-O3option.Fig.4.IA-64:-O3is the reference execution time.The performance results are given in Figure4and Figure5respectively for the IA-64and UltraSPARC targets.The average execution time of the optimized programs for the IA-64is93.8% of the reference execution time(no unrolling)using the MLB heuristics and 96.8%using the g77unrolling strategy.On the UltraSPARC we have respectively 96%and98.7%showing that our unrolling strategy performs better.Indeed,A Machine Learning Approach49Fig.5.UltraSPARC:-O3is the reference execution time.gaining a few percent on average execution time with one transformation is significant because each transformation is not often beneficial.For example,only 22%of the loops are beneficial by unrolling on IA-64and17%on UltraSPARC.In the last experiment we exchanged the decision trees learnt for the two target machines.On the UltraSPARC,the speedup is degraded from96%to 97.9%and on the IA-64it is degraded from93.8%to96.8%.This shows that the heuristics are effectively tuned to a target architecture.5ConclusionCompilers implement a lot of optimization algorithms for improving perfor-mance.The choice of using a particular sequence of optimizations and their parameters is done through a set of heuristics hard coded in the compiler.At each major compiler revision,but also at new implementations of the target Instruction Set Architecture,a new set of heuristics must be reengineered.In this paper,we have presented a new method for addressing such reengi-neering in the case of loop unrolling.Our method is based on a learning process which adapts to new target architectures or new compiler ing an abstract loop representation we showed that decision trees that provide target specific heuristics for loop unrolling can be learnt.While our study is limited to the simple case of loop unrolling it opens a new direction for the design of compiler heuristics.Even for loop unrolling, there are still many issues to consider to go beyond thisfirst result.Are there better abstractions that can capture loop characteristics?Can hardware counters50 A.Monsifrot,F.Bodin,and R.Quiniou(for instance cache miss counters)provide better insight on loop unrolling?How large should the learning set be?Can other machine learning techniques be more efficient than decision trees?More fundamentally our study raises the question whether it could be pos-sible or not to quasi automatically reengineer the implementation of a set of optimization heuristics for new processor target implementations.Acknowledgments.We would like to gratefully thank I.C.Lerman and L. Miclet for their insightful advice on machine learning techniques.References1.David Bailey.Nas kernel benchmark program,June1988./benchmark/nas.2.F.Bodin,Y.M´e vel,and R.Quiniou.A User Level Program Transformation Tool.In Proceedings of the International Conference on Supercomputing,pages180–187, July1998,Melbourne,Australia.3.GNU Fortran Compiler./.4.Standard Performance Evaluation Corporation./.5.Jack W.Davidson and Sanjay Jinturkar.Aggressive Loop Unrolling in a Retar-getable,Optimizing Compiler.In Compiler Construction,volume1060of Lecture Notes in Computer Science,pages59–73.Springer,April1996.6.J.J.Dongarra and A.R.Hinds.Unrolling loops in FORTRAN.Software Practiceand Experience,9(3):219–226,March1979.7.IA-64./design/Itanium/idfisa/index.htm.8.A.Koseki,H.Komastu,and Y.Fukazawa.A Method for Estimating OptimalUnrolling Times for Nested Loops.In Proceedings of the International Symposium on Parallel Architectures,Algorithms and Networks,1997.9.A.Monsifrot and puter Aided Hand Tuning(CAHT):“ApplyingCase-Based Reasoning to Performance Tuning”.In Proceedings of the15th ACM International Conference on Supercomputing(ICS-01),pages196–203.ACM Press, June17–212001,Sorrento,Italy.10.Sreerama K.Murthy,Simon Kasif,and Steven Salzberg.A System for Inductionof Oblique Decision Trees.Journal of Artificial Intelligence Research,2:1–32,1994.11.Vivek Sarkar.Optimized Unrolling of Nested Loops.In Proceedings of the14thACM International Conference onSupercomputing(ICS-00),pages153–166.ACM Press,May2000.12.UltraSPARC./processors/UltraSPARC-II/.13.C.Yu and D.B.Skillicorn.Parallelizing Boosting and Bagging.Technical report,Queen’s University,Kingston,Ontario,Canada K7L3N6,February2001.。