11Visualizing document content using language structure

- 格式:pdf

- 大小:986.00 KB

- 文档页数:8

Beyond Reason CodesA Blueprint for Human-Centered,Low-Risk AutoML H2O.ai Machine Learning Interpretability TeamH2O.aiMarch21,2019ContentsBlueprintEDABenchmarkTrainingPost-Hoc AnalysisReviewDeploymentAppealIterateQuestionsBlueprintThis mid-level technical document provides a basic blueprint for combining the best of AutoML,regulation-compliant predictive modeling,and machine learning research in the sub-disciplines of fairness,interpretable models,post-hoc explanations,privacy and security to create a low-risk,human-centered machine learning framework.Look for compliance mode in Driverless AI soon.∗Guidance from leading researchers and practitioners.Blueprint†EDA and Data VisualizationKnow thy data.Automation implemented inDriverless AI as AutoViz.OSS:H2O-3AggregatorReferences:Visualizing Big DataOutliers through DistributedAggregation;The Grammar ofGraphicsEstablish BenchmarksEstablishing a benchmark from which to gauge improvements in accuracy,fairness, interpretability or privacy is crucial for good(“data”)science and for compliance.Manual,Private,Sparse or Straightforward Feature EngineeringAutomation implemented inDriverless AI as high-interpretabilitytransformers.OSS:Pandas Profiler,Feature ToolsReferences:Deep Feature Synthesis:Towards Automating Data ScienceEndeavors;Label,Segment,Featurize:A Cross Domain Framework forPrediction EngineeringPreprocessing for Fairness,Privacy or SecurityOSS:IBM AI360References:Data PreprocessingTechniques for Classification WithoutDiscrimination;Certifying andRemoving Disparate Impact;Optimized Pre-processing forDiscrimination Prevention;Privacy-Preserving Data MiningRoadmap items for H2O.ai MLI.Constrained,Fair,Interpretable,Private or Simple ModelsAutomation implemented inDriverless AI as GLM,RuleFit,Monotonic GBM.References:Locally InterpretableModels and Effects Based onSupervised Partitioning(LIME-SUP);Explainable Neural Networks Based onAdditive Index Models(XNN);Scalable Bayesian Rule Lists(SBRL)LIME-SUP,SBRL,XNN areroadmap items for H2O.ai MLI.Traditional Model Assessment and DiagnosticsResidual analysis,Q-Q plots,AUC andlift curves confirm model is accurateand meets assumption criteria.Implemented as model diagnostics inDriverless AI.Post-hoc ExplanationsLIME,Tree SHAP implemented inDriverless AI.OSS:lime,shapReferences:Why Should I Trust You?:Explaining the Predictions of AnyClassifier;A Unified Approach toInterpreting Model Predictions;PleaseStop Explaining Black Box Models forHigh Stakes Decisions(criticism)Tree SHAP is roadmap for H2O-3;Explanations for unstructured data areroadmap for H2O.ai MLI.Interlude:The Time–Tested Shapley Value1.In the beginning:A Value for N-Person Games,19532.Nobel-worthy contributions:The Shapley Value:Essays in Honor of Lloyd S.Shapley,19883.Shapley regression:Analysis of Regression in Game Theory Approach,20014.First reference in ML?Fair Attribution of Functional Contribution in Artificialand Biological Networks,20045.Into the ML research mainstream,i.e.JMLR:An Efficient Explanation ofIndividual Classifications Using Game Theory,20106.Into the real-world data mining workflow...finally:Consistent IndividualizedFeature Attribution for Tree Ensembles,20177.Unification:A Unified Approach to Interpreting Model Predictions,2017Model Debugging for Accuracy,Privacy or SecurityEliminating errors in model predictions bytesting:adversarial examples,explanation ofresiduals,random attacks and“what-if”analysis.OSS:cleverhans,pdpbox,what-if toolReferences:Modeltracker:RedesigningPerformance Analysis Tools for MachineLearning;A Marauder’s Map of Security andPrivacy in Machine Learning:An overview ofcurrent and future research directions formaking machine learning secure and privateAdversarial examples,explanation ofresiduals,measures of epistemic uncertainty,“what-if”analysis are roadmap items inH2O.ai MLI.Post-hoc Disparate Impact Assessment and RemediationDisparate impact analysis can beperformed manually using Driverless AIor H2O-3.OSS:aequitas,IBM AI360,themisReferences:Equality of Opportunity inSupervised Learning;Certifying andRemoving Disparate ImpactDisparate impact analysis andremediation are roadmap items forH2O.ai MLI.Human Review and DocumentationAutomation implemented as AutoDocin Driverless AI.Various fairness,interpretabilityand model debugging roadmapitems to be added to AutoDoc.Documentation of consideredalternative approaches typicallynecessary for compliance.Deployment,Management and MonitoringMonitor models for accuracy,disparateimpact,privacy violations or securityvulnerabilities in real-time;track modeland data lineage.OSS:mlflow,modeldb,awesome-machine-learning-opsmetalistReference:Model DB:A System forMachine Learning Model ManagementBroader roadmap item for H2O.ai.Human AppealVery important,may require custom implementation for each deployment environment?Iterate:Use Gained Knowledge to Improve Accuracy,Fairness, Interpretability,Privacy or SecurityImprovements,KPIs should not be restricted to accuracy alone.Open Conceptual QuestionsHow much automation is appropriate,100%?How to automate learning by iteration,reinforcement learning?How to implement human appeals,is it productizable?ReferencesThis presentation:https:///navdeep-G/gtc-2019/blob/master/main.pdfDriverless AI API Interpretability Technique Examples:https:///h2oai/driverlessai-tutorials/tree/master/interpretable_ml In-Depth Open Source Interpretability Technique Examples:https:///jphall663/interpretable_machine_learning_with_python https:///navdeep-G/interpretable-ml"Awesome"Machine Learning Interpretability Resource List:https:///jphall663/awesome-machine-learning-interpretabilityAgrawal,Rakesh and Ramakrishnan Srikant(2000).“Privacy-Preserving Data Mining.”In:ACM Sigmod Record.Vol.29.2.URL:/cs/projects/iis/hdb/Publications/papers/sigmod00_privacy.pdf.ACM,pp.439–450.Amershi,Saleema et al.(2015).“Modeltracker:Redesigning Performance Analysis Tools for Machine Learning.”In:Proceedings of the33rd Annual ACM Conference on Human Factors in Computing Systems.URL: https:///en-us/research/wp-content/uploads/2016/02/amershi.CHI2015.ModelTracker.pdf.ACM,pp.337–346.Calmon,Flavio et al.(2017).“Optimized Pre-processing for Discrimination Prevention.”In:Advances in Neural Information Processing Systems.URL:/paper/6988-optimized-pre-processing-for-discrimination-prevention.pdf,pp.3992–4001.Feldman,Michael et al.(2015).“Certifying and Removing Disparate Impact.”In:Proceedings of the21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.URL:https:///pdf/1412.3756.pdf.ACM,pp.259–268.Hardt,Moritz,Eric Price,Nati Srebro,et al.(2016).“Equality of Opportunity in Supervised Learning.”In: Advances in neural information processing systems.URL:/paper/6374-equality-of-opportunity-in-supervised-learning.pdf,pp.3315–3323.Hu,Linwei et al.(2018).“Locally Interpretable Models and Effects Based on Supervised Partitioning (LIME-SUP).”In:arXiv preprint arXiv:1806.00663.URL:https:///ftp/arxiv/papers/1806/1806.00663.pdf.Kamiran,Faisal and Toon Calders(2012).“Data Preprocessing Techniques for Classification Without Discrimination.”In:Knowledge and Information Systems33.1.URL:https:///content/pdf/10.1007/s10115-011-0463-8.pdf,pp.1–33.Kanter,James Max,Owen Gillespie,and Kalyan Veeramachaneni(2016).“Label,Segment,Featurize:A Cross Domain Framework for Prediction Engineering.”In:Data Science and Advanced Analytics(DSAA),2016 IEEE International Conference on.URL:/static/papers/DSAA_LSF_2016.pdf.IEEE,pp.430–439.Kanter,James Max and Kalyan Veeramachaneni(2015).“Deep Feature Synthesis:Towards Automating Data Science Endeavors.”In:Data Science and Advanced Analytics(DSAA),2015.366782015.IEEEInternational Conference on.URL:https:///EVO-DesignOpt/groupWebSite/uploads/Site/DSAA_DSM_2015.pdf.IEEE,pp.1–10.Keinan,Alon et al.(2004).“Fair Attribution of Functional Contribution in Artificial and Biological Networks.”In:Neural Computation16.9.URL:https:///profile/Isaac_Meilijson/publication/2474580_Fair_Attribution_of_Functional_Contribution_in_Artificial_and_Biological_Networks/links/09e415146df8289373000000/Fair-Attribution-of-Functional-Contribution-in-Artificial-and-Biological-Networks.pdf,pp.1887–1915.Kononenko,Igor et al.(2010).“An Efficient Explanation of Individual Classifications Using Game Theory.”In: Journal of Machine Learning Research11.Jan.URL:/papers/volume11/strumbelj10a/strumbelj10a.pdf,pp.1–18.Lipovetsky,Stan and Michael Conklin(2001).“Analysis of Regression in Game Theory Approach.”In:Applied Stochastic Models in Business and Industry17.4,pp.319–330.Lundberg,Scott M.,Gabriel G.Erion,and Su-In Lee(2017).“Consistent Individualized Feature Attribution for Tree Ensembles.”In:Proceedings of the2017ICML Workshop on Human Interpretability in Machine Learning(WHI2017).Ed.by Been Kim et al.URL:https:///pdf?id=ByTKSo-m-.ICML WHI2017,pp.15–21.Lundberg,Scott M and Su-In Lee(2017).“A Unified Approach to Interpreting Model Predictions.”In: Advances in Neural Information Processing Systems30.Ed.by I.Guyon et al.URL:/paper/7062-a-unified-approach-to-interpreting-model-predictions.pdf.Curran Associates,Inc.,pp.4765–4774.Papernot,Nicolas(2018).“A Marauder’s Map of Security and Privacy in Machine Learning:An overview of current and future research directions for making machine learning secure and private.”In:Proceedings of the11th ACM Workshop on Artificial Intelligence and Security.URL:https:///pdf/1811.01134.pdf.ACM.Ribeiro,Marco Tulio,Sameer Singh,and Carlos Guestrin(2016).“Why Should I Trust You?:Explaining the Predictions of Any Classifier.”In:Proceedings of the22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining.URL:/kdd2016/papers/files/rfp0573-ribeiroA.pdf.ACM,pp.1135–1144.Rudin,Cynthia(2018).“Please Stop Explaining Black Box Models for High Stakes Decisions.”In:arXiv preprint arXiv:1811.10154.URL:https:///pdf/1811.10154.pdf.Shapley,Lloyd S(1953).“A Value for N-Person Games.”In:Contributions to the Theory of Games2.28.URL: http://www.library.fa.ru/files/Roth2.pdf#page=39,pp.307–317.Shapley,Lloyd S,Alvin E Roth,et al.(1988).The Shapley Value:Essays in Honor of Lloyd S.Shapley.URL: http://www.library.fa.ru/files/Roth2.pdf.Cambridge University Press.Vartak,Manasi et al.(2016).“Model DB:A System for Machine Learning Model Management.”In: Proceedings of the Workshop on Human-In-the-Loop Data Analytics.URL:https:///~matei/papers/2016/hilda_modeldb.pdf.ACM,p.14.Vaughan,Joel et al.(2018).“Explainable Neural Networks Based on Additive Index Models.”In:arXiv preprint arXiv:1806.01933.URL:https:///pdf/1806.01933.pdf.Wilkinson,Leland(2006).The Grammar of Graphics.—(2018).“Visualizing Big Data Outliers through Distributed Aggregation.”In:IEEE Transactions on Visualization&Computer Graphics.URL:https:///~wilkinson/Publications/outliers.pdf.Yang,Hongyu,Cynthia Rudin,and Margo Seltzer(2017).“Scalable Bayesian Rule Lists.”In:Proceedings of the34th International Conference on Machine Learning(ICML).URL:https:///pdf/1602.08610.pdf.。

初中生英语阅读技巧指南Guide to English Reading Skills for Middle School StudentsAs young learners embark on their journey to master the English language, developing strong reading skills is crucial for their overall language proficiency. In middle school, students are introduced to more complex texts that require higher comprehension abilities. To help them navigate through this important phase, it is essential to equip them with effective English reading strategies. In this article, we will provide a comprehensive guide to English reading skills for middle school students.1. Set a Reading Goal:Before diving into any text, it is essential to set a clear reading goal. The purpose of reading could be to gain information, understand a concept, analyze a story, or simply enhance vocabulary. Discussing the reading goal with the students helps them focus on the specific task at hand and stay engaged throughout the process.2. Preview the Text:Encourage students to develop the habit of previewing the text before reading it in detail. This involves skimming through the title, headings, subheadings, and graphic features such as pictures, tables, and graphs. Previewing allows students to activate their prior knowledge, make predictions about the content, and set expectations for what they are about to read.3. Activate Prior Knowledge:Help students make connections between their existing knowledge and the text they are about to read. This can be done by asking them to brainstorm everything they already know about the topic. Activating prior knowledge not only enhances comprehension but also builds confidence.4. Build Vocabulary:Middle school is a critical period for expanding vocabulary. Encourage students to create a list of unfamiliar words from the text and use context clues, such as surrounding words or sentence structure, to decipher their meanings. Additionally, suggest using online dictionaries or apps to look up unfamiliar words and reinforce their usage and pronunciation.5. Skim and Scan:Teach students the importance of skimming and scanning while reading. Skimming involves quickly glancing through the text to get a general understanding of the main ideas. Scanning, on the other hand, involves searching for specific information by quickly moving the eyes over the text. These skills help students locate relevant information efficiently.6. Highlight and Annotate:Encourage students to become actively engaged readers by highlighting important details and making annotations in the margins of the text. This practice improves concentration and allows for easy reference when reviewing the material later.7. Take Notes:After completing a reading passage, students should be encouraged to summarize the main points in their own words. Taking notes helps solidify understanding, aids in retention, and serves as a valuable resource for future reference.8. Practice Active Reading:Active reading involves interacting with the text by asking questions, making predictions, and drawing conclusions. Encourage students to pause at certain points and reflect on what they have read. This not only enhances comprehension but also develops critical thinking skills.9. Utilize Graphic Organizers:Graphic organizers, such as concept maps or Venn diagrams, can be invaluable tools for visualizing information and organizing thoughts. Encourage students to use these tools while reading to break down complex concepts and identify relationships between ideas.10. Collaborative Reading:Promoting collaborative reading activities fosters discussion, expands perspectives, and encourages active learning. Group discussions can focus on sharing insights, summarizing the text, or debating different interpretations. Collaborative reading also boosts social skills and teamwork.11. Read Widely:Encourage students to explore a variety of genres, including fiction, non-fiction, poetry, and news articles. Diversifying their reading materialsexposes them to different writing styles, expands their knowledge, and improves their overall language skills.12. Set a Reading Schedule:Establish a consistent reading schedule at home and at school. Designate a specific time for independent reading, making it a daily habit. Consistency is key to building reading stamina and developing a love for reading.13. Seek Guidance:Middle school students should be encouraged to seek guidance from teachers, parents, or tutors when encountering challenging texts. Clarifying doubts and discussing difficult concepts promotes deeper understanding and prevents the development of misconceptions.14. Keep a Reading Journal:Encourage students to maintain a reading journal where they can record their impressions, reflections, and reactions to various texts. This practice not only enhances their writing skills but also allows for self-expression and personal growth.15. Emphasize Reading for Pleasure:Above all, it is crucial to foster a love for reading among middle school students. Encourage them to choose books or articles that align with their interests and passions. Reading for pleasure helps students develop a lifelong habit that will enrich their knowledge, broaden their horizons, and instill a love for the English language.In conclusion, acquiring strong English reading skills is vital for middle school students. By implementing these various strategies, students will develop effective reading habits, improve their comprehension abilities, and ultimately become confident readers. Guiding them through this critical phase empowers them to excel in their English language journey and lays a solid foundation for their future academic success.。

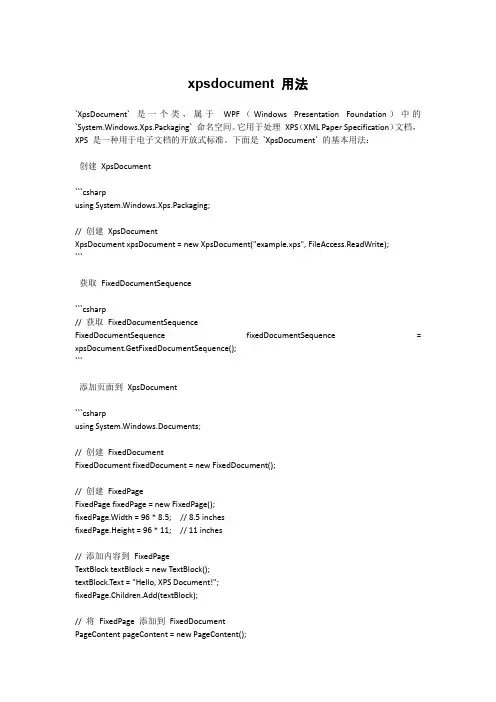

xpsdocument 用法`XpsDocument` 是一个类,属于WPF(Windows Presentation Foundation)中的`System.Windows.Xps.Packaging` 命名空间。

它用于处理XPS(XML Paper Specification)文档,XPS 是一种用于电子文档的开放式标准。

下面是`XpsDocument` 的基本用法:创建XpsDocument```csharpusing System.Windows.Xps.Packaging;// 创建XpsDocumentXpsDocument xpsDocument = new XpsDocument("example.xps", FileAccess.ReadWrite);```获取FixedDocumentSequence```csharp// 获取FixedDocumentSequenceFixedDocumentSequence fixedDocumentSequence = xpsDocument.GetFixedDocumentSequence();```添加页面到XpsDocument```csharpusing System.Windows.Documents;// 创建FixedDocumentFixedDocument fixedDocument = new FixedDocument();// 创建FixedPageFixedPage fixedPage = new FixedPage();fixedPage.Width = 96 * 8.5; // 8.5 inchesfixedPage.Height = 96 * 11; // 11 inches// 添加内容到FixedPageTextBlock textBlock = new TextBlock();textBlock.Text = "Hello, XPS Document!";fixedPage.Children.Add(textBlock);// 将FixedPage 添加到FixedDocumentPageContent pageContent = new PageContent();((IAddChild)pageContent).AddChild(fixedPage);fixedDocument.Pages.Add(pageContent);// 将FixedDocument 添加到XpsDocumentXpsDocumentWriter xpsDocumentWriter = XpsDocument.CreateXpsDocumentWriter(xpsDocument);xpsDocumentWriter.Write(fixedDocument);```关闭XpsDocument```csharp// 关闭XpsDocumentxpsDocument.Close();```这只是一个基本的示例,你可以根据需要使用更多的WPF 元素和功能来创建和编辑XPS 文档。

visual studio 扩展自动整理项目文件结构-概述说明以及解释1.引言1.1 概述概述部分的内容可以根据Visual Studio扩展自动整理项目文件结构的背景和目的来进行编写。

以下是一个概述的例子:Visual Studio是一款广泛使用的集成开发环境(IDE),提供了丰富的功能和工具来帮助开发人员快速、高效地开发各种类型的应用程序。

然而,随着项目规模的扩大和代码的增加,项目文件结构可能变得复杂而混乱,给代码的维护和理解带来了困难。

为了解决这个问题,许多开发人员希望能够自动整理项目文件结构,使其更加清晰和有组织。

通过自动整理项目文件结构,开发人员可以更方便地定位和管理各种资源、文件和文件夹,提高代码的可读性和可维护性。

基于这个目标,我们开发了一个Visual Studio扩展,旨在提供一种自动整理项目文件结构的解决方案。

该扩展可以帮助开发人员快速、轻松地优化项目文件结构,将文件和文件夹按照一定的规则和约定进行组织和排列。

它可以通过自动重命名、移动和排序来实现整理,减少了手动整理的工作量和出错的可能性。

通过使用我们的Visual Studio扩展,您可以节省大量时间和精力来维护项目文件结构,专注于代码的开发和调试工作。

本文将介绍该扩展的概念、项目文件结构的重要性,以及自动整理项目文件结构的必要性和实现方法。

希望通过阅读本文,您能够更加深入地了解和掌握如何利用Visual Studio扩展来自动整理项目文件结构。

文章结构是指文章中各个部分的组织方式和相互之间的关系。

一个良好的文章结构能够帮助读者更好地理解文章的内容,并能够清晰地表达作者的观点。

本文将按照以下结构进行展开:1. 引言1.1 概述在这一部分,我们将介绍Visual Studio扩展自动整理项目文件结构的概念和目的。

我们将讨论Visual Studio扩展的定义以及为什么自动整理项目文件结构对于开发人员来说是非常重要的。

1.2 文章结构(即本部分)在本部分中,我们将介绍整篇文章的结构,以帮助读者更好地理解我们要讨论的内容。

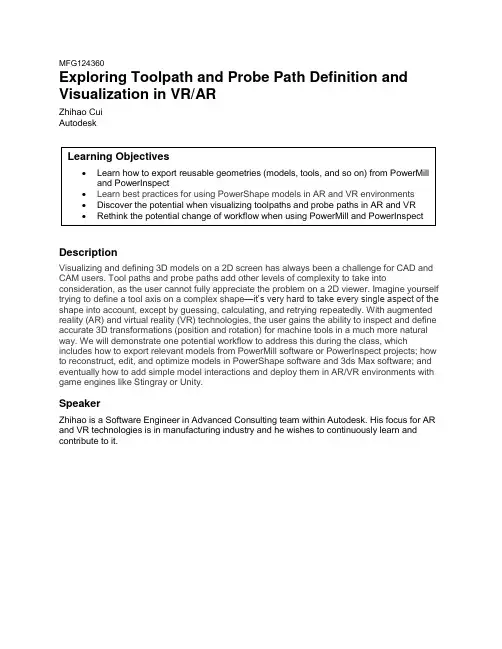

MFG124360Exploring Toolpath and Probe Path Definition and Visualization in VR/ARZhihao CuiAutodeskDescriptionVisualizing and defining 3D models on a 2D screen has always been a challenge for CAD and CAM users. Tool paths and probe paths add other levels of complexity to take into consideration, as the user cannot fully appreciate the problem on a 2D viewer. Imagine yourself trying to define a tool axis on a complex shape—it’s very hard to take every single aspect of the shape into account, except by guessing, calculating, and retrying repeatedly. With augmented reality (AR) and virtual reality (VR) technologies, the user gains the ability to inspect and define accurate 3D transformations (position and rotation) for machine tools in a much more natural way. We will demonstrate one potential workflow to address this during the class, which includes how to export relevant models from PowerMill software or PowerInspect projects; how to reconstruct, edit, and optimize models in PowerShape software and 3ds Max software; and eventually how to add simple model interactions and deploy them in AR/VR environments with game engines like Stingray or Unity.SpeakerZhihao is a Software Engineer in Advanced Consulting team within Autodesk. His focus for AR and VR technologies is in manufacturing industry and he wishes to continuously learn and contribute to it.Data PreparationThe first step of the journey to AR or VR is generating the content to be visualized. Toolpath and probe path need to be put into certain context to be meaningful, which could be models for the parts, tools, machines or even the entire factory.PowerMill ExportFigure 1 Typical PowerMill ProjectPartsExporting models of the part is relatively simple.1. Choose the part from the Explorer -> Models -> Right click on part name -> ExportModel…2. Follow the Export Model dialog to choose the name with DMT format1.1DGK file is also supported if additional CAD modification is needed later. See Convert PowerMill part mesh on page 7Figure 2 PowerMill – Export ModelToolTool in PowerMill consists three parts – Tip, Shank and Holder.To export the geometry of the tool, type in the macro commands shown in Figure 3, which would generate STL files2 contains the corresponding parts. Three lines of commands3 are used instead of exporting three in one file (See Figure 11), or one single mesh would be created instead of three which will make the coloring of the tool difficult.EDIT TOOL ; EXPORT_STL TIP "powermill_tool_tip.stl"EDIT TOOL ; EXPORT_STL SHANK "powermill_tool_shank.stl"EDIT TOOL ; EXPORT_STL HOLDER "powermill_tool_holder.stl"Figure 3 PowerMill Macro - Export ToolToolpathToolpath is the key part of the information generated by a CAM software. They are created based on the model of the part and various shapes of the tool for different stages (e.g. roughing, polishing, etc.). Toolpaths are assumed to be fully defined for visualization purposes in this class, and other classes might be useful around toolpath programming, listed on page 15. Since there doesn’t exist a workflow to directly stream data into AR/VR environment, a custom post-processor4 is used to extract minimal information needed to describe a toolpath, i.e. tool tip position, normal direction and feed rate (its format is described in Figure 17).The process is the same way as an NC program being generated for a real machine to operate. Firstly, create an NC program with the given post-processor shown in Figure 4. Then grab and drop the toolpath onto the NC program and write it out to a text file shown in Figure 5.2DDX file format can also be exported if geometry editing is needed later in PowerShape3 The macro is also available in addition al material PowerMill\ExportToolMesh.mac4 The file is in additional material PowerMill\simplepost_free.pmoptzFigure 4 PowerMill Create NC ProgramFigure 5 PowerMill Insert NC ProgramPowerInspect ExportFigure 6 Typical PowerInspect OMV ProjectCADCAD files can be found in the CAD tab of the left navigation panel. The model can be re-processed into a generic mesh format for visualization using PowerShape, which is discussed in Section Convert PowerMill part mesh on page 7.Figure 7 Find CAD file path in PowerInspectProbeDefault probe heads are installed at the following location:C:\Program Files\Autodesk\PowerInspect 2018\file\ProbeDatabaseCatalogueProbes shown in PowerInspect are defined in Catalogue.xml file and their corresponding mesh files are in probeheads folder. These files will be used to assemble the probe mentioned in Section Model PowerInspect probe on page 9.Probe toolAlthough probe tool is defined in PowerInspect, they cannot be exported as CAD geometries to be reused later. In Model PowerInspect probe section on page 9, steps to re-create the probe tool will be introduced in detail based on the stylus definition.Probe pathLike toolpath in PowerMill, probe path can be exported using post processor5 to a generic MSR file format, which contains information of nominal and actual probing points, measuring tolerance, etc.This can be achieved from Run tab -> NC Program, which is shown in Figure 8.Figure 8 Export Probe Path from PowerInspect5 The file is in additional materialPowerInspect\MSR_ResultsGenerator_1.022.pmoptzModelling using PowerShapeConvert PowerMill part meshDMT or DGK files can be converted to mesh in PowerShape to FBX format, which is a more widely adopted format.DMT file contains mesh definition, which can be exported again from PowerShape after color change and mesh decimation if needed (discussed in Section Exporting Mesh in PowerShape on page 10).Figure 9 PowerShape reduce meshDGK file exported from PowerMill / PowerInspect is still parametric CAD model not mesh, which means further editing on the shape is made possible. Theoretically, the shape of the model won’t be changed since the toolpath is calculated based on the original version, but further trimming operations could be carried here to keep minimal model to be rendered on the final device. For example, not all twelve blades of the impeller may be needed to visualize the toolpath defined on one single surface. It’s feasible to remove ten out of the twelve blades and still can verify what’s going on with the toolpath defined. After editing the model, PowerShape can convert the remaining to mesh and export to FBX format as shown below.Figure 10 Export FBX from PowerShapeModel PowerMill toolImport three parts of the tool’s STL files into PowerShape, and change the color of individual meshes to match PowerMill’s color scheme for easier recognition.Figure 11 PowerShape Model vs PowerMill assembly viewBefore exporting, move the assembled tool such that the origin is at the tool tip and oriented z-axis upwards, which saves unnecessary positional changes during AR/VR setup. Then follow Figure 10 to export FBX file from PowerShape to be used in later stages.Model PowerInspect probeTake the example Probe OMP400. OMP400.mtd file6 contains where the mesh of individual components of the probe head are located and their RGB color. For most of the probe heads, DMT mesh files will be located in its subfolder. They can be dragged and dropped into PowerShape in one go to form the correct shape, but all in the same color (left in Figure 14). To achieve similar looking in PowerInspect, it’s better to follow the definition fi le, and import each individual model and color it according to the rgb value one by one (right in Figure 14).<!-- Head --><machine_part NAME="head"><model_list><dmt_file><!-- Comment !--><path FILE="probeheads/OMP400/body.dmt"/><rgb R="192"G="192"B="192"/></dmt_file>Figure 12 Example probe definition MTD fileFigure 13 PowerShape apply custom colorFigure 14 Before and after coloring probe headFor the actual probe stylus, it’s been defined in ProbePartCatalogue.xml file. For theTP20x20x2 probe used in the example, TP20 probe body, TP20_STD module and M2_20x2_SS stylus are used. Construct them one by one in the order of probe body, module and stylus, and each of them contains the definition like the below, which is the TP20 probe body.6C:\Program Files\Autodesk\PowerInspect 2018\file\ProbeDatabaseCatalogue<ProbeBody name="TP20"from_mounting="m8"price="15.25"docking_height="0"to_mounting="AutoMagnetic"length="17.5"><Manufacturer>Renishaw</Manufacturer><Geometry><Cylinder height="14.5"diameter="13.2"offset="0"reference_length="14.5" material="Aluminium"color="#C8C8C8"/><Cylinder height="3.0"diameter="13.2"offset="0"reference_length="3.0" material="Stainless"color="#FAFAFA"/></Geometry></ProbeBody>Figure 15 Example TP20 probe body definitionAlmost all geometries needed are cylinder, cone and sphere to model a probing tool. Start with the first item in the Geometry section, and use the parameters shown in the definition to model each of the geometries with solid in PowerShape and then convert to mesh. To make the result look as close as it shows in PowerInspect, color parameter can also be utilized (Google “color #xxx” to convert the hex color).Figure 16 Model PowerInspect ToolU nlike PowerMill tool, PowerInspect probe’s model origin should be set to the probe center instead of tip, which is defined in the MSR file. But the orientation should still be tuned to be z-axis facing upwards.DiscussionsExporting Mesh in PowerShapeIn PowerShape, there are different ways that a mesh can be generated and exported. Take the impellor used in PowerMill project as an example, the end mesh polycount is 786,528 if it’s been converted from surfaces to solid and then mesh with a tolerance set to 0.01. However, if the model was converted straight from surface to mesh, the polycount is 554,630, where the 30% reduce makes a big impact on the performance of the final AR/VR visualization.Modifying the tolerance could be another choice. For visualization purposes, the visual quality will be the most impactable factor of choosing the tolerance value. If choosing the value is set too high, it may introduce undesired effect that the simulated tool is clipped into the model in certain position. However, setting the tolerance too small will quickly result in a ridiculous big mesh, which will dramatically slow down the end visualization.Choosing the balance of the tolerance here mainly depends on what kind of end devices will the visualization be running on. If it will be a well-equipped desktop PC running VR, going towards a large mesh won’t ne cessarily be a problem. On the other hand, if a mobile phone is chosen for AR, a low polycount mesh will be a better solution, or it can be completely ignored as a placeholder, which is discussed in Section On-machine simulation on page 12.Reading dataSame set of model and paths data can be used in multiple ways on different devices. The easiest way to achieve this is through game engines like Stingray or Unity 3D, which has built-in support for rendering in VR environment like HTC Vive and mobile VR, and AR environment like HoloLens and mobile AR.Most of the setup in the game engine will be the same for varies platform, like models and paths to be displayed. Small proportion will need to be implemented differently for each platform due to different user interaction availability. For example, for AR when using HoloLens, the user will mainly control the application with voice and gesture commands, while on the mobile phones, it will make more sense to offer on-screen controls.For part and tool models, FBX files can be directly imported into the game engines without problem. Unit of the model could be a problem here, where export from PowerShape is usually in millimeter but units in game engines are normally in meters. Unit change in this case could result in a thousand times bigger, which may cause the user seeing nothing when running the application.For toolpath data, three sets of toolpath information are exported from PowerMill with the given post-processor, i.e. tool tip position, tool normal vector and its feed rate. They can be read line by line, and its positions can be used to create toolpath lines. And together with the normal vector and feed rates, an animation of the tool head can be created.Position(x,y,z) Normal(i,j,k) Feed rate33.152,177.726,52.0,0.713,-0.208,0.67,3000.0Figure 17 Example toolpath output from PowerMillFor probe path data, similar concept could be applied with an additional piece of information7–actual measured point, which means not only the nominal probe path can be simulated ahead of time, but also the actual measured result could be visualized with the same setup.7 See page 14 for MSR file specification.STARTG330 N0 A0.0 B0.0 C0.0 X0.0 Y0.0 Z0.0 I0 R0G800 N1 X0 Y0 Z25.0I0 J0 K1 O0 U0.1 L-0.1G801 N1 X0.727 Y0.209 Z27.489 R2.5ENDFigure 18 Example probe path output from PowerInspectUse casesOn-machine simulationWhen running a new NC program with a machine tool, it’s common to see the machine operator tuning down the feed rate and carefully looking through the glass to see what is happening inside the box. After several levels of collision checking in CAM software and machine code simulator, why would they still not have enough confidence to run the program?Figure 19 Toolpath simulation with AR by Hans Kellner @ AutodeskOne potential solution to this problem is using AR on the machine. Since how the fixture is used nowadays is still fairly a manual job constrained by operator’s experience, variations of fixtures make it a very hard process to verify ahead of machining process. Before hitting the start button for the NC program, the operator could start the AR simulation on the machine bed, with fixtures and part held in place. It will become an intuitive task for the operator to check for collisions between part of the virtual tool and the real part and fixtures. Furthermore, a three-second in advance virtual simulation of the tool head can be shown during machining process to significantly increase the confidence and therefore leave the machine always running at full speed, which ultimately increases the process efficiency.Toolpath programming assistanceProgramming a toolpath within a CAM software can sometimes be a long iterative try and error process since the user always imagines how the tool will move with the input parameters. Especially with multi-axis ability, the user will often be asked to provide not only the basic parameters like step over values but also coordinate or direction in 3D for the calculation to start. Determining these 3D values on a screen becomes increasingly difficult when othersurfaces surround the places needed to be machined. Although there are various ways to let the user to navigate to those positions through hiding and sectioning, workarounds are always not ideal and time-consuming. As shown in Figure 20, there’s no easy and intuitive way to analyze the clearance around the tool within a tight space, which is one of the several places to be considering.Figure 20 Different angles of PowerMill simulation for a 5-axis toolpath in a tight spaceTaking the example toolpath in PowerMill, a user will need to recalculate the toolpath after each modification of the tool axis point, to balance between getting enough clearance8and achieving better machining result makes the user and verify the result is getting better or worth. However, this workflow can be changed entirely if the user can intuitively determine the position in VR. The tool can be attached to the surface and freely moved by hand in 3D, which would help to determine the position in one go.Post probing verificationProbing is a common process to follow a milling process on a machine tool, making sure the result of the manufacturing is within desired tolerance. Generating an examination report in PowerInspect is one of the various ways to control the quality. However, what often happens is that if an out of tolerance position is detected, the quality engineer will go between the PC screen and the actual part to determine what is the best treatment process depending on different kind of physical appearance.8 Distance between the tool and the partFigure 21 Overlay probing result on to a physical partOverlaying probing result with AR could dramatically increase the efficiency by avoiding this coming back and forth. Same color coded probed point can be positioned exactly at the place of occurrence, so that the surrounding area can be considered separately. The same technique could also be applied to scanning result, as shown in Figure 22.Figure 22 Overlaying scanning result on HoloLens by Thomas Gale @ AutodeskAppendixReference Autodesk University classesPowerMillMFG12196-L: PowerMILL Hands on - Multi Axis Machining by GORDON MAXWELL MP21049: How to Achieve Brilliant Surface Finishes for CNC Machining by JEFF JAJE MSR File format9G330 Orientation of the probeG800 Nominal valuesG801 Measured valuesN Item numberA Rotation about the X axisB Rotation about the Y axisC Rotation about the Z axisXYZ Translations along the X, Y and Z axes (these are always zero)U Upper toleranceL Lower toleranceO OffsetI and R (in G330) just reader valuesR (in G801) probe radius9 Credit to Stefano Damiano @ Autodesk。

Reading is an essential skill that not only enhances knowledge but also improves cognitive abilities.Here are some effective methods to make your reading experience more fruitful:1.Set a Purpose for Reading:Before you start reading,decide what you want to achieve from the text.Are you reading for pleasure,to learn a new skill,or to gather information for a project?2.Choose the Right Material:Select books or articles that align with your interests and reading level.This will keep you engaged and motivated to read.3.Create a Reading Environment:Find a quiet and comfortable place where you can focus without distractions.Good lighting and a comfortable chair can make a significant difference.4.PreReading Strategies:Skim through the material before diving in.Look at the headings,subheadings,and any summaries or introductions to get an overview of what the text is about.5.Active Reading:Engage with the text by asking questions,making predictions,and visualizing what you read.This helps in better understanding and retention.6.NoteTaking:Jot down important points,new vocabulary,or any thoughts that come to mind as you read.This not only helps in remembering the content but also in reviewing later.7.Vocabulary Building:When you encounter unfamiliar words,look them up and try to use them in sentences.This will expand your vocabulary and improve your language skills.8.Reading Speed:Practice reading faster without sacrificing comprehension.Speed reading techniques can be helpful,but always ensure that you understand what youre reading.9.Critical Thinking:Analyze the authors arguments,the texts structure,and the evidence presented.This will help you develop your critical thinking skills.10.Discussion and Reflection:Discuss what youve read with others or write a reflection on your thoughts and opinions about the material.This can deepen your understanding and appreciation of the text.11.Regular Reading:Make reading a daily habit.The more you read,the better you become at it.Consistency is key to improving your reading skills.e of Technology:Utilize ereaders,apps,and online resources to enhance your reading experience.These tools can offer features like highlighting,notetaking,and even texttospeech options.13.Diversify Your Reading:Read different genres and styles to broaden your perspective and improve your adaptability to various writing styles.14.Review and Summarize:After finishing a chapter or a section,take a moment to review and summarize what youve learned.This helps in reinforcing the information. 15.Apply What Youve Learned:Try to apply the knowledge gained from your reading to reallife situations or other areas of study.This will make the learning more practical and memorable.By incorporating these methods into your reading routine,you can enhance your comprehension,improve your language skills,and enjoy a more enriching reading experience.。

/cgi/content/full/313/5786/504/DC1Supporting Online Material forReducing the Dimensionality of Data with Neural NetworksG. E. Hinton* and R. R. Salakhutdinov*To whom correspondence should be addressed. E-mail: hinton@Published 28 July 2006, Science313, 504 (2006)DOI: 10.1126/science.1127647This PDF file includes:Materials and MethodsFigs. S1 to S5Matlab CodeSupporting Online MaterialDetails of the pretraining:To speed up the pretraining of each RBM,we subdivided all datasets into mini-batches,each containing100data vectors and updated the weights after each mini-batch.For datasets that are not divisible by the size of a minibatch,the remaining data vectors were included in the last minibatch.For all datasets,each hidden layer was pretrained for50passes through the entire training set.The weights were updated after each mini-batch using the averages in Eq.1of the paper with a learning rate of.In addition,times the previous update was added to each weight and times the value of the weight was sub-tracted to penalize large weights.Weights were initialized with small random values sampled from a normal distribution with zero mean and standard deviation of.The Matlab code we used is available at /hinton/MatlabForSciencePaper.htmlDetails of thefine-tuning:For thefine-tuning,we used the method of conjugate gradients on larger minibatches containing1000data vectors.We used Carl Rasmussen’s“minimize”code(1).Three line searches were performed for each mini-batch in each epoch.To determine an adequate number of epochs and to check for overfitting,wefine-tuned each autoencoder on a fraction of the training data and tested its performance on the remainder.We then repeated thefine-tuning on the entire training set.For the synthetic curves and hand-written digits,we used200epochs offine-tuning;for the faces we used20epochs and for the documents we used 50epochs.Slight overfitting was observed for the faces,but there was no overfitting for the other datasets.Overfitting means that towards the end of training,the reconstructions were still improving on the training set but were getting worse on the validation set.We experimented with various values of the learning rate,momentum,and weight-decay parameters and we also tried training the RBM’s for more epochs.We did not observe any significant differences in thefinal results after thefine-tuning.This suggests that the precise weights found by the greedy pretraining do not matter as long as itfinds a good region from which to start thefine-tuning.How the curves were generated:To generate the synthetic curves we constrained the co-ordinate of each point to be at least greater than the coordinate of the previous point.We also constrained all coordinates to lie in the range.The three points define a cubic spline which is“inked”to produce the pixel images shown in Fig.2in the paper.The details of the inking procedure are described in(2)and the matlab code is at(3).Fitting logistic PCA:Tofit logistic PCA we used an autoencoder in which the linear code units were directly connected to both the inputs and the logistic output units,and we minimized the cross-entropy error using the method of conjugate gradients.How pretraining affectsfine-tuning in deep and shallow autoencoders:Figure S1com-pares performance of pretrained and randomly initialized autoencoders on the curves dataset. Figure S2compares the performance of deep and shallow autoencoders that have the same num-ber of parameters.For all these comparisons,the weights were initialized with small random values sampled from a normal distribution with mean zero and standard deviation. Details offinding codes for the MNIST digits:For the MNIST digits,the original pixel intensities were normalized to lie in the interval.They had a preponderance of extreme values and were therefore modeled much better by a logistic than by a Gaussian.The entire training procedure for the MNIST digits was identical to the training procedure for the curves, except that the training set had60,000images of which10,000were used for validation.Figure S3and S4are an alternative way of visualizing the two-dimensional codes produced by PCA and by an autencoder with only two code units.These alternative visualizations show many of the actual digit images.We obtained our results using an autoencoder with1000units in thefirst hidden layer.The fact that this is more than the number of pixels does not cause a problem for the RBM–it does not try to simply copy the pixels as a one-hidden-layer autoencoder would. Subsequent experiments show that if the1000is reduced to500,there is very little change in the performance of the autoencoder.Details offinding codes for the Olivetti face patches:The Olivetti face dataset from which we obtained the face patches contains ten6464images of each of forty different people.We constructed a dataset of165,6002525images by rotating(to),scaling(1.4to1.8), cropping,and subsampling the original400images.The intensities in the cropped images were shifted so that every pixel had zero mean and the entire dataset was then scaled by a single number to make the average pixel variance be.The dataset was then subdivided into124,200 training images,which contained thefirst thirty people,and41,400test images,which contained the remaining ten people.The training set was further split into103,500training and20,700 validation images,containing disjoint sets of25and5people.When pretraining thefirst layer of2000binary features,each real-valued pixel intensity was modeled by a Gaussian distribution with unit variance.Pretraining thisfirst layer of features required a much smaller learning rate to avoid oscillations.The learning rate was set to0.001and pretraining proceeded for200epochs. We used more feature detectors than pixels because a real-valued pixel intensity contains more information than a binary feature activation.Pretraining of the higher layers was carried out as for all other datasets.The ability of the autoencoder to reconstruct more of the perceptually significant,high-frequency details of faces is not fully reflected in the squared pixel error.This is an example of the well-known inadequacy of squared pixel error for assessing perceptual similarity.Details offinding codes for the Reuters documents:The804,414newswire stories in the Reuters Corpus V olume II have been manually categorized into103topics.The corpus covers four major groups:corporate/industrial,economics,government/social,and markets.The labels were not used during either the pretraining or thefine-tuning.The data was randomly split into 402,207training and402,207test stories,and the training set was further randomly split into 302,207training and100,000validation mon stopwords were removed from the documents and the remaining words were stemmed by removing common endings.During the pretraining,the confabulated activities of the visible units were computed using a“softmax”which is the generalization of a logistic to more than2alternatives:the results using the best value of.We also checked that K was large enough for the whole dataset to form one connected component(for K=5,there was a disconnected component of21 documents).During the test phase,for each query document,we identify the nearest count vectors from the training set and compute the best weights for reconstructing the count vector from its neighbors.We then use the same weights to generate the low-dimensional code for from the low-dimensional codes of its high-dimensional nearest neighbors(7).LLE code is available at(8).For2-dimensional codes,LLE()performs better than LSA but worse than our autoencoder.For higher dimensional codes,the performance of LLE()is very similar to the performance of LSA(see Fig.S5)and much worse than the autoencoder.We also tried normalizing the squared lengths of the document count vectors before applying LLE but this did not help.Using the pretraining andfine-tuning for digit classification:To show that the same pre-training procedure can improve generalization on a classification task,we used the“permuta-tion invariant”version of the MNIST digit recognition task.Before being given to the learning program,all images undergo the same random permutation of the pixels.This prevents the learning program from using prior information about geometry such as affine transformations of the images or local receptivefields with shared weights(9).On the permutation invariant task,Support Vector Machines achieve1.4%(10).The best published result for a randomly initialized neural net trained with backpropagation is1.6%for a784-800-10network(11).Pre-training reduces overfitting and makes learning faster,so it makes it possible to use a much larger784-500-500-2000-10neural network that achieves1.2%.We pretrained a784-500-500-2000net for100epochs on all60,000training cases in the just same way as the autoencoders were pretrained,but with2000logistic units in the top layer.The pretraining did not use any information about the class labels.We then connected ten“softmaxed”output units to the top layer andfine-tuned the whole network using simple gradient descent in the cross-entropy error with a very gentle learning rate to avoid unduly perturbing the weights found by the pretraining.For all but the last layer,the learning rate was for the weights and for the biases.To speed learning,times the previous weight increment was added to each weight update.For the biases and weights of the10output units, there was no danger of destroying information from the pretraining,so their learning rates were five times larger and they also had a penalty which was times their squared magnitude. After77epochs offine-tuning,the average cross-entropy error on the training data fell below a pre-specified threshold value and thefine-tuning was stopped.The test error at that point was %.The threshold value was determined by performing the pretraining andfine-tuning on only50,000training cases and using the remaining10,000training cases as a validation set to determine the training cross-entropy error that gave the fewest classification errors on the validation set.We have alsofine-tuned the whole network using using the method of conjugate gradients (1)on minibatches containing1000data vectors.Three line searches were performed for eachmini-batch in each epoch.After48epochs offine-tuning,the test error was%.The stopping criterion forfine-tuning was determined in the same way as described above.The Matlab code for training such a classifier is available at/hinton/MatlabForSciencePaper.htmlSupporting textHow the energies of images determine their probabilities:The probability that the model assigns to a visible vector,isSupportingfiguresFig.S1:The average squared reconstruction error per test image duringfine-tuning on the curves training data.Left panel:The deep784-400-200-100-50-25-6autoencoder makes rapid progress after pretraining but no progress without pretraining.Right panel:A shallow784-532-6autoencoder can learn without pretraining but pretraining makes thefine-tuning much faster,and the pretraining takes less time than10iterations offine-tuning.Fig.S2:The average squared reconstruction error per image on the test dataset is shown during the fine-tuning on the curves dataset.A784-100-50-25-6autoencoder performs slightly better than a shal-lower784-108-6autoencoder that has about the same number of parameters.Both autoencoders were pretrained.Fig.S3:An alternative visualization of the2-D codes produced by taking thefirst two principal compo-nents of all60,000training images.5,000images of digits(500per class)are sampled in random order.Each image is displayed if it does not overlap any of the images that have already been displayed.Fig.S4:An alternative visualization of the2-D codes produced by a784-1000-500-250-2autoencoder trained on all60,000training images.5,000images of digits(500per class)are sampled in random order.Each image is displayed if it does not overlap any of the images that have already been displayed.A c c u r a c y Fig.S5:Accuracy curves when a query document from the test set is used to retrieve other test set documents,averaged over all 7,531possible queries.References and Notes1.For the conjugate gradient fine-tuning,we used Carl Rasmussen’s “minimize”code avail-able at http://www.kyb.tuebingen.mpg.de/bs/people/carl/code/minimize/.2.G.Hinton,V .Nair,Advances in Neural Information Processing Systems (MIT Press,Cam-bridge,MA,2006).3.Matlab code for generatingthe images of curves is available at/hinton.4.G.E.Hinton,Neural Computation 14,1711(2002).5.S.T.Roweis,L.K.Saul,Science 290,2323(2000).6.The 20newsgroups dataset (called 20news-bydate.tar.gz)is available at/jrennie/20Newsgroups.7.L.K.Saul,S.T.Roweis,Journal of Machine Learning Research 4,119(2003).8.Matlab code for LLE is available at /roweis/lle/index.html.9.Y .Lecun,L.Bottou,Y .Bengio,P.Haffner,Proceedings of the IEEE 86,2278(1998).10.D.V .Decoste,B.V .Schoelkopf,Machine Learning 46,161(2002).11.P.Y .Simard,D.Steinkraus,J.C.Platt,Proceedings of Seventh International Conferenceon Document Analysis and Recognition (2003),pp.958–963.。

knowledge of the general world 例子1 .the importance of reading in english learningin language learning. reading is regarded as a major source of input,and for many efllcarners it is the most important skill in an academic context (grabe 1991). in addition, readingcan help learners extend their general knowledge of the world. in the context of china, as chineseefl learners arc learning the target languagc in anacquisition-poor environment, they need all themore to depend on reading for language and culture immersion.what is more,there is anothernecessity to chinese efl learners—reading for examinations (zhou 2003). it is obvious thatreading makes up a large proportion of the total scores in important english proficiency tests atvarious levels such as test of english as a foreign l.anguage(toefl),international englishlanguage testing system (ielts), college english test band 4(cet-4), college english testband 6 (cet-6),test for english majors band 4 (tem-4),test for english majors band 8(tem-8) (pu 2006). therefore, reading is considered as an essential and prerequisite ability on thepart of english majors.2.the reading process2.1 the nature of readingreading comprehension begins at the smallest and simplest language units and each singleword, scentcnce and passage carries its own mcaning independently which has no direct link withthe reader (chomsky cited in zhang & guo 2005). it is the process of acquiring information froma written or printed text. so to rcad a tcxt successfully is to know the meaning of the tcxt. (eskey2002, cited in yu 2005).another view is that reading is al “psycholinguistic guessing game" (goodman 1967, cited inzhang 2006) during which the reader can make predictions about the content ofa passageaccording to the textual clues,his prior knowledge and experience. if his predictions areconfirmed, thc rcader continucs,otherwisc,he reviscs those predictions(goodman 1967; smith1971, cited in silberstein 2002:6).from this perspective,reading can be taken as an interactive activity (eskey 1988;grabe1993,cited in hedge 2002:188) which can be understood to be a complex cognitive process inwhich the reader and the text interact to (re)create meaningful discourse(silberstein 2002:x). it isin at least two ways. firstly,the various processes involved in reading arecarried outsimultaneously.secondly, it is interactive in the sense that linguistic information from the textinleracts with information activated by the reader from his long-tcrm memory,as backgroundknowledge (grabe & stoller 2005:18).at this point, reading can also be described as a kind ofdialogue between the reader and thc text, or even between the reader and the author (widdowson1979a, cited in hedge 2002: 188).meanwhile, reading is a complex process. it involves processing ideas generated by othevsthat are transmitted through language and involvcs highly complcx cognitive processingoperations (nunan 1999, cited in yu 2005).and many processing skills are coordinated in veryefficient combinations (grabe & stoller 2005: 4).furthermore,reading is a purposeful process. we can divide the purpose into differentcategories: reading for pleasure or reading for information in order to find out something or dosomcthing with the information youget(grellet 2000: 4): to get information,to respond tocuriosity about a topic; to follow instructions to perform a task; for pleasure,amusement,andpersonal cnjoyment; to keep in touch with fricnds and collcagues; to know what is happcninginthe world; and to find out when and where things are (rivers and temperley1978: 187, cited inhedge 2002: 195).besides these,reading is a critical process. critical reading views reading as a socialengagement (kress 1985, cited in hedge 2002:197).form this viewpoint, texts are organized incertain ways by writers to shape thc perceptions of readers towards acceptancc of the undcrlyingideology of the text (hedge 2002: 197).so it is the process for the readers to 出现恶意脚本uate the writers‘attitudes or viewpoints.2.2 three components of reading2.2.1 language competencemany researchers have poinited out that l2 learners must reach a certann level of seconalanguage competence before they can smoothly read in the target language (see (rabe & stoller2005).an efficient reader can recognize and decode the words, grammatical structures and otherlinguistie features quickly, accurately, and automatically.obviously,somctimes sccond language rcadcrs havc dificultics in proccssing texts whichcontain unfamiliar elements of the english language such as the cohesive devices (hedge 2002:192)..lust as berman ( 1984,cited in hedge 2002: 193) suggests,deletion, another cohesive deviee,can make atext ‘opaque’to the reader. it seems to confirm the hypothesis that foreign languagereaders are partly dependent on processing syntactic structures successfully to get access tomeaning (hedge 2002:193).another major difficulty lies in vocabulary. language learners experience difficulty withvocabulary, but the degree of dilliculty varies with the demands of the texl, the prior knowledge ofthe reader,the degree of automaticity a learner has achieved in general word recognition,anyspecialist lexical knowledge a student might have, and the learner ‘s first language(hedge 2002:193).2.2.2 background knowledgebackground knowledge is one‘s previously acquired comprehensive knowledge or worldknowledge and one‘s special knowledge on cecrtain subjects (zhang & guo 2005). in languagclearning, especially for reading comprehension, the function of background knowledge in readingcomprehension is formularized as schema theory(bartlett 1932;rumelhart & ortony 1977;rumelhart 1980, cited in zhang 2006).the reader begins with the perception of graphic cues, butknowledge of the world in gencral arc brought into play (parry 1987: 61, cited in qian 1997).sowords in texts functionas signs within a culture-bound system, and familiar cultural schemata cansometimes be more powerful than lexical knowledge (swaffar 1988:123).if the texts are productsof an unfamiliar culture,some learners’reading problems may be caused by insufficientbackground knowledge,and a particular schema fails to exist for them because this schema isspecific to a given culture and is not part of their own background (carrcll1 1988: 245, cited inqian 1997).thereforc, comprchending a text is dcscribed as an intcractive process betwecn the reader‘sbackground knowledge and the text (qian 1997), but it depends largely on the reader rather thanon the text (carrell 1984:333;swaffar 1988: 123 , cited in qian 1997). the reason is that anytext,either spoken or written,doesn‘t by itself carry meaning and what a text provides is only thedirections as to how a reader should retrieve or construct meaning from previously acquiredknowledlge which is the recader‘s background knowledgc(zhang & guo 2005).there are two basic types of schemata: formal schemata and content schemata.formalschemata, often known as textual schemata, consists of knowledge of different text types,genres,and the understanding that different types oftexts use text organization,language structures,vocabulary, grammar, level of formality/register differently.if esl readers possess the appropriateformal schemata against which they process the discourse typc of the text and if they utilize thatformal schemata to organize their recall protocols,they will retrieve more information (carrell1984:460,cited in hedge 2002:192). therefore, the readers’background knowledge and priorexperience with textual organization can facilitate reading comprehension(pu 2006). contentschemata involve a reader‘s existent knowledge about certain topics and his general worldknowledge. the reader ‘s content schemata functions while he is trying to comprehendsubject-specific and culture-specific texts.2.2.3 reading strategiesreading strategies are defined as the mental operations involved when readers approach atext effectively and make sense of what they are reading (barnett 1988, cited in pani 2006). thesestrategies consist of cognitive strategies and metacognitive strategies,william grabe 71991: 379,citedl in qian 1997) includes the into six categories:( 1 ) automatic recognition skills(2) vocabulary and structure knowledge(3) formal discourse structure knowledge(4) content/wor1d background knowledge(5) synthesis and 出现恶意脚本uation skills ( 6) metacognitive knowledge and monitoring skills2.2.3.1 cognitive strategics in rcadingcognitive stralegics are described as mental processes directly concerned with the processingof information in order to learn,that is for obtaining,storage,retri出现恶意脚本or use of information(williams & burden 2000: 148).they are involved in the analysis, synthesis, or transformation oflearning materials (ellis2000: 77).furthermore,these strategies enable readers to deal with theinformation presentcd in tasks and matcrials by working on it in different ways (hedge 20027了-t8).mikulecky (1990: 25-26) has listed 24 reading strategies which an efficient reader mustacquire:( 1 ) automatic decoding2 ) previcwing and predicting(3) specifying purpose(4) ldentifying gcnrc(5) questioning( ) scanning(7) recognizing topics( 8) classification of ideas into main topics and details(9) locating topic scntencc(10) stating the main idea of a sentence, paragraph or passage(11 ) recognizing patterns of relationships( 12)identifying and using words which signal the patterns of relationships(13) inferring the main idea, using patterns and other clues(14)recognizing and using pronouns,referents, and other lexical equivalents as clues to cohesion(15) guessing the meaning of unknown words from the context( 16) skimming(17)paraphrasing(1 8) sunmarizing(19) draw conclusions(20) draw inferences and using evidence(21) visualizing(22) reading critically(23) reading faster(24)adjusting reading rate according to materials and purposeamong these reading stratcegies/skills,some are more basic to intermediatc english majors,such as previewing and predicting, scanning. locating topic sentence,recognizing patterns ofrelationships,inferring the main ing patterns and other clues, guessing the meaning ofunknown words from the context,skimming.paraphrasing, or etc. some are more essential toadvanced english majors,such as automaticdecoding,draw conclusions,draw inferences andusing evidence, rcading critically, ctc.2.2.3.2 metacognitive strategies in readinga large body of literature on fsl/efl. reading has proved that the ability to usemetacognitive strategies is a critical component of skilled reading(grabe 1991:382, cited inqian1997). as the indispensable strategies,metacognitive strategies consist of planning for learning,thinking about lcarning and how to make it cffective,self-monitoring during lcarning, and出现恶意脚本uation of how successful learning has been after working on language in some way(hledge2002:78). when it comes to reading, metacognitive strategies involve awareness,monitoring andregulating (qian 2005).awareness includes readers’consciousness of their own reading strengths and weaknesses(mikulecky 1990: 28, citcd in qian 2005), thcir purpose of rcading, their recognition of implicit aswell as explicit information in the text (haller et al. 1988,cited in qian 2005), and their awarenessof strategies to be employed by them in the process of reading (qian 2005).monitoring can be used to adjust reading rate, check comprehension during reading,integrateprior knowledge with current information,compare main ideas,generateself-questioning andsummarize the written text (grabe 1991; haller ct al.1988, cited in qian 2005).regulating is used to redirect self-comprehension,check effectiveness of the strategies used(grabe 199 1, cited in qian 2005), and operate repair stratcgies if comprchension fails.the relationship among these is that monitoring and regulating of cognitive processingfinction on the basis of awareness (qian 2005).3. the importance of strategic processing in efl reading3.1 modes of information processing in efl readingtext comprchension requires the simultancous intcraction of two modes of informationprocessing, that is, bottom-up processing (text-based or dada driven) and top-down processingknowledge-based or conceptuallydriven)(silberstein 2002: 7).bottom-up processing refers to the decoding of the letters,words, and other language featuresin the text(hedge 2002:189). the processing occurs when linguistic input from the text ismapped against the reader ‘s cxistent linguisticknowledge, and it is also evoked by the incomingdata (silberstein 2002:7). this processing ensures the reader to be sensitive to information that isnovel or thal docs nol lit their ongoing hypothescs about the contenl or structure of the text (zhang2006). for fluent reading, the most fundamental requirement is rapid and automatic wordrecognition(grabe & stoller 2005: 20).and a fluent reader can take in and store words togetherso that basic grammatical information can be extracted to support clause-level meaning (ibid:22).so improving reading speed is an important strategy for this processing(grellet 2000:16). butthis is the basic processing mode dependent on linguistic competence and leading tocomprehension mainly on the sentence level.on the other hand,top-down processing refers to the application /of prior knowledge toworking on the meaning of a text (hedge 2002: 189). the processing occurs when readers useprior knowldgc to make predictions about thc data thcey will find in a text (silbcrstcin 2002:8). itassumes that reading is primarily directed by reader goals and expectations (grabe & stoller 2005:32). it helns the readers to resolve ambiguities or to select between alternative possibleinterpretations of the incoming data (zhang 2006).some strategies involved are:makinginferences through the context and word formation,skimming and scanning or etc (grellet2000:14-18).however, there is a qucstion about what a rcadcr could lcarn from a text if thc rcadermust first have expectations about all the information in the text (grabe & stoller 2005:32).therefore, to some extent, these views concerning processing in reading comprehension arenot entirely adequate. a more effective way is to use these two modes of processing interactively,during which one can take useful ideas from a bottom-up perspective and combine them with keyideas from a top-down view (grabe & stoller 2005: 33). it is claimed that prior knowledge andprediction facilitate the processing of input from the text. meanwhile, the integrated mode requireslinguistic pcrccption.rcaders can use bottom-up proccssing as a base for sampling data and thcnswitch totop-down process to execute higher-level interpretation of the text. furthermore, throughfurther sampling of data, readers will confirm, revise or reject predictions about the content of thetext. so these two modes of information processing are complementary to each other (qian &ding 2004).to be strategic readers,efl learners should possess theability of between bottom-upprocessing and top-down processing flcxibly to monitor and regulate the rcading process.3.2 strategy trainingmany sludies have provided suflicient evidence for the efficacy of strategy training. oneexample was a study undertaken by carrell, pharis, and liberto(1989, cited in hedge 2002:80-81)with twenty-six esl students of mixed first language backgrounds. this investigated the valuc oftwo training techniques: semantic mapping andexperience-text-relationship(etr) in pre-reading.ln the experiment, onc group of students underwent training in the semantic mapping techniqueand another underwent training in the etr technique. a third group received no training. thegroups were pre-tested and post-tested on their ability to answer multiple choice comprehensionquestions, to complete partially constructed maps of the topics of threc texts,and to create theirown semantic maps. the general results suggested that the use of both techniques enhancedthesccond-language rcading of the students involved as compared with thc group that reccive notraining.another example of such studies was undertaken with fifty-six freshmen in two classes ofchina university of geosciences (liu & zuo 2006). the students took the cet-4 reading testwhich contained five passages of different style and registers were chosen from the cet-4 test ofthe ycars 1991,1993,1995 and 1996.finally they finished arcading-stratcgy questionnaire. thisquestionnaire was aself-assessment inventory including 19 statements on afive-point scaleranging from “*strongly disagree”to"strongly agree" for ussessing the reading stralegics usedduring the three stages of reading comprehension: pre-reading stage, during-reading stage andpost-reading stage. the results indicated that reading strategies contributed a lot to the readingperformance. the students who used more reading strategies that promoted their reading scoresperformed better than those who used less reading strategies. therefore,students shouild and canbe trained to acquire and develop reading strategies to improve their reading proliciency.the above two examples show that strategy training has a significant positive effect onreading comprehension and different techniques can be employed for strategy trainingwhich aimsto help learners apply strategies to upgrade their reading ability. the use of cognitive strategiesand metacognitive strategies enhance the second-language reading (hedge 2002:8) and facilitatescomprehension ( ibid.79).furthermore, these strategies make the readers become more self-reliant,responsible,confident,motivated and autonomous when they begin to understand the relationshipbetween the use of strategies and success in reading conprchension(chamol & kupper 1989;chamot & o‘malley 1994; cited in rasekh & ranjbary 2003).4.summarymany researchers have done lots of elaborated studies on strategic reading. although manystudics have provided us with much enlightcnmcnt, they cither 出现恶意脚本on thc stratcgic training foresl learners as is shown in the study of carrell, pharis, and liberto, or fornon-english majors asis shown in the research of liu and7.uo.ujp to now, there have been not many studies on strategictraining in the efl reading classroom for english majors in china.under these circumstances,there is still some room for further probe in this field.so on the basis of others’studies,myrcscarch is to discuss the following questions:(1)what are the major problems in reading among english majors in china?(2)what kind of rcading stratcgics english majors frcqucntly cmploy?(3)what kind of reading strategies get much more correlation with the efficiency of reading?(4) what are the major differences between successful and less successful readers in the use ofreading strategies?the purpose of my research is to identify the reading problems of english majors, analyze theundcrlying causcs,and suggcst cffectivc ways for stratcgy training in the rcading classroom..。

科技改变了我们的学习方式英文作文Technology is Changing How We Learn at SchoolWow, school is so different now from how it used to be when my parents and grandparents were kids! Technology has changed pretty much everything about how we learn and what we learn about. I can't imagine going to school without laptops, tablets, interactive whiteboards, and all the cool apps and websites we use every day. Learning is so much more fun and interesting with all the amazing tech tools we get to use.In our classroom, we don't just read from dusty old books anymore. We use e-books that have moving pictures, videos, and audio clips to make the stories come alive. Sometimes we even get to click on interactive maps or take virtual field trips right on our tablets. It's like the whole world is opened up to explore right from our desks!Instead of just copying notes from the board like my grandma had to do, our teacher uses a huge interactive whiteboard at the front of the class. She can write notes, draw diagrams, show videos, or let us interact with animations and games, all on that giant screen. If we get stuck on something, she can easily pull up another resource like a website or video to helpexplain it in a different way. With so many multimedia tools at her fingertips, our lessons are always engaging andmulti-dimensional.When we learn about history or science topics, we don't just read about them in a textbook. We can watch video footage of historical events, explore 3D models of ancient structures, or see animations that show how things work, like the water cycle or the human body. Visualizing all these concepts makes them click so much better than just reading about them. We can even take virtual reality field trips to museums, heritage sites, or national parks from our classroom. It's like being there in person without even leaving school!Working on projects and assignments is a total tech experience too. We use apps and websites to do research, create presentations and videos, analyze data, and collaborate with teammates. Instead of having to make posters with markers and glue, we can build amazing multimedia projects with audio, video, graphics, and special effects. All the tools we need are right at our fingertips on computers and tablets.Every student also has their own online portfolio where we save and share our work. Our teachers and parents can view our portfolios anytime to see what we're learning and the projectswe're working on. We can even video chat with experts or students from other schools to get feedback and different perspectives. Collaboration is so much easier with technology.Another huge way tech has changed learning is by letting us have more personalized, self-paced instruction. We use adaptive learning apps and websites that automatically adjust the content and pace based on our individual strengths and struggles. If I'm zipping through a math unit, it will keep challenging me with harder lessons. But if I'm getting stuck on something, it will automatically provide review materials and practice activities to build up my skills before moving ahead.There are also tons of educational websites, videos, podcasts, and apps we can use for independent study on any topic that interests us. My friend Marcus is obsessed with dinosaurs, so he's always finding new games and videos to learn more about different species and time periods. I'm really into coding and robotics, so I use kid-friendly coding apps and tutorials to work on my programming skills. With technology, we can explore our passions and keep learning at our own level and pace.Having one-to-one devices like laptops and tablets in the classroom makes collaboration and interaction so much easier too. We can do group work and projects right on our devices,sharing our screens and documents. Sometimes we'll split into teams for group competitions using gamified learning apps like Kahoot or Quizlet Live. Those always get us really excited and motivated to learn!Technology has even changed how we take tests and demonstrate our knowledge. Instead of just pencil and paper tests, we often take assessments right on our devices. The tests can have multimedia components like videos, simulations, drag-and-drop activities, and even video submissions for some open-ended questions where we record our verbal responses. It's a much more interactive and versatile way to show what we know.Of course, tech in the classroom does have some downsides too. I have to be really careful not to get distracted by games, social media, or websites that have nothing to do with my schoolwork. Cyberbullying and internet safety are serious issues we have to learn about too. And sometimes all the devices can have technical difficulties or glitches that frustrate everyone.But overall, injecting technology into our learning has been an awesome change that makes school more engaging, interactive, collaborative, and personalized. We have countless resources at our fingertips and multimedia tools that bringlessons to life in ways a traditional textbook style of teaching never could.While my grandparents just had to sit passively and listen to their teachers lecture, we get to be active participants in the learning process with technology. We can explore topics through videos and virtual reality. We can build multimedia projects to show our knowledge. We can learn at our own pace with adaptive learning tools. We can satisfy our curiosities and chase our passions with endless online resources. School is just way more empowering, individualized, and fun with technology in the mix.I honestly can't imagine what learning would be like without laptops, tablets, apps, and all the latest ed-tech. While technology definitely has its downsides, it's mostly been an awesome force for transforming education and motivating students like me. I feel really lucky to be growing up in this digital age of learning!。

Macromedia网页设计认证部分试题一1、要想设置在浏览器中,当鼠标指针移动到某段文字上时,改变成沙漏形状,那么应该()A. 在command命令中,选择get more commands命令,再对这段文字应用B. 在command命令中,选择edit command list命令,再对这段文字应用C. 编辑css样式表,再对该段文字应用D. 在page properties中对这段文字2、html中段落标志中,标注文件子标题的是?A. <Hn></Hn>B. <PRE><PRE>C. <p>D. <BR>3、利用时间链做动画效果,如果想要一个动作在页面载入5秒启动,并且是每秒15帧的效果,那么起始关键帧应该设置在时间链的()A 第1帧B 第60帧 c 第75帧 D 第5帧4 什么菜单可以允许用户根据模板进行表格的分类和排序A. format layerB. format tableC. format textD. format cell5、在CSS语言中下列哪一项是"字体大小"的允许值?A. list-style-position: <值>B. xx-smallC. list-style: <值>D. <族科名称>6、Bulleseyet图标像什么样子?A. 汽水瓶B. 钟C. 小狗D. 飞机7、在CSS语言中下列哪一项的适用对象是"内部元素"?A. 背景重复B. 背景附件C. 纵向排列D. 背景位置8、分帧文档的文档格式是()。

A. HTML格式B. ASP格式C. CSS格式D. TXT格式9、下列对CSS文本转换表述不正确的一项是?A. 语法: text-transform: <值>B. 允许值: none | capitalize | uppercase | lowercaseC. 初始值:0D. 适用于: 所有元素10、下列哪一项是"多选式选单"的语法?A. <SELECT MULTIPLE>B. <SAMP></SAMP>C. <ISINDEX PROMPT="***">D. <TEXTAREA WRAP=OFF|VIRTUAL|PHYSICAL></TEXTAREA11、动态HTML中随机分解的转换特效类型是?A. Random dissolveB. Split vertical inC. Split vertical outD. Split horizontal in12、Dreamweaver的图像在Fireworks中可以得到什么处理?A. 直接优化B. 间接优化C. 直接钝化D. 间接钝化13、禁止表格格子内的内容自动断行回卷的HTML代码是?A. <tr valign=?>B. <td colspan=#>C. <td rowspan=#>D. <td nowrap>14、动态HTML文档的多媒体控件"Sequencer"表示?A. 同时播放多个声音文件B. 为子画面和其他可视化对象定义移动路径C. 控制多媒体事年的定时自动播放D. 显示动画图形对象15、CSS分层是利用什么标记构建的分层?A. 〈dir〉B. 〈div〉C. 〈dis〉D. 〈dif〉16、在CSS语言中下列哪一项的适用对象不是"带有显示值的目录项元素"?A. 目录样式位置B. 背景位置C. 目录样式类型D. 目录样式图象17、html语言中,创建一个位于文档内部的靶位的标记是?A. <name="NAME">B. <name="NAME"></name>C. <a name="NAME"></a>D.<a name="NAME"18、在Dreamweaver中,如果网页中的某幅图片(hgj.gif)和该网页的地址从"C:\my document\123\"变为"D:\123\my document\123\",在不改变该网页的地址设置情况下,仍然能正确在浏览器中浏览到该图象的地址设置是:A. "C:\my document\123\hgj.gif"B. "\my document\123\hgj.gif"C."\123\hgj.gif" D. "hgj.gif"19、下列对CSS"值"和"组合"表述不正确的一项是?A. 声明的值是一个属性接受的指定B. 为了减少样式表的重复声明,组合的选择符声明被禁止C. 文档中所有的标题可以通过组合给出相同的声明D. red是属性颜色能接受的值20、HTML的段落标志中,标注行中断的是?A. <Hn> </Hn>B. <PRE> </PRE>C. <P>D. <BR>21、HTML文本显示状态代码中,<SUP></SUP>表示?A. 文本加注下标线B. 文本加注上标线C. 文本闪烁D. 文本或图片居中22、动态HTML中设定路径移动时间的属性是?A. BounceB. DurationC. RepeatD. Target23、下面CGI脚本中的通用格式和content-types不是一一对应的是哪一项?A. HTML与text/htmlB. Text与text/plainC. GIF与image/gifD. MPEG与image/jpeg24、下列对CSS内容表述不正确的一项是?A. 伪类和伪元素不应用HTML的CLASS属性来指定B. 一般的类不可以与伪类和伪元素一起使用C. 一个已访问连接可以定义为不同颜色的显示D. 一个已访问连接可以定义为不同字体大小和风格25、用户可以在()命令的动作中见到canAcceptCommand。