Exploiting Similarities among Languages for Machine Translation - google word2vec

- 格式:pdf

- 大小:280.49 KB

- 文档页数:10

Questionnaires in language teaching research作者:Ping Guo来源:《速读·下旬》2019年第03期Questionnaires are often used to examine people’s attitudes,beliefs and behaviors in language learning and teaching.The data we get from questionnaire research can be especially insightful and satisfying when patterns emerge from a large number of respondents,when apparent differences or similarities are found among groups,or when relationships are ascertained among variables.As researchers,we feel empowered when we make recommendations for learning and teaching if the pattern we find is not only salient and strong,but also attested on a large scale.However,by nature,survey research is exploratory and shallow.It often does not go beyond pattern finding or relationship mapping.The patterns themselves are long on description and short on explanation.And of course,with every pattern there are exceptions.Most consumers of our research only care about our conclusions and pedagogical implications.As researchers,however,it is our responsibility to make sure that we have selected the right tool,and that the tool we have used to reach our conclusions is indeed trustworthy.The questionnaire as a research instrument has been a concern for applied linguistics for some time.As early as 1990,Reid (1990) traced the processes in developing her learning styles measure and boldly revealed the ‘dirty laundry of ESL [English as a second language] surveyresearch’.Luppescu and Day (1990) attempted to develop a questionnaire to canvass the attitudes and beliefs of Japanese learners and teachers towards the learning of English,but found it difficult to validate the student questionnaire.In fact,instead of arriving at any conclusions about student beliefs,they presented a ‘more important conclusion’,that ‘questionnaire data should not blindly be accepted or considered meaningful unless they have been properly validated.Compared to other fields such as the social sciences,education,marketing,and medicine that extensively make use of questionnaires,applied linguists have paid only cursory attention to the questionnaire tool itself.Despite an occasional paper on questionnaire validation (e.g.Brown,1997) or issues involved in using the Likert scale (e.g.Busch,1993; Gu,Wen,& Wu,1995),questionnaire design and validation remained a topic rarely touched upon until the end of the 20th century.Even up until this day,Dörnyei and Taguchi (2010; first edition published in 2003).Only a few papers (e.g.Petrić & Czárl,2003) showcase how a questionnaire can be validated.With these precious efforts,novice researchers today have much clearer guidance in the process of questionnaire design and validation.However,applied linguists have barely explored the major issues involved in the analysis of questionnaire data.One example would be the analysis of Likert scale data.It is now time for the field to pay more attention not just to what a questionnaire study reveals,but also to how the questionnaire is designed,validated,and analyzed.Questionnaire research is often seen as ‘quick and dirty’.While the administration of a questionnaire does seem quick,the development,validation,and analysis of the questionnaire are far from a quick and easy process.Another common practice that makes it feel easy is theadoption/adaptation of existing questionnaires.Again,this is not as straightforward as it seems.In commenting on Park’s (2014) recent validation of the FLCAS,Horwitz (2016) emphasized the point that the factor structure of the foreign language anxiety scale may vary from group to group,and that: future empirical efforts to understand the components of FLA [foreign language anxiety] should clearly delineate the specific learner population and learning context being examined to determine if,and if so,how the construct of FLA differs across learning populations and situations.References[1]Brown,J.D.Designing surveys for language programs.In D.T.Griffee & D.Nunan (Eds.),Classroom teachers and classroom research[J].Tokyo: JALT,1997: 109-121.[2] Brown,J.D.Likert items and scales of measurement[J]? SHIKEN: JALT Testing & Evaluation SIG Newsletter,2011,15:10-14.[3]Gu,Y.,Wen,Q.,& Wu,D.How often is often: Reference ambiguities of the Likert-scale in language learning strategy research[J].Occasional Papers in English Language Teaching,ERIC Document Reproduction Service No.ED 391 358.1995,5:19-35.作者简介郭萍(1981.07—),女,汉族,籍贯:四川隆昌县,讲师,学历:本科;单位:成都艺术职业学院;研究方向:外国语语言与教学。

Transactions of the Philological Society Volume103:2(2005)121–146VERTICAL AND HORIZONTAL TRANSMISSION INLANGUAGE EVOLUTION1By W ILLIAM S-Y.W ANG a AND J AMES W.M INETT ba Chinese University of Hong Kong and Academia Sinica,Taiwan,b Chinese University of Hong KongA BSTRACTIt has been observed that borrowing within a group of genetically related languages often causes the lexical similar-ities among them to be skewed.Consequently,it has been proposed that borrowing can sometimes be inferred from such skewing.However,heterogeneity in the rate of lexical replacement,as well as borrowing from other languages,can also give rise to skewed lexical similarities.It is important, therefore,to determine to what degree skewing is a statisti-cally significant indicator of borrowing.Here,we describe a statistical hypothesis test for detecting language contact based on skewing of linguistic characters of arbitrary type.Signifi-cant probabilities of correct detection of contact are main-tained for various contact scenarios,with low false alarm probability.Our experiments show that the test is fairly robust to substantial heterogeneity in the retention rate,both across characters and across lineages,suggesting that the method can provide an objective criterion against which claims of signi-ficant skewing due to contact can be tested,pointing the way for more detailed analysis.1This work has been supported in part by grants1224⁄02H and1127⁄04H awarded by the Research Grants Council of Hong Kong to the Chinese University of Hong Kong.We would like to thank the anonymous reviewers of an earlier draft of this paper for their constructive criticism.We are also grateful to Drs.Jinyun Ke and Feng Wang,and Miss Joyce Cheung for their helpful comments.Additional supplementary material can be found at /transactions.aspÓThe Philological Society2005.Published by Blackwell Publishing,9600Garsington Road,Oxford OX42DQ and350Main Street,Malden,MA02148,USA.1.I NTRODUCTIONThe tree diagram allows the hierarchy of language splits that are hypothesized to have taken place in a language stock to be displayed clearly and simply.However,the underlying assumption that languages split discretely into two (or more)lineages,each evolving independently thereafter by vertical transmission only,is far from realistic.As Schmidt recognized when he proposed the Wellentheorie ,languages often do not split nearly so cleanly as supposed in the Stammbaumtheorie .Furthermore,innovations that arise in one language may come to be acquired by other nearby languages with which it comes into contact.When such horizontally transmitted innovations are incorrectly interpreted as vertically transmitted innovations,and are used to infer a language tree,the topology of the tree provides a warped representation of the genetic relationships.In order to prepare tree diagrams that accurately reflect only the genetic relationships among a set of languages,the two main mechanisms by which innovations come to be shared must be distinguished,and the horizontal transmission filtered out.Alternatively,if a hybrid picture of the evolution of a set of languages is sought,comprising both modes of transmission,the tree diagram must be discarded and replaced by a network diagram on which are marked both the lines of descent by vertical transmission and the contact events involving horizontal transmis-sion.One method of distinguishing vertical and horizontal transmis-sion,which can be traced back to Hu bschmann’s (1875)work on Armenian,is to stratify the correspondences between two lineages and to recognize that only the oldest stratum can possibly reflect the vertically transmitted signal.This idea has been taken up by Wen (1940),and more recently by Sagart &Xu (2001),to resolve contact among Sino-Tibetan languages,and forms part of the Distillation Procedure for reconstruction recently proposed by Wang (2004).Other,more quantitative approaches to detecting horizontal transmission have also been proposed.One such class of methods is based on cladistics.For example,Warnow and Ringe,and their colleagues,have promoted the use of cladistic methods in linguisticTRANSACTIONS OF THE PHILOLOGICAL SOCIETY 103,2005122WANG&MINETT–VERTICAL AND HORIZONTAL TRANSMISSION123 classification,publishing a number of papers(e.g.,Warnow et al. 1995;Ringe et al.2002)in which they apply their own implemen-tation of the maximum compatibility method to refine the classi-fication of Indo-European.In their approach,determination of the optimal position of each sub-group is undertaken by seeking the topologies—there might be more than one—that are compatible with the greatest number of characters.The remaining,incompat-ible characters are viewed as having been subject to non-genetic processes,such as borrowing,and are not used to determine the optimal topologies.Application of the computational technique answer set programming to the automatic assessment of contact-induced innovations that are optimal within the Warnow-Ringe paradigm is currently under investigation(Erdem et al.2003; Brooks et al.2005),and shows promising results.Minett&Wang(2003)have described another cladistic method for detecting borrowed characters.In this method,the optimal trees are sought according to the maximum parsimony criterion.Each innovation is assumed to arise independently only once,hence instances of some innovation after itsfirst occurrence are considered to be due to contact.The method has been applied to detect lexical borrowing among representative dialects of each of the seven main sub-groups of Chinese.More tests must be performed to check that these cladistic methods are indeed detecting contact-induced change and not leading toward plausible, but spurious,inferences.Methods based on lexicostatistics have also been attempted.A number of Africanists,including Hinnebusch(1999)and Heine (1971)before him,have noted that the lexical similarities between sub-families of closely genetically related languages tend to be approximately equal when only genetic effects have influenced the languages,but tend to be different when borrowing or other non-genetic effects have influenced them.This observation has led Hinnebusch(1996)to propose a lexicostatistical concept for identifying languages that have come into contact by looking for skewing in the lexical similarities among them—s kewing is just the difference that is observed between the similarities of one language with respect to two other languages;a more formal definition of skewing is given in Section2.Hinnebusch cites a comment by Heine (1974:17),regarding possible contact among three Nilotic languages,which we repeat here to illustrate the concept (Hinnebusch,1999:177):The Nilotic languages Samburu and Nandi share 9.9percent lexical resemblances on the basis of the 200-word list.The percentage between Masai and Nandi,on the other hand,amounts to 15.7.These two languages have been in close contact over the last few centuries.It seems reasonable to assume that the difference of 5.8percent between Samb-uru ⁄Nandi and Masai ⁄Nandi is a result of the process of borrowing which took place between Masai and Nandi.The claim that the 5.8%difference,or skewing ,between the lexical similarities observed for Samburu ⁄Nandi and Masai ⁄Nandi is due to borrowing between Masai and Nandi is certainly intuitively appealing.However,notwithstanding the fact that Masai and Nandi are known to have come into contact recently,an alternative explanation for the skewing noted by Heine might simply be that Masai has been more conservative than Samburu since splitting from it,thereby causing the lexical similarity between Masai ⁄Nandi to exceed that between Samburu ⁄Nandi.Another possible explan-ation is that Samburu might have come into contact with some other language,causing some lexical items that had hitherto been cognate with lexical items in Nandi to be replaced,so reducing the lexical similarity for Samburu ⁄Nandi.Chen (2000)has proposed a lexicostatistical method for identi-fying whether a pair of languages have come into contact.The method,called rank analysis ,divides the lexical items into groups,or ranks ,that are known (or assumed)to have different average rates of replacement,and works with the lexical similarities between the languages in each of those ranks.In its simplest implementa-tion,universal rank analysis ,the Swadesh basic words are grouped into two ranks:Rank 1,consisting of the Swadesh 100word-list (Swadesh,1955),and Rank 2,the remaining words of the Swadesh 200word-list (Swadesh,1951).The lexical similarity between the pair of languages is then calculated for each rank.Based on the assumption that the Rank 1words are more conservative and less susceptible to borrowing than the Rank 2words,the two languagesTRANSACTIONS OF THE PHILOLOGICAL SOCIETY 103,2005124WANG&MINETT–VERTICAL AND HORIZONTAL TRANSMISSION125 are inferred to be genetically related only if the Rank1similarity exceeds the Rank2similarity;otherwise,the shared resemblances are inferred to have come about through ed in conjunction with stratification,whereby the rank analysis is applied only to the oldest detected stratum,the method may prove to be a powerful tool for detecting horizontal transmission.However,it is not yet clear how accurate this method is.Several other lexicostatistical methods for detecting horizontal transmission have also been developed:For example,both Sankoff(1972)and Embleton(1981;1986)have extended the traditional implementation of lexicostatistics to account for both heterogene-ous retention rates and borrowing,modelling the borrowing between languages in terms of their geographic neighbourhood. However,these methods do not in themselves allow the detection of horizontal transmission.Rather,they use estimates of the borrow-ing rates between neighbouring languages to improve the classifi-cation produced by the lexicostatistical analyses.In his study of the settlement of Taiwan,Wang(1989)postulated that patterns in the lexicostatistical error matrix(the absolute difference between the input lexical distances and the distances reconstructed from the optimal lexicostatistical tree)are indicative of horizontal transmission.While this approach has produced suggestive results,its efficacy is yet to be verified.In addition to developing a cladistic method for detecting contact,Minett&Wang(2003)also proposed a lexicostatistical approach to detecting contact,hypothesizing that branches of negative length in the trees built by distance-based tree-building algorithms,such as Neighbor-Joining(Saitou and Nei,1987),are indicative of contact.This hypothesis,however,turned out to be false.Yet another approach,suggested by Cavalli-Sforza et al.(1994) for detecting admixture among human populations,is to use bootstrapping in conjunction with an arbitrary tree-building algorithm.Bootstrapping works by generating multiple trees,with one or more randomly selected languages removed from the analysis each time.The stability of the topologies so produced are then examined—clusters of languages that are grouped together for many bootstrap samples are considered to be representative ofvalid genetic relationships.But when a language is unstable in the sampling,shifting from one sub-grouping to another,it is consid-ered likely that that language has come into contact with other languages.The multiple allegiances of such a language may perhaps be identified by examining for which bootstrap samples it shifts sub-group.This method has been applied to Indo-European by Ogura and Wang (1996)with some success,correctly detecting the heavy lexical borrowing by English from both French and the Scandina-vian languages.It is also important to mention the split decomposition method for phylogenetic analysis (Bandelt &Dress,1992).The majority of classification algorithms that have been applied to historical linguistics constrain the topologies that are produced to be trees.However,as we have mentioned,horizontal transmission cannot be shown on a language tree,and actually warps the tree away from representing genetic relationships accurately.Vertically transmitted characters and horizontally transmitted characters,if not distin-guished,tend to produce contradictory sub-groupings on the tree —in other words,they tend to be incompatible.The split decompo-sition method,however,does not constrain the topology to be a tree,but transforms the characters into a set of splits to construct a so-called splits graph .Only when the characters are compatible —suggesting that there has been no horizontal transmission —does the split decomposition method construct a tree;otherwise,a tree-like network is constructed.As more characters become subject to horizontal transmission,so the departure from a tree topology becomes more pronounced.Our aim in this paper is to place Hinnebusch’s idea for using skewing to detect language contact on firm ground by deriving a statistical hypothesis test that can detect contact under idealized conditions at prescribed levels of significance,and to investigate its level of performance under several less-idealized conditions.The paper is laid out as follows.Section 2summarizes the skewing concept and our implementation of Hinnebusch’s method for detecting contact among an arbitrary number of genetically related languages.In Section 3,we show results for a number of contact scenarios that illustrate the robustness of the test.Some concluding remarks are given in Section 4.TRANSACTIONS OF THE PHILOLOGICAL SOCIETY 103,2005126WANG&MINETT–VERTICAL AND HORIZONTAL TRANSMISSION127 2.T HE SKEWING METHOD FOR DETECTING LANGUAGE CONTACTThe skewing method for inferring language contact outlined by Hinnebusch is a similarity-based lexicostatistical method.In a standard lexicostatistical approach,the lexical similarity of two languages is calculated by counting the proportion of some set of pre-selected meanings for which the corresponding glosses appear to be reflexes of the same nguages having a greater lexical similarity are considered to be more closely genetically related than languages having a lesser lexical similarity.There are a number of theoretical problems with the lexicosta-tistical method:Sometimes,no word can found to express a particular meaning.At other times,multiple words are found to correspond to a certain meaning—which word should the linguist use to encode the character?However,looking at these problems from the viewpoint of statistics,the lexical similarity calculated for any two languages is simply an estimate of their similarity based on noisy data.As long as there are not too many such noisy characters and the linguist adopts a consistent approach to handling them,we believe that the lexical similarity can still be a useful tool for estimating how closely related are two languages.Also,Blust(2000) has reminded us that use of lexicostatistics can lead to incorrect classifications when the retention rate across lineages is heteroge-neous.While it is true that lexicostatistics can perform poorly,even for only slight heterogeneity in the retention rate,it remains an important question as to when and how often lexicostatistics performs poorly.We emphasise,however,that in the skewing method described here,no attempt is made to actually classify languages using lexicostatistics.2.1.SkewingIn order to explain more clearly what we mean by skewing and how skewing might be used to detect language contact,wefind it convenient to make use of certain concepts used in cladistics.In cladistics,a character can be defined,rather loosely,as some feature of the taxa being classified,here languages,that allows them to be categorized on the basis of the different character states thatselected characters manifest.Suppose that character state data is available for two sets of languages that are known to be members of two distinct,genetically related sub-groups of some language family,but for which the genetic relationships within each of the two sub-groups are unknown.Our aim is to formalize Hinnebusch’s method into a statistical hypothesis test that can be used to infer whether there has been contact between the languages in two such sub-groups.We begin by defining the skewing between two sibling languages,A and B,with respect to a third language,C,as the similarity of A and C minus the similarity of B and C.The similarity measure can be simply the usual lexical similarity that is adopted in lexicosta-tistical studies (e.g.,as in Dyen et al.1992)or some other measure of similarity based on characters of arbitrary type.If contact has occurred between,say,recipient language A and donor language C,A will tend to have a higher similarity with C than does B,resulting in positive skewing between A and B with respect to C.This tendency for contact to induce positive skewing forms the basis of the skewing method.As Hinnebusch (1999:184)observes,‘‘lan-guages which group together lexicostatistically will tend to have a numerical symmetry with other noncontiguous languages in the comparison set if in fact the grouped languages form a genetic group.’’In other words,we would expect languages within one sub-group of languages to exhibit little skewing with respect to languages of another,related sub-group of languages.When,nonetheless,skewing is observed,language contact is one possible cause.We also define the aggregate skewing of a language,A,with respect to another language,C,as the average skewing between A and each of its siblings with respect to C.The potential use of skewing as an indicator of language contact is best explained by means of an example.Consider the lexical similarities shown in Table 1among eight Bantu dialects:four Mijikenda dialects (Chonyi,Giriyama,Duruma and Digo)and four Comorian dialects (Ngazija,Mwali,Nzuani and Maore),all members of the Sabaki sub-group of Bantu (data from Hinnebusch,1999).Calculation of the aggregate skewing for the Mijikenda dialect Digo with respect to each of the Comorian dialects is summarizedTRANSACTIONS OF THE PHILOLOGICAL SOCIETY 103,2005128in Table 2.So,for example,the aggregate skewing of Digo with respect to Ngazija is )3per cent.Proceeding in this way,we can calculate the aggregate skewing for each of the Mijikenda dialects with respect to each of the Comorian dialects and vice versa ,the results for which are shown in Table 3.Notice that the aggregate skewing is not symmetric.For example,the skewing of Digo with respect to Ngazija,)3per cent,does not equal that of Ngazija with respect to Digo,)1⁄3per cent.This is because the former value is obtained by summing the skewing for Digo and Ngazija over all the Mijikenda dialects while the latter value is obtained by summing over all the Comorian dialects.These values indicate that Digo is lexically less similar to Ngazija than are the other Mijikenda dialects,but that Ngazija is about as similar to Table 1.Lexical similarities among two sub-groups of Nilotic languages,after Hinnebusch (1999).LexicalMijikenda:Comorian:Similarity (%)Chonyi Giriyama Duruma Digo Ngazija Mwali Nzuani Maore Chonyi10081786859605959Giriyama100776660585960Duruma1007060585859Digo10056545659Ngazija100817780Mwali1008384Nzuani10083Maore 100Table 2.An example of the calculation of aggregate skewing.Aggregate skewing is calculated for the Mijikenda dialect Digo with respect to four Comorian dialects.Comorian:(%)Ngazija Mwali Nzuani Maore Chonyi56–59¼)354–60¼)656–59¼)359–59¼0Giriyama56–60¼)454–58¼)456–59¼)359–60¼)1Duruma56–60¼)454–58¼)456–58¼)259–59¼0d S Comorian Digo )3)4)2)1⁄3WANG &MINETT –VERTICAL AND HORIZONTAL TRANSMISSION 129Digo as are the other Comorian dialects.Digo is also negatively skewed with respect to both Mwali and Nzuani.One possible cause is that Digo has borrowed from a separate sub-group.Indeed,Hinnebusch suggests that this is so,arguing that Digo has come into heavy contact with Swahili.If this is indeed the case,negative skewing would seem to indicate contact with some language outside the group.However,an alternative explanation —one based only on the skewing data —is simply that Digo is less conservative than the other Mijikenda dialects,causing it to exhibit fewer similarities with the Comorian dialects than its Mijikenda siblings.This would also account for the relatively low similarity of Digo with its siblings (shown in Table 1).Examining Table 3we see large magnitude positive skewing for Maore with respect to Digo,+3per cent,and for Chonyi with respect to Mwali,+3per cent.If then contact between two languages tends to induce positive skewing,these values imply possible contact between Maore and Digo,and between Chonyi and Mwali.But what is the probable direction of transmission?Consider the case of Chonyi and Mwali.If the direction of transmission were from Mwali to Chonyi,we would expect some of Table 3(a).Aggregate skewing percentages of the Mijikenda dialects with respect to the Comorian dialects.d S Comorian MijikendaNgazija Mwali Nzuani Maore Chonyi +1⁄3+3+1)1⁄3Giriyama +1+2⁄3+1+1Duruma+1+2⁄30)1⁄3Digo )3)4)2)1⁄3Table 3(b).Aggregate skewing of the Comorian dialects with respect to the Mijikenda dialects.d S MijikendaComorianChonyi Giriyama Duruma Digo Ngazija)1⁄3+1+1)1⁄3Mwali+1)1)1)3Nzuani)1⁄3)1)1)1⁄3Maore )1⁄3+1+1⁄3+3TRANSACTIONS OF THE PHILOLOGICAL SOCIETY 103,2005130Table4.Parameters values used to generate pseudo-random character state data in the absence of contact.Number of languages in sub-group K1:5 Number of languages in sub-group K2:5 Time depth of family:2 Time depth of sub-groups:1 Retention rate:90% Number of characters:100 the character states acquired from Mwali by borrowing to also be present in the other Comorian dialects.Therefore,in addition to positive skewing of Chonyi with respect to Mwali,we would also expect to observe at least some positive skewing of Chonyi with respect to the other Comorian dialects.On the other hand,if the direction of transmission were from Chonyi to Mwali,we would not expect the lexical similarity between Chonyi and Mwali’s Comorian siblings to be affected.Consequently,we would not expect any significant positive skewing of Chonyi with respect to the other Comorian dialects.Examining Table3,it is apparent that horizontal transmission from Mwali into Chonyi is the more probable scenario.But of course,the skewing might be caused simply by heterogeneity of the retention rates.2.2.Distribution of Aggregate Skewing—no contactWe proceed by deriving the distribution of aggregate skewing when there is no contact between the two sub-groups.Pseudo-random character state data are generated for ten languages that have split into two sub-groups,each sub-group comprisingfive languages.2 The parameter values chosen for this experiment are summarized in Table4;there is no contact.Note that the retention rate is set to be homogeneous—both across lineages and across characters—at 90%.We estimate the distribution of aggregate skewing by Monte-Carlo simulation,observing the relative frequency of different 2The algorithm used to generate the pseudo-random character state data is described in Appendix A in the supplementary material,available at http:// /More/philsoc/Transactions/html.values of aggregate skewing in multiple runs of the algorithm.Figure 1shows the distribution of aggregate skewing for all pairs of languages observed over 1000runs with the parameters specified in Table 4—both the frequency (the vertical bars)and the cumulative frequency (the curve)of aggregate skewing are shown in the figure.Notice that the aggregate skewing is approximately Gaussian distributed with zero mean.2.3.Distribution of Aggregate Skewing —contactWe now examine how the distribution of skewing changes when there is contact between the two sub-groups by injecting borrowing of various degrees between a single donor language and a single recipient language,one language in each sub-group.For the first such set of runs,the parameter values of the algorithm are set to those values specified in Table 4;the contact rate is set to 10%.Figures 2&3summarize the results of this experiment:Figure 2shows the distribution of aggregate skewing for the pairs of languages that have not come into contact;Figure 3shows the distribution of aggregate skewing of the recipient languagewithrespect to the donor language.In both cases,the distribution of aggregate skewing is roughly Gaussian.For the pairs of languages that have not come into contact,the mean level of aggregate skewing is)0.1%(Figure2),only slightly lower than the zero-mean skewing observed under the no-contact scenario(cf.Figure1). However,due to the contact between the donor and recipient languages,we expect these two languages to exhibit positive aggregate skewing.In fact,the observed mean level of aggregate skewing of the recipient language with respect to the donor language is+3.3%(Figure3).Wefind then that language contact tends to induce positive aggregate skewing between the donor and recipient,but does not greatly affect the aggregate skewing for languages that have not come into contact.2.4.Hypothesis test for detecting language contactThe abovefindings point to a method for identifying languages that have come into contact—positive aggregate skewing tends to indicate language contact.But what amount of aggregate skewing is a significant indicator of contact?As Figure1indicates,when there is no contact,less than5%of language pairs have aggregate skewing of+3.7%or greater;but slightly more than5%of language pairs have aggregate skewing of+3.6%or greater. Hence,for a5%probability of false alarm(the significance), language contact can be inferred whenever the aggregate skewing exceeds the threshold value,h,of+3.7%.Looking back at Figure2,which shows the distribution of aggregate skewing among language pairs which have not come into contact when contact has occurred between some other language pair,we observe that6.0% of language pairs exhibit significant skewing that exceeds the threshold,only slightly greater than the specified level of signifi-cance of5%.This is further evidence(for this set of parameters at least)that contact between a single pair of languages does not induce significant aggregate skewing between other language pairs. From Figure3,we observe significant aggregate skewing of the recipient language with respect to the donor language with frequency42.2%.Thus we can correctly infer contact between a single pair of languages with probability roughly42%as long as weare prepared to accept 6%chance of incorrectly inferring contact between a pair of languages between which no contact has occurred.3.R ESULTS AND DISCUSSIONWe now examine the performance of the method described in Section2for inferring contact in a variety of contact situations. One thousand pseudo-random data sets are generated for each experiment detailed below.In each case,the observed distribution of aggregate skewing is determined so that the probabilities of incorrectly inferring contact,the false alarm rate,and of correctly inferring contact,the detection rate,can be estimated. Experiment1:The examples given in Section2were implemented based on the assumption that the sub-group time depth,as well as the time depth of the entire family,were assumed to be known, allowing an appropriate threshold value to be calculated.Here we consider the effect of setting the threshold based on an incorrect evaluation of the sub-group time depth.The sub-group time depth is set successively to0.25,0.50,…,1.75,while the contact rate is set to10%.However,for each value of the actual sub-group time depth,the performance is assessed for a threshold optimised for each value of the assumed,or nominal,sub-group time depth.Thus, in most cases,the chosen threshold is not optimised for the actual value of the sub-group time depth.Figure4shows the resultant performance for10%contact:(a) the detection rate,and(b)the false alarm rate.Examining the detection rate curves,we observe that for each value of the nominal sub-group time depth the detection rate is roughly constant for all actual values of the sub-group time-depth(although there is a slight dependence on the actual time depth for the more extreme values of the nominal time depth).This means that a reasonably accurate assessment of the detection rate can be obtained based on just the nominal sub-group time depth—the greater its value,the greater the detection rate.Examining now the false alarm rate curves in Figure4(b),we see that the false alarm rate certainly does depend on the values of both the actual sub-group time depth and the nominal sub-group time。

words that differ in only one soundThey differ in meaning, they differ only in one sound segment, the different sounds occur in the same environmentExample: beat, bit They form a minimal pairSo /ea/ and /i/ are different sounds in EnglishThey are different phonemes1.the Sapir-Whorf Hypothesislinguistic determinism (语言决定论) -Language determines thought.and linguistic relativity (语言相对论)-There is no limit to the structural diversity of languages.2.BehaviorismBehaviorism in linguistics holds the view that Children learn language through a chain of stimulus-response-reinforcement (刺激—反应—强化), and adults’ use of language is also a process of stimulus-response.3.discovery proceduresA grammar is discovered through the performing of certain operations on a corpus of data4.Universal GrammarUG consists of a set of innate grammatical principles.Each principle is associated with a number of parameters.5.Systemic GrammarIt aims to explain the internal relations in language as a system network, or meaning potential.6.Ideational MetafunctionThe Ideational Function (Experiential and Logical) is to convey new information, to communicate a content that is unknown to the hearer. It is a meaning potential.It mainly consists of “transitivity” and “voice”. This function no t only specifies the available options in meaning but also determines the nature of their structural realisations. For example, “John built a new house” can be analysed as a configuration of the functions (功能配置): Actor: JohnProcess: Material: Creation: builtGoal: Affected: a new house7.Interpersonal MetafunctionThe INTERPERSONAL FUNCTION embodies all uses of language to express social and personal relations. This includes the various ways the speaker enters a speech situation and performs a speech act.8.basic speech rolesThe most fundamental types of speech role are just two: (i) giving, and (ii) demanding.Cutting across this basic distinction between giving and demanding is another distinction that relates to the nature of the commodity being exchanged. This may be either (a) goods-&-services or (b) information.9.finite verbal operatorsFiniteness is thus expressed by means of a verbal operator which is either temporal or modal.10.Textual MetafunctionThe textual metafunction enables the realization of the relation between language and context, making the language user produce a text which matches the situation.It refers to the fact that language has mechanisms to make any stretch of spoken or written discourse into coherent and unified texts and make a living passage different from a random list of sentences.It is realized by thematic structure, information structure and cohesion.11.theme and rhemeThe Theme is the element which serves as the point of departure of the message. The remainder of the message, the part in which the Theme is developed, is called the Rheme.As a message structure, a clause consists of a Theme accompanied by a Rheme. The Theme is the first constituent of the clause. All the rest of the clause is simply labelled the Rheme12.experientialismExperientialism assumes that the external reality is constrained by our uniquely human experience.The parts of this external reality to which we have access are largely constrained by the ecological niche we have adapted to and the nature of our embodiment. In other words, language does not directly reflect the world. Rather, it reflects our unique human construal of the world: our ‘world view’ as it appears to us through the lens of our embodiment.This view of reality has been termed experientialism or experiential realism by cognitive linguists George Lakoffand Mark Johnson. Experiential realism acknowledges that there is an external reality that is reflected by concepts and by language. However, this reality is mediated by our uniquely human experience which constrains the nature of this reality ‘for us’.13.image schemataAn image schema is a recurring structure within our cognitive processes which establishes patterns of understanding and reasoning. Image schemas are formed from our bodily interactions, from linguistic experience, and from historical context.14.prototype theoryPrototype theory is a mode of graded categorization in cognitive science, where some members of a category are more central than others. For example, when asked to give an example of the concept furniture, chair is more frequently cited than, say, stool. Prototype theory has also been applied in linguistics, as part of the mapping from phonological structure to semantics.二、Directions: Please answer the following questions.1.Why is Saussure called “one of the founders of structural linguisticsand “father of modern linguistics”He helped to set the study of human behavior on a new footing (basis).He helped to promote semiology.He clarified the formal strategies of Modernist thoughts.He attached importance to the study of the intimate relation between language and human mind.2.W hat are the similarities and differences between Saussure’s langue andparole and Chomsky’s competence a nd performanceThe similarities (1) language and competence mainly concerns the user’s underlying knowledge; parole and performance concerns the actual phenomena (2) language and competence are abstract; parole and performance are concrete.The differences (1) according to Saussure, language is a mere systematic inventory of items; according to Chomsky, competence should refer to the underlying competence as a system of generative processes (2)According to Saussure, language mainly base on sociology, in separating language from parole, we separate social from individual; according to Chomsky, competence was restricted to a knowledge of grammar.3.What is the conflict between descriptive adequacy and explanatoryadequacy A nd what is Chomsky’s solution to thi s conflicta theory of grammar: descriptively adequate should adequately describethe grammatical dada of a language.should not just focus on a fragment of a language.a theory of grammar: explanatorily adequateshould explain the general form of language.should choose among alternative descriptively-adequate grammars.should essentially be about how a child acquires a grammar.A theory of grammar should be both descriptively and explanatorilyadequate.But there is a conflict:To achieve DA, the grammar must be very detailed.To achieve EA, the grammar must be very simple. (think why)because the child can learn a language very easily on very little language exposure.Chomsky’s solution:construct a simple UGlet individual grammars be derivable from UG4.What are Chomsky’s contribution s to the linguistic revolutionChomsky’s contribution to the linguistic revolution is that he showed the world a totally new way of looking at language and at human nature, particularly the human mind. Chomsky challenged behaviorism and empiricism because he believes that language is innate.Rationalism (vs. empiricism in philosophy)Empiricist evidence is often unreliable.Innateness (vs. behaviorism in psychology)Children can acquire a complicated language on the basis of very limited exposure to speech.This indicates that UG is innate faculty.5.How to compare and contrast Generative Linguistics andSystemic-Functional Linguistics from perspectives of epistemology, theoretical basis, research tasks and methodology6.How many process types are there in the transitivity system Pleaseillustrate each type by a proper example.Six. Material Processes, Mental Processes, Relational Processes, Behavioural Processes, Verbal Processes, Existential Processes The typical types of outer experience are actions, goings-on and events: actions happen, people act on other people or things, or make things happen. This type of process is called Material Processes.The inner experience is that in our consciousness or imagination. You may react on it, think about it, or perceive it. This type of process is called Mental Processes.Then there is a third type of process: we learn to generalize, to relate one fragment of experience to another. It does this by classifying or identifying. This kind of process is called Relational Processes.These three processes are called major processes. Related to them are three minor processes: each one lies at the boundary between two processes of the three. Not so clearly set apart, they share some features of each, and finally acquire the character of their own.On the borderline between material and mental are the Behavioural Processes: those that represent outer manifestations of inner workings, the acting out of processes of consciousness and physiological states.On the borderline of mental and relational is the category of Verbal Processes: symbolic relationships constructed in human consciousness and enacted in the form of language.Then on the borderline between the relational process and the material process are Existential Processes, by which phenomena of all kinds are recognized to be or to exist.7.What is a multiple Theme to be contrasted with a simple Theme What isa marked Theme to be contrasted with an unmarked Theme Please illustratethem with proper examples.Conjunctions in ThemeConjunctive and modal Adjuncts in ThemeTextual, interpersonal and experiential elements in ThemeInterrogatives as multiple Themes8.What are the similarities and differences between conceptual metaphorand conceptual metonymyMetaphor and metonymy are viewed as phenomena fundamental to the structure of the conceptual system rather than superficial linguistic‘devices’.Conceptual metaphor (概念隐喻) maps structure from one conceptual domain onto another, while metonomy highlights an entity by referring to another entity within the same domain.隐喻就是把一个领域的概念投射到另一个领域,或者说从一个认知域(来源域)投射到另一个认知域(目标域)。

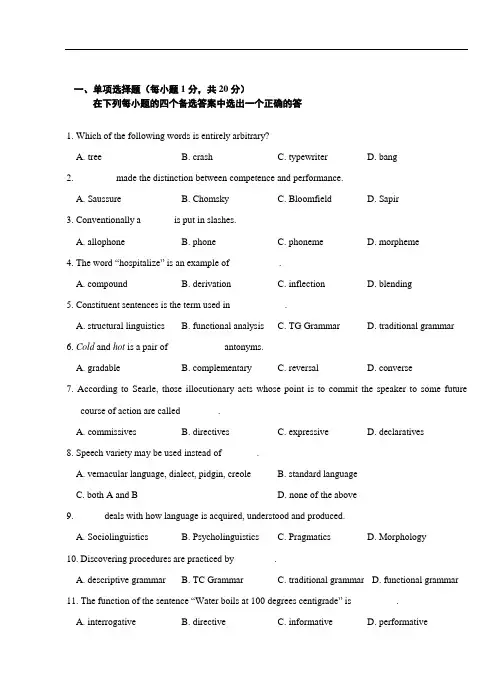

一、单项选择题(每小题1分,共20分)在下列每小题的四个备选答案中选出一个正确的答1. Which of the following words is entirely arbitrary?__________A. treeB. crashC. typewriterD. bang2. ________ made the distinction between competence and performance.A. SaussureB. ChomskyC. BloomfieldD. Sapir3. Conventionally a ______ is put in slashes.A. allophoneB. phoneC. phonemeD. morpheme4. The word “hospitalize” is an example of __________.A. compoundB. derivationC. inflectionD. blending5. Constituent sentences is the term used in ___________.A. structural linguisticsB. functional analysisC. TG GrammarD. traditional grammar6. Cold and hot is a pair of ___________ antonyms.A. gradableB. complementaryC. reversalD. converse7. According to Searle, those illocutionary acts whose point is to commit the speaker to some futurecourse of action are called________.A. commissivesB. directivesC. expressiveD. declaratives8. Speech variety may be used instead of _______.A. vernacular language, dialect, pidgin, creoleB. standard languageC. both A and BD. none of the above9.______ deals with how language is acquired, understood and produced.A. SociolinguisticsB. PsycholinguisticsC. PragmaticsD. Morphology10. Discovering procedures are practiced by ________.A. descriptive grammarB. TC GrammarC. traditional grammarD. functional grammar11. The function of the sentence “Water boils at 100 degrees centigrade” is _________.A. interrogativeB. directiveC. informativeD. performative12. _________ refers to the abstract linguistic system shared by all the members of a speech community.A. ParoleB. LangueC. SpeechD. Writing13. The opening between the vocal cords is sometimes referred as _________.A. glottisB. vocal cavityC. pharynxD. uvula14. ________ refers to the study of the internal structure of words, and the rules by which words areformed.A. MorphologyB. SyntaxC. SemanticsD. Phonology15. “When did you stop taking this medicine?” is an example of _________in sense relationships.A. entailmentB. presuppositionC. assumptionD. implicature16. Idioms are ________.A. sentencesB. naming unitsC. phrasesD. communication units17. An illocutionary act is identical with________.A. sentence meaningB. the speaker’s intentionC. language understandingD. the speaker's competence18. In sociolinguistics, ______ refers to a group of institutionalized social situations typically constrainedby a common set of behavioral rules.A. domainB. situationC. societyD. community19. ______ refers to the gradual and subconscious development of ability in the first language by using itnaturally communicative situations.A. LearningB. CompetenceC. PerformanceD. Acquisition20. In which of the following stage did Chomsky add the semantic component to his TG Grammar forthe first time? __________A. The Classic TheoryB. The Standard TheoryC. The Extended Standard TheoryD. The Minimalist Program1. In Chinese when someone breaks a bowl or a plate the host or the people present arelikely to say sui sui ping an (every year be safe and happy)as a means of controlling theforces which the belivers feel might affect their lives. Which function does it perform?__________A. interrogativeB. EmotiveC. PerformativeD. Recreational2. Which of the following properties of language enables language users to overcome the barriers caused by time and place, due to this feature of language, speakers of a language are free to talk about anything in any situation? ___________A. InterchangeableB. DualityC. DisplacementD. Arbitrariness.3. Which of the following is not the major branch of linguistics? ___________A. PhonologyB. PragmaticsC. SyntaxD. Speech4._______ deals with language application to other fields, particularly education.A. Linguistic geographyB. SociolinguisticsC. Applied linguisticsD. Comparative linguistics5. A phoneme is a group of similar sounds called_________.A. minimal pairsB. allomorphsC. phonesD. allophones6. Which one is different from the others according to manners of articulation? _________A. [z]B. [w]C. [h]D. [v]7.________ doesn’t belong to the most productive means of word-formation.A. AffixationB. CompoundingC. ConversionD. Blending8. Nouns, verbs, and adjectives can be classified as __________.A. lexical wordsB. grammatical wordsC. function wordsD. form words9. ________ refers to the relations holding between elements replaceable with each other at particular place in structure, or between one element present and the others absent.A. Syntagmatic relationB. Paradigmatic relationC. Co-occurrence relationD. Hierarchical relation10. According to Standard Theory of Chomsky, ________ contain all the informationnecessary for the semantic interpretation of sentences.A. deep structureB. surface structuresC. transformational rulesD. PS-rules11. ________describes whether a proposition is true or false.A. TruthB. Truth valueC. Truth conditionD. Falsehood12. “John hit Peter” and “Peter was hit by John” are the same ________.A. propositionB. sentenceC. utteranceD. truth13. ________ is a branch of linguistics which is the study of meaning in the context of use.A. MorphologyB. SyntaxC. PragmaticsD. Semantics14. ________is the study of how speaker of a language use sentences to affect successfulcommunication.A. SemanticsB. PragmaticsC. SociolinguisticsD. Psycholinguistics15.______is defined as any regionally or socially definable human group identified byshared linguistic system.A. A speech community A. A raceC. A societyD. A country16.______variation of language is the most discernible and definable in speech variation.A. RegionalB. SocialC. StylisticD. Idiolectal17. In first language acquisition children usually _________ grammatical rules from thelinguistic information they hear.A. useB. acceptC. generalizeD. reconstruct18. By the time children are going beyond the ______ stage, they begin to incorporate someof the inflectional morphemes.A. telegraphicB. multiwordC. two-wordD. one-word19. According to Halliday, the three metafunctions of language are ________.A. ideational, interpersonal and textualB. ideational, informative and textualC. metalinguistic, interpersonal and textualD. ideational, interpersonal and referential20. The person who is often described as “'father of modern linguistics” is _______.A. FirthB. SaussureC. HallidayD. Chomsky1. Study the following dialogue. What function does it play according to the functions of language? ___________- A nice day, isn’t it?- Right! I really enjoy the sunlight.A. EmotiveB. PhaticC. PeformativeD.Interpersonal2. Unlike animal communication systems, human language is __________.A. stimulus freeB. stimulus boundC. under immediate stimulus controlD. stimulated by some occurrence of communal interest3. Which branch of linguistics studies the similarities and differences among language?___________A. Diachronic linguisticsB. Synchronic linguisticsC. Prescriptive linguisticsD. Comparative linguistics4. __________ has been widely accepted as the forefather of modern linguistics.A. ChomskyB. SaussureC. BloomfieldD. John Lyons5. Which vowel is different from the others according to the tongue position of vowels?___________A. [i]B. [u]C. [e]D.[a]6. Liquids are classified in the light of __________.A. manner of articulationB. place of articulationC. place of tongueD. none of the above7. Morphemes that represent tense, number, gender and case are called _____ morphemes.A. inflectionalB. freeC. boundD. derivational8. There are _______ morphemes in the word denationalization.A. threeB. fourC. fiveD. six9. In English, theme and rhyme are often expressed by ________ and ________.A. subject, objectB. subject, predicateC. predicate, objectD. object, predicate10. The semantic triangle holds that the meaning of a word ________.A. is interpreted through the mediation of conceptB. is related to the thing it refers toC. is the idea associated with that word in the minds of speakersD. is the image it is represented in the mind.11. “John killed Bill but Bill didn’t die” is a (n) ___________.A. entailmentB. presuppositionC. anomalyD. contradiction12. ________ found that natural language had its own logic and concludes cooperativeprinciple.A. John AustinB. John FirthC. Paul GriceD. William Jones13. _______ proposed that speech acts can fall into five general categories.A. AustinB. SearleC. SapirD. Chomsky14. ______ is not a typical example of official bilingualism.A. CanadaB. FinlandC. BelgiumD. Germany15. The most recognizable difference between American English and British English are in_____ and vocabulary.A. diglossiaB. bilingualismC. pidginizationD. blending16. ______ transfer is a process that is more commonly known as interference.A. AcquisitionB. PositiveC. NegativeD. Interrogative17. In general, the two-word stage begins roughly in the _____ half of the child’s secondyear.A. earlyB. lateC. firstD. second18. The most important contribution of the Prague School to linguistics is that it seeslanguage in terms of ________.A. functionB. meaningC. signsD. system19. The principal representative of American descriptive linguistics is________.A. BoasB. SapirC. BloomfieldD. Harris20. At the _______ stage negation is simply expressed by single words with negativemeaning.A. prelinguisticsB. multiwordC. two-wordD. one-word1. Which of the following is the most important function of language? ___________A. Interpersonal functionB. Performative functionC. Informative functionD. Recreational function2. In different languages, different terms are used to express the animal "狗", this shows thenature of ______ of human language.A. arbitrarinessB. cultural transmissionC. displacementD. discreteness3. The study of language as a whole is often called ____________.A. general linguisticsB. sociolinguisticsC. psycholinguisticsD. applied linguistics4. The study of language meaning is called __________.A. syntaxB. semanticsC. morphology D pragmatics5. In English, there is one glottal fricative. It is _______.A. [I]B. [h]C. [k]D. [f]6. The phonetic symbol for “voiced bilabial glide” is _________.A. [v]B. [d]C. [f]D. [w]7. In English -ise and -tion are called ________.A. prefixesB. suffixesC. infixesD. free morphemes8. Morphology is generally divided into two fields: the study of word-formation and ______.A. affixationB. etymologyC. inflectionD. root9. The sense relationship between “John plays the violin”and “John plays a musicalinstrument” is ________.A. hyponymyB. antonymyC. entailmentD. presupposition10. Conceptual meaning is ________.A. denotativeB. connotativeC. associativeD. affective11. Promising, undertaking, vowing are the most typical of the_______.A. declarationsB. directivesC. sociolinguisticsD. Chomsky12. The violation of one or more of the conversational _______ (of the CP) can, when thelistener fully understands the speaker, create conversational implicatures, and humor sometimes.A. standardsB. principlesC. levelsD. maxim13. _______variety refers to speech variation according to the particular area where aspeaker comes from.A. RegionalB. SocialC. StylisticD. Register variety14. In a speech community people have something in common ______ -a language or aparticular variety of language and rules for using it.A. sociallyB. linguisticallyC. culturallyD. pragmatically15. The optimum age for SLA is _______.A. childhoodB. early teensC. teensD. adulthood16. In general, ________ language acquisition refers to children's development of theirlanguage of the community in which a child has been brought up.A. firstB. secondC. thirdD. fourth17. Children follow a similar ________ schedule of predictable stages along the route oflanguage development across cultures.A. learningB. studyingC. acquisitionD. acquiring18. The theory of _______ considers that all sentences are generated from a semanticstructure.A. Case GrammarB. Stratificational GrammarC. Relational GrammarD. Generative Semantics19. _______ grammar is the most widespread and the best understood method of discussingIndo-European language.A. TraditionalB. StructuralC. FunctionalD. Generative20. Hjelmslev is a Danish linguist and the central figure of the ______.A. Prague SchoolB. Copenhagen SchoolC. London SchoolD. Generative Semantics21. The relation between form and means in human language is natural.22. Descriptive linguistics studies one specific language.23. Phonetics is the science that deals with the sound system.24. Phonology is the study of speech sounds of all human languages.25. All consonants are produced with vocal-cord vibration.26. Inflectional morphology is one of the two sub-branches of morphology.27. The structure of words is not governed by rules.28. If a word has sense, it must have reference.29. “He didn't stop smoking” presupposes that he had been smoking.30. A locutionary act is the act of expressing the speaker’s intention.31. A text is best regarded as a semantic unit, a unit not of form but of meaning.32. Although the age at which children will pass through a given stage can vary significantfrom child to child, the particular sequence of stages seems to be the same for all children acquiring a given language.33. It’s normally assumed that, by the age of five, with an operating vocabulary of more2,000 words, children have completed the greater part of the language acquisition process.34. “Tom hit Mary and Mary hit Tom”is an exocentric construction while “men andwomen” is an endocentric construction.35. Following Saussure’s distinction between langue and parole, Trubetzkoy argued thatphonetics belonged to langue whereas phonology belonged to parole.36. The subject-predicate distinction is the same as the theme and functional linguistics.37. Langue refers to the abstract linguistic system shared by all the members of a speechcommunity.38. Consonant sounds can be either voiced or voiceless, while all vowel sounds arevoiceless.39. The standard language is a superposed, socially prestigious dialect of language.40. An illocutionary act is identical with the speaker’s intention.21. When language is used to get information from other, it serves an informative function.22. All the English words are not symbolic.23. All sounds produced by human speech organs are linguistics symbols.24. There are 72 symbols for consonants and 25 for vowels in English.25. The sound [z] is an oral voiced post-alveolar fricative.26. A morpheme is the basic unit in the study of morphology.27. Derivational affixes are added to an existing form to create a word.28. The grammatical meaning of a sentence refers to its grammaticality.29. There is only one argument in the sentence “Kids like apples”.30. While conversation participants nearly always observe the CP, they do not alwaysobserve these maxims strictly.31. Inviting, suggesting, warning, ordering are instance of commissives.32. Cohesion and coherence is identical with each other in essence.33. It has been recognized that in ideal acquisition situation, many adults can reachnative-like proficiency in all aspects of a second language.34. All roots are free morphemes while not all free morphemes are roots.35. In the Classical theory, Chomsky’s aim is to make linguistics a science. This theory ischaracterized by three features: emphasis on prescription of language, introduction of transformational rules, and grammatical description regardless of language formation. 36. Generative grammar is a system of rules that in some explicit and well-defined wayassigns structural descriptions to sentences.37. All words may be said to contain a root morpheme.38. Phrase structure rules allow us to better understand how words and phrases formsentences, and so on.39. Promising, undertaking, vowing are the most typical of the psycholinguistics.40. Halliday’s Systemic Grammar contains a functional component, and the theory behindhis Function Grammar is systemic.21. Most animal communication systems lack the primary level of articulation.22. Langue is more abstract than parole and therefore is not directly observable.23. General linguistics deals with the whole human language.24. Auditory phonetics investigates how a sound is perceived by the listener.25. In English, there are two nasal consonants. There are [m] and [n].26. Phonetically, the stress of a compound always falls on the first element, while thesecond element receives secondary stress.27. The meaning of the word we often used is the primary meaning.28. Meaning is central to the study of communication.29. Of the three speech acts, linguists are most interested in the illocutionary act becausethis kind of speech is identical with the speaker’s intention.30. As the process of communication is essentially a process of conveying meaning in acertain context, pragmatics can also be considered as a kind of meaning study.31. If a text has no cohesive words, we say the text is not coherent.32. The optimum age for SLA always accords with the maxim of “the younger the better”.33. In general, language acquisition refers to children’s development of their first language,that is, the native language of the community in which a child has been brought up.34. The London School is also known as systemic linguistics and functional linguistics.35. Coarticulation refers to the phenomenon of sounds continually show the influence oftheir neighbors.36. Band morphemes are independent units of meaning and can be used freely all bythemselves.37. In the history of American linguistics, the period between 1933 and 1950 is also knownas the Bloomfieldian Age.38. Paul Grice found that artificial language had its own logic and conclude cooperativeprinciple.39. Cultural transmission refers to the fact that language is cultural transmitted. It is passedon from one generation to the next through teaching and learning, rather than by instinct.40. Linguistic potential is similar to Saussure’s langue and Chomsky’s performance.21. Language change is universal, ongoing and arbitrary.22. Competence is more concrete than performance.23. Descriptive linguistics attempts to establish a theory which accounts for the rules oflanguage in general.24. The space between the vocal cords is called glottis.25. Stops can be divided into two types: plosives and nasals.26. All roots are free and all affixes are bound.27. The sentence “Tom, smoke!” and“Tom smokes” have the same semantic predication.28. The sentence that contains the same words is the same in meaning.29. A sentence is a grammatical unit and an utterance is a pragmatic notion.30. “John has been to Asia” entails “John has been to Japan”.31. Coherence is a logical, orderly and aesthetical relationship between parts, in speech,writing, or argument.32. Language acquisition is in accordance with language learning on the assumption thatthere are different processes.33. SLA is primarily the study of how learners acquire or learn an additional language afterthey acquired their first language.34. According to Firth, a system is a set of mutually exclusive options that come into play atsome point in a linguistic structure.35. American structuralism is a branch of diachronic linguistics that emerged independentlyin the United States at the beginning of the twentieth century.36. Phonological knowledge is a native speaker’s intuition about the sounds and soundpatterns of his language.37. Phonetics has three sub-branches: acoustic phonetics, auditory phonetics andarticulatory phonetics.38. The paradigmatical relation shows us the inner layering of sentences.39. An ethnic dialect is spoken mainly by a less privileged population that has experiencedsome sort of social isolation, such as racial discrimination.40. Searle proposed that speech act can fall into six general categories.41. _______ is the actual realization of one's linguistic knowledge in utterances.42. Combining two parts of two already existing words is called ________ inword-formation.43. Lexicon, in most cases, is synonymous with _______.44. A ________ is a structurally independent unit that usually comprises a number of wordsto form a complete statement, question or command.45. _______ studies the sentence structure of language.46. In semantic analysis, ________ is the abstraction of the meaning of a sentence.47. A speech _______is a group of people who share the same language or a particularvariety of language.48. In learning a second language, a learner will subconsciously use his L1 knowledge. Thisprocess is called language _______.49. The development of a first or native language is called first language________.50. ________ is a branch of linguistics which is the study of meaning in the context of use.41. In any language words can be used in new ways to mean new things and can becombined into innumerable sentences based on limited rules. This feature is usually termed _________ or creative.42. The description of a language as it changes through time is a ___________ study.43. The qualities of vowels depend upon the position of the _________ and the lips.44. Consonants differ from vowels in that the latter are produced without___________.45. ________________ is a reverse process of derivation, and therefore is a process ofshortening.46. For ______________________ antonyms, it is a matter of either one or the other.47. A ___________ language is originally a pidgin that has become established as a nativelanguage in some speech community.48. A linguistic _____________ refers to a word or expression that is prohibited by the“polite” society from general use.49. For the vast majority of children, language development occurs spontaneously andrequires little conscious _____________ on the part of adults.50. Systemic-Functional Grammar is a(n) _________________ oriented functionallinguistics approach.41. One general principle of linguistic analysis is the primacy of _______ over writing.42. ___________ is the branch of linguistics which studies the form of words.43. A word formed by derivation is called a ____________, and a word formed bycompounding is called a ___________.44. ____________ is a science that is concerned with how words are combined to formphrases and how phrases are combined by rules to form sentences.45. The ________________ relation is a kind of relation between linguistic forms in asentence and linguistic forms outside the sentence.46. The various meanings of a _____________ word are related to some degree.47. The pre-school years are a ____________ period for first language acquisition.48. Whorf proposed that all higher levels of thinking are dependent on ____________.49. _______________ deals with how language is acquired, understood and produced.50. Structuralism is based on the assumption that grammatical categories should be definednot in terms of meaning but in terms of ________________.41. Language is a system of arbitrary _________ symbols used for human communication.42. Langue or competence is _________ and not directly observable, while parole orperformance is concrete and directly observable.43. The vocal tract can be divided into two parts: the oral cavity and the __________.44. The combination of two or sometimes more than two words to create new words incalled _____________.45. The words of English are classified into native words and __________ words.46. Language itself is not sexist, but its use may reflect the ______________ attitudeconnoted in the language that is sexist.47. _____________ refers to the gradual and subconscious development of ability in thefirst language by using it naturally communicative situations.48. In first language acquisition children usually __________ grammatical rules from thelinguistic information they hear.49. The starting point of Chomsky's TG Grammar is his ___________ hypothesis.50. A ____________ analysis of an utterance will reveal what the speaker intends to dowith it.51. discreteness52. competence53. triphthongs54. bound morpheme55.syntax51. design features52. performance53. minimal pair54. morpheme55. polysemy51. arbitrarinessngue53.vowel54. affixs55. reference51. language52. phonemes, phones53. backformation54.lexical semantics55.speech community56. How does a linguist construct a rule?57. How can we decide a minimal pair or a minimal set?58. Explain the interrelations between semantic and structural classifications of morphemes.59. List the differences between surface structure and deep structure of a sentence.60. How does competence differ from performance?56. Explain the differences between langue and parole.57. Use examples to illustrate the difference between a compulsory constituent and an optional constituent.58. Define the two terms: phonemes and allophones.59. What are the three types of distribution?60. How many types of linguistic knowledge does a native speaker possess? What are they?56.What are the five sub-branches of linguistics?57. What are the suprasegmental features are?58. What is the difference between cohesion and coherence?59. What is ethnic dialect?60. What is learner language and target language?56. What is the difference between synchronic linguistics and diachronic linguistics?57. What are the functions of language?58.Explain the relationship between speech and writing.59. Analyze the word “disestablishment” by IC analysis:60.What does morphology study?61. What are the differences between inflectional and derivational affixes in terms of both function and position?61. List the differences between surface structure and deep structure of a sentence.61. Define the three types of distribution respectively.61. Describe with examples various types of morpheme used in English.。

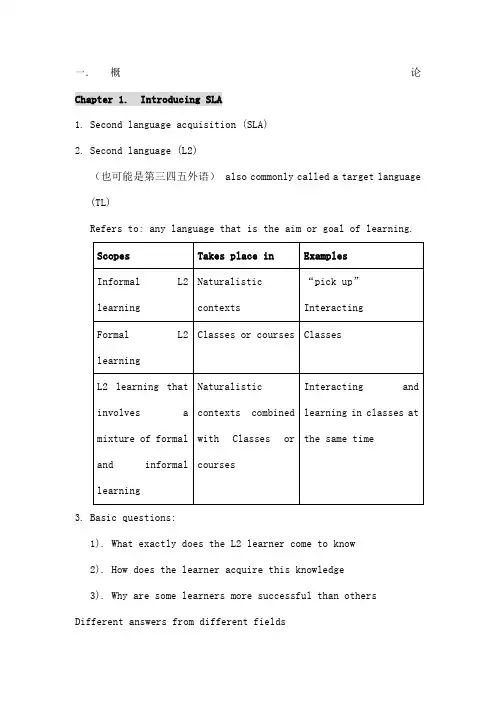

一.概论Chapter 1. Introducing SLA1.Second language acquisition (SLA)2.Second language (L2)(也可能是第三四五外语) also commonly called a target language (TL)Refers to: any language that is the aim or goal of learning.3.Basic questions:1). What exactly does the L2 learner come to know2). How does the learner acquire this knowledge3). Why are some learners more successful than othersDifferent answers from different fields4.3 main perspectives:linguistic; psychological; social.Only one (x) Combine (√)Chapter 2. Foundations of SLAⅠ. The world of second languages1.Multi-; bi-; mono- lingualism1)Multilingualism: the ability to use 2 or more languages.(bilingualism: 2 languages; multilingualism: >2)2)Monolingualism: the ability to use only one language.3)Multilingual competence (Vivian Cook, Multicompetence)Refers to: the compound state of a mind with 2 or more grammars.4)Monolingual competence (Vivian Cook, Monocompetence)Refers to: knowledge of only one language.2.People with multicompetence (a unique combination) ≠ 2monolingualsWorld demographic shows:3.Acquisition4.The number of L1 and L2 speakers of different languages can onlybe estimated.1)Linguistic information is often not officially collected.2)Answers to questions seeking linguistic information may notbe reliable.3)A lack of agreement on definition of terms and on criteria foridentification.Ⅱ. The nature of language learning1.L1 acquisition1). L1 acquisition was completed before you came to school andthe development normally takes place without any conscious effort.2). Complex grammatical patterns continue to develop through theschool years.Time Children will< 6 months (infant)Produce all of the vowel sounds and most of the consonant sounds of any language in the world. Learn to discriminate the among the sounds that make a different in the meaning of words (the phonemes)< < 3 years old Master an awareness of basic discourse patterns < 3 years old Master most of the distinctive sounds of L1< 5 or 6 years old Control most of the basic L1 grammatical patterns2. The role of natural ability1) Refers to: Humans are born with an innate capacity to learnlanguage.2) Reasons:Children began to learn L1 at the same age and in much thesame way.…master the basic phonological and grammatical operations in L1 at 5/ 6.…can understand and create novel utterances; and are not limited to repeating what they have heard; the utterances they produce are often systematically different from those of the adults around them.There is a cut-off age for L1 acquisition.L1 acquisition is not simply a facet of general intelligence.3)The natural ability, in terms of innate capacity, is that partof language structure is genetically “given”to every human child.3. The role of social experience1) A necessary condition for acquisition: appropriate socialexperience (including L1 input and interaction) is2) Intentional L1 teaching to children is not necessary and mayhave little effect.3) Sources of L1 input and interaction vary for cultural andsocial factors.4) Children get adequate L1 input and interaction→sources haslittle effect on the rate and sequence of phonological and grammatical development.The regional and social varieties (sources) of the input→pronunciationⅢ. L1 vs. L2 learning1.L1 and L2 development:2.Understanding the statesⅣ. The logical problem of language learning1.Noam Chomsky:1)innate linguistic knowledge must underlie languageacquisition2)Universal Grammar2.The theory of Universal Grammar:Reasons:1)Children’s knowledge of language > what could be learned fromthe input.2)Constraints and principles cannot be learned.3)Universal patterns of development cannot be explained bylanguage-specific input.Children often say things that adults do not.Children use language in accordance with general universalrules of language though they have not developed thecognitive ability to understand these rules. Not learnedfrom deduction or imitation.Patterns of children’s language development are notdirectly determined by the input they receive.Ⅴ. Frame works for SLA≤1950s1960s1970s1980s1990s。