The empirical evidence for predictability in common st...

- 格式:pdf

- 大小:79.35 KB

- 文档页数:34

中考英语经典科学实验与科学理论深度剖析阅读理解20题1<背景文章>Isaac Newton is one of the most famous scientists in history. He is known for his discovery of the law of universal gravitation. Newton was sitting under an apple tree when an apple fell on his head. This event led him to think about why objects fall to the ground. He began to wonder if there was a force that acted on all objects.Newton spent many years studying and thinking about this problem. He realized that the force that causes apples to fall to the ground is the same force that keeps the moon in orbit around the earth. He called this force gravity.The discovery of the law of universal gravitation had a huge impact on science. It helped explain many phenomena that had previously been mysteries. For example, it explained why planets orbit the sun and why objects fall to the ground.1. Newton was sitting under a(n) ___ tree when he had the idea of gravity.A. orangeB. appleC. pearD. banana答案:B。

八年级科技前沿英语阅读理解25题1<背景文章>Artificial intelligence (AI) has been making remarkable strides in the medical field in recent years. AI - powered systems are being increasingly utilized in various aspects of healthcare, bringing about significant improvements and new possibilities.One of the most prominent applications of AI in medicine is in disease diagnosis. AI algorithms can analyze vast amounts of medical data, such as patient symptoms, medical histories, and test results. For example, deep - learning algorithms can scan X - rays, CT scans, and MRIs to detect early signs of diseases like cancer, pneumonia, or heart diseases. These algorithms can often spot minute details that might be overlooked by human doctors, thus enabling earlier and more accurate diagnoses.In the realm of drug development, AI also plays a crucial role. It can accelerate the process by predicting how different molecules will interact with the human body. AI - based models can sift through thousands of potential drug candidates in a short time, identifying those with the highest probability of success. This not only saves time but also reduces the cost associated with traditional trial - and - error methods in drug research.Medical robots are another area where AI is making an impact.Surgical robots, for instance, can be guided by AI systems to perform complex surgeries with greater precision. These robots can filter out the natural tremors of a surgeon's hand, allowing for more delicate and accurate incisions. Additionally, there are robots designed to assist in patient care, such as those that can help patients with limited mobility to move around or perform simple tasks.However, the application of AI in medicine also faces some challenges. Issues like data privacy, algorithmic bias, and the need for regulatory approval are important considerations. But overall, the potential of AI to transform the medical field is vast and holds great promise for the future of healthcare.1. What is one of the main applications of AI in the medical field according to the article?A. Designing hospital buildings.B. Disease diagnosis.C. Training medical students.D. Managing hospital finances.答案:B。

阅读理解题型分类练(四) 推理判断题——推断隐含意义类A[2023·石家庄市教学质量检测] Throughout all the events in my life, one in particular sticks out more than the others. As I reflect on this significant event, a smile spreads across my face. As I think of Shanda, I feel loved and grateful.It was my twelfth year of dancing, I thought it would end up like any other year: stuck in emptiness, forgotten and without the belief of any teacher or friend that I really had the potential to achieve greatness.However, I met Shanda, a young, talented choreographer (编舞者). She influenced me to work to the best of my ability, pushed me to keep going when I wanted to give up, encouraged me and showed me the real importance of dancing. Throughout our hard work, not only did my ability to dance grow, but my friendship with Shanda grew as well.With the end of the year came our show time. As I walked to a backstage filled with other dancers, I hoped for a good performance that would prove my improvement.I waited anxiously for my turn. Finally, after what seemed like days, the loudspeaker announced my name. Butterflies filled my stomach as I took trembling steps onto the big lighted stage. But, with the determination to succeed and eagerness to live up to Shanda, expectations for me, I began to dance. All my troubles and nerves went away as I danced my whole heart out.As I walked up to the judge to receive my first place shining gold trophy (奖杯), I realized that dance is not about becoming the best. It was about loving dance for dance itself, a getaway from all my problems in the world. Shanda showed me that you could let everything go and just dance what you feel at that moment. After all the doubts that people had in me, I believed in myself and did not care what others thought. Thanks to Shanda, dance became more than a love of mine, but a passion.1.What did the author think her dancing would be for the twelfth year?A.A change for the better.B.A disappointment as before.C.A proof of her potential.D.A pride of her teachers and friends.2.How did Shanda help the author?A.By offering her financial help.B.By entering her in a competition.C.By coaching her for longer hours.D.By awakening her passion for dancing.3.How did the author feel when she stepped on the stage?A.Proud. B.Nervous.C.Scared. D.Relieved.4.What can we learn from the author's story?A.Success lies in patience.B.Fame is a great thirst of the young.C.A good teacher matters.D.A youth is to be treated with respect.B[2023·辽宁省部分学校二模] Almost a decade ago, researchers at Yale University launched a global database called Map of Life to track biodiversity distributions across the planet. Now, the team added a new feature to the database that predicts where species currently unknown to scientists may be hiding.In 2018, ecologist Mario Moura of the Federal University of Paraiba in Brazil teamed up with Yale ecologist Walter Jetz, who took the lead in the initial creation of the Map of Life. The pair set out to identify where 85 percent of Earth's undiscovered species may be. For two years, the team collected information about 32,000 vertebrate (脊椎动物)species. Data on population size, geographical range, historical discovery dates and other biological characteristics were used to create a computer model that estimated where undescribed species might exist today.The model found tropical environments in countries including Brazil, Indonesia, Madagascar, and Colombia house the most undiscovered species. Smaller animals have limited ranges that may be inaccessible, making their detection more difficult. In contrast, larger animals that occupy greater geographic ranges are more likely to be discovered, the researchers explain.“It is striking to see the importance of tropical forests as the birthplace of discoveries, stressing the urgent need to protect tropical forests and address the need of controlling deforestation rate if we want a chance to truly discover our biodiversity,” said Moura.The map comes at a crucial time when Earth is facing a biodiversity crisis. It was reported that there was a 68 percent decrease in vertebrate species populations between 1970 and 2006 and a 94 percent decline in animal populations in the America's tropical subregions. “At the current pace of global environmental change, there is no doubt that many species will go extinct before we have ever learned about their existence and had the chance to consider their fate,” Jetz said.5.What can be learned about the Map of Life?A.It only tracks biodiversity distributions.B.It was initially created by Mario Moura.C.It predicts where undiscovered species exist.D.It managed to locate 85% of the undiscovered species.6.Which factor makes animals easier to discover?A.location. B.species.C.size. D.population.7.What does the underlined word “address” mean in Paragraph 4?A.Tackle. B.Ignore.C.Maintain. D.Postpone.8.What can we infer from the last two paragraphs?A.Tropical animal populations have slightly declined.B.The Map of life is significant to protecting biodiversity.C.Tropical forests are the birthplace of many extinct species.D.Many species will undoubtedly go extinct even if discovered.CThis is the digital age, and the advice to managers is clear. If you don't know what ChatGPT is or dislike the idea of working with a robot, enjoy your retirement. So, as for the present you should get for your manager this festive season, a good choice may be anything made of paper. Undoubtedly, it can serve as a useful reminder of where the digital world's limitations lie. Several recent studies highlighted the enduring value of this ancient technology in several different aspects.A study by Vicky Morwitz of Columbia Business School, Yanliu Huang of Drexel University and Zhen Yang of California State University, Fullerton, finds that paper calendars produce different behaviours from digital calendars. Users of oldfashioned calendars made more detailed project plans than those looking at an App, and they were more likely to stick to those plans. Simple dimensions seem to count. The ability to see lots of days at once on a paper calendar matters.Here is another study from Maferima TouréTillery of the Kellogg School of Management at Northwestern University and Lili Wang of Zhejiang University. In one part of their study, the researchers asked strangers to take a survey. Half the respondents were given a pen and paper to fill out a form; the other half were handed an iPad. When asked for their email address to receive information, those who used paper were much likelier to decide on a positive answer. The researchers believe that people make better decisions on paper because it feels more consequential than a digital screen. Paperandpen respondents were more likely than iPad users to think their choices indicated their characters better.Researchers had other findings. They found shoppers were willing to pay more for reading materials in printed form than those they could only download online. Even the sight of someone handling something can help online sales. Similarly, people browsing(浏览) in a virtualreality(虚拟现实) shop was more willing to buy a Tshirt if they saw their own virtual hand touch it.9.How does the author lead in the topic?A.By telling a story.B.By giving examples.C.By raising questions.D.By describing a situation.10.Why can paper calendars make users stick to plans better?A.They are a better reminder.B.They can show more detailed plans.C.They provide chances for people to practice writing.D.They provide a better view of many days' plans at a time.11.Which of the following did paper influence based on Paragraph 3?A.Decision. B.Sympathy.C.Efficiency. D.Responsibility.12.What can we infer from the last paragraph?A.Paper posters will greatly promote sales online.B.Emagazines are thought less valuable than paper ones.C.Seeing others buy will increase one's purchasing desire.D.People prefer items made of paper instead of other materials.[答题区]阅读理解题型分类练(四)A【语篇解读】本文是一篇记叙文。

β-淀粉样蛋白在阿尔茨海默病中的作用郑玲艳;韩瑞兰;曹俊彦【摘要】阿尔茨海默病现已成为威胁人类健康发展的疾病之一,在对其发生发展过程及机制的研究中可以看出:Aβ在阿尔茨海默病病变过程中起着中心环节的作用,它通过多靶点、多通路导致AD的发生。

近几年有研究表明,β淀粉样蛋白在促进神经元凋亡方面起着重要作用,它可以直接引发细胞凋亡,也可以通过一些其他致病因素间接地通过β淀粉样蛋白的毒性作用加速神经元的凋亡。

本文主要通过查阅了Aβ神经毒性作用的文献,对其在AD形成过程中的作用进行了综述。

【期刊名称】《内蒙古医科大学学报》【年(卷),期】2016(038)002【总页数】5页(P147-150,155)【关键词】阿尔茨海默病;β-淀粉样蛋白;神经毒性【作者】郑玲艳;韩瑞兰;曹俊彦【作者单位】内蒙古医科大学药学院,内蒙古呼和浩特010110【正文语种】中文【中图分类】R742.5阿尔茨海默症(Alzheimer's disease,AD)是老年痴呆的主要类型,是一种进行性发展的致死性神经退行性疾病,AD病因及分子机制十分复杂,如胆碱能学说、Aβ(β-Amyloid)级联学说、氧化应激学说、神经细胞凋亡学说、免疫与炎症学说、基因遗传学说、有毒金属离子学说、钙代谢紊乱学说以及雌激素缺陷学说等[1]。

但许多研究表明,Aβ可能是各种原因诱发AD的共同通路,是AD形成和发展的关键因素[2],Aβ的产生与清除速率的失衡是导致神经元变性和痴呆发生的起始因素[3]。

因此,研究Aβ的产生、代谢及在AD形成过程中的毒性具有重要意义,本文查阅了关于Aβ在阿尔茨海默病中的作用的文献,对Aβ在AD发病机制中的神经毒性作用进行了综述。

Aβ是由存在于细胞膜上的淀粉样前体蛋白(APP)通过酶解途径裂解成的长度为39~43个氨基酸的片段。

APP分子量110~135kD,该蛋白的基因定位于人类21号染色体长臂的中段。

基因转录结束后根据剪接位点的不同,所得的mRNA可翻译形成数种亚型:APP695,APP751和APP770。

最新理论试题及答案英语一、选择题(每题1分,共10分)1. The word "phenomenon" is most closely related to which of the following concepts?A. EventB. FactC. TheoryD. Hypothesis答案:C2. In the context of scientific research, what does the term "hypothesis" refer to?A. A proven factB. A testable statementC. A final conclusionD. An unverifiable assumption答案:B3. Which of the following is NOT a characteristic of scientific theories?A. They are based on empirical evidence.B. They are subject to change.C. They are always universally applicable.D. They are supported by a body of evidence.答案:C4. The scientific method typically involves which of the following steps?A. Observation, hypothesis, experimentation, conclusionB. Hypothesis, observation, conclusion, experimentationC. Experimentation, hypothesis, observation, conclusionD. Conclusion, hypothesis, observation, experimentation答案:A5. What is the role of experimentation in the scientific process?A. To confirm a hypothesisB. To disprove a hypothesisC. To provide evidence for or against a hypothesisD. To replace the need for a hypothesis答案:C6. The term "paradigm shift" in the philosophy of science refers to:A. A minor change in scientific theoryB. A significant change in the dominant scientific viewC. The process of scientific discoveryD. The end of scientific inquiry答案:B7. Which of the following is an example of inductive reasoning?A. Observing a pattern and making a general ruleB. Drawing a specific conclusion from a general ruleC. Making a prediction based on a hypothesisD. Testing a hypothesis through experimentation答案:A8. Deductive reasoning is characterized by:A. Starting with a specific observation and drawing a general conclusionB. Starting with a general rule and applying it to a specific caseC. Making assumptions without evidenceD. Relying on intuition rather than logic答案:B9. In scientific research, what is the purpose of a control group?A. To provide a baseline for comparisonB. To test an alternative hypothesisC. To increase the number of participantsD. To confirm the results of previous studies答案:A10. The principle of falsifiability, introduced by Karl Popper, suggests that:A. Scientific theories must be proven trueB. Scientific theories must be able to withstand attempts at being disprovenC. Scientific theories are never wrongD. Scientific theories are always based on personal beliefs答案:B二、填空题(每题1分,共5分)1. The scientific method is a systematic approach to__________ knowledge through observation, experimentation, and __________.答案:gaining; logical reasoning2. A scientific law is a statement that describes a__________ pattern observed in nature, while a scientific theory explains the __________ behind these patterns.答案:recurring; underlying principles3. The process of peer review in scientific publishing is important because it helps to ensure the __________ and__________ of research findings.答案:validity; reliability4. In the context of scientific inquiry, an __________ is a tentative explanation for an aspect of the natural world that is based on a limited range of __________.答案:hypothesis; observations5. The term "empirical" refers to knowledge that is based on __________ and observation, rather than on theory or__________.答案:experimentation; speculation三、简答题(每题5分,共10分)1. Explain the difference between a scientific theory and a scientific law.答案:A scientific theory is a well-substantiated explanation of some aspect of the natural world, based on a body of facts that have been repeatedly confirmed through observation and experimentation. It is a broad framework that can encompass multiple laws and observations. A scientific law, on the other hand, is a concise verbal or mathematical statement that describes a general pattern observed in nature. Laws summarize specific phenomena, while theories explain the broader principles behind those phenomena.2. What is the significance of the falsifiability criterionin the philosophy of science?答案:The falsifiability criterion, proposed byphilosopher of science Karl Popper, is significant because it provides a way to distinguish between scientific and non-scientific theories. For a theory to be considered scientific, it must be testable and potentially refutable by empirical evidence. This criterion ensures that scientific theories are open。

实证的empirical名词解释引言:Empirical(实证)是一个常用的名词,经常被用来描述科学研究中的方法和结果。

然而,这个词的确切含义和解释并不被广泛了解。

在本文中,我们将探讨实证的含义,并提供一些相关的实例,以帮助读者更好地理解这个术语。

一、实证的定义实证一词源自拉丁语“empiricus”,意为“经验的”。

在科学研究中,实证方法是指通过观察和实践,收集和分析实际的数据和事实,以验证假设和推断。

换句话说,实证方法是依赖于经验和观察的科学研究方法。

二、实证方法的特点实证方法有几个显著的特点,其中包括以下几点:1. 基于观察:实证方法的核心是观察和实践。

研究者通过观察真实的情况,从中收集数据和信息,并根据这些数据进行推断和分析。

2. 依赖数据:实证方法要求使用可观察的数据和事实进行研究。

这些数据可以是定量数据(如数字或统计数据)或定性数据(如观察和描述)。

3. 可复制性:实证方法要求研究结果可以被其他研究者重复和验证。

这意味着实证研究的过程、方法和结果都应尽可能透明和可复制。

4. 假设验证:实证方法的目标是验证或驳斥某个假设或理论。

通过收集和分析实际数据,研究者可以评估假设的可靠性和准确性。

三、实证方法的实际应用实证方法在许多学科领域中都得到广泛运用。

下面我们将介绍一些实证方法在不同领域的具体应用。

1. 医学研究:在医学研究中,实证方法被用来评估特定治疗方法的有效性和安全性。

研究者通过比较使用特定治疗方法和非治疗方法的患者的结果,来判断该治疗方法的价值。

2. 经济学:实证经济学是一个广泛运用实证方法的领域。

经济学家使用历史数据、统计模型和实验等手段,来研究经济现象和政策的效果。

3. 教育研究:实证方法在教育研究中也有重要的应用。

教育学家通过收集学生成绩、教学方法和学生反馈等数据,来评估不同教学方法的有效性和学生学习成果。

4. 心理学:在心理学中,实证方法被用来研究和分析人类行为和思维的模式。

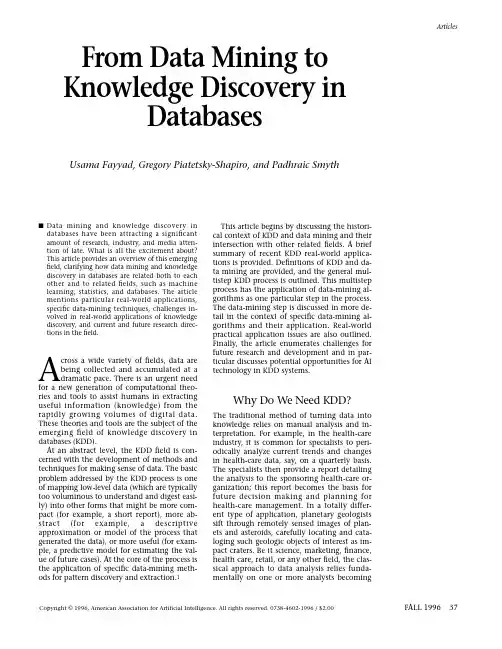

s Data mining and knowledge discovery in databases have been attracting a significant amount of research, industry, and media atten-tion of late. What is all the excitement about?This article provides an overview of this emerging field, clarifying how data mining and knowledge discovery in databases are related both to each other and to related fields, such as machine learning, statistics, and databases. The article mentions particular real-world applications, specific data-mining techniques, challenges in-volved in real-world applications of knowledge discovery, and current and future research direc-tions in the field.A cross a wide variety of fields, data arebeing collected and accumulated at adramatic pace. There is an urgent need for a new generation of computational theo-ries and tools to assist humans in extracting useful information (knowledge) from the rapidly growing volumes of digital data. These theories and tools are the subject of the emerging field of knowledge discovery in databases (KDD).At an abstract level, the KDD field is con-cerned with the development of methods and techniques for making sense of data. The basic problem addressed by the KDD process is one of mapping low-level data (which are typically too voluminous to understand and digest easi-ly) into other forms that might be more com-pact (for example, a short report), more ab-stract (for example, a descriptive approximation or model of the process that generated the data), or more useful (for exam-ple, a predictive model for estimating the val-ue of future cases). At the core of the process is the application of specific data-mining meth-ods for pattern discovery and extraction.1This article begins by discussing the histori-cal context of KDD and data mining and theirintersection with other related fields. A briefsummary of recent KDD real-world applica-tions is provided. Definitions of KDD and da-ta mining are provided, and the general mul-tistep KDD process is outlined. This multistepprocess has the application of data-mining al-gorithms as one particular step in the process.The data-mining step is discussed in more de-tail in the context of specific data-mining al-gorithms and their application. Real-worldpractical application issues are also outlined.Finally, the article enumerates challenges forfuture research and development and in par-ticular discusses potential opportunities for AItechnology in KDD systems.Why Do We Need KDD?The traditional method of turning data intoknowledge relies on manual analysis and in-terpretation. For example, in the health-careindustry, it is common for specialists to peri-odically analyze current trends and changesin health-care data, say, on a quarterly basis.The specialists then provide a report detailingthe analysis to the sponsoring health-care or-ganization; this report becomes the basis forfuture decision making and planning forhealth-care management. In a totally differ-ent type of application, planetary geologistssift through remotely sensed images of plan-ets and asteroids, carefully locating and cata-loging such geologic objects of interest as im-pact craters. Be it science, marketing, finance,health care, retail, or any other field, the clas-sical approach to data analysis relies funda-mentally on one or more analysts becomingArticlesFALL 1996 37From Data Mining to Knowledge Discovery inDatabasesUsama Fayyad, Gregory Piatetsky-Shapiro, and Padhraic Smyth Copyright © 1996, American Association for Artificial Intelligence. All rights reserved. 0738-4602-1996 / $2.00areas is astronomy. Here, a notable success was achieved by SKICAT ,a system used by as-tronomers to perform image analysis,classification, and cataloging of sky objects from sky-survey images (Fayyad, Djorgovski,and Weir 1996). In its first application, the system was used to process the 3 terabytes (1012bytes) of image data resulting from the Second Palomar Observatory Sky Survey,where it is estimated that on the order of 109sky objects are detectable. SKICAT can outper-form humans and traditional computational techniques in classifying faint sky objects. See Fayyad, Haussler, and Stolorz (1996) for a sur-vey of scientific applications.In business, main KDD application areas includes marketing, finance (especially in-vestment), fraud detection, manufacturing,telecommunications, and Internet agents.Marketing:In marketing, the primary ap-plication is database marketing systems,which analyze customer databases to identify different customer groups and forecast their behavior. Business Week (Berry 1994) estimat-ed that over half of all retailers are using or planning to use database marketing, and those who do use it have good results; for ex-ample, American Express reports a 10- to 15-percent increase in credit-card use. Another notable marketing application is market-bas-ket analysis (Agrawal et al. 1996) systems,which find patterns such as, “If customer bought X, he/she is also likely to buy Y and Z.” Such patterns are valuable to retailers.Investment: Numerous companies use da-ta mining for investment, but most do not describe their systems. One exception is LBS Capital Management. Its system uses expert systems, neural nets, and genetic algorithms to manage portfolios totaling $600 million;since its start in 1993, the system has outper-formed the broad stock market (Hall, Mani,and Barr 1996).Fraud detection: HNC Falcon and Nestor PRISM systems are used for monitoring credit-card fraud, watching over millions of ac-counts. The FAIS system (Senator et al. 1995),from the U.S. Treasury Financial Crimes En-forcement Network, is used to identify finan-cial transactions that might indicate money-laundering activity.Manufacturing: The CASSIOPEE trou-bleshooting system, developed as part of a joint venture between General Electric and SNECMA, was applied by three major Euro-pean airlines to diagnose and predict prob-lems for the Boeing 737. To derive families of faults, clustering methods are used. CASSIOPEE received the European first prize for innova-intimately familiar with the data and serving as an interface between the data and the users and products.For these (and many other) applications,this form of manual probing of a data set is slow, expensive, and highly subjective. In fact, as data volumes grow dramatically, this type of manual data analysis is becoming completely impractical in many domains.Databases are increasing in size in two ways:(1) the number N of records or objects in the database and (2) the number d of fields or at-tributes to an object. Databases containing on the order of N = 109objects are becoming in-creasingly common, for example, in the as-tronomical sciences. Similarly, the number of fields d can easily be on the order of 102or even 103, for example, in medical diagnostic applications. Who could be expected to di-gest millions of records, each having tens or hundreds of fields? We believe that this job is certainly not one for humans; hence, analysis work needs to be automated, at least partially.The need to scale up human analysis capa-bilities to handling the large number of bytes that we can collect is both economic and sci-entific. Businesses use data to gain competi-tive advantage, increase efficiency, and pro-vide more valuable services to customers.Data we capture about our environment are the basic evidence we use to build theories and models of the universe we live in. Be-cause computers have enabled humans to gather more data than we can digest, it is on-ly natural to turn to computational tech-niques to help us unearth meaningful pat-terns and structures from the massive volumes of data. Hence, KDD is an attempt to address a problem that the digital informa-tion era made a fact of life for all of us: data overload.Data Mining and Knowledge Discovery in the Real WorldA large degree of the current interest in KDD is the result of the media interest surrounding successful KDD applications, for example, the focus articles within the last two years in Business Week , Newsweek , Byte , PC Week , and other large-circulation periodicals. Unfortu-nately, it is not always easy to separate fact from media hype. Nonetheless, several well-documented examples of successful systems can rightly be referred to as KDD applications and have been deployed in operational use on large-scale real-world problems in science and in business.In science, one of the primary applicationThere is an urgent need for a new generation of computation-al theories and tools toassist humans in extractinguseful information (knowledge)from the rapidly growing volumes ofdigital data.Articles38AI MAGAZINEtive applications (Manago and Auriol 1996).Telecommunications: The telecommuni-cations alarm-sequence analyzer (TASA) wasbuilt in cooperation with a manufacturer oftelecommunications equipment and threetelephone networks (Mannila, Toivonen, andVerkamo 1995). The system uses a novelframework for locating frequently occurringalarm episodes from the alarm stream andpresenting them as rules. Large sets of discov-ered rules can be explored with flexible infor-mation-retrieval tools supporting interactivityand iteration. In this way, TASA offers pruning,grouping, and ordering tools to refine the re-sults of a basic brute-force search for rules.Data cleaning: The MERGE-PURGE systemwas applied to the identification of duplicatewelfare claims (Hernandez and Stolfo 1995).It was used successfully on data from the Wel-fare Department of the State of Washington.In other areas, a well-publicized system isIBM’s ADVANCED SCOUT,a specialized data-min-ing system that helps National Basketball As-sociation (NBA) coaches organize and inter-pret data from NBA games (U.S. News 1995). ADVANCED SCOUT was used by several of the NBA teams in 1996, including the Seattle Su-personics, which reached the NBA finals.Finally, a novel and increasingly importanttype of discovery is one based on the use of in-telligent agents to navigate through an infor-mation-rich environment. Although the ideaof active triggers has long been analyzed in thedatabase field, really successful applications ofthis idea appeared only with the advent of theInternet. These systems ask the user to specifya profile of interest and search for related in-formation among a wide variety of public-do-main and proprietary sources. For example, FIREFLY is a personal music-recommendation agent: It asks a user his/her opinion of several music pieces and then suggests other music that the user might like (<http:// www.ffl/>). CRAYON(/>) allows users to create their own free newspaper (supported by ads); NEWSHOUND(<http://www. /hound/>) from the San Jose Mercury News and FARCAST(</> automatically search information from a wide variety of sources, including newspapers and wire services, and e-mail rele-vant documents directly to the user.These are just a few of the numerous suchsystems that use KDD techniques to automat-ically produce useful information from largemasses of raw data. See Piatetsky-Shapiro etal. (1996) for an overview of issues in devel-oping industrial KDD applications.Data Mining and KDDHistorically, the notion of finding useful pat-terns in data has been given a variety ofnames, including data mining, knowledge ex-traction, information discovery, informationharvesting, data archaeology, and data patternprocessing. The term data mining has mostlybeen used by statisticians, data analysts, andthe management information systems (MIS)communities. It has also gained popularity inthe database field. The phrase knowledge dis-covery in databases was coined at the first KDDworkshop in 1989 (Piatetsky-Shapiro 1991) toemphasize that knowledge is the end productof a data-driven discovery. It has been popular-ized in the AI and machine-learning fields.In our view, KDD refers to the overall pro-cess of discovering useful knowledge from da-ta, and data mining refers to a particular stepin this process. Data mining is the applicationof specific algorithms for extracting patternsfrom data. The distinction between the KDDprocess and the data-mining step (within theprocess) is a central point of this article. Theadditional steps in the KDD process, such asdata preparation, data selection, data cleaning,incorporation of appropriate prior knowledge,and proper interpretation of the results ofmining, are essential to ensure that usefulknowledge is derived from the data. Blind ap-plication of data-mining methods (rightly crit-icized as data dredging in the statistical litera-ture) can be a dangerous activity, easilyleading to the discovery of meaningless andinvalid patterns.The Interdisciplinary Nature of KDDKDD has evolved, and continues to evolve,from the intersection of research fields such asmachine learning, pattern recognition,databases, statistics, AI, knowledge acquisitionfor expert systems, data visualization, andhigh-performance computing. The unifyinggoal is extracting high-level knowledge fromlow-level data in the context of large data sets.The data-mining component of KDD cur-rently relies heavily on known techniquesfrom machine learning, pattern recognition,and statistics to find patterns from data in thedata-mining step of the KDD process. A natu-ral question is, How is KDD different from pat-tern recognition or machine learning (and re-lated fields)? The answer is that these fieldsprovide some of the data-mining methodsthat are used in the data-mining step of theKDD process. KDD focuses on the overall pro-cess of knowledge discovery from data, includ-ing how the data are stored and accessed, howalgorithms can be scaled to massive data setsThe basicproblemaddressed bythe KDDprocess isone ofmappinglow-leveldata intoother formsthat might bemorecompact,moreabstract,or moreuseful.ArticlesFALL 1996 39A driving force behind KDD is the database field (the second D in KDD). Indeed, the problem of effective data manipulation when data cannot fit in the main memory is of fun-damental importance to KDD. Database tech-niques for gaining efficient data access,grouping and ordering operations when ac-cessing data, and optimizing queries consti-tute the basics for scaling algorithms to larger data sets. Most data-mining algorithms from statistics, pattern recognition, and machine learning assume data are in the main memo-ry and pay no attention to how the algorithm breaks down if only limited views of the data are possible.A related field evolving from databases is data warehousing,which refers to the popular business trend of collecting and cleaning transactional data to make them available for online analysis and decision support. Data warehousing helps set the stage for KDD in two important ways: (1) data cleaning and (2)data access.Data cleaning: As organizations are forced to think about a unified logical view of the wide variety of data and databases they pos-sess, they have to address the issues of map-ping data to a single naming convention,uniformly representing and handling missing data, and handling noise and errors when possible.Data access: Uniform and well-defined methods must be created for accessing the da-ta and providing access paths to data that were historically difficult to get to (for exam-ple, stored offline).Once organizations and individuals have solved the problem of how to store and ac-cess their data, the natural next step is the question, What else do we do with all the da-ta? This is where opportunities for KDD natu-rally arise.A popular approach for analysis of data warehouses is called online analytical processing (OLAP), named for a set of principles pro-posed by Codd (1993). OLAP tools focus on providing multidimensional data analysis,which is superior to SQL in computing sum-maries and breakdowns along many dimen-sions. OLAP tools are targeted toward simpli-fying and supporting interactive data analysis,but the goal of KDD tools is to automate as much of the process as possible. Thus, KDD is a step beyond what is currently supported by most standard database systems.Basic DefinitionsKDD is the nontrivial process of identifying valid, novel, potentially useful, and ultimate-and still run efficiently, how results can be in-terpreted and visualized, and how the overall man-machine interaction can usefully be modeled and supported. The KDD process can be viewed as a multidisciplinary activity that encompasses techniques beyond the scope of any one particular discipline such as machine learning. In this context, there are clear opportunities for other fields of AI (be-sides machine learning) to contribute to KDD. KDD places a special emphasis on find-ing understandable patterns that can be inter-preted as useful or interesting knowledge.Thus, for example, neural networks, although a powerful modeling tool, are relatively difficult to understand compared to decision trees. KDD also emphasizes scaling and ro-bustness properties of modeling algorithms for large noisy data sets.Related AI research fields include machine discovery, which targets the discovery of em-pirical laws from observation and experimen-tation (Shrager and Langley 1990) (see Kloes-gen and Zytkow [1996] for a glossary of terms common to KDD and machine discovery),and causal modeling for the inference of causal models from data (Spirtes, Glymour,and Scheines 1993). Statistics in particular has much in common with KDD (see Elder and Pregibon [1996] and Glymour et al.[1996] for a more detailed discussion of this synergy). Knowledge discovery from data is fundamentally a statistical endeavor. Statistics provides a language and framework for quan-tifying the uncertainty that results when one tries to infer general patterns from a particu-lar sample of an overall population. As men-tioned earlier, the term data mining has had negative connotations in statistics since the 1960s when computer-based data analysis techniques were first introduced. The concern arose because if one searches long enough in any data set (even randomly generated data),one can find patterns that appear to be statis-tically significant but, in fact, are not. Clearly,this issue is of fundamental importance to KDD. Substantial progress has been made in recent years in understanding such issues in statistics. Much of this work is of direct rele-vance to KDD. Thus, data mining is a legiti-mate activity as long as one understands how to do it correctly; data mining carried out poorly (without regard to the statistical as-pects of the problem) is to be avoided. KDD can also be viewed as encompassing a broader view of modeling than statistics. KDD aims to provide tools to automate (to the degree pos-sible) the entire process of data analysis and the statistician’s “art” of hypothesis selection.Data mining is a step in the KDD process that consists of ap-plying data analysis and discovery al-gorithms that produce a par-ticular enu-meration ofpatterns (or models)over the data.Articles40AI MAGAZINEly understandable patterns in data (Fayyad, Piatetsky-Shapiro, and Smyth 1996).Here, data are a set of facts (for example, cases in a database), and pattern is an expres-sion in some language describing a subset of the data or a model applicable to the subset. Hence, in our usage here, extracting a pattern also designates fitting a model to data; find-ing structure from data; or, in general, mak-ing any high-level description of a set of data. The term process implies that KDD comprises many steps, which involve data preparation, search for patterns, knowledge evaluation, and refinement, all repeated in multiple itera-tions. By nontrivial, we mean that some search or inference is involved; that is, it is not a straightforward computation of predefined quantities like computing the av-erage value of a set of numbers.The discovered patterns should be valid on new data with some degree of certainty. We also want patterns to be novel (at least to the system and preferably to the user) and poten-tially useful, that is, lead to some benefit to the user or task. Finally, the patterns should be understandable, if not immediately then after some postprocessing.The previous discussion implies that we can define quantitative measures for evaluating extracted patterns. In many cases, it is possi-ble to define measures of certainty (for exam-ple, estimated prediction accuracy on new data) or utility (for example, gain, perhaps indollars saved because of better predictions orspeedup in response time of a system). No-tions such as novelty and understandabilityare much more subjective. In certain contexts,understandability can be estimated by sim-plicity (for example, the number of bits to de-scribe a pattern). An important notion, calledinterestingness(for example, see Silberschatzand Tuzhilin [1995] and Piatetsky-Shapiro andMatheus [1994]), is usually taken as an overallmeasure of pattern value, combining validity,novelty, usefulness, and simplicity. Interest-ingness functions can be defined explicitly orcan be manifested implicitly through an or-dering placed by the KDD system on the dis-covered patterns or models.Given these notions, we can consider apattern to be knowledge if it exceeds some in-terestingness threshold, which is by nomeans an attempt to define knowledge in thephilosophical or even the popular view. As amatter of fact, knowledge in this definition ispurely user oriented and domain specific andis determined by whatever functions andthresholds the user chooses.Data mining is a step in the KDD processthat consists of applying data analysis anddiscovery algorithms that, under acceptablecomputational efficiency limitations, pro-duce a particular enumeration of patterns (ormodels) over the data. Note that the space ofArticlesFALL 1996 41Figure 1. An Overview of the Steps That Compose the KDD Process.methods, the effective number of variables under consideration can be reduced, or in-variant representations for the data can be found.Fifth is matching the goals of the KDD pro-cess (step 1) to a particular data-mining method. For example, summarization, clas-sification, regression, clustering, and so on,are described later as well as in Fayyad, Piatet-sky-Shapiro, and Smyth (1996).Sixth is exploratory analysis and model and hypothesis selection: choosing the data-mining algorithm(s) and selecting method(s)to be used for searching for data patterns.This process includes deciding which models and parameters might be appropriate (for ex-ample, models of categorical data are differ-ent than models of vectors over the reals) and matching a particular data-mining method with the overall criteria of the KDD process (for example, the end user might be more in-terested in understanding the model than its predictive capabilities).Seventh is data mining: searching for pat-terns of interest in a particular representa-tional form or a set of such representations,including classification rules or trees, regres-sion, and clustering. The user can significant-ly aid the data-mining method by correctly performing the preceding steps.Eighth is interpreting mined patterns, pos-sibly returning to any of steps 1 through 7 for further iteration. This step can also involve visualization of the extracted patterns and models or visualization of the data given the extracted models.Ninth is acting on the discovered knowl-edge: using the knowledge directly, incorpo-rating the knowledge into another system for further action, or simply documenting it and reporting it to interested parties. This process also includes checking for and resolving po-tential conflicts with previously believed (or extracted) knowledge.The KDD process can involve significant iteration and can contain loops between any two steps. The basic flow of steps (al-though not the potential multitude of itera-tions and loops) is illustrated in figure 1.Most previous work on KDD has focused on step 7, the data mining. However, the other steps are as important (and probably more so) for the successful application of KDD in practice. Having defined the basic notions and introduced the KDD process, we now focus on the data-mining component,which has, by far, received the most atten-tion in the literature.patterns is often infinite, and the enumera-tion of patterns involves some form of search in this space. Practical computational constraints place severe limits on the sub-space that can be explored by a data-mining algorithm.The KDD process involves using the database along with any required selection,preprocessing, subsampling, and transforma-tions of it; applying data-mining methods (algorithms) to enumerate patterns from it;and evaluating the products of data mining to identify the subset of the enumerated pat-terns deemed knowledge. The data-mining component of the KDD process is concerned with the algorithmic means by which pat-terns are extracted and enumerated from da-ta. The overall KDD process (figure 1) in-cludes the evaluation and possible interpretation of the mined patterns to de-termine which patterns can be considered new knowledge. The KDD process also in-cludes all the additional steps described in the next section.The notion of an overall user-driven pro-cess is not unique to KDD: analogous propos-als have been put forward both in statistics (Hand 1994) and in machine learning (Brod-ley and Smyth 1996).The KDD ProcessThe KDD process is interactive and iterative,involving numerous steps with many deci-sions made by the user. Brachman and Anand (1996) give a practical view of the KDD pro-cess, emphasizing the interactive nature of the process. Here, we broadly outline some of its basic steps:First is developing an understanding of the application domain and the relevant prior knowledge and identifying the goal of the KDD process from the customer’s viewpoint.Second is creating a target data set: select-ing a data set, or focusing on a subset of vari-ables or data samples, on which discovery is to be performed.Third is data cleaning and preprocessing.Basic operations include removing noise if appropriate, collecting the necessary informa-tion to model or account for noise, deciding on strategies for handling missing data fields,and accounting for time-sequence informa-tion and known changes.Fourth is data reduction and projection:finding useful features to represent the data depending on the goal of the task. With di-mensionality reduction or transformationArticles42AI MAGAZINEThe Data-Mining Stepof the KDD ProcessThe data-mining component of the KDD pro-cess often involves repeated iterative applica-tion of particular data-mining methods. This section presents an overview of the primary goals of data mining, a description of the methods used to address these goals, and a brief description of the data-mining algo-rithms that incorporate these methods.The knowledge discovery goals are defined by the intended use of the system. We can distinguish two types of goals: (1) verification and (2) discovery. With verification,the sys-tem is limited to verifying the user’s hypothe-sis. With discovery,the system autonomously finds new patterns. We further subdivide the discovery goal into prediction,where the sys-tem finds patterns for predicting the future behavior of some entities, and description, where the system finds patterns for presenta-tion to a user in a human-understandableform. In this article, we are primarily con-cerned with discovery-oriented data mining.Data mining involves fitting models to, or determining patterns from, observed data. The fitted models play the role of inferred knowledge: Whether the models reflect useful or interesting knowledge is part of the over-all, interactive KDD process where subjective human judgment is typically required. Two primary mathematical formalisms are used in model fitting: (1) statistical and (2) logical. The statistical approach allows for nondeter-ministic effects in the model, whereas a logi-cal model is purely deterministic. We focus primarily on the statistical approach to data mining, which tends to be the most widely used basis for practical data-mining applica-tions given the typical presence of uncertain-ty in real-world data-generating processes.Most data-mining methods are based on tried and tested techniques from machine learning, pattern recognition, and statistics: classification, clustering, regression, and so on. The array of different algorithms under each of these headings can often be bewilder-ing to both the novice and the experienced data analyst. It should be emphasized that of the many data-mining methods advertised in the literature, there are really only a few fun-damental techniques. The actual underlying model representation being used by a particu-lar method typically comes from a composi-tion of a small number of well-known op-tions: polynomials, splines, kernel and basis functions, threshold-Boolean functions, and so on. Thus, algorithms tend to differ primar-ily in the goodness-of-fit criterion used toevaluate model fit or in the search methodused to find a good fit.In our brief overview of data-mining meth-ods, we try in particular to convey the notionthat most (if not all) methods can be viewedas extensions or hybrids of a few basic tech-niques and principles. We first discuss the pri-mary methods of data mining and then showthat the data- mining methods can be viewedas consisting of three primary algorithmiccomponents: (1) model representation, (2)model evaluation, and (3) search. In the dis-cussion of KDD and data-mining methods,we use a simple example to make some of thenotions more concrete. Figure 2 shows a sim-ple two-dimensional artificial data set consist-ing of 23 cases. Each point on the graph rep-resents a person who has been given a loanby a particular bank at some time in the past.The horizontal axis represents the income ofthe person; the vertical axis represents the to-tal personal debt of the person (mortgage, carpayments, and so on). The data have beenclassified into two classes: (1) the x’s repre-sent persons who have defaulted on theirloans and (2) the o’s represent persons whoseloans are in good status with the bank. Thus,this simple artificial data set could represent ahistorical data set that can contain usefulknowledge from the point of view of thebank making the loans. Note that in actualKDD applications, there are typically manymore dimensions (as many as several hun-dreds) and many more data points (manythousands or even millions).ArticlesFALL 1996 43Figure 2. A Simple Data Set with Two Classes Used for Illustrative Purposes.。

山东省名校考试联盟2023-2024学年高二上学期11月期中英语试题学校:___________姓名:___________班级:___________考号:___________一、阅读理解2023 Hot List: The Best New Restaurants in the World Place des Fetes — New York CityThis famous wine bar provides a spot with a rare sweet and warm atmosphere. For date night, go to the bar with views of the open kitchen, or fill up the large table in the back with a group and taste the entire item menu. Either way, do not miss the famous mushroom soup.Le Doyenne — Saint — Vrain, FranceAustralian chefs James Henry and Shaun Kelly transformed the former stables (马厩) of a 19th-century private estate into a working farm, restaurant, and guesthouse driven by the principles of regenerative agriculture. More than one hundred varieties of fruits, vegetables, and herbs make their way into Henry’s cooking after being carefully nurtured by Kelly.Mi Compa Chava — Mexico CityAlmost everyone eating here is devoted to fixing last night’s damage from drunkenness and getting a head start on creating today’s. On the sidewalk, crowds of locals and tourists alike line up for fisherman Salvador Orozco’s creative takes on Sinaloa and Baja seafood. Anything from the raw half of the menu is a sure bet, though cooked dishes like fish can help fill out a meal.Vilas — BangkokCan a dish inspired by a Spanish recipe using Japanese ingredients (原料) still be considered Thai? For Chef Prin Polsuk, one of Bangkok’s most famous Thai chefs, it most certainly can. At his latest restaurant, a small dining room at the base of Bangkok’s hulalongkorn’s 1897 journey around Europe and the foreign ingredients and landmark King Power Mahanakhon Tower, he draws inspiration from King Chulalongkorn’s 1897 journey around Europe and the foreign ingredients and cooking techniques he added to the royal cookbooks.1.What can you do in Place des Fetes — New York City ?A.Drink the red wine.B.Taste the mushroom soup.2.Which restaurant best suits people who suffer from alcohol?A.Place des Fetes.B.Le Doyenne.C.Mi Compa Chava.D.Vilas.3.What’s the purpose of the text?A.To introduce the features of some restaurants.B.To compare the origins of some restaurants.C.To state the similarities of some restaurants.D.To recommend some foods of some restaurants.The 36-year-old Jia Juntingxian was born in Pingxiang, Jiangxi Province, and was blind in both eyes due to congenital eye disease. She has shown athletic talent since childhood and was selected as a track and field athlete by Jiangxi Disabled Persons’ Federation.Although she can’t see the world, Jia breaks through the “immediate” obstacles again and again while running, letting the world see her. In her sports career, Jia has won 43 national and world-class sports medals. Among them, in 2016, she broke the world record and stood on the podium (领奖台) of the women’s T11-T13 4×100-meter relay event at the Rio Paralympics.In 2017, Jia retired and chose to become a teacher at a special education school. Just a year ago, she found out that two young brothers, with visual impairments (视觉障碍), wanted to be an athlete. They had never attended a special education school and never achieved their athletic dream. Jia could only help them attend a local special education school. The experience made her realize that these children living in remote areas may have little knowledge of special education. Even she didn’t know about such schools until late into her education. Therefore, she decided to become more involved with special education.Changing from a Paralympic competitor to a special education teacher, Jia said that there is no discomfort, “Because I understand the students as well as myself and know the inconveniences and difficulties of the children. I hope that every child is like a different seed.Through hard study, they can bravely realize their own life.”Jia also has paid close attention to the rights and interests of disabled people. In 2021, Jia proposed the construction of audible (听得见的) traffic signals for blind people. Her advice to local authorities on dog management has resulted in more indoor public places allowingshop and currently employ 16 visually impaired people, with an average monthly salary of 3,500 yuan per person.Jia always believes that the world is a circle, as long as the love of others is constantly passed on, the whole society will be full of love!4.What can we learn about Jia from the passage?A.She won 43 sports medals in her country.B.She was strong-minded despite her disability.C.She was good at sports at the age of 5 years old.D.She never won national and world-class sports medals.5.What made Jia decide to occupy herself in special education?A.The high salary of special education.B.Her wish to enrich her life after sports.C.Local government’s need for special education.D.Her experience of helping two disabled brothers.6.Which of the following best describes Jia’s job on special education?A.Boring and dangerous.B.Patient and generous.C.Humorous and brave.D.Devoted and selfless.7.What did Jia do to help the disabled?A.She constructed audible traffic signals.B.She set up a massage shop on her own.C.She advised increasing indoor public places.D.She provided employmentopportunity for the blind.Coral reefs in Florida have lost an estimated 90% of their corals in the last 40 years. This summer, a marine heat wave hit Florida’s coral reefs. The record high temperatures created an extremely stressful environment for the coral reefs, which are currently also experiencing intense coral bleaching (白化).A coral is an animal, which has a symbiotic relationship with a microscopic algae (藻类). The algae gets energy from the sun and shares it with the coral internally. The coral builds a rock-like structure, which makes up most of the reef, providing homes and food for many organisms that live there. Coral bleaching is when the symbiotic relationship breaks down. Without the algae, the corals appear white because the rock skeleton becomes visible. If theFlorida is on the front lines of climate change. It is also on the cutting edge of restoration science. Many labs, institutions and other organizations are working nonstop to protect and maintain the coral reefs. This includes efforts to understand what is troubling the reef, from disease outbreaks to coastal development impacts. It also includes harvesting coral spawn (卵), or growing and planting coral parts. Scientists moved many coral nurseries into deeper water and shore-based facilities during this marine heat wave. They are digging into the DNA of the coral to discover which species will survive best in future.There are some bright spots in the story, however. Some corals have recovered from the bleaching, and many did not bleach at all. In addition, researchers recorded coral spawning. Although it’s not clear yet whether the larvae (幼虫) will be successful in the wild, it’s a sign of recovery potential. If the baby corals survive, they will be able to regrow the reef. They just have to avoid one big boss: human-induced climate change.8.What does the underlined word “symbiotic” in paragraph 2 mean?A.Reliable.B.Opposite.C.Harmonious.D.Contradictory. 9.What caused the coral bleaching?A.The rock skeleton.B.The microscopic algae.C.The high temperatures.D.The symbiotic relationship. 10.Which is not the efforts scientists made to help coral reefs?A.Transferring coral nurseries.B.Growing and planting coral spawn.C.Researching the DNA of the coral.D.Figuring out the reasons for problems. 11.Which of the following best describes the impact of scientists’ efforts?A.Identifiable.B.Predictable.C.Far-reaching.D.Effective.Scientists at the UCL Institute for Neurology have developed new tools, based on AI language models, that can characterize subtle signatures in the speech of patients diagnosed with schizophrenia (精神分裂症). The research, published in PNAS, aims to understand how the automated analysis of language could help doctors and scientists diagnose and assess psychiatric (病) conditions.Currently, psychiatric diagnosis is based almost entirely on talking with patients and those close to them, with only a minimal role for tests such as blood tests and brain scans. However, this lack of precision prevents a richer understanding of the causes of mental illnessThe researchers asked 26 participants with schizophrenia and 26 control participants to complete two verbal fluency tasks, where they were asked to name as many words as they could either belonging to the category “animals” or starting with the letter “p” in five minutes. To analyze the answers given by participants, the team used an AI language model to represent the meaning of words in a similar way to humans. They tested whether the words people naturally recalled could be predicted by the AI model, and whether this predictability was reduced in patients with schizophrenia.They found that the answers given by control participants were indeed more predictable by the AI model than those generated by people with schizophrenia, and that this difference was largest in patients with more severe symptoms. The researchers think that this difference might have to do with the way the brain learns relationships between memories and ideas, and stores this information in so called “cognitive maps”.The team now plan to use this technology in a larger sample of patients, across more diverse speech setting, to test whether it might prove useful in the clinic. Lead author, Dr. Matthew Nour, said: “There is enormous interest in using AI language models in medicine. If these tools prove safe, I expect they will begin to be used in the clinic within the next decade.”12.What is the disadvantage of current psychiatric diagnosis?A.It is greatly related to blood tests.B.It mostly relies on talking with patients.C.It refers to the words of patients’ family.D.It can’t comprehend schizophreniadeeply.13.What is paragraph 3 mainly about?A.The process of the research.B.The tasks of the participants.C.The performance of researchers.D.The predictability of AI language models 14.What is Dr Matthew Nour’s attitude toward AI language models?A.Unclear.B.Positive.C.Doubtful.D.Negative. 15.What can be a suitable title for the text?A.AI language new tools used in the clinic.B.AI language tools developed byscientists.C.AI language models treating schizophrenia.D.AI language models diagnosing schizophrenia.Protecting from aboveA deadly asteroid (小行星) heading toward the Earth is a common plot in sci-fi movies.16 An increasing number of space agencies are now taking steps to defend against near-Earth asteroids (NEAs).17Wu Yanhua, deputy director of the China National Space Administration (CNSA), recently told CCTV News that China will start to build Earth and space-based monitoring and warning systems to detect NEAs. 18 In 2025 or 2026, China hopes to be able to closely observe approaching asteroids before impacting them to change their path toward our planet.Making an impactNASA also has its own program for developing technology to deflect (使转向) incoming asteroids. On Nov 23, 2021, the Double Asteroid Redirection Test (DART) was launched to slam into Dimorphos and change the speed at which it orbits its space neighbor, Didymos, an asteroid approximately 2, 560 feet in diameter (直径). 19Global effort20 It also re-launched its Planetary Defense Office in 2021, according to Electronics Weekly. Restarting the program, which seeks to communicate with space agencies around the world, is due to “the global character of the dangers we all face due to asteroids”, said ESA Director General Josef Aschbacher.A.Plan to protect.B.Taking prompt actions.C.But most people believe this is only an imagination.D.However, this is also a risk we should be worried about in real life.E.They are aimed to classify incoming NEAs depending on the risks they pose.F.The European Space Agency (ESA) signed a deal to make a spacecraft for a joint mission with NASA.G.This will help prove out one viable (可行的) way to protect our planet from a dangerous asteroid.Watching a plane fly across the sky as a young boy, Todd Smith knew that flying was what he wanted to do when he was older.After five years’ training, he finally 21 his dream job in his late twenties-working as an airline pilot. But in 2019, the travel firm he was 22 for was closed down.By this time Mr Smith had become increasingly 23 about the growing threat of climate change, and the aviation (航空) industry’s carbon emissions (碳排放). “I had an uncomfortable 24 ,” he says. “I was really eager to get involved in environmental protest groups, but I knew it would ruin my 25 , and I had a lot of 26 . It would be easier to return to the industry and pay them off.”Yet after hesitating for several months, Mr Smith finally 27 to quit his flying career for good. “I prefer flying and 28 interesting destinations, and earning a decent 29 ,” says Mr Smith. “But when we are 30 the climate and ecological emergency, how could I possibly 31 my needs? We need to think about how to 32 the biggest threat to humanity.”Giving up his dream job was a 33 decision, he says. “Financially I’ve been really 34 . It’s been challenging, but taking action has 35 my anxiety.”Mr Smith is now a climate activist.21.A.quit B.changed C.completed D.landed 22.A.waiting B.preparing C.working D.looking 23.A.concerned B.curious C.serious D.doubtful 24.A.tension B.conflict C.solution D.passion 25.A.fame B.life C.ambition D.career 26.A.needs B.debts C.pressures D.troubles 27.A.refused B.promised C.expected D.decided 28.A.discovering B.comparing C.recording D.visiting 29.A.salary B.honor C.award D.title 30.A.accustomed to B.faced with C.addicted to D.trapped in 31.A.remove B.raise C.meet D.stress 32.A.issue B.view C.make D.handle34.A.saving B.struggling C.investing D.contributing 35.A.covered B.balanced C.eased D.increased四、用单词的适当形式完成短文阅读下面材料,在空白处填写适当的内容(1个单词)或括号内单词的正确形式。

多模态脑监测对急性大面积脑梗死后脑水肿评估作用研究进展朱炳综述,陈丽霞审校摘要:随着人口老龄化,脑血管疾病成为全球第二大死亡原因,急性脑梗死约占脑血管病的80%,急性大面积脑梗死是因颈内动脉或大脑中动脉主干粥样硬化及血栓形成导致动脉闭塞引起大面积脑组织缺血坏死,具有高发病率、高致死率、高致残率的特点。

急性大面积脑梗死发生后,病情严重进展迅速,脑细胞大量损伤、坏死后出现脑水肿,进一步压迫神经,可引发脑组织进一步损伤,若未得到及时有效救治,会出现脑疝等危及生命情况。

因此,能够床旁动态监测脑水肿的改变对帮助病情评估、判断预后以及指导临床治疗极为重要。

本文通过对多种监测方式对急性大面积脑梗死后脑水肿的动态评估应用价值进行综述,为以后临床诊治提供借鉴。

关键词:急性大面积脑梗死;脑水肿;多模态脑监测中图分类号:R743.3 文献标识码:AResearch advances in multimodal brain monitoring in evaluating cerebral edema after acute massive cerebral in⁃farction ZHU Bing,CHEN Lixia.(The Second Affiliated Hospital of Harbin Medical University, Harbin 150000, China)Abstract:With the aging of the population,cerebrovascular diseases have become the second leading cause of death in the world, and acute cerebral infarction accounts for about 80% of cerebrovascular diseases. Acute massive cere⁃bral infarction refers to a large area of brain ischemia and necrosis due to arterial occlusion caused by arteriosclerosis and thrombosis of the internal carotid artery or the middle cerebral artery, with the features of high incidence rate, mortality rate, and disability rate. After the onset of acute massive cerebral infarction, the disease progresses seriously and rapidly,and cerebral edema occurs after the damage and necrosis of a large number of brain cells, which further compresses nerves and leads to further brain tissue damage, resulting in life-threatening conditions like cerebral hernia without timely and ef⁃fective treatment. Therefore, bedside dynamic monitoring of cerebral edema is of great importance for assessing disease conditions, judging prognosis, and guiding clinical treatment. This article reviews the application value of various monitor⁃ing methods in dynamic assessment of cerebral edema after acute massive cerebral infarction, so as to provide a reference for future clinical diagnosis and treatment.Key words:Acute massive cerebral infarction;Cerebral edema;Multimodal brain monitoring所有卒中类型中大面积脑梗死约10%,一旦发生病情变化快,病情复杂,死亡率高达80%,在缺血期间,由于能量依赖性离子转运的失败和血脑屏障(blood-brain barrier, BBB)的破坏[1,2],过量的液体积聚在脑的细胞内或细胞外空间中,这导致组织肿胀和颅内压升高。

Collaborative filteringCollaborative filtering,即协同过滤,是⼀种新颖的技术。

最早于1989年就提出来了,直到21世纪才得到产业性的应⽤。

应⽤上的代表在国外有,Last.fm,Digg等等。

最近由于毕业论⽂的原因,开始研究这个题⽬,看了⼀个多星期的论⽂与相关资料之后,决定写篇总结来总结⼀下最近这段时间资料收集的成果。

在微软1998年的那篇关于协同过滤的论⽂[1]中,将协同过滤分成了两个流派,⼀个是Memory-Based,⼀个是Model-Based。

关于Memory-Based的算法,就是利⽤⽤户在系统中的操作记录来⽣成相关的推荐结果的⼀种⽅法,主要也分成两种⽅法,⼀种是User-Based,即是利⽤⽤户与⽤户之间的相似性,⽣成最近的邻居,当需要推荐的时候,从最近的邻居中得到推荐得分最⾼的⼏篇⽂章,⽤作推荐;另外⼀种是Item-Based,即是基于item之间的关系,针对item来作推荐,即是使⽤这种⽅法,使⽤⼀种基本的⽅法来得到不俗的效果。

⽽实验结果也表明,Item-Based的做法⽐User-Based更有效[2]。

⽽对于Model-Based的算法,即是使⽤机器学习中的⼀些建模算法,在线下对于模型进⾏预计算,在线上能够快速得出结果。

主要使⽤的算法有 Bayesian belief nets , clustering , latent semantic , 最近⼏年⼜出现了使⽤SVM 等的CF算法。

最近⼏年⼜提出⼀种新的分类,content-based,即是对于item的内容进⾏分析,从⽽进⾏推荐。

⽽现阶段,⽐较优秀的⼀些应⽤算法,则是将以上⼏种⽅法,混合使⽤。

⽐较说Google News[3],在它的系统中,使⽤了⼀种将Memory-Based与Model-Based两种⽅法混合的算法来处理。

在Google的那篇论⽂⾥⾯,它提到了如何构建⼀个⼤型的推荐系统,其中Google的⼀些⾼效的基础架构如:BigTable,MapReduce等得到很好的应⽤。

基于网络药理学预测白藜芦醇治疗阿尔茨海默症的关键潜在靶点田晓燕 江思瑜 张睿 许顺江 李国风*【摘要】目的通过网络药理学预测白藜芦醇(resveratrol,RSV)治疗阿尔茨海默症(Alzheimer's disease,AD)的关键靶点。

方法 利用TCMSP数据库检索含RSV的中药,并对其性味、归经和功效进行归纳分析。

利用SwissTargetPrediction、SEA、HERB数据库预测RSV作用靶点;利用GeneCards、OMIM、TTD、DisGeNRT 数据库检索AD靶点;取RSV的作用靶点与AD靶点的交集为潜在治疗靶点。

利用DAVID数据库进行潜在治疗靶点的GO分析。

利用STRING数据库获取潜在治疗靶点的KEGG富集分析和蛋白质交互作用(protein-protein interaction, PPI),并用Cytoscape绘制PPI网络图。

AlzData数据库验证AD关键靶点变化。

SwissDock网站对RSV与关键蛋白进行分子对接。

结果含RSV中药的性味为苦味最多;归经中入肝经最多;功效中清热解毒功效最多。

RSV预测靶点388个,AD靶点1624个,交集靶点119个。

KEGG富集通路中的阿尔兹海默症通路共富集到27个蛋白。

AlzData数据库分析发现AD患者表达发生变化的蛋白。

分子对接结果发现,RSV与丝氨酸/苏氨酸激酶(serine/threonine kinase 1, AKT1)、白介素-6(interleukin-6, IL-6)、连环蛋白-1(β-catenin, CTNNB1)、肿瘤坏死因子(tumor necrosis factor, TNF)均有较好的结合能力。

结论网络药理分析结果显示RSV对AD的治疗是多靶点、多通路的,可为后续研究方向提供参考。

【关键词】 网络药理学;白藜芦醇;阿尔兹海默症;分子对接中图分类号 R285文献标识码 A 文章编号1671-0223(2023)24-1879-08Predicting the key potential targets of resveratrol in the treatment of Alzheimer's disease based on network pharmacology Tian Xiaoyan, Jiang Siyu, Zhang Rui, Xu Shunjiang, Li Guofeng. Chengde Medical University, Chengde 067000, China【Abstract】Objective Key targets of resveratrol (RSV) in the treatment of Alzheimer's disease (AD) are predicted by network pharmacology. Methods The traditional Chinese medicines which contain RSV were searched by the TCMSP database, and their property and flavor, meridian distribution and phamacologic action were summarized and analyzed. The targets of RSV were predicted by SwissTargetPrediction, SEA and HERB databases. The targets of AD were retrieved using GeneCards, OMIM, TTD and DisGeNRT databases. The intersection targets of RSV and AD were taken as the potential therapeutic targets.Analysis gene ontology (GO) annotations of potential therapeutic targets by biological information annotation database (DAVID). Did KEGG cluster analysis and protein interactions (PPIs) of potential therapeutic targets in STRING database, and mapped PPI networks in Cytoscape. Verified changes of AD key targets in AlzData database.Docking RSV and key proteins in SwissDock website. Results The most Tropism of taste of the traditional Chinese medicines that contain RSV: bitter, cold, in the liver. And the main phamacologic action is clearing away heat and toxic materials.There are 388 predicted targets of RSV,1624 targets of AD, 119 intersection targets. Alzheimer's pathway in KEGG enriched pathway was enriched to 27 proteins. The proteins which expression changed of AD patients was analysised in AlzData database. The results of molecular docking showed that RSV had good binding ability with AKT1, IL-6, CTNNB1 and TNF. Conclusion The results of network pharmacological analysis show that the treatment of AD by RSV is multi-target and multi-pathway, which can provide reference for subsequent research directions.【Key words】 Network pharmacology; Resveratrol; Alzheimer's disease; Molecular docking作者单位:067000 河北省承德市,承德医学院研究生学院 (田晓燕、李国风);河北医科大学第一医院中心实验室(江思瑜、张睿、许顺江);河北省疾病预防控制中心药物研究所(李国风)*通讯作者现代科学研究认为,阿尔兹海默症(Alzheimer disease,AD)是一种不可逆的退行性神经疾病,临床上多以记忆力障碍、执行能力障碍以及人格变化等为特征,是老年性痴呆的最主要因素。