S04.A Frequent Pattern Based Framework for Event Detection in Sensor Network Stream Data

- 格式:pdf

- 大小:271.61 KB

- 文档页数:10

Finding Minimum Representative Pattern SetsGuimei Liu School of Computing National University ofSingapore liugm@.sgHaojun ZhangSchool of ComputingNational University ofSingaporezhanghao@.sgLimsoon WongSchool of ComputingNational University ofSingaporewongls@.sgABSTRACTFrequent pattern mining often produces an enormous num-ber of frequent patterns,which imposes a great challenge on understanding and further analysis of the generated pat-terns.This calls for the need offinding a small number of representative patterns to best approximate all other pat-terns.An ideal approach should1)produce a minimum number of representative patterns;2)can restore the sup-port of all patterns with error guarantee;and3)have good efficiency.Few existing approaches can satisfy all the three requirements.In this paper,we develop two algorithms, MinRPset and FlexRPset,forfinding minimum representa-tive pattern sets.Both algorithms provide error guarantee. MinRPset produces the smallest solution that we can pos-sibly have in practice under the given problem setting,and it takes a reasonable amount of time tofinish.FlexRPset is developed based on MinRPset.It provides one extra pa-rameter K to allow users to make a trade-offbetween result size and efficiency.Our experiment results show that Min-RPset and FlexRPset produce fewer representative patterns than RPlocal—an efficient algorithm that is developed for solving the same problem.FlexRPset can be slightly faster than RPlocal when K is small.Categories and Subject DescriptorsH.2.8[DATABASE MANAGEMENT]:Database Ap-plications—Data MiningKeywordsrepresentative patterns,frequent pattern summarization 1.INTRODUCTIONFrequent pattern mining is an important problem in the data mining area.It wasfirst introduced by Agrawal et al.in1993[4].Frequent pattern mining is usually per-formed on a transaction database D={t1,t2,...,t n},where t j is a transaction containing a set of items,j∈[1,n].Let I={i1,i2,...,i m}be the set of distinct items appearing in D.A pattern X is a set of items in I,that is,X⊆I.If a transaction t∈D contains all the items of a pattern X, then we say t supports X and t is a supporting transaction of X.Let T(X)be the set of transactions in D support-ing pattern X.The support of X,denoted as supp(X),is defined as|T(X)|.If the support of a pattern X is larger than a user-specified threshold min sup,then X is called a frequent pattern.Given a transaction database D and a minimum support threshold min sup,the task of frequent pattern mining is tofind all the frequent patterns in D with respect to min sup.Many efficient algorithms have been developed for mining frequent patterns[9].Now the focus has shifted from how to efficiently mine frequent patterns to how to effectively uti-lize them.Frequent patterns has the anti-monotone prop-erty:if a pattern is frequent,then all of its subsets must be frequent too.On dense datasets and/or when the minimum support is low,long patterns can be frequent.All the sub-sets of these frequent long patterns are frequent too based on the anti-monotone property.This leads to an explosion in the number of frequent patterns.The huge quantity of patterns can easily become a bottleneck for understanding and further analyzing frequent patterns.It has been observed that the complete set of frequent pat-terns often contains a lot of redundancy.Many frequent patterns have similar items and supporting transactions.It is desirable to group similar patterns together and represent them using one single pattern.Frequent closed pattern is proposed for this purpose[14].Let X be a pattern and S be the set of patterns appearing in the same set of transactions as X,that is,S={Y|T(Y)=T(X)}.The longest pattern in S is called a closed pattern,and all the other patterns in S are subsets of it.The closed pattern of S is selected to represent all the patterns in S.The set of frequent closed patterns is a lossless representation of the complete set of frequent patterns.That is,all the frequent patterns and their exact support can be recovered from the set of frequent closed patterns.The number of frequent closed patterns can be much smaller than the total number of frequent patterns, but it can still be tens of thousands or even more. Frequent closed patterns group patterns supported by ex-actly the same set of transactions together.This condition is too restrictive.Xin et al.[23]relax this condition to fur-ther reduce pattern set size.They propose the concept of δ-covered to generalize the concept of frequent closed pat-To appear in Proc. KDD 2012.tern.A pattern X1isδ-covered by another pattern X2if X1 is a subset of X2and(supp(X1)−supp(X2))/supp(X1)≤δ. The goal is tofind a minimum set of representative pat-terns that canδ-cover all frequent patterns.Whenδ=0,the problem corresponds tofinding all frequent closed patterns. Xin et al.show that the problem can be mapped to a set cover problem.They develop two algorithms,RPglobal and RPlocal,to solve the problem.RPglobalfirst generates the set of patterns that can beδ-covered by each pattern,and then employs the well-known greedy algorithm[8]for the set cover problem tofind representative patterns.The optimal-ity of RPglobal is determined by the optimality of the greedy algorithm,so the solution produced by RPglobal is almost the best solution we can possibly have in practice.However, RPglobal is very time-consuming and space-consuming.It is feasible only when the number of frequent patterns is not large.RPlocal is developed based on FPClose[10].It inte-grates frequent pattern mining with representative pattern finding.RPlocal is very efficient,but it produces much more representative patterns than RPglobal.In this paper,we analyze the bottlenecks forfinding a mini-mum representative pattern set and develop two algorithms, MinRPset and FlexRPset,to solve the problem.Algorithm MinRPset is similar to RPglobal,but it utilizes several tech-niques to reduce running time and memory usage.In par-ticular,MinRPset uses a tree structure called CFP-tree[13] to store frequent patterns compactly.The CFP-tree struc-ture also supports efficient retrieval of patterns that areδ-covered by a given pattern.Our experiment results show that MinRPset is only several times slower than RPlocal, while RPglobal is several orders of magnitude slower.Algo-rithm FlexRPset is developed based on MinRPset.It pro-vides one extra parameter K which allows users to make a trade-offbetween efficiency and the number of represen-tative patterns selected.When K=∞,FexRPset is the same as MinRPset.With the decrease of K,FlexRPset be-comes faster,but it produces more representative patterns. When K=1,FlexRPset is slightly faster than RPlocal,and it still produces fewer representative patterns than RPlocal in almost all casese.The rest of the paper is organized as follows.Section2in-troduces related work.Section3gives the formal problem definition.The two algorithms,MinRPset and FlexRPset, are described in Section4and Section5respectively.Ex-periment results are reported in Section6.Finally,Section 7concludes the paper.2.RELATED WORKThe number of frequent patterns can be very large.Besides frequent closed patterns,several other concepts,such as gen-erators[5],non-derivable patterns[7],maximal patterns[12], top-k frequent closed patterns[19]and redundancy-aware top-k patterns[22],have been proposed to reduce pattern set size.The number of generators is larger than that of closed patterns.Furthermore,the set of generators itself is not lossless.It requires a border to be lossless[6].Non-derivable patterns are generalizations of generators.A bor-der is also needed to make non-derivable patterns lossless. The number of maximal patterns is much smaller than the number of closed patterns.All frequent patterns can be recovered from maximal patterns,but their support infor-mation is lost.Another work that also ignores the support information is[3].It selects k patterns that best cover a collection of patterns.Frequent closed patterns preserve the exact support of all frequent patterns.In many applications,knowing the ap-proximate support of frequent patterns is sufficient.Several approaches have been proposed to make a trade-offbetween pattern set size and the precision of pattern support.The work by Xin et al.[23]described in Section1is one such ap-proach.Another approach proposed by Pei et al.[15]mines a minimal condensed pattern-base,which is a superset of the maximal pattern set.Pei et e heuristic algorithms tofind condensed pattern-bases.All frequent patterns and their support can be restored from a condensed pattern-base with error guarantee.Yan et al.[24]use profiles to summarize patterns.A profile consists of a master pattern,a support and a probability dis-tribution vector which contains the probability of the items in the master pattern.The set of patterns represented by a profile are subsets of the master pattern,and their support is calculated by multiplying the support of the profile and the probability of the corresponding items.To summarize a collection of patterns using k profiles,Yan et al.partition the patterns into k clusters,and use a profile to describe each cluster.There are several drawbacks with this profile-based approach:1)It makes contradictory assumptions.On one hand,the patterns represented by the same profile are sup-posed to be similar in both item composition and supporting transactions,thus the items in the same profile are expected to be strongly correlated.On the other hand,based on how the support of patterns are calculated from a profile,the items in the same profile are expected to be independent. It is hard to make a balance between the two contradicting requirements.2)There is no error guarantee on the esti-mated support of patterns.3)The proposed algorithm for generating profiles is very slow because it needs to scan the original dataset repeatedly.4)The support of a pattern is not determined by a single profile,but by all the profiles whose master pattern is a superset of the pattern.Thus it is very costly to recover the support of a pattern using profiles.5)The boundary between frequent patterns and infrequent patterns cannot be determined using profiles. Several improvements have been made to the profile-based approach.Jin et al.[11]develop a regression-based ap-proach to minimize restoration error.They cluster patterns based on restoration errors instead of similarity between pat-terns,thus their approach can achieve lower restoration er-ror.However,there is still no error guarantee on the restored support.CP-summary[16]uses conditional independence to reduce restoration error.It adds one more component to each profile:a pattern base,and the new profile is called c-profile.The items in a c-profile are expected to be in-dependent with respect to the pattern base.CP-summary provides error guarantee on estimated support.However, patterns of a c-profile often share little similarity,so a c-profile is not representative of its patterns any more.Profiles can be considered as generalizations of closed pat-terns.Wang et al.[18]make generalization on another con-cise representation of frequent patterns—non-derivable pat-terns.They use Markov Random Field(MRF)to summa-rize frequent patterns.The support of a pattern is estimated from its subsets,which is similar to non-derivable patterns. Markov Random Field model is not as intuitive as profiles, and it is also expensive to learn.It does not provide error guarantee on estimated support either.3.PROBLEM STATEMENTWe follow the problem definition in[23].The distance be-tween two patterns is defined based on their supporting transaction sets.Definition1(D(X1,X2)).Given two patterns X1and X2,the distance between them is defined as D(X1,X2)= 1−|T(X1)∩T(X2)||T(X1)∪T(X2)|.Definition2(ǫ-covered).Given a real numberǫ∈[0,1]and two patterns X1and X2,we say X1isǫ-covered by X2if X1⊆X2and D(X1,X2)≤ǫ.In the above definition,condition X1⊆X2ensures that the two patterns have similar items,and condition D(X1,X2)≤ǫensures that the two patterns have similar supporting trans-action sets and similar support.Based on the definition,a patternǫ-covers itself.Lemma1.Given two patterns X1and X2,if pattern X1 isǫ-covered by pattern X2and we use supp(X2)to approxi-mate supp(X1),then the relative error supp(X1)−supp(X2)supp(X1)isno larger thanǫ.Proof.supp(X1)−supp(X2)supp(X1)=1−supp(X2)supp(X1)=1−|T(X2)||T(X1)|≤1−|T(X1)∩T(X2)||T(X1)∪T(X2)|≤ǫ.Lemma2.If a frequent pattern X1isǫ-covered by pattern X2,then supp(X2)≥min sup·(1−ǫ).Proof.Based on Lemma1,1−supp(X2)supp(X1)≤ǫ,so we have supp(X2)≥supp(X1)·(1−ǫ)≥min sup·(1−ǫ).Our goal here is to select a minimum set of patterns that canǫ-cover all the frequent patterns.The selected patterns are called representative patterns.Based on Lemma1,the restoration error of all frequent patterns is bounded byǫ. We do not require representative patterns to be frequent. Based on Lemma2,the support of representative patterns must be no less than min sup·(1−ǫ).The problem is how to find a minimum representative pattern set?In the next two sections,we describe two algorithms to solve the problem.4.THE MINRPSET ALGORITHMLet F be the set of frequent patterns in a dataset D with respect to threshold min sup,andˆF be the set of patterns with support no less than min sup·(1−ǫ)in D.Obviously, F⊆ˆF.Given a pattern X∈ˆF,we use C(X)to denote the set of frequent patterns that can beǫ-covered by X.We have C(X)⊆F.If X is frequent,we have X∈C(X).A straightforward algorithm forfinding a minimum repre-sentative pattern set is as follows.First we generate C(X) for every pattern X∈ˆF,and we get|ˆF|sets.The elements of these sets are frequent patterns in F.Let S={C(X)|X∈ˆF}.Finding a minimum representative pattern set is nowequivalent tofinding a minimum number of sets in S that can cover all the frequent patterns in F.This is a set cover problem,and it is NP-hard.We use the well-known greedy algorithm[8]to solve the problem,which achieves an ap-proximation ratio of k i=11i,where k is the maximal size of the sets in S.We call this simple algorithm MinRPset.The greedy algorithm is essentially the best-possible poly-nomial time approximation algorithm for the set cover prob-lem.Our experiment results have shown that it usually takes little time tofinish.Generating C(X)s is the main bottle-neck of the MinRPset algorithm when F andˆF are large because we need tofind C(X)s over a large F for a large number of patterns inˆF.We use the following techniques to improve the efficiency of MinRPset:1)consider closed pat-terns only;2)use a structure called CFP-tree tofind C(X)s efficiently;and3)use a light-weight compression technique to compress C(X)s.4.1Considering closed patterns onlyA pattern is closed if all of its supersets are less frequent than it.If a pattern X1is non-closed,then there exists another pattern X2such that X1⊂X2and supp(X2)=supp(X1).Lemma3.Given two patterns X1and X2such that X1⊆X2and supp(X1)=supp(X2),if X2isǫ-covered by a pat-tern X,then X1must beǫ-covered by X too.The above lemma directly follows from Definition2.It im-plies that instead of covering all frequent patterns,we can cover frequent closed patterns only,which leads to the fol-lowing lemma.Lemma4.Let F be the set of frequent patterns in a dataset D with respect to a threshold min sup.If a set of patterns Rǫ-covers all the frequent closed patterns in F,then Rǫ-covers all the frequent patterns in F.Lemma5.Given two patterns X1and X2such that X1⊆X2and supp(X1)=supp(X2),if a pattern X isǫ-covered by X1,then X must beǫ-covered by X2too.This lemma also directly follows from Definition2.It sug-gests that we can use closed patterns only to cover all fre-quent patterns.The number of frequent closed patterns can be orders of magnitude smaller than the total number of frequent pat-terns.Consider only closed patterns improves the efficiency of the MinRPset algorithm in two aspects.On one hand,itTable 1:Anexample dataset D TID Transactions 1a,c,e,f,m,p 2b,e,v3a,b,f,m,p 4d,e,f,h,p 5a,c,d,m,v 6a,c,h,m,s 7a,f,m,p,u 8a,b,d,f,g Table 2:Frequent patterns (minsup =3)ID Itemsets ID itemsets ID itemsets 1a:69ac:317acm:32b:310af:418afm:33c:311am:519afp:34d:312ap:320amp:35e:313cm:321fmp:36f:514fm:322afmp:37m:515fp:48p:416mp:3Figure 1:CFP-tree constructed on the frequent pat-terns in Table 2reduces the size of individual C (X )s since now they contain only frequent closed patterns.On the other hand,it reduces the number of patterns whose C (X )needs to be generated as now we need to generate C (X )s for closed patterns only.4.2Using CFP-tree to find C (X )s efficientlyThe CFP-tree structure is specially designed for storing and querying frequent patterns [13].It resembles a set-enumer-ation tree [17].We use an example dataset D in Table 1to illuminate its structure.Table 2shows all the frequent pat-terns in D when min sup =3.The CFP-tree constructed from the frequent patterns is shown in Figure 1.Each node in a CFP-tree is a variable-length array.If a node contains multiple entries,then each entry contains exactly one item.If a node has only one entry,then it is called a singleton node .Singleton nodes can contain more than one item.For example,node 2in Figure 1is a singleton node with two items m and a .An entry E stores several pieces of information:(1)m items (m ≥1),(2)the support of E ,(3)a pointer pointing to the child node of E and (4)the id of the entry which is assigned using preordering.In the rest of this paper,we use E.items ,E.support ,E.child and E.preorder to denote the above fields.Every entry in a CFP-tree represents one or more patterns with the same support,and these patterns contain the items on the path from the root to the entry.Items contained insingleton nodes are optional.Let E be an entry,X m be the set of items in the multiple-entry nodes and X s be the set of items in the singleton nodes on the path from the root to the parent of E respectively.The set of patterns represented by E is {X m ∪Y ∪Z |Y ⊆X s ,Z ⊆E.items,Z =∅}.The longest pattern represented by E is X m ∪X s ∪E.items .Let us look at an example.Node 4contains only one entry.For this entry,we have X m ={p },X s ={f }and E.items ={m,a }.Hence node 4represents 6itemsets:{p,m },{p,a },{p,m,a },{p,f,m },{p,f,a }and {p,f,m,a }.We use E.pattern to denote the longest pattern represented by E .The above feature makes CFP-tree a very compact structure for storing frequent patterns.The number of entries in a CFP-tree is much smaller than the total number of patterns stored in the tree.For each entry,we consider its longest pattern only based on Lemma 3and Lemma 5.For an entry E ,only its longest pattern can be closed.Other patterns of E that are shorter than the longest pattern cannot be closed based on the definition of closed patterns.If the longest pattern of an entry is not closed,then we call the entry a non-closed entry .The CFP-tree structure has the following property.Property 1.In a multiple-entry node,the item of an entry E can appear in the subtrees pointed by entries before E ,but it cannot appear in the subtrees pointed by entries after E .For example,in the root node of Figure 1,item p is allowed to appear in the subtrees pointed by entries b ,c ,d and e ,but it is not allowed to appear in the subtrees pointed by entries f ,m and a .This property implies the following lemma.Lemma 6.In a CFP-tree,the supersets of a pattern can-not appear on the right of the pattern.They appear either on the left of the pattern or in the subtree pointed by the pattern.4.2.1Finding one C (X )Given a pattern X ,C (X )contains the subsets of X that can be ǫ-covered by X .CFP-tree supports efficient retrieval of subsets of patterns.To find the subsets of a pattern X in a CFP-tree,we simply traverse the CFP-tree and match the items of the entries against X .For an entry E in a multiple-entry node,if its item appears in X ,then entry E represents some subsets of X and the search is continued on its subtree.Otherwise,entry E and its subtree is skipped because all the patterns in the subtree of E contain E.items /∈X ,and these patterns cannot be subsets of X .An entry E in a singleton node can contain items not in X ,and these items are simply ignored.Algorithm 1shows the pseudo-codes for retrieving C (X ).Initially,cnode is the root node of the CFP-tree.Parameter Y contains the set of items to be searched in cnode .It is set to X initially.Once an entry E is visited,the item of E is removed from Y when Y is passed to the subtree of E (line 8,18).The item of E is also excluded when Y is passed to the entries after E (line 21).This is because the item ofAlgorithm 1Search CX AlgorithmInput:cnode is a CFP-tree node;//cnode is the root node initially.Y is the set of items to be searched in cnode ;//Y =X initially.supp (X )is the support of X ;Output:C (X );Description:1:if cnode contains only one entry E then2:if E.support ==supp (X )AND E.pattern ⊂X then 3:Mark E as non-closed;4:if E is not marked as non-closed then 5:if E.items Y =∅AND E.support ≤supp (X )(1−ǫ)then 6:Put E.preorder into C (X );7:if E.child =NULL AND Y −E.items =∅then 8:Search CX(E.child ,Y −E.items ,supp (X ));9:else if cnode contains multiple entries then10:for each entry E ∈cnode from left to right do 11:if E.items ∈Y then 12:if E.support ==supp (X )AND E.pattern ⊂Xthen13:Mark E as non-closed;14:if E is not marked as non-closed then15:if E.support ≤supp (X )(1−ǫ)then 16:Put E.preorder into C (X );17:if E.child =NULL AND Y −E.items =∅then 18:Search CX(E.child ,Y −E.items ,supp (X ));19:if supp (E.pattern ∪Y )>supp (X )(1−ǫ)then 20:return ;21:Y =Y −E.items ;E cannot appear in the subtrees pointed by entries after E based on Property 1.During the search of C (X )s,we also mark non-closed pat-terns.If the longest pattern of E is a proper subset of X and E.support =supp (X ),then E is marked as non-closed (line 2-3,12-13),and it is skipped in subsequent search.The early termination technique.If a pattern is ǫ-covered by X ,then its support must be no larger than supp (X )(1−ǫ)based on Definition 2.We use this requirement to further improve the efficiency of Algorithm 1.Given an en-try E in a multiple-entry node,after we visit the subtree ofE ,if we find supp (E.pattern ∪Y )>supp (X )(1−ǫ),where Y is the set of items that is passed to E ,then there is no need to visit the subtrees pointed by entries after E (line 19-0).The reason being that all the subsets of X in these subtrees must be subsets of (E.pattern ∪Y ),and their support must belarger than supp(X )(1−ǫ)too based on the anti-monotone prop-erty.We call this pruning technique the early termination technique.4.2.2Finding C (X )s of all closed patternsAlgorithm 2shows the pseudo-codes for generating all C (X )s.It traverses the CFP-tree in depth-first order from left to ing this traversal order,the supersets of a pattern X that are on the left of X are visited before X .If the support of X is the same as one of these supersets,then X should be marked as non-closed when Search CX is called for that superset.If X is not marked as non-closed when X is visited,it means that X is more frequent than all its supersets on its left.Based on Lemma 6,the supersets of a pattern appear either on the left of the pattern or in thesubtree pointed by the pattern.If X is also more frequentthan its child entries,then X must be closed.The conditions listed at line 2and line 3ensure that Algorithm 2generates C (X )s for only closed patterns.Algorithm 2DFS Search CXs AlgorithmInput:cnode is a CFP-tree node;//cnode is the root node initially.Output:C (X )s;Description:1:for each entry E ∈cnode from left to right do 2:if E is not marked as non-closed then 3:if E is more frequent than its child entries then 4:X =E.pattern ;5:C (X )=Search CX(root ,X ,E.support );6:if E.child =NULL then 7:DFS Search CXs(E.child );In Algorithm 2,if an entry E is marked as non-closed be-cause it has the same support as one of its supersets on its left,then all the patterns in the subtree pointed by E are non-closed.We can safely skip E and its subtree in subsequent traversal (line 2).The same pruning is done in Algorithm 1(line 4,14).This observation has been used in almost all frequent closed pattern mining algorithms to prune non-closed patterns [10,20].4.3Compressing C (X )sIn a CFP-tree,each entry E has an id,which is denoted as E.preorder .In Algorithm 1,we put the ids of entries in C (X )s if E is ǫ-covered by X (line 6,16).Each id takes 4bytes.Both the total number of C (X )s and the size of indi-vidual C (X )s grow with the number of frequent (closed)pat-terns.When the number of frequent closed patterns is large,the total size of C (X )s can be very large.If the main mem-ory cannot accommodate all C (X )s,the greedy set cover algorithm becomes very slow.To alleviate this problem,we compress C (X )s using a light-weight compression technique [21].Each entry id occupies one or more bytes depending on its value.To reduce the number of bytes needed for storing entries ids,we sort the entry ids in ascending order and store the differences be-tween consecutive ids instead.Our experiment results show that this compression technique can reduce the space needed for storing C (X )s by about three quarters.Algorithm 3MinRPset AlgorithmDescription:1:Mine patterns with support ≥min sup ·(1−ǫ)and store them in a CFP-tree;let root be the root node of the tree;2:DFS Search CXs(root );3:Remove non-closed entries from C (X )s;4:Apply the greedy set cover algorithm on C (X )s to find rep-resentative patterns;5:Output representative patterns;Algorithm 3shows the pseudo-codes of the MinRPset algo-rithm,and it calls Algorithm 2to find C (X )s.Note that we store all patterns,including non-closed patterns,with support no less than min sup ·(1−ǫ)in a CFP-tree (line 1).Non-closed patterns are identified during the search of C (X )s.Hence it is possible that some C (X )s contains somenon-closed entries.These non-closed entries are removed from C(X)s(line3)before the greedy set cover algorithm is applied.5.THE FLEXRPSET ALGORITHMWhen the number of frequent patterns is large on a dataset, the MinRPset algorithm may become very slow since it needs to search subsets over a large CFP-tree for a large number of patterns.Furthermore,the set of C(X)s may become too large tofit into the main memory.To solve this problem, instead of searing C(X)s for all closed patterns,we can se-lectively generate C(X)s such that every frequent pattern is covered a sufficient number of times,in the hope that the greedy set cover algorithm can stillfind a near-optimal solu-tion.Apparently,the fewer the number of C(X)s generated, the more efficient the algorithm is.This is the basic idea of the FlexRPset algorithm.The FlexRPset algorithm uses a parameter K to control the minimum number of times that a frequent pattern needs to be covered.Algorithm4shows how FlexRPset selectively generates C(X)s.The other steps of FlexRPset are the same as those of MinRPset.Algorithm4Flex Search CXs AlgorithmInput:cnode is a CFP-tree node;//cnode is the root node initially.K is the minimum number of times that a frequent closed pattern needs to be covered;Output:C(X)s;Description:1:for each entry E∈cnode from left to right do2:if E is not marked as non-closed then3:if E.child=NULL then4:Flex Search CXs(E.child);5:if E is more frequent than its child entries then6:if(E is frequent AND E is covered less than K times) OR(∃an ancestor entry E′of E such that E′is fre-quent,E′can beǫ-covered by E and E′is covered lessthan K times)then7:X=E.pattern;8:C(X)=Search CX(root,X,E.support);Algorithm4still uses the depth-first order to traverse a CFP-tree from left to right.It traverses the subtree of an entry Efirst(line3-4)before it processes entry E(line5-8), which means that when entry E is processed,all the super-sets of E have been processed already based on Lemma6, and entry E cannot be covered any more except by E itself. If E is frequent and it is covered less than K times,then we generate C(E.patterns)to cover E(thefirst condition at line6).If E has already be covered at least K times when E is visited,then we look at the ancestor entries of E.For an ancestor entry E′of E,most of its supersets are already processed too when E is visited,hence not many remaining entries can cover E′.If E′is frequent,E′can beǫ-covered by E and E′is covered less than K times,then we also gen-erate C(E.patterns)to cover E′(the second condition at line6).6.EXPERIMENTSIn this section,we study the performance of our algorithms. The experiments were conducted on a PC with2.33Ghz Intel Duo Core CPU and3.25GB memory.Our algorithms were implemented using C++.We downloaded the source codes of RPlocal from the IlliMine package[2].All source codes were compiled using Microsoft Visual Studio2005.6.1DatasetsThe datasets used in the experiments are shown in Table 3.They are obtained from the FIMI repository[1].Table 3shows some basic statistics of these databases:number of transactions(|T|),number of distinct items(|I|),maxi-mum length of transactions(MaxTL)and average length of transactions(AvgTL).Table3:Datasetsdataset|T||I|MaxTL AvgTLaccidents3401834685233.81chess3196753737.00connect675571294343.00mushroom81241192323.00pumsb4904621137474.00pumsb star4904620886350.486.2Comparing with RPlocalThefirst experiment compares MinRPset and FlexRPset with RPlocal.Let N be the number of representative pat-terns generated by an algorithm.Figure2shows the ratio of N to the number of representative patterns generated by RPlocal when min sup is varied.Obviously,the ratio is al-ways1for RPlocal.ǫis set to0.2on mushroom and to0.1 on other datasets.In[23],the authors have shown that the number of representative patterns selected by RPlocal can be orders of magnitude smaller than the number of frequent closed patterns.MinRPset further reduces the number of representative patterns by10%-65%.The FlexRPset algo-rithm generates a similar number of representative patterns with MinRPset when K is large.When K gets smaller,the number of representative patterns generated by FlexRPset increases.When K=1,FlexRPset still generates less repre-sentative patterns than RPlocal in most of the cases. Figure3shows the running time of the several algorithms when min sup is varied.The running time of MinRPset and FlexRPset includes time for mining frequent patterns.The running time of all algorithms increases with the descrease of min sup.MinRPset has similar running time with RPlocal on mushroom.On accidents and pumsb star,when min sup is relatively high,the running time of MinRPset and RPlocal is similar too.In other cases except for dataset pumsb, MinRPset is several times slower than RPlocal.RPglobal is often hundreds of times slower than RPlocal as shown in [23].This indicates the techniques used in MinRPset is very effective in reducing running time.On pumsb,MinRPset is more than10times slower than RPlocal when min sup≤0.7,but it achieves the greatest reduction in the number of representative patterns on this dataset.FlexRPset has similar running time with RPlocal when K is small.When K=10,the running time of FlexRPset is close to that of RPlocal,and the number of representative pat-terns generated by FlexRPset is close to that of MinRPset.。

2016年6月大学英语四级真题第二套2016年6月大学英语四级真题(第2套)该真题及答案摘自于巨微英语四级真题逐句精解,中间省略听力原文,详细资料可以关注公众号:巨微英语四六级或者官网渠道获得电子版。

Part I WritingDirections:For this part, you are allowed 30 minutes to write a letter to express your thanks to one of your school teachers upon entering college. You should write at least 120 words but no more than 180 words.Part ⅡListening Comprehensionwill hear two or three questions. Both the news report and the questions will be spoken only once. After you hear a question, you must choose the best answer from the four choices marked A), B), C) and D). Then mark the corresponding letter on Answer Sheet 1with a single line through the centre.Questions 1 and 2 are based on the news report you have just heard.1. A)How college students can improve their sleep habits.B)Why sufficient sleep is important for college students.D)Whether the Spanish company could offer better service.4. A)Inefficient management. B)Poor ownership structure.C)Lack of innovation and competition.D)Lack of runway and terminal capacity.Questions 5 to 7 are based on the news report you have just heard.5. A)Report the nicotine content of their cigarettes.B)Set a limit to the production of their cigarettes.C)Take steps to reduce nicotine in their products.D)Study the effects of nicotine on young smokers.6. A)The biggest increase in nicotine content tended to be in brands young smokers like.B)Big tobacco companies were frank withtheir customers about the hazards of smoking.C)Brands which contain higher nicotine content were found to be much more popular.D)Tobacco companies refused to discuss the detailed nicotine content of their products.7. A)They promised to reduce the nicotine content in cigarettes.B)They have not fully realized the harmful effect of nicotine.C)They were not prepared to comment on the cigarette study.D)They will pay more attention to the quality of their products.Section BDirections: In this section, you will hear two long conversations. At the end of each conversation, you will hear four questions. Both the conversation and the questions will be spoken only once. After you hear a question, you must choosethe best answer from the four choices marked A), B), C)and D). Then mark the corresponding letter on Answer Sheet 1 with a single line through the centre.Questions 8 to 11 are based on the conversation you have just heard.8. A)Indonesia. B)Holland. C)Sweden.D)England.9.A)Getting a coach who can offer real help.B)Talking with her boyfriend in Dutch.C)Learning a language where it is not spoken .D)Acquiring the necessary ability to socialize .10. A)Listening language programs on the radio.B)Trying to speak it as much as one can.C)Making friends with native speakers.D)Practicing reading aloud as often as possible.11.A)It creates an environment for socializing.B)It offers various courses with credit points.C)It trains young people’s leadership abilities.D)It provides opportunities for language practice.Questions 12 to 15 are based on the conversation you have just heard.12. A)The impact of engine design on rode safety.B)The role policemen play in traffic safety.C)A sense of freedom driving gives.D)Rules and regulations for driving.13. A)Make cars with automatic control.B)Make cars that have better brakes.C)Make cars that are less powerful.D)Make cars with higher standards.14. A)They tend to drive responsibly.B)They like to go at high speed.C)They keep within speed limits.D)They follow traffic rules closely.15.A)It is a bad idea. B)It isnot useful.C)It is as effective as speed bumps . D)It should be combined with education.Section CDirections:In this section, you will hear three passages. At the end of each passage, you will hear three or four questions. Both the passage and the questions will be spoken only once. After you hear a question, you must choose the best answer from the four choices marked A), B), C)and D).Then mark the corresponding letter on Answer Sheet 1 with a single line through the centre.Questions 16 to 18 are based on the passage you have just heard.16.A)The card got damaged . B)The card was found invalid.C)The card reader failed to do the scanning.D)The card reader broke down unexpectedly.17. A)By converting the credit card with a layer of plastic.B)By calling the credit card company for confirmation.C)By seeking help from the card reader maker Verifone.D)By typing the credit card number into the cash register.18.A)Affect the sales of high-tech appliances.B)Change the life style of many Americans.C)Give birth to many new technological inventions.D)Produce many low-tech fixes for high-tech failures.Questions 19 to 21 are based on the passage you have just heard.19. A)They are set by the dean of the graduate school.B)They are determined by the advising board.C)They leave much room for improvement.D)They vary among different departments.20. A)By consulting the examining committee .B)By reading the Bulletin of Information.C)By contacting the departmental office.D)By visiting the university’s website.21. A)They specify the number of credits students must earn.B)They are harder to meet than those for undergraduates.C)They have to be approved by the examining committee.D)They are the same among various divisions of the university.Questions 22 to 25 are based on the passage you have just heard.22. A)Students majoring in nutrition.B)Students in health classes.C)Ph.D. candidates in dieting.D)Middle and high school teachers.23. A)Its overestimate of the effect of dieting.B)Its mistaken conception of nutrition.C)Its changing criteria for beauty.D)Its overemphasis on thinness.24. A)To illustrate her point that beauty is but skin deep.B)To demonstrate the magic effect of dieting on women.C)To explain how computer images can be misleading.D)To prove that technology has impacted our culture.25. A)To persuade girls to stop dieting.B)To promote her own concept of beauty.C)To establish an emotional connection with students.D)To help students rid themselves of bad living habits.Section ADirections:In this section, thereis a passage with ten blanks.You are required to select one word for each blank from a list of choices given in a word bank following the passage. Read the passage through carefully before making your choices. Each choice in the bank is identified by a letter. Please mark the corresponding letter for each item on Answer Sheet 2with a single line through the centre. You may not use any of the words in the bank more than once.Contrary to popular belief, older people generally do not want to live with their children. Moreover, most adult children 26 every bit as much care and support to their aging parents as was the case in the “good old days”, and mostolder people do not feel 27 .About 80% of people 65years and older have living children, and about 90% of them have 28 contact with their children. About 75% of elderly parents who don’t go to nursing homes live within 30 minutes of at least one of their children.However, 29 having contact with children does not guarantee happiness in old age. In fact, some research has found that people who are most involved with their families have the lowest spirits. This research may be 30 , however, as ill health often makes older people more 31 and thereby increases contact with family members. So it is more likely that poor health, not just family involvement, 32 spirits.Increasingly, researchers have begun to look at the quality of relationships, rather than at the frequency of contact, between the elderly and their children. If parents and children share interests and values and agree on childrearingpractices and religious 33 , they are likely to enjoy each other’s company. Disagreements on such matters can 34 cause problems. If p arents are agreed by their daughter’s divorce, dislike her new husband, and disapprove of how she is raising their grandchildren, 35 are that they are not going to enjoy her visits.Section BDirections: In this section, you are going to read a passage with ten statements attached to it. Eachstatement contains information given in one of the paragraphs. Identify the paragraph from which the information is derived. You may choose a paragraph more than once. Each paragraph is marked with a letter. Answer the questions by marking the corresponding letter on Answer Sheet 2.Could Food Shortages Bring Down Civilization?[A] For many years I have studied globalagricultural, population, environmental and economic trends and their interactions. The combined effects of those trends and the political tensions they generate point to the breakdown of governments and societies. Yet I, too, have resisted the idea that food shortages could bring down not only individual governments but also our global civilization.[B] I can no longer ignore that risk. Ourcontinuing failure to deal with the environmental declines that are underminingthe world food economy forces me to conclude that such a collapse is possible.[C] As demand for food rises faster thansupplies are growing, the resulting food-price inflation puts severe stress on the governments of many countries. Unable to buy grain or grow their own, hungry people take to the streets. Indeed, even before the steep climb in grain prices in 2008, the number of failing states was expanding. If the food situation continues to worsen, entire nations will break down at an ever increasing rate. In the 20th century the main threat to international security was superpower conflict; today it is failing states.[D] States fail when national governments canno longer provide personal security, food security and basic social services such as education and health care. When governments lose their control on power, law and order begin to disintegrate. After a point,countries can become so dangerous that food relief workers are no longer safe and their programs are halted. Failing states are of international concern because they are a source of terrorists, drugs, weapons and refugees(难民), threatening political stability everywhere.[E] The surge in world grain prices in 2007 and2008—and the threat they pose to foodsecurity——has a different, more troublingquality than the increases of the past.During the second half of the 20th century,grain prices rose dramatically several times.In 1972, for instance, the Soviets,recognizing their poor harvest early, quietlycornered the world wheat market. As aresult, wheat prices elsewhere more thandoubled, pulling rice and corn prices upwith them. But this and other price shockswere event-driven——drought in the SovietUnion, crop-shrinking heat in the U.S. CornBelt. And the rises were short-lived: prices typically returned to normal with the next harvest.[F] In contrast, the recent surge in world grainprices is trend-driven, making it unlikely to reverse without a reversal in the trends themselves. On the demand side, those trends include the ongoing addition of more than 70 million people a year, a growing number of people wanting to move up the food chain to consume highly grain-intensive meat products, and the massive diversion(转向) of U.S. grain to the production of bio-fuel.[G] As incomes rise among low-incomeconsumers, the potential for further grain consumption is huge. But that potential pales beside the never-ending demand for crop-based fuels. A fourth of this year’s U.S.grain harvest will go to fuel cars.[H] What about supply? The threeenvironmental trends——the shortage of fresh water, the loss of topsoil and the rising temperatures——are making it increasingly hard to expand the world’s grain supply fast enough to keep up with demand. Of all those trends, however, the spread of water shortages poses the most immediate threat.The biggest challenge here is irrigation, which consumes 70% the world’s fresh water.Millions of irrigation wells in many countries are now pumping water out of underground sources faster than rainfall can refill them.The result is falling water tables(地下水位) in countr ies with half the world’s people, including the three big grain producers——China, India and the U.S.[I] As water tables have fallen and irrigationwells have gone dry, China’s wheat crop, the world’s largest, has declined by 8% since it peaked at 123 million tons in 1997. But water shortages are even more worrying in India.Millions of irrigation wells have significantly lowered water tables in almost every state.[J] As the world’s food security falls to pieces, individual countries acting in their own self-interest are actually worsening the troubles of many. The trend began in 2007, when leading wheat-exporting countries such as Russia and Argentina limited or banned their exports, in hopes of increasing local food supplies and thereby bringing down domestic food prices. Vietnam banned its exports for several months for the same reason. Such moves may eliminate the fears of those living in the exporting countries, but they are creating panic in importing countries that must rely on what is then left for export.[K] In response to those restrictions, grain-importing countries are trying to nail down long-term trade agreements that would lock up future grain supplies.Food-import anxiety is even leading to new efforts by food-importing countries to buy or lease farmland in other countries. In spite of such temporary measures, soaring food prices and spreading hunger in many other countries are beginning to break down the social order.[L] Since the current world food shortage is trend-driven, the environmental trends that cause it must be reversed. We must cut carbon emissions by 80% from their 2006 levels by 2020, stabilize the world’s population at eight billion by 2040, completely remove poverty, and restore forests and soils. There is nothing new about the four objectives. Indeed, we have made substantial progress in some parts of the world on at least one of these——the distribution of family-planning services and the associated shift to smaller families.[M]For many in the development community,the four objectives were seen as positive, promoting development as long as they did not cost too much. Others saw them as politically correct and morally appropriate.Now a third and far more significant motivation presents itself: meeting these goals may be necessary to prevent the collapse of our civilization. Yet the cost we project for saving civilization would amount to less than $200 billion a year, 1/6 of current global military spending. In effect, our plan is the new security budget.36.The more recent steep climb in grain pricespartly results from the fact that more and more people want to consume meat products.37. Social order is breaking down in many countries because of food shortages.38. Rather than superpower conflict, countriesunable to cope with food shortages now constitute the main threat to world security.39. Some parts of the world have seen successful implementation of family planning.40. The author has come to agree that foodshortages could ultimately lead to the collapse of world civilization.41. Increasing water shortages prove to be thebiggest obstacle to boosting the world’s grain production.42. The cost for saving our civilization would beconsiderably less than the world’s current military spending.43. To lower domestic food prices, some countries limited or stopped their grain exports.44. Environmental problems must be solved to ease the current global food shortage.45. A quarter of this year’s American grain harvest will be used to produce bio-fuel for cars.Section CDirections: There are 2 passages in this section. Each passage is followed by some questions or unfinished statements. For each of them there are four choices marked A), B), C) and D). You should decide on the best choice and mark the corresponding letter on Answer Sheet 2 with a single line through the centre.Passage OneQuestions 46 to 50 are based on the following passage.Declining mental function is often seen as a problem of old age, but certain aspects of brain function actually begin their decline in young adulthood, a new study suggests.The study, which followed more than 2,000 healthy adults between the ages of 18 and 60, found that certain mental functions—including measures of abstract reasoning, mental speed and puzzle-solving—started to dull as early as age 27.Dips in memory, meanwhile, generallybecame apparent around age 37.On the other hand, indicators of a person’s accumulated knowledge—like performance on tests of vocabulary and general knowledge—kept improving with age, according to findings published in the journal Neurobiology of Aging.The results do not mean that young adults need to start worrying about their memories. Most people’s minds function at a high level even in their later years, according to researcher Timothy Salthouse.“These patterns suggest that some types of mental flexibility decrease relatively early in adulthood, but that the amount of knowledge one has, and the effectiveness of integrating it with one’s abilities, may increase throughout all of adulthood if there are no dise ases,” Salthouse said in a news release.The study included healthy, educated adultswho took standard tests of memory, reasoning and perception at the outset and at some point over the next seven years.The tests are designed to detect subtle (细微的) changes in mental function, and involve solving puzzles, recalling words and details from stories, and identifying patterns in collections of letters and symbols.In general, Salthouse and his colleagues found, certain aspects of cognition (认知能力) generally started to decline in the late 20s to 30s.The findings shed light on normal age-related changes in mental function, which could aid in understanding the process of dementia (痴呆), according to the researchers.“By following individuals over time,” Salthouse said, “we gain insight in cognition changes, and may possibly discover ways to slow the rate of decline.”The researchers are currently analyzing thestudy participants’ health and lifestyle to see which factors might influence age-related cognitive changes.46. What is the common view of mental function?A)It varies from person to person.B)It weakens in one’s later years.C)It gradually expands with age. D)It indicates one’s health condition.47. What does the new study find about mental functions?A)Some diseases inevitably lead to their decline.B)They reach a peak at the age of 20 for most people.C)They are closely related to physical and mental exercise.D)Some of them begin to decline when people are still young.48. What does Timothy Salthouse say about people’s minds in most cases?A)They tend to decline in people’s later years.B)Their flexibility determines one’s abilities.C)They function quite well even in old age.D)Their functioning is still a puzzle to be solved.49. Although people’s minds may function less flexibly as they age, they _____.A)may be better at solving puzzlesB)can memorize things with more easeC)may have greater facility in abstract reasoningD)can put what they have learnt into more effective use50. According to Salthouse, their study may help us_____.A)find ways to slow down our mental declineB)find ways to boost our memoriesC)understand the complex process of mental functioningD)understand the relation between physical and mental healthPassage TwoQuestions 51 to 55 are based on the following passage.The most important thing in the news last week was the rising discussion in Nashville about the educational needs of children. The shorthand(简写)educators use for this is “pre-K”—meaning instruction before kindergarten—and the big idea is to prepare 4-year-olds and even younger kids to be ready to succeed on their K-12 journey.But it gets complicated. The concept hasmultiple forms, and scholars and policymakers argue about the shape, scope and cost of the ideal program.The federal Head Start program, launched 50 years ago, has served more than 30 million children. It was based on concepts developed at Vanderbilt University’s Peabody College by Susan Gray, the legendary pioneer in early childhood education research.A new Peabody study of the Tennessee Voluntary Pre-K program reports that pre-K works, but the gains are not sustained through the third grade. It seems to me this highlights quality issues in elementary schools more than pre-K, and indicates longer-term success must connect pre-K with all the other issues related to educating a child.Pre-K is controversial. Some critics say it is a luxury and shouldn’t be free to families able to pay. Pre-K advocates insist it is proven and will succeed if integrated with the rest of the child’sschooling. I lean toward the latter view.This is, in any case, the right conversation to be having now as Mayor Megan Barry takes office. She was the first candidate to speak out for strong pre-K programming. The important thing is for all of us to keep in mind the real goal and the longer, bigger picture.The weight of the evidence is on the side of pre-K that early intervention (干预)works. What government has not yet found is the political will to put that understanding into full practice with a sequence of smart schooling that provides the early foundation.For this purpose, our schools need both the talent and the organization to educate each child who arrives at the schoolhouse door. Some show up ready, but many do not at this critical time when young brains are developing rapidly.51.What does the author say about pre-kindergarten education?A)It should cater to the needs of individual children.B)It is e ssential to a person’s future academic success.C)Scholars and policymakers have different opinions about it.D)Parents regard it as the first phase of children’s development.52.What does the new Peabody study find?A)Pre-K achievements usually do not last long.B)The third grade marks a new phase of learning.C)The third grade is critical to children’s development.D)Quality has not been the top concern of pre-K programs.53.When does the author think pre-K works the best?A)When it is accessible to kids of all families.B)When it is made part of kids’ education.C)When it is no longer considered a luxury.D)When it is made fun and enjoyable to kids.54.What do we learn about Mayor Megan Barry?A)She knows the real goal of education.B)She is a mayor of insight and vision.C)She has once run a pre-K program.D)She is a firm supporter of pre-K.55.What does the author think is critical to kids’ education?A)Teaching method. B)Kids’ interest.C)Early intervention.D)Parents’ involvement.Directions:For this part, youare allowed 30 minutes totranslate a passage fromChinese into English. Youshould write your answer on Answer Sheet 2.在山东省潍坊市,风筝不仅仅是玩具,而且还是这座城市文化的标志。

高二英语机器学习单选题50题1.Machine learning is a field of study that focuses on the development of algorithms that can learn from ___.A.dataB.experienceC.intuitionD.opinion答案:A。

本题主要考查机器学习的基本概念。

机器学习是通过数据进行学习的,选项A“data”符合题意。

选项B“experience”通常指人的经验,机器学习主要依据数据而非人的经验。

选项C“intuition”是直觉,机器学习是基于数据和算法的,不是直觉。

选项D“opinion”是观点,机器学习不是基于观点进行学习。

2.The main goal of machine learning is to ___.A.predict future eventsB.create new algorithmsC.solve complex equationsD.store large amounts of data答案:A。

机器学习的主要目标是根据已有数据预测未来事件,选项 A 正确。

选项B“create new algorithms”不是机器学习的主要目标,虽然在研究中可能会产生新算法,但不是主要目的。

选项C“solve complex equations”是数学等领域的任务,不是机器学习的主要目标。

选项D“store large amounts of data”只是存储数据,不是机器学习的目标。

3.Machine learning algorithms can be used in ___.A.image recognitionB.math calculationsC.physical experimentsD.literary analysis答案:A。

机器学习算法可以用于图像识别,选项A 正确。

Filtering Frequent Spatial Patterns withQualitative Spatial ReasoningVania Bogorny a Bart Moelans a Luis Otavio Alvares aba Theoretical Computer Science Group,Hasselt University&Transnational University of Limburg,Belgiumb Instituto de Inform´a tica–Universidade Federal do Rio Grande do Sul,BrazilThe full version of this paper appeared in the2007IEEE Workshop Proceedings of the23rdInternational Conference on Data Engineering(ICDE2007)[6]1IntroductionThe huge amount of patterns generated by frequent pattern mining algorithms has been extensively ad-dressed in the last few years.Different measures have been proposed to evaluate how interesting association rules are.However,according to[3]it is difficult to come up with a single metric that quantifies the“inter-estingness”or“goodness”of an association rule.In most approaches,non-interesting rules are eliminated during the rule generation,i.e.,a posteriori,when frequent itemsets have already been generated.In spatial frequent pattern mining the number of non-interesting association rules can increase even fur-ther than in transactional pattern mining.Geographic data have semantic dependencies and spatial properties which in many cases are well known and non-interesting for data mining.In the geographic domain,not only well known geographic dependencies(e.g.,is gasStation→touches street)generate a large number of patterns without novel and useful knowledge.Different spatial predicates may contain the same geographic object type.In transactional frequent pattern mining items have binary values,and do either participate or not in a transaction.For instance,the item milk is either present or not in a transaction t.In spatial frequent pattern mining the same spatial object(item)may have different qualitative spatial relationships with the target feature(transaction),and by consequence participate more then once in the same transaction t.For instance,a city C may contain an instance i1of river,be crossed by an instance i2of river,or even touch an instance i3of river.Different spatial relationships with the same geographic object type will generate associations such as contains River→touches River when data are considered at general granularity levels[8].It is well known that a city does not touch a river because it also contains a river.Such kind of rule is non-interesting for most applications.An interesting association rule would be the combination of any of these two predicates with a different geographic ob-ject type or some non-spatial attribute.For instance:contains River→W aterP ollution=high or touches River→exportationRate=high.In[5],Apriori-KC is proposed.This method made some changes on Apriori[1]to eliminate well known geographic patterns using background knowledge.In[4]this method is extended with an additional step where not only frequent itemsets are reduced,but input space is reduced as much as possible,since this is still the most efficient way for pruning frequent patterns.In[7]the closed frequent pattern mining approach is applied to the geographic domain,eliminating both well known dependencies and redundant frequent itemsets.In this paper we extended the method presented in[5]to reduce the number of non-interesting spatial patterns using qualitative spatial reasoning.Not only the data is considered,but its semantics is taken into account,and frequent patterns that contain the same feature type are removed a priori.The method proposed in this paper is more effective and efficient then most other methods which perform pruning after the rule generation,since our method explores the anti-monotone constraint of Apriori[1] and prunes non-interesting patterns during the frequent set generation.Other transactional pattern mining(a)Frequent Geographic Pattern with Apriori,Apriori-KC,and Apriori-KC+(b)Computational Time to Generate Frequent Geographic Patterns with Apriori,Apriori-KC,and Apriori-KC+Figure 1:Experiments on real dataapproaches such as [2,9]remove redundant frequent patterns and association rules exploiting support and confidence constraints,while our method is independent of such thresholds.In these approaches “redundant”rules are eliminated by frequent itemset pruning,but “non-interesting”and “meaningless”rules are still generated.In Figure 1(a)it is clear that there is a reduction of the number of frequent patterns,and in Figure 1(b)the efficiency of the method is confirmed.AcknowledgementsThis research has been funded by the Brazilian agency CAPES,the European Union (FP6-IST-FET pro-gramme,Project n.FP6-14915,GeoPKDD:Geographic Privacy-Aware Knowledge Discovery and Deliv-ery),and by the Research Foundation Flanders (FWO-Vlaanderen),Research Project G.0344.05.References[1]Rakesh Agrawal and Ramakrishnan Srikant.Fast algorithms for mining association rules in largedatabases.In VLDB ,pages 487–499,1994.[2]Yves Bastide,Nicolas Pasquier,Rafik Taouil,Gerd Stumme,and LotfiLakhal.Mining minimal non-redundant association rules using frequent closed itemsets.In CL ’00:Proceedings of the First Interna-tional Conference on Computational Logic ,pages 972–986,London,UK,2000.Springer-Verlag.[3]Roberto J.Bayardo and Rakesh Agrawal.Mining the most interesting rules.In KDD ,pages 145–154,1999.[4]Vania Bogorny,Sandro Camargo,Paulo Engel,and Luis Otavio Alvares.Mining frequent geographicpatterns with knowledge constraints.In ACM-GIS ,pages 139–146.ACM,2006.[5]Vania Bogorny,Sandro Camargo,Paulo Engel,and Luis Otavio Alvares.Towards elimination of wellknown patterns in spatial association rule mining.In IS ,pages 532–537.IEEE Computer Society,2006.[6]Vania Bogorny,Bart Moelans,and Luis Otavio Alvares.Filtering frequent spatial patterns with qual-itative spatial reasoning.In Proceedings of the 22nd International Conference on Data Engineering Workshops,ICDE 2007,15–16&20April 2007,Istanbul,Turkey ,pages 527–535,2007.[7]Vania Bogorny,Joao Valiati,Sandro Camargo,Paulo Engel,Bart Kuijpers,and Luis Otavio Alvares.Mining maximal generalized frequent geographic patterns with knowledge constraints.In ICDM ,pages 813–817.IEEE Computer Society,2006.[8]Jiawei Han.Mining knowledge at multiple concept levels.In CIKM ,pages 19–24.ACM,1995.[9]Mohammed J.Zaki.Generating non-redundant association rules.In KDD ’00:Proceedings of the sixthACM SIGKDD international conference on Knowledge discovery and data mining ,pages 34–43,New York,NY ,USA,2000.ACM Press.。

学术英语写作智慧树知到课后章节答案2023年下天津外国语大学天津外国语大学第一章测试1.What steps are involved in writing? ()A:PrewritingB:Revising and editingC:Writing the first draftD:Rewriting答案:Prewriting;Revising and editing;Writing the first draft2.The objectives of the course include ()A:To improve students’ thinking ability via choosing a topic, sourcinginformation, writing different parts of a project paper including introduction, literature review, methods, findings, discussions and conclusionB:To improve students’ writing and reading proficiency in EnglishC:To improve students’ capability of carrying out research by going through the process of writing a project paperD:To improve students’ cultural competence through learning western ways of thinking in English writing答案:To improve students’ thinking ability via choosing a topic,sourcing information, writing different parts of a project paperincluding introduction, literature review, methods, findings,discussions and conclusion;To im prove students’ writing and reading proficiency in English;To improve students’ capability of carrying out research by goingthrough the process of writing a project paper;To improve students’ cultural competence through learning westernways of thinking in English writing3.In freewriting, the writer needs to make everything perfect.()A:错 B:对答案:错4.The relationship between reading and writing can be described as()A:When you start learning writing, you will read differently.B:Reading is the input, while writing is the output of language.C:When you start learning writing, you will improve the awareness ofaccumulating language, information and idea for various topics, which will consequently change the way you read.D:Reading and writing are reciprocal activities; the outcome of a readingactivity can serve as input for writing, and writing can lead a student tofurther reading resources.答案:When you start learning writing, you will read differently.;Reading is the input, while writing is the output of language.;When you start learning writing, you will improve the awareness ofaccumulating language, information and idea for various topics, which will consequently change the way you read.;Reading and writing are reciprocal activities; the outcome of a reading activity can serve as input for writing, and writing can lead a student to further reading resources.5.The functions of writing include ()A:The discipline of writing will strengthen your skills as a reader and listener B:Writing will make you a strong thinker and writing allows you to organize your thoughts in clear and logical waysC:Writing encourages you to seek worthwhile questionsD:Writing helps you refine and enrich your ideas based on feedback fromreaders答案:The discipline of writing will strengthen your skills as a readerand listener;Writing will make you a strong thinker and writing allows you toorganize your thoughts in clear and logical ways;Writing encourages you to seek worthwhile questions;Writing helps you refine and enrich your ideas based on feedback fromreaders第二章测试1. A thesis statement should be one single sentence, including only one idea. ( )A:对 B:错答案:对2.An essay should include at least three specific details to be well-developed. ( )A:对 B:错答案:错3.The topic sentence for each body paragraph should be fairly specific becauseeach body paragraph deals with only one of the many things stated in thethesis statement. ( )A:错 B:对答案:对4.Living with my ex-roommate was unbearable. First, she thought everythingshe owned was the best. Secondly, she possessed numerators filthy habits.Finally, she constantly exhibited immature behavior.Read this paragraph and decide which statement is NOT TRUE? ()。

2018年12⽉⼤学英语六级考试真题答案与详解⼀~2018年12⽉⼤学英语六级考试真题答案与详解⼀梦想不会辜负每⼀个努⼒的⼈(第3套)Part I Writing本篇话题是“如何平衡学业和课外活动”,这与考⽣的学习⽣活密切相关。

在具体⾏⽂⽅⾯,考⽣可以开篇引出话题;然后针对这⼀问题提出⾃⼰的建议;最后总结全⽂,重述论点或升华主题。

旳厝作提纲'—、引出话题:适当地参加课⼣⼘活动不仅能促进学习,⽽且能提⾼综合能⼒(promote academic studyenhance our overallactivities more rationally)如何平衡学业和课外活动许多学⽣和他们的⽗母担⼼花时间参加课外活动会妨碍学习,这是可以理解的。

但在我看来,只要我们能在两者之间取得平衡,适当地参加课外活动不仅能促进学习'⽽且能提⾼我们的综合能⼒。

⾸先,合理安排课业并⾼效地完成是明智之举,因为只有这样,我们才能分配出额外的时间和精⼒参加课外201⾉12/6(第3套)梦想不会辜负每⼀个努⼒的⼈活珈并且不会对我们的学习产⽣负⾯影响。

其次,应该只将时间花在我们想参加的活动上,因为它们会给我们带来乐趣,并在⼀定程度上缓解学习压⼒。

第三,我们也可以参加⼀些俱乐部,在那⾥可以遇到志同遣合的⼈,并会以1种直接对我们的学业有利的⽅式提升我们的能⼒。

总⽽⾔之,只有通过更⾼效地学习和更合理地安排业余活动,我们才能获得学业与课外活动之间的真正平衡。

主题词汇excessive 过度的in hot water 陷⼊困境under the spotlight 备受关注disapprove of 不赞同for the sake of...为了..............not to mention..,更不⽤说...............trigger 引发transform...to...把..............转换成.........pave the way for...为..............铺平道路Part HI Reading ComprehensionSection A在我曾写过的可能最为疯狂的新闻报道中,我曾报道过科学家在⽜屁股上画眼睛的实验,这是牲畜保护⽅⾯所取得的(26)进步。

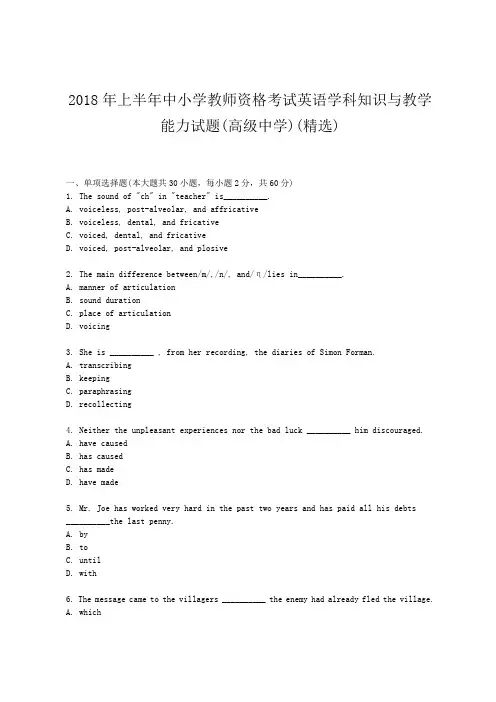

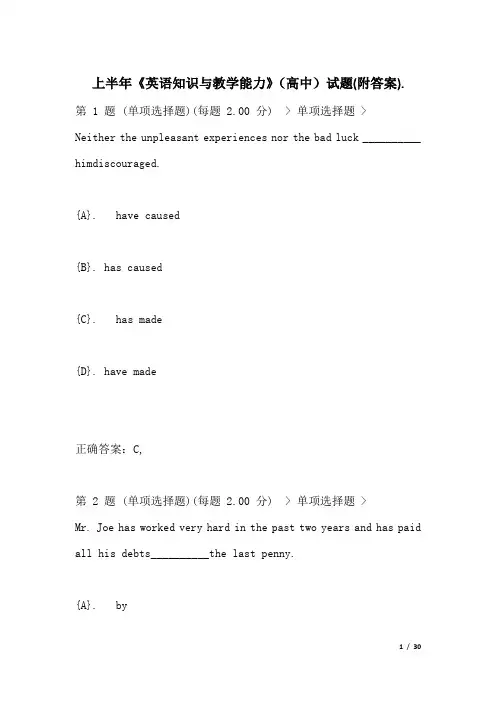

2018年上半年中小学教师资格考试英语学科知识与教学能力试题(高级中学)(精选)一、单项选择题(本大题共30小题,每小题2分,共60分)1. The sound of "ch" in "teacher" is__________.A. voiceless, post-alveolar, and affricativeB. voiceless, dental, and fricativeC. voiced, dental, and fricativeD. voiced, post-alveolar, and plosive2. The main difference between/m/,/n/, and/η/lies in__________.A. manner of articulationB. sound durationC. place of articulationD. voicing3. She is __________ , from her recording, the diaries of Simon Forman.A. transcribingB. keepingC. paraphrasingD. recollecting4. Neither the unpleasant experiences nor the bad luck __________ him discouraged.A. have causedB. has causedC. has madeD. have made5. Mr. Joe has worked very hard in the past two years and has paid all his debts__________the last penny.A. byB. toC. untilD. with6. The message came to the villagers __________ the enemy had already fled the village.A. whichB. whoC. thatD. where7. We must improve the farming method __________we may get high yields.A. in caseB. in order thatC. now thatD. even if8.--Do you mind if I smoke here?--__________.A. Yes, I don'tB. Yes, you mayC. No, not at allD. Yes,I won’t9. What is the main rhetoric device used in “The plowman homeward plods his weary way.”?A. Metaphor.B. Metonymy.C. Synecdoche.D.Transferred epithet.10.--A:Let's go to the movie tonight.--B:I'd like to. but I have to study for an exam.In the conversation above, B's decline of the proposal is categorized as a kindof__________.A. illocutionary actB. perlocutionary actC. propositional conditionD. sincerity condition11. Which of the following activities is NOT typical of the Task-Based Language Teaching method?A. Problem-solving activities.B. Opinion exchange activities.C. Information-gap activities.D. Pattern practice activities.12. If a teacher shows students how to do an activity before they start doing it, he/sheis using the technique of__________.A. presentationB. demonstrationC. elicitationD. evaluation13. When a teacher asks students to discuss how a text is organized,he/she is most likely to help them__________.A. evaluate the content of the textB. analyze the structure of the passageC. understand the intention of the writerD. distinguish the facts from the opinions14. Which of the following practices can encourage students to read an article critically?A. Evaluating its point of view.B. Finding out the facts.C. Finding detailed information.D. Doing translation exercises.15. Which of the following is a display question used by teachers in class?A. If you were the girl in the story,would you behave like her?B. Do you like this story Girl the Thumb ,why or why not?C. Do you agree that the girl was a kind-hearted person?D. What happened to the girl at the end of the story?16. Which of the following would a teacher encourage students to do in order to develop their cognitive strategies?A. To make a study plan.B. To summarize a story.C. To read a text aloud.D. To do pattern drills.17. Which of the following exercises would a teacher most probably use if he/she wants to help students de-velop discourse competence?A. Paraphrasing sentences.B. Translating sentences.C. Unscrambling sentences.D. Transforming sentences.18. The advantages of pair and group work include all of the following EXCEPT__________.A. interaction with peersB. variety and dynamismC. an increase in language practiceD. opportunities to guarantee accuracy19. Which of the following should a teacher avoid when his/her focus is on developing students' ability to use words appropriately?A. Teaching both the spoken and written form.B. Teaching words in context and giving examples.C. Presenting the form, meaning, and use of a word.D. Asking students to memorize bilingual word lists.20. Which of the following practices is most likely to encourage students' cooperation in learning?A. Doing a project.B. Having a dictationC. Taking a test.D. Copying a text.阅读Passage 1,完成第21~25小题。