Teleoperation

- 格式:pdf

- 大小:250.53 KB

- 文档页数:16

Teleoperation Remote-Controlled Robots Teleoperation of remote-controlled robots has become an integral part of various industries and sectors, including manufacturing, healthcare, and space exploration. This technology allows operators to control robots from a distance, enabling them to perform tasks in hazardous or hard-to-reach environments. While teleoperation offers numerous benefits, it also presents several challenges and limitations that need to be addressed.One of the primary advantages of teleoperated robots is their ability to access and operate in environments that are unsafe for humans. For example, in the field of disaster response, teleoperated robots can be deployed to search for survivors in collapsed buildings or navigate through hazardous materials. Similarly, in the healthcare sector, teleoperated surgical robots enable surgeons to perform minimally invasive procedures with precision and control. This capability not only enhances the safety of the operation but also reduces the risk of complications for the patient.Moreover, teleoperation allows for increased efficiency and productivity in various industrial processes. For instance, in manufacturing plants, remote-controlled robots can perform repetitive or physically demanding tasks with a high degree of accuracy, leading to improved production output and cost savings. Additionally, in the field of space exploration, teleoperated rovers and probes enable scientists to conduct research and gather data from distant planets and celestial bodies, expanding our understanding of the universe.Despite these benefits, teleoperation also presents several challenges, particularly in the areas of communication and feedback. The reliance on wireless communication for controlling remote robots introduces the risk of signal interference or latency, which can affect the real-time responsiveness of the robot. This can be particularly problematic in critical applications such as surgery or emergency response, where any delay or loss of control can have serious consequences. Therefore, ensuring robust and reliable communication systems is essential for the successful teleoperation of robots.Another significant challenge in teleoperation is the limited sensory feedback available to the operator. While modern teleoperated systems may provide visual and auditoryfeedback, they often lack the tactile and proprioceptive feedback that humans rely on for dexterous manipulation and spatial awareness. This limitation can make certain tasks more challenging for the operator, especially in complex and dynamic environments. Addressing this challenge requires the development of advanced haptic feedback systems that can simulate the sense of touch and enable operators to interact more intuitively with remote-controlled robots.Furthermore, the integration of artificial intelligence (AI) and autonomous capabilities into teleoperated robots raises ethical and safety concerns. As robots become increasingly autonomous and capable of making decisions without direct human input, questions arise regarding accountability and liability in the event of accidents or errors. Additionally, ensuring the ethical and responsible use of teleoperated robots, especially in sensitive areas such as healthcare and security, requires careful consideration of privacy, consent, and human oversight.In conclusion, teleoperation of remote-controlled robots offers significant advantages in terms of safety, efficiency, and access to remote environments. However, it also presents challenges related to communication, feedback, and ethical considerations that need to be carefully addressed. As technology continues to advance, addressing these challenges will be crucial in realizing the full potential of teleoperated robots across various industries and applications.。

Proceedings of the 35th International Symposium on Robotics (ISR2004), Paris-Nord Villepinte, France, March 23-26, 2004Robotics Research toward Next-GenerationHuman-Robot Networked SystemsSusumu TACHI, Ph.D.Professor, The University of TokyoDepartment of Information Physics & Computing7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656 Japanhttp://www.star.t.u-tokyo.ac.jp/Abstract. Research on human-robot systems started as teleoperation and cybernetic prostheses in the 1940's. Teleoperation developed into telerobotics, networked robotics, and telexistence. Telexistence is fundamentally a concept named for the technology that enables a human being to have a real-time sensation of being at a place other than where he or she actually exists, and to interact with the remote environment, which may be real, virtual, or a combination of both. It also refers to an advanced type of teleoperation system that enables an operator at the control to perform remote tasks dexterously with the feeling of existing in a surrogate robot. Although conventional telexistence systems provide an operator the real-time sensation of being in a remote environment, persons in the remote environment have only the sensation that a surrogate robot is present, not the operator. Mutual telexistence aims to solve this problem so that the existence of the operator is apparent to persons in the remote environment by providing mutual sensations of presence. This enables humans to be seemingly everywhere at the same time, i.e., to be virtually ubiquitous. This paper reviews the generation of robots and the prospects of future networked robotics.Keywords: generations of robots, networked robotics, real-time remote robotics (R-Cubed), humanoid robotics project (HRP), telexistence, mutual telexistence, telepresence, virtual reality, retro-reflective projection technology (RPT)1. IntroductionOne of humanity's most ancient dreams has been to have a substitute that can undertake those jobs that are dangerous, difficult or tedious. In primeval times, the dream was realized by utilizing animals, and unfortunately, by using fellowmen as slaves. In some countries, such conditions continued until nearly a hundred years ago.With the advent of robotics and automation technology, and also the progress of computers and electronics in recent years, it has become possible to let automated machinery replace human labor. Robots are expected to replace human work as the only tolerable slave from a humanitarian point of view. The “Human Use of Human Beings” of N. Wiener will be truly realized only when humans make robots to replace them for adverse tasks, supporting an important end in the development and safety of our modern society.The application fields are not limited only to ordinary manufacturing in secondary industry, but have been expanding gradually to mining, civil engineering and construction in the same secondary industry, as well as to agriculture, forestry and fisheries in primary industry.They are expanding also to retailing, wholesaling, finance, insurance, real estate, warehousing, transportation, communications, nuclear power, space, and ocean development, to social work, such as medical treatment, welfare and sanitation, and to disaster control, leisure, household, and other tertiary industry-related fields.Another dream of human beings has been to amplify human muscle power and sensing capability by using machines while reserving human dexterity with a sensation of direct operation. Also it has long been a desire of human beings to project themselves into a remote environment, that is, to have a sensation of being present or existing at the same time in a different place other than the place they really exist, i.e., to become virtually ubiquitous.This dream is now on the way to accomplishment using robots as surrogates of ourselves over networks through technologies such as virtual reality, augmented reality, wearable systems and ubiquitous computing.As this realization progresses our relations with the robot are becoming more and more important. These are called human-robot systems, human-robot interfaces, or human-robot communications, and are also referred to as teleoperation, telerobotics, networked robotics, r-cubed (real-time remoteS.Tachirobotics) or telexistence when robots are remotely placed. These are some of the most undeveloped areas despite being among the most important in robot technology.An example of the human-robot cooperation system, which will play an increasingly important role in the highly networked society of today and the future, as well as topics such as virtual reality and augmented realty for realizing such a system will be presented and intensively discussed.2. Generations of RobotsSince the latter half of the 1960’s, robots have been brought from the world of fiction to the practical world, and the development of the robot is characterized by generations, as in the case of the computer.With the rapid progress of science and technology after World War II, the robot, which had been only a dream, came to realize some human or animal functions, although it had a different shape. V ersatran and Unimate were the first robots made commercially available in 1960, and were introduced to Japan in 1967. They are called industrial robots and can be said to be the First Generation of robots finding practical use.This is considered to have resulted from a combination of two large areas of development after World War II: hardware configuration and control technology for a remote operational type mechanical hand (or manipulator), which had been under research and development for use in the hot radioactive cell of a nuclear reactor, and automation technology for automated machinery or NC machine tools.The term “industrial robot” is said to have originated under the title “Programmed Article Transfer,” which G. C. Devol applied for registration in 1954 and which was registered in 1961 in the United States. It has come into wide usage since the American Mental Market, a U.S. journal, used the expression in 1960. After passing through infancy in the latter half of the 1960’s, the industrial robot reached the age of practical use in the 1970’s.Thus the robot entered an age of prevalence in anticipation of a rapid increase in demand. That is why 1980 is called "the first year of the prevalence of the industrial robot." From a technical point of view, however, the first generation robot that found wide use is a kind of repetition machine, which plays back repeatedly its position and posture instructed in an embedding process before commencement of operation.In essence, it is a composite system of technology based on control techniques for various automated machines and NC machine tools, and design and control techniques of manipulators with multiple degrees of freedom. Naturally, the application area is limited. These robots can be most effectively used in manufacturing processes in secondary industry, especially in material handling, painting, spot welding, etc.In other areas, such as arc welding and assembling, it is necessary to vary actions and to better understand human instructions by using not only knowledge from within, like for the First Generation Robot, but also to acquire external information with sensors. A device that could change its actions according to the situation using a sensor is the so-called Second-Generation sensor-based adaptive robot. It came to prevail gradually in the 1970’s.The non-manufacturing areas of primary industry (agriculture, fisheries, and forestry), secondary industry (mining and construction), and tertiary industry (security and inspection) had so far been excluded from mechanization and automatization, as the older type First and Second Generation robots could not operate in environments that were dangerous and unregulated or unstructured. However, harsh and hazardous environments such as nuclear power plants, deep oceans, and areas affected with natural disasters, are where robots are needed the most as substitutes to humans who risk their lives working there. The Third Generation Robot was proposed to answer these problems.The key to the development of the Third Generation Robot was to figure out a way to enable the robot to work in an environment that was not maintained or structured. The First and Second Generation Robots possess the data of the maintained environment. This means that humans have a grasp of the entire scope of data concerning the environment. This is called the “structured environment.” The factory where first and second generation robots work is an example of the structured environment. All the information concerning the structure of the factory, such as where passages are and how things are arranged, is clear. One can also change the environment to accommodate the robot. For example, objects can be rearranged to where the robot's sensor can recognize them easily.However, there are structured environments that cannot be altered so easily. For example, it is not possible to change the environment in places such as the reactor of a nuclear power plant, objects in the ocean, and areas affected by disasters. Even with full knowledge about the environment, one cannot alter the environment to accommodate robots. In many cases, one cannot determine the vantage points and lighting. Furthermore, one can encounter an “unstructured environment” where humans do not possess accurate data. Nature is also full of environments where humans are totally disoriented.Proceedings of the 35th International Symposium on Robotics (ISR2004), Paris-Nord Villepinte, France, March 23-26, 2004In the development of the Third Generation Robot, one focused on the structuralization of the environment based on available information. Robots conduct their work automatically once the environment was structured, and worked under the direction of humans in an environment that was not structured. This system, called the supervisory controlled autonomous mobile robot system, was the major paradigm of the Third Generation Robot.Thus the Third Generation Robot was able to work in places where humans possessed basic data of the environment but were unable to alter the environment. These robots are engaged in security maintenance in such uncontrollable environments, and could deal with unpredictable events with the help of humans.In Japan, between 1983 and 1991, the Ministry of International Trade and Industry (now Ministry of Economy, Trade and Industry) promoted the research and development of a National Large-Scale Project under this paradigm called “Advanced Robot Technology in Hazardous Environments”. Telexistence played an important role in the paradigm of the Third Generation robots.Fig. 1 Generations of Robots.3. TelexistenceTelexistence (tel-existence) is a technology that enables us to control remote objects and communicate with others in a remote environment with a real-time sensation of presence by using surrogate robots, remote / local computers and cybernetic human interfaces. This concept has been expanded to include the projection of ourselves into computer-generated virtual environments, and also the use of a virtual environment for theaugmentation of the real environment. The concept of telexistence was proposed and patented in Japan in 1980, and became the fundamental guiding principle of the eight-year Japanese National Large Scale Project called "Advanced Robot Technology in Hazardous Environments," which was initiated in 1983 together with the concept of Third Generation Robotics. Through this project, we made theoretical considerations, established systematic design procedures, developed experimental hardware telexistence systems, and demonstrated the feasibility of the concept.Through the efforts of twenty years of research and development in the U.S., Europe and Japan [1-10], it has nearly become possible for humans to use a humanoid robot in a remote environment as if it was an other self, i.e., they are able to have the sensation of being just inside the robot in the remote environment.Our first report [5,7] proposed the principle of the telexistence sensory display, and explicitly defined its design procedure. The feasibility of a visual display with a sensation of presence was demonstrated through psychophysical measurements using experimental visual telexistence apparatus. A method was also proposed to develop a mobile telexistence system that can be driven remotely with both an auditory and visual sensation of presence. A prototype mobile televehicle system was constructed and the feasibility of the method was evaluated.In 1989, a preliminary evaluation experiment of telexistence was conducted with the first prototype telexistence master slave system for remote manipulation. An experimental telexistence system for real and/or virtual environments was designed and developed, and the efficacy and superiority of the telexistence master-slave system over conventional master-slave systems was demonstrated experimentally [11].Fig. 2 Telexistence Surrogate Anthropomorphic Robot (TELESAR) atWork (1988).S.TachiAugmented telexistence can be effectively used in numerous situations. For instance, to control a slave robot in a poor visibility environment, an experimental augmented telexistence system was developed that uses a virtual environment model constructed from design data of the real environment. To use augmented reality in the control of a slave robot, a calibration system using image measurements was proposed for matching the real environment and the environment model [12]. The slave robot has an impedance control mechanism for contact tasks and to compensate for errors that remain even after calibration. An experimental operation in a poor visibility environment was successfully conducted by using a humanoid robot called TELESAR (TELExistence Surrogate Anthropomorphic Robot), shown in Figure 2, and its virtual dual. Figure 3 shows the virtual TELESAR used in the experiment, and Figure 4 shows the master system for the control of both real TELESAR and virtual TELESAR.Experimental studies of tracking tasks demonstrated quantitatively that a human being can telexist in a remote and/or computer-generated environment by using the dedicated telexistence master slave system [11].Fig. 3 Virtual TELESAR at Work (1993).Fig. 4 Telexistence Master (1989).4. R-CubedIn order to realize a society where everyone can freelytelexist anywhere through a network, the Japanese Ministry of Economy, Trade and Industry (METI) together with the University of Tokyo, proposed a long-range national research and development scheme in 1995 dubbed R-Cubed (Real-time Remote Robotics) [13].Figure 5 shows an example of an artist's rendition of a future use of R-Cubed System. In this example, a handicapped person climbs a mountain with his friends using a networked telexistence system.In an R-Cubed system, each robot site includes its local robot's server. The robot type varies from a mobile camera on the low end, to a humanoid on the high end. A virtual robot canalso be a controlled system to be telexisted.Fig. 5 Mountain Climbing using R-Cubed.Each client has a teleoperation system called a cockpit, ranging from an ordinary personal computer system on the low end to a control cockpit with master manipulators and a Head Mounted Display (HMD), or a CA VE Automatic Virtual Environment (CA VE) on the high end. RCML/RCTP (R-Cubed Manipulation Language / R-Cubed Transfer Protocol) is now under development to support the lower end user's ability to control remote robots through a network [13]. To standardize the following control scheme, a language dubbed RCML (), which describes a remote robot's features and its working environment, has been proposed. A communication protocol RCTP has also been designed and developed to exchange control commands, status data, and sensory information between the robot and the user.5. Humanoid Robotics Project (HRP)After a two-year feasibility study called the Human Friendly Network Robot (FNR), which was conducted from April 1996 till March 1998 based on the R-Cubed Scheme, a National Applied Science & Technology Project called “Humanoid and Human Friendly Robotics (HRP)” was launched in 1998. It is a five-year project toward the realizationProceedings of the 35th International Symposium on Robotics (ISR2004), Paris-Nord Villepinte, France, March 23-26, 2004of a so-called R-Cubed Society by providing humanoid robots, control cockpits and remote control protocols.A novel robot system capable of assisting and cooperating with people is necessary for any human-centered system to be used for activities such as the maintenance of plants or power stations, the operation of construction work, the supply of aid in case of emergency or disaster, and the care of elderly people. If we consider such systems from both a technical and a safety point of view, however, it is clearly intractable to develop a completely autonomous robot system for these objectives.The robot system should therefore be realized with the combination of autonomous control and teleoperated control. By introducing telexistence techniques through an advanced type of teleoperated robot system, a human operator can be provided with information about the robot's remote site in the form of natural audio, visual, and force feedback, thus invoking the feeling of existing inside the robot itself [14,15].Fig. 6 Telexistence Cockpit for Humanoid Control (2000).In order to address the problem of narrow fields of view associated with HMD's, a surround visual display using immersive projection technology (as adopted in the CA VE), has recently been developed (Fig. 6). The surround visual display panoramically presents real images captured by a stereo multi-camera system for a wide field of view mounted on the robot, which allows the operator to have the feeling of on-board motion when he or she uses the robot to walk around.V arious teleoperation experiments using the developed telexistence master system confirmed that kinesthetic presentation by the master system through visual imagery greatly improves both the operator's sensation of walking, and dexterity at manipulating objects.If the operator issued a command to move the robot, the robot actually walked to the goal. As the robot walked around, real images captured by a wide field of view multi-camera system were displayed on four screens of the surrounded visual display. This made the operator feel as if he or she was inside the robot, walking around the robot site (Fig. 7).A CG model of the robot in the virtual environment was represented and updated according to the current location and orientation received from sensors on the real robot. The model was displayed on the bottom-right screen of the surround visual display, and by augmenting real images captured by the camera system, it supported the operator's navigation of the robot. Since the series of real images presented on the visual display are integrated with the movement of the motion base, the operator feels the real-time sensation of stepping up and down.This was the first experiment and success of controlling ahumanoid biped robot using telexistence [15].Fig. 7 HRP Humanoid Robot at Work (2000).6. Mutual Telexistence using RPTBy using a telexistence system, persons can control the robot by simply moving their bodies naturally, without using verbal commands. The robot conforms to the person’s motion, and through sensors on board the robot the human can see, hear and feel as if they sensed the remote environment directly. Persons can virtually exist in the remote environment without actually being there.For observers in the remote environment, however, the situation is quite different: they see only the robot moving and speaking. Although they can hear the voice and witness the behaviour of the human operator through the robot, it does not actually look like him or her. This means that the telexistence is not yet mutual. In order to realize mutual telexistence, we have been pursuing the use of projection technology with retro-reflective material as a surface, which we call Retro-reflective Projection Technology (RPT) [16,17,18,19].RPT is a new approach to augmented reality (AR) combining the versatility of projection technology with the tangibility of physical objects. By using RPT in conjunction with an HMP, the mutual telexistence problem can be solved asS.Tachishown in Figure 8: suppose a human user A uses his telexistence robot A' at the remote site where another human user B is present. The user B in turn uses another telexistence robot B', which exists in the site where the user A works. 3-D images of the remote scenery are captured by cameras on board both robots A' and B', and are sent to the HMP’s of human users A and B respectively, both with a sensation of presence. Both telexistence robots A' and B' are seen as if they were their respective human users by projecting the real image of the users onto their respective robots. The first demonstration of RPT together with an HMP was made at SIGGRAPH98, followed by demonstrations at SIGGRAPH99 and SIGGRAPH2000.Fig. 8 Concept of Robotic Mutual Telexistence (adopted from [16]). Fig. 9 Principle of Retro-reflective Projection Technology (RPT).Fig. 10 Head-Mounted Projector.Fig. 11 (A) Miniature of the HONDA Humanoid Robot,(B) Painted with Retro-reflective Material,(C) and (D) Examples of Projecting a Human onto it.(adopted from [16]).Figure 9 shows the principle of Retro-reflective Projection Technology (RPT) and Figure 10 shows a Head-Mounted Projector (HMP) constructed according to RPT [17,18,19].Figure 11 presents an example of how mutual telexistence can be achieved through the use of RPT. Figure 11(A) shows a miniature of the HONDA Humanoid Robot, while Figure 11(B) shows the robot painted with retro-reflective material. Figures 11 (C) and (D) show how they appear to a human wearing an HMP. The telexisted robot looks just like the human operator of the robot, and mutual telexistence can be naturally performed [16]. However, this preliminary experiment was conducted off-line, and real-time experiments are yet to be conducted by constructing and using a mutual telexistence hardware system.7. Toward the FutureThere are two major styles or ways of thinking inProceedings of the 35th International Symposium on Robotics (ISR2004), Paris-Nord Villepinte, France, March 23-26, 2004designing robots. An important point to note here is that these ways of thinking have nothing to do with the forms of robots, such as the distinction between humanoid robots or those with special forms. Other distinctions include those that perform general or specific functions, and those in the shapes of animals or those that are not. These distinctions are indeed important especially when the robots are applied to practical use, and must be considered in practical situations.However, the distinction that is discussed here concerns the philosophy toward robot design per se. The two different ways of thinking concern the question of whether to make "robots as independent beings" or "robots as extensions of humans". Robots as independent beings will ultimately have a will of their own, although that is far off from the stage of development today. Accordingly, commands toward the robots are made through language, such as spoken words, written manuals, or computer instructions.On the other hand, robots as extensions of humans do not have a will of their own. Robots are a part of the humans who command them, and humans are the only ones who possess will. Commands are made automatically according to human movements and internal states, and not through language. Robots move according to the human will.A prime example of robots as extensions of humans is a prosthetic upper-limb or an artificial arm, which substitutes lost arms. Humans move artificial arms as though they moved their own arms. What if one gained an artificial arm as a third arm, in addition to the existing two arms? The artificial arm would move according to the human will and function as an extra arm extending the human ability. The artificial arm, or, a robot as an extension of human, could physically be separate from the human body; it would still move according to the human will without receiving lingual commands. The robot would not have its own will and function as part of the human, even though the robot is physically separated from the human body. This is what can be called"one's other-self-robot". There may be multiple other-self-robots.It is also possible to create an environment where humans feel as if they are inside one's other-self-robots, thereby the human cognizes the environment through the sense organs of the robot and then operates the robot using its effect organs. This technology is known as telexistence. Telexistence enables humans to transcend time and space, and allow them to be virtually ubiquitous.Robots as independent beings must have the intelligence that pre-empts any attempt of the robots to harm humans. That is to say, "safety intelligence" is the number one priority in this type of robot. Isaac Asimov's three laws of robotics, for example, are quite relevant in designing this type of robot. It is crucial to find a solution to make sure that machines would never harm humans by any means.The safety intelligence requires high technology and its innovation will not an easy task. The intelligence must be perfect, as a partially successful safety intelligence would be totally useless. The robots need to possess safety intelligence that even exceeds human intelligence. As Alan M. Turing has argued, this idea was still not relevant in the twentieth century, when autonomous robots could not have true intelligence as humans have. However, inventing the safety intelligence is the most important mission in the twenty-first century as robots are about to enter the everyday lives of humans.On the other hand, there is an alternate approach to this problem. One could argue that the "one's other-self-robots" rather than the "independent robots" should be the priority in development. The other-self-robots are analogous to automobiles. The robots are machines and tools to be used by humans; robots are extensions of humans both intellectually and physically.This approach pre-empts the problem of robots having their own rights, as they remain extensions of humans. Humans therefore need not to be threatened by robots, as the robots remain subordinate to humans. One's other-self-robot therefore is a promising path that humans can follow.Take nursing for example. It is not desirable for a nursing robot that takes care of you to be an independent being. We can protect the patient's privacy the most when it is the patient who is taking care of himself. Accordingly, it is more appropriate if the nursing robot is the other-self-robot, an extension of oneself. The other-self-robot can either help himself or other people. The other-self-robot is more secure than the robot as an independent being, as the rights and the responsibilities associated with the robot are evident in the former type. The right and the responsibility of the robot belong to the humans who own the robot as their other self. Robots cannot claim their own rights or responsibilities.One can nurse himself not only by using his own other- self-robot but also by asking family members and professional nurses to take care of him by using robots. These people, who may live far away from the patient, can use telexistence technology to personify the robot near the patient to help him. One important consideration in using this technology is that through the robot the patient needs to feel as though a person he knows, rather than an impersonal robot, is taking care of him. It is essential that the robot have a "face": a clear marker。

移动操作机器人及其共享控制力反馈遥操作研究博士学位论文移动操作机器人及其共享控制的力反馈遥操作研究RESEARCH ON MOBILE MANIPULATOR AND ITSFORCE FEEDBACK TELEOPERATION BASED ONSHARED CONTROL MODE于振中哈尔滨工业大学2010年 11月国内图书分类号:TP242.2学校代码:10213国际图书分类号:681.5 密级:公开工学博士学位论文移动操作机器人及其共享控制的力反馈遥操作研究博士研究生 :于振中导师 :蔡鹤皋院士副导师 :赵杰教授申请学位 :工学博士学科 :机械电子工程所在单位 :机电工程学院答辩日期 : 2010 年 11 月授予学位单位 :哈尔滨工业大学Classified Index: TP242.2U.D.C: 681.5Dissertation for the Doctoral Degree in EngineeringRESEARCH ON MOBILE MANIPULATOR AND ITSFORCE FEEDBACK TELEOPERATION BASED ONSHARED CONTROL MODEYu ZhenzhongCandidate:Supervisor: Academician Cai HegaoVice-Supervisor: Prof. Zhao Jie Academic Degree Applied for: Doctor of EngineeringSpeciality: Mechantronics EngineeringUnit: School of Mechatronics EngineeringDate of Defense: Nov, 2010University: Harbin Institute of Technology摘要摘要移动操作机器人相比于固定操作机器人具有更大的操作空间和更强的操作灵活性,成为机器人领域研究的热点方向之一。

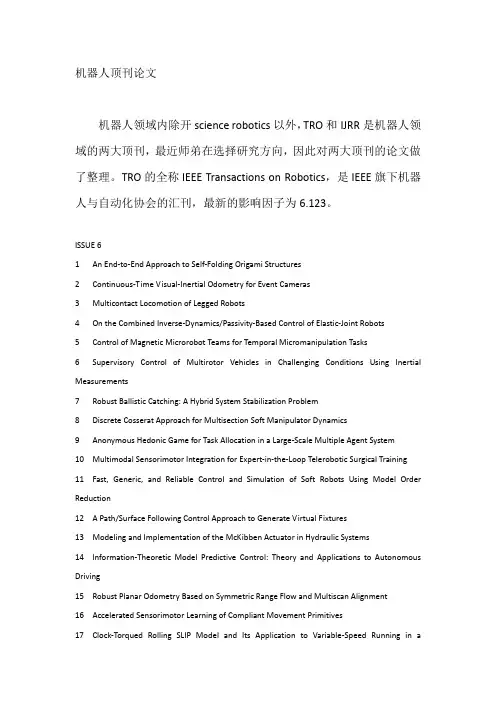

机器人顶刊论文机器人领域内除开science robotics以外,TRO和IJRR是机器人领域的两大顶刊,最近师弟在选择研究方向,因此对两大顶刊的论文做了整理。

TRO的全称IEEE Transactions on Robotics,是IEEE旗下机器人与自动化协会的汇刊,最新的影响因子为6.123。

ISSUE 61 An End-to-End Approach to Self-Folding Origami Structures2 Continuous-Time Visual-Inertial Odometry for Event Cameras3 Multicontact Locomotion of Legged Robots4 On the Combined Inverse-Dynamics/Passivity-Based Control of Elastic-Joint Robots5 Control of Magnetic Microrobot Teams for Temporal Micromanipulation Tasks6 Supervisory Control of Multirotor Vehicles in Challenging Conditions Using Inertial Measurements7 Robust Ballistic Catching: A Hybrid System Stabilization Problem8 Discrete Cosserat Approach for Multisection Soft Manipulator Dynamics9 Anonymous Hedonic Game for Task Allocation in a Large-Scale Multiple Agent System10 Multimodal Sensorimotor Integration for Expert-in-the-Loop Telerobotic Surgical Training11 Fast, Generic, and Reliable Control and Simulation of Soft Robots Using Model Order Reduction12 A Path/Surface Following Control Approach to Generate Virtual Fixtures13 Modeling and Implementation of the McKibben Actuator in Hydraulic Systems14 Information-Theoretic Model Predictive Control: Theory and Applications to Autonomous Driving15 Robust Planar Odometry Based on Symmetric Range Flow and Multiscan Alignment16 Accelerated Sensorimotor Learning of Compliant Movement Primitives17 Clock-Torqued Rolling SLIP Model and Its Application to Variable-Speed Running in aHexapod Robot18 On the Covariance of X in AX=XB19 Safe Testing of Electrical Diathermy Cutting Using a New Generation Soft ManipulatorISSUE 51 Toward Dexterous Manipulation With Augmented Adaptive Synergies: The Pisa/IIT SoftHand 22 Efficient Equilibrium Testing Under Adhesion and Anisotropy Using Empirical Contact Force Models3 Force, Impedance, and Trajectory Learning for Contact Tooling and Haptic Identification4 An Ankle–Foot Prosthesis Emulator With Control of Plantarflexion and Inversion–Eversion Torque5 SLAP: Simultaneous Localization and Planning Under Uncertainty via Dynamic Replanning in Belief Space6 An Analytical Loading Model for n -Tendon Continuum Robots7 A Direct Dense Visual Servoing Approach Using Photometric Moments8 Computational Design of Robotic Devices From High-Level Motion Specifications9 Multicontact Postures Computation on Manifolds10 Stiffness Modulation in an Elastic Articulated-Cable Leg-Orthosis Emulator: Theory and Experiment11 Human–Robot Communications of Probabilistic Beliefs via a Dirichlet Process Mixture of Statements12 Multirobot Reconnection on Graphs: Problem, Complexity, and Algorithms13 Robust Intrinsic and Extrinsic Calibration of RGB-D Cameras14 Reactive Trajectory Generation for Multiple Vehicles in Unknown Environments With Wind Disturbances15 Resource-Aware Large-Scale Cooperative Three-Dimensional Mapping Using Multiple Mobile Devices16 Control of Planar Spring–Mass Running Through Virtual Tuning of Radial Leg Damping17 Gait Design for a Snake Robot by Connecting Curve Segments and ExperimentalDemonstration18 Server-Assisted Distributed Cooperative Localization Over Unreliable Communication Links19 Realization of Smooth Pursuit for a Quantized Compliant Camera Positioning SystemISSUE 41 A Survey on Aerial Swarm Robotics2 Trajectory Planning for Quadrotor Swarms3 A Distributed Control Approach to Formation Balancing and Maneuvering of Multiple Multirotor UAVs4 Joint Coverage, Connectivity, and Charging Strategies for Distributed UAV Networks5 Robotic Herding of a Flock of Birds Using an Unmanned Aerial Vehicle6 Agile Coordination and Assistive Collision Avoidance for Quadrotor Swarms Using Virtual Structures7 Decentralized Trajectory Tracking Control for Soft Robots Interacting With the Environment8 Resilient, Provably-Correct, and High-Level Robot Behaviors9 Humanoid Dynamic Synchronization Through Whole-Body Bilateral Feedback Teleoperation10 Informed Sampling for Asymptotically Optimal Path Planning11 Robust Tactile Descriptors for Discriminating Objects From Textural Properties via Artificial Robotic Skin12 VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator13 Zero Step Capturability for Legged Robots in Multicontact14 Fast Gait Mode Detection and Assistive Torque Control of an Exoskeletal Robotic Orthosis for Walking Assistance15 Physically Plausible Wrench Decomposition for Multieffector Object Manipulation16 Considering Uncertainty in Optimal Robot Control Through High-Order Cost Statistics17 Multirobot Data Gathering Under Buffer Constraints and Intermittent Communication18 Image-Guided Dual Master–Slave Robotic System for Maxillary Sinus Surgery19 Modeling and Interpolation of the Ambient Magnetic Field by Gaussian Processes20 Periodic Trajectory Planning Beyond the Static Workspace for 6-DOF Cable-Suspended Parallel Robots1 Computationally Efficient Trajectory Generation for Fully Actuated Multirotor Vehicles2 Aural Servo: Sensor-Based Control From Robot Audition3 An Efficient Acyclic Contact Planner for Multiped Robots4 Dimensionality Reduction for Dynamic Movement Primitives and Application to Bimanual Manipulation of Clothes5 Resolving Occlusion in Active Visual Target Search of High-Dimensional Robotic Systems6 Constraint Gaussian Filter With Virtual Measurement for On-Line Camera-Odometry Calibration7 A New Approach to Time-Optimal Path Parameterization Based on Reachability Analysis8 Failure Recovery in Robot–Human Object Handover9 Efficient and Stable Locomotion for Impulse-Actuated Robots Using Strictly Convex Foot Shapes10 Continuous-Phase Control of a Powered Knee–Ankle Prosthesis: Amputee Experiments Across Speeds and Inclines11 Fundamental Actuation Properties of Multirotors: Force–Moment Decoupling and Fail–Safe Robustness12 Symmetric Subspace Motion Generators13 Recovering Stable Scale in Monocular SLAM Using Object-Supplemented Bundle Adjustment14 Toward Controllable Hydraulic Coupling of Joints in a Wearable Robot15 Geometric Construction-Based Realization of Spatial Elastic Behaviors in Parallel and Serial Manipulators16 Dynamic Point-to-Point Trajectory Planning Beyond the Static Workspace for Six-DOF Cable-Suspended Parallel Robots17 Investigation of the Coin Snapping Phenomenon in Linearly Compliant Robot Grasps18 Target Tracking in the Presence of Intermittent Measurements via Motion Model Learning19 Point-Wise Fusion of Distributed Gaussian Process Experts (FuDGE) Using a Fully Decentralized Robot Team Operating in Communication-Devoid Environment20 On the Importance of Uncertainty Representation in Active SLAM1 Robust Visual Localization Across Seasons2 Grasping Without Squeezing: Design and Modeling of Shear-Activated Grippers3 Elastic Structure Preserving (ESP) Control for Compliantly Actuated Robots4 The Boundaries of Walking Stability: Viability and Controllability of Simple Models5 A Novel Robotic Platform for Aerial Manipulation Using Quadrotors as Rotating Thrust Generators6 Dynamic Humanoid Locomotion: A Scalable Formulation for HZD Gait Optimization7 3-D Robust Stability Polyhedron in Multicontact8 Cooperative Collision Avoidance for Nonholonomic Robots9 A Physics-Based Power Model for Skid-Steered Wheeled Mobile Robots10 Formation Control of Nonholonomic Mobile Robots Without Position and Velocity Measurements11 Online Identification of Environment Hunt–Crossley Models Using Polynomial Linearization12 Coordinated Search With Multiple Robots Arranged in Line Formations13 Cable-Based Robotic Crane (CBRC): Design and Implementation of Overhead Traveling Cranes Based on Variable Radius Drums14 Online Approximate Optimal Station Keeping of a Marine Craft in the Presence of an Irrotational Current15 Ultrahigh-Precision Rotational Positioning Under a Microscope: Nanorobotic System, Modeling, Control, and Applications16 Adaptive Gain Control Strategy for Constant Optical Flow Divergence Landing17 Controlling Noncooperative Herds with Robotic Herders18 ε⋆: An Online Coverage Path Planning Algorithm19 Full-Pose Tracking Control for Aerial Robotic Systems With Laterally Bounded Input Force20 Comparative Peg-in-Hole Testing of a Force-Based Manipulation Controlled Robotic HandISSUE 11 Development of the Humanoid Disaster Response Platform DRC-HUBO+2 Active Stiffness Tuning of a Spring-Based Continuum Robot for MRI-Guided Neurosurgery3 Parallel Continuum Robots: Modeling, Analysis, and Actuation-Based Force Sensing4 A Rationale for Acceleration Feedback in Force Control of Series Elastic Actuators5 Real-Time Area Coverage and Target Localization Using Receding-Horizon Ergodic Exploration6 Interaction Between Inertia, Viscosity, and Elasticity in Soft Robotic Actuator With Fluidic Network7 Exploiting Elastic Energy Storage for “Blind”Cyclic Manipulation: Modeling, Stability Analysis, Control, and Experiments for Dribbling8 Enhance In-Hand Dexterous Micromanipulation by Exploiting Adhesion Forces9 Trajectory Deformations From Physical Human–Robot Interaction10 Robotic Manipulation of a Rotating Chain11 Design Methodology for Constructing Multimaterial Origami Robots and Machines12 Dynamically Consistent Online Adaptation of Fast Motions for Robotic Manipulators13 A Controller for Guiding Leg Movement During Overground Walking With a Lower Limb Exoskeleton14 Direct Force-Reflecting Two-Layer Approach for Passive Bilateral Teleoperation With Time Delays15 Steering a Swarm of Particles Using Global Inputs and Swarm Statistics16 Fast Scheduling of Robot Teams Performing Tasks With Temporospatial Constraints17 A Three-Dimensional Magnetic Tweezer System for Intraembryonic Navigation and Measurement18 Adaptive Compensation of Multiple Actuator Faults for Two Physically Linked 2WD Robots19 General Lagrange-Type Jacobian Inverse for Nonholonomic Robotic Systems20 Asymmetric Bimanual Control of Dual-Arm Exoskeletons for Human-Cooperative Manipulations21 Fourier-Based Shape Servoing: A New Feedback Method to Actively Deform Soft Objects into Desired 2-D Image Contours22 Hierarchical Force and Positioning Task Specification for Indirect Force Controlled Robots。

List of related AI Classes CS229covered a broad swath of topics in machine learning,compressed into a sin-gle quarter.Machine learning is a hugely inter-disciplinary topic,and there are many other sub-communities of AI working on related topics,or working on applying machine learning to different problems.Stanford has one of the best and broadest sets of AI courses of pretty much any university.It offers a wide range of classes,covering most of the scope of AI issues.Here are some some classes in which you can learn more about topics related to CS229:AI Overview•CS221(Aut):Artificial Intelligence:Principles and Techniques.Broad overview of AI and applications,including robotics,vision,NLP,search,Bayesian networks, and learning.Taught by Professor Andrew Ng.Robotics•CS223A(Win):Robotics from the perspective of building the robot and controlling it;focus on manipulation.Taught by Professor Oussama Khatib(who builds the big robots in the Robotics Lab).•CS225A(Spr):A lab course from the same perspective,taught by Professor Khatib.•CS225B(Aut):A lab course where you get to play around with making mobile robots navigate in the real world.Taught by Dr.Kurt Konolige(SRI).•CS277(Spr):Experimental Haptics.Teaches haptics programming and touch feedback in virtual reality.Taught by Professor Ken Salisbury,who works on robot design,haptic devices/teleoperation,robotic surgery,and more.•CS326A(Latombe):Motion planning.An algorithmic robot motion planning course,by Professor Jean-Claude Latombe,who(literally)wrote the book on the topic.Knowledge Representation&Reasoning•CS222(Win):Logical knowledge representation and reasoning.Taught by Profes-sor Yoav Shoham and Professor Johan van Benthem.•CS227(Spr):Algorithmic methods such as search,CSP,planning.Taught by Dr.Yorke-Smith(SRI).Probabilistic Methods•CS228(Win):Probabilistic models in AI.Bayesian networks,hidden Markov mod-els,and planning under uncertainty.Taught by Professor Daphne Koller,who works on computational biology,Bayes nets,learning,computational game theory, and more.1Perception&Understanding•CS223B(Win):Introduction to computer vision.Algorithms for processing and interpreting image or camera information.Taught by Professor Sebastian Thrun, who led the DARPA Grand Challenge/DARPA Urban Challenge teams,or Pro-fessor Jana Kosecka,who works on vision and robotics.•CS224S(Win):Speech recognition and synthesis.Algorithms for large vocabu-lary continuous speech recognition,text-to-speech,conversational dialogue agents.Taught by Professor Dan Jurafsky,who co-authored one of the two most-used textbooks on NLP.•CS224N(Spr):Natural language processing,including parsing,part of speech tagging,information extraction from text,and more.Taught by Professor Chris Manning,who co-authored the other of the two most-used textbooks on NLP.•CS224U(Win):Natural language understanding,including computational seman-tics and pragmatics,with application to question answering,summarization,and inference.Taught by Professors Dan Jurafsky and Chris Manning.Multi-agent systems•CS224M(Win):Multi-agent systems,including game theoretic foundations,de-signing systems that induce agents to coordinate,and multi-agent learning.Taught by Professor Yoav Shoham,who works on economic models of multi-agent interac-tions.•CS227B(Spr):General game playing.Reasoning and learning methods for playing any of a broad class of games.Taught by Professor Michael Genesereth,who works on computational logic,enterprise management and e-commerce.Convex Optimization•EE364A(Win):Convex Optimization.Convexity,duality,convex programs,inte-rior point methods,algorithms.Taught by Professor Stephen Boyd,who works on optimization and its application to engineering problems.AI Project courses•CS294B/CS294W(Win):STAIR(STanford AI Robot)project.Project course with no lectures.By drawing from machine learning and all other areas of AI, we’ll work on the challenge problem of building a general-purpose robot that can carry out home and office chores,such as tidying up a room,fetching items,and preparing meals.Taught by Professor Andrew Ng.2。

起重机中英⽂对照外⽂翻译⽂献中英⽂对照外⽂翻译(⽂档含英⽂原⽂和中⽂翻译)Control of Tower Cranes WithDouble-Pendulum Payload DynamicsAbstract:The usefulness of cranes is limited because the payload is supported by an overhead suspension cable that allows oscilation to occur during crane motion. Under certain conditions, the payload dynamics may introduce an additional oscillatory mode that creates a double pendulum. This paper presents an analysis of this effect on tower cranes. This paper also reviews a command generation technique to suppress the oscillatory dynamics with robustness to frequency changes. Experimental results are presented to verify that the proposed method can improve the ability of crane operators to drive a double-pendulum tower crane. The performance improvements occurred during both local and teleoperated control.Key words:Crane , input shaping , tower crane oscillation , vibrationI. INTRODUCTIONThe study of crane dynamics and advanced control methods has received significant attention. Cranes can roughly be divided into three categories based upontheir primary dynamic properties and the coordinate system that most naturally describes the location of the suspension cable connection point. The first category, bridge cranes, operate in Cartesian space, as shown in Fig. 1(a). The trolley moves along a bridge, whose motion is perpendicular to that of the trolley. Bridge cranes that can travel on a mobile base are often called gantry cranes. Bridge cranes are common in factories, warehouses, and shipyards.The second major category of cranes is boom cranes, such as the one sketched in Fig. 1(b). Boom cranes are best described in spherical coordinates, where a boom rotates aboutaxes both perpendicular and parallel to the ground. In Fig. 1(b), ψis the rotation aboutthe vertical, Z-axis, and θis the rotation about the horizontal, Y -axis. The payload is supported from a suspension cable at the end of the boom. Boom cranes are often placed on a mobile base that allows them to change their workspace.The third major category of cranes is tower cranes, like the one sketched in Fig. 1(c). These are most naturally described by cylindrical coordinates. A horizontal jib arm rotates around a vertical tower. The payload is supported by a cable from the trolley, which moves radially along the jib arm. Tower cranes are commonly used in the construction of multistory buildings and have the advantage of having a small footprint-to-workspace ratio. Primary disadvantages of tower and boom cranes, from a control design viewpoint, are the nonlinear dynamics due to the rotational nature of the cranes, in addition to the less intuitive natural coordinate systems.A common characteristic among all cranes is that the pay- load is supported via an overhead suspension cable. While this provides the hoisting functionality of the crane, it also presents several challenges, the primary of which is payload oscillation. Motion of the crane will often lead to large payload oscillations. These payload oscillations have many detrimental effects including degrading payload positioning accuracy, increasing task completion time, and decreasing safety. A large research effort has been directed at reducing oscillations. An overview of these efforts in crane control, concentrating mainly on feedback methods, is provided in [1]. Some researchers have proposed smooth commands to reduce excitation of system flexible modes [2]–[5]. Crane control methods based on command shaping are reviewed in [6]. Many researchers have focused on feedback methods, which necessitate the addition necessitate the addition of sensors to the crane and can prove difficult to use in conjunction with human operators. For example, some quayside cranes have been equipped with sophisticated feedback control systems to dampen payload sway. However, the motions induced by the computer control annoyed some of the human operators. As a result, the human operators disabled the feedback controllers. Given that the vast majority of cranes are driven by human operators and will never be equipped with computer-based feedback, feedback methods are not considered in this paper.Input shaping [7], [8] is one control method that dramatically reduces payload oscillation by intelligently shaping the commands generated by human operators [9], [10]. Using rough estimates of system natural frequencies and damping ratios, a series of impulses, called the input shaper, is designed. The convolution of the input shaper and the original command is then used to drive the system. This process is demonstrated with atwo-impulse input shaper and a step command in Fig. 2. Note that the rise time of the command is increased by the duration of the input shaper. This small increase in the rise time isnormally on the order of 0.5–1 periods of the dominant vibration mode.Fig. 1. Sketches of (a) bridge crane, (b) boom crane, (c) and tower crane.Fig. 2. Input-shaping process.Input shaping has been successfully implemented on many vibratory systems including bridge [11]–[13], tower [14]–[16], and boom [17], [18] cranes, coordinate measurement machines[19]–[21], robotic arms [8], [22], [23], demining robots [24], and micro-milling machines [25].Most input-shaping techniques are based upon linear system theory. However, some research efforts have examined the extension of input shaping to nonlinear systems [26], [14]. Input shapers that are effective despite system nonlinearities have been developed. These include input shapers for nonlinear actuator dynamics, friction, and dynamic nonlinearities [14], [27]–[31]. One method of dealing with nonlinearities is the use of adaptive or learning input shapers [32]–[34].Despite these efforts, the simplest and most common way to address system nonlinearities is to utilize a robust input shaper [35]. An input shaper that is more robust to changes in system parameters will generally be more robust to system nonlinearities that manifest themselves as changes in the linearized frequencies. In addition to designing robust shapers, input shapers can also be designed to suppress multiple modes of vibration [36]–[38].In Section II, the mobile tower crane used during experimental tests for this paper is presented. In Section III, planar and 3-D models of a tower crane are examined to highlight important dynamic effects. Section IV presents a method to design multimode input shapers with specified levels of robustness. InSection V, these methods are implemented on a tower crane with double-pendulum payload dynamics. Finally, in Section VI, the effect of the robust shapers on human operator performance is presented for both local and teleoperated control.II. MOBILE TOWER CRANEThe mobile tower crane, shown in Fig. 3, has teleoperation capabilities that allow it to be operated in real-time from anywhere in the world via the Internet [15]. The tower portion of the crane, shown in Fig. 3(a), is approximately 2 m tall with a 1 m jib arm. It is actuated by Siemens synchronous, AC servomotors. The jib is capable of 340°rotation about the tower. The trolley moves radially along the jib via a lead screw, and a hoisting motor controls the suspension cable length. Motor encoders are used for PD feedback control of trolley motion in the slewing and radial directions. A Siemens digital camera is mounted to the trolley and records the swing deflection of the hook at a sampling rate of 50 Hz [15].The measurement resolution of the camera depends on the suspension cable length. For the cable lengths used in this research, the resolution is approximately 0.08°. This is equivalent to a 1.4 mm hook displacement at a cable length of 1 m. In this work, the camera is not used for feedback control of the payload oscillation. The experimental results presented in this paper utilize encoder data to describe jib and trolley position and camera data to measure the deflection angles of the hook. Base mobility is provided by DC motors with omnidirectional wheels attached to each support leg, as shown in Fig. 3(b). The base is under PD control using two HiBot SH2-based microcontrollers, with feedback from motor-shaft-mounted encoders. The mobile base was kept stationary during all experiments presented in this paper. Therefore, the mobile tower crane operated as a standard tower crane.Table I summarizes the performance characteristics of the tower crane. It should be noted that most of these limits areenforced via software and are not the physical limitations of the system. These limitations are enforced to more closely match theoperational parameters of full-sized tower cranes.Fig. 3. Mobile, portable tower crane, (a) mobile tower crane, (b) mobile crane base.TABLE I MOBILE TOWER CRANE PERFORMANCE LIMITSFig. 4 Sketch of tower crane with a double-pendulum dynamics.III. TOWER CRANE MODELFig.4 shows a sketch of a tower crane with a double-pendulum payload configuration. The jib rotates by an angle around the vertical axis Z parallelto the tower column. The trolley moves radially along the jib; its position along the jib is described by r . The suspension cable length from the trolley to the hook is represented by an inflexible, massless cable of variable length 1l . The payload is connected to the hook via an inflexible, massless cable of length 2l . Both the hook and the payload are represented as point masses having masses h m and p m , respectively.The angles describing the position of the hook are shown in Fig. 5(a). The angle φrepresents a deflection in the radial direction, along the jib. The angle χ represents a tangential deflection, perpendicular to the jib. In Fig. 5(a), φ is in the plane of the page, and χ lies in a plane out of the page. The angles describing the payload position are shown in Fig. 5(b). Notice that these angles are defined relative to a line from the trolley to the hook. If there is no deflection of the hook, then the angleγ describes radial deflections, along the jib, and the angle α represents deflections perpendicular to the jib, in the tangential direction. The equations of motion for this model were derived using a commercial dynamics package, but they are too complex to show in their entirety here, as they are each over a page in length.To give some insight into the double-pendulum model, the position of the hook and payload within the Newtonian frame XYZ are written as —h q and —p q , respectivelyWhere -I , -J and -K are unit vectors in the X , Y , and Z directions. The Lagrangian may then be written asFig. 5. (a) Angles describing hook motion. (b) Angles describing payload motion.Fig. 6. Experimental and simulated responses of radial motion.(a) Hook responses (φ) for m 48.01=l ,(b) Hook responses for m 28.11=lThe motion of the trolley can be represented in terms of the system inputs. The position of the trolley —tr q in the Newtonian frame is described byThis position, or its derivatives, can be used as the input to any number of models of a spherical double-pendulum. More detailed discussion of the dynamics of spherical double pendulums can be found in [39]–[42].The addition of the second mass and resulting double-pendulum dramatically increases the complexity of the equations of motion beyond the more commonly used single-pendulum tower model [1], [16], [43]–[46]. This fact can been seen in the Lagrangian. In (3), the terms in the square brackets represent those that remain for the single-pendulum model; no —p q terms appear. This significantly reduces the complexity of the equations because —p q is a function of the inputs and all four angles shown in Fig. 5.It should be reiterated that such a complex dynamic model is not used to design the input-shaping controllers presented in later sections. The model was developed as a vehicle to evaluate the proposed control method over a variety of operating conditions and demonstrate its effectiveness. The controller is designed using a much simpler, planar model.A. Experimental V erification of the ModelThe full, nonlinear equations of motion were experimentally verified using several test cases. Fig.6 shows two cases involving only radial motion. The trolley was driven at maximum velocity for a distance of 0.30 m, with 2l =0.45m .The payload mass p m for both cases was 0.15 kg and the hook mass h m was approximately 0.105 kg. The two cases shown in Fig. 6 present extremes of suspension cable lengths 1l . In Fig. 6(a), 1l is 0.48 m , close to the minimum length that can be measured by the overhead camera. At this length, the double-pendulum effect is immediately noticeable. One can see that the experimental and simulated responses closely match. In Fig. 6(b), 1l is 1.28 m, the maximum length possible while keeping the payload from hitting the ground. At this length, the second mode of oscillation has much less effect on the response. The model closely matches the experimental response for this case as well. The responses for a linearized, planar model, which will be developed in Section III-B, are also shown in Fig. 6. The responses from this planar model closely match both the experimental results and the responses of the full, nonlinear model for both suspension cable lengths.Fig. 7. Hook responses to 20°jib rotation:(a) φ (radial) response;(b) χ (tangential) response.Fig. 8. Hook responses to 90°jib rotation:φ(radial) response;(b) χ(tangential) response.(a)If the trolley position is held constant and the jib is rotated, then the rotational and centripetal accelerations cause oscillation in both the radial and tangential directions. This can be seen in the simulation responses from the full nonlinear model in Figs. 7 and 8. In Fig. 7, the trolley is held at a fixed position of r = 0.75 m, while the jib is rotated 20°. This relatively small rotation only slightly excites oscillation in the radial direction, as shown in Fig. 7(a). The vibratory dynamics are dominated byoscillations in the tangential direction, χ, as shown in Fig. 7(b). If, however, a large angular displacement of the jib occurs, then significant oscillation will occur in both the radial and tangential directions, as shown in Fig. 8. In this case, the trolley was fixed at r = 0.75 m and the jib was rotated 90°. Figs. 7 and 8 show that the experimental responses closely match those predicted by the model for these rotational motions. Part of the deviation in Fig. 8(b) can be attributed to the unevenness of the floor on which the crane sits. After the 90°jib rotation the hook and payload oscillate about a slightly different equilibrium point, as measured by the overhead camera.Fig.9.Planardouble-pendulummodel.B.Dynamic AnalysisIf the motion of the tower crane is limited to trolley motion, like the responses shown in Fig. 6, then the model may be simplified to that shown in Fig. 9. This model simplifies the analysis of the system dynamics and provides simple estimates of the two natural frequencies of the double pendulum. These estimates will be used to develop input shapers for the double-pendulum tower crane.The crane is moved by applying a force )(t u to the trolley. A cable of length 1l hangs below the trolley and supports a hook, of mass h m , to which the payload is attached using rigging cables. The rigging and payload are modeled as a second cable, of length 2l and point mass p m . Assuming that the cable and rigging lengths do not change during the motion, the linearized equations of motion, assuming zero initial conditions, arewhere φ and γ describe the angles of the two pendulums, R is the ratio of the payload mass to the hook mass, and g is the acceleration due to gravity.The linearized frequencies of the double-pendulum dynamics modeled in (5) are [47]Where Note that the frequencies depend on the two cable lengths and the mass ratio.Fig. 10. Variation of first and second mode frequencies when m l l 8.121=+.。

一种移动机器人遥操作接口系统的设计与实现作者:侯保民冯健翔杜芳王俊锋郭小强侯海英来源:《现代电子技术》2009年第10期摘要:针对移动机器人的远程操作问题,基于C++ Builder软件环境,设计和实现了一种移动机器人的遥操作接口系统,可利用方向盘、键盘和鼠标来操作机器人的移动。

基于此接口系统建立了遥操作系统原型,并且进行了室内试验。

室内试验表明,此遥操作接口系统具有简便、界面友好等特点。

关键词:遥操作;人机接口;移动机器人;软件环境中图分类号:TP311文献标识码:A文章编号:1004-373X(2009)10-034-02Design and Implement of Teleoperation Interface System for Mobile RobotHOU Baomin,FENG Jianxiang,DU Fang,WANG Junfeng,GUO Xiaoqiang,HOU Haiying(Academy of Equipment Command & Technology,Beijing,101416,China)Abstract:To resolve the teleoperation problem for a mobile robot,a kind of human-machine interface systembased on the C++ Builder software environment is designed.It can operate movement of the robot in three operation modes:steering wheels,keyboard and mouse.Based on it,a teleoperation system prototype is constructed and implemented.and it is tested in the laboratory.The experimentation in doors indicates that the teleoperation interface system issimple,convenient,friendly and so on.Keywords:teleoperation;human-machine interface;mobile robot;software environment0 引言遥操作就是远距离操作,是在远方人的行为动作远距离作用下,使事物产生运动变化。

电气工程学院六个研究所简介一、检测技术研究所研究方向,科研方向主要有三个:①工业过程控制、智能控制理论及应用。

以计算机、单片机、DSP、PLC等为技术手段,应用各种先进控制理论,以粮油食品加工的过程控制和智能控制为特色,开发面向工农业生产的先进过程控制系统。

②检测技术和智能仪表。

研究各种信号的获取与数据实时处理技术,采用各种先进传感器技术、应用先进的数据融合技术,研制面向工农业生产一线各种智能仪表、新型测控装置和系统。

③光电检测技术的理论和应用研究。

尤其侧重于光电检测技术在粮油食品行业的理论和应用研究。

二、先进制造研究所研究方向:快速制造技术:新的快速成型方法、设备和软件;1.逆向工程技术:三维扫描设备、软件和应用技术;2.制造业信息化技术:三维设计、制造、工艺规程编制、优化分析、产品数;3.据管理、企业资源管理,数控技术与装备。

重点研究内容:开发适合中小企业的先进制造技术,为企业提供数控技术及装备的技术支撑;1.围绕河南省汽车、摩托车及零部件行业,开发覆盖件快速设计技术;2.以青铜器为核心,开发文物三维数字化技术及装备、残缺文物智能修复技术,开发高档文物工艺品。

三、机器人研究所研究所的主要研究方向:空间遥控智能机器人、遥操作技术、自动控制、数控技术和机电一体化。

主要从事遥控智能机器人(Telerobots)、遥操作技术(Teleoperation)的理论、关键技术及应用方面的研究与开发,尤其侧重遥操作系统手控器、高级人机接口及其相关技术的研究。

十多年来连续承担国家863计划空间机器人研究项目,河南省机器人类重大研究项目以及为企业研究开发机器人技术的基础上滚动发展起来的一个跨学科、专兼职相结合的研究机构。

研究所目前在研各类纵向课题10余项,同时承担横向课题多项。

已毕业硕士生8人,在读4人。

自1992年以来,承担国家863计划项目5项、国家“八五计划”项目1项、河南省杰出人才创新基金项目1项;获机械部科技进步二等奖1项、河南省科技进步奖4项;发表学术论文26篇,其中被EI收录4篇。

一种基于共享控制的双臂协同遥操作控制方法黄攀峰;鹿振宇;党小鹏;刘正雄【摘要】For the delicate teleoperation task of the dual-arm space robot,a novel shared control method for the cooperative tasks is proposed,which is based on the traditional four-channel bilateral control architecture and the shared control method.Adding multiple dominant factors to the controllers in the master and slave side,a double closed-loop feedback loop,containing the information of force and position is built.By adjusting the shared control factors,the master operator can receive and share operation intentions with other operators.A space teleoperation experiment is conducted by CHAI paring with the two cases that single operator operates a dual-arm space robot and two operators handle one of the space robot arms respectively,the experimental results show that the proposed algorithm can improve the accuracy of operation and reduce the frequency of collision,thereby making the efficiency of the teleoperation task enhanced.%针对双臂空间机器人精细操作任务,提出一种双臂协同遥操作共享控制方法.该方法将传统的遥操作四通道控制结构与共享控制相结合,通过在主从端控制器中加入优势因子,实现操作者与机器人左右操作手力和位置信息的双闭环反馈回路,并通过调节优势因子使主端的操作者对从端机械臂具有不同的控制权重,且主端操作者在协同操作时可以感受到对方的操作意图.通过CHAI 3D搭建的实验平台设计典型空间操作实验,并与单个操作者单独进行双手操作和双操作者分别操作单机械臂的情况对比.实验结果表明,本算法操作精度和效率较高,操作中的碰撞次数较少.【期刊名称】《宇航学报》【年(卷),期】2018(039)001【总页数】7页(P104-110)【关键词】遥操作;双臂空间机器人;共享控制;优势因子【作者】黄攀峰;鹿振宇;党小鹏;刘正雄【作者单位】西北工业大学航天学院智能机器人研究中心,西安710072;西北工业大学航天飞行动力学重点实验室,西安710072;西北工业大学航天学院智能机器人研究中心,西安710072;西北工业大学航天飞行动力学重点实验室,西安710072;西北工业大学航天学院智能机器人研究中心,西安710072;西北工业大学航天飞行动力学重点实验室,西安710072;中国航天科技集团公司第四研究院第四十一研究所,西安710025;西北工业大学航天学院智能机器人研究中心,西安710072;西北工业大学航天飞行动力学重点实验室,西安710072【正文语种】中文【中图分类】TP242.30 引言随着目前空间深空探测和在轨服务技术的发展,在轨维修、在轨组装技术的需求越来越强烈。

【导语】北京时间2021年10⽉16⽇0时23分,搭载神⾈⼗三号载⼈飞船的长征⼆号F遥⼗三运载⽕箭,在酒泉卫星发射中⼼点⽕发射取得圆满成功。

以下是⽆忧考为⼤家整理的内容,欢迎阅读参考。

1.神⾈13号发射成功英语作⽂ At present, we are facing great changes that have not been seen in a century and have embarked on a new journey of building a modern socialist country in an all-round way. Opportunities and challenges coexist, and difficulties and hopes coexist. On the one hand, young cadres must take over the "baton" of the revolutionary cause, refine experience, inspire wisdom and forge ahead in the history of the party's struggle, learn to be old scalpers, carry forward the spirit of dedication that doesn't care about gain and loss and the sense of responsibility that work conscientiously, and shoulder the important task of creating a new era. On the other hand, we should study hard, increase our skills, act actively, answer the "responsibility volume" of youth, and make good achievements belonging to the young generation in the "relay race" of national rejuvenation. The majority of scientific research workers should further carry forward the aerospace spirit, always climb the peak of science and technology, and take the long march of the new era. Carry forward the patriotic spirit of "grow here and serve the country". In the early stage of the development of China's aerospace industry, many successful and talented scientists gave up favorable conditions abroad and returned to the motherland without hesitation. Many development workers are willing to be unsung heroes, hide their names, make silent contributions, and some even give their precious lives. With their blood and life, they wrote a moving poem that dedicated themselves to the motherland and the people and died. Scientific research talents in the new era should learn from the older generation of scientists, strengthen the idea of scientific and technological innovation and serving the country, integrate the pursuit of career into the needs of the country, inherit the tradition of patriotism and dedication of predecessors, take the needs of the country and the nation as the research guidance, and realize the perfect integration of individual, career and the country on the road of serving the country scientifically and strengthening the country through science and technology. Carry forward the fighting spirit of "thousands of grinding and thousands of hitting, but also perseverance". In the boundless Gobi wasteland and the sparsely populated deep mountain canyon, the older generation of scientific researchers have overcome all kinds of unimaginable difficulties and dangers. Using limited scientific research and experimental means, relying on science, they worked tenaciously, worked hard and made innovations, broke through technical difficulties one by one, and won a great victory in the cause of "two bombs and one satellite". In this era, we are undoubtedly lucky. Our living environment and scientific research conditions are much better than those of the older generation of scientists. Contemporary scientific research workers take the older generation of scientists as an example, vigorously carry forward the spirit of self-reliance and hard work, work down-to-earth and hard in their respective fields, and create new achievements. Carry forward the unity spirit of "everyone gathers firewood and the flame is high". In the extraordinary process of developing "two bombs and one satellite", thousands of scientific and technical personnel, engineering and technical personnel and logistics support personnel from all regions and departments across the country have united, cooperated and made concerted efforts to form a vast Team marching towards the peak of modern science and technology. With their brilliant achievements, they have added a dazzling page to the history of the creation of Chinese civilization. Scientific research is a complex and arduous group work. In scientific research activities, the interaction between people directly affects the scientific research cooperation and the completion of scientific research plans. The majority of scientific research workers should firmly establish the awareness of the overall situation, cooperation and service, focus on the common goal, and cooperate with each other while giving full play to their respective strengths.2.神⾈13号发射成功英语作⽂ In the early morning of this morning, the Long March 2 F remote 13 carrier rocket launched the Shenzhou 13 spacecraft in Jiuquan, carrying three astronauts to pierce the sky like sharp arrows and fly into space. This is another major success of China's manned space project and the "key battle" of China's space station construction. Qingdao science and technology has always provided escort technical support. As early as the early stage of the mission launch, the information support team of CETC 22 Institute has fully entered the working state. On the one hand, the team made every effort to provide the space radio wave environment situation and abnormal event prediction and early warning information for the mission, and provided technical support for the determination of the launch window; On the other hand, the team also provided high-precision radio wave environmental effect data for the mission system to ensure the reliable operation of aerospace measurement and control, satellite communication, space target monitoring radar and other systems. Portable orienteers, land beacons, sea beacons, astronaut communication stations and other equipment developed by CETC 22 are "on the battlefield", forming a three-dimensional search and life-saving network with near, medium and long-distance matching and sea, land and air cooperation. This set of "star equipment" that has repeatedly provided support for China's space mission launch once again provided a strong escort for the smooth departure of Shenzhou 13. "The deep space exploration real-time three-dimensional visualization technology developed by the aerospacevisualization team of the Institute of complex network and visualization has once again accepted the test of practical missions in this flight mission." Dr. Guo Yang of the team told reporters that it is difficult to directly observe the flight state of the spacecraft in space. This technology can process the massive data generated during the flight of the spacecraft in 0.1 seconds "Real time translation" drives the spacecraft model on the screen of the control center to adjust the position and attitude, so that the ground control personnel can see the "live" of the spacecraft for the first time. "This technology is like the 'eyes' of the spacecraft, so that it can maintain a better attitude and run." Guo Yang said that the aerospace visualization team is on a "space business trip" for the Shenzhou 13 manned spacecraft Provide key technical support and engineering support for orbit correction, attitude adjustment and flight control and command. The real-time three-dimensional visualization technology for deep space exploration developed by the team plays a key role in the autonomous rendezvous and docking mission between Shenzhou 13 spacecraft and Tianhe core module, and escorts the on orbit flight of Shenzhou 13 spacecraft. "The real-time three-dimensional visualization technology for deep space exploration developed by the team has helped China's aerospace industry for more than 10 years in the implementation of rendezvous and docking missions, and has become the normalized mission execution system of Beijing aerospace flight control center." According to Guo Yang, the team has served as a deduction platform for the whole process of the mission as early as the launch mission of firefly-1 Mars probe in 2011. It has successively participated in and successfully completed a number of engineering practical missions such as national major manned spaceflight, lunar exploration project and deep space exploration, mainly including tianwen-1 Mars exploration, Chang'e-2, chang'e-3 and chang'e-5 T1 flights Visual flight control command and teleoperation control tasks of the tester, chang'e-4 and chang'e-5 missions, real-time three-dimensional visual flight control and command of the manned space engineering Tiangong-1 and Shenzhou-8, shenzhou-9, shenzhou-10, tiangong-2 and shenzhou-11, tianzhou-1, and the rendezvous and docking tasks of the space station Tianhe core module and shenzhou-12 and tianzhou-2 Wave the task.3.神⾈13号发射成功英语作⽂ Just now, the Long March 2 F remote 13 carrier rocket carrying the Shenzhou 13 manned spacecraft ignited and took off at the Jiuquan Satellite Launch Center. About 40 minutes after the launch of the rocket, the Shenzhou 13 manned spacecraft successfully separated from the rocket and entered the predetermined orbit, successfully sent three astronauts into space, and the launch was a complete success! "This is my first time to watch the rocket launch on site." just before the launch of Shenzhou 13 manned spacecraft, the people watching turned on their mobile phones and couldn't help counting down, "the countdown is almost the same as the rocket ignition!" Because the launch was in the evening, when the countdown to the last second of ignition, Gu Xiaoyan saw the fire flashing from the bottom of the rocket, followed by flames and smoke rising, illuminating the whole launch area, and she also felt the vibration of the ground. A few seconds later, as the low roar spread to the crowd watching the launch, watching the rocket gradually rise into the air, at that moment, everyone began to cheer! "I'm so excited. I'm proud of my motherland! I'm proud of China's aerospace!" I felt her excitement about an hour after the launch. "We saw the whole process of Shenzhou 13 flying to the sky!"。

基于虚拟环境的多操作者多机器人协作遥操作系统作者:马良, 闫继宏, 赵杰, 陈志峰, MA Liang, YAN Jihong, ZHAO Jie, CHEN Zhifeng 作者单位:哈尔滨工业大学机器人技术及系统国家重点实验室,黑龙江,哈尔滨,150080刊名:机器人英文刊名:ROBOT年,卷(期):2011,33(2)被引用次数:2次参考文献(9条)1.Burdea G C Invited review:The synergy between virtual reaIity and robotics[外文期刊] 1999(03)2.刘伟军;朱枫;董再励虚拟现实辅助机器人遥操作技术研究[期刊论文]-机器人 2001(05)3.闫继宏;赵楠;赵杰基于适应性虚拟向导的遥操作机器人系统[期刊论文]-哈尔滨工业大学学报 2007(01)4.赵杰;高胜;闻继宏基于虚拟向导的多操作者多机器人遥操作系统[期刊论文]-哈尔滨工业大学学报 2005(01)5.Cheng J P Research on distributed virtual environment based on web 20096.Ali A E E;El-Desoky A I;Salah M An allocation management algorithm for DVE system 20097.Chong N Y;Kotoku T;Ohba K A collaborative multi-siteteleoperation over an ISDN 2003(8/9)8.Chong N Y;Kotoku T;Ohba K Remote coordinated controis in multiple telerobot cooperation 20009.Alencastre-Miranda M;Munoz-Gomez L;Rudomin I Teleoperating robots in multiuser virtual envirorLments 2003本文读者也读过(3条)1.李珺.潘启树.周浦城.洪炳镕.LI Jun.PAN Qi-shu.Zhou Pu-cheng.HONG Bing-rong未知环境下多机器人协作追捕算法[期刊论文]-电子学报2011,39(3)2.刘利枚.蔡自兴.LIU Li-mei.CAI Zi-xing粒子群优化的多机器人协作定位方法[期刊论文]-中南大学学报(自然科学版)2011,42(3)3.丁滢颍.何衍.蒋静坪基于蚁群算法的多机器人协作策略[期刊论文]-机器人2003,25(5)引证文献(2条)1.王殿君移动机器人网络遥操作系统设计[期刊论文]-机床与液压 2013(9)2.李波.赵怀慈.孙士洁.花海洋空间机器人遥操作预测仿真系统研究[期刊论文]-计算机工程与设计 2013(10)引用本文格式:马良.闫继宏.赵杰.陈志峰.MA Liang.YAN Jihong.ZHAO Jie.CHEN Zhifeng基于虚拟环境的多操作者多机器人协作遥操作系统[期刊论文]-机器人 2011(2)。