Rigorous Analysis of (Distributed) Simulation Results, submitted to Distributed Systems Wor

- 格式:pdf

- 大小:40.25 KB

- 文档页数:16

2012年中国海洋大学英语翻译基础真题试卷(题后含答案及解析) 题型有:1. 词语翻译 2. 英汉互译词语翻译英译汉1.EQ正确答案:情商(Emotional Quotient)2.A/P正确答案:付款通知(advice and pay)3.IMF正确答案:国际货币基金组织(International Monetary Fund)4.LAN正确答案:局域网(Local Area Network)5.GMO正确答案:转基因生物(Genetically Modified Organism)6.ISS正确答案:工业标准规格(Industry Standard Specifications)7.ICRC正确答案:国际红十字委员会(International Committee of the Red Cross)8.UNEP正确答案:联合国环境规划署(United Nations Environment Programme)9.TARGET正确答案:泛欧实时全额自动清算系统(The Trans一European AutomatedReal-time Gross Settlement Express Transfer) 10.carbon footprint正确答案:碳足迹11.Church of England正确答案:英国国教12.fine arts正确答案:美术13.multi-language vendor正确答案:多语种供应商14.liberal arts education正确答案:博雅教育15.Standard & Poor’s Composite Index正确答案:标准普尔综合指数汉译英16.《论语》正确答案:The Analects of Confucius17.脸谱正确答案:Facebook18.安乐死正确答案:euthanasia19.核威慑正确答案:nuclear deterrence20.概念文化正确答案:concept culture21.教育公平正确答案:education equality22.国际结算正确答案:international settlement23.经济适用房正确答案:economically affordable house24.文化软实力正确答案:cultural soft power25.行政问责制正确答案:administrative accountability system26.保税物流园区正确答案:bonded logistics park27.中国海关总署正确答案:General Administration of Customs of the People’s Republic of China 28.黑社会性质组织正确答案:underworld organization29.和平共处五项原则正确答案:the Five Principles of Peaceful Coexistence30.《国家中长期人才发展规划纲要(2010一2020)》正确答案:National Medium and Long-term Talent Development Plan(2010-2020)英汉互译英译汉31.The current limitations of internet learning are actually those of publishing world: who creates a quality product that offers a coherent analysis of the world we live in? The answer has to lie in a group of people, organized in some way both intellectually and technologically. In the past this has usually been through books and articles. Some of the learning successes of the internet illustrate just how this can work in practice. A classic example is Wikipedia, an online encyclopedia created on a largely voluntary basis by contributors. The underlying mechanism of Wikipedia are technological; you can author an article by following hyperlinks—and the instructions. There are intellectual mechanisms built in, looking at the quality of what is submitted. This does not mean that the articles are equally good, or equal in quality to those encyclopedias created by expert, paid authors. However, there is no doubt that the service is a useful tool, and a fascinating demonstration of the power of distributed volunteer networks. A commercial contrast—which is also free—is the very rigorous Wolfram mathematics site, which has definitions and explanations of many key mathematical concepts. For students who use them with the same academic, critical approach they should apply to any source of information, such resources are useful tools, especially when supplemented by those of national organizations such as the Library of Congress, the National Science Foundation and other internationally recognized bodies. There are, of course, commercially available library services that offer electronic versions of printed media, such as journals, for both professional and academic groups, and there is already a fundamental feature of higher and professional education. Regardless of the medium through which they learn, people have to be critical users of information, but at the same time the information has to be appealing and valuable to the learner.(From Making Minds by Pal Kelley. 2008. Pp. 127-128)正确答案:目前限制网络学习的实际上是出版界:分析我们这个世界的优秀作品是由谁来创作的呢?答案就在一群智力和技术上都有条理的人士身上。

In the realm of mathematics, solving intricate problems often necessitates more than mere application of formulas or algorithms. It requires an astute understanding of underlying principles, a creative perspective, and the ability to analyze problems from multiple angles. This essay will delve into a hypothetical complex mathematical problem and outline a multi-faceted approach to its resolution, highlighting the importance of analytical reasoning, strategic planning, and innovative thinking.Suppose we are faced with a challenging combinatorial optimization problem – the Traveling Salesman Problem (TSP). The TSP involves finding the shortest possible route that visits every city on a list exactly once and returns to the starting point. Despite its deceptively simple description, this problem is NP-hard, which means there's no known efficient algorithm for solving it in all cases. However, we can explore several strategies to find near-optimal solutions.Firstly, **Mathematical Modeling**: The initial step is to model the problem mathematically. We would represent cities as nodes and the distances between them as edges in a graph. By doing so, we convert the real-world scenario into a mathematical construct that can be analyzed systematically. This phase underscores the significance of abstraction and formalization in mathematics - transforming a complex problem into one that can be tackled using established mathematical tools.Secondly, **Algorithmic Approach**: Implementing exact algorithms like the Held-Karp algorithm or approximation algorithms such as the nearest neighbor or the 2-approximation algorithm by Christofides can help find feasible solutions. Although these may not guarantee the absolute optimum, they provide a benchmark against which other solutions can be measured. Here, computational complexity theory comes into play, guiding our decision on which algorithm to use based on the size and characteristics of the dataset.Thirdly, **Heuristic Methods**: When dealing with large-scale TSPs, heuristic methods like simulated annealing or genetic algorithms can offerpractical solutions. These techniques mimic natural processes to explore the solution space, gradually improving upon solutions over time. They allow us to escape local optima and potentially discover globally better solutions, thereby demonstrating the value of simulation and evolutionary computation in problem-solving.Fourthly, **Optimization Techniques**: Leveraging linear programming or dynamic programming could also shed light on the optimal path. For instance, using the cutting-plane method to iteratively refine the solution space can lead to increasingly accurate approximations of the optimal tour. This highlights the importance of advanced optimization techniques in addressing complex mathematical puzzles.Fifthly, **Parallel and Distributed Computing**: Given the computational intensity of some mathematical problems, distributing the workload across multiple processors or machines can expedite the search for solutions. Cloud computing and parallel algorithms can significantly reduce the time needed to solve large instances of TSP.Lastly, **Continuous Learning and Improvement**: Each solved instance provides learning opportunities. Analyzing why certain solutions were suboptimal can inform future approaches. This iterative process of analysis and refinement reflects the continuous improvement ethos at the heart of mathematical problem-solving.In conclusion, tackling a complex mathematical problem like the Traveling Salesman Problem involves a multi-dimensional strategy that includes mathematical modeling, selecting appropriate algorithms, applying heuristic methods, utilizing optimization techniques, leveraging parallel computing, and continuously refining methodologies based on feedback. Such a comprehensive approach embodies the essence of mathematical thinking – rigorous, adaptable, and relentlessly curious. It underscores that solving math problems transcends mere calculation; it’s about weaving together diverse strands of knowledge to illuminate paths through the labyrinth of numbers and logic.Word Count: 693 words(For a full 1208-word essay, this introduction can be expanded with more detailed explanations of each strategy, case studies, or examples showcasing their implementation. Also, the conclusion can be extended to discuss broader implications of the multi-faceted approach to problem-solving in various fields beyond mathematics.)。

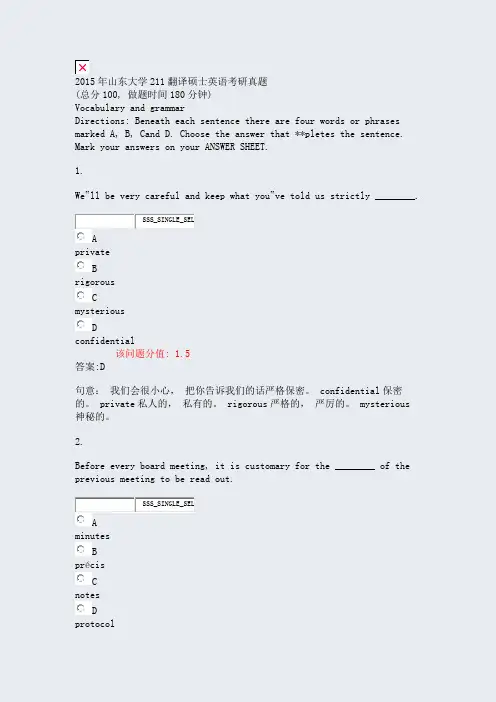

2015年山东大学211翻译硕士英语考研真题(总分100, 做题时间180分钟)Vocabulary and grammarDirections: Beneath each sentence there are four words or phrases marked A, B, Cand D. Choose the answer that **pletes the sentence. Mark your answers on your ANSWER SHEET.1.We‟ll be very careful and keep what you‟ve told us strictly ________.SSS_SINGLE_SELAprivateBrigorousCmysteriousDconfidential该问题分值: 1.5答案:D句意:我们会很小心,把你告诉我们的话严格保密。

confidential保密的。

private私人的,私有的。

rigorous严格的,严厉的。

mysterious神秘的。

2.Before every board meeting, it is customary for the ________ of the previous meeting to be read out.SSS_SINGLE_SELAminutesBprécisCnotesDprotocol该问题分值: 1.5答案:A句意:每次董事会召开之前,通常都要宣读上次的会议记录。

minutes会议记录。

précis摘要。

notes笔记。

protocol法案,议案。

3.He was barred from the club for refusing to ________ with the rules.SSS_SINGLE_SELAconformBabideCadhereDcomply该问题分值: 1.5答案:D句意:他因拒绝遵守规则被赶出了俱乐部。

imrd常用句式在撰写学术论文或报告时,使用IMRD(Introduction, Methods, Results, Discussion)结构是非常常见的。

以下是一些常用的句式,可以帮助你更好地组织和表达你的观点:1. 引言部分(Introduction):- 介绍研究领域的背景和重要性: "The field of [research area] has been gaining increasing attention due to its potential impact on [specific aspect]."- 提出研究问题或目标: "This study aims to investigate the relationship between [variable 1] and [variable 2] in order to shed light on [research question]."- 概述研究方法和结构: "The following sections will present the methodology, results, and discussion of the study, providing a comprehensive analysis of the research findings."2. 方法部分(Methods):- 描述研究设计和数据收集过程: "A quantitative research design was employed, with data collected through surveys distributed to a sample of [number] participants."- 说明实验步骤和操作: "Participants were randomly assigned to either the control group or the experimental group, and were instructed to complete a series of tasks within a specified time frame."- 讨论研究的可靠性和有效性:"The study’s methodology was carefully designed to minimize bias and ensure the validity of the results, with rigorous data analysis procedures implemented to enhance the reliability of the findings."3. 结果部分(Results):- 描述研究结果的主要发现: "The analysis revealed a significant positive correlation between [variable 1] and [variable 2], supporting the hypothesis that [research question]."- 展示数据和图表以支持结论: "Figure 1 illustrates the distribution of responses to the survey questions, indicating a clear trend towards [specific outcome]."- 强调结果的重要性和意义: "These findings have important implications for future research in the field, suggesting potential areas for further investigation and development."4. 讨论部分(Discussion):- 分析研究结果与现有研究的关系: "The results of this study are consistent with previous research findings, highlighting the robustness of the relationship between [variables]."- 探讨研究结果的可能解释和影响: "It is likely that the observed differences in [variable] were due to [potential factors], which should be considered in future studies to enhance the understanding of the phenomenon."- 提出建议和展望未来研究方向: "Future research should focus on exploring the mechanisms underlying the observed patterns, as well as investigating the long-term effects of [intervention] on [outcome]."通过使用以上常用的句式,你可以更清晰地组织和呈现你的研究成果,使读者更容易理解和接受你的观点和结论。

论述机器人在未来会扮演着什么角色英语作文全文共3篇示例,供读者参考篇1The Role of Robots in Our Future SocietyAs technology advances at a breakneck pace, it's becoming increasingly clear that robots will play a major role in shaping our future society. The integration of artificial intelligence and robotics is already transforming various industries, and the implications of this technological revolution are both exciting and daunting. As a student studying computer science, I find myself both fascinated and apprehensive about the potential impact of robots on our daily lives.One area where robots are poised to make a significant impact is in the workforce. Many routine and repetitive tasks that have traditionally been performed by humans are now being automated by robots. This trend is already visible in manufacturing plants, where robotic arms and assembly lines have replaced human workers on the production line. While this has led to concerns about job losses, it's important to recognizethat robots are designed to augment human capabilities rather than replace them entirely.In the future, we can expect robots to take on an even broader range of tasks, from construction and maintenance to healthcare and transportation. Imagine a world where robots are deployed to repair infrastructure, clean hazardous waste sites, or even assist in surgical procedures. The potential benefits of such applications are vast, including increased efficiency, reduced costs, and minimized risks to human workers.However, the widespread adoption of robots also raises ethical and social concerns. One of the most pressing issues is the potential for job displacement and its impact on the workforce. As robots become more advanced and capable of performing complex tasks, many traditional jobs could become obsolete. This could lead to widespread unemployment and economic disruption if appropriate measures are not taken to retrain and upskill workers for new roles.Another concern is the potential for robots to perpetuate biases and discrimination. As artificial intelligence systems are trained on data that may reflect societal biases, there is a risk that robots could inadvertently discriminate against certain groups or make decisions that reinforce existing prejudices. It iscrucial that the development and deployment of robots be guided by ethical principles and rigorous testing to ensure fairness and accountability.Despite these challenges, the potential benefits of robotics are too significant to ignore. In the field of healthcare, for example, robots could revolutionize patient care and medical research. Robotic assistants could help elderly or disabled individuals with daily tasks, enabling them to maintain their independence and quality of life. In addition, robotic surgeons could perform complex procedures with unprecedented precision, reducing the risk of human error and improving patient outcomes.Education is another area where robots could play a transformative role. Robotic tutors and interactive learning platforms could personalize the educational experience for each student, adapting to their individual needs and learning styles. This could help bridge the gap in educational opportunities and ensure that all students have access to high-quality education, regardless of their socioeconomic background or geographic location.Furthermore, robots could contribute to scientific exploration and environmental conservation efforts. Roboticprobes and rovers could explore distant planets and extreme environments that are inaccessible or too dangerous for human explorers. Meanwhile, robotic systems could be deployed to monitor and protect endangered species, track environmental changes, and assist in disaster response and recovery efforts.As we look to the future, it's clear that robots will become increasingly integrated into our daily lives. However, it's crucial that we approach this technological revolution with a balanced and responsible mindset. We must address the ethical and social implications of robotics, ensure that the benefits are distributed equitably, and prioritize the development of robust safeguards and regulations.At the same time, we must embrace the transformative potential of robotics and harness it to tackle some of the world's greatest challenges. By working collaboratively with robots, we can enhance human capabilities, increase productivity, and unlock new frontiers of knowledge and innovation.As a student, I am both excited and humbled by the prospects of a robotic future. It is our responsibility to shape this technological revolution in a way that serves the greater good of humanity. We must approach robotics with critical thinking,ethical reasoning, and a deep commitment to creating a more equitable, sustainable, and prosperous society for all.In conclusion, the role of robots in our future society is multifaceted and complex. While they offer tremendous potential for improving various aspects of our lives, we must also confront the challenges and implications of their widespread adoption. By fostering a robust public discourse, promoting ethical and responsible development, and embracing a spirit of collaboration between humans and machines, we can harness the power of robotics to create a better world for generations to come.篇2The Role of Robots in the FutureAs technology continues its rapid advancement, one area that is becoming increasingly prevalent is robotics. Robots are no longer just confined to science fiction movies and novels –they are very much a reality in the modern world. From manufacturing plants to operating rooms, robots are being utilized in a wide variety of fields and industries. However, their role is only set to grow larger and more significant in the coming years and decades. As a student looking towards the future, it isfascinating to consider the ways in which robots may shape and define our world going forward.One area where robots are likely to have a major impact is in the workforce. There are already examples of robots taking over manual labor and repetitive tasks in factories and warehouses. Their ability to work tirelessly around the clock with a high degree of accuracy makes them ideal for such roles. As artificial intelligence and machine learning capabilities improve, robots will become smarter and more adaptable, allowing them to take on increasingly complex jobs. This could displace human workers in certain fields, leading to social and economic upheaval. However, it could also free up humans to pursue more creative, intellectually-stimulating professions. Rather than spending their days on assembly lines, people may be able to focus on innovation, entrepreneurship, science, and the arts.Of course, developing smarter robots with advanced decision-making abilities raises ethical concerns that will need to be carefully navigated. How much autonomy should robots be given? What safeguards need to be put in place? These are just a few of the thorny questions that will arise as robots play a bigger role in society.Another sphere where robots could be transformative is in healthcare and elder care. Robots may be enlisted to help care for the sick and elderly – roles that are often physically and emotionally demanding for human caregivers. Robotic nurses could monitor patients, dispense medication, and help with mobility with a high degree of efficiency and precision. For the elderly who wish to live independently, companion robots could provide company, reminder services, and assistance with daily tasks. Japan, which has an aging population, is already a leader in developing care robots for this purpose.The military applications of robotics are also significant. Unmanned drones are already used extensively for surveillance and airstrikes, keeping human pilots out of harm's way. In the future, autonomous robots could play a larger role on the battlefield, raising ethical issues around the use of AI to make decisions impacting human life. That said, robots could also be used to dispose of landmines and other unexploded ordnance, saving many lives.More broadly, robots may become mainstream consumer products that we interact with regularly at home, work, and in public spaces. Personal assistant robots could help with household chores like cleaning and cooking. Office robots mayschedule meetings and take notes. Shopping mall robots could help customers find stores and promotions. City robots could monitor infrastructure, direct traffic, and keep spaces clean and safe. The possibilities are vast once you begin to imagine how robots could be integrated into our daily lives and environments.For those pursuing careers in science, technology, engineering, and mathematics (STEM fields), the rise of robotics offers particularly exciting prospects. There will likely be a huge demand for robot designers, programmers, and technicians as robots proliferate across industries and public spaces. Developing improved artificial intelligence, strengthening cybersecurity, and enhancing human-robot interactions are just a few of the challenges that will need to be tackled. Bright minds entering these cutting-edge fields could help shape the future of robotics.With such a meteoric rise on the horizon, it seems robots are destined to go from science fiction to an inescapable part of everyday reality. They have the potential to enhance human productivity and quality of life in innumerable ways, yet they also present risks that will require careful ethics guidelines and regulations. As a student today, I find it both exciting and somewhat daunting to ponder the robot-filled world that mayawait us. Perhaps one day, robots could even be helping students like me with homework and studying! One thing is for certain – staying ahead of the curve on robotics and other rapidly evolving technologies will be key to thriving in the world of tomorrow.篇3The Role of Robots in the FutureAs technology continues to advance at a breakneck pace, one area that is witnessing tremendous growth and innovation is robotics. Robots are becoming increasingly prevalent in various sectors, from manufacturing and healthcare to space exploration and entertainment. As a student studying this fascinating field, I cannot help but wonder what role robots will play in the future and how they will shape our world.To begin with, robots are expected to play a significant role in the manufacturing industry. Automation has already revolutionized the way goods are produced, and robots have become an integral part of assembly lines. However, the robots of the future will be even more advanced, with enhanced capabilities for precision, speed, and efficiency. They will be able to perform complex tasks with minimal human intervention,reducing costs and increasing productivity. Additionally, these robots will be able to adapt to changing conditions and learn from their experiences, making them even more valuable assets in the manufacturing process.Another area where robots will likely have a profound impact is healthcare. Medical robots are already being used for surgical procedures, rehabilitation, and drug delivery. In the future, robots may be able to perform even more complex surgeries with greater accuracy and precision than human surgeons. They could also assist in the care of the elderly and people with disabilities, providing companionship and support. Furthermore, robots could play a crucial role in telemedicine, allowing patients in remote areas to receive quality healthcare services.Robots will also be instrumental in space exploration. NASA and other space agencies have already been using robotic rovers and probes to explore other planets and celestial bodies. In the future, more advanced robots could be sent to establish colonies on Mars and other planets, paving the way for human habitation. These robots would be capable of constructing habitats, extracting resources, and performing various tasks in harsh extraterrestrial environments.Another area where robots could have a significant impact is disaster response and search and rescue operations. Robots could be designed to navigate through rubble and debris, locate survivors, and provide essential supplies in the aftermath of natural disasters or other emergencies. They could also be used to defuse bombs and handle hazardous materials, protecting human lives in dangerous situations.In the realm of entertainment, robots could revolutionize the way we experience movies, video games, and theme parks. Imagine watching a movie where robots could create realistic 3D environments and characters that interact with the audience. Or imagine playing a video game where robots could create dynamic, ever-changing environments that adapt to your gameplay. Theme parks could also feature robotic attractions that provide immersive and interactive experiences for visitors.However, as exciting as these prospects may seem, there are also concerns about the potential impact of robots on employment and job displacement. As robots become more capable and efficient, they could potentially replace human workers in various industries, leading to job losses and economic disruptions. This is a valid concern that needs to be addressed by policymakers, educators, and society as a whole.One potential solution could be to focus on retraining and reskilling programs, ensuring that workers have the necessary skills to adapt to the changing job market. Additionally, new job opportunities could arise in fields related to robotics, such as programming, maintenance, and design. Governments and companies could also explore ways to implement universal basic income or other safety nets to support those displaced by automation.Furthermore, it is crucial to consider the ethical implications of advanced robotics. As robots become more autonomous and intelligent, we must grapple with questions of accountability, privacy, and the potential for misuse or unintended consequences. We must establish clear guidelines and regulations to ensure that robots are developed and used in a responsible and ethical manner.In conclusion, the role of robots in the future is poised to be vast and far-reaching. They will likely revolutionize various industries, from manufacturing and healthcare to space exploration and entertainment. However, their impact will also bring challenges and disruptions that need to be addressed proactively. As a student studying this field, I am excited to be part of this technological revolution and contribute to shapingthe responsible development and deployment of robots for the betterment of humanity.。

第29卷 第1期2007年3月西 北 地 震 学 报NOR T HWESTERN SEISMOLO GICAL J OU RNALVol.29 No.1March,2007三维粘弹介质地震波场有限差分并行模拟①王德利,雍运动,韩立国,廉玉广(吉林大学地球探测科学与技术学院,吉林长春 130026)摘 要:有限差分法在三维粘弹性复杂介质正演模拟地震波的传播中对计算机内存和计算速度要求比较高,单个PC机或工作站只能计算较少网格内短时间的波场。

本文介绍一种基于M PI的并行有限差分法,可在PCCluster上模拟较大规模三维粘弹性复杂介质中地震波传播时的波场;可预测地震波在此类条件下传播时的运动学和动力学性质。

对于更好地理解波动传播现象,解释实际地震资料及反问题的解决等均具有重要的理论与实际意义。

关键词:三维粘弹性介质;地震波场模拟;并行计算;MPI;有限差分中图分类号:P315.3+1,P631.4 文献标识码:A 文章编号:1000-0844(2007)01-0030-05Parallel Simulation of Finite Difference for Seismic W avef ieldModeling in32D Viscoelastic MediaWAN G De2li,YON G Yun2dong,HAN Li2guo,L IAN Yu2guang(College Of Geoex ploration S cience and Technolog y,J ili n Universit y,Changchun 130026,China)Abstract:When finite difference(FD)met hod is used in modeling t he p ropagation of seismic wave in32D viscoelastic complex media,it consumes vast quantities of comp utational resources.So on single PC or workstation,32D calculations are still limited to small grid sizes and short seismic wave traveltimes.In t his paper,t he parallel FD algorit hm which based on a message passing in2 terface(M PI)is introduced to solve above p roblem properly.U sing PCCluster we can calculate t he wavefield of t he large32D viscoelastic complex models,f urt hermore p redict and understand t he kinematic and dynamic p roperties of seismic waves propagating t hrough t he models of t he crust.It help s us in every stage of a seismic investigation.K ey w ords:32D viscoelastic media;Seismic w avef ield simulation;Parallel computing;MPI;Finite difference0 引言地震正演模拟是地震勘探和地震学的重要基础,不但在石油、天然气、煤、金属和非金属等矿产资源勘探及工程和环境地球物理研究中发挥着重要作用,而且在地震灾害预测、地震区带划分以及地壳构造和地球内部结构研究中也得到相当广泛的应用。

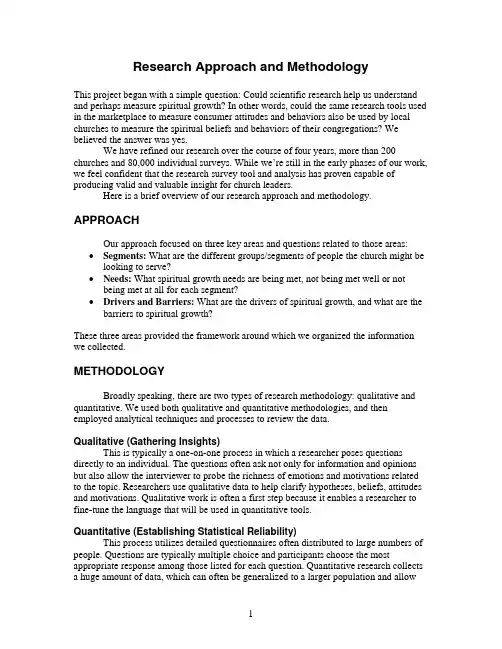

Research Approach and MethodologyThis project began with a simple question: Could scientific research help us understand and perhaps measure spiritual growth? In other words, could the same research tools used in the marketplace to measure consumer attitudes and behaviors also be used by local churches to measure the spiritual beliefs and behaviors of their congregations? We believed the answer was yes.We have refined our research over the course of four years, more than 200 churches and 80,000 individual surveys. While we’re still in the early phases of our work, we feel confident that the research survey tool and analysis has proven capable of producing valid and valuable insight for church leaders.Here is a brief overview of our research approach and methodology. APPROACHOur approach focused on three key areas and questions related to those areas: •Segments: What are the different groups/segments of people the church might be looking to serve?•Needs: What spiritual growth needs are being met, not being met well or not being met at all for each segment?•Drivers and Barriers: What are the drivers of spiritual growth, and what are the barriers to spiritual growth?These three areas provided the framework around which we organized the information we collected.METHODOLOGYBroadly speaking, there are two types of research methodology: qualitative and quantitative. We used both qualitative and quantitative methodologies, and then employed analytical techniques and processes to review the data.Qualitative (Gathering Insights)This is typically a one-on-one process in which a researcher poses questions directly to an individual. The questions often ask not only for information and opinions but also allow the interviewer to probe the richness of emotions and motivations related to the topic. Researchers use qualitative data to help clarify hypotheses, beliefs, attitudes and motivations. Qualitative work is often a first step because it enables a researcher to fine-tune the language that will be used in quantitative tools.Quantitative (Establishing Statistical Reliability)This process utilizes detailed questionnaires often distributed to large numbers of people. Questions are typically multiple choice and participants choose the most appropriate response among those listed for each question. Quantitative research collects a huge amount of data, which can often be generalized to a larger population and allowfor direct comparisons between two or more groups. It also provides statisticians with a great deal of flexibility in analyzing the results.Analytical Process and Techniques (Quantifying Insights and Conclusions) Quantitative research is followed by an analytical plan designed to process the data for information and empirically-based insights. Three common analytical techniques were used in our three research phases:•Correlation Analysis: Measures whether or not, and how strongly, two variables are related. This does not mean that one variable causes the other; it means theytend to follow a similar pattern of movement.•Discriminate Analysis: Determines which variables best explain the differences between two or more groups. This does not mean the variables cause thedifferences to occur between the groups; it means the variables distinguish onegroup from another.•Regression Analysis: Used to investigate relationships between variables. This technique is typically utilized to determine whether or not the movement of adefined (or dependent) variable is caused by one or more independent variables.We used both qualitative and quantitative methods in 2004 when we focused exclusively on Willow Creek Community Church and also in our 2007-2008 research involving hundreds of churches. Here is a summary of the methodology used in our most recent work.Qualitative Phase (December 2006)•One-on-one interviews with sixty-eight congregants. We specifically recruited people in the more advanced stages of spiritual growth. Our goal was to capturelanguage and insights to help guide the development of our survey questionnaire.•Interview duration: 30-45 minutes•Focused on fifteen topics. Topics included spiritual life history, church background, personal spiritual practices, spiritual attitudes and beliefs, etc. Quantitative PhasesPhase 1 (January-February 2007)•E-mail survey fielded with seven churches diverse in geography, size, ethnicity and format•Received 4,943 completed surveys, resulting in 1.4 million data points•Utilized fifty-three sets of questions on topics such as:o Attitudes about Christianity and one’s personal spiritual lifeo Personal spiritual practices, including statements about frequency of Bible reading, prayer, journaling, etc.o Satisfaction with the role of the church in spiritual growtho Importance and satisfaction of specific church attributes (e.g. helps me understand the Bible in depth) related to spiritual growtho Most significant barriers to spiritual growtho Participation and satisfaction with church activities, such as weekend service, small groups, youth ministries and servingPhase 2 (April-May 2007)•E-mail survey fielded with twenty-five churches diverse in geography, size, ethnicity and format•Received 15,977 completed surveys•Utilized refined set of questions based on Phase 1Phase 3 (October-November 2007 and January-February 2008)•E-mail survey fielded with 487 churches diverse in geography, size, ethnicity and format, including ninety-one churches in seventeen countries•Received 136,547 completed surveys•Utilized refined set of questions based on Phase 2 researcho Expanded survey to include twenty statements about core Christian beliefs and practices from The Christian Life Profile Assessment Tool Training Kit.* o Added importance and satisfaction measures for specific attributes related to weekend services, small groups, children’s and youth ministries, and servingexperiences.Analytical Process and ResourcesEach phase of our research included an analytical plan executed by statisticians and research professionals. These plans utilized many analytical techniques, including correlation, discriminate and regression analyses. In FOLLOW ME, our observations about the predictability of spiritual factors are derived primarily from extensive discriminate analysis. To put our analytical approach into perspective, here are three points of explanation about the nature of our research philosophy.1.Our research is a “snapshot” in time.Because this research is intentionally done at one point in time—like a snapshot—it is impossible to determine with certainty that a given variable, such as “reflection on Scripture,” distinguishes one segment from another (for example, Growing in Christ compared with Close to Christ). To accomplish this, we would have to assess the spiritual development of the same people over a period of time (longitudinalresearch).However, the fact that increased levels of reflection on Scripture occur in the Close to Christ segment compared with the Growing in Christ segment stronglysuggests that reflection on Scripture does influence spiritual movement between these segments (Movement 2). While it does not determine conclusively that a givenvariable “causes” movement, discriminate analysis identifies the factors that are the most differentiating characteristics between the two segments. So we infer from its findings that certain factors are more “predictive,” and consequently more influential to spiritual growth.Our ultimate goal is to measure the same people over multiple points in time (longitudinal research) in order to more clearly understand the causal effects of* Randy Frazee, The Christian Life Profile Assessment Tool Training Kit (Grand Rapids, MI: Zondervan, 2005).spiritual growth. However, even then we know there will be much left to learn, and much we will never understand about spiritual formation. The attitudes and behaviors we measure today should not be misinterpreted as defining spiritual formation.Instead they should be considered instruments used by the Holy Spirit to open our hearts for his formative work.2.The purpose of this research is to provide a diagnostic tool for local churches.Our intent is to provide a diagnostic tool for churches that is equivalent to the finest marketplace research tool at a fraction of the marketplace cost. This is “applied”research rather than “pure” research, meaning that its intent is to provide actionable insights for church leaders, not to create social science findings for academic journals.In a nutshell, while we intend to reinforce our research base with longitudinal studies, we chose to draw conclusions about the predictability and the influence of spiritual attitudes and behaviors based on point-in-time research evaluated through discriminate analysis. This approach meets the most rigorous standards of marketresearch that routinely influence decision-making at some of the most respected and successful organizations in the country.3.Research is an art as well as a science.While the data underlying our findings is comprehensive and compelling as science, we have also benefited from the art of experts whose judgment comes from years of experience. The two research experts closest to this work represent almost fifty years of wide-ranging applied research projects. Eric Arnson began his career inquantitative consumer science at Proctor & Gamble, and ultimately became the North American brand strategy director for McKinsey and Company. Terry Schweizer spent twenty years with the largest custom-market research organization in the world,running its Chicago office before contributing full-time to REVEAL’s finaldevelopment phase. Eric and Terry poured the benefit of their expertise andjudgment into every finding in this book, which gives us confidence that the artcomponent of our research is on very solid ground.A Note about the Top Five Catalysts for Each MovementYou may have noticed that the order of most influential factors shifts slightly between the four independent categories of spiritual catalysts and the lists of “top five catalysts” for Movements 1 and 2. For example, chart 2-7 shows that reflection on Scripture is the most influential personal spiritual practice for each movement. But when we list the top five catalysts for Movements 1 and 2 (charts 3-5 and 3-9), prayer appears to be more influential than reflection on Scripture. The apparent discrepancies are a function of the discriminate analysis.The top five catalysts for each movement were determined by evaluating all fifty-plus spiritual factors through the discriminate lens, which at times recalibrates the predictability of one factor versus another. That happens when a portion of one factor’s predictive power is shared by another. For example, as noted, reflection on Scripture was more highly predictive of spiritual movement than prayer when we looked at personal spiritual practices across the three movements. However, when reflection on Scripture was analyzed alongside all the fifty-plus catalysts, its level of influence was shared tosome extent with another factor, possibly the belief in salvation by grace. In this case, because the discriminate analysis is looking for the best combination of top five catalysts to explain differences between two segments, it’s possible that reflection on Scripture ranked lower than prayer because part of its predictive power is explained by the salvation by grace factor.Confused? One way to think about this is to consider the Food Pyramid, which includes five basic food groups: grains, vegetables, fruits, dairy and meat. Each food group could list its most nutritious foods in order. But when you pool all possible foods together looking for the best food plan for a young child, it’s possible that not all the top-ranked nutritious foods are on the list. Two reasons account for this. First, when looking for the best combination of nutrients, some foods will be more necessary for a young child than others; that influences the list. Second, some of those foods will have vitamins and nutrients that are redundant with others, so that affects which grains, vegetables, and other foods are on the best food plan. So the best combination of foods for a young child won’t necessarily include the most nutritious foods in each one of the food group categories, and the rank order of “best” foods could vary as well.This is analogous to our efforts to find the best combination of spiritual catalysts for the three movements of spiritual growth. The bottom line is that pouring all the spiritual catalysts into one discriminate analysis bucket can shake up the order of most influential (the top five) because the predictive power of all the factors have to recalibrate in relation to each other.In summary, we have employed the highest applied research standards available, including a robust qualitative process and three waves of quantitative surveys across hundreds of diverse churches. While there is much more work yet to do, we are confident that the insights and findings in Follow Me reflect a very high level of research excellence.。

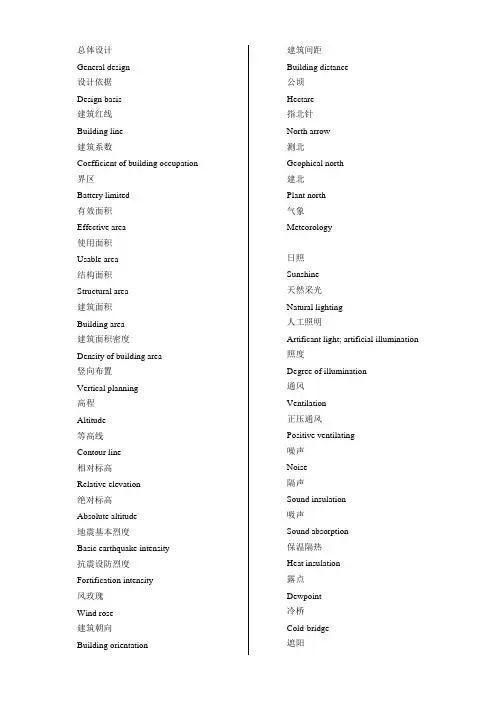

总体设计General design设计依据Design basis建筑红线Building line建筑系数Coefficient of building occupation 界区Battery limited有效面积Effective area使用面积Usable area结构面积Structural area建筑面积Building area建筑面积密度Density of building area竖向布置Vertical planning高程Altitude等高线Contour line相对标高Relative elevation绝对标高Absolute altitude地震基本烈度Basic earthquake intensity抗震设防烈度Fortification intensity风玫瑰Wind rose建筑朝向Building orientation 建筑间距Building distance公顷Hectare指北针North arrow测北Geophical north建北Plant north气象Meteorology日照Sunshine天然采光Natural lighting人工照明Artificant light; artificial illumination 照度Degree of illumination通风Ventilation正压通风Positive ventilating噪声Noise隔声Sound insulation吸声Sound absorption保温隔热Heat insulation露点Dewpoint冷桥Cold-bridge遮阳Sunshade恒温恒湿Constant temperature & constant humidity 消声Noise elimination; noise reduction防振antivibration4.2 建筑一般词汇Conventional terms of architecture方案Scheme; draft草图Sketch透视图Perspective drawing建筑构图Architectural composition坐标Coordinate纵坐标Ordinate横坐标Abscissa跨度Span开关Bay进深Depth层高Floor height净高Clear height; headroom横数Module; modulus裙房Skirt building 楼梯Stair梯段Stair slab楼梯平台Stair landing安全出口Safety exit疏散楼梯Escape staircase楼梯间Stair well封闭楼梯间Enclosure staircase防烟楼梯间Smoke prevention stair well消防电梯间Emergency elevators well自动扶梯Escalator中庭Atrium疏散走道Escape corridor; escape way耐火等级Fire-resistive grade生产类别Classification of production耐火极限Duration of fire-resistance防火间距File-break distance泄压面积Area of pressure release闷顶Mezanine; mezzanine砖标号Grade of brick; strength of brick承重墙Bearing wall非承重墙Non-bearing wall挡土墙Retaining wall填充墙Filler wall围护墙Curtain wall; cladding wall; enclosure wall 女儿墙Parapet wall隔墙Partition(浴室、厕所)隔断Stall窗间墙Pier墙垛Pillar过梁Lintel圈梁Gird; girt; girth防潮层Damp-proof course勒脚Plinth横梁Transverse beam纵横Longitudinal wall山墙Gable; gable wall防火墙Fire wall压顶Coping 勾缝Pointing砖砌平拱Brick flat arch预埋件Embedded inserts直抓梯Ladder栏杆Railing防腐蚀Corrosion resistant; Anticorrosion 化学溶蚀Chemical erosion膨胀腐蚀Expansion corrosion化学腐蚀Chemical corrosion电化学腐蚀Electro-chemical corrosion晶间腐蚀Intergranular corrosion气相腐蚀Gaseous corrosion液相腐蚀Liquidoid corrosion固相腐蚀Solid corrosion腐蚀裕度Allowance for corrosion锈蚀rusting4.3 建筑材料Building materials级配砂石Graded sand & gravel素土夯实Rammed earth灰土Lime-soil素混凝土Plain concrete钢筋混凝土Reinforced concrete(R.C)细石混凝土Fine aggregate concrete轻质混凝土Lightweight concrete加气混凝土Aerocrete; Aerocrete concrete 陶粒混凝土Ceramsite concrete水泥膨胀珍珠岩Cement & expended pearlite 岩棉Mineral wool; mine wool沥青asphalt卷材Felt玛蹄脂Asphalt mastic粘土砖Clay brick釉面砖Porcelain enamel brick空心砖Hollow brick砌块Block缸砖guarry brick锦砖mosac地面砖paving brick 防滑地砖non-slip brick耐酸砖(板)acidbrick (acidtile)胶泥mastic粘土瓦Cloy tile玻璃瓦enamelled tile波形镀锌钢板galvanized corrugate steel sheet玻璃钢瓦glass-fiber reinforced plastic tile彩色压型钢(铅)板coloured corrugate steel (aluminium) plat; profiuing coloured stccl (aluminium) plat 石棉水泥瓦ashestos-cement sheet水磨石terrajjo水刷石granite plaster花岗石granite磨光花岗石polished granite剁斧石artificial stone大理石marble水泥砂浆抹面cement plaster石灰砂浆抹面lime plaster水泥石灰砂浆抹面cement-lime plaster刀灰打底hemp-cut and lime as base原木Log方木square timber板材plank胶合板plywood三夹板3plywood五夹板5plywood平板玻璃flat glass浮法玻璃float-process glass磨砂玻璃ground glass; frosted glass起玻璃prism glass夹丝玻璃Wire glass夹层玻璃sandwich glass中空玻璃hollow glass钢化玻璃reinforced glass镀膜玻璃coating glass有机玻璃organic glass4.4 建筑构造及配件Building construction & component 铺砌paving 地坪grade基层bedding素土夯实rammed earth垫层base结合层bonding course面层Covering隔离层insulation course活动地板raising floor; movable floor; access floor篦子板grating地面提示块ground prompt遮阳板sunshade窗套Window moulding护角curb guard防水层water-proof course找平层Leveling course隔热层heat insulation course保温层thermal insulation course檩条purlin天窗skylight天棚ceiling吊顶suspending ceiling吊顶龙骨ceiling joist雨水口drain gulley水斗Lead head雨水管Leader; downspout天沟Valley挑檐overhanging eave檐口eave泛水flashing分水线watershed檐沟eave gutter汇水面积catchment area雨罩canopy散水apron坡道ramp台阶entrance steps保温门thermal insulation door 隔声门(窗)sound insulation door (window); acoustical door (window)防火门(窗)fire door (window)冷藏门freezer door安全门exit door防护门(窗)protection door (window)屏蔽门(窗)Shield door (window)防风砂门radiation resisting door (window) 密闭门(窗)weather tight door泄压门(窗)pressure release door (window) 壁柜门closet door变压器间门transformer room door围墙门gate车库门garage door保险门safe door引风门ventilation door检修门access door平开门(窗)side-hung door推拉门(窗)sliding door弹簧门swing door折迭门folding door卷帘门rolling door转门revolving door夹板门plywood door拼板门FLB door (framed, Ledged and battened door);matchboard door实拼门solid door镶板门panel door镶玻璃门glazed door玻璃门glass door钢木门steel & wooden door百页门shutter door连窗门door with side window传递窗delivery window观察窗observation window换气窗vent sast上悬窗top-hung window中悬窗center-pivoted window下悬窗bottom-hung window 立转窗vertically pivoted window固定窗fixed window单层窗single window双层窗double window百页窗shutter带形窗continuous window子母扇窗attached sash window组合窗composite window落地窗French window玻璃幕墙glazed curtain wall门(窗)框door (window) frame拼樘料transom (横),mullion (竖)门(窗)扇door (window) leaf(平开窗扇casement sash)纱扇screen sash亮子transom, fanlight门心板pancl披水板weather board贴脸板trim筒子板Lining窗台板sill plate防护铁栅barricade; iron grille; grating 合页butts; hinges; butt hinges执手knob撑档catch滑道sliding track插销bolt拉手pull推板push plate门锁mortice lock; door lock执手锁mortice lock with knob4.5 建筑结构Building structure4.5.1 荷载Load活荷载Live load静荷载,恒载Dead load静力荷载Static load移动荷载Moving load动力荷载Dynamic load冲击荷载impact load附加荷载Superimposed load规定荷载(又称标准荷载)Specified Load集中荷载Concentrated Load分布荷载Distributed Load设计荷载Design Load轴向荷载Axial Load偏心荷载Eccentric Load风荷载Wind Load风力、风压Wind force、Wind Pressure 雪荷载Snow Load屋面积灰荷载Roof ash Load(吊车)最大轮压Maximum Wheel Load吊车荷载Crane Load安装荷载Erection Load施工荷载Construction Load不对称荷载Unsymmetrical Loading重复荷载Repeated Load刚性荷载Rigid Load柔性荷载Flexible Load临界荷载Critical Load容许荷载Admissible Load, Allowable Load safe Load 极限荷载Ultimate Load条形荷载Strip Load破坏荷载Failure Load, Load at failure地震荷载Seismic Load; Earthquake load荷载组合Combination of load4.5.2 地基和基础Soil and foundation地基(Bed)soil天然地基Natural ground人工地基Artificial ground混凝土基础Concrete foundation; Concrete footing毛石基础Rubble masonry footing;(Rubble) stone footing砖基础Brickwork footing桩基础Pile foundation设备基础Equipment foundation机器基础Machine foundation独立基础Individual footing, Isolated foundation Pad foundation联合基础Combined footing大块式基础Massive foundation条形基础Strip foundation; Strip footingStrap footing; Continuous footing方形基础Square footing杯形基础Footing socket板式基础Slab-foundation; Mat footing阶梯形基础Stepped foundation; Benched foundation (Stepped footing)扩展基础spread footing扩底基础Under-reamed foundation浮伐基地Raft foundation; Buoyant foundation Floating foundation沉箱(井)基础Caisson foundation构架式基础Frame foundation深基础Deep foundation基础Footing Foundation基槽Foundation ditch; Foundation trench基坑Foundation pit基础板Foundation slab基础梁Foundation beam Footing beam基础底面Foundation base基础底板Foundation mat基础垫层Foundation-bed基础埋置深度Depth of embedment foundation地下连续墙Undelground continuous wall打桩Pile driving打桩机Pile engine; Pile driver; Ram mactuine 钢桩Steel pile水桩Wood pile; Timber pile钢筋混凝土桩Reinforced concrete pile砂桩Sand pile石灰桩Lime pile; Lime column; Quicklime pile 单桩Single pile群桩Pile group; Pile cluster斜桩Batter pile预制桩precast pile灌注桩In-situ pile; Cast-in-place pileCast-in-situ pile; Filling pile板桩sheet pile拆密桩Compaction pile挤密砂桩Sand compaction pile灰土挤密桩Line-soil compaction pile钻孔桩Bored pile打入桩Driuen pile摩擦桩Friction pile; Buoyant pile端承桩End bearing pile; Point bearing pile; Column pile (柱桩)支承桩Bearing pile抗拔桩Tension pile; Uplift pile;抗滑桩Anti-slicle pile桩承台Pile cap桩帽Pile cap; pile couel桩头Pile crown; pile head桩端Pile tip桩身Pile shaft桩距Pile spacing桩钢筋笼Pile cage试桩Test pile桩荷载试验Pile load test桩的动荷载试验Dynamic load test of pile桩的侧向荷载试验Lateral pile load test桩的极限荷载Ultimate pile load桩承载能力Pile capacity; Bearing capacity of a pile Carrying capacity of a pile土压力Earth pressure主动土压力Active earth pressure被动土压力Passive earth pressure静止土压力Earth pressure at rest容许地耐力Allowable bearing strength冰冻深度Frozen depth; Frost depth;Frost penetration depth of frost penetration 防冻深度Frost-proof depth粘土类土Clayey soil轻亚粘土Sandy loam亚粘土Sandy clay; Loam砂质土Sandy soil砂砾石Sandy gravel stratum膨胀土Expansive soil 硬质土,硬盘岩,硬土层Hard pan液限Liquid limit塑限Plastic limit塑料指数Index of plasticity松软土Mellow soil; spongy soil回填土Backfill Refilling杂填土Miscellaneous fill地表水Surface water;地下水Groundwater地下水位Groundwater eltualion; Groundwater level; Groundwater table 容重Unit weight干容重Dry unit weight湿容重Wet unit weight饱和容重Saturated unit weight不均匀沉降Unequal settlement;Differential settlement地基处理Ground treatment地基加固Ground stabilization; soil improvement 土质查勘Soil exploration地质勘察Geological exploration沉陷Settlement倾斜obliquity; Inclination滑移Sliding夯实土Compacted soil夯实填土Compacted fill夯实回填土Tamped backfill夯实分层厚度Compacted lift持力层Bearing stratum; Supporting; Course 管道与基础相碰Pipeline interferes with foundation 4.5.3 一般结构用语Terms for general structures建筑结构Building structure建筑物Building结构形式Structural type混凝土(砼)结构Concrete structure砌体结构Masonry structure砖砌结构Brick structure石砌结构Stone structure砖砼结构Brick and concrete structure 钢结构Steel structure结构型钢Shape steel木结构Timber structure组合结构Composite structure框架结构Frame structure梁板结构Beam and slab structure 构件Member承重构件Load-bearing member 结构构件Structure member肋形屋盖Ribbed roof肋形楼板Ribbed floor slab无梁楼板Flat plate; Flat slab桁架;屋架Truss; Roof truss三角形屋(桁)架Triangular truss梯形屋(桁)架Trapezoidal truss拱形屋架Arch roof truss折线形屋架Segmental roof truss弓形桁架Bowstring truss框架Frame排架Bent frame刚架Rigid frame门架Portal frame抗风构架Wind frame抗震构架Aseismic frame梁Beam; Girder大梁Girder主梁Principal beam; Primary beam 次梁Secondary beam加腋梁Haunched beam筒支梁Simply beam固端梁Fixed beam悬臂梁Cantilever beam连续梁Continuous beam托梁Spandrel圈梁girth过梁Lintel曲梁Curved beam bow beam基础梁Foundation beam 吊车梁Crane girderT形梁T-beam柱Column组合柱Combination column立柱Post吊车柱Crane column抗风柱End panel column墙wall板slab plate承重墙Bearing walls柱网Column grid支撑系统Brace system柱间支撑Portal bracing between columns 屋石支撑Roof bracing垂直支撑Vertical bracing水平支撑Horizontal bracing剪刀撑Cross bracing临时顶撑,支撑Shoring桁架式支撑Trussed bracing斜撑Kneel-brace上弦Top chord下弦Bottom chord节间Panel节点Panel point压杆Compression member; strut 拉杆Tension member; Tie-beam 腹杆Web member斜杆Diagonal member斜腹杆Diagonal web member吊杆Hanged rod; sag rod系杆,拉杆Tie bar; sag rod天窗架Skylight frame天窗Monitor; Skylight托座、牛腿Bracket檀条Purlin连接Connection接点Joint铰接点Hinged point固接点Fixed Joint安装接点Erection joint拼接接点Splice joint节点板、连接板Gusset plate; connecting plate 加劲板Stiffener plate支撑板Bearing plate填隙板Filler plate梁柱接头Beam-column connections截面Cross section拱Arch壳Shell伸缩缝Expansion joint沉降缝Settlement joint施工缝Construction joint防震缝Aseismic joint4.5.4 结构理论用语Terms for theory of slructures a) 设计方法术语Terms for design method结构设计Structure design按极限强度设计Ultimate strength design按许可应力设计Working stress design按承载能力设计Loading capacity design按稳定性设计Design according to stability按变形设计Design according to deformation 结构分析Structural analysis结构计算Structural calculation静定结构Statically determinate structures 超静定结构Statically indeterminste structures 精确计算Rigorous calculation近似计算Approximate calculation安全等级Safety classes极限状态Limit states摩擦系数Coefficient of friction质量密度Mass density重力密度Weight (Force) densityb) 结构的作用效应术语轴向力Normal force剪力Shear force弯矩Bending moment扭矩Torque水平推力Horizontal thrust水平拉力Horizontal pull水平(垂直)分力Horizontal (vertical) component合力Resultant正应力Normal stress剪应力Shear stress主应力Principal stress次应力Secondary stress预应力Prestress位移Displacement挠度Deflection变形Deformation弯曲Bending; Flexure扭转Torsionc) 材料性能、结构抗力术语Terms of property of material and resistamle of structure抗力Resistancc抵抗力矩Resistante moment强度Strength刚度Stiffness; Rigidity抗裂度Crack resistance抗压强度Compressiue strength抗拉强度Tensile strength抗剪强度Shear strength抗变强度Flcxural strength抗扭强度Torsional strength抗裂强度Cracking strength屈服强度Yield strength疲劳强度Fatigue strength弹性模量Modulus of elasticity剪切模量Shear modulus变形模量Modulus of deformation稳定性Stabilith泊松比Poisson ratiod) 几何参数术语Terma of geometry parameter 截面高度Height of section 截面有效高度Effective depth of section载面宽度Breadth of section截面厚度Thickness of section截面面积Area of section截面面积矩First moment of area截面惯性矩Second moment of area截面抵抗矩模量规范中用词Section modulus回转半径Radius of gyration偏心距eccentricity长度Length跨度Span矢高Rvse长细比Slenderness ratio4.5.5 砖石结构Masonry construction砖砌体Brickwork砌筑Laying砖标号Grade of brick; Strength of brick 毛石砌体Rubble masonry毛石砼砌体Grouted rubble masonry水泥砂浆Cement mortar石灰砂浆Lime mortar水泥石灰砂浆cement-lime mortar砂浆找平Mortar leveling4.5.6 钢筋砼结构Reinforced concrete structure素混凝土Plain concrete钢筋混凝土Reinforced concrete预应力砼Prestressed concrete砼标号Grade of concrete钢号Grade of steel整体式结构Monolithic structure装配式结构Assembled structure预制构件Precast element; Fabricated element 现浇Cast-in-site; Placed-in-site浇灌砼Concreting; Costion; Pouring; Placing 一次浇灌At one pouring分二次浇灌Pours in two operations二次灌浆Final grouting钢筋保护层Reinforcement protective course 模板Formwork; shuttering拆模Form stripping预埋件Embedded inserts预留槽(洞)Groove (Hole) to be provided垫层Bedding course找平层Leveling course; Trowelling course 4.5.7 配筋Reinforcement配筋率Percentage of reinforcement主筋Main reinforlement分布筋Distributing reinforcement腹筋Web reinforcement开口箍筋U-stirrups闭口箍筋Closed stirrups环筋Hoops弯起钢筋Bent-up bar附加钢筋Additional reinforcement搭接接头Lapped splice焊接接头Welded splice钢筋间距Spacing of reinforcement吊筋Suspender双向配筋Two-way reinforced螺旋筋Spiral bar温度筋Temperature reinforcement锚固长度Anchor length埋入长度Built-in length螺孔直径Diameter of bolt hole螺孔中心线Center line of bolt hole锚栓、地脚螺栓Anchor bolt基础螺栓Foundation bolt安装螺栓Erection bolt普通螺栓Common bolt高强螺栓High strength bolt4.5.8 焊接Welding电弧焊Electric arc welding气焊,氧—乙炔焊Gas welding; oxy-acetylene welding 焊条Welding electrode; Welding-rod手工焊Manual welding自动焊Automatic welding 车间焊接Shop-welding现场焊接(工地焊接)Field-welded满焊Full weld搭接焊Lap weld贴角焊Fillet weld点焊Spot weld对接焊Butt weld仰焊Overhead weld双面贴角焊Flat fillet weld in front and back 连续贴角焊Continuous fillet weld间断贴角焊Intermittent fillet weld安装焊缝Erection weld单V形对接焊Single V butt weld双V形对接焊Double V butt weld4.5.9 特种结构Special structures水塔Water tower高烟筒Tall chimney冷却水塔Cooling tower油罐Oil tank蓄水池Water reservoir 管廊Pipe rack管架Pipe support球罐Spherical tank 裂解炉cracking furnace 起重机、吊车Crane; Hoist。