Automatic Meaning Discovery Using Google

- 格式:pdf

- 大小:245.98 KB

- 文档页数:23

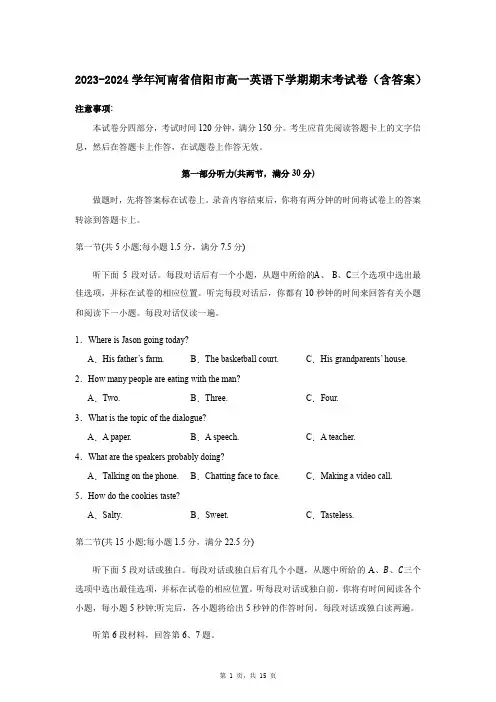

2023-2024学年河南省信阳市高一英语下学期期末考试卷(含答案)注意事项:本试卷分四部分,考试时间120分钟,满分150分。

考生应首先阅读答题卡上的文字信息,然后在答题卡上作答,在试题卷上作答无效。

第一部分听力(共两节,满分30分)做题时,先将答案标在试卷上。

录音内容结束后,你将有两分钟的时间将试卷上的答案转涂到答题卡上。

第一节(共5小题;每小题1.5分,满分7.5分)听下面5段对话。

每段对话后有一个小题,从题中所给的A、 B、C三个选项中选出最佳选项,并标在试卷的相应位置。

听完每段对话后,你都有10秒钟的时间来回答有关小题和阅读下一小题。

每段对话仅读一遍。

1.Where is Jason going today?A.His father’s farm.B.The basketball court.C.His grandparents’ house. 2.How many people are eating with the man?A.Two.B.Three.C.Four.3.What is the topic of the dialogue?A.A paper.B.A speech.C.A teacher.4.What are the speakers probably doing?A.Talking on the phone.B.Chatting face to face.C.Making a video call. 5.How do the cookies taste?A.Salty.B.Sweet.C.Tasteless.第二节(共15小题;每小题1.5分,满分22.5分)听下面5段对话或独白。

每段对话或独白后有几个小题,从题中所给的A、B、C三个选项中选出最佳选项,并标在试卷的相应位置。

听每段对话或独白前,你将有时间阅读各个小题,每小题5秒钟;听完后,各小题将给出5秒钟的作答时间。

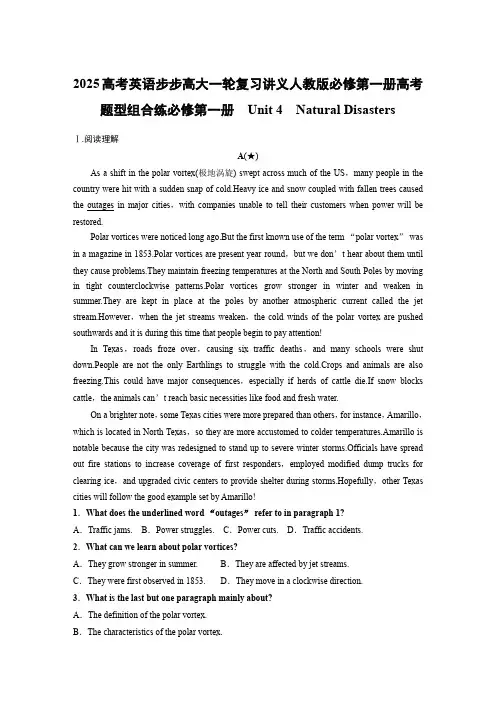

2025高考英语步步高大一轮复习讲义人教版必修第一册高考题型组合练必修第一册Unit 4Natural DisastersⅠ.阅读理解A(★)As a shift in the polar vortex(极地涡旋) swept across much of the US,many people in the country were hit with a sudden snap of cold.Heavy ice and snow coupled with fallen trees caused the outages in major cities,with companies unable to tell their customers when power will be restored.Polar vortices were noticed long ago.But the first known use of the term “polar vortex” was in a magazine in 1853.Polar vortices are present year-round,but we don’t hear about them until they cause problems.They maintain freezing temperatures at the North and South Poles by moving in tight counterclockwise patterns.Polar vortices grow stronger in winter and weaken in summer.They are kept in place at the poles by another atmospheric current called the jet stream.However,when the jet streams weaken,the cold winds of the polar vortex are pushed southwards and it is during this time that people begin to pay attention!In Texas,roads froze over,causing six traffic deaths,and many schools were shut down.People are not the only Earthlings to struggle with the cold.Crops and animals are also freezing.This could have major consequences,especially if herds of cattle die.If snow blocks cattle,the animals can’t reach basic necessities like food and fresh water.On a brighter note,some Texas cities were more prepared than others,for instance,Amarillo,which is located in North Texas,so they are more accustomed to colder temperatures.Amarillo is notable because the city was redesigned to stand up to severe winter storms.Officials have spread out fire stations to increase coverage of first responders,employed modified dump trucks for clearing ice,and upgraded civic centers to provide shelter during storms.Hopefully,other Texas cities will follow the good example set by Amarillo!1.What does the underlined word “outages” refer to in paragraph 1?A.Traffic jams. B.Power struggles. C.Power cuts. D.Traffic accidents.2.What can we learn about polar vortices?A.They grow stronger in summer. B.They are affected by jet streams.C.They were first observed in 1853. D.They move in a clockwise direction.3.What is the last but one paragraph mainly about?A.The definition of the polar vortex.B.The characteristics of the polar vortex.C.The ways to deal with the polar vortex.D.The serious impact made by the polar vortex.4.Why is Amarillo striking?A.Because it is located in the north of Texas.B.Because it has been upgraded and modernized.C.Because it has been regarded as an example to other cities.D.Because it has taken effective measures to resist winter storms.BWhat is the 15-minute city?It’s the urban planning concept that everything city residents need should be a short walk or bike ride away—about 15 minutes from home to work,shopping,entertainment,restaurants,schools,parks and health care.Supporters argue that 15-minute cities are healthier for residents and the environment,creating united mini-communities,boosting local businesses,and encouraging people to get outside walk,and cycle.Many cities across Europe offer similar ideas,but Paris has become its poster child.Mayor Anne Hidalgo has sought to fight climate change by decreasing choking traffic in the streets and fuel emissions.In 2015,Paris was 17th on the list of bike-friendly cities; by 2019,it was 8th.Car ownership,meanwhile,dropped from 60 percent of households in 2001 to 35 percent in 2019.The 15-minute city figured largely in Hidalgo’s successful 2020 re-election campaign.The idea has also gained support in the U.S.It clearly won’t work everywhere:Not every city is as centralized and walkable as Paris.Some car-dominated cities like Los Angeles and Phoenix would be hard-pressed to provide everything people need within walking distance.In addition,some urban planners argue that the 15-minute city could increase the separation of neighborhoods by income.Neighborhoods equipped with all the conveniences required by the 15-minute city also tend to have high housing costs and wealthier residents.Despite some resistance,the basic principles behind the 15-minute city are influencing planning in cities around the world,including Melbourne,Barcelona,Buenos Aires,Singapore,and Shanghai.Urban designer and thinker Jay Pitter says cities where basic needs are within walking distance create more individual freedom than needing to drive everywhere.“In a city where services are always close by,” he says,“mobility is a choice:You go where you want because you want to,not because you have to.My fight is not against the car.My fight is how we could improve the quality of life.”5.Which best describes the 15-minute city?A.Modern. B.Smart. C.Entertaining. D.Convenient.6.What’s the original intention for Paris to advocate the 15-minute city?A.To address climate issues. B.To beautify the city.C.To promote the bike industry. D.To help Hidalgo get re-elected.7.What’s some urban planners’ worry about the 15-minute city?A.It slows the city’s expansion.B.It represents a setback for society.C.It may widen the gap between neighborhoods.D.It can cause the specialization of neighborhoods.8.What’s Jay Pitter’s attitude to the concept of 15-minute city?A.Doubtful. B.Favorable. C.Critical. D.Uninterested.C(★)Earthquake forecasting is one of the most ancient skills known to mankind.From ancient Greece to the present day,countless scientists have tried to develop tools to predict earthquakes.Their attempts usually focused on searching for reliable evidence of coming quakes.However,there are many reasons why predicting quakes is so hard.“We don’t understand some basic physics of earthquakes,” said Egill,a research professor at the California Institute of Technology.Scientists have also attempted to create mathematical models of movement,but precisely predicting would require great mapping and analysis of the Earth’s crust.Other challenges include a lack of data on the early warning signs,given that these warning signs are not yet entirely understood.Actually,real earthquake prediction is very similar to the diagnosis of potential human illnesses based on observing and analyzing each patient’s signs and symptoms.As it turns out,quake prediction is extremely difficult.Many sources show that earthquake forecasting was recognized science in ancient Greece.Ancient Greeks lived very close to nature and were able to detect unusual phenomena and forecast earthquakes.The first known forecast was made by Pherecydes of Syros about 2,500 years ago:he made it as he scooped water from a well and noticed that usually very clean water had suddenly become muddy.Indeed,an earthquake occurred two days later,making Pherecydes famous.Nowadays,seismic(地震的) and remote-sensing methods are considered to have the greatest potential in terms of solving the earthquake prediction problem.Currently,Terra Seismic can identify a forthcoming earthquake with a high level of confidence.Generally,Terra Seismic does not predict a quake if the earthquake’s epicenter is located beyond a depth of 40km.Fortunately,such quakes are almost always harmless,since quake’s energy reduces before reaching the Earth’s surface.“Scientists have tried every possible method to try to predict earthquakes,” Bruneau said.“Nobody has been able to crack it and make a believable prediction.”9.What do we know about earthquake forecasting?A.Scientists have been passionate about accurately predicting earthquakes.B.As long as enough data is collected,earthquakes can be avoided.C.Mathematical models of motion can simulate and predict earthquakes.D.Scientists have fully studied the structure of earthquakes.10.How did Pherecydes successfully predict that earthquake?A.By seismic and remote-sensing methods.B.By observing unusual natural phenomena.C.By living in seismic zones throughout the year.D.By looking into data on the early warning signs.11.What was Bruneau’s opinion about the current methods of earthquake prediction? A.He strongly believed the Terra Seismic can solve the difficult problem.B.He was sure that humans could accurately predict earthquakes in the future.C.He considered it harmless to humans for an earthquake deeper than 40km.D.He thought that scientists had no reliable method to predict earthquakes.12.What is the text mainly about?A.Why do humans predict earthquakes.B.How to protect oneself during an earthquake.C.What methods can be used to forecast earthquakes.D.When to achieve accurate earthquake forecasting.Ⅱ.七选五(★)Hurricane season can be wild and unpredictable. 1 Here are some ways you can beef up your home security in hurricane weather,even if you need to move out.2 That actually does nothing to ensure your safety in the powerful winds.Sticking to hurricane shelters or plywood(胶合板) is the only way to go when it comes to protecting those points of entry in hurricane conditions.Buy sheets of plywood,measure your windows and use brackets(支架) to hold the plywood in place.Sandbags are very useful.While sandbags won’t be able to help in the extreme storm,many people will be able to prevent flooding and extensive damage to their belongings by placing sandbags. 3 Any storm water that is kept out of your home is storm water that won’t be able to damage the belongings inside your home,so sandbags are well worth having at your doorways during a hurricane.It’s important to prepare the inside of your home for strong winds and rain.But you should also keep an eye on the outside of your house,too.Trim(修剪) trees,especially dead branches,to prevent anything else that could fly through a window and cause damage during a hurricane. 4 You should also take pictures of expensive items like electronics and keep notes of their serialnumbers. 5 Also,there are always bad people out right after a storm.If the worst happens and your home is broken into right after a bad storm,having pictures and detailed notes about what was in your home prior to the hurricane will make it easier for police to track down stolen items. A.You should have a camera at home.B.It can be strong enough to resist the forceful winds.C.Making sure nothing is loose in your yard is important.D.You may see people taping windows before a hurricane comes.E.They will be able to effectively keep storm water out in many cases.F.It will aid with your insurance company if anything needs to be replaced.G.These dangerous storms can bring damage to your home and belongings.必修第一册Unit 5Languages Around the WorldⅠ.阅读理解AMost of us take the task of buying a cup of coffee for granted,as it seems simple enough.However,we have no idea just how stressful tasks like this can be for people who suffer from disabilities.That’s why it’s so heart-warming to see a story like this in which a barista (咖啡师) does something small to make life a little easier for someone who is deaf.Ibby Piracha lost his hearing when he was only two years old,and he now goes to his local cafe in Leesburg,Virginia to order a cup of coffee at least three times a week.Though all the baristas who work there have his order memorized,Ibby always writes his order on his phone and shows it to the barista.One day,however,one of the baristas did something that changed everything! After Ibby ordered his coffee,he was amazed when barista Krystal Pane handed him a note in response.“I’ve been learning sign language just so you can have the same experience as everyone else,” the note read.Krystal then asked Ibby in sign language what he would like to order.Ibby was touched that she would learn sign language just to help him feel welcome.“I was just so moved that she actually wanted to learn sign language.It is really a totally different language and it was something that she wanted to do because of me.Because I was a deaf customer.I was very,very impressed,” Ibby said.Krystal had spent hours watching teaching videos so that she could learn enough sign language to give Ibby the best customer service that she could! “My job is to make sure peoplehave the experience they expect and that’s what I gave him,” Krystal says.Ibby posted a photo of Krystal’s note online,and it quickly went viral,getting hundreds of likes and comments that praised Krystal for her kind action.1.What can we learn about Ibby Piracha from paragraph 2?A.He was born deaf. B.He lives a hard life.C.He loves to order take-out food. D.He visits the cafe regularly.2.Why did Krystal learn sign language?A.To serve Ibby better. B.To attract more customers.C.To give Ibby a big surprise. D.To make herself more popular.3.Which of the following can best describe Krystal?A.Kind and considerate. B.Honest and responsible.C.Sociable and humorous. D.Ambitious and sensitive.4.What message does the author want to convey in the text?A.Two heads are better than one. B.A small act makes a difference.C.One good turn deserves another. D.Actions speak louder than words.B(★)(2024·湖北武汉调研)When I mentioned to some friends that we all have accents,most of them proudly replied,“Well,I speak perfect English/Chinese/etc.” But this kind of saying misses the point.More often than not,what we mean when we say someone “has an accent” is that their accent is different from the local one,or that pronunciations are different from our own.But this definition of accents is limiting and could give rise to prejudice.Funnily enough,in terms of the language study,every person speaks with an accent.It is the regular differences in how we produce sounds that define our accents.Even if you don’t hear it yourself,you speak with some sort of accent.In this sense,it’s pointless to point out that someone “has an accent”.We all do!Every person speaks a dialect,too.In the field of language study,a dialect is a version of a language that is characterized by its variations of structure,phrases and words.For instance,“You got eat or not?”(meaning “Have you eaten?”) is an acceptable and understood question in Singapore Oral English.The fact that this expression would cause a standard American English speaker to take pause doesn’t mean that Singapore Oral English is “wrong”or “ungrammatical”.The sentence is well-formed and clearly communicative,according to native Singapore English speakers’ solid system of grammar.Why should it be wrong just because it’s different?We need to move beyond a narrow conception of accents and dialects—for the benefit ofeveryone.Language differences like these provide insights into people’s cultural experiences and backgrounds.In a global age,the way one speaks is a distinct part of one’s identity.Most people would be happy to talk about the cultures behind their speech.We’d learn more about the world we live in and make friends along the way.5.What does the author think of his/her friends’ response in paragraph 1?A.It reflects their self confidence.B.It reflects their language levels.C.It misses the point of communication.D.It misses the real meaning of accents.6.Why does the author use the example of Singapore Oral English?A.To justify the use of dialects. B.To show the diversity of dialects.C.To correct a grammatical mistake. D.To highlight a traditional approach.7.What does the author recommend us to do in the last paragraph?A.Learn to speak with your local dialect.B.Seek for an official definition of accents.C.Appreciate the value of accents and dialects.D.Distinguish our local languages from others’.8.What can be a suitable title for the passage?A.Everyone Has an Accent B.Standard English Is at RiskC.Accents Enhance Our Identities D.Dialects Lead to MisunderstandingⅡ.完形填空One summer night in a seaside cottage,a small boy was in bed,sound asleep.Suddenly,he felt himself 1 from bed and carried in his father’s arms onto the beach.Overhead was the clear starry sky.“Watch!” As his father spoke, 2 ,one of the stars moved.It 3 across the sky like a golden fire.And before the 4 of this could fade,another star leapt from its place,then another...“What is it?” the child asked in 5 .“Shooting stars.They 6 every year on a certain night in August.”That was all:just an 7 encounter of something magic and beautiful.But,back in bed,the child stared into the dark,with mind full of the falling stars.I was the 8 seven-year-old boy whose father believed that a new experience 9 more for a small boy than an unbroken night’s sleep.That night,my father opened a door for his child,leading him into an area of splendid10 .Children are naturally curious,but they need someone to 11 them.This art of adding new dimensions to a child’s world doesn’t 12 require a great deal of time.It simply 13 doing things more often with children instead of for them or to them.Good parents know this:The most precious gift they can give a child is to spark their flame of 14 .That night is still deeply 15 in my memory.Next year,when August comes with its shooting stars,my son will be seven.1.A.hidden B.robbed C.lifted D.kicked2.A.incredibly B.accidentally C.apparently D.actually3.A.exploded B.circled C.spread D.flashed4.A.success B.wonder C.exhibition D.discovery5.A.amazement B.horror C.relief D.delight6.A.blow up B.turn up C.show off D.give out7.A.uncomfortable B.unbearable C.undetected D.unexpected8.A.curious B.fortunate C.determined D.chosen9.A.worked B.mattered C.deserved D.proved10.A.newness B.emptiness C.freedom D.innovation11.A.protect B.challenge C.guide D.believe12.A.absolutely B.basically C.possibly D.necessarily13.A.involves B.risks C.admits D.resists14.A.hope B.faith C.curiosity D.wisdom15.A.trapped B.set C.lost D.rootedⅢ.语法填空(★)The Olympics are a series of international athletic competitions held in different countries.They’re 1. important multi-sport event that takes place every four years.For the Olympics,participants from all over the world train for years and try their best 2. (win).Pierre Coubertin,a French man,3. (found) the International Olympic Committee (IOC) in 1894.He was once a teacher and historian.In his honor,French had the official status(地位) and 4. (prior) over other languages at the Olympics.According to the Olympic Charter,English and French are the official languages of the Olympics.Therefore,every participant needs to have their documents 5. (translate) in both languages.Besides,real-time interpretations in Arabic,German,Russian,and Spanish must be available for all sessions.The IOC has a complete guide for those 6. want to apply to these contests.French has the status of being the first official language for Olympic events and it was the language of diplomacy (外交) in the beginning.But later,more countries considered 7. (participate) in this contest.The IOC then made French and English the official languages of the Olympics.8.(actual),more than 100 countries in the world speak English now.Besides,almost every country has made 9. a must that children learn English in schools and colleges.This 10. (influence) status has caused the IOC to make English one of the official languages.必修第一册Welcome UnitⅠ.阅读理解AThroughout all the events in my life,one in particular sticks out more than the others.As I reflect on this significant event,a smile spreads across my face.As I think of Shanda,I feel loved and grateful.It was my twelfth year of dancing,I thought it would end up like any other year:stuck in emptiness,forgotten and without the belief of any teacher or friend that I really had the potential to achieve greatness.However,I met Shanda,a young,talented choreographer(编舞者).She influenced me to work to the best of my ability,pushed me to keep going when I wanted to give up,encouraged me and showed me the real importance of dancing.Throughout our hard work,not only did my ability to dance grow,but my friendship with Shanda grew as well.With the end of the year came our show time.As I walked to a backstage filled with other dancers,I hoped for a good performance that would prove my improvement.I waited anxiously for my turn.Finally,after what seemed like days,the loudspeaker announced my name.Butterflies filled my stomach as I took trembling steps onto the big lighted stage.But,with the determination to succeed and eagerness to live up to Shanda’s expectations for me,I began to dance.All my troubles and nerves went away as I danced my whole heart out.As I walked up to the judge to receive my first-place shining,gold trophy(奖杯),I realized that dance is not about becoming the best.It is about loving dance for dance itself,a getaway from all my problems in the world.Shanda showed me that you could let everything go and just dance what you feel at that moment.After all the doubts that people had in me,I believed in myself and did not care what others thought.Thanks to Shanda,dance became more than a love of mine,but a passion.1.What did the author think her dancing would be for the twelfth year?A.A change for the better. B.A disappointment as before.C.A proof of her potential. D.A pride of her teachers and friends.2.How did Shanda help the author?A.By offering her financial help. B.By entering her in a competition.C.By coaching her for longer hours. D.By awakening her passion for dancing.3.How did the author feel when she stepped on the stage?A.Proud. B.Nervous. C.Scared. D.Relieved.4.What can we learn from the author’s story?A.Success lies in patience. B.Fame is a great thirst of the young.C.A good teacher matters. D.A youth is to be treated with respect.B(★)Are you a good judge of character?Can you make an accurate judgement of someone’s personality based only on your first impression of them?Interestingly,the answer lies as much in them as it does in you.One of the first people to try to identify good judges of character was US psychologist Henry F Adams in 1927.His research led him to conclude that people fell into two groups—good judges of themselves and good judges of others.Adams’s research has been widely criticized since then,but he wasn’t entirely wrong about there being two clearly different types.More on that in a moment,but first we need to define what a good judge of character is.Is it someone who can read personality or someone who can read emotion?Those are two different skills.Emotions such as anger or joy or sadness can generate easily identifiable physical signs.Most of us would probably be able to accurately identify these signs,even in a stranger.As such,most of us are probably good judges of emotion.In order to be a good judge of personality,however,there needs to be an interaction with the other person,and that person needs to be a “good target”.“Good targets” are people who reveal relevant and useful signals to their personality.So this means “the good judge” will only manifest when reading “good targets”.This is according to Rogers and Biesanz in their 2019 journal entitled “Reassessing the good judge of personality”.“We found consistent,clear and strong evidence that the good judge does exist,” Rogers and Biesanz concluded.But their key finding is that the good judge does not have magical gifts of perception—they are simply able to “detect and use the information provided by the good target”.So,are first impressions really accurate?Well,if you’re a good judge talking to a “good target”,then it seems the answer is “yes”.And now we know that good judges probably do exist,more research can be done into how they read personality,what kind of people they are—and whether their skills can be taught.5.What can we learn about Adams from paragraph 2?A.He is a good judge of character.B.He divided psychologists into two groups.C.His research result has been widely accepted.D.His research on good judges was partially right.6.What does the author think of emotion reading?A.Annoying. B.Joyful. C.Simple. D.Strange.7.Which of the following would Rogers and Biesanz agree with?A.A good target is necessary for personality judgement.B.A good target needs to get his personality reassessed.C.A good judge can provide useful signals to our personality.D.It’s possible to be a good judge just by looking at the other person.8.What does the author think future research should focus on?A.The skills of good communication. B.The features of good judges.C.The ways to read personality. D.The accuracy of first impressions.C(2023·新课标Ⅱ,C)Reading Art: Art for Book Lo v ers is a celebration of an everyday object—the book,represented here in almost three hundred artworks from museums around the world.The image of the reader appears throughout history,in art made long before books as we now know them came into being.In artists’ representations of books and reading,we see moments of shared humanity that go beyond culture and time.In this “book of books”,artworks are selected and arranged in a way that emphasizes these connections between different eras and cultures.We see scenes of children learning to read at home or at school,with the book as a focus for relations between the generations.Adults are portrayed(描绘) alone in many settings and poses—absorbed in a volume,deep in thought or lost in a moment of leisure.These scenes may have been painted hundreds of years ago,but they record moments we can all relate to.Books themselves may be used symbolically in paintings to demonstrate the intellect(才智),wealth or faith of the subject.Before the wide use of the printing press,books were treasured objects and could be works of art in their own right.More recently,as books have become inexpensive or even throwaway,artists have used them as the raw material for artworks—transforming covers,pages or even complete volumes into paintings and sculptures.Continued developments in communication technologies were once believed to make the printed page outdated.From a 21st-century point of view,the printed book is certainly ancient,but it remains as interactive as any battery-powered e-reader.To serve its function,a book must be activated by a user: the cover opened,the pages parted,the contents reviewed,perhaps notes written down or words underlined.And in contrast to our increasingly networked lives where theinformation we consume is monitored and tracked,a printed book still offers the chance of a wholly private,“off-line” activity.9.Where is the text most probably taken from?A.An introduction to a book. B.An essay on the art of writing.C.A guidebook to a museum. D.A review of modern paintings.10.What are the selected artworks about?A.Wealth and intellect. B.Home and school.C.Books and reading. D.Work and leisure.11.What do the underlined words “relate to” in paragraph 2 mean?A.Understand. B.Paint. C.Seize. D.Transform.12.What does the author want to say by mentioning the e-reader?A.The printed book is not totally out of date.B.Technology has changed the way we read.C.Our lives in the 21st century are networked.D.People now rarely have the patience to read.Ⅱ.七选五Wonderful views from the mountains,breathtaking places waiting to explore,fresh air,as well as all the special health benefits,are the main rewards of mountain hiking. 1You need to train your lower body muscles,anytime and anywhere.Strong legs and core muscles will improve your balance and help you hike longer.Focus on your lower body strength and on your cardiovascular(心血管的) workout. 2 You really can train anywhere:use your backyard,living room,or bedroom to keep your body ready for the true conditions in the mountains.You will need a stronger back.Who’s going to carry your backpack? 3 So we are quite sure what the true answer is.Develop back muscles with exercises like V-ups,deadlifts,diver push-ups,and exercise named “mountain climbers”:Start in a traditional plank(平板支撑) position and then bring your knee forward under your chest.You will start to feel your abs(腹肌) for sure,too.4 Even Rome wasn’t built in a day so give yourself the pleasure to enjoy discovering what your body is capable of! All the perfect benefits will improve your blood pressure and blood sugar levels,helping you strengthen your core,and even help you control your weight as an additional benefit.5 Just don’t forget to stretch after each training.Please remember that your training for mountain hiking should be combined with real hiking.And don’t miss the other clues on what to do for mountaineering training!。

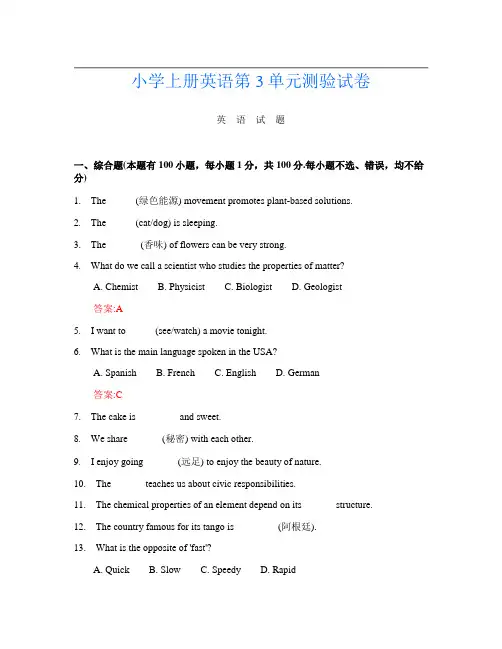

小学上册英语第3单元测验试卷英语试题一、综合题(本题有100小题,每小题1分,共100分.每小题不选、错误,均不给分)1.The _____ (绿色能源) movement promotes plant-based solutions.2.The _____ (cat/dog) is sleeping.3.The ______ (香味) of flowers can be very strong.4.What do we call a scientist who studies the properties of matter?A. ChemistB. PhysicistC. BiologistD. Geologist答案:A5.I want to _____ (see/watch) a movie tonight.6.What is the main language spoken in the USA?A. SpanishB. FrenchC. EnglishD. German答案:C7.The cake is ________ and sweet.8.We share ______ (秘密) with each other.9.I enjoy going ______ (远足) to enjoy the beauty of nature.10.The ______ teaches us about civic responsibilities.11.The chemical properties of an element depend on its ______ structure.12.The country famous for its tango is ________ (阿根廷).13.What is the opposite of 'fast'?A. QuickB. SlowC. SpeedyD. Rapid14.What is the capital of Tunisia?A. TunisB. SousseC. MonastirD. Bizerte答案:A15.What do you call a house made of ice?A. IglooB. CabinC. CastleD. Hut答案:A16._____ (当地植物) can adapt to specific environments.17.Chemical reactions often involve a change in ______.18.The __________ is the layer of skin that helps to protect against injury.19.I enjoy making memories with my toy ________ (玩具名称).20.I can ________ (manage) my time effectively.21.What do we call the opposite of ‘clean’?A. DirtyB. NeatC. TidyD. Clear22. A __________ is a sudden release of energy in the Earth's crust.23.My friend is a ______. He loves to collect stamps.24.My dad shows me how to be __________ (勇敢的) in difficult times.25.What is the largest country in the world?A. CanadaB. ChinaC. RussiaD. India26.The _______ (The Battle of Waterloo) marked the defeat of Napoleon.27.The ____ is a small creature that hides under leaves.28.What do we call the study of the universe?A. BiologyB. AstronomyC. GeologyD. Physics答案:B29.The _____ (果实) develops after a flower blooms.30. Wall of China was built to __________ (保护) the country from invasions. The Grea31.The ant carries food back to its ______ (巢).32.What is 4 + 5?A. 8B. 9C. 10D. 1133.The _____ (水果收成) happens in late summer.34.Many plants have a specific ______ period for blooming. (许多植物有特定的开花期。

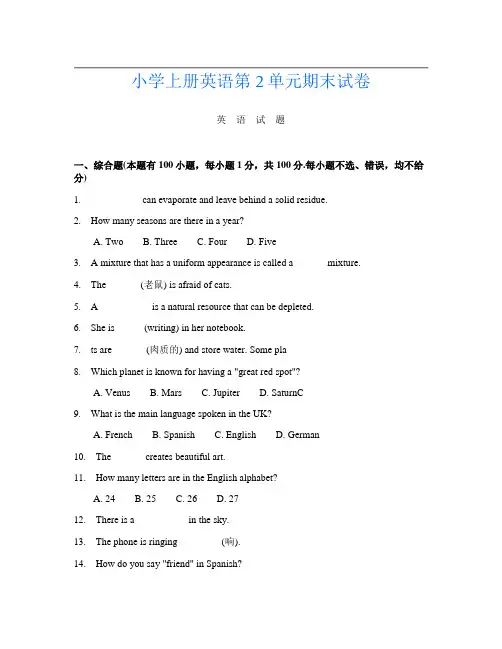

小学上册英语第2单元期末试卷英语试题一、综合题(本题有100小题,每小题1分,共100分.每小题不选、错误,均不给分)1.__________ can evaporate and leave behind a solid residue.2.How many seasons are there in a year?A. TwoB. ThreeC. FourD. Five3. A mixture that has a uniform appearance is called a ______ mixture.4.The ______ (老鼠) is afraid of cats.5. A __________ is a natural resource that can be depleted.6.She is _____ (writing) in her notebook.7.ts are ______ (肉质的) and store water. Some pla8.Which planet is known for having a "great red spot"?A. VenusB. MarsC. JupiterD. SaturnC9.What is the main language spoken in the UK?A. FrenchB. SpanishC. EnglishD. German10.The ______ creates beautiful art.11.How many letters are in the English alphabet?A. 24B. 25C. 26D. 2712.There is a __________ in the sky.13.The phone is ringing ________ (响).14.How do you say "friend" in Spanish?A. AmigoB. AmieC. FrèreD. Buddy15.I like to collect _____ from different places. (postcards)16.The fruits are fresh and ___. (juicy)17.Which animal is famous for its slow movement?A. CheetahB. SlothC. RabbitD. HorseB18.My ________ (玩具名称) is inspired by my favorite character.19.What is the opposite of fast?A. QuickB. SlowC. SpeedyD. Rapid20.The _______ (蜥蜴) can be very quick.21.I find ________ (化学) very interesting.22.I have a ________ that glows in the dark.23.This girl, ______ (这个女孩), enjoys acting in plays.24.The ________ (覆盖层) protects the soil.25.The snow is ___ (white/black).26.ocean) is home to many fish and other marine life. The ____27.I enjoy exploring nature and going on ______ (远足) with my family. We see beautiful ______ (风景) along the way.28.The ancient Greeks held the Olympic Games every __________ years. (四年)29.The ________ was a major event that shaped modern European history.30.What is the name of the famous American musician known for "Shallow"?A. Lady GagaB. Kelly ClarksonC. AdeleD. Kacey MusgravesA31.The _____ (pillow) is soft.32.What is the name of the ocean on the east coast of the USA?A. Atlantic OceanB. Pacific OceanC. Indian OceanD. Arctic OceanA33.Many _______ have different blooming seasons.34.The __________ (历史的理解过程) is essential for growth.35.The trees in the _______ provide shade in summer.36.What type of animal is a penguin?A. MammalB. BirdC. ReptileD. FishB37.What do we call the process of turning milk into cheese?A. FermentationB. CoagulationC. PasteurizationD. Homogenization38.The _______ of a plant can be green, yellow, or brown.39.My sister is learning how to ____ (swim) this summer.40.The ________ (城市发展) affects many people.41.I have a ___ (story/book) to read.42.My cat purrs when it feels _______ (放松).43.The ________ (气味) of herbs is wonderful.44. A ________ (生态平衡) ensures diversity.45.The __________ helps to create new cells in the body.46.I can ______ (唱) very well.47.The _____ (iris) blooms in various colors.48.My brother is my ________ (哥哥). We play video games together every weekend.49.The flowers are ______ in the garden.50.The ancient Greeks established many ________ (城邦).51.The ocean is _______ (闪闪发光).52.He plays _______ (video games) every weekend.53.The _______ (Silk Road) was a trade route that connected the East and West.54.Which of these is a flying mammal?A. BatB. DogC. CatD. Elephant55.What is the name of the fairy that helps Peter Pan?A. Tinker BellB. CinderellaC. BelleD. ArielA56.What do you call the process of planting seeds?A. SowingB. HarvestingC. CultivatingD. TillingA57.The part of the atom that has a negative charge is called an _______.58.What is the opposite of fat?A. ThinB. SlimC. LeanD. All of the aboveD59.I see a ___ (star/moon) shining.60.Earth has one moon, while Mars has ______.61.The cat is __________ on the sofa.62.My birthday is in ______ (七月). I want to have a big ______ (派对) with cake and ______ (气球).63.What do you call the main character in a comic book?A. HeroB. VillainC. SidekickD. Comic reliefA64.What is 15 divided by 5?A. 2B. 3C. 4D. 5B65.My grandma teaches me how to ____ (knit).66.The moon affects the ______ of the oceans.67.What is the sound of a duck?A. QuackB. MooC. WoofD. Baa68.The __________ (历史的多样性) enriches dialogue.69.What is the name of the tree that produces acorns?A. PineB. MapleC. OakD. BirchC70.We have ______ at the picnic. (sandwiches)71. A ______ is a natural feature that can influence local climates.72.What do you call a person who studies stars and planets?A. ScientistB. AstronomerC. BiologistD. GeologistB Astronomer73.The _____ (雨伞) is red.74. A turtle can hide in its ______ for protection.75. A ______ plays a key role in the habitat.76.The first successful double hand transplant was performed in _______. (2008年)77. A __________ is a narrow strip of land connecting two larger land areas. (地峡)78.What do you call a place where you can borrow books?A. SchoolB. LibraryC. ParkD. StoreB79.I want to ________ (learn) English.80.What do you call the person who helps you learn in school?A. DoctorB. TeacherC. CookD. Driver81.The ________ likes to chase after butterflies.82.I like to watch the ________ when I go outside.83.Acids taste ______ and can be sour.84.The _____ (园艺) in my backyard is my favorite place.85.The main component of antioxidants is _____.86.My dog loves to fetch a ______ (球).87.The chemical formula for acetic acid is _____.88.What is the name of the ocean on the east coast of the United States?A. AtlanticB. PacificC. IndianD. Arctic89.My sister is a ______. She likes to help others.90.The country known for kangaroos is ________ (澳大利亚).91.What is the name of the famous scientist known for his work on electromagnetism?A. James Clerk MaxwellB. Nikola TeslaC. Thomas EdisonD. Michael FaradayA92.The first successful airplane flight took place in ________.93.The movie was very ___ (interesting).94.The _____ (balloon/kite) is flying.95. A base can neutralize an ______.96.The movie was ___ (exciting/boring).97.What do you call a person who studies history?A. HistorianB. ArchaeologistC. GeographerD. Anthropologist答案:A98.The __________ (历史的持续演变) reflects societal changes.99.I think it’s important to ________ (保持积极).100.What is the name of the traditional Japanese dish made with rice and fish?A. PizzaB. SushiC. PastaD. BurgerB。

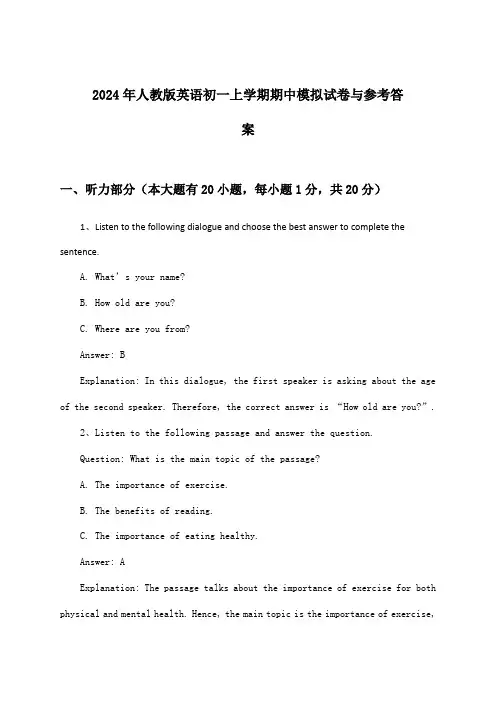

2024年人教版英语初一上学期期中模拟试卷与参考答案一、听力部分(本大题有20小题,每小题1分,共20分)1、Listen to the following dialogue and choose the best answer to complete the sentence.A. What’s your name?B. How old are you?C. Where are you from?Answer: BExplanation: In this dialogue, the first speaker is asking about the age of the second speaker. Therefore, the correct answer is “How old are you?”.2、Listen to the following passage and answer the question.Question: What is the main topic of the passage?A. The importance of exercise.B. The benefits of reading.C. The importance of eating healthy.Answer: AExplanation: The passage talks about the importance of exercise for both physical and mental health. Hence, the main topic is the importance of exercise,which makes “The importance of exercise” the correct answer.3、Listen to the conversation and choose the best answer to the question you hear.Question: What does the man suggest they do next?A) Go to the library.B) Have a picnic.C) Watch a movie.Answer: B) Have a picnic.Explanation: The man suggests they have a picnic, which is option B. The other options are not mentioned in the conversation.4、Listen to the short passage and answer the question.Question: What is the main topic of the passage?A)The benefits of reading.B)The history of the book.C)The importance of education.Answer: C) The importance of education.Explanation: Although the passage does touch on the benefits of reading and the history of the book, the main topic is the importance of education, making option C the best answer.5、You hear a conversation between two students discussing their weekend plans.Student A: “Are you going to the beach this weekend?”Student B: “Yes, I am. It’s going to be sunny and warm.”Student A: “That sounds great! Are you planning to bring anything to do there?”Student B: “I think I’ll bring some books to read. How about you?”Question: What activity does Student B plan to do at the beach?A) Go swimmingB) Read booksC) Play sportsD) Visit a friendAnswer: B) Read booksExplanation: In the conversation, Student B mentions that they think they will bring some books to read at the beach, indicating that reading is their planned activity.6、You hear a short dialogue between a teacher and a student about the upcoming science fair.Teacher: “Hi, John. Are you ready for the science fair next week?”Student: “I’m almost there, Mrs.Smith. I just need to finish my project on renewable energy sou rces.”Teacher: “That sounds interesting. Do you already have all the materials you need?”Student: “Yes, I have all the materials, but I’m still working on the experiment to test the efficiency of the solar panel.”Question: What is the student working on for the science fair?A) A paintingB) A renewable energy projectC) A history presentationD) A music performanceAnswer: B) A renewable energy projectExplanation: The student explicitly states that they are working on a project about renewable energy sources for the science fair, indicating that the correct answer is B.7、听力材料:W: Hi, John! How was your science project fair?M: It was great! I presented a model of the solar system and explained how each planet orbits the sun.Q: What did John do at the science project fair?A. He presented a model of the solar system.B. He explained the theory of relativity.C. He demonstrated a new type of rocket.D. He showed a collection of insects.Answer: AExplanation: The question asks what John did at the science project fair. In the dialogue, John says, “I presented a model of the solar system,” which directly answers the question.8、听力材料:M: Have you ever tried the new Italian restaurant downtown?W: Yes, I went there last weekend. The pasta was amazing, but the service was a bit slow.Q: What did the woman think about the service at the restaurant?A. It was very quick.B. It was satisfactory.C. It was quite slow.D. It was exceptional.Answer: CExplanation: The question inquires about the woman’s opinion of the service at the restaurant. The woman says, “the service was a bit slow,” which indicates that she found the service to be slow, thus making option C the correct answer.9.You are listening to a conversation between two students, Alex and Sarah, discussing their weekend plans.M: (9) Hi, Sarah, what are you planning to do this weekend?W: Oh, I’m thinking of going hiking with a group of friends. How about you, Alex?M: That sounds fun! I mig ht just stay home and relax. Maybe I’ll go for a walk in the park.Q: What is Alex’s plan for the weekend?A: To stay home and relax.B: To go hiking with friends.C: To go for a walk in the park.D: To go shopping with Sarah.Answer: AExplanation: In the conversation, Alex says, “Maybe I’ll go for a walk in the park,” indicating that he plans to stay home and relax.10.You are listening to a phone conversation between a teacher, Mr. Green, and a student, Lily.M: (10) Hi, Lily. How are you doing with your homework assignment?W: Hi, Mr. Green. I’m almost done. I just have one question about the math problem.M: Sure, go ahead and ask me.W: Okay, it’s about the quadratic equation. I’m not sure how to find the vertex.M: Well, Lily, to find the vertex of a quadratic equation, you need to use the formula for the x-coordinate, which is -b/2a, and then plug that value into the equation to find the y-coordinate.Q: What is Lily’s question about the math problem?A: How to solve a linear equation.B: How to find the vertex of a quadratic equation.C: How to factorize a trinomial.D: How to simplify an expression.Answer: BExplanation: Lily directly asks Mr. Green, “I’m not sure how to find the vertex,” which is related to the quadratic equation.11.You are listening to a conversation between two students, Tom and Lily, discussing their weekend plans. Listen carefully and answer the question below.Q: What are Tom and Lily planning to do this weekend?A: Tom and Lily are planning to go hiking this weekend.Answer: AExplanation: The conversation starts with Tom saying, “Hey Lily, do you want to go hiking this weekend?” and Lily replies, “Sure, that sounds like fun!” This indicates that they have planned to go hiking.12.You are listening to a weather report for a city. Listen carefully and answer the question below.Q: What is the weather forecast for tomorrow in the city?A: The weather forecast for tomorrow in the city is sunny with a high of 25 degrees Celsius.Answer: Sunny, 25°CExplanation: T he weather report states, “Tomorrow, we expect a sunny day with a high of 25 degrees Celsius.” This provides the information needed to answer the question.13.You hear a conversation between two students discussing their weekend plans.A. What did the first student plan to do on Saturday?1.Go to the movies.2.Visit a museum.3.Go hiking.Answer: 2. Visit a museum.Explanation: The first student mentions that they have a free Saturday and decides to visit the museum with a friend.14.You hear a short dialogue between a teacher and a student about a school project.A. What was the student’s initial plan for the project?1.To complete it by next week.2.To do it in groups.3.To work on it over the weekend.Answer: 3. To work on it over the weekend.Explanation: The student explains to the teacher that they had planned to work on the project over the weekend but need additional help.15.You are listening to a conversation between two students, Alex and Emily, discussing their weekend plans. Listen to the conversation and answer the question.Question: What activity does Alex plan to do on Saturday afternoon?A) Go shopping with friends.B) Attend a football game.C) Visit a museum.Answer: B) Attend a football game.Explanation: In the conversation, Al ex mentions, “I’m going to a football game with some friends on Saturday afternoon,” which indicates the correct answer is B) Attend a football game.16.Listen to a short dialogue between a teacher and a student, discussinga school project. Answer the following question.Question: What is the main topic of the project?A) Science fair experiments.B) Historical figures research.C) Environmental conservation efforts.Answer: B) Historical figures research.Explanation: The teacher says, “The project is abo ut researching a historical figure from the past,” which suggests that the main topic of the project is B) Historical figures research.17.You are listening to a conversation between two students, Tom and Alice, about their weekend plans. Listen carefully and answer the following question: What does Tom plan to do on Saturday afternoon?A. Go to the movies.B. Go shopping with Alice.C. Visit his grandparents.Answer: AExplanation: In the conversation, Tom says, “I’m going to the movies this afternoon. Do you want to come with me?” This indicates that Tom plans to goto the movies on Saturday afternoon.18.Listen to a short passage about the importance of exercise and answer the following question:What is the main idea of the passage?A. Exercise is good for physical health.B. Exercise is important for mental health.C. Exercise can help people lose weight.Answer: BExplanation: The passage discusses the positive effects of exercise on mental health, such as reducing stress and improving mood. While physical health benefits are also mentioned, the main idea of the passage focuses on the mental health aspect of exercise.19.You are listening to a conversation between two students discussing their weekend plans.St udent A: “Hey, have you thought about what you’re going to do this weekend?”Student B: “I was thinking of going hiking. How about you?”Student A: “That sounds fun! I was actually planning to visit the city museum with my family.”Student B: “Oh, that’s cool. Do you know if there’s anything special going on there?”Student A: “Yes, they have a special exhibit about ancient artifacts. It’sreally interesting.”Question: What is Student A planning to do this weekend?A) Go hikingB) Visit the city museumC) Go to the moviesD) Go shoppingAnswer: BExplanation: Student A mentions planning to visit the city museum with their family, which indicates that this is their weekend plan.20.You are listening to a weather forecast for the coming week.Narrator: “This week, we can expect a mix of sunny and cloudy days. Monday will be mostly sunny with a high of 75 degrees Fahrenheit. Tuesday will see some scattered showers in the afternoon, with a high of 68 degrees. Wednesday and Thursday will be sunny with highs in the low 70s. Friday will bring a chance of thunderstorms in the evening, with a high of 72 degrees. The weekend will be partly cloudy with a high of 68 degrees on Saturday and 70 degrees on Sunday.”Question: Which day of the week will have the highest temperature?A) MondayB) TuesdayC) WednesdayD) SundayAnswer: DExplanation: The forecast indicates that the highest temperature will be on Sunday, with a high of 70 degrees Fahrenheit.二、阅读理解(30分)Reading ComprehensionPassage:The first day of school is always an exciting and nervous time for students. Imagine walking into a new classroom, filled with unfamiliar faces and a teacher who is about to be your guide through a new language. For many students in China, this experience is multiplied when they start learning English in the first grade. English is a challenging subject for beginners, but it opens up a world of opportunities.In the first grade, students are introduced to basic English vocabulary and grammar. They learn simple words like “hello,”“goodbye,” “yes,” and “no,” as well as basic sentence structures. One of the first activities they do is to create a simple “Hello, my name is…” card, which helps them practice their new vocabulary and introduces them to the concept of proper introductions.The classroom environment is designed to be engaging and interactive. There are posters with colorful pictures and words, and there are often games and activities that reinforce the lessons. One popular game is “Simon Says,” where the teacher calls out commands beginning with the word “Simon says,” and the students only follow the commands if they are preceded by “Simon says.”Despite the excitement, the first day of English class can also be daunting. Many students are worried about making mistakes and being corrected in front of their peers. However, the teacher reassures them that learning a new language is a process, and mistakes are a natural part of that process.Questions:1.Why is the first day of school exciting for students?2.What are some of the basic words and sentence structures that first-grade students learn in English?3.How does the classroom environment help students learn English?Answers:1.The first day of school is exciting for students because they are starting a new journey, meeting new classmates, and learning a new subject.2.Some of the basic words and sentence structures that first-grade students learn in English include “hello,” “goodbye,” “yes,” “no,” and simple sentence structures like “I am…,” “My name is…,” and “This is…”.3.The classroom environment helps students learn English by being engaging and interactive, with colorful posters and games that reinforce the lessons.三、完型填空(15分)Title: Human Education Edition Grade 7 English Mid-term Exam - Part 3: Cloze TestQuestion 1:Reading the following passage, choose the best word or phrase for each blankfrom the options given below.In the small town of Greenfield, the [1] of the library was always a topic of conversation among the residents. The library was more than just a place to borrow books; it was a [2] where people of all ages could gather and share their interests.Last year, the library [3] a new program called “Community Reads.” The program encouraged everyone in the town to read the same book and then come together for discussions. It was a great success, and the library became a [4] place for the community to connect.Unfortunately, the library’s budget was cut this year, and it looked like the “Community Reads” program might [5]. Many residents were worried that the library would no longer be the heart of the community.1.A) importance B) attraction C) benefit D) opportunity2.A) meeting B) gathering C) destination D) collection3.A) introduced B) removed C) destroyed D) expanded4.A) favorite B) private C) outdated D) abandoned5.A) continue B) stop C) replace D) combineAnswer Key:1.B2.C3.A4.A5.B四、语法填空题(本大题有10小题,每小题1分,共10分)1、Tom______(go) to the movies last night, but he had too much homework.A. goesB. wentC. is goingD. will go答案:B解析:根据句意,这里描述的是昨晚的动作,所以需要使用一般过去时。

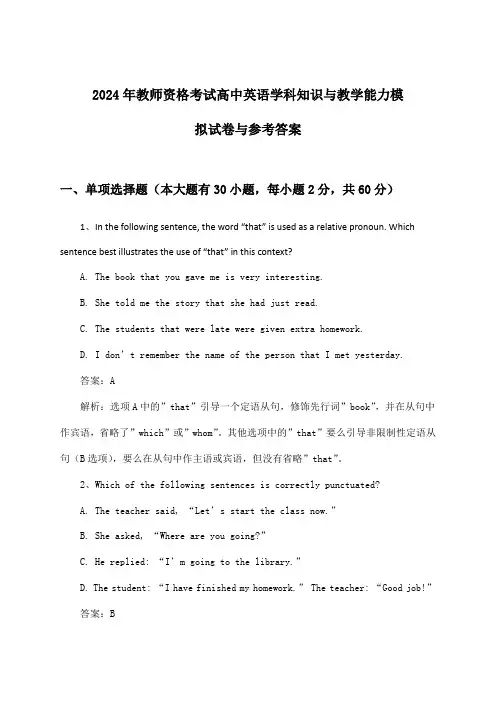

2024年教师资格考试高中英语学科知识与教学能力模拟试卷与参考答案一、单项选择题(本大题有30小题,每小题2分,共60分)1、In the following sentence, the word “that” is used as a relative pronoun. Which sentence best illustrates the use of “that” in this context?A. The book that you gave me is very interesting.B. She told me the story that she had just read.C. The students that were late were given extra homework.D. I don’t remember the name of the person that I met yesterday.答案:A解析:选项A中的”that”引导一个定语从句,修饰先行词”book”,并在从句中作宾语,省略了”which”或”whom”。

其他选项中的”that”要么引导非限制性定语从句(B选项),要么在从句中作主语或宾语,但没有省略”that”。

2、Which of the following sentences is correctly punctuated?A. The teacher said, “Let’s start the class now.”B. She asked, “Where are you going?”C. He replied: “I’m going to the library.”D. The student: “I have finished my homework.” The teacher: “Good job!”答案:B解析:选项B中的句子正确地使用了问号,因为它是直接引语,而直接引语中的句子如果是疑问句,则必须以问号结尾。

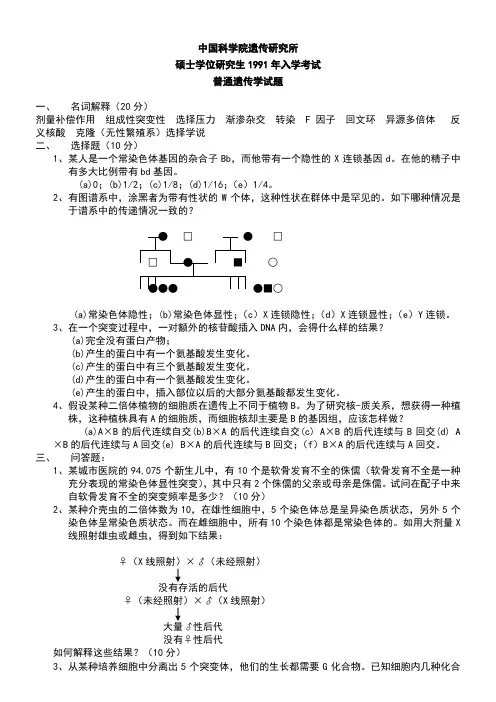

中国科学院遗传研究所硕士学位研究生1991年入学考试普通遗传学试题一、名词解释(20分)剂量补偿作用组成性突变性选择压力渐渗杂交转染 F因子回文环异源多倍体反义核酸克隆(无性繁殖系)选择学说二、选择题(10分)1、某人是一个常染色体基因的杂合子Bb,而他带有一个隐性的X连锁基因d。

在他的精子中有多大比例带有bd基因。

(a)0;(b)1/2;(c)1/8;(d)1/16;(e)1/4。

2、有图谱系中,涂黑者为带有性状的W个体,这种性状在群体中是罕见的。

如下哪种情况是于谱系中的传递情况一致的?●□●□□●■○●●●●■○(a)常染色体隐性;(b)常染色体显性;(c)X连锁隐性;(d)X连锁显性;(e)Y连锁。

3、在一个突变过程中,一对额外的核苷酸插入DNA内,会得什么样的结果?(a)完全没有蛋白产物;(b)产生的蛋白中有一个氨基酸发生变化。

(c)产生的蛋白中有三个氨基酸发生变化。

(d)产生的蛋白中有一个氨基酸发生变化。

(e)产生的蛋白中,插入部位以后的大部分氨基酸都发生变化。

4、假设某种二倍体植物的细胞质在遗传上不同于植物B。

为了研究核-质关系,想获得一种植株,这种植株具有A的细胞质,而细胞核却主要是B的基因组,应该怎样做?(a)A×B的后代连续自交(b)B×A的后代连续自交(c) A×B的后代连续与B回交(d) A×B的后代连续与A回交(e) B×A的后代连续与B回交;(f)B×A的后代连续与A回交。

三、问答题:1、某城市医院的94,075个新生儿中,有10个是软骨发育不全的侏儒(软骨发育不全是一种充分表现的常染色体显性突变),其中只有2个侏儒的父亲或母亲是侏儒。

试问在配子中来自软骨发育不全的突变频率是多少?(10分)2、某种介壳虫的二倍体数为10,在雄性细胞中,5个染色体总是呈异染色质状态,另外5个染色体呈常染色质状态。

而在雌细胞中,所有10个染色体都是常染色体的。

英语作文字典的应用英语作文,英语字典的应用。

Introduction:An English dictionary is an essential tool for English language learners. It provides definitions, explanations, and examples of words, helping learners to expand their vocabulary and improve their language skills. This essay will discuss the importance of English dictionaries and how they are used in different ways.The Importance of English Dictionaries:English dictionaries play a crucial role in language learning. They help learners to understand the meaning and usage of words, enabling them to communicate effectively in English. Dictionaries also provide information on word forms, pronunciation, synonyms, antonyms, and idiomatic expressions, facilitating learners' comprehension and usageof the language. Moreover, dictionaries can enhancelearners' reading skills as they encounter unfamiliar words while reading. By using a dictionary, learners can decipher the meaning of these words and broaden their understandingof the text.Types of English Dictionaries:There are various types of English dictionaries available, catering to different needs and proficiency levels. The most common types include learner's dictionaries, bilingual dictionaries, and specialized dictionaries. Learner's dictionaries are designedspecifically for language learners, providing simplified definitions, examples, and explanations. Bilingual dictionaries, on the other hand, offer translations ofwords from one language to another, assisting learners in understanding words in their native language. Specialized dictionaries focus on specific fields such as medicine, law, or technology, providing in-depth explanations of technical terms.Using English Dictionaries:English dictionaries can be used in different ways depending on the purpose and context. Here are some common ways dictionaries are utilized:1. Vocabulary Expansion: Learners can use dictionaries to look up new words they encounter while reading or listening. By understanding the meaning and usage of these words, learners can expand their vocabulary and improve their language skills.2. Word Pronunciation: Dictionaries provide phonetic transcriptions and audio pronunciations, helping learners to pronounce words correctly. This is particularly useful for non-native English speakers who may struggle with English phonetics.3. Word Forms: Dictionaries provide information on word forms such as verb conjugations, noun plurals, andadjective comparisons. This helps learners to understand how words change in different contexts and grammaticalstructures.4. Synonyms and Antonyms: Dictionaries offer synonyms and antonyms for words, enabling learners to enhance their vocabulary by exploring alternative words with similar or opposite meanings.5. Idiomatic Expressions: Dictionaries provide explanations and examples of idiomatic expressions, helping learners to understand and use these phrases in appropriate contexts.Conclusion:In conclusion, English dictionaries are indispensable tools for language learners. They provide definitions, explanations, and examples of words, helping learners to expand their vocabulary, improve their language skills, and enhance their overall understanding of the English language. By utilizing dictionaries effectively, learners can overcome language barriers and communicate more confidently and accurately in English.。

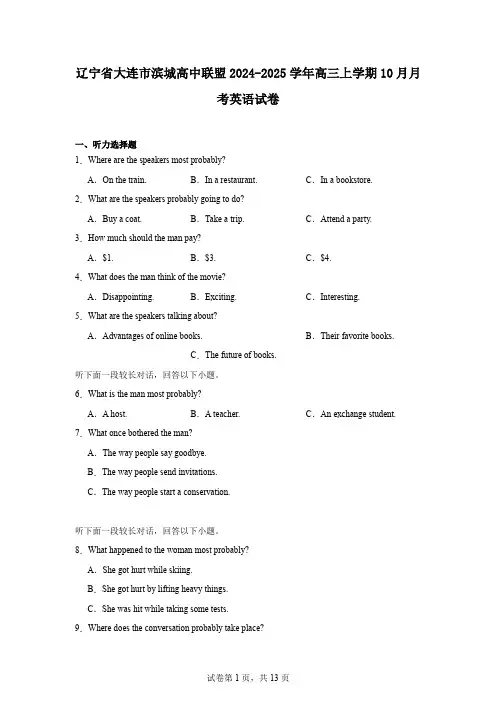

辽宁省大连市滨城高中联盟2024-2025学年高三上学期10月月考英语试卷一、听力选择题1.Where are the speakers most probably?A.On the train.B.In a restaurant.C.In a bookstore.2.What are the speakers probably going to do?A.Buy a coat.B.Take a trip.C.Attend a party.3.How much should the man pay?A.$1.B.$3.C.$4.4.What does the man think of the movie?A.Disappointing.B.Exciting.C.Interesting.5.What are the speakers talking about?A.Advantages of online books.B.Their favorite books.C.The future of books.听下面一段较长对话,回答以下小题。

6.What is the man most probably?A.A host.B.A teacher.C.An exchange student. 7.What once bothered the man?A.The way people say goodbye.B.The way people send invitations.C.The way people start a conservation.听下面一段较长对话,回答以下小题。

8.What happened to the woman most probably?A.She got hurt while skiing.B.She got hurt by lifting heavy things.C.She was hit while taking some tests.9.Where does the conversation probably take place?A.In the doctor’s office.B.In the ski field.C.In the drugstore.听下面一段较长对话,回答以下小题。

The Significance of NonverbalCommunication in Cross-CulturalInteractionsIn the intricate web of human interactions, nonverbal communication stands out as a powerful and often overlooked tool. It involves the transmission of messages and meanings through facial expressions, gestures, eye contact, body language, and other forms of communication that do not rely on spoken or written words. In cross-cultural settings, where language barriers and cultural differences can pose significant challenges, nonverbal communication becomes even more crucial.The importance of nonverbal communication in cross-cultural interactions lies in its ability to bridge the gaps created by linguistic and cultural differences. When words fail to convey a message accurately due to language barriers, nonverbal cues can often compensate and clarify the intended meaning. For instance, a smile can convey warmth and friendliness regardless of the language spoken, while a nod of the head can indicate agreement in many cultures.Moreover, nonverbal communication can provide valuable insights into cultural norms and values. Different cultures often have distinct ways of expressing emotions and attitudes through nonverbal means. Observing and interpreting these cues can help us better understand and respect the cultural background of our interlocutors, thereby enhancing the effectiveness of our interactions.However, it is important to note that nonverbal communication can also be a source of misunderstandings and misinterpretations in cross-cultural settings. Cultural differences can lead to varying interpretations of the same nonverbal cues. For example, what may be considered a respectful gesture in one culture may be deemed offensivein another. Therefore, it is crucial to be aware of and sensitive to these differences when engaging in cross-cultural interactions.To effectively utilize nonverbal communication incross-cultural settings, it is essential to developcultural awareness and sensitivity. This involves understanding the nonverbal communication styles and norms of different cultures and adapting our own behavioraccordingly. Additionally, active listening and observation skills are key to accurately interpret the nonverbal cuesof others. By paying attention to facial expressions, body language, and other forms of nonverbal communication, wecan gain a deeper understanding of the thoughts andfeelings of our interlocutors.In conclusion, nonverbal communication plays a pivotal role in cross-cultural interactions. It serves as apowerful tool for bridging language and cultural gaps, enhancing understanding, and fostering effective communication. By developing cultural awareness and sensitivity, and honing our listening and observationskills, we can leverage the power of nonverbal communication to build stronger and more meaningful relationships across cultures.**非语言沟通在跨文化交流中的重要性**在人类交往的复杂网络中,非语言沟通作为一种强大而常被忽视的工具脱颖而出。

小学上册英语第6单元真题试卷英语试题一、综合题(本题有100小题,每小题1分,共100分.每小题不选、错误,均不给分)1.The process of extracting oil from seeds is called ______.2.The _______ (The Age of Enlightenment) emphasized reason and individual rights.3.What do we call the act of developing a strategy?A. PlanningB. OrganizingC. StructuringD. All of the AboveD4.The _______ (小长颈鹿) eats leaves from tall trees.5.Which fruit is yellow and curved?A. AppleB. BananaC. GrapeD. Orange6.Which of these is a type of tree?A. FernB. RosebushC. OakD. GrassC7.How many wheels does a bicycle have?A. OneB. TwoC. ThreeD. FourB8.The cake is ___ (baking) in the oven.9.The pyramids in Egypt were built as __________ for pharaohs. (陵墓)10.What is the capital of El Salvador?A. San SalvadorB. Santa AnaC. San MiguelD. La Libertad11.My cousin is a fan of _______ (运动). 她喜欢 _______ (动词).12.The _______ of a comet can be seen when it approaches the sun.13.The ancient Greeks valued ______ (运动) and physical fitness.14.What is the opposite of 'thin'?A. SmallB. FatC. NarrowD. Slim15.My favorite subject in school is __________. I find it exciting because __________.I look forward to learning more about it each day.16.The chemical symbol for strontium is ______.17.The __________ can indicate areas of high biodiversity and ecological significance.18.I have a collection of toy _____.19.Planting native species can help support local ______ (生态).20.The antelope runs very ______ (快).21.I can ______ (ride) a unicycle.22.We have a ______ (丰富的) curriculum focused on arts.23.What do you call a large area of water surrounded by land?A. SeaB. OceanC. LakeD. PondC24. A _______ is a substance that can dissolve in water.25. A _______ is a chemical that changes color in different pH levels.26.The chemical formula for mercury(II) chloride is _____.27.My cat enjoys chasing ______ (小虫) in the sunlight.28.Which animal is known for being very slow?A. CheetahB. SnailC. RabbitD. Dog29.The cheetah is the _________ animal on land. (最快)30.My favorite fruit is _______ (草莓).31.I like to play ________ games.32.I enjoy playing ______ (乐器) and practicing music every day.33.The butterfly is very _______ (colorful).34.There are _____ (三) birds in the tree.35.I like to go ________ (滑翔伞) during summer.36.What do we call the person who studies plants?A. BiologistB. BotanistC. EcologistD. GeologistB37.I can ______ (利用) online tools effectively.38.She is ___ (playing/singing) a tune.39.The _____ (turf) provides a soft lawn.40. A __________ is a geological feature that provides habitats for wildlife.41. A __________ is a type of mixture where particles do not settle.42.What do you use to write on paper?A. PaintB. MarkerC. PencilD. All of the aboveD43.What is 15 divided by 3?A. 3B. 4C. 5D. 6C44.The ______ is known for its colorful feathers.45.What do you call a place where you can borrow books?A. SchoolB. LibraryC. StoreD. ParkB46.Many _______ have medicinal uses.47. A liquid that can dissolve other substances is called a ______.48.Earth has a magnetic ______ that protects us from solar wind.49.I can ______ (read) a book in one day.50.What is the largest planet in our solar system?A. EarthB. MarsC. JupiterD. Venus51. A colloid is a mixture where tiny particles remain _______ suspended.52.We have ______ (很多) books at home.53. A parakeet enjoys hanging upside down on its ______ (栖木).54.The chemical symbol for strontium is _____.55.Wildflowers bloom in _____ (开放的) fields.56.The _______ can be a source of inspiration for stories.57.I can ______ my bike very fast. (ride)58.I enjoy playing ________ (运动) during the week.59.What do we call the process of changing from a liquid to a solid?A. MeltingB. FreezingC. BoilingD. EvaporatingB60.My _____ (魔方) is difficult to solve.61.At the party, we had a _________ (气球) fight and it was so _________ (有趣的).62.I want to _____ (learn/teach) English.63.What do you call a story that teaches a lesson?A. FableB. MythC. LegendD. TaleA64.They are _____ (fishing) at the lake.65.The Earth's surface is home to diverse ecosystems, including ______ and wetlands.66.The _____ (游乐场) is fun for kids.67.The ________ is a very fast animal.68.What do you call a young flamingo?A. ChickB. KitC. PupD. Calf69.In chemistry, the term "molarity" refers to the concentration of a ________.70. A _______ grows best in sandy soil.71.The __________ (历史的创新) drives progress.72.Which country is known for the kangaroo?A. CanadaB. AustraliaC. IndiaD. South AfricaB73.The process of evaporation happens when a __________ turns into a gas.74.I like to race my ________ (玩具名称) with my friends.75.What is the opposite of ‘cold’?A. WarmB. HotC. CoolD. Chilly76.The chemical reaction that occurs when fuel burns is called _______.77.The process of saponification involves fatty acids and ______.78.The __________ is a large area of land with limited vegetation. (干旱地区)79.The ancient Greeks contributed greatly to ________ and literature.80.I want to _______ (学会) how to skateboard.81.The _______ (Mongol Empire) was one of the largest empires in history.82. e often found in ________________ (草丛). Snakes c83.I like to explore ______ (新地方) when I travel.84.The chemical formula for cetyl alcohol is ______.85.What is the common name for the substance that falls from the sky during rain?A. SnowB. HailC. WaterD. IceC86.I enjoy going to ________ (电影院) with my friends.87.How many players are there in a soccer team?A. 5B. 7C. 11D. 13C88. A mixture of oil and water is an example of an ______ mixture.89.The spider spins its web to catch _________ (猎物).90.What is the name of the sweet treat made from milk and sugar?A. ChocolateB. CandyC. FudgeD. Ice CreamD91.What is the largest mammal in the ocean?A. SharkB. WhaleC. DolphinD. OctopusB92.They are _____ (happy) together.93.The skunk's spray is a powerful ________________ (防御).94.I can ______ (dance) with my friends.95.The capital of Iceland is __________.96.The chemical formula for propanol is _____.97.What do you call a person who makes bread?A. BakerB. CookC. ChefD. Pastry chefA98.Which of these animals is a reptile?A. FrogB. LizardC. RabbitD. DolphinB99.My grandma loves to bake ____ (bread).100.The singer has a ______ (beautiful) voice.。

小学下册英语上册试卷(含答案)英语试题一、综合题(本题有100小题,每小题1分,共100分.每小题不选、错误,均不给分)1. A rabbit's favorite snack is ______ (胡萝卜).2.What do we call the device used to take pictures?A. CameraB. ProjectorC. MonitorD. Scanner答案:A3.The ______ (植物的形状) can affect light absorption.4.At the park, children play with _________ (飞盘) and _________ (球).5.She has a nice ________.6.What is the capital of Lesotho?A. MaseruB. TeyateyanengC. MafetengD. Mohale's Hoek答案:A7.What is the name of our planet?A. MarsB. EarthC. VenusD. Jupiter答案:B8. A parrot can live for several _______ (年).9.The chemical formula for calcium acetate is _______.10.My sister is a ______. She studies very hard.11.What do caterpillars turn into?A. FrogsB. ButterfliesC. MothsD. Snakes答案:B Butterflies12.The ______ (植物的生态功能) is vital for balance.13.I enjoy ___ (playing) with my little brother.14.The bed is very ___ (comfortable).15.The __________ (历史的启蒙者) inspire change.16. A snake moves by __________ its body.17.What do you call the act of telling a story?A. NarrativeB. DescriptionC. ExpositionD. Dialogue答案:A18.What do we call a scientist who studies plants?A. ZoologistB. BotanistC. GeologistD. Biologist答案:B19.What do we call the time it takes for one full rotation of the Earth?A. DayB. MonthC. YearD. Hour答案:A20.The ______ is the part of the brain that controls emotions.21.The __________ (历史的记录) serve as cautionary tales.22.My friend is very ________.23.Flowers are often very _____ (美丽的).24.The __________ is known for its vibrant nightlife.25.The kangaroo hops to _______ (寻找) food.26.The capital of Ghana is __________.27.What is the name of the famous ship that sank in 1912?A. Queen MaryB. TitanicC. BritannicD. Lusitania答案:B28.How many sides does a hexagon have?A. 4B. 5C. 6D. 7答案:C29.What do we call the art of creating visual works?A. PaintingB. SculptureC. DrawingD. All of the Above答案:D30.What do we call a person who repairs cars?A. DoctorB. MechanicC. PilotD. Chef31.They are dancing at the ___. (party)32.My cousin is very __________ (勤奋的) and studies hard.33.The elephant's trunk is an extension of its ________________ (鼻子).34.I like to participate in school ______ (俱乐部) to meet new friends and learn new skills.35.What is the name of the famous landmark in Egypt?A. Great WallB. Eiffel TowerC. PyramidsD. Colosseum36.The first successful double organ transplant was performed in ________.37.What is the capital of Zambia?A. LusakaB. NdolaC. KitweD. Livingstone答案:A38.They are _____ (dancing) to music.39.I want to know more about _______ (主题). Learning is a lifelong _______ (旅程).40.The turtle moves very _______ (乌龟的动作很_______).41.We visit the ______ (博物馆) for educational trips.42. A chemical reaction can occur in _____ conditions.43.The fall of the Berlin Wall occurred in _______.44.n Tea Party was a protest against the ________ (茶税). The Brit45.What is the name of the famous bear who loves honey?A. Paddington BearB. Winnie the PoohC. Yogi BearD. Baloo答案:B46.What do we call a person who studies the impact of culture on society?A. AnthropologistB. SociologistC. HistorianD. Psychologist答案:A47.The butterfly's life cycle includes ______ (多个阶段).48.The rabbit loves to hop around the _________. (院子)49. A _______ is a diagram that shows the arrangement of electrons in an atom.50.The ______ (花期) of a plant varies with species.51.The cat is ___ (playing) with a ball of yarn.52.She has a _____ (happy/sad) face.53. A reaction that produces gas may cause bubbles to _______.54.What do we call the act of traveling to different countries?A. ExploringB. AdventuringC. TouringD. Vacationing答案:C55. A ____(community coalition) unites diverse stakeholders.56.I want to be a ______ (pilot) when I grow up.57.We will have a ______ tomorrow. (party)58.We have a ______ (美丽的) garden full of flowers.59.What do you call the line that separates two countries?A. BoundaryB. BorderC. FrontierD. Division60.I have a _____ (电子玩具) that lights up.61.The Earth’s crust is divided into many pieces called ______ plates.62.What do you call a series of events that happen one after another?A. SequenceB. CycleC. PatternD. Series答案:A63.The boiling point of a substance can change with ______.64.What do we call a person who flies an airplane?A. DriverB. PilotC. SailorD. Engineer答案:B65.What do we call the art of making pottery?A. SculptureB. PaintingC. PotteryD. Weaving答案:C66.Many chemical reactions require an increase in ________ to proceed.67.The __________ is a famous waterfall located in North America. (尼亚加拉大瀑布)68.The capital of the United Arab Emirates is ________ (阿布扎比).69.What do we call a scientist who studies ecosystems?A. EcologistB. BiologistC. Environmental scientistD. Conservationist答案:A70. A ______ is a type of animal that can climb very well.71.The ancient Romans used ________ to build their cities.72.When I feel _______ (情感), I like to listen to _______ (音乐类型). It makes me feel better.73.The __________ is a significant geological feature in South America. (安第斯山脉)74.I want to ___ a great chef. (become)75.The __________ is a critical area for studying geological processes.76. A _______ (海马) is a unique type of fish.77. A __________ is formed through the interaction of multiple geological processes.78.What is the name of the galaxy we live in?A. Milky WayB. AndromedaC. WhirlpoolD. Triangulum79.My dad works at a _____ (hospital/school).80.I enjoy ______ (与同事们合作).81.The cake is ________ and sweet.82.The cat stretches after a long ____.83.The __________ (历史的反响) echoes through time.84.I like to ___ (build) sandcastles at the beach.85.The __________ (历史的引导) shapes our future.86.I want to _______ (学习) about physics.87.What do you call a written message sent electronically?A. LetterB. EmailC. TextD. Note88.The __________ is essential for transporting blood in animals.89.My brother is interested in ____ (mathematics).90.I see a ______ (car) in the driveway.91.There are _____ (三) cats in the house.92.I want to ___ a new toy. (get)93.What animal says "moo"?A. DogB. CatC. CowD. Sheep答案:C94. A reaction that absorbs energy is known as an ______ reaction.95.Every Friday, we have a ________ (运动会) at school. I participate in the________ (跑步) race.96.What is the capital of Colombia?A. BogotáB. MedellínC. CaliD. Cartagena97. A solution that can conduct electricity is called an ______ solution.98.What do you call the process by which plants lose water?A. PhotosynthesisB. TranspirationC. RespirationD. Germination答案:B99.What do you call the process of a liquid turning into a solid?A. FreezingB. MeltingC. BoilingD. Evaporating答案:A100.What do we call the study of living things?A. BiologyB. ChemistryC. PhysicsD. Geology。

本文是谷歌创始人Sergey和Larry在斯坦福大学计算机系读博士时的一篇论文。

发表于1997年。

在网络中并没有完整的中文译本,现将原文和本人翻译的寥寥几句和网络收集的片段(网友xfygx和雷声大雨点大的无私贡献)整理和综合到一起,翻译时借助了,因为是技术性的论文,文中有大量的合成的术语和较长的句子,有些进行了意译而非直译。

作为Google辉煌的起始,这篇文章非常有纪念价值,但是文中提到的内容因年代久远,已经和时下最新的技术有了不少差异。

但是文中的思想还是有很多借鉴价值。

因本人水平有限,对文中内容可能会有理解不当之处,请您查阅英文原版。

大规模的超文本网页搜索引擎的分析Sergey Brin and Lawrence Page{sergey, page}@Computer Science Department, Stanford University, Stanford, CA 94305摘要在本文中我们讨论Google,一个充分利用超文本文件结构进行搜索的大规模搜索引擎的原型。

Google可以有效地对网络资源进行爬行搜索和索引,比目前已经存在的系统有更令人满意的搜索结果。

该原型的数据库包括2400万页面的全文和之间的链接,可通过/访问。

设计一个搜索引擎是一种具挑战性的任务。

搜索引擎索索引数以亿计的不同类型的网页并每天给出过千万的查询的答案。

尽管大型搜索引擎对于网站非常重要,但是已完成的、对于大型搜索引擎的学术上的研究却很少。

此外,由于技术上的突飞猛进和网页的急剧增加,在当前,创建一个搜索引擎和三年前已不可同日而语。

本文提供了一种深入的描述,与 Web 增殖快速进展今日创建 Web 搜索引擎是三年前很大不同。

本文提供了到目前为止,对于我们大型的网页所搜引擎的深入的描述,这是第一个这样详细的公共描述。

除了如何把传统的搜索技术扩展到前所未有的海量数据,还有新的技术挑战涉及到了使用超文本中存在的其他附加信息产生更好的搜索结果。

广东省阳江市黄冈实验学校2023-2024学年高二下学期6月质量监测英语试题一、听力选择题1.What will the man probably do this weekend?A.Climb mountains.B.Have a picnic.C.See his grandparents. 2.Where does Bill work now?A.In a supermarket.B.In a garage.C.In a restaurant.3.What is the relationship between the speakers?A.Teacher and student.B.School friends.C.Fellow workers.4.How does the woman feel about the meal?A.Greatly satisfied.B.A bit dissatisfied.C.Terribly disappointed. 5.Why does Amber call Mike?A.To ask a favor.B.To rent an apartment.C.To find him a roommate.听下面一段较长对话,回答以下小题。

6.What are the speakers talking about?A.How to start a speech.B.How to behave in public.C.How to connect with people.7.What is the woman’s attitude towards the man’s suggestion?A.Supportive.B.Doubtful.C.Disapproving.听下面一段较长对话,回答以下小题。

8.How often did the man run?A.Twice a week.B.Four days a week.C.Every day.9.Which event is the man good at?A.Short distance.B.Middle distance.C.Long distance.10.How fast can the man run the event now?A.In 3 minutes and 47 seconds.B.Within 4 minutes.C.Inabout 6 minutes.听下面一段较长对话,回答以下小题。