工具变量Instrumental variable methods for causal inference

- 格式:pdf

- 大小:1.33 MB

- 文档页数:44

孟德尔随机化的方法学

孟德尔随机化(Mendelian Randomization)是流行病学研究中评估病因推断的数据分析技巧,它在非实验数据中,使用遗传变异作为工具变量(Instrumental Variable, IV)来估计感兴趣的暴露因素与所关注结局之间的因果关系。

这种方法的主要优势在于它遵循了“亲代等位基因随机分配给子代”的孟德尔遗传规律,因此可以用来推断表型与疾病之间的关联。

孟德尔随机化方法的应用前提是,遗传变异与感兴趣的暴露因素存在关联,并且这种关联在某种程度上可以预测感兴趣的结局。

此外,应用孟德尔随机化方法时还需要考虑一些重要的原则和假设,包括工具变量的独立性、工具变量与暴露因素的关联性、工具变量与结局的关联性以及排除限定准则等。

在实践中,孟德尔随机化方法可以通过多种统计方法和技术来实现,如单样本MR、两样本MR、两阶段MR和双向MR等。

这些方法通过建立G-X回归模型和P-Y回归模型,定量估计暴露因素与结局之间的关联效应大小。

虽然孟德尔随机化方法具有很多优势和应用前景,但仍然需要注意其局限性,如工具变量的选择和潜在混杂因素的影响等。

总的来说,孟德尔随机化方法是一种非常有用的数据分析技巧,可以帮助我们更好地理解疾病发生的因果关系。

但是,在使用这种方

法时,需要充分考虑其前提条件和局限性,并进行合理的验证和解释。

工具变量法工具变量法具体步骤工具变量法(Instrumental Variable Method)是一种用于处理内生性问题的统计方法,它通过引入一个“工具变量”来解决内生性问题。

工具变量是一个有着良好相关性但不会受到内生性干扰的变量,它可以用来代替内生变量,从而解决内生性的影响。

1.确定内生变量和工具变量:首先,需要确定研究中存在的内生变量和可能的工具变量。

内生变量是对所研究问题有影响的变量,而工具变量是与内生变量具有相关性但不会受到内生性干扰的变量。

内生性问题是由于内生变量的存在而导致的因果关系估计偏倚。

2.检验工具变量的相关性:接下来,需要检验所选取的工具变量与内生变量之间的相关性。

这可以通过计算相关系数或进行统计检验来实现。

如果工具变量与内生变量存在显著相关性,那么它可能是一个有效的工具变量。

3.确定工具变量的外生性:除了相关性外,工具变量还需要满足外生性的要求,即工具变量对因变量的影响是通过内生变量而不是其他方式引起的。

这可以通过进行实证分析来判断,例如通过回归模型来检验工具变量对因变量的影响是否通过内生变量进行中介。

如果工具变量的影响仅通过内生变量介导,则可以认为工具变量满足外生性的要求。

4.估计工具变量模型:一旦确定了有效的工具变量,可以使用工具变量法来估计因果关系。

工具变量法的核心思想是通过回归模型来解释内生变量对因变量的影响,并利用工具变量对内生变量进行替代。

通过将工具变量引入估计方程中,可以消除内生性的影响,从而得到无偏的因果关系估计。

5.进行统计推断:在估计了工具变量模型之后,可以进行统计推断来评估估计结果的显著性。

这可以通过计算标准误差、置信区间和假设检验等来实现。

统计推断可以帮助判断估计结果的可靠性,并验证因果关系的存在与否。

总结而言,工具变量法是一种用于解决内生性问题的统计方法。

它通过引入一个有效的工具变量来代替内生变量,消除内生性的干扰,从而得到无偏的因果关系估计。

工具变量法的具体步骤包括确定内生变量和工具变量、检验工具变量的相关性和外生性、估计工具变量模型,并进行统计推断。

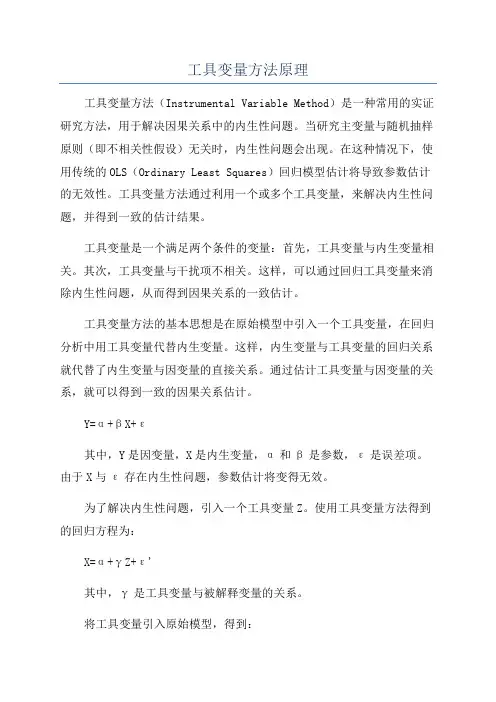

工具变量方法原理工具变量方法(Instrumental Variable Method)是一种常用的实证研究方法,用于解决因果关系中的内生性问题。

当研究主变量与随机抽样原则(即不相关性假设)无关时,内生性问题会出现。

在这种情况下,使用传统的OLS(Ordinary Least Squares)回归模型估计将导致参数估计的无效性。

工具变量方法通过利用一个或多个工具变量,来解决内生性问题,并得到一致的估计结果。

工具变量是一个满足两个条件的变量:首先,工具变量与内生变量相关。

其次,工具变量与干扰项不相关。

这样,可以通过回归工具变量来消除内生性问题,从而得到因果关系的一致估计。

工具变量方法的基本思想是在原始模型中引入一个工具变量,在回归分析中用工具变量代替内生变量。

这样,内生变量与工具变量的回归关系就代替了内生变量与因变量的直接关系。

通过估计工具变量与因变量的关系,就可以得到一致的因果关系估计。

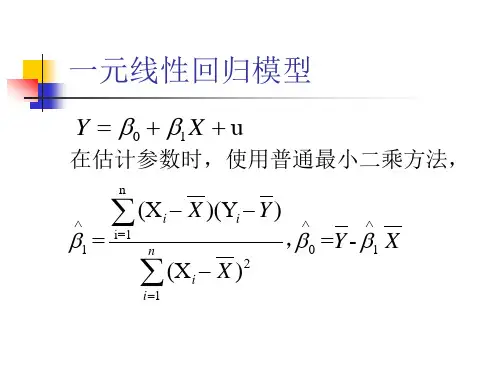

Y=α+βX+ε其中,Y是因变量,X是内生变量,α和β是参数,ε是误差项。

由于X与ε存在内生性问题,参数估计将变得无效。

为了解决内生性问题,引入一个工具变量Z。

使用工具变量方法得到的回归方程为:X=α+γZ+ε'其中,γ是工具变量与被解释变量的关系。

将工具变量引入原始模型,得到:Y=α+β(α+γZ+ε')+ε化简后可以得到:Y=α+βα+βγZ+βε'+ε由于内生性问题,βγ≠0,OLS估计将无效。

但是,由于工具变量与ε无相关性,βε'=0。

因此,使用工具变量方法可以得到一致的估计结果,即β的一致估计。

工具变量方法中的关键问题是选择合适的工具变量。

一个好的工具变量要满足两个条件:首先,与内生变量相关,以确保能够消除内生性问题;其次,与干扰项不相关,以确保工具变量不会引入新的内生性问题。

如果工具变量不满足这两个条件,工具变量方法仍然会产生一致的估计结果,但结果可能存在偏误。

要选择合适的工具变量,需要根据研究问题及具体情境进行判断。

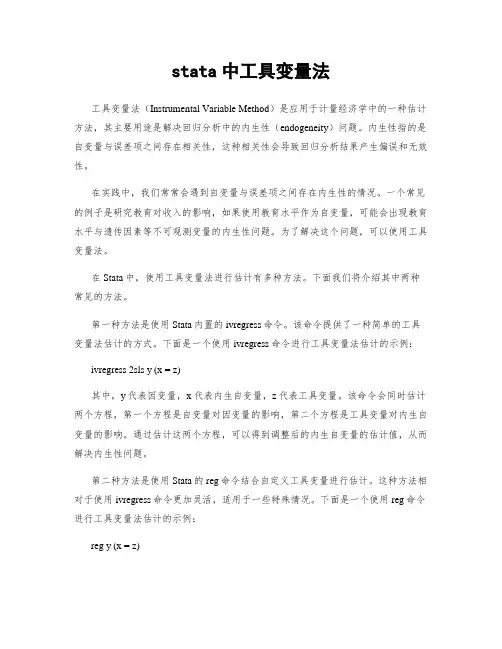

stata中工具变量法工具变量法(Instrumental Variable Method)是应用于计量经济学中的一种估计方法,其主要用途是解决回归分析中的内生性(endogeneity)问题。

内生性指的是自变量与误差项之间存在相关性,这种相关性会导致回归分析结果产生偏误和无效性。

在实践中,我们常常会遇到自变量与误差项之间存在内生性的情况。

一个常见的例子是研究教育对收入的影响,如果使用教育水平作为自变量,可能会出现教育水平与遗传因素等不可观测变量的内生性问题。

为了解决这个问题,可以使用工具变量法。

在Stata中,使用工具变量法进行估计有多种方法。

下面我们将介绍其中两种常见的方法。

第一种方法是使用Stata内置的ivregress命令。

该命令提供了一种简单的工具变量法估计的方式。

下面是一个使用ivregress命令进行工具变量法估计的示例:ivregress 2sls y (x = z)其中,y代表因变量,x代表内生自变量,z代表工具变量。

该命令会同时估计两个方程,第一个方程是自变量对因变量的影响,第二个方程是工具变量对内生自变量的影响。

通过估计这两个方程,可以得到调整后的内生自变量的估计值,从而解决内生性问题。

第二种方法是使用Stata的reg命令结合自定义工具变量进行估计。

这种方法相对于使用ivregress命令更加灵活,适用于一些特殊情况。

下面是一个使用reg命令进行工具变量法估计的示例:reg y (x = z)在这个示例中,y代表因变量,x代表内生自变量,z代表工具变量。

通过在reg命令中指定x和z之间的关系,可以实现工具变量法的估计。

需要注意的是,使用reg命令进行工具变量法估计需要确保工具变量满足一些假设条件,比如工具变量与误差项之间不应存在相关性。

总之,Stata中提供了多种方法进行工具变量法的估计。

根据实际问题的需求和假设条件的满足程度,可以选择合适的方法进行估计。

通过使用工具变量法可以有效解决回归分析中的内生性问题,提高估计结果的准确性和有效性。

工具变量是什么意思

工具变量的意思是:一个计量经济学的概念,它的出现是为了克服普通最小二乘法中的内生性问题。

在这里,内生性是指回归模型中的解释变量(X)和随机扰动项(δ)相关。

工具变量(英语:instrumental variable,简称“IV”)也称为“仪器变量”或“辅助变量”,是经济学、计量经济学、流行病学和相关学科中无法实现可控实验的时候,用于估计模型因果关系的方法。

工具变量(英语:instrumental variable,简称“IV”)也称为“仪器变量”或“辅助变量”,是经济学、计量经济学、流行病学和相关学科中无法实现可控实验的时,用于估计模型因果关系的方法。

在回归模型中,当解释变量与误差项存在相关性(内生性问题),使用工具变量法能够得到一致的估计量。

内生性问题一般产生于被忽略变量问题或者测量误差问题。

当内生性问题出现时,常见的线性回归模型会出现不一致的估计量。

此时,如果存在工具变量,那么人们仍然可以得到一致的估计量。

根据定义,工具变量应该是一个不属于原解释方程并且与内生解释变量相关的变量。

在线性模型中,一个有效的工具变量应该满足以下两点:

此变量和内生解释变量存在相关性;

此变量和误差项不相关,也就是说工具变量严格外生。

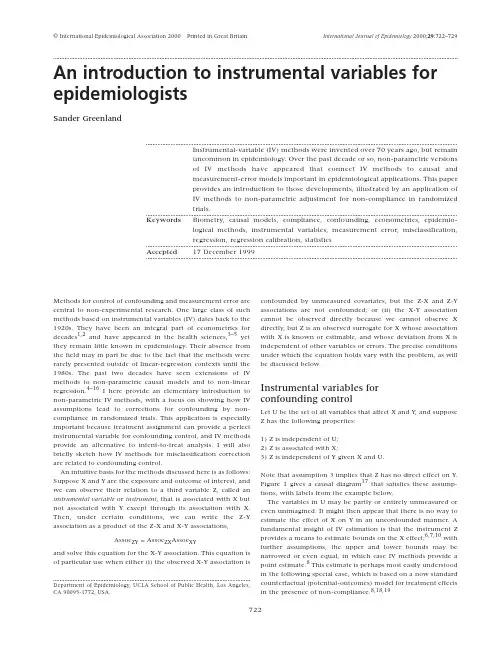

International Journal of Epidemiology 2000;29:722–729Methods for control of confounding and measurement error are central to non-experimental research. One large class of such methods based on instrumental variables (IV) dates back to the 1920s. They have been an integral part of econometrics for decades1,2and have appeared in the health sciences,3–5yet they remain little known in epidemiology. Their absence from the field may in part be due to the fact that the methods were rarely presented outside of linear-regression contexts until the 1980s. The past two decades have seen extensions of IV methods to non-parametric causal models and to non-linear regression.4–16I here provide an elementary introduction to non-parametric IV methods, with a focus on showing how IV assumptions lead to corrections for confounding by non-compliance in randomized trials. This application is especially important because treatment assignment can provide a perfect instrumental variable for confounding control, and IV methods provide an alternative to intent-to-treat analysis. I will also briefly sketch how IV methods for misclassification correction are related to confounding control.An intuitive basis for the methods discussed here is as follows: Suppose X and Y are the exposure and outcome of interest, and we can observe their relation to a third variable Z, called an instrumental variable or instrument, that is associated with X but not associated with Y except through its association with X. Then, under certain conditions, we can write the Z-Y association as a product of the Z-X and X-Y associations,Assoc ZY= Assoc ZX Assoc XYand solve this equation for the X-Y association. This equation is of particular use when either (i) the observed X-Y association is confounded by unmeasured covariates, but the Z-X and Z-Y associations are not confounded; or (ii) the X-Y association cannot be observed directly because we cannot observe X directly, but Z is an observed surrogate for X whose association with X is known or estimable, and whose deviation from X is independent of other variables or errors. The precise conditions under which the equation holds vary with the problem, as will be discussed below.Instrumental variables forconfounding controlLet U be the set of all variables that affect X and Y, and suppose Z has the following properties:1) Z is independent of U;2) Z is associated with X;3) Z is independent of Y given X and U.Note that assumption 3 implies that Z has no direct effect on Y. Figure 1 gives a causal diagram17that satisfies these assump-tions, with labels from the example below.The variables in U may be partly or entirely unmeasured or even unimagined. It might then appear that there is no way to estimate the effect of X on Y in an unconfounded manner. A fundamental insight of IV estimation is that the instrument Z provides a means to estimate bounds on the X effect;6,7,10with further assumptions, the upper and lower bounds may be narrowed or even equal, in which case IV methods provide a point estimate.8This estimate is perhaps most easily understood in the following special case, which is based on a now standard counterfactual (potential-outcomes) model for treatment effects in the presence of non-compliance.8,18,19© International Epidemiological Association 2000Printed in Great BritainAn introduction to instrumental variables for epidemiologistsSander GreenlandInstrumental-variable (IV) methods were invented over 70 years ago, but remainuncommon in epidemiology. Over the past decade or so, non-parametric versionsof IV methods have appeared that connect IV methods to causal andmeasurement-error models important in epidemiological applications. This paperprovides an introduction to those developments, illustrated by an application ofIV methods to non-parametric adjustment for non-compliance in randomizedtrials.Keywords Biometry, causal models, compliance, confounding, econometrics, epidemio-logical methods, instrumental variables, measurement error, misclassification,regression, regression calibration, statisticsAccepted17 December 1999Department of Epidemiology, UCLA School of Public Health, Los Angeles,CA 90095-1772, USA.722INSTRUMENTAL VARIABLES FOR EPIDEMIOLOGISTS723Intrumental variable methods fornon-complianceA paradigmatic example in which the IV conditions 1–3 are often satisfied is in a randomized trial with non-compliance: Z becomes treatment assignment, which is randomized and so fulfills assumption 1; X becomes treatment received, which is affected but not fully determined by assignment Z. To illustrate these concepts, Table 1 presents individual one-year mortality data from a cluster-randomized trial of vitamin A supplementa-tion in childhood.18,20Of 450 villages, 229 were assigned to a treatment in which village children received two oral doses of vitamin A; children in the 221 control villages were assigned none. This protocol resulted in 12094 children assigned to the treatment (Z=1) and 11588 assigned to the control (Z = 0). Only children assigned to treatment received the treatment; that is, no one had Z = 0 and X = 1. Unfortunately, 2419 (20%) of those assigned to the treatment did not receive the treatment (had Z = 1 and X = 0), resulting in only 9675 receiving treat-ment (X = 1). Nonetheless, assumption 1 is satisfied if the ran-domization was not subverted, while assumption 2 is supported by the data: Assignment to vitamin A increased the percentage receiving A from 0 to 80%.Assumption 3 is plausible biologically, but must be reconciled with the fact that, among those both assigned to no vitamin A (Z = 0) and receiving no vitamin A (X = 0), mortality is only 639 per 100000, versus 1406 per 100000 for those assigned to vitamin A (Z = 1) but receiving no vitamin A (X = 0). Assuming that this difference is due to confounding by factors U that affect compliance (and hence X) and mortality (Figure 1), this illusory direct effect of assignment Z exemplifies the type of bias that arises when one attempts to estimate direct effects by stratifying on intermediates21(X is intermediate between Z and Y). There are many plausible explanations for such confounding. For example, perhaps families that fail to comply tend to be the poorest and so provide high-risk environments (poorer nutrition and sanitation); their low compliance would leave behind a low-risk group of compliers in the X = Z = 1 category, and thus confound an unadjusted comparison of the treated group (X = 1) with the untreated group (X = 0). Confounding is a threat whenever people fail to comply with their assignment (i.e. have X ≠Z) for reasons (U) related to their outcome;4–10,18,19,22,23this problem is often referred to as one of biased selection for treatment. For example, patients assigned to a complex pill regimen may become lax in following that regimen. These non-compliers are often those who feel less ill and who have a better prognosis with respect to the outcome Y. In such situations, there will be confounding in a comparison of those complying with treatment to the other patients, because those complying are sicker than the others (i.e. there is self-selection for treatment that is related to prognosis).Concerns of this sort have led to recommendations (often rigid) that intent-to-treat analysis be followed. To test and esti-mate effects, intent-to-treat compares those assigned to one treatment against those assigned to another treatment without regard to actual treatment received (X). Critics of this approach point out that treatment received is the source of biological efficacy, and that comparison of treatment assigned is biased for the effect of treatment received (furthermore, the bias is not always toward the null,24contrary to common lore). By recognizing treatment assignment as an instrument, IV methods provide an alternative to the biased extremes of analysing Z as the treatment (intent-to-treat) and analysing received treat-ment X in the conventional manner (which is likely to be con-founded by determinants of compliance).To see how the IV concept can be used to control for confounding due to non-compliance, let us refer to people who would always obey their treatment assignment as co-operative; among these people, X and Z are always equal. It is crucial to distinguish the concept of co-operative people from the concept of compliance. Co-operative people are those who will receive their assigned regimen, no matter which regimen (treatment) they are assigned. In the example, co-operative children have parents or guardians who will fully co-operate with the researchers, in that they will allow the researchers to give their child the vitamin if assigned to receive it, and will not give their child the vitamin if assigned to not receive it. Non-co-operative people are those who will not receive certain regimens if assigned to them. In the example, some parents may refuse to let their child receive the experimental treatment. These refusers have children who exhibit non-compliance if they areassigned to the vitamin A, but who exhibit compliance if they Figure1Table 1One-year mortality data from cluster-randomized trial of vitamin A supplementation in children.20Z = 1 if assigned A, 0 if not; X = 1 if received A, 0 if notDeaths (Y = 1)123446074 Total9675241912 094011 588 Risk a1241406380undefined639 a Deaths per 100 000 within one year.724INTERNATIONAL JOURNAL OF EPIDEMIOLOGYare assigned to no vitamin. Thus, some non-co-operative people will be in compliance with their assignment, simply because they were not assigned to a treatment they would have refused.Following earlier derivations of the correction below,8,19I will introduce this simplifying assumption:4) Treatment assignment affects X only among co-operative people.This assumption says that the participants may be divided into two groups: co-operative, for whom X always equals Z; and non-co-operative, for whom assignment Z has no effect on X. If the treatment variable has only two levels (1 and 0),assumption 4 reduces to an assumption that no trial participant would always (perversely) receive the opposite of what she was assigned, no matter which treatment she was assigned,8so that there are only two types of non-co-operators: Those who would always receive treatment and those who would never receive treatment. Table 1 provides evidence that assumption 4 is satisfied in the example: If there were participants who would always receive the opposite of their assignment (‘defiers’8), we should expect to see some of them in the Z = 0, X = 1 column,but no one is seen there.Now define p c = proportion of trial participants who are co-operative.p c is the effect that assignment to treatment 1 rather than 0would have on the average value of the treatment indicator X.To see this, definep 1= proportion of participants who would receive treat-ment 1 (X = 1) if assigned treatment 1 (Z = 1)= average of X if everyone were assigned treatment 1p 0=proportion of participants who would receive treat-ment 1 (X = 1) if assigned treatment 0 (Z = 0)= average of X if no one were assigned treatment 1.Any difference between p 1and p 0has to be due to the change in X among co-operative people (because, by assumption 4,only the co-operative people are affected by Z). More precisely,p 1– p 0must equal p c because only co-operative people would go from X = 0 to X = 1 in response to a change from Z = 0 to Z = 1. Also, p 0must equal the proportion of non-co-operators who always receive treatment, 1–p 1must equal the proportion of non-co-operators who never receive treatment, and p c + p 0+ 1 – p 1= 1.Under assumption 1, p 1– p 0and hence p c is validly estimated by the observed difference in the proportion receiving treat-ment 1 (X = 1) for the group assigned treatment 1 (Z = 1) versus the group assigned treatment 0 (Z = 0). In the example, this estimate is the difference in the proportions receiving vitamin A among those assigned and not assigned to A:Next, definem •1= average outcome (Y¯) if everyone is assigned treatment 1 (Z = 1)m •0= average outcome if everyone is assigned treatment 0(Z = 0).The average outcome difference m •1– m •0is the effect that assignment to treatment 1 rather than 0 would have on the aver-age outcome. (Recall that, when Y is a binary (0,1) disease indi-cator, the average outcome is the average risk or incidence proportion, and the average outcome difference is the average risk difference.25) Under assumption 1, this difference is validly estimated by the observed difference in average outcome for the group assigned treatment 1 versus the group assigned treatment 0 (which is the intent-to-treat [ITT] estimate of treatment effect):m •1 = 46/12095 = 380 per 100000m •0 = 74/11588 = 639 per 100000.Next, consider two quantities that we do not observe directly:m 1c = average outcome of co-operative people if everyone isassigned treatment 1,m 0c = average outcome of co-operative people if everyone isassigned treatment 0.m 1c – m 0c is the effect that assignment to treatment 1 rather than 0 would have on the average outcome of co-operative people .In addition, because assignment Z and treatment received X are identical among co-operative people, m 1c – m 0c is the effect that receiving treatment 1 rather than 0 would have on the average outcome among co-operative people. In other words, m 1c – m 0c is both an intent-to-treat (Z) effect and a biological (X) effect on co-operative people.Finally, consider two quantities that are estimable in a ran-domized trial:m 1n = average outcome among non-co-operative peoplewho receive treatmentm 0n = average outcome among non-co-operative peoplewho do not receive treatment.Under assumptions 3 and 4, these averages do not depend on assignment Z. Thus, m 1n can be estimated directly from the average outcome of those assigned to 0 but receiving 1 (Z = 0, X = 1); similarly, m 0n can be estimated directly from the average outcome of those assigned to 1 but receiving 0 (Z = 1, X = 0).Table 2 summarizes the data expected from a study satisfying assumptions 1–4, using the above notation. It shows that we can write m •1, the average outcome if everyone were assigned treatment 1, as a weighted average of the average outcomes for co-operative people and non-co-operative people, with weights equal to the respective proportions of each type of person:m •1= p c m 1c + p 0m 1n – (1–p 1)m 0n(1)Similarly,m •0= p 0m 1n + (1–p 1)m 0n + p c m 0c(2)Subtracting the second equation from the first yieldsm •1– m •0= p c (m 1c – m 0c ) = (p 1– p 0)(m 1c – m 0c )(3)ˆˆ–ˆ–.–..p p p c 10====9675120940115880800080INSTRUMENTAL VARIABLES FOR EPIDEMIOLOGISTS725Thus, the effect m•1– m•0of assignment on the overall average outcome can be viewed as the effect p1– p0of assignment Z on treatment received X times the effect m1c– m0c of treatment received X on the outcome Y among co-operative people. Equation 3 exhibits the dilution of the Z effect produced by non-compliance; p c quantifies this dilution on a scale from 0 (no Z effect if no compliance) to 1 (Z effect equals X effect if full compliance).We can solve equation 3 for the effect of treatment X among co-operative people to getm1c– m0c= (m•1– m•0)͞p c= (m•1– m•0)͞(p1– p0)(4) This equation shows that, subject to the assumptions, we can estimate the effect of treatment received X on the average outcome among co-operative people as the estimated effect of treatment assignment Z on the overall average outcome divided by the estimated effect of treatment assignment on the received treatment X. The Appendix gives a formula for the variance of this estimate. Because m•1– m•0and p1– p0equal the Z-coefficients from the linear regressions of Y on Z and X on Z, the effect estimate from equation 4 equals the classical IV-regression estimate.2Returning to the example, the unadjusted estimate of the risk difference produced by treatment (which is confounded by non-compliance) compares X = 1 to X = 0:124 – 100 000 (34 + 74)͞(2419 + 11 588)= –647 per 100 000 = –0.65%(95% CI: –0.81%, –0.49%). In contrast, the usual ITT estimate of the risk difference (which is a valid estimate of the risk difference caused by assignment to vitamin A) compares Z = 1 to Z = 0:ˆm•1–ˆm•0= 380 – 639 = –259 per 100000 = –0.26% (95% CI: –0.44%, –0.08%); this is a 259/639 = 41% risk reduction. The IV estimate is(ˆm•1–ˆm•0)͞ˆp c= –259/0.80 = –323 per 100000 = –0.32% (95% CI: –0.55%, –0.10%), which is a 324/639 = 51% risk reduction. (Because the original data are unavailable, the confidence limits were computed assuming simple random-ization, and so are incorrect to the extent that village effects are present.) It thus appears that the unadjusted estimate severely overestimates the treatment effect, but that the ITT estimate somewhat underestimates the effect.Choice of target effectIn the above example the effect of vitamin A, m1c– m0c, is an effect restricted to co-operative people. This effect is useful if we think that co-operative people in the trial are typical (with respect to treatment effect) of people who will accept the treatment they are assigned. Aside from such generalizability issues (which arise in all trials), a conceptual problem is that we often cannot identify co-operative people with any certainty.8,18We can of course ask trial participants (or, above, their parents) assigned to and receiving a treatment if they would have obtained and taken that treatment had they not been assigned to it, but the reliability of the responses would ordinarily be unknown (even to the participant).Under assumption 4 and the assumption that the treatment would never be received by those not assigned to it (which is plausible in the above example), we may identify as co-operative those people who receive treatment among those assigned to treatment. Otherwise, although we can estimate the proportion p c of co-operative people (to whom our estimate of m1c– m0c applies), we cannot characterize those people. The only behavioural effect we can always identify is the effect of assignment Z on treatment received X within our trial.Sometimes, the effects of treatment under strictly enforced regimens are of central interest in planning mandatory programmes of otherwise unavailable prophylactics (such as vaccines). Definem jf= average outcome if everyone were forced to receive treatment j.In the example, m1f– m0f is the effect of forcing every child to take vitamin A versus withholding the supplement from every child. If co-operative and non-co-operative people differ in any way related to the effect of treatment received (i.e. if the variable co-operative/non-co-operative modifies the effect of X), the effect of X among co-operative people will not suffice to estimate the forcing effect. Unfortunately, we should expect the selective non-compliance that confounds the naïve X = 1 versus X = 0 comparison to also make m1c– m0c unequal to m1f– m0f. Thus, one should never presume that the IV estimate of the effect is a valid estimate of the forcing effect, even when it is a valid estimate of the effect on co-operative people.Estimation of ratio measuresA problem arises if one wishes to estimate measures that are ratios of average outcomes, reflective of more general non-collapsibility problems of ratio measures:25,26Because logs ofTable 2 Expected data under assumptions 1–4 and text notation for a binary treatment variable X, with everyone assigned (Z = 1) or not assigned (Z = 0) treatment. C = 1 for co-operators, N = 1 for non-co-operators who always have X = 1, N = 0 for non-co-operators who always have X = 0Average Y m1c m1n m0n m1n m0n m0c Proportion p c p0 1 – p1p0 1 – p1p c Overall average Y:p c m1c+ p0m1n + (1 – p1)m0n= m•1p0m1n+ (1 – p1)m0n+ p c m0c= m•0726INTERNATIONAL JOURNAL OF EPIDEMIOLOGYaverages are not averages of logs, one cannot simply take Y as (say) the log rate or log odds and apply the above formulas to differences in these logs to obtain corrected log relative risk estimates; one needs additional strong assumptions about homogeneity of risks within levels of X and all controlled covariates to use the formulas. Such assumptions are implicit in IV methods based on regression modelling.2,11–16 Alternatively, under assumptions 1–3 and one more assump-tion one may derive risk ratio (RR) estimators for the effect of treatment among co-operative people, as well as alternative risk-difference (RD) estimators18,23(note, however, that the formulas in Sommer and Zeger18have serious misprints). For example, suppose that p0= 0, i.e. one cannot get treatment 1 (X = 1) without assignment to treatment 1 (Z = 1), so that the only non-co-operators are those who do not receive treatment when assigned to treatment (as in the example). Then, from assumptions 1 and 3,The proportion receiving treatment among the proportion assigned to treatment, ˆp1, is a valid estimate of p c.People assigned to and receiving treatment (Z = X = 1) are representative of all co-operative people in the trial, and so their average outcome provides a valid estimate of m1c; call it ˆm1c. People assigned to but not receiving treatment (Z = 1, X = 0) are representative of non-co-operative people in the trial, and so their average outcome provides a valid estimate of m0n; call it ˆm0n.As before, we can also estimate m•0by the average outcome among those assigned to 0 (Z = 0), ˆm•0.We can now solve equation 2 for m0c to obtainm0c= [m•0– (1 – p c)m0n]/p c(5)and substitute the above estimates into this equation to get mˆ0c.The ratio and difference of ˆm lc and ˆm0c are now the estimated relative and absolute effects of received treatment on the average outcome. In the example,ˆp c=ˆp1= 0.80,ˆm lc= 12/9675 = 124 per 100000,ˆm•0= 639 per 100000,ˆm0n= 34/2419 = 11406 per 100000, and soˆm0c= [639 – (0.20)1406]/0.80 = 447 per 100000RRˆ = ˆm lc͞ˆm0c= 124/447 = 0.28,RDˆ= ˆm lc– ˆm0c= 124 – 447 = –323 per 100000.The latter estimate is the same as the estimate obtained from equation 4, although it was derived from the assumption that p0= 0, which is stronger than assumption 4 (from which equa-tion 4 was derived).Cuzick et al.23derived a more general risk-ratio estimator under an alternative assumption that the ratio is the same (homogeneous) for co-operators and for all types of non-compliers; when ˆp1= 0 (as in the example), their estimator reduces to that just given. As with the other estimators, their estimator also requires assumptions 1–3. Connor et al.27also derived formula 5 in the context in which treatment is screen-ing and compliance is acceptance of screening, using assump-tions equivalent to 1–3 and p0= 0.Insufficiency of the instrumental assumptions Assumptions 1–3, which here define Z as an instrument, are not sufficient to yield a point estimate of effects of the received treatment X. They do allow setting of bounds for X effects,4,6,7,10but these bounds can be uselessly wide.9Using only assumptions 1–3 in the above example, Balke and Pearl10 derived non-parametric bounds for the forcing risk difference m1f– m0f of –0.5% and 19%. The details of their derivation are beyond the present paper, but their upper bound suggests vitamin A might kill up to a fifth of the children, an absurd value in light of the extensive background information on the non-toxicity of vitamin A in the doses administered.20Thus, assumptions 1–3 plus the example data provide almost no additional information beyond what is already known to place an upper bound on the risk difference.To obtain useful results, one will often need further plausible biological (causal) or parametric statistical assumptions beyond those embodied in assumptions 1–3. In the above example, assumption 4 is quite plausible. An even more plausible assumption is that vitamin A would not kill any of the children. With this ‘no harm’ assumption replacing assumption 4, the ITT estimate (-0.26%) is an upper bound for the forcing risk difference; this bound is obtained by assuming that no death in the group with Z = 1 and X = 0 (who are non-co-operative people) would have been prevented by forcing every child to take vitamin A. A companion lower bound of 12/12094 –74/11584 = –0.54% is obtained by assuming that every death in the group with Z = 1 and X = 0 would have been prevented by forcing treatment on these children. These bounds are only for the point estimate of effect that would be obtained under a forced regimen; they do not account for random errors in the numbers of deaths. Nonetheless, the lower bound is already implausibly low on biological grounds, for we can be sure that treatment could not have prevented every death in the Z = 1, X = 0 group. Instrumental variables for misclassification correctionOne obtains a different perspective on IV methods by con-sidering a surrogate or noisy measure Z for the exposure of interest X. The chief difference from the confounding problem is that no causal interpretation of the associations is required; the methods apply even for a purely descriptive (associational) analysis. Thus, for simplicity, in this section I will set aside U;I will also assume Y is measured without error. Assumption 3 then simplifies to3’) Z is independent of Y given XThis assumption corresponds to the notion that error in Z as a measure of X is non-differential with respect to the outcome; this error may have systematic as well as random components, as long as neither component is associated with Y.Given assumptions 2 and 3’, we can validly estimate the association of Y with X using IV formulas, provided we have validation data that show how Z predicts X in our study (i.e. that provide estimates of the predictive values for Z as a measure of X). To see this relation for a binary exposure X in a cohort study, for each exposure level j (j = 1 or 0) definem X=j= average outcome among those with X = jINSTRUMENTAL VARIABLES FOR EPIDEMIOLOGISTS727m Z=j= average outcome among those with Z = jp j= probability that X = 1 when Z = j for the entire cohort;p1and 1 – p0are then the positive and negative predictive values for the entire cohort (without regard to the outcome).Under assumption 3′, Z has no effect on Y other than through Z; hence, the average outcomes within levels of X do not change across levels of Z, and in particular equal the m X=j. Further-more, the average outcome m Z=j within a given level j of Z is just the average of the average outcomes within levels of X, weighted by the probabilities of the X levels within the Z level; that is,m Z=1= p1m X=1+ (1 – p1)m X=0andm Z=0= p0m X=1+ (1 – p0)m X=0(6) Subtracting the second equation from the first yieldsm Z=1– m Z=0= (p1– p0)m X=1– (p1– p0)m X=0= (p1– p0) (m X=1– m X=0)(7) We now solve this equation to getm X=1– m X=0= (m Z=1– m Z=0)͞(p1– p0).(8) This equation shows that, subject to the assumptions, we can estimate the exposure-specific outcome difference as the ratio of the surrogate-specific outcome difference (the Z-Y association) and the difference of the surrogate-specific probabilities of X = 1 (the Z-X association).Use of validation dataEquation 8 does not require any data that directly relate the true exposure X to the outcome Y: We can correct the estimate from a study relating the surrogate Z to the outcome provided we can construct accurate estimates of p1and p0from a valida-tion study relating the true exposure to the surrogate. If, how-ever, the validation data also show the relation of the true exposure to the outcome (as would be the case if they were a random sample from the study relating surrogate to outcome), the IV-corrected estimate from applying equation 8 to the unvalidated data can and should be combined with the direct estimate from the validation data. Such data combination is done most efficiently with regression modelling.15,28Validation data that are not restricted on the outcome (e.g. that include both cases and non-cases when Y is a disease indicator) also allow one to test assumption 3 and to use methods that do not require that assumption.28–30Relation to confounding controlThe resemblance of equation 8 to 4 reflects an underlying com-mon feature of the two situations. In both, we lack complete data on the relation of X to Y. In the non-compliance problem, we lack data on who is co-operative; the IV method uses the X-Z data to correct for the dilution of the Z-Y association as a measure of the X effect that results from inclusion of non-co-operative people in the intent-to-treat comparison. Similarly, in the classification problem we lack data on who is correctly classi-fied;the IV method uses X-Z data to correct for the dilution result-ing from the inclusion of the misclassified data in our comparison.There is a distinction, however: For misclassification, assump-tion 3’ implies that the X-Y association is the same among the correctly and incorrectly classified; hence, the corrected esti-mate applies to the entire study cohort. In contrast, for con-founding control, the assumptions do not imply that the effect of X on Y would be the same for co-operative and non-co-operative people; hence, the corrected estimate applies only to co-operative people (who may be difficult to identify). This distinction does not appear in classical IV methods, as these methods are based on models in which the X effect on Y is homogeneous across covariates U. (Note that, by assumption 3, Z has no effect and so the X effect must be homogeneous within levels of Z.)DiscussionThe above corrections extend directly to situations requiring adjustment for measured covariates by applying them within covariate strata and summarizing; nonetheless, a more efficient approach is supplied by IV methods based on regression models.15Those methods are often presented under the head-ing of regression-calibration or linear-imputation methods13,29 but are special cases of general IV regression formulas. Bashir and Duffy29provide an elementary introduction to linear imputation and other measurement-error corrections, while Carroll et al.15,28provide advanced and thorough coverage of model-based corrections, including general IV corrections; the latter allow both Y and X to be measured with error in both the main and validation sample, as long as that error is uncorrelated with Z.An important limitation of corrections based on regression models is their model dependence, especially on the models for error distributions. For example, P-values and confidence limits from the basic regression-calibration (linear-imputation) form of IV correction assumes that the regression of the true (biologically relevant) exposure X on the instrument Z follows a linear model with normal errors.13,29This model is highly implausible in many situations and is mechanically impossible to satisfy if X and Z are discrete. This limitation is not shared by non-parametric methods4–10and special methods for cate-gorical variables.30–32In purely observational studies (in which neither the instru-ment Z nor the treatment X has been subject to experimental manipulation), a major limitation of all IV methods is their strong dependence on assumptions 1 and 3. The corrections may even be harmful if Z is associated with other errors or with unmeasured confounders, as might be expected if (say) Z is self-reported alcohol consumption, X is true consumption, Y is cog-nitive function, and U is use of illegal drugs. Thus, IV corrections are no cure for differential errors; they address only independ-ent non-differential errors, as embodied by assumptions 1 and 3. If there are violations of the assumptions, bias due to measure-ment error (using Z as a surrogate for X) will no longer act multi-plicatively (as in formula 7) and adjustment will require more complex formulas.15,28The sensitivity of IV corrections to the assumptions increases with the amount of non-compliance or the amount of error in Z as a measure of X; the corrections will be especially unreliable if Z is a very noisy measure of X, so that the association of Z and X is weak.33The key to successful IV correction is thus to find。

工具变量的标准工具变量在经济学和社会科学研究中起到至关重要的作用,它们用于处理内生性问题,即某种变量可能与因果变量以及其他自变量之间存在内在的相关性。

本文将从工具变量的定义、选择、标准以及使用等方面进行探讨。

工具变量(Instrumental Variables, IV)是一种经济学中用于解决内生性问题的技术手段。

内生性问题主要指的是观测数据中存在的内在的相关性,导致无法直接得到准确的因果关系。

例如,假设我们想研究教育对收入的影响,但由于教育与个体能力水平以及其他影响收入的因素存在共同决定因素,因此无法准确地测量教育对收入的独立影响。

在这种情况下,工具变量可以帮助我们解决内生性问题。

工具变量可以看作是对内生性问题的一个解决方案,它是一种可以从外部影响因果关系的变量。

通过使用工具变量,我们可以利用这种外部影响来估计原始因果效应,而不会受到内生性问题的影响。

工具变量的基本思想是通过利用这种外部影响,将原始内生性问题转化为一个外生性问题,进而得到更准确的因果关系估计。

在选择工具变量时,需要满足一些标准。

首先,工具变量与内生变量之间应该存在一定的相关性,即工具变量对内生变量有一定的影响。

如果工具变量与内生变量没有相关性,那么它就不能有效地解决内生性问题。

其次,工具变量与误差项之间应该不存在相关性。

如果工具变量与误差项之间存在相关性,那么工具变量就不能满足外生性的要求,从而无法有效地解决内生性问题。

此外,工具变量应该具有足够的异质性,即工具变量对不同个体的影响程度应该有所不同。

如果工具变量没有足够的异质性,那么它不能提供有效的“随机试验”条件,无法解决内生性问题。

在实际应用中,我们常常使用一些统计测量指标来评估工具变量是否符合标准。

例如,工具变量的相关性通常可以通过计算工具变量与内生变量之间的相关系数来衡量。

同时,我们可以使用所谓的第一阶段回归来检验工具变量与内生变量以及其他控制变量之间的相关性。

另外,工具变量也需要满足一些经济学上的合理性标准。

孟德尔随机化 missing data在数据分析中,missing data(缺失数据)是一个常见的问题。

缺失数据的出现可能是由于实验设计上的问题、数据收集过程中的错误或者被调查者的拒绝回答等原因导致的。

缺失数据会对分析结果产生不良影响,因此需要采用合适的方法来处理缺失数据。

孟德尔随机化(Mendelian randomization)是一种处理缺失数据的方法之一。

孟德尔随机化是以格里高利·孟德尔(Gregor Mendel)的遗传学理论为基础的一种因果推断方法。

该方法利用遗传变异对自然实验进行模拟,通过遗传变异对某一因素进行干预,进而研究该因素对特定结果的影响。

在处理缺失数据时,孟德尔随机化方法可以通过利用遗传变异来推断缺失数据的可能值。

具体而言,孟德尔随机化方法基于以下三个假设:第一,基因型对于受试者的特征是随机分配的;第二,基因型与受试者的特征之间不存在共同的因果关系;第三,基因型与其他干预因素之间不存在交互作用。

根据这些假设,可以利用基因型与受试者特征之间的关系来推断缺失数据的可能值。

孟德尔随机化方法可以通过多种方式来实施。

其中一种常见的方式是使用工具变量法(instrumental variable)来推断缺失数据的可能值。

工具变量是一种与感兴趣因素相关但与其他干预因素不相关的变量,通过利用工具变量与感兴趣因素之间的关系,可以推断缺失数据的可能值。

另外,孟德尔随机化方法还可以利用遗传突变作为工具变量,进一步提高推断的准确性。

孟德尔随机化方法在处理缺失数据时具有一定的优势。

首先,该方法可以避免传统的缺失数据处理方法所带来的偏差问题。

其次,孟德尔随机化方法可以提供因果推断,帮助我们理解缺失数据与感兴趣因素之间的关系。

此外,孟德尔随机化方法还可以应用于基因组学研究中,通过遗传变异来推断基因与疾病之间的关系。

然而,孟德尔随机化方法也存在一些限制。

首先,该方法的有效性依赖于基因型与受试者特征之间的关系,如果基因型与特征之间存在复杂的关联,则孟德尔随机化方法可能会失效。

stata中工具变量法在Stata 中,工具变量法(Instrumental Variables, IV)是一种处理内生性(endogeneity)问题的方法,通常用于解决因果关系中的回归模型。

内生性问题指的是模型中的某些变量可能与误差项相关,从而导致OLS估计结果的偏误。

工具变量法通过引入一个或多个外生性足够相关但与误差项不相关的变量(称为工具变量)来解决这个问题。

以下是在Stata 中使用工具变量法的一般步骤:1. 确定内生性问题:确定模型中是否存在内生性问题,即某些解释变量与误差项相关。

2. 选择工具变量:选择足够相关但与误差项不相关的工具变量。

这些变量通常被认为是外生的,与误差项独立。

3. 估计工具变量模型:使用Stata 中的`ivregress` 命令估计工具变量模型。

语法如下:```stataivregress 2sls dependent_variable (endogenous_variable = instruments) other_exogenous_variables```其中,`dependent_variable` 是因变量,`endogenous_variable` 是内生变量,`instruments` 是工具变量,`other_exogenous_variables` 是其他外生变量。

例如:```stataivregress 2sls y (x = z) controls```4. 检验工具变量的有效性:使用`ivregress` 命令的`ivendog` 选项来检验工具变量的有效性。

```stataivregress 2sls y (x = z) controls, ivendog(x)```此命令将进行工具变量的内生性检验。

5. 诊断:进行模型诊断,检查模型的合理性和有效性。

因果效应评估指标在社会科学研究中,因果效应评估是一个重要的研究领域。

因果效应评估旨在研究一些因素对特定结果的影响,并确定这种影响是否是因果关系。

评估因果效应需要一系列指标来衡量和分析,以确保研究的严谨性和可靠性。

本文将介绍几个常见的因果效应评估指标。

1. 实验设计指标(Experimental Design Indicators)在开展因果效应评估研究时,对实验设计的合理性和可信度进行评估至关重要。

一种常见的实验设计指标是随机化实验设计。

通过随机分配实验组和对照组,可以确保组间的差异是由于所研究因素引起的,而非其他因素的干扰。

其他常见的实验设计指标包括平衡性检验、双盲实验设计等。

2. 外源性指标(Externality Indicators)外源性指标是指研究因果关系时需要考虑的外部因素。

外部因素可能对研究结果产生干扰,因此需要使用合适的指标来控制这些因素。

一种常见的外源性指标是工具变量(Instrumental Variable, IV)分析。

IV分析通过引入一个与观测结果相关但与处理变量不相关的变量,来估计处理变量对观测结果的因果影响。

3. 效应大小指标(Effect Size Indicators)效应大小指标衡量了研究因果效应的强度和重要性。

常见的效应大小指标包括平均效应大小、标准化效应大小等。

平均效应大小可以通过计算处理组和对照组之间的均值差异来衡量。

标准化效应大小可以通过将平均效应大小与标准偏差相除来得到,以消除不同样本大小和变异性的影响。

4. 内在效应指标(Internal Validity Indicators)内在效应指标用于评估研究的内在有效性,即因果关系的可信度和稳定性。

一个常见的内在效应指标是偏差检验。

偏差检验可以检测研究中是否存在统计学上的偏差,以及这种偏差对结论的影响。

其他内在效应指标还包括鲁棒性检验、敏感性分析等,用于评估研究结果的稳健性和鲁棒性。

5. 可复制性指标(Replicability Indicators)可复制性指标评估研究结果的可复制性和可靠性。

工具变量法英文文献English:Instrumental variable (IV) method, also known as the two-stage least squares (2SLS) estimator, is a widely used econometric technique to deal with endogeneity issues in regression analysis. It involves the use of one or more instrumental variables that are correlated with the endogenous regressors but not directly correlated with the error term. In the first stage, these instrumental variables are regressed on the endogenous variables to obtain predicted values. In the second stage, these predicted values are used as regressors in the main regression equation to estimate the coefficients of interest. IV estimation helps to overcome biases that arise from endogeneity, where the endogenous variables are correlated with the error term, leading to inconsistent and biased parameter estimates. By using instrumental variables, IV estimation provides consistent estimates of causal effects even in the presence of endogeneity. However, the validity of instrumental variables relies on certain assumptions, such as relevance (instrumental variables are correlated with the endogenous regressors), exogeneity (instrumental variables are not correlated with the error term), and exclusion restriction(instrumental variables affect the outcome variable only throughtheir impact on the endogenous regressors). Violation of these assumptions can lead to biased estimates. Despite its usefulness, IV estimation requires careful consideration and interpretation of results to ensure the reliability of causal inferences.中文翻译:工具变量(IV)方法,也称为两阶段最小二乘(2SLS)估计器,是一种广泛使用的计量经济学技术,用于处理回归分析中的内生性问题。

Tutorial in BiostatisticsReceived20June2013,Accepted10February2014Published online in Wiley Online Library ()DOI:10.1002/sim.6128Instrumental variable methods for causal inference‡Michael Baiocchi,a Jing Cheng b and Dylan S.Small c*†A goal of many health studies is to determine the causal effect of a treatment or intervention on health outcomes. Often,it is not ethically or practically possible to conduct a perfectly randomized experiment,and instead,an observational study must be used.A major challenge to the validity of observational studies is the possibility of unmeasured confounding(i.e.,unmeasured ways in which the treatment and control groups differ before treat-ment administration,which also affect the outcome).Instrumental variables analysis is a method for controlling for unmeasured confounding.This type of analysis requires the measurement of a valid instrumental variable, which is a variable that(i)is independent of the unmeasured confounding;(ii)affects the treatment;and(iii) affects the outcome only indirectly through its effect on the treatment.This tutorial discusses the types of causal effects that can be estimated by instrumental variables analysis;the assumptions needed for instrumental vari-ables analysis to provide valid estimates of causal effects and sensitivity analysis for those assumptions;methods of estimation of causal effects using instrumental variables;and sources of instrumental variables in health studies.Copyright©2014John Wiley&Sons,Ltd.Keywords:instrumental variables;observational study;confounding;comparative effectiveness1.IntroductionThe goal of many medical studies is to estimate the causal effect of one treatment versus another,that is, to compare the effectiveness of giving patients one treatment versus another.To compare the effects of treatments,randomized controlled studies are the gold standard in medicine.Unfortunately,randomized controlled studies cannot answer many comparative effectiveness questions because of cost or ethical constraints.Observational studies offer an alternative source of data for developing evidence regarding the comparative effectiveness of different treatments.However,a major challenge for observational stud-ies is confounders—pretreatment variables that affect the outcome and differ in distribution between the group of patients who receive one treatment versus the group of patients who receive another treatment. The impact of confounders on the estimation of a causal treatment effect can be mitigated by meth-ods such as propensity scores,regression,and matching[1–3].However,these methods only control for measured confounders and do not control for unmeasured confounders.The instrumental variable(IV)method was developed to control for unmeasured confounders.The basic idea of the IV method is(i)find a variable that influences which treatment subjects receive but is independent of unmeasured confounders and has no direct effect on the outcome except through its effect on treatment;(ii)use this variable to extract variation in the treatment that is free of the unmeasured con-founders;and(iii)use this confounder-free variation in the treatment to estimate the causal effect of the treatment.The IV method seeks tofind a randomized experiment embedded in an observational study and use this embedded randomized experiment to estimate the treatment effect.a Department of Statistics,Stanford University,Stanford,CA,U.S.A.b Division of Oral Epidemiology and Dental Public Health,School of Dentistry,University of California,San Francisco (UCSF),San Francisco,CA,U.S.A.c Department of Statistics,The Wharton School,University of Pennsylvania,Philadelphia,PA,U.S.A.*Correspondence to:Dylan S.Small,Department of Statistics,The Wharton School,University of Pennsylvania,400 Huntsman Hall,Philadelphia,PA19104,U.S.A.†E-mail:dsmall@‡The three authors contributed equally to this paper.M.BAIOCCHI,J.CHENG AND D.S.SMALL1.1.Tutorial aims and outlineIV methods have long been used in economics and are being increasingly used to compare treatments in health studies.There have been many important contributions to IV methods in recent years.The goal of this tutorial is to bring together this literature to provide a practical guide on how to use IV methods to compare treatments in a health study.We focus on several important practical issues in using IVs:(i) when is an IV analysis needed and when is it feasible;(ii)what are sources of IVs for health studies; (iii)how to use the IV method to estimate treatment effects,including how to use currently available software;(iv)for what population does the IV method estimate the treatment effect;(v)how to assess whether a proposed IV satisfies the assumptions for an IV to be valid;(vi)how to carry out sensitivity analysis for violations of IV assumptions;and(vii)how does the strength of a potential IV affect its usefulness for a study.In the rest of this section,we will present an example of using the IV method that we will use through-out the paper.In Section2,we discuss situations when one should consider using the IV method.In Section3,we discuss common sources of IVs for health studies.In Section4,we discuss IV assump-tions and estimation for a binary IV and binary treatment.In Section5,we discuss the treatment effect that the IV method estimates.In Section6,we provide a framework for assessing IV assumptions and sensitivity analysis for violations of assumptions.In Section7,we demonstrate the consequences of weak instruments.In Section8,we discuss power and sample size calculations for IV studies.In Section9,we present techniques for analyzing outcomes that are not continuous outcomes,such as binary,survival, multinomial,and continuous outcomes.In Section10,we discuss multi-valued and continuous IVs.In Section11,we discuss using multiple IVs.In Section12,we present IV methods for multi-valued and continuously valued treatments.In Section13,we suggest a framework for reporting IV analyses.In Section14,we provide examples of using software for IV analysis.If you are just beginning to familiarize yourself with IVs,we recommend focussing on Sections1–4, 5.1–5.2,6–8,and13–14,while skipping Sections5.3–5.5and9–12.Sections5.3–5.5and9–12contain interesting,cutting-edge,and more specialized applications of IVs that a beginner may want to return to at a later point.We include these sections for advanced readers,or those interested in more specialized applications.Table I is a table of notation that will be used throughout the paper.1.2.Example:Effectiveness of high-level neonatal intensive care unitsAs an example where the IV method is useful,consider comparing the effectiveness of premature babies being delivered in high volume,high technology neonatal intensive care units(high-level NICUs)versus local hospitals(low-level NICUs),where a high-level NICU is defined as a NICU that has the capac-ity for sustained mechanical assisted ventilation and delivers at least50premature infants per year. Lorch et al.[4]used data from birth and death certificates and the UB-92form that hospitals use for billing purposes to study premature babies delivered in Pennsylvania.The data set covered the years 1995–2005(192,078premature babies).For evaluating the effect of NICU level on baby outcomes,a baby’s health status before delivery is an important confounder.Table II shows that babies delivered at high-level NICUs tend to have smaller birthweight and be more premature,and the babies’mothers tend to have more problems during the pregnancy.Although the available confounders,which include those in Table II and several other variables that are given in[4],describe certain aspects of a baby’sM.BAIOCCHI,J.CHENG AND D.S.SMALLTable II.Imbalance of measured covariates between babies delivered at high-level NICUs versus low-level NICUs.Characteristic X P.X j High-level NICU/P.X j Low-level NICU/Standardized difference Birthweight<1500g0.120.050.28 Gestational age632weeks0.180.070.34Mother college graduate0.280.230.12African-American0.220.090.36 Gestational diabetes0.050.050.03Diabetes mellitus0.020.010.06 Pregnancy-induced hypertension0.120.080.13Chronic hypertension0.020.010.07The standardized difference is the difference in means between the two groups in units of the pooled within group standard deviation,that is,for a binary characteristic X,where D D1or0according to whether the baby was delivered at a high-level or low-level NICU,the standardized difference is P.X D1j D D1/ P.X D1j D D0/.pf Var.X j D D1/C Var.X j D D0/g=2Figure1.Directed acyclic graph for the relationship between an instrumental variable Z,a treatment D,unmeasured confounders U,and an outcome Y.health prior to delivery,the data set is missing several important confounding variables such as fetal heart tracing results,the severity of maternal problems during pregnancy(e.g.,we only know whether the mother had pregnancy-induced hypertension but not the severity),and the mother’s adherence to prenatal care guidelines.Figure1,which is an example of a directed acyclic graph[5],illustrates the difficulty with estimating a causal effect in this situation.The arrows denote causal relationships.Read the arrow between the treatment D and outcome Y like so:Changing the value of D causes Y to change.In our example,Y represents in-hospital mortality,and D indicates whether or not a baby attended a high-level NICU.Our goal is to understand the arrow connecting D to Y,that is,the effect of attending a high-level NICU on in-hospital mortality compared with attending a low-level NICU.Assume that Figure1shows relation-ships within a strata of the observed covariates X,for example,Figure1represents the relationships for only babies with gestational age of33weeks and mother had pregnancy-induced hypertension.The U variable causes concern as it represents the unobserved level of severity of the preemie,and it is causally linked to both mortality(sicker babies are more likely to die)and to which treatment the preemie receives (sicker babies are more likely to be delivered in high-level NICUs).Because U is not recorded in the data set,it cannot be precisely adjusted for using statistical methods such as propensity scores or regression. If the story stopped with just D,Y,and U,then the effect of D on Y could not be estimated.IV estimation makes use of a form of variation in the system that is free of the unmeasured confound-ing.What is needed is a variable,called an IV(represented by Z in Figure1),which has very specialcharacteristics.In this example,we consider excess travel time as a possible IV.Excess travel time is defined as the time it takes to travel from the mother’s residence to the nearest high-level NICU minus the time it takes to travel to the nearest low-level NICU.We write Z D1if the excess travel time is less than or equal to10min(so that the mother is encouraged by the IV to go to a high-level NICU)M.BAIOCCHI,J.CHENG AND D.S.SMALL and Z D0if the excess travel time is greater than10min.(We dichotomize the instrument here for simplicity of discussion.)There are three key features a variable must have in order to qualify as an IV(see Section4for mathematical details on these features and additional assumptions for IV methods).Thefirst feature (represented by the directed arrow from Z to D in Figure1)is that the IV causes a change in the treatment assignment.When a woman becomes pregnant,she has a high probability of establishing a relationship with the proximal NICU,regardless of the level,because she is not anticipating having a preemie.Proximity as a leading determinant in choosing a facility has been discussed in[6].By selecting where to live,mothers assign themselves to be more or less likely to deliver in a high-level NICU.The fact that changes in the IV are associated with changes in the treatment is verifiable from the data.The second feature(represented by the crossed-out arrow from Z to U)is that the IV is not associated with variation in unobserved variables U that also affect the outcome.That is,Z is not connected to the unobserved confounding that was a worry to begin with.In our example,this would mean unobserved severity is not associated with variation in geography.As high-level NICUs tend to be in urban areas and low-level NICUs tend to be the only type in rural areas,this assumption would be dubious if there were a high level of pollutants in urban areas(think of Manchester,England,circa the Industrial Revolution) or if there were more pollutants in the drinking water in rural areas than in urban areas.These hypothet-ical pollutants may have an impact on the unobserved levels of severity.The assumption that the IV is not associated with variation in the unobserved variables,while certainly an assumption,can at least be corroborated by examining the values of variables that are perhaps related to the unobserved variables of concern(Section6.1).The third feature(represented by the crossed-out line from Z to Y in Figure1)is that the IV does not cause the outcome variable to change directly.That is,it is only through its impact on the treatment that the IV affects the outcome.This is often referred to as the exclusion restriction(ER)assumption. In our case,the ER assumption seems reasonable as presumably a nearby hospital with a high-level NICU affects a baby’s mortality only if the baby receives care at that hospital.That is,proximity to a high-level NICU in and of itself does not change the probability of death for a preemie,except through the increased probability of the preemie being delivered at the high-level NICU.See Section6.2.2for further discussion about the ER in the NICU study.2.Evaluating the need for and feasibility of an IV analysisAs discussed earlier,IV methods provide a way to control for unmeasured confounding in comparative effectiveness studies.Although this is a valuable feature of IV methods relative to regression,match-ing,and propensity score methods,which do not control for unmeasured confounding,IV methods have some less attractive features such as increased variance.When considering whether or not to include an IV analysis in an evaluation of a treatment effect,thefirst question one should ask is whether or not an IV analysis is needed.The second question one should ask is whether or not an IV analysis is feasible in the sense of there being an IV that is close enough to being valid and has a strong enough effect on the treatment to provide useful information about the treatment effect.In this section,we will discuss how to think about these two questions.2.1.Is an IV analysis needed?The key consideration in whether an IV analysis is needed is how much unmeasured confounding there is.It is useful to evaluate this using both scientific considerations and statistical considerations. Scientific consideration.Whether or not there is any unmeasured confounding should befirst thought of from a scientific point of view.In the NICU example discussed in Section1.2,mothers(as advised by doctors)who choose to deliver in a far-away high-level NICU rather than a nearby low-level NICU often do so because they think their baby may be at a high risk of having a problem and that delivery at a high-level NICU will reduce this risk.Investigators know that many variables can be confounders (i.e.,associated with delivery at a high-level NICU and associated with in-hospital mortality)such as variables indicating a baby’s health prior to delivery.They know that the data set available for analy-ses is missing several important confounding variables such as fetal heart tracing results,the severity of maternal problems during pregnancy,and the mother’s adherence to prenatal care guidelines.When unmeasured confounding is a big concern in a study like in the NICU study,analyses with IV methods are desired and helpful to better understand the treatment effect.M.BAIOCCHI,J.CHENG AND D.S.SMALLUnmeasured confounders are particularly likely to be present when the treatment is intended to help the patient(as compared with unintended exposures)[7].When two patients who have the same measured covariates are given different treatments,there are often rational but unrecorded reasons.In particular,administrative data often do not contain measurements of important prognostic variables that affect both treatment decisions and outcomes such as lab values(e.g.,serum cholesterol levels),clinical variables(e.g.,blood pressure and fetal heart tracing results),aspects of lifestyle(e.g.,smoking status and eating habits),and measures of cognitive and physical functioning[8,9].Common sources of IVs for health studies are discussed in Section3.When using IV analyses, the assumptions that are required for a variable to be a valid IV are usually at best plausible,but not certain.If the assumptions are merely plausible,but not certain,are IV analyses still useful?Imbens and Rosenbaum[10]provide a nice discussion of two settings in which IV analyses with plausible but not certain IVs are useful:(i)When one is concerned about unmeasured confounding in any way,it is helpful to replace the implausible assumption of no unmeasured confounding by a plausible assumption, although not a certain assumption,with IV methods;and(ii)when there is concern about unmeasured confounding,IV analyses play an important role in replicating an observational study.Consider two sequences of studies,thefirst sequence in which each study involves only adjusting for measured con-founders and the other sequence in which each study involves using a different IV(one of the studies in this second sequence could also involve only adjusting for measured confounders).Throughout the first sequence of studies,the comparison is likely to be biased in the same way.For example,a repeated finding that people with more education are more healthy from different survey data sets that do not con-tain information about genetic endowments or early life experiences does little to address the concern of unmeasured confounding from these two variables.However,if different IVs are used for education,for example,a lottery that affects education[11],a regional discontinuity in educational policy[12],a tem-poral discontinuity in educational policy[13]and distance lived from a college when growing up[14], and if each IV is plausibly,but not certainly,valid,then there may be no reason why these different IVs should provide estimates that are biased in the same direction.If studies with these different IVs all pro-vide evidence that education affects health in the same direction,this would strengthen the evidence for thisfinding[15](when different IVs are used,each IV identifies the average treatment effect for a differ-ent subgroup,so that we would only expect thatfindings from the different IVs would agree in direction if the average treatment effects for the different subgroups have the same direction;see Sections5.5and 11for discussion).In summary,when unmeasured confounding is a big concern in a study based on one’s understanding of the problem and data,investigators should consider IV methods in their analyses.At the same time, if investigators only expect a small amount of unmeasured confounding in their study,Brookhart et al.[16]suggested that investigators may not want to use IV methods for the primary analysis but may want to consider IV methods for a secondary analysis.Statistical tests.Under some situations in practice,especially for exploratory studies,investigators may not have enough scientific information to determine whether or not there is unmeasured confound-ing.Then statistical tests can be helpful to provide additional insight.The Durbin–Wu–Hausman test is widely used to test whether there is unmeasured confounding[17–19].The test requires the availability of a valid IV.The test compares the ordinary least squares(OLS)estimate and IV estimate of the treat-ment effect;a large difference between the two estimates indicates the potential presence of unmeasured confounding.The Durbin–Wu—Hausman test assumes homogeneous treatment effects,meaning that the treatment effect is the same at different levels of covariates.The test cannot distinguish between unmeasured confounding and treatment effect heterogeneity[16,20].As an alternative approach,Guo et al.[20] developed a test that can detect unmeasured confounding as distinct from treatment effect heterogeneity in the context of the model described in Section4.1.2.2.Valid IVsAs discussed in Section1,a variable must have three key features to qualify as an IV:(i)relevance:the IV causes a change in the treatment received;(ii)effective random assignment:the IV is independent ofunmeasured confounding conditional on covariates as if it was randomly assigned conditional on covari-ates;and(iii)ER:the IV does not have a direct effect on outcomes,that is,it only affects outcomes through the treatment.Section4includes mathematical details on these features and assumptions.To use IV methods in a real study,investigators need to evaluate if there is any variable that satisfies theM.BAIOCCHI,J.CHENG AND D.S.SMALL three features and qualifies as a good IV based on both scientific understanding and statistical evidence. Please see Section3for sources of IVs in health studies.Note that not all of the features/assumptions can be completely tested,but methods have been proposed to test certain parts of the assumptions.Please see Section6for a discussion about how to evaluate if a variable satisfies those features/assumptions needed to be a valid IV.We would also like to point out that even if there is no variable that is a perfectly valid IV,an IV anal-ysis may still provide helpful information about the treatment effect.As discussed earlier,when there is unmeasured confounding,a repeatedfinding from a sequence of analyses with different IVs(even though none of the IVs are perfect)will provide very helpful evidence on the treatment effect[10].Also, sensitivity analyses can be performed to assess the evidence provided by an IV analysis allowing for the IV not being perfectly valid;see Section6.2.2.3.Strength of IVsAn IV is considered to be a strong IV if it has a strong impact on the choice of different treatments and a weak IV if it only has a slight impact.When the IV is weak,even if it is a valid IV,treatment effect estimates based on IV methods have some limitations,such as large variance even with large samples. Then investigators face a trade-off between an IV estimate with a large variance and a conventional estimate with possible bias[16].Additionally,the estimate with a weak IV will be sensitive to a slight departure from being a valid IV.Please see Section7for more detailed discussion on the problems when only weak IVs are available in a study.In summary,whether or not an IV analysis will be helpful for a study depends on if unmeasured con-founding is a major concern,if there is any plausibly close to valid IV,and if the IV is strong enough for a study.For studies with treatments that are intentionally chosen by physicians and patients,there is often substantial unmeasured confounding from unmeasured indications or severity[7,10,16].There-fore,an IV analysis can be most helpful for those studies.When an IV is available,even if it is not perfectly valid,an IV analysis or a sequence of IV analyses with different IVs can provide very help-ful information about the treatment effect.For studies in which unmeasured confounding is not a big concern and no strong IV is available,we suggest investigators to consider IV analyses as secondary or sensitivity analyses.3.Sources of instruments in health studiesThe biggest challenge in using IV methods isfinding a good IV.There are several common sources of IVs for health studies.Randomized encouragement trials.One way to study the effect of a treatment when that treatment cannot be controlled is to conduct a randomized encouragement trial.In such a trial,some subjects are randomly chosen to obtain extra encouragement to take the treatment,and the rest of the subjects receive no extra encouragement[21].For example,Permutt and Hebel[22]studied the effect of mater-nal smoking during pregnancy on an infant’s birthweight using a randomized encouragement trial in which some mothers received extra encouragement to stop smoking through a master’s level staff per-son providing information,support,practical guidance,and behavioral strategies[23].For a randomized encouragement trial,the randomized encouragement assignment(1if encouraged,0if not encouraged) is a potential IV.The randomized encouragement is independent of unmeasured confounders because it is randomly assigned by the investigators and will be associated with the treatment if the encouragement is effective.The only potential concern with the randomized encouragement being a valid IV is that the randomized encouragement might have a direct effect on the outcome and not through the treatment.For example,in the aforementioned smoking example,the encouragement could have a direct effect if the staff person providing the encouragement also encouraged expectant mothers to stop drinking alcohol during pregnancy.To minimize a potential direct effect of the encouragement,Sexton and Hebel[23] asked the staff person providing encouragement to avoid recommendations or information concerningother habits that might affect birthweight such as alcohol or caffeine consumption and also prohibited discussion of maternal nutrition or weight gain.A special case of a randomized encouragement trial is a usual randomized trial in which the intent is for everybody to take their assigned treatment,but in fact, some people do not adhere to their assigned treatment so that assignment to treatment is in fact just anM.BAIOCCHI,J.CHENG AND D.S.SMALLencouragement to treatment.For such randomized trials with nonadherence,random assignment can be used as an IV to estimate the effect of receiving the treatment versus receiving the control provided that random assignment does not have a direct effect(not through the treatment);see Section4.7for further discussion and an example.Distance to specialty care provider.When comparing two treatments,one of which is only provided by specialty care providers and one of which is provided by more general providers,the distance a per-son lives from the nearest specialty care provider has often been used as an IV.For emergent conditions, proximity to a specialty care provider particularly enhances the chance of being treated by the specialty care provider.For less acute conditions,patients/providers have more time to decide and plan where to be treated,and proximity may have less of an influence on treatment selection,while for treatments that are stigmatized(e.g.,substance abuse treatment),proximity could have a negative effect on the chance of being treated.A classic example of using distance as an IV in studying treatment of an emergent condition is the McClellan et al.study of the effect of cardiac catheterization for patients suffering a heart attack[24].The IV used in the study was the differential distance the patient lives from the near-est hospital that performs cardiac catheterization to the nearest hospital that does not perform cardiac catheterization.Another example is the study of the effect of high-level versus low-level NICUs[4] that was discussed in Section1.2.Because distance to a specialty care provider is often associated with socioeconomic characteristics,it will typically be necessary to control for socioeconomic characteristics in order for distance to potentially be independent of unmeasured confounders.The possibility that dis-tance might have a direct effect because the time it takes to receive treatment affects outcomes needs to be considered in assessing whether distance is a valid IV.Preference-based IVs.A general strategy forfinding an IV for comparing two treatments A and B is to look for naturally occurring variation in medical practice patterns at the level of geographic region, hospital,or individual physician and then use whether the region/hospital/individual physician has a high or low use of treatment A(compared with treatment B)as the IV.Brookhart and Schneeweiss[9] termed these IVs‘preference-based instruments’because they assume that different providers or groups of providers have different preferences or treatment algorithms dictating how medications or medical procedures are used.Examples of studies using preference-based IVs are by Brooks et al.[25]who studied the effect of surgery plus irradiation versus mastectomy for breast cancer patients using geo-graphic region as the IV,Johnston[26]who studied the effect of surgery versus endovascular therapy for patients with a ruptured cerebral aneurysm using hospital as the IV,and Brookhart et al.[27]who studied the benefits and risks of selective cyclooxygenase2inhibitors versus nonselective nonsteroidal anti-inflammatory drugs for treating gastrointestinal problems using individual physician as the IV.For proposed preference-based IVs,it is important to consider that the patient mix may differ between the different groups of providers with different preferences,which would make the preference-based IV invalid unless patient mix is fully controlled for.It is useful to look at whether measured patient risk factors differ between groups of providers with different preferences.If there are measured differences, there are likely to be unmeasured differences as well;see Section6.1for further discussion.Also,for pro-posed preference-based IVs,it is important to consider whether the IV has a direct effect(not through the treatment);a direct effect could arise if the group of providers that prefers treatment A treats patients dif-ferently in ways other than the treatment under study compared with the providers who prefer treatment B.For example,Newman et al.[28]studied the efficacy of phototherapy for newborns with hyper-bilirubinemia and considered the frequency of phototherapy use at the newborn’s birth hospital as an IV.However,chart reviews revealed that hospitals that use more phototherapy also have a greater use of infant formula;use of infant formula is also thought to be an effective treatment for hyperbilirubinemia. Consequently,the proposed preference-based IV has a direct effect(going to a hospital with higher use of phototherapy also means a newborn is more likely to receive infant formula even if the newborn does not receive phototherapy)and is not valid.The issue of whether a proposed preference-based IV has a direct effect can be studied by looking at whether the IV is associated with concomitant treatments such as use of infant formula[9].A related way in which a proposed preference-based IV can have a direct effect is that the group of providers who prefer treatment A may have more skill than the group of providers who prefer treatment B.Also,providers who prefer treatment A may deliver treatment A better than those providers who prefer treatment B because they have more practice with it,for exam-ple,doctors who perform surgery more often may perform better surgeries.Korn and Baumrind[29] discussed a way to assess whether there are provider skill effects by collecting data from providers on whether or not they would have treated a different provider’s patient with treatment A or B based on the patient’s pretreatment records.。