Mining the Most Interesting Rules

- 格式:pdf

- 大小:237.63 KB

- 文档页数:10

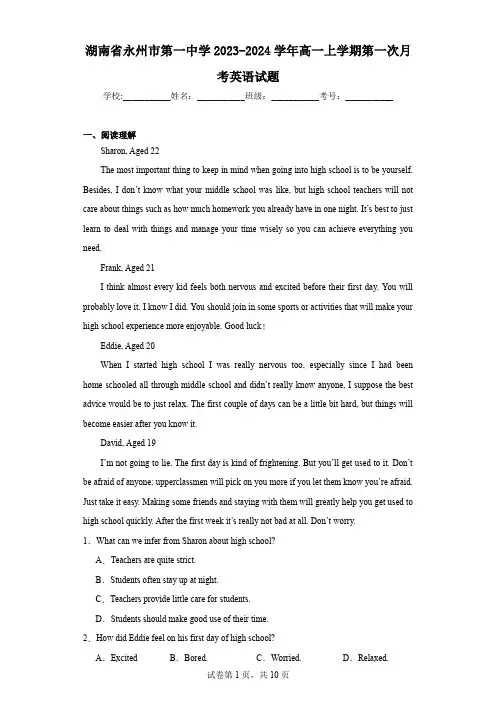

湖南省永州市第一中学2023-2024学年高一上学期第一次月考英语试题学校:___________姓名:___________班级:___________考号:___________一、阅读理解Sharon, Aged 22The most important thing to keep in mind when going into high school is to be yourself. Besides, I don’t know what your middle school was like, but high school teachers will not care about things such as how much homework you already have in one night. It’s best to just learn to deal with things and manage your time wisely so you can achieve everything you need.Frank, Aged 21I think almost every kid feels both nervous and excited before their first day. You will probably love it. I know I did. You should join in some sports or activities that will make your high school experience more enjoyable. Good luck!Eddie, Aged 20When I started high school I was really nervous too, especially since I had been home-schooled all through middle school and didn’t really know anyone, I suppose the best advice would be to just relax. The first couple of days can be a little bit hard, but things will become easier after you know it.David, Aged 19I’m not going to lie. The first day is kind of frightening. But you’ll get used to it. Don’t be afraid of anyone; upperclassmen will pick on you more if you let them know you’re afraid. Just take it easy. Making some friends and staying with them will greatly help you get used to high school quickly. After the first week it’s really not bad at all. Don’t worry.1.What can we infer from Sharon about high school?A.Teachers are quite strict.B.Students often stay up at night.C.Teachers provide little care for students.D.Students should make good use of their time.2.How did Eddie feel on his first day of high school?A.Excited B.Bored.C.Worried.D.Relaxed.3.Who mentions the importance of friends?A.Frank.B.David.C.Sharon.D.Eddie.I’m a seventeen-year-old boy preparing for my A Level exams at the end of the year. In the society where my peers (同龄人) and I live, we tend to accept the rat race values. As students, we want to get good grades so that we can get good jobs. I enjoy studying and have consistently received A’s in my classes. There was a year when I finished first in my class in the final exams. It was a great accomplishment.Another one I am pleased with is that I managed to improve the relationship between Mum and Dad. Dad was a successful businessman who was rarely at home. Mum was a housewife who always felt bored and constantly nagged (唠叨) him to let her go to work. Their constant arguing bothered me, so I advised Dad that Mum would be better off with a part-time job. He agreed, and their relationship has improved since then.My most proud achievement, however, is my successful work in the local old folks’ home. My grandparents had raised me since I was a child. I wept (哭泣) bitterly when they died. Unlike many of my classmates, I do not take part in my school’s community service to earn points. I enjoy my voluntary work and believe I’m contributing to a worthwhile cause. This is where I can help. I talk to the elderly, assist them with their daily life, and listen to their problems, glory days and the hardships they experienced.Last year, I hosted a successful New Year party for the elderly and they enjoyed a great time. Many expressed a desire to attend another party the following year. When I reflect on my accomplishments, I’m especially proud of my service at the old folks’ home, so I hope to study social work at university and work as a social worker in the future. I wish to be more skilled in attending to the less fortunate as well as find great satisfaction in it, after all. 4.What can be inferred about the author from the first paragraph?A.His good grades got him a good job.B.He refuses to compete with his peers fiercely.C.His views on social values are well known.D.He is content with his scholastic achievements.5.Which role does the author play in his parents’ relationship?A.A helper.B.A judge.C.A monitor D.A supporter. 6.What is the greatest accomplishment for the author?A.The contribution to volunteering.B.The success in exams.C.The recovery of confidence in life.D.The work in community service. 7.Why does the author want to major in social work at university?A.To gain a well-paid job.B.To give his life a purpose.C.To meet his grandparents’ expectations.D.To better help the disadvantaged."Creativity is the key to a brighter future, "say education and business experts. Here is how schools and parents can encourage this important skill in children.If Dick Drew had listened to his boss in 1925, we might not have the product that we now think of as great importance: a new type of tape. Drew worked for the Minnesota Mining and Manufacturing Company. At work he developed a kind of material strong enough to hold things together. But his boss told him not to think more about the idea. Finally, using his own time, Drew improved the tape, which now is used everywhere by many people. And his former company learned from its mistake. Now it encourages people to spend 15 percent of their working time just thinking about and developing new ideas.Creativity is not something one is just born with, nor is it necessarily a character of high intelligence. The fact that a person is highly intelligent does not mean that he uses it creatively. Creativity is the matter of using the resources one has to produce new ideas that are good for something.Unfortunately, schools have not tried to encourage creativity. With strong attention to test results and the development of reading, writing and mathematical skills, many educators give up creativity for correct answers. The result is that children can gain information but can't recognize ways to use it in new situations. They may know the rules correctly but they are unable to use them to work out practical problems.It is important to give children choices. From the earliest age, children should be allowed to make decisions and understand their results. Even if it' choosing between two food items for lunch, decision-making helps thinking skills. As children grow older, parents should try to let them decide how to use their time or spend their money. This is because the most important character of creative people is a very strong desire to find a way out of trouble. 8.What did the company where Drew once worked learn from its mistake?A.It should encourage people to work a longer time.B.People should be discouraged to think freely.C.People will do better if they spend most of their working time developing new ideas.D.It is necessary for people to spend some of their working time considering anddeveloping new ideas.9.We can know from the passage that creativity _________.A.something that most people are born withB.something that has nothing to do with intelligence at allC.a way of using what one has learned to work out new problemsD.something that is not important to the life in the future at all10.Why don't schools try to encourage creativity?A.They don't attach importance to creativity education.B.They don't want their students to make mistakes.C.They pay no attention to examination marks.D.They think it impossible to develop creativity in class.11.What should the parents do when their children decide how to spend their money?A.Allow them to have a try.B.Try to help them as much as possible.C.Take no notice of whatever they do.D.Order them to spend the least money.Livestreaming (直播) through channels such as Amazon Live and QVC is an increasingly popular way to sell goods online. It usually lasts between 5 and 10 minutes, and someone promotes a product. Viewers can then readily buy it by clicking on a link.We analyzed (分析) 99,451 sales cases on a livestream selling platform and matched them with actual sales cases. In terms of time, that is equal to over 2 million 30-second television advertisements.To determine the emotional (情绪的) expression of the salesperson, we used two deep learning models: a face model and an emotion model. The face model discovers the presence or absence of a face in a frame of a video stream. The emotion model then determines the probability that a face is exhibiting any of the six basic human emotions: happiness, sadness, surprise, anger, fear or disgust. For example, smiling signals a high probability of happiness, while an off-putting expression usually points toward anger.We wanted to see the effect of emotions expressed at different times in the sales cases sowe counted probabilities for each emotion for all 62 million frames in our database. We then combined these probabilities with other possible aspects that might drive sales — such as price and product characteristics — to judge the effect of emotion.We found that, perhaps unsurprisingly, when salespeople show more negative emotions — such as anger and disgust — the volume of sales went down. But we also found that a similar thing happened when the salespeople show high levels of positive emotions, such as happiness or surprise.A likely explanation, based on our research, is that smiling can be disgusting because it lacks true feelings and can reduce trust in the seller. A seller’s happiness may be taken as a sign that the seller is gaining interests at the customer’s expense.12.What can we know about the livestreaming to sell goods online?A.It challenges the physical economy.B.It helps big company sell all goods.C.It is very convenient for the buyers.D.It helps the sellers develop fixed expressions.13.What does the underlined word “off-putting” mean in paragraph 3?A.Unhappy.B.Embarrassed.C.Surprised.D.Shy.14.How do customers feel about the seller’s smiling?A.They feel thankful.B.They feel happy.C.They feel cheated.D.They feel so tired.15.Which of the following could be the best title for the text?A.Livestreamers Sell Products SuccessfullyB.Smiling Can Increase the Sales in RealityC.Emotions and Faces: What’s the DifferenceD.Expressions Affect Selling Products Online二、七选五Students must then answer properly, using materials they have learned in preparation for the exam. Showing plentiful knowledge of the subject results in passing the exam or an excellentgrade.17 As part of the completion of a program at the undergraduate or graduate levels, students might need to prove their knowledge and understanding of a subject area. Many science majors finish Bachelor’s studies with oral exams, or a particular program may require oral and written exams that show how a student has taken in all the materials learned in a four-year period. Usually study guides are available for these exams. 18 One valuable tip for students to succeed is to practice before the exam. 19 But by answering questions classmates or family ask them students can do as many practices as possible. On the day of the exam, students should dress plainly but respectfully, and in keeping with any dress requirements.Students taking these exams should remember how much they have learned, which is hopefully quite a lot. 20 When waiting outside the test room, taking a deep breath and reminding themselves that the oral exam is a nice way to show off how much knowledge has been learned from teachers can help overcome stressful moments. Also, any other relaxation techniques that help can be used.A.It raises questions to students in spoken form.B.Behaving properly can earn students some favor.C.They may not be able to predict all the questions.D.Usually, students performing well are experienced.E.Nervousness can even make the smartest student get stuck.F.There are many examples in college where oral exams are used.G.Therefore, prepared students tend not to be surprised by what they’re asked.三、完形填空The sun was shining brightly over our heads and sweat (汗水) was pouring off our backstraining 28 .After this journey, we were more 29 than we had imagined and we were much braver than we had 30 . Girls who looked pale rested for a little while, and then right away came back to the team. Boys who were being punished 31 to the playground at once and began to run. Sweat flowed down our faces when we thought about how to shout 32 than other classes.Military training taught us perseverance (坚持不懈) and determination. On the last day of our training, the confidence could be 33 on our faces. We shouted so loud that our 34 could be heard across the heavens. With eyes like burning torches (火炬), we walked into the future. Now the sun is 35 at all of us.21.A.crying B.making C.sending D.telling 22.A.nothing B.anything C.something D.everything 23.A.wasted B.spent C.kept D.took 24.A.strict B.curious C.interesting D.normal 25.A.success B.pleasure C.worry D.wonder 26.A.referred to B.contributed to C.turned to D.listened to 27.A.sunlight B.wealth C.desire D.health 28.A.received B.helped C.offered D.happened 29.A.concerned B.determined C.interested D.surprised 30.A.expected B.expressed C.disliked D.rescued 31.A.escaped B.moved C.rode D.rushed 32.A.larger B.stronger C.louder D.lower 33.A.seen B.heard C.smelt D.touched 34.A.thoughts B.feelings C.opinions D.voices 35.A.staring B.laughing C.waving D.smiling四、用单词的适当形式完成短文阅读下列短文,在空白处填入1个适当的单词或括号内单词的正确形式。

描述美国历史与移民政策的英语作文全文共6篇示例,供读者参考篇1American History and Immigration PoliciesHi there! Today, I'm going to talk about the history of America and how it has welcomed people from all over the world through its immigration policies. It's an exciting and important topic that has shaped the country we know today.America is often referred to as a "nation of immigrants." That's because, except for the Native Americans who were the original inhabitants of the land, everyone else came from somewhere else. Even the early settlers, like the Pilgrims who arrived on the Mayflower in 1620, were immigrants from Europe.In the beginning, America was a collection of colonies ruled by different European powers like Britain, France, and Spain. Many people came to the colonies seeking religious freedom, economic opportunities, or a fresh start in a new land. The colonies welcomed immigrants, but they had to follow the rules set by their European rulers.Things changed after the American Revolution in 1776. The thirteen colonies broke away from British rule and formed the United States of America. As a new independent nation, the United States could make its own immigration laws.At first, the new country didn't have many immigration restrictions. People from all over Europe, particularly from countries like Ireland, Germany, and Italy, came to America in search of better lives. They were attracted by the promise of economic opportunities, religious freedom, and the chance to own land.As the years went by, and more and more immigrants arrived, some people started to worry that too many foreigners were coming into the country. They feared that these newcomers might threaten American culture and values. So, in the late 1800s and early 1900s, the government started to pass laws to limit and control immigration.One of the most famous immigration laws was the Chinese Exclusion Act of 1882. This law prevented Chinese laborers from entering the United States. It was the first time the government had excluded a specific group of people based on their race or nationality.Another important law was the Immigration Act of 1924, also known as the National Origins Act. This law set quotas, or limits, on how many immigrants could come from each country. The quotas favored immigrants from Western European countries like Britain and Germany, while restricting immigration from Eastern and Southern Europe, as well as from Asia.During World War II, the United States also turned away many Jewish refugees fleeing from Nazi Germany. The government was worried that some of these refugees might be spies or criminals, so they didn't let them in. This decision was later criticized as a shameful moment in American history.After World War II, attitudes towards immigration started to change. The country needed more workers to help rebuild and grow the economy. In 1965, the Immigration and Nationality Act was passed. This law abolished the old quota system and made it easier for people from all over the world to come to America.Today, America is one of the most diverse countries in the world. People have come from every corner of the globe, bringing their cultures, traditions, and dreams with them. While immigration has sometimes been a controversial topic, it has also been a source of strength and renewal for the nation.Immigration has played a huge role in shaping American history and culture. From the early settlers to the waves of immigrants in the 19th and 20th centuries, newcomers have contributed to the country's economic growth, scientific advances, and artistic achievements.As an elementary school student, I'm proud to live in a country that has welcomed people from all over the world. I have classmates and friends whose families came from different countries, and I've learned so much from them. Their stories and traditions have enriched my understanding of the world and made me appreciate the diversity that immigration has brought to America.In the future, I hope America continues to be a beacon of hope and opportunity for people seeking a better life. While immigration policies may change over time, the spirit of welcoming and embracing newcomers should remain at the heart of what it means to be American.That's my take on American history and immigration policies. It's a complex topic, but one that has played a crucial role in shaping the country we live in today. I'm grateful for the contributions of immigrants, and I look forward to seeing howtheir stories will continue to enrich and strengthen the United States.篇2American History and ImmigrationHi there! My name is Emma and I'm going to tell you all about the history of America and how its immigration policies have changed over time. It's a pretty interesting story with lots of ups and downs.America was first explored and settled by European colonists starting in the 1600s. The earliest English settlers landed in Virginia in 1607 and established Jamestown, which was the first permanent English colony in what would later become the United States. More colonies were founded after that, including Massachusetts, Maryland, Pennsylvania, Georgia, and others.Most of the early colonists were from England, but over time immigrants started arriving from other European nations like Germany, Ireland, Scotland, and the Netherlands. The English colonies needed people to settle the land and grow crops, so they welcomed immigrants who could help build the colonies.As more and more colonists arrived, tensions grew between the colonists and the British government over taxes, representation, and independence. In 1776, the colonists declared independence from Britain and fought the Revolutionary War. After winning the war in 1783, the United States of America was officially established as an independent nation.In the early years of the United States, there were no strict immigration laws. The new country continued to allow immigrants, especially from Europe, to arrive and settle. New settlers and immigrants were needed to explore, farm, and build in the growing nation.One of the key principles of America is that it is a nation of immigrants. The United States was built by wave after wave of immigrants coming from all over the world. The ancestors of most Americans today can be traced back to immigrants who came seeking a better life.As the 1800s progressed and America continued expanding westward, immigration rates increased rapidly. Millions of immigrants poured in from Europe, especially from Ireland and Germany where there was widespread poverty and famine.Immigrants also started arriving from China to help build railroads and work in mining.With so many immigrants coming, the first immigration laws were passed in the late 1800s to start controlling the flow of people. The Chinese Exclusion Act of 1882 was the first major law restricting immigration based on nationality. It stopped immigration from China for 10 years.In the early 1900s, even more immigrants flooded in from Southern and Eastern Europe. This included many Italians, Greeks, Poles, Russians and others. To handle the large numbers, the government opened Ellis Island in 1892 as an entry point and inspection station for immigrants arriving in New York.Between 1880 and 1920, around 25 million European immigrants came to America through places like Ellis Island, seeking better opportunities. This huge wave of immigration from Europe eventually led to new restrictive laws in the 1920s limiting the numbers of immigrants allowed based on nationality.The Immigration Act of 1924 established quotas based on national origins that heavily favored immigration from Western Europe while sharply restricting arrivals from places like Italy,Greece, Poland and other parts of Eastern and Southern Europe. Very few immigrants were allowed from Asian countries.This quota system remained in place for over 40 years until it was replaced by the Immigration and Nationality Act of 1965. This new law abolished the discriminatory national origins quota system and established a new policy focused on reuniting immigrant families and attracting skilled labor to the United States.After the 1965 reforms, immigration surged again from all around the world, with large numbers of immigrants coming from Asia and Latin America in addition to Europe. Between 1965 and 2000, over 25 million legal permanent residents entered the U.S.Today, the debate over immigration reform continues. Some want to increase immigration to attract workers and talent, while others want to reduce immigration levels. There are also debates over policies regarding unauthorized or illegal immigration.Immigration has played a huge role in American history from the earliest days. The United States was founded and built by generations of immigrants seeking freedom and opportunity. While immigration policies have gone through many changes over the centuries, immigrants from all over the globe continueto come to America and become part of the nation's rich diversity.I hope you found this overview interesting! Immigration is a really important part of America's story. Even though policies have shifted, the idea of America as a nation of immigrants has remained a core part of its identity and values. Thanks for reading!篇3America: A Nation of ImmigrantsHi there! My name is Emily and I'm 10 years old. Today, I want to tell you all about the history of America and how it became a nation of immigrants.A long, long time ago, there were no cities, roads or houses in America. The only people living here were Native Americans. They lived in tribes, hunted animals, fished in rivers and lakes, and grew crops like corn, beans and squash. They had their own languages, customs and beliefs.Then in 1492, an Italian explorer named Christopher Columbus arrived on a ship from Europe. He thought he had reached India, so he called the native people "Indians." Butscientists later realized he had actually landed on islands near North America. More European explorers like John Cabot, Jacques Cartier and Juan Ponce de León followed. They claimed land for their countries and set up colonies.The first permanent English colony was Jamestown, founded in 1607 in what is now the state of Virginia. The colonists had a really hard time at first. They didn't know how to farm the land properly and didn't have enough food or shelter. A lot of them got sick or died. But they kept getting new settlers and supplies from England.In 1620, a group of English Protestants called the Pilgrims arrived in North America aboard the Mayflower ship. They created the Plymouth Colony in present-day Massachusetts. Unlike the colonists at Jamestown, the Pilgrims had a documented agreement on how they would govern themselves called the Mayflower Compact. This helped them work together.Over time, more and more European immigrants came to America. Some came voluntarily to start a new life and pursue religious freedom, while others were brought as slaves from Africa against their will. By the 1700s, there were 13 British colonies along the East Coast of North America.In 1776, leaders from these colonies declared independence from Britain in the Declaration of Independence. After winning the Revolutionary War, America became an independent nation - the United States of America! The colonists were now considered American citizens.During the 1800s, millions of immigrants continued flocking to America, many from Europe. They came by ship looking for better opportunities and life away from poverty or oppression in their home countries. Large numbers of Irish fled the potato famine, while Germans, Italians, Poles and others sought jobs and land.Not everyone was welcomed though. Laws were passed like the Chinese Exclusion Act to stop immigration from China. There were also big arguments about whether new Western territories should allow slavery.In the early 1900s, immigrants continued arriving from southern and eastern Europe. But then World War 1 happened and immigration slowed for a while. After the war, the U.S. passed national origin quotas to limit immigration from certain parts of the world.Things changed again during World War 2 when America allowed in refugees from the war. Then in 1965, a new law endednational origin quotas and opened doors to more immigrants from around the world, not just Europe.Today, America is one of the most diverse nations with people from all backgrounds, cultures and religions. Immigrants and their descendants make up a huge part of the country's population. People continue coming to America legally as students, workers, or to reunite with family members. But there's also a lot of debate about controlling illegal immigration - people entering or staying without proper documentation.Overall, America was built by generations of immigrants over hundreds of years. Each new group faced challenges and sometimes discrimination. But together they formed the rich, multicultural society of the United States that we know today.No matter where your family is originally from, if you live in America now, you're an American! This country was made possible by the hopes, dreams, hard work and sacrifices of countless immigrants searching for freedom and opportunity. That's why the words on the Statue of Liberty say "Give me your tired, your poor, your huddled masses yearning to breathe free."篇4America: A Nation of ImmigrantsDo you know that unless you are a Native American, your family came from another country? That's right, we are all immigrants or descended from immigrants who came to America from somewhere else. This great nation was built by people from all over the world. Let me tell you the story of how America became a nation of immigrants.A Long Time AgoA very long time ago, there were no countries or borders. People lived in small tribes and moved from place to place to find food and shelter. About 15,000 years ago, the ancestors of today's Native Americans began migrating across a land bridge from Asia into North America during the Ice Age. These were the very first immigrants to the continent we now call America.The Europeans ArriveFor thousands of years, Native Americans were the only people living in America. Then in 1492, an Italian explorer named Christopher Columbus arrived from Europe. He thought he had reached the Indies (Asia), so he called the native people "Indians." More European explorers soon followed, seeking wealth and claiming lands for their nations.The First English SettlersIn 1607, the first permanent English settlement was established at Jamestown, Virginia. More English colonists kept coming, along with immigrants from other European countries. Most came seeking economic opportunities or religious freedom that they could not find at home.The United States is BornBy the 1700s, there were 13 British colonies along the Atlantic coast. The colonists grew unhappy with unfair treatment by the British government. In 1776, they declared independence and fought the Revolutionary War. After winning, they formed a new nation - the United States of America.More Settlers Head WestAs the young United States expanded westward, even more immigrants poured in from across the Atlantic. Millions of Europeans came seeking cheap farmland and a fresh start, including large numbers of Irish and Germans fleeing famine and poverty. Chinese immigrants arrived to work on the Transcontinental Railroad.The New Immigration WaveIn the late 1800s, a new wave of immigrants started coming from Southern and Eastern Europe - Italians, Poles, Russians, andothers. Many were escaping religious persecution or looking for jobs in booming U.S. industries like coal mining and steelmaking. Chinatowns and Little Italys sprouted in big cities.Restrictions BeginAs immigration soared in the early 1900s, some Americans became worried about so many newcomers. They thought it was too much change happening too fast. In 1924, strict new limits were placed on immigration from Europe and other parts of the world. Only small numbers were allowed entry each year.New Sources of ImmigrationAfter World War II, immigration slowly increased again from new sources like Latin America and Asia. The U.S. economy was booming and needed more workers. In 1965, the old national quotas were abolished in favor of a new policy emphasizing family reunification and job skills.Today's ImmigrantsIn recent decades, most immigrants to the U.S. have come from Asia and Latin America, especially Mexico. Some enter legally, others come without documentation. Immigration has helped make America a diverse nation of many cultures,languages and religions living together. Love of freedom and opportunity still draws people from all over the globe.Debate Over ImmigrationThere is an ongoing debate about immigration policies in America today. Some people want to allow more immigrants to enter legally and provide a path for undocumented immigrants to become citizens. Others want to limit immigration, especially undocumented entries. They are concerned about security risks, job competition and costs of social services.Whatever your view, one thing is clear - the story of America is a story of immigration from its very beginning. This nation was built by generation after generation of immigrants seeking a better life. Their hopes and dreams, along with the cultures they brought with them, helped shape the diverse and prosperous America we know today. And the story continues...篇5The Story of America and Its ImmigrantsAmerica is a nation of immigrants. That means that except for the Native Americans who were here first, every single person in the United States has ancestors who came from somewhereelse. My great-grandparents were immigrants from Italy and Ireland. Maybe your ancestors came from Mexico, China, India, or Nigeria. We're all descendants of people who left their home countries and came to America.For as long as people have lived in North America, there has been migration and movement between lands. The Native Americans themselves migrated across the Bering Land Bridge from Asia thousands of years ago. They spread out across the continents, forming many diverse tribes and nations.Then, in the 1500s and 1600s, European explorers and settlers began arriving and creating colonies in what would become the United States. The first successful English colony was at Jamestown, Virginia in 1607. Over the next few centuries, millions more immigrants came from Europe, especially from England.Most of the earliest European immigrants came voluntarily, seeking freedom and opportunity. But there was also a dark side – the forced migration of enslaved Africans brought over in the transatlantic slave trade. This terrible injustice left a painful legacy that still impacts America today.In the 1800s, America's immigrant population became even more diverse, with large numbers arriving from Germany, Ireland,China, and other nations. Chinese immigrants helped build the Transcontinental Railroad. But they faced racism and the Chinese Exclusion Act that barred more immigration for decades.By the 1900s, there was a new great wave of immigration from Southern and Eastern Europe. Millions of Italians, Poles, Russians, and others came through Ellis Island dreaming of freedom and opportunity. But racism and discrimination made life hard for many of these new immigrants too.Finally, in 1965, America passed the Immigration and Nationality Act which did away with discriminatory quotas based on nationality and race. This opened the door to even more diversity, with immigrants coming from all across the world –Latin America, Asia, the Middle East, Africa, and everywhere else.Throughout its history, immigration has had a huge impact on America's economy, culture, and identity as a nation of different people united by shared ideals of liberty, equality, and democracy. Immigrants today continue to make America dynamic, innovative, and prosperous.But immigration has also always been controversial, with debates raging over how many immigrants to allow in and what the criteria should be. The 19th century saw backlash against Catholic immigrants, while the 20th century had arguments overrejecting Jewish refugees from the Nazis. In recent decades, the big debates have centered on immigrants from Mexico, Central America, and Muslim-majority countries.Those in favor of more immigration point to America's founding ideals welcoming immigrants, as well as the economic and cultural benefits they bring. Critics worry about immigration's impact on jobs, security, and national unity. They want more limits and border security.The immigration debate rages on today as America keeps arguing over what kind of immigration policies it should have:How many immigrants should be allowed in each year?Should there be more border security and deportations?What criteria should immigrants need to meet (job skills, English ability, etc.)?Should immigrants be admitted based more on employment/skills or reuniting families?Should there be a path to citizenship for undocumented immigrants brought as children?How should immigration policies balance economic, security, and humanitarian concerns?These are hard questions without easy answers. But immigration has always been central to America's story as a nation of immigrants constantly being reshaped and redefined by each new wave of newcomers seeking the American dream.So that's the story of America and its immigrants – a nation of people coming from all over the world, some voluntarily and some forced, all contributing to the kaleidoscope of diversity that shapes our national identity. It's a story of contradictions, of openness and resistance, of new beginnings and long struggles. And it's a story that's still being written by the immigrants of today and tomorrow.篇6America: A Nation of ImmigrantsHi there! Let me tell you a story about America and all the people who came here from faraway lands. It's a tale of dreams, hopes, and a country that was built by immigrants like your ancestors.A long time ago, even before your great-great-grandparents were born, America was a vast land inhabited by Native American tribes. They lived in harmony with nature, hunting,fishing, and cultivating crops. Their way of life was simple but fulfilling.Then, in 1492, an Italian explorer named Christopher Columbus sailed across the Atlantic Ocean and landed in the Caribbean islands. He thought he had reached the Indies (which is now called Asia), so he called the native people "Indians." Little did he know, he had discovered a whole new continent!After Columbus, many more Europeans began exploring and settling in the "New World." The first successful English colony was established in 1607 at Jamestown, Virginia. The colonists had a tough time at first, but they learned to farm and trade with the Native Americans.Over the next few centuries, millions of immigrants from all over the world came to America in search of a better life. They escaped poverty, religious persecution, and wars in their home countries. Some came as indentured servants or enslaved people, while others arrived as free settlers.One of the most significant waves of immigration happened in the 1800s, when millions of Europeans fled famine and political turmoil. The Irish Potato Famine forced many Irish families to seek refuge in America. Italians, Germans, Poles, andRussians also arrived in large numbers, often settling in big cities like New York, Boston, and Chicago.During this time, the United States government passed several laws to control and restrict immigration. The Naturalization Act of 1790 allowed only "free white persons" to become citizens. Later, laws like the Chinese Exclusion Act of 1882 and the Immigration Act of 1924 further limited immigration from certain countries.In the early 1900s, a new wave of immigrants arrived from southern and eastern Europe. Many of them were poor and faced discrimination from earlier European immigrants who had already settled in America. Despite the challenges, these newcomers worked hard, started businesses, and became an integral part of American society.After World War II, the United States opened its doors to refugees from war-torn countries and survivors of the Holocaust. The Immigration and Nationality Act of 1965 abolished the discriminatory quota system and allowed more immigrants from Asia, Africa, and Latin America.Today, America is a melting pot of cultures, religions, and traditions. People from all over the world continue to come here, seeking the American Dream – a life of freedom, opportunity,and prosperity. However, immigration policies are still hotly debated, with some favoring stricter laws and others advocating for more open borders.No matter what side you're on, one thing is clear: America would not be the great nation it is today without the contributions of immigrants. From the first settlers to the latest arrivals, immigrants have helped shape American culture, built cities, fought in wars, and made countless contributions to science, art, and technology.So the next time you see someone who looks or sounds different from you, remember that their family probably came to America searching for a better life, just like your ancestors did. We're all part of the great American story, a nation built by people from every corner of the globe.。

八年级下册英语听力部分第四单元全文共6篇示例,供读者参考篇1Unit 4 Listening - My Struggles and TriumphsEnglish class can be pretty tough sometimes, especially the listening part. In Unit 4, we had to listen to all kinds of dialogues and passages and then answer comprehension questions. It was not easy!The first dialogue was about two friends making plans to go to the movies. I thought I had it down at first - Jake wanted to see the new superhero movie and Lily preferred a romantic comedy. But then they started discussing times and locations, and I got a little lost. Did Jake say he could only go on Saturday evening or Sunday afternoon? I missed that part.The next dialogue covered shopping for gifts. A daughter was asking her mom for advice on what to buy her dad for Father's Day. Again, I caught the main idea of needing a present for dad. However, there were so many specific details about his hobbies, favorite colors, and past gifts that just went right over my head. How was I supposed to remember all that?Things didn't get any easier with the longer listening passages. One was about the history of poker and all the different variants of the game. I could barely even pronounce some of those crazy poker terms they were using, let alone understand the rules! The passage on the evolution of smartphones was slightly more interesting to me, but still loaded with technical vocabulary that had me scratching my head.I have to admit, after bombing that listening section test, I felt pretty discouraged about my English skills. Why couldn't I just understand simple conversations without missing key details? How would I ever pass the bigger tests coming up?That's when my English teacher Ms. Roberts stepped in to give me a big pep talk. She reminded me that listening comprehension is one of the most challenging skills to master in any new language. It takes a ton of practice, patience, and perseverance.Ms. Roberts said I was already making great progress from where I started at the beginning of the school year. Back then, I could barely catch a couple words here and there when we did listening exercises. Now I at least had the tools to identify the main topics and ideas. The finer details would come with more exposure over time.She also shared some great tips, like listening for key words instead of trying to catch everything. Using context clues from the parts I did understand to piece together the missing gaps. Most importantly, not getting frustrated when my mind went blank for a moment, but just keeping my ears focused and waiting for the next understandable part.With Ms. Roberts' advice and lots of hard work, I could feel myself improving little by little throughout the rest of the semester. Listening exercises that used to seem like complete gibberish gradually started making more sense. While I still had room for growth, I was no longer getting discouraged or overwhelmed.The last listening test for Unit 4 went so much better than that first one. I sailed through the dialogues about everyday situations like eating out and shopping. The passages on more complex topics like space exploration were still pretty tough, but I managed to answer most of the questions correctly this time. What a contrasting feeling of pride compared to my earlier failure!I realized that making progress in English listening is almost never a straight path. It's filled with ups and downs, easy wins and major struggles, demoralizing moments and thenbreakthroughs. Every student goes through this normal roller coaster. The key is having supportive teachers, helpful strategies, and most of all, a positive mindset that doesn't give up.I've got a long road ahead before I can call myself truly fluent in listening to English. Still, I'm going to keep putting in the work, growing my skills day by day. Because feeling that sense of understanding and accomplishment makes it all worthwhile. Maybe listening exercises won't seem so scary after all by the time I reach high school!篇2Hi there! My name is Emma and I'm in 8th grade. Today I want to tell you all about the listening section we just did in Unit 4 of our English textbook. It was actually pretty interesting!The unit was called "Going Green" and it was all about environmental issues and how we can protect the planet. The listening exercises really helped bring the topic to life.In the first exercise, we listened to a conversation between two friends talking about an environmental club at their school. One friend was trying to convince the other to join. I thought it was a realistic conversation - they used a lot of casual languageand slang like "It's gonna be so much fun!" and "Come on, don't be a party pooper!"The speaking was pretty natural too. The girl who wanted to join the club asked questions like "What kind of activities do they do?" and the other friend responded with details about beach clean-ups, recycling projects, and so on. It definitely made me want to join an environmental club if my school had one!Next up was a longer listening exercise about the problem of electronic waste or "e-waste." It was a news-style report explaining what e-waste is, why it's an issue, and some potential solutions. I have to admit, some of the vocabulary was pretty challenging for me at first - words like "hazardous," "contaminants," and "disposal."But the reporter spoke clearly and the report had a good structure with an introduction, examples in the middle, and a conclusion summarizing the main points at the end. Our teacher also had us answer comprehension questions along the way, which really forced me to pay close attention.By the end, I realizedI had learned a ton of new information! Like, did you know that things like old phones, computers, and televisions can leak toxic chemicals into the environment if they aren't disposed of properly? And some of the practices theymentioned for dealing with e-waste, like refurbishing and recycling components, were really interesting to me.Who knew listening exercises could actually be so educational and eye-opening? I'll definitely think twice before just tossing out any old electronics from now on.The last listening was probably my favorite though - it was a fun song all about reducing, reusing, and recycling. The lyrics were kind of silly but they made all the main points stick in my head SO well. Like the line "Don't be a waste maker, be are-creator!" How brilliant is that?I already find myself singing little lines from the chorus when I'm trying to decide if I can reuse something instead of just throwing it away. The song-writers were geniuses to turn environmental tips into such a catchy, memorable tune.Overall, while listening exercises can sometimes feel like a chore, this unit really opened my eyes to some importantreal-world issues in a way that kept me engaged and interested the whole time. The variety of conversations, reports, and even a song made the experience way more dynamic than just reading facts off a page.I came away understanding way more about e-waste, recycling, and how small actions to reduce waste can have a big impact. Who knew learning English could also make me want to be a better environmental steward?I'm actually really excited to see what sorts of creative, informative listening exercises are coming up in the next units. Maybe I'll even write one myself one day! For now, I've got plenty of new terms and concepts from this unit to practice applying in my speaking and writing. Getting to improve my English skills while learning about important issues? Now that's what I call a win-win!篇3Unit 4 - Going GreenHi there! My name is Emma and I'm a 4th grader at Oakwood Elementary School. For our English listening practice this unit, we learned all about going green and protecting the environment. It was really interesting and I wanted to share some of the things I learned with you!First up, we talked about what it actually means to "go green." Basically, it refers to making choices and taking actions that are better for the planet. This could be things like recycling,saving energy and water, or reducing waste and pollution. The main idea is trying to live in a more environmentally-friendly or sustainable way.Our teacher showed us some pretty mind-blowing facts about why going green is so important these days. Did you know that the Earth's temperature has risen by around 1°C in just the last 100 years or so? That might not sound like much, but even small temperature changes can cause huge problems like rising sea levels, more extreme weather, loss of plant and animal species, and other scary stuff. A big reason for this is human activities that release greenhouse gases into the atmosphere and contribute to climate change.We also learned that we're losing forests at an alarming rate due to deforestation for things like agriculture, mining, and urban development. It's estimated that 18 million acres of forest are cut down each year - that's an area larger than the country of Portugal! This is really bad for the environment since trees absorb carbon dioxide and produce oxygen. Plus, millions of plants and animals lose their natural habitats.Another major issue is excessive waste ending up in landfills and the ocean. The statistics on plastic pollution in particular are just staggering. Something like 8 million metric tons of plasticwaste enters the oceans every year! That's the equivalent of dumping a garbage truck full of plastic into the water every single minute. So sad to think of all the marine life getting tangled up in or ing篇4My Struggle with Unit 4 ListeningHey everyone, it's me again - Jack from 8th grade. I'm here to give you all the juicy details about the listening struggle I've been having with Unit 4 in our English textbook. Get ready, because this is going to be a wild ride!First off, let me set the scene. Unit 4 is all about talking about hobbies and free time activities. Sounds pretty straightforward, right? Well, little did I know, it was going to be a massive headache thanks to those dreadful listening exercises.It all started with Exercise A, where we had to listen to a bunch of kids chatting about what they like to do for fun. Simple enough, or so I thought. But whenever I tried to tune in, it was like my brain went into screensaver mode. I could make out a few words here and there - "soccer", "video games", "shopping" - but piecing together whole sentences? Forget about it.My friend Samantha, being the grade-A student that she is, seemed to breeze through that first exercise. I tried to convince her to share her secrets, but all she said was, "Jack, you just have to pay closer attention!" Gee, thanks for that brilliant advice, Sam.Moving on to Exercise B, where we were supposed to listen for specific details about these kids' hobbies. I might as well have been listening to gibberish at this point. Anytime a new voice came on, I lost the thread completely. How was I meant to catch the part about how often this imaginary kid played basketball? I don't have bionic ears!And don't even get me started on Exercise C - the one with those terrible-quality recordings that sounded like they were made in a broken tin can. Were those accents British? Australian? Martian? Who knows! All I heard were vague mumbles about "watching movies" and "going cycling". My brain checked out approximately 30 seconds in.At that point, I was ready to give up and become a monk who takes a vow of silence. This listening stuff was just not my strong suit. But then I remembered - I'm Jack, and I never back down from a challenge!So I buckled down and started coming up with strategies. First up, I convinced my dad to makeme practice listening exercises using movie clips and TV shows. The first few times, I could barely keep up with the plot. But slowly but surely, my ears started adjusting to processing continuous streams of dialogue.Next, I found some listening practice websites and podcasts for English learners. Boring at first, sure, but so helpful for training my brain to separate different voices and accents. Shoutout to that guy on "Culips Listening" who helped me finally distinguish between the "th" and "z" sounds!Finally, the breakthrough I'd been waiting for - I moved my desk right in front of the classroom speakers for our listening activities. No more spacing out or zoning out from the back row. With the audio clarity straight in my face, I was LOCKED IN.Did I still struggle occasionally and have to replay some tracks? You betcha. English listening is no joke when it's not your first language. But using those techniques, pre篇5Unit 4 Listening - My Struggles and TriumphsEnglish class was never my strong suit, especially the listening part. I just couldn't seem to keep up with thoserapid-fire recordings and make out all the words. When we started Unit 4 this semester, I braced myself for another round of frustration.The first listening exercise was about a family going on vacation. Simple enough, right? Wrong! The moment the track started playing, my mind went into panic mode. All I could hear was a jumbled mess of sounds. I strained my ears, desperately trying to latch onto any familiar words, but it was like they were speaking a foreign language at double speed.As the questions appeared on the screen, I felt my heart sink.I had absolutely no clue about the answers. Reluctantly, I filled ina few random guesses, knowing full well that I was bound to fail this section yet again.Dejected, I slumped in my seat, already dreading the next listening exercise. Little did I know, this unit would turn out to be a game-changer for me.Our teacher, Mrs. Smith, must have sensed the collective struggle in the classroom. She gathered us around and shared a simple yet brilliant tip: "Don't try to understand every single word. Focus on the main ideas and key details."It was like a lightbulb went off in my head. All this time, I had been so fixated on catching each and every word that I missed the bigger picture. With her guidance, we learned strategies to identify context clues, tone, and the overall gist of the conversations.The next few exercises were still challenging, but something had shifted within me. Instead of panicking, I took a deep breath and tried to tune into the main ideas. Slowly but surely, I started picking up on the critical information.One exercise was about two friends planning a camping trip. At first, the rapid back-and-forth banter threw me off. But then I caught on to key phrases like "next weekend," "hiking trail," and "bring a tent." Suddenly, the overall context became clear, and I could answer the questions with newfound confidence.With each successive exercise, my listening skills improved. I started recognizing common expressions and idioms, and I became better at separating relevant information from background noise.As we neared the end of Unit 4, I couldn't believe how far I had come. The final exercise was a news report about a local community event, and I managed to answer most of thequestions correctly. It was a small victory, but it felt monumental to me.Looking back, Unit 4 was a turning point in my English listening journey. It taught me the value of perseverance and the importance of not getting overwhelmed by the details. By focusing on the main ideas and key phrases, I was able to break through my listening comprehension barriers.Will I ace every listening exercise from now on? Probably not. But I've gained valuable strategies and a newfound confidence that will serve me well as I continue to navigate the challenges of English class.So, to anyone else struggling with listening comprehension, don't lose hope. Keep an open mind, focus on the big picture, and trust that with practice and the right mindset, those jumbled sounds will eventually start making sense.篇6My Exciting School TripHey friends! I'm so excited to tell you all about the awesome school trip I just went on last week. It was for our English listening class and man, did we have some fun adventures.It all started bright and early on Tuesday morning. Our teacher, Mrs. Johnson, had us all meet at the school bus loop at 7am sharp. I had stayed up way too late the night before playing video games, so I was pretty tired. But I could barely contain my excitement knowing we were going on a field trip!After we all piled onto the big yellow bus, Mrs. Johnson got on the microphone and gave us the rundown for the day. "Alright class, our first stop is going to be the radio station downtown to learn all about how they broadcast music, news, and other programs over the airwaves."The bus ride there seemed to take forever, but I didn't mind too much since I got to sit next to my best friend Jessica. We were gabbing away about all the latest gossip when finally, Mrs. Johnson announced we had arrived at 102.7 THE BEAT!We were greeted by one of the radio DJs named Josh. He was really friendly and had a great sense of humor as he gave us a tour of the studio. I couldn't believe all the crazy equipment they had to operate everything! Josh showed us the sound board, where they queue up songs, commercials, and sound effects. He even let a few of us put on headphones and read some lines over a fake radio broadcast which was super cool.After touring the radio station, we all piled back onto the bus and headed to our next destination - the local television news station, Channel 8 News. This place was even more high-tech than the radio station! We got to watch the news team in action filming their afternoon broadcast from the control room. It was fascinating seeing all the cameras, teleprompters, green screens, and other gear they use to make everything come together.During the commercial break, the meteorologist even brought a few of us on set to demonstrate how they use the green screen to show weather maps and graphics. I have to admit, I looked pretty goofy pretending to point at fake temperatures and storm systems! But it was still a really unique opportunity.After the news station, we stopped for a quick lunch at a pizza place downtown. Jessica and I split an extra large pepperoni pizza - it was so delicious but we probably shouldn't have eaten that much! We both had horrible stomachaches by the time we made it to our third and final destination of the day.Our last stop was the public library, which doesn't seem that exciting compared to the other places we visited. But it ended up being one of the highlights! We got to go behind the scenes and learn all about how libraries acquire, catalog, and shelve theirhuge book collections. We even got to sit in on a reading circle for little kids where the librarian did different voices and sound effects for all the different characters. It was honestly the cutest thing ever.At the end, the librarians had a surprise for us. They brought out these awesome 3D audio systems and let us experience what it's like to have stories come alive with immersive sound all around you. We listened to a few excerpts from popular audiobooks and it honestly felt like you were right there in the middle of the action. The effects were mind-blowing! I've never experienced books that way before but I am 100% going to start checking out more audiobooks from now on.As we headed back to the bus at the end of the day, I was exhausted but totally felt like I had learned so much about how sound and audio are used for radio, TV, libraries, and more. It really opened my eyes to all the careers out there that involve listening, broadcasting, and bringing stories to life through sound.Who knows, maybe I'll look into becoming a radio DJ, television personality, audiobook narrator, or even a sound engineer someday? One thing's for sure, this field trip gave me away deeper appreciation for audio and the roles it plays in our everyday lives. I can't wait for the next academic adventure!。

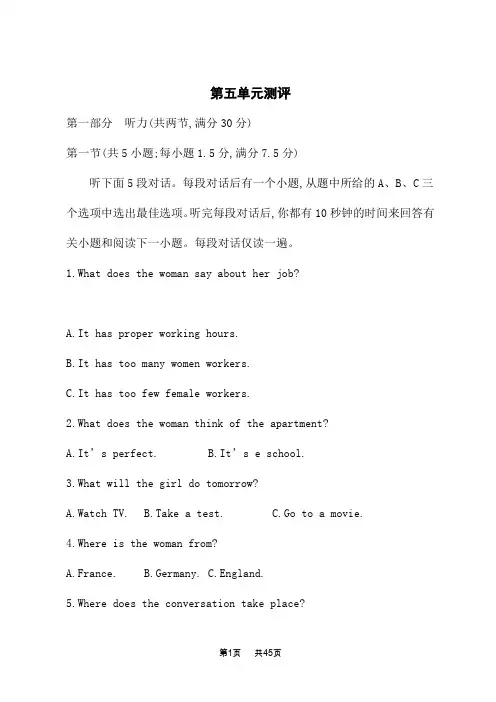

第五单元测评第一部分听力(共两节,满分30分)第一节(共5小题;每小题1.5分,满分7.5分)听下面5段对话。

每段对话后有一个小题,从题中所给的A、B、C三个选项中选出最佳选项。

听完每段对话后,你都有10秒钟的时间来回答有关小题和阅读下一小题。

每段对话仅读一遍。

1.What does the woman say about her job?A.It has proper working hours.B.It has too many women workers.C.It has too few female workers.2.What does the woman think of the apartment?A.It’s perfect.B.It’s e school.3.What will the girl do tomorrow?A.Watch TV.B.Take a test.C.Go to a movie.4.Where is the woman from?A.France.B.Germany.C.England.5.Where does the conversation take place?A.In a travel agency.B.In a hotel.C.In an art museum.第二节(共15小题;每小题1.5分,满分22.5分)听下面5段对话或独白。

每段对话或独白后有几个小题,从题中所给的A、B、C三个选项中选出最佳选项。

听每段对话或独白前,你将有时间阅读各个小题,每小题5秒钟;听完后,各小题将给出5秒钟的作答时间。

每段对话或独白读两遍。

听第6段材料,回答第6、7题。

6.Why is the man talking to the woman?A.She doesn’t have a ticket.B.She parks in the wrong place.C.She doesn’t pay the parking fe e.7.What does the woman suggest the man do?A.Remove the tree.B.Stay behind the tree.C.Keep the sign in plain sight.听第7段材料,回答第8至10题。