Verifying Cryptographic Protocols for Electronic Commerce

- 格式:pdf

- 大小:126.70 KB

- 文档页数:14

信息安全工程师英语词汇Information Security Engineer English VocabularyIntroductionIn today's digital era, information security plays a critical role in safeguarding sensitive data from unauthorized access, alteration, or destruction. As technology continues to advance, the need for highly skilled professionals, such as Information Security Engineers, has become increasingly important. These professionals possess a vast knowledge of English vocabulary used in the field of information security. This article aims to provide an extensive list of English words and phrases commonly used by Information Security Engineers.1. Basic Terminology1.1 ConfidentialityConfidentiality refers to the protection of information from unauthorized disclosure. It ensures that only authorized individuals have access to sensitive data.1.2 IntegrityIntegrity refers to maintaining the accuracy, consistency, and trustworthiness of data throughout its lifecycle. It involves preventing unauthorized modification or alteration of information.1.3 AvailabilityAvailability refers to ensuring that authorized users have access to the information they need when they need it. It involves implementing measures to prevent service interruptions and downtime.1.4 AuthenticationAuthentication is the process of verifying the identity of a user, device, or system component. It ensures that only authorized individuals or entities can access the system or data.1.5 AuthorizationAuthorization involves granting or denying specific privileges or permissions to users, ensuring they can only perform actions they are allowed to do.2. Network Security2.1 FirewallA firewall is a network security device that monitors and controls incoming and outgoing traffic based on predetermined security rules. It acts as a barrier between internal and external networks, protecting against unauthorized access.2.2 Intrusion Detection System (IDS)An Intrusion Detection System is a software or hardware-based security solution that monitors network traffic for suspicious activities or patterns that may indicate an intrusion attempt.2.3 Virtual Private Network (VPN)A Virtual Private Network enables secure communication over a public network by creating an encrypted tunnel between the user's device and the destination network. It protects data from being intercepted by unauthorized parties.2.4 Secure Socket Layer/Transport Layer Security (SSL/TLS)SSL/TLS is a cryptographic protocol that provides secure communication over the internet. It ensures the confidentiality and integrity of data transmitted between a client and a server.3. Malware and Threats3.1 VirusA computer virus is a type of malicious software that can replicate itself and infect other computer systems. It can cause damage to data, software, and hardware.3.2 WormWorms are self-replicating computer programs that can spread across networks without human intervention. They often exploit vulnerabilities in operating systems or applications to infect other systems.3.3 Trojan HorseA Trojan Horse is a piece of software that appears harmless or useful but contains malicious code. When executed, it can provide unauthorized access to a user's computer system.3.4 PhishingPhishing is a fraudulent technique used to deceive individuals into providing sensitive information, such as usernames, passwords, or credit card details. It often involves impersonating trusted entities via email or websites.4. Cryptography4.1 EncryptionEncryption is the process of converting plain text into cipher text using an encryption algorithm. It ensures confidentiality by making the original data unreadable without a decryption key.4.2 DecryptionDecryption is the process of converting cipher text back into plain text using a decryption algorithm and the appropriate decryption key.4.3 Key ManagementKey management involves the generation, distribution, storage, and revocation of encryption keys. It ensures the secure use of encryption algorithms.5. Incident Response5.1 IncidentAn incident refers to any event that could potentially harm an organization's systems, data, or users. It includes security breaches, network outages, and unauthorized access.5.2 ForensicsDigital forensics involves collecting, analyzing, and preserving digital evidence related to cybersecurity incidents. It helps identify the cause, scope, and impact of an incident.5.3 RemediationRemediation involves taking actions to mitigate the impact of a security incident and prevent future occurrences. It includes removing malware, patching vulnerabilities, and implementing additional security controls.ConclusionAs Information Security Engineers, a strong command of English vocabulary related to information security is crucial for effective communication and understanding. This article has provided an extensive list of terms commonly used in the field, ranging from basic terminology to network security, malware, cryptography, and incident response. By mastering these words and phrases, professionals in the field can enhance their knowledge and contribute to the protection of sensitive information in today's ever-evolving digital landscape.。

【最新整理,下载后即可编辑】PART 11 Electronic Records; Electronic Signatures第11款电子记录;电子签名Subpart A--General Provisions分章A 一般规定Sec. 11.1 Scope.11.1适用范围(a) The regulations in this part set forth the criteria under which the agency considers electronic records, electronic signatures, and handwritten signatures executed to electronic records to be trustworthy, reliable, and generally equivalent to paper records and handwritten signatures executed on paper.本条款的规则提供了标准,在此标准之下FDA将认为电子记录、电子签名、和在电子记录上的手签名是可信赖的、可靠的并且通常等同于纸制记录和在纸上的手写签名。

(b) This part applies to records in electronic form that are created, modified, maintained, archived, retrieved, or transmitted, under any records requirements set forth in agency regulations.This part also applies to electronic records submitted to the agency under requirements of the Federal Food, Drug, and Cosmetic Act and the Public Health Service Act, even if such records are not specifically identified in agency regulations.However, this part does not apply to paper records that are, or have been, transmitted by electronic means.本条款适用于在FDA规则中阐明的在任何记录的要求下,以电子表格形式建立、修改、维护、归档、检索或传送的记录。

FDA 21 CFR part 11译文21 CFR Part 11是针对电子记录和电子签名的FDA法规,对于药厂和医疗器械使用的众多电子记录和电子签名提供了详尽的要求和规范。

Subpart A--General ProvisionsA部分—通用规定11.1 Scope.11.1 范围(a) 本部分的法规制定了接受标准,用于机构评估电子记录、电子签名、电子记录加手写签名的可信性、可靠性,以及通常等同于纸质记录和手写签名的形式。

(a) The regulations in this part set forth the criteria under which the agency considers electronic records, electronic signatures, and handwritten signatures executed to electronic records to be trustworthy, reliable, and generally equivalent to paper record sand handwritten signatures executed on paper.(b) 本部分适用于根据法规需求制定的,以电子形式生成、修改、维护、存档、恢复或传输的任何记录。

还适用于提交给监管机构的关于联邦食品、药品和化妆品以及公共健康服务法案需求的电子记录,即使此类记录不是法规中特别提到的。

但是,本部分不适用于以电子形式传输的纸质记录。

(b) This part applies to records in electronic form that are created, modified, maintained, archived, retrieved, or transmitted, under any records requirements set forth in agency regulations. This part also applies to electronic records submitted to the agency under requirements of the Federal Food, Drug, and Cosmetic Act and the Public Health Service Act, even if such records are not specifically identified in agency regulations. However, this part does not apply to paper records that are, or have been, transmitted by electronic means.(c) 当电子签名和相关的电子记录符合本部分要求时,机构应认可电子签名等同于手写签名、缩写和其他法规中要求常用的签名形式,除非是法规自1997年8月20日以来特别强调的情况。

ATTACKS ON SECURITY PROTOCOLSUSING AVISPAVaishakhi SM. Tech Computer EngineeringKSV University, Near Kh-5, Sector 15Gandhinagar, GujaratProf.Radhika MDept of Computer EngineeringKSV University, Near Kh-5, Sector 15Gandhinagar, GujaratAbstractNow a days, Use of Internet is increased day by day. Both Technical and non technical people use the Internet very frequently but only technical user can understand the aspects working behind Internet. There are different types of protocols working behind various parameters of Internet such as security, accessibility, availability etc. Among all these parameters, Security is the most important for each and every internet user. There are many security protocols are developed in networking and also there are many tools for verifying these types of protocols. All these protocols should be analyzed through the verification tool. AVISPA is a protocol analysis tool for automated validation of Internet security protocol and applications. In this paper, we will discuss about Avispa library which describes the security properties, their classification, the attack found and the actual HLPSL specification of security protocols.Keywords- HLPSL,OFMC,SATMC,TA4SP,MASQURADE,DOSI.I NTRODUCTIONAs the Usage of Internet Increases, its security accessibility and availability must be increased. All users are concerns about their confidentiality and security while sending the data through the Internet. We have many security protocols for improve the security. But Are these protocols are technically verified? Are these protocols are working correctly? For answers of all these questions, there are some verification tools are developed. There are many tools like SPIN, Isabelle, FDR, Scyther, AVISPA for verification and validation of Internet security protocols. Among these, we will use the AVISPA research tool is more easy to use[1].The AVISPA tool provides the specific language called HLPSL (High Level Protocol Specification Language). Avispa tool has the library which includes different types of security protocols and its specifications. Avispa library contains around 79 security protocols from 33 groups[1]. It constitutes 384 security problems. Various standardization committees like IETF (Internet Engineering Task Force), W3C(World Wide Web Consortium) and IEEE(Institute of Electrical and Electronics Engineers)work on this tool. AVISPA library is the collection of specification of security which is characterized as IETF protocols, NON IETF protocols and E-Business protocols.Each protocol is describe in Alice-Bob notation. AVISPA library also describes the security properties, their classification and the attack found[2].AVISPA library also provides the short description of the included protocols. AVISPA tool is working using four types of Back Ends:(1)OFMC(On the Fly Model Checker) performs protocol falsification and bounded verification. It implements the symbolic techniques and support the algebraic properties of cryptographic operators.(2)CL-Atse(Constraint logic Based Attack Searcher)applies redundancy elimination techniques. It supports type flaw detection.(3)SATMC(SAT based Attack Searcher)builds proportional formula encoding a bounded unrolling of the transition relation by Intermediate format.(4)TA4SP(Tree Automata Based Protocol Analyser).It approximates the intruder knowledge by regular tree language.TA4SP can show whether a protocol is flawed or whether it is safe for any number of sessions[4]. We found some security attacks while analyzing the security protocols. All security attacks are discussed below:II. HLPSL SyntaxPROTOCOL Otway_Rees;IdentifiersA, B, S : User;Kas,Kbs, Kab: Symmetric_Key;M,Na,Nb,X : Number;KnowledgeA : B,S,Kas;B : S,Kbs;S : A,B,Kas,Kbs;Messages1. A -> B : M,A,B,{Na,M,A,B}Kas2. B -> S : M,A,B,{Na,M,A,B}Kas,{Nb,M,A,B}Kbs3. S -> B : M,{Na,Kab}Kas,{Nb,Kab}Kbs4. B -> A : M,{Na,Kab}Kas5. A -> B : {X}KabSession_instances[ A:a; B:b; S:s; Kas:kas; Kbs:kbs ];Intruder Divert, Impersonate;Intruder_knowledge a;Goal secrecy_of X;A.Basic Roles[2]It is very easy to translate a protocol into HLPSL if it is written in Alice-Bob notation. A-B notation for particular protocol is as following:A ->S: {Kab}_KbsS ->B:{Kab}_KbsIn this protocol ,A want to set up a secure session with B by exchanging a new session key with the help of trusted server. Here Kas is the shared key between A and S.A starts by generating a new session key which is intended for B.She encrypts this key with Kas and send it to S.Then S decrypts message ,re encrypts kab with Kbs.After this exchange A and B share the new session key and can use it to communicate with one another.B.Transitions[2]The transition part contains set of transitions.Each represents the receipt of message and the sending of a reply message.The example of simple transition is as follows:Step 1: State = 0 /\ RCV({Kab’}_Kas) =|>State’:=2/\SND({kab’}_Kbs)Here, Step 1 is the name of the transition. This step 1 specifies that if the value of state is equal to zero and a message is received on channel RCV which contain some value Kab’ encrypted with Kas, then a transition files which sets the new value of state to 2 and sends the same value kab’ on channel SND, but this time encrypted with Kbs.posed Roles[2]Role session(A,B,S : agent,Kas, Kbs : symmetric key ) def=Local SA, RA, SB,RB,SS,RS :channel (dy)CompositionAlice (A,B,S, Kas, SA,RA)/\bob (B, A, S, Kbs, SB, RB)/\server (S, A, B, Kas, Kbs, SS, RS)end roleComposed roles contains one or more basic roles and executes together in parallel. It has no transition section. The /\ operator indicates that the roles should execute in parallel[4]. Here the type declaration channel (dy)stands for the Dolev-Yao intruder model[2]. The intruder has full control over the network, such that all messages sent by agents will go to the intruder. All the agents can send and receive on whichever channel they want; the intended connection between certain channel variables is irrelevant because the intruder is the network.We create the HLPSL code of security protocol using above syntax and verify those through the AVISPA tool [2]. Here we found some protocols with attack and some protocols without attacks. All the verified security protocol list are as below (figure 1):III. Security AttacksAs we show in the table that Internet security protocols may suffer from several types of attacks like flaw, replay, Man in the middle, masquerade, DOS etc. In Dos attack ,the attacker may target your computer and its network connection and the sites you are trying to use, an attacker may able to prevent you for accessing email, online accounts, websites etc[6].A flaw attack is an attack where a principal accepts a message component of one type as a message of another[7]. A replay attack Masqurade is the type of attack where the attackers pretends to be an authorized user of a system in order to gain access the private information of the system. Man in the middle is the attack where a user gets between the sender and receiver of information and sniffs any information being sent[6]. Man in the middle attack is sometimes known as Brigade attacks. Evasdropping attack is the act of secretly listening to the private conversation of others without their concent. It is a network layer attack. The attack could be done using tools called network sniffers [7]. These types of attacks can be removed by making some changes in the sessions and transactions.occurs when an attacker copies a stream of messages between two parties and replays the stream to one or more of the parties.IV.CONCLUSIONHere we have studied about the protocols using the AVISPA verification tool and we found different types of attacks on different Internet security protocols. All different types of goals are specified for different protocols.The attacks are interrupting to achieve their goals.We have to remove those attacks to make the protocols working properly.Figure 1: Attacks on security protocolsV.FUTURE WORKIn this paper we have defined the AVISPA library for Internet security protocols and survey the protocols and categorized the protocol with attacks and protocols without attacks. In the next stage we will apply some modifications in HLPSL language code on the security protocol which have the man in the middle attack using the techniques and we will try our best to remove the particular attack.VI.REFERENCES[1] Information Society Technologies, Automated Validation of Internet Security Protocols and Applications (version 1.1) user manual bythe AVISPA team,IST-2001-39252[2] Information Society Technologies, High Level Protocol Specification language Tutorial, A beginners Guide to Modelling and AnalyzingInternet Security Protocols,IST-2001-39252[3] Laura Takkinen,Helsinki University of Technology,TKKT-110.7290 Research Seminar on Network security[4] Daojing He,Chun Chen,Maode Ma,Sammy chan,International Journal of Communication Systems DOI:10.1002/Dac.1355 [5] Luca Vigano,Information Security Group,Electronic Theoretical Computer Science 155(2006)61-86 [6] U.Oktay and O.K.Sahingoz,6th [7] James Heather,Gavin Lowe,Steve Schneider,Programming Research group Oxford UniversityInternational Information security and cryptology conference,Turkey。

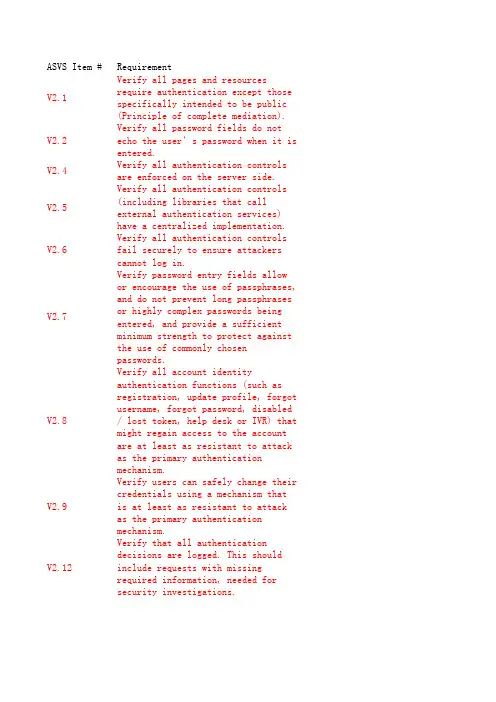

ASVS Item #RequirementV2.1Verify all pages and resources require authentication except those specifically intended to be public (Principle of complete mediation).V2.2Verify all password fields do not echo the user’s password when it is entered.V2.4Verify all authentication controls are enforced on the server side.V2.5Verify all authentication controls (including libraries that call external authentication services) have a centralized implementation.V2.6Verify all authentication controls fail securely to ensure attackers cannot log in.V2.7Verify password entry fields allow or encourage the use of passphrases, and do not prevent long passphrases or highly complex passwords being entered, and provide a sufficient minimum strength to protect against the use of commonly chosen passwords.V2.8Verify all account identity authentication functions (such as registration, update profile, forgot username, forgot password, disabled / lost token, help desk or IVR) that might regain access to the account are at least as resistant to attack as the primary authentication mechanism.V2.9Verify users can safely change their credentials using a mechanism that is at least as resistant to attack as the primary authentication mechanism.V2.12Verify that all authentication decisions are logged. This should include requests with missing required information, needed for security investigations.V2.13salted using a salt that is unique to that account (e.g., internal user ID, account creation) and use bcrypt, scrypt or PBKDF2 before storing the password.V2.16Verify that credentials, and all other identity information handled by the application(s), do not traverse unencrypted or weakly encrypted links.V2.17Verify that the forgotten password function and other recovery paths do not reveal the current password and that the new password is not sent in clear text to the user.V2.18Verify that username enumeration is not possible via login, password reset, or forgot account functionality.V2.19Verify there are no default passwords in use for the application framework or any components used by the application (such as “admin/password”).V2.20Verify that a resource governor is in place to protect against vertical (a single account tested against all possible passwords) and horizontal brute forcing (all accounts tested with the same password e.g. “Password1”). A correct credential entry should incur no delay. Both these governor mechanisms should be active simultaneously to protect against diagonal and distributed attacks.V2.21Verify that all authentication credentials for accessing services external to the application are encrypted and stored in a protected location (not in source code).V2.22other recovery paths send a link including a time-limited activation token rather than the password itself. Additional authentication based on soft-tokens (e.g. SMS token, native mobile applications, etc.) can be required as well before the link is sent over.V2.23Verify that forgot password functionality does not lock or otherwise disable the account until after the user has successfully changed their password. This is to prevent valid users from being locked out.V2.24Verify that there are no shared knowledge questions/answers (so called "secret" questions and answers).V2.25Verify that the system can be configured to disallow the use of a configurable number of previous passwords.V2.26Verify re-authentication, step up or adaptive authentication, SMS or other two factor authentication, or transaction signing is required before any application-specific sensitive operations are permitted as per the risk profile of the application.V3.1Verify that the framework’s default session management control implementation is used by the application.V3.2Verify that sessions are invalidated when the user logs out.V3.3Verify that sessions timeout after a specified period of inactivity.V3.4Verify that sessions timeout after an administratively-configurable maximum time period regardless of activity (an absolute timeout).V3.5Verify that all pages that require authentication to access them have logout links.V3.6Verify that the session id is never disclosed other than in cookie headers; particularly in URLs, error messages, or logs. This includes verifying that the application does not support URL rewriting of session cookies.V3.7Verify that the session id is changed on login to prevent session fixation.V3.8Verify that the session id is changed upon re-authentication.V3.10Verify that only session ids generated by the application framework are recognized as valid by the application.V3.11Verify that authenticated session tokens are sufficiently long and random to withstand session guessing attacks.V3.12Verify that authenticated session tokens using cookies have their path set to an appropriately restrictive value for that site. The domain cookie attribute restriction should not be set unless for a business requirement, such as single sign on.V3.14Verify that authenticated session tokens using cookies sent via HTTP, are protected by the use of "HttpOnly".V3.15Verify that authenticated session tokens using cookies are protected with the "secure" attribute and a strict transport security header (such as Strict-Transport-Security: max-age=60000; includeSubDomains) are present.V3.16Verify that the application does not permit duplicate concurrent user sessions, originating from different machines.V4.1Verify that users can only access secured functions or services for which they possess specific authorization.V4.2Verify that users can only access secured URLs for which they possess specific authorization.V4.3Verify that users can only access secured data files for which they possess specific authorization.V4.4Verify that direct object references are protected, such that only authorized objects or data are accessible to each user (for example, protect against direct object reference tampering).V4.5Verify that directory browsing is disabled unless deliberately desired.V4.8Verify that access controls fail securely.V4.9Verify that the same access control rules implied by the presentation layer are enforced on the server side for that user role, such that controls and parameters cannot be re-enabled or re-added from higher privilege users.V4.10Verify that all user and data attributes and policy information used by access controls cannot be manipulated by end users unless specifically authorized.V4.11Verify that all access controls are enforced on the server side.V4.12Verify that there is a centralized mechanism (including libraries that call external authorization services) for protecting access to each type of protected resource.V4.14Verify that all access control decisions are be logged and all failed decisions are logged.V4.16Verify that the application or framework generates strong random anti-CSRF tokens unique to the user as part of all high value transactions or accessing sensitive data, and that the application verifies the presence of this token with the proper value for the current user when processing these requests.V4.17Aggregate access control protection – verify the system can protect against aggregate or continuous access of secured functions, resources, or data. For example, possibly by the use of a resource governor to limit the number of edits per hour or to prevent the entire database from being scraped by an individual user.V5.1Verify that the runtime environment is not susceptible to buffer overflows, or that security controls prevent buffer overflows.V5.3Verify that all input validation failures result in input rejection.V5.4Verify that a character set, such as UTF-8, is specified for all sources of input.V5.5Verify that all input validation or encoding routines are performed and enforced on the server side.V5.6Verify that a single input validation control is used by the application for each type of data that is accepted.V5.7Verify that all input validation failures are logged.V5.8Verify that all input data is canonicalized for all downstream decoders or interpreters prior to validation.V5.10Verify that the runtime environment is not susceptible to SQL Injection, or that security controls prevent SQL Injection.V5.11Verify that the runtime environment is not susceptible to LDAP Injection, or that security controls prevent LDAP Injection.V5.12Verify that the runtime environment is not susceptible to OS Command Injection, or that security controls prevent OS Command Injection.V5.13Verify that the runtime environment is not susceptible to XML External Entity attacks or that security controls prevents XML External Entity attacks.V5.14Verify that the runtime environment is not susceptible to XML Injections or that security controls prevents XML Injections.V5.16Verify that all untrusted data that are output to HTML (including HTML elements, HTML attributes, JavaScript data values, CSS blocks, and URI attributes) are properly escaped for the applicable context.V5.17If the application framework allows automatic mass parameter assignment (also called automatic variable binding) from the inbound request to a model, verify that security sensitive fields such as “accountBalance”, “role” or “password” are protected from malicious automatic binding.V5.18Verify that the application has defenses against HTTP parameter pollution attacks, particularly if the application framework makes no distinction about the source of request parameters (GET, POST, cookies, headers, environment, etc.)V5.19Verify that for each type of output encoding/escaping performed by the application, there is a single security control for that type of output for the intended destination.V7.1Verify that all cryptographic functions used to protect secrets from the application user are implemented server side.V7.2Verify that all cryptographic modules fail securely.V7.3Verify that access to any master secret(s) is protected from unauthorized access (A master secret is an application credential stored as plaintext on disk that is used to protect access to security configuration information).V7.6Verify that all random numbers, random file names, random GUIDs, and random strings are generated using the cryptographic module’s approved random number generator when these random values are intended to be unguessable by an attacker.V7.7Verify that cryptographic modules used by the application have been validated against FIPS 140-2 or an equivalent standard.V7.8Verify that cryptographic modules operate in their approved mode according to their published security policies.V7.9Verify that there is an explicit policy for how cryptographic keys are managed (e.g., generated, distributed, revoked, expired). Verify that this policy is properly enforced.V8.1Verify that the application does not output error messages or stack traces containing sensitive datathat could assist an attacker, including session id and personal information.V8.2Verify that all error handling is performed on trusted devicesV8.3Verify that all logging controls are implemented on the server.V8.4Verify that error handling logic in security controls denies access by default.V8.5Verify security logging controls provide the ability to log both success and failure events that are identified as security-relevant.V8.6Verify that each log event includes: a timestamp from a reliablesource,severity level of the event, an indication that this is asecurity relevant event (if mixed with other logs), the identity of the user that caused the event (if there is a user associated with the event), the source IP address of the request associated with the event, whether the event succeeded or failed, and a description of the event.V8.7Verify that all events that include untrusted data will not execute as code in the intended log viewing software.V8.8Verify that security logs are protected from unauthorized access and modification.V8.9Verify that there is a single application-level logging implementation that isused by the software.V8.10Verify that the application does not log application-specific sensitive data that could assist an attacker, including user’s sessionidentifiers and personal orsensitive information. The length and existence of sensitive data can be logged.V8.11Verify that a log analysis tool is available which allows the analyst to search for log events based on combinations of search criteria across all fields in the log record format supported by this system.V8.13Verify that all non-printable symbols and field separators are properly encoded in log entries, to prevent log injection.V8.14Verify that log fields from trusted and untrusted sources are distinguishable in log entries.V8.15Verify that logging is performed before executing the transaction. If logging was unsuccessful (e.g. disk full, insufficient permissions) the application fails safe. This is for when integrity and non-repudiation are a must.V9.1Verify that all forms containing sensitive information have disabled client side caching, including autocomplete features.V9.2Verify that the list of sensitive data processed by this application is identified, and that there is an explicit policy for how access to this data must be controlled, and when this data must be encrypted (both at rest and in transit). Verify that this policy is properly enforced.V9.3Verify that all sensitive data is sent to the server in the HTTP message body (i.e., URL parameters are never used to send sensitive data).V9.4Verify that all cached or temporary copies of sensitive data sent to the client are protected from unauthorized access orpurged/invalidated after the authorized user accesses the sensitive data (e.g., the proper no-cache and no-store Cache-Control headers are set).V9.5Verify that all cached or temporary copies of sensitive data stored on the server are protected from unauthorized access orpurged/invalidated after the authorized user accesses the sensitive data.V9.6Verify that there is a method to remove each type of sensitive data from the application at the end of its required retention period.V9.7Verify the application minimizes the number of parameters sent to untrusted systems, such as hidden fields, Ajax variables, cookies and header values.V9.8Verify the application has theability to detect and alert on abnormal numbers of requests for information or processing high value transactions for that user role, such as screen scraping, automated use of web service extraction, or data loss prevention. For example, the average user should not be able to access more than 5 records per hour or 30 records per day, or add 10 friends to a social network per minute.V10.1Verify that a path can be built from a trusted CA to each Transport Layer Security (TLS) server certificate, and that each server certificate is valid.V10.2Verify that failed TLS connections do not fall back to an insecure HTTP connection.V10.3Verify that TLS is used for all connections (including both external and backend connections) that are authenticated or that involve sensitive data or functions.V10.4Verify that backend TLS connection failures are logged.V10.5Verify that certificate paths are built and verified for all client certificates using configured trust anchors and revocation information.V10.6Verify that all connections to external systems that involve sensitive information or functions are authenticated.V10.7Verify that all connections to external systems that involve sensitive information or functions use an account that has been set up to have the minimum privileges necessary for the application to function properly.V10.8Verify that there is a single standard TLS implementation that is used by the application that is configured to operate in an approved mode of operation (See/groups/STM/cmvp /documents/fips140-2/FIPS1402IG.pdf ).V10.9Verify that specific character encodings are defined for all connections (e.g., UTF-8).V11.2Verify that the application accepts only a defined set of HTTP request methods, such as GET and POST and unused methods are explicitly blocked.V11.3Verify that every HTTP response contains a content type header specifying a safe character set (e.g., UTF-8).V11.6Verify that HTTP headers in both requests and responses contain only printable ASCII characters.V11.8Verify that HTTP headers and / or other mechanisms for older browsers have been included to protect against clickjacking attacks.V11.9Verify that HTTP headers added by a frontend (such as X-Real-IP), and used by the application, cannot be spoofed by the end user.V11.10Verify that the HTTP header, X-Frame-Options is in use for sites where content should not be viewed in a 3rd-party X-Frame. A common middle ground is to send SAMEORIGIN, meaning only websites of the same origin may frame it.V11.12Verify that the HTTP headers do not expose detailed version information of system components.V13.1Verify that no malicious code is in any code that was either developed or modified in order to create the application.V13.2Verify that the integrity of interpreted code, libraries, executables, and configuration files is verified using checksums or hashes.V13.3Verify that all code implementing or using authentication controls is not affected by any malicious code.V13.4Verify that all code implementing or using session management controls is not affected by any malicious code.V13.5Verify that all code implementing or using access controls is not affected by any malicious code.V13.6Verify that all input validation controls are not affected by any malicious code.V13.7Verify that all code implementing or using output validation controls is not affected by any malicious code.V13.8Verify that all code supporting or using a cryptographic module is not affected by any malicious code.V13.9Verify that all code implementing or using error handling and logging controls is not affected by any malicious code.V13.10Verify all malicious activity is adequately sandboxed.V13.11Verify that sensitive data is rapidly sanitized from memory as soon as it is no longer needed and handled in accordance to functions and techniques supported by the framework/library/operating system.V15.1Verify the application processes or verifies all high value business logic flows in a trusted environment, such as on a protected and monitored server.V15.2allow spoofed high value transactions, such as allowing Attacker User A to process a transaction as Victim User B by tampering with or replaying session, transaction state, transaction or user IDs.V15.3Verify the application does not allow high value business logic parameters to be tampered with, such as (but not limited to): price, interest, discounts, PII, balances, stock IDs, etc.V15.4Verify the application has defensive measures to protect against repudiation attacks, such as verifiable and protected transaction logs, audit trails or system logs, and in highest value systems real time monitoring of user activities and transactions for anomalies.V15.5Verify the application protects against information disclosure attacks, such as direct object reference, tampering, session brute force or other attacks.V15.6Verify the application hassufficient detection and governor controls to protect against brute force (such as continuously using a particular function) or denial of service attacks.V15.7Verify the application hassufficient access controls to prevent elevation of privilege attacks, such as allowing anonymous users from accessing secured data or secured functions, or allowing users to access each other’s details or using privileged functions.V15.8process business logic flows in sequential step order, with all steps being processed in realistic human time, and not process out of order, skipped steps, process steps from another user, or too quickly submitted transactions.V15.9Verify the application hasadditional authorization (such as step up or adaptive authentication) for lower value systems, and / or segregation of duties for high value applications to enforce anti-fraud controls as per the risk of application and past fraud.V15.10Verify the application has business limits and enforces them in atrusted location (as on a protected server) on a per user, per day or daily basis, with configurable alerting and automated reactions to automated or unusual attack. Examples include (but not limited to): ensuring new SIM users don’t exceed $10 per day for a new phone account, a forum allowing more than 100 new users per day or preventing posts or private messages until the account has been verified, a health system should not allow a single doctor to access more patient records than they can reasonably treat in a day, or a small business finance system allowing more than 20 invoice payments or $1000 per day across all users. In all cases, the business limits and totals should be reasonable for the business concerned. The only unreasonable outcome is if there are no business limits, alerting or enforcement.V16.1Verify that URL redirects and forwards do not include unvalidated data.V16.2Verify that file names and path data obtained from untrusted sources is canonicalized to eliminate path traversal attacks.V16.3Verify that files obtained from untrusted sources are scanned by antivirus scanners to prevent upload of known malicious content.V16.4Verify that parameters obtained from untrusted sources are not used in manipulating filenames, pathnames or any file system object without first being canonicalized and input validated to prevent local file inclusion attacks.V16.5Verify that parameters obtained from untrusted sources are canonicalized, input validated, and output encoded to prevent remote file inclusion attacks, particularly where input could be executed, such as header, source, or template inclusionV16.6Verify remote IFRAMEs and HTML5 cross-domain resource sharing does not allow inclusion of arbitrary remote content.V16.7Verify that files obtained from untrusted sources are stored outside the webroot.V16.8Verify that web or application server is configured by default to deny access to remote resources or systems outside the web or application server.V16.9Verify the application code does not execute uploaded data obtained from untrusted sources.V16.10Verify if Flash, Silverlight or other rich internet application (RIA) cross domain resource sharing configuration is configured to prevent unauthenticated or unauthorized remote access.V17.1Verify that the client validates SSL certificatesV17.2Verify that unique device ID (UDID) values are not used as security controls.V17.3Verify that the mobile app does not store sensitive data onto shared resources on the device (e.g. SD card or shared folders)V17.4Verify that sensitive data is not stored in SQLite database on the device.V17.5Verify that secret keys or passwords are not hard-coded in the executable.V17.6Verify that the mobile app prevents leaking of sensitive data via auto-snapshot feature of iOS.V17.7Verify that the app cannot be run on a jailbroken or rooted device.V17.8Verify that the session timeout is of a reasonable value.V17.9Verify the permissions being requested as well as the resources that it is authorized to access (i.e. AndroidManifest.xml, iOS Entitlements) .V17.10Verify that crash logs do not contain sensitive data.V17.11Verify that the application binary has been obfuscated.V17.12Verify that all test data has been removed from the app container (.ipa, .apk, .bar).V17.13Verify that the application does not log sensitive data to the system log or filesystem.V17.14Verify that the application does not enable autocomplete for sensitive text input fields, such as passwords, personal information or credit cards.V17.15Verify that the mobile app implements certificate pinning to prevent the proxying of app traffic.V17.16Verify no misconfigurations are present in the configuration files (Debugging flags set, world readable/writable permissions) and that, by default, configuration settings are set to theirsafest/most secure value.V17.17Verify any 3rd-party libraries in use are up to date, contain no known vulnerabilities.V17.18Verify that web data, such as HTTPS traffic, is not cached.V17.19Verify that the query string is not used for sensitive data. Instead, a POST request via SSL should be used with a CSRF token.V17.20Verify that, if applicable, any personal account numbers are truncated prior to storing on the device.V17.21Verify that the application makes use of Address Space Layout Randomization (ASLR).V17.22Verify that data logged via the keyboard (iOS) does not contain credentials, financial information or other sensitive data.V17.23If an Android app, verify that the app does not create files with permissions of MODE_WORLD_READABLE or MODE_WORLD_WRITABLEV17.24Verify that sensitive data is stored in a cryptographically secure manner (even when stored in the iOS keychain).V17.25Verify that anti-debugging and reverse engineering mechanisms are implemented in the app.V17.26Verify that the app does not export sensitive activities, intents, content providers etc. on Android.V17.27Verify that mutable structures have been used for sensitive strings such as account numbers and are overwritten when not used. (Mitigate damage from memory analysis attacks).V17.28Verify that any exposed intents, content providers and broadcast receivers perform full data validation on input (Android).需求Level 1Level 2验证所有页面和资源要求除了那些专门旨在成为公共的Y Y(完整的调解原则)认证。

ca证书身份认证流程A digital certificate, also known as a CA certificate, is a crucial component of the process of verifying the identity of users online. It is essentially an electronic document issued by a trusted third party, the certificate authority (CA), which vouches for the authenticity of the credentials presented by an individual or an organization. This process plays a vital role in ensuring the security and integrity of online transactions, communications, and data exchanges.数字证书,也称为CA证书,是验证用户在线身份的过程中至关重要的组成部分。

它本质上是由受信任的第三方,即证书颁发机构(CA)发出的电子文档,证实个人或组织提出的凭证的真实性。

这个过程在确保在线交易、通信和数据交流的安全性和完整性方面起着至关重要的作用。

The process of CA certificate identity verification typically involves several steps. First, the individual or organization requesting the certificate must generate a pair of cryptographic keys, consisting of a public key for encryption and a private key for decryption. These keys are used to create a digital signature, which serves as a unique identifier for the entity. The certificate authority then verifies theidentity of the requester through various means, such as validating official documents, conducting background checks, and verifying the authenticity of the cryptographic keys.CA证书身份验证的过程通常涉及几个步骤。

云计算外文翻译参考文献(文档含中英文对照即英文原文和中文翻译)原文:Technical Issues of Forensic Investigations in Cloud Computing EnvironmentsDominik BirkRuhr-University BochumHorst Goertz Institute for IT SecurityBochum, GermanyRuhr-University BochumHorst Goertz Institute for IT SecurityBochum, GermanyAbstract—Cloud Computing is arguably one of the most discussedinformation technologies today. It presents many promising technological and economical opportunities. However, many customers remain reluctant to move their business IT infrastructure completely to the cloud. One of their main concerns is Cloud Security and the threat of the unknown. Cloud Service Providers(CSP) encourage this perception by not letting their customers see what is behind their virtual curtain. A seldomly discussed, but in this regard highly relevant open issue is the ability to perform digital investigations. This continues to fuel insecurity on the sides of both providers and customers. Cloud Forensics constitutes a new and disruptive challenge for investigators. Due to the decentralized nature of data processing in the cloud, traditional approaches to evidence collection and recovery are no longer practical. This paper focuses on the technical aspects of digital forensics in distributed cloud environments. We contribute by assessing whether it is possible for the customer of cloud computing services to perform a traditional digital investigation from a technical point of view. Furthermore we discuss possible solutions and possible new methodologies helping customers to perform such investigations.I. INTRODUCTIONAlthough the cloud might appear attractive to small as well as to large companies, it does not come along without its own unique problems. Outsourcing sensitive corporate data into the cloud raises concerns regarding the privacy and security of data. Security policies, companies main pillar concerning security, cannot be easily deployed into distributed, virtualized cloud environments. This situation is further complicated by the unknown physical location of the companie’s assets. Normally,if a security incident occurs, the corporate security team wants to be able to perform their own investigation without dependency on third parties. In the cloud, this is not possible anymore: The CSP obtains all the power over the environmentand thus controls the sources of evidence. In the best case, a trusted third party acts as a trustee and guarantees for the trustworthiness of the CSP. Furthermore, the implementation of the technical architecture and circumstances within cloud computing environments bias the way an investigation may be processed. In detail, evidence data has to be interpreted by an investigator in a We would like to thank the reviewers for the helpful comments and Dennis Heinson (Center for Advanced Security Research Darmstadt - CASED) for the profound discussions regarding the legal aspects of cloud forensics. proper manner which is hardly be possible due to the lackof circumstantial information. For auditors, this situation does not change: Questions who accessed specific data and information cannot be answered by the customers, if no corresponding logs are available. With the increasing demand for using the power of the cloud for processing also sensible information and data, enterprises face the issue of Data and Process Provenance in the cloud [10]. Digital provenance, meaning meta-data that describes the ancestry or history of a digital object, is a crucial feature for forensic investigations. In combination with a suitable authentication scheme, it provides information about who created and who modified what kind of data in the cloud. These are crucial aspects for digital investigations in distributed environments such as the cloud. Unfortunately, the aspects of forensic investigations in distributed environment have so far been mostly neglected by the research community. Current discussion centers mostly around security, privacy and data protection issues [35], [9], [12]. The impact of forensic investigations on cloud environments was little noticed albeit mentioned by the authors of [1] in 2009: ”[...] to our knowledge, no research has been published on how cloud computing environments affect digital artifacts,and on acquisition logistics and legal issues related to cloud computing env ironments.” This statement is also confirmed by other authors [34], [36], [40] stressing that further research on incident handling, evidence tracking and accountability in cloud environments has to be done. At the same time, massive investments are being made in cloud technology. Combined with the fact that information technology increasingly transcendents peoples’ private and professional life, thus mirroring more and more of peoples’actions, it becomes apparent that evidence gathered from cloud environments will be of high significance to litigation or criminal proceedings in the future. Within this work, we focus the notion of cloud forensics by addressing the technical issues of forensics in all three major cloud service models and consider cross-disciplinary aspects. Moreover, we address the usability of various sources of evidence for investigative purposes and propose potential solutions to the issues from a practical standpoint. This work should be considered as a surveying discussion of an almost unexplored research area. The paper is organized as follows: We discuss the related work and the fundamental technical background information of digital forensics, cloud computing and the fault model in section II and III. In section IV, we focus on the technical issues of cloud forensics and discuss the potential sources and nature of digital evidence as well as investigations in XaaS environments including thecross-disciplinary aspects. We conclude in section V.II. RELATED WORKVarious works have been published in the field of cloud security and privacy [9], [35], [30] focussing on aspects for protecting data in multi-tenant, virtualized environments. Desired security characteristics for current cloud infrastructures mainly revolve around isolation of multi-tenant platforms [12], security of hypervisors in order to protect virtualized guest systems and secure network infrastructures [32]. Albeit digital provenance, describing the ancestry of digital objects, still remains a challenging issue for cloud environments, several works have already been published in this field [8], [10] contributing to the issues of cloud forensis. Within this context, cryptographic proofs for verifying data integrity mainly in cloud storage offers have been proposed,yet lacking of practical implementations [24], [37], [23]. Traditional computer forensics has already well researched methods for various fields of application [4], [5], [6], [11], [13]. Also the aspects of forensics in virtual systems have been addressed by several works [2], [3], [20] including the notionof virtual introspection [25]. In addition, the NIST already addressed Web Service Forensics [22] which has a huge impact on investigation processes in cloud computing environments. In contrast, the aspects of forensic investigations in cloud environments have mostly been neglected by both the industry and the research community. One of the first papers focusing on this topic was published by Wolthusen [40] after Bebee et al already introduced problems within cloud environments [1]. Wolthusen stressed that there is an inherent strong need for interdisciplinary work linking the requirements and concepts of evidence arising from the legal field to what can be feasibly reconstructed and inferred algorithmically or in an exploratory manner. In 2010, Grobauer et al [36] published a paper discussing the issues of incident response in cloud environments - unfortunately no specific issues and solutions of cloud forensics have been proposed which will be done within this work.III. TECHNICAL BACKGROUNDA. Traditional Digital ForensicsThe notion of Digital Forensics is widely known as the practice of identifying, extracting and considering evidence from digital media. Unfortunately, digital evidence is both fragile and volatile and therefore requires the attention of special personnel and methods in order to ensure that evidence data can be proper isolated and evaluated. Normally, the process of a digital investigation can be separated into three different steps each having its own specificpurpose:1) In the Securing Phase, the major intention is the preservation of evidence for analysis. The data has to be collected in a manner that maximizes its integrity. This is normally done by a bitwise copy of the original media. As can be imagined, this represents a huge problem in the field of cloud computing where you never know exactly where your data is and additionallydo not have access to any physical hardware. However, the snapshot technology, discussed in section IV-B3, provides a powerful tool to freeze system states and thus makes digital investigations, at least in IaaS scenarios, theoretically possible.2) We refer to the Analyzing Phase as the stage in which the data is sifted and combined. It is in this phase that the data from multiple systems or sources is pulled together to create as complete a picture and event reconstruction as possible. Especially in distributed system infrastructures, this means that bits and pieces of data are pulled together for deciphering the real story of what happened and for providing a deeper look into the data.3) Finally, at the end of the examination and analysis of the data, the results of the previous phases will be reprocessed in the Presentation Phase. The report, created in this phase, is a compilation of all the documentation and evidence from the analysis stage. The main intention of such a report is that it contains all results, it is complete and clear to understand. Apparently, the success of these three steps strongly depends on the first stage. If it is not possible to secure the complete set of evidence data, no exhaustive analysis will be possible. However, in real world scenarios often only a subset of the evidence data can be secured by the investigator. In addition, an important definition in the general context of forensics is the notion of a Chain of Custody. This chain clarifies how and where evidence is stored and who takes possession of it. Especially for cases which are brought to court it is crucial that the chain of custody is preserved.B. Cloud ComputingAccording to the NIST [16], cloud computing is a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources (e.g., networks, servers, storage, applications and services) that can be rapidly provisioned and released with minimal CSP interaction. The new raw definition of cloud computing brought several new characteristics such as multi-tenancy, elasticity, pay-as-you-go and reliability. Within this work, the following three models are used: In the Infrastructure asa Service (IaaS) model, the customer is using the virtual machine provided by the CSP for installing his own system on it. The system can be used like any other physical computer with a few limitations. However, the additive customer power over the system comes along with additional security obligations. Platform as a Service (PaaS) offerings provide the capability to deploy application packages created using the virtual development environment supported by the CSP. For the efficiency of software development process this service model can be propellent. In the Software as a Service (SaaS) model, the customer makes use of a service run by the CSP on a cloud infrastructure. In most of the cases this service can be accessed through an API for a thin client interface such as a web browser. Closed-source public SaaS offers such as Amazon S3 and GoogleMail can only be used in the public deployment model leading to further issues concerning security, privacy and the gathering of suitable evidences. Furthermore, two main deployment models, private and public cloud have to be distinguished. Common public clouds are made available to the general public. The corresponding infrastructure is owned by one organization acting as a CSP and offering services to its customers. In contrast, the private cloud is exclusively operated for an organization but may not provide the scalability and agility of public offers. The additional notions of community and hybrid cloud are not exclusively covered within this work. However, independently from the specific model used, the movement of applications and data to the cloud comes along with limited control for the customer about the application itself, the data pushed into the applications and also about the underlying technical infrastructure.C. Fault ModelBe it an account for a SaaS application, a development environment (PaaS) or a virtual image of an IaaS environment, systems in the cloud can be affected by inconsistencies. Hence, for both customer and CSP it is crucial to have the ability to assign faults to the causing party, even in the presence of Byzantine behavior [33]. Generally, inconsistencies can be caused by the following two reasons:1) Maliciously Intended FaultsInternal or external adversaries with specific malicious intentions can cause faults on cloud instances or applications. Economic rivals as well as former employees can be the reason for these faults and state a constant threat to customers and CSP. In this model, also a malicious CSP is included albeit he isassumed to be rare in real world scenarios. Additionally, from the technical point of view, the movement of computing power to a virtualized, multi-tenant environment can pose further threads and risks to the systems. One reason for this is that if a single system or service in the cloud is compromised, all other guest systems and even the host system are at risk. Hence, besides the need for further security measures, precautions for potential forensic investigations have to be taken into consideration.2) Unintentional FaultsInconsistencies in technical systems or processes in the cloud do not have implicitly to be caused by malicious intent. Internal communication errors or human failures can lead to issues in the services offered to the costumer(i.e. loss or modification of data). Although these failures are not caused intentionally, both the CSP and the customer have a strong intention to discover the reasons and deploy corresponding fixes.IV. TECHNICAL ISSUESDigital investigations are about control of forensic evidence data. From the technical standpoint, this data can be available in three different states: at rest, in motion or in execution. Data at rest is represented by allocated disk space. Whether the data is stored in a database or in a specific file format, it allocates disk space. Furthermore, if a file is deleted, the disk space is de-allocated for the operating system but the data is still accessible since the disk space has not been re-allocated and overwritten. This fact is often exploited by investigators which explore these de-allocated disk space on harddisks. In case the data is in motion, data is transferred from one entity to another e.g. a typical file transfer over a network can be seen as a data in motion scenario. Several encapsulated protocols contain the data each leaving specific traces on systems and network devices which can in return be used by investigators. Data can be loaded into memory and executed as a process. In this case, the data is neither at rest or in motion but in execution. On the executing system, process information, machine instruction and allocated/de-allocated data can be analyzed by creating a snapshot of the current system state. In the following sections, we point out the potential sources for evidential data in cloud environments and discuss the technical issues of digital investigations in XaaS environmentsas well as suggest several solutions to these problems.A. Sources and Nature of EvidenceConcerning the technical aspects of forensic investigations, the amount of potential evidence available to the investigator strongly diverges between thedifferent cloud service and deployment models. The virtual machine (VM), hosting in most of the cases the server application, provides several pieces of information that could be used by investigators. On the network level, network components can provide information about possible communication channels between different parties involved. The browser on the client, acting often as the user agent for communicating with the cloud, also contains a lot of information that could be used as evidence in a forensic investigation. Independently from the used model, the following three components could act as sources for potential evidential data.1) Virtual Cloud Instance: The VM within the cloud, where i.e. data is stored or processes are handled, contains potential evidence [2], [3]. In most of the cases, it is the place where an incident happened and hence provides a good starting point for a forensic investigation. The VM instance can be accessed by both, the CSP and the customer who is running the instance. Furthermore, virtual introspection techniques [25] provide access to the runtime state of the VM via the hypervisor and snapshot technology supplies a powerful technique for the customer to freeze specific states of the VM. Therefore, virtual instances can be still running during analysis which leads to the case of live investigations [41] or can be turned off leading to static image analysis. In SaaS and PaaS scenarios, the ability to access the virtual instance for gathering evidential information is highly limited or simply not possible.2) Network Layer: Traditional network forensics is knownas the analysis of network traffic logs for tracing events that have occurred in the past. Since the different ISO/OSI network layers provide several information on protocols and communication between instances within as well as with instances outside the cloud [4], [5], [6], network forensics is theoretically also feasible in cloud environments. However in practice, ordinary CSP currently do not provide any log data from the network components used by the customer’s instances or applications. For instance, in case of a malware infection of an IaaS VM, it will be difficult for the investigator to get any form of routing information and network log datain general which is crucial for further investigative steps. This situation gets even more complicated in case of PaaS or SaaS. So again, the situation of gathering forensic evidence is strongly affected by the support the investigator receives from the customer and the CSP.3) Client System: On the system layer of the client, it completely depends on the used model (IaaS, PaaS, SaaS) if and where potential evidence could beextracted. In most of the scenarios, the user agent (e.g. the web browser) on the client system is the only application that communicates with the service in the cloud. This especially holds for SaaS applications which are used and controlled by the web browser. But also in IaaS scenarios, the administration interface is often controlled via the browser. Hence, in an exhaustive forensic investigation, the evidence data gathered from the browser environment [7] should not be omitted.a) Browser Forensics: Generally, the circumstances leading to an investigation have to be differentiated: In ordinary scenarios, the main goal of an investigation of the web browser is to determine if a user has been victim of a crime. In complex SaaS scenarios with high client-server interaction, this constitutes a difficult task. Additionally, customers strongly make use of third-party extensions [17] which can be abused for malicious purposes. Hence, the investigator might want to look for malicious extensions, searches performed, websites visited, files downloaded, information entered in forms or stored in local HTML5 stores, web-based email contents and persistent browser cookies for gathering potential evidence data. Within this context, it is inevitable to investigate the appearance of malicious JavaScript [18] leading to e.g. unintended AJAX requests and hence modified usage of administration interfaces. Generally, the web browser contains a lot of electronic evidence data that could be used to give an answer to both of the above questions - even if the private mode is switched on [19].B. Investigations in XaaS EnvironmentsTraditional digital forensic methodologies permit investigators to seize equipment and perform detailed analysis on the media and data recovered [11]. In a distributed infrastructure organization like the cloud computing environment, investigators are confronted with an entirely different situation. They have no longer the option of seizing physical data storage. Data and processes of the customer are dispensed over an undisclosed amount of virtual instances, applications and network elements. Hence, it is in question whether preliminary findings of the computer forensic community in the field of digital forensics apparently have to be revised and adapted to the new environment. Within this section, specific issues of investigations in SaaS, PaaS and IaaS environments will be discussed. In addition, cross-disciplinary issues which affect several environments uniformly, will be taken into consideration. We also suggest potential solutions to the mentioned problems.1) SaaS Environments: Especially in the SaaS model, the customer does notobtain any control of the underlying operating infrastructure such as network, servers, operating systems or the application that is used. This means that no deeper view into the system and its underlying infrastructure is provided to the customer. Only limited userspecific application configuration settings can be controlled contributing to the evidences which can be extracted fromthe client (see section IV-A3). In a lot of cases this urges the investigator to rely on high-level logs which are eventually provided by the CSP. Given the case that the CSP does not run any logging application, the customer has no opportunity to create any useful evidence through the installation of any toolkit or logging tool. These circumstances do not allow a valid forensic investigation and lead to the assumption that customers of SaaS offers do not have any chance to analyze potential incidences.a) Data Provenance: The notion of Digital Provenance is known as meta-data that describes the ancestry or history of digital objects. Secure provenance that records ownership and process history of data objects is vital to the success of data forensics in cloud environments, yet it is still a challenging issue today [8]. Albeit data provenance is of high significance also for IaaS and PaaS, it states a huge problem specifically for SaaS-based applications: Current global acting public SaaS CSP offer Single Sign-On (SSO) access control to the set of their services. Unfortunately in case of an account compromise, most of the CSP do not offer any possibility for the customer to figure out which data and information has been accessed by the adversary. For the victim, this situation can have tremendous impact: If sensitive data has been compromised, it is unclear which data has been leaked and which has not been accessed by the adversary. Additionally, data could be modified or deleted by an external adversary or even by the CSP e.g. due to storage reasons. The customer has no ability to proof otherwise. Secure provenance mechanisms for distributed environments can improve this situation but have not been practically implemented by CSP [10]. Suggested Solution: In private SaaS scenarios this situation is improved by the fact that the customer and the CSP are probably under the same authority. Hence, logging and provenance mechanisms could be implemented which contribute to potential investigations. Additionally, the exact location of the servers and the data is known at any time. Public SaaS CSP should offer additional interfaces for the purpose of compliance, forensics, operations and security matters to their customers. Through an API, the customers should have the ability to receive specific information suchas access, error and event logs that could improve their situation in case of aninvestigation. Furthermore, due to the limited ability of receiving forensic information from the server and proofing integrity of stored data in SaaS scenarios, the client has to contribute to this process. This could be achieved by implementing Proofs of Retrievability (POR) in which a verifier (client) is enabled to determine that a prover (server) possesses a file or data object and it can be retrieved unmodified [24]. Provable Data Possession (PDP) techniques [37] could be used to verify that an untrusted server possesses the original data without the need for the client to retrieve it. Although these cryptographic proofs have not been implemented by any CSP, the authors of [23] introduced a new data integrity verification mechanism for SaaS scenarios which could also be used for forensic purposes.2) PaaS Environments: One of the main advantages of the PaaS model is that the developed software application is under the control of the customer and except for some CSP, the source code of the application does not have to leave the local development environment. Given these circumstances, the customer obtains theoretically the power to dictate how the application interacts with other dependencies such as databases, storage entities etc. CSP normally claim this transfer is encrypted but this statement can hardly be verified by the customer. Since the customer has the ability to interact with the platform over a prepared API, system states and specific application logs can be extracted. However potential adversaries, which can compromise the application during runtime, should not be able to alter these log files afterwards. Suggested Solution:Depending on the runtime environment, logging mechanisms could be implemented which automatically sign and encrypt the log information before its transfer to a central logging server under the control of the customer. Additional signing and encrypting could prevent potential eavesdroppers from being able to view and alter log data information on the way to the logging server. Runtime compromise of an PaaS application by adversaries could be monitored by push-only mechanisms for log data presupposing that the needed information to detect such an attack are logged. Increasingly, CSP offering PaaS solutions give developers the ability to collect and store a variety of diagnostics data in a highly configurable way with the help of runtime feature sets [38].3) IaaS Environments: As expected, even virtual instances in the cloud get compromised by adversaries. Hence, the ability to determine how defenses in the virtual environment failed and to what extent the affected systems havebeen compromised is crucial not only for recovering from an incident. Also forensic investigations gain leverage from such information and contribute to resilience against future attacks on the systems. From the forensic point of view, IaaS instances do provide much more evidence data usable for potential forensics than PaaS and SaaS models do. This fact is caused throughthe ability of the customer to install and set up the image for forensic purposes before an incident occurs. Hence, as proposed for PaaS environments, log data and other forensic evidence information could be signed and encrypted before itis transferred to third-party hosts mitigating the chance that a maliciously motivated shutdown process destroys the volatile data. Although, IaaS environments provide plenty of potential evidence, it has to be emphasized that the customer VM is in the end still under the control of the CSP. He controls the hypervisor which is e.g. responsible for enforcing hardware boundaries and routing hardware requests among different VM. Hence, besides the security responsibilities of the hypervisor, he exerts tremendous control over how customer’s VM communicate with the hardware and theoretically can intervene executed processes on the hosted virtual instance through virtual introspection [25]. This could also affect encryption or signing processes executed on the VM and therefore leading to the leakage of the secret key. Although this risk can be disregarded in most of the cases, the impact on the security of high security environments is tremendous.a) Snapshot Analysis: Traditional forensics expect target machines to be powered down to collect an image (dead virtual instance). This situation completely changed with the advent of the snapshot technology which is supported by all popular hypervisors such as Xen, VMware ESX and Hyper-V.A snapshot, also referred to as the forensic image of a VM, providesa powerful tool with which a virtual instance can be clonedby one click including also the running system’s mem ory. Due to the invention of the snapshot technology, systems hosting crucial business processes do not have to be powered down for forensic investigation purposes. The investigator simply creates and loads a snapshot of the target VM for analysis(live virtual instance). This behavior is especially important for scenarios in which a downtime of a system is not feasible or practical due to existing SLA. However the information whether the machine is running or has been properly powered down is crucial [3] for the investigation. Live investigations of running virtual instances become more common providing evidence data that。

英汉网络安全词典英汉网络安全词典1. antivirus software / 杀毒软件Antivirus software, also known as anti-malware software, is a program designed to detect, prevent and remove malicious software from a computer or network.2. firewall / 防火墙A firewall is a network security device that monitors and filters incoming and outgoing network traffic based on predetermined security rules. It helps protect a computer or network from unauthorized access and potential threats.3. encryption / 加密Encryption is the process of converting plain text or data into an unreadable format using an algorithm and a key. It helps protect sensitive information and ensures secure communication.4. phishing / 钓鱼Phishing is a fraudulent practice where cybercriminals try to trick individuals into revealing sensitive information, such as passwords or credit card numbers, by pretending to be a legitimate entity.5. malware / 恶意软件Malware, short for malicious software, is any software designed to cause damage, disrupt operations, or gain unauthorized access to a computer or network. Common types of malware include viruses, worms, trojans, and ransomware.6. vulnerability / 漏洞A vulnerability is a weakness or flaw in a computer system or network that can be exploited by attackers. It can result in unauthorized access, data breaches, or system disruptions.7. authentication / 身份验证Authentication is the process of verifying the identity of an individual or device accessing a computer system or network. It can involve passwords, biometrics, or other means to ensure the authorized user's identity.8. intrusion detection system (IDS) / 入侵检测系统An intrusion detection system is a network security technology that monitors network traffic for malicious activity or unauthorized access. It alerts administrators or automatically takes action to prevent further damage.9. encryption key / 加密密钥An encryption key is a piece of information used in encryption algorithms to convert plain text into cipher text or vice versa. The key is necessary to decrypt the encrypted data and ensure secure communication.10. cybersecurity / 网络安全Cybersecurity refers to the practice of protecting computer systems, networks, and data from unauthorized access, damage, or theft. It involves implementing measures to prevent, detect, and respond to cyber threats.11. two-factor authentication (2FA) / 双因素身份验证Two-factor authentication is a security process that requires two different forms of identification before granting access to a computer system or network. It typically involves something the user knows (password) and something the user possesses (security token or mobile device).12. data breach / 数据泄露A data breach is an incident where unauthorized individuals gain access to protected or sensitive data without permission. It can result in the exposure or theft of personal information, financial records, or other confidential data.13. cyber attack / 网络攻击A cyber attack is an intentional act to compromise computer systems, networks, or devices by exploiting vulnerabilities. It can involve stealing sensitive data, disrupting operations, or causing damage to digital infrastructure.14. vulnerability assessment / 漏洞评估A vulnerability assessment is the process of identifying and evaluating vulnerabilities in a computer system, network, or application. It helps organizations understand their security weaknesses and take appropriate measures to mitigate risks.15. secure socket layer (SSL) / 安全套接字层Secure Socket Layer is a cryptographic protocol that ensures secure communication over a computer network. It provides encryption, authentication, and integrity, making it widely used for securing online transactions and data transfer.以上是英汉网络安全词典的部分词汇,以供参考。

[联邦法规][第21章第1卷][2006年04月01日修改] [代号:21CFR 11]第21章-食品与药品第1节-食品和药品管理局健康与人类服务部亚节-一般规定[Code of Federal Regulations][Title 21, Volume 1][Revised as of April 1, 2006][CITE: 21CFR 11]TITLE 21--Food And DrugsCHAPTER I--Food And Drug Administration Department of Health And Human Services Subchapter A--General第11款电子记录;电子签名PART 11 Electronic Records; Electronic Signatures分章A 一般规定适用范围Subpart A--General Provisions Sec. Scope.本条款的规则提供了标准,在此标准之下FDA将认为电子记录、电子签名、和在电子记录上的手签名是可信赖的、可靠的并且通常等同于纸制记录和在纸上的手写签名。

本条款适用于在FDA规则中阐明的在任何记录的要求下,以电子表格形式建立、修改、维护、归档、检索或传送的记录。

本条款同样适用于在《联邦食品、药品和化妆品法案》和《公众健康服务法案》要求下的呈送给FDA的电子记录,即使该记录没有在FDA规则下明确识别。

然而,本条款不适用于现在和已经以电子的手段传送的纸制记录。

一旦电子签名和与它相关的电子记录符合本条款的要求,FDA将会认为电子签名等同于完全手签名、缩写签名、和其他的FDA规则所求的一般签名。

除非被从1997年8月20日起(包括该日)生效后的规则明确地排除在外。

(a) The regulations in this part set forth the criteria under which the agency considers electronic records, electronic signatures, and handwritten signatures executed to electronic records to be trustworthy, reliable, and generally equivalent to paper records and handwritten signatures executed on paper.(b) This part applies to records in electronic form that are created, modified, maintained, archived, retrieved, or transmitted, under any records requirements set forth in agency regulations.This part also applies to electronic records submitted to the agency under requirements of the Federal Food, Drug, and Cosmetic Act and the Public Health Service Act, even if such records are not specifically identified in agency regulations.However, this part does not apply to paper records that are, or have been, transmitted by electronic means.(c) Where electronic signatures and their associated electronic records meet the requirements of this part, the agency will consider the electronic signatures to be equivalent to full handwritten signatures, initials, and other general signings as required by agency regulations, unless specifically excepted by regulation(s) effective on or after August 20, 1997.依照本条款,除非纸制记录有特殊的要求,符合本条款要求的电子记录可以代替纸制记录使用。