Data swapping Variations on a theme by dalenius and reiss

- 格式:pdf

- 大小:156.80 KB

- 文档页数:16

sequ词根-回复Seq stands for "sequ" root, meaning "to follow" or "sequence". In this article, we will delve into the concept of sequence and explore its various applications in different fields such as mathematics, genetics, and computer science. Step by step, we will decipher the significance of sequence and its role in understanding patterns and processes. By the end of this article, you will have a comprehensive understanding of the sequ root and its implications.The concept of sequence is deeply embedded in the fabric of our everyday lives. We encounter sequences in different forms, whether it's the order in which we perform a series of tasks or the steps involved in solving a problem. The essence of sequence lies in recognizing the order and arranging elements accordingly.In mathematics, sequence plays a crucial role in understanding patterns and series. A sequence is a list of numbers arranged in a particular order. Each number in the sequence is called a term. The sequence can be finite or infinite, depending on the number of terms present. For example, the sequence 1, 2, 3, 4, 5 forms a finite sequence with five terms, while the sequence 2, 4, 6, 8, ... continues indefinitely, forming an infinite sequence.Moreover, sequences can follow specific patterns or rules. For instance, the Fibonacci sequence is a famous example, where each term is the sum of the previous two terms (1, 1, 2, 3, 5, 8, 13, ...). By understanding the rules governing a sequence, mathematicians can predict and model various phenomena.Moving beyond mathematics, the concept of sequence extends to genetics. In the field of genetics, DNA sequences are of utmost importance. DNA, short for deoxyribonucleic acid, carries the genetic instructions required for the development, functioning, and reproduction of all known living organisms.The human genome, for instance, is made up of billions of DNA base pairs arranged in a specific order. This order is critical for various biological processes, such as protein synthesis and gene regulation. By sequencing the DNA, scientists can read the genetic code and gain insights into the causes of genetic disorders, develop personalized medicine, and understand the evolutionary history of species.The sequencing technology has evolved over time, enablingscientists to unravel the complexities of DNA. Initially, sequencing a DNA sequence was a labor-intensive and time-consuming process. However, advancements in technology, such as the development of high-throughput sequencing methods like Next-Generation Sequencing (NGS), have revolutionized the field. These techniques allow for the rapid and cost-effective sequencing of DNA, enabling researchers to analyze complex genomes and identify genetic variations more efficiently.Besides genetics, the concept of sequence is also vital in computer science. In computer science, a sequence is defined as an ordered set of elements. The order of these elements is crucial as it determines how a computer program processes and manipulates the data.Sequences are used in various algorithms and data structures to solve problems efficiently. For example, in sorting algorithms like Merge Sort or Quick Sort, the elements are rearranged into a particular order by comparing and swapping them based on a predefined rule. Similarly, in data structures like linked lists or arrays, the elements are stored in a specific order, allowing for faster access and manipulation of data.Overall, the sequ root encompasses the concept of sequence and its implications across different fields. From mathematics to genetics to computer science, the understanding of sequencing plays a pivotal role in unraveling patterns, solving problems, and gaining insights into various phenomena. By recognizing the order and following the sequence, we can unlock the mysteries that surround us and make significant advancements in different areas of knowledge.。

数据分析英语试题及答案一、选择题(每题2分,共10分)1. Which of the following is not a common data type in data analysis?A. NumericalB. CategoricalC. TextualD. Binary2. What is the process of transforming raw data into an understandable format called?A. Data cleaningB. Data transformationC. Data miningD. Data visualization3. In data analysis, what does the term "variance" refer to?A. The average of the data pointsB. The spread of the data points around the meanC. The sum of the data pointsD. The highest value in the data set4. Which statistical measure is used to determine the central tendency of a data set?A. ModeB. MedianC. MeanD. All of the above5. What is the purpose of using a correlation coefficient in data analysis?A. To measure the strength and direction of a linear relationship between two variablesB. To calculate the mean of the data pointsC. To identify outliers in the data setD. To predict future data points二、填空题(每题2分,共10分)6. The process of identifying and correcting (or removing) errors and inconsistencies in data is known as ________.7. A type of data that can be ordered or ranked is called________ data.8. The ________ is a statistical measure that shows the average of a data set.9. A ________ is a graphical representation of data that uses bars to show comparisons among categories.10. When two variables move in opposite directions, the correlation between them is ________.三、简答题(每题5分,共20分)11. Explain the difference between descriptive andinferential statistics.12. What is the significance of a p-value in hypothesis testing?13. Describe the concept of data normalization and its importance in data analysis.14. How can data visualization help in understanding complex data sets?四、计算题(每题10分,共20分)15. Given a data set with the following values: 10, 12, 15, 18, 20, calculate the mean and standard deviation.16. If a data analyst wants to compare the performance of two different marketing campaigns, what type of statistical test might they use and why?五、案例分析题(每题15分,共30分)17. A company wants to analyze the sales data of its products over the last year. What steps should the data analyst take to prepare the data for analysis?18. Discuss the ethical considerations a data analyst should keep in mind when handling sensitive customer data.答案:一、选择题1. D2. B3. B4. D5. A二、填空题6. Data cleaning7. Ordinal8. Mean9. Bar chart10. Negative三、简答题11. Descriptive statistics summarize and describe thefeatures of a data set, while inferential statistics make predictions or inferences about a population based on a sample.12. A p-value indicates the probability of observing the data, or something more extreme, if the null hypothesis is true. A small p-value suggests that the observed data is unlikely under the null hypothesis, leading to its rejection.13. Data normalization is the process of scaling data to a common scale. It is important because it allows formeaningful comparisons between variables and can improve the performance of certain algorithms.14. Data visualization can help in understanding complex data sets by providing a visual representation of the data, making it easier to identify patterns, trends, and outliers.四、计算题15. Mean = (10 + 12 + 15 + 18 + 20) / 5 = 14, Standard Deviation = √[(Σ(xi - mean)^2) / N] = √[(10 + 4 + 1 + 16 + 36) / 5] = √52 / 5 ≈ 3.816. A t-test or ANOVA might be used to compare the means ofthe two campaigns, as these tests can determine if there is a statistically significant difference between the groups.五、案例分析题17. The data analyst should first clean the data by removing any errors or inconsistencies. Then, they should transformthe data into a suitable format for analysis, such ascreating a time series for monthly sales. They might also normalize the data if necessary and perform exploratory data analysis to identify any patterns or trends.18. A data analyst should ensure the confidentiality andprivacy of customer data, comply with relevant data protection laws, and obtain consent where required. They should also be transparent about how the data will be used and take steps to prevent any potential misuse of the data.。

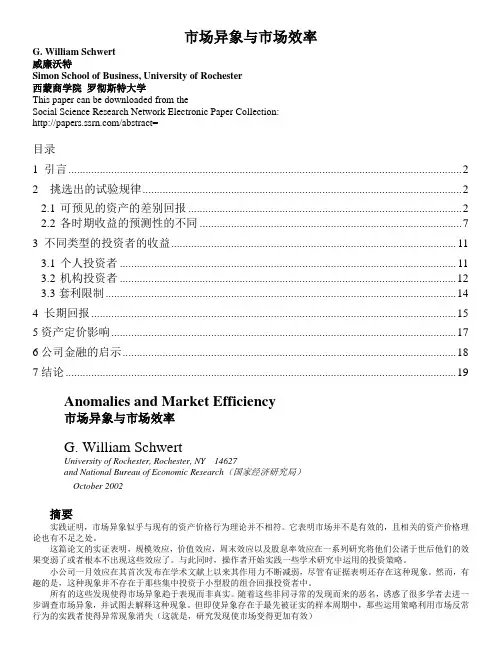

市场异象与市场效率G. William Schwert威廉沃特Simon School of Business, University of Rochester西蒙商学院罗彻斯特大学This paper can be downloaded from theSocial Science Research Network Electronic Paper Collection:/abstract=目录1 引言 (2)2 挑选出的试验规律 (2)2.1可预见的资产的差别回报 (2)2.2各时期收益的预测性的不同 (7)3 不同类型的投资者的收益 (11)3.1个人投资者 (11)3.2机构投资者 (12)3.3套利限制 (14)4 长期回报 (15)5资产定价影响 (17)6公司金融的启示 (18)7结论 (19)Anomalies and Market Efficiency市场异象与市场效率G. William SchwertUniversity of Rochester, Rochester, NY 14627and National Bureau of Economic Research(国家经济研究局)October 2002摘要实践证明,市场异象似乎与现有的资产价格行为理论并不相符。

它表明市场并不是有效的,且相关的资产价格理论也有不足之处。

这篇论文的实证表明,规模效应,价值效应,周末效应以及股息率效应在一系列研究将他们公诸于世后他们的效果变弱了或者根本不出现这些效应了。

与此同时,操作者开始实践一些学术研究中运用的投资策略。

小公司一月效应在其首次发布在学术文献上以来其作用力不断减弱,尽管有证据表明还存在这种现象。

然而,有趣的是,这种现象并不存在于那些集中投资于小型股的组合回报投资者中。

所有的这些发现使得市场异象趋于表现而非真实。

随着这些非同寻常的发现而来的恶名,诱惑了很多学者去进一步调查市场异象,并试图去解释这种现象。

A User’s Guide toRockWare®Aq•QA®Version 1.1RockWare, Inc.Golden, Colorado, USACopyright © 2003–2004 Prairie City Computing, Inc. All rights reserved.Aq•QA® information and updates: Aq•QA® sales and support:RockWare, Inc.2221 East Street, Suite 101Golden, Colorado 80401 USASales: 303-278-3534, aqqa@Orders: 800-775-6745Fax: 303-278-4099Developer: Developed exclusively for RockWare, Inc. by:Prairie City Computing, Inc.115 West Main Street, Suite 400PO Box 1006Urbana, Illinois 61803-1006 USATrademarks: Aq•QA® and Prairie City Computing® are trademarks or registered trademarks of Prairie City Computing, Inc. RockWare® is a registered trademark of RockWare, Inc. All other trademarks used herein are the properties of their respective owners.Warranty: RockWare warrants that the original CD is free from defects in material and workmanship, assuming normal use, for a period of 90 days from the date of purchase. If a defect occurs during this time, you may return the defective CD to PCC, along with a dated proof of purchase, and RockWare will replace it at no charge. After 90 days, you can obtain a replacement for a defective CD by sending it and a check for $25 (to cover postage and handling) to RockWare. Except for the express warranty of the original CD set forth here, neither RockWare nor Prairie City Computing (PCC) makes any other warranties, express or implied. RockWare attempts to ensure that the information contained in this manual is correct as of the time it was written. We are not responsible for any errors or omissions. RockWare’s and PCC’s liability is limited to the amount you paid for the product. Neither RockWare not PCC is liable for any special, consequential, or other damages for any reason.Copying and Distribution: You are welcome to make backup copies of the software for your own use and protection, but you are not permitted to make copies for the use of anyone else. We put a lot of time and effort into creating this product, and we appreciate your support in seeing that it is used by licensed users only.End User License Agreement: Use of Aq•QA® is subject to the terms of the accompanying End User License Agreement. Please refer to that Agreement for details.ContentsA Guided Tour of Aq•QA®1About Aq•QA® (1)Data Sheet (1)Entering Data (2)Working With Data (4)Graphing Data (6)Replicates, Standards, and Mixing (11)The Data Sheet 13 About the Data Sheet (13)Creating a New Data Sheet (13)Opening an Existing Data Sheet (13)Layout of the Data Sheet (13)Selecting Rows and Columns (14)Reordering Rows and Columns (14)Adding Samples and Analytes (14)Deleting Samples and Analytes (15)Using Analyte Symbols (15)Data Cells (15)Entering Data (15)Changing Units (16)Using Elemental Equivalents (16)Notes and Comments (17)Flagging Data Outside Regulatory Limits (17)Saving Data (17)Exporting Data to Other Software (17)Printing the Data Sheet (18)Analytes 19 About Analytes (19)Analyte Properties (19)Changing the Properties of an Analyte (20)Creating a New Analyte (21)Analyte Libraries (21)Editing the Analyte Library (21)Updating Aq•QA Files (22)A User’s Guide to Aq•QA Contents • iData Analysis 23 About Data Analysis (23)Fluid Properties (23)Water Type (24)Dissolved Solids (24)Density (24)Electrical Conductivity (24)Hardness (25)Internal Consistency (25)Anion-Cation Balance (25)Measured TDS Matches Calculated TDS (26)Measured Conductivity Matches Calculated Value (26)Measured Conductivity and Ion Sums (26)Calculated TDS to Conductivity Ratio (26)Measured TDS to Conductivity Ratio (26)Organic Carbon Cannot Exceed Sum of Organics (26)Carbonate Equilibria (26)Speciation (27)Total Carbonate From Titration Alkalinity (27)Titration Alkalinity From Total Carbonate (27)Mineral Saturation (27)Partial Pressure of CO2 (27)Irrigation Waters (27)Salinity hazard (28)Sodium Adsorption Ratio (28)Exchangeable Sodium Ratio (28)Magnesium Hazard (28)Residual Sodium Carbonate (28)Reference (29)Geothermometry (29)Unit Conversion (30)Replicates, Standards, and Mixing 33 About Replicates, Standards, and Mixing (33)Comparing Replicate Analyses (33)Checking Against Standards (34)Fluid Mixing (34)Graphing Data 35 About Graphing Data (35)Time Series Plots (35)Series Plots (36)Cross Plots (37)Ternary Diagrams (37)Piper Diagrams (38)Durov Diagrams (39)Schoeller Diagrams (39)ii • A Guided Tour of Aq•QA® A User’s Guide to Aq•QAStiff Diagrams (40)Radial Plots (40)Ion Balance Diagrams (41)Pie Charts (41)Copying a Graph to Another Document (42)Saving Graphs (42)Tapping Aq•QA®’s Power 43 About Tapping Aq•QA®’s Power (43)Template for New Data Sheets (43)Exporting the Data Sheet (43)Subscripts, Superscripts, and Greek Characters (44)Analyte Symbols (44)Colors and Markers (44)Calculated Ions (44)Hiding Analytes and Samples (44)Selecting Display Fonts (45)Searching the Data Sheet (45)Arrow Key Behavior During Editing (45)Sorting Samples and Analytes (45)“Tip of the Day” (45)Appendix: Carbonate Equilibria 47 About Carbonate Equilibria (47)Necessary Data (47)Activity Coefficients (47)Apparent Equilibrium Constants (48)Speciation (49)Titration Alkalinity (49)Mineral Saturation (50)CO2 Partial Pressure (51)Index 53 A User’s Guide to Aq•QA Contents • iiiA Guided Tour of Aq•QA®About Aq•QAImagine you could keep the results of your chemical analyses in aspreadsheet developed especially for the purpose. A spreadsheet thatknows how to convert units, check your analyses for internal consistency,graph your data in the ways you want it graphed, and so on.A spreadsheet like that exists, and it’s called Aq•QA. Aq•QA was writtenby water chemists, for water chemists. Best of all, it is not only powerfulbut easy to learn, so you can start using it in minutes. Just copy the datafrom your existing ordinary spreadsheets, paste it into Aq•QA, andyou’re ready to go!To see what Aq•QA can do for you, take the guided tour below.Data SheetWhen you start Aq•QA, you see an empty Data Sheet. Click on File →Open…, move to directory “\Program Files\AqQA\Examples” and openfile “Example1.aqq”.The example Data SheetAnalyteSampleis arranged with samples in columns, and analytes – the things youmeasure – in rows.A User’s Guide to Aq•QA A Guided Tour of Aq•QA® • 12 • A Guided Tour of Aq•QA® A User’s Guide to Aq•QAYou can flip an Aq•QA Data Sheet so the samples are in rows andanalytes in columns by selecting View → Transpose Data Sheet . Clickon this tab again to return to the original view. Tip: Aq•QA by default labels analytes by name (Sodium, Potassium,Dissolved Solids, …), but by clicking on View → Show AnalyteSymbols you can view them by chemical symbol (Na, K, TDS, …). To include more samples or analytes in your Data Sheet, click on the “Add Sample” or “Add Analyte” button: Add asampleAdd an analyteSelect analyte(s)Select sample(s)Select valuesYou select analytes or samples by clicking on “handles”, marked in theData Sheet by small triangles. You can select the values associated withan analyte using a separate set of handles, next to the “Unit” column.Give it a try!Tip: To rearrange rows or columns, select one or more, hold down theAlt key, and drag them to the desired location. Entering DataTo see how to enter your own data into an Aq•QA Data Sheet, begin byselecting File → New . Add to the Data Sheet whatever analytes youneed, and delete any you don’t need.Tip: To delete analytes, select one or more and click on the button.To delete samples you have selected, click on the button.When you click on the “Add Analyte” button, you can pick from amonga number of predefined choices in various categories, such as “InorganicAnalytes”, “Organic Analytes”, and so on:lets youfromA number of commonly encountered data fields (Date, pH,Temperature, …) can be found in the “General” category.Tip: If you don’t find an analyte you need among the predefined choices,you can easily define your own by clicking on Analytes→NewAnalyte….To make your work easier, rearrange the analytes (select, hold down theAlt key, and drag) so they appear in the same order as in your data.Tip: You can add a number of analytes in a single step by clicking onAnalytes→Add Analytes….Set units for the various analytes, as necessary: right click in the unitfield and choose the desired units from under Change Units, or selectChange Units under Analytes on the menubar.Right click tochange unitsA User’s Guide to Aq•QA A Guided Tour of Aq•QA® • 3Tip: You can change the units for more than one analyte in one step.Simply select any number of analytes and right-click on the unit field.Tip: Analyses are sometimes reported in elemental equivalents. Forexample, sulfate might be reported as “SO4 (as S)”, bicarbonate as“HCO3 (as C)”, and so on. In this case, right click on the unit of such ananalyte and select Convert to Elemental Equivalents.You can now enter your data into the Data Sheet as you would in anordinary spreadsheet.Tip: If you have an analysis below the detection limit, you can enter afield such as “<0.01”. Aq•QA knows what this means. If the analysisreports an analyte was not detected, enter a string such as “n/d” or “--”.For missing data, enter a non-numeric string, or simply leave the entryblank.You of course can type data into the Data Sheet by hand, or paste thevalues into cells one-by-one. But it’s far easier to copy them from anordinary spreadsheet or other document as a block and paste them all atonce into the Aq•QA Data Sheet.Making sure the analytes appear in the same order as in your spreadsheet,copy the data block, click on the top, leftmost cell in the Aq•QA DataSheet, and select Edit → Paste, or touch ctrl+V.Tip: If there are more samples in a data block you are pasting than inyour Aq•QA Data Sheet, Aq•QA will make room automatically.Tip: If the data arranged in your spreadsheet in columns fall in rows inyour Aq•QA Data Sheet, or vice-versa, you can transpose the Data Sheet,or simply select Edit → Paste Special → Paste Transposed.Tip: You can flag data in an Aq•QA Data Sheet that fall outsideregulatory guidelines. Select Samples → Check Regulatory Limits, orclick on . Violations on the Data Sheet are flagged in red.Working With DataOnce you have entered your chemical analyses in the Data Sheet, Aq•QAcan tell you lots of useful information.Click on File → Open… and load file “Example2.aqq” from directory“\Program Files\AqQA\Examples”. To see Aq•QA’s analysis of one ofthe samples in the Data Sheet, select the sample by clicking on its handleand then click on the tab. This moves you to the DataAnalysis pane, which looks like4 • A Guided Tour of Aq•QA® A User’s Guide to Aq•QAClick on anybar to expandor close up acategoryClick herefor moreinformationThere are a number of categories in the Data Analysis pane. To open acategory, click on the corresponding bar. A second click on the bar closesthe category. Clicking on the symbol gives more information aboutthe category.Tip: You can view the data analysis for the previous or next sample inyour Data Sheet by clicking on the and buttons to the left andright of the top bar in the Data Analysis pane.The top category, Fluid Properties, identifies the water type, dissolvedsolids content, density, temperature-corrected conductivity, and hardness,as measured or calculated by Aq•QA.The next category, Internal Consistency, reports the results of a numberof Quality Assurance tests from the American Water Works Association“Standard Methods” reference. For example, Aq•QA checks that anionsand cations balance electrically, that TDS and conductivitymeasurements are consistent with the reported fluid composition, and soon.The Carbonate Equilibria category tells the speciation of carbonate insolution, carbonate concentration calculated from measured titrationalkalinity and vice-versa, the fluid’s calculated saturation state withrespect to the calcium carbonate minerals calcite and aragonite, and thecalculated partial pressure of carbon dioxide.A User’s Guide to Aq•QA A Guided Tour of Aq•QA® • 5The Irrigation Waters category shows the irrigation properties of asample, and the Geothermometry category shows the results of applyingchemical geothermometers to the samples, assuming they are geothermalwaters.Finally, the sample’s analysis is displayed in a broad range of units, frommg/kg to molal and molar.Tip: You can print results in the Data Analysis pane: open the categoriesyou want printed and click on File→Print…Graphing DataAq•QA can display the data in your Data Sheet on a number of the typesof plots most commonly used by water chemists.To try your hand at making a graph, make sure that you have file“Example2.aqq” open. If not, click on File→Open… and select the filefrom directory “\Program Files\AqQA\Examples”.On the Data Sheet, select the row for Iron. Hold down the ctrl key andselect the row for Manganese. Click on and select Time SeriesPlot. The graph appears in Aq•QA as a new pane.The result should look like:To change or Click here toSelect…delete a graph, right-click on its tab alter the graph’s appearanceanalytes…andsamples tographYou can select the analytes and samples to appear in the graph on thecontrol panel to the right of the plot. Right clicking on the pane’s tab,along the bottom of the Aq•QA window, lets you change the plot to adifferent type, or delete it.Tip: You can alter the appearance of a graph by clicking on theAdvanced Options… button on the graph pane.6 • A Guided Tour of Aq•QA® A User’s Guide to Aq•QAYou can copy the graph (Edit→Copy) and paste it into another program, such as an illustration program like Adobe® Illustrator® or Microsoft® PowePoint®, or a word processing program like Microsoft®Word®.Tip: Once you have pasted a graph into an illustration program, you can edit its appearance and content. To do so, select the graphic and ungroup the picture elements (you may need to ungroup them twice).You can also send it to a printer by clicking on File→Print.Tip: In addition to copying a graph to the clipboard, you can save it in a file in one of several formats: as a Windows® EMF file, an EncapsulatedPostScript® (EPS) file, or a bitmap. Select File→Save Image As… andselect the format from the “Save as type” dropdown menu.Tip: Select a linear or logarithmic vertical axis for a Series or TimeSeries plot by unchecking or checking the box labeled “Log Scale” onthe Advanced Options…→ dialog or dialog.Aq•QA can display your data on a broad variety of graphs and diagrams:simply choose a diagram type from the pulldown.In addition to Time Series plots, Aq•QA can produce the following typesof diagrams:Series Diagrams.A User’s Guide to Aq•QA A Guided Tour of Aq•QA® • 7Cross Plots, in linear and logarithmic coordinates.Ternary diagrams.8 • A Guided Tour of Aq•QA® A User’s Guide to Aq•QAPiper diagrams.Durov diagrams.A User’s Guide to Aq•QA A Guided Tour of Aq•QA® • 9Schoeller diagrams.Stiff diagrams.Radial diagrams.10 • A Guided Tour of Aq•QA® A User’s Guide to Aq•QAIon balance diagrams.Pie charts.Replicates, Standards, and MixingAq•QA can check replicate analyses, compare analyses to a standard, andfigure the compositions of sample mixtures.Replicate analyses are splits of the same sample that have been analyzedmore than once, whether by the same or different labs. The analyses,therefore, should agree to within a small margin of error.To see how this feature works, load (File → Open…) file“Replicates.aqq” from directory “\Program Files\AqQA\Examples”.Select samples PCC-2, PCC-2a, and PCC-2b: click on the handle forPCC-2, then hold down the shift key and click on the handle for PCC-2b.Now, click on the button on the toolbar.A new display appears at the right side of the Aq•QA Data Sheet, oralong the bottom if you have transposed it.A User’s Guide to Aq•QA A Guided Tour of Aq•QA® • 1112 • A Guided Tour of Aq•QA®A User’s Guide to Aq•QAThe display shows the coefficient of variation for each analyte, and whether this value falls within a certain tolerance. Small coefficients of variation indicate good agreement among the replicates. The tolerance, by default, is ±5, but you can set it to another value by clicking on Samples → Set Replicate Tolerance….A standard is a sample of well-known composition, one that wasprepared synthetically, or whose composition has already been analyzed precisely. Enter the known composition as a sample in the Data Sheet and click on Samples → Designate As Standard, or the button. Then select an analysis of the standard on the Data Sheet and click on Samples → Compare To Standard, or the button. The display at the right or bottom of the Data Sheet shows the error in the analysis, relative to the standard. Set the tolerance for the comparison, by default ±10, clicking on Samples → Set Standard Tolerance….To find the composition of a mixture of two or more samples, select two or more samples and click on the button on the toolbar. Thecomposition of the mixed fluid appears to the right or bottom of the Data Sheet.The Data SheetAbout the Data SheetThe Aq•QA® Data Sheet is a special spreadsheet that holds yourchemical data. The data is typically composed of the values measured forvarious analytes, for a number of samples.You can enter data into a Data Sheet and manipulate it, as describedbelow.Creating a New Data SheetTo create a new Aq•QA Data Sheet, select File → New, or touch ctrl+N.An empty Data Sheet, containing a number of analytes, but no data,appears.The appearance of new Data Sheets is specified by a template. You cancreate your own template so new Data Sheets contain the analytes youneed, in your choice of units, and ordered as you desire. For moreinformation, see Template for New Data Sheets in the TappingAqQA’s Power chapter of this guide.Opening an Existing Data SheetAq•QA files end with the extension “.aqq”. These files contain the dataentered in the Data Sheet, as well as any graphs produced and theprogram’s current configuration.You can open an existing Data Sheet by clicking on File → Open… andselecting a “.aqq” file, either one that you have previously saved or anexample file installed with the Aq•QA package. A number of examplefiles are installed in the “Examples” directory within the Aq•QAinstallation directory (commonly “\Program Files\AqQA”).Layout of the Data SheetAn Aq•QA Data Sheet contains the values measured for various analytes(Na+, Ca2+, HCO3−, and so on) for any number of samples that have beenanalyzed. Each piece of information about a sample is considered ananalyte, even sample ID, location, sampling date, and so on.A User’s Guide to Aq•QA The Data Sheet • 13By default, each analyte occupies a row in the Data Sheet, and thesamples fall in columns. You can reverse this arrangement, so analytesfall in columns and the samples occupy rows, by clicking on Edit →Transpose Data Sheet. To flip the Data Sheet back to its originalarrangement, click on this tab a second time.You can rearrange the order of analytes or symbols on the Data Sheet, asdescribed below under Reordering Rows and Columns.Selecting Rows and ColumnsTo select a row or column, click on the marker to the left of a row, or thetop of a column. The marker for a row or column appears as a smalltriangle. Analytes have two markers, one for selecting the entire analyte,and one for selecting only the analyte’s data values.You can select a range of rows or columns by holding down the leftmouse button on the marker at the beginning of the range, then draggingthe mouse to the marker at the end of the range. Alternatively, select thebeginning of the range, then hold down the shift button and click on themarker for the end of the range.To select a series of rows or columns that are not necessarily contiguouson the Data Sheet, select the first row or column, then hold down the ctrlkey and select subsequent rows or columns.By clicking on one of the small blue squares at the top or left of the DataSheet, you can select either the entire sheet, or all of the data values onthe sheet.Reordering Rows and ColumnsYou can easily rearrange the rows and columns of samples and analytesin your Data Sheet. To do so, first select a row or column, or a range ofrows and columns, as described under Selecting Rows andColumns. Then, holding down the alt key, press the left mouse button,drag the selection to its new position, and release the mouse button.Adding Samples and AnalytesTo include more samples or analytes in your Data Sheet, select onSamples → Add Sample, or Analytes → Add Analyte, or simply clickon the or buttons on the toolbar. To add several analytes at once,select Analytes → Add Analytes…, which opens a dialog box for thispurpose.When you add an analyte, you choose from among the large number thatAq•QA knows about. These are arranged in categories: inorganics,organics, biological assays, radioactivity, isotopes, and a generalcategory that includes things like pH, temperature, date, and samplelocation.14 • The Data Sheet A User’s Guide to Aq•QAIf you don’t find the analyte you need, you can quickly define your own.Select Analytes → New Analyte…, or New Analyte…from thedropdown menu. For more information about defining analytes, see theAnalytes chapter of the guide.Deleting Samples and AnalytesTo delete analytes or samples, select one or more and click on Analytes→ Delete, or Samples → Delete. Alternatively, select an analyte andclick on the button, or a sample and click on .Using Analyte SymbolsAnalytes are labeled with names such as Sodium, Calcium, andBicarbonate. If you prefer, you can view them labeled with thecorresponding chemical symbols, such as Na+, Ca2+, HCO3−. Simplyclick on View → Show Analyte Symbols. A second click on this tabreturns to labeling analytes by name.Data CellsEach cell in the data sheet contains one of several types of information:1. A numerical value, such as the concentration of a species.2. A character string.3. A date or a time.Numerical values are, most commonly, simply a number. You can,however, indicate a lack of data with a character string, such as “n/d” or“Not analyzed”, or just leaving the cell empty.If an analysis falls below the detection limit for a species, enter thedetection limit preceded by a “<”. For example, “<0.01”.Character strings, such as you might enter for the “Sample ID”, containany combination of characters, and can be of any length.You can enter dates in a variety of formats: “Sep 21, 2003”, 9/21/03”,“September 23”, and so on. Aq•QA will interpret your input and cast it inyour local format (e.g., mm/dd/yy in the U.S.). Similarly, enter time as“2:20 PM” or “14:20”. Append seconds, if you wish: 2:20:30 PM”.To change the width of the data cells (i.e., the column width), drag thedividing line between columns to the left or right. This changes the widthof all the data columns in the Data Sheet.Entering DataTo enter data into an Aq•QA Data Sheet, you can of course type it infrom the keyboard, or paste it into the cells in the Data Sheet, one by one.A User’s Guide to Aq•QA The Data Sheet • 15It is generally more expedient, however, to copy all of the values as ablock from a source file, such as a table in a word processing document,or a spreadsheet. To do so, set up your Aq•QA Data Sheet so that itcontains the same analytes as the source file, in the same order (seeAdding Samples and Analytes above, and Reordering Rows andColumns). You don’t necessarily need to add samples: Aq•QA will addcolumns (or rows) to accommodate the data you paste.Now, select the data block from the source document and copy it to theclipboard. Move to Aq•QA, click on the top, leftmost data cell, and selectEdit → Paste. If the source data is arranged in the opposite sense as yourData Sheet (the samples are in rows instead of columns, or vice-versa),transpose the Data Sheet (View → Transpose Data Sheet), or selectEdit → Paste Special → Paste Transposed.Changing UnitsYou can change the units of analytes on the Data Sheet at any time. Todo so, select one or more analytes, then click on Analytes → ChangeUnits. Alternatively, right click and choose a new unit from the optionsunder Change Units. If you have entered numerical data for the analyte(or analytes), you will be given the option of converting the values to thenew unit.Some unit conversions require that the program be able to estimatevalues for the fluid’s density, dissolved solids content, or both. If youhave entered values for the Density or Dissolved Solids analytes, Aq•QAwill use these values directly when converting units.If you have not specified this data for a sample, Aq•QA will calculateworking values for density and dissolved solids from the chemicalanalysis provided. It is best, therefore, to enter the complete analysis for asample before converting units, so the Aq•QA can estimate density anddissolved solids as accurately as possible.Aq•QA estimates density and dissolved solids using the methodsdescribed in the Data Analysis section of the User’s Guide, assuming atemperature of 20°C, if none is specified. Aq•QA can estimate densityover only the temperature range 0°C –100°C; outside this range, itassumes a value of 1.0 g/cm3, which can be quite inaccurate and lead toerroneous unit conversions.Using Elemental EquivalentsYou may find that some of your analytical results are reported aselemental equivalents. For example, sulfate might be reported as “SO4(as S)”, bicarbonate as “HCO3 (as C)”, and so on.In this case, select the analyte or analytes in question and click onAnalytes → Convert to Elemental Equivalents. Alternatively, select16 • The Data Sheet A User’s Guide to Aq•QAthe analyte(s), then right click on your selection and choose Convert toElemental Equivalents.To return to the default setting, select Analytes → Convert to Species,or select the Convert to Species option when you right-click.Notes and CommentsWhen you construct a Data Sheet, you may want to save certain notesand comments, such as a site’s location, who conducted the sampling,what laboratory analyzed the samples, and so on.To do so, select File → Notes and Comments… and type theinformation into the box that appears. This information will be savedwith your Aq•QA document; you may access it and alter it at any time.Flagging Data Outside Regulatory LimitsYou can highlight on the Data Sheet concentrations in excess of ananalyte’s regulatory limit. Select Samples → Check Regulatory Limits.Concentrations above the limit now appear highlighted in a red font.Select the tab a second time to disable the option. Touching ctrl+L alsotoggles the option.Aq•QA can maintain a regulatory limit for each analyte. The analytelibrary contains default limits based on U.S. water quality standards atthe time of compilation, but you should of course verify these againststandards as implemented locally. You can easily change the limit carriedfor an analyte, as described in the Analytes chapter of this guide.Saving DataBefore you exit Aq•QA, you will probably want to save your workspace,which includes the data in your Data Sheet, any graphs you have created,and so on, in a .aqq file.To save your workspace, select File → Save , or click on the button onthe Aq•QA toolbar.To save your workspace as a .aqq file under a different name, select File→ Save As… and specify the file’s new name.You may also want to save the data in the Data Sheet as a file that can beread by other applications, such as Microsoft® Excel®. For informationon saving data in this way, see the next section, Exporting Data toOther Software.Exporting Data to Other SoftwareWhen Aq•QA saves a .aqq file, it does so in a special format thatincludes all of the information about your Aq•QA session, such as theA User’s Guide to Aq•QA The Data Sheet • 17。

全文分为作者个人简介和正文两个部分:作者个人简介:Hello everyone, I am an author dedicated to creating and sharing high-quality document templates. In this era of information overload, accurate and efficient communication has become especially important. I firmly believe that good communication can build bridges between people, playing an indispensable role in academia, career, and daily life. Therefore, I decided to invest my knowledge and skills into creating valuable documents to help people find inspiration and direction when needed.正文:写关于兰州天气预报的英语作文全文共3篇示例,供读者参考篇1The Unpredictable Skies of Lanzhou: A Student's Perspective on Weather ForecastingAs a student hailing from the captivating city of Lanzhou, located in the heart of China's Gansu Province, I have developeda keen interest in the ever-changing weather patterns that grace our skies. Nestled along the Yellow River, our city is renowned for its unique geographical location, which often leads to unexpected meteorological phenomena. Keeping a watchful eye on the weather forecast has become a ritual for many of us, as it holds the key to planning our daily activities and ensuring our safety.Growing up in Lanzhou, I quickly learned that the weather here could be as unpredictable as the winding streets of our ancient city. One moment, the sun would be shining brightly, and the next, dark clouds would gather, threatening to unleash a torrential downpour. This capricious nature of the weather has taught me the importance of being prepared for any eventuality, whether it's carrying an umbrella or donning an extra layer of clothing.As a student, the weather forecast plays a crucial role in my academic life. During exam season, when the pressure is at its peak, a sudden change in weather conditions can significantly impact my ability to concentrate and perform at my best. On sweltering summer days, the scorching heat can drain my energy, making it challenging to focus on my studies. Conversely, duringthe bitter winter months, the frigid temperatures can make the journey to and from school a daunting task.However, it's not just the extremes that concern us; even the slightest variations in weather can have far-reaching consequences. A bout of heavy rain can turn the city's streets into treacherous rivers, making it difficult for students like myself to navigate our way to class. Conversely, a sudden snowfall can bring the city to a standstill, causing transportation disruptions and forcing schools to close unexpectedly.Despite the challenges posed by Lanzhou's unpredictable weather, I have learned to embrace the excitement it brings. Watching the clouds gather and dissipate, observing the subtle shifts in wind direction, and feeling the temperature fluctuations have become a part of my daily routine. It's a constant reminder of the power of nature and the importance of respecting the forces that shape our environment.In recent years, advances in technology have made weather forecasting more accurate and accessible than ever before. Meteorological agencies now employ sophisticated models and algorithms to predict weather patterns with increasing precision. As a tech-savvy student, I have come to rely heavily on mobile applications and online resources that provide real-time updateson weather conditions, ensuring that I am always prepared for whatever Mother Nature has in store.Yet, even with these technological advancements, there is still an element of uncertainty that surrounds weather forecasting in Lanzhou. The city's unique geographical location, nestled between the Qilian Mountains and the Yellow River basin, creates a complex interplay of factors that can influence the weather in unpredictable ways. It's a humbling reminder that, despite our best efforts, nature still holds the ultimate trump card.Nonetheless, the challenges posed by Lanzhou'sever-changing weather have taught me valuable lessons in resilience, adaptability, and respect for the natural world. As I navigate the academic and personal challenges that life as a student presents, I find solace in the knowledge that, just like the weather, every obstacle is temporary, and with perseverance and preparation, I can weather any storm.In conclusion, the weather forecast in Lanzhou is more than just a report on atmospheric conditions; it's a window into the city's unique character, a reflection of the unpredictable forces that shape our lives, and a constant reminder of the beauty and power of nature. As a student, embracing the uncertainties of theweather has taught me invaluable lessons that will undoubtedly serve me well as I embark on the journey of life beyond the classroom walls.篇2An Unexpected Surprise: Lanzhou's Peculiar Weather ForecastAs I was getting ready for school this morning, I couldn't help but notice the odd weather report on the TV. Living in Lanzhou, the capital city of Gansu Province in northwest China, we're accustomed to a relatively dry and continental climate. However, today's forecast seemed to defy all logic and normalcy.The cheery meteorologist on screen announced with a bright smile, "Good morning, Lanzhou! Brace yourselves for a delightfully unexpected surprise today. We're in for a burst of tropical weather conditions unlike anything we've experienced before!"I nearly choked on my breakfast, certain I had misheard. Tropical weather? In Lanzhou? The city rests on the upper reaches of the Yellow River, surrounded by the arid Gobi Desert and rugged mountain ranges. Surely, this had to be some kind of joke or technical glitch.Nevertheless, the forecast insisted on defying my disbelief. "That's right, folks! We can expect scorching temperatures reaching a sweltering 40°C (104°F), coupled with intense humidity levels of 90%. But that's not all! Get ready for torrential downpours, with rainfall accumulations of up to 300 millimeters (12 inches) throughout the day."My jaw must have hit the floor at that point. Lanzhou receives an average annual precipitation of merely 315 millimeters (12.4 inches), and the thought of that much rain falling in a single day was mind-boggling.As if that wasn't enough, the meteorologist continued, "And hold on to your hats, folks, because we're also anticipating hurricane-force winds gusting up to 200 kilometers per hour (124 mph)! It's going to be one wild ride, so make sure to secure any loose objects and seek shelter when necessary."I couldn't believe what I was hearing. Lanzhou, a city known for its dry, temperate climate, was supposedly going to transform into a tropical cyclone zone overnight. This had to be a prank, right?Skeptical yet intrigued, I decided to keep an open mind and see how the day would unfold. After all, stranger things have happened in the world of weather.As I stepped outside, the first thing that hit me was the overwhelming humidity. The air felt thick and heavy, like walking through a sauna. Beads of sweat immediately formed on my forehead, and my clothes clung to my skin uncomfortably.Pushing through the oppressive heat, I made my way to school, only to be met with a torrential downpour halfway there. Within seconds, I was drenched from head to toe, my backpack soaked through and weighing a ton. The streets quickly transformed into raging rivers, with water levels rising rapidly.Seeking refuge under a shop's awning, I watched in awe as the storm intensified. Winds howled ferociously, whipping debris through the air and threatening to sweep me off my feet. Trees bent precariously, their branches thrashing violently, and streetlights swayed ominously.Just when I thought the situation couldn't get any more surreal, a massive flash of lightning illuminated the sky, followed by a deafening clap of thunder that rattled the windows around me. The hair on the back of my neck stood on end, and I couldn't help but feel a sense of awe mixed with trepidation.As the hours ticked by, the tropical onslaught showed no signs of letting up. News reports flooded in, detailingwidespread flooding, power outages, and even a few tornado sightings on the city's outskirts.By the time I finally made it to school, the campus resembled a war zone. Fallen trees and branches littered the grounds, and the once-pristine lawns had transformed into muddy quagmires. Several classrooms were flooded, forcing the cancellation of afternoon classes.During our lunch break, my friends and I huddled in the cafeteria, swapping stories of our harrowing journeys through the storm. Some had even witnessed roof tiles being ripped off buildings or cars being swept away by the raging floodwaters.As the day drew to a close, the meteorologists offered a glimmer of hope, predicting that the tropical conditions would begin to subside by the following morning. However, they warned that the aftermath would be significant, with widespread damage and cleanup efforts required throughout the city.On my way home, I couldn't help but feel a sense of disbelief and wonder at the day's events. Lanzhou, a city known for its dry, temperate climate, had been transformed into a tropical paradise (or nightmare, depending on your perspective) in a matter of hours.As I finally reached the sanctuary of my home, I couldn't help but reflect on the incredible power of nature and the unpredictability of weather patterns. What had seemed like an ordinary day had turned into an adventure straight out of a Hollywood disaster movie.In the end, this unexpected tropical surprise left me with a newfound appreciation for the meteorologists and their tireless efforts to forecast and prepare us for Mother Nature's whims. It also served as a humbling reminder that no matter how advanced our technology or knowledge may be, the forces of nature still possess the ability to surprise and astound us.As for Lanzhou, well, let's just say we'll be stocking up on raincoats and umbrellas from now on, just in case the tropics decide to pay us another unexpected visit.篇3A Grey and Hazy Future: Lanzhou's Troubling Weather ForecastAs a student living in the city of Lanzhou, the capital of Gansu Province in northwest China, the weather forecast has become a topic of significant concern and anxiety for me and my classmates. Surrounded by mountains and situated in asemi-arid climate zone, Lanzhou has long faced environmental challenges, but recent projections paint a grim picture of what lies ahead.According to the latest meteorological data and climate models, Lanzhou is expected to experience an increasing number of days with severe air pollution, extreme temperatures, and water scarcity over the next decade. These alarming trends not only threaten our quality of life but also raise serious questions about the long-term sustainability of our city.One of the most pressing issues is the prevalence of air pollution, which has become an all-too-familiar aspect of life in Lanzhou. The city's geography, with its surrounding mountains trapping pollutants, combined with industrial emissions and vehicle exhaust, has created a perfect storm for poor air quality. In recent years, we've witnessed an alarming rise in the number of days with hazardous levels of particulate matter (PM2.5) and other harmful pollutants.The weather forecast for the upcoming years only paints a bleaker picture. Meteorologists predict that Lanzhou will experience a significant increase in the number of days with severe smog, often lasting for weeks at a time. During theseperiods, the air becomes thick and hazy, making it difficult to breathe and forcing schools and businesses to close temporarily.As a student, the impact of air pollution on our health and education is a major concern. Exposure to high levels of particulate matter has been linked to respiratory problems, heart disease, and even cognitive impairment. Numerous studies have shown that air pollution can negatively affect children's lung development and academic performance. It's heartbreaking to see my younger siblings and their classmates struggle with asthma and other respiratory issues exacerbated by the poor air quality.Unfortunately, air pollution is not the only challenge we face. The weather forecast also warns of an increase in the frequency and intensity of extreme temperatures, both hot and cold. Lanzhou's continental climate has always been characterized by hot summers and cold winters, but climate change is amplifying these extremes.During the summer months, we can expect more frequent and prolonged heatwaves, with temperatures soaring well above 40°C (104°F). These sco rching conditions not only make outdoor activities unbearable but also put a strain on the city's energy resources as air conditioning usage skyrockets. Moreover, therisk of heat-related illnesses, such as heat stroke and dehydration, becomes a significant concern for vulnerable populations, including the elderly and young children.In contrast, the winters in Lanzhou are expected to become even harsher, with longer periods of extreme cold and heavy snowfall. While we're accustomed to dealing with frigid temperatures, the forecast suggests that we may face more frequent and intense cold snaps, with temperatures plummeting below -20°C (-4°F). These conditions can lead to disruptions in transportation, power outages, and increased heating costs, placing a significant burden on families and the local economy.Perhaps the most alarming aspect of Lanzhou's weather forecast is the projected water scarcity. As a semi-arid region, Lanzhou has long relied on the Yellow River and other water sources for its freshwater supply. However, climate change is expected to further exacerbate the already strained water resources in the region.The forecast indicates a significant decrease in precipitation levels, coupled with more frequent and prolonged droughts. This combination could potentially lead to water shortages, affecting not only households but also agricultural production and industrial activities. The prospect of water rationing andpotential conflicts over limited resources is a genuine concern for our community.As students, we are taught about the importance of environmental stewardship and sustainable development, but the reality we face in Lanzhou makes it challenging to remain hopeful. We watch helplessly as our city grapples with the consequences of pollution, climate change, and resource depletion, and we worry about the future that awaits us.Despite the grim forecast, we must not lose hope. It is crucial for us, as the next generation, to actively participate in finding solutions and advocating for change. We must demand that our local authorities and policymakers take decisive action to address these issues, from implementing stricter environmental regulations to investing in renewable energy sources and water conservation measures.Furthermore, we must educate ourselves and our peers about the importance of adopting sustainable lifestyles. Simple actions, such as reducing energy consumption, using public transportation, and minimizing waste, can collectively make a significant impact. By fostering a culture of environmental awareness and responsibility, we can work towards mitigatingthe adverse effects of climate change and preserving our city's natural resources.As I look out of my classroom window and see the hazy skyline, I am reminded of the challenges we face. But I also see the determination and resilience of my peers, who refuse to accept this grim future as inevitable. We are the future of Lanzhou, and it is our responsibility to fight for a cleaner, more sustainable, and livable city.The weather forecast may paint a bleak picture, but it is also a call to action. By working together, embracing innovation, and prioritizing environmental protection, we can create a brighter future for Lanzhou – a future where we can breathe clean air, enjoy moderate temperatures, and have access to clean water. It won't be easy, but as students, we must be the driving force behind this change, for the sake of our city, our health, and the generations to come.。

不平衡系数英文In the realm of machine learning, the imbalance coefficient holds significant importance, especially when dealing with datasets that exhibit a significant disparity in the number of instances between different classes. This disparity, often referred to as class imbalance, can lead to challenges in training accurate and reliable models. The imbalance coefficient serves as a metric to quantify this imbalance, allowing researchers and practitioners to assess the severity of the issue and take appropriate measures to address it.The imbalance coefficient is typically calculated as a ratio between the number of instances in the majority class and the number of instances in the minority class. A higher imbalance coefficient indicates a more severe imbalance, which can lead to issues such as bias towards the majority class and poor performance on the minority class. This can be problematic in scenarios where accurate predictions on the minority class are crucial, such as in fraud detection or rare disease diagnosis.To address class imbalance, several strategies can be employed. One common approach is oversampling, which involves generating synthetic instances of the minority class to increase its representation in the dataset. Another approach is undersampling, which involves reducing the number of instances in the majority class to balance the classes. Both approaches aim to create a more balanced dataset that can lead to improved model performance.However, it is important to note that simply balancing the classes may not always be sufficient. The imbalance coefficient, although a useful metric, does not capture all the nuances of class imbalance. For instance, the distribution of instances within each class may still be highly skewed, even if the overall class counts are balanced. In such cases, more advanced techniques such as cost-sensitive learning or ensemble methods may be required to effectively handle the imbalance.In addition, it is crucial to evaluate model performance not just on the overall dataset but also on each individual class. Metrics such as precision, recall, and F1-score provide a more nuanced understanding of modelperformance, especially in the context of class imbalance. By monitoring these metrics, researchers and practitioners can assess whether their strategies to address imbalance are effective and make informed decisions about model improvements.In conclusion, the imbalance coefficient plays apivotal role in machine learning, particularly when dealing with datasets exhibiting class imbalance. It serves as a valuable metric to quantify the severity of the imbalance and guide strategies to address it. By understanding and effectively addressing class imbalance, researchers and practitioners can develop more accurate and reliable models that perform well across all classes, leading to improved outcomes in various real-world applications.**不平衡系数在机器学习中的重要性**在机器学习的领域中,不平衡系数具有重要意义,特别是在处理不同类别之间实例数量存在显著差异的数据集时。

variance和variation的用法Variance and variation are terms commonly used in statistics and probability to describe the extent, dispersion, or spread of data. While they are related concepts, there are subtle differences in their usage. In this essay, we will explore the definitions, applications, and significance of variance and variation in various fields.Variance is a statistical measure that quantifies how spread out or dispersed a set of values is. It is calculated as the average of the squared differences from the mean of the data set. The basic idea behind variance is to determine the average distance between each data point and the mean. A larger variance indicates a greater spread or dispersion of data, while a smaller variance indicates a more concentrated cluster of values around the mean.Variance is widely used in fields such as finance, economics, engineering, and physics. In finance, for example, variance is a key measure of volatility in asset prices. Higher variance implies greater price fluctuations, making an investment riskier. Economists use variance to assess the volatility of economic indicators like GDP, inflation rates, and stock market returns. In engineering, variance helps evaluate the consistency and reliability of processes or systems. For instance, in manufacturing, measuring the variance of product dimensions ensures quality control. In physics, variance is used to analyze the fluctuations or noise in experimental measurements.On the other hand, variation refers to the range or diversity of values within a data set or population. It provides a measure of how different individual observations are from one another. Variation can be expressed in several ways, such as the range (maximum minus minimum), interquartile range (middle 50% of observations), or coefficient of variation (standard deviation divided by the mean). Variation is used in fields including biology, genetics, ecology, and social sciences. In biology and genetics, variation is crucial for understanding the diversity of traits within a species or population. It helps researchers study genetic variability, evolution, and adaptability. In ecology, variation is used to analyze how different environmental factors impact species diversity, population dynamics, and ecosystem stability. Social scientists use variation to investigate differences in attitudes, behaviors, socioeconomic factors, or cultural practices across different groups or regions.While variance and variation share similarities, they serve distinct purposes in statistics. Variance focuses specifically on the dispersion or spread of data around the mean. It provides a quantitative measure of the average distance between individual values and the central tendency of the data set. On the other hand, variation encompasses a broader concept that considers the entire range of values or patterns in a data set. It quantifies the degree of diversity, heterogeneity, or variability within the set. Both variance and variation play vital roles in hypothesis testing, modeling, and decision-making. They help researchers and practitioners make inferences, draw comparisons, and evaluate statistical significance. For example, when testing the effectiveness of a new drug, variance allows researchers to assess the consistency and reliability of treatment outcomes. In a manufacturing process, variation analysis helps identify sources of defects, optimize performance, and minimize waste. Moreover, in social sciences, analyzing variation across different groups provides insights into social inequalities,policy implications, or cultural differences.In conclusion, variance and variation are critical statistical measures used to analyze the spread, diversity, or dispersion of data. Variance focuses on the differences between individual values and the mean, providing a measure of how spread out the values are. Variation, on the other hand, considers the entire range of values or patterns within a data set, quantifying the degree of diversity or heterogeneity. Both concepts are extensively applied in various fields and are instrumental in decision-making, evaluating statistical significance, and understanding patterns in data.。