Mining Subsidence Paper_Ren_Li_Buckeridge_rev4_Jan2010

- 格式:pdf

- 大小:965.16 KB

- 文档页数:19

A review on time series data miningTak-chung FuDepartment of Computing,Hong Kong Polytechnic University,Hunghom,Kowloon,Hong Konga r t i c l e i n f oArticle history:Received19February2008Received in revised form14March2010Accepted4September2010Keywords:Time series data miningRepresentationSimilarity measureSegmentationVisualizationa b s t r a c tTime series is an important class of temporal data objects and it can be easily obtained from scientificandfinancial applications.A time series is a collection of observations made chronologically.The natureof time series data includes:large in data size,high dimensionality and necessary to updatecontinuously.Moreover time series data,which is characterized by its numerical and continuousnature,is always considered as a whole instead of individual numericalfield.The increasing use of timeseries data has initiated a great deal of research and development attempts in thefield of data mining.The abundant research on time series data mining in the last decade could hamper the entry ofinterested researchers,due to its complexity.In this paper,a comprehensive revision on the existingtime series data mining researchis given.They are generally categorized into representation andindexing,similarity measure,segmentation,visualization and mining.Moreover state-of-the-artresearch issues are also highlighted.The primary objective of this paper is to serve as a glossary forinterested researchers to have an overall picture on the current time series data mining developmentand identify their potential research direction to further investigation.&2010Elsevier Ltd.All rights reserved.1.IntroductionRecently,the increasing use of temporal data,in particulartime series data,has initiated various research and developmentattempts in thefield of data mining.Time series is an importantclass of temporal data objects,and it can be easily obtained fromscientific andfinancial applications(e.g.electrocardiogram(ECG),daily temperature,weekly sales totals,and prices of mutual fundsand stocks).A time series is a collection of observations madechronologically.The nature of time series data includes:large indata size,high dimensionality and update continuously.Moreovertime series data,which is characterized by its numerical andcontinuous nature,is always considered as a whole instead ofindividual numericalfield.Therefore,unlike traditional databaseswhere similarity search is exact match based,similarity search intime series data is typically carried out in an approximatemanner.There are various kinds of time series data related research,forexample,finding similar time series(Agrawal et al.,1993a;Berndtand Clifford,1996;Chan and Fu,1999),subsequence searching intime series(Faloutsos et al.,1994),dimensionality reduction(Keogh,1997b;Keogh et al.,2000)and segmentation(Abonyiet al.,2005).Those researches have been studied in considerabledetail by both database and pattern recognition communities fordifferent domains of time series data(Keogh and Kasetty,2002).In the context of time series data mining,the fundamentalproblem is how to represent the time series data.One of thecommon approaches is transforming the time series to anotherdomain for dimensionality reduction followed by an indexingmechanism.Moreover similarity measure between time series ortime series subsequences and segmentation are two core tasksfor various time series mining tasks.Based on the time seriesrepresentation,different mining tasks can be found in theliterature and they can be roughly classified into fourfields:pattern discovery and clustering,classification,rule discovery andsummarization.Some of the research concentrates on one of thesefields,while the others may focus on more than one of the aboveprocesses.In this paper,a comprehensive review on the existingtime series data mining research is given.Three state-of-the-arttime series data mining issues,streaming,multi-attribute timeseries data and privacy are also briefly introduced.The remaining part of this paper is organized as follows:Section2contains a discussion of time series representation andindexing.The concept of similarity measure,which includes bothwhole time series and subsequence matching,based on the rawtime series data or the transformed domain will be reviewed inSection3.The research work on time series segmentation andvisualization will be discussed in Sections4and5,respectively.InSection6,vary time series data mining tasks and recent timeseries data mining directions will be reviewed,whereas theconclusion will be made in Section7.2.Time series representation and indexingOne of the major reasons for time series representation is toreduce the dimension(i.e.the number of data point)of theContents lists available at ScienceDirectjournal homepage:/locate/engappaiEngineering Applications of Artificial Intelligence0952-1976/$-see front matter&2010Elsevier Ltd.All rights reserved.doi:10.1016/j.engappai.2010.09.007E-mail addresses:cstcfu@.hk,cstcfu@Engineering Applications of Artificial Intelligence24(2011)164–181original data.The simplest method perhaps is sampling(Astrom, 1969).In this method,a rate of m/n is used,where m is the length of a time series P and n is the dimension after dimensionality reduction(Fig.1).However,the sampling method has the drawback of distorting the shape of sampled/compressed time series,if the sampling rate is too low.An enhanced method is to use the average(mean)value of each segment to represent the corresponding set of data points. Again,with time series P¼ðp1,...,p mÞand n is the dimension after dimensionality reduction,the‘‘compressed’’time series ^P¼ð^p1,...,^p nÞcan be obtained by^p k ¼1k kX e ki¼s kp ið1Þwhere s k and e k denote the starting and ending data points of the k th segment in the time series P,respectively(Fig.2).That is, using the segmented means to represent the time series(Yi and Faloutsos,2000).This method is also called piecewise aggregate approximation(PAA)by Keogh et al.(2000).1Keogh et al.(2001a) propose an extended version called an adaptive piecewise constant approximation(APCA),in which the length of each segment is notfixed,but adaptive to the shape of the series.A signature technique is proposed by Faloutsos et al.(1997)with similar ideas.Besides using the mean to represent each segment, other methods are proposed.For example,Lee et al.(2003) propose to use the segmented sum of variation(SSV)to represent each segment of the time series.Furthermore,a bit level approximation is proposed by Ratanamahatana et al.(2005)and Bagnall et al.(2006),which uses a bit to represent each data point.To reduce the dimension of time series data,another approach is to approximate a time series with straight lines.Two major categories are involved.Thefirst one is linear interpolation.A common method is using piecewise linear representation(PLR)2 (Keogh,1997b;Keogh and Smyth,1997;Smyth and Keogh,1997). The approximating line for the subsequence P(p i,y,p j)is simply the line connecting the data points p i and p j.It tends to closely align the endpoint of consecutive segments,giving the piecewise approximation with connected lines.PLR is a bottom-up algo-rithm.It begins with creating afine approximation of the time series,so that m/2segments are used to approximate the m length time series and iteratively merges the lowest cost pair of segments,until it meets the required number of segment.When the pair of adjacent segments S i and S i+1are merged,the cost of merging the new segment with its right neighbor and the cost of merging the S i+1segment with its new larger neighbor is calculated.Ge(1998)extends PLR to hierarchical structure. Furthermore,Keogh and Pazzani enhance PLR by considering weights of the segments(Keogh and Pazzani,1998)and relevance feedback from the user(Keogh and Pazzani,1999).The second approach is linear regression,which represents the subsequences with the bestfitting lines(Shatkay and Zdonik,1996).Furthermore,reducing the dimension by preserving the salient points is a promising method.These points are called as perceptually important points(PIP).The PIP identification process isfirst introduced by Chung et al.(2001)and used for pattern matching of technical(analysis)patterns infinancial applications. With the time series P,there are n data points:P1,P2y,P n.All the data points in P can be reordered by its importance by going through the PIP identification process.Thefirst data point P1and the last data point P n in the time series are thefirst and two PIPs, respectively.The next PIP that is found will be the point in P with maximum distance to thefirst two PIPs.The fourth PIP that is found will be the point in P with maximum vertical distance to the line joining its two adjacent PIPs,either in between thefirst and second PIPs or in between the second and the last PIPs.The PIP location process continues until all the points in P are attached to a reordered list L or the required number of PIPs is reached(i.e. reduced to the required dimension).Seven PIPs are identified in from the sample time series in Fig.3.Detailed treatment can be found in Fu et al.(2008c).The idea is similar to a technique proposed about30years ago for reducing the number of points required to represent a line by Douglas and Peucker(1973)(see also Hershberger and Snoeyink, 1992).Perng et al.(2000)use a landmark model to identify the important points in the time series for similarity measure.Man and Wong(2001)propose a lattice structure to represent the identified peaks and troughs(called control points)in the time series.Pratt and Fink(2002)and Fink et al.(2003)define extrema as minima and maxima in a time series and compress thetime Fig.1.Time series dimensionality reduction by sampling.The time series on the left is sampled regularly(denoted by dotted lines)and displayed on the right with a largedistortion.Fig.2.Time series dimensionality reduction by PAA.The horizontal dotted lines show the mean of each segment.1This method is called piecewise constant approximation originally(Keoghand Pazzani,2000a).2It is also called piecewise linear approximation(PLA).Tak-chung Fu/Engineering Applications of Artificial Intelligence24(2011)164–181165series by selecting only certain important extrema and dropping the other points.The idea is to discard minor fluctuations and keep major minima and maxima.The compression is controlled by the compression ratio with parameter R ,which is always greater than one;an increase of R leads to the selection of fewer points.That is,given indices i and j ,where i r x r j ,a point p x of a series P is an important minimum if p x is the minimum among p i ,y ,p j ,and p i /p x Z R and p j /p x Z R .Similarly,p x is an important maximum if p x is the maximum among p i ,y ,p j and p x /p i Z R and p x /p j Z R .This algorithm takes linear time and constant memory.It outputs the values and indices of all important points,as well as the first and last point of the series.This algorithm can also process new points as they arrive,without storing the original series.It identifies important points based on local information of each segment (subsequence)of time series.Recently,a critical point model (CPM)(Bao,2008)and a high-level representation based on a sequence of critical points (Bao and Yang,2008)are proposed for financial data analysis.On the other hand,special points are introduced to restrict the error on PLR (Jia et al.,2008).Key points are suggested to represent time series in (Leng et al.,2009)for an anomaly detection.Another common family of time series representation approaches converts the numeric time series to symbolic form.That is,first discretizing the time series into segments,then converting each segment into a symbol (Yang and Zhao,1998;Yang et al.,1999;Motoyoshi et al.,2002;Aref et al.,2004).Lin et al.(2003;2007)propose a method called symbolic aggregate approximation (SAX)to convert the result from PAA to symbol string.The distribution space (y -axis)is divided into equiprobable regions.Each region is represented by a symbol and each segment can then be mapped into a symbol corresponding to the region inwhich it resides.The transformed time series ^Pusing PAA is finally converted to a symbol string SS (s 1,y ,s W ).In between,two parameters must be specified for the conversion.They are the length of subsequence w and alphabet size A (number of symbols used).Besides using the means of the segments to build the alphabets,another method uses the volatility change to build the alphabets.Jonsson and Badal (1997)use the ‘‘Shape Description Alphabet (SDA)’’.Example symbols like highly increasing transi-tion,stable transition,and slightly decreasing transition are adopted.Qu et al.(1998)use gradient alphabets like upward,flat and download as symbols.Huang and Yu (1999)suggest transforming the time series to symbol string,using change ratio between contiguous data points.Megalooikonomou et al.(2004)propose to represent each segment by a codeword from a codebook of key-sequences.This work has extended to multi-resolution consideration (Megalooi-konomou et al.,2005).Morchen and Ultsch (2005)propose an unsupervised discretization process based on quality score and persisting states.Instead of ignoring the temporal order of values like many other methods,the Persist algorithm incorporates temporal information.Furthermore,subsequence clustering is a common method to generate the symbols (Das et al.,1998;Li et al.,2000a;Hugueney and Meunier,2001;Hebrail and Hugueney,2001).A multiple abstraction level mining (MALM)approach is proposed by Li et al.(1998),which is based on the symbolic form of the time series.The symbols in this paper are determined by clustering the features of each segment,such as regression coefficients,mean square error and higher order statistics based on the histogram of the regression residuals.Most of the methods described so far are representing time series in time domain directly.Representing time series in the transformation domain is another large family of approaches.One of the popular transformation techniques in time series data mining is the discrete Fourier transforms (DFT),since first being proposed for use in this context by Agrawal et al.(1993a).Rafiei and Mendelzon (2000)develop similarity-based queries,using DFT.Janacek et al.(2005)propose to use likelihood ratio statistics to test the hypothesis of difference between series instead of an Euclidean distance in the transformed domain.Recent research uses wavelet transform to represent time series (Struzik and Siebes,1998).In between,the discrete wavelet transform (DWT)has been found to be effective in replacing DFT (Chan and Fu,1999)and the Haar transform is always selected (Struzik and Siebes,1999;Wang and Wang,2000).The Haar transform is a series of averaging and differencing operations on a time series (Chan and Fu,1999).The average and difference between every two adjacent data points are computed.For example,given a time series P ¼(1,3,7,5),dimension of 4data points is the full resolution (i.e.original time series);in dimension of two coefficients,the averages are (26)with the coefficients (À11)and in dimension of 1coefficient,the average is 4with coefficient (À2).A multi-level representation of the wavelet transform is proposed by Shahabi et al.(2000).Popivanov and Miller (2002)show that a large class of wavelet transformations can be used for time series representation.Dasha et al.(2007)compare different wavelet feature vectors.On the other hand,comparison between DFT and DWT can be found in Wu et al.(2000b)and Morchen (2003)and a combination use of Fourier and wavelet transforms are presented in Kawagoe and Ueda (2002).An ensemble-index,is proposed by Keogh et al.(2001b)and Vlachos et al.(2006),which ensembles two or more representations for indexing.Principal component analysis (PCA)is a popular multivariate technique used for developing multivariate statistical process monitoring methods (Yang and Shahabi,2005b;Yoon et al.,2005)and it is applied to analyze financial time series by Lesch et al.(1999).In most of the related works,PCA is used to eliminate the less significant components or sensors and reduce the data representation only to the most significant ones and to plot the data in two dimensions.The PCA model defines linear hyperplane,it can be considered as the multivariate extension of the PLR.PCA maps the multivariate data into a lower dimensional space,which is useful in the analysis and visualization of correlated high-dimensional data.Singular value decomposition (SVD)(Korn et al.,1997)is another transformation-based approach.Other time series representation methods include modeling time series using hidden markov models (HMMs)(Azzouzi and Nabney,1998)and a compression technique for multiple stream is proposed by Deligiannakis et al.(2004).It is based onbaseFig.3.Time series compression by data point importance.The time series on the left is represented by seven PIPs on the right.Tak-chung Fu /Engineering Applications of Artificial Intelligence 24(2011)164–181166signal,which encodes piecewise linear correlations among the collected data values.In addition,a recent biased dimension reduction technique is proposed by Zhao and Zhang(2006)and Zhao et al.(2006).Moreover many of the representation schemes described above are incorporated with different indexing methods.A common approach is adopted to an existing multidimensional indexing structure(e.g.R-tree proposed by Guttman(1984))for the representation.Agrawal et al.(1993a)propose an F-index, which adopts the R*-tree(Beckmann et al.,1990)to index thefirst few DFT coefficients.An ST-index is further proposed by (Faloutsos et al.(1994),which extends the previous work for subsequence handling.Agrawal et al.(1995a)adopt both the R*-and R+-tree(Sellis et al.,1987)as the indexing structures.A multi-level distance based index structure is proposed(Yang and Shahabi,2005a),which for indexing time series represented by PCA.Vlachos et al.(2005a)propose a Multi-Metric(MM)tree, which is a hybrid indexing structure on Euclidean and periodic spaces.Minimum bounding rectangle(MBR)is also a common technique for time series indexing(Chu and Wong,1999;Vlachos et al.,2003).An MBR is adopted in(Rafiei,1999)which an MT-index is developed based on the Fourier transform and in(Kahveci and Singh,2004)which a multi-resolution index is proposed based on the wavelet transform.Chen et al.(2007a)propose an indexing mechanism for PLR representation.On the other hand, Kim et al.(1996)propose an index structure called TIP-index (TIme series Pattern index)for manipulating time series pattern databases.The TIP-index is developed by improving the extended multidimensional dynamic indexfile(EMDF)(Kim et al.,1994). An iSAX(Shieh and Keogh,2009)is proposed to index massive time series,which is developed based on an SAX.A multi-resolution indexing structure is proposed by Li et al.(2004),which can be adapted to different representations.To sum up,for a given index structure,the efficiency of indexing depends only on the precision of the approximation in the reduced dimensionality space.However in choosing a dimensionality reduction technique,we cannot simply choose an arbitrary compression algorithm.It requires a technique that produces an indexable representation.For example,many time series can be efficiently compressed by delta encoding,but this representation does not lend itself to indexing.In contrast,SVD, DFT,DWT and PAA all lend themselves naturally to indexing,with each eigenwave,Fourier coefficient,wavelet coefficient or aggregate segment map onto one dimension of an index tree. Post-processing is then performed by computing the actual distance between sequences in the time domain and discarding any false matches.3.Similarity measureSimilarity measure is of fundamental importance for a variety of time series analysis and data mining tasks.Most of the representation approaches discussed in Section2also propose the similarity measure method on the transformed representation scheme.In traditional databases,similarity measure is exact match based.However in time series data,which is characterized by its numerical and continuous nature,similarity measure is typically carried out in an approximate manner.Consider the stock time series,one may expect having queries like: Query1:find all stocks which behave‘‘similar’’to stock A.Query2:find all‘‘head and shoulders’’patterns last for a month in the closing prices of all high-tech stocks.The query results are expected to provide useful information for different stock analysis activities.Queries like Query2in fact is tightly coupled with the patterns frequently used in technical analysis, e.g.double top/bottom,ascending triangle,flag and rounded top/bottom.In time series domain,devising an appropriate similarity function is by no means trivial.There are essentially two ways the data that might be organized and processed(Agrawal et al., 1993a).In whole sequence matching,the whole length of all time series is considered during the similarity search.It requires comparing the query sequence to each candidate series by evaluating the distance function and keeping track of the sequence with the smallest distance.In subsequence matching, where a query sequence Q and a longer sequence P are given,the task is tofind the subsequences in P,which matches Q. Subsequence matching requires that the query sequence Q be placed at every possible offset within the longer sequence P.With respect to Query1and Query2above,they can be considered as a whole sequence matching and a subsequence matching,respec-tively.Gavrilov et al.(2000)study the usefulness of different similarity measures for clustering similar stock time series.3.1.Whole sequence matchingTo measure the similarity/dissimilarity between two time series,the most popular approach is to evaluate the Euclidean distance on the transformed representation like the DFT coeffi-cients(Agrawal et al.,1993a)and the DWT coefficients(Chan and Fu,1999).Although most of these approaches guarantee that a lower bound of the Euclidean distance to the original data, Euclidean distance is not always being the suitable distance function in specified domains(Keogh,1997a;Perng et al.,2000; Megalooikonomou et al.,2005).For example,stock time series has its own characteristics over other time series data(e.g.data from scientific areas like ECG),in which the salient points are important.Besides Euclidean-based distance measures,other distance measures can easily be found in the literature.A constraint-based similarity query is proposed by Goldin and Kanellakis(1995), which extended the work of(Agrawal et al.,1993a).Das et al. (1997)apply computational geometry methods for similarity measure.Bozkaya et al.(1997)use a modified edit distance function for time series matching and retrieval.Chu et al.(1998) propose to measure the distance based on the slopes of the segments for handling amplitude and time scaling problems.A projection algorithm is proposed by Lam and Wong(1998).A pattern recognition method is proposed by Morrill(1998),which is based on the building blocks of the primitives of the time series. Ruspini and Zwir(1999)devote an automated identification of significant qualitative features of complex objects.They propose the process of discovery and representation of interesting relations between those features,the generation of structured indexes and textual annotations describing features and their relations.The discovery of knowledge by an analysis of collections of qualitative descriptions is then achieved.They focus on methods for the succinct description of interesting features lying in an effective frontier.Generalized clustering is used for extracting features,which interest domain experts.The general-ized Markov models are adopted for waveform matching in Ge and Smyth(2000).A content-based query-by-example retrieval model called FALCON is proposed by Wu et al.(2000a),which incorporates a feedback mechanism.Indeed,one of the most popular andfield-tested similarity measures is called the‘‘time warping’’distance measure.Based on the dynamic time warping(DTW)technique,the proposed method in(Berndt and Clifford,1994)predefines some patterns to serve as templates for the purpose of pattern detection.To align two time series,P and Q,using DTW,an n-by-m matrix M isfirstTak-chung Fu/Engineering Applications of Artificial Intelligence24(2011)164–181167constructed.The(i th,j th)element of the matrix,m ij,contains the distance d(q i,p j)between the two points q i and p j and an Euclidean distance is typically used,i.e.d(q i,p j)¼(q iÀp j)2.It corresponds to the alignment between the points q i and p j.A warping path,W,is a contiguous set of matrix elements that defines a mapping between Q and P.Its k th element is defined as w k¼(i k,j k)andW¼w1,w2,...,w k,...,w Kð2Þwhere maxðm,nÞr K o mþnÀ1.The warping path is typically subjected to the following constraints.They are boundary conditions,continuity and mono-tonicity.Boundary conditions are w1¼(1,1)and w K¼(m,n).This requires the warping path to start andfinish diagonally.Next constraint is continuity.Given w k¼(a,b),then w kÀ1¼(a0,b0), where aÀa u r1and bÀb u r1.This restricts the allowable steps in the warping path being the adjacent cells,including the diagonally adjacent cell.Also,the constraints aÀa uZ0and bÀb uZ0force the points in W to be monotonically spaced in time.There is an exponential number of warping paths satisfying the above conditions.However,only the path that minimizes the warping cost is of interest.This path can be efficiently found by using dynamic programming(Berndt and Clifford,1996)to evaluate the following recurrence equation that defines the cumulative distance gði,jÞas the distance dði,jÞfound in the current cell and the minimum of the cumulative distances of the adjacent elements,i.e.gði,jÞ¼dðq i,p jÞþmin f gðiÀ1,jÀ1Þ,gðiÀ1,jÞ,gði,jÀ1Þgð3ÞA warping path,W,such that‘‘distance’’between them is minimized,can be calculated by a simple methodDTWðQ,PÞ¼minWX Kk¼1dðw kÞ"#ð4Þwhere dðw kÞcan be defined asdðw kÞ¼dðq ik ,p ikÞ¼ðq ikÀp ikÞ2ð5ÞDetailed treatment can be found in Kruskall and Liberman (1983).As DTW is computationally expensive,different methods are proposed to speedup the DTW matching process.Different constraint(banding)methods,which control the subset of matrix that the warping path is allowed to visit,are reviewed in Ratanamahatana and Keogh(2004).Yi et al.(1998)introduce a technique for an approximate indexing of DTW that utilizes a FastMap technique,whichfilters the non-qualifying series.Kim et al.(2001)propose an indexing approach under DTW similarity measure.Keogh and Pazzani(2000b)introduce a modification of DTW,which integrates with PAA and operates on a higher level abstraction of the time series.An exact indexing approach,which is based on representing the time series by PAA for DTW similarity measure is further proposed by Keogh(2002).An iterative deepening dynamic time warping(IDDTW)is suggested by Chu et al.(2002),which is based on a probabilistic model of the approximate errors for all levels of approximation prior to the query process.Chan et al.(2003)propose afiltering process based on the Haar wavelet transformation from low resolution approx-imation of the real-time warping distance.Shou et al.(2005)use an APCA approximation to compute the lower bounds for DTW distance.They improve the global bound proposed by Kim et al. (2001),which can be used to index the segments and propose a multi-step query processing technique.A FastDTW is proposed by Salvador and Chan(2004).This method uses a multi-level approach that recursively projects a solution from a coarse resolution and refines the projected solution.Similarly,a fast DTW search method,an FTW is proposed by Sakurai et al.(2005) for efficiently pruning a significant number of search candidates. Ratanamahatana and Keogh(2005)clarified some points about DTW where are related to lower bound and speed.Euachongprasit and Ratanamahatana(2008)also focus on this problem.A sequentially indexed structure(SIS)is proposed by Ruengron-ghirunya et al.(2009)to balance the tradeoff between indexing efficiency and I/O cost during DTW similarity measure.A lower bounding function for group of time series,LBG,is adopted.On the other hand,Keogh and Pazzani(2001)point out the potential problems of DTW that it can lead to unintuitive alignments,where a single point on one time series maps onto a large subsection of another time series.Also,DTW may fail to find obvious and natural alignments in two time series,because of a single feature(i.e.peak,valley,inflection point,plateau,etc.). One of the causes is due to the great difference between the lengths of the comparing series.Therefore,besides improving the performance of DTW,methods are also proposed to improve an accuracy of DTW.Keogh and Pazzani(2001)propose a modifica-tion of DTW that considers the higher level feature of shape for better alignment.Ratanamahatana and Keogh(2004)propose to learn arbitrary constraints on the warping path.Regression time warping(RTW)is proposed by Lei and Govindaraju(2004)to address the challenges of shifting,scaling,robustness and tecki et al.(2005)propose a method called the minimal variance matching(MVM)for elastic matching.It determines a subsequence of the time series that best matches a query series byfinding the cheapest path in a directed acyclic graph.A segment-wise time warping distance(STW)is proposed by Zhou and Wong(2005)for time scaling search.Fu et al.(2008a) propose a scaled and warped matching(SWM)approach for handling both DTW and uniform scaling simultaneously.Different customized DTW techniques are applied to thefield of music research for query by humming(Zhu and Shasha,2003;Arentz et al.,2005).Focusing on similar problems as DTW,the Longest Common Subsequence(LCSS)model(Vlachos et al.,2002)is proposed.The LCSS is a variation of the edit distance and the basic idea is to match two sequences by allowing them to stretch,without rearranging the sequence of the elements,but allowing some elements to be unmatched.One of the important advantages of an LCSS over DTW is the consideration on the outliers.Chen et al.(2005a)further introduce a distance function based on an edit distance on real sequence(EDR),which is robust against the data imperfection.Morse and Patel(2007)propose a Fast Time Series Evaluation(FTSE)method which can be used to evaluate the threshold value of these kinds of techniques in a faster way.Threshold-based distance functions are proposed by ABfalg et al. (2006).The proposed function considers intervals,during which the time series exceeds a certain threshold for comparing time series rather than using the exact time series values.A T-Time application is developed(ABfalg et al.,2008)to demonstrate the usage of it.Fu et al.(2007)further suggest to introduce rules to govern the pattern matching process,if a priori knowledge exists in the given domain.A parameter-light distance measure method based on Kolmo-gorov complexity theory is suggested in Keogh et al.(2007b). Compression-based dissimilarity measure(CDM)3is adopted in this paper.Chen et al.(2005b)present a histogram-based representation for similarity measure.Similarly,a histogram-based similarity measure,bag-of-patterns(BOP)is proposed by Lin and Li(2009).The frequency of occurrences of each pattern in 3CDM is proposed by Keogh et al.(2004),which is used to compare the co-compressibility between data sets.Tak-chung Fu/Engineering Applications of Artificial Intelligence24(2011)164–181 168。

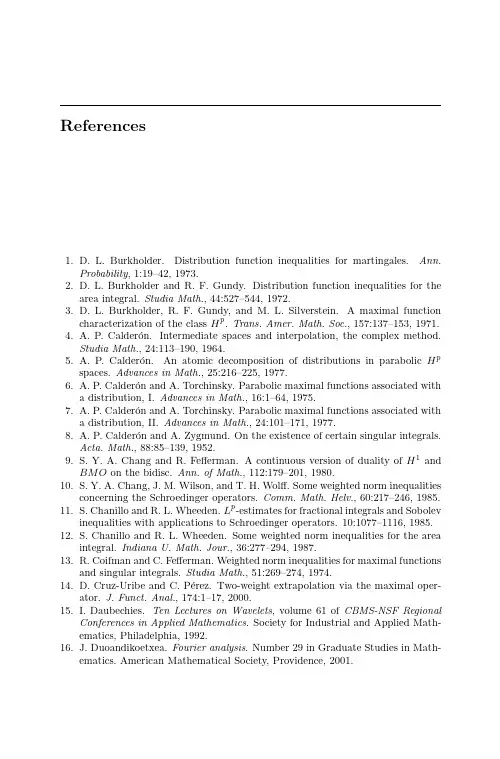

References1. D.L.Burkholder.Distribution function inequalities for martingales.Ann.Probability,1:19–42,1973.2. D.L.Burkholder and R.F.Gundy.Distribution function inequalities for thearea integral.Studia Math.,44:527–544,1972.3. D.L.Burkholder,R.F.Gundy,and M.L.Silverstein.A maximal functioncharacterization of the class H p.Trans.Amer.Math.Soc.,157:137–153,1971.4. A.P.Calder´o n.Intermediate spaces and interpolation,the complex method.Studia Math.,24:113–190,1964.5. A.P.Calder´o n.An atomic decomposition of distributions in parabolic H pspaces.Advances in Math.,25:216–225,1977.6. A.P.Calder´o n and A.Torchinsky.Parabolic maximal functions associated witha distribution,I.Advances in Math.,16:1–64,1975.7. A.P.Calder´o n and A.Torchinsky.Parabolic maximal functions associated witha distribution,II.Advances in Math.,24:101–171,1977.8. A.P.Calder´o n and A.Zygmund.On the existence of certain singular integrals.Acta.Math.,88:85–139,1952.9.S.Y.A.Chang and R.Fefferman.A continuous version of duality of H1andBMO on the bidisc.Ann.of Math.,112:179–201,1980.10.S.Y.A.Chang,J.M.Wilson,and T.H.Wolff.Some weighted norm inequalitiesconcerning the Schroedinger m.Math.Helv.,60:217–246,1985.11.S.Chanillo and R.L.Wheeden.L p-estimates for fractional integrals and Sobolevinequalities with applications to Schroedinger operators.10:1077–1116,1985.12.S.Chanillo and R.L.Wheeden.Some weighted norm inequalities for the areaintegral.Indiana U.Math.Jour.,36:277–294,1987.13.R.Coifman and C.Fefferman.Weighted norm inequalities for maximal functionsand singular integrals.Studia Math.,51:269–274,1974.14. D.Cruz-Uribe and C.P´e rez.Two-weight extrapolation via the maximal oper-ator.J.Funct.Anal.,174:1–17,2000.15.I.Daubechies.Ten Lectures on Wavelets,volume61of CBMS-NSF RegionalConferences in Applied Mathematics.Society for Industrial and Applied Math-ematics,Philadelphia,1992.16.J.Duoandikoetxea.Fourier analysis.Number29in Graduate Studies in Math-ematics.American Mathematical Society,Providence,2001.220References17. C.Fefferman.The uncertainty principle.Bull.Amer.Math.Soc.(NS),9:129–206,1983.18. C.Fefferman and E.M.Stein.Some maximal inequalities.Amer.Jour.ofMath.,93:107–115,1971.19. C.Fefferman and E.M.Stein.H p spaces of several variables.Acta Math.,129:137–193,1972.20.R.Fefferman,R.F.Gundy,M.L.Silverstein,and E.M.Stein.Inequalitiesfor ratios of functionals of harmonic A, 79:7958–7960,1982.21.G.B.Folland.Real Analysis:Modern Techniques and Their Applications.WileyInterscience,New York,1999.22.M.Frazier,B.Jawerth,and G.Weiss.Littlewood-Paley Theory and the Studyof Function Spaces.Number79in CBMS Regional Conference Series in Math-ematics.American Mathematical Society,Providence,1991.23.J.Garcia-Cuerva.An extrapolation theorem in the theory of A p weights.Proc.Amer.Math.Soc.,87:422–426,1983.24.J.Garcia-Cuerva and J.L.Rubio de Francia.Weighted Norm Inequalities andRelated Topics.North-Holland,Amsterdam,1985.25.J.B.Garnett.Bounded Analytic Functions.Academic Press,New York,1981.26.R.F.Gundy and R.L.Wheeden.Weighted integral inequalities for the nontan-gential maximal function,Lusin area integral,and Walsh-Paley series.Studia Math.,49:107–124,1973/74.27. A.Haar.Zur Theorie der orthogonalen Funktionensysteme.Math.Ann.,69:331–371,1910.28.G.H.Hardy and J.E.Littlewood.A maximal theorem with function-theoreticapplications.Acta Math.,54:81–116,1930.29.L.Hormander.Estimates for translation invariant operators in L p spaces.ActaMath.,104:93–139,1960.30.R.A.Hunt,B.Muckenhoupt,and R.L.Wheeden.Weighted norm inequalitiesfor the conjugate function and Hilbert transform.Trans.Amer.Math.Soc., 176:227–251,1973.31.Y.Katznelson.An Introduction to Harmonic Analysis.Dover,New York,1976.32.R.Kerman and E.Sawyer.The trace inequality and eigenvalue estimates forSchroedinger operators.Annales de L’Institut Fourier,36:207–228,1986.33. A.Khinchin.Ueber dyadische Brueche.Math.Zeit.,18:109–116,1923.34.M.A.Krasnosel’skii and Ya.B.Rutickii.Convex functions and Orlicz spaces.P.Noordhoff,Groningen,1961.35. D.S.Kurtz.Littlewood-Paley and multiplier theorems on weighted L p spaces.Trans.Amer.Math.Soc.,259:235–254,1980.36. D.S.Kurtz and R.L.Wheeden.Results on weighted norm inequalities formultipliers.Trans.Amer.Math.Soc.,255:343–362,1979.37.J.E.Littlewood and R.E.A.C.Paley.Theorems on Fourier series and powerseries,Part I.J.London Math.Soc.,6:230–233,1931.38.J.E.Littlewood and R.E.A.C.Paley.Theorems on Fourier series and powerseries,Part II.Proc.London Math.Soc.,42:52–89,1937.39.J.E.Littlewood and R.E.A.C.Paley.Theorems on Fourier series and powerseries,Part III.Proc.London Math.Soc.,43:105–126,1937.40.J.Marcinkiewicz.Sur l’interpolation d’op´e rations. C.R.Acad.Sci.Paris,208:1272–1273,1939.References221 41.S.G.Mihlin.On the multipliers of Fourier integrals.Dokl.Akad.Nauk.,109:701–703,1956.42. B.Muckenhoupt.Weighted norm inequalities for the Hardy maximal function.Trans.Amer.Math.Soc.,165:207–226,1972.43.T.Murai and A.Uchiyama.Good-λinequalities for the area integral and thenontangential maximal function.Studia Math.,83:251–262,1986.44. F.Nazarov.Private communication.45.R.O’Neill.Fractional integration in Orlicz spaces.Trans.Amer.Math.Soc.,115:300–328,1963.46. C.P´e rez.Weighted norm inequalities for singular integral operators.J.LondonMath.Soc.,49:296–308,1994.47. C.P´e rez.On sufficient conditions for the boundedness of the Hardy-Littlewoodmaximal operator between weighted L p spaces with different weights.Proc.London Math.Soc.,71:135–157,1995.48. C.P´e rez.Sharp weighted L p weighted Sobolev inequalities.Annales deL’Institut Fourier,45:809–824,1995.49.J.L.Rubio de Francia.Factorization theory and A p weights.Amer.Jour.ofMath.,106:533–547,1984.50. C.Segovia and R.L.Wheeden.On weighted norm inequalities for the Lusinarea integral.Trans.Amer.Math.Soc.,176:103–123,1973.51. E.M.Stein.On the functions of Littlewood-Paley,Lusin,and Marcinkiewicz.Trans.Amer.Math.Soc.,88:430–466,1958.52. E.M.Stein.On some functions of Littlewood-Paley and Zygmund.Bull.Amer.Math.Soc.,67:99–101,1961.53. E.M.Stein.Singular Integrals and Differentiability Properties of Functions.Princeton University Press,Princeton,1970.54. E.M.Stein.The development of square functions in the work of A.Zygmund.Bull.Amer.Math.Soc.,7:359–376,1982.55.J.O.Stromberg and A.Torchinsky.Weighted Hardy Spaces,volume1381ofLecture Notes in Mathematics.Springer-Verlag,Berlin,1989.56. A.Torchinsky.Real-Variable Methods in Harmonic Analysis.Academic Press,New York,1986.57. A.Uchiyama.A constructive proof of the Fefferman-Stein decomposition ofBMO(R n).Acta Math.,148:215–241,1982.58.G.Weiss.A note on Orlicz spaces.Portugal Math.,15:35–47,1950.59.J.M.Wilson.The intrinsic square function.To appear in Revista MatematicaIberoamericana.60.J.M.Wilson.A sharp inequality for the square function.Duke Math.Jour.,55:879–888,1987.61.J.M.Wilson.Weighted inequalities for the dyadic square function withoutdyadic A∞.Duke Math.Jour.,55:19–49,1987.62.J.M.Wilson.Weighted norm inequalities for the continuous square function.Trans.Amer.Math.Soc.,314:661–692,1989.63.J.M.Wilson.Chanillo-Wheeden inequalities for0<p≤1.J.London Math.Soc.,41:283–294,1990.64.J.M.Wilson.Some two-parameter square function inequalities.Indiana U.Math.Jour.,40:419–442,1991.65.J.M.Wilson.Paraproducts and the exponential square class.Jour.of Math.Analy.and Applic.,271:374–382,2002.66. A.Zygmund.On certain integrals.Trans.Amer.Math.Soc.,55:170–204,1944.IndexY-functional,44,49,80adapted functionmultidimensional,81,90one-dimensional,69Calder´o n reproducing formula,85 Calder´o n-Zygmund operator,157, 158convergence,86,88,92–95,112,119in weighted L p,129limitations,97normalization,85redundancy,124Calder´o n-Zygmund decomposition,5 Calder´o n-Zygmund kernel,153Calder´o n-Zygmund operator,153 boundedness,154on test class,156,157Calder´o n reproducing formula,157, 158weighted norm inequalities,158,159 Carleson box,88top half,88Chanillo-Wheeden Inequality(Theorem3.10),60compact-measurable exhaustion,87 constantly changing constant,3Cruz-Uribe-P´e rez Theorem(Theorem10.9),181weighted norm inequalities,181,182, 184doubling weight,26dyadiccube,2,77interval,2maximal function,15square functionmultidimensional,77,78one-dimensional,13dyadic A∞,26dyadic doubling,26dyadic maximal function,15Hardy-Littlewood,15fine structure,17 multidimensional,77one-dimensionalF-adapted,70exponential square class,39 multidimensional,80Fourier transform,1Free Lunch Lemma(Lemma6.2),114 functionalY-,44,49,80good and bad functions,6good family ofcubes,81,90intervals,69good-λinequalities,19goodbye to,189,190224IndexH¨o rmander-Mihlin Multiplier Theorem, 197Haar coefficients,10and averages,12filtering properties,10Haar functions,9multidimensional,77,82 Hamiltonian operator,145Hardy-Littlewood maximal function, 34,35dyadic,15Hardy-Littlewood Maximal Theorem, 34,35,37dyadic,15Hilbert transform,151harmonic conjugate,152 interpolation,17,32intrinsic square function,103,104,117, 118Khinchin’s Inequalities(Theorem14.1), 215maximal functiondyadic,15Hardy-Littlewood,34,35dyadic,15Orlicz space,167,168Rubio de Francia,27necessity of A∞,55Orlicz maximal functionintegrability,174,176Orlicz space,162dual,164examples,163H¨o lder inequality,166local,166maximal function,167,168P´e rez Maximal Theorem(Theorem10.4),168,185potential wells,147probability lemma,79Rademacher functions,214random variables,214independent,214Schr¨o dinger equation,145Schwartz class,2singular integral operator,151splitting of functions,5square functionclassical,113,120dyadicmultidimensional,77,78one-dimensional,13intrinsic,103,104,117,118dominates many others,117,118 one-dimensionalF-adapted,70real-variable,101semi-discretemultidimensional,90one-dimensional,71stopping-time argument,21,23,49 telescoping sum,52,64Uchiyama Decomposition Lemma(Lemma6.3),115uncertainty principle,147weak-type inequality,16weight,26A1,47A d∞,26,27,45,47 counterexample,217doubling,26draping,28inequalities,29counterexample,31,36,43,55,62,64,67Muckenhoupt A p condition,129connection with A∞,132,135,136extrapolation,131maximal functions,137,138singular integrals,159,160 weighted norm inequalitiesCalder´o n-Zygmund operator,158, 159Cruz-Uribe-P´e rez Theorem(Theorem10.9),184square function,181A p weights,130Young function,161approximate dual,172examples,173dual,164examples,165Lecture Notes in Mathematics For information about earlier volumesplease contact your bookseller or SpringerLNM Online archive:V ol.1732:K.Keller,Invariant Factors,Julia Equivalences and the(Abstract)Mandelbrot Set(2000)V ol.1733:K.Ritter,Average-Case Analysis of Numerical Problems(2000)V ol.1734:M.Espedal,A.Fasano,A.Mikeli´c,Filtration in Porous Media and Industrial Applications.Cetraro1998. Editor:A.Fasano.2000.V ol.1735:D.Yafaev,Scattering Theory:Some Old and New Problems(2000)V ol.1736:B.O.Turesson,Nonlinear Potential Theory and Weighted Sobolev Spaces(2000)V ol.1737:S.Wakabayashi,Classical Microlocal Analysis in the Space of Hyperfunctions(2000)V ol.1738:M.Émery,A.Nemirovski,D.V oiculescu, Lectures on Probability Theory and Statistics(2000)V ol.1739:R.Burkard,P.Deuflhard,A.Jameson,J.-L. Lions,G.Strang,Computational Mathematics Driven by Industrial Problems.Martina Franca,1999.Editors: V.Capasso,H.Engl,J.Periaux(2000)V ol.1740:B.Kawohl,O.Pironneau,L.Tartar,J.-P.Zole-sio,Optimal Shape Design.Tróia,Portugal1999.Editors: A.Cellina,A.Ornelas(2000)V ol.1741:E.Lombardi,Oscillatory Integrals and Phe-nomena Beyond all Algebraic Orders(2000)V ol.1742: A.Unterberger,Quantization and Non-holomorphic Modular Forms(2000)V ol.1743:L.Habermann,Riemannian Metrics of Con-stant Mass and Moduli Spaces of Conformal Structures (2000)V ol.1744:M.Kunze,Non-Smooth Dynamical Systems (2000)V ol.1745:man,G.Schechtman(Eds.),Geomet-ric Aspects of Functional Analysis.Israel Seminar1999-2000(2000)V ol.1746:A.Degtyarev,I.Itenberg,V.Kharlamov,Real Enriques Surfaces(2000)V ol.1747:L.W.Christensen,Gorenstein Dimensions (2000)V ol.1748:M.Ruzicka,Electrorheological Fluids:Model-ing and Mathematical Theory(2001)V ol.1749:M.Fuchs,G.Seregin,Variational Methods for Problems from Plasticity Theory and for Generalized Newtonian Fluids(2001)V ol.1750:B.Conrad,Grothendieck Duality and Base Change(2001)V ol.1751:N.J.Cutland,Loeb Measures in Practice: Recent Advances(2001)V ol.1752:Y.V.Nesterenko,P.Philippon,Introduction to Algebraic Independence Theory(2001)V ol.1753:A.I.Bobenko,U.Eitner,PainlevéEquations in the Differential Geometry of Surfaces(2001)V ol.1754:W.Bertram,The Geometry of Jordan and Lie Structures(2001)V ol.1755:J.Azéma,M.Émery,M.Ledoux,M.Yor (Eds.),Séminaire de Probabilités XXXV(2001)V ol.1756:P.E.Zhidkov,Korteweg de Vries and Nonlin-ear Schrödinger Equations:Qualitative Theory(2001)V ol.1757:R.R.Phelps,Lectures on Choquet’s Theorem (2001)V ol.1758:N.Monod,Continuous Bounded Cohomology of Locally Compact Groups(2001)V ol.1759:Y.Abe,K.Kopfermann,Toroidal Groups (2001)V ol.1760:D.Filipovi´c,Consistency Problems for Heath-Jarrow-Morton Interest Rate Models(2001)V ol.1761:C.Adelmann,The Decomposition of Primes in Torsion Point Fields(2001)V ol.1762:S.Cerrai,Second Order PDE’s in Finite and Infinite Dimension(2001)V ol.1763:J.-L.Loday,A.Frabetti,F.Chapoton,F.Goi-chot,Dialgebras and Related Operads(2001)V ol.1764:A.Cannas da Silva,Lectures on Symplectic Geometry(2001)V ol.1765:T.Kerler,V.V.Lyubashenko,Non-Semisimple Topological Quantum Field Theories for3-Manifolds with Corners(2001)V ol.1766:H.Hennion,L.Hervé,Limit Theorems for Markov Chains and Stochastic Properties of Dynamical Systems by Quasi-Compactness(2001)V ol.1767:J.Xiao,Holomorphic Q Classes(2001)V ol.1768:M.J.Pflaum,Analytic and Geometric Study of Stratified Spaces(2001)V ol.1769:M.Alberich-Carramiñana,Geometry of the Plane Cremona Maps(2002)V ol.1770:H.Gluesing-Luerssen,Linear Delay-Differential Systems with Commensurate Delays:An Algebraic Approach(2002)V ol.1771:M.Émery,M.Yor(Eds.),Séminaire de Prob-abilités1967-1980.A Selection in Martingale Theory (2002)V ol.1772:F.Burstall,D.Ferus,K.Leschke,F.Pedit, U.Pinkall,Conformal Geometry of Surfaces in S4(2002) V ol.1773:Z.Arad,M.Muzychuk,Standard Integral Table Algebras Generated by a Non-real Element of Small Degree(2002)V ol.1774:V.Runde,Lectures on Amenability(2002)V ol.1775:W.H.Meeks,A.Ros,H.Rosenberg,The Global Theory of Minimal Surfaces in Flat Spaces. Martina Franca1999.Editor:G.P.Pirola(2002)V ol.1776:K.Behrend,C.Gomez,V.Tarasov,G.Tian, Quantum Comohology.Cetraro1997.Editors:P.de Bar-tolomeis,B.Dubrovin,C.Reina(2002)V ol.1777:E.García-Río,D.N.Kupeli,R.Vázquez-Lorenzo,Osserman Manifolds in Semi-Riemannian Geometry(2002)V ol.1778:H.Kiechle,Theory of K-Loops(2002)V ol.1779:I.Chueshov,Monotone Random Systems (2002)V ol.1780:J.H.Bruinier,Borcherds Products on O(2,1) and Chern Classes of Heegner Divisors(2002)V ol.1781:E.Bolthausen,E.Perkins,A.van der Vaart, Lectures on Probability Theory and Statistics.Ecole d’Etéde Probabilités de Saint-Flour XXIX-1999.Editor: P.Bernard(2002)V ol.1782:C.-H.Chu,u,Harmonic Functions on Groups and Fourier Algebras(2002)V ol.1783:L.Grüne,Asymptotic Behavior of Dynamical and Control Systems under Perturbation and Discretiza-tion(2002)V ol.1784:L.H.Eliasson,S.B.Kuksin,S.Marmi,J.-C. Yoccoz,Dynamical Systems and Small Divisors.Cetraro, Italy1998.Editors:S.Marmi,J.-C.Yoccoz(2002)V ol.1785:J.Arias de Reyna,Pointwise Convergence of Fourier Series(2002)V ol.1786:S.D.Cutkosky,Monomialization of Mor-phisms from3-Folds to Surfaces(2002)V ol.1787:S.Caenepeel,itaru,S.Zhu,Frobenius and Separable Functors for Generalized Module Cate-gories and Nonlinear Equations(2002)V ol.1788:A.Vasil’ev,Moduli of Families of Curves for Conformal and Quasiconformal Mappings(2002)V ol.1789:Y.Sommerhäuser,Yetter-Drinfel’d Hopf alge-bras over groups of prime order(2002)V ol.1790:X.Zhan,Matrix Inequalities(2002)V ol.1791:M.Knebusch,D.Zhang,Manis Valuations and Prüfer Extensions I:A new Chapter in Commutative Algebra(2002)V ol.1792:D.D.Ang,R.Gorenflo,V.K.Le,D.D.Trong, Moment Theory and Some Inverse Problems in Potential Theory and Heat Conduction(2002)V ol.1793:J.Cortés Monforte,Geometric,Control and Numerical Aspects of Nonholonomic Systems(2002)V ol.1794:N.Pytheas Fogg,Substitution in Dynamics, Arithmetics and Combinatorics.Editors:V.Berthé,S.Fer-enczi,C.Mauduit,A.Siegel(2002)V ol.1795:H.Li,Filtered-Graded Transfer in Using Non-commutative Gröbner Bases(2002)V ol.1796:J.M.Melenk,hp-Finite Element Methods for Singular Perturbations(2002)V ol.1797:B.Schmidt,Characters and Cyclotomic Fields in Finite Geometry(2002)V ol.1798:W.M.Oliva,Geometric Mechanics(2002)V ol.1799:H.Pajot,Analytic Capacity,Rectifiability, Menger Curvature and the Cauchy Integral(2002)V ol.1800:O.Gabber,L.Ramero,Almost Ring Theory (2003)V ol.1801:J.Azéma,M.Émery,M.Ledoux,M.Yor (Eds.),Séminaire de Probabilités XXXVI(2003)V ol.1802:V.Capasso, E.Merzbach, B.G.Ivanoff, M.Dozzi,R.Dalang,T.Mountford,Topics in Spatial Stochastic Processes.Martina Franca,Italy2001.Editor: E.Merzbach(2003)V ol.1803:G.Dolzmann,Variational Methods for Crys-talline Microstructure–Analysis and Computation(2003) V ol.1804:I.Cherednik,Ya.Markov,R.Howe,G.Lusztig, Iwahori-Hecke Algebras and their Representation Theory. Martina Franca,Italy1999.Editors:V.Baldoni,D.Bar-basch(2003)V ol.1805:F.Cao,Geometric Curve Evolution and Image Processing(2003)V ol.1806:H.Broer,I.Hoveijn.G.Lunther,G.Vegter, Bifurcations in Hamiltonian puting Singu-larities by Gröbner Bases(2003)V ol.1807:man,G.Schechtman(Eds.),Geomet-ric Aspects of Functional Analysis.Israel Seminar2000-2002(2003)V ol.1808:W.Schindler,Measures with Symmetry Prop-erties(2003)V ol.1809:O.Steinbach,Stability Estimates for Hybrid Coupled Domain Decomposition Methods(2003)V ol.1810:J.Wengenroth,Derived Functors in Functional Analysis(2003)V ol.1811:J.Stevens,Deformations of Singularities (2003)V ol.1812:L.Ambrosio,K.Deckelnick,G.Dziuk, M.Mimura,V.A.Solonnikov,H.M.Soner,Mathematical Aspects of Evolving Interfaces.Madeira,Funchal,Portu-gal2000.Editors:P.Colli,J.F.Rodrigues(2003)V ol.1813:L.Ambrosio,L.A.Caffarelli,Y.Brenier, G.Buttazzo,C.Villani,Optimal Transportation and its Applications.Martina Franca,Italy2001.Editors:L.A. Caffarelli,S.Salsa(2003)V ol.1814:P.Bank, F.Baudoin,H.Föllmer,L.C.G. Rogers,M.Soner,N.Touzi,Paris-Princeton Lectures on Mathematical Finance2002(2003)V ol.1815: A.M.Vershik(Ed.),Asymptotic Com-binatorics with Applications to Mathematical Physics. St.Petersburg,Russia2001(2003)V ol.1816:S.Albeverio,W.Schachermayer,M.Tala-grand,Lectures on Probability Theory and Statistics. Ecole d’Etéde Probabilités de Saint-Flour XXX-2000. Editor:P.Bernard(2003)V ol.1817:E.Koelink,W.Van Assche(Eds.),Orthogonal Polynomials and Special Functions.Leuven2002(2003) V ol.1818:M.Bildhauer,Convex Variational Problems with Linear,nearly Linear and/or Anisotropic Growth Conditions(2003)V ol.1819:D.Masser,Yu.V.Nesterenko,H.P.Schlick-ewei,W.M.Schmidt,M.Waldschmidt,Diophantine Approximation.Cetraro,Italy2000.Editors:F.Amoroso, U.Zannier(2003)V ol.1820:F.Hiai,H.Kosaki,Means of Hilbert Space Operators(2003)V ol.1821:S.Teufel,Adiabatic Perturbation Theory in Quantum Dynamics(2003)V ol.1822:S.-N.Chow,R.Conti,R.Johnson,J.Mallet-Paret,R.Nussbaum,Dynamical Systems.Cetraro,Italy 2000.Editors:J.W.Macki,P.Zecca(2003)V ol.1823: A.M.Anile,W.Allegretto, C.Ring-hofer,Mathematical Problems in Semiconductor Physics. Cetraro,Italy1998.Editor:A.M.Anile(2003)V ol.1824:J.A.Navarro González,J.B.Sancho de Salas, C∞–Differentiable Spaces(2003)V ol.1825:J.H.Bramble,A.Cohen,W.Dahmen,Mul-tiscale Problems and Methods in Numerical Simulations, Martina Franca,Italy2001.Editor:C.Canuto(2003)V ol.1826:K.Dohmen,Improved Bonferroni Inequal-ities via Abstract Tubes.Inequalities and Identities of Inclusion-Exclusion Type.VIII,113p,2003.V ol.1827:K.M.Pilgrim,Combinations of Complex Dynamical Systems.IX,118p,2003.V ol.1828:D.J.Green,Gröbner Bases and the Computa-tion of Group Cohomology.XII,138p,2003.V ol.1829:E.Altman,B.Gaujal,A.Hordijk,Discrete-Event Control of Stochastic Networks:Multimodularity and Regularity.XIV,313p,2003.V ol.1830:M.I.Gil’,Operator Functions and Localization of Spectra.XIV,256p,2003.V ol.1831:A.Connes,J.Cuntz,E.Guentner,N.Hig-son,J.E.Kaminker,Noncommutative Geometry,Martina Franca,Italy2002.Editors:S.Doplicher,L.Longo(2004) V ol.1832:J.Azéma,M.Émery,M.Ledoux,M.Yor (Eds.),Séminaire de Probabilités XXXVII(2003)V ol.1833:D.-Q.Jiang,M.Qian,M.-P.Qian,Mathemati-cal Theory of Nonequilibrium Steady States.On the Fron-tier of Probability and Dynamical Systems.IX,280p, 2004.V ol.1834:Yo.Yomdin,te,Tame Geometry with Application in Smooth Analysis.VIII,186p,2004.V ol.1835:O.T.Izhboldin, B.Kahn,N.A.Karpenko, A.Vishik,Geometric Methods in the Algebraic Theory of Quadratic Forms.Summer School,Lens,2000.Editor: J.-P.Tignol(2004)V ol.1836:C.Nˇa stˇa sescu,F.Van Oystaeyen,Methods of Graded Rings.XIII,304p,2004.V ol.1837:S.Tavaré,O.Zeitouni,Lectures on Probabil-ity Theory and Statistics.Ecole d’Etéde Probabilités de Saint-Flour XXXI-2001.Editor:J.Picard(2004)V ol.1838:A.J.Ganesh,N.W.O’Connell,D.J.Wischik, Big Queues.XII,254p,2004.V ol.1839:R.Gohm,Noncommutative Stationary Processes.VIII,170p,2004.V ol.1840:B.Tsirelson,W.Werner,Lectures on Probabil-ity Theory and Statistics.Ecole d’Etéde Probabilités de Saint-Flour XXXII-2002.Editor:J.Picard(2004)V ol.1841:W.Reichel,Uniqueness Theorems for Vari-ational Problems by the Method of Transformation Groups(2004)V ol.1842:T.Johnsen,A.L.Knutsen,K3Projective Mod-els in Scrolls(2004)V ol.1843:B.Jefferies,Spectral Properties of Noncom-muting Operators(2004)V ol.1844:K.F.Siburg,The Principle of Least Action in Geometry and Dynamics(2004)V ol.1845:Min Ho Lee,Mixed Automorphic Forms,Torus Bundles,and Jacobi Forms(2004)V ol.1846:H.Ammari,H.Kang,Reconstruction of Small Inhomogeneities from Boundary Measurements(2004) V ol.1847:T.R.Bielecki,T.Björk,M.Jeanblanc,M. Rutkowski,J.A.Scheinkman,W.Xiong,Paris-Princeton Lectures on Mathematical Finance2003(2004)V ol.1848:M.Abate,J.E.Fornaess,X.Huang,J.P.Rosay, A.Tumanov,Real Methods in Complex and CR Geom-etry,Martina Franca,Italy2002.Editors:D.Zaitsev,G. Zampieri(2004)V ol.1849:Martin L.Brown,Heegner Modules and Ellip-tic Curves(2004)V ol.1850:man,G.Schechtman(Eds.),Geomet-ric Aspects of Functional Analysis.Israel Seminar2002-2003(2004)V ol.1851:O.Catoni,Statistical Learning Theory and Stochastic Optimization(2004)V ol.1852:A.S.Kechris,ler,Topics in Orbit Equivalence(2004)V ol.1853:Ch.Favre,M.Jonsson,The Valuative Tree (2004)V ol.1854:O.Saeki,Topology of Singular Fibers of Dif-ferential Maps(2004)V ol.1855:G.Da Prato,P.C.Kunstmann,siecka, A.Lunardi,R.Schnaubelt,L.Weis,Functional Analytic Methods for Evolution Equations.Editors:M.Iannelli, R.Nagel,S.Piazzera(2004)V ol.1856:K.Back,T.R.Bielecki,C.Hipp,S.Peng, W.Schachermayer,Stochastic Methods in Finance,Bres-sanone/Brixen,Italy,2003.Editors:M.Fritelli,W.Rung-galdier(2004)V ol.1857:M.Émery,M.Ledoux,M.Yor(Eds.),Sémi-naire de Probabilités XXXVIII(2005)V ol.1858:A.S.Cherny,H.-J.Engelbert,Singular Stochas-tic Differential Equations(2005)V ol.1859:E.Letellier,Fourier Transforms of Invariant Functions on Finite Reductive Lie Algebras(2005)V ol.1860:A.Borisyuk,G.B.Ermentrout,A.Friedman, D.Terman,Tutorials in Mathematical Biosciences I. Mathematical Neurosciences(2005)V ol.1861:G.Benettin,J.Henrard,S.Kuksin,Hamil-tonian Dynamics–Theory and Applications,Cetraro, Italy,1999.Editor:A.Giorgilli(2005)V ol.1862:B.Helffer,F.Nier,Hypoelliptic Estimates and Spectral Theory for Fokker-Planck Operators and Witten Laplacians(2005)V ol.1863:H.Führ,Abstract Harmonic Analysis of Con-tinuous Wavelet Transforms(2005)V ol.1864:K.Efstathiou,Metamorphoses of Hamiltonian Systems with Symmetries(2005)V ol.1865:D.Applebaum,B.V.R.Bhat,J.Kustermans, J.M.Lindsay,Quantum Independent Increment Processes I.From Classical Probability to Quantum Stochastic Cal-culus.Editors:M.Schürmann,U.Franz(2005)V ol.1866:O.E.Barndorff-Nielsen,U.Franz,R.Gohm, B.Kümmerer,S.Thorbjønsen,Quantum Independent Increment Processes II.Structure of Quantum Lévy Processes,Classical Probability,and Physics.Editors:M. Schürmann,U.Franz,(2005)V ol.1867:J.Sneyd(Ed.),Tutorials in Mathematical Bio-sciences II.Mathematical Modeling of Calcium Dynamics and Signal Transduction.(2005)V ol.1868:J.Jorgenson,ng,Pos n(R)and Eisenstein Series.(2005)V ol.1869:A.Dembo,T.Funaki,Lectures on Probabil-ity Theory and Statistics.Ecole d’Etéde Probabilités de Saint-Flour XXXIII-2003.Editor:J.Picard(2005)V ol.1870:V.I.Gurariy,W.Lusky,Geometry of Müntz Spaces and Related Questions.(2005)V ol.1871:P.Constantin,G.Gallavotti,A.V.Kazhikhov, Y.Meyer,ai,Mathematical Foundation of Turbu-lent Viscous Flows,Martina Franca,Italy,2003.Editors: M.Cannone,T.Miyakawa(2006)V ol.1872:A.Friedman(Ed.),Tutorials in Mathemati-cal Biosciences III.Cell Cycle,Proliferation,and Cancer (2006)V ol.1873:R.Mansuy,M.Yor,Random Times and En-largements of Filtrations in a Brownian Setting(2006)V ol.1874:M.Yor,M.Émery(Eds.),In Memoriam Paul-AndréMeyer-Séminaire de Probabilités XXXIX(2006) V ol.1875:J.Pitman,Combinatorial Stochastic Processes. Ecole d’Etéde Probabilités de Saint-Flour XXXII-2002. Editor:J.Picard(2006)V ol.1876:H.Herrlich,Axiom of Choice(2006)V ol.1877:J.Steuding,Value Distributions of L-Functions (2007)V ol.1878:R.Cerf,The Wulff Crystal in Ising and Percol-ation Models,Ecole d’Etéde Probabilités de Saint-Flour XXXIV-2004.Editor:Jean Picard(2006)V ol.1879:G.Slade,The Lace Expansion and its Applica-tions,Ecole d’Etéde Probabilités de Saint-Flour XXXIV-2004.Editor:Jean Picard(2006)V ol.1880:S.Attal,A.Joye,C.-A.Pillet,Open Quantum Systems I,The Hamiltonian Approach(2006)V ol.1881:S.Attal,A.Joye,C.-A.Pillet,Open Quantum Systems II,The Markovian Approach(2006)V ol.1882:S.Attal,A.Joye,C.-A.Pillet,Open Quantum Systems III,Recent Developments(2006)V ol.1883:W.Van Assche,F.Marcellàn(Eds.),Orthogo-nal Polynomials and Special Functions,Computation and Application(2006)V ol.1884:N.Hayashi, E.I.Kaikina,P.I.Naumkin, I.A.Shishmarev,Asymptotics for Dissipative Nonlinear Equations(2006)V ol.1885:A.Telcs,The Art of Random Walks(2006)V ol.1886:S.Takamura,Splitting Deformations of Dege-nerations of Complex Curves(2006)V ol.1887:K.Habermann,L.Habermann,Introduction to Symplectic Dirac Operators(2006)V ol.1888:J.van der Hoeven,Transseries and Real Differ-ential Algebra(2006)V ol.1889:G.Osipenko,Dynamical Systems,Graphs,and Algorithms(2006)V ol.1890:M.Bunge,J.Funk,Singular Coverings of Toposes(2006)V ol.1891:J.B.Friedlander, D.R.Heath-Brown, H.Iwaniec,J.Kaczorowski,Analytic Number Theory, Cetraro,Italy,2002.Editors:A.Perelli,C.Viola(2006) V ol.1892:A.Baddeley,I.Bárány,R.Schneider,W.Weil, Stochastic Geometry,Martina Franca,Italy,2004.Editor: W.Weil(2007)V ol.1893:H.Hanßmann,Local and Semi-Local Bifur-cations in Hamiltonian Dynamical Systems,Results and Examples(2007)V ol.1894:C.W.Groetsch,Stable Approximate Evaluation of Unbounded Operators(2007)V ol.1895:L.Molnár,Selected Preserver Problems on Algebraic Structures of Linear Operators and on Function Spaces(2007)V ol.1896:P.Massart,Concentration Inequalities and Model Selection,Ecole d’Étéde Probabilités de Saint-Flour XXXIII-2003.Editor:J.Picard(2007)V ol.1897:R.Doney,Fluctuation Theory for Lévy Processes,Ecole d’Étéde Probabilités de Saint-Flour XXXV-2005.Editor:J.Picard(2007)V ol.1898:H.R.Beyer,Beyond Partial Differential Equa-tions,On linear and Quasi-Linear Abstract Hyperbolic Evolution Equations(2007)V ol.1899:Séminaire de Probabilités XL.Editors: C.Donati-Martin,M.Émery,A.Rouault,C.Stricker (2007)V ol.1900:E.Bolthausen,A.Bovier(Eds.),Spin Glasses (2007)V ol.1901:O.Wittenberg,Intersections de deux quadriques et pinceaux de courbes de genre1,Inter-sections of Two Quadrics and Pencils of Curves of Genus 1(2007)V ol.1902: A.Isaev,Lectures on the Automorphism Groups of Kobayashi-Hyperbolic Manifolds(2007)V ol.1903:G.Kresin,V.Maz’ya,Sharp Real-Part Theo-rems(2007)V ol.1904:P.Giesl,Construction of Global Lyapunov Functions Using Radial Basis Functions(2007)V ol.1905:C.Prévˆo t,M.Röckner,A Concise Course on Stochastic Partial Differential Equations(2007)V ol.1906:T.Schuster,The Method of Approximate Inverse:Theory and Applications(2007)V ol.1907:M.Rasmussen,Attractivity and Bifurcation for Nonautonomous Dynamical Systems(2007)V ol.1908:T.J.Lyons,M.Caruana,T.Lévy,Differential Equations Driven by Rough Paths,Ecole d’Étéde Proba-bilités de Saint-Flour XXXIV-2004(2007)V ol.1909:H.Akiyoshi,M.Sakuma,M.Wada, Y.Yamashita,Punctured Torus Groups and2-Bridge Knot Groups(I)(2007)V ol.1910:man,G.Schechtman(Eds.),Geo-metric Aspects of Functional Analysis.Israel Seminar 2004-2005(2007)V ol.1911: A.Bressan, D.Serre,M.Williams, K.Zumbrun,Hyperbolic Systems of Balance Laws. Lectures given at the C.I.M.E.Summer School held in Cetraro,Italy,July14–21,2003.Editor:P.Marcati(2007) V ol.1912:V.Berinde,Iterative Approximation of Fixed Points(2007)V ol.1913:J.E.Marsden,G.Misiołek,J.-P.Ortega, M.Perlmutter,T.S.Ratiu,Hamiltonian Reduction by Stages(2007)V ol.1914:G.Kutyniok,Affine Density in Wavelet Analysis(2007)V ol.1915:T.Bıyıkoˇg lu,J.Leydold,P.F.Stadler,Laplacian Eigenvectors of Graphs.Perron-Frobenius and Faber-Krahn Type Theorems(2007)V ol.1916:C.Villani,F.Rezakhanlou,Entropy Methods for the Boltzmann Equation.Editors:F.Golse,S.Olla (2008)V ol.1917:I.Veseli´c,Existence and Regularity Properties of the Integrated Density of States of Random Schrödinger (2008)V ol.1918:B.Roberts,R.Schmidt,Local Newforms for GSp(4)(2007)V ol.1919:R.A.Carmona,I.Ekeland, A.Kohatsu-Higa,sry,P.-L.Lions,H.Pham, E.Taflin, Paris-Princeton Lectures on Mathematical Finance2004. Editors:R.A.Carmona,E.Çinlar,I.Ekeland,E.Jouini, J.A.Scheinkman,N.Touzi(2007)V ol.1920:S.N.Evans,Probability and Real Trees.Ecole d’Étéde Probabilités de Saint-Flour XXXV-2005(2008) V ol.1921:J.P.Tian,Evolution Algebras and their Appli-cations(2008)V ol.1922:A.Friedman(Ed.),Tutorials in Mathematical BioSciences IV.Evolution and Ecology(2008)V ol.1923:J.P.N.Bishwal,Parameter Estimation in Stochastic Differential Equations(2008)V ol.1924:M.Wilson,Weighted Littlewood-Paley Theory and Exponential-Square Integrability(2008)V ol.1925:M.du Sautoy,Zeta Functions of Groups and Rings(2008)V ol.1926:L.Barreira,V.Claudia,Stability of Nonauto-nomous Differential Equations(2008)Recent Reprints and New EditionsV ol.1618:G.Pisier,Similarity Problems and Completely Bounded Maps.1995–2nd exp.edition(2001)V ol.1629:J.D.Moore,Lectures on Seiberg-Witten Invariants.1997–2nd edition(2001)V ol.1638:P.Vanhaecke,Integrable Systems in the realm of Algebraic Geometry.1996–2nd edition(2001)V ol.1702:J.Ma,J.Yong,Forward-Backward Stochas-tic Differential Equations and their Applications.1999–Corr.3rd printing(2007)V ol.830:J.A.Green,Polynomial Representations of GL n,with an Appendix on Schensted Correspondence and Littelmann Paths by K.Erdmann,J.A.Green and M.Schocker1980–2nd corr.and augmented edition (2007)。

克劳德•麦凯《回到哈莱姆》中的跨国书写舒进艳内容摘要:克劳德•麦凯的《回到哈莱姆》描摹了20世纪早期的黑人跨国体验。

学界主要阐释了作者个人的跨国经历与黑人国际主义思想对小说塑造主要人物的影响,而忽视了小说中副线主人公雷的国籍及其旅居哈莱姆的意义。

雷的跨国移民经历既再现了麦凯的复杂跨国情感与认同经历,又观照了哈莱姆作为流散非裔移居的理想家园与城市黑人社区所承载的空间意涵。

论文提出哈莱姆具有三个维度,作为移民唤起历史记忆的地理空间、建构跨国身份的政治空间及容纳差异的多元文化空间,并考察移民在跨国流动中历经的现代性体验,以此揭示他们通过改变既定身份与重新定义自我而竭力摆脱传统的民族、种族和阶级观念的束缚与身份认同的困惑,从而参与到美国城市的种族空间生产中。

关键词:克劳德•麦凯;《回到哈莱姆》;跨国书写基金项目:本文系国家社会科学重大项目“美国文学地理的文史考证与学科建构”(项目编号:16ZDA197);天津市研究生科研创新项目“美国新现实主义小说的跨国空间研究”(项目编号:19YJSB039)的阶段性研究成果。

作者简介:舒进艳,南开大学外国语学院博士研究生、喀什大学外国语学院副教授,主要从事美国文学研究。

Title: Claude Mckay’s Transnational Writing in Home to HarlemAbstract: Claude McKay’s Home to Harlem depicts the black transnational experience of the early 20th century. Academics mainly studied the influence of McKay’s personal transnational experience and black internationalist thinking on his main character, but neglected the minor plot’s protagonist Ray and his nationality, and the significance of his sojourn in Harlem. Ray’s transnational migration experience not only embodies McKay’s complex transnational feeling and identity experience, but also reflects Harlem’s spatial significance as an ideal home for African diaspora and urban black community. The paper aims to examine Caribbean immigrants’ experience of modernity in Harlem which is interpreted as the geographic space for immigrants to evoke historical memories, the political space for constructing transnational identities and the multicultural space for accommodating differences. It is to prove that they manage to extricate themselves from the shackles of traditional concepts of nation, race and class and their confusion of identity by changing their established identity and redefining themselves, and thus participate in the production of racial space in American cities.60Foreign Language and Literature Research 2 (2021)外国语文研究2021年第2期Key words: Claude Mckay; Home to Harlem; transnational writingAuthor: Shu Jinyan is Ph. D. candidate at College of Foreign Languages, Nankai University (Tianjin, 300071, China), associate professor at School of Foreign Studies, Kashi University (Kashi 844000, China). Her major academic research interest includes American literature. E-mail: ******************1925年,阿伦•洛克在《新黑人》选集中将哈莱姆描述为一个国际化的文化之都,视其重要性堪比欧洲新兴民族国家的首都。