A new hybrid critic-training method for approximate dynamic programming

- 格式:pdf

- 大小:66.04 KB

- 文档页数:11

混合研究法的设计类型朱雁【期刊名称】《中学数学月刊》【年(卷),期】2016(000)001【总页数】7页(P1-7)【作者】朱雁【作者单位】华东师范大学 200062【正文语种】中文质性与量化的争论,与结合两者以调和它们之间的差异从而服务于同一研究问题的混合方法的迅速崛起几乎是同步的.混合方法的源起通常可以追溯到Campbell 和Fiske的多质多法(multi-trait,multi-method)方式(见[2]).尽管混合法被认为是一种较新的方法,但其主要的哲学和方法论方面的基础,以及实践标准早在20世纪90年代初期就已经形成了[3].关于混合法研究的基本原理,Johnson和Turner提出,不同类型的数据应当可以使用不同的策略和方法进行收集,从而优势互补,弥补各自的不足,这就可使混合法研究能够提供通过收集单一质性或量化数据而无法得到的见解[4].类似地,Greene提出,此种方法可以“弥补方法中所固有的不足,利用方法中所固有的优势,并消除方法上无法避免的偏向性”[5].混合法研究结合质性和量化的方法,以期利用两者的优势,这很明显区别于原有方法的局限性及相应的实践,尤其是有别于量化的方法.Johnson和Onwuegbuzie指出,混合法研究是研究者混合或组合量化及质性研究的技巧、方法、途径、概念或语言,以用于某个单个的研究中的一类研究;混合法研究也是试图让使用多种方法合法化的一种尝试,而不再限制或约束研究者们的选择(这亦是对教条主义的否定);它是研究的一种拓展和创造性形式,而不再是一种限制;它具有包容性、多元性和互补性,它要求研究者以折衷的方式进行方法的选择、思考以及实施研究[6]17-18.Johnson和Onwuegbuzie的上述提法,一方面强调了混合质性及量化方法中的多种元素的潜在价值,同时也指出了这一做法的潜在复杂性.针对混合法研究的使用方式,Caracelli和Greene概括了三种典型的类型,包括:(1)检验由不同测试工具得出的结果的一致性;(2)用另一种方法说明和建构由前一种方法得出的结果;(3)说明从一种方法得出的结果如何能够影响后续的方法或是从该结果推导出的结论[7].这些功能以某种方式已在多个领域的许多混合法研究中有所体现,包括教育、心理学、犯罪学、护理与保健科学、家庭研究、商业等.混合法研究近期在数量上的增长,其部分原因应归于研究资助的增长[8,9].然而,期刊论文以及资助的项目也通过大量的数据显示,混合方法在教育研究中被接纳及资助仍是一项正在进展中的工作[10-14].尽管混合法研究已变得越来越为普及,但关于它是由什么构成的,却始终未能取得广泛一致的认识[15].例如,有些学者坚持,混合法研究是任何同时包含有质性和量化数据的研究,而另一些学者则认为,混合法研究必须存在一个混合方式的问题,同时又要具备质性和量化分析,以及整合性的推论[3].此外,对于与混合方式相关的几个元素,学者们的认识也未能有所统一.例如,应当在什么时候进行混合,是在设计研究的时候?在数据收集的过程当中?在数据分析的过程当中?又或是在进行数据解释的时候?还有另一些学者对混合法的整个理念持批判意见[16-18],其中一些对混合法的批判是基于某一特定立场的,例如纯粹主义立场、实用主义立场、辩证主义立场等[6,19,20].那些持纯粹主义立场的学者认为混合法不是一种合适的研究方法,其根源在于这种方法与质性和量化方法的基本世界观或信念体系(范式)是不相容的[21],因为质性和量化方法是通过使用不同的方法来研究不同的现象的[18].有部分纯粹主义者还指出,混合法设计将质性方法置于了量化研究的从属位置[16,22,23].秉持实用主义立场的研究者认为,范式间的差异在回应同一研究问题时是相互独立的,因此可以配合使用[6,24].Wheeldon在总结这一观点时提到,相较于依赖归纳推理及一般性前提得出特定的结论,或是基于特定前提的演绎法寻求一般性的结论,实用主义允许更为灵活的溯因推理(abductive approach)[25].通过强调解决实际问题,可以有效地回避关于客观性“事实”的存在性的争议,或是主观性观念的价值.由此,实用主义者便得出了以下两个论点,一是存在一个单一的“真实世界”,二是所有个体对“世界”都是有他/她自己独特的解读的[25]88.不过,实用主义立场也有其自身的批判者[17,26].例如,不同于实用主义的“独立”说,辩证主义研究者认为,多重范式是相互兼容的,且应当加以使用,但它们之间的差异和研究的意义应予以澄清[19].不过需要说明的是,采取辩证主义立场的研究者在认为各类范式具有平等性(即没有哪一种范式优于其他范式)的前提下,其所使用的混合过程与持有实用主义立场的研究者是相同的.目前,有关立场理念的问题还未得到解决,而且关于这一主题的其他发展在混合法的文献中仍在不断的持续中(如Creswell和Plan Clark提出的设计立场[8]).混合法设计的起源与有关于评估的文献的关系较为密切.这一领域中早期的一篇重要论文出自Greene,Caracelli和Graham,该论文提出使用混合法评估的五种主要的用途(或是理由)[27].第一种是三角论证法,其目的在于检验研究结果之间的一致性.比如,那些通过使用不同工具而得出的结果,其中可能会用到访谈和问卷.Greene等人指出,三角论证法可以降低推论受到质疑的几率.第二种用途是互补,即运用质性和量化数据评估研究现象中既有重合又有所区别的方面(如课堂观察、调查).第三种是用于发展,即由一种方法得出的结果对研究的后续方法或步骤产生影响.例如,与教师的访谈可能会建议需要增加额外的年终评估.混合法评估的第四种用途是用于起始,包括由一种方法得出的结果对其他的结果形成挑战或者是引发研究的新方向.例如,对教师的访谈可能挑战学区管理者提出的论点.第五,也为最后一种用途是拓展,即用以澄清结论或是增加结果的丰富程度.在有关于混合法的文献中,出现了不少的框架,其中有许多[6,7,15,20,21,28,29,30]是建立在Greene等人的工作之上的.虽然这些框架在很多方面存在着差异,但它们都较为成功地体现了同一个理念,即研究者在研究中有大量的工具可选用.而在另外一方面,没有一种框架获得研究者们的广泛青睐和使用.针对混合法研究的探究策略,Creswell划分出了六种具有一定重合性的设计类型[28].这些设计可以用来指导建构混合法研究的特定属性,而这些不同的设计,依据质性和量化数据是通过顺序性还是并行性的方式收集,不同属性的数据在进行混合时所被赋予的权重,以及使用某一理论观点(如后实证主义、建构主义)并指导研究设计的程度等,会有所不同.Creswell提出的第一种设计类型是顺序性解释设计(sequential explanatory design)[28].在这种设计中,质性数据被用来提升、补充,和在某些情况下跟进未能预计到的量化结果.这类设计的重点在于解释和说明变量之间的关系,其过程可能或可能不受某一特定理论观念的指导.这种设计首先是收集和分析量化数据,接着再收集和分析质性数据;这也就意味着在顺序性解释设计中,质性和量化数据在分析阶段并没有得到合并/混合,它们的实际合成是发生在数据解释的阶段.一般而言,此种设计在解释结果时往往给予量化部分较多的权重;设计的各个阶段相对独立,因而不同属性(即质性与量化)的数据在收集和呈现上是分别进行的,这也常常被视为是此种设计的特点,因为从操作和安排上看实施起来较为方便.当然,这种设计也显然存在着一些不足.例如,相对于收集单一的质性或量化数据,此种设计在收集不同属性数据的阶段所要花费的时间和资源会较多;在整合质性和量化数据时会要用到专门的知识.Morgan指出,顺序性解释设计是混合法研究使用得最为频繁的一种方式[24].在此,Howell等人实施的一个量化研究[31]可作为一个实际的案例.在这个研究中,研究者比对了获得代金券并被允许就读私立学校(之后确实就读于此类学校)的学生,他们在学业成绩的提升上是否优于未获得代金券且就读于公立学校的学生.为了探讨这个问题,这些作者考察了三所美国学校、超过4 000名学生的学习成绩,其中学生是否能够获得代金券是以抽签的方式随机确定的.由此而产生的随机设计,使得代金券的获得与否成为研究中的主要自变量,而学生的成绩就成了主要的因变量.另外,该研究并没有提供理论模型.研究结果显示,获得代金券并就读于私立学校的非洲裔美国学生的成绩较之于未获得代金券的非洲裔美国学生有所提高.然而该研究同时也发现,上述的结果对于其他族裔的学生群体并不成立.从量化研究的角度来看,这个研究具有很多的长处,特别是在随机分配方面,大大增强了因果关系的结论.但Howell等人也指出,他们对于研究中发现的非洲裔美国学生在成绩上的优势,为何未能在其他族裔群体上出现的原因并不是很清楚.这一差异是否与学生对其自身的学习技巧的看法有关,是否与其同伴有关联,是否是由动机上的差异或是父母对于教育的较强支持、学生与学校职员的关系或是其他因素而引起的?鉴于Howell等人提出的困惑,我们可以进行一个顺序性解释研究设计,从而帮助这些作者找出有关代金券提升非洲裔美国学生而非其他族裔学生的成绩的缘由.比如,在前一阶段的研究结束之后,研究者可以开展后续的访谈,访谈的对象可在获得及未获得代金券的学生的家长中进行有目的性的选择,这一做法可以增强和补充之前在量化研究得出的结果.第二种设计类型是顺序性探索设计(sequential exploratory design),其在本质上与顺序性解释设计恰好相反,即是运用量化数据来增强和补充质性数据.当研究者的兴趣在于增强研究结果的一般化能力时,这种设计尤其有用,而相应的研究可能或可能不受某一理论观点的指导.Creswell指出,在开发研究工具时常常会用到此种设计[28].具体来说,在某一研究工具(比如调查、测试)形成初稿后,研究者往往会邀请少量的被试对该工具实施预测,这些被试根据他们的使用体会,通常会提出一些较为重要的反馈,基于此,研究者便可对相关工具进行适当的修改,以形成日后用于量化数据收集的工具的终稿.经过这一过程而获得的量化数据,可用于增强、补充、拓展之前获得的预测结果.这一设计的优势和不足与顺序性解释设计是较为类似的.Norman的混合法研究[32]可视为是此一设计的一个研究案例.Norman的研究关注的是,揭示大学学业导师在为新入学的学生建议其在开始大学数学学习时应修读何种适当的数学课程时的理念,并将这一信息与反映学生已完成的高中数学课程及他们的大学分班测试成绩的量化信息相整合.Norman的这一研究是受如下的现象所启发的,通常那些在高中阶段完成基于标准(standards-based)的数学课程的学生,相较于那些完成传统高中数学课程的学生,更倾向于被建议在大学开始阶段去修读难度较低的数学课程.高中阶段基于标准的数学课程在每一学年都会较注重于一系列的数学主题,强调问题解决和小组作业,而弱化算法操作;传统的数学课程则更为注重代数、算法和重复性教学,并且在学生的学习上较为依赖于教师[33].依据学生在高中阶段完成的数学课程的不同,给予学生不同的修课建议,这可能会使一些学生被建议在开始大学数学学习时去修读他们不应该修读的课程(如微积分初步[precalculus],而非微积分I).这一做法意味着,学生和学校可能都要在不必要的课程上花费时间、金钱和精力.Norman改编了两位Miller的一个理论性决策模型[34],以用于研究学业导师的决策过程,同时该研究还使用到了个案研究法[35].具体地,Norman有目的地选取了6名导师和24名学生,通过深度访谈这些导师和学生收集数据,其中还包括观察指导过程,以及学生在高中和大学阶段的数学课程的修读记录及成绩[32].Norman发现,导师对高中成绩单中的数学部分有错误的解读,而这对于他们建议其学生在学习开始时应修读哪一门大学数学课程会产生重要的影响.比如,某学生的成绩单显示,该生已完成的最高级别的高中数学课程是“整体数学IV”(Integrated Mathematics IV),这是一门基于标准的课程,那么这名学生往往会被建议从修读“微积分基础”开始;而其实,“整体数学IV”本身就是一门微积分基础课程,因此应当建议该学生去修读“微积分I”.同时Norman也发现,对于那些完成传统高中数学课程的学生,若他们的成绩单显示其所完成的最高级别的数学课程是“微积分基础”,他们的导师会更倾向于建议这些学生去修读“微积分I”.Norman认为,导师对基于标准的课程的看法可能与他们在数学系的工作经历有关系,因为数学系通常对基于标准的课程会有相当的挑剔[33,36].此外,Norman还对1 000多名大学新生收集了关于他们的量化数据并做了相应的分析,其中包括他们在高中阶段所完成的数学课程信息、在大学数学分班测试中的成绩,以及修读的第一门大学数学课程的难度(这一指标是通过一个4点Likert变量进行测量的:1=应在高中完成的课程,有时被视为是一门补修课程[developmental course];4=难度超越“微积分I”的课程)[32].这些分析的结果显示,学生所完成的课程,与他们在数学分班测试中的成绩及所修读的第一门大学数学课程的难度水平是相关的.尤其是完成基于标准的高中数学课程的学生,比较那些完成传统课程的学生,更可能去修读那些难度较低的大学数学课程.Norman的研究体现了混合法研究的几个典型特征,包括使用个案研究法,强调发现和理解导师和学生的经验、观念和想法,以及呈现量化数据以凸显导师对学生们高中成绩单的误解所可能带来的后果.对于具体何时收集量化及质性数据,Norman的研究并没有给出详细的描述;有关的数据似乎是在一个较为宽松的时间段内收集起来的,这可能意味着某次数据收集的结果对另一次的数据收集不会产生较为显著的影响.由于主要的研究问题关注的是导师,就首先收集和分析了有关导师的数据,其次再有目的地使用这一信息指导和分析有关学生的量化数据,随后整合这两组数据的结果.从这一过程,不难看出顺序性探索设计的价值所在.例如,从有关导师对学生在高中修读的数学课程的信息的误解的质性数据而得出的信息,可用于编写学生问卷,诸如学生是否认为他们的导师对他们已修读的高中课程有所误解等.第三种类型是顺序性转换设计(sequential transformative design).其中,无论是质性或是量化数据,都可以首先进行收集.这类设计的理论基础对于实施的相应研究是很关键的,即所选择的研究方法应服从于该理论基础.在这里,对于质性和量化数据的分析是分别处理的,而它们的结果是在解释的阶段再加以整合的.这种方法通常用来确保能够体现各个不同参与者的观点和理念,或是寻求对所研究的一个变化中的过程的更深层次的理解.此种研究设计的优势与不足与顺序性探索设计也较为类似.举例来说,依据Norman使用的决策模型提示,收集那些受导师建议影响(有的是负面的)的学生的数据,对于评价和改进指导的过程来说是重要的.Norman指出,大多数的学生是遵循那些建议而修读相应的数学课程的,即便他们对这些建议有异议.在这一研究中,重点在于发现和理解学生对于指导过程的体验,以及这些体验对于他们的大学经历的影响.运用一种目的性的选样手段,挑选修读不同难易程度的数学课程以开始他们的大学学习的学生,以个案研究的方式展开此项研究是合适的.从访谈和学生学业记录得来的信息可用于建构学生问卷,而问卷调查的结果则可用于改进导师的决策及提升研究发现的一般化程度.第四种设计类型是并行性三角互证设计(concurrent triangulation design),此类设计常用于确定(confirm)、交叉校验(cross-validate)或是证实(corroborate)源自某一单个研究的结果.此类研究通过并行收集质性和量化数据,使得某类数据的不足可为另一种数据的优势所弥补.通常,在混合研究的发现结果时,会给予两种数据以相同的权重,尽管也可以赋予其中某一类的数据较多一些的权重.对质性和量化数据的分析是分别进行的,混合则是在解释结果的阶段发生.此种设计的重要优势在于,能够将从单个研究得到的信息做到最大化.例如,当研究关注的是交叉校验时,此种设计比之顺序性数据收集的方式,在数据收集的时长上会短一些.而此种设计的不足包括有,同时进行质性和量化数据的收集所附带有的复杂性,以及需要将专门性的知识有效地运用于两种方法之上等.此外,质性及量化研究结果的不相一致也可能较难调和.在前面提到的Howell等人的研究中,其主要的发现是获得代金券且就读于私立学校的非洲裔美国学生的平均成绩要高于那些没有获得代金券的非洲裔美国学生,而这一差异在其他族裔的学生群体中没有出现.选用并行性三角互证设计,可以解释这些通过以访谈收到和未收到代金券的学生的家长的形式收集而来的质性数据,以及基于学生的测试成绩和背景信息收集而来的量化数据.这就为证实从该研究中得到的有关非洲裔(而非其他族裔)美国学生的成绩有所提高的研究发现提供了机会.例如,通过并行性数据收集,可能得出如下结果,由质性数据显示,非洲裔美国学生的家长比之其他学生的家长,对于学生的教育,尤其是代金券项目,显得更为负责且热衷,而这种热情在这一学年中(用于本研究的学业测试是在学年结束时实施的)始终保持着.在这种情况下,相关的质性和量化数据将可为非洲裔美国学生所提高的成绩,部分地归因于他们所获得代金券和就读于私立学校,部分地归因于学生家长们的支持、鼓励和积极性,提供确凿信息.第五种设计类型是并行性嵌套设计(concurrent nested design),其中的质性和量化数据是并行收集的,而之后的分析则是一起进行的.此种设计会给予某一类数据较大的权重,从这个意义上说,某一类数据将会嵌套于另一类数据之中;在此种设计中,可能存在、也可能不存在某种指导性理论观念.对于此种设计的一个较为流行的应用是使用多层结构[21],在其中,某一结构的不同水平或单元得以研究.该设计的优势,包括数据收集的时长较短,数据中嵌套有多种的理念;不足的方面则包括需要具有不同层面的专业知识才能有效地实施研究,特别是在数据分析时要混合质性和量化数据,而调和产生于质性和量化分析的相互矛盾的结果存在一定的困难.在这一设计中,质性和量化数据是在分析阶段得以混合的,而这一过程可以采用不同的方式(如[21,37]).Caracelli和Greene描述了分析中可使用的四种混合质性和量化数据的策略[38].一种是数据转换(data transformation),即将质性数据转换为量化数据,或是将量化数据转换为叙述性数据,而后再分析这些新产生的数据.对于Norman的研究,就可将以访谈和笔录等形式获得的质性数据转换(即标尺化[rescaling])成为能够体现这些数据所传达的关键性主题(key themes)的量化形式.通常,被转换的质性数据会以测量的类别性(nominal scale)或等级性(ordinal scale)量表形式呈现出来.Caracelli和Greene提出的第二种混合数据的策略是定义类型(typology development),即通过对某一类数据的分析提出某种类型或一组类别,其可作为一种框架用来分析另一类数据.在Norman的研究中,通过分析质性数据形成一些主题,由此定义出一个以类别性量表量度的变量,其中的类别对于为何参与者能够成为导师的缘由给予了一个解释.这个变量可用于量化分析.第三种混合数据的策略是极端案例分析(extreme case analysis),这里的极端案例是在结合一类数据、研究另一类数据时产生的,其目的是为了解释为何这些案例具有极端性.例如,在Norman的研究中,对量化数据的多层分析显示,对应于导师样本,某些导师在统计意义上属于极端案例(例如,有些导师的学生在开始他们的大学学习时,不相称地修读了原应在高中完成的课程).质性数据可用于尝试解释为何这些导师在关于大学数学课程的修读上给予其学生不太相称的指导.Caracelli和Greene描述的第四种混合数据的策略是数据加固/合并(data consolidation/merging),即通过对两类数据的仔细审核,创设出新的变量或是以质性或量化度量标准呈现数据集合.而合并后的数据再用于附加的分析.在Norman的研究中,对质性和量化数据的审核可能会产生一些新的变量.例如,基于对导师和学生数据的观察,可以建构一个用于衡量学生在指导过程中坚持自己主张的程度的变量.在Caracelli和Greene[38],Sandelowski,Voils和Knafl[39]及Tashakkori和Teddlie[21]的研究中都有混合数据的情况.由于同时涉及到导师和学生,Norman的研究似乎已为并行性嵌套设计做好了准备.这一研究关注的一个主要结果是,学生修读的第一门大学数学课程的难度水平、学生修读的高中数学课程(基于标准型或传统型)和他们修读的第一门大学数学课程间的量化关系,可以与从导师所并行提供的质性信息相混合.例如,质性数据可以通过使用叙述的方式获取,其中导师会谈论发生在他们生活中的、使他们成为导师并继续指导学生的那些事件.转换这些有关导师的质性数据,使由此产生的变量能够反映一些重要的主题,并让这些信息与学生变量一起包含(混合)于量化多层数据分析;在此种分析中,学生嵌套于他们的导师之中[40].这些分析可用以探究嵌套的影响及导师变量对学生数据的影响,并对学生如何及为何修读某一特定的大学数学课程给出有力的解释.第六种设计类型是并行性转换设计(concurrent transformative design).与顺序性转换设计相类似,也有一个明确定义了的理论性观念指导此种方法.在这种方式中,质性和量化数据是并行收集的,在整合相应的研究发现时可以对它们赋予相等或不相等的权重.质性和量化数据通常是在分析阶段加以混合的.其优点包括较短。

《2024年高考英语新课标卷真题深度解析与考后提升》专题05阅读理解D篇(新课标I卷)原卷版(专家评价+全文翻译+三年真题+词汇变式+满分策略+话题变式)目录一、原题呈现P2二、答案解析P3三、专家评价P3四、全文翻译P3五、词汇变式P4(一)考纲词汇词形转换P4(二)考纲词汇识词知意P4(三)高频短语积少成多P5(四)阅读理解单句填空变式P5(五)长难句分析P6六、三年真题P7(一)2023年新课标I卷阅读理解D篇P7(二)2022年新课标I卷阅读理解D篇P8(三)2021年新课标I卷阅读理解D篇P9七、满分策略(阅读理解说明文)P10八、阅读理解变式P12 变式一:生物多样性研究、发现、进展6篇P12变式二:阅读理解D篇35题变式(科普研究建议类)6篇P20一原题呈现阅读理解D篇关键词: 说明文;人与社会;社会科学研究方法研究;生物多样性; 科学探究精神;科学素养In the race to document the species on Earth before they go extinct, researchers and citizen scientists have collected billions of records. Today, most records of biodiversity are often in the form of photos, videos, and other digital records. Though they are useful for detecting shifts in the number and variety of species in an area, a new Stanford study has found that this type of record is not perfect.“With the rise of technology it is easy for people to make observation s of different species with the aid of a mobile application,” said Barnabas Daru, who is lead author of the study and assistant professor of biology in the Stanford School of Humanities and Sciences. “These observations now outnumber the primary data that comes from physical specimens(标本), and since we are increasingly using observational data to investigate how species are responding to global change, I wanted to know: Are they usable?”Using a global dataset of 1.9 billion records of plants, insects, birds, and animals, Daru and his team tested how well these data represent actual global biodiversity patterns.“We were particularly interested in exploring the aspects of sampling that tend to bias (使有偏差) data, like the greater likelihood of a citizen scientist to take a picture of a flowering plant instead of the grass right next to it,” said Daru.Their study revealed that the large number of observation-only records did not lead to better global coverage. Moreover, these data are biased and favor certain regions, time periods, and species. This makes sense because the people who get observational biodiversity data on mobile devices are often citizen scientists recording their encounters with species in areas nearby. These data are also biased toward certain species with attractive or eye-catching features.What can we do with the imperfect datasets of biodiversity?“Quite a lot,” Daru explained. “Biodiversity apps can use our study results to inform users of oversampled areas and lead them to places – and even species – that are not w ell-sampled. To improve the quality of observational data, biodiversity apps can also encourage users to have an expert confirm the identification of their uploaded image.”32. What do we know about the records of species collected now?A. They are becoming outdated.B. They are mostly in electronic form.C. They are limited in number.D. They are used for public exhibition.33. What does Daru’s study focus on?A. Threatened species.B. Physical specimens.C. Observational data.D. Mobile applications.34. What has led to the biases according to the study?A. Mistakes in data analysis.B. Poor quality of uploaded pictures.C. Improper way of sampling.D. Unreliable data collection devices.35. What is Daru’s suggestion for biodiversity apps?A. Review data from certain areas.B. Hire experts to check the records.C. Confirm the identity of the users.D. Give guidance to citizen scientists.二答案解析三专家评价考查关键能力,促进思维品质发展2024年高考英语全国卷继续加强内容和形式创新,优化试题设问角度和方式,增强试题的开放性和灵活性,引导学生进行独立思考和判断,培养逻辑思维能力、批判思维能力和创新思维能力。

高考真题英语2024新课标一卷一、听力部分(共30分)Section A1. 根据所听对话,选择正确答案。

- 问题1:What is the man going to do this weekend?- A. Visit his parents.- B. Go to a concert.- C. Work on a project.- 问题2:Why does the woman suggest the man should take a break?- A. He has been working too hard.- B. He needs to prepare for an exam.- C. He is going to have a meeting....Section B1. 根据所听短文,选择正确答案。

- 问题1:What is the main topic of the passage?- A. The importance of environmental protection.- B. The impact of technology on education.- C. The benefits of physical exercise.- 问题2:What does the speaker think about the future ofeducation?- A. It will be completely online.- B. It will be a combination of online and offline learning.- C. It will not change much....二、阅读理解部分(共40分)Passage 1A new study has found that regular exercise can significantly improve memory and cognitive function in older adults. The research, conducted by a team of scientists at the University of XYZ, involved a group of participants aged between 60 and 80. They were asked to engage in moderate physical activity for 30 minutes a day, five days a week, over a period of six months.Questions:1. What was the age range of the participants in the study?2. How long did the participants engage in physical activity each day?3. What was the duration of the study?Passage 2The article discusses the impact of social media on teenagers' mental health. It highlights the negative effects such as increased anxiety and depression, while alsoacknowledging the positive aspects like the ability toconnect with others and share experiences.Questions:1. What is the main concern of the article?2. What are some of the negative effects of social media mentioned?3. How does the article view the positive aspects of social media?Passage 3...三、完形填空部分(共20分)Once upon a time, in a small village, there lived an old man named John. He was known for his wisdom and kindness. One day, a young boy approached him with a problem. The boy had losthis favorite toy, and he was very upset.John listened to the boy's story and then said, "Life is full of ups and downs. It's important to learn from our losses and to keep moving forward."The boy looked at John with confusion and asked, "But how can I find my toy?"John smiled and said, "Sometimes, the things we lose are not meant to be found. Instead, we should focus on the lessons we learn from the experience."The boy thought about John's words and slowly began to understand the importance of resilience and acceptance.四、语法填空部分(共10分)In recent years, the popularity of online shopping has grown rapidly. Many people prefer to buy products online because it is convenient and time-saving. However, there are also some disadvantages, such as the inability to try products before buying them.1. The popularity of online shopping has grown rapidly because it is convenient and time-saving.2. Despite its convenience, online shopping also has some disadvantages, such as the inability to try products before buying them.五、短文改错部分(共10分)One day, a boy was walking along the street when he suddenly saw a wallet on the ground. He picked it up and found that it was full of money. He decided to hand it in to the police station. The police officer thanked him and asked for his name, but the boy refused to tell. He said that he did not want to receive any reward for his honesty.六、书面表达部分(共30分)假如你是李华,你的美国朋友Tom对中国的传统节日非常感兴趣。

Comparative Effectiveness ReviewNumber 6Efficacy and Comparative Effectiveness of Off-Label Use of Atypical AntipsychoticsThis report is based on research conducted by the Southern California/RAND Evidence-based Practice Center (EPC) under contract to the Agency for Healthcare Research and Quality (AHRQ), Rockville, MD (Contract No. 290-02-0003). The findings and conclusions in this document are those of the author(s), who are responsible for its contents; the findings and conclusions do not necessarily represent the views of AHRQ. Therefore, no statement in this report should be construed as an official position of the Agency for Healthcare Research and Quality or of the U.S. Department of Health and Human Services.This report is intended as a reference and not as a substitute for clinical judgment. Anyone who makes decisions concerning the provision of clinical care should consider this report in the same way as any medical reference and in conjunction with all other pertinent information.This report may be used, in whole or in part, as the basis for development of clinical practice guidelines and other quality enhancement tools, or as a basis for reimbursement and coverage policies. AHRQ or U.S. Department of Health and Human Services endorsement of such derivative products may not be stated or implied.Comparative Effectiveness ReviewNumber 6Efficacy and Comparative Effectiveness ofOff-Label Use of Atypical AntipsychoticsPrepared for:Agency for Healthcare Research and QualityU.S. Department of Health and Human Services540 Gaither RoadRockville, MD 20850Contract No. 290-02-0003Prepared by:Southern California/RAND Evidence-based Practice CenterInvestigatorsPaul Shekelle, M.D., Ph.D. Lara Hilton, B.A.Director Programmer/Analyst Margaret Maglione, M.P.P. Annie Zhou, M.S.Project Manager/Policy Analyst StatisticianSteven Bagley, M.D., M.S. Susan Chen, B.A.Content Expert/Physician Reviewer Staff AssistantMarika Suttorp, M.S. Peter Glassman, M.B., B.S., M.Sc.Benefits ManagementStatistician PharmacyWalter A. Mojica, M.D., M.P.H. ExpertPhysician Reviewer Sydne Newberry, Ph.D.Jason Carter, B.A. Medical EditorCony Rolón, B.A.Literature Database ManagersAHRQ Publication No. 07-EHC003-EFJanuary 2007This document is in the public domain and may be used and reprinted without permission except those copyrighted materials noted for which further reproduction is prohibited without the specific permission of copyright holders.Suggested citation:Shekelle P, Maglione M, Bagley S, Suttorp M, Mojica WA, Carter J, Rolon C, Hilton L, Zhou A, Chen S, Glassman P. Comparative Effectiveness of Off-Label Use of Atypical Antipsychotics. Comparative Effectiveness Review No. 6. (Prepared by the Southern California/RAND Evidence-based Practice Center under Contract No. 290-02-0003.)Rockville, MD: Agency for Healthcare Research and Quality. January 2007. Available at: /reports/final.cfm.PrefaceThe Agency for Healthcare Research and Quality (AHRQ) conducts the Effective Health Care Program as part of its mission to organize knowledge and make it available to inform decisions about health care. As part of the Medicare Prescription Drug, Improvement, and Modernization Act of 2003, Congress directed AHRQ to conduct and support research on the comparative outcomes, clinical effectiveness, and appropriateness of pharmaceuticals, devices, and health care services to meet the needs of Medicare, Medicaid, and the State Children’s Health Insurance Program (SCHIP).AHRQ has an established network of Evidence-based Practice Centers (EPCs) that produce Evidence Reports/Technology Assessments to assist public- and private-sector organizations in their efforts to improve the quality of health care. The EPCs now lend their expertise to the Effective Health Care Program by conducting Comparative Effectiveness Reviews of medications, devices, and other relevant interventions, including strategies for how these items and services can best be organized, managed, and delivered.Systematic reviews are the building blocks underlying evidence-based practice; they focus attention on the strengths and limits of evidence from research studies about the effectiveness and safety of a clinical intervention. In the context of developing recommendations for practice, systematic reviews are useful because they define the strengths and limits of the evidence, clarifying whether assertions about the value of the intervention are based on strong evidence from clinical studies. For more information about systematic reviews, see/reference/purpose.cfm.AHRQ expects that Comparative Effectiveness Reviews will be helpful to health plans, providers, purchasers, government programs, and the health care system as a whole. In addition, AHRQ is committed to presenting information in different formats so that consumers who make decisions about their own and their family’s health can benefit from the evidence. Transparency and stakeholder input are essential to the Effective Health Care Program. Please visit the Web site () to see draft research questions and reports or to join an e-mail list to learn about new program products and opportunities for input. Comparative Effectiveness Reviews will be updated regularly.AcknowledgmentsWe would like to thank the Effective Health Care Scientific Resource Center, located at Oregon Health & Science University, for assisting in communicating with stakeholders and ensuring consistency of methods and format.We would like to acknowledge Di Valentine, J.D., Catherine Cruz, B.A., and Rena Garland, B.A., for assistance in abstraction of adverse events data.Technical Expert PanelMark S. Bauer, M.D., Brown University, Providence Veterans Affairs Medical Center, Providence, RIBarbara Curtis, R.N., M.S.N., Washington State Department of Corrections, Olympia, WACarol Eisen, L.A. County Department of Mental Health, Los Angeles, CABruce Kagan, M.D., Ph.D., UCLA Psychiatry and Biobehavioral Science – Neuropsychiatric Institute, Los Angeles, CAJamie Mai, Pharm.D., Office of the Medical Director, Tumwater, WAAlexander L. Miller, M.D., University of Texas, Department of Psychiatry, Health Science Center at San Antonio, San Antonio, TXAdelaide S. Robb, M.D., Children’s National Medical Center, Department of Psychiatry, Washington, DCCharles Schulz, M.D., University of Minnesota, Department of Psychiatry, Minneapolis, MNSarah J. Spence, M.D., Ph.D., UCLA, Autism Evaluation Clinic, Los Angeles, CA David Sultzer, M.D., UCLA and VA Greater L.A. Healthcare System, Department. of Psychiatry and Biobehavioral Sciences, Los Angeles, CAAHRQ ContactsBeth A. Collins-Sharp, Ph.D., R.N. Margaret Coopey, M.P.S., M.G.A., R.N. Director Task Order OfficerEvidence-based Practice Center Program Evidence-based Practice Center Program Center for Outcomes and Evidence Center for Outcomes and Evidence Agency for Healthcare Research and Agency for Healthcare Research and Quality Quality Rockville, MDRockville,MDContentsExecutive Summary (1)Introduction (11)Background (11)Scope and Key Questions (15)Methods (17)Topic Development (17)Search Strategy (17)Technical Expert Panel (18)Study Selection (18)Data Abstraction (18)Adverse Events (20)Quality Assessment (20)Applicability (21)Rating the Body of Evidence (21)Data Synthesis (22)Peer Review (24)Results (25)Literature Flow (25)Key Question 1: What are the leading off-label uses of antipsychotics in the literature? (28)Key Question 2: What does the evidence show regarding the effectiveness of antipsychotics for off-label indications, such as depression? How doantipsychotic medications compare with other drugs for treating off-labelindications? (28)Dementia (28)Depression (32)Obsessive-Compulsive Disorder (37)Posttraumatic Stress Disorder (40)Personality Disorders (43)Tourette’s Syndrome (47)Autism (50)Sensitivity Analysis (51)Publication Bias (51)Key Question 3: What subset of the population would potentially benefit from off-label uses? (52)Key Question 4: What are the potential adverse effects and/or complicationsinvolved with off-label antipsychotic prescribing? (52)Key Question 5: What is the appropriate dose and time limit for off-label indications? (62)Summary and Discussion (63)Limitations (63)Conclusions (64)Future Research (69)References (71)TablesTable 1. Efficacy outcomes abstracted (19)Table 2. Pooled results of placebo-controlled trials of atypical antipsychotics for patients with dementia and behavioral disturbances or agitation (29)Table 3. Trials of atypical antipsychotics as augmentation therapy for major depression (33)Table 4. Placebo-controlled trials of atypical antipsychotics as augmentation for obsessive compulsive disorder (39)Table 5. Posttraumatic Stress Disorder (42)Table 6. Personality Disorders (46)Table 7. Tourette’s Syndrome (49)Table 8. Cardiovascular adverse events among dementia patients – Atypical Antipsychotics Compared to Placebo (55)Table 9. Neurological adverse events among dementia patients – Atypical Antipsychotics Compared to Placebo (55)Table 10. Urinary adverse events among dementia patients – Atypical Antipsychotics Compared to Placebo (56)Table 11. Summary of Evidence – Efficacy (65)Table 12. Summary of adverse event and safety findings for which there is moderate or strong evidence (67)FiguresFigure 1. Literature flow (26)Figure 2. Pooled analysis of the effect of atypical antipsychotic medications versus placebo on “response” in patients with obsessive compulsive disorder (40)AppendixesAppendix A. Exact Search StringsAppendix B. Data Collection FormsAppendix C. Evidence and Quality TablesAppendix D. Excluded ArticlesAppendix E. Adverse Event AnalysisEfficacy and Comparative Effectiveness of Off-Label Use of Atypical AntipsychoticsExecutive SummaryThe Effective Health Care Program was initiated in 2005 to provide valid evidence about the comparative effectiveness of different medical interventions. The object is to help consumers, health care providers, and others in making informed choices among treatment alternatives. Through its Comparative Effectiveness Reviews, the program supports systematic appraisals of existing scientific evidence regarding treatments for high-priority health conditions. It also promotes and generates new scientific evidence by identifying gaps in existing scientific evidence and supporting new research. The program puts special emphasis on translating findings into a variety of useful formats for different stakeholders, including consumers.The full report and this summary are available at/reports/final.cfmBackgroundolanzapine, quetiapine, risperidone, and ziprasidone are atypical antipsychotics Aripiprazole,approved by the U.S. Food and Drug Administration (FDA) for treatment of schizophrenia and bipolar disorder. These drugs have been studied for off-label use in the following conditions: dementia and severe geriatric agitation, depression, obsessive-compulsive disorder, posttraumatic stress disorder, and personality disorders. The atypicals have also been studied for the management of Tourette’s syndrome and autism in children. The purpose of this report is to review the scientific evidence on the safety and effectiveness of such off-label uses.The Key Questions were:Key Question 1. What are the leading off-label uses of atypical antipsychotics in theliterature?Key Question 2. What does the evidence show regarding the effectiveness of atypicalantipsychotics for off-label indications, such as depression? How do atypical antipsychotic medications compare with other drugs for treating off-label indications?Key Question 3. What subset of the population would potentially benefit from off-label uses?Key Question 4. What are the potential adverse effects and/or complications involved with off-label prescribing of atypical antipsychotics?Key Question 5. What are the appropriate dose and time limit for off-label indications?ConclusionsEvidence on the efficacy of off-label use of atypical antipsychotics is summarized in Table A. Table B summarizes findings on adverse events and safety.Leading off-label uses of atypical antipsychotics•The most common off-label uses of atypical antipsychotics found in the literature were treatment of depression, obsessive-compulsive disorder, posttraumatic stress disorder,personality disorders, Tourette's syndrome, autism, and agitation in dementia. In October 2006, the FDA approved risperidone for the treatment of autism.Effectiveness and comparison with other drugsDementia-agitation and behavioral disorders• A recent meta-analysis of 15 placebo-controlled trials found a small but statistically significant benefit for risperidone and aripiprazole on agitation and psychosis outcomes.The clinical benefits must be balanced against side effects and potential harms. See“Potential adverse effects and complications” section.•Evidence from this meta-analysis shows a trend toward effectiveness of olanzapine for psychosis; results did not reach statistical significance. The authors found three studies of quetiapine; they were too dissimilar in their design and the outcomes studied to pool.• A large head-to-head placebo controlled trial (Clinical Antipsychotic Trials of Intervention Effectiveness-Alzheimer’s Disease; CATIE-AD) concluded there were nodifferences in time to discontinuation of medication between risperidone, olanzapine,quetiapine, and placebo. Efficacy outcomes favored risperidone and olanzapine, andtolerability outcomes favored quetiapine and placebo.•We found no studies of ziprasidone for treatment of agitation and behavioral disorders in patients with dementia.•Strength of evidence = moderate for risperidone, olanzapine, and quetiapine; low for aripiprazole.Depression•We identified seven trials where atypical antipsychotics were used to augment serotonin reuptake inhibitor (SRI) treatment in patients with initial poor response to therapy, twostudies in patients with depression with psychotic features, and four trials in patients with depression with bipolar disorder.•For SRI-resistant patients with major depressive disorder, combination therapy with an atypical antipsychotic plus an SRI antidepressant is not more effective than an SRI alone at 8 weeks.•In two trials enrolling patients with major depressive disorder with psychotic features, olanzapine and olanzapine plus fluoxetine were compared with placebo for 8 weeks.Neither trial indicated a benefit for olanzapine alone. In one trial, the combination group had significantly better outcomes than placebo or olanzapine alone, but the contribution of olanzapine cannot be determined, as the trial lacked a fluoxetine-only comparison arm.•For bipolar depression, olanzapine and quetiapine were superior to placebo in one study for each drug, but data are conflicting in two other studies that compared atypicalantipsychotics to conventional treatment.•We found no studies of aripiprazole for depression.•Strength of evidence = moderate strength of evidence that olanzapine, whether used as monotherapy or augmentation, does not improve outcomes at 8 weeks in SRI-resistantdepression; low strength of evidence for all atypical antipsychotics for other depression indications due to small studies, inconsistent findings, or lack of comparisons to usualtreatment.Obsessive-compulsive disorder (OCD)•We identified 12 trials of risperidone, olanzapine, and quetiapine used as augmentation therapy in patients with OCD who were resistant to standard treatment.•Nine trials were sufficiently similar clinically to pool. Atypical antipsychotics have a clinically important benefit (measured by the Yale-Brown Obsessive-Compulsive Scale) when used as augmentation therapy for patients who fail to adequately respond to SRItherapy. Overall, patients taking atypical antipsychotics were 2.66 times as likely to“respond” as placebo patients (95-percent confidence interval (CI): 1.75 to 4.03).Relative risk of “responding” was 2.74 (95-percent CI: 1.50 to 5.01) for augmentationwith quetiapine and 5.45 (95-percent CI: 1.73 to 17.20) for augmentation withrisperidone. There were too few studies of olanzapine augmentation to permit separatepooling of this drug.•We found no trials of ziprasidone or aripiprazole for obsessive-compulsive disorder.•Strength of evidence = moderate for risperidone and quetiapine; low for olanzapine due to sparse and inconsistent results.Posttraumatic stress disorder (PTSD)•We found four trials of risperidone and two trials of olanzapine of at least 6 weeks duration in patients with PTSD.•There were three trials enrolling men with combat-related PTSD; these showed a benefit in sleep quality, depression, anxiety, and overall symptoms when risperidone orolanzapine was used to augment therapy with antidepressants or other psychotropicmedication.•There were three trials of olanzapine or risperidone as monotherapy for women with PTSD; the evidence was inconclusive regarding efficacy.•We found no studies of quetiapine, ziprasidone, or aripiprazole for PTSD.•Strength of evidence = low for risperidone and olanzapine for combat-related PTSD due to sparse data; very low for risperidone or olanzapine for treating non-combat-relatedPTSD.Personality disorders•We identified five trials of atypical antipsychotic medications as treatment for borderline personality disorder and one trial as treatment for schizotypal personality disorder.•Three randomized controlled trials (RCTs), each with no more than 60 subjects, provide evidence that olanzapine is more effective than placebo and may be more effective than fluoxetine in treating borderline personality disorder.•The benefit of adding olanzapine to dialectical therapy for borderline personality disorder was small.•Olanzapine caused significant weight gain in all studies.•Risperidone was more effective than placebo for the treatment of schizotypal personality disorder in one small 9-week trial.•Aripiprazole was more effective than placebo for the treatment of borderline personality in one small 8-week trial.•We found no studies of quetiapine or ziprasidone for personality disorders.•Strength of evidence = very low due to small effects, small size of studies, and limitations of trial quality (e.g., high loss to followup).Tourette’s syndrome•We found four trials of risperidone and one of ziprasidone for treatment of Tourette’s syndrome.•Risperidone was more effective than placebo in one small trial, and it was at least as effective as pimozide or clonidine for 8 to 12 weeks of therapy in the three remainingtrials.•The one available study of ziprasidone showed variable effectiveness compared to placebo.•We found no studies of olanzapine, quetiapine, or aripiprazole for Tourette’s syndrome.•Strength of evidence = low for risperidone; very low for ziprasidone.Autism•Just before this report was published, the FDA approved risperidone for use in autism.•Two trials of 8 weeks duration support the superiority of risperidone over placebo in improving serious behavioral problems in children with autism. The first trial showed agreater effect for risperidone than placebo (57-percent decrease vs. 14-percent decrease in the irritability subscale of the Aberrant Behavior Checklist). In the second trial, morerisperidone-treated than placebo-treated children improved on that subscale (65 percentvs. 31 percent).•We found no trials of olanzapine, quetiapine, ziprasidone, or aripiprazole for this indication.•Strength of evidence = low.Population that would benefit most from atypical antipsychotics •There was insufficient information to answer this question. It is included as a topic for future research.Potential adverse effects and complications•There is high-quality evidence that olanzapine patients are more likely to report weight gain than those taking placebo, other atypical antipsychotics, or conventionalantipsychotics. In two pooled RCTs of dementia patients, olanzapine users were 6.12times more likely to report weight gain than placebo users. In a head-to-head trial ofdementia patients, olanzapine users were 2.98 times more likely to gain weight thanrisperidone patients. In the CATIE trial, elderly patients with dementia who were treatedwith olanzapine, quetiapine, or risperidone averaged a monthly weight gain of 1.0, 0.7, and 0.4 pounds while on treatment, compared to a weight loss among placebo-treated patients of 0.9 pounds per month. Even greater weight gain relative to placebo has been reported in trials of non-elderly adults.•In two pooled RCTs for depression with psychotic features, olanzapine patients were 2.59 times as likely as those taking conventional antipsychotics to report weight gain.•In a recently published meta-analysis of 15 dementia treatment trials, death occurred in3.5 percent of patients randomized to receive atypical antipsychotics vs. 2.3 percent ofpatients randomized to receive placebo. The odds ratio for death was 1.54, with a 95-percent CI of 1.06 to 2.23. The difference in risk for death was small but statistically significant. Sensitivity analyses did not show evidence for differential risks forindividual atypical antipsychotics. Recent data from the DEcIDE (Developing Evidence to Inform Decisions about Effectiveness) Network suggest that conventionalantipsychotics are also associated with an increased risk of death in elderly patients with dementia, compared to placebo.•In another recently published meta-analysis of six trials of olanzapine in dementia patients, differences in mortality between olanzapine and risperidone were notstatistically significant, nor were differences between olanzapine and conventionalantipsychotics.•In our pooled analysis of three RCTs of elderly patients with dementia, risperidone was associated with increased odds of cerebrovascular accident compared to placebo (odds ratio (OR): 3.88; 95-percent CI: 1.49 to 11.91). This risk was equivalent to 1 additional stroke for every 31 patients treated in this patient population (i.e., number needed to harm of 31). The manufacturers of risperidone pooled four RCTs and found thatcerebrovascular adverse events were twice as common in dementia patients treated with risperidone as in the placebo patients.•In a separate industry-sponsored analysis of five RCTs of olanzapine in elderly dementia patients, the incidence of cerebrovascular adverse events was three times higher inolanzapine patients than in placebo patients.•We pooled three aripiprazole trials and four risperidone trials that reported extrapyramidal side effects (EPS) in elderly dementia patients. Both drugs wereassociated with an increase in EPS (OR: 2.53 and 2.82, respectively) compared toplacebo. The number needed to harm was 16 for aripiprazole and 13 for risperidone.•Ziprasidone was associated with an increase in EPS when compared to placebo in a pooled analysis of adults with depression, PTSD, or personality disorders (OR: 3.32; 95-percent CI: 1.12 to 13.41).•In the CATIE trial, risperidone, quetiapine, and olanzapine were each more likely to cause sedation than placebo (15-24 percent vs. 5 percent), while olanzapine andrisperidone were more likely to cause extrapyramidal signs than quetiapine or placebo(12 percent vs. 1-2 percent). Cognitive disturbance and psychotic symptoms were morecommon in olanzapine-treated patients than in the other groups (5 percent vs. 0-1percent).•There is insufficient evidence to compare atypical with conventional antipsychotics regarding EPS or tardive dyskinesia in patients with off-label indications.•Risperidone was associated with increased weight gain compared to placebo in our pooled analyses of three trials in children/adolescents. Mean weight gain in therisperidone groups ranged from 2.1 kg to 3.9 kg per study. Odds were also higher forgastrointestinal problems, increased salivation, fatigue, EPS, and sedation among theseyoung risperidone patients.•Compared to placebo, all atypicals were associated with sedation in multiple pooled analyses for all psychiatric conditions studied.Appropriate dose and time limit•There was insufficient information to answer this question. It is a topic for future research.Remaining IssuesMore research about how to safely treat agitation in dementia is urgently needed. The CATIE-AD study has substantially added to our knowledge, but more information is still necessary. We make this statement based on the prevalence of the condition and uncertainty about the balance between risks and benefits in these patients. While the increased risk of death in elderly dementia patients treated with atypical antipsychotics was small, the demonstrable benefits in the RCTs were also small. Information is needed on how the risk compares to risks for other treatments.An established framework for evaluating the relevance, generalizability, and applicability of research includes assessing the participation rate, intended target population, representativeness of the setting, and representativeness of the individuals, along with information about implementation and assessment of outcomes. As these data are reported rarely in the studies we reviewed, conclusions about applicability are necessarily weak. In many cases, enrollment criteria for these trials were highly selective (for example, requiring an open-label run-in period). Such highly selective criteria may increase the likelihood of benefit and decrease the likelihood of adverse events. At best, we judge these results to be only modestly applicable to the patients seen in typical office-based care.With few exceptions, there is insufficient high-grade evidence to reach conclusions about the efficacy of atypical antipsychotic medications for any of the off-label indications, either vs. placebo or vs. active therapy.More head-to-head trials comparing atypical antipsychotics are needed for off-label indications other than dementia.IntroductionBackgroundAntipsychotic medications, widely used for the treatment of schizophrenia and other psychotic disorders, are commonly divided into two classes, reflecting two waves of historical development. The conventional antipsychotics--also called typical antipsychotics, conventional neuroleptics, or dopamine antagonists--first appeared in the 1950s and continued to evolve over subsequent decades, starting with chlorpromazine (Thorazine), and were the first successful pharmacologic treatment for primary psychotic disorders, such as schizophrenia. While they provide treatment for psychotic symptoms - for example reducing the intensity and frequency of auditory hallucinations and delusional beliefs - they also commonly produce movement abnormalities, both acutely and during chronic treatment, arising from the drugs’ effects on the neurotransmitter dopamine. These side effects often require additional medications, and in some cases, necessitate antipsychotic dose reduction or discontinuation. Such motor system problems spurred the development of the second generation of antipsychotics, which have come to be known as the “atypical antipsychotics.”Currently, the U.S. Food and Drug Administration (FDA)-approved atypical antipsychotics are aripiprazole, clozapine, olanzapine, quetiapine, risperidone, and ziprasidone. Off-label use of the atypical antipsychotics has been reported for the following conditions: dementia and severe geriatric agitation, depression, obsessive-compulsive disorder, posttraumatic stress disorder, and personality disorders. The purpose of this Evidence Report is to review the evidence supporting such off-label uses of these agents. We were also asked to study the use of the atypical antipsychotics for the management of Tourette’s Syndrome and autism in children. The medications considered in this report are those listed above; however, we have excluded clozapine, which has been associated with a potentially fatal disorder of bone-marrow suppression and requires frequent blood tests for safety monitoring. Because of these restrictions, it is rarely used except for schizophrenia that has proven refractive to other treatment. Dementia and Severe Geriatric AgitationDementia is a disorder of acquired deficits in more than one domain of cognitive functioning. These domains are memory, language production and understanding, naming and recognition, skilled motor activity, and planning and executive functioning. The most common dementias – Alzheimer’s and vascular dementia - are distinguished by their cause. Alzheimer’s dementia occurs with an insidious onset and continues on a degenerative course to death after 8 to10 years; the intervening years are marked by significant disturbances of cognitive functioning and behavior, with severe debilitation in the ability to provide self-care. Vascular dementia refers to deficits of cognitive functioning that occur following either a cerebrovascular event – a stroke – leading to a macrovascular dementia, or, alternatively, more diffusely located changes in the smaller blood vessels, leading to a microvascular dementia. These (and other) dementia types commonly co-occur. Psychotic symptoms are frequent among dementia patients and include。

【GAN论⽂-01】翻译-ProgressivegrowingofGANSforimpro。

Published as a conference paper at ICLR 2018Tero Karras、Timo Aila、Samuli Laine and Jaakko LehtinenNVIDIA and Aalto University⼀、论⽂翻译ABSTRACTWe describe a new training methodology for generative adversarial networks. The key idea is to grow both the generator and discriminator progressively: starting from a low resolution, we add new layers that model increasingly fine details as training progresses. This both speeds the training up and greatly stabilizes it, allowing us to produce images of unprecedented quality, e.g., CELEBA images at 10242. We also propose a simple way to increase the variation in generated im ages, and achieve a record inception score of 8.80 in unsupervised CIFAR10. Additionally, we describe several implementation details that are important for discouraging unhealthy competition between the generator and discriminator. Finally, we suggest a new metric for evaluating GAN results, both in terms of image quality and variation. As an additional contribution, we construct a higher-quality version of the CELEBA dataset.摘要:我们为GAN描述了⼀个新的训练⽅法。

hybrid methodHybrid MethodHybrid Method is a powerful technique that has been used in many areas of research and development, including optimization, simulation, control, and machine learning. A hybrid method combines the strengths of both numerical and symbolic methods to develop an efficient and accurate algorithm. In this article, we will discuss the basic concept, applications, advantages, and limitations of the Hybrid Method.Basic Concept of Hybrid MethodA hybrid method combines the numerical power of mathematical modeling and the symbolic power of algorithmic approach. It is designed to tackle difficult problems that are beyond the reach of traditional numerical and symbolic methods. Hybrid method is a methodology that integrates numerical and symbolic algorithms in a single algorithmic framework. It combines the numerical accuracy of numerical methods with the symbolic reasoning ofsymbolic methods, thus providing a powerful toolfor solving complex problems.Applications of Hybrid MethodHybrid method has wide applications in many areas. For example, in optimization problems,hybrid methods are used to combine the strengths of different optimization techniques such as linear programming, genetic algorithms, and simulated annealing. This combination of methods results in a more efficient and effective optimization method. Hybrid methods are also used in control systems, especially for modeling and control of complex systems such as power plants, chemical plants, and robotics.In machine learning, hybrid methods are used in the development of intelligent algorithms, such as support vector machines, deep learning, anddecision trees. These algorithms combine the advantages of both numerical and symbolic approaches, resulting in better accuracy and performance. Hybrid methods are also used in simulation, particularly in the simulation ofphysical systems, where mathematical models and simulations are combined to create a more accurate and realistic simulation.Advantages of Hybrid MethodThe main advantage of hybrid method is its ability to tackle complex problems that are beyond the reach of traditional methods. Hybrid methods are capable of handling problems that are mathematically complex, computationally intensive, or have high dimensionalities. The hybrid method also provides a robust and flexible framework that can handle different types of problems and data structures.Hybrid methods provide accurate and efficient solutions by combining the strengths of different approaches. They allow for a more holistic understanding of the problem by utilizing multiple perspectives. Additionally, these methods are extensible, meaning that they allow for the integration of new techniques and algorithms as they become available.Limitations of Hybrid MethodDespite the many advantages of hybrid methods, they also have some limitations. First, hybrid methods can be computationally intensive, and they may require high-performance computing resources. Additionally, these methods may be difficult to implement and may require specialized expertise. As such, they may not be accessible to less experienced users or those without access to advanced computing resources.Another limitation is that hybrid methods may not always result in the best solution. Although hybrid methods can provide accurate and efficient solutions, they may not always be the optimal solution. This is because the hybrid method relies on combining multiple methods, and the final solution can depend on the specific combination of methods used. Additionally, the hybrid method may not always be the most transparent approach. This means that it may be difficult to understand why the algorithm produced a particular result.ConclusionHybrid Method is an important and valuable technique that has been widely used in various fields of research and development. The hybrid method combines the strengths of both numerical and symbolic methods to develop an efficient and accurate algorithm. The hybrid method has been used in optimization, simulation, control, and machine learning. It provides accurate and efficient solutions by combining the strengths of different approaches. Although hybrid methods have limitations, they are still an essential tool for solving complex problems. As such, hybrid methods remain an important area of research and development, and we can expect to see continued growth and application of this technique in the future.。

强化学习强化学习笔记(一)1 强化学习概述Alpha Go 的成功,强化学习(Reinforcement Learning,RL)成为了当下机器学习中最热门的研究领域之一。

与常见的监督学习和非监督学习不同,强化学习强调智能体(agent)与环境(environment)的交互,交互过程中智能体需要根据自身所处的状态(state)选择接下来采取的动作(action),执行动作后,智能体会进入下一个状态,同时从环境中得到这次状态转移的奖励(reward)。

强化学习的目标就是从智能体与环境的交互过程中获取信息,学出状态与动作之间的映射,指导智能体根据状态做出最佳决策,最大化获得的奖励。

2 强化学习要素强化学习通常使用马尔科夫决策过程(Markov Decision Process,MDP)来描述。

MDP数学上通常表示为五元组的形式,分别是状态集合,动作集合,状态转移函数,奖励函数以及折扣因子。

近些年有研究工作将强化学习应用到更为复杂的MDP形式,如部分可观察马尔科夫决策过程(Partially ObservableMarkov Decision Process,POMDP),参数化动作马尔科夫决策过程(Parameterized Action Markov Decision Process,PAMDP)以及随机博弈(Stochastic Game,SG)。

状态(S):一个任务中可以有很多个状态,且我们设每个状态在时间上是等距的;动作(A):针对每一个状态,应该有至少1个操作可选;奖励(R):针对每一个状态,环境会在下一个状态直接给予一个数值回馈,这个值越高,说明该状态越值得青睐;策略(π):给定一个状态,经过π的处理,总是能产生唯一一个操作a,即a=π(s),π可以是个查询表,也可以是个函数;3 强化学习的算法分类强化学习的算法分类众多,比较常见的算法有马尔科夫决策过程算法(MDP),Q-Learning算法等。

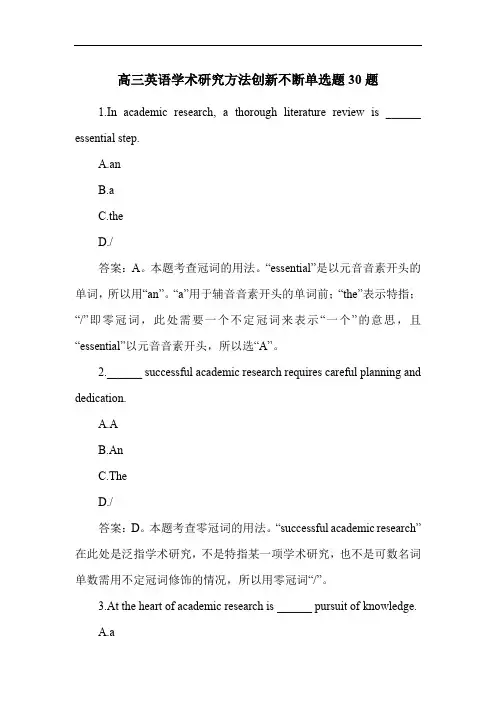

高三英语学术研究方法创新不断单选题30题1.In academic research, a thorough literature review is ______ essential step.A.anB.aC.theD./答案:A。

本题考查冠词的用法。

“essential”是以元音音素开头的单词,所以用“an”。

“a”用于辅音音素开头的单词前;“the”表示特指;“/”即零冠词,此处需要一个不定冠词来表示“一个”的意思,且“essential”以元音音素开头,所以选“A”。

2.______ successful academic research requires careful planning and dedication.A.AB.AnC.TheD./答案:D。

本题考查零冠词的用法。

“successful academic research”在此处是泛指学术研究,不是特指某一项学术研究,也不是可数名词单数需用不定冠词修饰的情况,所以用零冠词“/”。

3.At the heart of academic research is ______ pursuit of knowledge.A.aC.theD./答案:C。

本题考查定冠词的用法。

“the pursuit of knowledge”表示“对知识的追求”,是特指的概念,所以用“the”。

4.Researchers need ______ accurate data to draw valid conclusions.A.anB.aC.theD./答案:D。

本题考查零冠词的用法。

“data”在此处是不可数名词,且不是特指某一特定的数据,所以用零冠词“/”。

5.______ innovation is crucial in academic research.A.AnB.AC.TheD./答案:D。

本题考查零冠词的用法。

“innovation”在此处是泛指创新,不是特指某一个创新,也不是可数名词单数需用不定冠词修饰的情况,所以用零冠词“/”。

STUDIA UNIV.BABES¸–BOLYAI,INFORMATICA,Volume XL VIII,Number1,2003A NEW REINFORCEMENT LEARNING ALGORITHMGABRIELA S¸ERBANAbstract.Thefield of Reinforcement Learning,a sub-field of machine learn-ing,represents an important direction for research in Artificial Intelligence,the way for improving an agent’s behavior,given a certain feed-back aboutits performance.In this paper we propose an original algorithm(URU-Utility-Reward-Utility),which is a temporal difference reinforcement learningalgorithm.Moreover,we design an Agent for solving a path-finding problem(searching a maze),using the URU algorithm.Keywords:Reinforcement Learning,Intelligent Agents.1.Reinforcement LearningReinforcement Learning(RL)is the way of improving the behavior of an agent, given a certain feedback about his performance.Reinforcement Learning[3]is an approach to machine intelligence that combines two disciplines to successfully solve problems that neither discipline can address individually.Dynamic Programming is afield of mathematics that has tradition-ally been used to solve problems of optimization and control.However,traditional dynamic programming is limited in the size and complexity of the problems it can address.Supervised learning is a general method for training a parameterized function approximator,such as a neural network,to represent functions.However,super-vised learning requires sample input-output pairs from the function to be learned. In other words,supervised learning requires a set of questions with the right an-swers.Unfortunately,there are many situations where we do not know the correct answers that supervised learning requires.For these reasons there has been much interest recently in a different approach known as reinforcement learning(RL). Reinforcement learning is not a type of neural network,nor is it an alternative to neural networks.Rather,it is an orthogonal approach that addresses a different, more difficult question.Reinforcement learning combines thefields of dynamic Received by the editors:December10,2002.2000Mathematics Subject Classification.68T05.1998CR Categories and Descriptors.I.2.6[Computing Methodologies]:Artificial In-telligence–Learning.34GABRIELA S¸ERBANprogramming and supervised learning to yield powerful machine-learning systems. Reinforcement learning appeals to many researchers because of its generality.In RL,the computer is simply given a goal to achieve.The computer then learns how to achieve that goal by trial-and-error interactions with its environment.A reinforcement learning problem has three fundamental parts[3]:•the environment–represented by“states”.Every RL system learns amapping from situations to actions by trial-and-error interactions witha dynamic environment.This environment must at least be partiallyobservable by the reinforcement learning system;•the reinforcement function–the“goal”of the RL system is defined usingthe concept of a reinforcement function,which is the exact function offuture reinforcements the agent seeks to maximize.In other words,thereis a mapping from state/action pairs to reinforcements;after performingan action in a given state the RL agent will receive some reinforcement(reward)in the form of a scalar value.The RL agent learns to performactions that will maximize the sum of the reinforcements received whenstarting from some initial state and proceeding to a terminal state.It isthe job of the RL system designer to define a reinforcement function thatproperly defines the goals of the RL agent.Although complex reinforce-ment functions can be defined,there are at least three noteworthy classesoften used to construct reinforcement functions that properly define thedesired goals;•the value(utility)function–explains how the agent learns to choose“good”actions,or even how we might measure the utility of an action.Two terms were defined:a policy determines which action should beperformed in each state;a policy is a mapping from states to actions.The value of a state is defined as the sum of the reinforcements receivedwhen starting in that state and following somefixed policy to a terminalstate.The value(utility)function would therefore be the mapping fromstates to actions that maximizes the sum of the reinforcements whenstarting in an arbitrary state and performing actions until a terminalstate is reached.In a reinforcement learning problem,the agent receives a feedback,known as reward or reinforcement;the reward is received at the end,in a terminal state,or in any other state,where the agent has exactly information about what he did well or wrong.2.A Reinforcement Learning ProblemLet us consider the following problem:The Problem DefinitionA NEW REINFORCEMENT LEARNING ALGORITHM5We consider an environment represented as a space of states(each state is characterized by its position in the environment-two coordinates specifying the X-coordinate,respectively the Y-coordinate of the current position).The goal of a robotic agent is to learn to move in the environment from an initial to afinal state,on a shortest path(as number of transitions between states).Notational conventions used in the followings are:•M={s1,s2,...,s n}-the environment represented as a space of states;•si∈M,sf∈M-the initial,respectively thefinal state of the environ-ment(the problem could be generalized for the case of the environmentswith a set offinal states);•h:M→P(M)-the transition function between the states,having thefollowing signification:h(i)={j1,j2,...,j k},if,at a given moment,fromthe state i the agent could move in one of the states j1,j2,...,j k;wewill call a state j that is accessible from state i(j∈h(i))the neighbor(successor)state of i;•the transition probabilities between a state i and each neighbor state j(we note with card(M)the number of i are the same,P(i,j)=1card(h(i))of elements of the set M);The GoalThe problem will consist in training the agent tofind the shortest path to reach thefinal state sf starting from the initial state si.For solving this problem,we propose in the followings a reinforcement learn-ing algorithm,based on learning the states’utilities(values),in which the agent receives rewards from interactions with the environment.3.The URU Algorithm(Utility-Reward-Utility)The algorithm described in this section is an algorithm for learning the states’values,a variant of learning based on Temporal Differences[1].The algorithm’s idea is the following:•the agent starts with some initial estimates of the state’s utilities;•during some training episodes,the agent will experiment some pathsfrom si to sf(possible optimal),updating,properly,the states’utilitiesestimations;•during the training process the states’utilities estimations converge tothe exact values of the states’utilities,thus,at the end of the trainingprocess,the estimations will be in the vicinity of the exact values.We make the following notations:•U(i)-the estimated utility of the state i;•R(i)-the reward received by the agent in the state i.The URU Algorithm6GABRIELA S¸ERBANThe algorithm is shown in Figure1.(1)Initialize the state utilities with some initial values;(2)Initialize the current state with the initial state sc:=si;(3)Choose a state s neighbor of sc(s∈h(sc)),using some known actionselection mechanisms( -Greedy or SoftMax[2]),following the steps:(a)determine the set of successors of the current state(m=h(sc));(b)if the current state has no successors(m is empty),return to theprevious state(s:=sc);otherwise go to step(c);(c)select from m a subset m1containing the states that were not visitedyet in the current training sequence;(d)choose a state s from m1using a selection mechanism.(4)determine the reward r received by the agent in the state sc;(5)if the current state is notfinal,then update the utility of the currentstate as follows:(1)U(sc):=U(sc)+α·(r+γ·U(s)−U(sc))whereα∈(0,1)is afixed parameter(the learning rate),andγ∈(0,1)is afixed parameter(the reward factor).(6)sc:=s;(7)repeat the step3until sc is thefinal state;(8)repeat the steps2-7for a given number of training episodes.Figure1.The Reward-Utility-Reward(URU)Algorithm.We have to make the following specifications:•the training process during an episode has the complexity in the worstcase O(n2),where n is the number of the environment’s states;•in a training sequence,the agent updates the utility of the current stateusing only the selected successor state,not all the successors(the tem-poral difference characteristic).4.Case StudyIt is known that the estimated utility of a state[1]in a reinforcement learning process is the estimated reward-to-go of the state(the sum of rewards received from the given state to afinal state).So,after a reinforcement learning process, the agent learns to execute those transitions that maximize the sum of rewards received on a path from the initial to afinal state.If we consider the reward function as:r(s)=−1if s=sf,and r(s)=0, otherwise,it is obvious that the goal of the learning process is to minimize theA NEW REINFORCEMENT LEARNING ALGORITHM7 number of transitions from the initial to thefinal state(the agent learns to move on the shortest path).For illustrating the convergence of the algorithm,we will consider that the problem is one of learning the shortest path(the reward function is as we described above),and the environment has the following characteristics:•the environment has a rectangular form;•at a given moment,from a given state,the agent could move in fourdirections:North,South,East,West.In the followings,we will enounce an original theorem that gives the conditions for convergence of the URU algorithm.Theorem1.Let us consider the learning problem described above and which sat-isfies the conditions:•the initial values of the states’utilities(the step(1)of the URU algorithmdescribed in Figure1)are calculated as:U(s)=−d(s,sf)−2,for alls∈M,where d(s1,s2)represent the Manhattan distance between thetwo states;•γ≤13In this case,the URU algorithm is convergent(the states’utilities are convergent after the training sequence).The Theorem1proving is based on the following lemmas:Lemma 2.At the n-th training episode of the agent the following inequalities hold:U n(i)≤−2,for all i∈M.Lemma 3.At the n-th training episode of the agent the following inequalities hold:|U n(i)−U n(j)|≤1,for each transition from i(i=sf)to j made by the agent in the current training sequence.Lemma4.The inequalities U n+1(i)≥U n(i)hold for all i∈M and for all n∈N, in other words the states’utilities increase from a training episode to another.Theorem2gives the equilibrium equation of the states’utilities after applying the URU algorithm.Theorem5.In our learning problem,the equilibrium equation of the states’util-ities is given by the following equation:(2)U∗URU(i)=R(i)+γcard(h(i))·j successor of iU∗URU(j)for all i∈M,where U∗URU (i)represents the exact utility of the state i,obtainedafter applying the URU algorithm.We note by card(M)the number of elements of the set M.8GABRIELA S¸ERBAN5.An Agent for Maze Searching5.1.General Presentation.The application is written in JDK1.4and imple-ments the behavior of an Intelligent Agent(a robotic agent),whose purpose is coming out from a maze on the shortest path,using the algorithm described in the previous section(URU).We assume that:•the maze has a rectangular form;in some positions there are obstacles;the agent starts in a given state and tries to reach afinal(goal)state,avoiding the obstacles;•from a certain position on the maze the agent could move in four direc-tions:north,south,east,west(there are four possible actions);5.2.The Agent’s Design.The basis classes used for implementing the agent’s behavior are the followings:•CState:defines the structure of a State from the environment.Thisclass has methods for:–setting components(the current position on the maze,the value ofa state,the utility of a state);–accessing components;–calculating the utility of a state;–verifying if the state is accessible(does this contain or not an ob-stacle).•CList:defines the structure of a list of objects.The main methods ofthe class are for:–adding elements;–accessing elements;–updating elements.•CEnvironment:defines the structure of the agent’s environment(itdepends on the concrete problem-in our example the environment is arectangular maze).•CNeighborhood:the class that defines the accessibility relation be-tween two states of the environment;•CRLAgent:the main class of the application,which implements theagent’s behavior and the learning algorithm.The private member data of this class are:–m:the agent’s environment(is a CEnvironment);–v:the accessibility relation between the states(is a CNeighborhood);The public methods of the agent are the followings:–readEnvironment:reads the information about the environment from an input stream;A NEW REINFORCEMENT LEARNING ALGORITHM9–writeEnvironment:writes the information about the environ-ment in an output stream;–learning:is the main method of the agent,which implements the URU algorithm;based on this algorithm,the agent updates theutilities of the environment’s states.–next:the agent determines the next state where to move(this is made after the learning process took place).Besides the public methods,the agent has some private methods usedin the method learning.5.3.Experimental Results.For our experiment,we consider the environment shown in Figure2.The state marked with1represents the initial state of the agent,the state marked with2represents thefinal state and the statesfilled with black are containing obstacles(which the agent should avoid).Figure2.The agent’s environmentFor the environment described in Figure2,we use the URU algorithm,with the following initial settings:•γ=0.9;•α=0.01;•number of episodes=10;•as a selection mechanism,we choose the -Greedy selection,with =0.1.The results obtained after the URU learning are presented in Table1.The states from the environment are numbered from1to36,starting with the corner10GABRIELA S¸ERBANTable1.The states’utilities after the training episodes with theURU algorithmState Episode Episode Episode Episode Episode Episode Episode Episode Episode Episode 1234567891010.00000.00000.00000.00000.00000.00000.00000.00000.00000.00002-6.0000-6.0000-6.0000-6.0000-6.0000-6.0000-5.9710-5.9422-5.9422-5.94223-5.0000-5.0000-5.0000-5.0000-5.0000-5.0000-4.9780-4.9561-4.9561-4.956140.00000.00000.00000.00000.00000.00000.00000.00000.00000.00005-3.0000-2.9760-2.9760-2.9760-2.9522-2.9522-2.9522-2.9522-2.9522-2.95226-2.0000-2.0000-2.0000-2.0000-2.0000-2.0000-2.0000-2.0000-2.0000-2.00007-8.0000-8.0000-8.0000-8.0000-8.0000-8.0000-7.4610-7.1124-7.1124-7.11248-7.0000-7.0000-7.0000-7.0000-7.0000-7.0000-6.9640-6.9267-6.9267-6.92679-6.0000-6.0000-6.0000-6.0000-6.0000-6.0000-5.9650-5.9303-5.9303-5.930310-5.0000-5.0000-5.0000-5.0000-5.0000-5.0000-5.0000-4.9779-4.9779-4.977911-4.0000-3.9790-3.9790-3.9790-3.9581-3.9581-3.9581-3.9436-3.9436-3.943612-3.0000-3.0000-3.0000-3.0000-2.9919-2.9919-2.9919-2.9839-2.9839-2.960113-9.0000-9.0000-9.0000-9.0000-9.0000-9.0000-8.3031-7.8600-7.8600-7.8600140.00000.00000.00000.00000.00000.00000.00000.00000.00000.000015-7.0000-7.0000-7.0000-7.0000-7.0000-7.0000-6.9580-6.9580-6.9580-6.9580160.00000.00000.00000.00000.00000.00000.00000.00000.00000.000017-5.0000-4.9720-4.9720-4.9720-4.9720-4.9720-4.9720-4.9720-4.9720-4.972018-4.0000-3.9850-3.9850-3.9850-3.9641-3.9641-3.9641-3.9435-3.9435-3.9230190.00000.00000.00000.00000.00000.00000.00000.00000.00000.000020-8.3664-8.3166-7.7394-7.7394-7.6956-7.6956-7.6521-7.6521-7.0875-7.049821-7.9510-7.9024-7.8541-7.8541-7.8063-7.8063-7.7592-7.7592-7.7122-7.665522-6.9640-6.9282-6.8926-6.8926-6.8573-6.8573-6.8573-6.8573-6.8218-6.7866230.00000.00000.00000.00000.00000.00000.00000.00000.00000.000024-5.0000-4.9720-4.9720-4.9720-4.9442-4.9442-4.9442-4.9167-4.9167-4.889325-10.9300-10.8584-10.7872-9.7732-9.7732-9.7105-9.7105-9.6483-9.5865-9.523326-9.2171-9.1601-8.4560-8.4138-8.3629-8.3214-8.2712-8.2303-7.5718-7.5273270.00000.00000.00000.00000.00000.00000.00000.00000.00000.000028-7.9510-7.9024-7.8541-7.8541-7.8063-7.7588-7.7588-7.7117-7.6691-7.626829-6.9640-6.9282-6.8927-6.8927-6.8573-6.8166-6.8166-6.7761-6.7761-6.735930-6.0000-5.9650-5.9650-5.9650-5.9303-5.9017-5.9017-5.8675-5.8675-5.805831-11.9230-11.8466-11.7707-10.5639-10.5006-10.4349-10.3725-10.3079-10.2438-10.180132-10.9298-10.9298-10.8578-10.7846-10.7120-10.6445-10.5731-10.5047-10.4342-10.434233-9.9430-9.9430-9.8864-9.8864-9.8864-9.1123-9.1123-9.0559-9.0068-9.006834-8.9500-8.9500-8.9003-8.9003-8.9003-8.2313-8.2313-8.1823-8.1376-8.089635-7.9570-7.9084-7.8662-7.8662-7.8184-7.7768-7.7768-7.7768-7.7768-7.729336-7.0000-6.9580-6.9580-6.9580-6.9163-6.8806-6.8806-6.8806-6.8806-6.8393left-up,in order of the lines.On the columns are presented the estimated values of the states’utilities,during the training episodes.From Table1it is obvious that the states’utilities grow during the training episodes.After the training,the agent will report the learned path(from the initial to thefinal state),that is the path(6-1),(5-1),(5-2),(4-2),(4-3),(4-4),(5-4),(5-5),(5-6),(4-6),(3-6),(2-6),(1-6).As a policy for moving in the environment after the learning,we consider that,from a given state,the agent will move to a neighboring state that was not visited yet,and having a maximum utility(of course,to determine the policy,we could use some probabilistic action selection mechanisms).In order to illustrate the experimental results,we will give,in the followings, graphical representations that confirm the theoretical results from the previous sections.Figure3presents the change of the initial state’s utilities during the training episodes(it is obvious that the utilities grow during the training).A NEW REINFORCEMENT LEARNING ALGORITHM11Figure3.The initial state’s utilities during the training process Figure4presents the graphical representation of the states’utilities on thefirst training episode,and Figure5presents the graphical representation of the states’utilities on the last training episode.Analyzing comparatively the twofigures, we observe that,for each state of the environment,the utilities grow during the training.In Figure6we present comparatively the states’utilities on thefirst,the10th and the5th training episode.5.4.Experimental comparison between the URU algorithm and the TD [1](Temporal Difference)algorithm.In section4we illustrate that,in the case of the learning problem in the environment shown in Figure2,by applying the TD algorithm the states’utilities are not convergent.This fact is presented in Table2,where the utilities of thefirstfive states during the training are described, results obtained by applying the TD algorithm for our problem.From Table2it is obvious that the states’utilities have not a monotonic be-havior,in other words,for some states the utilities increase,for other states the utilities decrease along the training,which does not guarantee the convergence of the algorithm.12GABRIELA S¸ERBANFigure4.The states’utilities on thefirst training episode Table2.The states’utilities after the training episodes with theURU algorithmState Episode Episode Episode Episode Episode Episode Episode Episode Episode Episode 1234567891010.00000.00000.00000.00000.00000.00000.00000.00000.00000.00002-6.0000-6.0000-6.0000-6.0002-6.0002-6.0002-6.0002-6.0002-6.0202-6.02023-5.0000-5.0000-5.0000-5.0200-5.0200-5.0400-5.0400-5.0400-5.0600-5.060040.00000.00000.00000.00000.00000.00000.00000.00000.00000.00005-2.9900-2.9801-2.9703-2.9703-2.9703-2.9606-2.9606-2.9606-2.9510-2.9510...6.Conclusions and Further WorkAs we have mentioned in the previous sections,the URU algorithm is a variant of a RL algorithm based on temporal differences(ifγ=1URU becomes the classical temporal difference learning algorithm-TD).In comparison with the classical TD algorithm,the following remarks may be considered.These follow naturally from the theoretical results described in Section 4):•the states’utilities grow faster in the URU algorithm than in TD algo-rithm,in other words U URU(i)>U T D(i),for all i∈M,which meansA NEW REINFORCEMENT LEARNING ALGORITHM13Figure5.The states’utilities on the last training episodethat the URU algorithm converge faster to the solution than the TDalgorithm;•in the case of our learning problem,as we proved in Theorem1,forγ=1(the TD algorithm),we cannot prove the convergence of thestates’utilities.Further work is planned to be done in the following directions:•to analyze what happens if the transitions between states are nondeter-ministic(the environment is a Hidden Markov Model[4]);•to analyze what happens if the reward factor(γ)is not afixed parameter,but a function whose values depend on the current state of the agent.•to develop the algorithm for solving path-finding problems with multipleagents.References[1]Russell,S.J.,Norvig,P.:Artificial Intelligence.A Modern Approach.Prentice-Hall,Engle-wood Cliffs,NJ,1995[2]Sutton,R.,Barto,A.,G.:Reinforcement Learning.The MIT Press,Cambridge,England,1998[3]Harmon,M.,Harmon,S.:Reinforcement Learning–A Tutorial,Wright State University,/∼mharmon/rltutorial/frames.html,200014GABRIELA S¸ERBANFigure6.The states’utilities on thefirst,the10th and the5thtraining episode[4]Serban,G.:Training Hidden Markov Models–A Method for Training Intelligent Agents,Proceedings of the Second International Workshop of Central and Eastern Europe on Multi-Agent Systems,Krakow,Poland,2001,pp.267-276Babes¸-Bolyai University,Cluj-Napoca,RomaniaE-mail address:gabis@cs.ubbcluj.ro。

人工智能是一门新兴的具有挑战力的学科。

自人工智能诞生以来,发展迅速,产生了许多分支。

诸如强化学习、模拟环境、智能硬件、机器学习等。

但是,在当前人工智能技术迅猛发展,为人们的生活带来许多便利。

下面是搜索整理的人工智能英文参考文献的分享,供大家借鉴参考。