The Implementation of ObjectMath - a High-Level Programming Environment for Scientific Comp

- 格式:pdf

- 大小:138.84 KB

- 文档页数:7

Design and Implementation of a Bionic Robotic Hand with Multimodal Perception Based on ModelPredictive Controlline 1:line 2:Abstract—This paper presents a modular bionic robotic hand system based on Model Predictive Control (MPC). The system's main controller is a six-degree-of-freedom STM32 servo control board, which employs the Newton-Euler method for a detailed analysis of the kinematic equations of the bionic robotic hand, facilitating the calculations of both forward and inverse kinematics. Additionally, MPC strategies are implemented to achieve precise control of the robotic hand and efficient execution of complex tasks.To enhance the environmental perception capabilities of the robotic hand, the system integrates various sensors, including a sound sensor, infrared sensor, ultrasonic distance sensor, OLED display module, digital tilt sensor, Bluetooth module, and PS2 wireless remote control module. These sensors enable the robotic hand to perceive and respond to environmental changes in real time, thereby improving operational flexibility and precision. Experimental results indicate that the bionic robotic hand system possesses flexible control capabilities, good synchronization performance, and broad application prospects.Keywords-Bionic robotic hand; Model Predictive Control (MPC); kinematic analysis; modular designI. INTRODUCTIONWith the rapid development of robotics technology, the importance of bionic systems in industrial and research fields has grown significantly. This study presents a bionic robotic hand, which mimics the structure of the human hand and integrates an STM32 microcontroller along with various sensors to achieve precise and flexible control. Traditional control methods for robotic hands often face issues such as slow response times, insufficient control accuracy, and poor adaptability to complex environments. To address these challenges, this paper employs the Newton-Euler method to establish a dynamic model and introduces Model Predictive Control (MPC) strategies, significantly enhancing the control precision and task execution efficiency of the robotic hand.The robotic hand is capable of simulating basic human arm movements and achieves precise control over each joint through a motion-sensing glove, enabling it to perform complex and delicate operations. The integration of sensors provides the robotic hand with biological-like "tactile," "auditory," and "visual" capabilities, significantly enhancing its interactivity and level of automation.In terms of applications, the bionic robotic hand not only excels in industrial automation but also extends its use to scientific exploration and daily life. For instance, it demonstrates high reliability and precision in extreme environments, such as simulating extraterrestrial terrain and studying the possibility of life.II.SYSTEM DESIGNThe structure of the bionic robotic hand consists primarily of fingers with multiple joint degrees of freedom, where each joint can be controlled independently. The STM32 servo acts as the main controller, receiving data from sensors positioned at appropriate locations on the robotic hand, and controlling its movements by adjusting the joint angles. To enhance the control of the robotic hand's motion, this paper employs the Newton-Euler method to establish a dynamic model, conducts kinematic analysis, and integrates Model Predictive Control (MPC) strategies to improve operational performance in complex environments.In terms of control methods, the system not only utilizes a motion-sensing glove for controlling the bionic robotic hand but also integrates a PS2 controller and a Bluetooth module, achieving a fusion of multiple control modalities.整整整整如图需要预留一个图片的位置III.HARDWARE SELECTION AND DESIGN Choosing a hardware module that meets the functional requirements of the system while effectively controlling costs and ensuring appropriate performance is a critical consideration prior to system design.The hardware components of the system mainly consist of the bionic robotic hand, a servo controller system, a sound module, an infrared module, an ultrasonic distance measurement module, and a Bluetooth module. The main sections are described below.A.Bionic Mechanical StructureThe robotic hand consists of a rotating base and five articulated fingers, forming a six-degree-of-freedom motion structure. The six degrees of freedom enable the system to meet complex motion requirements while maintaining high efficiency and response speed. The workflow primarily involves outputting different PWM signals from a microcontroller to ensure that the six degrees of freedom of the robotic hand can independently control the movements of each joint.B.Controller and Servo SystemThe control system requires a variety of serial interfaces. To achieve efficient control, a combination of the STM32 microcontroller and Arduino control board is utilized, leveraging the advantages of both. The STM32 microcontroller serves as the servo controller, while the Arduino control board provides extensive interfaces and sensor support, facilitating simplified programming and application processes. This integration ensures rapid and precise control of the robotic hand and promotes efficient development.C.Bluetooth ModuleThe HC-05 Bluetooth module supports full-duplex serial communication at distances of up to 10 meters and offers various operational modes. In the automatic connection mode, the module transmits data according to a preset program. Additionally, it can receive AT commands in command-response mode, allowing users to configure control parameters or issue control commands. The level control of external pins enables dynamic state transitions, making the module suitable for a variety of control scenarios.D.Ultrasonic Distance Measurement ModuleThe US-016 ultrasonic distance measurement module provides non-contact distance measurement capabilities of up to 3 meters and supports various operating modes. In continuous measurement mode, the module continuously emits ultrasonic waves and receives reflected signals to calculate the distance to an object in real-time. Additionally, the module can adjust the measurement range or sensitivity through configuration response mode, allowing users to set distance measurement parameters or modify the measurement frequency as needed. The output signal can dynamically reflect the measurement results via level control of external pins, making it suitable for a variety of distance sensing and automatic control applications.IV. DESIGN AND IMPLEMENTATION OF SYSTEMSOFTWAREA.Kinematic Analysis and MPC StrategiesThe control research of the robotic hand is primarily based on a mathematical model, and a reliable mathematical model is essential for studying the controllability of the system. The Denavit-Hartenberg (D-H) method is employed to model the kinematics of the bionic robotic hand, assigning a local coordinate system to each joint. The Z-axis is aligned with the joint's rotation axis, while the X-axis is defined as the shortest distance between adjacent Z-axes, thereby establishing the coordinate system for the robotic hand.By determining the Denavit-Hartenberg (D-H) parameters for each joint, including joint angles, link offsets, link lengths, and twist angles, the transformation matrix for each joint is derived, and the overall transformation matrix from the base to the fingertip is computed. This matrix encapsulates the positional and orientational information of the fingers in space, enabling precise forward and inverse kinematic analyses. The accuracy of the model is validated through simulations, confirming the correct positioning of the fingertip actuator. Additionally, Model Predictive Control (MPC) strategies are introduced to efficiently control the robotic hand and achieve trajectory tracking by predicting system states and optimizing control inputs.Taking the index finger as an example, the Denavit-Hartenberg (D-H) parameter table is established.The data table is shown in Table ITABLE I. DATA SHEETjoints, both the forward kinematic solution and the inverse kinematic solution are derived, resulting in the kinematic model of the ing the same approach, the kinematic models for all other fingers can be obtained.The movement space of the index finger tip is shownin Figure 1.Fig. 1.Fig. 1.The movement space at the end of the index finger Mathematical Model of the Bionic Robotic Hand Based on the Newton-Euler Method. According to the design, each joint of the bionic robotic hand has a specified degree of freedom.For each joint i, the angle is defined as θi, the angular velocity asθi, and the angular acceleration as θi.The dynamics equation for each joint can be expressed as:τi=I iθi+w i(I i w i)whereτi is the joint torque, I i is the joint inertia matrix, and w i and θi represent the joint angular velocity and acceleration, respectively.The control input is generated by the motor driver (servo), with the output being torque. Assuming the motor input for each joint is u i, the joint torque τi can be mapped through the motor's torque constant as:τi=kτ∙u iThe system dynamics equation can be described as:I iθi+b iθi+c iθi=τi−τext,iwhere b i is the damping coefficient, c i is the spring constant (accounting for joint elasticity), and τext,i represents external torques acting on the joint i, such as gravity and friction.The primary control objective is to ensure that the end-effector of the robotic hand (e.g., fingertip) can accurately track a predefined trajectory. Let the desired trajectory be denoted as y d(t)and the actual trajectory as y(t)The tracking error can be expressed as:e(t)=y d(t)−y(t)The goal of MPC is to minimize the cumulative tracking error, which is typically achieved through the following objective function:J=∑[e(k)T Q e e(k)]N−1k=0where Q e is the error weight matrix, N is the prediction horizon length.Mechanical constraints require that the joint angles and velocities must remain within the physically permissible range. Assuming the angle range of the i-th joint is[θi min,θi max]and the velocity range is [θi min,θi max]。

Journal of VLSI Signal Processing39,295–311,2005c 2005Springer Science+Business Media,Inc.Manufactured in The Netherlands.Parallel-Beam Backprojection:An FPGA Implementation Optimizedfor Medical ImagingMIRIAM LEESER,SRDJAN CORIC,ERIC MILLER AND HAIQIAN YU Department of Electrical and Computer Engineering,Northeastern University,Boston,MA02115,USAMARC TREPANIERMercury Computer Systems,Inc.,Chelmsford,MA01824,USAReceived September2,2003;Revised March23,2004;Accepted May7,2004Abstract.Medical image processing in general and computerized tomography(CT)in particular can benefit greatly from hardware acceleration.This application domain is marked by computationally intensive algorithms requiring the rapid processing of large amounts of data.To date,reconfigurable hardware has not been applied to the important area of image reconstruction.For efficient implementation and maximum speedup,fixed-point implementations are required.The associated quantization errors must be carefully balanced against the requirements of the medical community.Specifically,care must be taken so that very little error is introduced compared tofloating-point implementations and the visual quality of the images is not compromised.In this paper,we present an FPGA implementation of the parallel-beam backprojection algorithm used in CT for which all of these requirements are met.We explore a number of quantization issues arising in backprojection and concentrate on minimizing error while maximizing efficiency.Our implementation shows approximately100times speedup over software versions of the same algorithm running on a1GHz Pentium,and is moreflexible than an ASIC implementation.Our FPGA implementation can easily be adapted to both medical sensors with different dynamic ranges as well as tomographic scanners employed in a wider range of application areas including nondestructive evaluation and baggage inspection in airport terminals.Keywords:backprojection,medical imaging,tomography,FPGA,fixed point arithmetic1.IntroductionReconfigurable hardware offers significant potentialfor the efficient implementation of a wide range ofcomputationally intensive signal and image process-ing algorithms.The advantages of utilizing Field Pro-grammable Gate Arrays(FPGAs)instead of DSPsinclude reductions in the size,weight,performanceand power required to implement the computationalplatform.FPGA implementations are also preferredover ASIC implementations because FPGAs have moreflexibility and lower cost.To date,the full utility ofthis class of hardware has gone largely unexploredand unexploited for many mainstream applications.In this paper,we consider a detailed implementa-tion and comprehensive analysis of one of the mostfundamental tomographic image reconstruction steps,backprojection,on reconfigurable hardware.While weconcentrate our analysis on issues arising in the useof backprojection for medical imaging applications,both the implementation and the analysis we providecan be applied directly or easily extended to a widerange of otherfields where this task needs to be per-formed.This includes remote sensing and surveillanceusing synthetic aperture radar and non-destructiveevaluation.296Leeser et al.Tomography refers to the process that generates a cross-sectional or volumetric image of an object from a series of projections collected by scanning the ob-ject from many different directions[1].Projection data acquisition can utilize X-rays,magnetic resonance,ra-dioisotopes,or ultrasound.The discussion presented here pertains to the case of two-dimensional X-ray ab-sorption tomography.In this type of tomography,pro-jections are obtained by a number of sensors that mea-sure the intensity of X-rays travelling through a slice of the scanned object.The radiation source and the sen-sor array rotate around the object in small increments. One projection is taken for each rotational angle.The image reconstruction process uses these projections to calculate the average X-ray attenuation coefficient in cross-sections of a scanned slice.If different structures inside the object induce different levels of X-ray atten-uation,they are discernible in the reconstructed image. The most commonly used approach for image recon-struction from dense projection data(many projections, many samples per projection)isfiltered backprojection (FBP).Depending on the type of X-ray source,FBP comes in parallel-beam and fan-beam variations[1].In this paper,we focus on parallel-beam backprojection, but methods and results presented here can be extended to the fan-beam case with modifications.FBP is a computationally intensive process.For an image of size n×n being reconstructed with n projec-tions,the complexity of the backprojection algorithm is O(n3).Image reconstruction through backprojection is a highly parallelizable process.Such applications are good candidates for implementation in Field Pro-grammable Gate Array(FPGA)devices since they pro-videfine-grained parallelism and the ability to be cus-tomized to the needs of a particular implementation. We have implemented backprojection by making use of these principles and shown approximately100times speedup over a software implementation on a1GHz Pentium.Our architecture can easily be expanded to newer and larger FPGA devices,further accelerating image generation by extracting more data parallelism.A difficulty of implementing FBP is that producing high-resolution images with good resemblance to in-ternal characteristics of the scanned object requires that both the density of each projection and their total num-ber be large.This represents a considerable challenge for hardware implementations,which attempt to maxi-mize the parallelism in the implementation.Therefore, it can be beneficial to usefixed-point implementations and to optimize the bit-width of a projection sample to the specific needs of the targeted application domain. We show this for medical imaging,which exhibits distinctive properties in terms of requiredfixed-point precision.In addition,medical imaging requires high precision reconstructions since visual quality of images must not be compromised.We have paid special attention to this requirement by carefully analyzing the effects of quan-tization on the quality of reconstructed images.We have found that afixed-point implementation with properly chosen bit-widths can give high quality reconstructions and,at the same time,make hardware implementation fast and area efficient.Our quantization analysis inves-tigates algorithm specific and also general data quanti-zation issues that pertain to input data.Algorithm spe-cific quantization deals with the precision of spatial ad-dress generation including the interpolation factor,and also investigates bit reduction of intermediate results for different rounding schemes.In this paper,we focus on both FPGA implemen-tation performance and medical image quality.In pre-vious work in the area of hardware implementations of tomographic processing algorithms,Wu[2]gives a brief overview of all major subsystems in a com-puted tomography(CT)scanner and proposes loca-tions where ASICs and FPGAs can be utilized.Ac-cording to the author,semi-custom digital ASICs were the most appropriate due to the level of sophistica-tion that FPGA technology had in1991.Agi et al.[3]present thefirst description of a hardware solu-tion for computerized tomography of which we are aware.It is a unified architecture that implements for-ward Radon transform,parallel-and fan-beam back-projection in an ASIC based multi-processor system. Our FPGA implementation focuses on backprojection. Agi et al.[4]present a similar investigation of quanti-zation effects;however their results do not demonstrate the suitability of their implementation for medical ap-plications.Although theirfiltered sinogram data are quantized with12-bit precision,extensive bit trunca-tion on functional unit outputs and low accuracy of the interpolation factor(absolute error of up to2)ren-der this implementation significantly less accurate than ours,which is based on9-bit projections and the max-imal interpolation factor absolute error of2−4.An al-ternative to using specially designed processors for the implementation offiltered backprojection(FBP)is pre-sented in[5].In this work,a fast and direct FBP al-gorithm is implemented using texture-mapping hard-ware.It can perform parallel-beam backprojection of aParallel-Beam Backprojection 297512-by-512-pixel image from 804projections in 2.1sec,while our implementation takes 0.25sec for 1024projections.Luiz et al.[6]investigated residue number systems (RNS)for the implementation of con-volution based backprojection to speedup the process-ing.Unfortunately,extra binary-to-RNS and RNS-to-binary conversions are introduced.Other approaches to accelerating the backprojection algorithm have been investigated [7,8].One approach [7]presents an order O (n 2log n )and merits further study.The suitability to medical image quality and hardware implementation of these approaches[7,8]needs to be demonstrated.There are also a lot of interests in the area of fan-beam and cone-beam reconstruction using hardware implementa-tion.An FPGA-based fan-beam reconstruction module [9]is proposed and simulated using MAX +PLUS2,version 9.1,but no actual FPGA implementation is mentioned.Moreover,the authors did not explore the potential parallelism for different projections as we do,which is essential for speed-up.More data and com-putation is needed for 3D cone-beam FBP.Yu’s PC based system [10]can reconstruct the 5123data from 288∗5122projections in 15.03min,which is not suit-able for real-time.The embedded system described in [11]can do 3D reconstruction in 38.7sec with the fastest time reported in the literature.However,itisFigure 1.(a)Illustration of the coordinate system used in parallel-beam backprojection,and (b)geometric explanation of the incremental spatial address calculation.based on a Mercury RACE ++AdapDev 1120devel-opment workstation and need many modifications for a different platform.Bins et al.[12]have investigated precision vs.error in JPEG compression.The goals of this research are very similar to ours:to implement de-signs in fixed-point in order to maximize parallelism and area utilization.However,JPEG compression is an application that can tolerate a great deal more error than medical imaging.In the next section,we present the backprojection algorithm in more detail.In Section 3we present our quantization studies and analysis of error introduced.Section 4presents the hardware implementation in de-tail.Finally we present results and discuss future di-rections.An earlier version of this research was pre-sented [13].This paper provides a fuller discussion of the project and updated results.2.Parallel-Beam Filtered BackprojectionA parallel-beam CT scanning system uses an array of equally spaced unidirectional sources of focused X-ray beams.Generated radiation not absorbed by the object’s internal structure reaches a collinear array of detectors (Fig.1(a)).Spatial variation of the absorbed298Leeser et al.energy in the two-dimensional plane through the ob-ject is expressed by the attenuation coefficient µ(x ,y ).The logarithm of the measured radiation intensity is proportional to the integral of the attenuation coef-ficient along the straight line traversed by the X-ray beam.A set of values given by all detectors in the array comprises a one-dimensional projection of the attenu-ation coefficient,P (t ,θ),where t is the detector dis-tance from the origin of the array,and θis the angle at which the measurement is taken.A collection of pro-jections for different angles over 180◦can be visualized in the form of an image in which one axis is position t and the other is angle θ.This is called a sinogram or Radon transform of the two-dimensional function µ,and it contains information needed for the reconstruc-tion of an image µ(x ,y ).The Radon transform can be formulated aslog e I 0I d= µ(x ,y )δ(x cos θ+y sin θ−t )dx dy≡P (t ,θ)(1)where I 0is the source intensity,I d is the detected inten-sity,and δ(·)is the Dirac delta function.Equation (1)is actually a line integral along the path of the X-ray beam,which is perpendicular to the t axis (see Fig.1(a))at location t =x cos θ+y sin θ.The Radon transform represents an operator that maps an image µ(x ,y )to a sinogram P (t ,θ).Its inverse mapping,the inverse Radon transform,when applied to a sinogram results in an image.The filtered backprojection (FBP)algo-rithm performs this mapping [1].FBP begins by high-pass filtering all projections be-fore they are fed to hardware using the Ram-Lak or ramp filter,whose frequency response is |f |.The dis-crete formulation of backprojection isµ(x ,y )=πK Ki =1 θi(x cos θi +y sin θi ),(2)where θ(t )is a filtered projection at angle θ,and K is the number of projections taken during CT scanning at angles θi over a 180◦range.The number of val-ues in θ(t )depends on the image size.In the case of n ×n pixel images,N =√n D detectors are re-quired.The ratio D =d /τ,where d is the distance between adjacent pixels and τis the detector spac-ing,is a critical factor for the quality of the recon-structed image and it obviously should satisfy D >1.In our implementation,we utilize values of D ≈1.4and N =1024,which are typical for real systems.Higher values do not significantly increase the image quality.Algorithmically,Eq.(2)is implemented as a triple nested “for”loop.The outermost loop is over pro-jection angle,θ.For each θ,we update every pixel in the image in raster-scan order:starting in the up-per left corner and looping first over columns,c ,and next over rows,r .Thus,from (2),the pixel at loca-tion (r ,c )is incremented by the value of θ(t )where t is a function of r and c .The issue here is that the X-ray going through the currently reconstructed pixel,in general,intersects the detector array between detec-tors.This is solved by linear interpolation.The point of intersection is calculated as an address correspond-ing to detectors numbered from 0to 1023.The frac-tional part of this address is the interpolation factor.The equation that performs linear interpolation is given byint θ(i )=[ θ(i +1)− θ(i )]·I F + θ(i ),(3)where IF denotes the interpolation factor, θ(t )is the 1024element array containing filtered projection data at angle θ,and i is the integer part of the calculated address.The interpolation can be performed before-hand in software,or it can be a part of the backpro-jection hardware itself.We implement interpolation in hardware because it substantially reduces the amount of data that must be transmitted to the reconfigurable hardware board.The key to an efficient implementation of Eq.(2)is shown in Fig.1(b).It shows how a distance d between square areas that correspond to adjacent pixels can be converted to a distance t between locations where X-ray beams that go through the centers of these areas hit the detector array.This is also derived from the equa-tion t =x cos θ+y sin θ.Assuming that pixels are pro-cessed in raster-scan fashion,then t =d cos θfor two adjacent pixels in the same row (x 2=x 1+d )and sim-ilarly t =d sin θfor two adjacent pixels in the same column (y 2=y 1−d ).Our implementation is based on pre-computing and storing these deltas in look-up tables(LUTs).Three LUTs are used corresponding to the nested “for”loop structure of the backprojection algorithm.LUT 1stores the initial address along the detector axis (i.e.along t )for a given θrequired to update the pixel at row 1,column 1.LUT 2stores the increment in t required as we increment across a row.LUT 3stores the increment for columns.Parallel-Beam Backprojection299Figure2.Major simulation steps.3.QuantizationMapping the algorithm directly to hardware will not produce an efficient implementation.Several modifica-tions must be made to obtain a good hardware realiza-tion.The most significant modification is usingfixed-point arithmetic.For hardware implementation,narrow bit widths are preferred for more parallelism which translates to higher overall processing speed.How-ever,medical imaging requires high precision which may require wider bit widths.We did extensive analy-sis to optimize this tradeoff.We quantize all data and all calculations to increase the speed and decrease the re-sources required for implementation.Determining al-lowable quantization is based on a software simulation of the tomographic process.Figure2shows the major blocks of the simulation. An input image isfirst fed to the software implementa-tion of the Radon transform,also known as reprojection [14],which generates the sinogram of1024projections and1024samples per projection.Thefiltering block convolves sinogram data with the impulse response of the rampfilter generating afiltered sinogram,which is then backprojected to give a reconstructed image.All values in the backprojection algorithm are real numbers.These can be implemented as eitherfloating-point orfixed-point values.Floating-point represen-tation gives increased dynamic range,but is signifi-cantly more expensive to implement in reconfigurable hardware,both in terms of area and speed.For these reasons we have chosen to usefixed-point arithmetic. An important issue,especially in medical imaging,is how much numerical accuracy is sacrificed whenfixed-point values are used.Here,we present the methods used tofind appropriate bit-widths for maintaining suf-ficient numerical accuracy.In addition,we investigate possibilities for bit reduction on the outputs of certain functional units in the datapath for different rounding schemes,and what influence that has on the error intro-duced in reconstructed images.Our analysis shows that medical images display distinctive properties with re-spect to how different quantization choices affect their reconstruction.We exploit this and customize quan-tization to bestfit medical images.We compute the quantization error by comparing afixed-point image reconstruction with afloating-point one.Fixed-point variables in our design use a general slope/bias-encoding,meaning that they are represented asV≈V a=SQ+B,(4) where V is an arbitrary real number,V a is itsfixed-point approximation,Q is an integer that encodes V,S is the slope,and B is the bias.Fixed-point versions of the sinogram and thefiltered sinogram use slope/bias scaling where the slope and bias are calculated to give maximal precision.The quantization of these two vari-ables is calculated as:S=max(V)−min(V)max(Q)−min(Q)=max(V)−min(V)2,(5) B=max(V)−S·max(Q)orB=min(V)−S·min(Q),(6) Q=roundV−BS,(7)where ws is the word size in bits of integer Q.Here, max(V)and min(V)are the maximum and mini-mum values that V will take,respectively.max(V) was determined based on analysis of data.Since sinogram data are unsigned numbers,in this case min(V)=min(Q)=B=0.The interpolation factor is an unsigned fractional number and uses radix point-only scaling.Thus,the quantized interpolation factor is calculated as in Eq.(7),with saturation on overflow, with S=2−E where E is the number of fractional bits, and with B=0.For a given sinogram,S and B are constants and they do not show up in the hardware—only the quan-tized value Q is part of the hardware implementation. Note that in Eq.(3),two data samples are subtracted from each other before multiplication with the inter-polation factor takes place.Thus,in general,the bias B is eliminated from the multiplication,which makes quantization offiltered sinogram data with maximal precision scaling easily implementable in hardware.300Leeser etal.Figure 3.Some of the images used as inputs to the simulation process.The next important issue is the metric used for evalu-ating of the error introduced by quantization.Our goal was to find a metric that would accurately describe vi-sual differences between compared images regardless of their dynamic range.If 8-bit and 16-bit versions of a single image are reconstructed so that there is no vis-ible difference between the original and reconstructed images,the proper metric should give a comparable estimate of the error for both bit-widths.The proper metric should also be insensitive to the shift of pixel value range that can emerge for different quantization and rounding schemes.Absolute values of single pix-els do not effect visual image quality as long as their relative value is preserved,because pixel values are mapped to a set of grayscale values.The error metric we use that meets these criteria is the Relative Error (RE):RE = M i =1 (x i −¯x )− y F P i−¯y F P 2M i =1 y F P i−¯y F P ,(8)Here,M is the total number of pixels,x i and y F Pi are the values of the i -th pixel in the quantized and floating-point reconstructions respectively,and ¯x,¯y FP are their means.The mean value is sub-tracted because we only care about the relative pixel values.Figure 3shows some characteristic images from a larger set of 512-by-512-pixel images used as inputs to the simulation process.All images are monochrome 8-bit images,but 16-bit versions are also used in simu-lations.Each image was chosen for a certain reason.For example,the Shepp-Logan phantom is well known and widely used in testing the ability of algorithms to accu-rately reconstruct cross sections of the human head.It is believed that cross-sectional images of the human head are the most sensitive to numerical inaccuracies and the presence of artifacts induced by a reconstruction algo-rithm [1].Other medical images were Female,Head,and Heart obtained from the visible human web site [15].The Random image (a white noise image)should result in the upper bound on bit-widths required for a precise reconstruction.The Artificial image is unique because it contains all values in the 8-bit grayscale range.This image also contains straight edges of rect-angles,which induce more artifacts in the reconstructed image.This is also characteristic of the Head image,which contains a rectangular border around the head slice.Figure 4shows the detailed flowchart of the simu-lated CT process.In addition to the major blocks des-ignated as Reproject,Filter and Backproject,Fig.4also includes the different quantization steps that we have investigated.Each path in this flowchart rep-resents a separate simulation cycle.Cycle 1gives aParallel-Beam Backprojection301Figure 4.Detailed flowchart of the simulation process.floating-point (FP)reconstruction of an input image.All other cycles perform one or more type of quan-tization and their resulting images are compared to the corresponding FP reconstruction by computing the Relative Error.The first quantization step converts FP projection data obtained by the reprojection step to a fixed-point representation.Simulation cycle 2is used to determine how different bit-widths for quantized sino-gram data affect the quality of a reconstructed image.Our research was based on a prototype system that used 12-bit accurate detectors for the acquisition of sino-gram data.Simulations showed that this bit-width is a good choice since worst case introduced error amounts to 0.001%.The second quantization step performsthe Figure 5.Simulation results for the quantization of filtered sinogram data.conversion of filtered sinogram data from FP to fixed-point representation.Simulation cycle 3is used to find the appropriate bit-width of the words representing a filtered sinogram.Figure 5shows the results for this cycle.Since we use linear interpolation of projection values corresponding to adjacent detectors,the interpo-lation factor in Eq.(3)also has to be quantized.Figure 6summarizes results obtained from simulation cycle 4,which is used to evaluate the error induced by this quantization.Figures 5and 6show the Relative Error metric for different word length values and for different simula-tion cycles for a number of input images.Some input images were used in both 8-bit and 16-bit versions.302Leeser etal.Figure 6.Simulation results for the quantization of the interpolation factor.Figure 5corresponds to the quantization of filtered sinogram data (path 3in Fig.4).The conclusion here is that 9-bit quantization is the best choice since it gives considerably smaller error than 8-bit quantiza-tion,which for some images induces visible artifacts.At the same time,10-bit quantization does not give vis-ible improvement.The exceptions are images 2and 3,which require 13bits.From Fig.6(path 4in Fig.4),we conclude that 3bits for the interpolation factor (mean-ing the maximum error for the spatial address is 2−4)Figure 7.Relative error between fixed-point and floating-point reconstruction.is sufficiently accurate.As expected,image 1is more sensitive to the precision of the linear interpolation be-cause of its randomness.Figure 7shows that combining these quantization schemes results in a very small error for image “Head”in Fig.3.We also investigated whether it is feasible to discard some of the least significant bits (LSBs)on outputs of functional units (FUs)in the datapath and still not introduce any visible artifacts.The goal is for the re-constructed pixel values to have the smallest possibleParallel-Beam Backprojection 303bit-widths.This is based on the intuition that bit re-duction done further down the datapath will introduce a smaller amount of error in the result.If the same bit-width were obtained by simply quantizing filtered projection data with fewer bits,the error would be mag-nified by the operations performed in the datapath,es-pecially by the multiplication.Path number 5in Fig.4depicts the simulation cycles that investigates bit reduc-tion at the outputs of three of the FUs.These FUs imple-ment subtraction,multiplication and addition that are all part of the linear interpolation from Eq.(3).When some LSBs are discarded,the remaining part of a binary word can be rounded in different ways.We investigate two different rounding schemes,specifically rounding to nearest and truncation (or rounding to floor).Round-ing to nearest is expected to introduce the smallest er-ror,but requires additional logic resources.Truncation has no resource requirements,but introduces a nega-tive shift of values representing reconstructed pixels.Bit reduction effectively optimizes bit-widths of FUs that are downstream in the data flow.Figure 8shows tradeoffs of bit reduction and the two rounding schemes after multiplication for medi-cal images.It should be noted that sinogram data are quantized to 12bits,filtered sinogram to 9bits,and the interpolation factor is quantized to 3bits (2−4pre-cision).Similar studies were done for the subtraction and addition operations and on a broader set of im-ages.It was determined that medical images suffer the least amount of error introduced by combining quanti-zations and bit reduction.For medical images,in case of rounding to nearest,there is very little difference inthe Figure 8.Bit reduction on the output of the interpolation multiplier.introduced error between 1and 3discarded bits after multiplication and addition.This difference is higher in the case of bit reduction after addition because the multiplication that follows magnifies the error.For all three FUs,when only medical images are considered,there is a fixed relationship between rounding to near-est and truncation.Two least-significant bits discarded with rounding to nearest introduce an error that is lower than or close to the error of 1bit discarded with trun-cation.Although rounding to nearest requires logic re-sources,even when only one LSB is discarded with rounding to nearest after each of three FUs,the overall resource consumption is reduced because of savings provided by smaller FUs and pipeline registers (see Figs.11and 12).Figure 9shows that discarding LSBs introduces additional error on medical images for this combination of quantizations.In our case there was no need for using bit reduction to achieve smaller resource consumption because the targeted FPGA chip (Xilinx Virtex1000)provided sufficient logic resources.There is one more quantization issue we considered.It pertains to data needed for the generation of the ad-dress into a projection array (spatial address addr )and to the interpolation factor.As described in the intro-duction,there are three different sets of data stored in look-up tables (LUTs)that can be quantized.Since pixels are being processed in raster-scan order,the spa-tial address addr is generated by accumulating entries from LUTs 2and 3to the corresponding entry in LUT 1.The 10-bit integer part of the address addr is the index into the projection array θ(·),while its fractional part is the interpolation factor.By using radix point-only。

面向对象编程英语Object-oriented programming (OOP) is a programming paradigm based on the concept of "objects". It is widely used today for developing software applications, and it has become an essential skill for software developers. In this article, we will discuss the basics of OOP and the importance of mastering it.1. What is OOP?OOP is a programming concept that revolves around the idea of "objects". An object is an instance of a class, which is a blueprint for creating objects. Classes define the attributes and behavior of an object, and objects caninteract with each other through their methods. OOP is built on three main principles: encapsulation, inheritance, and polymorphism.2. EncapsulationEncapsulation is the process of hiding the implementation details of an object from the outside world. It protects the object's data and methods from being accessed or modified by unauthorized code. Encapsulation helps to improve the robustness and maintainability of the code by reducing the side-effects of modifying an object's data.3. InheritanceInheritance is a mechanism that allows a class toinherit attributes and behavior from a parent or base class. It simplifies code by allowing developers to reuse code without duplication. Inheritance enables developers to build complex systems by arranging classes in a hierarchicalstructure.4. PolymorphismPolymorphism is the ability of objects to take on different forms. It allows developers to create code that can work with multiple types of objects at once, without needing to know the exact class or type of each object. Polymorphism is achieved through methods that can accept parameters of different types.5. Why is OOP important?OOP has become a fundamental skill for software developers because of its many benefits. OOP allows developers to write more organized, modular, and reusable code. It improves code readability and makes it easier to understand, maintain, and test. OOP also enables teamwork by dividing work into smaller components that can be developed independently. Additionally, OOP is widely used in modern software development frameworks and technologies, such as Java, Python, and .NET.ConclusionIn conclusion, OOP is a powerful programming paradigm that has become essential for software developers. Its fundamental principles of encapsulation, inheritance, and polymorphism enable developers to write more organized, modular, and reusable code. Mastery of OOP is crucial for building complex software systems, improving team productivity, and staying up-to-date with modern software development technologies.。

《计算机英语(第2版)》参考答案注:这里仅给出《计算机英语(第2版)》新增或变化课文的答案,其他未改动课文答案参见《计算机英语(第1版)》原来的答案。

Unit OneSection CPDA Prizefight: Palm vs. Pocket PCI. Fill in the blanks with the information given in the text:1. With DataViz’s Documents To Go, you can view and edit desktop documents on your PDA without converting them first to a PDA-specific ________. (format)2. Both Palm OS and Windows Mobile PDAs can offer e-mail via ________ so that new messages received on your desktop system are transferred to the PDA for on-the-go reading. (synchronization)3. The Windows Mobile keyboard, Block Recognizer, and Letter Recognizer are all ________ input areas, meaning they appear and disappear as needed. (virtual)4. Generally speaking, Windows Mobile performs better in entering information and playing ________ files while Palm OS offers easier operation, more ________ programs, better desktop compatibility, and a stronger e-mail application. (multimedia; third-party)II. Translate the following terms or phrases from English into Chinese and vice versa:1. data field数据字段2. learning curve学习曲线3. third-party solution第三方解决方案4. Windows Media Player Windows媒体播放器5. 开始按钮Start button6. 指定输入区designated input area7. 手写体识别系统handwriting-recognition system8. 字符集character setUnit ThreeSection BLonghorn:The Next Version of WindowsI. Fill in the blanks with the information given in the text:1. NGSCB, the new security architecture Microsoft is developing for Longhorn, splits the OS into two parts: a standard mode and a(n) ________ mode. (secure)2. It is reported that Longhorn will provide different levels of operation that disable the more intensive Aero effects to boost ________ on less capable PCs. (performance)3. With Longhorn’s new graphics and presentation engine, we can create and display Tiles on the desktop, which remind us of the old Active Desktop but are based on ________ instead of ________. (XML; HTML)4. The most talked-about feature in Longhorn so far is its new storage system, WinFS, whichworks like a(n) ________ database. (relational)II. Translate the following terms or phrases from English into Chinese and vice versa:1. search box搜索框2. built-in firewall内置防火墙3. standalone application独立应用程序4. active desktop 活动桌面5. mobile device移动设备6. 专有软件proprietary software7. 快速加载键quick-launch key8. 图形加速器graphics accelerator9. 虚拟文件夹virtual folder10. 三维界面three-dimensional interfaceUnit FourSection CArraysI. Fill in the blanks with the information given in the text:1. Given the array called object with 20 elements, if you see the term object10, you know the array is in ________ form; if you see the term object[10], you know the array is in ________ form. (subscript; index)2. In most programming languages, an array is a static data structure. When you define an array, the size is ________. (fixed)3. A(n) ________ is a pictorial representation of a frequency array. (histogram)4. An array that consists of just rows and columns is probably a(n) ________ array. (two-dimensional)II. Translate the following terms or phrases from English into Chinese and vice versa:1. bar chart条形图2. frequency array频率数组3. graphical representation图形表示4. multidimensional array多维数组5. 用户视图user(’s) view6. 下标形式subscript form7. 一维数组one-dimensional array8. 编程结构programming constructUnit FiveSection BMicrosoft .NET vs. J2EEI. Fill in the blanks with the information given in the text:1. One of the differences between C# and Java is that Java runs on any platform with a Java Virtual ________ while C# only runs in Windows for the foreseeable future. (Machine)2. With .NET, Microsoft is opening up a channel both to ________ in other programming languages and to ________. (developers; components)3. J2EE is a single-language platform; calls from/to objects in other languages are possiblethrough ________, but this kind of support is not a ubiquitous part of the platform. (CORBA)4. One important element of the .NET platform is a common language ________, which runs bytecodes in an Internal Language format. (runtime)II. Translate the following terms or phrases from English into Chinese and vice versa:1. messaging model消息收发模型2. common language runtime通用语言运行时刻(环境)3. hierarchical namespace分等级层次的名称空间4. development community开发社区5. CORBA公用对象请求代理(程序)体系结构6. 基本组件base component7. 元数据标记metadata tag8. 虚拟机virtual machine9. 集成开发环境IDE(integrated development environment)10. 简单对象访问协议SOAP(Simple Object Access Protocol)Unit SixSection ASoftware Life CycleI. Fill in the blanks with the information given in the text:1. The development process in the software life cycle involves four phases: analysis, design, implementation, and ________. (testing)2. In the system development process, the system analyst defines the user, needs, requirements and methods in the ________ phase. (analysis)3. In the system development process, the code is written in the ________ phase. (implementation)4. In the system development process, modularity is a very well-established principle used in the ________ phase. (design)5. The most commonly used tool in the design phase is the ________. (structure chart)6. In the system development process, ________ and pseudocode are tools used by programmers in the implementation phase. (flowcharts)7. Pseudocode is part English and part program ________. (logic)8. While black box testing is done by the system test engineer and the ________, white box testing is done by the ________. (user; programmer)II. Translate the following terms or phrases from English into Chinese and vice versa:1. standard graphical symbol标准图形符号2. logical flow of data标准图形符号3. test case测试用例4. program validation程序验证5. white box testing白盒测试6. student registration system学生注册系统7. customized banking package定制的金融软件包8. software life cycle软件生命周期9. user working environment用户工作环境10. implementation phase实现阶段11. 测试数据test data12. 结构图structure chart13. 系统开发阶段system development phase14. 软件工程software engineering15. 系统分析员system(s) analyst16. 测试工程师test engineer17. 系统生命周期system life cycle18. 设计阶段design phase19. 黑盒测试black box testing20. 会计软件包accounting packageIII. Fill in each of the blanks with one of the words given in the following list, making changes if necessary:development; testing; programmer; chart; engineer; attend; interfacessystem; software; small; userdevelop; changes; quality; board; UncontrolledIV. Translate the following passage from English into Chinese:软件工程是软件开发的一个领域;在这个领域中,计算机科学家和工程师研究有关的方法与工具,以使高效开发正确、可靠和健壮的计算机程序变得容易。

1前言。

2Mathematics(数学)。

3DataStructures&Algorithms(数据结构、算法)。

4Compiler(编译原理)。

5OperatingSystem(操作系统)。

6Database(数据库)。

7C(C语言)。

8C++(C++语言)。

9Object-Oriented(面向对象)。

10SoftwareEngineering(软件工程)。

11UNIXProgramming(UNIX编程)。

12UNIXAdministration(UNIX系统管理)。

13Networks(网络)。

14WindowsProgramming(Windows编程)。

15Other(*)。

Mathematics(数学)。

书名(英文):DiscreteMathematicsandItsApplications(FifthEdition)。

书名(中文):离散数学及其应用(第五版)。

原作者:KennethH.Rosen。

书名(英文):ConcreteMathematics:AFoundationforComputerScience(SecondEdition)。

书名(中文):具体数学:计算机科学基础(第2版)。

原作者:RonaldL.Graham/DonaldE.Knuth/OrenPatashnik。

DataStructures&Algorithms(数据结构、算法)。

书名(英文):DataStructuresandAlgorithmAnalysisinC,SecondEdition。

书名(中文):数据结构与算法分析--C语言描述(第二版)。

原作者:MarkAllenWeiss。

书名(英文):DataStructures&ProgramDesignInC(SecondEdition)。

书名(中文):数据结构与程序设计C语言描述(第二版)。

原作者:RobertKruse/C.L.Tondo/BruceLeung。

U n i t14568翻译-CAL-FENGHAI.-(YICAI)-Company One1unit14 Translate the paragraph into Chinese.篮球运动是一个名叫詹姆斯·奈史密斯的体育老师发明的。

1891年冬天,他接到一个任务,要求他发明一种运动,让田径运动员既保持良好的身体状态,又能不受伤害。

篮球在大学校园里很快流行起来。

20世纪40年代,职业联赛开始之后,美国职业篮球联赛一直从大学毕业生里招募球员。

这样做对美国职业篮球联赛和大学双方都有好处:大学留住了可能转向职业篮球赛的学生,而美国职业篮球联赛无需花钱组建一个小职业篮球联盟。

大学篮球在全国的普遍推广以及美国大学体育协会对“疯狂三月”(即美国大学体育协会甲级联赛男篮锦标赛)的市场推广,使得这项大学体育赛事一直在蓬勃发展。

The sport of basketball was created by a physical education teacher named James Naismith, who in the winter of 1891 was given the task of creating a game that would keep track athletes in shape without risking them getting hurt a lot. Basketball quickly became popular on college campuses. When the professional league was established in the 1940s, the National Basketball Association (NBA) drafted players who had graduated from college.This was a mutually beneficial relationship for the NBA and colleges — the colleges held onto players who would otherwise go professional, and the NBA did not have to fund a minor league. The pervasiveness of college basketball throughout the nation and the NCAA’ s (美国大学体育协会) marketing of “March Madness” (officially the NCAA Division I Men’ s Basketball Championship), have kept the college game alive and well.5 Translate the paragraph into English.现在中国大学生参加志愿活动已成为常态。

软件工程练习题1、考察在你所编写的软件中已经出现的故障。

辨别并列出那些导致每个故障的缺陷和错误。

2、描述你早上去上课或者上班的过程,并画一个图来表达这个过程。

3、静态模型和动态模型的区别是什么?并说明每种模型的作用和用途。

4、按照工作分解结构描述获得学位(学士的、硕士或博士的)的过程。

画出过程的活动图。

什么是关键路程?5、预测产生一个估计值E,该估计值最终将与实际值A进行比较。

设计两个可由E和A计算得到的值,用以帮助确定估计值的准确性。

定义这两个值,并论述每个值怎样用于告诉我们某个预测是可接受的。

6、描述两种不同的规模度量(方法),并且指出每种的优点和缺点。

7、大部分系统的需求详细说明了系统应该做预期要做的工作。

这种需求是不是也说明了系统不应该去做没有预期要做的工作?如果你的答案是no,为什么;如果答案是yes,举一个例子。

8、下列陈述中描述了程序的模块(假设的)。

对于每一个模块,判断该模块是否可能有高的或低的内聚。

如果是低内聚,请解释原因。

a.模块“InventorySearchByID”查询清单记录,看是否匹配指定范围的ID号。

返回一个包含任何匹配的记录的数据结构。

b.模块“ProcessPurchase”移除清单中已购买产品,为客户打印收据并更新日志。

c.模块“FindSet”处理用户的要求,确定了一系列的满足要求的项目清单,并以可以向客户展示的格式列出来。

9、把设计划分为系统设计和程序设计两个阶段为什么很有用?10、假如你正在做一个书店的运营系统,书店的收入来源自两个不同的服务:顾客买书,顾客把自己的书拿来重新装订,要为两个服务设计两个不同的类,这两个类都是继承于“销售项目”这个类,这样做可能的好处是什么?在这个例子中有没有可能的原因不允许继承?详尽描述什么因素会影响你的决定。

11、在6.7中讨论了Chidamber和Kemerer的继承深度的度量,为什么一个继承层次深的类要比一个继承层次相对浅的类看起来更难理解和维护?12、解释设计和实现之间的关系。

CArchive使用的一种错误方式Introduction介绍Object serialization is one of the most powerful features of MFC. 对象串行化是MFC的一个重要特性。

With it you can store your objects into a file and let MFC recreate your objects from that file.利用它你可以将你的对象存入一个文件,然后让MFC通过读取文件去重新创建它们。

Unfortunately there are some pitfalls in using it correctly.不幸的是,这里面有很多的陷阱。

So it happened that yesterday I spent a lot of time in understanding what I did wrong while programming a routine that wrapped up some objects for a drag and drop operation. 昨天我花了很长的时候去查清一个实现拖放操作时出现的BUG,After a long debugging session I understood what happened and so I think that it might be a good idea to share my newly acquired knowledge with you.我花了很长的时间才调试出来,所以我觉得有必要和大家分享一下。

The Wrong Way错误的方式Let's start with my wrong implementation of object serialization.先说我的错误的方式。

A very convenient way to implement drag and drop and clipboard operations is to wrap up your objects in a COleDataSource object. 一个实现拖放操作非常方便的方式是把你的对象封装到一个COleDataSource对象里。

设计翻译成英文DesignDesign is the process of creating and refining a plan or blueprint for the construction or implementation of an object, system, or process. It involves carefully considering and combining various elements and factors to achieve a desired outcome.Design can be applied to various fields and disciplines, such as architecture, fashion, graphic design, industrial design, interior design, and web design. Each field has its own unique considerations and requirements, but all share a common goal: to create something functional, aesthetically pleasing, and meaningful.In architecture, design entails the creation of detailed plans, drawings, and specifications for buildings and structures. Architects take into account factors such as aesthetics, functionality, safety, and sustainability when designing structures. They must consider the needs and preferences of the client, as well as the context and environment in which the building will be located.Fashion design involves the creation of clothing, footwear, and accessories. Designers in this field must consider factors such as trends, market demand, and the target audience. They often draw inspiration from various sources, such as art, culture, and nature, to create unique and visually appealing garments.Graphic design focuses on visual communication. Graphic designers create visual content for various mediums, such as print,web, and social media. Their designs may include logos, brochures, posters, websites, and advertisements. They must consider factors such as the message, audience, and medium when creating their designs.Industrial design involves the creation of products for mass production. Industrial designers consider factors such as usability, ergonomics, aesthetics, and cost when designing products. Their designs may range from household appliances to automobiles.Interior design involves the planning and design of interior spaces. Interior designers consider factors such as functionality, aesthetics, and safety when designing spaces. They work with clients to create spaces that meet their needs and preferences while ensuring that the design is practical and visually pleasing.Web design focuses on the creation and layout of websites. Web designers consider factors such as usability, accessibility, and aesthetics when designing websites. They must create user-friendly and visually appealing interfaces that effectively communicate the desired message.In all fields of design, the process usually involves several stages, including research, conceptualization, development, and refinement. Designers often collaborate with other professionals, such as engineers, architects, and marketers, to ensure that the final product meets the desired objectives.In conclusion, design is a multifaceted process that involves careful consideration of various factors and elements to createsomething functional, aesthetically pleasing, and meaningful. Design can be applied to various fields and disciplines, with each having its own unique considerations and requirements. Through research, conceptualization, and collaboration, designers strive to create innovative and impactful designs.。

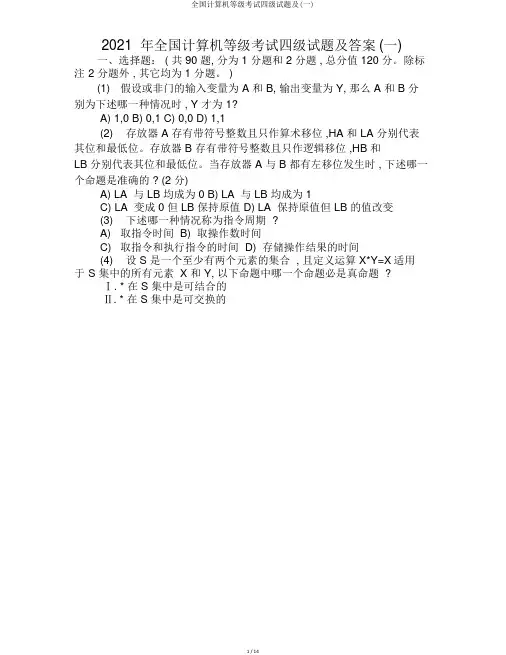

2021 年全国计算机等级考试四级试题及答案 (一)一、选择题: ( 共 90 题, 分为 1 分题和 2 分题 , 总分值 120 分。

除标注2 分题外 , 其它均为 1 分题。

)(1)假设或非门的输入变量为 A 和 B, 输出变量为 Y, 那么 A 和 B 分别为下述哪一种情况时 , Y 才为 1?A)1,0 B) 0,1 C) 0,0 D) 1,1(2)存放器 A 存有带符号整数且只作算术移位 ,HA 和 LA 分别代表其位和最低位。

存放器 B 存有带符号整数且只作逻辑移位 ,HB 和LB 分别代表其位和最低位。

当存放器 A 与 B 都有左移位发生时 , 下述哪一个命题是准确的 ? (2 分)A) LA 与 LB 均成为 0 B) LA 与 LB 均成为 1C)LA 变成 0 但 LB 保持原值 D) LA 保持原值但 LB 的值改变(3)下述哪一种情况称为指令周期 ?A)取指令时间 B) 取操作数时间C)取指令和执行指令的时间 D) 存储操作结果的时间(4)设 S 是一个至少有两个元素的集合 , 且定义运算 X*Y=X适用于S 集中的所有元素 X 和 Y, 以下命题中哪一个命题必是真命题 ?Ⅰ. * 在 S 集中是可结合的Ⅱ. * 在 S 集中是可交换的Ⅲ. * 在 S 集中有单位元A)只有Ⅰ B) 只有Ⅱ C) Ⅰ和Ⅲ D) Ⅱ和Ⅲ(5)设 Z 是整数集 , 且设 f :Z×Z→Z, 对每一个∈ Z×Z, 有f()=m2n 。

集合 {0} 的原象为 (2 分)A){0} ×ZB)Z ×{0}C)({0} ×Z) ∩(Z ×{0})D)({0} ×Z) ∪(Z ×{0})(6)对于一个只有 3 个不同元素的集合 A来说 ,A 上的等价关系的总数为A)2 B) 5 C) 9 D)取决于元素是否为数值(7)设有命题:对于组成元素为集合的集合C,存有函数为 f :C→∪ C,使得对每一个S∈C,有f(S) ∈S。

java中面对对象的英语English Answer:Object-oriented programming (OOP) is a programming paradigm that revolves around the concept of "objects." An object is a data structure consisting of data fields and methods together with their interactions. This makes it easier to create complex programs that are easier to maintain and reuse. OOP is based on several concepts such as encapsulation, abstraction, inheritance, and polymorphism. It aims to imitate and simplify the real world by programming objects that contain both data and functions.Encapsulation refers to the bundling of data and methods that operate on that data within a single unit. This helps keep data safe and secure from external interference and ensures that the object's internal state is not exposed.Abstraction is the act of hiding the implementation details of an object from the user. It allows the user to interact with the object without having to know how it works internally. This simplifies the design and maintenance of complex systems.Inheritance is a mechanism that allows objects to inherit properties and behaviors from other objects. It helps in code reusability and reduces redundancy.Polymorphism is the ability of an object to take on multiple forms. It allows objects to respond to the same message in different ways depending on their type.OOP has several advantages over other programming paradigms. It promotes code reusability, making it easier to maintain and update programs. It also enhances security by protecting data from unauthorized access. OOP makes programs more flexible and extensible, enabling them to adapt to changing requirements.However, OOP also has some disadvantages. It can bemore complex to design and implement than other programming paradigms. It also requires more memory and processing power, making it less suitable for resource-constrained environments.Overall, OOP is a powerful programming paradigm that has revolutionized software development. It has enabled the creation of complex, maintainable, and reusable software systems. However, it is important to understand its advantages and disadvantages before choosing it for a specific project.中文回答:面向对象编程 (OOP) 是一种编程范例,围绕“对象”的概念展开。

1About the T utorialLISP is the second-oldest high-level programming language after Fortran and has changed a great deal since its early days, and a number of dialects have existed over its history. Today, the most widely known general-purpose LISP dialects are Common LISP and Scheme.This tutorial takes you through features of LISP Programming language by simple and practical approach of learning.AudienceThis reference has been prepared for the beginners to help them understand the basic to advanced concepts related to LISP Programming language.PrerequisitesBefore you start doing practice with various types of examples given in this reference, we assume that you are already aware of the fundamentals of computer programming and programming languages.Copyright & DisclaimerCopyright 2014 by Tutorials Point (I) Pvt. Ltd.All the content and graphics published in this e-book are the property of Tutorials Point (I) Pvt. Ltd. The user of this e-book is prohibited to reuse, retain, copy, distribute or republish any contents or a part of contents of this e-book in any manner without written consent of the publisher.You strive to update the contents of our website and tutorials as timely and as precisely as possible, however, the contents may contain inaccuracies or errors. Tutorials Point (I) Pvt. Ltd. provides no guarantee regarding the accuracy, timeliness or completeness of our website or its contents including this tutorial. If you discover any errors on our website or in this tutorial, please notify us at **************************iT able of ContentsAbout the Tutorial (i)Audience (i)Prerequisites (i)Copyright & Disclaimer (i)Table of Contents (ii)1.OVERVIEW (1)Features of Common LISP (1)Applications Developed in LISP (1)2.ENVIRONMENT SETUP (3)How to Use CLISP (3)3.PROGRAM STRUCTURE (4)A Simple LISP Program (4)LISP Uses Prefix Notation (5)Evaluation of LISP Programs (5)The 'Hello World' Program (6)4.BASIC SYNTAX (7)Basic Elements in LISP (7)Adding Comments (8)Notable Points (8)LISP Forms (8)Naming Conventions in LISP (9)Use of Single Quotation Mark (9)5.DATA TYPES (11)Type Specifiers in LISP (11)6.MACROS (14)Defining a Macro (14)7.VARIABLES (15)Global Variables (15)Local Variables (16)8.CONSTANTS (18)9.OPERATORS (19)Arithmetic Operations (19)Comparison Operations (20)Logical Operations on Boolean Values (22)Bitwise Operations on Numbers (24)10.DECISION MAKING (27)The cond Construct in LISP (28)The if Construct (29)The when Construct (30)The case Construct (31)11.LOOPS (32)The loop Construct (33)The loop for Construct (33)The do Construct (35)The dotimes Construct (36)The dolist Construct (37)Exiting Gracefully from a Block (38)12.FUNCTIONS (40)Defining Functions in LISP (40)Optional Parameters (41)Keyword Parameters (43)Returning Values from a Function (43)Lambda Functions (45)Mapping Functions (45)13.PREDICATES (47)14.NUMBERS (51)Various Numeric Types in LISP (52)Number Functions (53)15.CHARACTERS (56)Special Characters (56)Character Comparison Functions (57)16.ARRAYS (59)17.STRINGS (66)String Comparison Functions (66)Case Controlling Functions (68)Trimming Strings (69)Other String Functions (70)18.SEQUENCES (73)Creating a Sequence (73)Generic Functions on Sequences (73)Standard Sequence Function Keyword Arguments (76)Finding Length and Element (76)Modifying Sequences (77)Sorting and Merging Sequences (78)Sequence Predicates (79)19.LISTS (81)The Cons Record Structure (81)Creating Lists with list Function in LISP (82)List Manipulating Functions (83)Concatenation of car and cdr Functions (85)20.SYMBOLS (86)Property Lists (86)21.VECTORS (89)Creating Vectors (89)Fill Pointer Argument (90)22.SET (92)Implementing Sets in LISP (92)Checking Membership (93)Set Union (94)Set Intersection (95)Set Difference (96)23.TREE (98)Tree as List of Lists (98)Tree Functions in LISP (98)Building Your Own Tree (100)Adding a Child Node into a Tree (100)24.HASH TABLE (103)Creating Hash Table in LISP (103)Retrieving Items from Hash Table (104)Adding Items into Hash Table (104)Applying a Specified Function on Hash Table (106)25.INPUT & OUTPUT (107)Input Functions (107)Reading Input from Keyboard (108)Output Functions (110)Formatted Output (113)26.FILE I/O (115)Opening Files (115)Writing to and Reading from Files (116)Closing a File (118)27.STRUCTURES (119)Defining a Structure (119)28.PACKAGES (122)Package Functions in LISP (122)Creating a Package (123)Using a Package (123)Deleting a Package (125)29.ERROR HANDLING (127)Signaling a Condition (127)Handling a Condition (127)Restarting or Continuing the Program Execution (128)Error Signaling Functions in LISP (131)MON LISP OBJECT SYSTEMS (133)Defining Classes (133)Providing Access and Read/Write Control to a Slot (133)Defining a Class Method (135)Inheritance (136)LISP8LISP stands for LIS t P rogramming. John McCarthy invented LISP in 1958, shortly after the development of FORTRAN. It was first implemented by Steve Russell on an IBM 704 computer. It is particularly suitable for Artificial Intelligence programs, as it processes symbolic information efficiently.Common LISP originated during the decade of 1980 to 1990, in an attempt to unify the work of several implementation groups, as a successor of Maclisp like ZetaLisp and New Implementation of LISP (NIL) etc.It serves as a common language, which can be easily extended for specific implementation. Programs written in Common LISP do not depend on machine-specific characteristics, such as word length etc.Features of Common LISP∙ It is machine-independent∙ It uses iterative design methodology∙ It has easy extensibility∙ It allows to update the programs dynamically∙ It provides high level debugging.∙ It provides advanced object-oriented programming.∙ It provides convenient macro system.∙ It provides wide-ranging data types like, objects, structures, lists, vectors, adjustable arrays, hash-tables, and symbols.∙ It is expression-based.∙ It provides an object-oriented condition system.∙ It provides complete I/O library.∙ It provides extensive control structures.1. OVERVIEWLISPApplications Developed in LISPThe following applications are developed in LISP: Large successful applications built in LISP.∙Emacs: It is a cross platform editor with the features of extensibility, customizability, self-document ability, and real-time display.∙G2∙AutoCad∙Igor Engraver∙Yahoo Store9LISP10CLISP is the GNU Common LISP multi-architechtural compiler used for setting up LISP in Windows. The Windows version emulates Unix environment using MingW under Windows. The installer takes care of this and automatically adds CLISP to the Windows PATH variable.You can get the latest CLISP for Windows at:/projects/clisp/files/latest/downloadIt creates a shortcut in the Start Menu by default, for the line-by-line interpreter.How to Use CLISPDuring installation, CLISP is automatically added to your PATH variable if you select the option (RECOMMENDED). It means that you can simply open a new Command window and type "clisp" to bring up the compiler. To run a *.lisp or *.lsp file, simply use: clisp hello.lisp2. ENVIRONMENT SETUPLISP11LISP expressions are called symbolic expressions or S-expressions. The S-expressions are composed of three valid objects:∙ Atoms ∙ Lists ∙StringsAny S-expression is a valid program. LISP programs run either on an interpreter or as compiled code.The interpreter checks the source code in a repeated loop, which is also called the Read-Evaluate-Print Loop (REPL). It reads the program code, evaluates it, and prints the values returned by the program.A Simple LISP ProgramLet us write an s-expression to find the sum of three numbers 7, 9 and 11. To do this, we can type at the interpreter prompt ->: (+7911)LISP returns the following result: 27If you would like to execute the same program as a compiled code, then create a LISP source code file named myprog.lisp and type the following code in it: (write(+7911))When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is: 273. PROGRAM STRUCTURELISP Uses Prefix NotationIn prefix notation, operators are written before their operands. You might have noted that LISP uses prefix notation. In the above program, the ‘+’ symbol works as a function name for the process of summation of the numbers.For example, the following expression,a * (b +c ) / dis written in LISP as:(/ (* a (+ b c) ) d)Let us take another example. Let us write code for converting Fahrenheit temperature of 60o F to the centigrade scale:The mathematical expression for this conversion is:(60 * 9 / 5) + 32Create a source code file named main.lisp and type the following code in it:(write(+ (* (/ 9 5) 60) 32))When you click the Execute button, or type Ctrl+E, MATLAB executes it immediately and the result is:140Evaluation of LISP ProgramsThe LISP program has two parts:∙Translation of program text into LISP objects by a reader program.∙Implementation of the semantics of the language in terms of LSIP objects by an evaluator program.The evaluation program takes the following steps:∙The reader translates the strings of characters to LISP objects or s-expressions.12∙The evaluator defines syntax of LISP forms that are built from s-expressions.∙This second level of evaluation defines a syntax that determines which s-expressions are LISP forms.∙The evaluator works as a function that takes a valid LISP form as an argument and returns a value. This is the reason why we put the LISP expression in parenthesis, because we are sending the entire expression/form to the evaluator as argument.The 'Hello World' ProgramLearning a new programming language does not really take off until you learn how to greet the entire world in that language, right ?Let us create new source code file named main.lisp and type the following code in it:(write-line "Hello World")(write-line "I am at 'Tutorials Point'! Learning LISP")When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:Hello WorldI am at 'Tutorials Point'! Learning LISP13LISP14This chapter introduces you to basic syntax structure in LISP.Basic Elements in LISPLISP programs are made up of three basic elements:∙ atom ∙ list ∙stringAn atom is a number or string of contiguous characters. It includes numbers and special characters. The following examples show some valid atoms: hello-from-tutorials-point name 123008907 *hello* Block#221 abc123A list is a sequence of atoms and/or other lists enclosed in parentheses. The following examples show some valid lists: ( i am a list) (a ( a b c) d e fgh)(father tom ( susan bill joe)) (sun mon tue wed thur fri sat) ( )A string is a group of characters enclosed in double quotation marks. The following examples show some valid strings:4. BASIC SYNTAX" I am a string""a ba c d efg #$%^&!""Please enter the following details:""Hello from 'Tutorials Point'! "Adding CommentsThe semicolon symbol (;) is used for indicating a comment line.Example(write-line "Hello World") ; greet the world; tell them your whereabouts(write-line "I am at 'Tutorials Point'! Learning LISP")When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result returned is:Hello WorldI am at 'Tutorials Point'! Learning LISPNotable PointsThe following important points are notable:∙The basic numeric operations in LISP are +, -, *, and /∙LISP represents a function call f(x) as (f x), for example cos(45) is written as cos 45∙LISP expressions are not case-sensitive. Means, cos 45 or COS 45 are same.∙LISP tries to evaluate everything, including the arguments of a function. Only three types of elements are constants and always return their own value:o Numberso The letter t, that stands for logical trueo The value nil, that stands for logical false, as well as an empty list.15LISP FormsIn the previous chapter, we mentioned that the evaluation process of LISP code takes the following steps:∙The reader translates the strings of characters to LISP objects or s-expressions.∙The evaluator defines syntax of LISP forms that are built from s-expressions.This second level of evaluation defines a syntax that determines which s-expressions are LISP forms.A LISP form can be:∙An atom∙An empty list or non-list∙Any list that has a symbol as its first elementThe evaluator works as a function that takes a valid LISP form as an argument and returns a value.This is the reason why we put the LISP expression in parenthesis,because we are sending the entire expression/form to the evaluator as argument.Naming Conventions in LISPName or symbols can consist of any number of alphanumeric characters other than whitespace, open and closing parentheses, double and single quotes, backslash, comma, colon, semicolon and vertical bar. To use these characters in a name, you need to use escape character (\).A name can have digits but must not be made of only digits, because then it would be read as a number. Similarly a name can have periods, but cannot be entirely made of periods.Use of Single Quotation MarkLISP evaluates everything including the function arguments and list members.At times, we need to take atoms or lists literally and do not want them evaluated or treated as function calls. To do this, we need to precede the atom or the list with a single quotation mark.16The following example demonstrates this:Create a file named main.lisp and type the following code into it:(write-line "single quote used, it inhibits evaluation")(write '(* 2 3))(write-line " ")(write-line "single quote not used, so expression evaluated")(write (* 2 3))When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:single quote used, it inhibits evaluation(* 2 3)single quote not used, so expression evaluated617LISP18LISP data types can be categorized as:Scalar types - numbers, characters, symbols etc. Data structures - lists, vectors, bit-vectors, and strings.Any variable can take any LISP object as its value, unless you declare it explicitly. Although, it is not necessary to specify a data type for a LISP variable, however, it helps in certain loop expansions, in method declarations and some other situations that we will discuss in later chapters.The data types are arranged into a hierarchy. A data type is a set of LISP objects and many objects may belong to one such set.The typep predicate is used for finding whether an object belongs to a specific type. The type-of function returns the data type of a given object.T ype Specifiers in LISPType specifiers are system-defined symbols for data types.Array fixnum package simple-string Atom float pathname simple-vector Bignum function random-state single-float Bit hash-table Ratio standard-char bit-vector integer Rational stream Character keyword readtable string [common]listsequence[string-char]5. DATA TYPESLISP compiled-function long-float short-float symbolComplex nill signed-byte tCons null simple-array unsigned-bytedouble-float number simple-bit-vector vectorApart from these system-defined types, you can create your own data types. When a structure type is defined using defstruct function, the name of the structure type becomes a valid type symbol.>/p>Example 1Create new source code file named main.lisp and type the following code in it:(setq x 10)(setq y 34.567)(setq ch nil)(setq n 123.78)(setq bg 11.0e+4)(setq r 124/2)(print x)(print y)(print n)(print ch)(print bg)(print r)When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result returned is:1034.567123.78NIL19LISP110000.062Example 2Next let us check the types of the variables used in the previous example. Create new source code file named main.lisp and type the following code in it:(setq x 10)(setq y 34.567)(setq ch nil)(setq n 123.78)(setq bg 11.0e+4)(setq r 124/2)(print (type-of x))(print (type-of y))(print (type-of n))(print (type-of ch))(print (type-of bg))(print (type-of r))When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:(INTEGER 0 281474976710655)SINGLE-FLOATSINGLE-FLOATNULLSINGLE-FLOAT(INTEGER 0 281474976710655)20LISP 21LISP22This chapter introduces you about macros in LISP.A macro is a function that takes an s-expression as arguments and returns a LISP form, which is then evaluated. Macros allow you to extend the syntax of standard LISP.Defining a MacroIn LISP, a named macro is defined using another macro named defmacro. Syntax for defining a macro is:(defmacro macro-name (parameter-list)"Optional documentation string."body-form)The macro definition consists of the name of the macro, a parameter list, an optional documentation string, and a body of LISP expressions that defines the job to be performed by the macro.ExampleLet us write a simple macro named setTo10, which takes a number and sets its value to 10.Create new source code file named main.lisp and type the following code in it: defmacro setTo10(num)(setq num 10)(print num))(setq x 25)(print x)(setTo10 x)When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:6. MACROSLISP251023LISP24In LISP, each variable is represented by a symbol. The name of the variable is the name of the symbol and it is stored in the storage cell of the symbol. Global V ariablesGlobal variables are generally declared using the defvar construct. Global variables have permanent values throughout the LISP system and remain in effect until new values are specified.Example(defvar x 234)(write x)When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:234As there is no type declaration for variables in LISP, you need to specify a value for a symbol directly with the setq construct.Example->(setq x 10)The above expression assigns the value 10 to the variable x. You can refer to the variable using the symbol itself as an expression.The symbol-value function allows you to extract the value stored at the symbol storage place.ExampleCreate new source code file named main.lisp and type the following code in it: (setq x 10)(setq y 20)7. VARIABLES(format t "x = ~2d y = ~2d ~%" x y)(setq x 100)(setq y 200)(format t "x = ~2d y = ~2d" x y)When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:x = 10 y = 20x = 100 y = 200Local V ariablesLocal variables are defined within a given procedure. The parameters named as arguments within a function definition are also local variables. Local variables are accessible only within the respective function.Like the global variables, local variables can also be created using the setq construct. There are two other constructs - let and prog for creating local variables.The let construct has the following syntax:(let ((var1 val1) (var2 val2).. (varn valn))<s-expressions>)Where var1, var2,…,varn are variable names and val1, val2,…, valn are the initial values assigned to the respective variables.When let is executed, each variable is assigned the respective value and at last, the s-expression is evaluated. The value of the last expression evaluated is returned.If you do not include an initial value for a variable, the variable is assigned to nil. ExampleCreate new source code file named main.lisp and type the following code in it:(let ((x 'a)(y 'b)(z 'c))(format t "x = ~a y = ~a z = ~a" x y z))25When you click the Execute button, or type Ctrl+E, LISP executes it immediately and the result is:x = A y = B z = CThe prog construct also has the list of local variables as its first argument, which is followed by the body of the prog,and any number of s-expressions.26End of ebook previewIf you liked what you saw…Buy it from our store @ https://27。

Java (programming language)From Wikipedia,the free encyclopediaJava is a programming language originally developed by James Gosling at Sun Microsystems (which has since merged into Oracle Corporation)and released in 1995 as a core component of Sun Microsystems' Java platform. The language derives much of its syntax from C and C++ but has a simpler object model and fewer low—level facilities. Java applications are typically compiled to bytecode (class file) that can run on any Java Virtual Machine (JVM)regardless of computer architecture. Java is a general-purpose,concurrent,class-based,object-oriented language that is specifically designed to have as few implementation dependencies as possible. It is intended to let application developers "write once, run anywhere," meaning that code that runs on one platform does not need to be edited to run on another. Java is currently one of the most popular programming languages in use, particularly for client—server web applications,with a reported 10 million users.[10][11] The original and reference implementation Java compilers,virtual machines, and class libraries were developed by Sun from 1995。