M. Algorithms for openings of binary and label images with rectangular structuring elements

- 格式:pdf

- 大小:134.63 KB

- 文档页数:10

算法导论第三版第⼆章第⼀节习题答案2.1-1:以图2-2为模型,说明INSERTION-SORT在数组A=<31,41,59,26,41,58>上的执⾏过程。

NewImage2.1-2:重写过程INSERTION-SORT,使之按⾮升序(⽽不是按⾮降序)排序。

注意,跟之前升序的写法只有⼀个地⽅不⼀样:NewImage2.1-3:考虑下⾯的查找问题:输⼊:⼀列数A=<a1,a2,…,an >和⼀个值v输出:下标i,使得v=A[i],或者当v不在A中出现时为NIL。

写出针对这个问题的现⾏查找的伪代码,它顺序地扫描整个序列以查找v。

利⽤循环不变式证明算法的正确性。

确保所给出的循环不变式满⾜三个必要的性质。

(2.1-3 Consider the searching problem:Input: A sequence of n numbers A D ha1; a2; : : : ;ani and a value _.Output: An index i such that _ D AOEi_ or the special value NIL if _ does not appear in A.Write pseudocode for linear search, which scans through the sequence, looking for _. Using a loop invariant, prove that your algorithm is correct. Make sure that your loop invariant fulfills the three necessary properties.)LINEAR-SEARCH(A,v)1 for i=1 to A.length2 if v = A[i]3 return i4 return NIL现⾏查找算法正确性的证明。

Numerical Methods Using MATLABIntroductionNumerical methods are essential in solving mathematical problems that cannot be solved analytically. These methods utilize computational algorithms to obtain approximate solutions to complex mathematical equations. MATLAB, a powerful numerical computing software, provides several built-in functions and tools for implementing and solving numerical problems efficiently.In this article, we will explore various numerical methods that can be implemented using MATLAB. We will discuss their underlying concepts and provide examples to illustrate their applications. Additionally, we will demonstrate how MATLAB’s robust computational capabilities can simplify the implementation of these methods.Root-Finding Methods1. Bisection MethodThe bisection method is a simple and robust numerical technique used to find the root of a function within a given interval. The interval is successively divided into smaller intervals until a root is identified. MATLAB provides the function fzero for implementing the bisection method.Here is an example of finding the root of the equation f(x) = x^2 - 4 using the bisection method:1.Initialize the interval [a, b]: a = 1, b = 32.Calculate the midpoint c: c = (a + b) / 2 = 23.Evaluate f(c): f(c) = c^2 - 4 = 2^2 - 4 = 04.If f(c) = 0, c is the root. Otherwise, update the interval:–If f(a) * f(c) < 0, set b = c–If f(c) * f(b) < 0, set a = c5.Repeat steps 2-4 until the desired accuracy is achieved.2. Newton-Raphson MethodThe Newton-Raphson method is an iterative numerical technique used to find the root of a function. It relies on linearizing the function at an initial guess and iteratively improving the estimate until convergence. MATLAB provides the function fzero for implementing the Newton-Raphson method.Consider finding the root of the equation f(x) = x^2 - 4 using the Newton-Raphson method:1.Initialize the initial guess x0: x0 = 32.Calculate the next approximation using the formula: xi+1 = xi -f(xi) / f’(xi)3.Repeat step 2 until convergence is achieved.Interpolation Methods1. Linear InterpolationLinear interpolation is a method used to estimate the value of afunction between two known data points. It assumes that the function varies linearly between the given points. MATLAB provides the function interp1 for implementing linear interpolation.Here is an example of linear interpolation using MATLAB:1.Given two data points: (x1, y1) = (1, 2) and (x2, y2) = (3, 6)2.Calculate the slope: m = (y2 - y1) / (x2 - x1) = (6 - 2) / (3 - 1)= 23.Calculate the y-intercept: c = y1 - m * x1 = 2 - 2 * 1 = 0e the equation of a line, y = mx + c, to estimate the value ofthe function at a new point.2. Polynomial InterpolationPolynomial interpolation is a method used to estimate the value of a function between known data points using a polynomial equation. MATLAB provides the function polyfit for implementing polynomial interpolation.Consider the following set of data points: (x1, y1) = (1, 2), (x2, y2) = (2, 4), and (x3, y3) = (3, 6). We want to estimate the value of the function at x = 2.5 using polynomial interpolation:1.Define the polynomial equation: y = a0 + a1 * x + a2 * x^2 + …2.Substitute the data points into the equation and solve theresulting system of equations.e the obtained coefficients to evaluate the function at thedesired point.Numerical Integration Methods1. Trapezoidal RuleThe trapezoidal rule is a numerical integration method used to approximate the definite integral of a function. It divides the interval into trapezoids and sums up their areas to obtain an estimate. MATLAB provides the function trapz for implementing the trapezoidal rule.To illustrate the trapezoidal rule, consider evaluating the integral of the function f(x) = x^2 over the interval [0, 1]:1.Divide the interval into n subintervals of equal width: h = (b - a)/ n2.Approximate the integral using the formula: I = 0.5 * h * (f(a) +2 * sum(f(xi)) + f(b))3.Increase the value of n to improve the accuracy of theapproximation.2. Simpson’s RuleSimpson’s rule is a numerical integration method that provides a more accurate approximation by fitting the function with quadratic curves. It divides the interval into subintervals and uses weighted averages to estimate the integral. MATLAB provides the function quad forimplementing Simpson’s rule.Consider evaluating the integral of the function f(x) = x^4 over the interval [0, 1] using Simpson’s rule:1.Divide the interval into n subintervals: h = (b - a) / n2.Approximate the integral using the formula: I = (h / 3) * (f(a) +4 * sum(f(xi)) + 2 * sum(f(xi+1)) + f(b))3.Increase the value of n to improve the accuracy of theapproximation.ConclusionNumerical methods using MATLAB provide powerful tools for solving complex mathematical problems. In this article, we discussed root-finding methods, interpolation methods, and numerical integration methods. We explored the concepts behind these methods and provided examples of their implementation using MATLAB’s built-in functions. By leveraging MATLAB’s computational capabilities, we can efficiently and accurately solve various numerical problems.。

Research StatementParikshit GopalanMy research focuses on fundamental algebraic problems such as polynomial reconstruction and interpolation arising from various areas of theoretical computer science.My main algorith-mic contributions include thefirst algorithm for list-decoding a well-known family of codes called Reed-Muller codes[13],and thefirst algorithms for agnostically learning parity functions[3]and decision trees[11]under the uniform distribution.On the complexity-theoretic side,my contribu-tions include the best-known hardness results for reconstructing low-degree multivariate polyno-mials from noisy data[12]and the discovery of a connection between representations of Boolean functions by polynomials and communication complexity[2].1IntroductionMany important recent developments in theoretical computer science,such as probabilistic proof checking,deterministic primality testing and advancements in algorithmic coding theory,share a common feature:the extensive use of techniques from algebra.My research has centered around the application of these methods to problems in Coding theory,Computational learning,Hardness of approximation and Boolean function complexity.While atfirst glance,these might seem like four research areas that are not immediately related, there are several beautiful connections between these areas.Perhaps the best illustration of these links is the noisy parity problem where the goal is to recover a parity function from a corrupted set of evaluations.The seminal Goldreich-Levin algorithm solves a version of this problem;this result initiated the study of list-decoding algorithms for error-correcting codes[5].An alternate solution is the Kushilevitz-Mansour algorithm[19],which is a crucial component in algorithms for learning decision trees and DNFs[17].H˚a stad’s ground-breaking work on the hardness of this problem has revolutionized our understanding of inapproximability[16].All these results rely on insights into the Fourier structure of Boolean functions.As I illustrate below,my research has contributed to a better understanding of these connec-tions,and yielded progress on some important open problems in these areas.2Coding TheoryThe broad goal of coding theory is to enable meaningful communication in the presence of noise, by suitably encoding the messages.The natural algorithmic problem associated with this task is that of decoding or recovering the transmitted message from a corrupted encoding.The last twenty years have witnessed a revolution with the discovery of several powerful decoding algo-rithms for well-known families of error-correcting codes.A key role has been played by the notion of list-decoding;a relaxation of the classical decoding problem where we are willing to settle for a small list of candidate transmitted messages rather than insisting on a unique answer.This relaxation allows one to break the classical half the minimum distance barrier for decoding error-correcting codes.We now know powerful list-decoding algorithms for several important code families,these algorithms have also made a huge impact on complexity theory[5,15,23].List-Decoding Reed-Muller Codes:In recent work with Klivans and Zuckerman,we give the first such list-decoding algorithm for a well-studied family of codes known as Reed-Muller codes, obtained from low-degree polynomials over thefinitefield F2[13].The highlight of this work is that our algorithm is able to tolerate error-rates which are much higher than what is known as the Johnson bound in coding theory.Our results imply new combinatorial bounds on the error-correcting capability of these codes.While Reed-Muller codes have been studied extensively in both coding theory and computer science communities,our result is thefirst to show that they are resilient to remarkably high error-rates.Our algorithm is based on a novel view of the Goldreich-Levin algorithm as a reduction from list-decoding to unique-decoding;our view readily extends to polynomials of arbitrary degree over anyfield.Our result complements recent work on the Gowers norm,showing that Reed-Muller codes are testable up to large distances[21].Hardness of Polynomial Reconstruction:In the polynomial reconstruction problem,one is asked to recover a low-degree polynomial from its evaluations at a set of points and some of the values could be incorrect.The reconstruction problem is ubiquitous in both coding theory and computational learning.Both the Noisy parity problem and the Reed-Muller decoding problem are instances of this problem.In joint work with Khot and Saket,we address the complexity of this problem and establish thefirst hardness results for multivariate polynomials of arbitrary degree [12].Previously,the only hardness known was for degree1,which follows from the celebrated work of H˚a stad[16].Our work introduces a powerful new algebraic technique called global fold-ing which allows one to bypass a module called consistency testing that is crucial to most hardness results.I believe this technique willfind other applications.Average-Case Hardness of NP:Algorithmic advances in decoding of error-correcting codes have helped us gain a deeper understand of the connections between worst-case and average case complexity[23,24].In recent work with Guruswami,we use this paradigm to explore the average-case complexity of problems in NP against algorithms in P[8].We present thefirst hardness amplification result in this setting by giving a construction of an error-correcting code where most of the symbols can be recovered correctly from a corrupted codeword by a deterministic algorithm that probes very few locations in the codeword.The novelty of our work is that our decoder is deterministic,whereas previous algorithms for this task were all randomized.3Computational LearningComputational learning aims to understand the algorithmic issues underlying how we learn from examples,and to explore how the complexity of learning is influenced by factors such as the ability to ask queries and the possibility of incorrect answers.Learning algorithms for a class of concept typically rely on understanding the structure of that concept class,which naturally ties learning to Boolean function complexity.Learning in the presence of noise has several connections to decoding from errors.My work in this area addresses the learnability of basic concept classes such as decision trees,parities and halfspaces.Learning Decision Trees Agnostically:The problem of learning decision trees is one of the central open problems in computational learning.Decision trees are also a popular hypothesis class in practice.In recent work with Kalai and Klivans,we give a query algorithm for learning decision trees with respect to the uniform distribution on inputs in the agnostic model:given black-box access to an arbitrary Boolean function,our algorithmfinds a hypothesis that agrees with it on almost as many inputs as the best decision tree[11].Equivalently,we can learn decision trees even when the data is corrupted adversarially;this is thefirst polynomial-time algorithm for learning decision trees in a harsh noise model.Previous decision-tree learning algorithms applied only to the noiseless setting.Our algorithm can be viewed as the agnostic analog of theKushilevitz-Mansour algorithm[19].The core of our algorithm is a procedure to implicitly solve a convex optimization problem in high dimensions using approximate gradient projection.The Noisy Parity Problem:The Noisy parity problem has come to be widely regarded as a hard problem.In work with Feldman et al.,we present evidence supporting this belief[3].We show that in the setting of learning from random examples(without queries),several outstanding open problems such as learning juntas,decision trees and DNFs reduce to restricted versions of the problem of learning parities with random noise.Our result shows that in some sense, noisy parity captures the gap between learning from random examples and learning with queries, as it is believed to be hard in the former setting and is known to be easy in the latter.On the positive side,we present thefirst non-trivial algorithm for the noisy parity problem under the uniform distribution in the adversarial noise model.Our result shows that somewhat surprisingly, adversarial noise is no harder to handle than random noise.Hardness of Learning Halfspaces:The problem of learning halfspaces is a fundamental prob-lem in computational learning.One could hope to design algorithms that are robust even in the presence of a few incorrectly labeled points.Indeed,such algorithms are known in the setting where the noise is random.In work with Feldman et al.,we show that the setting of adversarial errors might be intractable:given a set of points where99%are correctly labeled by some halfs-pace,it is NP-hard tofind a halfspace that correctly labels even51%of the points[3].4Prime versus Composite problemsMy thesis work focuses on new aspects of an old and famous problem:the difference between primes and composites.Beyond basic problems like primality and factoring,there are many other computational issues that are not yet well understood.For instance,in circuit complexity,we have excellent lower bounds for small-depth circuits with mod2gates,but the same problem for circuits with mod6gates is wide open.Likewise in combinatorics,set systems where sizes of the sets need to satisfy certain modular conditions are well studied.Again the prime case is well understood,but little is known for composites.In all these problems,the algebraic techniques that work well in the prime case break down for composites.Boolean function complexity:Perhaps the simplest class of circuits for which we have been unable to show lower bounds is small-depth circuits with And,Or and Mod m gates where m is composite;indeed this is one of the frontier open problems in circuit complexity.When m is prime, such bounds were proved by Razborov and Smolensky[20,22].One reason for this gap is that we do not fully understand the computational power of polynomials over composites;Barrington et.al were thefirst to show that such polynomials are surprisingly powerful[1].In joint work with Bhatnagar and Lipton,we solve an important special case:when the polynomials are symmetric in their variables[2].We show an equivalence between computing Boolean functions by symmetric polynomials over composites and multi-player communication protocols,which enables us to apply techniques from communication complexity and number theory to this problem.We use these techniques to show tight degree bounds for various classes of functions where no bounds were known previously.Our viewpoint simplifies previously known results in this area,and reveals new connections to well-studied questions about Diophantine equations.Explicit Ramsey Graphs:A basic open problem regarding polynomials over composites is: Can asymmetry in the variables help us compute a symmetric function with low degree?I show a connec-tion between this question and an important open problem in combinatorics,which is to explicitly construct Ramsey graphs or graphs with no large cliques and independent sets[6].While good Ramsey graphs are known to exist by probabilistic arguments,explicit constructions have proved elusive.I propose a new algebraic framework for constructing Ramsey graphs and showed howseveral known constructions can all be derived from this framework in a unified manner.I show that all known constructions rely on symmetric polynomials,and that such constructions cannot yield better Ramsey graphs.Thus the question of symmetry versus asymmetry of variables is precisely the barrier to better constructions by such techniques.Interpolation over Composites:A basic problem in computational algebra is polynomial interpolation,which is to recover a polynomial from its evaluations.Interpolation and related algorithmic tasks which are easy for primes become much harder,even intractable over compos-ites.This difference stems from the fact that over primes,the number of roots of a polynomial is bounded by the degree,but no such theorem holds for composites.In lieu of this theorem I presented an algorithmic bound;I show how to compute a bound on the degree of a polynomial given its zero set[7].I use this to give thefirst optimal algorithms for interpolation,learning and zero-testing over composites.These algorithms are based on new structural results about the ze-roes of polynomials.These results were subsequently useful in ruling out certain approaches for better Ramsey constructions[6].5Other Research HighlightsMy other research work spans areas of theoretical computer science ranging from algorithms for massive data sets to computational complexity.I highlight some of this work below.Data Stream Algorithms:Algorithmic problems arising from complex networks like the In-ternet typically involve huge volumes of data.This has led to increased interest in highly efficient algorithmic models like sketching and streaming,which can meaningfully deal with such massive data sets.A large body of work on streaming algorithms focuses one estimating how sorted the input is.This is motivated by the realization that sorting the input is intractable in the one-pass data stream model.In joint work with Krauthgamer,Jayram and Kumar,we presented thefirst sub-linear space data stream algorithms to estimate two well-studied measures of sortedness:the distance from monotonicity(or Ulam distance for permutations),and the length of the Longest Increasing Subsequence or LIS.In more recent work with Anna G´a l,we prove optimal lower bounds for estimating the length of the LIS in the data-stream model[4].This is established by proving a direct-sum theorem for the communication complexity of a related problem.The novelty of our techniques is the model of communication that they address.As a corollary,we obtain a separation between two models of communication that are commonly studied in relation to data stream algorithms.Structural Properties of SAT solutions:The solution space of random SAT formulae has been studied with a view to better understanding connections between computational hardness and phase transitions from satisfiable to unsatisfiable.Recent algorithmic approaches rely on connectivity properties of the space and break down in the absence of connectivity.In joint work with Kolaitis,Maneva and Papadimitriou,we consider the problem:Given a Boolean formula,do its solutions form a connected subset of the hypercube?We classify the worst-case complexity of various connectivity properties of the solution space of SAT formulae in Schaefer’s framework[14].We show that the jump in the computational hardness is accompanied by a jump in the diameter of the solution space from linear to exponential.Complexity of Modular Counting Problems:In joint work with Guruswami and Lipton,we address the complexity of counting the roots of a multivariate polynomial over afinitefield F q modulo some number r[9].We establish a dichotomy showing that the problem is easy when r is a power of the characteristic of thefield and intractable otherwise.Our results give several examples of problems whose decision versions are easy,but the modular counting version is hard.6Future Research DirectionsMy broad research goal is to gain a complete understanding of the complexity of problems arising in coding theory,computational learning and related areas;I believe that the right tools for this will come from Boolean function complexity and hardness of approximation.Below I outline some of the research directions I would like to pursue in the future.List-decoding algorithms have allowed us to break the unique-decoding barrier for error-correcting codes.It is natural to ask if one can perhaps go beyond the list-decoding radius and solve the problem offinding the codeword nearest to a received word at even higher error rates. On the negative side,we do not currently know any examples of codes where one can do this.But I think that recent results on Reed-Muller codes do offer some hope[13,21].Algorithms for solving the nearest codeword problem if they exist,could also have exciting implications in computational learning.There are concept classes which are well-approximated by low-degree polynomials over finitefields lying just beyond the threshold of what is currently known to be learnable efficiently [20,22].Decoding algorithms for Reed-Muller codes that can tolerate very high error rates might present an approach to learning such concept classes.One of the challenges in algorithmic coding theory is to determine whether known algorithms for list-decoding Reed-Solomon codes[15]and Reed-Muller codes[13,23]are optimal.This raises both computational and combinatorial questions.I believe that my work with Khot et al.rep-resents a goodfirst step towards understanding the complexity of the decoding/reconstruction problem for multivariate polynomials.Proving similar results for univariate polynomials is an excellent challenge which seems to require new ideas in hardness of approximation.There is a large body of work proving strong NP-hardness results for problems in computa-tional learning.However,all such results only address the proper learning scenario where the learning algorithm is restricted to produce a hypothesis from some particular class H which is typically the same as the concept class C.In contrast,known learning algorithms are mostly im-proper algorithms which could use more complicated hypotheses.For hardness results that are independent of the hypothesis H used by the algorithm,one currently has to resort to crypto-graphic assumptions.In ongoing work with Guruswami and Raghavendra,we are investigating the possibility of proving NP-hardness for improper learning.Finally,I believe that there are several interesting directions to explore in the agnostic learn-ing model.An exciting insight in this area comes from the work of Kalai et al.who show that 1regression is a powerful tool for noise-tolerant learning[18].A powerful paradigm in com-putational learning is to prove that the concept has some kind of polynomial approximation and then recover the approximation.Algorithms based on 1regression require a weaker polynomial approximation in comparison with previous algorithms(which use 2regression),but use more powerful machinery for the recovery step.Similar ideas might allow us to extend the boundaries of efficient learning even in the noiseless model;this is a possibility I am currently exploring.Having worked in areas ranging from data stream algorithms to Boolean function complexity, I view myself as both an algorithm designer and a complexity theorist.I have often found that working on one aspect of a problem gives insights into the other;indeed much of my work has originated from such insights([12]and[13],[10]and[4],[6]and[7]).Ifind that this is increasingly the case across several areas in theoretical computer science.My aim is to maintain this balance between upper and lower bounds in my future work.References[1]D.A.Barrington,R.Beigel,and S.Rudich.Representing Boolean functions as polynomialsmodulo composite putational Complexity,4:367–382,1994.[2]N.Bhatnagar,P.Gopalan,and R.J.Lipton.Symmetric polynomials over Z m and simultane-ous communication protocols.Journal of Computer&System Sciences(special issue for FOCS’03), 72(2):450–459,2003.[3]V.Feldman,P.Gopalan,S.Khot,and A.K.Ponnuswami.New results for learning noisyparities and halfspaces.In Proc.47th IEEE Symp.on Foundations of Computer Science(FOCS’06), 2006.[4]A.G´a l and P.Gopalan.Lower bounds on streaming algorithms for approximating the lengthof the longest increasing subsequence.In Proc.48th IEEE Symp.on Foundations of Computer Science(FOCS’07),2007.[5]O.Goldreich and L.Levin.A hard-core predicate for all one-way functions.In Proc.21st ACMSymposium on the Theory of Computing(STOC’89),pages25–32,1989.[6]P.Gopalan.Constructing Ramsey graphs from Boolean function representations.In Proc.21stIEEE symposium on Computational Complexity(CCC’06),2006.[7]P.Gopalan.Query-efficient algorithms for polynomial interpolation over composites.In Proc.17th ACM-SIAM symposium on Discrete algorithms(SODA’06),2006.[8]P.Gopalan and V.Guruswami.Deterministic hardness amplification via local GMD decod-ing.Submitted to23rd IEEE Symp.on Computational Complexity(CCC’08),2008.[9]P.Gopalan,V.Guruswami,and R.J.Lipton.Algorithms for modular counting of roots of mul-tivariate polynomials.In tin American Symposium on Theoretical Informatics(LATIN’06), 2006.[10]P.Gopalan,T.S.Jayram,R.Krauthgamer,and R.Kumar.Estimating the sortedness of a datastream.In Proc.18th ACM-SIAM Symposium on Discrete Algorithms(SODA’07),2007.[11]P.Gopalan,A.T.Kalai,and A.R.Klivans.Agnostically learning decision trees.In Proc.40thACM Symp.on Theory of Computing(STOC’08),2008.[12]P.Gopalan,S.Khot,and R.Saket.Hardness of reconstructing multivariate polynomials overfinitefields.In Proc.48th IEEE Symp.on Foundations of Computer Science(FOCS’07),2007. [13]P.Gopalan,A.R.Klivans,and D.Zuckerman.List-decoding Reed-Muller codes over smallfields.In Proc.40th ACM Symp.on Theory of Computing(STOC’08),2008.[14]P.Gopalan,P.G.Kolaitis,E.N.Maneva,and puting the connec-tivity properties of the satisfiability solution space.In Proc.33rd Intl.Colloqium on Automata, Languages and Programming(ICALP’06),2006.[15]V.Guruswami and M.Sudan.Improved decoding of Reed-Solomon and Algebraic-Geometric codes.IEEE Transactions on Information Theory,45(6):1757–1767,1999.[16]J.H˚a stad.Some optimal inapproximability results.J.ACM,48(4):798–859,2001.[17]J.Jackson.An efficient membership-query algorithm for learning DNF with respect to theuniform distribution.Journal of Computer and System Sciences,55:414–440,1997.[18]A.T.Kalai,A.R.Klivans,Y.Mansour,and R.A.Servedio.Agnostically learning halfspaces.In Proc.46th IEEE Symp.on Foundations of Computer Science,pages11–20,2005.[19]E.Kushilevitz and Y.Mansour.Learning decision trees using the Fourier spectrum.SIAMJournal of Computing,22(6):1331–1348,1993.[20]A.Razborov.Lower bounds for the size of circuits of bounded depth with basis{∧,⊕}.Mathematical Notes of the Academy of Science of the USSR,(41):333–338,1987.[21]A.Samorodnitsky.Low-degree tests at large distances.In Proc.39th ACM Symposium on theTheory of Computing(STOC’07),pages506–515,2007.[22]R.Smolensky.Algebraic methods in the theory of lower bounds for Boolean circuit com-plexity.Proc.19th Annual ACM Symposium on Theoretical Computer Science,(STOC’87),pages 77–82,1987.[23]M.Sudan,L.Trevisan,and S.P.Vadhan.Pseudorandom generators without the XOR lemma.put.Syst.Sci.,62(2):236–266,2001.[24]L.Trevisan.List-decoding using the XOR lemma.In Proc.44th IEEE Symposium on Foundationsof Computer Science(FOCS’03),pages126–135,2003.。

Efficient Hardware Architectures forModular MultiplicationbyDavid Narh AmanorA Thesissubmitted toThe University of Applied Sciences Offenburg, GermanyIn partial fulfillment of the requirements for theDegree of Master of ScienceinCommunication and Media EngineeringFebruary, 2005Approved:Prof. Dr. Angelika Erhardt Prof. Dr. Christof Paar Thesis Supervisor Thesis SupervisorDeclaration of Authorship“I declare in lieu of an oath that the Master thesis submitted has been produced by me without illegal help from other persons. I state that all passages which have been taken out of publications of all means or unpublished material either whole or in part, in words or ideas, have been marked as quotations in the relevant passage. I also confirm that the quotes included show the extent of the original quotes and are marked as such. I know that a false declaration willhave legal consequences.”David Narh AmanorFebruary, 2005iiPrefaceThis thesis describes the research which I conducted while completing my graduate work at the University of Applied Sciences Offenburg, Germany.The work produced scalable hardware implementations of existing and newly proposed algorithms for performing modular multiplication.The work presented can be instrumental in generating interest in the hardware implementation of emerging algorithms for doing faster modular multiplication, and can also be used in future research projects at the University of Applied Sciences Offenburg, Germany, and elsewhere.Of particular interest is the integration of the new architectures into existing public-key cryptosystems such as RSA, DSA, and ECC to speed up the arithmetic.I wish to thank the following people for their unselfish support throughout the entire duration of this thesis.I would like to thank my external advisor Prof. Christof Paar for providing me with all the tools and materials needed to conduct this research. I am particularly grateful to Dipl.-Ing. Jan Pelzl, who worked with me closely, and whose constant encouragement and advice gave me the energy to overcome several problems I encountered while working on this thesis.I wish to express my deepest gratitude to my supervisor Prof. Angelika Erhardt for being in constant touch with me and for all the help and advice she gave throughout all stages of the thesis. If it was not for Prof. Erhardt, I would not have had the opportunity of doing this thesis work and therefore, I would have missed out on a very rewarding experience.I am also grateful to Dipl.-Ing. Viktor Buminov and Prof. Manfred Schimmler, whose newly proposed algorithms and corresponding architectures form the basis of my thesis work and provide the necessary theoretical material for understanding the algorithms presented in this thesis.Finally, I would like to thank my brother, Mr. Samuel Kwesi Amanor, my friend and Pastor, Josiah Kwofie, Mr. Samuel Siaw Nartey and Mr. Csaba Karasz for their diverse support which enabled me to undertake my thesis work in Bochum.iiiAbstractModular multiplication is a core operation in many public-key cryptosystems, e.g., RSA, Diffie-Hellman key agreement (DH), ElGamal, and ECC. The Montgomery multiplication algorithm [2] is considered to be the fastest algorithm to compute X*Y mod M in computers when the values of X, Y and M are large.Recently, two new algorithms for modular multiplication and their corresponding architectures were proposed in [1]. These algorithms are optimizations of the Montgomery multiplication algorithm [2] and interleaved modular multiplication algorithm [3].In this thesis, software (Java) and hardware (VHDL) implementations of the existing and newly proposed algorithms and their corresponding architectures for performing modular multiplication have been done. In summary, three different multipliers for 32, 64, 128, 256, 512, and 1024 bits were implemented, simulated, and synthesized for a Xilinx FPGA.The implementations are scalable to any precision of the input variables X, Y and M.This thesis also evaluated the performance of the multipliers in [1] by a thorough comparison of the architectures on the basis of the area-time product.This thesis finally shows that the newly optimized algorithms and their corresponding architectures in [1] require minimum hardware resources and offer faster speed of computation compared to multipliers with the original Montgomery algorithm.ivTable of Contents1Introduction 91.1 Motivation 91.2 Thesis Outline 10 2Existing Architectures for Modular Multiplication 122.1 Carry Save Adders and Redundant Representation 122.2 Complexity Model 132.3 Montgomery Multiplication Algorithm 132.4 Interleaved Modular Multiplication 163 New Architectures for Modular Multiplication 193.1 Faster Montgomery Algorithm 193.2 Optimized Interleaved Algorithm 214 Software Implementation 264.1 Implementational Issues 264.2 Java Implementation of the Algorithms 264.2.1 Imported Libraries 274.2.2 Implementation Details of the Algorithms 284.2.3 1024 Bits Test of the Implemented Algorithms 30 5Hardware Implementation 345.1 Modeling Technique 345.2 Structural Elements of Multipliers 34vTable of Contents vi5.2.1 Carry Save Adder 355.2.2 Lookup Table 375.2.3 Register 395.2.4 One-Bit Shifter 405.3 VHDL Implementational Issues 415.4 Simulation of Architectures 435.5 Synthesis 456 Results and Analysis of the Architectures 476.1 Design Statistics 476.2 Area Analysis 506.3 Timing Analysis 516.4 Area – Time (AT) Analysis 536.5 RSA Encryption Time 557 Discussion 567.1 Summary and Conclusions 567.2 Further Research 577.2.1 RAM of FPGA 577.2.2 Word Wise Multiplication 57 References 58List of Figures2.3 Architecture of the loop of Algorithm 1b [1] 163.1 Architecture of Algorithm 3 [1] 21 3.2 Inner loop of modular multiplication using carry save addition [1] 233.2 Modular multiplication with one carry save adder [1] 254.2.2 Path through the loop of Algorithm 3 29 4.2.3 A 1024 bit test of Algorithm 1b 30 4.2.3 A 1024 bit test of Algorithm 3 314.2.3 A 1024 bit test of Algorithm 5 325.2 Block diagram showing components that wereimplemented for Faster Montgomery Architecture 35 5.2.1 VHDL implementation of carry save adder 36 5.2.2 VHDL implementation of lookup table 38 5.2.3 VHDL implementation of register 39 5.2.4 Implementation of ‘Shift Right’ unit 40 5.3 32 bit blocks of registers for storing input data bits 425.4 State diagram of implemented multipliers 436.2 Percentage of configurable logic blocks occupied 50 6.2 CLB Slices versus bitlength for Fast Montgomery Multiplier 51 6.3 Minimum clock periods for all implementations 52 6.3 Absolute times for all implementations 52 6.4 Area –time product analysis 54viiList of Tables6.1 Percentage of configurable logic block slices(out of 19200) occupied depending on bitlength 47 6.1 Number of gates 48 6.1 Minimum period and maximum frequency 48 6.1 Number of Dffs or Latches 48 6.1 Number of Function Generators 49 6.1 Number of MUX CARRYs 49 6.1 Total equivalent gate count for design 49 6.3 Absolute Time (ns) for all implementations 53 6.4 Area –Time Product Values 54 6.5 Time (ns) for 1024 bit RSA encryption 55viiiChapter 1Introduction1.1 MotivationThe rising growth of data communication and electronic transactions over the internet has made security to become the most important issue over the network. To provide modern security features, public-key cryptosystems are used. The widely used algorithms for public-key cryptosystems are RSA, Diffie-Hellman key agreement (DH), the digital signature algorithm (DSA) and systems based on elliptic curve cryptography (ECC). All these algorithms have one thing in common: they operate on very huge numbers (e.g. 160 to 2048 bits). Long word lengths are necessary to provide a sufficient amount of security, but also account for the computational cost of these algorithms.By far, the most popular public-key scheme in use today is RSA [9]. The core operation for data encryption processing in RSA is modular exponentiation, which is done by a series of modular multiplications (i.e., X*Y mod M). This accounts for most of the complexity in terms of time and resources needed. Unfortunately, the large word length (e.g. 1024 or 2048 bits) makes the RSA system slow and difficult to implement. This gives reason to search for dedicated hardware solutions which compute the modular multiplications efficiently with minimum resources.The Montgomery multiplication algorithm [2] is considered to be the fastest algorithm to compute X*Y mod M in computers when the values of X, Y and M are large. Another efficient algorithm for modular multiplication is the interleaved modular multiplication algorithm [4].In this thesis, two new algorithms for modular multiplication and their corresponding architectures which were proposed in [1] are implemented. TheseIntroduction 10 algorithms are optimisations of Montgomery multiplication and interleaved modular multiplication. They are optimised with respect to area and time complexity. In both algorithms the product of two n bit integers X and Y modulo M are computed by n iterations of a simple loop. Each loop consists of one single carry save addition, a comparison of constants, and a table lookup.These new algorithms have been proved in [1] to speed-up the modular multiplication operation by at least a factor of two in comparison with all methods previously known.The main advantages offered by these new algorithms are;•faster computation time, and•area requirements and resources for the implementation of their architectures in hardware are relatively small compared to theMontgomery multiplication algorithm presented in [1, Algorithm 1a and1b].1.2 Thesis OutlineChapter 2 provides an overview of the existing algorithms and their corresponding architectures for performing modular multiplication. The necessary background knowledge which is required for understanding the algorithms, architectures, and concepts presented in the subsequent chapters is also explained. This chapter also discusses the complexity model which was used to compare the existing architectures with the newly proposed ones.In Chapter 3, a description of the new algorithms for modular multiplication and their corresponding architectures are presented. The modifications that were applied to the existing algorithms to produce the new optimized versions are also explained in this chapter.Chapter 4 covers issues on the software implementation of the algorithms presented in Chapters 2 and 3. The special classes in Java which were used in the implementation of the algorithms are mentioned. The testing of the new optimized algorithms presented in Chapter 3 using random generated input variables is also discussed.The hardware modeling technique which was used in the implementation of the multipliers is explained in Chapter 5. In this chapter, the design capture of the architectures in VHDL is presented and the simulations of the VHDLIntroduction 11 implementations are also discussed. This chapter also discusses the target technology device and synthesis results. The state machine of the implemented multipliers is also presented in this chapter.In Chapter 6, analysis and comparison of the implemented multipliers is given. The vital design statistics which were generated after place and route were tabulated and graphically represented in this chapter. Of prime importance in this chapter is the area – time (AT) analysis of the multipliers which is the complexity metric used for the comparison.Chapter 7 concludes the thesis by setting out the facts and figures of the performance of the implemented multipliers. This chapter also itemizes a list of recommendations for further research.Chapter 2Existing Architectures for Modular Multiplication2.1 Carry Save Adders and Redundant RepresentationThe core operation of most algorithms for modular multiplication is addition. There are several different methods for addition in hardware: carry ripple addition, carry select addition, carry look ahead addition and others [8]. The disadvantage of these methods is the carry propagation, which is directly proportional to the length of the operands. This is not a big problem for operands of size 32 or 64 bits but the typical operand size in cryptographic applications range from 160 to 2048 bits. The resulting delay has a significant influence on the time complexity of these adders.The carry save adder seems to be the most cost effective adder for our application. Carry save addition is a method for an addition without carry propagation. It is simply a parallel ensemble of n full-adders without any horizontal connection. Its function is to add three n -bit integers X , Y , and Z to produce two integers C and S as results such thatC + S = X + Y + Z,where C represents the carry and S the sum.The i th bit s i of the sum S and the (i + 1)st bit c i+1 of carry C are calculated using the boolean equations,001=∨∨=⊕⊕=+c z y z x y x c z y x s ii i i i i i i i i iExisting Architectures for Modular Multiplication 13 When carry save adders are used in an algorithm one uses a notation of the form (S, C) = X + Y + Zto indicate that two results are produced by the addition.The results are now represented in two binary words, an n-bit word S and an (n+1) bit word C. Of course, this representation is redundant in the sense that we can represent one value in several different ways. This redundant representation has the advantage that the arithmetic operations are fast, because there is no carry propagation. On the other hand, it brings to the fore one basic disadvantage of the carry save adder:•It does not solve our problem of adding two integers to produce a single result. Rather, it adds three integers and produces two such that the sum of these two is equal to that of the three inputs. This method may not be suitable for applications which only require the normal addition.2.2 Complexity ModelFor comparison of different algorithms we need a complexity model that allows fora realistic evaluation of time and area requirements of the considered methods. In[1], the delay of a full adder (1 time unit) is taken as a reference for the time requirement and quantifies the delay of an access to a lookup table with the same time delay of 1 time unit. The area estimation is based on empirical studies in full-custom and semi-custom layouts for adders and storage elements: The area for 1 bit in a lookup table corresponds to 1 area unit. A register cell requires 4 area units per bit and a full adder requires 8 area units. These values provide a powerful and realistic model for evaluation of area and time for most algorithms for modular multiplication.In this thesis, the percentage of configurable logic block slices occupied and the absolute time for computation are used to evaluate the algorithms. Other hardware resources such as total number of gates and number of flip-flops or latches required were also documented to provide a more practical and realistic evaluation of the algorithms in [1].2.3 Montgomery Multiplication AlgorithmThe Montgomery algorithm [1, Algorithm 1a] computes P = (X*Y* (2n)-1) mod M. The idea of Montgomery [2] is to keep the lengths of the intermediate resultsExisting Architectures for Modular Multiplication14smaller than n +1 bits. This is achieved by interleaving the computations and additions of new partial products with divisions by 2; each of them reduces the bit-length of the intermediate result by one.For a detailed treatment of the Montgomery algorithm, the reader is referred to [2] and [1].The key concepts of the Montgomery algorithm [1, Algorithm 1b] are the following:• Adding a multiple of M to the intermediate result does not change the valueof the final result; because the result is computed modulo M . M is an odd number.• After each addition in the inner loop the least significant bit (LSB) of theintermediate result is inspected. If it is 1, i.e., the intermediate result is odd, we add M to make it even. This even number can be divided by 2 without remainder. This division by 2 reduces the intermediate result to n +1 bits again.• After n steps these divisions add up to one division by 2n .The Montgomery algorithm is very easy to implement since it operates least significant bit first and does not require any comparisons. A modification of Algorithm 1a with carry save adders is given in [1, Algorithm 1b]:Algorithm 1a: Montgomery multiplication [1]P-M;:M) then P ) if (P (; }P div ) P :(*M; p P ) P :(*Y; x P ) P :() {n; i ; i ) for (i (;) P :(;: LSB of P p bit of X;: i x X;in bits of n: number M ) ) (X*Y(Output: P MX, Y Y, M with Inputs: X,i th i -n =≥=+=+=++<===<≤625430201 mod 20001Existing Architectures for Modular Multiplication15Algorithm 1b: Fast Montgomery multiplication [1]P-M;:M) then P ) if (P (C;S ) P :(;} C div ; C :S div ) S :(*M; s C S :) S,C (*Y; x C S :) S,C () {n; i ; i ) for (i (; ; C : ) S :(;: LSB of S s bit of X;: i x X;of bits in n: number M ) ) (X*Y(Output: P M X, Y Y, M with Inputs: X,i th i -n =≥+===++=++=++<====<≤762254302001mod 20001In this algorithm the delay of one pass through the loop is reduced from O (n ) to O (1). This remarkable improvement of the propagation delay inside the loop of Algorithm 1b is due to the use of carry save adders to implement step (3) and (4) in Algorithm 1a.Step (3) and (4) in Algorithm 1b represent carry save adders. S and C denote the sum and carry of the three input operands respectively.Of course, the additions in step (6) and (7) are conventional additions. But since they are performed only once while the additions in the loop are performed n times this is subdominant with respect to the time complexity.Figure 1 shows the architecture for the implementation of the loop of Algorithm 1b. The layout comprises of two carry save adders (CSA) and registers for storing the intermediate results of the sum and carry. The carry save adders are the dominant occupiers of area in hardware especially for very large values of n (e.g. n 1024).In Chapter 3, we shall see the changes that were made in [1] to reduce the number of carry save adders in Figure1 from 2 to 1, thereby saving considerable hardware space. However, these changes also brought about other area consuming blocks such as lookup tables for storing precomputed values before the start of the loop.Existing Architectures for Modular Multiplication 16Fig. 1: Architecture of the loop of algorithm 1b [1].There are various modifications to the Montgomery algorithm in [5], [6] and [7]. All these algorithms aimed at decreasing the operating time for faster system performance and reducing the chip area for practical hardware implementation. 2.4 Interleaved Modular MultiplicationAnother well known algorithm for modular multiplication is the interleaved modular multiplication. The details of the method are sketched in [3, 4]. The idea is to interleave multiplication and reduction such that the intermediate results are kept as short as possible.As shown in [1, Algorithm 2], the computation of P requires n steps and at each step we perform the following operations:Existing Architectures for Modular Multiplication17• A left shift: 2*P• A partial product computation: x i * Y• An addition: 2*P+ x i * Y •At most 2 subtractions:If (P M) Then P := P – M; If (P M) Then P := P – M;The partial product computation and left shift operations are easily performed by using an array of AND gates and wiring respectively. The difficult task is the addition operation, which must be performed fast. This was done using carry save adders in [1, Algorithm 4], introducing only O (1) delay per step.Algorithm 2: Standard interleaved modulo multiplication [1]P-M; }:M) then P ) if (P (P-M; :M) then P ) if (P (I;P ) P :(*Y; x ) I :(*P; ) P :() {i ; i ; n ) for (i (;) P :( bit of X;: i x X;of bits in n: number M X*Y Output: P M X, Y Y, M with Inputs: X,i th i =≥=≥+===−−≥−===<≤765423 0 1201mod 0The main advantages of Algorithm 2 compared to the separated multiplication and division are the following:• Only one loop is required for the whole operation.• The intermediate results are never any longer than n +2 bits (thus reducingthe area for registers and full adders).But there are some disadvantages as well:Existing Architectures for Modular Multiplication 18 •The algorithm requires three additions with carry propagation in steps (5),(6) and (7).•In order to perform the comparisons in steps (4) and (5), the preceding additions have to be completed. This is important for the latency because the operands are large and, therefore, the carry propagation has a significant influence on the latency.•The comparison in step (6) and (7) also requires the inspection of the full bit lengths of the operands in the worst case. In contrast to addition, the comparison is performed MSB first. Therefore, these two operations cannot be pipelined without delay.Many researchers have tried to address these problems, but the only solution with a constant delay in the loop is the one of [8], which has an AT- complexity of 156n2.In [1], a different approach is presented which reduces the AT-complexity for modular multiplication considerably. In Chapter 3, this new optimized algorithm is presented and discussed.Chapter 3New Architectures for Modular Multiplication The detailed treatment of the new algorithms and their corresponding architectures presented in this chapter can be found in [1]. In this chapter, a summary of these algorithms and architectures is given. They have been designed to meet the core requirements of most modern devices: small chip area and low power consumption.3.1 Faster Montgomery AlgorithmIn Figure 1, the layout for the implementation of the loop of Algorithm 1b consists of two carry save adders. For large wordsizes (e.g. n = 1024 or higher), this would require considerable hardware resources to implement the architecture of Algorithm 1b. The motivation behind this optimized algorithm is that of reducing the chip area for practical hardware implementation of Algorithm 1b. This is possible if we can precompute the four possible values to be added to the intermediate result within the loop of Algorithm 1b, thereby reducing the number of carry save adders from 2 to 1. There are four possible scenarios:•if the sum of the old values of S and C is an even number, and if the actual bit x i of X is 0, then we add 0 before we perform the reduction of S and C by division by 2.•if the sum of the old values of S and C is an odd number, and if the actual bit x i of X is 0, then we must add M to make the intermediate result even.Afterwards, we divide S and C by 2.•if the sum of the old values of S and C is an even number, and if the actual bit x i of X is 1, but the increment x i *Y is even, too, then we do not need to add M to make the intermediate result even. Thus, in the loop we add Y before we perform the reduction of S and C by division by 2. The same action is necessary if the sum of S and C is odd, and if the actual bit x i of X is 1 and Y is odd as well. In this case, S+C+Y is an even number, too.New Architectures for Modular Multiplication20• if the sum of the old values of S and C is odd, the actual bit x i of X is 1, butthe increment x i *Y is even, then we must add Y and M to make the intermediate result even. Thus, in the loop we add Y +M before we perform the reduction of S and C by division by 2.The same action is necessary if the sum of S and C is even, and the actual bit x i of X is 1, and Y is odd. In this case, S +C +Y +M is an even number, too.The computation of Y +M can be done prior to the loop. This saves one of the two additions which are replaced by the choice of the right operand to be added to the old values of S and C . Algorithm 3 is a modification of Montgomery’s method which takes advantage of this idea.The advantage of Algorithm 3 in comparison to Algorithm 1 can be seen in the implementation of the loop of Algorithm 3 in Figure 2. The possible values of I are stored in a lookup-table, which is addressed by the actual values of x i , y 0, s 0 and c 0. The operations in the loop are now reduced to one table lookup and one carry save addition. Both these activities can be performed concurrently. Note that the shift right operations that implement the division by 2 can be done by routing.Algorithm 3: Faster Montgomery multiplication [1]P-M;:M) then P ) if (P (C;S ) P :(;} C div ; C :S div ) S :(I;C S :) S,C ( R;) then I :) and x y c ((s ) if ( Y;) then I :) and x y c (not(s ) if ( M;) then I :x ) and not c ((s ) if (; ) then I :x ) and not c ((s ) if () {n; i ; i ) for (i (; ; C : ) S :(M; of Y uted value R: precomp ;: LSB of Y , y : LSB of C , c : LSB of S s bit of X;: i x X;of bits in n: number M ) ) (X*Y(Output: P M X, Y Y, M with Inputs: X,i i i i th i -n =≥+===++==⊕⊕=⊕⊕=≠==++<===+=<≤10922876540302001mod 2000000000000001New Architectures for Modular Multiplication 21Fig. 2: Architecture of Algorithm 3 [1]In [1], the proof of Algorithm 3 is presented and the assumptions which were made in arriving at an Area-Time (AT) complexity of 96n2 are shown.3.2 Optimized Interleaved AlgorithmThe new algorithm [1, Algorithm 4] is an optimisation of the interleaved modular multiplication [1, Algorithm 2]. In [1], four details of Algorithm 2 were modified in order to overcome the problems mentioned in Chapter 2:•The intermediate results are no longer compared to M (as in steps (6) and(7) of Algorithm 2). Rather, a comparison to k*2n(k=0... 6) is performedwhich can be done in constant time. This comparison is done implicitly in the mod-operation in step (13) of Algorithm 4.New Architectures for Modular Multiplication22• Subtractions in steps (6), (7) of Algorithm 2 are replaced by one subtractionof k *2n which can be done in constant time by bit masking. • Next, the value of k *2n mod M is added in order to generate the correctintermediate result (step (12) of Algorithm 4).• Finally, carry save adders are used to perform the additions inside the loop,thereby reducing the latency to a constant. The intermediate results are in redundant form, coded in two words S and C instead of generated one word P .These changes made by the authors in [1] led to Algorithm 4, which looks more complicated than Algorithm 2. Its main advantage is the fact that all the computations in the loop can be performed in constant time. Hence, the time complexity of the whole algorithm is reduced to O(n ), provided the values of k *2n mod M are precomputed before execution of the loop.Algorithm 4: Modular multiplication using carry save addition [1]M;C) (S ) P :(M;})*C *C S *S () A :( A);CSA(S, C,) :) (S,C ( I); CSA(S, C,C) :) (S,(*Y;x ) I :(*A;) A :(*C;) C :(*S;) S :(; C ) C :(; S ) S :() {; i ; i n ) for (i (; ; A : ; C :) S :( bit of X;: i x X;of bits in n: number M X*Y Output: P MX, Y Y, M with Inputs: X,n n n n n i n n th i mod 12mod 2221110982726252mod 42mod 30120001mod 011+=+++=========−−≥−=====<≤++New Architectures for Modular Multiplication 23Fig. 3: Inner loop of modular multiplication using carry save addition [1]In [1], the authors specified some modifications that can be applied to Algorithm 2 in order simplify and significantly speed up the operations inside the loop. The mathematical proof which confirms the correctness of the Algorithm 4 can be referred to in [1].The architecture for the implementation of the loop of Algorithm 4 can be seen in the hardware layout in Figure 3.In [1], the authors showed how to reduce both area and time by further exploiting precalculation of values in a lookup-table and thus saving one carry save adder. The basic idea is:。

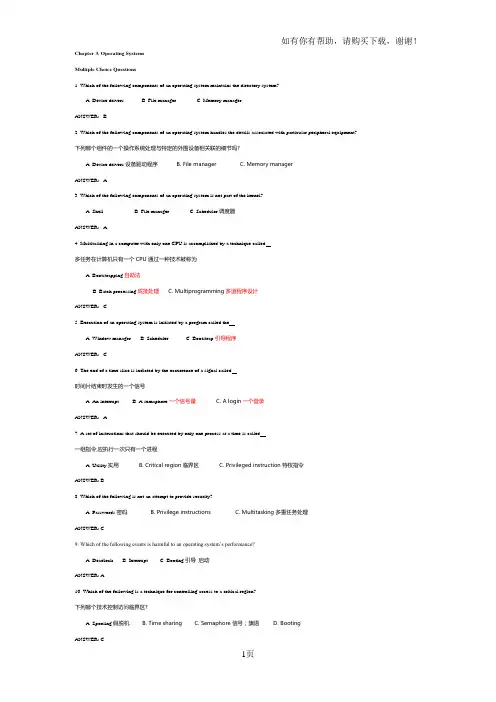

Chapter 3. Operating SystemsMultiple Choice Questions1. Which of the following components of an operating system maintains the directory system?A. Device driversB. File managerC. Memory managerANSWER: B2. Which of the following components of an operating system handles the details associated with particular peripheral equipment?下列哪个组件的一个操作系统处理与特定的外围设备相关联的细节吗?A. Device drivers 设备驱动程序B. File managerC. Memory managerANSWER: A3. Which of the following components of an operating system is not part of the kernel?A. ShellB. File managerC. Scheduler调度器ANSWER: A4. Multitasking in a computer with only one CPU is accomplished by a technique called .多任务在计算机只有一个CPU通过一种技术被称为A. Bootstrapping 自助法B. Batch processing 成批处理C. Multiprogramming多道程序设计ANSWER: C5. Execution of an operating system is initiated by a program called the .A. Window managerB. SchedulerC. Bootstrap引导程序ANSWER: C6. The end of a time slice is indicted by the occurrence of a signal called .时间片结束时发生的一个信号A. An interruptB. A semaphore 一个信号量C. A login一个登录ANSWER: A7. A set of instructions that should be executed by only one process at a time is called .一组指令,应执行一次只有一个进程A. Utility 实用B. Critical region临界区C. Privileged instruction特权指令ANSWER: B8. Which of the following is not an attempt to provide security?A. Passwords密码B. Privilege instructionsC. Multitasking多重任务处理ANSWER: C9. Which of the following events is harmful to an operating system’s performance?A. DeadlockB. InterruptC. Booting引导启动ANSWER: A10. Which of the following is a technique for controlling access to a critical region?下列哪个技术控制访问临界区?A. Spooling 假脱机B. Time sharingC. Semaphore 信号;旗语D. BootingANSWER: C11. Which of the following is not involved in a process switch?A. InterruptB. Process tableC. Dispatcher调度程序D. ShellANSWER: D12. Which of the following is a task that is not performed by the kernel of an operating system?下列哪个是一个任务,并不是由一个操作系统的内核?A. Communicate with the userB. Schedule processes调度进程C. Allocate resources分配资源D. Avoid deadlockANSWER: A13. Which of the following components of an operating system is executed to handle an interrupt signal?A. DispatcherB. Memory managerC. File managerANSWER: AFill-in-the-blank/Short-answer Questions1. In contrast to early batch processing techniques, __A__ allows the user to communicate with the computer while the user’s application is being executed. In turn, this type of processing requires that the computer’s responses to its environment be performed in a timely manner, a requirement known as __B__.与早期的批处理技术相比,便利允许用户与计算机进行通信,用户的应用程序被执行。

Algorithm Design Techniques and Analysis: English VersionExercise with AnswersIntroductionAlgorithms are an essential aspect of computer science. As such, students who are part of this field must master the art of algorithm design and analysis. Algorithm design refers to the process of creating algorithms that solve computational problems. Algorithm analysis, on the other hand, focuses on evaluating the resources required to execute those algorithms. This includes computational time and memory consumption.This document provides students with helpful algorithm design and analysis exercises. The exercises are in the formof questions with step-by-step solutions. The document is suitable for students who have completed the English versionof the Algorithm Design Techniques and Analysis textbook. The exercises cover various algorithm design techniques, such as divide-and-conquer, dynamic programming, and greedy approaches.InstructionEach exercise comes with a question and its solution. Read the question carefully and try to find a solution withoutlooking at the answer first. If you get stuck, look at the solution. Lastly, try the exercise agn without referring to the answer.Exercise 1: Divide and ConquerQuestion:Given an array of integers, find the maximum possible sum of a contiguous subarray.Example:Input: [-2, -3, 4, -1, -2, 1, 5, -3]Output: 7 (the contiguous subarray [4, -1, -2, 1, 5]) Solution:def max_subarray_sum(arr):if len(arr) ==1:return arr[0]mid =len(arr) //2left_arr = arr[:mid]right_arr = arr[mid:]max_left_sum = max_subarray_sum(left_arr)max_right_sum = max_subarray_sum(right_arr)max_left_border_sum =0left_border_sum =0for i in range(mid-1, -1, -1):left_border_sum += arr[i]max_left_border_sum =max(max_left_border_sum, left_b order_sum)max_right_border_sum =0right_border_sum =0for i in range(mid, len(arr)):right_border_sum += arr[i]max_right_border_sum =max(max_right_border_sum, righ t_border_sum)return max(max_left_sum, max_right_sum, max_left_border_s um+max_right_border_sum)Exercise 2: Dynamic ProgrammingQuestion:Given a list of lengths of steel rods and a corresponding list of prices, determine the maximum revenue you can get by cutting these rods into smaller pieces and selling them. Assume the cost of each cut is 0.Lengths: [1, 2, 3, 4, 5, 6, 7, 8]Prices: [1, 5, 8, 9, 10, 17, 17, 20]If the rod length is 4, the maximum revenue is 10.Solution:def max_revenue(lengths, prices, n):if n ==0:return0max_val =float('-inf')for i in range(n):max_val =max(max_val, prices[i] + max_revenue(length s, prices, n-i-1))return max_valExercise 3: Greedy AlgorithmQuestion:Given a set of jobs with start times and end times, find the maximum number of non-overlapping jobs that can be scheduled.Start times: [1, 3, 0, 5, 8, 5]End times: [2, 4, 6, 7, 9, 9]Output: 4Solution:def maximum_jobs(start_times, end_times):job_list =sorted(zip(end_times, start_times))count =0end_time =float('-inf')for e, s in job_list:if s >= end_time:count +=1end_time = ereturn countConclusionThe exercises presented in this document provide a practical way to master essential algorithm design and analysis techniques. Solving the problems without looking at the answers will expose students to the type of problems they might encounter in real life. The document’s solutionsprovide step-by-step instructions to ensure that students can approach the problems with confidence.。

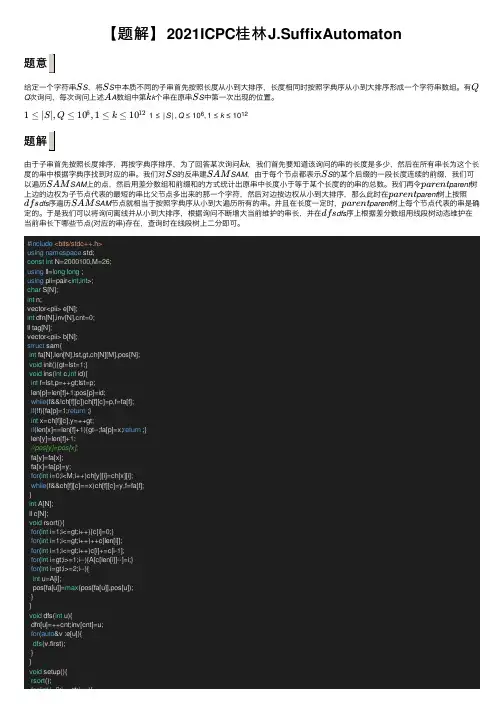

【题解】2021ICPC 桂林J.SuffixAutomaton题意给定⼀个字符串S ,将S 中本质不同的⼦串⾸先按照长度从⼩到⼤排序,长度相同时按照字典序从⼩到⼤排序形成⼀个字符串数组。

有Q 次询问,每次询问上述A 数组中第k 个串在原串S 中第⼀次出现的位置。

1≤|S |,Q ≤106,1≤k ≤1012题解由于⼦串⾸先按照长度排序,再按字典序排序,为了回答某次询问k ,我们⾸先要知道该询问的串的长度是多少,然后在所有串长为这个长度的串中根据字典序找到对应的串。

我们对S 的反串建SAM ,由于每个节点都表⽰S 的某个后缀的⼀段长度连续的前缀,我们可以遍历SAM 上的点,然后⽤差分数组和前缀和的⽅式统计出原串中长度⼩于等于某个长度的的串的总数。

我们再令parent 树上边的边权为⼦节点代表的最短的串⽐⽗节点多出来的那⼀个字符,然后对边按边权从⼩到⼤排序,那么此时在parent 树上按照dfs 序遍历SAM 节点就相当于按照字典序从⼩到⼤遍历所有的串。

并且在长度⼀定时,parent 树上每个节点代表的串是确定的。

于是我们可以将询问离线并从⼩到⼤排序,根据询问不断增⼤当前维护的串长,并在dfs 序上根据差分数组⽤线段树动态维护在当前串长下哪些节点(对应的串)存在,查询时在线段树上⼆分即可。

#include <bits/stdc++.h>using namespace std;const int N=2000100,M=26;using ll=long long ;using pii=pair<int ,int >;char S[N];int n;vector<pii> e[N];int dfn[N],inv[N],cnt=0;ll tag[N];vector<pii> b[N];struct sam{int fa[N],len[N],lst,gt,ch[N][M],pos[N];void init(){gt=lst=1;}void ins(int c,int id){int f=lst,p=++gt;lst=p;len[p]=len[f]+1;pos[p]=id;while (f&&!ch[f][c])ch[f][c]=p,f=fa[f];if (!f){fa[p]=1;return ;}int x=ch[f][c],y=++gt;if (len[x]==len[f]+1){gt--;fa[p]=x;return ;}len[y]=len[f]+1;//pos[y]=pos[x];fa[y]=fa[x];fa[x]=fa[p]=y;for (int i=0;i<M;i++)ch[y][i]=ch[x][i];while (f&&ch[f][c]==x)ch[f][c]=y,f=fa[f];}int A[N];ll c[N];void rsort(){for (int i=1;i<=gt;i++){c[i]=0;}for (int i=1;i<=gt;i++)++c[len[i]];for (int i=1;i<=gt;i++)c[i]+=c[i -1];for (int i=gt;i>=1;i--){A[c[len[i]]--]=i;}for (int i=gt;i>=2;i--){int u=A[i];pos[fa[u]]=max (pos[fa[u]],pos[u]);}}void dfs(int u){dfn[u]=++cnt;inv[cnt]=u;for (auto &v :e[u]){dfs (v.first);}}void setup(){rsort ();for (int i=2;i<=gt;i++){S S Q A k S 1≤|S |,Q ≤,1≤k ≤1061012k S SAM S SAM parent parent dfs SAM parent dfsfor(int i=2;i<=gt;i++){e[fa[i]].push_back(pii{i,S[pos[i]-len[fa[i]]]-'a'});}for(int i=1;i<=gt;i++){sort(e[i].begin(),e[i].end(),[&](const pii&a,const pii& b){return a.second<b.second;});}dfs(1);for(int i=2;i<=gt;i++){++tag[len[fa[i]]+1];--tag[len[i]+1];b[len[fa[i]]+1].push_back(pii{i,1});b[len[i]+1].push_back(pii{i,-1});}tag[0]=0;for(int i=1;i<=n;i++){tag[i]+=tag[i-1];}for(int i=1;i<=n;i++){tag[i]+=tag[i-1];}}}g;#define ls o<<1#define rs o<<1|1#define mid ((l+r)/2)int s[N<<2];void mt(int o){s[o]=s[ls]+s[rs];}void bd(int o,int l,int r){s[o]=0;if(l==r)return ;bd(ls,l,mid);bd(rs,mid+1,r);}void upd(int o,int l,int r,int x,int d){s[o]+=d;if(l==r)return ;if(x<=mid)upd(ls,l,mid,x,d);else upd(rs,mid+1,r,x,d);}int query(int o,int l,int r,int k){if(l==r)return l;return s[ls]>=k?query(ls,l,mid,k):query(rs,mid+1,r,k-s[ls]);}pair<ll,int> qu[N];pii ans[N];void f1(){scanf("%s",S+1);n=strlen(S+1);for(int i=1;i<=n/2;i++)swap(S[i],S[n-i+1]);g.init();for(int i=1;i<=n;i++){g.ins(S[i]-'a',i);}g.setup();int Q;scanf("%d",&Q);for(int i=1;i<=Q;i++){scanf("%lld",&qu[i].first);qu[i].second=i;}sort(qu+1,qu+1+Q,[&](const pair<ll,int>& a,const pair<ll,int>& b){ return a.first<b.first;});bd(1,1,g.gt);int na=1;for(int i=1;i<=Q;i++){ll k=qu[i].first;int id=qu[i].second;int np=lower_bound(tag+1,tag+1+n,k)-tag;if(np>n){ans[id]=pii{-1,-1};continue;}ll res=k-tag[np-1];while(na<=np){for(auto& v:b[na]){for(auto& v:b[na]){if(v.second==1){upd(1,1,g.gt,dfn[v.first],1);}else{upd(1,1,g.gt,dfn[v.first],-1);}}++na;}int nb=query(1,1,g.gt,res);nb=inv[nb];ans[id]=pii{n-g.pos[nb]+1,n-(g.pos[nb]-np+1)+1};}for(int i=1;i<=Q;i++){printf("%d %d\n",ans[i].first,ans[i].second);} }int main(){f1();return0;}。