Perceptually-Friendly H.264AVC Video Coding Based on Foveated Just-Noticeable-Distortion Model

- 格式:pdf

- 大小:6.10 MB

- 文档页数:14

Preferred Contact MethodIntroductionIn this digital age, communication has become easier and more convenient than ever before. With a multitude of contact methods available, it is important to determine the preferred method for effective and efficient communication. This article will explore the various contact methods and discuss the factors that influence individuals’ preferences.Factors Influencing Preferred Contact Method1. Accessibility•Availability of technology: The level of access to smartphones, computers, and the internet can grea tly influence an individual’s preferred contact method.•Ease of use: Some contact methods may require a certain level of technical knowledge or skills, which can impact an individual’schoice.2. Urgency•Time sensitivity: Depending on the urgency of the message, individuals may prefer a contact method that allows for immediate communication, such as phone calls or instant messaging.•Response time: If a quick response is expected, individuals may choose a contact method that is known for prompt replies.3. Privacy and Security•Confidentiality: For sensitive information, individuals may prefer contact methods that ensure privacy and security, such asencrypted messaging apps or face-to-face meetings.•Data protection: Concerns about data breaches and online security may influence an individual’s choice of contact method.4. Personal Preference•Communication style: Some individuals may prefer face-to-face interactions, while others may feel more comfortable communicating through written messages.•Personal habits: Factors like introversion or extroversion, preference for written or verbal communication, and individualhabits can play a role in determining the preferred contact method. Types of Contact Methods1. Phone Calls•Pros:–Real-time communication: Phone calls allow for immediate interaction, enabling quick clarification and discussion.–Tone and emotion: Verbal communication allows for theconveyance of tone and emotion, which can enhanceunderstanding.•Cons:–Availability: Phone calls require both parties to beavailable at the same time, which may not always beconvenient.–Intrusion: Unsolicited phone calls can be intrusive and disruptive.2. Email•Pros:–Asynchronous communication: Emails can be sent and received at any time, allowing for flexibility in communication.–Documentation: Emails provide a written record ofconversations, which can be useful for reference andclarification.•Cons:–Delayed response: Emails may not receive an immediateresponse, leading to potential delays in communication.–Spam and clutter: Email inboxes can easily become cluttered with spam or irrelevant messages, making important emailsharder to find.3. Instant Messaging•Pros:–Real-time interaction: Instant messaging allows for quick back-and-forth communication, similar to a conversation.–Multi-media sharing: Users can easily share files, images, and videos through instant messaging platforms.•Cons:–Distractions: Instant messaging can be distracting, with constant notifications and the temptation to engage in non-work-related conversations.–Misinterpretation: Without visual cues, written messages can be misinterpreted, leading to misunderstandings.4. Face-to-Face Meetings•Pros:–Personal connection: Face-to-face meetings allow for a deeper level of personal connection and relationshipbuilding.–Non-verbal communication: Body language and facialexpressions can enhance understanding and convey emotions.•Cons:–Time-consuming: Face-to-face meetings often require travel time and coordination, which can be impractical for remoteor geographically distant individuals.–Scheduling conflicts: Finding a mutually convenient time fora face-to-face meeting can be challenging.ConclusionDetermining the preferred contact method depends on various factors, including accessibility, urgency, privacy, and personal preference. Each contact method has its own advantages and disadvantages, and individuals may choose different methods for different situations. Ultimately, effective communication relies on understanding and respecting each other’s preferred contact method to ensure successful interaction.。

The ITU-T published J.144, a measurement of quality of service, for the transmission of television and other multimedia digital signals over cable networks. This defines the relationship between subjective assessment of video by a person and objective measurements taken from the network.The correlation between the two are defined by two methods:y Full Reference (Active) – A method applicable when the full reference video signal is available, and compared with the degraded signal as it passes through the network.y No Reference (Passive) – A method applicable when no reference video signal or informationis available.VIAVI believes that a combination of both Active and Passive measurements gives the correct blendof analysis with a good trade off of accuracy and computational power. T eraVM provides both voice and video quality assessment metrics, active and passive, based on ITU-T’s J.144, but are extended to support IP networks.For active assessment of VoIP and video, both the source and degraded signals are reconstituted from ingress and egress IP streams that are transmitted across the Network Under T est (NUT).The VoIP and video signals are aligned and each source and degraded frame is compared to rate the video quality.For passive measurements, only the degraded signal is considered, and with specified parameters about the source (CODEC, bit-rate) a metric is produced in real-time to rate the video quality.This combination of metrics gives the possibility of a ‘passive’ but lightweight Mean Opinion Score (MOS) per-subscriber for voice and video traffic, that is correlated with CPU-expensive but highly-accurate ‘active’ MOS scores.Both methods provide different degrees of measurement accuracy, expressed in terms of correlation with subjective assessment results. However, the trade off is the considerable computation resources required for active assessment of video - the algorithm must decode the IP stream and reconstitute the video sequence frame by frame, and compare the input and outputnframesto determine its score. The passive method is less accurate, but requires less computing resources. Active Video AnalysisThe active video assessment metric is called PEVQ– Perceptual Evaluation of Video Quality. PEVQ provides MOS estimates of the video quality degradation occurring through a network byBrochureVIAVITeraVMVoice, Video and MPEG Transport Stream Quality Metricsanalysing the degraded video signal output from the network. This approach is based on modelling the behaviour of the human visual tract and detecting abnormalities in the video signal quantified by a variety of KPIs. The MOS value reported, lies within a range from 1 (bad) to 5 (excellent) and is based on a multitude of perceptually motivated parameters.T o get readings from the network under test, the user runs a test with an video server (T eraVM or other) and an IGMP client, that joins the stream for a long period of time. The user selects the option to analysis the video quality, which takes a capture from both ingress and egress test ports.Next, the user launches the T eraVM Video Analysis Server, which fetches the video files from the server, filters the traffic on the desired video channel and converts them into standard video files. The PEVQ algorithm is run and is divided up into four separate blocks.The first block – pre-processing stage – is responsible for the spatial and temporal alignment of the reference and the impaired signal. This process makes sure, that only those frames are compared to each other that also correspond to each other.The second block calculates the perceptual difference of the aligned signals. Perceptual means that only those differences are taken into account which are actually perceived by a human viewer. Furthermore the activity of the motion in the reference signal provides another indicator representing the temporal information. This indicator is important as it takes into account that in frame series with low activity the perception of details is much higher than in frame series with quick motion. The third block in the figure classifies the previously calculated indicators and detects certain types of distortions.Finally, in the fourth block all the appropriate indicators according to the detected distortions are aggregated, forming the final result ‒ the mean opinion score (MOS). T eraVM evaluates the quality of CIF and QCIF video formats based on perceptual measurement, reliably, objectively and fast.In addition to MOS, the algorithm reports:y D istortion indicators: For a more detailed analysis the perceptual level of distortion in the luminance, chrominance and temporal domain are provided.y D elay: The delay of each frame of the test signal related to the reference signal.y Brightness: The brightness of the reference and degraded signal.y Contrast: The contrast of the distorted and the reference sequence.y P SNR: T o allow for a coarse analysis of the distortions in different domains the PSNR is provided for theY (luminance), Cb and Cr (chrominance) components separately.y Other KPIs: KPIs like Blockiness (S), Jerkiness, Blurriness (S), and frame rate the complete picture of the quality estimate.Passive MOS and MPEG StatisticsThe VQM passive algorithm is integrated into T eraVM, and when required produces a VQM, an estimation of the subjective quality of the video, every second. VQM MOS scores are available as an additional statistic in the T eraVM GUI and available in real time. In additionto VQM MOS scores, MPEG streams are analysed to determine the quality of each “Packet Elementary Stream” and exports key metrics such as Packets received and Packets Lost for each distinct Video stream within the MPEG Transport Stream. All major VoIP and Video CODECs are support, including MPEG 2/4 and the H.261/3/3+/4.2 TeraVM Voice, Video and MPEG Transport Stream Quality Metrics© 2020 VIAVI Solutions Inc.Product specifications and descriptions in this document are subject to change without notice.tvm-vv-mpeg-br-wir-nse-ae 30191143 900 0620Contact Us +1 844 GO VIAVI (+1 844 468 4284)To reach the VIAVI office nearest you, visit /contacts.VIAVI SolutionsVoice over IP call quality can be affected by packet loss, discards due to jitter, delay , echo and other problems. Some of these problems, notably packet loss and jitter, are time varying in nature as they are usually caused by congestion on the IP path. This can result in situations where call quality varies during the call - when viewed from the perspective of “average” impairments then the call may appear fine although it may have sounded severely impaired to the listener. T eraVM inspects every RTP packet header, estimating delay variation and emulating the behavior of a fixed or adaptive jitter buffer to determine which packets are lost or discarded. A 4- state Markov Model measures the distribution of the lost and discarded packets. Packet metrics obtained from the Jitter Buffer together with video codec information obtained from the packet stream to calculate a rich set of metrics, performance and diagnostic information. Video quality scores provide a guide to the quality of the video delivered to the user. T eraVM V3.1 produces call quality metrics, includinglistening and conversational quality scores, and detailed information on the severity and distribution of packet loss and discards (due to jitter). This metric is based on the well established ITU G.107 E Model, with extensions to support time varying network impairments.For passive VoIP analysis, T eraVM v3.1 emulates a VoIP Jitter Buffer Emulator and with a statistical Markov Model accepts RTP header information from the VoIP stream, detects lost packets and predicts which packets would be discarded ‒ feeding this information to the Markov Model and hence to the T eraVM analysis engine.PESQ SupportFinally , PESQ is available for the analysis of VoIP RTP Streams. The process to generate PESQ is an identical process to that of Video Quality Analysis.。

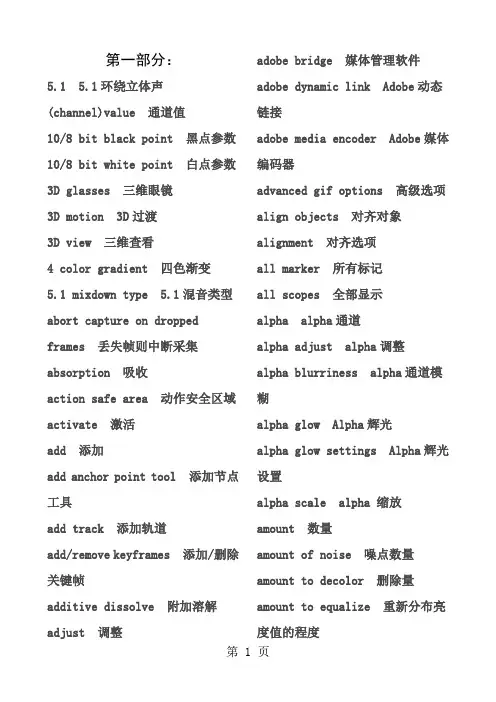

第一部分:5.1 5.1环绕立体声(channel)value 通道值10/8 bit black point 黑点参数10/8 bit white point 白点参数3D glasses 三维眼镜3D motion 3D过渡3D view 三维查看4 color gradient 四色渐变5.1 mixdown type 5.1混音类型abort capture on dropped frames 丢失帧则中断采集absorption 吸收action safe area 动作安全区域activate 激活add 添加add anchor point tool 添加节点工具add track 添加轨道add/remove keyframes 添加/删除关键帧additive dissolve 附加溶解adjust 调整adobe bridge 媒体管理软件adobe dynamic link Adobe动态链接adobe media encoder Adobe媒体编码器advanced gif options 高级选项align objects 对齐对象alignment 对齐选项all marker 所有标记all scopes 全部显示alpha alpha通道alpha adjust alpha调整alpha blurriness alpha通道模糊alpha glow Alpha辉光alpha glow settings Alpha辉光设置alpha scale alpha 缩放amount 数量amount of noise 噪点数量amount to decolor 删除量amount to equalize 重新分布亮度值的程度amount to tint 指定色彩化的数量amplitude 振幅anchor 定位点anchor 交叉anchor point 定位点angle 角度antialias 抗锯齿antialiasing 反锯齿anti-flicker filter 去闪烁滤镜append style library 加载样式库apply audio transition 应用音频转换apply phase map to alpha 应用相位图到alpha通道apply style 应用样式apply style color only 仅应用样式颜色apply style with font size 应用样式字体大小apply video transition 应用视频转换arc tool 扇形工具area type tool 水平段落文本工具arithmetic 计算arrange 排列aspect 比例assume this frame rate 手动设置帧速率audio 音频audio effects 音频特效audio gain 音频增益audio hardware 音频硬件audio in 音频入点audio master meters 音频主电平表audio mixer 混音器audio options 音频选项audio out 音频出点audio output mapping 音频输出映射audio previews 音频预演audio samples 音频采样audio transitions 音频转换audio transitions default duration 默认音频切换持续时间auto black level 自动黑色auto color 自动色彩auto contrast 自动对比度auto levels 自动色阶auto save 自动保存auto white level 自动白色auto-generate dvd markers 自动生成DVD标签automatch time 自动匹配时间automate to sequence 序列自动化automatic quality 自动设置automatically save every 自动保存时间automatically save projects 自动保存average pixel colors 平均像素颜色background color 背景色balance 平衡balance angle 白平衡角度balance gain 增加白平衡balance magnitude 设置平衡数量band slide 带形滑行band wipe 带形擦除bandpass 带通滤波barn doors 挡光板bars and tone 彩条色调栅栏based on current title 基于当前字幕based on template 基于字幕模板baseline shift 基线basic 3D 基本三维bass 低音batce capture 批量采集bend 弯曲bend settings 弯曲设置best 最佳质量between 两者bevel 斜面填充bevel alpha alpha导角bevel edges 边缘倾斜bin 文件夹black & white 黑白black input level 黑色输入量black video 黑屏blend 混合blend with layer 与层混合blend with original 混合来源层blending 混合选项blending mode 混合模式block dissolve 块面溶解block width/block height 板块宽度和板块高度blue 蓝色blue blurriness 蓝色通道模糊blue screen key 蓝屏抠像blur & sharpen 模糊与锐化blur center 模糊中心blur dimensions 模糊方向blur dimensions 模糊维数blur length 模糊程度blur method 模糊方式blurriness 模糊强度blurs 斜面边框boost light 光线亮度border 边界bottom 下branch angle 分支角度branch seg. Length 分支片段长度branch segments 分支段数branch width 分支宽度branching 分支brightness 亮度brightness & contrast 亮度与对比度bring forward 提前一层bring to front 放在前层broadcast colors 广播级颜色broadcast locale 指定PAL或NTSC两种电视制式browse 浏览brush hardness 画笔的硬度brush opacity 画笔的不透明度brush position 画笔位置brush size 画笔尺寸brush spacing 绘制的时间间隔brush strokes 画笔描边brush time properties 应用画笔属性(尺寸和硬度)到每个笔触段或者整个笔触过程calculations 计算器camera blur 镜头模糊camera view 相机视图camera view settings 照相机镜头设置capture 采集capture format 采集格式capture settings 采集设置cell pattern 单元图案center 中心center at cut 选区中间切换center gradient 平铺center merge 中心融合center of sphere 球体中心center peel 中心卷页center split 中心分裂center texture 纹理处于中心center X 中心 Xcenter Y 中心 Ychange by 颜色转换的执行方式change color 转换颜色change to color 转换到颜色channel 通道channel blur 通道模糊channel map 通道映射图channel map settings 通道映射图设置channel mixer 通道混合channel volume 通道音量checker wipe 方格擦除checkerboard 棋盘格choose a texture image 选择纹理图片chroma 浓度chroma key 色度键chroma max 最小值chroma min 最大值cineon converter 转换cineon文件circle 圆形clear 清除clear all dvd markers 清除所有DVD标签clear clip marker 清除素材标记clear dvd marker 清除DVD标记clear sequence marker 清除序列标记clip 素材clip gain 修剪增益clip name 存放名称clip notes 剪辑注释clip overlap 重叠时间clip result values 用于防止设置颜色值的所有功能函数项超出限定范围clip sample 原始画面clip speed/duration 片段播放速度/持续时间clip/speed/duration 片段/播放速度/持续时间clipped corner rectangletool 尖角矩形工具clipped face 简略表面clipping 剪辑clock wipe 时钟擦除clockwise 顺时针close 关闭color 色彩color balance(HLS) 色彩平衡HLScolor balance(RGB)色彩平衡RGBcolor correction 色彩校正color correction layer 颜色校正层color correction mask 颜色校正遮罩color depth 色彩深度color emboss 色彩浮雕color influence 色彩影响color key 色彩键color match 色彩匹配color matte 颜色底纹color offset 色彩偏移color offset settings 色彩偏移设置color pass 色彩通道color pass settings 色彩过滤设置color picker 颜色提取color replace 色彩替换color replace settings 颜色替代设置color stop color 色彩设置color stop opacity 色彩不透明度color to change 颜色变换color to leave 保留色彩color tolerance 颜色容差colorze 颜色设置complexity 复杂性composite in back 使用alpha通道从后向前叠加composite in front 使用alpha 通道从前向后叠加composite matte withoriginal 指定使用当前层合成新的蒙版,而不是替换原素材层composite on original 与源图像合成composite rule 混合通道composite using 设置合成方式composite video 复合视频composition 合成项目compound arithmetic 复合算法compressor 压缩constant gain 恒定功率constant power 恒定增益contrast 对比度contrast level 对比度的级别convergence offset 集中偏移conversion type 转换类型convert anchor point tool 节点转换工具convolution kernel 亮度调整convolution kernel settings 亮度调整设置copy 复制core width 核心宽度corner 交叉corner pin 边角count 数量counterclockwise 逆时针counting leader 计数向导crawl 水平爬行crawl left 从右向左游动crawl right 从左向右游动crop 裁剪cross dissolve 淡入淡出cross stretch 交叉伸展cross zoom 十字缩放crossfade 淡入淡出cube spin 立体旋转cue blip at all second starts 每秒开始时提示音cue blip on 2 倒数第2秒时提示音cue blip on out 最后计数时提示音cursor 光标curtain 窗帘curvature 曲率custom settings 自定义custom setup 自定义设置cut 剪切cutoff 对比cyan 青色cycle (in revolutions)指定旋转循环cycle evolution 循环设置第二部分:decay 组合素材强度减弱的比例default 默认default crawl 默认水平滚动default roll 默认垂直滚动default sequence 默认序列设置default still 默认静态defringing 指定颜色通道delay 延时delete anchor point tool 删除节点工具delete render files 删除预览文件delete style 删除样式delete tracks 删除轨道delete workspace 删除工作界面denoiser 降噪density 密度depth 深度deselect all 取消全选detail amplitude 细节振幅detail level 细节级别device control 设备控制devices 设备diamond 菱形difference matte key 差异抠像dip to black 黑色过渡direct 直接direction 角度directional blur 定向模糊directional lights 平行光disperse 分散属性displace 位移displacement 转换display format 时间显示格式display mode 显示模式dissolve 溶解distance 距离distance to image 图像距离distort 扭曲distribute objects 分布对象dither dissolve 颗粒溶解doors 关门down 下draft 草图draft quality 草稿质量drop face 正面投影drop shadow 阴影duplicate 副本duplicate style 复制样式duration 持续时间dust & scratches 灰尘噪波dvd layout DVD布局dynamics 动态echo 重复echo operator 重复运算器echo time 重复时间edge 边缘edge behavior 设置边缘edge color 边缘颜色edge feather 边缘羽化edge sharpness 轮廓的清晰度edge softness 柔化边缘edge thickness 边缘厚度edge thin 边缘减淡edge type 边缘类型edit 编辑edit dvd marker 编辑DVD标记edit in adobe audition 用adobe audition编辑edit in adobe photoshop 用adobe photoshop编辑edit original 初始编辑edit sequence marker 编辑序列标记edit subelip 编辑替代素材editing 编辑模式editing mode 编辑模式effect controls 特效控制effects 特效eight-point garbage matte 八点无用信号遮罩eliminate 去除填充ellipse 椭圆ellipse tool 椭圆形工具embedding options 嵌入选项emboss 浮雕enable 激活end at cut 结束处切换end color 终点颜色end of ramp 渐变终点end off screen 从幕内滚出end point 结束点EQ 均衡器equalize 均衡events 事件窗口evolution 演变evolution options 演变设置exit 退出expander 扩展export 输出export batch list 批量输出列表export for clip notes 导出素材记录export frame 输出帧export frame settings 输出帧设置export movie 输出影片export movie settings 输出影片设置export project as aaf 按照AAF 格式输出项目export to DVD 输出到DVDextended character 扩展特性extract 提取extract settings 提取设置eyedropper fill 滴管填充face 表面facet 平面化fade 淡化fade out 淡出fast blur 快速模糊fast color corrector 快速校正色彩feather 羽化feather value 羽化的强度feedback 回馈field interpolate 插入新场field options 场设置fields 场设置file 文件文件格式for 文件信息fill 填充fill alpha channel 填充alpha 通道fill color 填充颜色fill key 填充键fill left 填充左声道fill point 填充点fill right 填充右声道fill selector 填充选择fill type 填充类型find 查找find edges 查找边缘first object above 第一对象上fit 自动fixed endpoint 固定结束点flare brightness 光晕亮度flare center 光晕中心flip over 翻页flip with object 翻转纹理focal length 焦距fold up 折叠font 字体font browser 字体浏览器font size 字号大小format 格式four-point garbage matte 四角无用信号遮罩fractal influence 不规则影响程度frame blend 帧混合frame blending 帧混合frame hold 帧定格frame rate 帧速率frame size 帧尺寸frames 逐帧显示funnel 漏斗gamma 调整gamma还原曲线gamma correction gamma修正gamma correctionsettings gamma修正设置gaussian blur 高斯模糊gaussian sharen 高斯锐化general 常规generate batch log on unsuccessful completion 生成批处理文件get properties for 获得属性ghost 阴影填充ghosting 重影glow 辉光go to clip marker 到素材标记点go to dvd marker 到DVD标记点go to next keyframe 转到下一关键帧go to previous keyframe 转到上一关键帧go to sequence marker 到序列标记点good 好GPU effects GPU特效GPU transitions GPU转场效果gradient layer 选择使用哪个渐变层进行参考gradient placement 设置渐变层的放置gradient wipe 渐变擦拭grain size 颗粒大小gray 灰色green 绿色green blurriness 绿色通道模糊green channels 绿色通道green screen key 绿屏抠像grid 网格group 群组height 高度help 帮助hi damp 高频阻尼high 高highest quality 最高质量highlight color 高光颜色highlight opacity 高光不透明度highlight rolloff 高光重算highlights 高光highpass 高通滤波history 历史horizontal 水平方向horizontal and vertical 水平和垂直方向horizontal blocks 水平方格horizontal center 水平居中horizontal decentering 水平偏移horizontal flip 水平翻转horizontal hold 水平保持horizontal prism fx 水平方向扭曲程度how to make color safe 实现“安全色”的方法how to use help 使用帮助hue 色调hue balance and angle 色调平衡和角度hue transform 色相调制if layer size differ 混合层的位置ignore alpha 忽视通道ignore audio 忽略音频ignore audio effects 忽略音频效果特效ignore options 忽略选项ignore video 忽略视频image control 图像控制image mask 图像遮罩image matte key 图像遮罩抠像import 导入import after effects composition 导入after effects 合成import batch list 批量导入列表import clip notes comments 导入素材记录import image as logo 导入图像为标志import recent file 最近导入的文件in 入点in and out around selection 入点和出点间选择individual parameters 特定参数info 信息inner strokes 内描边inport recent file 最近打开过的文件input channel 导入频道input levels 输入级别input range 输入范围insert 插入insert edit 插入编辑insert logo 插入标志insert logo into text 插入标志图到正文inset 插入切换inside color 内部颜色intensity 强度interlace upper L lower R 左右隔行交错interpolation 插入值interpret footage 解释影片invert 反转invert alpha 反转invert circle 反转圆环invert color correctionmask 设置是否将颜色校正遮罩反向invert composite 反转通道invert fill 反转填充invert grid 反转网格invert matte 反转蒙版层的透明度iris 划像iris box 盒子划像iris cross 十字划像iris diamond 钻石形划像iris points 四角划像iris round 圆形划像iris shapes 菱形划像iris star 五角星划像jitter 躁动kerning 字间距key 键控key color 键控颜色key out safe 将安全颜色透明key out unsafe 将不安全的像素透明keyboard 快捷键keyboard customization 键盘自定义keyframe 关键帧keyframe and rendering 关键帧和渲染keyframe every 关键帧间隔keying 键控label 标签label colors 标签颜色label defaults 默认标签large thumbnails 大图标large trim offset 最大修剪幅度last object below 最末对象下latitude 垂直角度layout 设置分屏预览的布局leading 行间距leave color 分离颜色left 左left view 左视图lens distortion 光学变形lens distortion settings 光学变形设置lens flare 镜头光晕lens flare settings 镜头光晕设置lens type 镜头类型level 等级levels 电平levels settings 电平设置lift 删除light angle 灯光角度light color 灯光颜色light direction 光源方向light intensity 灯光强度light magnitude 光照强度light source 光源lighting 光lighting effect 光效lightness 亮度lightness transform 亮度调制lightning 闪电lilac 淡紫limit date rate to 限制传输率line color 坐标线颜色line tool 直线工具linear gradient 线性渐变填充linear ramp 线性渐变linear wipe 线性擦拭link 链接link media 链接媒体lit 变亮lo damp 低频阻尼load 载入load preset 装载预置location 位置log clip 原始片段logging 原始素材logo 图形long 长longitude 水平角度lower field first 下场优先lower third 底部居中lowpass 低通滤波luma corrector luma校正luma curve luma曲线luma key 亮度键luminance map 亮光过渡第三部分:magnification 扩放倍率magnify 扩放magnitude 数量main menu 主菜单maintain original alpha 保持来源层的alpha通道make offline 解除关联make subclip 制作替代素材map 映射图map black to 黑色像素被映像到该项指定的颜色map white to 白色像素被映像到该项指定的颜色marker 标记mask feather 遮罩羽化mask only 蒙版mask radius 遮罩半径master 复合通道master 主要部分match colors 匹配颜色matching softness 匹配柔和度matching tolerance 匹配容差matte 蒙板maximum 最大值maximum project versions 最大项目数maximum signalamplitude(IRE) 限制最大的信号幅度media 媒体median 中值method 方法mid high 中间高色mid low 中间阴影midtones 中间色milliseconds 毫秒minimum 最小值mirror 镜像mix 混合mode 模式monitor 监视器mono 单声道monochrome 单色mosaic 马赛克motion 运动motion settings 运动设置movie 影片multiband compressor 多步带压缩multicam editing 多视频编辑multi-camera 多摄像机模式multiply key 增加键multi-spin 多方格旋转multi-split 多层旋转切换multitap delay 多重延迟name 名称new 新建new after effectscomposition 新建after effects合成new bin 新建文件夹new project 新建项目new style 新建样式new title 新建字幕next 下一个next available numbered 下一个有效编号next object above 下一对象上next object below 下一对象下no fields 无场noise 噪波noise & grain 噪波颗粒noise alpha alpha噪波noise hls HLS噪波noise hls auto 自动HLS噪波noise options 噪波的动画控制方式noise phase 噪波的状态noise type 噪点类型non red key 非红色抠像non-additive dissolve 无附加溶解none 无notch 凹槽number of echoes 重复数量numbered 编号标记numeral color 数字颜色object X 物体 Xobject Y 物体 Yoffline file 非线性文件offset 偏移omnilights 全光源online support 在线服务opacity 不透明度open in source monitor 用素材监视器打开open project 打开项目open recent project 打开最近编辑过的项目open style library 打开样式库operate on alpha channel 特效应用在alpha通道中operate on channels 通道运算方式operator 运算方法optimize stills 优化静态图像orange 橙色ordering 排序,分类,调整orientation 排列方式original alpha 原始alpha通道和噪波的关系other numbered 其他编号设置out 出点outer strokes 外描边output 输出选项output levels 输出级别output sample 应用特效后的画面outside color 外部颜色oval 圆形overflow 噪波的溢出方式overflow behavior 溢出范围overlay 覆盖overlay edit 覆盖编辑page peel 卷页page turn 卷页paint bucket 油漆桶paint splatter 泼溅油漆paint style 设置笔触是应用到源素材还是应用到透明层paint surface 绘画表面paint time properties 应用绘画属性(颜色和透明度)到每个笔触段或者整个笔触过程parametric EQ 参量均衡paste 粘贴paste attributes 粘贴属性paste insert 插入粘贴path type tool 水平路径文字工具peel back 剥落卷页pen tool 钢笔工具percent 百分比perspective 透视phase 相位photoshop 文件photoshop style photoshop样式pink 粉红pinning 定位pinwheel 旋转风车pips 模糊pitchshifter 变速变调pixel aspect ratio 像素高宽比pixelate 颗粒pixels 像素placement 布置play audio while scrubbing 当擦洗时播放声音playback settings 播放设置polar coordinates 极坐标polar to rect 极坐标转换为直角坐标position 位置position & colors 位置和颜色posterize 多色调分色posterize time 多色调分色时期postroll 后滚pre delay 时间间隔precent blur 模糊百分比preferences 参数设置premultiply matte layer 选择和背景合成的遮罩层pre-process 预处理preroll 前滚preset 预先设定presets 近来preview 预览previous 上一个procamp 色阶program 节目program monitor 节目监视器project 项目project manager 项目管理器project settings 项目设置projection distance 投影距离properties 属性ps arbitrary map 映像pull direction 拉力方向pull force 拉力push 推动quality 质量radial blur 放射模糊radial gradient 放射渐变填充radial ramp 辐射渐变radial shadow 放射阴影radial wipe 径向擦拭radius 半径ramp 渐变ramp scatter 渐变扩散ramp shape 渐变类型random blocks 随机碎片random invert 随机颠倒random seed 随机速度random strobe probablity 闪烁随机性random wipe 随机擦除range 范围rate 频率ray length 光线长度razor at current time indicator 在编辑线上使用修剪工具razor tool 剪切工具rebranching 再分支rect to polar 平面到极坐标rectangle 矩形rectangle tool 矩形工具red 红色red blurriness 红色通道模糊redo 重做reduce luminance 降低亮度reduce saturation 降低饱和度reduction axis 设置减少亮度或者视频电平reduction method 设置限制方法reflection angle 反射角度reflection center 反射中心registration 注册relief 浮雕程度remove matte 移除遮罩remove unused 移除未使用的素材rename 重命名rename style 重命名样式render 光效render details 渲染细节render work area 预览工作区域rendering 渲染repeat 重复repeat edge pixels 重复像素边缘replace color 替换色彩replace style library 替换样式库replicate 复制report dropped frames 丢失帧时显示报告rerun at each frame 每一帧上都重新运行reset 恢复reset style library 恢复样式库resize layer 调整层reveal in bridge 用媒体管理器bridge显示reveal in project 在项目库中显示reverb 混响reverse 反相revert 恢复rgb color corrector RGB色彩校正rgb curves RGB曲线rgb difference key RGB不同抠像rgb parade RGB三基色right 右right view 右视图ripple 波纹ripple delete 波动删除ripple settings 涟漪设置roll 滚动roll away 滚动卷页roll settings 滚动设置roll/crawl options 滚动/爬行选项rotate with object 旋转纹理rotation 旋转rotation tool 旋转工具roughen edges 粗糙边缘round corner rectangle tool 圆角矩形工具round rectangle tool 圆矩形工具rule X X 轴对齐方式rule Y Y 轴对齐方式safe action margin 操作安全框safe margins 安全框safe title margin 字幕安全框same as project 和项目保存相同路径sample point 取样点sample radius 取样范围sample rate 采样率sample type 采样类型saturation 饱和度saturation transform 饱和度调制save 保存save a copy 保存一个备份文件save as 另存为save file 保存文件save style library 保存样式库save workspace 保存工作界面scale 比例scale height 缩放高度scale to frame size 按比例放大至满屏scale width 画面宽度scaling 缩放比例scene 场景scratch disks 采集放置路径screen 屏幕screen key 屏幕键second source 第二来源second source layer 另一个来源层secondary color correction 二级色彩校正segments 片段select 选择select a matte image 选择图像select all 全选select label group 选择标签组selection 选项selection tool 选择工具send backward 退到后层send to back 退后一层sequence 序列sequence/add tracks 序列/添加轨道sequentially 继续set clip marker 设置素材标记set dvd marker 设置DVD标记set in 起点set matte 设置蒙版set out 结束点set sequence marker 设置序列标记set style as default 设定默认样式setting to color 设置到颜色setup 设置shadow 投影shadow color 投影颜色shadow only 只显示投影shadow opacity 阴影不透明度shadows 暗色调shape 形状sharp colors 锐化颜色sharpen 锐化sharpen amount 锐化强度sharpen edges 锐化边缘sheen 辉光shift center to 图像的上下和左右的偏移量shimmer 微光控制shine opacity 光线的透明度short 短show clip keyframes 显示素材关键帧show keyframes 显示关键帧show name only 仅显示名称show split view 设置为分屏预览模式show track keyframes 显示轨道关键帧show video 显示视频show waveform 显示波形shutter angle 设置应用于层运动模糊的量similarity 相似sine 正弦sixteen-point garbage matte 十六点无用信号遮罩size 尺寸size from 格子的尺寸skew 歪斜skew axis 歪斜轴slant 倾斜slash slide 斜线滑行slide 滑行sliding bands 百叶窗1sliding boxes 百叶窗2small caps 小写small caps size 小写字号small thumbnails 小图标smoothing 平滑snap 边缘吸附soft edges 当质量为最佳时,指定柔和板块边缘softness 柔和度solarize 曝光solid 实色填充solid colors 实色solid composite 固态合成sort order 排序source 来源source monitor 素材监视器source opacity 源素材不透明度source point 中心点special effect 特技specular highlight 镜面高光speed 速度speed/duration 播放速度/持续时间spherize 球面化spilt view percent 设置分屏比例spin 旋转方式spin away 翻转spiral boxes 旋转消失split 分开split percent 分离百分比split screen 分离屏幕spotlights 点光spread 延展square 方形stability 稳定性start angle 初始角度start at cut 开始处切换start color 起点颜色start of ramp 渐变起点start off screen 从幕外滚入start point 开始点start/end point 起始点/结束点starting intensity 开始帧的强度stereo 立体声still 静态stop 停止stretch 伸展stretch gradient to fit 拉伸stretch in 快速飞入stretch matte to fit 用于放大缩小屏蔽层的尺寸与当前层适配stretch over 变形进入stretch second source to fit 拉伸另一层stretch texture to fit 拉伸纹理到适合stretch width or height 宽度和高度的延伸程度strobe 闪烁方式strobe color 闪烁颜色strobe duration(secs) 闪烁周期strobe light 闪光灯strobe operator 闪烁的叠加模式strobe period(secs) 间隔时间stroke 描边stroke angle 笔触角度stroke density 笔触密度stroke length 笔触长度stroke randomness 笔触随机性style swatches 样本风格stylize 风格化swap 交换s 交换声道s 交换左右视图swing in 外关门swing out 内关门swirl 旋涡swivel 旋转synchronize 同步第四部分:tab margin 制表线tab markers 跳格止点tab stops 制表符设置take 获取take matte from layer 指定作为蒙版的层tape name 录像带的名称target color 目标色彩templates 模板text baselines 文本基线text only 文件名texture 纹理texture contrast 纹理对比度texture key 纹理键texture layer 纹理层texture placement 纹理类型texturize 纹理化thickness 厚度three-d 红蓝输出three-way color corrector 三方色彩校正threshold 混合比例threshold 曝光值tile gradient 居中tile texture 平铺纹理tile X 平铺 X轴tile Y 平铺 Y轴tiling options 重复属性tilt 倾斜time 时期time base 时基timecode 时间码timecode offset 时间码设置timeline 时间线timing 适时, 时间选择, 定时, 调速tint 色调title 字幕title actions 字幕操作title designer 字幕设计title properties 字幕属性title safe 字幕安全框title safe area 字幕安全区域title style 字幕样式title tools 字幕工具title type 字幕类型titler 字幕titler styles 字幕样式toggle animation 目标关键帧tolerance 容差tonal range definition 选择调整区域tool 工具top 上track matte key 跟踪抠像tracking 间距tracks 跟踪transcode settings 码流设置transfer activation 转移激活transfer mode 叠加方式transform 转换transforming to color 转换到颜色transition 切换transition completion 转场完成百分比transition softness 指定边缘柔化程度transparency 抠像transparent video transparent 视频treble 高音triangle 三角形trim 修剪tube 斜面加强tumble away 翻筋斗turbulent displace 湍动位移twirl 漩涡twirl center 漩转中心twirl radius 漩转半径twirls 马赛克type 类型type alignment 对齐方式type of conversion 转换方式type tool 水平文字工具underline 下划线undo 撤消ungroup 解散群组uniform scale 锁定比例units 单位universal counting leader 通用倒计时器universal counting leader setup 倒计时片头设置unlink 拆分unnumbered 无编号标记up 上upper field first 上场优先use color noise 使用颜色杂色use composition's shutter angle 合成图像的快门角度use for matte 指定蒙版层中用于效果处理的通道use mask 使用遮罩user interface 用户界面vect/yc wave/rgb parade 矢量/YC 波形/三基色vect/yc wave/ycbcr parade 矢量/YC 波形/分量vectorscope 矢量venetian blinds 百叶窗vertical 垂直方向vertical area type tool 垂直段落文本工具vertical blocks 垂直方格vertical center 垂直居中vertical decentering 垂直偏移vertical flip 垂直翻转vertical hold 垂直保持vertical path type tool 垂直路径文字工具vertical prism fx 垂直方向扭曲程度vertical type tool 垂直文字工具video 视频video effects 视频特效video in 视频入点video limiter 视频限制器video options 视频选项video out 视频出点video previews 视频预览video rendering 视频渲染设置video transitions 视频转换video transitions default duration 默认视频切换持续时间view 查看view correction matte 查看差异蒙版violet 紫色wave 波纹wave height 波纹高度wave speed 波纹速度wave type 波纹类型wave warp 波浪波纹wave width 波纹宽度wedge tool 三角形工具wedge wipe 楔形擦除white balance 设置白平衡white input level 白色输入量width 宽度width variation 宽度变化window 窗口wipe 擦除wipe angle 指定转场擦拭的角度wipe center 指定擦拭中心的位置wipe color 擦除区域颜色word wrap 自动换行workspace 工作界面write-on 书写X offset X 轴偏移量X Position X 轴坐标xor 逻辑运算Y offset Y 轴偏移量Y Position Y 轴坐标YC waveform YC 波形ycbcr parade ycbcr 分量zig-zag blocks 积木碎块zoom 变焦方式zoom boxes 盒子缩放zoom in 放大素材zoom out 缩小素材zoom trails 跟踪缩放。

Zhou et al./J Zhejiang Univ SCIENCE A 20067(Suppl.I):76-8176Resynchronization and remultiplexing fortranscoding to H.264/AVC *ZHOU Jin,XIONG Hong-kai,SONG Li,YU Song-yu(Institu te of Image Comm unication and Infor m ation Processing,Shanghai Jiao Tong University ,Shanghai 200030,China)E-mail:zhoujin@;xion ghongkai@;songli@;syyu@Received Dec.9,2005;revision accepted Feb.19,2006Abstract:H.264/MPEG-4AVC standard appears highly competitive dueto its high efficiency,flexibility and error resilience.In order to maintain universal multimedia access,statistical multiplexing,or adaptive video content delivery,etc.,it induces an immense demand forconverting a large volume ofexisting multimedia content from other formats into the H.264/AVC format and vice versa.In this work,we study theremultiplexing and resynchronization issue within system coding aftertranscoding,aiming to sustain the management and time information destroyed in transcoding and enable synchronized decoding of decoder buffers over a wide range of retrieval or receipt conditions.Given the common intention of multiplexing and synchronization mechanism in system coding of different standards,this paper takes the most widely used MPEG-2transport stream (TS)as an example,and presents a software system and the key technologies to solve the time stamp mapping and relevant buffer management.The so-lution reuses previous information contained in the input streams to remultiplex and resynchronize the output information with the regulatory coding and composition structure.Experimental results showed that our solutions efficiently preserve the performance in multimedia presentation.Key words:Transport stream (TS),Remultiplex,Time stamp,Transcoding,H.264/AVC doi:10.1631/jzus.2006.AS0076Document code:A CLC number:TN919.8INTRODUCTIONWith its prominent coding efficiency,flexibility and error resilience,the H.264/MPEG-4AVC stan-dard,jointly developed by the ITU-T and the MPEG committees,appears highly pared with various standards such as MPEG-1,MPEG-2,and MPEG-4,H.264/AVC offers an improvement of about 1/3to 1/2in coding efficiency with perceptually equivalent quality video (Thomas et al.,2003).These significant bandwidth savings open a great market to new products and services.As a result,the potential of H.264/AVC yields several possibilities for em-ploying the transcoding and corresponding technolo-gies (Hari,2004).Especially,it induces immense demand for converting a large volume of existing multimedia content from other formats into the H.264/AVC format and vice versa.The transcoding technologies also lead to the emergence of the corresponding technologies.For example,currently,MPEG-2system coding is widely used in digital TV,DVD,and HDTV applications.It is specified in the program stream (PS)and the transport stream (TS)based on packet-oriented mul-tiplexes (ISO/IEC JTC11/SC29/WG11,1994).It is assumed that transport streams be the preferred for-mat.It is of great interest that the video ESs in transport streams are transcoded to the H.264/AVC video ESs to save a great amount of bandwidth and resources.Transport streams provide coding syntax necessary and sufficient to synchronize the video and audio ESs,while ensuring that data buffers in the decoders do not overflow or underflow.Therefore,Corresponding author*Project suppo rted by the National Natural Science Foundation of China (No.60502033),the Natural Science Foundation of Shanghai (No.04Z R14084)and the Research Fund for the Doctoral Program of Higher Education (No.20040248047),ChinaJ ournal of Z hejiang University SCIENCE A ISSN 1009-3095(Print);ISSN 1862-1775(Online)/jzus; E-mail:jzus@Zhou et a l./J Zhejiang Univ SCIENCE A 20067(Suppl.I):76-8177remultiplexing and resynchronization in system cod-ing for the dedicated transcoding to H.264/AVC will become two key technologies.In this work,we focus on the issues of remulti-plexing and resynchronization in system coding after video transcoding.Unlike previously proposed remultiplexers which are usually implemented by hardware,this paper proposes a software solution.Our solution reuses the management and time infor-mation contained in the input streams instead of re-generating new information by hardware,to remulti-plex and resynchronize the output information com-bining with the regulatory coding and composition structure.Consequently,it is cheap in the sense that it is simple and easy to implement,and could be adopted by desktop PCs or intermediate gateways servers with a minimum extra cost.This paper is organized as follows.Section 2describes the mechanisms in multiplexing.And Sec-tion 3describes the architecture of our system.Sec-tion 4analyzes the key technologies in the system.Experimental results are discussed in Section 5.We close the paper with concluding remarks in Section 6.MULTIPLEXING MECHANISMAlthough the semantics and syntax differ much in system coding of various coding standards,the main functions of the system part are similar:to mul-tiplex different bitstreams and provide information that enable demultiplexing by decoders.The transport stream (TS)combines one or more programs with one or more independent time bases into a single stream.Between the encoder and de-coder,a common system time clock (STC)is main-tained.Decoding time stamp (DTS)and presentation time stamp (PTS)specify when the data is to be de-coded and presented at the decoder.A program is composed of one or more elementary streams,each labeled with a PID.Other formats such as program stream have similar synchronization mechanism (us-ing time stamps)and also the management mecha-nism,which are sometimes even much simpler as there may be just one program in a program stream.Therefore,we can apply these mechanisms for H.264/AVC in system layer.When multiplexing the H.264bitstream into a transport stream,the H.264/AVC video bitstream can be regarded as an elementary stream and encapsulated in the transport stream with other elementary streams such as audio ES,Program Specific Information (PSI)data,etc.,and the necessary time stamps are also inserted for synchronization.The entire system is illustrated in Fig.1and can also be regarded as a remultiplexer consisting of three major parts:demultiplexer,transcoder and multiplexer.ARCHITECTURE OF THE TRANSCODING SYS-TEMDemultiplexerThe main function of the demultiplexer is to ex-tract the video bitstream and the necessary informa-tion from the input multiplexed stream.In our system,the demultiplexer first scans the transport stream to obtain the needed PSI.Second,the video ES is ex-tracted from the transport stream according to the given PSI.Meanwhile,the demultiplexer ought to store all the time stamps and their exact positions in the trans-Outputtingmultiplexed bitstreamH.264video bits treamControl informationTimestamp InfoNon-video dataInputting multi-plexed bitstreamTime-stamp correctionPacket managerH.264bitstreamT ranscoderDE M U X CorrectionMUXVideo bitstreamFig.1Architecture of the systemZhou et al./J Zhejiang Univ SCIENCE A20067(Suppl.I):76-81 78sport stream.To describe these positions,the demultiplexer can mark each frame in the video se-quence with a unique number.For example,the first frame is marked1,the second is2,and so on.Tr anscoderThe main function of the transcoder is to transcode the input video sequence to a H.264/AVC compliant video stream without any time information.The video coding layer(VCL)of H.264/AVC is similar in concept to other standards such as MPEG-2 video.Moreover,the concept of generalized B-pictures in H.264/AVC allows hierarchical tem-poral decomposition.In fact,any picture can be marked as reference picture and used for mo-tion-compensated prediction of following pictures independent of the coding types of the corresponding slices in H.264/AVC.Multiplexer and the cor r esponding problems At the input of the multiplexer are the newly-transcoded H.264/AVC video bitstream and the original multiplexed stream.The multiplexer will replace the encapsulated original video bitstream with the new generated H.264/AVC bitstream and output the transcoded multiplexed stream,which is,however, not as simple as it appears.For example,in a transport stream,as an ele-mentary stream is erased and another resized ele-mentary stream enters,the PSI and the timing and spacing of PCR packets will be disturbed.Furthermore,the input video sequence and the H.264/AVC sequence can possibly have different picture structures.Therefore,the time stamps of the former cannot be directly copied to the latter and time stamps correction step should be taken.Finally,how to insert the data on video ES and other ESs into single transport stream needs consid-eration.As discussed above,we will recommend our solutions for the four key technologies:DTS/PTS correction,PCR correction,PID mapping and data insertion(Wang et a l.,2002).OUR SOLUTIONSPTS/DTS cor r ectionOther coding standards may have their own video/audio synchronization mechanism,although many common standards use time stamps for syn-chronization.For example,the composition time stamp(CTS)and DTS in MPEG-4are similar to the PTS and DTS in MPEG-2respectively.Assume diT S and piTS to be the DTS and the PTSof the ith picture,and diTS′and piTS′to be those of the ith H.264/AVC picture respectively.The PTS/DTS correction between the ith picture and the ith H.264/AVC picture is done as follows: Step1:If PTS is present while DTS is not,the DTS value of the input picture is supposed to be equal to the PTS value.d pi iTS TS=,if diTS is not provided.(1)Step2:As the decoding order is assumed to be the same as the encoding order,the DTS value of pictures with the same position should be equal.d di iT S T S′=.(2)Step3:PTS correction.The purpose of PTS correction is to compensate for the time delay due to the picture reordering.There are3conditions as the H.264/AVC picture may be an anchor picture(I-or P-picture),a B-picture,or a hi-erarchical B-picture.(1)H.264/AVC I-picture or P-pictureThere are still2conditions as the corresponding input video picture is an anchor picture or a B-picture:(a)If the input picture is an I-or P-picture,piTS′is calculated as follows:p p12[()/_],i iTS TS N N frame rate frequency ′=×(3) where N1denotes the number of B-pictures between the relevant input picture and the next MPEG-2I-or P-picture and N2is the number of frames between the H.264/AVC I-picture and the next H.264/AVC I-or P-picture.frame_rate is the number of frames dis-played per second.frequency denotes the frequency of encoder STC.(b)If the input picture is a B-picture,piT S′isZhou et a l./J Zhejiang Univ SCIENCE A 20067(Suppl.I):76-8179calculated as follows:p p 2[(1)/_].i i TS T S N frame rate frequency ′=++×(4)(2)H.264/AVC B-pictureB-pictures are displayed immediately as soon as it is received.Consequently,p i TS ′is calculated as follows:p d i i TS TS ′′=.(5)Especially,DTS should not be inserted in B-picture as it is always equal to PTS.(3)H.264/AVC hierarchical B-pictureIf the pyramid coding is employed in the transcoder,hierarchical B-pictures will be present in the bitstream.p i TS ′is calculated as follows:(a)If the input MPEG-2picture is an I-or P-picture,p p 1{[(__)]/_}.i i TS TS N dis ordenc ord frame ra te frequency ′=×(6)(b)If it is a B-picture,p p {[1(__)]/_},i i TS TS dis ordenc ord frame ra te frequency ′=++×(7)where dis_ord and enc_ord are the display order and the encoding order of the H.264hierarchical B-picture in a group of pictures (GOP).For example,if we code the sequence using the coding pattern I0-P8-RB4-RB2-RB6-B1-B3-B5-B7-P16-RB12-RB 10-RB14-B9-B11-B13-B15-P24…,where RB refers to a B slice only coded picture which can be used as a reference.See Fig.2.Then the dis_ord and enc_ord ofRB4are 4and 2while those of B1are 1and 5re-spectively.PID mappingIn a transport stream,PID is used to identify different elementary streams,as our multiplexer just exchanges the H.264/AVC video sequence for the video sequence.The PID of the input sequence can be assigned to the H.264/AVC video as its PID,and the PID of other elementary streams may stay the same.Therefore,the Program Association Table (PAT)and Program Map Table (PMT)are not necessary to make a change.PCR corr ection and data inser tionAs the video sequence is resized,the original PCR ought to be changed to accommodate the new bit rate.In (Bin and Klara,2002),a software approach to reuse the PCRs was proposed.This approach scales the PCR packets ’distance and achieves a fixed new bit rate.The remaining non-video stream packet can be simply mapped to the new position on the scaled output time line corresponding to the same time point in the original input time line.For example,if in the input stream,packet 3is a frame header,packet 6is an audio packet and packets 4,5,7through 15are video data from the same frame as packet 3.Since the video sequence is shrunk to its 1/2size after transcoding,while the non-video data are not changed,the trans-port stream is approximately 2/3of its bandwidth.After shrinking,packet 3is positioned to slot 2,and packet 6to slot 4.The empty slots such as slot 3,5through 10,are used to carry the other H.264/AVC video data.As the video data are about 1/2of original data,the slots should be enough to carry the video frame.And the redundant slots can simply be padded with NULL packets to maintain a constant bit rate (CBR).Resynchr onization when the input TS is unsyn-chr onizedAn important problem is how to synchronize the video and audio stream if the input TS is not syn-chronized.We can solve this problem when the ex-tractor marks the input video frame.For example,if the frame rate is 25Hz and the video is 0.08s (2frames ’time)later or earlier than the audio,we should mark the first frame 1instead of 1and so do theP BPB B B BB B Fig.2Level pyr a mid str u ctureZhou et al./J Zhejiang Univ SCIENCE A20067(Suppl.I):76-81 80following frames.Then the third frame would bestamped by the first frame’s time stamp and display atthe time when the first frame used to.Consequently,the stream is synchronized again.EXPERIMENTAL RESULTSWe applied our system to a transport streamwhose scenario is a person’s talk.The transportstream multiplexed an MPEG-2video stream and anMP2audio stream.We use libmpeg2as the MPEG-2decoder and JM10.1as the H.264/AVC encoder.Inour experiments,the MPEG-2video ES,which com-plies with a picture structure I P B B P B B (i)transcoded into an H.264/AVC stream with the struc-ture I P B P B P B….The MP2audio ES remains thesame.After transcoding,the H.264/AVC ES and MP2ES and other data bitstreams are multiplexed again toproduce the new TS.Remultiplexing and resynchro-nization are achieved by employing our solution inthe multiplexer as discussed with the experimentalresults being satisfactory as expected.Fig.3shows20pairs of display time of theframes sampled from the two bitstreams.It is clearthat each pair of marked spots are approximate su-perposition,indicating that the transcoded video se-quence is also temporal consistent with the audio justas the MPEG-2video is.After analyzing the experi-mental results,we find that the maximum jitter amongthe20pairs of display time is less than10ms whichassures the synchronization of video and audio aftertranscoding given the timestamp information pro-vided by the original video bit stream are all correctand that the MPEG-2sequence has already been syn-chronized.Fig.4shows the comparison between the twovideo ES by sampling them at the same points of time.As the MP2audio ES does not change in thetranscoding process,pictures sampled at the sametime points of the two video ES are comparable.The leftmost part of Fig.4is the time axis and theaudio waveform of the MP2mentioned above.Sixpairs of pictures sampled from the MPEG-2ES andH.264/AVC ES respectively are shown in the middleand the corresponding sampling time is listed in theright.Obviously,each two relevant pictures show great similarity which means that the resynchronization Fig.4Comparisons of synchronization during the mul-timedia presentation0.16s1.97s3.67s4.95s6.85s8.24sFig.3Comparison of display time050100150200Frame number87654321Di spl ayt ime(s)Display time of MPEG-2pictureDisplay time of H.264pictureZhou et a l./J Zhejiang Univ SCIENCE A 20067(Suppl.I):76-8181obtained properly after applying our method in the remultiplexing step.CONCLUSIONIn this work,we studied the problem of the bit rate being changed after transcoding to the H.264/AVC bitstream,control information and that time stamps in the multiplexed stream may be destroyed.We have proposed a software system and discussed the key technologies and have taken transport stream as an example.We studied the relationship between the DTS/PTS in the input and output TS to make DTS/PTS correction.DTS/PTS correction is also effective for transcoding with hierarchical B-pictures.The PID mapping method we used is simple and effective and a scaling method is employed to do the PCR correction and data insertion.Moreover,our system can easily synchronize the TS which was pre-viously unsynchronized.Experimental results showed that with our solutions,the H.264/AVC bitstream is remultiplexed and well-synchronized with the audio bitstream.Refer encesBin,Y.,Klara,N.,2002.A Realtime Software Solution forResynchronizing Filtered MPEG-2Transport Stream.Proceedings of the IEEE Fourth International Symposium on Multimedia Software Engineering.Hari,K.,2004.Issues in H.264/MPEG-2Video Transcoding.Consumer Communications and Networking Conference (CCNC).The First IEEE,p.657-659.ISO/IEC JTC11/SC29/WG11,1994.Generic Coding ofMoving Pictures and Associated Audio:Systems.ISO/IEC 13818-1.Thomas,W.,Gary,S.,Gisle,B.,Ajay,L.,2003.Overview ofthe H.264/AVC video coding standard.IEEE Trans.on Circuits and Systems for Video Technology,7:560-576.Wang,X.D.,Yu,S.,Liang,L.,2002.Implementation ofMPEG-2transport stream remultiplexer for DTV broad-casting.IEEE Trans.on Consumer Electronics,48(2):329-334.[doi:10.1109/TCE.2002.1010139]JZUS -A foc us es on “Applied P hys ics &Engine e ring ”We lcome Your Contributions to JZUS-AJourna l of Zhejiang Univ ersity SCIENCE A warmly and sincerely welcomes scientists all overthe world to contribute Reviews,Articles and Science Letters focused on Applied Physics &Engi-neer ing.Especially,Science Letters (34pages)would be published as soon as about 30days (Note:detailed research articles can still be published in the professional journals in the future after Science Letters is published by JZUS-A).SCIENCE AJournal of ZhejiangUniversityEditors-in-Chief:Pan Yun-heISSN 1009-3095(P rin t);ISS N 1862-1775(On lin e ),mo n th ly/jz us ;www.springe jzus@zju.e du.c n。

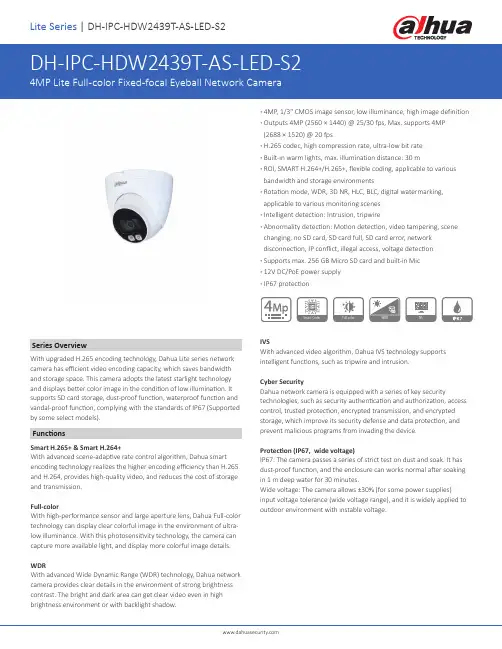

· 4MP , 1/3" CMOS image sensor, low illuminance, high image definition · Outputs 4MP (2560 × 1440) @ 25/30 fps, Max. supports 4MP (2688 × 1520) @ 20 fps· H.265 codec, high compression rate, ultra-low bit rate · Built-in warm lights, max. illumination distance: 30 m· ROI, SMART H.264+/H.265+, flexible coding, applicable to various bandwidth and storage environments· Rotation mode, WDR, 3D NR, HLC, BLC, digital watermarking, applicable to various monitoring scenes · Intelligent detection: Intrusion, tripwire· Abnormality detection: Motion detection, video tampering, scene changing, no SD card, SD card full, SD card error, network disconnection, IP conflict, illegal access, voltage detection · Supports max. 256 GB Micro SD card and built-in Mic · 12V DC/PoE power supply · IP67 protectionSeries OverviewWith upgraded H.265 encoding technology, Dahua Lite series network camera has efficient video encoding capacity, which saves bandwidth and storage space. This camera adopts the latest starlight technology and displays better color image in the condition of low illumination. It supports SD card storage, dust-proof function, waterproof function and vandal-proof function, complying with the standards of IP67 (Supported by some select models).FunctionsSmart H.265+ & Smart H.264+With advanced scene-adaptive rate control algorithm, Dahua smart encoding technology realizes the higher encoding efficiency than H.265 and H.264, provides high-quality video, and reduces the cost of storage and transmission.Full-colorWith high-performance sensor and large aperture lens, Dahua Full-color technology can display clear colorful image in the environment of ultra-low illuminance. With this photosensitivity technology, the camera can capture more available light, and display more colorful image details. WDRWith advanced Wide Dynamic Range (WDR) technology, Dahua network camera provides clear details in the environment of strong brightness contrast. The bright and dark area can get clear video even in high brightness environment or with backlight shadow.IVSWith advanced video algorithm, Dahua IVS technology supports intelligent functions, such as tripwire and intrusion.Cyber SecurityDahua network camera is equipped with a series of key securitytechnologies, such as security authentication and authorization, access control, trusted protection, encrypted transmission, and encrypted storage, which improve its security defense and data protection, and prevent malicious programs from invading the device.Protection (IP67, wide voltage)IP67: The camera passes a series of strict test on dust and soak. It has dust-proof function, and the enclosure can works normal after soaking in 1 m deep water for 30 minutes.Wide voltage: The camera allows ±30% (for some power supplies) input voltage tolerance (wide voltage range), and it is widely applied to outdoor environment with instable voltage.Smart CodecWDRFull-colorRev 001.001PFM114TLC SD Card© 2020 Dahua . All rights reserved. Design and specifications are subject to change without notice.Pictures in the document are for reference only, and the actual product shall prevail.AccessoriesOptional:PFA130-EJunction BoxPFB203WWall MountPFA152-EPole MountLR1002-1ET/1ECSingle-port LongReach Ethernet overCoax ExtenderPFM900-EIntegrated MountTesterPFM321D12V DC 1A PowerAdapter。