Budgeted learning, part I The multi-armed bandit case. submitted

- 格式:pdf

- 大小:187.59 KB

- 文档页数:8

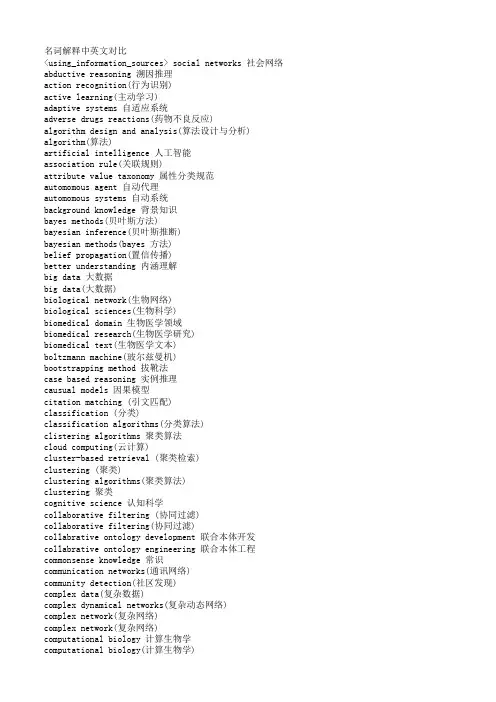

名词解释中英文对比<using_information_sources> social networks 社会网络abductive reasoning 溯因推理action recognition(行为识别)active learning(主动学习)adaptive systems 自适应系统adverse drugs reactions(药物不良反应)algorithm design and analysis(算法设计与分析) algorithm(算法)artificial intelligence 人工智能association rule(关联规则)attribute value taxonomy 属性分类规范automomous agent 自动代理automomous systems 自动系统background knowledge 背景知识bayes methods(贝叶斯方法)bayesian inference(贝叶斯推断)bayesian methods(bayes 方法)belief propagation(置信传播)better understanding 内涵理解big data 大数据big data(大数据)biological network(生物网络)biological sciences(生物科学)biomedical domain 生物医学领域biomedical research(生物医学研究)biomedical text(生物医学文本)boltzmann machine(玻尔兹曼机)bootstrapping method 拔靴法case based reasoning 实例推理causual models 因果模型citation matching (引文匹配)classification (分类)classification algorithms(分类算法)clistering algorithms 聚类算法cloud computing(云计算)cluster-based retrieval (聚类检索)clustering (聚类)clustering algorithms(聚类算法)clustering 聚类cognitive science 认知科学collaborative filtering (协同过滤)collaborative filtering(协同过滤)collabrative ontology development 联合本体开发collabrative ontology engineering 联合本体工程commonsense knowledge 常识communication networks(通讯网络)community detection(社区发现)complex data(复杂数据)complex dynamical networks(复杂动态网络)complex network(复杂网络)complex network(复杂网络)computational biology 计算生物学computational biology(计算生物学)computational complexity(计算复杂性) computational intelligence 智能计算computational modeling(计算模型)computer animation(计算机动画)computer networks(计算机网络)computer science 计算机科学concept clustering 概念聚类concept formation 概念形成concept learning 概念学习concept map 概念图concept model 概念模型concept modelling 概念模型conceptual model 概念模型conditional random field(条件随机场模型) conjunctive quries 合取查询constrained least squares (约束最小二乘) convex programming(凸规划)convolutional neural networks(卷积神经网络) customer relationship management(客户关系管理) data analysis(数据分析)data analysis(数据分析)data center(数据中心)data clustering (数据聚类)data compression(数据压缩)data envelopment analysis (数据包络分析)data fusion 数据融合data generation(数据生成)data handling(数据处理)data hierarchy (数据层次)data integration(数据整合)data integrity 数据完整性data intensive computing(数据密集型计算)data management 数据管理data management(数据管理)data management(数据管理)data miningdata mining 数据挖掘data model 数据模型data models(数据模型)data partitioning 数据划分data point(数据点)data privacy(数据隐私)data security(数据安全)data stream(数据流)data streams(数据流)data structure( 数据结构)data structure(数据结构)data visualisation(数据可视化)data visualization 数据可视化data visualization(数据可视化)data warehouse(数据仓库)data warehouses(数据仓库)data warehousing(数据仓库)database management systems(数据库管理系统)database management(数据库管理)date interlinking 日期互联date linking 日期链接Decision analysis(决策分析)decision maker 决策者decision making (决策)decision models 决策模型decision models 决策模型decision rule 决策规则decision support system 决策支持系统decision support systems (决策支持系统) decision tree(决策树)decission tree 决策树deep belief network(深度信念网络)deep learning(深度学习)defult reasoning 默认推理density estimation(密度估计)design methodology 设计方法论dimension reduction(降维) dimensionality reduction(降维)directed graph(有向图)disaster management 灾害管理disastrous event(灾难性事件)discovery(知识发现)dissimilarity (相异性)distributed databases 分布式数据库distributed databases(分布式数据库) distributed query 分布式查询document clustering (文档聚类)domain experts 领域专家domain knowledge 领域知识domain specific language 领域专用语言dynamic databases(动态数据库)dynamic logic 动态逻辑dynamic network(动态网络)dynamic system(动态系统)earth mover's distance(EMD 距离) education 教育efficient algorithm(有效算法)electric commerce 电子商务electronic health records(电子健康档案) entity disambiguation 实体消歧entity recognition 实体识别entity recognition(实体识别)entity resolution 实体解析event detection 事件检测event detection(事件检测)event extraction 事件抽取event identificaton 事件识别exhaustive indexing 完整索引expert system 专家系统expert systems(专家系统)explanation based learning 解释学习factor graph(因子图)feature extraction 特征提取feature extraction(特征提取)feature extraction(特征提取)feature selection (特征选择)feature selection 特征选择feature selection(特征选择)feature space 特征空间first order logic 一阶逻辑formal logic 形式逻辑formal meaning prepresentation 形式意义表示formal semantics 形式语义formal specification 形式描述frame based system 框为本的系统frequent itemsets(频繁项目集)frequent pattern(频繁模式)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy data mining(模糊数据挖掘)fuzzy logic 模糊逻辑fuzzy set theory(模糊集合论)fuzzy set(模糊集)fuzzy sets 模糊集合fuzzy systems 模糊系统gaussian processes(高斯过程)gene expression data 基因表达数据gene expression(基因表达)generative model(生成模型)generative model(生成模型)genetic algorithm 遗传算法genome wide association study(全基因组关联分析) graph classification(图分类)graph classification(图分类)graph clustering(图聚类)graph data(图数据)graph data(图形数据)graph database 图数据库graph database(图数据库)graph mining(图挖掘)graph mining(图挖掘)graph partitioning 图划分graph query 图查询graph structure(图结构)graph theory(图论)graph theory(图论)graph theory(图论)graph theroy 图论graph visualization(图形可视化)graphical user interface 图形用户界面graphical user interfaces(图形用户界面)health care 卫生保健health care(卫生保健)heterogeneous data source 异构数据源heterogeneous data(异构数据)heterogeneous database 异构数据库heterogeneous information network(异构信息网络) heterogeneous network(异构网络)heterogenous ontology 异构本体heuristic rule 启发式规则hidden markov model(隐马尔可夫模型)hidden markov model(隐马尔可夫模型)hidden markov models(隐马尔可夫模型) hierarchical clustering (层次聚类) homogeneous network(同构网络)human centered computing 人机交互技术human computer interaction 人机交互human interaction 人机交互human robot interaction 人机交互image classification(图像分类)image clustering (图像聚类)image mining( 图像挖掘)image reconstruction(图像重建)image retrieval (图像检索)image segmentation(图像分割)inconsistent ontology 本体不一致incremental learning(增量学习)inductive learning (归纳学习)inference mechanisms 推理机制inference mechanisms(推理机制)inference rule 推理规则information cascades(信息追随)information diffusion(信息扩散)information extraction 信息提取information filtering(信息过滤)information filtering(信息过滤)information integration(信息集成)information network analysis(信息网络分析) information network mining(信息网络挖掘) information network(信息网络)information processing 信息处理information processing 信息处理information resource management (信息资源管理) information retrieval models(信息检索模型) information retrieval 信息检索information retrieval(信息检索)information retrieval(信息检索)information science 情报科学information sources 信息源information system( 信息系统)information system(信息系统)information technology(信息技术)information visualization(信息可视化)instance matching 实例匹配intelligent assistant 智能辅助intelligent systems 智能系统interaction network(交互网络)interactive visualization(交互式可视化)kernel function(核函数)kernel operator (核算子)keyword search(关键字检索)knowledege reuse 知识再利用knowledgeknowledgeknowledge acquisitionknowledge base 知识库knowledge based system 知识系统knowledge building 知识建构knowledge capture 知识获取knowledge construction 知识建构knowledge discovery(知识发现)knowledge extraction 知识提取knowledge fusion 知识融合knowledge integrationknowledge management systems 知识管理系统knowledge management 知识管理knowledge management(知识管理)knowledge model 知识模型knowledge reasoningknowledge representationknowledge representation(知识表达) knowledge sharing 知识共享knowledge storageknowledge technology 知识技术knowledge verification 知识验证language model(语言模型)language modeling approach(语言模型方法) large graph(大图)large graph(大图)learning(无监督学习)life science 生命科学linear programming(线性规划)link analysis (链接分析)link prediction(链接预测)link prediction(链接预测)link prediction(链接预测)linked data(关联数据)location based service(基于位置的服务) loclation based services(基于位置的服务) logic programming 逻辑编程logical implication 逻辑蕴涵logistic regression(logistic 回归)machine learning 机器学习machine translation(机器翻译)management system(管理系统)management( 知识管理)manifold learning(流形学习)markov chains 马尔可夫链markov processes(马尔可夫过程)matching function 匹配函数matrix decomposition(矩阵分解)matrix decomposition(矩阵分解)maximum likelihood estimation(最大似然估计)medical research(医学研究)mixture of gaussians(混合高斯模型)mobile computing(移动计算)multi agnet systems 多智能体系统multiagent systems 多智能体系统multimedia 多媒体natural language processing 自然语言处理natural language processing(自然语言处理) nearest neighbor (近邻)network analysis( 网络分析)network analysis(网络分析)network analysis(网络分析)network formation(组网)network structure(网络结构)network theory(网络理论)network topology(网络拓扑)network visualization(网络可视化)neural network(神经网络)neural networks (神经网络)neural networks(神经网络)nonlinear dynamics(非线性动力学)nonmonotonic reasoning 非单调推理nonnegative matrix factorization (非负矩阵分解) nonnegative matrix factorization(非负矩阵分解) object detection(目标检测)object oriented 面向对象object recognition(目标识别)object recognition(目标识别)online community(网络社区)online social network(在线社交网络)online social networks(在线社交网络)ontology alignment 本体映射ontology development 本体开发ontology engineering 本体工程ontology evolution 本体演化ontology extraction 本体抽取ontology interoperablity 互用性本体ontology language 本体语言ontology mapping 本体映射ontology matching 本体匹配ontology versioning 本体版本ontology 本体论open government data 政府公开数据opinion analysis(舆情分析)opinion mining(意见挖掘)opinion mining(意见挖掘)outlier detection(孤立点检测)parallel processing(并行处理)patient care(病人医疗护理)pattern classification(模式分类)pattern matching(模式匹配)pattern mining(模式挖掘)pattern recognition 模式识别pattern recognition(模式识别)pattern recognition(模式识别)personal data(个人数据)prediction algorithms(预测算法)predictive model 预测模型predictive models(预测模型)privacy preservation(隐私保护)probabilistic logic(概率逻辑)probabilistic logic(概率逻辑)probabilistic model(概率模型)probabilistic model(概率模型)probability distribution(概率分布)probability distribution(概率分布)project management(项目管理)pruning technique(修剪技术)quality management 质量管理query expansion(查询扩展)query language 查询语言query language(查询语言)query processing(查询处理)query rewrite 查询重写question answering system 问答系统random forest(随机森林)random graph(随机图)random processes(随机过程)random walk(随机游走)range query(范围查询)RDF database 资源描述框架数据库RDF query 资源描述框架查询RDF repository 资源描述框架存储库RDF storge 资源描述框架存储real time(实时)recommender system(推荐系统)recommender system(推荐系统)recommender systems 推荐系统recommender systems(推荐系统)record linkage 记录链接recurrent neural network(递归神经网络) regression(回归)reinforcement learning 强化学习reinforcement learning(强化学习)relation extraction 关系抽取relational database 关系数据库relational learning 关系学习relevance feedback (相关反馈)resource description framework 资源描述框架restricted boltzmann machines(受限玻尔兹曼机) retrieval models(检索模型)rough set theroy 粗糙集理论rough set 粗糙集rule based system 基于规则系统rule based 基于规则rule induction (规则归纳)rule learning (规则学习)rule learning 规则学习schema mapping 模式映射schema matching 模式匹配scientific domain 科学域search problems(搜索问题)semantic (web) technology 语义技术semantic analysis 语义分析semantic annotation 语义标注semantic computing 语义计算semantic integration 语义集成semantic interpretation 语义解释semantic model 语义模型semantic network 语义网络semantic relatedness 语义相关性semantic relation learning 语义关系学习semantic search 语义检索semantic similarity 语义相似度semantic similarity(语义相似度)semantic web rule language 语义网规则语言semantic web 语义网semantic web(语义网)semantic workflow 语义工作流semi supervised learning(半监督学习)sensor data(传感器数据)sensor networks(传感器网络)sentiment analysis(情感分析)sentiment analysis(情感分析)sequential pattern(序列模式)service oriented architecture 面向服务的体系结构shortest path(最短路径)similar kernel function(相似核函数)similarity measure(相似性度量)similarity relationship (相似关系)similarity search(相似搜索)similarity(相似性)situation aware 情境感知social behavior(社交行为)social influence(社会影响)social interaction(社交互动)social interaction(社交互动)social learning(社会学习)social life networks(社交生活网络)social machine 社交机器social media(社交媒体)social media(社交媒体)social media(社交媒体)social network analysis 社会网络分析social network analysis(社交网络分析)social network(社交网络)social network(社交网络)social science(社会科学)social tagging system(社交标签系统)social tagging(社交标签)social web(社交网页)sparse coding(稀疏编码)sparse matrices(稀疏矩阵)sparse representation(稀疏表示)spatial database(空间数据库)spatial reasoning 空间推理statistical analysis(统计分析)statistical model 统计模型string matching(串匹配)structural risk minimization (结构风险最小化) structured data 结构化数据subgraph matching 子图匹配subspace clustering(子空间聚类)supervised learning( 有support vector machine 支持向量机support vector machines(支持向量机)system dynamics(系统动力学)tag recommendation(标签推荐)taxonmy induction 感应规范temporal logic 时态逻辑temporal reasoning 时序推理text analysis(文本分析)text anaylsis 文本分析text classification (文本分类)text data(文本数据)text mining technique(文本挖掘技术)text mining 文本挖掘text mining(文本挖掘)text summarization(文本摘要)thesaurus alignment 同义对齐time frequency analysis(时频分析)time series analysis( 时time series data(时间序列数据)time series data(时间序列数据)time series(时间序列)topic model(主题模型)topic modeling(主题模型)transfer learning 迁移学习triple store 三元组存储uncertainty reasoning 不精确推理undirected graph(无向图)unified modeling language 统一建模语言unsupervisedupper bound(上界)user behavior(用户行为)user generated content(用户生成内容)utility mining(效用挖掘)visual analytics(可视化分析)visual content(视觉内容)visual representation(视觉表征)visualisation(可视化)visualization technique(可视化技术) visualization tool(可视化工具)web 2.0(网络2.0)web forum(web 论坛)web mining(网络挖掘)web of data 数据网web ontology lanuage 网络本体语言web pages(web 页面)web resource 网络资源web science 万维科学web search (网络检索)web usage mining(web 使用挖掘)wireless networks 无线网络world knowledge 世界知识world wide web 万维网world wide web(万维网)xml database 可扩展标志语言数据库附录 2 Data Mining 知识图谱(共包含二级节点15 个,三级节点93 个)间序列分析)监督学习)领域 二级分类 三级分类。

Linear Algebra and its Applications432(2010)2089–2099Contents lists available at ScienceDirect Linear Algebra and its Applications j o u r n a l h o m e p a g e:w w w.e l s e v i e r.c o m/l o c a t e/l aaIntegrating learning theories and application-based modules in teaching linear algebraୋWilliam Martin a,∗,Sergio Loch b,Laurel Cooley c,Scott Dexter d,Draga Vidakovic ea Department of Mathematics and School of Education,210F Family Life Center,NDSU Department#2625,P.O.Box6050,Fargo ND 58105-6050,United Statesb Department of Mathematics,Grand View University,1200Grandview Avenue,Des Moines,IA50316,United Statesc Department of Mathematics,CUNY Graduate Center and Brooklyn College,2900Bedford Avenue,Brooklyn,New York11210, United Statesd Department of Computer and Information Science,CUNY Brooklyn College,2900Bedford Avenue Brooklyn,NY11210,United Statese Department of Mathematics and Statistics,Georgia State University,University Plaza,Atlanta,GA30303,United StatesA R T I C L E I N F O AB S T R AC TArticle history:Received2October2008Accepted29August2009Available online30September2009 Submitted by L.Verde-StarAMS classification:Primary:97H60Secondary:97C30Keywords:Linear algebraLearning theoryCurriculumPedagogyConstructivist theoriesAPOS–Action–Process–Object–Schema Theoretical frameworkEncapsulated process The research team of The Linear Algebra Project developed and implemented a curriculum and a pedagogy for parallel courses in (a)linear algebra and(b)learning theory as applied to the study of mathematics with an emphasis on linear algebra.The purpose of the ongoing research,partially funded by the National Science Foundation,is to investigate how the parallel study of learning theories and advanced mathematics influences the development of thinking of individuals in both domains.The researchers found that the particular synergy afforded by the parallel study of math and learning theory promoted,in some students,a rich understanding of both domains and that had a mutually reinforcing effect.Furthermore,there is evidence that the deeper insights will contribute to more effective instruction by those who become high school math teachers and,consequently,better learning by their students.The courses developed were appropriate for mathematics majors,pre-service secondary mathematics teachers, and practicing mathematics teachers.The learning seminar focused most heavily on constructivist theories,although it also examinedThe work reported in this paper was partially supported by funding from the National Science Foundation(DUE CCLI 0442574).∗Corresponding author.Address:NDSU School of Education,NDSU Department of Mathematics,210F Family Life Center, NDSU Department#2625,P.O.Box6050,Fargo ND58105-6050,United States.Tel.:+17012317104;fax:+17012317416.E-mail addresses:william.martin@(W.Martin),sloch@(S.Loch),LCooley@ (L.Cooley),SDexter@(S.Dexter),dvidakovic@(D.Vidakovic).0024-3795/$-see front matter©2009Elsevier Inc.All rights reserved.doi:10.1016/a.2009.08.0302090W.Martin et al./Linear Algebra and its Applications432(2010)2089–2099Thematicized schema Triad–intraInterTransGenetic decomposition Vector additionMatrixMatrix multiplication Matrix representation BasisColumn spaceRow spaceNull space Eigenspace Transformation socio-cultural and historical perspectives.A particular theory, Action–Process–Object–Schema(APOS)[10],was emphasized and examined through the lens of studying linear algebra.APOS has been used in a variety of studies focusing on student understanding of undergraduate mathematics.The linear algebra courses include the standard set of undergraduate topics.This paper reports the re-sults of the learning theory seminar and its effects on students who were simultaneously enrolled in linear algebra and students who had previously completed linear algebra and outlines how prior research has influenced the future direction of the project.©2009Elsevier Inc.All rights reserved.1.Research rationaleThe research team of the Linear Algebra Project(LAP)developed and implemented a curriculum and a pedagogy for parallel courses in linear algebra and learning theory as applied to the study of math-ematics with an emphasis on linear algebra.The purpose of the research,which was partially funded by the National Science Foundation(DUE CCLI0442574),was to investigate how the parallel study of learning theories and advanced mathematics influences the development of thinking of high school mathematics teachers,in both domains.The researchers found that the particular synergy afforded by the parallel study of math and learning theory promoted,in some teachers,a richer understanding of both domains that had a mutually reinforcing effect and affected their thinking about their identities and practices as teachers.It has been observed that linear algebra courses often are viewed by students as a collection of definitions and procedures to be learned by rote.Scanning the table of contents of many commonly used undergraduate textbooks will provide a common list of terms such as listed here(based on linear algebra texts by Strang[1]and Lang[2]).Vector space Kernel GaussianIndependence Image TriangularLinear combination Inverse Gram–SchmidtSpan Transpose EigenvectorBasis Orthogonal Singular valueSubspace Operator DecompositionProjection Diagonalization LU formMatrix Normal form NormDimension Eignvalue ConditionLinear transformation Similarity IsomorphismRank Diagonalize DeterminantThis is not something unique to linear algebra–a similar situation holds for many undergraduate mathematics courses.Certainly the authors of undergraduate texts do not share this student view of mathematics.In fact,the variety ways in which different authors organize their texts reflects the individual ways in which they have conceptualized introductory linear algebra courses.The wide vari-ability that can be seen in a perusal of the many linear algebra texts that are used is a reflection the many ways that mathematicians think about linear algebra and their beliefs about how students can come to make sense of the content.Instruction in a course is based on considerations of content,pedagogy, resources(texts and other materials),and beliefs about teaching and learning of mathematics.The interplay of these ideas shaped our research project.We deliberately mention two authors with clearly differing perspectives on an undergraduate linear algebra course:Strang’s organization of the material takes an applied or application perspective,while Lang views the material from more of a“pure mathematics”perspective.A review of the wide variety of textbooks to classify and categorize the different views of the subject would reveal a broad variety of perspectives on the teaching of the subject.We have taken a view that seeks to go beyond the mathe-matical content to integrate current theoretical perspectives on the teaching and learning of undergrad-uate mathematics.Our project used integration of mathematical content,applications,and learningW.Martin et al./Linear Algebra and its Applications432(2010)2089–20992091 theories to provide enhanced learning experiences using rich content,student meta cognition,and their own experience and intuition.The project also used co-teaching and collaboration among faculty with expertise in a variety of areas including mathematics,computer science and mathematics education.If one moves beyond the organization of the content of textbooks wefind that at their heart they do cover a common core of the key ideas of linear algebra–all including fundamental concepts such as vector space and linear transformation.These observations lead to our key question“How is one to think about this task of organizing instruction to optimize learning?”In our work we focus on the conception of linear algebra that is developed by the student and its relationship with what we reveal about our own understanding of the subject.It seems that even in cases where researchers consciously study the teaching and learning of linear algebra(or other mathematics topics)the questions are“What does it mean to understand linear algebra?”and“How do I organize instruction so that students develop that conception as fully as possible?”In broadest terms, our work involves(a)simultaneous study of linear algebra and learning theories,(b)having students connect learning theories to their study of linear algebra,and(c)the use of parallel mathematics and education courses and integrated workshops.As students simultaneously study mathematics and learning theory related to the study of mathe-matics,we expect that reflection or meta cognition on their own learning will enable them to construct deeper and more meaningful understanding in both domains.We chose linear algebra for several reasons:It has not been the focus of as much instructional research as calculus,it involves abstraction and proof,and it is taken by many students in different programs for a variety of reasons.It seems to us to involve important mathematical content along with rich applications,with abstraction that builds on experience and intuition.In our pilot study we taught parallel courses:The regular upper division undergraduate linear algebra course and a seminar in learning theories in mathematics education.Early in the project we also organized an intensive three-day workshop for teachers and prospective teachers that included topics in linear algebra and examination of learning theory.In each case(two sets of parallel courses and the workshop)we had students reflect on their learning of linear algebra content and asked them to use their own learning experiences to reflect on the ideas about teaching and learning of mathematics.Students read articles–in the case of the workshop,this reading was in advance of the long weekend session–drawn from mathematics education sources including[3–10].APOS(Action,Process,Object,Schema)is a theoretical framework that has been used by many researchers who study the learning of undergraduate and graduate mathematics[10,11].We include a sketch of the structure of this framework and refer the reader to the literature for more detailed descriptions.More detailed and specific illustrations of its use are widely available[12].The APOS Theoretical Framework involves four levels of understanding that can be described for a wide variety of mathematical concepts such as function,vector space,linear transformation:Action,Process,Object (either an encapsulated process or a thematicized schema),Schema(Intra,inter,trans–triad stages of schema formation).Genetic decomposition is the analysis of a particular concept in which developing understanding is described as a dynamic process of mental constructions that continually develop, abstract,and enrich the structural organization of an individual’s knowledge.We believe that students’simultaneous study of linear algebra along with theoretical examination of teaching and learning–particularly on what it means to develop conceptual understanding in a domain –will promote learning and understanding in both domains.Fundamentally,this reflects our view that conceptual understanding in any domain involves rich mental connections that link important ideas or facts,increasing the individual’s ability to relate new situations and problems to that existing cognitive framework.This view of conceptual understanding of mathematics has been described by various prominent math education researchers such as Hiebert and Carpenter[6]and Hiebert and Lefevre[7].2.Action–Process–Object–Schema theory(APOS)APOS theory is a theoretical perspective of learning based on an interpretation of Piaget’s construc-tivism and poses descriptions of mental constructions that may occur in understanding a mathematical concept.These constructions are called Actions,Processes,Objects,and Schema.2092W.Martin et al./Linear Algebra and its Applications432(2010)2089–2099 An action is a transformation of a mathematical object according to an explicit algorithm seen as externally driven.It may be a manipulation of objects or acting upon a memorized fact.When one reflects upon an action,constructing an internal operation for a transformation,the action begins to be interiorized.A process is this internal transformation of an object.Each step may be described or reflected upon without actually performing it.Processes may be transformed through reversal or coordination with other processes.There are two ways in which an individual may construct an object.A person may reflect on actions applied to a particular process and become aware of the process as a totality.One realizes that transformations(whether actions or processes)can act on the process,and is able to actually construct such transformations.At this point,the individual has reconstructed a process as a cognitive object. In this case we say that the process has been encapsulated into an object.One may also construct a cognitive object by reflecting on a schema,becoming aware of it as a totality.Thus,he or she is able to perform actions on it and we say the individual has thematized the schema into an object.With an object conception one is able to de-encapsulate that object back into the process from which it came, or,in the case of a thematized schema,unpack it into its various components.Piaget and Garcia[13] indicate that thematization has occurred when there is a change from usage or implicit application to consequent use and conceptualization.A schema is a collection of actions,processes,objects,and other previously constructed schemata which are coordinated and synthesized to form mathematical structures utilized in problem situations. Objects may be transformed by higher-level actions,leading to new processes,objects,and schemata. Hence,reconstruction continues in evolving schemata.To illustrate different conceptions of the APOS theory,imagine the following’teaching’scenario.We give students multi-part activities in a technology supported environment.In particular,we assume students are using Maple in the computer lab.The multi-part activities,focusing on vectors and operations,in Maple begin with a given Maple code and drawing.In case of scalar multiplication of the vector,students are asked to substitute one parameter in the Maple code,execute the code and observe what has happened.They are asked to repeat this activity with a different value of the parameter.Then students are asked to predict what will happen in a more general case and to explain their reasoning.Similarly,students may explore addition and subtraction of vectors.In the next part of activity students might be asked to investigate about the commutative property of vector addition.Based on APOS theory,in thefirst part of the activity–in which students are asked to perform certain operation and make observations–our intention is to induce each student’s action conception of that concept.By asking students to imagine what will happen if they make a certain change–but do not physically perform that change–we are hoping to induce a somewhat higher level of students’thinking, the process level.In order to predict what will happen students would have to imagine performing the action based on the actions they performed before(reflective abstraction).Activities designed to explore on vector addition properties require students to encapsulate the process of addition of two vectors into an object on which some other action could be performed.For example,in order for a student to conclude that u+v=v+u,he/she must encapsulate a process of adding two vectors u+v into an object(resulting vector)which can further be compared[action]with another vector representing the addition of v+u.As with all theories of learning,APOS has a limitation that researchers may only observe externally what one produces and discusses.While schemata are viewed as dynamic,the task is to attempt to take a snap shot of understanding at a point in time using a genetic decomposition.A genetic decomposition is a description by the researchers of specific mental constructions one may make in understanding a mathematical concept.As with most theories(economics,physics)that have restrictions,it can still be very useful in describing what is observed.3.Initial researchIn our preliminary study we investigated three research questions:•Do participants make connections between linear algebra content and learning theories?•Do participants reflect upon their own learning in terms of studied learning theories?W.Martin et al./Linear Algebra and its Applications432(2010)2089–20992093•Do participants connect their study of linear algebra and learning theories to the mathematics content or pedagogy for their mathematics teaching?In addition to linear algebra course activities designed to engage students in explorations of concepts and discussions about learning theories and connections between the two domains,we had students construct concept maps and describe how they viewed the connections between the two subjects. We found that some participants saw significant connections and were able to apply APOS theory appropriately to their learning of linear algebra.For example,here is a sketch outline of how one participant described the elements of the APOS framework late in the semester.The student showed a reasonable understanding of the theoretical framework and then was able to provide an example from linear algebra to illustrate the model.The student’s description of the elements of APOS:Action:“Students’approach is to apply‘external’rules tofind solutions.The rules are said to be external because students do not have an internalized understanding of the concept or the procedure tofind a solution.”Process:“At the process level,students are able to solve problems using an internalized understand-ing of the algorithm.They do not need to write out an equation or draw a graph of a function,for example.They can look at a problem and understand what is going on and what the solution might look like.”Object level as performing actions on a process:“At the object level,students have an integrated understanding of the processes used to solve problems relating to a particular concept.They un-derstand how a process can be transformed by different actions.They understand how different processes,with regard to a particular mathematical concept,are related.If a problem does not conform to their particular action-level understanding,they can modify the procedures necessary tofind a solution.”Schema as a‘set’of knowledge that may be modified:“Schema–At the schema level,students possess a set of knowledge related to a particular concept.They are able to modify this set of knowledge as they gain more experience working with the concept and solving different kinds of problems.They see how the concept is related to other concepts and how processes within the concept relate to each other.”She used the ideas of determinant and basis to illustrate her understanding of the framework. (Another student also described how student recognition of the recursive relationship of computations of determinants of different orders corresponded to differing levels of understanding in the APOS framework.)Action conception of determinant:“A student at the action level can use an algorithm to calculate the determinant of a matrix.At this level(at least for me),the formula was complicated enough that I would always check that the determinant was correct byfinding the inverse and multiplying by the original matrix to check the solution.”Process conception of determinant:“The student knows different methods to use to calculate a determinant and can,in some cases,look at a matrix and determine its value without calculations.”Object conception:“At the object level,students see the determinant as a tool for understanding and describing matrices.They understand the implications of the value of the determinant of a matrix as a way to describe a matrix.They can use the determinant of a matrix(equal to or not equal to zero)to describe properties of the elements of a matrix.”Triad development of a schema(intra,inter,trans):“A singular concept–basis.There is a basis for a space.The student can describe a basis without calculation.The student canfind different types of bases(column space,row space,null space,eigenspace)and use these values to describe matrices.”The descriptions of components of APOS along with examples illustrate that this student was able to make valid connections between the theoretical framework and the content of linear algebra.While the2094W.Martin et al./Linear Algebra and its Applications432(2010)2089–2099descriptions may not match those that would be given by scholars using APOS as a research framework, the student does demonstrate a recognition of and ability to provide examples of how understanding of linear algebra can be organized conceptually as more that a collection of facts.As would be expected,not all participants showed gains in either domain.We viewed the results of this study as a proof of concept,since there were some participants who clearly gained from the experience.We also recognized that there were problems associated with the implementation of our plan.To summarize ourfindings in relation to the research questions:•Do participants make connections between linear algebra content and learning theories?Yes,to widely varying degrees and levels of sophistication.•Do participants reflect upon their own learning in terms of studied learning theories?Yes,to the extent possible from their conception of the learning theories and understanding of linear algebra.•Do participants connect their study of linear algebra and learning theories to the mathematics content or pedagogy for their mathematics teaching?Participants describe how their experiences will shape their own teaching,but we did not visit their classes.Of the11students at one site who took the parallel courses,we identified three in our case studies (a detailed report of that study is presently under review)who demonstrated a significant ability to connect learning theories with their own learning of linear algebra.At another site,three teachers pursuing math education graduate studies were able to varying degrees to make these connections –two demonstrated strong ability to relate content to APOS and described important ways that the experience had affected their own thoughts about teaching mathematics.Participants in the workshop produced richer concept maps of linear algebra topics by the end of the weekend.Still,there were participants who showed little ability to connect material from linear algebra and APOS.A common misunderstanding of the APOS framework was that increasing levels cor-responded to increasing difficulty or complexity.For example,a student might suggest that computing the determinant of a2×2matrix was at the action level,while computation of a determinant in the 4×4case was at the object level because of the increased complexity of the computations.(Contrast this with the previously mentioned student who observed that the object conception was necessary to recognize that higher dimension determinants are computed recursively from lower dimension determinants.)We faced more significant problems than the extent to which students developed an understanding of the ideas that were presented.We found it very difficult to get students–especially undergraduates –to agree to take an additional course while studying linear algebra.Most of the participants in our pilot projects were either mathematics teachers or prospective mathematics teachers.Other students simply do not have the time in their schedules to pursue an elective seminar not directly related to their own area of interest.This problem led us to a new project in which we plan to integrate the material on learning theory–perhaps implicitly for the students–in the linear algebra course.Our focus will be on working with faculty teaching the course to ensure that they understand the theory and are able to help ensure that course activities reflect these ideas about learning.4.Continuing researchOur current Linear Algebra in New Environments(LINE)project focuses on having faculty work collaboratively to develop a series of modules that use applications to help students develop conceptual understanding of key linear algebra concepts.The project has three organizing concepts:•Promote enhanced learning of linear algebra through integrated study of mathematical content, applications,and the learning process.•Increase faculty understanding and application of mathematical learning theories in teaching linear algebra.•Promote and support improved instruction through co-teaching and collaboration among faculty with expertise in a variety of areas,such as education and STEM disciplines.W.Martin et al./Linear Algebra and its Applications432(2010)2089–20992095 For example,computer and video graphics involve linear transformations.Students will complete a series of activities that use manipulation of graphical images to illustrate and help them move from action and process conceptions of linear transformations to object conceptions and the development of a linear transformation schema.Some of these ideas were inspired by material in Judith Cederberg’s geometry text[14]and some software developed by David Meel,both using matrix representations of geometric linear transformations.The modules will have these characteristics:•Embed learning theory in linear algebra course for both the instructor and the students.•Use applied modules to illustrate the organization of linear algebra concepts.•Applications draw on student intuitions to aid their mental constructions and organization of knowledge.•Consciously include meta-cognition in the course.To illustrate,we sketch the outline of a possible series of activities in a module on geometric linear transformations.The faculty team–including individuals with expertise in mathematics,education, and computer science–will develop a series of modules to engage students in activities that include reflection and meta cognition about their learning of linear algebra.(The Appendix contains a more detailed description of a module that includes these activities.)Task1:Use Photoshop or GIMP to manipulate images(rotate,scale,flip,shear tools).Describe and reflect on processes.This activity uses an ACTION conception of transformation.Task2:Devise rules to map one vector to another.Describe and reflect on process.This activity involves both ACTION and PROCESS conceptions.Task3:Use a matrix representation to map vectors.This requires both PROCESS and OBJECT conceptions.Task4:Compare transform of sum with sum of transforms for matrices in Task3as compared to other non-linear functions.This involves ACTION,PROCESS,and OBJECT conceptions.Task5:Compare pre-image and transformed image of rectangles in the plane–identify software tool that was used(from Task1)and how it might be represented in matrix form.This requires OBJECT and SCHEMA conceptions.Education,mathematics and computer science faculty participating in this project will work prior to the semester to gain familiarity with the APOS framework and to identify and sketch potential modules for the linear algebra course.During the semester,collaborative teams of faculty continue to develop and refine modules that reflect important concepts,interesting applications,and learning theory:Modules will present activities that help students develop important concepts rather than simply presenting important concepts for students to absorb.The researchers will study the impact of project activities on student learning:We expect that students will be able to describe their knowledge of linear algebra in a more conceptual(structured) way during and after the course.We also will study the impact of the project on faculty thinking about teaching and learning:As a result of this work,we expect that faculty will be able to describe both the important concepts of linear algebra and how those concepts are mentally developed and organized by students.Finally,we will study the impact on instructional practice:Participating faculty should continue to use instructional practices that focus both on important content and how students develop their understanding of that content.5.SummaryOur preliminary study demonstrated that prospective and practicing mathematics teachers were able to make connections between their concurrent study of linear algebra and of learning theories relating to mathematics education,specifically the APOS theoretical framework.In cases where the participants developed understanding in both domains,it was apparent that this connected learning strengthened understanding in both areas.Unfortunately,we were unable to encourage undergraduate students to consider studying both linear algebra and learning theory in separate,parallel courses. Consequently,we developed a new strategy that embeds the learning theory in the linear algebra。

高等教育自学考试自考《英语二》模拟试题及答案指导一、阅读判断(共10分)First Question: Reading Comprehension and JudgementRead the following passage carefully and then decide whether the statements that follow are true or false. Mark your answers as “True (T)” or “False (F)”.Passage:In today’s globalized world, learning a second language has become more important than ever. Not only does it enhance personal development but it also opens up numerous career opportunities. Many experts agree that the best way to learn a new language is through immersion—living in a country where the language is spoken and using it daily. However, not everyone has this luxury. For those who cannot immerse themselves fully in another culture, there are other effective methods such as watching movies in the target language, practicing with native speakers online, or attending language classes at local community centers. Regardless of the method chosen, consistency and practice are key to mastering any language.Questions:1、Learning a second language is consi dered less important in today’s world. Answer: False (F)2、According to the passage, immersion is the most effective way to learna new language.Answer: True (T)3、The passage suggests that living in a foreign country is the only way to learn a new language.Answer: False (F)4、Watching movies in the target language is mentioned as an alternative method to full immersion.Answer: True (T)5、Consistency and practice are crucial for mastering a new language. Answer: True (T)This is a sample set of questions based on the given passage. Please note that actual examination questions would be more varied and challenging. Second Question: Reading Comprehension and JudgmentPassage:In the heart of the city stands an old library, known for its vast collection of rare books and historical manuscripts. Established in the early 19th century, it has been a beacon of learning and culture for generations. The library not only serves as a repository of knowledge but also hosts regular workshops and seminars to encourage literacy and academic research among the young and old. In recent years, due to the increasing use of digital media, the library has adapted by incorporating e-books and online databases into its resources. Despite these changes, the essence of the library remains the same—a place wherepeople can come together to learn, share ideas, and grow intellectually.Questions:1、The old library is primarily known for its modern architecture. Answer: False2、The library was established in the late 20th century.Answer: False3、The library offers workshops and seminars to promote literacy. Answer: True4、Recently, the library has begun to offer digital resources. Answer: True5、The library’s purpose has changed significantly with the introduction of e-books.Answer: False二、阅读理解(共10分)Title: The Digital Age and Its Impact on EducationIn the digital age, education has undergone significant transformations, reshaping the way we learn and access knowledge. With the widespread adoption of the internet, smartphones, and tablets, learning has become more accessible, interactive, and personalized than ever before. This revolution in education, often referred to as e-learning or online education, has opened doors to countless opportunities for learners worldwide.One of the most notable changes is the rise of massive open online courses (MOOCs). These platforms offer a wide range of courses, from introductory subjects to advanced specialties, for free or at a minimal cost. Students can enroll in courses from top universities around the globe, engaging with world-class professors and peers from diverse backgrounds. The flexibility of these courses allows learners to study at their own pace, fitting education into their busy schedules.Moreover, digital tools have enhanced the learning experience by providing interactive resources, such as simulations, videos, and virtual labs. These multimedia elements make abstract concepts more tangible, helping students grasp complex ideas more easily. Additionally, educational apps and software facilitate personalized learning, tailoring content and pace to each student’s needs and abilities.However, the digital age also poses challenges for education. The abundance of information online can be overwhelming, and the authenticity of sources needs to be carefully verified. Moreover, not everyone has access to the technology or the internet, creating a digital divide that exacerbates existing educational inequalities.Despite these challenges, the digital age has undeniably revolutionized education, making it more inclusive, accessible, and engaging. As we continue to navigate this digital landscape, it is crucial to harness its potential while addressing the challenges it presents, ensuring that everyone has theopportunity to benefit from this new era of learning.Questions:1.What is the main topic of the passage?A) The history of online education.B) The benefits and challenges of the digital age in education.C) The evolution of traditional classrooms.D) The role of technology in the workplace.Answer: B2.What are MOOCs?A) A type of online course offered by universities at no cost.B) A software program for creating educational videos.C) A method of assessing students’ understanding through tests.D) A tool for tracking students’ progress in traditional classrooms.Answer: A3.How do digital tools enhance the learning experience?A) By making education less accessible to students.B) By providing interactive resources like simulations and videos.C) By reducing the number of courses available online.D) By creating more opportunities for face-to-face interaction.Answer: B4.What is one challenge of the digital age in education?A) The lack of technology available to students.B) The ease of verifying the authenticity of online sources.C) The abundance of information available online.D) The inability to tailor content to individual students’ needs.Answer: C5.What is the author’s overall attitude towards the digital age in education?A) Negative, as it has created more problems than solutions.B) Neutral, as it has both advantages and disadvantages.C) Positive, as it has revolutionized education for the better.D) Undecided, as the impact is still unclear.Answer: C三、概况段落大意和补全句子(共10分)第一题Passage:The following passage is about the benefits and challenges of online learning. Please read the passage carefully and answer the questions below.Online learning has become increasingly popular in recent years, especially with the advancements in technology. It offers numerous benefits, such as flexibility, convenience, and cost-effectiveness. However, it also presents several challenges, including lack of face-to-face interaction, time management issues, and potential for distraction. Despite these challenges, many students have successfully completed their courses online and achieved academic success.Questions:1、What are some of the benefits of online learning?2、What challenges do students face when taking online courses?3、According to the passage, how have many students responded to the challenges of online learning?4、What is the author’s opinion about online learning?5、Choose the sentence that best summarizes the main idea of the passage.Answers:1、Flexibility, convenience, and cost-effectiveness.2、Lack of face-to-face interaction, time management issues, and potential for distraction.3、Many students have successfully completed their courses online and achieved academic success.4、The author believes that online learning offers numerous benefits but also presents several challenges.5.”Online learning has become increasingly popular due to its benefits, such as flexibility and convenience, but it also comes with challenges like lack of face-to-face interaction and time management issues.”第二题Passage:The history of the Great Wall of China is a testament to the ingenuity and perseverance of ancient Chinese engineers. Constructed over several centuries,the Great Wall was originally built to protect the Chinese Empire from invasions by nomadic tribes from the north. As time passed, the Wall became a symbol of China’s strength and unity. Its construction involved the labor of millions of workers, including soldiers, convicts, and civilians. The Wall stretches over 13,000 miles and is made primarily of stone, brick, wood, and earth, depending on the region. Despite its age, the Great Wall remains a marvel of human achievement and continues to attract tourists from around the world.1、The Great Wall of China was primarily built to __________.a) connect different provincesb) protect the Chinese Empire from northern invasionsc) serve as a road for traded) enhance the beauty of the Chinese landscape2、The construction of the Great Wall spanned over __________.a) a few decadesb) a few centuriesc) a few yearsd) a few months3、The Great Wall is a symbol of __________.a) Chinese agricultureb) Chinese strength and unityc) Chinese religiond) Chinese politics4、The Great Wall was built using various materials such as __________.a) only stone and brickb) only wood and earthc) stone, brick, wood, and earthd) only metal and glass5、Today, the Great Wall attracts __________.a) only local touristsb) a few tourists each yearc) millions of tourists from around the worldd) no tourists at allAnswers:1、b) protect the Chinese Empire from northern invasions2、b) a few centuries3、b) Chinese strength and unity4、c) stone, brick, wood, and earth5、c) millions of tourists from around the world四、填空补文(共10分)Section 4: Fill in the BlanksRead the following passage and choose the best word or phrase to complete each blank.Passage:In the modern world, the importance of learning a second language cannot be overstated. The ability to communicate in multiple languages has become a crucial skill for personal and professional development. One such language that has gained immense popularity is English. The demand for English proficiency is evident in various sectors, such as business, technology, and entertainment. To meet this demand, many individuals opt for self-study programs to improve their English skills. One of the most popular self-study programs is the Self-Taught Higher Education Self-Exam (Zhíxíng Zhíshì Gàokǎo, 简称自考) for English Level Two (Yīngyǔ Èr, 简称英语二).1.The ability to communicate in multiple languages has become a crucial skillfor_____________________and professional development.A. personalB. personalizationC. personallyD. personalizes2.The demand for English proficiency is evident in various sectors, such as______________ _______.A. businessB. businessesC. the businessD. businesses’3.One such language that has gained immense popularity is______________ _______.A. EnglishB. EnglishesC. EnglishsD. Englished4.To meet this demand, many individuals optfor_____________________programs to improve their English skills.A. self-studyB. self-studyingC. self-studiedD. self-studyingly5.The most popular self-study program is the Self-Taught Higher Education Self-Exam for English Level Two, also known as______________ _______.A. English Level TwoB. Yīngyǔ ÈrC. Zhíxíng Zhíshì GàokǎoD. Self-Taught Higher Education Self-ExamAnswers:1.A2.A3.A4.A5.B五、填词补文(共15分)First QuestionPlease read the following passage and choose the best word or phrase to complete each sentence. There is only one correct answer for each question.Passage:The world of technology has seen tremendous growth over the past few decades. With advancements in computing power and connectivity, the Internet has become an integral part of our daily lives, facilitating communication, commerce, education, and entertainment. From smartphones that keep us connected on the go, to software that makes our work more efficient, technology has transformed how we interact with the world around us.1、The rapid development in technology over the last few decades has been ________.A)astoundingB)decliningC)stagnantD)puzzlingAnswer: A) astounding2、With the increase in computing power and connectivity, the Internet has become ________.A)less relevantB)optionalC)essentialD)obsoleteAnswer: C) essential3、Smartphones allow us to stay connected while we are ________.A)stationaryB)disconnectedC)at homeD)on the moveAnswer: D) on the move4、Technology has________how we communicate and conduct business.A)hinderedB)complicatedC)transformedD)isolatedAnswer: C) transformed5、Software applications have made our work ________.A)less productiveB)more cumbersomeC)more efficientD)outdatedAnswer: C) more efficientThis completes the “First Question” section of the cloze test designed for the Higher Education Self-Study Examination’s English Course B. Eachquestion requires choosing the most appropriate word or phrase to fit into the context of the paragraph provided.第二题阅读下面的短文,并从每个空格后的四个选项中选择最佳答案填入空白处。