Decentralizing UNIX abstractions in the exokernel architecture

- 格式:pdf

- 大小:96.38 KB

- 文档页数:42

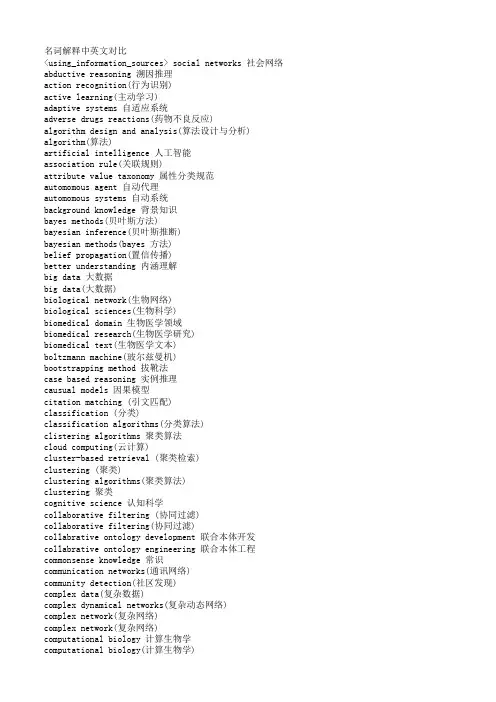

名词解释中英文对比<using_information_sources> social networks 社会网络abductive reasoning 溯因推理action recognition(行为识别)active learning(主动学习)adaptive systems 自适应系统adverse drugs reactions(药物不良反应)algorithm design and analysis(算法设计与分析) algorithm(算法)artificial intelligence 人工智能association rule(关联规则)attribute value taxonomy 属性分类规范automomous agent 自动代理automomous systems 自动系统background knowledge 背景知识bayes methods(贝叶斯方法)bayesian inference(贝叶斯推断)bayesian methods(bayes 方法)belief propagation(置信传播)better understanding 内涵理解big data 大数据big data(大数据)biological network(生物网络)biological sciences(生物科学)biomedical domain 生物医学领域biomedical research(生物医学研究)biomedical text(生物医学文本)boltzmann machine(玻尔兹曼机)bootstrapping method 拔靴法case based reasoning 实例推理causual models 因果模型citation matching (引文匹配)classification (分类)classification algorithms(分类算法)clistering algorithms 聚类算法cloud computing(云计算)cluster-based retrieval (聚类检索)clustering (聚类)clustering algorithms(聚类算法)clustering 聚类cognitive science 认知科学collaborative filtering (协同过滤)collaborative filtering(协同过滤)collabrative ontology development 联合本体开发collabrative ontology engineering 联合本体工程commonsense knowledge 常识communication networks(通讯网络)community detection(社区发现)complex data(复杂数据)complex dynamical networks(复杂动态网络)complex network(复杂网络)complex network(复杂网络)computational biology 计算生物学computational biology(计算生物学)computational complexity(计算复杂性) computational intelligence 智能计算computational modeling(计算模型)computer animation(计算机动画)computer networks(计算机网络)computer science 计算机科学concept clustering 概念聚类concept formation 概念形成concept learning 概念学习concept map 概念图concept model 概念模型concept modelling 概念模型conceptual model 概念模型conditional random field(条件随机场模型) conjunctive quries 合取查询constrained least squares (约束最小二乘) convex programming(凸规划)convolutional neural networks(卷积神经网络) customer relationship management(客户关系管理) data analysis(数据分析)data analysis(数据分析)data center(数据中心)data clustering (数据聚类)data compression(数据压缩)data envelopment analysis (数据包络分析)data fusion 数据融合data generation(数据生成)data handling(数据处理)data hierarchy (数据层次)data integration(数据整合)data integrity 数据完整性data intensive computing(数据密集型计算)data management 数据管理data management(数据管理)data management(数据管理)data miningdata mining 数据挖掘data model 数据模型data models(数据模型)data partitioning 数据划分data point(数据点)data privacy(数据隐私)data security(数据安全)data stream(数据流)data streams(数据流)data structure( 数据结构)data structure(数据结构)data visualisation(数据可视化)data visualization 数据可视化data visualization(数据可视化)data warehouse(数据仓库)data warehouses(数据仓库)data warehousing(数据仓库)database management systems(数据库管理系统)database management(数据库管理)date interlinking 日期互联date linking 日期链接Decision analysis(决策分析)decision maker 决策者decision making (决策)decision models 决策模型decision models 决策模型decision rule 决策规则decision support system 决策支持系统decision support systems (决策支持系统) decision tree(决策树)decission tree 决策树deep belief network(深度信念网络)deep learning(深度学习)defult reasoning 默认推理density estimation(密度估计)design methodology 设计方法论dimension reduction(降维) dimensionality reduction(降维)directed graph(有向图)disaster management 灾害管理disastrous event(灾难性事件)discovery(知识发现)dissimilarity (相异性)distributed databases 分布式数据库distributed databases(分布式数据库) distributed query 分布式查询document clustering (文档聚类)domain experts 领域专家domain knowledge 领域知识domain specific language 领域专用语言dynamic databases(动态数据库)dynamic logic 动态逻辑dynamic network(动态网络)dynamic system(动态系统)earth mover's distance(EMD 距离) education 教育efficient algorithm(有效算法)electric commerce 电子商务electronic health records(电子健康档案) entity disambiguation 实体消歧entity recognition 实体识别entity recognition(实体识别)entity resolution 实体解析event detection 事件检测event detection(事件检测)event extraction 事件抽取event identificaton 事件识别exhaustive indexing 完整索引expert system 专家系统expert systems(专家系统)explanation based learning 解释学习factor graph(因子图)feature extraction 特征提取feature extraction(特征提取)feature extraction(特征提取)feature selection (特征选择)feature selection 特征选择feature selection(特征选择)feature space 特征空间first order logic 一阶逻辑formal logic 形式逻辑formal meaning prepresentation 形式意义表示formal semantics 形式语义formal specification 形式描述frame based system 框为本的系统frequent itemsets(频繁项目集)frequent pattern(频繁模式)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy clustering (模糊聚类)fuzzy data mining(模糊数据挖掘)fuzzy logic 模糊逻辑fuzzy set theory(模糊集合论)fuzzy set(模糊集)fuzzy sets 模糊集合fuzzy systems 模糊系统gaussian processes(高斯过程)gene expression data 基因表达数据gene expression(基因表达)generative model(生成模型)generative model(生成模型)genetic algorithm 遗传算法genome wide association study(全基因组关联分析) graph classification(图分类)graph classification(图分类)graph clustering(图聚类)graph data(图数据)graph data(图形数据)graph database 图数据库graph database(图数据库)graph mining(图挖掘)graph mining(图挖掘)graph partitioning 图划分graph query 图查询graph structure(图结构)graph theory(图论)graph theory(图论)graph theory(图论)graph theroy 图论graph visualization(图形可视化)graphical user interface 图形用户界面graphical user interfaces(图形用户界面)health care 卫生保健health care(卫生保健)heterogeneous data source 异构数据源heterogeneous data(异构数据)heterogeneous database 异构数据库heterogeneous information network(异构信息网络) heterogeneous network(异构网络)heterogenous ontology 异构本体heuristic rule 启发式规则hidden markov model(隐马尔可夫模型)hidden markov model(隐马尔可夫模型)hidden markov models(隐马尔可夫模型) hierarchical clustering (层次聚类) homogeneous network(同构网络)human centered computing 人机交互技术human computer interaction 人机交互human interaction 人机交互human robot interaction 人机交互image classification(图像分类)image clustering (图像聚类)image mining( 图像挖掘)image reconstruction(图像重建)image retrieval (图像检索)image segmentation(图像分割)inconsistent ontology 本体不一致incremental learning(增量学习)inductive learning (归纳学习)inference mechanisms 推理机制inference mechanisms(推理机制)inference rule 推理规则information cascades(信息追随)information diffusion(信息扩散)information extraction 信息提取information filtering(信息过滤)information filtering(信息过滤)information integration(信息集成)information network analysis(信息网络分析) information network mining(信息网络挖掘) information network(信息网络)information processing 信息处理information processing 信息处理information resource management (信息资源管理) information retrieval models(信息检索模型) information retrieval 信息检索information retrieval(信息检索)information retrieval(信息检索)information science 情报科学information sources 信息源information system( 信息系统)information system(信息系统)information technology(信息技术)information visualization(信息可视化)instance matching 实例匹配intelligent assistant 智能辅助intelligent systems 智能系统interaction network(交互网络)interactive visualization(交互式可视化)kernel function(核函数)kernel operator (核算子)keyword search(关键字检索)knowledege reuse 知识再利用knowledgeknowledgeknowledge acquisitionknowledge base 知识库knowledge based system 知识系统knowledge building 知识建构knowledge capture 知识获取knowledge construction 知识建构knowledge discovery(知识发现)knowledge extraction 知识提取knowledge fusion 知识融合knowledge integrationknowledge management systems 知识管理系统knowledge management 知识管理knowledge management(知识管理)knowledge model 知识模型knowledge reasoningknowledge representationknowledge representation(知识表达) knowledge sharing 知识共享knowledge storageknowledge technology 知识技术knowledge verification 知识验证language model(语言模型)language modeling approach(语言模型方法) large graph(大图)large graph(大图)learning(无监督学习)life science 生命科学linear programming(线性规划)link analysis (链接分析)link prediction(链接预测)link prediction(链接预测)link prediction(链接预测)linked data(关联数据)location based service(基于位置的服务) loclation based services(基于位置的服务) logic programming 逻辑编程logical implication 逻辑蕴涵logistic regression(logistic 回归)machine learning 机器学习machine translation(机器翻译)management system(管理系统)management( 知识管理)manifold learning(流形学习)markov chains 马尔可夫链markov processes(马尔可夫过程)matching function 匹配函数matrix decomposition(矩阵分解)matrix decomposition(矩阵分解)maximum likelihood estimation(最大似然估计)medical research(医学研究)mixture of gaussians(混合高斯模型)mobile computing(移动计算)multi agnet systems 多智能体系统multiagent systems 多智能体系统multimedia 多媒体natural language processing 自然语言处理natural language processing(自然语言处理) nearest neighbor (近邻)network analysis( 网络分析)network analysis(网络分析)network analysis(网络分析)network formation(组网)network structure(网络结构)network theory(网络理论)network topology(网络拓扑)network visualization(网络可视化)neural network(神经网络)neural networks (神经网络)neural networks(神经网络)nonlinear dynamics(非线性动力学)nonmonotonic reasoning 非单调推理nonnegative matrix factorization (非负矩阵分解) nonnegative matrix factorization(非负矩阵分解) object detection(目标检测)object oriented 面向对象object recognition(目标识别)object recognition(目标识别)online community(网络社区)online social network(在线社交网络)online social networks(在线社交网络)ontology alignment 本体映射ontology development 本体开发ontology engineering 本体工程ontology evolution 本体演化ontology extraction 本体抽取ontology interoperablity 互用性本体ontology language 本体语言ontology mapping 本体映射ontology matching 本体匹配ontology versioning 本体版本ontology 本体论open government data 政府公开数据opinion analysis(舆情分析)opinion mining(意见挖掘)opinion mining(意见挖掘)outlier detection(孤立点检测)parallel processing(并行处理)patient care(病人医疗护理)pattern classification(模式分类)pattern matching(模式匹配)pattern mining(模式挖掘)pattern recognition 模式识别pattern recognition(模式识别)pattern recognition(模式识别)personal data(个人数据)prediction algorithms(预测算法)predictive model 预测模型predictive models(预测模型)privacy preservation(隐私保护)probabilistic logic(概率逻辑)probabilistic logic(概率逻辑)probabilistic model(概率模型)probabilistic model(概率模型)probability distribution(概率分布)probability distribution(概率分布)project management(项目管理)pruning technique(修剪技术)quality management 质量管理query expansion(查询扩展)query language 查询语言query language(查询语言)query processing(查询处理)query rewrite 查询重写question answering system 问答系统random forest(随机森林)random graph(随机图)random processes(随机过程)random walk(随机游走)range query(范围查询)RDF database 资源描述框架数据库RDF query 资源描述框架查询RDF repository 资源描述框架存储库RDF storge 资源描述框架存储real time(实时)recommender system(推荐系统)recommender system(推荐系统)recommender systems 推荐系统recommender systems(推荐系统)record linkage 记录链接recurrent neural network(递归神经网络) regression(回归)reinforcement learning 强化学习reinforcement learning(强化学习)relation extraction 关系抽取relational database 关系数据库relational learning 关系学习relevance feedback (相关反馈)resource description framework 资源描述框架restricted boltzmann machines(受限玻尔兹曼机) retrieval models(检索模型)rough set theroy 粗糙集理论rough set 粗糙集rule based system 基于规则系统rule based 基于规则rule induction (规则归纳)rule learning (规则学习)rule learning 规则学习schema mapping 模式映射schema matching 模式匹配scientific domain 科学域search problems(搜索问题)semantic (web) technology 语义技术semantic analysis 语义分析semantic annotation 语义标注semantic computing 语义计算semantic integration 语义集成semantic interpretation 语义解释semantic model 语义模型semantic network 语义网络semantic relatedness 语义相关性semantic relation learning 语义关系学习semantic search 语义检索semantic similarity 语义相似度semantic similarity(语义相似度)semantic web rule language 语义网规则语言semantic web 语义网semantic web(语义网)semantic workflow 语义工作流semi supervised learning(半监督学习)sensor data(传感器数据)sensor networks(传感器网络)sentiment analysis(情感分析)sentiment analysis(情感分析)sequential pattern(序列模式)service oriented architecture 面向服务的体系结构shortest path(最短路径)similar kernel function(相似核函数)similarity measure(相似性度量)similarity relationship (相似关系)similarity search(相似搜索)similarity(相似性)situation aware 情境感知social behavior(社交行为)social influence(社会影响)social interaction(社交互动)social interaction(社交互动)social learning(社会学习)social life networks(社交生活网络)social machine 社交机器social media(社交媒体)social media(社交媒体)social media(社交媒体)social network analysis 社会网络分析social network analysis(社交网络分析)social network(社交网络)social network(社交网络)social science(社会科学)social tagging system(社交标签系统)social tagging(社交标签)social web(社交网页)sparse coding(稀疏编码)sparse matrices(稀疏矩阵)sparse representation(稀疏表示)spatial database(空间数据库)spatial reasoning 空间推理statistical analysis(统计分析)statistical model 统计模型string matching(串匹配)structural risk minimization (结构风险最小化) structured data 结构化数据subgraph matching 子图匹配subspace clustering(子空间聚类)supervised learning( 有support vector machine 支持向量机support vector machines(支持向量机)system dynamics(系统动力学)tag recommendation(标签推荐)taxonmy induction 感应规范temporal logic 时态逻辑temporal reasoning 时序推理text analysis(文本分析)text anaylsis 文本分析text classification (文本分类)text data(文本数据)text mining technique(文本挖掘技术)text mining 文本挖掘text mining(文本挖掘)text summarization(文本摘要)thesaurus alignment 同义对齐time frequency analysis(时频分析)time series analysis( 时time series data(时间序列数据)time series data(时间序列数据)time series(时间序列)topic model(主题模型)topic modeling(主题模型)transfer learning 迁移学习triple store 三元组存储uncertainty reasoning 不精确推理undirected graph(无向图)unified modeling language 统一建模语言unsupervisedupper bound(上界)user behavior(用户行为)user generated content(用户生成内容)utility mining(效用挖掘)visual analytics(可视化分析)visual content(视觉内容)visual representation(视觉表征)visualisation(可视化)visualization technique(可视化技术) visualization tool(可视化工具)web 2.0(网络2.0)web forum(web 论坛)web mining(网络挖掘)web of data 数据网web ontology lanuage 网络本体语言web pages(web 页面)web resource 网络资源web science 万维科学web search (网络检索)web usage mining(web 使用挖掘)wireless networks 无线网络world knowledge 世界知识world wide web 万维网world wide web(万维网)xml database 可扩展标志语言数据库附录 2 Data Mining 知识图谱(共包含二级节点15 个,三级节点93 个)间序列分析)监督学习)领域 二级分类 三级分类。

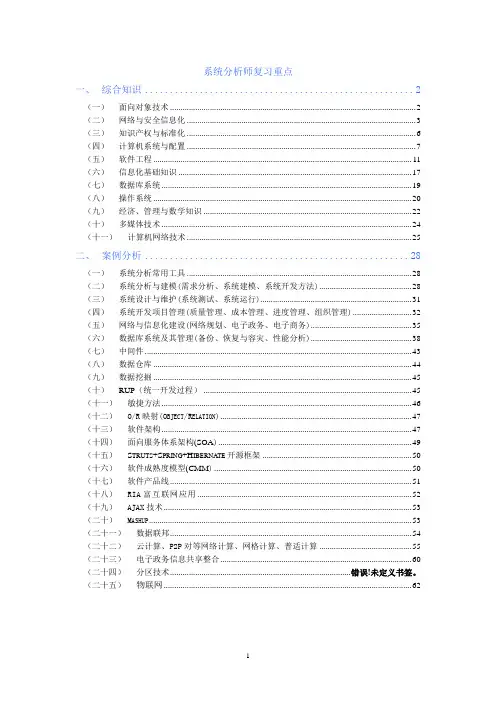

系统分析师复习重点一、综合知识 (2)(一)面向对象技术 (2)(二)网络与安全信息化 (3)(三)知识产权与标准化 (6)(四)计算机系统与配置 (7)(五)软件工程 (11)(六)信息化基础知识 (17)(七)数据库系统 (19)(八)操作系统 (20)(九)经济、管理与数学知识 (22)(十)多媒体技术 (24)(十一)计算机网络技术 (25)二、案例分析 (28)(一)系统分析常用工具 (28)(二)系统分析与建模(需求分析、系统建模、系统开发方法) (28)(三)系统设计与维护(系统测试、系统运行) (31)(四)系统开发项目管理(质量管理、成本管理、进度管理、组织管理) (32)(五)网络与信息化建设(网络规划、电子政务、电子商务) (35)(六)数据库系统及其管理(备份、恢复与容灾、性能分析) (38)(七)中间件 (43)(八)数据仓库 (44)(九)数据挖掘 (45)(十)RUP(统一开发过程) (45)(十一)敏捷方法 (46)(十二)O/R映射(O BJECT/R ELATION) (47)(十三)软件架构 (47)(十四)面向服务体系架构(SOA) (49)(十五)S TRUTS+S PRING+H IBERNATE开源框架 (50)(十六)软件成熟度模型(CMM) (50)(十七)软件产品线 (51)(十八)RIA富互联网应用 (52)(十九)AJAX技术 (53)(二十)M ASHUP (53)(二十一)数据联邦 (54)(二十二)云计算、P2P对等网络计算、网格计算、普适计算 (55)(二十三)电子政务信息共享整合 (60)(二十四)分区技术...................................................................................... 错误!未定义书签。

(二十五)物联网 . (62)一、综合知识(一)面向对象技术1.JacksonBooch 和UML2.类:是一组具有相同属性、操作、、关系、和语义的对象描述接口:是描述类或构件的一个服务的操作构件:是遵从一组接口规范且付诸实现的物理的、可替换的软件模块包:用于把元素组织成组节点:运行时的物理对象,代表一个计算机资源,通常至少有存储空间和执行能力3.4.UML5.传统的程序流程图与UML活动图区别在于:程序流程图明确指定了每个活动的先后程序,而活动图仅描述了活动和必要的工作程序。

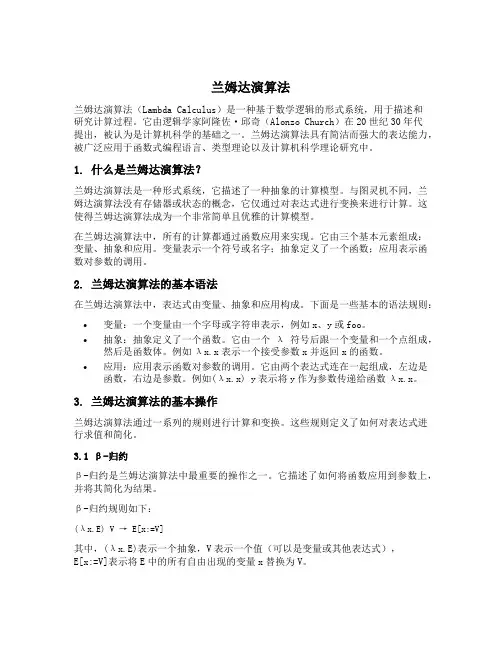

兰姆达演算法兰姆达演算法(Lambda Calculus)是一种基于数学逻辑的形式系统,用于描述和研究计算过程。

它由逻辑学家阿隆佐·邱奇(Alonzo Church)在20世纪30年代提出,被认为是计算机科学的基础之一。

兰姆达演算法具有简洁而强大的表达能力,被广泛应用于函数式编程语言、类型理论以及计算机科学理论研究中。

1. 什么是兰姆达演算法?兰姆达演算法是一种形式系统,它描述了一种抽象的计算模型。

与图灵机不同,兰姆达演算法没有存储器或状态的概念,它仅通过对表达式进行变换来进行计算。

这使得兰姆达演算法成为一个非常简单且优雅的计算模型。

在兰姆达演算法中,所有的计算都通过函数应用来实现。

它由三个基本元素组成:变量、抽象和应用。

变量表示一个符号或名字;抽象定义了一个函数;应用表示函数对参数的调用。

2. 兰姆达演算法的基本语法在兰姆达演算法中,表达式由变量、抽象和应用构成。

下面是一些基本的语法规则:•变量:一个变量由一个字母或字符串表示,例如x、y或foo。

•抽象:抽象定义了一个函数。

它由一个λ符号后跟一个变量和一个点组成,然后是函数体。

例如λx.x表示一个接受参数x并返回x的函数。

•应用:应用表示函数对参数的调用。

它由两个表达式连在一起组成,左边是函数,右边是参数。

例如(λx.x) y表示将y作为参数传递给函数λx.x。

3. 兰姆达演算法的基本操作兰姆达演算法通过一系列的规则进行计算和变换。

这些规则定义了如何对表达式进行求值和简化。

3.1 β-归约β-归约是兰姆达演算法中最重要的操作之一。

它描述了如何将函数应用到参数上,并将其简化为结果。

β-归约规则如下:(λx.E) V → E[x:=V]其中,(λx.E)表示一个抽象,V表示一个值(可以是变量或其他表达式),E[x:=V]表示将E中的所有自由出现的变量x替换为V。

这个规则表示,当一个函数应用到参数上时,它的函数体中的变量将被替换为参数,从而得到一个新的表达式。

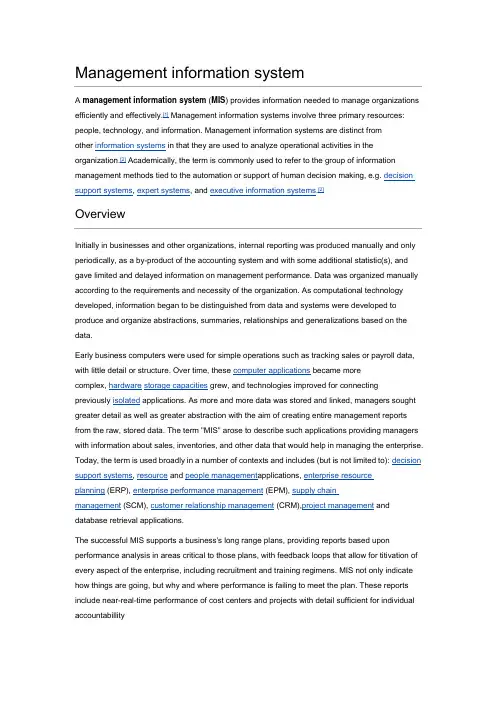

Initially in businesses and other organizations, internal reporting was produced manually and only periodically, as a by-product of the accounting system and with some additional statistic(s), and gave limited and delayed information on management performance. Data was organized manually according to the requirements and necessity of the organization. As computational technology developed, information began to be distinguished from data and systems were developed to produce and organize abstractions, summaries, relationships and generalizations based on the data.Early business computers were used for simple operations such as tracking sales or payroll data, with little detail or structure. Over time, these computer applications became morecomplex, hardware storage capacities grew, and technologies improved for connectingpreviously isolated applications. As more and more data was stored and linked, managers sought greater detail as well as greater abstraction with the aim of creating entire management reports from the raw, stored data. The term "MIS" arose to describe such applications providing managers with information about sales, inventories, and other data that would help in managing the enterprise. Today, the term is used broadly in a number of contexts and includes (but is not limited to): decision support systems, resource and people management applications, enterprise resourceplanning (ERP), enterprise performance management (EPM), supply chainmanagement (SCM), customer relationship management (CRM),project management and database retrieval applications.The successful MIS supports a business's long range plans, providing reports based upon performance analysis in areas critical to those plans, with feedback loops that allow for titivation of every aspect of the enterprise, including recruitment and training regimens. MIS not only indicate how things are going, but why and where performance is failing to meet the plan. These reports include near-real-time performance of cost centers and projects with detail sufficient for individual accountabillityKenneth and Jane Laudon identif y five eras of MIS evolution corresponding to five phases in the development of computing technology: 1) mainframe and minicomputer computing, 2) personal computers, 3) client/server networks, 4) enterprise computing, and 5) cloud computing.[3].The first (mainframe and minicomputer) era was ruled by IBM and their mainframe computers, these computers would often take up whole rooms and require teams to run them, IBM supplied the hardware and the software. As technology advanced these computers were able to handle greater capacities and therefore reduce their cost. Smaller, more affordable minicomputers allowed larger businesses to run their own computing centers in-house.The second (personal computer) era began in 1965 as microprocessors started to compete with mainframes and minicomputers and accelerated the process of decentralizing computing power from large data centers to smaller offices. In the late 1970s minicomputer technology gave way to personal computers and relatively low cost computers were becoming mass market commodities, allowing businesses to provide their employees access to computing power that ten years before would have cost tens of thousands of dollars. This proliferation of computers created a ready market for interconnecting networks and the popularization of the Internet.As the complexity of the technology increased and the costs decreased, the need to share information within an enterprise also grew, giving rise to the third (client/server) era in which computers on a common network were able to access shared information on a server. This allowed for large amounts of data to be accessed by thousands and even millions of people simultaneously. The fourth (enterprise) era enabled by high speed networks, tied all aspects of the business enterprise together offering rich information access encompassing the complete management structure.The fifth and latest (cloud computing) era of information systems employs networking technology to deliver applications as well as data storage independent of the configuration, location or nature of the hardware. This, along with high speed cellphone and wifi networks, led to new levels of mobility in which managers access the MIS from most anywhere with laptops, tablet pcs, and smartphones.Most management information systems specialize in particular commercial and industrial sectors, aspects of the enterprise, or management substructure.▪Management information systems (MIS), per se, produce fixed, regularly scheduled reports based on data extracted and summarized from the firm’s underlying transactionprocessing systems[4] to middle and operational level managers to identify and informstructured and semi-structured decision problems.▪Decision support systems (DSS) are computer program applications used by middle management to compile information from a wide range of sources to support problem solving and decision making.▪Executive information systems (EIS) is a reporting tool that provides quick access to summarized reports coming from all company levels and departments such as accounting, human resources and operations.▪Marketing information systems are MIS designed specifically for managing the marketing aspects of the business.▪Office automation systems (OAS) support communication and productivity in the enterprise by automating work flow and eliminating bottlenecks. OAS may be implemented at any and all levels of management.AdvantagesThe following are some of the benefits that can be attained for different types of management information systems.[5]▪The company is able to highlight their strength and weaknesses due to the presence of revenue reports, employee performance records etc. The identification of these aspects can help the company to improve their business processes and operations.▪Giving an overall picture of the company and acting as a communication and planning tool. ▪The availability of the customer data and feedback can help the company to align their business processes according to the needs of the customers. The effective management of customer data can help the company to perform direct marketing and promotion activities.▪Information is considered to be an important asset for any company in the modern competitive world. The consumer buying trends and behaviors can be predicted by the analysis of sales and revenue reports from each operating region of the company.Enterprise applications▪Enterprise systems, also known as enterprise resource planning (ERP) systems provide an organization with integrated software modules and a unified database which enable efficientplanning, managing, and controlling of all core business processes across multiple locations.Modules of ERP systems may include finance, accounting, marketing, human resources,production, inventory management and distribution.▪Supply chain management (SCM) systems enable more efficient management of the supply chain by integrating the links in a supply chain. This may include suppliers,manufacturer, wholesalers, retailers and final customers.▪Customer relationship management (CRM) systems help businesses manage relationships with potential and current customers and business partners across marketing, sales, and service.▪Knowledge management system (KMS) helps organizations facilitate the collection, recording, organization, retrieval, and dissemination of knowledge. This may includedocuments, accounting records, and unrecorded procedures, practices and skills. Developing Information Systems"The actions that are taken to create an information system that solves an organizational problem are called system development (Laudon & Laudon, 2010)". These include system analysis, system design, programming, testing, conversion, production and finally maintenance. These actions usually take place in that specified order but some may need to repeat or be accomplished concurrently.System analysis is accomplished on the problem the company is facing and is trying to solve with the information system. Whoever accomplishes this step will identify the problem areas and outlines a solution through achievable objectives. This analysis will include a feasibility study, which determines the solutions feasibility based on money, time and technology. Essentially the feasibility study determines whether this solution is a good investment. This process also lays out what the information requirement will be for the new system.System design shows how the system will fulfill the requirements and objectives laid out in the system analysis phase. The designer will address all the managerial, organizational and technological components the system will address and need. It is important to note that user information requirements drive the building effort. The user of the system must be involved in the design process to ensure the system meets the users need and operations.Programming entails taking the design stage and translating that into software code. This is usually out sourced to another company to write the required software or company’s buy existing software that meets the systems needs. The key is to make sure the software is user friendly and compatible with current systems.Testing can take on many different forms but is essential to the successful implementation of the new system. You can conduct unit testing, which tests each program in the system separately or system testing which tests the system as a whole. Either way there should also be acceptance testing, which provides a certification that the system is ready to use. Also, regardless of the test a comprehensive test plan should be developed that identifies what is to be tested and what the expected outcome should be.Conversion is the process of changing or converting the old system into the new. This can be done in four ways:Parallel strategy – Both old and new systems are run together until the new one functions correctly (this is the safest approach since you do not lose the old system until the new one is “bug” free). Direct cutover – The new system replaces the old at an appointed time.Pilot study – Introducing the new system to a small portion of the operation to see how it fares. If good then the new system expands to the rest of the company.Phased approach – New system is introduced in stages.Anyway you implement the conversion you must document the good and bad during the process to identify benchmarks and fix problems. Conversion also includes the training of all personnel that are required to use the system to perform their job.Production is when the new system is officially the system of record for the operation and maintenance is just that. Maintain the system as it performs the function it was intended to meet.。

Draft:Deep Learning in Neural Networks:An OverviewTechnical Report IDSIA-03-14/arXiv:1404.7828(v1.5)[cs.NE]J¨u rgen SchmidhuberThe Swiss AI Lab IDSIAIstituto Dalle Molle di Studi sull’Intelligenza ArtificialeUniversity of Lugano&SUPSIGalleria2,6928Manno-LuganoSwitzerland15May2014AbstractIn recent years,deep artificial neural networks(including recurrent ones)have won numerous con-tests in pattern recognition and machine learning.This historical survey compactly summarises relevantwork,much of it from the previous millennium.Shallow and deep learners are distinguished by thedepth of their credit assignment paths,which are chains of possibly learnable,causal links between ac-tions and effects.I review deep supervised learning(also recapitulating the history of backpropagation),unsupervised learning,reinforcement learning&evolutionary computation,and indirect search for shortprograms encoding deep and large networks.PDF of earlier draft(v1):http://www.idsia.ch/∼juergen/DeepLearning30April2014.pdfLATEX source:http://www.idsia.ch/∼juergen/DeepLearning30April2014.texComplete BIBTEXfile:http://www.idsia.ch/∼juergen/bib.bibPrefaceThis is the draft of an invited Deep Learning(DL)overview.One of its goals is to assign credit to those who contributed to the present state of the art.I acknowledge the limitations of attempting to achieve this goal.The DL research community itself may be viewed as a continually evolving,deep network of scientists who have influenced each other in complex ways.Starting from recent DL results,I tried to trace back the origins of relevant ideas through the past half century and beyond,sometimes using“local search”to follow citations of citations backwards in time.Since not all DL publications properly acknowledge earlier relevant work,additional global search strategies were employed,aided by consulting numerous neural network experts.As a result,the present draft mostly consists of references(about800entries so far).Nevertheless,through an expert selection bias I may have missed important work.A related bias was surely introduced by my special familiarity with the work of my own DL research group in the past quarter-century.For these reasons,the present draft should be viewed as merely a snapshot of an ongoing credit assignment process.To help improve it,please do not hesitate to send corrections and suggestions to juergen@idsia.ch.Contents1Introduction to Deep Learning(DL)in Neural Networks(NNs)3 2Event-Oriented Notation for Activation Spreading in FNNs/RNNs3 3Depth of Credit Assignment Paths(CAPs)and of Problems4 4Recurring Themes of Deep Learning54.1Dynamic Programming(DP)for DL (5)4.2Unsupervised Learning(UL)Facilitating Supervised Learning(SL)and RL (6)4.3Occam’s Razor:Compression and Minimum Description Length(MDL) (6)4.4Learning Hierarchical Representations Through Deep SL,UL,RL (6)4.5Fast Graphics Processing Units(GPUs)for DL in NNs (6)5Supervised NNs,Some Helped by Unsupervised NNs75.11940s and Earlier (7)5.2Around1960:More Neurobiological Inspiration for DL (7)5.31965:Deep Networks Based on the Group Method of Data Handling(GMDH) (8)5.41979:Convolution+Weight Replication+Winner-Take-All(WTA) (8)5.51960-1981and Beyond:Development of Backpropagation(BP)for NNs (8)5.5.1BP for Weight-Sharing Feedforward NNs(FNNs)and Recurrent NNs(RNNs)..95.6Late1980s-2000:Numerous Improvements of NNs (9)5.6.1Ideas for Dealing with Long Time Lags and Deep CAPs (10)5.6.2Better BP Through Advanced Gradient Descent (10)5.6.3Discovering Low-Complexity,Problem-Solving NNs (11)5.6.4Potential Benefits of UL for SL (11)5.71987:UL Through Autoencoder(AE)Hierarchies (12)5.81989:BP for Convolutional NNs(CNNs) (13)5.91991:Fundamental Deep Learning Problem of Gradient Descent (13)5.101991:UL-Based History Compression Through a Deep Hierarchy of RNNs (14)5.111992:Max-Pooling(MP):Towards MPCNNs (14)5.121994:Contest-Winning Not So Deep NNs (15)5.131995:Supervised Recurrent Very Deep Learner(LSTM RNN) (15)5.142003:More Contest-Winning/Record-Setting,Often Not So Deep NNs (16)5.152006/7:Deep Belief Networks(DBNs)&AE Stacks Fine-Tuned by BP (17)5.162006/7:Improved CNNs/GPU-CNNs/BP-Trained MPCNNs (17)5.172009:First Official Competitions Won by RNNs,and with MPCNNs (18)5.182010:Plain Backprop(+Distortions)on GPU Yields Excellent Results (18)5.192011:MPCNNs on GPU Achieve Superhuman Vision Performance (18)5.202011:Hessian-Free Optimization for RNNs (19)5.212012:First Contests Won on ImageNet&Object Detection&Segmentation (19)5.222013-:More Contests and Benchmark Records (20)5.22.1Currently Successful Supervised Techniques:LSTM RNNs/GPU-MPCNNs (21)5.23Recent Tricks for Improving SL Deep NNs(Compare Sec.5.6.2,5.6.3) (21)5.24Consequences for Neuroscience (22)5.25DL with Spiking Neurons? (22)6DL in FNNs and RNNs for Reinforcement Learning(RL)236.1RL Through NN World Models Yields RNNs With Deep CAPs (23)6.2Deep FNNs for Traditional RL and Markov Decision Processes(MDPs) (24)6.3Deep RL RNNs for Partially Observable MDPs(POMDPs) (24)6.4RL Facilitated by Deep UL in FNNs and RNNs (25)6.5Deep Hierarchical RL(HRL)and Subgoal Learning with FNNs and RNNs (25)6.6Deep RL by Direct NN Search/Policy Gradients/Evolution (25)6.7Deep RL by Indirect Policy Search/Compressed NN Search (26)6.8Universal RL (27)7Conclusion271Introduction to Deep Learning(DL)in Neural Networks(NNs) Which modifiable components of a learning system are responsible for its success or failure?What changes to them improve performance?This has been called the fundamental credit assignment problem(Minsky, 1963).There are general credit assignment methods for universal problem solvers that are time-optimal in various theoretical senses(Sec.6.8).The present survey,however,will focus on the narrower,but now commercially important,subfield of Deep Learning(DL)in Artificial Neural Networks(NNs).We are interested in accurate credit assignment across possibly many,often nonlinear,computational stages of NNs.Shallow NN-like models have been around for many decades if not centuries(Sec.5.1).Models with several successive nonlinear layers of neurons date back at least to the1960s(Sec.5.3)and1970s(Sec.5.5). An efficient gradient descent method for teacher-based Supervised Learning(SL)in discrete,differentiable networks of arbitrary depth called backpropagation(BP)was developed in the1960s and1970s,and ap-plied to NNs in1981(Sec.5.5).BP-based training of deep NNs with many layers,however,had been found to be difficult in practice by the late1980s(Sec.5.6),and had become an explicit research subject by the early1990s(Sec.5.9).DL became practically feasible to some extent through the help of Unsupervised Learning(UL)(e.g.,Sec.5.10,5.15).The1990s and2000s also saw many improvements of purely super-vised DL(Sec.5).In the new millennium,deep NNs havefinally attracted wide-spread attention,mainly by outperforming alternative machine learning methods such as kernel machines(Vapnik,1995;Sch¨o lkopf et al.,1998)in numerous important applications.In fact,supervised deep NNs have won numerous of-ficial international pattern recognition competitions(e.g.,Sec.5.17,5.19,5.21,5.22),achieving thefirst superhuman visual pattern recognition results in limited domains(Sec.5.19).Deep NNs also have become relevant for the more generalfield of Reinforcement Learning(RL)where there is no supervising teacher (Sec.6).Both feedforward(acyclic)NNs(FNNs)and recurrent(cyclic)NNs(RNNs)have won contests(Sec.5.12,5.14,5.17,5.19,5.21,5.22).In a sense,RNNs are the deepest of all NNs(Sec.3)—they are general computers more powerful than FNNs,and can in principle create and process memories of ar-bitrary sequences of input patterns(e.g.,Siegelmann and Sontag,1991;Schmidhuber,1990a).Unlike traditional methods for automatic sequential program synthesis(e.g.,Waldinger and Lee,1969;Balzer, 1985;Soloway,1986;Deville and Lau,1994),RNNs can learn programs that mix sequential and parallel information processing in a natural and efficient way,exploiting the massive parallelism viewed as crucial for sustaining the rapid decline of computation cost observed over the past75years.The rest of this paper is structured as follows.Sec.2introduces a compact,event-oriented notation that is simple yet general enough to accommodate both FNNs and RNNs.Sec.3introduces the concept of Credit Assignment Paths(CAPs)to measure whether learning in a given NN application is of the deep or shallow type.Sec.4lists recurring themes of DL in SL,UL,and RL.Sec.5focuses on SL and UL,and on how UL can facilitate SL,although pure SL has become dominant in recent competitions(Sec.5.17-5.22). Sec.5is arranged in a historical timeline format with subsections on important inspirations and technical contributions.Sec.6on deep RL discusses traditional Dynamic Programming(DP)-based RL combined with gradient-based search techniques for SL or UL in deep NNs,as well as general methods for direct and indirect search in the weight space of deep FNNs and RNNs,including successful policy gradient and evolutionary methods.2Event-Oriented Notation for Activation Spreading in FNNs/RNNs Throughout this paper,let i,j,k,t,p,q,r denote positive integer variables assuming ranges implicit in the given contexts.Let n,m,T denote positive integer constants.An NN’s topology may change over time(e.g.,Fahlman,1991;Ring,1991;Weng et al.,1992;Fritzke, 1994).At any given moment,it can be described as afinite subset of units(or nodes or neurons)N= {u1,u2,...,}and afinite set H⊆N×N of directed edges or connections between nodes.FNNs are acyclic graphs,RNNs cyclic.Thefirst(input)layer is the set of input units,a subset of N.In FNNs,the k-th layer(k>1)is the set of all nodes u∈N such that there is an edge path of length k−1(but no longer path)between some input unit and u.There may be shortcut connections between distant layers.The NN’s behavior or program is determined by a set of real-valued,possibly modifiable,parameters or weights w i(i=1,...,n).We now focus on a singlefinite episode or epoch of information processing and activation spreading,without learning through weight changes.The following slightly unconventional notation is designed to compactly describe what is happening during the runtime of the system.During an episode,there is a partially causal sequence x t(t=1,...,T)of real values that I call events.Each x t is either an input set by the environment,or the activation of a unit that may directly depend on other x k(k<t)through a current NN topology-dependent set in t of indices k representing incoming causal connections or links.Let the function v encode topology information and map such event index pairs(k,t)to weight indices.For example,in the non-input case we may have x t=f t(net t)with real-valued net t= k∈in t x k w v(k,t)(additive case)or net t= k∈in t x k w v(k,t)(multiplicative case), where f t is a typically nonlinear real-valued activation function such as tanh.In many recent competition-winning NNs(Sec.5.19,5.21,5.22)there also are events of the type x t=max k∈int (x k);some networktypes may also use complex polynomial activation functions(Sec.5.3).x t may directly affect certain x k(k>t)through outgoing connections or links represented through a current set out t of indices k with t∈in k.Some non-input events are called output events.Note that many of the x t may refer to different,time-varying activations of the same unit in sequence-processing RNNs(e.g.,Williams,1989,“unfolding in time”),or also in FNNs sequentially exposed to time-varying input patterns of a large training set encoded as input events.During an episode,the same weight may get reused over and over again in topology-dependent ways,e.g.,in RNNs,or in convolutional NNs(Sec.5.4,5.8).I call this weight sharing across space and/or time.Weight sharing may greatly reduce the NN’s descriptive complexity,which is the number of bits of information required to describe the NN (Sec.4.3).In Supervised Learning(SL),certain NN output events x t may be associated with teacher-given,real-valued labels or targets d t yielding errors e t,e.g.,e t=1/2(x t−d t)2.A typical goal of supervised NN training is tofind weights that yield episodes with small total error E,the sum of all such e t.The hope is that the NN will generalize well in later episodes,causing only small errors on previously unseen sequences of input events.Many alternative error functions for SL and UL are possible.SL assumes that input events are independent of earlier output events(which may affect the environ-ment through actions causing subsequent perceptions).This assumption does not hold in the broaderfields of Sequential Decision Making and Reinforcement Learning(RL)(Kaelbling et al.,1996;Sutton and Barto, 1998;Hutter,2005)(Sec.6).In RL,some of the input events may encode real-valued reward signals given by the environment,and a typical goal is tofind weights that yield episodes with a high sum of reward signals,through sequences of appropriate output actions.Sec.5.5will use the notation above to compactly describe a central algorithm of DL,namely,back-propagation(BP)for supervised weight-sharing FNNs and RNNs.(FNNs may be viewed as RNNs with certainfixed zero weights.)Sec.6will address the more general RL case.3Depth of Credit Assignment Paths(CAPs)and of ProblemsTo measure whether credit assignment in a given NN application is of the deep or shallow type,I introduce the concept of Credit Assignment Paths or CAPs,which are chains of possibly causal links between events.Let usfirst focus on SL.Consider two events x p and x q(1≤p<q≤T).Depending on the appli-cation,they may have a Potential Direct Causal Connection(PDCC)expressed by the Boolean predicate pdcc(p,q),which is true if and only if p∈in q.Then the2-element list(p,q)is defined to be a CAP from p to q(a minimal one).A learning algorithm may be allowed to change w v(p,q)to improve performance in future episodes.More general,possibly indirect,Potential Causal Connections(PCC)are expressed by the recursively defined Boolean predicate pcc(p,q),which in the SL case is true only if pdcc(p,q),or if pcc(p,k)for some k and pdcc(k,q).In the latter case,appending q to any CAP from p to k yields a CAP from p to q(this is a recursive definition,too).The set of such CAPs may be large but isfinite.Note that the same weight may affect many different PDCCs between successive events listed by a given CAP,e.g.,in the case of RNNs, or weight-sharing FNNs.Suppose a CAP has the form(...,k,t,...,q),where k and t(possibly t=q)are thefirst successive elements with modifiable w v(k,t).Then the length of the suffix list(t,...,q)is called the CAP’s depth (which is0if there are no modifiable links at all).This depth limits how far backwards credit assignment can move down the causal chain tofind a modifiable weight.1Suppose an episode and its event sequence x1,...,x T satisfy a computable criterion used to decide whether a given problem has been solved(e.g.,total error E below some threshold).Then the set of used weights is called a solution to the problem,and the depth of the deepest CAP within the sequence is called the solution’s depth.There may be other solutions(yielding different event sequences)with different depths.Given somefixed NN topology,the smallest depth of any solution is called the problem’s depth.Sometimes we also speak of the depth of an architecture:SL FNNs withfixed topology imply a problem-independent maximal problem depth bounded by the number of non-input layers.Certain SL RNNs withfixed weights for all connections except those to output units(Jaeger,2001;Maass et al.,2002; Jaeger,2004;Schrauwen et al.,2007)have a maximal problem depth of1,because only thefinal links in the corresponding CAPs are modifiable.In general,however,RNNs may learn to solve problems of potentially unlimited depth.Note that the definitions above are solely based on the depths of causal chains,and agnostic of the temporal distance between events.For example,shallow FNNs perceiving large“time windows”of in-put events may correctly classify long input sequences through appropriate output events,and thus solve shallow problems involving long time lags between relevant events.At which problem depth does Shallow Learning end,and Deep Learning begin?Discussions with DL experts have not yet yielded a conclusive response to this question.Instead of committing myself to a precise answer,let me just define for the purposes of this overview:problems of depth>10require Very Deep Learning.The difficulty of a problem may have little to do with its depth.Some NNs can quickly learn to solve certain deep problems,e.g.,through random weight guessing(Sec.5.9)or other types of direct search (Sec.6.6)or indirect search(Sec.6.7)in weight space,or through training an NNfirst on shallow problems whose solutions may then generalize to deep problems,or through collapsing sequences of(non)linear operations into a single(non)linear operation—but see an analysis of non-trivial aspects of deep linear networks(Baldi and Hornik,1994,Section B).In general,however,finding an NN that precisely models a given training set is an NP-complete problem(Judd,1990;Blum and Rivest,1992),also in the case of deep NNs(S´ıma,1994;de Souto et al.,1999;Windisch,2005);compare a survey of negative results(S´ıma, 2002,Section1).Above we have focused on SL.In the more general case of RL in unknown environments,pcc(p,q) is also true if x p is an output event and x q any later input event—any action may affect the environment and thus any later perception.(In the real world,the environment may even influence non-input events computed on a physical hardware entangled with the entire universe,but this is ignored here.)It is possible to model and replace such unmodifiable environmental PCCs through a part of the NN that has already learned to predict(through some of its units)input events(including reward signals)from former input events and actions(Sec.6.1).Its weights are frozen,but can help to assign credit to other,still modifiable weights used to compute actions(Sec.6.1).This approach may lead to very deep CAPs though.Some DL research is about automatically rephrasing problems such that their depth is reduced(Sec.4). In particular,sometimes UL is used to make SL problems less deep,e.g.,Sec.5.10.Often Dynamic Programming(Sec.4.1)is used to facilitate certain traditional RL problems,e.g.,Sec.6.2.Sec.5focuses on CAPs for SL,Sec.6on the more complex case of RL.4Recurring Themes of Deep Learning4.1Dynamic Programming(DP)for DLOne recurring theme of DL is Dynamic Programming(DP)(Bellman,1957),which can help to facili-tate credit assignment under certain assumptions.For example,in SL NNs,backpropagation itself can 1An alternative would be to count only modifiable links when measuring depth.In many typical NN applications this would not make a difference,but in some it would,e.g.,Sec.6.1.be viewed as a DP-derived method(Sec.5.5).In traditional RL based on strong Markovian assumptions, DP-derived methods can help to greatly reduce problem depth(Sec.6.2).DP algorithms are also essen-tial for systems that combine concepts of NNs and graphical models,such as Hidden Markov Models (HMMs)(Stratonovich,1960;Baum and Petrie,1966)and Expectation Maximization(EM)(Dempster et al.,1977),e.g.,(Bottou,1991;Bengio,1991;Bourlard and Morgan,1994;Baldi and Chauvin,1996; Jordan and Sejnowski,2001;Bishop,2006;Poon and Domingos,2011;Dahl et al.,2012;Hinton et al., 2012a).4.2Unsupervised Learning(UL)Facilitating Supervised Learning(SL)and RL Another recurring theme is how UL can facilitate both SL(Sec.5)and RL(Sec.6).UL(Sec.5.6.4) is normally used to encode raw incoming data such as video or speech streams in a form that is more convenient for subsequent goal-directed learning.In particular,codes that describe the original data in a less redundant or more compact way can be fed into SL(Sec.5.10,5.15)or RL machines(Sec.6.4),whose search spaces may thus become smaller(and whose CAPs shallower)than those necessary for dealing with the raw data.UL is closely connected to the topics of regularization and compression(Sec.4.3,5.6.3). 4.3Occam’s Razor:Compression and Minimum Description Length(MDL) Occam’s razor favors simple solutions over complex ones.Given some programming language,the prin-ciple of Minimum Description Length(MDL)can be used to measure the complexity of a solution candi-date by the length of the shortest program that computes it(e.g.,Solomonoff,1964;Kolmogorov,1965b; Chaitin,1966;Wallace and Boulton,1968;Levin,1973a;Rissanen,1986;Blumer et al.,1987;Li and Vit´a nyi,1997;Gr¨u nwald et al.,2005).Some methods explicitly take into account program runtime(Al-lender,1992;Watanabe,1992;Schmidhuber,2002,1995);many consider only programs with constant runtime,written in non-universal programming languages(e.g.,Rissanen,1986;Hinton and van Camp, 1993).In the NN case,the MDL principle suggests that low NN weight complexity corresponds to high NN probability in the Bayesian view(e.g.,MacKay,1992;Buntine and Weigend,1991;De Freitas,2003), and to high generalization performance(e.g.,Baum and Haussler,1989),without overfitting the training data.Many methods have been proposed for regularizing NNs,that is,searching for solution-computing, low-complexity SL NNs(Sec.5.6.3)and RL NNs(Sec.6.7).This is closely related to certain UL methods (Sec.4.2,5.6.4).4.4Learning Hierarchical Representations Through Deep SL,UL,RLMany methods of Good Old-Fashioned Artificial Intelligence(GOFAI)(Nilsson,1980)as well as more recent approaches to AI(Russell et al.,1995)and Machine Learning(Mitchell,1997)learn hierarchies of more and more abstract data representations.For example,certain methods of syntactic pattern recog-nition(Fu,1977)such as grammar induction discover hierarchies of formal rules to model observations. The partially(un)supervised Automated Mathematician/EURISKO(Lenat,1983;Lenat and Brown,1984) continually learns concepts by combining previously learnt concepts.Such hierarchical representation learning(Ring,1994;Bengio et al.,2013;Deng and Yu,2014)is also a recurring theme of DL NNs for SL (Sec.5),UL-aided SL(Sec.5.7,5.10,5.15),and hierarchical RL(Sec.6.5).Often,abstract hierarchical representations are natural by-products of data compression(Sec.4.3),e.g.,Sec.5.10.4.5Fast Graphics Processing Units(GPUs)for DL in NNsWhile the previous millennium saw several attempts at creating fast NN-specific hardware(e.g.,Jackel et al.,1990;Faggin,1992;Ramacher et al.,1993;Widrow et al.,1994;Heemskerk,1995;Korkin et al., 1997;Urlbe,1999),and at exploiting standard hardware(e.g.,Anguita et al.,1994;Muller et al.,1995; Anguita and Gomes,1996),the new millennium brought a DL breakthrough in form of cheap,multi-processor graphics cards or GPUs.GPUs are widely used for video games,a huge and competitive market that has driven down hardware prices.GPUs excel at fast matrix and vector multiplications required not only for convincing virtual realities but also for NN training,where they can speed up learning by a factorof50and more.Some of the GPU-based FNN implementations(Sec.5.16-5.19)have greatly contributed to recent successes in contests for pattern recognition(Sec.5.19-5.22),image segmentation(Sec.5.21), and object detection(Sec.5.21-5.22).5Supervised NNs,Some Helped by Unsupervised NNsThe main focus of current practical applications is on Supervised Learning(SL),which has dominated re-cent pattern recognition contests(Sec.5.17-5.22).Several methods,however,use additional Unsupervised Learning(UL)to facilitate SL(Sec.5.7,5.10,5.15).It does make sense to treat SL and UL in the same section:often gradient-based methods,such as BP(Sec.5.5.1),are used to optimize objective functions of both UL and SL,and the boundary between SL and UL may blur,for example,when it comes to time series prediction and sequence classification,e.g.,Sec.5.10,5.12.A historical timeline format will help to arrange subsections on important inspirations and techni-cal contributions(although such a subsection may span a time interval of many years).Sec.5.1briefly mentions early,shallow NN models since the1940s,Sec.5.2additional early neurobiological inspiration relevant for modern Deep Learning(DL).Sec.5.3is about GMDH networks(since1965),perhaps thefirst (feedforward)DL systems.Sec.5.4is about the relatively deep Neocognitron NN(1979)which is similar to certain modern deep FNN architectures,as it combines convolutional NNs(CNNs),weight pattern repli-cation,and winner-take-all(WTA)mechanisms.Sec.5.5uses the notation of Sec.2to compactly describe a central algorithm of DL,namely,backpropagation(BP)for supervised weight-sharing FNNs and RNNs. It also summarizes the history of BP1960-1981and beyond.Sec.5.6describes problems encountered in the late1980s with BP for deep NNs,and mentions several ideas from the previous millennium to overcome them.Sec.5.7discusses afirst hierarchical stack of coupled UL-based Autoencoders(AEs)—this concept resurfaced in the new millennium(Sec.5.15).Sec.5.8is about applying BP to CNNs,which is important for today’s DL applications.Sec.5.9explains BP’s Fundamental DL Problem(of vanishing/exploding gradients)discovered in1991.Sec.5.10explains how a deep RNN stack of1991(the History Compressor) pre-trained by UL helped to solve previously unlearnable DL benchmarks requiring Credit Assignment Paths(CAPs,Sec.3)of depth1000and more.Sec.5.11discusses a particular WTA method called Max-Pooling(MP)important in today’s DL FNNs.Sec.5.12mentions afirst important contest won by SL NNs in1994.Sec.5.13describes a purely supervised DL RNN(Long Short-Term Memory,LSTM)for problems of depth1000and more.Sec.5.14mentions an early contest of2003won by an ensemble of shallow NNs, as well as good pattern recognition results with CNNs and LSTM RNNs(2003).Sec.5.15is mostly about Deep Belief Networks(DBNs,2006)and related stacks of Autoencoders(AEs,Sec.5.7)pre-trained by UL to facilitate BP-based SL.Sec.5.16mentions thefirst BP-trained MPCNNs(2007)and GPU-CNNs(2006). Sec.5.17-5.22focus on official competitions with secret test sets won by(mostly purely supervised)DL NNs since2009,in sequence recognition,image classification,image segmentation,and object detection. Many RNN results depended on LSTM(Sec.5.13);many FNN results depended on GPU-based FNN code developed since2004(Sec.5.16,5.17,5.18,5.19),in particular,GPU-MPCNNs(Sec.5.19).5.11940s and EarlierNN research started in the1940s(e.g.,McCulloch and Pitts,1943;Hebb,1949);compare also later work on learning NNs(Rosenblatt,1958,1962;Widrow and Hoff,1962;Grossberg,1969;Kohonen,1972; von der Malsburg,1973;Narendra and Thathatchar,1974;Willshaw and von der Malsburg,1976;Palm, 1980;Hopfield,1982).In a sense NNs have been around even longer,since early supervised NNs were essentially variants of linear regression methods going back at least to the early1800s(e.g.,Legendre, 1805;Gauss,1809,1821).Early NNs had a maximal CAP depth of1(Sec.3).5.2Around1960:More Neurobiological Inspiration for DLSimple cells and complex cells were found in the cat’s visual cortex(e.g.,Hubel and Wiesel,1962;Wiesel and Hubel,1959).These cellsfire in response to certain properties of visual sensory inputs,such as theorientation of plex cells exhibit more spatial invariance than simple cells.This inspired later deep NN architectures(Sec.5.4)used in certain modern award-winning Deep Learners(Sec.5.19-5.22).5.31965:Deep Networks Based on the Group Method of Data Handling(GMDH) Networks trained by the Group Method of Data Handling(GMDH)(Ivakhnenko and Lapa,1965; Ivakhnenko et al.,1967;Ivakhnenko,1968,1971)were perhaps thefirst DL systems of the Feedforward Multilayer Perceptron type.The units of GMDH nets may have polynomial activation functions imple-menting Kolmogorov-Gabor polynomials(more general than traditional NN activation functions).Given a training set,layers are incrementally grown and trained by regression analysis,then pruned with the help of a separate validation set(using today’s terminology),where Decision Regularisation is used to weed out superfluous units.The numbers of layers and units per layer can be learned in problem-dependent fashion. This is a good example of hierarchical representation learning(Sec.4.4).There have been numerous ap-plications of GMDH-style networks,e.g.(Ikeda et al.,1976;Farlow,1984;Madala and Ivakhnenko,1994; Ivakhnenko,1995;Kondo,1998;Kord´ık et al.,2003;Witczak et al.,2006;Kondo and Ueno,2008).5.41979:Convolution+Weight Replication+Winner-Take-All(WTA)Apart from deep GMDH networks(Sec.5.3),the Neocognitron(Fukushima,1979,1980,2013a)was per-haps thefirst artificial NN that deserved the attribute deep,and thefirst to incorporate the neurophysiolog-ical insights of Sec.5.2.It introduced convolutional NNs(today often called CNNs or convnets),where the(typically rectangular)receptivefield of a convolutional unit with given weight vector is shifted step by step across a2-dimensional array of input values,such as the pixels of an image.The resulting2D array of subsequent activation events of this unit can then provide inputs to higher-level units,and so on.Due to massive weight replication(Sec.2),relatively few parameters may be necessary to describe the behavior of such a convolutional layer.Competition layers have WTA subsets whose maximally active units are the only ones to adopt non-zero activation values.They essentially“down-sample”the competition layer’s input.This helps to create units whose responses are insensitive to small image shifts(compare Sec.5.2).The Neocognitron is very similar to the architecture of modern,contest-winning,purely super-vised,feedforward,gradient-based Deep Learners with alternating convolutional and competition lay-ers(e.g.,Sec.5.19-5.22).Fukushima,however,did not set the weights by supervised backpropagation (Sec.5.5,5.8),but by local un supervised learning rules(e.g.,Fukushima,2013b),or by pre-wiring.In that sense he did not care for the DL problem(Sec.5.9),although his architecture was comparatively deep indeed.He also used Spatial Averaging(Fukushima,1980,2011)instead of Max-Pooling(MP,Sec.5.11), currently a particularly convenient and popular WTA mechanism.Today’s CNN-based DL machines profita lot from later CNN work(e.g.,LeCun et al.,1989;Ranzato et al.,2007)(Sec.5.8,5.16,5.19).5.51960-1981and Beyond:Development of Backpropagation(BP)for NNsThe minimisation of errors through gradient descent(Hadamard,1908)in the parameter space of com-plex,nonlinear,differentiable,multi-stage,NN-related systems has been discussed at least since the early 1960s(e.g.,Kelley,1960;Bryson,1961;Bryson and Denham,1961;Pontryagin et al.,1961;Dreyfus,1962; Wilkinson,1965;Amari,1967;Bryson and Ho,1969;Director and Rohrer,1969;Griewank,2012),ini-tially within the framework of Euler-LaGrange equations in the Calculus of Variations(e.g.,Euler,1744). Steepest descent in such systems can be performed(Bryson,1961;Kelley,1960;Bryson and Ho,1969)by iterating the ancient chain rule(Leibniz,1676;L’Hˆo pital,1696)in Dynamic Programming(DP)style(Bell-man,1957).A simplified derivation of the method uses the chain rule only(Dreyfus,1962).The methods of the1960s were already efficient in the DP sense.However,they backpropagated derivative information through standard Jacobian matrix calculations from one“layer”to the previous one, explicitly addressing neither direct links across several layers nor potential additional efficiency gains due to network sparsity(but perhaps such enhancements seemed obvious to the authors).。

稀疏表示分类中遮挡字典构造方法的改进第一章:引言介绍稀疏表示分类的背景和意义,以及现有研究中存在的遮挡字典构造问题。

简要介绍本文的主要研究内容和贡献。

第二章:相关工作综述综述稀疏表示分类及其在图像识别中的应用,以及已有方法解决的问题。

重点介绍现有方法中存在的局限性和不足,为本文提出的改进方法奠定理论基础。

第三章:改进的遮挡字典构造方法详细介绍本文提出的改进方法:基于非空子空间门限的遮挡字典构造方法。

阐述该方法的理论基础和具体实现方式,并与现有方法进行比较和分析。

第四章:实验结果和分析通过对真实数据集上的实验,比较改进方法和现有方法的分类准确度和效率,验证改进方法的有效性和优越性,并分析和讨论实验结果。

第五章:总结和展望总结全文的主要工作和研究成果,阐述改进方法的重要性和应用前景,并提出未来进一步改进的方向和展望。

随着计算机技术的发展和普及,图像识别已经被广泛应用于人类的各个领域。

在这个领域中,稀疏表示分类作为一种有效的分类方法,因其在识别准确度和效率方面的优势而备受关注。

稀疏表示分类是一种基于字典学习的图像识别方法,该方法可以将图像表示为由字典中的原子线性组合而成的向量,其中大部分原子系数为零。

在分类时,将待分类图像表示为稀疏向量,由此可以快速地进行图像分类和识别。

其因为拥有较高的识别准确度和较低的计算成本而在近年来受到越来越多的关注。

然而,稀疏表示分类在实际应用中也遇到了一些问题。

其中之一就是遮挡字典构造问题。

遮挡字典指的是由于图像中某些部分被遮挡或缺失,导致字典的某些原子部分无法区分不同类别的图像。

这种遮挡情况是普遍存在的,并且会严重影响图像识别的准确度和鲁棒性。

为了解决这个问题,现有的一些方法主要采用了增量字典学习、梯度迭代等策略,但这些方法存在训练时间长、字典过大和识别效率低等问题,需要进一步优化和改进。

基于此,本文提出了一种改进的遮挡字典构造方法,即基于非空子空间门限的遮挡字典构造方法。

第一篇、学生成绩管理系统设计报告设计学生成绩信息管理系统心得体会南京理工大学数据库课程设计作者: 学院(系): 专业: 题目: 指导老师:学号计算机科学与工程学院网络工程学生成绩管理系统衷宜2013 年9 月目录一、概述·3 二、需求分析··4 三、系统设计··9 四、系统实施··15 五、系统测试··29 六、收获和体会·33七、附录··34 八、参考文献··34一、概述1、项目背景当今时代是飞速发展的信息时代。

在各行各业中离不开信息处理,这正是计算机被广泛应用于信息管理系统的环境。

计算机的最大好处在于利用它能够进行信息管理。

使用计算机进行信息控制,不仅提高了工作效率,而且大大的提高了其安全性。

尤其对于复杂的信息管理,计算机能够充分发挥它的优越性。

计算机进行信息管理与信息管理系统的开发密切相关,系统的开发是系统管理的前提。

目前随着个大高校的扩招,在校学生数量庞大。

拥有一款好的学习成绩管理系统软件,对于加强对在校生的成绩管理起到积极作用。

并且,可以为在校生随时查阅自己的成绩信息、教师录入成绩、管理员进行信息维护等提供方便,为学校节省大量人力资源本系统就是为了管理好学生成绩信息而设计的。

2、编写目的首先,学生成绩管理是一个学校不可缺少的部分,它的内容对于学校的管理者和学生以及学生家长来说都至关重要,所以一个良好的学生成绩管理系统应该能够为用户提供充足的信息和快捷的查询手段。

学生成绩管理系统对学校加强学生成绩管理有着极其重要的作用. 作为计算机应用的一部分,使用计算机对学生成绩信息进行管理,具有手工管理所无法比拟的优点。

例检索迅速、查找方便、可靠性高、存储量大、保密性好、寿命长、成本低等。

这些优点能够极大地提高管理者管理的效率,也是学校走向科学化、正规化管理,与世界接轨的重要条件。

用关键字abstract修饰的方法称为abstract方法(抽象方法)。

abstract int min (int x, int y );

对于abstract方法,只允许声明,不允许实现(没有方法体),而且不允许使用final和abstract同时修饰一个方法或类,也不允许使用static修饰abstract方法,即abstract方法必须是实例方法

1.abstract类中可以有abstract方法

和普通类(非abstract类)相比,abstract类中可以有abstract方法,

也可以有非abstract方法(非abstract类中不可以有abstract方法)。

注意:abstract类里也可以没有abstract方法。

2.abstract类不能用new运算符创建该类的对象。

3.abstract类的子类

如果一个非abstract类是abstract的子类,他必须重写父类的abstract

方法,即去掉abstract方法的abstract修饰,并给出方法体。

如果一个abstract 类是abstract类的子类,它可以重写父类的abstract方法,也可以继承父类的abstract方法。

4.abstract类的对象可以作为上转型对象

可以使用abstract类声明对象,尽管不能使用new运算符创建该对象,但该对象可以成为其子对象的上转型对象,那么该对象就可以调用子类重写的方法。

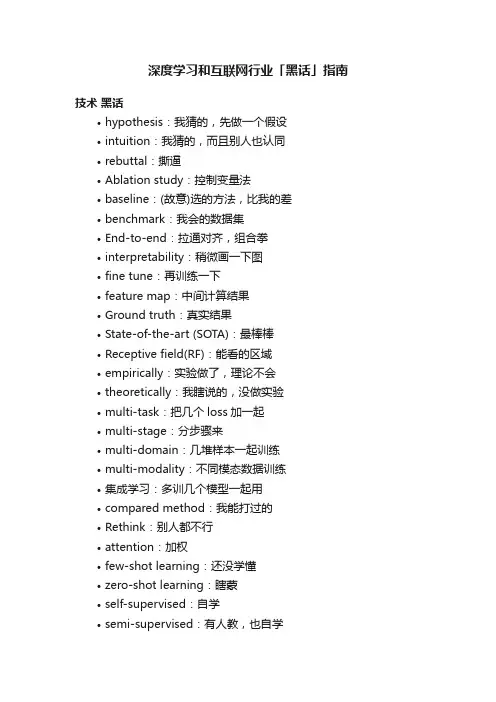

深度学习和互联网行业「黑话」指南技术黑话•hypothesis:我猜的,先做一个假设•intuition:我猜的,而且别人也认同•rebuttal:撕逼•Ablation study:控制变量法•baseline:(故意)选的方法,比我的差•benchmark:我会的数据集•End-to-end:拉通对齐,组合拳•interpretability:稍微画一下图•fine tune:再训练一下•feature map:中间计算结果•Ground truth:真实结果•State-of-the-art (SOTA):最棒棒•Receptive field(RF):能看的区域•empirically:实验做了,理论不会•theoretically:我瞎说的,没做实验•multi-task:把几个loss加一起•multi-stage:分步骤来•multi-domain:几堆样本一起训练•multi-modality:不同模态数据训练•集成学习:多训几个模型一起用•compared method:我能打过的•Rethink:别人都不行•attention:加权•few-shot learning:还没学懂•zero-shot learning:瞎蒙•self-supervised:自学•semi-supervised:有人教,也自学•unsupervised:没人教,到处瞎学•pixel-wise:像素级别•image-wise:图片级别•anchor:锚点•Short connection:走捷径行业黑话•接地气:别做太洋气,土一点•联动:两个品牌互相@对方•背书:找说话有分量的人夸我•咱们拉个群吧:和你沟通真费劲•在做了:进度0%•有案例吗?:有现成的可以抄吗•能承受较大的工作压力:加班•有强烈责任心:没做完不准走•包三餐:早晚都得加班•扁平化管理:领导和你一个办公室还有很多其他的,就不一一列举了...复盘(review),赋能(enable),抓手(grip)对标(benchmark),沉淀(internalize),对齐(alignment) 拉通(stream-line),倒逼(push back),落地(landing)中台(middle office),漏斗(funnel),闭环(closed loop)打法(tactics),履约(delivery),串联(cascade)纽带(bond),矩阵(matrix),协同(collabration)反哺(give back),交互(inter-link),兼容(inclusive),包装(package),相应(relative),刺激(stimulate)规模(scale),重组(restructure),量化(measurable)宽松(loose),认知(perception),发力(put the force on ) 智能(smart),颗粒度(granularity),方法论(methodology) 组合拳(blended measures),生命周期(life cycle)转自:coggle。

扩展巴科斯范式(转⾃维基)https:///wiki/%E6%89%A9%E5%B1%95%E5%B7%B4%E7%A7%91%E6%96%AF%E8%8C%83%E5%BC%8F扩展巴科斯范式[]维基百科,⾃由的百科全书扩展巴科斯-瑙尔范式(EBNF, Extended Backus–Naur Form)是表达作为描述计算机和的正规⽅式的的(metalanguage)符号表⽰法。

它是基本(BNF)元语法符号表⽰法的⼀种扩展。

它最初由开发,最常⽤的 EBNF 变体由标准,特别是 ISO-14977 所定义。

⽬录[隐藏]基本[],如由即可视字符、数字、标点符号、空⽩字符等组成的的。

EBNF 定义了把各符号序列分别指派到的:digit excluding zero = "1" | "2" | "3" | "4" | "5" | "6" | "7" | "8" | "9" ;digit = "0" | digit excluding zero ;这个产⽣规则定义了在这个指派的左端的⾮终结符digit。

竖杠表⽰可供选择,⽽终结符被引号包围,最后跟着分号作为终⽌字符。

所以digit是⼀个 "0"或可以是 "1或2或3直到9的⼀个digit excluding zero"。

产⽣规则还可以包括由逗号分隔的⼀序列终结符或⾮终结符:twelve = "1" , "2" ;two hundred one = "2" , "0" , "1" ;three hundred twelve = "3" , twelve ;twelve thousand two hundred one = twelve , two hundred one ;可以省略或重复的表达式可以通过花括号 { ... } 表⽰:natural number = digit excluding zero , { digit } ;在这种情况下,字符串1, 2, ...,10,...,12345,... 都是正确的表达式。

属于映射数据到新的空间的方法映射数据到新的空间是一种常见的数据处理方法,它可以将原始数据转换为具有新特征的数据,以便更好地理解和分析。

在本文中,将介绍几种常用的方法来实现数据映射,包括主成分分析、流形学习和自编码器。

一、主成分分析(Principal Component Analysis,PCA)主成分分析是一种常用的线性降维方法,它通过线性变换将原始数据映射到一个新的空间,使得新空间中的样本具有最大的方差。

主成分分析的核心思想是将原始数据在协方差矩阵的特征向量方向上进行投影,从而得到新的特征向量。

这些特征向量被称为主成分,它们按照对应的特征值大小排序,表示数据中的主要变化方向。

通过选择前几个主成分,可以实现数据的降维,同时保留较多的信息。

二、流形学习(Manifold Learning)流形学习是一种非线性降维方法,它假设高维数据分布在一个低维流形上,并试图通过学习这个流形的结构来实现数据的映射。

流形学习方法可以保留原始数据的局部结构和全局结构,能够更好地处理非线性数据。

常用的流形学习方法包括等度量映射(Isomap)、局部线性嵌入(Locally Linear Embedding,LLE)和拉普拉斯特征映射(Laplacian Eigenmaps)等。

这些方法通过计算样本之间的距离或相似度来构建流形结构,并将原始数据映射到低维空间中。

三、自编码器(Autoencoder)自编码器是一种无监督学习的神经网络模型,它可以将输入数据压缩成一个低维的编码表示,并尽可能地通过解码器将编码后的数据重构为原始输入。

自编码器的目标是最小化重构误差,从而学习到数据的有效表示。

自编码器有多种变体,包括稀疏自编码器、去噪自编码器和变分自编码器等。

自编码器可以在无标签数据上进行训练,因此适用于无监督的数据映射任务。

除了上述方法,还有一些其他的数据映射方法,如非负矩阵分解(Non-negative Matrix Factorization,NMF)、线性判别分析(Linear Discriminant Analysis,LDA)和高斯过程回归(Gaussian Process Regression,GPR)等。

面向对象编程基础考试(答案见尾页)一、选择题1. 什么是面向对象编程(OOP)?A. 一种编程范式B. 一种编程语言C. 一种软件开发方法论D. 以上都不是2. 在面向对象编程中,哪个概念描述了类和实例之间的关系?A. 封装B. 继承C. 多态D. 抽象3. 在面向对象编程中,什么是封装?A. 将数据隐藏在对象内部B. 将方法隐藏在对象内部C. 将数据和方法都隐藏在对象内部D. 将对象隐藏在类内部4. 在面向对象编程中,什么是继承?A. 创建一个新的类,这个新类是现有类的扩展B. 创建一个新的类,这个新类与现有类完全相同C. 创建一个新的类,但这个新类只有一个子类D. 创建一个新的类,但这个新类没有子类5. 在面向对象编程中,什么是多态?A. 同一个接口可以被不同的对象以不同的方式实现B. 一个类的所有方法都是静态的C. 一个类的所有属性都是静态的D. 类的每个对象都有一个固定的属性值6. 在面向对象编程中,什么是抽象?A. 创建一个新的类,这个新类是现有类的子类B. 创建一个新的类,这个新类与现有类完全不同C. 创建一个新的类,但这个新类只有一个子类D. 创建一个新的类,但这个新类没有子类7. 在面向对象编程中,什么是局部变量?A. 在方法内部定义的变量B. 在类内部定义的变量,但在方法外部也可以访问C. 在类内部定义的变量,但在方法内部也可以访问D. 在方法内部定义的变量,且只在方法内部可见8. 在面向对象编程中,什么是全局变量?A. 在方法内部定义的变量B. 在类内部定义的变量,但在方法外部也可以访问C. 在类内部定义的变量,但在方法内部也可以访问D. 在程序的任何地方都可以访问的变量9. 在面向对象编程中,什么是构造函数?A. 用于初始化对象的函数B. 用于创建对象的函数C. 用于销毁对象的函数D. 以上都不是10. 在面向对象编程中,什么是析构函数?A. 用于初始化对象的函数B. 用于创建对象的函数C. 用于销毁对象的函数D. 以上都不是11. 什么是面向对象编程(OOP)?A. 一种编程范式B. 一种编程语言C. 一种软件开发方法论D. 一种算法12. 在面向对象编程中,哪个概念描述了对象之间的交互?A. 封装B. 继承C. 多态D. 抽象13. 在面向对象编程中,什么是类(Class)?A. 一个对象的蓝图或原型B. 一个抽象概念C. 一个具体的实现D. 一个操作系统的组件14. 在面向对象编程中,什么是对象(Object)?A. 一个程序中的所有数据B. 一个程序中的所有函数C. 一个具有属性和行为的实体D. 一个计算机的硬件部件15. 在面向对象编程中,什么是继承(Inheritance)?A. 一种代码重用机制B. 一种创建新类的方式C. 一种事件处理机制D. 一种数据处理技术16. 在面向对象编程中,什么是多态(Polymorphism)?A. 一种代码重用机制B. 一种创建新类的方式C. 一种事件处理机制D. 一种数据处理技术17. 在面向对象编程中,什么是封装(Encapsulation)?A. 一种代码重用机制B. 一种创建新类的方式C. 一种事件处理机制D. 一种数据隐藏技术18. 在面向对象编程中,什么是抽象(Abstraction)?A. 一种代码重用机制B. 一种创建新类的方式C. 一种事件处理机制D. 一种概念性的表示19. 在面向对象编程中,什么是继承链(Inheritance Chain)?A. 一种对象之间的关联关系B. 一种代码重用机制C. 一种创建新类的方式D. 一种属性和方法的传递路径20. 在面向对象编程中,什么是接口(Interface)?A. 一种代码重用机制B. 一种创建新类的方式C. 一种事件处理机制D. 一种对象间的通信约定21. 什么是面向对象编程(OOP)?A. 面向对象编程是一种编程范式,它使用“对象”来表示数据和方法。

摘要运动目标检测与跟踪是计算机视觉领域的热点问题,广泛应用于视频监控、人机交互、虚拟现实和图像压缩等。

而要在各种复杂的环境中和不同的条件下(如遮挡、光照变化等)都对目标进行准确的跟踪是目前广大研究工作者共同关注的焦点,也是目前实际应用中一个亟待解决的难题。

本文主要研究静态背景下运动目标的检测、运动目标跟踪以及相关结果仿真分析三方面的内容。

运动目标检测方面,在分析了目前比较常用的三种目标检测方法,即光流法、帧间差分法、背景相减法的基础上,着重研究了基于帧间差分法运动目标检测的算法原理及流程,讨论了三种检测算法的优缺点。

运动目标跟踪方面,在分析了目前比较常用的三种目标跟踪算法,即均值漂移算法、卡尔曼滤波算法、基于特征的目标跟踪算法的基础上,重点研究了基于特征——最小外接矩形框运动目标跟踪算法。

分析了其算法原理以及跟踪步骤。

最后用matlab软件采用帧间差分运动目标检测法以及基于最小外接矩形框跟踪法对含有运动目标的视频进行仿真。

在采用了帧间差分检测法以及最小外接矩形框跟踪法基础上,用matlab软件对视频进行仿真,检测到了人体的轮廓,同时矩形框跟踪出了运动人体的轨迹,达到了运动目标检测与跟踪的效果。

关键词:运动目标检测;运动目标跟踪;帧间差分法;最小外接矩形框AbstractMoving target detection and tracking is a hot issue in the field of computer vision, is widely used in video surveillance, human-computer interaction, virtual reality and image compression etc.. But in various complex environments and different conditions (such as occlusion, illumination changes) of target accurate tracking is currently the focus of researchers of common concern in the current application, is an urgent problem to be solved.This paper mainly involves moving target detection under a static background, moving target tracking and simulation results analysis of three aspects. In the moving object detection, in the analysis of the current commonly used three kinds of target detection method based on optical flow method, namely, the inter-frame difference method, background subtraction method, focusing on the frame difference algorithm principle and process method based on moving object detection.And discusses the advantages and disadvantages of three kinds of detection algorithm.In terms of moving object tracking, in the analysis of the current three kinds of target more commonly used tracking algorithm, the mean shift algorithm, Calman algorithm, based on the characteristics of the target tracking algorithm based on the characteristics, focuses on the minimum exterior rectangle based on moving target tracking algorithm.Finally using MATLAB software using frame difference detection method for moving targets as well as based on the minimum bounding box tracking method to simulate the video with moving objects.Based on the frame difference detection method and the minimum bounding box tracking method based on the simulation, the video with the MATLAB software, to detect the contours of the body, at the same time rectangle tracking a human motion trajectory, reached the moving target detection and tracking results.Keywords: moving object detection; object tracking; inter-frame difference method; minimum circumscribed rectangle目录1 绪论 (1)1.1 研究背景和意义 (1)1.2 国内外研究现状 (2)1.3 章节安排 (4)2 运动目标检测与跟踪技术 (5)2.1 数字图像处理相关概念 (5)2.1.1 数字图像处理过程 (5)2.1.2 图像增强 (5)2.1.3 图像分割 (6)2.1.4 数学形态学 (6)2.2 运动目标检测流程及常用算法 (7)2.2.1 背景差分法 (8)2.2.2 帧间差分法 (8)2.2.3 光流法 (9)2.3 运动目标跟踪常用算法 (10)2.3.1 基于均值漂移目标跟踪算法 (10)2.3.2 基于卡尔曼滤波目标跟踪算法 (10)2.3.3 基于特征的目标跟踪算法 (11)3 基于帧间差分法运动目标检测的研究 (12)3.1 帧间差分法运动目标检测流程 (12)3.2 帧间差分法运动目标检测过程及原理 (12)3.2.1RGB图像转换为灰度图像 (12)3.2.2 图像差分处理 (13)3.2.3 差分图像二值化 (13)3.2.4 形态学滤波 (16)3.2.5 连通性检测 (17)4 基于最小外接矩形框目标跟踪 (19)4.1 目标跟踪流程 (19)4.1.1 运动目标跟踪过程图 (19)4.1.2 运动目标跟踪过程分析 (19)4.2 基于最小外接矩形框跟踪原理 (20)4.2.1 特征提取 (20)4.2.2 最小外接矩形提取 (21)4.3 最小矩形框跟踪实现 (21)5 仿真结果与分析 (23)5.1 仿真环境 (23)5.2 运动目标检测仿真 (23)5.3 运动目标跟踪仿真 (28)结论 (33)致谢 (34)参考文献 (35)附录A (38)附录B (54)附录C (67)1 绪论1.1 研究背景和意义视觉是人类感知自身周围复杂环境最直接有效的手段之一,而在现实生活中大量有意义的视觉信息都包含在运动中,人眼对运动的物体和目标也更敏感,能够快速的发现运动目标,并对目标的运动轨迹进行预测和描绘[1]。