ABSTRACT Cloud Control with Distributed Rate Limiting

- 格式:pdf

- 大小:1020.54 KB

- 文档页数:12

模糊云资源调度的CMAPSO算法作者:李成严,宋月,马金涛来源:《哈尔滨理工大学学报》2022年第01期摘要:针对多目标云资源调度问题,以优化任务的总完成时间和总执行成本为目标,采用模糊数学的方法,建立了模糊云资源调度模型。

利用协方差矩阵能够解决非凸性问题的优势,采取协方差进化策略对种群进行初始化,并提出了一种混合智能优化算法CMAPSO算法(covariance matrix adaptation evolution strategy particle swarm optimization,CMAPSO ),并使用该算法对模糊云资源调度模型进行求解。

使用Cloudsim仿真平台随机生成云计算资源调度的数据,对CMAPSO算法进行测试,实验结果证明了CMAPSO算法对比PSO算法(particle wwarm optimization),在寻优能力方面提升28%,迭代次数相比提升20%,并且具有良好的负载均衡性能。

关键词:云计算;任务调度;粒子群算法; 协方差矩阵进化策略DOI:10.15938/j.jhust.2022.01.005中图分类号: TP399 文献标志码: A 文章编号: 1007-2683(2022)01-0031-09CMAPSO Algorithm for Fuzzy Cloud Resource SchedulingLI Chengyan,SONG Yue,MA Jintao(School of Computer Science and Technology, Harbin University of Science and Technology, Harbin 150080,China)Abstract:Aiming at the multiobjective cloud resource scheduling problem, with the goal of optimizing the total completion time and total execution cost of the task, a fuzzy cloud resource scheduling model is established using the method of fuzzy mathematics. Utilizing the advantage of the covariance matrix that can solve the nonconvexity problem, adopting the covariance evolution strategy to initialize the population, a hybrid intelligent optimization algorithm CMAPSO algorithm (covariance matrix adaptation evolution strategy particle swarm optimization,CMAPSO) is proposed to solve the fuzzy cloud resource scheduling model. The Cloudsim simulation platform was used to randomly generate cloud computing resource scheduling data, and the CMAPSO algorithm was tested. The experimental results showed that compared with the PSO algorithm (particle swarm optimization), the optimization capability of CMAPSO algorithm is increased by 28%, the number of iterations of CMAPSO algorithm is increased by 20%, and it has good load balancing performance.Keywords:cloud computing; task scheduling; particle swarm algorithm; covariance matrix adaptation evolution strategy0引言云計算是一种商业计算的模型和服务模式[1],而云计算资源调度的主要目的是将网络上的资源进行统一的管理和调式,再给予用户服务调用。

OverviewRubrik, the Zero Trust Data Security Company™, delivers data security and operational resilience for enterprises. Rubrik provides data security and data protection on a single platform, going beyond just backup and recovery with Zero Trust Data Protection, Ransomware Investigation, Incident Containment, Sensitive Data Discovery, and Orchestrated Application Recovery. Data is ready at all times so users can recover the data they need, and avoid paying a ransom. Rubrik’s software solution scales linearly and can be quickly deployed anywhere across on-prem, hybrid cloud, and multi-cloud environments to protect physical, virtual, and cloudnative apps.Discovery QuestionsDue to a massive increase in cyber-attacks, security is top of mind to every customer and vertical. What are you doing to ensure your data is safe and recoverable?Rubrik cyber resilience capabilities empower IT and Security teams across the lifecycle of a cyber incident. Rubrik can identify indicators of compromise from backup data to alert teams and help contain the impact of ransomware. Rubrik can also perform analytics across snapshots to identify the last knowngood state to prevent reinfection and delayed recovery. Plus, Rubrik can preserve point-in-time snapshots for use in both internal post incident reviews and cyber investigations for external regulatory compliance.What tools are you using to drive efficiencies in delivering IT services via automation?Rubrik enables users to build custom workflows through integrations with automation tools such as Terraform, ServiceNow, Chef, Puppet, and Ansible.Every organization has a different stance on Cloud. What does cloud mean for your business? Rubrik simplifies multi-cloud data protection across AWS, Azure, Google Cloud, Microsoft 365 andhybrid-cloud workloads, facilitating compliance and governance with centralized reporting. Rubrik also optimizes storage costs with tiering, and automates backup across hundreds of cloud accounts.Microsoft has also made an equity investment in Rubrik, partnering with Rubrik to deliver regulatorycompliance tools alongside data security. One such benefit is Rubrik Cloud Vault, built to reduce the risk of backup data breach, loss, or theft. Rubrik Cloud Vault is a fully managed solution for isolated, offsite cloud archival, allowing users to set up archival policies, select the tier and available regions, and begin direct, authenticated Azure Blob integration for encrypted data upload.Target Customer•Decision-maker is VP of Infrastructure,IT Director/Manager•User is IT Ops, Systems or Network Manager/Admin, Virtualization or Backup Admin•Influencer is SecOps, DBA, Enterprise Architect •Looking to reduce risk of paying the ransom inthe case of ransomware attack•Using enterprise backup software/storage •Looking to reduce financial, operational, andbusiness costs of backup, archival, test/dev •Using or exploring private/public cloud archival(object store, Azure, AWS, GCP)What is your current disaster recovery solution?Rubrik customers can migrate VMs to public cloud; Rubrik automatically converts vSphere and Hyper-V VMs to cloud-native instances such as Amazon EC2 and Azure VM. Rubrik also provides “Brik-to-Brik” replication that is WAN-optimized, meaning that Rubrik minimizes the amount of data sent over long distance network connections. And, Rubrik offers Orchestrated Application Recovery, automating the configuration process for VMs, taking into account application dependencies with predefined blueprints for 2-click DR.Are you meeting your backup and recovery objectives/SLAs?Rubrik customers reduce RTOs and RPOs from hours to seconds With Continuous Data Protection, users can recover missioncritical VMs to just seconds before a disaster or corruption point.Rubrik’s SLA policy engine also simplifies compliance for a “set-it-and-forget-it” approachto backup, replication, and archival.Object HandlingOur air gap has to be physical.99.9% of Rubrik customers buy Rubrik for the security of a physical air gap without the need and the cost/expense of implementing one. But if you do want a physical air gap, Rubrik has options.Aren’t you just going to restore infected files? How do you know what point to recover to?Rubrik users can threat hunt within backups and restore everything except the executable that launched the attack. Rubrik can show what the executable did and if sensitive or regulated data was in scope. Everyone says they are immutable. How is Rubrik any different?Immutability is table stakes these days. Rubrik solutions offer not only that, but also help organizationsin terms of speeding up time to recovery, identifying the blast radius of an attack, and identifying what sensitive data has potentially been impacted in order to help customers get back up and running quickly.I don’t buy into the Zero Trust hype. What does it even mean?Assume a breach has already happened. Authenticate everything. Trust nothing. That is a cornerstone of a successful cyber resilience program and what many executives and boards ask for. It’s also a vital, last line of defense approach. One of the most trusted roles is the backup admin. If they go rogue, and expire all of an organization’s backups, Zero Trust is a critical platform defense strategy. With Rubrik,all data is encrypted and logically air gapped from the rest of the environment, with all access governed by comprehensive authentication and access controls.Unique Selling Points1. Built-in recovery: Recover quickly from ransomware and other DR events via immutable backups, ML-driven detection, detailed impact analysis, and single-click recovery.2. High performance (backup and recovery) at large scale: Near-zero RTOs for VMs and databases via Live Mount. Accelerate test/dev, perform application health checks, and deliver ad-hoc restores with self-service access.3. Policy-driven automation to simplify management operations and protect more apps.4. An API-first design that makes it easy to integrate data management into a larger IT environment for greater efficiency and control.5. Automated protection that applies SLA policies to new cloud applications.6. Consolidated multi-cloud protection eliminates the need to rely on multiple native tools.7. Easy configuration and control with SaaS-based management.8. Zero Trust Security protects cloud and SaaS data so that applications running on-prem, at the edge, and in the cloud can be recovered reliably. Rubrik indexes all app data stored in the cloud,enabling instant access to cloud data through predictive search.Customer Use CasesWED2B prevented disruption from a ransomware attack in November 2019,restoring critical SQL databases with Active Directory immediately. “With Rubrik,we experienced zero data loss, zero impact to our core business, we paidnothing in ransom fees and were up and running in 24 hours, which is fantastic”Rob Mole, Head of IT & Solutions.Langs Building Supplies was hacked in May of 2021. With Rubrik, they recovered100% of their data within 24 hours and paid $0 in ransom. CIO, Matthew Day,stated “Rubrik is not just about recovering from ransomware. Rubrik is thedifference between survival and non-survival in this new digital age.”Colchester Institute recovered from a crippling ransomware attack within daysleveraging Rubrik Zero Trust Data Security. Ben Williams, IT Services Managersaid, “Rubrik safeguarded our backups. Due to its native immutability, 100% ofour backups were protected against corruption and deletion. We were able tokeep the lights on and deliver a robust service to our students and staff.Estée Lauder — Leading house of prestige beauty, Estée Lauder is a collection ofover 25 globally renowned beauty brands, each with its own unique set of dataand unique challenges. To protect all data at scale while keeping costs low, thebeauty company partners with Rubrik to break free from legacy data protectionand mobilize to the cloud.For more customer stories, check out /customers。

外研版英语必修五单词表在本文中,我将按照外研版英语必修五单词表的内容来进行论述。

以下是按照字母顺序排列的单词表:A: abandon, abbreviation, abdomen, abide, ability, abnormal, aboard, abolish, about, above, abroad, abrupt, absence, absolute, absorb, abstract, absurd, abundance, abuse, academic, accelerate, accent, accept, access, acclaim, accommodate, accompany, accomplish, accord, account, accumulate, accurate, accuse, achieve, acknowledge, acquire, act, adapt, addict, addition, adequate, adjust, administration, admire, admit, adopt, adore, adult, advance, advice, advocate, aerial, aesthetic, affirm, affix, affordable, Afro-American, afterwards, agenda, aged, agency, aggress, agony, agree, aid, aim, air, aircraft, aisle, alert, alien, alive, allocate, allot, aloud, amateur, ambassador, ambiguous, ambition, ambulance, among, amount, amuse, analyze, anchor, ancient, anecdote, angel, anger, angle, anniversary, announce, annoy, anonymous, anticipate, antique, anxiety, apart, apartment, ape, apologise, apparent, appeal, applause, appoint, appreciate, approach, appropriate, approve, approximate, aquarium, arch, architect, architecture, archive, area, argue, arise, arm, armed, army, arouse, articulate, artificial, ascend, ash, ashamed, aside, ask, aspect, aspirin, assemble, assert, assess, assign, assist, associate, assume, assurance, astonish, attach, attack, attain, attempt, attend, attention, attitude, attract, attribute, auction, audition, authentic, author, authority, autobiography, automobile, autumn, available, avenue, average, avoid, await, aware, awful, awkward, axeB: baby, bachelor, background, backup, bacteria, badminton, bag, baggage, bait, balance, balcony, bald, ball, ballet, balloon, ballot, ban, band,bandage, bank, bankruptcy, banner, banquet, bar, barbarian, barber, bare, bargain, bark, barn, barrel, barrier, barter, base, baseball, basic, basket, bat, batch, batter, battle, bay, beam, bean, bear, beard, beast, beat, beatify, because, beckon, become, bed, bedroom, bee, beef, beer, beetle, beforehand, beg, begin, behalf, behavior, belief, bell, belle, bellow, belly, belong, belt, bench, bend, beneath, beneficial, benefit, bent, beside, best, betray, better, between, beverage, beware, beyond, bicycle, bid, big, bike, bill, biology, bird, birth, biscuit, bit, bite, bitter, bizarre, blackboard, blade, blame, blank, blanket, blast, blaze, bleach, blend, bless, blind, block, blossom, blouse, blow, blush, board, boast, boat, bob, boil, bold, bolt, bond, bone, bonus, bookcase, booth, border, bore, born, bother, bottle, bottom, bounce, bour, bow, bowling, box, boxer, boy, brac, bracelet, bracket, brave, breach, bread, breakfast, break, breeze, brick, bride, bridge, brief, bright, brilliant, bring, broom, brooch, brood, brother, brow, brown, browse, brush, brutal, bubble, bucket, buckle, bud, bug, build, bulb, bull, bullet, bulletin, bump, bun, bundle, burden, bureau, burglar, burst, business, bust, butcher, butterfly, button, buy, buzz, by, cab, cabin, cabinet, cafe, cafeteria, cage, calculate, calendar, calf, call, calm, calory, camera, campaign, camp, campsite, campus, canal, candidate, candle, canteen, canvas, cap, capability, capacity, capital, capitalism, capsize, capsule, captain, capture, car, carbon, card, care, career, careless, cargo, carpenter, carpet, carriage, carrot, carry, cart, cartoon, case, cassette, cast, castle, casually, cat, cattle, cause, caution, cave, ceiling, celebrate, celebrity, censor, certain, certificate, chain, chair, chairman, chalk, challenge, champion, chance, change, channel, chaos, chap, chapel, chapter, character, charge, charity, charm, chart, chase, cheap, check, cheese, chef, chemical, chemistry, cherry, chess, chest, chew, chicken, chief, child, chill, chin, chip, chorus, choose, chop, chuckle, church, cigarette, cinema, circle,circumstance, cite, citizen, city, civilization, claim, clasp, classical, classify, clean, clergy, clerk, clever, cliff, climate, climb, clinic, clip, cloak, clock, close, clothes, clothing, cloud, clown, club, clue, clumsy, coach, coal, coast, collapse, collar, colleague, collect, college, collocation, colony, column, comb, combat, combine, comedian, comedy, comma, command, comment, commercial, commission, commitment, committee, common, communicate, communism, community, commute, compact, companion, company, compare, comparison, compel, compensate, compete, competition, compile, complain, complaint, complete, complicated, comply, compose, composition, comprehend, comprehensive, compress, comprise, compute, computer, concentrate, concept, concern, concert, conclude, conclusion, concrete, condense, condition, conduct, cone, confess, confidence, confirm, conflict, confuse, confusion, congratulate, congress, connect, connection, conquer, conscience, conscious, consent, consequence, conservative, consider, consist, consistent, console, consolidate, conspiracy, constant, constitute, construct, construction, consult, consume, consumer, consumption, contact, contain, contemporary, contempt, content, contest, context, continent, continue, contradiction, contrary, contribution, control, convert, conviction, convince, cook, cool, cooperation, coordinate, cope, copper, copy, core, corn, corner, correct, correspondence, corridor, corrupt, corruption, cost, costume, cosy, cotton, couch, cough, council, counsel, count, country, countryside, county, couple, courage, course, court, cousin, cover, coward, cow, crack, craft, cram, crane, crash, crawl, crazy, cream, create, creature, credit, crew, cricket, crime, criminal, crisis, crisp, criterion, criticize, crop, cross, crowd, crown, crucial, cruel, cruise, crush, crust, cry, crystal, cube, cucumber, cuddle, cuisine, cultivate, culture, cup, cupboard, cure, curious, curl, currency,current, curriculum, curse, curtain, curve, cushion, custom, customer, customs, cute, cycle, cylinderD: dad, daily, dairy, dam, damage, dame, damp, dance, danger, dangerous, dare, dark, darling, dart, dash, date, daughter, dawn, day, daylight, dazzle, dead, deaf, deal, dear, death, debate, debris, debt, decay, deceit, deceive, decide, decision, declare, decline, decorate, decrease, dedicate, deem, deep, deer, defeat, defend, defendant, defense, define, defy, degree, delay, delete, deliberate, delightful, deliver, delivery, demand, democracy, demonstrate, deny, depart, department, departure, depend, dependant, deposit, depress, depression, deputy, depth, derive, describe, desert, deserve, design, designate, desirable, desire, desk, despair, desperate, despise, destination, destroy, destruction, detach, detail, determination, determine, devote, diamond, diary, dice, dictionary, die, difference, different, difficult, dig, dilemma, dimension, dine, dinner, dip, direct, director, dirt, dirty, disability, disaster, discard, discharge, disciple, discipline, disclose, discount, discourse, discover, discretion, discriminate, discuss, disease, disguise, disgust, dish, dismay, dismiss, disorder, display, disposal, dispose, dispute, dissent, dissolve, distance, distinguish, distract, distress, distribute, district, disturb, dive, divide, divine, division, divorce, dizzy, document, dog, doll, dollar, dolphin, domain, dome, domestic, dominant, dominate, donate, donation, donkey, doom, door, dope, dose, dot, double, doubt, dough, dove, down, downstairs, downward, dozen, draft, drag, drain, drama, drapery, draw, dread, dream, dreary, dress, dressmaker, dribble, drift, drill, drink, drip, drive, drop, drown, drug, drum, drunk, dry, dual, duck, dull, dumb, dump, dust, duty, dwell, dynamicE: each, eager, eagle, ear, earnest, ease, easily, east, easy, eat, echo, economic, economics, economize, economy, edge, edit, edition, editor, educate, education, effective, effectiveness, efficient, effort, either, elastic, elation, electric, electricity, electronic, elegant, elevator, eliminate, elite, else, elsewhere, embarrass, embassy, emerge, emergency, emotion, emotional, emphasis, emphasize, employ, employee, employer, employment, empty, enable, enclose, enclosure, encourage, end, endless, enemy, energy, engage, engine, engineer, engineering, enhance, enjoy, enlarge, enormous, enough, ensure, enter, entertain, enthusiasm, entire, entrance, entry, envelope, environment, envy, episode, equal, equality, equator, equip, equipment, equivalent, era, erode, error, escape, especially, essay, essence, essential, establish, estate, esteemed, estimate, eternal, ethics, ethnic, evaporate, even, event, eventual, ever, every, evidence, evil, evolution, evolve, exaggerate, examine, example, excavate, exceed, excel, excellent, except, exception, excess, exchange, excited, exciting, exclude, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation,exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse,execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise,exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhaust, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit,exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse, execute, executive, exercise, exhausted, exhibit, existence, exit, exclamation, exclude, excursion, excuse,。

云计算中分布式系统技术的使用教程推荐云计算已经成为当今科技领域的热门话题,如何利用分布式系统技术实现云计算的应用,是每个云计算从业者都需要掌握的关键技能。

在本文中,我将为您介绍几本经典的分布式系统技术使用教程,帮助您更好地理解和应用云计算中的分布式系统技术。

1. 《分布式系统:概念与设计》(Distributed Systems: Concepts and Design)这本经典教材由George Coulouris等人合著,已经成为分布式系统领域的标准参考书之一。

书中详细介绍了分布式系统的概念、架构和设计原则。

从分布式系统的基础概念开始介绍,涵盖了分布式系统的通信、一致性、容错、安全等方面的内容,适合初学者和高级从业者阅读。

此外,书中还涉及了很多经典的分布式系统技术和实际案例,对于深入理解分布式系统有很大帮助。

2. 《分布式系统原理与范型》(Distributed Systems: Principles and Paradigms)由Andrew S. Tanenbaum和Maarten van Steen合著,该书是分布式系统领域的另一本经典之作。

除了介绍分布式系统的基本原理外,还强调了分布式系统中常见的范型,如客户端-服务器模型、对等网络模型等。

该书提供了大量实例和案例研究,让读者更好地理解实际应用中的分布式系统设计和实现。

3. 《分布式系统:原理与范型(第二版)》(Distributed Systems: Principles and Paradigms)该书是前述《分布式系统原理与范型》的第二版,由Andrew S. Tanenbaum和Maarten van Steen继续合著。

相较于第一版,第二版增加了对最新分布式系统技术和云计算的介绍。

书中详细介绍了云计算的概念、架构和关键技术,包括虚拟化、容器化等。

此外,还讨论了云计算中的安全与隐私、大数据处理等前沿问题。

对于想要深入了解云计算中的分布式系统技术的读者来说,这本书是不可或缺的。

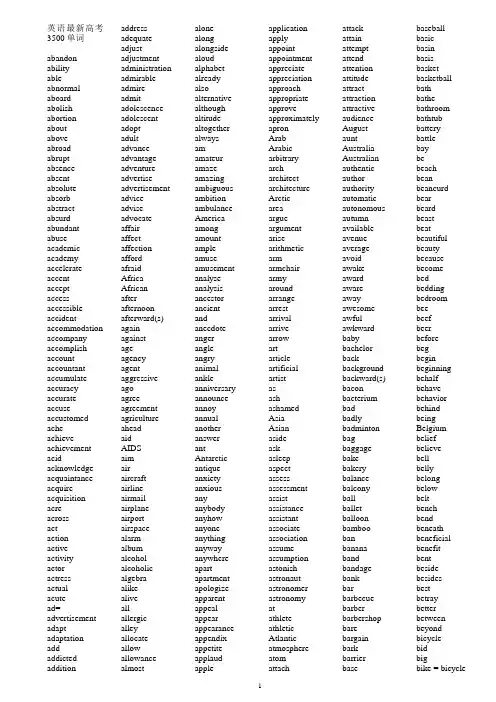

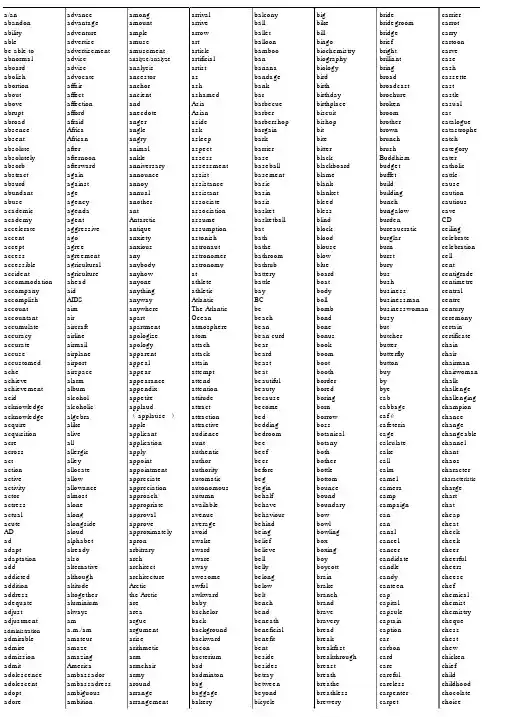

英语最新高考3500单词abandon abilityable abnormal aboard abolish abortion aboutaboveabroadabrupt absence absent absolute absorb abstract absurd abundant abuse academic academy accelerate accentaccept access accessible accident accommodation accompany accomplish account accountant accumulate accuracy accurate accuse accustomed acheachieve achievement acid acknowledge acquaintance acquire acquisition acreacrossactactionactiveactivityactoractressactualacutead= advertisement adapt adaptationaddaddicted addition addressadequateadjustadjustmentadministrationadmirableadmireadmitadolescenceadolescentadoptadultadvanceadvantageadventureadvertiseadvertisementadviceadviseadvocateaffairaffectaffectionaffordafraidAfricaAfricanafterafternoonafterward(s)againagainstageagencyagentaggressiveagoagreeagreementagricultureaheadaidAIDSaimairaircraftairlineairmailairplaneairportairspacealarmalbumalcoholalcoholicalgebraalikealiveallallergicalleyallocateallowallowancealmostalonealongalongsidealoudalphabetalreadyalsoalternativealthoughaltitudealtogetheralwaysamamateuramazeamazingambiguousambitionambulanceAmericaamongamountampleamuseamusementanalyseanalysisancestorancientandanecdoteangerangleangryanimalankleanniversaryannounceannoyannualanotheranswerantAntarcticantiqueanxietyanxiousanyanybodyanyhowanyoneanythinganywayanywhereapartapartmentapologizeapparentappealappearappearanceappendixappetiteapplaudappleapplicationapplyappointappointmentappreciateappreciationapproachappropriateapproveapproximatelyapronArabArabicarbitraryarcharchitectarchitectureArcticareaargueargumentarisearithmeticarmarmchairarmyaroundarrangearrestarrivalarrivearrowartarticleartificialartistasashashamedAsiaAsianasideaskasleepaspectassessassessmentassistassistanceassistantassociateassociationassumeassumptionastonishastronautastronomerastronomyatathleteathleticAtlanticatmosphereatomattachattackattainattemptattendattentionattitudeattractattractionattractiveaudienceAugustauntAustraliaAustralianauthenticauthorauthorityautomaticautonomousautumnavailableavenueaverageavoidawakeawardawareawayawesomeawfulawkwardbabybachelorbackbackgroundbackward(s)baconbacteriumbadbadlybadmintonbagbaggagebakebakerybalancebalconyballballetballoonbamboobanbananabandbandagebankbarbarbecuebarberbarbershopbarebargainbarkbarrierbasebaseballbasicbasinbasisbasketbasketballbathbathebathroombathtubbatterybattlebaybebeachbeanbeancurdbearbeardbeastbeatbeautifulbeautybecausebecomebedbeddingbedroombeebeefbeerbeforebegbeginbeginningbehalfbehavebehaviorbehindbeingBelgiumbeliefbelievebellbellybelongbelowbeltbenchbendbeneathbeneficialbenefitbentbesidebesidesbestbetraybetterbetweenbeyondbicyclebidbigbike = bicycle1billbingo biochemistry biography biologybirdbirth birthday birthplace biscuit bishopbitbitebitterblack blackboard blame blank blanket bleedblessblindblock blood blouseblowblueboardboatbodyboilbombbondbone bonusbookboombootbooth border bored boringborn borrow boss botanical botanyboth bother bottle bottom bounce bound boundary bowbowl bowling boxboxingboy boycott brainbrake branch brand bravebraverybreadbreakbreakfastbreakthroughbreastbreathbreathebreathlessbrewerybrickbridebridegroombridgebriefbrightbrilliantbringBritainBritishbroadbroadcastbrochurebrokenbroombrotherbrownbrushbucketBuddhismbudgetbuildbuildingbuildingbunchbungalowburdenbureaucraticburnburstburybusbushbusinessbusinessmanbusybutbutcherbutterbutterflybuttonbuybybyecabcabbagecafecafeteriacagecakecalculatecallcalmcamelcameracampcampaigncanCanadiancanalcancelcancercandidatecandlecandycanteencapcapitalcapsulecaptaincaptioncarcarboncardcarecarefulcarelesscarpentercarpetcarriagecarriercarrotcarrycartcartooncarvecasecashcassettecastcastlecasualcatcataloguecatastrophecatchcategorycaterCatholiccattlecausecautiouscautiouscavecelebratecelebrationcellcentcentigradecentimetercentralcentrecenturyceremonycertaincertificatechainchairchairmanchalkchallengechallengingchampionchancechangechangeablechannelchantchaoschaptercharactercharacteristicchargechartchatcheapcheatcheckcheekcheercheerfulcheesechefchemicalchemistchemistrychequechesschestchewchickenchiefchildchildhoodchimneyChinaChinesechocolatechoicechoirchokechoosechopstickschorusChristianChristmaschurchcigarcigarettecinemacirclecircuitcirculatecircumstancecircuscitizencitycivilcivilizationclaimclapclarifyclassclassicclassmateclassroomclawclaycleancleanerclearclerkcleverclickclimateclimbclinicclockclonecloseclothclothesclothingcloudcloudyclubclumsycoachcoalcoastcoatcockcocoacoffeecoincoincidencecokecoldcollarcolleaguecollectcollectioncollegecollisioncolourcombcombinecomecomedycomfortcomfortablecommandcommentcommercialcommitcommitteecommoncommunicatecommunicationcommunismcommunistcompanioncompanycomparecompasscompensatecompetecompetencecompetitioncomplaincompletecomplexcomponentcompositioncomprehensioncompromisecompulsorycomputercomradeconcentrateconceptconcernconcertconcludeconclusionconcretecondemnconditionconductconductorconferenceconfidentconfidentialconfirmconflictconfusecongratulatecongratulationconnectconnectionconscienceconsensusconsequenceconservativeconsiderconsiderateconsiderationconsistconsistentconstantconstitutionconstructconstructionconsultconsultantconsumecontaincontainercontemporarycontentcontentcontinentcontinuecontradictcontradictorycontrarycontributecontributioncontrolcontroversialconvenienceconvenientconventionalconversation2convey convince cook cooker cookie coolcopycorn corner corporation correct correction correspond corrupt costcosy cottage cotton cough could count counter country countryside couple courage course court courtyard cousin covercow crash crayon crazy cream create creature credit crew crime criminal criterion crop cross crossing crossroads crowd cruelcrycube cubic cuisine culture cup cupboard cure curious currency curriculum curtain cushion custom customer customs cutcyclecyclistdad = daddydailydamdamagedancedangerdangerousdaredarkdarknessdashdatadatabasedatedatedaughterdawndaydeaddeadlinedeafdealdeardeathdebatedebtdecadeDecemberdecidedecisiondeclaredeclinedecoratedecorationdecreasedeeddeepdeerdefeatdefencedefenddegreedelaydeletedeliberatelydelicatedeliciousdelightdelighteddeliverdemanddentistdepartmentdeparturedependdepositdepthdescribedescriptiondesertdeservedesigndesiredeskdesperatedessertdestinationdestroydetectivedeterminedevelopdevelopmentdevotedevotiondiagramdialdialoguediarydictationdictionarydiedietdifferdifferencedifferentdifficultdifficultydigdigestdigitaldignitydilemmadimensiondinedinnerdinosaurdioxidedipdiplomadirectdirectiondirectordirectorydirtydirtydisabilitydisableddisadvantagedisagreedisagreementdisappeardisappointeddisasterdiscountdiscouragediscoverdiscoverydiscriminationdiscussdiscussiondiseasedisgustingdiskdislikedismissdisobeydistancedistantdistinctiondistinguishdistributedistrictdisturbdisturbingdivediversedividedivisiondivorcedizzydodoctordocumentdogdolldollardonatedoordormitorydotdoubledoubtdowndownloaddownstairsdowntowndozendraftdragdrawdrawbackdrawerdrawingdreamdressdrilldrinkdrivedriverdropdrowndrugdrumdryduckduedullduringduskdustdustbindustydutydynamicdynastyeacheagereagleearearlyearnearthearthquakeeastEastereasterneasyeatedgeeditioneditoreducateeducationeducatoreffectefforteggEgyptEgyptianeighteeneightheightyeitherelderelectelectricelectricalelectricityelectronicelegantelephantelevenelsee-maile-mailembarrassembassyemergencyemperoremployemptyencourageencouragementendendingendlessenemyenergeticenergyengineengineerEnglandEnglishenjoyenjoyableenlargeenoughenquiryenterenterpriseentertainmententhusiasticentireentranceentryenvelopeenvironmentenvyequalequalityequipequipmenterasererroreruptescapeespeciallyessayEuropeEuropeanevaluateeveeveneveningeventeventuallyevereveryeverybodyeverydayeveryoneeverythingeverywhereevidenceevidentevolutionexactexamexamineexampleexcellentexceptexchangeexciteexcuseexerciseexhibitionexistexistenceexitexpandexpectexpectationexpenseexpensiveexperienceexperimentexpertexplainexplanationexplicitexplodeexploreexportexposeexpressexpressionextensionextraextraordinaryextremeextremely3eye eyesight face facial fact factory fadefail failure fair faithfall false familiar family famous fan fancy fantastic fantasy farfare farm farmer fashion fast fasten fat father fault favour favourite faxfear feast feather February federal fee feed feel feeling fellow female fence ferry festival fetch fever few fibre fiction fiction field fierce fifteen fifth fifty fight figure figure filefillfilm final financefindfinefingerfinishfirefireworkfirmfirstfishfishermanfistfitfivefixflagflameflashflashlightflatfleefleshflexibleflightfloatfloodfloorflourflowflowerflufluencyfluentfluentflyfocusfogfoggyfoldfolkfollowfondfoodfoolfoolishfootfootballforforbidforceforecastforeheadforeignforeignerforeseeforestforeverforgetforgetfulforgiveforkformformerfortnightfortunatefortunefortyforwardfosterfoundfoundfountainfourfourteenfourthfoxfragilefragrantframeworkfrancfreefreedomfreewayfreezefreezingfrequentfreshFridayfridgefriendfriendlyfriendshipfrightenfrogfromfrontfrontierfrostfruitfryfuelfullfunfunctionfundamentalfuneralfunnyfurfurnishedfurniturefuturegaingallerygallongamegaragegarbagegardengarlicgarmentgasgategathergaygeneralgenerallygenerationgenerousgentlegentlemangeographygeometrygesturegetgiftgiftedgiraffegirlgivegladglareglassglobegloryglovegluegogoalgoatgodgoldgolfgoodgoodsgoosegoverngovernmentgradegradualgraduategraduationgraingramgrammargrandgrandchildgranddaughtergrandmagrandpagrandparentsgrandsongrannygrapegraphgraspgrassgratefulgravitygreatGreecegreedyGreekgreengreengrocergreetgreetinggreygrocergrocerygroundgroupgrowgrowthguaranteeguardguessguestguidanceguideguiltyguitargungymgymnasticshabithairhaircuthalfhallhamhamburgerhammerhandhandbaghandfulhandkerchiefhandlehandsomehandwritinghandyhanghappenhappinesshappyharbourhardhardlyhardshiphardworkingharmharmfulharmonyharvesthathatchhatehaveheheadheadacheheadlineheadmasterheadmistresshealthhealthyheaphearhearingheartheatheavyheelheighthelicopterhellohelmethelphelpfulhenherhereherohersherselfhesitatehihidehighhighwayhillhimhimselfhirehishistoryhithobbyholdholeholidayholyhomehomelandhometownhomeworkhonesthonourhookhopehopefulhopelesshorriblehorsehospitalhosthostesshothotdoghotelhourhousehousewifehouseworkhowhoweverhowlhughugehumanhuman beinghumoroushumourhundredhungerhungryhunthunterhurricanehurryhurthusbandhydrogenIiceice-cream4Icelandidea identification identity idiomifignoreillillegal illness imagine immediately immigration import importance important impossible impress impression improveininch incident include income increase indeed independence independent IndiaIndian indicate industry infer influence inform information initialinjureinjuryinkinn innocent insect insideinsist inspect inspire instant instead institute institution instruction instrument insurance insure intelligence intend intention interest interesting international Internet interpreter interrupt interviewintointroduceintroductioninventinventioninvitationinviteinviteIrelandIrishironirrigationislanditItalianItalyitsitselfjacketjamJanuaryJapanJapanesejarjawjazzjeansjetjewelleryjobjogjoinjokejournalistjourneyjoyjudgejudgmentjuiceJulyjumpJunejunglejuniorjustjusticekangarookeepkettlekeykeyboardkickkidkillkilokilogramkilometrekindkindergartenkindnesskingkingdomkisskitchenkitekneeknifeknockknowknowledgelab = laboratorylabourlackladylakelamelamplandlanguagelanternlaplargelastlatelatterlaughlaughterlaundrylawlawyerlaylazyleadleaderleadingleafleagueleaklearnleastleatherleavelectureleftleglegallemonlemonadelendlengthlessonletletterlevellibertylibrarianlibrarylicencelidlielifelifetimeliftlightlightninglikelikelylimitlinelinklionlipliquidlistlistenliteraryliteraturelitrelitterlittlelivelivelylivingloadloaflocallocklonelylonglooklooselorryloselosslotloudloungelovelovelylowluckluckyluggagelunchlungmachinemadmadam/madamemagazinemagicmaidmailmailboxmainmainlandmajormajoritymakemalemanmanagemanagermankindmannermanymapmaplemarathonmarblemarchmarketmarketmarriagemarriedmarrymaskmassmastermatmatchmaterialmathematics =mathsmattermaturemaximummaymaybememealmeanmeaningmeansmeanwhilemeasuremeatmedalmediamedicalmedicinemediummeetmeetingmelonmembermemorialmemorymendmentalmentionmenumerchantmercifulmercymerelymerrymessmessagemessymetalmethodmeterMexicanMexicomicroscopemicrowavemiddlemidnightmightmildmilemilkmillionmillionairemindminemineralminibusminimumministerministryminorityminusminutemirrormissmissilemistmistakemistakenmisunderstandmixmixturemmmobilemodelmodemmodernmodestmom = mummomentmommyMondaymoneymonitormonkeymonthmoonmopmoralmoremorningMoslemmosquitomostmothermotherlandmotivationmotormotorcyclemottomountainmountainousmournmousemoustachemouthmovemovementmovieMr.Mrs.Ms.muchmudmuddymultiplymurdermuseummushroommusicmusicalmusician5must mustard muttonmymyselfnailnamenarrow nation national nationality nationwide native natural naturenavynearnearby nearlyneat necessary neck necklace needneedle negotiate neighbour neighbourhood neither nephew nervousnestnetnetwork nevernewNew Zealand news newspaper nextniceniecenightnine nineteen ninetyninthnoNo. = number noble nobodynodnoisenoisynonenoodlenoonnornormalnorth northeast northern northwest nosenot notenotebooknothingnoticenovelnovelistNovembernownowadaysnowherenuclearnuclearnumbnumbernursenurserynutnutritionnylonO.K.o’clockobeyobjectobserveobtainobviousoccupationoccupyoccuroceanOceaniaofoffofferofficeofficerofficialoffshoreoftenohoiloilfieldOKoldOlympic(s)ononceoneoneselfoniononlyontoopenopeningoperaoperateoperationoperatoropinionopposeoppositeoptimisticoptionalororalorangeorbitorderordinaryorganorganizeorganizationorganizeoriginotherotherwiseoughtouroursourselvesoutoutcomeoutdoorsouteroutgoingoutingoutlineoutputoutsideoutspokenoutstandingoutwardsovaloverovercoatovercomeoverheadoverlookoverweightoweownownerownershipoxoxygenP.E.p.m./pm,P.M./PMpacePacificpackpackagepacketpaddlepagepainpainfulpaintpainterpaintingpairpalacepalepanpancakepandapanicpaperpaperworkparagraphparallelparcelpardonparentparkparrotpartparticipateparticularpartlypartnerpart-timepartypasspassagepassengerpasser-bypassivepassportpastpatentpathpatiencepatientpatternpausepavementpayPCpeapeacepeacefulpeachpearpeasantpedestrianpenpencilpennypensionpeoplepepperperpercentpercentageperfectperformperformanceperformerperfumeperhapsperiodpermanentpermissionpermitpersonpersonalpersonnelpersuadepestpetpetrolphenomenonphonephoto =photographphotographerphrasephysicalphysicistphysicspianistpianopickpicnicpicturepiepiecepigpilepillpillowpilotpinpinepineapplepingpongpinkpintpioneerpipepityplaceplainplanplaneplanetplantplasticplateplatformplayplayerplaygroundpleasantpleasepleasedpleasureplentyplotploughplugpluspmpocketpoempoetpointpoisonpoisonouspolepolicepolicemanpolicypolishpolitepoliticalpoliticianpoliticspollutepollutionpondpoolpoorpop = popularpopcornpopularpopulationporkporridgeportportableporterpositionpositivepossesspossessionpossibilitypossiblepostpostagepostcardpostcodepostmanpostponepotpotatopotentialpoundpourpowderpowerpowerfulpracticalpracticepractisepraiseprayprayerpreciousprecisepredictpreferpreferencepregnantprejudicepremierpreparationprepareprescriptionpresentpresentationpreservepresidentpresspressurepretendprettypreventpreviewpreviouspriceprideprimaryprimitive6principle printprison prisoner private privilege prize probably problem procedure process produce product production profession professor profit programme progress prohibit project promise promote pronounce pronunciation proper properly protect protection proudprove provide province psychology pubpublic publishpullpulsepump punctual punctuation punish punishment pupil purchase purepurple purpose pursepushputpuzzle pyramid quake qualification quality quantity quarrel quarter queen question questionnaire queuequick quietquitquitequizrabbitraceracialradiationradioradioactiveradiumragrailrailwayrainrainbowraincoatrainfallrainyraiserandomrangerankrapidrareratrateratherrawrayrazorreachreactreadreadingreadyrealrealiserealityreallyreasonreasonablerebuildreceiptreceivereceiverrecentreceptionreceptionistrecipereciterecogniserecommendrecordrecorderrecoverrecreationrecycleredreducereferrefereereferencereflectreformrefreshrefrigeratorrefuseregardregardlessregisterregretregularregulationrejectrelaterelationrelationshiprelativerelaxrelayrelevantreliablereliefreligionreligiousrelyremainremarkrememberremindremoteremoverentrepairrepeatreplacereplyreportreporterrepresentrepresentativerepublicrequestrequirerequirementrescueresearchresemblereservationreserveresignresistrespectrespondresponsibilityrestrestaurantrestrictionresultretellretirereturnreviewrevisionrevolutionrewardrewindrhymericerichriddlerideridiculousrightrigidringriperipenriseriskriverroadroastrobrobotrockrocketrolerollroofroomroosterrootroperoserotroughroundroundaboutroutinerowroyalrubberrubbishruderugbyruinrulerulerrunrunnerrunningrushRussiaRussiansacredsacrificesadsadnesssafesafetysailsailorsaladsalarysalesalesgirlsalesmansaltsaltysalutesamesandsandwichsatellitesatisfactionsatisfySaturdaysaucersausagesavesaysayingscanscarescarfscenesceneryschedulescholarscholarshipschoolschoolbagschoolboyschoolmatesciencescientificscientistscissorsscoldscoreScotlandScottishscratchscreamscreensculptureseaseagullsealsearchseashellseasideseasonseatseaweedsecondsecretsecretarysectionsecuresecurityseeseedseekseemseizeseldomselectselfselfishsellsemicircleseminarsendseniorsensesensitivesentenceseparateseparationSeptemberseriousservantserveservicesessionsetsettlesettlementsettlersevenseventeenseventhseventyseveralseveresewsexshabbyshadeshadowshakeshallshallowshameshapesharesharksharpsharpensharpenershaveshavershesheepsheetshelfshelfsheltershineshipshirtshockshoeshootshopshopkeepershoppingshoreshortshortcomingshortlyshortsshotshouldshouldershoutshowshowershrinkshutshuttleshy7sick sickness side sideroad sideway sideways sighsight sightseeing sign signal signature significance silence silentsilksillysilver similar simple simplify simply since sincerely sing singlesinksirsistersit situation six sixteen sixteenth sixthsixtysizeskate skateboard skeptical skiskill skillful skinskipskirtsky skyscraper slave slavery sleep sleepy sleeve sliceslideslightslimslipslowsmall smart smell smile smog smoke smokersmoothsnakesneakersneezesniffsnowsnowysosoapsobsoccersocialsocialismsocialistsocietysocksocketsofasoftsoftwaresoilsolarsoldiersolidsomesomebodysomehowsomeonesomethingsometimessomewheresonsongsoonsorrowsorrysortsoulsoundsoupsoursouthsoutheastsouthernsouvenirsowspacespaceshipspadeSpainSpanishsparesparrowspeakspeakerspearspecialspecialistspecificspeechspeedspellspellingspendspinspiritspiritualspitsplendidsplitspoilspokenspokesmansponsorspoonspoonfulsportspotsprayspreadspringspysquaresqueezesquidsquirrelstablestadiumstaffstagestainstainlessstairstampstandstandardstarstarestartstarvationstarvestatestatementstatesmanstationstatisticstatuestatusstaysteadysteakstealsteamsteelsteepstepstewardstewardessstickstillstockingstomachstonestopstoragestorestormstorystoutstovestraightstraightforwardstraitstrangestrangerstrawstrawberrystreamstreetstrengthstrengthenstressstrictstrikestringstrongstrugglestubbornstudentstudiostudystupidstylesubjectsubjectivesubmitsubscribesubstitutesucceedsuccesssuchsucksuddensuffersufferingsugarsuggestsuggestionsuitsuitablesuitcasesuitesummarysummersunsunburntSundaysunlightsunnysunsetsunshinesupersuperiorsupermarketsuppersupplysupportsupposesupremesuresurfacesurgeonsurplussurprisesurroundsurroundingsurvivalsurvivesuspectsuspensionswallowswapswearsweatsweatersweepsweepsweetswellswiftswimswingSwissswitchSwitzerlandswordsymbolsympathysymphonysymptomsystemsystematictabletable tennistailtailortaketaletalenttalktalltanktaptapetargettasktastetastelesstastytaxtaxitaxpayerteateachteacherteamteamworkteapottearteasetechnicaltechniquetechnologyteenagertelephonetelescopetelevisiontelltemperaturetempletemporarytentendtendencytennistensetenttentativetermterminalterribleterrifyterrortesttexttextbookthanthankthankfulthatthetheatrethefttheirtheirsthemthemethemselvesthentheoreticaltheorytherethereforethermosthesetheythickthiefthinthingthinkthinkingthirdthirstthirstythirteenthirtythisthoroughthosethoughthoughtthousandthreadthreethrillthrillerthroatthroughthroughoutthrowthunderthunderstormThursday8。

---------a/an advance among arrival balcony big bride carrier abandon advantage amount arrive ball bike bridegroom carrot ability adventure ample arrow ballet bill bridge carryable advertise amuse art balloon bingo brief cartoonbe able to advertisement amusement article bamboo biochemistry bright carve abnormal advice analyse/analyze artificial ban biography brilliant case aboard advise analysis artist banana biology bring cash abolish advocate ancestor as bandage bird broad cassette abortion affair anchor ash bank birth broadcast castabout affect ancient ashamed bar birthday brochure castle above affection and Asia barbecue birthplace broken casual abrupt afford anecdote Asian barber biscuit broom catabroad afraid anger aside barbershop bishop brother catalogue absence Africa angle ask bargain bit brown catastrophe absent African angry asleep bark bite brunch catch absolute after animal aspect barrier bitter brush category absolutely afternoon ankle assess base black Buddhism cater absorb afterward anniversary assessment baseball blackboard budget catholic abstract again announce assist basement blame buffet cattle absurd against annoy assistance basic blank build cause abundant age annual assistant basin blanket building caution abuse agency another associate basis bleed bunch cautious academic agenda ant association basket bless bungalow cave academy agent Antarctic assume basketball blind burden CD accelerate aggressive antique assumption bat block bureaucratic ceiling accent ago anxiety astonish bath blood burglar celebrate accept agree anxious astronaut bathe blouse burn celebration access agreement any astronomer bathroom blow burst cell accessible agricultural anybody astronomy bathtub blue bury cent accident agriculture anyhow at battery board bus centigrade accommodation ahead anyone athlete battle boat bush centimetre accompany aid anything athletic bay body business central accomplish AIDS anyway Atlantic BC boll businessman centre account aim anywhere The Atlantic be bomb businesswoman century accountant air apart Ocean beach bond busy ceremony accumulate aircraft apartment atmosphere bean bone but certain accuracy airline apologize atom bean curd bonus butcher certificate accurate airmail apology attach bear book butter chain accuse airplane apparent attack beard boom butterfly chair accustomed airport appeal attain beast boot button chairman ache airspace appear attempt beat booth buy chairwoman achieve alarm appearance attend beautiful border by chalk achievement album appendix attention beauty bored bye challenge acid alcohol appetite attitude because boring cab challenging acknowledge alcoholic applaud attract become born cabbage champion acknowledge algebra( applause)attraction bed borrow caf échance acquire alike apple attractive bedding boss cafeteria change acquisition alive applicant audience bedroom botanical cage changeable acre all application aunt bee botany calculate channel across allergic apply authentic beef both cake chantact alley appoint author beer bother call chaos action allocate appointment authority before bottle calm character active allow appreciate automatic beg bottom camel characteristic activity allowance appreciation autonomous begin bounce camera charge actor almost approach autumn behalf bound camp chart actress alone appropriate available behave boundary campaign chatactual along approval avenue behaviour bow can cheap acute alongside approve average behind bowl can cheatAD aloud approximately avoid being bowling canal checkad alphabet apron awake belief box cancel cheek adapt already arbitrary award believe boxing cancer cheer adaptation also arch aware bell boy candidate cheerful add alternative architect away belly boycott candle cheers addicted although architecture awesome belong brain candy cheese addition altitude Arctic awful below brake canteen chef address altogether the Arctic awkward belt branch cap chemical adequate aluminium are baby bench brand capital chemist adjust always area bachelor bend brave capsule chemistry adjustment am argue back beneath bravery captain cheque administration a.m./am argument background beneficial bread caption chess admirable amateur arise backward benefit break car chest admire amaze arithmetic bacon bent breakfast carbon chew admission amazing arm bacterium beside breakthrough card chicken admit America armchair bad besides breast care chief adolescence ambassador army badminton betray breath careful child adolescent ambassadress around bag between breathe careless childhood adopt ambiguous arrange baggage beyond breathless carpenter chocolate adore ambition arrangement bakery bicycle brewery carpet choiceadult ambulance arrest balance bid brick carriage choir---------choke communication cookie dawn dioxide due equality fantastic choose communism cool day dip dull equip fantasy chopsticks communist copy dead diploma dumpling equipment far chorus companion corn deadline direct during eraser fare Christian company corner deaf direction dusk error farm Christmas compare corporation deal director dust erupt farmer church compass correct dear directory dustbin escape fastcigar compensate correction death dirty dusty especially fasten cigarette compete correspond debate disability duty essay fat cinema competence corrupt debt disabled DVD Europe father circle competition cost decade disadvantage dynamic European fault circuit complete cosy/cozy decide disagree dynasty evaluate favour circulate complex cottage decision disagreement each even favourite circumatance component cotton declare disappear eager evening fax circus composition cough decline disappoint eagle event fear citizen comprehension count decorate disappointed ear eventually feastcity compromise counter decoration disaster early ever feather civil compulsory country decrease discount earn every federal civilian computer countryside deed discourage earth everybody fee civilization concentrate couple deep discover earthquake everyday feedclap concept courage deer discovery east everyone feel clarify concern course defeat discrimination Easter everything feeling class concert court defence discuss eastern everywhere fellow classic conclude courtyard defend discussion easy evidence female classify conclusion cousin degree disease eat evident fence classmate concrete cover delay disgusting ecology evolution ferry classroom condition cow delete dish edge exact festival claw condemn crash deliberately dislike edition exam fetch clay conduct crayon delicate dislike editor examine fever clean conductor crazy delicious dismiss educate example few cleaner conference cream delight distance educator excellent fibre clear confident create delighted distant education except fiction clerk confidential creature deliver distinction effect exchange field clever confirm credit demand distinguish effort excite fierce click conflict crew dentist distribute egg excuse fight climate confuse crime department district eggplant exercise figure climb congratulate criminal departure disturb either exhibition fileclinic congratulation criterion depend disturbing elder exist fillclock connect crop deposit dive elect existence film clone connection cross depth diverse electric exit final close conscience crossing describe divide electrical expand finance cloth consensus crossroads description division electricity expect find clothes consequence crowd desert divorce electronic expectation fine clothing conservation cruel deserve dizzy elegant expense fine cloud conservative cry design do elephant expensive finger cloudy consider cube desire doctor else experience fingernail club considerate cubic desk document e-mail experiment finish clumsy consideration cuisine desperate dog embarrass expert fire coach consist culture dessert doll embassy explain fireworks coal consistent cup destination dollar emergency explanation firm coast constant cupboard destroy donate emperor explicit firmcoat constitution cure detective door employ explode fish cocoa construct curious determine dormitory empty explore fisherman coffee construction currency develop dot encourage export fistcoin consult curriculum development double encouragement expose fit coincidence consultant curtain devote doubt end express fixcoke consume cushion devotion down ending expression flagcold contain custom diagram download endless extension flame collar container customer dial downstairs enemy extra flash colleague contemporary customs dialogue downtown energetic extraordinary flashlight collect content cut diamond dozen energy extreme flat collection content cycle diary Dr.engine eye flat college continent cyclist dictation draft engineer eyesight Flee collision continue dad dictionary drag enjoy face flexible colour contradict daily die draw enjoyable facial flesh comb contradictory dam diet drawback enlarge fact flight combine contrary damage differ drawer enough factory float come contribute damp difference dream enquiry fade flood comedy contribution dance different dress enter failure floor comfort control danger difficult drill enterprise fair flour comfortable controversial dangerous difficulty drink entertainment faith flow command convenience dare dig drive enthusiastic fall flower comment convenient dark digest driver entire fall flu commercial conventional darkness digital drop entrance FALSE fluency commit conversation dash dignity drug entry familiar fluent commitment convey data dilemma drum envelope family fly committee convince database dimension drunk environment famous fly common cook date dinner dry envy fan focuscommunicate cooker daughter dinosaur duck equal fancy fog---------foggy gas guide hobby information kilogram liquid mealfold gate guilty hold initial Kilometre list meanfolk gather guitar hole injure kind listen meaningfollow gay gun holiday injury kind literature meansfond general gym holy ink kindergarten literary meanwhile food generation gymnastics home inn kindness litre measurefool generous habit homeland innocent King litter meatfoolish gentle hair Hometown insect kingdom little medal gold foot gentleman haircut homework insert kiss live medalfootball geography half honest inside kitchen lively mediafor geometry hall honey insist kite load medicalforbid gesture ham honour inspect knee loaf medicineforce get hamburger hook inspire knife local medium forecast gift hammer hope instant knock lock meetforehead gifted hand hopeful instead know lonely meeting foreign giraffe handbag hopeless institute knowledge long melon foreigner girl handful horrible institution lab look member foresee give handkerchief horse instruct labour loose memorial forest glad handle hospital instruction lack lorry memory forever glance handsome host instrument lady loss mendforget glare handwriting hostess insurance lake lose mental forgetful glass handy hot insure lamb lot mention forgive globe hang hot dog intelligence lame Lost & Found menufork glory happen hotel intend lamp loud merchantform glove happiness hour intention land lounge mercifulformat glue happy house interest language love mercyformer go harbour housewife interesting lantern lovely merely fortnight goal hard housework international lap low merry fortunate goat hardly how internet large luck messfortune god hardship however interpreter last lucky messageforty gold hardworking howl interrupt late luggage messyforward golden harm hug interval latter lunch metalfound golf harmful huge interview laugh lung method fountain good harmony human into laughter machine metrefox goods harvest human being introduce laundry mad microscope fragile goose hat humorous introduction law madam/madame microwave fragrant govern hatch hunger invent lawyer magazine middle framework government hate hungry invention lay magic Middle East franc grade have hunt invitation lazy maid midnightfree gradual he hunter invite lead mail mightfreedom graduate head hurricane iron leader mailbox mildfreeway graduation headache hurry irrigation leaf main milefreeze grain headline hurt island league mainland milkfreezing gram headmaster husband it leak major millionaire frequent grammar headmistress hydrogen its learn majority mindfresh grand health ice itself least make minefriction grandchild healthy ice-cream jacket leather make minefridge granddaughter hear idea jam leave male mineralfriend grandma hearing identity jar lecture man minibus friendly grandpa heart identification jaw left manage minimum friendship grandparents heat idiom jazz leg manager minister frighten grandson heaven if jeans legal mankind ministryfrog granny heavy ignore jeep lemon manner table minorityfrom grape heel ill jet lemonade manners minusfront graph height illegal jewellery lend many minutefrontier grasp helicopter illness job length map mirrorfrost grass hello imagine jog lesson maple missfruit grateful helmet immediately join let maple missilefry gravity help immigration joke letter leaves mistfuel great helpful import journalist level marathon mistakefull greedy hen importance journey liberty marble mistakenfun green her important joy liberation march misunderstand function green herb impossible judge librarian mark mix fundamental grocer here impress judgement library market mixturefuneral greet hero impression juice license marriage mmfunny greeting hers improve jump lid marry mobilefur grey herself in jungle lie mask mobile phone furnished grill hesitate inch junior lie mass model furniture grocer hi incident just life master modemfuture grocery hide include justice lift mat moderngain ground high income kangaroo light match modestgallery group highway increase keep lightning material momgallon grow hill indeed kettle like mathematics momentgame growth him independence key likely matter mommy garage guarantee himself independent keyboard limit mature money garbage guard hire indicate Kick line maximum monitor garden guess his industry kid link may monkeygarlic guest history influence kill lion maybe monthgarment guidance hit inform kilo lip me monument---------moon No.or Party pineapple power punish recorder moon cake noble oral pass ping-pong powerful punishment recovermop nobody orange passage Pink practical Pupil recreationmoral nod orbit passenger pint practice purchase recycle more noise order passer-by pioneer practise pure rectangle morning noisy ordinary passive pipe praise Purple red Moslem none organ passport pity pray Purpose reduce mosquito noodle organise past place prayer purse refer mother noon organization patent plain precious push referee motherland nor origin path plan precise put reference motivation normal other patience plane predict puzzle reflect motor north otherwise patient Planet prefer pyramid reform motorcycle northeast ought patient plant preference quake refresh motto northern our pattern plastic pregnant qualification refrigerator mountain northwest ours pause plate prejudice quality refusemountainous nose ourselves Pavement platform premier quantity regard mourn not out pay play Preparation quarrel regardless mouse note outcome P.E.playground prepare quarter register moustache notebook outdoors P.C.pleasant prescription queen regret mouth nothing outer pea please present question regular from mouth to mouth notice outgoing peace pleased present questionnaire regulation move novel outing peaceful pleasure presentation queue rejectmovement novelist outline peach Plenty preserve quick relatemovie now output pear plot president quiet relation Mr.nowadays outside pedestrian plug press quilt relationship Mrs.nowhere outspoken pen Plus press quit relative Ms.nuclear outstanding pence p.m./pm,P.M./PM pressure quite relaxmud numb outward (s )pencil pocket pretend quiz relay muddy number oval penny poem pretty rabbit relevant multiply nurse over pension poet prevent race reliable murder nursery overcoat People point preview racial relief museum nut overcome pepper poison previous radiation religion mushroom nutrition overhead per poisonous price radio religious music obey overlook percent pole pride radioactive rely musical object overweight percentage<the North primary radium remain musician observe owe perfect(South ) Pole >primary school rag remark must obtain own perform police primitive rail remember mustard obvious owner performance policeman principle railway remind mutton occupation ownership performer Policewoman print rain remotemy occupy ox perfume policy prison rainbow remove myself occur oxygen perhaps polish prisoner raincoat rentnail ocean pace period Polite private rainfall repair name Oceania Pacific permanent Political privilege rainy repeat narrow o'clock the Pacific Ocean permission politician prize raise replace nation of pack permit politics Probably random reply national off package Person pollute problem range report nationality offence packet personal pollution procedure rank reporter nationwide offer paddle personnel pond process rapid represent native office page personally pool produce rare representative natural officer Pain persuade poor product rat republic nature official painful pest pop production rather reputation navy offshore paint pet popcorn profession raw request near often Painter petrol Popular professor raw material require nearby oh painting phenomenon population profit ray requirement nearly oil pair phone pork programme razor rescue neat oilfield palace photo porridge progress reach research necessary O.K.pale photograph port Prohibit react resemble neck old pan Photographer Portable project read reservation necklace Olympic ( s)pancake phrase porter promise reading reserve need Olympic Games panda physical position promote ready resign needle on panic physical positive pronounce real Resist negotiate once paper examination possess Pronunciation reality Respect neighbour one paperwork physician possession proper realise respond neighbourhood oneself paragraph physicist possibility properly really responsibility neither onion parallel physics possible protect reason rest nephew only parcel Pianist post protection reasonable restaurant nervous onto pardon piano post Proud rebuild restriction nest open parent pick postage prove receipt resultnet opening park Picnic Postcard provide receive retell network opera park picture postcode province receiver Retire never opera house Parking pie poster psychology recent returnnew operate parking lot piece postman pub reception review news operation parrot pig postpone public receptionist revision newspaper operator part pile pot publish recipe revolution next opinion participate pill Potato pull recite reward nice oppose particular pillow potential pulse recognise rewind niece opposite partly pilot pound pump recommend rhyme night optimistic Partner pin pour punctual record riceno optional part-time pine powder Punctuation record holder rich---------rid scholar she slip spoken stupid tap throatriddle scholarship sheep slow spokesman/woman style tape throughride schoolbag sheet small sponsor subject target throughout ridiculous schoolboy/girl shelf smart spoon subjective task throwright schoolmate shelter smell spoonful submit tasteless thunderrigid science shine smile sport subscribe tasty thunderstorm ring scientific ship smog spot substitute tax thusripe scientist shirt smoke spray succeed taxi tickripen scissors shock smoker spread success taxpayer ticketrise scold shoe smooth spring successful tea tidyrisk score shoot snake spy such teach tieriver scratch shop sneaker square suck teacher tigerroad scream shop assistant sneeze squeeze sudden team tightroast screen shopkeeper sniff squirrel suffer teamwork tillrob sculpture shopping snow stable suffering teapot timerobot sea shore snowy stadium sugar tear timetable rock seagull short so staff suggest tear tinrocket seal shortcoming soap stage suggestion tease tinyrole search shortly sob stain suit technical tiproll seashell shorts soccer stainless suitable technique tireroof seaside shot social stair suitcase technology tiredroom season should socialism stamp suite teenager tiresome rooster seat shoulder socialist stand summary telephone tissueroot seaweed shout society standard summer telescope titlerope second show sock star sun television torose secret shower socket stare sunburnt tell toastrot secretary shrink sofa start sunlight temperature tobacco rough section shut soft starvation sunny temple todayround secure shuttle soft drink starve sunshine temporary together roundabout security shy software state super tend toiletroutine see sick soil station superb tendency toleraterow seed melon sickness solar statement superior tennis tomatorow seed side soldier<statesman/st supermarket tense tombroyal seek sideroad solid ateswoman>supper tension tomorrow rubber seem sideway some statistics supply tent tonrubbish seize sideways somebody statue support tentative tonguerude seldom sigh somehow status suppose term tonightrugby select sight someone stay supreme terminal tooruin self sightseeing something steady surplus terrible toolrule selfish signal sometimes steal sure terrify toothruler sell signature somewhere steam surface terror toothacherun semicircle significance son steel surgeon test toothbrush rush seminar silence song steep surprise text toothpaste sacred send silent soon step surround textbook topsacrifice senior silk sorrow steward surrounding than topicsad sense silly sorry stewardess survival thank tortoise sadness sensitive silver sort stick survive thankful totalsafe sentence similar soul still suspect that touchsafety separate simple sound stocking suspension the toughsail separation simplify soup stomach swallow theatre toursailor serious since sour stone swap theft tourismsalad servant sincerely south stop swear their touristsalary serve sing southeast storage sweat theirs tournament sale service single southern store sweater them toward (s )salesgirl session sink southwest storm sweep theme towel salesman set sir souvenir story sweet themselves tower saleswoman settle sister sow stout swell then townsalt settlement sit space stove swift theoretical toysalty settler situation spaceship straight swim theory tracksalute several size spade straightforward swing there tractorsame severe skate spare strait switch therefore tradesand sew skateboard sparrow strange sword thermos tradition sandwich sex ski speak stranger symbol these traditional satellite shabby skill speaker straw sympathy they traffic satisfaction shade skillful spear strawberry symphony thick trainsatisfy shadow skin special stream symptom thief training saucer shake skip specialist street system thin tram sausage shall skirt specific strength systematic thing transform save shallow sky speech strengthen table think translatesay shame skyscraper speed stress table tennis thinking translation saying shape slave spell strict tablet thirst translator scan share slavery spelling strike tail this transparent scar shark sleep spend string tailor thorough transport scarf sharp sleepy spin strong take those trapscene sharpen sleeve spirit struggle tale though travel scenery sharpener pencil slice spiritual stubborn talent thought traveler sceptical sharpener slide spit student talk thread treasure schedule shave slight splendid studio tall thrill treatschool shaver slim split study tank thriller treatment--------- tree urgent waste withouttremble use watch witnesstrend used water wolftrial useful watermelon womantriangle useless wave wondertrick user wax wonderfultrip usual way woodtrolleybus vacation we wooltroop vacant weak woolentrouble vague weakness word troublesome vain wealth worktrousers valid wealthy workertruck valley wear worldTRUE valuable weather worldwidetruly value web wormtrunk variety website worriedtrust various wedding worrytruth vase weed worthtry vast week worthwhileT-shirt VCD weekday worthytube vegetable weekend woundtune vehicle weekly wrestleturkey version weep wrinkleturn vertical weigh wristturning very weight writetutor vest welcome wrongTV via welfare X-raytwice vice well yardtwin victim west yawntwist victory western yelltype video westwards yeartypewriter videophone wet yellowtypical view whale yestyphoon village what yesterdaytypist villager whatever wettyre vinegar wheat yoghurtugly violate wheel youumbrella violence when youngunable violent whenever yourunbearable violin where yours unbelievable violinist wherever yourself uncertain virtue whether yourselvesuncle virus which youth uncomfortable visa whichever yummy unconditional visit while zebra unconscious visitor whisper zebra crossing under visual whistle zero underground vital white zipunderline vivid who zip code understand vocabulary whole zipperundertake voice whom who zoneunderwear volcano whose zoo unemployment volleyball why zoomunfair voluntary wideunfit volunteer widespreadunfortunate vote widowuniform voyage wifeunion wag wildunique wage wildlifeunit waist willunite wait willuniversal waiter willinguniverse waiting-room winuniversity waitress windunless wake windunlike walk windowunrest wall windyuntil wallet wineunusual walnut wingunwilling wander winnerup want winterupdate war wipeupon ward wireupper warehouse wisdomupset warm wiseupstairs warmth wishupward ( s )Warn withurban wash withdrawurge washroom within---------。