The treatment of epsilon moves in subset construction

- 格式:pdf

- 大小:202.62 KB

- 文档页数:16

DOI:10.16662/ki.1674-0742.2021.33.089艾司西酞普兰与喹硫平联合治疗首发抑郁症的效果分析杨晓江泉州市第三医院精神科,福建泉州362121[摘要]目的探讨艾司西酞普兰与喹硫平联合治疗首发抑郁症的效果。

方法方便选取2018年1月—2020年12月在该院就诊的116例首发抑郁症患者为研究对象,以随机数表法将其划分为两组。

对照组58例患者应用艾司西酞普兰治疗,研究组58例在此基础上联合喹硫平治疗。

比较两组患者的临床疗效、治疗前后的神经因子水平[髓鞘间隙蛋白(MBP)与神经营养因子(BDNF)],治疗前、治疗4周与8周时的认知功能[以重复性成套神经心理状态测验(RBANS)评价]以及不良反应情况。

结果研究组治疗的总有效率为94.83%较对照组79.31%高,差异有统计学意义(χ2=6.202,P<0.05)。

治疗后,研究组MBP(4.52±0.85)ng/mL较对照组(5.79±0.69)ng/mL低,BDNF(27.52±4.03)ng/mL较对照组(24.33±5.00)ng/mL高,差异有统计学意义(t=8.834、3.783,P<0.05)。

治疗4周与8周时,研究组RBANS评分(73.52±10.00)分、(78.62±10.40)分较对照组高(68.03±8.46)分、(71.32±9.05)分,差异有统计学意义(t=3.192,4.033,P<0.05)。

研究组不良反应发生率为10.34%,对照组不良反应发生率为8.62%,差异无统计学意义(χ2=0.100,P>0.05)。

结论首发抑郁症患者应用艾司西酞普兰与喹硫平联合治疗能够提高临床疗效,改善神经因子水平与认知功能,安全性佳,具有临床推广价值。

[关键词]艾司西酞普兰;喹硫平;首发抑郁症[中图分类号]R5[文献标识码]A[文章编号]1674-0742(2021)11(c)-0089-04. All Rights Reserved.Analysis of the Effect of Escitalopram and Quetiapine in the Treatment ofFirst-episode DepressionYANG XiaojiangDepartment of Psychiatry,Quanzhou Third Hospital,Quanzhou,Fujian Province,362121China[Abstract]Objective To explore the effect of escitalopram and quetiapine in the treatment of first-episode depression.Methods Conveniently selected the116first-episode depression patients who visited the hospital from January2018toDecember2020for research.They were divided into two groups using a random number table.58patients in the controlgroup were treated with escitalopram,and58patients in the study group were treated with quetiapine on this basis.Compared the clinical efficacy of the two groups of patients,the levels of neurological factors[myelin interstitial protein(MBP)and neurotrophic factor(BDNF)]before and after treatment,and the cognitive function[in a repetitive set ofneuropsychological status test(RBANS)evaluation]before and after treatment,4weeks and8weeks of treatment,andadverse reactions.Results The total effective rate of treatment in the study group was94.83%higher than79.31%in thecontrol group,the difference was statistically significant(χ2=6.202,P<0.05).After treatment,MBP(4.52±0.85)ng/mL in thestudy group was lower than that in the control group(5.79±0.69)ng/mL,and BDNF(27.52±4.03)ng/mL was higher thanthat in the control group(24.33±5.00)ng/mL,the difference was statistically significant(t=8.834,3.783,P<0.05).In thetreatment of4weeks and8weeks,the RBANS score of the study group was(73.52±10.00)points and(78.62±10.40)pointshigher than that of the control group(68.03±8.46)points and(71.32±9.05)points,the difference was statistically significant(t=3.192,4.033,P<0.05).The incidence of adverse reactions in the study group was10.34%,and the incidence of adversereactions in the control group was8.62%,the difference was not statistically significant(χ2=0.100,P>0.05).Conclusion Thecombined treatment of escitalopram and quetiapine in patients with first-episode depression can improve the clinicalefficacy,improve the level of neurological factors and cognitive function,with good safety and clinical promotion value.[Key words]Escitalopram;Quetiapine;First episode depression[作者简介]杨晓江(1978-),男,本科,主治医师,研究方向为精神病。

慢性胰腺炎患者消化不良的诊治进展胡良皞,金震东海军军医大学第一附属医院消化内科,上海 200433通信作者:金震东,****************(ORCID:0000-0003-1196-9047)摘要:消化不良是临床常见的一组症状,可以分为器质性和功能性两类。

慢性胰腺炎(CP)时常出现脂肪泻、腹胀、腹痛等消化不良症状,其中大部分患者伴有胰腺外分泌功能不全(PEI),属于器质性消化不良。

临床上,PEI和消化不良的诊断需综合评估患者的临床表现、营养状况和胰腺外分泌功能,并以此制订个性化的治疗方案。

但部分患者外分泌功能良好却有消化不良的症状,其诊断和治疗仍为临床难点。

本文针对CP患者消化不良的诊治研究进展进行综述。

关键词:胰腺炎,慢性;胰腺外分泌功能不全;消化不良;诊断;治疗学Advances in the diagnosis and treatment of dyspepsia in chronic pancreatitis patientsHU Lianghao,JIN Zhendong.(Department of Gastroenterology,The First Affiliated Hospital of Navy Medical University,Shanghai 200433, China)Corresponding author: JIN Zhendong,****************(ORCID: 0000-0003-1196-9047)Abstract:Dyspepsia is a common group of clinical symptoms and can be classified into organic and functional dyspepsia. Patients with chronic pancreatitis (CP) often have the symptoms of dyspepsia such as fatty diarrhea, abdominal distention,and abdominal pain, and most patients have pancreatic exocrine insufficiency (PEI), which belongs to organic dyspepsia. In clinical practice,the diagnosis of PEI and dyspepsia requires a comprehensive assessment of clinical manifestations,nutritional status, and pancreatic exocrine function, and an individualized treatment regimen should be developed based on such factors. However, some patients with normal exocrine function may have the symptoms of dyspepsia, and the diagnosis and treatment of such patients are still difficulties in clinical practice. This article reviews the advances in the diagnosis and treatment of dyspepsia in CP patients.Key words:Pancreatitis, Chronic; Exocrine Pancreatic Insufficiency; Dyspepsia; Diagnosis; Therapeutics消化不良指的是胃和十二指肠的不适症状,主要包括上腹部胀气、上腹疼痛或烧灼感、餐后饱胀及早饱、嗳气、恶心等,若存在器质性、代谢性疾病等病因(如消化性溃疡、胃肠道肿瘤、胰腺疾病、甲状腺功能亢进、药物不良反应等)则为器质性消化不良,其余无法用疾病原因解释的则为功能性消化不良[1]。

江苏南师大附中2022—2023学年高三一模适应性考试英语本卷满分150分,考试时间120分钟第一部分听力(共两节,满分30分)做题时,先将答案标在试卷上。

录音内容结束后,你将有两分钟的时间将试卷上的答案转涂到答题卡上。

第一部分听力(共两节,满分30分)第一节(共5小题;每小题1.5分,满分7.5分)听下面5段对话。

每段对话后有一个小题,从题中所给的A、B、C三个选项中选出最佳选项。

听完每段对话后,你都有10秒钟的时间来回答有关小题和阅读下一小题。

每段对话仅读一遍。

1. What did the woman forget?A. Her gloves.B. Her scarf.C. Her coat.2. What does the boy suggest doing?A. Going to the circus.B. Playing with small animals.C. Taking a trip to a farm.3. How far is the last stop sign?A. Two blocks away.B. Three blocks away.C. Five blocks away.4. Why couldn’t the woman hear the man clearly?A. The man was eating.B. The man was in the shower.C. The woman has bad hearing.5. Where are the speakers?A. In a gas station.B. In a clinic.C. In an interview room.第二节(共15小题;每小题1.5分,满分22.5分)听下面5段对话或独白。

每段对话或独白后有几个小题,从题中所给的A、B、C三个选项中选出最佳选项,并标在试卷的相应位置。

听每段对话或独白前,你将有时间阅读各个小题,每小题5秒钟;听完后,各小题将给出5秒钟的作答时间。

中屮中医药杂志(原中国医药学报)2021年2;j第36卷第2期CJTCMP,February 2021, Vol.36, No.2•869 -•临证经验•周斌微观辨证治疗自身免疫性胃炎经验莫方正\郭哲宇-,周斌2r北京中医药大学,北京100029; 2中国中医科学院广安门医院,北京100053)摘要:文章概述了 &身免疫性胃炎的临床特点,总结r周斌教授应丨n中医中药辨证治疗丨'丨»免疫性胃炎的临 床经验周斌教授提出先天®赋不足,脏腑发育+a,致使脾肾两虚,足木病的主要病因,治疗时强调从发病之初即补益脾肾,并贯穿疾病治疗的始终;同时根据现代胃镜及病理、血淸学等手段进行微观辨丨I丨•:,结合患荇不同的症状表现,微观辨证与宏观辨证相结合,精准治疗,从而冇效的治疗n a免疫n胃炎关键词:f丨身免疫性胃炎;周斌;微观辨证;经验C l i n i c a l experience o f Z H O U Bin i n t r e a t i n g autoimmune g a s t r i t i s based onmicroscopic syndrome d i f f e r e n t i a t i o nM O F a n g-z h e n g1,G U O Z h e-y u:,Z H O U B i n-('Beijing University o f C h i n e s e M e d i c i n e, Beijing 100029, C h i n a: "G u a n g'a n m e n Hospital, C h i n a A c a d e m y o fC h i n e s e M e d i c a l Sciences, Beijing 100053, C h i n a )A b s t r a c t:T h e article s u m m a r i z e s the clinical characteristics o f a u t o i m m u n e gastritis a n d the clinical e x p e r i e n c e o fprofessor Z H O U B i n in a p p l y i n g T C M dialectical therapy to a u t o i m m u n e gastritis. Professor Z H O U B i n pointed out that lack ofcongenital e n d o w m e n t a n d p o o r d e v e l o p m e n t o f viscera result in deficiency o f both spleen a n d kidney, w h i c h are the m a i n causeso f this disease. D u r i n g treatment, i t is e m p h a s i z e d that the spleen a n d k i d n e y sh o u l d be n o u r i s h e d f r o m the b e g i n n i n g o f the diseasea n d r u n t h r o u g h the treatment. Besides, the treatment s h o u l d reference the gastroscopy, histology, s e r u m m a r k e r s a n d differents y m p t o m s o f patients. T h e c o m b i n a t i o n o f m i c r o s c o p i c s y n d r o m e difTerentiation a n d m a c r o s c o p i c s y n d r o m e differentiationprovides precise treatment, thus effectively treating a u t o i m m u n e gastritis.K e y W O r d S I A u t o i m m u n e gastritis; Z H O U Bin: M i c r o s c o p i c s y n d r o m e differentiation; E x p e r i e n c e《胃炎的分类和分级——新悉尼系统》n i将慢性 胃炎根据病变部位的不同分为A型胃炎及B型胃炎,A 型胃炎即自身免疫性胃炎(a u t o i m m u n e gastrilis,A I G);相对于B型胃炎以胃窦病变为主要表现,A l(;病变部位 主要在胃体及胃底,而胃窦基本不受影响在我国传统中医学文献中,A1G尚无对应的中医病名,但根据 其症状体征,周斌教授认为本病当归于“痞病”“虚 劳”等范畴。

・综述・ 胫骨远端关节外骨折的治疗研究进展杜武军1,2徐彬2刘宸赫2【摘要】 胫骨远端骨折是创伤骨科较为常见的骨折。

目前胫骨远端骨折的治疗仍是创伤骨科治疗的难点及热点,治疗方法很多,但没有哪一种固定的治疗方式可以覆盖所有类型的骨折,治疗的热点多是围绕最大限度地减少破坏血运及损伤软组织情况。

随着AO及BO理念的不断完善,微创治疗已成为当前治疗的趋势。

本文就近年来胫骨远端骨折应用较多的几种微创固定治疗方式作一综合阐述。

【关键词】 胫骨骨折;内固定器;骨折固定术,髓内Study development for the treatment of extra-articular distal tibial fracture Du Wujun1,2, Xu Bin2,Liu Chenhe2. 1Shanxi Medical University, Taiyuan 030001, China; 2Department of Orthopaedics, the FirstHospital of Shanxi Medical University, Taiyuan 030001, ChinaCorresponding author: Xu Bin, Email: xushu07017@【Abstract】Distal tibial fracture is a common fracture in trauma department of orthopedics. So far,its treatment is still a hot pot and difficulty. There are many treatments about distal tibial fracture, but noone fixed treatment modality covers all sorts of fractures. And the hot pot of treatment centres on thereduction of destorying the blood supply and hurting soft issue in most largest extent. With the increasinglydevelopment of the aspect of AO and BO, minimally invasive fixation has been temporary trend oftreatment. This article carried on a comprehensive explaination about some minimally invasive fixationsmore used in the treatment of distal tibia fracture in recent years.【Key words】 Tibial fractures; Internal fixators; Fracture fixation, intramedullary胫骨远端骨折在创伤骨科中较为常见。

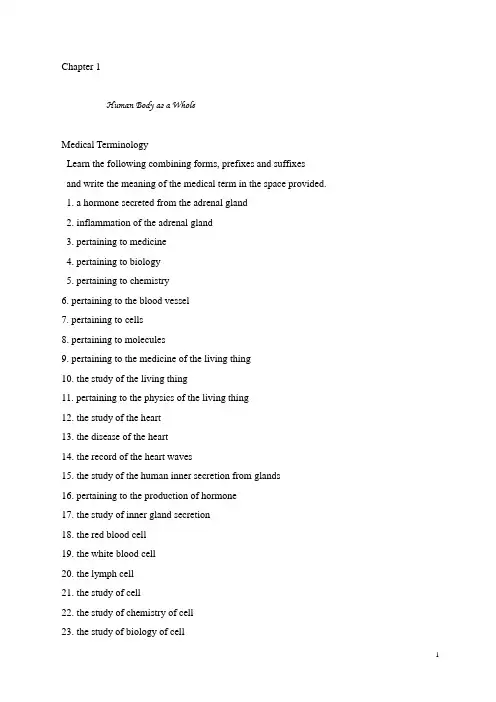

Chapter 1Human Body as a WholeMedical TerminologyLearn the following combining forms, prefixes and suffixesand write the meaning of the medical term in the space provided.1. a hormone secreted from the adrenal gland2. inflammation of the adrenal gland3. pertaining to medicine4. pertaining to biology5. pertaining to chemistry6. pertaining to the blood vessel7. pertaining to cells8. pertaining to molecules9. pertaining to the medicine of the living thing10. the study of the living thing11. pertaining to the physics of the living thing12. the study of the heart13. the disease of the heart14. the record of the heart waves15. the study of the human inner secretion from glands16. pertaining to the production of hormone17. the study of inner gland secretion18. the red blood cell19. the white blood cell20. the lymph cell21. the study of cell22. the study of chemistry of cell23. the study of biology of cell25. the tumor of the embryo26. the study of the disease of the embryo27. the study of the human inner secretion from glands28. pertaining to the inside of the heart29. pertaining to the inside of the cell30. above the skin31. above the skin32. inflammation of the skin33. red blood cells34. instrument of measuring red blood cells35. to breathe out the waste gas36. to drive somebody or something out37. to spread outside38. sth that produces diseases39. the study of blood40. blood cells41. the study of tissues42. the study of tissue pathology43. therapy treatment, hence tissue treatment44. pertaining to the base45. pertaining to the toxin46. pertaining to symptoms47. the study of body’s auto protection from diseases48. protected from49. deficiency in the immune system of the body50. substance from the adrenal gland51. water from it52. the study of societies53. the study of urinary system55. lymph cells56. tumor of the lymphatic system57. the study of the lymphatic system58. the study of physical growth59. a doctor of internal medicine60. treatment by physiological methods61. the new growth ,tumor62. the study of mind63. abnormal condition of the mind64. the study of the relationship between psychology and biology65. a condition of over activity of the thyroid gland66. the condition of under activity of the thyroid gland67. the inflammation of the thyroid gland68.cutting apart the human body as a branch of medical sciences69. cut open the bone70.to cut the heart open71. pertaining to the blood vessel72. inflammation of the blood vessel73. pertaining to the lymphatic system and the blood vesselKey to the Exercises B.1.(embryo)embryology2.(process/condition) mechanism3.(heart) cardiovascular4.(color) chromatin5.(secretion) endocrinology6.(cell) cytology7.(sth. that produces or is produced) pathogen8.(lymph) lymphatic9.(the study of) psychology10.(pertaining to) regularLanguage Points:put together组成known as 叫做joints between bones关节to provide points of attachment for the muscles that move the body牵动骨骼肌引起各种运动hip joint髋关节flexible有韧性的cushioning缓冲replaced by bone 骨化one bone moves in relation to the other两骨彼此靠近产生运动contents物质nourishment营养物质function发挥功能waste products废物accumulate积聚poison the body危害生命distributes运送needed materials有用的物质unneeded ones废物is made up of包括protect…against foreign invaders防止外来侵袭(See! Power Point)identical对等的receives吸收traveled through流经forced out压送reenter流入directly直接地channels 管道filters过滤larynx喉管trachea气管two lungs左右肺very large number of 大量的air spaces肺泡release释放出extending 延伸到broken down分解absorbed into吸收进chewing咀嚼esophagus食管Key to the Section B Passage 1 Exercises B.1. (The skeletal system consists of bones, joints and soft bones.)2. (Heart is generated when muscles are contracted, which helps keep the bodytemperature constant.)3. (The circulation of blood carries useful materials to all body cells while removing wasteones.)4. (Oxygen is inhaled and carbon dioxide is exhaled in the process of respiration.)5. (The digestion of food involves both mechanical and chemical procedures.)6. (The urinary system keeps normal levels of water and of certain chemicals in the body.)7. (The pituitary is a major gland located under the brain in the middle of the head.)8. (The brain collects and processes information and then sends instructions to all parts ofthe body to be carried out.)9. (The main function of the male reproductive system is to generate, transport and keepactive male sex cells.)10. (The largest of the body’s organs, the skin protects the inner structure of the body with acomplete layer.)Key to the Section A Passage 1 Exercises E.1.cardiovascular diseases2. function of the pituitary3. the urinary tract4. molecules5. artery6. endocrinology7. dyspnea / difficulty in respiration 8. saliva9. histology 10. blood circulation11. hematology 12. physiology13. anatomy 14. the female reproductive15. nervous cells 16. immunology17. indigestion / poor in digestion 18. voluntary muscle19. embryology 20. psychologySection B, Passage 2 Cells and TissuesLanguage PointsOrganized组(构)成arranged in to构成in turn are grouped into又进一步组成serves its specific有特定的bear in mind记住result from源于billions亿万determind确立fit on合在一起by contrast相比之下machinary机构while normally在正常情况下function with great efficiency高效地发挥作用are subject to易于发生result in导致millionth百万分之一equal等于average一般 a speck barely visible基本上看不见的一个小点The science that deals with cells on the smalleststructural and functional level is called molecular biology.从最小的结构及功能水平研究细胞的科学叫分子生物学。

Exercise and Type2DiabetesThe American College of Sports Medicine and the American Diabetes Association:joint position statementS HERI R.C OLBERG,PHD,FACSM1R ONALD J.S IGAL,MD,MPH,FRCP(C)2 B O F ERNHALL,PHD,FACSM3J UDITH G.R EGENSTEINER,PHD4B RYAN J.B LISSMER,PHD5R ICHARD R.R UBIN,PHD6L ISA C HASAN-T ABER,SCD,FACSM7A NN L.A LBRIGHT,PHD,RD8B ARRY B RAUN,PHD,FACSM9Although physical activity(PA)is a key element in the prevention and management of type2 diabetes,many with this chronic disease do not become or remain regularly active.High-quality studies establishing the importance of exercise andfitness in diabetes were lacking until recently, but it is now well established that participation in regular PA improves blood glucose control and can prevent or delay type2diabetes,along with positively affecting lipids,blood pressure, cardiovascular events,mortality,and quality of life.Structured interventions combining PA and modest weight loss have been shown to lower type2diabetes risk by up to58%in high-risk populations.Most benefits of PA on diabetes management are realized through acute and chronic improvements in insulin action,accomplished with both aerobic and resistance training.The benefits of physical training are discussed,along with recommendations for varying activities, PA-associated blood glucose management,diabetes prevention,gestational diabetes mellitus, and safe and effective practices for PA with diabetes-related complications.Diabetes Care33:e147–e167,2010 INTRODUCTIOND iabetes has become a widespreadepidemic,primarily because of theincreasing prevalence and inci-dence of type2diabetes.According to the Centers for Disease Control and Preven-tion,in2007,almost24million Ameri-cans had diabetes,with one-quarter of those,or six million,undiagnosed(261). Currently,it is estimated that almost60 million U.S.residents also have prediabe-tes,a condition in which blood glucose(BG)levels are above normal,thus greatlyincreasing their risk for type2diabetes(261).Lifetime risk estimates suggest thatone in three Americans born in2000orlater will develop diabetes,but in high-risk ethnic populations,closer to50%may develop it(200).Type2diabetes is asignificant cause of premature mortalityand morbidity related to cardiovasculardisease(CVD),blindness,kidney andnerve disease,and amputation(261).Al-though regular physical activity(PA)mayprevent or delay diabetes and its compli-cations(10,46,89,112,176,208,259,294),most people with type2diabetes are notactive(193).In this article,the broader term“physical activity”(defined as“bodilymovement produced by the contractionof skeletal muscle that substantially in-creases energy expenditure”)is used in-terchangeably with“exercise,”which isdefined as“a subset of PA done with theintention of developing physicalfitness(i.e.,cardiovascular[CV],strength,andflexibility training).”The intent is to rec-ognize that many types of physical move-ment may have a positive effect onphysicalfitness,morbidity,and mortalityin individuals with type2diabetes.Diagnosis,classification,andetiology of diabetesCurrently,the American Diabetes Associ-ation(ADA)recommends the use of anyof the following four criteria for diagnos-ing diabetes:1)glycated hemoglobin(A1C)value of6.5%or higher,2)fastingplasma glucoseՆ126mg/dl(7.0mmol/l),3)2-h plasma glucoseՆ200mg/dl(11.1mmol/l)during an oral glucose tol-erance test using75g of glucose,and/or4)classic symptoms of hyperglycemia(e.g.,polyuria,polydipsia,and unex-plained weight loss)or hyperglycemic cri-sis with a random plasma glucose of200mg/dl(11.1mmol/l)or higher.In the ab-sence of unequivocal hyperglycemia,thefirst three criteria should be confirmed byrepeat testing(4).Prediabetes is diag-nosed with an A1C of5.7–6.4%,fastingplasma glucose of100–125mg/dl(5.6–6.9mmol/l;i.e.,impaired fasting glucose[IFG]),or2-h postload glucose of140–199mg/dl(7.8–11.0mmol/l;i.e.,im-paired glucose tolerance[IGT])(4).The major forms of diabetes can becategorized as type1or type2(4).In type1diabetes,which accounts for5–10%ofcases,the cause is an absolute deficiencyof insulin secretion resulting from auto-immune destruction of the insulin-producing cells in the pancreas.Type2●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●●From the1Human Movement Sciences Department,Old Dominion University,Norfolk,Virginia;the2De-partments of Medicine,Cardiac Sciences,and Community Health Sciences,Faculties of Medicine and Kinesiology,University of Calgary,Calgary,Alberta,Canada;the3Department of Kinesiology and Com-munity Health,University of Illinois at Urbana-Champaign,Urbana,Illinois;the4Divisions of General Internal Medicine and Cardiology and Center for Women’s Health Research,University of Colorado School of Medicine,Aurora,Colorado;the5Department of Kinesiology and Cancer Prevention Research Center,University of Rhode Island,Kingston,Rhode Island;the6Departments of Medicine and Pediatrics, The Johns Hopkins University School of Medicine,Baltimore,Maryland;the7Division of Biostatistics and Epidemiology,University of Massachusetts,Amherst,Massachusetts;the8Division of Diabetes Transla-tion,Centers for Disease Control and Prevention,Atlanta,Georgia;and the9Department of Kinesiology, University of Massachusetts,Amherst,Massachusetts.Corresponding author:Sheri R.Colberg,scolberg@.This joint position statement is written by the American College of Sports Medicine and the American Diabetes Association and was approved by the Executive Committee of the American Diabetes Association in July2010.This statement is published concurrently in Medicine&Science in Sports&Exercise and Diabetes Care.Individual name recognition is stated in the ACKNOWLEDGMENTS at the end of the statement.Thefindings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.DOI:10.2337/dc10-9990©2010by the American Diabetes Association.Readers may use this article as long as the work is properly cited,the use is educational and not for profit,and the work is not altered.See http://creativecommons.org/licenses/by-nc-nd/3.0/for details.See accompanying article,p.2692.R e v i e w s/C o m m e n t a r i e s/A D A S t a t e m e n t sdiabetes(90–95%of cases)results from a combination of the inability of muscle cells to respond to insulin properly(insu-lin resistance)and inadequate compensa-tory insulin secretion.Less common forms include gestational diabetes melli-tus(GDM),which is associated with a 40–60%chance of developing type2di-abetes in the next5–10years(261).Dia-betes can also result from genetic defects in insulin action,pancreatic disease,sur-gery,infections,and drugs or chemicals (4,261).Genetic and environmental factors are strongly implicated in the develop-ment of type2diabetes.The exact genetic defects are complex and not clearly de-fined(4),but risk increases with age,obe-sity,and physical inactivity.Type2 diabetes occurs more frequently in popu-lations with hypertension or dyslipide-mia,women with previous GDM,and non-Caucasian people including Native Americans,African Americans,Hispanic/ Latinos,Asians,and Pacific Islanders. Treatment goals in type2diabetes The goal of treatment in type2diabetes is to achieve and maintain optimal BG, lipid,and blood pressure(BP)levels to prevent or delay chronic complications of diabetes(5).Many people with type2di-abetes can achieve BG control by follow-ing a nutritious meal plan and exercise program,losing excess weight,imple-menting necessary self-care behaviors, and taking oral medications,although others may need supplemental insulin (261).Diet and PA are central to the man-agement and prevention of type2diabe-tes because they help treat the associated glucose,lipid,BP control abnormalities, as well as aid in weight loss and mainte-nance.When medications are used to control type2diabetes,they should aug-ment lifestyle improvements,not replace them.ACUTE EFFECTS OFEXERCISEFuel metabolism during exercise Fuel mobilization,glucose production, and muscle glycogenolysis.The main-tenance of normal BG at rest and during exercise depends largely on the coordina-tion and integration of the sympathetic nervous and endocrine systems(250). Contracting muscles increase uptake of BG,although BG levels are usually main-tained by glucose production via liver gly-cogenolysis and gluconeogenesis and mobilization of alternate fuels,such asfree fatty acids(FFAs)(250,268).Several factors influence exercise fueluse,but the most important are the inten-sity and duration of PA(9,29,47,83,111,133,160,181,241).Any activity causes ashift from predominant reliance on FFA atrest to a blend of fat,glucose,and muscleglycogen,with a small contributionfrom amino acids(15,31).With in-creasing exercise intensity,there is agreater reliance on carbohydrate as longas sufficient amounts are available inmuscle or blood(21,23,47,133).Earlyin exercise,glycogen provides the bulkof the fuel for working muscles.As gly-cogen stores become depleted,musclesincrease their uptake and use of circu-lating BG,along with FFA released fromadipose tissue(15,132,271).Intramus-cular lipid stores are more readily usedduring longer-duration activities andrecovery(23,223,270).Glucose pro-duction also shifts from hepatic glyco-genolysis to enhanced gluconeogenesisas duration increases(250,268).Evidence statement.PA causes increasedglucose uptake into active muscles bal-anced by hepatic glucose production,with a greater reliance on carbohydrate tofuel muscular activity as intensity in-creases.The American College of SportsMedicine(ACSM)evidence category A(seeTables1and2for explanation).Insulin-independent and insulin-dependent muscle glucose uptake dur-ing exercise.There are two well-definedpathways that stimulate glucose uptakeby muscle(96).At rest and postprandi-ally,its uptake by muscle is insulin de-pendent and serves primarily to replenishmuscle glycogen stores.During exercise,contractions increase BG uptake to sup-plement intramuscular glycogenolysis(220,227).As the two pathways are dis-tinct,BG uptake into working muscle isnormal even when insulin-mediated up-take is impaired in type2diabetes(28,47,293).Muscular BG uptake re-mains elevated postexercise,with thecontraction-mediated pathway persist-ing for several hours(86,119)and insulin-mediated uptake for longer(9,33,141,226).Glucose transport into skeletal mus-cle is accomplished via GLUT proteins,with GLUT4being the main isoform inmuscle modulated by both insulin andcontractions(110,138).Insulin activatesGLUT4translocation through a complexsignaling cascade(256,293).Contrac-tions,however,trigger GLUT4transloca-tion at least in part through activation of5Ј-AMP–activated protein kinase(198,293).Insulin-stimulated GLUT4translocation is generally impaired in type2diabetes(96).Both aerobic and resis-tance exercises increase GLUT4abun-dance and BG uptake,even in the presenceof type2diabetes(39,51,204,270).Evidence statement.Insulin-stimulatedBG uptake into skeletal muscle predomi-nates at rest and is impaired in type2diabetes,while muscular contractionsstimulate BG transport via a separate ad-ditive mechanism not impaired by insulinresistance or type2diabetes.ACSM evi-dence category A.Postexercise glycemic control/BGlevelsAerobic exercise effects.During mod-erate-intensity exercise in nondiabeticpersons,the rise in peripheral glucose up-take is matched by an equal rise in hepaticglucose production,the result being thatBG does not change except during pro-longed,glycogen-depleting exercise.Inindividuals with type2diabetes perform-ing moderate exercise,BG utilization bymuscles usually rises more than hepaticglucose production,and BG levels tend todecline(191).Plasma insulin levels nor-mally fall,however,making the risk ofexercise-induced hypoglycemia in any-one not taking insulin or insulin secreta-gogues very minimal,even withprolonged PA(152).The effects of a sin-gle bout of aerobic exercise on insulin ac-tion vary with duration,intensity,andsubsequent diet;a single session in-creases insulin action and glucose toler-ance for more than24h but less than72h(26,33,85,141).The effects ofmoderate aerobic exercise are similarwhether the PA is performed in a singlesession or multiple bouts with the sametotal duration(14).During brief,intense aerobic exercise,plasma catecholamine levels rise mark-edly,driving a major increase in glucoseproduction(184).Hyperglycemia can re-sult from such activity and persist for upto1–2h,likely because plasma catechol-amine levels and glucose production donot return to normal immediately withcessation of the activity(184).Evidence statement.Although moderateaerobic exercise improves BG and insulin ac-tion acutely,the risk of exercise-induced hy-poglycemia is minimal without use ofexogenous insulin or insulin secretagogues.Transient hyperglycemia can follow intensePA.ACSM evidence category C.Exercise and type2diabetesResistance exercise effects.The acute effects of a single bout of resistance train-ing on BG levels and/or insulin action in individuals with type2diabetes have not been reported.In individuals with IFG (BG levels of100–125mg/dl),resistance exercise results in lower fasting BG levels 24h after exercise,with greater reduc-tions in response to both volume(multi-ple-vs.single-set sessions)and intensity of resistance exercise(vigorous compared with moderate)(18).Evidence statement.The acute effects of resistance exercise in type2diabetes have not been reported,but result in lower fast-ing BG levels for at least24h after exercise in individuals with IFG.ACSM evidence category C.Combined aerobic and resistance and other types of training.A combination of aerobic and resistance training may be more effective for BG management than either type of exercise alone(51,238).Any increase in muscle mass that may re-sult from resistance training could con-tribute to BG uptake without altering themuscle’s intrinsic capacity to respond toinsulin,whereas aerobic exercise en-hances its uptake via a greater insulin ac-tion,independent of changes in musclemass or aerobic capacity(51).However,all reported combination training had agreater total duration of exercise and ca-loric use than when each type of trainingwas undertaken alone(51,183,238).Mild-intensity exercises such as tai chiand yoga have also been investigated fortheir potential to improve BG manage-ment,with mixed results(98,117,159,257,269,286,291).Although tai chi maylead to short-term improvements in BGlevels,effects from long-term training(i.e.,16weeks)do not seem to last72hafter the last session(257).Some studieshave shown lower overall BG levels withextended participation in such activities(286,291),although others have not(159,257).One study suggested that yo-ga’s benefits on fasting BG,lipids,oxida-tive stress markers,and antioxidant statusare at least equivalent to more conven-tional forms of PA(98).However,a meta-analysis of yoga studies stated that thelimitations characterizing most studies,such as small sample size and varyingforms of yoga,preclude drawingfirmconclusions about benefits to diabetesmanagement(117).Evidence statement.A combination ofaerobic and resistance exercise trainingmay be more effective in improving BGcontrol than either alone;however,morestudies are needed to determine if totalcaloric expenditure,exercise duration,orexercise mode is responsible.ACSM evi-dence category der forms of exercise(e.g.,tai chi,yoga)have shown mixed re-sults.ACSM evidence category C.Table1—Evidence categories for ACSM and evidence-grading system for clinical practice recommendations for ADAI.ACSM evidence categoriesEvidencecategory Source of evidence DefinitionA Randomized,controlled trials(overwhelming data)Provides a consistent pattern offindings with substantial studiesB Randomized,controlled trials(limited data)Few randomized trials exist,which are small in size,and results are inconsistentC Nonrandomized trials,observational studies Outcomes are from uncontrolled,nonrandomized,and/or observational studiesD Panel consensus judgment Panel’s expert opinion when the evidence is insufficient to place it in categoriesA–CII.ADA evidence-grading system for clinical practice recommendationsLevel ofevidence DescriptionA Clear evidence from well-conducted,generalizable,randomized,controlled trials that are adequately powered,including thefollowing:•Evidence from a well-conducted multicenter trial•Evidence from a meta-analysis that incorporated quality ratings in the analysisCompelling nonexperimental evidence,i.e.,the“all-or-none”rule developed by the Centre for Evidence-Based Medicine at OxfordSupportive evidence from well-conducted,randomized,controlled trials that are adequately powered,including the following:•Evidence from a well-conducted trial at one or more institutions•Evidence from a meta-analysis that incorporated quality ratings in the analysisB Supportive evidence from well-conducted cohort studies,including the following:•Evidence from a well-conducted prospective cohort study or registry•Evidence from a well-conducted meta-analysis of cohort studiesSupportive evidence from a well-conducted case-control studyC Supportive evidence from poorly controlled or uncontrolled studies,including the following:•Evidence from randomized clinical trials with one or more major or three or more minor methodologicalflaws that couldinvalidate the results•Evidence from observational studies with high potential for bias(such as case series with comparison to historical controls)•Evidence from case series or case reportsConflicting evidence with the weight of evidence supporting the recommendationE Expert consensus or clinical experienceColberg and AssociatesTable2—Summary of ACSM evidence and ADA clinical practice recommendation statementsACSM evidence and ADA clinical practice recommendation statements ACSM evidence category (A,highest;D,lowest)/ ADA level of evidence (A,highest;E,lowest)Acute effects of exercise•PA causes increased glucose uptake into active muscles balanced by hepatic glucoseproduction,with a greater reliance on carbohydrate to fuel muscular activity as intensityincreases.A/*•Insulin-stimulated BG uptake into skeletal muscle predominates at rest and is impairedin type2diabetes,while muscular contractions stimulate BG transport via a separate,additive mechanism not impaired by insulin resistance or type2diabetes.A/*•Although moderate aerobic exercise improves BG and insulin action acutely,the risk ofexercise-induced hypoglycemia is minimal without use of exogenous insulin or insulinsecretagogues.Transient hyperglycemia can follow intense PA.C/*•The acute effects of resistance exercise in type2diabetes have not been reported,butresult in lower fasting BG levels for at least24h postexercise in individuals with IFG.C/*•A combination of aerobic and resistance exercise training may be more effective inimproving BG control than either alone;however,more studies are needed todetermine whether total caloric expenditure,exercise duration,or exercise mode isresponsible.B/*•Milder forms of exercise(e.g.,tai chi,yoga)have shown mixed results.C/*•PA can result in acute improvements in systemic insulin action lasting from2to72h.A/*Chronic effects of exercise training •Both aerobic and resistance training improve insulin action,BG control,and fatoxidation and storage in muscle.B/*•Resistance exercise enhances skeletal muscle mass.A/*•Blood lipid responses to training are mixed but may result in a small reduction in LDLcholesterol with no change in HDL cholesterol or bined weight lossand PA may be more effective than aerobic exercise training alone on lipids.C/*•Aerobic training may slightly reduce systolic BP,but reductions in diastolic BP are lesscommon,in individuals with type2diabetes.C/*•Observational studies suggest that greater PA andfitness are associated with a lowerrisk of all-cause and CV mortality.C/*•Recommended levels of PA may help produce weight loss.However,up to60min/daymay be required when relying on exercise alone for weight loss.C/*•Individuals with type2diabetes engaged in supervised training exhibit greatercompliance and BG control than those undertaking exercise training withoutsupervision.B/*•Increased PA and physicalfitness can reduce symptoms of depression and improvehealth-related QOL in those with type2diabetes.B/*PA and prevention of type2diabetes •At least2.5h/week of moderate to vigorous PA should be undertaken as part oflifestyle changes to prevent type2diabetes onset in high-risk adults.A/APA in prevention and control of GDM •Epidemiological studies suggest that higher levels of PA may reduce risk of developingGDM during pregnancy.C/*•RCTs suggest that moderate exercise may lower maternal BG levels in GDM.B/*Preexercise evaluation•Before undertaking exercise more intense than brisk walking,sedentary persons withtype2diabetes will likely benefit from an evaluation by a physician.ECG exercisestress testing for asymptomatic individuals at low risk of CAD is not recommended butmay be indicated for higher risk.C/CRecommended PA participation for persons with type2 diabetes •Persons with type2diabetes should undertake at least150min/week of moderate tovigorous aerobic exercise spread out during at least3days during the week,with nomore than2consecutive days between bouts of aerobic activity.B/B•In addition to aerobic training,persons with type2diabetes should undertakemoderate to vigorous resistance training at least2–3days/week.B/B •Supervised and combined aerobic and resistance training may confer additional healthbenefits,although milder forms of PA(such as yoga)have shown mixed results.Persons with type2diabetes are encouraged to increase their total daily unstructuredPA.Flexibility training may be included but should not be undertaken in place ofother recommended types of PA.B/C(continued)Exercise and type2diabetesInsulin resistanceAcute changes in muscular insulin re-sistance.Most benefits of PA on type2 diabetes management and prevention are realized through acute and chronic im-provements in insulin action(29,46, 116,118,282).The acute effects of a re-cent bout of exercise account for most of the improvements in insulin action,with most individuals experiencing a decrease in their BG levels during mild-and mod-erate-intensity exercise and for2–72h af-terward(24,83,204).BG reductions are related to the dura-tion and intensity of the exercise,preex-ercise control,and state of physical training(24,26,47,238).Although previ-ous PA of any intensity generally exerts its effects by enhancing uptake of BG for gly-cogen synthesis(40,83)and by stimulat-ing fat oxidation and storage in muscle (21,64,95),more prolonged or intense PAacutely enhances insulin action for longerperiods(9,29,75,111,160,238).Acute improvements in insulin sensi-tivity in women with type2diabetes havebeen found for equivalent energy expen-ditures whether engaging in low-intensityor high-intensity walking(29)but may beaffected by age and training status(24,75,100,101,228).For example,mod-erate-to heavy-intensity aerobic trainingundertaken three times a week for6months improved insulin action in bothyounger and older women but persistedonly in the younger group for72–120h.Acute changes in liver’s ability to pro-cess glucose.Increases in liver fat con-tent common in obesity and type2diabetesare strongly associated with reduced he-patic and peripheral insulin action.En-hanced whole-body insulin action afteraerobic training seems to be related to gainsin peripheral,not hepatic,insulin action(146,282).Such training not resulting inoverall weight loss may still reduce hepaticlipid content and alter fat partitioning anduse in the liver(128).Evidence statement.PA can result inacute improvements in systemic insulinaction lasting from2to72h.ACSM evi-dence category A.CHRONIC EFFECTS OFEXERCISE TRAININGMetabolic control:BG levels and insu-lin resistance.Aerobic exercise has beenthe mode traditionally prescribed for dia-betes prevention and management.Even1week of aerobic training can improvewhole-body insulin sensitivity in individ-uals with type2diabetes(282).Moderateand vigorous aerobic training improve in-Table2—ContinuedACSM evidence and ADA clinical practice recommendation statements ACSM evidence category (A,highest;D,lowest)/ ADA level of evidence (A,highest;E,lowest)Exercise with nonoptimal BG control •Individuals with type2diabetes may engage in PA,using caution when exercising withBG levels exceeding300mg/dl(16.7mmol/l)without ketosis,provided they arefeeling well and are adequately hydrated.C/E•Persons with type2diabetes not using insulin or insulin secretagogues are unlikely toexperience hypoglycemia related to ers of insulin and insulin secretagogues areadvised to supplement with carbohydrate as needed to prevent hypoglycemia duringand after exercise.C/CMedication effects on exercise responses •Medication dosage adjustments to prevent exercise-associated hypoglycemia may berequired by individuals using insulin or certain insulin secretagogues.Most othermedications prescribed for concomitant health problems do not affect exercise,withthe exception of-blockers,some diuretics,and statins.C/CExercise with long-term complications of diabetes •Known CVD is not an absolute contraindication to exercise.Individuals with anginaclassified as moderate or high risk should likely begin exercise in a supervised cardiacrehabilitation program.PA is advised for anyone with PAD.C/C•Individuals with peripheral neuropathy and without acute ulceration may participatein moderate weight-bearing prehensive foot care including dailyinspection of feet and use of proper footwear is recommended for prevention and earlydetection of sores or ulcers.Moderate walking likely does not increase risk of footulcers or reulceration with peripheral neuropathy.B/B•Individuals with CAN should be screened and receive physician approval and possiblyan exercise stress test before exercise initiation.Exercise intensity is best prescribedusing the HR reserve method with direct measurement of maximal HR.C/C•Individuals with uncontrolled proliferative retinopathy should avoid activities thatgreatly increase intraocular pressure and hemorrhage risk.D/E•Exercise training increases physical function and QOL in individuals with kidneydisease and may even be undertaken during dialysis sessions.The presence ofmicroalbuminuria per se does not necessitate exercise restrictions.C/CAdoption and maintenance of exercise by persons with diabetes •Efforts to promote PA should focus on developing self-efficacy and fostering socialsupport from family,friends,and health care providers.Encouraging mild or moderatePA may be most beneficial to adoption and maintenance of regular PA participation.Lifestyle interventions may have some efficacy in promoting PA behavior.B/B*No recommendation given.Colberg and Associates。

司维拉姆联合血液灌流治疗血液透析患者顽固性皮肤瘙痒症的临床研究曹珊,刘玉(萍乡市人民医院肾内科,江西萍乡337000)摘要:目的探究司维拉姆联合血液灌流治疗血液透析患者顽固性皮肤瘙痒症的临床价值。

方法选取2018年12月至2020年6月于本院行血液透析治疗>3个月且合并顽固性皮肤瘙痒患者50例作为研究对象,按照随机数字表法分为两组,每组25例。

对照组给予血液灌流治疗,观察组在对照组基础上给予司维拉姆治疗。

比较两组临床疗效、血清因子、皮肤瘙痒评分及不良反应发生率。

结果治疗后,观察组瘙痒症状缓解率(96.00%)高于对照组(80.00%),但差异无统计学意义;观察组改良Duo氏瘙痒评分、血清磷(P)、甲状旁腺素(PTH)、肌酐(SCr)、尿素氮(BUN)均低于对照组,差异具有统计学意义(P<0.05);两组血清钙(Ca)、不良反应发生率比较差异无统计学意义。

结论司维拉姆联合血液灌流治疗血液透析患者的顽固性皮肤瘙痒效果显著,可明显改善患者的临床瘙痒症状。

关键词:司维拉姆;血液灌流;血液透析;顽固性皮肤瘙痒症Clinical study on sevelamer combined with hemoperfusion in treatment of hemodialysis patientswith intractable cutaneous pruritusCAO Shan,LIU Yu(Department of Nephrology,Pingxiang People's Hospital,Pingxiang,Jiangxi,337000,China) Abstract:Objective To investigate the clinical value of sevelamer combined with hemoperfusion in treatment of hemodialysis patients with in-tractable cutaneous pruritus.Methods50patients with intractable cutaneous pruritus who underwent hemodialysis treatment3months in our hospi-tal from December2018to June2020were selected as the research subjects,and they were divided into two groups according to the random number table method,with25cases in each group.The control group was given hemoperfusion treatment,and the observation group was given sevelamer treatment on the basis of the control group.The clinical efficacy,serum factors,skin pruritus score and the incidence of adverse reactions were com-pared between the two groups.Results After treatment,the remission rate of cutaneous pruritus in the observation group(96.00%)was higher than that of the control group(80.00%),but the difference was not statistically significant;the modified Duo's pruritus score,serum phosphorus(P),para-thyroid hormone(PTH),and serum creatinine(SCr)and blood urea nitrogen(BUN)in the observation group were lower than those of the control group,and the difference was statistically significant(P<0.05);there was no significant difference in the incidence of serum calcium(Ca)and ad-verse reactions between the two groups.Conclusion Sevelamer combined with hemoperfusion in treatment of hemodialysis patients with intractable cutaneous pruritus has significant efficacy of improving clinical pruritus symptoms.Key words:Sevelamer;Hemoperfusion;Hemodialysis;Intractable cutaneous pruritus皮肤瘙痒症是终末期肾脏病常见并发症,22%~48%的维持性血液透析患者存在中重度皮肤瘙痒[1]。

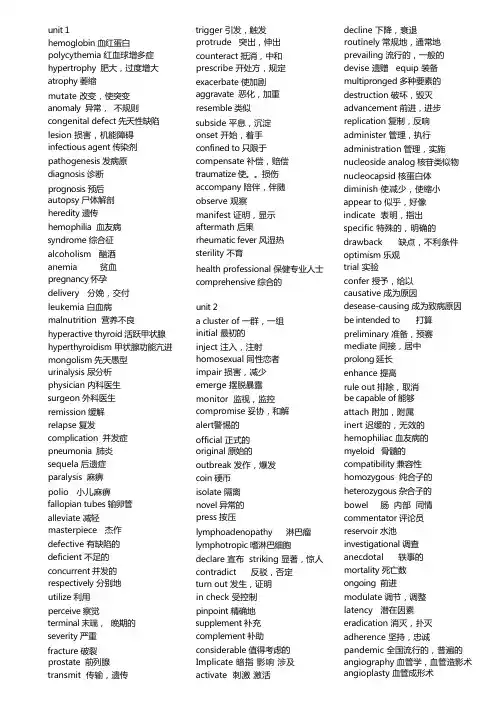

unit 1hemoglobin 血红蛋白polycythemia 红血球增多症hypertrophy 肥大,过度增大atrophy 萎缩mutate 改变,使突变anomaly 异常,不规则congenital defect 先天性缺陷lesion 损害,机能障碍infectious agent 传染剂pathogenesis 发病原diagnosis 诊断prognosis 预后autopsy 尸体解剖heredity 遗传hemophilia 血友病syndrome 综合征alcoholism 酗酒anemia 贫血pregnancy 怀孕delivery 分娩,交付leukemia 白血病malnutrition 营养不良hyperactive thyroid 活跃甲状腺hyperthyroidism 甲状腺功能亢进mongolism 先天愚型urinalysis 尿分析physician 内科医生surgeon 外科医生remission 缓解relapse 复发complication 并发症pneumonia 肺炎sequela 后遗症paralysis 麻痹polio 小儿麻痹fallopian tubes 输卵管alleviate 减轻masterpiece 杰作defective 有缺陷的deficient 不足的concurrent 并发的respectively 分别地utilize 利用perceive 察觉terminal 末端,晚期的severity 严重fracture 破裂prostate 前列腺transmit 传输,遗传trigger 引发,触发protrude 突出,伸出counteract 抵消,中和prescribe 开处方,规定exacerbate 使加剧aggravate 恶化,加重resemble 类似subside 平息,沉淀onset 开始,着手confined to 只限于compensate 补偿,赔偿traumatize 使。

•指南与共识•利妥昔单抗静脉快速输注中国专家共识(2020年版)扫码阅读电子版中国老年保健协会淋巴瘤专业委员会中华医学会血液学分会通信作者:黄慧强,中山大学胖瘤防治中心胖瘤内科,广州 510060,Email:huanghq@【摘要】利妥昔单抗上市20多年以来,在临床实践中疗效和安全性良好。

美国食品药品管理局(FD A)于2012年批准了利妥昔单抗的静脉快速输注用于第2个及后续疗程。

目前,利妥昔单抗90 min静脉快速输注方案已在国外成熟应用,美国国立综合癌症网络(NCCN)指南中也推荐第1个疗程利妥昔单抗输注未出现输注反应的患者,后续疗程可进行利妥昔单抗90 min静脉快速输注。

我国也有研究数据证实了利妥昔单抗90 1^»静脉快速输注方案的安全性和耐受性。

因此,基于国内外循证医学证据,为了提高患者的就诊质量以及节约医疗成本,本专家共识推荐,对于首次输注未发生明显不良反应的患者,后续疗程可使用利妥昔单抗90 min静脉快速输注方案。

【关键词】淋巴瘤,非霍奇金;利妥昔单抗;静脉快速输注;输注相关反应DOI: 10.3760/l 15356-20201030-00259Chinese expert consensus on rapid infusion of rituximab (2020 version)Lymphoma Committee of Chinese Aging Well Association, Chinese Society of Hematology, Chinese MedicalAssociationCorresponding author: Huang Huiqiang, Department of Oncology, Sun Yat-sen University Cancer Center,Guangzhou 510060, China, Email:******************.cn【Abstract】Rituximab has received marketing authorization for more than 20 years and has goodefficacy and safety in clinical practice. The U. S. Food and Drug Administration (FDA) approved a rapidinfusion of rituximab for the second and subsequent cycles of treatment in 2012. Nowadays, the 90-minuterapid infusion of rituximab in foreign countries has been maturely applied. The National Comprehensive CancerNetwork (NCCN) guidelines also recommend a 90 - minute rapid infusion of rituximab for the second andsubsequent cycles of rituximab to patients who do not experience infusion - related reactions during the firstcycle of rituximab infusion. In China, there are emerging research data confirming the safety and tolerability ofthe 90-minute rapid infusion of rituximab. Therefore, based on evidences from evidence - based medicine athome and abroad, in order to improve the quality of patients' hospital visits and further save healthcare costs,this expert consensus recommends the use of 90-minute rapid infusion of rituximab for patients who have noobvious adverse reactions during the first cycle of infusion.【Keywords】Lymphoma, non-Hodgkin; Rituximab; Rapid infusion; Infusion related reactionsDOI : 10.3760/l 15356-20201030-00259利妥昔单抗是一种靶向CD20的单克隆抗体,能 显著改善CD20阳性非霍奇金淋巴瘤(NHL)患者的预 后。

医护英语试题及答案一、选择题(每题2分,共20分)1. What is the most common method of transmission for the common cold?A. AirborneB. FoodborneC. Direct contactD. Vector-borne答案:A2. Which of the following is not a vital sign?A. TemperatureB. PulseC. RespirationD. Blood pressure答案:C3. The abbreviation "IV" stands for:A. IntravenousB. IntramuscularC. IntraperitonealD. Intrathecal答案:A4. What does "ICU" refer to in a hospital setting?A. Intensive Care UnitB. Inpatient Care UnitC. International Care UnitD. Intermittent Care Unit答案:A5. Which of the following is a symptom of anemia?A. FatigueB. High feverC. Excessive sweatingD. Rapid weight gain答案:A6. The term "auscultation" refers to listening to sounds within the body using a:A. StethoscopeB. OtoscopeC. OphthalmoscopeD. Thermometer答案:A7. A patient is said to be in "shock" when:A. They are experiencing severe painB. They are unconsciousC. Their blood pressure is significantly lowD. They have a high fever答案:C8. What is the purpose of a "suture" in medical terms?A. To provide anesthesiaB. To close a woundC. To remove a foreign objectD. To diagnose a condition答案:B9. The "HCG" test is used to detect:A. DiabetesB. PregnancyC. AnemiaD. Infection答案:B10. Which of the following is a type of imaging technique used in medical diagnostics?A. X-rayB. Blood testC. BiopsyD. Electrocardiogram答案:A二、填空题(每题2分,共20分)1. The medical term for a broken bone is ____________.答案:fracture2. A doctor's written instructions for the use of medication are called a ____________.答案:prescription3. The process of removing a damaged or diseased organ is called ____________.答案:surgery4. The study of the causes and control of diseases is known as ____________.答案:epidemiology5. A ____________ is a healthcare professional who specializes in the care of patients with mental disorders.答案:psychiatrist6. The medical term for a surgical incision is ____________. 答案:incision7. The ____________ is the part of the hospital where critically ill patients are treated.答案:intensive care unit8. A ____________ is a healthcare professional who provides care to patients in their homes.答案:nurse9. The ____________ is a medical device used to measure blood pressure.答案:sphygmomanometer10. The term ____________ refers to the process of removing a patient's blood for analysis.答案:blood draw三、简答题(每题10分,共40分)1. Explain the difference between a "nurse" and a "nurse practitioner".答案:A nurse is a healthcare professional who provides care to patients under the supervision of a physician. A nurse practitioner (NP) is an advanced practice registered nurse who has completed additional education and training, allowingthem to diagnose and treat patients, prescribe medications, and often act as a primary care provider.2. Describe the role of a "physician assistant" in a medical setting.答案:A physician assistant (PA) is a healthcare professional who practices medicine under the supervision of a physician. They can perform physical exams, diagnose and treat illnesses, order and interpret tests, assist in surgery, and prescribe medications.3. What is the purpose of a "dialysis" treatment?答案:Dialysis is a medical treatment that removes waste products and excess fluids from the blood when the kidneysare unable to do so. It is used to treat patients with kidney failure or severe kidney disease.4. Explain the concept of "informed consent" in medical procedures.答案:Informed consent is the process by which a patient is given information about a medical procedure or treatment, including its risks and benefits, and then voluntarily agrees to undergo the procedure. It is a fundamental ethicalprinciple in healthcare that ensures the patient's autonomy and right to make decisions about their own medical care.。

Chapter 3.I. IntroductionThis chapter has three purposes. The first purpose is to teach many of the most common suffixes in the medical language. As you work through the entire book, the suffixes mastered in this chapter will appear often. An additional group of suffixes is presented in Chapter 6. The second purpose is to introduce new combining forms and use them to make works with suffixes. Your analysis of the terminology in Section III of this chapter will increase tour medical language wocabulary.The third purpose is to expand your understanding ofterminology beyond basic word analgsis. The appendices in Section IV present illustrations and additional explanations of new terms. Your should refer to these appendices as your complete the meanings of terms in Section III.II. Combining FormsRead this list and underline those combining forms that are unfamiliarCombining FormsCombining Form MeaningAbdomin/o abdomenAcr/o extremities, top, extreme pointAcu/o sharp, severe, suddenAden/o glandAgor/a marketplaceAmni/o amnionAngi/o vesselArteri/o arteryArthr/o jointAxill/o armpitBlephar/o eyelidBronch/o bronchial tubesCarcin/o cancerChem/o drug, chemicalChondr/o cartilageChron/o timeCol/o colonCyst/o urinary bladderEncephal/o brainHydr/o water, fluidInguin/o groinIsch/o to hold backLapar/o abdomen, abdominal wallLaryng/o larynxLymph/o lymphLymph, a clear fluid that bathes tissue spaces, is contained inspecial lymph vessels and nodes throughout the bodyMamm/o breastMast/o breastMorph/o shape, formMuc/o mucusMy/o muscleMyel/o spinal cord, bone marrowContext of usage indicates the meaning intendedNecr/o deathNephr/o kidneyNeur/o nerveNeutr/o neurophilOphthalm/o eyeOste/o boneOt/o earPath/o diseasePeritone/o peritoneumPhag/o to eat, swallowPhleb/o veinPlas/o formation, developmentPleur/o pleuraPneumon/o lungsPulmon/o lungsRect/o rectumRen/o kidneySarc/o fleshSplen/o spleenStaphyl/o clystersStrept/o twisted chainsThorac/o chestThromb/o clotTonsill/o tonsilsTrache/o tracheaVen/o veinIII. Suffixes and TerminologyNoun SuffixesThe following list includes common noun suffixes. After the meaning of each suffix, terminology illustrates the use of the suffix in various words. Remember the basic rule for building a medical term: Use a combining vowel, such as o, to connect the root to the suffix. However, drop the combining vowel if the suffix begins with a vowel. For example: gastr/it is, not gastr/o/it is. Numbers after certain terms direct you to the Appendices that follow this list. These Appendices contain additional information to help you understand the terminology.Suffix Meaning Terminology Meaning-algia pain arthralgia _________________________otalgia ____________________________neuralgia __________________________myalgia ___________________________-cele hernia rectocele ___________________________cystocele __________________________-centesis surgical puncture to remove fluid thoracentesis __________________________Notice that this term is shortened fromthoracocentesisamniocentesis__________________________abdominocentesis ________________________This procedure is more commonly known as aparacentesis. A tube is placed through anincision of the abdomen and fluid is removedtfrom the peritoneal cavity .-coccus berry-shaped, bacterium streptococcus _________________________staphylocci ___________________________ -cyte cell erythrocyte __________________________leukocyte ___________________________thrombocyte _________________________-dynia pain pleurodynia _________________________Pain in the chest wall muscles that isaggravated by breathing-ectomy excision, removal, resection laryngectomy _______________________mastectomy _________________________ -ectomy blood condition anemia ___________________________ischemia _________________________-genesis condition of producing, forming carcinogenesis _____________________pathogenesis ________________________angiogenesis ________________________-genic pertaining to, producing, carcinogenic_________________________ produced by, or inosteogenic ___________________________An osteogenic sarcoma is a tumor producedin bone tissue-gram record electroencephalogram __________________myelogram _________________________Myle/o means spinal cord in this term. This isan x-ray record taken after contrast material isinjected into membranes around the spinalcordmammogram _________________________ -graph instrument for recording electroencephalograph _________________ -graphy process of recording electroencephalography ________________angiography _________________________ -itis inflammation bronchitis ___________________________tonsillitis ____________________________thrombophlebitis ______________________Also called phlebitis-logy study of ophthalmology _______________________morphology__________________________ -lysis breakdown, destruction, separation hemolysis ___________________________Breakdown of red blood cells with release ofhemoglobin-malacia softening osteomalacia _______________________chondromalacia ______________________ -megaly enlargement acromegaly _________________________splenomegaly _______________________-oma tumor, mass, collection of fluid myoma __________________________A benign tumormyosarcoma _______________________A malignant tumor. Muscle is a type of fleshtissuemyltiple myeloma ____________________Myel/o means bone marrow in this term. Thismaligant tumor occurs in bone marrow tissuethroughout the bodyhematoma _______________________-opsy to view biopsy _____________________________necropsy __________________________This is an autopsy or postmortemexamination-osis condition, usually abnormal necrosis ___________________________hydronephrosis ______________________leukocytosis _________________________ -pathy disease condition cardiomyopathy _____________________Primary disease of the heart muscle in theabsence of a known underlying etiology-penia deficiency erythropenia ______________________neutropenia ________________________In this term, neutr/o means neutrophilthrombocytopenia___________________-phobia fear acrophobia ________________________Fear of heightsagoraphobia _______________________An anxiety disorder maked by fear ofventuring out into a crowded place-plasm development, formation, growth achondroplasia _____________________ -plasty surgical repair angioplasty ________________________A cardiologist opens a narrowed bloodvessel using a ballon that is inflated afterinsertion into the vessel. Stents, or slottedtubes, are then put in place to keep theartery open-ptosis drooping, sagging, prolapse blepharoptosis _____________________Physcians use ptosis alone, to indicateprolapse of the upper eyelidnephroptosis ________________________ -sclerosis hardening arteriosclerosis _____________________In atherosclerisis deposits of fat collect inan artery-scope instrument for visual examination laparoscope _______________________ -scopy process of visual examination laparoscopy _______________________ -stasis stopping, controlling metastasis ________________________Meta- means beyond. A metastasis is thespread of a malignant tumor beyond itsoriginal site to a secondary organ orlocationhemostasis ________________________Blood flow is stopped naturally byclotting or artificially by compression orwsuturing of a wound-stomy opening to form a mouth colostomy ________________________tracheostomy ______________________ -therapy treatment hydrotherapy ______________________chemotherapy ______________________radiotherapy _____________________-tomy incision, to cut into laparotomy _____________________This is a large incision through theabdominal wallphlebotomy ______________________ -trophy development, nourishment hypertrophy ______________________Cells increase in size, not number.Muscles of weight lifters often hypertrophyatrophy ___________________________Cells decrease in size. Muscles atrophywhen immobilized in a cast and not in use The following are shorter noun suffixes that are usually attached to roots in wordsSuffix Meaning Terminology Meaning-er one who radiographer _______________________A technologist who assists in the making ofdiagnostic x-ray pictures-ia condition leukemia __________________________pneumonia ________________________-ist specialist nephrologist _______________________-ole little, small arteriole __________________________-ule little, small venule ____________________________ -um, -ium structure, tissue, thing pericardium _______________________This membrane surrounds the heart-y condition, process nephropathy _______________________Adjective SuffixesThe following are adjectival suffixes. No simple rule will tell you which suffix meanin “pertaining to” should be used with a specific combining form. Your job is to recongnize the suffix in each term and know the meaning of the entire termSuffix Meaning Terminology Meaning-ac, -iac pertaining to cardiac _________________________-al pertaining to peritoneal _______________________inguinal _________________________pleural __________________________-ar pertaining to tonsillar __________________________-ary pertaining to pulmonary ________________________axillary ___________________________-eal pertaining to laryngeal __________________________-ic, -ical pertaining to chronic ____________________________Acute is the opposite of chronic. It describes adisease that is of rapid onset and has severesymptoms and brief durationpathological __________________________-oid resembling adenoids __________________________-ose pertaining to, full of adipose ___________________________-ous pertaining to mucous ___________________________Mecous membranes produce the stickysection called mucus-tic pertaining to necrotic _____________________________IV. AppendicesAppendix A.A hernia is protrusion of an organ or the muscular wall of an organ througg the cavity that normally contains it. A hiatal hernia occurs when the stomach protrudes upward into the mediastinum through the esophageal opening in the diaphragm, and an inguinal hernia occurs when part of the intestine protrudes downward into the groin region and commonly into the scrotal sac in the male. A rectocele is the protrusion of a portion of the rectum toward the vagina through a weak part of the vaginal wall muscles. An omphalocele is a herniation of the intestines through the navel occurring in infants at birth. A cystocele occurs when part of the urinary bladder herniates through the vaginal wall due to weakened pelvic musclesAppendix B: AmniocentesisThe amnion is the sac that surrounds the embbryo in the uterus. Fluid accumulates within the sac and can be withdrawn for analysis between the 12th and 18th weeks of pregnancy. The fetus sheds cells into the fluid, and these cells are grown for microscopic analysis. A karyotype is made to analyze chromosomes, and the fluid can be examined for high levels of chemicals that indicate defects in the developing spinal cord and spinal column of the fetusAppendix C: PluralsWords ending in –us commonly form their plural by dropping the –us and adding –i. Thus, nucleus becomes nuclei and coccus becomes cocci. For additional information on formation of plurals, please refer to Appendix I, page 949, at the end of the book.Appendix D: Streptococcus and StaphylococcusStreptococcus, a berry-shaped bacterium, grows in twisted chains. One group of streptocoocci causes such conditions as “strep” throat, tonsillitis, rheumatic fever, and certain kidney ailments, whereas another group causes infections in teeth, in the sinuses of the nose and face, and in the valves of the heart.Staphylococci, other berry-shaped bacteria, grow in small clusters, like grapes. Staphylococcal lesions may be external or internal. An abscess is a collection of pus, white blood cells, and protein that is present at the site of infection.Examples of diplococci are pneumococci and gonococci. Pneumococci cause bacterial pneumonia, and gonococci invade the reproductive organs causing gonorrhea. Figure 3-3 illustrates the different growth patterns of streptococci, staphylococci, and diplococci.Appendix E: Blood CellsStudy Figure 3-4 as you read the following to note the differences among the three different types of cells in the blood.Erythrocytes. These cells are made in the bone marrow. They carry oxygen from the lungs through the blood to all body cells. The body cells use oxygen to burn food and release energy. Hemoglobin, an important protein in erythrocytes, carries the oxygen through the bloodstream. Leukocytes. There are five different leukocytes:Granulocytes contain dark-staining granules in their gytoplasm and have a multilobed nucleus. They are formed in the bone marrow and there are three types:1.Eosinophils are active and elevated in allergic conditions such as asthma. About 3 percent ofleukocytes are eosinophils2.Basophils. The function of basophils is not clear, but they play a role in inflammation. Lessthan 1 percent of leukocytes are basophils3.Neutrophils are important disease-fighting cells. They are phagocytes because they engulf anddigest bacteria. They are the most numerous disease-fighting “soldiers”, and are referred to as “polys” or polymorphonuclear leukocytes because of their multilobed nucleusMononuclear leukocytes have one large nucleus and only a few granules in their cytoplasm.They are produced in lymph nodes and the spleen. There are two types of mononuclear leukocytes4. Lymphocytes fight disease by producing antibodies and thus destroying foreign cells. Theymay also attach directly to foreign cells and destroy them. Two types of lymphocytes are T cells and B cells. About 32 percent of leukocytes are lymphocytes5. Monocyte: Engulf and destroy cellular debris after neutrophils have attacked foreign cells.Monocytes leave the bloodstream and enter tissues (such as lung and liver) to become macrophages, which are large phagocytes. Monocytes make up about 4 percent of all leukocytes.Appendix F: Pronunciation CluePronunciation clue: The letters g and c are soft when followed by an i or e, and are hard when followed by an o or aAppendix G: AnemiaAnemia literally means no blood. However, in medical language and usage, anemia is a condition of reduction in the number of erythrocytes or amount of hemoglobin in circulating blood. Anemias are classified according to the different problems that arise with red blood cells. Aplastic (a = no, plas/o = formation) anemia, a severe type, occurs when bone marrow fails to produce not only erythrocytes but leukocytes and thrombocytes as well.Appendix H: IschemiaIschemia litterally mens to hold back (isch/o) blood (-emia) from a part of the body. Tissue that becomes ischemic loses its normal flow of blood and becomes deprived of oxygen. The ischemia can be caused by mechanical injury to a blood vessel, by blood clots lodging in a vessel, or by the progressive and gradual closing off (occusion) of a vessel caused by collection of faty material. Appendix I: TonsillitisThe tonsils are lymphatic tissue in back of the throat. They contain white blood cells (lymphocytes), which filter and fight bacteria. However, tonsils can also become infected and inflamed. Streptococcal infection of the throat causes tonsillitis, which may require tonsillectomy.Appendix J: AcromegalyAcromegaly is an endocrine disorder. It occurs when the pituitary gland, attached to the base of the brain, produces an excesive amount of growth hormone after the completion of puberty. The excess grouth hormone most often results from benign tumor of the pituitary gland. A person with acromegaly is of normalheight beccause the long bones have stopped growth after puberty, but bones and soft tissue in the hands, feet, and facegrow abnormally. High levels of growth hormone before completion of puberty produce excessive growth of long bones (gigantism) as well as acromegaly.Appendix K: SplenomegalyThe speen is an organ in the left upper quadrant (LUQ) of the abdomen (below the diaphragm and to the side of the stomach). Composed of lymph lymph tissue and blood vessels, it disposes of dying red blood cells and manugactures white blood cells (lymphocytes) to fight disease. If the speen is removed (dplenectomy), other organs carry out these functions.Appendix L: LeukocytosisWhen –osis is a suffix with blood cells, it is an abnormal condition of increase normal circulating blood cells. Thus, in leukocytosis an elevation in numbers of normal white blood cells occurs in response to the presence of infection. When –emia is a suffix with blood cells, the condition is an abnormally high, excessive increase in number of cancerous blood cells.Appendix M: AchondroplasiaAchondroplasia is an inherited disorder in which the bones of the arms and legs fail to grow to normal size because of a defect in both cartilage and bone. It results in a type of dwarfism characterized by short limbs, a normal-sized head and body, and normal intelligence.Appendix N: -ptosis = blepharoptosisThe suffix –ptosis is pronounced. When two consonants begin a word, the first is silent. If the two consonants are found in the middle of a word, both are pronounced for example, blepharoptosis. This condition occurs when eyelid muscles weaken, and a person has difficulty lifting the eyelid to keep it open.Appendix O: Laparoscopy = peritoneoscopy = MIS, mingmally invasive surgeryLaparoscopy is visual examination of the abdominal cavity using a laparoscope. The laparoscope, a lighted telescopic instrument, is inserted through an incision in the abdomen near the navel, and gas is infused into the peritoneal cavity to prevent injury to abdominal structures during surgery. Surgeons use laparosocopy to examine abdominal viscera for evidence of disease or for procedures such as removal of the appendix, gallbladder, adrenal gland, spleen, or colon, and repair of hernias. It is also used to clip and collapse the fallopain tube, which prevents sperm cells from reaching eggs that leave the ovary.Appendix P: Arteriole, capillary, venuleNotice the relationship among an artery, arterioles, capillaries, venules, and a vein as illustrated in Figure 3-8Appendix Q: AdenoidsThe adenoids are lymphatic tissue in the part of the pharynx (throat) near the nose and nasal passages. The literal meaning “resembling glands”is appropriate because they are neither endocrine nor exocrine glands. Enlargement of adenoids may cause blockage of the airway from the nose to the pharynx, and adenoidectomy may be advised. The tonsils are also lymphatic tissue, and their location as well as that of the adenoids is indicated in Figure 3-9.V. Practical ApplicationsMatch the diagnostic or treatment procedures with their descriptions:amniocentesis colostomy mastectomy tonsillectomy angiography laparoscopy paracentesis tracheotomy angioplasty laparotomy thoracentesis1.removal of abdominal fluid from the peritoneal space. ____________________rge abdominal incision to remove an ovarian adenocarcinoma ________________3.removal of an adnocarcinoma of the breast. ______________________4. a method used to determine the karyotype of a fetus _______________________5.establishment of an emergency airway path _______________________6.surgical procedure to remove pharyngeal lymphatic tissue _____________________7.surgical precdedure to open clogged coronary arteties. _______________________8.method of removing fluid from the chest (pleural effusion) _______________________9.procedure to drain feces from the body after bowel resection. ______________________10.X-ray procedure used to examine blood vessels before surgery ______________________11.minimally invasive surgery within the abdomen. _______________________VI. ExercisesRemember to check your answers carefully with those given in Section VII, Answers to ExerciseA.give the meanings for the following suffixes.1.– cele ______________2.– emia ______________3.– coccus ______________4.–gram ______________5.–cyte __________________6.–algia____________________7.–ectomy ________________8.–centesis _______________9.–genesis _________________10.– graph _________________11.–it is ____________________12.– graphy _______________ing the following combining forms and your knowleges of suffixes, build the followingmedical terms.amni/o isch/o ot/o angi/o laryng/o rect/o arthr/o mast/o staphyl/o bronch/o my/o strept/o carcin/o myel/o thorac/o cyst/o1.Hernia of the urinary bladder ____________________2.pain of muscle ______________________________3.process of produsing cancer ___________________4.record (x-ray) of the spinal cord ________________5.berry-shaped bacteria in twisted chains _____________6.surgical puncture to remove fluid from the chest ________________7.removal of the breast _____________________8.inflammation of the tubes leading from the windpipe to the lungs _________________9.to hold back blood from cells ____________________10.process of recording (x_ray) blood vessels _________________11.visual examination of joints _______________________12.berry-shaped bacteria in clusters _________________13.resection of the voice box ___________________14.surgical procedure to remove fluid from the sac around the fetus _________________C.Match the following terms, which describe blood cells, with their meanings below.Basophil eosinophil erythrocyte lymphocyte monocytethrombocyte erythrocyte1.granulocytic white blood cell (granules stain purple) that destroys foreign cells byengulfing and disgesting them; also called a polymorphonuclear leukocyte _________________________2.mononuclear white blood cell that destroys foreign cells by making antibodies___________________3.cloting cell; also called a platelet ___________________4.leukocyte with reddish –staining granules and numbers elevated in allergic reactions_______________5.red blood cell ____________________6.mononuclear white blood cell that engulfs and digests cellular debris; contains one largenucleus __________7.granulocytic (granules stain blue) white blood cell prominent in inflammatory reaction_______________D.give the mening of the following suffixes.1.– logy ________________2.–lysis ________________3.–pathy ______________4.–penia _______________5.–malacia _______________6.–osis ________________7.–phobia _______________8.–megaly __________________9.–oma ___________________10.–opsy ________________11.–plasia _________________12.–plasty ________________13.–sclerosis _______________14.–stasis ____________________ing the following combining forms and your knowledge of suffixes, build the followingmedical terms.Acr/o agor/o arteri/o bi/o blephar/o cardi/ochondr/o hem/o hydr/o morph/o my/o myel/onephr/o phleb/o sarc/o splen/o1.fear of the marketplace (crowds) ______________2.enlargement of the spleen ________________3.study of the shape (of cells) _______________4.softening of cartilage ______________________5.abnomal condition of water (fluid) in the kidney ________________6.disease conditionof heart muscle ____________________7.hardening of arteries ___________________8.tumor (benign ) of muscle ____________________9.flesh tumor (malignant ) of muscle _____________________10.surgical reqair of the nose ________________11.tumor of bone marrow _______________12.fear of heights ____________________13.view of living tissue upder the microscope ________________14.stoppage of the flow of blood ( by mechanical or natural means ) _________________15.inflamation of the eyelid __________________16.incision of vein ________________________F.Match the following terms with their meanings below.Achondroplasia acromegly atrophychemotherapy colostomy hydrotherapyhypertrophy laparoscope laparoscopymetastasis necrosis osteomalacia1.treatment using drogs ____________________2.conditiono f death (of cells ) ______________3.softening of bone _________________4.opening of the large intestine to the outside of the body ________________5.no development; shrinkage of cells ________________6.beyond control; spread of a cancerous tumor to another organ _______________7.instrument to visually examine the abdomen ____________________8.enlargement of extremities; an endocrine disorder that causes excess growth hormone tobe produced by the pituitary gland after puberty __________________9.condition of improper formation of cartilage in the embryo that leads to short bones anddwarr-like deformities _______10.process of viewing the peritoneal (adbominal ) cavity _________________11.treatment using water _______________12.excessive development of cells (increase in size of individual cells )____________G.give the menaing of the following suffixes.1.–ia _________2.–trophy ___________3.–stasis ____________4.–stomy ________________5.–tomy _____________6.–ole __________________7.–um ________________8.–ule _________________9.–y ___________________10.– oid_______________11.–genic ________________12.– ptosis ______________ing the following combining forms and suffixes, build the following medical terms.Combining Formsarteri/o pleur/o lapar/o pneumon/o mamm/o radi/onephr/o ven/oSuffixes-dynia -ole -therapy -ectomy -pathy -tomy -gram -plasty -ule -ia -scopy1.incision of the abdomen _____________2.process of visual examination of the abdomen _____________3. a small artery ________________4.condition of the lungs ______________5.treamtent using x-rays ______________6.recod (x-ray) of the breast ________________7.pain of the chest wall and the membranes surrounding the lungs ______________8. a small vein __________________9.disease condition of the kidney ___________________10.surgical repair of the breast _________________I.Underline the suffix in the following terms and give the menaing of the entire term.ryngeal _____________2.inguinal ______________3.chronic _______________4.pulmonary _____________5.adipose ________________6.peritoneal ______________7.axillary _________________8.necrotic ________________9.mucoid _________________10.mucous _______________J.Select from the following terms relating to blood and blood vessels to eomplete the sentences below.Anemia angioplasty arterioles hematoma hemolysis hemostasis ischemia leukemia leukocytosis multiple myeloma thromocytopenia venules1.Billy was diagnosed with excessively high numbers of cancerous white blood cells, or__________________. His doctor prescribed chemotherapy and expected an excellent prognosis.2.Mr. Clark’s angiogram showed that he had serious atherosclerosis of one of the arteriessupplying bood to his heart. His doctor recommended that _________________ would be helpful to open up his clogged artery by threading a catheter (tube) through his artery and opening a ballon at the end of the catheter to widen the artery.3.Mrs. Jackson’s blood count showed a reduced number of red blood cells, indicating____________________. Her erythrocytes were being destroyed by __________________________4.Doctors refused to operate on Joe Hite becausse of his low platelet count, a conditioncalled ________________.5.Blockage of an artery leading to Mr. Stein’s brain led to the holding back of blood flowto nerve tissue in his brain. This condition, called __________________, could lead to necrosis of tissue and a cerebrovascular accident.6.Small arteries, or ___________________, were broken under Ms. Bein’s scalp when shewas struck on the head with a rock. She soon developed a mass of blood, a (an) _________________, under the skin in that region of her head.7.Sarah Jones had a staphylococcal infection causing elevation of her white blood cellcount. She was treated with antibiotics and the ________________________ returned to normal.8.Within the body, the bone marrow (soft tissue within bones) is the “factory” for makingblood cells. Mr. Scott developed ____________________, a malignant condition of the bone marrow cells in his hip, upper arm, and thigh bones.9.During operations, surgeons use clamps to close off blood vessels and prevent blood loss.Thus, they maintain _________________ and avoid blood transfusions.10.Small vessels that carry blood toward the heart from capillaries and tissues are_______________.。

2020年9月 第17卷 第17期药物与临床 Drugs and Clinic脑卒中俗称“中风”,是由脑部血管突发破裂或堵塞引起的,临床发病率较高,不仅会造成脑部神经功能损伤,还会引起心理状态的改变,如焦虑、抑郁、失眠等,严重者会有自杀倾向,给患者及家庭带来严重影响[1]。

调查显示[2],在脑卒中发生后2~12个月,大多患者抑郁情绪表现突出,失去对生活的希望,不利于后期神经功能恢复和生活质量的提高。

因此,应重视脑卒中患者的心理状态,缓解或消除焦虑抑郁的不良情绪。

盐酸曲舍林和帕罗西汀都是临床常用的抗抑郁药,均属于5-羟色胺(5-HT)再摄取抑制剂,但作用位点不同[3]。

本研究对盐酸曲舍林和帕罗西汀的临床疗效及安全性进行对比,现报告如下。

1 资料与方法1.1 一般资料从2019年1月—2020年1月在我院进行治疗的脑卒中患者中选取96例,按照随机数字表法分为观察组和对照组,每组48例。

观察组男性25例,女性23例;年龄40~82岁,平均(57.12±5.81)岁;病程3~10个月,平均(5.77±1.28)个月。

对照组男性26例,女性22例;年龄41~80岁,平均(57.33±5.79)岁;病程2~10个月,平均(5.69±1.14)个月。

两组性别、年龄及病程比较,无显著差异(P>0.05)。

本研究顺利通过医院伦理委员会的批准,且所有患者已签署知情同意书。

1.2 纳入及排除标准纳入标准:(1)确诊为脑卒盐酸帕罗西汀片与盐酸舍曲林片治疗脑卒中后抑郁的效果对比张宗江南阳医学高等专科学校第一附属医院药学部,河南南阳 473000[摘要]目的:比较盐酸帕罗西汀片与盐酸曲舍林片治疗脑卒中后抑郁的临床疗效和不良反应。

方法:选取98例脑卒中后抑郁患者,随机分为观察组(n=48例,常规治疗+口服盐酸曲舍林片)和对照组(n=48例,常规治疗+口服盐酸帕罗西汀片),比较两组临床疗效和不良反应,并于治疗前后对患者的神经功能、抑郁状态进行评价。