Compressing Sparse Tables using a Genetic Algorithm

- 格式:pdf

- 大小:30.26 KB

- 文档页数:11

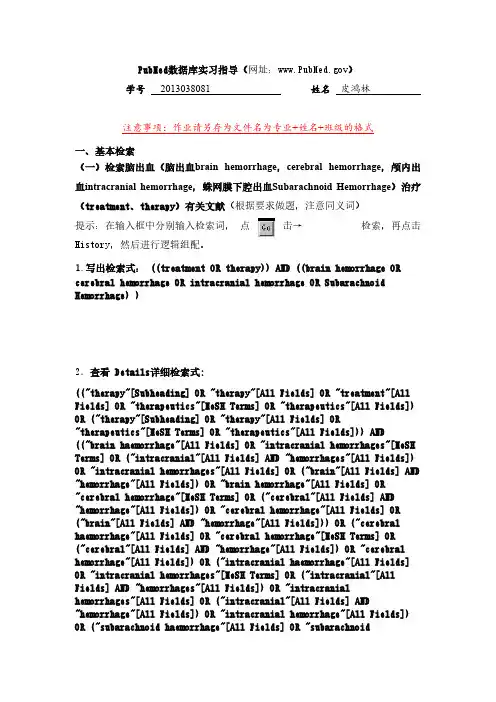

PubMed数据库实习指导(网址:)学号2013038081 姓名皮鸿林注意事项:作业请另存为文件名为专业+姓名+班级的格式一、基本检索(一)检索脑出血(脑出血brain hemorrhage,cerebral hemorrhage,颅内出血intracranial hemorrhage,蛛网膜下腔出血Subarachnoid Hemorrhage)治疗(treatment、therapy)有关文献(根据要求做题,注意同义词)提示:在输入框中分别输入检索词,点击→检索,再点击History,然后进行逻辑组配。

1.写出检索式: ((treatment OR therapy)) AND ((brain hemorrhage OR cerebral hemorrhage OR intracranial hemorrhage OR Subarachnoid Hemorrhage) )2.查看 Details详细检索式:(("therapy"[Subheading] OR "therapy"[All Fields] OR "treatment"[All Fields] OR "therapeutics"[MeSH Terms] OR "therapeutics"[All Fields]) OR ("therapy"[Subheading] OR "therapy"[All Fields] OR "therapeutics"[MeSH Terms] OR "therapeutics"[All Fields])) AND (("brain haemorrhage"[All Fields] OR "intracranial hemorrhages"[MeSH Terms] OR ("intracranial"[All Fields] AND "hemorrhages"[All Fields]) OR "intracranial hemorrhages"[All Fields] OR ("brain"[All Fields] AND "hemorrhage"[All Fields]) OR "brain hemorrhage"[All Fields] OR "cerebral hemorrhage"[MeSH Terms] OR ("cerebral"[All Fields] AND "hemorrhage"[All Fields]) OR "cerebral hemorrhage"[All Fields] OR ("brain"[All Fields] AND "hemorrhage"[All Fields])) OR ("cerebral haemorrhage"[All Fields] OR "cerebral hemorrhage"[MeSH Terms] OR ("cerebral"[All Fields] AND "hemorrhage"[All Fields]) OR "cerebral hemorrhage"[All Fields]) OR ("intracranial haemorrhage"[All Fields] OR "intracranial hemorrhages"[MeSH Terms] OR ("intracranial"[All Fields] AND "hemorrhages"[All Fields]) OR "intracranial hemorrhages"[All Fields] OR ("intracranial"[All Fields] AND "hemorrhage"[All Fields]) OR "intracranial hemorrhage"[All Fields]) OR ("subarachnoid haemorrhage"[All Fields] OR "subarachnoidhemorrhage"[MeSH Terms] OR ("subarachnoid"[All Fields] AND "hemorrhage"[All Fields]) OR "subarachnoid hemorrhage"[All Fields]))(二)检索骨折(fracture)的外科手术疗法(surgery)的近二年英文或者法文免费综述提示:点击Limits: 按要求的四个限制条件选择后,点击系统开始检索。

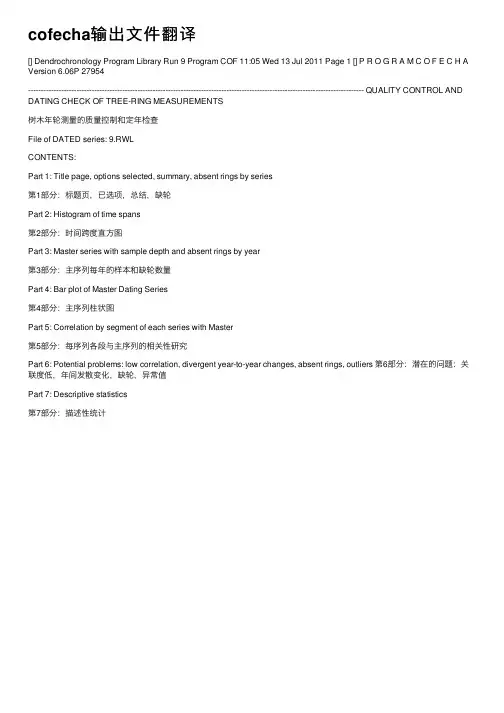

cofecha输出⽂件翻译[] Dendrochronology Program Library Run 9 Program COF 11:05 Wed 13 Jul 2011 Page 1 [] P R O G R A M C O F E C H A Version 6.06P 27954------------------------------------------------------------------------------------------------------------------------------------ QUALITY CONTROL AND DATING CHECK OF TREE-RING MEASUREMENTS树⽊年轮测量的质量控制和定年检查File of DATED series: 9.RWLCONTENTS:Part 1: Title page, options selected, summary, absent rings by series第1部分:标题页,已选项,总结,缺轮Part 2: Histogram of time spans第2部分:时间跨度直⽅图Part 3: Master series with sample depth and absent rings by year第3部分:主序列每年的样本和缺轮数量Part 4: Bar plot of Master Dating Series第4部分:主序列柱状图Part 5: Correlation by segment of each series with Master第5部分:每序列各段与主序列的相关性研究Part 6: Potential problems: low correlation, divergent year-to-year changes, absent rings, outliers 第6部分:潜在的问题:关联度低,年间发散变化,缺轮,异常值Part 7: Descriptive statistics第7部分:描述性统计Time span of Master dating series is 1815 to 2009 195 yearsContinuous time span is 1815 to 2009 195 yearsPortion with two or more series is 1816 to 2009 194 years*****************************************C* Number of dated series4 *C* 定年的样芯数量*O* Master series 1815 2009 195 yrs *O* 主序列*F* Total rings in all series 768 *F* 所有轮数*E* Total dated rings checked 767 *E* 被定年的轮数*C* Series intercorrelation .299 *C* 序列相关系数*H* Average mean sensitivity .195 *H* 平均敏感度*A* Segments, possible problems 26 *A* 可能有问题的部分数*** Mean length of series 192.0 *** 序列平均长度****************************************ABSENT RINGS listed by SERIES: (See Master Dating Series for absent rings listed by year) No ring measurements of zero value------------------------------------------------------------------------------------------------------------------------------------PART 6: POTENTIAL PROBLEMS: 第6部分:潜在的问题:关联度低,年间发散变化,缺轮,异常值08:08 Thu 14 Jul 2011 Page 5------------------------------------------------------------------------------------------------------------------------------------For each series with potential problems the following diagnostics may appear:检测出来的每个序列可能存在的潜在问题。

An Introduction to Categorical Data Analysis Using RBrett PresnellMarch28,2000AbstractThis document attempts to reproduce the examples and some of the exercises in An Introduction to Categor-ical Data Analysis[1]using the R statistical programming environment.Chapter0About This DocumentThis document attempts to reproduce the examples and some of the exercises in An Introduction to Categori-cal Data Analysis[1]using the R statistical programming environment.Numbering and titles of chapters will follow that of Agresti’s text,so if a particular example/analysis is of interest,it should not be hard tofind, assuming that it is here.Since R is particularly versatile,there are often a number of different ways to accomplish a task,and naturally this document can only demonstrate a limited number of possibilities.The reader is urged to explore other approaches on their own.In this regard it can be very helpful to read the online documentation for the various functions of R,as well as other tutorials.The helpfiles for many of the R functions used here are also included in the appendix for easy reference,but the online help system is definitely the preferred way to access this information.It is also worth noting that as of this writing(early2000),R is still very much under development. Thus new functionality is likely to become available that might be more convenient to use than some of the approaches taken here.Of course any user can also write their own R functions to automate any task,so the possibilities are endless.Do not be intimidated though,for this is really the fun of using R and its best feature:you can teach it to do whatever is neede,instead of being constrained only to what is“built in.”A Note on the DatasetsOften in this document I will show how to enter the data into R as a part the example.However,most of the datasets are avaiable already in R format in the R package for the course,sta4504,available from the course web site.After installing the library on your computer and starting R,you can list the functions and datafiles available in the package by typing>library(help=sta4504)>data(package=sta4504)You can make thefiles in the package to your R session by typing>library(sta4504)and you can read one of the package’s datasets into your R session simply by typing,e.g.,>data(deathpen)Chapter1Introduction1.3Inference for a(Single)ProportionThe function prop.test(appendix A.1.3)will carry out test of hypotheses and produce confidence intervals in problems involving one or several proportions.In the example concerning opinion on abortion,there were 424“yes”responses out of950subjects.Here is one way to use prop.test to analyze these data:>prop.test(424,950)1-sample proportions test with continuity correctiondata:424out of950,null probability0.5X-squared=10.7379,df=1,p-value=0.001050alternative hypothesis:true p is not equal to0.595percent confidence interval:0.41446340.4786078sample estimates:p0.4463158Note that by default:•the null hypothesisπ=.5is tested against the two-sided alternativeπ=.5;•a95%confidence interval forπis calculated;and•both the test and the CI incorporate a continuity correction.Any of these defaults can be changed.The call above is equivalent toprop.test(424,950,p=.5,alternative="two.sided",conf.level=0.95,correct=TRUE)Thus,for example,to test the null hypothesis thatπ=.4versus the one-sided alternativeπ>.4and a 99%(one-sided)CI forπ,all without continuity correction,just typeprop.test(424,950,p=.4,alternative="greater",conf.level=0.99,correct=FALSE)Chapter2Two-Way Contingency TablesEntering and Manipulating DataThere are a number of ways to enter counts for a two-way table into R.For a simple concrete example, we consider three different ways of entering the“belief in afterlife”data.Other methods and tools will be introduced as we go along.Entering Two-Way Tables as a MatrixOne way is to simply enter the data using the matrix function(this is similar to using the array function which we will encounter later).For the“belief in afterlife”example,we might type:>afterlife<-matrix(c(435,147,375,134),nrow=2,byrow=TRUE)>afterlife[,1][,2][1,]435147[2,]375134Things are somewhat easier to read if we name the rows and columns:>dimnames(afterlife)<-list(c("Female","Male"),c("Yes","No"))>afterlifeYes NoFemale435147Male375134We can dress things even more by providing names for the row and column variables:>names(dimnames(afterlife))<-c("Gender","Believer")>afterlifeBelieverGender Yes NoFemale435147Male375134Calculating the total sample size,n,and the overall proportions,{p ij}is easy:>tot<-sum(afterlife)>tot[1]1091>afterlife/totBelieverGender Yes NoFemale0.39871680.1347388Male0.34372140.1228231To calculate the row and column totals,n i+and n+j and the row and column proportions,p i+and p+j,one can use the apply(appendix A.1.1)and sweep(appendix A.1.4)functions:>rowtot<-apply(afterlife,1,sum)>coltot<-apply(afterlife,2,sum)>rowtotFemale Male582509>coltotYes No810281>rowpct<-sweep(afterlife,1,rowtot,"/")>rowpctBelieverGender Yes NoFemale0.74742270.2525773Male0.73673870.2632613>round(rowpct,3)BelieverGender Yes NoFemale0.7470.253Male0.7370.263>sweep(afterlife,2,coltot,"/")BelieverGender Yes NoFemale0.5370370.5231317Male0.4629630.4768683Entering Two-Way Tables as a Data FrameOne might also put the data into a data frame,treating the row and column variables as factor variables.This approach is actually be more convenient when the data is stored in a separatefile to be read into R,but we will consider it now anyway.>Gender<-c("Female","Female","Male","Male")>Believer<-c("Yes","No","Yes","No")>Count<-c(435,147,375,134)>afterlife<-data.frame(Gender,Believer,Count)>afterlifeGender Believer Count1Female Yes4352Female No1473Male Yes3754Male No134>rm(Gender,Believer,Count)#No longer neededAs mentioned above,you can also just enter the data into a textfile to be read into R using the read.table command.For example,if thefile afterlife.dat contained the linesGender Believer CountFemale Yes435Female No147Male Yes375Male No134then the command>read.table("afterlife.dat",header=TRUE)would get you to the same point as above.1To extract a contingency table(a matrix in this case)for these data,you can use the tapply(appendix A.1.5)function in the following way:>attach(afterlife)#attach the data frame>beliefs<-tapply(Count,list(Gender,Believer),c)>beliefsNo YesFemale147435Male134375>detach(afterlife)#can detach the data when longer needed>names(dimnames(beliefs))<-c("Gender","Believer")>beliefsBelieverGender No YesFemale147435Male134375>beliefs<-beliefs[,c(2,1)]#reverse the columns?>beliefsBelieverGender Yes NoFemale435147Male375134At this stage,beliefs can be manipulated as in the previous subsection.2.3Comparing Proportions in Two-by-Two TablesAs explained by the documentation for prop.test(appendix A.1.3),the data may be represented in several different ways for use in prop.test.We will use the matrix representation of the last section in examining the Physician’s Health Study example.>phs<-matrix(c(189,10845,104,10933),byrow=TRUE,ncol=2)>phs[,1][,2][1,]18910845[2,]10410933>dimnames(phs)<-+list(Group=c("Placebo","Aspirin"),MI=c("Yes","No"))>phs1Actually,there is one small difference:the levels of the factor“Believer”will be ordered alphabetically,and this will make a small difference in how some things are presented.If you want to make sure that the levels of the factors are ordered as they appear in the data file,you can use the read.table2function provided in the sta4504package for R.Or use the relevel command.MIGroup Yes NoPlacebo18910845Aspirin10410933>prop.test(phs)2-sample test for equality of proportionswith continuity correctiondata:phsX-squared=24.4291,df=1,p-value=7.71e-07alternative hypothesis:two.sided95percent confidence interval:0.0045971340.010814914sample estimates:prop1prop20.017128870.00942285A continuity correction is used by default,but it makes very little difference in this example: >prop.test(phs,correct=F)2-sample test for equality of proportionswithout continuity correctiondata:phsX-squared=25.0139,df=1,p-value= 5.692e-07alternative hypothesis:two.sided95percent confidence interval:0.0046877510.010724297sample estimates:prop1prop20.017128870.00942285You can also save the output of the test and manipulate it in various ways:>phs.test<-prop.test(phs)>names(phs.test)[1]"statistic""parameter""p.value""estimate" [5]"null.value""conf.int""alternative""method" [9]"">phs.test$estimateprop1prop20.017128870.00942285>phs.test$conf.int[1]0.0045971340.010814914attr(,"conf.level")[1]0.95>round(phs.test$conf.int,3)[1]0.0050.011attr(,"conf.level")[1]0.95>phs.test$estimate[1]/phs.test$estimate[2]%relative riskprop11.8178022.4The Odds RatioRelative risk and the odds ratio are easy to calculate(you can do it in lots of ways of course): >phs.test$estimateprop1prop20.017128870.00942285>odds<-phs.test$estimate/(1-phs.test$estimate)>oddsprop1prop20.0174273860.009512485>odds[1]/odds[2]prop11.832054>(phs[1,1]*phs[2,2])/(phs[2,1]*phs[1,2])#as cross-prod ratio [1] 1.832054Here’s one way to calculate the CI for the odds ratio:>theta<-odds[1]/odds[2]>ASE<-sqrt(sum(1/phs))>ASE[1]0.1228416>logtheta.CI<-log(theta)+c(-1,1)*1.96*ASE>logtheta.CI[1]0.36466810.8462073>exp(logtheta.CI)[1] 1.440036 2.330790It is easy to write a quick and dirty function to do these calculations for a2×2table. odds.ratio<-function(x,pad.zeros=FALSE,conf.level=0.95){if(pad.zeros){if(any(x==0))x<-x+0.5}theta<-x[1,1]*x[2,2]/(x[2,1]*x[1,2])ASE<-sqrt(sum(1/x))CI<-exp(log(theta)+c(-1,1)*qnorm(0.5*(1+conf.level))*ASE) list(estimator=theta,ASE=ASE,conf.interval=CI,conf.level=conf.level)}This has been added to the sta4504package.For the example above:>odds.ratio(phs)$estimator[1] 1.832054$ASE[1]0.1228416$conf.interval[1] 1.440042 2.330780$conf.level[1]0.952.5Chi-Squared Tests of IndependenceGender Gap Example The chisq.test function will compute Pearson’s chi-squared test statistic(X2)and the corresponding P-value.Here it is applied to the gender gap example:>gendergap<-matrix(c(279,73,225,165,47,191),byrow=TRUE,nrow=2)>dimnames(gendergap)<-list(Gender=c("Females","Males"),+PartyID=c("Democrat","Independent","Republican"))>gendergapPartyIDGender Democrat Independent RepublicanFemales27973225Males16547191>chisq.test(gendergap)Pearson’s Chi-square testdata:gendergapX-squared=7.0095,df=2,p-value=0.03005In case you are worried about the chi-squared approximation to the sampling distribution of the statistic, you can use simulation to compute an approximate P-value(or use an exact test;see below).The argument B(default is2000)controls how many simulated tables are used to compute this value.More is better,but eventually you will run out of either compute memory or time,so don’t get carried away.It is interesting to do it a few times though to see how stable the simulated P-value is(does it change much from run to run).In this case the simulated P-values agree closely with the chi-squared approximation,suggesting that the chi-squared approximation is good in this example.>chisq.test(gendergap,simulate.p.value=TRUE,B=10000)Pearson’s Chi-square test with simulated p-value(based on10000replicates)data:gendergapX-squared=7.0095,df=NA,p-value=0.032>chisq.test(gendergap,simulate.p.value=TRUE,B=10000)Pearson’s Chi-square test with simulated p-value(based on10000replicates)data:gendergapX-squared=7.0095,df=NA,p-value=0.0294An exact test of independence in I×J tables is implemented in the functionfisher.test of the ctest (classical tests)package(this package is now part of the base distribution of R and is loaded automatically when any of its functions are called).This test is just a generalization of Fisher’s exact test for2×2ta-bles.Note that the P-value here is in pretty good agreement with the simulated values and the chi-squared approximation.>library(ctest)#this is not needed with R versions>=0.99>fisher.test(gendergap)Fisher’s Exact Test for Count Datadata:gendergapp-value=0.03115alternative hypothesis:two.sidedJob Satisfaction Example For the job satisfaction example given in class,there was some worry about the chi-squared approximation to the null distribution of the test statistic.However the P-value again agrees closely with the simulated P-values and P-value for the the exact test:>jobsatis<-c(2,4,13,3,2,6,22,4,0,1,15,8,0,3,13,8)>jobsatis<-matrix(jobsatis,byrow=TRUE,nrow=4)>dimnames(jobsatis)<-list(+Income=c("<5","5-15","15-25",">25"),+Satisfac=c("VD","LS","MS","VS"))>jobsatisSatisfacIncome VD LS MS VS<5241335-152622415-2501158>2503138>chisq.test(jobsatis)Pearson’s Chi-square testdata:jobsatisX-squared=11.5243,df=9,p-value=0.2415Warning message:Chi-square approximation may be incorrect in:chisq.test(jobsatis)>chisq.test(jobsatis,simulate.p.value=TRUE,B=10000)Pearson’s Chi-square test with simulated p-value(based on10000replicates)data:jobsatisX-squared=11.5243,df=NA,p-value=0.2408>fisher.test(jobsatis)Fisher’s Exact Test for Count Datadata:jobsatisp-value=0.2315alternative hypothesis:two.sidedA”PROC FREQ”for R Here is a little R function to do some of the calculations that SAS’s PROC FREQ does.There are other ways to get all of this information,so the main idea is simply to illustrate how you can write R functions to do the sorts of calculations that you mightfind yourself doing repeatedly.Also,you can always go back later and modify your function add capabilities that you need.Note that this is just supposed to serve as a simple utility function.If I wanted it to be really nice,I would write a general method function and a print method for the output(you can alsofind source for this function on the course web page). "procfreq"<-function(x,digits=4){total<-sum(x)rowsum<-apply(x,1,sum)colsum<-apply(x,2,sum)prop<-x/totalrowprop<-sweep(x,1,rowsum,"/")colprop<-sweep(x,2,colsum,"/")expected<-(matrix(rowsum)%*%t(matrix(colsum)))/totaldimnames(expected)<-dimnames(x)resid<-(x-expected)/sqrt(expected)adj.resid<-resid/sqrt((1-matrix(rowsum)/total)%*%t(1-matrix(colsum)/total)) df<-prod(dim(x)-1)X2<-sum(residˆ2)attr(X2,"P-value")<-1-pchisq(X2,df)##Must be careful about zero freqencies.Want0*log(0)=0.tmp<-x*log(x/expected)tmp[x==0]<-0G2<-2*sum(tmp)attr(G2,"P-value")<-1-pchisq(G2,df)list(sample.size=total,row.totals=rowsum,col.totals=colsum,overall.proportions=prop,row.proportions=rowprop,col.proportions=colprop,expected.freqs=expected,residuals=resid,adjusted.residuals=adj.resid,chi.square=X2,likelihood.ratio.stat=G2,df=df)}If you save this function definition in afile called“procfreq.R”and then“source”it into R,you can use it just like any built-in function.Here is procfreq in action on the income data:>source("procfreq.R")>jobsat.freq<-procfreq(jobsatis)>names(jobsat.freq)[1]"sample.size""row.totals"[3]"col.totals""overall.proportions"[5]"row.proportions""col.proportions"[7]"expected.freqs""residuals"[9]"adjusted.residuals""chi.square"[11]"likelihood.ratio.stat""df">jobsat.freq$expectedSatisfacIncome VD LS MS VS<50.8461538 2.96153813.32692 4.8653855-15 1.3076923 4.57692320.596157.51923115-250.9230769 3.23076914.53846 5.307692>250.9230769 3.23076914.53846 5.307692>round(jobsat.freq$adjusted.residuals,2)SatisfacIncome VD LS MS VS<5 1.440.73-0.16-1.085-150.750.870.60-1.7715-25-1.12-1.520.22 1.51>25-1.12-0.16-0.73 1.51>jobsat.freq$chi.square[1]11.52426attr(,"P-value")[1]0.2414764>jobsat.freq$likelihood.ratio.stat[1]13.46730attr(,"P-value")[1]0.1425759Fisher’s Exact Test As mentioned above,Fisher’s exact test is implemented asfisher.test in the ctest (classical tests)package.Here is the tea tasting example in R.Note that the default is to test the two-sided alternative.>library(ctest)#not needed with R versions>=0.99>tea<-matrix(c(3,1,1,3),ncol=2)>dimnames(tea)<-+list(Poured=c("milk","tea"),Guess=c("milk","tea"))>teaGuessPoured milk teamilk31tea13>fisher.test(tea)Fisher’s Exact Test for Count Datadata:teap-value=0.4857alternative hypothesis:true odds ratio is not equal to1 95percent confidence interval:0.2117329621.9337505sample estimates:odds ratio6.408309>fisher.test(tea,alternative="greater")Fisher’s Exact Test for Count Datadata:teap-value=0.2429alternative hypothesis:true odds ratio is greater than1 95percent confidence interval:0.3135693Infsample estimates:odds ratio6.408309Chapter3Three-Way Contingency TablesThe Cochran-Mantel-Haenszel test is implemented in the mantelhaen.test function of the ctest library. The Death Penalty Example Here we illustrate the use of mantelhaen.test as well as the ftable function to present a multiway contigency table in a“flat”format.Both of these are included in base R as of version 0.99.Note that by default mantelhaen.test applies a continuity correction in doing the test.>dp<-c(53,414,11,37,0,16,4,139)>dp<-array(dp,dim=c(2,2,2))>dimnames(dp)<-list(DeathPen=c("yes","no"),+Defendant=c("white","black"),Victim=c("white","black"))>dp,,Victim=whiteDefendantDeathPen white blackyes5311no41437,,Victim=blackDefendantDeathPen white blackyes04no16139>ftable(dp,row.vars=c("Victim","Defendant"),col.vars="DeathPen")DeathPen yes noVictim Defendantwhite white53414black1137black white016black4139>mantelhaen.test(dp)Mantel-Haenszel chi-square test with continuity correctiondata:dpMantel-Haenszel X-square= 4.779,df=1,p-value=0.02881>mantelhaen.test(dp,correct=FALSE)Mantel-Haenszel chi-square test without continuity correction data:dpMantel-Haenszel X-square= 5.7959,df=1,p-value=0.01606Smoking and Lung Cancer in China Example This is a bigger example that uses the Cochran-Mantel-Haenszel test.First we will enter the data as a“data frame”instead of as an array as in the previous example. This is mostly just to demonstrate another way to do things.>cities<-c("Beijing","Shanghai","Shenyang","Nanjing","Harbin", +"Zhengzhou","Taiyuan","Nanchang")>City<-factor(rep(cities,rep(4,length(cities))),levels=cities)>Smoker<-+factor(rep(rep(c("Yes","No"),c(2,2)),8),levels=c("Yes","No"))>Cancer<-factor(rep(c("Yes","No"),16),levels=c("Yes","No"))>Count<-c(126,100,35,61,908,688,497,807,913,747,336,598,235,+172,58,121,402,308,121,215,182,156,72,98,60,99,11,43,104,89,21,36)>chismoke<-data.frame(City,Smoker,Cancer,Count)>chismokeCity Smoker Cancer Count1Beijing Yes Yes1262Beijing Yes No1003Beijing No Yes354Beijing No No615Shanghai Yes Yes9086Shanghai Yes No6887Shanghai No Yes4978Shanghai No No8079Shenyang Yes Yes91310Shenyang Yes No74711Shenyang No Yes33612Shenyang No No59813Nanjing Yes Yes23514Nanjing Yes No17215Nanjing No Yes5816Nanjing No No12117Harbin Yes Yes40218Harbin Yes No30819Harbin No Yes12120Harbin No No21521Zhengzhou Yes Yes18222Zhengzhou Yes No15623Zhengzhou No Yes7224Zhengzhou No No9825Taiyuan Yes Yes6026Taiyuan Yes No9927Taiyuan No Yes1128Taiyuan No No4329Nanchang Yes Yes10430Nanchang Yes No8931Nanchang No Yes2132Nanchang No No36>rm(cities,City,Smoker,Cancer,Count)#Cleaning upAlternatively,we can read the data directly from thefile chismoke.dat.Note that if we want“Yes”before“No”we have to relevel the factors,because read.table puts the levels in alphabetical order.>chismoke<-read.table("chismoke.dat",header=TRUE)>names(chismoke)[1]"City""Smoker""Cancer""Count">levels(chismoke$Smoker)[1]"No""Yes">chismoke$Smoker<-relevel(chismoke$Smoker,c("Yes","No"))>levels(chismoke$Smoker)[1]"Yes""No">levels(chismoke$Cancer)[1]"No""Yes">chismoke$Cancer<-relevel(chismoke$Cancer,c("Yes","No"))>levels(chismoke$Cancer)[1]"Yes""No"If you use the function read.table2in the sta4504package,you will not have to relevel the factors.Of course if you have the package,thenNow,returning to the example:>attach(chismoke)>x<-tapply(Count,list(Smoker,Cancer,City),c)>detach(chismoke)>names(dimnames(x))<-c("Smoker","Cancer","City")>#ftable will be in the next release of R>ftable(x,row.vars=c("City","Smoker"),col.vars="Cancer")Cancer Yes NoCity SmokerBeijing Yes126100No3561Shanghai Yes908688No497807Shenyang Yes913747No336598Nanjing Yes235172No58121Harbin Yes402308No121215Zhengzhou Yes182156No7298Taiyuan Yes6099No1143Nanchang Yes10489No2136>ni.k<-apply(x,c(1,3),sum)>ni.kCitySmoker Beijing Shanghai Shenyang Nanjing Harbin Zhengzhou Yes22615961660407710338No961304934179336170CitySmoker Taiyuan NanchangYes159193No5457>n.jk<-apply(x,c(2,3),sum)>n.jkCityCancer Beijing Shanghai Shenyang Nanjing Harbin Zhengzhou Yes16114051249293523254No16114951345293523254CityCancer Taiyuan NanchangYes71125No142125>n..k<-apply(x,3,sum)>mu11k<-ni.k[1,]*n.jk[1,]/n..k>mu11kBeijing Shanghai Shenyang Nanjing Harbin Zhengzhou113.0000773.2345799.2830203.5000355.0000169.0000Taiyuan Nanchang53.000096.5000>sum(mu11k)[1]2562.517>sum(x[1,1,])[1]2930>varn11k<-ni.k[1,]*ni.k[2,]*n.jk[1,]*n.jk[2,]/(n..kˆ2*(n..k-1)) >sum(varn11k)[1]482.0612>>MH<-(sum(x[1,1,]-mu11k))ˆ2/sum(varn11k)>MH[1]280.1375Chapter4Chapter4:Generalized Linear Models Snoring and Heart Disease This covers the example in Section4.2.2and also Exercise4.2.There are several ways tofit a logistic regression in R using the glm function(more on this in Chapter5).In the method illustrated here,the response in the model formula(e.g.,snoring in snoring∼scores.a)is a matrix whosefirst column is the number of“successes”and whose second column is the number of“failures”for each observed binomial.>snoring<-+matrix(c(24,1355,35,603,21,192,30,224),ncol=2,byrow=TRUE)>dimnames(snoring)<-+list(snore=c("never","sometimes","often","always"),+heartdisease=c("yes","no"))>snoringheartdiseasesnore yes nonever241355sometimes35603often21192always30224>scores.a<-c(0,2,4,5)>scores.b<-c(0,2,4,6)>scores.c<-0:3>scores.d<-1:4>#Fitting and comparing logistic regression models>snoring.lg.a<-glm(snoring˜scores.a,family=binomial())>snoring.lg.b<-glm(snoring˜scores.b,family=binomial())>snoring.lg.c<-glm(snoring˜scores.c,family=binomial())>snoring.lg.d<-glm(snoring˜scores.d,family=binomial())>coef(snoring.lg.a)(Intercept)scores.a-3.86624810.3973366>coef(snoring.lg.b)(Intercept)scores.b-3.77737550.3272648>coef(snoring.lg.c)(Intercept)scores.c-3.77737550.6545295>coef(snoring.lg.d)(Intercept)scores.d-4.43190500.6545295>predict(snoring.lg.a,type="response")#compare to table 4.1[1]0.020507420.044295110.093054110.13243885>predict(snoring.lg.b,type="response")[1]0.022370770.042174660.078109380.14018107>predict(snoring.lg.c,type="response")[1]0.022370770.042174660.078109380.14018107>predict(snoring.lg.d,type="response")[1]0.022370770.042174660.078109380.14018107Note that the default link function with the binomial family is the logit link.To do a probit analysis,say using the original scores used in Table4.1:>snoring.probit<-+glm(snoring˜scores.a,family=binomial(link="probit"))>summary(snoring.probit)Call:glm(formula=snoring˜scores.a,family=binomial(link="probit")) Deviance Residuals:[1]-0.6188 1.03880.1684-0.6175Coefficients:Estimate Std.Error z value Pr(>|z|)(Intercept)-2.060550.07017-29.367<2e-16***scores.a0.187770.023487.997 1.28e-15***---Signif.codes:0‘***’0.001‘**’0.01‘*’0.05‘.’0.1‘’1(Dispersion parameter for binomial family taken to be1)Null deviance:65.9045on3degrees of freedomResidual deviance: 1.8716on2degrees of freedomAIC:26.124Number of Fisher Scoring iterations:3>predict(snoring.probit,type="response")#compare with Table 4.1[1]0.019672920.045993250.095187620.13099512There is no identity link provided for the binomial family,so we cannot reproduce the thirdfit given in Table4.1.This is not such a great loss of course,since linear probability models are rarely used.Grouped Crabs Data This is the example done in class(slightly different from that done in the text.The data are in thefile“crabs.dat”(available on the course web site)and can be read into R using the read.table function:>crabs<-read.table("crabs.dat",header=TRUE)Alternatively,these data can accessed directly from the sta4504package by typing。

To appear in ACM Transactions on Computer SystemsA General Framework for Prefetch Scheduling in Linked Data Structures and its Application to Multi-Chain PrefetchingSEUNGRYUL CHOIUniversity of Maryland,College ParkNICHOLAS KOHOUTEVI Technology LLC.SUMIT PAMNANIAdvanced Micro Devices,Inc.andDONGKEUN KIM and DONALD YEUNGUniversity of Maryland,College ParkThis research was supported in part by NSF Computer Systems Architecture grant CCR-0093110, and in part by NSF CAREER Award CCR-0000988.Author’s address:Seungryul Choi,University of Maryland,Department of Computer Science, College Park,MD20742.Permission to make digital/hard copy of all or part of this material without fee for personal or classroom use provided that the copies are not made or distributed for profit or commercial advantage,the ACM copyright/server notice,the title of the publication,and its date appear,and notice is given that copying is by permission of the ACM,Inc.To copy otherwise,to republish, to post on servers,or to redistribute to lists requires prior specific permission and/or a fee.c 2001ACM1529-3785/2001/0700-0001$5.00ACM Transactions on Computer Systems2·Seungryul Choi et al.Pointer-chasing applications tend to traverse composite data structures consisting of multiple independent pointer chains.While the traversal of any single pointer chain leads to the seri-alization of memory operations,the traversal of independent pointer chains provides a source of memory parallelism.This article investigates exploiting such inter-chain memory parallelism for the purpose of memory latency tolerance,using a technique called multi-chain prefetching. Previous works[Roth et al.1998;Roth and Sohi1999]have proposed prefetching simple pointer-based structures in a multi-chain fashion.However,our work enables multi-chain prefetching for arbitrary data structures composed of lists,trees,and arrays.This article makesfive contributions in the context of multi-chain prefetching.First,we intro-duce a framework for compactly describing LDS traversals,providing the data layout and traversal code work information necessary for prefetching.Second,we present an off-line scheduling algo-rithm for computing a prefetch schedule from the LDS descriptors that overlaps serialized cache misses across separate pointer-chain traversals.Our analysis focuses on static traversals.We also propose using speculation to identify independent pointer chains in dynamic traversals.Third,we propose a hardware prefetch engine that traverses pointer-based data structures and overlaps mul-tiple pointer chains according to the computed prefetch schedule.Fourth,we present a compiler that extracts LDS descriptors via static analysis of the application source code,thus automating multi-chain prefetching.Finally,we conduct an experimental evaluation of compiler-instrumented multi-chain prefetching and compare it against jump pointer prefetching[Luk and Mowry1996], prefetch arrays[Karlsson et al.2000],and predictor-directed stream buffers(PSB)[Sherwood et al. 2000].Our results show compiler-instrumented multi-chain prefetching improves execution time by 40%across six pointer-chasing kernels from the Olden benchmark suite[Rogers et al.1995],and by3%across four pared to jump pointer prefetching and prefetch arrays,multi-chain prefetching achieves34%and11%higher performance for the selected Olden and SPECint2000benchmarks,pared to PSB,multi-chain prefetching achieves 27%higher performance for the selected Olden benchmarks,but PSB outperforms multi-chain prefetching by0.2%for the selected SPECint2000benchmarks.An ideal PSB with an infinite markov predictor achieves comparable performance to multi-chain prefetching,coming within6% across all benchmarks.Finally,speculation can enable multi-chain prefetching for some dynamic traversal codes,but our technique loses its effectiveness when the pointer-chain traversal order is highly dynamic.Categories and Subject Descriptors:B.8.2[Performance and Reliability]:Performance Anal-ysis and Design Aids;B.3.2[Memory Structures]:Design Styles—Cache Memories;C.0[Gen-eral]:Modeling of computer architecture;System Architectures; C.4[Performance of Sys-tems]:Design Studies;D.3.4[Programming Languages]:Processors—CompilersGeneral Terms:Design,Experimentation,PerformanceAdditional Key Words and Phrases:Data Prefetching,Memory parallelism,Pointer Chasing CodeA General Framework for Prefetch Scheduling·3performance platforms.The use of LDSs will likely have a negative impact on memory performance, making many non-numeric applications severely memory-bound on future systems. LDSs can be very large owing to their dynamic heap construction.Consequently, the working sets of codes that use LDSs can easily grow too large tofit in the processor’s cache.In addition,logically adjacent nodes in an LDS may not reside physically close in memory.As a result,traversal of an LDS may lack spatial locality,and thus may not benefit from large cache blocks.The sparse memory access nature of LDS traversal also reduces the effective size of the cache,further increasing cache misses.In the past,researchers have used prefetching to address the performance bot-tlenecks of memory-bound applications.Several techniques have been proposed, including software prefetching techniques[Callahan et al.1991;Klaiber and Levy 1991;Mowry1998;Mowry and Gupta1991],hardware prefetching techniques[Chen and Baer1995;Fu et al.1992;Jouppi1990;Palacharla and Kessler1994],or hybrid techniques[Chen1995;cker Chiueh1994;Temam1996].While such conventional prefetching techniques are highly effective for applications that employ regular data structures(e.g.arrays),these techniques are far less successful for non-numeric ap-plications that make heavy use of LDSs due to memory serialization effects known as the pointer chasing problem.The memory operations performed for array traver-sal can issue in parallel because individual array elements can be referenced inde-pendently.In contrast,the memory operations performed for LDS traversal must dereference a series of pointers,a purely sequential operation.The lack of memory parallelism during LDS traversal prevents conventional prefetching techniques from overlapping cache misses suffered along a pointer chain.Recently,researchers have begun investigating prefetching techniques designed for LDS traversals.These new LDS prefetching techniques address the pointer-chasing problem using several different approaches.Stateless techniques[Luk and Mowry1996;Mehrotra and Harrison1996;Roth et al.1998;Yang and Lebeck2000] prefetch pointer chains sequentially using only the natural pointers belonging to the LDS.Existing stateless techniques do not exploit any memory parallelism at all,or they exploit only limited amounts of memory parallelism.Consequently,they lose their effectiveness when the LDS traversal code contains insufficient work to hide the serialized memory latency[Luk and Mowry1996].A second approach[Karlsson et al.2000;Luk and Mowry1996;Roth and Sohi1999],which we call jump pointer techniques,inserts additional pointers into the LDS to connect non-consecutive link elements.These“jump pointers”allow prefetch instructions to name link elements further down the pointer chain without sequentially traversing the intermediate links,thus creating memory parallelism along a single chain of pointers.Because they create memory parallelism using jump pointers,jump pointer techniques tolerate pointer-chasing cache misses even when the traversal loops contain insufficient work to hide the serialized memory latency.However,jump pointer techniques cannot commence prefetching until the jump pointers have been installed.Furthermore,the jump pointer installation code increases execution time,and the jump pointers themselves contribute additional cache misses.ACM Transactions on Computer Systems4·Seungryul Choi et al.Finally,a third approach consists of prediction-based techniques[Joseph and Grunwald1997;Sherwood et al.2000;Stoutchinin et al.2001].These techniques perform prefetching by predicting the cache-miss address stream,for example us-ing hardware predictors[Joseph and Grunwald1997;Sherwood et al.2000].Early hardware predictors were capable of following striding streams only,but more re-cently,correlation[Charney and Reeves1995]and markov[Joseph and Grunwald 1997]predictors have been proposed that can follow arbitrary streams,thus en-abling prefetching for LDS traversals.Because predictors need not traverse program data structures to generate the prefetch addresses,they avoid the pointer-chasing problem altogether.In addition,for hardware prediction,the techniques are com-pletely transparent since they require no support from the programmer or compiler. However,prediction-based techniques lose their effectiveness when the cache-miss address stream is unpredictable.This article investigates exploiting the natural memory parallelism that exists between independent serialized pointer-chasing traversals,or inter-chain memory parallelism.Our approach,called multi-chain prefetching,issues prefetches along a single chain of pointers sequentially,but aggressively pursues multiple independent pointer chains simultaneously whenever possible.Due to its aggressive exploitation of inter-chain memory parallelism,multi-chain prefetching can tolerate serialized memory latency even when LDS traversal loops have very little work;hence,it can achieve higher performance than previous stateless techniques.Furthermore,multi-chain prefetching does not use jump pointers.As a result,it does not suffer the overheads associated with creating and managing jump pointer state.Andfinally, multi-chain prefetching is an execution-based technique,so it is effective even for programs that exhibit unpredictable cache-miss address streams.The idea of overlapping chained prefetches,which is fundamental to multi-chain prefetching,is not new:both Cooperative Chain Jumping[Roth and Sohi1999]and Dependence-Based Prefetching[Roth et al.1998]already demonstrate that simple “backbone and rib”structures can be prefetched in a multi-chain fashion.However, our work pushes this basic idea to its logical limit,enabling multi-chain prefetching for arbitrary data structures(our approach can exploit inter-chain memory paral-lelism for any data structure composed of lists,trees,and arrays).Furthermore, previous chained prefetching techniques issue prefetches in a greedy fashion.In con-trast,our work provides a formal and systematic method for scheduling prefetches that controls the timing of chained prefetches.By controlling prefetch arrival, multi-chain prefetching can reduce both early and late prefetches which degrade performance compared to previous chained prefetching techniques.In this article,we build upon our original work in multi-chain prefetching[Kohout et al.2001],and make the following contributions:(1)We present an LDS descriptor framework for specifying static LDS traversalsin a compact fashion.Our LDS descriptors contain data layout information and traversal code work information necessary for prefetching.(2)We develop an off-line algorithm for computing an exact prefetch schedulefrom the LDS descriptors that overlaps serialized cache misses across separate pointer-chain traversals.Our algorithm handles static LDS traversals involving either loops or recursion.Furthermore,our algorithm computes a schedule even ACM Transactions on Computer SystemsA General Framework for Prefetch Scheduling·5when the extent of dynamic data structures is unknown.To handle dynamic LDS traversals,we propose using speculation.However,our technique cannot handle codes in which the pointer-chain traversals are highly dynamic.(3)We present the design of a programmable prefetch engine that performs LDStraversal outside of the main CPU,and prefetches the LDS data using our LDS descriptors and the prefetch schedule computed by our scheduling algorithm.We also perform a detailed analysis of the hardware cost of our prefetch engine.(4)We introduce algorithms for extracting LDS descriptors from application sourcecode via static analysis,and implement them in a prototype compiler using the SUIF framework[Hall et al.1996].Our prototype compiler is capable of ex-tracting all the program-level information necessary for multi-chain prefetching fully automatically.(5)Finally,we conduct an experimental evaluation of multi-chain prefetching us-ing several pointer-intensive applications.Our evaluation compares compiler-instrumented multi-chain prefetching against jump pointer prefetching[Luk and Mowry1996;Roth and Sohi1999]and prefetch arrays[Karlsson et al.2000], two jump pointer techniques,as well as predictor-directed stream buffers[Sher-wood et al.2000],an all-hardware prediction-based technique.We also inves-tigate the impact of early prefetch arrival on prefetching performance,and we compare compiler-and manually-instrumented multi-chain prefetching to eval-uate the quality of the instrumentation generated by our compiler.In addition, we characterize the sensitivity of our technique to varying hardware stly,we undertake a preliminary evaluation of speculative multi-chain prefetching to demonstrate its potential in enabling multi-chain prefetching for dynamic LDS traversals.The rest of this article is organized as follows.Section2further explains the essence of multi-chain prefetching.Then,Section3introduces our LDS descriptor framework.Next,Section4describes our scheduling algorithm,Section5discusses our prefetch engine,and Section6presents our compiler for automating multi-chain prefetching.After presenting all our algorithms and techniques,Sections7and8 then report on our experimental methodology and evaluation,respectively.Finally, Section9discusses related work,and Section10concludes the article.2.MULTI-CHAIN PREFETCHINGThis section provides an overview of our multi-chain prefetching technique.Sec-tion2.1presents the idea of exploiting inter-chain memory parallelism.Then, Section2.2discusses the identification of independent pointer chain traversals. 2.1Exploiting Inter-Chain Memory ParallelismThe multi-chain prefetching technique augments a commodity microprocessor with a programmable hardware prefetch engine.During an LDS computation,the prefetch engine performs its own traversal of the LDS in front of the processor,thus prefetching the LDS data.The prefetch engine,however,is capable of traversing multiple pointer chains simultaneously when permitted by the application.Conse-quently,the prefetch engine can tolerate serialized memory latency by overlapping cache misses across independent pointer-chain traversals.ACM Transactions on Computer Systems6·Seungryul Choi et al.<compute>ptr = A[i];ptr = ptr->next;while (ptr) {for (i=0; i < N; i++) {a)b)}<compute>ptr = ptr->next;while (ptr) {}}PD = 2INIT(ID ll);stall stall stallINIT(ID aol);stall stallFig.1.Traversing pointer chains using a prefetch engine.a).Traversal of a single linked list.b).Traversal of an array of lists data structure.To illustrate the idea of exploiting inter-chain memory parallelism,wefirst de-scribe how our prefetch engine traverses a single chain of pointers.Figure1a shows a loop that traverses a linked list of length three.Each loop iteration,denoted by a hashed box,contains w1cycles of work.Before entering the loop,the processor ex-ecutes a prefetch directive,INIT(ID ll),instructing the prefetch engine to initiate traversal of the linked list identified by the ID ll label.If all three link nodes suffer an l-cycle cache miss,the linked list traversal requires3l cycles since the link nodes must be fetched sequentially.Assuming l>w1,the loop alone contains insufficient work to hide the serialized memory latency.As a result,the processor stalls for 3l−2w1cycles.To hide these stalls,the prefetch engine would have to initiate its linked list traversal3l−2w1cycles before the processor traversal.For this reason, we call this delay the pre-traversal time(P T).While a single pointer chain traversal does not provide much opportunity for latency tolerance,pointer chasing computations typically traverse many pointer chains,each of which is often independent.To illustrate how our prefetch engine exploits such independent pointer-chasing traversals,Figure1b shows a doubly nested loop that traverses an array of lists data structure.The outer loop,denoted by a shaded box with w2cycles of work,traverses an array that extracts a head pointer for the inner loop.The inner loop is identical to the loop in Figure1a.In Figure1b,the processor again executes a prefetch directive,INIT(ID aol), causing the prefetch engine to initiate a traversal of the array of lists data structure identified by the ID aol label.As in Figure1a,thefirst linked list is traversed sequentially,and the processor stalls since there is insufficient work to hide the serialized cache misses.However,the prefetch engine then initiates the traversal of subsequent linked lists in a pipelined fashion.If the prefetch engine starts a new traversal every w2cycles,then each linked list traversal will initiate the required P T cycles in advance,thus hiding the excess serialized memory latency across multiple outer loop iterations.The number of outer loop iterations required to overlap each linked list traversal is called the prefetch distance(P D).Notice when P D>1, ACM Transactions on Computer SystemsA General Framework for Prefetch Scheduling·7 the traversals of separate chains overlap,exposing inter-chain memory parallelism despite the fact that each chain is fetched serially.2.2Finding Independent Pointer-Chain TraversalsIn order to exploit inter-chain memory parallelism,it is necessary to identify mul-tiple independent pointer chains so that our prefetch engine can traverse them in parallel and overlap their cache misses,as illustrated in Figure1.An important question is whether such independent pointer-chain traversals can be easily identi-fied.Many applications perform traversals of linked data structures in which the or-der of link node traversal does not depend on runtime data.We call these static traversals.The traversal order of link nodes in a static traversal can be determined a priori via analysis of the code,thus identifying the independent pointer-chain traversals at compile time.In this paper,we present an LDS descriptor frame-work that compactly expresses the LDS traversal order for static traversals.The descriptors in our framework also contain the data layout information used by our prefetch engine to generate the sequence of load and prefetch addresses necessary to perform the LDS traversal at runtime.While compile-time analysis of the code can identify independent pointer chains for static traversals,the same approach does not work for dynamic traversals.In dynamic traversals,the order of pointer-chain traversal is determined at runtime. Consequently,the simultaneous prefetching of independent pointer chains is limited since the chains to prefetch are not known until the traversal order is computed, which may be too late to enable inter-chain overlap.For dynamic traversals,it may be possible to speculate the order of pointer-chain traversal if the order is pre-dictable.In this paper,we focus on static LDS ter in Section8.7,we illustrate the potential for predicting pointer-chain traversal order in dynamic LDS traversals by extending our basic multi-chain prefetching technique with specula-tion.3.LDS DESCRIPTOR FRAMEWORKHaving provided an overview of multi-chain prefetching,we now explore the al-gorithms and hardware underlying its implementation.We begin by introducing a general framework for compactly representing static LDS traversals,which we call the LDS descriptor framework.This framework allows compilers(and pro-grammers)to compactly specify two types of information related to LDS traversal: data structure layout,and traversal code work.The former captures memory refer-ence dependences that occur in an LDS traversal,thus identifying pointer-chasing chains,while the latter quantifies the amount of computation performed as an LDS is traversed.After presenting the LDS descriptor framework,subsequent sections of this article will show how the information provided by the framework is used to perform multi-chain prefetching(Sections4and5),and how the LDS descriptors themselves can be extracted by a compiler(Section6).3.1Data Structure Layout InformationData structure layout is specified using two descriptors,one for arrays and one for linked lists.Figure2presents each descriptor along with a traversal code exampleACM Transactions on Computer Systems8·Seungryul Choi etal.a).b).Bfor (i = 0 ; i < N ; i++) {... = data[i].value;}for (ptr = root ; ptr != NULL; ) { ptr = ptr->next;}Fig.2.Two LDS descriptors used to specify data layout information.a).Array descriptor.b).Linked list descriptor.Each descriptor appears inside a box,and is accompanied by a traversal code example and an illustration of the data structure.and an illustration of the traversed data structure.The array descriptor,shown in Figure 2a,contains three parameters:base (B ),length (L ),and stride (S ).These parameters specify the base address of the array,the number of array elements traversed by the application code,and the stride between consecutive memory ref-erences,respectively.The array descriptor specifies the memory address stream emitted by the processor during a constant-stride array traversal.Figure 2b illus-trates the linked list descriptor which contains three parameters similar to the array descriptor.For the linked list descriptor,the B parameter specifies the root pointer of the list,the L parameter specifies the number of link elements traversed by the application code,and the ∗S parameter specifies the offset from each link element address where the “next”pointer is located.The linked list descriptor specifies the memory address stream emitted by the processor during a linked list traversal.To specify the layout of complex data structures,our framework permits descrip-tor composition.Descriptor composition is represented as a directed graph whose nodes are array or linked list descriptors,and whose edges denote address generation dependences.Two types of composition are allowed.The first type of composition is nested composition .In nested composition,each address generated by an outer descriptor forms the B parameter for multiple instantiations of a dependent inner descriptor.An offset parameter,O ,is specified in place of the inner descriptor’s B parameter to shift its base address by a constant offset.Such nested descriptors cap-ture the memory reference streams of nested loops that traverse multi-dimensional data structures.Figure 3presents several nested descriptors,showing a traversal code example and an illustration of the traversed multi-dimensional data structure along with each nested descriptor.Figure 3a shows the traversal of an array of structures,each structure itself containing an array.The code example’s outer loop traverses the array “node,”ac-cessing the field “value”from each traversed structure,and the inner loop traverses ACM Transactions on Computer SystemsA General Framework for Prefetch Scheduling·9a).b).c).for (i = 0 ; i < L 0 ; i++) {... = node[i].value;for (j = 0 ; j < L 1 ; j++) {... = node[i].data[j];}}for (i = 0 ; i < L 0 ; i++) {down = node[i].pointer;for (j = 0 ; j < L 1 ; j++) {... = down->data[j];}}node for (i = 0 ; i < L 0 ; i++) {for (j = 0 ; j < L 1 ; j++) {... = node[i].data[j];}down = node[i].pointer;for (j = 0 ; j < L 2 ; j++) {... = down->data[j];}}node Fig.3.Nested descriptor composition.a).Nesting without indirection.b).Nesting with indirection.c).Nesting multiple descriptors.Each descriptor composition appears inside a box,and is accompanied by a traversal code example and an illustration of the composite data structure.each embedded array “data.”The outer and inner array descriptors,(B,L 0,S 0)and (O 1,L 1,S 1),represent the address streams produced by the outer and inner loop traversals,respectively.(In the inner descriptor,“O 1”specifies the offset of each inner array from the top of each structure).Figure 3b illustrates another form of descriptor nesting in which indirection is used between nested descriptors.The data structure in Figure 3b is similar to the one in Figure 3a,except the in-ner arrays are allocated separately,and a field from each outer array structure,“node[i].pointer,”points to a structure containing the inner array.Hence,as shown in the code example from Figure 3b,traversal of the inner array requires indirect-ing through the outer array’s pointer to compute the inner array’s base address.In our framework,this indirection is denoted by placing a “*”in front of the inner descriptor.Figure 3c,our last nested descriptor example,illustrates the nestingACM Transactions on Computer Systems10·Seungryul Choi et al.main() { foo(root, depth_limit);}foo(node, depth) { depth = depth - 1; if (depth == 0 || node == NULL)return;foo(node->child[0], depth);foo(node->child[1], depth);foo(node->child[2], depth);}Fig.4.Recursive descriptor composition.The recursive descriptor appears inside a box,and is accompanied by a traversal code example and an illustration of the tree data structure.of multiple inner descriptors underneath a single outer descriptor to represent the address stream produced by nested distributed loops.The code example from Fig-ure 3c shows the two inner loops from Figures 3a-b nested in a distributed fashion inside a common outer loop.In our framework,each one of the multiple inner array descriptors represents the address stream for a single distributed loop,with the order of address generation proceeding from the leftmost to rightmost inner descriptor.It is important to note that while all the descriptors in Figure 3show two nesting levels only,our framework allows an arbitrary nesting depth.This permits describ-ing higher-dimensional LDS traversals,for example loop nests with >2nesting depth.Also,our framework can handle non-recurrent loads using “singleton”de-scriptors.For example,a pointer to a structure may be dereferenced multiple times to access different fields in the structure.Each dereference is a single non-recurrent load.We create a separate descriptor for each non-recurrent load,nest it under-neath its recurrent load’s descriptor,and assign an appropriate offset value,O ,and length value,L =1.In addition to nested composition,our framework also permits recursive compo-sition .Recursively composed descriptors describe depth-first tree traversals.They are similar to nested descriptors,except the dependence edge flows backwards.Since recursive composition introduces cycles into the descriptor graph,our frame-work requires each backwards dependence edge to be annotated with the depth of recursion,D ,to bound the size of the data structure.Figure 4shows a simple recursive descriptor in which the backwards dependence edge originates from and terminates to a single array descriptor.The “L”parameter in the descriptor spec-ifies the fanout of the tree.In our example,L =3,so the traversed data structure is a tertiary tree,as shown in Figure 4.Notice the array descriptor has both B and O parameters–B provides the base address for the first instance of the descriptor,while O provides the offset for all recursively nested instances.In Figures 2and 4,we assume the L parameter for linked lists and the D parame-ter for trees are known a priori,which is generally not ter in Section 4.3,we discuss how our framework handles these unknown descriptor parameters.In addi-ACM Transactions on Computer Systems。

RECSIT1.1中英文对照全文Assessment of the change in tumour burden is an important feature of the clinical evaluation of cancer therapeutics: both tumour shrinkage (objective response) and disease progression are useful endpoints in clinical trials. Since RECIST was published in 2000, many investigators, cooperative groups, industry and government authorities have adopted these criteria in the assessment of treatment outcomes. However, a number of questions and issues have arisen which have led to the development of a revised RECIST guideline (version 1.1). Evidence for changes, summarised in separate papers in this special issue, has come from assessment of a large data warehouse (6500 patients), simulation studies and literature reviews.临床上评价肿瘤治疗效果最重要的一点就是对肿瘤负荷变化的评估:瘤体皱缩(目标疗效)和病情恶化在临床试验中都是有意义的判断终点。

自从2000年RECIST出版以来,许多研究人员、企业团体、行业和政府当局都采纳了这一标准来评价治疗效果。