Engineering the Compression of Massive Tables An Experimental Approach SIAM 2000.

- 格式:pdf

- 大小:209.84 KB

- 文档页数:10

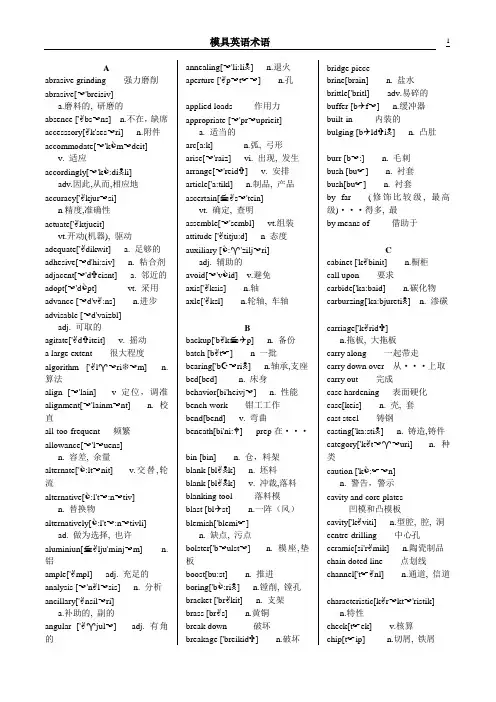

Aabrasive grinding 强力磨削abrasive[☜'breisiv]a.磨料的, 研磨的absence ['✌bs☜ns] n.不在,缺席accesssory[✌k'ses☜ri] n.附件accommodate[☜'k m☜deit]v. 适应accordingly[☜'k :di☠li]adv.因此,从而,相应地accuracy['✌kjur☜si]n精度,准确性actuate['✌ktjueit]vt.开动(机器), 驱动adequate['✌dikwit] a. 足够的adhesive[☜d'hi:siv] n. 粘合剂adjacent[☜'d✞eisnt] a. 邻近的adopt[☜'d pt] vt. 采用advance [☜d'v✌:ns] n.进步advisable [☜d'vaizbl]adj. 可取的agitate['✌d✞iteit] v. 摇动a large extent 很大程度algorithm ['✌l♈☜ri❆☜m] n. 算法align [☜'lain] v 定位,调准alignment[☜'lainm☜nt] n. 校直all-too-frequent 频繁allowance[☜'l☜uens]n. 容差, 余量alternate[' :lt☜nit] v.交替,轮流alternative[ :l't☜:n☜tiv]n. 替换物alternatively[ :l't☜:n☜tivli]ad. 做为选择, 也许aluminiun[ ✌lju'minj☜m] n.铝ample['✌mpl] adj. 充足的analysis [☜'n✌l☜sis] n. 分析ancillary['✌nsil☜ri]a.补助的, 副的angular ['✌♈jul☜] adj. 有角的annealing[☜'li:li☠] n.退火aperture ['✌p☜t☞☜] n.孔applied loads 作用力appropriate [☜'pr☜uprieit]a. 适当的arc[a:k] n.弧, 弓形arise[☜'raiz] vi. 出现, 发生arrange[☜'reid✞] v. 安排article['a:tikl] n.制品, 产品ascertain[ ✌s☜'tein]vt. 确定, 查明assemble[☜'sembl] vt.组装attitude ['✌titju:d] n 态度auxiliary [ :♈'zilj☜ri]adj. 辅助的avoid[☜'v id] v.避免axis['✌ksis] n.轴axle['✌ksl] n.轮轴, 车轴Bbackup['b✌k ✈p] n. 备份batch [b✌t☞] n 一批bearing['b☪☜ri☠] n.轴承,支座bed[bed] n. 床身behavior[bi'heivj☜] n. 性能bench-work 钳工工作bend[bend] v. 弯曲beneath[bi'ni: ] prep在···bin [bin] n. 仓,料架blank [bl✌☠k] n. 坯料blank [bl✌☠k] v. 冲裁,落料blanking tool 落料模blast [bl✈st] n.一阵(风)blemish['blemi☞]n. 缺点, 污点bolster['b☜ulst☜] n. 模座,垫板boost[bu:st] n. 推进boring['b :ri☠] n.镗削, 镗孔bracket ['br✌kit] n. 支架brass [br✌s] n.黄铜break down 破坏breakage ['breikid✞] n.破坏bridge piecebrine[brain] n. 盐水brittle['britl] adv.易碎的buffer [b✈f☜] n.缓冲器built-in 内装的bulging [b✈ld✞i☠] n. 凸肚burr [b☜:] n. 毛刺bush [bu☞] n. 衬套bush[bu☞] n. 衬套by far (修饰比较级, 最高级)···得多, 最by means of 借助于Ccabinet ['k✌binit] n.橱柜call upon 要求carbide['ka:baid] n.碳化物carburzing['ka:bjureti☠] n. 渗碳carriage['k✌rid✞]n.拖板, 大拖板carry along 一起带走carry down over 从···上取carry out 完成case hardening 表面硬化case[keis] n. 壳, 套cast steel 铸钢casting['ka:sti☠] n. 铸造,铸件category['k✌t☜♈☜uri] n. 种类caution ['k :☞☜n]n. 警告,警示cavity and core plates凹模和凸模板cavity['k✌viti] n.型腔, 腔, 洞centre-drilling 中心孔ceramic[si'r✌mik] n.陶瓷制品chain doted line 点划线channel['t☞✌nl] n.通道, 信道characteristic[k✌r☜kt☜'ristik]n.特性check[t☞ek] v.核算chip[t☞ip] n.切屑, 铁屑chuck [t☞✈k] n.卡盘chute [☞u:t] n. 斜道circa ['s☜k☜:] adv. 大约circlip['s☜:klip] n.(开口)簧环circuit['s☜:kit] n. 回路, 环路circular supoport block circulate['s☜:kjuleid]v.(使)循环clamp [kl✌mp] vt 夹紧clamp[kl✌mp] n.压板clay[klei] n. 泥土clearance ['kli☜r☜ns] n. 间隙clip [klip] vt. 切断,夹住cold hobbing 冷挤压cold slug well 冷料井collapse[k☜'l✌ps]vi. 崩塌, 瓦解collapsible[k☜'l✌ps☜bl]adj. 可分解的combination [k mbi'nei☞☜n] n. 组合commence[k☜'mens]v. 开始, 着手commence[k☜'mens] v. 开始commercial [k☜'m☜:☞☜l]adj. 商业的competitive[k☜m'petitiv]a. 竞争的complementary[ k mpli'ment☜ri]a. 互补的complexity [kem'pleksiti]n. 复杂性complicated['k mpl☜keitid]adj. 复杂的complication [k mpli'kei☞☜n] n. 复杂化compression [k☜m'pre☞☜n] n.压缩comprise[k☜m'prais] vt.包含compromise['k mpr☜maiz]n. 妥协, 折衷concern with 关于concise[k☜n'sais]a. 简明的, 简练的confront[k☜n'fr✈nt] vt. 使面临connector[k☜'nekt☜]n. 连接口, 接头consequent['k nsikw☜nt]a. 随之发生的, 必然的console ['k nsoul] n.控制台consume [k☜n'sjum]vt. 消耗, 占用consummate [k☜n's✈meit]vt. 使完善container[k☜n'tein☜] n. 容器contingent[ken'tind✞☜nt]a.可能发生的contour['k☜ntu☜] n.轮廓conventional[k☜n'ven☞☜nl]a. 常规的converge[k☜n'v☜:d✞]v. 集中于一点conversant[k n'v☜:s☜nt]a. 熟悉的conversion[k☜n'v☜:☞☜n]n 换算, 转换conveyer[ken'vei☜]n. 运送装置coolant['ku:l☜nt] n. 冷却液coordinate [k☜u' :dnit]vt. (使)协调copy machine 仿形(加工)机床core[k :] n. 型芯, 核心corresponding [ka:ri'sp di☠]n.相应的counteract [kaunt☜'r✌kt]vt. 反作用,抵抗couple with 伴随CPU (central processing unit)中央处理器crack[kr✌k ] v.(使)破裂,裂纹critical['kritikl] adj.临界的cross-hatching 剖面线cross-section drawn 剖面图cross-slide 横向滑板CRT (cathoder-ray tube)阴极射线管crush[kr✈☞] vt.压碎cryogenic[ krai☜'d✞enik ]a. 低温学的crystal['kristl] adj.结晶状的cubic['kju:bik]a. 立方的, 立方体的cup [k✈p]vt (使)成杯状, 引伸curable ['kjur☜bl]adj. 可矫正的curvature['k☜:v☜t☞☜] n.弧线curve [k☜:v] vt. 使弯曲cutter bit 刀头, 刀片cyanide['sai☜naid] n.氰化物Ddash [d✌☞] n. 破折号daylight ['deilait] n. 板距decline[di'klain]v. 下落,下降,减少, ,deform[di'f :m] v. (使)变形demonstrate['dem☜streit ]v 证明depict[di'pikt ] vt 描述deposite [di'p zit] vt. 放置depression[di'pre☞☜n] n. 凹穴descend [di'sent] v. 下降desirable[di'zair☜bl] a. 合适的detail ['diteil] n.细节,详情deterioration[diti☜ri:☜'rei☞☜n]n. 退化, 恶化determine[di't☜:min] v.决定diagrammmatic[ dai☜gr☜'m✌tik] a. 图解的,图表的dictate['dikteit] v. 支配die[dai] n.模具, 冲模, 凹模dielectric[daii'lektrik]n. 电介质die-set 模架digital ['did✞itl ]n. 数字式数字, a.数字的dimensional[dddi'men☞☜nl]a. 尺寸的, 空间的discharge[dis't☞a:d✞]n.v. 放电, 卸下, 排出discharge[dis't☞a:d✞] v.卸下discrete [dis'cri:t]adj. 离散的,分立的dislodge[dis'l d✞]v. 拉出, 取出dissolution[dis☜'lu:☞☜n] n.结束distinct [dis'ti☠kt]a.不同的,显著的distort [dis'd :t] vt. 扭曲distort[dis't :t]vt. (使)变形, 扭曲distributed system分布式系统dowel ['dau☜l] n. 销子dramaticlly [dr☜'m✌tikli]adv. 显著地drastic ['dr✌stik] a.激烈的draughting[dra:fti☠] n. 绘图draughtsman['dr✌ftsm☜n]n. 起草人drawing['dr :i☠] n. 制图drill press 钻床drum [dr✈m] n.鼓轮dual ['dju:☜l]adv. 双的,双重的ductility [d✈k'tiliti ] n.延展性dynamic [dai'n✌mik ]adj 动力的Eedge [ed✞] n .边缘e.g.(exempli gratia) [拉] 例如ejector [i'd✞ekt☜] n.排出器,ejector plate 顶出板ejector rob 顶杆elasticity[il✌'stisiti] n.弹性electric dicharge machining电火花加工electrochemical machining电化学加工electrode[i'lektr☜ud] n. 电极electro-deposition 电铸elementary [el☜'ment☜ri]adj.基本的eliminate[i'limineit]vt. 消除, 除去elongate[i'l ☠♈et]vt. (使)伸长, 延长emerge [i'm☜:d✞]vi. 形成, 显现emphasise['emf☜saiz]vt. 强调endeavour[en'dev☜] n. 尽力engagement[in'♈eid✞ment]n. 约束, 接合enhance[in'h✌ns]vt. 提高, 增强ensure [in'☞u☜] vt. 确保,保证envisage[in'vizid✞] vt.设想erase[i'reis] vt. 抹去, 擦掉evaluation[i'v✌lju ei☞☜n]n. 评价, 估价eventually[i'v☜nt☞u☜li ]adv. 终于evolution[ev☜'lu:☞☜n] n.进展excecution[eksi'kju:☞☜n]n. 执行, 完成execute ['ekskju:t] v. 执行exerte [i♈'z☜:t] vt. 施加experience[iks'piri☜ns] n. 经验explosive[iks'pl☜usiv]adj.爆炸(性)的extend[eks'tend] v. 伸展external[eks't☜:nl] a. 外部的extract[eks'tr✌kt] v. 拔出extreme[iks'tri:m] n. 极端extremely[iks'tri:mli]adv. 非常地extremity[iks'tmiti] n. 极端extrusion[eks'tru:✞☜n]n. 挤压, 挤出FF (Fahrenheit)['f✌r☜nhait]n. 华氏温度fabricate ['f✌brikeit]vt. 制作,制造facilitate [f☜'siliteit] vt. 帮助facility[f☜'siliti] n. 设备facing[feisi☠] n. 端面车削fall within 属于, 适合于fan[f✌n] n.风扇far from 毫不, 一点不, 远非fatigue[f☜'ti♈] n.疲劳feasible ['fi:z☜bl] a 可行的feature ['fi:t☞☜] n.特色, 特征feed[fi:d] n.. 进给feedback ['fi:db✌k] n. 反馈female['fi:meil]a. 阴的, 凹形的ferrule['fer☜l] n. 套管file system 文件系统fitter['fit☜] n.装配工, 钳工fix[fiks]vt. 使固定, 安装, vi. 固定fixed half and moving half定模和动模flat-panel technology平面(显示)技术flexibility[fleksi'biliti]n. 适应性, 柔性flexible['fleks☜bl] a. 柔韧的flow mark 流动斑点follow-on tool 连续模foregoing ['f :'♈☜ui☠]adj.在前的,前面的foretell[f :'tell]vt. 预测, 预示, 预言forge[f :d✞] n. v. 锻造forming[f :mi☠] n. 成型four screen quadrants四屏幕象限fracture['fr✌kt☞☜] n.破裂free from 免于Ggap[♈✌p] n. 裂口, 间隙gearbox['♈i☜b ks] n.齿轮箱general arrangementgovern['♈✈v☜n]v. 统治, 支配, 管理grain [♈rein] n. 纹理graphic ['♈r✌fik] adj. 图解的grasp [♈r✌sp] vt. 抓住grid[♈rid] n. 格子, 网格grind[♈raind]v. 磨, 磨削, 研磨grinding ['♈raindi☠]n. 磨光,磨削grinding machine 磨床gripper[♈rip☜] n. 抓爪, 夹具groove[♈ru:v] n. 凹槽guide bush 导套guide pillar 导柱guide pillars and bushes导柱和导套Hhandset['h✌ndset] n. 电话听筒hardness['ha:dnis] n.硬度hardware ['ha:dw☪☜] n. 硬件headstock['hedst k] n.床头箱, 主轴箱hexagonal[hek's✌♈☜nl]a. 六角形的, 六角的hindrance['hindr☜ns]n.障碍, 障碍物hob[h b] n. 滚刀, 冲头hollow-ware 空心件horizontal[h ri'z ntl]a. 水平的hose[h☜uz] n. 软管, 水管hyperbolic [haip☜'b lik]adj. 双曲线的Ii.e. (id est) [拉] 也就是identical[ai'dentikl] a同样的identify [ai'dentifai] v. 确定,识别idle ['aidl] adj.空闲的immediately[i'mi:dj☜tli] adv.正好, 恰好impact['imp✌kt] n.冲击impart [im'pa:t] v.给予implement ['implim☜nt]vt 实现impossibility[imp s☜'biliti]n.不可能impression[im'pre☞☜n] n. 型腔in contact with 接触in terms of 依据inasmuch (as)[in☜z'm✈t☞]conj. 因为, 由于inch-to-metric conversions英公制转换inclinable [in'klain☜bl]adj. 可倾斜的inclusion [in'klu☞☜n]n. 内含物inconspicuous[ink☜n'spikju☜s]a. 不显眼的incorporate [in'k :p☜reit]v 合并,混合indentation[ inden'tei☞☜n ]n. 压痕indenter[in'dent☜] n. 压头independently[indi'pein☜ntli]a. 独自地, 独立地inevitably[in'evit☜bli]ad. 不可避免地inexpensive[inik'spensiv]adj. 便宜的inherently [in'hi☜r☜ntli]adv. 固有的injection mould 注塑模injection[in'd✞ek☞☜n] n. 注射in-line-of-draw 直接脱模insert[in's☜:t] n. 嵌件inserted die 嵌入式凹模inspection[in'spek☞☜n]n. 检查,监督installation[inst☜'lei☞☜n]n. 安装integration [inti'♈rei☞☜n]n.集成intelligent[in'telid✞☜nt]a. 智能的intentinonally [in'ten☞☜n☜li]adv 加强地,集中地interface ['int☜feis] n.. 界面internal[in't☜:nl] a. 内部的interpolation [int☜p☜'lei☞☜n]n.插值法investment casting 熔模铸造irregular [i'regjul☜]adj. 不规则的,无规律irrespective of 不论, 不管irrespective[iri'spektiv]a. 不顾的, 不考虑的issue ['isju] vt. 发布,发出Jjoint line 结合线Kkerosene['ker☜si:n] n.煤油keyboard ['ki:b :d ] n. 健盘knock [n k] v 敲,敲打Llance [la:ns] v. 切缝lathe[lei❆] n. 车床latitude ['l✌titju:d] n. 自由lay out 布置limitation[limi'tei☞☜n]n. 限度,限制,局限(性)local intelligence 局部智能locate [l☜u'keit] vt. 定位logic ['l d✞ik] n. 逻辑longitudinal['l nd✞☜'tju:dinl]a. 纵向的longitudinally['l nd✞☜'tju:dinl]a. 纵向的look upon 视作, 看待lubrication[lju:bri'kei☞☜n ]n. 润滑Mmachine shop 车间machine table 工作台machining[m☜'☞i:ni☠] n. 加工made-to-measure 定做maintenance['meintin☜ns]n.维护,维修majority[m☜'d✞a:riti] n.多数make use of 利用male[meil] a. 阳的, 凸形的malfunction['m✌l'f✈☠☞☜n]n. 故障mandrel['m✌dtil] n.心轴manifestation[m✌nif☜s'tei☞☜n] n. 表现, 显示massiveness ['m✌sivnis ]厚实,大块measure['me✞☜] n. 大小, 度量microcomputer 微型计算机microns['maikr n] n.微米microprocessor 微处理器mild steel 低碳钢milling machine 铣床mineral['min☜r☜l] n.矿物, 矿产minimise['minimaiz]v.把···减到最少, 最小化minute['minit] a.微小的mirror image 镜像mirror['mir☜] n. 镜子MIT (Massachusetts Institute ofTechnology) 麻省理工学院moderate['m d☜rit]adj. 适度的modification [m difi'kei☞☜n ]n. 修改, 修正modulus['m djul☜s] n.系数mold[m☜uld]n. 模, 铸模, v. 制模, 造型monitor ['m nit☜ ] v. 监控monograph['m n☜♈ra:f]n. 专著more often than not 常常motivation[m☜uti'vei☞☜n]n. 动机mould split line 模具分型线moulding['m☜udi☠] n. 注塑件move away from 抛弃multi-imprssion mould多型腔模Nnarrow['n✌r☜u] a. 狭窄的NC (numerical control ) 数控nevertheless[ nev☜❆☜'les]conj.,adv.然而,不过nonferrous['n n'fer☜s]adj.不含铁的, 非铁的normally['n :mli] adv.通常地novice['n vis]n. 新手, 初学者nozzle['n zl] n. 喷嘴, 注口numerical [nju'merikl]n. 数字的Oobjectionable [☜b'd✞ek☞☜bl]adj. 有异议的,讨厌的observe[☜b'z☜:v] vt. 观察obviously [' bvi☜sli]adv 明显地off-line 脱机的on-line 联机operational [ p☜'rei☞☜nl]adj. 操作的, 运作的opportunity[ p☜'tju:niti]n. 时机, 机会opposing[☜'p☜uzi☠]a. 对立的, 对面的opposite[' p☜zit]n. 反面a.对立的,对面的optimization [ ptimai'zei☞☜n]n. 最优化orient [' :ri☜nt] vt. 确定方向orthodox [' : ☜d ks]adj. 正统的,正规的overall['☜uv☜r :l]a. 全面的,全部的overbend v.过度弯曲overcome[☜uv☜'k✈m]vt. 克服, 战胜overlaping['☜uv☜'l✌pi☠]n. 重叠overriding[☜uv☜'raidi☠]a. 主要的, 占优势的Ppack[p✌k] v. 包装package ['p✌kid✞] vt.包装pallet ['p✌lit] n.货盘panel ['p✌nl] n.面板paraffin['p✌r☜fin] n. 石蜡parallel[p✌r☜lel] a.平行的penetration[peni'trei☞☜n ]n.穿透peripheral [p☜'rif☜r☜l] adj外围的periphery [p☜'rif☜ri] n. 外围permit[p☜'mit] v. 许可, 允许pessure casting 压力铸造pillar['pil☜] n. 柱子, 导柱pin[pin] n. 销, 栓, 钉pin-point gate 针点式浇口piston ['pist☜n] n.活塞plan view 主视图plasma['pl✌zm☜] n. 等离子plastic['pl✌stik] n. 塑料platen['pl✌t☜n] n. 压板plotter[pl t☜] n. 绘图机plunge [pl✈nd✞] v翻孔plunge[pl✈nd✞] v.投入plunger ['pl✈nd✞☜ ] n. 柱塞pocket-size 袖珍portray[p :'trei] v.描绘pot[p t] n.壶pour[p :] vt. 灌, 注practicable['pr✌ktik☜b]a. 行得通的preferable['pref☜r☜bl]a.更好的, 更可取的preliminary [pri'limin☜ri]adj 初步的,预备的press setter 装模工press[pres]n. 压,压床,冲床,压力机, prevent [pri'vent] v. 妨碍primarily['praim☜rili]adv. 主要地procedure[pr☜'si:d✞☜]n. 步骤, 方法, 程序productivity.[pr☜ud✈k'tiviti] n. 生产力profile ['pr☜ufail] n. 轮廓progressively[pr☜'♈resiv]ad. 渐进地project[pr☜'d✞ekt] n.项目project[pr☜'d✞ekt] v. 凸出projection[pr☜'d✞ek☞☜n]n. 突出部分proper['pr p☜] a. 本身的property['pr p☜ti] n.特性prototype ['pr☜ut☜taip] n. 原形proximity[pr k'simiti] n.接近prudent['pru:d☜nt] a. 谨慎的punch [p✈nt☞] v. 冲孔punch shapper tool 刨模机punch-cum-blanking die凹凸模punched tape 穿孔带purchase ['p☜:t☞☜s] vt. 买,购买push back pin 回程杆pyrometer[pai'n mit☜] n. 高温计Qquality['kwaliti] n. 质量quandrant['kw dr☜nt] n. 象限quantity ['kw ntiti] n. 量,数量quench[kwent☞] vt. 淬火Rradial['reidi☜l] adv.放射状的ram [r✌m] n 撞锤.rapid['r✌pid]adj. 迅速的rapidly['r✌pidli] adv. 迅速地raster['r✌st☜] n. 光栅raw [r :] adj. 未加工的raw material 原材料ream [ri:m] v 铰大reaming[ri:mi☠]n. 扩孔, 铰孔recall[ri'k :l] vt. 记起, 想起recede [ri'si:d] v. 收回, 后退recess [ri'ses]n. 凹槽,凹座,凹进处redundancy[ri'd✈nd☜nsi]n. 过多re-entrant 凹入的refer[ri'f☜:]v. 指, 涉及, 谈及,reference['ref☜r☜ns]n.参照,参考refresh display 刷新显示register ring 定位环register['red✞st☜]v. 记录, 显示, 记数regrind[ri:'♈aind](reground[ri:'gru:nd]) vt. 再磨研relative['rel☜tiv]a. 相当的, 比较的relay ['ri:lei] n. 继电器release[ri'li:s] vt. 释放relegate['rel☜geit] vt.把··降低到reliability [rilai☜'biliti] n. 可靠性relief valves 安全阀relief[ri'li:f] n. 解除relieve[ri'li:v ] vt.减轻, 解除remainder[ri'meind☜]n. 剩余物, 其余部分removal[ri'mu:vl] n. 取出remove[ri'mu:v] v. 切除, 切削reposition [rip☜'zi☞☜n]n. 重新安排represent[ repri'zent☜]v 代表,象征reputable['repjut☜bl]a. 有名的, 受尊敬的reservoir['rez☜vwa: ]n.容器, 储存器resident['rezid☜nt] a. 驻存的resist[ri'zist] vt. 抵抗resistance[ri'zist☜ns]n.阻力, 抵抗resolution[ rez☜'lu:☞☜n] n.分辨率respective[ri'spektiv]a. 分别的,各自的respond[ris'p nd]v. 响应, 作出反应responsibility[risp ns☜'biliti]n. 责任restrain[ris'trein] v. 抑制restrict [ris'trikt] vt 限制,限定restriction[ris'trik☞☜n] n. 限制retain[ri'tein] vt.保持, 保留retaining plate 顶出固定板reveal [ri'vil] vt.显示,展现reversal [ri'v☜sl] n. 反向right-angled 成直角的rigidity[ri'd✞iditi] n. 刚度rod[r d] n. 杆, 棒rotate['r☜uteit] vt.(使)旋转rough machining 粗加工rough[r✈f] a. 粗略的routine [ru:'ti:n] n. 程序rubber['r✈b☜] n.橡胶runner and gate systems 流道和浇口系统Ssand casting 砂型铸造satisfactorily[ s✌tis'f✌ktrili] adv. 满意地saw[a :] n. 锯子scale[skeil] n. 硬壳score[sk :] v. 刻划scrap[skr✌p]n. 废料, 边角料, 切屑, screwcutting 切螺纹seal[si:l] vt.密封secondary storagesection cutting plane 剖切面secure[si'kju☜] v. 固定secure[si'kju☜]vt. 紧固,夹紧,固定segment['se♈m☜nt] v. 分割sensitive['sensitiv] a. 敏感的sequence ['si:kw☜ns] n. 次序sequential[si'kwen☞☜l]a. 相继的seriously['si☜ri☜sli] adv.严重地servomechanism['s☜:v☜'mek☜nizm] n.伺服机构Servomechanism aboratoies 伺服机构实验室servomotor ['s☜:v☜m☜ut☜] n. 伺服马达setter ['set☜] n 安装者set-up 机构sever ['sev☜] v 切断severity [si'veriti] n. 严重shaded[☞✌did] adj. 阴影的shank [☞✌☠k] n. 柄.shear[☞i☜] n.剪,切shot[☞t] n. 注射shrink[☞ri☠k] vi. 收缩side sectional view 侧视图signal ['si♈nl] n. 信号similarity[simi'l✌riti] n.类似simplicity[sim'plisiti] n. 简单single-point cutting tool 单刃刀具situate['sitjueit] vt. 使位于, 使处于slide [slaid] vi. 滑动, 滑落slideway['slaidwei] n. 导轨slot[sl t] n. 槽slug[sl✈♈] n. 嵌条soak[s☜uk] v. 浸, 泡, 均热software ['s ftw☪☜] n. 软件solid['s lid] n.立体, 固体solidify[s☜'lidifai] vt.vi. (使)凝固, (使)固solution[s☜'lu:☞☜n] n.溶液sophisiticated [s☜'fistikeitid]adj.尖端的,完善的sound[saund] a. 结实的, 坚固的)spark erosion 火花蚀刻spindle['spindl] n. 主轴spline[splain] n.花键split[split] n. 侧向分型, 分型spool[spu:l] n. 线轴springback n.反弹spring-loaded 装弹簧的sprue bush 主流道衬套sprue puller 浇道拉杆square[skw☪☜] v. 使成方形stage [steid✞] n. 阶段standardisation[ st✌nd☜dai'zei☞☜n] n. 标准化startling['sta:tli☠]a. 令人吃惊的steadily['sted☜li ] adv. 稳定地step-by-step 逐步stickiness['stikinis] n.粘性stiffness['stifnis] n. 刚度stock[st k] n.毛坯, 坯料storage tube display储存管显示storage['st :rid✞] n. 储存器straightforward[streit'f :w☜d]a.直接的strain[strein] n. 应变strength[stre☠] n.强度stress[stres] n. 压力,应力stress-strain 应力--应变stretch[stret☞] v.伸展strike [straik] vt. 冲击stringent['strind✞☜nt ] a.严厉的stripper[strip☜] n. 推板stroke[strouk] n. 冲程, 行程structrural build-up 结构上形成的sub-base 垫板subject['s✈bd✞ikt] vt.使受到submerge[s☜b'm☜:d✞] v.淹没subsequent ['s✈bsikwent] adj.后来的subsequently ['s✈bsikwentli]adv. 后来, 随后substantial[s☜b'st✌n☞☜l] a.实质的substitute ['s✈bstitju:t] vt. 代替,.替换subtract[s☜b'tr✌kt] v.减, 减去suitable['su:t☜bl]a. 合适的, 适当的suitably['su:t☜bli] ad.合适地sunk[s✈☠k](sink的过去分词)v. 下沉, 下陷superior[s☜'pi☜ri☜] adj.上好的susceptible[s☜'sept☜bl]adj.易受影响的sweep away 扫过symmetrical[si'metrikl]a. 对称的synchronize ['si☠kr☜naiz]v. 同步,同时发生Ttactile['t✌ktail] a. 触觉的, 有触觉的tailstock['teilst k] n.尾架tapered['teip☜d] a. 锥形的tapping['t✌pi☠] n. 攻丝technique[tek'ni:k] n. 技术tempering['temp☜r☠] n.回火tendency['tend☜nsi]n. 趋向, 倾向tensile['tensail]a. 拉力的, 可拉伸的拉紧的, 张紧的tension ['ten☞☜n] n.拉紧,张紧terminal ['t☜:m☜nl ] n. 终端机terminology[t☜:mi'n l☜d✞i ] n. 术语, 用辞theoretically [ i:☜'retikli ] adv.理论地thereby['❆☪☜bai] ad. 因此, 从而thermoplastic[' ☜:m☜u'pl✌stik] a. 热塑性的,n. 热塑性塑料thermoset[' ☜:m☜set] n.热固性thoroughly[' ✈r☜uli] adv.十分地, 彻底地thread pitch 螺距thread[ red] n. 螺纹thrown up 推上tilt [tilt] n. 倾斜, 翘起tolerance ['t l☜r☜ns] n..公差tong[t ☠] n. 火钳tonnage['t✈nid✞]n. 吨位, 总吨数tool point 刀锋tool room 工具车间toolholder['tu:lh☜uld☜]n. 刀夹,工具柄toolmaker ['tu:l'meik☜]n 模具制造者toolpost grinder 工具磨床toolpost['tu:l'p☜ust] n. 刀架torsional ['t :☞☜nl] a扭转的.toughness['t fnis] n. 韧性trace [treis] vt.追踪tracer-controlled milling machine仿形铣床transverse[tr✌ns'v☜:s]a. 横向的tray [trei] n. 盘,盘子,蝶treatment['tri:tm☜nt] n.处理tremendous[tri'mend☜s]a. 惊人的, 巨大的trend [trend] n.趋势trigger stop 始用挡料销tungsten['t✈☠st☜n] n.钨turning['t☜:ni☠] n.车削twist[twist ] v.扭曲,扭转two-plate mould双板式注射模Uultimately['✈ltimitli] adv终于.undercut moulding侧向分型模undercut['✈nd☜k✈t]n. 侧向分型undercut['✈nd☜k✈t] n. 底切underfeed['✈nd☜'fi:d]a 底部进料的undergo[✈nd☜'♈☜u] vt. 经受underside['✈nd☜said]n 下面,下侧undue[✈n'dju:]a.不适当的, 过度的uniform['ju:nif :m]a. 统一的, 一致的utilize ['ju:tilaiz] v 利用Utopian[ju't☜upi☜n]adj. 乌托邦的, 理想化的Vvalve[v✌lv] n. 阀vaporize['veip☜raiz]vt.vi. 汽化, (使)蒸发variation [v☪☜ri'ei☞☜n] n.变化various ['v☪☜ri☜s]a. 不同的,各种的,vector feedrate computation向量进刀速率计算vee [vi:] n. v字形velocity[vi'l siti] n.速度versatile['v☜s☜tail]a. 多才多艺的,万用的vertical['v☜:tikl] a. 垂直的via [vai☜] prep.经,通过vicinity[v☜'siniti] n. 附近viewpoint['vju:p int] n. 观点Wwander['w nd☜] v. 偏离方向warp[w :p] v. 翘曲washer ['w ☞☜] n. 垫圈wear [w☪☜] v. 磨损well line 结合线whereupon [hw☪☜r☜'p n]adv. 于是winding ['waindi☠] n. 绕, 卷with respect to 相对于withstand[wi❆'st✌nd]vt. 经受,经得起work[w☜:k] n. 工件workstage 工序wrinkle['ri☠kl] n.皱纹vt.使皱Yyield[ji:ld] v. 生产Zzoom[zu:] n. 图象电子放大。

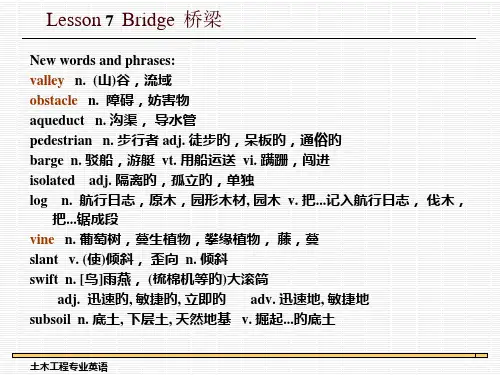

桥梁建设英语In the realm of civil engineering, the art of constructing bridges is nothing short of a marvel that intertwines human ingenuity with the natural landscape. Imagine standing at the edge of a vast chasm, pondering the challenge of connecting two distant points, and then behold, a bridge emerges, a testament to the power of engineering and the human spirit. The process of bridge construction is a symphony of meticulous planning, cutting-edge technology, and sheer determination.Starting with the conceptualization, engineers and architects collaborate to design a structure that not only meets the functional requirements but also stands as an architectural landmark. The design phase is a meticulous dance of calculations, where forces of gravity, tension, and compression are carefully considered to ensure the bridge's stability and longevity.Once the design is finalized, the real work begins. The site preparation involves clearing the area, setting up access routes, and ensuring the ground is ready to support the massive structure. Foundations are laid, often requiring deep excavations and the installation of piles that reach down into the earth's core, providing a solid base for the bridge's weight.The construction itself is a spectacle of coordinationand precision. Steel beams, concrete slabs, and cables are assembled with the precision of a Swiss watch, each piece playing a crucial role in the overall integrity of the bridge. The use of heavy machinery, such as cranes and piling rigs,is a common sight, as they hoist and position the massive components into place.Safety is paramount during bridge construction. Workersare equipped with the necessary personal protective equipment, and safety protocols are strictly enforced to prevent accidents. As the bridge takes shape, it becomes a beacon of progress, a symbol of overcoming obstacles, and a gateway to new opportunities.Upon completion, the bridge stands as a testament to the hard work and dedication of all those involved. It is notjust a passage over an obstacle; it is a connection between communities, a link to economic growth, and a bridge to the future. The engineering marvel that is a bridge is a story of overcoming challenges, of uniting people, and of creating pathways where none existed before.。

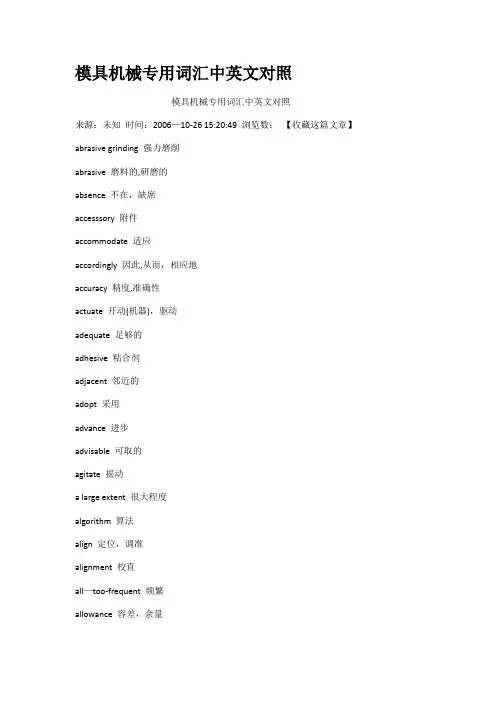

模具机械专用词汇中英文对照模具机械专用词汇中英文对照来源:未知时间:2006—10-26 15:20:49 浏览数:【收藏这篇文章】abrasive grinding 强力磨削abrasive 磨料的,研磨的absence 不在,缺席accesssory 附件accommodate 适应accordingly 因此,从而,相应地accuracy 精度,准确性actuate 开动(机器),驱动adequate 足够的adhesive 粘合剂adjacent 邻近的adopt 采用advance 进步advisable 可取的agitate 摇动a large extent 很大程度algorithm 算法align 定位,调准alignment 校直all—too-frequent 频繁allowance 容差,余量alternate 交替,轮流alternatively 做为选择,也许aluminiun 铝ample 充足的analysis 分析ancillary 补助的,副的angular 有角的annealing 退火aperture 孔applied loads 作用力appropriate 适当的arc 弧,弓形arise 出现,发生arrange 安排article 制品,产品ascertain 确定,查明assemble 组装attitude 态度auxiliary 辅助的avoid 避免axis 轴axle 轮轴,车轴alternative 替换物backup 备份batch 一批bearing 轴承,支座bed 床身behavior 性能bench—work 钳工工作bend 弯曲beneath 在???下bin 仓,料架blank 坯料blank 冲裁,落料blanking 落料模blast 一阵(风) blemish 缺点,污点bolster 模座,垫板boring 镗削,镗孔bracket 支架brass 黄铜break down 破坏breakage 破坏brine 盐水brittle 易碎的buffer 缓冲器built-in 内装的bulging 凸肚burr 毛刺bush 衬套by far ???得多,最by means of 借助于boost 推进cabinet 橱柜call upon 要求carbide 碳化物carburzing 渗碳carriage 拖板,大拖板carry along 一起带走carry down over 从???上取下carry out 完成case hardening 表面硬化case 壳,套cast steel 铸钢casting 铸造,铸件category 种类caution 警告,警示cavity and core plates 凹模和凸模板cavity 型腔,腔,洞centre—drilling 中心孔ceramic 陶瓷制品chain doted line 点划线channel 通道,信道characteristic 特性check 核算chip 切屑,铁屑chuck 卡盘chute 斜道circa 大约circlip (开口)簧环circuit 回路,环路circulate (使)循环clamp 夹紧clamp 压板clay 泥土clearance 间隙clip 切断,夹住cold hobbing 冷挤压cold slug well 冷料井collapse 崩塌,瓦解collapsible 可分解的combination 组合commence 开始,着手commence 开始commercial 商业的competitive 竞争的complementary 互补的complexity 复杂性complication 复杂化compression 压缩comprise 包含compromise 妥协,折衷concern with 关于concise 简明的,简练的confront 使面临connector 连接口,接头consequent 随之发生的,必然的console 控制台consume 消耗,占用consummate 使完善container 容器contingent 可能发生的CPU (central processing unit) 中央处理器conventional 常规的converge 集中于一点conversant 熟悉的conversion 换算,转换conveyer 运送装置coolant 冷却液coordinate (使)协调copy machine 仿形(加工)机床core 型芯,核心corresponding 相应的counteract 反作用,抵抗couple with 伴随contour 轮廓crack (使)破裂,裂纹critical 临界的cross—hatching 剖面线cross-section drawn 剖面图cross—slide 横向滑板CRT (cathoder—ray tube)阴极射线管crush 压碎cryogenic 低温学的crystal 结晶状的cubic 立方的,立方体的cup (使)成杯状,引伸curable 可矫正的curvature 弧线curve 使弯曲cutter bit 刀头,刀片cyanide 氰化物complicated 复杂的dash 破折号daylight 板距decline 下落,下降,减少deform (使)变形demonstrate 证明depict 描述deposite 放置depression 凹穴descend 下降desirable 合适的detail 细节,详情deterioration 退化,恶化determine 决定diagrammmatic 图解的,图表的dictate 支配die 模具,冲模,凹模dielectric 电介质die-set 模架digital 数字式数字dimensional 尺寸的,空间的discharge 放电,卸下,排出discharge 卸下discrete 离散的,分立的dislodge 拉出,取出dissolution 结束distinct 不同的,显著的distort 扭曲distort (使)变形,扭曲distributed system 分布式系统dowel 销子dramaticlly 显著地drastic 激烈的draughting 绘图draughtsman 起草人drawing 制图drill press 钻床drum 鼓轮dual 双的,双重的ductility 延展性dynamic 动力的edge 边缘e。

岩土力学英文版IntroductionGeotechnical Engineering, also known as Soil Mechanics or Rock Mechanics, is a branch of civil engineering that deals with the behavior of soil and rock materials under various conditions. It is an important field of study as it helps engineers understand the properties and characteristics of these materials, which in turn enables them to design and construct safe and stable structures.Soil MechanicsSoil Mechanics is the study of the behavior of soil materials, including its formation, classification, and properties. Various aspects of soil mechanics are essential in geotechnical engineering, such as soil compaction, permeability, and soil stability.Soil formation is a complex process that involves the weathering and erosion of existing rocks, resulting in the formation of different soil types. The composition and particle size distribution of soil influence its properties, including its bearing capacity, shear strength, and compressibility.Soil classification is an important step in understanding the behavior of various soil types. The Unified Soil Classification System, which categorizes soils based on their particle size and organic content, is widely used in geotechnical engineering. Common soil types include gravel, sand, silt, clay, and organic soils.Understanding soil properties is crucial in determining its suitability for construction projects. Soil compaction refers to the process of densifying soil by applying mechanical force, ensuring stability and reducing settlement. Permeability is the ability of soil to transmit fluids such as water or gas, which is essential in designing drainage systems.Shear strength is another critical property of soil, as it determines its ability to resist sliding or deformation. Soil stability can be assessed through various laboratory tests, such as direct shear tests or triaxial tests, which simulate the conditions that soil experiences in real-world applications.Rock MechanicsRock Mechanics, on the other hand, is the study of thebehavior of rock materials, including its strength, deformation, and stability. It plays a crucial role in the design and construction of underground structures, such as tunnels and mines, as well as in slope stability analysis.Rock strength is an essential characteristic to consider when designing structures in rock formations. Different rock types have varying strength properties, with factors such as mineral composition, rock structure, and geological history influencing their behavior. Lab testing, such as uniaxial compression tests or point load tests, is typically conducted to determine the rock's strength.Rock deformation refers to the response of rock materials to applied stresses, including compression, tension, and shear. Understanding the deformation behavior of rock is crucial in predicting stability and designing support systems for underground excavations. Slope stability analysis is a critical aspect of geotechnical engineering, especially in hilly or mountainous regions. An unstable slope can lead to landslides or slope failures with disastrousconsequences. Various methods, including limit equilibrium analysis and numerical modeling, are used to assess slope stability and design appropriate reinforcement measures.ConclusionGeotechnical engineering plays a vital role in the construction industry as it helps design safe and stable structures by understanding the behavior of soil and rock materials. Soil mechanics focuses on the properties and characteristics of soil, including its formation, classification, and behavior under various conditions. Rock mechanics, on the other hand, studies the properties of rock materials such as strength, deformation, and stability. These fields of study are essential for engineers to ensure the safety and integrity of construction projects.。

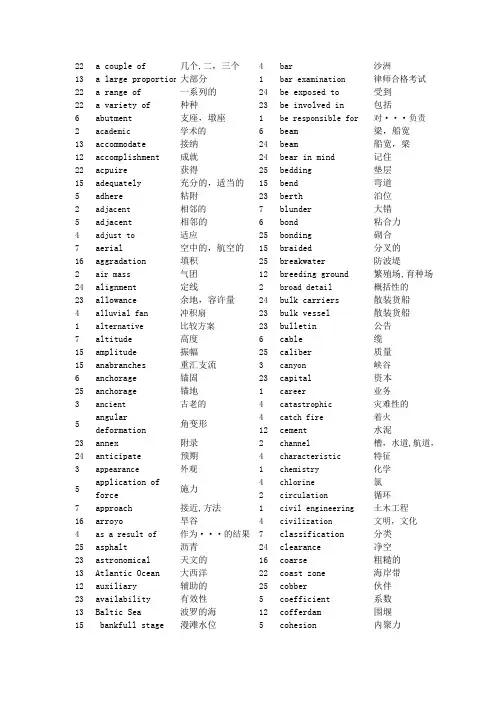

22 a couple of 几个,二,三个13 a large proportion 大部分22 a range of 一系列的22 a variety of 种种6 abutment 支座,墩座2 academic 学术的13 accommodate 接纳12 accomplishment 成就22 acpuire 获得15 adequately 充分的,适当的5 adhere 粘附2 adjacent 相邻的5 adjacent 相邻的4 adjust to 适应7 aerial 空中的,航空的16 aggradation 填积2 air mass 气团24 alignment 定线23 allowance 余地,容许量4 alluvial fan 冲积扇1 alternative 比较方案7 altitude 高度15 amplitude 振幅15 anabranches 重汇支流6 anchorage 锚固25 anchorage 锚地3 ancient 古老的5 angulardeformation角变形23 annex 附录24 anticipate 预期3 appearance 外观5 application offorce施力7 approach 接近,方法16 arroyo 旱谷4 as a result of 作为···的结果25 asphalt 沥青23 astronomical 天文的13 Atlantic Ocean 大西洋12 auxiliary 辅助的23 availability 有效性13 Baltic Sea 波罗的海15 bankfull stage 漫滩水位4 bar 沙洲1 bar examination 律师合格考试24 be exposed to 受到23 be involved in 包括1 be responsible for 对···负责6 beam 梁,船宽24 beam 船宽,梁24 bear in mind 记住25 bedding 垫层15 bend 弯道23 berth 泊位7 blunder 大错6 bond 粘合力25 bonding 砌合15 braided 分叉的25 breakwater 防波堤12 breeding ground 繁殖场,育种场2 broad detail 概括性的24 bulk carriers 散装货船23 bulk vessel 散装货船23 bulletin 公告6 cable 缆25 caliber 质量3 canyon 峡谷23 capital 资本1 career 业务4 catastrophic 灾难性的4 catch fire 着火12 cement 水泥2 channel 槽,水道,航道,4 characteristic 特征1 chemistry 化学4 chlorine 氯2 circulation 循环1 civil engineering 土木工程4 civilization 文明,文化7 classification 分类24 clearance 净空16 coarse 粗糙的22 coast zone 海岸带25 cobber 伙伴5 coefficient 系数12 cofferdam 围堰5 cohesion 内聚力4 commodity 商品 12 compact 压实 23 comparative 相当的 16 composition 组成 6 compression压缩1 computer programing 编制计算机程序 15 concave凹的 2 concern themselves with关心22 concomitant with 与...相伴的 1 concrete 混凝土2 condense 冷凝25 confine 限制16 confluence 汇合点,汇流点 23 congress 会议24 conservative 保守的2 constant 不变的,常数 1 construction 施工 23 container集装箱23 container berth 集装箱泊位23 container vessel 集装箱船24 container vessel 集装箱船7 contaminate 混淆,沾染13 continent 大陆25 coping顶层15 correspondingly 相应的25 course 层6 crack 开裂4 cradle 摇篮,发源地 6 creep 蠕动,徐变25 crib木笼24 criteria 标准4 crop庄稼,植物 24 cross wind 横风24 crosscurrent 横流6 cross —section 横截面,断面,剖面 12 crush碎16 cultivation 耕作16 cure治愈1 curriculum 全部课程 7 curvature 曲率12 cut stone 琢石25 cyclopean 毛石1 data数据 24dead weight (=dwt) 载重吨位,载重量 23 deficit亏损 4 definite integral 定积分 6 deflection 挠度 21 degenerate 退化7 delineate描绘,勾画 16 delivery ratio 递送比 7 demonstrate 表明 21 denote 表示 5density密度 24Department of Commerce 商业部4 deposits 沉积物 16desert 沙漠 1 design设计6 design load 设计载荷 22 destructive 破坏性的 7detect察觉 2 determinant 决定因素 24 dictate支配 24 differentation 区别 5 dimension 量纲,维5diminish [di'mini ʃ] 减小3 dirt泥土4 discharge 排放,泄放 25 disintegrate 崩解2 disperse 分散,散发 1disrepair 失修15 dissipate 消散,消耗 21 distinction 区别 21 distinguish 区别 2distribution 分布12 divert 改道,转向 2 domain 范围,领域 25 dowel 销钉 15 downcut 下切23 dredge 挖泥,疏浚 13 dredger 挖泥船,疏浚船 2drought 干旱12 duct 导管,通道 4 dump倾倒 22 duration历时 2earth science地球科学1 earth-fillembankment dam填土坝12 earthquake 地震5 edge condition 边界条件2 elevation 海拔,高程5 elevation 高程13 eliminate 排除21 ellipse 椭圆21 ellipse 椭圆12 embankment 填土堤坝,路基12 embankment dam 填筑坝,土石坝6 embed 埋置2 embrace 包括12 enclosure 围栏21 energy flux 能通量,能流22 energy-transfer 能量传递1 environment 环境5 equivalent[i’kwivələnt]相当于16 erosion 侵蚀2 erraatic 不稳定的7 error 误差15 evaluation 估计值2 evaporation 蒸发2 eventually 最终的16 evidence 证据21 exception 例外22 expanse 浩瀚,广阔24 explicit 明显的,明确的,显式24 explicitly 明显的4 exploration 探险3 explore 探险23 exposure 方位24 extrapollation 外延,外推2 extremes 极值,极端情况12 facility 设施,设备6 failure 破坏6 faulty 毛病1 feasibility 可行性7 features 特征,地物4 fertile 肥沃的4 fertilizer 肥料22 fetch 风浪区22 fetch area 受风水域7 field work 野外作业,外业1 finance 资助2find its way 。

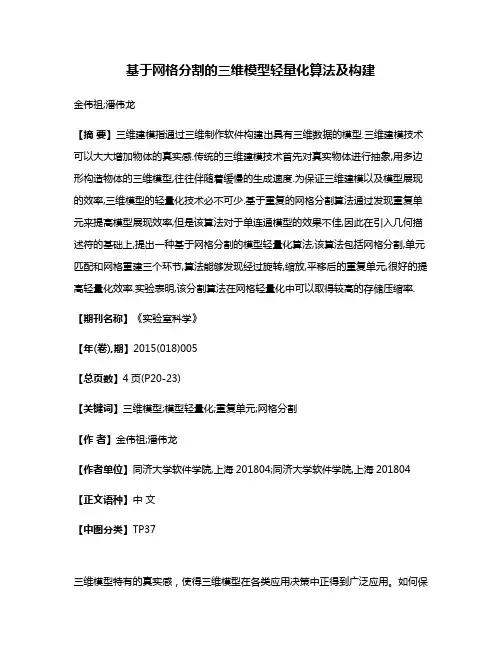

基于网格分割的三维模型轻量化算法及构建金伟祖;潘伟龙【摘要】三维建模指通过三维制作软件构建出具有三维数据的模型.三维建模技术可以大大增加物体的真实感.传统的三维建模技术首先对真实物体进行抽象,用多边形构造物体的三维模型,往往伴随着缓慢的生成速度.为保证三维建模以及模型展现的效率,三维模型的轻量化技术必不可少.基于重复的网格分割算法通过发现重复单元来提高模型展现效率,但是该算法对于单连通模型的效果不佳,因此在引入几何描述符的基础上,提出一种基于网格分割的模型轻量化算法,该算法包括网格分割,单元匹配和网格重建三个环节,算法能够发现经过旋转,缩放,平移后的重复单元,很好的提高轻量化效率.实验表明,该分割算法在网格轻量化中可以取得较高的存储压缩率.【期刊名称】《实验室科学》【年(卷),期】2015(018)005【总页数】4页(P20-23)【关键词】三维模型;模型轻量化;重复单元;网格分割【作者】金伟祖;潘伟龙【作者单位】同济大学软件学院,上海201804;同济大学软件学院,上海201804【正文语种】中文【中图分类】TP37三维模型特有的真实感,使得三维模型在各类应用决策中正得到广泛应用。

如何保证三维建模以及模型展现的效率至关重要,核心问题就是三维模型的轻量化,减少三维模型数据的存储量是研究的焦点之一。

本文具体分析了现有的、基于轻量化的模型分割算法,并分析它们各自的优劣和适用场景,最终在这些算法的基础上提出一种针对模型轻量化的网格分割算法,并以该算法为基础,提出了三维模型的轻量化构建步骤。

1 基于重复单元的轻量化研究重复单元是指模型中相同或相似的部分,这些相似的部分可以通过一系列几何变换来完成。

重复单元的轻量化是指通过提取模型中的重复单元,只保存一份模型数据和几何变换信息代替整个模型,达到大幅度减少数据存储量的目的。

在三维模型中,常常含有很多相似或者可以通过几何变换而变得相似的部分,例如一个建筑中含有很多窗口,而这些窗口模型之间是可以通过平移来转换的,即可以通过保存一份窗口模型的数据和平移信息来实现对窗口的保存,这样就可以使用更少的数据来保存窗口模型。

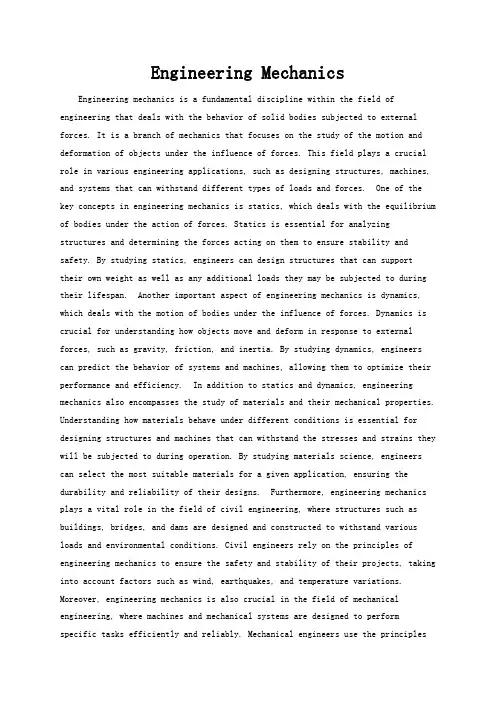

Engineering MechanicsEngineering mechanics is a fundamental discipline within the field of engineering that deals with the behavior of solid bodies subjected to external forces. It is a branch of mechanics that focuses on the study of the motion and deformation of objects under the influence of forces. This field plays a crucial role in various engineering applications, such as designing structures, machines, and systems that can withstand different types of loads and forces. One of the key concepts in engineering mechanics is statics, which deals with the equilibrium of bodies under the action of forces. Statics is essential for analyzingstructures and determining the forces acting on them to ensure stability and safety. By studying statics, engineers can design structures that can supporttheir own weight as well as any additional loads they may be subjected to during their lifespan. Another important aspect of engineering mechanics is dynamics, which deals with the motion of bodies under the influence of forces. Dynamics is crucial for understanding how objects move and deform in response to external forces, such as gravity, friction, and inertia. By studying dynamics, engineers can predict the behavior of systems and machines, allowing them to optimize their performance and efficiency. In addition to statics and dynamics, engineering mechanics also encompasses the study of materials and their mechanical properties. Understanding how materials behave under different conditions is essential for designing structures and machines that can withstand the stresses and strains they will be subjected to during operation. By studying materials science, engineers can select the most suitable materials for a given application, ensuring the durability and reliability of their designs. Furthermore, engineering mechanics plays a vital role in the field of civil engineering, where structures such as buildings, bridges, and dams are designed and constructed to withstand various loads and environmental conditions. Civil engineers rely on the principles of engineering mechanics to ensure the safety and stability of their projects, taking into account factors such as wind, earthquakes, and temperature variations. Moreover, engineering mechanics is also crucial in the field of mechanical engineering, where machines and mechanical systems are designed to performspecific tasks efficiently and reliably. Mechanical engineers use the principlesof engineering mechanics to analyze the forces and stresses acting on machine components, ensuring that they can withstand the forces generated during operation. By applying the principles of engineering mechanics, mechanical engineers can design machines that are safe, durable, and efficient. In conclusion, engineering mechanics is a fundamental discipline within the field of engineering that plays a crucial role in designing structures, machines, and systems that can withstand different types of loads and forces. By studying statics, dynamics, and materials science, engineers can analyze the behavior of objects under the influence of forces, ensuring the safety, stability, and reliability of their designs. Whetherin civil engineering or mechanical engineering, the principles of engineering mechanics are essential for ensuring the success of engineering projects and the safety of the built environment.。

标准与质量中图分类号:TU528.07文献标识码:A文章编号:1001-6945(2023)06-44-02本栏编辑:冯凯混凝土是现代建设工程中必不可少的原材料之一,其抗压强度是混凝土最基本的性能之一,也是混凝土最重要的物理性能;混凝土物理性能与建设工程中混凝土工程的质量和耐久性能息息相关,直接关系整个建设工程的质量安全。

混凝土抗压强度检测是混凝土性能检测中最为基本的项目,对于混凝土抗压强度检测,许多学者均对其影响因素开展了研究,主要是混凝土成型、浇筑、养护等方面,但对于混凝土抗压强度的不确定度评定的研究相对较少[1-2]。

不确定度评定是通过一种分析各种实验因素对实验结果造成偏差的方法[3]。

本文通过开展混凝土抗压强度检测中不确定度评定,分析影响检测过程中各种因素对检测结果造成的影响程度分析,可以明确影响较大因素,进而为检测准确性的提升提供技术支撑。

1检测过程测量对象:混凝土试件。

测量依据:GB/T 50081-2019《混凝土物理力学性能试验方法标准》[4];GB/T 27418-2017《测量不确定度评定和表示》[5]。

测量仪器:微机控制电液伺服万能试验机(量程1000kN ,精度1级),游标卡尺(量程200mm ,分度值0.02mm ),塞尺(精度0.01mm )。

其中,扩展不确定度及扩展因子由设备计量单位提供。

测量环境:温度20℃,湿度60%。

试验方法:按照GB/T 50081-2019《混凝土物理力学性能试验方法标准》中轴向抗压强度要求,使用游标卡尺测量150mm×150mm×150mm 的立方体混凝土试块尺寸,精确至0.1mm 。

采用钢板尺和塞尺测量试件承压面的平面度,精确至0.01mm 。

将试件放置在承压板中心处,设置加荷速度为0.65MPa/s ,直至试件破坏,记录破坏力值,按照下式计算混凝土试件抗压强度。

考虑试件制作偏差可能导致评定无效,选取10块试件进行评定。

Value Engineering1建筑大体积混凝土施工现状1.1需要加强施工管理人员和操作人员的技术水平施工现场质量管理的决定性因素之一是施工管理人员的专业能力和责任感。

我国目前的建设过程缺乏高素质的管理人才,管理人才的技术水平低下。

他们无法完全理解设计图纸上加密节点的详细信息(例如梁和箍筋)的重要性。

一旦被管理人员忽略,施工人员可能会错过并减少钢筋的放置,这会影响施工质量并导致潜在的安全隐患。

但是,在施工过程中,大多数建筑工人是农民工,缺乏安全生产所需的专业技能和知识。

在施工过程中,无法按照相应的施工规定进行操作,从而难以保证工程质量。

1.2影响温度的因素在房屋建筑工程中浇筑大体积混凝土结构时,温度的影响很容易引起结构裂缝,直接影响工程的整体质量。

更确切地说,第一个是水泥的水热现象。

水泥在水合过程中会散发一定量的热量。

由于大体积混凝土结构的壁厚,大量的热量积聚在结构内部。

另外,混凝土的导热性差并且不能在短时间内散发热量。

最终,混凝土在固化过程中会产生巨大的拉伸应力,超过其自身的拉伸强度,会导致裂纹。

其次,外界温度的影响。

在建造大体积混凝土结构时,由于外部环境温度的影响,很可能会发生裂缝。

混凝土的温度将随着外部温度的变化而变化,特别是当外部温度急剧下降时,混凝土结构的内部和表面之间将存在较大的温差。

温差越大,热应力越大,这将导致混凝土结构在硬化过程中收缩。

一系列问题,例如不均匀和不均匀膨胀,会导致裂纹。

1.3不平等地基大体积混凝土结构主要在房屋建设项目中起一定作用,直接影响到工程的稳定性和安全性。

在实际施工中,由于对地基的处理不当,工程的地基部分在外力的作用下不均匀沉降,在混凝土结构中产生内应力。

安装基础时的质量一旦应力超过拉伸范围,就会出现裂纹。

诸如断裂等一系列问题严重影响了项目的稳定性,并降低了项目的整体性能和寿命。

2建筑中大体积混凝土施工技术2.1施工前准备在高层建筑进行大体积混凝土施工之前,应根据建筑物设计的要求选择材料。

电气工程的英文定义Electrical engineering, often referred to as EE, is a broad field that deals with the study, design, and application of electrical systems and electronics. It encompasses a diverse range of sub-disciplines, such as power engineering, electronics engineering, control systems, telecommunications, computer engineering, and more. Thefield is constantly evolving, driven by advancements in technology and the increasing demand for efficient, sustainable, and intelligent electrical systems.The core of electrical engineering lies in the fundamental principles of electricity, magnetism, and electronics. These principles form the backbone of all electrical systems, from the tiny components inside electronic devices to the massive power grids that supply electricity to cities and industries. Electrical engineers are responsible for understanding and applying these principles to create systems that are reliable, efficient, and safe.One of the most significant areas of electrical engineering is power engineering. This sub-disciplinefocuses on the generation, transmission, and distribution of electrical power. With the growth of the global population and the increasing demand for electricity, power engineers are constantly challenged to find new and innovative ways to meet this demand while ensuring the efficiency and sustainability of the power systems.Another crucial area is electronics engineering, which deals with the design and development of electronic devices and systems. From smartphones and computers to medical equipment and aerospace systems, electronics engineers play a pivotal role in creating the technology that shapes our world.Control systems are another important aspect of electrical engineering. These systems are responsible for monitoring and regulating the operation of variouselectrical and mechanical devices. They are crucial in ensuring the safety and efficiency of these devices, and they are widely used in industries such as automotive, aerospace, and manufacturing.Telecommunications is another key area of electrical engineering, dealing with the transmission and reception ofinformation over long distances. This field has seen significant advancements in recent years, with the emergence of new technologies such as 5G and the Internet of Things (IoT).Computer engineering, which combines the principles of electrical engineering and computer science, is another crucial discipline within electrical engineering. It deals with the design and development of computer systems and hardware, including processors, memory, and networking components.Looking ahead, the future of electrical engineering is bright. With the continued advancement of technology and the increasing demand for intelligent, efficient, and sustainable electrical systems, there are ample opportunities for electrical engineers to innovate and make a positive impact on society. From developing renewable energy systems to creating smart cities and autonomous vehicles, the role of electrical engineers in shaping the future is undeniable.电气工程:定义、发展与未来展望电气工程,简称电气工程(EE),是一个涉及电力系统和电子学研究、设计与应用的广泛领域。

工程车左右英语作文1. The construction truck rumbled down the dusty road, its massive tires kicking up clouds of dirt behind it.2. The driver of the engineering vehicle gripped the steering wheel tightly, his eyes focused on the road ahead as he navigated through the construction site.3. The sound of the engine roared in the ears of the workers nearby, drowning out their conversations andforcing them to pause their work momentarily.4. The hydraulic arms of the construction truck moved with precision, lifting heavy loads of materials andplacing them in the designated areas with ease.5. The construction truck was a vital piece of equipment on the site, helping to transport materials,clear debris, and support the work of the construction crew.6. The bright yellow paint of the engineering vehicle gleamed in the sunlight, a stark contrast to the dusty, muddy surroundings of the construction site.7. The driver of the construction truck expertly maneuvered the vehicle through tight spaces, skillfully avoiding obstacles and ensuring the safety of himself and those around him.8. The construction truck was a symbol of progress and development, its presence on the site signaling the beginning of a new project and the promise of a finished product in the near future.。

土木工程巨匠:茅以升Title: Engineering Genius: Mao YishengIn the vast realm of civil engineering, few names stand as tall as Mao Yisheng. A renowned Chinese civil engineer, bridge expert, and academician, Mao Yisheng left anindelible mark on the global engineering community. His contributions to the field of civil engineering are numerous and far-reaching, making him a legend in the industry.Born in 1896, Mao Yisheng's early life was filled with promise. He excelled academically and demonstrated a keen interest in engineering, particularly bridges. His hardwork and dedication paid off when he was offered a position at the Tsinghua University, where he would later become a professor.One of Mao Yisheng's most famous achievements was his role in the design and construction of the Qianjiang Bridge. Completed in 1937, this bridge was a testament to Mao's engineering prowess. It was not only a massive feat of engineering but also a symbol of China's technologicalprowess at the time. The Qianjiang Bridge remains a landmark in civil engineering, showcasing Mao's innovative thinking and dedication to excellence.Mao Yisheng's contributions to the field of civil engineering extend beyond his individual projects. He was a staunch advocate for the advancement of engineering education in China. His belief was that a strong foundation in engineering principles and practices was crucial for the country's future development. His efforts in this regard resulted in the establishment of several engineering schools and programs, laying the groundwork for generations of engineers in China.Mao Yisheng's legacy is not just in the bridges hebuilt or the schools he founded, but in the spirit he instilled in his students and colleagues. He emphasized the importance of innovation, collaboration, and a relentless pursuit of excellence in all aspects of engineering. His words and actions continue to inspire engineers around the world, reminding them of the impact they can have on society through their work.Mao Yisheng's impact on civil engineering is indelible. His contributions to bridge design and construction, as well as his dedication to engineering education, have left a lasting impact on the field. His legacy serves as a reminder of the power of engineering in shaping the world and the responsibility that engineers carry in ensuring safe, efficient, and sustainable infrastructure.茅以升,这位土木工程领域的巨匠,以其卓越的成就和深远的影响力,成为了世界工程界的传奇人物。

科技创新为主题的科普英语作文四年级全文共3篇示例,供读者参考篇1The Wonders of Technology and InnovationTechnology is so cool! It seems like every day there are new gadgets, machines, or inventions that make our lives easier and more fun. My favorite technologies are computers, robots, and anything that lets me play video games. But technology isn't just for gaming - it's also helping solve big problems and make amazing discoveries in science, medicine, transportation and so many other fields.One area where technology is really advancing quickly is in robotics. Robots can now do amazing things like assisting in surgeries, exploring other planets, and even beating the world's best chess players! My dream is to one day build my own robot assistant to help me with chores and homework. Wouldn't that be awesome?Some of the most cutting-edge robots are made using a technology called 3D printing. 3D printers can manufacture or "print" solid objects like tools, toys, and even artificial body partsjust by following a computer design plan. The printer squeezes out thin layers of a special plastic or metal material, stacking up the layers to gradually form a 3D shape. With 3D printing, we can produce just about anything we can imagine on a computer!Another incredible new technology is virtual reality, or VR. By wearing a special headset, VR can make you feel like you're inside a completely different digital world. You could hang out in simulated outer space, explore ancient civilizations, or walk through the human body - all from your own home! VR is being used to train pilots, treat medical patients, and create fun new video game worlds to explore. I got to try a VR headset at a museum once and it was a trippy mind-blowing experience.In medicine, new technologies like robotic surgeons, artificial organs, and advanced x-ray scanners are allowing doctors to treat injuries and diseases better than ever before. Scientists are even working on ways to edit our genes to cure genetic disorders or give people cool new traits like being stronger or smarter. With gene editing, we may be able to get rid of all hereditary diseases in the future! That would be incredible.Of course, we've had major breakthroughs in computing and digital technologies too. Our phones and computers are getting smaller yet more powerful every year. Technologies like 5Gwireless networks, cloud computing, and quantum computers that work differently than regular computers could lead to amazing new capabilities in how we communicate, store data, and process information. Maybe one day our phones or laptops will be powerful enough to work like the talking computer assistants you see in sci-fi movies!I'm really excited to see what new mind-boggling innovations will emerge as today's kids grow up surrounded by all this incredible technology. With all the new tools and knowledge we have access to, I think my generation will be able to create world-changing inventions and solve huge global challenges like climate change, diseases, hunger, and more.Scientists and engineers are working hard to develop new sustainable energy sources like solar, wind, and nuclear fusion power to reduce pollution and our reliance on fossil fuels. They're also trying to figure out how to remove excess greenhouse gases from the atmosphere to slow global warming, protect the environment, and prevent more extreme weather disasters.In the future, we may have electric self-driving cars that can help reduce emissions and traffic accidents. Or maybe we'll all get around in efficient mag-lev train systems and personal flyingdrones! With artificial intelligence getting smarter each year, maybe AI will be able to crunch data and model solutions to challenges like climate change for us.Another huge area for future innovation will be in space exploration. Reusable rockets, robotic rovers, and orbiting telescopes are letting us study the solar system, launch satellites to beam internet across the globe, and observe incredible new galaxies and cosmic phenomenon like black holes. Maybe I'll get to be one of the first people to travel to Mars or join a mission to explore deeper into our mysterious universe!Whatever new technologies lie ahead, I have no doubt they will be super awesome and mind-bending. It's an incredibly exciting time to be alive with how quickly science and innovation are advancing. The future possibilities are endless for my generation to use our creativity and these powerful new tools to build a better world. I can't wait to see what we dream up and make a reality! The road to new discoveries and inventions starts with an idea, and kids imaginations today could transform life as we know it tomorrow. That's the magic of technology and human ingenuity.篇2Technology All Around UsHave you ever stopped to think about how much technology we use every single day? It's absolutely amazing when you really pay attention! Technology is everywhere and makes so many things possible that wouldn't be possible without it. Let me tell you about some of the awesome innovations in different areas of technology.Computing and the InternetCan you imagine life without computers, tablets, smartphones and the internet? I can't! Computers are like modern-day superbrains that can process massive amounts of data at lightning speeds. The internet connects all of these computers and devices together in an enormous global network. We can use search engines to find information on virtually any topic instantly. We can watch videos, play games, do schoolwork, and communicate with people anywhere in the world over email, text, video calls and social media. It's mind-blowing!The computers and internet we use today built upon decades of incremental innovations by scientists, engineers and inventors. Things like the binary number system, silicon microchips, operating systems, programming languages, networking protocols and data compression techniquescollectively enabled this digital revolution. And innovations in computing and the internet keep accelerating at a blistering pace with things like artificial intelligence, cloud computing, big data analytics, and the "internet of things." I can't wait to see what computer geniuses come up with next!Energy and Green TechnologyKeeping all of our technology charged up and running requires huge amounts of energy from power plants. Unfortunately, a lot of that energy comes from dirty sources like coal and oil that pollute the air and contribute to climate change and global warming. That's a big problem we need to solve soon!Luckily, scientists and engineers have been developing some really cool green technology innovations that create clean energy from renewable sources like the sun, wind, water movement and even trash! Things like solar panels that turn sunlight into electricity, giant windmills that use the wind to generate power, hydroelectric dams that harness the force of moving water, and waste-to-energy plants that burn garbage as fuel. There are also cleaner ways to produce energy from fossil fuels like using carbon capture technology to trap emissions.The more we can create energy using green tech instead of dirty fuels, the healthier our planet will be. And exciting newinnovations like nuclear fusion reactors, outer space-based solar farms, and algae biofuel are being developed that could power our world entirely with clean energy someday. How awesome is that?!Medicine and BiotechnologyOne area where new technology is quite literally saving lives is medicine and biotechnology. Being able to use machines like X-ray and MRI scanners to safely see inside the human body was a huge breakthrough in helping diagnose diseases and injuries. And innovations in fields like genomics, pharmaceuticals, medical devices and regenerative medicine have given us all sorts of new ways to treat and even cure sicknesses.For example, biotechnology allows us to modify crops to grow faster and bigger while being resistant to droughts and diseases. Scientists can use tools like CRISPR to edit DNA sequences to get rid of genetic disorders or enhance helpful traits in plants, animals and humans. Thanks to innovations in 3D printing and bioengineering, doctors can even manufacture compatible organs and tissues to help patients who need transplants!Robotics and EngineeringAnother incredible technological field that is rapidly evolving is robotics and engineering. Robots are incredible machines that can be programmed to do all sorts of tasks with amazing speed, precision and efficiency. We use robots to build things like cars and electronics in factories. We also have robots that can go places people can't like into space, under the ocean or into hazardous areas during disasters.Robots come in all shapes and sizes from tiny nanorobots that can navigate inside the human body to giant mechanical arms used for construction. Many big companies and militaries utilize fleets of specialized robots to handle logistics and other operations. And in the future, we may have robots that can think for themselves using artificial intelligence and carry out complex jobs and services alongside humans.Engineering geniuses are also coming up with innovations like 3D printed construction, self-driving vehicles, hyperloop transportation systems, advanced prosthetics and exoskeletons to improve humans' mobility and abilities. Some of the greatest challenges we face like colonizing other planets may only be possible by using cutting-edge robotic and engineering tech. It's so exciting to think about!ConclusionAs you can see, technological innovation is rapidly changing and improving virtually every aspect of our lives. From computing and green energy to medicine and robotics, the boundaries of what's possible are constantly being pushed thanks to the creativity of scientists, inventors and engineers. While not every new technology turns out to be useful or good, many of them equip us with powerful tools to tackle global issues like disease, hunger, pollution and resource shortages.We kids today get to grow up in a world of wonders that would seem like magic compared to the past. And thanks to innovations happening now and in the future, our lives will no doubt be full of even more amazing technology that we can't even dream of today! I feel so fortunate to be a kid in this incredible age of technological progress. The 21st century is going to be an awesome time to be alive.篇3Technology Innovation is Changing the WorldHi there! My name is Jamie and I'm a 4th grader who really loves learning about all the awesome new technologies that are being invented. Technology is advancing at a blistering pacethese days and it's exciting to think about how it could change the world in the years to come.Let me tell you about some of the amazing innovations that have me psyched for the future. One area where we're seeing huge breakthroughs is in artificial intelligence, or AI for short. AI involves creating computer systems that can sense their environment, process data, and then make decisions or take actions based on that information.AI is already being used in lots of products and services we use every day. The virtual assistants in our smartphones like Siri and Alexa use AI to understand our voice commands and questions. Streaming services like Netflix use AI to analyze our viewing habits and suggest new shows and movies we might enjoy. Many websites and social media platforms use AI for things like content recommendations, facial recognition, language translation and spam filtering.But AI capabilities are advancing rapidly and researchers are working on more advanced systems that could be revolutionary. There are AI systems now that can beat the best humans at complex games like chess and Go. They are using AI to develop self-driving cars that could make our roads much safer. And inhealthcare, AI is helping doctors detect diseases earlier and determine the best treatments.In the future, AI could help solve some of the world's biggest challenges around things like climate change, disease, hunger, and more. Imagine smart AI systems that can comprehend massive amounts of data and then guide us towards creative solutions! With AI, we may be able to invent world-changing technologies that we can't even imagine yet.Another area where innovation is soaring is in the field of robotics. Robots are machines guided by computer programs to automatically carry out tasks and operations. While robots have been used for manufacturing for many decades, new advances are allowing robots to take on roles that once seemed impossible.Today's robots can move, sense, and operate with much more flexibility and intelligence than the rigid, single-task robots of the past. Some robots can now recognize faces, understand human language, learn from situations, and even walk over rough terrain. The capabilities just keep getting better.We're seeing robots being used for things like exploring other planets, disarming explosives, assisting with surgeries, and much more. Robots are also being developed as companions forelderly people to provide company, remind them to take medicine, and monitor their safety.In the near future, robots may become advanced enough to help out around our homes by doing chores like cleaning, yardwork and cooking meals. At schools, robots could be utilized to make education more engaging by acting as teaching assistants. And in dangerous jobs like firefighting or construction, robots could keep humans out of harm's way.Another exciting area of innovation is in the field of biotechnology. Biotechnology is where we apply biological concepts to create useful products and technologies. This can involve modifying the genes of living organisms or using biological molecules like proteins or enzymes.Through biotechnology, scientists have made important advances in areas like agriculture, medicine, and environmental protection. They've developed crops that can better withstand droughts or pests. They've created medicines through bioengineering to treat diseases like cancer and diabetes. Biotechnology has also given us biofuels and microorganisms that can help clean up pollution.What's really fascinating about biotechnology is its potential to solve global challenges around food scarcity, disease, andenvironmental sustainability. Bioengineers may be able to design super nutritious crops that can grow in very harsh climates. They could develop biofuels from plant sources as a renewable alternative to fossil fuels. And they could create microbes that can break down and digest plastics, helping address our plastic waste crisis.Beyond AI, robotics and biotechnology, there are so many other areas where innovations are happening at a dizzying rate. With 3D printing, we can now manufacture objects with complex shapes on-demand by building them up one layer at a time. The Internet of Things involves connecting devices like home appliances, vehicles, and infrastructure to the internet to allow intelligent monitoring and control.Renewable energy sources like solar and wind power are advancing quickly and becoming cheaper to help us reduce our reliance on polluting fossil fuels. In space exploration, we're developing new propulsion systems and advanced telescopes to help us travel farther and learn more about the universe. The list goes on and on!All of this technological progress is exciting, but it's a bit scary too. New innovations often come with risks, challenges and unintended consequences that we have to grapple with. Thingslike AI systems that get too smart and become difficult to control. Or genetic engineering techniques that get misused. Or automation that disrupts too many jobs before society is ready.We have to be thoughtful about developing these new technologies responsibly and making sure they are safe, secure and beneficial for humanity as a whole. We'll need strong safety guidelines, robust testing, and public discourse to tackle the tough ethical dilemmas that technological revolutions can create.That said, I'm still super optimistic about our innovative future! With ingenuity and good intentions, we can use technology as a great catalyst for human progress. Innovations could help us create abundance and solve major challenges around food, water, energy, education, and the environment. Technology may give us powerful tools to fight disease, extend our lifespans, and push the boundaries of human potential.Just think - the mind-blowing technologies of 100 years from now could be completely unrecognizable to us today. They might be based on things like quantum computing, molecular engineering, or even a unified theory that combines principles from physics, computing, and biology!But one thing is certain - the accelerating pace of innovation means my generation will experience more technological upheaval and progress than any other period in human history. I can't wait to be a part of it and maybe even help createworld-changing innovations myself one day. The future is brimming with possibilities if we dream big and work hard. Bring it on!。

土木工程专业英语慕课Civil Engineering English MOOCCivil engineering is a broad field that encompasses the design, construction, and maintenance of various infrastructure projects, such as buildings, roads, bridges, dams, and water treatment facilities. As the world continues to evolve and grow, the demand for skilled civil engineers has never been higher. In this context, the development of online learning platforms, known as Massive Open Online Courses (MOOCs), has become an increasingly popular and accessible way for individuals to gain knowledge and skills in the field of civil engineering.One of the key advantages of a civil engineering English MOOC is the opportunity for students to learn the technical terminology and language used in the industry. Civil engineering, like many other specialized fields, has its own unique vocabulary and communication practices. By participating in an English-based MOOC, students can immerse themselves in the language of civil engineering, improving their understanding of the subject matter and enhancing their ability to communicate effectively with colleagues, clients, and stakeholders.Moreover, a civil engineering English MOOC can provide a diverse and global learning experience. With participants from around the world, students can engage in discussions and exchange ideas with individuals from different cultural and linguistic backgrounds. This exposure to diverse perspectives can broaden the students' understanding of civil engineering practices and challenges, as well as foster a more inclusive and collaborative mindset.Another significant benefit of a civil engineering English MOOC is the flexibility it offers. Unlike traditional classroom-based courses, MOOCs allow students to learn at their own pace, fitting the coursework around their existing commitments. This flexibility is particularly valuable for working professionals or individuals with other responsibilities, who may not have the time or resources to attend on-campus classes.Furthermore, a civil engineering English MOOC can provide access to a wealth of educational resources, including video lectures, interactive simulations, case studies, and online discussions. These resources can help students deepen their understanding of complex topics, such as structural analysis, soil mechanics, or transportation engineering, while also developing their critical thinking and problem-solving skills.One of the key challenges in developing a successful civilengineering English MOOC is ensuring the quality and relevance of the course content. Civil engineering is a rapidly evolving field, with new technologies, regulations, and best practices constantly emerging. Therefore, the MOOC must be designed and maintained by a team of experienced civil engineering educators and industry professionals, who can continuously update the course material to keep it aligned with the latest developments in the field.Another challenge is the effective delivery of the course content in an online format. While MOOCs offer the convenience of remote learning, they also require careful instructional design and the integration of interactive learning activities to keep students engaged and motivated. This may involve the use of multimedia elements, virtual simulations, and online collaboration tools, among other strategies.Despite these challenges, the potential benefits of a civil engineering English MOOC are significant. By providing access to high-quality educational resources and opportunities for global collaboration, these online learning platforms can play a crucial role in addressing the growing demand for skilled civil engineers and promoting the advancement of the field.In conclusion, the development of a civil engineering English MOOC represents a valuable opportunity to enhance the learningexperience and professional development of individuals interested in the field of civil engineering. By leveraging the power of technology and the global reach of online education, these courses can help students acquire the necessary knowledge, skills, and language proficiency to excel in the dynamic and ever-evolving world of civil engineering.。

解开原子弹之谜作文英文回答:The mystery of unlocking the atomic bomb is a complex and fascinating topic. To understand it, we need to delve into the history of nuclear physics and the key players involved in its development.One of the most significant breakthroughs in atomic bomb technology was the discovery of nuclear fission. This process involves splitting the nucleus of an atom, releasing an enormous amount of energy. Scientists such as Otto Hahn and Fritz Strassmann discovered this phenomenon in 1938, laying the foundation for further research.However, it was the work of physicists like Enrico Fermi and J. Robert Oppenheimer that ultimately led to the successful creation of the atomic bomb. Fermi conducted the first controlled nuclear chain reaction in 1942, demonstrating the feasibility of a nuclear weapon.Oppenheimer, on the other hand, played a crucial role in the Manhattan Project, which brought together the brightest minds in nuclear physics to develop the bomb.The actual process of building an atomic bomb involves several stages. First, highly enriched uranium or plutonium is obtained. This requires advanced techniques for separating the desired isotopes from natural uranium or plutonium. One method is the centrifuge process, which involves spinning uranium hexafluoride gas at high speeds to separate the isotopes based on their weight.Once the fissile material is obtained, it must be shaped into a critical mass. This means bringing enough fissile material together to sustain a chain reaction. The shape and configuration of the material are crucial to achieving this. For example, the Little Boy bomb dropped on Hiroshima used a gun-type design, where two sub-critical masses of uranium-235 were brought together by a conventional explosive, creating a critical mass and initiating the chain reaction.The final stage is the actual detonation of the bomb. This involves triggering a high-explosive conventional charge that compresses the fissile material into a supercritical state. The compression increases the density of the material, causing more atoms to collide and increasing the likelihood of a chain reaction. Once the chain reaction is initiated, a massive amount of energy is released in the form of an explosion.中文回答:解开原子弹之谜是一个复杂而迷人的话题。

信工所博士英语As a doctoral student in the field of Information and Communication Engineering, I have been exposed to a wide range of advanced research topics and cutting-edge technologies. My research focuses on the application of machine learning algorithms in wireless communication systems, specifically in the optimization of resource allocation and interference management.I am currently investigating the potential of deep learning techniques to enhance the performance of massive MIMO systems. By leveraging the power of artificial intelligence, I aim to develop novel algorithms that can adaptively allocate resources and mitigate interference in real-time, thereby improving the overall efficiency and reliability of wireless networks.Moreover, I have also been involved in projects related to the integration of IoT devices in 5G networks, where I have explored the challenges and opportunities associated with the massive deployment of IoT devices and the impact on network performance.In addition to my research work, I have actively participated in academic conferences and workshops, where I have presented my findings and engaged in discussions with experts in the field. These experiences have not only broadened my knowledge but also provided me with valuable insights and feedback that have helped shape my research direction.Overall, I am passionate about leveraging my expertisein information and communication engineering to addressreal-world challenges and contribute to the advancement of wireless communication technologies.作为信息与通信工程领域的博士生,我接触了广泛的先进研究课题和尖端技术。