Random networks created by biological evolution

- 格式:pdf

- 大小:261.88 KB

- 文档页数:9

关于人工智能思考的英语作文英文回答:When we contemplate the intriguing realm of artificial intelligence (AI), a fundamental question arises: can AI think? This profound inquiry has captivated the minds of philosophers, scientists, and futurists alike, generating a rich tapestry of perspectives.One school of thought posits that AI can achieve true thought by emulating the intricate workings of the human brain. This approach, known as symbolic AI, seeks to encode human knowledge and reasoning processes into computational models. By simulating the cognitive functions of the mind, proponents argue, AI can unlock the ability to think, reason, and solve problems akin to humans.A contrasting perspective, known as connectionism, eschews symbolic representations and instead focuses on the interconnectedness of neurons and the emergence ofintelligent behavior from complex networks. This approach, inspired by biological neural systems, posits that thought and consciousness arise from the collective activity of vast numbers of nodes and connections within an artificial neural network.Yet another framework, termed embodied AI, emphasizes the role of physical interaction and embodiment in shaping thought. This perspective contends that intelligence is inextricably linked to the body and its experiences in the real world. By grounding AI systems in physical environments, proponents argue, we can foster a more naturalistic and intuitive form of thought.Beyond these overarching approaches, ongoing research in natural language processing (NLP) and machine learning (ML) is contributing to the development of AI systems that can engage in sophisticated dialogue, understand complex texts, and make predictions based on vast data sets. These advancements are gradually expanding the cognitive capabilities of AI, bringing us closer to the possibility of artificial thought.However, it is essential to recognize the limitations of current AI systems. While they may excel at performing specific tasks, they still lack the comprehensive understanding, self-awareness, and creativity that characterize human thought. The development of truly thinking machines remains a distant horizon, requiring significant breakthroughs in our understanding of consciousness, cognition, and embodiment.中文回答:人工智能是否能够思考?人工智能领域的核心问题之一就是人工智能是否能够思考。

iod术语Iod is an open-source machine learning framework developed by OpenAI. It aims to provide a unified interface for building, training, and deploying machine learning models. In this article, we will explore some key terms and concepts related to Iod.1. Machine Learning: Machine learning refers to the field of study that enables computers to learn from data and make predictions or decisions without being explicitly programmed. Iod is designed to facilitate the development of machine learning models.2. Deep Learning: Deep learning is a subfield of machine learning that focuses on training neural networks with multiple layers. Deep learning models are capable of automatically learning hierarchical representations of data. Iod provides tools and utilities for building deep learning models.3. Artificial Neural Networks (ANNs): ANNs are a computational model inspired by the biological neural networks in the human brain. ANNs consist of interconnected nodes or "neurons" that process and transmit information. Iod supports various types of neural networks, such as feedforward networks, recurrent neural networks (RNNs), and convolutional neural networks (CNNs).4. Model Training: Model training refers to the process of optimizing a machine learning model's parameters or weights using a labeled dataset. Iod offers a flexible training pipeline that allows users to specify custom training loops or leverage pre-built training routines. This enables efficient training of complex models on large datasets.5. Model Evaluation: Model evaluation is the process of assessinga trained model's performance on unseen data. Iod provides functions and metrics for evaluating models based on various evaluation criteria, such as accuracy, precision, recall, and F1 score. These metrics help users gauge the effectiveness of their models and make informed decisions.6. Model Deployment: Model deployment involves deploying a trained model to a production environment to make predictions on new, unseen data. Iod streamlines the deployment process by offering tools for model serialization, model versioning, and serving predictions through APIs. This enables users to easily integrate trained models into their applications.7. Natural Language Processing (NLP): NLP is a branch of artificial intelligence that focuses on the interaction between computers and human language. Iod includes libraries for NLP tasks such as text classification, sentiment analysis, named entity recognition, and machine translation. These capabilities enable developers to build sophisticated NLP applications.8. Computer Vision: Computer vision is a field that deals with enabling computers to understand and interpret visual information from images or videos. Iod provides tools and functionality for tasks such as image classification, object detection, and image segmentation. These capabilities empower developers to create computer vision applications using deep learning techniques.9. Model Optimization: Model optimization refers to techniquesused to improve a machine learning model's efficiency, inference speed, or memory footprint. Iod includes utilities for model compression, model pruning, and quantization. These techniques allow users to reduce the size of trained models without sacrificing performance.10. Transfer Learning: Transfer learning is a technique that allows knowledge gained from training one model to be transferred and applied to another related task. Iod supports transfer learning by providing pre-trained models and tools to fine-tune them on custom datasets. This enables users to quickly adapt existing models to their specific needs.In summary, Iod is a powerful machine learning framework that encompasses various concepts and techniques related to the development, training, evaluation, and deployment of machine learning models. It offers a comprehensive set of tools and libraries for tasks such as NLP, computer vision, and model optimization. By leveraging the capabilities of Iod, developers can streamline the process of building and deploying advanced machine learning applications.。

classificationClassification is a fundamental task in machine learning and data analysis. It involves categorizing data into predefined classes or categories based on their features or characteristics. The goal of classification is to build a model that can accurately predict the class of new, unseen instances.In this document, we will explore the concept of classification, different types of classification algorithms, and their applications in various domains. We will also discuss the process of building and evaluating a classification model.I. Introduction to ClassificationA. Definition and Importance of ClassificationClassification is the process of assigning predefined labels or classes to instances based on their relevant features. It plays a vital role in numerous fields, including finance, healthcare, marketing, and customer service. By classifying data, organizations can make informed decisions, automate processes, and enhance efficiency.B. Types of Classification Problems1. Binary Classification: In binary classification, instances are classified into one of two classes. For example, spam detection, fraud detection, and sentiment analysis are binary classification problems.2. Multi-class Classification: In multi-class classification, instances are classified into more than two classes. Examples of multi-class classification problems include document categorization, image recognition, and disease diagnosis.II. Classification AlgorithmsA. Decision TreesDecision trees are widely used for classification tasks. They provide a clear and interpretable way to classify instances by creating a tree-like model. Decision trees use a set of rules based on features to make decisions, leading down different branches until a leaf node (class label) is reached. Some popular decision tree algorithms include C4.5, CART, and Random Forest.B. Naive BayesNaive Bayes is a probabilistic classification algorithm based on Bayes' theorem. It assumes that the features are statistically independent of each other, despite the simplifying assumption, which often doesn't hold in the realworld. Naive Bayes is known for its simplicity and efficiency and works well in text classification and spam filtering.C. Support Vector MachinesSupport Vector Machines (SVMs) are powerful classification algorithms that find the optimal hyperplane in high-dimensional space to separate instances into different classes. SVMs are good at dealing with linear and non-linear classification problems. They have applications in image recognition, hand-written digit recognition, and text categorization.D. K-Nearest Neighbors (KNN)K-Nearest Neighbors is a simple yet effective classification algorithm. It classifies an instance based on its k nearest neighbors in the training set. KNN is a non-parametric algorithm, meaning it does not assume any specific distribution of the data. It has applications in recommendation systems and pattern recognition.E. Artificial Neural Networks (ANN)Artificial Neural Networks are inspired by the biological structure of the human brain. They consist of interconnected nodes (neurons) organized in layers. ANN algorithms, such asMultilayer Perceptron and Convolutional Neural Networks, have achieved remarkable success in various classification tasks, including image recognition, speech recognition, and natural language processing.III. Building a Classification ModelA. Data PreprocessingBefore implementing a classification algorithm, data preprocessing is necessary. This step involves cleaning the data, handling missing values, and encoding categorical variables. It may also include feature scaling and dimensionality reduction techniques like Principal Component Analysis (PCA).B. Training and TestingTo build a classification model, a labeled dataset is divided into a training set and a testing set. The training set is used to fit the model on the data, while the testing set is used to evaluate the performance of the model. Cross-validation techniques like k-fold cross-validation can be used to obtain more accurate estimates of the model's performance.C. Evaluation MetricsSeveral metrics can be used to evaluate the performance of a classification model. Accuracy, precision, recall, and F1-score are commonly used metrics. Additionally, ROC curves and AUC (Area Under Curve) can assess the model's performance across different probability thresholds.IV. Applications of ClassificationA. Spam DetectionClassification algorithms can be used to detect spam emails accurately. By training a model on a dataset of labeled spam and non-spam emails, it can learn to classify incoming emails as either spam or legitimate.B. Fraud DetectionClassification algorithms are essential in fraud detection systems. By analyzing features such as account activity, transaction patterns, and user behavior, a model can identify potentially fraudulent transactions or activities.C. Disease DiagnosisClassification algorithms can assist in disease diagnosis by analyzing patient data, including symptoms, medical history, and test results. By comparing the patient's data againsthistorical data, the model can predict the likelihood of a specific disease.D. Image RecognitionClassification algorithms, particularly deep learning algorithms like Convolutional Neural Networks (CNNs), have revolutionized image recognition tasks. They can accurately identify objects or scenes in images, enabling applications like facial recognition and autonomous driving.V. ConclusionClassification is a vital task in machine learning and data analysis. It enables us to categorize instances into different classes based on their features. By understanding different classification algorithms and their applications, organizations can make better decisions, automate processes, and gain valuable insights from their data.。

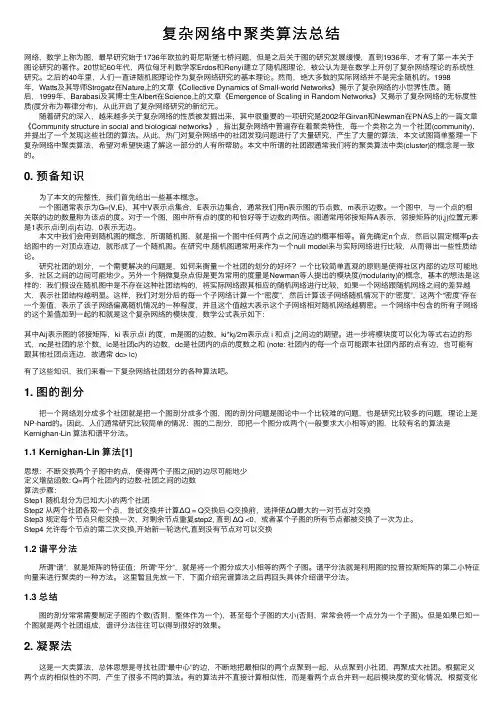

复杂⽹络中聚类算法总结⽹络,数学上称为图,最早研究始于1736年欧拉的哥尼斯堡七桥问题,但是之后关于图的研究发展缓慢,直到1936年,才有了第⼀本关于图论研究的著作。

20世纪60年代,两位匈⽛利数学家Erdos和Renyi建⽴了随机图理论,被公认为是在数学上开创了复杂⽹络理论的系统性研究。

之后的40年⾥,⼈们⼀直讲随机图理论作为复杂⽹络研究的基本理论。

然⽽,绝⼤多数的实际⽹络并不是完全随机的。

1998年,Watts及其导师Strogatz在Nature上的⽂章《Collective Dynamics of Small-world Networks》揭⽰了复杂⽹络的⼩世界性质。

随后,1999年,Barabasi及其博⼠⽣Albert在Science上的⽂章《Emergence of Scaling in Random Networks》⼜揭⽰了复杂⽹络的⽆标度性质(度分布为幂律分布),从此开启了复杂⽹络研究的新纪元。

随着研究的深⼊,越来越多关于复杂⽹络的性质被发掘出来,其中很重要的⼀项研究是2002年Girvan和Newman在PNAS上的⼀篇⽂章《Community structure in social and biological networks》,指出复杂⽹络中普遍存在着聚类特性,每⼀个类称之为⼀个社团(community),并提出了⼀个发现这些社团的算法。

从此,热门对复杂⽹络中的社团发现问题进⾏了⼤量研究,产⽣了⼤量的算法,本⽂试图简单整理⼀下复杂⽹络中聚类算法,希望对希望快速了解这⼀部分的⼈有所帮助。

本⽂中所谓的社团跟通常我们将的聚类算法中类(cluster)的概念是⼀致的。

0. 预备知识为了本⽂的完整性,我们⾸先给出⼀些基本概念。

⼀个图通常表⽰为G=(V,E),其中V表⽰点集合,E表⽰边集合,通常我们⽤n表⽰图的节点数,m表⽰边数。

⼀个图中,与⼀个点的相关联的边的数量称为该点的度。

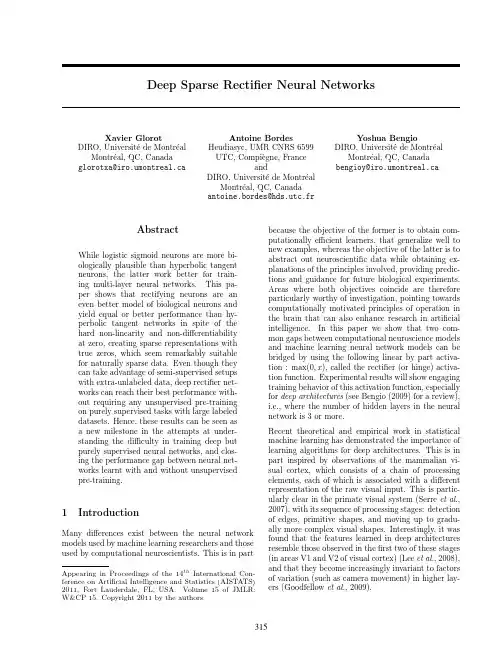

Deep Sparse Rectifier Neural NetworksXavier Glorot Antoine Bordes Yoshua BengioDIRO,Universit´e de Montr´e al Montr´e al,QC,Canada glorotxa@iro.umontreal.ca Heudiasyc,UMR CNRS6599UTC,Compi`e gne,FranceandDIRO,Universit´e de Montr´e alMontr´e al,QC,Canadaantoine.bordes@hds.utc.frDIRO,Universit´e de Montr´e alMontr´e al,QC,Canadabengioy@iro.umontreal.caAbstractWhile logistic sigmoid neurons are more bi-ologically plausible than hyperbolic tangentneurons,the latter work better for train-ing multi-layer neural networks.This pa-per shows that rectifying neurons are aneven better model of biological neurons andyield equal or better performance than hy-perbolic tangent networks in spite of thehard non-linearity and non-differentiabilityat zero,creating sparse representations withtrue zeros,which seem remarkably suitablefor naturally sparse data.Even though theycan take advantage of semi-supervised setupswith extra-unlabeled data,deep rectifier net-works can reach their best performance with-out requiring any unsupervised pre-trainingon purely supervised tasks with large labeleddatasets.Hence,these results can be seen asa new milestone in the attempts at under-standing the difficulty in training deep butpurely supervised neural networks,and clos-ing the performance gap between neural net-works learnt with and without unsupervisedpre-training.1IntroductionMany differences exist between the neural network models used by machine learning researchers and those used by computational neuroscientists.This is in part Appearing in Proceedings of the14th International Con-ference on Artificial Intelligence and Statistics(AISTATS) 2011,Fort Lauderdale,FL,USA.Volume15of JMLR: W&CP15.Copyright2011by the authors.because the objective of the former is to obtain com-putationally efficient learners,that generalize well to new examples,whereas the objective of the latter is to abstract out neuroscientific data while obtaining ex-planations of the principles involved,providing predic-tions and guidance for future biological experiments. Areas where both objectives coincide are therefore particularly worthy of investigation,pointing towards computationally motivated principles of operation in the brain that can also enhance research in artificial intelligence.In this paper we show that two com-mon gaps between computational neuroscience models and machine learning neural network models can be bridged by using the following linear by part activa-tion:max(0,x),called the rectifier(or hinge)activa-tion function.Experimental results will show engaging training behavior of this activation function,especially for deep architectures(see Bengio(2009)for a review), i.e.,where the number of hidden layers in the neural network is3or more.Recent theoretical and empirical work in statistical machine learning has demonstrated the importance of learning algorithms for deep architectures.This is in part inspired by observations of the mammalian vi-sual cortex,which consists of a chain of processing elements,each of which is associated with a different representation of the raw visual input.This is partic-ularly clear in the primate visual system(Serre et al., 2007),with its sequence of processing stages:detection of edges,primitive shapes,and moving up to gradu-ally more complex visual shapes.Interestingly,it was found that the features learned in deep architectures resemble those observed in thefirst two of these stages (in areas V1and V2of visual cortex)(Lee et al.,2008), and that they become increasingly invariant to factors of variation(such as camera movement)in higher lay-ers(Goodfellow et al.,2009).Deep Sparse Rectifier Neural NetworksRegarding the training of deep networks,something that can be considered a breakthrough happened in2006,with the introduction of Deep Belief Net-works(Hinton et al.,2006),and more generally the idea of initializing each layer by unsupervised learn-ing(Bengio et al.,2007;Ranzato et al.,2007).Some authors have tried to understand why this unsuper-vised procedure helps(Erhan et al.,2010)while oth-ers investigated why the original training procedure for deep neural networks failed(Bengio and Glorot,2010). From the machine learning point of view,this paper brings additional results in these lines of investigation. We propose to explore the use of rectifying non-linearities as alternatives to the hyperbolic tangent or sigmoid in deep artificial neural networks,in ad-dition to using an L1regularizer on the activation val-ues to promote sparsity and prevent potential numer-ical problems with unbounded activation.Nair and Hinton(2010)present promising results of the influ-ence of such units in the context of Restricted Boltz-mann Machines compared to logistic sigmoid activa-tions on image classification tasks.Our work extends this for the case of pre-training using denoising auto-encoders(Vincent et al.,2008)and provides an exten-sive empirical comparison of the rectifying activation function against the hyperbolic tangent on image clas-sification benchmarks as well as an original derivation for the text application of sentiment analysis.Our experiments on image and text data indicate that training proceeds better when the artificial neurons are either offor operating mostly in a linear regime.Sur-prisingly,rectifying activation allows deep networks to achieve their best performance without unsupervised pre-training.Hence,our work proposes a new contri-bution to the trend of understanding and merging the performance gap between deep networks learnt with and without unsupervised pre-training(Erhan et al., 2010;Bengio and Glorot,2010).Still,rectifier net-works can benefit from unsupervised pre-training in the context of semi-supervised learning where large amounts of unlabeled data are provided.Furthermore, as rectifier units naturally lead to sparse networks and are closer to biological neurons’responses in their main operating regime,this work also bridges(in part)a machine learning/neuroscience gap in terms of acti-vation function and sparsity.This paper is organized as follows.Section2presents some neuroscience and machine learning background which inspired this work.Section3introduces recti-fier neurons and explains their potential benefits and drawbacks in deep networks.Then we propose an experimental study with empirical results on image recognition in Section4.1and sentiment analysis in Section4.2.Section5presents our conclusions.2Background2.1Neuroscience ObservationsFor models of biological neurons,the activation func-tion is the expectedfiring rate as a function of the total input currently arising out of incoming signals at synapses(Dayan and Abott,2001).An activation function is termed,respectively antisymmetric or sym-metric when its response to the opposite of a strongly excitatory input pattern is respectively a strongly in-hibitory or excitatory one,and one-sided when this response is zero.The main gaps that we wish to con-sider between computational neuroscience models and machine learning models are the following:•Studies on brain energy expense suggest that neurons encode information in a sparse and dis-tributed way(Attwell and Laughlin,2001),esti-mating the percentage of neurons active at the same time to be between1and4%(Lennie,2003).This corresponds to a trade-offbetween richness of representation and small action potential en-ergy expenditure.Without additional regulariza-tion,such as an L1penalty,ordinary feedforward neural nets do not have this property.For ex-ample,the sigmoid activation has a steady state regime around12,therefore,after initializing with small weights,all neuronsfire at half their satura-tion regime.This is biologically implausible and hurts gradient-based optimization(LeCun et al., 1998;Bengio and Glorot,2010).•Important divergences between biological and machine learning models concern non-linear activation functions.A common biological model of neuron,the leaky integrate-and-fire(or LIF)(Dayan and Abott,2001),gives the follow-ing relation between thefiring rate and the input current,illustrated in Figure1(left):f(I)=τlogE+RI−V rE+RI−V th+t ref−1,if E+RI>V th0,if E+RI≤V thwhere t ref is the refractory period(minimal time between two action potentials),I the input cur-rent,V r the resting potential and V th the thresh-old potential(with V th>V r),and R,E,τthe membrane resistance,potential and time con-stant.The most commonly used activation func-tions in the deep learning and neural networks lit-erature are the standard logistic sigmoid and theXavier Glorot,Antoine Bordes,YoshuaBengioFigure1:Left:Common neural activation function motivated by biological data.Right:Commonly used activation functions in neural networks literature:logistic sigmoid and hyperbolic tangent(tanh).hyperbolic tangent(see Figure1,right),which areequivalent up to a linear transformation.The hy-perbolic tangent has a steady state at0,and istherefore preferred from the optimization stand-point(LeCun et al.,1998;Bengio and Glorot,2010),but it forces an antisymmetry around0which is absent in biological neurons.2.2Advantages of SparsitySparsity has become a concept of interest,not only incomputational neuroscience and machine learning butalso in statistics and signal processing(Candes andTao,2005).It wasfirst introduced in computationalneuroscience in the context of sparse coding in the vi-sual system(Olshausen and Field,1997).It has beena key element of deep convolutional networks exploit-ing a variant of auto-encoders(Ranzato et al.,2007,2008;Mairal et al.,2009)with a sparse distributedrepresentation,and has also become a key ingredientin Deep Belief Networks(Lee et al.,2008).A sparsitypenalty has been used in several computational neuro-science(Olshausen and Field,1997;Doi et al.,2006)and machine learning models(Lee et al.,2007;Mairalet al.,2009),in particular for deep architectures(Leeet al.,2008;Ranzato et al.,2007,2008).However,inthe latter,the neurons end up taking small but non-zero activation orfiring probability.We show here thatusing a rectifying non-linearity gives rise to real zerosof activations and thus truly sparse representations.From a computational point of view,such representa-tions are appealing for the following reasons:•Information disentangling.One of theclaimed objectives of deep learning algo-rithms(Bengio,2009)is to disentangle thefactors explaining the variations in the data.Adense representation is highly entangled becausealmost any change in the input modifies most ofthe entries in the representation vector.Instead,if a representation is both sparse and robust tosmall input changes,the set of non-zero featuresis almost always roughly conserved by smallchanges of the input.•Efficient variable-size representation.Dif-ferent inputs may contain different amounts of in-formation and would be more conveniently repre-sented using a variable-size data-structure,whichis common in computer representations of infor-mation.Varying the number of active neuronsallows a model to control the effective dimension-ality of the representation for a given input andthe required precision.•Linear separability.Sparse representations arealso more likely to be linearly separable,or moreeasily separable with less non-linear machinery,simply because the information is represented ina high-dimensional space.Besides,this can reflectthe original data format.In text-related applica-tions for instance,the original raw data is alreadyvery sparse(see Section4.2).•Distributed but sparse.Dense distributed rep-resentations are the richest representations,be-ing potentially exponentially more efficient thanpurely local ones(Bengio,2009).Sparse repre-sentations’efficiency is still exponentially greater,with the power of the exponent being the numberof non-zero features.They may represent a goodtrade-offwith respect to the above criteria.Nevertheless,forcing too much sparsity may hurt pre-dictive performance for an equal number of neurons,because it reduces the effective capacity of the model.Deep Sparse Rectifier NeuralNetworksFigure 2:Left:Sparse propagation of activations and gradients in a network of rectifier units.The input selects a subset of active neurons and computation is linear in this subset.Right:Rectifier and softplus activation functions.The second one is a smooth version of the first.3Deep Rectifier Networks3.1Rectifier NeuronsThe neuroscience literature (Bush and Sejnowski,1995;Douglas and al.,2003)indicates that corti-cal neurons are rarely in their maximum saturation regime ,and suggests that their activation function can be approximated by a rectifier.Most previous stud-ies of neural networks involving a rectifying activation function concern recurrent networks (Salinas and Ab-bott,1996;Hahnloser,1998).The rectifier function rectifier(x )=max(0,x )is one-sided and therefore does not enforce a sign symmetry 1or antisymmetry 1:instead,the response to the oppo-site of an excitatory input pattern is 0(no response).However,we can obtain symmetry or antisymmetry by combining two rectifier units sharing parameters.Advantages The rectifier activation function allows a network to easily obtain sparse representations.For example,after uniform initialization of the weights,around 50%of hidden units continuous output val-ues are real zeros,and this fraction can easily increase with sparsity-inducing regularization.Apart from be-ing more biologically plausible,sparsity also leads to mathematical advantages (see previous section).As illustrated in Figure 2(left),the only non-linearity in the network comes from the path selection associ-ated with individual neurons being active or not.For a given input only a subset of neurons are active .Com-putation is linear on this subset:once this subset of neurons is selected,the output is a linear function of1The hyperbolic tangent absolute value non-linearity |tanh(x )|used by Jarrett et al.(2009)enforces sign symme-try.A tanh(x )non-linearity enforces sign antisymmetry.the input (although a large enough change can trigger a discrete change of the active set of neurons).The function computed by each neuron or by the network output in terms of the network input is thus linear by parts.We can see the model as an exponential num-ber of linear models that share parameters (Nair and Hinton,2010).Because of this linearity,gradients flow well on the active paths of neurons (there is no gra-dient vanishing effect due to activation non-linearities of sigmoid or tanh units),and mathematical investi-gation is putations are also cheaper:there is no need for computing the exponential function in activations,and sparsity can be exploited.Potential Problems One may hypothesize that the hard saturation at 0may hurt optimization by block-ing gradient back-propagation.To evaluate the poten-tial impact of this effect we also investigate the soft-plus activation:softplus (x )=log (1+e x )(Dugas et al.,2001),a smooth version of the rectifying non-linearity.We lose the exact sparsity,but may hope to gain eas-ier training.However,experimental results (see Sec-tion 4.1)tend to contradict that hypothesis,suggesting that hard zeros can actually help supervised training.We hypothesize that the hard non-linearities do not hurt so long as the gradient can propagate along some paths ,i.e.,that some of the hidden units in each layer are non-zero.With the credit and blame assigned to these ON units rather than distributed more evenly,we hypothesize that optimization is easier.Another prob-lem could arise due to the unbounded behavior of the activations;one may thus want to use a regularizer to prevent potential numerical problems.Therefore,we use the L 1penalty on the activation values,which also promotes additional sparsity.Also recall that,in or-der to efficiently represent symmetric/antisymmetric behavior in the data,a rectifier network would needXavier Glorot,Antoine Bordes,Yoshua Bengiotwice as many hidden units as a network of symmet-ric/antisymmetric activation functions.Finally,rectifier networks are subject to ill-conditioning of the parametrization.Biases and weights can be scaled in different (and consistent)ways while preserving the same overall network function.More precisely,consider for each layer of depth i of the network a scalar αi ,and scaling the parameters asW i =W iαi and b i =b i ij =1αj.The output units values then change as follow:s =sn j =1αj .Therefore,aslong as nj =1αj is 1,the network function is identical.3.2Unsupervised Pre-trainingThis paper is particularly inspired by the sparse repre-sentations learned in the context of auto-encoder vari-ants,as they have been found to be very useful intraining deep architectures (Bengio,2009),especially for unsupervised pre-training of neural networks (Er-han et al.,2010).Nonetheless,certain difficulties arise when one wants to introduce rectifier activations into stacked denois-ing auto-encoders (Vincent et al.,2008).First,the hard saturation below the threshold of the rectifier function is not suited for the reconstruction units.In-deed,whenever the network happens to reconstruct a zero in place of a non-zero target,the reconstruc-tion unit can not backpropagate any gradient.2Sec-ond,the unbounded behavior of the rectifier activation also needs to be taken into account.In the follow-ing,we denote ˜x the corrupted version of the input x ,σ()the logistic sigmoid function and θthe model pa-rameters (W enc ,b enc ,W dec ,b dec ),and define the linear recontruction function as:f (x,θ)=W dec max(W enc x +b enc ,0)+b dec .Here are the several strategies we have experimented:e a softplus activation function for the recon-struction layer,along with a quadratic cost:L (x,θ)=||x −log(1+exp(f (˜x ,θ)))||2.2.Scale the rectifier activation values coming from the previous encoding layer to bound them be-tween 0and 1,then use a sigmoid activation func-tion for the reconstruction layer,along with a cross-entropy reconstruction cost.L (x,θ)=−x log(σ(f (˜x ,θ)))−(1−x )log(1−σ(f (˜x ,θ))).2Why is this not a problem for hidden layers too?we hy-pothesize that it is because gradients can still flow throughthe active (non-zero),possibly helping rather than hurting the assignment of credit.e a linear activation function for the reconstruc-tion layer,along with a quadratic cost.We triedto use input unit values either before or after the rectifier non-linearity as reconstruction targets.(For the first layer,raw inputs are directly used.)e a rectifier activation function for the recon-struction layer,along with a quadratic cost.The first strategy has proven to yield better gener-alization on image data and the second one on text data.Consequently,the following experimental study presents results using those two.4Experimental StudyThis section discusses our empirical evaluation of recti-fier units for deep networks.We first compare them to hyperbolic tangent and softplus activations on image benchmarks with and without pre-training,and then apply them to the text task of sentiment analysis.4.1Image RecognitionExperimental setup We considered the image datasets detailed below.Each of them has a train-ing set (for tuning parameters),a validation set (for tuning hyper-parameters)and a test set (for report-ing generalization performance).They are presented according to their number of training/validation/test examples,their respective image sizes,as well as their number of classes:•MNIST (LeCun et al.,1998):50k/10k/10k,28×28digit images,10classes.•CIFAR10(Krizhevsky and Hinton,2009):50k/5k/5k,32×32×3RGB images,10classes.•NISTP:81,920k/80k/20k,32×32character im-ages from the NIST database 19,with randomized distortions (Bengio and al,2010),62classes.This dataset is much larger and more difficult than the original NIST (Grother,1995).•NORB:233,172/58,428/58,320,taken from Jittered-Cluttered NORB (LeCun et al.,2004).Stereo-pair images of toys on a cluttered background,6classes.The data has been prepro-cessed similarly to (Nair and Hinton,2010):we subsampled the original 2×108×108stereo-pair images to 2×32×32and scaled linearly the image in the range [−1,1].We followed the procedure used by Nair and Hinton (2010)to create the validation set.Deep Sparse Rectifier Neural NetworksTable1:Test error on networks of depth3.Bold results represent statistical equivalence between similar ex-periments,with and without pre-training,under the null hypothesis of the pairwise test with p=0.05.Neuron MNIST CIF AR10NISTP NORB With unsupervised pre-trainingRectifier 1.20%49.96%32.86%16.46% Tanh 1.16%50.79%35.89%17.66% Softplus 1.17%49.52%33.27%19.19% Without unsupervised pre-trainingRectifier 1.43%50.86%32.64%16.40% Tanh 1.57%52.62%36.46%19.29% Softplus 1.77%53.20%35.48%17.68% For all experiments except on the NORB data(Le-Cun et al.,2004),the models we used are stacked denoising auto-encoders(Vincent et al.,2008)with three hidden layers and1000units per layer.The ar-chitecture of Nair and Hinton(2010)has been used on NORB:two hidden layers with respectively4000 and2000units.We used a cross-entropy reconstruc-tion cost for tanh networks and a quadratic cost over a softplus reconstruction layer for the rectifier and softplus networks.We chose masking noise as the corruption process:each pixel has a probability of0.25of being artificially set to0.The unsuper-vised learning rate is constant,and the following val-ues have been explored:{.1,.01,.001,.0001}.We se-lect the model with the lowest reconstruction error. For the supervisedfine-tuning we chose a constant learning rate in the same range as the unsupervised learning rate with respect to the supervised valida-tion error.The training cost is the negative log likeli-hood−log P(correct class|input)where the probabil-ities are obtained from the output layer(which imple-ments a softmax logistic regression).We used stochas-tic gradient descent with mini-batches of size10for both unsupervised and supervised training phases.To take into account the potential problem of rectifier units not being symmetric around0,we use a vari-ant of the activation function for whichhalf of the units output values are multiplied by-1.This serves to cancel out the mean activation value for each layer and can be interpreted either as inhibitory neurons or simply as a way to equalize activations numerically. Additionally,an L1penalty on the activations with a coefficient of0.001was added to the cost function dur-ing pre-training andfine-tuning in order to increase the amount of sparsity in the learned representations. Main results Table1summarizes the results on networks of3hidden layers of1000hidden units each,Figure3:Influence offinal sparsity on accu-racy.200randomly initialized deep rectifier networks were trained on MNIST with various L1penalties(from 0to0.01)to obtain different sparsity levels.Results show that enforcing sparsity of the activation does not hurtfinal performance until around85%of true zeros.comparing all the neuron types3on all the datasets, with or without unsupervised pre-training.In the lat-ter case,the supervised training phase has been carried out using the same experimental setup as the one de-scribed above forfine-tuning.The main observations we make are the following:•Despite the hard threshold at0,networks trained with the rectifier activation function canfind lo-cal minima of greater or equal quality than those obtained with its smooth counterpart,the soft-plus.On NORB,we tested a rescaled version of the softplus defined by1αsoftplus(αx),which allows to interpolate in a smooth manner be-tween the softplus(α=1)and the rectifier(α=∞).We obtained the followingα/test error cou-ples:1/17.68%,1.3/17.53%,2/16.9%,3/16.66%, 6/16.54%,∞/16.40%.There is no trade-offbe-tween those activation functions.Rectifiers are not only biologically plausible,they are also com-putationally efficient.•There is almost no improvement when using un-supervised pre-training with rectifier activations, contrary to what is experienced using tanh or soft-plus.Purely supervised rectifier networks remain competitive on all4datasets,even against the pretrained tanh or softplus models.3We also tested a rescaled version of the LIF and max(tanh(x),0)as activation functions.We obtained worse generalization performance than those of Table1, and chose not to report them.Xavier Glorot,Antoine Bordes,Yoshua Bengio•Rectifier networks are truly deep sparse networks.There is an average exact sparsity(fraction of ze-ros)of the hidden layers of83.4%on MNIST,72.0%on CIFAR10,68.0%on NISTP and73.8%on NORB.Figure3provides a better understand-ing of the influence of sparsity.It displays the MNIST test error of deep rectifier networks(with-out pre-training)according to different average sparsity obtained by varying the L1penalty on the works appear to be quite ro-bust to it as models with70%to almost85%of true zeros can achieve similar performances. With labeled data,deep rectifier networks appear to be attractive models.They are biologically credible, and,compared to their standard counterparts,do not seem to depend as much on unsupervised pre-training, while ultimately yielding sparse representations.This last conclusion is slightly different from those re-ported in(Nair and Hinton,2010)in which is demon-strated that unsupervised pre-training with Restricted Boltzmann Machines and using rectifier units is ben-eficial.In particular,the paper reports that pre-trained rectified Deep Belief Networks can achieve a test error on NORB below16%.However,we be-lieve that our results are compatible with those:we extend the experimental framework to a different kind of models(stacked denoising auto-encoders)and dif-ferent datasets(on which conclusions seem to be differ-ent).Furthermore,note that our rectified model with-out pre-training on NORB is very competitive(16.4% error)and outperforms the17.6%error of the non-pretrained model from Nair and Hinton(2010),which is basically what wefind with the non-pretrained soft-plus units(17.68%error).Semi-supervised setting Figure4presents re-sults of semi-supervised experiments conducted on the NORB dataset.We vary the percentage of the orig-inal labeled training set which is used for the super-vised training phase of the rectifier and hyperbolic tan-gent networks and evaluate the effect of the unsuper-vised pre-training(using the whole training set,unla-beled).Confirming conclusions of Erhan et al.(2010), the network with hyperbolic tangent activations im-proves with unsupervised pre-training for any labeled set size(even when all the training set is labeled). However,the picture changes with rectifying activa-tions.In semi-supervised setups(with few labeled data),the pre-training is highly beneficial.But the more the labeled set grows,the closer the models with and without pre-training.Eventually,when all avail-able data is labeled,the two models achieve identical performance.Rectifier networks can maximally ex-ploit labeled and unlabeledinformation.Figure4:Effect of unsupervised pre-training.On NORB,we compare hyperbolic tangent and rectifier net-works,with or without unsupervised pre-training,andfine-tune only on subsets of increasing size of the training set.4.2Sentiment AnalysisNair and Hinton(2010)also demonstrated that recti-fier units were efficient for image-related tasks.They mentioned the intensity equivariance property(i.e. without bias parameters the network function is lin-early variant to intensity changes in the input)as ar-gument to explain this observation.This would sug-gest that rectifying activation is mostly useful to im-age data.In this section,we investigate on a different modality to cast a fresh light on rectifier units.A recent study(Zhou et al.,2010)shows that Deep Be-lief Networks with binary units are competitive with the state-of-the-art methods for sentiment analysis. This indicates that deep learning is appropriate to this text task which seems therefore ideal to observe the behavior of rectifier units on a different modality,and provide a data point towards the hypothesis that rec-tifier nets are particarly appropriate for sparse input vectors,such as found in NLP.Sentiment analysis is a text mining area which aims to determine the judg-ment of a writer with respect to a given topic(see (Pang and Lee,2008)for a review).The basic task consists in classifying the polarity of reviews either by predicting whether the expressed opinions are positive or negative,or by assigning them star ratings on either 3,4or5star scales.Following a task originally proposed by Snyder and Barzilay(2007),our data consists of restaurant reviews which have been extracted from the restaurant review site .We have access to10,000 labeled and300,000unlabeled training reviews,while the test set contains10,000examples.The goal is to predict the rating on a5star scale and performance is evaluated using Root Mean Squared Error(RMSE).4 4Even though our tasks are identical,our database is。

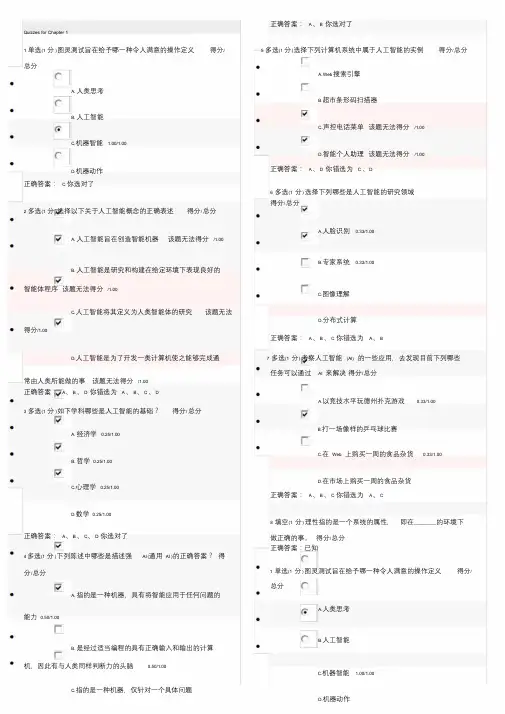

正确答案:A、B 你选对了Quizzes for Chapter 11 单选(1 分)图灵测试旨在给予哪一种令人满意的操作定义得分/ 5 多选(1 分)选择下列计算机系统中属于人工智能的实例得分/总分总分A. Web搜索引擎A. 人类思考B.超市条形码扫描器B. 人工智能C.声控电话菜单该题无法得分/1.00C.机器智能 1.00/1.00D.智能个人助理该题无法得分/1.00正确答案:A、D 你错选为C、DD.机器动作正确答案: C 你选对了6 多选(1 分)选择下列哪些是人工智能的研究领域得分/总分2 多选(1 分)选择以下关于人工智能概念的正确表述得分/总分A.人脸识别0.33/1.00A. 人工智能旨在创造智能机器该题无法得分/1.00B.专家系统0.33/1.00B. 人工智能是研究和构建在给定环境下表现良好的智能体程序该题无法得分/1.00C.图像理解C.人工智能将其定义为人类智能体的研究该题无法D.分布式计算得分/1.00正确答案:A、B、C 你错选为A、BD.人工智能是为了开发一类计算机使之能够完成通7 多选(1 分)考察人工智能(AI) 的一些应用,去发现目前下列哪些任务可以通过AI 来解决得分/总分常由人类所能做的事该题无法得分/1.00正确答案:A、B、D 你错选为A、B、C、DA.以竞技水平玩德州扑克游戏0.33/1.003 多选(1 分)如下学科哪些是人工智能的基础?得分/总分B.打一场像样的乒乓球比赛A. 经济学0.25/1.00C.在Web 上购买一周的食品杂货0.33/1.00B. 哲学0.25/1.00D.在市场上购买一周的食品杂货C.心理学0.25/1.00正确答案:A、B、C 你错选为A、CD.数学0.25/1.008 填空(1 分)理性指的是一个系统的属性,即在_________的环境下正确答案:A、B、C、D 你选对了做正确的事。

得分/总分正确答案:已知4 多选(1 分)下列陈述中哪些是描述强AI (通用AI )的正确答案?得1 单选(1 分)图灵测试旨在给予哪一种令人满意的操作定义得分/ 分/总分总分A. 指的是一种机器,具有将智能应用于任何问题的A.人类思考能力0.50/1.00B.人工智能B. 是经过适当编程的具有正确输入和输出的计算机,因此有与人类同样判断力的头脑0.50/1.00C.机器智能 1.00/1.00C.指的是一种机器,仅针对一个具体问题D.机器动作正确答案: C 你选对了D.其定义为无知觉的计算机智能,或专注于一个狭2 多选(1 分)选择以下关于人工智能概念的正确表述得分/总分窄任务的AIA. 人工智能旨在创造智能机器该题无法得分/1.00B.专家系统0.33/1.00B. 人工智能是研究和构建在给定环境下表现良好的C.图像理解智能体程序该题无法得分/1.00D.分布式计算C.人工智能将其定义为人类智能体的研究该题无法正确答案:A、B、C 你错选为A、B得分/1.00 7 多选(1 分)考察人工智能(AI) 的一些应用,去发现目前下列哪些任务可以通过AI 来解决得分/总分D.人工智能是为了开发一类计算机使之能够完成通A.以竞技水平玩德州扑克游戏0.33/1.00常由人类所能做的事该题无法得分/1.00正确答案:A、B、D 你错选为A、B、C、DB.打一场像样的乒乓球比赛3 多选(1 分)如下学科哪些是人工智能的基础?得分/总分C.在Web 上购买一周的食品杂货0.33/1.00A. 经济学0.25/1.00D.在市场上购买一周的食品杂货B. 哲学0.25/1.00正确答案:A、B、C 你错选为A、CC.心理学0.25/1.008 填空(1 分)理性指的是一个系统的属性,即在_________的环境下D.数学0.25/1.00 做正确的事。

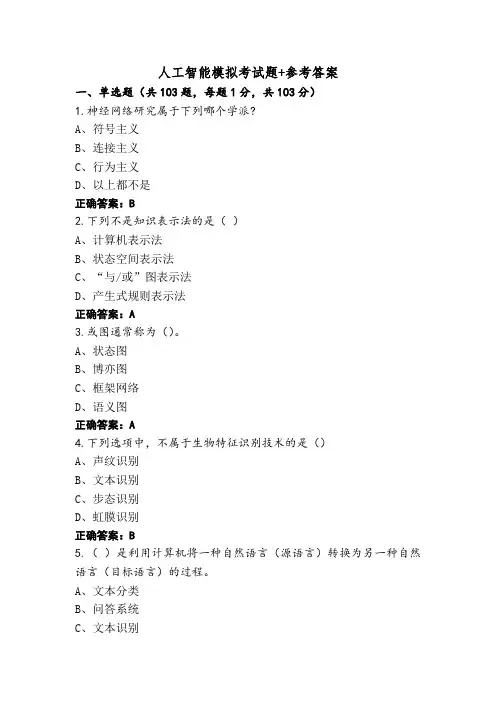

人工智能模拟考试题+参考答案一、单选题(共103题,每题1分,共103分)1.神经网络研究属于下列哪个学派?A、符号主义B、连接主义C、行为主义D、以上都不是正确答案:B2.下列不是知识表示法的是()A、计算机表示法B、状态空间表示法C、“与/或”图表示法D、产生式规则表示法正确答案:A3.或图通常称为()。

A、状态图B、博亦图C、框架网络D、语义图正确答案:A4.下列选项中,不属于生物特征识别技术的是()A、声纹识别B、文本识别C、步态识别D、虹膜识别正确答案:B5.()是利用计算机将一种自然语言(源语言)转换为另一种自然语言(目标语言)的过程。

A、文本分类B、问答系统C、文本识别D、机器翻译正确答案:D6.根据numpy数组中ndim属性的含义确定程序的输出()。

array=np.array([[1,2,3],[4,5,6],[7,8,9],[10,11,12]]);print(array.ndim)A、$4B、(3,4)C、(4,3)D、2正确答案:D7.下面哪项操作能实现跟神经网络中Dropout的类似效果?A、BoostingB、BaggingC、StackingD、Mapping正确答案:B8.我们想在大数据集上训练决策树, 为了减少训练时间, 我们可以A、增大学习率(Learnin Rate)B、增加树的深度C、对决策树模型进行预剪枝D、减少树的数量正确答案:C9.深度学习中神经网络类型很多,以下神经网络信息是单向传播的是:A、LSTMB、GRUC、循环神经网络D、卷积神经网络正确答案:D10.在处理序列数据时,较容易出现梯度消失现象的模型是()A、CNNC、GRUD、LSTM正确答案:B11.人工智能发展历程大致分为三个阶段。

符号主义(Symbolism)是在人工智能发展历程的哪个阶段发展起来的?A、20世纪70年代-90年代B、20世纪50年代-80年代C、20世纪60年代-90年代正确答案:B12.在人脸检测算法中,不属于该算法难点的是()A、需要检测不同性别的人脸B、人脸角度变化大C、需要检测分辨率很小的人脸D、出现人脸遮挡正确答案:A13.深度学习神经网络的隐藏层数对网络的性能有一定的影响,以下关于其影响说法正确的是:A、隐藏层数适当增加,神经网络的分辨能力越弱B、隐藏层数适当减少,神经网络的分辨能力不变C、隐藏层数适当减少,神经网络的分辨能力越强D、隐藏层数适当增加,神经网络的分辨能力越强正确答案:D14.Inception模块采用()的设计形式,每个支路使用()大小的卷积核。

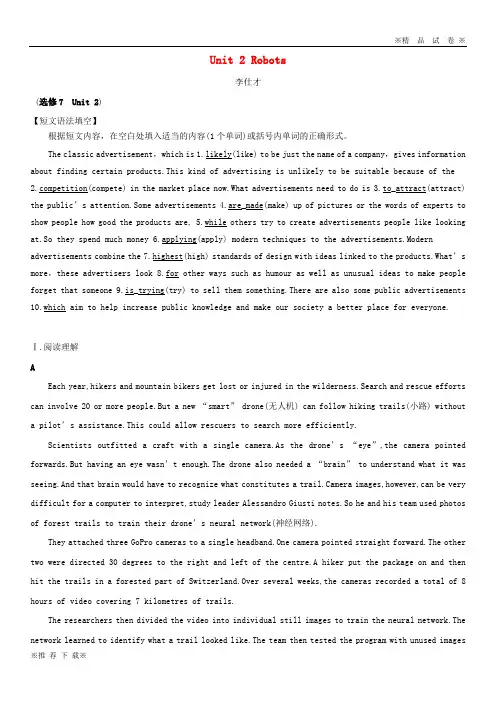

Unit 2 Robots李仕才(选修7Unit 2)【短文语法填空】根据短文内容,在空白处填入适当的内容(1个单词)或括号内单词的正确形式。

The classic advertisement,which is 1.likely(like) to be just the name of a company,gives information about finding certain products.This kind of advertising is unlikely to be suitable because of the petition(compete) in the market place now.What advertisements need to do is 3.to_attract(attract) the public’s attention.Some advertisements 4.are_made(make) up of pictures or the words of experts to show people how good the products are, 5.while others try to create advertisements people like looking at.So they spend much money 6.applying(apply) modern techniques to the advertisements.Modern advertisements combine the 7.highest(high) standards of design with ideas linked to the pr oducts.What’s more,these advertisers look 8.for other ways such as humour as well as unusual ideas to make people forget that someone 9.is_trying(try) to sell them something.There are also some public advertisements 10.which aim to help increase public knowledge and make our society a better place for everyone.Ⅰ.阅读理解AEach year,hikers and mountain bikers get lost or injured in the wilderness.Search and rescue efforts can involve 20 or more people.But a new “smart” drone(无人机) can follow hiking trails(小路) without a pilot’s assistance.This could allow rescuers to search more efficiently.Scientists outfitted a craft with a single camera.As the drone’s “eye”,the camera pointed forwards.But having an eye wasn’t enough.The drone also needed a “brain” to underst and what it was seeing.And that brain would have to recognize what constitutes a trail.Camera images,however,can be very difficult for a computer to interpret,study leader Alessandro Giusti notes.So he and his team used photos of forest trails to train the ir drone’s neural network(神经网络).They attached three GoPro cameras to a single headband.One camera pointed straight forward.The other two were directed 30 degrees to the right and left of the centre.A hiker put the package on and then hit the trails in a forested part of Switzerland.Over several weeks,the cameras recorded a total of 8 hours of video covering 7 kilometres of trails.The researchers then divided the video into individual still images to train the neural network.The network learned to identify what a trail looked like.The team then tested the program with unused imagesfrom the video.The computer did a slightly better job than the people had in correctly classifying the images.This meant the drone was ready to navigate the real world on its own.Giusti and his team took their now “smart” device to a forest with trails that had not been used in the drone’s training.It flew along the path,constantly adjusting its direction based on the images coming in through its camera.The drone didn’t perform a s well in real-life as it did in the lab,Giusti admits.That’s because the drone’s camera took lower-quality images than the cameras that had been used to train it.As a result,the images being taken by the drone looked different,he explains.This confused its neural network.“It’s an exciting use for neural networks,” says Christopher Rasmussen,a computer-vision scientist who was not involved with the study.“However,the problems faced in the experiments show that there are still some important issues to be wo rked out.”1.The “smart” drone has been created in order to help .A.find travelers who get lostB.ensure the safety of travelersC.deliver necessities to travelersD.show travelers the way to a place2.While being tested to classify the images in the lab,the computer .A.did as well as the peopleB.did a lot better than the peopleC.did much worse than the peopleD.did a little better than the people3.Why didn’t the drone perform well in the forest?A.The light in the forest was very poor.B.The trees or bushes covered the trails.C.The drone couldn’t fly very low there.D.The pictures taken were of poor quality.4.What is Christopher Rasmussen’s attitude towards the results of the experiments?A.Critical.B.Doubtful.C.Objective.D.Optimistic.B(2016·石家庄调研检测)Shopping centres,stadiums and universities may soon have a new tool to help fight crime.A California company called Knightscope says its robots can predict and prevent crime.Knightscope says the goal is to reduce crime by half in areas the robots guard.William Santana Li is the chief executive officer of Knightscope.He says,“These robot security guards will change the world.Our planet has seven billion people on it.It’s going to quickly get to nine billion people.The security equipment that we have globally is just not going to develop that fast.The company’s Autonomous Data Machines can become the eyes and ears of law enforcement (执行).”“You want it to be machines plus humans.Let the machines do the heavy and sometimes dangerous work and let the humans do the strategic decision-making work,so it’s always working all together.”The machines are one and a half meters tall and weigh 136 kilograms.They do not carry weapons but they have day-and-night time video cameras which are able to turn 360 degrees and can also sense chemical and biological weapons.Some people may become concerned about their privacy,especially in connection with the video recordings.Some people may worry that such recordings will appear on the Internet.Eugene Volokh,a law professor at the University of California,says the machines have to be used in the right way and it will be interesting to see how state laws deal with this kind of video.William Santana Li says there is a long waiting list for the robots in the US.Workers in the company are working overtime to meet the demands of the market.At least 25 other countries are also interested in these robotic security guards.5.What can this new tool do for humans?A.Make strategic decisions.B.Keep watching day and night.C.Carry heavy weapons.D.Stop crime autonomously.6.Why are people worried?A.Their privacy may be let out.B.The robots are very expensive.C.Robots will replace humans.D.They will be out of work soon.7.Which of the following can be the best title of the text?A.Robots Are Becoming More PopularB.Robots Contribute a Lot to the WorldC.Robots Are in Great Demand NowD.Security Robots Could Help Cut CrimeCFrom young kids to fitness lovers,to those looking for a laugh,or to families looking for a free movie night,Atlantic Station prepares some days for them to enjoy this year.Tot Spot—Each Tuesday morning.Atlantic Station's Central Park transforms into every child's dream playground! From 10:00 am to noon,kids can enjoy games,storytelling, toys,music and some very special things.This event begins on April 5 and lasts through September 27.Wellness Wednesday—Each Wednesday from 6:30 pm to 7:30 pm,Atlantic Station's Central Park becomes the perfect place to find quietness of the mind and body while instructors lead guests through exercises including yoga and more.Wellness Wednesday welcomes all ages and skill levels.This event begins on April 6 and lasts through September 28.Improv in the Park—Whole World Theatre,Atlanta's premier improv group,has once again partnered with Atlantic Station to bring a familyfriendly night of comedy to the Central Park on the first Wednesday of each month until September.Bring a blanket,relax under the stars and prepare to laugh until your stomach hurts!The hourlong show b egins at 8:00 pm.This event occurs on April 5,May 4,June 1,July 6 and August 3.Movies in the Central Park—Each Thursday night at dusk,guests are invited to bring chairs,blankets,togo snacks and picnics to Atlantic Station's Central Park.All outdoor scree nings are available,weather permitting,so keep your fingers crossed for no The Wizard of Oztype storms!Be sure to arrive early to find a good seat.1.Mothers can take their kids who like listening to stories to ________.A.Tot SpotB.Improv in the ParkC.Wellness WednesdayD.Movies in the Central Park2.When can you do yoga with an instructor in Atlantic Station's Central Park?A.On each Tuesday from 6:30 pm to 7:30 pm in August.B.On each Tuesday morning from 10:00 am to noon in July.C.At 8:00 pm on April 5, May 4,June 1,July 6 and August 3.D.On each Wednesday at 7:00 pm in June and July.3.What can we learn from the text?A.Atlantic Station sets the days for guests to have fun.B.Tickets for Movies in Central Park are hard to get.C.Movies in the Central Park are shown only indoors.D.Only teenagers are welcome to join in Wellness Wednesday【解题导语】本文主要介绍了大西洋站几个可以让人们放松自己、享受生活和获得乐趣的活动。

a r X i v :p h y s i c s /0602124v 1 [p h y s i c s .d a t a -a n ] 17 F eb 2006Modularity and community structure in networksM. E.J.NewmanDepartment of Physics and Center for the Study of Complex Systems,Randall Laboratory,University of Michigan,Ann Arbor,MI 48109–1040Many networks of interest in the sciences,including a variety of social and biological networks,are found to divide naturally into communities or modules.The problem of detecting and characterizing this community structure has attracted considerable recent attention.One of the most sensitive detection methods is optimization of the quality function known as “modularity”over the possible divisions of a network,but direct application of this method using,for instance,simulated annealing is computationally costly.Here we show that the modularity can be reformulated in terms of the eigenvectors of a new characteristic matrix for the network,which we call the modularity matrix,and that this reformulation leads to a spectral algorithm for community detection that returns results of better quality than competing methods in noticeably shorter running times.We demonstrate the algorithm with applications to several network data sets.IntroductionMany systems of scientific interest can be represented as networks—sets of nodes or vertices joined in pairs by lines or edges .Examples include the Internet and the worldwide web,metabolic networks,food webs,neural networks,communication and distribution networks,and social networks.The study of networked systems has a history stretching back several centuries,but it has expe-rienced a particular surge of interest in the last decade,especially in the mathematical sciences,partly as a result of the increasing availability of large-scale accurate data describing the topology of networks in the real world.Statistical analyses of these data have revealed some un-expected structural features,such as high network tran-sitivity [1],power-law degree distributions [2],and the existence of repeated local motifs [3];see [4,5,6]for reviews.One issue that has received a considerable amount of attention is the detection and characterization of com-munity structure in networks [7,8],meaning the appear-ance of densely connected groups of vertices,with only sparser connections between groups (Fig.1).The abil-ity to detect such groups could be of significant practical importance.For instance,groups within the worldwide web might correspond to sets of web pages on related top-ics [9];groups within social networks might correspond to social units or communities [10].Merely the finding that a network contains tightly-knit groups at all can convey useful information:if a metabolic network were divided into such groups,for instance,it could provide evidence for a modular view of the network’s dynamics,with dif-ferent groups of nodes performing different functions with some degree of independence [11,12].Past work on methods for discovering groups in net-works divides into two principal lines of research,both with long histories.The first,which goes by the name of graph partitioning ,has been pursued particularly in computer science and related fields,with applications in parallel computing and VLSI design,among other ar-eas [13,14].The second,identified by names such as blockFIG.1:The vertices in many networks fall naturally into groups or communities,sets of vertices (shaded)within which there are many edges,with only a smaller number of edges between vertices of different groups.modeling ,hierarchical clustering ,or community structure detection ,has been pursued by sociologists and more re-cently also by physicists and applied mathematicians,with applications especially to social and biological net-works [7,15,16].It is tempting to suggest that these two lines of re-search are really addressing the same question,albeit by somewhat different means.There are,however,impor-tant differences between the goals of the two camps that make quite different technical approaches desirable.A typical problem in graph partitioning is the division of a set of tasks between the processors of a parallel computer so as to minimize the necessary amount of interprocessor communication.In such an application the number of processors is usually known in advance and at least an approximate figure for the number of tasks that each pro-cessor can handle.Thus we know the number and size of the groups into which the network is to be split.Also,the goal is usually to find the best division of the network re-gardless of whether a good division even exists—there is little point in an algorithm or method that fails to divide the network in some cases.Community structure detection,by contrast,is per-2haps best thought of as a data analysis technique used to shed light on the structure of large-scale network datasets,such as social networks,Internet and web data, or biochemical munity structure meth-ods normally assume that the network of interest divides naturally into subgroups and the experimenter’s job is to find those groups.The number and size of the groups is thus determined by the network itself and not by the experimenter.Moreover,community structure methods may explicitly admit the possibility that no good division of the network exists,an outcome that is itself considered to be of interest for the light it sheds on the topology of the network.In this paper our focus is on community structure de-tection in network datasets representing real-world sys-tems of interest.However,both the similarities and differences between community structure methods and graph partitioning will motivate many of the develop-ments that follow.The method of optimal modularity Suppose then that we are given,or discover,the struc-ture of some network and that we wish to determine whether there exists any natural division of its vertices into nonoverlapping groups or communities,where these communities may be of any size.Let us approach this question in stages and focus ini-tially on the problem of whether any good division of the network exists into just two communities.Perhaps the most obvious way to tackle this problem is to look for divisions of the vertices into two groups so as to mini-mize the number of edges running between the groups. This“minimum cut”approach is the approach adopted, virtually without exception,in the algorithms studied in the graph partitioning literature.However,as discussed above,the community structure problem differs crucially from graph partitioning in that the sizes of the commu-nities are not normally known in advance.If community sizes are unconstrained then we are,for instance,at lib-erty to select the trivial division of the network that puts all the vertices in one of our two groups and none in the other,which guarantees we will have zero intergroup edges.This division is,in a sense,optimal,but clearly it does not tell us anything of any worth.We can,if we wish,artificially forbid this solution,but then a division that puts just one vertex in one group and the rest in the other will often be optimal,and so forth.The problem is that simply counting edges is not a good way to quantify the intuitive concept of commu-nity structure.A good division of a network into com-munities is not merely one in which there are few edges between communities;it is one in which there are fewer than expected edges between communities.If the num-ber of edges between two groups is only what one would expect on the basis of random chance,then few thought-ful observers would claim this constitutes evidence of meaningful community structure.On the other hand,if the number of edges between groups is significantly less than we expect by chance—or equivalently if the number within groups is significantly more—then it is reasonable to conclude that something interesting is going on. This idea,that true community structure in a network corresponds to a statistically surprising arrangement of edges,can be quantified using the measure known as modularity[17].The modularity is,up to a multiplicative constant,the number of edges falling within groups mi-nus the expected number in an equivalent network with edges placed at random.(A precise mathematical formu-lation is given below.)The modularity can be either positive or negative,with positive values indicating the possible presence of com-munity structure.Thus,one can search for community structure precisely by looking for the divisions of a net-work that have positive,and preferably large,values of the modularity[18].The evidence so far suggests that this is a highly effective way to tackle the problem.For instance, Guimer`a and Amaral[12]and later Danon et al.[8]op-timized modularity over possible partitions of computer-generated test networks using simulated annealing.In di-rect comparisons using standard measures,Danon et al. found that this method outperformed all other methods for community detection of which they were aware,in most cases by an impressive margin.On the basis of con-siderations such as these we consider maximization of the modularity to be perhaps the definitive current method of community detection,being at the same time based on sensible statistical principles and highly effective in practice.Unfortunately,optimization by simulated annealing is not a workable approach for the large network problems facing today’s scientists,because it demands too much computational effort.A number of alternative heuris-tic methods have been investigated,such as greedy algo-rithms[18]and extremal optimization[19].Here we take a different approach based on a reformulation of the mod-ularity in terms of the spectral properties of the network of interest.Suppose our network contains n vertices.For a par-ticular division of the network into two groups let s i=1 if vertex i belongs to group1and s i=−1if it belongs to group2.And let the number of edges between ver-tices i and j be A ij,which will normally be0or1,al-though larger values are possible in networks where mul-tiple edges are allowed.(The quantities A ij are the el-ements of the so-called adjacency matrix.)At the same time,the expected number of edges between vertices i and j if edges are placed at random is k i k j/2m,where k i and k j are the degrees of the vertices and m=14m ijA ij−k i k j4m s T Bs,(1)where s is the vector whose elements are the s i.The leading factor of1/4m is merely conventional:it is in-cluded for compatibility with the previous definition of modularity[17].We have here defined a new real symmetric matrix B with elementsk i k jB ij=A ij−FIG.2:Application of our eigenvector-based method to the “karate club”network of Ref.[23].Shapes of vertices indi-cate the membership of the corresponding individuals in the two known factions of the network while the dotted line indi-cates the split found by the algorithm,which matches the fac-tions exactly.The shades of the vertices indicate the strength of their membership,as measured by the value of the corre-sponding element of the eigenvector.groups,but to place them on a continuous scale of“how much”they belong to one group or the other.As an example of this algorithm we show in Fig.2the result of its application to a famous network from the so-cial science literature,which has become something of a standard test for community detection algorithms.The network is the“karate club”network of Zachary[23], which shows the pattern of friendships between the mem-bers of a karate club at a US university in the1970s. This example is of particular interest because,shortly after the observation and construction of the network, the club in question split in two as a result of an inter-nal dispute.Applying our eigenvector-based algorithm to the network,wefind the division indicated by the dotted line in thefigure,which coincides exactly with the known division of the club in real life.The vertices in Fig.2are shaded according to the val-ues of the elements in the leading eigenvector of the mod-ularity matrix,and these values seem also to accord well with known social structure within the club.In partic-ular,the three vertices with the heaviest weights,either positive or negative(black and white vertices in thefig-ure),correspond to the known ringleaders of the two fac-tions.Dividing networks into more than two communities In the preceding section we have given a simple matrix-based method forfinding a good division of a network into two parts.Many networks,however,contain more than two communities,so we would like to extend our method tofind good divisions of networks into larger numbers of parts.The standard approach to this prob-lem,and the one adopted here,is repeated division into two:we use the algorithm of the previous sectionfirst to divide the network into two parts,then divide those parts,and so forth.In doing this it is crucial to note that it is not correct, afterfirst dividing a network in two,to simply delete the edges falling between the two parts and then apply the algorithm again to each subgraph.This is because the degrees appearing in the definition,Eq.(1),of the mod-ularity will change if edges are deleted,and any subse-quent maximization of modularity would thus maximize the wrong quantity.Instead,the correct approach is to define for each subgraph g a new n g×n g modularity matrix B(g),where n g is the number of vertices in the subgraph.The correct definition of the element of this matrix for vertices i,j isB(g)ij=A ij−k i k j2m ,(4)where k(g)i is the degree of vertex i within subgraph g and d g is the sum of the(total)degrees k i of the vertices in the subgraph.Then the subgraph modularity Q g=s T B(g)s correctly gives the additional contribution to the total modularity made by the division of this subgraph.In particular,note that if the subgraph is undivided,Q g is correctly zero.Note also that for a complete network Eq.(4)reduces to the previous definition for the modu-larity matrix,Eq.(2),since k(g)i→k i and d g→2m in that case.In repeatedly subdividing our network,an important question we need to address is at what point to halt the subdivision process.A nice feature of our method is that it provides a clear answer to this question:if there exists no division of a subgraph that will increase the modular-ity of the network,or equivalently that gives a positive value for Q g,then there is nothing to be gained by divid-ing the subgraph and it should be left alone;it is indi-visible in the sense of the previous section.This happens when there are no positive eigenvalues to the matrix B(g), and thus our leading eigenvalue provides a simple check for the termination of the subdivision process:if the lead-ing eigenvalue is zero,which is the smallest value it can take,then the subgraph is indivisible.Note,however,that while the absence of positive eigen-values is a sufficient condition for indivisibility,it is not a necessary one.In particular,if there are only small positive eigenvalues and large negative ones,the terms in Eq.(3)for negativeβi may outweigh those for positive.It is straightforward to guard against this possibility,how-ever:we simply calculate the modularity contribution for each proposed split directly and confirm that it is greater than zero.Thus our algorithm is as follows.We construct the modularity matrix for our network andfind its leading (most positive)eigenvalue and eigenvector.We divide the network into two parts according to the signs of the elements of this vector,and then repeat for each of the parts.If at any stage wefind that the proposed split makes a zero or negative contribution to the total mod-5ularity,we leave the corresponding subgraph undivided. When the entire network has been decomposed into in-divisible subgraphs in this way,the algorithm ends. One immediate corollary of this approach is that all “communities”in the network are,by definition,indi-visible subgraphs.A number of authors have in the past proposed formal definitions of what a community is[9,16,24].The present method provides an alter-native,first-principles definition of a community as an indivisible subgraph.Further techniques for modularity maximization In this section we describe briefly another method we have investigated for dividing networks in two by mod-ularity optimization,which is entirely different from our spectral method.Although not of especial interest on its own,this second method is,as we will shortly show,very effective when combined with the spectral method.Let us start with some initial division of our vertices into two groups:the most obvious choice is simply to place all vertices in one of the groups and no vertices in the other.Then we proceed as follows.Wefind among the vertices the one that,when moved to the other group, will give the biggest increase in the modularity of the complete network,or the smallest decrease if no increase is possible.We make such moves repeatedly,with the constraint that each vertex is moved only once.When all n vertices have been moved,we search the set of in-termediate states occupied by the network during the operation of the algorithm tofind the state that has the greatest modularity.Starting again from this state,we repeat the entire process iteratively until no further im-provement in the modularity results.Those familiar with the literature on graph partitioning mayfind this algo-rithm reminiscent of the Kernighan–Lin algorithm[25], and indeed the Kernighan–Lin algorithm provided the inspiration for our method.Despite its simplicity,wefind that this method works moderately well.It is not competitive with the best pre-vious methods,but it gives respectable modularity val-ues in the trial applications we have made.However, the method really comes into its own when it is used in combination with the spectral method introduced ear-lier.It is a common approach in standard graph par-titioning problems to use spectral partitioning based on the graph Laplacian to give an initial broad division of a network into two parts,and then refine that division us-ing the Kernighan–Lin algorithm.For community struc-ture problems wefind that the equivalent joint strategy works very well.Our spectral approach based on the leading eigenvector of the modularity matrix gives an ex-cellent guide to the general form that the communities should take and this general form can then befine-tuned by our vertex moving method,to reach the best possible modularity value.The whole procedure is repeated to subdivide the network until every remaining subgraph is indivisible,and no further improvement in the modular-ity is possible.Typically,thefine-tuning stages of the algorithm add only a few percent to thefinal value of the modularity, but those few percent are enough to make the difference between a method that is merely good and one that is, as we will see,exceptional.Example applicationsIn practice,the algorithm developed here gives excel-lent results.For a quantitative comparison between our algorithm and others we follow Duch and Arenas[19] and compare values of the modularity for a variety of networks drawn from the literature.Results are shown in Table I for six different networks—the exact same six as used by Duch and Arenas.We compare mod-ularityfigures against three previously published algo-rithms:the betweenness-based algorithm of Girvan and Newman[10],which is widely used and has been incor-porated into some of the more popular network analysis programs(denoted GN in the table);the fast algorithm of Clauset et al.[26](CNM),which optimizes modularity using a greedy algorithm;and the extremal optimization algorithm of Duch and Arenas[19](DA),which is ar-guably the best previously existing method,by standard measures,if one discounts methods impractical for large networks,such as exhaustive enumeration of all parti-tions or simulated annealing.The table reveals some interesting patterns.Our al-gorithm clearly outperforms the methods of Girvan and Newman and of Clauset et al.for all the networks in the task of optimizing the modularity.The extremal opti-mization method on the other hand is more competitive. For the smaller networks,up to around a thousand ver-tices,there is essentially no difference in performance be-tween our method and extremal optimization;the mod-ularity values for the divisions found by the two algo-rithms differ by no more than a few parts in a thousand for any given network.For larger networks,however,our algorithm does better than extremal optimization,and furthermore the gap widens as network size increases, to a maximum modularity difference of about a6%for the largest network studied.For the very large networks that have been of particular interest in the last few years, therefore,it appears that our method for detecting com-munity structure may be the most effective of the meth-ods considered here.The modularity values given in Table I provide a use-ful quantitative measure of the success of our algorithm when applied to real-world problems.It is worthwhile, however,also to confirm that it returns sensible divisions of networks in practice.We have given one example demonstrating such a division in Fig.2.We have also checked our method against many of the example net-works used in previous studies[10,17].Here we give two more examples,both involving network representationsmodularity Q network GN CNM DA this paper3419845311331068027519maximal value of the quantity known as modularity over possible divisions of a network.We have shown that this problem can be rewritten in terms of the eigenval-ues and eigenvectors of a matrix we call the modularity matrix,and by exploiting this transformation we have created a new computer algorithm for community de-tection that demonstrably outperforms the best previ-ous general-purpose algorithms in terms of both quality of results and speed of execution.We have applied our algorithm to a variety of real-world network data sets, including social and biological examples,showing it to give both intuitively reasonable divisions of networks and quantitatively better results as measured by the modu-larity.AcknowledgmentsThe author would like to thank Lada Adamic,Alex Arenas,and Valdis Krebs for providing network data and for useful comments and suggestions.This work was funded in part by the National Science Foundation un-der grant number DMS–0234188and by the James S. McDonnell Foundation.[1]D.J.Watts and S.H.Strogatz,Collective dynamics of‘small-world’networks.Nature393,440–442(1998). [2]A.-L.Barab´a si and R.Albert,Emergence of scaling inrandom networks.Science286,509–512(1999).[3]o,S.Shen-Orr,S.Itzkovitz,N.Kashtan,D.Chklovskii,and U.Alon,Network motifs:Simplebuilding blocks of complex networks.Science298,824–827(2002).[4]R.Albert and A.-L.Barab´a si,Statistical mechanics ofcomplex networks.Rev.Mod.Phys.74,47–97(2002).[5]S.N.Dorogovtsev and J.F.F.Mendes,Evolution ofnetworks.Advances in Physics51,1079–1187(2002). [6]M.E.J.Newman,The structure and function of complexnetworks.SIAM Review45,167–256(2003).[7]M.E.J.Newman,Detecting community structure in net-works.Eur.Phys.J.B38,321–330(2004).[8]L.Danon,J.Duch, A.Diaz-Guilera,and A.Arenas,Comparing community structure identification.J.Stat.Mech.p.P09008(2005).[9]G.W.Flake,wrence,C.L.Giles,and F.M.Co-etzee,Self-organization and identification of Web com-munities.IEEE Computer35,66–71(2002).[10]M.Girvan and M.E.J.Newman,Community structurein social and biological networks.Proc.Natl.Acad.Sci.USA99,7821–7826(2002).[11]P.Holme,M.Huss,and H.Jeong,Subnetwork hierar-chies of biochemical pathways.Bioinformatics19,532–538(2003).[12]R.Guimer`a and L.A.N.Amaral,Functional cartogra-phy of complex metabolic networks.Nature433,895–900 (2005).[13]U.Elsner,Graph partitioning—a survey.Technical Re-port97-27,Technische Universit¨a t Chemnitz(1997). [14]P.-O.Fj¨a llstr¨o m,Algorithms for graph partitioning:Asurvey.Link¨o ping Electronic Articles in Computer and Information Science3(10)(1998).[15]H.C.White,S.A.Boorman,and R.L.Breiger,Socialstructure from multiple networks:I.Blockmodels of roles and positions.Am.J.Sociol.81,730–779(1976). [16]S.Wasserman and K.Faust,Social Network Analysis.Cambridge University Press,Cambridge(1994).[17]M.E.J.Newman and M.Girvan,Finding and evaluat-ing community structure in networks.Phys.Rev.E69, 026113(2004).[18]M.E.J.Newman,Fast algorithm for detecting com-munity structure in networks.Phys.Rev.E69,066133 (2004).[19]J.Duch and A.Arenas,Community detection in complexnetworks using extremal optimization.Phys.Rev.E72, 027104(2005).[20]F.R.K.Chung,Spectral Graph Theory.Number92in CBMS Regional Conference Series in Mathematics, American Mathematical Society,Providence,RI(1997).[21]M.Fiedler,Algebraic connectivity of graphs.Czech.Math.J.23,298–305(1973).[22]A.Pothen,H.Simon,and K.-P.Liou,Partitioning sparsematrices with eigenvectors of graphs.SIAM J.Matrix Anal.Appl.11,430–452(1990).[23]W.W.Zachary,An informationflow model for conflictandfission in small groups.Journal of Anthropological Research33,452–473(1977).[24]F.Radicchi,C.Castellano,F.Cecconi,V.Loreto,andD.Parisi,Defining and identifying communities in net-A101,2658–2663 (2004).[25]B.W.Kernighan and S.Lin,An efficient heuristic proce-dure for partitioning graphs.Bell System Technical Jour-nal49,291–307(1970).[26]A.Clauset,M.E.J.Newman,and C.Moore,Findingcommunity structure in very large networks.Phys.Rev.E70,066111(2004).[27]P.Gleiser and L.Danon,Community structure in jazz.Advances in Complex Systems6,565–573(2003). [28]H.Jeong,B.Tombor,R.Albert,Z.N.Oltvai,and A.-L.Barab´a si,The large-scale organization of metabolic networks.Nature407,651–654(2000).[29]H.Ebel,L.-I.Mielsch,and S.Bornholdt,Scale-free topol-ogy of e-mail networks.Phys.Rev.E66,035103(2002).[30]X.Guardiola,R.Guimer`a,A.Arenas,A.Diaz-Guilera,D.Streib,and L. A.N.Amaral,Macro-and micro-structure of trust networks.Preprint cond-mat/0206240 (2002).[31]M.E.J.Newman,The structure of scientific collabora-tion A98,404–409 (2001).[32]L.A.Adamic and N.Glance,The political blogosphereand the2004us election.In Proceedings of the WWW-2005Workshop on the Weblogging Ecosystem(2005).。