Metastability and dispersive shock waves in Fermi-Pasta-Ulam system

- 格式:pdf

- 大小:664.73 KB

- 文档页数:11

JOURNAL OF COMPUTATIONAL PHYSICS126,202–228(1996)ARTICLE NO.0130Efficient Implementation of Weighted ENO Schemes*G UANG-S HAN J IANG†AND C HI-W ANG S HUDi v ision of Applied Mathematics,Brown Uni v ersity,Pro v idence,Rhode Island02912Received August14,1995;revised January3,1996or perhaps with a forcing term g(u,x,t)on the right-handside.Here uϭ(u1,...,u m),fϭ(f1,...,f d),xϭ(x1,...,x d) In this paper,we further analyze,test,modify,and improve thehigh order WENO(weighted essentially non-oscillatory)finite differ-and tϾ0.ence schemes of Liu,Osher,and Chan.It was shown by Liu et al.WENO schemes are based on ENO(essentially non-that WENO schemes constructed from the r th order(in L1norm)oscillatory)schemes,which werefirst introduced by ENO schemes are(rϩ1)th order accurate.We propose a new wayHarten,Osher,Engquist,and Chakravarthy[5]in the form of measuring the smoothness of a numerical solution,emulatingthe idea of minimizing the total variation of the approximation,of cell averages.The key idea of ENO schemes is to use which results in afifth-order WENO scheme for the case rϭ3,the‘‘smoothest’’stencil among several candidates to ap-instead of the fourth-order with the original smoothness measure-proximate thefluxes at cell boundaries to a high order ment by Liu et al.Thisfifth-order WENO scheme is as fast as theaccuracy and at the same time to avoid spurious oscillations fourth-order WENO scheme of Liu et al.and both schemes arenear shocks.The cell-averaged version of ENO schemes about twice as fast as the fourth-order ENO schemes on vectorsupercomputers and as fast on serial and parallel computers.For involves a procedure of reconstructing point values from Euler systems of gas dynamics,we suggest computing the weights cell averages and could become complicated and costly for from pressure and entropy instead of the characteristic values to multi-dimensional ter,Shu and Osher[14,15] simplify the costly characteristic procedure.The resulting WENOdeveloped theflux version of ENO schemes which do not schemes are about twice as fast as the WENO schemes using thecharacteristic decompositions to compute weights and work well require such a reconstruction procedure.We will formulate for problems which do not contain strong shocks or strong reflected the WENO schemes based on thisflux version of ENO waves.We also prove that,for conservation laws with smooth solu-schemes.The WENO schemes of Liu et al.[9]are basedtions,all WENO schemes are convergent.Many numerical tests,on the cell-averaged version of ENO schemes.including the1D steady state nozzleflow problem and2D shockFor applications involving shocks,second-order schemes entropy wave interaction problem,are presented to demonstratethe remarkable capability of the WENO schemes,especially the are usually adequate if only relatively simple structures WENO scheme using the new smoothness measurement in resolv-are present in the smooth part of the solution(e.g.,the ing complicated shock andflow structures.We have also applied shock tube problem).However,if a problem contains rich Yang’s artificial compression method to the WENO schemes tostructures as well as shocks(e.g.,the shock entropy wave sharpen contact discontinuities.ᮊ1996Academic Press,Inc.interaction problem in Example4,Section8.3),high ordershock capturing schemes(order of at least three)are more1.INTRODUCTION efficient than low order schemes in terms of CPU time andmemory requirements.In this paper,we further analyze,test,modify,and im-ENO schemes are uniformly high order accurate rightprove the WENO(weighted essentially non-oscillatory)up to the shock and are very robust to use.However,theyfinite difference schemes of Liu,Osher,and Chan[9]for also have certain drawbacks.One problem is with the freelythe approximation of hyperbolic conservation laws of adaptive stencil,which could change even by a round-offthe type perturbation near zeroes of the solution and its derivatives.Also,this free adaptation of stencils is not necessary in u tϩdiv f(u)ϭ0,(1.1)regions where the solution is smooth.Another problem isthat ENO schemes are not cost effective on vector super-computers such as CRAY C-90because the stencil-choos-*Research supported by ARO Grant DAAH04-94-G-0205,NSFGrants ECS-9214488and DMS-9500814,NASA Langley Grant NAG-ing step involves heavy usage of logical statements,which 1-1145and Contract NAS1-19480while the second author was in resi-perform poorly on such machines.Thefirst problem could dence at ICASE,NASA Langley Research Center,Hampton,VA23681-reduce the accuracy of ENO schemes for certain functions 0001,and AFOSR Grant95-1-0074.[12];however,this can be remedied by embedding certain †Current address:Department of Mathematics,UCLA,Los Angeles,CA90024.parameters(e.g.,threshold and biasing factor)into the2020021-9991/96$18.00Copyright©1996by Academic Press,Inc.All rights of reproduction in any form reserved.WEIGHTED ENO SCHEMES203 stencil choosing step so that the preferred linearly stable the total variation of the approximations.This new mea-surement gives the optimalfifth-order accurate WENO stencil is used in regions away from discontinuities.See[1,3,13].scheme when rϭ3(the smoothness measurement in[9]gives a fourth-order accurate WENO scheme for rϭ3). The WENO scheme of Liu,Osher,and Chan[9]is an-other way to overcome these drawbacks while keeping the Although the WENO schemes are faster than ENOschemes on vector supercomputers,they are only as fast robustness and high order accuracy of ENO schemes.Theidea is the following:instead of approximating the numeri-as ENO schemes on serial computers.In Section4,wepresent a simpler way of computing the weights for the calflux using only one of the candidate stencils,one usesa con v ex combination of all the candidate stencils.Each approximation of Euler systems of gas dynamics.The sim-plification is aimed at reducing thefloating point opera-of the candidate stencils is assigned a weight which deter-mines the contribution of this stencil to thefinal approxi-tions in the costly but necessary characteristic procedureand is motivated by the following observation:the only mation of the numericalflux.The weights can be definedin such a way that in smooth regions it approaches certain nonlinearity of a WENO scheme is in the computation ofthe weights.We suggest using pressure and entropy to optimal weights to achieve a higher order of accuracy(anr th-order ENO scheme leads to a(2rϪ1)th-order WENO compute the weights,instead of the local characteristicquantities.In this way one can exploit the linearity of the scheme in the optical case),while in regions near disconti-nuities,the stencils which contain the discontinuities are rest of the scheme.The resulting WENO schemes(rϭ3)is about twice as fast as the original WENO scheme which assigned a nearly zero weight.Thus essentially non-oscilla-tory property is achieved by emulating ENO schemes uses local characteristic quantities to compute the weights(see Section7).The same idea can also be applied to the around discontinuities and a higher order of accuracy isobtained by emulating upstream central schemes with the original ENO ly,we can use the undivideddifferences of pressure and entropy to replace the local optimal weights away from the discontinuities.WENOschemes completely remove the logical statements that characteristic quantities to choose the ENO stencil.Thishas been tested numerically but the results are not included appear in the ENO stencil choosing step.As a result,theWENO schemes run at least twice as fast as ENO schemes in this paper since the main topic here is the WENOschemes.(see Section7)on vector machines(e.g.,CRAY C-90)andare not sensitive to round-off errors that arise in actual WENO schemes have the same smearing at contact dis-continuities as ENO schemes.There are mainly two tech-computation.Atkins[1]also has a version of ENO schemesusing a different weighted average of stencils.niques for sharpening the contact discontinuities for ENOschemes.One is Harten’s subcell resolution[4]and the Another advantage of WENO schemes is that itsflux issmoother than that of the ENO schemes.This smoothness other is Yang’s artificial compression(slope modification)[20].Both were introduced in the cell average context. enables us to prove convergence of WENO schemes forsmooth solutions using Strang’s technique[18];see Section Later,Shu and Osher[15]translated them into the pointvalue framework.In one-dimensional problems,the sub-6.According to our numerical tests,this smoothness alsohelps the steady state calculations,see Example4in Sec-cell resolution technique works slightly better than theartificial compression method.However,for two or higher tion8.2.In[9],the order of accuracy shown in the error tables dimensional problems,the latter is found to be more effec-tive and handy to use[15].We will highlight the key proce-(Table1–5in[9])seemed to suggest that the WENOschemes of Liu et al.are more accurate than what the dures of applying the artificial compression method to theWENO schemes in Section5.truncation error analysis indicated.In Section2,we carryout a more detailed error analysis for the WENO schemes In Section8,we test the WENO schemes(both theWENO schemes of Liu et al.and the modified WENO andfind that this‘‘super-convergence’’is indeed superfi-cial:the‘‘higher’’order is caused by larger error on the schemes)on several1D and2D model problems and com-pare them with ENO schemes to examine their capability coarser grids instead of smaller error on thefiner grids.Our error analysis also suggests that the WENO schemes in resolving shock and complicatedflow structures.We conclude this paper by a brief summary in Section can be made more accurate than those in[9].Since the weight on a candidate stencil has to vary ac-9.The time discretization of WENO schemes will be imple-mented by a class of high order TVD Runge–Kutta-type cording to the relative smoothness of this stencil to theother candidate stencils,the way of evaluating the smooth-methods developed by Shu and Osher[14].To solve theordinary differential equationness of a stencil is crucial in the definition of the weight.In Section3,we introduce a new way of measuring thesmoothness of the numerical solution which is based uponminimizing the L2norm of the derivatives of the recon-dudt ϭL(u),(1.2)struction polynomials,emulating the idea of minimizing204JIANG AND SHUTABLE I where L (u )is a discretization of the spatial operator,the third-order TVD Runge–Kutta is simplyCoefficients a r k ,lrk l ϭ0l ϭ1l ϭ2u (1)ϭu n ϩ⌬tL (u n )u (2)ϭ u n ϩ u (1)ϩ ⌬tL (u (1))(1.3)20Ϫ1/23/211/21/2u n ϩ1ϭ u n ϩ u (2)ϩ ⌬tL (u (2)).301/3Ϫ7/611/61Ϫ1/65/61/3Another useful,although not TVD,fourth-order Runge–21/35/6Ϫ1/6Kutta scheme isu (1)ϭu n ϩ ⌬tL (u n )f (u )ϭf ϩ(u )ϩf Ϫ(u ),(2.4)u(2)ϭu nϩ ⌬tL (u (1))(1.4)where df (u )ϩ/du Ն0and df (u )Ϫ/du Յ0.For example,u (3)ϭu n ϩ⌬tL (u (2))one can defineu n ϩ1ϭ (Ϫu n ϩu (1)ϩ2u (2)ϩu (3))ϩ ⌬tL (u (3)).f Ϯ(u )ϭ (f (u )ϮͰu ),(2.5)This fourth-order Runge–Kutta scheme can be made TVD by an increase of operation counts [14].We will mainly where Ͱϭmax ͉f Ј(u )͉and the maximum is taken over the use these two Runge–Kutta schemes in our numerical tests whole relevant range of u .This is the global Lax–Friedrichs in Section 8.The third-order TVD Runge–Kutta scheme (LF)flux splitting.For other flux splitting,especially the will be referred to as ‘‘RK-3’’while the fourth-order (non-Roe flux splitting with entropy fix (RF);see [15]for details.TVD)Runge–Kutta scheme will be referred to as ‘‘RK-4.’’Let f ˆϩj ϩ1/2and f Ϫj ϩ1/2be,resp.the numerical fluxes obtained from the positive and negative parts of f (u ),we then have2.THE WENO SCHEMES OF LIU,OSHER,AND CHANf ˆj ϩ1/2ϭf ˆϩj ϩ1/2ϩf ˆϪj ϩ1/2.(2.6)In this section,we use the flux version of ENO schemes Here we will only describe how f ˆϩj ϩ1/2is computed in [9]as our basis to formulate WENO schemes of Liu et al.and on the basis of flux version of ENO schemes.For simplicity,analyze their accuracy in a different way from that used we will drop the ‘‘ϩ’’sign in the superscript.The formulas in [9].We use one-dimensional scalar conservation laws for the negative part of the split flux are symmetric (with (i.e.,d ϭm ϭ1in (1.1))as an example:respect to x j ϩ1/2)and will not be shown.As we know,the r th-order (in L 1sense)ENO scheme u t ϩf (u )x ϭ0.(2.1)chooses one ‘‘smoothest’’stencil from r candidate stencils and uses only the chosen stencil to approximate the flux Let us discretize the space into uniform intervals of sizeh j ϩ1/2.Let’s denote the r candidate stencils by S k ,k ϭ0,⌬x and denote x j ϭj ⌬x .Various quantities at x j will be 1,...,r Ϫ1,whereidentified by the subscript j .The spatial operator of the WENO schemes,which approximates Ϫf (u )x at x j ,will S k ϭ(x j ϩk Ϫr ϩ1,x j ϩk Ϫr ϩ2,...,x j ϩk ).take the conservative formIf the stencil S k happens to be chosen as the ENO interpola-L ϭϪ1⌬x(f ˆj ϩ1/2Ϫf ˆj Ϫ1/2),(2.2)tion stencil,then the r th-order ENO approximation of h j ϩ1/2iswhere the numerical flux f ˆj ϩ1/2approximates h j ϩ1/2ϭf ˆj ϩ1/2ϭq r k (f j ϩk Ϫr ϩ1,...,f j ϩk ),(2.7)h (x j ϩ1/2)to a high order with h (x )implicitly defined by [15]wheref (u (x ))ϭ1⌬x͵x ϩ⌬x /2x Ϫ⌬x /2h ()d .(2.3)q r k (g 0,...,g r Ϫ1)ϭr Ϫ1l ϭ0ark ,l g l .(2.8)Here a r k ,l ,0Յk ,l Յr Ϫ1,are constant coefficients.For We can actually assume f Ј(u )Ն0for all u in the range of our interest.For a general flux,i.e.,f Ј(u )Ն͞0,one can later use,we provide these coefficients for r ϭ2,3,in Table I.split it into two parts either globally or locally,WEIGHTED ENO SCHEMES205TABLE II To just use the one smoothest stencil among the r candi-dates for the approximation of h j ϩ1/2,is very desirable nearOptimal Weights C r kdiscontinuities because it prohibits the usage of informa-C r k k ϭ0k ϭ1k ϭ2tion on discontinuous stencils.However,it is not so desir-able in smooth regions because all the candidate stencils r ϭ21/32/3—carry equally smooth information and thus can be used r ϭ31/106/103/10together to give a higher order (higher than r ,the order of the base ENO scheme)approximation to the flux h j ϩ1/2.In fact,one could use all the r candidate stencils,which all together contain (2r Ϫ1)grid values of f to give f ˆj ϩ1/2ϭq 2r Ϫ1r Ϫ1(f j Ϫr ϩ1,...,f j ϩr Ϫ1)(2.12)a (2r Ϫ1)th-order approximation of h j ϩ1/2:ϩr Ϫ1k ϭ0(ͶkϪC r k )q rk (f j ϩk Ϫr ϩ1,...,f j ϩk ).f ˆj ϩ1/2ϭq 2r Ϫ1r Ϫ1(f j Ϫr ϩ1,...,f j ϩr Ϫ1)(2.9)Recalling (2.9),we see that,the first term on the right-which is just the numerical flux of a (2r Ϫ1)th-order up-hand side of the above equation is a (2r Ϫ1)th-orderstream central scheme.As we know,high order upstreamapproximation of h j ϩ1/2.Since ͚r Ϫ1k ϭ0C r k ϭ1,if we require central schemes (in space),combined with high order ͚r Ϫ1k ϭ0Ͷk ϭ1,the last summation term can be written asRunge–Kutta methods (in time),are stable and dissipative under appropriate CFL numbers and thus are convergent,according to Strang’s convergence theory [18]when the r Ϫ1k ϭ0(ͶkϪC r k)(q r k(fj ϩk Ϫr ϩ1,...,f j ϩk )Ϫh j ϩ1/2).(2.13)solution of (1.1)is smooth (see Section 6).The above facts suggest that one could use the (2r Ϫ1)th-order upstream central scheme in smooth regions and only use the r th-Each term in the last summation can be made O (h 2r Ϫ1)iforder ENO scheme near discontinuities.As in (2.7),each of the stencils can render an approxima-Ͷk ϭC r k ϩO (hr Ϫ1)(2.14)tion of h j ϩ1/2.If the stencil is smooth,this approximation is r th-order accurate;otherwise,it is less accurate or even for k ϭ0,1,...,r Ϫ1.Here,h ϭ⌬x .Thus C r k will bearnot accurate at all if the stencil contains a discontinuity.the name of optimal weight.One could assign a weight Ͷk to each candidate stencil S k ,The question now is how to define the weight such that k ϭ0,1,...,r Ϫ1,and use these weights to combine the (2.14)is satisfied in smooth regions while essentially non-r different approximations to obtain the final approxima-oscillatory property is achieved.In [9],the weight Ͷk for tion of h j ϩ1/2asstencil S k is defined byͶk ϭͰk0r Ϫ1,(2.15)fˆj ϩ1/2ϭr Ϫ1k ϭ0Ͷk q r k (f j ϩk Ϫr ϩ1,...,f j ϩk ),(2.10)wherewhere q r k (f j ϩk Ϫr ϩ1,...,f j ϩk )is defined in (2.8).To achieveessentially non-oscillatory property,one then requires the weight to adapt to the relative smoothness of f on each Ͱk ϭC r k(ϩIS k )p,k ϭ0,1,...,r Ϫ1.(2.16)candidate stencil such that any discontinuous stencil is ef-fectively assigned a zero weight.In smooth regions,one Here is a positive real number number which is intro-can adjust the weight distribution such that the resultingduced to avoid the denominator becoming zero (in our approximation of the flux fˆj ϩ1/2is as close as possible to later tests,we will take ϭ10Ϫ6.Our numerical tests that given in (2.9).indicate that the result is not sensitive to the choice of ,Simple algebra gives the coefficients C r k such thatas long as it is in the range of 10Ϫ5to 10Ϫ7);the power p will be discussed in a moment;IS k in (2.16)is a smoothness measurement of the flux function on the k th candidate q 2r Ϫ1r Ϫ1(f j Ϫr ϩ1,...,f j ϩr Ϫ1)ϭr Ϫ1k ϭ0C r k q rk(fj ϩk Ϫr ϩ1,...,f j ϩk )(2.11)stencil.It is easy to see that ͚r Ϫ1k ϭ0Ͷk ϭ1.To satisfy (2.14),it suffices to have (through a Taylor expansion analysis)and ͚r Ϫ1k ϭ0C r k ϭ1for all r Ն2.For r ϭ2,3,these coefficients are given in Table II.Comparing (2.11)with (2.10),we getIS k ϭD (1ϩO (h r Ϫ1))(2.17)206JIANG AND SHUfor k ϭ0,1,...,r Ϫ1,where D is some non-zero quantity IS 1ϭ ((f Јh Ϫ f Љh 2)2ϩ(f Јh ϩ f Љh 2)2)independent of k .As we know,an ENO scheme chooses the ‘‘smoothest’’ϩ(f Љh 2)2ϩO (h 5)(2.24)ENO stencil by comparing a hierarchy of undivided differ-IS 2ϭ ((f Јh ϩ f Љh 2)2ϩ(f Јh ϩ f Љh 2)2)ences.This is because these undivided differences can be used to measure the smoothness of the numerical flux on ϩ(f Љh 2)2ϩO (h 5).(2.25)a stencil.In [9],IS k is defined asWe can see that the second-order terms are different from stencil to stencil.Thus (2.22)is no longer valid at critical IS k ϭr Ϫ1l ϭ1r Ϫli ϭ1(f [j ϩk ϩi Ϫr ,l ])2r Ϫl,(2.18)points of f (u (x ))which implies that the WENO scheme of Liu et al.for r ϭ3is only third-order accurate at these points.In fact,the weights computed from the smoothness where f [и,и]is the l th undivided difference:measurement (2.18)diverge far away from the optimal weights near critical points (see Fig.1in the next section)f [j ,0]ϭf jon coarse grids (10to 80grid points per wave).But on f [j ,l ]ϭf [j ϩ1,l Ϫ1]Ϫf [j ,l Ϫ1],k ϭ1,...,r Ϫ1.fine grids,since the smoothness measurements IS k for all k are relatively smaller than the non-zero constant in For example,when r ϭ2,we have(2.16),the weights become close to the optimal weights.Therefore the ‘‘super-convergence’’phenomena appeared IS k ϭ(f [j ϩk Ϫ1,1])2,k ϭ0,1.(2.19)in Tables 1–5in [9]are caused by large error commitment on coarse grids and less error commitment on finer grids When r ϭ3,(2.18)giveswhen using the errors of the fifth-order central scheme as reference (see Tables III and IV).IS k ϭ ((f [j ϩk Ϫ2,1])2ϩ(f [j ϩk Ϫ1,1])2)(2.20)At discontinuities,it is typical that one or more of the r candidate stencils reside in smooth regions of the numerical ϩ(f [j ϩk Ϫ2,2])2,k ϭ0,1,2.solution while other stencils contain the discontinuities.The size of the discontinuities is always O (1)and does not In smooth regions,Taylor expansion analysis of (2.18)change when the grid is refined.So we have for a smooth givesstencil S k ,IS k ϭ(f Јh )2(1ϩO (h )),k ϭ0,1,...,r Ϫ1,(2.21)IS k ϭO (h 2p )(2.26)where f Јϭf Ј(u j ).Note the O (h )term is not O (h r Ϫ1)that we and for a non-smooth stencil S l ,would want to have (see (2.17)).Thus in smooth monotone regions,i.e.,f Ј϶0,we haveIS l ϭO (1).(2.27)Ͷk ϭC r k ϩO (h ),k ϭ0,1,...,r Ϫ1.(2.22)From the definition of the weights (2.15),we can see that,for this non-smooth stencil S l ,the corresponding weight Recalling (2.12),we see that the WENO schemes with theͶl satisfiessmoothness measurement given by (2.18)is (r ϩ1)th-order accurate in smooth monotone regions of f (u (x )).This re-Ͷl ϭO (h 2p ).(2.28)sult was proven in [9]using a different approach.For r ϭ2,this is optimal in the sense that the third-order upstream Therefore for small h and any positive integer power p ,central scheme is approximated in most smooth regions.the weight assigned to the non-smooth stencil vanishes as However,this is not optimal for r ϭ3,for which this h Ǟ0.Note,if there is more than one smooth stencils in measurement can only give fourth-order accuracy while the r candidates,from the definition of the weights in (2.15),the optimal upstream central scheme is fifth-order accu-we expect each of the smooth stencils will get a weight rate.We will introduce a new measurement in the next which is O (1).In this case,the weights do not exactly section which will result in an optimal order accurate resemble the ‘‘ENO digital weights.’’However,if a stencil WENO scheme for the r ϭ3case.is smooth,the information that it contains is useful and When r ϭ3,Taylor expansion of (2.20)givesshould be utilized.In fact,in our extensive numerical ex-periments,we find the WENO schemes in [9]work very IS 0ϭ ((f Јh Ϫ f Љh 2)2ϩ(f Јh Ϫ f Љh 2)2)well at shocks.We also find that p ϭ2is adequate to obtain essentially non-oscillatory approximations at leastϩ(f Љh 2)2ϩO (h 5)(2.23)WEIGHTED ENO SCHEMES207for r ϭ2,3,although it is suggested in [9]that p should IS 1ϭ (f j Ϫ1Ϫ2f j ϩf j ϩ1)2ϩ (f j Ϫ1Ϫf j ϩ1)2(3.3)be taken as r ,the order of the base ENO schemes.We will use p ϭ2for all our numerical tests.Notice that,IS 2ϭ(f j Ϫ2f j ϩ1ϩf j ϩ2)2ϩ (3f j Ϫ4f j ϩ1ϩf j ϩ2)2.(3.4)in discussing accuracy near discontinuities,we are simply concerned with spatial approximation error.The error due In smooth regions.Taylor expansion of (3.2)–(3.4)gives,to time evolution is not considered.respectively,In summary,WENO schemes of Liu et al.defined by (2.10),(2.15),and (2.18)have the following properties:IS 0ϭ (f Љh 2)2ϩ (2f Јh Ϫ f ٞh 3)2ϩO (h 6)(3.5)1.They involve no logical statements which appear in IS 1ϭ(f Љh 2)2ϩ (2f Јh ϩ f ٞh 3)2ϩO (h 6)(3.6)the base ENO schemes.IS 2ϭ (f Љh 2)2ϩ (2f Јh Ϫ f ٞh 3)2ϩO (h 6),(3.7)2.The WENO scheme based on the r th-order ENO scheme is (r ϩ1)th-order accurate in smooth monotone where f ٞϭf ٞ(u j ).If f Ј϶0,thenregions,although this is still not as good as the optimal order (2r Ϫ1).IS k ϭ(f Јh )2(1ϩO (h 2)),k ϭ0,1,2,(3.8)3.They achieve essentially non-oscillatory property by emulating ENO schemes at discontinuities.which means the weights (see (2.15))resulting from thismeasurement satisfy (2.17)for r ϭ3;thus we obtain a 4.They are smooth in the sense that the numerical flux fifth-order (the optimal order for r ϭ3)accurate f ˆj ϩ1/2is a smooth function of all its arguments (for a general WENO scheme.flux,this is also true if a smooth flux splitting method is Moreover,this measurement is also more accurate at used,e.g.,global Lax–Friedrichs flux splitting).critical points of f (u (x )).When f Јϭ0,we have3.A NEW SMOOTHNESS MEASUREMENTIS k ϭ (f Љh 2)2(1ϩO (h 2)),k ϭ0,1,2,(3.9)In this section,we present a new way of measuring thesmoothness of the numerical solution on a stencil which which implies that the weights resulting from the measure-can be used to replace (2.18)to form a new weight.As we ment (3.1)is also fifth-order accurate at critical points.know,on each stencil S k ,we can construct a (r Ϫ1)th-To illustrate the different behavior of the two measure-order interpolation polynomial,which if evaluated at x ϭments (i.e.,(2.18)and (3.1))for r ϭ3in smooth monotone x j ϩ1/2,renders the approximation of h j ϩ1/2given in (2.7).regions,near critical points or near discontinuities,we com-Since total variation is a good measurement for smooth-pute the weights Ͷ0,Ͷ1,and Ͷ2for the functionness,it would be desirable to minimize the total variation for the approximation.Consideration for a smooth flux and for the role of higher order variations leads us to the f j ϭͭsin 2ȏx j if 0Յx j Յ0.5,1Ϫsin 2ȏx j if 0.5Ͻx j Յ1.(3.10)following measurement for smoothness:let the interpola-tion polynomial on stencil S k be q k (x );we defineat all half grid points x j ϩ1/2,where x j ϭj ⌬x ,x j ϩ1/2ϭx j ϩ⌬x /2,and ⌬x ϭ .We display the weights Ͷ0and Ͷ1in IS k ϭr Ϫ1l ϭ1͵x j ϩ1/2x j Ϫ1/2h 2l Ϫ1(q (l )k )2dx ,(3.1)Fig.1(Ͷ2ϭ1ϪͶ0ϪͶ1is omitted in the picture).Notethe optimal weight for Ͷ0is C 30ϭ0.1and for Ͷ1is C 31ϭ0.6.We can see that the weights computed with (2.18)where q (l )kis the l th-derivative of q k (x ).The right-hand side (referred to as the original measurement in Fig.1)are of (3.1)is just a sum of the L 2norms of all the derivatives far less optimal than those with the new measurement,of the interpolation polynomial q k (x )over the interval especially around the critical points x ϭ , .However,near (x j Ϫ1/2,x j ϩ1/2).The term h 2l Ϫ1is to remove h -dependent the discontinuity x ϭ ,the two measurements behave factors in the derivatives of the polynomials.This is similar similarly;the discontinuous stencil always gets an almost-to,but smoother than,the total variation measurement zero weight.Moreover,for the grid point immediately left based on the L 1norm.It also renders a more accurate to the discontinuity,Ͷ0Ȃ and Ͷ1Ȃ ,which means,when WENO scheme for the case r ϭ3,when used with (2.15)only one of the three stencils is non-smooth,the other two and (2.16).stencils get O (1)weights.Unfortunately,these weights do When r ϭ2,(3.1)gives the same measurement as (2.18).not approximate a fourth-order scheme at this point.A However,they become different for r Ն3.For r ϭ3,similar situation happens to the point just to the right of (3.1)givesthe discontinuity.For simplicity of notation,we use WENO-X-3to standIS 0ϭ (f j Ϫ2Ϫ2f j Ϫ1ϩf j )2ϩ (f j Ϫ2Ϫ4f j Ϫ1ϩ3f j )2(3.2)208JIANG AND SHUFIG.1.A comparison of the two smoothness measurements.for the third-order WENO scheme (i.e.,r ϭ2,for which u 0(x )ϭsin 4(ȏx ).Again we see that WENO-RF-4is more accurate than WENO-RF-5on the coarsest grid (N ϭ20)the original and new smoothness measurement coincide)but becomes less accurate than WENO-RF-5on finer grids.(where X ϭLF,Roe,RF refer,respectively,to the global The order of accuracy for WENO settles down later than Lax–Friedrichs flux splitting,Roe’s flux splitting,and Roe’s in the previous example.Notice that this is the example flux splitting with entropy fix).The accuracy of this scheme for which ENO schemes lose their accuracy [12].has been tested in [9].We will use WENO-X-4to represent the fourth-order WENO scheme of Liu et al.(i.e.,r ϭ3 4.A SIMPLE WAY FOR COMPUTING WEIGHTS FORwith the original smoothness measurement of Liu et al .)EULER SYSTEMSand WENO-X-5to stand for the fifth-order WENO scheme resulting from the new smoothness measurement.In later For system (1.1)with d Ͼ1,the derivatives d f i /dx i ,i ϭsections,we will also use ENO-X-Y to denote conventional 1,...,d ,are approximated dimension by dimension;forENO schemes of ‘‘Y’’th order with ‘‘X’’flux splitting.We caution the reader that the orders here are in L 1sense.So TABLE IIIENO-RF-4in our notation refers to the same scheme as ENO-RF-3in [15].Accuracy on u t ϩu x ϭ0with u 0(x )ϭsin(ȏx )In the following we test the accuracy of WENO schemes Method N L ȍerror L ȍorder L 1error L 1order on the linear equation:WENO-RF-410 1.31e-2—7.93e-3—u t ϩu x ϭ0,Ϫ1Յx Յ1,(3.11)20 3.00e-3 2.13 1.32e-3 2.5940 4.27e-4 2.81 1.56e-4 3.08u (x ,0)ϭu 0(x ),periodic.(3.12)80 5.17e-5 3.05 1.13e-5 3.79160 4.99e-6 3.37 6.88e-7 4.04320 3.44e-7 3.86 2.74e-8 4.65In Table III,we show the errors of the two schemes at t ϭ1for the initial condition u 0(x )ϭsin(ȏx )and compare WENO-RF-510 2.98e-2— 1.60e-2—them with the errors of the fifth-order upstream central 20 1.45e-3 4.367.41e-4 4.4340 4.58e-5 4.99 2.22e-5 5.06scheme (referred to as CENTRAL-5in the following ta-80 1.48e-6 4.95 6.91e-7 5.01bles).We can see that WENO-RF-4is more accurate than 160 4.41e-8 5.07 2.17e-8 4.99WENO-RF-5on the coarsest grid (N ϭ10)but becomes 320 1.35e-9 5.03 6.79e-10 5.00less accurate than WENO-RF-5on the finer grids.More-over,WENO-RF-5gives the expected order of accuracy CENTRAL-510 4.98e-3— 3.07e-3—20 1.60e-4 4.969.92e-5 4.95starting at about 40grid points.In this example and the 40 5.03e-6 4.99 3.14e-6 4.98one for Table IV,we have adjusted the time step to ⌬t ȁ80 1.57e-7 5.009.90e-8 4.99(⌬x )5/4so that the fourth-order Runge–Kutta in time is 160 4.91e-9 5.00 3.11e-9 4.99effectively fifth-order.3201.53e-105.009.73e-115.00In Table IV,we show errors for the initial condition。

Communicative Competence ScaleWiemann (1977) created the Communicative Competence Scale (CCS) to measure communicative competence, an ability "to choose among available communicative behaviors" to accomplish one's own "interpersonal goals during an encounter while maintaining the face and line" of "fellow interactants within the constraints of the situation" (p. 198). Originally, 57 Likert-type items were created to assess five dimensions of interpersonal competence (General Competence, Empathy Affiliation/Support, Behavioral Flexibility, and Social Relaxation) and a dependent measure- (interaction Management). Some 239 college students used the scale to rate videotaped confederates enacting one of four role-play interaction management conditions (high, medium, low, rude). The 36 items that discriminated the best between conditions were used in the final instrument. Factor analysis resulted in two main factors-general and relaxation-indicating that the subjects did not differentiate among the dimensions as the model originally predicted.Subjects use the CCS to assess another person's communicative competence by responding to 36 items using Likert scales that range from strongly agree (5) to strongly disagree (1). The scale takes less than 5 minutes to complete. Some researchers have adapted the other-report format to self-report and partner-report. These formats are available from the author.RELIABILITYThe CCS appears to be internally consistent. Wiemann (1977) reported a .96 coefficient alpha (and .74 magnitude of experimental effect) for the 36item revised instrument. McLaughlin and Cody (1982) used a 30-item version for college students to rate their partners after 30 minutes of conversation and reported an alpha of .91. Jones and Brunner (1984) had college students rate audio-taped interactions and reported an overall alpha of .94 to .95; subscale scores had alphas ranging from .68 to .82. Street, Mulac, and Wiemann (1988) had college students rate each other on communicative competence and reported an alpha of .84. The 36-item self-report format version is also reliable: Cupach and Spitz berg (1983) reported an alpha of .90, Hazleton and Cupach (1986) reported an alpha of .91, Cegala, Savage, Brunner, and Conrad (1982) reported an alpha of .85, and Query, Parry, and Flint (1992) reported an alpha of .86,Profile by Rebecca R. Rubin.VALIDITYTwo studies found evidence of construct validity. First, McLaughlin and Cody (1982) found that interactants in conversations in which there were multiple lapses of time rated each other lower on communicative competence. Second, Street et al. (1988) found that conversants' speech rate, vocal back channeling, duration of speech, and rate of interruption were related to their communicative competence scores; they also found that conversants rated their partners significantly more favorably than did observers.Various studies have provided evidence of concurrent validity. Cupach and Spitzberg (1983) used the dispositional self-report format and found that the CCS was strongly correlated with two other dispositions: communication adaptability and trait self-rated competence. The CCS was also modestly related to situational, conversation-specific measures of feeling good and self-rated competence. Hazleton and Cupach (1986) found a moderate relationship between communicative competence and both ontological knowledge about interpersonal communication and interpersonal communication apprehension. Backlund (1978) found communicative competence was related to social insight and open-mindedness. Douglas (1991) reported inverse relationships between communication competence and uncertainty and apprehension during initial meetings, And Query et al. (1992) found that nontraditional students, those high in communication competence, had more social supports and were more satisfied with these supports.In addition, Cegala et al. (1982) compared 326 college students' CCS and Interaction Involvement Scalescores. All three dimensions of interaction involvement were positively correlated with the CCS, but onlyperceptiveness correlated significantly with all five dimensions for both men and women. Responsiveness was related to behavioral flexibility, affiliation/support, and social relaxation, and attentiveness was related toimpression management.COMMENTSAlthough this scale has existed for a number of years and the original article has been cited numerous times,relatively few research studies have actually used the CCS. As reported by Perotti and De Wine (1987), problems with the factor structure and the Likert-type format may be reasons why. They suggested that theinstrument be used as a composite measure of communicative competence rather than breaking the scale into subscales, and this appears to be good advice. Spitzberg (1988, 1989) viewed the instrument as well conceived, suitable for observant or conversant rating situations, and aimed at "normal" adolescent or adult populations, yet Backlund (1978) found little correlation between peer-perceived competence and expert-perceived competence when using the CCS. The scale has been used only with college student populations.LOCATIONWiemann; J. M. (1977). Explication and test of a model of communicative competence. Human Communication Research, 3, 195-213.REFERENCESBacklund, P. M. (1978). Speech communication correlates of perceived communication competence (Doctoral dissertation, University of Denver, 1977). Dissertation Abstracts International, 38, 3800A.Cegala, D. J, Savage, G. T., Brunner, C. c., & Conrad, A. B. (1982). An elaboration of the meaning of interaction involvement: Toward the development of a theoretical concept. Communication Monographs, 49,229-248. Cupach, W. R., & Spitzberg, B. H. (1983). Trait versus state: A comparison of dispositional and situational measures of interpersonal communication competence. Western Journal o/SPeech Communication, 47,364-379.Douglas, W. (1991). Expectations aboUt initial interaction: An examination of rheeffects of global uncertainty. Human Communication Research, 17,355-384.Hazleton, V., Jr., & Cupach, W. R. (1986). An exploration of ontological knowledge: Communication competence as a function of the ability to describe,predict, and explain. Western Journal o/Speech Communication, 50,119-132.Jones, T. S., & Brunner, C. C. (1984). The effects of self-disclosure and sex on perceptions of interpersonal communication competence. Women's Studies in Communication, 7, 23-37.McLaughlin, M. 1., & Cody, M. J. (1982). Awkward silences: Behavioral antecedents and consequences of the conversational lapse. Human Communication Research, 8,299-316.Perotti, V. S., & DeWine, S. (1987). Competence in communication: An examination of three instruments.Management Communication Quarterly, 1,272-287.Query, J. 1., Parry, D., & Flint, 1. J. (1992). The relationship among social support, communication competence, and cognitive depression for nontraditional students. Journal 0/ Applied Communication Research, 20, 78-94.Spitzberg, B. H. (1988). Communication competence: Measures of perceived effectiveness. In C. H. Tardy (Ed.),A handbook for the study of human communication: Methods and instruments for observing, measuring, andassessing communication processes (pp. 67-105). Norwood, NJ: Ablex.Spitzberg, B. H. (1989). Handbook of interpersonal competence research. New York: Springer-Verlag.Street, R. 1., Jr., Mulac, A., & Wiemann, J. M. (1988). Speech evaluation differences as a function of perspective (participant versus observer) and presentational medium. Human Communication Research, 14,333-363.Communicative Competence Scale*Instructions: Complete the following questionnaire/scale with the subject (S) in mind. Write in one of the sets of letters before each numbered question based upon whether you:strongly agree (SA), agree (A), are undecided or neutral (?),disagree (D), or strongly disagree (SD).Always keep the subject in mind as you answer.______ 1. S finds it easy to get along with others.______ 2. S can adapt to changing situations.______ 3. S treats people as individuals.______ 4. S interrupts others too much.______ 5. S is "rewarding" to talk to.______ 6. S can deal with others effectively.______ 7. S is a good listener.______ 8. S's personal relations are cold and distant.______ 9. S is easy to talk to.______ 10. S won't argue with someone just to prove he/she is right.______ 11. S's conversation behavior is not "smooth.”______ 12. S ignores other people's feelings.______ 13. S generally knows how others feel.______ 14. S lets others know he/she understands them.______ 15. S understands other people.______ 16. S is relaxed and comfortable when speaking.______ 17. S listens to what people say to him/her.______ 18. S likes to be close and personal with people.______ 19. S generally knows what type of behavior is appropriate in any given situation.______ 20. S usually does not make unusual demands on his/her friends.______ 21. S is an effective conversationalist.______ 22. S is supportive of others.______ 23. S does not mind meeting strangers.______ 24. S can easily put himself/herself in another person's shoes.______ 25. S pays attention to the conversation.______ 26. S is generally relaxed when conversing with a new acquaintance. 27. S is interested in what others have to say.______ 27. S doesn't follow the conversation very well.______ 28. S enjoys social gatherings where he/she can meet new people.______ 29. S is a likeable person.______ 30. S is flexible.______ 31. S is not afraid to speak with people in authority.______ 32. People can go to S with their problems.______ 33. S generally says the right thing at the right time.______ 34. S likes to use his/her voice and body expressively.______ 35. S is sensitive to others' needs of the moment.Note. Items 4, 8, 11, 12, and 28 are reverse-coded before summing the 36 items. For "Partner" version, "S" is replaced by "My partner" and by "my long-standing relationship partner" in the instructions. For the "Self-Report" version, "S" is replaced by "I" and statements are adjusted forfirst-person singular.。

法布里珀罗基模共振英文The Fabryperot ResonanceOptics, the study of light and its properties, has been a subject of fascination for scientists and researchers for centuries. One of the fundamental phenomena in optics is the Fabry-Perot resonance, named after the French physicists Charles Fabry and Alfred Perot, who first described it in the late 19th century. This resonance effect has numerous applications in various fields, ranging from telecommunications to quantum physics, and its understanding is crucial in the development of advanced optical technologies.The Fabry-Perot resonance occurs when light is reflected multiple times between two parallel, partially reflective surfaces, known as mirrors. This creates a standing wave pattern within the cavity formed by the mirrors, where the light waves interfere constructively and destructively to produce a series of sharp peaks and valleys in the transmitted and reflected light intensity. The specific wavelengths at which the constructive interference occurs are known as the resonant wavelengths of the Fabry-Perot cavity.The resonant wavelengths of a Fabry-Perot cavity are determined bythe distance between the mirrors, the refractive index of the material within the cavity, and the wavelength of the incident light. When the optical path length, which is the product of the refractive index and the physical distance between the mirrors, is an integer multiple of the wavelength of the incident light, the light waves interfere constructively, resulting in a high-intensity transmission through the cavity. Conversely, when the optical path length is not an integer multiple of the wavelength, the light waves interfere destructively, leading to a low-intensity transmission.The sharpness of the resonant peaks in a Fabry-Perot cavity is determined by the reflectivity of the mirrors. Highly reflective mirrors result in a higher finesse, which is a measure of the ratio of the spacing between the resonant peaks to their width. This high finesse allows for the creation of narrow-linewidth, high-resolution optical filters and laser cavities, which are essential components in various optical systems.One of the key applications of the Fabry-Perot resonance is in the field of optical telecommunications. Fiber-optic communication systems often utilize Fabry-Perot filters to select specific wavelength channels for data transmission, enabling the efficient use of the available bandwidth in fiber-optic networks. These filters can be tuned by adjusting the mirror separation or the refractive index of the cavity, allowing for dynamic wavelength selection andreconfiguration of the communication system.Another important application of the Fabry-Perot resonance is in the field of laser technology. Fabry-Perot cavities are commonly used as the optical resonator in various types of lasers, providing the necessary feedback to sustain the lasing process. The high finesse of the Fabry-Perot cavity allows for the generation of highly monochromatic and coherent light, which is crucial for applications such as spectroscopy, interferometry, and precision metrology.In the realm of quantum physics, the Fabry-Perot resonance plays a crucial role in the study of cavity quantum electrodynamics (cQED). In cQED, atoms or other quantum systems are placed inside a Fabry-Perot cavity, where the strong interaction between the atoms and the confined electromagnetic field can lead to the observation of fascinating quantum phenomena, such as the Purcell effect, vacuum Rabi oscillations, and the generation of nonclassical states of light.Furthermore, the Fabry-Perot resonance has found applications in the field of optical sensing, where it is used to detect small changes in physical parameters, such as displacement, pressure, or temperature. The high sensitivity and stability of Fabry-Perot interferometers make them valuable tools in various sensing and measurement applications, ranging from seismic monitoring to the detection of gravitational waves.The Fabry-Perot resonance is a fundamental concept in optics that has enabled the development of numerous advanced optical technologies. Its versatility and importance in various fields of science and engineering have made it a subject of continuous research and innovation. As the field of optics continues to advance, the Fabry-Perot resonance will undoubtedly play an increasingly crucial role in shaping the future of optical systems and applications.。

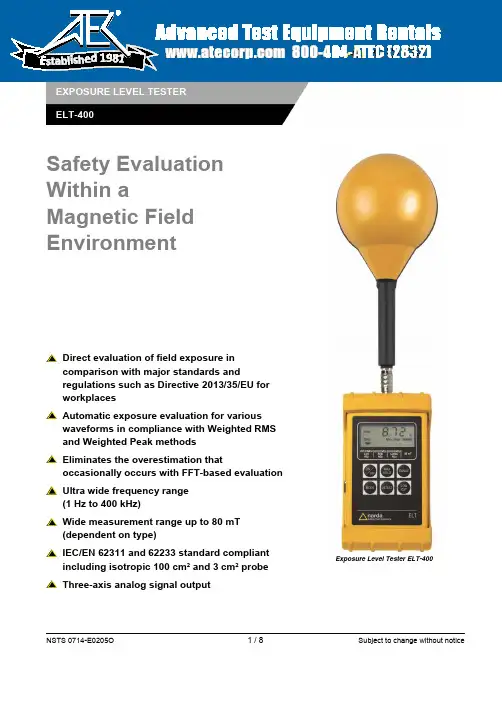

Safety Evaluation Within a Magnetic Field EnvironmentDirect evaluation of field exposure incomparison with major standards andregulations such as Directive 2013/35/EU forworkplacesAutomatic exposure evaluation for variouswaveforms in compliance with Weighted RMSand Weighted Peak methodsEliminates the overestimation thatoccasionally occurs with FFT-based evaluationUltra wide frequency range(1 Hz to 400 kHz)Wide measurement range up to 80 mT(dependent on type)IEC/EN 62311 and 62233 standard compliantincluding isotropic 100 cm² and 3 cm² probeThree-axis analog signal outputExposure Level Tester ELT-400t a b l i s h e d1981NSTS 0714-E0205O 1 / 8Subject to change without noticeNSTS 0714-E0205O 2 / 8Subject to change without noticeAPPLICATIONSThe ELT-400 is an innovative exposure level meter for measuring magnetic fields in the workplace and in public spaces. The model is designed for health and safety professionals in industry, the insurance business and service industries.The instrument can simply and precisely handle practically any level measurement required in the low and medium-frequency range. It is comparable to the sound level meters that are commonly used in the assessment of noise at the workplace.Production AreasThe ELT-400 is useful for checking fields caused by various manufacturing plant, including induction heating, melting and hardening equipment. Thanks to its extremely low frequency limit and high power capability, it can also be used to check most magnetic stirrers.Special demands often occur with machinery in production areas where non-sinusoidal signals are common, e.g. in industrial applications that use resistance welding machinery (pulse waveform, phase angle control) with traditional 50/60 Hz systems, as well as in newer medium-frequency switching units.General EnvironmentThe different types of electronic article surveillance systems generate complex fields in public spaces. Most electromagnetic and magneto acoustic gates operate within the frequency range of the ELT-400.EMC Test HouseThe magnetic fields generated by household appliances or other electrical devices have become the focus of increased attention. Some new standards such as IEC/EN 62233 describe how to investigate such products. The ELT-400 is the ideal measuring device when it comes to compliance with these standards. Benefits include the perfectly matched frequency range and implementation of the specified transfer function.The ELT-400 allows to greatly simplify the assessment process. With EXPOSURE STD (Shaped Time Domain) mode, the instrument achieves a new standard in simple but reliable measurement of magnetic fields, whether in straightforward or in very complex field environments. Industrial melting furnaceResistance welding machinery in operation Magneto acoustic gate used for product surveillanceNSTS 0714-E0205O 3 / 8Subject to change without noticeThe easily misinterpreted time-consuming measurements with aspectrum analyzer or scope are rendered obsolete. Detailed knowledgeabout the evaluation procedure or the field waveform or frequency is nolonger needed. The results are reliable, and speed and ease of use aresignificantly better than all traditional methods.BASIC OPERATIONThe ELT-400 covers the wide frequency range of 1 Hz to 400 kHz. Themeasurement range of the ELT-400 is far wider than the reference limitsof common guidelines. The instrument has an external isotropicmagnetic field probe with a 100 cm2cross-sectional area. This issuitable for standards-compliant measurement even in non-homogeneous fields. The ELT-400 has a rugged housing and is easy tooperate using only six buttons. The measurement result and theinstrument settings are clearly displayed on a backlit LCD panel.The optional probe extension cable is specially designed for lowinfluence on the frequency response and sensitivity of the instrument.The cable is a good choice in cases where the probe and instrumentmust be handled separately. Variants of the ELT-400 are available withdifferent operating mode combinations, e.g. “Exposure STD” or “FieldStrength”. Please refer to the Ordering Information section for details.EXPOSURE STD (SHAPED TIME DOMAIN) MODESignal-Shaped-Independent Field EvaluationIn EXPOSURE STD mode, the level of the magnetic (B) field is directlydisplayed as a “Percent of Standard” regardless of the signal shape andfrequency. The numeric result clearly reflects the current situation andthe remaining safety margin. The method employed can be compared tosound level meters that are commonly used to determine noise in theworkplace.The variation with frequency specified in the standard is normalized bymeans of an appropriate filter. Users no longer need to know thefrequency or the frequency-dependent limits. The standard is easilyselected by pressing just one button. Multi-frequency signals are just aseasy to measure as single frequencies.Compliance testing of household appliancesCoupling factors can be determined in compliancewith IEC/EN 62233 by use of the optional 3 cm2 probeNSTS 0714-E0205O 4 / 8Subject to change without noticeThe newer safety standards and guidelines also specify waveform-specific evaluation procedures. For example, stationary sinusoidal and pulsed fields are differentiated. With the ELT-400 the waveform is automatically taken into account. Users no longer need any knowledge about the waveform or the duty cycle. Measurements on pulsed signals are also possible. Different evaluation patterns are occasionally specified in the standard for certain pulse waveforms. These patterns (valid for all imaginable waveforms) are directly handled by EXPOSURE STD mode. This completely eliminates the need to analyze the waveform in the time domain using a scope.Even when faced with pulses that include DC fields, the EXPOSURE STD method provides valuable results. The ELT-400 covers all the signal components down to 1 Hz that are relevant in assessing such a situation.Occasionally both the RMS value and the peak value are critical for assessing exposure in the low-frequency range. Both detector types are provided (Weighted RMS and Weighted Peak), and are simultaneously activated in the default setting. Depending on the incoming signal and standard selected, the most suitable detector is automatically employed at all times. The necessary weighting factors are also taken into account. The detectors may also be selected independently for further interpretation of the signal.Detailed knowledge of the field, the test equipment and other auxiliary conditions is necessary to obtain insight into the degree of exposure when using traditional analysis instruments. The exposure level is derived through extensive calculation. Results can be easily misinterpreted or other problems may occur. For example, FFT spectrum analysis tends to overestimate results for the ICNIRP standard. The ELT-400 continuously monitors the field, and the results are constantly updated. Any change in the field, e.g. due to a power reduction, can be evaluated immediately.Proper evaluation in a personal safety context is achieved quickly and reliably using the STD technique. In Exposure STD mode the result is displayed directly as a percentage of the permitted limitExposure STD automatically sets the prescribeddetector applicable for the selected standardNSTS 0714-E0205O 5 / 8 Subject to change without noticeFIELD STRENGTH MODEBroadband Field Strength MeasurementsIf the field under test is essentially a single frequency component, broadband mode is also a good choice.The ELT-400 provides an ultra wideband, flat frequency response. The measurement range can handle extremely high field strength levels. Both detectors, RMS and Peak, are available for broadband measurement. The field strength result is displayed in “Tesla”.ACTIVE FIELD PROBEThree-Axis Analog Signal OutputFor scientific studies or advanced signal-shape / frequency analysis, a scope or an FFT analyzer can be connected to the analog output. The output signal ensures proper phase within the three axes and covers the full bandwidth of the instrument.The buffered output provides an adequate voltage swing to allow forsimple operation.Broadband measurement in “mT”with RMS detectorThe oscilloscope display shows the welding current when using the analog signal output of ELT-400aa Unless otherwise stated, these specifications apply fort the reference condition: ambient temperature 23±3 °C,relative air humidity 40 % to 60 %, continuous wave signal (CW) and RMS detectionb Depends on type; see Ordering Informationc Detection: Automatic according to selected standard, for IEC/EN 62233 based on ICNIRP limit valuesd Includes flatness, isotropy, absolute and linearity variations (frequency range: 1 Hz to 400 kHz or 10 Hz to 400 kHz).The uncertainty increases at the frequency band limits to ±1 dB based on the nominal frequency response.e For Frequency Range 10 Hz to 400 kHz and 30 Hz to 400 kHz only.NSTS 0714-E0205O 6 / 8Subject to change without noticea Unless otherwise stated, these specifications apply for the reference condition: ambient temperature 23±3 °C,relative air humidity 40 % to 60 %, continuous wave signal (CW) and RMS detectionb Depends on type, see Ordering Informationc Detection: Automatic according to selected standard, for IEC 62233 based on ICNIRP limit valuesd Includes flatness, isotropy, absolute and linearity variations (frequency range: 1 Hz to 400 kHz or 10 Hz to 400 kHz).The uncertainty increases at the frequency band limits to ±1 dB based on the nominal frequency response.e For frequency range 10 Hz to 400 kHz and 30 Hz to 400 kHz only.NSTS 0714-E0205O 7 / 8Subject to change without noticeNSTS 0714-E0205O 8 / 8 Subject to change without notice® Names and Logo are registered trademarks of Narda Safety Test Solutions GmbH and L3 Communications Holdings, Inc. – Trade names are trademarks of the owners.Narda Safety Test Solutions GmbH Sandwiesenstrasse 772793 Pfullingen, Germany Phone: +49 7121 9732 0 Fax: +49 7121 9732 790E-Mail:*************************** Narda Safety Test Solutions 435 Moreland RoadHauppauge, NY 11788, USA Phone: +1 631 231-1700 Fax: +1 631 231-1711E-Mail:*******************Narda Safety Test Solutions Srl Via Leonardo da Vinci, 21/23 20090 Segrate (Milano) - Italy Phone: +39 02 2699871 Fax: +39 02 26998700E-mail:**************************www.narda-sts.it。