A comparison of text and shape matching for retrieval of online 3D models

- 格式:pdf

- 大小:54.53 KB

- 文档页数:12

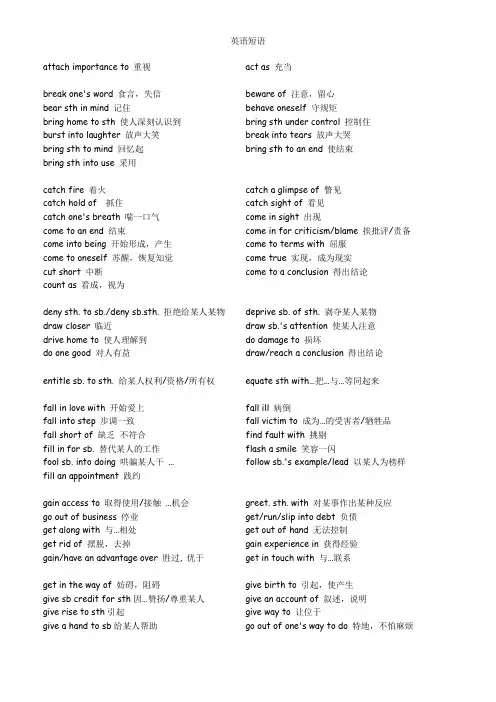

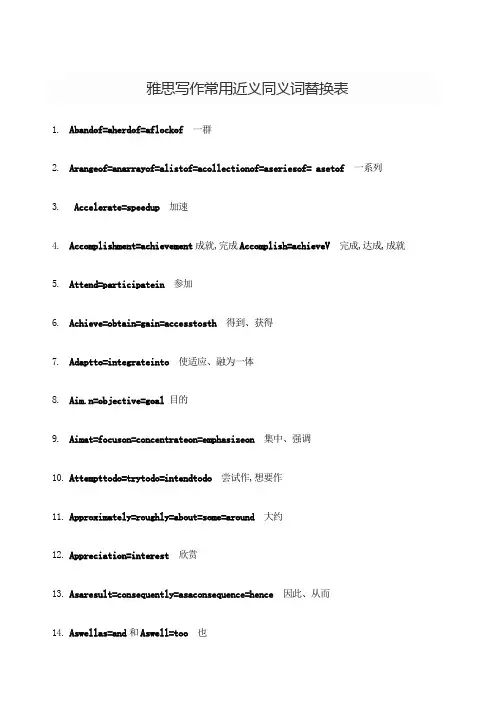

附录A 英文文献Speaker RecognitionBy Judith A. Markowitz, J. Markowitz ConsultantsSpeaker recognition uses features of a person‟s voice to identify or verify that person. It is a well-established biometric with commercial systems that are more than 10 years old and deployed non-commercial systems that are more than 20 years old. This paper describes how speaker recognition systems work and how they are used in applications.1. IntroductionSpeaker recognition (also called voice ID and voice biometrics) is the only human-biometric technology in commercial use today that extracts information from sound patterns. It is also one of the most well-established biometrics, with deployed commercial applications that are more than 10 years old and non-commercial systems that are more than 20 years old.2. How do Speaker-Recognition Systems WorkSpeaker-recognition systems use features of a person‟s voice and speaking style to:●attach an identity to the voice of an unknown speaker●verify that a person is who she/ he claims to be●separate one person‟s voice from other voices in a multi-speakerenvironmentThe first operation is called speak identification or speaker recognition; the second has many names, including speaker verification, speaker authentication, voice verification, and voice recognition; the third is speaker separation or, in some situations, speaker classification. This papers focuses on speaker verification, the most highly commercialized of these technologies.2.1 Overview of the ProcessSpeaker verification is a biometric technology used for determining whether the person is who she or he claims to be. It should not be confused with speech recognition, a non-biometric technology used for identifying what a person is saying. Speech recognition products are not designed to determine who is speaking.Speaker verification begins with a claim of identity (see Figure A1). Usually, the claim entails manual entry of a personal identification number (PIN), but a growing number of products allow spoken entry of the PIN and use speech recognition to identify the numeric code. Some applications replace manual or spoken PIN entry with bank cards, smartcards, or the number of the telephone being used. PINS are also eliminated when a speaker-verification system contacts the user, an approach typical of systems used to monitor home-incarcerated criminals.Figure A1.Once the identity claim has been made, the system retrieves the stored voice sample (called a voiceprint) for the claimed identity and requests spoken input from the person making the claim. Usually, the requested input is a password. The newly input speech is compared with the stored voiceprint and the results of that comparison are measured against an acceptance/rejection threshold. Finally, the system accepts the speaker as the authorized user, rejects the speaker as an impostor, or takes another action determined by the application. Some systems report a confidence level or other score indicating how confident it about its decision.If the verification is successful the system may update the acoustic information in the stored voiceprint. This process is called adaptation. Adaptation is an unobtrusive solution for keeping voiceprints current and is used by many commercial speaker verification systems.2.2 The Speech SampleAs with all biometrics, before verification (or identification) can be performed the person must provide a sample of speech (called enrolment). The sample is used to create the stored voiceprint.Systems differ in the type and amount of speech needed for enrolment and verification. The basic divisions among these systems are●text dependent●text independent●text prompted2.2.1 Text DependentMost commercial systems are text dependent.Text-dependent systems expect the speaker to say a pre-determined phrase, password, or ID. By controlling the words that are spoken the system can look for a close match with the stored voiceprint. Typically, each person selects a private password, although some administrators prefer to assign passwords. Passwords offer extra security, requiring an impostor to know the correct PIN and password and to have a matching voice. Some systems further enhance security by not storing a human-readable representation of the password.A global phrase may also be used. In its 1996 pilot of speaker verification Chase Manhattan Bank used …Verification by Chemical Bank‟. Global phrases avoid the problem of forgotten passwords, but lack the added protection offered by private passwords.2.2.2 Text IndependentText-independent systems ask the person to talk. What the person says is different every time. It is extremely difficult to accurately compare utterances that are totally different from each other - particularly in noisy environments or over poor telephone connections. Consequently, commercial deployment of text-independentverification has been limited.2.2.3 Text PromptedText-prompted systems (also called challenge response) ask speakers to repeat one or more randomly selected numbers or words (e.g. “43516”, “27,46”, or “Friday, c omputer”). Text prompting adds time to enrolment and verification, but it enhances security against tape recordings. Since the items to be repeated cannot be predicted, it is extremely difficult to play a recording. Furthermore, there is no problem of forgetting a password, even though the PIN, if used, may still be forgotten.2.3 Anti-speaker ModellingMost systems compare the new speech sample with the stored voiceprint for the claimed identity. Other systems also compare the newly input speech with the voices of other people. Such techniques are called anti-speaker modelling. The underlying philosophy of anti-speaker modelling is that under any conditions a voice sample from a particular speaker will be more like other samples from that person than voice samples from other speakers. If, for example, the speaker is using a bad telephone connection and the match with the speaker‟s voiceprint is poor, it is likely that the scores for the cohorts (or world model) will be even worse.The most common anti-speaker techniques are●discriminate training●cohort modeling●world modelsDiscriminate training builds the comparisons into the voiceprint of the new speaker using the voices of the other speakers in the system. Cohort modelling selects a small set of speakers whose voices are similar to that of the person being enrolled. Cohorts are, for example, always the same sex as the speaker. When the speaker attempts verification, the incoming speech is compared with his/her stored voiceprint and with the voiceprints of each of the cohort speakers. World models (also called background models or composite models) contain a cross-section of voices. The same world model is used for all speakers.2.4 Physical and Behavioural BiometricsSpeaker recognition is often characterized as a behavioural biometric. This description is set in contrast with physical biometrics, such as fingerprinting and iris scanning. Unfortunately, its classification as a behavioural biometric promotes the misunderstanding that speaker recognition is entirely (or almost entirely) behavioural. If that were the case, good mimics would have no difficulty defeating speaker-recognition systems. Early studies determined this was not the case and identified mimic-resistant factors. Those factors reflect the size and shape of a speaker‟s speaking mechanism (called the vocal tract).The physical/behavioural classification also implies that performance of physical biometrics is not heavily influenced by behaviour. This misconception has led to the design of biometric systems that are unnecessarily vulnerable to careless and resistant users. This is unfortunate because it has delayed good human-factors design for those biometrics.3. How is Speaker Verification Used?Speaker verification is well-established as a means of providing biometric-based security for:●telephone networks●site access●data and data networksand monitoring of:●criminal offenders in community release programmes●outbound calls by incarcerated felons●time and attendance3.1 Telephone NetworksToll fraud (theft of long-distance telephone services) is a growing problem that costs telecommunications services providers, government, and private industry US$3-5 billion annually in the United States alone. The major types of toll fraud include the following:●Hacking CPE●Calling card fraud●Call forwarding●Prisoner toll fraud●Hacking 800 numbers●Call sell operations●900 number fraud●Switch/network hits●Social engineering●Subscriber fraud●Cloning wireless telephonesAmong the most damaging are theft of services from customer premises equipment (CPE), such as PBXs, and cloning of wireless telephones. Cloning involves stealing the ID of a telephone and programming other phones with it. Subscriber fraud, a growing problem in Europe, involves enrolling for services, usually under an alias, with no intention of paying for them.Speaker verification has two features that make it ideal for telephone and telephone network security: it uses voice input and it is not bound to proprietary hardware. Unlike most other biometrics that need specialized input devices, speaker verification operates with standard wireline and/or wireless telephones over existing telephone networks. Reliance on input devices created by other manufacturers for a purpose other than speaker verification also means that speaker verification cannot expect the consistency and quality offered by a proprietary input device. Speaker verification must overcome differences in input quality and the way in which speech frequencies are processed. This variability is produced by differences in network type (e.g. wireline v wireless), unpredictable noise levels on the line and in the background, transmission inconsistency, and differences in the microphone in telephone handset. Sensitivity to such variability is reduced through techniques such as speech enhancement and noise modelling, but products still need to be tested under expected conditions of use.Applications of speaker verification on wireline networks include secure calling cards, interactive voice response (IVR) systems, and integration with security forproprietary network systems. Such applications have been deployed by organizations as diverse as the University of Maryland, the Department of Foreign Affairs and International Trade Canada, and AMOCO. Wireless applications focus on preventing cloning but are being extended to subscriber fraud. The European Union is also actively applying speaker verification to telephony in various projects, including Caller Verification in Banking and Telecommunications, COST250, and Picasso.3.2 Site accessThe first deployment of speaker verification more than 20 years ago was for site access control. Since then, speaker verification has been used to control access to office buildings, factories, laboratories, bank vaults, homes, pharmacy departments in hospitals, and even access to the US and Canada. Since April 1997, the US Department of Immigration and Naturalization (INS) and other US and Canadian agencies have been using speaker verification to control after-hours border crossings at the Scobey, Montana port-of-entry. The INS is now testing a combination of speaker verification and face recognition in the commuter lane of other ports-of-entry.3.3 Data and Data NetworksGrowing threats of unauthorized penetration of computing networks, concerns about security of the Internet, and increases in off-site employees with data access needs have produced an upsurge in the application of speaker verification to data and network security.The financial services industry has been a leader in using speaker verification to protect proprietary data networks, electronic funds transfer between banks, access to customer accounts for telephone banking, and employee access to sensitive financial information. The Illinois Department of Revenue, for example, uses speaker verification to allow secure access to tax data by its off-site auditors.3.4 CorrectionsIn 1993, there were 4.8 million adults under correctional supervision in the United States and that number continues to increase. Community release programmes, such as parole and home detention, are the fastest growing segments of this industry. It is no longer possible for corrections officers to provide adequate monitoring ofthose people.In the US, corrections agencies have turned to electronic monitoring systems. Since the late 1980s speaker verification has been one of those electronic monitoring tools. Today, several products are used by corrections agencies, including an alcohol breathalyzer with speaker verification for people convicted of driving while intoxicated and a system that calls offenders on home detention at random times during the day.Speaker verification also controls telephone calls made by incarcerated felons. Inmates place a lot of calls. In 1994, US telecommunications services providers made $1.5 billion on outbound calls from inmates. Most inmates have restrictions on whom they can call. Speaker verification ensures that an inmate is not using another inmate‟s PIN to make a forbidden contact.3.5 Time and AttendanceTime and attendance applications are a small but growing segment of the speaker-verification market. SOC Credit Union in Michigan has used speaker verification for time and attendance monitoring of part-time employees for several years. Like many others, SOC Credit Union first deployed speaker verification for security and later extended it to time and attendance monitoring for part-time employees.4. StandardsThis paper concludes with a short discussion of application programming interface (API) standards. An API contains the function calls that enable programmers to use speaker-verification to create a product or application. Until April 1997, when the Speaker Verification API (SV API) standard was introduced, all available APIs for biometric products were proprietary. SV API remains the only API standard covering a specific biometric. It is now being incorporated into proposed generic biometric API standards. SV API was developed by a cross-section of speaker-recognition vendors, consultants, and end-user organizations to address a spectrum of needs and to support a broad range of product features. Because it supports both high level functions (e.g. calls to enrol) and low level functions (e.g. choices of audio input features) itfacilitates development of different types of applications by both novice and experienced developers.Why is it important to support API standards? Developers using a product with a proprietary API face difficult choices if the vendor of that product goes out of business, fails to support its product, or does not keep pace with technological advances. One of those choices is to rebuild the application from scratch using a different product. Given the same events, developers using a SV API-compliant product can select another compliant vendor and need perform far fewer modifications. Consequently, SV API makes development with speaker verification less risky and less costly. The advent of generic biometric API standards further facilitates integration of speaker verification with other biometrics. All of this helps speaker-verification vendors because it fosters growth in the marketplace. In the final analysis active support of API standards by developers and vendors benefits everyone.附录B 中文翻译说话人识别作者:Judith A. Markowitz, J. Markowitz Consultants 说话人识别是用一个人的语音特征来辨认或确认这个人。

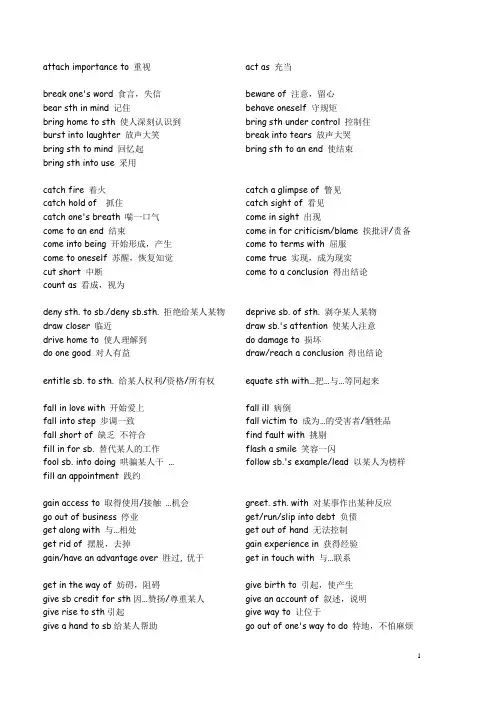

Part 1A A band of= a herd of=a flock of 一群A range of=an array of= a list of= a collection of=a series of=a set of一系列Accelerate=speed up加速Accomplishment=achievement 成就,完成Accomplish=achieve (V)完成,达成,成就Attend=participate in 参加Achieve=obtain=gain=access to sth 得到、获得Adapt to=integrate into使适应、融为一体=objective=goal 目的Aim at=focus on=concentrate on=emphasize on 集中、强调An array of=a list of =a set of=a series of 一系列Attempt to do=try to do=intend to do尝试作,想要作Approximately=roughly=about=some=around 大约Appreciation=interest 欣赏As a result=consequently=as a consequence=hence因此、从而As well as=and和As well=too也Associate with=connect with=link with=relate to=involve in与……相关联Attach to=connect to=link to=serve与……相连接、附着在……上At will=at random=by chance=by accident=accidentally随意地Automatically ensure=guarantee确保B Be similar to=closely resembled =have something in common 相似Be used to=be devoted to专门用于Be equivalent to=be equal to 等同于Be taken aback=be surprised 吃惊Briskly =rapidly=swiftly快速地C Calculate=measure 衡量Catch up with=keep pace with跟上步伐Chief=major=dominant=principle主要的Chronic=long term 慢性病的Complain=illness 病症Concern n. =focus关心的问题Concern v. =about 与……有关Concept =conception=notion=idea=understanding概念、理解Condition=regulate 调节Confront=encounter=in the face of面临Concern = care关心Consist of=comprise由什么组成Concur with sb=agree with sb 同意某人Contaminate=pollute=poison 污染Contend=assert=cliam断言Contribute to=result in=lead to=link to=responsible for导致Cooperate with=work with 与……协作Convert to=turn into 转为、转变为Crop up=crop out=come up 出现D Degenerated=underdeveloped退化Distract from= can not concentrate 使精力分散Deadly=fateful 致命的Diminish=decrease=shrink 减少Distort reality=far from reality=dishonest不真实的Distinction=difference=nuance 不同之处Distinct=distinguish=different=unique不同的Distinct variety of =different kind of=separate kind of不同的Distinguish=tell辨别Due to=because of由于Drawback=defect=ill缺点E Eat=feed 喂食,进食Endanger=threaten 威胁Engage in=participate in=join in 参与Establish=set up建立Establish=find out=figure out=work out查明Evolvement=development=progress发展、发育Emphasize=stress 强调(v)Embark upon=start=launch开始Enlighten=illuminate=instruct=educate启蒙、给予知识Entire=whole 全部Exceptional=extremely 特别(特别好)F Fail to do=failure to do=can not do没有能够做Far from=not at all根本不Fault=flaw=defect错误Form=shape=mould形成Foundation=basis基础Finance=sponsor=fund 提供赞助(金钱)Free=release=set free释放Frequently=often 经常GGreat milestone=impact=effect=influence对……具有很重要的影响力Grant=give授予Gas=petrol石油H Hold=harbor=embrace拥有Halt=stop=hamper(v.) 阻止、使停滞Hurdle=barrier(n.) 阻碍I Identical=the same一模一样Imitate=impersonate模仿Incline to do=tend to do倾向于做某事Indicate=show=suggest=signify=identify表明Identify=recognize鉴别In reaction to=respond to 对……起反应Interpret=illustrate解释Introduce to=import to引入K Key (a.)=important=significant=crucial=essential=play avital/ an important part/role in重要的,关键的Key (n.)=approach=means=method=technique方法LLikelihood=possibility可能性Link-up=connection 联系M Master=grasp 掌握Match sth to=assign sth to把……和……搭配起来Misconception=misunderstanding=mistaken view错误的理解Modify=change=alter修改、改变Manifestation=demonstration 显示、表明N Nature=character=characteristics本质、特点Non-toxic= less poisonous=safe 无毒的、安全的Novel a.=fresh=new=up to date 新潮的、创新的Numerous= a number of 许多O Owing to=thanks to =contribute to多亏……,有赖于……P Pattern=format形式,模式Pattern=design图案Pore over=study 研究Poisonous=toxic 有毒的Portray=describe描写塑造(v.)Portrait=description描写塑造(n.)Preserve=store 储存Prevailing=popular=prevalent 盛行的Present day=modern society 现代社会、现在时代Prodigious effort=enormous effort重大的努力Produce=trigger=generate=spawn=bringabout=result=breed=spawn激发、产生Provide=offer 提供Protect A from B =shelter A from B 使A不受B的侵害Q Questionnaire=survey 问卷调查R Readily=easily=accessible 容易地Reflect=consider考虑Relate to=understand=appreciate理解Rely on=depend on 依靠……Remarkable=extraordinary 特别突出的(好的事物)Replace=take the place of 取代Residue=remains=relics=relicts=ruins剩余物、废墟Resemble=like=as像S Show=reflect表现Significance=importance 重要性State=Situation=circumstance 状况,状态,情形Striking=noticeable值得注意地Struggle for=strive for 努力地争取Substantial=considerable 想当可观的Success=achievement=great outcome=great progress=huge development成功、成就Surprising=unexpectedly uncommon令人吃惊的T Take to=be used to=get used to 对……习惯Tale=story=fairytale 故事Talent=gift 天赋Tuition=formal teaching正式的学校教育Turn to=consult 寻求帮助、求助于Toxic=poisonous 有毒的U Unbiased=without prejudice 无立场、不带任何偏见的Up to=depend on/upon=rely on 由……决定Universal=popular普遍V Virtue=advantage=strength 优点好处W Work out=figure out=reckon 计算出、作出Part 21解决: Solve, deal with, cope with, handle, resolve, address, tackle2损害:Damage, hurt, injure, harm, impair, undermine, jeopardize3给与:Give, offer, render, impart, provide, supply, afford4培养::Develop, cultivate, foster5优势:Advantage, merit, virtue, benefit, upside, strength6缺陷:Disadvantage, demerit, drawback, downside, weakness7使迷惑:Puzzle, bewilder, perplex, baffle8重要的:Key, crucial, critical, important, significant, vital, substantial, indispensable, imperative, essential9认为:Think, believe, insist, maintain, assert, conclude, deem, hold, argue, be convinced, be firmly convinced, be fully convinced10保护:Protect, conserve, preserve11确保:Assure, ensure, guarantee, pledge12有害的:Bad, baneful evil, harmful, detrimental13要求:Request, demand, needs, requisition14消除:Eliminate, clear, remove, clear up, take away, smooth away15导致:Lead to, bring about, result in, cause, spark off, conduce to, procure, induce, generate16因此:So, therefore, thus, hence, consequently, as a consequence, accordingly, as a result, because of this, as a result of this17增长至:Grow to,rise to,increase to,go up to,climb to,ascend to,jump to,shoot to18降低至:Dip to,fall to,decline to,decrease to,drop to,go down to,reduce to,slump to,descend to,sink to,slide to 19 保持稳定:Level out,do not change,remain stable,remain still,remain steady,be stable,maintain the same level,remain unchanged,be still,remain the same level,stay constant,keep at the same level,level off,stabilize,keep its stability,even out20急剧的:Dramatically,drastically,sharply,hugely,enormously,steeply,substantially,considerably,significantly,markedly,surprisingly,strikingly,radically,remarkably,vastly,noticeably21平稳地:Steadily,smoothly,slightly,slowly,marginally,gradually,moderately,mildly22宣称:Allege, assert, declare, claim23发生:Happen, occur, take place24原因:Reason, factor, cause25发展:Development, advance, progress26有益的:Useful, helpful, beneficial, profitable, rewarding,advantageous27影响:Influence, impact, effect28明显的:Clear, obvious, evident, self-evident, manifest, apparent, crystal-clear29占:Comprise, take up, account for, constitute, consist of, make up, occupy, hold, compose30与…相比:Compared with,compared to,in comparison with,in comparison to,by comparison with,by comparison to31对比而言:By contrast,in contrast,on the other hand,on the contrary=,conversely32展示:Show, reveal, illustrate, demonstrate, depict, present, represent, describe33大约:Approximately,almost,about,around,nearly,roughly 34波动:Fluctuate,go ups and downs,display a fluctuation,demonstrate a fluctuation35事实上:Practically,in practice,essentially,in essence,in reality,in effect,in fact,as a matter of fact,it is a fact that36换言之:Namely,that is to say,in other words,to put it like this,to put it differently,to put it from another way,to put it from another anglePart 31. Concern with = relate to 与有关2. grow= increase= riseconcentrate on = focus on3. Under the title=name achievement =success4. tend to do sth = plane to do sth/ want to do sth5. be associated with = be connected with = be linked to 与……有关联6. approach = method = way = key intensely= extremely = very7. establish= found = set up establishment = organization8. offer sb sth =supply sb with sth =provide sb with sth= grant9. argument= disagreementwork = materpiece 著作10. contract = agreement合同,合约no= without = have no 没有11. chaos = mess 混乱monument= landmark里程碑12. help sb with = assist sb in doing sth=reach a hand to sb 帮助某人作某事13. draw from = take fromleading = outstanding14. competitor= rival recognize as = look/assume asPart 41. dislike=distaste2. test = examine3. effect= impact=influencefrom…to = range from…to…4. ignore = neglectas per=according to5. unfold= carry on be reluctant to do=reject=refuse6. before = prior to7. predict = estimate =anticipateobey= abide by = comply with8. account for= explain= elucidate=illustrate in essence = in fact=indeed9. discrepancy=difference=shade forgo=give up10. context =environment=sircumstance=situation11. radical different=extremely different put another way=in other words12. sort out= find out= figure out plausible = reasonable12. fear=worrypollute = contaminate13. predict= anticipate=forecastoverstate = exaggerate14. in great number of = in _____ number ofactually=indeed15. disappear= extinct灭绝seem to do =assume to do16. solve = cope with=deal with解决accelerate = speed up17. impact = effect =influenceresponse to = react to18. opinion poll= survey reason = factor 原因、因素19. entitle= name overwhelming=dominant20. field =domain 学科领域oppose=against21. for example=such as=for instance=sayaltruistic=non-selfish22. lead to=result in 导致 extremely=seriously 严重地23. perception = view 观点、看法 association=society协会24. encounter with = face=confront面对postpone to=delay25. pressing=urgent紧急、急迫measure=solution=method解决方法26. key=crucial =significant=importmant关键、重要27. but=yet enormous importance=great/significant28. launch=startderive from = get from29. revert to= get back todistinction=identity30. immense =greatin the domain of=in the field of31. couple with = along withamass dollar=accumulate dollar32. essential feature = principleseize=grasp33. innovative=fresh drab=dull/single34. entirely=totallybe restricted to=be limited to35. needs=demand36. dub=call=name reflex=reaction/response37. result from=originate frombe critical for = be important to38. demanding=difficultretune=adjust39. seek for =search foroutlook=view40. figure out = find outtrack=follow41. in response to=respond to = react to42. prominence=importance revolution=invention43. in relation to= in association withsociety = association44. treatise=workfeature=characteristics45. commercial exploitation =commercializedo with=dueto=because of46. objective = impersonal=impartialfoster=develop47.account=explanation=illustrationsubstantial=consider=eno ugh48. be acknowledged=be realizedpilot program=trial program49. configuration=structurephenomenal=surprising50. make stride=make progressregardless of=despite of51. perpetuate=keep=maintainaccess to sth=get sth52. vital importance=extremely important53. rest=depositartificial=manmade54. make a comeback=return=re-enterbig=popular55. ignite=trigger a field of=a domain of56. encompass=unifyimply=indicate57. breakthrough=milestonearound the corner=is coming58. materialize=realizetide=trend59. agency=organizationreckon=deem60. approach=methodspot=find out61. boost=promoteprospect=future62. feature=portray elusive=pessimistic63. match up to sth=equal with impressive=great64. impact=effect=influencefragile=vulnerable65. indigenous people=native peopletend to=look after66. undermine=destroy=damageinevitable=unavoidable67. renaissance=revive for instance=for example68. operate=run69. failure=incidentrare=unusually70. tension=pressurecompression=pressure71. prime=get ready to doinclusion=impurity72. occur=happenanalyze=closely examine73. detect=examine74. trigger=produceinduce=encourage75. regardless=in spite of considerable=substantial76. important =crucial77. opinon=perspective, standpoint78. fame=prestige79. relieve=alleviate(alleviate means you make pain or sufferings less intense or severe)80. force=coerces into(coerce means you make someone do something s/he does not want to),compel81. enlarge=magnify(magnify means make something larger than it really is)82. Lonely=solitary(if someone is solitary, there is no one near him/her83. small=minuscule(very small), minute84. praise=extol(stronger than praise), compliment(polite and political)85. hard-working=assiduous(someone who is assiduous works hard or does things very thoroughly86. difficult=arduous(if something is arduous, it is difficult and tiring, and involves a lot of efforts)87. show=demonstrate(to demonstrate a fact means tp make it clear to people.)88. avoid=shun(if someone shuns something, s/he deliberately avoid that something or keep away from it89. fair=impartial(someone who is impartial is able to givea fair opinion or decision on something.)90. always=invariably(the same as always, but better than always)91. forever=perpetual(a perpetual state never changes), immutable(something immutable will neverchange or be changed)92. quiet=tranquil(calm and peaceful), serene(calm and quiet)93. expensive=exorbitant(it means too expensive that it should be)94. respect=esteem95. worry=fret(if you fret about something, you worry about it)96. cold=chilly(unpleasantly cold), icy(extremely cold)97. dangerous=perilous(very dangerous, hazardous(dangerous, especially to people's safety and health)98. nowadays=currently99. stop=cease(if something ceases, it stops happening or existing)100. part=component(the components of something are the parts that it is made of)101. difficult=formidable102. strange=eccentric(if some one is eccentric, s/he behaves in a strange way, or his/her opinion is different from most people)103. rich=affluent(if you are affluent, you have a lot of money)104. approximately将近-nearly, almost105. dubious=skeptical(if you are skeptical about something, you have doubts on it.)106. scholarship=fellowship107. attractive=appealing(pleasing and attractive),absorbing(something absorbing can attract you a great deal) 108. diverse=miscellaneous(a miscellaneous groups consists of many different kinds of things)109. rapid=meteoric(ATTENTION: meteoric is only used to describe someone achieves success quickly)110. despite=not withstanding(FORMAL)111. best=optimal(used to describe the best level something can achieve)112. sharp=acute(severe and intense)113. unbelievable=inconceivable(if you deem something inconceivable, you think it very unlike to happen114. famous=distinguished(used to describe people who are successful in their career)115. ancient=archaic(extremely old and extremely old-fashioned)116. possible=feasible(if something is feasible, it can be done, made or achieved)117. of=necessary to118. without=free from119. about=concerning=related to120. 在…方面:in …ways=in …aspects/respects/perspectives 121. 用…方法:in…ways=in…fashion=in …manner122. rich = wealthy = affluent = well-to-do = well-off 123. poor = needy = impoverished124. good = conducive = beneficial=advantageous125. bad = detrimental = baneful =undesirable126. healthy = robust = sound = wholesome127. surprising = amazing = extraordinary = miraculous 128. energetic = dynamic = vigourous =animated129. 难的:difficult=troublesome=burdensome=thorny130. 不可或缺的:necessary=indispensable=inevitable=irreplaceable=unavoidabl e131. 重要的:important=crucial, critical, essential, vital 132. =be importance=be of cruciality=be of criticality= be of essentiality=be of vitality133. 巨大的:large=enormous, tremendous, considerable, appreciable134. 正确的(correct)=仔细的(careful)=arduous=assiduous 135. 有道理的:reasonable=rational and plausible136. 吸引人的:attractive=inviting and appealing137. 足够的:adequate and sufficient138. 日常:daily = day-to-day = everyday139. improve = enhance= promote = strengthen = optimize140. solve =resolve =address = tackle =cope with = deal with 141. destroy = tear down = knock down = eradicate142. develop = cultivate = foster = nurture143. encourage = motivate = stimulate = spur144. complete = fulfill = accomplish= achieve145. destroy = impair = undermine = jeopardize146. influence= impact147. danger = perils =hazards148. human beings= mankind = humane race149. old people= the old = the elderly = the aged = senior citizens150. teachers = instructors = educators = lecturers151. education = schooling = family parenting = upbringing 152. young people = youngsters = youths = adolescents153. responsibility = obligation = duty = liability154. 因素:cause, reason=factor, determinant155. 联系:relationship(relate A to B) =connection(connect A to B ) =association(associate A to B)156. 优点:advantage=benefit=superiority=merit = favorable aspects/respects/perspectives=undesirableaspects/respects/perspectives157.disadvantage=detriment=inferiority=defect=deficiency=unfavo rable aspects/respects/perspectives=undesirable aspects/respects/perspectives158. enjoyment = pastimes = recreation= entertainment159. children = offspring = descendant = kidPart 51. 充满了:be filled with = be awash with = be inundate with = be saturated with2. 努力:struggle for = aspire after = strive for = spare no efforts for3. 从事: embark on = take up = set about = go in for4. 在当代: in contemporary society = in present-day society= in this day and age5. a host of = a multitude of = a vast number of = a vast amountof6. 对…有益处:be good for.=do good to=be beneficial to=be advantageous to=be conducive to7. 对…有害处:be harmful/bad for =be detrimental to =be disadvantageous to =be pernicious to8. 集中精力于:focus on= concentrate on= concentrate/ fix/ focus one’s attention ondevote oneself todedicate oneself tocommit oneself toengage oneself inemploy oneself in9. 认为:think = argue, assert, contend, claim, maintain, note hold the viewpoint thathold the standpoint thathold the perspective thatharbour an idea/opinion thatembrace a view that10. 反对:be against=reject, object, oppose =be opposed to=become hostile to11. 支持:support=take sides with =advocate =lend support to=sing high praise of12. 导致:lead to=trigger =result in, bring about, contributeto=give rise to, give birth to, be responsible for13. (归咎于:be ascribed to=be attributed to)14. 发展(develop),提高(increase),改善(improve)=A 及物动词组promote, advance, enhance, reinforce名词 promotion, advancement, enhancement, reinforcement=B 不及物动词rocket, boom, flourish, prosper15. 有:负面含义:suffer, run high risk of正面含义:boast, embrace16. 中性含义:face=be faced with=be possessed of面临,面对:be faced with=be encountered with=be poised on the brink of17. 破坏:destroy, damage=A decompose, destruct=B endanger, threaten=C impair, decimate, deteriorate=D exercise/practice/perform damage/danger…upon18. 阻碍:limit=A restrict, restrain, constrict, constrain =B obstacle, barricade=C smother, curb=D hinder, impede19. 下结论:make a conclusion= draw a conclusion that= reacha conclusion that= arrive at a conclusion that= hammer a conclusion that= crystallize a conclusion that20. 使用:use=employ, apply, adopt,=resort to21. innovate/ invent22. attend to/ take care of23. balanced/ equitable24. virtual (love)/ simulate25. small-scale小范围/ marginal边缘处的26. dialect方言 accent腔调27. look like/ resemble28. chronic长期的,慢性的 long-term长期的29. on-line /net/ cyber30. solely/ merely/ only31. deplete开采完,耗尽/ exhaust32. category-categorize/ class-classify?。

Multi-modal retrieval of trademark images using globalsimilarityS.Ravela R.ManmathaMultimedia Indexing and Retrieval GroupCenter for Intelligent Information RetrievalUniversity of Massachusetts,Amherst,MA01003Email:ravela,manmatha@AbstractIn this paper a system for multi-modal retrieval of trademark images is presented.Images are characterized and retrieved using associated text and visual appearance.A user initiates retrieval forsimilar trademarks by typing a text query.Subsequent searches can be performed by visual appearanceor using both appearance and text information.Textual information associated with trademarks issearched using the INQUERY search engine.Images are searched visually using a method for globalimage similarity by appearance developed in this paper.Images arefiltered with Gaussian derivativesand geometric features are computed from thefiltered images.The geometric features used here arecurvature and phase.Two images may be said to be similar if they have similar distributions of suchfeatures.Global similarity may,therefore,be deduced by comparing histograms of these features.This allows for rapid retrieval.The system’s performance on a database of2000trademark images isshown.A trademark database obtained from the US Patent and Trademark Office containing63000design only trademark images and text is used to demonstrate scalability of the image search methodand multi-modal retrieval.1IntroductionRetrieval of similar trademarks is an interesting application for multimedia information retrieval.Con-sider the following example.The US Patent and Trademark Office has a repository that has to be searchedfor conflicting(similar)trademarks before one can be awarded to a company or individual.There are several issues that make this task attractive for multi-modal information retrieval techniques.First,current searches are labour intensive.The number of trademarks stored is enormous and examiners have to leaf through large number of trademarks before making a decision.Second,there is a distinct notion of visual similarity used to compare trademarks.This is usually a decisive factor in an award decision.Third,there is readily available text information describing and categorizing a trademark.A system that automates these functions and helps the examiner decide faster would be immensely valuable.Clearly trademarks need to be searched both by text and image content.Text retrieval is a better understood problem,and there are several search engines that are applicable.However,the indexing and retrieval of images using their content is a difficult problem.A person using an image retrieval system usually seeks tofind semantically relevant information.For example,a person may be looking for a picture of a leopard from a certain viewpoint.Or alternatively,the user may require a picture of Abraham Lincoln from a particular viewpoint.Since the automatic segmentation of an image into objects is a difficult and unsolved problem in computer vision,inferring semantic information from image content is difficult to do.However,many image attributes like color,texture,shape and “appearance”are often directly correlated with the semantics of the problem.For example,logos or product packages(e.g.,a box of Tide)have the same color wherever they are found.The coat of a leopard has a unique texture while Abraham Lincoln’s appearance is uniquely defined.These image attributes can often be used to index and retrieve images.In this paper,a system for multi-modal retrieval combining textual information and visual appearance is presented.The system combines text search using INQUERY[2]and image search.The image search was originally developed for general(heterogeneous)grey-level image collections[18].Here,it is applied to trademark images.Trademark images are large binary images rather than grey-level images.Trademark images may consist of geometric designs,more realistic pictures(for example,animals and people)as well as abstract images making them a challenging domain.Trademark images are also an example of a domain where there is an actual user need tofind“similar”trademarks to avoid conflicts.Trademarks for this paper were obtained from the US Patent and Trademark office.The63000design trademarks used here contain images of trademarks and associated text describing the trademark.Multi-modal retrieval begins with a user requesting trademarks that match a text query.The INQUERY search engine is used tofind trademarks whose associated text match the query.The images associated with these trademarks are then displayed.Once an initial query is processed subsequent searches can be carried out by selecting the returned images and submitting them for retrieval by visual appearance or a combination of visual appearance and associated text.INQUERY is a well known search engine for retrieving text which is based on a probabilistic retrievalmodel called an inference net The reader is referred to[2]for details about the INQUERY engine.The current paper focuses on visual appearance representation,its quantitative evaluation with respect to trade-marks,scalability to a large collection,and feasibility to multi-modal retrieval.The visual appearance of an image is characterized here using the shape of the intensity surface.The images arefiltered with Gaussian derivatives and geometric features are computed from thefiltered im-ages.The geometric features used here are the image shape index(which is a ratio of curvatures of the three dimensional intensity surface)and the local orientation of the gradient.Two images are said to be similar if they have similar distributions of such features.The images are,therefore,ranked by compar-ing histograms of these features.Recall/Precision results with this method is tabulated with a database of about2000trademark images.Then multi-modal retrieval is demonstrated on a collection of63000 trademark images.The rest of the paper is organized as follows.Section2provides some background on the image retrieval area as well as on the appearance matching framework used in this paper.Section3surveys related work in the literature.In section4,the notion of appearance is developed further and characterized using Gaussian derivativefilters and the derived global representation is discussed.Section5shows how the representation may be scaled for multi-modal retrieval from a database of about63,000trademark images.A discussion and conclusion follows in Section6.2Motivation and BackgroundThe different image attributes like color,texture,shape and appearance have all been used in a variety of systems for retrieving images similar to a query image(see3for a review).Systems like QBIC[6]and Virage[5]allow users to combine color,texture and shape to retrieve a database of general images.One weakness of such a system is that attributes like color do not have direct semantic correlates when applied to a database of general images.For example,say a picture of a red and green parrot is used to retrieve images based on their similarity in color with it.The retrievals may include other parrots and birds as well as redflowers with green stems and other images.While this is a reasonable result when viewed as a matching problem,clearly it is not a reasonable result for a retrieval system.The problem arises because color does not have a good correlation with semantics when used with general images.However,if the domain or set of images is restricted to sayflowers,then color has a direct semantic correlate and is useful for retrieval(see[3]for an example).Some attempts have been made to retrieve objects using their shape[6,22].For example,the QBIC system[6],developed by IBM,matches binary shapes.It requires that the database be segmented into objects.Since automatic segmentation is an unsolved problem,this requires the user to manually outline the objects in the database.Clearly this is not desirable or practical.Except for certain special domains,all methods based on shape are likely to have the same problem. An object’s appearance depends not only on its three dimensional shape,but also on the object’s albedo, the viewpoint from which it is imaged and a number of other factors.It is non-trivial to separate the different factors constituting an object’s appearance and it is usually not possible to separate an object’s three dimensional shape from the other factors.For example,the face of a person has a unique appearance that cannot just be characterized by the geometric shape of the’component parts’.In this paper a char-acterization of the shape of the intensity surface of imaged objects is used for retrieval.The experiments conducted show that retrieved objects have similar visual appearance,and henceforth an association is made between’appearance’and the shape of the intensity surface.Similarity can be computed using either local or global methods.In local similarity,a part of the query is used to match a part of a database image or images.One approach to computing local similarity[18]is to have the user outline the salient portions of the query(eg.the wheels of a car or the face of a person) and match the outlined portion of the query with parts of images in the database.Although,the technique works well in extracting relevant portions of objects embedded against backgrounds it is slow.The slow speed stems from the fact that the system must not only answer the question”is this image similar”but also the question”which part of the image is relevant”.This paper focuses on a representation for computing global similarity.That is,the task is tofind images that,as a whole,appear visually similar.The utility of global similarity retrieval is evident,for example, infinding similar scenes or similar faces in a face database.Global similarity also works well when the object in question constitutes a significant portion of the image.2.1Appearance based retrievalThe image intensity surface is robustly characterized using features obtained from responses to multi-scale Gaussian derivativefilters.Koenderink[14]and others[7]have argued that the local structure of an image can be represented by the outputs of a set of Gaussian derivativefilters applied to an image.That is, images arefiltered with Gaussian derivatives at several scales and the resulting response vector locally de-scribes the structure of the intensity surface.By computing features derived from the local response vector and accumulating them over the image,robust representations appropriate to querying images as a whole (global similarity)can be generated.One such representation uses histograms of features derived from the multi-scale Gaussian derivatives.Histograms form a global representation because they capture the distribution of local features(A histogram is one of the simplest ways of estimating a non parametric dis-tribution).This global representation can be efficiently used for global similarity retrieval by appearance and retrieval is very fast.The choice of features often determines how well the image retrieval system performs.Here,the task is to robustly characterize the3-dimensional intensity surface.A3-dimensional surface is uniquely de-termined if the local curvatures everywhere are known.Thus,it is appropriate that one of the features be local curvature.The principal curvatures of the intensity surface are invariant to image plane rotations, monotonic intensity variations and further,their ratios are in principle insensitive to scale variations of the entire image.However,spatial orientation information is lost when constructing histograms of curvature (or ratios thereof)alone.Therefore we augment the local curvature with local phase,and the representation uses histograms of local curvature and phase.Local principal curvatures and phase are computed at several scales from responses to multi-scale Gaus-sian derivativefilters.Then histograms of the curvature ratios[13,4]and phase are generated.Thus,the image is represented by a single vector(multi-scale histograms).During run-time the user presents an example image as a query and the query histograms are compared with the ones stored,and the images are then ranked and displayed in order to the user.2.2The choice of domainThere are two issues in building a content based image retrieval system.Thefirst issue is technological, that is,the development of new techniques for searching images based on their content.The second issue is user or task related,in the sense of whether the system satisfies a user need.While a number of content based retrieval systems have been built([6,5]),it is unclear what the purpose of such systems is and whether people would actually search in the fashion described.In this paper we describe how the techniques described here may be scaled to retrieve images from a database of about63000trademark images provided by the US Patent and Trademark Office.This database consists of all(at the time the database was provided)the registered trademarks in the United States which consist only of designs(i.e.there are no words in them).Trademark images are a good domain with which to test image retrieval.First,there is an existing user need:trademark examiners do have to check for trademark conflicts based on visual appearance.That is,at some stage they are required to look at the images and check whether the trademark is similar to an existing one.Second,trademark images may consist of simple geometric designs,pictures of animals or even complicated designs.Thus, they provide a test-bed for image retrieval algorithms.Third,there is text associated with every trademark and the associated text maybe used in a number of ways.One of the problems with many image retrieval systems is that it is unclear where the example or query image will come from.In this paper,the associated text is used to provide an example or query image.In addition associated text can also be combined with image ing trademark images does have some limitations.First,we are restricted to binary images(albeit large ones).As shown later in the paper,this does not create any problems for the algorithms described here.Second,in some cases the use of abstract images makes the task more difficult.Others have attempted to get around it by restricting the trademark images to geometric designs[9].3Related WorkSeveral authors have tried to characterize the appearance of an object via a description of the intensity surface.In the context of object recognition[21]represent the appearance of an object using a parametric eigen space description.This space is constructed by treating the image as afixed length vector,and then computing the principal components across the entire database.The images therefore have to be size and intensity normalized,segmented and trained.Similarly,using principal component representations described in[11]face recognition is performed in[26].In[24]the traditional eigen representation is augmented by using most discriminant features and is applied to image retrieval.The authors apply eigen representation to retrieval of several classes of objects.The issue,however,is that these classes are manually determined and training must be performed on each.The approach presented in this paper is different from all the above because eigen decompositions are not used at all to characterize appearance. Further,the method presented uses no learning and,does not require constant sized images.It should be noted that although learning significantly helps in such applications as face recognition,however,it may not be feasible in many instances where sufficient examples are not available.This system is designed to be applied to a wide class of images and there is no restriction per se.In earlier work we showed that local features computed using Gaussian derivativefilters can be used for local similarity,i.e.to retrieve parts of images[18].Here we argue that global similarity can be determined by computing local features and comparing distributions of these features.This technique gives good results,and is reasonably tolerant to view variations.Schiele and Crowley[23]used such a technique for recognizing objects using grey-level images.Their technique used the outputs of Gaussian derivatives as local features.A multi-dimensional histogram of these local features is then computed.Two images are considered to be of the same object if they had similar histograms.The difference between this approach and the one presented by Schiele and Crowley is that here we use1D histograms(as opposed to multi-dimensional)and further use the principal curvatures as the primary feature.The use of Gaussian derivativefilters to represent appearance is motivated by their use in describing the spatial structure[14]and its uniqueness in representing the scale space of a function[15,12,28, 25]The invariance properties of the principal curvatures are well documented in[7].Nastar[20],has independently used the image shape index to compute similarity between images.However,in his work curvatures were computed only at a single scale.This is insufficient.In the context of global similarity retrieval it should be noted that representations using moment in-variants have been well studied[19].In these methods global representation of appearance may involve computing a few numbers over the entire image.Two images are then considered similar if these num-bers are close to each other(say using an L2norm).We argue that such representations are not able to really capture the“appearance”of an image,particularly in the context of trademark retrieval where mo-ment invariants are widely used.In other work[18]we compared moment invariants with the technique presented here and found that moment invariants work best for a single binary shape without holes in it, and,in general,fare worse than the method presented here.Jain and Vailaya[10]used edge angles and invariant moments to prune trademark collections and then use template matching tofind similarity within the pruned set.Their database was limited to1100images.Texture based image retrieval is also related to the appearance based work presented in this ing Wold modeling,in[16]the authors try to classify the entire Brodatz texture and in[8]attempt to classify scenes,such as city and country.Of particular interest is work by[17]who use Gaborfilters to retrieve texture similar images.The earliest general image retrieval systems were designed by[6,22].In[6]the shape queries require prior manual segmentation of the database which is undesirable and not practical for most applications. 4Global representation of appearanceThree steps are involved in order to computing global similarity.First,local derivatives are computed at several scales.Second,derivative responses are combined to generate local features,namely,the principal curvatures and phase and,their histograms are generated.Third,the1D curvature and phase histograms generated at several scales are matched.These steps are described next.puting local derivatives:Computing derivatives usingfinite differences does not guarantee stability of derivatives.In order to compute derivatives stably,the image must be regularized,or smoothed or band-limited.A Gaussianfiltered image obtained by convolving the image I with a normalized Gaussian is a band-limited function.Its high frequency components are eliminated and derivatives will be stable.In fact,it has been argued by Koenderink and van Doorn[14]and others [7]that the local structure of an image I at a given scale can be represented byfiltering it with Gaussian derivativefilters(in the sense of a Taylor expansion),and they term it the N-jet.However,the shape of the smoothed intensity surface depends on the scale at which it is observed.For example,at a small scale the texture of an ape’s coat will be visible.At a large enough scale,the ape’s coat will appear homogeneous.A description at just one scale is likely to give rise to many accidental mis-matches.Thus it is desirable to provide a description of the image over a number of scales,that is,a scale space description of the image.It has been shown by several authors[15,12,28,25,7],that under certain general constraints,the Gaussianfilter forms a unique choice for generating scale-space.Thus local spatial derivatives are computed at several scales.B.Feature Histograms:The normal and tangential curvatures of a3-D surface(X,Y,Intensity)are de-fined as[7]:Where and are the local derivatives of Image I around point using Gaussian derivative at scale.Similarly,,and are the corresponding second derivatives.The normal curvature and tangential curvature are then combined[13]to generate a shape index as follows:when and is undefined when either and are both zero,and is, therefore,not computed.This is interesting because veryflat portions of an image(or ones with constant ramp)are eliminated.For example in Figure1,the background in most of these images does not contribute to the curvature histogram.The curvature index or shape index is rescaled and shifted to the rangeas is done in[4].A histogram is then computed of the valid index values over an entire image.The second feature used is phase.The phase is simply defined as. Note that is defined only at those locations where is and ignored elsewhere.As with the curvature index is rescaled and shifted to lie between the interval.At different scales different local structures are observed and,therefore,multi-scale histograms are a more robust representation.Consequently,a feature vector is defined for an image as the vectorwhere and are the curvature and phase histograms respectively.We found that using5scales gives good results and the scales are in steps of half an octave.C.Matching feature histograms:Two feature vectors are compared using normalized cross-covariance defined aswhere.Retrieval is carried out as follows.A query image is selected and the query histogram vector is correlated with the database histogram vectors using the above formula.Then the images are ranked by their correlation score and displayed to the user.In this implementation,and for evaluation purposes,the ranks are computed in advance,since every query image is also a database image.4.1ExperimentsThe curvature-phase method is evaluated on a small database of2048images obtained from the US Patent and Trademark Office(PTO).The images obtained from the PTO are large,binary and are converted to gray-level and reduced for the experiments.This smaller set is used because relevance judgments can be obtained relatively easily.In the following experiments an image is selected and submitted as a query.The objective of this query is stated and the relevant images are decided in advance.Then the retrieval instances are gauged against the stated objective.In general,objectives of the form’extract images similar in appearance to the query’will be posed to the retrieval algorithm.A measure of the performance of the retrieval engine can be obtained by examining the recall/precision table for several queries.Briefly,recall is the proportion of the relevant material actually retrieved and precision is the proportion of retrieved material that is relevant[27].It is a standard widely used in the information retrieval community and is one that is adopted here.Figure1:Trademark retrieval using Curvature and PhaseQueries were submitted for the purpose of computing recall/precision.The judgment of relevance is qualitative.For each query in both databases the relevant images were decided in advance.These were restricted to48.The top48ranks were then examined to check the proportion of retrieved images that were relevant.All images not retrieved within48were assigned a rank equal to the size of the database.Table1:Precision at standard recall points for six QueriesRecall1030507090 Precision(trademark)%93.285.274.545.59.0Precision(assorted)%92.688.386.865.912.061.1%66.3%That is,they are not considered retrieved.These ranks were used to interpolate and extrapolate precision at all recall points.In the case of assorted images relevance is easier to determine and more similar for different people.However in the trademark case it can be quite difficult and therefore the recall-precision can be subject to some error.The recall/precision results are summarized in Table1and both databases are individually discussed below.Figure1shows the performance of the algorithm on the trademark images.Each strip depicts the top 8retrievals,given the leftmost as the query.Most of the shapes have roughly the same structure as the query.Note that,outline and solidfigures are treated similarly(see rows one and two in Figure1).Six queries were submitted for the purpose of computing recall-precision in Table1.Tests were also carried out with an assorted collection of1561grey-level images.These results are discussed elsewhere[1],and the recall/precision table is shown in Table1.While the queries presented here are not“optimal”with respect to the design constraints of global similarity retrieval,they are however,realistic queries that can be posed to the system.Mismatches can and do occur.Thefirst is the case where the global appearance is very different.Second,mismatches can occur at the algorithmic level.Histograms coarsely represent spatial information and therefore will admit images with non-trivial deformations.The recall/precision presented here compares well with text retrieval.The time per retrieval is of the order of milli-seconds.In the next section we discuss the application of the presented technique to a database of63000images.5Trademark RetrievalThe system indexes63,718trademarks from the US Patent and Trademark office in the design only category.These trademarks are binary images.In addition,associated text consists of a design code that designates the type of trademark,the goods and services associated with the trademark,a serial number and a short descriptive text.The system for browsing and retrieving trademarks is illustrated in Figure2.The netscape/Java user interface has two search-able parts.On the left a panel is included to initiate search using text.Any or all of thefields can be used to enter a query.In this example,the text“Merriam Webster’is entered.All images associated with it are retrieved using the INQUERY[2]text search engine.The user can then use any of the example pictures to search for images that are similar visually or restrict it to images withTable2:Fields supporting the text query FieldThe business this trademark is used inAll are of type DESIGN ONLYAn assigned code categorySerial number assigned to trademarkDate trademark application wasfiledNumber assigned to trademarkDate trademark was registeredOwner of the trademarkA textual description of the trademark. Section44Type of markRegisterAffidavit textLive/dead(1,4,8).A histogram descriptor of the image is obtained by concatenating all the individual histograms across scales and regions.These two steps are conducted off-line.Execution:The image search server begins by loading all the histograms into memory.Then it waits on a port for a query.A CGI client transmits the query to the server.Its histograms are matched with the ones in the database.The match scores are ranked and the top requested retrievals are returned.5.1ExamplesFigure2:Retrieval in response to a“Merriam Webster”queryIn Figure2,the user typed in Merriam Webster in the text window.The system searches for trade-marks which have either Merriam or Webster in th associated text and displays them.Here,thefirst two trademarks(first two images in the left window)belong to Merriam Webster.In this example,the user has chosen to’click’the second image and search for images of similar trademarks.This search is based entirely on the image and the results are displayed in the right window in rank order.Retrieval takes a few seconds and is done by comparing histograms of all63,718trademarks on thefly.。