CUDA C GETTING STARTED GUIDE FOR MICROSOFT WINDOWS

- 格式:pdf

- 大小:939.08 KB

- 文档页数:14

CUDA Developer Guide for NVIDIA Optimus PlatformsReference GuideTable of ContentsChapter 1. Introduction to Optimus (1)Chapter 2. CUDA Applications and Optimus (2)Chapter 3. Querying for a CUDA Device (3)3.1. Applications without Graphics Interoperability (3)3.2. Applications with Graphics Interoperability (5)3.3. CUDA Support with DirecX Interoperability (6)3.4. CUDA Support with OpenGL Interoperability (7)3.5. Control Panel Settings and Driver Updates (8)Chapter 1.Introduction to OptimusNVIDIA® Optimus™ is a revolutionary technology that delivers great battery life and great performance, in a way that simply works. It automatically and instantaneously uses the best tool for the job – the high performance NVIDIA GPU for GPU-Compute applications, video, and 3D games; and low power integrated graphics for applications like Office, Web surfing, or email.The result is long lasting battery life without sacrificing great graphics performance, delivering an experience that is fully automatic and behind the scenes.When the GPU can provide an increase in performance, functionality, or quality over theIGP for an application, the NVIDIA driver will enable the GPU. When the user launches an application, the NVIDIA driver will recognize whether the application being run can benefit from using the GPU. If the application can benefit from running on the GPU, the GPU is powered up from an idle state and is given all rendering calls.Using NVIDIA’s Optimus technology, when the discrete GPU is handling all the rendering duties, the final image output to the display is still handled by the Intel integrated graphics processor (IGP). In effect, the IGP is only being used as a simple display controller, resulting in a seamless, flicker-free experience with no need to reboot.When the user closes all applications that benefit from the GPU, the discrete GPU is powered off and the Intel IGP handles both rendering and display calls to conserve power and provide the highest possible battery life.The beauty of Optimus is that it leverages standard industry protocols and APIs to work. From relying on standard Microsoft APIs when communicating with the Intel IGP driver, to utilizing the PCI-Express bus to transfer the GPU’s output to the Intel IGP, there are no proprietary hoops to jump through NVIDIA.This document provides guidance to CUDA developers and explains how NVIDIA CUDA APIs can be used to query for GPU capabilities in Optimus systems. It is strongly recommendedto follow these guidelines to ensure CUDA applications are compatible with all notebooks featuring Optimus.Chapter 2.CUDA Applications andOptimusOptimus systems all have an Intel IGP and an NVIDIA GPU. Display heads may be electrically connected to the IGP or the GPU. When a display is connected to a GPU head, all rendering and compute on that display happens on the NVIDIA GPU just like it would on a typical discrete system. When the display is connected to an IGP head, the NVIDIA driver decides if an application on that display should be rendered on the GPU or the IGP. If the driver decides to run the application on the NVIDIA GPU, the final rendered frames are copied to the IGP’s display pipeline for scanout. Please consult the Optimus white paper for more details on this behavior: /object/optimus_technology.html.CUDA developers should understand this scheme because it affects how applications should query for GPU capabilities. For example, a CUDA application launched on the LVDS panel of an Optimus notebook (which is an IGP-connected display), would see that the primary display device is the Intel's graphic adapter – a chip not capable of running CUDA. In this case, it is important for the application to detect the existence of a second device in the system – the NVIDIA GPU – and then create a CUDA context on this CUDA-capable device even when it is not the display device.For applications that require use of Direct3D/CUDA or OpenGL/CUDA interop, there are restrictions that developers need to be aware of when creating a Direct3D or OpenGL context that will interoperate with CUDA. CUDA Support with DirecX Interoperability and CUDA Support with OpenGL Interoperability in this guide discuss this topic in more detail.Chapter 3.Querying for a CUDA DeviceDepending on the application, there are different ways to query for a CUDA device.3.1. Applications without GraphicsInteroperabilityFor CUDA applications, finding the best CUDA capable device is done through the CUDA API. The CUDA API functions cudaGetDeviceProperties (CUDA runtime API), and cuDeviceComputeCapability (CUDA Driver API) are used. Refer to the CUDA Sample deviceQuery or deviceQueryDrv for more details.The next two code samples illustrate the best method of choosing a CUDA capable device with the best performance.// CUDA Runtime API Versioninline int cutGetMaxGflopsDeviceId(){int current_device = 0, sm_per_multiproc = 0;int max_compute_perf = 0, max_perf_device = 0;int device_count = 0, best_SM_arch = 0;int arch_cores_sm[3] = { 1, 8, 32, 192 };cudaDeviceProp deviceProp;cudaGetDeviceCount( &device_count );// Find the best major SM Architecture GPU devicewhile ( current_device < device_count ) {cudaGetDeviceProperties( &deviceProp, current_device );if (deviceProp.major > 0 && deviceProp.major < 9999) {best_SM_arch = max(best_SM_arch, deviceProp.major);}current_device++;}// Find the best CUDA capable GPU devicecurrent_device = 0;while( current_device < device_count ) {cudaGetDeviceProperties( &deviceProp, current_device );if (deviceProp.major == 9999 && deviceProp.minor == 9999) {sm_per_multiproc = 1;} else if (deviceProp.major <= 3) {sm_per_multiproc = arch_cores_sm[deviceProp.major];} else { // Device has SM major > 3sm_per_multiproc = arch_cores_sm[3];}int compute_perf = deviceProp.multiProcessorCount *sm_per_multiproc * deviceProp.clockRate;if( compute_perf > max_compute_perf ) {// If we find GPU of SM major > 3, search only theseif ( best_SM_arch > 3 ) {// If device==best_SM_arch, choose this, or else passif (deviceProp.major == best_SM_arch) {max_compute_perf = compute_perf;max_perf_device = current_device;}} else {max_compute_perf = compute_perf;max_perf_device = current_device;}}++current_device;}cudaGetDeviceProperties(&deviceProp, max_compute_perf_device);printf("\nDevice %d: \"%s\"\n", max__perf_device,);printf("Compute Capability : %d.%d\n",deviceProp.major, deviceProp.minor);return max_perf_device;}// CUDA Driver API Versioninline int cutilDrvGetMaxGflopsDeviceId(){CUdevice current_device = 0, max_perf_device = 0;int device_count = 0, sm_per_multiproc = 0;int max_compute_perf = 0, best_SM_arch = 0;int major = 0, minor = 0, multiProcessorCount, clockRate;int arch_cores_sm[3] = { 1, 8, 32, 192 };cuInit(0);cuDeviceGetCount(&device_count);// Find the best major SM Architecture GPU devicewhile ( current_device < device_count ) {cuDeviceComputeCapability(&major, &minor, current_device));if (major > 0 && major < 9999) {best_SM_arch = MAX(best_SM_arch, major);}current_device++;}// Find the best CUDA capable GPU devicecurrent_device = 0;while( current_device < device_count ) {cuDeviceGetAttribute( &multiProcessorCount,CU_DEVICE_ATTRIBUTE_MULTIPROCESSOR_COUNT, current_device ) );cuDeviceGetAttribute( &clockRate,CU_DEVICE_ATTRIBUTE_CLOCK_RATE,current_device ) );if (major == 9999 && minor == 9999) {sm_per_multiproc = 1;} else if (major <= 3) {sm_per_multiproc = arch_cores_sm[major];} else {sm_per_multiproc = arch_cores_sm[3];}int compute_perf = multiProcessorCount * sm_per_multiproc *clockRate;if( compute_perf > max_compute_perf ) {// If we find GPU with SM major > 2, search only theseif ( best_SM_arch > 2 ) {// If our device==dest_SM_arch, choose this, or else passif (major == best_SM_arch) {max_compute_perf = compute_perf;max_perf_device = current_device;}} else {max_compute_perf = compute_perf;max_perf_device = current_device;}}++current_device;}char name[100];cuDeviceGetName(name, 100, max_perf_device);cuDeviceComputeCapability(&major, &minor, max_perf_device);printf("\nDevice %d: "%s\n", max_perf_device, name);printf(" Compute Capability : %d.%d\n", major, minor);return max_perf_device;}3.2. Applications with GraphicsInteroperabilityFor CUDA applications that use the CUDA interop capability with Direct3D or OpenGL, developers should be aware of the restrictions and requirements to ensure compatibility with the Optimus platform. For CUDA applications that meet these descriptions:1.Application requires CUDA interop capability with either Direct3D or OpenGL.2.Application is not directly linked against cuda.lib or cudart.lib or LoadLibrary todynamically load the nvcuda.dll or cudart*.dll and uses GetProcAddress to retrieve function addresses from nvcuda.dll or cudart*.dll.A Direct3D or OpenGL context has to be created before the CUDA context. The Direct3D or OpenGL context needs to pass this into the CUDA. See the sample calls below in red below. Your application will need to create the graphics context first. The sample code below does not illustrate this.Refer to the CUDA Sample simpleD3D9 and simpleD3D9Texture for details.// CUDA/Direct3D9 interop// You will need to create the D3D9 context firstIDirect3DDevice9 * g_pD3D9Device; // Initialize D3D9 rendering device// After creation, bind your D3D9 context to CUDAcudaD3D9SetDirect3DDevice(g_pD3D9Device);Refer to the CUDA Sample simpleD3D10 and simpleD3D10Texture for details.// CUDA/Direct3D10 interop// You will need to create a D3D10 context firstID3D10Device * g_pD3D10Device; // Initialize D3D10 rendering device// After creation, bind your D3D10 context to CUDAcudaD3D10SetDirect3DDevice(g_pD3D10Device);Refer to the CUDA Sample simpleD3D11Texture for details.// CUDA/Direct3D11 interop// You will need to first create the D3D11 context firstID3D11Device * g_pD3D11Device; // Initialize D3D11 rendering device// After creation, bind your D3D11 context to CUDAcudaD3D11SetDirect3DDevice(g_pD3D11Device);Refer to the CUDA Samples simpleGL and postProcessGL for details.// For CUDA/OpenGL interop// You will need to create the OpenGL Context first// After creation, bind your D3D11 context to CUDAcudaGLSetGLDevice(deviceID);On an Optimus platform, this type of CUDA application will not work properly. If a Direct3Dor OpenGL graphics context is created before any CUDA API calls are used or initialized, the Graphics context may be created on the Intel IGP. The problem here is that the Intel IGP does not allow Graphics interoperability with CUDA running on the NVIDIA GPU. If the Graphics context is created on the NVIDIA GPU, then everything will work.The solution is to create an application profile in the NVIDIA Control Panel. With an application profile, the Direct3D or OpenGL context will always be created on the NVIDIA GPU when the application gets launched. These application profiles can be created manually through the NVIDIA control Panel (see Control Panel Settings and Driver Updates Section 5 for details). Contact NVIDIA support to have this application profile added to the drivers, so future NVIDIA driver releases and updates will include it.3.3. CUDA Support with DirecXInteroperabilityThe following steps with code samples illustrate what is necessary to initialize your CUDA application to interoperate with Direct3D9.Note: An application profile may also be needed.1.Create a Direct3D9 Context:// Create the D3D objectif ((g_pD3D = Direct3DCreate9(D3D_SDK_VERSION))==NULL )return E_FAIL;2.Find the CUDA Device that is also a Direct3D device// Find the first CUDA capable device, may also want to check// number of cores with cudaGetDeviceProperties for the best// CUDA capable GPU (see previous function for details)for(g_iAdapter = 0;g_iAdapter < g_pD3D->GetAdapterCount();g_iAdapter++){D3DCAPS9 caps;if (FAILED(g_pD3D->GetDeviceCaps(g_iAdapter,D3DDEVTYPE_HAL, &caps)))// Adapter doesn't support Direct3Dcontinue;D3DADAPTER_IDENTIFIER9 ident;int device;g_pD3D->GetAdapterIdentifier(g_iAdapter,0, &ident);cudaD3D9GetDevice(&device, ident.DeviceName);if (cudaSuccess == cudaGetLastError() )break;}3.Create the Direct3D device// Create the D3DDeviceif (FAILED( g_pD3D->CreateDevice( g_iAdapter, D3DDEVTYPE_HAL,hWnd, D3DCREATE_HARDWARE_VERTEXPROCESSING, &g_d3dpp, &g_pD3DDevice ) ) )return E_FAIL;4.Bind the CUDA Context to the Direct3D device:// Now we need to bind a CUDA context to the DX9 devicecudaD3D9SetDirect3DDevice(g_pD3DDevice);3.4. CUDA Support with OpenGLInteroperabilityThe following steps with code samples illustrate what is needed to initialize your CUDA application to interoperate with OpenGL.Note: An application profile may also be needed.1.Create an OpenGL Context and OpenGL window// Create GL contextglutInit(&argc, argv);glutInitDisplayMode(GLUT_RGBA | GLUT_ALPHA |GLUT_DOUBLE | GLUT_DEPTH);glutInitWindowSize(window_width, window_height);glutCreateWindow("OpenGL Application");// default initialization of the back bufferglClearColor(0.5, 0.5, 0.5, 1.0);2.Create the CUDA Context and bind it to the OpenGL context// Initialize CUDA context (ontop of the GL context)int dev, deviceCount;cudaGetDeviceCount(&deviceCount);cudaDeviceProp deviceProp;for (int i=0; i<deviceCount; i++) {cudaGetDeviceProperties(&deviceProp, dev));}cudaGLSetGLDevice(dev);3.5. Control Panel Settings and DriverUpdatesThis section is for developers who create custom application-specific profiles. It describes how end users can be sure that their CUDA applications run on Optimus and how they can receive the latest updates on drivers and application profiles.‣Profile updates are frequently sent to end user systems, similar to how virus definitions work. Systems are automatically updated with new profiles in the background with no user intervention required. Contact NVIDIA developer support to create the appropriate driver application profiles. This will ensure that your CUDA application is compatible on Optimus and included in these automatic updates.‣End users can create their own application profiles in the NVIDIA Control panel and set when switch and not to switch to the NVIDIA GPU per application. The screenshot below shows you where to find these application profiles.‣NVIDIA regularly updates the graphics drivers through the NVIDIA Verde Driver Program. End users will be able to download drivers which include application-specific Optimus profiles for NVIDIA-powered notebooks from: /object/notebook_drivers.htmlNoticeThis document is provided for information purposes only and shall not be regarded as a warranty of a certain functionality, condition, or quality of a product. NVIDIA Corporation (“NVIDIA”) makes no representations or warranties, expressed or implied, as to the accuracy or completeness of the information contained in this document and assumes no responsibility for any errors contained herein. NVIDIA shall have no liability for the consequences or use of such information or for any infringement of patents or other rights of third parties that may result from its use. This document is not a commitment to develop, release, or deliver any Material (defined below), code, or functionality.NVIDIA reserves the right to make corrections, modifications, enhancements, improvements, and any other changes to this document, at any time without notice. Customer should obtain the latest relevant information before placing orders and should verify that such information is current and complete.NVIDIA products are sold subject to the NVIDIA standard terms and conditions of sale supplied at the time of order acknowledgement, unless otherwise agreed in an individual sales agreement signed by authorized representatives of NVIDIA and customer (“Terms of Sale”). NVIDIA hereby expressly objects to applying any customer general terms and conditions with regards to the purchase of the NVIDIA product referenced in this document. No contractual obligations are formed either directly or indirectly by this document.OpenCLOpenCL is a trademark of Apple Inc. used under license to the Khronos Group Inc.TrademarksNVIDIA and the NVIDIA logo are trademarks or registered trademarks of NVIDIA Corporation in the U.S. and other countries. Other company and product names may be trademarks of the respective companies with which they are associated.Copyright© 2013-2022 NVIDIA Corporation & affiliates. All rights reserved.NVIDIA Corporation | 2788 San Tomas Expressway, Santa Clara, CA 95051。

Micrium Tools Training: µC/Probe and SystemViewLabsMicrium’s µC/Probe and SEGGER’s SystemView can be invaluable for developers whose projects are based on the Micrium OS. The lab exercises described in this document will familiarize you with these two tools. The instructions for the lab assume that you have a Mighty Gecko Wireless Starter Kit (SLWSTK6000B), and that you’ve already insta lled µC/Probe and SystemView, as well as the Simplicity Studio IDE, on your PC. Download links for all of these tools are provided below.Download LinksSimplicity Studio: /products/development-tools/software/simplicity-studioThe Micrium OS example project prepared for these labs was developed using SimplicityStudio. You’ll need the IDE in ord er to build and run the project.µC/ProbeA visit to Micrium’s Web site to download µC/Probe is not necessary, since the tool was recently integrated into Simplicity Studio. However, you may need to use the IDE’s Package Manager to update your install and gain access to µC/Probe. Within the Package Manager, which you can find by clicking the button with the green, downward-facing arrow appearing near the upper-left corner of Simplicity Studio in the Launcher perspective, the link for installing µC/Probe is located on the Tools page, as indicated in the below screenshot. The Educational Edition of µC/Probe referenced by the link can be used without a license. If you’d like access to the full-featured Professional Edition of the tool, you can contact Micrium to get a one-year FAE license at no cost.SystemView: https:///downloads/free_tools/Setup_SystemView_V242.exe SystemView is a free tool from SEGGER. In order for the tool t o work properly, you’ll need the latest J-Link software, which is also available from SEGGER: https:///downloads/jlink/JLink_Windows_V614c.exe.Lab 1: Building and Running the Example ProjectIn this lab, you’ll build and run a Simplicity Studio example proj ect incorporating the Micrium OS. You’ll use this project in Lab 2 and Lab 3 to explore the features of µC/Probe and SystemView.Procedure:1.Your hardware platform for the labs is the Mighty Gecko Wireless Starter Kit. Youshould now connect one of your k it’s main boards (BRD4001A) to a radio board(BRD4161A). You should then establish a USB connection between your PC and thecombined boards. Your main board features a built-in J-Link debugger, and the USB connection will allow you to leverage this debugger within Simplicity Studio.2.If you’re not al ready running Simplicity Studio, you should start it now. You shouldmake sure that you’re in the Simplicity IDE perspective, which you can open by selecting Window>Perspective>Simplicity IDE.3.The example project for the labs is delivered in a zip file named Micrium-Tools-Lab.zip.You should now extract the contents of this zip file to a folder of your choice. To avoid any possible issues with long path names, it is recommended to keep the zip file contents relatively close to your root folder.4.You’ll now need to import the example project that was delivered in Micrium-Tools-Lab.zip. You should start the import process in Simplicity Studio by selecting File>Import…. On the dialog that subsequently appears, you should expand General and select Existing Projects into Workspace, as indicated in the below screenshot. You should then click the Next button.5.Within the second Import dialog, you should ensure that Select root directory is chosenand you should then click the corresponding Browse button. You must then navigate to the location of the project folder (named Tools-Lab-1) that was provided in Micrium-Tools-Lab.zip. Before clicking the Finish button, you should ensure that the Tools-Lab-1 is checked in the Projects field and that Copy projects into workspace is likewise checked, as indicated in the below screenshot.6.The example project should now appear in your Project Explorer, as indicated below.To clean any build artifacts that might exist for the project, you should right-click its name, Tools-Lab-1, and select Clean Project from the menu that appears. If the clean operation was successful, you should then attempt to build the project by again right-clicking the project name and this time selecting Build Project.7.The build operation for your project should have completed without any errors. (Thereshould have been one warning associated with deprecated interrupt code in the BSP.) In the case of a successful build, you should now attempt to download your project’s code to your board by right-clicking Tools-Lab-1in the Project Explorer and then selecting Debug As>1 Silicon Labs ARM Program.8.Simplicity Studio should automatically switch to the Debug perspective as a result ofyour actions in the previous step. Within this pers pective’s editor area, the tool should indicate that execution of your project is halted on the first line of the main() function. You should now start execution of the project’s code by clicking the Resume button shown below. LED0and LED1should then begin alternately blinking on yourboard.9.After confirming that your code is running and your LEDs are blinking, you can terminateyour debug session by right-clicking the Silicon Labs ARM MCU item that, as indicated inthe below screenshot, should appear in the Debug window. You should selectTerminate from the subsequent pop-up menu. Your LEDs should continue to blink afterthe debug session has ended, since your project’s code now resides in Flash memory.Lab 2: µC/ProbeIn this lab, you’ll use µC/Probe to visualize the internals of the project that you built in Lab 1. You’ll first monitor one of the project’s C variables, and you’ll then use µC/Probe’s kernel-awareness capabilities to view statistics output by the µC/OS 5 kernel on which the project is based.Procedure:1.You should now run µC/Probe. You can actually start the tool within Simplicity Studio byclicking the button shown in the below screenshot.2.µC/Probe makes it possible to view the values of an application’s variables as codeactually runs. In order to offer this capability, it needs information on the variables’ locations. It typically gets this information from an ELF file. When you built the Micrium OS example project following the procedure given in the previous section of this document, an ELF file, Tools-Lab-1.axf, should have been generated by Simplicity Studio and placed at the path listed below. In this path, <project_location> is the folder where your project resides. If you’re uncertain of this location, you should right-click the project’s name in Project Explorer and select Properties. If you then select Resource from the categories listed on the left side of the Properties dialog, you’ll be able to read your project folder from the Location field in the upper half of the dialog.<project_location>\GNU ARM v4.9.3 - Debug3.You can pass an ELF file to µC/Probe using the Symbol Browser located at the bottom ofthe tool’s main window. You should now click the Symbol Broswer’s ELF button, which is shown in the below screen shot. In the ensuing dialog, you should simply browse to the ELF file described above and then click the Open button.4.µC/Probe will be able to use your board’s built-in J-Link debugger to access variables inyour running example code. In order to establish J-Link as your preferred communication interface, you’ll need to select it in the Settings dialog. You should now open this dialog by clicking the Settings button shown below.5.As indicated in the below screenshot, the lower left corner of the Settings dialogcontains a list of Debug Interfaces containing just one item: J-Link. You should confirm that J-Link is selected, and you should then ensure that Don’t Change has been specified for the Interface Mode. Before clicking OK, you should choose Silicon Labs as the Manufacturer, and, using the provided table, select EFR32MG12PxxxF1024as your Device.6.µC/Probe provides a variety of graphical components that can be used for displaying thevalues of variables. You can access these components via the Toolbox located on the left side of the main program window. You should now instantiate a new component—a gauge—by first clicking Angular Gauges in the lower half of the Toolbox and thendragging and dropping the Semicircle 3gauge onto the data screen in the middle of µC/Probe’s program window. The data screen should then appear as shown in thebelow screenshot.7. A graphical component is not of much use until it has been associated with a variable, orsymbol. The Symbol Browser will be your means of associating a variable with your new gauge. If the Symbol Browser is not already displaying a list of C files corresponding to the code that you built in the first part of the lab, you can make such a list appear by expanding the entry for Tools-Lab-1.axf that appeared when you specified the path of this file in Step 2. Once the list is visible, you should expand the entry for the C file ex_main.c in order to make the variables contained in that file appear. You should then drag and drop the variable Ex_MainSpeed from the Symbol Browser to your gauge. As indicated below, the variable name will appear below thegauge to indicate that the two are associated.8.You should now click µC/Probe's Run button, which, as indicated below, is located in theupper left-hand corner of the tool's main program window. If you’re using the Educational Edition of the tool, you’ll be presented with a dialog indicating that you won’t have access to µC/Probe’s full set of features unless you upg rade to the Professional Edition. You can simply click this dialog’s Close button. You will then enter µC/Probe’s Run-Time mode. The dial on your gauge should begin moving from 0 to 100 and then back to 0. It should repeat this pattern indefinitely. Keep in mind that, with the Educational Edition, you will only be able to remain in Run-Time mode for one minute before a time-out occurs and another dialog prompting you to upgrade appears.9.µC/Probe can be used with nearly any embedded system—including those not based ona real-time kernel. However, the tool is especially helpful when paired with projectsthat incorporate Micrium’s embedded software modules, in part because of its kernel-awareness capabilities. These capabilities are implemented via a number of pre-populated data screens that show the values of different kernel variables. You should now stop µC/Probe (by clicking the Stop button located in the upper left-hand corner of the main program window) and add the kernel-awareness screens for µC/OS 5, the kernel featured in the example project, to your workspace. You can add the screens by first clicking Project1in µC/Probe's Workspace Explorer window and then clicking Screens>Micriµm OS Kernel (µC/OS-5), as indicated in the below screenshot. After making this change, you can again click Run to prompt µC/Probe to begin updating the new screens with kernel statistics.10. Within the main µC/OS 5 kernel-awareness screen, there are a number of subordinatescreens, including one for Task(s). As shown below, this screen displays the status of each task running in your project. You should now take a moment to look over the screen and familiarize yourself with the various tasks that the example incorporates.The percentage of CPU cycles consumed by the tasks is provided in the CPU Usage column, and you should note that, after the kernel’s Idle Task—which runs when no application tasks are ready—it is the Tick Task—the kernel task responsible for processing periodic tick interrupts—that consumes the most CPU cycles, with a usage of approximately 5.5%.11.After you’ve looked over Task(s), you should stop µC/Probe (via the Stop buttonmentioned in Step 9). You should then return to Simplicity Studio to make a simple change to the example project’s code. Within the IDE, you should open the file ex_main.c. You’ll be able to find this file by expanding the Micrium folder within the Project Explorer, and then similarly expanding Micrium_OS_V5.00 and examples. You should add the below two lines to ex_main.c, placing the new code at line 156, just below the variable declaration in the main() function. The result of your addition will be to change the frequency of tick interrupts received by the kernel, from 1 kHz to 100 Hz.OS_TASK_CFG Ex_MainCfgTick = {DEF_NULL, 256u, 4u, 100u};OS_ConfigureTickTask(&Ex_MainCfgTick);12.You should now build and run your modified project, following the same procedure thatyou used in Lab 1. Afterward, you should return to µC/Probe and again run your workspace. With tick interrupts now occurring at 1/10 of their original frequency, you should confirm that the overhead of the kernel’s Tick Task has experienced a similar decrease, meaning that the task’s CPU usage should be around 0.6%.Lab 3: SystemViewThis lab w ill walk you through the steps needed to use SEGGER’s SystemView with the example project featured in the previous two labs. With SystemView, you’ll be able to see the cont ext switches, interrupts, and kernel function calls that occur as the project runs.Procedure:1.Because the latest Micrium OS is a relatively new software module, it is not yetsupported by the version of SystemView available from the SEGGER Web site.Fortunately, though, it is fairly easy to update SystemView so that it will recognize applications based on MicriumOS. To make the update, you should now copy SYSVIEW_Micrium OS Kernel.txt, which was provided in Micrium-Tools-Lab.zip, and paste this file into the Description folder in the SystemView install path—Program Files (x86)\SEGGER\SystemView_V242.2.You should now run SystemView by clicking the tool’s entry in the Windows Start menu.3.When you run SystemView for the first time, you’ll be presented with the below dialog,asking whether you’d like to load a sample recording. You can simply click this dialog’s No button.4.In order to begin analyzing the behavior of your example project’s code, you’ll need torecord the code’s activity. To initiate recording, you should select Target>Start Recording within SystemView. You will then be presented with a Configuration dialog.Once you’ve verified that the contents of this dialog match the screenshot shown below, you should click the OK button to initiate recording.5.Shortly after you’ve started to record, you may be presented with the dia log shown inthe below screenshot. This dialog indicates that some of the data that would otherwise be displayed by SystemView was lost, because the buffer used to temporarily store that data on your target experienced overflows. You can simply click OK to close the dialog.For information on how to limit the potential for overflows, you can consult the last section in this document.6.Once you’ve gathered a few second’s worth of data, you should click the Stop Recordingbutton shown in the first of the two screenshots shown below. As the second screenshot indicates, the Timeline located near the center of the SystemView main program window should subsequently display all of the task and ISR activity that occurred during the period the recording was active. You now can begin investigating the execution of the example project’s code in detail.7.If you adjust the zoom on the timeline, you should be able to see, every 40 ms, thepattern of events depicted in the below screenshot. The example project incorporates three application tasks, in addition to a number of kernel, or system, tasks. One of the application’s tasks, labeled Post Task, peridocially performs a post operation on a semaphore, and that is what is shown in the screenshot. In the next couple of steps, you’ll adjust the period of the Post Task and then make an additional SystemViewrecording to confirm the results.8.The period of the Post Task is established by a variable in the same file that youmanipulated in Step 11 of the previous lab, ex_main.c. The variable is named Ex_MainDelayPostms and you have two different options for changing its value.One is to simply replace the variable’s initialization on line 371 of ex_main.c with thebelow code. You’l l then need to rebuild the example project and again download its code to your board. The second option involves µC/Probe. In addition to allowing you to read variables, µC/Probe offers a number of Writable Controls for changing variable values. You can drag and drop one of these controls—a Horizontal Slider, for example—into your workspace from Lab 2, and use the new control to adjust the value of ExMainDelayPostms.Ex_MainDelayPostms = 20;9.With the value of the variable adjusted, you should return to SystemView and make anew recording. Now the post operation described in Step 6 should be visible every 20 ms, as opposed to 40 ms. If time permits, you can make further adjustments to the code, changing, for example, the priority of the Post Task (established by the #define EX_MAIN_POST_TASK_PRIO), and you can use SystemView to observe the results of the changes.Limiting OverflowsThere are a few steps that you can take to limit overflows and maximize the amount of data recorded by SystemView. One of these is to increase the size of the buffer used to temporarily store SystemView data on your target before it is passed to the PC application. The buffer size is established by the #define SEGGER_SYSVIEW_RTT_BUFFER_SIZE in the file SEGGER_SYSVIEW_Conf.h. This file is contained in Micrium/Tools/SystemView/Config within the example project. The provided copy of SEGGER_SYSVIEW_Conf.h uses a buffer size of 4096, but you’re free to increase to any size that the hardware can accomodate.An additional measure, recommended by SEGGER for reducing the number of overflows, is to ensure that SystemView is the only tool using your J-Link. In other words, if you were previously running µC/Probe or the Simplicity Studio debugger while recording data, you should stop the other tools and try a new recording. With exclusive access to the J-Link, SystemView should have fewer impediments to capturing all of the example project’s events.。

GETTING STARTED WITH THE NVIDIA DRIVE AGX ORIN DevKitFOR DRIVE AGX SDK DEVELOPER PROGRAM MEMBERSCovers:Intro to the NVIDIA DRIVE AGX Orin™platformStep by step guide to register your deviceInstructions on how to join the NVIDIA DRIVE AGX™SDK Developer programA navigation through the Start pageLink to Welcome to the DRIVE AGX PlatformREGISTRATIONFirst things first –register your DevKit on the Registration Page. This will ensure an optimal experience for you and help us to provide support.Link to Registration PageSTART PAGEUp next, visit the Start Page. It is your gateway to explore the DRIVE AGX Platform.Link to Start PageKEY WEBSITES FOR DRIVE AGX ORIN developer /drive/startDevKit Start Page How to Navigate DRIVE Developer Page developer /drive/setupDevKit Setup PageStep by step guide to setup your DevKit developer /drive/registerDevKit Register PageStep by step guide to register your DevKitKEY WEBSITES FOR DRIVE AGX ORIN developer /drive/downloadsDownloads Link to access software releases forums.developer/c/autonomous-vehicles/drive-agx-orin/developer /drive/documentationDocs Comprehensive documentation Forum Ask questions or browse threads Hyperion Sensors For additional supported sensors, please refer to DRIVE AGX Orin Sensors and Accessoriesdeveloper /drive/ecosystem-hw-swRESOURCE OVERVIEWHardware SetupTrainingSDK Need Help?HARDWARE SETUPCovers:Product featuresLink to Product BriefHARDWARE QUICK START GUIDECovers:Components listSystem ConnectorsDevKit versionsSteps required to run the DevKit for the first time Link to Hardware Quick Start GuideMECHANICAL & INSTALLATION GUIDECovers:Mechanical dimensionsMounting considerationsInterface connectionsEnvironmental requirementsElectrical installationLink to Mechanical and Installation guideHardware for DRIVE AGX Orin that is supported by NVIDIA and our partnersCovers:CamerasLidarsRadarsIMU / GNSS devicesUSS/RCSLink to DRIVE Hyperion 8.1 Sensors and AccessoriesSDKDRIVE OS AND DRIVEWORKS INTROThe DRIVE SDK website shows architecture and major components of the SDKThe DRIVE OS website provides more details on the DRIVE OS modules and toolsThe DRIVEWORKS website shares insights on eachmodule under its architectureLink to DRIVE OSLink to DriveWorksProvides access to all relevant DRIVE SDK releases, including Release Summary, Installation Guides, Release Notes, etc.Note: DRIVE OS 6.0.4 supports installation viaDocker containers and SDK Manager.Link to DRIVE Downloads SiteLink to Details on NVIDIA DRIVE Platform Docker Containers Link to Details on NVIDIA SDK ManagerLink to Details on DRIVE OS DockerA collection of documentation that helps you to develop with your DRIVE AGX Orin DevKit, includes: Developer Kit documentsSensors & AccessoriesDRIVE OS software documentationDeveloper T oolsLicensesLink to DRIVE DocumentationDRIVE OS 6.0INSTALLATION GUIDE A step-by-step guide introducing the Drive OS 6.0A guide for how to download the DRIVE OS using either SDK Manager or DockerSome tips for building & Running sample applications for DRIVE OS 6.x on linuxLink to DRIVE OS 6.0 Installation GuideSDK MANAGERProvides an end-to-end development environment setup solution for NVIDIA DRIVE®Link to NVIDIA SDK ManagerLink to DRIVE SDK Manager download & RunNGC DOCKERA quick intro to the NVIDIA Docker ContainersconceptLink to NVIDIA DRIVE Platform Docker ContainersLink to DRIVE OS 6 Linux SDK Developer Guide Board Setup & Configuration Components & Interfaces System Programming Mass Storage Partition Configuration NVIDIA DRIVE UtilititesDRIVE OS 6.0DEVELOPER GUIDE NVIDIA DRIVE OS is the reference operating system and software stack for developing and deploying AV applications on DRIVE AGXImportant documentation sections:Link to DriveWorks Documentation Getting Started Modules: Functional Components Sample Code Guide for porting from previous releases DRIVEWORKS DOCUMENTATION The DriveWorks SDK provides an extensive set of fundamental capabilities, including processing modules, tools and frameworks for advanced AV developmentImportant documentation sections:TRAININGNVIDIA TRAININGNVIDIA provides a wide list of learning tools to help in your development journeyNVIDIA has the following verticals that can help you, GTC talksDRIVE Videos / DRIVE LabsWebinarsDeep Learning institute coursesLink to DRIVE TrainingGTC SESSIONSThroughout the GPU Technology Conference (GTC)Relevant research such as state-of-the-art algorithms are showcasedCustomers show their work on top of the DRIVE platform The NVIDIA DRIVE team provides update on the DRIVE hardware and softwareLink to GTC22 March DRIVE Developer DayLink to GTC22 March AutomotiveA comprehensive list to increase your learning 35+ Video-Webinars all focused on DRIVE Requires NVIDIA Developer LoginLink to DRIVE WebinarsDRIVE VIDEOSThere are numerous videos that showcase applications that can be developed on top of the DRIVE platform DRIVE Labs videosare short-form videos that dive into specific self-driving algorithmsDRIVE Dispatch videosprovide Brief updates from our AV fleet,highlighting new breakthroughsLink to DRIVE VideosDEEP LEARNING INSTITUTE (DLI) COURSESNumerous self-paced and instructor-led courses,Some recommendations:Integrating Sensors with NVIDIA DRIVEFundamentals of Accelerated Computingwith CUDA C/C++Optimization and Deployment of TensorFlowModels with TensorRTDeep Learning at Scale with HorovodLink to Deep Learning InstituteLink to Course Catalog PDFNEED HELP?GOT STUCK? TRY TO…Check Out the DRIVE OS and DriveWorks DocumentationComprehensive documentation that includes many samples that illustrate how to leverage the DRIVE SDKBrowse the Support ForumThe Forum contains 1000+ experiences of other users with answers by our support team. If your question is not already covered —feel free to raise itSubmit a BugRaise a bug if suggested by the Forum Support team or via NVONLINE if applicable.Our tech teams will support with information and guidanceContact your Distributor or NVIDIA RepresentativeThe issue persists? Contact your Developer Relations Manager or Account ManagerSUPPORT FORUMThe Forum contains an ever-evolving collection of customer questions and answers by our support team. If your question is not already covered—feel free to raise itThe Forum team usually replies within 24hRaising questions in the Forum requires Developer LoginLink to DRIVE AGX Orin ForumIF FORUM CAN’T HELP Reporting a Bug on NVIDIA Developer (aka DevZone) for confidential contentLogin to https:///drive In upper right user picture, click the down arrow Select “Account”In the left navigation menu, select “My Bugs”Select “Submit a New Bug” (in upper right green box, or within text of bounded green box)Fill in the details of your feedback, request or issueIMPORTANT:When Filing a Bug, be sure to include the Platform Name —e.g. [DRIVE AGX Orin] in the summary, and Select DRIVE [Autonomous Driving] for Relevant AreaIf you have any issues, please contact **********************Request: Create one bug per issue: do not file multiple issues in the same reportReport a BugDRIVE AGX SDK Developer ProgramNVONLINE Report a Bug on NVONLINELogin to https:///In upper left, select BUGS > Report a BugFill in the details of your feedback, request or issueIMPORTANT: When filing Bug, under ProjectClick ProjectSelect DRIVEIf you do not have this project, please contact **********************Request: Create one bug per issue; do not file multiple issues in the same report Tracking a Bug (track status, provide additional information)In upper left, select BUGS > View Bug Status Report a BugNVONLINEFILE A NVBUG—DETAILS(1/2)FILE A NVBUG—DETAILS(2/2)。

Ubuntu 14.04 系统下安装Gromacs 4.6.5 对于初学者来说安装软件比较麻烦,在网上找到安装教程往往安装步骤不全,不能完全安装,导致安装失败,我是刚入门小白,把安装经验记录下来供大家参考。

本文描述了在Ubuntu 14.04 系统下安装Gromacs 4.6.5(CPU 版和GPU版)的详细步骤。

第一步.安装CUDA (在线安装,下载速度有点慢,要有耐心啊)转自/xizero00/article/details/43227019首先验证你是否有nvidia的显卡(/cuda-gpus这个网站查看你是否有支持gpu的显卡):$ lspci | grep -i nvidia查看你的linux发行版本(主要是看是64位还是32位的):$ uname -m && cat /etc/*release看一下gcc的版本:$ gcc --version首先下载nvidia cuda的仓库安装包(我的是ubuntu 14.0464位,所以下载的是ubuntu14.04的安装包,如果你是32位的可以参看具体的地址,具体的地址是https:///cuda-downloads)wget /compute/cuda/repos/ubuntu1404/x86_64/cuda-repo-ubuntu1404_6.5-14_amd64.deb下载完成之后可以使用如下命令安装它,注意文件名修改为cuda-repo-ubuntu1404_6.5-14_amd64.debsudo dpkg -i cuda-repo-<distro>_<version>_<architecture>.deb安装好仓库之后,就可以更新你的本地仓库。

sudo apt-get update最后开始安装cuda以及显卡驱动(安装cuda的同时就会把显卡驱动也全部安装好,这个真的很方便。

但是下载的时间有点长。

cuda中文手册【原创版】目录1.CUDA 简介2.CUDA 安装与配置3.CUDA 编程模型4.CUDA 应用实例5.CUDA 的未来发展正文一、CUDA 简介CUDA(Compute Unified Device Architecture,统一计算设备架构)是 NVIDIA 推出的一种通用并行计算架构,旨在利用 GPU 的强大计算能力进行高性能计算。

CUDA 允许开发人员使用 NVIDIA 的 GPU 来执行计算密集型任务,如大规模数据处理、复杂数学计算和物理仿真等。

二、CUDA 安装与配置1.硬件要求:要运行 CUDA,首先需要一台配备 NVIDIA GPU 的计算机。

此外,还需要支持 CUDA 的操作系统,如 Windows、Linux 和 macOS 等。

2.安装过程:从 NVIDIA 官网下载 CUDA Toolkit,根据操作系统和GPU 型号选择合适的版本进行安装。

安装过程中需要配置 CUDA 的驱动程序和工具。

3.配置环境变量:安装完成后,需要将 CUDA 的安装路径添加到系统环境变量中,以便在编写 CUDA 程序时能够正确识别 CUDA 库。

三、CUDA 编程模型CUDA 提供了一种类似于 C 的语言扩展,称为 CUDA C 编程语言。

CUDA C 允许开发人员在 C 代码中使用 CUDA API,将计算任务分配给GPU。

CUDA 编程模型主要包括以下几个方面:1.主从线程模型:CUDA C 程序由一个主机线程和多个设备线程组成。

主机线程负责管理设备线程,将任务分配给 GPU 并从 GPU 读取结果。

2.设备变量与共享内存:CUDA C 提供了设备变量和共享内存,用于在 GPU 上存储和传输数据。

3.核函数:CUDA C 中的核函数是 GPU 上执行的计算任务。

通过将数据传递给核函数,可以实现对 GPU 的并行计算。

四、CUDA 应用实例CUDA 广泛应用于各个领域,如深度学习、图形渲染、流体力学仿真等。

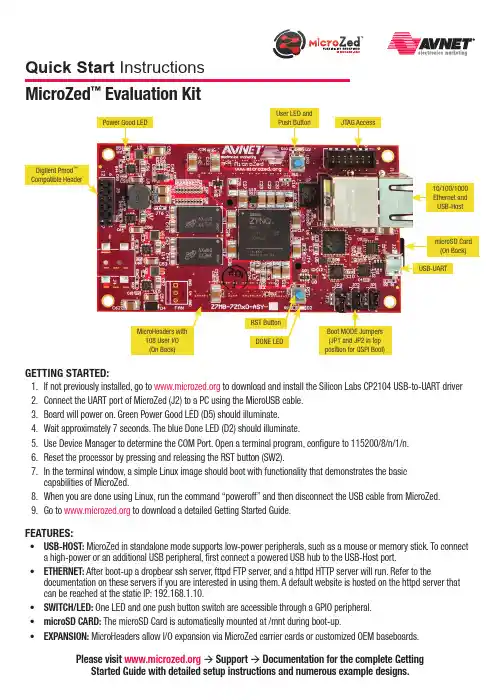

GettinG Started:1. If not previously installed, go to to download and install the Silicon Labs CP2104 USB-to-UART driver2. Connect the UART port of MicroZed (J2) to a PC using the MicroUSB cable.3. Board will power on. Green Power Good LED (D5) should illuminate.4. Wait approximately 7 seconds. The blue Done LED (D2) should illuminate.5. Use Device Manager to determine the COM Port. Open a terminal program, configure to 115200/8/n/1/n.6. Reset the processor by pressing and releasing the RST button (SW2).7.In the terminal window, a simple Linux image should boot with functionality that demonstrates the basic capabilities of MicroZed.8. When you are done using Linux, run the command “poweroff” and then disconnect the USB cable from MicroZed.9. Go to to download a detailed Getting Started Guide.FeatureS:• uSB-HOSt: MicroZed in standalone mode supports low-power peripherals, such as a mouse or memory stick. To connect a high-power or an additional USB peripheral, first connect a powered USB hub to the USB-Host port.• etHernet: After boot-up a dropbear ssh server, fttpd FTP server, and a httpd HTTP server will run. Refer to thedocumentation on these servers if you are interested in using them. A default website is hosted on the httpd server that can be reached at the static IP: 192.168.1.10.• SWitCH/Led: One LED and one push button switch are accessible through a GPIO peripheral.• microSd Card: The microSD Card is automatically mounted at /mnt during boot-up.• eXPanSiOn: MicroHeaders allow I/O expansion via MicroZed carrier cards or customized OEM baseboards.Please visit à Support à documentation for the complete Getting Started Guide with detailed setup instructions and numerous example designs.MicroZed ™ evaluation KitQuick Start InstructionsCopyright © 2013, Avnet, Inc. All rights reserved. Published by Avnet Electronics Marketing, a group of Avnet, Inc. Avnet, Inc. disclaims any proprietary interest or right in any trademarks, service marks, logos, domain names, company names, brands, product names, or other form of intellectual property other than its own. AVNET and the AV logo are registered trademarks of Avnet, Inc. Pn: QSC-Z7MB-7Z010-G-v1LiCenSe aGreeMentTHE AVNET DESIGN KIT (“DESIGN KIT” OR “PRODUCT”) AND ANY SUPPORTING DOCUMENTATION (“DOCUMENTATION” OR “PRODUCT DOCUMENTATION”) IS SUBJECT TO THIS LICENSE AGREEMENT (“LICENSE”). USE OF THE PRODUCT OR DOCUMENTATION SIGNIFIES ACCEPTANCE OF THE TERMS AND CONDITIONS OF THIS LICENSE. The terms of this license Agreement are in addition to the Avnet Customer Terms and Conditions, which can be viewed at . THE TERMS OF THIS LICENSE AGREEMENT WILL CONTROL IN THE EVENT OF A CONFLICT.1.Limited License. Avnet grants You, the Customer, (“You” “Your” or “Customer”) a limited, non-exclusive, non-transferable, license to: (a) use the Product for Your own internal testing, evaluation and design efforts at a single Customer site; (b) create a single derivative work based on theProduct using the same semiconductor supplier product or product family as used in the Product; and (c) make, use and sell the Product in a single production unit. No other rights are granted and Avnet and any other Product licensor reserves all rights not specifically granted in this License Agreement. Except as expressly permitted in this License, neither the Design Kit, Documentation, nor any portion may be reverse engineered,disassembled, decompiled, sold, donated, shared, leased, assigned, sublicensed or otherwise transferred by Customer. The term of this License is in effect until terminated. Customer may terminate this license at any time by destroying the Product and all copies of the Product Documentation.2. Changes. Avnet may make changes to the Product or Product Documentation at any time without notice. Avnet makes no commitment to update or upgrade the Product or Product Documentation and Avnet reserves the right to discontinue the Product or Product Documentation at any time without notice.3.Limited Warranty. ALL products and documentation are provided “AS IS” WITHOUT WARRANTY OF ANY KIND. AVNET MAKES NO WARRANTIES, EITHER EXPRESS OR IMPLIED, WITH RESPECT TO THE PRODUCTS AND DOCUMENTATION PROVIDED HEREUNDER. AVNET SPECIFICALLY DISCLAIMS THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE AND ANY WARRANTY AGAINST INFRINGEMENT OF ANY INTELLECTUAL PROPERTY RIGHT OF ANY THIRD PARTY WITH REGARD TO THE PRODUCTS AND DOCUMENTATION.4.LIMITATIONS OF LIABILITY . CUSTOMER SHALL NOT BE ENTITLED TO AND AVNET WILL NOT LIABLE FOR ANY INDIRECT, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES OF ANY KIND OR NATURE, INCLUDING, WITHOUT LIMITATION, BUSINESS INTERRUPTION COSTS, LOSS OF PROFIT OR REVENUE, LOSS OF DATA, PROMOTIONAL OR MANUFACTURING EXPENSES, OVERHEAD, COSTS OR EXPENSES ASSOCIATED WITH WARRANTY OR INTELLECTUAL PROPERTY INFRINGEMENT CLAIMS, INJURY TO REPUTATION OR LOSS OF CUSTOMERS, EVEN IF AVNET HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. THE PRODUCTS AND DOCUMENTATION ARE NOT DESIGNED, AUTHORIZED OR WARRANTED TO BE SUITABLE FOR USE IN MEDICAL, MILITARY , AIR CRAFT, SPACE OR LIFE SUPPORT EQUIPMENT NOR IN APPLICATIONS WHERE FAILURE OR MALFUNCTION OF THE PRODUCTS CAN REASONABLY BE EXPECTED TO RESULT IN A PERSONAL INJURY , DEATH OR SEVERE PROPERTY OR ENVIRONMENTAL DAMAGE. INCLUSION OR USE OF PRODUCTS IN SUCH EQUIPMENT OR APPLICATIONS, WITHOUT PRIOR AUTHORIZATION IN WRITING OF AVNET, IS NOT PERMITTED AND IS AT CUSTOMER’S OWN RISK. CUSTOMER AGREES TO FULLY INDEMNIFY AVNET FOR ANY DAMAGES RESULTING FROM SUCH INCLUSION OR USE.5. LIMITATION OF DAMAGES. CUSTOMER’S RECOVERY FROM AVNET FOR ANY CLAIM SHALL NOT EXCEED CUSTOMER’S PURCHASE PRICE FOR THEPRODUCT GIVING RISE TO SUCH CLAIM IRRESPECTIVE OF THE NATURE OF THE CLAIM, WHETHER IN CONTRACT, TORT, WARRANTY , OR OTHERWISE.6. INDEMNIFICATION. AVNET SHALL NOT BE LIABLE FOR AND CUSTOMER SHALL INDEMNIFY , DEFEND AND HOLD AVNET HARMLESS FROM ANYCLAIMS BASED ON AVNET’S COMPLIANCE WITH CUSTOMER’S DESIGNS, SPECIFICATIONS OR IN S TRUCTIONS, OR MODIFICATION OF ANY PRODUCT BY PARTIES OTHER THAN AVNET, OR USE IN COMBINATION WITH OTHER PRODUCTS.7. U.S. Government Restricted Rights. The Product and Product Documentation are provided with “RESTRICTED RIGHTS.” If the Product and ProductDocumentation and related technology or documentation are provided to or made available to the United States Government, any use, duplication, or disclosure by the United States Government is subject to restrictions applicable to proprietary commercial computer software as set forth in FAR 52.227-14 and DFAR 252.227-7013, et seq., its successor and other applicable laws and regulations. Use of the Product by the United States Government constitutes acknowledgment of the proprietary rights of Avnet and any third parties. No other governments are authorized to use the Product without written agreement of Avnet and applicable third parties.8. Ownership. Licensee acknowledges and agrees that Avnet or Avnet’s licensors are the sole and exclusive owner of all Intellectual Property Rightsin the Licensed Materials, and Licensee shall acquire no right, title, or interest in the Licensed Materials, other than any rights expressly granted in this Agreement.9. Intellectual Property. All trademarks, service marks, logos, slogans, domain names and trade names (collectively “Marks”) are the properties of theirrespective owners. Avnet disclaims any proprietary interest in Marks other than its own. Avnet and AV design logos are registered trademarks and service marks of Avnet, Inc. Avnet’s Marks may be used only with the prior written permission of Avnet, Inc. 10. General. The terms and conditions set forth in the License Agreement or at will apply notwithstanding any conflicting, contraryor additional terms and conditions in any purchase order, sales acknowledgement confirmation or other document. If there is any conflict, the terms of this License Agreement will control. This License may not be assigned by Customer, by operation of law, merger or otherwise, without the prior written consent of Avnet and any attempted or purported assignment shall be void. Licensee understands that portions of the Licensed Materials may have been licensed to Avnet from third parties and that such third parties are intended beneficiaries of the provisions of thisAgreement. In the event any of the provisions of this Agreement are for any reason determined to be void or unenforceable, the remaining provisions will remain in full effect.This constitutes the entire agreement between the parties with respect to the use of this Product, and supersedes all prior or contemporaneous understandings or agreements, written or oral, regarding such subject matter. No waiver or modification is effective unless agreed to in writing and signed by authorized representatives of both parties. The obligations, rights, terms and conditions shall be binding on the parties and their respective successors and assigns. The License Agreement is governed by and construed in accordance with the laws of the State of Arizona excluding any law or principle, which would apply the law of any other jurisdiction. The United Nations Convention for the International Sale of Goods shall not apply.。

Getting Started GuideGetting started with Active Learn PrimaryWhat is Active Learn Primary?Active Learn Primary is an online learning world where you will find all of our online teaching and learning services. For teachers, it’s a giant repository of teaching material, with in-depth planning, assessment and reporting built in. For children, it’s where they can log in from the classroom and home to find allocated books, games and activities – and earn rewards as they go.Getting started with Active Learn PrimaryActive Learn Primary: In order to help you get up and running, and acquainted with Active Learn Primary as quickly and efficiently as possible, we have created some very short ‘how to’ videos. There are support videos to give you an overview of how to use Active Learn Primary and more specific, product-related videos, for example to show you how to use our Bug Club reading programme.Select from the products in this link to see product-specific overview videos, or see the below summaries of each product.Select from the videos below for a generic overview of Active Learn Primary or click on this link to see all available videos and guidance:•How to login in to Active Learn Primary [watch video]•Overview of products available on Active Learn Primary[watch video]•Overview of Classroom Support area [watch video]•How to add teachers to Active Learn Primary so that they can use the resources [watch video]•How to add pupils to Active Learn Primary so that they can use the resources [watch video]Helpful guidance to send to parents/carersMany parents and carers will be new to the world of online learning, so we have also created some short‘how to’ videos to introduce them to Active Learn Primary. Please do send these links out to parents/carers as necessary.•What is Active Learn Primary and how to log in [watch video]• A quick tour of the pupil world [watch video]•How do rewards work? [watch video]•The grown-ups area [watch video]The Bug Club FamilyThe Bug Club family contains a suite of reading and teaching programmes for children aged 4-11. It covers all reading needs from early reading and phonics through to guided and independent reading, development of deeper text comprehension, and spelling, punctuation and grammar resources.As well as a fantastic online reading world for children, Bug Club also contains a clever online toolkit for teachers. This includes access to all of the eBooks and activities as well as integrated assessment.Find detailed guidance on how to set-up and use Bug Club in this document: (PDF 3.27 MB)Bug Club PhonicsBug Club Phonics is based on a seven-year study in Clackmannanshire, UK, that’s proven systematic phonics to be the most effective way to teach children to read.Bug Club Phonics contains everything you need to teach synthetic phonics to your littlest learners. It isfast-paced – children start to read after learning just eight phonemes – and combines fun, 100% decodable books with BBC CBeebies videos and whole-class teaching software to give you a range of aural, visual and kinaesthetic phonic activities to appeal to all the children in your class.You can:•Search for and allocate resources (eBooks and activities) to classes, groups of children or individual children [watch video ].•Encourage parents to use the parental guidance notes on the inside front and back covers of the eBooks for an enhanced experience, and follow the audio instructions within each phonics game.•Use the activity reporting area to see at a glance how each child performs on the embeddedactivities, whether they enjoyed their reading, and get insights into their progress [watch video ].Parents can:•Learn all about Bug Club Phonics [watch video ].•Learn how to use the Bug Club Phonics eBooks and activities at home [watch video ].•Use the parental guidance notes on the inside front and back covers of the eBooks for an enhanced experience, and follow the audio instructions within each phonics game.Bug Club for Guided Independent Reading (4-11)Bug Club is an engaging, imaginative and finely levelled reading solution for your whole school. We have created some ‘how to’ videos to help you understand the product and enable you to start using it with your class at home.All of the eBooks are levelled according to Letters and Sounds. They also all fit within traditional book band levels. This makes it easy for you to choose which level books to allocate to the children in your class for read-ing practice.You can:•Search for and allocate individual eBooks to classes, groups of children or individual children [watch video ].•Search for and allocate entire book bands of eBooks to classes, groups of children or individual children and sign up for notifications when children need more books [watch video ].•Encourage parents to use the parental guidance notes on the inside front and back covers of the eBooks for an enhanced learning experience for their child.•Use the activity reporting area to see at a glance how each child performs on the embeddedcomprehension activities, whether they enjoyed their reading, and get insights into their progress[watch video ].•If you wish to teach remote Guided Reading lessons with small groups of children, perhaps via anonline video chat service, you can do this with Bug Club. Each eBook has a linked guided reading card which you can work through with children over a number of days. The guided reading cards areavailable as links from each individual eBook within Active Learn Primary. Please note that you cannot currently allocate these for use by parents with children at home.Bug Club KS2 ComprehensionBug Club Comprehension is a fresh approach that helps every child master deep comprehension. It uses a powerful and proven talk-based, mastery approach to help children develop a deeper understanding of texts, regardless of decoding ability.You can:•Use the planning area and allocate resources to children [watch video ].•If you wish to teach remote Guided Reading lessons with your class, allocate eBooks to your class chapter by chapter. Then use the teaching cards for daily discussion.For all Bug Club reading programmes, parents can:•Learn all about Bug Club and the benefits of using it [watch video ]•Learn how to use the Bug Club eBooks [watch video ].•Use the parental guidance notes on the inside front and back covers of the eBooks for an enhanced learning experience for their child.Grammar and Spelling BugGrammar and Spelling Bug is a completely online programme that brings spelling, grammar andpunctuation lessons to life for children aged 5-11, and follows the national curriculum for England. There are comprehensive lesson plans, activities and assessments available atthe click of a button.Built to find the fun in learning grammar, punctuation and spelling, Grammar and Spelling Bug is bursting with online practice games and video tutorials that you can allocate to your class to help them masteressential skills and continue their learning at home. Your children will time travel from Aztec to Victorian times completing exciting practice games again and again, learning key skills at every step without even knowing it!You can:•Learn all about Grammar and Spelling Bug and the benefits of using it [watch video ]•Find and allocate resources to your class [watch video ]Parents can:•Access Grammar and Spelling Bug activities by navigating to the Grammar and Spelling Bug tab inthe My Stuff section of the pupil world [watch video ]Power English: Writing (7-11)Power English: Writing is a new, dynamic KS2 writing approach, which is genre-focused and encourages children to develop writing using their own interests and ideas. Using an evidence-based process which can be applied to all writing, Power English: Writing places a strong emphasis on creativity alongside the technical aspects of writing.Resources available to you on Active Learn Primary are as follows:•An interactive planner, containing lesson plans for individual writing projects along with lesson plans for grammar, punctuation and writing process mini-lessons.•Allocatable, child-facing genre booklets which contain examples and explanations of genre con-ventions as well as helpful prompt questions and hints and tips for generating ideas for writing, drafting, revising, editing and publishing pieces of writing.•Helpful checklists and planning grids which can be allocated to children.•Videos from real-life authors discussing aspects of the genres in which they write.You can:•Use the interactive planner to access lesson plans for individual writing projects and mini-lessons.These lesson plans are especially helpful if you wish to teach online writing lessons with your class.•Learn about the different resources available within Power English: Writing and allocate them to children in your class based on which writing project you would like them to undertake []•Choose a genre you would like your class to focus on, for example fairy tales, and allocate genre booklets to the children to help them create their own fairy tales at home.Parents can:•Access Power English: Writing resources by navigating to the Power English: Writing tab in the My Stuff section of the pupil world [watch video]WordsmithWordsmith is a digital programme for reading comprehension, speaking and listening, grammar and writing, designed to help every child progress. It brings together everything you need to plan, allocate and assess all in one place and includes units on fiction, non-fiction and poetry with detailed, editable lesson plans, resources and e-books.You can:•Allocate resources to a child to look at or work on from home []•Find detailed guidance on how to use Wordsmith in this document: Wordsmith Getting Started Guide (PDF 1.43 MB).•Fiction texts: Try allocating a chapter a week to your students. Look at some of the ideas suggested in the planning or just think of a couple of questions that will encourage them to think about what they have read. For younger students consider getting them to draw pictures, design posters or draw mind maps rather than writing their responses.•Non-fiction texts: These are interactive eBooks that encourage students to explore a ‘Big Question’ in an exciting and dynamic way. Each non-fiction eBook sets out to answer a big question, e.g. in theYear 2 eBook called All About Orang-utans, the students explore the Big Question: Could you keep an orang-utan as a pet? Having explored the eBook, which includes videos and animations, the intention is that they will then have the necessary knowledge to make an informed decision. If you have time, take a look at the planning, which accompanies each book, for suggestion questions, written tasksand challenges. If you prefer not to follow the planning, consider asking the children to keep a logor record (in any format they like) of those facts which they found interesting and didn’t know before.Could those who have access to an iPhone do a 2-3 min recording of what they liked most abouttheir favourite book? Could these be emailed to you and uploaded to your school website?•Poetry: Allocate an age appropriate poem to either be learned off by heart or illustrated. The accompanying lesson plans include lots of suggested activities and links to interactive teachingpages: videos, audio files, drag and drop activities and short compositions. Try using the searchfacility to look for a favourite author, such as Michael Rosen, and allocating the poem and the videoof Michael reading the poem, to your students. Set them the challenge of learning this off by heart,to be delivered with as much expression as Michael. Search for I’m the Youngest in Our House as an example of a poem being read by Michael.Parents can:•Access Wordsmith resources by navigating to the Wordsmith tab in the My Stuff section of the pupil world [watch video].The Rapid FamilyThe Rapid Family is a complete reading, writing and maths solution that is perfect for English Language learners. It consists of an online reading world for children where they can access carefully-levelled eBooks allocated to them by their teacher, as well as a reporting area to help teachers monitor each pupil’s progress remotely.Rapid PhonicsRapid Phonics is based on the successful Sound Discovery phonics catch up programme, and it has been proven in independent studies to help children make accelerated progress in reading and spelling. Rapid Phonics online programme contains a set of fully decodable eBooks that follow the Rapid Phonics progression.Printed books, printed Teaching Guides, phonics flashcards and a phonics wall chart are all available to purchase separately.You can:•Search for and allocate eBooks to groups of children or individual children [].•Encourage parents to help their child practise the key sounds in the book using the activity at the beginning of each book.•Encourage pupils to click on the Rapid Boy or Rapid Girl icons when they appear in the book and follow the audio instructions to complete the activities within the eBooks.•Use the reporting area to see at a glance how each child performs on the embedded activities, whether they have finished the book, and get insights into their progress [watch video].•Learn all about Rapid [watch video].Parents can:•Learn how to use the Rapid Phonics eBooks and activities at home [watch video]•Use the first activity within each book to help their child practise the key sounds they will meet in the book.•Encourage their child to read aloud.•Give their child plenty of praise and encouragement to build their confidence.Rapid ReadingRapid Reading has been proven to help children to make accelerated progress in their word reading and comprehension skills.Rapid Reading online consists of hundreds of eBooks that are all carefully levelled and matched to children’s reading age. The books include fiction and non-fiction texts and cover a range of high-interest topics that will engage even the most reluctant readers. Each eBook includes interactive activities that help children build their word-reading, spelling and comprehension skills.Printed books and printed Teaching Guides are available to purchase separately.Find detailed guidance on how to set-up and use Rapid in this document: Rapid Getting Started Guide (PDF 16.6 MB)You can:•Search for and allocate eBooks to groups of children or individual children [].•Encourage parents to go through the ‘Before Reading’ page of each eBook with their child before beginning the book, although full audio support is included for children who need to access theeBook independently.•Encourage pupils to click on the Rapid Boy or Rapid Girl icons when they appear in the book and follow the audio instructions to complete the activities within the eBooks.•Use the activity reporting area to see at a glance how each child performs on the embedded activities, whether they have finished the book, and get insights into their progress [watch video].•Learn all about Rapid [watch video].Parents can:•Learn how to use the Rapid Reading eBooks and activities at home [watch video].•Use the ‘Before reading’ page of each book to ensure their child has the confidence they need to begin reading.•Encourage their child to read aloud and to complete the activities within each eBook.•Give their child plenty of praise and encouragement to build their confidencePower MathsPower Maths is a mastery programme designed to spark curiosity and excitement in maths. It is recommended by the Department for Education in England and is fully aligned with the White Rose Maths schemes of work, having been developed in partnership.To help teachers set work for children during school closures, we have added free Power Maths content to the Active Learn Secondary website including Textbooks and Practice Book units. Parents/children can access the content via links without signing in. If you are a current Power Maths user, your school will have received an email providing the links.If you do not use Power Maths already, you can get access to the links by completing the form here.These ‘how to’ videos explain how to access and use the content:••For parents and carersAbacusAbacus is a maths scheme for children from Reception to Year 6 (ages 4-11). It has been built by a team of expert authors and teachers to inspire a genuine love of maths and help every child master mathematical concepts. It is written for the primary national curriculum in England and has been built with the outcome of Mastery in mind.Abacus is built around a spiral curriculum so children have the opportunity to revise and practise what they have learnt throughout the year.The planning area contains Long-term, Medium-term, Weekly and Daily plans, which you can export to Word and edit to suit your class.As well as plans, it contains hundreds of resources that you can allocate to the children in your class for practising core skills and improving fluency. For example, there are fun interactive games and pupil videos [watch video], homework sheets and practice powerpointsFind detailed guidance on how to set-up and use Abacus in this document:(PDF 5.29 MB)You can:•Use the planning area to access plans [watch video]•Search for and allocate resources to children in your class [watch video]•Access individual pages from the textbooks (KS2) and workbooks (KS1) in the Resources area•If you are teaching maths lessons remotely, there are links in the plans (online and in Word)to resources relevant to the lessons, such as tools, practice powerpoints and resource sheets.You may want to allocate these to your class in advance of your lesson.Parents can:•Learn all about Abacus [watch video]•Play allocated activities with their children, especially the 414 Talking and Listening activities.Science Bug InternationalScience Bug International is a challenging and complete curriculum programme fromYear 1 to Year 6 (5-11). It contains:•Inspiring plans, hands-on activities, videos, animations and fun activities that children will love.•Detailed support and guidance for every aspect of the UK curriculum ensure retention and mastery of the curriculum standards.•assessment opportunities to help you assess children’s progress and attainment goals. You can:•Learn about the product and start using it with your class at home.•Get an overview of the Science Bug planning area [watch video].Parents can:•Access allocated resources in the Pupil World [watch video].。

QUAKE II RTX Getting Started tQUAKE II RTX - Getting StartedIntroductionThis guide provides further information about the real-time ray tracing enhancements, and other advanced features, that NVIDIA has implemented to create Q uake II RTX. Most noticeably, we have introduced high-fidelity, real-time path-traced reflections, ambient occlusion, lighting, and shadows, which is a world’s first for any game. For an explanation, c heck out this video:https:///watch?v=p7RniXWvYhYQuake II RTX includes the first 3 levels of the game with our full suite of enhancements. P urchase the full game to get access to all the levels, as well as multiplayer with path-tracing.System RecommendationThe following system configuration, or better, is recommended:●GPU:NVIDIA GeForce RTX 2060 or higher●OS:Windows 10 64 bit or Ubuntu 18.04 LTS 64-bit●CPU:Intel Core i3-3220, or AMD equivalent●RAM:8 GB RAMGetting Started1.)Ensure you have the recommended system specifications2.)Have the latest G ame Ready Driver installed.3.)Install Q uake II RTX.a.)Please see installer instructions below4.)Launch Q uake II RTX via desktop shortcut.5.)Set Recommended Settingsa.)Go to Video -> Set desired Global Illumination Setting (R ecommended: Medium)b.)Set desired resolution (R ecommended: 1080p)c.)Go back and click Game -> Choose desired difficulty.Installer Instructions1.)Windows (Demo)a.)Launch the Q uake II RTX installer executable, or download and run from S teamb.)Make sure that "Quake II Shareware Demo" and "Desktop Shortcut" are both selectedc.)The Installer will install the RTX Demo and leave a shortcut on your desktop.2.)Linux (Demo - Debian / Ubuntu Package)a.)Install the Quake II RTX.deb packageb.)The Installer will install the RTX Demo and leave an icon in your applications menu.3.)Linux (Demo - Steam)a.)Download and run Quake II RTX from SteamEnable Ray Tracing for the Full Game1)Purchase the full game2)Launch the Q uake II RTX installer executable, or download and run from S team3)The installer will detect the Quake II executable location.4)The installer will implement RTX with the full game and leave a shortcut on your desktop.Playing the DemoQuake II RTX is a remaster of the classic game.Quake II R TX i s fully ray-traced (using path tracing), delivering highly realistic lighting, shadows, reflections, global illumination and more.●Start game○Game > Easy●Movement & Weapons○Left Mouse Button = Fire/Attack/Action○W = Forward○ A = Strafe Left○S = Backward○ D = Strafe Right○SPACE = Jump○CTRL = (Holding) = Crouch○SHIFT = Walk (default is run)○ F = Flare gun○G = Grenades●Give weapons and ammo○Press ~ (tilda) button to open the console○/give all = All weapons, ammo, items, keys○/dmflags 8192 = Unlimited Ammo●Show RTX ON/OFF○Show RTX OFF■RTX OFF: V ideo > Renderer > OpenGL■RTX ON: Video > Renderer > rtxMultiplayer SupportQuake II RTX comes with multiplayer support.There are multiple options to play this game in multiplayer mode:1.Join an existing public or private Quake II server online.2.Start a server directly from the game.3.Start a dedicated server and join it.Joining a serverThere are two ways to join an existing server:through the server catalog on ,and manually. The catalog is available through the Multiplayer menu:To join an unlisted server, open the console and type “connect <address>”.If the server is password protected,open the console and type“password<password>”before joining the server.Note that when playing on non-Q2RTX servers, you may observe some compatibility issues: -Rockets and other items may disappear in certain pools of water,which were opaque in the original Quake II;-Flare gun will not be available;-Gameplay mods or custom maps may be incompatible with Q2RTX.Starting a server from the gameIn the multiplayer menu, select “start server”. The following dialog will appear:Starting a dedicated serverQuake II RTX ships with a separate dedicated server executable, “q2rtxded.exe” (on Windows). This is a lightweight version of the game that can only act as a server, but doesn’t allow you to play it directly and doesn’t have any graphics.Simply launching the dedicated server will make it start a deathmatch session on the first available map, which is “base1”. You can change the map and other game settings using the server console, or you can put those commands into a config file and execute that file on server startup using the command line. For example, you can put something like this in a file called “server.cfg” in the baseq2 directory:set password <password>set rcon_password <another_password>set deathmatch 1set coop 0set sv_maplist q2dm1 q2dm2 q2dm3 q2dm4set timelimit 20set fraglimit 20map q2dm1Then start the server using command line:q2rtxded.exe +exec server.cfgServer configurationMany of the console variables useful for dedicated server administration are documented in the Q2PRO server manual- since Quake II RTX is derived from Q2PRO, these variables still apply. We have added some more variables to control RTX specific features:sv_flaregun: controls whether players spawn with the flare gun and ammo for it. 0 means no flare gun, 1 means spawn with the flare gun but no ammo, 2 means spawn with the flare gun and 5 grenades (because the flare gun uses grenades).sv_restrict_rtx: if set to 1, makes the server available only to clients playing Quake II RTX; other clients like the regular Quake II or Q2PRO will be rejected.sv_savedir: path to the directory w ithin baseq2where the server will save game state in cooperative play mode. If multiple dedicated cooperative servers are started from the same installation directory, they should use different directories to save game state.The s v_flaregun and s v_restrict_rtx settings have been added mostly for compatibility reasons. Quake II RTX uses the regular Quake II network protocol (version 36 from Q2PRO), but the flare gun is an extension to the protocol. If both Q2RTX and other clients participate in a multiplayer game, and theQ2RTX user fires a flare gun, other clients that see the flare will disconnect because they do not understand what that means. So it is advised to either disable the flare gun on MP servers, or to make the servers only available to Q2RTX players.© 1997 id Software LLC, a ZeniMax Media company. QUAKE, id, id Software, id Tech and related logos are registered trademarks or trademarks of id Software LLC in the U.S. and/or other countries. Bethesda, Bethesda Softworks, ZeniMax and related logos are registered trademarks or trademarks of ZeniMax Media Inc. in the U.S. and/or other countries. All Rights Reserved.This product is based on or incorporates materials from the sources listed below (third party IP). Such licenses and notices are provided for informational purposes only.Quake II: Copyright (C) 1997-2001 Id Software, Inc. Licensed under the terms of the GPLv2.Q2VKPT: Copyright © 2018 Christoph Schied. Licensed under the terms of the GPLv2.Quake2MaX "A Modscape Production":TexturesfromQuake2MaxusedinQuake2XP.Copyright©*******************************************.SubjecttoCreative Commons license version 1.0. Roughness and specular channels were adjusted in texture maps to work with the Q uake II RTX engine.Q2XP Mod Pack: Used with permission from Arthur Galaktionov.Q2Pro: Copyright © 2003-2011 Andrey Nazarov. Licensed under the terms of the GPLv2.。